Onetime IRL callout: If you’re in SF, join Dylan Patel (aka “that semianalysis guy” who wrote the GPU Rich/Poor essay) for a special live Latent Space special event tomorrow. Our first convo was one of last year’s top referenced eps.

As hinted last year, HuggingFace/BigCode has finally released StarCoder v2 and The Stack v2. Full technical report here.

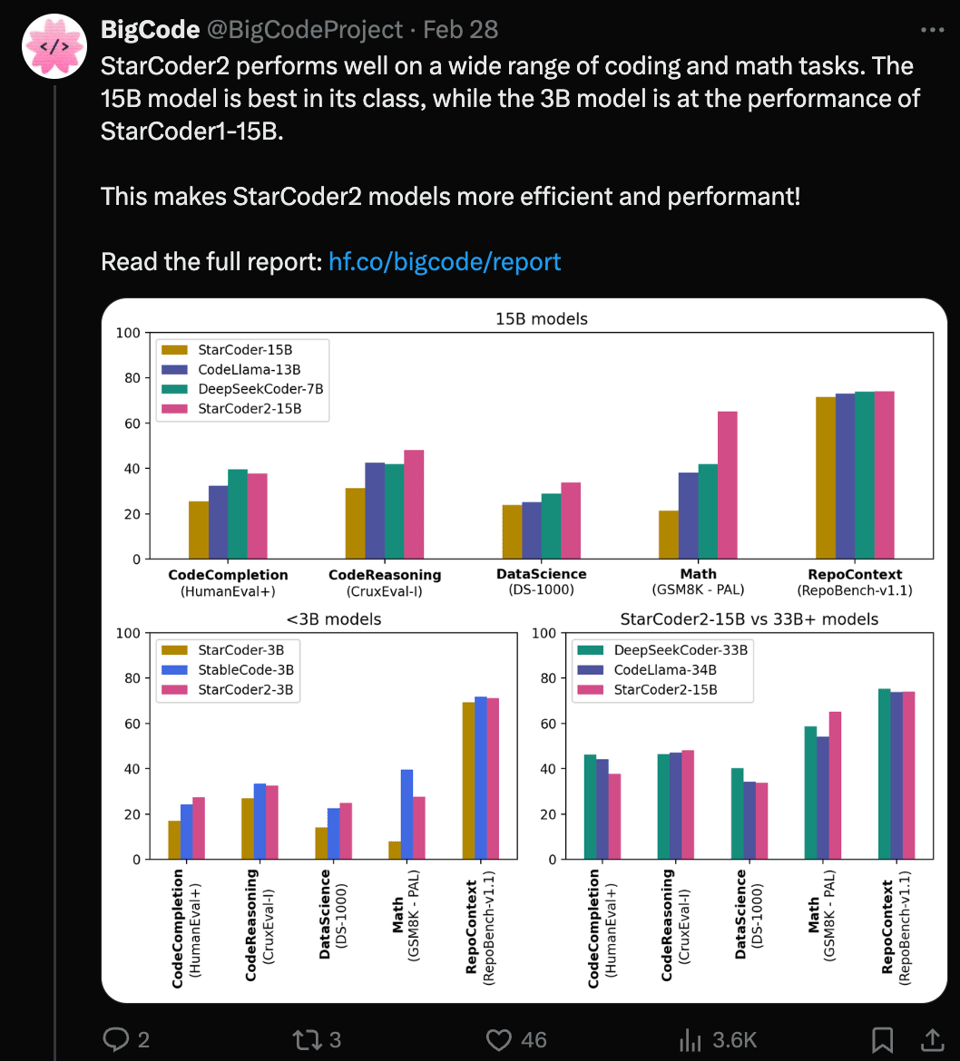

StarCoder 2: SOTA for size (3B and 15B)

StarCoder2-15B model is a 15B parameter model trained on 600+ programming languages from The Stack v2, with opt-out requests excluded. The model uses Grouped Query Attention, a context window of 16,384 tokens with a sliding window attention of 4,096 tokens, and was trained using the Fill-in-the-Middle objective on 4+ trillion tokens.

Since it was only just released, best source on evals is BigCode for now:

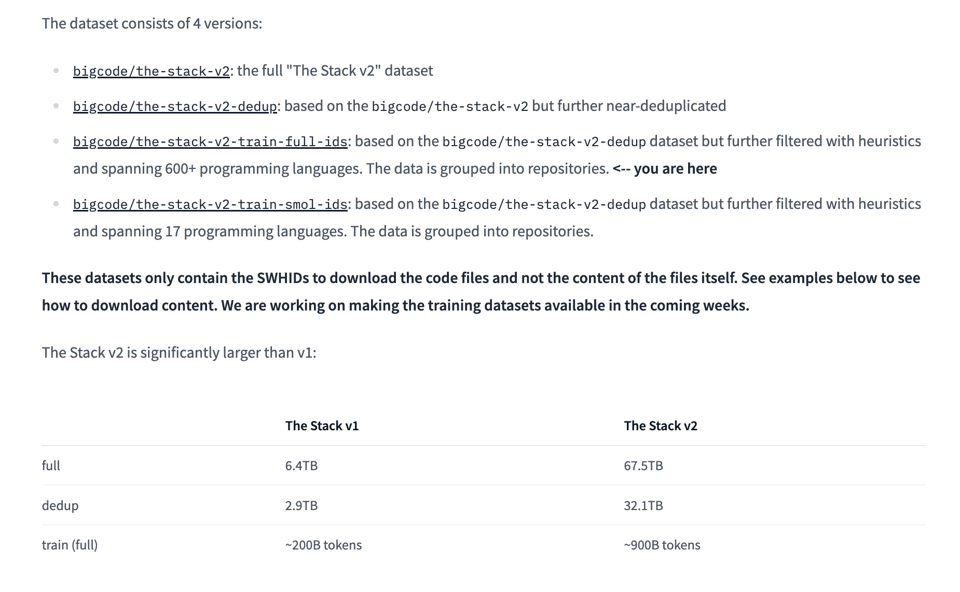

The Stack v2: 10x bigger raw, and 4.5x bigger deduped (900B Tokens)

We are experimenting with removing Table of Contents as many people reported it wasn’t as helpful as hoped. Let us know if you miss the TOCs, or they’ll be gone permanently.

AI Twitter Summary

AI and Machine Learning Discussions

- François Chollet remarks on the nature of LLMs, emphasizing that output mirrors the training data, capturing human thought patterns.

- Sedielem shares extensive thoughts on diffusion distillation, inviting community feedback on the blog post.

- François Chollet differentiates between current AI capabilities and true intelligence, focusing on the efficiency of skill acquisition.

- Stas Bekman raises concerns about the ML community’s dependency on a single hub for accessing weight copies, suggesting the need for a backup hub.

Executive Shifts and Leadership

- Saranormous highlights leadership change at $SNOW, welcoming @RamaswmySridhar as the new CEO and applauding his technical and leadership expertise.

Technology Industry Updates

- DeepLearningAI rounds up this week’s AI stories, including Gemini 1.5 Pro’s rough week, Groq chips’ impact on AI processing speed, and a discussion on version management in AI development by @AndrewYNg.

- KevinAFischer celebrates his feature in Tech Crunch as an early user of the Shader app by @shaderapp and @daryasesitskaya.

Innovation and Technical Insights

- Andrew N Carr discusses the potential of fitting 120B parameter models on consumer GPUs as per the 1.58 Bit paper, emphasizing breakthroughs in VRAM efficiency.

- Erhartford highlights a real-time EMO lip sync model, suggesting its integration for innovative applications.

Memes/Humor

- C_valenzuelab draws a humorous analogy, stating that “airplanes didn’t disrupt the bicycle market”.

- KevinAFischer jokes about the economics of using LLMs, poking fun at the current state of AI development.

- KevinAFischer makes a light-hearted comment about ideas being ahead of their time.

Miscellaneous Observations

- Margaret Mitchell questions diversity in news coverage of the Gemini fiasco - 2806 impressions

- Kevin Fischer humorously touches on repeating himself - 732 impressions

- Zach talks about the need for fair tax rates for the wealthy - 492 impressions

AI Development and Infrastructure

- abacaj mentions the need for backing up weights following an HF outage - 1558 impressions

- Together Compute announces the launch of OLMo-7B-Instruct API from @allen_ai - 334 impressions

- A discussion on the ternary BitNet paper’s potential to revolutionize model scalability - 42 impressions

AI Twitter Narrative

The technical and engineer-oriented Twitter ecosystem has been buzzing with significant discussions spanning AI, blockchain, leadership transitions in tech, and some light-hearted humor.

Regarding AI and Machine Learning, François Chollet’s reflection on LLMs as mirrors to our inputs, alongside Daniele Grattarola’s deep dive into diffusion distillation, underscore critical thinking about the essence and future of AI technologies. Reinforcing the importance of diversified safeguarding of machine learning models, Stas Bekman’s proposition for a secondary hub for model weights has caught the community’s attention, highlighting the community’s resilience in facing practical challenges.

In the leadership and innovation arena, the leadership transition at $SNOW garnered significant engagement, reflecting the continuous evolution and admiration for leadership within tech organizations.

Humor and memes remain a vital part of the discourse, with tweets like Cristóbal Valenzuela’s observation about the non-competition between airplanes and bicycles bringing a light-hearted perspective to innovation and disruption.

On various miscellaneous observations, Margaret Mitchell’s call for more diverse perspectives in tech reporting highlights the importance of inclusivity and varied viewpoints in shaping our understanding of tech events.

Lastly, discussions around AI development and infrastructure saw practical considerations taking the forefront, as noted by abacaj’s preparation for possible future outages by backing up model weights. This operational resilience mirrors the broader strategic resilience seen across the technical and engineering community.

PART 0: Summary of Summaries of Summaries

ChatGPT Model Evaluations and Data Integrity on TheBloke Discord

- Detailed ChatGPT Model Comparisons: Members critically evaluated ChatGPT models, including GPT-4, Mixtral, and Miqu, focusing on API reliability and comparative performance. Specific concerns were raised about training data contamination from other AI outputs, potentially degrading model quality and reliability.

Technological Innovations and AI Deployment on Mistral Discord

- NVIDIA RAG Technical Limitations: NVIDIA's demo, showcasing retrieval-augmented generation (RAG), was critiqued for its 1024 token context limit and response coherence issues. The critique extended to NVIDIA's implementation choices, including the use of LangChain for RAG's reference architecture, hinting at broader discussions on optimizing AI model architectures for better performance.

Qualcomm's Open Source AI Models on LM Studio Discord

- Qualcomm's Contribution to AI Development: Qualcomm released 80 open source AI models on Hugging Face, targeting diverse applications in vision, audio, and speech technologies. Notable models include "QVision" for image processing, "QSpeech" for audio recognition, and "QAudio" for enhanced sound analysis. These models represent Qualcomm's push towards enriching the AI development ecosystem, offering tools for researchers and developers to innovate in machine learning applications across various domains. The release was aimed at fostering advancements in AI modeling and development, specifically enhancing capabilities in vision and audio processing, as well as speech recognition tasks.

These updated summaries provide a more focused view on the specific areas of interest and discussion within the respective Discord communities. They highlight the depth of technical scrutiny applied to AI models, the identification of performance limitations and potential improvements in AI technologies, and the specific contributions of Qualcomm to the open-source AI landscape, underlining the continuous evolution and collaborative nature of AI research and development.

PART 1: High level Discord summaries

TheBloke Discord Summary

- Spam Alert in General Chat: Users reported a spam incident involving

@kquant, with Discord’s spam detection system flagging his activity after excessively contacting over 100 people with identical messages. - ChatGPT Variants Under Scrutiny: Diverse experiences with ChatGPT models were discussed, including GPT-4’s API reliability and comparisons with Mixtral or Miqu models. Concerns were raised over training data contamination from other AI outputs, potentially compromising quality.

- Mixed Results in Model Mergers: Dialogue highlighted the uncertainty in model merging outcomes, emphasizing the role of luck and model compatibility. Merging tactics such as spherical linear interpolation (slerp) or concatenation were suggested in the specialized channels.

- Innovative Roleplay with LLMs: Techniques to enhance character consistency in role-play involve using detailed backstories and traits for LLMs. Specific models like Miqu and Mixtral were favored for these tasks, though longer context length could reduce coherence.

- Pacing AI Training and Fine-tuning: Users exchanged training tips, including using Perplexity AI and efficient methods like QLoRA to curb hardware demand. The importance of validation and deduplication was stressed, alongside managing model generalization and hallucination.

Links to consider:

- For looking into detailed personalities and character backstories in AI role-play, one might explore the strategy explanations and datasets at Hugging Face.

- Searching for efficient training techniques could lead AI engineers to MAX’s announcement about their platform aimed at democratizing AI development via an optimized infrastructure, detailed in their Developer Edition Preview blog post here.

Mistral Discord Summary

-

NVIDIA’s Demo Faces Criticism for RAG Implementation: The NVIDIA “Chat with RTX” demo showcasing retrieval-augmented generation (RAG) faced criticism for limiting context size to 1024 tokens and issues with coherent responses. Discussions hinted at concerns with NVIDIA’s use of LangChain in RAG’s reference architecture.

-

Mistral AI Discussions Span Licensing to Open Weights and Hardware Requirements: Conversations touched on Mistral AI’s use of Meta’s LLaMa model, anticipation for future open weight models following Mistral-7B, and hardware requirements for running larger models, like Mistral 8x7B, which may need at least 100GB of VRAM. Users considered the use of services like Together.AI for deployment assistance.

-

Model Quantization and Deployment Discussions Highlight Constraints: Technical discussions included constraining Mistral-7B to specific document responses, the stateless nature of language models, and the limitations of quantized models. Quantization reducing parameter counts for Mistral-7B and the necessity for large VRAM for full precision models were underscored.

-

Mistral Platform Intricacies and Function Calling Discussed: Users shared experiences and obstacles with Mistral function calls and reported on the necessity for specific message role orders. Some referred to the use of tools like Mechanician for better integration with Mistral AI.

-

Educational Tools and the Potential of Specialized Models: One user showcased an app for teaching economics using Mistral and GPT-4 AI models, while discussions touched on the specialized training of models for tasks like JavaScript optimization. An expressed need for improved hiring strategies within the AI industry surfaced among chats.

The conversations reveal technical discernment among the users, highlighting both enthusiasm for AI’s advancements and practical discussions on AI model limitations and ideal deployment scenarios.

OpenAI Discord Summary

-

Loader Showdown: lm studio vs oobabooga and Jan dot ai: lm studio was criticized for requiring manual GUI interaction to kickstart the API, making it a non-viable option for automated website applications, prompting engineers to suggest alternatives oobabooga and Jan dot ai for more seamless automation.

-

AI Moderation and OpenAI Feedback: A message removed in a discussion about Copilot AI due to automod censorship led to suggestions to report to Discord mods and submit feedback directly through OpenAI’s Chat model feedback form, with community members discussing the extent of moderation rules.

-

Mistral’s Power and Regulation Query: The Mistral model, known for its powerful, uncensored outputs was compared to GPT-4, resulting in a conversation about the impact of European AI regulation on such models. A related YouTube video was shared, illustrating how to run Mistral and its implications.

-

Advancing Chatbot Performance: Enhancing GPT-3.5-Turbo for chatbot applications sparked a debate on achieving performance on par with GPT-4, with users discussing fine-tuning techniques and suggesting utilizing actual data and common use cases for improvement.

-

AI Certification vs. Real-world Application: For those seeking AI specialization, the community highlighted the primacy of hands-on projects over certifications, recommending learning resources such as courses by Andrew Ng and Andrej Karpathy, available on YouTube.

LM Studio Discord Summary

Model Compatibility Queries Spark GPU Discussions: Engineers engaged in detailed explorations of LLMs, such as Deepseek Coder 6.7B and StarCoder2-15B, and their compatibility with Nvidia RTX 40 series GPUs, discussing optimization strategies for GPUs like disabling certain features on Windows 11. A focus on finding the best-fitting models for hardware specifications was observed, underlined by the launch news of StarCoder2 and The Stack v2, with mentions of LM Studio’s compatibility issues, especially on legacy hardware like the GTX 650.

Hugging Face Outage Disrupts Model Access: An outage at Hugging Face caused network errors for members trying to download models, affecting their ability to search for models within LM Studio.

Qualcomm Unveils 80 Open Source Models: Qualcomm released 80 open source AI models on Hugging Face, targeting vision, audio, and speech applications, potentially enriching the landscape for AI modeling and development.

LLM Functionality Expansions: Users exchanged insights on enhancing functionalities within LM Studio, such as implementing an accurate PDF chatbot with Llama2 70B Q4 LLM, seeking guidance on adding image recognition features with models like PsiPi/liuhaotian_llava-v1.5-13b-GGUF/, and expressing desires for simplified processes in downloading vision adapter models.

Hardware Hubris and Hopes: Discussions thrived around user experiences with hardware, from reminiscing about older GPUs to sharing frustrations over misrepresented specs in an e-commerce setting. One user advised optimizations for Windows 11, while TinyCorp announced a new hardware offering, TinyBox, found here. There was also speculation about the potential for Nvidia Nvlink / SLI in model training compared to inference tasks.

HuggingFace Discord Summary

-

Cosmopedia’s Grand Release: Cosmopedia was announced, a sizable synthetic dataset with over 25B tokens and 30M files, constructed by Mixtral. It is aimed at serving various AI research needs, with the release information accessible through this LinkedIn post.

-

Hugging Face Updates Galore: The

huggingface_hublibrary has a new release 0.21.0 with several improvements, and YOLOv9 made its debut on the platform, now compatible with Transformers.js as per the discussions and platforms like Hugging Face spaces and huggingface.co/models. -

DSPy Grows Closer to Production: Exploration of DSPy and Gorilla OpenFunctions v2 is underway to transition from Gradio prototypes to production versions. The tools promise enhanced client onboarding processes for foundation models without prompting, and the discussions and resources can be found in repositories like stanfordnlp/dspy on GitHub.

-

BitNet Bares Its Teeth: A new 1-bit Large Language Model, BitNet b1.58, boasted to preserve performance with impressive efficiency metrics, is discussed with its research available via this arXiv paper.

-

Inference Challenges and Solutions: In the field of text inference, an AI professional ran into issues when trying to deploy the text generation inference repository on a CPU-less and non-CUDA system. This highlights typical environmental constraints encountered in AI model deployment.

LAION Discord Summary

-

AI’s Ideogram Stirs Interest: Engineers discussed the release of a new AI model from Ideogram, drawing comparisons with Stable Diffusion and shedding light on speculated quality matters pertaining to unseen Imagen samples. A user shared a prompt result that sparked a debate on its prompt adherence and aesthetics.

-

Integration of T5 XXL and CLIP in SD3 Discussed: There have been discussions around the potential integration of T5 XXL and CLIP models into Stable Diffusion 3 (SD3), with participants expecting advancements in both the precision and the aesthetics of upcoming generative models.

-

Concerns Over AI-Generated Art: A legal discussion unfolded concerning AI-generated art and copyright laws, referencing a verdict from China and an article on copyright safety for generative AI, highlighting uncertainty in the space and varied industry responses to DMCA requests.

-

Spiking Neural Networks Back in Vogue?: Some members considered the potential resurgence of spiking neural networks with advanced techniques like time dithering to improve precision, reflecting on historical and current research approaches.

-

State-of-the-Art Icon Generation Model Released: A new AI icon generation model has been released on Hugging Face, developed with a personal funding of $2,000 and touted to create low-noise icons at 256px, although scale limitations were acknowledged by its creator.

Nous Research AI Discord Summary

-

Emoji Storytelling on GPT-5’s No-show: Community members used a sequence of emojis to express sentiments about GPT-5’s absence, oscillating between salutes, skulls, and tears, while revering GPT iterations up to the mythical GPT-9.

-

Dell’s Dual Connection Monitors and Docks Intrigue Engineers: A YouTube review of Dell’s new 5K monitor and the Dell Thunderbolt Dock WD22TB4 piqued interest for their capabilities to connect multiple machines, with eBay as the suggested source for purchases.

-

1-bit LLMs Unveiled with BitNet B1.58: The arXiv paper revealed BitNet b1.58 as a 1-bit LLM with performance on par with full-precision models, highlighting it as a cost-effective innovation alongside a mention of Nicholas Carlini’s LLM benchmark.

-

Exploring Alternative Low-Cost LLMs and Fine-Tuning Practices: Users discussed alternatives to GPT-4, the effect of small training dataset sizes, and the potential use of Directed Prompt Optimization (DPO) to improve model responses.

-

Cutting-Edge Research and New Genomic Model Debut: Stanford’s release of HyenaDNA, a genomic sequence model, alongside surprising MMLU scores from CausalLM, and resources on interpretability in AI, such as Representation Engineering and tokenization strategies, were the hot topics of discussion.

Latent Space Discord Summary

-

Noam Shazeer on Coding Style:

@swyxiohighlighted Noam Shazeer’s first blog post on coding style and shape suffixes, which may interest developers who are keen on naming conventions. -

AI in Customer Service: Enthusiasm was expressed around data indicating that LLMs can match human performance in customer service, potentially handling two-thirds of customer service queries, suggesting a pivot in how customer interactions are managed.

-

Learning with Matryoshka Embeddings: Members discussed the innovative “Matryoshka Representation Learning” paper and its applications in LLM embeddings with adaptive dimensions, with potential benefits for compute and storage efficiency.

-

MRL Embeddings Event: An announcement for an upcoming event by

<@206404469263433728>where the authors of the MRL embeddings paper will attend was made, providing an opportunity for deep discussions on representation learning in the#1107320650961518663channel. -

Representation Engineering Session:

@ivanleomksignaled an educational session on Representation Engineering 101 with<@796917146000424970>, indicating a chance to learn and query about engineering effective data representations in the#1107320650961518663channel.

Perplexity AI Discord Summary

-

Rabbit R1 Activation Assistance: User

@mithrilmanencountered a non-clickable email link issue when trying to activate the Rabbit R1 promo.@icelavamansuggested using the email link and reaching out to support. -

Podcast Identity Confirmation: Confusion arose around podcasts using the name “Perplexity AI,” leading

@icelavamanto clarify with the official podcast link, while@ok.alexspeculated that the name might be used without authorization for attention or financial gain. -

Comparing AI Model Capabilities: Users explored the strengths and weaknesses of various AI models like Experimental, GPT-4 Turbo, Claude, and Mistral. There was notably divided opinion regarding Mistral’s effectiveness for code queries.

-

Brainstorming Perplexity AI Improvements: Suggestions for Perplexity AI included exporting thread responses, a feature currently missing but considered for future updates. Issues also included the absence of file upload options and confusion over product name changes.

-

Model Performance Nostalgia and API Errors: Discussions touched upon glitches in text generation and fond memories of pplx-70b being superior to sonar models.

@jeffworthingtonfaced challenges with OpenAPI definitions, suggesting the current documentation might be outdated.

Links shared:

- Official Perplexity AI podcasts: Discover Daily by Perplexity and Perplexity AI.

- Getting started with Perplexity’s API: pplx-api documentation.

Eleuther Discord Summary

-

Foundation Model Development Cheatsheet Unveiled: A new resource titled The Foundation Model Development Cheatsheet has been released to aid open model developers, featuring contributions from EleutherAI, MIT, AI2, Hugging Face, among others, and focusing on often overlooked yet crucial aspects such as dataset documentation and licensing. The cheatsheet can be accessed as a PDF paper or an interactive website, with additional information in their blog post and Twitter thread.

-

Scaling Laws and Model Training Discussions Heat Up: Discourse ranges from inquiries about cross-attention SSM models, stable video diffusion training, and the nuances of lm-evaluation-harness, to the status of EleutherAI’s Pythia model, and an abstract on a 1-bit Large Language Model (LLM). Notable references include a blog post on Multiple Choice Normalization in LM Evaluation and the research paper on the Era of 1-bit LLMs.

-

From Open-Sourced Models To Maze Solving Diffusion Models: The research channel showcased discussions on a variety of AI topics, from open-sourced models and pretraining token-to-model size ratios to diffusion models trained to solve mazes, prompting engineering transfer studies, and the practical challenges of sub 8-bit quantization. Key resources shared include a Stable LM 2 1.6B Technical Report, and a tweet on training diffusion models to solve mazes by François Fleuret.

-

Neox Query for Slurm Compatibility: User

@muwndsought recommendations on running Neox with Slurm and its compatibility with containers. It was highlighted that Neox’s infrastructure does not make assumptions about the user’s setup, and a slurm script may be needed for multinode execution. -

Interpretability Techniques and Norms Explored: Conversations in the interpretability channel delved into matrix norms and products, RMSNorm layer applications, decoding using tuned lenses, and the proper understanding of matrix norm terminology. For example, the Frobenius norm is the Euclidean norm when the matrix is flattened, while the “2-norm” is the spectral norm or top singular value.

-

Tweaks for LM Eval Harness and Multilingual Upgrades: Enhancements to the LM Eval harness for chat templates were shared, along with news that higher-quality translations for the Multilingual Lambada have been contributed by

@946388490579484732and will be included in the evaluation harness. These datasets are made available on Hugging Face.

LangChain AI Discord Summary

-

Confidence in LangChain.js:

@ritanshooraised a question regarding confidence score checks when utilizing LangChain.js for RAG. While an immediate answer was not provided, users were referred to the LangChain documentation for in-depth guidance. -

Integration Queries for LangChain: Technical discussions highlighted the possibilities of memory addition to LCEL and effective language integration with LangChain in an Azure-hosted environment. Users were advised to consult official documentation or seek community assistance for specific integration issues.

-

ToolException Workarounds Explored:

@abinandan soughtadvice on retrying a tool after aToolExceptionoccurs with a custom tool. The community pointed to LangChain GitHub discussions and issues for potential solutions. -

LangServe Execution Quirks:

@thatdcreported missing intermediate step details when using langserve, as opposed to direct invocation from the agent class. They identified a potential glitch in theRemoteRunnablerequiring a workaround. -

Summoning Python Template Alchemists:

@tigermusksought assistance creating a Python template similar to the one available on Smith LangChain Chat JSON Hub, sparking discussions on template generation. -

“LangChain in your Pocket” Celebrated:

@mehulgupta7991announced their book “LangChain in your Pocket,” recently featuring in Google’s Best books on LangChain, highlighting resources for LangChain enthusiasts. -

Beta Testing for AI Voice Chat App: Pablo, an AI Voice Chat app that integrates multiple LLMs and provides voice support without typing, called for beta testers. Engineers were invited to join the team behind this app, leveraging LangChain technology, with an offer for free AI credits.

-

AI Stock Analysis Chatbot Creation Explained: A video tutorial was shared by

@tarikkaoutar, demonstrating the construction of an AI stock analysis chatbot using LangGraph, Function call, and YahooFinance, catering to engineers interested in multi-agent systems. -

Groq’s Hardware Reveal Generates Buzz: An introduction to Groq’s breakthrough Language Processing Unit (LPU) suitable for LLMs captivated tech enthusiasts, conveyed through a YouTube showcase shared by

@datasciencebasics.

(Note: The above summary integrates topics and resources from various channels within the Discord guild, focusing on points of interest most relevant to an engineer audience looking for technical documentation, coding integration, and advancement in AI hardware and applications.)

OpenAccess AI Collective (axolotl) Discord Summary

-

Jupyter Configuration Chaos: Users reported issues with Jupyter notebooks, highlighting error messages concerning extension links and a “Bad config encountered during initialization” without a conclusive solution in the discussion.

-

BitNet b1.58 Breakthroughs: An arXiv paper introduced BitNet b1.58, a 1-bit LLM that matches the performance of full-precision models, heralding significant cost-efficiency with an innovative architecture.

-

Sophia Speeds Past Adam: The Sophia optimizer, claimed to be twice as fast as Adam algorithms, was shared alongside its implementation code, sparking interest in its efficiency for optimization methods in AI models.

-

DropBP Drops Layers for Efficiency: A study presented Dropping Backward Propagation (DropBP), a method that can potentially reduce computational cost in neural network training by skipping layers during backward propagation without significantly affecting accuracy.

-

Scandinavian Showdown: Mistral vs. ChatGPT 3.5: A user, le_mess, reported that their 7B Mistral model rivaled ChatGPT 3.5 in performance for Danish language tasks, using an iterative synthetic data approach for progressive training through 30 iterations and initial human curation.

LlamaIndex Discord Summary

- Groq’s Integration Powers Up LlamaIndex: The Groq LPU now supports LlamaIndex, including

llama2andMixtralmodels, aimed at improving Large Language Model (LLM) generation with a comprehensive cookbook guide provided for streamlining application workflows. - LlamaIndex Services Expand and Optimize: LlamaParse reported significant usage leading to a usage cap increase and updates towars uncapped self-serve usage, while a new strategy using LLMs for alpha parameter adjustment in hybrid search has been shared in this insight. Plus, a RAG architecture combining structured and unstructured data by

@ClickHouseDBhas been highlighted, which can be read about here. - Technical Insights and Clarifications Heat Up LlamaIndex Discussions: Indexing the latest LlamaIndex docs is in consideration with mendable mentioned as a tool for docs, while

@cheesyfishescomments on an anticipated refactor ofCallbackHandlerin Golang. A combination of FlagEmbeddingReranker with CohereReranker was identified as a tactic despite the absence of comparison metrics, and@cheesyfishesexplained that while LlamaIndex serves data to LLMs, Langchain is a more encompassing library. - Model Behaviors Questioned Within AI Community: There’s a discussion about model decay with

@.sysfornoting degrading outputs from their models and@cheesyfishesreinforcing that models do not decay but input issues can affect performance. The concern extends to fine-tuned models underperforming when compared to baseline models.

OpenRouter (Alex Atallah) Discord Summary

-

Claude Encounters a Conversational Hiccup: Claude models from Anthropics were reported to have an error with chats having more than 8 alternating messages. The problem was acknowledged by

@louisgvwith a promise of an upcoming fix. -

Turn Taking Tweaks for OpenRouter:

@alexatallahsuggested a workaround for Claude’s prompt errors involving changing the initial assistant message to a system message. Development is ongoing to better handle conversations initiated by the assistant. -

OpenRouter’s Rate Limit Relay: When asked about rate limits for article generation,

@alexatallahclarified that individually assigned API keys for OpenRouter users would have separate limits, presumably allowing adequate collective throughput. -

Mistral’s Suspected Caching Unearthed: Users noticed repeat prompt responses from Mistral models suggesting caching might be at play.

@alexatallahconfirmed the possibility of query caching in Mistral’s API. -

Prepaid Payment Puzzles for OpenRouter:

@fakeleiikunraised a question about the acceptance of prepaid cards through OpenRouter, and@louisgvresponded with possible issues tied to Stripe’s fraud prevention mechanisms, indicating mixed support.

CUDA MODE Discord Summary

-

Benchmarking Bounties:

@hdcharles_74684improved a benchmark script for Triton kernels, which may outperform cuBLAS in specific scenarios such as batch sizes greater than 1, pertinent to applications like sdxl-fast. In light of potential Triton optimizations, focusing on technologies such as Torch.compile could address bottlenecks when handling batch size of 2. -

Triton Turmoil and Triumphs: Users encountered debugging issues with Triton versions 3.0.0 and 2.2.0; a workaround involved setting the

TRITON_INTERPRETenvironment variable. Moreover, stability concerns were voiced regarding Triton’s unpredictable segfaults compared to CUDA, prompting a request for comparative examples to understand the inconsistencies. -

FP8 Intrinsics Intact: In response to a query based on a tweet,

@zippikaclarified that FP8 intrinsics are still documented in the CUDA math API docs, noting that FP8 is primarily a data format and not universally applied for compute operations. -

Compiler Conundrums: In the realm of deep learning, skepticism was expressed about the usefulness of polyhedral compilation for optimizing sharding. This ties into the broader discussion about defining cost functions, the complexity of mapping DL programs to hardware, and whether top AI institutions are tackling these optimization challenges.

-

Ring Attention Riddles: A comparison was proposed for validating the correctness and performance of Ring Attention implementations, as potential bugs were noted in the backward pass, and GPU compatibility issues surfaced. User

@iron_boundsuggested there may be breakage in the implementation per commit history analysis, stressing the need for careful code review and debugging.

Interconnects (Nathan Lambert) Discord Summary

-

European Independence and Open-Weight Ambitions: Arthur Mensch emphasized the commitment to open-weight models, specifically mentioning 1.5k H100s, and highlighted a reselling deal with Microsoft. Le Chat and Mistral Large are attracting attention on La Plateforme and Azure, showing growth and a quick development approach. Here are the details.

-

Starcoder2 Breaks New Ground: The Stack v2, featuring over 900B+ tokens, is the powerhouse behind StarCoder2, which flaunts a 16k token context and is trained on more than 4T+ tokens. It represents a robust addition to the coding AI community with fully open code, data, and models. Explore StarCoder2.

-

Meta’s Upcoming Llama 3: A report from Reuters indicates that Meta is gearing up to launch Llama 3 in July, signaling a potential shake-up in the AI language model landscape. The Information provided additional details on this forthcoming release. Further information available here.

-

DeepMind CEO’s Insights Captivate Nathan: Nathan Lambert tuned into a podcast featuring Demis Hassabis of Google DeepMind, covering topics such as superhuman AI scaling, AlphaZero combining with LLMs, and the intricacies of AI governance. These insights are accessible on various platforms including YouTube and Spotify.

-

Open AI and Personal Perspectives: The conversation between Nathan and Mike Lambert touched on the nature and importance of open AI and the differing thought models when compared to platforms like Twitter. Additionally, Mike Lambert, associated with Anthropic, expressed a preference to engage in dialogues personally rather than as a company representative.

LLM Perf Enthusiasts AI Discord Summary

- A Buzz for Benchmarking Automation: Engineers

@ampdotand@dare.aiare keen on exploring automated benchmark scripts, with the latter tagging another user for a possible update on such a tool. - Springtime Hopes for Llama 3:

@res6969predicts a spring release for Llama 3, yet hints that the timeline could stretch, while@potrockis hopeful for last-minute updates, particularly intrigued by the potential integration of Gemini ring attention. - The Testing Time Dilemma:

@jeffreyw128voices the challenge of time investment needed for comprehensive testing of new LLMs, aiming for an adequate “vibe check” on each model. - ChatGPT Search Speculation Surfaces: Rumors of an impending OpenAI update to ChatGPT’s web search features were mentioned by

@jeffreyw128, with@res6969seeking more reliable OpenAI intel and curious about resources for deploying codeinterpreter in production.

DiscoResearch Discord Summary

-

DiscoLM Template Usage Critical:

@bjoernpunderscored the significance of utilizing the DiscoLM template for proper chat context tokenization, pointing to the chat templating documentation on Hugging Face as a crucial resource. -

Chunking Code Struggles with llamaindex:

@sebastian.bodzaencountered severe issues with the llamaindex chunker for code, which is outputting one-liners despite thechunk_linessetting, suggesting a bug or a need for tool adjustments. -

Pushing the Boundaries of German AI:

@johannhartmannis working on a German RAG model using Deutsche Telekom’s data, seeking advice on enhancing the German-speaking Mistral 7b model reliability, while@philipmaydelved into generating negative samples for RAG datasets by instructing models to fabricate incorrect answers. -

German Language Models Battleground: A debate emerged over whether Goliath or DiscoLM-120b is more adept at German language tasks, with

@philipmayand@johannhartmannweighing in;@philipmayposted the Goliath model card on Hugging Face for further inspection. -

Benchmarking German Prompts and Models:

@crispstroberevealed that EQ-Bench now includes German prompts, with the GPT-4-1106-preview model leading in performance, and provided a GitHub pull request link; they mentioned translation scripts being part of the benchmarks, effectively translated by ChatGPT-4-turbo.

Datasette - LLM (@SimonW) Discord Summary

- JSON Judo Techniques Remain Hazy:

@dbreunigverbalized the common challenge of dealing with noisy JSON responses, though specifics on the cleanup techniques or functions were not disclosed. - Silencing Claude’s Small Talk:

@justinpinkneyrecommended using initial characters like<rewrite>based on Anthropic’s documentation to circumvent Claude’s default lead-in phrases such as “Sure here’s a…”. - Brevity Battle with Claude:

@derekpwillisexperimented with several strategies for attaining shorter outputs from Claude, including forcing the AI to begin responses with{, but admitted that Claude still tends to include prefatory explanations.

Skunkworks AI Discord Summary

An Unexpected Recruitment Approach: User .papahh directly messaged @1117586410774470818, indicating a job opportunity and showing enthusiasm for their potential involvement.

Alignment Lab AI Discord Summary

- Value Hunting Across Species:

@taodoggyis inviting collaboration on a project to probe into the biological and evolutionary origins of shared values among species, refine value definitions, and explore their manifestation in various cultures. The project overview is accessible via a Google Docs link.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1070 messages🔥🔥🔥):

- Discord Detects Spammer: Users noticed messages flagged for likely spam in the chat, particularly from

@kquant, who was reported for messaging over 100 people with the same message, triggering Discord’s spam detection system. - Exploring ChatGPT Performance: Users like

@itsme9316and@notreimudiscussed their varying experiences with ChatGPT models. Some noted that GPT-4’s API was unreliable for them compared to alternatives like Mixtral or Miqu models. - Model Merging Conversations: Various users, including

@itsme9316and@al_lansley, discussed model merging and how it doesn’t always result in smarter models. There was consensus that merging often depends on luck and the models’ compatibility. - Concerns Over Contaminated Training Data: Users such as

@itsme9316expressed concerns about modern LLMs potentially being contaminated with outputs from other models like OpenAI’s, which could affect quality and reliability. - Quantization and Model Performance: There was discussion led by

@notreimuand@aiwaldohabout the performance differences between high-parameter models with low bit-per-weight (bpw) quantization and smaller models with higher bpw. Users shared varying experiences with different quantized models.

Links mentioned:

- Database Search: Search our database of leaked information. All information is in the public domain and has been compiled into one search engine.

- A look at Apple’s new Transformer-powered predictive text model: I found some details about Apple’s new predictive text model, coming soon in iOS 17 and macOS Sonoma.

- Microsoft-backed OpenAI valued at $80bn after company completes deal: Company to sell existing shares in ‘tender offer’ led by venture firm Thrive Capital, in similar deal as early last year

- Sad GIF - Sad - Discover & Share GIFs: Click to view the GIF

- writing-clear.png · ibm/labradorite-13b at main: no description found

- And death shall have no dominion: And death shall have no dominion. / Dead men naked they shall be one

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO · Hugging Face: no description found

- Uncensored Models: I am publishing this because many people are asking me how I did it, so I will explain. https://huggingface.co/ehartford/WizardLM-30B-Uncensored https://huggingface.co/ehartford/WizardLM-13B-Uncensore…

- BioMistral/BioMistral-7B · Hugging Face: no description found

- NousResearch/Nous-Hermes-2-SOLAR-10.7B · Hugging Face: no description found

- adamo1139 (Adam): no description found

- p1atdev/dart-v1-sft · Hugging Face: no description found

- google/gemma-7b-it · Buggy GGUF Output: no description found

- Attack of the stobe hobo.: Full movie. Please enjoy. Rip Jim Stobe.

- Fred again..: Tiny Desk Concert: Teresa Xie | April 10, 2023When Fred again.. first proposed a Tiny Desk concert, it wasn’t immediately clear how he was going to make it work — not because h…

- My Fingerprint- Am I Unique ?: no description found

- GitHub - MooreThreads/Moore-AnimateAnyone: Contribute to MooreThreads/Moore-AnimateAnyone development by creating an account on GitHub.

- adamo1139/rawrr_v2 · Datasets at Hugging Face: no description found

TheBloke ▷ #characters-roleplay-stories (511 messages🔥🔥🔥):

-

LLM Roleplay Discussion: Users discussed the effectiveness of using Large Language Models (LLMs) for role-playing characters, including techniques for crafting character identities, such as telling the LLM “you are a journalist” to improve performance.

@nathaniel__suggested successful strategies involve assigning roles and detailed personalities and@maldevideshared a prompt structuring approach using#definesyntax. -

Character Consistency: Several users, including

@shanman6991and@superking__, explored whether character consistency can be improved by giving LLMs detailed backstories and personality traits. There was particular interest in techniques to allow characters to lie or scheme convincingly within role-play scenarios. -

Prompt Engineering Tactics:

@maldevidediscussed the use of proper names and declarative statements in prompts to guide LLMs into desired patterns of conversation, while@superking__provided examples of instruct vs. pure chat mode setups for better model guidance. -

Model Selection for Roleplay: Users like

@superking__indicated a preference for specific models such as miqu and mixtral for role-play purposes, often eschewing the use of system prompts. There was also mention of the potential for models to become less coherent with longer context lengths, and strategies to offset this were discussed. -

Naming Conventions in LLMs:

@gryphepadarand@maldevideobserved that certain names, like “Lyra” and “Lily”, seem to be particularly common in responses when LLMs are prompted to generate character names, leading to some speculation about the training data’s influence on these naming trends.

Links mentioned:

- Let Me In Eric Andre GIF - Let Me In Eric Andre Wanna Come In - Discover & Share GIFs: Click to view the GIF

- Sad Smoke GIF - Sad Smoke Pinkguy - Discover & Share GIFs: Click to view the GIF

- Why Have You Forsaken Me? GIF - Forsaken Why Have You Forsaken Me Sad - Discover & Share GIFs: Click to view the GIF

- maldv/conversation-cixot · Datasets at Hugging Face: no description found

- Hawk Eye Dont Give Me Hope GIF - Hawk Eye Dont Give Me Hope Clint Barton - Discover & Share GIFs: Click to view the GIF

- GitHub - UltiRTS/PrometheSys.vue: Contribute to UltiRTS/PrometheSys.vue development by creating an account on GitHub.

- GitHub - predibase/lorax: Multi-LoRA inference server that scales to 1000s of fine-tuned LLMs: Multi-LoRA inference server that scales to 1000s of fine-tuned LLMs - predibase/lorax

TheBloke ▷ #training-and-fine-tuning (86 messages🔥🔥):

- Perplexity AI as a New Tool: User

@icecream102suggested trying out Perplexity AI as a resource. - Budget Training with QLoRA:

@dirtytigerxadvised that training large language models like GPT can be expensive and suggested using techniques like QLoRA to limit hardware requirements, though noting it would still take many hours of compute. - Training and Inference Cost Estimates: In a discussion on estimating GPU hours for training and inference,

@dirtytigerxrecommended conducting a tiny test run and looking at published papers for benchmarks. - Model Training Dynamics Discussed:

@cogbujiquestioned training a model with a static low validation loss, prompting@dirtytigerxto suggest altering the validation split and taking deduplication steps to address discrepancies. - Model Generalization and Hallucination Concerns:

@dirtytigerxand@cogbujidiscussed training model generalization and the inevitable problem of hallucination during inference, suggesting the use of retrieval mechanisms and further evaluation strategies.

Links mentioned:

cogbuji/Mr-Grammatology-clinical-problems-Mistral-7B-0.5 · Hugging Face: no description found

TheBloke ▷ #model-merging (6 messages):

- Tensor Dimension Misalignment Issue:

@falconsflypointed out that an issue arose due to a single bit being misplaced or misaligned, resulting in incorrect tensor dimensions. - Appreciation Expressed for Information:

@222gatethanked@falconsflyfor sharing the information about the tensor dimension problem. - Query about Slerp or Linear Techniques:

@222gateasked if the discussed merging techniques involved spherical linear interpolation (slerp) or just linear ties. - Reflection on Diffusion Test Techniques: In response,

@alphaatlas1mentioned not being certain about@222gate’s specific query but shared that their diffusion test used dare ties and speculated that a HuggingFace test may have involved dare task arithmetic. - Recommendation for Concatenation in Merging:

@alphaatlas1suggested trying concatenation for anyone doing the peft merging, stating it works well and noting there’s no full-weight merging analogue for it.

TheBloke ▷ #coding (8 messages🔥):

-

Eager for Collaboration:

@wolfsaugeexpresses enthusiasm to learn from@falconsfly, anticipating a discussion on fresh ideas for enhancement after dinner. -

No GPU, No Speed?:

@dirtytigerxstates that without a GPU, speeding up processes is challenging, offering no alternative solutions for performance improvement. -

APIs for Acceleration:

@tom_lrdsuggests using APIs as an alternative to speed up processes, listing multiple services like huggingface, together.ai, and mistral.ai. -

Looking Beyond Colab for Hosted Notebooks: Despite

@dirtytigerxmentioning the lack of hosted notebooks on platforms provided by cloud providers,@falconsflypoints out that Groq.com offers fast inference. -

Modular MAX Enters the Game:

@dirtytigerxshares news about the general availability of the modular MAX platform, announcing the developer edition preview and its vision to democratize AI through a unified, optimized infrastructure.

Links mentioned:

Modular: Announcing MAX Developer Edition Preview: We are building a next-generation AI developer platform for the world. Check out our latest post: Announcing MAX Developer Edition Preview

Mistral ▷ #general (992 messages🔥🔥🔥):

-

NVIDIA’s Chat with RTX Demo Criticized: Users like

@netrveexpressed disappointment with NVIDIA’s “Chat with RTX” demo, which was meant to showcase retrieval-augmented generation (RAG) capabilities. The demo, which limited context size to 1024 tokens, faced issues with retrieving correct information and delivering coherent answers. NVIDIA’s use of LangChain in the reference architecture for RAG was also questioned. -

OpenAI and Meta Licensing Discussions: There was a heated discussion spearheaded by

@i_am_domand@netrveregarding Mistral AI’s usage of Meta’s LLaMa model, potential licensing issues, and implications of commercial use. The consensus suggested that an undisclosed agreement between Mistral and Meta was possible, given the seeming compliance with Meta’s licensing terms. -

Conversations about Mistral AI’s Open Weight Models:

@mrdragonfox,@tarruda, and others discussed Mistral AI’s commitment to open weight models and speculated about future releases following the Mistral-7B model. The community expressed trust and expectations towards Mistral for providing more open weight models. -

RAG Implementation Challenges Highlighted: Several users, including

@mrdragonfoxand@shanman6991, discussed the complexities of implementing RAG systems effectively. They mentioned the significant impact of the embedding model on RAG performance and the difficulty in achieving perfection with RAG, often taking months of refinement. -

Mistral AI and Microsoft Deal Scrutinized: An investment by Microsoft in Mistral AI raised discussions about the size of the investment and its implications for competition in the AI space.

@ethuxshared information hinting that the investment was minimal, while@i_am_domraised concerns about Microsoft’s cautious approach due to potential complexities with open-source models like Miqu.

Links mentioned:

- What Is Retrieval-Augmented Generation aka RAG?: Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from external sources.

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits: Recent research, such as BitNet, is paving the way for a new era of 1-bit Large Language Models (LLMs). In this work, we introduce a 1-bit LLM variant, namely BitNet b1.58, in which every single param…

- LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys: no description found

- Klopp Retro GIF - Klopp Retro Dancing - Discover & Share GIFs: Click to view the GIF

- Basic RAG | Mistral AI Large Language Models: Retrieval-augmented generation (RAG) is an AI framework that synergizes the capabilities of LLMs and information retrieval systems. It’s useful to answer questions or generate content leveraging …

- mlabonne/NeuralHermes-2.5-Mistral-7B · Hugging Face: no description found

- Legal terms and conditions: Terms and conditions for using Mistral products and services.

- Microsoft made a $16M investment in Mistral AI | TechCrunch: Microsoft is investing €15 million in Mistral AI, a Paris-based AI startup working on foundational models.

- Client code | Mistral AI Large Language Models): We provide client codes in both Python and Javascript.

- NVIDIA Chat With RTX: Personnalisez et déployez votre chatbot d’IA.

- Microsoft made a $16M investment in Mistral AI | TechCrunch: Microsoft is investing €15 million in Mistral AI, a Paris-based AI startup working on foundational models.

- Mistral Large vs GPT4 - Practical Benchmarking!: ➡️ One-click Fine-tuning & Inference Templates: https://github.com/TrelisResearch/one-click-llms/➡️ Trelis Function-calling Models (incl. OpenChat 3.5): http…

- Short Courses: Take your generative AI skills to the next level with short courses from DeepLearning.AI. Enroll today to learn directly from industry leaders, and practice generative AI concepts via hands-on exercis…

Mistral ▷ #models (12 messages🔥):

- More Meaningful Error Messages on Mistral:

@lerelaaddressed an issue regarding system limitations, stating that a certain operation is not permitted with the large model, but users will now receive a more meaningful error message. - Discussion on System/Assistant/User Sequence:

@skisquawremarked on having to change the sequence from system/assistant/user to user/assistant/user due to the model treating the first user input as a system one, despite a functionality need where assistant prompts follow system commands. - Quantization Packs Mistral-7B Parameters:

@chrismccormick_inquired about the parameter count of Mistral-7B, originally tallying only around 3.5B. They later deduced that 4-bit quantization likely halves the tensor elements. - Large Code Segments Questioned for Mistral:

@frigjordcontemplated whether querying long code segments, especially more than 16K tokens, might pose a problem for Mistral models. - Complex SQL Queries with Mistral-7B:

@sanipanwalaasked about generating complex SQL queries with Mistral-7B, and@tom_lrdresponded affirmatively, providing advice on formulating the queries and even giving an example for creating a sophisticated SQL query.

Mistral ▷ #deployment (174 messages🔥🔥):

-

Mistral Deployment Conundrum:

@arthur8643inquired about hardware requirements for running Mistral 8x7B locally, contemplating a system upgrade. Users@_._pandora_._and@mrdragonfoxadvised that his current setup wouldn’t suffice, recommending at least 100GB of VRAM for full precision deployment, and suggesting the use of services like together.ai for assistance. -

Debates on Optimal Server Specs:

@latoile0221sought advice on server specifications for token generation, considering a dual CPU setup and RTX 4090 GPU. The user received mixed responses regarding the importance of CPU versus GPU;@ethuxstressed the GPU’s significance for inference tasks while discussions circled around the necessity of substantial VRAM for full precision models. -

Quantization Qualms and GPU Capabilities: Various participants expressed that quantized models underperform, with

@frigjordand@ethuxnoting that quantized versions aren’t worthwhile for coding tasks. The consensus emerged that substantial VRAM (near 100GB) is needed to run non-quantized, full-precision models effectively. -

Self-Hosting, Model Types, and AI Limitations: Dialogue ensued about the practicalities of self-hosting AI models like Mixtral, with mentions of utilizing quant versions and alternatives like GGUF format. Users including

@ethuxand@sublimatorniqshared experiences, with a focus on the limitations of quantized models and better performance of full models on high-spec hardware. -

On the Topic of Specialized AI Models: The discussion touched on the potential advantages and challenges of training a specialized JS-only AI model.

@frigjordand@mrdragonfoxdebated the effectiveness and handling of such focused models, with general agreement on the extensive work required to clean and prep datasets for any specialized AI training.

Links mentioned:

- Jurassic Park GIF - Jurassic Park World - Discover & Share GIFs: Click to view the GIF

- starling-lm: Starling is a large language model trained by reinforcement learning from AI feedback focused on improving chatbot helpfulness.

- Tags · mixtral: A high-quality Mixture of Experts (MoE) model with open weights by Mistral AI.

Mistral ▷ #ref-implem (76 messages🔥🔥):

- Typo Alert in Notebook:

@foxalabs_32486identified a typo in theprompting_capabilities.ipynbnotebook, where an extra “or” was present. The correct text should read “Few-shot learning or in-context learning is when we give a few examples in the prompt…” - Fix Confirmation: In response to

@foxalabs_32486’s notice,@sophiamyangacknowledged the error and confirmed the fix. - Typos Add Human Touch:

@foxalabs_32486mused about using occasional typos to make AI-generated content appear more human, sparking a discussion on the ethics of making AI seem human with@mrdragonfox. - Ethics over Earnings:

@mrdragonfoxdeclined projects aimed at humanizing AI beyond ethical comfort, underscoring a preference to choose integrity over financial gain. - AI Industry Hiring Challenges:

@foxalabs_32486discussed the difficulties in hiring within the AI industry due to a shortage of skilled professionals and the rapid expansion of knowledge required.

Mistral ▷ #finetuning (15 messages🔥):

- Limiting Model Answers to Specific Documents:

@aaronbarreiroinquired about constraining a chatbot to only provide information from a specific document, such as one about wines, and not respond about unrelated topics like pizza. - The Challenge of Controlling LLMS:

@mrdragonfoxexplained that language models like LLMS will likely hallucinate answers, because they are designed fundamentally as next token predictors, thus a robust system prompt is vital to direct responses. - Language Models as Stateless Entities:

@mrdragonfoxhighlighted the stateless nature of language models, meaning they don’t retain memory like a human would, and if pushed beyond their token limit—specifically mentioned the 32k context—they will forget earlier information. - Strategies to Maintain Context Beyond Limits:

@mrdragonfoxdiscussed strategies to circumvent the context limitation, such as using function calling or retrieval-augmented generation (RAG), but acknowledged these methods are more complex and don’t work directly out-of-the-box. - Fine-Tuning Time Depends on Dataset Size: When

@atipasked about the time required to fine-tune a 7B parameter model on H100 hardware,@mrdragonfoxstated it varies based on dataset size, implying the duration can’t be estimated without that information.

Mistral ▷ #showcase (7 messages):

-

Teaching Economics with AI:

@patagonia50shared about creating an app for an intermediate microeconomics course that provides instant personalized feedback by making API calls to gpt-4-vision-preview and Mistral models. The app, which adapts to different questions and rubrics via a JSON file, has been deployed on Heroku and is still being refined, with future plans to expand its capabilities with Mistral AI models. -

Interest Expressed in Educational App:

@akshay_1showed interest in@patagonia50’s educational app, asking if there was a GitHub repository available for it. -

Open Source Plans: In response to

@akshay_1,@patagonia50indicated that there isn’t a GitHub repository yet but plans to create one for the educational app. -

Request for a Closer Look:

@akshay_1expressed a desire for a sneak peek at@patagonia50’s educational app, demonstrating enthusiasm for the project.

Links mentioned:

- cogbuji/Mr-Grammatology-clinical-problems-Mistral-7B-0.5 · Hugging Face: no description found

- Use Mistral AI Large Model Like This: Beginner Friendly: We learn the features of High Performing Mistral Large and do live coding on Chat Completions with Streaming and JSON Mode. The landscape of artificial intel…

Mistral ▷ #random (2 messages):

- Seeking the Google Million Context AI: User

@j673912inquired about how to access the elusive Google 1M Context AI. - Insider Connection Required:

@dawn.duskrecommended having direct contact with someone from Deepmind to gain access.

Mistral ▷ #la-plateforme (41 messages🔥):

-

Mistral Function Calls Require Adjustments:

@michaelhungerdiscussed challenges with the Mistral function calling mechanism, noting the need for patches and system messages. Specifically, Mistral’s behavior contrasts with expectations, often preferring additional tool calls over answering the user’s query directly. -

Clarifying

tool_choiceBehavior:@liebkeexpressed confusion over the behavior oftool_choice="auto"in the context of Mistral’s function calling, as the setting does not seem to trigger tool calls as anticipated.@sophiamyangsuggested that “auto” should work as intended, prompting a request for Liebke’s implementation details for further troubleshooting. -

Inconsistencies in Mistral Function Calling:

@alexclubsprovided feedback on integrating Mistral Function Calling into Profound Logic, noticing differences from OpenAI’s tool behavior and a lack of consistency in when functions are triggered. -

Reproducibility of Outputs on Mistral’s Platform Uncertain:

@alexli3146inquired about seedable outputs for reproducibility, while@foxalabs_32486and@sublimatorniqdiscussed potential issues and existing settings in the API that may affect it. -

Mistral Message Roles Must Follow Specific Order: After discussing error messages encountered with “mistral-large-latest,”

@not__cooldiscovered that wrapping a user message with two system messages is not supported, as confirmed by@lerela. However,@skisquawsuccessfully used the user/assistant format with the system role message in the first user role statement.

Links mentioned:

- Technology: Frontier AI in your hands

- AI Assistants are the Future | Profound Logic: With Profound AI, you can enhance your legacy applications with natural language AI assistants in just 3 steps.

- AI Assistants are the Future | Profound Logic.): With Profound AI, you can enhance your legacy applications with natural language AI assistants in just 3 steps.

- GitHub - liebke/mechanician: Daring Mechanician is a Python library for building tools that use AI by building tools that AIs use.: Daring Mechanician is a Python library for building tools that use AI by building tools that AIs use. - liebke/mechanician

- mechanician/packages/mechanician_mistral/src/mechanician_mistral/mistral_ai_connector.py at main · liebke/mechanician: Daring Mechanician is a Python library for building tools that use AI by building tools that AIs use. - liebke/mechanician

- mechanician/examples/notepad/src/notepad/main.py at main · liebke/mechanician: Daring Mechanician is a Python library for building tools that use AI by building tools that AIs use. - liebke/mechanician

Mistral ▷ #office-hour (1 messages):

- Mark Your Calendars for Evaluation Talk:

@sophiamyanginvites everyone to the next office hour on Mar. 5 at 5pm CET with a focus on evaluation and benchmarking. They express interest in learning about different evaluation strategies and benchmarks used by participants.

Mistral ▷ #le-chat (423 messages🔥🔥🔥):

-

Le Chat Model Limit Discussions: User

@alexeyzaytsevinquired about the limits for Le Chat on a free account. Although currently undefined,@ethuxand@_._pandora_._speculated that future restrictions might mimic OpenAI’s model, with advanced features potentially becoming paid services. -

Mistral on Groq Hardware:

@foxalabs_32486asked about plans to run Large on Groq hardware, while@ethuxnoted Groq’s memory limitations.@foxalabs_32486provided a product brief from Groq, highlighting potential misconceptions about their hardware’s capabilities. -

Mistral’s Market Position and Microsoft Influence: In an extensive discussion, users

@foxalabs_32486and@mrdragonfoxshared their perceptions of Mistral’s market positioning and the influence of Microsoft’s investment. They touched on topics like strategic hedging, the potential impact on OpenAI, and the speed of Mistral’s achievements. -

Feedback for Le Chat Improvement: Several users, including

@sophiamyang, engaged in discussing ways to improve Le Chat. Suggestions included a “thumb down” button for inaccurate responses (@jmlb3290), ease of switching between models during conversations (@sublimatorniq), features to manage token counts and conversation context (@_._pandora_._), preserving messages on error (@tom_lrd), and support for image inputs (@foxalabs_32486). -

Debating Efficiency of Low-Bitwidth Transformers: Users, especially

@foxalabs_32486and@mrdragonfox, debated the implications of a low-bitwidth transformer research paper, discussing potential boosts in efficiency and the viability of quickly implementing these findings. They mentioned the work involved in adapting existing models and the speculative nature of immediate hardware advancements.

Links mentioned:

- Technology.): Frontier AI in your hands

- Why 2024 Will Be Not Like 2024: In the ever-evolving landscape of technology and education, a revolutionary force is poised to reshape the way we learn, think, and…

- Unsloth update: Mistral support + more: We’re excited to release QLoRA support for Mistral 7B, CodeLlama 34B, and all other models based on the Llama architecture! We added sliding window attention, preliminary Windows and DPO support, and …

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

Mistral ▷ #failed-prompts (6 messages):

-

Instructions for Reporting Failed Prompts:

@sophiamyangprovided a template requesting details for reporting failed prompts, specifying information likemodel,prompt,model output, andexpected output. -

Witty Math Mistake Report:

@blueaquilaehumorously flagged an issue regarding mathematics with the Mistral Large model with their comment, “math, halfway there (pun intended) on large chat”. -

Tongue-in-Cheek Query Confirmation: In a playful exchange,

@notan_aiqueries whether a specific example counts as a failed prompt, to which@blueaquilaeresponds, “Synthetic data all the way?” -

General Failures on le chat:

@blacksummer99reports that all versions of Mistral, including Mistral next, fail on a prompt given on le chat, without providing specifics. -

Incomplete Issue Indication:

@aiwaldohmentions “Fondée en 2016?!” possibly pointing out an issue or confusion with the Mistral model’s output, but no further details are provided.

Mistral ▷ #prompts-gallery (5 messages):

-

Invitation to Share Prompt Mastery: User

@sophiamyangwelcomed everyone to share their most effective prompts, emphasizing prompt crafting as an art form and looking forward to seeing users’ creations. -

Confusion About Channel Purpose: After user

@akshay_1simply mentioned “DSPy”,@notan_airesponded with curiosity about “SudoLang” but expressed confusion regarding the purpose of the channel. -

Possible Model Mention with Ambiguity: The model name “Mistral next le chat” was mentioned twice by

@blacksummer99, however, no further context or details were provided.

OpenAI ▷ #ai-discussions (58 messages🔥🔥):

-

Loader Choices for AI Models:

@drinkoblog.weebly.compointed out that lm studio requires manual GUI interaction to start the API, which is impractical for websites. They recommend using alternative loaders such as oobabooga or Jan dot ai for automation on boot. -

Automod Censorship on AI Discussions:

@chonkyman777reported their message was removed for showcasing problematic behavior by Copilot AI, and@eskcantasuggested reaching out to Discord mods via Modmail and reporting AI issues directly to OpenAI through their feedback form. Users debated the nuances of moderation and the scope of the rules in place. -

Concerns Over Mistral and Uncensored Content:

@dezuzelshared a YouTube video discussing Mistral, an AI model considered powerful and uncensored.@tariqaliraised questions about the implications of European AI regulation on Mistral, despite its promoted lack of censorship.@chief_executivecompared Mistral Large to GPT-4 and found the latter superior for coding tasks. -

Fine-Tuning GPT-3.5 for Chatbot Use Case:

@david_zoesought advice on fine-tuning GPT-3.5-Turbo to perform better than the baseline and maintain conversation flow, but faced challenges matching the performance of GPT-4.@elektronisaderecommended examining common use cases and consulting ChatGPT with actual data for further guidance on fine-tuning. -

Exploring Certifications for AI Specialization:

@navs02, a young developer, inquired about certifications for specializing in AI.@dezuzeland.doozadvised focusing on real-world projects over certifications and mentioned learning resources including courses by Andrew Ng and Andrej Karpathy on YouTube.

Links mentioned:

- Chat model feedback: no description found

- This new AI is powerful and uncensored… Let’s run it: Learn how to run Mistral’s 8x7B model and its uncensored varieties using open-source tools. Let’s find out if Mixtral is a good alternative to GPT-4, and lea…

OpenAI ▷ #gpt-4-discussions (21 messages🔥):

- Confusion Over API and File Uploads:

@ray_themad_nomadexpressed frustration with the chatbot’s inconsistent responses after uploading files and creating custom APIs, noting that methods that worked months ago seem to fail now. - Clarifying Document Size Limitations:

@darthgustav.pointed out that the chatbot can only read documents within context size, and it will summarize larger files, which spurred a debate with@fawesumwho suggested that knowledge files can be accessed efficiently even if they are huge. - Seed Parameters Causing Inconsistent Outputs:

@alexli3146asked if anyone had success with getting reproducible output using the seed parameter, but shared that they haven’t. - Security Measures with Web Browsing and Code Interpreter:

@darthgustav.explained that using python to search knowledge files with the Code Interpreter can disable web browsing in the instance which is a security decision. - Proper Channel for Sharing The Memory Game:

@takk8isshared a link to “The Memory” but was redirected by@solbusto share it in the dedicated channel to avoid it getting lost in the chat.

OpenAI ▷ #prompt-engineering (391 messages🔥🔥):

-

Prompt Engineering with MetaPrompting:

@madame_architectshared their work on annotating “MetaPrompting” research, enhancing their compiled list of prompt architecture papers to 42 total. The article details a method integrating meta-learning with prompts, aimed at improving initializations for soft prompts in NLP models. MetaPrompting Discussion -

LaTeX and Katex in ChatGPT: Several users, including

@yami1010and@eskcanta, discussed the capabilities of ChatGPT in handling LaTeX and Katex for creating visual data representations, with a focus on math and flowchart diagrams. -

Curly Brackets Saga in DALL-E 3: Users such as

@darthgustav.and@beanz_and_riceencountered an issue where DALL-E 3 payloads were not accepting standard curly brackets in JSON strings. They found a workaround by using escape coded curly brackets, which appeared to bypass the parser error. -

Enhancing ChatGPT Creativity for Artistic Prompts: When asked about improving creativity in artistic prompts,

@bambooshootsand@darthgustav.suggested a multi-step iterative process and the use of semantically open variables to encourage less deterministic and more imaginative outputs from the AI. -

Challenges with Custom ChatGPT File Reading:

@codenamecookieand@darthgustav.discussed issues with Custom ChatGPT’s inconsistent ability to read ‘.py’ files from its knowledge. They explored potential solutions such as converting files to plain text and avoiding unnecessary zipping for better AI parsing and responsiveness.

Links mentioned:

Disrupting malicious uses of AI by state-affiliated threat actors: We terminated accounts associated with state-affiliated threat actors. Our findings show our models offer only limited, incremental capabilities for malicious cybersecurity tasks.

OpenAI ▷ #api-discussions (391 messages🔥🔥):

- Prompt Engineering Secrets:

@yami1010and@eskcantashared insights on using Markdown, LaTeX, and KaTeX in prompts with ChatGPT for creating diagrams and flowcharts. They discussed the effectiveness of different diagram-as-code tools, with mentions of mermaid and mathplotlib, and the peculiarities of dealing with curly brackets in the DALL-E 3 parser. - MetaPrompting Annotated:

@madame_architectadded MetaPrompting to their list of 42 annotated prompt architecture papers. The list, which can be found on the AI-Empower GitHub, is maintained to keep high-quality standards and is useful for researching prompt engineering. - The Curly Brackets Saga: A long discussion revolving around the DALL-E 3 payload’s formatting issues with curly brackets (

{},}) in JSON strings took place, with multiple users like@darthgustav.and@yami1010noting failures during image generation. A solution involving Unicode escape codes was found, bypassing the parser error. - Custom ChatGPT File Reading: In a conversation about Custom ChatGPT,

@codenamecookieexpressed confusion about the model’s inconsistent ability to read Python files from its ‘knowledge’.@darthgustav.recommended not zipping the files and converting them to plain text while maintaining Python interpretation, which might help the AI process the files better. - Boosting AI Creativity: For enhancing AI-created artistic prompts, users like

@bambooshootsand@darthgustav.suggested using a multi-step process to develop the scene and elicit more creative responses from GPT-3.5 and GPT-4. The inclusion of semantically open variables and iterative prompting would help provoke less deterministic and more unique outputs.

Links mentioned:

Disrupting malicious uses of AI by state-affiliated threat actors: We terminated accounts associated with state-affiliated threat actors. Our findings show our models offer only limited, incremental capabilities for malicious cybersecurity tasks.

LM Studio ▷ #💬-general (484 messages🔥🔥🔥):

-

Exploring Model Options: Users are discussing various LLMs and their compatibility with specific GPUs, with a focus on coding assistance models such as Deepseek Coder 6.7B and StarCoder2-15B. For example,

@solusan.is looking for the best model to fit an Nvidia RTX 40 series with 12 GB, currently considering Dolphin 2.6 Mistral 7B. -

LM Studio GPU Compatibility Issues: Several users like

@jans_85817and@kerberos5703are facing issues running LM Studio with certain GPUs. Discussions revolve around LM Studio’s compatibility mainly with newer GPUs, and older GPUs are presenting problems for which users are seeking solutions or alternatives. -

Hugging Face Outage Impact: A common issue reported by multiple members like

@barnleyand@heyitsyorkieis related to a network error when downloading models due to a Hugging Face outage affecting LM Studio’s ability to search for models. -

Image Recognition and Generation Queries: Questions regarding image-related tasks surfaced, and

@heyitsyorkieclarified that while LM Studio cannot perform image generation tasks, it is possible to work with image recognition through Llava models. -

Hardware Discussions and Anticipations: Users like

@pierrunoytand@nink1are discussing future hardware expectations for AI and LLMs, noting that current high-end AI-specific hardware may become more accessible with time.

Links mentioned:

- GroqChat: no description found

- no title found: no description found

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Stop Shouting Arnold Schwarzenegger GIF - Stop Shouting Arnold Schwarzenegger Jack Slater - Discover & Share GIFs: Click to view the GIF

- BLOOM: Our 176B parameter language model is here.

- Continue: no description found

- no title found: no description found

- GeForce GTX 650 Ti | Specifications | GeForce: no description found

- MaziyarPanahi/dolphin-2.6-mistral-7b-Mistral-7B-Instruct-v0.2-slerp-GGUF · Hugging Face: no description found

- Specifications | GeForce: no description found

- 02 ‐ Default and Notebook Tabs: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

- Add support for StarCoder2 by pacman100 · Pull Request #5795 · ggerganov/llama.cpp: What does this PR do? Adds support for StarCoder 2 models that were released recently.

- bigcode/starcoder2-15b · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Anima/air_llm at main · lyogavin/Anima: 33B Chinese LLM, DPO QLORA, 100K context, AirLLM 70B inference with single 4GB GPU - lyogavin/Anima

- GitHub - MDK8888/GPTFast: Accelerate your Hugging Face Transformers 6-7x. Native to Hugging Face and PyTorch.: Accelerate your Hugging Face Transformers 6-7x. Native to Hugging Face and PyTorch. - MDK8888/GPTFast

- itsdotscience/Magicoder-S-DS-6.7B-GGUF at main: no description found

LM Studio ▷ #🤖-models-discussion-chat (61 messages🔥🔥):

-

Seeking PDF chatbot guidance:

@solenya7755is looking to implement an accurate PDF chat bot with LM Studio and llama2 70B Q4 LLM, but experiences inaccuracies with hallucinated commands.@nink1suggests extensive prompt work and joining the AnythingLLM discord for further assistance. -

StarCoder2 and The Stack v2 launch:

@snoopbill_91704shares news about the launch of StarCoder2 and The Stack v2 by ServiceNow, Hugging Face, and NVIDIA, noting a partnership with Software Heritage aligned with responsible AI principles. -

Qualcomm releases 80 open source models:

@misangeniusbrings attention to Qualcomm’s release of 80 open source AI models, for vision, audio, and speech applications available on Huggingface. -

Querying Models that prompt you with questions:

@ozimandisinquires about local LLMs that ask questions and has mixed results with different models, while@nink1shares success in getting models like dolphin mistral 7B q5 to ask provocative questions. -

Best setup for business document analysis and writing:

@redcloud9999seeks advice on the best LLM setup for analyzing and writing business documents with a high-spec machine.@heyitsyorkieadvises searching for GGUF quants by “TheBloke” on Huggingface and@coachdennis.suggests testing trending models.

Links mentioned:

- qualcomm (Qualcomm): no description found

- bigcode/starcoder2-15b · Hugging Face: no description found

- bigcode/the-stack-v2-train-full-ids · Datasets at Hugging Face: no description found

- Pioneering the Future of Code Preservation and AI with StarCoder2: Software Heritage’s mission is to collect, preserve, and make the entire body of software source code easily available, especially emphasizing Free and Open Source Software (FOSS) as a digital c…

LM Studio ▷ #🎛-hardware-discussion (42 messages🔥):

<ul>

<li><strong>Optimization Tips for Windows 11</strong>: `.bambalejo` advised users to disable certain features like microsheet's core isolation and vm platform on Windows 11 for better performance, and to ensure <em>VirtualizationBasedSecurityStatus</em> is set to 0.</li>

<li><strong>TinyBox Announcement</strong>: `senecalouck` shared a link with details on the TinyBox from TinyCorp, a new hardware offering found <a href="https://tinygrad.org">here</a>.</li>

<li><strong>E-commerce GPU Frustrations and Specs</strong>: `goldensun3ds` recounted a negative experience purchasing a falsely advertised GPU on eBay, opting for Amazon for their next purchase, listing their robust PC specs including dual RTX 4060 Ti 16GB.</li>