In the agent literature it is common to find that multiple agents outperform single agents (if you conveniently ignore inference cost). Cohere has now found the same for LLMs-as-Judges:

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Here is the updated summary with the requested formatting and de-ranking of AGI posts:

OpenAI News

- Memory feature now available to all ChatGPT Plus users: OpenAI announced on Twitter that the memory feature is now rolled out to all ChatGPT Plus subscribers.

- OpenAI partners with Financial Times for AI in news: OpenAI has signed a deal to license content from the Financial Times to train its AI models. An image was shared announcing the partnership to develop AI experiences for news.

- Concerns over OpenAI’s profitability with paid training data: In /r/OpenAI, a post questioned OpenAI’s profitability as they start paying to license training data, speculating local open source models may undercut their business.

- Possible reduction in GPT-4 usage limits: A user in /r/OpenAI noticed GPT-4’s usage has been reduced from 40 messages per 3 hours to around 20 questions per hour.

- Issues with ChatGPT after memory update: In /r/OpenAI, a user found ChatGPT struggled with data cleansing and analysis tasks after the memory update, producing errors and incomplete outputs.

OpenAI API Projects and Discussions

- Tutorial on building an AI voice assistant with OpenAI: A blog post was shared in /r/OpenAI on building an AI voice assistant using OpenAI’s API along with web speech APIs.

- AI-powered side projects discussion: In /r/OpenAI, a post asked others to share their AI-powered side projects. The poster made a requirements analysis tool with GPT-4 and an interactive German tutor with GPT-3.5.

- Interface agents powered by LLMs: A /r/OpenAI post discussed “interface agents” - AI that can interact with and control user interfaces like browsers and apps. It covered key components, tools, challenges and use cases.

- Difficulty resizing elements in GPT-4 generated images: In /r/OpenAI, a user asked for advice on instructing GPT-4 to shrink an element in a generated image, as the model struggles to consistently resize things.

Stable Diffusion Models and Extensions

- Seeking realistic SDXL models comparable to PonyXL: In /r/StableDiffusion, a user asked about realistic SDXL models on par with PonyXL’s quality and prompt alignment for photographic styles.

- Hi-diffusion extension for ComfyUI: A /r/StableDiffusion user found Hi-diffusion works well for generating detailed 2K images in ComfyUI with SD1.5 models, outperforming Khoya deep shrink. An extension is available but needs improvements.

- Virtuoso Nodes v1.1 adds Photoshop features to ComfyUI: Version 1.1 of Virtuoso Nodes for ComfyUI was released, adding 8 new nodes that replicate key Photoshop functions like blend modes, selective color, color balance, etc.

- Styles to simplify Pony XL prompts in Fooocus: A /r/StableDiffusion user created styles for Fooocus to handle the quality tags in Pony XL prompts, allowing cleaner and shorter prompts focused on content.

- Anime-style shading LoRA released: An anime-style shading LoRA was announced, recommended for use with Anystyle and other ControlNets. A Hugging Face link to the LoRA file was provided.

Stable Diffusion Help and Discussion

- Avoiding explicit content in generated images: In /r/StableDiffusion, a user getting phallic elements in 80% of their generated images asked for negative prompt advice to generate “regular porn” instead.

- Creating short video clips with AI images and animated text: A /r/StableDiffusion post asked about APIs to generate AI images with animated text overlays to create short video clips.

- Newer Nvidia GPUs may be slower for AI despite gaming gains: A warning was posted that newer Nvidia GPUs like the 4070 laptop version use narrower memory buses than older models, making them slower for AI workloads.

- Proposal for community image tagging project: A /r/StableDiffusion post suggested a community effort to comprehensively tag images to create a dataset of consistently captioned images for training better models.

- Using VAEs for image compression: Experiments shared in /r/StableDiffusion show using VAE latents for image compression is competitive with JPEG in some cases. Saving generated images as latents is lossless and much smaller than PNGs.

- Generating a full body from a headshot: In /r/StableDiffusion, a user asked if it’s possible to generate a full body from a headshot image without altering the face much using SD Forge.

- Textual inversion of Audrey Hepburn: A /r/StableDiffusion user made a textual inversion of Audrey Hepburn that produces similar but varied faces, sharing example images and a Civitai link.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

LLMs and AI Models

- Llama 3 Performance: @abacaj noted that llama-3 models with zero-training can get 32k context with exceptional quality, surpassing significantly larger models. @rohanpaul_ai mentioned Llama 3 captures extremely nuanced data relationships, utilizing even the minutest decimals in BF16 precision, making it more sensitive to quantization degradation compared to Llama 2.

- Llama 3 Benchmarks: @abacaj reported llama-3 70B takes 3rd place on a benchmark, replacing Haiku. @abacaj shared a completion from the model on a code snippet benchmark that requires the model to find a function based on a description.

- Llama 3 Variants: @mervenoyann noted new LLaVA-like models based on LLaMA 3 & Phi-3 that pass the baklava benchmark. @AIatMeta mentioned Meditron, an LLM suite for low-resource medical settings built by @ICepfl & @YaleMed researchers, which outperforms most open models in its parameter class on benchmarks like MedQA & MedMCQA using Llama 3.

- GPT-2 Chatbot: There was speculation about the identity of the gpt2-chatbot model, with @sama noting he has a soft spot for gpt2. Some theories suggested it could be a preview of GPT-4.5/5 or a derivative model, but most agreed it was unlikely to be the latest OAI model.

- Phi-3 and Other Models: @danielhanchen released a Phi-3 notebook that finetunes 2x faster and uses 50% less VRAM than HF+FA2. @rohanpaul_ai shared a paper suggesting transformers learn in-context by performing gradient descent on a loss function constructed from the in-context data within their forward pass.

Prompt Engineering and Evaluation

- Prompt Engineering Techniques: @cwolferesearch categorized recent prompt engineering research into reasoning, tool usage, context window, and better writing. Techniques include zero-shot CoT prompting, selecting exemplars based on complexity, refining rationales, decomposing tasks, using APIs, optimizing context windows, and iterative prompting.

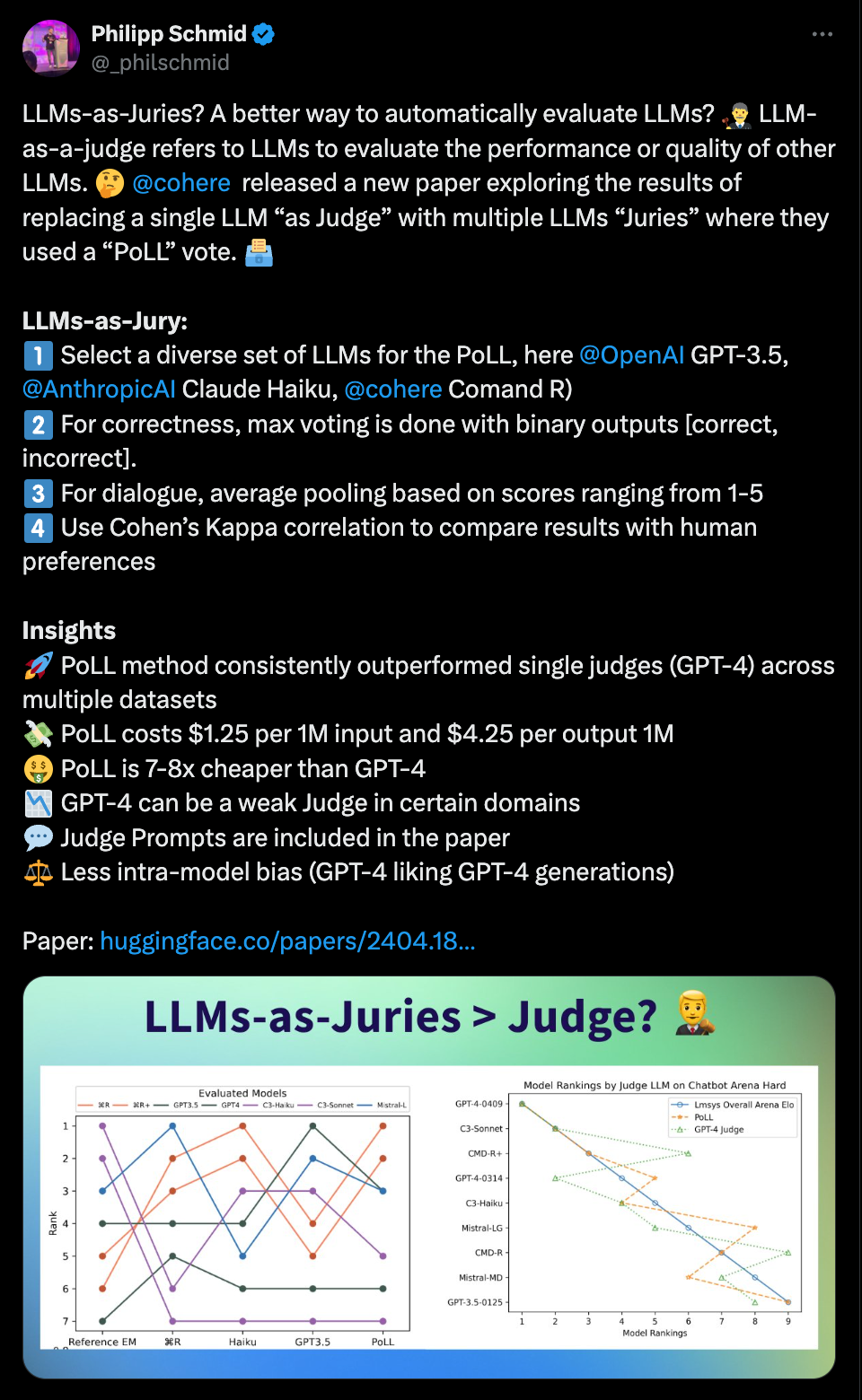

- LLMs as Juries: @cohere released a paper exploring replacing a single LLM judge with multiple LLM juries for evaluation. The “PoLL” method with a diverse set of LLMs outperformed single judges across datasets while being 7-8x cheaper than GPT-4.

- Evaluating LLMs: @_lewtun asked about research on which prompts produce an LLM-judge most correlated with human preferences for pairwise rankings, beyond the work by @lmsysorg. @_philschmid summarized the PoLL (Panel of LLM) method proposed by @cohere for LLM evaluation as an alternative to a single large model judge.

Applications and Use Cases

- Financial Calculations: @llama_index shared a full-stack tutorial for building a financial assistant that can calculate percentage evolution, CAGR, and P/E ratios over unstructured financial reports using LlamaParse, RAG, Opus, and math formulas in @llama_index.

- SQL Query Generation: @virattt used @cohere cmd r+ to extract ticker and year metadata from financial queries in ~1s, then used the metadata to filter a vector db, fed results to GPT-4, and answered user query with ~3s total latency.

- Multi-Agent RAG: @LangChainAI announced a YouTube workshop on exploring “multi-agent” applications that combine independent agents to solve complex problems using planning, reflection, tool use, and their LangGraph library.

- Robotics and Embodied AI: @DrJimFan advocated for robotics as the next frontier after LLMs, sharing MIT AI Lab’s 1971 proposal emphasizing robotics and reflecting on the current state. @_akhaliq shared a paper on Ag2Manip, which improves imitation learning for manipulation tasks using agent-agnostic visual and action representations.

Frameworks, Tools and Platforms

- LangChain Tutorials: @LangChainAI shared a 4-hour course on understanding how LangChain works with various technologies to build 6 projects. @llama_index provided a reference architecture for advanced RAG using LlamaParse, AWS Bedrock, and @llama_index.

- Diffusers Library: @RisingSayak explained how the Diffusers library supports custom pipelines and components, allowing flexibility in building diffusion models while maintaining the benefits of the

DiffusionPipelineclass. - Amazon Bedrock: @cohere announced their Command R model series is now available on Amazon Bedrock for enterprise workloads. @llama_index showed how to use LlamaParse for advanced parsing in the AWS/Bedrock ecosystem and build RAG with the Bedrock Knowledge Base.

- DeepSpeed Support: @StasBekman noted a PR merged into

main@acceleratethat makes FSDP converge at the same speed as DeepSpeed when loading fp16 models, by automatically upcasting trainable params to fp32.

Memes, Humor and Other

- ASCII Art: Several tweets poked fun at the ASCII art capabilities of LLMs, with @ylecun noting how AI hype has become indistinguishable from satire. @teortaxesTex shared a prompt to draw a Katamari Damacy level map using emojis that strains “GPT2“‘s instruction following.

- Anthropic Slack: @alexalbert__ shared his 10 favorite things from Anthropic’s internal Slack channel where employees post cool Claude interactions and memes since its launch.

- Rabbit Disappointment: Several users expressed disappointment with the Rabbit AI device, noting its limited functionality compared to expectations. @agihippo questioned what the Rabbit r1 can do that a phone can’t.

AI Discord Recap

A summary of Summaries of Summaries

1) Fine-Tuning and Optimizing Large Language Models

-

Challenges in Fine-Tuning LLaMA-3: Engineers faced issues like the model not generating EOS tokens, and embedding layer compatibility across bit formats. However, one member achieved success by utilizing LLaMA-3 specific prompt strategies for fine-tuning.

-

LLaMA-3 Sensitive to Quantization: Discussions highlighted that LLaMA-3 experiences more degradation from quantization compared to LLaMA-2, likely due to capturing nuanced relationships from training on 15T tokens.

-

Perplexity Fine-Tuning Challenges: Fine-tuning LLaMA-3 for perplexity may not surpass the base model’s performance, with the tokenizer suspected as a potential cause.

2) Extending Context Lengths and Capabilities

-

Llama-3 Hits New Context Length Highs: The release of Llama-3 8B Gradient Instruct 1048k extends the context length from 8k to over 1048k tokens, showcasing state-of-the-art long context handling.

-

Llama 3 Gains Vision with SigLIP: A breakthrough integrates vision capabilities for Llama 3 using SigLIP, enabling direct use within Transformers despite quantization limitations.

-

Extending Context to 256k with PoSE: The context length of Llama 3 8B has been expanded from 8k to 256k tokens using PoSE, though inferencing challenges remain for ‘needle in haystack’ scenarios.

3) Benchmarking and Evaluating LLMs

-

Llama 3 Outperforms GPT-4 in German NLG: On the ScanEval German NLG benchmark, Llama 3 surpassed the performance of GPT-4, indicating its strong language generation capabilities.

-

Mysterious GPT2-Chatbot Sparks Speculation: A GPT2-chatbot with gpt4-level capabilities surfaced, leading to debates on whether it could be an early glimpse of GPT-4.5 or a finetuned version of the original GPT-2.

-

Questioning Leaderboard Utility for Code Generation: A blog post challenges the effectiveness of AI leaderboards for code generation, citing the high operational cost of top performers like LLM debugger despite ranking highly.

4) Revolutionizing Gaming with LLM-Powered NPCs

-

LLM-Powered NPCs and Inference Stack: The release of LLM-powered NPC models aims to enhance action spaces and simplify API calls, including a single LLM call feature and open-weights on Hugging Face.

-

Overcoming LLM Challenges for Gameplay: Developers faced issues like NPCs breaking the fourth wall, missing details in large prompts, and optimizing for runtime speeds, suggesting solutions like output compression, minimizing model calls, and leveraging smaller models.

-

Insights into Fine-Tuning LLMs for NPCs: Developers plan to share their struggles and triumphs in fine-tuning LLMs for dynamic NPC behavior through an upcoming blog post, pointing towards new strategies for gaming applications.

5) Misc

-

CUDA Optimization Techniques: CUDA developers discussed various optimization strategies, including using

Packed128custom structs for memory access patterns, replacing integer division with bit shifts (Compiler Explorer link), and comparing performance of CUTLASS vs CuBLAS for matrix multiplications. The Effort Engine algorithm was introduced, enabling adjustable computational effort during LLM inference to achieve speeds comparable to standard matrix multiplications on Apple Silicon (kolinko.github.io/effort, GitHub). -

LLaMA-3 Context Length Extension and Fine-Tuning: The LLaMA-3 8B model’s context length was extended to over 1M tokens using PoSE (huggingface.co/winglian/llama-3-8b-256k-PoSE), sparking discussions on its retrieval performance and compute requirements. Fine-tuning LLaMA-3 presented challenges like quantization degradation, EOS token generation, and embedding layer compatibility across bit formats. A potential breakthrough was shared in a GitHub pull request demonstrating successful fine-tuning with model-specific prompt strategies.

-

Civitai Monetization Backlash: Stable Diffusion community members expressed discontent with Civitai’s monetization strategies, particularly the Buzz donation system, which was labeled a “rip-off” by some like Tower13Studios (The Angola Effect). Discussions also highlighted the potential profitability of NSFW AI-generated art commissions compared to the saturated SFW market.

-

Perplexity AI Performance Issues: Users reported significant slowdowns and poor performance across various Perplexity AI models during Japan’s Golden Week, with specific issues in Japanese searches resulting in meaningless outputs. Frustrations arose over expired Pro subscription coupons and the removal of the 7-day free trial. Technical troubles included email link delays affecting login and inconsistencies in the iOS voice feature depending on app versions.

-

Decentralized AI Training Initiatives: Prime Intellect proposed a decentralized training approach using H100 GPU clusters to enable open-source AI to compete with proprietary models (blog post). The initiative aims to address computing infrastructure limitations by leveraging globally distributed GPU resources.

PART 1: High level Discord summaries

CUDA MODE Discord

-

Triton Troubles: Engineers discussed limitations with Triton blocks, identifying an issue where blocks of 4096 elements are feasible, yet blocks of 8192 are not, hinting at discrepancies with expected CUDA limits.

-

CUDA Cognitions and Collaborations: Various CUDA topics were mulled over, including CUTLASS vs. CuBLAS performance, CUDA checkpointing, and the replacement of integer division with bit shifts. A link to the Compiler Explorer was shared to help with experiments.

-

PyTorch Peculiarities Pursued: Members examined the behavior of PyTorch’s

linearfunction and matrix multiplication kernel launches, with observations about double kernel launches and the false expectation of performance differences due to transposition. -

LLM Inference Optimization with Effort Engine: Discussion revolved around the Effort Engine algorithm, which enables adjustable computational effort during LLM inference, purportedly yielding speeds comparable to standard matrix multiplications on Apple Silicon at lower efforts. The implementation and details are provided on kolinko.github.io/effort and GitHub.

-

InstaDeep’s Machine Learning Manhunt: InstaDeep is on the hunt for Machine Learning Engineers with expertise in high-performance ML engineering, custom CUDA kernels, and distributed training. Candidates can scout for opportunities at InstaDeep Careers.

-

Llama-3 Levitates to Longer Contexts: The release of Llama-3 8B Gradient Instruct 1048k set a new benchmark for context length capabilities in LLMs.

-

ROCm Rallies for Flash Attention 2: Conversations in the ROCM channel centered on adapting NVIDIA’s Flash Attention 2 for ROCm, with a focus on compatibility with ROCM 6.x versions and a link to the relevant repository ROCm/flash-attention on GitHub.

-

CUDA Conclave Converges on “Packed128” Innovations: The llmdotc channel was a hotspot with discussions focused on optimizing

Packed128data structures and BF16 mixed-precision strategies, while also touching on the nuanced use of NVTX contexts and the utility of different benchmarking toolsets like Modal.

Unsloth AI (Daniel Han) Discord

-

Fusing Checkpoints to Avoid Overfitting: A member sought guidance on checkpoint merging to avoid overfitting and was directed to the Unsloth finetuning checkpoint wiki. Techniques such as warmup steps and resuming from checkpoints were recommended for nuanced training regimens.

-

Quantization Quandary in WSL2: Users reported RuntimeError: Unsloth: Quantization failed when converting models to F16 within WSL2. Despite attempts at rebuilding the

llama.cppand re-quantization, the error persisted. -

Phi-3: A Model of Interest: The upcoming release of Phi-3 stirred interest, with engineers debating whether to adopt the 3.8b version or wait for the heftier 7b or 14b variants.

-

OOM Countermeasures and Performance Data Confusion: Tips for handling Out of Memory errors on Google Colab by cache clearing were exchanged. Meanwhile, confusion surfaced over reported performance measures for quantized LLama 2 and LLama 3, hinting at possible data misplacement between Bits Per Word (BPW) and Perplexity (PPL).

-

Extended Possibilities: Llama 3 8B reached new potential with a Context length increase to 256k tokens, achieved with PoSE, showcased at winglian/llama-3-8b-256k-PoSE. Community applause went to Winglian, though some voiced skepticism about non-official context-extended model behavior.

LM Studio Discord

- Groq’s Gift to Discord Bots: A user shared a YouTube video highlighting the free Groq API enabling access to the LLAMA-3 model’s impressive 300 tokens per second speed, optimally suited for small server Discord bots due to its no-cost setup.

- Spec Smackdown: Users recommended posting system specs in specific channels when troubleshooting LM Studio on Ubuntu GPUs, debated the compatibility of GPUs with inference tasks, and discussed the potentially incorrect VRAM capacity display in LM Studio causing concerns with GPU offloading efficiency.

- Model Mania: The community buzzed about alternative methods for downloading the GGUF model from sources other than Huggingface, the time and resource demands of creating iQuants and imatrices, and shared reward offers for optimizing the Goliath 120B Longlora model to create its iQuant version.

- Model Mayhem on Modest Machines: Users grappled with issues like the Phi-3 model’s leaking prompts, local training queries for Hugging Face-based models, and the unexpected noises from hard drives during token generation by the Llama3m. Some determined that more dated hardware could just about manage a 7b Q4 model but nothing heftier.

- ROCm Ruminations: Enthusiasts dissected ROCm versions, mulling over the benefits of beta 0.2.20 for AMD functionality, addressed confusion about compatibility—especially the RX 6600’s support with the current HIP SDK—and discussed discrepancies in ROCm’s functionality on different operating systems like Ubuntu versus Windows.

Stability.ai (Stable Diffusion) Discord

Buzz Off, Civitai: AI creators in the guild are upset with Civitai’s monetization strategies, particularly the Buzz donation system, which was labeled a “rip-off” by some members, such as Tower13Studios. The discontent revolves around value not being fairly returned to creators (The Angola Effect).

Finding The AI Art Goldmine: A vibrant discussion unfolded on the economics of AI-generated art, with consensus pointing towards NSFW commissions, including furry and vtuber content, as a more profitable avenue compared to the more crowded SFW market.

Race for Real-Time Rendering: Members actively shared Python scripting techniques for accelerating Stable Diffusion (SDXL) models, eyeing uses in dynamic realms like Discord bots, aiming to enhance image generation speed for real-time applications.

Anticipation Builds for Collider: The community is keenly awaiting Stable Diffusion’s next iteration, dubbed “Collider,” with speculation about release dates and potential advancements fueling eager anticipation among users.

Tech Troubleshooting Talk: Guild members exchanged insights and solutions on a spectrum of technical challenges, from creating LoRAs and IPAdapters to running AI models on low-spec hardware, demonstrating a collective effort to push the boundaries of model implementation and optimization.

Perplexity AI Discord

-

Japanese Golden Week Glitches: During Japan’s Golden Week, users observed a noticeable performance drop in tools like Opus, Sonar Large 32K, and GPT-4 Turbo, with specific issues in Japanese searches, resulting in outputs that users deemed meaningless garbage. To address the problem, vigilant monitoring and optimization of these models was suggested.

-

Frustration over Pro Subscription and Trial Perils: Pro subscription users reported expired coupons on the due date, with offers linked to the Nothing Phone 2(a) aborted prematurely due to fraudulent activities. Moreover, the 7-day free trial’s removal from the site prompted disappointment, emphasizing its value as a user conversion tool.

-

Tech Turbulence with Perplexity AI: The community grappled with email link delays, causing login difficulties, particularly for non-Gmail services. Additionally, variations in the iOS voice feature were found to be dependent on the app version being used, reflecting inconsistencies in user experience.

-

API Avenues Explored: Engineers queried the pplx-api channel regarding source URL access through the API, following its mention in roadmap documentation, and debated whether using Claude 3 would entail adherence to Anthropic’s political usage restrictions under Perplexity’s terms.

-

Miscellaneous Inquiries and Insights Surface: A post in the #sharing channel spotlighted Lenny’s Newsletter on product growth and building concepts, while queries about WhatsApp’s autoreply feature and Vimeo’s API were thrown in. These discussions, particularly on the API, highlight engineers’ focus on integrating and utilizing various functionalities in their systems/processes.

Nous Research AI Discord

Bold Decentralization Move: Prime Intellect’s initiative for decentralized AI training, leveraging H100 GPU clusters, promises to push the boundaries by globalizing distributed training. The open-source approach may address current computing infrastructure bottlenecks as discussed in their decentralized training blog.

Retrieval Revolution with LLama-3: The extension of LLama-3 8B’s context length to over 1040K tokens sparks discussions on whether its retrieval performance lives up to the hype. Skeptics remain, emphasizing the ongoing necessity of improvements and training, supported by an ArXiv paper on IN2 training.

PDF Challenges Tackled: To address PDF parsing challenges within AI models, particularly for tables, the community discussed workarounds and tools like OpenAI’s file search for better multimodal functionality handling roughly 10k files.

World Sims Showcase AI’s Role-Playing Prowess: Engagements with AI-driven world simulations highlight the capacities of llama 3 70b and Claude 3, from historical figures to business and singing career simulators. OpenAI’s chat on HuggingChat and links to niche simulations like Snow Singer Simulator reflect the diversity and depth achievable.

Leveraging Datasets for Multilingual Dense Retrieval: A noted Wikipedia RAG dataset on HuggingFace earmarks the rise of fostering AI’s language retrieval capabilities. The included Halal and Kosher data points toward a trend of creating diverse and inclusive AI resources.

Modular (Mojo 🔥) Discord

-

Mojo’s Memory Safety and Concurrency Debated: Despite buzz around Mojo’s potential, it was clarified that features like Golang-like concurrency and Rust-like memory safety are not currently implemented due to borrow checking being disabled. However, possibilities regarding the use of actor model concurrency are being explored which may enhance Mojo’s runtime efficiency.

-

Installation Tactics for Mojo on Varied Systems: Users face challenges installing Mojo with Python 3.12.3 particularly on Mac M1, for which using a Conda environment is recommended. Also, while native Windows support is pending, WSL on Windows is a current workaround, with cross-compilation capabilities hinted through LLVM involvement.

-

Community Contributions to Mojo Ecosystem: Several community-driven projects are enhancing the Mojo ecosystem, from a Mojo-based forum on GitHub to a 20% performance optimized atof-simd project for long strings. Enthusiasm for collaboration and knowledge-sharing is evident as members share projects and call for joint efforts to tackle challenges such as the 1brc.

-

Nightly Compilations Trigger Discussions on SIMD and Source Location: A new nightly release of the Mojo compiler spurred conversation about the conversion of SIMD to EqualityComparable and the need for explicit

reduce_andorreduce_orin place of implicit conversion toBool. The move of__source_location()to__call_location()incited exchanges on proper usage within the language. -

Performance and Benchmarking Take the Spotlight: From optimizing SIMD-based error correction code to sharing substantial speed gains in the 1brc project, performance topics spurred discussions on LLVM/MLIR optimizations. There were calls to form a “team-mojo” for communal challenge tackling, underscoring a shared interest in progressing Mojo’s benchmarking endeavors against other languages.

HuggingFace Discord

Snowflake’s MoE Model Breaks Through: Snowflake introduces a monumental 408B parameter Dense + Hybrid MoE model with a 4K context window, entirely under Apache 2.0 license, sparking excitement for its performance on sophisticated tasks.

Gradio Share Server on the Fritz: Gradio acknowledges issues with their Share Server, impacting Colab integrations, which is under active resolution with updates available on their status page.

CVPR 2023 Sparks Competitive Spirit: CVPR 2023 announced competetive events like SnakeCLEF, FungiCLEF, and PlantCLEF, boasting over $120k in rewards and happening June 17-21, 2024.

MIT Deep Learning Course Goes Live: MIT updates its Introduction to Deep Learning course for 2024, with comprehensive lecture videos on YouTube.

NLP Woes in Chatbot Land: Within the NLP community, effort mounts to finetune a chatbot using the Rasa framework, despite struggles with intent recognition and categorization, and plans to augment performance with a custom NER model and company-specific intents.

OpenRouter (Alex Atallah) Discord

-

Alex Atallah Signposts Collaboration with Syrax: Alex Atallah has initiated experiments with Syrax and extended support by proposing a group chat for collaborative efforts, marking the start of a partnership acknowledged with enthusiasm by Mart02.

-

Frontend for the Rest of Us: The community explored solutions for deploying multi-user frontends on shared hosting without advanced technical requirements. LibreChat was suggested as a viable platform, with Vercel’s free tier hosting mentioned as a means to address hosting and cost obstacles.

-

LLMs Throwdown: A robust debate unfolded over several large language models including Llama-3 8B, Dolphin 2.9, and Mixtral-8x22B, touching on aspects like context window size and censorship concerns related to conversational styles and datasets.

-

Training Unhinged AIs: An intriguing experiment involved training a model with a toxic dataset to foster a more “unhinged” persona. Discussions dug into model limitations with long contexts, with an agreement that although models like Llama 3 8B handle extensive contexts, performance dips were likely past a threshold.

-

Cost-Effective Experimentation on OpenRouter: Conversations centered on finding efficient yet affordable models on OpenRouter. Noteworthy was the mix of surprise and approval for the human-like output of models like GPT-3.5 that deliver a solid blend of affordability and performance.

LlamaIndex Discord

AWS Architecture Goes Academic: LlamaIndex revealed an advanced AWS-based architecture for building sophisticated RAG systems, aimed at parsing and reasoning. Details are accessible in their code repository.

Documentation Bot Triumphs in Hackathon: Hackathon victors, Team CLAB, developed an impressive documentation bot leveraging LlamaIndex and Nomic embeddings; check out the hackathon wrap-up in this blog post.

Financial Assistants Get a Boost: Constructing financial assistants that interpret unstructured data and perform complex computations has been greatly improved. The methodology is thoroughly explored in a recent post.

Turbocharging RAG with Semantic Caching: Collaboration with @Redisinc demonstrated significant performance gains for RAG applications using semantic caching to speed up queries. The collaboration details can be found here.

GPT-1: The Trailblazer Remembered: A reflective glance at GPT-1 and its contributions to LLM development was shared, discussing features like positional embeddings which paved the way for modern models like Mistral-7B. The nostalgia-laden blog post revisits GPT-1’s architecture and impact.

Eleuther Discord

Plug Into New Community Projects: Members are seeking opportunities to contribute to community AI projects that provide computational resources, addressing the issue for those lacking personal GPU infrastructure.

Unlock the Mysteries of AI Memory: Intricacies of memory processes in AI were covered with a particular focus on “clear-ing”, orthogonal keys, and the delta rule in compressive memory. There’s an interest in discussing whether infini-attention has been overhyped, despite its theoretical promise.

Comparing Apples to Supercomputers: There’s an active debate regarding performance discrepancies between models like mixtral 8x22B and llama 3 70B, where llama’s reduced number of layers, despite having more parameters, may be impacting its speed and batching efficiency.

LLMs: Peering Inside the Black Box: The community is contemplating the “black box” nature of Large Language Models, discussing emergent abilities and data leakage. A connection was made between emergent abilities and pretraining loss, challenging the focus on compute as a performance indicator.

Bit Depth Bewilderment: A user reported issues when encoding with 8bit on models like llama3-70b and llamma3-8b, experiencing significant degradation in output quality, suggesting a cross-model encoding challenge that needs addressing.

LAION Discord

- GDPR Complaint Challenges AI Birthdays: An EU privacy advocate has filed a GDPR complaint after an AI model incorrectly estimated his birthday, triggering discussions on the potential implications for AI operations in Europe.

- Mysterious GPT-5 Speculations: Amidst rumors of a new GPT-5 model release, the community debates inconsistent test outcomes and the absence of official communication or leaderboard recognitions, questioning the framework’s evasiveness in generating hallucinations.

- Llama3 70B’s Slow Performance Spotlight: AI engineers are troubleshooting the Llama3 70B model’s unexpectedly sluggish token generation rate of 13 tokens per second on a dual 3090 rig, delving into possible hardware and configuration enhancements.

- Exllama Library Outraces Rivals: Users endorse Exllama for its fast performance on language model tasks and suggest utilizing the TabbyAPI repository for simpler integrations, naming it a superior choice compared to other libraries.

- Research Breakthrough with OpenCLIP: The successful application of OpenCLIP to cardiac ultrasound analysis has been published, highlighting the rigorous revision process and a move towards novel, non-zero-shot techniques, with the study available here; meanwhile r/StableDiffusion is back online and a relevant CLIP training repository is discussed in the context of Reddit’s recent API changes, found at this Reddit discussion.

OpenAI Discord

Memory Lane with Upscaled ChatGPT Plus: ChatGPT Plus now allows users to command the AI to remember specific contexts, which can be toggled on and off in settings; the rollout has not reached Europe or Korea yet. Plus, both Free and Plus users gain enhanced data control, including a ‘Temporary Chat’ option that discards conversations immediately after they end.

AI Ghosh-darn Curiosity and Camera Tricks: Discussions swung from defining AI curiosity and sentience with maze challenges to the merits of DragGAN altering photos with new angles. Meanwhile, the Llama-3 8B model emerged, flaunting its long-context skills and is accessible at Hugging Face, but the community still wrestled with the accessibility of advanced AI technologies and the dream of inter-model collaboration.

GPT-4: Bigger and Maybe Slower?: The community dove into the attributes of GPT-4, noting its significantly larger size than the 3.5 version and raising concerns about whether its scale may affect processing speed. Meanwhile, the possibility of mass-deleting archived chats was also a topic of concern.

Prompt Engineering’s Competitive Edge: Prompt engineering drew attention, with suggestions for competitions to hone skills, and ‘meta prompting’ via GPT Builder to refine AI output. The group agreed that positive prompting trumps listing prohibites, and wrestled with optimizing regional Spanish nuances in AI text generation.

Cross-Channel Theme of Prompting Excellence: Both AI discussions and API channels tackled prompt engineering, with meta-prompting techniques at the spotlight, indicating a shift toward more efficient prompting strategies that might decrease the need for competitions. Navigating the complexities of multilingual outputs also emerged as a shared challenge, emphasizing adaptation rather than prohibition.

OpenAccess AI Collective (axolotl) Discord

LLaMA 3 Struggles with Quantization: LLaMA 3 is observed to have significant performance degradation from quantization processes, more so than its predecessor, which might be due to its expansive training on 15T tokens capturing very nuanced data relations. A critique within the community called a study on quantization sensitivity “worthless,” suggesting that the issue may be more related to model training approaches rather than size; the critique referenced a study on arXiv.

Riding the Zero Train: The Guild discussed Huggingface’s ZeroGPU, a beta feature offering free access to multi-GPU resources like Nvidia A100, with some members expressing regret at missing early access. A member has shared access and is open to suggestions for testing on the platform.

Finetuning Finesse: Advised against fine-tuning meta-llama/Meta-Llama-3-70B-Instruct, it was suggested that members start with smaller models like 8B to sharpen their fine-tuning skills. The Guild clarified how to convert a fine-tuning dataset from OpenAI to ShareGPT format, and provided guidance with Python code for dataset transformation.

Tutorial Spreads Its Wings: A helpful tutorial was shared on fine-tuning Axolotl using dstack, showing the community’s knack for collaboratively improving practices. Appreciation was conveyed by members, noting the tutorial’s ease of use.

Axolotl Adaptations: Discussing the fine-tuning of command-r within Axolotl and related format adaptations, a member shared an untested pull request relating to this topic, while also noting its prematurity for merging. In addition, there’s uncertainty about the support for phi-3 format and the implementation standing of sample packing feature, indicating a need for further clarification or development.

Latent Space Discord

-

Memary: An Autonomous Agent’s Long-term Memory: The Memary project on GitHub has introduced a new approach for long-term memory in autonomous agents, using document similarity searches over traditional knowledge graphs.

-

The GPT-2 Chatbot Enigma: Intense debates have emerged on a GPT2-chatbot that showcases surprisingly advanced capabilities, leading to speculation that it might be a finetuned version of OpenAI’s GPT-2.

-

Can Decentralized Training Compete with Big Tech?: Prime Intellect’s blog post discusses decentralized training as a plausible avenue for open-source artificial intelligence to compete with the proprietary models developed by large corporations with extensive GPU resources.

-

Redefining LLMs with Modular Context and Memory: Discussions are emerging that suggest a paradigm shift towards designing autonomous agents with modularized shared context and memory capabilities for reasoning and planning, stepping away from the reliance on standalone large language models (LLMs).

-

Educational Resources for Aspiring AI Enthusiasts: For those seeking to learn AI fundamentals, community members recommended resources including neural network tutorials such as the one on YouTube and courses like Learn Prompting, providing a glimpse into AI engineering and prompt engineering basics.

OpenInterpreter Discord

OS Start-up with a Vision: A user faced challenges attempting to launch OS mode with a local vision model for Moondream and received gibberish output, but the discussion did not yield a solution or direct advice.

Integration Achievements: An exciting integration of OpenInterpreter outputs into MagicLLight was mentioned, with anticipation for a future code release and pull request including a stream_out function hook and external_input.

Hardware Hiccup Help: Queries about running OpenInterpreter on budget hardware like a Raspberry Pi Zero were brought up alongside requests for assistance with debugging startup issues. Community members offered to help with troubleshooting once more details were provided.

Push Button Programming: An individual fixed an external push button issue on pin 25 and shared a code snippet, also getting community confirmation that the fix was effective.

Volume Up on Tech Talk: There were mixed opinions on whether tech YouTubers have a grasp on AI technologies while advising on options for increasing speaker volume, including using M5Unified or an external amplifier.

tinygrad (George Hotz) Discord

-

Peek into Tinygrad’s Inner Workings: The tinygrad GitHub repository was recommended to someone curious about tinygrad, an educational project for enthusiasts of PyTorch and micrograd. Another community member inquired about graph visualization, leading to the suggestion to use the

GRAPH=1environment variable to generate diagrams for addressing backward pass issues #3572. -

The Discovery of Learning Resources: The community explored learning AI with TinyGrad through resources like MicroGrad and MiniTorch, with MiniTorch being singled out as particularly useful for understanding deep learning systems. The “tinygrad Quick Start Guide” was highlighted as a starting point for beginners.

-

Taking the Symbolic Route: Implementing a symbolic mean operation in TinyGrad brought up discussions about LazyBuffer’s interaction with data types and the practicality of variable caching for operations like

sumandmean. A pull request demonstrated symbolic code execution while further GitHub compare views tackled the development of symbolic mean with variables at tinygrad symbolic-mean-var-pull and GitHub changes by gh. -

Bounty Hunting for Mean Solutions: The community sought guidance for bounty challenges related to “Mean of symbolic shape” and “Symbolic arrange”. Discussion centered around the implementation nuances and practical approaches to these problems in the TinyGrad environment.

-

Cluster of Curiosities: A spontaneous question about how a member discovered the Discord server triggered a chain of speculations, with the respondent admitting they did not recall the method of encounter, adding a touch of mystery to the channel discourse.

Cohere Discord

-

Single-Site Restrictions in Command-R: API Command R+‘s

web_searchtool only allows for one website at a time, and the workaround discussed involves separate API calls for each site. -

Feature Request Frenzy: Engineers are eager for Command-R improvements with an emphasis on Connectors, including multi-website searches and extra parameter control; to get familiar with current capabilities, refer to the Cohere Chat Documentation.

-

Multi-Step Connector Capabilities Currently Limited: It was confirmed that multi-step tool use with connectors isn’t yet possible within Command-R.

-

Generate Option Gone Missing: Queries rose regarding the disappearance of ‘Generate’ for fine-tuning models from the dashboard, leaving its future presence in question.

-

Strategic Embedding Sought: Discussion revolved around cost-effective strategies for keeping data fresh for embeddings, with a focus on reindexing only modified segments.

-

Nordic Networking Noted: Members highlighted operations within Sweden using Cohere and existing connections through the company Omegapoint, spanning both Sweden and Norway.

LangChain AI Discord

-

Gemini Experience Wanted & Observability Tools Sought: Users in the general channel are seeking expertise in Gemini 1.0 or 1.5 models and discussing available tools for Large Language Model (LLM) observability, with interest in self-hosted, open-source options compatible with LlamaIndex. Meanwhile, there’s a push for enhanced SQL security when connecting to OpenAI models and a technical discussion on integrating autoawq with LangGraph for high-speed AI agent inference using exllamav2 kernels.

-

Asynchronous Adventures and Google Drive Gyrations: Within the langserve channel, a user is challenged by the lack of async support in AzureSearchVectorStoreRetriever and is considering whether to push for an async feature or to craft an async wrapper themselves. Separately, the discussion turned to the nuances of using Google Drive libraries and the importance of setting the drive key as an environment variable.

-

Showcase Extravaganza & Plugin Revelation: In share-your-work, there’s an insights-filled trip back to GPT-1’s role in initiating current LLM advancements and several LangChain use cases, including a “D-ID Airbnb Use Case” and a “Pizza Bot”, both featured on YouTube. The VectorDB plugin for LM Studio also made an appearance, aiming to bolster ChromaDB vector databases in server mode, while QuickVid was launched to deliver YouTube video summaries and fact checks.

-

RAG Agents Go Multilingual & Private: Tutorials channel is sharing resources for interested French speakers in building RAG assistants with LangChain, Mistral Large, and Llamaindex. Another guide demonstrates enhancing llama3’s performance by incorporating personal knowledge bases to create agentic RAGs, revealing potential for more localized and data-rich AI capabilities.

Alignment Lab AI Discord

Alert: Illicit Spam Floods Channels: Numerous messages across different channels promoted explicit material involving “18+ Teen Girls and OnlyFans leaks,” accompanied by a Discord invite link. All messages were similar in nature, using emojis and @everyone to garner attention, and are flagrant violations of Discord’s community guidelines.

Prompt Moderation Action Required: The repeated posts are indicative of a coordinated spam attack necessitating immediate moderation intervention. Each message invariably linked to an external Discord server, potentially baiting users into exploitative environments.

Engineer Vigilance Advocacy: Members are encouraged to report such posts to maintain professional decorum. The content breaches both legal and ethical boundaries and does not align with the guild’s purpose or standards.

Discord Server Safety at Risk: The proliferation of these messages highlights a concern for server security and member safety. The spam suggests a compromise of server integrity, underscoring the need for robust anti-spam measures.

Community Urged to Disregard Suspicious Links: Engineers and members are urged to avoid engaging with or clicking on unsolicited links. Such practices help safeguard personal information and the community’s credibility while adhering to legal and ethical codes.

AI Stack Devs (Yoko Li) Discord

-

Game Devs Gear Up for Gamification: Rosebud AI’s Game Jam invites creators to fashion 2D browser-based games using Phaser JS with a $500 prize pool, and an AIxGames Meetup is slated for Thursday in SF to bring together AI and gaming professionals RSVP here.

-

NPC Revolution with LLMs: A developer has introduced LLM-powered NPC models and an inference stack, available on GigaxGames at GitHub, promising an LLM single call feature and open-weights on Huggingface’s Hub, despite a hiccup with a broken API access link.

-

Grappling with Gaming NPC Realities: Developers are experimenting with output compression, minimized model calls, and smaller models to improve NPC runtime performance and grappling with NPCs that break the fourth wall, with the Claude 3 model showing promise in empathetic interactions for better gaming experiences.

-

Blog Teased on LLMs for NPCs: There’s an upcoming blog post chronicling the struggles and triumphs in finetuning LLMs for dynamic NPC behavior, pointing towards new strategies that could be shared within the community.

-

Navigating Windows Woes with Convex: The Convex local setup does not play nice with Windows, causing users to encounter sticking points, though potential solutions like WSL or Docker have been floated, and a Windows-compatible Convex is reportedly on the horizon.

Skunkworks AI Discord

Binary Quest in HaystackDB: Curiosity piqued about the potential use of 2-bit embeddings in HaystackDB, while Binary Quantized (BQ) indexing becomes a spotlight topic due to its promise of leaner and faster similarity searches.

The Rough Lane of Fine-Tuning LLaMA-3: Engineers face a bumpy road with LLaMA-3 fine-tuning, battling issues from the model neglecting EOS token generation to embedding layer compatibility across bit formats.

Perplexed by Perplexity: The community debates fine-tuning LLaMA-3 for perplexity, suggesting that performance may not surpass the base model, possibly due to tokenizer-related complications.

Shining a Light on LLaMA-3 Improvement: A beacon of hope shines as one user successfully fine-tunes LLaMA-3 with model-specific prompt strategies, sparking interest with a GitHub pull request for the collective’s scrutiny.

Off-Topic Oddities Go Unsummarized: A solitary link in #off-topic stands alone, contributing no technical discussion to the collective knowledge pool.

Mozilla AI Discord

-

Mozilla’s AI Talent Search: Mozilla AI is actively recruiting for various roles, with job opportunities available for those interested in contributing to their initiatives. For those looking to join the team, they can find more information and apply using the provided link.

-

LM-buddy: Eval Tool for Language Models: The release of Lm-buddy, an open-source evaluation tool for language models, stands to improve the assessment of LLMs. Contributors and users are encouraged to engage with the project through the given link.

-

Prometheus Benchmarks LLMs in Judicial Roles: The Prometheus project has demonstrated the potential for Local Large Language Models (LLMs) to act as arbiters, a novel concept sparking discussion. Interested parties can join the conversation about this application by following the link.

-

In-Depth Code Analysis Request for LLaMA: An engineer has noted that token generation in llama.cpp/llamafile is a bottleneck, with matrix-vector multiplications consuming 95% of the inference time for LLaMA2. This has led to speculation on whether loop unrolling contributes to the 30% better performance of llama.cpp over alternative implementations.

-

LLaMA Tales of Confusion and Compatibility: The Discord discussed amusing mix-ups and pseudonymous confusion with LLaMA parameters. Additionally, challenges were shared regarding the integration with Plush-for-comfyUI and LLaMA3’s compatibility issues on M1 Macbook Air, promising priority testing for the M1 once current LLaMA3 issues are addressed.

Interconnects (Nathan Lambert) Discord

-

OLMo Deep Dive Shared by AI Maverick: A detailed talk on “OLMo: Findings of Training an Open LM” by Hanna Hajishirzi was posted, featuring her work at the Open-Source Generative AI Workshop. Her pace of presenting substantive content on OLMo, Dolma, Tulu, etc., was noted to be rapid, possibly challenging for students to digest, thus reflecting her expertise and the extensive research involved in these projects.

-

RL in LM-Based Systems Exposed: Key takeaways from John Schulman’s discussion on reinforcement learning for language model-based systems were encapsulated in a GitHub Gist, providing engineers with a compressed synthesis of his approach and findings.

-

AI Leaderboard Limitations Pointed Out: A blog post by Sayash Kapoor and Benedikt Stroebl challenges the effectiveness of AI leaderboards for code generation, highlighting LLM debugger’s (LDB) high operational cost despite its top rankings, calling into question the utility of such benchmarks in the face of significant expenses.

-

SnailBot: A mention for an update or news related to SnailBot was made but lacked further information or context for a substantive summary.

-

Notice: Based on the provided snippets from the Discord guild there is no additional content that warrants a summary, indicating that these messages may have been part of a larger context or subsequent discussions that were not included.

LLM Perf Enthusiasts AI Discord

-

Gamma Seeking AI Wizard: Gamma is hiring an AI engineer to drive innovation in AI-driven presentation and website design, with a focus on prompt engineering, metrics, and model fine-tuning; details are at Gamma Careers. Despite the need for an in-person presence in San Francisco, the role is open to those with strong Large Language Model (LLM) skills even if they lack extensive engineering experience.

-

AI-Powered Enterprise on Growth Fast-track: Flaunting over 10 million users and $10M+ in funding, Gamma is looking for an AI engineer to help sustain its growth while enjoying a hybrid work culture within its profitable and compact 16-member team.

-

The Case of GPT-4.5 Speculations: A tweet by @phill__1 hinted at gpt2-chatbot possessing ‘insane domain knowledge,’ leading to speculation that it might represent the capabilities of a GPT-4.5 version phill__1’s observation.

-

Chatbot Causes Community Commotion: The engineer community is abuzz with the idea that the gpt2-chatbot could be an unintentional glimpse at the prowess of GPT-4.5, with a member succinctly endorsing it as “good”.

Datasette - LLM (@SimonW) Discord

-

Snazzy Syntax-Nixing for Code-Gen: A user discussed the concept of incorporating a custom grammar within a language model to prioritize identifying semantic rather than syntactic errors during code generation.

-

Data-fied Dropdowns for Datasette: Suggestions were exchanged on improving Datasette’s UX, including a front-page design that features dropdown menus to enable users to generate summary tables based on selected parameters, such as country choice.

-

UX Magic with Direct Data Delivers: Members proposed enhanced UX solutions for Datasette, including dynamically updating URLs or building homepage queries adjusted by user selection to streamline access to relevant data.

DiscoResearch Discord

- Loading Anomalies Enigma: A conversation highlighted that a process loads in 3 seconds on a local machine but faces delays when run through job submission, implying that the issue may not be related to storage but perhaps environment-specific overheads.

- Llama Trumps GPT-4 in Language Benchmark: Llama 3 outperformed GPT-4 in the ScanEval benchmark for German NLG, as shown on ScandEval’s leaderboard.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

CUDA MODE ▷ #triton (1 messages):

- Clarifying Triton Block Size Limits: A member inquired about the maximum size of a Triton block, noting that while they can create blocks with 4096 elements, they cannot do the same with 8192, suggesting there’s a discrepancy with the expected CUDA limits.

CUDA MODE ▷ #cuda (8 messages🔥):

- Seeking Flash Attention Code: A user inquired about how to download lecture12 of flash attention code presented by Thomas Viehmann; no resolution to the query was provided in the chat.

- Understanding CUDA Reductions: A member worked out their confusion regarding row-wise versus column-wise reductions in CUDA, realizing the performance difference is due to the (non)coalesced memory accesses and clarified their own question.

- Integer Division in Kernel Code: An optimization discussion took place regarding replacing integer division with bit shifts; it was suggested that nvcc or ptxas may optimize division when divisors are powers of 2, and a compiler explorer link was provided for further experimentation.

- CUDA Checkpointing Resource Shared: An external GitHub resource for CUDA checkpoint and restore utility, NVIDIA/cuda-checkpoint, was shared without further discussion.

- Comparing CUTLASS and CuBLAS Performance: A member benchmarked matrix multiplication performance comparing CuBLAS and CUTLASS, reporting that CUTLASS outperforms CuBLAS in a standalone profiler, but when integrated into Python the performance gains disappear, as shared in an article at Thonking AI’s post about matrix multiplications.

Links mentioned:

- Strangely, Matrix Multiplications on GPUs Run Faster When Given "Predictable" Data! [short]: Great minds discuss flops per watt.

- GitHub - NVIDIA/cuda-checkpoint: CUDA checkpoint and restore utility: CUDA checkpoint and restore utility. Contribute to NVIDIA/cuda-checkpoint development by creating an account on GitHub.

- Compiler Explorer - CUDA C++ (NVCC 11.7.0): #include <algorithm> #include <cassert> #include <cstdio> #include <cstdlib> __global__ void sgemmVectorize(int M, int N, int K, float alpha, f...

CUDA MODE ▷ #torch (4 messages):

- Curiosity About Double Kernel Launches: A member inquired as to why, during matrix multiplication in PyTorch, the profiler sometimes indicates two kernel launches.

- Clarification on PyTorch

linearFunction: Another member clarified thatlinearin PyTorch does include a transpose operation by default on the input, which might not lead to a performance difference.

CUDA MODE ▷ #algorithms (2 messages):

-

Introducing Effort Engine for LLMs: The Effort Engine algorithm was shared, with the capability of dynamically adjusting the computational effort during LLM inference. At 50% effort, it reaches speeds comparable to standard matrix multiplications on Apple Silicon, and at 25% effort, it’s twice as fast with minimal quality loss, as per the details on kolinko.github.io/effort.

-

Effort Engine’s Approach to Model Inference: This new technique allows for selectively loading important weights, potentially enhancing speed without substantial quality degradation. It’s implemented for Mistral and should be compatible with other models after some conversion and precomputation, with the implementation available on GitHub.

-

FP16 Only Implementation and Room for Improvement: The Effort Engine is currently available for FP16 implementations only, and while the multiplications are fast, improvements are needed in other areas such as softmax and attention summation operations.

-

Potential Limitations of Effort Engine Explored: A member highlighted that while the Effort Engine’s approach is innovative, it might share limitations with activation sparsity methods, especially in batched computations with batch size greater than one due to misaligned activation magnitudes.

Link mentioned: Effort Engine: A possibly new algorithm for LLM Inference. Adjust smoothly - and in real time - how many calculations you’d like to do during inference.

CUDA MODE ▷ #jobs (1 messages):

-

InstaDeep is Hiring ML Engineers: InstaDeep Research is actively seeking Machine Learning Engineers who are passionate about high-performance ML engineering and its real-world applications. Candidates who excel in building custom CUDA kernels, state-of-the-art model architectures, quantisation, and distributed training should reach out for opportunities.

-

Join a Collaborative Innovator: InstaDeep offers a stimulating, collaborative environment to work on real-life decision-making and technology products, and encourages applications from talented individuals eager to make a transformative impact. The company emphasizes innovation and real-world applications in Bio AI and Decision Making AI.

-

Seeking Interns and Multi-Applicants: Individuals interested in internships or pursuing more than one job opportunity at InstaDeep can explore internship opportunities and apply to multiple positions provided they have the relevant skills, though it is advised not to apply to more than two to avoid application rejection.

-

Reapplication Guidelines Suggested: Those who applied previously and were not selected are recommended to wait before reapplying, particularly if they applied within the last six months, indicating a period of consideration for changes in applicant profile or company needs.

Link mentioned: Job Offer | InstaDeep - Decision-Making AI For The Enterprise: no description found

CUDA MODE ▷ #youtube-recordings (2 messages):

- No Updates on Progress: A member confirmed that there have been no new developments to report currently.

- Profiling Techniques on Video: A YouTube video titled “Lecture 16: On Hands Profiling” was shared in the chat, providing a resource for learning about profiling techniques, although no specific description was provided.

Link mentioned: Lecture 16: On Hands Profiling: no description found

CUDA MODE ▷ #ring-attention (1 messages):

- Llama-3 Hits New Context Length Highs: Gradient has released Llama-3 8B Gradient Instruct 1048k that extends the context length from 8k to over 1048k. The achievement demonstrates that state-of-the-art language models can adapt to long contexts with minimal training adjustments.

Link mentioned: gradientai/Llama-3-8B-Instruct-Gradient-1048k · Hugging Face: no description found

CUDA MODE ▷ #off-topic (1 messages):

- CUTLASS: A Dance of Integers: A member observed that CUTLASS, despite being a linear algebra library, primarily handles integer operations and index manipulations before calling advanced linear algebra routines. This characteristic rationalizes its nature as a header-only library without the need for complex linking.

CUDA MODE ▷ #llmdotc (721 messages🔥🔥🔥):

-

CUDA Programming Discussions & Packed128 Types: There was a detailed debate about the usage of

Packed128custom struct for optimizing memory access patterns, addressing both reads and writes. Special attention was given to the proper construction and utilization ofPacked128, and whether to use explicit typecasting with floatX and BF16 inside kernels. -

Mixed-Precision Strategy Concerns: There’s concern about the impact of using BF16 throughout the entire model and whether stochastic rounding might affect training convergence. There are plans to compare the loss metrics between llm.c’s BF16 approach and standard PyTorch mixed-precision implementations.

-

Profiling & Debugging: A member added NVTX contexts for better profiling with NSight Compute, enabling more accurate GPU timings. A member observed that AdamW kernel may need optimization regarding FP32 atomics and scratch storage usage.

-

Tooling & Infrastructure for Benchmarking: Members discussed the potential utility of external platforms like Modal for running benchmarks on standardized specs, specifically the benefits and limitations of Modal with regard to profiling tools like nvprof and nsys.

-

PR Reviews Prepared for Merge & CI Suggestions: The channel had several PRs prepared for merging, mostly pertaining to the f128 and Packed128 optimizations for various kernels. The need for keeping branch documentation updated, -Wall compilation, and a CI check to ensure python and C implementations deliver similar results were also highlighted.

Links mentioned:

- Nvidia’s H100: Funny L2, and Tons of Bandwidth: GPUs started out as devices meant purely for graphics rendering, but their highly parallel nature made them attractive for certain compute tasks too. As the GPU compute scene grew over the past cou…

- cuda::associate_access_property: CUDA C++ Core Libraries

- FP8-LM: Training FP8 Large Language Models: In this paper, we explore FP8 low-bit data formats for efficient training of large language models (LLMs). Our key insight is that most variables, such as gradients and optimizer states, in LLM traini...

- cuda::memcpy_async: CUDA C++ Core Libraries

- Strangely, Matrix Multiplications on GPUs Run Faster When Given "Predictable" Data! [short]: Great minds discuss flops per watt.

- Log in: no description found

- Compiler Explorer - CUDA C++ (NVCC 12.2.1): #include <cuda/barrier> #include <cuda/std/utility> // cuda::std::move #include <cooperative_groups.h> #include <cooperative_groups/reduce.h> t...

- llm.c/dev/cuda/layernorm_backward.cu at master · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- llm.c/train_gpt2.cu at master · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- WikiText 103 evaluation · Issue #246 · karpathy/llm.c: I've seen some repos use WikiText-103 as the dataset they use to eval GPT-like models, e.g.: https://github.com/tysam-code/hlb-gpt/tree/main Add prepro script to download and preprocess and tokeni...

- llm.c/train_gpt2.cu at 9464f4272ef646ab9ce0667264f8816a5b4875f1 · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- Compiler Explorer - CUDA C++ (NVCC 12.3.1): #include <cuda_fp16.h> template<class ElementType> struct alignas(16) Packed128 { __device__ __forceinline__ Packed128() = default; __device__ __forceinline__ exp...

- Add script to run benchmarks on Modal by leloykun · Pull Request #311 · karpathy/llm.c: This PR adds a script to run the benchmarks on the Modal platform. This is useful for folks who do not have access to expensive GPUs locally. To run the benchmark for the attention forward pass on ...

- GitHub - graphcore-research/out-of-the-box-fp8-training: Demo of the unit_scaling library, showing how a model can be easily adapted to train in FP8.: Demo of the unit_scaling library, showing how a model can be easily adapted to train in FP8. - graphcore-research/out-of-the-box-fp8-training

- GitHub - NVIDIA/cudnn-frontend: cudnn_frontend provides a c++ wrapper for the cudnn backend API and samples on how to use it: cudnn_frontend provides a c++ wrapper for the cudnn backend API and samples on how to use it - NVIDIA/cudnn-frontend

- round 1 of some changes. we will now always write in fp32, even if dt… · karpathy/llm.c@3fb7252: …ype is set to float16 or bfloat16. next up, we actually want to write in lower precision, when the dtype is set so

- fixed potential error and generalized gelu forward by ngc92 · Pull Request #313 · karpathy/llm.c: This adds a helper function for safe casting from size_t to ints (may want to have that in utils.h too). that macro is then used to convert the size_t valued block_size * x128::size back to a regu...

- Feature/packed128 by karpathy · Pull Request #298 · karpathy/llm.c: no description found

- Updated adamw to use packed data types by ChrisDryden · Pull Request #303 · karpathy/llm.c: Before Runtime total average iteration time: 38.547570 ms After Runtime: total average iteration time: 37.901735 ms Kernel development file specs: Barely noticeable with the current test suite: Bef...

- Add NSight Compute ranges, use CUDA events for timings by PeterZhizhin · Pull Request #273 · karpathy/llm.c: CUDA events allow for more accurate timings (as measured by a GPU) nvtxRangePush/nvtxRangePop Adds simple stack traces to NSight Systems: Sample run command: nsys profile mpirun --allow-run-as-roo...

- yet another gelu by ngc92 · Pull Request #293 · karpathy/llm.c: more complicated Packet128 for cleaner kernels

- Full BF16 including layernorms by default (minimising number of BF16 atomics) by ademeure · Pull Request #272 · karpathy/llm.c: I added 4 different new versions of layernorm_backward_kernel, performance is best for: Kernel 4 (using atomicCAS, no scratch, but rounding many times so probably worse numerical accuracy Kernel 6...

- Removing Atomic Adds and adding memory coalescion by ChrisDryden · Pull Request #275 · karpathy/llm.c: This PR is ontop of the GELU memory coalescion PR and is essentially just a rewrite of the backwards encoder to use shared memory instead of atomic adds and then using the Packed struct to do coale...

- Removing Atomic Adds and adding memory coalescion by ChrisDryden · Pull Request #275 · karpathy/llm.c: This PR is ontop of the GELU memory coalescion PR and is essentially just a rewrite of the backwards encoder to use shared memory instead of atomic adds and then using the Packed struct to do coale...

- Removing Atomic Adds and adding memory coalescion by ChrisDryden · Pull Request #275 · karpathy/llm.c: This PR is ontop of the GELU memory coalescion PR and is essentially just a rewrite of the backwards encoder to use shared memory instead of atomic adds and then using the Packed struct to do coale...

- Packing for Gelu backwards by JaneIllario · Pull Request #306 · karpathy/llm.c: Update gelu backwards kernel to do packing into 128 bits, and create gelu brackward cuda file Previous kernel: block_size 32 | time 0.1498 ms | bandwidth 503.99 GB/s block_size 64 | time 0.0760...

- karpath - Overview: GitHub is where karpath builds software.

- Remove FloatN & simplify adam/reduce with BF16 LayerNorms by ademeure · Pull Request #295 · karpathy/llm.c: The MULTI_GPU path is untested, but everything else seems to work fine. I kept the per-tensor "param_sizeof" as it's used in test_gpt2.cu for example, it's not much code and may be u...

- Speedup `attention_forward_kernel2` by implementing Flash Attention 2 kernel by leloykun · Pull Request #60 · karpathy/llm.c: This speeds up the attention_forward_kernel2 kernel by replacing the implementation with a minimal Flash Attention 2 kernel as can be found in https://github.com/leloykun/flash-hyperbolic-attention...

- flash-hyperbolic-attention-minimal/flash_attention_2.cu at main · leloykun/flash-hyperbolic-attention-minimal: Flash Hyperbolic Attention in ~[...] lines of CUDA - leloykun/flash-hyperbolic-attention-minimal

- Flashattention by kilianhae · Pull Request #285 · karpathy/llm.c: Faster Flash Attention Implementation Added attention_forward6 to src/attention_forward: A fast flash attention forward pass to src/attention_forward written without any dependencies. We are assumi...

- llm.c/train_gpt2.cu at 9464f4272ef646ab9ce0667264f8816a5b4875f1 · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- llm.c/train_gpt2.cu at master · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- llm.c/train_gpt2.cu at master · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- Added packing for gelu forwards kernel by ChrisDryden · Pull Request #301 · karpathy/llm.c: This PR implements packing for the Gelu forwards kernel using the example provided. The kernel dev file was also updated to show the impact of changing the data types for floatX. Before changes: to...

- Update residual_forward to use packed input by JaneIllario · Pull Request #299 · karpathy/llm.c: Update residual_forward to use 128 bit packed input, with floatX Previous Kernel: block_size 32 | time 0.1498 ms | bandwidth 503.99 GB/s block_size 64 | time 0.0760 ms | bandwidth 993.32 GB/s b...

CUDA MODE ▷ #rocm (8 messages🔥):

- Inquiry on Flash Attention 2 for ROCm 6.x: A member inquired whether anyone has been building Flash Attention 2 for ROCM 6.x, noting they have successfully done so for ROCm 5.6 and Torch 2.2 but are interested in a newer stack.

- Building Woes for Torch Nightly: Members discussed the difficulties in building for current versions like Torch 2.3, with one expressing a desire to use Torch nightly but facing issues.

- Official Fork Lagging Behind: There’s mention of the official fork of Flash Attention for AMD hardware being outdated, still at version 2.0 of Flash Attention, without recent developments ported over.

- Backward Pass Update Confirmation: When queried about the backward pass addition to AMD Flash Attention, a member confirmed that it had indeed been added.

- Flash Attention GitHub Repository: A repository link for ROCm/flash-attention on GitHub was shared, which serves as resource for Fast and Memory-Efficient Exact Attention.

Link mentioned: GitHub - ROCm/flash-attention: Fast and memory-efficient exact attention: Fast and memory-efficient exact attention. Contribute to ROCm/flash-attention development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #general (487 messages🔥🔥🔥):

- Conversion Issues with llama3 on WSL2: A user reported errors during model conversion to F16 in WSL2, stating

RuntimeError: Unsloth: Quantization failed. Even after trying to rebuildllama.cppand redo the quantization, the problem persisted. - Model Checkpoint Merging Queries: One member asked how to merge a specific checkpoint to avoid overfitting from the latest epoch. Another member provided information directing to the Unsloth wiki for more info on checkpointing, and further conversation suggested methods like warmup steps and resuming from a checkpoint options in training functions.

- Anticipation for Phi-3: Members discussed the potential release of Phi-3, with anticipation for trying out the 3.8b version. The conversation spanned from speculation about release timelines to consideration of whether to wait for larger versions like 7b or 14b.

- Training Tips and Troubleshooting: Various users discussed their experiences and strategies with training models like Gemma, LLaMA-3, and Mistral. Tips included the importance of saving checkpoints and adjusting training parameters like max steps and batch sizes.

- Updates on Unsloth Tools: There was a notable emphasis on updating Unsloth installations with newer versions, discussing updates in repositories, and speculations about multi-GPU support on the platform in development.

Links mentioned:

- Tweet from RomboDawg (@dudeman6790): Currently training Llama-3-8b-instruct on the full 230,000+ lines of coding data in the OpenCodeInterpreter data set. I wonder how much we can increase that .622 on humaneval 🤔🤔 Everyone pray my jun...

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- unsloth/Phi-3-mini-4k-instruct-bnb-4bit · Hugging Face: no description found

- Tweet from RomboDawg (@dudeman6790): Here is a full colab notebook if you dont want to copy the code by hand. Again thanks to @Teknium1 for the suggestion https://colab.research.google.com/drive/1bX4BsjLcdNJnoAf7lGXmWOgaY8yekg8p?usp=shar...

- DiscoResearch/DiscoLM_German_7b_v1 · Hugging Face: no description found

- Here We Go Joker GIF - Here We Go Joker Heath Ledger - Discover & Share GIFs: Click to view the GIF

- Weird Minion GIF - Weird Minion - Discover & Share GIFs: Click to view the GIF

- Wheel Of Fortune Wheel GIF - Wheel Of Fortune Wheel Wof - Discover & Share GIFs: Click to view the GIF

- gradientai/Llama-3-8B-Instruct-Gradient-1048k · Hugging Face: no description found

- Load: no description found

- mlabonne/orpo-dpo-mix-40k · Datasets at Hugging Face: no description found

- crusoeai/Llama-3-8B-Instruct-Gradient-1048k at main: no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- botbot-ai/CabraLlama3-8b at main: no description found

- arthrod/cicerocabra at main: no description found

- [FIXED] NotImplementedError: No operator found for `memory_efficient_attention_forward` with inputs · Issue #400 · unslothai/unsloth: I'm a beginner to try unsloth. I run the free notebook Llama 3 (8B), and then got the following error: I also encountered the following error during the first installing step: ERROR: pip's dep...

- GitHub - M-Chimiste/unsloth_finetuning: Contribute to M-Chimiste/unsloth_finetuning development by creating an account on GitHub.

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- schedulefree optimizers by winglian · Pull Request #30079 · huggingface/transformers: What does this PR do? integrates meta's https://github.com/facebookresearch/schedule_free for adamw & sgd https://twitter.com/aaron_defazio/status/1776320004465582331 Before submitting This ...

- no title found: no description found

- Type error when importing datasets on Kaggle · Issue #6753 · huggingface/datasets: Describe the bug When trying to run import datasets print(datasets.__version__) It generates the following error TypeError: expected string or bytes-like object It looks like It cannot find the val...

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- GitHub - facebookresearch/xformers: Hackable and optimized Transformers building blocks, supporting a composable construction.: Hackable and optimized Transformers building blocks, supporting a composable construction. - facebookresearch/xformers

- unsloth (Unsloth AI): no description found

- llama : improve BPE pre-processing + LLaMA 3 and Deepseek support by ggerganov · Pull Request #6920 · ggerganov/llama.cpp: Continuing the work in #6252 by @dragnil1 This PR adds support for BPE pre-tokenization to llama.cpp Summary The state so far has been that for all BPE-based models, llama.cpp applied a default pre...

Unsloth AI (Daniel Han) ▷ #random (48 messages🔥):

-

Handling Out of Memory in Colab: A member gave a tip on combating Out of Memory (OOM) errors in Google Colab by running a Python snippet that clears cache and collects garbage using

torchandgcmodules. Other members appreciated this hack and plan to adopt it for future use. -

Confusion Over the Performance Data of LLama Models: There was a discussion about the perplexity differences when quantizing LLama models, specifically LLama 2 and LLama 3. It appears there may have been a miscommunication regarding the actual data, as members pointed out possible swaps or errors in the Bits Per Word (BPW) and Perplexity (PPL) columns.

-

Phi-3 Now Supported: An update was shared about Phi 3 being supported, and members expressed excitement to utilize it for their projects. A link to a Colab notebook was supposed to be shared but was evidently not provided.

-

Phi-3 Integration Issues: Members were discussing issues when trying to use the Phi-3 model in an Unsloth notebook, with error messages popping up about needing a custom script. The discussion focused on troubleshooting the problem and ensuring that proper notebooks are used.

-

Llama 3 License Questions: A member raised a question about the Llama 3 license conditions, wondering if all models derived from it should have certain prefixes and display credits according to the license. Concerns were also voiced about potential license violations by Huggingface models.

Link mentioned: Out of memory - Wikipedia: no description found

Unsloth AI (Daniel Han) ▷ #help (230 messages🔥🔥):

-