AI News for 5/22/2024-5/23/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (380 channels, and 5410 messages) for you. Estimated reading time saved (at 200wpm): 551 minutes.

Special addendum for the AI Engineer World’s Fair callout yesterday - Scholarships are available for those who cannot afford full tickets!. More speaker announcements are rolling out.

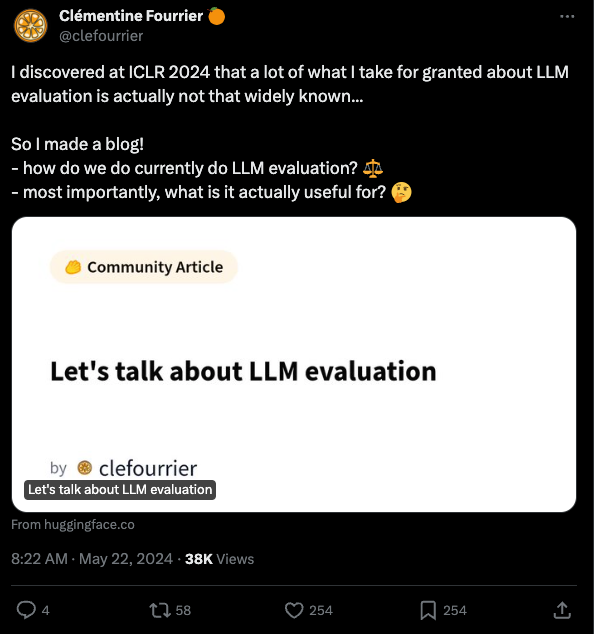

Many people know Huggingface’s Open LLM Leaderboard, but you rarely hear from the people behind it. Clémentine Fourrier made a rare appearance at ICLR (to co-present GAIA with Meta, something we cover on the upcoming ICLR pod) and is now back with a blog covering how she thinks about LLM Evals.

This is not going to be groundbreaking for those very close to the problem, but is a good and accessible “state of the art” summary from one of the most credible people in the field.

Our TL;DR: There are 3 main ways to do evals:

- Automated Benchmarking

- Evals are made of a collection of sample input/outputs (usually comparing generated text with a reference or multiple choice) and a metric (to compute a score for a model)

- for a specific task

- Works well for very well-defined tasks

- Common problems: models favoring specific choices based on the order in which they have been presented for multi-choice evaluations, and generative evaluations relying on normalisations which can easily be unfair if not designed well

- or for a general capability

- e.g. GSM8K high school problems as a proxy for “good at math”, unicorns for “can draw”

- LLMs scores on automated benchmarks are extremely susceptible to minute changes in prompting

- Biggest problem: data contamination. BigBench tried adding a “canary string” but compliance/awareness is poor. Tools exist to detect contamination and peopel are exploring dynamic benchmarks though this is costly.

- Humans as Judges

- Done by tasking humans with 1) prompting models and 2) grading a model answer or ranking several outputs according to guidelines.

- more flexibility than automated metrics.

- prevents most contamination cases,

- correlates well with human preference

- Either as Vibe-checks

- mostly constitute anecdotal evidence, and tend to be highly sensitive to confirmation bias

- but some people like Ravenwolf are very systematic

- Or as Arena (e.g. LMsys)

- Votes are then aggregated in an Elo ranking (a ranking of matches) to select which model is “the best”.

- high subjectivity: hard to enforce a consistent grading from many community members using broad guidelines

- Or as systematic annotations

- provide extremely specific guidelines to paid selected annotators, in order to remove as much as the subjectivity bias as possible (Scale AI and other annotation companies)

- still expensive

- can still fall prey to human bias

- Models as Judges

- using generalist, high capability models

- OR using small specialist models trained specifically to discriminate from preference data

- Limitations:

- tend to favor their own outputs when scoring answers

- bad at providing consistent score ranges

- not that consistent with human rankings

- Introduce very subtle and un-interpretable bias in the answer selection

Evals are used to prevent regressions, and to rank models, and to serve as a proxy for progress in the field.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

NVIDIA Earnings and Stock Performance

- Strong earnings: @nearcyan noted NVIDIA beat earnings six quarters in a row, with revenue up 262% last year to $26B and margins at 75.5%. They also did a 10:1 stock split.

- Investor reaction: @nearcyan shared an article about NVIDIA’s earnings, with investors happy about the results. The stock is up over 260% in the past year.

- Market cap growth: @rohanpaul_ai highlighted NVIDIA’s success, with their market cap increasing over 6x to $2.3T, surpassing Google and Amazon. Revenue was up 262% and diluted EPS was up over 600%.

Mistral AI Model Updates

- Faster LoRA finetuning: @danielhanchen released a free Colab notebook for Mistral v3 with 2x faster LoRA finetuning using Unsloth AI. It uses 70% less VRAM with no accuracy loss.

- Mistral-7B v0.3 updates: @rohanpaul_ai noted Mistral-7B v0.3 was released with extended vocab to 32768, v3 tokenizer support, and function calling. 8x7B and 8x22B versions are coming soon.

- Mistral v0.3 on 🤗 MLX: @awnihannun shared that Mistral v0.3 base models are available in the 🤗 MLX community, generating 512 tokens at 107 tok/sec with 4-bit quantization on an M2 Ultra.

Meta’s Llama and Commitment to Open Source

- Calls for open-sourcing Llama: @bindureddy said Meta open-sourcing Llama-3 400B would make them the biggest hero and is the most important thing right now.

- Open source foundation: @ClementDelangue reminded that open-source is the foundation of all AI, including closed-source systems.

- Meta’s open source leadership: @rohanpaul_ai highlighted Meta’s leadership in open source beyond just Llama-3, with projects like React, PyTorch, GraphQL, Cassandra and more.

Anthropic’s Constitutional AI

- Claude’s writing abilities: @labenz shared a clip of @alexalbert__ from Anthropic explaining that Claude is the best LLM writer because they “put the model in the oven and wait to see what pops out” rather than explicitly training it.

- Claude Character work: @labenz is excited to read more about the “Claude Character” work @AmandaAskell is leading at Anthropic on building an AI assistant with stable traits and behaviors.

- Anthropic’s honest approach: @alexalbert__ explained that Anthropic is honest with Claude about what they know and don’t know regarding its ability to speculate on tricky philosophical questions, rather than purposely choosing to allow or prevent it.

Google’s AI Announcements and Issues

- LearnLM for personalized tutoring: @GoogleDeepMind announced new “LearnLM” models to enable personalized AI tutors on any topic to make learning more engaging.

- Inconsistencies in AI overviews: @mmitchell_ai noted Google’s new LLM-powered AI overviews seem to have some inconsistencies, like saying President Andrew Johnson was assassinated.

- Website poisoning attack: @mark_riedl successfully used a website poisoning attack on Google’s LLM overviews by modifying information on his own site.

- Changing meaning of “Googling”: @mmitchell_ai expressed concern that Google’s AI summaries are changing the meaning of “Googling” something from retrieving high-quality information to potentially unreliable AI-generated content.

Open Source Debates and Developments

- Open source as a strategy: @saranormous strongly disagreed with the idea that open source is just a charity, arguing it’s a strategy for building and selling, citing Linux’s success and large community of contributors.

- Open source success stories: @saranormous pushed back on claims open source can’t compete with big tech AI labs, noting Android’s massive mobile ecosystem as an example of open source success.

- Open source as AI’s foundation: @ClementDelangue stated open source is the foundation of all AI, including closed source systems from major labs.

- Openness for US leadership: @saranormous argued restricting open source AI won’t stop determined adversaries, only slow US innovation and cede leadership to others. She believes openness keeps the US on the offensive and is key to shaping AI with western values.

AI Safety and Regulation Discussions

- California AI bill criticism: @bindureddy criticized the new California AI bill for effectively banning open source AI by placing compute thresholds and restrictions on models.

- AI safety job market predictions: @teortaxesTex predicted the percentage of top math/CS grads aspiring to work in AI safety will fall, not rise, as new regulations mean few jobs in core AI development but plenty in the “safety apparatus.”

- DARPA funding for AI safety: @ylecun suggested perhaps AI safety research could be funded under a DARPA program for building better, safer AI systems.

- Key points of California AI bill: @rohanpaul_ai summarized key points of California’s newly passed AI bill, including capability shutdown requirements, annual certification, and restrictions on models trained with over 10^26 FLOPs.

Emerging AI Architectures and Techniques

- Similar concept learning across modalities: @DrJimFan shared an MIT study showing LLMs and vision models learn similar concept representations across modalities, without explicit co-training. He wants to see this extended to 3D shapes, speech, sound, and touch.

- PaliGemma in KerasNLP: @fchollet announced PaliGemma vision-language model is now in KerasNLP with JAX, TF and PyTorch support for image captioning, object detection, segmentation, VQA and more.

- Linearity of transformers: @arohan joked “we don’t need skip connections or normalization layers either” in response to a paper showing the linearity of transformers.

- Unnecessary transformer components: @teortaxesTex summarized recent papers suggesting many transformer components like attention, KV cache, FFN layers, and reward models may be unnecessary.

AI Benchmarking and Evaluation

- Prompt Engineering Guide milestone: @omarsar0 announced the Prompt Engineering Guide has reached 4M visitors and continues to add new advanced techniques like LLM agents and RAG.

- LLM evaluation blog post: @clefourrier published a blog post on how LLM evaluation is currently done and what it’s useful for, after realizing it’s not widely understood based on discussions at ICLR.

- Saturated benchmarks: @hwchung27 noted saturated benchmarks can give a false impression of slowing progress and become useless or misleading proxies for what we care about.

- Fine-tuning and hallucinations: @omarsar0 shared a paper suggesting fine-tuning LLMs on new knowledge encourages hallucinations, as unknown examples are learned more slowly but linearly increase hallucination tendency.

Emerging Applications and Frameworks

- No-code model fine-tuning: @svpino demonstrated no-code fine-tuning and deployment of open source models using an AI assistant, powered by GPT-4 and Monster API’s platform.

- RAG-powered job search assistant: @llama_index shared an end-to-end tutorial on building a RAG-powered job search assistant with Koyeb, MongoDB, and LlamaIndex with a web UI.

- Generative UI templates in LangChain: @LangChainAI added templates and docs for generative UI applications using LangChain JS/TS and Next.js with streaming agent calls and tool integrations.

- AI-powered reporting tool: @metal__ai highlighted their AI-powered reporting tool for running complex multi-step operations on company data to streamline information requests, ESG diligence, call summary insights and more.

Compute Trends and Developments

- M3 MacBook Pro matrix multiplication: @svpino tested matrix multiplication on an M3 MacBook Pro, seeing 3.72ms on GPU vs 14.4ms on CPU using PyTorch. Similar results with TensorFlow and JAX.

- Copilot+ PC demo: @yusuf_i_mehdi demo’d a Copilot+ PC (Surface Laptop) with CPU, GPU and 45+ TOPS NPU delivering unrivaled performance.

AI-Generated Voices and Identities

- Spite and revenge in East Asian cultures: @TheScarlett1 and @teortaxesTex expressed concern about the prominence of spite and desire for revenge in East Asian cultures, with people willing to burn their lives to get back at offenders.

- OpenAI non-disparagement clause: @soumithchintala noted an impressive follow-up from @KelseyTuoc with receipts that OpenAI proactively pressured employees to sign a non-disparagement clause with threats of exclusion from liquidity events.

- Scarlett Johansson/OpenAI controversy: @soumithchintala argued the Scarlett Johansson/OpenAI controversy makes the AI attribution conversation tangible to a broad audience. Cultural norms are still being established before laws can be written.

Miscellaneous AI News and Discussions

- Auto-regressive LLMs insufficient for AGI: @ylecun shared a Financial Times article where he explains auto-regressive LLMs are insufficient for human-level intelligence, but alternative “objective driven” architectures with world models may get there.

- Selling to capital allocators vs developers: @jxnlco advised founders to focus on selling to rich capital allocators, not developers, in order to fund their AI roadmap and get to a mass-market product later.

- Getting enough data for AGI: @alexandr_wang discussed on a podcast how we can get enough data to reach AGI, but it will be more like curing cancer incrementally than a single vaccine-like discovery.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Model Releases and Updates

- GPT-4o performance: In /r/LocalLLaMA, GPT-4o is reported to be 6 times faster and 12 times cheaper than the base model, with a 120K context window.

- Mistral-7B v0.3 updates: /r/LocalLLaMA announces that Mistral-7B v0.3 has been released with extended vocabulary, v3 tokenizer support, and function calling. Mixtral v0.3 was also released.

- Microsoft Phi-3 models: Microsoft released Phi-3-Small (7B) and Phi-3-Medium (14B) models following Phi-3-Mini, according to a post in /r/LocalLLaMA. Comparisons were made to Llama 3 70B and 8B models.

- “Abliterated-v3” models: New “abliterated-v3” models were released on Hugging Face, including Phi-3-medium-4k-instruct, Smaug-Llama-3-70B, Llama-3-70B-Instruct, and Llama-3-8B-Instruct. They have an inhibited ability to refuse requests and reduced hallucinations compared to previous versions.

AI Capabilities and Limitations

- Understanding LLMs through sparse autoencoders: /r/singularity discusses how Anthropic is making progress in understanding LLMs through sparse autoencoders in Claude 3 Sonnet. Extracting interpretable, multilingual, multimodal features could help customize model outputs without extensive retraining.

- Concerns about AI agents: In /r/MachineLearning, some argue that AI agents are overhyped and too early, with challenges around reliability, performance, costs, legal concerns, and user trust. Narrowly scoped automations with human oversight are suggested as a path forward.

AI Ethics and Safety

- OpenAI’s tactics toward former employees: Vox reports on OpenAI documents revealing aggressive tactics toward former employees, with concerns raised about short timelines to review complex termination documents.

- OpenAI employee resignations over safety: Business Insider reports that another OpenAI employee quit over safety concerns after two high-profile resignations. Krueger said tech firms can “disempower those seeking to hold them accountable” by sowing division.

- Containing powerful AI systems: Former Google CEO Eric Schmidt says most powerful future AI systems will need to be contained in military bases due to dangerous capabilities, raising concerns about an AI arms race and existential risk.

AI Applications and Use Cases

- Microsoft’s Copilot AI agents: The Verge reports on Microsoft’s new Copilot AI agents that can act like virtual employees to automate tasks, with potential for significant job displacement.

- AI in chip design and software development: Nvidia CEO Jensen Huang says chip design and software development can no longer be done without AI, and wants to turn Nvidia into “one giant AI”.

- AI helping the blind: /r/singularity shares a story of AI being used to help a blind 16-year-old through Meta AI glasses, serving as a reminder of AI’s potential to positively impact lives.

Stable Diffusion and Image Generation

- Stable Diffusion in classic photography: /r/StableDiffusion showcases examples of using Stable Diffusion in a classic photography workflow for tasks beyond inpainting, such as model training, img2img, and enriching photos.

- Generating images from product photos: Punya.ai shares a blog post on how generating images from product photos is getting easier with Stable Diffusion, with tutorials and ready-to-use tools available.

- Future of Stable Diffusion: /r/StableDiffusion discusses questions around the future of Stable Diffusion after Emad Mostaque’s departure from Stability AI, with concerns about direction and progress.

AI Discord Recap

A summary of Summaries of Summaries

1. Model Performance Optimization and New Releases:

-

Gemini Tops Reward Bench Leaderboard: Gemini 1.5 Pro achieved top rankings in the Reward Bench Leaderboard as noted by Jeff Dean, outperforming other generative models.

-

Mistral v0.3 Sparks Mixed Reactions: The release of Mistral v0.3 created buzz with its enhanced vocabulary and new features (Model Card), though users debated its performance improvements and integration complexities.

-

Tensorlake Open-Sources Indexify: Tensorlake announced Indexify, an open-source real-time data framework, stirring enthusiasm for its potential in AI stacks.

2. Fine-Tuning Strategies and Challenges:

-

Retaining Fine-Tuned Data Woes: Users faced difficulties with Llama3 models retaining fine-tuned data when converted to GGUF format, pointing to a confirmed bug discussed in the community.

-

Axolotl’s Configuration Struggles: Persistent issues configuring Axolotl for dataset paths and loss scaling highlighted community recommendations for updates, including checking Axolotl’s documentation.

-

Cuda Errors and GPU Utilization: Members reported CUDA out of memory errors on various GPUs, with suggestions of switching to QLoRA and using Docker images (example) to mitigate issues.

3. Open-Source AI Innovations and Collaborations:

-

Open-Source Gains with AlphaFold Rivals: ProteinViz was introduced as an open-source alternative to AlphaFold3, detailed in a community blog post.

-

StoryDiffusion Launches MIT-Licensed Alternative to Sora: StoryDiffusion entered the open-source scene, though weights are yet to be released (GitHub repo).

4. AI API Integrations and Community Efforts:

-

Roleplay AI Models Launched: The Lumimaid 70B model was released for roleplay applications, with details on the OpenRouter announcement page.

-

Batch Inference for GenAI: LlamaIndex highlighted the efficiency of batch inference for pre-processing data in GenAI applications, providing integration insights here.

-

Anthropic and Gemini Models via OpenRouter: OpenRouter expanded its support to include Anthropic and Gemini models, detailed in their documentation and recent announcements.

5. GPU Optimization and Technical Workshops:

-

GPU Optimization Workshop a Hit: The GPU optimization workshop, featuring experts from OpenAI, NVIDIA, and Meta, saw over 2400+ registrants, with resources available on GitHub.

-

Technical Fixes with Docker and CUDA: Members discussed common CUDA errors, recommending Docker images and Axolotl configurations for smooth GPU operations in AI workloads.

-

LLM Training Cost Reports and Benchmarks: Detailed cost and efficiency reports on frontier model training costs were shared, with estimations of $250k for the largest Pythia model, underscoring the importance of optimizing GPU-hours used.

{% if medium == ‘web’ %}

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

-

Mistral v0.3 Causes Stir: The announcement of Mistral v0.3 generated excitement but also some confusion due to a mix-up with version naming. To improve GPU efficiency with Mistral models, suggestions included increasing batch sizes and updating training codes.

-

Unsloth Growth: Unsloth AI has expanded its repertoire, now supporting new models like Phi-3, Mistral v3, and a range of 4-bit quantized models. Experimentation with these models is facilitated by various Colab notebooks.

-

Technical Tweaks and Fixes: Engineers are actively working on resolving issues, such as the “buggy” reserved tokens in LLaMa 3 and discussing complexities in training certain layers of models like Qwen, with recommended workarounds involving biases and layer training adjustments.

-

Recognition and Resources: Unsloth AI has been recognized as part of GitHub’s 2024 Accelerator program, joining other projects in driving innovation in open-source AI. To aid in deploying these advancements, free notebooks have been provided for ease of access.

-

Challenges in Language and Truthfulness: The engineering discourse included tackling the challenges posed by fact-checking and language-specific fine-tuning in LLMs, referencing studies like scaling-monosemanticity and In-Context RALM to aid in these pursuits.

Perplexity AI Discord

Scheduled Downtime for Database Boost: A scheduled downtime has been announced, set to commence at 12:00am EST and last approximately 30 minutes to upgrade the database improving performance and user experience.

Engineering Excitement Over Free Gemini: Engineering conversations revolved around the free usage of Gemini in AI Studio for high-volume tasks like fine-tuning, spurring discussions on data privacy and cost-saving strategies.

Perplexity Powers Past Performance Hurdles: Notable improvements in Perplexity’s web scraping have yielded speeds of 1.52s, significantly surpassing previous performances over 7s, while discussions highlighted the importance of parallel processing and efficient tooling in AI applications.

Comparative AI Discourse: Technically-inclined users compared Perplexity with Gemini Pro and ChatGPT, lauding Perplexity’s research and writing capabilities and flexible file management, with suggestions to include additional features like CSV support to reach new heights of utility.

API Anomalies and Alternatives Analysis: Community members discussed discrepancies in outputs between web and API versions of the same models, seeking clarifications on the observed inconsistencies, while also sharing their experiences in balancing model accuracy and utilization within API rate limits for platforms like Haiku, Cohere, and GPT-4-free.

LLM Finetuning (Hamel + Dan) Discord

Instruction Finetuning with ColBERT and Task Updates: Engineers discussed finetuning strategies for instruction embeddings, citing frameworks like INSTRUCTOR and TART as references. A project proposal for automating standup transcript ticket updates involved using examples of standup conversions correlated with ticket actions.

CUDA Woes and Workarounds: Persistent CUDA errors while running LLM models like llama 3 8b were a common issue, with remedies including adjusting batch sizes and monitoring GPU usage via nvidia-smi. Docker was recommended for managing CUDA library compatibility, with a link to a Docker image from Docker Hub provided.

Parameters and Efficient Model Training: Queries emerged about default Axolotl’s configuration parameters and optimization strategies for training on A100 and H100 GPUs, where using bf16 and maximizing VRAM usage were among the suggested strategies. Discussions also extended to newer optimizers like Sophia and Adam_LoMo.

Accelerating Free Credits and Workshop Excitement: Modal’s fast credit allocation was commended, and excitement built around a GPU Optimization Workshop featuring representatives from OpenAI, NVIDIA, Meta, and Voltron Data. Additionally, there was anticipation for a recording of an upcoming talk by Kyle Corbitt.

Model Fine-Tuning and Training Factors: Fine-tuning LLMs to generate layouts, troubleshooting Axolotl’s dataset paths, and considering LoRA hyperparameters were topics of interest. The use of GPT-4 as a judge for level 2 model evaluations and troubleshooting Axolotl on Modal due to gated model access issues were also discussed.

Deployment Dilemmas: Engineers encountered challenges when deploying trained models to S3 on Modal, with solutions including using the modal volume get command and mounting an S3 bucket as a volume, as described in Modal’s documentation.

Paper and Tutorial References: The community shared valuable learning resources, such as a YouTube demo on EDA assistant chatbots. They also appreciated illustrative examples from Hamel and Jeremy Howard, with references to both a tweet and a GitHub repo.

HuggingFace Discord

-

AlphaFold Rivals and Advances: A member introduced ProteinViz, an alternative to AlphaFold3, showcasing the tool for predicting protein structures, along with a community blog post on AlphaFold3’s progress.

-

Transparent Gains with LayerDiffusion: Diffuser_layerdiffuse allows for creating transparent images from any base model, raising the bar for foreground image separation accuracy.

-

Effective Minimal Training Data Use: A discussion noted that training Mistral with as few as 80 messages to perceive itself as a 25-year-old was surprisingly effective, hinting at efficient fine-tuning strategies.

-

AI Enters Query Support Role: Enthusiasm was shown for using AI to query lengthy software manuals, with members pondering the practicality of feeding a 1000-page document to an AI for user support.

-

Model Training Memory Management: Utilizing

torch_dtype=torch.bfloat16, one combated CUDA OOM errors during Mistral model SFT, reinforcing the instrumental role of tensor precision in managing extensive computational workloads on GPUs.

Nous Research AI Discord

Flash Attention Needed for YaRN: Efforts to implement flash attention into the YaRN model are meeting challenges, with some progress but not a perfect fit yet.

Rust Rising Among AI Enthusiasts: Increasing interest and discussions around using Rust for machine learning, with members sharing resources like Rust-CUDA GitHub and rustml - Rust, while recognizing the dominance of Python in AI.

Nous Research Expanding Teams: Nous Research is on the hunt for new talent, as evidenced by their recent hiring announcement and a call to apply via their Google Form.

Python vs Rust in AI Careers: A robust debate over Python’s primacy in AI careers with members bringing up alternatives like Rust or Go, alongside sharing insights from AI experts like Yann LeCun’s views on focusing beyond LLMs for next-gen AI systems.

RAG’s Validity in Question: Proposals made to enhance RAG’s model context, emphasizing the need for context accuracy by referencing a debate over the reliability of Google’s AI drawing conclusions from outdated sources.

Stability.ai (Stable Diffusion) Discord

-

Emad’s Mysterious Weights Countdown: Speculation is high about Stable Diffusion’s forthcoming weight updates, with a user implying an important release could happen in two weeks, sharing excitement with a Star Wars analogy.

-

Clearer Visions Ahead for Stable Diffusion: There’s ongoing discussion regarding Stable Diffusion 3 producing blurred images, particularly for female characters; modifying prompts by removing ‘woman’ seemed to offer a clearer output.

-

Laptop Muscle Matchup: Rumbles in the tech space about ASUS AI laptops and NVIDIA’s rumoured 5090 GPU, accompanied by a PC Games Hardware article, are drawing attention and debate among users, with a focus on specifications and performance authenticity.

-

AI Tool Smackdown: A brief exchange compared MidJourney and Stable Diffusion, with one camp favoring MJ for quality, while suggesting hands-on experience with the latter might sway opinions.

-

Installation vs. Cloud: The eternal debate on local installation versus utilizing web services for Stable Diffusion’s usage continues, with a new angle brought to light concerning the performance with AMD GPUs, and a general guideline suggesting installation for those with robust graphics cards.

LM Studio Discord

LLama Lamentations & Local Model Logistics: There’s unrest over Llama 3’s 8k context performance, with members revealing it falls short of expectations. Despite being the topic of debate, suggestions for improving its performance, such as introducing longer contexts up to 1M, remain theoretical.

Discussions Turn to Vision Models: OCR discussions saw mixed reviews of vision models like LLaVA 1.6 as users recommend Tesseract for reliable text extraction. Interest in Vision Language Models (VLMs) is evident, but deploying them effectively with web server APIs requires attentive configuration, including apikey incorporation.

Multimodal Mishaps and Merits: Idefics 2.0 multimodal’s compatibility sparked interest, yet it seems to trip on existing infrastructure like llama.cpp. Meanwhile, Mistral-7B-Instruct v0.3 emerges as part of the dialogue, boasting extended vocabulary and improved functional calling (Model Card). In parallel, Cohere’s Aya 23 showcases its talents in 23 languages, promising to sway future conversations (Aya 23 on Huggingface).

GPU Grows but Guides Needed: The adoption of 7900xt graphics cards is underway among members seeking to amp up their tech game. However, guidance for effective environment setups, such as treating an RX 6600 card as gfx1030 on Fedora, remains a precious commodity.

Storage Solved, Support Summoned: One member’s move to allocate an M.2 SSD exclusively for LM Studio paints a picture of the ongoing hardware adaptations. On the flip side, GPU compatibility queries like dual graphics card support highlight the community’s reliance on shared wisdom.

Modular (Mojo 🔥) Discord

-

Mojo on the Rise: Users observed compilation errors in Mojo nightly

2024.5.2305and shared solutions like explicit type casting toFloat64. A debate over null-terminated strings in Mojo brought up performance concerns and spurred discussions on potential changes referencing GitHub issues and external resources such as the PEP 686 on UTF-8 string handling. -

Syntax Shuffle: The replacement of

inferredkeyword with//for inferred parameters in Mojo stirred mixed reactions and highlighted the trade-off between brevity and clarity. A proposal forf-string-like functionality encouraged the exploration of aFormatabletrait, setting the stage for possible future contributions. -

Decorators and Data Types Discussed: In the Mojo channels, discourse ranged from using

@valuedecorators for structs, seen as valuable for reducing boilerplate, to the feasibility of custom bit-size integers and MLIR dialects for optimizing memory use. The need for documentation improvement was highlighted by a query about FFT implementation in Mojo. -

Structured Logging and GitHub Issue Management: Participants suggested the creation of a dedicated channel for GitHub issues to improve tracking within the community. Additionally, the importance of proper syntax and notation in documentation became clear as users addressed confusion caused by the misuse of

**in documentation, emphasizing the need for consistency. -

Community and Updates: Modular released a new video on a community meeting, with details found in their public agenda, and shared their weekly newsletter, Modverse Weekly - Issue 35, keeping the community informed and engaged with the latest updates and events.

Eleuther Discord

Pythia’s Pocketbook: Discussing the cost of training models like Pythia, Stellaathena estimated a bill of $250k for the largest model, mentioning efficiency and discounted GPU-hour pricing in calculations.

Cost-Efficiency Report Needs Reviewers: A forthcoming report on frontier model training costs seeks peer review; interested parties would assess GPU-hours and the influence of GPU types like A100 40GB.

LeanAttention Edging Out FlashAttention?: A recently shared paper introduces LeanAttention, which might outperform FlashAttention, raising debates on its innovation. The community also joked about unorthodox practices to improve model benchmarks, playfully noting, “The secret ingredient is crime.”

Interpretability’s New Frontiers: A new paper was noted for opening research doors in interpretability, kindling curiosity on its implications for future studies.

Evaluating Large Models: Tech tips were exchanged, such as running the lm eval harness on multi-node SLURM clusters and how to set parameters like num_fewshot for evaluations with challenges reported around reproducibility and internet access on compute nodes.

OpenAI Discord

-

Model Prefers YAML, Causing JSON Jealousy: Engineers noted anecdotally that the AI model favors YAML handling over JSON, sparking both technical curiosity and humor among discussion participants regarding model preferences despite development efforts being skewed towards JSON.

-

GPT-4o and DALL-E 3 Create Artful Collabs: Conversations revealed that GPT-4o is enhancing image prompts interpretation, creating better outputs when used with DALL-E 3 compared to using DALLE-3 in isolation. This synergy illustrates the evolving interplay between text and image models.

-

Newlines in the Playground Cause Formatting Frustrations: The OpenAI playground newline handling has been causing usability issues, with reports of inconsistent pasting results. This seemingly minor technical hiccup has sparked broader discussions on formatting and data presentation.

-

Anthropic Paper Ignites Ideas and Speculations: The community discussed a paper from Anthropic on mech interpretation and its implications, touching on how AI might anthropomorphize based on training data, reflecting concepts like confinement and personas in unexpected ways. Technical debates ensued regarding the impact of such findings on future AI development.

-

Prompt Engineering Secrets and Critiques Shared: Technical discussions included strategies for prompt engineering, with practical advice being exchanged on system prompts, which some found lacking. Issues such as models disappearing from sidebars and the semantics of “step-by-step” prompts were dissected, reflecting a deep dive into the minutiae of user experience and AI interactivity.

CUDA MODE Discord

Full House at the GPU Optimization Workshop: The GPU optimization workshop raked in excellent engagement with over 2400+ registrants and valuable sessions from experts including Sharan Chetlur (NVIDIA), Phil Tillet (OpenAI), and William Malpica (Voltron Data). Enthusiasts can RSVP for future interactions here, with additional resources available on GitHub.

Breaching CUDA Confusion: A member clarified that __global__ CUDA functions can’t be simultaneously __host__ due to their grid launch setup, and they posited the theoretical utility of a __global__ function agnostic of threadIdx and blockIdx.

Tricky Transformation with Triton: One user discussed performance drops when converting a kernel from FP32 to FP6 using triton+compile, speculating on the potential impact of inplace operators.

AI Research Synopsis Spices Up Discussions: A weekly AI research spotlight surfaced, featuring analysis on works like KAN, xLSTM, and OpenAI’s GPT-4. The discussion extended to the computationally intensive nature of KANs owing to activation-based edge computation.

The CUDA Cul-de-Sac and Vulkan Ventures: Conversations veered into contributions and coding concerns, including a member’s flash-attention repository stalling, GPU model benchmarks like 7900xtx versus 3090, and Vulkan’s failure to impress in a heat transfer simulation.

LLM.C Lurches Forward: There was a bustling (busy) exchange about llm.c with members celebrating the integration of HellaSwag evaluation in C, debating CUDA stream optimization for speed, and sharing the challenge of scaling batch sizes without training disruptions.

Please note, some quotes and project links have been shared verbatim as no additional context was provided.

OpenAccess AI Collective (axolotl) Discord

- Quantization Quandaries with Llama 3: Technophiles are discussing the challenging quantization of Llama 3 models, noting performance drop due to the model’s bit accuracy sensitivity.

- Models in the Spotlight: Some engineers are pivoting their attention back to Mistral models for fine-tuning issues, while the Aya models, especially the 35B version released on Hugging Face, are stirring excitement due to their architecture and training prospects.

- GPU Roadblocks: AI mavens are finding GPU memory limitations a steep hill to climb, with frequent

CUDA out of memoryerrors during fine-tuning efforts on high-capacity cards like the RTX 4090. They are investigating alternatives such as QLoRA. - Published Pearl: Community members are hitting the library stacks with the publication of an academic article on medical language models, available through this DOI.

- Troubleshooting Colossus: Members are brainstorming on multi-GPU setups for fine-tuning Llama-3-8B models with prompt templates in Colab, while wrestling with pesky mixed precision errors stating “Current loss scale at minimum.” Resources are being shared, including the Axolotl dataset formats documentation, for bettering these massive computation endeavors.

LAION Discord

-

NSFW Content in Datasets Sparks Debates: Technical discussions have surfaced regarding the challenges of processing Common Crawl datasets, specifically addressing the issue of NSFW content and highlighting a code modification for image handling at cc2dataset. Simultaneously, debates question Hugging Face’s hosting policies for datasets that could contain sensitive materials, with their own uncurated dataset publication coming into scrutiny.

-

Content Moderation Challenges and Legal Worries: LAION community discusses the balance between dataset accessibility and moderation, with some highlighting the convenience of a complaint-driven restriction system on Hugging Face. Concerns regarding anime-related datasets and the pressure it puts on users to discern pornographic content have sparked serious discussions about potential legal repercussions.

-

Dissatisfaction with GPT4o’s Performance: Users have expressed dissatisfaction with GPT4o, citing problems with self-contamination and a perceived failure to meet the performance standards set by GPT4 despite improvements in multi-modal functionality.

-

Transformer Circuits and Autoencoders Stir Technical Debate: A call for transparency in AI systems, especially in the Transformer Circuits Thread, reflects AI engineers’ concerns about the possible influence of models on societal norms. Separately, some users dissect the difference between MLPs and autoencoders, pinpointing the importance of clear architectural distinctions.

-

New Research Unveiled: Anthropic’s latest insights on the Claude 3 Sonnet model have been brought to attention, revealing neuron activations for concepts such as the Golden Gate Bridge and the potential for influential model tuning, with detailed research published at Anthropic.

Interconnects (Nathan Lambert) Discord

OpenAI’s Alleged NDA Overreach: OpenAI leadership claimed ignorance over threats to ex-employees’ vested equity for not signing NDAs, but documents with leadership’s signatures suggest otherwise. Ex-employees were pressured with seven-day windows to sign or face losing millions.

Model Performance Headlines: Gemini 1.5 Pro impressively topped the Reward Bench Leaderboard for generative models, as indicated by Jeff Dean’s tweet, while News Corp and OpenAI entered a multi-year deal, allowing AI utilization of News Corp content, as per this announcement.

Merch in a Flash: Nathan Lambert’s Shopify store, Interconnects, launches amidst lighthearted uncertainty about operations and with community-driven product adjustments for inclusivity; he assures ethical sourcing.

The Emergence of AI Influencers?: TikTok’s teen demographic reportedly resonates with content generated by bots, highlighting the potential for AI-created content to go viral. The platform stands out as a launchpad for careers like Bella Poarch’s.

Anthropic AI’s Golden Gate Focus: A whimsical experiment by Anthropic AI altered Claude AI’s focus to obsess over the Golden Gate Bridge, leading to a mix of amusement and interest in the AI community.

OpenRouter (Alex Atallah) Discord

OpenRouter Swings Open the Gates to Advanced AI Tools: OpenRouter now facilitates the use of Anthropic and Gemini models with a syntax matching OpenAI’s, broadening the landscape for AI practitioners. Supported tool calls and function usage instructions can be found in the documentation.

Lumimaid 70B Sashays into the AI Theater: Aimed specifically at roleplay scenarios, the Lumimaid 70B model was tweaked and let loose by the NeverSleep team and details can be scooped from their announcement page.

Calling all Roleplayers to a New Digital Realm: A new roleplaying app granting a free tier has launched, leveraging OpenRouter’s multifaceted AI characters, with the creator keen on gathering feedback via RoleplayHub.

Tech Snags and Community Dialogues Tangle in General Channel: Software patches were applied to mend streaming issues with models like Llama-3, and the release of Mistral-7B v0.3 spewed some confusion due to new vocab/tokenizer—uncertainty lingered about if it should be a distinct model route or a direct route upgrade. Meanwhile, Cohere’s Aya initiative garnered attention offering multilingual AI research spanning 101 languages, find out more here.

Economies of Scale Kick in for AI Model Access: Sharp price reductions have been executed for several models, including a tempting 30% off for nousresearch/nous-hermes-llama2-13b, among others. These markdowns are stirring up the market for developers and enthusiasts alike.

LlamaIndex Discord

-

Batch Inference for GenAI Pre-Processing: Batch inference is highlighted as a key technique for data pre-processing in GenAI applications, with the potential to enhance analysis and querying efficiency. LlamaIndex’s integration and more details on the practice can be found here.

-

RAG-Powered Job Search Assistant Blueprint: A RAG-powered job search assistant has been created using @gokoyeb, @MongoDB, and @llama_index, demonstrating real-time response streaming and the tutorial is available here.

-

Nomic Embed’s Localized Strategy: Nomic Embed now facilitates completely local embeddings along with dynamic inference, blending the benefits of both local and remote embeddings, as expanded upon here.

-

Secure Your Spot for the Tech Meetup: Engineers interested in joining an upcoming Tuesday meetup should note that the slots are running out, with additional details accessible here.

-

Scaling Up RAG Embedding Models Peaks Interest: Discussions surfaced around the effectiveness of bigger AI models in improving RAG embeddings, without landing on a clear consensus. Reference to the ReAct algorithm and advice on custom similarity scores utilizing an

alphaparameter can be found in the LlamaIndex documentation and the discussion of these topics included links to detailed articles and papers.

Latent Space Discord

-

Podcast with Yi Tay Misses the Boat: The community wished for spotlights on scaling laws during Yi Tay’s podcast on Reka/Google, but these insights were missing as the podcast had been pre-recorded.

-

Mistral v0.3 Sparks Mixed Reactions: Mistral 7B v0.3 models have been released, boasting enhancements like a 32K extended vocabulary, new v3 tokenizer, and function calling capabilities, leading to both excitement and criticism Mistral’s newest chapter.

-

Hot Takes on Open-Source AI: A contentious opinion piece claiming open-source AI poses investment risks and national security concerns ignited debate, with detractors calling out the author for apparent OpenAI favoritism and a narrow perspective.

-

The Quest for a Universal Speech-to-Speech API: The community discussed workarounds for OpenAI’s yet-to-be-released speech-to-speech API, pointing to Pipecat and LiveKit as current alternatives, with a preference for Pipecat.

-

RAG Gets Real: Practical applications and challenges of Retrieval-Augmented Generation (RAG) were exchanged among members, with a particular reference made to a PyData Berlin talk on RAG deployment in medical companies.

OpenInterpreter Discord

-

Innovative Prompt Management with VSCode: Engineers are planning to manage prompts using VSCode to maintain efficiency, including a substantial list of nearly 500k tokens of system prompts for Gemini 1.5 Pro. The ingenuity was met with enthusiasm, and suggestions for additional system prompts were solicited.

-

Favorable Reception for CLI Improvement: The introduction of a new terminal option

--no_live_responsevia a GitHub pull request was well-received for its potential to smooth out terminal UI issues. Steve235lab’s contribution was praised as a noteworthy improvement. -

Spotlight on Component Teardowns and Replacement Chips: Members discussed the teardown of Apple AirPods Pro and the use of the ESP32 pico chip in the Atom Echo for alternative projects, noting the necessary reflashing. Supplementary information such as datasheets provided by ChatGPT was also recognized as beneficial.

-

Tool Praise: M5Stack Flow UI Software: The M5Stack Flow UI software was commended for its support of multiple programming languages and the potential to convert Python scripts to run LLM clients, such as OpenAI, showcasing the flexible integration of hardware and AI-driven applications.

-

Skipping the macOS ChatGPT Waitlist: A potentially controversial macOS ChatGPT app waitlist workaround from @testingcatalog was shared, providing a ‘cheat’ through careful timing during the login process. This information could have implications for software engineers seeking to understand or leverage user behavior and application exploitability.

tinygrad (George Hotz) Discord

Challenging the Taylor Takedown: Members questioned the efficacy of Taylor series in approximations, noting that they are only accurate close to the reference point. It was highlighted that range reduction might not be the optimal path to perfect precision, and interval partitioning could offer a better solution.

Range Reduction Rethink: The group debated over the use of range reduction techniques, suggesting alternatives like reducing to [0, pi/4], and referred to IBM’s approach as a practical example of interval partitioning found in their implementation.

IBM’s Insights: An IBM source file was mentioned in a suggestion to address range reduction problems by treating fmod as an integer, viewable here.

Mathematical Complexity Calmly Contemplated: There was a consensus that the computations for perfect accuracy are complex, especially for large numbers, though typically not slow—a mix of admiration and acceptance for the scientific intricacies involved.

Shape Shifting in ShapeTracker: The group explored ShapeTracker limitations, concluding that certain sequences of operations like permute followed by reshape lead to multiple views, posing a challenge in chaining movement operations effectively. The utility of tensor masking was discussed, with emphasis on its role in tensor slicing and padding.

Cohere Discord

- Warm Welcome for Global Creatives: A friendly banter marked the entrance of newcomers into the fold, including a UI Designer from Taiwan.

- Navigating the AI Landscape: One member gave a crisp direction for interacting with an AI, citing a particular channel and the

@coralhandle for assistance. - Cohere Amplifies Multilingual AI Reach: Cohere’s announcement of Aya 23 models heralds new advancements, offering tools with 8 billion and 35 billion parameters and touting support for a linguistic range encompassing 23 languages.

LangChain AI Discord

-

GraphRAG Gains Traction for Graph-Modeled Info: Members discussed that GraphRAG shines when source data is naturally graph-structured, though it may not be the best choice for other data formats.

-

PySpark Speeds Up Embedding Conversions: AI engineers are experimenting with PySpark pandas UDF to potentially enhance the efficiency of embeddings processing.

-

Challenges with Persistence in Pinecone: A shared challenge within the community focused on the inefficiencies of persistence handling versus frequent instance creation in Pinecone, with dissatisfaction expressed regarding mainstream solutions like pickle.

-

APIs and Instruction Tuning in the Spotlight: Upcoming event “How to Develop APIs with LangSmith for Generative AI Drug Discovery Production” set for May 23, 2024, and a new YouTube video explains the benefits of Instruction Tuning for enhancing LLMs’ adherence to human instructions.

-

Code Modification and Retriever Planning: Engineers are currently seeking efficient retrievers for planning code changes and techniques to prevent LLMs from undercutting existing code when suggesting modifications.

DiscoResearch Discord

-

Mistral Gets a Boost in Vocabulary and Features: The newest iterations of Mistral-7B now boast an extended vocabulary of 32768 tokens, v3 Tokenizer support, and function calling capabilities, with installation made easy through

mistral_inference. -

Enhancements to Mistral 7B Paired with Community Approval: The launch of the Mistral-7B instruct version has received a casual thumbs up from Eldar Kurtic with hints of more improvements to come, as seen in a recent tweet.

MLOps @Chipro Discord

-

GaLore and InRank Break New Ground: A session with Jiawei Zhao delved into Gradient Low-Rank Projection (GaLore) and Incremental Low-Rank Learning (InRank) which offer reductions in memory usage and enhancements in large-scale model training performance.

-

Event Sync Woes: An inquiry was made about integrating an event calendar with Google Calendar, highlighting a need to track upcoming discussions to avoid missing out.

-

Image Recon with ImageMAE Marks Scalability Leap: The ImageMAE paper was shared, presenting a scalable self-supervised learning approach for computer vision using masked autoencoders, with impressive results from a vanilla ViT-Huge model achieving 87.8%.

-

Community Spirits High: A member voiced their appreciation for the existence of the channel, finding it a valuable asset for sharing and learning in the AI field.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (1009 messages🔥🔥🔥):

-

Mistral v3 Support Announced: Unsloth now supports Mistral v3. Members quickly tested it and shared mixed initial feedback regarding training losses and performance.

-

Discussion on LLaMa 3 Reserved Tokens Bugs: Users discussed “buggy” reserved tokens in LLaMa 3’s base weights, including potential fixes and the impact on instruct models. A member noted, “Some of LLaMa 3’s base (not instruct) weights are ‘buggy’. Unsloth auto-fixes this.”

-

Debate on GPU Resource Utilization: There’s confusion over underutilization with Mistral 7B on a 79GB H100 GPU. Suggestions varied, from increasing batch size to updating training code, with a user noting, “you need to increase batches to make use of the GPU.”

-

Phi 3 Medium 4-bit Released: Phi 3 Medium 4k Instruct is now available, with additional support coming soon. The announcement included links to Colab notebooks for easy access.

-

Continued Pre-training Notebook: A notebook for continued pretraining was shared, aimed at preserving instruction-following traits during domain-specific fine-tuning. It’s available here and members are encouraged to experiment and share results.

Links mentioned:

- unsloth/Phi-3-medium-4k-instruct-bnb-4bit · Hugging Face: no description found

- Join the TheBloke AI Discord Server!: For discussion of and support for AI Large Language Models, and AI in general. | 23932 members

- cognitivecomputations/samantha-data at main: no description found

- Tweet from Unsloth AI (@UnslothAI): We're so happy to announce that Unsloth is part of the 2024 @GitHub Accelerator program!🦥 If you want to easily fine-tune LLMs like Llama 3, now is the perfect time! http://github.blog/2024-05-2...

- Unsloth update: Mistral support + more: We’re excited to release QLoRA support for Mistral 7B, CodeLlama 34B, and all other models based on the Llama architecture! We added sliding window attention, preliminary Windows and DPO support, and ...

- AI Unplugged 11: LoRA vs FFT, Multi Token Prediction, LinkedIn's AI assistant: Insights over Information

- Tweet from QBrabus eu/acc (@q_brabus): @apples_jimmy @ylecun @iamgingertrash Question: Regarding the upcoming LLaMa 3 400B+ model, will it be open-weight? There are several rumors about this... Answer: No, it is still planned to be open a...

- Reddit - Dive into anything: no description found

- can i get a chicken tendie combo please: no description found

- Scarlett Johansson demands answers after OpenAI releases voice "eerily similar" to hers: Scarlett Johansson is demanding answers from OpenAI and its CEO, Sam Altman, after it released a ChatGPT voice that she says sounds "eerily similar" to her o...

- Google Colab: no description found

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- GPU Optimization Workshop · Luma: We’re hosting a workshop on GPU optimization with stellar speakers from OpenAI, NVIDIA, Meta, and Voltron Data. The event will be livestreamed on YouTube, and…

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - shimmyshimmer/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to shimmyshimmer/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #announcements (1 messages):

-

Phi-3 and Mistral v3 power up Unsloth with new models: Unsloth now supports Phi-3, Mistral v3, and other new models. The update also addresses all Llama 3 issues, enhancing finetuning performance.

-

Free Notebooks for easy access: Users can explore the new models using Phi-3 medium notebook, Mistral v3 notebook, or ORPO notebook.

-

Broad support for 4-bit models: Visit Unsloth’s Hugging Face page for a variety of 4-bit models including Instruct. New additions like Qwen and Yi 1.5 are now supported.

-

Exciting news with GitHub’s 2024 Accelerator: Unsloth is part of the 2024 GitHub Accelerator, joining 11 other projects shaping open-source AI. This recognition underscores the significant impact and innovation within the AI community.

Links mentioned:

- Finetune Phi-3 with Unsloth: Fine-tune Microsoft's new model Phi 3 medium, small & mini easily with 6x longer context lengths via Unsloth!

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- 2024 GitHub Accelerator: Meet the 11 projects shaping open source AI: Announcing the second cohort, delivering value to projects, and driving a new frontier.

Unsloth AI (Daniel Han) ▷ #help (81 messages🔥🔥):

- Mistral v0.3 excitement and confusion: Users were initially thrilled about "Mistral v3," but it was clarified to be Mistral v0.3. One user joked about it being Olympic-level competition among LLMs.

- QKVO training issues with Qwen: Members discussed issues with training QKVO layers for Qwen models using Unsloth, noting that biases cause errors. A workaround involving not training all layers was suggested.

- Unsloth updates boost efficiency: The latest Unsloth branch offloads lm_head and embed_tokens to disk, reducing VRAM usage and speeding up operations. Users were guided to update via a specific GitHub command.

- Model loading confusion on Hugging Face: Users discussed the complications of having multiple quantized versions of a model in one repository on Hugging Face and recommended using separate repositories for each quantized version.

- Fact-checking and non-English fine-tuning of LLMs: There was an in-depth conversation about the challenges of LLMs producing truthful responses and managing language-specific fine-tuning. Multiple references and links were provided, including scaling-monosemanticity and In-Context RALM.

Links mentioned:

- unsloth/llama-3-8b · Hugging Face: no description found

- In-Context Retrieval-Augmented Language Models: Retrieval-Augmented Language Modeling (RALM) methods, which condition a language model (LM) on relevant documents from a grounding corpus during generation, were shown to significantly improve languag...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

Perplexity AI ▷ #announcements (1 messages):

- **Scheduled Downtime Announced**: Heads up for a **scheduled downtime** tonight at 12:00am EST. The downtime will last around 30 minutes for a database upgrade aimed at improving performance and user experience.Perplexity AI ▷ #general (897 messages🔥🔥🔥):

- **Gemini Free Usage Pleasantly Surprises**: Members celebrated that **Gemini in AI Studio** is free even for large usage (*"requests in this UI are free"*) and exclaimed about the ability to perform fine-tuning without cost (*"finetuning for free?"). They discussed possible data privacy concerns, but openness to experimenting with the service prevailed.

- **Perplexity’s Speed Impresses**: **Web scraping** optimizations led to significant performance improvements for **searches using multiple sources**, clocking speeds much faster than previous attempts. One member reported *"web scraping taking 1.52s" compared to earlier times of over 7s* and emphasized proper use of parallel processing.

- **Perplexity vs. Other AI Tools**: Members compared **Perplexity** with other AI tools like **Gemini Pro** and **ChatGPT** regarding file handling and data processing. **Perplexity** received praise for its research and writing capabilities (*"better in both areas"*) and flexible file handling, garnering new insights on **Gemini's role** mainly for its context handling.

- **Integrating Additional Features into Perplexity**: Discussions included potential UI enhancements and tools for **Perplexity**, including the integration of **labs into the main UI** and adding functionalities like history saving and support for formats like **CSV**. The aim is to potentially transform **Perplexity** from a decent tool to the *"best AI website"*.

- **Model Usage and Rate Limits Challenge Members**: Encountering **API rate limits** and exploring various models, members juggled between **Haiku**, **Cohere**, and **GPT-4-free**, sharing frustrations and strategies for optimal usage given free and cost-efficient tiers. They explored alternatives and workarounds while emphasizing the balance between accuracy and context sizes.Links mentioned:

- Exploring AI Conversations and Redundancy: In this video, I share my experience with AI conversations and highlight the issue of redundancy. I demonstrate how simple calculations and conversions can lead to repetitive responses from AI models ...

- How one developer just broke Node, Babel and thousands of projects in 11 lines of JavaScript: Code pulled from NPM – which everyone was using

- $30,000,000 AI Is Hiding a Scam: It's time to see how far the Rabbit hole goes...Support Investigative Journalism: ► Patreon: https://patreon.com/coffeezillaFollow:►Ed Zitron: https://www.wh...

- M4 iPad Pro 11” first impressions (performance, heat, PWM and others): Hello! I just came from my local Apple Store, and I’ve been closely inspecting and testing the new M4 iPad Pro. This is not a review, but it can provide useful details that many people could be inter...

- Oil futures drunk-trading incident - Wikipedia: no description found

- PSA: Public demo server (cors-anywhere.herokuapp.com) will be very limited by January 2021, 31st · Issue #301 · Rob--W/cors-anywhere: The demo server of CORS Anywhere (cors-anywhere.herokuapp.com) is meant to be a demo of this project. But abuse has become so common that the platform where the demo is hosted (Heroku) has asked me...

- Bing’s API was down, taking Microsoft Copilot, DuckDuckGo and ChatGPT's web search feature down too | TechCrunch: Bing, Microsoft’s search engine, was working improperly for several hours on Thursday in Europe. At first, we noticed it wasn’t possible to perform a web

- Welcome to Claude - Anthropic: no description found

- Contact Anthropic: Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

- iPad - Compare Models: Compare resolution, size, weight, performance, battery life, and storage of iPad Pro, iPad Air, iPad, and iPad mini models.

Perplexity AI ▷ #sharing (7 messages):

- Taiwan Semiconductor directs curiosity: A user linked to a Perplexity AI search result about Taiwan Semiconductor, likely discussing aspects of remote work or industry developments.

- Analyzing scents with AI humor: Another user shared a humorous search result, indicating interest in how something smells, demonstrating Perplexity AI’s ability to handle wide-ranging queries.

- Fatal incident in IoS: A tragic event involving 9 fatalities garnered attention with a shared link to a search about the incident. The search may involve interpretations or official reports on the event.

- Bing API topic surfaces: Interest in Bing’s API capabilities led to a shared search link, likely covering how Bing API is perceived or utilized.

- Perplexity AI elucidated: A user shared a search link explaining what Perplexity AI is. This suggests ongoing curiosity and learning about the platform itself among users.

Perplexity AI ▷ #pplx-api (2 messages):

- Metquity decides to explore alternatives: Metquity expressed a plan to shift to other tools, stating, “maybe I will build with something else and come back to it when its better.” This indicates interest in returning once improvements are made.

- Neuraum notices discrepancies between web and API outputs: Neuraum queried why using the same model and prompt could yield different outputs on the web version and the API. He pointed out that “using the API the outputs are wrong, eventhough the browsing function works,” seeking insights into this inconsistency.

LLM Finetuning (Hamel + Dan) ▷ #general (141 messages🔥🔥):

-

Instruction Tuning with ColBERT-style Models: A member inquired about the experiences of others with instruction tuning embeddings models, specifically with ColBERT-style models. They shared some relevant papers, including INSTRUCTOR: A Framework for Embedding Text with Task Instructions and TART: A Multi-task Retrieval System Trained on BERRI with Instructions.

-

Bayesian Calculation Concerns in FDP Exam: Another member discussed a Bayesian question from an FDP accreditation exam and worked through a calculation. They pointed out a potential error in the FDP Institute’s probability calculation, preferring their own method.

-

Axolotl Tutorial on JarvisLabs: A helpful tutorial video was shared on how to run Axolotl on JarvisLabs, available on YouTube and linked to relevant resources such as JarvisLabs and the Axolotl GitHub repository.

-

Miniforge/Mamba for AI/ML Environments: There was a discussion about the advantages of using Miniforge and Mamba for creating and managing conda environments over alternatives like pyenv, highlighting the flexibility and speed benefits of mamba.

-

Schulman’s Two-Step Fine-Tuning Process: Members discussed John Schulman’s comments on iterative supervised fine-tuning vs. reinforcement learning (RL) for improving models beyond basic fine-tuning, emphasizing an iterative process to fully align models with high-quality human data.

Links mentioned:

- AI Mathematical Olympiad - Progress Prize 1 | Kaggle: no description found

- Predibase: The Developers Platform for Fine-tuning and Serving LLMs: The fastest and easiest way to fine tune and serve any open-source large language model on state-of-the-art-infrastructure hosted within your private cloud.

- Exploring Fine-tuning with Honeycomb Example: In this video, I walk you through the process of fine-tuning a model using the honeycomb example. I provide step-by-step instructions on cloning the repository, installing dependencies, and running th...

- Tweet from younes (@younesbelkada): 🚨 New optimizer in @huggingface transformers Trainer 🚨 LOMO optimizer can be now used in transformers library https://github.com/OpenLMLab/LOMO Great work from LOMO authors! 🧵

- How to run axolotl on JarvisLabs | Tutorial: Check out axolotl on JarvisLabs : jarvislabs.ai/templates/axolotlCheck out axolotl github : https://github.com/OpenAccess-AI-Collective/axolotl

- Vincent D. Warmerdam - Active Teaching, Human Learning: Want a dataset for ML? Internet says you should use ... active learning!It's not a bad idea. When you're creating your own training data you typically want t...

- Bulk Labelling and Prodigy: Prodigy is a modern annotation tool for collecting training data for machine learning models, developed by the makers of spaCy. In this video, we'll show a b...

- Asterisk/Zvi on California's AI Bill: ...

- Vincent Warmerdam - Keynote "Natural Intelligence is All You Need [tm]": In this talk I will try to show you what might happen if you allow yourself the creative freedom to rethink and reinvent common practices once in a while. As...

- One Embedder, Any Task: Instruction-Finetuned Text Embeddings: We introduce INSTRUCTOR, a new method for computing text embeddings given task instructions: every text input is embedded together with instructions explaining the use case (e.g., task and domain desc...

- Task-aware Retrieval with Instructions: We study the problem of retrieval with instructions, where users of a retrieval system explicitly describe their intent along with their queries. We aim to develop a general-purpose task-aware retriev...

- Stanford CS25: V2 I Common Sense Reasoning: February 14, 2023Common Sense ReasoningYejin ChoiIn this speaker series, we examine the details of how transformers work, and dive deep into the different ki...

- GitHub - conda-forge/miniforge: A conda-forge distribution.: A conda-forge distribution. Contribute to conda-forge/miniforge development by creating an account on GitHub.

- no title found: no description found

- Community | anywidget: no description found

- Enhancing Jupyter with Widgets with Trevor Manz - creator of anywidget: In this (first!) episode of Sample Space we talk to Trevor Mantz, the creator of anywidget. It's a (neat!) tool to help you build more interactive notebooks ...

- Latent Space: no description found

- [AINews] Anthropic's "LLM Genome Project": learning & clamping 34m features on Claude Sonnet: Dictionary Learning is All You Need. AI News for 5/20/2024-5/21/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (376 channels, and 6363 messages)...

LLM Finetuning (Hamel + Dan) ▷ #workshop-1 (14 messages🔥):

-

Automate Standup Transcripts with Ticket Updates: A proposed service reads transcripts of standup meetings and updates tickets with statuses mentioned. This involves fine-tuning using examples of standup conversations and their correlation with ticket updates.

-

Custom Stop Sequences to Prevent Jailbreaks: Members discussed fine-tuning models to use custom stop sequences like a hash instead of common tokens like ”###”, aiming to resist jailbreak attempts. One suggested fine-tuning to ignore jailbreak prompts, though acknowledging the challenge of anticipating every prompt in advance.

-

Lightweight Text Tagging and Entity Generation Models: A member suggested several lightweight LLM projects, including one similar to GliNER for text tagging and classification and another to generate training data for tools like spaCy. Another interesting project proposed was creating an LLM to generate cool names for Python packages.

-

Prompt Injection Protections: Discussion highlighted the improbability of completely preventing jailbreaks directly on models, pointing to prompt injection protection tools as better solutions. Shared resources included a list of protection libs/tools and a collection of related papers.

-

EDA Assistant and Suggestions: A project for a chatbot to assist data scientists in EDA of time series data, identified through a YouTube demo, with version 2 planning fine-tuning to improve deductive reasoning and formatting. Another assistant aimed to process EDA outputs into actionable steps, exploring cost-effective and faster implementation methods.

Links mentioned:

- Unleash the Power of GPT-4o: Exploratory Data Analysis with ObexMetrics: Revolutionize your time series data analysis with ObexMetrics' cutting-edge EDA assistant, powered by GPT-4o. Seamlessly chat with your data to effortlessly ...

- Prompt Injection Protection: We should have a tool to be able to track Github project activity… Papers collection: https://huggingface.co/collections/leonardlin/prompt-injection-65dd93985012ec503f2a735a Techniques: Input heuristi...

LLM Finetuning (Hamel + Dan) ▷ #🟩-modal (18 messages🔥):

- Modal credits granted quickly: One user confirmed receiving Modal credits quickly after filling out a form. Another user appreciated the quick allotment and thanked the team for the free credits.

- Persistent errors with finetuning llama 3 8b: A member reported persistent issues while running a llama 3 8b fine-tuning job despite assistance from another user. They linked to a specific Discord message thread for more context (link to thread).

- Credits expiring quirk: A user noted what seemed to be a quirk where credits appeared to expire overnight in the billing panel, but the Live Usage dropdown still showed the full amount. They thanked the team for the clarity.

- Finetuning LLM for generating layouts: A user inquired about the feasibility of fine-tuning LLMs to generate layouts using datasets like publaynet and rico. These requests underscore the varied applications users are exploring with their models.

- Downloading trained models to S3: Another member clarified that it’s possible to download trained models to an S3 bucket using the

modal volume getcommand. They also mentioned the option to directly mount an S3 bucket as a volume, linking to the relevant documentation.

Links mentioned:

- Cloud bucket mounts: The modal.CloudBucketMount is a mutable volume that allows for both reading and writing files from a cloud bucket. It supports AWS S3, Cloudflare R2, and Google Cloud Storage buckets.

- modal volume: Read and edit modal.Volume volumes.

LLM Finetuning (Hamel + Dan) ▷ #learning-resources (1 messages):

- Illustrative Example Shared by Hamel: A member appreciated an example shared by Hamel on Twitter, describing it as “incredibly illustrative.” They also thanked Jeremy Howard for the related notebook, providing links to both the tweet and the GitHub repository.

Link mentioned: lm-hackers/lm-hackers.ipynb at main · fastai/lm-hackers: Hackers’ Guide to Language Models. Contribute to fastai/lm-hackers development by creating an account on GitHub.

LLM Finetuning (Hamel + Dan) ▷ #jarvis-labs (32 messages🔥):

-

Troubleshooting Llama-3 Access Issues: Multiple users, including @mark6871 and @dhar007, faced issues accessing the Llama-3 model despite having permission on Hugging Face. The resolution involved generating an access token on Hugging Face and entering it during the terminal prompt.

-

Overcoming CUDA Memory Errors: Users like @dhar007 experienced “CUDA out of memory” errors while training models with RTX5000 GPUs. They resolved it by adjusting the batch size and monitoring GPU usage using

nvidia-smi. -

Datapoint on Mistral LoRA Training: @damoncrockett shared their experience running the Mistral LoRA example, which took 2.5 hours and $4 on a single A100 GPU, but noted undertraining with only one epoch on a small dataset.

-

Feedback and Support for Jarvislabs Credits: Users like @rashmibanthia expressed gratitude for the credits and shared positive experiences with Jarvislabs compared to other services. There were also several inquiries about missing credits and how to confirm sign-ups ([@manjunath_63072] and @darkavngr).

-

Queries on Spot Instances and Repo Saving on Jarvislabs: @tokenbender faced difficulties finding spot instances, while @nisargvp inquired about saving repositories without pausing the instance to avoid credit usage.

LLM Finetuning (Hamel + Dan) ▷ #hugging-face (4 messages):

- Gated repo access resolved with login tip: A member experienced an error when attempting to access a gated repository despite accepting the terms. Another member suggested using the

huggingface-cli logincommand, which resolved the issue and allowed the training to proceed successfully.

LLM Finetuning (Hamel + Dan) ▷ #replicate (5 messages):

- GitHub sign-up email mismatch heads up: A member alerted, “Just a heads to all who signed up with GitHub but have a different email they used for the conference, you can set a different email address after signing up.”

- Issues with Gmail ”+” sign: Users expressed frustration as it “does not seem to accept my gmail address with a

+sign in it.” - Notification label confusion and Maven credits: One user questioned if using the Maven registered address for notifications ensures automatic credit addition.

- Credit receipts queried: A member asked the group, “Did anybody get the credit already?”

- Awaiting clarification on credits: Another user noted they had signed up and are “Now I just have to wait. I hope official info when something is happening.”

LLM Finetuning (Hamel + Dan) ▷ #kylecorbitt_prompt_to_model (2 messages):

-

Attendee Inquires About Recording Availability: A participant eagerly asked, “Will there be a recording?” This indicates anticipation for an upcoming event and interest in having access to later review.

-

Excitement for Upcoming Event: Another participant expressed enthusiasm saying they were “Hyped for the talk later!” Their excitement is further highlighted by the use of an emoji and sparkle symbol.

LLM Finetuning (Hamel + Dan) ▷ #workshop-2 (209 messages🔥🔥):

-

Axolotl’s dataset troubleshooting: Users faced issues running finetuning on a local machine, yielding JSON decoding errors. To resolve, correctly align dataset paths in configuration and use compatible formats as demonstrated in Axolotl’s documentation.

-

LoRA hyperparameters tuning: Discussions focused on adjusting learning rates and LoRA hyperparameters. A shared config showed

lora_r: 128,lora_alpha: 128, and varying learning rates to optimize model training, and leveraging tips from Sebastian Raschka. -

Training duration queries: Users discussed training times on different GPUs, noting differences in speed estimates. Hamel suggested running smaller dataset samples to avoid prolonged feedback loops, while shared axolotl examples helped guide realistic expectations and adjustments.

-

Configuration for function calling: Users sought best practices for fine-tuning models for specific tasks like text to code translation using examples like ReactFlow. Templates and prompt formats specific to their models were recommended, including reviewing fine-tuning benchmarks.

-

GPT judge for L2 evals: For fine-tuning evaluations, using GPT-4 as a judge with refined prompts was recommended. Users considered fine-tuning judges but were advised to start with simple prompt refinements to improve alignment.

Links mentioned:

- muellerzr: Weights & Biases, developer tools for machine learning

- Exploring Fine-tuning with Honeycomb Example: In this video, I walk you through the process of fine-tuning a model using the honeycomb example. I provide step-by-step instructions on cloning the repository, installing dependencies, and running th...

- no title found: no description found

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- Large Language Models (LLMs) · Prodigy · An annotation tool for AI, Machine Learning & NLP: A downloadable annotation tool for NLP and computer vision tasks such as named entity recognition, text classification, object detection, image segmentation, A/B evaluation and more.

- no title found: no description found

- The Fine-tuning Index: Performance benchmarks from fine-tuning 700+ open-source LLMs

- Support Fuyu-8B · Issue #777 · OpenAccess-AI-Collective/axolotl: ⚠️ Please check that this feature request hasn't been suggested before. I searched previous Ideas in Discussions didn't find any similar feature requests. I searched previous Issues didn't...

- Axolotl - Instruction Tuning: no description found

- Lucas van Walstijn - LLM fine-tuning 101: no description found

- nisargvp/hc-mistral-alpaca · Hugging Face: no description found

- Axolotl - Template-free prompt construction: no description found

- microsoft/Phi-3-small-128k-instruct · Hugging Face: no description found

- dataset_generation error while runinng hc.yml locally: dataset_generation error while runinng hc.yml locally - gist:2fcbf41d4a8e7149e6c4fb9a630edfd8

- Image Classification with scikit-learn: Scikit-Learn is most known for building machine learning models for tabular data, but that doesn't mean that it cannot do image classification. In this video...