AI News for 3/14/2025-3/15/2025. We checked 7 subreddits, 433 Twitters and 28 Discords (222 channels, and 2399 messages) for you. Estimated reading time saved (at 200wpm): 240 minutes. You can now tag @smol_ai for AINews discussions!

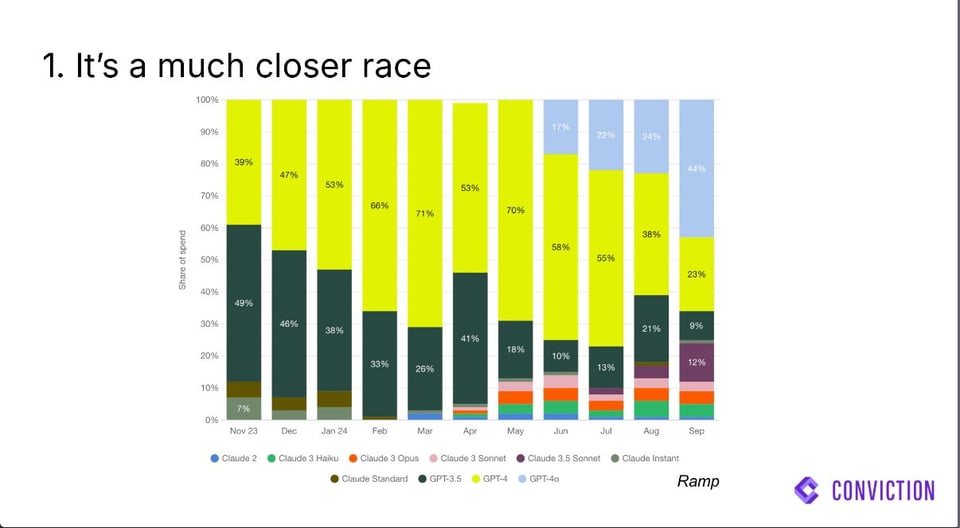

Happy 2nd birthday to GPT4 and Claude 1. Few would have guessed the tremendous market share shifts that have happened in the past year.

SPECIAL NOTE: We are launching the 2025 State of AI Engineering Survey today in preparation for the AI Eng World’s Fair in Jun 3-5. Please fill it out to have your voice heard!

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

Language Models and Model Updates

- Google’s Gemini 2.0 Updates and Features: @jack_w_rae announced improved Google Deep Research due to product development and underlying model updating from 1.5 Pro to 2.0 Flash Thinking. The Gemini app is launching improvements, including an upgraded Flash Thinking model with stronger reasoning, deeper app integration, Deep Research, and personalization @jack_w_rae. Additionally, @jack_w_rae noted the team’s progress in creating native image generation for Gemini 2, highlighting its difference from text-to-image models.

- Cohere’s Command A Model: @ArtificialAnlys reported that Cohere launched Command A, a 111B parameter dense model with an Artificial Analysis Intelligence Index of 40, close to OpenAI’s latest GPT-4o. The model has a 256K context window, a speed of 185 tokens/s, and is priced at $2.5/$10 per million input/output tokens. It is available on Hugging Face for research and commercially with a license from Cohere.

- Meta’s Dynamic Tanh (DyT): @TheTuringPost reported that Meta AI proposed Dynamic Tanh (DyT) as a replacement for normalization layers in Transformers, which works just as well or better without needing extra calculations or tuning and works for images, language, supervised learning, and self-supervised learning. Yann LeCun also announced the same thing on Twitter.

- Alibaba’s QwQ-32B: @DeepLearningAI highlighted Alibaba’s QwQ-32B, a 32.5-billion-parameter language model excelling in math, coding, and problem-solving. Fine-tuned with reinforcement learning, it rivals larger models like DeepSeek-R1 and outperforms OpenAI’s o1-mini on benchmarks. The model is freely available under the Apache 2.0 license.

- Google’s Gemma 3 Models: @GoogleDeepMind announced the release of Gemma 3, available in sizes from 1B to 27B, featuring a 128K token context window and supporting over 140 languages @GoogleDeepMind. It also announced the ShieldGemma 2, a 4B image safety checker built on the Gemma 3 foundation @GoogleDeepMind. @ArtificialAnlys benchmarked Gemma 3 27B with an Artificial Analysis Intelligence Index of 38, noting its strengths include a permissive commercial license, vision capability, and memory efficiency, while not being competitive with larger models like Llama 3.3 70B or DeepSeek V3 (671B). @sirbayes noted that Gemma 3 is best in class for a VLM that runs on 1 GPU.

Model Performance and Benchmarking

- Leaderboard Lore and History: @_lewtun shared the origin story of the Hugging Face LLM leaderboard, highlighting contributions from @edwardbeeching, @AiEleuther, @Thom_Wolf, @ThomasSimonini, @natolambert, @abidlabs, and @clefourrier. The post emphasizes the impact of small teams, early releases, and community involvement. @clefourrier added to this, noting that @nathanhabib1011 and they were working on an internal evaluation suite when the leaderboard went public, leading to industrializing the code.

- GPU Benchmarks and CPU Overhead: @dylan522p expressed their appreciation for GPU benchmarks that measure CPU overhead, such as vLLM and KernelBench.

- Tic-Tac-Toe as a Benchmark: @scaling01 stated they are a LLM bear until GPT-5 is released, citing that GPT-4.5 and o1 can’t even play tic-tac-toe consistently and @scaling01 argued that if LLMs can’t play tic-tac-toe despite seeing millions of games, they shouldn’t be trusted for research or business tasks.

- Evaluation Scripts for Reasoning Models: @Alibaba_Qwen announced a GitHub repo providing evaluation scripts for testing the benchmark performance of reasoning models and reproducing reported results for QwQ.

AI Applications and Tools

- AI-Assisted Coding and Prototyping: @NandoDF supports the idea that it’s a great time to learn to code, as coding is more accessible due to AI copilots, potentially leading to a wave of entrepreneurship. This sentiment was echoed by @AndrewYNg, noting that AI and AI-assisted coding have reduced the cost of prototyping.

- Agentic AI in IDEs: @TheTuringPost introduced Qodo Gen 1.0, an IDE plugin by @QodoAI that embeds agentic AI into JetBrains and VS Code, using LangGraph by LangChain and MCP by Anthropic.

- Integration of Gemini 2.0 with OpenAI Agents SDK: @_philschmid announced a one-line code change to use Gemini 2.0 with the OpenAI Agents SDK.

- LangChain’s Long-Term Agentic Memory Course: @LangChainAI and @DeepLearningAI announced a new DeepLearningAI course on Long-term Agentic Memory with LangGraph, taught by @hwchase17 and @AndrewYNg, focusing on building agents with semantic, episodic, and procedural memory to create a personal email assistant.

- UnslothAI Updates: @danielhanchen shared updates for UnslothAI, including support for full fine-tuning + 8bit, nearly any model like Mixtral, Cohere, Granite, Gemma 3, no more OOMs for vision finetuning, further VRAM usage reduction, speedup boost for 4-bit, Windows support, and more.

- Perplexity AI on Windows: @AravSrinivas announced the Perplexity App is now available in the Windows and Microsoft App Store, with voice-to-voice mode coming soon.

- HuggingSnap on TestFlight: @mervenoyann announced that HuggingSnap, an offline vision LM for phones built by @pcuenq and @cyrilzakka, is available on TestFlight, seeking feedback for further development.

- New Trends in Machine Translation: @_akhaliq highlighted a paper on New Trends for Modern Machine Translation with Large Reasoning Models.

- Microsoft and Shopify: @MParakhin announced Shopify has acquired VantageAI.

AI and Hardware

- AMD’s Radeon GPUs Support on Windows: @dylan522p reported on AMD’s @AnushElangovan discussing making Radeon GPUs first-class citizens on Windows at the RoCm User meetup, with support for multiple GPU architectures and a focus on CI and constant shipping.

- MLX LM New Home: @awnihannun announced that MLX LM has a new home.

AI Conferences and Events

- AI Dev 25 Conference: @AndrewYNg kicked off AI Dev 25 in San Francisco, noting that agents are the most exciting topic for AI developers. The conference included talks from Google’s Bill Jia @AndrewYNg, Meta’s Chaya Nayak @AndrewYNg, and a panel discussion on building AI applications in 2025 @AndrewYNg. @DeepLearningAI shared a takeaway from Nebius’ Roman Chernin emphasizing solving real-world problems, and @AndrewYNg highlighted a tip from Replit’s @mattppal on debugging by understanding the LLM’s context.

- GTC Fireside Chat: @ylecun announced they would be doing a fireside chat at GTC with Nvidia chief scientist Bill Dally on Tuesday next week.

- Interrupt Conference: @LangChainAI promoted the Interrupt conference, listing its sponsors, including CiscoCX, TryArcade, Box, and others @LangChainAI.

- Khipu AI in Santiago, Chile: @sirbayes shared their talk on Sequential decision making using online variational bayes at @Khipu_AI in Santiago, Chile. @sarahookr mentioned that the museum was really curious why their top item to see was the khipu.

Other

- The value of open-source models: @Teknium1 expressed concern that banning Chinese models from Americans won’t slow down their progress, and that not having access to the full range of models will make the US fall off.

- AI and Film Making: @c_valenzuelab discussed the divergent qualities of AI video generation, allowing for creative impulses and exploration of unexpected moments, unconstrained by physical limitations.

- The future of software: @c_valenzuelab speculates on the future of major public software companies, suggesting that companies focused on features and complex interfaces are at risk because the new software stack is intention-driven.

- Team Size: @scottastevenson made the argument that small teams are winning, and that clinging to old big team culture may be damaging for your career.

Humor/Memes

- “Everything is Transformer”: @AravSrinivas simply stated, “everything is transformer” with a picture of a transformer.

- “Our top technology at Midjourney is Domain Not Resolving”: @DavidSHolz joked that Midjourney’s top technology is “Domain Not Resolving”, seeking someone with at least 6 years of experience in the domain.

- “One million startups must perish”: @andersonbcdefg said “one million startups must perish”.

- “I will vibe edit human genome on a PlayStation 2”: @fabianstelzer posted “I will vibe edit human genome on a PlayStation 2”.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Gemma 3 Fine-Tuning Revolution: Performance and Efficiency in Unsloth

- Gemma 3 Fine-tuning now in Unsloth - 1.6x faster with 60% less VRAM (Score: 172, Comments: 36): Unsloth now allows fine-tuning of Gemma 3 (12B) with 1.6x faster performance and 60% less VRAM usage compared to Hugging Face + FA2, fitting models like the 27B in a 24GB GPU. The platform fixes issues such as infinite exploding gradients on older GPUs and double BOS tokens, and supports a broad range of models and algorithms, including full fine-tuning and Dynamic 4-bit quantization. For more details, visit their blog and access their Colab notebook for free fine-tuning.

- Users express enthusiasm for Unsloth’s advancements, particularly the support for full fine-tuning and the potential for 8-bit fine-tuning. Danielhanchen confirms that all methods, including 4-bit, 8-bit, and full fine-tuning, will be prioritized, and mentions the possibility of adding torchao for float8 support.

- There is interest in a more user-friendly interface, with requests for a webUI for local running to simplify usage. Few_Painter_5588 predicts that Unsloth will become the primary toolset for LLM fine-tuning.

- FullDeer9001 shares positive feedback on running Gemma3 on Radeon XTX with 8k context, highlighting VRAM usage and prompt statistics, and compares it favorably to Deepseek R1. Users discuss the idea of optimizing the 12B model for 16GB RAM to enhance performance.

Theme 2. Sesame CSM 1B Voice Cloning: Expectations vs. Reality

-

Sesame CSM 1B Voice Cloning (Score: 216, Comments: 30): Sesame CSM 1B is a newly released voice cloning model. No additional details were provided in the post.

- Voice Cloning Model Licensing and Usage: There is a discussion about the licensing differences between Sesame (Apache licensed) and F5 (Creative Commons Attribution Non Commercial 4.0), highlighting that Sesame can be used for commercial purposes. Users also mention the integration of voice cloning into a conversational speech model (CSM) as a potential advancement.

- Performance and Compatibility Issues: Users report slow performance of the voice cloning model, taking up to 50 seconds for a full paragraph on a GPU, and note that it may not be optimized for Windows. There are suggestions that it might work better on Linux and that running it on a mini PC without a dedicated GPU could be challenging due to the “experimental” triton backend for CPU.

- Technical Adjustments and API Access: Chromix_ shares steps to get the model working on Windows by upgrading to torch 2.6 and other packages, and mentions bypassing the need for a Hugging Face account by downloading files from a mirror repo. They also provide a link to the API endpoint for voice cloning.

-

Conclusion: Sesame has shown us a CSM. Then Sesame announced that it would publish… something. Sesame then released a TTS, which they obviously misleadingly and falsely called a CSM. Do I see that correctly? (Score: 154, Comments: 51): Sesame’s Controversy revolves around their misleading marketing strategy, where they announced a CSM but released a TTS instead, falsely labeling it a CSM. The issue could have been mitigated if Sesame had clearly communicated that it wouldn’t be open source.

- Misleading Marketing Strategy: Many users express disappointment with Sesame’s marketing tactics, noting that the company created significant hype by suggesting an open-source release, only to deliver a less impressive product. VC-backed companies often use such strategies to gauge product-market fit and generate investor interest, as seen with Sesame’s lead investor a16z.

- Technical Challenges and Model Performance: There’s a consensus that the released 1B model is underwhelming in performance, particularly in real-time applications. Users discuss technical aspects, such as the Mimi tokenizer and the model’s architecture, which contribute to its slow speed, and suggest optimizations like using CUDA graphs or alternative models like exllamav2 for better performance.

- Incomplete Product Release: Discussions highlight that Sesame’s release lacks crucial components of the demo pipeline, such as the LLM, STT, and VAD, forcing users to build these themselves. The demo’s impressive performance contrasts with the actual release, raising questions about the demo’s setup possibly using larger models or more powerful hardware like 8xH100 nodes.

Theme 3. QwQ’s Rise: Dominating Benchmarks and Surpassing Expectations

-

QwQ on LiveBench (update) - is better than DeepSeek R1! (Score: 256, Comments: 117): QwQ-32b from Alibaba surpasses DeepSeek R1 on LiveBench, achieving a global average score of 71.96, compared to DeepSeek R1’s 71.57. QwQ-32b consistently outperforms in subcategories like Reasoning, Coding, Mathematics, Data Analysis, Language, and IF Average, as highlighted in the comparison table.

- There is skepticism about the QwQ-32b’s performance compared to DeepSeek R1, with some users noting that Alibaba tends to optimize models for benchmarks rather than real-world scenarios. QwQ-32b is highlighted as a strong model, but there are doubts about its stability and real-world knowledge compared to R1.

- Coding performance is a contentious point, with users questioning how QwQ-32b approaches Claude 3.7 in coding capabilities. Discussions mention that LiveBench primarily tests in Python and JavaScript, while Aider tests over 30 languages, suggesting potential discrepancies in testing environments.

- Some users express excitement about the potential of QwQ-max, with anticipation that it might surpass R1 in both size and performance. There are also discussions on the impact of settings changes on the model’s performance, with links provided for further insights (Bindu Reddy’s tweet).

-

Qwq-32b just got updated Livebench. (Score: 130, Comments: 73): QwQ 32B has been updated on LiveBench, providing new insights into its performance. The full results can be accessed through the Livebench link.

- The QwQ 32B model is praised for its local coding capabilities, with some users noting it surpasses larger models like R1 in certain tasks. Users have discussed adjusting the model’s thinking time by tweaking settings such as the logit bias for the ending tag, and some have experimented with recent updates to resolve issues like infinite looping.

- Discussions highlight the evolving power of smaller models like QwQ 32B, with users noting their increasing potential for local applications compared to larger flagship models. Some users express surprise at the model’s creative capabilities and its ability to perform well on benchmarks, leading to decisions like dropping OpenAI subscriptions.

- There is a debate on the implications of open-sourcing models, with some users suggesting that China’s strategy of open-sourcing models accelerates development, contrasting with the U.S. approach focused on corporate profit. Concerns are raised about the future of open-source availability, especially if competitive advantages shift.

-

Meme i made (Score: 982, Comments: 55): The post titled “Meme i made” lacks detailed content as it only mentions a meme creation related to the QwQ Model’s Thinking Process. No additional information or context about the video or the meme is provided, making it difficult to extract further technical insights.

- Discussions highlight the QwQ Model’s tendency to doubt itself, leading to inefficient token usage and prolonged response times. This behavior is likened to “fact-checking” itself excessively, which some users find inefficient compared to traditional LLMs.

- There’s a consensus that current reasoning models, like QwQ, are in their early stages, akin to GPT-3’s initial release, with expectations for significant improvements in their reasoning capabilities over the next year. Users anticipate a shift towards reasoning in latent space, which could enhance efficiency by a factor of 10x.

- Humorous and critical commentary highlights the model’s repetitive questioning and self-doubt, drawing parallels to outdated technology and sparking discussions about the potential for these models to improve in handling complex reasoning tasks without excessive self-questioning.

Theme 4. Decentralized LLM Deployment: Akash, IPFS & Pocket Network Challenges

- HowTo: Decentralized LLM on Akash, IPFS & Pocket Network, could this run LLaMA? (Score: 229, Comments: 20): The post titled “HowTo: Decentralized LLM on Akash, IPFS & Pocket Network, could this run LLaMA?” suggests deploying a decentralized Large Language Model (LLM) using Akash, IPFS, and Pocket Network. It questions the feasibility of running LLaMA, a specific LLM, on this decentralized infrastructure, implying a focus on leveraging decentralized technologies for AI model deployment.

- Concerns about Security and Privacy: Users question the cryptographic verification process of Pocket Network, expressing doubts about ensuring the correct model is served and the privacy of prompts. There are concerns about whether user data, such as IP addresses, might be logged and how the network handles latency for anonymity.

- Challenges of Decentralized Infrastructure: Commenters highlight the technical challenges of running LLMs in a decentralized manner, especially the need for high bandwidth and low latency between nodes, which currently limits the feasibility of distributed LLM deployment compared to single-machine setups.

- Decentralization vs. Centralization: The discussion contrasts Pocket Network’s API relay role with centralized AI hosting, noting that while Pocket does not run the model itself, the use of Akash for model hosting offers benefits like resilience and potential cost savings, despite adding complexity with a crypto layer.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Advanced AI Video Generation with SDXL, Wan2.1, and Long Context Tuning

-

Another video aiming for cinematic realism, this time with a much more difficult character. SDXL + Wan 2.1 I2V (Score: 1018, Comments: 123): This post discusses the creation of a video aimed at achieving cinematic realism using SDXL and Wan 2.1 I2V. It highlights the challenge of working with a more difficult character in this context.

- Technical Challenges and Techniques: Parallax911 shares the complexity of achieving cinematic realism with SDXL and Wan 2.1 I2V, highlighting the use of Photopea for inpainting and compositing in Davinci Resolve. They mention the difficulty in achieving consistency and realism, especially with complex character designs, and the use of Blender for animating segments like the door opening.

- Project Costs and Workflow: The project incurred a cost of approximately $70 using RunPod’s L40S at $0.84/hr, taking about 80 hours of GPU time. Parallax911 utilized a workflow involving RealVisXL 5.0, Wan 2.1, and Topaz Starlight for upscaling, with scenes generated at 61 frames, 960x544 resolution, and 25 steps.

- Community Feedback and Suggestions: The community praised the atmospheric storytelling and sound design, with specific feedback on elements like water droplet size and the need for a tutorial. Some users suggested improvements, such as better integration of AI and traditional techniques, and expressed interest in more action-oriented scenes with characters like Samus Aran from Metroid.

-

Video extension in Wan2.1 - Create 10+ seconds upscaled videos entirely in ComfyUI (Score: 123, Comments: 23): The post discusses a highly experimental workflow in Wan2.1 using ComfyUI for creating upscaled videos, achieving approximately 25% success. The process involves generating a video from the last frame of an initial video, merging, upscaling, and frame interpolation, with specific parameters like Sampler: UniPC, Steps: 18, CFG: 4, and Shift: 11. More details can be found in the workflow link.

- Users are inquiring about the aspect ratio handling in the workflow, questioning if it’s automatically set or needs manual adjustment for input images.

- There is positive feedback from users interested in the workflow, indicating anticipation for such a solution.

- Concerns about blurriness in the second half of clips were raised, with suggestions that it might be related to the input frame quality.

-

Animated some of my AI pix with WAN 2.1 and LTX (Score: 115, Comments: 10): The post discusses the creation of animated AI videos using WAN 2.1 and LTX. Without further context or additional details, the focus remains on the tools used for animation.

- Model Usage: LTX was used for the first clip, the jumping woman, and the fighter jet, while WAN was used for the running astronaut, the horror furby, and the dragon.

- Hardware Details: The videos were generated using a rented cloud computer from Paperspace with an RTX5000 instance.

Theme 2. OpenAI’s Sora: Transforming Cityscapes into Dystopias

-

OpenAI’s Sora Turns iPhone Photos of San Francisco into a Dystopian Nightmare (Score: 931, Comments: 107): OpenAI’s Sora is a tool that transforms iPhone photos of San Francisco into images with a dystopian aesthetic. The post likely discusses the implications and visual results of using AI to alter real-world imagery, although specific details are not available due to the lack of text content.

- Several commenters express skepticism about the impact of AI-generated dystopian imagery, with some suggesting that actual locations in San Francisco or other cities already resemble these dystopian visuals, questioning the need for AI alteration.

- iPhone as the device used for capturing the original images is a point of contention, with some questioning its relevance to the discussion, while others emphasize its importance in understanding the image source.

- The conversation includes a mix of admiration and concern for the AI’s capabilities, with users expressing both astonishment at the technology and anxiety about distinguishing between AI-generated and real-world images in the future.

-

Open AI’s Sora transformed Iphone pics of San Francisco into dystopian hellscape… (Score: 535, Comments: 58): OpenAI’s Sora has transformed iPhone photos of San Francisco into a dystopian hellscape, showcasing its capabilities in altering digital images to create a futuristic, grim aesthetic. The post lacks additional context or details beyond this transformation.

- Commenters draw parallels between the dystopian images and real-world locations, with references to Delhi, Detroit, and Indian streets, highlighting the AI’s perceived biases in interpreting urban environments.

- There are concerns about AI’s text generation capabilities, with one commenter noting that sign text in the images serves as a tell-tale sign of AI manipulation.

- Users express interest in the process of creating such images, with a request for step-by-step instructions to replicate the transformation on their own photos.

Theme 3. OpenAI and DeepSeek: The Open Source Showdown

-

I Think Too much insecurity (Score: 137, Comments: 58): OpenAI accuses DeepSeek of being “state-controlled” and advocates for bans on Chinese AI models, highlighting concerns over state influence in AI development. The image suggests a geopolitical context, with American and Chinese flags symbolizing the broader debate over state control and security in AI technologies.

- The discussion highlights skepticism over OpenAI’s claims against DeepSeek, with users challenging the notion of state control by pointing out that DeepSeek’s model is open source. Users question the validity of the accusation, with calls for proof and references to Sam Altman’s past statements about the lack of a competitive moat for LLMs.

- DeepSeek is perceived as a significant competitor, managing to operate with lower expenses and potentially impacting OpenAI’s profits. Some comments suggest that DeepSeek’s actions are seen as a form of economic aggression, equating it to a declaration of war on American interests.

- There is a strong undercurrent of criticism towards OpenAI and Sam Altman, with users expressing distrust and dissatisfaction with their actions and statements. The conversation includes personal attacks and skepticism towards Altman’s credibility, with references to his promises of open-source models that have not materialized.

-

Built an AI Agent to find and apply to jobs automatically (Score: 123, Comments: 22): An AI agent called SimpleApply automates job searching and application processes by matching users’ skills and experiences with relevant job roles, offering three usage modes: manual application with job scoring, selective auto-application, and full auto-application for jobs with over a 60% match score. The tool aims to streamline job applications without overwhelming employers and is praised for finding numerous remote job opportunities that users might not discover otherwise.

- Concerns about data privacy and compliance were raised, with questions on how SimpleApply handles PII and its adherence to GDPR and CCPA. The developer clarified that they store data securely with compliant third parties and are working on explicit user agreements for full compliance.

- Application spam risks were discussed, with suggestions to avoid reapplying to the same roles to prevent being flagged by ATS systems. The developer assured that the tool only applies to jobs with a high likelihood of landing an interview to minimize spam.

- Alternative pricing strategies were suggested, such as charging users only when they receive callbacks via email or call forwarding. This approach could potentially be more attractive to unemployed users who are hesitant to spend money upfront.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. Google’s Gemma 3 Takes Center Stage Across Tools

- Unsloth Supercharges Gemma 3 Finetuning, Vision Too: Unsloth AI now boasts full support for Gemma 3, enhancing finetuning speeds by 1.6x, slashing VRAM usage by 60%, and expanding context length 6x compared to standard Flash Attention 2 setups on 48GB GPUs. Optimized versions for full finetuning, 8-bit, and pretraining are available on Hugging Face, and initial support for Gemma 3 vision is also implemented, though Ollama users might face compatibility issues for now.

- Gemma 3 12B Outsmarts Qwen, Needs GPT4All Update: Users reported Gemma 3 12B outperforming Qwen 14B and 32B in personal tests and excelling in multilingual question answering, yet GPT4All requires updates for full Gemma 3 12B support due to architectural shifts and the need for an

mmprojfile. In a basic physics test, Gemma-3-12b correctly predicted jar shattering when water freezes, unlike DeepSeek-R1. - vLLM and LigerKernel Gear Up for Gemma 3 Integration: vLLM is actively working on Gemma 3 support, tracked in this GitHub issue, while a draft implementation of Gemma 3 into LigerKernel is underway, noting high architectural similarity to Gemma 2 with minor RMSNorm call differences; however, some users are reporting context window size issues with Gemma3 and TGI.

Theme 2. New Models Emerge: OLMo 2, Command A, Jamba 1.6, PaliGemma 2 Mix

- AI2’s OLMo 2 32B Shines as Open-Source GPT-3.5 Killer: AI2 launched OLMo 2 32B, a fully open-source model trained on 6T tokens using Tulu 3.1, which it claims outperforms GPT3.5-Turbo and GPT-4o mini on academic benchmarks, while costing only one-third of Qwen 2.5 32B training; available in 7B, 13B, and 32B sizes, it is now available on OpenRouter and sparking discussion in Yannick Kilcher’s community about its open nature and performance.

- Cohere’s Command A and AI21’s Jamba 1.6 Models Arrive with Massive Context: Cohere unveiled Command A, a 111B parameter open-weights model with a 256k context window, designed for agentic, multilingual, and coding tasks, while AI21 released Jamba 1.6 Large (94B active parameters, 256K context) and Jamba 1.6 Mini (12B active parameters), both now featuring structured JSON output and tool-use, all models available on OpenRouter. However, Command A is exhibiting a peculiar bug with prime number queries, and local API performance is reportedly suboptimal without specific patches.

- Google’s PaliGemma 2 Mix Family Unleashes Vision-Language Versatility: Google released PaliGemma 2 Mix, a vision language model family in 3B, 10B, and 28B sizes, with 224 and 448 resolutions, capable of open-ended vision language tasks and document understanding, while Sebastian Raschka reviewed multimodal models including Meta AI’s Llama 3.2 in a blog post; users in HuggingFace are also seeking open-source alternatives to Gemini 2.0 Flash with similar image editing capabilities.

Theme 3. Coding Tools and IDEs Evolve with AI Integration

- Cursor IDE Users Cry Foul Over Performance and Claude 3.7 Downgrade: Cursor IDE faces user backlash for lag and freezing on Linux and Windows after updates like 0.47.4, with Claude 3.7 deemed dumb as bricks and rule-ignoring, costing double credits, and the Cursor agent criticized for spawning excessive terminals; despite issues, v0 remains praised for rapid UI prototyping, contrasting with Cursor’s credit system and limited creative freedom compared to v0.

- Aider and Claude Team Up, Users Debate Rust Port and MCP Server Setup: Users laud the powerful combination of Claude with Aider, augmented with web search and bash scripting, while discussions on porting Aider to Rust for speedier file processing are met with skepticism, citing LLM API bottlenecks; a user-improved readme for the Aider MCP Server emerged, yet setup complexities persist, and Linux users are finding workarounds to run Claude Desktop.

- ‘Vibe Coding’ Gains Momentum, Showcased in Game Dev and Resource Lists: The concept of “vibe coding”—AI-assisted collaborative coding—is gaining traction, exemplified by a developer creating a multiplayer 3D game 100% with AI in 20 hours for 20 euros using Cursor, and Awesome Vibe Coding, a curated list of AI coding tools and resources, has been released on GitHub, and a GitDoc VS Code extension for auto-committing changes is gaining popularity, sparking UI design ideas for “vibe coding” IDEs with visualized change trees.

Theme 4. Training and Optimization Techniques Advance

- Unsloth Pioneers GRPO for Reasoning Models, Dynamic Quantization for Speed: Unsloth introduces GRPO (Guiding Preference Optimization), enabling 10x longer context with 90% less VRAM for reasoning models, and highlights dynamic quantization outperforming GGUF in quality, especially for Phi-4, showcased on the Hugging Face leaderboard, while Triton bitpacking achieves massive speedups up to 98x over Pytorch, reducing Llama3-8B repacking time from 49 sec to 1.6 sec.

- DeepSeek’s Search-R1 Leverages RL for Autonomous Query Generation, IMM Promises Faster Sampling: DeepSeek’s Search-R1 extends DeepSeek-R1 with reinforcement learning (RL) to generate search queries during reasoning, using retrieved token masking for stable training and enhanced LLM rollouts, while Inductive Moment Matching (IMM) emerges as a novel generative model class promising faster inference via one- or few-step sampling, surpassing diffusion models without pre-training or dual-network optimization.

- Reasoning-Gym Explores GRPO, veRL, and Composite Datasets for Enhanced Reasoning: Group Relative Policy Optimization (GRPO) gains popularity for RL in LLMs, with reasoning-gym confirming veRL training success for chain_sum and exploring composite datasets for improved reasoning capabilities, moving towards a refactor for enhanced “all-around” model performance, and the project nears 500 stars, with version 0.1.16 uploaded to pypi.

Theme 5. Infrastructure and Access: H100s, VRAM, and API Pricing

- SF Compute Disrupts H100 Market with Low Prices, Vultr Enters Inference API Space: SF Compute offers surprisingly low H100 rental prices, especially for short-term use, advertising 128 H100s available hourly and launching an additional 2,000 H100s soon, while Vultr announces inference API pricing at $10 for 50 million output tokens initially, then 2 cents per million, accessible via an OpenAI-compatible endpoint, stemming from a large GH200 purchase.

- LM Studio Users Dive into Runtime Retrieval and Snapdragon Compatibility: LM Studio users are reverse-engineering the application to find download URLs for offline runtimes, after claims it runs offline, discovering CDN ‘APIs’ like Runtime Vendor, while Snapdragon X Plus GPU support in LM Studio requires direct llama.cpp execution, and users report Gemini Vision limitations potentially due to geo-restrictions in Germany/EU.

- VRAM Consumption Concerns Rise: Gemma 3 and SFT Discussed: Users report increased VRAM usage for Gemma 3 post-vision update, speculating CLIP integration as a cause, and Gemma’s SFT VRAM needs are debated, suggesting potentially higher requirements than Qwen 2.5 in similar conditions, while resources for estimating memory usage for LLMs are shared, like Substratus AI blog and Hugging Face space.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Unsloth’s Gemma 3 Support Gains Steam: Unsloth now supports Gemma 3, including full fine-tuning and 8-bit, and optimizes Gemma 3 (12B) finetuning by 1.6x, reduces VRAM usage by 60%, and extends context length by 6x compared to environments using Flash Attention 2 on a 48GB GPU.

- All Gemma 3 model uploads are available on Hugging Face, including versions optimized for full finetuning, 8-bit, and pretraining.

- Dynamic Quants Face Off GGUF Quality: Discussion compares dynamic quantization with GGUF models, especially regarding the trade-offs between size and quality, with Unsloth’s dynamic quants for Phi-4 on the Hugging Face leaderboard.

- A direct comparison with GGUF benchmarks is anticipated to clarify performance at different bit widths, with a likely holdup being llama-server lacking vision support yet.

- GRPO to Grant Reasoning Greatness: GRPO (Guiding Preference Optimization) is coming next week along with new notebooks, and now supports 10x longer context with 90% less VRAM, detailed in a blog post.

- The team stated, only if you let it reason about the rules first a la GRPO, which is specifically designed for reasoning models, offering significant memory savings and expanded context windows.

- Vision Models Get Unsloth’s Vision: Unsloth has implemented the train on completions feature and also resizing of images for Vision Language Models, and the models now auto resize images which stops OOMs and also allows truncating sequence lengths.

- A Qwen2_VL Colab notebook was also shared for images.

- QwQ-32B Bugfixes Bolster Model: Bugfixes have been implemented for the QwQ-32B model, as highlighted in a blog post with corresponding model uploads.

- These fixes improve the model’s stability and performance, ensuring a smoother user experience.

Cursor IDE Discord

- Cursor Experiences Performance Hiccups on Linux and Windows: Users have reported Cursor experiencing lag and freezing on Linux and Windows, particularly after updates such as 0.47.4 (download link).

- One user detailed that the UI freezes for seconds after just 20-30 messages on Linux; another noted constant lags on Windows even with a high performance laptop running version 3.7.

- Claude 3.7 Judged as Underperforming and Disobedient: Users find Claude 3.7 dumb as bricks following the update to 0.47.4, and using it now costs double the credits.

- Members mentioned that Sonnet 3.7 ignores global rules, even when prompted to output them, with one user jokingly suggesting to put ‘be a good boy’ in your prompt and it will fix anything, according to a tweet.

- Cursor Agent Uncorks Terminal Barrage: Multiple users are finding the Cursor agent is spawning an excessive amount of terminals, causing frustration, especially when it restarts servers that are already running.

- One member suggested that this functionality should either be built-in or users should just write the terminal commands themselves.

- V0 Praised for Prototyping Speed: Some users advocate using v0 for front-end prototyping due to its UI design capabilities with subframes, which is similar to Figma, before transferring designs to Cursor.

- One user stated it’s much better to build prototype and layout (better front end) imo then import locally to cursor, although others favor Cursor because of v0’s credit system and limited creative autonomy.

Eleuther Discord

- LM Studio Users Seek Support: A member suggested users facing issues with LM Studio seek assistance in the dedicated LM Studio Discord.

- This aims to provide more focused help for LM Studio related problems.

- SMILES String Encoders Sought for Stereoisomers: A member inquired about models or architectures that can encode a SMILES string into various stereoisomers or a ChemDraw input.

- The goal is to enable chemical descriptor extraction from these encodings.

- Diffusion Models Excel at Generative Tasks: A Nature article was shared, highlighting the proficiency of diffusion models (DMs) in modeling complex data distributions and generating realistic samples for diverse media.

- These models are now state-of-the-art for generating images, videos, audio, and 3D scenes.

- Search-R1 Autonomously Searches with RL**: The Search-R1 paper was introduced, detailing an extension of the DeepSeek-R1 model that employs reinforcement learning (RL) to generate search queries during reasoning (see paper).

- The model uses retrieved token masking for stable RL training, enhancing LLM rollouts through multi-turn search interactions.

- IMM Claims Faster Sampling Times**: A paper on Inductive Moment Matching (IMM) was shared, noting it as a novel class of generative models that promise faster inference through one- or few-step sampling, surpassing diffusion models.

- Notably, IMM does not require pre-training initialization or the optimization of two networks, unlike distillation methods.

HuggingFace Discord

- LLM Faceoff: Ministral 8B vs. Exaone 8B: Members suggested using Ministral 8B or Exaone 8B at 4-bit quantization for LLM tasks.

- A user running an M4 Mac mini with 24 GB RAM, was trying to figure out tokens per second.

- SmolAgents Has Troubles With Gemma3: A user reported errors running Gemma3 with SmolAgents, stemming from code parsing and regex issues, pointing to a potential fix on GitHub.

- The user resolved the problem by increasing the Ollama context length.

- Awesome Vibe Coding Curates Resources: A curated list of tools, editors, and resources for AI-assisted coding has been released, called Awesome Vibe Coding.

- The list includes AI-powered IDEs, browser-based tools, plugins, command line tools, and the latest news on vibe coding.

- PaliGemma 2 Models Drop: Google released PaliGemma 2 Mix, a family of vision language models with three sizes (3B, 10B, and 28B) and resolutions of 224 and 448 that can do vision language tasks with open-ended prompts.

- Check out the blog post for more.

- Chess Championship Models Make Illegal Moves?: A user shared a YouTube playlist titled Chatbot Chess Championship 2025, showcasing language models or chess engines playing chess.

- Participants speculated whether the models were true language models or merely calling chess engines, and one person noted a language model made illegal moves.

Perplexity AI Discord

- Complexity Extension Goes Full Maintenance: The Complexity extension for Perplexity AI is now in full maintenance mode due to a layout update breaking the extension.

- The developer thanked users for their patience during this maintenance period.

- Locking Kernel a Pipe Dream for Security: Users debated whether locking down the kernel would improve security, in the general channel.

- Others argued that this is not feasible due to the open-source nature of Linux, with one user joking about using Windows instead.

- Perplexity Users Beg for More Context: Users are requesting larger context windows in Perplexity AI and are willing to pay extra to avoid using ChatGPT.

- A user cited Perplexity’s features like unlimited research on 50 files at a time, spaces for custom instructions, and the ability to choose reasoning models as reasons to stay.

- Grok 3 Riddled with Bugs Upon Release: The newly released Grok AI is reportedly buggy.

- Users reported that suddenly the chat stops working or breaks in middle.

- Gemini Deep Research Not So Deep: Users testing the new Gemini Deep Research feature found it weaker than OpenAI’s offerings.

- One user found it retained less context than the regular Gemini, even with search disabled.

aider (Paul Gauthier) Discord

- Claude + Aider = Coding SuperPower: Members discussed using Claude with Aider, which augments it with web search/URL scrapping and running bash script calling, resulting in more powerful prompting capabilities.

- One user highlighted that each unique tool added to Claude unlocks a lot more than the sum of its parts, especially when the model searches the internet for bugs.

- Does Rust Rocket Aider Speed?: One user inquired about porting Aider to C++ or Rust for faster file processing, particularly when loading large context files for Gemini models.

- Others expressed skepticism, suggesting that the bottleneck remains with the LLM API and any improvements might not be quantifiable.

- Linux Lovers Launch Claude Desktop: Users shared instructions for getting the Claude Desktop app to work on Linux, as there isn’t an official version.

- One user referenced a GitHub repo providing Debian-based installation steps while another shared their edits to an Arch Linux PKGBUILD.

- Aider MCP Server Readme Rescued: Users discussed the Aider MCP Server, with one mentioning that another user’s readme was 100x better, referring to this repo.

- However, another user humorously stated that they still can’t setup ur mcp despite the readme’s existence.

- DeepSeek Models Speak Too Much: A user reported that DeepSeek models are generating excessive output, around 20-30 lines of phrases, and inquired about setting a

thinking-tokensvalue in the configuration.- It was noted that 20 lines is pretty standard for the R1 model, and one user shared that they once waited 2 minutes for the model to think on a 5 word prompt.

Latent Space Discord

- OLMo 2 32B Dominates GPT 3.5: AI2 released OLMo 2 32B, a fully open-source model trained up to 6T tokens using Tulu 3.1, outperforming GPT3.5-Turbo and GPT-4o mini on academic benchmarks.

- It is claimed to require only one third of the cost of training Qwen 2.5 32B while reaching similar performance and is available in 7B, 13B, and 32B parameter sizes.

- Vibe Coding Creates Games with AI: A developer created a multiplayer 3D game 100% with AI, spending 20 hours and 20 euros, calling the concept vibe coding, and sharing the guide.

- The game features realistic elements like hit impacts, smoke when damaged, and explosions on death, all generated via prompting in Cursor with no manual code edits.

- Levels.io’s AI Flight Sim Soars to $1M ARR: A member referenced the success of Levels.io’s flight simulator, built with Cursor, which quickly reached $1 million ARR by selling ads in the game.

- Levelsio noted, AI really is a creativity and speed maximizer for me, making me just way more creative and more fast.

- GitDoc Extension Auto-Commits Changes: Members shared the GitDoc VS Code extension that allows you to edit a Git repo and auto commit on every change.

- One user suggested branching, restarting and other features and said storage is cheap, like auto commit on every change and visualize the tree of changes.

- Latent Space Podcast Dives into Snipd AI App: The Latent Space podcast released a new Snipd podcast with Kevin Ben Smith about the AI Podcast App for Learning and released their first ever OUTDOOR podcast on Youtube.

- The podcast features a discussion about @aidotengineer NYC, switching from Finance to Tech, how AI can help us get a lot more out of our podcast time, and dish the details on the tech stack of Snipd app.

LM Studio Discord

- LM Studio’s Runtimes Retrieved via Reverse Engineering: A user decompiled LM Studio to locate download URLs for offline use, discovering the backends master list and CDN ‘APIs’ like the Runtime Vendor.

- This was after another user claimed LM Studio doesn’t need an internet connection to run, showing a demand for offline runtime access.

- Snapdragon Support Requires Direct llama.cpp Execution: A user reported that LM Studio did not detect their Snapdragon X Plus GPU, and another member replied that GPU support requires running llama.cpp directly.

- They directed the user to this github.com/ggml-org/llama.cpp/pull/10693 pull request for more information.

- Gemini Vision Hampered by Geo-Restrictions: Users reported issues testing Gemini 2.0 Flash Experimental’s image processing abilities, potentially due to regional restrictions in Germany/EU.

- One user in Germany suspected that the limitations were due to local laws while a user in the US reported that Gemini in AI Studio failed to perform the image manipulation.

- AI Chess Tournament Highlights Model Accuracy: An AI chess tournament featuring 15 models was held, with results available at dubesor.de/chess/tournament, where results are impacted by game length and opponent moves.

- Although DeepSeek-R1 achieved a 92% accuracy, the organizer clarified that accuracy varies based on game length and opponent moves, and normal O1 was too expensive to run in the tournament.

- VRAM Consumption for Gemma 3 Jumps After Vision Update: Following a vision speed increase update, a user reported a significant increase in VRAM usage for Gemma 3.

- Speculation arose that the download size increase may be due to CLIP being used for vision, potentially being called from a separate file, increasing the overall memory footprint.

Nous Research AI Discord

- DeepHermes 3 Converts to MLX: The model mlx-community/DeepHermes-3-Mistral-24B-Preview-4bit was converted to MLX format from NousResearch/DeepHermes-3-Mistral-24B-Preview using mlx-lm version 0.21.1.

- This conversion allows for efficient use on Apple Silicon and other MLX-compatible devices.

- Deep Dive into VLLM Arguments for Hermes 3: Members are sharing different configurations to get vllm working correctly with Hermes-3-Llama-3.1-70B-FP8, including suggestions like adding

--enable-auto-tool-choiceand--tool-call-parserfor Hermes 3 70B.- One member noted the need for

<tool_call>and</tool_call>tags in the tokenizer, which are present in Hermes 3 models but not necessarily in DeepHermes.

- One member noted the need for

- Vultr Announces Inference Pricing: A member from Vultr shared the official pricing for their inference API, which includes $10 for 50 million output tokens initially, then 2 cents per million output tokens after, accessible via an OpenAI-compatible endpoint at https://api.vultrinference.com/

- This pricing is a result of purchasing an absurd amount of gh200s and needing to do something with them, according to a member.

- Dynamic LoRAs Docking into VLLM: Members discussed the possibility of hosting dynamic LoRAs with vllm for various use cases, like up-to-date coding styles, referencing the vLLM documentation.

- It was suggested to let users pass in their huggingface repo IDs for the LoRAs and supply them into the VLLM serve command cli args.

MCP (Glama) Discord

- Astro Clients gear up for MCP Integration: A member plans to use MCP for their Astro clients, using AWS API Gateway with each MCP server as a Lambda function, leveraging the MCP bridge with the SSE gateway.

- The goal is to enable MCP usage specifically for customers and explore adding MCP servers to a single project for client visibility.

- Decoding MCP Server Architecture: A member inquired how clients like Cursor and Cline, which keep MCP servers on the client side, communicate with the backend.

- The discussion involved the architecture and communication methods used by these clients but was redirected to a more specific channel for detailed information.

- Smart Proxy Server Converts to Agentic MCP: A smart proxy MCP server converts standard MCP servers with many tools into one with a single tool via its own LLM, effectively a sub-agent approach using vector tool calling.

- The OpenAI Swarm framework follows a similar process of assigning a subset of tools to individual agents, now rebranded as openai-agents by OpenAI.

- Debugger Uses MCP Server to Debug Webpages: A member shared a debugger project, chrome-debug-mcp (https://github.com/robertheadley/chrome-debug-mcp), that uses MCP to debug webpages with LLMs, originally built with Puppeteer.

- The project has been ported to Playwright, with the updated GitHub repository pending after further testing.

- MCP Hub Concept Simplifies Server Management: To enhance enterprise adoption of MCP, a member created an MCP Hub concept featuring a dashboard for simplified server connections, access control, and visibility across MCP servers, as demoed in this video.

- The hub aims to address concerns about managing multiple MCP servers and permissions in enterprise settings.

Interconnects (Nathan Lambert) Discord

- DeepSeek Confiscates Employee Passports: The owner of DeepSeek reportedly asked R&D staff to surrender their passports to prevent foreign travel, according to Amir on Twitter.

- Members debated whether this would lead to more open source work from DeepSeek, or if the US might adopt similar measures.

- SF Compute H100 Prices Shock the Market: A member pointed out that SF Compute offers surprisingly low prices for H100s, especially for short-term rentals, advertising 128 H100s available for hourly use.

- San Francisco Compute Company is launching soon an additional 2,000 H100s and runs a market for large-scale, vetted H100 clusters, while also sporting a simple but powerful CLI.

- Gemma 3 License Raises Red Flags: A recent TechCrunch article highlighted concerns over model licenses, particularly Google’s Gemma 3.

- The article noted that while Gemma 3’s license is efficient, its restrictive and inconsistent terms could pose risks for commercial applications.

- User Data Privacy is Under Siege: A member reported their frustration with individuals discovering their phone number online and making unsolicited requests, such as “hey nato, can you post-train my llama2 model? ty”.

- They speculate that extensions or paid services are the source and are seeking methods to remove their data from sites like Xeophon.

- Math-500 Sampling Validated: In response to a question about seemingly random sampling in Qwen’s github repo evaluation scripts, it was confirmed that apparently sampling is random.

- Members cited Lightman et al 2023 and that long context evals and answer extraction is a headache and that Math 500 is very well correlated.

OpenRouter (Alex Atallah) Discord

- Cohere Commands Attention with 111B Model: Cohere launched Command A, a new open-weights 111B parameter model boasting a 256k context window, with a focus on agentic, multilingual, and coding applications.

- This model is designed to deliver high performance in various use cases.

- AI21 Jamba Jams with New Models: AI21 released Jamba 1.6 Large with 94 billion active parameters and a 256K token context window, alongside Jamba 1.6 Mini, featuring 12 billion active parameters.

- Both models now support structured JSON output and tool-use.

- Gemma Gems Gleam for Free: All variations of Gemma 3 are available for free: Gemma 3 12B which introduces multimodality, supporting vision-language input and text outputs and handles context windows up to 128k tokens.

- The model understands over 140 languages, and also features Gemma 3 4B and Gemma 3 1B models.

- Anthropic API Anomaly Averted: Anthropic reported an incident of elevated errors for requests to Claude 3.7 Sonnet, with updates posted on their status page.

- The incident has been resolved.

- Chess Tournament Pits AI Models Against Each Other: An AI chess tournament, accessible here, pits 15 models against each other using standard chess notations for board state, game history, and legal moves.

- The models are fed information about the board state, the game history, and a list of legal moves.

Yannick Kilcher Discord

- Go Wins at Practical Porting: Members debated the utility of Go vs Rust for porting, explaining that porting to Go function-by-function allows for exact behavior parity, avoiding rewriting code for years.

- While Rust is faster and more efficient, a member pointed out that Golang is really ergonomic to develop in, particularly for distributed, async, or networked applications.

- DeepSeek Hype Suspicions Sparked: Some members argued that the hype around DeepSeek is engineered and that their models are simplified, likening the comparison to frontier AI models comparing Ananas with Apple.

- Others defended DeepSeek, claiming their crazy engineers developed a filesystem faster than life.

- OLMo 2 32B Fully Open: OLMo 2 32B launched as the first fully-open model to outperform GPT3.5-Turbo and GPT-4o mini on academic benchmarks.

- It is claimed to be comparable to leading open-weight models while only costing one third of the training cost of Qwen 2.5 32B.

- ChatGPT is Overrated, Claude Preferred: One member expressed that ChatGPT is overrated because it actually doesn’t solve problems that I need solved, preferring Mistral Small 24B, QwQ 32B, and Claude 3.7 Sonnet.

- Another user shared, I’ve had better luck getting what I want from Claude, and that it seems better at understanding intention and motivation for whatever reason.

- Grok 3 Writes Professional Code: Members debated code generation qualities, highlighting that OpenAI models often generate legacy code, while Mistral can refactor it into more modern code.

- It was also noted that Grok 3 generates code that looks like a professional programmer wrote it, while in VSCode, one member prefers using Amazon Q over Copilot.

GPU MODE Discord

- Speech-to-Speech Models Spark Quest: A member is actively seeking speech-to-speech generation models that focus on conversational speech, distinguishing them from multimodal models like OpenAI Realtime API or Sesame AI.

- Block Diffusion Bridges Autoregressive and Diffusion: The Block Diffusion model, detailed in an ICLR 2025 Oral presentation, combines autoregressive and diffusion language model benefits, offering high quality, arbitrary-length generation, KV caching, and parallelizable processing.

- Code can be found on GitHub and HuggingFace.

- Triton bitpacking gets Huge Boost: Bitpacking in Triton achieved significant speed-ups versus the Pytorch implementation on the 4090, achieving 98x speedup for 32-bit packing and 26x for 8-bit packing.

- Re-packing a Llama3-8B model time was reduced from 49 sec -> 1.6 sec using the new bitpacking implementation, with code available on GitHub.

- Gemma3 Gains Traction in vLLM and LigerKernel: Members discussed adding Gemma 3 support to vLLM, referencing this GitHub issue, while a member has started drafting an implementation of Gemma3 into LigerKernel, and shared a link to the pull request.

- According to the pull request, Gemma3 has high similarities to Gemma2 with some differences in RMSNorm Calls.

- GRPO Gains Popularity for LLM Training: Members discussed how Group Relative Policy Optimization (GRPO) has become popular for Reinforcement Learning in Large Language Models, referencing the DeepSeek-R1 paper.

- A blog post from oxen.ai on GRPO VRAM requirements was shared, noting its effectiveness in training.

OpenAI Discord

- Intelligence Declines Spark Debate: Discussion sparked from a Financial Times article that average intelligence is dropping in developed countries, citing increased reports of cognitive challenges and declining performance in reasoning and problem-solving.

- One member theorized this could be due to technology, especially smartphones and social media, leading to outsourcing of thinking, however the graphics only showed the years really before ChatGPT became a thing.

- Is Tech the Culprit for Cognitive Decline?: Members debated potential causes for cognitive decline, including technology’s influence, immigration, and fluoridated water.

- One member pointed out that the rates of cognitive challenges were steadily increasing since the 1990s, and a sudden acceleration from around 2012.

- DeepSeek V3 Distilled from OpenAI Models: The discussion covers that Deepseek V3 (the instruct version) was likely distilled from OpenAI models.

- One member notes that even OpenAI unofficially supports distilling their models, they just don’t seem to like it when Deepseek does it.

- Claude Sonnet 3.7 Dominates in Coding Tasks: A member now uses Claude Sonnet 3.7 exclusively for coding, finding ChatGPT lagging behind.

- In related news, a member stated that the o3-mini-high model is better than o1.

- Food Additives Fuel Mental Slowdown: Members discuss that the availability and consumption of ultra-processed foods (UPFs) has increased worldwide and represents nowadays 50–60% of the daily energy intake in some high-income countries, and is linked to cognitive decline

- Another member mentions Multinational corporations such as Nestlé that operate in many countries produce and distribute worldwide, it seems understandable how different additives or changes made to these products in one of these corporations can have a worldwide impact.

Notebook LM Discord

- Gemini 2.0 Deep Research Joins NotebookLM?: Members are exploring pairing Gemini 2.0 Deep Research with NotebookLM for enhanced documentation handling.

- The community questioned if Deep Research might eventually supersede NotebookLM in functionality.

- NotebookLM Inspires African Project Ecokham: A member from Africa reported using NotebookLM to connect thoughts, edit roadmaps, and generate audio for his project, Ecokham.

- He expressed gratitude for NotebookLM’s contribution to inspiring his team.

- NotebookLM Prototyping PhytoIntelligence Framework: A member is leveraging NotebookLM to organize notes and prototype the PhytoIntelligence framework for autonomous nutraceutical design, with the aim of mitigating diagnostic challenges.

- The user acknowledged Google for the tool’s capabilities.

- Users Demand Image & Table Savvy in NotebookLM: Users are requesting image and table recognition in NotebookLM, complaining that the current state feels incomplete because of the need to constantly reopen source files and dig through Google Sheets; one user even shared a relevant cat GIF.

- The community emphasized that images are worth a “thousand words” and the clearest data is often found in tables.

- Mobile App still not here for NotebookLM: Users are actively requesting a mobile app version of NotebookLM for improved accessibility.

- The community feels a mobile version is “still not yet coming up”.

LlamaIndex Discord

- Google Gemini and Vertex AI Merge in LlamaIndex!: The

@googleaiintegration unifies Google Gemini and Google Vertex AI with streaming, async, multi-modal, and structured prediction support, even supporting images, detailed in this Tweet.- This integration simplifies building applications leveraging Google’s latest models.

- LlamaIndex Perks Debated: A member sought clarity on LlamaIndex’s advantages over Langchain for building applications.

- The inquiry did not lead to a conclusive discussion within the provided context.

- OpenAI’s Delta Event Absence Probed: A member questioned why OpenAI models do not emit delta events for tool calling, observing the events are emitted but empty.

- The consensus was that tool calling cannot be a stream because the LLM needs the full tool response to generate the subsequent response, advocating for a DIY approach.

- API for Agentic RAG Apps Questioned: There was a question on whether any API exists specifically for building Agentic RAG applications to streamline development and management.

- The conversation mentioned that multiple constructs are available in LlamaIndex but lacked a clear, definitive guide.

Nomic.ai (GPT4All) Discord

- Gemma 3 12B Edges Out Qwen in IQ Test: A user reported that the Gemma 3 12B model outperformed Qwen 14B and Qwen 32B in terms of intelligence on their personal computer.

- This was tested by asking questions in multiple languages; Gemma 3 and DeepSeek R1 consistently provided correct answers in the same language as the question.

- Gemma 3 Needs New GPT4All Support: Users noted that GPT4All may require updates to fully support Gemma 3 12B because its architecture differs from Gemma 2.

- Specifically, Gemma 3 needs an mmproj file to work with GPT4All, highlighting the challenges of quickly adapting to new AI model developments.

- Freezing Water Tests AI Knowledge: When queried about freezing water, DeepSeek-R1 incorrectly predicted that jars would break, while Gemma-3-12b accurately described the shattering effect due to water expansion.

- This demonstrates the models’ varying levels of understanding of basic physics, indicating the diverse reasoning capabilities across different architectures.

DSPy Discord

- Explicit Feedback Flows into Refine: A member requested the reintroduction of explicit feedback into

dspy.Refine, similar todspy.Suggestto enhance debugging and understanding.- The member emphasized the value of explicit feedback for identifying areas needing improvement.

- Manual Feedback Makes its Mark on Refine: The team announced the addition of manual feedback into

Refine.- The implementation involves including feedback in the return value of the reward function as a

dspy.Predictionobject, containing both a score and feedback.

- The implementation involves including feedback in the return value of the reward function as a

- Reward Function Returns Feedback: A team member inquired about the feasibility of integrating feedback as part of the return value of the reward_fn.

- The user responded affirmatively, expressing their gratitude.

Cohere Discord

- Command A Debuts on OpenRouter: Cohere’s Command A, a 111B parameter open-weights model boasting a 256k context window, is now accessible on OpenRouter.

- The model aims to deliver high performance across agentic, multilingual, and coding applications, setting a new standard for open-weight models.

- Command A Flunks Prime Number Test: Users discovered a peculiar bug in Command A: when queried about prime numbers whose digits sum to 15, the model either provides an incorrect response or gets stuck in an infinite loop.

- This unexpected behavior highlights potential gaps in the model’s mathematical reasoning capabilities.

- Local API struggles with Command A: Users are encountering performance bottlenecks when running Command A locally, reporting that even with sufficient VRAM, the model doesn’t achieve acceptable speeds without patching the modeling in ITOr or using the APIs.

- This suggests that optimizing Command A for local deployment may require further work to enhance its efficiency.

- Cohere unveils Compatibility base_url: A member suggests to use the Cohere Compatibility API.

- They recommend utilizing base_url for integration.

Modular (Mojo 🔥) Discord

- Discord Account Impersonation Alert: A member reported being messaged by a scam account impersonating another user, and the impersonated user confirmed that “That is not me. This is my only account”.

- The fake account caroline_frascaa was reported to Discord and banned from the server after a user posted a screenshot of the fake account.

- Mojo stdlib Uses Discussed: The use of some feature in the Mojo stdlib was mentioned by soracc in #mojo.

- The user mentioned it is used in

base64.

- The user mentioned it is used in

LLM Agents (Berkeley MOOC) Discord

- Self-Reflection Needs External Evaluation: A member inquired about statements on self-evaluation from the first lecture in contrast to the second, suggesting a contradiction regarding the role of external feedback.

- The first lecture emphasized that self-reflection and self-refinement benefit from good external evaluation, while self-correction without oracle feedback can degrade reasoning performance.

- Clarification on Self-Evaluation in Lectures 1 & 2 Sought: A user is seeking clarification on the apparent contradiction between the lectures regarding self-evaluation.

- They noted the emphasis on self-evaluation and improvement in the second lecture, while the first lecture highlighted the importance of external evaluation and the potential harm of self-correction without oracle feedback.

AI21 Labs (Jamba) Discord

- Vertex Preps for Version 1.6: Version 1.6 is not yet available on Vertex, but is slated to arrive in the near future.

- It will also be available on other platforms like AWS for broader access.

- AWS Soon to Host 1.6: Version 1.6 will be available on platforms like AWS in the near future, expanding its reach.

- This development aims to allow AWS customers access to the new features.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Unsloth AI (Daniel Han) ▷ #general (301 messages🔥🔥):

Gemma 3 Support in Unsloth, Multi-GPU Training, Dynamic Quantization vs GGUF, GRPO and Reasoning, Vision Models

- Unsloth Unleashes Gemma 3 Support: Unsloth now supports Gemma 3, including full fine-tuning and 8-bit, with almost any model like Mixtral, Cohere, Granite.

- According to a tweet, optimizations in Unsloth led to 10% less VRAM usage and a 10% speedup boost for 4-bit, plus fixes and improvements for Windows support and GGUF conversions.

- Multi-GPU Support Still on the Horizon: Despite user interest, Unsloth currently doesn’t natively support multi-GPU training in the free version.

- However, there is speculation that it is possible by deconstructing the components to your training code (FastLanguageModel), and there is the imminent release of the AGPL3 multi-GPU and the Enterprise version.

- Dynamic Quants Duel GGUF in Quality: There’s ongoing discussion about comparing dynamic quantization with GGUF models, especially regarding the trade-offs between size and quality.

- Unsloth’s dynamic quants for Phi-4 are on the Hugging Face leaderboard, but a direct comparison with GGUF benchmarks is anticipated to clarify performance at different bit widths.

- GRPO Powers Reasoning Prowess: The team mentioned that GRPO (Guiding Preference Optimization) is coming next week along with new notebooks.

- They will have a GRPO notebook; and they stated, only if you let it reason about the rules first a la GRPO.

- Vision Models Get the Unsloth Treatment: Unsloth has implemented the train on completions feature and also resizing of images for Vision Language Models, a highly demanded feature, to reduce OOM.

- The models now auto resize images which stops OOMs and also allows truncating sequence lengths.

Links mentioned:

- Tutorial: How to Run Gemma 3 effectively | Unsloth Documentation: How to run Gemma 3 effectively with our GGUFs on llama.cpp, Ollama, Open WebUI, LM Studio.

- Unsloth Benchmarks | Unsloth Documentation: Want to know how fast Unsloth is?

- Re-introducing Unsloth: In celebration of us being the #1 Trending GitHub repo of the day, we reflect on our journey and contributions to the open-source community.

- Unsloth - Dynamic 4-bit Quantization: Unsloth's Dynamic 4-bit Quants selectively avoids quantizing certain parameters. This greatly increases accuracy while maintaining similar VRAM use to BnB 4bit.

- 8-bit optimizers: no description found

- Google Colab: no description found

- Google Colab: no description found

- Unsloth update: Mistral support + more: We’re excited to release QLoRA support for Mistral 7B, CodeLlama 34B, and all other models based on the Llama architecture! We added sliding window attention, preliminary Windows and DPO support, and ...

- Tweet from Daniel Han (@danielhanchen): Excited to share that @UnslothAI now supports:• Full fine-tuning + 8bit• Nearly any model like Mixtral, Cohere, Granite, Gemma 3• No more OOMs for vision finetuning!Blogpost with details: https://unsl...

- unslothai/unsloth: Finetune Llama 3.3, DeepSeek-R1 & Reasoning LLMs 2x faster with 70% less memory! 🦥 - unslothai/unsloth

- AttributeError: 'Gemma3Config' object has no attribute 'vocab_size' · Issue #36683 · huggingface/transformers: System Info v4.50.0.dev0 Who can help? @ArthurZucker @LysandreJik @xenova Information The official example scripts My own modified scripts Tasks An officially supported task in the examples folder ...

- Google Colab: no description found

- llama : Add Gemma 3 support (+ experimental vision capability) by ngxson · Pull Request #12343 · ggml-org/llama.cpp: Model infoOfficial model page: https://ai.google.dev/gemma/docs/corePre-quantized GGUF: https://huggingface.co/collections/ggml-org/gemma-3-67d126315ac810df1ad9e913Available sizes: 1B, 4B, 12B,...

- [Model] Add support for Gemma 3 by WoosukKwon · Pull Request #14660 · vllm-project/vllm: This PR adds the support for Gemma 3, an open-source vision-language model from Google.NOTE:The PR doesn't implement the pan-and-scan pre-processing algorithm. It will be implemented by a fo.....

Unsloth AI (Daniel Han) ▷ #announcements (1 messages):

Gemma 3 models, Unsloth support for models, GRPO for reasoning models, QwQ-32B bugfixes, New model uploads

- Google’s Gemma 3 Integrated with Unsloth: Google’s new Gemma 3 model is now supported in Unsloth with a blog post and a Colab notebook provided.

- All Gemma 3 model uploads are available on Hugging Face, including versions optimized for full finetuning, 8-bit, and pretraining.

- Unsloth Boosts Gemma 3 Finetuning Speed: Unsloth accelerates Gemma 3 (12B) finetuning by 1.6x, reduces VRAM usage by 60%, and extends context length by 6x compared to environments using Flash Attention 2 on a 48GB GPU.

- The team fixed issues with training Gemma 3 and uploaded all versions including 2-8 bit GGUFs, dynamic 4-bit, and 16-bit versions.

- GRPO Enables Extended Context with Reduced VRAM: Unsloth now supports 10x longer context with 90% less VRAM using GRPO (Generalized Rank Position Optimization), detailed in a blog post and tutorial.

- This enhancement is designed for reasoning models, offering significant memory savings and expanded context windows.

- QwQ-32B Model Gets a Makeover: Bugfixes have been implemented for the QwQ-32B model, as highlighted in a blog post with corresponding model uploads.

- These fixes improve the model’s stability and performance, ensuring a smoother user experience.

- Fresh Model Uploads Hit Hugging Face: New model uploads include Gemma 3 GGUF variants (1B, 4B, 12B, 27B), Gemma 3 Dynamic 4-bit versions, QwQ-32B variants, and Phi-4-mini versions, all available on Hugging Face.

- These models cater to various hardware configurations and performance needs, expanding the accessibility of state-of-the-art models.

Links mentioned:

- Google Colab: no description found

- Fine-tune Gemma 3 with Unsloth: Gemma 3, Google's new multimodal models.Fine-tune & Run them with Unsloth! Gemma 3 comes in 1B, 4B, 12B and 27B sizes.

- Unsloth Documentation: no description found

- Unsloth Documentation: no description found

Unsloth AI (Daniel Han) ▷ #off-topic (5 messages):

Gemma 3, Ollama, Phi Vision, GGUFs vision

- Gemma 3 Image Compatibility with Ollama Questioned: A member inquired whether Gemma 3 works with images via Ollama, similar to Phi Vision.

- Another user clarified that their Gemma 3 GGUFs vision component functions on all engines except Ollama, including LM Studio and llama.cpp; this is likely due to llama-server lacking vision support yet.

- Ollama lacks vision support: The Gemma 3 GGUFs vision component functions on all engines except Ollama, including LM Studio and llama.cpp.

- This is likely due to llama-server lacking vision support yet.

Unsloth AI (Daniel Han) ▷ #help (51 messages🔥):

Gemma-3 GGUF and Ollama, Llama 3.2 inference cancellation, Phi-4-mini support, Gemma finetuning error, TurboML Continual Pre-Training

- Gemma-3 GGUF vision fails with Ollama: Support for Gemma-3 GGUF models with vision components in Ollama is currently non-functional due to a widespread issue affecting all uploaders.

- The recommendation is to use Ollama’s original Gemma model for text, until the issue can be debugged.

- Llama 3.2 inference needs cancellation method: A user inquired about the possibility of cancelling long-running inferences with Llama 3.2 models in Unsloth without unloading the model from memory.

- The user asked if they could stop it after a certain amount of time in a timeout loop.

- Phi-4-mini receives new Unsloth update: After upgrading to the latest Unsloth version, users should now be able to use Phi-4-mini models, which was previously causing a RuntimeError.

- The user reported that Phi-4-mini works fine, but

unsloth/Phi-4-mini-instructgives arope_scalingerror.

- The user reported that Phi-4-mini works fine, but

- Gemma finetuning error requires unsloth update: Users reported encountering an error (

only Tensors of floating point dtype can require gradients) when fine-tuning Gemma models after adding new tokens and training new embeddings, particularly during the evaluation phase.- Upgrading to the latest version of Unsloth is recommended, though some users have reported that the issue persists even after the update.

- TurboML seeks guidance on Continual Pre-Training dataset format: A member with a new framework - TurboML - sought advice on the correct dataset format for Continual Pre-Training (CPT), specifically for Sentence Completion and SFT tasks.

- They referenced a Unsloth notebook and inquired about the placement of the EOS token.

Links mentioned:

- Google Colab: no description found

- Google Colab: no description found

- huggi - Overview: huggi has 2 repositories available. Follow their code on GitHub.

- Google Colab: no description found

- Finetuning from Last Checkpoint | Unsloth Documentation: Checkpointing allows you to save your finetuning progress so you can pause it and then continue.

Unsloth AI (Daniel Han) ▷ #research (9 messages🔥):

Gemma SFT, Maximum Context Length, Memory Usage Calculation

- Gemma’s SFT VRAM Needs Debated: Members discussed Gemma’s VRAM usage in SFT, suggesting it may require more VRAM than Qwen 2.5 under similar training conditions, though specific numbers were not given.

- One shared a Qwen2_VL Colab notebook for images.

- Max Context Length is a Limitation, Not a Hyperparameter: Members clarified that the maximum context length is not a hyperparameter of a model but rather a limitation based on available memory.

- The longer the context, the more memory is needed to process it, but exact calculation is not solely based on model size.

- Estimating Memory Usage for LLMs: It was mentioned that the amount of memory needed to process context depends on the model architecture, with different layers requiring varying amounts of memory.

- Links were shared to approximate memory requirements (Substratus AI blog and Hugging Face space).

Links mentioned:

- Google Colab: no description found

- Google Colab: no description found

Cursor IDE ▷ #general (263 messages🔥🔥):

Cursor performance issues on Linux and Windows, Issues with Claude 3.7, Custom modes in Cursor, Gemini API key issues, Cursor agent spawning terminals

- Cursor Lags on Linux and Windows: Users report Cursor lagging and freezing on both Linux and Windows, even with powerful hardware, especially after recent updates like 0.47.4 (download link).

- One user mentioned that on Linux, after 20-30 messages, the UI freezes for seconds, while another cited that they have “lags all the time” on Windows with a beefed up laptop and version 3.7.