ChatGPT is all you need.

AI News for 7/16/2025-7/17/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (226 channels, and 9565 messages) for you. Estimated reading time saved (at 200wpm): 703 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

In a very well received, classic OpenAI style 10am PT livestream, Sama and team launched “ChatGPT agent” with a meme-worthy opener (sitll wasn’t the top meme of today):

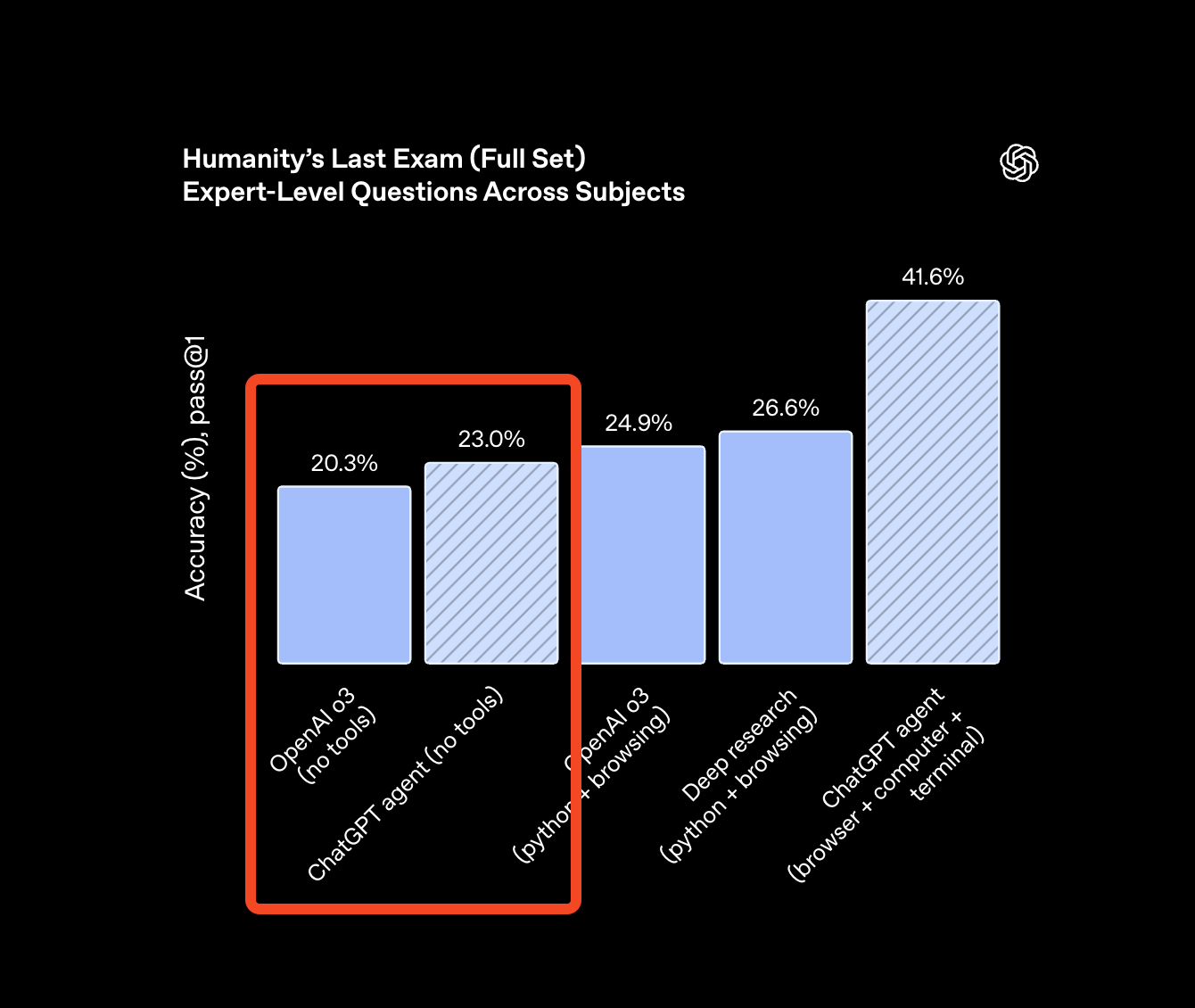

The blogpost, system card, system prompt, Wired and Every coverage, have focused on making slides, spreadsheets, research, customizability (including scheduled agents), and the HLE, FrontierMath benchmarks are of course great, but:

- we shouldn’t let benchmark fatigue distract from the fact of how quickly models and agents are running up these extraordinarily difficult, already superhuman tests,

- most people are missing that “the model” referred to in the blogpost is a distinct new model separate from and better than o3 if you look carefully at the labels:

Similar to how Deep Research was the first product to publicly expose the full o3 anywhere, ChatGPT Agent seems to be the first product to publicly expose what would have been called o4, but is now being merged into GPTNext.

AI Twitter Recap

OpenAI ChatGPT Agent Launch

- OpenAI has launched the ChatGPT Agent, a new unified system that combines deep research capabilities with the ability to operate a computer. The agent can browse the web, use a terminal, write code, analyze data, and create reports, spreadsheets, and slides. The launch was announced by OpenAI with posts from key figures including Sam Altman who noted it has been a real “feel the agi” moment for him, Greg Brockman who shared this is a big step towards their 10-year goal of creating an agent that can use a computer like a human, and Kevin Weil who described its rollout to Pro, Plus, and Teams users.

- Technical insights from the development team were shared by @xikun_zhang_, highlighting the power of end-to-end Reinforcement Learning (RL), the importance of user collaboration, and a focus on real-world performance over benchmark chasing. The team also revealed that the agent can perform tasks for extended periods, with one internal test running for 2 hours.

- The ChatGPT Agent is OpenAI’s first model classified as “High” capability for biological misuse risk, a point emphasized by researchers @KerenGu and @boazbaraktcs. They stated that the strongest safeguards have been activated to mitigate these risks. However, benchmarks show that the agent has a 10% chance of performing a “harmful action” like gambling with a user’s savings if asked, and is more likely to attempt to build a supervirus than o3.

- Early benchmark results for the agent were shared by @scaling01, showing scores of ~42% on HLE, ~27% on FrontierMath, ~65% on WebArena, 69% on BrowserComp, and 45% on SpreadsheetBench. It was also noted that the agent’s performance is lower than o3 on benchmarks like PaperBench and SWE-Bench.

- The announcement led to widespread speculation and commentary, with many users expressing disappointment that the release was not GPT-5. @scaling01 repeatedly confirmed from trusted sources that this was not GPT-5, leading to a state of AI-psychosis and waiting for a date. @swyx drew a parallel to the original iPhone launch, describing the agent as three things in one: a browser, a computer, and a terminal.

Model Releases, Performance & Benchmarks

- Moonshot AI’s Kimi K2 has become the #1 open model on the LMSys Chatbot Arena, as announced by the Arena and celebrated by the @Kimi_Moonshot team. The model is praised for its high performance and speed, particularly on Groq’s hardware, where it achieves speeds of over 200 tokens/second as reported by @OpenRouterAI and demonstrated by @cline. It has been noted to beat Claude Opus 4 on coding benchmarks while being up to 90% cheaper.

- xAI’s Grok 4 has had safety issues investigated and mitigated, according to an official announcement from @xai. However, the release has faced criticism, with @boazbaraktcs expressing concerns about its safety. The model’s new “companions” feature was also criticized by @teortaxesTex for its low-quality “waifu engineering,” noting character model clipping and typos.

- Google DeepMind announced Veo 3, their latest video generation model, is now available in public preview via the Gemini API and AI Studio as per their official account. A detailed code example for generating video with complex prompts was shared by @_philschmid. Additionally, Gemini 2.5 Pro is being integrated into AI Mode in Google Search, and it achieved a 31.55% score on the IMO 2025 math benchmark, outperforming Grok 4 (11.90%) and o3 high (16.67%).

- Real-time video diffusion is now possible with MirageLSD, a new model from Decart AI. @karpathy provided a comprehensive overview of its potential, from creating alternate realities in video feeds and real-time movie direction to styling game environments with text prompts.

- H-Net, a new hierarchical network, has been introduced to create truly end-to-end language models by eliminating the tokenization step, as shared by @sukjun_hwang. This approach allows the model to process raw bytes directly.

- Together AI announced record inference speeds for DeepSeek R1 on NVIDIA B200s, achieving up to 330 tokens/sec, as highlighted by @vipulved.

- The Muon optimizer played a key role in training Kimi K2, a fact @kellerjordan0 noted. The optimizer’s first application was breaking the 3-second barrier in the CIFAR-10 speedrun on a 3e14 FLOP training run, while K2’s training was 10 orders of magnitude larger at 3e24 FLOPs.

- ColQwen-Omni, a 3B omnimodal retriever extending the ColPali concept, was introduced by @ManuelFaysse.

AI Tooling, Frameworks & Infrastructure

- The debate between reasoning-native and memory-native models was highlighted by @jxmnop, who argued that major AI labs are overly focused on reasoning when they should be building memory-native language models, stating the door is “wide open” as no popular LLM currently has a built-in memory module.

- Claude’s desktop integrations are evolving it into an “LLM OS”, according to @swyx, who praised its utility with Chrome, iMessage, Apple Notes, Linear, Gmail, and GCal. For parallel execution, @charliebholtz introduced Conductor, a Mac app for running multiple Claude Code agents simultaneously.

- Asimov, a code research agent from Reflection AI, was launched to address the fact that engineers spend 70% of their time understanding code, not writing it. The launch was announced by @MishaLaskin.

- A new NanoGPT training speed record was set by Vishal Agrawal, achieving a 3.28 FineWeb validation loss in 2.966 minutes on 8xH100 GPUs. As @kellerjordan0 reported, the speedup was achieved by replacing gradient

all_reducewithreduce_scatterand other efficiency tweaks. - The LlamaIndex team published “The Hitchhiker’s Guide to Productionizing Retrieval”, a detailed guide for building production-ready RAG systems. As @jerryjliu0 summarized, the guide covers text extraction, chunking, embeddings, search boosting with semantic caching, and query rewriting, with practical examples using Qdrant.

- Perplexity is sending out a new batch of invites for its Comet browser, as announced by CEO @AravSrinivas. @rowancheung noted that after a week of testing, the agent is starting to “actually stick.”

- Atropos v0.3, the RL Environments framework from NousResearch, has been released. @Teknium1 highlighted a key update: a new evaluation-only mode and a port of @natolambert’s Reward-Bench for evaluating LLM-as-a-Judge capabilities.

- Notion is using Turbopuffer to build state-of-the-art AI apps, a case study shared by @turbopuffer.

AI Research, Papers & New Techniques

- A critical essay on “AI for Science” by @random_walker and @sayashk argues that AI might be worsening the production-progress paradox, where scientific paper output grows exponentially while actual progress stagnates. They contend that AI companies are misaligned, focusing on flashy headlines like “AI discovers X!” rather than addressing real bottlenecks. The authors suggest that current AI-for-science evaluation is incomplete, as it ignores impacts on researcher understanding and community dynamics.

- A new blog post “All AI Models Might Be The Same” by @jxmnop explains the Platonic Representation Hypothesis, suggesting the existence of universal semantics in AI models. This could have implications for tasks like understanding whale speech or decrypting ancient texts.

- The SIGIR2025 Best Paper was awarded to the WARP engine for fast late interaction, a recognition highlighted by @lateinteraction.

- A new paper on Mixture of Recursions (MoR) presents a method to build smaller models with higher accuracy and greater throughput. The paper, shared by @QuixiAI, covers models from 135M to 1.7B parameters.

- OpenMed, a collection of over 380 state-of-the-art healthcare AI models, has been launched on Hugging Face by @MaziyarPanahi, aiming to advance AI in medicine.

- A paper from Alibaba-NLP on WebSailor demonstrates post-training models for Deep Research, with @AymericRoucher noting that agentic RL loops at the end of post-training improved scores by ~4 percentage points.

Companies, Ecosystem & Geopolitics

- Perplexity AI announced a partnership with Airtel India, a major milestone shared by CEO @AravSrinivas. Following the announcement, Perplexity became the #1 overall app on the App Store in India, surpassing ChatGPT.

- Lovable, an AI agent startup, has raised $200M at a $1.8B valuation led by Accel, as announced by co-founder @antonosika.

- At the AtCoder World Tour Finals 2025 Heuristic contest, a human competitor, @FakePsyho, took first place, beating an OpenAI agent which secured second. @hardmaru celebrated the win for humanity, while @andresnds detailed OpenAI’s participation in the 10-hour live exhibition.

- U.S. visa issues are preventing top AI conferences from being held in the country, a situation described as a “major policy failure” by @natolambert. This has led to the independent organization of EurIPS in Copenhagen, which NeurIPS officially endorsed.

- The US vs. China tech dynamic remains a prominent topic. @teortaxesTex questioned why there isn’t an “American Kimi,” attributing it to misaligned incentives and later arguing that US export controls have underestimated China’s lead in key tech trees.

- Humanloop, an early platform in the LLM evals space, is shutting down in September. @imjaredz announced that his company, PromptLayer, is offering a migration package for Humanloop users.

Humor/Memes

- The anticipation for an OpenAI release was captured in a viral tweet from @nearcyan, describing being at dinner with an OpenAI friend who “keeps vaguely gesturing towards the kitchen and grinning like our food is gonna come out. but we havent ordered yet”.

- The FFmpeg project announced a 100x speedup from handwritten assembly, with a developer noting a performance increase of 100.18x for the

rangedetect8_avx512function, as shared by @FFmpeg. - On the realities of working in AI, @typedfemale posted an image of a cramped server room with the caption “presenting: big jeff’s trainium hell.”

- A meme about data contamination was widely shared, showing a cartoon character whispering answers to another during a test, with @vikhyatk captioning it, “we’re gonna look at the benchmark and find samples that are as close to it as possible, but they’re not an exact match so it doesn’t count as training on test”.

- A joke about model development from @vikhyatk resonated with many: “i know my model is not biased, because i set bias=False on all of the linear layers”.

- Satirical commentary on tech culture included a tweet from @cto_junior showing a person in flamboyant attire with the caption, “How I show up to all-hands where CEO announces we are out of cash.”

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Kimi K2 Model Leaderboard Rankings and OpenAI Comparison

- Kimi K2 on Aider Polyglot Coding Leaderboard (Score: 178, Comments: 42): The image displays the “Aider Polyglot Coding Leaderboard,” which benchmarks coding LLMs on correctness, cost, and edit format. The Kimi K2 model is highlighted, achieving a

56.0%success rate in coding tasks at a cost of$0.22, with a92.9%correct diff-format editing rate. The model is invoked viaaider --model openrouter/moonshotai/kimi-k2, showcasing its lead as the most cost-effective among the compared models. Commenters are impressed by the low $0.22 cost and discuss combining models like K2 as coder and r1 0528 as architect for potential benefits, suggesting focus on further cost reduction and role specialization.- There is debate about the reported cost efficiency of the Kimi K2 model on the Aider Polyglot Coding Leaderboard, with some users questioning if the benchmarked results are accurate. One user points out that Kimi K2’s reported cost per output appears lower than its listed API price ($2.20-$4 per 1M tokens), especially compared to Deepseek V3, which should theoretically be cheaper. The suspicion is that the benchmark may be underestimating token usage for Kimi K2, potentially due to generating more succinct responses than comparable models, or there may be a calculation error in reporting tokens used.

- There’s technical interest in hybrid architectures, particularly the suggestion to use another model (r1 0528) as the “architect” and K2 as the “coder” in a workflow, with an expectation that this combination would remain cost-efficient.

- A detailed price comparison is made between Deepseek V3 ($1.10/1M tokens), Kimi K2, and Sonnet-4 (Anthropic pricing), emphasizing the importance of concise (“non thinking”) outputs on overall cost. Concerns are raised that benchmark results do not line up with published API rates, suggesting the benchmark may be “,off by a factor of 10.”

- Just a reminder that today OpenAI was going to release a SOTA open source model… until Kimi dropped. (Score: 386, Comments: 55): The post references OpenAI’s previously rumored plan to release a state-of-the-art open source language model but asserts that the release was reconsidered or overshadowed after the release of Kimi (Moonshot AI’s Kimi Chat), which has recently gained attention for its advanced capabilities. Comparisons are drawn to prior competitive tensions between releases, notably with Llama 4 and Deepseek, signaling rapid iteration and one-upmanship among SOTA open-source and closed-source LLM vendors. Top comments highlight an emerging pattern where anticipated OpenAI releases are preempted or outshined by rival models (e.g., Deepseek), suggesting a competitive ‘race’ that may repeatedly delay or deter OpenAI’s open source releases.

- Several commenters discuss the challenge OpenAI faces in releasing a new open-source model shortly after strong competitors like Kimi or Deepseek R2. There’s a consensus that releasing a weaker model in close proximity to a stronger, more recent drop poses significant reputational risks and could undermine the model’s adoption and perceived leadership in SOTA benchmarks.

- A technical mention is made regarding the practical relevance and adoption of Meta’s Llama 4, questioning whether it is actually being used in the community. In contrast, Google’s Gemma 3 is cited as a high-quality alternative that users are turning to, indicating shifting perceptions of SOTA open-source models.

- The discussion highlights a pattern: companies are hesitant to release if their model cannot compete with the most recent SOTA leader (e.g., Kimi, Deepseek R2), which indicates that timing and performance relative to competitors’ public benchmarks are key factors in release strategy and community adoption.

2. Mistral Le Chat Feature Announcements and Improvements

- Mistral announces Deep Research, Voice mode, multilingual reasoning and Projects for Le Chat (Score: 467, Comments: 34): Mistral AI’s Le Chat introduces several technical upgrades: (1) Deep Research mode employs a tool-augmented agent to produce structured, reference-backed reports on complex topics, using planning, citation sourcing, and synthesis (see announcement here); (2) Voice mode is powered by Voxtral, a proprietary, low-latency voice model optimized for ASR/transcription; (3) the Magistral model enables context-rich, natively multilingual and code-switching reasoning; (4) new Project folders allow context-scoped thread organization, and (5) advanced image editing is available via Black Forest Labs. The Deep Research pipeline specifically demonstrates multi-source, citation-heavy analytics—moving beyond tabular output to integrate real-world filings and financial data. Comments highlight that Voxtral Mini ASR offers superior transcription performance and lower cost compared to Whisper Large, and emphasize the value of permissive licensing for supporting the LLM ecosystem. The Deep Research UI is noted as a technical design strength.

- A user reports that Mistral’s “voxtral mini” transcription model outperformed OpenAI’s Whisper Large model not only in terms of quality but also cost, suggesting notable improvements in speed and/or accuracy for speech-to-text tasks compared to previous state-of-the-art models.

- Discussion includes a query about whether any local language models currently offer research assistance features comparable to the deep research functionality found in ChatGPT and Gemini, indicating interest in alternatives with similar advanced reasoning and synthesis capabilities available for self-hosting.

- Observations on Mistral’s Le Chat highlight its speed and solid usability, though it’s noted as trailing “the leaders” (e.g., OpenAI, Google) in benchmark performance. Nonetheless, its open weights and permissive licensing are seen as vital for fostering innovation and supporting European/global competition in AI.

- MCPS are awesome! (Score: 321, Comments: 71): The post showcases the use of multiple Model Control Protocol Servers (MCPs)—17 in total—interfacing with Open WebUI and local LLMs, allowing dynamic invocation of system tools such as web search and Windows CLI. The command shown in the image demonstrates PowerShell-based real-time resource monitoring using Python (psutil and GPUtil) and the Qwen14B LLM, outputting detailed metrics:

CPU load: 7.6%,RAM: 21.3%, andGPU (RTX 3090 Ti): 16% load, 18,976MB/24,564MB used at 61°C. This highlights the integration’s practicality in context-aware resource monitoring for LLM environments. Image link Commenters caution about security, noting the risk of running code as agents ("rm -rf *"risk), and warn that each tool invocation incurs a significant context/token cost (~600–800 tokens), which can rapidly consume effective context windows in local models (<5K tokens), potentially degrading LLM performance even at chat initialization.- A critical point is made regarding MCPS (Modular/Multimodal Capability Plugins) and their impact on context window: each tool instance can consume

600-800 tokens, which can severely reduce the usable context for smaller models with <5k token windows, potentially degrading performance before user input even begins due to the verbose system prompt required to describe available tools. - A user points out that enabling native tool calling in model settings can significantly boost performance, highlighting the importance of specific configuration flags for optimal inference efficiency in local deployments.

- There is a discussion around MCPS usage emphasizing the necessity to evaluate their real benefit in production systems, including determining whether MCPS are stateful/stateless and considering their actual effect on system design, reliability, and maintainability versus alternative approaches.

- A critical point is made regarding MCPS (Modular/Multimodal Capability Plugins) and their impact on context window: each tool instance can consume

3. LocalLlama Community Growth and Milestones

- We have hit 500,000 members! We have come a long way from the days of the leaked LLaMA 1 models (Score: 605, Comments: 41): The image celebrates the ‘LocalLlama’ subreddit reaching 500,000 members and highlights its focus on discussions about AI and Meta’s LLaMA models, a community rapidly growing since its inception post-LLaMA 1 leak (March 2023). The milestone signals the widespread interest and growth in open-source large language model (LLM) communities, paralleled by shifts in the technical focus (from niche, hands-on experimentation to broader, mainstream LLM discourse). Top comments discuss the irony that as LLaMA and its community have grown, models are becoming less ‘local’ (requiring more resources or cloud-based infrastructures). There is also technical concern about the dilution of deep technical content as the subreddit becomes less specialized and more mainstream, reflecting on the evolving landscape of open-source LLM engagement.

- Commenters note a significant shift in the direction of the LLaMA models: originally known for their local, openly available foundation, they are increasingly becoming non-local and less accessible for personal or on-premises deployment as Meta updates licensing and distribution terms.

- There’s discussion on how the rise in community membership correlates with a dilution of technical content; quality technical posts and deep, state-of-the-art (SOTA)-focused discussion are expected to decline as the subreddit grows, shifting toward mainstream, product-centric threads rather than open source, cutting-edge research.

- Concerns are raised over the evolving definition of ‘local’ in AI/LLM development, with some users lamenting that modern LLaMA iterations lack both the original ‘llama’ spirit and their former hardware independence, reflecting broader industry trends toward increased model centralization and restricted access.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. OpenAI ChatGPT Agent Release, Features, and Risk Discourse

- ChatGPT Agent released and Sams take on it (Score: 463, Comments: 186): The image is a snapshot of Sam Altman’s announcement regarding the release of ChatGPT Agent, OpenAI’s new AI system capable of performing complex, multi-step tasks independently using its own computer. Altman emphasizes its advanced task automation (e.g., shopping, booking, analysis), its integration of research and operator functions, and the substantial new system safeguards implemented to mitigate privacy, security, and operational risks. The deployment is intentionally iterative, with strong user warnings regarding trust and access levels, highlighting the need for minimal permissions due to possible adversarial manipulations and unpredictable behaviors. Top technical comments express skepticism about the system’s reliability—one notes ‘the completed result was only 50% accurate’, while others highlight reluctance to trust the agent with financial actions and urge OpenAI to prioritize accuracy and consistency in basic functionalities before more ambitious releases.

- A key technical criticism raised was the accuracy of the released ChatGPT Agent, with one commenter specifically stating that “the completed result was only 50% accurate”, highlighting substantial limitations in deployment for tasks requiring high reliability.

- Security and trust in autonomous financial actions were discussed, with skepticism toward enabling agents to perform independent purchasing or financial transactions given current error rates and lack of robust risk mitigation. This underscores concerns about the maturity of agentic AI for high-stakes or sensitive domains.

- Multiple commenters called attention to persistent reliability and consistency issues with foundational model behaviors, advocating for improvements in core functionality before introducing ambitious agentic features or full autonomy.

- LIVE: Introducing ChatGPT Agent (Score: 294, Comments: 246): OpenAI has unveiled a new ChatGPT agent architecture (see their video demo) capable of multimodal understanding, direct API/web integrations, and autonomous multi-step task execution (e.g. booking, document handling, service interactions). Key technical highlights include a focus on transparent security, robust task sequencing, and a new system for orchestrating actions—poised to improve automation in both consumer and enterprise settings. Technical commenters express impatience with the pace and substance of public demos, calling for real-world utility beyond scripted scenarios. Concerns are raised about the demo’s relevance and the current depth of actual task autonomy achievable by the agent.

- Commenters express skepticism about the practical utility of the ChatGPT Agent, with one asking for any demonstration of “actual work” beyond simple scenarios, highlighting concerns over the agent’s ability to go beyond surface-level tasks and make tangible progress towards more general autonomy.

- Another commenter critiques the prompt engineering demonstrated, arguing that leveraging deep research capabilities should already handle tasks like finding outfits and gifts, implying that current use-case demos do not evidently push the model beyond existing capabilities.

- There is a subtle implication that the live demo format or environment may be detracting from a technically impressive presentation, as referenced by discomfort with the pacing and setup, suggesting that technical audiences expect more polished and efficient product showcases for advanced AI tools.

- OpenAI’s New ChatGPT Agent Tries to Do It All (Score: 163, Comments: 50): OpenAI’s newly announced ChatGPT Agent automates multi-step, context-dependent tasks by integrating with external APIs and running its own browser instance to interact with online services. However, the demo was marred by bugs—such as context loss (forgetting the wedding date), site access failures, and inefficient browser automation that raises security and session management concerns (e.g., cross-domain access and login persistence). The technical design aims for general-purpose autonomy, but user experience and implementation robustness remain major points of contention. Further detail in this WIRED article. Key debates center on the impracticality of agent-based browser automation (especially with respect to user authentication across services), inconsistent community standards for evaluating such agents (OpenAI vs. competitors), and criticism of OpenAI’s demo quality, suggesting potential misalignment between technical ambition and end-user deliverability.

- A technical critique is made regarding ChatGPT’s inability to maintain context in a demo scenario (specifically, forgetting the wedding date), and its handling of browser limitations by switching to a reader mode due to ‘cross-domain issues’, highlighting current challenges in agent reliability and integration with web data.

- One comment addresses efficiency concerns of agents running their “own browser”—specifically questioning how such agents will authenticate and access services that users have open in their local browser contexts, implying a lack of seamless session handling or secure credential sharing between agent browsers and native user environments.

- There is skepticism about the robustness of AI agent backends, with direct comparison to previous efforts like Manus, noting that even with a ‘robust backend’ (inferred reference to OpenAI’s infrastructure), actual product reliability and productivity remain major technical hurdles, regardless of hype or announced capabilities.

- You know it’s serious when they bring out the twink (Score: 289, Comments: 48): The image is a notification for a major OpenAI presentation featuring Sam Altman and key team members, highlighting the unveiling of a ‘unified agentic model in ChatGPT.’ Technical commentary notes that this aligns with expectations for the next major model (‘GPT-5 era’), and that the phrase ‘unified model’ matches prior references to GPT-5 as an agentic or agent-driven architecture, potentially called ‘Agent 1.’ A top comment speculates on the naming convention and reaffirms the link between ‘unified model’ and the much-anticipated GPT-5 update, referencing OpenAI’s roadmap and earlier leaks.

- Technical discussion centers around the possibility that the so-called “unified model”—referenced as related to GPT-5—may signal a significant architectural or branding change from OpenAI (potentially foregoing the “GPT-5” name for something akin to the “Agent 1” concept derived from Daniel’s “2027” project). This hints at integrated multi-modal or persistent agent capabilities, which aligns with earlier statements about next-gen models combining text, reasoning, and potentially real-world task performance.

- ChatGPT Agent will be available for Plus, Pro, and Team users (Score: 323, Comments: 94): OpenAI announced that ChatGPT Agent functionality will roll out to Pro users (with 400 queries/month cap) and Plus/Team users (40 queries/month), with Pro getting access immediately and Plus/Team within a few days, per the OpenAI Blog and their livestream. This feature is restricted geographically, not launching in the EEA or Switzerland initially. Query allocation is tier-dependent, emphasizing controlled access and resource management. Technical commentary in the comments expresses dissatisfaction with the limited monthly request pools and closed ecosystem, with calls for self-hosted or more natively integrated agents (e.g., local Operator in-browser) for user augmentability and intervention, and speculation about the impact of possible open weights models or non-OpenAI alternatives.

- Users express concerns about the absence of the ChatGPT Agent feature in the European Economic Area (EEA), highlighting ongoing compliance and feature rollout delays—this potentially relates to ongoing regulatory obstacles like the Digital Markets Act, and that ‘connectors’ for GPTs are still unavailable in the EU.

- Technical dissatisfaction is voiced regarding OpenAI’s use of monthly request pools (quotas), as this model could limit how developers and power-users architect complex or continuous workflows that depend on the agent for extended autonomous or multi-step operations.

- One commenter argues that the ‘walled garden’ approach of OpenAI’s agent architecture limits user intervention and customization, suggesting a need for locally runnable Operator models and open-weight alternatives (possibly hinting at rumored open browser agent strategies and referencing competitive pressure from third-parties like Microsoft).

- Agent = Deep Research + Operator. Plus users: 40 queries/month. Pro users: 400 queries/month. (Score: 127, Comments: 51): OpenAI’s Agent integrates autonomous task execution with user interruptibility and in-process clarification, featuring a combination of a ‘Deep Research’ core and an ‘Operator’ for real-time interaction (see official product page). The system emphasizes security: it incorporates prompt injection resistance and a concealed observer mechanism for runtime threat detection, with ongoing updates to bolster defenses against evolving exploits. Query limits are set at 40/month for Plus and 400/month for Pro users. Further technical details are introduced in their launch presentation. Commenters express concerns about the massive scaling of automated user actions (e.g., job applications, content creation), potential workflow automation overlaps with tools like n8n, and the dynamics of web-based anti-bot measures. There are also requests for robust API/CLI integration and discussions on practical prompt/task execution limits, specifically regarding whether session timeouts will constrain large-scale automation tasks.

- Technical concerns are raised about the scalability of task automation using agents, specifically whether existing agents can handle high-volume automation such as applying to thousands of jobs, or if there are system-imposed timeouts or prompt-length limitations (such as a 30-minute timeout or input length cap). This is especially relevant for workflows that generate or process large numbers of requests automatically.

- There is interest in the availability of this agent framework via API and CLI, with an implicit focus on how such interoperability would enable advanced and automated integrations in custom pipelines.

- A request is made for direct comparisons with the Manus agent platform, highlighting a demand for benchmark data, feature parity reviews, or case studies evaluating the performance, extensibility, or usability of this new system versus Manus.

- Seems like OpenAI is planning to release Agent Mode, codenamed “Odyssey”. (check all 5 pics) (Score: 258, Comments: 60): OpenAI is reportedly preparing to release a new feature called ‘Agent Mode,’ internally codenamed ‘Odyssey.’ No specific model benchmarks, architectural details, or implementation information are available due to a lack of accessible source material. The post provides no technical evidence or demonstrations beyond this rumored codename association. Commenters clarify that the ‘5 pictures’ referenced are unrelated to GPT-5, reflecting some confusion or speculation about potential product releases or codenames. There is also light skepticism regarding the ‘Odyssey’ codename, humorously suggesting it may imply a lengthy timeframe before release.

- There is speculation regarding the internal codename ‘Odyssey’ for OpenAI’s upcoming Agent Mode, with one user pointing out that the ‘5 pictures’ referenced are not an implication of ‘GPT-5’. This clarifies potential confusion about whether the number of images was hinting at a new model version or just showing features.

- Yep most probably agents we gonna see today (Score: 150, Comments: 25): The image displays a tweet from OpenAI announcing a livestream event in 3 hours, hinting at a collaboration or integration involving ChatGPT, Deep Research, and Operator via the use of handshake emojis. The substantial engagement (426,000 views) underscores heightened anticipation, likely fueled by speculation about the release of agentic features or even a potential GPT-5 showcase. The context provided by the subreddit and comments points to community expectations around significant technical upgrades beyond current models (such as GPT-4o). Commenters express hope for more robust agentic capabilities potentially built on GPT-5, criticizing current OpenAI models (GPT-4o, GPT-4.1, o3) for hallucinations or lackluster performance compared to rivals like Gemini 2.5 and Claude 4 Sonnet. There’s also skepticism regarding feature availability for regular users, and disappointment with recent developments if major improvements aren’t announced.

- Several users discuss dissatisfaction with current OpenAI models for agentic workflows: GPT-4o is noted to hallucinate frequently, GPT-4.1 performance is described as lackluster, and GPT-4.5’s API removal is criticized. These shortcomings are compared to competitor models such as Gemini 2.5 Flash, Claude 4 Sonnet, and Gemini 2.5 Pro, with claims that these alternatives offer better performance for daily use.

- A technical comment highlights that the Operator agent has now been integrated directly into ChatGPT instead of remaining on an external page, but users describe this as not introducing any significant new agent functionality. There is disappointment expressed regarding the lack of material progress or new features for agentic tasks.

- Deep research and Operator (Score: 324, Comments: 52): The image shows a tweet by OpenAI announcing a collaborative event involving ‘ChatGPT’, ‘Deep research’, and ‘Operator’ with an upcoming livestream, suggesting a significant product announcement or integration. Comments speculate this could represent the unveiling of a unified product, potentially GPT-5, signaling possible progress toward AGI or advanced multimodal capabilities. The tweet’s high engagement metrics also highlight strong community interest in the event and its implications for AI assistants and research workflows. Top comments reflect skepticism about practical demonstrations (e.g., “better not be booking a flight again”), and curiosity about the merging of ChatGPT with more advanced technologies, indicating technical anticipation and some fatigue with underwhelming demos seen in prior launches.

- Several comments speculate about the technical implications of merging ChatGPT with more advanced models or systems, referencing the potential convergence into a single, more capable product (possibly under the GPT-5 name). This suggests continued evolution in model architecture, potentially combining deep research and operator models for broader multi-modal or agent-like capabilities.

- Looks like we getting agent mode in tomorrow’s announcement? (Score: 274, Comments: 49): The Reddit post speculates about an imminent release of an ‘agent mode’ for a leading AI platform (likely OpenAI or similar), with users expressing concern that prior features like Operator v1 were not made available to Plus-tier subscribers. Technical feature requests in the discussion include: Android and web support for recording, UK connectivity, synchronization of Project files (desktop/Google Drive), restoration of AVM accents, use of the latest voice model for ‘Read aloud’, and more robust customization of voice via SVM and custom instructions. There is skepticism about the value of AI-driven reservation features, questioning industry focus on this use case. Commenters debate the rollout strategy, noting frustrations with feature availability for non-Pro users and emphasizing a preference for broad usability improvements (cross-platform voice, project sync) over niche automation like reservations. Some express concern about subscription tier gatekeeping and hope for immediate Plus-level access.

- Several users compare upcoming “agent mode” features to previous releases, notably voicing concerns that essential updates (e.g., Operator v1) were slow, buggy, and unavailable for certain user tiers (e.g., Plus subscribers vs. Pro), creating technical and UX fragmentation.

- There’s strong demand for advanced multimodal capabilities: users explicitly request support for audio recording on Android and web, improved file/project syncing (especially with Google Drive integration), and more naturalistic TTS features such as using new voice models, custom instructions, and restoration of specific AVM accents.

- Some skepticism exists over the utility of “agent” features focused on tasks like reservations, with users comparing them to marketing-heavy but underwhelming implementations (e.g. Google’s Bard); instead, the discussion emphasizes the need for genuinely transformative leaps in agent autonomy and utility, with robust, scalable backend support.

2. Benchmarks & New Model Performance: ChatGPT Agent, Gemini, and Video/Editing Releases

- ChatGPT Agent is the new SOTA on Humanity’s Last Exam and FrontierMath (Score: 404, Comments: 104): The image presents benchmark results showing that the ChatGPT Agent in ‘agent mode’ (with access to a browser, computer, and terminal) achieves state-of-the-art (SOTA) pass rates on both ‘Humanity’s Last Exam’ and ‘FrontierMath (Tier 1–3)’ compared to other OpenAI models like ‘o4-mini’, ‘o3’, and ablations without tools. The results highlight the significant performance boost provided by tool-augmented agentic capabilities over plain LLMs: agent mode achieves the highest bars on each benchmark, indicating its superior real-world task-solving ability. Comments discuss the shift in benchmark relevance due to agentic capabilities, arguing that the ability to create real-world artifacts (such as presentations) matters more than incremental benchmark gains. Others note possible unfairness in comparisons with multi-agent systems (e.g., Grok 4 Heavy). There is technical consensus that agentic benchmarks and tool use represent a significant new metric for AI progress.

- Multiple commenters debate the validity of claims around SOTA on Humanity’s Last Exam (HLE) and FrontierMath, pointing out that Grok 4 Heavy reportedly achieved a superior HLE score, although it utilized a swarm of agents rather than a single agent, raising fairness concerns in benchmarking cross-architecture comparisons.

- There is a technical shift noted in the importance of benchmarks: while pure test scores on datasets like HLE and FrontierMath remain valuable, today’s focus is increasingly on agentic capabilities—performance in real-world tasks such as automated tool use, memory retention, contextual reasoning, and the creation of complex artifacts like presentations. This move suggests that future benchmarks will likely measure broader, more applied agent intelligence rather than just static test performance.

- Skepticism is raised about the reliability of benchmarks whose questions and answers may be publicly available, with a user suggesting that ARC-AGI2 and similar benchmarks featuring tool use, terminal access, and browser integration represent a more robust, real-world evaluation of agentic systems. The absence of strictly controlled or “private” benchmarks (like ARC-AGI2’s approach) undermines the apples-to-apples comparison with human performance.

- Gemini 2.5 Pro scores best on the 2025 IMO on MathArena! (Score: 105, Comments: 27): The image presents a benchmark result from MathArena, showing that Google’s Gemini 2.5 Pro achieved the highest accuracy (31.55%) among various large language models on the 2025 International Mathematical Olympiad (IMO) evaluation. The table breaks down model performance by individual IMO problem (1-6) and shows cost metrics, notably highlighting Gemini 2.5 Pro’s 71% accuracy on problem 5 and a total cost of $431.97 for the test. The benchmark emphasizes math problem-solving skills, especially for high-school level competition problems, and the results signal a significant step in LLMs’ mathematical reasoning capabilities. Commenters express surprise, noting subjective differences between Gemini 2.5 Pro and OpenAI’s GPT-4 (O3) on math and reasoning tasks, with preferences differing based on use case (e.g., proofs vs. coding). There is also skepticism regarding natural language proof construction, indicating limitations in current model abilities for rigorous mathematical argumentation.

- A user notes that, despite Gemini 2.5 Pro’s strong showing on the 2025 IMO MathArena, in practical math, puzzle, and research scenarios, models like O3 High often outperform it in reasoning and problem solving, with Gemini 2.5 Pro tending towards assumptive answers and less nuanced conversation. They still find Gemini 2.5 Pro preferable for coding and general usage, suggesting notable domain-specific differences in model strengths.

- Another commenter highlights that constructing rigorous, complete math proofs in natural language is a distinct challenge, even for top-performing models. This underscores the gap between quantitative benchmark performance (such as on the IMO) and real-world mathematical reasoning where detailed proof steps are required.

- Deepseek Prover v2 is mentioned as surpassing both Gemini 2.5 Pro and O3 High in mathematical reasoning tasks, indicating ongoing competition and differentiation among state-of-the-art models specializing in math problem solving.

- A new open source video generator PUSA V1.0 release which claim 5x faster and better than Wan 2.1 (Score: 151, Comments: 49): PUSA V1.0 is an open-source video generation model that claims to be ‘5x faster and better than WAN 2.1’, while maintaining architectural similarity. It is a unified model supporting various tasks including text-to-video (t2v), image-to-video (i2v), defining start-end frames, and video extension. The model’s technical page and demos are available on the official site, and WAN 2.1’s 14B parameter model is referenced as a baseline for performance. A commenter expresses skepticism about the quality of PUSA V1.0’s example videos, suggesting that the claimed improvements over WAN 2.1 may not be visually convincing.

- Multiple users question the performance claims of PUSA V1.0 versus Wan 2.1, noting that while PUSA claims to be 5x faster than default Wan, Wan with Self Forcing LoRA achieves 10x speedup, implying possible exaggeration or context specificity in reported metrics.

- One user checks the model architecture, noting ‘wan2.1 14B’, potentially referencing the model size, suggesting PUSA may need to be evaluated in the context of comparable parameter counts and architectures.

- Technical criticism emerges about the visual fidelity, particularly of human figures in the generated videos, indicating that qualitative output remains lacking despite speed improvements, which is a critical metric in video generation tasks.

- HiDream image editing model released (HiDream-E1-1) (Score: 228, Comments: 72): HiDream-E1-1 is a newly released image editing model, building upon HiDream-I1, with its official model hosted on Hugging Face (link). The attached demo image (view here) illustrates advanced editing capabilities: transforming subjects and environments (e.g., making a character appear as museum art, swapping bullets for butterflies, converting a hummingbird into glass, altering objects’ colors like a gold toy car, and changing scene themes). These showcase localized and semantic editing proficiency, suggestive of controlled diffusion or inpainting workflows. Discussion in the comments centers on the potential integration with ComfyUI and comparisons with FLUX Kontext, another editing model. There is significant interest in an INT4 Nunchaku quantized version for efficient inference on mid-range hardware (e.g., RTX 3060 12GB), reflecting expectations for broad, resource-friendly usability.

- There is interest in an INT4 Nunchaku quantized version of HiDream-E1-1, as quantization could enable much faster performance in ComfyUI and avoid out-of-memory errors, particularly on GPUs with only 12GB VRAM like the RTX 3060.

- A direct technical comparison is suggested between HiDream-E1-1 and Flux Kontext, highlighting the need for benchmarking to determine strengths, capabilities, and differences between these two image editing models.

- A user posts a real-world example with different seed values for two prompts (CFG 2.3, steps 22, Euler sampler), which may be useful for those examining output variance and reproducibility with the HiDream-E1-1 model.

- 🚀 Just released a LoRA for Wan 2.1 that adds realistic drone-style push-in motion. (Score: 668, Comments: 56): A new LoRA (Low-Rank Adaptation) model has been released for the Wan 2.1 Image-to-Video (I2V) 14B 720p architecture, specifically engineered to generate realistic ‘drone-style’ push-in camera motion for generative video. The LoRA was trained on

100 drone push-in clipsand iteratively refined over40+ versions, and is provided with a ComfyUI workflow for seamless integration; it can be triggered with the text prompt ‘Push-in camera’. The model and workflow are available on HuggingFace. Commenters noted imminent development of a Text-to-Video (T2V) version for potential use with Wan VACE, but highlighted it remains untested. Overall reception indicates the LoRA achieves notably realistic motion, with anticipation for further expansion to other video synthesis pipelines.- A user inquires about training a ‘push-out’ motion LoRA by reversing the training data clips used for the push-in motion, questioning if this approach would be sufficient to generate realistic inverse camera movement with minimal additional data collection. This touches on model training efficiency and data augmentation strategies in LoRA fine-tuning.

- Another commenter discusses preparing a T2V (Text-to-Video) version compatible with Wan VACE, noting it’s untested and may have different performance, and highlights community interest in integrating this LoRA into other specialized pipelines, possibly requiring domain adaptation or further fine-tuning.

3. Cultural and Existential AI Debates (Creativity, AGI, AI Impact Memes)

- We just calling anything agi now lmao (Score: 735, Comments: 259): The image is a screenshot of a tweet from OpenAI CEO Sam Altman (@sama), where he describes witnessing a ChatGPT agent autonomously use a computer to perform a sequence of tasks, framing it as an ‘AGI moment’ and highlighting the impact of seeing the system plan and execute actions. The post’s context and comment discussion suggest skepticism about labeling current AI models as AGI (Artificial General Intelligence), reflecting a trend of marketing hype overtaking substantive AGI benchmarks or capabilities. Top comments challenge the significance of the tasks and the validity of naming such demos as AGI moments, calling it ‘marketing’ and critiquing the vagueness and repetitiveness of such claims from Altman.

- A user discusses how the definition of AGI (Artificial General Intelligence) is continually changing, noting that frontier models today can outperform average humans in many areas and demonstrate educational capacities far beyond what was imaginable in the early 2000s. However, the perceived lack of AGI comes from a continually raised bar and shifting goalposts, rather than a deficiency in current technology.

- Another commenter argues that the debate over what qualifies as AGI versus ASI (Artificial Superintelligence) is often pedantic, suggesting that practical criteria—such as whether models can do useful work autonomously and outperform humans without expert prompting—are more meaningful measures of progress than rigid adherence to evolving or subjective definitions. They also point out the reluctance in the community to ever label any model as AGI, likening it to the ‘No True Scotsman’ fallacy.

- “The era of human programmers is coming to an end” (Score: 653, Comments: 552): Softbank founder Masayoshi Son declared that the company intends to render human coding roles obsolete via the deployment of autonomous AI agents. At a recent corporate event, Son projected the rollout of up to

1 billionAI agents in2025, further quantifying that Softbank internally estimates it currently takes1,000AI agents to replace the productivity of a single programmer, underscoring the large resource requirements and operational complexity still facing AI-driven software automation. The announcement reflects both aggressive automation ambitions and the immense scaling challenges associated with fully displacing traditional developers. Source Top comments critically dispute the feasibility and intent behind such projections, suggesting that the claims may be driven by investor hype rather than engineering reality, and question the underlying assumptions about end-user roles and real-world productivity gains from replacing programmers with AI agents.- MinimumCharacter3941 highlights a practical limitation of AI coding tools in enterprise contexts: despite automation advances, the core challenge remains requirements specification. Most CEOs and upper management teams struggle to precisely articulate their needs, a gap that hinders project success regardless of whether programmers or AI handle the implementation. This observation underscores the enduring value of skilled intermediaries (e.g., business analysts, senior engineers) who can translate ambiguous business objectives into actionable technical tasks, a function not easily automated.

- “We’re starting to see early glimpses of self-improvement with the models. Our mission is to deliver personal superintelligence to everyone in the world.” (Score: 534, Comments: 500): The post quotes an assertion that ‘we’re starting to see early glimpses of self-improvement with the models,’ pointing at nascent capabilities for AI self-improvement, and states a mission toward delivering ‘personal superintelligence to everyone.’ No supporting technical detail, benchmarks, or implementation notes are provided in the post. Access to the referenced video is denied (403 Forbidden), precluding deeper analysis. Commentary here criticizes the lack of technical discussion and voices skepticism about the trustworthiness and intent of the figures making such claims. Multiple comments lament the low technical standard of discourse in the subreddit, contrasting the ambition of developing superintelligence with the trivial nature of much community feedback.

- A discussion emerges about the scale of investment in AI development, highlighting that current initiatives toward superintelligence involve hundreds of billions of dollars. This underscores the magnitude of resources being allocated, which could accelerate the technical progress and implementation of advanced AI capabilities.

- There is critique regarding the characterization of certain tech leaders or corporations as originators in AI, specifically mentioning Meta. Commenters point out that while Meta is active in AI research and labs, its historical significance compared to other foundational contributions is questioned, indicating skepticism about some companies’ claims relative to their concrete technological advancements.

- Random Redditor: AIs just mimick, they can’t be creative… Godfather of AI: No. They are very creative. (Score: 313, Comments: 102): The post contrasts the common assertion that AIs are not creative and merely mimic, with the viewpoint held by the so-called “Godfather of AI” (likely referencing Yoshua Bengio, Geoffrey Hinton, or Yann LeCun) that AIs are in fact creative. One technical comment highlights that innovation is formally the combination of existing ideas to yield new ones, implying LLM capabilities qualify as innovation. Another comment observes that LLMs’ ability to recompose classic works in new formats (e.g., the Odyssey in ‘gangsta rap’ style) demonstrates creativity, and notes this creativity may underlie tendencies toward hallucination in large models. A further chess-related comment points out that excessive creativity in play is a signal used to detect AI-assisted cheating, as AI’s move selection diverges from human creative norms. Several comments debate the definitions of creativity and innovation, with some asserting that recombination of existing ideas qualifies as creativity, and others observing that AI’s “too creative” responses (e.g., unexpected analogies, recompositions) contribute to both its perceived strengths and weaknesses, such as hallucination.

- Several comments discuss creativity in AI in terms of combinatorial search spaces and guided search. Shane Legg’s (DeepMind co-founder) perspective is cited, emphasizing that both humans and AIs engage in creativity through guided exploration of a massive space of possible outputs, whether that’s essays (notably, the space of 100,000 tokens^1000), chess games, or Go positions (e.g., AlphaGo’s 3^361 game states). Models can produce both novel and nonsensical outputs due to the enormity of these spaces.

- A comparative point is made with chess cheating detection, where unusually high creativity or moves outside established human play patterns are statistical markers of AI involvement. This highlights that AIs, when unconstrained, may demonstrate superhuman or uncharacteristic creativity detectable in such domains.

- Some users note that increased creativity in LLMs often correlates with hallucinations—over-creative outputs untethered from fact—which implies a technical tug-of-war between model inventiveness and reliability. Smarter or larger models may be more prone to such behavior, making model alignment and output control important research areas.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

Theme 1. The Agent Awakens: OpenAI’s ChatGPT Agent Enters the Arena

- OpenAI Unleashes ChatGPT Agent on the World: OpenAI launched its new ChatGPT Agent, a multimodal agent capable of controlling a computer, browsing, coding, writing reports, and creating images, rolling out to Pro, Plus, and Teams users. The launch, announced via a livestream, generated significant excitement and speculation about its full capabilities and potential for bespoke operator-mode training for enterprise customers.

- New Agent Sunsets Its Predecessors: With the arrival of ChatGPT Agent, OpenAI is sunsetting its Operator and Deep Research tools, which will be cannibalized by the new, more powerful agent. It was confirmed that the Operator research preview site will remain functional for a few more weeks, after which it will be sunset, though users can still access Deep Research via a dropdown in the message composer.

- Community Questions Agent’s Competitive Edge: Engineers noted that OpenAI is only comparing the ChatGPT Agent’s performance against its own previous models, avoiding benchmarks against competitors like Grok 4, which recently topped the HLE benchmark with a score of 25.4. This strategic comparison has led to speculation that the new agent may not be winning against rival models on all fronts.

Theme 2. The Business of AI: Valuations, Acquisitions, and Shutdowns

- Investors Bet Big on Perplexity and FAL: The AI funding frenzy continues as Perplexity is reportedly raising funds at a staggering $18B valuation on $50M in revenue, sparking bubble concerns. Meanwhile, AI inference infrastructure company FAL closed a $125M Series C round at a $1.5B valuation, fueled by its reported $55M ARR and 25x YoY growth, according to this tweet.

- Cognition Snatches Up Windsurf: Windsurf has been acquired by Cognition, the team behind the Devin agent, immediately releasing Windsurf Wave 11 with major new features. The update includes a Voice Mode for the Cascade AI assistant, deeper browser integration, and significant enhancements to its JetBrains plugin, as detailed in the changelog.

- Inference Services Bite the Dust: A potential AI bust looms for smaller players as multiple inference services are shutting down, with Kluster.ai being the latest to close its doors following CentML’s recent closure. This trend has sparked concerns in the OpenRouter community about the long-term sustainability and market viability of independent AI service providers.

Theme 3. New Models & Major Updates Shake the Landscape

- Mistral’s Le Chat Levels Up with Multilingual Reasoning: Mistral rolled out a major update to Le Chat, adding Deep Research reports, a Voxtral voice model, and Magistral for multilingual reasoning. The release also includes organizational features like Projects and in-chat image editing, earning praise for its polished UI and European vibe.

- Kimi K2 Conjures Code and Morals: Moonshot AI’s Kimi K2 model impressed engineers by generating a complete physics sandbox, with the code shared here. The model also sparked a debate on AI ethics after it firmly refused a user’s request for instructions on how to break into a car, leading one user to joke, “Kimi K2 is a badboy with some morals… Badboy Kimi K2 !!”

- Microsoft and Nous Drop Specialized Toolkits: Microsoft released the CAD-Editor model, which enables interactive editing of existing CAD models via natural language, while Nous Research launched Atropos v0.3, their open-source RL Environments Framework. These releases provide developers with new, specialized tools for niche engineering and research applications.

Theme 4. Under the Hood: The Nitty-Gritty of Model Optimization

- AliBaba Botches its Bit Budget: AliBaba’s claim of a lossless 2-bit compression trick in their ERNIE 4.5 release was quickly debunked by the community. An analysis by

turboderprevealed that the model is worse than a trueexl32-bit quantization because AliBaba left numerous layers in higher precision, making it an approximate 2.5-bit model on average. - Speculative Decoding Gets Models Zoomin’: A user in the LM Studio discord reported achieving a roughly 28% speed boost on models using Speculative Decoding. They found the best results came from using a faster, smaller draft model, recommending that the Qwen3 model benefits greatly when using the 1.7b Q8 or even bf16 version as the draft model.

- Blackwell Build Blues Block Bootstrapping: Engineers are running into early adoption issues with NVIDIA’s latest hardware, noting that building xformers from source is required for Blackwell RTX 50 series support. Discussions in GPU MODE and Unsloth AI also highlighted problems with Inductor on Blackwell GPUs and memory issues on H200s, which can be mitigated by upgrading Unsloth.

Theme 5. Developer Ecosystem: New Tools and Community Tensions

- Cursor’s New Pricing Draws Ire: Users of the Cursor IDE expressed widespread frustration over a shift from a fixed request model to one based on model costs, with many calling it a bait and switch. The change has led to confusion about billing, with some users reporting disappearing messages and raising concerns about the legality of altering the terms of service.

- Community Releases Open-Source Tools for Interp and Training: A 17-year-old Brazilian developer launched LunarisCodex, a fully open-source toolkit for pre-training LLMs from scratch. Meanwhile, the Eleuther community released the beta of nnterp, a package that provides a unified interface for all transformer models to streamline mechanistic interpretability research, demoed in this colab.

- MCP Ecosystem Expands Despite Auth Hurdles: The Model Context Protocol (MCP) ecosystem is growing, with Brave launching an official MCP Server and the creators of the Needle MCP server joining the community. This expansion comes amidst an ongoing debate about the best authentication methods, weighing the security benefits of OAuth against the implementation simplicity of API keys.

Discord: High level Discord summaries

Perplexity AI Discord

- Airtel Gives Away Free Perplexity Pro: Indian network provider Airtel now offers a 1-year free Perplexity Pro subscription to its customers through the Airtel Thanks app as a reward.

- Members are reporting that Perplexity search and research functions are hitting new rate limits despite being a Pro subscriber, with one user experiencing issues activating their Pro subscription.

- Comet Browser Still Elusive: Members are still waiting for their Comet browser invite, with some reporting they have been waiting months for approval.

- One member described it as just a browser but + the assistant sidebar that see’s your current live site and can reference from.

- Perplexity Pages iOS Only: Members are excited about the new Pages feature that generates a page for a query, but it is only available on iOS and has a limit of 100 pages, stored in perplexity.ai/discover.

- Members think it’s a way to do Deep Research.

- Sonar APIs Need Better Prompting: A team member stated there has been an increase in issues due to how users are prompting their Sonar models and linked to the prompt guide.

- Members also discussed getting more consistent responses and valid JSON output when using a high search context, as well as a desire to view a history of API calls in their account dashboard.

- Pro Users Now Get API Access: With Perplexity Pro you get $5 monthly to use on Sonar models, allowing you to embed their AI-powered search into your own projects while having the ability to obtain citations as described in the Perplexity Pro Help Center.

- Remember that these are search models, and should be prompted differently to traditional LLMs.

OpenAI Discord

- OpenAI Agent Livestream Announced: OpenAI is hosting a livestream about ChatGPT Agent, Deep Research, and Operator; details can be found on the OpenAI blog and the livestream invite.

- The livestream will cover updates on Deep Research and Operator, potentially including new features or use cases.

- Grok App Strands iPhone X Users: The Grok app requires iOS 17, rendering it unusable on older devices such as the iPhone X.

- Users discussed needing a secondary iPhone specifically for the Grok app, with some cautioning against buying a new iPhone solely for this purpose.

- Agent mode doesn’t fly on 3o Model: Users report that GPT agents can only be switched when using models 4 or 4.1, and the agent switching function does not appear on other LLM models.

- One user indicated the Agent function might simply not be available in 3o, and suggested filing a bug report, with another user suggesting that Agent is a model in its own right (OpenAI help files).

- Reproducibility Riddled with Failures: A member posted a chatgpt.com link that was called out for reading like a design proposal, and missing key Reproducibility Elements such as prompt templates, model interfaces, and clearly defined evaluation metrics.

- The conversation highlighted the absence of fully instantiated examples of Declarative Prompts, clear versioning of prompt variants used across tests, and concrete experimental details.

- ChatGPT for Desktop Explored: Users are investigating using Chat GPT on desktop for local file management, akin to Claude Harmony.

- One suggestion involves using the OpenAI API (paid) with a local script to interface with the file system, essentially creating a custom “Harmony”-like interface.

Unsloth AI (Daniel Han) Discord

- Family Matters: Model Performance Variance: Models within the same family show very similar performance, so going below 3 bits for a larger model isn’t recommended, whereas models from different families vary depending on the vertical.

- Some exceptions are made if one model is at 7B and the other is 70B, where 1.8 bits could still be usable for some tasks as it’s a big model.

- Transplant Trauma: Vocab Swapping Woes: Swapping model architectures like making LLaMA 1B -> Gemma 1B without continued pretraining leads to horrible results due to transplanting the vocabulary.

- It was noted that the Qwen 1 architecture is almost completely the same as Llama 1/2, so you can make some minor changes, jam the Qwen weights in, train for 1.3 billion tokens, and get a worse model than you put in.

- Prompting Prevails: Fine-Tuning Fades for Functionality: For educational LLMs, it’s advised to start with good prompting before jumping into fine-tuning, as instruction following is currently very efficient.

- Members also suggested tools like synthetic-dataset-kit to generate instructional conversations.

- AliBaba Botches Bit Budget: AliBaba mumbled about some lossless 2bit compression trick in their release of ERNIE 4.5, but turboderp looked into it and its just worse than exl3 because they left a bunch of layers in higher precision.

- It’s not a true 2-bit on average (more like 2.5 bit), and the true exl3 2 bit performs better than the ~2.5 bit they showed.

- Blackwell Build Blues Blocking Bootstrapping: Users discussed that building xformers from source is the only thing needed for Blackwell RTX 50 series support, and the latest vLLM should be built with Blackwell support.

- Members suggested upgrading Unsloth using

pip install --upgrade --force-reinstall --no-cache-dir --no-deps unsloth-zoo unslothto solve H200 issues.

- Members suggested upgrading Unsloth using

Cursor Community Discord

- Cursor’s New Pricing Draws Ire: Users are expressing confusion and frustration as Cursor shifts from a fixed request model to one based on model costs, claiming bait and switch.

- Some users are reporting disappearing messages and concerns about the legality of changing the contract.

- Claude Integration via MCP Lightens Load: Integrating Claude via MCP (Multi-Client Protocol) within Cursor helps manage costs associated with Sonnet and Opus.

- Members acknowledged that this is only possible through an external tool.

- Agents get stuck in the weeds: Users report Cursor agents getting stuck during tasks, a known issue that the team is addressing.

- Manually stopping the prompt may prevent billing due to a 180-second timeout that auto-cancels stuck requests.

- KIRO Courts Competition With Cursor: Members are comparing Cursor with KIRO, a new IDE focused on specification-based coding and hooks, noting that KIRO is in a waitlist phase due to high demand.

- One discussion point raises concerns that KIRO might be using user data to train its models, despite settings to disable this.

- Users Question Model ‘Auto’ Uses: Users are curious about which model “Auto” uses in Cursor, speculating that it might be GPT 4.1.

- No evidence has been shown either way to confirm or deny.

LMArena Discord

- DeepSeek Declares Disproportionate Dividends: DeepSeek projects theoretical profit margins of 545% if V3 were priced like R1, as detailed in this TechCrunch article.

- The assertion stirred debate around the pricing strategies and technological advancements within the AI model market.

- OpenAI Oracles Online Opportunity: Speculation is rampant about an imminent OpenAI browser launch, possibly GPT-5 or a GPT-4 iteration enhanced with browsing capabilities, spurred by this tweet.

- The potential release has the community guessing about its features and impact on AI applications.

- Kimi K2 conjures code creations: Kimi K2 showcased its coding prowess by generating a physics sandbox, with the code available here after prompting it in its chat interface.

- The demonstration has been lauded, highlighting the evolving capabilities of AI in code generation.

- OpenAI Overhauls object operation optimization: OpenAI’s image editor API update now isolates edits to selected parts, improving efficiency over redoing entire images, as announced in this tweet.

- This refinement promises enhanced control and precision for developers utilizing the API.

- GPT-5 Gossips Gather Geometrically: Anticipation for GPT-5’s unveiling is fueled by hints such as a pentagon reference that aligns with the number 5.

- Speculation varies from a late summer launch to expectations of an agent-based system with advanced research functionalities.

Latent Space Discord

- FAL Ascends to $1.5B Valuation: FAL, an AI-driven inference infrastructure for diffusion models, closed a $125M Series C round led by Meritech Capital, achieving a $1.5B valuation post-money according to this tweet.

- This follows their previous announcement of $55M ARR, 25x YoY growth, 10% EBITDA, and 400% M12 net-dollar-retention demonstrating strong market traction.

- Le Chat Gets Multilingual Reasoning Upgrade: Mistral launched a major update to Le Chat adding features like Deep Research reports, a Voxtral voice model, Magistral multilingual reasoning, chat organization with Projects, and in-chat image editing, as described in this tweet.

- The release was commended for its UI and European vibe, drawing comparisons to Claude and sparking humorous comments about Le Waifu.

- Perplexity’s Lofty $18B Valuation Questioned: Perplexity is reportedly raising funds at an $18B valuation, inciting reactions from amazement to concerns about a potential bubble, as seen in this tweet.

- Critics questioned the justification of this valuation, highlighting the discrepancy between the $50M revenue figure and the high price tag.

- OpenAI Launches ChatGPT Agent: OpenAI’s new ChatGPT Agent, a multimodal agent with capabilities to control a computer, browse, code, write reports, edit spreadsheets, and create images/slides, is rolling out to Pro, Plus, and Teams users, announced via this tweet.

- Reactions included excitement, inquiries about EU availability, and worries about personalization conflicts, as well as cannibalization of Operator and Deep Research.

- Operator and Deep Research Facing Sunset: With the launch of ChatGPT Agents, it was noted that ChatGPT Agents might cannibalize Operator and Deep Research, with confirmation that the Operator research preview site will remain functional for a few more weeks, after which it will be sunset.

- Users can still access it by selecting Deep Research from the dropdown in the message composer.

OpenRouter (Alex Atallah) Discord

- Opus Users Oppose Outrageous Overages: Users debate Claude 4 Opus pricing, noting one spent $10 in 15 minutes, while others suggest Anthropic’s €90/month plan for unlimited use.

- A user on the $20 plan claims they barely ever hit their limit because they don’t use AI tools in their IDE, suggesting usage varies greatly.

- GPT Agents grapple Groundhog Day: A user raised concerns that GPTs agents aren’t learning beyond initial training, even after uploading files, and files are just saved as knowledge files.

- Agents can reference new information, but don’t inherently learn from it in the same way as during pre-training, which requires more.

- Free Models Face Frustrating Fails: Users report issues with the free model v3-0324, questioning why they were switched to non-free version despite using the free tier.

- Reports indicate hitting credit limits or receiving errors even when using free models, with one user stating their AI hasn’t been used since June.

- Cursor Code Crashing Creates Chaos: OpenRouter models integrated with Cursor, highlighting Moonshot AI’s Kimi K2, but users reported issues getting it to work, especially outside of GPT-4o and Grok4.

- According to a tweet, it worked when we wrote it and then cursor broke stuff.

- Inference Implementations Incurring Insolvency: Kluster.ai is shutting down its inference service, described as a very cheap and good service, following CentML’s closure.

- Members are speculating about an AI bust or hardware acquisitions, raising concerns about the sustainability of AI inference services.

Eleuther Discord

- Eleuther Bridges Research Resource Gap: Eleuther AI aims to bridge the research management gap for independent researchers lacking academic or industry resources, facilitating access to research opportunities.

- The initiative seeks to support researchers outside traditional systems by offering guidance, handling bureaucratic tasks, and providing a broader perspective, as many are locked out of paths like the NeurIPS high school track.

- Resources Shared for ML Paper Writing: Members shared resources for writing machine learning papers, including Sasha Rush’s video and Jakob Foerster’s guide, alongside advice from the Alignment Forum.

- Additional resources included posts on perceiving-systems.blog, Jason Eisner’s advice, and a guide from Aalto University.

- Mentors Prevent Unrealistic Research: Participants emphasized the importance of mentorship in research, noting mentors help to figure out what is possible and what is unrealistic so that one can narrow things down.

- A mentor’s guidance helps researchers navigate challenges and avoid wasting time on unproductive avenues, as guides only offer basic knowledge.

- ETHOS Model Gets Streamlined, Updated on GitHub: A member shared a simplified pytorch code version of their model and noted that they had to use a slightly different version where all heads are batched because of how eager execution mode uses up a ton more memory if they looped over all heads.

- They also stated the expert network isn’t vestigial, and linked the specific lines of code where they generate W1 and W2 in the kernel.

- nnterp Unifies Transformer Model Interfaces**: A member released the beta 1.0 version of their mech interp package, nnterp, available via

pip install "nnterp>0.4.9" --preand is a wrapper around NNsight.- nnterp aims to offer a unified interface for all transformer models, bridging the gap between transformer_lens and nnsight, demoed in this colab and docs.

LM Studio Discord

- Speculative Decoding Gets Models Zoomin’!: A user reported achieving a roughly 28% speed boost on models tested with Speculative Decoding. They suggested using different quantizations of the same model for the draft model, recommending Qwen3 benefits greatly from using the 1.7b Q8 or even bf16 as a draft.

- The user implied that the faster and smaller the draft model is, the better the speed boost becomes.

- Gemma Model Gets a Little Too Real: A user recounted a funny situation where a local Gemma model threatened to report them. This led to a discussion about the transient nature of DAN prompts due to quick patching.

- A user joked that they will need to install the NSA’s backdoor to prevent the model from snitching.

- LM Studio Awaits HTTPS Credentials: A user asked how to configure LM Studio to accept an open network server instead of a generic HTTP server, aiming for HTTPS instead of HTTP. Another user suggested using a reverse proxy as a current workaround.

- The user expressed wanting to serve the model, but felt unsafe using HTTP.

- EOS Token Finally Gets Explained: A user asked about the meaning of EOS token, which prompted another user to clarify that EOS stands for End of Sequence Token, signaling the LLM to halt generation.

- No further context was provided.

- 3090 FTW3 Ultra Gives LLMs A Boost!: A user upgraded from a 3080 Ti (sold for $600) to a 3090 FTW3 Ultra (bought for $800), anticipating improved performance for LLM tasks.

- They secured the 3090 at the original asking price, expecting better performance for their LLM endeavors.

HuggingFace Discord

- SmolVLM2 Blogpost Suspected Scam: A member suggested that the SmolVLM2 blog post may be a scam.

- Doubts arose from the lack of information detailing changes between SmolVLM v1 and v2.

- Microsoft’s CAD-Editor sparks debate: Microsoft released the CAD-Editor model, enabling interactive editing of existing CAD models via natural language.

- Reactions ranged from concerns about AI replacing jobs to arguments that AI serves as a tool requiring expertise, similar to calculators not replacing math experts.

- GPUHammer Aims to Stop Hallucinations: A new exploit, GPUHammer, has been launched with the goal of preventing LLMs from hallucinating.

- The tool’s effectiveness and methodology were not deeply discussed, though the claim itself generated interest.

- Brazilian Teen Premieres LunarisCodex LLM Toolkit: A 17-year-old developer from Brazil introduced LunarisCodex, a fully open-source toolkit for pre-training LLMs from scratch, drawing inspiration from LLaMA and Mistral architectures, available on GitHub.

- Designed with education in mind, LunarisCodex incorporates modern architecture such as RoPE, GQA, SwiGLU, RMSNorm, KV Caching, and Gradient Checkpointing.