Open models are all you need?

AI News for 9/5/2025-9/6/2025. We checked 12 subreddits, 544 Twitters and 22 Discords (186 channels, and 3961 messages) for you. Estimated reading time saved (at 200wpm): 324 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

In July, we last commented on Kimi K2 being the largest SOTA OSS open model to be released, and today Moonshot AI updated their model weights again and released new benchmarks in their paper:

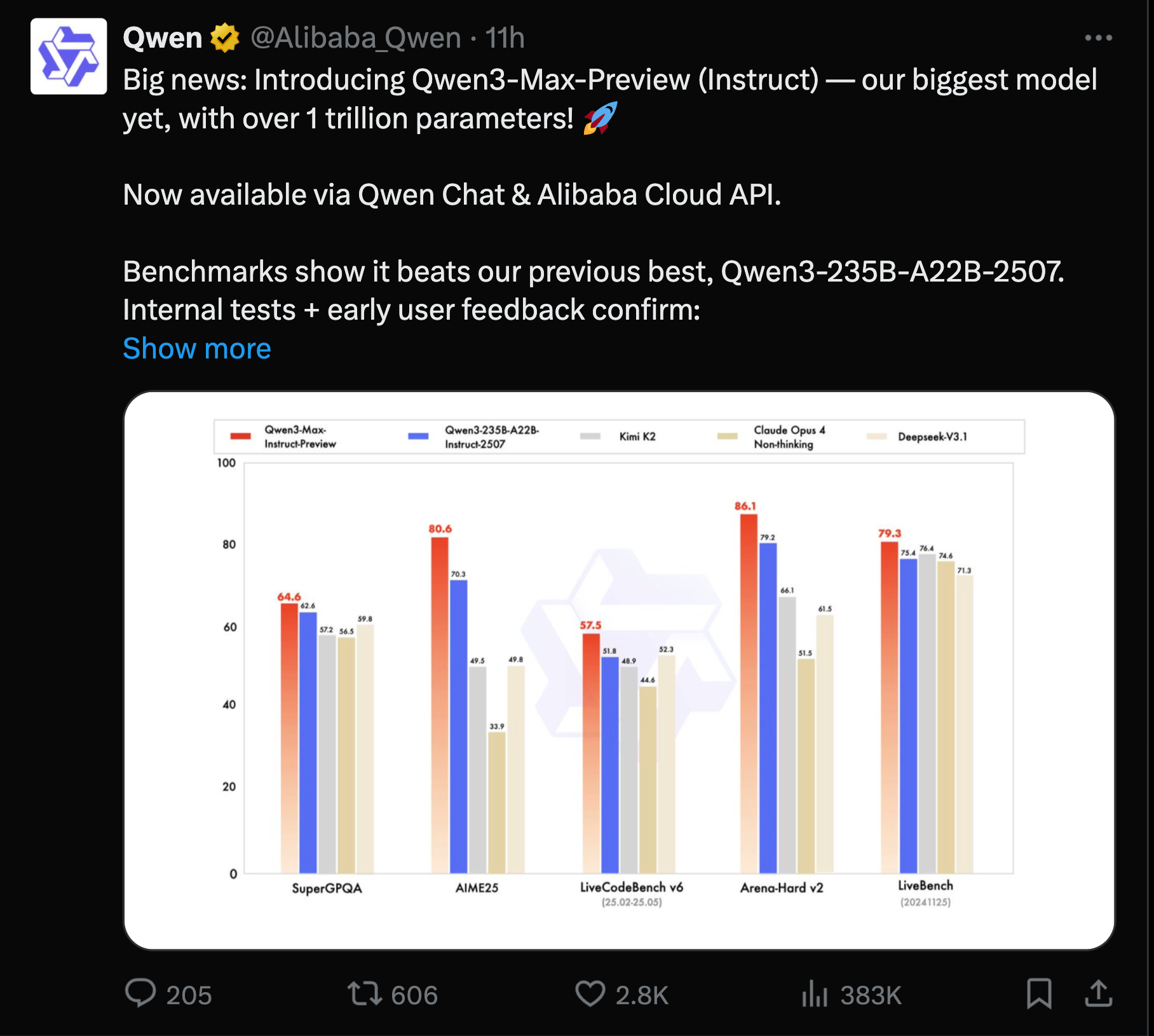

The big new entrant though is Qwen 3 Max, releasing a 1T param model for the first time, obviously beating its smaller siblings. They declined to release hparams, instead calling it “Max”, but it still seems that the model weights will be released in short order so it’s unclear why exactly they are breaking their own MoE naming schema.

China is overwhelmingly winning the open model war, it seems.

AI Twitter Recap

China’s long‑context coding surge: Kimi K2‑0905 and Qwen3‑Max preview

- Moonshot’s Kimi K2‑0905 (open weights) ships a practical agents upgrade: Kimi doubled context to 256k, improved coding and tool‑calling, and tuned integration with agent scaffolds (Cline, Claude Code, Roo). It’s already live on multiple stacks: Hugging Face weights/code, Together AI, vLLM deployment guide, LMSYS SGLang runtime (60–100+ TPS), Groq instant inference (200+ T/s, $1.50/M tokens), and Cline integration. Community reports emphasize that “agents really need ultra‑long context” for stability and tool orchestration (Teknium). Claims of “meets or beats Sonnet 4” surfaced in demos, while Kimi engineers acknowledged SWE‑Bench remains challenging (@andrew_n_carr, @bigeagle_xd).

- Qwen3‑Max‑Preview (Instruct): 1T‑parameter scale, agent‑oriented behavior: Alibaba introduced its largest model yet (over 1T parameters), available via Qwen Chat, Alibaba Cloud API, and now OpenRouter (announcement, OpenRouter). Benchmarks and early users point to stronger conversations, instruction following, and agentic tasks relative to prior Qwen3 models. Community reaction frames it as a “US‑grade frontier model” with competitive pricing and throughput (reaction, scale tease). Details on dense vs MoE remain unspecified in public channels.

Evals, agents, and what to measure

- “No evals” vs “evals that matter”: A widely‑shared thread argues many top code‑agent teams ship without formal evals, while vendors evangelize them; the nuance is that early 0→1 success often comes from dogfooding + error analysis before codifying evals (@swyx, receipts). Follow‑ons advocate for richer, causal evals of long‑horizon capability (e.g., months‑long tasks, protocol replication, strategy games, real‑world setups) and domain‑specific enterprise workflows that today’s leaderboards miss (@willdepue, ideas, @levie, @BEBischof). A pragmatic tip: use models as discriminators to rank outputs—generator/discriminator gaps can be leveraged in practice (@karpathy).

- Operationalizing evals and traces in agent stacks: CLI‑first agents plus semantic search can outperform ad‑hoc RAG for document tasks; LlamaIndex shows SemTools handling 1,000 arXiv papers with UNIX tooling + fuzzy semantic search (post). For RL pipelines, THUDM’s slime provides a clean rollout abstraction integrating tool calls and state transitions, reducing glue code in agentic RL experiments (overview).

Inference and post‑training advances

- Decoding and planning: Meta’s Set Block Decoding (SBD) samples multiple future tokens in parallel, cutting forward passes 3–5× with no architecture changes and KV‑cache compatibility; trained models match standard NTP performance on next‑token prediction (summary). For agents, “always reasoning” (ReAct) isn’t optimal—new work trains models to learn when to plan, dynamically allocating test‑time compute to balance cost and performance (thread, paper context).

- Post‑training theory and results: “RL’s Razor” argues on‑policy RL forgets less than SFT—even at matched accuracy—by biasing toward KL‑minimal solutions, with toy + LLM experiments supporting reduced catastrophic forgetting (summary). A “Unified View of LLM Post‑Training” shows SFT and RL optimize the same reward‑with‑KL objective; Hybrid Post‑Training (HPT) switches between them via simple performance feedback and consistently beats strong baselines across scales/families (overview). On the empirical side, Microsoft’s rStar2‑Agent‑14B uses agentic RL to reach frontier‑level math (AIME24 80.6, AIME25 69.8) in just 510 RL steps, with shorter, more verifiable chains of thought (results).

GPU stacks, kernels, and platforms

- ROCm quality regression in PyTorch: Analysis alleges a growing deficit of ROCm‑only skipped/disabled tests (>200 each), with a net increase since June 2025; reports say even core transformer ops (e.g., attention) have been disabled for months, harming developer trust. AMD leadership has reportedly reprioritized fixes (report). PyTorch maintainers note broad test‑skipping is endemic and requires sustained contributor attention across subsystems (context, quip). Separately, PyTorch published a kernel deep‑dive on 2‑simplicial attention implemented in TLX (Triton low‑level extensions) (kernel post).

- Infra momentum and meetups: Together AI announced a $150M Series D led by BOND (Jay Simons to board) to scale inference infra (annc); Baseten also raised $150M Series D as it rolls out performance work and EmbeddingGemma support (annc). vLLM is hosting a Toronto meetup on distributed inference, spec decode, and FlashInfer (event) and already supports Kimi K2 deployments (support).

OpenAI ecosystem: ChatGPT branching, Responses API, and Codex

- Product/API shifts: ChatGPT now supports conversation branching (@gdb; @sama). OpenAI’s Responses API got an in‑depth explainer (thread); the AI SDK v5 now defaults the OpenAI provider to Responses (Completions remains available) (note). Some devs countered that Responses complicates context portability and stateless usage in practice (critique), while others observed improved “chain‑of‑thought preservation” in ongoing conversations vs Chat Completions (anecdote).

- Coding agents and GPT‑5 Pro: Multiple practitioners report GPT‑5 Pro inside Codex can unblock gnarly engineering problems with deeper, slower passes; “smarter” beats “faster” was the sentiment in a public exchange with Sam Altman (experience, follow‑up, @sama). The Codex CLI/IDE continues shipping rapidly (changelog).

Embeddings and retrieval move on‑device (and hit limits)

- Small, fast, local: Google’s new open‑source EmbeddingGemma got day‑0 platform support (e.g., Baseten), with reports of embedding 1.4M docs in ~80 minutes on an M2 Max for free and better quality than older large paid models (Baseten, field result). On‑device retrieval is getting easier: SQLite‑vec + EmbeddingGemma runs fully offline across languages/runtimes (guide).

- Single‑vector limits: New theory/benchmark “LIMIT” shows hard lower bounds on top‑k retrieval under fixed embedding dimensions, with SOTA models failing on deliberately stress‑tested simple tasks—evidence that some combinations of relevant documents are intrinsically unrecoverable with single‑vector embeddings, motivating multi‑vector/late‑interaction approaches (summary).

Top tweets (by engagement)

- “The ability to predict the future is the best measure of intelligence.” — @elonmusk

- Kimi K2‑0905 update (256k, coding/tool‑calling, agent integration) — @Kimi_Moonshot

- Qwen3‑Max‑Preview (Instruct), “over 1T parameters,” now live via Qwen Chat/Alibaba Cloud — @Alibaba_Qwen

- ChatGPT conversation branching now live — @gdb

- GPT‑5 Pro in Codex praised for solving hard coding tasks with deeper passes — @karpathy

- “Very requested feature!” (ChatGPT branching) — @sama

- ROCm regression in PyTorch testing — @SemiAnalysis_

- DeepMind’s “Deep Loop Shaping” improves LIGO gravitational wave detection — @demishassabis

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Kimi K2-0905 and Qwen 3 Max Launches + Early Demos

- Kimi-K2-Instruct-0905 Released! (Score: 729, Comments: 192): Release announcement for Kimi-K2-Instruct-0905 with an attached benchmark/leaderboard image comparing it to other LLMs (e.g., DeepSeek). The chart is presented as showing K2-Instruct-0905 performing near SOTA and ahead of DeepSeek, with a commenter calling out a “1t-a32b” variant, possibly indicating a notable configuration highlighted in the results. Image: https://i.redd.it/6jq7r55ak9nf1.png (preview: https://preview.redd.it/u97uhts0q9nf1.png?width=1200&format=png&auto=webp&s=7d65247fb861127f04dd422d2ae8885c748edabd). Commenters claim it’s “very close to SOTA” and “clearly beats DeepSeek,” while noting it may be larger; discussion centers on size–performance trade-offs and the strength of the “1t-a32b” variant.

- Performance claims: commenters assert Kimi-K2-Instruct-0905 is “very close to SOTA” and “beats DeepSeek” albeit being larger; treat as anecdotal until verified. Cross-check the benchmark chart shared in the thread (image) and the model card on Hugging Face for head-to-heads versus DeepSeek variants (e.g., V3/R1) on standard suites like MMLU, MT-Bench, GSM8K, and HellaSwag.

- Scale/architecture hints: references to a “trillion-parameter” open-source model and a “1T-A32B” variant suggest a MoE-style setup where total parameters can be ~1T while active parameters per token are far lower (e.g., tens of billions). Clarifying total vs active params, routing/expert count, and training token budget is key to interpreting claims that it outperforms smaller dense baselines like DeepSeek at higher compute. “1T-A32B” likely denotes a ~32B active slice within a ~1T total-parameter regime, but verify on the model card before comparing efficiency.

- Resources: official release is on Hugging Face: https://huggingface.co/moonshotai/Kimi-K2-Instruct-0905. Check the card for evaluation tables, context length, tokenizer details, and quantization/inference notes (e.g., int4/int8), as well as licensing and any hardware recommendations to reproduce reported benchmarks.

- Qwen 3 max (Score: 269, Comments: 93): Qwen 3 Max is now available via the OpenRouter model hub and a web preview at Qwen Chat (OpenRouter, chat.qwen.ai). Pricing on OpenRouter is tiered by context-length: input

USD 1.2(≤128K) /USD 3(>128K) and outputUSD 6(≤128K) /USD 15(>128K), implying support for contexts beyond 128K and placing it near frontier-model pricing tiers (e.g., Claude/GPT). Commenters note prior Qwen Max variants were closed-source and express hope this release will have open weights on Hugging Face; others remark the pricing positions it alongside top-tier proprietary models.- Pricing details: Input is listed as $1.2 for contexts <

128Kand $3 for ≥128K; Output is $6 (<128K) and $15 (≥128K). Commenters note this places Qwen 3 Max’s cost structure close to Claude and GPT tiers, implying a frontier-model pricing posture and a separate long-context SKU at the128Kcutoff. - Release/availability expectations: Prior “Qwen Max” was closed-source; commenters hope for a Hugging Face release but others suggest this one is likely API-only (not locally runnable) at launch. This indicates uncertainty about open weights and potential lack of immediate local quantizations (e.g., GGUF) for on-device inference.

- Model size speculation: One user infers Qwen 3 Max “must be bigger than

235B,” suggesting expectations of a very large dense model surpassing earlier Qwen baselines. This is unconfirmed, but if accurate it would put Qwen 3 Max in the top tier of parameter counts among 2024+ LLMs, aligning with its frontier-like pricing.

- Pricing details: Input is listed as $1.2 for contexts <

- I’ve made some fun demos using the new kimi-k2-0905 (Score: 161, Comments: 24): OP showcases several demos built with the new kimi‑k2‑0905 using a single‑pass, AI‑generated prompt workflow that pairs Claude Code with kimi‑k2‑0905. The shared prompt resources are published as gists: gist 1 and gist 2; the demo video link on v.redd.it returns

HTTP 403without login, limiting independent verification (original link). A commenter proposes a capability stress‑test: ask the model to generate a full Game Boy emulator end‑to‑end. (Another non‑technical comment was ignored.)- A commenter shared concrete prompt templates for kimi-k2-0905, linking two gists that appear to provide reusable prompt scaffolding and examples for consistent behavior and demo replication: https://gist.github.com/karminski/52a72d4726128c10a266bfb8270fe632 and https://gist.github.com/karminski/0435b69c6d8c93b4bd1724b64e43bd75. These resources are useful for standardizing system instructions/roles and I/O formatting when evaluating K2 across tasks.

- There’s a proposed stress-test: have K2 generate a full Game Boy emulator end‑to‑end. This would probe long‑horizon code generation, multi‑file project scaffolding, and hardware reasoning (instruction decoding, timing/cycle accuracy for CPU/PPU/APU, ROM loading), offering a stringent benchmark versus other frontier models.

- Multiple requests focus on head‑to‑head evaluation and tooling: comparing kimi‑k2‑0905 to Claude Opus and guidance for using K2 with Claude Code. Useful axes for comparison would include code generation pass@k, long‑context reliability, tool‑use quality, latency, and cost; integration with Claude Code would likely require an OpenAI/Anthropic‑compatible API layer or an adapter to map chat and tool-call schemas.

2. Open-Source LLMs: GPT-OSS 20B Home Server & Weekly Release Roundup

- Converted my unused laptop into a family server for gpt-oss 20B (Score: 176, Comments: 94): **OP repurposed a 2021 MacBook Pro M1 Pro (16 GB unified RAM) as a 24/7 family LLM server running “gpt-oss 20B” via the llama.cpp server, reporting

46–30 tok/s,32Kcontext, ~1.7 Widle and ~36 Wunder generation; the 20B model + large context narrowly fits in 16 GB, so the system runs headless over SSH, with sleep/auto-updates disabled, Dynamic DNS for WAN access, and battery health managed while plugged in (native Apple charger measured more efficient than a generic GaN). The model is described as fast, concise, and compliant, but occasionally emits “very strange” factual errors—OP speculates possible weight corruption or low‑quality fine‑tuning. ** Comments request a setup guide and whether it’s bare‑metal, and ask about tweaks to improve responses; one user notes success on a non‑Mac by removing the battery and upgrading RAM to 32 GB. Another recommends LM Studio using Apple’s MLX stack and serving through Open WebUI in Docker for auth + web search, questioning if the OP avoided it due to the 16 GB constraint.- Reproducibility hinges on sharing exact llama.cpp (repo) runtime parameters; commenters request flags like

t(threads),ngl(GPU layer offload/Metal),c(context),b(batch size), plus the quantization (e.g.,Q4_K_MvsQ5_K_M) and exact model variant. Reported throughput varies from~8 tok/s(Ollama/LM Studio defaults) to a claimed~40 tok/son an M1/16GB; differences likely stem from quantization, GPU offload, and batching, so posting the full parameter set is essential for fair comparison. - On Apple Silicon, several note better reliability/perf by using LM Studio with the MLX backend (MLX) versus other options; some pair this with Open WebUI in Docker for auth/search. Stack choice impacts speed and resource headroom: MLX/Metal acceleration and bare‑metal runs can beat containerized UIs and Ollama defaults, while Dockerized setups trade some performance for convenience/features.

- Hardware constraints are a key limiter for 20B inference: upgrading a PC laptop to

32 GB RAM(and removing the battery for 24/7 use) improved stability and enabled higher‑precision quants; Macs can’t upgrade RAM, makingM1 16 GBnotably constrained. This context helps explain why heavier UIs/backends may be avoided on low‑memory machines in favor of leaner llama.cpp servers.

- Reproducibility hinges on sharing exact llama.cpp (repo) runtime parameters; commenters request flags like

- List of open models released or updated this week on this sub, just in case you missed one. (Score: 220, Comments: 30): Weekly roundup highlights new/updated open models across tasks and scales: Moonshot AI’s Kimi K2‑0905; AI Dungeon’s Wayfarer 2 12B & Nova 70B (open-sourced narrative roleplay LLMs); Google’s EmbeddingGemma (300M) multilingual embedding encoder; ETH Zürich’s Apertus multilingual LLM (≈

40%+non‑English training data); WEBGEN‑4B web‑design generator trained on ~100ksynthetic samples; Lille (130M) small LLM; Tencent’s Hunyuan‑MT‑7B & Hunyuan‑MT‑Chimera‑7B MT/ensemble models; GPT‑OSS‑120B benchmark updates; and Beens‑MiniMax (103M MoE) scratch‑built SFT+LoRA experiments. Coverage spans~103Mto~120Bparams, with notable techniques/data mentions including synthetic data generation, MoE, multilingual emphasis, roleplay fine‑tuning, and translation ensembles. Comments note strong reception for Kimi; the WEBGEN team adds that a non‑preview release and more UIGEN models are forthcoming, and that4Bcheckpoints serve as internal thermometers to validate their pipelines.- Sparse-MoE drops stood out: Klear-46B-A2.5B-Instruct uses a 46B-parameter mixture-of-experts with only

~2.5Bactive per token, so compute and KV cache scale with the active experts, not the total size; similarly, LongCat-Flash-Chat 560B MoE pushes total params higher while keeping per-step cost bounded. For local inference, this means memory/throughput are governed by the number of activated experts (and sequence length), enabling large-capacity behavior on modest hardware if routing remains sparse and load-balanced. - Specialized models expanded: Step-Audio 2 Mini (8B) adds open speech-to-speech capability; Neeto-1.0-8B targets medicine and reports

85.8on a medical benchmark; and Anonymizer SLMs provide privacy-first PII replacement at0.6B/1.7B/4Bscales for edge/server use. Translation saw breadth with YanoljaNEXT-Rosetta and CohereLabs/command-a-translate-08-2025, while vision/mobile got attention via Apple’s FastVLM and MobileCLIP2 on Hugging Face. - From a workflow perspective, the WEBGEN team notes they use

~4Bmodels as an internal “thermometer” to validate training/inference pipelines before scaling up, which is a practical proxy for detecting regressions early. Separately, users plan to evaluate Gemma embeddings for clustering; for rigorous comparison, consider intrinsic (cosine separation, silhouette) and extrinsic metrics (NMI/ARI) against baselines like E5 or text-embeddings models.

- Sparse-MoE drops stood out: Klear-46B-A2.5B-Instruct uses a 46B-parameter mixture-of-experts with only

3. AI/LLM Race Discourse and Meme Reactions

- Th AI/LLM race is absolutely insane (Score: 189, Comments: 146): Meta-discussion noting the rapid cadence of LLM releases and infra over the last 3–6 months—especially code-focused and general models like Alibaba’s Qwen2.5-Coder, THUDM’s GLM-4, and xAI’s Grok-2—plus the rise of third‑party API platforms hosting heavier models (e.g., OpenRouter, Together). The OP frames it as a bubble-vs-platform-shift question, pointing to relentless iteration on throughput (“new way of increasing tps”), the shift from local to hosted inference, and heavy corporate CAPEX, layoffs/poaching, and M&A as signals of a high-velocity market regime. Top comments are split between enthusiasm (“These ARE the good old days”), a macro take citing UBS’s forecast of

~$0.5TAI investment in 2026 with~60%YoY growth (arguing scale exceeds typical hype cycles), and moderation concerns noting the post may be off-topic for r/localllama.- UBS is cited forecasting

~$0.5Tin AI investment in 2026 with~60%YoY growth, implying massive near-term demand for compute (GPUs/TPUs), high-speed interconnects (400G/800G), and power/cooling capacity. For local/edge LLMs, this scale-up could affect GPU availability/pricing and spur rapid infra buildouts, but also raises risk of overcapacity similar to past infrastructure cycles. - Practitioner-run, task-specific benchmarks often fail to reproduce headline SOTA gains, suggesting many reported improvements are narrow, cherry‑picked, or brittle to prompt/distribution shifts. The discussion urges skepticism of single-paper claims and the casual use of “SOTA” as an objective yardstick, advocating rigorous replication, ablations of proposed components, and evaluation across diverse datasets/use cases to validate real capability gains.

- Some frame the current phase as a dot‑com‑like buildout focused on GPUs and extended context windows, where marketed advances (more VRAM, longer sequences) face practical limits: latency, cost, and quality degradation over very long contexts. The view is that a portion of perceived progress is aggressive market capture (subsidized usage now, price hikes later) rather than consistent, generalizable step-function model improvements.

- UBS is cited forecasting

- This is not funny…this is simply 1000000% correct (Score: 1697, Comments: 128): Non-technical meme critiquing current AI hype: it suggests companies (especially CEOs) push “AI” initiatives primarily to please markets and inflate stock prices, while job postings across roles now demand vague “AI experience” regardless of real need. The image contextualizes the broader trend of AI-washing—adding AI buzzwords to products, roadmaps, and hiring to signal innovation rather than deliver concrete value. Commenters note near-ubiquitous AI requirements in tech job ads and argue executives chase an “AI premium” independent of business benefit; some satirically suggest AI could replace CEOs, underscoring frustration with hype-driven leadership decisions.

- Thread consensus notes a hiring trend where “AI experience” is mandated across technical roles without specifying models, frameworks, or measurable outcomes, reflecting top‑down, market‑driven directives rather than concrete implementation plans. Comments suggest the business objective is headcount reduction and stock‑price signaling (“What do we need for that? AI!”) rather than validated ROI, with no discussion of benchmarks, latency/cost metrics, or deployed systems—indicating AI as a checkbox skill rather than a scoped technical requirement.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI-Broadcom Chips, Google Veo/Nano Banana, Nunchaku v1.0 Releases

- OpenAI set to start mass production of its own AI chips with Broadcom (Score: 522, Comments: 58): Reuters (citing the FT) reports that OpenAI will begin mass-producing its own custom AI accelerators with Broadcom, aiming to reduce reliance on Nvidia GPUs and lower training/inference costs while securing supply. This mirrors hyperscaler strategies (e.g., Google TPUs, AWS Trainium/Inferentia) but carries substantial risks around upfront NRE, manufacturing yield, time-to-market, and building/optimizing the software stack to fully utilize the hardware. Source: https://www.reuters.com/business/openai-set-start-mass-production-its-own-ai-chips-with-broadcom-ft-reports-2025-09-05/ Commenters characterize it as a high-risk, high-reward and ultimately “obvious” strategic move: smart if it works (cost/control advantages), but a massive gamble given the capital intensity and execution risk.

- Strategic rationale and risks: Commenters frame this as mirroring Google’s TPU play (custom accelerators to cut dependence on Nvidia and optimize TCO for training/inference), which could deliver workload-specific efficiency and capacity control. The downside is massive upfront NRE, tapeout/yield risk, long bring-up, and the need to mature a compiler/runtime and kernel library (XLA-like) to approach GPU-class performance; failure would strand significant capex. See TPUs for precedent: https://cloud.google.com/tpu

- Scope of deployment: The thread highlights that, per reporting, OpenAI aims to use the chip internally rather than sell it, i.e., “OpenAI planned to put the chip to use internally rather than make it available to external customers…”. This implies tight co-design with OpenAI’s training/research stack and no third‑party productization, reducing external support/validation burden but limiting amortization across customers and focusing optimization on their own models/pipelines.

- Manufacturing/competitiveness: Partnering with Broadcom suggests a full ASIC with advanced packaging/HBM; competitiveness vs GPUs hinges on process node, yields, memory bandwidth, interconnect, and software tooling. Beating

H100/B200on perf/W and cost-per-token would secure supply/cost advantages; missing those targets leaves high sunk costs. Reference GPU baselines: https://www.nvidia.com/en-us/data-center/h100/ and https://www.nvidia.com/en-us/data-center/blackwell/

- Google is on fire…Nano Banana & Veo are absolute game-changers (Score: 210, Comments: 14): Post hypes Google’s latest generative models — Veo (text-to-video) and Gemini Nano (on‑device LLM) — as “game‑changers.” Veo is Google/DeepMind’s video model for high‑fidelity, longer‑duration 1080p text‑to‑video with coherent motion, camera control, and style conditioning; see DeepMind: Veo. Gemini Nano is a compact on‑device model integrated into Android via AICore for low‑latency tasks (e.g., summarization, context‑aware system features); see Gemini Nano docs. Comments highlight the realism of the demo and request explicit art‑style conditioning (e.g., “add Saturn Devouring his Son”), while another geopolitical remark is non‑technical and not directly relevant to model capabilities.

- Nunchaku v1.0.0 Officially Released! (Score: 305, Comments: 91): Nunchaku v1.0.0 ships a backend migration from C to Python for broader compatibility and adds asynchronous CPU offloading, enabling Qwen-Image diffusion to run in

~3 GiBVRAM with claimed no performance loss. New wheels and a ComfyUI node are available (release, ComfyUI node), plus a4-bit,4/8-stepQwen-Image-Lightning build on Hugging Face (repo); docs cover install/setup (guide). Roadmap: kick off Qwen-Image-Edit imminently and add Wan 2.2 support next. Commenters nudge prioritization toward Wan 2.2 over 2.1 and note enthusiasm for faster image generation workflows. One asks about Nunchaku compatibility with Chroma (examples currently show Flux), implying interest in broader model/runtime support.- Questions center on model compatibility: can Nunchaku work with

Chroma, given examples showcaseFLUX? Others ask whetherLoRAis supported for fine-tuning/adapters. This suggests users want broader model/runtime abstraction beyond the showcasedFLUXpipelines. - Multiple users request

WAN 2.2support (some preferring it overWAN 2.1), with one quoting that “WAN2.2 hasn’t been forgotten — we’re working hard to bring support!”. Emphasis is on keeping parity with current model versions for state-of-the-art image generation; no concrete timelines or technical plan details were provided in-thread. - Upgrade reliability: a tester reports the in‑app Manager update “almost never works,” often requiring manual uninstall/reinstall to reach new versions (e.g., v1.0.0). This points to packaging/update pipeline issues that may hinder smooth adoption and automated environments.

- Questions center on model compatibility: can Nunchaku work with

2. AI Robotics: Figure Home Chores and RAI Robomoto

- **Will figure.ai take over home chores?** (Score: 350, Comments: 223): Thread discusses whether Figure AI’s humanoids (e.g., Figure 01) could handle full-spectrum home chores. No benchmarks or implementation details are provided (linked video is behind Reddit login at v.redd.it); commenters define an MVP capability set: end‑to‑end laundry, mopping, dishwasher loading/unloading, cardboard breakdown, trash/bin logistics, and vacuuming—implying reliable mobile manipulation in unstructured homes (deformable-object handling, force‑controlled tool use, long‑horizon task planning, perception, and safety). A consumer price tolerance of

USD $30–50kis cited if these tasks are executed robustly. Notable sentiments: strong willingness to adopt at the stated price if routine chores are solved; speculation that competent in‑home cooking plus drone ingredient delivery could reshape restaurant demand and last‑mile logistics; general hope for near‑term timelines without concrete evidence.- The chore list (end-to-end laundry, mopping, dishwasher loading/unloading, cardboard flattening, trash logistics, vacuuming) implies hard requirements: robust deformable-object manipulation (cloth, bags, cardboard), tool use, appliance interfacing, long-horizon task planning, and home-scale navigation in clutter. Benchmarks highlight the gap: BEHAVIOR-1K long-horizon household tasks [https://behavior.stanford.edu/behavior-1k], iGibson [https://svl.stanford.edu/igibson], and Habitat [https://aihabitat.org] show success rates degrade in unstructured settings. Hitting acceptable cycle times (e.g., folding a basket in <10–15 min) and recovering from errors without human resets is as crucial as dexterity. The stated willingness to pay

\$30–50Ksuggests BoM targets of a mobile base + 1–2 arms + RGB-D sensors are viable only if reliability approaches appliance-like duty cycles. - Multiple commenters flag the “certain conditions” demo gap: real homes vary widely (lighting, layouts, object novelties), so sim/demo policies must generalize and recover from failure. For 50–200-step chores, per-step reliability must be

≥99.9%to keep task success high (0.999^100 ≈ 90%vs0.99^100 ≈ 36%), which far exceeds staged demo rates. This demands self-calibration, continuous mapping, grasp-under-occlusion, compliant control, and safety interlocks, with MTBF in tens of hours between human interventions—hence the “couple years” to appliance-grade deployment. - Assistive and cooking scenarios raise the bar: force-limited compliant manipulation, food-safe materials, heat/grease handling, contamination-aware tool use, reliable multimodal interfaces, and robust environment understanding. Teleop-to-imitation systems like Mobile ALOHA show bimanual kitchen tasks under curated conditions [https://mobile-aloha.github.io], but end-to-end autonomy also needs pantry inventory tracking, recipe/temporal planning, and integration with delivery logistics (drones/robots), which face reliability and regulatory constraints. These requirements exceed today’s vacuum/mop robots and are the gating factors for impactful aging-in-place or blind-user assistance.

- The chore list (end-to-end laundry, mopping, dishwasher loading/unloading, cardboard flattening, trash logistics, vacuuming) implies hard requirements: robust deformable-object manipulation (cloth, bags, cardboard), tool use, appliance interfacing, long-horizon task planning, and home-scale navigation in clutter. Benchmarks highlight the gap: BEHAVIOR-1K long-horizon household tasks [https://behavior.stanford.edu/behavior-1k], iGibson [https://svl.stanford.edu/igibson], and Habitat [https://aihabitat.org] show success rates degrade in unstructured settings. Hitting acceptable cycle times (e.g., folding a basket in <10–15 min) and recovering from errors without human resets is as crucial as dexterity. The stated willingness to pay

- Another day, another AI driven robomoto (Score: 321, Comments: 46): The Reddit post links to a short X/Twitter clip from the RAI Institute showcasing a riderless, AI-driven motorcycle performing basic balance/riding maneuvers (video). The post/video includes no technical details (e.g., control stack, sensors, training method, or quantitative performance/robustness metrics), and the mirrored Reddit-hosted video (v.redd.it) returns

403to unauthenticated clients. Top comments are largely non-technical memes; the only semi-substantive point raised is curiosity about validation at higher speeds on a supersport platform and highway conditions.- A recurring technical question was why quadrupeds (robot dogs) appear agile while humanoids look clunky. Commenters note quadrupeds benefit from passive/static stability and simpler gait planners, whereas bipeds are underactuated and require real‑time whole‑body control (ZMP/MPC), high‑bandwidth torque control, and reliable contact estimation; adding dexterous hands compounds the problem with

~28–40DOF vs~12–16on many quadrupeds. Progress exists but is still brittle outside demos (see BD Atlas parkour for state of the art: https://www.youtube.com/watch?v=tF4DML7FIWk). - On “strap it to a supersport,” prior art shows autonomous motorcycle control is feasible without a human rider’s body: Yamaha MOTOBOT rode an R1M at

>200 km/husing GPS/IMU fusion and model‑based control of throttle, brake, clutch, shifting, and steering to induce roll via counter‑steering (https://global.yamaha-motor.com/showroom/technologies/ymrt/motobot/). The hard parts are low‑latency control under rapidly changing tire‑road friction and maintaining stability across low‑speed balance vs high‑speed dynamics; anthropomorphic actuation to “grab” the bike is unnecessary when drive‑by‑wire is available. Related balancing approaches on bikes (e.g., Honda Riding Assist) highlight how steering geometry and active control manage stability at low speeds: https://global.honda/innovation/robotics/experimental/riding-assist/.

- A recurring technical question was why quadrupeds (robot dogs) appear agile while humanoids look clunky. Commenters note quadrupeds benefit from passive/static stability and simpler gait planners, whereas bipeds are underactuated and require real‑time whole‑body control (ZMP/MPC), high‑bandwidth torque control, and reliable contact estimation; adding dexterous hands compounds the problem with

3. AI Society: Inequality, Layoffs, Deepfakes, and Accessibility

- Computer scientist Geoffrey Hinton: ‘AI will make a few people much richer and most people poorer’ (Score: 216, Comments: 76): In a recent Financial Times interview, Geoffrey Hinton warns that current AI deployment will concentrate wealth and power in a small set of firms while reducing incomes for most workers, exacerbating inequality and social risk. He urges stronger oversight, safety research, and governance before further rapid roll‑out to mitigate labor‑market displacement and broader systemic harms. Sources: no‑paywall archive link, FT original paywalled. Top comments frame this as a continuation of capitalism’s widening wealth gap, with AI accelerating the trend; some read Hinton’s tone as ironically “more optimistic now.” Another thread asserts that concentrated gains are a deliberate feature benefiting incumbents, not an unforeseen bug.

- Several commenters highlight a structural tax asymmetry: hiring humans triggers payroll taxes (e.g., US employer FICA

~7.65%plus mandated benefits) while deploying robots/software incurs no payroll tax, effectively making automation’s total cost of ownership lower than labor for equivalent tasks. They argue this acts as a de facto subsidy accelerating capital–labor substitution and concentrating returns to capital owners, and reference ideas like a “robot tax” or shifting tax burdens from labor to capital to rebalance incentives (see IRS/SSA FICA overview: https://www.ssa.gov/pubs/EN-05-10003.pdf; policy debates: https://www.oecd.org/tax/tax-policy/taxation-and-the-future-of-work.htm). - Another thread contends the unequal distribution of AI gains is not technologically inevitable but driven by institutional choices that tax labor-linked transfers heavily while taxing large wealth transfers (inheritances/capital gains) comparatively less, allowing AI-driven productivity to accrue primarily to asset owners. They frame this in terms of factor income shares and bargaining power, noting long-run declines in labor share as a warning signal (e.g., US nonfarm business labor share trend: https://fred.stlouisfed.org/series/PRS85006173), and propose reorienting tax/transfer systems toward wealth and capital income to avoid “neo-feudal” dynamics.

- Several commenters highlight a structural tax asymmetry: hiring humans triggers payroll taxes (e.g., US employer FICA

- Salesforce CEO confirms 4,000 layoffs ‘because I need less heads’ with AI (Score: 290, Comments: 69): Salesforce CEO Marc Benioff confirmed

~4,000customer-support layoffs—reducing support headcount from~9,000to~5,000—attributing the cuts to AI-driven efficiencies from its Agentforce system, saying “because I need less heads”, per CNBC. Salesforce says AI has reduced support case volume and it won’t backfill affected support engineer roles; internally, AI reportedly handles up to50%of work. Top commenters argue firms are invoking AI to justify post-pandemic over-hiring corrections and to signal efficiency to investors (citing analyst Ed Zitron), predicting more AI-attributed layoffs as the current hype cycle deflates.- Several commenters argue the

4,000layoffs attributed to “AI efficiency” lack technical substantiation—no disclosed productivity metrics, automation coverage, infrastructure cost reductions, or model/inference choices. They note this mirrors a broader post‑pandemic overhire correction being reframed as AI‑driven without benchmarks (e.g., tickets handled per agent, leads per AE, cost‑to‑serve deltas). The absence of details like which models, fine‑tunes, or workflow automations actually replaced FTEs makes the claim hard to evaluate. - An anecdote about building a personal CRM with AI underscores how LLM‑assisted scaffolding can accelerate CRUD apps and simple automations, potentially eroding the moat of generic SaaS. However, replacing Salesforce at enterprise scale requires non‑trivial capabilities—complex role hierarchies/ACLs, compliance (SOC 2/HIPAA), data model extensibility, integration throughput (ETL/event buses), observability, and SLAs—areas where DIY + LLM still imposes significant ongoing ops and reliability burden.

- Expectation of more firms citing AI for headcount cuts until the hype normalizes, absent hard ROI. Technical readers would expect quantifiable proof such as

>X%workflow automation,~$Y/seat license consolidation, or inference spend offset by labor savings; none were referenced, suggesting investor‑signaling rather than measured AI‑driven efficiency.

- Several commenters argue the

- An Update: Ben can now surf the web thanks to Vibe Coding in ChatGPT (Score: 1387, Comments: 77): A caregiver built a custom AAC/accessibility stack via “vibe coding” with ChatGPT that enables a nonverbal quadriplegic with TUBB4A-related leukodystrophy and severe nystagmus to browse content using a binary, two-button headband input with on-screen scanning selection. The system evolved from a phrase board to a media picker, a predictive‑text keyboard, and 8 custom games, culminating in search integrated directly into the keyboard so the user can type queries and independently retrieve images/YouTube videos; demo link: v.redd.it/6qzlngnab8nf1 (currently returns

403/auth‑gated). Implementation emphasizes low-vision, low-fine-motor constraints with binary input scanning and UI options sized/sequenced for minimal visual demand, all prototyped by a novice using ChatGPT for rapid iteration. Commenters encourage sharing/replicating the approach for other families and suggest enabling the user to co‑create via ChatGPT (user‑in‑the‑loop prompt engineering) to expand functionality.- A commenter suggested shifting toward end-user programming by giving Ben direct access to ChatGPT so he can prototype and build his own tools/automations, noting that user-driven iterations often surface solutions others wouldn’t anticipate. This implies extending the current “Vibe Coding” workflow from caregiver-authored prompts to user-authored scripts/macros, increasing personalization and autonomy in assistive tech.

- Tech CEOs Take Turns Praising Trump at White House - “Thank you for being such a pro-business, pro-innovation president. It’s a very refreshing change,” Altman said (Score: 804, Comments: 207): At a White House event (reported as a Rose Garden dinner), multiple tech CEOs publicly praised President Trump’s stance as “pro‑business, pro‑innovation,” with Sam Altman (OpenAI) quoted as saying, “Thank you for being such a pro‑business, pro‑innovation president. It’s a very refreshing change.” The only source provided is a paywalled WSJ link; no agenda, policy commitments, participant list, or technical outcomes (e.g., regulatory changes, funding programs) are available from the shared materials. Top comments are overwhelmingly critical of CEOs’ integrity and of Altman personally, offering no technical or policy analysis; an image link is shared without context. Overall, the thread reflects skepticism toward corporate motives rather than substantive debate on tech policy.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

1. The AI Arms Race: New Models and Hardware Heats Up

- Qwen 3 Max Enters the Arena with Mixed Reviews: The new Qwen 3 Max model sparked speculation of having 500B to 1 Trillion parameters, with users in the Unsloth AI discord praising its creative writing abilities as superior to K2 and Sonnet 4. However, its high price and shortcomings in tool calls and logic-based coding were noted, while an official release announcement from OpenRouter highlighted its improved accuracy and optimization for RAG and tool calling.

- Hardware Wars Rage from Custom Silicon to Workstations: OpenAI is reportedly partnering with Broadcom on a custom AI chip to reduce its reliance on Nvidia, detailed in a Financial Times article. Meanwhile, engineers debated the merits of workstations, with one quipping that the DGX Spark is a toy compared to the more powerful DGX Station, and others speculated that Nvidia’s upcoming 5000 series may be a skip gen due to a lack of significant VRAM increases.

- Niche Models Cater to Specific Tastes: A new model named Glazer was released on Hugging Face and Ollama specifically to replicate the sycophantic personality of GPT-4 that some users miss. In a more experimental vein, a developer trained a micro-LLM on H.P. Lovecraft’s stories, producing what they described as quite promising Lovecraftian output, seen in this YouTube video.

2. Geopolitical Jitters and Corporate Policy Shake-Ups

- Anthropic Draws a Line in the Geopolitical Sand: A new Anthropic policy, first shared on X, restricts service to organizations controlled by jurisdictions where its products are not permitted, such as China. The move ignited debates across multiple Discords about whether the motivation was genuine national security concerns or simply corporate self-interest aimed at protecting market share.

- MasterCard’s AI Unleashes Compliance Chaos: MasterCard replaced its human fraud prevention team with an AI system that is now aggressively flagging merchants for obscenity rule violations, as detailed in Chapter 5.12.7 of their rulebook. The system’s insufficiently specified criteria has led to fees as high as $200,000, forcing merchants into a corner and highlighting the risks of automated policy enforcement without clear context.

- OpenAI Clarifies Responses API Reality: A developer posted a thread on X to bust widespread myths about the OpenAI Responses API, clarifying that it does not magically unlock higher model intelligence but is essential for building GPT-5-level agents. It was also confirmed that OpenRouter uses this API for most of its OpenAI models, making the clarification critical for developers building on the platform.

3. The Developer’s Dilemma: Choosing and Tuning the Right Tools

- Coding Assistants Clash in the IDE: Developers are fiercely debating the best AI coding tools, with many finding GPT-5 superior to Sonnet 4 due to its conciseness and lower tendency to hallucinate. The community is also split between Codex CLI, praised for its code quality, and Cursor Code, favored for its creative reasoning, with one user noting the optimal setup might be a $20/month Cursor subscription paired with a separate Claude Code plan.

- Engineers Wrangle LLMs with Prompts and Programs: In the OpenAI discord, users shared advanced prompt engineering techniques, advocating for token efficiency by cutting useless words and using bracket notation like [list] and {object} for abstraction. Elsewhere, developers using DSPy focused on a more programmatic approach, building voice agents and using frameworks like GEPA to optimize prompts for specific conversational tasks.

- Hardware Constraints Force Creative Solutions: A user on a 6GB GPU sought model recommendations for immersive roleplaying, leading to suggestions like Mistral Nemo Instruct and the quantized Qwen3-30B-A3B-Instruct-2507-MXFP4_MOE model. For developers with tight cloud budgets, another discussion highlighted using models with RoPE (Rotary Position Embedding) to build RAG applications that can handle context windows larger than what they were explicitly trained on.

4. Under the Hood: The Guts of GPU Programming and Performance

- Mojo and Zig Push Compiler Boundaries for Peak Performance: Engineers in the Modular community are chasing the dream of writing simple, Pythonic code that automatically compiles to SIMD instructions using Mojo and MLIR. This mirrors concerns in the Zig community over a new async IO approach where IO needs to haul around state now, fueling discussion on how next-gen language features like Mojo’s type system can solve these low-level performance challenges.

- Engineers Decode Low-Level CUDA and ROCm Mysteries: A deep dive revealed that the FP32 accumulator for FP8 matrix multiplication in Nvidia’s tensor cores is actually FP22, according to this paper. Other discussions focused on leveraging L2 cache persistence on the Ampere architecture for performance gains, detailed in a blog post, and tackling errors in rocSHMEM related to its ROCm-aware MPI requirements.

- Future-Forward Architectures Spark Niche Debates: Discussions explored brain-like Spiking Neural Networks (SNNs) after a member shared an explainer video. On the more practical side of performance, vLLM profiling revealed significant slowdowns caused by ‘Runtime Triggered Module Loading’, prompting an investigation into its root cause and potential workarounds.

5. User Blues: Platform Instability and UX Woes Create Headaches

- LMArena Buckles Under Unprecedented Traffic: The LMArena platform is struggling with major stability issues, as users report widespread image generation glitches, infinite loops, and a non-functional video arena bot. Compounding the frustration are newly implemented rate limits and login requirements, with one user complaining the change was bad ‘because most of us don’t want to’.

- APIs Sputter and Services Stumble Across Platforms: Users of the Perplexity PPLX API reported a spike in 500 Internal Server Errors, with the Playground also becoming non-functional. The instability extends to paying customers, as some Perplexity Pro users noted that the Grok 4 model was missing from their selector, while an OpenRouter user discovered that hitting the output token limit silently truncates responses.

- AI Assistants Flub the Job and Frustrate Users: Developers using Cursor’s Auto mode shared numerous complaints about its poor performance, including its inability to fix simple bugs and its tendency to type edits in the chat instead of applying them. One user who switched back to aider from Claude Code remarked that Anthropic have made some questionable changes, highlighting a broader sentiment that even top-tier tools are experiencing regressions.

Discord: High level Discord summaries

LMArena Discord

- Image Generation Plagued by Glitches: Users are reporting widespread issues with image generation, including persistent errors and infinite generation loops resulting in the ‘Something went wrong with this response’ message.

- Suggestions include adding more specific error messages to aid in troubleshooting, especially when the model appears confused by the prompt.

- Video Arena Bot Briefly Vanishes: The video arena bot experienced downtime but is now back online after a fix; users can use the bot with

/videoand a prompt in the specified channels <#1397655695150682194>, <#1400148557427904664>, or <#1400148597768720384>.- A GIF tutorial on using the bot was shared here.

- Login Requirements Incite Ire: The new login requirements, especially the Google account requirement, sparked user concerns with one member pointing out the requirement was bad.

- This member explained this was ‘because most of us don’t want to’.

- Rate Limits Rankle Regulars: The recent implementation of rate limits on image generation, due to unprecedented traffic, has led to frustration, with confusion whether the limits are intentional or a result of ongoing issues.

- Logged-in users will continue to enjoy higher limits, and more information about user login can be found here.

- Account Data Evaporates Erratically: Users reported instances of lost chat histories, particularly when not logged in, which raised concerns about data retention.

- One member suggested trying to restore the chats ‘if you use brave you might be able to restore them, i dont know about google’ and that the platform is likely using cloudflare.

Perplexity AI Discord

- Perplexity Pro Users Whine About Grok 4 Absence: Some Perplexity Pro users are missing Grok 4 in their model selector, and were advised to contact support to check if their Pro account was the enterprise version.

- A member suggested reinstalling the app might help resolve the issue, or checking with Perplexity support if it was assigned to the account, noting that university users were especially impacted.

- Arc Browser Bites the Dust: Users discussed the transition from Arc to Dia, with one noting that Arc hasn’t had a meaningful update in about a year and another expressing concern about the $15 charge for the browser.

- They added that a large fanbase of happy Arc users would be left behind with the transition to building agentic chromium, and complained that it should be cheaper than Perplexity Max.

- Qwen 3 Max Speculation Swirls: Members speculated on the specs of the upcoming Qwen 3 Max, anticipating parameters between 500B and 1 Trillion.

- One member stated that they believe that since the models are free for consumers that it’s for Better Training Data and Big Community and the community is also driving model building by labeling, testing, and evaluating different model versions.

- PPLX API Melts Down: Multiple users reported receiving 500 Internal Server Errors on API calls and noted that the Playground was also non-functional.

- The users confirmed that no outage was reported on the status page, while one quipped that they’re going to pretend nothing happened after the service appeared to be working again, and another blamed increased usage.

- Comet Hits Usage Limits: Users are reporting that after using Comet Personal Search too much, it stops working, throwing the message You’ve reached your daily limit for Comet Personal Search. Upgrade to Max to increase your limit.

- Others noted that Comet is currently offered on the Paypal/Venmo deal or if you’re a student, and are sharing invite links in the discord, but it may be impacting API performance.

Unsloth AI (Daniel Han) Discord

- Postgres Dominates Complex Queries: While Qdrant excels in vector search, Postgres with pgvector is considered superior for handling complex database queries, sparking discussion on database suitability.

- A member linked to a tweet and humorously shared a Borat GIF, adding levity to the tech discussion.

- Local Sonnet’s Mammoth RAM Requirements: Running Local Sonnet demands a minimum of 512GB of RAM, highlighting significant hardware requirements for optimal performance.

- Even with 1TB of RAM, achieving full precision remains a challenge, leading to inquiries about Q8 fine-tuning as a potential solution, though dismissed as insufficient.

- DGX Spark: Toy or Treasure?: The community debated the merits of DGX Spark versus DGX Station, with one member quipping that Spark is a toy, Station is a workstation, while linking to the DGX Station product page.

- Despite its limitations, the DGX Spark was acknowledged for its attractive price and storage capacity, described as a good product.

- Qwen 3 Max Excels in Creative Writing: Evaluations of Qwen 3 Max highlighted its strengths in creative writing and roleplay, surpassing K2 and Sonnet 4 in member evaluations.

- However, its high price and perceived shortcomings in tool calls and logic-based coding tempered enthusiasm, positioning it as potentially overpriced.

- Glazer Mirrors GPT-4: A new model, Glazer, designed to replicate the sycophantic personality of GPT-4 that some users miss, was released to positive reception.

- It is available on Ollama via

ollama run gurubot/glazerand on Huggingface in 4B and 8B versions.

- It is available on Ollama via

LM Studio Discord

- 3090 Bug Overclocks 4090?: A user reported that their 3090 seemed to be causing their 4090 to draw excessive power, potentially due to a software bug.

- This resulted in higher temperatures, leading the user to typically undervolt to prevent overheating.

- Tentacle LORAs conquer Art Styles: A member created and shared a collection of LORAs, exploring various art styles, providing a link to the LORA template.

- These LORAs were described as stomped together, resulting in a tentacle-like shape, designed for artistic experimentation.

- 6GB GPU Owner needs roleplaying model: A user with a 6GB GPU sought recommendations for the best model for realistic and immersive roleplaying games.

- Suggestions included increasing CPU RAM to 64GB and utilizing models like Mistral Nemo Instruct or Qwen3-30B-A3B-Instruct-2507-MXFP4_MOE.

- Bionic Legs Flop?: A member inquired about consumer-priced bionic legs (exoskeletons), seeking real-world performance insights.

- Another member referenced a YouTube review indicating that they barely do anything and might even induce muscle atrophy.

- 5000 Series to skip VRAM?: Discussion arose around the potential for the new Nvidia 5000 series to be a skip gen, with minimal performance increases over the 4000 series.

- The lack of added VRAM was also a point of concern.

Cursor Community Discord

- GPT-5 Demolishes Sonnet 4: Members find GPT-5 superior to Sonnet 4 for coding, citing its conciseness and accuracy, albeit requiring more specific prompting, while Sonnet 4 tends to hallucinate more.

- Users appreciate GPT-5 as a valuable planner and discussion partner, especially when coupled with auto-implementation, because Sonnet 4 seems template-based.

- Codex CLI vs Cursor Code: Dueling Code Geniuses: The community is split between Codex CLI and Cursor Code, as some prefer Codex CLI’s superior code quality, while others favor Cursor Code’s creative thinking and reasoning abilities, as quality depends on prompt quality.

- A member unsubscribed from Cursor Code’s Max plan due to hallucinations, while others warn of Codex’s lower, harder-to-track rate limits, though some appreciate its suggestion system.

- Cursor’s $20/m Price Tag: Still a Steal?: Discussions revolve around the value of Cursor’s $20/month Pro plan, with users debating how quickly one might hit the usage limits.

- One user, finding it essential, canceled their Cursor subscription for Claude Code and Codex, suggesting a $20/month Cursor subscription paired with a Claude Code plan for inline editing and terminal usage is the optimal setup.

- Cursor Auto-Mode: Handle with Extreme Caution: Multiple users report issues with Cursor’s Auto mode, noting its poor performance, inability to fix simple bugs, and tendency to type edits in the chat instead of applying them.

- One user humorously illustrates Cursor’s overconfidence with a meme-like message generated by the tool, underscoring the need for thorough debugging.

OpenRouter Discord

- Qwen3-Max Gets Smarter: The latest Qwen3-Max model shows accuracy gains in math, coding, logic, and science tasks over the January 2025 version, according to this X post.

- The model is optimized for RAG and tool calling, lacks a dedicated ‘thinking’ mode, and is available for testing here.

- Fake OpenRouter Crypto on PancakeSwap: An OpenRouter-related cryptocurrency is a scam and not officially connected to OpenRouter.

- Users were warned after inquiring about the existence of an OpenRouter coin on PancakeSwap and its availability for trading.

- Anthropic’s Geopolitical Stance Debated: Members debated Anthropic’s blog post which restricts access from regions with ownership structures subject to control from countries where their products are not allowed.

- Some speculated whether the move was motivated by national security or market share protection.

- Output Tokens Capped at 8k: A user found that hitting the output token limit results in response truncation, with the stop reason flagged as *‘length’**.

- The API restricts setting

max_tokensbeyond the model’s limit.

- The API restricts setting

- OpenRouter Leverages OpenAI Responses API: A member inquired whether OpenRouter uses the OpenAI Responses API, referencing a tweet.

- It was confirmed that OpenRouter uses it for most OpenAI models.

OpenAI Discord

- Slash Token Waste: A member advocates for token efficiency by filtering grammatically useless words and consolidating multiple words into useful ones in the prompt, claiming that in inference, wasted tokens = wasted resources.

- They argue that wasted tokens lead to accelerated amortization of components if you’re hosting and that politeness in AI prompts can increase environmental waste.

- Gemini 2.5 Pro Unlocks Unlimited Access: Google AI Studio now gives unlimited access to Gemini’s best model, 2.5 Pro along with other features like Imagen, Nano Banana, Stream Realtime, speech generation, and Veo 2.

- Some members are focused on LLMs, and some have found use for video editing, educational videos and recreating public domain.

- Claims of AGI Generate Carbon: Members discussed a blog post revealing the carbon generated by claims of AGI outpaces the token wastage used on please and thank you in ChatGPT, which can be found here.

- It suggests that the environmental impact of grand claims in AI may be more significant than previously thought, prompting discussions about sustainable AI practices.

- Engineering Manual Shared: A user named darthgustav shared a JavaScript code snippet outlining prompt engineering lessons, covering hierarchical communication with markdown, abstraction through variables, reinforcement in prompts, and ML format matching for compliance.

- The lessons aim to enhance clarity, structure, and determinacy in AI interactions, guiding tool use and shaping output more effectively.

- Abstraction via Bracketology: A user emphasizes teaching abstraction through bracket interpretation such as [list], {object}, and (option) within prompts.

- This approach aims to enhance clarity and structure, enabling more effective communication between the user and the AI, improving overall prompt engineering practices.

GPU MODE Discord

- Anthropic’s China Policy Draws Fire: A tweet revealed Anthropic’s new policy, restricting service to organizations controlled by jurisdictions where their products are not permitted (e.g., China).

- The ensuing debate questioned whether the policy reflects national security concerns or mere corporate self-interest.

- CUDA Newbies Convene on Triton: Newcomers sought guidance on learning Triton without prior CUDA or GPU experience, and received a recommendation to start with the official Triton tutorials.

- They further inquired about the necessity of reading the PMPP book for learning Triton.

- Profiling Reveals Slow Module Loading: During vLLM profiling, time is spent on ‘Runtime Triggered Module Loading’, though its precise meaning and how to avoid it during profiling are unclear, and a trace was shared.

- Analysis revealed that the FP32 accumulator designed for FP8 matrix multiplication in tensor cores is actually FP22 (1 sign bit, 8 exponent bits, and 13 mantissa bits), according to a paper (arxiv.org/pdf/2411.10958).

- rocSHMEM struggles with HIP kernels: A member is exploring rocSHMEM implementation similar to HIP kernels using load_inline, encountering errors related to ROCm-aware MPI requirements.

- Another member suggested trying ROCm/iris as a possible alternative while they investigate the issue.

- L2 Cache Persistence makes comeback: A blog post highlights performance gains on the Ampere architecture via leveraging L2 cache for persistent memory accesses, as detailed in a blog post.

- The corresponding code shows structuring a CUDA project using CMAKE to streamline code organization.

Latent Space Discord

- OpenAI Designs Custom AI Chip: Financial Times reports OpenAI partnered with Broadcom to co-design a custom AI chip, with mass production slated to start next year, indicating a move away from Nvidia dependency, costing around $10B, see article.

- Community reactions varied from skepticism about the chip’s quality to speculation that OpenAI will out-compete its own customers.

- Mercor fields $10B Pre-emptive Offers: AI-hiring startup Mercor has received unsolicited offers valuing it at ~$10B—5× its June 2025 Series B price—just four months later, see tweet.

- The news has spurred jokes about the AI-funding frenzy.

- Augie raises $85M Series A for AI Logistics: Augment (Augie) announced an $85M Series A—bringing total funding to $110M in just 5 months—to scale their AI teammate built for the $10T logistics sector, see announcement.

- Augie already helps freight teams handling $35B+ double productivity by orchestrating end-to-end order-to-cash workflows across email, calls, Slack, TMS and more.

- Responses API Myths Busted: A thread clarified widespread confusion about the OpenAI Responses API, debunking myths that Responses is a superset of Completions, can be run statelessly, and unlocks higher model intelligence & 40-80% cache-hit rates, see thread.

- Developers stuck on Completions are urged to switch to Responses for GPT-5-level agents, with pointers to OpenAI cookbooks.

- AI Engineer CODE Summit Slated for NYC: The AI Engineer team is launching its first dedicated CODE summit this fall in NYC, gathering 500+ AI Engineers & Leaders alongside top model builders and Fortune-500 users to unpack the reality of AI coding tools, see announcement.

- The summit is invite-only, with two tracks (Engineering & Leadership), no vendor talks, and a CFP open until Sep 15, aiming to celebrate PMF (Product-Market Fit) while addressing MIT’s statistic that 95% of enterprise AI pilots fail.

DSPy Discord

- DSPy Powers Budding Voice Agents: Members discussed building voice agents with DSPy and explored using GEPA to optimize prompts for frameworks like Livekit and Pipecat.

- One member suggested using the optimized prompt from GEPA as a straightforward string, while acknowledging that this might feel anti-DSPy.

- GEPA flexes Prompt Optimization Muscles: While DSPy creators might cringe at the term prompt optimization, tools like GEPA can indeed be used for this purpose and Groq was recommended for inference.

- For prompt creation, it was suggested to setup a Rubric type judge to assess generated responses, especially at the conversation level.

- Multi-Turn Musings Spark DSPy Conversational Capabilities: While a member found no satisfying implementation of multi-turn conversations with DSPy or RL applications like GEPA or GRPO, DSPy is fully capable of handling multi-turn conversations using

dspy.History.- However, it was cautioned that defining examples well is crucial, as it’s easy to introduce bias when building chat systems.

- RAG and Fine-Tuning face off in Memory Game: The discussion addressed how to equip voice agents with extensive information (hours, services, pricing, etc.) without runtime latency, with some approaches being fine-tuning or retrieval.

- While fine-tuning can build in memorization, it’s a big job, and simple functions or maps (like hours of operation) don’t need a vector database like RAG.

- Token Streaming Rides the Wave: Members explored the impact of streaming responses (token by token) on user experience, with a key focus on minimizing Time To First Token (TTFT).

- While streaming doesn’t reduce TTFT, it enhances user perception by providing immediate feedback, and libraries like Pipecat already stream frames.

Moonshot AI (Kimi K-2) Discord

- Kimi API Credits Arriving: The Kimi giveaway winner was notified that API credits are incoming.

- Credits were anticipated to arrive within the hour, arranged by the crew.

- Anthropic API Absent on Kimi: A user asked about the availability of the Anthropic API on the new model, but it was clarified that kimi-k2-turbo-preview points to -0905.

- This indicates the Anthropic API is not currently integrated into the new model.

- Kimi’s 0905 Model Launches: The turbo model now utilizes the 0905 model, updated from the 0711 model.

- Some users find the new K2 model over poetic, while others find it to be more detailed and better.

- Kimi Team’s Lofty Ambitions: Despite being a smaller team compared to Grok/OAI, the Kimi team harbors big dreams and has a big model.

- A member noted that smaller companies often offer more user interaction.

- Coding Improvements Confuse Kimi Users: Users express confusion over the emphasis on coding improvements in the new Kimi K2 model.

- Opinions diverge, with one user preferring 0711 over 0905.

Nous Research AI Discord

- Spiking Neural Networks Mimic the Brain: Members shared a YouTube video discussing Spiking Neural Networks (SNNs) and their similarities to the human brain.

- Another member mentioned image sensors that work closer to how the human eye does, linking this video.

- Meta Wristband Controls Smart Glasses: Meta plans to release a wristband that reads body electrical signals to control smart glasses, according to this Nature article.

- No further details were discussed.

- Hermes Plays Super Conservative Holdem: A member observed that Hermes exhibits extremely OOD unique behavior in the husky holdem benchmark.

- The member noted it plays super conservative in a way no other model does.

- Micro-LLM Channels Lovecraft: A member’s experiments with a micro-LLM trained on H.P. Lovecraft’s stories produced quite promising output, view the youtube video.

- They also speculated that a 3 million parameter model could become a light chat model with the right dataset and sufficient training.

- NVIDIA Unleashes SLM Agents: A member shared NVIDIA’s research on SLM Agents (project page) and the accompanying paper (arxiv link).

- No further details were provided.

Modular (Mojo 🔥) Discord

- Zig’s Async IO Faces Doubts: Concerns arise in other language communities regarding the viability of Zig’s new approach to async IO, mentioning that IO needs to haul around state now, referencing this discussion in Ziggit.

- It was suggested that Mojo’s type system and effect generics may address some of the underlying problems.

- SIMD Nirvana: Pythonic SIMD Approaches: Members discussed the goal of writing simple, Pythonic code that automatically compiles to SIMD instructions, using Mojo and MLIR for optimal parallelized assembly without relying on LLVM to correctly vectorize code.

- One member dreamed of for loops automatically compiled for parallel processing, utilizing hardware capabilities effectively.

- Compiler Needs Input Data Shapes For Vectorization: To fully vectorize code, especially loops, the compiler requires sufficient information about input data shapes or must perform speculation to identify hot loops, clarifying that Mojo encourages the use of portable SIMD libraries.

- It was noted that scalar and vector operations can ideally run simultaneously on CPUs and AMD GPUs due to separate execution resources.

- GPU Kernel Maturity: Check-up: A member inquired about the maturity of writing GPU kernels in Mojo, specifically implementing a Mamba2 kernel for use in PyTorch, and was pointed to Modular’s custom kernels tutorial.

- MAX (Modular’s API) is not primarily targeted at training but is viable for inference, with MLA already implemented for inference (see GitHub).

- Span Abstraction Dream: A member wishes for Span (a contiguous slice of memory abstraction) to be an easily usable, auto-vectorized tool, with algorithms that work on NDBuffer (being ported to LayoutTensor) as part of Span.

- They observed that existing implementations are manual and parametrized with hardware characteristics, lacking sufficient compiler magic.

Eleuther Discord

- MasterCard’s AI Flags Obscene Material: MasterCard replaced fraud prevention staff with an AI system that is triggering conflicts with merchants over obscenity rule enforcement; details are available in Chapter 5.12.7 of mastercard-rules.pdf.

- The system flags more transactions as obscene, with fees reaching up to $200,000 per violation and $2,500 daily for noncompliance, incentivizing merchants to avoid admitting fault.

- Lacking Criteria Plagues Fraud Prevention: The automated fraud prevention issue stems from insufficiently specified criteria in obscenity rules, with no clear examples of safe items, resulting in confusing gradients for the LLM.

- The discussion focused on the need to clarify unwritten policies and approaches to avoid issues caused by automated enforcement without adequate context.

- Brand Risk Drives Over-Enforcement Tactics: Pressure from mutual funds to mitigate brand risk, such as board diversity targets, leads to over-enforcement within MasterCard’s fraud department, impacting merchants.

- MasterCard’s focus on concealing issues hinders developing useful monitoring metrics, as any flaw discovered would create a problem that needs to be solved, to protect their career.

- Endorsement Request Sparks Concern: A researcher’s request for endorsements for an arXiv paper on semantic drift raised suspicion due to recent cases of AI-induced psychosis.

- The concerns stemmed from the use of terms associated with AI-generated nonsense, prompting a request to share the paper for review.

- Community Ponders GRPO Baseline: Members discussed the possibility of using a GRPO baseline for an upcoming project.

- The idea came about when one member asked did you have an GRPO baseline? and the other responded no, this will be next.

HuggingFace Discord

- Anthropic’s Policy Raises Self-Interest Questions: Members debated if Anthropic’s new policy prohibiting access from organizations in restricted jurisdictions is motivated by national security or corporate self-interest.

- The discussion focused on the rationale behind restricting access based on ownership structures, sparking questions about corporate control.

- Reward Weighting Gets Deciphered in RL Studies: Members sought studies on the benefits of weighting reward functions during RL to avoid uninformed experimentation.

- One member shared a document regarding reward weighting in RL.

- Attention Bias Gets Explored to Train Causal Models: A member requested advice on modifying causal model training with SFTTrainer to add attention bias for specific words, referencing the Attention Bias paper.

- Suggestions included checking specific terms/tokens against common tokenizers and considering alternative approaches for loss calculation and gradient signal control.

- RoPE Technique gets Employed to Rescue RAG Context: Members exchanged tips for building RAG applications with LLMs under very limited context sizes (4096 tokens).

- One tip involved using models with RoPE and fine-tuning them with a larger context size, referencing this repo and emphasizing that RoPE enables models to perform well even on context it hasn’t been trained on.

- Enron Emails get Parsed into Parquet: A member uploaded their parser for the Enron email dataset, resulting in 5 structured parquet files, including Emails, Users, Groups, Email/User junction, and Email/Group junction.

- Parent and child emails have been parsed, and duplicates are managed both by file and message hashes/caches, with all messages included as MD5 hash objects.

tinygrad (George Hotz) Discord

- Digital Ocean MI300X Stable Diffusion Fails: Users had errors running the stable_diffusion.py example on a Digital Ocean MI300X GPU instance, tracing back to some z3 issue.

- The failure wasn’t reproducible on a Mac, though mnist_gan.py was tested successfully.

- AMD_LLVM=1 Causes TypeError During MNIST Training: A

TypeErroroccurred involving unsupported operand types (BoolRef) when using AMD_LLVM=1 during a simple mnist training loop.- George Hotz suggested trying IGNORE_OOB=1, linking it to a possible z3 version issue, noting that some overloads added in z3>=1.2.4.0 might be the cause, and provided a link.

- Kernel Removal Project Seeks Contributors: A user expressed interest in contributing to the kernel removal project within Tinygrad.

- The scope of potential contributions was not clarified, but presumably would involve slimming the kernel surface.

aider (Paul Gauthier) Discord

- Warp Code Gets Love: Users are praising Warp Code, with one user noting that Warp feels like the difference between driving a stick and manual.

- Warp is useful when you don’t know files and want to get the sense of a new codebase via embeddings search.

- Aider Still Shines: A user who switched from aider to Claude Code months back has switched back, finding that Anthropic have made some questionable changes.

- The user now prefers aider for its simplicity, and uses Gemini 2.5 Pro, Gemini Flash, and Qwen3 Coder along with

/runto replicate Claude Code’s plan mode.

- The user now prefers aider for its simplicity, and uses Gemini 2.5 Pro, Gemini Flash, and Qwen3 Coder along with

- Run command is a Killer Feature: The

/runin aider is a major feature for a user, and they noted Aider is good when you know better what files you want to work with.- They also enquired where they can see Aider’s success stories.

- Coding Agent Undergoing Refactoring: A member is refactoring their own coding agent, inspired by Aider, to learn more about AI system design.

- They already have a small proof of concept, but are now reading a tutorial to see what others do for a similar project.

- Code Validation Advice Sought: A member is seeking advice on how to prevent dangerous code (env leakage, rm -rfs, network requests, etc.) from being generated in any language.

- They considered a TreeSitter based validator, and asked how Aider avoids these issues, requesting pointers to relevant files in the repo.

Yannick Kilcher Discord

- Reviewing Paper Baselines Presents Challenges: A member requested general guidelines for approaching baselines presented in research papers, particularly when unfamiliar with the dataset.

- The member expressed uncertainty about judging performance without sufficient knowledge of the dataset, implying a need for more background research.

- LoRA Adds Instead of Replaces Original Weights: A member asked why LoRA trained layers are added instead of replacing the original weight matrix, noting the contrast with other efficient processes like depthwise convolutions.

- The member sought a paper, article, or reasoning to explain this design choice, rather than replacement, and mentioned having an intuition on the matter.

Manus.im Discord Discord

- AI Politeness Gets Scientific Backing: A user shared a paper providing scientific evidence that being polite to AIs matters.

- The discussion centered around whether acting nicely towards AIs results in more cooperative behavior.

- Demand for Scientific Validation of AI Politeness: Users expressed a desire for scientific proof that politeness influences AI behavior.

- This aligned with the shared arxiv link, suggesting a community interest in understanding the impact of human-AI interaction styles.

LLM Agents (Berkeley MOOC) Discord

- AI Agents Curriculum Plans for 2025 Examined: A member inquired whether the 2025 Fall curriculum would mirror the 2024 Fall curriculum’s focus on Introduction to AI Agents.

- They explicitly requested a link to join the course, suggesting they are seeking registration or access details.

- Fall 2025 enrollment: The user is specifically looking for information on how to join the Fall 2025 course.

- They explicitly requested the link, and will be awaiting course joining details.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1100 messages🔥🔥🔥):

Image generation issues, Video arena bot down, Login requirements, Rate limits, Account data loss

- Image Generation Glitches Rampant: Users reported widespread issues with image generation, including persistent errors and infinite generation loops, with many experiencing the dreaded ‘Something went wrong with this response’ message.

- A member pointed out that ‘Sometimes the model is confused with the prompt and gives the same error… We seriously need to have more error feedback’ suggesting the need for more specific error messages.

- Video Arena Bot grounded by Glitches: The video arena bot is currently down, with the team actively working to resolve the issues, however there is no ETA for when it will be back online.

- One member quipped that *‘The video bot currently isn’t working. Trying to use it in different channels isn’t going to work. Even if the bot was working properly you’re unable to use it in this channel.’

- Login Requirements Trigger Tantrums: The introduction of login requirements, particularly the Google account requirement, has caused concern among users.