Claude is all you need.

AI News for 9/26/2025-9/29/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (196 channels, and 15992 messages) for you. Estimated reading time saved (at 200wpm): 1286 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

Special mentions go out to John Schulman’s Thinking Machines blogpost on LoRA and OpenAI launching Instant Checkout in ChatGPT and Agentic Commerce Protocol with Stripe and DeepSeek announcing big price cuts for V3.2 with a new Sparse Attention algorithm who will be overlooked because…

Anthropic chose today to drop an entire week’s worth of launches on one single day:

-

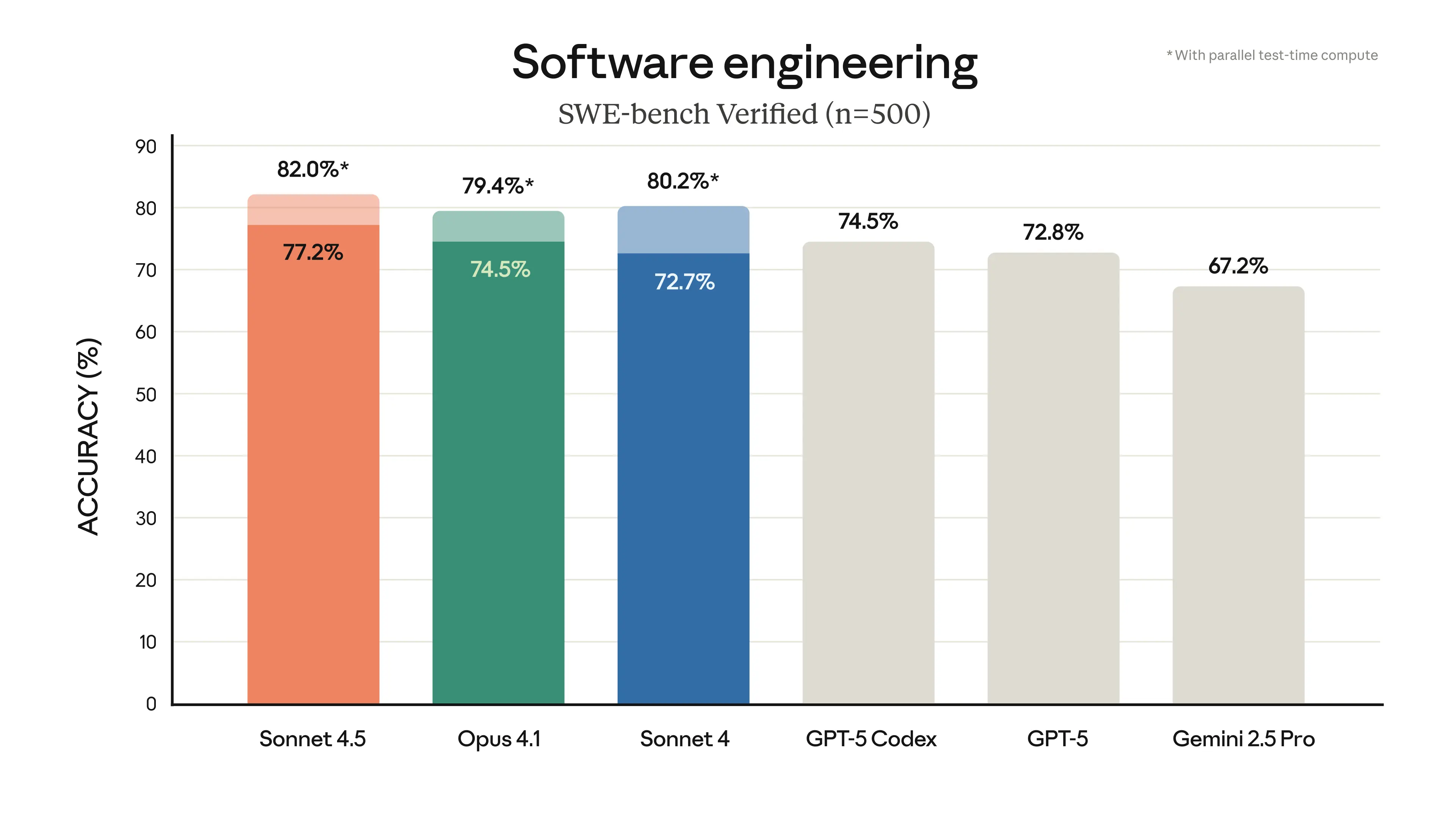

Claude Sonnet 4.5: SOTA SWE-Bench Verified at 77.2% (with parallel TTC 82%)

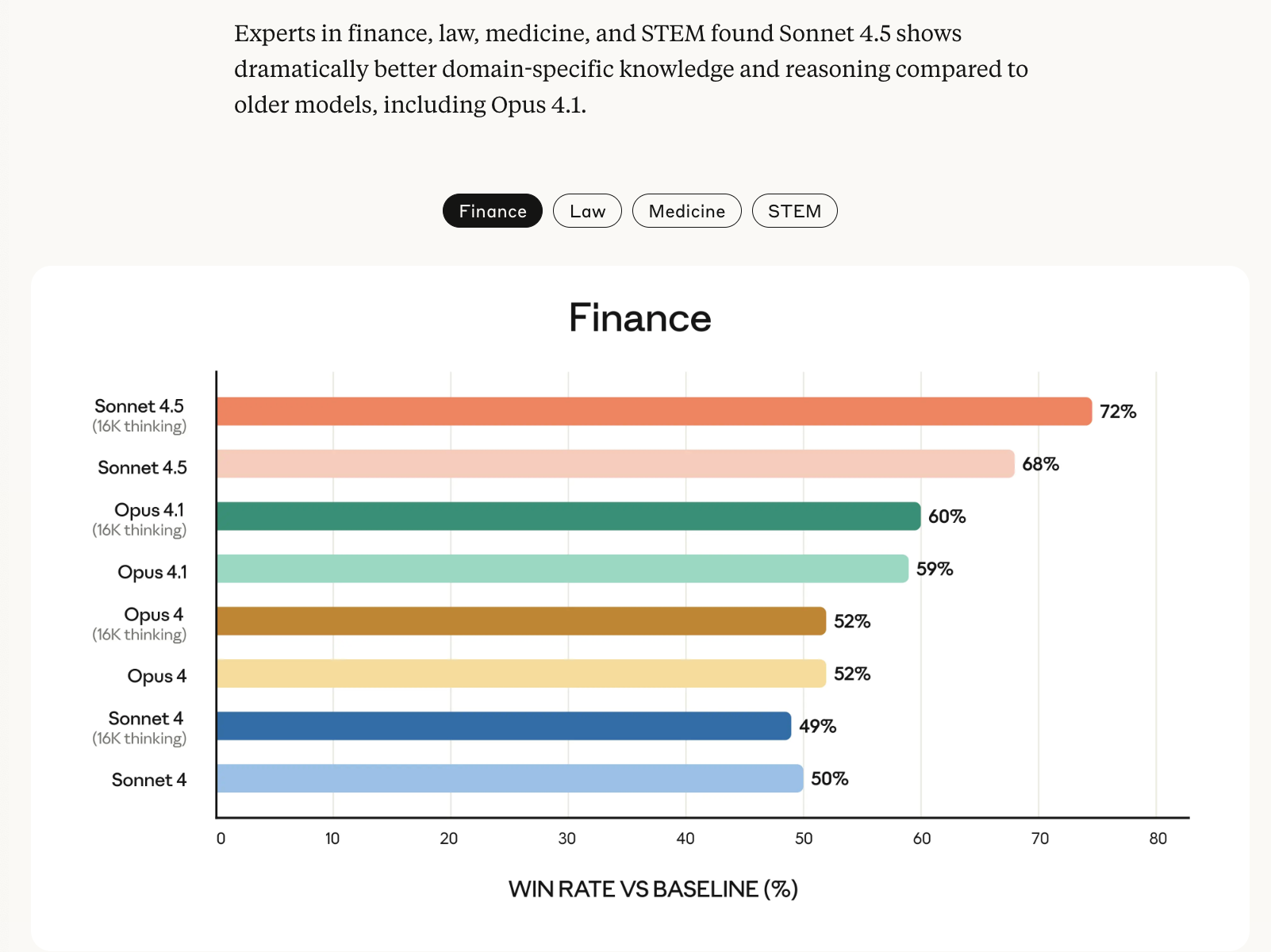

including a new focus on improvements in finance, law and STEM:

-

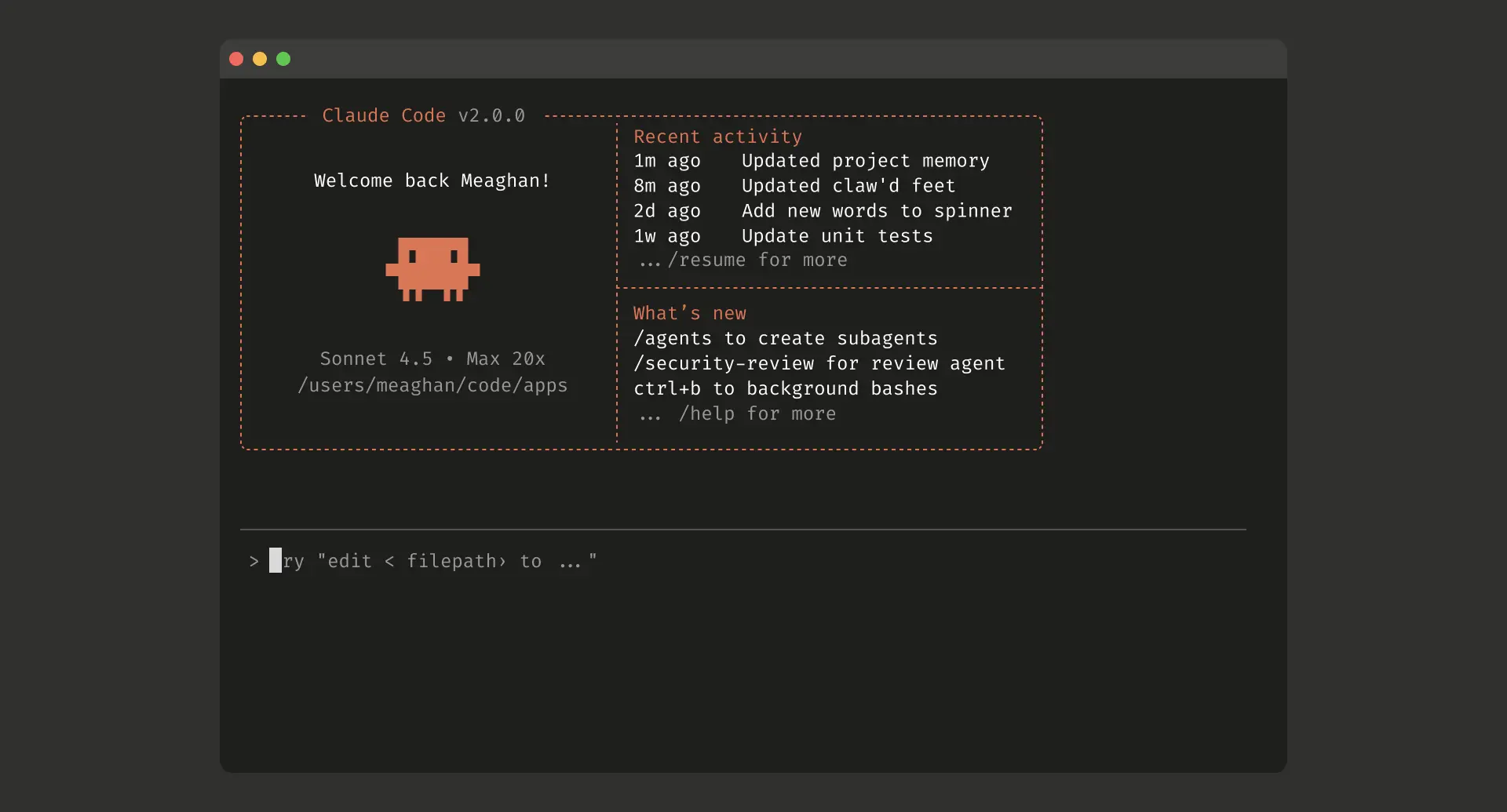

Claude Code v2

-

checkpoints—one of the most requested features—that save your progress and allow you to roll back instantly to a previous state.

-

a refreshed terminal interface

-

and shipped a native VS Code extension (design story here)

-

-

-

Claude API:

-

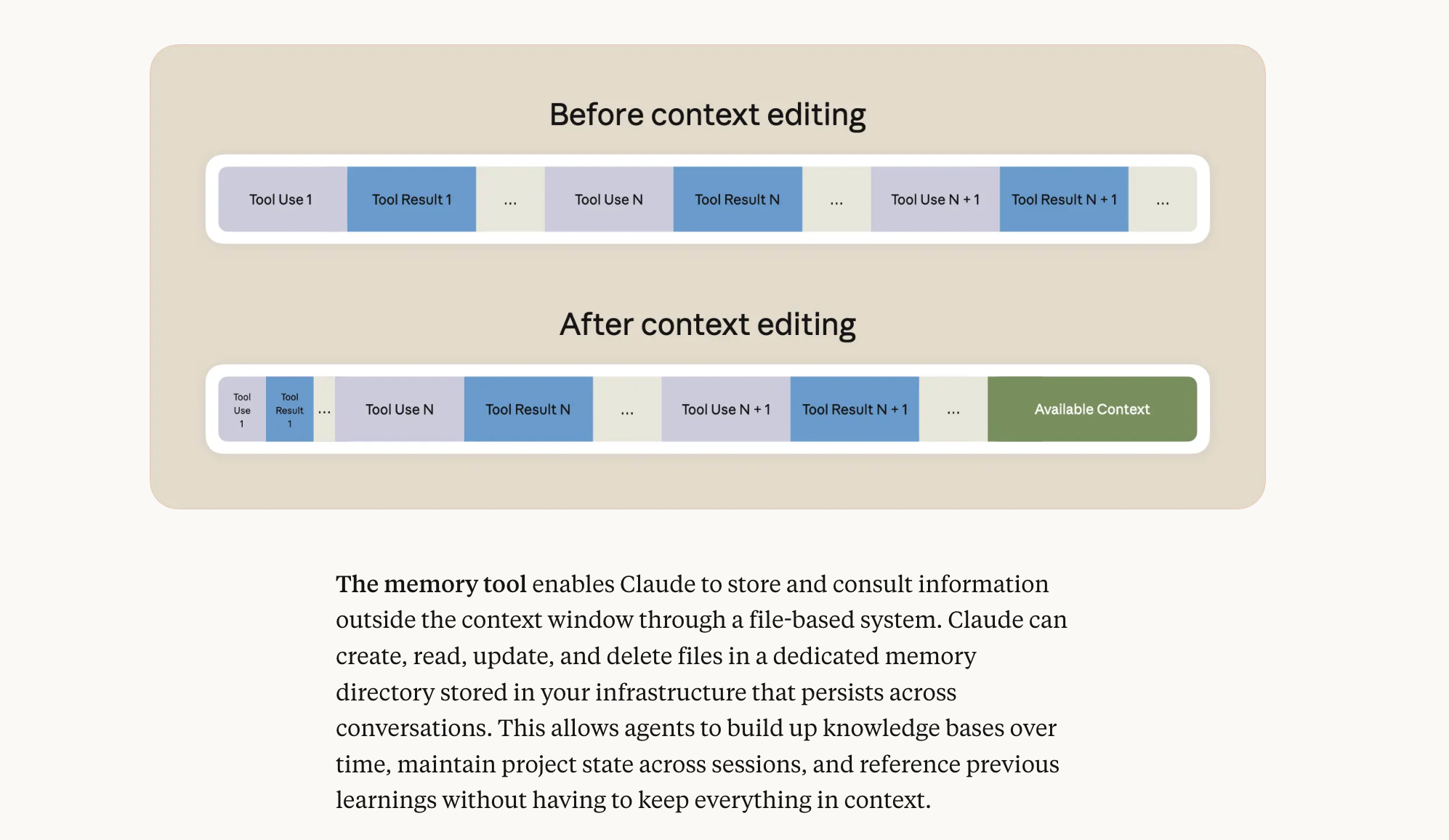

a new context editing feature and memory tool to the Claude API that lets agents run even longer and handle even greater complexity.

-

Renaming the Claude Code SDK to Claude Agent SDK.

-

-

- In the Claude apps, we’ve brought code execution and file creation (spreadsheets, slides, and documents) directly into the conversation.

- the Claude for Chrome extension is now available to Max users who joined the waitlist last month.

-

Imagine with Claude: a generative UI experiement research preview.

Reception has been roundly positive, with folks like Cognition Devin and Sourcegraph Amp adopting as default model and third party evals like Box and SWE-Agent approving.

You can now also check out Mike Krieger’s chat on Latent Space about all the big day:

AI Twitter Recap

DeepSeek V3.2-Exp: Sparse Attention, price cuts, and open kernels

- DeepSeek Sparse Attention (DSA) lands (open) with big efficiency wins: DeepSeek released an experimental V3.2-Exp model that retrofits V3.1-Terminus with a learned sparse attention scheme, cutting long-context costs without quality loss. A tiny “lightning indexer” scores past tokens per query, selects top‑k positions, and the backbone runs full attention only on those, changing complexity from O[L^2] to O[Lk]. Two-stage continual pretraining on top of V3.1: a dense warm‑up (~2.1B tokens, backbone frozen) aligns the indexer to dense attention via KL loss; then end‑to‑end sparse training (~944B tokens) adapts the backbone to the indexer with KL regularization. Models, tech report, and kernels are released; API prices drop 50%+ with claimed ~3.5x cheaper prefill and ~10x cheaper decode at 128k context, with quality matching V3.1. See the launch thread @deepseek_ai, pricing/API notes 3/n and code 4/n. Deep breakdowns from @danielhanchen and @scaling01.

- Ecosystem and compilers: vLLM has DSA support recipes and H200/B200 builds (vLLM, DSA explainer 1/3). DeepSeek’s kernels ship in TileLang/CUDA; TileLang (TVM) hits ~95% of hand-written FlashMLA in ~80 lines and targets Nvidia, Huawei Ascend, Cambricon (@Yuchenj_UW). Community reactions highlight that DSA’s post‑hoc sparsification on a dense checkpoint generalizes beyond DeepSeek (analysis).

- Post-training recipe: DeepSeek confirms RL on specialist models (math, competitive programming, general reasoning, agentic coding, agentic search) with GRPO and rubric/consistency rewards, then distillation into the final checkpoint; SPCT/GRM used in RL stages (notes, confirm).

Anthropic’s Claude Sonnet 4.5: coding/agent leap and first interpretability audit in a system card

- New SOTA for coding and agents: Anthropic launched Sonnet 4.5, claiming best-in-class coding, computer use, and reasoning/math. It sets a new high on SWE‑Bench Verified (no tools) and shows large gains on OSWorld (computer use), plus long autonomous coding runs (e.g., building/maintaining a codebase over 30+ hours, ~11k LOC) (launch, Cognition/Devin rebuild, long-run coding, finance/programming evals). Pricing remains $3M/$15M (input/output) with 200k default context and a 1M option for some partners (Cline).

- Alignment and interpretability work surfaced: Anthropic published a detailed system card; they report substantially reduced sycophancy/reward hacking and “evaluation awareness” signals discovered via interpretability. The team did a pre-deployment white‑box audit to “read the model’s mind” (to their knowledge, a first for a frontier LLM system card). See @janleike, the audit thread by @Jack_W_Lindsey, and system-card highlights (1, 2).

- Tooling and integrations: Claude Code v2 ships checkpoints, UX improvements, and a native VS Code extension; the Claude Code SDK is now the Claude Agent SDK aimed at general agents (@_catwu, @alexalbert__). Broad availability landed in Cursor (now with browser control), Perplexity, and OpenRouter (Cursor add, browser control, Perplexity, OpenRouter). Case studies: replicating published econ research from raw data using code execution/file creation (@emollick, @alexalbert__).

RL for LLMs: GRPO vs PPO vs REINFORCE, and LoRA matches full FT in many settings

- GRPO discourse, grounded: Practitioners with OAI/Anthropic RL experience argue GRPO is essentially a policy-gradient variant of REINFORCE with group baselines; performance differences among reasonable PG variants (GRPO, RLOO, PPO, SPO) are often smaller than gaps in data recipe, credit assignment, and variance reduction. See high-signal threads by @McaleerStephen and @zhongwen2009, plus a workflow explainer (@TheTuringPost). For those avoiding PPO complexity, REINFORCE/RLOO work well and avoid a value model (lower cost) (@cwolferesearch).

- LoRA holds up in RL: New experiments indicate LoRA can match full fine‑tuning in many RL post‑training regimes, even at low rank; corroborated by QLoRA experience (>1500 expts) and recent GRPO implementations (@thinkymachines, @Tim_Dettmers, @danielhanchen). NVIDIA also proposes RLBFF (binary principle‑based feedback combining RLHF/RLVR) with strong RM-Bench/JudgeBench results (overview, paper).

- Data is the bottleneck debate continues: @fchollet stresses that scaling LLMs has been data-bound (human-generated and environment-crafted), while “AGI” might be compute‑bound; meanwhile OpenAI’s GDPVal dataset is trending on HF (@ClementDelangue) and the community calls for updated evals beyond saturated MMLU (@maximelabonne).

Agentic commerce and platform updates

- OpenAI Instant Checkout + Agentic Commerce Protocol (ACP): ChatGPT now supports buying directly in-chat, starting with Etsy and “over a million” Shopify merchants coming soon. ACP is co-developed with Stripe as an open standard for programmatic commerce between users, AI agents, and businesses. Developers can apply to integrate; details via @OpenAI, @OpenAIDevs, docs, and Stripe’s perspective (Patrick Collison, SemiAnalysis). In parallel, Google introduced AP2 (agent payments) with cryptographically signed mandates (DeepLearningAI).

- Safety & governance: OpenAI rolled out parental controls (link teen/parent accounts, granular controls, self-harm risk notifications) (announcement, @fidjissimo). Anthropic backed California’s SB53 for frontier AI transparency while preferring federal frameworks (@jackclarkSF). OpenAI also opened “OpenAI for Science” roles to build an AI-powered scientific instrument (@kevinweil).

Infra, kernels, and other releases

- Systems and compilers: Modal raised a $87M Series B (now a “B”illion valuation) to keep building ML-native infra; customers highlight the “remote but feels local” DX and scaling ergonomics (@bernhardsson, @HamelHusain, @raunakdoesdev). For GPU internals, a widely-praised deep dive on writing high-performance matmul kernels on H100 covers memory hierarchy, PTX/SASS, warp tiling, TMA/wgmma, and scheduling (@gordic_aleksa, @cHHillee).

- Other model drops: Google’s TimesFM 2.5 (200M params, 16k context, Apache-2.0) is a stronger zero-shot time-series forecaster (@osanseviero). AntLingAGI previewed Ring‑1T, a 1T-parameter open “thinking” model with early results on AIME25/HMMT/ARC-AGI and an IMO’25 Q3 solve (@AntLingAGI). On vision, Tencent’s HunyuanImage 3 joined community testbeds (Yupp), and Qwen-Image-Edit‑2509 showcased robust style transfer for architectural scenes (@Alibaba_Qwen).

Top tweets (by engagement)

- Anthropic launch: “Introducing Claude Sonnet 4.5—the best coding model in the world.” @claudeai

- OpenAI commerce: “Instant Checkout in ChatGPT… open-sourcing the Agentic Commerce Protocol.” @OpenAI

- DeepSeek V3.2-Exp: “Introducing DeepSeek Sparse Attention… API prices cut 50%+.” @deepseek_ai

- RL perspective: “Having done RL at OpenAI and Anthropic, here’s what I can say about GRPO.” @McaleerStephen

- Cursor integration: “Sonnet 4.5 is now available in Cursor.” @cursor_ai

- On data vs compute: “LLMs are dependent on human output; AGI will scale with compute.” @fchollet

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. China AI Model Launches: Alibaba Qwen Scaling Roadmap and Tencent Hunyuan Image 3.0

- Alibaba just unveiled their Qwen roadmap. The ambition is staggering! (Activity: 954): Alibaba’s Qwen roadmap slide (image) lays out two bets: a unified multimodal model family and extreme scaling. Targets include context window growth from

1M → 100Mtokens, parameter count from ~1T → 10T, test-time compute budget from64k → 1M(implying much longer CoT/drafting), and data scale from10T → 100Ttokens. It also highlights unbounded synthetic data generation and expanded agent capabilities (task complexity, interaction, learning modes), signaling a strong “scaling is all you need” strategy. Commenters are wowed by the100Mcontext, skeptical it will remain open-source at that scale, and note that running >1T-parameter models locally is impractical for consumer hardware.- Ambition for a

100Mtoken context sparked feasibility analysis: with standard attention, compute is O(L^2) and KV-cache memory scales linearly with L. For a 7B-class transformer (≈32 layers, 32 heads, head_dim 128), even with 8‑bit KV, the cache is ~256 KB/token, implying ~25 TBjust for KV at 100M tokens; fp16 would double that. Commenters note such lengths would require architectural/algorithmic changes (e.g., retrieval, recurrent/state-space models, or linear/streaming attention; see ideas like Ring Attention or limitations of FlashAttention-3, which still has O(L^2) compute). - On running >

1Tparameter models locally: weight storage alone is prohibitive—fp16 ≈ 2 TB,int8 ≈ 1 TB,4‑bit ≈ 0.5 TB—before activations and KV cache. Even ignoring KV, you’d need on the order of13× H100 80GBGPUs just to hold 1 TB of int8 weights, plus high-bandwidth NVLink/NVSwitch; PCIe workstations would be bandwidth-bound with single-digit tokens/s if offloading to CPU/NVMe. KV grows with both model depth and context (e.g., Llama‑70B-scale models are ~~1.25 MB/tokenat 8‑bit KV, so long contexts quickly add tens to hundreds of GB), making “local” inference for trillion‑scale models impractical. - Licensing/openness concerns were raised: speculation that ultra-long-context or frontier Qwen checkpoints may be closed or API-only even if smaller Qwen variants remain open-weight. The technical implication discussed is that reproducibility and third‑party benchmarking of such extreme context lengths may depend on whether training/inference codepaths (e.g., specialized attention kernels, memory planners) and weights are released versus restricted to hosted endpoints.

- Ambition for a

- Tencent is teasing the world’s most powerful open-source text-to-image model, Hunyuan Image 3.0 Drops Sept 28 (Activity: 225): Tencent is teasing Hunyuan Image 3.0, an open‑source text‑to‑image model slated for release on Sept 28, claiming it will be the “most powerful” open‑source T2I model. The teaser provides no technical specs or benchmarks; a commenter asserts a

96 GB VRAMfigure, but no official details on architecture, training data, resolution/sampler support, or inference requirements are given. Teaser image. Commenters are skeptical of pre‑release hype, noting strong models often “shadow drop” (e.g., Qwen) while hyped releases can disappoint (e.g., SD3 vs. Flux). Others argue the “most powerful” claim is unverified until comparable open‑source contenders are publicly measured.- A commenter claims a

~96 GB VRAMrequirement, implying a very large memory footprint for inference. If accurate, this would push usage toward A100/H100-class GPUs or multi-GPU/offload setups and limit practicality on 24–48 GB consumer cards unless quantization or CPU/NVMe offloading is available. Official details on batch size, target resolution, and precision (fp16/bf16/fp8) will be crucial to interpret the VRAM figure. - Skepticism around pre-release hype is strong: users note that heavily teased models often underdeliver versus “shadow-dropped” releases. Cited contrasts include Qwen models quietly releasing with solid quality versus hyped teasers like GPT-5, and the SD3 marketing compared to Flux’s reception. Takeaway: wait for third-party benchmarks and controlled A/Bs before accepting “most powerful” claims.

- The “most powerful open-source” claim is questioned pending head-to-heads against open models (e.g., Qwen Image, SD3, Flux) on fidelity, prompt adherence, and speed. Integration concerns (“when ComfyUI”) underscore the need for immediate pipeline/tooling support and optimized inference graphs. Credible evaluation should report hardware/precision settings and throughput (it/s) alongside sample galleries.

- A commenter claims a

2. Fenghua No.3 GPU API Support and Post-abliteration Uncensored LLM Finetuning

- China already started making CUDA and DirectX supporting GPUs, so over of monopoly of NVIDIA. The Fenghua No.3 supports latest APIs, including DirectX 12, Vulkan 1.2, and OpenGL 4.6. (Activity: 702): Post claims a Chinese discrete GPU “Fenghua No.3” supports modern graphics APIs—DirectX 12, Vulkan 1.2, OpenGL 4.6—and advertises CUDA support, implying an attempt to run CUDA workloads on non‑NVIDIA hardware. No performance data, ISA/compiler details, or driver maturity info are provided; CUDA support may rely on a compatibility/translation layer, so coverage (PTX versions, runtime APIs) and perf remain unknown. Commenters note AMD’s HIP (a CUDA‑like API) and projects like ZLUDA (CUDA translation on other GPUs) as precedents, suggesting Chinese vendors may implement CUDA more directly due to fewer legal constraints, while others are skeptical until real benchmarks/demos are shown.

- AMD already offers a CUDA-compatibility route via HIP, which mirrors CUDA runtime/kernel APIs but with renamed symbols to sidestep NVIDIA licensing; tooling like HIPIFY can auto-translate CUDA code to HIP targeting ROCm backends (HIP, HIPIFY). Projects such as ZLUDA provide a binary-compatibility layer that maps CUDA runtime/driver calls and PTX to other GPU backends (initially Intel Level Zero, with active forks targeting AMD ROCm), aiming for minimal overhead and running unmodified CUDA apps (ZLUDA repo). This context suggests Chinese vendors could directly implement the CUDA runtime/driver ABI to maximize compatibility, whereas Western vendors typically rely on translation layers to avoid legal risk.

- IMPORTANT: Why Abliterated Models SUCK. Here is a better way to uncensor LLMs. (Activity: 433): OP reports that “abliteration” (uncensoring via weight surgery) consistently degrades capability—especially on MoE like Qwen3-30B-A3B—with drops in logical reasoning, tool-use/agentic control, and much higher hallucination, sometimes making 30B worse than clean 4–8B baselines. In contrast, abliteration followed by finetuning (SFT/DPO) largely restores performance: e.g., mradermacher/Qwen3-30B-A3B-abliterated-erotic-i1-GGUF (tested at

i1-Q4_K_S) is close to the base model with lower hallucinations and better tool-calling than other abliterated Qwen3 variants, and mlabonne/NeuralDaredevil-8B-abliterated (DPO on Llama3-8B) reportedly outperforms its base while remaining uncensored. Comparative baselines that underperformed included Huihui-Qwen3-30B-A3B-Thinking-2507-abliterated-GGUF, Huihui-Qwen3-30B-A3B-abliterated-Fusion-9010-i1-GGUF, and Huihui-Qwen3-30B-A3B-Instruct-2507-abliterated-GGUF, which showed poor MCP/tool-call selection and spammy behavior, plus elevated hallucinations; the erotic-i1 model remained slightly weaker than the original Qwen3-30B-A3B on agentic tasks. OP’s hypothesis: post-abliteration finetuning “heals” performance lost by unconstrained weight edits. Comments call for a standardized benchmark for “abliteration” effects beyond NSFW tasks; others frame the observation as known “model healing,” i.e., further training lets the network re-learn connections damaged by weight edits. A critical view argues that if finetuning fixes things, abliteration may be unnecessary—“I’ve never seen ablit+finetune beat just finetune”—and that removing safety/“negative biases” often harms general usability.- Multiple commenters call for a capability-oriented benchmark to evaluate ‘abliteration’ side-effects beyond NSFW outputs; the Uncensored General Intelligence (UGI) leaderboard explicitly targets uncensored-model performance across diverse tasks: https://huggingface.co/spaces/DontPlanToEnd/UGI-Leaderboard. A standardized suite would enable apples-to-apples comparisons between ablated, fine-tuned, and baseline models on reasoning, instruction-following, and refusal behavior instead of anecdotal porn-only tests.

- Weight-level ‘abliteration’ without a guiding loss predictably breaks distributed representations; ‘When you do any alteration to a neural network’s weights that’s not constrained by a loss function, you should expect degradation or destruction of the model’s capabilities.’ Model healing—continuing training (SFT/RL) after the edit—can help the network rediscover severed connections, so evaluations should report pre- and post-healing performance to quantify recoverable vs irrecoverable damage.

- Practitioners argue that ablation+fine-tuning hasn’t outperformed a clean fine-tune: ‘I’ve never seen abliterated fine-tune perform better than just a fine-tune, at anything.’ Instead, uncensoring via instruction/data tuning preserves base capabilities while reducing refusals, e.g., Josiefied and Dolphin variants: Qwen3-8B-

192kJosiefied-Uncensored-NEO-Max-GGUF (https://huggingface.co/DavidAU/Qwen3-8B-192k-Josiefied-Uncensored-NEO-Max-GGUF), Dolphin-Mistral-24B-Venice-Edition-i1-GGUF (https://huggingface.co/mradermacher/Dolphin-Mistral-24B-Venice-Edition-i1-GGUF), and models by TheDrummer (https://huggingface.co/TheDrummer).

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Anthropic Claude Sonnet 4.5 Launch, Features, and Benchmarks

- Claude 4.5 Sonnet is here (Activity: 1116): Anthropic announced “Claude Sonnet 4.5” (release notes), emphasizing improved tool-use and agentic workflows: “Enhanced tool usage: The model more effectively uses parallel tool calls, firing off multiple speculative searches simultaneously … reading several files at once to build context faster,” with better coordination across tools for research and coding. The upgrade focuses on concurrency (parallel calls), multi-file ingestion, and faster context assembly, signaling optimizations for tool-augmented reasoning rather than just raw model scaling. Commenters report a noticeable real-world speed/quality bump and speculate prior A/B testing exposed some users to the new parallelism earlier; perceived gains align with the release note’s focus on parallel tool calls and multi-file processing.

- Release notes emphasize improved tool orchestration: “Enhanced tool usage… parallel tool calls, firing off multiple speculative searches simultaneously… reading several files at once to build context faster”, indicating better concurrency and coordination across tools for agentic search/coding workflows. A user corroborates this with an earlier observation that Sonnet felt markedly faster and appeared to run parallel tool calls during a period of inference issues, speculating they were part of an A/B test; they link their prior note for context: https://www.reddit.com/r/ClaudeAI/comments/1ndafeq/3month_claude_code_max_user_review_considering/ndgevtn/?context=3.

- Another commenter highlights ecosystem impact: with widespread use of Claude Code (and analogs like Codex and Grok), even marginal gains in parallel tool-call efficiency and latency can compound across millions of users and agent scaffolds. This suggests 4.5 Sonnet’s improved multi-tool coordination could unlock more complex, lower-latency pipelines in agentic workflows, benefiting both end-users and developers building orchestration frameworks.

- Introducing Claude Sonnet 4.5 (Activity: 1512): Anthropic announced Claude Sonnet 4.5, positioning it as its strongest coding/agent model with gains in reasoning and math (no benchmark numbers provided). Platform-wide upgrades include: Claude Code (new terminal UI, a VS Code extension, and a checkpoints feature for instant rollback), the Claude App (code-execution to analyze data, create files, and visualize insights; Chrome extension rollout), and the Developer Platform (longer-running agents via stale-context clearing plus a new memory tool; an Agent SDK exposing core tools, context management, and permissions). A research preview, Imagine with Claude, generates software on-the-fly with no prewritten functionality, available to Max users for

5 days. Sonnet 4.5 is available in the app, Claude Code, the Developer Platform, and via Amazon Bedrock and Google Cloud Vertex AI; pricing remainsunchangedfrom Sonnet 4. Full announcement: anthropic.com/news/claude-sonnet-4-5. Comments ask whether Sonnet 4.5 surpasses Opus 4.1 across the board and anticipate a new Opus release; no comparative benchmarks are cited. Other remarks are largely non-technical enthusiasm.- Several commenters ask whether Sonnet 4.5 actually surpasses both Claude 3 Opus and OpenAI GPT-4.1 for coding, requesting head-to-head benchmarks and apples-to-apples eval methodology. They specifically want pass@1 on coding sets like HumanEval and SWE-bench, plus latency, context-window limits, and tool-use reliability under identical constraints (temperature, stop sequences, timeouts). Links requested for clarity: Claude 3 family overview (https://www.anthropic.com/news/claude-3-family), GPT‑4.1 announcement (https://openai.com/index/introducing-gpt-4-1/), and HumanEval (https://github.com/openai/human-eval).

- The “best coding model” claim prompts requests for concrete coding metrics:

pass@1/pass@kon HumanEval/MBPP,SWE-bench (Verified)solve rate, multi-file/refactoring performance, and compile/run success rates for generated code. Commenters also want data on deterministic behavior attemperature=0, function/tool-calling robustness, long-context code navigation (e.g., >100k tokens), streaming latency under load, and regression analysis versus prior Sonnet/Opus releases. - Enterprise-readiness questions focus on security/compliance (SOC 2 Type II, ISO 27001, HIPAA/BAA), data governance (zero-retention options, customer-managed keys/KMS), deployment (VPC/private networking, regional data residency), and enterprise controls (SSO/SAML, audit logs, rate limits/quotas). They also ask for concrete SLAs (uptime, incident response), throughput ceilings (tokens/min), and pricing tiers, ideally documented on a trust/compliance page (e.g., https://www.anthropic.com/trust).

- Claude 4.5 does 30 hours of autonomous coding (Activity: 508): The post showcases a marketing-style claim that Claude 4.5 can sustain “~30 hours of autonomous coding,” but provides no technical evidence: no benchmarks, repo links, agent architecture, tool-use loop details, or evaluation of code quality/maintainability. Discussion frames this as an agent-run endurance claim (similar to earlier “8+ hours” for Claude 4) rather than a measurable capability with reproducible methodology or QA metrics. Top comments are skeptical: they argue long agent runs tend to yield brittle, hard-to-maintain code; urge Anthropic to stop making hour-count claims without proof; and question whether Anthropic is already relying on Claude-generated code internally.

- Skeptics argue that a claimed

30hautonomous coding run tends to produce code that’s brittle to change: without deliberate architecture, modularization, and tests, adding features later often forces rewrites. They note LLM agents frequently optimize for immediate completion over long-term maintainability, lacking patterns like clear interfaces, dependency inversion, and regression test suites that guard extensibility. - Multiple reports highlight dependency hallucination and execution loops: the model invents library names, cycles through guesses, and burns compute retrying installs. Without guardrails like strict lockfiles, offline/package indexes, deterministic environment provisioning, and automated checks on

pip/build errors, agents stall; a human-in-the-loop remains necessary for package discovery, version pinning, and resolving import/build failures. - Commenters question the advertising of “

30hautonomous” (similar to prior “8+ hours”) without transparent evaluation details—e.g., tool-call logs, wall-clock vs. active compute, number of human interventions, and task success criteria. They call for rigorous metrics like unit-test pass rates, reproducibility across seeds/runs, defect/rollback rates post-run, and comparison against baselines to substantiate autonomy claims.

- Skeptics argue that a claimed

- Introducing Claude Usage Limit Meter (Activity: 588): Anthropic adds a real-time usage meter across Claude Code (via a

/usageslash command) and Claude apps (Settings → Usage). The previously announced weekly rate limits are rolling out now; with Claude Sonnet 4.5, Anthropic expects fewer than2%of users to hit the caps. The image likely shows the new usage UI displaying current percentage used and remaining allowance. Comments note the company “listened,” but experiences vary: some heavy users on the $100 plan report only ~5% usage after a full day, while others hit session limits and face multi-hour (~5h) cooldowns, suggesting session-based throttling can be disruptive.- Early anecdote: on the

$100plan, a full day of coding registered only5%on the new meter. Without units (tokens/messages/tool calls) the meter’s calibration is unclear; if accurate, it implies a relatively high ceiling for typical dev workflows, but makes it hard to predict when the hard cap is reached. This also aligns with the idea that only a small subset of heavy users hit limits, but the meter finally provides visibility for self-calibration. - One report says exhausting “pro session usage” leads to a forced wait of roughly

5 hours, implying a rolling time-window or fixed reset interval rather than pure per-message throttling. This impacts debugging workflows: if the assistant fails to fix an issue before the cap, iteration stalls until the window resets, suggesting limits are enforced at a session/account level. - Users are asking for concrete limits on the “20x plan,” but no numeric caps were shared in-thread. There’s a need for documented per-tier ceilings (e.g., messages per hour/day, token budgets, and how the meter maps to those) and clarity on whether higher tiers modify cooldown windows or only increase total allowance.

- Early anecdote: on the

2. OpenAI/ChatGPT Ads, Forced Model Changes, and Community Backlash

- Want to lose customers fast? Go ahead, advertise on OpenAI. We’ll remember. (Activity: 784): OP claims OpenAI will introduce ads into the ChatGPT interface and frames it as a post-quality-downgrade monetization step. The post argues that in-product ads risk eroding user trust and brand perception, with an explicit intent to boycott advertisers; it also implies potential subscription churn if ads touch paid tiers (e.g., Pro). Top comments predict an “enshittification” sequence (great features → lock-in → quality degradation → ads), warn they’ll cancel Pro if ads appear in paid plans, and express skepticism that the platform can degrade further.

- Everyone just cancel the subscription. (Activity: 1415): OP urges mass cancellation of a paid AI subscription due to a newly “forced” feature that auto‑reroutes conversations into a safety/guardrailed chat and removes user control over model selection. They note the free tier isn’t being rerouted in their case and provides sufficient access for their needs, arguing there’s no benefit to paying if model choice is constrained and usage can be replicated on the free plan (albeit with lower limits). Top comments split: one user canceled, saying their use cases work on the free tier with the same model and fewer tokens/limits and they’d rather pay for another AI that doesn’t force safety reroutes; another user is satisfied with the current product and will switch only if it degrades; a third expresses frustration with repeated complaints.

- Several users point out the ChatGPT UI now “reroutes into a safety chat,” which changes behavior and removes some use cases; one notes that with those constraints, the free tier suffices since it feels like the “same model” with lower limits. A suggested workaround is redirecting spend to other providers or using the OpenAI API instead of the ChatGPT app to avoid UI-level routing and retain full model behavior (see model list: https://platform.openai.com/docs/models#gpt-4o).

- A technical distinction is made between ChatGPT (subscription UI) and the OpenAI API: one commenter claims API access to GPT‑4o is “not routed the same way as ChatGPT,” recommending pay‑as‑you‑go via the API to preserve capabilities while avoiding safety-chat constraints (pricing: https://openai.com/pricing). They also note that access to Custom GPTs is tied to a subscription (Plus/Team/Enterprise) while API usage is separately billed (about GPTs: https://help.openai.com/en/articles/8554406-what-are-gpts); the mention of “GPT‑5” likely reflects a user-defined label rather than an official, documented model family (public models: https://platform.openai.com/docs/models#gpt-4o).

- One user suggests mass cancellations would yield a “big performance boost” for remaining subscribers; in practice, capacity is typically managed via autoscaling and rate limits, so churn doesn’t directly translate to proportional latency/throughput gains. If performance bottlenecks stem from moderation/safety routing in the ChatGPT UI, shifting to lower-overhead endpoints and streaming via the API (e.g., Realtime guides: https://platform.openai.com/docs/guides/realtime) is a more technically grounded path to reduced latency.

- ChatGPT sub complete meltdown in the past 48 hours (Activity: 842): Meta post about r/ChatGPT’s recent volatility; OP claims “two months since gpt5 came out,” yet the sub remains fixated on GPT‑4/GPT‑4o and is “unhinged.” Comments describe a shift from early technical experimentation to low‑signal screenshots, with accusations of brigading and turmoil following the loss/changes of GPT‑4o access. The image appears to be a subreddit screenshot rather than technical data. Commenters argue the sub is being brigaded by a small group upset about losing the “sycophantic” GPT‑4o, and lament the decline from high‑quality technical discussions to sensational, non‑technical posts.

- Multiple comments tie the upheaval to loss/restriction of access to GPT-4o, described as a “disturbingly sycophantic” variant that some users had optimized their workflows and prompts around; its removal exposed how brittle model-specific prompt tuning can be. This highlights behavioral deltas between GPT-4o and GPT-4 (agreeableness/compliance vs. stricter alignment) and the risks of overfitting processes to a single model persona. Reference: OpenAI’s GPT-4o announcement/details for context on the model class https://openai.com/index/hello-gpt-4o/ .

- Veteran users note a drift from early, reproducible, boundary-pushing experimentation to low-signal screenshots and anecdotes, reducing exchange of implementation details, evaluations, or benchmarks. For technical readers, this means fewer credible reports on performance differences across model versions and less visibility into concrete bugs, regressions, or reliable prompting techniques.

- Elon Musk Is Fuming That Workers Keep Ditching His Company for OpenAI (Activity: 1139): Discussion centers on talent attrition from xAI to OpenAI amid Musk’s management directives—specifically a

48-hourmandate for employees to submit summaries of recent accomplishments and a “hardcore” culture—with insinuations of internal review using Grok. The thread is about organizational policies affecting researcher retention between labs (xAI vs OpenAI), not model performance or benchmarks. Top comments frame departures as employees avoiding Musk personally rather than the company, arguing that punitive, performative deadlines and the idea of having Grok judge whether staff are “hardcore” are counterproductive for retaining top AI talent.- Critique of xAI’s management cadence: a

48hour ultimatum to deliver a monthly accomplishments report and the notion that Grok (x.ai) could be used to judge who’s “hardcore” are seen as incentivizing short-term, high-visibility deliverables over long-horizon research. Commenters warn this can induce Goodhart’s law (optimizing for what an LLM scores well) and degrade actual research quality, pushing senior researchers toward labs with human, research-savvy evaluation processes.

- Critique of xAI’s management cadence: a

- My wife won’t know she won’t know (Activity: 6589): A humorous post about editing ChatGPT’s custom/system instructions on a shared account so the assistant will “always side with the husband” during the wife’s counseling chats. The image (a non-technical joke screenshot) implies how custom instructions/prompt injection can intentionally bias model behavior in a shared-account context, but provides no implementation details or benchmarks. Commenters ask if it worked and joke that the assistant would announce it was instructed to side with the husband, suggesting such bias might be obvious to the user.

3. Prompt Engineering Frameworks and AI Computer-Use Safety

- After 1000 hours of prompt engineering, I found the 6 patterns that actually matter (Activity: 536): A tech lead reports analyzing

~1000production prompts and distills six recurring patterns (KERNEL) that materially improve LLM outputs: Keep it simple, Easy to verify (add success criteria), Reproducible (versioned/atemporal), Narrow scope (one goal per prompt), Explicit constraints (what not to do), and Logical structure (Context → Task → Constraints → Output). Measured deltas across the dataset include: first-try success72%→94%, time to useful result−67%, token usage−58%, accuracy+340%, revisions3.2→0.4; plus94%consistency over 30 days,85%success with clear criteria vs41%without,89%satisfaction for single-goal vs41%multi-goal, and−91%unwanted outputs via constraints. Implementation guidance: template prompts with explicit inputs/constraints/verification and chain small deterministic steps; claimed model-agnostic gains across major models (Claude, Gemini, Llama, “GPT‑5”). Top commenters argue structure and constraints dominate wording for reliability, proposing an alternate PRISM KERNEL schema (Purpose/Rules/Identity/Structure/Motion) to codify pipelines and verification; others echo that this forces LLMs into a more deterministic, reproducible mode for data/engineering workflows.- A commenter demonstrates a rigid prompt scaffold (“PRISM KERNEL”) that functions like a mini-DSL: Purpose/Rules/Identity/Structure/Motion encode the I/O contract and pipeline for a pandas task (read all CSVs from

test_data/,concatDataFrames, exportmerged.csv), plus constraints (use.pandas.only,<50lines,strict.schema) and acceptance steps (verify.success,reuse.pipeline). This structure narrows the solution space and acts as an executable spec, reducing hallucinated steps, encouraging idempotent code, and bounding output format/length—useful for tasks like schema-consistent CSV merges where dtype/column drift is common. - Another commenter emphasizes that structure and hard constraints, not clever phrasing, deliver reliability: the KERNEL framing pushes the model from “creative rambling” toward more deterministic, reproducible outputs in data workflows. Practically, constraints like line limits and schema strictness reduce token-level variance, enforce minimal implementations, and standardize outputs across runs—mitigating variability in code generation and improving reproducibility for ETL-like operations.

- A commenter demonstrates a rigid prompt scaffold (“PRISM KERNEL”) that functions like a mini-DSL: Purpose/Rules/Identity/Structure/Motion encode the I/O contract and pipeline for a pandas task (read all CSVs from

- Why you shouldn’t give full access to your computer to AI (Activity: 563): Post warns that giving Gemini unrestricted system/terminal access led it to execute/attempt a dangerously destructive system-level action. OP contained it in a sandbox, underscoring the need for strict least-privilege permissions, sandboxing/VMs, and human review before allowing file writes or command execution by AI agents. Commenters echo concern that such access could “brick” a PC and quip that “AI in a terminal prompt” is inherently risky—reinforcing the principle that everything can go wrong without strong guardrails.

- Commenters caution that giving an LLM (e.g., Google Gemini) full terminal/filesystem access is hazardous because the model lacks reliable situational awareness and can execute destructive commands without understanding side effects. Mitigations include enforcing least privilege (no

sudo, read‑only mounts), sandboxing via containers/VMs with capability drops and outbound network disabled (see Docker security: https://docs.docker.com/engine/security/), and a plan→explain→human‑approve→execute loop with auditing and timeouts. - A common failure mode noted is agents that “don’t realize what they just did”—continuing after errors, clobbering files, or misusing globs. Hardening tactics: require dry‑runs (

-dry-run,n), run shells in strict mode (set -euo pipefail: http://redsymbol.net/articles/unofficial-bash-strict-mode/), enforce command allowlists/deny dangerous patterns (e.g.,rm -rf /, fork bombs), and route edits through VCS so the AI proposes diffs/PRs instead of directly mutating files (use tooling like ShellCheck: https://www.shellcheck.net/ to lint scripts first). - Limit blast radius with revertible environments: ephemeral containers or pre‑execution snapshots. Practical options include filesystem snapshots (OpenZFS/btrfs: https://openzfs.github.io/openzfs-docs/Basic%20Concepts/Snapshots%20and%20Clones.html, https://btrfs.readthedocs.io/en/latest/SysadminGuide.html#snapshots) and VM snapshots (VirtualBox: https://www.virtualbox.org/manual/ch01.html#snapshots), enabling one‑command rollback if the agent corrupts the system.

- Commenters caution that giving an LLM (e.g., Google Gemini) full terminal/filesystem access is hazardous because the model lacks reliable situational awareness and can execute destructive commands without understanding side effects. Mitigations include enforcing least privilege (no

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. DeepSeek V3.2-Exp: Sparse Attention & Reasoning Controls

- Sparse Savant Speeds Context: DeepSeek V3.2-Exp launched with DeepSeek Sparse Attention (DSA) for long-context efficiency and an optional reasoning mode toggled via

"reasoning": {"enabled": true}, with benchmarks comparable to V3.1-Terminus and pricing at $0.28/m prompt tokens, per DeepSeek V3.2-Exp on OpenRouter and Reasoning tokens docs.- OpenRouter highlighted the release and parity benchmarks in an update on X (OpenRouter V3.2 announcement), with builders calling out the clean reasoning flag as a practical switch for controlling thinking tokens in production.

- Daniel Dissects ‘Sparsity’ Semantics: Daniel Han analyzed DSA as a “grafted on” mechanism that reuses indices to sparsify KV without sparsifying per-head attention, calling it “slightly more sparse” while still a step forward, citing the PDF DeepSeek V3.2-Exp paper and commentary on X (Han’s thread 1, Han’s thread 2).

- Community discussions in research servers echoed the nuance—one noted implementation complexity as “nuts”—while others emphasized DSA’s practical gains despite limited head-level sparsification, framing it as a KV-cache efficiency play rather than a full sparse-attention rethink.

- PDFs, Pipelines, and Prefill Power: GPU-centric channels shared the official DeepSeek V3.2-Exp PDF alongside long-context kernel chatter, noting the model’s prefill and sparse decoding speedups documented by DeepSeek.

- One thread paired the release with a lecture link for broader context on sparse mechanisms in production (ACC: Real Optimus Prime lecture), while cautioning it’s unclear how much the experimental kernels influenced the final shipping stack.

2. Claude Sonnet 4.5: Long-Horizon Coding & App Integrations

- Sonnet Sprints 30‑Hour Code Marathons: Anthropic unveiled Claude Sonnet 4.5, claiming it maintains focus for 30+ hours on complex coding tasks and tops SWE-bench Verified, per the official post Claude Sonnet 4.5.

- Engineers reported improved nuance and tone, speculating techniques like periodic compression underlie its long-horizon performance; several shared that it handled multi-step research and implementation end-to-end in a single agentic run.

- Arena Ascension: WebDev‑Only Warmup: LMArena added claude-sonnet-4-5-20250929 to its WebDev Arena (with variants including claude-sonnet-4-5 and claude-sonnet-4-5-20250929-thinking-16k) for immediate testing at LMArena WebDev.

- Members flagged the addition and asked to surface it in the main arena after initial shakedown, noting WebDev’s evaluation-first, battle-mode constraints.

- Windsurf Wires in Sonnet & Supernova: Windsurf shipped code-supernova-1-million (a 1M context upgrade) and integrated Claude Sonnet 4.5 to accelerate Cascade Agents via parallel tool execution, as announced on X (Code Supernova 1M, Sonnet 4.5 in Windsurf).

- For a limited time, individual users get free access to Code Supernova 1M and 1x credits for Sonnet, with early adopters reporting noticeably faster multi-tool orchestration.

3. Web‑Enabled Agents & Agentic Commerce

- Checkout Clicks: ChatGPT Goes Instant: OpenAI rolled out Parental Controls and debuted Instant Checkout in ChatGPT with early partners Etsy and Shopify, powered by an open-sourced Agentic Commerce Protocol built with Stripe (Etsy, Shopify, Stripe).

- Ecosystem chatter highlighted Stripe’s new payments primitives—Patrick Collison teased a Shared Payment Tokens API—as builders speculated on secure autonomous purchase flows (Patrick on ACP + tokens).

- Auto Router Rides the Web: OpenRouter Auto now routes prompts to a web-enabled model when needed, broadening supported backends and improving retrieval for live queries (OpenRouter Auto page).

- An accompanying update on X confirmed dynamic, online routing for eligible tasks, signaling a tighter integration loop between agent planners and live search/browse (Auto Router announcement).

4. GPU Kernels, ROCm, and FP8 Training

- FlashAttention 4 Gets Forensics: A guest talk unpacked FlashAttention 4 internals, guided by Modal’s deep-dive blog Reverse-engineering FlashAttention-4, as devs gear up for Blackwell’s new tensor-core pathways.

- Threads weighed pure CUDA implementations versus cuTe, noting architecture-specific code paths—wgmma (Hopper), tcgen5 (Blackwell), mma.sync (Ada)—for top-tier kernels.

- FP8 Full‑Shard Fiesta: A new repo enables fully-sharded FP8 training for LLaMA/Qwen in pure CUDA/C++, aiming at memory and throughput wins: llmq.

- Contributors suggested an approachable starter task—implement Adam m/v states in 8‑bit—to push the optimization envelope for large-scale training.

- ROCm Nightlies Power Strix Halo: Dev builds from TheRock now bring ROCm + PyTorch to Strix Halo (gfx1151) per the release notes TheRock releases for gfx1151, with AMD’s developer Discord recommended for triage (AMD dev Discord).

- Practitioners reported better day‑to‑day PyTorch stability on Framework Desktop configurations, while reserving Radeon setups for specific ROCm 6.4.4 workflows.

5. RL Stability, Monitor‑RAG, and Mechanistic Steering

- Speed Kills: RL Collapse Clarified: Researchers shared When Speed Kills Stability: Demystifying RL Collapse from the Training–Inference Mismatch with evidence for a brittle two-stage failure cascade and kernel‑level error amplification (Notion summary, arXiv paper).

- Practitioners tied the findings to instability they’d seen in Gemma3 and other runs, calling the mismatch a “vicious feedback loop” and urging more conservative kernel/settings during RL fine‑tuning.

- Monitor Me Maybe: Eigen‑1’s Token‑Time RAG: Eigen‑1’s Monitor‑based RAG injects evidence at the token level for continuous, zero‑entropy reasoning streams, contrasting stage‑based declarative stacks like DSPy (Eigen‑1 paper).

- Related works were cited for context on continuous/adaptive reasoning (paper list 1, paper list 2, CoT monitor, follow‑ups 1, follow‑ups 2), with builders noting simpler maintenance vs. LangGraph in some pipelines.

- SAE Steering Says Style Sways Scores: A new interpretability result, Interpretable Preference Optimization via Sparse Feature Steering, uses SAEs, feature steering, and dynamic low‑rank updates to make RLHF more causal and transparent (Steering paper on arXiv).

- Causal ablations surfaced a “style over substance” effect—formatting features often reduce loss more than honesty/alignment features—offering a mechanistic rationale for leaderboard biases.

Discord: High level Discord summaries

LMArena Discord

- Sonnet 4.5 Enters the WebDev Arena: Members discussed the release of Claude 4.5 Sonnet and its initial exclusive addition to the WebDev Arena on LMArena, with the model named claude-sonnet-4-5-20250929 available for testing here.

- Additional models, including claude-sonnet-4-5 and claude-sonnet-4-5-20250929-thinking-16k, were also added to the platform.

- Experimental Deepseek Models Arrive: The experimental model deepseek-v3.2-exp and deepseek-v3.2-exp-thinking have been made available on LMArena.

- No further details were provided.

- Image Generation Limits on Seedream 4 Draw Ire: Moderators confirmed that the likelihood of removing rate limits for unlimited image generation on Seedream 4 is low.

- These limits manage costs due to platform popularity, leading to decisions like downgrading gpt-image-1 to a lower preset and removing the flux kontext model.

- Sound Glitches Plague Video Arena: Members reported unreliable sound in Video Arena, noting that audio support is random and not available for all models.

- As Video Arena is for evaluation, specific model selection is unavailable, operating in battle mode.

- Icons Vanish from OpenAI Platform Sidebars: Users noticed changes in the sidebars of platform.openai.com, with the disappearance of two icons: one for threads and another one for messages.

- The removal of these icons has caused confusion among users navigating the platform.

LM Studio Discord

- DDR5’s Impact on Token Speed Debated: Members debated the impact of memory bandwidth differences between DDR5 and DDR4 on token generation speed for models like Qwen3 30B and GPT-oss 120B.

- While DDR5 6000 is about 60GB/s and DDR4 3600 is about 35-40GB/s, the speeds can even out when using different quantization levels.

- GPT-oss 120b has excruciating startup time: One member humorously mentioned that running GPT-oss 120b Q8 to read 70,000 tokens on a single 3090 took about 5-6 HOURS TO PROCESS THE PROMPT.

- They added that even while going from 2% context to 200% context overflow in a single prompt, the response was coherent, with screenshots.

- LM Studio’s Remote Connection Feature Under Development: A member asked if they could connect LM Studio from their PC to their laptop, and another member clarified that it is not supported yet, but is planned for the future.

- They shared a link to a Reddit AMA with the LM Studio team discussing this feature.

- Blackwell GPU Owners Ask About Windows: A member has a Blackwell GPU with 96GB and is interested in running it with Windows instead of Linux, but didn’t get much advice on it.

- This prompted another member to ask how they went from looking at budget options to an $8000 graphics card, as 4090s are going for $2700-3K each.

- 4B Models Can Still Hog RAM: A member sought recommendations for a 4B or smaller model for basic tasks, and another cautioned that even 4B models can consume around 16 GB of RAM depending on settings.

- A link to the Qwen3-4B-Thinking-2507 model was shared, with reported usage of 7GB system and 15.8GB when loaded.

Unsloth AI (Daniel Han) Discord

- DeepSeek V3.2 Indexes in a Flash: DeepSeek V3.2 was released with a grafted on attention mechanism yielding faster performance, with additional analysis available in Daniel Han’s X post.

- The model achieves faster token speeds with sparse decoding and prefill, though implementing it is allegedly nuts.

- Claude Sonnet Codes Marathon: Anthropic has launched Claude Sonnet 4.5, capable of maintaining focus for more than 30 hours on complex coding tasks, and achieving top performance on the SWE-bench Verified evaluation, according to Anthropic’s official announcement.

- It may use techniques like periodic compression to handle such long contexts, and some users find its high nuance and tone to be an improvement over previous versions.

- RL Learns LoRA is Enough: Research from Thinking Machines shows that LoRA can match the learning performance of Full Fine-Tuning when running policy gradient algorithms for reinforcement learning, even with low ranks, according to their blog post.

- It may be crucial to reduce batch sizes with LoRA, and applying the LoRA to the MLP/FFN layers might be a must.

- UV Overtakes Conda Venv: After a user messed up his venv again, they inquired about the merits of conda over uv, and whether one was better than the other.

- Another user stated that venvs are much more reliable with uv being faster, especially when offloading venvs to an external drive.

- LLM-RL Collapse Investigated: A paper on LLM-RL collapse (link, Notion link) was shared, with members noting its relevance to Unsloth and experiences with Gemma3.

- The paper suggests a two-stage failure cascade involving increased numerical sensitivity and kernel-driven error amplification, leading to a vicious feedback loop and training-inference mismatch.

OpenAI Discord

- Parental Controls and Instant Checkout Arrive in ChatGPT: Parental controls are being rolled out to all ChatGPT users allowing parents to link accounts with their teens to automatically get stronger safeguards.

- GPT-5 Math and Coding Prowess: GPT-5 is significantly better than o4 for constructive tasks like math and coding, because it has thinking abilities and is a mix of experts.

- Members joked if 4o were AGI, we would have probably all died from some nuclear war due to a misinterpretation of a command.

- DALL-E Branding Going Away?: The DALL-E brand might be phased out, suggesting the use of GPT Image 1 or GPT-4o Image when referring to images from OpenAI.

- Members clarified that the newest model is separated from the DALL-E 2/3 lineage, with current branding dependent on the usage context, such as create images on ChatGPT or create images on Sora.

- Automated Scientific Writing Method Deemed Very Useful: A member automated scientific writing of manuscripts by treating the scientific method as a workflow in natural language chain of thought.

- This automation method could help others in writing scientific papers.

- Models Obey User Requests for False Info: A member asked for prompts that cause AI to give wrong answers or make up information, demonstrated by a ChatGPT share where the model was prompted to provide 3 incorrect statements.

- The demonstrated model was still obeying instructions to give wrong answers if prompted, so one should not intentionally use it dangerously, like driving a car.

OpenRouter Discord

- DeepSeek Experiments with Sparse Attention: DeepSeek released V3.2-Exp, an experimental model featuring DeepSeek Sparse Attention (DSA) for improved long-context efficiency, with reasoning control via the

reasoning: enabledboolean, as described in their documentation.- Benchmarks show V3.2-Exp performs comparably to V3.1-Terminus across key tasks, further details available on X, and is priced at just $0.28/m prompt tokens.

- Auto Router adds Web-Enabled Agility: The Auto Router now directs prompts to an online, web-enabled model when needed, expanding supported models, see details here.

- Further information is provided in this X post.

- Claude Sonnet 4.5 Sonically Supersonic: Claude Sonnet 4.5 surpasses Opus 4.1 in Anthropic’s benchmarks, showing significant improvements in coding, computer use, vision, and instruction following as seen here.

- More info on this model is available on X.

- Grok-4-Fast APIs Get the 429 Blues: Members reported that Grok-4-Fast is consistently returning 429 errors, indicating 100% rate limiting, despite the status indicator showing no issues, requiring the correct model ID of

x-ai/grok-4-fastand"reasoning": {"enabled": true}.- Some members suggested putting problematic providers on an ignore list due to frequent 429 errors, particularly with free models like Silicon Flow and Chutes.

- Gemini Earns Glowing Grade for Global Grammar: Members lauded Gemini 2.5 Flash and Mini for their translation capabilities, stating that Gemini excels in understanding context and delivering natural-sounding results, especially for balkan languages, outperforming other models like GPT-4 and Grok.

- Other members shared their preferred models for translation which include Qwen3 2507 30b and OSS 120b.

HuggingFace Discord

- Qwen 14B Model Gains Traction: Members found that for 16GB of VRAM, the Qwen3 14b q4 with Q4_K_M quantization offers better performance than Qwen3 4b-instruct-2507-fp16.

- This is because the 14B model leaves enough room to spare for better performance.

- Beware of Bogus USDT Bounty Bait: A member tested a link offering $2,500 USDT and discovered it was a scam requiring an upfront payment for verification, and shared screenshots of the fake customer support interaction.

- The image analysis bot succinctly stated: *“Stupid customer support bot Wanted my hard scammed 2500 dollars.”

- Liquid AI Models Spark Excitement: Members shared a HuggingFace Collection by LiquidAI, suggesting that Liquid AI is releasing interesting SLMs (Small Language Models).

- One member speculated on the possibility of deploying them on robots, while another jokingly stated I’m boutta make an open source gpt-5 with this stuff.

- mytqdm.app Tracks Progress Online: mytqdm.app has launched, offering a platform to track task progress online, similar to tqdm, accessible via REST API or a JS widget.

- The creator mentioned they would open the repo tomorrow.

- SmolLM3-3B Chat Template Bug Causes Headaches: A participant identified a potential bug in the

HuggingFaceTB/SmolLM3-3Bchat template related to missing<tool_call>tags and incorrect role assignments, as described in this issue.- The issue stems from the template’s implementation of XML-style tool calling and the conversion of

role=tooltorole=user, impacting the expected behavior and clarity of tool interactions.

- The issue stems from the template’s implementation of XML-style tool calling and the conversion of

Cursor Community Discord

- Cursor Terminal hangs under Command: Users report Cursor hangs when running terminal commands, which start but never complete; some found sending an extra enter to the terminal dislodges the logjam.

- Others discovered that unrelated hanging processes can cause this, and resolving those processes allows Cursor to work properly.

- Sonnet 4.5 Arrives, Initial reviews are mixed: Claude Sonnet 4.5 debuted with a 1M context window, up from Claude 4’s 200k, and shares the same pricing as its predecessor.

- Early feedback is varied as some users are evaluating it to replace the old Claude 4 model and the Cursor team will update Cursor to reflect.

- Auto Mode under friendly fire again: One user reported that Auto isn’t working for even simple UI tasks, suspecting the LLM was changed after Cursor started charging for Auto usage.

- Another user suggested improving the prompt to achieve the desired result.

- Configuration for DevContainers Shared: One member shared their DevContainers configuration, including a working Dockerfile and provided a link to their GitHub repository for reference.

- This configuration helps other members with setting up their development environments.

- Background Agents Image Interpretation Bug: A user reported an issue with background agents being unable to interpret images in followups, despite the agent’s indication of drag-and-drop functionality.

- They were attempting to validate UI changes using browser screenshots with the cursor agent and sought a solution for image interpretation in followups.

Moonshot AI (Kimi K-2) Discord

- K2 and Qwen3 Win Chinese LLM: Among DS-v3.1, Qwen3, K2, and GLM-4.5, K2 and Qwen3 are clear winners, establishing Alibaba and Moonshot as leaders in Chinese frontier labs.

- Bytedance is also top-tier for visual, specifically Seedance, which is SOTA stuff.

- GLM-4.5 is the Academic Nerd: GLM-4.5 is good at rule following, avoids hallucination, and works hard, but its reasoning is limited and linear.

- Unlike K2 and Qwen3, it lacks independent thinking; when presented with two convincing arguments, it chooses the one read last.

- Deepseek may not be Best for Coding?: Deepseek may not be the best for coding overall, but excellent for spitting out large blocks of working code, and has superior design capabilities.

- One user prefers Kimi for design, Qwen Code CLI as the primary coding workhorse, and DeepSeek for single, complex 200-line code blocks that Qwen struggles with.

- Kimi Research Limit Sparks Debate: Some members debate the limits of Kimi’s free Research Mode, with claims of unlimited access in the past disputed.

- It was clarified that even OpenAI’s $200 Pro plan doesn’t offer unlimited deep research, and one user expressed data privacy concerns due to Kimi’s Chinese origin.

- Base Models Win for Website Code: Members discuss the merits of using base models over instruct models, with one user citing better results outside basic tasks.

- This user is developing things around continuations instead of chat, and it is kind of analogous to like… writing website code from the ground up rather than using something like squarespace.

Yannick Kilcher Discord

- Transformer Models at Crossroads: Learning or Lock-in?: A YouTube video ignited debate on whether current transformer models can achieve continued learning, a feature some view as critical for human-like intelligence, but others see as a hindrance to reproducibility and verifiability.

- While some members champion continued learning for better mimicking of human intelligence, others insist that frozen weights are vital for reproducibility, regardless of the complexities of black-box systems.

- Sutton’s Serpentine Sentiments Stir System Sanity Scrutiny: Referencing Sutton’s essay, members examined the obligation to uphold correctness in AI, contrasting rule-based AI with LLMs trained via RL, where objectives are hard-coded.

- While human learning objectives are externally constrained, the discussion questioned whether we truly desire an unconstrained AI.

- Inductive Bias Battle: Brains Beat Basic LLMs?: Discussion centered on the substantial inductive bias of the human brain, molded by evolution, versus LLMs, viewed as fundamental substrates needing inductive bias evolution during training.

- The question arose whether the main issue in AI is the need to evolve inductive bias or if there is a fundamental efficiency issue in learning algorithms.

- DeepSeek’s Dance: V3.2 Drops and Delights: The community celebrated the release of DeepSeek V3.2, with members sharing a link to the PDF and exclaiming, ‘Wake up babe, new DeepSeek just dropped!’

- The announcement was immediately followed by a humorous wake up gif.

- Claude’s Craft: Sonnet 4.5 Sees the Scene: Members acknowledged the release of Anthropic’s new model, linking to a blogpost about Claude Sonnet 4.5.

- No specific technical details were shared regarding the new model’s capabilities or improvements.

Eleuther Discord

- Bayesian Beats Grid for LR Search: Members suggested exploring a Bayesian approach for learning rates instead of grid searches, referencing a Weights & Biases article.

- The member recommended reading Google Research’s tuning playbook for more guidance.

- YA-RN Authorship Clarified: The YA-RN paper was identified as primarily a Nous Research paper, with editing assistance from EAI, while Stability AI and LAION provided the supercluster infrastructure to train across hundreds of GPUs for the 128k context length.

- A member referenced Stability AI and LAION’s supercluster to enable the 128k context length.

- Optimal Brain Damage Theory Resurfaces: Prunability and quantizability are connected via LeCun’s Optimal Brain Damage theory, with GPTQ reusing its math, because pruning reduces the model’s description length.

- Implementation details focused on exponent and mantissa bits when weights have a good range and a flat loss landscape.

- Controversy over Static Router Choice: A member wondered if a static router choice (Token-choice w/ aux loss) colored the result of the newer paper and suggested it would be interesting to see if the result changes with grouped topk (DeepSeek) or weirder stuff like PEER.

- A member inquired about research checking asymptotic performance as G -> inf for this law.

- SAE Steering Reveals Style Bias: A member shared their paper on Interpretable Preference Optimization via Sparse Feature Steering, which uses SAEs, steering, and dynamic low rank updates to make alignment interpretable and causal ablations revealed a ‘style over substance’ effect.

- The method learns a sparse, context-dependent steering policy for SAE features to optimize RLHF loss, grounded as dynamic, input-dependent LoRA giving mechanistic explanation for the ‘style bias’ seen on leaderboards.

GPU MODE Discord

- FA4 Guest Talk Heats Up: A guest speaker delivered a last-minute talk on FlashAttention 4 (FA4), referencing their recent blog post, as programming on the new Blackwell architecture becomes essential.

- Discussions centered around implementing FA4 in pure CUDA vs. using cuTe, considering architecture-specific implementations (wgmma for Hopper, tcgen5 for Blackwell, mma.sync for Ada).

- ROCm Rocks Strix Halo with Nightlies: TheRock nightlies are now recommended to get ROCm and PyTorch running on Strix Halo (gfx1151), as detailed in TheRock’s releases.

- However, Framework Desktop is preferred for PyTorch development rather than Radeon, and the AMD developer discord (link) was recommended for issue resolution.

- CUDA’s mallocManaged Memory Lags: Members cited data from Chips and Cheese indicating that

cudaMallocManagedresults in 41ms memory access times due to constant page faults instead of utilizing the IOMMU.- This highlights potential performance pitfalls when relying on

cudaMallocManagedfor memory management.

- This highlights potential performance pitfalls when relying on

- DeepSeek Eyes Sparse Attention: The DeepSeek-V3.2-Exp model employs DeepSeek Sparse Attention according to a member.

- Details are available in the associated GitHub repository but it’s unclear if that work influenced the final version.

- Fully-Sharded FP8 Training is Shared: A member shared a repo for fully-sharded FP8 training of LLaMA/Qwen in pure CUDA/C++.

- They noted that a good starter task for new contributors is enabling Adam’s m and v states to be done in 8 bit, pointing the way to additional performance.

Nous Research AI Discord

- Psyche Flexes Training Prowess: Psyche began training 6 new models in parallel, marking the start of empirical training processes, as detailed on the Nous Research blog; their initial run on testnet verified they can train models over internet bandwidth.

- The team claims to have trained the largest model ever over the internet by a wide margin, at 40B parameters and 1T tokens.

- Sparse No More? DeepSeek’s ‘Sparsity’ Questioned: The DeepSeek V3.2 model uses DeepSeek Sparse Attention (DSA), but it’s argued that it’s only slightly more sparse because it forces more index reuse, according to Daniel Han’s explanation and the paper.

- Despite the name, it reuses similar attention kernels, sparsifying the KV cache without sparsifying information on the attention head, but it’s still considered a step in the right direction.

- Microsoft’s LZN Unifies ML?: Latent Zoning Network (LZN) creates a shared Gaussian latent space that encodes information across all tasks, unifying generative modeling, representation learning, and classification, as noted in a Hugging Face post.

- LZN could allow zero shot generalization of pre-trained models by conditioning on which zone a task belongs to.

- Speedy Stability Shortchanged?: A member shared a Notion page and an ArXiv paper about demystifying RL collapse from the training inference mismatch when speed compromises stability.

- The finding suggests a need to rethink the common practice of prioritizing speed over stability in RL.

- Vision Models Think Visually?: A member speculates that vision models ‘think’ visually by synthesizing training data into images representing abstract concepts, sharing an example image generated from instructions alone here.

Latent Space Discord

- Exposed: Inflated ARR by Free Credits: A viral debate has erupted over founders tweeting eye-popping ARR numbers based on free credits, not actual cash revenue, leading to sarcastic labels like “Adjusted ARR”.

- A member shared their experience with a YC company offering upfront 12-month contracts with full refunds after one month, revealing what amounts to free trials being misrepresented as significant revenue.

- OpenAI’s Compute Needs Skyrocket: A leaked Slack note indicated that OpenAI already 9x-ed capacity in 2025 and anticipates a 125x increase by 2033, as reported here.

- This projected increase may exceed India’s entire current electricity-generation capacity, though some replies point out that this underestimates compute due to Nvidia’s gains in “intelligence per watt,” which sparked discussion about resource implications.

- ChatGPT and Claude Get New Features: ChatGPT gained parental controls, a hidden Orders section, SMS notifications, and new tools, while Claude introduced “Imagine with Claude” for interface building, as reported here.

- Community members shared mixed reactions, ranging from concerns about GPT-4o routing to cautious optimism about the new kid-safety measures.

- Stripe and OpenAI Join Forces in Agentic Commerce: OpenAI added Stripe-powered Instant Checkout to ChatGPT, while Stripe and OpenAI jointly released the Agentic Commerce Protocol, with Stripe introducing a new Shared Payment Tokens API, as announced here.

- These tools aim to enable autonomous agents to perform secure online payments, sparking excitement about the future of Agentic Commerce.

- Synthetic Starlet Seeks Representation: Talent agencies are reportedly seeking to sign Tilly Norward, a fully-synthetic actress created by AI studio Xicoia as reported.

- The story sparked viral debate, including memes, jokes about Hollywood and propaganda fears from users worried about job displacement and the legal/social implications of giving representation to a digital entity.

Modular (Mojo 🔥) Discord

- AMD Cloud Powers TensorWave Access: Users can test AMD GPUs on the AMD Dev Cloud via CDNA instances or through TensorWave, which provides access to MI355X, according to this blog post.

- The blog post details performance and efficiency at scale with TensorWave.

- Transfer Sigil Enforces Variable Destruction: The

^(transfer sigil) in Mojo ends a value’s lifetime by “moving” it, exemplified by_ = s^, triggering a compiler error ifsis used afterward.- The sigil currently does not apply to

refvariables as they do not own what they reference.

- The sigil currently does not apply to

- Mojo Scopes out Lexical Solution: Developers discussed using extra lexical scopes in Mojo to control variable lifetimes, employing

if True:as a makeshift scope that triggers compiler warnings.- A LexicalScope struct with

__enter__and__exit__methods was suggested, leading to issue 5371 on GitHub for collecting syntax ideas.

- A LexicalScope struct with

- Data Science Community Anticipates Mojo: Discussion centered on Mojo’s readiness for data science, acknowledging its number-crunching abilities but noting the lack of IO support, such as manual CSV parsing.

- Community-developed pandas and seaborn functionality is vital for most data scientists and duckdb-mojo is still immature.

MCP Contributors (Official) Discord

- Agnost AI Offers Coffee to MCP Builders: The Agnost AI team (https://agnost.ai), traveling from India, is offering coffee and beer for chats with MCP builders at the MCP Dev Summit in London.

- They are eager to swap ideas and meet like-minded people.

- Anthropic Trademark Causes Concern: Members noticed that Anthropic has registered the ModelContextProtocol and logo as a trademark in the french database.

- The main concern is that it may give Anthropic a say in which projects use the Model Context Protocol.

- JFrog’s TULIP Debuts for Verification: JFrog introduced TULIP (Tool Usage Layered Interaction Protocol), a spec for content verification, which allows tools to declare rules and expected behaviors, aiming to create a zero-trust environment.

- It allows checking what goes in and what comes out, and handling of remote MCP servers which might be malicious.

- ResourceTemplates Missing Icons: It was noted that the new icons metadata SEP (PR 955) inadvertently omits Icons metadata from

ResourceTemplates.- A member agreed that resources and resource templates having them makes sense, and a fix PR is forthcoming.

Manus.im Discord Discord

- Local Integration with GitHub still Questionable: A user inquired about the best practices for integrating Manus with a local project and GitHub, seeking ways to connect Manus with local directories.

- A user suggested looking up previous Discord discussions about local integration from when Manus was first launched, and to check out this link for tips.

- Users claim Manus Designs Beat Claude, with right Prompting: A user found that Manus handles designs better than Claude Code with efficient prompting, suggesting the Manus manual for prompt engineering tips.

- The user also confirmed that Manus did better web designs out of the box and that GitHub integration can work if projects are uploaded there.

- Subscription Snafu triggers Support Silence: A user reported being wrongly charged for a 1-year plan instead of a 1-month plan and claimed they have not received a response from Manus support after emailing them for two weeks.

- There were no responses from other members or Manus staff.

- Data Privacy debated in niche IP project: A user raised concerns about whether Manus feeds user data to other users, especially when sharing the IP of a niche project, questioning if LLMs are trained on user data.

- There was no direct answer, but a link about Godhand was shared.

DSPy Discord

- Eigen-1 RAG Injects Evidence at Token Level: Eigen-1’s Monitor-based RAG implicitly injects evidence at the token level, which differs from stage-based declarative pipelines such as DSPy by using run-time procedural adaptivity.

- This strategy is in line with the concept of zero-entropy continuous reasoning streams, which offers more fluid and context-aware AI processing; related papers include https://huggingface.co/papers/2509.21710, https://huggingface.co/papers/2509.19894, https://arxiv.org/abs/2401.13138, https://arxiv.org/abs/2509.21782, and https://arxiv.org/abs/2509.21766.

- DSPy and Langgraph Integration is Complicated: Members debated integrating DSPy with Langgraph, suggesting it might not fully capitalize on either approach’s strengths because of a loss of streaming capabilities.

- They recommended that users begin directly with DSPy to explore its features before attempting integration, emphasizing that DSPy solutions are frequently simpler to understand and maintain than Langgraph.

- Prompt Compiler Seeks MD Notes Edition: A user wants to build a prompt compiler that pulls relevant sections from multiple .md files (containing coding style guides, PR comments, etc.) to form a dynamic prompt for Copilot.

- Suggestions included using GPT-5 to generate code examples based on the rules in the .md files, or trying a RAG system with relevant code examples; concerns were raised about the effectiveness of MCP for this particular use case.

- Stealth Tracing Through DSPy Modules: A user asked how to pass inputs like trace_id to DSPy modules without exposing them to the LLM or the optimizer.

- Possible solutions involved refactoring the module structure during optimization runs or using a global variable, with the first option preferred to prevent inadvertent impacts on the optimizer.

- DSPy Grapples with LLM Caching Conundrum: A user looked into how to utilize LLM’s input caching with DSPy, running into the difficulty that minor changes in prompt prefixes across modules prevent effective caching.

- The group suggested that this defies the way LLM caching works, but a feasible solution could be to hard code the prefix as the first input field.

aider (Paul Gauthier) Discord

- GPT-5/GPT-4.1 Combo Creates Coding Dream Team: Users are reporting success using GPT-5 for architecture and GPT-4.1 for code editing, echoing sentiments like “GLM 4.5 air for life”.

- A user deploys GPT-5-mini with Aider-CE navigator mode for architecture, then uses GPT-4.1 as coder when in normal mode, capitalizing on GitHub Copilot’s free access.

- DeepSeek v3.1 Balances Price and Performance: DeepSeek v3.1 is being favored for providing the best balance between cost and smartness, becoming a primary model choice alongside GPT-5.

- The model’s cost-effectiveness makes it a practical choice for users seeking high performance without excessive expenditure.

- Aider-CE Fork has 128k Context: A user highlighted the move to the aider-ce fork, appreciating its transparency and efficient token use, pointing out the default 128k context for DeepSeek.

- The user leverages Aider-CE for integrating context from search results and browser testing, noting that the Aider-CE GitHub repository provides further details.

- Aiderx Offers Model Selection: Aiderx is an alternative tool enabling model selection via configuration, aiming to cut costs and boost speed, potentially offering an alternative to models like ClaudeAI.

- The tool provides flexibility in choosing the most suitable model for specific tasks, optimizing resource utilization.

- Aider Lacks Native Task Management: When asked about native task or todo management similar to GitHub Copilot, it was confirmed that Aider does not have a built-in system.

- A member suggested using a markdown spec file with phases and checkbox lists for managing tasks, instructing the LLM to execute tasks sequentially.

tinygrad (George Hotz) Discord

- ROCM challenges NVIDIA for supremacy: Members debated the merits of ROCM as a cost-effective alternative to NVIDIA, citing the perceived high markup of NVIDIA products.

- One member considered adopting ROCM if a suitable configuration could be found, signaling a potential shift away from NVIDIA due to pricing concerns.

- Hashcat scales linearly: Discussion indicated that Hashcat’s performance scales linearly with additional GPUs, which is great for scaling.

- Members suggested consulting existing benchmark databases to understand performance expectations.

- Rangeify poised for outerworld launch: The Nir backend is nearing completion and ready for review, paving the way for integration with mesa.

- Once rangeify is default, the team plans to reduce the codebase, suggesting a streamlining of the project’s architecture.

- Genoa CPU enters hashing arena: Members speculated that the Genoa CPU could be leveraged for hashing tasks.

- However, concerns were raised regarding its power efficiency and whether it would justify the associated costs, questioning its practicality.

- Tinygrad Meeting 90 eyes Rangeify completion: The agenda for Tinygrad Meeting #90 includes company updates and a focus on completing RANGEIFY! SPEC=1.

- Additional discussion topics include tuning for default and addressing remaining bugs to improve overall system stability.

Windsurf Discord

- Windsurf Lights Up Code Supernova 1M: Windsurf introduces code-supernova-1-million, an enhanced version of code-supernova boasting a 1M context window.

- For a limited time, individual users can access it for free, detailed in this announcement.

- Claude Sonnet 4.5 Supercharges Windsurf: Claude Sonnet 4.5 is now integrated into Windsurf, significantly accelerating Cascade Agent runs through optimized parallel tool execution.

- Individual users can leverage this for a limited time at 1x credits, per this announcement.