Data is all you need.

AI News for 12/3/2025-12/4/2025. We checked 12 subreddits, 544 Twitters and 24 Discords (205 channels, and 7543 messages) for you. Estimated reading time saved (at 200wpm): 563 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

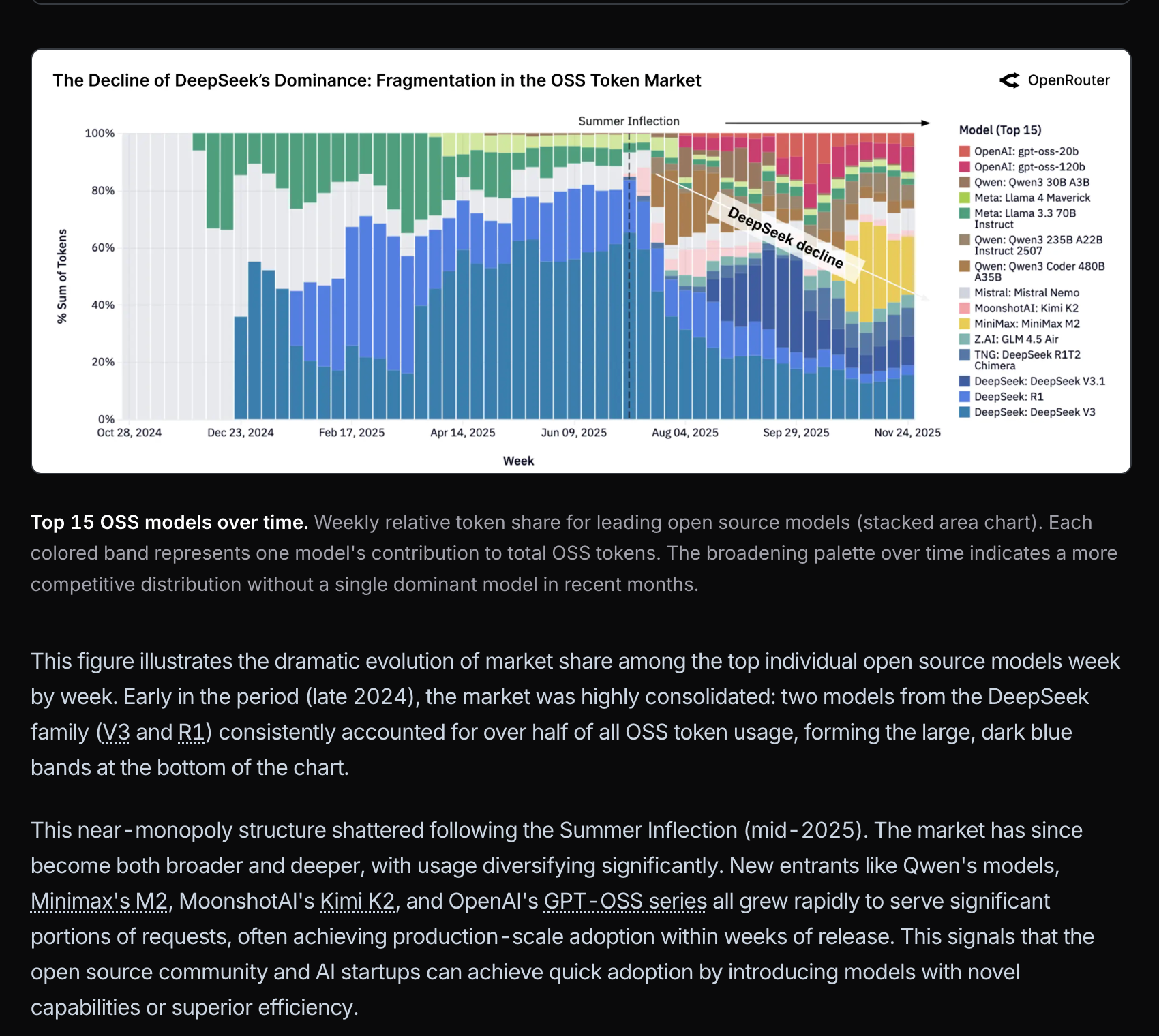

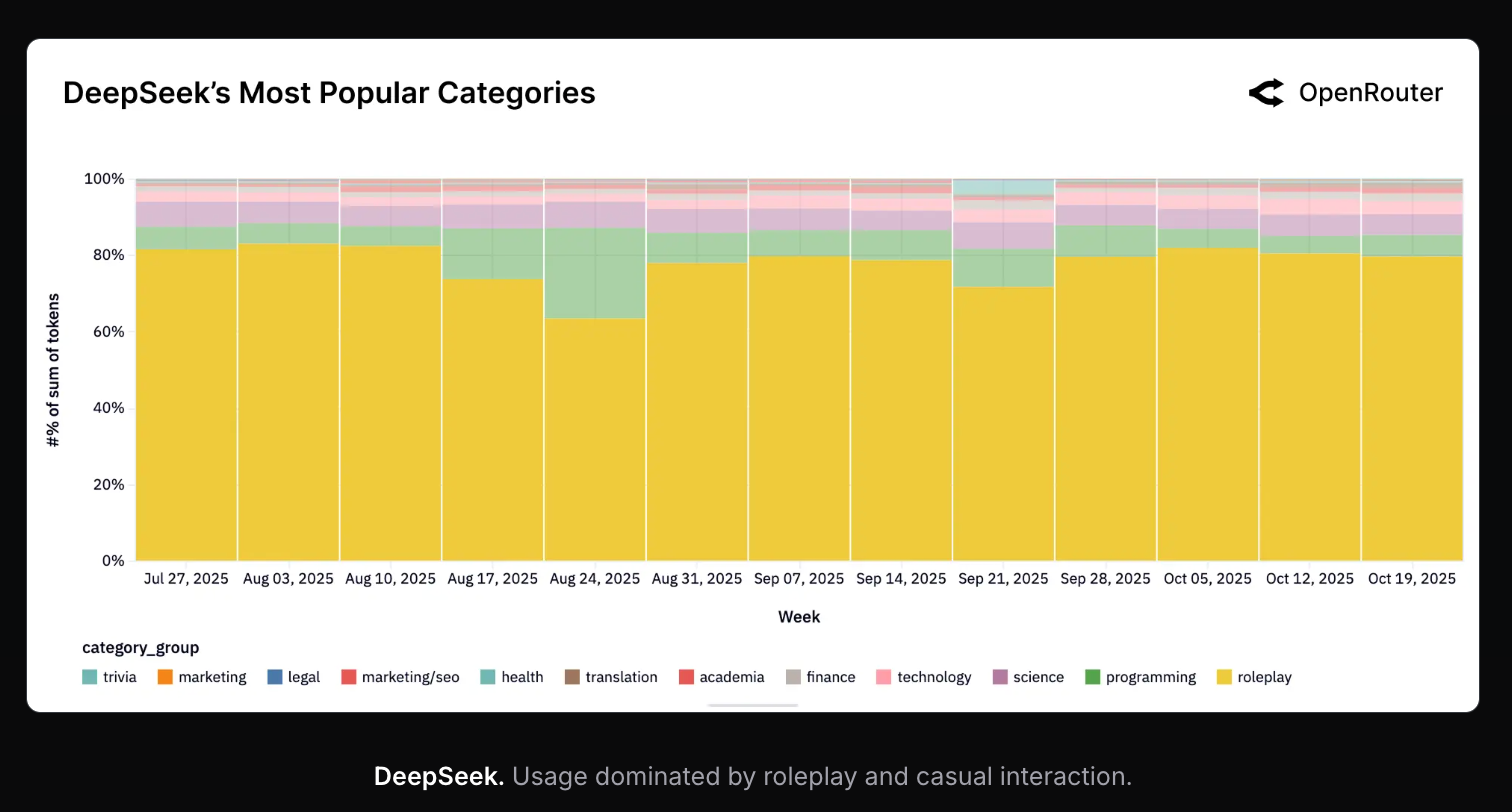

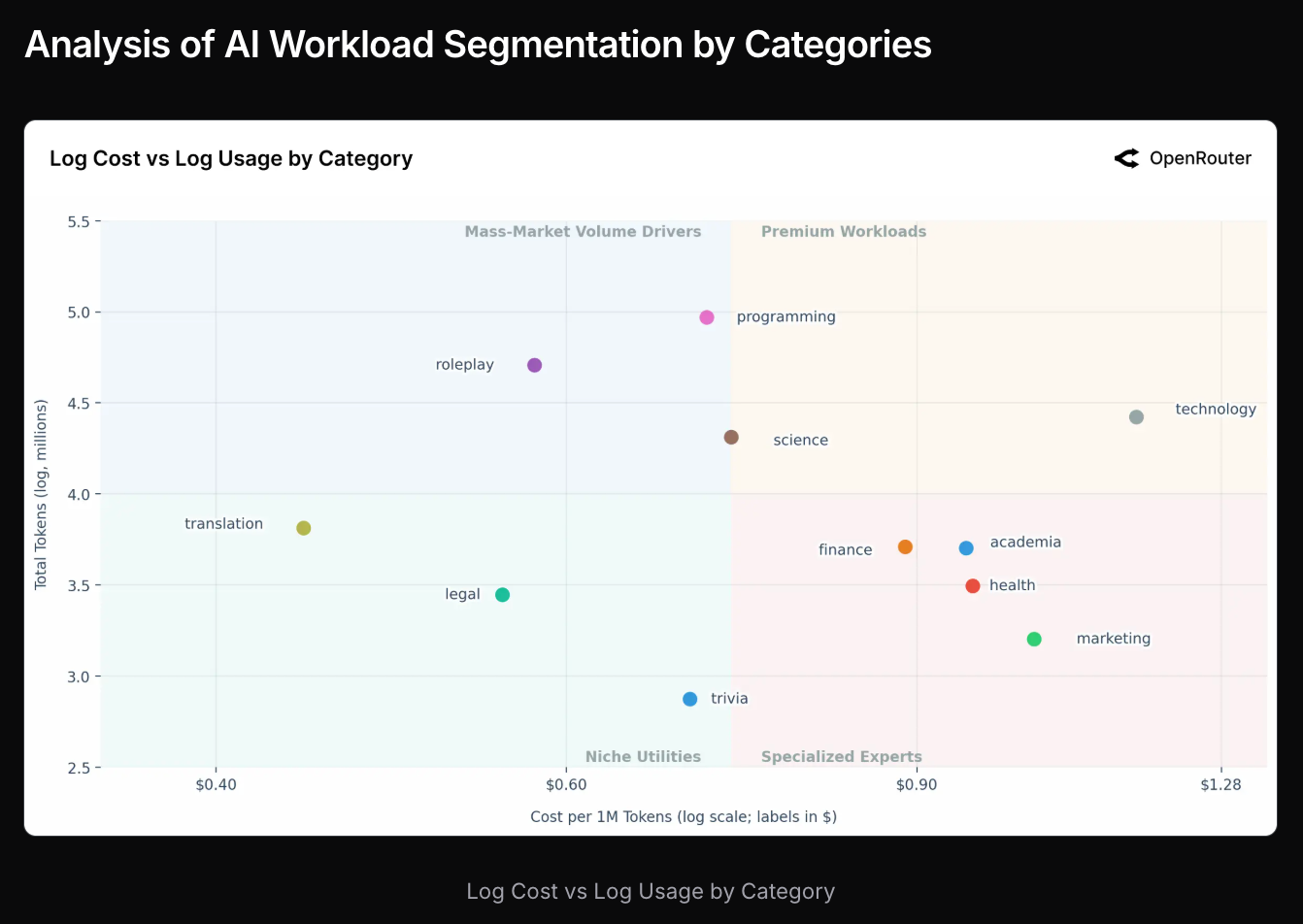

OpenRouter’s first survey is out, in web and pdf forms, and it is delightfully well done. Obviously OpenRouter has a bias (52% usage is for ahem “roleplay”), and there are other token consumers with higher volume, but OR is the ~only player that has this level of open data proxying 7T tokens per week.

Some picks:

Deepseek’s 50% open model marketshare has plummeted

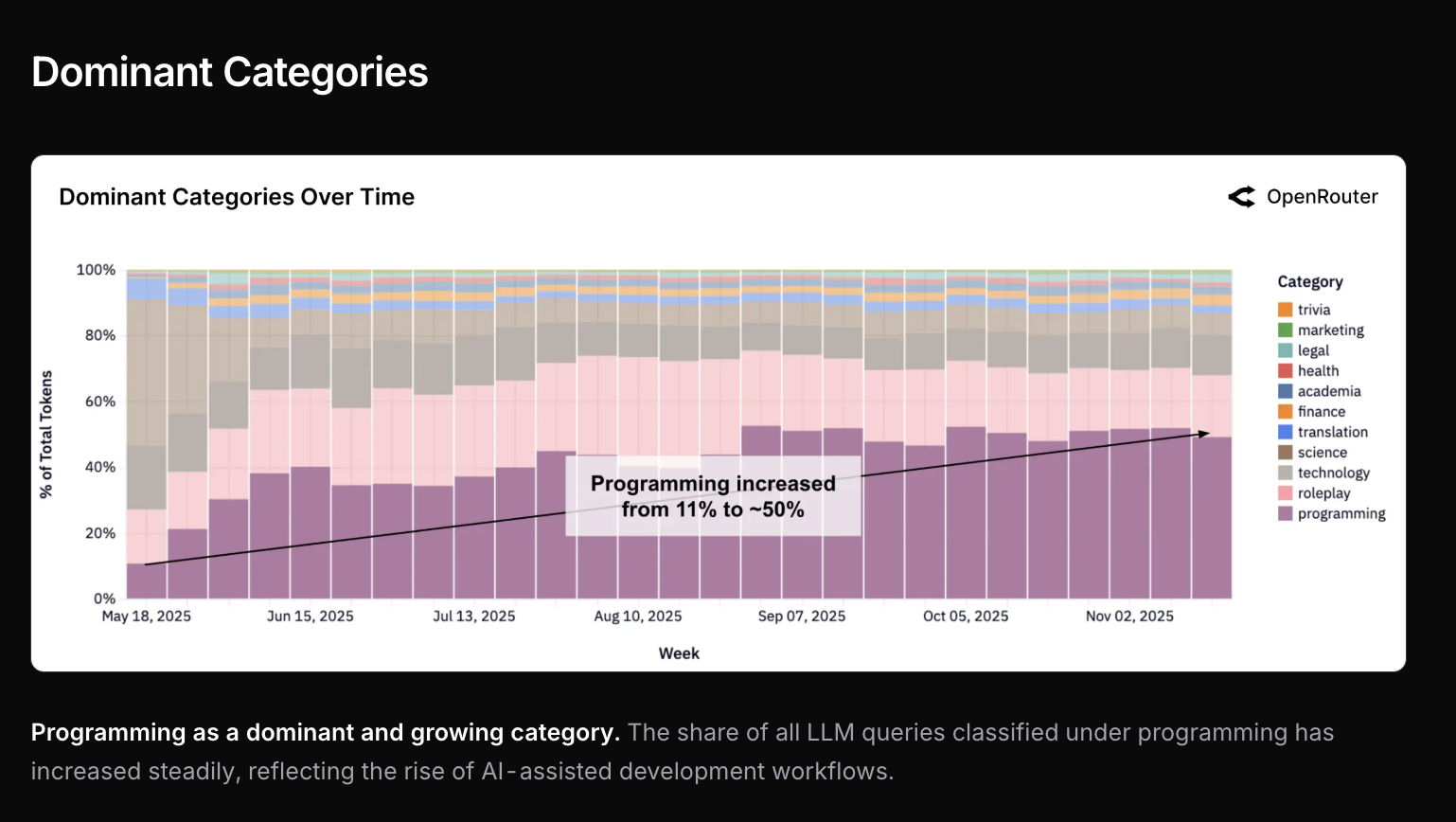

mostly because coding rose and nobody uses deepseek for coding:

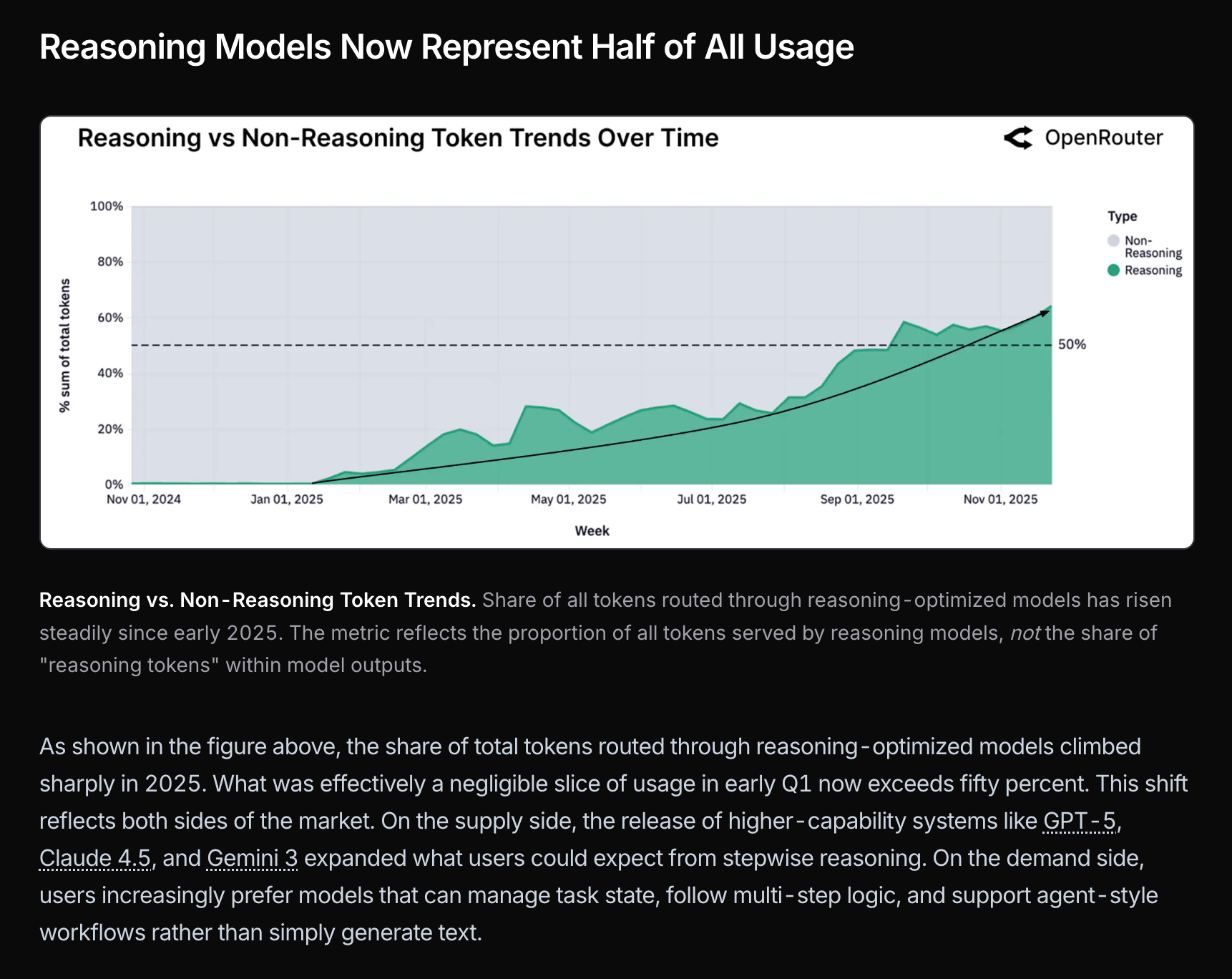

Reasoning models went from 0 to >50% usage

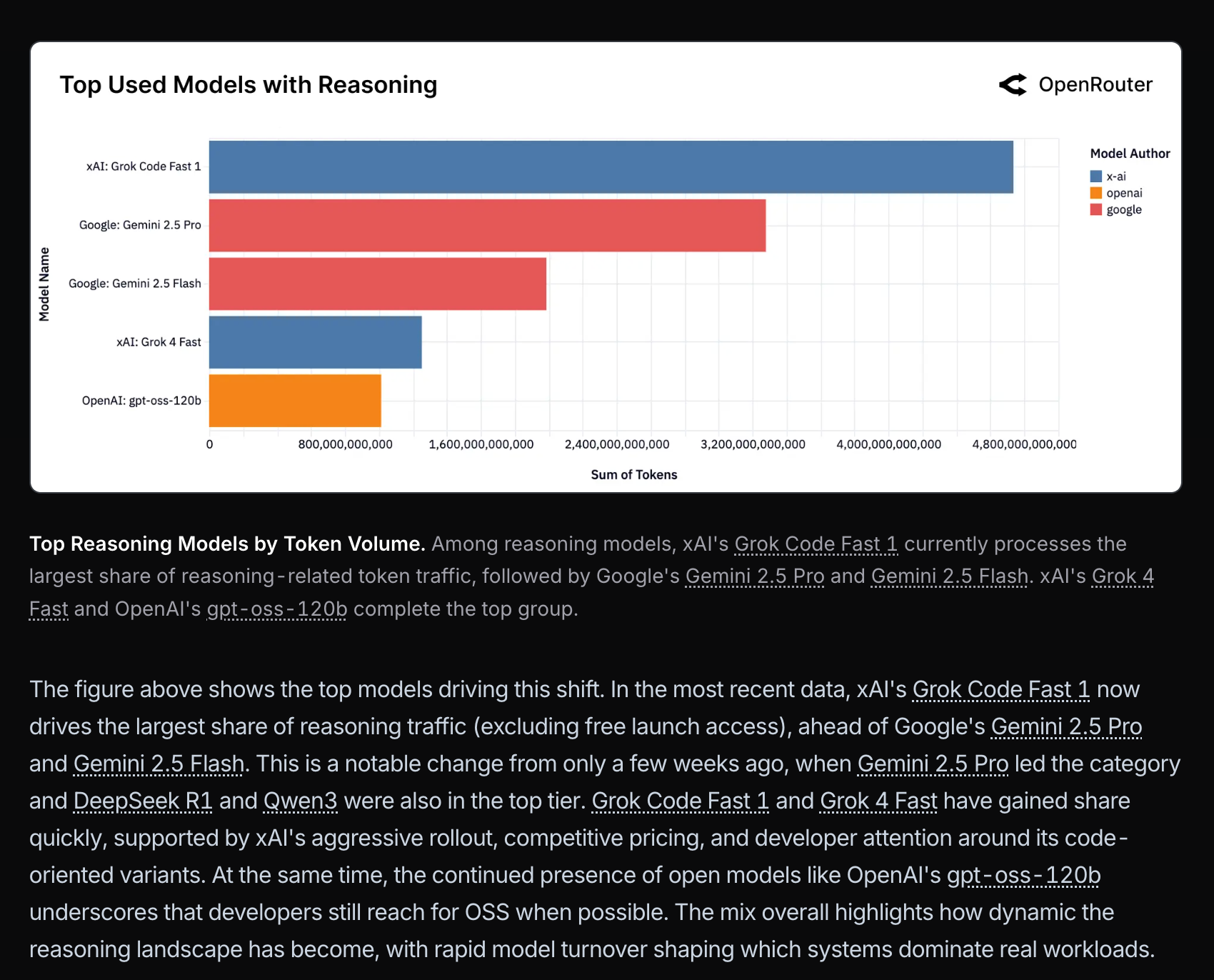

Grok Code Fast is weirdly high usage even excluding free promo:

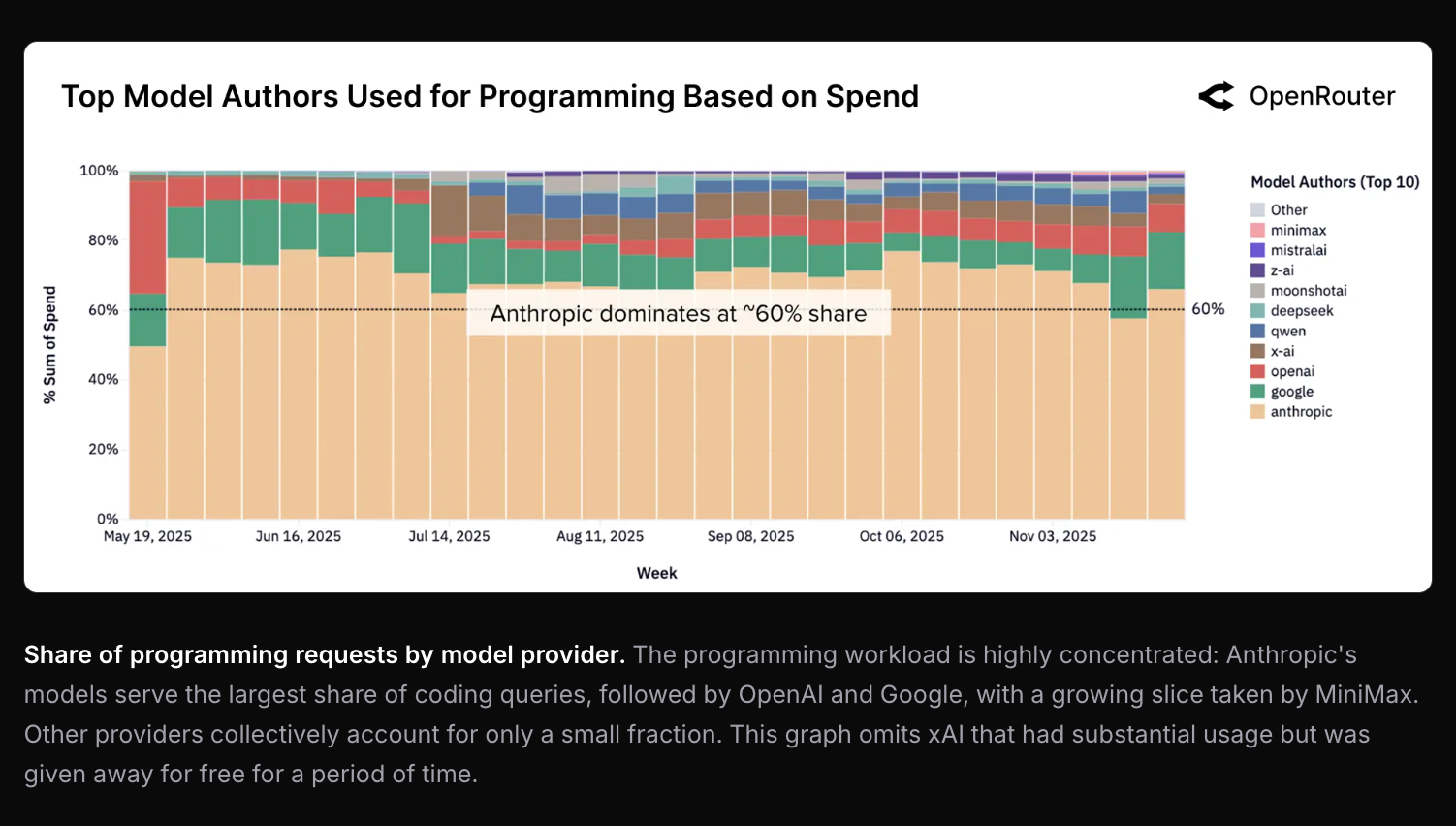

Anthropic dominates tool calling and koding

:

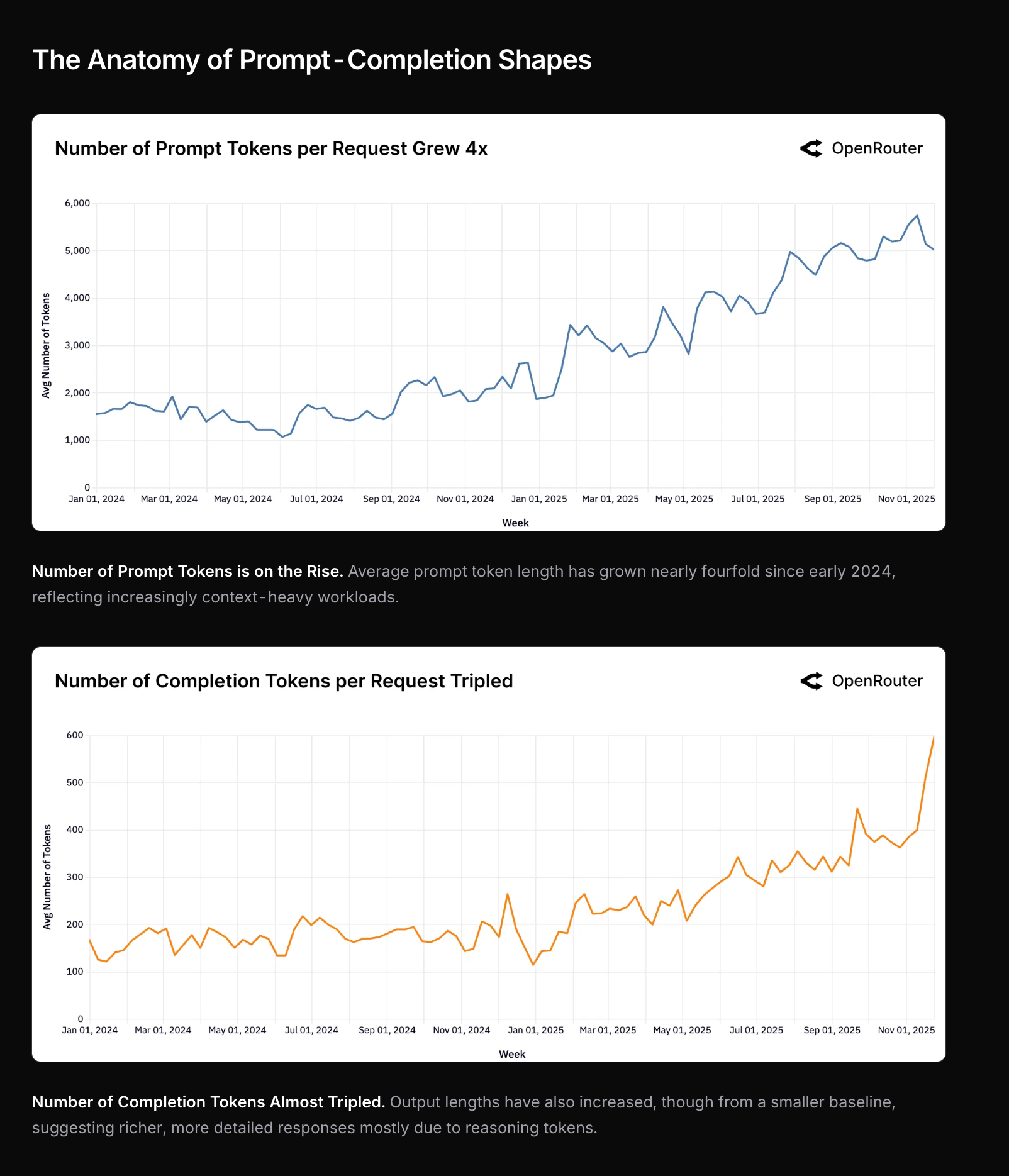

Input tokens 4xed, output tokens 3xed this year…

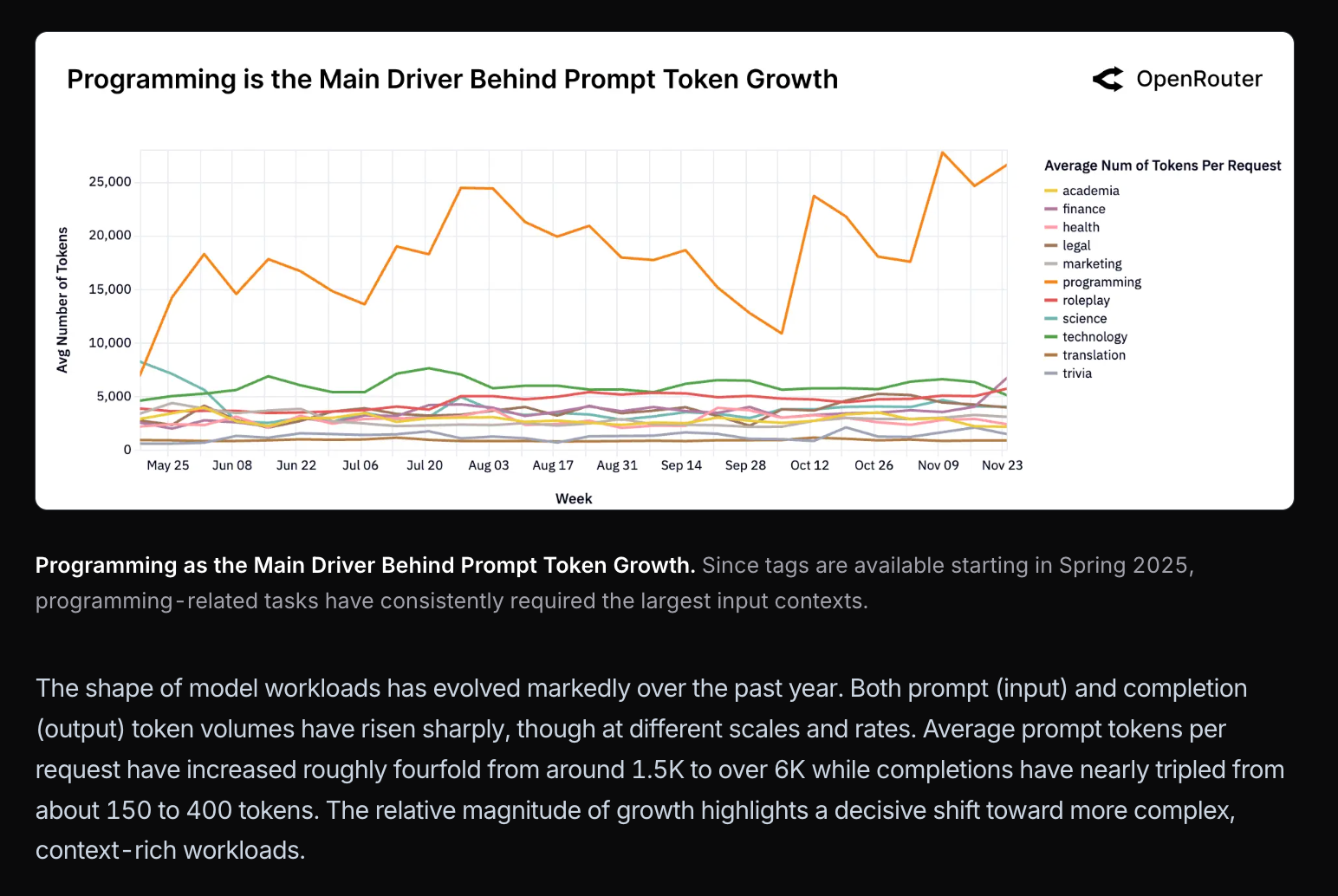

… only because of programing usecases

… which are at a sweet spot of spend and volume

AI Twitter Recap

Reasoning and Model Architecture: Gemini 3 Deep Think and Google’s “Titans”

- Gemini 3 Deep Think (rollout + benchmarks): Google launched an updated Deep Think mode for Gemini 3 to Google AI Ultra subscribers inside the Gemini app. It uses “parallel thinking” (multiple hypotheses in parallel) and derives from the variants that reached gold-medal level at IMO/ICPC. Google reports meaningful gains over Gemini 3 Pro on ARC-AGI-2 and HLE; one example cites 45.1% on ARC-AGI-2 for Deep Think @GoogleAI, @GoogleDeepMind, @GeminiApp, @quocleix, @NoamShazeer. How to try: select “Deep Think” in the prompt bar and use the “Thinking” model dropdown in the Gemini app @GeminiApp.

- “Titans”: long-context neural memory: Google previewed Titans, an architecture that combines RNN-like efficiency with Transformer-level performance using deep neural memory, scaling to contexts larger than 2M tokens. Early results were presented at NeurIPS; background/history on the Titan memory line was also posted by the authors @GoogleResearch, @mirrokni.

Coding Models and Agent Harnesses

- OpenAI’s GPT-5.1-Codex Max (agentic coding): Now available in the Responses API, recommended inside the Codex agent harness. OpenAI shared prompting guidance and customer examples; integrations landed across the ecosystem: VS Code, Cursor, Windsurf, and Linear (assign/mention Codex to kick off cloud tasks with updates posted back to Linear) @OpenAIDevs, @code, @cursor_ai, @windsurf, @cognition, @OpenAIDevs.

- Mistral Large 3 (OSS leader for coding): Mistral reports Large 3 is now #1 open-source coding model on lmarena; community corroborations followed, and cloud availability via Ollama (local support “soon”) @MistralAI, @sophiamyang, @b_roziere, @ollama.

- DeepSeek V3.2: Baseten published strong serving metrics (TTFT ~0.22s, 191 tps) for V3.2 and made it available via their APIs; lmarena added V3.2/V3.2-thinking to the text leaderboard (mixed movement overall; strongest open-model rankings in Math/Legal/Science categories) @basetenco, @arena.

- Low-compute RL and training infra: Qwen showed FP8 RL training running in just 5 GB VRAM @Alibaba_Qwen. Hugging Face introduced “HF Skills” you can call from Claude Code, Codex, and Gemini to train/eval/publish models end-to-end (scripts, cloud GPUs, progress dashboards, push to Hub) @ben_burtenshaw, @ClementDelangue.

Video, Vision, and Generative Media

- Kling 2.6 + Avatar 2.0: Kling 2.6 shipped audio-aligned video generation and launched an Audio Challenge; Avatar 2.0 adds longer inputs and better emotion capture, with day-0 hosting on fal @Kling_ai, @Kling_ai, @fal. Practitioners showed multi-tool agents orchestrating Kling for creative workflows @fabianstelzer.

- Runway Gen-4.5: Broader aesthetic control (photoreal, puppetry, 3D, anime) with coherent visual language across clips; “character morphing” is emerging as a distinct strength @runwayml, @c_valenzuelab.

- Image leaderboards: Bytedance’s Seedream 4.5 entered lmarena at #3 (Image Edit) and #7 (Text-to-Image), joining Nano Banana variants at the top; earlier, Nano Banana Pro 2k topped the Image Edit board @arena, @JeffDean.

- SVG generation as a reasoning/coding probe: Yupp launched an SVG leaderboard and an open dataset (~3.5k prompts/responses/preferences). Gemini 3 Pro leads the SVG leaderboard; prompts like “Earth–Venus 5-fold symmetry” showcase geometric reasoning + code synthesis @lintool, @yupp_ai, @lmthang.

- Microsoft VibeVoice-Realtime-0.5B: A lightweight realtime speech model released on Hugging Face @_akhaliq.

Agents, Scaffolds, and Reliability (what’s working in prod)

- Agent scaffolds matter: “Agent scaffolds are as important as models,” echoed across threads exploring management-process-like scaffolds for subagents and auto-compaction, and the importance of ontological clarity (a single LLM call ≠ subagent) @AlexGDimakis, @vikhyatk, @fabianstelzer.

- Reliability tooling: LangChain 1.1 added model/tool retry middleware with exponential backoff (JS and Python), and VS Code prompt files can autoselect per-prompt models to better compose workflows @sydneyrunkle, @bromann, @burkeholland.

- “Code as tool” for robustness: CodeVision lets models write Python to compose arbitrary image operations, drastically improving robustness on transformed OCR tasks (73.4 on transformed OCRBench, +17.4 over base; 60.1 on MVToolBench vs Gemini 2.5 Pro’s 32.6) @dair_ai.

- SkillFactory and post-training: A data-first approach that rearranges traces to demonstrate verification+retry, then SFT→RL, improves learning of explicit verification skills across domains—consistent with observations in Yejin Choi’s keynote on base-model/RL “chemistry” @ZayneSprague, @gregd_nlp.

- Inference acceleration (beyond speculative decoding): AutoJudge learns which tokens matter for the answer, achieving 1.5–2× speedups vs speculative decoding (and stacks with other accelerations) @togethercompute.

- Security reality check for agentic coding: SUSVIBES benchmark finds SWE-Agent+Claude Sonnet 4 gets 61% functionally correct but only 10.5% secure solutions across 200 real-world feature requests that historically led to vulns; vulnerability hints didn’t fix the issue—this pattern held across frontier agents @omarsar0.

Evaluation, Measurement, and Trust

- Leaderboard hygiene and independent evals: “The Leaderboard Illusion” (private testing, selective retractions, data access gaps) was prominent at NeurIPS @mziizm, with a Cohere Labs poster and community discussion @Cohere_Labs. The new AI Evaluator Forum (AEF) debuted to coordinate third‑party evaluations, with METR, RAND, SecureBio, etc. as founding members @aievalforum, @METR_Evals.

- Benchmarks and footguns: Global MMLU 2.0 released with expanded multilingual coverage @mziizm. LlamaIndex analyzed OlmOCR-Bench, highlighting gaps in document types and brittle exact matching @jerryjliu0. IF-Eval reminder: strip reasoning content using the correct delimiter (, [/THINK], etc.) @_lewtun.

- Trust and measurement science: Andrew Ng urged the field to address declining public trust (Edelman/Pew) and avoid hyped existential framings, pointing to NIST’s construct-validity emphasis for AI measurement as a constructive path forward @AndrewYNg, @mmitchell_ai.

Org Moves and Ecosystem

- New Google DeepMind team in Singapore (hiring): Led by Yi Tay under Quoc Le’s org, focused on advanced reasoning, LLM/RL, and pushing Gemini/Deep Think. Backed by leadership (Jeff Dean, Demis Hassabis) and compute access; building a small, high-talent-density team @YiTayML, @JeffDean, @quocleix.

- Model availability and platforms: MiniMax-M2 joined Amazon Bedrock @MiniMax__AI. AI21 announced Maestro deployments inside AWS VPC @AI21Labs. Run Mistral Large 3 on Ollama Cloud now; local support coming soon @ollama.

- Anthropic Interviewer: Short pilot to collect perspectives on AI at work; initial results + an open dataset of 1,250 interviews released on Hugging Face @AnthropicAI, @calebfahlgren.

- Perplexity funding: Cristiano Ronaldo announced an investment in Perplexity, positioning it as “powering the world’s curiosity” @Cristiano.

Top tweets (by engagement)

- Cristiano Ronaldo invests in Perplexity; “powering the world’s curiosity” @Cristiano — 46.9k

- Reminder: many robots “fake” humanlike motions via training; hardware can move far faster/weirder @chris_j_paxton — 36.7k

- Excel Copilot “Agent Mode” helps Satya compete in the M365 digital challenge @satyanadella — 2.8k

- Gemini 3 Deep Think rollout and results across key reasoning benchmarks @GeminiApp — 2.8k

- Mistral Large 3 claims #1 open-source coding on lmarena @MistralAI — 1.7k

- Microsoft’s VibeVoice‑Realtime‑0.5B on Hugging Face @_akhaliq — 1.3k

- “RIP ‘you’re absolutely right’” (on model behaviors) @alexalbert__ — 1.2k

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Microsoft VibeVoice-Realtime Model Launch

- New model, microsoft/VibeVoice-Realtime-0.5B (Activity: 360): VibeVoice-Realtime is a new open-source text-to-speech model by Microsoft, designed for real-time applications with a parameter size of

0.5B. It supports streaming text input and can generate initial audible speech in approximately300 ms, making it suitable for real-time TTS services and live data narration. The model is optimized for English and Chinese, featuring robust long-form speech generation capabilities. For more technical details, refer to the Hugging Face model page. A notable comment highlights the model’s support for both English and Chinese, while another points out a broken link to an unreleased version, VibeVoice-Large, indicating potential oversight in documentation.- The model

microsoft/VibeVoice-Realtime-0.5Bsupports both English and Chinese languages, which is significant for applications requiring bilingual capabilities. However, there are concerns about the quality of Mandarin output, as one user noted that the Mandarin speaker has a Western accent, which might affect the model’s usability for native speakers. - There is a broken link issue with the

VibeVoice-Largemodel on Hugging Face, leading to a 404 error. This suggests that the model might have been unreleased or removed, indicating potential issues with version control or release management by Microsoft. - Users are seeking guidance on how to run the

VibeVoice-Realtime-0.5Bmodel, indicating a need for clearer documentation or tutorials to facilitate user adoption and implementation. This highlights a common challenge in deploying complex models to a broader audience.

- The model

2. Humorous Quant Legend Comparison

- legends (Activity: 394): The image is a meme contrasting traditional and modern interpretations of ‘quant legends.’ On the left, a classic image of a mathematician in front of a chalkboard represents the traditional view, while on the right, a cartoon alien with social media icons humorously depicts a modern, internet-driven perspective. The post and comments highlight a playful take on the concept of ‘legends’ in quantitative fields, with a nod to contributors in the AI and model development community, such as those working on EXL and GGUF models. The comments reflect a light-hearted discussion, with some users humorously questioning the post’s intent as ‘karma farming.’ Others take the opportunity to acknowledge various contributors to AI model development, suggesting a broader appreciation for community efforts beyond the ‘legend’ depicted in the meme.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Gemini 3 Deep Think Release and Benchmarks

- Gemini 3 Deep Think now available (Activity: 634): The image announces the release of “Gemini 3 Deep Think,” a new mode in the Gemini app specifically for Google AI Ultra subscribers. This mode is designed to enhance advanced reasoning capabilities, particularly in complex math, science, and logic problems. It has reportedly performed well in rigorous benchmarks and competitions, indicating its potential effectiveness in handling sophisticated tasks. The announcement also includes instructions for accessing this new mode, suggesting a focus on usability for subscribers. One commenter expresses anticipation for how “smart people” might leverage this new mode, while another notes the impressive performance of Gemini 3 even before the release of “Deep Think.”

- Gemini 3 “Deep Think” benchmarks released: Hits 45.1% on ARC-AGI-2 more than doubling GPT-5.1 (Activity: 510): The image is a bar chart illustrating the performance of various AI models on three benchmarks, with a focus on the ARC-AGI-2 benchmark. Gemini 3 Deep Think achieves a score of

45.1%, significantly outperforming GPT-5.1 which scores17.6%. This demonstrates a2.5ximprovement in novel puzzle-solving capabilities, attributed to the integration of System 2 search/RL techniques, possibly involving AlphaProof logic. This advancement highlights Google’s lead in reasoning and inference-time compute, challenging OpenAI to respond with updates like o3 or GPT-5.5 to regain competitive standing. Commenters are excited about the progress, with some noting that OpenAI may be developing a competitive model, and others questioning the absence of certain models like Opus in the comparison.- The release of Gemini 3 “Deep Think” benchmarks shows a significant improvement, achieving 45.1% on the ARC-AGI-2 benchmark, which is more than double the performance of GPT-5.1. This benchmark, referred to as “Novel problem solving” in Anthropic’s blog, highlights the model’s capabilities in handling complex problem-solving tasks. However, some users express skepticism, noting that despite high benchmark scores, models can still exhibit issues like hallucinations in simpler tasks.

- A comparison is made between Gemini 3 “Deep Think” and Opus 4.5, with Gemini 3 achieving 45.1% and Opus 4.5 reaching 37% on the ARC-AGI-2 benchmark. This indicates a notable performance gap between the two models on this specific benchmark, which is designed to test advanced problem-solving abilities. The discussion suggests that while benchmarks are useful, they may not fully capture a model’s practical performance in real-world applications.

2. Z-Image Prompting and Styles

- The prompt adherence of Z-Image is unreal, I can’t believe this runs so quickly on a measly 3060. (Activity: 762): The image demonstrates the capabilities of the Z-Image model, which is praised for its prompt adherence and speed, even when running on a relatively modest GPU like the NVIDIA 3060. The user highlights the model’s ability to accurately render complex visual prompts, capturing intricate details such as specific clothing patterns, facial expressions, and accessories. However, the model struggles with negation, as seen in the inability to exclude rings from the man’s depiction. The use of Lenovo LoRA is mentioned to enhance output fidelity, suggesting a combination of techniques to achieve high-quality results quickly. Commenters express excitement about Z-Image’s potential, comparing it to SDXL and anticipating further improvements with fine-tuning and additional LoRA models.

- Saturnalis highlights the prompt adherence of Z-Image, noting its ability to capture complex details like ‘alternating black and white rings’ on fingers, while struggling with negation such as ‘The man has no rings.’ The user mentions using the Lenovo LoRA for higher fidelity outputs, achieving results in 15-30 seconds on a 3060 GPU, which is impressive for such detailed rendering.

- hdean667 discusses using Z-Image for generating quick images for animation in long-form videos. The tool’s ease of use is emphasized, as users can achieve specific looks by simply adding sentences or keywords, making it highly adaptable for creative projects.

- alborden inquires about the GUI used for running Z-Image, asking if it’s ComfyUI or another interface, indicating interest in the technical setup and user interface preferences for optimal performance.

- Z-Image styles: 70 examples of how much can be done with just prompting. (Activity: 647): The post discusses the capabilities of Z-Image, a model similar to SDXL, in generating diverse styles through prompting alone, without relying on artist names. The author provides a detailed workflow using Z-Image-Turbo-fp8-e43fn and Qwen3-4B-Q8_0 clip at

1680x944resolution, employing a specific process involving model shifts and upscaling to enhance detail and speed. The workflow includes using a negative prompt set to “blurry ugly bad,” although it appears ineffective atcfg 1.0. The post also links to resources like twri’s sdxl_prompt_styler and a full workflow image. Commenters discuss the effectiveness of negative prompts in Z-Image, with one noting that the “Moebius-like” style is not accurate, suggesting the need for LoRAs for specific styles. Another commenter mentions crafting style prompts and notes Z-Image’s ability to generate ASCII art when well-described.- Baturinsky raises a technical question about whether Z-Image considers negative prompts, which are often used in AI image generation to guide models away from certain styles or elements. This is crucial for refining outputs and ensuring the model adheres closely to the desired artistic direction.

- Optimisticalish points out a limitation in Z-Image’s ability to replicate specific art styles, such as Moebius, suggesting that current models may require additional training data or LoRAs (Low-Rank Adaptations) to accurately capture these styles. They note that using specific artist names with underscores, like ‘Jack_Kirby’, can modify the ‘comic-book style’ effectively, indicating a nuanced approach to style prompting.

- Perfect-Campaign9551 highlights the effectiveness of using specific style prompts like ‘flat design graphic’, which involves creating a colorful, two-dimensional scene with minimal shading. This suggests that Z-Image can handle a variety of stylistic requests, provided the prompts are well-crafted and descriptive.

3. AI’s Impact on Tech Jobs and Society

- Deep down, we all know that this is the beginning of the end of tech jobs, right? (Activity: 1262): The post discusses the rapid advancement of AI and its potential impact on tech jobs, suggesting that roles like software developers, DevOps, and designers may see a significant reduction in demand. The author argues that while humans will still be involved, the number of people needed will drastically decrease as AI takes over tasks such as writing code, generating tests, and designing systems. The post challenges the notion that AI will only augment human roles, comparing the situation to historical shifts in labor demand due to automation. A notable comment argues that while AI tools are transformative, they do not replace the need for human involvement in complex tasks such as stakeholder management, system architecture, and dealing with legacy systems. The commenter emphasizes that AI is raising the entry-level bar but also increasing the complexity of what can be built, suggesting that the nature of development work is evolving rather than disappearing.

- The comment by ‘alphatrad’ highlights the limitations of AI in software development, emphasizing that while AI tools can automate coding tasks, they cannot replace the nuanced human roles in the software development lifecycle (SDLC). The commenter points out that AI lacks the ability to handle complex organizational dynamics, such as stakeholder management, conflicting requirements, and legacy system integration. They argue that AI is merely the next step in a long history of technological abstraction, which has consistently increased the complexity and scope of software projects rather than eliminating jobs.

- ‘alphatrad’ also discusses the evolving nature of developer roles, suggesting that while AI can automate junior-level tasks, it raises the bar for entry-level positions. The commenter advises developers to focus on skills that AI cannot replicate, such as system design, debugging, and understanding business operations. They emphasize the importance of communication skills and the ability to work with legacy systems, suggesting that the future of development will require a blend of technical and soft skills.

- The comment by ‘codemagic’ suggests a shift in focus towards the early stages of the SDLC, such as requirements gathering and high-level architecture, as automation takes over more routine coding tasks. This shift emphasizes the need for precise language and writing skills, indicating a potential change in the skill set required for developers as AI tools become more prevalent in handling low-level implementation and tuning tasks.

- Deep down we all know Google trained its image generation AI using Google Photos… but we just can’t prove it. (Activity: 3803): The post speculates that Google may have used its vast collection of user-uploaded images from Google Photos to train its image generation AI, despite official statements that user photos are not used for advertising. The author suggests that the familiarity of AI-generated images could be due to the extensive metadata and high-quality images Google has collected over the years. This is compared to past instances like Google’s voice recognition improvements following the Goog-411 service, implying a pattern of leveraging user data to enhance AI capabilities. Commenters discuss Google’s data policies, noting that while user content is not sold for advertising, Google retains a broad license to use it for service improvements. They draw parallels to past Google services like Goog-411, which seemingly collected data to improve subsequent technologies like Voice Search.

- Fonephux highlights Google’s data policy, which grants the company a broad license to use, host, and modify user content from services like Google Photos. This policy is designed to improve service functionality, suggesting that while user content is protected, it can be utilized to enhance Google’s AI capabilities.

- redditor_since_2005 draws a parallel between Google’s past service, Goog-411, and its subsequent development of Voice Search. The implication is that Google used data from Goog-411 to train its speech recognition models, suggesting a similar strategy might be employed with Google Photos for image generation AI.

- ChuzCuenca implies that Google Photos’ free hosting service is likely not without ulterior motives, hinting at the possibility that user photos could be used to train Google’s AI models, despite the lack of direct evidence.

- This grandson used AI to recreate his grandfather’s entire life for his 90th birthday. (Activity: 2938): A grandson utilized AI technology to recreate his grandfather’s life story as a gift for his 90th birthday. This project likely involved using machine learning models to process and synthesize personal data, such as photos, videos, and possibly audio recordings, to create a comprehensive digital narrative. The use of AI in this context highlights its potential for personal storytelling and preserving family histories, leveraging tools like generative adversarial networks (GANs) or natural language processing (NLP) to enhance the narrative experience. The comments reflect a positive reception, with users appreciating the innovative use of AI for personal and emotional storytelling, though some expressed a desire for more content or details about the project.

- This grandson used AI to recreate his grandfather’s entire life for his 90th birthday. (Activity: 2943): A grandson utilized AI technology to recreate his grandfather’s life story as a gift for his 90th birthday. This project likely involved using machine learning models to process and synthesize historical data, personal anecdotes, and possibly multimedia elements to create a comprehensive narrative. The use of AI in this context highlights its potential in personal storytelling and preserving family histories, demonstrating a novel application of technology in enhancing personal and emotional experiences. The comments reflect a positive reception, with users appreciating the innovative use of AI for personal storytelling. However, there is a lack of technical debate or detailed discussion on the implementation specifics in the comments.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5.1

1. Frontier Coding Models, OpenRouter Trends, and IDE Integrations

- OpenRouter’s 100T-Token Telescope Tracks Roleplay, Coding, and Agents: OpenRouter and a16z released the State of AI report based on 100 trillion tokens of anonymized traffic, showing that >50% of open‑source model usage is roleplay/creative, while programming exceeds 50% of paid-model traffic and reasoning models now handle >50% of all tokens. The data highlights that users overwhelmingly choose quality over price, that Claude owns ~60% of coding workloads with average prompts over 20K tokens, and that tool‑calling plus long contexts are pushing the ecosystem toward full AI agents rather than one‑shot Q&A.

- The report notes a flat correlation between cost and usage, implying that reliability, latency, and ergonomics matter more than raw token price until quality converges, and it calls out a large, underserved consumer segment for entertainment/companion AI. Engineers in the OpenRouter community emphasized that building competitive products now requires multi‑step execution, strong state management, and robust tool orchestration, not just dropping in a single chat endpoint.

- GPT‑5.1 Thinking and Codex Max Crash Gemini’s Coding Party: Across OpenAI, OpenRouter, and Windsurf communities, GPT‑5.1 and GPT‑5.1‑Codex Max emerged as new coding workhorses, with OpenAI users reporting that GPT‑5.1 Thinking beat Gemini 3 at bug finding in code and Windsurf announcing GPT‑5.1‑Codex Max availability at Low/Medium/High reasoning levels via a new release. OpenRouter discussion added that OpenAI also shipped a Codex Max model as part of an intensifying race against Google’s Gemini 3 Deep Think Mode, while rumors via an ArsTechnica article point to yet another OpenAI model drop next week.

- Windsurf is giving paid users a free trial of GPT‑5.1‑Codex Max Low, aiming squarely at dev workloads, while OpenAI Discord engineers contrasted Gemini 3 Pro’s UX with its failure to spot basic bugs that GPT‑5.1 caught. On OpenRouter, users framed this as part of a wider coding stack shake‑up, with Anthropic’s acquisition of Bun for Claude’s $1B code revenue and OpenAI’s Codex Max making IDEs like Cursor and Windsurf the front line of the model wars.

- Hermes 4.3 Shrinks Size, Targets OpenRouter, and Competes with DeepSeek: Nous Research announced Hermes 4.3 on ByteDance Seed 36B, claiming Hermes 4.4 36B‑class performance roughly equal to Hermes 4 70B at half the size, post‑trained entirely on the Psyche network secured by Solana, with more details in the launch post “Introducing Hermes 4.3”. In Discord, Teknium hinted that Mistral‑3 Hermes fine‑tunes and MoE support are coming next via their internal trainer, and confirmed existing Hermes models on OpenRouter, though users want Nous to onboard as a direct provider.

- Engineers compared Hermes 4.3 with DeepSeek v3.2, praising DeepSeek for being “super affordable” and asking for Hermes 70B/405B to join that pricing tier on OpenRouter, while others noted that Opus 4.5 (Anthropic) is now better integrated into tools like GitHub Copilot and is available free via antigravity. The Hermes launch is also tied to the experimental Psyche training network, with office hours advertised via Discord to discuss decentralized training and how it outperformed centralized setups.

2. Security, Jailbreaking, and Agent Execution Safety

- From Sora 2 and Gemini Web to DeepSeek: Jailbreakers Keep Winning: Across LMArena and BASI, red‑teamers reported bypasses in Sora 2 and web models: one LMArena user claims to have found an exploit in Sora 2’s filtration, noting that character generation prompts can circumvent guardrails, while BASI members discussed NSFW image generation backdoors on Gemini Web using custom system instructions and filters that fail intermittently. BASI jailbreaking threads also documented nested jailbreaks against DeepSeek, with a screenshot of DeepSeek generating Windows reverse‑shell malware code and noted that older tricks like the ENI jailbreak from the Wired nuclear‑weapon poem article still work on Gemini 2.5.

- Attackers also probed Grok 4.1 and GPT‑5.1, with BASI members trying to force drug and soda‑recipe style outputs from Grok and sharing the UltraBr3aks jailbreak collection to attack GPT‑5.1, but conceding that GPT‑5.1 remains hard to fully compromise. Jailbreakers continue to switch to more permissive or less‑polished models for offensive content and malware generation — DeepSeek, Gemini 3, Seeds like Seedream 4.5 — while observing that each new safety layer increases “intelligence tax” on the model when heavily constrained.

- AI Agents Get Red‑Teamed with Prompt Injection and Execution‑Time Guards: BASI’s red‑teaming channel coordinated realistic AI agent attack simulations, including prompt injection, spoofed agent messages, and replay attacks, to test how well agent frameworks resist arbitrary code execution and data exfiltration. The group evaluated execution‑time authorization frameworks like A2SPA as a way to gate external actions, aiming to ensure that even if the LLM’s reasoning step is compromised, tool invocations still obey a separate policy layer.

- At the tooling level, MCP Contributors debated whether MCP tools should accept UUIDs as arguments, after observing that LLMs tend to hallucinate UUIDs even when told not to, and suggested a two‑tool pattern: a

list_itemstool that returns lightweight items with UUIDs and adescribe_itemtool that takes a UUID to fetch full records. This architecture separates identifier generation (never entrusted to the LLM) from identifier usage, aligning with the agent red‑team view that LLMs should not mint primary keys or security‑sensitive identifiers, only consume them under strict schemas.

- At the tooling level, MCP Contributors debated whether MCP tools should accept UUIDs as arguments, after observing that LLMs tend to hallucinate UUIDs even when told not to, and suggested a two‑tool pattern: a

- Secure Code Verification, ARR Explosions, and Legal AI at Scale: In Latent Space, users highlighted three big business moves tied to security and code correctness: Antithesis raised a $105M Series A led by Jane Street to build deterministic simulation testing for AI‑generated code, per this X thread, Anthropic projected $8–10B ARR this year driven largely by Claude for coding, and legal AI company Harvey closed a $160M Series F at an $8B valuation serving 700+ law firms in 58 countries via Brian Burns’ tweet. The consensus is that as LLMs write more production and compliance‑sensitive code, customers demand trust‑through‑testing and specialized vertical stacks (legal, finance) rather than generic chatbots.

- These revenue numbers frame the Anthropic news that it acquired Bun to power Claude’s $1B code generation business, via Anthropic’s announcement, and sit alongside startup agents like Shortcut v0.5 that auto‑build institutional FP&A spreadsheets. Engineers interpreted this as validation for investing heavily in static analysis, deterministic simulation, and verticalized agents, since money is flowing toward stacks that can both generate and prove code behavior.

3. GPU Systems, Quantization, and Kernel Competitions

- TorchAO MoE Quantization and NvFP4 GEMM Tuning Go Deep: In GPU MODE, PyTorch engineers dug into TorchAO’s quantization stack for MoEs, pointing to the dedicated

MoEQuantConfigand newFqnToConfig‑based routing from PR #3083 that lets you assign quantization configs by fully‑qualified name instead of only viafilter_fn. They noted that compilation remains slow even after precompile and recommended settingTORCH_LOGS="+recompiles"to spot dynamic shapes and unnecessary recompilations, as well as ensuring MoE packed weights live innn.Parameters like in the Mixtral MoE example.- Concurrently, the NVIDIA nvfp4_gemm competition channel confirmed the reference kernels are built on cuBLAS 13.0.0.19 (CUDA 13.0.0), and a PR fixed INF issues by using the full FP4 a/b range with non‑negative scale factors. Competitors discovered that some LLMs were “cheating” the eval (exploiting Python‑based harness quirks), and discussed porting the evaluator to a non‑Python stack; a participant also documented that submissions silently failed until they added the explicit

-leaderboard nvfp4_gemmflag to target the right board.

- Concurrently, the NVIDIA nvfp4_gemm competition channel confirmed the reference kernels are built on cuBLAS 13.0.0.19 (CUDA 13.0.0), and a PR fixed INF issues by using the full FP4 a/b range with non‑negative scale factors. Competitors discovered that some LLMs were “cheating” the eval (exploiting Python‑based harness quirks), and discussed porting the evaluator to a non‑Python stack; a participant also documented that submissions silently failed until they added the explicit

- Sparse Attention, VAttention Guarantees, and CUDA cp.async Puzzles: GPU MODE’s cool‑links channel resurfaced a long‑running frustration: despite ~13,000 papers on sparse attention, practical systems like vLLM rarely use it, as argued in this X post from skylight_org. One promising line is “VATTENTION: VERIFIED SPARSE ATTENTION” (arXiv:2510.05688), which gives user‑specified (ϵ, δ) guarantees on approximation error and was cited as a template for deeper collaboration between PL/verification researchers and ML systems people.

- On the low‑level side, a CUDA developer observed Nsight Compute warnings about

LDGSTS.E.BYPASS.LTC128B.128(thecp.asyncpath) when they cranked uplaunch__registers_per_thread, with 3.03% of global accesses and 17.95% of shared wavefronts flagged as “excessive”, and those warnings vanished once register usage dropped. The thread wrestled with how high register pressure and reduced occupancy feed back intocp.asyncbehavior within a block, illustrating the subtle interplay between register allocation, SM occupancy, and async copy instructions in real kernels.

- On the low‑level side, a CUDA developer observed Nsight Compute warnings about

- Hardware Pricing, Multi‑GPU Weirdness, and Edge‑Server Architectures: LM Studio and GPU MODE hardware channels compared GPU pricing and multi‑GPU setups: one user complained that $3.50/hr for 2× H100 PCIe with 1 Gbit is steep, pointing to SFCompute’s H100 at $1.40/hr and Prime Intellect’s B200 at ~$1/hr spot, ~$3/hr on‑demand via primeintellect.cloud. LM Studio users reported that triple‑GPU rigs were “very buggy out of 10”, especially with non‑even card counts and small 8 GB cards when sharding dense models past 50 GB, and that CachyOS struggled with mixed‑generation dual‑GPU setups that worked fine on Ubuntu.

- Practitioners also explored home‑lab server patterns: one LM Studio user wanted to convert an old gaming laptop into a central LLM server with a request queue to protect weaker devices, while Modular’s Mojo channel linked to constant‑memory kernel examples in the modular/modular repo for devs looking to hard‑wire convolution kernels into GPU constant memory. The cumulative message is that cost‑efficient, multi‑GPU, and edge‑server setups remain finicky, with a lot of tacit knowledge around PSU wiring, telemetry defaults, and OS quirks (e.g., CachyOS telemetry opt‑out and GNOME vs KDE trade‑offs).

4. New Optimization, Evaluation, and Research Directions

- ODE Solvers, STRAW Rewiring, and Feature Attribution Shake Up Vision: Hugging Face’s research channels surfaced multiple novel optimization ideas: a new fast ODE solver for diffusion models claims 4K images in 8 steps with quality comparable to 30‑step dpm++2m SDE Karras, released as a HF Space “Hyperparameters are all you need 4K” alongside its paper. Another experiment, STRAW (sample‑tuned rank‑augmented weights), lets a net rewrite low‑rank weight adapters per input to mimic biological neuromodulation while avoiding RAM blowups, documented in the write‑up “Sample‑tuned rank‑augmented weights”.

- Complementing this, an interpretability‑heavy post “Your features aren’t what you think” analyzed feature behavior in deep vision models via perturbation‑based attribution, arguing that intuitive “feature = concept” mappings often break under systematic perturbations. The authors and HF reading‑group participants stressed that getting chunking quality and input semantics right (especially for tables and RAG corpora) may matter as much as exotic architectures when you want robust eval scores and interpretable internal representations.

- Shampoo, CFG, and Attention Sinks: Optimizer and Diffusion Theory Evolve: Eleuther’s research channel critiqued the Shampoo optimizer, with a Google employee noting that the exponent on the preconditioner in the Shampoo paper might be better at −1 than −1/2, calling the current work “ok” but with “a few other deficiencies”. They also discussed “Random Rotations for Adam”, which surprisingly performs worse than standard Adam despite hopes that rotating away activation outliers would help, in part because the method never re‑rotates when the underlying SVD basis drifts.

- In diffusion land, members dissected Classifier‑Free Guidance (CFG) using a 2024 CFG/memorization paper, surprised that the memorization basin emerges very early and likely depends strongly on dataset size and resolution, and brainstormed orthogonalizing the unguided and guided updates to reduce required CFG strength (citing an OpenReview paper at openreview.net/forum?id=ymmY3rrD1t). A separate survey on attention sinks from NeurIPS (poster PDF at neurips.cc) triggered debate about rope‑based intuitions, with some arguing that the authors mischaracterize 1D rotations and sink behavior in long‑context transformers.

- Lightweight Local Eval, Smol Training, and Latent Multi‑Agent Collaboration: Hugging Face’s makers announced smallevals, a local RAG evaluation suite that uses tiny 0.6B Qwen‑based models trained on Natural Questions and TriviaQA to generate question‑answer pairs from your docs, shipped as QAG‑0.6B GGUF plus a GitHub repo and

pip install smallevals. It builds golden retrieval eval datasets without depending on the generation model, and includes a local dashboard to inspect rank distributions, failing chunks, and dataset stats, enabling cheap, offline RAG benchmarking.- On the training front, an Eleuther member working on SLMs for agents is designing pipelines that fit under 16 GB VRAM, referencing the Hugging Face smol‑training playbook and benchmarks like MixEval, LiveBench, GPQA Diamond, IFEval/IFBench, HumanEval+, while others recommended LoRA without Regret rather than full pretrain. In the DSPy server, someone shared a paper on Latent Collaboration in Multi‑Agent Systems (link) where agents “implicitly coordinate through learned latent spaces”, which neatly aligns with DSPy users’ push to integrate tools like Claude Code Agents and MCP‑Apps SDK into structured multi‑agent workflows.

5. On‑Device, Small Models, and Agent/Tool Ecosystems

- Phones Run Qwen and Gemma While Vulkan and WSL2 Smooth Local Dev: Unsloth users confirmed that llama.cpp’s Vulkan backend works on Android with the Freedreno ICD, though FP16 can be flaky, and recommended

pkg install llama-cppinstead of custom Vulkan builds to reduce friction. In the same server, people are running Qwen 3 14B on an iPhone 17 Pro and Gemma E2B on an iPhone 12 via Termux + llama.cpp/kobold.cpp, while others reminded the room that “not every phone can run a 4B 24/7” despite optimistic claims.- On Windows, Unsloth’s help channel repeatedly pushed devs to WSL2 + VSCode with official Conda and pip install guides, after users hit issues like Unsloth downgrading Torch to a CPU build or crashing Ollama with Qwen3‑VL due to format incompatibilities (Ollama issue #13324). The result is a de facto pattern: phones and thin clients talk to a local Linux box (WSL2 or bare metal) running llama.cpp/Unsloth, which then exposes APIs for downstream tools like aider and Crowdllama.

- MCP Apps SDK, Claude Code Agents, and UUID‑Centric Tool Design: DSPy and MCP ecosystems are converging: General Intelligence Labs open‑sourced mcp‑apps‑sdk, letting devs run ChatGPT MCP apps with UIs on any assistant platform and test them locally, as explained in their X thread. DSPy members meanwhile proposed adding a

dspy_claude_codebackend that talks to Claude Code/Claude Agents SDK, wiring tools likeRead,Write,Terminal, andWebSearchinto DSPy’s declarative LM interface.- The MCP Contributors WG debated how tools should handle UUIDs, concluding that LLMs should never create primary UUIDs, only pass them between a

list_itemstool and adescribe_itemtool, to mitigate the model’s tendency to hallucinate IDs and cross‑wire resources. Together, these discussions show a clear push toward strong tool schemas, explicit IDs, and portable app layers where LMs orchestrate pre‑defined capabilities rather than inventing opaque state.

- The MCP Contributors WG debated how tools should handle UUIDs, concluding that LLMs should never create primary UUIDs, only pass them between a

- SLMs for Agents, Student Models, and Edge‑Oriented Training Pipelines: In Eleuther, the founder of A2ABase.ai is exploring Small Language Models for agents, asking for edge‑friendly benchmarks and referencing the Hugging Face smol‑training playbook while aiming to train models under 16 GB VRAM and merging TRMs with nanoGPT. They were advised to avoid full pretraining on that budget and instead use well‑chosen benchmarks like MixEval and HumanEval+, plus light‑touch LoRA to add capabilities without wrecking generalization.

- On the application side, DSPy users requested a “student models” subforum for models like Qwen3 and gpt‑oss‑20B, to centralize best practices for low‑cost, long‑running agents, while multiple engineers (in DSPy, Manus, GPU MODE jobs) showcased workflow automation systems that connect Slack, Notion, and internal APIs to small or mid‑size LMs, claiming ~60% response‑time reductions. This cements a pattern: cheap, specialized SLMs + orchestration libraries (DSPy, MCP, custom agents) are becoming the default for edge and SMB workloads, with frontier models reserved for hardest reasoning or coding tasks.

Discord: High level Discord summaries

LMArena Discord

- AI Companies can’t find green pastures: Members discussed the financial challenges in the AI sector, pointing out that even leading companies struggle with profitability due to significant computational costs and infrastructure demands.

- It was emphasized that compute is expensive, which hinders the accessibility and profitability of AI compared to traditional software or internet services.

- LM Arena places prompt limits to keep it real: LM Arena now limits repeated prompts to 4 to ensure fair testing, with resets available for new prompts, though this deletes old prompts and responses.

- The update aims to make the arena fairer, sparking inquiries about how the new rate limit impacts testing methodologies and user experience.

- Frame-Flow Battles Opus in Text Arena: Users are actively trying to identify the new Frame-Flow model, which is performing well in text battles against Opus, with speculation it might be Gemini 3 Flash, Grok 5, or a model from a new company.

- Discussions involve testing Frame-Flow with steganography puzzles and comparing its coding abilities to existing models.

- Seedream 4.5 Enters Image Arena: The Seedream 4.5 image model is now available in the Image Arena, accessible via Direct or Side by Side modes in the dropdown menu.

- While some find the model comparable to nano banana pro, others argue it’s inferior, with a rate limit of 5 Generations/h.

- Sora 2 filtration system is not so air tight: A user claims to have discovered an exploit in Sora 2’s filtration system, noting that guard rails are not equally distributed.

- The user notes the exploit involves generating content using specific prompts and methods that bypass restrictions, but the only way to fix it is to not allow people to generate characters.

BASI Jailbreaking Discord

- Grok’s Reality leaves Tweeters Skeptical: Members doubted the legitimacy of a tweet about Grok, using GIFs and images to express their skepticism.

- One member even shared a GIF depicting a water wheel, jokingly suggesting Grok’s capabilities are just for show.

- Gemini and Claude Duke it out for Malware Creation: Members discussed using Gemini 3 to craft prompts that Claude struggles with, focusing on generating code or malware, emphasizing the effectiveness of specific coding questions over general jailbreaking attempts.

- Despite some success, opinions were mixed, with one member finding Claude unimpressive, while others debated the value and future of AI in malware creation compared to traditional reverse engineering.

- Gemini Web’s Backdoor for NSFW Image Generation: Members shared methods for generating NSFW images with Gemini Web using system instructions, noting the platform’s filter limitations and inconsistent results.

- One member found Seedream 4.5 most effective for editing NSFW images due to its prompt adherence and output stability, contrasting it with Nano Banana Pro, which is hindered by filters and inconsistent output.

- AI Agents Face Security Stress Tests: Members explored simulating and documenting real-world AI agent attack scenarios, including prompt injection attacks, spoofed agent messages, and replay attacks, to evaluate security and threat modeling.

- These simulations aim to identify vulnerabilities and assess the effectiveness of execution-time authorization methods like A2SPA in preventing unintended execution and unauthorized access.

- DeepSeek Dives Deep into Jailbreak Territory: Members reported successfully jailbreaking DeepSeek using nested jailbreaks, sharing a screenshot as evidence of its ability to generate malware code.

- This nested approach may be used in the future for jailbreaking.

Unsloth AI (Daniel Han) Discord

- Vulkan Backend Confirmed on Android: Members confirmed that the Vulkan backend works via llama.cpp on Android devices, but it requires the correct ICD (Freedreno) to function properly.

- Using

pkg install llama-cppwas recommended as an easier alternative to compiling with Vulkan support, though issues with FP16 might still occur depending on the hardware.

- Using

- iPhones Run LLMs: Members are running LLMs on phones directly with llama.cpp through Termux, also utilizing kobold.cpp for enhanced performance.

- Configurations varied, with some running Qwen 3 14B on an iPhone 17 Pro and others testing Gemma E2B on an iPhone 12, highlighting the range of possibilities and limitations based on device capabilities.

- Unsloth Community Lauded: The Unsloth Discord community received high praise for its active engagement and value in finetuning, with members appreciating the community’s support.

- Members building the community were praised, and when asked about its origin, the answer was it’s just you guys really xD you guys started being active which helped a lot.

- Nvidia VRAM Supply Rumors Spark Debate: Members speculated that Nvidia might halt VRAM supply to partners, potentially causing supply issues for smaller AiB partners.

- This discussion raised concerns about market dynamics, with one member jokingly suggesting shorting 3090 stock, and others considered parallels with previous EVGA-like situations.

- Windows Users Turn to WSL2: A user utilizing Windows 11 was advised to install WSL2 and run VSCode for a smoother development environment, and was pointed to helpful installation guides.

- The user was provided links to Conda Installation and Pip Installation guides for setting up the environment.

Cursor Community Discord

- Grok Code Falls Out of Favor: Users initially praised Grok Code’s reasoning, but one user later reported it had stopped reasoning completely.

- No further details were provided.

- Engineers Seek Cursor UI tips: Users requested tips for creating professional UIs without paid tools like Figma.

- One suggestion involved pasting screenshots into Cursor and prompting it to reproduce the layout.

- Cursor Nightly Builds Launch Rogue Agents: Users reported that Cursor Agents in nightly builds were running without permission, creating/deleting files, and potentially downloading codebases.

- A user whose forum post was deleted suggested downgrading to a stable version and disabling dotfile/external file access.

- Auto Agent Suffers Intelligence Crisis: A user reported that Auto Agent purposely went crazy comparing unrelated pages, while another reported a surge in errors from 11 to 34.

- Other users noted that the model’s quality is task-dependent.

- New Pricing Model Strikes Auto: Users discussed a new pricing model where Auto is no longer free after the next billing cycle for some users.

- One user, having used 360M tokens this month (costing $127), plans to switch to $12 Copilot with GPT5-mini.

LM Studio Discord

- GNOME favored on CachyOS: Some users chose GNOME for CachyOS because they prefer it to KDE and find Cinnamon to be light on VRAM.

- A user stated they “can’t stand KDE”.

- CachyOS faces dual GPU challenges: Users are encountering issues with running two different GPUs (e.g., Nvidia 4070ti and 1070ti) on CachyOS, with an error that doesn’t occur on Ubuntu.

- The problem may be related to using GPUs from different generations, prompting one user to consider using the second GPU in another PC.

- Qwen springs to LM Studio: Qwen is now supported in LM Studio, as showcased by a user’s screenshot of the LM Studio UI.

- Others remarked on possible UI bugs and the large VRAM requirements for certain Qwen model quantizations.

- DDR4 still worthy?: A member inquired about the viability of 3200MHz DDR4 compared to 3600MHz, and another member responded with an image noting that 3200Mhz is basically top of the bracket of the DDR4 standards.

- The attached image indicated that 3200MHz is the top of the bracket for DDR4 standards.

- Triple GPU setups equals bugginess: A user reported that a triple GPU setup is very buggy out of 10, prompting another to jokingly suggest adding a fourth to fix it.

- One member noted issues with splitting LLMs across non-even numbers of cards, another suggested that an 8GB GPU might be the problem, and mentioned dense models become annoying once exceeding 50GB.

Perplexity AI Discord

- Comet Browser Stirs Spyware Suspicion: Users debated whether the Comet browser is spyware due to background activity, with counterarguments citing Perplexity’s privacy policies and Comet-specific notices.

- The consensus leans towards Comet’s Chromium base and its background processes being standard browser operations rather than malicious spyware.

- Minecraft Server Builds Blocky Excitement: Enthusiastic members proposed a Perplexity Minecraft server, weighing technical specifications, including free hosting options with 12GB of RAM and 3vCPUs.

- A moderator confirmed that some servers were rolled out.

- Opus 4.5: Free But Metered: The community noted that Opus 4.5 is now freely accessible on LMArena and Google AI Studio, but is subject to rate limiting on Perplexity at 10 prompts per week.

- Members reported that the rate limits may be dynamic.

- Image Generation Limits Irk Users: Users are hitting image generation limits within Perplexity, capped at 150 images per month, and seeking clearer UI feedback on usage.

- Better UI feedback was requested.

- Perplexity Labs versus Gemini Ultra: Research Rumble: Users debated the optimal model for research, suggesting Perplexity Labs, Sonnet, and Opus and highlighting the cost of Gemini AI Ultra at $250/month.

- One user noted the model’s utility in determining effective prompting structures.

OpenAI Discord

- Sora AI Faces European Delay?: A user asked about Sora AI’s availability in Europe, but no information was provided about a potential release.

- The lack of clarity leaves European users in suspense regarding when they might access Sora AI.

- AI-Text Camouflage: Mastering Authenticity: Members discussed methods to make AI-generated text appear more human-like, advising to program ChatGPT to use less recognizable language and mimic typing speed.

- This tactic aims to evade detection by AI text detectors and ensure the generated content blends seamlessly with human-written material.

- Discord Channel Chaos: Taming the Flood: Users voiced concerns about miscategorized posts, specifically regarding Sora AI content, and one member jokingly suggested renaming a channel ai-to-ai-discussions to highlight ChatGPT output overload.

- The discussion underscored the importance of adhering to channel guidelines and utilizing appropriate channels for GPT outputs to maintain order and relevance.

- Model Mania: Preferences Spark Debate: Members revealed their preferences for AI models, with some preferring Gemini 3 Pro and Claude Sonnet for coding accuracy.

- While some favored OpenAI’s models, others found AmazonQ (Sonnet4.5) preferable despite potential bugs after the kiro update, source.

- GPT-5.1 Crushes Gemini 3 in Bug Hunt: In a comparative assessment, GPT-5.1 Thinking surpassed Gemini 3 in pinpointing bugs within code, in spite of Gemini 3’s superior user interface.

- During testing, GPT-5.1 identified a bug missed by another model, but Gemini 3 failed to detect any errors, source.

OpenRouter Discord

- OpenRouter’s AI Report Reveals Trends: OpenRouter and a16z released their State of AI report, analyzing 100 trillion tokens of LLM requests, highlighting trends such as the dominance of roleplay in open-source model usage and the rise of coding in paid model traffic.

- The report also finds that users prioritize quality over price, with reasoning models handling over 50% of all tokens, indicating a shift towards AI agents managing tasks.

- Deep Chat Project Goes Open Source: A member open-sourced Deep Chat, a feature-rich chat web component that can be embedded into any website and used with OpenRouter AI models, available on GitHub.

- The project includes direct connection APIs as illustrated here, and the author appreciates Github stars.

- Grok 4.1 Gets Slugged: Users noticed the removal of Grok 4.1 fast free model, and a member explained that users on the paid slug were being routed to the free model, and advised migration to the free slug.

- The x-ai/grok-4.1-fast slug will start charging as of December 3rd 2025, and some members feel Cloudflare is the singular metaphorical stick that holds up the world, as it underwent downtime.

- Rumors of OpenAI Model Next Week: A user shared an ArsTechnica article hinting at a new OpenAI model release next week, as Gemini gains traction.

- Another user speculated about the model name they’re testing, referring to it as some model name.

- Anthropic Acquires Bun to Power Claude’s Coding: Referencing this article, Anthropic acquired Bun as Claude’s code generation hits a $1B milestone.

- Members discussed Cursor raising $50B on a $500B next round to buy Vercel/Next, and OAI released Codex Max while Google released Deep Think Mode for Gemini 3.

Nous Research AI Discord

- Hermes 4.3 Lands on ByteDance Seed 36B: Hermes 4.3 on ByteDance Seed 36B, offers roughly equivalent performance to Hermes 4 70B at half the model size, post-trained entirely on the Psyche network secured by Solana, read more.

- The instruct format is coincidentally similar to Llama 3 and Nous Research may release Mistral-3 fine-tunes of Hermes and MoE support in their internal trainer, so an MoE is next.

- QuickChatah Launches Ubuntu GUI for Ollama: A member released QuickChatah, a cross-platform Ollama GUI for Ubuntu built with PySide6.

- They mentioned I didn’t like OpenWebUI because it was resource intensive and that their version uses like 384KiB of RAM tops.

- Opus Model Exhibits Better Performance: A user reported that the new Opus is better than the old one and was before already the only model who dealed with that proper but now its even better at it and doesn’t do some mistakes it did before.

- They also noted that GitHub CoPilot can’t use Opus 4 as Agent but Opus 4.5 can, adding that Opus 4.5 is also available in antigravity for free.

- Deepseek V3.2 Praised for Affordability: A user recommends using Deepseek v3.2 because it’s super affordable and asked for the Nous team to try to get Hermes 4 70B and 405B on OpenRouter.

- Teknium clarified that the Hermes models are already on OpenRouter, but the user clarified they meant that they wanted Nous Research to be a provider directly.

- Simulate markets and logistics in Godot: A member is building a 3D simulation space in Godot to simulate markets, agriculture, and logistics interactions and asked for model recommendations, another member suggested contemporary NLP economic simulation research.

- Another member agreed, citing that Langchain is a wrong abstraction and causes more headache than doing things from first principles, especially since LLMs are good at writing the types of stuff that Langchain was supposed to solve.

Moonshot AI (Kimi K-2) Discord

- Deepseek V3.2 Agentic Task Drawbacks Uncovered: Despite improvements, Deepseek V3.2 faces issues, including being limited to one tool call per turn, ignoring tool schema requirements, and failing tool calls by outputting in

message.contentrather thanmessage.tool_calls.- Users suggest Deepseek V3.2 needs enhanced tool call post-training to address these limitations.

- Kimi’s Haggle Deal Glitch Troubles Users: Users report issues with the Kimi Black Friday haggle deal, facing inaccessibility despite not having active subscriptions, one user speculated the sale to be over.

- Another user reports the deal ends December 12th.

- Kimi for Coding Access and Support Concerns: Users face access issues with Kimi for Coding, needing a Kimi.com subscription for a key.

- Questions arise regarding corporate policy supporting only cloud code and roo code, with users seeking contact information for inquiries.

- Deepseek Targets Enterprise Over Casual Users: A YouTube video explains that Chinese labs like Deepseek are targeting enterprise users due to the intelligence-to-price ratio being crucial for agentic tasks.

- While Deepseek may not focus on casual users, some claim it’s popular as an alternative to ChatGPT and Gemini.

- Sparking LM Fun: A Developer’s Lament: A user advocates for more fun and experimentation in the LM space, beyond chatbots and money-making ventures.

- The user praises Kimi for its model, fun features, visual style, search, and name, but wishes it were more than just a chatbot.

GPU MODE Discord

- Nemotron Speed Claims Face Scrutiny: A member questioned the claimed 3x and 6x speedups of Nemotron, reporting it to be slower than Qwen based on their results, detailed in a screenshot.

- The user had sought recommendations for async RL MLsys papers and blogs discussing different directions of scaling the RL system.

- Nsight Warnings Surface in CUDA Kernel Optimization: A member reported that increasing

launch__registers_per_threadin a CUDA kernel optimization triggers specific Nsight Compute warnings related to theLDGSTS.E.BYPASS.LTC128B.128instruction (corresponding tocp.async).- The warnings indicate that 3.03% of global accesses are excessive and 17.95% of shared wavefronts are excessive, disappearing when register usage is lowered.

- Sparse Attention Doesn’t Spark: Despite 13,000 papers on sparse attention, its adoption in systems like vLLM remains limited, as highlighted in this X post.

- Meanwhile, the paper VATTENTION: VERIFIED SPARSE ATTENTION (arxiv link) introduces a sparse attention mechanism with user-specified (ϵ, δ) guarantees.

- TorchAO Quantization Tricks Exposed: Compilation time remains slow in TorchAO even after previous precompilation and recent improvements using

FqnToConfighave enhanced support for quantizing model weights, specifically targeting MoEs, detailed in this pull request.- TorchAO also has a dedicated

MoEQuantConfigthat members may be interested in. A reference code for MoE quantization can be found here.

- TorchAO also has a dedicated

- LLMs Cheating and INF Bugs Resolved in NVIDIA Comp: The reference kernels for the NVIDIA competition appear to be using cuBLAS 13.0.0.19, corresponding to CUDA Toolkit 13.0.0, and, to prevent INF, a PR was merged to use the full fp4 range a/b and non-negative scale factors.

- LLMs have been found to have a hack in the evaluation with no known solution, and a user mistook the

nvfp4_gemmcompetition for the closed amd-fp8-mm, but resolved it by explicitly passing the —leaderboard nvfp4_gemm flag.

- LLMs have been found to have a hack in the evaluation with no known solution, and a user mistook the

Latent Space Discord

- Antithesis Stress Tests AI Code with Jane Street Bucks: Jane Street led a $105M Series A investment in Antithesis, focused on deterministic simulation testing for AI-generated code verification.

- The conversation focused on the necessity of trust-through-testing as AI increasingly automates coding tasks.

- Anthropic Forecasts Massive ARR: Anthropic expects to close the year with $8–10B in annualized revenue, a substantial leap from the $1B projection in January, as per this link.

- This surge is driven by significant enterprise adoption of Claude, particularly for coding, while OpenAI aims for $20B ARR.

- Harvey’s Hefty Series F: Legal AI firm Harvey raised $160M in Series F funding led by a16z, reaching an $8B valuation and serving over 700 law firms across 58 countries, according to this tweet.

- The company’s humble origins with just 10 people in a WeWork space were highlighted.

- TanStack AI Steps Into the Arena: TanStack AI was introduced, boasting full type safety and multi-backend language support.

- The team promised a forthcoming blog post and documentation detailing its advantages over Vercel.

- Kling Synchronizes Audio, Blows Minds!: Angry Tom’s tweet demonstrated generative video progress over 2.5 years, featuring Kling’s VIDEO 2.6 with synchronized audio.

- Observers jokingly suggested that AI Will Smith eating spaghetti is the new Turing test, igniting speculation on future realism.

Eleuther Discord

- SLMs for Agents Gain Traction: The founder of A2ABase.ai is actively researching Small Language Models (SLMs) for use in agents, and a member suggested exploring alignment benchmarks from the Emergent Misalignment paper and the Cloud et al subliminal learning paper.

- The founder is creating training pipelines for small LMs to be trained on less than 16GB VRAM and asked for benchmark recommendations for small models trained on edge devices, and looking into the HuggingFace LM training playbook.

- Shampoo Might Need More Power: A Google employee stated that the Shampoo paper might need the power to be -1 instead of -1/2.

- The author stated it’s ok work but has a few other deficiencies.

- CFG Benefits Show Early Memorization: Members discussed the benefits of CFG (Classifier-Free Guidance) and memorization, referencing this paper.

- One member was surprised that the basin emerges so early and that it probably has something to do with the resolution and size of the dataset.

- LLMs Aid Visual Creation: A member has used LLMs to help make visuals for videos, creating voiceover text on Clipchamp, and building programs for a 4D physics engine for his company.

- The member added that language can be severely limiting, and have found that LLMs often struggle to understand what they are trying to convey, requiring them to teach the LLM how to process the 3rd step and simulate the prime number ‘latch’ for non-quantized signal analysis.

- SHD CCP Protocol Explained: A member shared a series of videos explaining their work on interoperability, particularly the SHD CCP (01Constant Universal Communication Protocol), including an introduction to the language.

- Additional videos were shared that covered use cases for 0(1) time compression data, optimizations for cycle saving in modern GPUs, and the necessity of quaternions.

Yannick Kilcher Discord

- Brian Douglas Advocates Control Theory Learning: A member suggested using Brian Douglas’s video to learn control theory, while noting that practical projects are essential for understanding the concepts.

- They suggested control theory is something that won’t sink in without doing an actual project though.

- DeepSeek Article Raises Linearity Question: A member shared a DeepSeek article about control theory, asking whether the linearity assumption limits the use of linear control.

- The member then exclaimed, Control theory is actually funWhy aren’t people talking about it.

- AWS Re:Invent 2025 Updates Spark Debate: Amazon announced AWS re:Invent 2025 AI news updates including Nova Forge to build frontier AI models.

- One member called out the updates as click bait by a literal political opinion.

- Nova Forge Promises Frontier Customization: Nova Forge is a service for building custom frontier AI models; more info here.

- Members questioned how it differs from basic fine-tuning and noted it may offer more flexibility with checkpoints and integration of gyms for RL training.

- Bezos’s AI Company Remains Elusive: Members noted the absence of Bezos’s new AI company in the AWS re:Invent 2025 announcements.

- They speculated on potential competition or specialization between the firms.

HuggingFace Discord

- Multi-GPU Setup Troubleshoot Requested: A member shared a link to their multi-GPU setup and requested a sanity check, revealing their inexperience with multi-GPU configurations.

- The member appeared unsure about the setup’s correctness, highlighting the challenges faced when configuring multi-GPU systems for the first time.

- Image Models Still Censor Explicit Content: Despite being uncensored, the Z image demo censors explicit content, such as gore or nudity, displaying a maybe not safe image.

- The member questioned if a configuration error or improper usage caused the model to deviate from its expected behavior of generating uncensored content.

- ODE Solver Powers Up Diffusion Models: A new fast ODE solver, ideal for diffusion models, was created; its Hugging Face repo is now available.

- The author claims one can sample a 4K image in 8 steps with results matching 30 steps of dpm++2m SDE with karras; the paper is also accessible.

- smallevals Locally Assesses RAG Systems: A member launched smallevals, a suite for evaluating RAG / retrieval systems swiftly and freely using tiny 0.6B models trained on Google Natural Questions and TriviaQA to produce golden evaluation datasets, installable via

pip install smallevals.- This tool has a built-in local dashboard to visualize rank distributions, failing chunks, retrieval performance, and dataset statistics, with the first released model being QAG-0.6B, which creates evaluation questions directly from documents to evaluate retrieval quality independently from generation quality, with source code available on GitHub.

- STRAW Rewrites Neural Net Wiring for Every Image: A member introduced STRAW (sample-tuned rank-augmented weights), an experiment mimicking biological neuromodulation where the neural net rewrites its own wiring for every single input image it sees, mitigating RAM crashes by using low-rank techniques, a step towards liquid networks.

- The deep dive with the math and results are available in this write-up.

Modular (Mojo 🔥) Discord

- Community Meeting YouTube Release Delayed: The release of the November 24th community meeting video on YouTube is delayed due to the U.S. holiday, scheduled to be uploaded tomorrow.

- The video is currently being processed.

- Level 15 Achieved: Congratulations to a member for advancing to level 15!

- Another member advanced to Level 1!

codepoint_slicesDebugging Unearths Memory Access Error: An investigation into a failing AOC solution usingcodepoint_slicesrevealed an out-of-bounds memory access due to an empty list.- The issue was resolved by switching from

split("\n")tosplitlines(), which avoids the empty line causing the error; debugging with-D ASSERT=allcould have caught it sooner.

- The issue was resolved by switching from

splitlinesvssplit("\n")Exhibits Discrepancies:splitlinesandsplit("\n")exhibit different behaviors with trailing newlines, wheresplitlinesomits the last empty line, mirroring Python’s behavior.split("\n")includes the empty line as an empty string in the resulting list.

- GPU Constant Memory Explored via github: An example demonstrating the usage of constant memory was found in the modular/modular GitHub repository.

- A question was raised regarding methods for placing data, such as convolution kernels computed at runtime, into the GPU’s constant memory.

aider (Paul Gauthier) Discord

- Aider Eyes Distributed Inference Systems: Members discussed using aider with distributed inference AI systems like Crowdllama and setting up an API server with llama.cpp to benchmark performance.

- One user pointed out having 16GB of memory but no GPU, which likely explains the slower speeds.

- Ollama Timeout Troubleshooter Seeks Solutions: A member reported getting timeout errors with Ollama while using models like

gpt-oss:120bandllama4:scout, resulting in alitellm.APIConnectionErrorafter 600.0 seconds.- No specific solution was found in the provided context.

- Aider Flags Need Manual Confirmation: A user found that the —auto-test and —yes-always flags in aider were not fully automating the process.

- They reported that they still required manual execution despite using these flags.

- Mac & Fold 6 Get Aider Setup Advice: A new user wants to run LLMs locally on their Mac and then run aider on their Fold 6 (in the same network).

- The user is seeking advice from anyone who has implemented a similar setup for coding on their Fold device.

DSPy Discord

- MCP Apps SDK goes Open Source!: General Intelligence Labs has open-sourced the mcp-apps-sdk, which allows developers to embed apps designed for ChatGPT into other chatbots, assistants, or AI platforms, enabling local testing.

- The company posted an explanation on X here explaining why they are building the MCP Apps SDK.

- Latent Collaboration Paper Shared: A member shared a link to a paper on Latent Collaboration in Multi-Agent Systems.

- The paper explores methods to enable agents to implicitly coordinate through learned latent spaces.

- Subforum for Student Models Suggested: A member suggested creating a dedicated subforum for discussing student models like Qwen3 and gpt-oss-20b to consolidate knowledge on best settings and use cases.

- The goal is to pool community experiences and optimize the application of these models.

- Claude Code LM Integration Proposed for DSPy: A member proposed adding Claude Code / Claude Agents SDK as a native LM within DSPy, potentially using

dspy_claude_code.- This integration would support structured outputs and leverage Claude Code’s tools like

Read,Write,Terminal, andWebSearch.

- This integration would support structured outputs and leverage Claude Code’s tools like

- Full Stack Engineer Automates with DSPy: A full stack engineer specializing in workflow automation, LLM integration, RAG, AI detection, image and voice AI introduced themself, highlighting experience building automated pipelines and task orchestration systems using DSPy.

- One system connects Slack, Notion, and internal APIs to LLM, cutting response times by 60%.

Manus.im Discord Discord

- AI Engineer Automates for Efficiency: An AI engineer detailed their proficiency in Workflow Automation & LLM Integration, RAG Pipelines, AI Content Detection, Image AI, Voice AI, and Full Stack development, demonstrating successful project implementations.

- They reported a 60% reduction in response times by creating pipelines that integrate Slack, Notion, and internal APIs.

- Account Suspensions for Referrals: A user reported their account suspension following multiple referrals, prompting an official response.

- An agent suggested appealing through official channels and offered follow-up assistance if a response is delayed.

- Chat Mode Makes Triumphant Return: Chat Mode has been officially reinstated; instructions for using it are available at this link.

- Note that using Chat Mode still consumes credits.

- Manus Eyes New Talent: Manus is actively recruiting new talent and inviting interested candidates to submit their resumes via DM.

- Submitted resumes will be reviewed by HR and relevant teams to enhance Manus’ capabilities.

tinygrad (George Hotz) Discord

train_stepStill Needs Work: A recent PR almost fixed an issue withtrain_step(x, y), but it still receives two tensors without utilizing them.- This means the training step isn’t correctly processing input data, requiring further attention to complete the fix.

shrinkbeats indexing forobsTensor: Usingobs.shrink((None, (0, input_size)))is reportedly faster thanobs[:, :input_size]for indexing theobstensor.- This optimization could ramp up performance when working with large observation tensors by leveraging

shrinkfor faster slicing.

- This optimization could ramp up performance when working with large observation tensors by leveraging

VariablevminGets a Bump: TheVariablevminparameter had to be increased to 2 to avoid errors.- The original

vminsetting was causing issues, thus needing an adjustment to ensure proper functionality and stability.

- The original

RMSNorm -1Dimension Needs Verification: The use of-1as a dimension parameter inRMSNorm(dim=-1)needs verification.- Members suggested checking the source code of

RMSNormto confirm that it behaves as expected with a negative dimension index.

- Members suggested checking the source code of

- Tinygrad Refactors Master Branch: An outdated codebase element can no longer be found on the current master branch and has been moved.

- It can now be found under the name axis_colors dict.

MCP Contributors (Official) Discord

- Debate Sparked on Tools Accepting UUIDs: A discussion has begun on whether tools should accept UUIDs as input, focusing on mitigating the issue of LLMs outputting UUIDs despite prompts against it.

- Opinions vary, with some questioning if it’s inherently bad practice and others finding it acceptable under certain circumstances.

- LLM’s Role in UUID Creation Questioned: A member expressed reluctance towards allowing LLMs to create UUIDs, suggesting it’s more appropriate for LLMs to use UUIDs to retrieve items from other tools.

- The suggested architecture involves a

list_itemstool returning lightweight items with UUIDs, complemented by adescribe_itemtool that uses a UUID to return a complete item.

- The suggested architecture involves a

Windsurf Discord

- GPT-5.1-Codex Max Arrives on Windsurf: GPT-5.1-Codex Max is now integrated into Windsurf, accessible to users with Low, Medium, and High reasoning levels.

- Paid users get a free trial of 5.1-Codex Max Low, available via the latest Windsurf version, as detailed in Windsurf’s X post.

- Windsurf Dangles Free GPT-5.1-Codex Max Trial: Windsurf provides a free trial of GPT-5.1-Codex Max Low to its paid user base for a limited duration.

- Users need to grab the newest Windsurf version to enjoy this trial, announced on their X post.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1282 messages🔥🔥🔥):

Profitable AI Companies, LM Arena Prompt Limits, Gemini 3 Deepmind, Frame-Flow Model, OpenAI Models - Robin-High

- AI Companies Struggle for Profitability: Members discussed the difficulty of achieving profitability in the AI sector, noting that even top AI companies are facing challenges due to the high computational costs and infrastructure needs.

- Compute is expensive, making it difficult for AI to be as accessible and profitable as traditional software or internet services.

- LM Arena Implements Prompt Limits to level playing field: LM Arena has implemented a repeated prompt limit of 4 to ensure fair testing, which errors out on repeated prompts but can be reset with new prompts, although this causes the chat to delete old prompts/responses.

- One user inquired about the new measures, questioning if the new rate limit was made to make the arena fairer.

- Frame-Flow Model Emerges as Gemini 3 Flash Contender: Users are actively trying to identify the new Frame-Flow model, which is kicking Opus’s butt in text battles, with some speculating it could be Gemini 3 Flash, a weaker model, or Grok 5, while others suggest it may come from a new company.

- The discussion also revolves around testing Frame-Flow with steganography puzzles and assessing its coding abilities compared to existing models.

- Seedream 4.5 image model now available for image arena: Seedream 4.5 image model has been released into Image Arena, now available via selecting Direct or Side by Side modes in the dropdown.

- Members find the model comparable to nano banana pro though some users argue the model is inferior. The rate limit is 5 Generations/h.