Very impressive for a SOTA open source model you can run on your laptop. Discords are also positively reviewing Mistral-medium and confused about the Le Platforme API.

[TOC]

OpenAI Discord Summary

- Intense discussions about various AI models and tools were held, with users comparing the likes of Perplexity and ChatGPT’s browsing tool;

@chief_executivefavored Perplexity due to its superior data gathering capabilities compared to ChatGPT, its pricing, and usage limits. Tools like Gemini Pro and Mistral were also mentioned for their distinct capabilities. - Users expressed interest in AI’s ability to convert large code files to another programming language, with DeepSeek Coder being recommended for such tasks. Problems related to context window size with large models were discussed.

- An lively debate on the potential implications of the eradication of privacy for AI advancement was led by

@【penultimate】and@qpdv. - There were several points about handling LLMs on local and Cloud GPUs - focusing on cost-effectiveness, complications, and choices for suitable GPUs were detailed by users like

@afterst0rmand@lugui. - Users also reported multiple issues regarding OpenAI services, including performance issues with ChatGPT, loss of access to custom GPTs, unauthorized access, and problems with publishing GPT as public reported by several participants. The new archive button feature in ChatGPT was a topic of discussion too.

- Future developments in AI were piqued, including discussions and speculations about GPT 4.5 and GPT 4, and the performance of various AI models in different tasks.

- Queries regarding ChatGPT Discord bot and increased errors with the Assistant API were addressed in the OpenAI Questions channel.

- There were also inquiries and advice exchanged about crafting prompts for LLM with large context window and handling large contexts in prompt-engineering and api-discussions channels.

Note: All usernames and direct quotes are mentioned in italics.

OpenAI Channel Summaries

▷ #ai-discussions (154 messages🔥🔥):

- Performance of Various AI Models and Tools:

@chief_executivecompared the information gathering capabilities of Perplexity and ChatGPT’s browsing tool, stating that Perplexity is superior and doesn’t take as much time as ChatGPT.@chief_executiveand@pietmanalso discussed about Perplexity’s pricing and usage limits.@chief_executivementioned Perplexity has more than 300+ GPT-4 and Copilot uses a day, it’s worth the cost due to its browsing capabilities.

- Converting Programming Languages via AI:

@Joakimasked about AI models that can convert large code files to another programming language.@rjkmelbrecommended trying out DeepSeek Coder for such tasks while@afterst0rmpointed out issues related to context window size in large models and suggested breaking down larger tasks for better results.

- Discussion on Privacy and AI:

@【penultimate】and@qpdvhad a discussion speculating total removal of privacy for AI progress. Discussions pivoted towards potential implications of interconnectivity and privacy.

- Utilization of AI models:

@chief_executiveshared their experience with Gemini Pro mentioning its video analysis capabilities and Multi-Model Language Model (LLM) potential.@afterst0rmshared that their work uses Mistral for classification (~80% right) and GPT-3.5 for generative tasks. They also mentioned services like Cloudflare and Poe for supporting Mistral which offer lower latency.

- Running LLMs on Local GPUs and Cloud GPUs:

@afterst0rmand@luguidiscussed the complications and costs of running LLMs locally on GPUs in the current market and how lower-cost alternatives like HuggingFace or Cloudflare can be effective.@luguimentioned the free tier option on Cloudflare as a beneficial feature.@millymoxsought advice on selecting a cloud GPU for their use case and compared pricing and specifications of RTX 3090 with 24GB VRAM and A6000 with 48GB VRAM. Various users suggested getting whatever has more VRAM for the best price, and that having more memory could be more beneficial than more tensor cores.

▷ #openai-chatter (546 messages🔥🔥🔥):

- ChatGPT New Features:

@klenak,@thecrougand@dr.quantumdiscussed a newly added archive button next to the three-dot menu in the ChatGPT history interface. The new button archives chat and removes it from the history. Users indicated difficulty in finding an option to access the archived chats. - False Information Regarding GPT 4.5:

@DawidMand@satanhashtaghad a debate over the credibility of a leak about GPT 4.5. They noted that the rumor originated from Reddit and agreed that future announcements should be considered reliable only if they come directly from OpenAI or verified sources. - AI Performance in Varying Tasks: In the discussion regarding AI capabilities,

@cynicalcolaqueried the best AI model between Claude Pro or ChatGPT Plus with GPT 4 Advanced Data Analysis for summarizing and interpreting PDFs.@rjkmelband@australiaballsupported ChatGPT stating that Claude seems to lose context in complicated/long documents while ChatGPT is able to focus on details and discuss anything the user asks it to, but the choice depends on the speed of handling and the complexity of data under consideration. - ChatGPT Performance Issues: Multiple users, including

@jcrabtree410and@.australiaball, reported experiencing issues with browsing speed and lag in the ChatGPT service. They indicated these issues have persisted for several days. It was suggested that there might be a server issue causing this. - GPT Comparison: During a discussion on the performance of different versions of the GPT model,

@arigatosand@barretagreed that the python language tends to work best with the GPT model due to its linguistic similarities to English, which makes the translations more accurate and reliable.

▷ #openai-questions (102 messages🔥🔥):

-

ChatGPT Discord bot on server: A user (

@jah777) inquired if there is a ChatGPT bot for Discord that they could use for their server. It was addressed by@satanhashtagstating that it is possible with the API but it’s not free. -

Increased errors with Assistant API:

@sweatprintsreported seeing failed requests with the assistant API over the past few hours, suggesting an increase in errors. -

Inability to access chatGPT: Many users such as

@sharo98,@s1ynergy, and@crafty.chaoshave reported experiencing problems accessing their ChatGPT on their computers. Various possible solutions have been suggested by@solbus(one being trying different browsers and also checking network settings), but no definitive solution seems to have been found yet. -

Loss of access to custom GPTs:

@milwaukeeresraised concern about losing access to a custom GPT they had spent time building.@fulgrim.alphaalso inquired about sharing access to a custom GPT without a Plus subscription, but@solbusexplained that this is currently not possible. -

Unauthorized access to account:

@babbalanjareported a breach of their account, with new unauthorized chats conducted. They were advised by@solbusto change their account password and reach out for assistance on help.openai.com.

▷ #gpt-4-discussions (48 messages🔥):

- Problems with Publishing GPT as Public: Users including

@franck.bl,@optimalcreativity, and.parkerrexhave reported issues with the option to publish a GPT as public being greyed out or disabled. Despite having previously published GPTs, they are now unable to do so and suspect a glitch.@elektronisadealso confirmed that the confirm button seems to switch to disabled with delay or on click.@optimalcreativityreported seeing errors in the browser console. - Archive Button Concerns:

@imdaniel__,@rjkmelb, and@gare_62933shared their experiences with the ‘Archive’ button. Conversation threads were disappearing from their menu, they were able to recover only by using browser history. - Chat GPT lagging issue: User

@.australiaballexperienced issues with Chat GPT lag. The chat displayed, but the user could only scroll the side bar or had trouble interacting with the interface..australiaballasked users to react with a thumbs up if they faced same issue. - Interest in GPT-4 Development:

@lyraaaa_joked about wanting to use GPT-4 as a junior developer if there were 8xA100 instances available. - Work Performance of Different AI:

@drcapyahhbarashared their opinion that while Gemini appears to be overhyped and underperformed, GPT-4 and Bing AI seem to be performing well, with Bing AI showing marked improvement.

▷ #prompt-engineering (9 messages🔥):

- LLM System Prompt techniques for RAG systems: User

@jungle_jois inquiring about crafting prompts for LLM with a context window that receives lots of information. The user provided a sample style for the prompt. - Handling Large Contexts in ChatGPT:

@eskcantaprovided their experience with secretkeeper, which involves pulling data from uploaded knowledge files up to 300k characters. They note that although ChatGPT handles this scale of data, it might not work effectively with larger, more complex inputs. - Marking Up Reference Texts:

@knb8761asked whether triple-quotes are still necessary for marking up reference texts, citing the OpenAI Platform documentation. The current practice is to just insert an empty line and paste the reference text.

▷ #api-discussions (9 messages🔥):

- LLM System Prompt techniques for RAG systems:

@jungle_josought advice on techniques to use for an LLM system prompt in RAG systems, elaborating that the issue is how the LLM will deal with a lot of information in its context window to best answer a user query. They provided a prompt style example to demonstrate their point. - Uploaded Knowledge Files:

@eskcantashared their experience with pulling data from uploaded knowledge files. They mentioned that they have used up to 300k characters of plain text but haven’t gone above that yet. - Usage of Triple Quotes for Marking Up Reference Texts:

@knb8761asked if triple quotes are still needed for marking up reference texts, referencing the OpenAI documentation which recommends using them. They noted that they usually paste the reference text after an empty line following their question.

Nous Research AI Discord Summary

-

Extensive discussion on AI model performance, including specific mentions of SOLAR-10.7B, GPT-3.5, Tulu, Qwen 72B, Hermes 2.5, PHI-2b, and OpenChat 3.5. Notably,

@nemoiaargued that SOLAR-10.7B outperforms all the 13B models while@agcobra1doubted it surpassing larger models like Qwen 72B. In addition,@n8programsreported slower than expected run times for PHI-2b. -

The Discord community dialogued on the best models for embeddings and vector storage. Suggestions included fast embed from Quadrant and gte-small.

@lightningralfshared cautionary tweet about synthetic data generation from an unmodified embedding of scientific papers on ArXiv using ada-002. -

Conversation on desired features and performance for model blending and merging sparked interest in tools like mergekit. Participants compared different infrastructures for running models, such as MLX, Ollama, and LM Studio.

-

GPU requirements for AI training were debated, with ancillary discussion on industry advancements like the upcoming nVidia H200. There were questions about the requirements for renting GPUs, with suggestions for services that accept cryptocurrencies.

-

Dialogue about potential benchmark contamination specifically in relation to models trained on the metamath dataset.

@nonameusrexpressed concern, and@tokenbenderlinked to a similar discussion on Huggingface. -

Detailed guidance provided on the process of building tailored evaluations for AI models.

@giftedgummybeeoffered a six-step strategy which included components such as identifying the evaluation scope and compiling results data. -

A new model, “Metis-0.1”, was announced by user

@mihai4256as a result of reasoning and text comprehension. Highlighting a strong performance on GSM8K and trained on a private dataset. -

Shared YouTube video links for “Viper - Crack 4 Tha First Time” and “Stanford CS25: V3 I Recipe for Training Helpful Chatbots”, as well as discussions about the strength of open-ended search with external verifiers, the Phi-2 update, and a comparison of FunSearch with openELM.

-

A GitHub discussion on state-space model architecture, Mamba, was initiated by

@vincentweisser. Related GitHub link and Arxiv paper about the same were shared. -

A discussion regarding the significance of a benchmark performance, with a score of 74, considered substantial by

@gabriel_symeassuming that the community could fine-tune for improved results. -

A question about the experience in replacing one of the experts with another pre-trained one in Mixtral model was raised by

@dragan.jovanovich. A similar thread by@crainmakerwas posed about the parameter differences required for character-level transformers to achieve comparable performance to BPE-based transformers.

Nous Research AI Channel Summaries

▷ #ctx-length-research (1 messages):

nonameusr: this makes a lot of sense

▷ #off-topic (8 messages🔥):

- Discussion on Embeddings and Vector Storage:

@adjectiveallisoninitiated a discussion on the best models for embeddings and vector storage, and specifically asked about the performance of Jina 8k. - Fast Embed from Quadrant:

@lightningralfsuggested using fast embed from quadrant if that solution is being used. He urged caution in adopting a particular model due to the possibility of embedding the entire Arxiv database. - Reference to a Twitter Post:

@lightningralfshared a link to a tweet that refers to the generation of synthetic data from an unmodified embedding of every scientific paper on ArXiv. This process apparently uses ada-002. - Preference for gte-small:

@natefyi_30842shared that they prefer using gte-small for their work, arguing that larger embeddings like Jina are better suited to projects involving large volumes of data, such as books.

▷ #benchmarks-log (2 messages):

- Benchmark Performance Discussion: User

@artificialguybrmentioned that another user had already conducted a benchmark test, stating there was not a big gain. In response,@gabriel_symesuggested that a score of 74 could be considered substantial, and assumed that the community could fine-tune for even more significant results.

▷ #interesting-links (11 messages🔥):

- Open-ended Search with External Verifiers: User

@gabriel_symecommented on a discussion about the strength of open-ended search with external verifiers. - Phi-2 Inquiry: User

@huunguyenasked for opinions or updates on Phi-2. - FunSearch and ELM:

@gabriel_symecompared FunSearch to openELM in-context and mentioned he will look into the islands method. He compared it to Quality-Diversity algorithms but noted they’re not the same. - Shared YouTube Links:

@nonameusrshared a music video titled “Viper - Crack 4 Tha First Time” and@atgctgshared “Stanford CS25: V3 I Recipe for Training Helpful Chatbots” from YouTube. - Metis-0.1 Model Announcement:

@mihai4256introduced a 7b fine-tuned model named “Metis-0.1” for reasoning and text comprehension, stating that it should score high on GSM8K and recommending few-shot prompting while using it. He emphasized that the model is trained on a private dataset and not the MetaMath dataset.

▷ #general (189 messages🔥🔥):

- Concerns about Benchmark Contamination: Several users discussed the problem of models trained on benchmark datasets, specifically metamath.

@nonameusrexpressed concern that any models using metamath could be contaminated, and@tokenbenderlinked to a discussion on Huggingface on the same issue. There were calls for people to avoid using metamath in their blends. - Performances of Different Models: The performance and utility of different AI models were discussed, including Hermes 2.5, PHI-2b, and OpenChat 3.5.

@tsunemotooffered to provide mistral medium prompt replies on request.@n8programsreported that PHI-2b runs slower than expected at 35 tokens/second (mixed-precision) using mlx on an m3 max. - Interest in Merging AI Models: Various users expressed interest in merging different models to enhance performance. The toolkit mergekit by

@datarevisedwas suggested for this purpose, and there was discussion on merging Open Hermes 2.5 Mistral due to its good foundational properties. - Use of Different Inference Engines: There was discussion about the use of different infrastructures for running models, such as MLX, Ollama, and LM Studio.

@n8programsreported poor results from mlx, while@coffeebean6887indicated that ollama would likely be faster, even at higher precision than the default 4 bit. - Neural Architecture Search:

@euclaiseraised the idea of using Neural Architecture Search instead of merging parameters, on which there was brief discussion on potential application to subset of the search space concerning different model merges. - GPU requirements for AI: Debate was sparked about the demand for GPU resources in AI training and if centralized or decentralized compute would be more efficient. The discussion also drifted to industry advancements and potential upcoming GPU resources, notably the nVidia H200.

- Potential Issues with AI Services: User

@realsedlyfasked about the requirements for renting GPUs, specifically if a credit card was required, to which@qasband@thepoksuggested services accepting cryptocurrencies. - Neural Model Blending: User

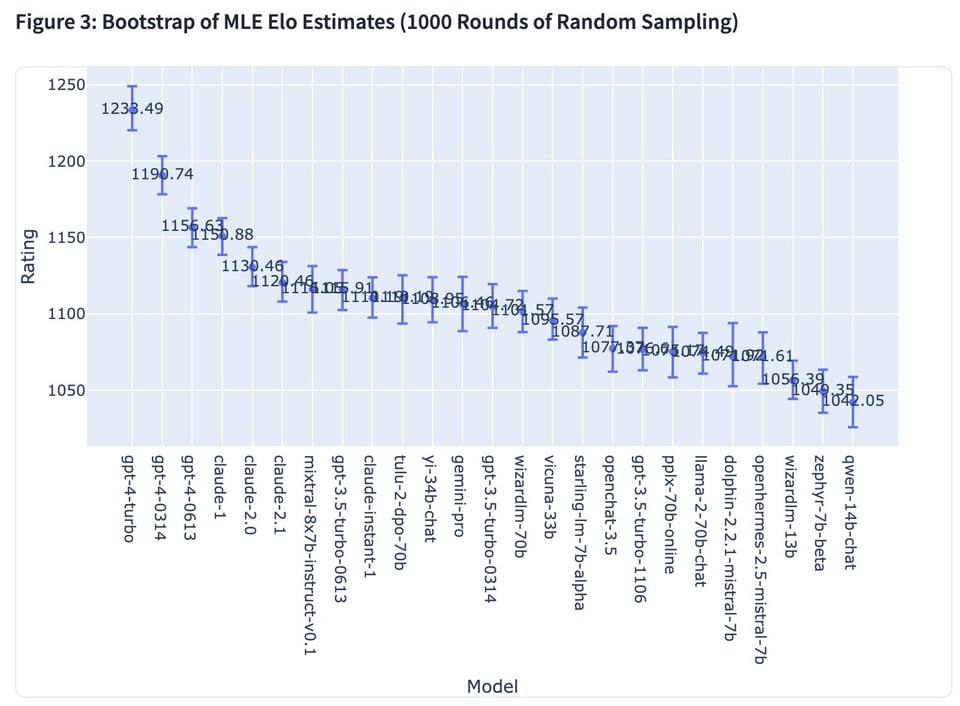

@dragan.jovanovichbrought up a question about experience in replacing one of the experts with another pre-trained one in Mixtral model. - Model Performance Comparison: A twitter post from LMSysOrg comparing the performance of different models was shared by user

@artificialguybr. Mixtral-Medium and Mistral-Medium were suggested for addition to the comparison by@zakkorand@adjectiveallisonrespectively.

▷ #ask-about-llms (45 messages🔥):

-

Discussion about Model Performance: The users debated over the performance of various models.

@nemoiamentioned that SOLAR-10.7B outperforms all the 13B models, and@n8programsshared anecdotal results from experimenting with Tulu, describing it as almost equal to GPT-3.5. However,@agcobra1expressed doubt about SOLAR-10.7B surpassing larger models such as Qwen 72B. -

Evaluation Strategy for Models: A discussion was sparked about building tailored evaluations.

@natefyi_30842shared that he needs to evaluate multiple use-cases, and@giftedgummybeeprovided a detailed 6-step guide, which includes identifying the evaluation scope, curating a list of ground truth examples, verifying that they aren’t in common datasets, building a structure to test the model, and compiling the results as data. -

Llava API Issue:

@papr_airplanereported encountering a ValueError when working with llava’s API regarding mismatching numbers of<image>tokens and actual images. -

Character-Level Transformer vs BPE-Based Transformer:

@crainmakerasked about the parameter differences required for character-level transformers to achieve comparable performance to BPE-based transformers. -

State-Space Model Architectures:

@vincentweisserbrought up discussion about the state-space model architecture called Mamba. Shared relevant GitHub link and Arxiv paper.

Mistral Discord Summary

- Mixtral Configuration and Use: Discussion on issues with Mixtral’s configuration, with

@dizeeresolving issues by adjustingconfig.jsonfiles. Further conversation about the non-usage of the sliding window in Mixtral models, instruction of setting the context length at 32768, given by@tlacroix_. - Chatbots and Documents Classification with Mistral: Users

@.akatonyand@naveenbachkethi_57471initiated a discussion on building a chatbot and using Mistral for document classification. Various web UI recommendations have been provided for use with Mistral API, including the OpenAgents project suggested by@cyborgdream. - The Performance and Optimisation of Models: A series of messages, mainly by

@ex3ndrand@choltha, voiced frustration towards the model’s inability to accurately answer rule-based questions and offered potential solutions for optimizing the MoE architecture. - SillyTavern Roleplay and API Modification: Conversation initiated by

@aikitoriaadvising@xilex.on how to modify the OpenAI API URL in the backend of SillyTavern for roleplay/story specifications. - Library Updates, Input Data, and Fine-Tuning: Noticeable library updates by

@cpxjjannouncing the new version of LLaMA2-Accessory and technical discussions on fine-tuning parameters and correct data input format. Discrepancies were noted by@geedspeedbetween example resources and original instructions. Suggestions were made for learning rates on Mixtral fine-tuning. - Showcase of New Developments: Users shared new projects, including the open-sourced library for the integration of Mistral with .NET by

@thesealmanand the latest version of LLaMA2-Accessory from@cpxjj. - Discussion and Requests on Features of la-plateforme: Users inquired about and discussed features of the Mistral platform including API rate limits, open-sourcing the Mistral-Medium model, potential feature requests regarding function calling and VPC Deployment capabilities, comparisons and feedback on different Mistral versions, and Stripe verification issues.

Mistral Channel Summaries

▷ #general (90 messages🔥🔥):

-

Mixtral Configuration Issues: User

@dizeediscussed some issues with getting Mixtral running with llama.cpp, pinpointing issues with config.json files. They were able to get the Mixtral branch working, but noted that the main llma.cpp repo was causing the error. -

Document Classification with Mistral: User

@naveenbachkethi_57471inquired about the possibility of using Mistral for document classification similarly to Amazon Bedrock. User@potatooffsuggested the use of few shot prompts for this purpose. -

Sliding Window in Mixtral Models: User

@tlacroix_clarified that for Mixtral models, the sliding window should not be used. The context length for these models remains at 32768. -

Chatbot Integration Discussion: User

@.akatonyinitiated a discussion about building a chatbot, with@.dontriskitsuggesting a combination of Rocketchat with live chat plugin and N8N with LLM. -

Mixtral Context and Configuration Questions: In a conversation about Mixtral’s context and configuration,

@tlacroix_explained that the context length for Mixtral is 32768, referencing the sliding_window setting. -

Available Chatbot UI for Mistral API:

@cyborgdreamasked for recommendations on good web UI to use with Mistral API that support certain features.@lee0099suggested Chatbot UI and@cyborgdreamfound OpenAgents that suits the outlined requirements. -

Introduction of Streamlit Chatapp:

@jamsynsshared a Streamlit chat app which interacts with Mistral’s API.

▷ #models (9 messages🔥):

- Performance of Models Running on Default Parameters:

@sviluppwas considering testing models by adjusting parameters, but was uncertain if it would be a fair comparison since typically models are tested with their default settings. - Models’ Ability to Answer Rule-Based Questions:

@ex3ndrexpressed frustration that the current model can’t correctly answer questions about the rules of FreeCell, a card game. - Potential Modifications to MoE Architecture:

@cholthaproposed an idea for optimising the MoE architecture by adding a “forward-pass-termination” expert that would force the model to skip a step if it is only tasked with “easy” next tokens. - Locally Running Mistral-Embed:

@talon1337asked for information about Mistral-Embed, expressing interest in knowing the recommended distance function and how it can be run locally. - Details on Mixtral Model Training Datasets: Both

@pdehayeand@petet6971had queries about Mixtral dataset specifics -@pdehayewas interested in what the 8 experts in Mixtral are expert at and@petet6971sought information or a license related to the datasets used to train Mixtral-8x7B and Mixtral-8x7B-Instruct.

▷ #deployment (5 messages):

- Roleplay/Story Utilization of SillyTavern:

@aikitoriasuggested@xilex.to use SillyTavern for roleplay/story specifications, mentioning that while it is not officially supportive for Mistral API, a workaround can be achieved by swapping the OpenAI API URL in the backend. - Swapping API URL in SillyTavern:

@aikitoriaprovided guidance on where to modify the API URL in SillyTavern’s backend - in theserver.jsfile.

▷ #ref-implem (2 messages):

- Discussion on Jinja Templates in Different Projects: User

@saulluquestioned why@titaux12thought that a Jinja template had to be fixed.@saulluclarified that no one is saying it’s wrong, but highlighted that they only guarantee the reference. They also pointed out that using the chat template creates bugs invllmand provided a link to the issue. - Utility of Jinja Templates for Supporting Multiple LLMs:

@titaux12responded by expressing the usefulness of a working Jinja template for projects that enable multiple LLMs to run seamlessly. They also mentioned working on supporting multiple models inprivateGPT. - Recommendations for Complying with Model Outputs:

@titaux12stated that they would do their best to comply with the output their model wants, emphasizing the importance of the token representation (1(BOS)) in the first position, with no duplication or encoding as another token.

▷ #finetuning (13 messages🔥):

- Fine-tuning on Apple Metal using mlx / mlx-examples:

@cogbujiasked if the Mistral model from the mlx-examples is suitable for fine-tuning for Q/A instructions on Apple Metal. They also asked if there’s a separate ‘instruction’ model that should be used for that purpose. - Recommended Learning Rates For Tuning Mixtral: Both

@ludis___and@remek1972suggested that learning rates around 1e-4 and 0.0002 respectively have yielded good results when tuning Mixtral. - New Version of LLaMA2-Accessory and Finetuning Parameters:

@cpxjjannounced the latest version of LLaMA2-Accessory which supports inference and instruction fine-tuning for mixtral-8x7b, and shared the detailed documentation for it. They also provided links to full finetuning settings and peft settings. - Confusion about Formatting Input Data:

@geedspeedexpressed confusion about how to format the data input for fine-tuning of mistral-7b-instruct and cited an example colab notebook from AI makerspace. They noticed a discrepancy between the example notebook and the instructions from Mistral about using [INST] tokens. - API for Fine-tuning:

@robhaisfieldsuggested the implementation of an API for fine-tuning, specifically mentioning the need to fine-tune “medium”.@jamiecropleyechoed this sentiment, noting it isn’t clear whether the current API supports fine-tuning.

▷ #showcase (6 messages):

- Mistral with .NET: User

@thesealmanhas completed an open-sourced library for using Mistral with .NET. The user would appreciate any feedback and has released a NuGet package. The GitHub repo is available at https://github.com/tghamm/Mistral.SDK. - Understanding Mistral for Language Learning App: New community member

@webcreationpastoris seeking resources to understand AI, specifically Mistral, for application in a language learning app. - Blogposts for Understanding Mistral: In response to

@webcreationpastor’s query,@daainhas shared a series of blogs for understanding Mistral. The available posts include Understanding embeddings and how to use them for semantic search and What are large language models and how to run open ones on your device. - LLaMA2-Accessory Support for mixtral-8x7b: User

@cpxjjhas announced their latest version of LLaMA2-Accessory, which now supports both inference and instruction finetuning on the mixtral-8x7b model. Detailed documentation is available at https://llama2-accessory.readthedocs.io/en/latest/projects/mixtral-8x7b.html. - Support for Mistral AI API in Microsoft’s Autogen:

@tonic_1shared a link to a GitHub issue discussing adding support for the Mistral AI API (and Mixtral2) in Microsoft’s Autogen.

▷ #random (1 messages):

balala: Привет

▷ #la-plateforme (68 messages🔥🔥):

-

Rate Limits and API access: User

@robhaisfieldasked about the API rate limits, with@tlacroix_providing an answer of 2M tokens per minute, 200M per month. The conversation also suggested the possibility of the JavaScript client being made open-source to accept PRs for better compatibility. -

Open-source Mistral Medium discussions: A discussion sparked by

@netbsdraised the question about open-sourcing the Mistral-Medium model. The community shared mixed thoughts about the feasibility and the impact of doing so. -

Potential Mistral Platform Feature Requests: Users showed interest in function calling with

@lukasgutwinski, VPC Deployment capabilities with@pierre_ru, and Mistral Large with@casper_aiall voicing their interest in these features.@tlacroix_confirmed that VPC Deployment capabilities is being worked on. -

Mistral Medium Feedback and Comparisons: Various users like

@rad3vv,@lukasgutwinski, and@flyinparkinglotshared positive feedback about the performance of Mistral Medium.@tarrudaalso noted that he found the performance of Mistral Tiny superior to a quantized version of Mistral on Huggingface. -

Stripe Verification Issue: User

@phantinefaced issues with phone number verification via Stripe while subscribing, but the issue was resolved through using the email verification option.

OpenAccess AI Collective (axolotl) Discord Summary

- Detailed discussion on fine-tuning Mistral, touching on handling out-of-memory errors, GPU VRAM requirements for qLoRA Mixtral training, and model distribution across multiple GPUs using deepspeed or Data parallel. Mentioned a Twitter post by Sebastien Bubeck on the positive results from fine-tuning phi-2 on math exercises.

- In Axolotl development, understanding and handling of GPU memory errors was explored, with a resourceful PyTorch blog post shared. There were also attempts to resolve import errors related to DeepSpeed PyTorch extension, subsequent TypeError encounter, and a possible github PR hotfix for the issue.

- Persistent issues regarding ChatML template and Axolotl’s inference capabilities were discussed in general help, particularly focusing on the inability of the models to properly learn.

- In datasets, a ToolAlpaca GitHub repository generalizing tool learning for language models was shared.

- Notable problems regarding inference on Runpod came to light, particularly involving long inference times and lack of completion in comparison to HuggingFace platform. Also, queries on how to stop Runpod pods automatically after training instead of default restart behavior.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (16 messages🔥):

- Mistral Fine-Tuning with Sample Packing Issues: User

@dirisalamentioned that they encountered out-of-memory errors when trying to fine-tune Mistral (not the latest version) with sample packing enabled. The errors were resolved by disabling sample packing. - VRAM Requirement for qLoRA Mixtral Training:

@jaredquekasked about the VRAM requirement for training qLoRA Mixtral.@faldoreresponded by stating that the training used 4 A100s. - Model Distribution Across Multiple GPUs: User

@kaltcitinquired if axolotl supports distributing the model across several GPUs for training.@nruaifconfirmed that it’s possible either by using deepspeed or normal Data parallel. They also added that if the model doesn’t fit on a single GPU, deepspeed 3 can be used. - Tweet On Phi-2 Fine-tuning:

@noobmaster29shared a Twitter link from Sebastien Bubeck discussing the promising results from fine-tuning phi-2 on 1M math exercises similar to CodeExercises and testing on a recent French math exam.

▷ #axolotl-dev (20 messages🔥):

-

Volunteer for Template Work for Training: User

.tostinoexpressed a willingness to participate in getting the chat templates operative for training and stated that the main task is for training data to run through the template and properly tokenize/mask some parts of the training data. -

GPU Memory Issue:

@tmm1shared a PyTorch blog post on handling and understanding GPU memory errors which concern the error message:torch.cuda.OutOfMemoryError: CUDA out of memory. -

Attempt to Resolve ImportError: User

@hamelhis trying to assist@tekniumin resolving an ImportError, specifically pertaining to the shared object file:/home/nous/.cache/torch_extensions/py310_cu117/fused_adam/fused_adam.so. There is speculation that it could be due to a bad installation or something to do with flash attention within the DeepSpeed PyTorch extension. -

TypeError Encounter following PyTorch Fix: Following the fix of the PyTorch issue,

@hamelhencountered a TypeError related to the LlamaSdpaAttention.forward() function. A YAML file and full traceback were provided to assist troubleshooting. -

Potential Hotfix:

@caseus_mentioned that a PR in Axolotl’s repository might fix the TypeError encountered by@hamelh. The PR is meant to address issues caused by the latest release of Transformers, which changed the use of SDPA for attention when flash attention isn’t used, thereby breaking all the monkey patches. You can find the PR here.

▷ #general-help (84 messages🔥🔥):

- Model Training and Templating Issues:

@noobmaster29and@self.1discuss issues with the ChatML template and Axolotl’s inference capabilities. The primary concern seems to be the failure of models to properly learn the `

▷ #datasets (2 messages):

- ToolAlpaca Repository: User

@visuallyadequateshared a GitHub link to ToolAlpaca, a project that generalized tool learning for language models with 3000 simulated cases.

▷ #runpod-help (2 messages):

- Issues with Inference on Runpod:

@mustapha7150is running a huggingface space on runpod (1x A100 80G) and experiencing issues with extremely long inference times of almost one hour which never complete, despite it taking only a few seconds on HuggingFace’s platform. They’ve also tried using an A40 with no notable difference. - Query on Automatically Stopping Pods Post-Training:

@_jp1_asked for advice on how to automatically stop pods after training has finished, instead of having the default behavior of restarting. They were unable to find a way to change this beyond manually stopping it via API.

HuggingFace Discord Discord Summary

- A vibrant dialogue took place on the classification of GPU performance levels, instigated by

@nerdimo’s question about the ranking of an RTX 4080.@osansevierosuggested that any set-up without 10,000+ GPUs could be considered “GPU poor”. - Several users experienced and offered solutions for technical issues. For instance,

@vishyouluckencountered a problem using theautotrain_advancedfunction, with@abhi1thakurrecommending them to post their issue on GitHub for more thorough assistance. - An in-depth conversation revolved around model hosting and inference speed.

@sagar___sought out tips for hosting a TensorRT-LLM model, and they, along with@acidgrim, discussed the challenges of memory use during inference and the concept of employing multiple servers for larger models. - Multiple users expressed curiosity and shared their discoveries about recurrent neural networks, sparked by discussions on GRU vs. RNN, LSTM preference, and the TD Lambda algorithm. In this context,

@nerdimonoted they were using the Andrew Mg ML spec as their learning resource. - Users shared and reviewed their creations, such as

@not_lain’s RAG based space for pdf searching,@aabbhishekk0804’s Hugging Face space for PdfChat using the zephyr 7b model, and@vipitis’s development of a new metric for fine-tuned models. - The reading group planned to review a paper on diffusion models titled “On the Importance of the Final Time Step in Training Diffusion Models”.

@chad_in_the_housealso offered insights into the problems with common diffusion noise schedules and sampler implementations. - The guild announced the deployment of Diffusers benchmarks for tracking the common pipeline performance in

diffusers, with automatic reporting managed by abenchmark.ymlfile in the GitHub repository. - Various users raised queries, shared resources and guidance about training diffusion models with paired data, Segformer pretrained weights discrepancy, context length of Llama-2 model, the procedure for fine-tuning language models, and the size and download time for Mixtral-8x7B model.

- Among cool finds shared, noteworthy were the Reddit discussion on AI generated scripts for Flipper Zero, and a HuggingFace paper on an end-to-end music generator trained using deep learning techniques.

HuggingFace Discord Channel Summaries

▷ #general (56 messages🔥🔥):

- GPU Classes and Performance:

@nerdimoand@osansevierohad a discussion about GPU classes and performance.@nerdimoasked if an RTX 4080 would be considered mid-class or poor-class GPU, to which@osansevieroresponded by mentioning an article that humorously claims everyone without 10k+ GPUs is GPU poor. - Issues with autotrain_advanced:

@vishyouluckhad issues when usingautotrain_advancedfor finetuning a model.@abhi1thakurresponded that more information was needed to help and suggested posting the issue on GitHub. - Survey on LLM Tool Usage:

@jay_wooowshared a survey, which aims to understand the motivations and challenges in building with LLMs (Large Language Models). - Segformer Performance Concerns:

@shamik6766expressed concerns that the pretrained Segformer model on Hugging Face does not match the official mIOU (mean Intersection over Union) values mentioned in the paper. - Model Hosting and Inference Speed:

@sagar___asked for suggestions on how to host a TensorRT-LLM model permanently, observing that memory usage goes up during inference.@acidgrimalso discussed running stablediffusion and Llama on mpirun, considering the use of multiple servers for large models.

▷ #today-im-learning (6 messages):

- GRU vs. RNN: User

@nerdimomentioned that their intuition was that the addition of gates made the GRU more complex than a standard RNN. They also indicated that they are learning from the Andrew Mg ML spec, and they plan to supplement their learning through side projects. - LSTM Preference:

@nerdimoexpressed a preference for LSTM over other options, citing that it provides more filtering and parameters. - TD Lambda Algorithm: User

@d97tumshared that they are trying to code the TD Lambda algorithm from scratch. - GRU vs. LSTM:

@merve3234clarified that they were comparing GRU to LSTM, not RNN, which aligns with@nerdimo’s earlier comment. - Model Addition to stabilityAI:

@nixon_88316queried if anyone was available to add any model to StabilityAI.

▷ #cool-finds (4 messages):

- AI generated code for Flipper Zero: User

@_user_bshared a link to a Reddit discussion about the possibility of generating scripts for Flipper Zero, a fully open-source customizable portable multi-tool for pentesters and geeks. - TensorRT-LLM model hosting:

@sagar___asked for suggestions on how to keep a model loaded continuously using TensorRT-LLM. - Music Streaming with AI:

@not_lainshared a HuggingFace paper about an end-to-end music generator trained using deep learning techniques, capable of responsive listening and creating music, ideal for projects like radio or Discord bots. The user was impressed by the model’s consistent performance and the fact that it can stream music without waiting for the AI to finish processing. - Feedback on Musical AI: The user

@osansevieroresponded positively to the shared HuggingFace paper commenting it was “very cool”.

▷ #i-made-this (3 messages):

- RAG Based Searching Space:

@not_lainhas completed building a RAG based space designed for pdf searching using thefacebook/dpr-ctx_encoder-single-nq-basemodel. The model and its related code can be accessed via this link. - PdfChat Space:

@aabbhishekk0804announced the creation of a space on Hugging Face for PdfChat. This utilized the zephyr 7b model as an LLM and can be viewed here. - Metric Development:

@vipitisshared preliminary results for the metric they are developing. This includes a problem with post-processing that needs to be addressed, as identified with fine tuned models.

▷ #reading-group (4 messages):

- Next Paper for Reading Group:

@chad_in_the_housesuggested the next paper for the reading group to be “On the Importance of the Final Time Step in Training Diffusion Models” due to its importance & influence in the field. Moreover, he mentioned the significant work@636706883859906562has done in relation to this, sharing a link to the Colab notebook of his implementation. - Discussion on Diffusion Models:

@chad_in_the_househighlighted the issues of common diffusion noise schedules not enforcing the last timestep to have zero signal-to-noise ratio (SNR) and some sampler implementations not starting from the last timestep, indicating that these designs are flawed and cause discrepancies between training and inference. - Problem Implementing the Paper:

@chad_in_the_housefound it interesting that stability failed to implement this exact research paper, inviting further discussion on this topic. - Epsilon Loss Problems:

@pseudoterminalxpointed out that the implementation fell short due to issue with epsilon loss.

▷ #core-announcements (1 messages):

- Introduction of Diffusers Benchmarks: User

@sayakpaulannounced the introduction of Diffusers benchmarks to track the performance of most commonly used pipelines indiffusers. Thebenchmarks are accessible here. - The automatic reporting workflow is managed by a file named

benchmark.yml, residing in the.github/workflowsdirectory of thediffusersGitHub repository. Theworkflow file can be found here. - The benchmarks include several configurations, such as

StableDiffusionXLAdapterPipeline,StableDiffusionAdapterPipeline, andStableDiffusionXLControlNetPipelineusing TencentARC models liket2i-adapter-canny-sdxl-1.0andt2iadapter_canny_sd14v1.

▷ #diffusion-discussions (2 messages):

- Discussion on Diffusion Model Training: User

@guinan_16875inquired about the possibility of training diffusion models with paired data, offering an example of a child’s photo and a corresponding photo of the father. They questioned if the practice of training diffusion models with images of the same style could be seen as a form of style transfer. - Diffusion Models & Paired Data Training: In response to

@guinan_16875’s query,@asrielhansuggested looking into Instructpix2pix and InstructDiffusion, stating these may involve a similar process to the proposed method.

▷ #computer-vision (1 messages):

- Segformer Pretrained Weights Discrepancy:

@shamik6766brought up an issue regarding the mismatch of mIOU values of Segformer b4 or b5 models on cityscape or ADE20k datasets, between what is referenced in the paper and what’s available in Hugging Face. The user asked for assistance in obtaining the correct pretrained weights. They also mentioned using#nvidia.

▷ #NLP (8 messages🔥):

-

Context Length of Models: User

@ppros666asked about the context length of Llama 2, particularly whether it’s 4000 or 4096.@Cubie | Tomclarified that it’s 4096, and this information can be found in the model configuration on HuggingFace. Additionally,@Cubie | Tomshared that in Python, this value can be accessed viamodel.config.max_position_embeddings. -

Fine-tuning Language Models:

@ppros666also expressed their intention to fine-tune a Language Model for the first time. They found a tutorial titled “Llama-2 4bit fine-tune with dolly-15k on Colab”, and asked if it is a good resource to follow given the fast-paced nature of the field. -

Model Download Size and Time:

@vikas8715asked about the download size and time for Mixtral-8x7B model. @vipitis reported that the size is approximately 56GB.@nerdimoadvised that a powerful GPU with high VRAM is recommended for handling such large models.

▷ #diffusion-discussions (2 messages):

- Discussion on Training Data for Diffusion Models: User

@guinan_16875started a discussion on the training data used for existing diffusion models. They noted that current models use images of the same style, making the task essentially one of style transfer. The user proposed an alternative paired data training method, using an example of a photo of a child and the corresponding photo of the father in one training session. The aim is to use the trained model to generate a predicted photo of the father from the child’s photo. - In response,

@asrielhanmentioned Instructpix2pix and InstructDiffusion as possible models that can perform similar tasks.

LangChain AI Discord Summary

- Gemini-Pro Streaming Query:

@fullstackericinquired about streaming capabilities in Gemini-Pro, receiving a reference to Google’s conversational AI Nodejs tutorial, and explored the potential for compatibility with the Langchain library. - Community Interaction - User Surveys and Announcements:

@jay_wooowshared a developer-focused open-source survey via tally.so link, while@ofermendannounced an early-preview sign up for an Optical Character Recognition (OCR) feature in Vectara through a google form. - Discussion on Handling JSON Input in Prompts:

@infinityexists.questioned the feasibility of incorporating JSON in PromptTemplate, which, as per@seththunder, can be implemented using a Pydantic Output Parser. - Experiences with AI Workflow Tools, Particularly Aiflows and LangChain:

@tohrniielicited feedback from users on their experiences with Aiflows, comparing it to LangChain. - Technical issue Reports: User

@ackeem_wmreported a “422 Unprocessable Entity” error during usage of Langserve with Pydantic v1. Concurrently,@arborealdaniel_81024flagged a broken link in the Langchain release notes.

LangChain AI Channel Summaries

▷ #general (42 messages🔥):

- Gemini-Pro Streaming Inquiry:

@fullstackericasked if gemini-pro has streaming which is confirmed by@holymodewith a code snippet for it in python and later in Javascript. Also, Google’s conversational AI node.js tutorial is suggested for reference. However,@fullstackericis looking for a solution through langchain library which doesn’t appear to be supported just yet, according to@holymode. - Survey Notification: User

@jay_wooowshared a tally.so link encouraging the community to participate in an open-source survey to help understand developers’ motivations and challenges while working with Lower Level Models (LLMs). The findings will also be published in an open-sourced format. - Prompt Accepting JSON Input Query:

@infinityexists.asked if we can pass a JSON in PromptTemplate, with@seththundersuggesting that this can be achieved using a Pydantic Output Parser. - AI Workflow Tools Discussion:

@tohrniiasked about community experiences with aiflows (a toolkit for collaborative AI workflows), comparing it to LangChain. The tool can be found in this Github link. - Vectara’s OCR Feature Announcement:

@ofermendannounced an optical character recognition (OCR) feature for Vectara that would allow users to extract text from images. Those interested in being part of the early preview were directed to sign up via a provided google form.

▷ #langserve (1 messages):

- POST /openai-functions-agent/invoke HTTP/1.1” 422 Unprocessable Entity Error: User

@ackeem_wmreported receiving a “422 Unprocessable Entity” error when using Langserve with Pydantic v1, even though the playground works fine with his requests.

▷ #langchain-templates (1 messages):

- Broken Link in Release Notes: User

@arborealdaniel_81024reported a broken link in the release notes which was supposed to lead to a template in question. The URL specified washttps://github.com/langchain-ai/langchain/tree/master/templates/rag-chroma-dense-retrieval. The user has flagged this for attention so it can be fixed.

DiscoResearch Discord Summary

- Engaged discussion on evaluation models and benchmarks between users

@bjoernpand_jp1_, exploring the probability of rating accuracy and potential methods of creating effective benchmarks, including using a test set of the eval model’s training set. - Conversations surrounding technical aspects of Mixtral implementations with users

@huunguyen,@tcapelle,@goldkoron,@dyngnosis,@bjoernpand@someone13574. Topics ranged from training of linear experts and router retraining to execution of Mixtral on specific GPUs and backpropagation techniques through a topk gate. - Extensive discussions on Llama-cpp-python issues, debugging strategies and other potential inference methods amongst

@rtyax,@bjoernpand@.calytrix. With reported silent failure issues with Llama-cpp-python, alternative models like VLLM were suggested, leading to the idea of a unified API that includes Ooba. Additionally, different efficient methods for downloading models were shared by@rtyaxand@bjoernp.

DiscoResearch Channel Summaries

▷ #disco_judge (6 messages):

- Evaluation Models and Benchmarks Discussion: User

@bjoernpand_jp1_engaged in a discussion about the use of evaluation models and benchmarks.@bjoernpexplained that the evaluation model is used to rate other models, demonstrating a positive correlation between actual benchmark score and the rating model score, indicating correct rating with high probability. On the other hand,_jp1_argued that the evaluation model’s capability should be almost equivalent to measuring MMLU of the evaluation model itself. - Test Set as Benchmark:

_jp1_suggested that a test set of the eval model training set could be used as the eval model benchmark, while@bjoernpagreed that a held-out test set could indeed be an effective benchmark, especially if the dataset creator is trusted. - Invitation to Collaborate:

_jp1_extended an invitation for others to work on developing a better English model based on their existing German data eval model.

▷ #mixtral_implementation (8 messages🔥):

- Expert Copies in Training: User

@huunguyenquestioned whether the experts are copies of an original linear and then continued train, or if they are completely new linears trained end2end. - Running Mixtral on A100 with 40GB:

@tcapelleenquired if anyone managed to run Mixtral on an A100 GPU with 40GB of memory. In response,@goldkoronmentioned they run it on a combination of 3090 and 4060ti 16GB GPUs which theoretically equate to 40GB. - Router Retraining for New Experts:

@dyngnosistheorized that the router portion might need to be retrained to properly route tokens to the new expert. - Backpropagation Through Topk Gate:

@bjoernpexpressed uncertainty about how backpropagation works through the topk gate, suggesting further study on router outputs could provide insight. - Training With or Without Topk:

@someone13574speculated whether during training topk is used, or whether a small amount of “leak” is allowed through topk.

▷ #benchmark_dev (20 messages🔥):

- LLama-cpp-python issues:

@rtyaxreported that Llama-cpp-python is failing silently during generate / chat_completion, even with the low level api example code. This behavior was observed after rebuilding llama-cpp-python for mixtral and it’s not clear if the problem is localized. Despite these issues, Oobabooga was reported to work correctly with the same Llama-cpp-python wheel. - Debugging suggestions:

@bjoernpsuggested debugging easier models first, like Mistral-7b. He also pointed out the possibility of tweaking thread settings infasteval/evaluation/constants.pyto potentially help with the issue. - Alternative models and inference methods:

@bjoernpoffered VLLM as an alternative model since it supportsmin_plike Llama-cpp-python. However,@rtyaxshowed interest in continuing to explore Llama-cpp-python further. Both@.calytrixand@bjoernpadvocated for a unified API approach, with.calytrixspecifically mentioning the value of having Ooba as an inference engine for increased flexibility. - Model downloading techniques: Both

@rtyaxand@bjoernpshared their preferred methods for downloading models.@rtyaxusesdownload_snapshotfrom Hugging Face, while@bjoernpuseshuggingface-cli downloadwith theHF_HUB_ENABLE_HF_TRANSFER=1option for optimized download speeds.@.calytrixacknowledged the effectiveness of the latter, with recent improvements making it more reliable.

Latent Space Discord Summary

- Discussions around AI model hosting and pricing; a comparison of Anyscale’s performance across different servers was conducted by ‘@dimfeld’ who reported inferior performance on Mistral 8x7b compared to Fireworks and DeepInfra.

- Anyscale’s performance enhancement efforts were subsequently highlighted, showcasing their acknowledgment of these performance issues and subsequent work towards resolving them as shared by ‘@coffeebean6887’ through a Twitter post.

- An update depicting Anyscale’s improvements in benchmarking, cited a 4-5x lower end-to-end latency achievement.

- A lesser known aspect of the AI industry surfaced; the intense price competition in AI model hosting/serving.

- The resourcefulness of the community came to light with ‘@guardiang’ sharing a link to the OpenAI API documentation for the benefit of guild members.

- A brief mention of the term ‘Qtransformers’ by ‘@swyxio’ in the #llm-paper-club channel without any accompanying context or discussion.

Latent Space Channel Summaries

▷ #ai-general-chat (13 messages🔥):

- Performance of Anyscale on Mistral 8x7b:

@dimfeldreported that Anyscale was significantly slower when tried on Mistral 8x7b compared to Fireworks and DeepInfra. - Performance Enhancement Efforts by Anyscale: In response to the above,

@coffeebean6887shared a Twitter post indicating that Anyscale is aware of the performance issue and they are actively working on improvements. - Benchmarking Improvements: In a follow-up,

@coffeebean6887noted that a PR indicates that Anyscale has achieved 4-5x lower end-to-end latency in benchmarks. - Price Competition in Hosting/Serving:

@coffeebean6887and@dimfelddiscussed the noticeable price competition around hosting and service providing for AI models. - Link to OpenAI API Doc:

@guardiangposted a link to the new Twitter/OpenAIDevs account on the OpenAI platform.

▷ #llm-paper-club (1 messages):

swyxio: Qtransformers

LLM Perf Enthusiasts AI Discord Summary

- Discussion regarding the usage and performance of the GPT-4 Turbo API in production environments. User

@evan_04487sought clarification on operating the API despite its preview status, and also inquired about any initial intermittent issues which@res6969assured were non-existent in their experience. - Inquiry on resources for MLops about scaling synthetic tabular data generation, particularly books and blogs by user

@ayenem. @thebaghdaddyqueried on the effectiveness of MedPalm2 for kNN purposes compared to their currently used model and GPT4, and planned to run comparative trials soon.

LLM Perf Enthusiasts AI Channel Summaries

▷ #gpt4 (3 messages):

- Using GPT-4 Turbo API in Production: User

@evan_04487raised a question about the utilization of the GPT-4 Turbo API in production environments despite its preview status. It was mentioned that while the limit for transactions per minute (TPM) was raised to 600k, doubling the capacity of GPT-4, and requests per minute (RPM) stayed the same as GPT-4’s at 10,000, it still remained technically a preview. @res6969responded positively, stating that they actively used it, and it performed well.@evan_04487further questioned about the initial intermittent issues with the system, and whether those were resolved.

▷ #resources (1 messages):

- MLops Resources for Scaling Synthetic Tabular Data Generation: User

@ayenemasked if anyone knew of books or blogs on the subject of MLops specifically discussing scaling synthetic tabular data generation for production.

▷ #prompting (1 messages):

- Usage and Comparison of Models:

@thebaghdaddyis currently using an unspecified model and finds it satisfactory. They haven’t used MedPalm2 so they are unable to compare the two. They are also pondering whether MedPalm2 could provide CoT more effectively for kNN purposes than GPT4, and plan to test this soon.

Skunkworks AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- LLM Developers Survey:

@jay_wooowis conducting an open-source survey aimed at understanding the motivations, challenges, and tool preferences of those building with LLMs (Language Models). He wants to gather data that could help the wider developer community. The survey results, including the raw data, will be published when the target number of participants is met. The objective is to influence product development and to provide useful data for other AI developers.

YAIG (a16z Infra) Discord Summary

Only 1 channel had activity, so no need to summarize…

- DC Optimization for AI workloads: A user discussed that most of the existing Data Centers (DCs) are not well optimized for AI workloads as the economical factors differ.

- Inference at Edge and Training: The user found the concept of performing inference at the edge and training where power is cheaper to be an interesting approach.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Engineer Foundation Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.