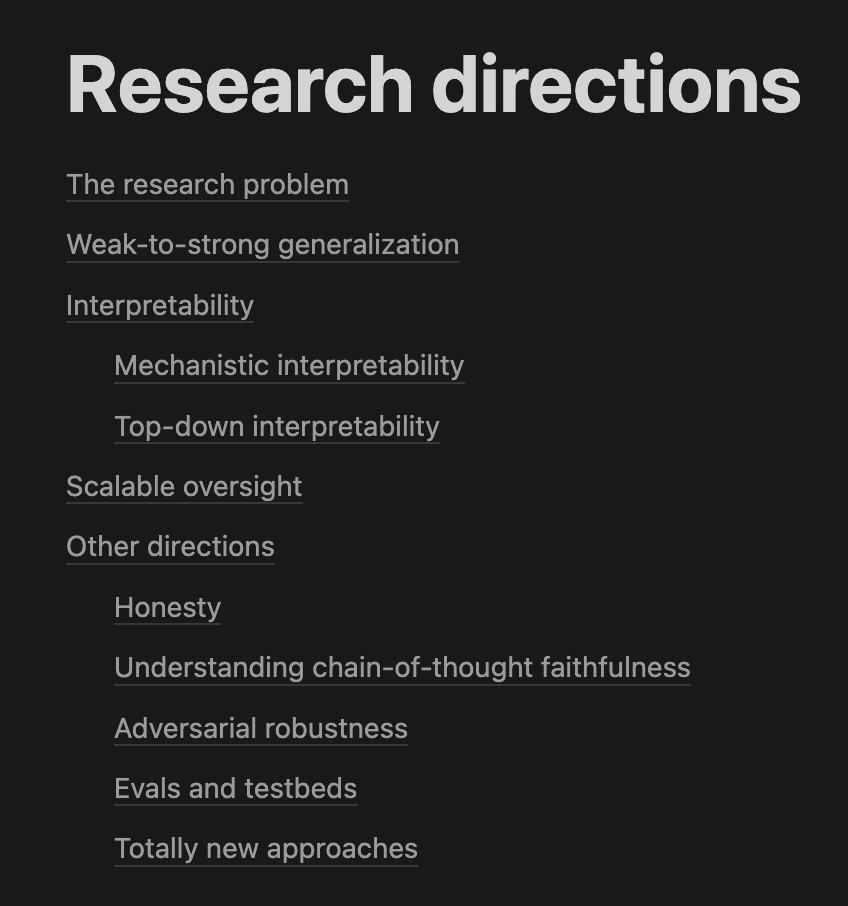

The Notion page has research directions to explore in more detail, with many good papers.

[TOC]

OpenAI Discord Summary

- Introduction of the new OpenAI Developers handle on Twitter under the username @OpenAIDevs. The online community members were encouraged to follow this account for updates. Twitter link.

- Discussions around the utilization differences between Gemini vs Bard chatbots from OpenAI, with affirmation that both bots can read each other’s instructions and provide newer, unique perspectives on solving coding problems.

- Various issues with OpenAI’s services were highlighted, from problems with OpenAI’s website, to features like archiving and phishing captcha. Also included were ongoing conversations around GPT customization and dissatisfaction about the business relationship between OpenAI and Microsoft.

- A heated debate existed regarding the performance and function concerns of GPT (OpenAI). Users reported various problems with GPT-4, such as unfriendly responses and inaccessibility, and issues with its capacity. Furthermore, there were speculations on the potential release of an updated version, GPT-4.5.

- Users shared their experiences with custom GPT-4 difficulties, their struggles with PDF file attachments, and potentials of using Dalle 3 in GPT for style imitation. An announcement revealed that a bug with image recognition was resolved.

- People discussed issues around prompt engineering, like struggles with getting the AI to adhere to detailed guidelines for modifying code and difficulties in getting the AI to rewrite original text into a format suitable for reading aloud. Introduction of an enhancement in Convo-lang with JSON mode support was also noted.

- In the API discussions, there were further elaborations on newly introduced JSON Mode support in Convo-lang, necessary rules for producing Venn diagrams, issues with GPT-4 in modifying large scripts without stripping off the comments and introduction of placeholder comments, and challenges in making human-written text sound more natural for TTS in informative ‘talking head’ videos, especially in the medical field.

OpenAI Channel Summaries

▷ #annnouncements (1 messages):

- Announcement of New OpenAI Developers Twitter Handle:

@abdubsintroduced the new OpenAI Developers handle on Twitter and encouraged users to follow for updates. The handle can be accessed via this link.

▷ #ai-discussions (91 messages🔥🔥):

-

Gemini vs Bard: User

@offlinediscusses their experience with using the Gemini and Bard chatbots from OpenAI. They mention that the bots can read each other’s instructions, providing unique perspectives on coding issues, and giving newer information than what GPT provides. User@muyfashionistashares they have not noticed significant differences between these bots. -

Use of AIs for Illegal activities: User

@sylviajaderaises a concern about chatGPT being used to generate malware, wondering if any tracking or monitoring of such uses is in place. Respondent@luguiclarifies that violating the Terms of Service (ToS), which includes illegal activities, can result in account bans on OpenAI. -

Customizing GPTs: Users

@rudish,@elektronisade, and@aznironmandiscuss on how to customize GPT for various purposes, including use in Discord bots and for creating an offline chatbot.@webheadrecommends using Llama2 based models for most cases due to its good licensing conditions and availability of various models. -

Concerns with GPTs and Alternatives: Users

@stevenramenbyand@jerrybobsshare their dissatisfaction with GPTs, citing issues with rambling words and plugin failures.@jerrybobssuggests looking into AI Assistants, which are harder to set up but purportedly more reliable. -

Perplexity chatbot: User

@chief_executiveshares their positive experience with the Perplexity chatbot, commending its real-time browsing capability for information gathering. Comparatively, they find Perplexity superior to both ChatGPT’s browsing tool and Bing AI. They also mention that Perplexity provides 600 uses a day under its Plus version for $20 a month.

▷ #openai-chatter (544 messages🔥🔥🔥):

- Performance issues and concerns with GPT-4: Users

@koll0212,@stevenramenbyand@lindeberg02reported experiencing issues with GPT-4, mentioning it as “acting up,” giving random words and emojis as response, inability to use it, and even cancelling subscription due to these problems.@stevenramenbyshared several instances of the issues in chat transcripts, which were discussed with users@offline,@rjkmelb, and@solbus. The issue seemed to persist across custom agents for@stevenramenby, prompting further help requests in the specified channels. - ChatGPT Down Experience: Users

@zappymonster,@7877,@andrew.lol,@gardener_windson,@acaba1806,@bricky___,@smokzzand@thepitviperreported experiencing a downtime with the ChatGPT platform. They described it as returning 502 errors, timing out, or being unable to open the site. - Usage Cap and User Interface Concerns: Users

@lindeberg02,@openheroes,@solbusand@kyoeidiscussed the usage cap on GPT-4 for ChatGPT Plus.@solbusclarified that the cap is currently 40/3hrs for the base GPT-4 usage and custom GPTs are limited to 25/3hrs.@lindeberg02complained about restrictions after payment and sought more information about the cap. - Expectation and Speculation on GPT-4.5 Release: Users

@realmunknown,@DawidM,@realgavok,@mrcrack_, and@luguiengaged in discussions and speculation about the potential release of an update, specifically GPT-4.5. These were based on rumors and leaks, with@DawidMnoting that a user on Reddit was a source of information about the potential model update. - GPT Performance and Function Concerns: Users

@skrrt8227,@you.wish,@afterst0rm,@kyper, and@bad3rvoiced criticisms about GPT’s perceived limitations, including an inability to effectively format text, a lack of consistency, and the impact of a usage cap.@bad3rspecifically criticized the server capacity of the platform, arguing that ChatGPT Plus users should be allocated more bandwidth.

▷ #openai-questions (251 messages🔥🔥):

- Issues with OpenAI Services: Several users reported issues with OpenAI’s services, from problems with OpenAI’s website

@jcrabtree410,@mohammad_soqar101,@l3xp3rt,@kamikolart,@dsssssssssand@cosmosfractal, to specific features and functions such as archiving@nickieeand@xhlsand phishing captcha pop up despite being logged in from a familiar device@vassiou. - Chatbot Discussions: Multiple users sought assistance or had conversations around GPT usage and customization. Users such as

@stevenramenby,@bionicle1337, and.cybersenpaidiscussed issues and questions around custom GPTs, plugin behavior, and fine-tuning. Got several clarifications from@elektronisadeand@satanhashtagamongst others. - Disputes Around OpenAI and Microsoft: User

@bionicle1337expressed dissatisfaction about the business relationship between OpenAI and Microsoft, expressing beliefs of unfair business practices and antitrust violations. Some community members like@elektronisadeand@Rockprovided different perspectives but the discussions remained unresolved. - Product Features and Improvements Discussions:

@stevenramenbyreported anomalies with custom GPT’s output,@jtensettidiscussed the name toggle issue with Plus subscription. In line with this,.staycuriousinquired about setting up a feature for automatic motivation emails, and@jah777asked about integrating a ChatGPT bot on Discord. Relevant suggestions were provided by other members. - Multiple Language Discussions: Various users were engaging in conversations in multiple languages, being reminded by

@satanhashtagto use English for broader communication.

▷ #gpt-4-discussions (16 messages🔥):

- Chat Limit Issues: User

@zeriouszhitexpressed concerns about facing a chat limit even when encountering network errors or bugs on OpenAI’s end, describing a situation where they were unable to process images and were penalized with chat limit reductions. - Image Recognition Bug Fix:

@solbusmentioned that an image recognition bug has been resolved according to reports on a specific Discord thread. - Custom GPT Disappearances:

@ps5671and@pietmantalked about issues with custom GPTs disappearing or not being editable, which seem to have resolved eventually. - GPT Ability to Generate Detailed Technical Analysis Charts:

@consciousness3783inquired about GPT-4’s capabilities in generating technical analysis charts with details like price action indicators through images and text within a single prompt. - Issues with PDF Files:

@jazy_87295encountered difficulties when trying to attach a PDF file in GPT, wondering if a conversion to the TXT format is necessary. - Style Imitation with Dalle 3:

@xchronosshared an idea of using Dalle 3 in GPT to create images that imitate the style of other provided images.

▷ #prompt-engineering (22 messages🔥):

- Convo-Lang Update: User

@iyioioreported an enhancement in Convo-lang, with JSON mode support now available. They provided detailed examples of how to define structs and utilize JSON for various data formats, including a discussion of using it with Vision and function calling. Find more details in the NPM docs for Convo-lang. - Instructions for Chatbots: User

@offlinesuggested the strategy of prefixing instructions with numbers for easy referencing, along with emphasizing that the bot should only produce VENN diagrams with 3 circles unless otherwise stated by the user. - Script Modification Guidelines:

@joeyjoejoeshabadoo42is experiencing difficulties in getting the AI to adhere to their detailed guidelines for modifying code, specifically when the code size reaches or exceeds 110 lines. The rules include preserving all code comments, modifying as minimal code as possible, clear explanation of modifications, honoring the code’s history, and always providing a full modified script without placeholder comments. - Natural Language Processing:

@.ursiumexpressed difficulty in getting the AI to rewrite original text into a format suitable for reading aloud, in a specific context of creating medical videos. They highlighted issues where the AI changes nuances in the text, sometimes affecting the meaning of important information. - NLP responses:

@world_designersuggested informing the AI about the context and nature of the script for a more accurate and nuanced understanding, which@.ursiumagreed to try.

▷ #api-discussions (22 messages🔥):

-

JSON Mode support in Convo-lang:

@iyioiointroduced JSON Mode support in Convo-lang with examples demonstrating application in both simple and complex scenarios, such as listing planets in the solar system and describing people in an image. More details can be found in the NPM documentation for convo-lang. -

Rule for Producing Venn Diagrams:

@offlinestated a clear rule that Venn diagrams produced should only have 3 circles unless the user specifies otherwise. -

Custom Instructions for Code Modifications:

@joeyjoejoeshabadoo42has been facing issues with large python scripts when using custom instructions in the context of GPT-4 via the web chat UI. The AI fails to adhere to instructions, especially when the script size reaches around 110 lines. It gives placeholder comments and strips code comments. -

Making Text Sound Natural for ‘Talking Head’ Videos:

@.ursiumis trying to make human-written text sound more natural for TTS in informative ‘talking head’ videos, specifically within the medical field. The issue is that when asked to simplify the text or remove difficult to pronounce words, the text is rewritten and critical words such as ‘may’ or ‘sometimes’ are replaced or removed, changing the meaning. -

TTS AI Model Vs ChatGPT: In the conversation between

@.ursiumand@world_designer,@world_designerinitially proposed using a separate TTS AI model for@.ursium’s problem. However, after@.ursiumclarified that the issue involves adjusting written text for spoken presentation and not generating TTS,@world_designersuggested explaining the script context to ChatGPT.

OpenAccess AI Collective (axolotl) Discord Summary

- Extensive dialogue on AI performance and training strategies involving models like PHI-2 and A6000s. Critical conversations included potential overalignment of AI models, strategies for securing free computational power, handling CUDA out of memory issues using techniques like reducing micro batch size, and contemplating on the risk of overtraining during fine-tuning processes.

- Deep dive into DPO training was initiated by

@faldorewhere he shared a link to a RL/DPO pull request #935 on the OpenAccess AI Collective Axolotl repository. - Significant discussions around template and token configurations, for instance the thread on logic behind LLAMA2 SeparatorStyle which seemed to skip index 0 in messages dropping any instructions and the issue with unexpected space additions linked to the Hugging Face tokenizer opened by

@Aleksa Gordić. - Users also shared their exploration on handling end-of-sentence (EOS) token generation issues, proper load time for Qlora with ChatML template during inference stage, and finding the right configuration format for training models.

- The suggestions and fixes for the aforementioned challenges were often accompanied with Git pull requests, such as PR #952 submitted by

@hamelhto rectify the LLAMA templating concern. - The guild had detailed conversation on datasets, discussing topics like the token to TB ratio of models, hardware requirements, and duration for model training. There was also a query on function calling datasets with Glaive Function Calling v2 dataset being shared as a potential resource.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (85 messages🔥🔥):

- AI Overalignment and Performance:

@noobmaster29and@faldorediscussed how their AI models might be overaligned.@faldoreshared a humorous system prompt he used for his AI model and the group discussed the potential consequences if an AI were to realize the incentivises were false. - AI Training:

@faldorediscussed his plans to train a phi2 model.@le_messasked for advice on getting free compute, to which@faldoresuggested applying for Microsoft’s Level 3 startup programme. - AI Model Fine-tuning and OOM issues:

@mihai4256experienced CUDA out of memory issues while using deepspeed zero3 to fine-tune a Yi model with 8 x A100 (that is 8 * 80 GB),@nruaifsuggested reducing micro batch size and increasing accumulation steps.@dangfuturesalso encountered out of memory issues while fine-tuning the full model. - DPO Training and Git Repo:The users discussed DPO training with

@faldoresharing a link to a RL/DPO pull request #935 on the OpenAccess AI Collective Axolotl repository. - Troubleshooting Issues:

@matts9903and@dangfuturesreported issues when saving checkpoints and after one epoch of model training. They received some troubleshooting suggestions from@caseus_and@dangfutures.

▷ #axolotl-dev (111 messages🔥🔥):

-

Fine-tuning and Overfitting: Users

@caseus_,@faldore,@noobmaster29, and@nruaifwere discussing about how much fine-tuning and Quick Language-learning Objective with Reinforcement Actions (Qlora) to apply on A6000s and PHI-2 models, with the topic raising concerns about overtraining. Users suggested both full fine-tuning and Qlora first, highlighting that adjustments can be made if the model begins to overfit. -

Optimization Concerns on Mixtral: User

@casper_aishared his unsuccessful experience attempting to work with optimization suggestions from unsloth.ai. He is looking forward to their potential pull request (PR) to check if improvements can indeed be achieved, especially with Mixtral models where memory is crucial. -

Llama2 Templating Issue: User

@Aleksa Gordićbrought up an issue regarding the logic behindairoboros,llama-2, and others relying onSeparatorStyle.LLAMA2, as it appears to skip index 0 in the messages thus dropping any instructions. He proposed a solution where index 0 should be yielded together with the system prompt. This discussion further evolved into a discussion on more robust solutions, test cases, and other Lama2 related issues like prompt assembly. -

Fix for Llama Templating Issue: User

@caseus_highlighted a PR opened by@hamelhto fix the Llama templating issue. The PR #952 addresses errors in the EOS/BOS application amongst other issues. -

Token Space and Decoding Issues: User

@Aleksa Gordićidentified an issue regarding unanticipated space additions linked to the Hugging Face tokenizer. It sparked a discussion about token manipulation complexities, with@hamelhsuggesting using Hugging Face chat templates as the “source of truth” to resolve the issues.

▷ #general-help (79 messages🔥🔥):

-

Discussion on Special Tokens and Configuration: User

@noobmaster29discussed a challenge they were having with their models not generating an end-of-sentence (EOS) token, causing their model to make continuous outputs up to the token limit. They shared various configuration settings they had tried, including different methods for setting the EOS token, and asked other users for advice. -

Running Qlora with ChatML Template: Regarding the use of Qlora with a ChatML template,

@noobmaster29expressed confusion about when to load the ChatML template during the inference stage.@caseus_confirmed that the template should be loaded in that format. -

Configuration Format for Training Models: There were also discussions on what kind of configuration format should be used when training models.

@noobmaster29and@self.1were not sure about the correct token configurations for running prompts with the ChatML format. -

Inference Issues: In addition,

@noobmaster29reported that when they used a particular conversational setting during inference, the model didn’t adhere to the ChatML format.@nanobitzsuggested checking the full output using thepreprocessCLI with--debug. -

Links of Interest:

@noobmaster29shared a link to a Hugging Face model with a configuration that worked well with ChatML, but upon trying to replicate it, they didn’t achieve the same results.

▷ #datasets (33 messages🔥):

- Dataset sizes and training metrics discussions: A detailed discussion happened between users

@natefyi_30842,@noobmaster29and@nanobitz. They discussed token to TB ratio of models, the duration and hardware required for training models, with references to PHI-2 and OPT models. User@natefyi_30842clarified misunderstanding about the time and GPUs required for training different models (PHI-2 card, OPT-125m card). - Training smaller models: User

@noobmaster29suggested that models in the hundred million token range might be feasible for individual efforts, linking to the OPT model on huggingface. - Enquiry about Function Calling Dataset: User

@le_messasked for recommendations on function calling datasets, and shared the Glaive Function Calling v2 dataset and a leaderboard for function calling.@bjoernpshowed interest in this as well.

Nous Research AI Discord Summary

- In-depth discussions and speculation focusing on AI model training strategies, and handling of datasets within the guild. Specifically, extensive conversation centered around potential contamination of the MetaMath dataset, with proposals for an automatic contamination check, despite potential cost concerns. The focus also extended to strategies such as Neural Architecture Search (NAS) over merge parameters, in place of an excessive concentration on model training for benchmarks

- Chatter around upcoming models, including speculative user anticipation over the capabilities of Llama 3 and Galactica 2.0. A particular focus on coding-specialized models like Dolphin 2.5 Mixtral 8x7b was evident, with acknowledges for its proficiency in coding.

- Discussion on creating a decoupled and continuously improving world model, as voiced by

@maxwellandrews, and a contemplation of its high-level approach. - A shared interest in high-performance workstation setups for purposes beyond gaming, such as 3D rendering and machine learning. Specific link shares included a high-performance workstation named “Big Boss” and a discussion regarding NixOS and Python packages management with Nix on the NixOS forums.

- Probing dialogues on AI models and tokenization, including Based model architecture, MorphPiece as a potential BPE replacement, and the Phi-2 performance. A clear disappointment with Phi-2 was expressed for not patching holes left by the previous Phi-1/1.5 models. Links shared involved a tweet on Based, a Paper on MorphPiece, a research paper by OpenAI, and a blog post on FunSearch.

- Model performance updates from users, with reports on the average score of the Solar Model from

@gabriel_syme, who later suggested fine-tuning and slurping the Solar model, and the workings of the RedPajama 3B model albeit at a slower pace from@bevvy. - Critical comments on the pricing of GPT4-Turbo, with the general consensus categorizing the costs as overly high even for high-value tasks.

- Queries and pointers raised in the Ask-about-llms channel relating to Solar 10’s preset, alternatives to MLC with a suggestion for Android/iOS setup, and a reference to LLM Farm.

- Lastly, a professional tone of discussion was flavored with personal updates from

@gezegenon recovering from flu and missing some conversations.

Nous Research AI Channel Summaries

▷ #ctx-length-research (1 messages):

- Decoupled and Continuously Improving World Model: User

@maxwellandrewsis contemplating on a high-level approach to create a decoupled and continuously improving world model.

▷ #off-topic (27 messages🔥):

- Graphics Card Discussion:

@everyoneisgrossshared some issues with their graphics card, specifically mentioning black elements and checker renders. They have considered the limitations of their Colorful 3060 card. - High-performance Workstation:

@skadeskotenshared a link to ExtremeTech about a high-performance workstation from Germany named “Big Boss”, which has 7 RTX 4090 GPUs and a 64-core Threadripper Pro 5995WX. The workstation is designed for 3D rendering and machine learning rather than gaming. - Model Finetuning:

@euclaisestated their GPT-7b models can be finetuned on a single GeForce 3090 using their custom optimizer. They speculated that the Mixtral model would potentially fit on the “Big Boss” workstation setup too. - NixOS and Nix Package Management:

@euclaiseasked if anyone in the channel uses NixOS and manages Python packages with Nix. They also shared a link to a discussion on the NixOS forums. - Training Models for Specific Language:

@skadeskotenexpressed a desire to train language models for Norwegian, specifically medical Norwegian.@euclaiseresponded that it would take a long time to complete such a task.

▷ #benchmarks-log (2 messages):

- Solar Model Performance:

@gabriel_symereported that the Solar model holds an average of 74, though no specification was provided about what this metric measures. - Finetuning and Slurping Solar Model: In a subsequent message,

@gabriel_symesuggested the need to finetune and slerp the Solar model.

▷ #interesting-links (36 messages🔥):

- Discussion on Phi-2 Performance: User

@bevvyand@georgejrjrjrdiscussed the performance of Phi-2.@georgejrjrjrexpressed disappointment that Phi-2 did not patch holes left by the previous Phi-1/1.5 models and speculates it might actually be a case of Phi-CTNL. - Based Model Architecture & MorphPiece Tokenization: There was a discussion on new model architectures including Based, with a link shared by

@nodsto a tweet by Simran Arora detailing its simplicity and efficiency.@georgejrjrjrnoted the rapid advent of new model architectures, also bringing up MorphPiece as a potential BPE (Byte Pair Encoding) replacement. He shared a Paper Link on the subject. - Fine-tuning Dataset Creation: User

@atgctgbrought up the topic of creating instruction datasets for fine-tuning as an important parallel task to pre-training data curation. - Pricing of GPT4-Turbo: A discussion led by

@gabriel_symeand@giftedgummybeecritiqued the high pricing of GPT4-Turbo. The prices were deemed improper even for high-value tasks. - Weak to Strong Generalization Paper & FunSearch:

@giftedgummybeeshared a link to a research paper by OpenAI, and@coffeebean6887shared the GitHub repository with the code. A blog post on FunSearch was shared by@nods, and sparked a discussion among the users regarding its application and novelty.

▷ #general (139 messages🔥🔥):

-

Discussion on MetaMath Dataset: There was significant debate regarding the potential contamination of the MetaMath dataset.

@bjoernpmentioned the importance of avoiding contaminated data in model training, and@mihai4256confirmed potential contamination after running a test, scoring 0.96 where anything above 0.85 is deemed as a high likelihood of contamination. There was also discussion on automatically checking for contamination when models are submitted, but potential costs were raised as a concern. -

AI Model Training: Various users discussed model training strategies, with

@nonameusrexpressing concern over too much focus being placed on model training for benchmarks.@euclaisesuggested employing Neural Architecture Search (NAS) over merge parameters, prompting further discussion on model merging. -

Upcoming Models: Users such as

@Error.PDFand@gabriel_symespeculated about the potential release and capabilities of future models like Llama 3 and Galactica 2.0. However, no specific release dates or features were confirmed. -

Focus on Coding: Models specialized in coding were discussed by users

@nonameusrand@metaldragon01, focusing on the recently released Dolphin 2.5 Mixtral 8x7b model which claims to be very good at coding. -

Model Execution: Discussion around model execution included mention of QuIP models by

.beowulfbrand mentions of how to run inference on these models effectively.@decruzmentioned running Openhermes on a phone.

Relevant resources and links included in discussion:

▷ #ask-about-llms (6 messages):

- RedPajama 3B Performance: User

@bevvyreported that the RedPajama 3B was working, albeit at a slow pace. The prefill was slow and the system ran at 3 tokens/s on a pixel 7. - Question about Solar 10 Preset: User

@agcobra1queried about the preset used by Solar 10. No responses were provided within the given message history. - Alternative to MLC: User

@gezegenasked if there was a possible alternative to using MLC and suggested setting up on Android/iOS. - Reference to LLM Farm: User

@orabazesmentioned LLM Farm, though the context wasn’t clear from the given message history. - User Illness: User

@gezegennoted that they were recovering from the flu and may have missed some previous conversations.

HuggingFace Discord Discord Summary

-

NeurIPS Meetup Outreach & LSTM Discussion: User

@.yosunextending an invite for the last-minute attendees to a NeurIPS meetup and an AI3D breakfast. Additionally,@vipitiselucidated on working with LSTM in the conversation and shared link to PyTorch LSTM documentation. -

Chatbots & VSCode Plugins Talk: A brief discussion on customizing the AI name in LLAMA chatbots carried out by users

@criticaldevx,@jeffry4754,@shrap42,@ahmad3794and@funapple. Tips included fine-tuning and replacing the name post-generation using a script. -

Deep Dive into RNNs, LSTMs & GRUs: User

@nerdimoprovided a thorough explanation of recurrent neural networks concepts, alongwith components like LSTMs and GRUs. They also discussed the resource they’re learning from - the Andrew Ng Machine Learning specialization. User.memoshicontributed by sharing a paper on the SwitchHead method on arXiv. -

Space-Themed Twitter Account & Inclusive AI:

@boggdanu_sharing their twitter account @madpapayas focused on astronomy, and@sk21_discussing about inclusive AI design stating a post by Dr Maria Panagiotidi. -

AI Related Projects & Tutorials: Users sharing their AI projects and asking for feedback.

@marielandryceopresented methodologies of AI,@rwitz_introduced the merge of two AI models and@appstormer_25583unveiled an AI that analyzes logos. A query regarding fine-tuning was resolved. -

MagVIT2 Presentation & New Reading Material Suggestions:

@chad_in_the_houseintroducing MagVIT2 and sharing associated resources,@memehunter7209suggesting Mathematical Machine Learning as a potential reading group book and lastly@netskink.1inviting members to participate in their dataset project. -

Image-Conditioned Diffusion Model & Other Discussions:

@mr.frog2014sharing insights from their experiments with image conditioned Diffusion Models.@nixon_88316seeking information about stablecode and@sebys7asking about parameters tweaks in SD-X4-Upscaler. -

PyTorch Model Training & Hierarchical Text Classification: User

@merve3234providing instructions on transformer models training with PyTorch and@stroggozdiscussing Hierarchical Text Classification using separate models for each level. Also,@ppros666soliciting advice on fine-tuning an LLM for the first time and highlighting a guide on the same.

HuggingFace Discord Channel Summaries

▷ #general (82 messages🔥🔥):

- NeurIPS Meetup: User

@.yosunis calling for last-minute attendees to a NeurIPS meetup and an AI3D breakfast the next day. Shared a link to twitter post. - Working with LSTM: User

@vipitisexplained the functionality of LSTM and how multi-layered LSTM works. Suggested to check out the PyTorch LSTM documentation for understanding its implementation. - Customizing AI Name in LLAMA: Users

@criticaldevx,@jeffry4754,@shrap42,@ahmad3794had a conversation on how to change the AI name in LLAMA chatbots. Suggestions include fine-tuning and replacing the name post-generation using a script. - Training Trouble:

@vishyouluckraised an issue with fine-tuning a model usingautotrain_advanced, suspecting a problem with thetrain.csvfile. - VSCode Plugins:

@funappleasked for recommendations on VSCode plugins that work well with local Language Models (LLM) for real-time code suggestions.

▷ #today-im-learning (6 messages):

- Understanding Recurrent Neural Networks: User

@nerdimodelved into the concepts of recurrent neural networks (RNNs), including Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU). They explained that LSTMs and GRUs employ gates to update their internal memory and process relevant information, which aids in making accurate predictions and avoiding the vanishing gradient problem. - In additional discussions,

@nerdimomentioned the concept of bidirectional RNNs, where information flow can proceed from left to right and vice versa. Moreover, they discussed the power (and computational cost) of stacked RNNs that form a deep RNN. - User

@merve3234questioned if there is a significant difference in performance between GRUs and LSTMs, given that GRUs are generally more efficient to train. They also asked about the resources@nerdimois using for learning. - In response,

@nerdimoindicated that they are learning from the Andrew Ng Machine Learning specialization. They also expressed their intuition that LSTM would be the best choice due to its increased filtering and parameters. - SwitchHead Method for Transformers: User

.memoshishared a link to an arXiv paper about the SwitchHead method. It claims to reduce both compute and memory requirements of the self-attention layers in Transformers, achieving practical speedups without sacrificing language modeling performance.

▷ #cool-finds (2 messages):

- Astronomy Twitter Account:

@boggdanu_shared a link to his Twitter account @madpapayas which is focused on astronomy. - Creating Inclusive AI:

@sk21_brought to attention a Substack post about creating inclusive AI. Subtitled “A strengths-based approach to Artificial Intelligence”, the post is by Dr Maria Panagiotidi and it discusses the issues of inclusivity in AI design. The link is as follows: Creating Inclusive AI.

▷ #i-made-this (5 messages):

-

Navigating the Depths: The Critical Thinking ToT and Scientific Method CoT in AI: User

@marielandryceoshared an extensive overview of two essential methodologies guiding the world of Artificial Intelligence, namely the Critical Thinking Tree of Thoughts (ToT) and the Scientific Method Chain of Thoughts (CoT). The post detailed the step-by-step process of how these methodologies function in AI, emphasizing the significance of persistently refining our understanding. The discussion hashtagged#CriticalThinkingToT,#ScientificMethodCoT, and#AIExploration. -

Merge of AI Models:

@rwitz_shared a link to a merge of two AI models -viethq188/LeoScorpius-7B-Chat-DPOandGreenNode/GreenNodeLM-7B-v1olet- using slerp as a merge method. He detailed the slice sources and parameters involved in the merge process. The base model for the merge isviethq188/LeoScorpius-7B-Chat-DPO. -

Brand Logo Analyzer GPT: User

@appstormer_25583introduced an AI, which gives design feedback and improvement tips for logos based on the uploaded image. A link to their project was provided. -

@merve3234asked@rwitz_if he had submitted his merged model for the leaderboard, to which he affirmed with a “yes”.

▷ #reading-group (15 messages🔥):

- Presentation of MagVIT2: User

@chad_in_the_houseinitiated a presentation on MagVIT2, a model introduced in the paper “MagVIT2: Language Model Beats Diffusion: Tokenizer is key to visual generation” by Carnegie Mellon and Google. The model can generate images as tokens/words and is seen to beat diffusion models. For more in-depth discussion, link to the blog was shared and code available here. - Suggestion for Reading Group Material:

@memehunter7209suggested studying the mml-book in the reading group for a better understanding of the math stuff required for machine learning. - Discussion about mml-book:

@Panteraand@charlieparker8035sought clarification about the level and content of the book respectively, and@memehunter7209advised that it’s more of a review book and referred them to Gilbert strang lectures and course on EDx. - Project Invitation:

@netskink.1invites members to participate in their project working on a dataset of images and weather conditions aimed at detecting icy bridges. - Suggestion on Unresolved Math Problem Solve:

@caleb_solproposed discussing the paper about AI’s capacity to solve previously unsolved math problems, indicating it could be a good topic for the reading group.

▷ #diffusion-discussions (3 messages):

- Image Conditioned Diffusion Model: User

@mr.frog2014shared their experiment with image conditioned Diffusion model, suggesting the idea of injecting noise directly to x and subtracting after denoise model. They also raised a question about the potential benefit of incorporating an attention module. - Query about StableCode: User

@nixon_88316posed a query seeking information about stablecode. - Parameter Query for SD-X4-Upscaler: User

@sebys7is using the SD-X4-Upscaler with diffusers, and asked about tweaks to the parameters that might yield a specific type of result.

▷ #NLP (6 messages):

- Model Training Tips: User

@merve3234gave detailed instructions on using transformer models with PyTorch and decoder input tokens. They suggested manually feeding decoder_input_ids during the forward phase of the training loop. - Hierarchical Text Classification Discussion:

@stroggozproposed an approach to classifying academic texts using a network of smaller Bert models. The discussion raised the idea of classifying texts by primary topic (e.g., math, chemistry), then further classifying by subtopic, using separate models for each level. - LLM Context Length Clarification: User

@ppros666asked for clarification on the context length of Llama 2, specifically asking if the context length is 4000 or 4096.@Cubie | Tomclarified that the context length is 4096. Relevant information was linked to from the HuggingFace model’s config.json file. - Request for LLM Fine-Tuning Guidance:

@ppros666expressed an interest in fine-tuning an LLM for the first time and requested an up-to-date tutorial or example code. They highlighted a guide titled “Llama-2 4bit fine-tune with dolly-15k on Colab”, seeking advice on its reliability.

▷ #diffusion-discussions (3 messages):

- Image Conditioned Diffusion Model: User

@mr.frog2014discussed about a potential alteration to the standard image-conditioned diffusion model, presenting a method where the condition signal is added directly to the injected noise and subtracted subsequently after the denoise model. They also asked whether implementing an attention module to inject the condition would yield better results. - Inquiry about Stablecode:

@nixon_88316posted asking if anyone has knowledge regarding stablecode. However, no responses or follow-ups were captured in the supplied message history. - SD-X4-Upscaler usage: User

@sebys7asked if there are specific parameters using thesd-x4-upscalerwith diffusers that could cause certain outcome in the resulting image. However, no particular image or result was provided for reference within the given context.

LangChain AI Discord Summary

- Detailed discussions on LangChain integration and usage: official documentation, YouTube tutorials, creation of Language Learning Models (LLMs), output streaming, and implementation of metadata were extensively discussed.

- Queries raised on the progress of Plan-and-Execute, difficulties in copying text from LangServe’s ‘DOCUMENTS’ section, and accessing LangServe endpoints and fields led to collective troubleshooting and information exchange.

- Contributions shared included

@andysingal’s Medium blog post on LangChain Expression Language (LCEL),@appstormer_25583’s GPT-based brand logo analyzer,@pagerize_admin’s Pagerize video summarizer,@gokusan’s TinyLLM library for production applications, and@joshuasundance’s pre-commit hook to detect API keys. - Guild members participated in a LLM user survey hosted by Catena Labs to provide insights on market preferences, accessible here.

LangChain AI Channel Summaries

▷ #general (48 messages🔥):

- Integration and Learning Resources for LangChain:

@chasemcdosuggested utilising resources from the official LangChain site for documentation and its YouTube channel for further information and tutorials. - Plan-and-Execute Progress Inquiry:

@casper_marsraised a question about the progress on Plan-and-Execute, to which@manlylubbinreplied they are still planning. - Implementation of Metadata on LLM:

@reddiamond69highlighted that on generating output, LangChain allows for printing source documents along with their metadata, which can be implemented on the users’ application. - Difficulties with Streaming Output Through API:

@menny9762shared struggles streaming the output through Next.js. A comprehensive discussion entailed, involving@seththunderand others, on creation and use of Language Learning Models (LLMs), streaming and callback methods. - LLM Survey from Catena Labs: A community survey was promoted by

@jay_wooowto gather data on LLM usage and preferences to inform product development and provide useful market data. The survey is hosted here.

▷ #langserve (3 messages):

- Copying Text from Output Section:

@fritz4374reported difficulties in copying text from the ‘DOCUMENTS’ section of a run. The user can copy from the input and output sections but not from the ‘DOCUMENTS’ section. - LangServe Endpoint & Accessing Fields:

@khophi.cois seeking assistance with accessing the<payload>.historyfield in the langchain context when a frontend request, like{ 'mymessage': 'message' }, is sent to thepath="/myendpoint"in LangServe. The user is curious about how to retrieve other fields beyond the ‘input’ field, which LangServe does automatically.

▷ #share-your-work (7 messages):

- LangChain Expression Language (LCEL) Instructions:

@andysingalshared a Medium blog post on mastering the LangChain Expression Language (LCEL), providing examples of its application in scraping Wikipedia. - GPT-based Brand Logo Analyzer:

@appstormer_25583showcased a brand logo analyzer GPT that offers design feedback and suggestions for improvement based on an uploaded logo image. - Pagerize - AI Video Summarizer:

@pagerize_adminpresented Pagerize, an AI summarizer for YouTube videos. Included was an example summary of a Theory of Mind LangChain and Plastic Labs webinar, viewable here. - TinyLLM - LLM Library for Production Applications:

@gokusandeveloped TinyLLM, a library for running LLM applications at scale. An example of creating and evaluating an agent can be viewed here. - Pre-Commit Hook to Detect LLM API Keys:

@joshuasundancecreated a pre-commit hook to prevent developers from putting their API keys into source control.

▷ #tutorials (1 messages):

potatooff: https://www.youtube.com/watch?v=mrjq3lFz23s

DiscoResearch Discord Summary

- LLM-Assisted Scoring Evaluation Experiment: User

@_jp1_initiated a discussion regarding an early-stage AI startup’s LLM-assisted batch evaluation tool for highlight extraction. This sparked debates on reducing score and using special rating tokens for accuracy and discrimination “Link to the original post”. The conversation later branched out to potential collaboration and understanding the evaluation method featuring@bjoernpand@_jp1_. - Mixtral Implementation: The conversation in mixtral_implementation channel highlighted different elements of mixtral implementation including the best practices, access to high-end hardware, and expert selection strategies along with benchmark results.

- The main points here include a custom build of llama.cpp shared by

@kalomazeand a tweet shared by@someone13574suggesting that using 3 experts yields the best perplexity results.

- The main points here include a custom build of llama.cpp shared by

- OpenAI’s Alignment Plan and Phi-2 Discussion: OpenAI’s new paper’s alignment plan and effectiveness of Phi-2 were the topics of discussion here. Also, there was a suggestion by

@flozi00to implement scoring models to enhance data quality in Disco Research. - Progress on Simplified Language Understanding and Modeling (Llama) Integration and FastEval Evaluation: Conversation revolved around llama.cpp integration into projects, the completion of a substantial part of FastEval evaluation and problems related to llama-cpp-python. More specifically, new developments were noted such as the FastEval project fork on GitHub by Disco Research. Suggestions were provided by

@bjoernpto@rtyaxfor debugging simpler models and using a single thread for the local model.

DiscoResearch Channel Summaries

▷ #disco_judge (11 messages🔥):

-

LLM-Assisted Scoring Evaluation Experiment: User

@_jp1_shared a post from Reddit about an early-stage AI startup’s results using their LLM-assisted batch evaluation tool. The tool is used for highlight extraction from news articles and the MMLU benchmark using a scoring model. They referenced three 1, 2, 3 different research papers as the basis of their evaluation method. This led to a discussion about whether reducing score categories and using special rating tokens could influence accuracy and the ability to discriminate. Link to the original post -

Potential Collaboration: User

@bjoernpexpressed interest in the evaluation approach and suggested collaboration with the startup working on similar projects. -

Evaluation Method Understanding: A discussion between

@bjoernpand@_jp1_ensued concerning the circular nature of using benchmarks for evaluation models. They talked about how a good benchmark could negate the need for an eval model and how the use of evaluation models is often resorted to when there is no easy way to benchmark or it would be too much effort to create benchmarks for everything to measure. -

Confusion on Benchmarking and Evaluation Models:

@_jp1_expressed confusion over the evaluation method and questioned if measuring MMLU of the eval model is similar to direct model evaluation.@bjoernpacknowledged the similarity but noted a slight difference, stating that rating a response is an easier task. -

Other potential benchmark: In their conclusion,

@bjoernpsuggested that a held-out test set could potentially be a good benchmark for the rating model.

▷ #mixtral_implementation (29 messages🔥):

- Mixtral Implementation Best Practices:

@nul3asked which is better between the instruct and non-instruct versions for chat.@goldkoronrecommended the instruct version. - Custom MoE Routing:

@kalomazeshared a custom build of llama.cpp which is a modification to the Mixtral PR that lets users customize the amount of experts that are routed per token. - Access to High-End Hardware:

@tarikoctapmoffered access to a machine with 4 x RTX 4090, asking in return for the sharing of results from models. - Expert Selection Strategy Discussion:

@chrismcmasterand@kalomazeexchanged ideas on various expert selection strategies. They discussed hardcoding a set number of experts, ‘min_p’ strategy, and top-k experts.@kalomazeelaborated on ‘min_p’, which acts as a minimum probability threshold based on the maximum probability. - Benchmark Results:

@bjoernpshared preliminary benchmarks which showed sub-optimal performance when hardcoding either 1 or 4 top-k experts.@someone13574shared a tweet suggesting that using 3 experts yields the best perplexity results. These results were critiqued by@kenjiqqwho observed inconsistencies in the Q6 quant.

▷ #general (5 messages):

- OpenAI’s Alignment Plan:

@_jp1_brought attention to the interesting content in OpenAI’s new paper regarding their alignment plan. No link was provided. - Discussion on Phi-2:

@bjoernpand@flozi00engaged in conversation about Phi-2, questioning its effectiveness and the legitimacy of its benchmarks. While@bjoernpshowed skepticism,@flozi00shared some optimistic observations from Twitter examples, especially for smaller scale uses cases on edge. - Data Quality Scoring Models in Disco Research:

@flozi00suggested the implementation of scoring models to enhance data quality in Disco Research. The aim is to not only deduplicate datasets, but also cleanse them of low-quality data like raw lists from Wikipedia or translation errors.

▷ #benchmark_dev (13 messages🔥):

- Collaboration on Simplified Language Understanding and Modeling (Llama) Integration:

@bjoernprequested@rtyaxfor collaboration on incorporating llama.cpp into their project and asked for clarity on the problems related to tokenizer that@rtyaxhad mentioned earlier. - FastEval Evaluation Completion and Future Steps:

@bjoernpoutlined the next steps including adding the capacity for n repetitions of benchmarks and calculating the mean and standard deviation, thereby completing a substantial part of the evaluations. He also pointed out the continued need for the integration with llama.cpp to examine the effects of min_p and enabling grammar-based inference to fix the output format. - FastEval Repository on DiscoResearch GitHub:

@bjoernpcreated a fork of FastEval with the DiscoResearch GitHub account for coordination, directing users and@rtyaxto submit their changes there. The repository is found at https://github.com/DiscoResearch/FastEval. - Issues with Llama-cpp-python:

@rtyaxreported that llama-cpp-python was failing silently when running generate/chat_completion. They noted that the difficulty may not be with the tokenizer, but could not identify the specific problem. They had recently rebuilt llama-cpp-python for mixtral, and were uncertain if the problem was local or more widespread. - Debugging Suggestions:

@bjoernpsuggested debugging simpler models first, like mistral-7b, and changing fasteval evaluation constants to use a single thread for the local model.@rtyaxsaid that only one GPU was being used in his box, but the generation was continuing as if it was never called due to a try/catch block.

Latent Space Discord Summary

- Announcement of Anthropic’s new Google Sheets tool, Claude for Sheets™, praised by

@swyxioas “spreadsheets are the best prompt engineering tool”. Claude for Sheets™ - In-depth coverage of Mamba model provided through a YouTube video shared by

@swyxio. - Introduction to the Mistral-kit project which utilizes mistral-7b and ollama; repository shared by

@kevmodromeon GitHub. Github link - Discussion around rumored release of GPT 4.5 initiated by

@swyxiowith a linked tweet. - Access to Mistral API provided to

@fanahovaand@coffeebean6887; latter also noted the addition of Mistral as an endpoint on Anyscale. Anyscale URL - Announcement of the new podcast release by

@fanahovaon Twitter and Hacker News. (Twitter Link) - Mention of Qtransformers by

@swyxioin the #llm-paper-club channel, without further elaboration or context given.

Latent Space Channel Summaries

▷ #ai-general-chat (9 messages🔥):

- Anthropic’s New Google Sheets Tool:

@swyxiomentioned a new product called Claude for Sheets™, which can bring the helpful AI assistant Claude from Anthropic to Google Sheets™.@swyxiostated that “spreadsheets are the best prompt engineering tool”. - Discussion on Mamba Model:

@swyxioshared a YouTube video that provides a thorough explanation of the Mamba model. - Mistral-kit Project:

@kevmodromeshared a Github link to the mistral-kit project, which uses mistral-7b and ollama. - Rumors About GPT 4.5:

@swyxiomentioned some rumors about GPT 4.5 coming soon. - Access to Mistral API: Both

@fanahovaand@coffeebean6887indicated that they received access to the Mistral API.@coffeebean6887also pointed out that Anyscale added Mistral as an endpoint and posted the URL for it.

▷ #ai-event-announcements (1 messages):

fanahova: New pod is out! https://twitter.com/FanaHOVA/status/1735371425836568905

Also live on HN

▷ #llm-paper-club (1 messages):

swyxio: Qtransformers

LLM Perf Enthusiasts AI Discord Summary

- In the general channel, two key discussions were highlighted:

- Issue with Model 3.5:

@0xmmoreported unusual behavior from AI Model 3.5 involving an excessive production of new lines in responses. - The workload of ChatGPT during Finals Week was humorously speculated by

@res6969, who suggested it could be overloaded due to increased usage by students.

- Issue with Model 3.5:

- The finetuning channel saw

@robertchungoffer advice for a text extraction task, recommending an initial 30-50 samples for fine-tuning, with the potential to add more examples if results are unsatisfactory. - In the opensource channel,

@robhaisfielddiscussed a strategy to overcome rate limits by rotating among various fine-tuning providers but mentioned the challenge of accounting for the subtle behavioral differences typical of each provider’s unique fine-tunes. - Useful resources were shared in the resources channel, such as an insightful Twitter link posted by

@nosa_. - The openai channel showcased two interesting references:

@pantsforbirdspointed out an OpenAI paper on supervising larger models with smaller ones.@firefox8975highlighted Google AI’s competitive capability in supporting function calls, referencing the official document.

LLM Perf Enthusiasts AI Channel Summaries

▷ #general (3 messages):

- Possible Issue with Model 3.5:

@0xmmoreported that they’ve been getting strange responses from AI model 3.5, which has started to respond to their function calls with an excessive number of new lines. - AI Workload during Finals Week: In a lighter note,

@res6969humorously speculated that ChatGPT might be overloaded due to “finals week,” a reference to a period of intense academic work. This was backed up by@potrock, who mentioned that many people in a graduate-level Natural Language Processing (NLP) course are utilizing GPT-generated synthetic data for their final projects.

▷ #finetuning (2 messages):

- Text Extraction and Fine-Tuning:

@robertchungsuggested for a text extraction task, 30-50 samples may suffice for initial fine-tuning. They also suggested that if the results are not satisfactory, one can add more examples and proceed to fine-tune the already fine-tuned model.

▷ #opensource (1 messages):

- Discussion on Rotating Among Providers:

@robhaisfieldshared his thoughts on a strategy to power through rate limits by programmatically rotating among various fine-tune providers. He, however, expressed concerns that the subtle differences in behavior produced by each provider’s unique fine-tunes might make it tricky to treat all providers as a commodity.

▷ #resources (2 messages):

- Sharing Useful Resources: User

@nosa_shared a useful Twitter link with potentially valuable information for the community. User@ayenemresponded positively to the shared link.

▷ #openai (2 messages):

- Supervising Larger Models with Small Models: User

@pantsforbirdsshared an OpenAI paper about using small models (GPT-2) to supervise larger models (GPT-4). - Google AI’s Function Calling:

@firefox8975mentioned that Google AI supports function calling, which they found to be competitive with OpenAI during their exploration of it. They also provided a reference to Google AI’s official document.

Alignment Lab AI Discord Summary

- [oo] channel featured updates on Depth Upscaling utilized by Upstage for the franken llama-mistral, boasting 10.7B parameters with the model SOLAR-10.7B-Instruct-v1.0. “Upstage claims to have surpassed Mixtral with their approach.”

- New Open Source AI Grants were discussed in the same channel, with users congratulated on their grants via an announcement from a16z.

- [oo-priority] held details about a specific event from

@entropi, although the details of the event are not provided. - In [phi-tuning],

@entropishared an update on the Phi-2 transformer model with 2.7 billion parameters and a link to more information. The model’s data was the same as Phi-1.5 but with additional new sources encompassing NLP synthetic texts and safety and educationally-filtered websites. Updates on the model’s weights were also mentioned.

Alignment Lab AI Channel Summaries

▷ #oo (2 messages):

- Depth Upscaling on Franken Llama-Mistral: User

@entropishared a link to an article about Upstage’s use of “Depth Upscaling” on a franken llama-mistral to get to 10.7B params with continued pretraining. Upstage claims to have surpassed Mixtral with their approach. The end result is their model SOLAR-10.7B-Instruct-v1.0. - New Open Source AI Grants:

@entropialso congratulated users <@257999024458563585> and <@503006108928180245> for their new AI grants, sharing the announcement from a16z.

▷ #oo-priority (1 messages):

entropi: @here https://discord.com/events/

▷ #phi-tuning (2 messages):

- Phi-2 Model Summary:

@entropishared a link about Phi-2, a Transformer with 2.7 billion parameters. The data used for its training were the same as Phi-1.5. Additionally, the Phi-2 model was also trained with a new data source consisting of various NLP synthetic texts and filtered websites for safety and educational value. - Phi-2 Weights Update:

@entropireported that the weights for Phi-2 were updated recently.

YAIG (a16z Infra) Discord Summary

Only 1 channel had activity, so no need to summarize…

- AI Economics Discussion:

@stevekammanshared insights on the economics of AI, specifically focusing on the comparison between training and inference cost and revenues. He said: “Inference must pay the bills - GPU, S&M, overheads…” and pointed out that the revenue generated by inference must be noticeably higher than the cost associated with training to maintain profitability in the long term. - Consideration on Training Costs:

@stevekammannoted that the cost incurred in training can be offset by selling the foundational models but he also pointed out that it’s inevitable for the buyers of these models to fund the purchase either through revenue or efficiency gains. - Future of FM Companies:

@spillaispeculated on whether foundation model (FM) companies need to develop their own clouds to continue capturing enough long-term value from their customers and therefore attain profitability. They drew attention to the potential need for vertical AI infrastructure providers for large language models (LLMs). - Utilization of GPU by FM companies:

@stevekammanquestioned the utilization efficiencies of GPUs by FM companies, given their binary nature (100% or 0% utilization). This, considering how potential scale-based utilization efficiencies might be limited.

Skunkworks AI Discord Summary

- Open-source survey initiated by

@jay_wooowto understand motivations and challenges in building with LLMs (Language Learner Models). The survey data will be used internally and to assist the wider AI community in development tool creation. - Raw survey data and key insights will be open-sourced in a report and published online upon reaching the target participant number.

- Link to the survey was shared. Participation keeps developer identities anonymous.

- Uncontextualized link to a YouTube video was posted by pradeep1148 under off-topic. Further discussion or context was not provided, thus relevance remains unclear.

Skunkworks AI Channel Summaries

▷ #general (1 messages):

- LLM Tools Usage Survey:

@jay_wooowhas initiated an open-source survey aimed at understanding the motivations and challenges involved in building with LLMs (Language Learner Models). The data gathered through this survey will be used for internal productivity enhancement and to assist the wider community with market data to optimize the creation of development tools. The survey should take approximately 10 minutes to complete. - Results Publication: Once the target number of participants has been reached, all raw data collected as well as the key insights will be open-sourced in a report and published on a blog platform. This can be a valuable resource for AI developers to understand goals, challenges, and tool usage in the community.

- Participation Link: The link to participate in this survey was shared. All participating developers’ identities will remain anonymous.

▷ #off-topic (1 messages):

pradeep1148: https://www.youtube.com/watch?v=MOimHasrCKk

MLOps @Chipro Discord Summary

Only 1 channel had activity, so no need to summarize…

- Mastering MLOps and ML Engineering: Key Strategies for 2024:

@amitqwakannounced a live session titled “Mastering MLOps and ML Engineering: Key Strategies for 2024” scheduled for January 17th 2024 at 11:30 AM EST. The session aims to provide organizations with advanced insights and strategies for effectively incorporating and managing AI and ML in their business frameworks, with a focus on MLOps and ML engineering trends. The event, primarily for ML Engineers, Data Scientists, Data leaders, and Software engineering managers is free of charge. The registration link is here.

- Arize Holiday Special:

@sarahwelshannounced the Arize Holiday Special scheduled for December 15, 2023. The event comprises a series of live, virtual hands-on workshop sessions focused on prompt engineering, search and retrieval workflows, and LLM system evaluations. Speakers from Hugging Face, PromptLayer, Shopify, and Arize are participating. The registration link is here.

AI Engineer Foundation Discord Summary

Only 1 channel had activity, so no need to summarize…

- Upcoming Event Notification:

._zposted a notification for an upcoming event with the associated Discord link provided.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.