We developed the Depth Up-Scaling technique. Built on the Llama2 architecture, SOLAR-10.7B incorporates the innovative Upstage Depth Up-Scaling. We then integrated Mistral 7B weights into the upscaled layers, and finally, continued pre-training for the entire model.

The Nous community thinks it’s good but not great.

In other news, weights for the Phi-2 base model were released - it’s 1.4T tokens of Phi 1.5 + 250B worth of new GPT3-created synthetic texts and GPT4-filtered websites trained over 96 A100s for 14 days.

[TOC]

OpenAI Discord Summary

- Challenges, solutions, and discussions on OpenAI’s GPT Models: Users across the guilds reported difficulties and proposed solutions with the workings of various GPT models, mentioning problems like incoherent output from GPTs, GPT’s refusal to check knowledge files before answering, and errors with GPT-4 Vision API. These discussions also covered specific issues like GPT’s inability to play Hangman effectively, generate diagrams, and create quality content, where higher performance is seen after creating an outline first and enhancing it later.

- OpenAI API Usage Concerns and Clarifications: Dialogue was focused on understanding the limitations of the AI, including its inability to generate visual content, issues related to its usage cap, and problems generating less useful bullet point responses. Comparisons were made between GPT Assistants and Custom GPTs, even as users explored different use case possibilities of OpenAI’s APIs, like uploading bulk PDFs and cross-referencing food ingredients for dietary restrictions. Notably, the challenge of integrating the API in playing the Hangman game was highlighted, with some users providing successful examples and the limitations of slower Python access.

- Account Issues and Functionality Problem Reports: There were numerous discussions about account-related problems (account downgrades, deletions, login issues), and users were advised to contact official OpenAI support. They deliberated on a variety of issues like loss of chat data, inability to load certain conversations, browser-specific troubles affecting GPT models, and the reality of GPT’s message limits as 40 messages per hour.

- Collaborative Understanding of AGI and ASI: Users in the guild deepened their understanding of Artificial General Intelligence (AGI) and Artificial Super Intelligence (ASI), discussing rising expectations and their potential implications.

- Responses to OpenAI Business Strategies: Users voiced concerns over OpenAI’s partnership with Axel Springer due to fears of potential bias and the ethical implications of such a partnership. Changes in pricing and access to GPT Plus also sparked conversations across the guild.

OpenAI Channel Summaries

▷ #ai-discussions (55 messages🔥🔥):

- ChatGPT and PDF Reading:

@vantagesprecounted an issue where the AI seemed uncertain about its ability to read and summarize PDFs, despite the AI’s capable functionality. - Discussions about AGI and ASI:

@【penultimate】sparked a conversation about the definitions and expectations of Artificial General Intelligence (AGI) and Artificial Super Intelligence (ASI), asserting that people’s expectations of AGI continue to rise. - Jukebox by OpenAI:

@【penultimate】shared a link to OpenAI’s project called Jukebox, a music generation model, expressing hope that a more updated or enhanced version of such a model would be developed. - Issues with GPT-4 and ChatGPT: Users

@Saitamaand@kyoeireported issues with GPT-4, including mediocre response quality and an error in the input stream. User@slickogalso mentioned a problem with the incoherent output (“word spaghetti”) from GPTs. - Usage of Gemini AI: Users

@iron_hope_shop,@lugui,@solbus, and@jason.scottdiscussed about Gemini, a chat model integrated into Bard. Microsoft’s capability of inducing GPT-4 to perform at the level of Gemini Ultra with proper prompting was also noted by@【penultimate】.

▷ #openai-chatter (270 messages🔥🔥):

-

Integration Issues with GPT-4 Vision: Several users have been discussing difficulties in utilizing the GPT-4 Vision API. For instance,

@moonkingytand@gingeraiboth reported issues, with@gingeraiparticularly mentioning that errors often occur when uploading images.@luguiand@rjkmelbprovided some guidance and troubleshooting tips bug report thread. -

Discussions around Limitations of GPT: User

@mischievouscowinitiated a discussion regarding the limitations of ChatGPT, specifically related to its usage cap and issues with generating less useful bullet point responses. The conversation proceeded with@dabonemmcomparing GPT’s learning process to the “monkey typewriter experiments.” -

ChatGPT Plus Access Issues and Notifications: Several users reported issues and queries related to accessing and procuring ChatGPT Plus. For instance,

@isrichand@themehrankhanraised queries related to price changes and access to GPT Plus, while@openheroesand@miixmsannounced that ChatGPT re-enabled subscriptions. -

Concerns over OpenAI Partnership with Axel Springer: Users

@zawango,@jacobresch,@loschessand others expressed disappointment and concerns about OpenAI’s partnership with Axel Springer, citing potential bias and ethical implications of partnering with a news outlet that has faced controversy. -

Various Use Cases and Opinions of ChatGPT:

@textbook1987shared positive feedback on using ChatGPT for drafting a professional-sounding letter to a doctor.@loschessnoted limitations in the AI’s ability to code complex projects and had to hire a developer to finish a project.@thepitvipercriticized prompt limits interfering with user experience, which could push users towards alternatives.

▷ #openai-questions (168 messages🔥🔥):

- Account Issues: Users including

@vexlocity,@Kevlar,.draixon,norvzi,@thedebator, and.sweetycutemintydiscussed various account related issues such as login problems, account downgrades and account deletions. They were advised to reach out to the official OpenAI support for help. - Problems with GPT Functionality: Users like

@woodenrobot,@david_36209,@vadimp_37142,@8u773r,@astoundingamelia,@bayeslearner, and@happydiver_79reported and discussed issues regarding the functionality of their models. Problems included GPT refusing to check knowledge files before answering, stoppages in the use of external APIs/Actions in custom GPTs, slow performance, models not interacting properly with images, and inability to access voice chat in OpenAI GPT. - Use Case Discussions:

@samuleshugessought advice on a project involving “uploading bulk pdf’s and then chatting with them”, while@core4129asked about the feasibility of using the API for cross referencing food ingredients for diet and allergy restrictions.@skrrt8227was interested in using OpenAI to dictate notes into Notion. - Browser-Related Issues: There were conversations involving

@Mdiana94and@jordz5, regarding browser-specific problems affecting the functioning of GPT models. Clearing cache and changing the browser was suggested as a potential solution. - Data Loss Issues: Users like

@singularity3100,@Mdiana94, and@Victorperez4405raised concerns about losing their chat data or inability to load certain conversations. Besides suggestions to log out and log back in, users were advised to contact official support.

▷ #gpt-4-discussions (21 messages🔥):

- Reaching OpenAI staff for support: User

@eskcantaexplained that this Discord is mostly community members. They mentioned some OpenAI officials with gold names who could be contacted. There’s a link to a post by@Michael, an OpenAI member, clarifying the process of reaching to a human through their help portal. “[It should] lead to a person, says they intend to make it easier and faster to reach where you can message a human.” Link to Discord post - Modifying conversation titles of GPTs:

@ldupervalasked if it’s possible to affect the title a GPT gives to a conversation. They were interested in ways to identify the GPT that created it. - GPT grading essays: User

@bloodgoreexpressed difficulties getting their GPT to scan and respond based on an uploaded document (a rubric). They are trying to use GPT for grading essays but are facing issues with the model hallucinating its own rubrics despite the correct one being in its knowledge base. - Uploading files for GPT reference:

@solbusand@mysticmarks1suggested referencing the specific filename in the user’s request to get the GPT to analyze the document, emphasizing that the context limit might not allow for complete document evaluation. - Message Limits and Upgrade: Users

@elpapichulo1308, @bloodgore, @satanhashtag, @solbusand@loschessdiscussed the message limit rules. The limit seems to be 40 messages per hour, contrary to some users perception that it was per day.@solbuspointed out that “days” was a translation error.@loschessbrought up that upgrading was supposed to result in “No wait times”.

▷ #prompt-engineering (45 messages🔥):

- How to Enhance AI Outputs: User

@cat.hemlockadvised to instruct the AI to create an outline or brainstorm the article first, and then amplify and proofread its generated content to achieve superior results. - Issues with AI and Hangman:

@eskcantashared a link to a conversation where the AI failed to correctly play the game of Hangman, even with clear instructions and examples. - AI Successfully Runs Python Game for Hangman: Responding to

@eskcanta,@thepitvipershared a link to a successful instance of AI generating a Python game for Hangman, though it works slowly due to Python access. - Seeking Effective Marketing Advice from GPT:

@dnp_solicited advice for getting more specific and actionable output from the AI, such as using certain methods or powerwords in marketing campaigns.@bambooshootssuggested instructing the AI that it’s an expert in a specific field providing responses to a highly knowledgeable audience. - Visualizing Elements of Viral Content:

@dnp_also expressed interest in creating diagrams to visually represent elements necessary for creating viral content, though struggled with the AI’s inability to generate visual content like Venn diagrams.@exhort_oneconfirmed that GPT-3 is text-based and cannot create visuals unless combined with other tools like DALL-E.@bambooshootsprovided a prompt for generating visualizations using Python, though@dnp_noted limitations with break down that involves more than 3 elements.

▷ #api-discussions (45 messages🔥):

- Creating Quality Content with OpenAI APIs:

@cat.hemlockshared an approach to creating quality AI content by first brainstorming an outline and then generating content around it, emphasizing the importance of refining and proofreading after content is generated. - Challenges with Hangman Game:

@eskcantapointed out that OpenAI ChatGPT struggles with playing the Hangman game, even with a known word, and known order of letters to pick. However,@thepitvipershared a Python game they generated where Hangman worked well, bringing up the point that access to the python tool makes the algorithm slow. - OpenAI API and Content Creation:

@dnp_asked for advice on using ChatGPT to get specific answers such as methods, frameworks, and power words for a marketing campaign.@bambooshootssuggested making the assumption that the AI is an expert in a particular field and tailoring responses to match the expertise level of the audience.@dnp_also expressed interest in creating data visualizations with the model, to which@exhort_oneresponded that GPT is text-based and cannot create visual images or diagrams. - Comparison of GPT Assistants and Custom GPTs:

@dnp_asked about the comparison between GPT Assistants and Custom GPTs, but no clear answer was provided. @bambooshootsprovided an advanced script for creating Python diagrams with further explanation, but@dnp_mentioned the limitation of Venn diagrams not being able to support more than 3 circles.

Nous Research AI Discord Summary

-

Discussion on server rack preferences and potential performance issues when running high-load applications, such as running an LLM on GPU VRAM, Python maximising the CPU, and multi-screening YouTube videos; options for reducing graphics load and the unique multitasking abilities of Intel’s cores were discussed.

-

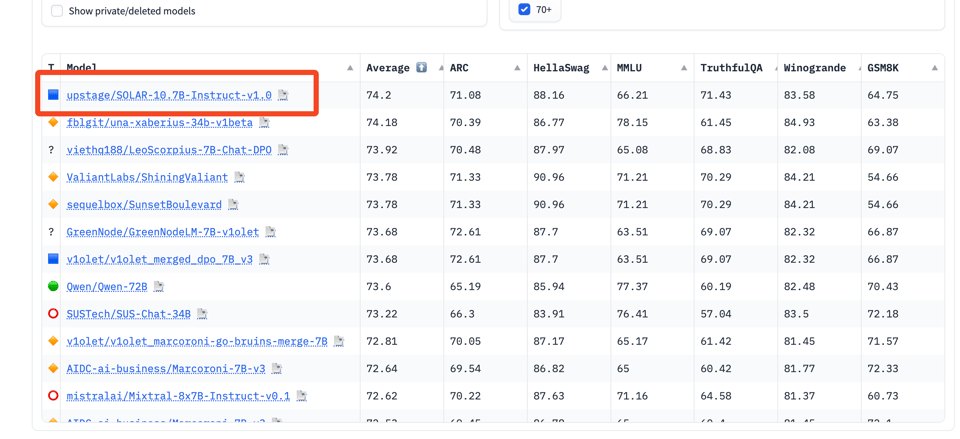

Benchmarked performance comparison between the SOLAR 10.7B model and Mistral 7B on several tests, showing improvements with SOLAR 10.7B;

@euclaisesuggested benchmarking the DeciLM-7B model next and shared a link to the model. -

Introduction of and discussion around the SOLAR-10.7B model, its features and potential inaccuracies in its performance claims; a debate about the quality and quantity of data used for training AI models; suggestions for using quality heuristics, semantic de-duplication and SSL Prototype filtering for mass data transformations.

-

Advancements in RWKV discussed, with highlight on increased performance and reduced VRAM usage; dialogue around the long prompt length limitation in LLM Modelling; continued debate on GPT’s AGI capabilities and performance; memory usage concerns with DeBERTa 1B model; discussion on open-source communities, potential copyright conflicts and the implications of licensing restrictions.

-

Recommendations for models including Mamba, Mistral0.2, Mixtral, and openHermes to benchmark performance on a task related to conversation snippets; comparison of running speed between stable diffusion and a 3 billion parameter LLM; multiple users sharing resources and models; inquiry about running Mistral-7B-v0.1 on a 2080 Ti graphics card with recommendations for quantized versions and model offloading provided; discussion about running small models on mobile devices.

Nous Research AI Channel Summaries

▷ #off-topic (9 messages🔥):

- Server Rack Preferences:

@fullstack6209and@erichallahanexpressed a preference for a stealth 4U server rack and air-cooling instead of water-cooling for their tech setup.@fullstack6209mentioned readiness to pay a high price for this specific setup. - Graphics Performance Concerns:

@everyoneisgrossasked about expected glitches when running an LLM on GPU VRAM, having Python maxing the CPU, and multi-screening YouTube videos.@coffeebean6887predicted potential lagging, freezing, and out of memory errors under these conditions. - Lowering Graphics Load:

@coffeebean6887suggested running the system headless, disconnecting extra monitors, and using lower monitor resolution to reduce graphics load and improve system performance. - Multitasking with Intel’s Cores:

@airpods69mentioned successful multitasking using Intel’s efficient cores for web-browsing and performance cores for model inference, without experiencing lag. - API Limitations:

@fullstack6209remarked on the absence of stop or log bias features in a particular API.

▷ #benchmarks-log (19 messages🔥):

- Performance Comparison of SOLAR 10.7B and Mistral 7B:

@tekniumshared the benchmark results of the SOLAR 10.7B model in comparison to Mistral 7B on several tests. Key observations included: - SOLAR 10.7B scored around 39% in AGIEval, a significant improvement over Mistral 7B’s 30.65%.

- In the GPT4All benchmark, SOLAR 10.7B achieved a score of 72%, slightly ahead of Mistral 7B’s 71.16%.

- SOLAR 10.7B performed only slightly better than Mistral 7B in the TruthfulQA benchmark, scoring 45% as compared to Mistral 7B’s 42.5%.

- BigBench Results: The BigBench test was also conducted for the SOLAR 10.7B model. According to the results shared by

@teknium, the model had an average score of 38.66%. - Perceptions of the SOLAR 10.7B Model: Some users, like

@artificialguybrand@teknium, mentioned that while SOLAR 10.7B showed good performance, it didn’t stand out significantly compared to other models of similar complexity. - DeciLM-7B: Following the performance summaries of other models,

@euclaisesuggested benchmarking the DeciLM-7B model next, and shared a link to the model, indicating its high performance on the Open LLM Leaderboard.

▷ #interesting-links (103 messages🔥🔥):

-

Introduction of SOLAR-10.7B Model:

Metaldragon01shared a link to the new 10.7 billion parameter model, SOLAR-10.7B, which claimed to be better than Mixtral using a new method.tekniumpromised to bench test this model against the claim and share the results. -

Discussion on SOLAR-10.7B Model Performance: The discussion evolved around the performance of the SOLAR-10.7B model. Several members,

n8programsandteknium, expressed skepticism based on initial test results, questioning if the claims made in the model card were accurate.Carsonpoolementioned that SOLAR-10.7B is only a pre-trained model and requires fine-tuning for specific tasks. -

Updates on Phi-2 Weights:

metaldragon01informed that Phi 2 weight has been made available andgiftedgummybeehinted that the weights would soon be available on Hugging Face (HF). Discussions on the usability and compatibility of Phi-2 with HF followed. -

Debate on Data Quality and Amount for Model Training: A detailed discussion, led by

georgejrjrjrandcrainmaker, revolved around the choice and amount of data used for training AI models. Topics covered include the idea of minimizing data requirements instead of maximizing them, the question of repeated epochs vs. new data, and the concept of synthetic data being used as padding. -

Data Generation, Transformation and Filtering:

atgctgquestionedgeorgejrjrjrabout data transformation on a large scale and the latter responded with suggestions on filtering junk text with quality heuristics, semantic de-duplication, and SSL Prototype filtering.

▷ #general (382 messages🔥🔥):

-

RWKV Discussion and Updates: Users

@vatsadev,@gabriel_syme,.benxhand@giftedgummybeediscussed the advancements in RWKV, noting the rapid development and improvements in each version. User@vatsadevhighlighted the increase in performance and reduced VRAM usage in RWKV as compared to transformers. In addition,@gabriel_symeshared his experience with training an Architext model on RWKV 2. Relevant link: Architext Model -

Long Prompt Length in LLM Modelling: Users

@ldj,@gitfedgummybee, and@carsonpoolediscussed the limitation of 6k tokens per second at a batch size of 256 for Mistral 7b on A100, noting this would likely exceed memory capacity if batch size was set to 1. -

Debate on GPT’s AGI Capabilities: Users

@fullstack6209,@nruaif,.benxhand@crainmakerhad a discussion around GPT’s AGI capabilities, suggesting that GPTs might benefit from more few-shot prompting.@kenshin9000’s twitter post suggests that understanding of “propositional logic” and “concept” can significantly enhance GPT4’s performance. -

DeBERTa 1B Discussion:

@euclaiseand@coffeebean6887discussed the high memory usage of the DeBERTa 1B model, noting that it tends to take more memory than larger models. -

Open Source Communities and Model Sharing:

@yikesawjeez,@atgctg,@.benxh,@tsunemoto,@beowulfbr,@nruaifand others discussed the sharing of models on Hugging Face, arguing over licensing agreements, the potential for copyright conflicts, and the pros and cons of model sharing within the community. They also touched upon Microsoft’s Phi-2 release and the implications of its licensing restrictions. Relevant link: Phi-2

▷ #ask-about-llms (72 messages🔥🔥):

-

Benchmarking Models for Conversation Snippets:

@brace1requested recommendations for open-source models to benchmark performance on a task related to extracting and understanding text from conversation snippets.@night_w0lfsuggested several models, including Mamba, Mistral0.2, Mixtral, and openHermes. They also recommended looking at the models’ ELO on the chatbot arena leaderboard.@n8programscautioned that these leaderboards are benchmark-based and suggested putting more weight on a model’s ELO. -

Speed Comparison Between Models:

@n8programsdiscussed the disparity in speed between stable diffusion and a 3 billion parameter LLM. Despite being similarly sized, stable diffusion can only complete one iteration per second, while the latter can process 80+ tokens/second on the same hardware. -

Resource and Model Sharing: Various users shared resources and models throughout the discussion. For instance,

@tsunemotoposted a link to the MLX Mistral weights.@coffeebean6887discussed their experience with Apple’s model conversion script and shared a Github link with examples to guide others through the process.@.benxhsuggested using the open source ChatGPT UI, located in this Github repo. -

Quantization and Model Running:

@Fynninquired about running Mistral-7B-v0.1 on a 2080 Ti graphics card with 10GB of VRAM through Hugging Face Transformers, asking for advice on using a quantized version or model offloading.@.beowulfbrsuggested a quantized version of Mistral from Hugging Face. They also shared a Google Colab notebook on fine-tuning Mistral-7B using Transformers, which contains a section on inference. -

LLMs on Mobile Devices:

@gezegenand@bevvyasked about running small models on iPhone and Android, respectively.@tsunemotosuggested using the LLM Farm TestFlight app to run quantized GGUF models on iPhone, while@night_w0lfmentioned mlc-llm as a solution for deploying models natively on a range of hardware backends and platforms, including Android and iOS.

DiscoResearch Discord Summary

- Focus on OpenAI’s new chat version, trained on the Prometheus dataset. Participants wondered whether this had contributed to the reported improved performance, despite the dataset containing no code-related examples. Reference to OpenChat’s detailed description on HuggingFace.

- Discussions about Mixture of Experts (MoE) indicate that orthogonality between experts might result in better performance. The concept of lower order rank approximation of individual experts was also brought up. Other topics included dynamic allocation of experts based on a set minimum threshold and custom MoE routing.

- The QuIP# method was discussed. This is a weights-only quantization method that allows a model to achieve near fp16 performance using 2 bits per weight and operate on an 11G GPU. Find more details on GitHub and the model in question on HuggingFace.

- Conversation around OpenSource German embedding models with reference to Deutsche Telekom’s models and Jina’s forthcoming German embedding model. Also mentioned were upcoming models based on LeoLM Mistral 7B, LeoLM Llama2 70B, and Mixtral 8x7B.

- Debates on the effectiveness of EQ Bench, in light of potential subjectivity in emotional evaluations. Agreement that subjectivity in emotional intelligence measurement presents a challenge.

- Debate about Mixtral’s performance despite reports that the model seems “smarter” than other 7B models. Several models were tested during the evaluation, and the possibility was proposed that a base 7B model may limit certain types of cognition, which might also affect role-playing and creative writing tasks.

DiscoResearch Channel Summaries

▷ #disco_judge (3 messages):

- OpenChat Version Training on Prometheus Data: User

_jp1_mentioned that the new OpenChat version was trained on the Prometheus dataset and asked if this inclusion improved or deteriorated the overall evaluation performance._jp1_provided a link to the OpenChat version’s details on the Huggingface website: OpenChat Details. - Improved HumanEval Performance: User

le_messresponded to_jp1_’s query stating that there has been an insane improvement on humaneval after the incorporation of the Prometheus dataset. - Effect of Prometheus Dataset and C-RLFT Training: User

_jp1_expressed curiosity about why the Prometheus dataset, which contains no code-related examples, improved performance._jp1_also specified that the custom C-RLFT training appears to be working effectively, with potential for future enhancement.

▷ #mixtral_implementation (160 messages🔥🔥):

-

Mixture of Experts and Router Performance:

@fernando.fernandesshared a conjecture about mixtures of experts (MoE) performing better due to orthogonality of experts leading to higher rankings and thus more efficient storage and retrieval of information. Mentioned the concept of “lower order rank approximation” of individual experts being challenging due to assumed high matrix ranks discussion. -

Quantized-Based QuIP Method for GPUs:

@2012456373shared an AI model from Hugging Face that uses a 2-bit per weight, weights-only quantization method (QuIP#) that can run on a 11GB GPU. This method is said to achieve near fp16 performance model link and source code. -

Dynamic Allocation of Experts:

@kalomazeand@nagaraj_arvinddiscussed the possibility of dynamically allocating experts based on a set minimum threshold for each layer/token. It led to a discussion on modifying and rebuildingllama.cppto cater for such a parameter discussion. -

Custom MoE Routing:

@kalomazedeveloped a custom version ofllama.cppthat allows the number of routed experts to be specified in the experts.txt file created by the script source code. -

Discussion on Experts and MoE Routing System: Experimenting on the routing mechanism and the number of experts selected for processing in a token was a predominant discussion. Posts from Reddit and a Discord server (TheBloke discord) were referenced Reddit post and Discord server invite.

▷ #general (18 messages🔥):

- Quantize base QuIP# method:

@2012456373shared information on a model that uses the QuIP# method, a weights-only quantization method that achieves near fp16 performance using only 2 bits per weight. The model can run on an 11G mem GPU and more details about the QuIP# method can be found on GitHub. - STK Training Code for Mixtral:

@e.vikis seeking help with stk training code for mixtral. They are close to getting it working but are experiencing memory access errors. - German Embedding Models:

@thewindmomand_jp1_discussed various open-source German embedding models they’ve tested._jp1_also mentioned an upcoming DiscoLM German v2 model planned to be released during the Christmas period which would use new finetuning datasets.@rasdanirecommended Deutsche Telekom’sgbert-large-paraphrase-euclideanandgbert-large-paraphrase-cosinemodels, while@flozi00announced Jina’s intent to release a German embedding model. - New Models under Leolm and Mixtral:

_jp1_shared plans for creating models based on LeoLM Mistral 7B, LeoLM Llama2 70B, and Mixtral 8x7B. However, they clarified that all models may not be ready/released immediately. - Comparison of Mixtral and Qwen-72b:

@aslawlietinquired about the comparison between Mixtral and Qwen-72b, while@cybertimonexpressed anticipation for the release of the Mixtral 8x7B model.

▷ #benchmark_dev (6 messages):

- Concerns about Sharing Code on GitHub: User

@rtyaxexpressed hesitation about sharing their code on GitHub due to potential privacy issues. They, however, considered creating a new GitHub account to sidestep this concern. - EQ Bench and Subjectivity in Emotion Evaluation:

@_jp1_pointed out that the EQ Bench could be influenced by subjectivity in emotions assessment and suggested conducting a comparative study of expert evaluations.@calytrixagreed that emotional intelligence measurement’s inherent subjectivity is a challenge, but still believes the EQ Bench can effectively discriminate between different responses. - Questions about Mixtral’s Performance:

@_jp1_also questioned why Mixtral’s performance wasn’t as high as expected.@calytrixresponded that they used the specified tokenisation and tested several models including: DiscoResearch/mixtral-7b-8expert, mattshumer/mistral-8x7b-chat, mistralai/Mixtral-8x7B-Instruct-v0.1, migtissera/Synthia-MoE-v3-Mixtral-8x7B. They hypothesized that having a base 7b model might limit certain types of cognition and expect this might also impact role playing & creative writing tasks. - Subjective Perceptions of Model Performance:

@technotechremarked that despite the lower performance scores, in their subjective opinion, the Mixtral model seems “much smarter” than other 7B models.

OpenAccess AI Collective (axolotl) Discord Summary

- Announcement of the availability of Microsoft Phi-2 model weights for research purposes Microsoft’s Official Phi-2 Repo. Comparison of Phi-2 and GPT4 was also discussed.

- Conversation about diverse instruction datasets, with topics on how unstructured datasets are structured.

- Mention of an idea to establish an AI model benchmark; fun discussion on the concept and a hypothetical dataset for benchmarking purposes.

- Update announcement for Mixtral (FFR issue fix), facilitating FFT operations on specific hardware configurations.

- Discussion of a credit card issue and attempts to find a solution.

- Interest in enabling evaluation of finetuned models against a test dataset for comparison with the base model.

- Exchange about loss spikes and methods to stabilize them, such as adjustments in learning rate.

- Conversation about memory constraints when training large models and potential solutions like the use of offload.

- Dialogue on an issue with multi-GPU training; shared strategies to counteract this problem, including a mentioned Pull Request on Github Pull Request on Github.

- Shared observations about the performances of Mixtral model versus Mistral model, including a useful Pull Request for better DeepSpeed loading Pull Request.

- Discussion around an issue with Flash Attention installation and transformers’ attention implementation, involving changes made by HuggingFace HuggingFace Changes.

- Tactical attempts to fix the training error, like modifying

sample_packingtofalsein configuration files. - Fix deployed to tackle the aforementioned training error; verification of its success.

- Exploration of potential reasons behind a sudden loss spike in pretraining.

- Shared user experience with a PDF parsing tool that was not fully satisfactory.

- Shared and discussed a tweet by Jon Durbin.

- Willingness expressed to experiment with different solutions for better PDF parsing.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (71 messages🔥🔥):

- Phi-2 Weights Availability: User

@caseus_shared that the Microsoft Phi-2 weights are now available on Hugging Face for research purposes following a discussion about the model’s availability on Azure, sparked by@noobmaster29. The model is reportedly not as effective as GPT4 but comparable in certain aspects (Microsoft’s Official Phi-2 Repo). - Instruction Datasets Discussion: Users

@dreamgenand_dampfdiscussed various instruction datasets like OpenOrca, Platypus, Airoboros 3.1, and OpenHermes 2.5. Queries were raised about how such datasets are curated and how unstructured datasets are made structured. - Potential Model Benchmark: There was a humorous discussion about creating a benchmark for AI models.

@faldoresuggested naming it Dolphin Benchmark, while@le_messoffered the idea to generate a dataset using one of@faldore’s models. They joked about training on the test set and comparing results of various prompting strategies. - Mixtral FFT Update:

@caseus_announced an update for Mixtral that fixes a Fast Fourier Transform (FFT) issue enabling FFT operation on certain hardware configurations. - Credit Card Issue: User

@nruaifreported a problem with their credit card getting rejected while trying to access some services. They also sent a friend request to another user on the chat for assistance.

▷ #axolotl-dev (82 messages🔥🔥):

- Evaluation of Finetuned Models:

@z7yeexpressed interest in being able to evaluate finetuned models against a test dataset for comparison with the base model. They included an example of potential commands they’d like to run. - Loss Spikes:

@casper_aiexperienced sudden loss spikes when training, but managed to stabilize this by adjusting the learning rate to 0.0005. - Memory Constraints: A discussion arose about memory constraints when training large models.

@casper_aimentioned they were unable to fit a model on 8x A100 using FFT due to its memory-consuming nature.@nruaifsuggested the use of offload, assuming the VM has sufficient RAM. - Issue with Model Saving: Several members, including

@casper_aiand@caseus_, discussed an issue when trying to save a model during multi-GPU training.@caseus_shared a link to a Pull Request on Github that addresses the problem. - Mixtral Model Performance: When comparing the Mixtral and Mistral models,

@casper_aiobserved that the Mixtral model seemed to have more stable loss during finetuning.@caseus_also shared a Pull Request which aims to improve DeepSpeed loading for the Mixtral model.

▷ #general-help (14 messages🔥):

- Flash Attention Installation and Transformers Attention Implementation:

@jovial_lynx_74856had trouble with training due to a change intransformersattention implementation.@caseus_found that the patch may not be working as intended due to this change and believes the implementation may be falling back to SDPA. - Changes in Transformers Attention Implementation:

@caseus_pointed out the recent changes made by huggingface in transformers attention implementation that could be causing the problem. - Attempt to Fix the Training Error:

@jovial_lynx_74856managed to run the training without having it packed by modifyingsample_packingtofalseinopenllama-3b/lora.yml. - Updated Branch for Training Error:

@caseus_pushed another update to fix the issue.@jovial_lynx_74856confirmed that the training runs without any issue now. - Loss Spike in Pretraining:

@jinwon_kbrought up an unidentified issue about experiencing a loss spike during pretraining and asked for known reasons behind it.

▷ #datasets (6 messages):

- Discussion on PDF Parsing Tool: User

@visuallyadequatediscussed their experience with a PDF parsing tool. They stated that the tool doesn’t work with some of their PDFs and mentioned that it is not open-source. They also reported that the API rejects some files without explanation, the tool works slowly if it does work, and it garbles the formatting. - Tweet Share from Jon Durbin:

@lightningralfshared a tweet from Jon Durbin for discussion. - Further Discussion on PDF Parsing: Responding to

@lightningralf,@visuallyadequateexpressed a willingness to keep trying different solutions for better PDF parsing.

Mistral Discord Summary

- Discussion on API Access Invitations and Mistral Model Performance, with users expressing feedback and inquiries on topics like model evaluation and access procedure.

- Various technical considerations on Mistral vs Qwen Models, privacy concerns, data sovereignty, and hosting and pricing of Mistral API were brought up.

- The pricing can be found on the pay-as-you-go platform.

- Detailed conversations on hyperparameters for Mistral Models, API Output Size, Discrepancies Between API and HF Model Outputs, and various responses were made surrounding these areas.

- Announcement of a new channel,

<#1184444810279522374>for API and dashboard-related questions.

- Announcement of a new channel,

- Several deployment related topics were explored, with inquiries about endpoint open source, FastChat Tokenization, and recommended settings for Mixtral.

- In reference to implementation, discussion consisted of rectifying wrong HF repo for Mistral v0.2 along with sharing the correct one, Mistral-7B-Instruct-v0.2.

- Links provided to updated documentation for Mistral-7B-v0.2 Instruct files and Mixtral-8x7B-v0.1-Instruct.

- Query by user

@casper_aion the finetuning learning rate of Mistral instruct. - Showcased work and experiments included the release of a new fine-tune of Mistral-7b, termed “Bagel,” sharing of GitHub repository for Chat Completion Streaming Starter.

- Random questions and humor in regards to querying LLMS with Random Questions and comparing Mistral to QMoE Mixtral.

- Within the

la-plateformechannel, several topics were featured including API Usage, Performance issues, Grammar Integration, Model Feedback and Playground, Rate Limit, Billing, and Bug Reports.- Creation of a Mistral interfacing playground Github link and web link (https://mistral-playground.azurewebsites.net/).

Mistral Channel Summaries

▷ #general (74 messages🔥🔥):

- API Access Invitations: Users such as

@vortacsand@jaredquekhave inquired about the speed of invites for API access.@aikitoriasigned up a couple of hours after the announcement and got access earlier, indicating a relatively quick process. - Mistral Model Performance:

@someone13574discussed about the possibility of dynamic expert count routing in Mistral and asked for evaluations for different numbers of active experts per token at inference time. - Hosting and Pricing of Mistral API: The hosting and pricing of the Mistral API was the main topic of discussion. It was confirmed that the API is hosted in Sweden on Azure. For international customers, the billing is in Euros and will incur some currency conversion fees. For US customers, they will be billed directly in USD in the future. The pricing can be found on their pay-as-you-go platform.

- Privacy Concerns and Data Sovereignty: Users expressed concerns regarding the Patriot Act and the hosting of data by US cloud providers.

@lerelafrom Mistral assured that it’s an important topic and they are working on providing better guarantees to their customers. The models can also be deployed on-premises for enterprise customers. - Mistral vs Qwen Models:

@aslawlietand@cybertimondiscussed the comparison between Mistral and Qwen models with the conclusion being Mistral being faster, easier to run and being better compared to Qwen 7b and 14b. The comparison with Qwen 72b wasn’t exact due to lack of in-depth testing, but users lean towards Mistral for its stated benefits.

▷ #models (21 messages🔥):

- Hyperparameters for Mistral Models: User

@tim9422expressed concern about the lack of information on hyperparameters used for Mistral model training, seeking guidance for full-finetuning. - API Output Size:

@vince_06258discussed limitations with API outputs, finding that independent of prompt length, the generated responses are consistently brief.@aikitoriasuggested adding a system message at the start to generate longer responses, but confirmed that there isn’t any parameter for “desired length”. - Discrepancies Between API and HF Model Outputs:

@thomas_pernethad issues reconciling API results with outputs from transformer’s model from Hugging Face, ending up with significantly different results.@daainpointed out that the API, in contrast to base models, uses the instruct model.@thomas_pernetmanaged to reproduce the API results using the correct instruct model. - New Channel for API Inquiries:

@lerelaannounced the creation of a new channel,<#1184444810279522374>dedicated to API and dashboard-related questions, noting that the API utilizes instruct-only models. - Discussion on Mixtral and SOLAR 10.7B Models: Users

@_dampf,@_bluewisp, and@vijen.initiated a conversation about Mixtral and SOLAR 10.7B models. Discussion points included whether Mixtral was trained on new English data, a comparison of Mixtral’s performance with the newly released SOLAR 10.7B-Instruct-v1.0 model, and inquiries on the specific use cases for Mixtral.

▷ #deployment (6 messages):

- Endpoint Open Source Inquiry:

@yvaine_16425asked if the endpoint is also open-source. - FastChat Tokenization Issue:

@lionelchgnoted a tokenization caveat of FastChat (used in vLLM) and asked if this issue is also present in the TensorRT-LLM deployment or if NVIDIA correctly sends tokens.@tlacroix_clarified that tensorrt-llm is just token-in/token-out and suggested following Triton Inference Server tutorials for setting up pipelines for tokenization/detokenization. - Recommended Settings for Mixtral:

@sa_codeasked for recommended settings for top-p and temperature for Mixtral. - Queries about RPS Cap on API:

@kml1087is considering deploying to production, but first wanted to know if there’s a cap on the requests per second (RPS) for the Mistral’s API.

▷ #ref-implem (8 messages🔥):

- Wrong HF Repo for Mistral v0.2: User

@titaux12pointed out that the Mistral AI Platform page had an incorrect link to the HuggingFace repo for Mistral v0.2. The incorrect link pointed to Mistral-7B-v0.1, but it should point to Mistral-7B-Instruct-v0.2. The error was in the sectionGenerative endpoints > Mistral-tiny. - Error Correction: User

@lerelaconfirmed the mistake and thanked@titaux12for pointing it out. The issue was subsequently fixed. - Mistral-7B-v0.2-Instruct Docs Update:

@tlacroix_shared a link to the Mistral-7B-v0.2 Instruct files indicating that the link would be added to the documentation.@vgoklaniappreciated the update. - Request for Mixtral-8x7B-Instruct-v0.1:

@vgoklanirequested for a non-HuggingFace version ofMixtral-8x7B-Instruct-v0.1.@tlacroix_responded jovially and promised to work on it. - Reference Implementation Preference:

@vgoklaniexpressed a preference for the reference implementation, citing cleaner code and efficient performance with FA2 (Fast Attention Assemble), particularly when fused with implementations from Tri Dao for both the RMS_NORM and Rotary Embeddings.@vgoklanialso mentioned working on flash-decoding and a custom AWQ implementation for the reference model. - Link to Mixtral-8x7B-v0.1-Instruct:

@tlacroix_provided the requested link for Mixtral-8x7B-v0.1-Instruct and confirmed this would be added to the documentation.

▷ #finetuning (1 messages):

- Finetuning of Mistral Instruct: User

@casper_airaised a question asking for information about the learning rate used to finetune the Mistral instruct. Further discussion or response to the question wasn’t capture in the provided message history.

▷ #showcase (8 messages🔥):

- Running Mistral on Macbook:

@sb_eastwindmentioned that he was running a 3bit version of Mistral on his 32gb Macbook with llamacpp and LM Studio, sharing a Twitter post that illustrates the setup. - Increasing Memory Allocation: In response to

@sb_eastwind,@daainsuggested that more memory could be allocated to llamacpp on MacOS to run the 4bit version of Mistral, pointing to a Discord message for details. - Difference between Q3 and Q4:

@sb_eastwindasked about the difference betweenq3_k_mandQ4, to which@daainstated they don’t know as they haven’t triedQ3. - Chat Completion Streaming Starter: User

@flyinparkinglotshared a GitHub repository for Chat Completion Streaming Starter, which allows users to toggle between OpenAI GPT-4 and Mistral Medium in a React app. - Release of Bagel, Mistral-7b Fine-Tune:

@jondurbinannounced the release of a new fine-tune of Mistral-7b, termed “Bagel”, which comes with features such as dataset merging, benchmark decontamination, multiple prompt formats, NEFTune, and DPO.

▷ #random (3 messages):

-

In Querying LLMS with Random Questions,

@basedbluesuggested compiling a list of random questions for the Language Model Learning System (LLMS). -

Querying Mistral Equivalent to Querying QMoE Mixtral: The user

@sb_eastwindhumorously questioned whether querying the Mistral AI is similar to querying the QMoE Mixtral. -

Can Generated Text from Mistral AI be Used for Commercial Datasets:

@nikeoxproposed a question for intellectual property lawyers, AI ethics experts and data privacy specialists, regarding the commercial use of generated text from Mistral AI. The user broke down their enquiry into a 2-part expert plan and requested confirmation from others in the channel.

▷ #la-plateforme (52 messages🔥):

-

API Usage and Performance issues: user

@_jp1_inquired about tracking usage, and@qwertioidentified a typo in the documentaion. User@flyinparkinglotexpressed interest in Function Calling feature while@sa_codeand@coco.pyagreed.@svilupexperienced API access issues which turned out to be a URL rendering error. -

Grammar Integration and Model Usage:

@nikeoxrequested information on the possibility of integrating grammars such as Llama.CPP.@tlacroix_confirmed that function calling and grammars are on the Mistral roadmap, and further queried@nikeoxon the specifics of their grammar usage. Conversation about using a system role in the chat completions API, and how to include it was discussed by@lionelchg,@tlacroix_and@nikeox. -

Mistral Model Feedback and Playground:

@delip.raoshared a positive feedback onmistral-mediummodel for handling complex coding tasks linking to [Twitter Post]https://x.com/deliprao/status/1734997263024329157?s=20). User@xmaster6ycreated a playground to interface with the Mistral API and shared Github link and web link (https://mistral-playground.azurewebsites.net/) -

Rate Limit, Billing, and Model Embeddings: Questions about rate limits were raised from

@_jp1_and@yusufhilmi_, and@tlacroix_explained them as approx 1.5M tokens per minute and 150M per month.@akshay_1inquired about the embedding model where@alexsablayrecommended context upto 512. Additional inquiries on the batch limit for embedding requests were raised by@lars6581and@tlacroix_expecting answers for precise rate limits for APIs. -

Bug Reports:

@nikeoxreported an inconsistency between the real and example API response provided the API documentation. ‘oleksandr_now’ reported issues about API responding over time and requested a Billing API to monitor their usage.

HuggingFace Discord Discord Summary

- Several updates and releases were discussed in the realm of AI and machine learning models including Transformers, Mixtral, multimodal LLMs, Seamless M4T v2 models, Phi 1 and 1.5 from Microsoft, and AMD GPU support for Llama-inspired architectures; all part of the open-source updates mentioned by

@osanseviero. Additionally, the Spaces Analytics got a shoutout along with announcements regarding LoRAs inference in the API and models tagged as text-to-3D. A new course by Codecademy focused on Hugging Face was also shared (Intro to Hugging Face Course & Gaussian Splatting). - The General channel had various discussions, notably multiple plans and fine-tuned versions for Deepsex-34b model, brain simulations aiming for artificial intelligence, potential text classification using HuggingFace models. There was a mention of an Australian supercomputer DeepSouth for simulating human brain. LSTM discussions were also held among the members regarding time series prediction.

- The ‘Today I’m Learning’ channel had discussions mainly around Mamba architecture, RNN, LSTM, and GRUs. User

@merve3234provided guidance to@nerdimoin understanding these RNN architectures (Mamba Paper). - Astounding new language models have caught attention in the ‘Cool Finds’ channel including the MOE-8x7B release by Mistral AI, and tabular deep learning for bias. Security issues of ML models were also shared with an interesting read on membership inference attacks. A diffusion model for depth estimation called Marigold was revealed and a free AI code-along series from DataCamp mentioning a session on “Building NLP Applications with Hugging Face” (DataCamp NLP session). A comprehensive article on precision medicine was also shared.

- The ‘I Made This’ channel primarily focused on individual achievements.

@rwitz_published a fine-tuned model Go Bruins V2, and@thestingerxcompiled a project RVC which offers audio conversion and TTS capabilities. Bringing in the festive cheer,@andysingalused Stable Diffusion to create Christmas vibes. An online artspace by@Metavers Artspacewas introduced along with a quantization blog post by@merve3234on LLMs. - The ‘Reading Group’ channel is opened with a proposed discussion topic Distilling the Knowledge in a Neural Network.

- GPU upgrade possibility was brought up in the ‘Diffusion Discussions’ channel.

- In the ‘Computer Vision’ channel, model recommendations are sought for recognizing 2D construction drawings.

- The NLP channel saw proposals on combining graph theory or finite state automata theory with models, looking for solutions to distribute model checkpoints between GPUs, seeking advice on models for resume parsing, and dealing with issues on custom datasets for machine translation.

HuggingFace Discord Channel Summaries

▷ #announcements (1 messages):

- Transformers Release: User

@osansevieroannounced several updates including compatibility of Mixtral with Flash Attention, introduction of multimodal LLMs (Bak)LlaVa, Seamless M4T v2 models that offer multiple translation capabilities, forecasting models like PatchTST and PatchTSMixer, Phi 1 and 1.5 from Microsoft, AMD GPU support and Attention Sinks for Llama-inspired architectures. Further details can be found on the official GitHub release page. - Open Source: Some significant updates include Small Distil Whisper which is 10x smaller and 5x faster with similar accuracy as the larger Whisper v2; Llama Guard, a 7B model for content moderation to classify prompts and responses; and Optimum-nvidia, which allows achieving faster latency and throughput with a 1-line code change. Additionally, there are updates on models in Apple’s new MLX framework available on the HuggingFace platform.

- Product Updates: Spaces Analytics is now available in settings pages of Spaces. LoRAs inference in the API has vastly improved. Models tagged as text-to-3D can now be easily found on the Hub. You can learn more about LoRAs inference on Huggingface’s blog page.

- Learning Resources: Codecademy launched an Intro to Hugging Face course. Here is the link to the course. Also mentioned is an intriguing introduction to Gaussian Splatting, available here.

▷ #general (57 messages🔥🔥):

-

Deepsex-34b Model Collaborations: User

@databoosee_55130shared a set of fine-tuned versions of the Deepsex-34b model by different contributors including[TheBloke](https://huggingface.co/TheBloke/deepsex-34b-GGUF),[waldie](https://huggingface.co/waldie/deepsex-34b-4bpw-h6-exl2), and others from the HuggingFace community. He announced plans to create a new model based on the “Seven Deadly Sins” series. -

Brain Simulation and AI: A discussion on brain simulations and artificial intelligence was initiated by

@databoosee_55130stating that simulating the human brain is extremely complex and current artificial neural networks only accomplish a fraction of the human brain’s complexity.@ahmad3794added that hardware implementations mimicking real neurons could yield more efficient simulations. -

Supercomputer for Brain Simulations:

@stroggozshared information on the DeepSouth supercomputer, capable of simulating human brain synapses, being developed by Australia’s International Center for Neuromorphic Systems, scheduled for launch in 2024. -

Inquiries on Text Classification and HuggingFace Support: Users

@jeffry4754and@firecherequested advice on text document classification, specifically using existing models for category prediction.@cakikisuggested reaching out to experts using the HuggingFace support form. -

Debate on LSTM Neural Network:

@cursed_goosereceived feedback from the group on implementing LSTM cells for time series prediction. He shared a basic implementation in Rust and asked for guidance on creating an LSTM layer and making predictions.@vipitissuggested doing unsupervised training or clustering, or trying a zero-shot task with large models.

▷ #today-im-learning (4 messages):

- Discussion on Mamba by

@merve3234: Introduced Mamba, an architecture that surpasses Transformers in both performance and efficiency, sharing a link to the Mamba paper on Arxiv (Mamba Paper). - Learning about Recurrent Neural Network (RNN) Architectures by

@nerdimo: Starting to learn about GRUs and LSTMs, specifically to tackle the vanishing gradient problem faced by standard RNNs. @merve3234’s Advice on Understanding RNN, LSTM, and GRUs: Noted the initial complexity in understanding LSTMs and GRUs compared to standard RNNs.@nerdimoshared their learning experience, noting the difficulties in understanding the intricate logic gate mechanisms of LSTMs and GRUs.

▷ #cool-finds (7 messages):

- Mistral AI’s Latest Language Model:

@tokey72420shared a link about Mistral AI’s recent breakthrough in language models with the release of MOE-8x7B, Check here for more. - Tabular Deep Learning:

@d3ariesrecommended a paper titled “An inductive bias for tabular deep learning”, available on Amazon.science. - Machine Learning Security:

@shivank2108shared a link to a research paper on the security of machine learning and membership inference attacks, accessible on arXiv. - New Depth Estimation Model, Marigold:

@merve3234mentioned Marigold, a diffusion model for depth estimation, an alternative to dense prediction transformers, available on huggingface.co. - AI Code-Along Series:

@datarhysshared about a free 9-part AI code-along series from DataCamp. The series includes a session on “Building NLP Applications with Hugging Face” and is freely accessible at Datacamp NLP session. - Precision Medicine and Its Role in Medical Practices:

@jeffry4754shared an article on how precision medicine, driven by technologies like genomics and proteomics, has the potential to change medical practices, accessible on link.springer.com.

▷ #i-made-this (7 messages):

- Go Bruins V2 - A Fine-tuned Language Model:

@rwitz_shared a link to their fine-tuned language model, Go Bruins V2. - RVC Project:

@thestingerxdiscussed their RVC project that includes audio conversion, various TTS capabilities, and more. They mentioned the challenge of running on CPU and shared a link to the project. - Christmas Vibes using Stable Diffusion:

@andysingalshared a YouTube video highlighting the application of Stable Diffusion to create Christmas vibes. - Metavers Artspace:

@Metavers Artspaceshared a link to an online artspace. - Quantization of LLMs Blog Post:

@merve3234wrote a blog post on the quantization of LLMs, excluding AWQ. They encouraged other users to write blog posts on Hugging Face. @nerdimoexpressed interest in examining@merve3234’s blog post on quantization in their free time.

▷ #reading-group (5 messages):

- Channel Introduction: User

@murtazanazirindicates interest in discussing and understanding more about a particular topic.@merve3234suggests that the discussion be held on the channel to allow others to join. - Discussion Topic:

@murtazanazirsuggests a topic for discussion: Distilling the Knowledge in a Neural Network.

▷ #diffusion-discussions (3 messages):

- GPU Upgrade Discussion:

@pseudoterminalxmentioned that, even though it was possible to upgrade the GPU in early 2023, it used 350w for 2 seconds per iteration.@knobels69responded that it might be time for them to upgrade their GPU.

▷ #computer-vision (3 messages):

- 2D Construction Data Recognition: User

@firecheinquired about a model that can recognize 2D construction drawings based on image classification, which@merve3234clarified.

▷ #NLP (9 messages🔥):

- Merging Graph Theory or Finite State Automata With Models: User

@stroggozproposed the idea of an open-source library that combines graph theory or finite state automata theory with models. The decision of which model to use would be based on the predictions/scores. - Parallelism for Distributing Model Checkpoints Between GPUs: In response to

@acidgrim'senquiry about splitting a model between two distinct systems,@merve3234referred them to the concept of parallelism as explained on the HuggingFace’s documentation on Text Generation Inference and Transformers’ Documentation on Performance Optimization for Training. - Model for Resume Parsing:

@surya2796sought information on creating a model for resume parsing. No further conversation or suggestions followed this query. - Issues with Custom Dataset for Machine Translation:

@dpalmzfaced aValueErrorwhile trying to train with a custom dataset for machine translation.@nerdimooffered to assist, having encountered the same problem in the past.

▷ #diffusion-discussions (3 messages):

- GPU Upgrade Discussion: User

@pseudoterminalxsuggested that it is not practical to use a certain feature that they did not specify due to high power usage, saying “most likely the simplest answer is No and even if you could (it was briefly possible in early 2023) it used 350w for 2 seconds per iteration”. In response,@knobels69showed interest in upgrading their GPU.

LangChain AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Azure Search Service with LangChain:

@hyder7605is seeking assistance with integrating multi-query retrieval or fusion RAG capabilities with Azure Cognitive Search, utilizing LangChain. He aims to define filters and search params during document retrieval and incorporate advanced features like hybrid and semantic search. - ReRanking model with Node.js:

@daii3696is looking for recommendations on incorporating the ReRanking model into a Node.js RAG application. Noted that the Cohere Re-Ranking model is currently only accessible for LangChain in Python. - GPT-3.5 Language Response:

@b0otableis discussing the difficulty in getting gpt-3.5 to respond in a desired language especially with longer and mixed language context. Current prompt works about 85% of the time. - LangChain Error:

@infinityexists.is experiencing an error while running any model with LangChain: “Not implemented error: text/html; charset =utf -8 output type is not implemented yet”. - gRPC Integration with LangChain:

@manel_alouiis looking for information on how to integrate gRPC with LangChain. Asked the community if anyone has found a solution. - Document Discrepancy:

@vuhoanganh1704noticed discrepancies between the LangChain JavaScript and Python documentation, causing confusion. - LANGSMITH Sudden Non-Response:

@thejimmyczbrought up an issue where LANGSMITH isn’t saving any calls.@seththunderverifies this to be a problem as well. - Stream Output with Next.js:

@menny9762is asking for advice on streaming the output using Next.js and LangChain.@seththundersuggested a callback function called StreamCallBack and to setstream = trueas a parameter in the language model. - Reranking with Open Source, Multilingual Support:

@legendary_pony_33278is interested in open-source reranking techniques for RAG applications that support multiple languages, including German. Noted that most tutorials use the Cohere Reranker Model. - Fine-tuning Mixtral-8x7B with LangChain:

@zaesaris inquiring about how to fine-tune the Mixtral-8x7B model with LangChain. - LangChain Materials:

@sniperwlf.is searching for learning materials for LangChain, similar to O’Reilly books.@arborealdaniel_81024commented that the project is too young and unstable for a book yet. - Indexing Solutions with Sources on RAG:

@hucki_rawen.ioasked about indexing solutions with sources used in RAG. Questioned about the desired outcome from this process.

Latent Space Discord Summary

- Video presentation, “Ben Evans’s 2023 AI Presentation”, was shared. YouTube link by @stealthgnome

- Introduction of the AI News service, a tool that summarizes discussions across AI Discord servers, with an option to sign up for the service launch. @swyxio’s post

- Discussions on Langchain’s rearchitecture prompted by the post on the subject in the AI News service. Langchain rearchitecture post

- Sharing of the first Mistral Medium report, and subsequent successful experimentation with Mistral-Medium by @kevmodrome for UI components creation. Twitter link by @swyxio

- A talk on LLM development beyond scaling was attended by @swyxio, with a link to the discussion shared. Twitter link by @swyxio

- Commentary on an unspecified subject by Sama was shared, albeit described as not being particularly insightful. Twitter link by @swyxio

- Active organization of paper reviews and related discussions:

- An invitation to a chatroom discussion about the Q-Transformer paper was extended. Paper link

- Encouragement to sign up for weekly reminders for the recurring LLM Paper Club events. Event signup link

- In the LLM Paper Club channel:

- Announcement of a presentation on the Q-Transformer paper by @cakecrusher, with a link to a copilot on the topic. Copilot link

- User queries and clarifications on technical issues and topics for upcoming discussions.

Latent Space Channel Summaries

▷ #ai-general-chat (9 messages🔥):

-

Ben Evans’s 2023 AI Presentation:

@stealthgnomeshared a link to a YouTube video of Ben Evans’s 2023 AI presentation. -

AI News Service Announcement:

@swyxiointroduced the AI News service, an MVP service that summarizes discussions across AI Discord servers, and shared a sign-up link for the upcoming service launch. -

Langchain Rearchitecture: The AI News service included a link to a post about the Langchain rearchitecture.

-

First Mistral Medium Report:

@swyxioshared the first Mistral Medium report’s Twitter link. -

Experimentation with Mistral-Medium:

@kevmodromementioned their successful experimentation with Mistral-Medium in generating UI components. -

Discussion on LLM Development:

@swyxioattended a talk about LLM development beyond scaling and shared the Twitter link to the discussion. -

Sama Commentary:

@swyxioshared a Twitter link to a commentary by Sama, pointing out it doesn’t say much.

▷ #ai-event-announcements (1 messages):

- Q-Transformer Paper Discussion:

@swyxioinvited the server members to join a chatroom led by<@383468192535937026>, discussing the Q-transformer paper. The paper, titled “Q-Transformer: Scalable Offline Reinforcement Learning via Autoregressive Q-Functions”, is authored by multiple researchers, including Yevgen Chebotar, Quan Vuong, Alex Irpan, Karol Hausman, etc. The paper can be accessed at https://qtransformer.github.io/ and is intended for open discussion. - LLM Paper Club:

@swyxioalso encourages signups for weekly reminders for the LLM Paper Club, a recurring event that conducts weekly paper reviews, breaking down and discussing various LLM papers. Interested members can register for this event at https://lu.ma/llm-paper-club. Hosted by Kevin Ball, Eugene Yan & swyx, it encourages a read-through of the chosen paper prior to the discussion.

▷ #llm-paper-club (7 messages):

- Q-Transformer Presentation Plans:

@cakecrusherannounced that they will be presenting the Q-Transformers paper and shared a link to their “copilot” Q-Transformer on OpenAI Chat. - Access Issue: User

@swyx.ioexpressed having trouble with something, although it’s unclear what exactly was the problem. - Confusion About Paper Topic:

@__pi_pi__expressed confusion about the paper being discussed in the next meeting. This confusion was cleared up by@slonoproviding a direct link to the thread discussing the Q-transformers paper. - Next Week’s Paper:

@coffeebean6887inquired about the paper to be discussed in the following week. There was no response to this query in the provided messages.

LLM Perf Enthusiasts AI Discord Summary

- Interest in Gemini Pro API usage and latency stats on Google’s Gemini expressed by

dongdong0755andrabiat, respectively, indicating a need for more information on the topic. - Queries regarding GPT-4 and GPT Tasks evidenced by

.psychickoala’s request for updates or examples and@dhruv1’s query about GPT tasks in the #resources channel. - Conversations around fine-tuning AI, including

@robertchungseeking to understand the term “nested replies” and@robhaisfielddiscussing using GPT-4 via TypeChat for a specific Email interface. - Sharing of valuable resources, including

@robotumssharing a blog post titled “Phi-2: The Surprising Power of Small Language Models” and@nosa_.providing a helpful link in the #resources channel, potentially relating to GPT. - Discussions surrounding evaluation metrics for Language Learning Model (LLM) apps.

@joschkabraunshared a blog post on the topic and underlined the thoughts of Bryan Bischof, Head of AI at Hex, on using defensive code parsing in GitHub Copilot. - Unfinished inquiry by

jeffreyw128on uncovering a better understanding of an unspecified subject matter under the #prompting channel.

LLM Perf Enthusiasts AI Channel Summaries

▷ #general (1 messages):

dongdong0755: Anyone trying out gemini pro api?

▷ #gpt4 (1 messages):

.psychickoala: Any update here? Anyone have examples of this?

▷ #finetuning (2 messages):

- Nested Replies Discussion:

@robertchungasked for clarification about the term “nested replies”, questioning if it refers to the composition of 1st inbound -> other replies. - Fine-tuning AI for Email Interface:

@robhaisfieldtalked about using GPT-4 via TypeChat to produce a specificEmailinterface. He also speculated that a fine-tuned GPT-3.5 or Mistral might be able to do it as effectively, but without fine-tuning, he believed that GPT-4 would be the most viable option.

▷ #opensource (1 messages):

- Phi-2 Small Language Models: User

@robotumsshared a blog post from Microsoft Research about the potential of small language models. The blog post, titled “Phi-2: The Surprising Power of Small Language Models”, includes contributions from various researchers such as Marah Abdin, Jyoti Aneja, and Sebastien Bubeck among others.

▷ #resources (2 messages):

- Discussing GPT Tasks: User

@dhruv1asked an unspecified user about the kind of task they are running with GPT. - Resource Link Shared:

@nosa_.shared a link that they found useful, potentially related to the GPT discussion.

▷ #speed (1 messages):

rabiat: Are there any latency stats on googles gemini?

▷ #eval (1 messages):

- Evaluation Metrics for LLM Apps:

@joschkabraunshared a blog post on evaluation metrics (including code), which don’t rely on ground truth data and are suitable for those who want to evaluate live traffic or offline experiments with their LLM app without ground truth data. - Quality Control and Evaluation in LLM Apps: Insights reported from Bryan Bischof (Head of AI at Hex) point towards the usage of defensive code parsing in the GitHub Copilot, amounting to thousands of lines to catch undesired model behaviors. Bryan mentioned that this defensive coding can be created with evaluation metrics, emphasizing their importance in quality control and evaluation for building production-grade LLM applications.

▷ #prompting (1 messages):

jeffreyw128: Anyone find a good way to understand

Alignment Lab AI Discord Summary

- Discussion concerning the recent version updates featuring a comparison between the updated v0.2 and the differences amongst OpenHermes 2.5 and 2, as pointed out by

@lightningralfand@gabriel_syme. - Mention of a potential new base model with extensive pretraining, as indicated by

@gabriel_syme. - Dialogue on the Upstage’s Depth Upscaling technique employed on a franken llama-Mistral to attain a size of 10.7B parameters with added pretraining. The use of this method led to superior results compared to Mixtral, as claimed by

@entropi. The model, named SOLAR-10.7B-Instruct-v1.0, fine-tuned for single-turn conversation, was shared on Hugging Face. - Engaging conversation around phi 2 about any exciting applications, instigated by

@joshxt. - Sharing of the Phi-2 model summary by

@entropi, with a link provided to the Hugging Face model card of Phi-2, a transformer model. The same sources used for training Phi-1.5 were used for Phi-2, along with some added NLP synthetic texts and filtered websites. - Mentioned recent availability of Phi-2 weights as noted by

@entropi.

Alignment Lab AI Channel Summaries

▷ #general-chat (4 messages):

- Version Discussion:

@lightningralfand@gabriel_symediscussed about the latest version, with@lightningralfnoting it’s v0.2 which is newer.@gabriel_symecompared this with the difference between openhermes 2.5 and 2. - Model Pretraining:

@gabriel_symementioned the expectation of a new base model with more pretraining.

▷ #oo (1 messages):

- Upstage Depth Upscaling: User

@entropishared about Upstage’s Depth Upscaling on a franken llama-mistral to achieve a size of 10.7B params with continued pretraining. They claimed to beat Mixtral with this approach and shared the link to their model named SOLAR-10.7B-Instruct-v1.0 on Hugging Face. They pointed out it is a fine-tuned version for single-turn conversation.

▷ #phi-tuning (3 messages):

- Discussion on Phi-2:

@joshxtasked if anyone has done anything interesting with phi 2. - Phi-2 Model Summary:

@entropishared a link to the Hugging Face model card of Phi-2, a transformer model with 2.7 billion parameters. The model was trained using the same data sources as Phi-1.5, augmented with a new data source of various NLP synthetic texts and filtered websites. - Availability of Phi-2 Weights:

@entropimentioned that the weights for Phi-2 had just been made available.

AI Engineer Foundation Discord Summary

- Proposal by

@tonic_1about the creation of Langchain openagents using Gemini. - Feedback by

@tonic_1on the recent Google Gemini walkthrough, describing it as disturbed due to the presenter’s excessive coughing. Several interruptions and switches to elevator music were noted. - User

@juanreds’s update on Java SDK acquisition explaining their absence from a meeting while mentioning that they are still working on getting the Java SDK.

AI Engineer Foundation Channel Summaries

▷ #general (2 messages):

- Creation of Langchain Openagents Using Gemini: User

@tonic_1made a proposal for creating Langchain openagents using Gemini. - Google Gemini Walkthrough Issues:

@tonic_1described the recent Google Gemini walkthrough as disturbed by the presenter’s excessive coughing. This led to several interruptions and switches to elevator music.

▷ #events (1 messages):

- Java SDK Acquisition: User

@juanredsapologized for missing the previous meeting, mentioning that they are still working on getting the Java SDK.

MLOps @Chipro Discord Summary

- Announcement of Feature and Model Management with Featureform & MLFlow Webinar scheduled for December 19th at 8 AM PT by

@marie_59545. This session aims to assist various roles like Data Scientists and ML Engineers, among others in understanding how to leverage Featureform’s data handling and MLflow’s model lifecycle management tools. Registrations can be made through this link. - User

@misturrsaminterested in online courses focusing on ML model deployment, with a particular emphasis on Microsoft Azure, Google Cloud, and Amazon AWS platforms, seeking community recommendations.

MLOps @Chipro Channel Summaries

▷ #events (1 messages):

- Feature and Model Management with Featureform & MLFlow Webinar:

@marie_59545announced an upcoming webinar on December 19th at 8 AM PT, hosted by Simba Khadder. The session will demonstrate how to enhance machine-learning workflows using Featureform’s efficient data handling and MLflow’s model lifecycle management tools. The webinar is aimed at Data Scientists, Data Engineers, ML Engineers, and MLOps/Platform Engineers. The event is free and attendees can sign up at this link. - About Featureform and MLflow: Featureform is an open-source feature store used for managing and deploying ML feature pipelines, while MLflow is a system for managing the end-to-end ML model lifecycle. Both tools together create a robust environment for machine learning projects.

▷ #general-ml (1 messages):

- ML Model Deployment Courses: User

@misturrsamrequested recommendations for good online courses focusing on ML model deployment for specific platforms: Microsoft Azure, Google Cloud, and Amazon AWS.

Skunkworks AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Benchmark Comparison of Phi and Zephyr:

@albfrescoinquired if there is a benchmark comparison of the new Phi and Zephyr models, both of which are claimed to be strong 3B models with extraordinarily high benchmarks.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.