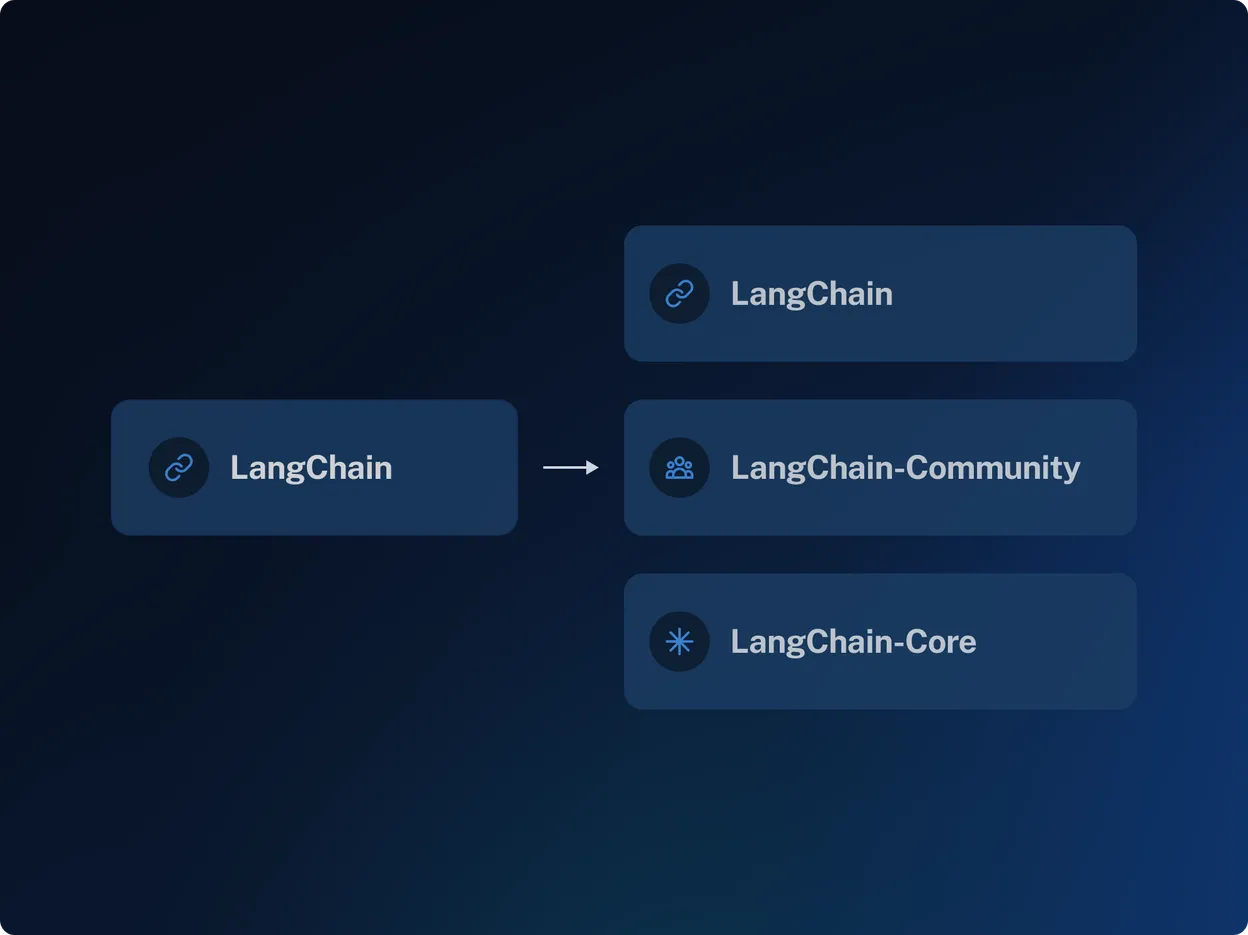

This splits up the langchain repo to be more maintainable and scalable, an inevitable part of every integration-heavy open source framework:

It’s all backwards compatible so no big rush.

In other news, Mistral has a new Discord (we’ll be adding to our tracker) and Anthropic is rumored to be raising another $3b.

[TOC]

OpenAI Discord Summary

- Discussion on Information Leakage in AI Training and Mixture of Experts Models:

@moonkingytqueried about information leakage during AI conversations and expressed curiosity about a model called “mixtral 8x7b”. It was clarified that this model is a type of Mixture of Experts (MoE) model. - Advanced Use Cases of AI: Users explored various potential AI use cases, such as modifying a company’s logo for specific events, explaining complex concepts in large classes at universities, and potential applications for local language models.

- Technical Challenges with OpenAI: Multiple users shared issues they have experienced with ChatGPT, such as server unresponsiveness, slow response times, and difficulty with image uploads. Users also discussed the feasibility of training an AI model using a Discord server and HTML text files, and how to go about doing it.

- Concerns about ChatGPT’s Performance and Behavior: User frustrations included perceived degradation in response quality/nuance, poor memory retention, and poor customer support. Some users reported that GPT is not answering questions as before and instead provides references to search answers on the internet.

- Discussion on Accessing OpenAI GPT-4 API: Users discussed the access and cost of GPT-4’s API, the usage limit of the ChatGPT Plus, how to access it, and the process of reaching human support. An issue about incorrect highlighting in diff markdowns was found and reported.

- Prompt Engineering Techniques: Users discussed various advanced prompt engineering tactics, with an emphasis on clear prompt setting for obtaining desired outputs. Techniques such as outlining for longer written outputs, iterative proofreading, and implementation of a dynamic model system were proposed.

- Oauth2 Issues and Solutions: Oauth2 authentication issues were solved in collaboration among users. Discussion regarding minor bugs and the process of reporting them also took place.

- Using AI for Games: Users shared their attempts at getting AI to play games like hangman. A potential feature of toggling the Python display in the interface for such engagements was proposed.

- Request for Detailed 2D Illustration: A user requested detailed 2D illustration, and was guided to use DALL·E 3 on Bing’s image creator or through ChatGPT Plus! An issue of not being able to edit custom GPT’s was also reported.

OpenAI Channel Summaries

▷ #ai-discussions (42 messages🔥):

- Information Leakage in AI Training:

@moonkingytqueried about cases where chatbots leak information during conversations.@xiaoqianwxresponded affirmatively without further elaborating the context. - Discussion on Mixture of Experts Models:

@moonkingytexpressed curiosity about a term called “mixtral 8x7b”.@xiaoqianwxclarified that it’s a type of Mixture of Experts (MoE) model. - Logo Generation with AI:

@julien1310sought guidance on whether an AI can modify a company’s existing logo for a special event like a 20th anniversary.@elektronisadesuggested using specific features like inpainting from StyleGAN or its derivatives. - Usage of AI in Educational Institutions:

@offlinementioned that some universities don’t permit AI assistance, hinting at the potential of AI like GPT-3 to explain complex concepts, often in a more detailed manner than instructors in large classes. - Question about Local Language Models:

@Shunraiasked the group for recommendations on good local language models. It spurred subsequent discussions, but no specific models were recommended. - Microsoft’s New Language Model:

@【penultimate】shared news about a small language model named Phi-2 being released by Microsoft. Further details or a link to more information were not provided. - AGI (Artificial General Intelligence) Debates: A side discussion was sparked by

@chief_executivequestioning the term AGI if it keeps getting “nerfed” every step. Meanwhile,@【penultimate】criticized constant shifting of AGI’s goalpost.

▷ #openai-chatter (337 messages🔥🔥):

- Issues with OpenAI ChatGPT: Users

@ruhi9194,@stupididiotguylol,@rjkmelb,@yyc_,@eskcanta,@toutclaquer,@gingerai,@ivy7k,@millicom,@ayymoss,@knightalfa,@loschess,@primordialblancreported multiple technical issues with ChatGPT including server unresponsiveness, slow response times, difficulty with image uploads, and error messages such as “Hmm…something seems to have gone wrong.” - Discussion on Using Custom GPT Files: User

@maxdipperasked about the possibility of training a model off a Discord server and whether HTML text files can be utilized for feeding data to the model. - Confusion Over Image Analysis in ChatGPT: User

@toutclaquershared an issue where image analysis in ChatGPT was not working. Error messages were displayed when attempting to analyze images. - Concerns about ChatGPT’s Behavior and Performance: Users

@prncsgateau,@the_time_being,@.nasalspray,@one_too_many_griz,@fefe_95868shared their frustrations with ChatGPT’s change in behavior, perceived degradation in response quality/nuance, poor memory retention, and poor customer support. - Questions about Accessing GPT-4 API: User

@moonkingytinquired about the access and cost of GPT-4’s API.@elektronisadeprovided a link to OpenAI’s documentation outlining the access conditions and@offlinefurther clarified the requirement of being a Pay-As-You-Go customer with a minimum spend of $1.@DawidMraised concerns about degradation of service for premium users.

▷ #openai-questions (170 messages🔥🔥):

- ChatGPT Troubleshooting and Questions: Many users such as

@mustard1978,@yyc_,@blinko0440,@jonsnov, among others, reported various issues with the platform, with common challenges being inconsistency of responses, error messages, issues in uploading images, and problems when the system has reached a usage limit.@solbusprovided extensive troubleshooting advice, suggesting solutions such as checking VPN status, trying different browsers, and clearing browser cache and cookies. - Access Termination Issues: User

@mouse9005shared an issue around account termination and appealed for help.@rjkmelbadvised them to contact OpenAI support via their help center. - ChatGPT Plus Subscription and Usage Limit: A discussion occurred centered on the usage limit of the ChatGPT Plus, involving

@lunaticspirit,@rjkmelb, and@DkBoss. The limit for ChatGPT Plus is 40 messages per 3 hours. A reference for the discussion was provided in the form of this link. - Accessing Tools and Plugins in the New ChatGPT Plus UI:

@_.gojoinquired about how to access the new GPT-4 model and plugins in the playground with the ChatGPT Plus subscription.@solbusprovides a link to how one can access GPT-4 API and also explains how to enable plugins from the account settings. - Payment Issues: Users like

@Ugibugi,@michaelyungkk, and@tardis77bhave encountered challenges with the payment process for subscribing to the service.@solbusand@eskcantarecommended contacting OpenAI help center for assistance.

▷ #gpt-4-discussions (74 messages🔥🔥):

- Oauth2 Issues and Solutions: Users

@jdo300and@draennirhad discussions about issues they were facing with Oauth2 authentication for their API servers. They worked collaboratively to solve their issues and@draennireventually solved his issue by addingapplication/x-www-form-urlencodedamong the parsers in Express. - Dynamic model suggestion for GPT: User

@offlinesuggested the implementation of a dynamic model system that would decide which GPT model to use based on the requirements of the user’s request. The idea is further discussed and supported by@solbus. - Minor bugs & Bug reporting: An issue of incorrect highlighting in diff markdowns was found and reported by user

@offline. They discussed about the bug reporting process with@solbus. - GPT Behaviour Issues: User

@maypridereported an issue with GPT not answering questions like before and instead it was providing references to search answers on the internet.@cris_cezarreported an inability to edit one of their custom GPT’s, even after trying multiple browsers and clearing cache. - User Interface & Support:

@cris_cezarexpressed confusion about the Discord interface, comparing it to an aviation cockpit. The user was guided to the OpenAI staff support via the help.openai.com chat.@solbusand@eskcantadiscussed the process of reaching human support through the help.openai.com chat interface.

▷ #prompt-engineering (32 messages🔥):

-

ChatGPT and Writing Specific Word Count:

@davidmajoulianmentioned that ChatGPT underproduces the number of words when asked to write an article of a specific length, to which@rjkmelbresponded that ChatGPT doesn’t know how to count words and it’s not realistic to expect it to produce an article of a specific length. The two users also discussed the possibility of using tools that add such functionality to OpenAI models. -

Exploration of Advanced Prompt Engineering:

@yautja_cetanuinitiated a discussion on advanced prompt engineering and the challenge in finding before-and-after examples of prompt adjustment that significantly improved the model’s performance. Several users affirmed that given the advancements in models like ChatGPT, many traditional prompt engineering techniques seem less important, with the quality of prompt output largely leaning on the clarity and specificity of the instructions given to the AI. -

Prompt Engineering Techniques:

@eskcantaand@cat.hemlockemphasized on the importance of clear, specific, error-free prompt setting for obtaining desired results from the AI, with the latter suggesting the use of outlining for longer written outputs and iterative proofreading. -

Using AI for Games:

@eskcantashared a failed attempt at getting the AI to play hangman and@thepitviperresponded with an example where they managed to get the AI to generate a working Python game for hangman. There was also a suggestion for the ability to toggle Python display on and off for this kind of engagement. -

Detailed 2D Illustration Request:

@yolimorrrequested for a detailed 2D illustration, and@solbusmentioned that image generation is not supported on the server and directed the user to use DALL·E 3 on Bing’s image creator or with ChatGPT Plus!.

▷ #api-discussions (32 messages🔥):

-

Prompt Length with ChatGPT:

@davidmajoulianasked about the issue of ChatGPT producing less content than requested in his prompts. He stated that when he requested a 1,500-word article, it returned only roughly 800 words.@rjkmelbclarified that ChatGPT doesn’t know how to count words and suggested using the tool to assist in constructing an article rather than expecting a set word length.@rjkmelbalso hinted at building other tools using the OpenAI GPT-4 API for advanced features. -

Advanced Prompt Engineering:

@yautja_cetanuinitiated a discussion on advanced prompt engineering, expressing difficulty in providing pre and post examples for his meetup talk because of how good chatGPT already is.@tryharder0569suggested focusing more on how specific prompts enhance an application’s functionality.@madame_architectrecommended considering step-back prompting or thinking about output quality in terms of higher value or more helpfulness instead of a working vs non-working dichotomy. -

Prompt Output Quality Control:

@cat.hemlockemphasized the importance of being specific and deliberate in instructing the AI to avoid generating ‘AI slop’ (garbage output). They suggested using ChatGPT to generate an article outline first and refining it before expanding it into full content, followed by a final proofread. -

ChatGPT Capability Limitations: Certain limitations of ChatGPT were also mentioned.

@eskcantaprovided an example of the model failing to correctly play a game of Hangman even with a known word, known order of letters, in-conversation correction, and step by step examples to follow.@thepitviperdemonstrated the use of Python with ChatGPT to run a slow, yet accurate Hangman game. -

Python Tool Display Toggle: A potential feature of toggling the Python display in the interface was discussed between

@thepitviperand@eskcantawhich could improve the user experience, though this would need to satisfactorily address situations where the system is performing an action to prevent undesired outcomes.

Nous Research AI Discord Summary

- Summary of extensive discussions on the SOLAR 10.7B AI model across multiple channels involving benchmark results, performance comparison, and validity of its claims on outperforming other models. The model showcased significant gains in the AGIEval, with interesting skepticism and eventual recognition of its performance.

- “from first look, it’s a flop” -

@teknium - “TLDR: Good but dont deserve so much att” -

@artificialguybr

- “from first look, it’s a flop” -

- Interaction on technical challenges in various areas: Base AI model described as an “untamed beast”, the query on GPU performance leading to a discussion on cooling methods for Lambda’s Vector One workstations, and queries on optimizing the inference speed with HuggingFace transformers.

- Dialogue on OpenHermes 2.5’s ability for function calling and the complexities involved with shared experiences and resources. Microsoft’s Phi-2 was mentioned for reportedly outperforming Mistral 7b and Llama 7b on benchmarks.

- Ongoing discourse on various Mistral models, the potential of fine-tuning models on Apple Silicon. Resources and discussion threads shared for further exploration.

- Community collaboration and assistance sought in areas like AI repositories needing frontend interface development, advice on choosing an orchestration library for developing an LLM-based application, with recommendations against LangChain favoring Llama-Index or custom platforms.

- The release announcement of the UNA-Neural-Chat-v3-3-Phase 1 model, described as outperforming the original in initial tests.

- Miscellaneous points: Humorous observations on working at Microsoft, involvement in gene editing technologies, grammar and spelling problem-solving using bots, sharing content of interest across different channels in the form of tweets and video links.

Nous Research AI Channel Summaries

▷ #ctx-length-research (1 messages):

teknium: https://fxtwitter.com/abacaj/status/1734353703031673200

▷ #off-topic (18 messages🔥):

- Concerns about Base Model:

@nagaraj_arvinddescribed the base AI model as an “untamed beast,” expressing some challenges or difficulties with it. - AI Repos Assistance:

@httpslinussought out any AI-related repos that need help with frontend interface building or extension, intending to assist with their current skill set. - Workplace Woes:

@airpods69shared their experience of leaving a workplace due to dissatisfaction and stress caused by the practices of a senior developer. - DeciLM-7B Discussion:

@pradeep1148shared a YouTube video about DeciLM-7B, a 7 billion-parameter language model. However,@tekniumexpressed disappointment, stating that DeciLM-7B’s performance is driven by its gsm8k score and is worse at mmlu than Mistral. - Vector One Workstations Analysis:

@erichallahanshared a Twitter post providing analysis on Lambda’s Vector One workstations. This led to a discussion on cooling methods, with@fullstack6209expressing a desire for air-cooled systems, and@erichallahanagreeing with this preference. - GPU Utilization Query:

@everyoneisgrossasked about the GPU performance when running an LLM on GPU VRAM, using python to max out CPU, and multi-screening YouTube videos at the same time, alluding to possible visual glitches or artifacts.

▷ #benchmarks-log (15 messages🔥):

- SOLAR 10.7B Benchmarks Shared:

@tekniumposted benchmark scores for the SOLAR 10.7B AI model on various tasks, including:truthfulqa_mc: Value: 0.3182 (mc1), 0.4565 (mc2) andarc_challenge: Value: 0.5247 (acc), 0.5708 (acc_norm) - Comparative Evaluation with Other Models:

@tekniumcompared the performance of SOLAR 10.7B with that of Mistral 7b. SOLAR 10.7B seemed to achieve a notable gain in AGIEval (39% vs 30.65%) but demonstrated similarities in other evaluations (72% vs 71.16% in gpt4all, 45% vs 42.5% in truthfulqa). - Remarks on SOLAR 10.7B’s Performance: Despite the initial perception that SOLAR 10.7B’s performance was not impressive (

"from first look, it's a flop"and"good but probably not as good as yi like it claims"), the comparative results led@tekniumto reassess the model’s performance, significantly in AGIEval. - Final Thoughts on SOLAR 10.7B:

@artificialguybrsummarised the discussion by saying that while SOLAR 10.7B had decent performance, it did not seem to warrant significant attention ("TLDR: Good but dont deserve so much att").

▷ #interesting-links (42 messages🔥):

- SOLAR-10.7B Model Discussion:

@metaldragon01linked to a Hugging Face post that introduced the first 10.7 billion parameter model, SOLAR-10.7B. - Claims of Outperforming Other Models: The SOLAR team claims their model outperforms others, including Mixtral. Users

@n8programsand@tekniumexpressed skepticism about the claims. - Evaluating the SOLAR-10.7B Model:

@tekniumbegan benchmarking the new SOLAR model and planned to compare the results with Mixtral’s scores. - SOLAR-10.7B Characterization: In discussion, users concluded that SOLAR is likely pre-trained but may not be a base model, based on its similarity to a past iteration of SOLAR, which was not a base model.

- Tweet on Mixtral and 3090 Setup:

@fullstack6209asked about the optimal setup for Mixtral and a 3090, to which@lightningralfresponded with a Twitter link possibly containing the answer.

▷ #bots (48 messages🔥):

- Gene Editing Technologies: User

@nagaraj_arvindasked about advances in gene editing technologies since 2015. The bot replied but the details were not elaborated in the messages. - Backward Sentences Completion:

@crainmakerseeked the bot to complete a string of backward sentences. - Questions in Portuguese:

@f3l1p3_lvasked a series of questions in Portuguese, most of which are related to basic math problems, day of the week calculations, analogy formation, and appropriate pronoun selection for sentences. The bot@gpt4and@compbothelped to solve most of these questions. - Grammar and Spelling Problems:

@f3l1p3_lvalso posted multiple spelling and grammar problems for the bot to solve, such as filling the blanks with specific letters or groups of letters, selecting pronouns for sentences, and choosing correctly written sentences. The bot@gpt4provided the solutions and explanations.

▷ #general (337 messages🔥🔥):

-

Discussion on Function Calling Capabilities: User

@realsedlyfinquired about OpenHermes 2.5’s ability to do function calling, other users, including@.beowulfbrand@tobowers, shared their experiences with function calling with various models.@.beowulfbrshared a link to a HuggingFace Model with function calling capabilities, whereas@tobowersshared his implementation of function calling using the SocialAGI code from GitHub. -

Announcement of UNA-Neural-Chat-v3-3-Phase 1 Release: User

@fblgitannounced the release of UNA-Neural-Chat-v3-3-Phase 1, which in initial tests outperforms the original model. -

Discussion on Optimization of Inference Speed w/ hf transformers:

@lhlshared his experiences on optimizing the inference speed with HuggingFace transformers, detailing how he achieved better results. -

Discussion on Usefulness and Performance of Small Scale Models:

@a.asifasked about the best performers in the realm of small scale models that could be run on laptops. Plugyy raised a question about the possibility of running the Mixtral MoE model on limited hardware with the help of quantization and llama.cpp. -

Links shared:

- A tweet by

@nruaifdiscussing a new development in AI modeling techniques. - A tweet shared by

@tokenbenderdiscussing Real SoTA work in function calling. - A tweet by

@weyaxidiscussing Marcoroni models. - Microsoft’s Phi-2 was mentioned in the discussion for reportedly outperforming Mistral 7b and Llama 7b on benchmarks.

- User

@benxhhas a dataset to upload which would lead to >100B high quality tokens if properly processed.

- A tweet by

▷ #ask-about-llms (63 messages🔥🔥):

-

Mistral Model Discussion: Users

@agcobra1,@teknium,@gabriel_syme, and@giftedgummybeediscussed about various Mistral models, including base and instruct versions. -

Library for LLM-based Application: User

@coco.pyasked for advice on choosing an orchestration library between LangChain, Haystack, and Llama-Index for developing a LLM-based application. Users@.beowulfbrand@decruzexpressed their problems with LangChain and recommended trying LLama-Index or building their own platforms. -

Fine-tuning Discussion: Users

@n8programs,@teknium,@youngphlo, and@eas2535discussed the potential of fine-tuning models on Apple Silicon and brought up some resources including Github discussion threads https://github.com/ggerganov/llama.cpp/issues/466 and Reddit posts Fine tuning on Apple Silicon and Fine tuning with GGML and Quantization. -

Benchmarking Models:

@brace1asked for recommendations for benchmarking open-source models for a specific text extraction task.@night_w0lfsuggested models like Mamba, Mistral0.2, Mixtral, OpenHermes etc, and also directed to a leaderboard on Hugging Face.@n8programscautioned about the limitations of benchmarks and recommended checking the models’ ELO on another leaderboard. -

Parameter Impact on Speed: User

@n8programsraised a query on why “stable diffusion”, despite having around 3 billion parameters, was significantly slower than a 3 billion parameter LLM.

▷ #memes (1 messages):

- Discussion on Microsoft Depiction: User

@fullstack6209shared a humorous observation about working at Microsoft, also questioning the absence of a certain group in their representation.

DiscoResearch Discord Summary

-

Release of Mixtral and Model optimization: Mistral released Mixtral 8x7B, a high-performance SMoE model with open weights. The model was praised for its efficiency and speed, with

@aortega_cybergemphasizing that it’s 4 times faster and performs better for coding compared toGoliath. Users also noted improvements inHermes 2.5overHermes 2. The availability and usage ofMistral-Mediummodel were also brought up. Read the full release details and pricing in Mistral’s blog post. -

Quantization, Software Integration and Challenges: Discussions about quantization methods including

"INT3 quantization"and"AWQ"were highlighted by@vince62sand@casper_ai. A need for exllamav2 support for contexts over 4k was also mentioned, and software limitations in LM Studio and exllama were brought up by@aiui. -

Model Efficiency and Function-Calling: The model’s efficiency is theorized to originate from the “orthogonality” of its experts, as per

@fernando.fernandes. A minimum-p routing system for expert evaluations was suggested which could enable scalable speed optimization. Strategies to mitigate similar problems and enrich Mixtral training data, such as including function-calling examples, were addressed with relevant resources shared, among them the Glaive Function-Calling v2 dataset on HuggingFace. -

Benchmarks, Scoring Models, and Emotional Intelligence: A new benchmark EQ-Bench, focusing on the emotional intelligence of LLMs, was introduced by

@.calytrixwho claimed its strong correlations with other benchmarks. Concerns about possible bias due to GPT-4 usage and potential improvements were discussed. GSM8K was recommended as a SOTA math benchmark. Score variations between mixtral instruct and other models on EQ-Bench stirred up conversations about potential causes. -

Resources and New Development: An explainer from Hugging Face on the Mixture of Experts (MoEs) model was shared here. A new model “Yi_34B_Chat_2bit” by Minami-su on HuggingFace was introduced here. Corrected pricing page link was shared here and Mistral’s Discord server link is here.

DiscoResearch Channel Summaries

▷ #mixtral_implementation (170 messages🔥🔥):

-

Mixtral Model Performance and Optimization: Users expressed a positive reception of the Mixtral model’s performance and efficiency.

@Makyastated that"Hermes 2.5"performs better than"Hermes 2"after adding code instruction examples. Regarding theMixtral-Instructmodel,@aortega_cyberghighlighted that althoughGoliathis better for creative writing, Mixtral performs better for coding and is 4x faster.@goldkronpointed out repetition loops in Poe’s Mixtral implementation. -

Quantization and Software Integration: Participants discussed different quantization methods, including 1-bit and 3-bit options.

@vince62sdiscussed the need for"INT3 quantization"with"AWQ".@casper_airesponded by stating that Mixtral is not yet ready for"AWQ"but anticipated that it should offer faster performance than 12 tokens per second once it is. In the meantime,@vince62ssuggested that finetuning with six active experts might allow the Mixtral model to run on a single 24GB card. Furthermore, users discussed the need for exllamav2 support for contexts over 4k, with@aiuipointing out current limitations in LM Studio and exllama. -

Importance of Experts in Model Efficiency:

@fernando.fernandestheorized that the efficiency of Mixtral potentially results from the “orthogonality” of its experts, leading to maximal knowledge compression.@someone13574proposed a minimum-p routing system where experts are evaluated based on their softmax score compared to the top expert. This would potentially enable scalable speed optimization by controlling the minimum-p level. -

Using Function Call Examples in Training:

@fernando.fernandessuggested enriching the Mixtral training data with function-calling examples for diversity and to mitigate similar problems. He shared the Glaive Function-Calling v2 dataset on HuggingFace as a potential resource. Users also shared other potential datasets for this purpose. -

Availability of the Mistral-Medium Model: Users mentioned that the

Mistral-Mediummodel is now available through the Mistral API. They speculated that this version could be around 70 billion parameters, though the model’s exact size is as yet unconfirmed.

▷ #general (9 messages🔥):

- Mixtral Release and Pricing:

@bjoernpshared the official announcement about the release of Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights, by Mistral. He noted that it offers the fastest performance at the lowest price— up to 100 tokens/s for $0.0006/1K tokens on the Together Inference Platform. Its optimized versions are available for inferences on Together’s platform. Full details in Mistral’s blog post. - Mixture of Experts Explainer:

@nembalshared a link to an explainer from HuggingFace about the Mixture of Experts (MoEs) model. The post dives into the building blocks of MoEs, training methods, and trade-offs to consider for inferences. Check out the full explainer here. - Pricing Page Issue:

@peterg0093reported that the Mistral’s pricing page link responded with a 404 error. However,@_jp1_provided the correct link to Mistral’s pricing information. - Mistral Discord Link:

@le_messasked for the Mistral’s Discord server link, which@nembalsuccessfully provided. Join their server here. - New Model by Minami-su:

@2012456373shared a new model by Minami-su on HuggingFace named “Yi_34B_Chat_2bit”. The model is optimized to run on an 11GB memory GPU with a weights-only quantization method called QuIP# to achieve near fp16 performance using only 2 bits per weight. Detailed information can be found here.

▷ #benchmark_dev (15 messages🔥):

- EQ-Bench for Emotional Intelligence:

@.calytrixintroduced his paper and benchmark on emotional intelligence for LLMs, EQ-Bench, claiming that it has strong correlations with other benchmarks (r=0.97 correlation with MMLU, for example). Mentioning that EQ-Bench seems to be less sensitive to fine-tuning for scoring well on other benchmarks. Notably, the parameter size seems to count for more as nuanced understanding and interpretations required for emotional intelligence seem more strongly emergent at larger param sizes. He also shared the Paper Link and the Github Repository. - Potential Improvements and Limitations to EQ-Bench:

@onuralp.suggested potential improvements including incorporating item response theory and reporting per-subject MMLU score correlations. The benefit of exploring model responses for well-structured scenarios involving agreeableness and negotiation was also suggested. A concern about potential bias due to choosing GPT-4 as the generator was brought up. - EQ-Bench creator’s response:

@.calytrixshared that the decision to use GPT-4 was based on resource constraints but confirmed that it did not generate the questions and answers. Defending the EQ-Bench, he argued that it genuinely measures some deep aspects of cognitive capabilities and offers useful discriminatory power. - Math Benchmark queries: In response to a request from

@mr.userbox020for references about SOTA math benchmarks,@.calytrixrecommended GSM8K for its focus on reasoning over raw calculation. He also shared a Paper Link exploring the use of the inference space as a scratchpad for working out problems using left-to-right heuristics. - Score Disparities between models on EQ-Bench: Users

@bjoernp,@rtyax, and@.calytrixengaged in a discussion about the lower scores mixtral instruct gets on EQ-Bench compared to its scores on other benchmarks. They showed curiosity about the disparity, suggesting possible reasons including a quick and dirty fine tune and inherent limitations of using 7b models as the base for MoE.

OpenAccess AI Collective (axolotl) Discord Summary

-

Engaged discussion around Mixtral, dealing with TypeError during loading mixtral via vllm, training on single A100 using qlora, LM Studio’s support, and code updates including support for Mixtral. A notable mention of a wandb project showing possible training set up was shared by

@le_messhere. -

Technical dialogues surround Axolotl, including activating “output_router_logits” setting in the model configuration for auxiliary balancing loss, and issues with loading multiple datasets with hyphenated names.

-

Inquiry about current quantization technics led to the mention of Hugging Face’s APIs, GenUtils and autogptq for choice-based inference.

-

Heartened interaction regarding training models, particularly around VRAM minimum requirements for training and fine-tuning AI models, issues and solutions related to stuck Mixtral training, discovering and sharing of wandb project results. Users shared their experiences while setting and solving problems in Axolotl setup and debugging with Docker, and discussed issues, views and workarounds for Axolotl training issues related to huggingface/transformers. A question related to loss spikes in pretraining remains open, without a common consensus.

-

Dataset-specific chat involved the potentials and cons of training AI on YouTube debate transcripts, interest in the local search repository, personal recommendation and experiences with tools for PDF conversion to markdown, specifically mentioning Marker. The discourse broadened to alternative solutions for document processing, but budget constraints negated their use for some users.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (48 messages🔥):

- Issues loading mixtral via vllm:

@papr_airplanereported TypeError when trying to load mixtral via vllm using “pt” format.@caseus_announced the official mixtral modeling code release now supports mixtral. - Impact of Rope on Training: User

@kaltcitsparked a discussion on the effect of enabling rope on VRAM usage and training sequences.@nanobitzclarified that rope itself doesn’t affect VRAM, but increasing the sequence length will. - Loading mixtral gguf onto LM studio:

@papr_airplaneasked if anyone has loaded mixtral gguf onto LM studio, but@nruaifreplied that LM studio doesn’t support it yet, while@faldoreclaimed to achieve it using ollama. - Training Mixtral on a single a100 using qlora:

@le_messshared that training Mixtral using qlora on a single a100 seems possible, linking to a wandb project. - Quality of Life Improvement in Docker Images:

@caseus_announced a new update addingevals_per_epochandsaves_per_epochparameters that would be available in docker images soon. The update is designed to support quality of life improvement by eliminating the need to calculate total steps or back-calculate from the number of total epochs.

▷ #axolotl-dev (11 messages🔥):

- Updated Modeling Code:

@bjoernpmentioned that the updated modeling code for Mixtral has been pushed. - Auxiliary Balancing Loss for the Router:

@bjoernpinquired about the activation of the auxiliary balancing loss for the router. According to@caseus_, enabling this feature is done through the configuration.@caseus_provided a sample code snippet, which indicates that it should be possible to enable this feature in Axolotl by adding"output_router_logits: true"undermodel_config. - Loading Multiple Datasets:

@le_messreported an issue with loading multiple datasets that have-in their names, and asked if anyone else had encountered this problem. According to@nanobitzand@noobmaster29, the loading should work fine if using quotes or if they have not updated to the latest mixtral update for Axolotl.

▷ #other-llms (3 messages):

- Discussion on Quantization Techniques: User

@jovial_lynx_74856asked about the current technology being used for quantization and inference, suggesting Hugging Face’s APIs and GenUtils. In response,@nanobitzmentioned autogptq for quantization, along with advising the choice-based inference.

▷ #general-help (70 messages🔥🔥):

-

Training Models with Different VRAMs: Users discussed about the minimum requirements of VRAM for training AI models.

@nruaifmentioned that the minimum is 1 A6000, which equates to 48 GB of VRAM.@gamevelosterasked if 24 GB or 48 GB VRAM is enough to fine-tune a 34b model, to which@le_messconfirmed that 48 GB should be enough, but there could be problems with 24 GB. -

Mixtral Training:

@le_messshared issues about stuck Mixtral training with no drop in loss. Other users suggested enablingoutput_router_logits, increasing learning rate by a factor of 10, and provided references to similar training issues.@le_messtracked his experiment using Weights & Biases. Link to result 1, Link to result 2.@caseus_suggested reference training with higher learning rate Link -

Axolotl Setup and Debugging with Docker:

@jovial_lynx_74856posted about issues encountered while setting up Axolotl with CUDA version 12.0 on an 80 GB A100 server and running the example command.@le_messsuggested running the setup in a Docker environment on Ubuntu and CentOS. -

Axolotl Training Issues:

@jovial_lynx_74856reported errors encountered during Axolotl training.@caseus_offered some insights and shared a link for reference on relevant changes introduced by huggingface/transformers Link. The issue seems to down to falling back to SDPA (Scaled Dot Product Attention) instead of the eager version and@jovial_lynx_74856found a workaround by settingsample_packingtofalsein the YML file. -

Loss Spike in Pretraining:

@jinwon_kasked about loss spikes in pretraining. The users did not provide solutions or pointers. This question remains open.

▷ #datasets (20 messages🔥):

- Training AI on Debate YouTube Transcripts:

@papr_airplanesuggested training AI on YouTube transcripts of debate competitions to improve reasoning skills. However,@faldorecountered that it risks teaching the AI to always argue with the user. - Access to Local Search Repository:

@emrgnt_cmplxtyoffered to give early access to a new repository after@papr_airplaneexpressed interest. - Discussion on PDF to Markdown Conversion Tools:

@visuallyadequateshared personal experiences with multiple tools and libraries for PDF to markdown conversion. They shared the link to Marker as a strong candidate, although noted its limitations with tables and tables of contents. They concluded some PDFs fare better than others while being converted. - Alternative Solutions for Document Processing: Other users suggested alternatives for document processing.

@lightningralfmentioned using Llama Index Hub, while@joshuasundancementioned using Azure Document Intelligence with Langchain. However, these alternatives weren’t very suitable for@visuallyadequategiven budget constraints.

HuggingFace Discord Discord Summary

- Technical Outage: A reported issue with all models not working, acknowledged by user

@harrison_2k. - Query about starting study in NLP with a suggested exploration into transformer models as an initial step.

- Question about fine-tuning BERT Model on a Turkish Q/A dataset for better performance and accuracy.

- Conversation on creating an EA for XM’s standard account for FX Automated trading and speculations about it.

- Users participated in a discussion on a Neural Network Distillation paper, with a link to the paper provided in both the general and reading group channels, supporting the exchange of ideas and further understandings.

- Discussion around the runtime error in the Nougat Transformers space with a suggestion to try running it in a different space provided by user

@osanseviero. - Introduction of a new model “Deepsex-34b” by user

@databoosee_55130and several shared links associated with the model. - Shared interest in the value of an Object, Property, Value list (OPV) in the digital field.

- Concern about the layout of the required dataset for a research paper in the field.

- Sharing of an article on a neuromorphic supercomputer that simulates the human brain.

- Promotion of Learning Machine Learning for Mathematicians paper for more mathematicians to apply machine learning techniques.

- Presentation of a variety of educational videos and articles, including a Retrieval Augmented Generation (RAG) video, XTTS2 Installation guide video, and a breakthrough in language models - MOE-8x7b.

- Sharing of newly created models on HuggingFace, such as the SetFit Polarity Model with sentence-transformers/paraphrase-mpnet-base-v2 and the Go Bruins V2 Model, with requests for guides on how to create similar models like the ABSA Model.

- Discussions primarily held on Wednesdays in the Reading Group channel in addition to the introduction to the paper Distilling the Knowledge in a Neural Network.

- Inquiry on creating separate bounding boxes for each news article in a newspaper.

- Discussion around the best Encoder/Decoder for a RAG system, with BERT being the current model in use, and a high interest in knowing more about model rankings for multilingual or code-specific use cases. The Palm model is frequently recommended.

HuggingFace Discord Channel Summaries

▷ #general (21 messages🔥):

- Fault in Models:

@noone346reported that all models appear to be down, a concern that was acknowledged by@harrison_2k. - Initiating NLP Study:

@bonashiosought advice on starting with NLP, already having a basic understanding of Deep Learning.@jskafersuggested an exploration into transformer models first. - Fine-tuning BERT Model on Turkish Q-A:

@emirdagli.asked for suggestions on fine-tuning a changeable BERT model on Turkish Q-A with a dataset of ~800 questions and ~60-70 answers. - Inquiry about MT4 and FX Automated Trading:

@ronny28717797mentioned their intent to create an EA for performing on XM’s standard account, detailing specific performance expectations and preferences. - Discussion on Distillation Paper:

@murtazanazirinitiated a discussion on a distillation paper, providing a link to the paper on arXiv. - Nougat Transformers Space Runtime Error:

@pier1337reported a runtime error on@697163495170375891’s Nougat Transformers space, asking if it can be run on a 16GB RAM CPU only.@osansevieroprovided a link to try it in a different space. - Introduction of Deepsex-34b Model:

@databoosee_55130introduced a new model, “Deepsex-34b” and shared multiple links related to it. - Object, Property, Value (OPV) List:

@noonethingsparked a discussion by questioning the value of an Object, Property, Value list in the field. - Dataset Layout Query:

@largedickasked about the layout of a dataset required for a paper. - Neuromorphic Supercomputer Article Share:

@bread browsershared a New Scientist article about a supercomputer that simulates the entire human brain.

▷ #today-im-learning (1 messages):

- Machine Learning for Mathematicians:

@alexmathshared a link to an arXiv paper encouraging more mathematicians to learn to apply machine learning techniques.

▷ #cool-finds (3 messages):

- Retrieval Augmented Generation (RAG) Video: User

@abelcorreadiasshared a video from Umar Jamil that explains the entire Retrieval Augmented Generation pipeline. - XTTS Installation Guide:

@devspotposted a link to a YouTube video that explains how to install XTTS2, a popular Text-To-Speech AI model, locally using Python. - Breakthrough in Language Models - MOE-8x7b:

@tokey72420shared a link to an article on MarkTechPost about Mistral AI’s new breakthrough in language models with its MOE-8x7b release.

▷ #i-made-this (5 messages):

- SetFit Polarity Model Creation:

@andysingalshared the link to his newly created model on HuggingFace, the SetFit Polarity Model with sentence-transformers/paraphrase-mpnet-base-v2, and encouraged others to try Aspect-Based Sentiment Analysis (ABSA) with it. - Guide Request:

@fredipyasked for a guide on how to create models similar to@andysingal’s ABSA Model. - Go Bruins V2 Model Showcase:

@rwitz_showcased his fine-tuned language model, Go Bruins V2, on the HuggingFace platform.

▷ #reading-group (3 messages):

- Meeting Schedules:

@chad_in_the_houseconfirmed that meetings usually occur on Wednesdays and are often held in a dedicated thread. - Discussion on Distillation Paper:

@murtazanazirinitiated a discussion on the paper Distilling the Knowledge in a Neural Network, inviting others to join in for an elaborate understanding.

▷ #computer-vision (2 messages):

- Bounding Box for Newspaper Segmentation: User

@navinaananthanasked for suggestions on models or methodologies to create a separate bounding box for each news article when uploading a newspaper. The user didn’t get answers yet.

▷ #NLP (5 messages):

- Best Encoder/Decoder for RAG System: User

@woodenrobotinquired about the most suitable encoder/decoder for a RAG system. They currently use BERT, but are open to other suggestions as they are unsure about BERT’s ability to scale up as data grows over time. - Rankings for Multilingual/Code:

@woodenrobotexpressed an interest in seeing model rankings specifically for multilingual/code use cases. - Palm as a Top Model Suggestion:

@woodenrobotnoted, based on interactions with bard, that Palm appeared frequently as the recommended model.

LangChain AI Discord Summary

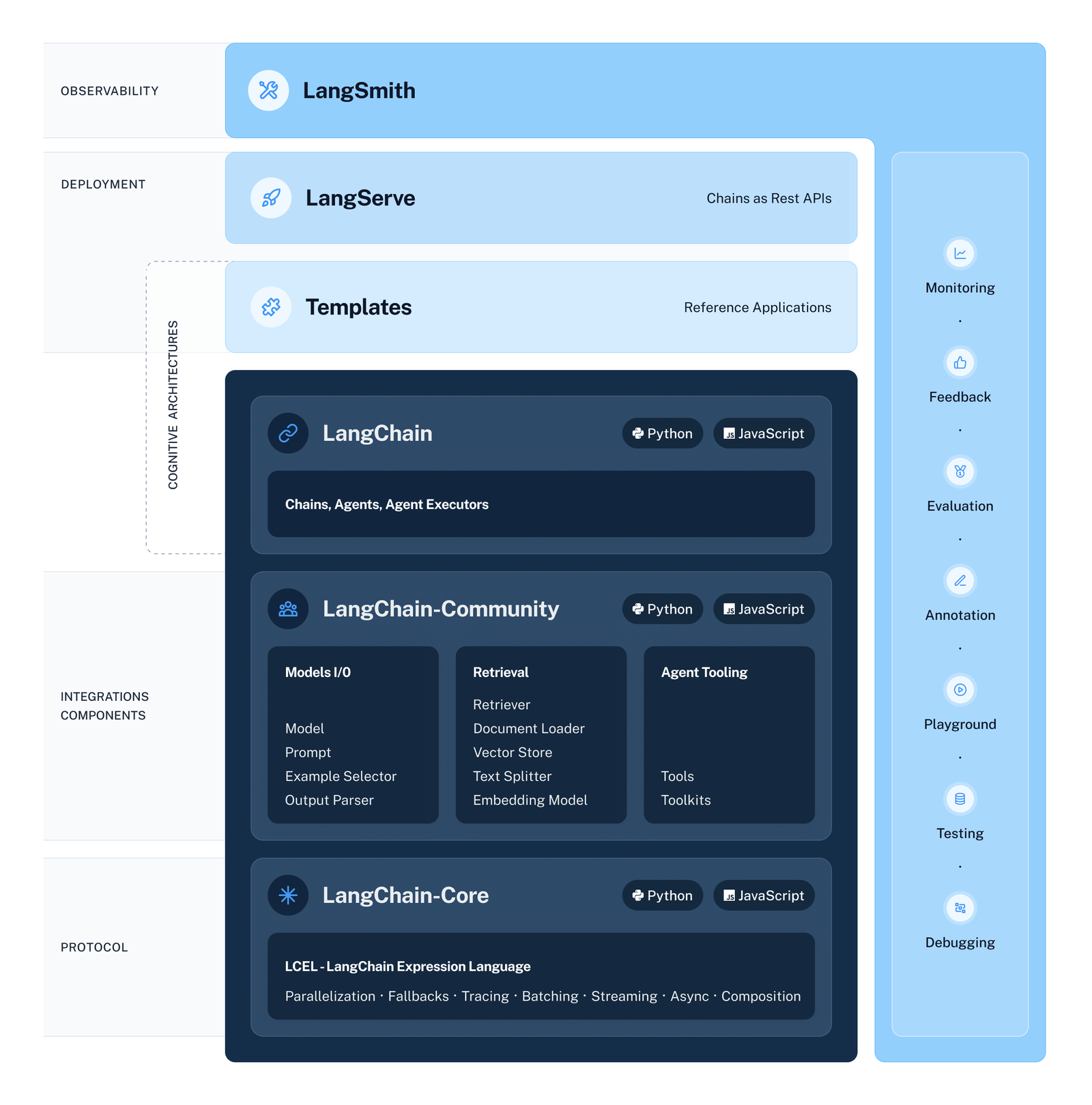

- A new architecture for LangChain, namely

langchain-coreandlangchain-community, was introduced by@hwchase17. This updated architecture will facilitate the development of context-aware reasoning applications using LLMs. Questions about this change are welcomed. - Various technical inquiries and issues:

- New techniques for tracking token consumption per LLM call and improving SQL query result summarization were requested.

- An issue with LangSmith API maintaining a log of LLM calls over the last 45 minutes was reported.

- Community members sought advice on using LangChain for specific task completion, employing open-source alternatives for OpenAIEmbeddings, and enhancing chatbots to show similar records from a database.

- Discussion about using LangChain to improve Azure Search Service’s multi-query retrieval and fusion RAG capabilities.

- In the langserve channel:

- Users faced challenges with callback managers in Llama.cpp and the integration of langserve, langchain, and Hugging Face pipelines.

- A proposal was made to create an issue about challenges with Langserve and possible adjustments to use RunnableGenerators over RunnableLambdas.

- A request for exposing the

agent_executorin langserve.

- A free 9-part code-along series on building AI systems using OpenAI & LangChain was shared. The first part specifically covers sentiment analysis with GPT and LangChain, MRKL prompts, and building a simple AI agent. The course can be found on this link and a corresponding YouTube Session is also available.

LangChain AI Channel Summaries

▷ #announcements (1 messages):

- New LangChain Architecture:

@hwchase17shared a blog post detailing the newlangchain-coreandlangchain-community. The goal of this re-architecture is to make the development of context-aware reasoning applications with LLMs easier. This change is a response to LangChain’s growth and community feedback, and implemented in a completely backwards-compatible way.@hwchase17also offered to answer questions regarding this change.

▷ #general (12 messages🔥):

-

Tracking Token Consumption Per LLM Call:

@daii3696inquired about a way to track token consumption for each LLM call in JavaScript. In response,@pferiolisuggested using a callback and provided a code snippet, dealing specifically with thehandleLLMEndcallback, which logs the token usage details. -

Improve SQL Query Result Summarization:

@jose_55264asked about possible methods to expedite the summarization of results from SQL query execution. No specific solutions were provided in the given messages. -

Using LangChain for Task Completion:

@sampson7786expressed a desire to utilize LangChain for completing a task with a specific procedure, seeking assistance on the platform for this issue. -

LangSmith API Issues:

@daii3696raised concerns about an apparent issue with the LangSmith API as they were unable to trace their LLM calls for the past 45 minutes. -

Open Source Alternatives for OpenAIEmbeddings in YouTube Assistant Project:

@infinityexists.is working on a YouTube assistant project and asked how HuggingFace can be used as an open-source alternative to OpenAIEmbeddings. They provided a link to the GitHub code used in their project. -

Increasing Odds in Vector Search:

@rez0queried about the name of a function that splits retrieval into three queries in vector search to improve the chances of getting the wanted results. -

Enhanced Search Capabilities in Chatbot:

@hamza_sarwar_is interested in enhancing their chatbot’s capabilities to display similar records from a database (e.g., vehicle info) when there are no precise matches to a user’s query. -

Azure Search Service Multi-query Retrieval/Fusion RAG with LangChain:

@hyder7605is working with Azure Search Service and wishes to integrate multi-query retrieval or fusion RAG abilities with Azure Cognitive Search using LangChain. They also aim to include advanced features like hybrid and semantic search in their query process but are unsure about defining filters and search parameters with LangChain.

▷ #langserve (5 messages):

- Issues with Callback Managers in Llama.cpp: User

@jbdzdraised a challenge with callback managers in Llama.cpp during their use of langserve. They got a RuntimeWarning indicating a coroutine ‘AsyncCallbackManagerForLLMRun.on_llm_new_token’ was never awaited. - Struggles with Implementing Streaming in Langserve:

@fortune1813expressed trouble with the integration of langserve, langchain, and hugging face pipelines, especially with respect to streaming. They have investigated the notebooks but requested further clarification on proper streaming implementation. - Proposal to Create Issue in Langserve:

@veryboldbagelsuggested that@fortune1813create an issue about their challenge in Langserve and share the full server script. They also brought to attention that RunnableGenerators should be used instead of RunnableLambdas for common operations, noting that it has been poorly documented. - Request for Agent Executor Exposure in Langserve:

@robertoshimizuqueried about exposingagent_executorin langserve and shared a code snippet with an example. However, they are struggling as the input seems different when invoked in a Python script.

▷ #tutorials (1 messages):

- AI Code-Along Series: User

@datarhysshared a free 9-part code-along series on AI, with a focus on “Building AI Systems with OpenAI & LangChain”, which was released by DataCamp. This series aims to guide learners from basic to more advanced topics in AI and LangChain.- The first code-along covers:

- Performing sentiment analysis with GPT and LangChain

- Learning about MRKL prompts used to help LLMs reason

- Building a simple AI agent

- The first code-along covers:

- The instructor, Olivier Mertens, is praised for his ability to present complex topics in an accessible manner.

- To start this code-along, follow the link provided: Code Along

- A YouTube session for the code-along is also available: YouTube Session

Alignment Lab AI Discord Summary

- Conversation about a Mixtral-based OpenOrca Test initiated by

@lightningralf, with the reference to a related fxtwitter post from the OpenOrca’s development team. - Speculation on the speed of the machine learning process, proposed solution includes using server 72 8h100 to enhance performance.

@teknium'sdeclaration of testing an unidentified model and the need for further clarification of the said model.- Inquiry from

@mister_poodleon ways to extend or fine-tune Mistral-OpenOrca for specific tasks, namely boosting NER task performance using their datasets and generating JSON outputs.

Alignment Lab AI Channel Summaries

▷ #oo (5 messages):

- Discussion about a Mixtral-based OpenOrca Test:

@lightningralfasked@387972437901312000if they tested Mixtral based on OpenOrca, linking a fxtwitter post - Question about Process Speed:

@nanobitzexpressed surprise about the speed of the process, with@lightningralfsuggesting the use of server 72 8h100. - Unidentified Model Testing:

@tekniummentioned testing some model, but being uncertain about which one.

▷ #open-orca-community-chat (1 messages):

- Extending/Fine-tuning Mistral-OpenOrca for Specific Tasks: User

@mister_poodleexpressed interest in using their datasets to boost Mistral-OpenOrca’s performance on an NER task with JSON outputs. They sought examples or suggestions for extending or fine-tuning Mistral-OpenOrca to achieve this goal.

Latent Space Discord Summary

- Discussion about fine-tuning open source models with recommendations for platforms such as Replicate.ai with the ability to run models with a single line of code shared by

@henriqueln7. - Introduction of an all-in-one LLMOps solution, Agenta-AI, including prompt management, evaluation, human feedback, and deployment by

@swizec. - Recognition of the popularity of daily AI newsletters highlighted through a Twitter post by Andrej Karpathy shared by

@swyxio. - Inquiry about the ideal size of language models for RAG applications by

@henriqueln7, focusing the debate on balancing world knowledge and reasoning capacity. - Sharing of a YouTube video presenting insights into the future of AI, titled “AI and Everything Else - Benedict Evans | Slush 2023” by

@stealthgnome. - Request for access to Smol Newsletter Discord text and API made by

@yikesawjeezwith the goal of displaying content as embeds or creating a daily digest. - Development progress update by

@yikesawjeezon a SK plugin, planning to complete this before catching up on backlog items.

Latent Space Channel Summaries

▷ #ai-general-chat (11 messages🔥):

-

Fine-tuning Open Source Models: User

@henriqueln7asked for recommendations on platforms to fine-tune open source models and presented https://replicate.ai/ as a suggestion. This platform allows one to run open-source models with one single line of code. -

All-in-one LLMOps platform: User

@swizecshared a link to the GitHub repository for Agenta-AI. This platform provides an all-in-one LLMOps solution, including prompt management, evaluation, human feedback, and deployment. -

Daily AI newsletters:

@swyxiocommented on the popularity of daily AI newsletters, putting forward a Twitter post by Andrej Karpathy as an example. -

Size of Language Models for RAG Applications:

@henriqueln7proposed a question about the ideal size of language models for RAG Applications. The question aimed to clarify whether a smaller or larger model would be better, considering the smaller model’s limited world knowledge and the larger model’s superior reasoning abilities. -

AI Overview for 2023: User

@stealthgnomeshared a YouTube link to the ‘AI and Everything Else - Benedict Evans | Slush 2023’ video, offering insights into the future of AI.

▷ #llm-paper-club (2 messages):

- Requesting Access to Smol Newsletter Discord Text and API: User

@yikesawjeezrequests@272654283919458306for an API to access the raw text or .md files from the smol newsletter discord. An API would enable them to display the content as embeds using another user, Red, or create a daily digest. - Development on SK Plugin:

@yikesawjeezis currently working on a sk plugin and plans on finishing that before going through their backlog.

LLM Perf Enthusiasts AI Discord Summary

- Conversation around Anthropic Fundraising with user

@res6969mentioning a rumor about Anthropic raising an additional $3 billion, and jesting about function calling issues, suggesting that more funds might solve them. - Discussion about Fine-tuning for Email Parsing initiated by

@robhaisfield, regarding the number of examples needed for creating a JSONL file to parse email strings into structured objects. - Share by

@robotumsof a Microsoft Research blog article about Phi 2: The Surprising Power of Small Language Models. - User

@lhlshared their experience with Inference Engine Performance, claiming a 50X performance boost after replacing some code with vLLM. They also compared transformers with other engines and shared a GitHub repository containing detailed results. - Dialogue on prompting techniques including the MedPrompt method and DeepMind’s Uncertainty Routed CoT (Cooperative Output Transformations). This discussion also touched on OCR (Optical Character Recognition) usage and MS Research’s achievements. All topics were introduced and discussed by users

@robotumsand@dhruv1.

LLM Perf Enthusiasts AI Channel Summaries

▷ #claude (3 messages):

- Anthropic Fundraising: User

@res6969commented about a rumor that Anthropic is raising an additional $3 billion. - Function Calling Issues:

@res6969made a jesting remark that maybe a few more billion dollars will get function calling to function properly.

▷ #finetuning (2 messages):

- Fine-tuning for Email Parsing: User

@robhaisfieldqueried about the number of examples needed for creating a JSONL file to fine-tune a model to parse an email string into a structured object with nested replies. They asked if 30 examples would be sufficient.

▷ #opensource (1 messages):

- Phi 2: The Surprising Power of Small Language Models:

@robotumsshared a link to a Microsoft Research blog article addressing the potential of smaller language models, accompanied by a list of contributors to the content.

▷ #speed (1 messages):

- Inference Engine Performance: User

@lhldetailed their experience with different inference engines. They noted that after replacing some existing code with vLLM, they observed a 50X performance boost. They also compared various inference options from transformers with other engines and shared their findings through a GitHub repository. The repo contains detailed results of the inferencing tests.

▷ #prompting (5 messages):

- Understanding When to Use OCR:

@robotumsexpressed curiosity about how to determine when OCR (Optical Character Recognition) is necessary for a page, asking if anyone knows how chatDOC accomplishes it. - Inquiring About the MedPrompt Technique:

@dhruv1asked if anyone has used the MedPrompt technique in their application. - Achievement by MS Research Using MedPrompt:

@dhruv1shared that MS Research has written a post about using the MedPrompt technique to surpass Gemini’s performance on MMLU (Multiple-choice Machine Learning dataset from University of Minnesota). - DeepMind’s Uncertainty Routed CoT Technique:

@dhruv1informed the channel that DeepMind has revealed a new technique called the uncertainty routed CoT (Cooperative Output Transformations) that outperforms GPT on MMLU. - Sharing the CoT Technique:

@dhruv1promised to share more about the CoT technique.

AI Engineer Foundation Discord Summary

Only 1 channel had activity, so no need to summarize…

- Event Timing Mix-up:

@yikesawjeezinquired about the event’s time being marked at 9:30 PM, which they had expected to be at 8:00 AM.@._zclarified that the event is actually scheduled to be at 9:30 AM PST. There was a minor confusion due to the time being marked incorrectly as PM instead of AM.

Skunkworks AI Discord Summary

- Mention of an individual’s influence on mistral licensing in the #moe-main channel, with a comment from user “.mrfoo”: “Influencing mistral licensing I see. Nice!”.

- In the #off-topic channel, user “pradeep1148” shared a YouTube link: https://www.youtube.com/watch?v=wjBsjcgsjGg. The content of the video was not discussed.

Skunkworks AI Channel Summaries

▷ #moe-main (1 messages):

.mrfoo: <@1117586410774470818> : Influencing mistral licensing I see. Nice!

▷ #off-topic (1 messages):

pradeep1148: https://www.youtube.com/watch?v=wjBsjcgsjGg

MLOps @Chipro Discord Summary

Only 1 channel had activity, so no need to summarize…

- The Complete Data Product Lifecycle:

@viv2668shared links to two parts of an enhanced end-to-end guide on practical Data Products.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.