[TOC]

LM Studio Discord Summary

- Extensive discussions were held on various GPU-related problems, ranging from model operational issues to compatibility concerns. For instance,

@pminevrequired assistance with GPU-related issues and on configuring the model for other functions and@dagbsguided them towards the Inference Server in LM Studio. - An ongoing conversation on incorporating a Discord bot with OpenAI API was witnessed.

@thelefthandofurzashared a Github link aiding users in tweaking the bot’s existing code as per their needs. - The community also interacted about specific LM Studio use-cases and compatibility, discussing character prompts in roleplaying contexts and integrating a GitHub wiki with the LLM assistant for more contextual responses.

- In terms of hardware, the topic revolved around the limitations that users faced in utilizing various models due to GPU restrictions. Possible solutions for running models with large context sizes were also speculated, with

@fabguyremarking, “Large context sizes slow down processing and they eat up RAM/vRAM like crazy.”. - Discussion on server hosting for embedding and LLM models was initiated by

@rofliexwith helpful input provided by@vic49. Also discussed was integrating with the embeddings API and the use of Databerry Project for building custom LLM Agents. - Community members proposed updates on the discord channels, requesting set up of a dedicated category for model development, and advocated for a visible leaderboard model section with data from trusted external sources. They also expressed the need to exercise caution in accepting submissions due to polluted training data issues.

- User

@.greglyshared a temp fix for a gibberish problem in the 0.2.10 (Windows) version in the beta releases discussion. - The Autogen topics revolved around installation troubles, understanding error messages, usage confusion about Docker, and Autogen’s operation nature. Clarity was provided by users who conducted detailed explanations about how Autogen functions under different conditions.

LM Studio Channel Summaries

▷ #🎄🎅-general (102 messages🔥🔥):

- GPU Related Issues and Queries:

@pminevfaced issues with the model operation and received suggestions to check GPU-related issues from@dagbs.@pminevwas also interested in configuring the model for other functions similar to OpenAI, and@dagbspointed him towards the Inference Server in LM Studio. - Discord Bot Implementation Discussions: Users

@Tripand@rewireshowed an interest in finding a Discord bot that works well with the OpenAI API.@thelefthandofurzashared a Github link for a discord bot, noting that users may have to tweak the existing code. - LM Studio Use-Case and Compatibility Discussions:

@olofpsuggested opening a specific channel for discussing use-cases of LM studio.@vanthrynasked for the best practices regarding character prompts in the context of using an LLM to roleplay.@professorakramsought advice for integrating a GitHub wiki with the LLM assistant for context in responses and@dagbssuggested using autogen. - System Compatibility Queries:

@katanasoul91and@basedkinghad issues related to their system compatibility with certain models.@fabguy,@yagilb, and@dagbsoffered their advice and guidance. - Model Performance Queries:

@jihaand@rocketraccoon6074were looking for models that align with their specific hardware capabilities and requirements. Suggestions and guidance were offered by@fabguy,@dagbs, and others.

Links mentioned:

- LM Studio Beta Releases

- GitHub - BBC-Esq/ChromaDB-Plugin-for-LM-Studio: Plugin that creates a ChromaDB vector database to work with LM Studio running in server mode!: Plugin that creates a ChromaDB vector database to …

- GitHub - openai/gpt-discord-bot: Example Discord bot written in Python that uses the completions API to have conversations with the

text-davinci-003model, and the moderations API to filter the messages.: Example Discord bot written in Python that uses th…

▷ #🤖-models-discussion-chat (6 messages):

- Confusion on Model Training Purpose: User

@dagbsexpressed confusion over the datasets used and the end-goal of a certain model. They stated, “I’m so confused by the end-goal of the Model and what it was trained for.” - Hardware Limitations: User

@dagbsalso noted the model’s size (8x7b) prevents them from running it due to hardware limitations. - Excitement Over Potential of SOLARC-MOE-10.7Bx4 Model:

@jihashared their enthusiasm about the potential power of the untestedSOLARC-MOE-10.7Bx4model, providing its link at the coverage. They also expressed a desire to see it tested but lamented not having the necessary hardware. - Speed and Memory Challenges with Large Context Sizes:

@fabguywarned about performance issues with large context sizes, stating “Large context sizes slow down processing and they eat up RAM/vRAM like crazy.”. They suggested a RAG setup could be beneficial. - Questions on MoE Model Processing Speeds:

@a1vxraised a query about the processing speed of MoE models, seeking to understand how an expert FFN router from a7bmodel could be stacked eight times.

Links mentioned:

TheBloke/SOLARC-MOE-10.7Bx4-GGUF · Hugging Face

▷ #🧠-feedback (9 messages🔥):

- Model Development Channel Request: User

@dagbsproposed setting up a specific channel category for model development, including subcategories such as general, pretraining, datasets, finetuning, and quantization, to foster collaboration within the LM Studio community. - Leader Board Model Section Suggestion:

@pandora_box_openrecommended adding a leaderboard model section visible to all. The data for this could be fetched from external sources like HuggingFace and they linked to OpenCompass as an example. - User

@fabguyaffirmed the idea of a leaderboard but also cautioned that currently no submissions are being accepted due to issues with polluted training data. @pandora_box_openresponded by suggesting the possibility of having a section for reviewers using LMstudio for rankings, which could serve as promotion for LM Studio while benefiting the community.

Links mentioned:

▷ #🔗-integrations-general (13 messages🔥):

- Hosting Servers for Embedding and LLM Models:

@rofliexasked about the possibility of hosting the server on 1234 and LM Studio on 1234 for embeding + LLM model.@vic49clarified that his program connects to LM Studio running in server mode and additional server mode isn’t required by his program.@rofliexexpressed appreciation for this solution. - Using Embeddings API:

@rofliexenquired about the need to clear suffix/preffix textboxes in LM Studio server panel configuration for utilizing embedings api and whether this requirement was only specific to@vic49’s chat implementation. In response,@vic49suggested disabling “automatic prompt formatting” in LM Studio, selecting a prompt, then updating settings in his program. He also advised removing anything in the prefix/suffix boxes in LM Studio. - Attempt to Run Databerry Project:

@rofliexmentioned attempting to run the Databerry Project, a no-code platform for building custom LLM Agents, and expressed the need for the correct embedding api for qdrant. - Feeding Multiple Folder to LLM:

@andrew.lostraised the question of whether it’s possible to feed an LLM a folder containing multiple sub-folders and files for reading and scanning. This query remained unanswered as per the message log.

Links mentioned:

GitHub - gmpetrov/databerry: The no-code platform for building custom LLM Agents: The no-code platform for building custom LLM Agent…

▷ #🎛-hardware-discussion (12 messages🔥):

- Using Models on GPU:

@taigasasori_94251asked how to make models run on a 4090 GPU as only CPU load was shown.@dagbssuggested setting the GPU parameter to-1or a positive number, while@fabguynoted that the application UI doesn’t show GPU utilization. Later,@pefortinadvised to check the GPU offloading box in the UI and monitor vRAM usage using system tools. - Efficiency of Model on GPUs:

@pefortinshared their experience with dolphin mixtral Q5 on a combo of 3090 and 3060ti using PCIe x1 to x16 riser. They observed an increase in tokens per second from 6 to 10-11 and planned to test with old 10xx and 20xx series GPUs. - Issue on AMD GPU:

@LokedTMXexperienced issues of GPU non-utilization while off-loading to an RX 6950xt AMD GPU.@yagilbacknowledged it as a known issue concerning AMD GPUs and provided a link for updates as well as invited comments to potentially test a beta build when available.

▷ #🧪-beta-releases-discussion (2 messages):

- Gibberish Problem in 0.2.10 (Windows):

@.greglynoticed that switching truncation strategies and regenerating seems to temporarily fix the gibberish problem in version 0.2.10 (Windows), although the issue reoccurs on the next generation. This feedback was identified as useful by@yagilb.

▷ #autogen (21 messages🔥):

-

Installation and usage of AutoGen: User

@ddhmksoishared their struggle with setting up AutoGen. They followed the steps including downloading the latest zip from git, running install.py, and using pip install for autogen. However, they encountered issues while running certain Autogen scripts. -

Understanding Error Messages in AutoGen:

@ddhmksoiencountered an error message that refers to issues withautogen.oai.completionand dependencies onopenai<1anddiskcachewhich raised concerns. -

Use of Docker with AutoGen:

@ddhmksoiexpressed confusion over Docker’s involvement in the AutoGen process. They installed Docker as recommended but did not observe an active instance within the Docker application. -

How AutoGen Works: User

@dagbsprovided insight into how Autogen works. They pointed out that Autogen’s behavior not only heavily depends on the model used, but also on the prompt given. Autogen may terminate prematurely if it determines the task is completed. To prevent early termination,@dagbssuggested adding asystem_messageinside ofUserProxyAgent()to guide the model on task completion status. -

Location of AutoGen Files:

@ddhmksoiinquired about the location where Autogen files are saved after execution.@dagbsclarified that Autogen does not save any files, as it is a Python script that’s meant for direct interaction.

Nous Research AI Discord Summary

-

User

@pradeep1148sparked a discussion on AI Models by inquiring about the differences between transformer architectures in llama2 and mistral on the #off-topic channel. A man contacting an astronaut on the ISS using a homemade antenna was also discussed through a Twitter link shared by@teknium. -

In the #benchmarks-log channel,

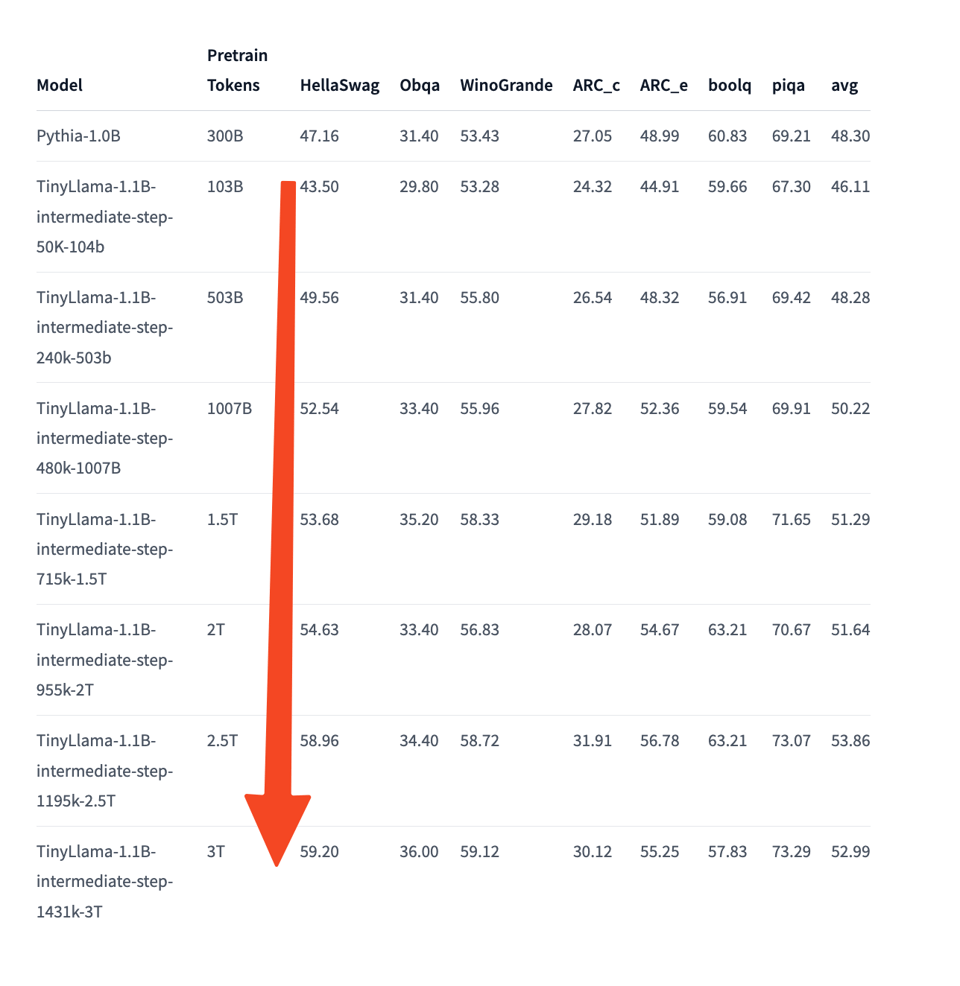

@tekniumshared benchmarks displaying varied performance across multiple tasks and data sets for different versions ofTinyLlama. -

The #interesting-links channel had discussions on running benchmarks for

Tinyllamacheckpoints, gaming in modded Minecraft, building a knowledge graph with Instructor and Pydantic for response_type, and a comprehensive comparison and ranking of various 7B models, including dolphin-2.6-mistral-7b, dolphin-2.6-mixtral-8x7b, Marcoroni-7B-v3, and mistral-ft-optimized-1218. -

@tekniumignited a thoughtful conversation on AI consciousness in the #general channel while discussing the Hermes 2 AI bot’s view on consciousness, sentience, and qualia in AI. The potential effects of doubling the model layers in AI models and the desire to train a tiny-llama semantic chunker using GPT4 data, were other areas of interest. -

The #ask-about-llms channel had interesting exchanges about the simplicity of tokenization and the unveiling of a new AI model called NeuralMix-2x7b, a Mixture of Experts (MoE) created using mergekit.

-

@vic49sparked a discussion about a query with a script execution in the #project-obsidian channel. The discussion evolved into project integration topics and code suggestions for smooth operations.

Nous Research AI Channel Summaries

▷ #off-topic (3 messages):

- User Shared Links:

@pradeep1148shared a YouTube link without any context.@tekniumshared a Twitter link describing a man contacting an astronaut on the International Space Station using a homemade antenna.

- Discussion on AI Models:

@pradeep1148asked about the differences between transformer architectures in llama2 and mistral.

Links mentioned:

Tweet from Historic Vids (@historyinmemes): This guy contacted an astronaut on the ISS using a…

▷ #benchmarks-log (8 messages🔥):

- Training TinyLlama:

tekniumshared benchmarks for different versions ofTinyLlama, specifically intermediate steps with various batch sizes.- TinyLlama-1.1B-intermediate-step-1431k-3T: Demonstrated varied performance across different tasks. Achieved an average accuracy of 52.99% on a set of tasks including ARC, Boolq, HellaSwag, and others. Achieved an average accuracy of 21.05% on a set of tasks from AgeIVal (AQA, LogiQA, LSAT AR, etc.). Performed at 31.95% average on a set of tasks from BigBench. “TruthfulQA MC” performance is reported with mc1 and mc2 metrics.

- TinyLlama-1.1B-intermediate-step-1195k-token-2.5T: Showed slightly inconsistent performance compared to the previous model. Obtained an average of 53.84% on a set similar to the first batch, but then dropped to 21.45% on a set from AgeIVal. In the BigBench tasks, it achieved an average performance of 31.73%. Similar to the previous model, “TruthfulQA MC” performance is reported.

▷ #interesting-links (10 messages🔥):

- Benching Tinyllama Checkpoints:

@tekniummentioned running benchmarks for the last three checkpoints of Tinyllama. - Gaming Discussion: A discussion about playing modded Minecraft was initiated by

@tekniumcasually inquiring if@1084792750001618965indulges in it. The conversation ended up including@max_paperclips, who mentioned that they do play the game occasionally. - Knowledge Graph Building:

@fullstack6209shared a GitHub Gist link about their project on building knowledge graph data with guidance using Instructor and Pydantic for response_type. They mentioned the process taking about 30 minutes on a 2080ti/3090 setup with VLLM. - AI Model Comparison:

@metaldragon01shared a Reddit post offering a comprehensive comparison and ranking of various 7B models, including dolphin-2.6-mistral-7b, dolphin-2.6-mixtral-8x7b, Marcoroni-7B-v3, and mistral-ft-optimized-1218. The Nous Capybara model was mentioned favorably. - Model Offloading:

@gabriel_symeshared a GitHub link about running Mixtral-8x7B models in Colab or consumer desktops.

Links mentioned:

- Reddit - Dive into anything

- GitHub - dvmazur/mixtral-offloading: Run Mixtral-8x7B models in Colab or consumer desktops: Run Mixtral-8x7B models in Colab or consumer deskt…

- asdf.py: GitHub Gist: instantly share code, notes, and snip…

▷ #general (75 messages🔥🔥):

- Speculation on AI Consciousness:

@tekniumshared a Hermes 2 AI bot’s output contemplating consciousness, sentience, and qualia in artificial intelligence. The bot highlighted the abstract and poorly understood nature of these concepts, and how they might manifest within AI. Despite some forms of AI exhibiting characteristics of human sentience and consciousness, the AI concluded that current understanding and technology don’t support the assertion that AI possesses the conscious, sentient, or qualitative attributes of living beings. - Discussion on Scaling AI Models:

@.wooserspeculated about doubling the model layers and the effects it could have on the model’s performance. They questioned if such actions would provide a fourfold increase in performance efficiency. - Mistral’s Ranking:

@mihai4256mentioned that Mistral’s strongest model now ranks similarly to 7b models, which shows a different trend against other benchmarks. They were still investigating the reason for this trend. - Semantic Chunking on GPT4:

@gabriel_symeexpressed interest in training a tiny-llama semantic chunker using GPT4 data. Their approach would involve taking 4k token text inputs provided to GPT4 and splitting the output into 1-10 sentence chunks based on semantic context. - Impressed with Mergekit:

@mihai4256remarked on how shocked they were to discover that Mergekit has a mixtral branch. Expectedly, users are looking forward to seeing how well it performs.

Links mentioned:

- nRuaif/IWasDointCrystalMethOnTheKitchenButThenMomWalkedIn-NeuralHermesStripedCapybara-Mistral-11B-SLERP · Hugging Face

- mlabonne/Beyonder-4x7b · Hugging Face

- Tweet from lmsys.org (@lmsysorg): @MistralAI’s strongest model, Mistral-Medium, …

- RAG

- Tweet from Delip Rao e/σ (@deliprao): AI researcher tuning hyperparameters of their LLM

▷ #ask-about-llms (6 messages):

- Tokenization Explained:

@woosercommented on the simplicity of tokenization, stating that it’s computationally super easy and involves chopping up a text file using items from a dictionary. - New AI Model - NeuralMix-2x7b:

@jason.todayshared a new AI model called NeuralMix-2x7b, a Mixture of Experts (MoE) created using mergekit (mixtral branch). It’s composed of the following base models: OpenPipe/mistral-ft-optimized-1218 and mlabonne/NeuralHermes-2.5-Mistral-7B. - Unexpected Language Output:

@fullstack6209reported that NeuralMix-2x7b started speaking Russian for an undisclosed reason.

Links mentioned:

mlabonne/NeuralMix-2x7b · Hugging Face

▷ #collective-cognition (1 messages):

dogehus: show me how I can operate the lasted neo cortex we have available pleas

▷ #project-obsidian (9 messages🔥):

- Code Execution Discussion: User

@vic49raised an issue about a problem with a script execution, presuming it to be a command line argument creator. @qnguyen3confirmed that LMStudio uses the same code for testing purposes.- Project Integration Topic:

@qnguyen3shared insights on people integrating Obsidian into their app. They suggested that if there were any issues, they should have been reported on both HF and GitHub. @vic49specified that his reference is related to using the native format of Obsidian, not the GGUF version used by LMStudio.- Code Suggestion:

@qnguyen3proposed a command to try:python llava/serve/cli.py --image-file your_image.jpg.

OpenAccess AI Collective (axolotl) Discord Summary

-

Discussion about loss reduction techniques for ultimate model performance and usage of Wandb for better visualization.

- “Increase the batch size and reduce the learning rate to reduce the fluctuation in training loss.” -

_jp1_

- “Increase the batch size and reduce the learning rate to reduce the fluctuation in training loss.” -

-

Conversation on estimating model performance with a new tokenizer, with the mention of using the 16/32 rank alpha combination for training, and checking performance through task completions.

-

Focus on Airship Axolotl training: the discussion concerning a VRAM spike issue with

sample_packing, suggestion of adding chat templates totokenizer_config.jsonfor traits likechatml,vicuna, andllama2 chat. -

A call for managing Axolotl installation issues via a reproducible pip / conda environment, and considering

mambaas a dependency. -

A milestone by the TinyLlama project: pretraining a 1.1B model on 3 trillion tokens.

-

Observations on the performance difference between Mixtral and Mistral models, and complications arising from EOS token conflicts when merging instruct models and story models.

-

Insight into the ultrachat_200k dataset, with questions on how to use it for training, understanding the

train_genformat, and confirming the usage oftrain_sftsplit and binarized dataset based on specific recipes.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (5 messages):

- Loss Reduction Techniques:

@_jp1_recommended increasing the batch size and reducing the learning rate to reduce the fluctuation in training loss. He added that fluctuations in training loss are not concerning as long as the evaluation loss is decreasing. - Usage of Wandb:

@_jp1_emphasized the importance of using wandb for learning and tracking model performance, stating that its use only requires setting an environment variable and adding a line in the axolotl configuration. - Evaluation of Model Performance:

@noobmaster29questioned the possible ways to estimate if the model is working well with a new tokenizer. He also mentioned that a loss of around 2 seems decent for text completion. - Rank and Alpha Combination: In reply to

@noobmaster29,@nanobitzsuggested using the 16/32 rank alpha combination for training the model. He also added that testing the model can simply be done through some completions.

▷ #axolotl-dev (45 messages🔥):

-

VRAM spike issue with sample_packing: Users

@_jp1_and@nanobitzdiscussed a VRAM spike issue, which appears when usingsample_packingduring training. This issue seems to be specific to certain datasets. While a definite solution has not been found, the issue doesn’t occur whensample_packingis switched off. -

Adding chat templates to the tokenizer_config.json:

@le_messraised the topic of adding chat templates totokenizer_config.json, asking users what chat templates, other thanchatml, should be included.@caseus_suggested includingllama2-chat,chatml,vicuna, andalpaca-instruct. -

Reproducible pip / conda environment for Axolotl:

@nanobitzraised the need for a reproducible pip / conda environment for Axolotl due to several installation issues observed.@le_messsuggested using thepip freeze > requirements.txtcommand, and@xyzzyrznoted complications with supporting multiple versions of torch for CI processes that build docker images. -

Mamba Dependency for Axolotl: Discussion occurred regarding making

Mambaa required dependency for Axolotl to avoid related issues.@nanobitzmentioned an issue comment related to this, to which@caseus_responded affirmatively. -

TinyLlama Project milestone:

@faldorereported that the TinyLlama project had reached the milestone of pretraining a 1.1B Llama model on 3 trillion tokens.

Links mentioned:

- TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T · Hugging Face

- Adds chat templates by mhenrichsen · Pull Request #1022 · OpenAccess-AI-Collective/axolotl: Adds Chat templates for easier inferencing chat mo…

- fix: warn user to install mamba_ssm package by NanoCode012 · Pull Request #1019 · OpenAccess-AI-Collective/axolotl: Fixes #975 . Warns when user does not have package…

▷ #general-help (15 messages🔥):

- Performance Difference Between Mixtral and Mistral:

@semantic_zonehas noticed a significant decrease in training and evaluation loss when switching the model from Mixtral to Mistral on their large dataset.@_jp1_suggested that the learning rate could be adjusted as it should probably be much smaller with a smaller batchsize and without sample packing.@_jp1_also provided a link to a classifier tutorial that suggested using a linear predictor on embeddings instead of next token prediction. - ChatML Models and EOS Token Conflicts:

@henk717shared that merging instruct models with story models previously led to EOS token conflicts, causing problems with models that specified `

Links mentioned:

- Intel/neural-chat-7b-v3-1 · Prompt Template?

- mistral-src/tutorials/classifier.ipynb at main · mistralai/mistral-src: Reference implementation of Mistral AI 7B v0.1 mod…

▷ #datasets (3 messages):

- Training with ultrachat_200k dataset: User

@noobmaster29inquired about methods to use the ultrachat_200k dataset for training and whether there is an axolotl data template for this purpose or whether manual configuration is needed in the dataset. He provided a dataset card link for more information on ultrachat_200k. - train_gen split in ultrachat_200k:

@noobmaster29also sought clarity on the format of thetrain_gensplit in the ultrachat_200k dataset, as he didn’t see a chosen/rejected pair in this split compared to what is present in theultrafeedback_binarizeddataset, sharing its dataset card link for reference. - Use of train_sft split and binarized dataset: According to

@noobmaster29, based on the alignment handbook’s recipts on Zephyr-7B-β, only thetrain_sftsplit from the ultrachat_200k dataset was used for sft, and the binarized dataset was used for dpo. He provided a link to the recipe for reference. - ultrachat prompt strategy merging: User

@caseus_responded that they had just merged an ultrachat prompt strategy.

Links mentioned:

- HuggingFaceH4/ultrachat_200k · Datasets at Hugging Face

- HuggingFaceH4/ultrafeedback_binarized · Datasets at Hugging Face

- alignment-handbook/recipes/zephyr-7b-beta at main · huggingface/alignment-handbook: Robust recipes for to align language models with h…

Mistral Discord Summary

- Language Learning Models (LLMs) and Function Choice Optimization: Users explored how to optimize LLMs function choice through prompt modifications. User

.tanuj.shared this method of defining a function call using system messages and context generation from prompt in their code. - Interests and Discussions on Different Mistral Models: Chatters expressed anticipation for the Mistral Medium feature, and shared experiences of Mistral-Medium’s performance. Also, question regarding using wasmedge to run Mistral and the possibility of fine-tuning Mistral on non-English languages were made.

- Deployment and Hardware-related Inquiries: User discussions included potential model choices based on hardware capabilities, including suggestions such as the openchat 3.5 model for systems with hardware limitations. Query about deploying a chatbot on an integrated GPU was also raised.

- Mistral Model Discussions and Showcasing: Users shared experiments with different models, including Mistral-7b and Mistral-7B-Instruct-v0.2. Inquiries about MoE model’s capabilities and a predictive future of resolving performance issues by the end of January were discussed. Users shared the HuggingFace blog post and an AI research survey video for a deeper understanding of MoE.

- Model Limitations and their Impact: User

@gilford3641sought advice on a local GPT model that supports a high number of input tokens to facilitate large text processing. A suggestion was given for Mistral-7B-Instruct-v0.2, however, it didn’t fit@gilford3641’s needs. - Platform and Model-related Discussions: Users compared Mistral AI client vs OpenAI client; speculated performance of mistral-tiny API; anticipated the introduction of 2FA on Mistral.ai; faced issues with rate limits on Mistral and experienced issues with mistral-medium model. Identified issues with LLMs performance with Huggingface libraries were discussed.

@daainsuggested a GitHub project to generate answers or code in Python using a LLM.

Mistral Channel Summaries

▷ #general (9 messages🔥):

- Prompting Method and LLMs Abstract APIs:

@theledgerluminaryinquired for examples on how to prompt Language Learning Models (LLMs) to optimize function choice. They suggested that LLMs API’s are abstract and function ideally by adjusting the prompt templates.@.tanuj.responded by sharing a high-level overview of his approach, emphasizing creating system messages for tasks, generating context with few-shot examples, allowing the agent to iterate series of steps and ensuring there is no unintentional side effect. They shared a link to their code for processing the LLM’s output into a clearly defined function call. - Interest in Mistral Medium:

@lee0099and@meyeloexpressed anticipation for Mistral Medium feature. - Mistral Medium Performance and Local Usage:

@gilford3641shared their experience that Mistral-Medium appears to perform better than Mistral-8x7b. They further inquired if it’s possible to run Mistral-Medium locally, to which@lee0099responded that it hasn’t been released yet. - Warm Welcome to New Members:

@akasik7243and@aircactus500announced their arrival and expressed their excitement to be part of the chat.

Links mentioned:

microchain/microchain/engine/engine.py at main · TanGentleman/microchain: function calling-based LLM agents. Contribute to T…

▷ #models (1 messages):

ved_ikke: Anybody using wasmedge to run mistral?

▷ #deployment (6 messages):

- Chatbot Deployment with Specific Hardware:

@ethuxadvised that deploying a 4 or 3bit GGUF model would be possible with a GPU that has 4GB VRAM and an additional 8GB Shared VRAM from the PC. - Alternative Model Recommendation:

@ethuxsuggested to consider the openchat 3.5 model for those with hardware limitations.@azetismeexpressed intent to look into this suggestion. - Integrated GPU Inquiry:

@hharryrqueried about the possibilities of deploying a chatbot on an integrated GPU, particularly the one on R7 7840H, which is paired with 32GB of RAM. This question remained unanswered in the given data.

Links mentioned:

TheBloke/openchat-3.5-1210-GGUF at main

▷ #finetuning (3 messages):

- Fine-tuning Mistral on Non-English Languages:

@deatheater006enquires about the process of fine-tuning Mistral on a non-English language. Specifically, they express interest in the Tamil language.@pieswapengages with the query, seeking specific details on the intended language.

▷ #showcase (10 messages🔥):

- Mistral-7b Performance:

@.gue22shared their experience with the Mistral-7b model running on Nvidia A40 (Large) GPU hardware, noting subpar performance, lack of generalization, and the benefit of Google search speed over the chatbot’s responses. - Local Model Execution:

@fayironrecommended running models locally using text-generation-webui for improved performance and control, especially on a Debian desktop. - Potential of MoE Models: In response to

@.gue22,@daainelaborated on the transition to Mixture of Experts (MoE) models for robust performance at lower computational costs, citing the HuggingFace blog post on the subject. - Future Projections:

@daainalso predicted that by the end of January, the remaining performance issues with MoE models will be resolved, enabling a mid-range model to run locally at small model speeds and opening up new use cases. - Model Education Resource: For further understanding of MoE,

@pradeep1148and@.gue22shared a YouTube link to an AI research survey video. The video covers the impact of Mixture of Experts (MoE), multimodal learning, and Artificial General Intelligence (AGI) on generative AI.

Links mentioned:

- Mixture of Experts Explained

- mistralai/mistral-7b-instruct-v0.1 – Run with an API on Replicate

- From Google Gemini to OpenAI Q*: A Survey of Reshaping the Generative AI Research Landscape: This survey examines the impact of Mixture of Expe…

▷ #random (4 messages):

- Discussion on GPT Model that Supports Large Input Tokens: User

@gilford3641was seeking suggestions on a local GPT model that allows a high number of input tokens. They aim to enter a large text (up to 10k tokens), which the model will paragraph conditionally. Their trials with several models on Gpt4All resulted unsuccessful, citing the apps’ lack of support for long inputs. - Suggestion from bam4d: In response,

@bam4drecommended Mistral-7B-Instruct-v0.2, a model that is tuned for a 32k context window. - gilford3641’s Previous Experience with Mistral Medium Model: Despite acknowledging the suggestion,

@gilford3641stated that they had previously tested this model, which was unable to fully process their input. They provided more detail about their experiment, mentioning that their attempt involved an input of over 7k Simplified Chinese characters (with 1 character equal to 1 token). The model processed about 60% of the text and did not yield more output. They questioned whether emotional medium’s token count relies on ASCII or a 2-byte encoding, suggesting this as a potential reason for its failure to process 7k * 2 tokens.

Links mentioned:

mistralai/Mistral-7B-Instruct-v0.2 at main

▷ #la-plateforme (17 messages🔥):

-

Mistral AI Client vs OpenAI Client:

@lerelaclarified that the Mistral AI client primarily focuses on completions, whereas the OpenAI client incorporates numerous OpenAI-exclusive features. They emphasized that while Mistral’s client is more streamlined, the openai Python package is still a viable choice if an application is already utilizing it. -

Performance of mistral-tiny API:

@sublimatorniqposed a query around the performance of the mistral-tiny API, hypothesizing it might be sharper than the mistral-7b weights they downloaded to operate locally, potentially due to having the quant v. The speculation was concurred by.superintendent, attributing the sharpness to the API running at fp16 and the local operation running a quant. -

Two-Factor Authentication on Mistral.ai:

@ved_ikkeinquired about the anticipated introduction of two-factor authentication (2FA) when logging into Mistral.ai. There was no answer at this time. -

Rate Limits on Mistral: Michaelwechner discussed their experience with the rate limits, noting that a single user received an average of 34 responses per minute with an average response time of 1.76 seconds. The user ran into an issue when they increased to two concurrent users and began receiving a “request rate limit exceeded” message after a few queries. They linked to the Mistral pricing and rate limits document for further reference.

-

Limited Language Model Performance with Huggingface Libraries:

@casper_aivoiced an observation that most large language models (LLMs), including Mistral medium, seem quite weak while working with Huggingface libraries—they tend to hallucinate arguments to simple functions. They also expressed hope for future optimization. In response,@daainsuggested a project on GitHub that uses a vector database embedding the ~1200 most popular Python libraries, which can be used in conjunction with an API or a local LLM for generating answers or code. -

Mistral-medium Model Issues:

@pw3456reported experiencing issues with the mistral-medium model no longer following chat protocols and replying on behalf of both parties.@sublimatorniqreported not seeing this issue currently.

Links mentioned:

- Pricing and rate limits | Mistral AI Large Language Models: Pay-as-you-go

- GitHub - fleet-ai/context: A CLI tool & API over the top 1221 Python libraries.: A CLI tool & API over the top 1221 Python libr…

HuggingFace Discord Discord Summary

- Conversation around safe script sharing practices, with a recommendation to leverage platforms like GitHub or Hugging Face Hub instead of .zip files.

- “avoid sharing .zip files” - cakiki

- Various resources for AI job searches were discussed, with specific mention of https://www.aimljobs.fyi/ and solicitation for additional platforms for AI related positions.

- Queries and interest around large language models (LLMs) and gradient-free methods, specifically evolutionary algorithms, for model training.

- A user displayed interest upon MoE model SOLARC-MOE-10.7Bx4 and its potential performance, sharing the model’s Hugging Face link.

- A call for advice and resources for fine-tuning the Blenderbot model and understanding the dataset format.

- “fine-tuning the Blenderbot model” - tchi_tchi_

- Learning exploration of model soups and LightGBM as shared by a user on the guild.

- “model soups and LightGBM” - onceabeginner

- A recommendation to explore Trending Papers, a resource that ranks the top trending papers in computer science.

- Announcement and launch of MindMirror, an AI-based app providing audio transcriptions and sentiment analysis, and the Bunkoer Library was introduced as a new open-source Python library aimed at enhancing data security for LLM tasks with Github link.

- Sharing of the Canarim-Bert-Nheengatu project, a BERT model pre-trained for the Nheengatu language with the link here.

- “Canarim-Bert-Nheengatu Project” - dominguesm

- Discussion on personalization techniques in Diffusers, and a document was shared showcasing techniques to control the generation of diffusion models.

- A discussion on the InternVL model, ViT-6B and comparison to Google’s ViT-22B, alongside an interest in the Sloot Digital Coding System and shared Wikipedia link.

- Addressing concerns on validation of legal data and limitations set by using a typical train-test split methodology.

- Insight on the performance of Korean SOLAR-LLM-10.5B and its comparison to Mixtral7*8B.

HuggingFace Discord Channel Summaries

▷ #general (16 messages🔥):

- Sharing scripts:

@cakikiadvised to avoid sharing .zip files in the server, recommending GitHub or Hugging Face Hub instead. - AI job search:

@Priyansh Rastogiasked for resources on finding AI related jobs, mentioning they currently use https://www.aimljobs.fyi/ but are seeking other platforms. - LLMs and gradient-free methods:

@_hazlerinquiring about any known research related to training LLMs with gradient-free methods such as evolutionary algorithms. - MoE model SOLARC-MOE-10.7Bx4:

@jihadrew attention to the SOLARC-MOE-10.7Bx4 model, expressing interest in its potential performance. They shared the model’s Hugging Face link. - Fine-tuning Blenderbot:

@tchi_tchi_asked for assistance with fine-tuning the Blenderbot model and needed help understanding the dataset format.

Links mentioned:

▷ #today-im-learning (1 messages):

- Model Soups and LightGBM: User

@onceabeginnershared that they are currently learning about model soups (averaging weights of models) and LightGBM (gradient boosting decision tree).

▷ #cool-finds (2 messages):

- Trending Papers in Computer Science:

@cyruscaoshared a link to Trending Papers, a resource that ranks the top trending papers in computer science. The site added 616 new papers in the last three days.@horosinfollowed up by asking about@cyruscao’s specific area of interest (architecture).

Links mentioned:

▷ #i-made-this (6 messages):

- MindMirror App Announcement:

@ddchikenannounced the demo of a new app, MindMirror, which is an AI audio transcription tool designed to analyze thoughts and emotions throughout conversations. Currently providing basic sentiment analysis, the app’s future plans are to include summarization, action items, and other insights. Designed with privacy in mind, it does not perform data synchronization or transmit users’ audio off-device. The app is free, works on mobile and desktop via web browser, and does not require an account.@ddchikenencourages everyone to use it and provide feedback. MindMirror App link. - Canarim-Bert-Nheengatu Project Sharing:

@dominguesmshared their project, a BERT model pre-trained for the Nheengatu language—an indigenous language spoken in Brazil. The project was particularly time-consuming due to the extensive data collection required, sourcing primarily from books dating to the 1800s and 1900s.@dominguesmsays the model could be useful for future NLP tasks aimed at developing resources for the Nheengatu language. Project link. - User

.naptasticasked whether the dataset for Canarim-Bert-Nheengatu is available.@dominguesmreplied that it’s not available yet but will be soon. - Introduction of Bunkoer Library:

@jossai88introduced a new open-source Python library, Bunkoer, aimed at enhancing data security in LLM tasks. Capabilities include data anonymization—specifically for CSV and PDF files, Streamlit integration for a user-friendly interface, and contextual anonymization for local data security. The library is actively developed with plans for further expansion, encouraging contributions. For detailed information, they shared the link to the GitHub repository.

Links mentioned:

- dominguesm/canarim-bert-nheengatu · Hugging Face

- Star Trek Star Trek Tos GIF - Star Trek Star Trek Tos Scotty - Discover & Share GIFs: Click to view the GIF

- GitHub - Bunkoer/bunkoer: This the bunkoer library, for secure your data on all your llm task: This the bunkoer library, for secure your data on …

- MindMirror

▷ #diffusion-discussions (1 messages):

- Personalization Techniques in Diffusers:

@sayakpauldiscusses the techniques to control outputs generated by diffusion models, which is an active research topic in the community. He mentions that subtle changes in inputs can drastically change outputs in diffusion models. He also shares a HuggingFace document presenting some of the techniques diffusers support to control generation of diffusion models. The goal is to map changes in input accurately to changes in output, influence qualities of generated images beyond semantic preservation, and generate outputs with good quality that adhere to a particular style or be realistic.

Links mentioned:

▷ #computer-vision (6 messages):

- InternVL Discussion:

@chklrdshared a link to the model card of InternVL-Chat-ViT-6B-Vicuna-13B, which was developed by OpenGVLab. InternVL scales up the Vision Transformer (ViT) to 6 billion parameters and aligns it with Language Model. It has achieved 32 state-of-the-art performances on tasks such as visual perception, cross-modal retrieval, and multimodal dialogue. [(Project Link)]. @nielsr_pointed out that InternVL presents an open-source alternative to Google’s ViT-22B.- Sloot Digital Coding System:

@tomgale_brought up the topic of the Sloot Digital Coding System, a data sharing technique that allegedly could store a complete digital movie file in 8 kilobytes of data. He noted that he has all the sources and proofs based on scientific method and observation and is seeking help for the algebra side of the project. He shared a link to a Wikipedia article about the system.

Links mentioned:

▷ #NLP (2 messages):

- Validation of Legal Data:

@shilz0145expressed concerns about how to perform validation on a chunk of legal data and the limitations of using a train-test split in this scenario. - Performance of Korean SOLAR-LLM-10.5B:

@harsh_xx_tec_87517pointed out the impressive performance of Korean SOLAR-LLM-10.5B on HuggingFace leaderboard, noting that it almost matches the performance of Mixtral7*8B and inquired about the difference in these models.

▷ #diffusion-discussions (1 messages):

- Controlling Diffusion Models Output: User

@sayakpaulshared a link to a document on the HuggingFace site discussing how to control outputs generated by diffusion models, an active research topic. The document explores ways to preserve semantics in the inputs for consistent outputs, and techniques supported bydiffusersto regulate the generation of diffusion models. Find the document here.

Links mentioned:

LangChain AI Discord Summary

- Discussion regarding tools and processes in LangChain, with users sharing inquiries and solutions. Key topics include per user retrieval with Chroma, creating more advanced RAG in Node, adjusting

RecursiveCharacterTextSplitter, and using the URL document loader from LangChain for FAQ generation. Various questions about API options, Firebase support in Python, and MongoDB Atlas Vector Search were also raised. User@3h0480specifically highlighted an issue regarding the transfer of information between generations in LangChain (source). Other users sought clarification on terminology within LangChain. - Exploration of data security with Large Language Models, with

@jossai88initiating a discussion about safely handling sensitive data with models like ChatGPT 4, Llama 2, or Mistral AI, highlighting the parallels with launching a Docker container. - Announcement of a new software release, Bunkoer v0.0.3, designed to anonymize PDFs and CSV files, aiming to enhance data protection in AI applications.

@jossai88invited contributions to the Bunkoer repository on Git, though no specific link was provided. - Sharing of a tutorial link in the #tutorials channel with no context (source).

- Brief mention in the #langserve channel by user

@a404.ethsuggesting the holiday season as a reason for some unspecified situation.

Links mentioned:

- Per-User Retrieval | 🦜️🔗 Langchain

- neuralmagic/bge-large-en-v1.5-quant · Hugging Face

- DOC: langchain LCEL - transfer of information between generations · Issue #15247 · langchain-ai/langchain

LangChain AI Channel Summaries

▷ #general (12 messages🔥):

- Query regarding per user retrieval using Chroma:

@pauln07asked how they can implement per user retrieval using Chroma like they did with pinecone based on this tutorial. - Creating more advanced RAG in Node:

@andremiksought advice on whether to use a fastapi server with LangChain in Python or create it in Node. The user mentioned wanting to use features like query expansion, hybrid search against supabase or pinecone, and cohere reranking. - Tool names must be alphanumeric:

@a404.ethmentioned that tool names in LangChain need to be alphanumeric. - Adjusting

RecursiveCharacterTextSplitter:@nas0875asked how they can adjust theRecursiveCharacterTextSplitterso that full stops appear at the end of the chunks rather than at the start. - Using URL document loader from LangChain:

@kvn2000suggested using the URL document loader from LangChain to generate FAQs. This process involves passing the loaded URL content to LLM with a prompt and then using output schemas and parsers for output formatting. - Query regarding MongoDB Atlas Vector Search:

@vaironmaninquired if anyone has experience with MongoDB Atlas Vector Search. - Choosing between different HuggingFaceEmbeddings or APIs:

@mr.dronieasked for advice on choosing betweenTaylorAI/bge-micro-v2,neuralmagic/bge-large-en-v1.5-quant,together.ai, orperplexityAPI for better models and faster inference, sharing the link of the HuggingFace neuralmagic model. - Support for Firebase in Python:

@atefyaminasked if Firebase is supported for memory in Python, noting that there’s a JavaScript implementation. - Issue with langchain:

@3h0480asked for help regarding an issue they encountered involving the transfer of information between generations in LangChain, linking a related GitHub issue for reference. - Definition of agent and chain:

@shivam51asked for clarification regarding the difference between an agent and a chain in LangChain.

Links mentioned:

- neuralmagic/bge-large-en-v1.5-quant · Hugging Face

- Per-User Retrieval | 🦜️🔗 Langchain: When building a retrieval app, you often have to b…

- DOC: langchain LCEL - transfer of information between generations · Issue #15247 · langchain-ai/langchain: Issue with current documentation: I do not underst…

▷ #langserve (1 messages):

a404.eth: Maybe b/c it’s the week between xmas and NYE?

▷ #share-your-work (1 messages):

- Data Security with Large Language Models: User

@jossai88raised a discussion about the importance of data security when processing sensitive data with advanced Large Language Models like ChatGPT 4, Llama 2, or Mistral AI. They emphasized the necessity for this issue to be addressed with the same caution as launching a Docker container. - Bunkoer v0.0.3:

@jossai88also presented their latest release, Bunkoer v0.0.3, which is designed to anonymize PDFs and CSV files. This update aims to provide advanced data protection features for secure and reliable AI applications. - Call for Contribution to Bunkoer Repo on Git: The user invited community members to contribute to the Bunkoer repository on Git, especially if they are using tools like LangChain, LlamaIndex, Pinecone, FAISS, Auto-GPT, llamacpp, or OpenAI. No specific link was provided to the Bunkoer Git repository.

▷ #tutorials (1 messages):

datasciencebasics: https://youtu.be/Z50BFFrmMbc?si=rsn4AbIcbzmU6GgJ

DiscoResearch Discord Summary

Only 1 channel had activity, so no need to summarize…

- German Embedding/Retrieval Models Discussion: User

@thewindmominitiated a discussion on German embedding/retrieval model progress. Several other users expressed interest in the topic, including@_jp1_and@rasdani. Some specific models were mentioned such asColbertv2,sentence-transformers/paraphrase-multilingual-mpnet-base-v2, anddeutsche-telekom/gbert-large-paraphrasemodels. Link to German BERT large paraphrase cosine and German BERT large paraphrase euclidean were shared by@rasdani. - Vision Models Inquiry: User

@lightvector_asked about the current progress of vision models.@rasdaniresponded, suggesting the user check out the ThursdAI podcast which recently covered multimodal models. Link to podcast. - DPO Dataset/Tokenization Issue:

_jp1_expressed difficulty with the expected format for the dataset for DPO according to TRL’s documentation and HuggingFace’s Alignment Handbook. A comparison was also made regarding the format used in the Ultrafeedback Binarized dataset. A problem with tokenization with ChatML and datasets with a system prompt was identified and subsequently fixed by user. They suggested to create a PR, as they saw a potential bug in the existing DPO pipeline.

Links mentioned:

- 📅 ThursdAI - Dec 28 - a BUNCH of new multimodal OSS, OpenAI getting sued by NYT, and our next year predictions — ThursdAI - The top AI news from the past week — Overcast

- deutsche-telekom/gbert-large-paraphrase-cosine · Hugging Face

- deutsche-telekom/gbert-large-paraphrase-euclidean · Hugging Face

- sentence-transformers/distiluse-base-multilingual-cased-v2 · Hugging Face

- DPO Trainer)

- HuggingFaceH4/ultrafeedback_binarized · Datasets at Hugging Face

- alignment-handbook/src/alignment/data.py at 61a11a5c7d66179ed0a930b0dd12e532fce701dd · huggingface/alignment-handbook: Robust recipes for to align language models with h…

Latent Space Discord Summary

- Discussion on Optimizing Code Metrics with LLM: User

@slonohighlighted the impact of LLM on his coding habits, allowing for more efficient refactoring and quality tool development, with an example of a tool for managing mass ticket deletion on Zendesk. - Exchange on LLM Codemods and TypeScript’s Role in Refactoring:

@swizecis enthusiastic about “LLM codemods” for large-scale refactoring and added value of TypeScript in catching basic mistakes. - Conversation on the Future of AI Field:

@swyxioshared a Tweet with a list of open questions for the AI industry in 2024, including potential breakthroughs, architecture, data privacy, and unchecked AI behaviour. - Podcasts and the AI Community:

@swyxioacknowledged an appreciative Tweet featuring a quote endorsing the approach of improving software without adding complexity, shared by@JackMcCloyin@latentspacepod. - Announcement of the Last Podcast of 2023: In

#ai-event-announcements,@swyxioshared a preview link to the podcast, set to be the last one for the year 2023.

Latent Space Channel Summaries

▷ #ai-general-chat (11 messages🔥):

- Optimizing Code Metrics with LLM: User

@slonohighlighted the impact of LLM (the Latent Language Model) on his coding habits, noting that it allowed for a paradigm shift in how programming problems can be approached. This includes more efficient refactoring, and the creation of better quality tools that offer extensive assistance in his refactoring processes. An example he gave was the tool he developed in 3 hours to manage mass ticket deletion on Zendesk. - LLM Codemods and TypeScript’s Role in Refactoring:

@swizecexpressed enthusiasm about the concept of “LLM codemods” for large-scale refactoring and added that the ability of TypeScript to catch basic mistakes further facilitates the refactoring process. - Questions for the AI Field in 2024:

@swyxioshared a Tweet with a list of open questions for the AI industry in 2024 by@jxmnop. The questions cover potential breakthroughs, architecture, optimal parameters, data privacy, unchecked AI behaviour, and future learning models. - Podcast Shoutout:

@swyxioacknowledged an appreciative Tweet from@JackMcCloythat featured a quote from George Hotz on the@latentspacepod, endorsing the approach of improving software without adding complexity.

Links mentioned:

- Tweet from jack morris (@jxmnop): people keep saying AI is moving so fast. some days…

- go-go-labs/cmd/apps/zendesk at main · go-go-golems/go-go-labs: GO GO EXPERIMENTAL LAB. Contribute to go-go-golems…

- Tweet from Jack McCloy (@JackMcCloy): “You can always make your software do more. Th…

▷ #ai-event-announcements (1 messages):

swyxio: preview of last pod of 2023 https://www.latent.space/p/f05ffdf0-2563-4b9e-b9a7-96a3660d4780

Skunkworks AI Discord Summary

- A question was posed about comparisons being made between children’s reasoning abilities and Language Model’s (LLMs) reasoning abilities instigated by

@leuyannin the General channel. - In regards to ChatGPT, a user named

@hdarteminitiated a discussion about the use of Nougat for inputting data for paper reviews in the Papers channel. - A possibility of collaborations was mentioned and flagged by

@hdartemin the Off-Topic channel, citing potential overlapping work others might be doing. - Shared resource in the Off-Topic channel from

@pradeep1148with a link to a YouTube video discussing a new quantization technique called Half-Quadratic Quantization (HQQ). - Lastly, user

lightvector_asked about any updates on vision in OSS in the Bakklava-1 channel.

Skunkworks AI Channel Summaries

▷ #general (1 messages):

- Comparing children’s and LLM’s reasoning abilities: User

@leuyanninitiated a discussion asking if anyone has read any insights or research about comparing children’s reasoning abilities and Language Model’s (LLMs) reasoning abilities.

▷ #papers (1 messages):

- Use of Nougat for ChatGPT:

@hdartemdiscussed using a tool called Nougat to input information onto ChatGPT for potential paper reviews and asked for clarification on the types of papers that were of interest.

▷ #off-topic (2 messages):

- Potential Collaborations:

@hdartemmentioned that some people might already be working on an unspecified project, suggesting the need to identify these individuals. - Resource Sharing:

@pradeep1148shared a YouTube video titled: “Half-Quadratic Quantization of LLM’s (colab)”, discussing a new quantization technique called Half-Quadratic Quantization (HQQ).

Links mentioned:

Half-Quadratic Quantization of LLM’s (colab): In this article, we propose a new quantization tec…

▷ #bakklava-1 (1 messages):

lightvector_: any updates on vision in oss?

LLM Perf Enthusiasts AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Configuring Azure OpenAI Service: User

@0xmmoexpressed frustration with the process of configuring Azure’s OpenAI service, likening it to wanting to “pierce my eyelids with dull rusty nails”. They ended the vent shortly after, not adding more details.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.