(gist form: https://gist.github.com/veekaybee/f8e589fea42ba7131e4ca0a0f280c0a4?utm_source=ainews&utm_medium=email)

also, notable image AI activity in Huggingface-land

[TOC]

Nous Research AI Discord Summary

- Detailed examination of the Local Attention Flax module with a focus on computational complexity. Relevant discussions included a linear vs quadratic complexity debate, confusion over code implementation, and posited solutions such as chunking data. Two GitHub repositories were shared for reference (repo1, repo2).

- Conversations surrounding various topics such as using AI in board games, launching MVP startups, critique of Lex Fridman’s interview style, income generation via social media, and a click-worthy YouTube video critically examining RAG’s retrieval functionality in OpenAI’s Assistants API.

- Sharing of benchmark logs for different LLMs including Deita v1.0 with reference to a specific Large Language Model (LLM) training method, Deita SFT+DPO (log link).

- Sharing and discussion of various projects and tools like DRUGS, MathPile, Deita, CL-FoMo, and SplaTAM. Key points included benefits, data quality considerations, and evaluations of performance efficiency.

- Extensive dialogue about the implications of merging models with different architectures, the potential use of graded modal types, training combined models, best AI models for function calling, and data contamination issues in Mixtral (GitHub link to logs, HuggingFace link to model merge).

- Community insights requested for Amazon’s new LLMs, Amazon Titan Text Express and Amazon Titan Text Lite. A unique training strategy involving bad dataset utilization was proposed and discussed along with the search for a catalogue of ChatGPT missteps (Amazon Titan Text release link).

Nous Research AI Channel Summaries

▷ #ctx-length-research (7 messages):

- Local Attention Computation:

@euclaisereferred to a clever masking and einsum method, and linked to a GitHub repository for a Local Attention Flax module. - Complexity Query:

@joey00072questioned the operation complexity of the method, suggesting it was quadratic (n^2) rather than linear (nxw).@euclaiseconfirmed that it should be linear (nxw). - Confusion Over Code:

@joey00072expressed confusion over a particular code segment which appears to show a cubic (n^3) operation. - Suggested Solution for Local Attention:

@euclaisesuggested a potential solution of chunking the data and applying attention over the chunks.

Links mentioned:

- local-attention-flax/local_attention_flax/local_attention_flax.py at main · lucidrains/local-attention-flax: Local Attention - Flax module for Jax. Contribute …

- local-attention-flax/local_attention_flax/local_attention_flax.py at e68fbe1ee01416648d15f55a4b908e2b69c54570 · lucidrains/local-attention-flax: Local Attention - Flax module for Jax. Contribute …

▷ #off-topic (26 messages🔥):

- AI Project Via Valkyrie:

@mnt_schreddiscussed their project that uses Valkyrie to create AI-generated scenarios for the board game Mansions of Madness. They pondered which Nous model would be the best storyteller, considering Trismegistus. - Discussions on Launching MVP Startup:

@fullstack6209queried about the future implications of launching an MVP startup with an “e/acc” discount.@tekniumhumorously suggested it may lead to regret. - Criticism of Lex Fridman’s Interview Style:

@fullstack6209critically evaluated Lex Fridman’s interviewing skills, describing them as poor and lacking in insight and context. This sentiment was endorsed by@teknium. - Discussion on Social Media Influencers:

@gabriel_symeexpressed amazement at how individuals can earn significant income through social media posts. - Exploration of AI YouTube Content:

@fullstack6209recommended a YouTube video which critically examines RAG’s retrieval functionality in OpenAI’s Assistants API.@gabriel_symeconcurred, citing personal experience with RAG’s issues in real-world application deployment.

Links mentioned:

- Valkyrie GM for Fantasy Flight Board Games

- RAG’s Collapse: Uncovering Deep Flaws in LLM External Knowledge Retrieval: The retrieval functionality of the new Assistants …

▷ #benchmarks-log (2 messages):

- Deita v1.0 Mistral 7B Benchmark Logs: User

@tekniumshared a GitHub link to benchmark logs for different LLMs including Deita v1.0 with Mistral 7B. - Model Training Methods: User

@tekniummentioned Deita SFT+DPO without further elaboration, possibly referring to a specific Large Language Model (LLM) training method.

Links mentioned:

LLM-Benchmark-Logs/benchmark-logs/deita-v1.0-Mistral-7B.md at main · teknium1/LLM-Benchmark-Logs: Just a bunch of benchmark logs for different LLMs…

▷ #interesting-links (39 messages🔥):

- DRUGS Project:

@gabriel_symeshared an exciting project called DRUGS which aids in handling finicky sampling parameters. - MathPile Corpus:

@giftedgummybeelinked to a math-centric corpus called MathPile, emphasizing the data quality over quantity, even in the pre-training phase. - Deita Project Discussion:

@.beowulfbrshared Deita, a Data-Efficient Instruction Tuning for Alignment. However,@tekniumrevealed that the benchmarks degraded every benchmark as compared to the base model except mt bench.@ldjcompared it to Capybara and mentioned that it seemed to be less cleaned and smaller. - Continual Learning of Foundation Models:

@giftedgummybeeshared an update on CL-FoMo, a suite of open-source LLMs comprising four 410M and four 9.6B models. They were trained on Pile, SlimPajama (SP), Mix Pile+SP, and Continual (Pile, SP). - SplaTAM:

@spirobelintroduced SplaTAM, a tool for precise camera tracking and high-fidelity reconstruction in challenging real-world scenarios, and pointed out that a more user-friendly version is under development.

Links mentioned:

- LLM-Benchmark-Logs/benchmark-logs/deita-v1.0-Mistral-7B.md at main · teknium1/LLM-Benchmark-Logs: Just a bunch of benchmark logs for different LLMs…

- GitHub - hkust-nlp/deita: Deita: Data-Efficient Instruction Tuning for Alignment: Deita: Data-Efficient Instruction Tuning for Align…

- SplaTAM: Splat, Track & Map 3D Gaussians for Dense RGB-D SLAM

- Generative AI for Math: Part I — MathPile: A Billion-Token-Scale Pretraining Corpus for Math: High-quality, large-scale corpora are the cornerst…

- CERC-AAI Lab - Continued Pretraining Blog: Continual Learning of Foundation Models: CL-FoMo S…

- Interviewing Tri Dao and Michael Poli of Together AI on the future of LLM architectures: The introduction to this post can be found here: h…

- GitHub - EGjoni/DRUGS: Stop messing around with finicky sampling parameters and just use DRµGS!: Stop messing around with finicky sampling paramete…

▷ #general (231 messages🔥🔥):

- Model Merging Discussion: Users

.beowulfbr,ldj, andgiftedgummybeehad a detailed conversation about merging models with different architectures like Llama2 and Mistral. Discussions touched upon how merging models can yield surprisingly strong results, with@ldjsharing a link to a successful merge of numerous models with distinct prompt formats on HuggingFace. They also discussed the implications of the merge size, and how some processes tend to create larger models. - Potential of Graded Modal Types: User

.beowulfbrproposed the idea of using graded modal types to track where objects are located on CPU and GPU, theorizing it could potentially improve performance substantially. - Discussions on Chatbot Training:

@gabriel_symeprompted a discussion about training merged models, with responses indicating this has already been done, but isn’t commonly done.@giftedgummybeeshared their current focus, which involves fine-tuning a Mixtral -> Mistral model with wiki + slimorca. - AI Model Suggestions: User

@dogehusinquired about strong AI models for function calling. Several users, including@mihai4256and@ldj, provided suggestions, including NexusRaven V2 and Nous-Hermes-2. - Mixtral Model Metamath Contaminat:

@nonameusrpointed out that the Metamath dataset used in Mixtral is contaminated.

Links mentioned:

- README.md · TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T at main

- NobodyExistsOnTheInternet/mergedallmixtralexpert · Hugging Face

- NobodyExistsOnTheInternet/unmixed-mixtral · Hugging Face

- uukuguy/speechless-llama2-hermes-orca-platypus-wizardlm-13b · Hugging Face

- 🤗 Transformers

- Tweet from Rohan Paul (@rohanpaul_ai): Run Mixtral-8x7B models in Free colab or smallish …

- NobodyExistsOnTheInternet/wikidedupedfiltered · Datasets at Hugging Face

- GitHub - uukuguy/multi_loras: Load multiple LoRA modules simultaneously and automatically switch the appropriate combination of LoRA modules to generate the best answer based on user queries.: Load multiple LoRA modules simultaneously and auto…

- Tweet from Nexusflow (@NexusflowX): 🚀Calling all developers of copilots and AI agents…

- GitHub - asahi417/lm-question-generation: Multilingual/multidomain question generation datasets, models, and python library for question generation.: Multilingual/multidomain question generation datas…

- llama.cpp/examples/finetune/finetune.cpp at master · ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++. Contr…

- GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.: A Gradio web UI for Large Language Models. Support…

- Mixtral Experts are initialized from Mistral 7b - Low Rank conversion possible? · Issue #4611 · ggerganov/llama.cpp: We have evidence that Mixtral’s Experts were i…

- TinyLlama Pretraining Report: See https://whimsical-aphid-86d.notion.site/Relea…

▷ #ask-about-llms (22 messages🔥):

- Discussion on Amazon Titan Text Express and Amazon Titan Text Lite: User

@spaceman777sought community insights about Amazon’s new large language models (LLM), Amazon Titan Text Express and Amazon Titan Text Lite. Despite its release on Nov 29, 2023, user finds no publicly available benchmarks, leading to speculation about Amazon’s low-key approach to AI releases. - DL Model Training Strategy:

@max_paperclipsintroduced an idea of creating a deliberately bad dataset and finetuning a model on it to subtract the delta from the base model, then applying a well-curated dataset for further finetuning. This concept sparked a discussion with@tekniumand@giftedgummybee, comparing this process to reversing a LoRA model. - Seeking Repository of ChatGPT Failures and Bloopers: User

@max_paperclipswas curious about the existence of any list showcasing typical errors made by ChatGPT.@giftedgummybeeresponded that no such definitive list existed but suggested the possibility of using the LLAMA tool.

Links mentioned:

Amazon Titan Text models—Express and Lite—now generally available in Amazon Bedrock

LAION Discord Summary

Only 1 channel had activity, so no need to summarize…

-

Overfitting in Models Talk: Various users discussed their concerns about the potential for models to overfit on certain data sets, leading to potential copyright infringement issues. Specifically,

@thejonasbrothersmentioned that MJ was likely to have trained their model on entire 4k movies.@astropulseemphasized the importance of considering the potential for extracting original artist details from the output of a fine-tuned model.@pseudoterminalxdiscussed an approach of limiting the exposure of a dataset to the model to a single epoch to temper the issue of overfitting.- User

@SegmentationFaultadded that issues may arise if models reproduce copyrighted text or images nearly verbatim, as discussed in relation to a New York Times lawsuit vs OpenAI.

-

Model Size and Performance:

@.undeletedcriticized the trend towards developing inefficient, oversized models that not only create legal trouble but waste resources.@thejonasbrothersmaintained that smaller models eliminate overfitting and train faster.- The users agreed that adding more parameters is not an ideal alternative to longer training.

-

Copyright and Legal Issues:

- There was a lengthy discussion on the legal ambiguity surrounding the use of copyrighted materials in AI model training.

@SegmentationFaultmentioned that infringement is handled case-by-case, based on the degree of similarity between the AI-produced content and the original copyrighted materials.@clock.work_added that any form of profiting from proprietary outputs could lead to legal troubles.- The application of these legal standards could affect both AI companies such as Midjourney and the development of open-source models.

-

Proprietary vs Open Models:

- The users discussed the implications of monetizing outputs from proprietary models and the potential issues confronting open-source AI development.

@SegmentationFaultstressed a preference for open models as fair use and expressed concerns about the implications of legal actions against proprietary models extending to open models.

-

MJ’s Video Model:

@SegmentationFaulthighlighted that Midjourney was training a video model, suggesting that if the model begins producing identical video clips from movies, it could lead to serious copyright infringement issues.

Links mentioned:

- Things are about to get a lot worse for Generative AI: A full of spectrum of infringment

- TheBloke/dolphin-2_6-phi-2-GGUF · Hugging Face

- Reddit - Dive into anything

OpenAI Discord Summary

- Concerns and discussions centered around GPT-4 and ChatGPT’s performance, limitations, potential misuse, and response times, especially within the paid premium version. Issues such as artificial usage limitations, slow response time, the AI behavior, and problems with human verification were cited by various users across the guild.

- Technical issues encountered by users while interfacing with GPT-4 and ChatGPT were prevalent; these included issues with running dolphin mixtral locally, publishing GPT’s errors, text file extraction, and continuous human verification.

- Users explored the potential for using custom-built GPT models to perform specific tasks such as enhanced creativity or structured thinking, as indicated in the GPT-4-discussions.

- A series of inquiries about using langchain’s memory to enhance or tune prompts, and recurse prompts to match a desired output length were prevalent in API-discussions and Prompt-engineering.

- A discussion on potential changes in consumption models for unlimited GPT and ChatGPT use and potential effects, such as increased scams due to misuse, was held.

- Several conversations emphasized the need for responsible use, compliance with OpenAI’s guidelines, and potential consequences, with policies such as OpenAI’s usage policies and guild conduct being highlighted.

- The upcoming launch of the GPT store in early 2024 was revealed in the GPT-4-discussions.

Links mentioned:

- Usage policies

- status.openai.com

- GitHub - guardrails-ai/guardrails: Adding guardrails to large language models.

OpenAI Channel Summaries

▷ #ai-discussions (14 messages🔥):

- Running Dolphin-Mixtral Locally:

@strongestmanintheworldposed a question about running dolphin mixtral locally, but no responses were provided. - Use of ChatGPT’s App: Discussion about the use of ChatGPT’s app vs their website, with

@jayswtfexpressing a preference for the website over the app.@prajwal_345noticed this as well. - ChatGPT Assistant Response Time:

@aviassagaraised issue of ChatGPT’s overly long response times, sometimes waiting up to 40 seconds for a response. - Discussion on Bing’s Tone:

@arevaxachexpressed frustration over Bing’s sassy and annoying demeanor.@jaicraftsuggested that things might improve with GPT-4 turbo in Copilot, hinting it might act more like ChatGPT.@Rockhowever, seemed to prefer Bing due to its personality and better coding skills.

▷ #openai-chatter (120 messages🔥🔥):

- ChatGPT and Usage Limitations: There were discussions about the limitations of ChatGPT, particularly the restriction on number of messages.

@colt.pythonbrought up the issue of usage cost per hour, and it was clarified by@smilebedathat the API has no such restriction, but the online app does.@infec.expressed dissatisfaction with the paid premium version still being subjected to these usage limitations. - Concerns about Custom ChatGPTs:

@infec.talked about using custom ChatGPTs as work aides, expressing disappointment when they encountered usage limits despite paying for the service. - Potential for Unlimited Usage:

@lemtoadspeculated on how much users would be willing to pay for unlimited ChatGPT use. The conversation touched on potential risks, such as increased scams and misuse.@.cymernoted that while power users would relish unlimited usage, this could lead to misuse. - Potential Issues with ChatGPT Answering Questions: Users offered their experiences with ChatGPT seemingly avoiding answering direct questions or ‘scamming’ users out of their daily message allowance.

@.cymerand@kaio268both shared frustrations with this aspect. - Creating Chatbots with GPT-4: User

@abhijeet0343asked for advice regarding inconsistencies in the responses from a GPT-4-based chatbot they developed, which used langchain for embeddings and stored them in Azure AI search. Suggestions from@poofeh_and@kaveenincluded making system prompts more assertive or giving specific examples, and employing guardrails to handle the issues of LLMs having difficulty counting things.

Links mentioned:

GitHub - guardrails-ai/guardrails: Adding guardrails to large language models.: Adding guardrails to large language models. Contri…

▷ #openai-questions (76 messages🔥🔥):

-

GPT-4 Performance: User

@꧁༒☬Jawad☬༒꧂expressed frustration over the deteriorating performance of GPT-4, especially regarding its inability to browse the web and fetch the required data. -

Human Verification Loop: Users

@rodescaand@realmcmonkeyencountered a repetitive human verification loop that prevented them from logging in. User@dystopia78suggested contacting support and checking the website status using status.openai.com. -

ChatGPT Limitations: User

@m54321described the inconvenience caused by limitations placed on the number of messages in a chat, which necessitates starting a new chat and retraining the model. User@laerunsuggested using a custom GPT and creating focused data chapters to improve efficiency. -

Persistent Verification: User

@ekot_0420complained about being constantly verified by ChatGPT after asking each question. -

User Quota Exceeded: User

@not_richard_nixonreported getting a “User quota exceeded” error when attempting to upload an image to GPT-4’s chat.

▷ #gpt-4-discussions (15 messages🔥):

- Generating Extreme Imagery with GPT-4/DALL-E: User

@gabigorgithijsasked for ways to generate more ‘extreme’ content using DALL-E despite difficulties in generating simple items. User@satanhashtagclarified that public personalities cannot be used in such applications. - Publishing GPTs Errors: User

@jranilreported an issue on the difficulty of publishing their latest GPT models, either experiencing an error message (“Error saving”) or no response from the page. - Text File Extraction Issue:

@writingjensought help with an issue extracting text files in GPT-4. - Exploring Capabilities of Custom GPTs:

@happyginitiated a discussion on the potential capabilities of custom-built GPT models that perform tasks not usually handled by the default GPT. Examples provided included models designed for structured thinking, brainstorming, or enhanced creativity. - GPT Message Limit Concerns:

@writingjenexpressed frustration over hitting a message limit after creating a few messages, despite abstaining from using advanced features like Dall-e.@solbusclarified that it was a rolling cap, fully resetting only after 3 hours of non-use. Further activities within the three-hour window eat into the limit balance. - Launch of GPT Store: In response to

@kd6’s query on the launch of the GPT store,@solbusprovided information that the intended launch was set for early 2024.

▷ #prompt-engineering (4 messages):

- Prompt Length Control:

@barium4104raised the question whether the only way to control the length of a prompt response is through prompt recursion. - Enhancing and Tuning Prompts:

@prochatkillerasked about the possibility of enhancing and tuning prompts, and whether the use of langchain memory could assist in this matter. - Increasing ‘Extreme’ Output:

@gabigorgithijsinquired for ways to make ChatGPT-4 and DALL-E generate more ‘extreme’ results, as simple generation was proving to be a challenge. - Usage Policies Clarification:

@eskcantaresponded to@gabigorgithijs’s request with a reminder to check OpenAI’s usage policies, indicating that certain types of extreme content might be disallowed and discussing them could risk account access. They also mentioned a specific OpenAI Discord channel<#1107255707314704505>for further discussions, as long as everything was within the rules.

Links mentioned:

▷ #api-discussions (4 messages):

- Prompt Response Length:

@barium4104questioned if the only way to achieve a specific length in a prompt response is through prompt recursion. - Enhancing and Tuning Prompts:

@prochatkillerasked if there are ways to enhance and tune prompts and if using langchain memory would help with the task. - Making GPT-4/DALL-E More Extreme:

@gabigorgithijsexpressed difficulty in generating even simple things with GPT-4/DALL-E and wanted to know how to make the AI generate more ‘extreme’ things. - GPT-4/DALL-E Usage Policies:

@eskcantaresponded to@gabigorgithijs'squery by emphasizing the importance of moral and legal use of OpenAI’s models. They pointed to OpenAI’s usage policies, warning about consequences for violations, while offering to help achieve goals within the bounds of the rules. They provided a link to OpenAI’s Usage Policies.

Links mentioned:

OpenAccess AI Collective (axolotl) Discord Summary

- Active discussion around Mixture of Experts (MoE) and Feed-Forward Networks (FFNs);

@stefangligaclarified that MoE only replaced some FFNs. - Ongoing exploration of various models to train using available rigs;

@nruaifadvised using the Official Capybara dataset with the YAYI 2 model and@faldoreconfirmed that Axolotl works with TinyLlama-1.1b. - Conversations about Axolotl’s compatibility, sourcing datasets for continued pretraining, and implementing RLHF fine-tuning; suggested use of LASER for improving Large Language Models and standardizing RAG prompt formatting.

- Training challenges encountered and resolved, including YAYI 2 training issues fixed by downloading the model manually and using Zero2 to save 51GB when training Mixtral.

- Discussion on temperature setting during RLHF showcase odd outputs while tweaking the value.

- Updates on community projects like

@faldoretraining Dolphin on TinyLlama-1.1b dataset. - Notable community guidance on handling DPO implementation in the main branch of Axolotl and how to fine-tune on preference-rated datasets, along with a call for data filtering due to bad data in certain datasets.

- Shared resources for multi-chat conversations, useful datasets, and logger tools that run on Axolotl, along with repositories and tools for ML preprocessing.

- Hardware-specific conversations on the need for certain rigs like 2 or 4x A100 80gb for running yayi 30b.

- Data-related practices emphasized include the avoidance of GPT4 generated data and the inclusion of non-English datasets; the use of FastText link recommended for non-English data filtering.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (87 messages🔥🔥):

-

MoE vs FFN discussion:

@caseus_had a query about the interchangeability of Mixture of Experts (MoE) and Feed-Forward Network (FFN).@stefangligaclarified that only some FFNs were replaced, likely to save parameters. -

Training Models:

@le_messasked for ideas on what model to train on his available 4x A100’s.@nruaifsuggested using the Official Capybara dataset and the YAYI 2 model, providing the link to the dataset and specifying that it needed to be reformatted for use with Axolotl.@le_messstated they would train the model if the data was reformatted to a suitable format. -

YAYI 2 Training Issues: While training,

@le_messran into aAttributeError: 'YayiTokenizer' object has no attribute 'sp_model'error. Despite attempting to fix it using a PR found on GitHub, the error persisted. Eventually, the model was downloaded and fixed manually, which seemed to work. -

Microtext Experiment:

@faldorenoted that he was training Dolphin on TinyLlama-1.1b dataset.@caseus_later mentioned plans to train on sheared Mistral in the next week. -

Training Progress:

@le_messmade progress with yayi2 training and shared the link to the WandB runs.

Note: The conversations are ongoing and the discussion topics could be better summarized with more context from future messages.

Links mentioned:

- wenge-research/yayi2-30b · fix AttributeError: ‘YayiTokenizer’ object has no attribute ‘sp_model’

- LDJnr/Capybara · Datasets at Hugging Face

- wenge-research/yayi2-30b · Hugging Face

- axolotl/examples/yi-34B-chat at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to Open…

- axolotl/examples/yayi2-30b/qlora.yml at yayi2 · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to Open…

- mhenrichsen: Weights & Biases, developer tools for machine lear…

- nRuaif/Kimiko_v3-v0.1 · Datasets at Hugging Face

▷ #axolotl-dev (23 messages🔥):

- Compatibility of Axolotl With TinyLlama-1.1b:

@faldoreconfirmed that Axolotl works with TinyLlama-1.1b with no modifications needed. - Discussion On Checkpoint Size When Training Mixtral:

@nruaifshared that Zero2 checkpoint will save 51GB when training Mixtral. - Share of Research Paper About Language Model Hallucinations:

@faldoreshared a research paper on how to teach a language model to refuse when it is uncertain of the answer -> Research Paper. - Introduction to LASER:

@faldoreintroduced LASER (LAyer-SElective Rank reduction), which is a technique for improving the performance of Large Language Models (LLMs) by removing higher-order components of their weight matrices after training. This method reportedly requires no additional parameters or data and can significantly boost predictive performance -> Learn More | GitHub Repo. - Training DPO Models in Axolotl:

@sumo43guided@faldoreon how to train DPO models in Axolotl by sharing the link to the branch where they trained their models -> Axolotl Branch. He also shared an example config -> Example Config. - Need for Availability of 2 or 4x A100 80gb:

@le_messexpressed a need for 2 or 4x A100 80gb to run yayi 30b as fft. He stated that running it on 4x A100 40gb with zero3 was not feasible.

Links mentioned:

- configs/axolotl.yml · openaccess-ai-collective/DPOpenHermes-7B-v2 at main

- R-Tuning: Teaching Large Language Models to Refuse Unknown Questions: Large language models (LLMs) have revolutionized n…

- The Truth Is In There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction

- GitHub - pratyushasharma/laser: The Truth Is In There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction: The Truth Is In There: Improving Reasoning in Lang…

- GitHub - OpenAccess-AI-Collective/axolotl at rl-trainer: Go ahead and axolotl questions. Contribute to Open…

▷ #general-help (13 messages🔥):

-

Using the RL-trainer Branch in Axolotl:

@tank02is trying to figure out how to create prompt formats like chatml for Axolotl to use in a run using the RL-trainer branch. They are not sure about the formatIntel/orca_dpo_pairswould use within Axolotl and how to ensure that any dataset they use is properly formatted for Axolotl. They shared a prompt format example at DPOpenHermes-7B Config. -

Importing Axolotl into Jupyter Notebook:

@wgpubsis having trouble importing Axolotl into a Jupyter notebook after pip installing the library. They are seeking a way to generate random examples based on an Axolotl configuration to verify prompts and their tokenized representations. -

Conversion of DPO Dataset Prompts into ChatML:

@caseus_explains that the existing transforms in Axolotl convert the existing prompt from the DPO dataset into a chatml input. They convert the chosen and rejected tokens to only include the eos token, as that’s all that needs to be generated by the model. -

Training of 8-Bit LoRA with Mixtral:

@caseus_asked if anyone has been able to train a regular 8-bit LoRA with Mixtral.@nruaifconfirmed having done so, but mentioned that without deepspeed it runs out of memory at a 16k context, and that the peak VRAM use for a 2k context was around 70gb. -

Question About Batch Size and Learning Rate:

@semantic_zoneis curious about the reasons for a smaller batch size with a bigger model and asks if there’s a rule of thumb for changing learning rate based on batch size. They wonder if they should adjust their learning rate when they double theirgradient_accumulation_steps.

▷ #datasets (9 messages🔥):

- Data Quality Concerns:

@faldorewarned users to filter a certain dataset because it contains lots of “bad data”, such as empty questions, empty responses, and refusals. - Preferred Dataset:

@xzuynsuggested using a dataset from HuggingFace, which is binarized using preference ratings and cleaned. This dataset, found atargilla/ultrafeedback-binarized-preferences-cleaned, is recommended when fine-tuning on UltraFeedback. - Tool Inquiry:

@noobmaster29asked if anyone had experience with Unstructured-IO/unstructured, an open-source library for building custom preprocessing pipelines for machine learning.

Links mentioned:

- argilla/ultrafeedback-binarized-preferences-cleaned · Datasets at Hugging Face

- GitHub - Unstructured-IO/unstructured: Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines.: Open source libraries and APIs to build custom pre…

▷ #rlhf (24 messages🔥):

- RAG Fine-tuning Data Discussion:

@_jp1_expressed dissatisfaction with a dataset used in a paper. They mentioned his team’s work on generating fine-tuning data for RAG (in different formats). They suggested an open-source release of the dataset if there is general interest and called for standardization of rag/agent call prompt formatting among open llms. - Multi-Chat Convo Resources:

@faldoreprovided various resources in response to requests for multi-chat conversation data. He shared a link to the Samantha dataset on HuggingFace and recommended Jon Durbin’s Airoboros framework. He suggested using autogen to generate conversations and provided a link to a logger tool. - DPO Implementation:

@jaredquekasked about the implementation of DPO in the main branch, and@caseus_responded that it will be available soon and provided a link to the relevant pull request. He stated that DPO can be activated by settingrl: truein the configuration. - Temperature Parameter Setting:

@dangfuturesshared an experience of tweaking the temperature setting for a model, resulting in odd model outputs. @faldorealso shared multiple model links named “Samantha” on HuggingFace and discussed a bit about AI models believing in their own sentience.

Links mentioned:

- configs/dpo.yml · openaccess-ai-collective/DPOpenHermes-7B at main

- src/index.ts · cognitivecomputations/samantha-data at main

- Meet Samantha: https://huggingface.co/ehartford/Samantha-1.11-70b…

- [WIP] RL/DPO by winglian · Pull Request #935 · OpenAccess-AI-Collective/axolotl

- autogen/notebook/agentchat_groupchat_RAG.ipynb at f39c3a7355fed3472dce61f30ac49c9375983157 · microsoft/autogen: Enable Next-Gen Large Language Model Applications…

- oailogger.js: GitHub Gist: instantly share code, notes, and snip…

▷ #shearedmistral (28 messages🔥):

- Continued Pretraining Datasets:

@caseus_shared links to datasets such as SlimPajama-627b, OpenWebMath, The Stack, and peS2o and asked for recommendations for more to be used in further pretraining. Links to Hugging Face subsets are shared here, here, here, and here. - Input on Additional Pretraining Datasets: In response,

@nruaifsuggested using textbook data and provided links to smaller datasets such as tiny-textbooks, tiny-codes, and tiny-orca-textbooks located here, here, and here. - Avoiding GPT4 Generated Data:

@dctannerand@caseus_agreed to avoid using data generated by GPT4 models to prevent impacts from OpenAI terms during the continued pretraining. - Mixtral Concerns & Support:

@nruaifproposed the idea of embarking on Mixtral, however,@caseus_raised that it’s necessary to address the existing Mixtral training bugs first before adding more to the mix. They expressed the anticipation of seeing an 8x3B Mixtral. - Inclusion of Non-English Datasets:

@nruaifand@xzuynproposed using non-English datasets, like yayi2_pretrain_data, and CulturaX found here and here, with the suggestion of filtering for the English texts where possible.@nruaifsuggested using FastText to filter out non-English data. FastText is available here.

Links mentioned:

- allenai/peS2o · Datasets at Hugging Face

- fastText: Library for efficient text classification and repr…

- nampdn-ai/tiny-textbooks · Datasets at Hugging Face

- nampdn-ai/tiny-codes · Datasets at Hugging Face

- nampdn-ai/tiny-orca-textbooks · Datasets at Hugging Face

- uonlp/CulturaX · Datasets at Hugging Face

- wenge-research/yayi2_pretrain_data · Datasets at Hugging Face

- cerebras/SlimPajama-627B · Datasets at Hugging Face

- open-web-math/open-web-math · Datasets at Hugging Face

- bigcode/starcoderdata · Datasets at Hugging Face

Eleuther Discord Summary

- Warm welcome to new members

@mahimairajaand@oganBAwho are keen on contributing to the community. - In-depth technical discussion about suitable architectures for a Robotics project with a focus on Multi-Head Attention (MHA) on input vectors and seq2seq LSTMs with attention.

- Relevant suggestions and resources provided towards identifying datasets for pretraining Language Models such as The Pile, RedPajamas, (m)C4, S2ORC, and the Stack.

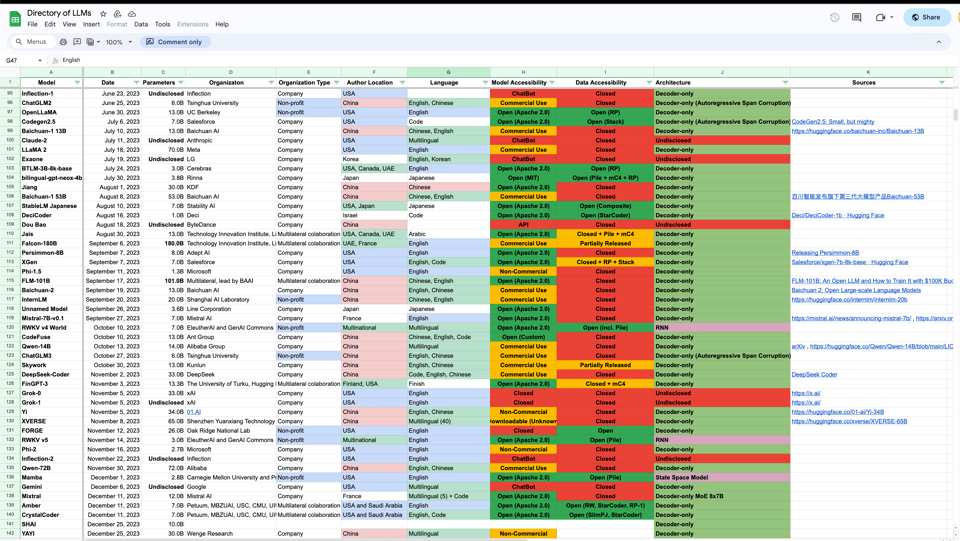

- Sharing of Detailed Listings of Language Models in a comprehensive spreadsheet and a follow-on conversation about creating a public database of such models.

- Deep dive into the Math-Shepherd research paper and its associated challenges, particularly focusing on reward assignment, model result verification, and concerns about misleading comparisons.

- Various practical elements discussed related to model resilience to noise injection, quantization bias and robustness of pretrained models, with a special mention of the concept of dither.

- Query about GPT-NeoX training speed compared to other repos like x-transformers, with clarification on its superior speed in large multi-node systems.

Eleuther Channel Summaries

▷ #general (35 messages🔥):

- An Introduction to the Community:

@mahimairajaand@oganBAintroduced themselves to the community, expressing their interests and looking forward to contributing to the domain. - Seeking Suggestions on Architectures for Robotics Project:

@marbleousasked for suggestions on architectures that allow Multi-Head Attention (MHA) on input vectors along with a hidden state to track previous observations for a robotics project.@catboy_slim_suggested to look into the work by rwkv and@thatspysaspymentioned seq2seq LSTMs with attention. - Discussion on Listing Datasets used for Pretraining LLMs: In response to

@sk5544’s query about available lists of datasets used for pretraining language models,@stellaathenamentioned The Pile, RedPajamas, (m)C4, S2ORC, and the Stack as the major compilation datasets. - Detailed Listings of Language Models:

@stellaathenashared a link to a detailed spreadsheet listing various language models along with their attributes. A second sheet was shared when@sentialxasked about models that used GLU activation functions. - Discussion on Creating a Public Database of Language Models: The discussion evolved into exploring ways to create a public database of language models.

@stellaathenaand@veekaybeediscussed approaches, including creating a markdown file, a small react app, or using a platform like Airtable for the public to update and filter. A key requirement was for the platform to allow for reviewable community contributions.

Links mentioned:

- directory_of_llms.md: GitHub Gist: instantly share code, notes, and snip…

- Common LLM Settings: All Settings Model Name,Dataset,Tokenizer,Trainin…

- Directory of LLMs): Pretrained LLMs Model,Date,Parameters,Organizaton…

▷ #research (48 messages🔥):

-

Math-Shepherd Discussion:

@gabriel_symeshared a research paper on Math-Shepherd, a process-oriented math reward model that assigns a reward score to each step of math problem solutions. The model showed improved performance, especially for Mistral-7B. However,@the_sphinxpointed out that the results might be misleading as they typically sample multiple generations and use a verifier to pick one, thus boosting performance significantly. -

Necessary Verifier in Practice:

@gabriel_symeand@the_sphinxagreed on the necessity of a verifier in practical applications. However, the latter suggested a more honest evaluation of the actual gains achieved from the verifier. A potential issue could be self-consistency in theorem-proving settings. -

Noise Injection and Model Resilience:

@kharr.xyzhinted at the need for careful noise injection in both training and inference to avoid the model going off the rails with a bad set of activations. Pretrained models without dropout are less resilient to noise. The range of noise resilience can be determined by observing the performance of quantized model versions. -

Misleading Comparisons: There was a general agreement (mainly from

@the_alt_man) about the potential misleadingness of comparisons in research papers and how they might overshadow genuinely interesting research. -

Dither Concept:

_inoxand@uwu1468548483828484had a discussion about the concept of dither, which involves adding noise to deal with quantization bias, especially in heavy quantization situations.

Links mentioned:

- Math-Shepherd: Verify and Reinforce LLMs Step-by-step without Human Annotations: In this paper, we present an innovative process-or…

- Tweet from Lewis Tunstall (@_lewtun): Very cool to see scalable oversight working for ma…

▷ #gpt-neox-dev (2 messages):

- GPT-NeoX Training Speed: User

@ad8easked if GPT-NeoX is expected to train faster than miscellaneous repos like x-transformers, assuming equal neural network architecture.@stellaathenaresponded that GPT-NeoX would train faster if on a large multi-node system, or would not be slower if the other systems were highly efficient.

HuggingFace Discord Discord Summary

- There was a significant discussion on diffusions. User

@joelkochsparked a conversation about creating new models and whether smaller models can be used for testing.@sayakpaulhighlighted the need for a model with standard depth for experimentation and described scaling experiments as intricate processes.@abhishekchorariafaced challenges with training Mistral 7B on a custom dataset, receiving an error due to the token indices sequence length. - End-to-End FP8 Training Implementation and Machine Specifications were primary topics in the #today-im-learning channel, with

@neuralinksharing their implementation progress and their work on a H100 machine. - In the #general channel, the discussion revolved around ByteLevelBPETokenizer, Fine-tuning LLMs, resources for beginners, DeepSpeed ZeRO3 and LoRA compatibility, unsplash Embeddings, access issues with the HuggingFace site, multi experts LLMs, the use of Intel Xeon with AMX, and WSL jobs interruption.

- The #cool-finds channel featured new HuggingFace Spaces, namely Text Diffuser 2, DiffMorpher & SDXL Auto FaceSwap, which have been collectively showcased in a YouTube video. The possibility of using an LLM for shell scripts was also discussed.

- #i-made-this saw updates on the NexusRaven2 function calling model and a call for help to complete code in a shared Colab notebook.

@vashi2396also shared a demo of the in-progress code. - Finally, the #reading-group and #NLP channels contained queries about the Mamba paper and multilingual pre-trained models, while also emphasizing the importance of avoiding bias in results.

HuggingFace Discord Channel Summaries

▷ #general (46 messages🔥):

- Discussion around ByteLevelBPETokenizer and loading it from a

.jsonfile:@exponentialxpasked how to load their saved tokenizer configuration.@vipitisand@hynek.kydlicekprovided multiple suggestions with@hynek.kydlicek’s solution of using theTokenizer.from_filemethod reportedly working. - Fine-tuning LLMs:

@skyward2989asked about making their fine-tuned language model stop generating tokens.@vipitisanswered by suggesting defining stop tokens or use a different stopping criteria. - Asking for LLMs beginner resources:

@maestro5786asked for resources on how to train an open source language model,@skyward2989recommended HuggingFace’s transformers documentation and course. - DeepSpeed ZeRO3 and LoRA compatibility:

@galcoh.asked whether there’s a way to enable DeepSpeed ZeRO3 with LoRA (PEFT), asking about a presumed issue of having no tensors in the model and the optimizer using all model size. - Query on unsplash Embeddings:

@nagaraj4896asked about the embeddings of Unsplash-25k-photos. - Issue with HuggingFace site access:

@weyaxireported an issue with accessing the HuggingFace site and@xbafssuggested disabling VPN if any is being used. Similarly,@SilentWraithreported an issue of the site not redirecting properly. @typoiluasked for explanations or documentation about multi experts LLMs.@vipitisexpressed an interest in Intel Xeon with AMX and@zorian_93363appreciated the choice between AMD and Intel for years.- Concern about WSL jobs interruption:

@__nordquestioned the disturbance in running training jobs in Windows Subsystem for Linux (WSL) that get interrupted after the PC is idle for a while, even with sleep mode disabled.

Links mentioned:

- Introduction - Hugging Face NLP Course

- tokenizers/bindings/python/py_src/tokenizers/implementations/byte_level_bpe.py at main · huggingface/tokenizers: 💥 Fast State-of-the-Art Tokenizers optimized for …

▷ #today-im-learning (10 messages🔥):

- End-to-End FP8 Training Implementation:

@neuralinkshared that they have implemented 17% of end-to-end FP8 training in 3D parallelism (excluding FP8 kernels) and 24% of DoReMi over the past three days. - Machine Specifications:

@neuralinkdisclosed that they have been working on a H100 machine.@lawls.netexpressed their desire to contribute their resources (Apple M3 Max 48Gb) to the open source community. - Implementation from Scratch: In response to

@gag123’s question,@neuralinkconfirmed that they implemented all components from scratch, apart from CUDA kernels.

▷ #cool-finds (6 messages):

@cognitivetechshared an interest in shell scriptable Language Learning Model (LLM) and also mentioned a link claiming: The Mistral 7b instruct llamafiles are good for summarizing HTML URLs if you pipe the output of the links command, which is a command-line web browser.@devspotintroduced few new HuggingFace Spaces this week featuring Text Diffuser 2, DiffMorpher & SDXL Auto FaceSwap in a YouTube video. The video details the functionality of each space.- Text Diffuser 2: A new model that integrates words into generated images.

- Inpainting Version: An enhancement of the Text Diffuser 2, this allows users to integrate text into certain areas of an existing image.

- DiffMorpher: This feature allows for the smooth transformation of one image into another.

- SDXL Auto FaceSwap: This feature generates images by swapping faces. The speaker demonstrates an example with Mona Lisa’s face swapped onto a pilot female.

Links mentioned:

Generate AI Images with Text - Text Diffuser 2, DiffMorpher & SDXL Auto FaceSwap!: A brief video about some of the trending huggingfa…

▷ #i-made-this (5 messages):

-

Sharing of NexusRaven2 on Community Box: User

@tonic_1shared NexusRaven2- a function calling model, on the community box. They indicated it’s for demo purposes and plan to improve it over time. They shared a link to the project (link). -

Request For Code Completion: User

@vashi2396shared a link to a colab notebook (link) and requested that any willing volunteer can help in completing the code mentioned in the notebook which is a ‘work in progress’. -

Demo of Progressed Code:

@vashi2396also shared a demo of the in-progress code through a LinkedIn post (link).

Links mentioned:

▷ #reading-group (1 messages):

- Understanding Mamba paper:

@_hazlerbrought up a query about a diagram in the Mamba paper, expressing confusion about the presence of a Conv (Convolutional Neural Network) layer since Mamba is generally known as a purely recurrent model.

▷ #diffusion-discussions (4 messages):

- New Models Creation: User

@joelkochinquired about the practical approach to creating new models, highlighting that the diffusion model Würstchen featured in a Hugging Face blog post harnesses a unique architecture. He further queried about the potential use of smaller models for quick iteration and validation of the approach. - Training Models on Custom Datasets:

@abhishekchorariaexperienced an issue in training Mistral 7B on a custom dataset using autotrain, reporting an error stating “token indices sequence length is greater than the maximum sequence length”. He sought guidance on changing the sequence length in auto-train. @sayakpaulresponded to@joelkoch, opining that small models might not yield useful findings. He emphasized the necessity for a model with standard depth for experimentation, describing scaling experiments as highly intricate.

Links mentioned:

Introducing Würstchen: Fast Diffusion for Image Generation

▷ #NLP (4 messages):

- Avoiding Bias in Results: User

@vipitishighlighted the importance of holding out a section of data for testing to avoid overfitting. They also suggested using k-fold cross-validation as another method to circumvent bias in results. - Bilingual or Multilingual Pre-Trained Models: User

@horosinasked for research or guidance on the topic of bilingual or multilingual pre-trained models.@vipitismentioned that most work in this field is being done on English-Chinese models.

▷ #diffusion-discussions (4 messages):

- New Models Creation and Experimentation:

@joelkochasked about the iteration process in developing new models like Würstchen and whether smaller models can be used for quicker testing.@sayakpaulresponded that historically, super small models don’t provide clear insights, hence the need for standard depth models, leading to scaling experiments becoming complex activities. Würstchen blog - Issue with Training Mistral 7B:

@abhishekchorariais encountering an error while using autotrain to train a custom dataset on Mistral 7B. The error is related to tokens indices exceeding the maximum sequence length and they’re seeking help to change the sequence length in autotrain.

Links mentioned:

Introducing Würstchen: Fast Diffusion for Image Generation

Mistral Discord Summary

- Extensive discussions emerged around the potential and capabilities of small models like Mistral-Tiny;

.tanuj.defended the feasibility to perform complex tasks offline on local machines, making it cost-effective and versatile. Models’ capabilities for stringing together tasks like GoogleSearch, EvaluateSources, CreateEssay, DeployWebsite were hypothesized, heralding new potential for abstract reasoning. - The JSON output and Tokenization of Chinese characters were topics of conversation;

@sublimatorniqsuggested asking the model to output JSON in TypeScript interface format,@poltronsuperstarnoted that Chinese characters are often 2 tokens due to Unicode, and.tanuj.offered assistance in understanding Mistral’s tokenization of Chinese characters. - The deployment channel focused on machine performance for running tasks on CPU, comparison of LPDDR5 RAM speed, and achieving similar performance to LLM on Apple Silicon GPU.

- The showcase channel featured a use case demonstration by

@.tanuj.of Mistral-Tiny for mathematical operations and task orchestration as well as.gue22sharing insights on Mistral-8x7B variant with helpful resource links. - On the la-plateforme channel, testing Mistral with French text was inquired about, feedback on Mistral-Medium tuning was shared, and confusion about the term Mixtral/Mistral was highlighted. A synthetic dataset’s planning was also mentioned.

Selected Quotes and Direct Mentions

.tanuj.: “If you can get good reasoning from a small model, you can get pretty powerful agents made in real time by a user, and be as powerful as you’d like them to be! It can be a solution like one prompt -> building a full web app and deploying it, no user input needed in between.”

@theledgerluminary: “But applying a similar architectural pattern to a large model could achieve better results. Really the only thing I see smaller models being beneficial for are real-time communication. If the overall goal is a large “long-running” task, it seems like a waste of time to only use a small model.”

@poltronsuperstar on potential question posed to AGI: “What’s your first question to an AGI?”

Links

How to fine tune Mixtral 8x7B Mistral Ai Mixture of Experts (MoE) AI model

Mistral Channel Summaries

▷ #general (35 messages🔥):

- Prompting Strategies Challenge from

.tanuj.:.tanuj.proposed a challenge to design a prompt or craft chat history that allows the CalculatorGPT to accurately solve various mathematical expressions using arithmetic operators, provided complete steps for reaching the intended answer, using themistral-tinymodel with the Mistral API endpoint alongside automated re-prompting. - Debate about Applicability of Small Models:

.tanuj.defended the feasibility of using a smaller model likemistral-tinyfor more complex task solving through intelligent, automated re-prompting and function calling. He suggested the possibility of performing complex tasks on a local machine offline, which can make the approach more cost-effective and versatile.@theledgerluminarydoubted the capabilities of smaller models compared to larger ones, and suggested the use of fine-tuned models specialized for different tasks, though.tanuj.argued for the practicality and simplicity of the “agent” over fine tuning. - JSON Output Suggestions:

@sublimatorniqsuggested asking the model to output JSON in the format of a TypeScript interface at the end of the prompt. - Affirmations of Small Model Potentials: Both

@poltronsuperstarand.tanuj.praised the potential of the Mistral tiny model for task orchestration.

Relevant quotes include:

.tanuj.: “If you can get good reasoning from a small model, you can get pretty powerful agents made in real time by a user, and be as powerful as you’d like them to be! It can be a solution like one prompt -> building a full web app and deploying it, no user input needed in between.”

@theledgerluminary: “But applying a similar architectural pattern to a large model could achieve better results. Really the only thing I see smaller models being beneficial for are real time communication. If the overall goal is a large “long running” task, it seems like a waste of time to only use a small model.”

Relevant Links:

- None were discussed.

▷ #deployment (3 messages):

- Running on CPU and iGPU:

@ethuxsuggested that in certain situations, a task will just run on the CPU since VRAM is faster, but there’s no reason to run it on the iGPU. - RAM Speed Comparison:

@hharryrdid a comparison of the speed of LPDDR5 RAM for the new ultra CPU, which is close to 78~80GB/s, similar to the bandwidth of RAM for the M1 / pro chip. - LLM Performance on Apple Silicon GPU:

@hharryrpondered if comparable performance to LLM running well on Apple Silicon GPU could be achieved with a machine using a new ultra processor.

▷ #showcase (12 messages🔥):

- Use Case of Mistral-Tiny for Mathematical Operations:

@.tanuj.presented that >Mistral-Tiny< can be used for calculations like “Evaluate (10**2*(7-2*1)+1)” by setting up a chat between the user and the model involving the computation steps. - He mentioned, “there were in-between steps that were automated,” and “only appends to the official chat history when it’s a valid, 100% callable function,” indicating that models can perform tasks.

@.tanuj.suggested a future scenario where Mistral-Tiny could have functions like GoogleSearch, EvaluateSources, CreateEssay, DeployWebsite, thus showing the model’s potential for abstract reasoning.@poltronsuperstarsaw potential in this approach, stating “Seems important that an agent can chain functions in a step by step way”. Despite this being a toy problem, it was regarded as having possible real-life applications.- Referring to the Mistral-8x7B variant,

.gue22shared that it runs on ancient, free Colab Nvidia T4 w/ 16GB of VRAM or any local Nvidia 16GB GPU + 11GB RAM. He shared links to a Google Colab notebook, an associated YouTube video, and the related paper Fast Inference of Mixture-of-Experts Language Models with Offloading for more details.

Links mentioned:

▷ #random (4 messages):

- Tokenization of Chinese Characters:

@poltronsuperstarnoted that “Chinese chars are often 2 tokens because unicode”. - MistralAI Library for Understanding Token Usage:

@.tanuj.suggested that using the MistralAI library in Python could help in understanding token usage, as the response object includes details about tokens used in the prompt and completion, and the total for the API call. - Tokenizing Chinese Characters in Mistral:

@.tanuj.also offered to help anyone interested in understanding how Mistral tokenizes Chinese characters, as he was curious about the process himself. They would just need to DM him. - First Question to an AGI:

@poltronsuperstarasked the chat for ideas on what their first question to an Artificial General Intelligence (AGI) would be.

▷ #la-plateforme (8 messages🔥):

-

Testing Mistral with French Text: User

@simply34raised a question about whether anyone has tested the Mistral embeddings model with French text, and how it performs when compared to open source multilingual models like multilingual-e5-large. No responses provided as of now. -

Discussion on Mixtral/Mistral Confusion:

@everymans.aibrought up a confusion about whether it’s Mixtral or Mistral and the functioning of the Mixture of Experts (MoE) AI model, sharing a related article.@dv8sspeculated that “Mix” could be a play on words relating to Mixture of Experts. -

Feedback on Mistral-Medium Tuning:

@jaredquekshared feedback on Mistral-Medium tuning, indicating that the model often outputs unnecessary explanations which he believes is a waste of tokens and money. He suggests this is a result of the model not correctly following instructions and could require further tuning. -

Planning for Synthetic Dataset Generation: User

@.superintendentis contemplating when to generate a synthetic dataset, hoping to avoid contributing to high traffic times.

Links mentioned:

How to fine tune Mixtral 8x7B Mistral Ai Mixture of Experts (MoE) AI model: When it comes to enhancing the capabilities of the…

DiscoResearch Discord Summary

- A conversation held predominantly by

@philipmayand@thewindmomregarding German language semantic embedding models and their different applications, with the sentence-transformers/paraphrase-multilingual-mpnet-base-v2 touted as the best open-source model for German, and Cohere V3 denoted as the overall best.@philipmayalso shared his Colab notebook for evaluating German semantic embeddings. - The group addressed the nuances of Question/Answer (Q/A) retrieval models versus semantic models and established the lack of a dedicated open-source finder for Q/A retrieval in German. Suggestions included the Cohere V3 multilingual model, and e5 large multilingual by Microsoft.

- The topic of Retrieval-Augmented Generation (RAG) on a German Knowledge Corpus came up, and while not a dedicated model for this, the aforementioned models were suggested due to their semantic capabilities.

@philipmayshared his experiences training the deutsche-telekom/gbert-large-paraphrase-cosine and deutsche-telekom/gbert-large-paraphrase-euclidean models, stating they are well-suited for training with SetFit.@_jp1_drew attention to a research paper, What Makes Good Data for Alignment? A Comprehensive Study of Automatic Data Selection in Instruction Tuning, looking into automatic data selection strategies for alignment with instruction tuning.- Discussions around issues concerning the DPO optimized Mixtral model were held, with

@philipmayand@bjoernpdiscussing the problems with router balancing and potential solutions, such as exploring alternatives like hpcaitech/ColossalAI, stanford-futuredata/megablocks, and laekov/fastmoe. There were also discussions about the location and absence of actual training code on GitHub.

DiscoResearch Channel Summaries

▷ #disco_judge (1 messages):

- Paper on Alignment and Instruction Tuning:

@_jp1_shared a link to a research paper which examines automatic data selection strategies for alignment with instruction tuning. The paper also proposes a novel technique for enhanced data measurement. The work is said to be similar to ongoing endeavors in the discord community. Link to the paper

Links mentioned:

What Makes Good Data for Alignment? A Comprehensive Study of Automatic Data Selection in Instruction Tuning: Instruction tuning is a standard technique employe…

▷ #mixtral_implementation (8 messages🔥):

- DPO Optimized Mixtral Model from Argilla:

@philipmaymentioned the DPO optimized Mixtral model released by Argilla on huggingface.co with additional training code on GitHub. Links provided are: Notux 8x7B-v1 on Hugging Face, GitHub - argilla-io/notus.

- Issues Regarding the Router Balancing:

@bjoernppointed out that the DPO optimized Mixtral model is equally affected by the issues regarding the router balancing due to its reliance on the transformers mixtral implementation. - Lack of Actual Training Code on GitHub:

@philipmayobserved that while the model card of Notux 8x7B-v1 links to a GitHub project, the actual training code seems omitted, with only the older Notus code available. - Location of the Training Code:

@philipmaydiscovered the actual training code, which resided in a different GitHub subtree, at argilla-io/notus, but had not yet been merged. - Alternative MoE Training Tools:

@philipmayproposed considering alternative MoE training tools like hpcaitech/ColossalAI, stanford-futuredata/megablocks, and laekov/fastmoe, which could potentially bypass the router balancing issues.@bjoernpresponded that contributions were underway in making the auxiliary-loss implementation in transformers equivalent to that of megablocks and that working directly with megablocks might be a viable but complex option.

Links mentioned:

- notus/vx/fine-tune at mixtral-fine-tune · argilla-io/notus: Notus is a collection of fine-tuned LLMs using SFT…

- argilla/notux-8x7b-v1 · Hugging Face

- GitHub - argilla-io/notus: Notus is a collection of fine-tuned LLMs using SFT, DPO, SFT+DPO, and/or any other RLHF techniques, while always keeping a data-first approach: Notus is a collection of fine-tuned LLMs using SFT…

- GitHub - hpcaitech/ColossalAI: Making large AI models cheaper, faster and more accessible: Making large AI models cheaper, faster and more ac…

- GitHub - stanford-futuredata/megablocks: Contribute to stanford-futuredata/megablocks devel…

- GitHub - laekov/fastmoe: A fast MoE impl for PyTorch: A fast MoE impl for PyTorch. Contribute to laekov/…

▷ #general (18 messages🔥):

- Experience with Embedding Models: In a discussion with

@thewindmom,@philipmayshared his experience with embedding models, especially German ones. He made clear distinctions between semantic embedding models and embedding model for Q/A retrieval, explaining that questions and potential answers are not necessarily semantically similar. - Best Semantic Embedding Models:

@philipmayrecommended the sentence-transformers/paraphrase-multilingual-mpnet-base-v2 as the best open-source German semantic embedding model, while the best overall was the new Cohere V3 embedding model. He also pointed out that ADA-2 embedding was not well-suited for German text. - Use of German BERT: He also explained how models he trained, deutsche-telekom/gbert-large-paraphrase-cosine and deutsche-telekom/gbert-large-paraphrase-euclidean, whilst not as efficient in semantic embedding as the paraphrase model he mentioned above, are very well suited as basic models for training with SetFit.

- RAG on German Knowledge Corpus: In response to

@thewindmom’s query about the best model for doing RAG on a German knowledge corpus,@philipmaynoted the lack of a dedicated open-source Q/A retrieval model for German and recommended the Cohere V3 multilingual model. However,@aiuisuggested e5 large multilingual by Microsoft as the best model based on practical experience. - Benchmarking and Evaluation:

@philipmayshared a link to a Colab Notebook that he created for evaluating German semantic embeddings and@rasdanidescribed a potential benchmark for context retrieval based on deepset/germanquad.

Links mentioned:

- Google Colaboratory

- sentence-transformers/paraphrase-multilingual-mpnet-base-v2 · Hugging Face

- deutsche-telekom/gbert-large-paraphrase-cosine · Hugging Face

- deutsche-telekom/gbert-large-paraphrase-euclidean · Hugging Face

- Question: OpenAI ada-002 embedding · Issue #1897 · UKPLab/sentence-transformers: Hi @nreimers , your blog about OpenAI embeddings i…

LangChain AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Langchain’s main components: In the context of Langchain,

@atefyaminoutlines the two main components which are the chains and agents. The chain is a “sequence of calls to components like models, document retrievers, or other chains” while the agent “is responsible for making decisions and taking actions based on inputs and reasoning”. - Agents vs Tools: There was a discussion around the roles and functions of an agent and tools in Langchain with

@shivam51and@atefyamin. Shivam was unsure about when tools are used instead of agents, but Atefyamin clarified that agents use tools to carry out their tasks. The discussion also explored if tools could be passed to chains. - Implementing ConversationBufferMemory:

@atefyaminasked for help implementing ConversationBufferMemory using an integration, sharing some of their code included firebase but read functionality seemed to lack. - Output Templates in Langchain:

@repha0709asked for assistance in creating output templates in Langchain so as to achieve a specific format of responses.@seththundersuggested using prompt templates might aid in achieving this, although@3h0480cautioned that prompts might not guarantee 100% compliance to the desired template. - Langchain Examples on GitHub:

@rajib2189shared a GitHub link to examples on how to use Langchain.

Links mentioned:

langchain_examples/examples/how_to_llm_chain_pass_multiple_inputs_to_prompt.py at main · rajib76/langchain_examples: This repo consists of examples to use langchain. C…

Alignment Lab AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Invitation to Live Podcast: User

@tekniuminvited@208954685384687617to join a live Twitter Spaces podcast. However, the invitation was politely declined by the recipient due to their preference for written English over spoken. - Inquiry about Podcast:

@ikaridevquestioned where they could listen to the podcast.@tekniumprovided the link to his Twitter for accessing the podcast, which occurs every Thursday at 8AM PST. - AI as a Language Translator: In relation to the declined invitation due to language barriers,

@ruschshared a link to an AI that translates languages in real-time. - Evaluation of Open Chat 3.5:

@axel_lsshared their experience with training, fine-tuning, and testing Open Chat 3.5. They stated that, although it’s not bad, it falls short when compared to GPT 3.5 for coding tasks. Also, they observed that fine-tuning didn’t improve performance much, but rather led to overfitting.

Links mentioned:

LLM Perf Enthusiasts AI Discord Summary

- Issues Encountered in Azure Integration with OpenAI: Users in the #general channel express multiple difficulties including the setup process

@pantsforbirds, complexities in managing different API limits across regions@robotums, managing different models/regions and their respective resource limits@0xmmo, and devops and security concerns@pantsforbirdsonce more. - In the #offtopic channel, user

@joshcho_expressed interest in ‘uploading a VITS model (text-to-speech) and making it available through an API’, seeking advice on model uploading for API creation. - Discussion in the #prompting channel revolved around a newly released project, TokenHealer, by

@ayenem, which aims to improve prompt alignment with a model’s tokenizer. Feedback on this novel project was requested, with@pantsforbirdsalready commending it as “really cool”.

LLM Perf Enthusiasts AI Channel Summaries

▷ #general (4 messages):

- Azure Account for OpenAI Setup Challenges: User

@pantsforbirdsexpressed difficulties in setting up an exclusive Azure account for OpenAI, citing the setup process as a deterrence. - Region-Specific API Limit Issues:

@robotumshighlighted the complexities of managing different API limits given by different regions, necessitating the management of multiple OpenAI objects for each model/deployment. - Model and Resource Limitations Per Region:

@0xmmomentioned the additional challenge of different models per region each with its own resource limits. Furthermore, they addressed the issue of needing different API keys per resource leading to a massive number of environment variables to manage. - Concerns Over Integration and Security Setup:

@pantsforbirdsalso voiced concerns over the heavy devops work required to integrate OpenAI with their existing system and the added complexities of setting up the security.

▷ #offtopic (2 messages):

- Model Uploading for API Creation: User

@joshcho_enquired if anyone has uploaded models to replicate to create APIs, expressing interest in uploading a VITS model (text-to-speech) and making it available through an API.

▷ #prompting (3 messages):

- TokenHealer Release: User

@ayenemreleased a new project called TokenHealer, an implementation that trims and regrows prompts to align more accurately with a model’s tokenizer, improving both completion and robustness to trailing white space and punctuation. - Feedback on Release:

@ayenemhas welcomed feedback on the project, stating a lack of experience in releasing projects. User@pantsforbirdshas commended the project, stating it looks “really cool”.

Links mentioned:

GitHub - Ayenem/TokenHealer: Contribute to Ayenem/TokenHealer development by cr…

Latent Space Discord Summary

- A query about server configuration for combining 4x A100 and 4x L40S to run on the same server led to a discussion about the creation of an app to convert enterprise unstructured data into datasets for fine tuning LLMs in the

#ai-general-chatchannel. User@aristokratic.ethexplored the idea and@fanahovaencouraged him to look into similar existing solutions. - The

#ai-event-announcementschannel featured an update on the release of a recent podcast episode by@latentspacepod, highlighting top startups from NeurIPS 2023, including companies led by@jefrankle,@lqiao,@amanrsanger,@AravSrinivas,@WilliamBryk,@jeremyphoward, Joel Hestness,@ProfJasonCorso, Brandon Duderstadt,@lantiga, and@JayAlammar. Links to the podcast were provided - Podcast Tweet and Podcast Page. - Noteworthy AI research papers of 2023 were proposed by

@eugeneyanin the#llm-paper-clubchannel for the reading group’s consideration, mainly with a focus on large language models. A link to the selection of these papers was provided.

Latent Space Channel Summaries

▷ #ai-general-chat (4 messages):

- Possibility of Server Configuration: User

@aristokratic.ethinquired the feasibility of having 4x A100 and 4x L40S on the same server. - Building an App for Unstructured Data:

@aristokratic.ethis considering the development of an application that could convert enterprise unstructured data into datasets for fine tuning LLMs. He asked for the community’s thoughts on the product-market fit for this idea. @fanahovasuggested@aristokratic.ethto research similar applications, indicating that such a solution might already exist in the market.- Consequently,

@aristokratic.ethasked for references to such existing solutions for further examination.

▷ #ai-event-announcements (1 messages):

- NeurIPS 2023 Recap — Top Startups:

@swyxioannounced the release of the latest pod from@latentspacepod, which covers NeurIPS 2023’s top startups. Notable participants include:@jefrankle: Chief Scientist, MosaicML@lqiao: CEO, Fireworks AI@amanrsanger: CEO, Anysphere (Cursor)@AravSrinivas: CEO, Perplexity@WilliamBryk: CEO, Metaphor@jeremyphoward: CEO, AnswerAI- Joel Hestness: Principal Scientist,

@CerebrasSystems @ProfJasonCorso: CEO, Voxel51- Brandon Duderstadt: CEO,

@nomic_ai(GPT4All) @lantiga: CTO, Lightning.ai@JayAlammar: Engineering Fellow, Cohere The podcast can be accessed via the provided links: Podcast Tweet and Podcast Page.

Links mentioned:

Tweet from Latent Space Podcast (@latentspacepod): 🆕 NeurIPS 2023 Recap — Top Startups! https://www…

▷ #llm-paper-club (1 messages):

- AI Research Paper Recommendations:

@eugeneyanshared a link to a list of 10 noteworthy AI research papers from 2023. He suggested these papers for the reading group and pointed out that their focus is mainly on large language models. His selection criteria for these papers were based on his personal enjoyment or their impact in the field.

Links mentioned:

Ten Noteworthy AI Research Papers of 2023: This year has felt distinctly different. I’ve…

Skunkworks AI Discord Summary

Only 1 channel had activity, so no need to summarize…

caviterginsoy: https://arxiv.org/abs/2305.11243

MLOps @Chipro Discord Summary

Only 1 channel had activity, so no need to summarize…

- CheXNet Model Deployment Issue: User

@taher_3is facing difficulties with deploying a pretrained chexnet model from CheXNet-Keras. They are encountering a problem where every loaded model produces the same prediction as the first image for all subsequent images. They are seeking help from anyone that has faced a similar issue.