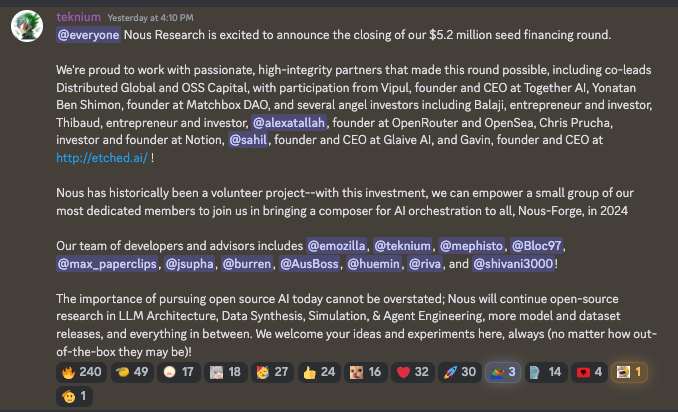

Nous announced their seed, and the business focus is Nous Forge:

Rabbit R1 also launched their demo at CES and opinions were very divided.

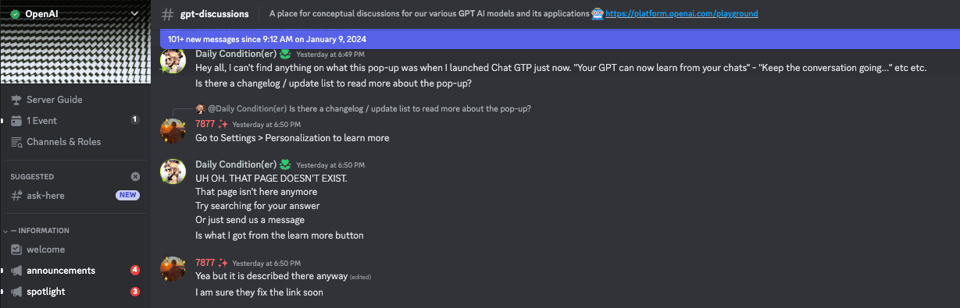

In other news, OpenAI shipped the GPT store today, and briefly leaked their upcoming personalization feature.

—

Table of Contents

[TOC]

Nous Research AI Discord Summary

- Breaking the LLM’s Context Window Limit with Activation Beacon:

@kenakafrostyshared an arXiv paper on Activation Beacon, a new solution that could potentially solve Large Language Models (LLMs) context window issue.@_stilic_confirmed that the code will be available on GitHub. - Vibes on Tech Gadgets and AI Use: In the off-topic channel, topics revolved around M2-equipped Apple Vision Pro, Rabbit product, WEHEAD AI companion, layoffs at Humane, and humor about Language Learning Models (LLMs) and their use.

- Curated Tech and AI Links: Interesting links shared include tools like Light Activation Beacon Training and MaybeLongLm Self-Extend for LLM, AI for explaining AI systems, discussions about Rabbit.tech, model interpolation, 2 MoE model and WikiChat.

- Nous Research’s Exciting Seed Financing and Future Plans:

@tekniumannounces Nous Research’s successful $5.2 million seed financing and their plan to etch transformer architecture into chips, creating powerful servers capable of real-time voice agents, improved coding, and running trillion parameter models. Further open-source research and the development of Nous-Forge are also in the pipeline. - OpenAI Community’s Various Projects on AI & LLMs: A broad range of topics were covered on the general channel, including the development progress of QLORA, research on wearable AI mentor, discussions on fine-tuning Large Models, use of custom architectures, experiments with WikiChat Dataset, and a spontaneous Spanish Speaking Session.

- LLM-related discussions and inquiries: In the ask-about-llms channel, discussions centered on replicating Phi Models, solutions to VRAM issues with Mixtral and Ollama, and strategies for building LLMs tailored to a proprietary knowledge base. Users considered the use of Synthesizer tool and suggested ways to create synthetic data sets.

- Obsidian Project Code Request: In the project-obsidian channel, users expressed interest in the Obsidian script that

@qnguyen3has used for their work. The script, when shared, would be valuable for other guild members in their own projects.

Nous Research AI Channel Summaries

▷ #ctx-length-research (3 messages):

- Activation Beacon: A solution for LLM’s context window issue:

@kenakafrostyshared a link to an arXiv paper on a new solution named Activation Beacon. The paper states that Activation Beacon “condenses LLM’s raw activations into more compact forms such that it can perceive a much longer context with a limited context window”. This tool seems to have the ability to balance both memory and time efficiency during training and inference. - Upcoming Code for Activation Beacon on GitHub:

@_stilic_offered an update, saying that the code for the Activation Beacon will be available here on GitHub.

Links mentioned:

- Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon: The utilization of long contexts poses a big challenge for large language models due to their limited context window length. Although the context window can be extended through fine-tuning, it will re…

- FlagEmbedding/Long_LLM/activation_beacon at master · FlagOpen/FlagEmbedding: Dense Retrieval and Retrieval-augmented LLMs. Contribute to FlagOpen/FlagEmbedding development by creating an account on GitHub.

▷ #off-topic (95 messages🔥🔥):

- M2-equipped Apple Vision Pro, and the Rabbit product discussed:

@nonameusrwas excited about the M2 on the Apple Vision Pro, while.beowulfbrexpressed skepticism about the Rabbit product’s cost and inference coverage. They also speculated that Apple might release a similar product this year. - Weighing in on WEHEAD AI companion in 2024: Several users had resonating and humorous opinions about the WEHEAD AI companion in 2024, following a link shared by

@teknium.@everyoneisgrossadmired the lowpoly aesthetic, and@youngphloimagined carrying the AI around like a baby. Check the post here. - Chats on Humane layoffs ahead of their first device launching:

@mister_poodleshared a link regarding layoffs at Humane ahead of the startup shipping its first device, a preordered $699, screenless, AI-powered pin. The article is available at this link. - Humor about Language Learning Models (LLMs) and their use: Users

@Error.PDFand@n8programsjoked about the usefulness of LLMs in understanding and communicating in foreign languages. They also humorously speculated on the next advancements in LLMs, such as Discord mods that automatically translate all screen text to the user’s native language.

Links mentioned:

- Tweet from PCMag (@PCMag): The WEHEAD AI companion is not the assistant we envisioned for 2024. #CES2024

- Mcmahon Crying He Was Special GIF - Mcmahon Crying He was special WWE - Discover & Share GIFs: Click to view the GIF

- Humane lays off 4 percent of employees before releasing its AI Pin: The cuts were described as cost-cutting measures.

▷ #interesting-links (39 messages🔥):

- Exploring Light Activation Beacon Training with MaybeLongLM Self-Extend:

@kenakafrostyraised the idea of combining Light Activation Beacon Training with MaybeLongLm Self-Extend to potentially eliminate the context window problem. - AI Agents Unraveling AI Systems:

@burnydelicshared an article on the novel approach taken by MIT’s CSAIL researchers who used AI models to experiment on other systems and explain their behavior. - Is Rabbit.tech the Next Big Thing?:

@kevin_kevin_kevin_kevin_kevin_kesparked a discussion on Rabbit.tech, a tech company offering standalone hardware for artificial intelligence. Some users expressed skepticism about the need for a separate device when smartphone apps could offer similar functionality (@georgejrjrjrand@teknium), while others (@gezegen) defended the uniqueness of specialized hardware for AI companions. - Criticisms and Defence of Model Interpolation: In the context of AI model development,

@georgejrjrjr,@romaincosentinoand@charlie0.odiscussed the limitations and potential advantages of model interpolation.@romaincosentinoposited that there’s a lack of theoretical foundation in model interpolation, while@charlie0.oconsidered it as a form of regularization. - Stumbled Upon Large MoE Model and WikiChat:

@nonameusrshared two links, one to a 2 MoE model based on Intel-neural series v3, and a tweet mentioning WikiChat, a tool boasting improved factual accuracy over GPT-4. The latter prompted@decruzto query its difference from systems grounded with RAG.

Links mentioned:

- rabbit — home: r1: your pocket companion. Order now: $199, no subscription required.

- fblgit/UNAversal-2x7B-v1 · Hugging Face

- AI agents help explain other AI systems: FIND (function interpretation and description) is a new technique for evaluating automated interpretability methods. Developed at MIT, the system uses artificial intelligence to automate the explanati…

- Tweet from Owen Colegrove (@ocolegro): This result is so fascinating: WikiChat: Stopping LLM Hallucination - Achieves 97.9% factual accuracy in conversations with human users about recent topics, 55.0% better than GPT-4! Anyone interes…

▷ #announcements (1 messages):

- Nous Research Announces $5.2M Seed Financing Round:

@tekniumannounces the successful conclusion of the $5.2 million seed financing round, with co-leads Distributed Global and OSS Capital, and participation from several angel investors, including Vipul, founder and CEO at Together AI, Yonatan Ben Shimon, founder at Matchbox DAO, and Balaji, Thibaud, founder at OpenRouter and OpenSea, Chris Prucha, founder at Notion, founder and CEO at Glaive AI, and Gavin, founder and CEO at etched.ai. - Burning Transformers Architecture Into Chips: The intention was revealed to create the world’s most powerful servers for transformer inference by burning the transformer architecture into chips.

- Products Impossible with GPUs:

@tekniumoutlines the projected capabilities of Nous Research’s servers, emphasising real-time voice agents, improved coding through tree search, and multicast speculative decoding. - Room for Trillion Parameter Models: The upcoming servers are expected to be able to run trillion parameter models, featuring a fully open-source software stack, expansibility to 100T param models, beam search, and MCTS decoding.

- Open-Source Pursuits & Future Project, Nous-Forge: Stressing the importance of open-source research,

@tekniumannounces that the funding will allow for continued investment in LLM Architecture, Data Synthesis, Simulation, & Agent Engineering research, and the development of Nous-Forge, set for 2024. The team of developers and advisors mentioned includes<@153017054545444864>,<@387972437901312000>,<@265269014148808716>, and<@187418779028815872>.

Links mentioned:

Etched | The World’s First Transformer Supercomputer: Transformers etched into silicon. By burning the transformer architecture into our chips, we’re creating the world’s most powerful servers for transformer inference.

▷ #general (377 messages🔥🔥):

- Nous Team raises $5.2 million: The Nous team posted a tweet announcing that they have successfully raised $5.2m in seed funding. Members and friends of the Nous team expressed their excitement and offered congratulations. Source:

@youngphlo - Development of QLORA: User

@n8programsshared progress on the development of QLORA, a method to fine-tune OpenAI models. He successfully trained QLORA on a single m3 max and concluded that QLORA generally performed better than ordinary mistral. Source:@n8programs - Researching Wearable AI mentor: User

@mg_1999is working on a wearable AI mentor and consulted the community about the best model to use from Nous Research. They shared a link to the product’s website:AISAMA - Discussion on Finetuning Large Models: The community discussed the benefits and challenges of training and merging large versus small models. Users argued for different strategies such as merging fine-tuned models with base models and using multiple adapters. Notable links shared include

LM-CocktailandCFG - Suggestion of Modal and Runpod platforms: User

@decruzdiscussed modal.com, a tool used for inference, and facilitated introductions to people at the company for those in need of GPUs. User@kenakafrostymentioned runpod as an alternative platform with similar capabilities. - Use of custom architectures: User

@mihai4256asked for advice on sharing custom architectures inherited from LlamaForCausalLM with others. He was guided to use a similar method as implemented byQwen, including a custom modeling file and allowing import withtrust_remote_code=True. - Interest in WikiChat Dataset: User

@emrgnt_cmplxtyexpressed interest in the dataset used by the WikiChat team, stating their own tests with it produced promising results. They raised the idea of reproducing the dataset to fine-tune OpenHermes-2.5-Mistral-7B. Source:stanford-oval/WikiChat - Spontaneous Spanish Speaking Session: Various users engaged in a fun and humorous conversation in Spanish. The conversation had no informative value but ended with the conversation in fun and good cheer.

Links mentioned:

- Tweet from anton (@abacaj): Why am I recommending bigger models? I mean look at this. Even Qwen-1.8B-chat fails at properly associating turns… smaller models will not cut it

- Google Colaboratory

- LM-Cocktail: LM_Cocktail

- Slerp - Wikipedia

- Evangelion Laugh GIF - Evangelion Laugh Smile - Discover & Share GIFs: Click to view the GIF

- Sama AI App: What if humans had infinite memory? Our new AI wearable is created to give you unlimited memory.

- Modal: Modal helps people run code in the cloud. We think it’s the easiest way for developers to get access to containerized, serverless compute without the hassle of managing their own infrastructure.

- Stay on topic with Classifier-Free Guidance: Classifier-Free Guidance (CFG) has recently emerged in text-to-image generation as a lightweight technique to encourage prompt-adherence in generations. In this work, we demonstrate that CFG can be us…

- Oh God Kyle Broflovski GIF - Oh God Kyle Broflovski Stan Marsh - Discover & Share GIFs: Click to view the GIF

- Issues · stanford-oval/WikiChat: WikiChat stops the hallucination of large language models by retrieving data from Wikipedia. - Issues · stanford-oval/WikiChat

- Tweet from Nous Research (@NousResearch): Nous Research is excited to announce the closing of our $5.2 million seed financing round. We’re proud to work with passionate, high-integrity partners that made this round possible, including c…

- Tweet from rabbit inc. (@rabbit_hmi): Introducing r1. Watch the keynote. Order now: http://rabbit.tech #CES2024

- GitHub - cg123/mergekit: Tools for merging pretrained large language models.: Tools for merging pretrained large language models. - GitHub - cg123/mergekit: Tools for merging pretrained large language models.

- Example reading directly from gguf file by jbochi · Pull Request #222 · ml-explore/mlx-examples: This loads all weights, config, and vocab directly from a GGUF file using ml-explore/mlx#350 Example run: $ python llama.py models/tiny_llama/model.gguf [INFO] Loading model from models/tiny_llama/…

- GGUF support by jbochi · Pull Request #350 · ml-explore/mlx: Proposed changes This adds GGUF support using the excellent gguflib from @antirez. Would there be interest in this? GGUF is currently very popular for local inference, and there are tons of models …

▷ #ask-about-llms (44 messages🔥):

- Exploration of Replicating Phi Models:

@gson_arloinquired about the existence of open models that aim to replicate the Phi series. In response,@georgejrjrjrpointed out that Owen from sci-phi has been focusing on synthetic data more than anyone else they know and also mentioned related projects such as refuel.ai and Ben Anderson’s Galactic. - Addressing VRAM Issues with Mixtral and Ollama:

@colby_04841asked for advice on handling VRAM limitations while using Mixtral 8x7b and Ollama on a system with 4 RTX 3090 GPUs. - RAG vs Fine-Tuning for Proprietary Knowledge Base:

@bigdatamikesought insights on whether to use RAG, fine-tuning, or both for building a language model tailored to a company’s proprietary knowledge base. The users gave mixed opinions, with@colby_04841favoring RAG and@georgejrjrjrsuggesting potentially transforming the data in the retrieval store to better match the output format. - Usefulness of Synthesizer Tool in Data Creation:

@georgejrjrjrrecommended Synthesizer, a tool developed by SciPhi-AI, for multi-purpose language model framework for RAG and data creation. User@everyoneisgrossconfirmed its addition to their project list. - Best Way to Create Synthetic Data Sets:

@gezegenasked about generating synthetic datasets, where@emrgnt_cmplxtypointed to open source models as a scalable solution, emphasizing the need for maintaining accuracy.

Links mentioned:

GitHub - SciPhi-AI/synthesizer: A multi-purpose LLM framework for RAG and data creation.: A multi-purpose LLM framework for RAG and data creation. - GitHub - SciPhi-AI/synthesizer: A multi-purpose LLM framework for RAG and data creation.

▷ #project-obsidian (4 messages):

- Obsidian script sharing request:

@qnguyen3mentioned they just ran Obsidian for their work. In response to this,@vic49.requested@qnguyen3to share the script. There is also an indicated interest in the script from@thewindmom.

OpenAI Discord Summary

- The Wait Game for GPT-4 Turbo: Member

@_treefolk_pointed out their anticipation for the full release of GPT-4 Turbo for cheaper usage and increased token limit. The individual highlighted the vagueness of “early January” as the promised release timeframe. - The AI vs. Coders Debate:

@you.wishand@【penultimate】engaged in a spirited discussion about the implications of future GPT versions for coders. While@【penultimate】envisioned a future where “Pretty much everybody on earth will be the world’s best coder with the next versions of GPT,”@you.wishcontested this with the fact that current AI models can only perform very elementary tasks.

- When Discord Rules Stir Discussions: The guild experienced an exhaustive rule interpretation discourse when

@you.wishasked for upvotes on a Reddit post, prompting dialogues about Discord’s Rule 7 prohibiting “self-promotion, soliciting, or advertising”. - LimeWire Takes AI to Music:

@shartokbrought a musical piece composed by LimeWire AI Studio into the conversation, sparking a discussion on AI-generated music. - Mixed Reviews on Midjourney’s Latest Version: Voices in the guild like

@dino.oats,@darthgustav.and@satanhashtagshared their experiences and perceptions of the newest version of Midjourney (MJ), discussing its deviation from Discord and the inclusion of privacy features. However,@you.wishexpressed dissatisfaction with the version. - Brand Guidelines Puzzle: In the GPT-4 discussions,

@mrbr2023posed questions over a black-marked section of the brand guidelines documentation for OpenAI GPTs received in an email and the inability to share a screenshot or link to the document. - Roadblocks in GPT Publishing:

@mrbr2023struggled with selecting ‘Publish to EVERYONE’ for their GPTs, which was resolved upon realizing that both the ‘Name’ and ‘Domain’ boxes in the builder profile settings need to be checked for the option to be available. - Exploring GPT Personalization: Venturing into the new GPT memory feature (personalization),

@winsomelosesomeand@darthgustav.shared their experiences of the GPT learning from their chats. - ChatGPT Romantically Challenged: In the prompt engineering channel,

@rchap92pointed out ChatGPT’s difficulty in crafting even a simple romantic scene without violating guidelines, a fact confirmed by@rjkmelbwho stated that ChatGPT is designed to be “G-rated”. - ChatGPT’s Conservative Guidelines; Possible Workaround?: When it comes to creating content that might violate guidelines,

@exhort_onesuggested an interesting workaround - involve ChatGPT in crafting a censored version* of the content and then let the user fill in the blanks. - AI’s Potential Across Different Fields:

@shoga4605pondered over the vast potential of AI and language models and their potential impact on various fields such as linguistics, ecology, environments, and more. They also hypothesized about AI’s application in agriculture for theoretically determining the amount of food that could be produced from lawn space.

OpenAI Channel Summaries

▷ #ai-discussions (160 messages🔥🔥):

- Impatience for GPT-4 Turbo’s official release:

@_treefolk_expressed eagerness for GPT-4 Turbo to move out of preview for cheaper usage and increased token limit, questioning the specifics of the promised “early January” release. - Discussion on the future of coding with GPT: A lively debate transpired between

@you.wishand@【penultimate】about the potential for future GPT versions to replace coders. While@【penultimate】believes that “Pretty much everybody on earth will be the world’s best coder with the next versions of GPT,”@you.wishargued that current models can only undertake very basic tasks. - Self promotion content prompts Discord rule debate: An extensive discussion about the application of Discord’s Rule 7, which prohibits “self-promotion, soliciting, or advertising,” was initiated by

@you.wish'srequest for upvotes on a Reddit post. - AI generated music shared:

@shartokshared a link to an AI-generated music piece created with LimeWire AI Studio. - Chat about latest Midjourney (MJ) version: Messages from

@dino.oats,@darthgustav.and@satanhashtagdetailed their experiences and opinions on the newest version of MJ, including its move away from Discord and introduction of privacy features.@you.wishexpressed dissatisfaction with the version.

Links mentioned:

- Using a ChatGPT made game to fool a Vet Gamedev: A lot of people were saying Games are safe from AI, so I asked AI to make a game that can fool the legendary creator of God Of War! 😜Thanks to @DavidJaffeGa…

- Radiant Warrior - LimeWire: “Check out Radiant Warrior from shartok on LimeWire”

▷ #gpt-4-discussions (154 messages🔥🔥):

- Confusion Over Brand Guidelines: User

@mrbr2023showed confusion about why a section of the brand guidelines documentation for OpenAI GPTs given in an email had a black mark over it. They were also puzzled about being unable to share a screenshot or link to the document in the group. - Voice Quality for Custom GPTs:

@vantagespasked why the voice for custom GPTs was subpar, without any response or discussion following. - Struggles with Publishing GPTs: User ‘mrbr2023’ displayed frustration about not being able to select ‘Publish to EVERYONE’ for their GPTs, eventually figuring out that both the ‘Name’ and ‘Domain’ boxes need to be ticked in the builder profile settings for the ‘EVERYONE’ option to become available.

- Users Encounter Technical Issues with GPTs: Several users reported that their GPTs disappeared and some website pages were not accessible, attributing it to a new update from OpenAI.

- Explored Personalization Feature of GPT:

@winsomelosesomeand@darthgustav.explored the new GPT memory feature (personalization) in Settings, which allows GPT to learn from your chats. However,@darthgustav.also noted that it seemed to be removed shortly after discovery. - Appeal for GPT Feedback: User

@faazdataai_71669asked for feedback on their GPT, ‘Resume Tailor’, sharing a link to their GPT.

Links mentioned:

Brand guidelines: Language and assets for using the OpenAI brand in your marketing and communications.

▷ #prompt-engineering (6 messages):

- ChatGPT struggles with romantic scenarios:

@rchap92raises a concern about ChatGPT struggling to outline even a basic romantic scene without getting the ‘may violate guidelines’ highlight.@rjkmelbconfirms that Indeed, ChatGPT is designed to be “G-rated”. - Workarounds for ChatGPT’s conservative approach:

@exhort_oneprovides a workaround suggestion that involves asking ChatGPT to censor any part that might violate guidelines, and then the user can fill in the blanks. - Prompt-engineering guide shared:

@scargiashares a link to the Prompt Engineering guide on OpenAI’s website. - Inspiring potentials use-cases for AI:

@shoga4605discusses potentials uses cases for AI and language models in understanding and modeling ecology, environments, habitats, and overall biodiversity. They also consider the possibilities of AI in agriculture, like determining how much food could theoretically be produced from lawn space. - Warm welcome to a new user:

@beanz_and_ricewelcomes@shoga4605to the community, appreciating their enthusiasm and ideas.

▷ #api-discussions (6 messages):

- Censoring needed content: User

@rchap92asked if chatGPT cannot create a romantic scene beyond a kiss without getting a violation warning.@rjkmelbconfirmed that ChatGPT is designed to be G rated. - Working around ‘violation’ highlights: User

@exhort_onesuggested asking ChatGPT to censor any part that may violate guidelines, thereby enabling users to fill in the blanks. - Prompt Engineering Guide:

@scargiashared a link to OpenAI’s guide on prompt engineering. - Enthusiasm for AI potential:

@shoga4605expressed excitement about the potential of AI and language models, and contemplated their application in fields such as linguistics, ecology, environments, and more. - Welcome to the discussion:

@beanz_and_ricegreeted and welcomed@shoga4605to the chat.

LM Studio Discord Summary

-

Ubuntu Server and LMStudio Compatibility Issue: User

@m.t.minquired about running LMStudio on Ubuntu 22.04 server without an X server. Responding member@heyitsyorkieindicated that LMStudio does not support a headless or CLI option and recommended llama.cpp for such needs. -

The GPU Debate RTX 4070 vs. RTX 4090: A discussion was held between

@b0otable,@heyitsyorkie,@fabguy,@senecalouck, and@rugg0064on whether to purchase an RTX 4070 or RTX 4090, focusing on performance benefits, VRAM consideration, and price. -

Use of LM Studio for LM-as-a-service Queried: User

@enavarro_questioned using only the LM Studio backend to set up a LM-as-a-service. They were informed by@heyitsyorkiethat such a feature isn’t currently offered and was directed to llama.cpp as a potential solution. -

Forward Looking Talk on ROCm Support & ML’s Future: ROCm support and the future landscape of machine learning sparked a conversation.

@senecalouckpointed out that ROCm support is already enabled in ollama, hoping it would soon be in LM Studio. The focus then shifted to the future implementation of ML and emerging players like TinyBox. -

Finding 7B-13B Model for Tool Selection and Chat Desires: User

@_anarche_conveyed their struggle to find a locally usable 7B-13B model that can perform both tool selection (function calling) and chat, possibly in a franken/merged form. Their goal is to shift away from the gpt-3.5-turbo model. -

Stanford DSPy Highlighted as a Potential Solution:

@nitex_dkrhighlighted the Stanford DSPy, a framework for programming—not prompting—foundation models, as a potential solution to @anarche’s challenge. -

Linux Loading Issue and Misleading Version Number Flagged: User

@moko081jdjfjddjreported that the model won’t load on Linux and noticed a discrepancy with the Linux version number on the website. These issues were addressed by@heyitsyorkieand@fabguywho clarified the version issue and directed the user to the specific Linux Beta channel for further support. -

Unsupported Platform Issue Encountered:

@kerylinereceived an error message that their platform is not supported by LM Studio due to their processor not supporting AVX2.@dagbssuggested trying the avx beta to resolve this. -

Mac v/s PC for Running Large Models Provokes Discussion: A conversation was initiated by

@scampbell70about the hardware requirements for efficiently running larger models like Mistral 8x7b, Falcon 180b, or Goliath 120. Some members praised Macs (particularly the Mac Studio) for better performance, while concerns about their lack of upgradability were raised. -

GPUs with Memory Slots Unavailable:

@doderleinasked where they could buy a GPU with memory slots, which@ptablestated isn’t possible.@heyitsyorkiepointed to a unique solution from ASUS that couples a GPU with a SSD M.2 NVME to create a hybrid storage-graphics card. -

Hardware Usage in LM Studio Clarified: User

@besiansherifajinquired if a CPU is necessary in LM Studio while having a 4090 RTX GPU.@fabguyclarified that the CPU will always be used even if the full model is on the GPU. -

Autogen Studio and LMStudio Usage Questioned: In the autogen channel, thelefthandofurza brought up a question about anyone’s experience using autogen studio with lmstudio. The discussion did not proceed further.

LM Studio Channel Summaries

▷ #💬-general (71 messages🔥🔥):

-

LmStudio Install Issues on Ubuntu Server: User

@m.t.m.asked if it’s possible to run LMStudio on Ubuntu 22.04 server without an X server.@heyitsyorkieresponded that LMStudio currently does not support a headless or CLI option and recommended llama.cpp for such needs. -

Nicknames and Server Rules: User

@sexisbadtotheboneasked if their nickname breaks the server rules about SFW content.@heyitsyorkieadvised editing nicknames to abide by the rules and maintain a work environment. -

The RTX 4070 vs. RTX 4090 Debate:

@b0otablesought advice on whether to purchase an RTX 4070 or RTX 4090. The discussion involved@heyitsyorkie,@fabguy,@senecalouck, and@rugg0064, focusing on performance benefits, VRAM consideration, and price. -

Using LM Studio for LM-as-a-service:

@enavarro_inquired about the possibility of using only the LM Studio backend to set up a LM-as-a-service. The user was informed by@heyitsyorkiethat such a feature is not available and was pointed to llama.cpp as a potential solution. -

Discussing ROCm Support & Future of ML: A discussion was held considering ROCm support and the future landscape of machine learning.

@senecalouckmentioned that ROCm support is available in ollama, with hopes of seeing it in LM Studio soon. The discussion then evolved into exploring the future implementation of ML technology and new players like TinyBox.

Links mentioned:

- TheBloke/LLaMA-Pro-8B-Instruct-GGUF · Hugging Face

- ROCm support by 65a · Pull Request #814 · jmorganca/ollama: #667 got closed during a bad rebase attempt. This should be just about the minimum I can come up with to use build tags to switch between ROCm and CUDA, as well as docs for how to build it. The exi…

▷ #🤖-models-discussion-chat (18 messages🔥):

- Seeking 7B-13B Model for Tool Selection and Chat:

@_anarche_voiced the struggle to find a local 7B-13B model capable of both tool selection (function calling) and chat, potentially in a franken/merged form. They aim to transition away from the gpt-3.5-turbo model. - Dolphin Model Serves as Suitable All-Around:

@dagbsrecommended the Dolphin model as a good generalist option for coding, functions, tools, etc., despite mixed results with certain function-calling tools like crewai and autogen. - Langchain Compatibility Considerations:

@_anarche_detailed their intention to integrate the new model into Langchain, for which they have already adjusted their bot to use the LM Studio API. - Uncensored Model Concerns on Discord: Drawing attention to potential issues with using an uncensored model in a Discord environment,

@dagbscautioned that such a move could result in a ban, exposing the need for careful model selection. - Stanford DSPy Shared as Possible Solution:

@nitex_dkrflagged the Stanford DSPy, a framework for programming—not prompting—foundation models, which could offer a promising solution to @anarche’s challenge.

Links mentioned:

GitHub - stanfordnlp/dspy: Stanford DSPy: The framework for programming—not prompting—foundation models: Stanford DSPy: The framework for programming—not prompting—foundation models - GitHub - stanfordnlp/dspy: Stanford DSPy: The framework for programming—not prompting—foundation models

▷ #🧠-feedback (10 messages🔥):

- Linux Loading Issue:

- User

@moko081jdjfjddjreported that the model doesn’t load on Linux.@heyitsyorkieand@fabguydirected the user to Channels and Roles to select the Linux Beta role and post the issue in the specific channel. @moko081jdjfjddjalso noticed a discrepancy with the version number for Linux provided on the website, to which@fabguyresponded saying that the Beta version 0.2.10 had stability issues, hence not updated on the site.

- User

- Unsupported Platform Issue:

@kerylineexperienced a problem with LM Studio on their Windows machine, getting an error message stating that their platform is not supported as their processor does not support AVX2 instructions.- To resolve this,

@dagbssuggested the user to try the avx beta.

- New Beta Version Request:

@logandarkrequested a new beta version that includes a specific commit from the llama.cpp repository.

▷ #🎛-hardware-discussion (29 messages🔥):

-

Mac v/s PC for Running Large Models: User

@scampbell70initiated a discussion on the hardware requirements for efficiently running larger models such as Mistral 8x7b, Falcon 180b, or Goliath 120 with the least amount of loss and best performance.@telemaqand@heyitsyorkiesuggested a Macbook Pro or a Mac Studio for better performance, while@pydusacknowledged the cost-effectiveness of a Mac Studio with 192GB of unified memory for a price of $7K. However,@scampbell70expressed concerns about Macs due to their lack of upgradability(source). -

VRAM allocation on Apple machines:

@heyitsyorkieshared a Reddit thread detailing how the amount of VRAM allocation can be controlled at runtime using a commandsudo sysctl iogpu.wired_limit_mb=12345(source). -

Purchasing GPUs with Memory Slots:

@doderleinasked where to buy a GPU with memory slots, a question that@ptableanswered as not being possible.@heyitsyorkiementioned a unique solution from ASUS that pairs the GPU with an SSD M.2 NVME, creating a hybrid storage-graphics card (source). -

Mac Performance with Goliath 120b Q8:

@telemaqshared a Reddit post of a user who ran Goliath 120b Q8 on a Mac Studio M2 Ultra with 192GB memory, achieving about 7tok/s, proving the Mac’s capability to handle larger models (source). -

Hardware Usage in LM Studio: User

@besiansherifajinquired about whether CPU is necessary in LM Studio while having a 4090 RTX GPU.@fabguyclarified that the CPU will always be utilized even if the full model is on GPU.

Links mentioned:

- Reddit - Dive into anything

- Reddit - Dive into anything

- ASUS Announces Dual GeForce RTX 4060 Ti SSD Graphics Card

▷ #autogen (1 messages):

thelefthandofurza: Has anyone used autogen studio with lmstudio?

Eleuther Discord Summary

- Model Performance Rises without Additional Data, Training, or Scale: A query launched by

@sehaj.dxstinyregarding improving performance without extra resources turned up some interesting resources, courtesy of@ad8eand@vatsala2290, including the Machine Learning workshop poll results. - Taking AI Development Mobile:

@pawngrubberwas steered towardsmlc-llmby@_fleetwoodfor starting machine learning development on mobile devices. This open source tool develops, optimizes, and deploys AI models natively. - Unpacking Llama-2-70B’s Benchmark Conundrum:

@tirmizi7715expressed confusion over whyLlama-2-70Bperforms worse on MT-Bench while doing well on other benchmarks. - Any Language for Mistral: A discussion headed by

@maxmaticalclarified that modern tokenizers handling all Unicode characters can allow language transfer, as cited by@thatspysaspyand@stellaathena. - Unravelling Huggingface Model Structures:

@sk5544received a coding approach for extracting the PyTorch model definition code from Huggingface, shared by@thatspysaspy. - Kaggle LLM Contest Peaks Interest:

@grimsqueakerhighlighted an ongoing Kaggle LLM contest of potential interest to the community. - Mechanism Behind MLM Loss Calculation: A discussion started by

@jks_plclarified why MLM loss is only computed on masked/corrupted tokens, explained by@bshlgrsand@stellaathena. - AI Behavior Explained through Evaluation:

@burnydelicshared an interesting MIT News article discussing the development of AI models that evaluate and are able to explain the behavior of other AI systems. - muP for Simplified Hyperparameter Tuning: Users

@ad8e,@thatspysaspy,@ricklius, and@cubic27shared thoughts on muP’s ability to simplify hyperparameter tuning across scales, albeit not being a magic solution. - Twitter Data Limited in Datasets:

@stellaathenaclarified to@rybchukthat significant amounts of Twitter data are unlikely in certain datasets. - The Truth Behind Mixtral Routing Analysis: A tweet highlighting a Mixtral routing analysis misconception was shared by

@tastybucketofrice, and further discussed by@stellaathenaand@norabelrose. - Gaining Insight with GPT/LLM Visualization Tool:

@brandon_xyzannounced a new tool that visualizes GPT/LLM’s cognitive process citing a tweet and received requests for private tool access. - Understanding Mechanistic Interpretability and BIMT: The role of Brain-Inspired Modular Training (BIMT) in boosting neural network interpretability was discussed by

@g_mine, who pointed out a paper on this issue. - Pythia Data Preparation Standard: Queries by

@joshlkabout Pythia data prep received clarification from@pietrolescithat it is a standard pre-training process, even if online information is sparse. - EOD Token Issue in Pythia-Deduped Dataset:

@pietrolescinoted an issue that the Pythia-deduped dataset lacked EOD tokens, with possibilities about why raised by@hailey_schoelkopfincluding the omission of the--append-eodoption during tokenizing. - EOD Tokens & Packer for Pythia Models:

@pietrolesciand@hailey_schoelkopfdiscussed whether the difference raised due to missing EOD tokens would affect the ‘packed’ dataset that the Pythia models see during training. - Masking Role in Document Attention: A question about the function of masks from

@joshlkgot answered by@hailey_schoelkopf, who clarified that they are not used to prevent cross-attention between documents.

Eleuther Channel Summaries

▷ #general (61 messages🔥🔥):

-

Optimization Options for Model Performance: User

@sehaj.dxstinyraised a question about improving a model’s performance without additional data, training, or scale. Recommended resources included a Machine Learning workshop poll shared by@ad8eand work by the Amitava Das group at USC shared by@vatsala2290. -

Exploring ML development on Mobile:

@pawngrubbershowed interest in starting machine learning development (specifically, inference) on mobile devices.@_fleetwoodsuggestedmlc-llmas a start point, a tool to develop, optimize, and deploy AI models natively on devices. -

Understanding Llama-2-70B’s MT-Bench Performance:

@tirmizi7715queried why the language modelLlama-2-70Bperformed worse on MT-Bench compared toMixtralandGpt 3.5, while performing equally well on other benchmarks. -

Japanese Pretraining on Mistral: In a discussion initiated by

@maxmaticalconcerning StableLM’s Japanese pretraining on the English language modelMistral, it was clarified by@thatspysaspyand@stellaathenathat modern tokenizers can handle all Unicode characters, allowing for language transfer. -

Understanding Huggingface Model Structure:

@sk5544sought a way to obtain the PyTorch model definition code of a model loaded from Huggingface.@thatspysaspyshared a coding approach to facilitate the same.

Links mentioned:

- Counter Turing Test CT^2: AI-Generated Text Detection is Not as Easy as You May Think — Introducing AI Detectability Index: With the rise of prolific ChatGPT, the risk and consequences of AI-generated text has increased alarmingly. To address the inevitable question of ownership attribution for AI-generated artifacts, the …

- HITYWorkshopPoll/PollResults.pdf at main · fsschneider/HITYWorkshopPoll: Results of the poll performed for the HITY workshop at NeurIPS 2022. - fsschneider/HITYWorkshopPoll

- GitHub - mlc-ai/mlc-llm: Enable everyone to develop, optimize and deploy AI models natively on everyone’s devices.: Enable everyone to develop, optimize and deploy AI models natively on everyone's devices. - GitHub - mlc-ai/mlc-llm: Enable everyone to develop, optimize and deploy AI models natively on every…

▷ #research (25 messages🔥):

- Kaggle LLM Contest:

@grimsqueakermentioned an ongoing Kaggle LLM contest that might be of interest to the research community. - Discussion on Masked Language Modeling (MLM) Loss Computation:

@jks_plinitiated a discussion questioning why MLM loss is calculated only on masked/corrupted tokens.@bshlgrsand@stellaathenaprovided responses, indicating that this setup is due to the original unmasked token being an easy task for the MLMs which might not provide much informational value for learning. - Novel Method of Explaining AI Behavior:

@burnydelicshared an MIT News article about researchers at MIT’s CSAIL who have developed AI models that can conduct experiments on other AI systems to explain their behavior. - muP: A Boon for Hyperparameter Tuning:

@ad8eshared his key takeaways about muP, emphasizing that muP simplifies the tuning of hyperparameters across different model scales. However, he also noted that muP is not a magic solution and may face issues with certain setups like tanh activations.@thatspysaspy,@ricklius, and@cubic27concurred that the main benefit of muP is to facilitate hyperparameter transfer across scales. - Absence of Twitter Data in Certain Datasets: In response to

@rybchuk’s query about extracting Twitter data from certain datasets,@stellaathenaresponded that none of the datasets in discussion are likely to contain a significant amount of Twitter data.

Links mentioned:

AI agents help explain other AI systems: FIND (function interpretation and description) is a new technique for evaluating automated interpretability methods. Developed at MIT, the system uses artificial intelligence to automate the explanati…

▷ #interpretability-general (8 messages🔥):

- Mixtral routing analysis finds lack of specialization:

@tastybucketofriceshared a tweet from@intrstllrninjastating that a Mixtral routing analysis showed that experts did not specialize to specific domains.@stellaathenaexpressed confusion about the widespread misunderstanding. - Previous findings align with Mixtral analysis: User

@norabelrosecommented that there was some other analysis showing the same thing about a year ago, indicating that this finding isn’t entirely new. - Trigram frequencies compile on Pythia-deduped training set:

@norabelrosealso shared a link to a document detailing trigram frequencies computed on 11.4% of the Pythia-deduped training set. - Innovative tool for GPT/LLM visualization:

@brandon_xyzmentioned the creation of a new tool that visualizes the thinking and understanding processes of a GPT/LLM, showcasing his tweet for example and welcomed private requests for tool access. - Mechanistic Interpretability and BIMT:

@g_minepointed out a paper discussing large language models’ mechanistic interpretability and the role of Brain-Inspired Modular Training (BIMT) in enhancing neural networks’ interpretability.

Links mentioned:

- Tweet from interstellarninja (@intrstllrninja): mixtral routing analysis shows that experts did not specialize to specific domains

- Evaluating Brain-Inspired Modular Training in Automated Circuit Discovery for Mechanistic Interpretability: Large Language Models (LLMs) have experienced a rapid rise in AI, changing a wide range of applications with their advanced capabilities. As these models become increasingly integral to decision-makin…

- trigrams.pkl.zst: trigrams.pkl.zst

- Tweet from Brandon (@brandon_xyzw): This is what a GPT/LLM looks like as it’s thinking and understanding

▷ #gpt-neox-dev (13 messages🔥):

-

Pythia Data Preparation:

@joshlknoted that there is a lot of information online about data prep for fine-tuning but not much on pre-training. They asked whether Pythia’s process is typical or different.@pietrolescicommented that it is generally a standard process for (decoder-only) Language Model (LLM) training, not just for Pythia. -

Missing EOD Tokens:

@pietrolescibrought up the issue that EOD tokens weren’t found in the Pythia-deduped dataset.@hailey_schoelkopffound this surprising and mentioned a possibility that the option--append-eodwasn’t included while tokenizing the Pile + deduped Pile into the Megatron format. -

Different Packer for Pre-Trained Pythia Models?:

@pietrolescipointed out that if no EOD token was added, the resulting “packed” dataset would be different from what the Pythia models saw during training because there would be N missing tokens for N documents, shifting every token in the pack.@hailey_schoelkopfconcurred that if both the pre-shuffled and the raw idxmaps datasets don’t have EOD tokens, they should match each other. But when packing, the NeoX codebase does not add EOD tokens itself (Source). -

Masking in Document Attention:

@joshlkinquired about the appearance of masks and whether they are used to prevent cross-attention between documents.@hailey_schoelkopfclarified that masks are not used for this purpose.

Links mentioned:

- gpt-neox/megatron/data/gpt2_dataset.py at e6e944acdab75f9783c9b4b97eb15b17e0d9ee3e · EleutherAI/gpt-neox): An implementation of model parallel autoregressive transformers on GPUs, based on the DeepSpeed library. - EleutherAI/gpt-neox

- Batch Viewer : Why Sequence Length 2049? · Issue #123 · EleutherAI/pythia: Hi, I am using utils/batch_viewer.py to iterate through Pythia’s training data and calculate some batch-level statistics. Firstly, there are some gaps between the actual code in batch_viewer.py an…

- GitHub - EleutherAI/pythia: The hub for EleutherAI’s work on interpretability and learning dynamics: The hub for EleutherAI’s work on interpretability and learning dynamics - GitHub - EleutherAI/pythia: The hub for EleutherAI’s work on interpretability and learning dynamics

- GitHub - EleutherAI/pythia: The hub for EleutherAI’s work on interpretability and learning dynamics: The hub for EleutherAI’s work on interpretability and learning dynamics - GitHub - EleutherAI/pythia: The hub for EleutherAI’s work on interpretability and learning dynamics

- EleutherAI/pile-deduped-pythia-preshuffled · Datasets at Hugging Face

- EleutherAI/pythia_deduped_pile_idxmaps · Datasets at Hugging Face

OpenAccess AI Collective (axolotl) Discord Summary

- Optimizing Mistral Training”: User @casper_ai shared in-depth details about optimizing the Mistral model training: “MoE layers can be run efficiently on single GPUs with high performance specialized kernels. Megablocks casts the feed-forward network (FFN) operations of the MoE layer as large sparse matrix multiplications, significantly enhancing the execution speed”.

- Potential slow-down in Deepspeed multi-gpu usage: @noobmaster29 highlighted an issue with

accelerate==0.23(Deepspeed integration), causing slower training for users. Downgrading toaccelerate==0.22or using themainbranch was suggested, with the fix awaiting release source. - Tracking Experiments with Axolotl and MLFlow: @caseus_ and @JohanWork discussed adding MLFlow into Axolotl for experiment tracking Pull Request #1059.

- Axolotl WebSocket for External Job Management @david78901 proposed a websockets endpoint in the Axolotl project for better external job management. @caseus_ expressed interest in incorporating the idea into the main project.

- A Discussion on the Impact of System Messages Training: In the context of model training, @le_mess stated the content of system messages have no significant impact on model performance and can be as random as “ehwhfjwjgbejficfjeejxkwbej” source.

- Implementing “Shearing” in ShearedMistral Training: @caseus_ pointed out a method for the process of shearing, specifically referencing a GitHub repo. He also discussed the merit of using SlimPajama over RedPajama v2 for data deduplication and quality, noting RedPajama v2 no longer includes subsets source.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (7 messages):

- Mistral training optimization methods explained: In the channel,

@casper_aidetailed some key information on how to optimize the training for the Mistral model. In particular, it was mentioned that MoE layers can be run efficiently on single GPUs with high performance specialized kernels. Megablocks [13] casts the feed-forward network (FFN) operations of the MoE layer as large sparse matrix multiplications, significantly enhancing the execution speed and naturally handling cases where different experts get a variable number of tokens assigned to them. - Request for Mistral model file on Ollama:

@dangfuturesinquired about the appropriate Mistral model file to be used on Ollama. - Potential regression in Accelerate/Deepspeed multi-gpu users: User

@noobmaster29shared a tweet from@StasBekmanwarning about a regression issue inaccelerate==0.23(a Deepspeed integration). Downgrading toaccelerate==0.22or using the latestmainbranch was advised to overcome this, with the fix awaiting release.

Links mentioned:

Tweet from Stas Bekman (@StasBekman): Heads up to Accelerate/Deepspeed multi-gpu users There was a regression in accelerate==0.23 (deepspeed integration) which would make your training much slower. The fix has just been merged - so you c…

▷ #axolotl-dev (37 messages🔥):

- Axolotl to incorporate MLFlow for experiment tracking:

@caseus_discussed the addition of MLFlow for experiment tracking in the Axolotl project. This was proposed by@JohanWorkin Pull Request #1059. - System prompts in YAML configuration: Conversation around configuring initial system prompts for the sharegpt within the YAML file.

@dctannersuggested this would be cleaner than adding it to all dataset records while@le_messshared that they are currently adding it manually each time. - Peft update fixed Phi LoRA issue:

@marktenenholtzidentified that an error in Phi LoRa handling was fixed in the update to peft==0.7.0. The issue related to shared memory not being handled correctly by previous peft versions and that identification of LoRa modules needed to be specific for embedding and linear layers. - Websockets added to Axolotl for external job management:

@david78901proposed adding a websockets endpoint to the Axolotl project to allow external triggering and monitoring of jobs.@caseus_showed interest in incorporating this into the main project. - Accelerate Pinning:

@caseus_suggested that Accelerate needs to be pinned to the correct version as indicated by@nanobitz, which was an issue highlighted in the Pull Request #1080.

Links mentioned:

- GitHub Status

- Issues · OpenAccess-AI-Collective/axolotl.): Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- GitHub - kmn1024/axolotl: for testing: for testing. Contribute to kmn1024/axolotl development by creating an account on GitHub.

- pin accelerate for deepspeed fix by winglian · Pull Request #1080 · OpenAccess-AI-Collective/axolotl: see https://twitter.com/StasBekman/status/1744769944158712210

- GitHub - dandm1/axolotl: Go ahead and axolotl questions about the API: Go ahead and axolotl questions about the API. Contribute to dandm1/axolotl development by creating an account on GitHub.

- update peft to 0.7.0 by mtenenholtz · Pull Request #1073 · OpenAccess-AI-Collective/axolotl: peft==0.6.0 has a bug in the way it saves LoRA models that comes down to safetensors. This is fixed in peft==0.7.0.

- be more robust about checking embedding modules for lora finetunes by winglian · Pull Request #1074 · OpenAccess-AI-Collective/axolotl: @mtenenholtz brought up in the discord that phi has a more nuanced embedding module name. this PR attempts to handle other architectures a little more gracefully

- Add: mlflow for experiment tracking by JohanWork · Pull Request #1059 · OpenAccess-AI-Collective/axolotl: Adding MLFOW to Axolotl for experiment tracking, looked into how Weight and Bias has been setup and tried to follow the same pattern. Have tested the changes and everything looks good to me. Happy …

▷ #other-llms (1 messages):

leoandlibe: I use the exllamav2 convert.py to make EXL2 quants 😄

▷ #general-help (10 messages🔥):

- Searching for Chat UI that Supports ChatML or chat_template:

@le_messinquired about any chat interfaces that support ChatML or chat_template out of the box. In response,@nanobitzsuggested ooba. - Interest in Testing gguf:

@le_messexpressed his/her interest in testing gguf.@nanobitzrecommended either lm studio or ollama but didn’t provide specific operating instructions for ollama. - Query about Zero2 Training Speed and GPU:

@athenawisdomsasked if there is a significant difference in Zero2 training speed between two multi-GPU systems (e.g., a6000), one using pcie3.0x16 and the other one using pcie4.0x16. The response to this query was not recorded.

▷ #rlhf (3 messages):

- Prompt Strategy a Necessity for AI Interaction: User

@caseus_suggested that a prompt strategy, which would involve formatting prompts and combining previous turns into the input, is necessary for optimal AI interaction. - Prompt Strategy Already in Action: Following up on the discussion, user

@xzuynmentioned that they have already been implementing a prompt strategy.

▷ #community-showcase (1 messages):

- System message has no impact on training performance:

@le_messstated that the content of the system message has no significant impact on the performance of the trained model. In their words, “The system message could be “ehwhfjwjgbejficfjeejxkwbej” and the performance would probably still be the same.”

▷ #shearedmistral (8 messages🔥):

- Referencing Shearing Method Implementation:

@caseus_pointed out a particular step in the shearing process via a link to a GitHub repository. He suggested utilizing the pre-processed data from the project’s Google Drive, but cautioned this would mean being tied to the same dataset. - Consideration for SlimPajama Use:

@caseus_contemplated if it’s worthwhile to choose SlimPajama over the RedPajama v2 dataset for improved deduplication and quality. He also observed that RedPajama v2 no longer includes subsets source. - Positive Reception to Dataset Subsets: Responding to this,

@emrgnt_cmplxtyvoiced liking the subset feature and questioning why it was removed. - Potential Shift to Slim Pajamas:

@emrgnt_cmplxtysuggested a possible pivot to using Slim Pajamas for the project.

Links mentioned:

- LLM-Shearing/llmshearing/data at main · winglian/LLM-Shearing: Preprint: Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning - winglian/LLM-Shearing

- togethercomputer/RedPajama-Data-V2 · Datasets at Hugging Face

HuggingFace Discord Discord Summary

- New Channels & Updates to Open Source Libraries:

@lunarfluannounced the launch of two new discussion channelstransformers.jsand ML and cybersecurity while celebratingdiffusersreaching 20,000 Github stars (source tweet). Further, vishnu5n showed users how to optimize machine learning codes for machine learning models of skew detection (source link). - Attention Shifted to Attention & Self-attention: In diffusion-discussions

@grepolianasked about the difference between attention and self-attention, but didn’t receive a response (source link). - Advancements in AI Tech for Gaming:

@papasanchoin cool finds discussed AI integration in video games using Herika and Mantella, attributing it as a significant advance in gaming (Herika link), (Mantella link). - Solving the Mystery of Phi-2 Behaviour: In the general channel,

@admin01234discussed a peculiar behavior of the Phi-2 model, where a correct response is followed by seemingly random answers that didn’t relate to the input. - LLMs & SQL Injection Attacks: In the NLP channel, they discussed the potential vulnerabilities of web applications integrated with LLMs, particularly to SQL injection attacks, using a paper on arXiv as a reference (source link).

- Conversations on Deep Reinforcement Learning: In today-im-learning,

@couldhuannounced finishing a Deep RL course, while others like@muhammadmehroz,@gduteaudand@cloudhuprovided insights about the course and shared the link to the course (source link). - CCTV Query and Skew Detection: In the computer vision channel, user

@iloveh8discussed implementing GPT-V or LLAVA for real-time CCTV usage, and@vishnu5nshared their work on skew detection in document images (source link).

HuggingFace Discord Channel Summaries

▷ #announcements (1 messages):

- New channels and open source updates grace HuggingFace’s roster:

@lunarfluannounced the launch of two new discussion channelstransformers.jsand the intersection of ML and cybersecurity. Plus,diffuserscelebrated reaching 20,000 GitHub stars and the new training script integrating the pivotal tuning (referred from@cloneofsimo cog-sdxl) and prodigy optimizer (referred fromkohya's scripts) alongside compatibility with AUTO1111 was released. See the tweet for details. Transformers.jsgets a 2024 glow-up:@xenovacomrevealed significant improvements for Transformers.js developers; including features like conditional typing of pipelines, inline documentation with code snippets, and pipeline-specific call parameters and return types. See here for more.- Direct Mistral / Llama / TinyLlama safetensors pull from the Hub: MLX:

@reach_vbconfirmed that MLX can now pull Mistral/ Llama/ TinyLlama safetensors directly from the Hub, including the support for all mistral/ llama fine-tunes too! More information about the installation here. - Gradio brings out 4.13 version with critical fixes and compatibility: Version 4.13 will come with fixes for Button +

.select()+ Chatbot, security patches, and compatibility with Python 3.12. Check out the comprehensive Changelog. - Swifter Whisper with speculative decoding: A noteworthy improvement cited was a 200% faster Whisper thanks to speculative decoding. See the tweet for more.

Links mentioned:

- Tweet from Linoy Tsaban🎗️ (@linoy_tsaban): Let’s go 2024 🚀: 🆕 training script in 🧨 @diffuserslib leveraging techniques from the community: ① pivotal tuning (from @cloneofsimo cog-sdxl) ② prodigy optimizer (from kohya’s scripts) + …

- Tweet from Sayak Paul (@RisingSayak): 🧨 diffusers reached 20k stars on GitHub 💫 But like many others, I am not a firm believer in this metric. So, let’s also consider the number of repos that rely on it and the SUM of their stars. …

- Tweet from Xenova (@xenovacom): 🚨 We’re kicking off 2024 with several improvements for Transformers.js developers: - Conditional typing of pipelines based on task. - Inline documentation + code snippets. - Pipeline-specific cal…

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): PSA 📣: MLX can now pull Mistral/ Llama/ TinyLlama safetensors directly from the Hub! 🔥 pip install -U mlx is all you need! All mistral/ llama fine-tunes supported too! 20,000+ checkpoints overall!…

- Gradio Changelog: Gradio Changelog and Release Notes

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): Parakeet RNNT & CTC models top the Open ASR Leaderboard! 👑 Brought to you by @NVIDIAAI and @suno_ai_, parakeet beats Whisper and regains its first place. The models are released under a commercial…

- 2023, year of open LLMs

- Welcome aMUSEd: Efficient Text-to-Image Generation

▷ #general (22 messages🔥):

-

Event Reading Group on the Block:

@admin01234enquired about the timings of the event reading group, to which@lunarfluresponded affirming its occurrence later in the week with additional synchronous discussions happening in the discord thread. -

Machine Learning Courses Query:

@daksh3551sought suggestions for a structured course (both paid and free) on Machine Learning. -

Enigma of the Phi-2 Behaviour:

@admin01234reported a peculiar phenomenon where the Phi-2 model would output a correct response followed by seemingly random responses. -

Desire for High Power Computing Environments: In a lengthy discourse,

@s4vyssexpressed difficulty using free computing resources like Kaggle and Google colab notebooks for larger projects due to lack of auto completion, error debugging, and limitations of working in a single notebook. The user wondered about alternative machine learning coding environments that could offer free computing power for local coding. -

Identifying PII in Headers using StarPII:

@benny0917shared his experience attempting to identify Personal Identifiable Information (PII) in headers using the StarPII model from Hugging Face. The model struggles to correctly identify context-dependent PII headers.

Links mentioned:

▷ #today-im-learning (7 messages):

- Benchmark Results for Mistral-7B-instruct & vLLM: User

@harsh_xx_tec_87517detailed their benchmark results forMistral-7B-instructwithvLLMon LinkedIn, stating it’s a great library for deploying OSS LLMs. Detailed benchmarking results can be found on their LinkedIn post. - Completion of the DRL course:

@cloudhuannounced the completion of their DRL course and received congratulations from@osanseviero. - Queries about the DRL course:

@muhammadmehrozshowed interest in pursuing the DRL course. In response, both@gduteaudand@cloudhusuggested the Deep Reinforcement Learning Course provided by Hugging Face, which can take one from beginner to expert level.

Links mentioned:

Welcome to the 🤗 Deep Reinforcement Learning Course - Hugging Face Deep RL Course

▷ #cool-finds (3 messages):

- Fine-tuning VLM like LLaVa: User

@silamineasked for any research papers or GitHub repos that provide guidance on fine-tuning a VLM like LLaVa. - Embed charts in readme with mermaid:

@not_lainrecommended a tool for embedding charts into readme files called mermaid, and shared the GitHub link of the tool. - AI Integration in Video Games:

@papasanchoshared their perspective on AI integration in video games, considering it a significant advance since the Atari 2600.@papasanchoidentified Herika and Mantella as examples of this innovation and shared links to the Nexus Mods pages for both Herika and Mantella which use AI technology to enhance in-game interactions.

Links mentioned:

- GitHub - mermaid-js/mermaid: Generation of diagrams like flowcharts or sequence diagrams from text in a similar manner as markdown: Generation of diagrams like flowcharts or sequence diagrams from text in a similar manner as markdown - GitHub - mermaid-js/mermaid: Generation of diagrams like flowcharts or sequence diagrams from…

- Herika - The ChatGPT Companion: ‘Herika - The ChatGPT Companion’ is a revolutionary mod that aims to integrate Skyrim with Artificial Intelligence technology. It specifically adds a follower, Herika, whose responses and interactions

- Mantella - Bring NPCs to Life with AI: Bring every NPC to life with AI. Mantella allows you to have natural conversations with NPCs using your voice by leveraging Whisper for speech-to-text, LLMs (ChatGPT, Llama, etc) for text generation,

▷ #i-made-this (3 messages):

-

World’s Fastest Conversational AI Unveiled:

@vladi9539shared a YouTube video of their attempt at creating the world’s fastest conversational AI software. The software is about real-time conversations with AI and its implementation boasts the lowest latency conversational algorithm compared to current technologies. -

AlgoPerf Competition Launched:

@franks22announced the recent launch of theAlgoPerfcompetition, aimed at finding the best algorithm for training contemporary deep architectures. The competition, open to everyone, offers a $25,000 prize in each of its two categories. More information can be found in their GitHub repository. -

MusicGen Extension Update Announced:

@.bigdookieupdated everyone about new features added to the MusicGen browser extension. This includes undo functionality as well as the ability to crop AI-generated music. They also invited community members to test the extension, shared through a YouTube link, and asked for help in enhancing the speed of MusicGen outputs.

Links mentioned:

- Tweet from thecollabagepatch (@thepatch_kev): ok the browser extension for ai remixes of youtube tracks now has cropping / undo functionality lots of @_nightsweekends but it’s rdy for a user or ten. dm me #buildinpublic https://youtub…

- AI roasting his programmer (I made world’s fastest conversational AI): This is me having a conversation with an AI in real time.This implementation has the lowest latency conversational algorithm compared to everything I’ve seen…

- GitHub - mlcommons/algorithmic-efficiency: MLCommons Algorithmic Efficiency is a benchmark and competition measuring neural network training speedups due to algorithmic improvements in both training algorithms and models.: MLCommons Algorithmic Efficiency is a benchmark and competition measuring neural network training speedups due to algorithmic improvements in both training algorithms and models. - GitHub - mlcommo…

▷ #reading-group (5 messages):

- Splitting Databases for Efficient Learning:

@chad_in_the_housesuggested a method involving the separation of databases into development db and testing db. The process involves answering questions in the development db, storing correct answers along with their text embeddings and chains of thought, and using this information for in-context learning for new questions. - SG Event Proposal: @lunarflu indicated plans to set up an event tomorrow but did not disclose further details.

- Invitation to Discuss in Reading Group:

@lunarflushowed interest in@bluematcha’s topic and extended an invitation for a deeper coverage in the next Reading Group discussion. - Variational Inference Book Promotion:

@ypbioshared information about a book on variational inference that claims to contain comprehensive review of the topic and everything needed to develop world-class foundational machine learning expertise. They included a link to the book’s website, www.thevariationalbook.com. - Time Zone Challenges:

@skyward2989expressed disappointment that the discussion would take place at an inconvenient time for them, specifically at 3 AM.

Links mentioned:

The Variational Inference Book: A comprehensive review of variational inference in one concise book.

▷ #diffusion-discussions (3 messages):

-

Loading and Fusing LoRA Weights:

@sayakpaulshared detailed instructions on how to load and fuse LoRA weights into the base UNet model. The commands shared were:pipeline.load_lora_weights()and thenpipeline.fuse_lora(). -

Query on Attention Mechanisms:

@grepolianasked about the differences between attention and self-attention, but no explanation was provided in this chat history. -

Efficient LoRA Inference Discussed in Blog Post:

@yondonfuposted a link to a recent Huggingface blog post on optimizing LoRA inference, elaborating efficient ways to load LoRA adapters and speed up inference. Key points include the observation that batching was not significantly improving throughput for diffusers and increased the latency six times. -

Batching with Diffusers Not Effective?:

@yondonfudrew particular attention to subject of batching with diffusers, questioning the utility of the technique given the minor throughput increase with an 8 batch size contrasted with a six-fold increase in latency, and asked for further insight into why this might be the case. The questions were left unanswered at the close of the chat.

Links mentioned:

Goodbye cold boot - how we made LoRA Inference 300% faster

▷ #computer-vision (2 messages):

- Query on Real-Time CCTV Use Case: User

@iloveh8brought up a discussion around implementing GPT-V or LLAVA for real-time CCTV use such as theft detection or baby monitoring. - Skew Detection Resource Shared: In response,

@vishnu5nshared their work which models skewed document images with their respective skewness. The detailed work can be found on Kaggle at this link which could potentially serve as a reference for similar problem statements.

Links mentioned:

skew_detection: Explore and run machine learning code with Kaggle Notebooks | Using data from rdocuments

▷ #NLP (18 messages🔥):

- SQL Injection Vulnerabilities in LLMs:

@jryariantodiscussed potential latency issues with computational resources in a developing country and asked about strategies to defend against SQL injection attacks. They suggested using parameterized queries for this purpose. They referenced a paper on arXiv that provides a comprehensive examination of a potential type of SQL injection attack that could occur with Language Models (LLMs). - Open Source LLM Model Suggestions for Conversational Chatbot:

@jillanisofttechasked for suggestions for an open-source LLM model suitable for fine-tuning on a large custom dataset of PDF, txt, and docs files. They need to develop a conversational chatbot that can handle text and voice input, and were also interested in knowing an appropriate framework for building the application. - Text Generation Using NSQL-2B Model:

@madi_nasked about settingmax_new_tokensto a value greater than 2048 in a text generation task using the NSQL-2B model. They sought clarity on whether it is alright to increasemax_new_tokens, considering the model’s predefined max length. - Fine-tuning Mistral 7B—Identifying the Correct Syntax:

@denisjannotinquired about the correct syntax to use while fine-tuning Mistral 7B, noticing some irregularities when using the trained model.@asprtnl_50418helped by suggesting the consistent use of the same prompt template that was used during the initial model training, and also provided a link to the End Of String (EOS) token. - GPU Usage for the Suno/bark-small Model in TTS Task:

@x_crash_presented a question about enabling explicit GPU use withsuno/bark-smallmodel on Google Colab, after noticing that the model did not seem to be utilizing the GPU resources. They provided their Python script to illustrate their attempt.

Links mentioned:

- tokenizer_config.json · mistralai/Mistral-7B-v0.1 at main).)

- From Prompt Injections to SQL Injection Attacks: How Protected is Your LLM-Integrated Web Application?: Large Language Models (LLMs) have found widespread applications in various domains, including web applications, where they facilitate human interaction via chatbots with natural language interfaces. I…

▷ #diffusion-discussions (3 messages):

- Instructions on loading LoRA:

@sayakpaulshared the method to load the LoRA weights into the base UNet model by callingpipeline.load_lora_weights()andpipeline.fuse_lora(). - Question about attention mechanisms:

@grepolianposed a question about the difference between attention and self-attention. (Further discussion or responses not provided). - Deep dive into LoRA inference optimization:

@yondonfureferenced a section from a HuggingFace blogpost discussing how LoRA inference has been optimized. They noted that it explained batching with diffusers doesn’t improve performance significantly and usually results in a higher latency. Two questions derive from this finding:- Is batching with diffusers generally not worthwhile due to marginal throughput gains and significant latency increases?

- What’s the logic behind the observation that batching with diffusers does not improve performance given that there is enough VRAM available?

Links mentioned:

Goodbye cold boot - how we made LoRA Inference 300% faster

Perplexity AI Discord Summary

- API and Model Outages Notices: User

@phinneasmctipperreported outages forpplx-7b-onlineandpplx-70b-onlinemodels via the API and API sandbox. User@monish0612in #pplx-api reported a separate 500 internal server error.@icelavamanacknowledged the issue. - Call for Improved Citation Format in AI Responses:

@Chris98suggested replacing numerical citation references with hyperlinks in Perplexity’s AI responses. The idea received support from@byerk_enjoyer_sociology_enjoyer. - Clarification on Subscription Billing During Free Trial:

@alekswathquestioned unexpected immediate billing when switching from a monthly to an annual plan during a free trial, triggering a discussion on subscription pricing. - Integration of Perplexity as a Search Engine: User

@bennyhobartwanted to set up Perplexity as a default search engine in Chrome.@mares1317shared a link to the Perplexity - AI Companion extension on the Google Web Store. - Changes Noticed in Claude 2.1 Responses: A shift in Claude 2.1’s tone was remarked by

@Chris98and@Cattowho were unsatisfied with the AI’s recent responses, comparing them to GPT Copilot’s style and expressing a wish for Claude 2.1’s original voice. - Issue in Citing Sources: In the #pplx-api channel,

@hanover188asked about pplx-70b-online model’s capability to cite sources and@brknclock1215clarified that it currently does not cite sources like the Perplexity app does, hinting at a potential future update but corrected later that it is currently not on Perplexity’s roadmap.

Perplexity AI Channel Summaries

▷ #general (51 messages🔥):

- Possible Outages in Perplexity’s Models:

@phinneasmctipperreported encountering 500 error codes when trying to access thepplx-7b-onlineandpplx-70b-onlinemodels via the API and API sandbox.@icelavamanacknowledged the issue and promised a fix despite currently being outside working hours. Link to the conversation - Request for Citation Format Change in Responses: User

@Chris98raised a request to replace the numerical citation references with hyperlinks in Perplexity’s AI responses.@byerk_enjoyer_sociology_enjoyeragreed and got support from@Chris98via a ⭐ emoji reaction on a relevant issue they had previously raised. - Question on Subscription Pricing:

@alekswathquestioned why they were immediately billed $200 upon trying to switch to an annual plan from a monthly one during a free trial. They inquired if there were issues with the free trial. - Utilizing Perplexity as Default Search Engine:

@bennyhobartasked how to set up Perplexity as the default search engine in Chrome.@mares1317shared a link to the Perplexity - AI Companion extension on the Google Web Store to help with this. - Change in Claude 2.1 Responses:

@Chris98and@Cattoexpressed dissatisfaction with Claude 2.1’s recent responses, noticing a perceived decrease in quality and a shift in tone to sound more like GPT Copilot. They wished for a return to the original Claude 2.1.

Links mentioned:

- Application Status

- What is Search Focus?: Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- Perplexity - AI Companion: Ask anything while you browse

- Reddit - Dive into anything

▷ #sharing (8 messages🔥):

- Stress Testing Web Application Resources Shared:

@whoistraianprovided a link for resources on stress testing a web application. - Inquiry about Perplexity AI’s Functionality:

@myob171asked if Perplexity AI is an AI search engine. - Discord Channels Links Shared:

@mares1317shared two Discord channel links, possibly containing other related discussions. - OpenAI Response Shared:

@__sahilpoonia__posted a link regarding how OpenAI responds to certain queries. - Praise for Perplexity’s Calendar Integration:

@clockworksquirrelhighlighted how Perplexity simplifies calendar management via natural language, especially beneficial due to their physical disability. They also noted the usefulness of copying and pasting within the tool. - Gratitude Expressed for Perplexity:

@siriusarchyexpressed their appreciation for Perplexity. - Volkswagen Incorporates ChatGPT: According to

@ipsifu, Volkswagen has integrated ChatGPT into its car systems.

▷ #pplx-api (6 messages):

-

500 Internal Server Error: User

@monish0612reported experiencing a 500 internal server error with the API for several hours. They are a paid user and are hopeful for a quick resolution. -

PPLX-70b-online model’s Source Citation Issue:

@hanover188asked if it’s possible for pplx-70b-online models to cite their sources like the Perplexity app does. They mentioned needing this for a build that requires summarized real-time data with actionable source information. -

No Direct Citation in PPLX-70b-online: In response to

@hanover188’s query,@brknclock1215provided a link and a summary of the source stating, “no - the pplx-70b-online model does not directly cite sources like the Perplexity app does… Adding support for grounding facts and citations is on Perplexity’s roadmap for the future.” -

Feature Not on the Roadmap?: Contrary to earlier information,

@brknclock1215later corrected that support for grounding facts and citations is actually not on Perplexity’s roadmap, providing another discord link to a discussion confirming this.

LAION Discord Summary

-

AI Debates Heat Up - “Utopia or Not?”: A spirited discussion led by

@SegmentationFaultsought to dissect the viewpoints of anti-AI critics, concluding that their stances largely stem from virtuous signaling and unrealistic utopian considerations.@mkaicfurther added to the discourse, asserting that the utopian goal of guaranteed income is more attainable with AI permeation. User.undeletedhumorously posited the three-step plan of anti-AI proponents: ban AI, preserve current jobs, and prevent future job elimination through technology. These assertions were met with resistance by@SegmentationFault, who championed AI’s role in productivity and global competitiveness. -

Pizza AI or Human?: Amidst the steady flow of AI discourse,

@thejonasbrothersinjected an amusing angle to the conversation, posing a lighthearted question as to whether the sentence “I am a pizza” was crafted by AI or not. -

Game-changing AI Training Hacks surfaced:

@pseudoterminalxintroduced innovative AI training techniques in an in-depth discussion, expounding on the advantages of biasing towards early timesteps in the selection process. By manifesting successful results using Euler’s image with zero-terminal SNR that surpassed the previous midjourney v6, this user circumvented traditional precedent, additionally endorsing the concurrent use of random crops and full frames. -

AI Detector Faces Credibility Crisis:

@lixiang01expressed doubt about the efficacy of a specific AI detector, arguing that it can be effortlessly deceived with carefully constructed prompts. -

State Space Models vs Transformers: A research paper shared by

@thejonasbrothersunveiled the rising dominance of State Space Models and Mixture of Experts over Transformers with the development of MoE-Mamba. The paper can be accessed here. -

Watermarking Woes for Generative Models: A blog post, based on a research paper titled Watermarks in the Sand: Impossibility of Strong Watermarking for Generative Models, was highlighted by

@chad_in_the_house. The post delves into the challenges of watermarking generative models while preserving output quality and enabling AI verification. The full post can be found here. -

HiDiffusion Framework - A New Viable Option:

@gothosfollydrew attention to a breakthrough text-to-image diffusion models framework called HiDiffusion, which can create high-resolution images. The research paper on HiDiffusion can be viewed here. -

Precise Location of RAU Block Questioned: