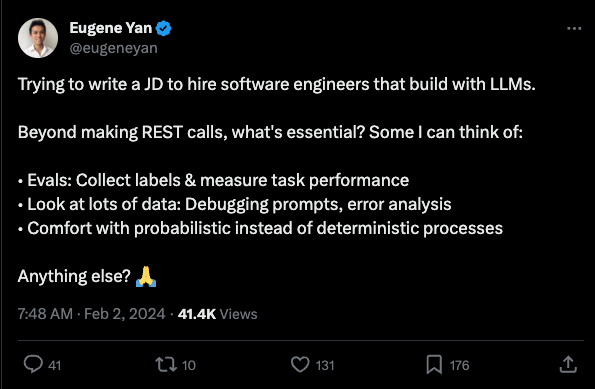

We really tried to avoid featuring Latent Space twice in a row, but Eugene Yan kicked off a discussion on AI Engineering:

Which resulted in the longest ever thread on the topic:

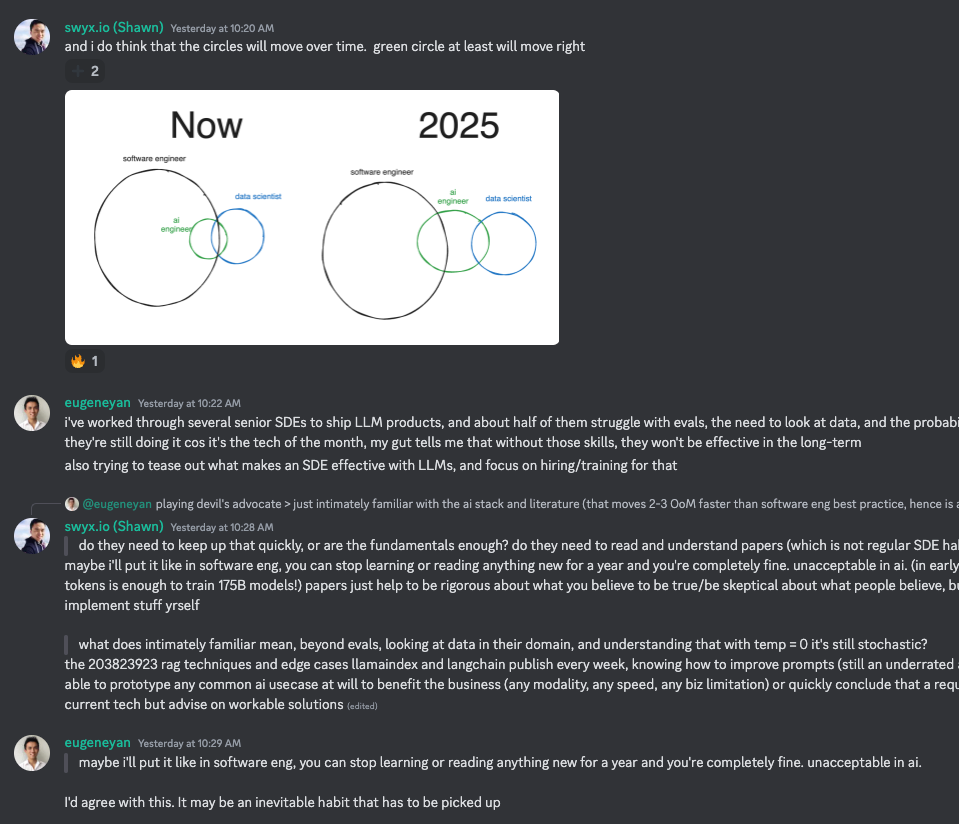

The central confusion is the high degree of overlap between what are traditionally software engineer skills and data scientist skills, but also what software engineers struggle with when dealing with probabilistic, data-driven systems. Do they need to be reading papers? Do they need to write CUDA kernels?

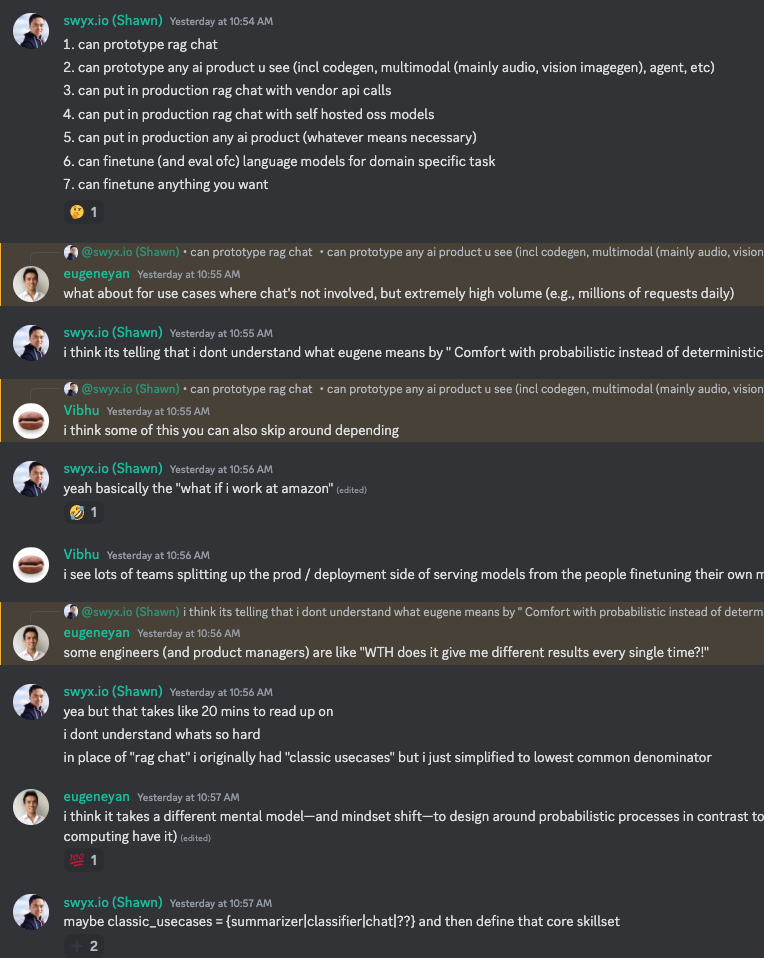

Some mental models were created:

as well as a progression path for skill development:

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Late-Night Tech Talk: Users including

@mrdragonfox,@coffeevampir3, and@potatooffengaged in a vivid discussion about the performance of large language models like MiquMaid and OLMo, and the potential applications of 3D printing for PC hardware alongside the usage of carbon nanotubes. -

Watermarks and AI Security: Conversation included techniques for using gradient ascent to make models unlearn information and the challenges around removing deep watermarking from models during training.

-

Open Licensing for OLMo: AI2’s OLMo GitHub repository was introduced, noteworthy for its open-source LLM availability under the Apache 2.0 license, with a 65B model’s training mentioned.

-

Superconductors and Nanotubes on AliExpress: Superconductor materials like Yttrium barium copper oxide (YBCO) and carbon nanotubes were topics of interest, highlighting their availability on AliExpress.

-

Aphrodite’s Capabilities and Limitations: The Aphrodite model was credited for its batching capabilities in AI horde but was pointed out as incompatible with GPUs of differing VRAM sizes.

-

Calibration Dataset Diversity for AWQ: Discussions around the best calibration datasets for Automatic Weight Quantization (AWQ) outlined the importance of diversity in datasets, particularly for AI models like EXL2.

-

Local AI and Role-Playing Practices: Usability of various AI models for role-playing was discussed, noting the preference for instruction mode when using instruction-tuned models.

-

Leaderboards and Ethical Model Usage: The presence of the Mistral medium model (MoMo) on leaderboards sparked a debate on the implications of using models with unclear licensing and the lack of corporate transparency in model training.

-

Quantization and Fine-Tuning: Questions arose about fine-tuning a pre-trained AWQ model with LoRA, and the benefits of aligning the quantization process during QLoRA fine-tuning and serving were debated.

-

LoftQ Introduction and Quantization Discussion: The linking of a paper on LoftQ, a quantization technique that fine-tunes LoRA and quantizes a model to improve performance, led to discussions about its effectiveness.

-

internLM Gets a Nod: In a brief exchange,

kquantrecommended internLM as a solid model. -

Deploying LLMs with HF Tools:

m.0861sought advice on the deployment of Large Language Models through HF spaces, followed by reflections on the advantage of using HF’s inference endpoints for LLM deployment.

LAION Discord Summary

-

Distillation Downfalls in SSD-1B: Members

@pseudoterminalxand@gothosfollydiscussed the rigidity of SSD-1B due to its distillation from a singular fine-tuned model, suggesting that using multiple models could enhance aesthetic aspects of distillation. -

Optimizing Data Quality with Proper Captioning: The use of well-captioned images from diverse sources such as BLIP, COCO, and LLaVA was highlighted in a strategy to improve prompt adherence in model training, with mentions of input perturbations and data pipeline refinements for efficacy.

-

Prompt Adherence Through Hybrid Encoding: A debate surrounded the merits of UTF-8 tokenization versus a hybrid approach amalgamating UTF-8 codes into single tokens, pondering on the potential benefits for image generation by adopting a byte-level encoding similar to ByT5.

-

Cropping and Upscaling in Image Generation: A effective methodology for image-to-image upscaling using cropped model weights was identified, which is credited with preserving scene integrity, especially beneficial for higher resolution enhancements.

-

The Peer Review Bypass: Discussions underscored a trend towards researchers releasing notable findings on blogs rather than in traditional journals, often due to the cumbersome peer review process, with some considering detailing novel architectures exclusively through blog posts.

Latent Space Discord Summary

-

The Evolution of AI Engineering: Engaging debates unfolded regarding the essential skills for software engineers to effectively use LLMs and the evolving job role of AI engineers. Discussion highlighted the importance of understanding the probabilistic nature of LLMs, evaluation, debugging, data familiarity, and a mindset shift from deterministic to probabilistic outcomes. The concept of an AI Engineer Continuum developed, proposing stages from using APIs to fine-tuning models.

-

Community Growth and Learning Initiatives: In the LLM Paper Club (East), attendees engaged in technical discussions, such as the methodology of self-rewarding LLMs, improving text embeddings, and the value of retrieving long-tail knowledge for RAG. Suggestions for forming a “code club” to collaboratively walk through code and a “production club” to examine the actual implementation of code/papers reflect the technical-oriented community’s learning desires.

-

AI Events and Gatherings Gain Popularity: Calls for participation in local and online events like the LLM Paper Club (East) and AI in Action meetings were made. Enthusiasm was shown for forming local user groups, demonstrated by the proposal of an LA meetup and various social learning events, underlining the proactive approach of community members in sharing knowledge and best practices.

-

Resource Sharing Enriches the Guild: Members contributed a wealth of resources, ranging from practical guides on using AI, evaluating LLMs, and instructional content for constructing LLMs, to discussions on AI startup strategies and AI in business pitches. This indicates a strong interest in the application of AI technology within the professional and entrepreneurial spaces.

-

Concerns Over Tools Reliance: Skepticism about OpenAI’s Fine-Tuning API/SDK was raised, along with cautions against potential platform lock-in. The discussions leaned towards the benefits of full-scale fine-tuning over simpler API interactions, surfacing concerns relevant to engineers wary of over-reliance on third-party platforms.

Eleuther Discord Summary

-

Elevating Open Models to Large-Scale Science Projects:

@laylunderlined the growing feasibility of securing EU government support for open model training, resonating with the notion of open models as large-scale science ventures.@stellaathenacorroborated a shift from negligible to minimal advancements in this area, suggesting prospects for deploying@layl’s ML library in High-Performance Computing (HPC) settings such as LUMI. -

Activation Function Efficacy in the Spotlight: A comprehensive debate on activation functions such as GeLU, ReLU, Mish, and TanhExp was spurred by users including

@xa9axand@fern.bear, which drew attention to the dearth of extensive empirical tests for these functions in large model training. Despite earlier doubts by@ad8eregarding the probity of a paper promoting Mish,@xa9axverified the inclusion of all pre-submission experiments in the final publication. -

Benchmarking Model Architectures: Conversations delved into comparisons between

@state-spaces/mambamodels and other architectures like Transformers++ and Pythia, with users like@ldjexpressing concerns about the basis of comparison and@stellaathenahighlighting the need for a uniform model suite trained on open data for fair evaluations. -

Intricacies of Activation Functions Explored: Users

@catboy_slim_,@fern.bear, and@nostalgiahurtspondered over the nuanced influence of activation function choices, discussing how the scale of these functions interacts with other hyperparameters to influence model performance. Empirical findings from EleutherAI’s blog and various academic papers were dissected to decode complex interdependencies between activation functions and training dynamics of models. -

Legal Complexities Shadowing Large Model Training:

@synquidhighlighted the legal intricacies surrounding transparency in model training data sources, noting how the overt disclosure of training data might lead to intellectual property litigations that could stifle scientific progress. -

Demystifying Knowledge Distillation: Inquisitive discussions by

@johnryan465and@xa9axrevolved around the efficiency benefits of training a smaller-sized model B to emulate a larger-sized model’s A logits over direct training of model A - pondering the infinity ngrams paper methodology to generate cost-effective models for potential distillation pipelines. -

MCTS Sampling Challenges Addressed:

@blagdadscrutinized the exploration conundrums in Monte Carlo Tree Search (MCTS), alluding to the utilization of Upper Confidence bounds for Trees (UCT) for guiding the exploration based on uncertainty as opposed to uniform branching. -

Fine-Tuning Efficiency via Exploration: The finesse of fine-tuning using efficient exploration was articulated, with a focus on agents that craft queries and a reward model that functions on the feedback received. The discussion encompassed the merits of double Thompson sampling and the employment of epistemic neural networks, detailed in an arXiv paper.

-

Bayesian Active Learning Awaits Unveiling:

@fedorovistindicated the imminent release of a Bayesian active learning implementation by@322967286606725126, spurring interest from@johnryan465due to past experiences with akin challenges. -

Probing Adam Optimizer Variations: A query by

@ai_waifuexamined whether any studies have ventured into modifying the Adam optimizer to utilize variance of parameters rather than the gradient’s second moment estimation. However, specifics about such research in response were not highlighted. -

Collaborating for Vision-Language Model Integration: Intention to assimilate vision and language support into lm-harness was voiced by

@asuglia, with@chrisociepaand@1072629185346019358cited as potential collaborators by@hailey_schoelkopf, who also suggested community contributions. -

Standard Error Conversation in MMLU Results:

@baber_inquired about substantial standard errors in the MMLU results for the model miqu, and@hailey_schoelkopfrecognized a possible need for recalibrating standard error computations within the evaluation code. -

Facilitating Zero-Shot Evaluation: For forcing a task to run in zero-shot mode in lm-harness,

@asugliawas directed by@hailey_schoelkopfto setnum_fewshot: 0, referencing the pertinent source code. -

Upgrading Grouped Task Evaluation Methodology: A suggested update to the standard error aggregation method across grouped tasks was put forth by

@hailey_schoelkopf, with a PR to the repository indicating a move to a Pooled variance-based calculation. -

Synchronizing Vision-Language Model Contributions: A cooperative fork for a functioning vision-language pipeline was offered by

@jbdel., with an arrangement to transition the work to@asugliapost-Feb 15th. Coordination is to be organized with a scheduling poll.

Nous Research AI Discord Summary

-

LLaVA-1.6 Surpasses Gemini Pro: A YouTube video demonstration suggests that LLaVA-1.6, with features like enhanced reasoning, OCR, and world knowledge, outperforms Gemini Pro on several benchmarks. Results and further details can be found on the LLaVA blog.

-

Hugging Face Introduces MiniCPM: A new model, MiniCPM, showcased on Hugging Face, has sparked interest due to its potential and performance, with discussions comparing it to other models like Mistral and awaiting fine-tuning results.

-

ResNet Growth Techniques Applied to LLMs: Discussions have surfaced around the application of “growing” techniques, successful with ResNet classifiers and ProGANs, to LLMs, evidenced by Apple’s Matroyshka Diffusion model. The new Miqu model’s entry on the Open LLM Leaderboard with notable scores leads to mixed reactions.

-

Quantization’s Impact on AI Model Performance: Conversations around miqu-70b bring up the potential effects of quantization on model performance aspects such as spelling accuracy, provoking thoughts on whether quantized models should be standard on certain platforms.

-

The Ongoing Pursuit for Optimized Tokenization: The engineering community has discussions around multilingual tokenizers, with a 32000-token vocabulary potentially limiting models like LLaMA/mistral. Efforts to adapt LLaMA models for specific languages, such as VinaLLaMA for Vietnamese and Alpaca for Chinese, indicate progress in model internationalization.

OpenAI Discord Summary

-

Questioning AI Censorship in Geopolitical Contexts: In a discussion about potential censorship, an OpenAI user,

@bambooshoots, questioned whether ChatGPT censors responses to comply with Chinese regulations. Another user,@jeremy.o, made it clear that OpenAI does not engage in such censorship practices. -

Content Creation Freedoms Celebrated in AI:

@jeremy.ohighlighted OpenAI’s DALL·E tool, emphasizing its ability to generate diverse content, including LGBTQI+ representations, showcasing the organization’s commitment to freedom of content creation. -

ChatGPT Conversational Memory and Identity Formation: Users like

@blckreaper,@darthgustav., and@jaicraftdebated the challenges related to GPT models potentially remembering previous sessions or confusing past responses. There’s a user desire for GPT entities to have separate memories and a clear division of conversation flows to enhance user experience. -

Invisible Text-to-Speech Modifications Explored:

@novumclassicumasked for guidance on making text modifications for text-to-speech applications without the changes being shown to the user. The idea is for GPT to internally replace words before submission, aiming for a seamless and invisible text alteration process for end users. -

Amplifying AI Dialogues Beyond Concise Summaries: User

@stealth2077expressed frustrations with GPT’s tendency to summarize dialogues between characters after only a few exchanges. The aspiration here is for the AI to consistently generate extended, realistic, character-driven dialogues, maintaining the play-by-play style without defaulting to summaries.

LM Studio Discord Summary

-

Navigating LLM Creation Complexities: Users discussed the technical aspects of LLM creation, noting the necessity of expertise in Machine Learning, PyTorch, and other areas. Meanwhile, there’s interest in utilizing LM Studio plugins, such as TTS and open interpreters, indicating a push for more integrated and interactive AI solutions.

-

Blazing New Trails with LLMs: Community members are exploring Moondream, for vision-to-text transformations, expressing interest in integrating such models into LM Studio, despite current limitations. In other chat, there’s excitement around CodeLlama 70B with an experimental preset linked for the community, and the leak of a Mistral Ai fine-tune of Llama 70B called miqu is also making waves due to its performance in coding tasks.

-

Hardware Hurdles and Optimization Discussions: Engaging discussions centered on optimizing hardware for LLMs, covering issues like dual GPU setups and VRAM’s critical role in model performance. Advice to upgrade to dual RTX 3090 GPUs for improved speed with 70b models was shared, and there’s anticipation over new machine setups with P40 GPUs for better LLM functioning. When it comes to benchmarking CPUs for LM Studio, the insights suggested focusing on VRAM usage rather than core counts.

-

Docker Dilemma Drives Conda Consideration: One user tackled problems with Docker by turning to Conda for setting up environments, highlighting the challenges sometimes faced with containerized environments, and the usefulness of environment managers in resolving them.

-

Embedding Efficiency Vs. Effectiveness: A brief but insightful exchange on database strategies for storing word embeddings considered the tradeoff between similarity search quality and database performance. It was noted that longer embeddings may give better context for searches but could adversely affect database efficiency.

HuggingFace Discord Summary

-

Adventure in Advanced RAG:

@andysingalhas showcased his work on Advanced RAG, sharing a GitHub notebook on the same, hinting at further development similar to OpenAI’s interfaces. -

LLaVA-1.6 Outshines Gemini Pro: LLaVA-1.6 has been announced, claiming improvements in resolution, OCR, and reasoning, even surpassing Gemini Pro in some benchmarks. For more insights, visit the LLaVA-1.6 blog post.

-

Diffusers 0.26.0 Release with New Video Models: The new Diffusers 0.26.0 release brings two new video models, with full notes accessible here. An implementation error in the release code led to incorrect inference steps, contributing to initial user issues.

-

Tokenizer Pattern Visualization and Conversion: Tokenization patterns have been visualized by

deeeps.igand are demonstrated in a Kaggle notebook. Additionally, a script for converting tiktoken tokenizers to Hugging Face format was shared, although licensing concerns were mentioned. -

AI & Law and Mamba Dissected: An ongoing discussion on AI in the legal field is backed by a Medium article, with a presentation to follow.

@chad_in_the_houseposted about an upcoming presentation on Mamba, a sequence modeling architecture, with relevant details found in the arXiv paper and further explanation in Yannic Kilcher’s YouTube video. -

Livestock Health ML Model Call for Volunteers: DalensAI is arranging a machine learning dataset to detect sickness in livestock and is in need of volunteers to contribute images and labels. This presents an opportunity to contribute to a real-world application of computer vision.

-

Donut’s Dicey Performance Across Transformers Versions: An issue was reported where the modified donut model performs differently during inference across

transformerslibrary versions 4.36.2 and 4.37.2. This implies potential backward compatibility challenges to be aware of when updating dependencies.

Mistral Discord Summary

-

Groq’s Competitive Edge with LPU Chips: Groq’s custom hardware, designated as Local Processing Units (LPUs), was recognized for its local optimization capabilities during runtime, suggesting they may rival Nvidia H100 chips. However, Groq does not provide hosting, and inquiries about its performance highlighted limited video memory, with more details available in the GroqNode™ Server product brief.

-

Curiosity Over MoMo-72B Model: A Hugging Face model known as MoMo-72B sparked debates about model quality and its ‘contaminated’ leaderboard scores, with links shared for further investigation - MoMo-72B Hugging Face Model and the associated discussion.

-

Light-hearted Teasing Among Peers: A brief, playful exchange arose involving a “betweter” comment and expressions of fun jest, alongside an important clarification regarding free model access, which can be explored on Hugging Face rather than through API keys for open-source options.

-

Assistance and Clarification for Mistral Deployment: Users provided guidance and solutions for running Mistral models on Mac, pointing towards LMStudio for suitable downloads, with expressions of gratitude for the support.

-

Anticipation for Innovative AI Projects: The community showcases generated excitement, from socontextual.com to a YouTube demo titled “Trying LLaVA-1.6 on Colab” which highlighted LLaVA-1.6’s improved reasoning and world knowledge - YouTube Demo. Additionally, a fan fiction titled “Sapient Contraptions” inspired by Terry Pratchett was shared via Pastebin - Sapient Contraptions on Pastebin, illustrating creative uses of AI LLM software for story crafting.

Perplexity AI Discord Summary

- Base Model Basics: Newcomer

christolitoinquired about the “base perplexity” model, prompting a response frommares1317with assistance and direction to further resources. - Perplexity App Developments:

- Document attachment functionality is currently unavailable in the Perplexity Android app, a feature existing in the web version.

- Details were presented concerning Copilot’s utilization of GPT-4 and Claude 2 models in offline search-facilitated modes.

- Membership and UX Concerns:

- Limitations of the free version of Perplexity were compared to those found in ChatGPT.

- Pro user

matthewtaksaexperienced delays and message duplication issues.

- Learning and Leveraging Perplexity:

@fkx0647reported success in uploading and interacting with documents through an API.- Perplexity’s effectiveness in content creation was highlighted in a shared YouTube video, with preference over Google and ChatGPT.

- API Expansion Appeal:

@bergutmanproposed the integration of llava-v1.6-34b for API support, citing the high costs of using 1.6 on replicate and the lack of multimodal API options compared to GPT4-V.

OpenAccess AI Collective (axolotl) Discord Summary

-

SuperServer Unveiled for AI Fine-Tuning: The community now has access to an 8x3090 SuperServer specifically for running axolotl fine-tunes, with

@dctannerinviting DMs for collaboration. Details on the server’s capabilities can be found indctanner’s announcement, The AI SuperServer is live!. -

Advantages of axolotl Sample Packing and BYOD Highlighted:

@nanobitzemphasized the benefits of axolotl over AutoTrain, praising its “sample packing and simple yaml sharing + byod” while noting AutoTrain’s automatic model selection as an appealing feature. -

FFT Ambitions and Model Fine-Tuning:

@le_messinquired about executing a Fast Fourier Transform (FFT) of Mistral on the new SuperServer, and@dctannerconfirmed that a full finetune of Mistral 7b was in progress, with plans to test Solar 10.7b. -

In-Depth Exchange on GPU Storage and Training Capabilities: The technical challenges associated with storing gradients and the necessary communication bandwidth for multiple GPUs during full model finetuning were discussed by

@nafnlaus00and@yamashi. -

Experience with vLLM Update: Version 0.3.0 of vLLM showed significant speed improvements for specific workloads compared to version 0.2.7, as reported by

@dreamgen. -

Premature Termination in Mixtral Instruct Encountered:

@nafnlaus00reported that GGUF Q3_K_M from Mixtral Instruct would sometimes terminate responses early, and also mentioned they were utilizing llama.cpp for MoE inference. -

Launch of Math-Multiturn-100K-ShareGPT Dataset: A new dataset, Math-Multiturn-100K-ShareGPT, has been made available on Hugging Face, featuring conversations designed to solve math problems. It provides up to 64 turn pairs and aims to include more complex equations in the future. Check out the dataset here.

LlamaIndex Discord Summary

-

RAGArch Simplifies RAG System Deployment: The new RAGArch tool, introduced by

@HarshadSurya1c, makes setting up a Retrieval-Augmented Generation (RAG) system convenient. It incorporates a Streamlit UI allowing for easy component selection and one-click creation of a RAG pipeline, as shared in a promotive tweet. -

Comprehensive Guide to Hugging Face LLMs with LlamaIndex:

@kapa.aiprovided a guide for integrating Hugging Face pre-trained language models with LlamaIndex, complete with a step-by-step example notebook. Additionally,@whitefang_jrshared a Colab notebook for users to employ HuggingFace StableLM on Colab with LlamaIndex. -

Integration Options for Predicative Models with LlamaIndex: A discussion highlighted the integration potential of LlamaIndex with predictive models’ APIs from various platforms, with guides available for each specific integration. The conversation also included information on running local models and using LlamaIndex in conjunction with or independently from LangChain, along with a mention of Ollama, an optimized local model runner.

-

Perplexity AI’s Citation Technique Draws Interest:

@tyronemichaelinquired about Perplexity AI’s rapid and advanced citation generation mentioned in their documentation comparing it with their own approach using SerpAPI and LlamaIndex. However, Perplexity’s approach remains unclear, even after inquiries, and a tweet discussing a Google paper highlights Perplexity AI’s capabilities in factual Q&A and debunking.

CUDA MODE (Mark Saroufim) Discord Summary

-

Optimizing With NVIDIA’s Finest: User

@zippikashared their experience with Nvidia 4090 GPU, discussing optimized CUDA code for RGB to grayscale conversion usinguchar3and integer arithmetic for efficiency.@jeremyhowardand@vim410, who brings experience from NVIDIA, contributed to discussions around bitwise shifts and welcomed@vim410into the community. -

Compiler Smarts on Bitwise Optimization: During the discussions,

@apazbrought up a point about compilers potentially replacing division with bit-shifts automatically in optimization, which was part of a broader conversation on efficiency in CUDA code. -

Solving CUDA Memory Management Mysteries:

@_davidgonmargot assistance from community members like@lancertsand@vim410with a bug fix and insight into proper C++ memory management techniques in a CUDA context. -

Numba’s Need for Speed using Shared Memory:

@stefangligaprovided help by sharing Siboehm’s article for@mishakeyvalue, which included optimization techniques like shared memory caching and performance enhancements in GPU matrix multiplication. -

Catch That Missing Brace!:

@ashpunwas assisted by@marksaroufimto fix aRuntimeErrorin a CUDA kernel caused by a syntax error, and they also tackled an ImportError linked to the elusiveGLIBCXX_3.4.32version, leading to suggestions on updating Conda and setting theLD_LIBRARY_PATHappropriately.

LangChain AI Discord Summary

-

LangChain Lacks in Docs, Gains in Tools: Engineers expressed frustrations with LangChain documentation, finding it confusing and ironically noting the tool’s inability to explain itself. Meanwhile, there’s enthusiasm for community contributions like AutoCrew, which automates crew and task creation for CrewAI.

-

Mixing Feelings on LangChain’s Viability: While some developers ceased using LangChain due to rapid changes and a lack of modularity, others praise its time-saving features. However, custom modifications like adding

user_idtolangchain_pg_collectionare queried without clear resolution. -

Community Driven AI Educational Content: The sharing of educational materials included a Stanford DSP tutorial on Demonstrate - Search - Predict models, a Chat UI adaptation tutorial by @esxr_, and insights into chatting with CSV files using LangChain and OpenAI API despite some bugs, as demonstrated in this tutorial.

-

Harnessing AI in Productivity Tools: Innovations highlighted include Lutra.ai, which merges AI with Google Workspace, and Tiny Desk AI, offering a no-frills, free AI-powered chat app, each touting unique capabilities to enhance productivity and user experience.

-

Routing Multiple AI Agents Discussed: The challenge of efficiently routing queries across multiple specialized agents was discussed, with inquiries about updating the

router_to_agentfunction for optimal performance.

LLM Perf Enthusiasts AI Discord Summary

-

MTEB Leaderboard Shines a Light on AI: Natureplayer highlighted the MTEB leaderboard, referencing the latest rankings and performances of language models on various tasks.

-

Feature Request: Browsing with Ease: A feature request for a browse channels option was put forward by

@joshcho_, noting the difficulty in navigating and selecting channels of interest due to the current lack of such functionality. -

GPT-3.5 Lauded for Instruction Adherence: Users discussed the enhanced instruction-following capabilities of GPT-3.5, with

@justahveeobserving its improved performance on instruction-heavy tasks, even at the cost of reasoning abilities. -

Detailed Prompting: A Double-Edged Sword: The guild covered the trade-off between detailed prompting and latency, with user

@res6969noting that extended explanations result in smarter AI performance but increased latency, while@sourya4discussed experimenting withgpt-4-turboto balance these factors. -

Chain of Thought Prompts Lead to Brainier AI: The conversation included insights on using Chain of Thought (CoT) prompts for asynchronous strategies, which yield intelligent responses, and the potential of reusing CoT outputs for a secondary processing step as reported by

@byronhsuand@res6969.

Alignment Lab AI Discord Summary

- Daydream Nation Joins the Chat: User

@daydream.nationjoined the [Alignment Lab AI ▷ #general-chat] and mentioned the team’s project going public, expressing regret for not having participated in it yet, and speculated on the intent to test human interaction on a larger scale in the context of alignment, akin to Google’s Bard. - Ready to Tackle the Hard Problems: In the [Alignment Lab AI ▷ #looking-for-work],

@daydream.nationoffered expertise in Python, Excel Data Modeling, and SQL, combined with a background in Philosophy and an interest in addressing consciousness with AI.

Datasette - LLM (@SimonW) Discord Summary

- Infinite Craft Channels Elemental Alchemy: An interactive game named Infinite Craft built on llama2 was spotlighted by

@chrisamico, showcasing gameplay elements such as water, fire, wind, and earth which can be combined through a drag-and-craft mechanism. - Game Creator Garners Praise:

@chrisamicofurther recommended games by the creator of Infinite Craft, highlighting them as clever, fun, and occasionally thought-provoking, although no specific titles or links were provided. - Endorsing the Endless Fun:

@dbreunigaffirmed the excitement around Infinite Craft, calling it a great example for its category, while@bdexterconfided about the game’s addictive nature, signaling high engagement potential.

DiscoResearch Discord Summary

- German Embedding Models Surpass Benchmarks: @damian_89_’s tweet discusses the superior performance of jina-embeddings-v2-base-de by @JinaAI_ and bge-m3 by @BAAIBeijing in enterprise data tests, with BGE being highlighted as particularly effective.

- Call for Quantitative Assessment: @devnull0 emphasizes the need to test embedding models against a suitable metric, though they do not specify which metrics to use for evaluation.

- Guide to RAG Evaluation Released: The GitHub notebook provided by @devnull0 offers a methodological guide to evaluate Retrieval-Augmented Generation (RAG) systems.

- Blogging Deep Dive into RAG: A detailed blog post complements the notebook which explains how to assess the encoder and reranker components of a RAG system using LlamaIndex and a specifically tailored testing dataset.

Skunkworks AI Discord Summary

-

LLaVA 1.6 Released: .mrfoo announced the release of LLaVA 1.6, pointing to the official release notes and documentation.

-

Off-Topic AI Buzz: Pradeep1148 shared a YouTube video in the off-topic channel which seems to be AI-related but lacked any context or discussion around it.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1441 messages🔥🔥🔥):

- Discussing Life and Tech in the Late Hours: Participants like

@mrdragonfox,@coffeevampir3, and@potatooffengaged in a late-night conversation about everything from the performance of large language models like MiquMaid and OLMo to the speculative possibility of 3D printing PC hardware and carbon nanotube applications. - Model Watermarking Techniques:

@turboderp_and@seleadiscussed the idea of using gradient ascent to make models unlearn unwanted information and the concept of watermarking models during training, with claims that watermarks can be so deep within the model that finding and removing them can be nearly impossible. - On Track with OLMo:

@drnicefellowintroduced the OLMo GitHub repository by AI2, highlighting its potential as a complete open-source LLM with checkpoints and the training of a 65B model in progress. It was noted that their model is under the Apache 2.0 license. - Academicat and Quantum: Users discussed the capabilities of academicat on processing very long papers and touched on how quantum materials like superconductors work under particular conditions.

- Exploring Superconductors and Nanotubes: In the context of future technologies and materials science,

@selea,@rtyax, and@spottylucktalked about superconductor materials like Yttrium barium copper oxide (YBCO) and carbon nanotubes, mentioning the convenience of purchasing them on platforms like AliExpress.

Links mentioned:

- Miau Cat GIF - Miau Cat Meow - Discover & Share GIFs: Click to view the GIF

- Tkt Smart GIF - Tkt Smart - Discover & Share GIFs: Click to view the GIF

- Mixture of Experts for Clowns (at a Circus): no description found

- Enterprise Scenarios Leaderboard - a Hugging Face Space by PatronusAI: no description found

- mlabonne/phixtral-2x2_8 · Hugging Face: no description found

- Yes Lawd GIF - Yes Lawd My Precious - Discover & Share GIFs: Click to view the GIF

- Creepy Talking Cat 🙀: I made this video into a full song!Watch here: youtu.be/WLryCXyjL_0

- nVidia Hardware Transcoding Calculator for Plex Estimates: no description found

- Thinking Christian Bale GIF - Thinking Christian Bale Patrick Bateman - Discover & Share GIFs: Click to view the GIF

- diable/enable CUDA Sysmem Fallback Policy from command line: diable/enable CUDA Sysmem Fallback Policy from command line - a

- Crash Course Mix [ RaiZen ]: Used theme :Crash Course - PhysicsCrash Course - Anatomy & PsychologyCrash Course - AstronomyCrash Course - PhilosophyCrash Course - PsychologyI do not own a…

- The Molecular Shape of You (Ed Sheeran Parody) | A Capella Science: I’m in love with your bonding orbitals.Support A Capella Science: http://patreon.com/acapellascienceSubscribe! https://www.youtube.com/subscription_center?ad…

- Lisa Su Amd GIF - Lisa Su Amd Ryzen9 - Discover & Share GIFs: Click to view the GIF

- THE TERMINATOR “Final Fight Clip” (1984) Sci Fi Horror Action: THE TERMINATOR “Final Fight Clip” (1984) Sci Fi Horror ActionPLOT: In 1984, a human soldier is tasked to stop an indestructible cyborg killing machine, both …

- Making YBCO superconductor: How to make and test your own pieces of YBCO superconductor.Best how-to resources for YBCO:http://physlab.org/wp-content/uploads/2016/04/Superconductor_manua…

- GitHub - bodaay/HuggingFaceModelDownloader: Simple go utility to download HuggingFace Models and Datasets: Simple go utility to download HuggingFace Models and Datasets - GitHub - bodaay/HuggingFaceModelDownloader: Simple go utility to download HuggingFace Models and Datasets

- Jack Tanamen GIF - Jack Tanamen Gecky - Discover & Share GIFs: Click to view the GIF

- GitHub - gameltb/ComfyUI_stable_fast: Experimental usage of stable-fast and TensorRT.: Experimental usage of stable-fast and TensorRT. Contribute to gameltb/ComfyUI_stable_fast development by creating an account on GitHub.

- Nvidia RTX A6000 48GB GDDR6 Ampere Graphics Card PNY 3536403379193 | eBay: no description found

- GitHub - allenai/OLMo: Modeling, training, eval, and inference code for OLMo: Modeling, training, eval, and inference code for OLMo - GitHub - allenai/OLMo: Modeling, training, eval, and inference code for OLMo

- I have this paper:Single-cell multi-omics defines the cell-type specific impac - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- Vanadium Dioxide as a Natural Disordered Metamaterial: Perfect Thermal Emission and Large Broadband Negative Differential Thermal Emittance: Thermal radiation from conventional emitters, such as the warm glow of a light bulb, increases with temperature: the hotter the bulb, the more it glows. Thermal emitters that buck this trend could lea…

TheBloke ▷ #characters-roleplay-stories (227 messages🔥🔥):

- Aphrodite Batching and GPU Compatibility Issues:

@sunijahighlighted the advantages of Aphrodite’s batching capabilities for services like AI horde but also pointed out it doesn’t work well with two GPUs of different VRAM sizes.@goldkoronadded favorably on batch generation options and expressed disappointment about the GPU issue. - Usage of Context in Aphrodite: According to

@sunija, Aphrodite can store multiple conversations’ contexts for efficient reuse. Meanwhile,@keyboardkingand@goldkoronraised concerns over potential memory usage and discussed the possibility of offloading processed context to the CPU. - Calibration Dataset Discussions and AWQ Model Cards:

@dreamgeninquired about best calibration datasets for Automatic Weight Quantization (AWQ), and@turboderp_highlighted the inclusion of variety in calibration datasets for EXL2, emphasizing the importance of variety in datasets for quality results. - Local AI for Roleplay:

@dxfileshared experiences with using different models for role-playing and preferred instruction mode to chat mode, receiving feedback from@sao10kthat instruct mode is optimal when the model is instruction-tuned.@dreamgenand@firepin123asked for clarification on support for various formats like iq3_xss in koboldcpp. - Leaderboards and the MoMo Model:

@mrdragonfoxand others discussed the controversial presence of the Mistral medium model (MoMo) on a leaderboard, touching on the problems of models without clear licensing and potential legal issues of using or promoting leaked models.@kaltcitand@c.gatooffered critical views on corporate honesty and the secrecy around model training specifics.

Links mentioned:

- TheBloke’s gists: GitHub Gist: star and fork TheBloke’s gists by creating an account on GitHub.

- Importance matrix calculations work best on near-random data · ggerganov/llama.cpp · Discussion #5006: So, I mentioned before that I was concerned that wikitext-style calibration data / data that lacked diversity could potentially be worse for importance matrix calculations in comparison to more ”…

- TheBloke/CapybaraHermes-2.5-Mistral-7B-AWQ · Hugging Face: no description found

- Reddit - Dive into anything: no description found

TheBloke ▷ #training-and-fine-tuning (8 messages🔥):

- Exploring Quantization and LoRA Fine-Tuning:

@dreamgeninquired whether fine-tuning a pre-trained AWQ model with LoRA would perform better than using the base model when planning to quantize later.@dirtytigerxclarified that while AWQ is different from standard QLoRA, there’s no evidence that it performs better. - Clarification on QLoRA Methodology: In response to

@dreamgen,@dirtytigerxcompared AWQ to normal QLoRA, emphasizing that QLoRA usesload_in_4bitviabitsandbyteswhile AWQ employs a different quantization method. - Introducing LoftQ: Bridging the Quantization Gap:

@dreamgenshared a link to a paper discussing LoftQ, a technique for quantization that simultaneously fine-tunes LoRa and quantizes a model to improve performance on downstream tasks. - Debating the Notions of Quantization and Fine-Tuning:

@dreamgensuggested that aligning the quantization process during QLoRA fine-tuning and during serving could offer benefits, but@dirtytigerxexpressed skepticism regarding the wide replication of the paper’s results.

Links mentioned:

LoftQ: LoRA-Fine-Tuning-Aware Quantization for Large Language Models: Quantization is an indispensable technique for serving Large Language Models (LLMs) and has recently found its way into LoRA fine-tuning. In this work we focus on the scenario where quantization and L…

TheBloke ▷ #model-merging (1 messages):

kquant: internLM is a solid recommendation.

TheBloke ▷ #coding (2 messages):

- Seeking Advice on LLM Deployment:

m.0861inquired about best practices for deploying Large Language Models (LLMs) using the HF (Hugging Face) spaces service, hinting at possible use of the service for such purposes. - Exploring HF Inference Endpoints for LLMs: Shortly after,

m.0861considered that HF’s inference endpoints might be a more appropriate tool for deploying LLMs, suggesting a shift in focus to that feature.

LAION ▷ #general (380 messages🔥🔥):

-

Distillation Insights for SSD-1B:

@pseudoterminalxremarked on SSD-1B’s inflexibility due to its distillation from a fine-tuned model.@gothosfollyconcurred, mentioning that distilling from multiple fine-tuned models can enhance aesthetics. -

Captioning Chat for Data Quality: In a detailed exchange dominated by

@pseudoterminalxand@gothosfolly, they discussed strategies for training models with properly captioned images to enhance prompt adherence.@pseudoterminalxreported using a combination of image sources like BLIP, COCO, and LLaVA, applying input perturbations, and addressing data pipeline issues like resizing and cropping for better training efficiency and data quality. -

Techniques for Enhanced Prompt Adherence:

@pseudoterminalxand@gothosfollydebated the value of using UTF-8 tokenization for text encoding and a hybrid approach that combines UTF-8 codes into a single token. They considered whether a model using ByT5’s byte-level encoding could offer advantages for image generation, especially in handling text. -

Cropping Models for Image Upscaling: The conversation between

@pseudoterminalxand@astropulsehighlighted the benefits of using cropped model weights for image-to-image upscaling. They noted that this approach helps maintain scene integrity and seems to work effectively for higher resolution upscaling. -

Troubleshooting Global Information Issues in VAEs: A discussion led by

@drheadconsidered the problem of visual editing models like the StyleGAN3 and SD VAE sneaking global information through intense regions in generated images.@thejonasbrothersalso pitched in, suggesting a variety of options to counteract this effect, emphasizing the need for concrete evidence rather than theorizing.

Links mentioned:

- Google’s AI Makes Stunning Progress with Logical Reasoning: 🤓Learn more about Artificial Intelligent on Brilliant! ➜ First 200 to use our link https://brilliant.org/sabine will get 20% off the annual premium subscrip…

- Sadako The Ring GIF - Sadako The Ring Ringu - Discover & Share GIFs: Click to view the GIF

LAION ▷ #research (24 messages🔥):

-

Cosine Annealing Takes a Backseat:

@top_walk_townshared their surprise about a new report that challenges the effectiveness of cosine annealing, describing it as a “roller coaster.” The report is accessible via Notion. -

Research Lands on Blogs Over Journals:

@chad_in_the_houseand others find it noteworthy that significant research findings are often shared in blog posts rather than through traditional academic publishing due to the hassle with peer review processes. -

Novel Architectures to Skip Traditional Publishing:

@mkaicis considering releasing information on a novel architecture they are working on through a blog post, expressing frustration with the current state of academic publishing. -

Low-Hanging Fruit in Machine Learning Research:

@mkaicbrought up how machine learning research is often just about applying well-known techniques to new datasets, which has become unexciting and crowds the landscape with incremental papers. -

Industry Experience Over Academic Publications:

@twoaboverecounted how their practical achievements in data competitions and industry connections provided opportunities beyond what academic papers could offer, hinting at the diminishing impact of being published in top journals.

Links mentioned:

- How Did Open Source Catch Up To OpenAI? [Mixtral-8x7B]: Sign-up for GTC24 now using this link! https://nvda.ws/48s4tmcFor the giveaway of the RTX4080 Super, the full detailed plans are still being developed. Howev…

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It’s the all-in-one workspace for you and your team

Latent Space ▷ #ai-general-chat (158 messages🔥🔥):

-

Defining AI Engineer Skills: @eugeneyan sought input on the necessary skills for software engineers to effectively use LLMs, leading to discussions about understanding the probabilistic nature of LLMs and the importance of evaluation, debugging, and data familiarity. Recognition of a difference emerged between traditional software engineering and AI engineering roles, with various views on whether calling LLM APIs could shape an SDE into a data scientist role.

-

The AI Engineer Continuum: Community members, including @eugeneyan and @swyxio, debated the stages of AI engineering expertise, from using APIs and rapid prototyping to fine-tuning models. A key focus was on the mindset shift required for engineers to move from deterministic to probabilistic outcomes and handling large data volumes effectively.

-

Skill Set Spotlight in AI Sector: @coffeebean6887 and @eugeneyan discussed the importance of job titles versus actual skill sets in the industry, considering expanding beyond traditional SDEs to other roles like data engineers and analysts. There was consensus that adaptability and rapid learning of evolving best practices in AI take priority over specific titles.

-

Exploration of CUDA Learning: @420gunna and other community users pondered the value of learning CUDA for future career prospects, contrasting it with the appeal of popular technologies and the rarity of in-depth CUDA knowledge in the LLM field.

-

Concerns & Curiosities About OpenAI’s Fine-Tuning API: @dtflare raised questions about experiences with OpenAI’s Fine-Tuning API/SDK, and @swyxio shared skepticism about the potential for platform lock-in and recommended going “the whole way” with fine-tuning rather than using simplified APIs, unless a substantial gain was evident.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Getting Started With CUDA for Python Programmers: I used to find writing CUDA code rather terrifying. But then I discovered a couple of tricks that actually make it quite accessible. In this video I introduc…

- Tweet from Eugene Yan (@eugeneyan): Trying to write a JD to hire software engineers that build with LLMs. Beyond making REST calls, what’s essential? Some I can think of: • Evals: Collect labels & measure task performance • Look a…

- Buttondown): no description found

- The Rise of the AI Engineer: Emergent capabilities are creating an emerging job title beyond the Prompt Engineer. Plus: Join 500 AI Engineers at our first summit, Oct 8-10 in SF!

- GitHub - AbanteAI/rawdog: Generate and auto-execute Python scripts in the cli: Generate and auto-execute Python scripts in the cli - GitHub - AbanteAI/rawdog: Generate and auto-execute Python scripts in the cli

Latent Space ▷ #ai-announcements (2 messages):

-

Join the LLM Paper Club (East) Discussion:

@swyxioannounces the ongoing LLM Paper Club (East) led by<@796917146000424970>. Interested parties are encouraged to join the discussion and check out the upcoming AI Engineering Singapore meetup. -

Don’t Miss AI in Action:

@kbal11invites members to the AI in Action event currently in session, discussing “Onboarding normies / how to differentiate yourself from the AI grifters”. The session is led by<@315351812821745669>and accessible here.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- AI Engineering Singapore meetup · Luma: What is this? Meetup for folks interested in machine learning, LLMs, and all things genAI with craft beers and good vibes downtown 🍻 swyx from https://latent.space/ is home for CNY, do…

Latent Space ▷ #llm-paper-club-east (63 messages🔥🔥):

- Granting Screen Share Permissions: User

@ivanleomkacknowledged that@796917146000424970(unidentified user) is sorting out screen share permissions and advised to give it some time. - Audio Troubles on Stage:

@ivanleomkinstructed@srini5844to join the stage for audio issues and later mentioned a brief intermission due to their own audio issues. - Exploring Self Rewarding LLMs:

@anthonyivnraised questions about the methodology for generating preference pairs for self-rewarding LLMs, leading to clarifications by@ivanleomkabout the paper’s process using scores to form preference pairs. - Discussion on Improving Text Embeddings and RAG:

@anthonyivnshared insights from experiments with different rating scales and discussed a paper on improving text embeddings (Improving Text Embeddings with Large Language Models) which is being utilized in recent research. - Idea for a ‘Code Club’ and ‘Production Club’:

@j0yk1ll.and@jevonmproposed creating a “code club” for walking through code together and a “production club” to review code/papers with actual implementation results, which can be valuable for engineers and those interested in real-world applications.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Self-Consistency Improves Chain of Thought Reasoning in Language Models: Chain-of-thought prompting combined with pre-trained large language models has achieved encouraging results on complex reasoning tasks. In this paper, we propose a new decoding strategy, self-consiste…

- RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval: Retrieval-augmented language models can better adapt to changes in world state and incorporate long-tail knowledge. However, most existing methods retrieve only short contiguous chunks from a retrieva…

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- Let’s build GPT with memory: learn to code a custom LLM (Coding a Paper - Ep. 1): You’ve used an LLM before, and you might’ve even fine-tuned one, but…have you ever built one yourself? How do you start from scratch and turn a new researc…

Latent Space ▷ #ai-in-action-club (134 messages🔥🔥):

- Greetings and Scheduling:

@alan_95125initiated the conversation, and@kbal11mentioned that they would start after more folks arrived. - Anticipation and Time Checks: A few participants such as

@yikesawjeezand@nuvic_commented on the start time, with@nuvic_suggesting that the channel be renamed to “Fridays 1PM” to match the event schedule. - Sharing AI-Related Links:

@yikesawjeezshared a series of links to various articles and blog posts related to AI, covering topics from founding AI startups to practical AI use cases, with the longest link dump including resources like Hitchhiker’s Guide to AI, The Washington Post, and Towards Data Science, among others. - Channel Activity and Enthusiasm: Users like

@eugeneyanand@coffeebean6887commented on the increasing number of audience members, indicating growing interest and participation in the channel’s event. - Launching a Local Group: There was interest in forming a local group for Los Angeles, with

@juliekwakrequesting the creation of a channel and@coffeebean6887tagging potential members for an LA meetup, which led to@swyxiocreating a new channel for it.

Links mentioned:

- Symphony – Interfaces: Another interesting consequence of models like GPT-3.5+ being able to call functions is that this ability can be used to render visual interfaces within a conversation.

- Gandalf | Lakera – Test your prompting skills to make Gandalf reveal secret information.: Trick Gandalf into revealing information and experience the limitations of large language models firsthand.

- People + AI Guidebook: A toolkit for teams building human-centered AI products.

- GitHub - uptrain-ai/uptrain: Your open-source LLM evaluation toolkit. Get scores for factual accuracy, context retrieval quality, tonality, and many more to understand the quality of your LLM applications: Your open-source LLM evaluation toolkit. Get scores for factual accuracy, context retrieval quality, tonality, and many more to understand the quality of your LLM applications - GitHub - uptrain-ai…

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- My Life as a Con Man: Confidence is a dual edged sword. I trafficked in confidence when I was in finance, and now I see it everywhere I look.

- Why we founded Parcha : A deeper dive into why we’re building AI agents to supercharge compliance and operations teams in fintech at Parcha

- How to use AI to do practical stuff: A new guide: People often ask me how to use AI. Here’s an overview with lots of links.

- 3 things everyone’s getting wrong about AI: As AI tools spread, people are struggling to separate fact from fiction.

- How to talk about AI (even if you don’t know much about AI): Plus: Catching bad content in the age of AI.

- What are AI Agents?: In this post, you’ll learn what AI agents are and what they are truly capable of. You’ll also learn how to build an AI agent suitable for your goals.

- AI Agent Basics: Let’s Think Step By Step: An introduction to the concepts behind AgentGPT, BabyAGI, LangChain, and the LLM-powered agent revolution.

- Pitching Artificial Intelligence to Business People: From silver bullet syndrome to silver linings

- How PR people should (not) pitch AI projects: These are exciting times for the artificial intelligence community. Interest in the field is growing at an accelerating pace, registration at academic and professional machine learning courses is soar…

- Educating Clients about Machine Learning and AI – Andy McMahon: no description found

- How to Announce Your Actual AI: no description found

- no title found: no description found

- 7 Habits of Highly Effective AI Business Projects: What’s the difference between good & great AI business projects? Here are 7 things to consider when doing AI work in your organisation.

- How to convince Venture Capitalists you’re an expert in Artificial Intelligence: If you like this article, check out another by Robbie: 15 Ways a Venture Capitalist Says “No”

- Launching your new AI Startup in 2023 — Building Better Teams: In the last few months more and more people have been asking me for my thoughts on their AI business ideas, and for help with navigating the space. This post covers the majority of my thoughts on the …

Eleuther ▷ #general (161 messages🔥🔥):

-

Open Models as Large-Scale Science Projects:

@layldiscussed the increasing ease of getting government support in the EU for open model training on national clusters, aligning with treating open models as large-scale science projects. Meanwhile,@stellaathenaconfirmed this trend from zero to small progress over the past years, suggesting possible future applications for@layl’s ML library in an HPC environment like LUMI. -

Activation Function Analysis and OpenAI’s Mish Experiment: Amidst a broad discussion about activation functions like GeLU, ReLU, Mish, and TanhExp,

@xa9ax,@fern.bear, and others exchanged insights and research, highlighting the lack of extensive empirical testing for different activation functions in large model training.@ad8eexpressed skepticism about the honesty of a paper favoring Mish but was reassured after@xa9axconfirmed that all pre-submission experiments were included in the published manuscript. -

Transformer++ and Mamba Models Examined: Questions arose around

@state-spaces/mambamodels and how they compare to other architectures like Transformers++ and Pythia.@ldjand@baber_highlighted concerns about baselines and comparisons, while@stellaathenanoted the absence of a standard model suite trained on open data for fair comparisons. -

Diverse Takes on Activation Functions Impact: Users

@catboy_slim_,@fern.bear, and@nostalgiahurtsoffered thoughts on the subtle influences of activation function choices, like scale interactions with other hyperparameters and their impact on function performance. Shared empirical results from EleutherAI’s blog and research papers were discussed as attempts to unravel complex dependencies between activation functions and model training dynamics. -

Legal Quandaries of Large Model Training:

@synquidbrought attention to the legal complications related to transparency in model training data sources, suggesting that openly revealing training data can attract intellectual property lawsuits, which could impede scientific progress.

Links mentioned:

- Activation Function Ablation: An ablation of activation functions in GPT-like autoregressive language models.

- Mish: A Self Regularized Non-Monotonic Activation Function: We propose $\textit{Mish}$, a novel self-regularized non-monotonic activation function which can be mathematically defined as: $f(x)=x\tanh(softplus(x))$. As activation functions play a crucial role i…

- A decoder-only foundation model for time-series forecasting – Google Research Blog: no description found

- Releasing Transformer++ models · Issue #63 · state-spaces/mamba: Great work! Would it be possible to release your Transformer++ baseline models (specifically the ones trained on the Pile)?

- TinyGSM: achieving >80% on GSM8k with small language models: Small-scale models offer various computational advantages, and yet to which extent size is critical for problem-solving abilities remains an open question. Specifically for solving grade school math, …

- ReLU Strikes Back: Exploiting Activation Sparsity in Large Language Models: Large Language Models (LLMs) with billions of parameters have drastically transformed AI applications. However, their demanding computation…

- Information Theory for Complex Systems Scientists: In the 21st century, many of the crucial scientific and technical issues facing humanity can be understood as problems associated with understanding, modelling, and ultimately controlling complex syst…

- TanhExp: A Smooth Activation Function with High Convergence Speed for Lightweight Neural Networks: Lightweight or mobile neural networks used for real-time computer vision tasks contain fewer parameters than normal networks, which lead to a constrained performance. In this work, we proposed a novel…

- Benchmarking PyTorch’s Native Mish: PyTorch 1.9 added a native implementation of Mish, my go to activation function for computer vision tasks. In this post I benchmark the computational performance of native Mish on a Tesla V100, Tesla …

- GitHub - digantamisra98/Mish: Official Repository for “Mish: A Self Regularized Non-Monotonic Neural Activation Function” [BMVC 2020]: Official Repository for "Mish: A Self Regularized Non-Monotonic Neural Activation Function" [BMVC 2020] - GitHub - digantamisra98/Mish: Official Repository for "Mish: A Self…

- GitHub - digantamisra98/Mish: Official Repository for “Mish: A Self Regularized Non-Monotonic Neural Activation Function” [BMVC 2020]: Official Repository for "Mish: A Self Regularized Non-Monotonic Neural Activation Function" [BMVC 2020] - GitHub - digantamisra98/Mish: Official Repository for "Mish: A Self…

- GitHub - digantamisra98/Mish: Official Repository for “Mish: A Self Regularized Non-Monotonic Neural Activation Function” [BMVC 2020]: Official Repository for "Mish: A Self Regularized Non-Monotonic Neural Activation Function" [BMVC 2020] - GitHub - digantamisra98/Mish: Official Repository for "Mish: A Self…

- Partial entropy decomposition reveals higher-order structures in human brain activity: The standard approach to modeling the human brain as a complex system is with a network, where the basic unit of interaction is a pairwise link between two brain regions. While powerful, this approach…

- Meet Mish: New Activation function, possible successor to ReLU?: Hi all, After testing a lot of new activation functions this year, I’m excited to introduce you to one that has delivered in testing - Mish. Per the paper, Mish outperformed ReLU by 1.67% in thei…

Eleuther ▷ #research (16 messages🔥):

-

Seeking Knowledge Distillation Insights:

@johnryan465expressed interest in research on the efficiency gains of training a smaller model (size B) to match the logits of a larger, already trained model (size A), compared to training model A directly.@xa9axand@johnryan465discussed the potential for using the infinity ngrams paper methodology to create inexpensive models that may be used in a distillation pretraining bootstrap pipeline. -

Sampling Challenges in MCTS:

@blagdadtouched on the exploration problem in Monte Carlo Tree Search (MCTS), highlighting the potential of using Upper Confidence bounds for Trees (UCT) to guide the exploration of the game tree based on uncertainty, as opposed to uniform expansion. -

Efficient Exploration for Model Improvement Shared: An interesting paper on efficiently selecting examples for fine-tuning by using human or LLM raters was shared by

@xylthixlm, focusing on agents that generate queries and a reward model based on received feedback. The paper describes the efficiency of double Thompson sampling and the use of epistemic neural networks, available at arXiv. -

Active Learning Implementation Tease:

@fedorovistmentioned that@322967286606725126is polishing a Bayesian active learning implementation, with@johnryan465showing interest in any draft available due to past work on similar problems. -

Adam Optimizer Variation Inquiry: The question about whether any papers have explored Adam using the variance of parameters instead of the gradient for the second moment estimation was posed by

@ai_waifu. No specific papers were mentioned as a response.

Links mentioned:

Efficient Exploration for LLMs: We present evidence of substantial benefit from efficient exploration in gathering human feedback to improve large language models. In our experiments, an agent sequentially generates queries while fi…

Eleuther ▷ #lm-thunderdome (22 messages🔥):

-

Vision-Language Integration: User

@asugliaexpressed interest in integrating vision and language model support into lm-harness.@hailey_schoelkopfmentioned that while it’s not a current focus, contributions are welcome with@chrisociepaand@1072629185346019358identified as possible collaborators. -

MMLU Standard Error Clarifications Sought:

@baber_raised questions regarding high standard errors in MMLU results for the model miqu.@hailey_schoelkopfacknowledged that the standard error calculations for groups within the evaluation code might need revisiting. -

Zero-Shot Configuration Confirmed:

@asugliaasked about forcing a task to run in zero-shot mode within lm-harness.@hailey_schoelkopfconfirmed that settingnum_fewshot: 0should achieve this and pointed to the relevant source code for clarification. -

Fixes and Improvements to Grouped Task Evaluation:

@hailey_schoelkopfproposed an update to the variance calculation method used to aggregate standard errors across groups of tasks with a pull request on the EleutherAI GitHub repository. -

Coordination on Vision-Language Model Support:

@jbdel.offered a harness fork with a working vision and language pipeline, suggesting a hands-off to@asugliapost-Feb 15th. A When2meet was set up by@hailey_schoelkopfto find a suitable time to discuss and coordinate efforts.

Links mentioned:

- lm-evaluation-harness/lm_eval/evaluator.py at 7411947112117e0339fe207fb620a70bcec22690 · EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models. - EleutherAI/lm-evaluation-harness

- [WIP] Use Pooled rather than Combined Variance for calculating stderr of task groupings by haileyschoelkopf · Pull Request #1390 · EleutherAI/lm-evaluation-harness: This PR updates the formula we use for aggregating stderrs / sample std. deviations across groups of tasks. In this PR: formula: result: hf (pretrained=mistralai/Mistral-7B-v0.1), gen_kwargs: (Non…

- LM Eval Harness—VLMs - When2meet: no description found

Nous Research AI ▷ #off-topic (11 messages🔥):

-

LLaVA-1.6 Outshines Gemini Pro:

@pradeep1148shared a YouTube video titled “Trying LLaVA-1.6 on Colab”, demonstrating the improved features of LLaVA-1.6 such as enhanced reasoning, OCR, and world knowledge, noting it even surpasses Gemini Pro on several benchmarks. The results and details are provided on the LLaVA blog. -

Notorious Hacker Strikes Again:

@itali4noposted a VX Twitter link commenting on the latest feat by “the hacker known as 4chan”. -

Apple Vision Pro Product Launch: User

@nonameusrannounced the launch of Apple Vision Pro, but did not provide any additional information or link to the product. -

AI Doomer vs. e/acc Leader Debate:

@if_alinked to a YouTube debate featuring a head-to-head between Connor Leahy, dubbed the world’s second-most famous AI doomer, and Beff Jezos, founder of the e/acc movement, discussing technology, AI policy, and human agency. -

In Memoriam of Carl Weathers: User

@gabriel_symeexpressed condolences over the passing of Carl Weathers, with a statement of remembrance but without linking to any external news source.

Links mentioned:

- Trying LLaVA-1.6 on Colab: LLaVA-1.6, with improved reasoning, OCR, and world knowledge. LLaVA-1.6 even exceeds Gemini Pro on several benchmarks.https://llava-vl.github.io/blog/2024-01…

- Explosive Showdown Between e/acc Leader And Doomer: The world’s second-most famous AI doomer Connor Leahy sits down with Beff Jezos, the founder of the e/acc movement debating technology, AI policy, and human …

Nous Research AI ▷ #interesting-links (17 messages🔥):

- Hugging Face Introduces MiniCPM: User

@Fynnshared a link to a Hugging Face paper on MiniCPM, a new model that could be of interest (MiniCPM Paper). - Testing MiniCPM on Twitter:

@burnytechreferenced a Twitter thread showcasing tests of the new MiniCPM model, sparking discussions about its performance. - Healthy Skepticism for MiniCPM Benchmarks:

@mister_poodlecommented that although MiniCPM’s scores are good, it underperforms compared to Mistral on the MMLU benchmark, and the usage of the model for fine-tuning on specific tasks is awaited. - Model Comparisons Ignite Discussion:

@bozoidpointed out that the MiniCPM not being specifically trained for math but achieving a 53 on the GSM8K benchmark is impressive and underscored that comparisons with newer models like StableLM 2 are missing. - Potential for Model Merging: User

@bozoidexpressed that model merging efforts could potentially enhance the capabilities of ~2B scale models, given the recent advancements in this area.

Links mentioned:

Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It’s the all-in-one workspace for you and your team

Nous Research AI ▷ #general (114 messages🔥🔥):

-

Growing LLMs Debate:

@theluckynickshared a tweet by @felix_red_panda discussing the success of “growing” models like ResNet and GANs, and questioning the application of this to LLMs. ResNet classifiers and ProGANs benefited from this technique, with examples including Apple’s Matroyshka Diffusion model. -

Miqu’s First Impression:

@weyaxiannounces that Miqu has entered the Open LLM Leaderboard with a score of 76.59. Subsequent messages from@nonameusrand others compare Miqu’s performance metrics, such as ARC and MMLU, to other models like MoMo, with mixed reactions regarding Miqu’s potential. -

Finetuning Trade-offs between autotrain and axolotl:

@papr_airplaneinquires about compromises when using autotrain versus axolotl for finetuning, with@tekniumsuggesting sample packing, flash attention, and prompt format selection as potential differences. -

Exploring Multilingual Tokenizers:

@light4bearsparked a discussion regarding LLMs and tokenizers, particularly focused on how a 32000-token vocabulary might limit the multilingual capabilities of models like llama/mistral.@tekniumprovided a link to a paper on VinaLLaMA, an open-weight SOTA Large Language Model for Vietnamese, and@light4bearmentioned efforts at adapting LLaMA models for Chinese. -

Quantized Models on the Leaderboard: Conversations emerged around quantized models, specifically miqu-70b, with various users like

@.ben.com,@betadoggo, and@nonameusrdiscussing the impact of quantization on performance, speeling accuracy, and whether these models are run on specific platforms by default.

Links mentioned:

- Zyphra (Zyphra): no description found

- AI News: GPT-4-Level Open Source, New Image Models, Neuralink (And More): Here’s all the AI news from the past week that you might have missed. Check out HubSpot’s Campaign Assistant here: https://clickhubspot.com/xn6Discover More …

- VinaLLaMA: LLaMA-based Vietnamese Foundation Model: In this technical report, we present VinaLLaMA, an open-weight, state-of-the-art (SOTA) Large Language Model for the Vietnamese language, built upon LLaMA-2 with an additional 800 billion trained toke…

- Tweet from Felix (@felix_red_panda): We know that “growing” models (=adding more parameters during training) works well for ResNet classifiers (@jeremyphoward did that ages ago), GANs (ProGAN) and image Diffusion models (Apple…

- Tweet from Weyaxi (@Weyaxi): Miqu is now on the 🤗Open LLM Leaderboard, achieving a score of 76.59. https://hf.co/152334H/miqu-1-70b-sf Benchmarks Average: 76.59 ARC: 73.04 HellaSwag: 88.61 MMLU: 75.49 TruthfulQA: 69.38 Winogr…

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It’s the all-in-one workspace for you and your team

- Chinese-LLaMA-Alpaca/README_EN.md at main · ymcui/Chinese-LLaMA-Alpaca: 中文LLaMA&Alpaca大语言模型+本地CPU/GPU训练部署 (Chinese LLaMA & Alpaca LLMs) - ymcui/Chinese-LLaMA-Alpaca

- Thealexera Soyjak GIF - Thealexera Soyjak Surprised - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #ask-about-llms (16 messages🔥):

- More Data Better?:

@stefangligarecommends saving all preference data, not just top choices, mentioning that Data Preferences Optimization (DPO) can utilize a ranking of multiple responses, though implementations are rare. - Proper Prompt Formatting for Hermes 2:

@mr.fundamentalsshared a code snippet used for formatting prompts for the Nous Hermes 2 Mixtral 8x7B DPO model, looking for advice on why initial characters might be missing in responses. - Check Your Outputs: In response to

@mr.fundamentals,@tekniumsuggested printing out the formatted prompt to help debug issues with skipped initial characters in model responses. - Avoiding Lengthy Replies:

@tekniumadvised@mr.fundamentalson how to prompt the model to generate shorter responses by providing example turns with desired length, while noting it might increase token usage.

Links mentioned:

NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face: no description found

OpenAI ▷ #ai-discussions (25 messages🔥):

-

Exploring Dense Languages for Machine Learning:

@pyhelixdiscussed an encoding scheme idea using a “7th bit” to signify cognitive dissonance in machine learning models and pondered the application of modular forms for creating a dense language. -

Does OpenAI Censor ChatGPT for China?: User

@bambooshootsinquired whether OpenAI censors ChatGPT responses based on Chinese law;@jeremy.oresponded, clarifying that OpenAI does not censor content for reasons related to Chinese regulations. -

Content Creation Freedom Using DALL·E:

@jeremy.ohighlighted that OpenAI allows users to create diverse representations, including LGBTQI+ content using DALL·E, indicating a commitment to content freedom. -

Contours of Content Restrictions Discussed:

@bambooshootsexpressed concerns about ChatGPT refusing to discuss topics even within the scope of fair use, with@jeremy.oand@luguiproviding context on content guidelines and dramatization propensities of ChatGPT. -

Philosophical Readings on Machine Intelligence:

@jimmygangstershared an intriguing read titled From Deep Learning to Rational Machines, which delves into the philosophical study comparing animal and human minds.

Note: Other participant messages were casual greetings or undetailed mentions and do not contribute substantive discussion points to summarize.

OpenAI ▷ #gpt-4-discussions (117 messages🔥🔥):

- @ Mentions Confusion and Potential for Bot Collaboration:

@blckreaperand@darthgustav.discussed the concept of using @ mentions to collaborate between different instances of GPT, with@jaicrafthighlighting a desire for separate entities in conversations that don’t confuse past responses as their own. - GPT Instruction Leakage Concerns:

@loschessexpressed concerns about GPTs leaking their custom instructions, with@solbusexplaining that GPT’s instructions are akin to client-side code in HTML, and@bambooshootssuggesting to secure sensitive content behind an API call action. - @ Mentions Integration and Agentic Behavior:

@jaicraftand@darthgustav.debated the functionality and limitations of @ mentions, discussing the possibility of multiple GPT entities in a chat and the need for better separation of instructions. - Bugs and Inconsistencies in GPT Responses: Users including

@_odaenathus,@blckreaper, and@loschessreport experiencing bugs and inconsistencies with GPT representations, knowledge file retrieval, and an unwillingness to perform certain tasks, suggesting a recent change in GPT behavior. - Request for Enhanced Entity Differentiation: The discussion led by

@jaicraftpointed towards a user interest in GPTs acting as separate entities with distinct memories and behaviors, rather than as a continuation of a single conversation flow.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

OpenAI ▷ #prompt-engineering (6 messages):

-

Request for Implicit Text Modification:

@novumclassicuminquired about a method to have GPT modify text for text-to-speech without displaying the changes on-screen. They seek to provide an output-ready submission after the GPT performs word replacements in memory. -

Injecting Personality into AI Responses:

@_fresniclooked for advice on making API responses reflect a certain personality. They’ve noted some success with prompting GPT to “talk like someone who is [personality…]”. -

Reducing Repetitive Server Communication Permissions:

@novumclassicumasked for a solution to prevent their custom GPT from repeatedly asking users for server communication permissions after the initial consent. -

Challenges in Sustaining Multi-Character Dialogues:

@stealth2077sought tips for generating realistic conversations between multiple characters. They struggle to get the AI to produce more than three lines of dialogue before it summarizes the conversation. -

Desire for Detailed Play-by-play Character Interactions: Further emphasizing the issue,

@stealth2077expressed a desire for the AI to generate a full dialogue with every line instead of summarizing.

OpenAI ▷ #api-discussions (6 messages):

-

Seeking Stealth Text Processing Tips:

@novumclassicumis looking for a way to have GPT perform text modifications invisibly before submission, specifically for a text-to-speech application. The desired outcome is for the GPT to replace words in memory and submit the text without displaying the modifications to the user, but they’re uncertain about the instructions required to achieve this. -

Personal Touch in API Responses:

@_fresnicexperimented with giving an AI via the API a personality, starting with a name and interests to be included in the responses. They discovered that phrasing like “talk like someone who is [personality…]” seemed to improve the AI’s response. -

One-Click Connection Conundrum:

@novumclassicuminquires about a way to prevent a custom GPT from repeatedly asking for permission to communicate with an outside server after the first approval. They’re looking to replicate the feature where the popup will not trigger after the initial click. -

Generating Those Character Dialogues:

@stealth2077seeks advice on generating realistic and extensive discussions between characters in a narrative. They’ve found that the AI tends to summarize conversations after three lines instead of continuing with the dialogue. -

Dialogue Expansion Desired: Continuing the topic,