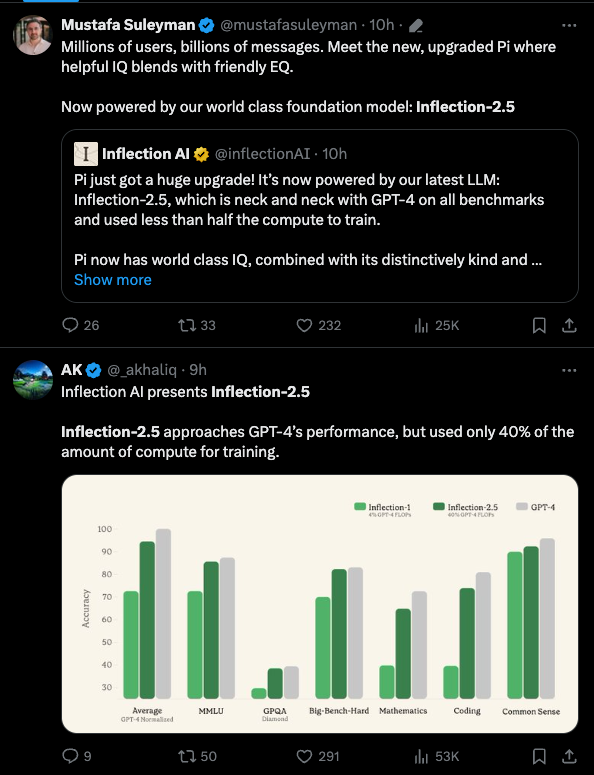

Mustafa Suleyman announced Inflection 2.5, which closes much of the gap Inflection had with GPT-4 in an undisclosed compute-efficient way (“achieves more than 94% the average performance of GPT-4 despite using only 40% the training FLOPs”, which is funny because those numbers aren’t public).

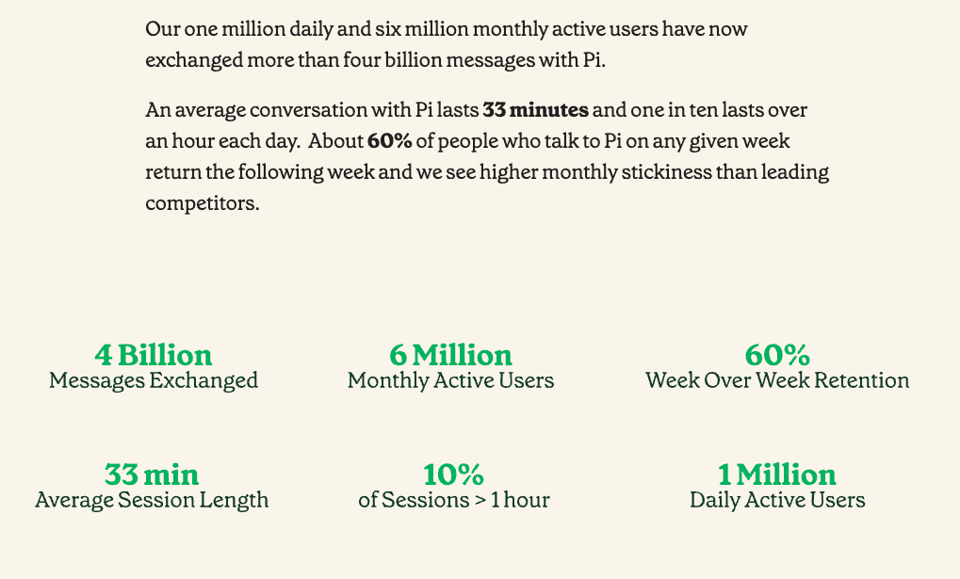

But IQ isn’t the only metric that matters; they are also optimizing for EQ, which is best proxied but the impressive user numbers they also released for Pi:

More notes on the Axios exclusive:

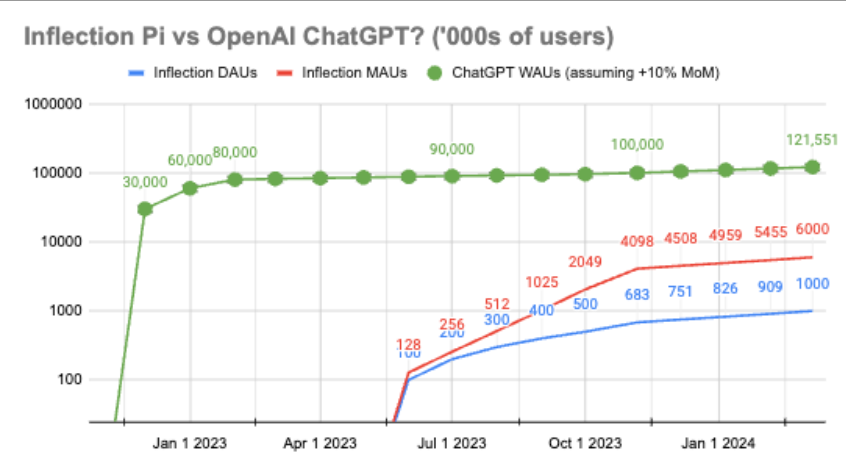

- Pi’s user base has been growing at around 10% a week for the last two months. This lets us construct some ballpark estimates for Pi vs ChatGPT:

They also released a corrected version of MT-Bench for community use.

The community has spotted a couple other interesting tidbits:

- The results are suspiciously close to Claude 3 Sonnet

- Pi also now has realtime web search.

Table of Contents

[TOC]

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs

Claude 3 Release and Capabilities:

- Amanda Askell broke down Claude 3's system prompt, explaining how it influences the model's behavior (671,869 impressions)

- Claude 3 Opus beat GPT-4 in a 1.5:1 vote, showing impressive performance (17,987 impressions)

- Claude 3 is now the default model for Perplexity Pro users, with Opus surpassing GPT-4 and Sonnet being competitive (89,658 impressions)

- Claude 3 shows impressive OCR and structured extraction capabilities, as demonstrated in the @llama_index cookbook (59,822 impressions)

- Anthropic has added experimental support for tool calling in Claude 3 via a LangChain wrapper (21,493 impressions)

Retrieval Augmented Generation (RAG):

- LlamaIndex released LlamaParse JSON Mode which allows parsing text and images from PDFs in a structured format. Combined with Claude-3, this enables building RAG pipelines over complex PDFs.

- LlamaIndex now supports video retrieval via integration with VideoDB, allowing RAG over video data by indexing visual and auditory components.

- A paper on "Knowledge-Augmented Planning for LLM Agents" proposes enhancing LLM planning capabilities through explicit action knowledge bases.

Benchmarking and Evaluation:

- TinyBenchmarks looks promising as a tool for evaluating language models, similar to the Dharma-1 benchmark by @far__el.

- An empirical result suggests that 100 examples may be sufficient to evaluate language models, based on datasets like HumanEval (164 examples) and Bamboogle (124 examples).

- The Yi-9B model was released, showing strong performance on code and math benchmarks, topping Mistral.

AI Research and Techniques:

- Researchers introduced Wanda, a method for network pruning that reduces computational burden while maintaining performance (7,530 impressions)

- A paper proposes foundation agents that can master any computer task by taking screen images and audio as input and producing keyboard/mouse operations (16,598 impressions)

- Microsoft presents DéjàVu, a KV-cache streaming method for fast, fault-tolerant generative LLM serving (16,458 impressions)

- Google presents RT-H, which outperforms RT-2 on a wide range of robotic tasks using action hierarchies and language (11,348 impressions)

- Meta presents ViewDiff for generating high-quality, multi-view consistent images of 3D objects in authentic surroundings (5,880 impressions)

Memes and Humor:

- "AGI by September 2024" meme tweet pokes fun at overhyped AI timelines.

- A humorous tweet laments the time sink of relying on GPT-4 to write code in 2024 instead of doing it manually.

- Playful banter about making Claude-3 a girlfriend, referencing the Gemini model's supposed creativity.

PART 0: Summary of Summaries of Summaries

Claude 3 Sonnet (14B?)

-

Model Releases and Comparisons: Multiple new AI models sparked heated discussions around their strengths and limitations. Inflection-2.5 claimed to match GPT-4 performance on benchmarks while using less compute, but faced skepticism from @HlibIvanov who called it a mere GPT-4 distill lacking innovation. Claude-3 Opus achieved impressive feats like a perfect 800 on the SAT reading section, with @res6969 praising its enhanced knowledge web construction over 35k tokens. However, @jeffreyw128 noted Claude struggled to find a specific name among 500. Gemma underwhelmed @lee0099 compared to 7B Mistral, especially in multi-turn dialogues and being English-only.

-

Open-Source AI and Community Dynamics: @natolambert vented frustrations over the OSS community’s pedantic corrections and lack of perspective, which can deter OSS advocates. Even helpful posts face excessive criticism, as experienced when writing on OSS. The GaLore optimizer by @AnimaAnandkumar promised major memory savings for LLM training, generating excitement from @nafnlaus00 and others about improving accessibility on consumer GPUs. However, @caseus_ questioned GaLore’s claimed parity with full pre-training. Integrating GaLore into projects like axolotl faced implementation challenges.

-

Hardware Optimization for AI Workloads: Optimizing hardware was a key focus, with techniques like pruning, quantization via bitsandbytes, and low-precision operations discussed. @iron_bound highlighted Nvidia’s H100 GPU offering 5.5 TB/s L2 cache bandwidth, while @zippika speculated the RTX 4090’s L1 cache could reach 40 TB/s. CUDA implementations like @tspeterkim_89106’s Flash Attention aimed for performance gains. However, @marksaroufim warned about coarsening impact on benchmarking consistency.

-

AI Applications and Tooling: Innovative AI applications were showcased, like @pradeep1148’s Infinite Craft Game and Meme Generation using Mistral. LlamaIndex released LlamaParse JSON Mode for parsing PDFs into structured data. Integrating AI with developer workflows was explored, with @alexatallah offering sponsorships for OpenRouter VSCode extensions, while LangChain’s

ask-llmlibrary simplified LLM coding integrations.

Claude 3 Opus (8x220B?)

-

NVIDIA Restricts CUDA Translation Layers: NVIDIA has banned the use of translation layers for running CUDA on non-NVIDIA hardware, targeting projects like ZLUDA that aimed to bring AMD GPUs to parity with NVIDIA on Windows. The updated restrictions are seen as a move to maintain NVIDIA’s proprietary edge, sparking debates over the policy’s enforceability.

-

Efficiency and Pruning Debates in LLM Training: Discussions emerged around the parameter-efficiency of LLMs and the potential for optimized training schemes. Some argued that the ability to heavily prune models without substantial performance drops indicates inefficiencies, while others cautioned about reduced generalizability. New memory-reduction strategies like GaLore generated interest, with ongoing attempts to integrate it into projects like OpenAccess-AI-Collective/axolotl. Questions arose about the limits of current architectures and the saturation of model compression techniques.

-

Inflection-2.5 and Claude-3 Make Waves: The release of Inflection-2.5, claiming performance on par with GPT-4, and Anthropic’s Claude-3 variants Opus and Sonnet sparked discussions. Some were skeptical of Inflection-2.5’s innovation, suggesting it might just be a GPT-4 distillation. Meanwhile, Claude-3 garnered significant community interest, with Opus achieving a perfect 800 on the SAT reading section according to this tweet.

-

Mistral Powers Innovative Applications: The Mistral language model demonstrated its versatility in powering an Infinite Craft Game and automating meme creation using the Giphy API. Other noteworthy releases included Nous Research’s Genstruct 7B for instruction-generation (HuggingFace) and new benchmarks like Hae-Rae Bench and K-MMLU for evaluating Korean language models (arXiv).

ChatGPT (GPT4T)

-

Multilingual Model Support and API Integration: Discord communities highlighted advancements in multilingual support and API integration, with Perplexity AI introducing user interface support for languages like Korean, Japanese, German, French, and Spanish and discussing the Perplexity API for integrating Llama 70B. API desires and troubles included discussions on rate limit increases and integration code, as detailed in their API documentation.

-

Innovations in AI-Driven Game Development: Nous Research AI and Skunkworks AI showcased the use of AI in creating new gaming experiences. A crafting game leveraging Mistral demonstrated AI’s potential in game development with its expandable element combination gameplay, showcased in a YouTube video. Similarly, Mistral was used in an Infinite Craft Game and for automating meme creation, illustrating innovative AI applications in gaming and humor.

-

Advancements and Debates in Model Optimization and Pruning: The LAION and OpenAccess AI Collective (axolotl) summaries brought to light discussions on model optimization, pruning, and efficiency. Debates on the pruning of Large Language Models (LLMs) reflected differing opinions on its impact on performance and generalizability, with some engineers proposing pruning as evidence for possible optimization. GaLore emerged as a focal optimization tool in discussions, despite skepticism about its performance parity with full pretraining, with integration efforts underway as noted in their pull request.

-

Emergence of New AI Models and Tools: Across multiple Discord summaries, there was significant buzz around the introduction of new AI models and tools, including Inflection AI 2.5, Genstruct 7B, and Yi-9B. Inflection AI 2.5’s release sparked conversations about its efficiency and performance, whereas Nous Research AI unveiled Genstruct 7B, an instruction-generation model aimed at enhancing dataset creation and detailed reasoning, available on HuggingFace. The Hugging Face community saw the launch of Yi-9B, adding to the growing list of models available for experimentation and deployment, showcasing the continuous innovation and expansion of AI capabilities, with a demo available here.

PART 1: High level Discord summaries

Perplexity AI Discord Summary

-

Perplexity AI Talks the Talk in Multiple Languages: Perplexity now supports Korean, Japanese, German, French, and Spanish for its user interface, as announced by

@ok.alex. Users can customize their language preferences in the app settings. -

A Limitations and Alternatives Smorgasbord: There was an active discussion about the limitations of AI models, with focus on daily usage limits for Claude 3 Opus and alternatives like Claude 3 Sonnet and GPT-4 Turbo. The need for more direct feedback was mentioned in regards to the closed beta application process.

-

Perplexity Pro Subscribers Sound Off: Users shared their experiences with Perplexity Pro, engaging in discourse regarding the additional benefits and how to access specialized support channels.

-

New Kid on the Block: Inflection AI 2.5: The release of Inflection AI 2.5 sparked conversations about its efficiency and performance levels, with users highlighting its speed and debating its potential use cases.

-

Global Cordiality or Algorithmic Manners?: A discussion was sparkled around cultural communication nuances, with a focus on the use of “sir” and global differences in respectful address, in the context of language models.

-

Sharing is Caring - Perplexity Goes 3D: Users shared interesting Perplexity search links, exploring topics from 3D space navigation, to altcoin trends, the concept of Ikigai, quality of text generation by Claude 3 Opus, and interpretations of quantum mechanics.

-

API Desires and Troubles: The guild members are engaging with the Perplexity API, seeking integration code for Llama 70B and support for rate limit increases, while also showing interest in the Discover feature. The Perplexity API documentation was referenced as a guide for usage and technical assistance.

Nous Research AI Discord Summary

-

Innovative Crafting AI Game Emerges: A new crafting game leveraging Mistral has been introduced by

@pradeep1148, showcasing the potential for AI in game development. The game begins with four elements and expands as players combine them, as demonstrated in a YouTube video. -

Continuous Improvement Triggers Tech Buzz: The Yi-34B base model has shown remarkable performance growth in its “Needle-in-a-Haystack” test, potentially raising the bar for upcoming models. Google’s Gemma model received community-driven bug fixes, which are available in Colab notebooks, and the new GaLore project demands community validation, potentially benefiting from pairing with low-bit optimizers.

-

Genstruct 7B Sets the Instructional Pace: Nous Research unveils Genstruct 7B, an instruction-generation model designed to enhance detailed reasoning and dataset creation. Heralded by Nous’s own

<@811403041612759080>, Genstruct 7B promises innovation in instruction-based model training, available on HuggingFace. -

Chat AI and LLMs Spark Discussions: Anthropic’s new Genstruct-7B-GGUF enters the spotlight alongside debates surrounding Claude 3’s performance, and Inflection AI claims its model Inflection-2.5 matches GPT-4 on benchmarks. Meanwhile, community skepticism prevails regarding both a shared Twitter IQ test chart and the rumors of a GPT-5 release.

-

Technical Debates and Clarifications: From running Ollama locally to the potential of a function-calling model inspired by Claude-style, the community seeks insights on various AI models and tools. Highlights include the Nous-Hermes-2-Mistral-7B-DPO’s upcoming update for function calling data and a refactoring effort for a logit sampler by

@ufghfigchvtailored for JSON/function calls. Access to GPT-4 was also mentioned with Corcel.io offering free ChatGPT-4 like interactions and a desire for longer context lengths in models like Nous-Hermes for RAG applications.

LAION Discord Summary

-

CUDA Controversy: NVIDIA’s recent policy change prohibits the use of translation layers for running CUDA on non-NVIDIA hardware, directly impacting projects like ZLUDA. The updated restrictions are seen as a move to maintain NVIDIA’s proprietary edge.

-

Scraping Spat Spirals: Stability AI was mixed up in a controversy for allegedly scraping Midjourney, causing a ban on their employees and raising concerns over data scraping practices. While some tweets suggest it was not work-related, it has kindled a discussion on scraping ethics and protocols.

-

Efficiency in the Spotlight: Debates concerning the pruning of Large Language Models (LLMs) have been center stage. Some engineers believe current training methods are ineffectual, proposing pruning as evidence for possible optimization, while others voice concerns about the potential loss of generalizability, stability, and the slowness of certain optimization techniques such as SVD.

-

Pruning Perplexities: Contrary to some beliefs that lightly pruned models experience performance degradation, there’s an argument that heavily pruned LLMs remain surprisingly generalizable. However, this leads to a bigger question: are oversized parameters needed for model training, or can engineers aim for leaner, yet effective LLMs?

-

Architectural Assessments: Conversations are probing the structural boundaries of current LLMs, exploring whether the strategies to compress and optimize models, especially those based on attention mechanisms, are approaching their limits. This underlines a curiosity about the saturation of model efficiency within present-day architectures.

OpenAI Discord Summary

- Claude’s Remarkable Performance: Positive experiences with Claude 3 Opus (C3) were highlighted as it outperformed GPT-4 on complex tasks and elementary class problems.

- Claude Versus Gemini: There was a debate over coding capabilities, where Gemini 1.5 Pro solved a Python GUI task successfully on the first try, while Claude 3 did not, indicating strengths and weaknesses in each.

- Doubts Cast on MMLU Datasets: Concerns arose about the MMLU datasets’ questions lacking logical consistency and containing incorrect answers, leading to calls for reconsidering their use in AI model evaluations.

- GPT-4 Availability and Policy Discussions: Users discussed intermittent access to GPT-4 and policy changes affecting code provision, with confusion over accessing custom models like Human GPT via API clarified by references to OpenAI’s model overview.

- Service Outages and Support Quests: A reported 6-hour service interruption on OpenAI’s APIs, a query on the implementation of randomization in storytelling using Python’s random function, and discussions on the development of a GPT classifier reflected the technical and operational challenges community members faced.

LM Studio Discord Summary

-

Tech Titans Talk Troubleshooting: Users discussed hardware configurations with 512 GB RAM and 24GB VRAM, and tackled library version issues, specifically a

GLIBCXX_3.4.29 not founderror. The debates extended to whether a Macbook Pro with M3 Max or M2 Max is suitable for local LLM processing, touching on price and performance trade-offs. Community members aired concerns over the non-openness of OpenAI, with alternative AI services like POE monthly being considered for model access. -

Narrative Nuances and AI Models: In models discussion, the optimal AI specified for storytelling was Mistral-7b-instruct-v0.2-neural-story.Q4_K_M.gguf, yet the limitations due to memory constraints were evident. Lack of image-generation capabilities in LM Studio led participants to consider tools like Automatic 1111 for Stable Diffusion tasks. Interest in Starcoder2 was evident, with users awaiting its support, as indicated by references to its Hugging Face page.

-

Feedback Focus Irregularities and Insights: A user shared they desire clearer guidance to exploit LM Studio’s potential. There’s feedback suggesting LM Studio could emulate Embra AI to improve its utility, and that the current version (v0.2.16) doesn’t support proxy on macOS 14.3. Also, it was clarified that help requests should not be posted in the feedback channel.

-

Hardware Hub Conversations: Reports indicated experiments with a 200K context in the Smaug model and VRAM demand issues with an LLM Studio task utilizing a 105,000 context. A minimum of 550W was recommended for a PSU to power an RTX 3090, and discussions included handling large contexts or datasets with LLM tasks and mismatched VRAM issues.

-

Response Rate Quest in Crew AI: In looking for ways to increase response speed for an unspecified process, users faced a connection timeout issue and proposed establishing local operations as a potential solution.

-

The Odyssey of Open Interpreter Syntax: There were inquiries and conversations surrounding the correct

default_system_messagesyntax and profile configurations in Open Interpreter. Users exchanged experiences with code-trained models and shared learning moments for configurations, with references to instructions at Open Interpreter - System Message and Hugging Face.

LlamaIndex Discord Summary

-

Survey Your Heart Out: LlamaIndex seeks deeper user insights with a 3-minute survey. Engineers are encouraged to participate to shape better resources like documentation and tutorials, accessible at SurveyMonkey.

-

LlamaParse JSON Unleashed: The LlamaParse JSON Mode from LlamaIndex is generating buzz with its ability to parse PDFs into structured dictionaries, especially when paired with models like claude-3 opus. For those interested, a tweet announces the launch at LlamaIndex Tweet.

-

Video Capabilities Level Up: LlamaIndex and

@videodb_iointegration opens new doors for video content handling, allowing keyword-based video upload, search, and streaming within LlamaIndex. Discover more about this integration via this Announcement Tweet. -

Optimizing A for Search Efficiency*: The A* algorithm’s feasibility for similarity search was validated with a subclassing of the embedding class to alter the search methodology, showcasing LlamaIndex’s flexibility.

-

In-Context Learning Gets a Boost: A new methodology enhancing in-context learning has been introduced by

@momin_abbaswith the Few-Shot Linear Probe Calibration. The initiative invites support from the community through GitHub engagement.

Latent Space Discord Summary

- Midjourney and Stability AI Spat Goes Public:

@420gunnareferenced a controversial incident where Stability AI was banned from Midjourney for scraping data, as detailed in a Twitter post by@nickfloats. - Podcast Episode Featuring AI Experts Hits the Airwaves and Hacker News:

@swyxioannounced a new podcast episode with<@776472701052387339>and highlighted its presence on Hacker News. - Cheers for Volunteer-led Model Serving Paper Presentation: The community showed appreciation for

@720451321991397446’s volunteering, with a specific focus on a presentation about model serving, accessible via Google Slides. - Inference Optimization and Hardware Utilization Debate Heats Up: Notable discussions on inference optimization included alternatives like speculative decoding and FlashAttention, and the effect of hardware, with insights derived from resources like EGjoni’s DRUGS GitHub and the DiLoCo paper.

- Decentralized Training and GPU Configuration Discussions Surge: There was an active engagement in deliberations around distributed training with references to DiLoCo and the influence of GPU configurations on model outputs, spurred by an anecdote of an OpenAI incident.

Eleuther Discord Summary

-

New Korean Language Benchmarks Unveiled: Two new evaluation datasets, Hae-Rae Bench and K-MMLU, specifically tailored to assess language models’ understanding of Korean language and culture, have been introduced by

@gson_arlo. -

vLLM Batching Clarified:

@baber_explained that batching is internally handled by vLLM, thus manual implementation for batched inference is not required, referencing the official documentation for ease of use. -

Multilingual Benchmark Collaboration Called For: Contributors speaking non-mainstream languages are invited to participate in creating pertinent benchmarks that evaluate language model competencies specific to their cultures.

-

Optimizer Memory Issues in GPT-NeoX Addressed: Discussions in the gpt-neox-dev channel focused on tackling memory peaks during optimization with members like

@tastybucketofriceciting Issue #1160 and suggesting Docker as a potential solution to dependency challenges (Docker commit here). -

Efforts on Unified Fine-tuning Framework: In the lm-thunderdome channel, the lack of a consistent mechanism for fine-tuning language models was spotlighted by

@karatsubabutslower, despite having a standardized evaluation method like lm-evaluation-harness.

HuggingFace Discord Summary

-

Entrepreneurs Seek Open Source Model Groups: Entrepreneurs on HuggingFace are looking for a community to discuss the application of open-source models in small businesses, however, no dedicated channel or space was recommended within the provided messages.

-

New Model on the Block, Yi-9B: Tonic_1 launched Yi-9B, a new model in the Hugging Face collection, available for use with a demo. Hugging Face may soon be hosting leaderboards and gaming competitions.

-

Inquiry into MMLU Dataset Structure: Privetin displayed interest in understanding the MMLU datasets, a conversation that went without elaboration or engagement from others.

-

Rust Programming Welcomes Enthusiasts: Manel_aloui kicked off their journey with the Rust language and encouraged others to participate, fostering a small community of learners within the channel.

-

AI’s Mainstream Moment in 2022: Highlighted by an Investopedia article shared by

@vardhan0280, AI’s mainstream surge in 2022 was attributed to the popularity of DALL-E and ChatGPT. -

New Features in Gradio 4.20.0 Update: Gradio announced version 4.20.0, now supporting external authentication providers like HF OAuth and Google OAuth, as well as introducing a

delete_cacheparameter and/logoutfeature to enhance the user experience. The newgr.DownloadButtoncomponent was also introduced for stylish downloads, detailed in the documentation.

OpenAccess AI Collective (axolotl) Discord Summary

-

Deepspeed Disappoints Multi-GPU Setups: Engineers noted frustration with Deepspeed, particularly in multi-GPU scenarios with 4x 4090s, finding that it fails to split the base model across GPUs when using the Lora adapter. The discussion referenced a Deepspeed JSON config file.

-

GaLore Spurs Debate: GaLore, an optimization tool, became a focal point due to its potential for memory savings in Large Language Model training. Despite excitement, skeptics questioned its performance parity with full pretraining, even as integration efforts are underway.

-

Efficiency Methods Under Microscope: Discussions surfaced doubts about the potential misleading nature of various efficiency methods, including ReLoRA and NEFT, prompting consideration of dataset sizes and settings for meaningful finetuning.

-

Gemma’s Performance Draws Criticism: The Gemma model came under scrutiny for underwhelming performance against 7B Mistral, especially in multi-turn dialogues, and was constrained by being English-only, which limited its value for multilingual tasks.

-

Dependency Wars in AI Development: A common thread across discussions was the battle against dependency conflicts, especially with

torchversions in the installation ofaxolotl[deepspeed]==0.4.0. Engineers shared tactics like manual installation and suggested specific versions, includingtorch==2.2.0, as potential fixes.

OpenRouter (Alex Atallah) Discord Summary

-

Claude 3 Makes Group Chat Cool: Alex Atallah shared a tweet on the positive self-moderated group chat experience using Claude 3.

-

Nitro-Power to Your Projects: New “nitro” models are in testing with OpenRouter, offering safe integration options, although slight adjustments may be expected during the feedback incorporation phase before an official launch.

-

VSCode Extension Bounty: Alex Atallah offered sponsorship for building a VSCode extension for OpenRouter, rewarding developers with free credits for their contributions.

-

Development Tips and Tricks Exchange: Community members exchanged information on various VSCode extensions for LLMs, including Cursor, Continue, and Tabby, as well as pointing to more cost-effective chat models like Sonar 8x7B by Perplexity.

-

Budget-Friendly AI Conversations: Discussions about the cost implications of engaging with models like Claude 3 Opus were had, whereby Sonar 8x7B was highlighted for its cost-effectiveness over others.

LangChain AI Discord Summary

-

CSV Loader Timeout Troubles: Users noted issues with

UnstructuredCSVLoaderthrowing “The write operation timed out” errors in LangChain. Although solutions were not discussed, the problem was acknowledged by members sharing similar experiences. -

Raising Red Flags on Phishing: Concerns were raised over an uptick in phishing attempts within the server, particularly through suspicious steamcommunity links, but follow-up actions or resolutions were not detailed.

-

Prompt Puzzle from Past Interactions: In the construction of a chat chain, one user faced issues with

HumanMessagecontent improperly propagating intoAIMessageafter initial interactions, despite the intention of memory segregation. A shared code snippet highlighted the problem, though the community’s advice was still sought. -

LangChain Leverages RAPTOR & Pydantic Pairing: Detailed strategies for utilizing Pydantic with LangChain and Redis for structuring user data and chat histories were under discussion, with an invite for insights into unexpected

AIMessagebehaviors. -

Link Library for LangChain Learners: Released resources included ChromaDB Plugin for LM Studio, a tool for generating vector databases, and the

ask-llmlibrary for simpler LLM integration into Python projects. Highlighted educational content included a Medium article on RAG construction using RAPTOR, and YouTube tutorials on game crafting and meme generation with Mistral and Giphy API.

CUDA MODE Discord Summary

- CUDA Coarsening Tips: A tweet by @zeuxcg shared insights on properly handling coarsening in code execution, highlighting performance impacts due to benchmarking inconsistencies.

- Comparing Relay and Flash Attention: In the realm of attention mechanisms,

@lancertsraised a discussion on comparing RelayAttention with ring/flash attention, citing a GitHub repository on vLLM with RelayAttention. - Insightful CUDA Command for GPU Reset: A

sudocommand was provided for resetting GPUs while addressing memory allocation on GPUs,sudo nvidia-smi --gpu-reset -i 0, as well as sharing a potentially relatednvtopobservation. - New CUDA Project on the Block:

@tspeterkim_89106introduced a project implementing Flash Attention in CUDA, inviting feedback and collaboration on GitHub. - CUDA Synchronization Mechanics in Torch: The use of

torch.cuda.synchronize()for accurate performance measurements was recommended, with clarifications on synchronization across CUDA kernels and the cross-device usage of scalar tensors.

Interconnects (Nathan Lambert) Discord Summary

-

Inflection-2.5 Sparks Debates:

@xeophon.introduced Inflection-2.5, an AI model embedded in Pi, which claims high performance on par with GPT-4 and Gemini. However, a tweet by @HlibIvanov criticizes it as a GPT-4 distill, raising questions about its innovation. -

AI Innovation Frenzy Noted:

@natolambertshowcased enthusiasm over the rapid development within the AI field, referencing multiple new model releases and discussing it further in a tweet. -

OSS Community Nitpicks Frustrate: Discussions touched on the unwelcoming nature of the open-source software community, with

@natolambertexpressing frustration over pedantic criticisms that are discouraging to OSS advocates and@xeophon.bringing up the confusion over labeling in the space. -

Claude-3 Heats Up AI Competition: The release of Claude-3 by @Anthropic has gathered a fervent community response, along with its variants Opus and Sonnet, as shared in a tweet with significant community involvement noted in the form of 20,000 votes in three days.

-

Expectations Soar for Gemini Ultra: The upcoming Gemini Ultra and its 1M context window feature are highly anticipated, with engineers like

@natolambertand@xeophonkeen on exploring its capabilities for tasks such as analyzing academic papers.

Alignment Lab AI Discord Summary

- Spam Alert Leads to Policy Change: Following a spam incident with @everyone tags, users like

@joshxthighlighted the importance of respecting everyone’s inboxes, leading to a new policy where the ability to ping everyone was disabled to prevent unwanted notifications. - Orca Dataset Dives into Discourse: The release of Microsoft’s Orca dataset was brought up by

@joshxt, sparking a conversation and personal model preferences, with “Psyonic-cetacean” and “Claude 3 Opus” getting special mentions. - Introducing Project Orca-2:

@aslawlietput forward a proposal for Orca-2, aiming to encompass a diverse range of datasets beyond Microsoft’s recent release, such as FLAN 2021 and selective zero-shot samples from T0 and Natural Instructions. - Efficient Data Augmentation Tactics:

@aslawlietproposed using Mixtral as a time and cost-efficient data augmentation method over GPT-4, prompting discussion on efficient methods for model improvement. - Cordial Introductions and Greetings: The community warmly welcomed new participants like

@segmentationfault.and@1168088006553518183, emphasizing a friendly atmosphere and the shared interest in contributing to the field of AI.

DiscoResearch Discord Summary

-

Choose Your Language Model Wisely: Depending on constraints,

@johannhartmannadvises using Claude Opus and GPT-4 when there are no limitations, DiscoLM-120B for open-source with substantial memory availability, and VAGOsolutions/Sauerkraut LM-UNA-SOLAR-Instruct as the go-to when working with restricted memory. -

Retrieval-Augmented on the Rise: A study in an arXiv paper shows the benefits of retrieval-augmented language models, with a specific focus on joint training of retriever and LLM, though comprehensive research on this integration remains scarce.

-

The Quest for the Best German-Speaker: The Nous Hermes 2 Mixtral 8x7b was praised by

@flozi00for its high accuracy in task comprehension. In contrast,@cybertimonand@johannhartmannrecommended exploring a range of models including DiscoResearch/DiscoLM_German_7b_v1 and seedboxai/KafkaLM-7B-DARE_TIES-LaserRMT-QLoRA-DPO-v0.5 for fluent German language capabilities. -

Evaluating Translation Through Embedding Models:

@flozi00is developing an approach to score translation quality based on embedding distance, using the OPUS 100 dataset. This initiative could steer enhancements in machine translation (MT) models and data quality. -

mMARCO Dataset Receives the Apache Seal: The mMARCO dataset now boasts an Apache 2.0 license, as shared by

@philipmay, enriching resources for developers although lacking dataset viewer support on Hugging Face.

LLM Perf Enthusiasts AI Discord Summary

- SAT Scores Soar with Opus: Opus nailed a perfect 800 on the SAT reading section, as shared by @jeffreyw128.

- Memorization vs. Learning: Following the SAT victory,

@dare.aitouched on the difficulty of ensuring that large models like Opus avoid memorizing answers rather than truly learning. - Opus Earns a Fanclub Member:

@nosa_humorously warned of a faux-confrontation should Opus learn of their high praise for its performance. - Weaving Webs of Wisdom:

@res6969praised Opus for its enhanced skill in crafting knowledge webs from expansive documents, highlighting its ability to follow instructions over 35k tokens. - In-Depth Search Dilemma: A task that involved finding a specific name among 500 proved to be challenging for different models including Claude Opus, as reported by

@jeffreyw128.

Datasette - LLM (@SimonW) Discord Summary

- GPT-4 Stumbles in Mystery Test: GPT-4 failed an unspecified test, according to

@dbreunig, yet no details about the nature of the test or the type of failure were provided. - Bridging Physical and Digital Libraries:

@xnimrodxshared a novel blog post about making bookshelves clickable that connect to Google Books pages, along with a demo, sparking discussion about potential applications in library systems and local book-sharing initiatives. - Dollar Signs in Templates Cause Chaos:

@trufuswashingtonexperienced crashes in thellmcommand caused by aTypeErrorwhen using a dollar sign$in a YAML template that was intended for explaining code-related content, uncovering the issue with the special character’s handling within template prompts.

Skunkworks AI Discord Summary

-

Mistral Turns Game Crafting Infinite: A new Infinite Craft Game powered by Mistral was shared, highlighting the Mistral language model’s application in a game that allows players to combine elements to create new items, suggesting innovative use-cases for AI in gaming.

-

Meme Generation Meets AI: The Mistral language model has been used to automate meme creation in combination with the Giphy API, as demonstrated in a YouTube video, with the code available on GitHub for engineers looking to explore the intersection of AI and humor.

PART 2: Detailed by-Channel summaries and links

Perplexity AI ▷ #announcements (1 messages):

- Perplexity AI now speaks your language: User

@ok.alexannounced that Perplexity is now available in Korean (한국어), Japanese (日本語), German (Deutsch), French (Français), and Spanish (Español). Users can change their preferred interface language in the settings on both desktop and mobile.

Perplexity AI ▷ #general (413 messages🔥🔥🔥):

-

Limitations and Comparisons of AI Models: Users discussed various limitations of AI models, with

@twelsh37sharing a comprehensive report prompt for testing AI capabilities.@zero2567and others noted the limited usage of Claude 3 Opus to 5 times a day, prompting discussions on the constraints and alternatives like Claude 3 Sonnet and GPT-4 Turbo for coding tasks, as mentioned by users like@tunafi.shand@deicoon. -

Pro Subscriptions and Features Enquiry: Users like

@arrogantpotatooand@dieg0brand0shared their subscription to Perplexity Pro, leading to discussions on the benefits and how to access specialized channels and pro support on Discord. -

Testing Inflection AI’s New Release: The announcement of Inflection AI’s 2.5 release caught the attention of multiple users, including

@codeliciousand@ytherium, with conversations around the model’s claimed performance level and efficiency. Several noted its speedy performance, even speculating on its potential for various use cases. -

Opinions on Gemini 1.5: Dissatisfaction and speculations with Gemini 1.5 were voiced by users like

@archient, who found it disappointing and lacking features compared to other services. The conversation touched upon expectations of Google’s AI product ecosystem and potential reasons for its perceived underperformance. -

Cultural Respect or Language Model Bias?: A few messages from

@gooddawg10sparked a discussion on respect and formality in communication, highlighting cultural nuances and prompting users like@twelsh37and@brknclock1215to address the use of “sir” and differences in global communication styles.

Links mentioned:

- Inflection-2.5: meet the world’s best personal AI: We are an AI studio creating a personal AI for everyone. Our first AI is called Pi, for personal intelligence, a supportive and empathetic conversational AI.

- Cat Dont Care Didnt Ask GIF - Cat Dont Care Didnt Ask Didnt Ask - Discover & Share GIFs: Click to view the GIF

- Perplexity Blog: Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- What is Pro Search?: Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- Welcome to Live — Ableton Reference Manual Version 12 | Ableton: no description found

Perplexity AI ▷ #sharing (14 messages🔥):

- Cruising Through the 3D Space: User

@williamc0206shared a perplexity search link, potentially discussing the capabilities of navigating or generating 3D spaces. Explore the 3D Space with Perplexity. - Altcoins in the Spotlight:

@b.irrekposted a link that appears to delve into the movement and trends surrounding alternative cryptocurrencies. Insight on Altcoins Here. - Unraveling the Concept of Ikigai:

@sevonade4invited others to check out a generated text on the concept of Ikigai, which could be of specific interest depending on personal curiosity. Dive into Ikigai. - Contemplations on Claude 3 Opus: Further,

@sevonade4highlighted the text generation quality of Claude 3 Opus for those interested in exploring different levels of text generation. Reflect with Claude 3 Opus. - Quantum Queries Addressed:

@vmgehmanshared how Perplexity has been a helpful resource in studying various interpretations of quantum mechanics. Quantum Mechanics Explorations.

Perplexity AI ▷ #pplx-api (19 messages🔥):

-

Seeking HTML & JS Code for Llama 70B Integration:

@kingmilosasked for assistance with code to integrate Llama 70B using HTML and JS because their expertise lies in Python.@po.shresponded by providing a basic code example, instructing to insert the API key and adjust the model as necessary. -

Feedback on Beta Application Process:

@brknclock1215expressed disappointment in the perceived impersonal nature of the closed beta application denial, suggesting a desire for more direct communication or feedback. -

Documentation for API Assists Programmers:

@icelavamanpointed@kingmilosand@pythoner_sadto the Perplexity API documentation for guidance on using the API with LLM inference, yet@kingmilosexpressed difficulty due to a lack of HTML and JS knowledge. -

User Seeks Support for Rate Limit Increase:

@xlhu_69745requested assistance with a rate limit increase for the Sonar model but noted a lack of response to their email.@icelavamanresponded with a non-verbal indication, possibly suggesting where to seek help or check updates. -

Interest in Discover Feature via API:

@yankovichinquired about Perplexity Discover and whether a similar feature could be implemented for users through Perplexity’s API.@bitsavage.suggested reviewing the API documentation to understand potential functionalities and consider how to craft personalized user discovery features.

Links mentioned:

pplx-api: no description found

Nous Research AI ▷ #off-topic (6 messages):

- Crafting with AI: User

@pradeep1148shared a YouTube video titled “Infinite Craft Game using Mistral”, which shows the development of a crafting game that begins with four elements and expands as players combine them. - In Search of Ollama Examples:

@pier1337inquired about examples of running Ollama with Deno, but no further information was provided in the channel. - Missed Connection: User

@tekniumresponded to nonexistent tags and apologized for missing a direct message on Twitter sent months ago by an unspecified user.

Links mentioned:

- Infinite Craft Game using Mistral: Let develop Neal Agarwal’s web game Infinite Craft. This is a “crafting game” where you start with just four elements and repeatedly combine pairs of element…

- Making memes with Mistral & Giphy: Lets make memes using mistral llm and Giphy api#llm #ml #python #pythonprogramming https://github.com/githubpradeep/notebooks/blob/main/Giphy%20Mistral.ipynb

Nous Research AI ▷ #interesting-links (44 messages🔥):

-

Yi LLMs Constantly Improving:

@thiloteehighlighted ongoing enhancements to the Yi-34B base model, notably its performance on the “Needle-in-a-Haystack” test improving from 89.3% to 99.8%. The discussion touched upon the potential for further finetuning and whether the Yi-9B model supports 200k context, which it appears not to (Yi’s Huggingface page and Reddit discussion). -

Google’s Gemma Admittedly Flawed:

@mister_poodleshared concerns that Google may be hastily releasing models, as a team called Unsloth fixed several bugs in the Gemma model which were not addressed elsewhere. The fixes are available in Colab notebooks. -

Claude 3 Opus Claims Debated: A user shared an experience with Claude 3 Opus translating the low-resource Circassian language impressively, but later updates suggested the model might have had prior knowledge of the language. This sparked a discussion on in-context reasoning capabilities and the validity of the original claims (Original Twitter Post).

-

GaLore: The New GitHub Gem:

@random_string_of_characterposted links to GaLore, a project on GitHub, and a Twitter post; however, community validation is needed to determine its effectiveness. A suggestion was made to pair it with low-bit optimizers for potential savings. -

Anticipation for Function Calling Model: Amidst various discussions,

@scottwernerand@sundar_99385expressed excitement about trying out a new model with function calling capabilities inspired by a Claude-style. No release date was mentioned, but eagerness for the model’s launch was evident.

Links mentioned:

- Tweet from An Qu (@hahahahohohe): Today while testing @AnthropicAI ‘s new model Claude 3 Opus I witnessed something so astonishing it genuinely felt like a miracle. Hate to sound clickbaity, but this is really what it felt like. …

- Stop Regressing: Training Value Functions via Classification for Scalable Deep RL: Value functions are a central component of deep reinforcement learning (RL). These functions, parameterized by neural networks, are trained using a mean squared error regression objective to match boo…

- Unsloth Fixing Gemma bugs: Unsloth fixing Google’s open-source language model Gemma.

- 01-ai/Yi-9B · Hugging Face: no description found

- GitHub - thu-ml/low-bit-optimizers: Low-bit optimizers for PyTorch: Low-bit optimizers for PyTorch. Contribute to thu-ml/low-bit-optimizers development by creating an account on GitHub.

- GitHub - jiaweizzhao/GaLore: Contribute to jiaweizzhao/GaLore development by creating an account on GitHub.

- 01-ai/Yi-34B-200K · Hugging Face: no description found

- Reddit - Dive into anything: no description found

Nous Research AI ▷ #announcements (1 messages):

<ul>

<li><strong>New Model Unveiled: Genstruct 7B</strong>: Nous Research announces the release of <strong>Genstruct 7B</strong>, an instruction-generation model that can create valid instructions from raw text, allowing for the creation of new finetuning datasets. The model, inspired by the Ada-Instruct paper, is designed to generate questions for complex scenarios, promoting detailed reasoning.</li>

<li><strong>User-Informed Generative Training</strong>: The <strong>Genstruct 7B</strong> model is grounded in user-provided context, taking inspiration from Ada-Instruct and pushing it further to enhance the reasoning capabilities of subsequently trained models. Available for download on HuggingFace: [Genstruct 7B on HuggingFace](https://huggingface.co/NousResearch/Genstruct-7B).</li>

<li><strong>Led by a Visionary</strong>: The development of <strong>Genstruct 7B</strong> was spearheaded by `<@811403041612759080>` at Nous Research, signifying a team investment in innovation for instruction-based model training.</li>

</ul>Links mentioned:

NousResearch/Genstruct-7B · Hugging Face: no description found

Nous Research AI ▷ #general (329 messages🔥🔥):

- Anthropic releases chat-oriented AI: @teknium announces a new model from Anthropic called Genstruct-7B-GGUF, a generative model that can create dialogues and instruction-based content.

- Discussion about Claude 3’s performance:

@proprietaryexclaims about the Claude 3 model’s impressive capabilities, sparking curiosity and requests to share outputs. - Evaluating a Twitter IQ Test Chart: An incorrect IQ test chart from Twitter is discussed. @makya2148 and others express skepticism about the reported IQ scores for AI models like Claude 3 and GPT-4.

- GPT-5 Release Rumors Circulate: @sanketpatrikar shares rumors of a potential GPT-5 release, leading to speculation but a consensus of skepticism amongst the chat participants.

- Inflection AI Claims Impressive Benchmark Results: Inflection AI tweets about their new model, Inflection-2.5, claiming it is competitive with GPT-4 on all benchmarks.

@tekniumand@mautonomydiscuss the credibility of these claims.

Links mentioned:

- Tweet from Netrunner — e/acc (@thenetrunna): GPT5_MOE_Q4_K_M.gguf SHA256: ce6253d2e91adea0c35924b38411b0434fa18fcb90c52980ce68187dbcbbe40c https ://t.ly/8AN5G

- Tweet from Inflection AI (@inflectionAI): Pi just got a huge upgrade! It’s now powered by our latest LLM: Inflection-2.5, which is neck and neck with GPT-4 on all benchmarks and used less than half the compute to train. Pi now has world clas…

- Tweet from Emad (@EMostaque): @Teknium1 Less stable above 7b. Transformer engine has it as main implementation. Intel have one too and Google have int8

- gguf/Genstruct-7B-GGUF · Hugging Face: no description found

- Swim In GIF - Swim In Swimming - Discover & Share GIFs: Click to view the GIF

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- Tweet from Sebastian Majstorovic (@storytracer): Open source LLMs need open training data. Today I release the largest dataset of English public domain books curated from the @internetarchive and the @openlibrary. It consists of more than 61 billion…

- Tweet from Prof. Anima Anandkumar (@AnimaAnandkumar): For the first time, we show that the Llama 7B LLM can be trained on a single consumer-grade GPU (RTX 4090) with only 24GB memory. This represents more than 82.5% reduction in memory for storing optimi…

- Tweet from FxTwitter / FixupX: Sorry, that user doesn’t exist :(

- Tweet from Daniel Han (@danielhanchen): Found more bugs for #Gemma: 1. Must add <bos> 2. There’s a typo for <end_of_turn>model 3. sqrt(3072)=55.4256 but bfloat16 is 55.5 4. Layernorm (w+1) must be in float32 5. Keras mixed_bfloa…

- How to Fine-Tune LLMs in 2024 with Hugging Face: In this blog post you will learn how to fine-tune LLMs using Hugging Face TRL, Transformers and Datasets in 2024. We will fine-tune a LLM on a text to SQL dataset.

- Microsoft’s new deal with France’s Mistral AI is under scrutiny from the European Union: The European Union is looking into Microsoft’s partnership with French startup Mistral AI. It’s part of a broader review of the booming generative artificial intelligence sector to see if it rais…

- llama_index/llama-index-packs/llama-index-packs-raptor/llama_index/packs/raptor at main · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

- Weyaxi/Einstein-v4-7B · Hugging Face: no description found

- Tweet from Weyaxi (@Weyaxi): 🎉 Exciting News! 🧑🔬 Meet Einstein-v4-7B, a powerful mistral-based supervised fine-tuned model using diverse high quality and filtered open source datasets!🚀 ✍️ I also converted multiple-choice…

- GitHub - jiaweizzhao/GaLore: Contribute to jiaweizzhao/GaLore development by creating an account on GitHub.

- WIP: galore optimizer by maximegmd · Pull Request #1370 · OpenAccess-AI-Collective/axolotl: Adds support for Galore optimizers Still a WIP, untested.

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- GitHub - PKU-YuanGroup/Open-Sora-Plan: This project aim to reproducing Sora (Open AI T2V model), but we only have limited resource. We deeply wish the all open source community can contribute to this project.: This project aim to reproducing Sora (Open AI T2V model), but we only have limited resource. We deeply wish the all open source community can contribute to this project. - PKU-YuanGroup/Open-Sora-Plan

Nous Research AI ▷ #ask-about-llms (41 messages🔥):

- Local OIllama Announcement: User

@tekniumdeclared that OIllama is intended for local running, while@lakshaykcsuggested it could potentially have an endpoint created for backend inference. - Current Training on Hermes Update:

@tekniumconfirmed to@aliissathat the dataset used for Nous-Hermes-2-Mistral-7B-DPO did not originally include function calling data but a newer version is now in training. - Sampling Tech for JSON/Function Calls:

@ufghfigchvis refactoring a logit sampler designed for JSON/function calls, which functions well with Hugging Face (HF) and very-large language models (vllm). - GPT-4 Free Access Question:

@micron588inquired about free access to GPT-4, and@tekniumclarified availability, pointing to a website called Corcel.io offering free ChatGPT-4 like interactions. - Inquiry on Context Length for Nous-Hermes Models:

@nickcbrownasked why the context lengths for the Nous-Hermes model built on Mixtral/Mistral had been apparently reduced and expressed a desire for longer contexts for applications like RAG (Retrieval-Augmented Generation).

Links mentioned:

- Corcel · Build with the power of Bittensor: no description found

- Lilac - Better data, better AI: Lilac enables data and AI practitioners improve their products by improving their data.

LAION ▷ #general (300 messages🔥🔥):

- NVIDIA Puts the Brakes on Cross-Platform CUDA: A recent change that has been stirring up attention is NVIDIA’s ban on using translation layers for running CUDA on non-NVIDIA chips, targeting projects like ZLUDA which aimed at bringing AMD to parity with NVIDIA on Windows.

- Midjourney Scrape Shake-Up: Stability AI is accused of scraping Midjourney for prompts and images, resulting in their employees being banned from Midjourney. The incident has sparked various reactions, with some suggesting it might not be work-related and others joking about the situation.

- Marketing Missteps: Conversation turned to a case where a marketing department is reportedly spending a disproportionate amount on ad conversions, leading to incredulous reactions and discussions on the inefficiency of such spending.

- SD3 Speculations Amidst Dataset Discussions: As talk of new datasets like MajorTOM released by

@mikonvergenceon Twitter surfaces, there’s anticipation around Stability AI’s plans to distribute SD3 invites and make PRs to diffusers, with users discussing the potential and limitations of SD3’s architecture. - Scraping Etiquette Examination: Amid the ongoing discussions about scraping, from the technical implications to the social impacts, users stress the importance of proper scraping techniques and etiquette, reinforcing that understanding and abiding by these principles is crucial.

Links mentioned:

- Nvidia bans using translation layers for CUDA software — previously the prohibition was only listed in the online EULA, now included in installed files [Updated]: Translators in the crosshairs.

- Tweet from Nick St. Pierre (@nickfloats): In MJ office hours they just said someone at Stability AI was trying to grab all the prompt and image pairs in the middle of a night on Saturday and brought down their service. MJ is banning all of …

- TwoAbove/midjourney-messages · Datasets at Hugging Face: no description found

- Regulations.gov: no description found

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

LAION ▷ #research (74 messages🔥🔥):

-

Debate on Training Efficiency and Pruning: Discussion between

@mkaicand@recvikingfocused on whether LLMs are parameter-inefficient and if training methods or architectures could be optimized.@mkaicsuggested that the ability to prune an LLM without a substantial drop in performance indicated potential for more efficient training schemes, while@recvikingargued that pruning reduces a model’s generalizability and that the vastness of potential inputs makes efficiency evaluation complex. -

SVD and Training Slowness:

@metal63reported a significant slowdown when applying SVD updates during the training of the Stable Cascade model, with training pausing for 2 minutes for updates.@thejonasbrothersexpressed a dislike towards SVD due to its slowness, hinting at the practical issues with certain training optimizations. -

Pruning Effects on Model Performance:

@thejonasbrothersnoted that pruning models often leads to performance issues such as token repetition and stability concerns, and that while some models may seem saturated at around 7 billion parameters, the situation might differ at larger scales, such as 1 trillion parameters. -

General Utility of Pruned LLMs:

@mkaicmaintained that even after heavy pruning, LLMs retain more generalizability than expected and posed questions about the necessity of large parameters for model training versus inference. The potential for major breakthroughs in training more efficient yet comparably effective LLMs was highlighted as a promising research area. -

Current Structural Limitations:

@thejonasbrothersand@recvikingdiscussed the structural limitations of current LLMs and the saturation of efficiencies with an especially critical lens on attention-based optimizations. The dialog raised questions about whether the industry is reaching the limits of compression and model efficiency within existing architectures.

Links mentioned:

Neverseenagain Yourleaving GIF - Neverseenagain Yourleaving Oh - Discover & Share GIFs: Click to view the GIF

OpenAI ▷ #ai-discussions (204 messages🔥🔥):

- Claude Outshines GPT-4 in Elemental Knowledge:

@you.wishand@kennyphngynshare their positive experiences with Claude 3 Opus (referred to as “C3”), reporting it outperforms GPT-4 on complex tasks and in elementary classes. - Debate Over Claude 3’s Coding Capabilities:

@testtmfinds that Gemini 1.5 Pro succeeds at a Python GUI code task on the first try, while Claude 3 doesn’t, suggesting that both models have strengths and weaknesses. - MMLU Dataset Efficacy in Question: On the subject of MMLU datasets,

@privetinand@foxalabs_32486criticize the set for having questions that don’t make logical sense and containing a significant percentage of incorrect answers, calling for its removal from AI model evaluation. - Limits of YouTube for AI Model Evaluation Highlighted:

@7877asserts that Youtube is not the best source for quick, raw evaluation numbers of AI models due to its focus on entertainment over detailed information, prompting a discussion on alternative evaluation resources. - Concerns Over the Potency of Free AI Services:

@eskcantaexpresses concern about the limited interactions allowed with Claude’s free version compared to the more generous allowances from OpenAI’s services, contemplating the economic sustainability of these AI companies in providing free services.

Links mentioned:

EvalPlus Leaderboard: no description found

OpenAI ▷ #gpt-4-discussions (29 messages🔥):

- Troubles Accessing GPT Account: User

@haseebmughal_546faced issues with a frozen page, preventing access to the GPT account. - GPT Policy Update on Code: User

@watcherkkraised concerns about GPT not providing full codes due to a policy change, mentioning an ‘out of policy’ message. - Service Disruption on OpenAI:

@qilin111reported a 6-hour service interruption;@dystopia78confirmed a partial API outage, although OpenAI’s status page showed “All Systems Operational” at the time. - Inconsistent GPT-4 Availability: Users

@cetacn,@emante,@liyucheng09,@openheroes,@ed1431, and@malc2987discussed intermittent access to GPT-4, with varying degrees of operability among users. - Mix-Up on GPT and Other Models:

@cliffsayshiinquired about using custom models like Human GPT via API, to which@solbusclarified that GPTs are exclusive to ChatGPT and not accessible via API, providing links to OpenAI’s model overview and additional info on GPTs vs. assistants.

Links mentioned:

- OpenAI Status: no description found

- OpenAI Status: no description found

OpenAI ▷ #prompt-engineering (68 messages🔥🔥):

- Navigating Channel Posting Requirements:

@giorgiomufenwas unclear on how to post in a specific channel due to a required tag, and@eskcantaassisted by pointing out that one must click on the ‘see more tags’ options first. - Positive Phrasing for Role-play Prompts: Users

@toothpiks252,@eskcanta, and@dezuzeladvised@loamy_on how to phrase a role-play prompt positively, suggesting it’s best to tell the model explicitly what to do instead of what not to do. - GPT-5 for Enhanced Problem-Solving:

@spikydexpressed confidence that GPT-5 would have a 10% better chance of solving a specific bird enigma puzzle, while@eskcantabelieved it might simply require more targeted training with the vision model. - Improving Randomization in Storytelling: In a conversation about adding randomness to GPT’s choices,

@solbusrecommended using Python’s random function via data analysis tools to give@interactiveadventureaimore variety in AI-generated stories. - Seeking Help for GPT Classifier Development:

@chemloxsought advice on whether to use a react-based agent or fine-tuning for building a GPT classifier to determine the status of conversations, with@eskcantasuggesting to test the base model first before deciding on a more complex solution.

OpenAI ▷ #api-discussions (68 messages🔥🔥):

- Tag Troubles in Posting:

@giorgiomufenhad an issue posting in a channel that required selecting a tag first.@eskcantahelped them out by pointing out the requirement to click on one of the ‘see more tags’ options. - Positivity Beats Negativity in Instructions:

@loamy_sought a positive phrase alternative to “do not repeat”, which@toothpiks252and@eskcantaassisted with by suggesting explicit instructions on desired actions. - Bird Enigma and GPT-5’s Potential:

@spikydand@eskcantadiscussed the potential for solving a complex bird enigma with the upcoming GPT-5, with emphasis on improved vision models and reasoning capabilities for such tasks. - Random Number Generation Query:

@interactiveadventureaiinquired about methods for GPT to generate different random seeds for number generation, with@solbussuggesting using Python’s random function as a possible solution. - A Warm Welcome to a New Member:

@thebornchampionintroduced themselves as an aspiring data analyst and prompt engineering enthusiast, and@eskcantawelcomed them while engaging in a discussion about their interests and use cases for ChatGPT.

LM Studio ▷ #💬-general (187 messages🔥🔥):

- Discussing Tech Specs and Troubleshooting:

@kavita_27183shares their hardware specs, boasting 512 GB RAM and 24GB VRAM, while@jedd1assists in troubleshooting libstdc++ errors and checking whether the system recognizes VRAM correctly. A shared error message points to a library version issue (GLIBCXX_3.4.29 not found), suggesting a need for updating. - Server-Side Issues with Local Models: Diverse local model topics are addressed, including

@_benoitbencountering API issues with nodes servers,@datasouldiscussing GGUF-related errors in LM Studio, and@mattjpowseeking clarification on server context behavior. Advice and responses are offered by@heyitsyorkie. - Hardware Recommendations for LLM Work:

@saber123316deliberates on getting a Macbook Pro with M3 Max or M2 Max, seeking community advice on which would suffice for local LLM processing. The conversation touches on the trade-offs regarding price, performance, and the value of more RAM. - Discovering LM Studio’s Capabilities: Users

@aeiou2623and@.lodisexplore features ranging from image uploads in conversations to model support.@heyitsyorkieprovides guidance on loading GGUF files and clarifies that LM Studio is primarily for running local models offline. - OpenAI Critique and Alternate AI Services Insights:

@saber123316and@rugg0064discuss the implications of the revealed non-openness of OpenAI, with mentions of Elon Musk’s dissatisfaction and OpenAI’s proprietary approach prompting users to consider other AI service subscriptions such as POE monthly for accessing various models like Claude and GPT-4.

Links mentioned:

- Inflection-2.5: meet the world’s best personal AI: We are an AI studio creating a personal AI for everyone. Our first AI is called Pi, for personal intelligence, a supportive and empathetic conversational AI.

- Reor: AI note-taking app that runs models locally & offline on your computer.

- Reddit - Dive into anything: no description found

- RIP Midjourney! FREE & UNCENSORED SDXL 1.0 is TAKING OVER!: Say goodbye to Midjourney and hello to the future of free open-source AI image generation: SDXL 1.0! This new, uncensored model is taking the AI world by sto…

- Accelerating LLM Inference: Medusa’s Uglier Sisters (WITH CODE): https://arxiv.org/abs/2401.10774https://github.com/evintunador/medusas_uglier_sisters

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- 22,000 H100s later, Inflection 2.5!!!: 🔗 Links 🔗https://inflection.ai/inflection-2-5❤️ If you want to support the channel ❤️Support here:Patreon - https://www.patreon.com/1littlecoder/Ko-Fi - ht…

LM Studio ▷ #🤖-models-discussion-chat (27 messages🔥):

-

Optimal AI for Storytelling Specified: User

@laszlo01inquired about the best AI model for storytelling using Open Chat 3.5.@jason_2065recommended Mistral-7b-instruct-v0.2-neural-story.Q4_K_M.gguf with 24 layers and 8192 context size, noting the memory constraints. -

LM Studio Lacks Image-Generation Models:

@karisnaasked about models capable of drawing images within LM Studio.@heyitsyorkieclarified that LM Studio does not support such models and recommended using Automatic 1111 for Stable Diffusion tasks. -

Starcoder2 Running Issues and Support: Users

@b1gb4ngand@madhur_11wondered if starcoder2 could be run via LM Studio, to which@heyitsyorkieinformed that it is not supported in the current LM Studio version. -

Exploring Image Generation Alternatives:

@callmemjininasought a model that generates pictures.@heyitsyorkieexplained that Language Models and LM Studio cannot perform this task, advising to look for Stable Diffusion tools and tutorials online. -

Request for Starcoder2:

@zachmayershared interest in using starcoder2 and posted a link to its Hugging Face page, but@wolfspyrehinted at the need for patience, implying future support might be coming.

Links mentioned:

Kquant03/TechxGenus-starcoder2-15b-instruct-GGUF · Hugging Face: no description found

LM Studio ▷ #🧠-feedback (4 messages):

- Users Seek Guidance: User

@tiro1cor15_10expressed a desire for guidance to fully realize the potential they see in using the service. - Proxy Support Lacking on macOS:

@calmwater.0184provided feedback indicating that LM Studio currently does not support the Proxy feature on macOS 14.3 (v0.2.16), impacting the ability to search or download models. - Feature Enhancement Suggestion:

@calmwater.0184suggested that LM Studio could look into the user experience and features of Embra AI to become a more efficient Productivity Booster Assistant for users at all levels. - Channel Usage Clarification:

@heyitsyorkiedirected users to stop using the designated feedback channel for help requests and instead use the appropriate help channel.

LM Studio ▷ #🎛-hardware-discussion (46 messages🔥):

-

Extreme Context Experiments on the Smaug Model:

@goldensun3dsreported running a test involving a 200K context in the Smaug model, which displayed erratic RAM usage between 70GB and 20GB, under only CPU usage. Their latest message indicated no outputs yet and continued fluctuations in RAM usage. -

VRAM and Power Supply Discussions:

@wilsonkeebssought advice for the smallest PSU to power a standalone RTX 3090, and@heyitsyorkierecommended a minimum of 550W but suggested 750W is standard. Wilsonkeebs emphasized the need for sufficient PCIe cables rather than the wattage itself. -

Exploring Large Contexts for Niche Use Cases:

@aswarpbrought up the potential for LLMs to process very large data sets monthly like entire codebases for thorough reporting, acknowledging the trade-off with processing time. Aswarp sees potential particularly for applications in government and smaller businesses. -

VRAM Demand for Processing in LLM Studio:

@jason_2065mentioned using 105,000 context and experiencing high resource usage with 42GB RAM and over 20GB VRAM, which aligns with the high demands discussed by others when handling large contexts or datasets in memory-intensive LLM tasks. -

Managing Mismatched VRAM for Machine Learning Models: The conversation touched on difficulties associated with mismatched VRAM when running LLMs, noting adjustments to the LLM preset file for GPU allocation which

@goldensun3dsfinds troublesome due to requiring a restart of the LM Studio.

Links mentioned:

- Razer Core X - Thunderbolt™ 3 eGPU | Razer United Kingdom: Now compatible with Mac and Windows laptops, featuring 3-slot PCI-Express desktop graphic cards, 650W power supply, and charges via USB-C.

- PSU for NVIDIA GeForce RTX 3090 | Power Supply Calculator: See what power supply you need for your NVIDIA GeForce RTX 3090

LM Studio ▷ #crew-ai (2 messages):

- Seeking Speed Enhancement Techniques:

@alluring_seahorse_04960is looking for methods to increase response speed for an unspecified process. They are also experiencing an error: ‘Connection to telemetry.crewai.com timed out’. - Suggestion for Baseline Local Operation: In response,

@wolfspyresuggests establishing a simple baseline operation that can run locally to possibly address the speed issue.

LM Studio ▷ #open-interpreter (85 messages🔥🔥):

-

Tackling the Preprompt Syntax:

@nxonxiinquired about the correct syntax for modifying thedefault_system_messagein Open Interpreter settings across different operating systems. They shared their struggles and attempts to alter the system message for Linux, Windows, and WSL. -

Confusion Over

-sand DOS Documentation:@nxonximentioned confusion over how to use Open Interpreter’s prompt settings, discussing the-sor--system_messageoption, and shared a link to the documentation, which led to further discussion with@1sbeforeon finding the correct usage and commands. -

Profiles and Configurations - The Journey Continues: Throughout the conversation,

@1sbeforeoffered assistance, suggesting to check the correct paths and configurations in Open Interpreter’s Python environments, while@nxonxireported on various unsuccessful attempts to modify the prompt or use profiles effectively. -

Exploring Code-Trained Models: In the latter part of the conversation, the discussion shifted toward experiences with different language models like

deepseek-coder-6.7B-instruct-GGUF, as well as the integration of prompts and system messages within these models.@1sbeforeshared a link to Hugging Face hosting potentially useful GGUFs and provided insights on the models’ performances. -

Belief in the Power of Curiosity: When faced with perplexities about working with the Open Interpreter and language models,

@1sbeforeencouraged@nxonxito embrace their curiosity as they ventured to find the right sources and setups. The exchange was filled with trial-and-error experiences and shared learning moments including attempts to clone git repositories and adjustments of Python environments.

Links mentioned:

- owao/LHK_DPO_v1_GGUF · Hugging Face: no description found

- All Settings - Open Interpreter: no description found

- All Settings - Open Interpreter: no description found

LlamaIndex ▷ #announcements (1 messages):

- User Survey Announced:

@seldo_vencourages everyone to take a 3-minute user survey to help LlamaIndex understand their user base better. The survey can be found at SurveyMonkey and aims to improve documentation, demos, and tutorials.

Links mentioned:

LlamaIndex user survey: Take this survey powered by surveymonkey.com. Create your own surveys for free.

LlamaIndex ▷ #blog (5 messages):

- Launch of LlamaParse JSON Mode: The LlamaIndex team is excited to announce the new LlamaParse JSON Mode, which simplifies the RAG pipeline creation by parsing text and images from a PDF into a structured dictionary. This feature enhances capabilities when combined with multimodal models like claude-3 opus. View tweet.

- LlamaParse testing by AIMakerspace:

@AIMakerspacetested LlamaParse with notable results and an in-depth analysis published that details its functionalities and performance metrics. In-depth look at LlamaParse. - Integration with VideoDB for RAG Over Video Streams: LlamaIndex introduced an integration with

@videodb_io, enabling the upload, search, and streaming of videos directly within LlamaIndex, by words spoken or visual scenes presented. Announcement tweet. - Comprehensive Video Guide for Claude 3: A new video guide has been released offering a comprehensive tutorial on using Claude 3 for various applications, including Vanilla RAG, Routing, and Sub-question query planning with LlamaIndex’s tools. Claude 3 Cookbook.

- LlamaIndex User Survey for Enhanced Resources: LlamaIndex is conducting a 3-minute user survey to better understand its users’ expertise and needs in order to tailor documentation, demos, and tutorials more effectively. Take the user survey.

Links mentioned:

LlamaIndex user survey: Take this survey powered by surveymonkey.com. Create your own surveys for free.

LlamaIndex ▷ #general (298 messages🔥🔥):

-

A Algorithm Chat*:

@nouiriasked if the A* algorithm could be applied to similarity search.@whitefang_jrconfirmed it is possible by subclassing the embedding class and changing the similarity search method, referencing LlamaIndex’s default use of cosine similarity. -

Configuring Chatbots for Contextual Outputs:

@techexplorer0inquired about configuring a RAG chatbot for brief, context-specific responses.@kapa.aisuggested using aResponseSynthesizeror post-processing responses to achieve concise outputs, linking to the LlamaIndex documentation for exemplified setup. -

Query Engine Customization on LlamaIndex:

@cheesyfishesreplied to various implementation queries, explaining that chat engines typically accept strings, the chunking in LlamaIndex isn’t randomized, and suggesting explorations of the source code for deeper issues such as the use of Gemini as a chat engine or embedding within Azure OpenAI. -

Ingesting Documents with Slack:

@habbymansought advice on best practices for Slack document ingestion, looking to maintain individual message metadata without losing conversational context. -

LlamaIndex Vector Store Recommendations: New users like

@generalenthuasked for vector store recommendations compatible with LlamaIndex, receiving suggestions from@cheesyfishesand@jessjess84to try Qdrant, ChromaDB, or Postgres/pgvector for their extensive documentation and robust user bases. -

Discrepancies Between LLM Direct Queries and VectorStoreIndex:

@jessjess84experienced subpar responses from LlamaIndex’sVectorStoreIndexcompared to direct LLM queries, with@teemu2454attributing this to prompt templates used byquery_engineand advising adjustments for improved results. In response to a separate query by the same user,@teemu2454clarified that theVectorStoreIndexis separate from the LLM used to process texts, with embeddings used only to fetch text for context during LLM queries.

Links mentioned:

- no title found: no description found

- Building a Slack bot that learns with LlamaIndex, Qdrant and Render — LlamaIndex, Data Framework for LLM Applications: LlamaIndex is a simple, flexible data framework for connecting custom data sources to large language models (LLMs).

- Chat Stores - LlamaIndex 🦙 v0.10.17: no description found

- OpenAI - LlamaIndex 🦙 v0.10.17: no description found

- llama_index/llama-index-integrations/vector_stores/llama-index-vector-stores-opensearch/llama_index/vector_stores/opensearch/base.py at 0ae69d46e3735a740214c22a5f72e05d46d92635 · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

- llama_index/llama-index-legacy/llama_index/legacy/llms/openai_like.py at f916839e81ff8bd3006fe3bf4df3f59ba7f37da3 · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

- llama_index/llama-index-core/llama_index/core/base/embeddings/base.py at df7890c56bb69b496b985df9ad28121c7f620c45 · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

- GitHub - mominabbass/LinC: Code for “Enhancing In-context Learning with Language Models via Few-Shot Linear Probe Calibration”: Code for “Enhancing In-context Learning with Language Models via Few-Shot Linear Probe Calibration” - mominabbass/LinC

- OMP_NUM_THREADS.): no description found

- llama_index/llama-index-integrations/llms/llama-index-llms-vllm/llama_index/llms/vllm/base.py at f916839e81ff8bd3006fe3bf4df3f59ba7f37da3 · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

- [Bug]: Issue with EmptyIndex and streaming. · Issue #11680 · run-llama/llama_index: Bug Description Im trying to create a simple Intent Detection agent, the basic expected functionality is to select between to queryengines with RouterQueryEngine, one q_engine with an emptyindex, t…

- Available LLM integrations - LlamaIndex 🦙 v0.10.17: no description found

- Implement EvalQueryEngineTool by d-mariano · Pull Request #11679 · run-llama/llama_index: Description Notice I would like input on this PR from the llama-index team. If the team agrees with the need and approach, I will provide unit tests, documentation updates, and Google Colab noteboo…

- Chroma Multi-Modal Demo with LlamaIndex - LlamaIndex 🦙 v0.10.17: no description found

- Multimodal Retrieval Augmented Generation(RAG) | Weaviate - Vector Database: A picture is worth a thousand words, so why just stop at retrieving textual context!? Learn how to perform multimodal RAG!

- Custom Response - HTML, Stream, File, others - FastAPI): FastAPI framework, high performance, easy to learn, fast to code, ready for production

- no title found: no description found

- llama_index/llama-index-integrations/llms/llama-index-llms-vertex/llama_index/llms/vertex/utils.py at main · run-llama/llama_index: LlamaIndex is a data framework for your LLM applications - run-llama/llama_index

LlamaIndex ▷ #ai-discussion (1 messages):

- New Approach to Enhancing In-context Learning:

@momin_abbasshared their latest work focusing on improving in-context learning through Few-Shot Linear Probe Calibration. They ask for support by starring the GitHub repository.

Links mentioned:

GitHub - mominabbass/LinC: Code for “Enhancing In-context Learning with Language Models via Few-Shot Linear Probe Calibration”: Code for “Enhancing In-context Learning with Language Models via Few-Shot Linear Probe Calibration” - mominabbass/LinC

Latent Space ▷ #ai-general-chat (14 messages🔥):

- Tea Time Tales from Twitter: User

@420gunnaamusingly described bringing news from Twitter, like a messenger arriving with updates. - Midjourney and Stability AI Clash: