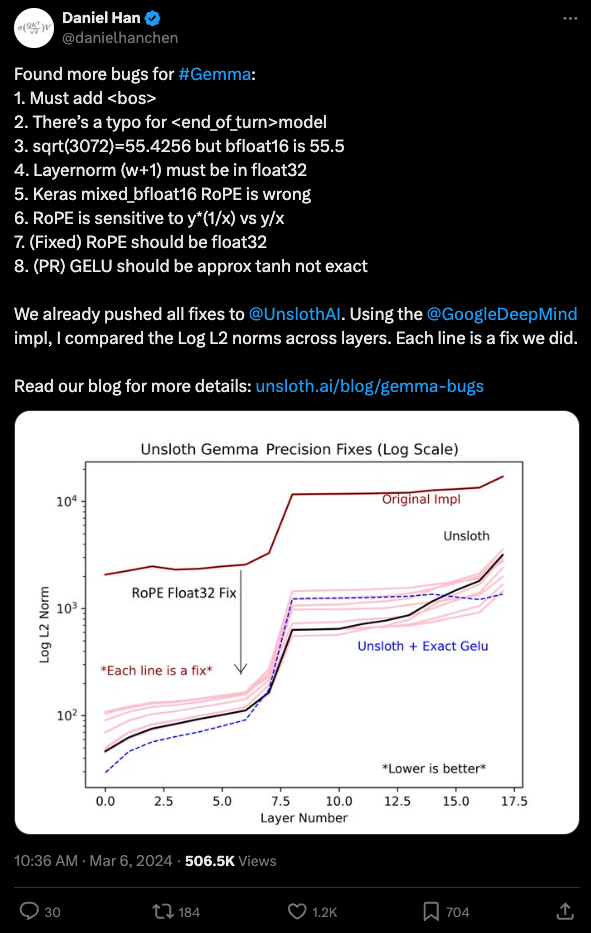

Google’s recently released Gemma model was widely known to be unstable for finetuning. Last week, Daniel Han from Unsloth got some love for finding and fixing 8 bugs in the implementation, some of which are being upstreamed. There is a thread, blogpost, and today Hacker News commentary and Google Colab to follow along, with some deserved community love.

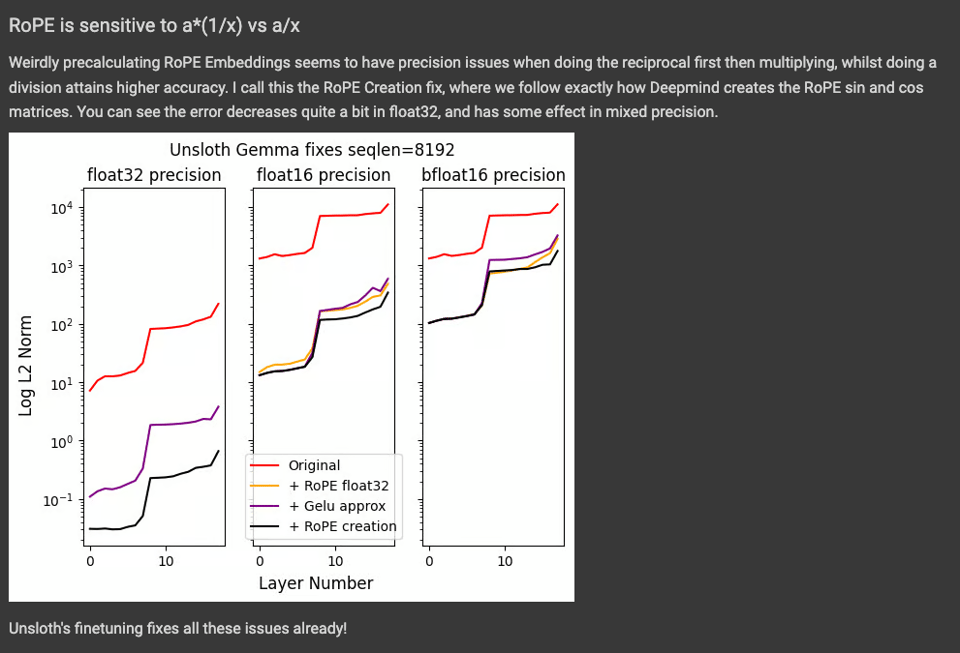

It is full of extremely subtle numerical precision issues like this:

Which takes extreme attention to detail to notice. Kudos!

Table of Contents

[TOC]

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus. Today’s output is lightly swyx edited. We are working on antihallucination, NER, and context addition pipelines.

Here is a summary of the key topics and themes from the provided tweets, with relevant tweets organized under each category:

Technical Deep Dives

- Yann LeCun explains the technical details of a pseudo-random bit sequence used to pre-train an adaptive equalizer, which is a linear classifier trained with least squares and a descendant of the Adaline (competitor of the Perceptron).

- Subtweeting a Yann tweet, François Chollet argues that the information bandwidth of the human visual system is much lower than 20MB/s, despite having 1 million optic nerve fibers. He estimates the actual information input is under 1MB/s, and the information extracted by the visual cortex and incorporated into the world model is even lower, measured in bytes per second.

- NearCyan feels that search engines provide monotonous sludge with zero actual information, so he now uses LLMs as his primary conduit of information with any semblance of reality.

New AI Model Releases & Benchmarks

- Arav Srinivas reports that after 100s of queries on Perplexity with Claude 3 (Opus and Sonnet) as the default model, he has yet to see a hallucination, unlike his experience with GPT-4. Similar reports from others who are switching.

- Hacubu benchmarked Anthropic's new Claude-3 models on structured data extraction using LangSmith. The high-end Opus model had no errors over 42 examples and slightly outperformed the previous non-GPT-4 contender, Mistral-Large.

Emerging Trends & Reflections

- Yann LeCun reflects on the history of AI, noting that generations of researchers thought the latest paradigm would lead to human-level AI, but it's always harder than expected with no single magic bullet. However, progress is definitely being made and human-level AI is merely a matter of time.

- Teknium predicts that people will start breaking down every GPT-based pipeline and rebuild it to work well with Claude instead.

- Aidan Clark experiences the emotional rollercoaster of hitting a bug and loving/hating machine learning in quick succession when working on ML projects.

Tutorials & How-To Guides

- Santiago Valdarrama recorded a 1-hour video on building a RAG application using open-source models (Llama2 and Mixtral 8x7B) to answer questions from a PDF.

- Jerry Liu demonstrates receipt processing with @llama_index + local models + PaddleOCR.

- Langchain published in-depth documentation on how to customize all aspects of Chat LangChain, in both Python and JS/TS, including core concepts, modifications, local runs, and production deployment.

Memes & Humor

- A meme jokes that it's "happy deep learning is hitting a wall day" for those who celebrate it.

- François Chollet finds it amusing that the more problems you solve, the more problems you have.

- Teknium jokes that Nvidia hates us regarding the challenges of working with their hardware/software for AI workloads.

PART 0: Summary of Summaries of Summaries

Claude 3 Sonnet (14B?)

-

Model Finetuning and Performance Optimization:

- Unsloth AI discussions centered around finetuning Gemma models, dealing with special tokens, and addressing issues like OOM errors. Solutions included updating Unsloth, using

pip install "unsloth[cu121-torch220] @ git+https://github.com/unslothai/unsloth.git", and exploring Gradient Low-Rank Projection (GaLore) (ArXiv paper) for reducing memory usage during LLM training. - The CUDA MODE community explored techniques like thread coarsening, vectorized memory access, and CUDA profiling tools to optimize performance. Projects like ring-attention and flash decoding were discussed.

- Answer.AI announced the ability to train 70B models locally using FSDP + QLoRA on standard GPUs like RTX 3090 (blog post).

- Unsloth AI discussions centered around finetuning Gemma models, dealing with special tokens, and addressing issues like OOM errors. Solutions included updating Unsloth, using

-

AI Model Comparisons and Benchmarking:

- Discussions compared models like Claude Opus, GPT-4, and Mistral for coding prowess, with Claude Opus often outperforming GPT-4 in areas like SQL and Rust. Users also anticipated the release of GPT-4.5/5 and its potential improvements.

- The DiscoResearch community explored using GPT-4 and Claude3 as judges for creative writing, developing benchmarks, and comparing models like Brezn3 and Dpo on German datasets.

- Gemini was highlighted for its impressive performance, with a YouTube video comparing it to Claude Opus and GPT-4 Turbo, noting its superior speed and lower costs.

-

AI Ethics, Regulation, and Societal Impact:

- Concerns were raised about censorship and restrictions creeping into AI models like the “Claude 2 self-moderated versions.” Discussions touched on balancing free expression with content moderation.

- The impact of AI on creativity and employment was debated, with some believing AI will assist rather than replace human creativity, while others anticipated job market shifts.

- A Slashdot article highlighted U.S. government concerns about frontier AI posing an extinction-level threat, suggesting potential regulatory measures.

-

Open-Source AI Models and Community Contributions:

- Anticipation grew around the open-sourcing of models like Grok by

@xAI, as announced by Elon Musk’s tweet. - Cohere introduced Command-R, a new retrieval augmented model with a 128k context window and public weight release for research (blog post).

- Community members shared projects like Prompt Mixer for building AI prompts, an open-source AI chatbot using LangChain, and tools like claudetools for function calling with Claude 3.

- Anticipation grew around the open-sourcing of models like Grok by

Claude 3 Opus (8x220B?)

Claude Outperforms GPT-4 in Coding Tasks: Engineers have observed that Claude Opus consistently delivers more complete and effective code outputs compared to GPT-4, particularly excelling in languages like SQL and Rust, as discussed in the OpenAI Discord.

Perplexity AI's Context Retention Struggles: Users have expressed frustration with Perplexity AI's inability to retain context effectively, often defaulting to base knowledge responses, leading to refund requests and bug reports, as seen in the Perplexity AI Discord. The removal of the 32k context length feature from the roadmap has also raised transparency concerns.

Gemma Models Gain Traction Despite Issues: While Gemma models have shown promise, such as the release of Ghost 7B v0.9.1 which ranked 3rd on VMLU's leaderboard, users in the LM Studio Discord have reported technical issues with Gemma models in LM Studio, even after the release of custom quantized versions.

Efficiency Breakthroughs in LLM Training and Inference: Researchers have made significant strides in reducing memory requirements and accelerating LLM training and inference. GaLore (arXiv paper) reduces memory usage by up to 65.5%, while Answer.AI's system using FSDP and QLoRA (blog post) enables training 70B models on consumer GPUs. For inference, techniques like ToDo (arXiv paper) can increase Stable Diffusion speeds by 2-4.5x through token downsampling.

ChatGPT (GPT4T)

I apologize for that oversight. Here's a revised version incorporating the relevant links inline:

Finetuning Challenges and Solutions in AI Modeling: The Unsloth AI community tackled finetuning Gemma, highlighting issues with special tokens and adapter precision. Recommendations include reinstalling

xformersto address errors, suggested via commandpip install "unsloth[cu121-torch220] @ git+https://github.com/unslothai/unsloth.git". The integration of multi-GPU support and a FSDP + QLoRA system by Answer.AI for training 70B models on gaming GPUs marked significant advancements (oKatanaaa/unsloth). Ghost 7B v0.9.1 showcased advancements in reasoning and language, accessible on huggingface.co, highlighting Unsloth AI's efficiency improvements during LLM fine-tuning.Emerging AI Technologies and Community Engagement: OpenAI Discord highlighted Claude Opus' superior performance over GPT-4 in coding tasks, spurring discussions on AI consciousness and Claude's capabilities. Technical solutions for GPT-4 bugs and strategies to improve ChatGPT's memory recall were shared, emphasizing the use of an output template for achieving consistency in custom models.

Model Compatibility and Efficiency in Coding: LM Studio's discourse revolved around model selection for coding and cybersecurity, noting Mistral 7B and Mixtral's compatibility with various hardware. Persistent issues with Gemma models prompted suggestions for alternatives like Yi-34b, available on arXiv. Discussions on power efficiency and ROCM compatibility underscored the ongoing search for optimal LLM setups, with detailed hardware discussions available at their hardware discussion channel.

Innovative Tools and Techniques for AI Development: CUDA MODE Discord provided insights into merging CUDA with image and language processing. The community also engaged in self-teaching CUDA and exploring Triton for performance improvements. Techniques like GaLore and FSDP with QLoRA for large model training were discussed, along with shared resources for CUDA learning, including CUDA Training Series on YouTube and lecture announcements for CUDA-MODE Reductions.

These summaries more accurately reflect the discussions and technical explorations across AI communities, showcasing challenges, innovative solutions, and the collaborative spirit driving advancements in the field, with relevant links provided inline for deeper exploration.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord Summary

Finetuning Frustrations and Triumphs: Discussions focused on finetuning Gemma created challenges with special tokens and the efficacy of model loading after finetuning, suggesting potential versioning issues and the impact of adapter precision. A recommendation included reinstalling xformers with pip install "unsloth[cu121-torch220] @ git+https://github.com/unslothai/unsloth.git" to address errors and updating Unsloth as a possible fix for OOM errors.

Unsloth Giveaways and Growth: The Unsloth community celebrated the implementation of multi-GPU support (oKatanaaa/unsloth) and the release of a new FSDP + QLoRA system by Answer.AI for training 70B models on gaming GPUs. A knowledge sharing exercise for Unsloth finetuned models on Kaggle identified key bugs and fixes, and the community also recognized contributors’ support on Ko-fi.

Boosting Productivity with Unsloth AI: Ghost 7B v0.9.1 advanced in reasoning and language, ranking 3rd on VMLU’s leaderboard and accessible on huggingface.co. Another significant achievement was reported by @lee0099, demonstrating Unsloth AI’s optimizations resulting in a 2x speedup and 40% memory reduction during LLM fine-tuning with no loss in accuracy.

Celebrating AI Contributions and Cutting-edge Updates: The Unsloth AI community shared updates and insights, including a new 0.43.0 release of bitsandbytes for FSDP support, contributing to the existing finesse of framework operations. AI2 Incubator’s provision of $200 million in AI compute to startups was highlighted, and discussions around OpenAI’s transparency surfaced as consequential.

Welcoming Winds and Gear for Growth: New Unsloth community members were directed to essential information channels, while suggestions for Unsloth advancements involved integrating features from Llama-factory into Unsloth. The prominence of the Galore thread was acknowledged, and a GitHub project named GEAR was shared, showcasing an efficient cache compression recipe for generative inference (GEAR on GitHub).

OpenAI Discord Summary

-

Claude Edges Out GPT-4 in Coding Prowess: Engineers have noted that Claude Opus appears to outperform GPT-4 in providing coding solutions, exhibiting strengths in SQL and Rust. The community has cited Claude’s ability to offer more complete code outputs.

-

AI’s Existential Question: Consciousness on the Table: The guild has engaged in debates concerning the potential consciousness of AI, specifically Claude. Papers and philosophical views on universal consciousness have been referenced, revealing a profound interest in the metaphysical aspects of AI technology.

-

AI Hiccups: Workarounds for GPT-4 Bugs: Users across the guild have reported GPT-4 outages and language setting bugs. A widely agreed solution is to switch the language to Auto-detect and refresh the browser, which has helped alleviate the issues for many users.

-

Transforming Prompts into Reliable AI Memories: Discussions have revolved around optimizing ChatGPT’s memory recall with prompt structuring. The approach includes formatting advice, like avoiding grammar mistakes and ensuring clarity, and using summaries to cue AI memory.

-

Maximizing Output Consistency across Custom Models: For achieving consistent outputs from custom GPT models, it’s been suggested to use an output template. The template should contain variable names that encode summary instructions, aligning well with an engineer’s need for standardized results.

LM Studio Discord Summary

-

Model Selection for Coding and Cybersecurity: Engineers are exchanging experiences using various models like Mistral 7B and Mixtral on different systems, including Mac M1 and PCs with Nvidia GPUs. For more detailed hardware and model compatibility discussions, such as running 70B models on a 64GB M2 MacBook with slow response times, engineers are advised to consult the hardware discussion channel.

-

GEMMA Models’ Quirks Confirmed: Technical issues persist with Gemma models in LM Studio, even after the release of custom quantized versions. Yi-34b with a 200k context window was suggested as a feasible alternative.

-

Explorations in Power Efficiency for LLM Setups: Community members are actively discussing the power consumption of high-end GPUs like 7900 XTX and CPU performance, especially AMD 3D cache models. The importance of efficient RAM setups and cooling systems, like Arctic P12 fans, is also noted. For system configuration recommendations, hardware discussion chat is a valuable resource.

-

Desire for Improved LM Studio Features: Users are requesting enhancements in LM Studio, including the ability to view recent models easily and more sophisticated filter capabilities to select models by size, type, and performance. Solutions like using Hugging Face to view recent models with a specific search are being shared while waiting for the platform to expand its features. An example search link is here.

-

ROCM Readiness for Diverse Operating Systems: Compatibility concerns with ROCM on various operating systems, including Windows and non-Ubuntu Linux distributions, have been raised. ROCm’s performance on Debian has been described as challenging due to Python version conflicts and AMD’s predominant Ubuntu support. Users successfully running models on Windows with ROCM have suggested using

koboldcppand the overrideHSA_OVERRIDE_GFX_VERSION=10.3.0. -

CrewAi vs AutoGen Evaluation for Bot Integration: As users navigate the complex landscape of bot integrations, with options like AutoGen and CrewAi, there’s active discussion on structural design and compatibility. CrewAi is characterized by its intuitive logic, while AutoGen offers a graphical user interface. Concerns over token costs due to agent loops and API calls are noted for those integrating these systems with GPT.

Perplexity AI Discord Summary

Perplexity’s Context Retention Struggles: Users expressed frustrations over Perplexity AI’s context handling ability, with complaints about it defaulting to base knowledge responses and subsequent requests for refunds. Concerns were raised about transparency after the removal of the 32k context length from the roadmap.

Confusion Around API Token Limits: Queries on the maximum output token length for new models and the absence of the expected 32k context length feature on the roadmap sparked discussions, amidst concerns of documentation inconsistencies and how they might affect API usage and development of projects like an Alexa-like personal assistant.

New Users Navigate the Pro Plan: New Perplexity Pro users were confused about redeeming promo subscriptions and using the API conservatively to avoid depleting credits, leading to requests for clear guidance on usage tracking.

Legal, Health, and Tech Discussions on Sharing Channel: Insightful conversation threads from the sharing channel touched on Apple’s legal actions against Epic, life expectancy concerns, the merits of a specific Super Bowl halftime show, Google’s payments to publishers, and discussions on nootropic efficiencies recommending caffeine, L-theanine, and creatine stack.

Comparative Analysis and Learning: The community exchanged thoughts on diverse AI services, comparing Perplexity to others like Copilot Pro and ChatGPT Pro, with Perplexity drawing praise specifically for its image generation capabilities.

Nous Research AI Discord Summary

-

Decoding the Decoder Models: An inquiry by

@mattlawhonregarding the implications of using longer sequences during inference with decoder models trained without Positional Encoding was raised.@vatsadevclarified that feeding more tokens is possible, though it may lead to errors or nonsensical output, and the question’s specificity caused some puzzlement among peers. -

Creative AI Unleashed: A new multiplayer game Doodle Wars ventured into neural network-scoring doodles, while discussions on enabling party games with fewer players via multi-modal LLMs took place. The announcement of Command-R from Cohere as a new generation model optimized for RAG and multilingual generation was also shared through Hugging Face.

-

Benchmarks and AI Analysis Expansion: The Gemini AI, designed to understand entire books and movies, was introduced alongside the WildBench and Bonito models, proposing new approaches to benchmarking and dataset creation. Discussions also highlighted Lex Fridman’s tweet addressing the intersection of AI with power dynamics, although the exact content wasn’t provided.

-

Model Parallelism and GPT-next: The complexities of model parallelism were dissected, with insights on the limitations of current methods and anticipation for GPT-5’s release stirring debates. Meanwhile, Cohere’s new model release and practical assistance with Genstruct were also hot topics.

-

LLMs at the Forefront: The ability to train effective chatbots with a curated set of 10k training examples was discussed, referencing insights from the Yi paper found on Reddit. XML tagging was highlighted as an evolving method for precise function call generation, and open-webui was recommended as a user-friendly GUI for Claude 3.

-

Quality Data and Quirky Model Responses: Within Project Obsidian, the challenge of maintaining data quality was acknowledged. Language models reflecting user-provided assumptions — even whimsical events like a fictional squirrel uprise — point to inherent model behaviors worth considering.

-

Focused Discussions for Bittensor: A prompt reminder was issued to keep the discussion on Bittensor topics, following a scam alert. Questions about primary insights from models produced by the subnet and the mention of an enhanced data generation pipeline, aimed at increasing diversity, indicated ongoing improvements.

LlamaIndex Discord Summary

-

Innovative Code Splitting with CodeHierarchyNodeParser: Users in the LlamaIndex guild discussed the use of

CodeHierarchyNodeParserfor splitting large code files into hierarchies, potentially enhancing RAG/agent performance. The approach has been shared on Twitter. -

AI Chatbot Challenges and Cosine Similarity Clarifications: A user sought advice on creating a RAG chatbot using LlamaIndex, citing the Ensemble Retriever document, while another user clarified the range of cosine similarity, which includes negative values and its implication for similarity score cutoffs in a query engine, referencing Wikipedia.

-

Handling Ingestion Pipeline Duplication and Conda Install Issues: Discussions highlighted solutions for ingestion pipelines processing duplicates, solved by using

filename_as_id=True, while another user reported on and sought help with resolving Conda installation conflicts involving version mismatches and modules not found post-upgrade. -

Query Pipeline Storage Queries and PDF Parsing with LlamaParse: One user inquired about saving pipeline outputs, questioning the feasibility of using Pydantic objects, and another shared informational resources on PDF parsing using LlamaIndex’s LlamaParse service through a YouTube video.

-

Engaging Community with User Surveys and AI-enhanced Browser Automation: LlamaIndex is conducting a 3-minute user survey, found here, to gather user feedback for improvements while also discussing LaVague, a project by @dhuynh95 utilizing RAG and MistralAI to aid in creating Selenium code from user queries, detailed in this post.

LAION Discord Summary

-

Artefacts Troubling Engineers: Technical discussions highlighted issues with high-resolution AI models, such as discernible artefacts at large resolutions and constraints in smaller models like the 600m. Engineers like

@marianbastiand@thejonasbrothersindicated a shared concern that these limitations might prevent full realization of the models’ capabilities. -

Constructing Advanced Video Scripting Tools:

@spirit_from_germanyproposed an advanced two-model system for video scripting capable of analyzing and predicting video and audio, recommending concentration on the most popular videos to ensure data quality. The idea was shared through a Twitter post. -

Generated Datasets Under Microscope:

@pseudoterminalxmentioned the limitations of generated datasets, underlining the potential for being trapped within a specific knowledge corpus and the automated descriptions being constrained by the training of the generating model. -

CogView3 vs. Pixart - An Incomplete Picture: The exploration of CogView3’s framework, a 3-billion parameter text-to-image diffusion model, was discussed with reference to its arXiv paper. The absence of comparative data with Pixart was noted, bringing into question the assessments of CogView3’s capabilities.

-

Loss Spike Dilemmas on MacBooks: MacBook Pro M1 Max users like

@keda4337are facing challenges with overheating while training diffusion models, resulting in erratic loss spikes from 0.01 - 0.9 to 500 when resuming training across epochs. Such issues underscore the practical challenges of model training on certain hardware configurations.

HuggingFace Discord Summary

-

Inference API Performance Inquiry:

@hari4626reported possible performance issues with Hugging Face’s Inference API, expressing concerns about receiving incomplete responses which might affect production suitability. -

Collaborative Learning on Generative AI:

@umbreenhand@yasirali1149showed interest in collaborative learning on generative AI for development purposes, while@wukong7752looked for guidance on calculating KL-divergence in latent-DM. -

Algorithm Optimization & AI Advancements: Discussions about AI models for programming optimization included GitHub Co-Pilot and DeepSeek-Coder instruct. Important resources include discussions about strategic reasoning with LLMs using few-shot examples (arXiv paper) and the scope of NLP covered by a deep learning article.

-

AI-Created Entertainment and Legal Datasets Released: Doodle Wars, a neural network-scored doodling multiplayer game, was introduced at Doodle Wars, and Caselaw Access Project with Harvard Library released over 6.6 million U.S. court decisions data set, accessible via Enrico Shippole’s Tweet.

-

Mistral Model Bluescreens and Image-to-Text With Problems: User

@elmatero6sought advice on CPU-optimizing Mistral to prevent system bluescreens, and@ninamanisearched for high-performing, accurate open-source models for uncensored image captioning, with cogvlm as a suggested option, albeit with noted quantization stability issues.

Eleuther Discord Summary

-

The Great BOS Debate: The use of the Beginning of Sentence (BOS) token was under scrutiny, with a consensus that its application varies across different models; no uniform standard exists. HFLM code was discussed regarding the incorporation of ‘self.add_bos_token’.

-

Efficiency Leap in Image Diffusion: ToDo, a method to up the ante in Stable Diffusion speeds by up to 2-4.5x through token downsampling, piqued interest, with related repository and discussion spanning potential implications for AI residency in hardware.

-

Zero-Shot Wonders Overtaking Few-Shots: Counterintuitive results on MMLU benchmarks showed zero-shot outperforming few-shot, sparking theories on context distraction and an idea to curve test with varying shots.

-

Dependencies and Developments in NeoX Land: GPT-NeoX development touched on the challenges of dependency management and the necessity of Apex, amid a climate of container complexity and Flash Attention updates.

-

Resources for AI Interpretability Aspirants: ARENA 3.0 was hailed as a “gem” for embarking on interpretability research, with a juicy link to riches: ARENA 3.0 Landing Page.

-

On the AI Existential Radar: A chilling Slashdot article spotlights U.S. government concerns over frontier AI as an extinction-level threat, nudging towards heavy-handed regulatory steps.

Latent Space Discord Summary

-

AI Biographer Raises Security Eyebrows: @swyxio recommended trying out Emma, the AI Biographer but advises caution on privacy, opting for fake details during trials.

-

Leadership Restructured at OpenAI: After internal turmoil, OpenAI reinstates Sam Altman as leader and welcomes three new board members, concluding a governance review.

-

Ideogram 1.0’s Quiet Entrance: The potential of Ideogram 1.0, a new text rendering tool, is noted by @swyxio but seems to have slipped under the radar.

-

Microsoft Research Seeks LLM Interface Feedback: A new interface standardization proposal from Microsoft, AICI, is currently up for community feedback, particularly on its Rust runtime, as shared in a Hacker News post.

-

State Space Model Could Rival Transformers: @swyxio spotlights “Mamba,” a State Space Model, as a Transformer alternative for LLMs, guiding interested AI Engineers to a visual guide and research paper.

-

Latent Space Paper Clubs Activate!: In various time zones, members are gearing up for GPT-focused discussions with preparatory notes shared and real-time responses to queries during sessions, such as clarifying “causal attention.”

-

AI-strategy Sessions Spark Community Sharing: From tips on workflow optimization using AI to sharing AI-enhanced CLI tools like

asciinema, AI in Action Club members are not just engaging but also advocating for future topics like decentralized AI applications. -

Asia Engages with GPT-2 Knowledge: A call to join Asia’s paper-club members for an EPIC presentation on the GPT-2 paper was made by @ivanleomk. Further engagement is seen with the recent release of a Latent Space pod.

Interconnects (Nathan Lambert) Discord Summary

-

New Roles to Distinguish Discord Members: Nathan Lambert introduced new roles within the Discord guild to separate manually added close friends from subscribers, inviting feedback on the change.

-

GPT-4’s Doom Playing Capabilities Published: GPT-4 demonstrated its ability to play the 1993 first-person shooter game Doom, as described in a paper shared by Phil Pax (GPT-4 Plays Doom). The model’s complex prompting is highlighted as a key factor in its reasoning and navigation skills.

-

Musk and Open Models Stir Debate: A tweet by Elon Musk about OpenAI’s Grok being open-sourced led to discussions around market reactions and the use of “open source,” with concerns over OpenAI’s ongoing commitment to open models also mentioned. Separately, Cohere’s new model Command-R sparked anticipation among engineers due to its long context window and public weight release, potentially impacting startups and academia (Command-R: Retrieval Augmented Generation at Production Scale).

-

AI-Centric Dune Casting Game Unfolds: Discord members humorously cast prominent figures from the AI industry as characters from Dune, with suggestions including Sam Altman as the Kwisatz Haderach and Elon Musk as Baron Harkonnen.

-

Reinforcement Learning Podcast and Papers Touted: Ian Osband’s TalkRL podcast episode on information theory and RL was recommended (Ian Osband’s episode on Spotify), and discussions emerged around a paper on RLHF, PPO, and Expert Iteration applied to LLM reasoning (Teaching Large Language Models to Reason with Reinforcement Learning). The theme of consistent quality in RL content was echoed across discussions.

-

Inflection AI Model Integrity Questioned: After similar outputs were noted between Inflection AI’s bot and OpenAI’s Claude-3-Sonnet, debates ensued over possible A/B testing or model wrappers, exacerbated by Inflection AI’s response about its bot Pi remembering previous inputs (Inflection AI’s Clarification).

-

Costs and Approaches to Model Training Examined: The affordability of less than $1,000 for pretraining models like GPT-2 and the potential for deals on compute, such as Stability AI’s speculated sub-$100,000 expenditure for their model’s compute, were hot topics. Fine-tuning with books and articles using a masking strategy was also discussed.

-

Sam Altman’s Return to OpenAI and Light-Hearted Role Queries: Sam Altman’s return to the OpenAI board prompted discussions and a sprinkling of humor about leadership. Discord roles, including self-nominated goose roles, were jestingly proposed as subscribers’ stakes became a topic of amusement.

OpenRouter (Alex Atallah) Discord Summary

-

Mistral 7b 0.2 Takes the Stage with Speed: The newly introduced Mistral 7b 0.2 model is making waves with a 10x performance increase for short outputs and 20x for longer outputs, and boasts a 32k token context window. A performance demo can be viewed in a tweet by OpenRouterAI.

-

Gemma Nitro Offers Efficiency and Economy: OpenRouter announces a new model, Gemma Nitro, with over 600+ tokens per second speed and pricing set at an affordable $0.1 per million tokens. Details are outlined on OpenRouter’s model page.

-

Conversations Heat Up Around AI Censorship: User concerns rise about censorship potentially affecting AI models, like Claude 2’s self-moderated versions, prompting discussions about free expression and the need for uncensored platforms, alongside technical inquiries regarding message formatting and system parameters.

-

Community Innovates with Claude 3 Library:

@thevatsalsagalnipresents claudetools, a library that facilitates function calling with Claude 3 models, promoting ease-of-use for developers with Pydantic support. The library is available for community contribution on GitHub. -

Technical Discussions Abound on Model Limits and Usage: Users discuss the technical aspects of AI models, delving into topics like GPT-4’s token output limitation, the intricacies of Claude API’s role message handling, and the utilization of Chat Markup Language (ChatML) in prompt customization. Community-created tools, like a Google Sheets connection app, demonstrate growing engagement and address model accessibility concerns.

CUDA MODE Discord Summary

-

Combining CUDA with Image and Language Processing: Engineers discussed challenges in concatenating image features with caption layers and the use of linear layers to project image features to the shape of NLP embeddings. Further insights included CUDA’s potential for improving machine learning model operations by employing techniques like vectorized additions.

-

Exploring CUDA and Triton Development: The community is engaging in self-teaching CUDA and exploring tools for performance improvement, such as the Triton language. There’s an interest in comparing CUDA’s performance to higher-level tools like libtorch and understanding the compilation process involved in

torch.compile. -

Advancements in Large Model Training: Techniques like GaLore and FSDP with QLoRA are discussed for their contribution to reducing memory requirements and enabling the training of large models on standard GPUs. An ArXiv paper covers Gradient Low-Rank Projection, and Answer.AI’s blog post provides insights on training a 70b model at home.

-

CUDA Knowledge Sharing and Lecture Announcements: A YouTube playlist and GitHub repository for the CUDA Training Series were shared, while a call for participation in a CUDA-MODE Reductions lecture was announced, with resources for the lecture available online. Moreover, CUDA novices discussed compilation differences and performance observations across PyTorch versions.

-

Job Opportunities and Project Development in CUDA: A developer is sought to design a custom CUDA kernel, offering a remuneration between $2,000 and $3,000 USD, with prerequisites including experience in algorithmic development and CUDA programming. Conversations also highlighted user projects like building a custom tensor library and the importance of depth in knowledge for CUDA’s practical applications.

LangChain AI Discord Summary

-

Innovative Prompt Crafting Desktop App: User

@tomatyssintroduced Prompt Mixer, a new tool for building, testing, and iterating on AI prompts, offering features like connecting to various models, prompt version tracking, and a guide for creating custom connectors. -

Enhancements on Langchain: Users discussed multiple aspects of Langchain such as PDF extraction issues, handling complex logic in templates, wrappers for ChatOllama functions such as Ollama Functions, execution locations for Langchain Serve, and capturing outputs from routes. Meanwhile, Claude3 support enhancement is in progress as indicated by

@baytaew, referencing a Pull Request #18630 on GitHub. -

RAG Tutorial Resources Shared: Tutorials on improving and utilizing Retrieval Augmented Generation (RAG) were shared by

@mehulgupta7991and@infoslack, providing videos on enhancing RAG with LangGraph and building a chatbot with RAG and LangChain respectively. -

Open Source Tools for Chatbots and Data Analytics: An open-source AI Chatbot for conversational data analysis was shared by

@haste171on GitHub, while@appstormer_25583released Data GPTs in Appstorm 1.5.0 for data exploration and visualization with sample GPTs for various industries. -

Automated Lead Generation & Generation Tools:

@robinsayaris developing an automated tool for generating leads using public company information, sparking interest from@baytaewwho is anticipating the potential impact of such innovation.

DiscoResearch Discord Summary

-

AI Judging Creative Writing: Skepticism was raised by

.calytrixabout the feasibility of AI models judging creative writing due to parameter limitations. Despite this, GPT-4 and Claude3 are being tested with detailed scoring criteria for such a task, a benchmark is being developed by.calytrix, and Mistral large has been suggested as a potential candidate for an ensemble of AI judges bybjoernp. -

Evo Tackles Genomic Scale: Evo, featuring the StripedHyena architecture, was released by Together AI and the Arc Institute for handling sequences ranging from DNA, RNA, to proteins and supports over 650k tokens. Interest was shown by

johannhartmannin AutoMerger for automatic model merging, though it’s currently non-operational. -

Benchmarking Tools and Strategies Discussed:

johannhartmannshared the tinyBenchmarks dataset for efficient AI benchmarking and expressed intent to translate it for broader usability. Insights on benchmarking with the Hellaswag dataset suggested that using 100 data points might be insufficient for detailed comparisons. -

Advancements and Challenges in German AI Research:

johannhartmannprovided insights into training models like Mistral using the German Orca dataset and addressed technical issues encountered bycrispstrobein model merging through a GitHub commit fix. Additionally, Brezn3 showed promising improvements over its predecessor given benchmark results, while Dpo (Domain Prediction Override) was noted as in progress. Consideration was being given to DiscoLM for better benchmarking consistency over previous base models.

Alignment Lab AI Discord Summary

-

AI Hallucination Challenges Spark Debate: Engineers explored strategies to minimize AI hallucinations, discussing Yi’s report without a consensus on a definition and considering methods like using RAG (Retrieval-Augmented Generation) or employing a manual rewrite of repetitive responses in fine-tuning datasets to mitigate hallucinations. No consensus emerged from the discussion.

-

Mermaid Magic for Code Diagrams: The use of Claude to create mermaid graphs from code bases up to 96k tokens was presented as an innovative approach to visualizing code architecture, sparking interest in potential applications for such visualization techniques.

-

Gemma-7b Arrives with a Bang: The introduction of Gemma-7b, enhanced with C-RLFT and fine-tuned using 6T tokens, was heralded as a significant achievement, almost matching the performance of Mistral-based models. The first usable fine-tune is available on HuggingFace and was celebrated in a tweet by OpenChatDev.

-

Balancing Act Between Gemma and Mistral Models: A conversation highlighted why Gemma 7B was released even though it doesn’t outperform Mistral 7B, with agreement that each model represents a distinct experiment and Gemma’s potential was yet to be fully explored, especially in areas like NSFW content moderation.

-

Community Collaboration in Coding: Users shared experiences and extended calls for collaboration, particularly around setting up a Docker environment to facilitate development. The tone was comradely, emphasizing the value of collective input in overcoming technical hurdles.

LLM Perf Enthusiasts AI Discord Summary

-

Free AI Tools for Vercel Pro Subscribers: Claude 3 Opus and GPT-4 vanilla are now accessible for free to those with Vercel Pro. More information and tools can be found at the Vercel AI SDK.

-

Migrating from OpenAI to Azure SDK: Transitioning from OpenAI’s SDK to an Azure-based solution has been a topic of interest for users like

@pantsforbirds, who are seeking advice on potential migration challenges. -

XML Enhances Function Calls in Claude: Users, notably

@res6969, have noted improved function call performance when using XML tags with Claude. Conversely,@pantsforbirdspointed out that embedding XML complicates sharing prompt generators. -

Opus Rises Above GPT-4: Discussions led by users

@jeffreyw128,@nosa_., and@vgelhighlighted Opus prevails over GPT-4 in delivering smart responses.@potrockpreferred Claude’s straightforward prose over GPT’s more verbose explanations. Users are eagerly anticipating GPT-4.5 and GPT-5 releases, curious about enhancements over current models. -

Speculations on Google’s Potential AI Dominance:

@jeffreyw128theorizes Google could dominate in general AI use due to its capability to integrate AI into its existing platforms, like search and Chrome, and offer it at lower costs, possibly introducing a Generative Search Experience. However, they suggest that OpenAI may maintain a competitive lead with specialized applications, while Google might prioritize a blend of generative and extractive AI solutions.

Skunkworks AI Discord Summary

- A Groundbreaking Claim in AI Training:

@baptistelqthas announced a substantial methodological breakthrough, asserting the ability to accelerate convergence by a factor of 100,000 by training models from scratch each round. The details of the methodology or verification of these claims have not been provided.

Datasette - LLM (@SimonW) Discord Summary

- Shout-out to Symbex:

@bdexterexpressed gratitude for regular usage of symbex, with@simonwacknowledging the project’s fun aspect.

AI Engineer Foundation Discord Summary

Mysterious Mention of InterconnectAI: A user named .zhipeng appears to have referenced a blog post from Nathan’s InterconnectAI, but no specific details or context were provided.

AI Video Deep Dive Incoming: An event has been announced focusing on Gen AI Video and the ‘World Model’, featuring speakers such as Lijun Yu from Google and Ethan He from Nvidia, set for March 16, 2024, in San Francisco and available on Zoom. Those interested can RSVP here.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (368 messages🔥🔥):

-

Inquiry about Finetuning Gemma:

@kaleina_nyanand@starsupernovadiscussed issues with finetuning Gemma using the ChatML template, with concerns about whether special tokens like<start_of_turn>and<end_of_turn>are trained for vanilla pre-trained models. They explore potential fixes and workarounds, such as unfreezing the embedding matrix (Unsloth Wiki). -

Multi-GPU Support for Unsloth:

@kaleina_nyanshared a fork she made on GitHub implementing multi-GPU support to Unsloth (oKatanaaa/unsloth) and further discussed potential issues with numerical results and memory distribution. -

New FSDP + QLoRA Training System:

@dreamgenhighlighted a new system released by Answer.AI, capable of training 70B models locally on typical gaming GPUs, not yet sure how it differs from existing methods involving DeepSpeed and QLoRA. -

Experiences Sharing Unsloth Finetuned Models on Kaggle:

@simon_vtrshared experiences attempting to use Unsloth finetuned models in a Kaggle competition, dealing with issues related to offline packages and inference bugs. A notebook with bug fixes for Gemma models was mentioned for inference use on Kaggle by@starsupernova. -

Thanking Supporters:

@theyruinedeliseand@starsupernovaexpressed gratitude towards the Unsloth community members for their support on Ko-fi, thanking individual contributors like@1121304629490221146and@690209623902650427for their donations. -

Gemma Token Mapping and

generateMethod:@kaleina_nyanand@starsupernovaengaged in a technical discussion about the function ofmap_eos_tokenand its implications for the.generatemethod of Gemma models. They identified a potential issue withgeneratenot stopping after creating `

Links mentioned:

- Answer.AI - You can now train a 70b language model at home: We’re releasing an open source system, based on FSDP and QLoRA, that can train a 70b model on two 24GB GPUs.

- Answer.AI - You can now train a 70b language model at home: We’re releasing an open source system, based on FSDP and QLoRA, that can train a 70b model on two 24GB GPUs.

- Google Colaboratory: no description found

- GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection: Training Large Language Models (LLMs) presents significant memory challenges, predominantly due to the growing size of weights and optimizer states. Common memory-reduction approaches, such as low-ran…

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Kaggle Mistral 7b Unsloth notebook Error: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Kaggle Mistral 7b Unsloth notebook Error: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Support Unsloth AI on Ko-fi! ❤️. ko-fi.com/unsloth: Support Unsloth AI On Ko-fi. Ko-fi lets you support the people and causes you love with small donations

- tokenizer_config.json · unsloth/gemma-7b at main: no description found

- 4 apps incroyables qui utilisent l’IA: vous allez kiffer (lien vers les apps 👇)👀 À ne pas manquer, Cet OS va vous faire courir acheter un Mac : https://youtu.be/UfrsyoFUXmULes apps présentées da…

- Home: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- GitHub - stanford-crfm/helm: Holistic Evaluation of Language Models (HELM), a framework to increase the transparency of language models (https://arxiv.org/abs/2211.09110). This framework is also used to evaluate text-to-image models in Holistic Evaluation of Text-to-Image Models (HEIM) (https://arxiv.org/abs/2311.04287).: Holistic Evaluation of Language Models (HELM), a framework to increase the transparency of language models (https://arxiv.org/abs/2211.09110). This framework is also used to evaluate text-to-image …

- Tensor on cuda device 1 cannot be accessed from Triton (cpu tensor?) · Issue #2441 · openai/triton: The code of softmax below is coppied from tutorials to demonstrate that we cannot pass tensors on devices other than “cuda:0” to triton kernel. Errors are: ValueError: Pointer argument (at 0…

- GitHub - EleutherAI/cookbook: Deep learning for dummies. All the practical details and useful utilities that go into working with real models.: Deep learning for dummies. All the practical details and useful utilities that go into working with real models. - EleutherAI/cookbook

- GitHub - oKatanaaa/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to oKatanaaa/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #welcome (4 messages):

- A Warm Welcome and Handy Reminders:

@theyruinedelisegreeted new members with a hearty welcome in multiple messages and encouraged everyone to check out important channels. Members are specifically reminded to read information in channel 1179040220717522974 and to select their roles in channel 1179050286980006030.

Unsloth AI (Daniel Han) ▷ #random (19 messages🔥):

-

CUDA Conundrums: User

@maxtensorreports Bootstrap CUDA exceptions in certain scripts within the same environment where others work perfectly, wondering if it’s an OS script limitation. Troubleshooting with@starsupernovaleads to potential GPU visibility issues. -

Praise for the Framework:

@maxtensorexpresses admiration for a framework they find innovative, stating it “opens a lot of new doors.” -

New bitsandbytes Version Released:

@maxtensorshares a link to the new 0.43.0 release of bitsandbytes, notable for FSDP support and officially documented Windows installation, but remains cautious about updating their working environment. -

AI2 Incubator’s Massive Compute Giveaway:

@mister_poodleshares news about the AI2 Incubator, which has secured $200 million in AI compute resources for its portfolio companies, offering significant support for startups in the AI space. -

Questions Around OpenAI’s AGI Tactics:

@iron_boundand@theyruinedelisediscuss concerns and implications of OpenAI’s approach to AI development, particularly in relation to sharing scientific advancements and Elon Musk’s stance on OpenAI’s alleged shift in openness.

Links mentioned:

- AI2 Incubator secures $200M in AI compute resources for portfolio companies: (AI2 Incubator Image) Companies building artificial intelligence models into their software products need a lot of computation power, also known as

- [ML News] Elon sues OpenAI | Mistral Large | More Gemini Drama: #mlnews #ainews #openai OUTLINE:0:00 - Intro0:20 - Elon sues OpenAI14:00 - Mistral Large16:40 - ML Espionage18:30 - More Gemini Drama24:00 - Copilot generate…

- Release 0.43.0: FSDP support, Official documentation, Cross-compilation on Linux and CI, Windows support · TimDettmers/bitsandbytes: Improvements and New Features: QLoRA + FSDP official support is now live! #970 by @warner-benjamin and team - with FSDP you can train very large models (70b scale) on multiple 24GB consumer-type G…

Unsloth AI (Daniel Han) ▷ #help (514 messages🔥🔥🔥):

- Xformers Installation Issues: A user

@fjefoencountered errors related toxformerswhile attempting to use Unsloth AI with Gemma models. They were advised by@starsupernovato reinstallxformers, and later to use the python package installation commandpip install "unsloth[cu121-torch220] @ git+https://github.com/unslothai/unsloth.git". - Gemma Model Load and Fine-tuning Challenges: [Gemma Loading Difficulty]

@patleemanfaced troubles loading a finetuned Gemma 2B model using Unsloth on the vLLM server, getting a KeyError forlm_head.weight. After a workaround to skip the key, the model loaded fine, suggesting a potential issue on vLLM’s end, as discussed in this Github issue. - Using HF_HOME Environment Variable with Jupyter: [HF_HOME Troubles]

@hyperleashstruggled with setting theHF_HOMEenvironment variable in Jupyter notebooks for Unsloth. They managed to successfully set it for .py scripts but hit a snag with notebooks, stating no logs were generated for troubleshooting.@starsupernovaacknowledged the issue, confirmed there are no logs, and provided advice on trying to set the environment variable correctly. - Discussions on Finetuned Model Performance: Users discussed the performance of finetuned models.

@mlashcorpobserved a performance discrepancy with the merged model versus when loading the adapter directly.@starsupernovasuggested trying"merged_4bit_forced"and mentioned precision issues when merging adapters. - Downloading and Finetuning Gemma 7B Issues:

@fjeforeported issues with downloading and finetuning Gemma 7B but was later able to initiate training successfully. They mentioned OOM errors compared to Mistral 7B and were guided by@starsupernovato update Unsloth and consider redownloading via transformers.

Links mentioned:

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Repetition Improves Language Model Embeddings: Recent approaches to improving the extraction of text embeddings from autoregressive large language models (LLMs) have largely focused on improvements to data, backbone pretrained language models, or …

- Gemma models do not work when converted to gguf format after training · Issue #213 · unslothai/unsloth: When Gemma is converted to gguf format after training, it does not work in software that uses llama cpp, such as lm studio. llama_model_load: error loading model: create_tensor: tensor ‘output.wei…

- KeyError: lm_head.weight in GemmaForCausalLM.load_weights when loading finetuned Gemma 2B · Issue #3323 · vllm-project/vllm: Hello, I finetuned Gemma 2B with Unsloth. It uses LoRA and merges the weights back into the base model. When I try to load this model, it gives me the following error: … File “/home/ubuntu/proj…

- Faster Inference & Training Roadmap · Issue #226 · unslothai/unsloth: @danielhanchen In the unsloth Gemma intro blogpost, you mention VRAM increase due to larger MLP size in Gemma compared to Llama and Mistral, and show a graph demonstrating decreased memory usage wh…

- VLLM Multi-Lora with embed_tokens and lm_head in adapter weights · Issue #2816 · vllm-project/vllm: Hi there! I’ve encountered an issue with the adatpter_model.safetensors in my project, and I’m seeking guidance on how to handle lm_head and embed_tokens within the specified modules. Here'…

- Conda installation detailed instructions · Issue #73 · unslothai/unsloth: I’m trying to follow the instructions for installing unsloth in a conda environment, the problem is that the conda gets stuck when running the install lines. I’ve tried running it twice, both …

- Google Colaboratory: no description found

- Hastebin: no description found

- Google Colaboratory: no description found

- Tutorial: How to convert HuggingFace model to GGUF format · ggerganov/llama.cpp · Discussion #2948: Source: https://www.substratus.ai/blog/converting-hf-model-gguf-model/ I published this on our blog but though others here might benefit as well, so sharing the raw blog here on Github too. Hope it…

- Home: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- Hastebin: no description found

- LoRA Land: Fine-Tuned Open-Source LLMs that Outperform GPT-4 - Predibase: LoRA Land is a collection of 25+ fine-tuned Mistral-7b models that outperform GPT-4 in task-specific applications. This collection of fine-tuned OSS models offers a blueprint for teams seeking to effi…

- Reddit - Dive into anything: no description found

- Merging QLoRA weights with quantized model: Merging QLoRA weights with quantized model. GitHub Gist: instantly share code, notes, and snippets.

- py : add Gemma conversion from HF models by ggerganov · Pull Request #5647 · ggerganov/llama.cpp: # gemma-2b python3 convert-hf-to-gguf.py ~/Data/huggingface/gemma-2b/ —outfile models/gemma-2b/ggml-model-f16.gguf —outtype f16 # gemma-7b python3 convert-hf-to-gguf.py ~/Data/huggingface/gemma-…

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- Third-party benchmark · Issue #6 · jiaweizzhao/GaLore: Hello, thank you very much for such excellent work. We have conducted some experiments using Llama-Factory, and the results indicate that Galore can significantly reduce memory usage during full pa…

- unsloth/unsloth/save.py at main · unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #showcase (8 messages🔥):

- Ghost 7B v0.9.1 Takes Flight: User

@lh0x00announced the release of Ghost 7B v0.9.1, touting improvements in reasoning and language capabilities in both Vietnamese and English. It’s available for online use and app applications at huggingface.co. - Ghost 7B Secures Top Rank: In a subsequent message,

@lh0x00mentioned that Ghost 7B v0.9.1 scored high enough to rank 3rd in VMLU’s “Leaderboard of fine-tuned models”. - Community Cheers for Ghost 7B: Users

@starsupernovaand@lh0x00exchanged congratulations on the successful launch and high performance of the Ghost 7B model. - French AI app insight: User

@theyruinedeliseshared a YouTube video titled “4 apps incroyables qui utilisent l’IA” offering insights into impressive AI apps: Watch here. - Unsloth AI Accelerates Fine-tuning:

@lee0099discussed finetuningyam-peleg/Experiment26-7Bon a NeuralNovel dataset, highlighting Unsloth AI’s optimizations that lead to 2x speedup, 40% memory reduction, and 0% accuracy degradation during LLM fine-tuning.

Links mentioned:

- 4 apps incroyables qui utilisent l’IA: vous allez kiffer (lien vers les apps 👇)👀 À ne pas manquer, Cet OS va vous faire courir acheter un Mac : https://youtu.be/UfrsyoFUXmULes apps présentées da…

- NeuralNovel/Unsloth-DPO · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #suggestions (5 messages):

- Suggestion for Unsloth Integration: User

@imranullahsuggested implementing features from Llama-factory into Unsloth AI, implying that such features have proven to be good in their current application. - Agreement on Galore’s Usefulness: User

@starsupernovaagreed on the usefulness of the Galore thread, endorsing its potential application. - Implementation Ease: User

@remek1972humorously remarked on the ease of implementing a certain feature, tagging@160322114274983936in the conversation. - GitHub Project Shared:

@remek1972shared a link to a GitHub repository named GEAR, which relates to an efficient KV cache compression recipe for generative inference of large language models. View the GEAR project on GitHub.

Links mentioned:

GitHub - opengear-project/GEAR: GEAR: An Efficient KV Cache Compression Recipefor Near-Lossless Generative Inference of LLM: GEAR: An Efficient KV Cache Compression Recipefor Near-Lossless Generative Inference of LLM - opengear-project/GEAR

OpenAI ▷ #ai-discussions (611 messages🔥🔥🔥):

-

AI-Assisted Coding Comparison: Users like

@askejmand@sangam_kshared experiences comparing the coding capabilities of Claude Opus and GPT-4. The consensus seems to be that Claude Opus is better for coding, offering more complete code outputs and performing well in languages like SQL and Rust. -

Exploring AI’s Consciousness: A discussion led by

@sotiris.btouched on the belief by some that Claude might be conscious. Debates included different views on universal consciousness and whether AI can be considered conscious, with users like@metaldrgnand@dezuzeldiscussing papers on the topic. -

GPT-4’s Cutoff and Performance: User

@webheadconfirmed using test queries that GPT-4’s knowledge cut-off is in April 2023 and that while ChatGPT’s conversations may be slower, the recall abilities of various models vary, with Google’s 1.5 preview showing impressive recall but potential shortcomings in specific tasks. -

International Access to AI Products: There were several mentions of difficulties accessing Claude 3 Opus internationally, with users

@lightpicturesand@lazybones3discussing workarounds. User@webheadrecommended using openrouter for testing different models. -

Subscription Issues with OpenAI: User

@arxsenaldescribed a problem with their ChatGPT Plus subscription not being recognized. Others, including@eskcanta, suggested ways to resolve it, including clearing cache, using different devices/browsers, and contacting support through the OpenAI help site.

Links mentioned:

- Skm: no description found

- LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys: no description found

- Prompt-based image generative AI tool for editing specific details: I am trying to make some spritesheets using DALLE3, and while the initial generation of spritesheets by DALLE3 are fascinating, I have encountered these problems: Inconsistent art style(multi…

- How can we Improve Democracy?: Introduction

- Bland Web: no description found

- Tweet from Bland.ai (@usebland): Introducing Bland web. An AI that sounds human and can do anything. 📢 Add voice AI to your website, mobile apps, phone calls, video games, & even your apple vision pro. ⚡️ Talk to the future right …

- GitHub - Kiddu77/Train_Anything: A repo to get you cracking with Neural Nets .: A repo to get you cracking with Neural Nets . Contribute to Kiddu77/Train_Anything development by creating an account on GitHub.

- Literal Labs - Cambridge Future Tech: Accelerating The New Generation of Energy Efficient AI Literal Labs applies a streamlined and more efficient approach to AI that is faster, explainable, and up to 10,000X more energy efficient than to…

OpenAI ▷ #gpt-4-discussions (78 messages🔥🔥):

-

GPT Outage and Language Setting Bugs: Multiple users, including

@kor_apucard,@dxrkunknown,@snolpix, and@alessid_55753, reported issues with GPT not responding. A common fix found by users like@pteromapleand confirmed by others such as@katai5plateand@hccren, was to switch the language preview in settings to Auto-detect and refresh the browser. -

Chat Functionality Troubles and Workarounds: Issues were not limited to a single browser, as

@dxrkunknownand@macy7272had problems on both web and mobile. Solutions varied with@pteromaplesuggesting language setting changes, whereas@winter9149found deleting old chats could help resume normal operation. -

Discussions around AI Competitors: Several users, including

@tsanva,@1kio1, and@zeriouszhit, discussed possibly switching to competitor models like Claude, citing context window limitations and confusion in responses from GPT. Concerns were also raised about the lack of comparable features to support Claude compared to those available for GPT. -

Help and Status Updates: User

@openheroesshared a link to OpenAI’s status page indicating no current outages, suggesting users ensure they are not on a VPN or blocking connections and referencing the help center for additional support. -

Payment Queries for GPT Creators: User

@ar888inquired about payment for GPT creators, to which@elektronisaderesponded by noting that the official word from OpenAI suggested payments would start in Q1 for US creators, as stated in a blog post.

Links mentioned:

OpenAI Status: no description found

OpenAI ▷ #prompt-engineering (90 messages🔥🔥):

- In Search of Enhanced ChatGPT Memory: User

@youri_kwas troubleshooting ChatGPT’s ability to recall chat history for context in responses and received advice from@eskcantaon how to improve the prompt structure to handle memory, including the suggestion to ask for a summary before ending conversations. - ChatGPT Struggles to Sketch for Beginners:

@marijanarukavinaencountered issues getting ChatGPT to create a simple sketch explaining Boundary Value Analysis;@eskcantasuggested using Python tool for better results and provided a step-by-step approach to tweaking the model’s output. - Delving into GPT-Based UI Generation:

@dellgeniusprobed into how GPT-4 could be used for creating Figma plugins or generating UI elements, with@eskcantasharing a link showcasing GPT-4’s potential capabilities in this area. - GPT for Homework Assistance? Not Quite:

@levidogenquired about extracting questions from an assignment document using chatGPT, but@darthgustav.cautioned about the limitations and the ethical considerations of using GPT for homework-related tasks. - Achieving Consistent Output in Custom GPTs:

@iloveh8sought advice on ensuring consistent responses from custom GPT models, and@darthgustav.recommended using an output template with variable names that encode summary instructions.

OpenAI ▷ #api-discussions (90 messages🔥🔥):

-

Efficient Prompt Engineering with GPT:

@eskcantaarticulated the basic steps for creating efficient prompts, outlining the importance of clarity, language proficiency, and instructing the model with specifics. They advised to avoid typos, grammar mistakes, and to communicate in any language well understood by both the user and the AI. -

Keeping Custom GPT Outputs Consistent: According to

@darthgustav., employing an output template with variable names that encode a summary of the instructions can help maintain consistent output from custom GPT prompts. -

Professional Vocabulary Expansion Challenge:

@ericplayzsought assistance in rewriting a paragraph with professional vocabulary while keeping the word count;@eskcantashared an attempted solution and prompted for feedback to assess if the needs were met. The guidance included ensuring that the rewritten text in Romanian maintains length, details, and appropriate tone. -

JSON Formatting in GPT-4 Discussions:

@dellgeniusinquired about the use of JSON formatting for organizing responses;@aminelgconfirmed its utility for structured data, and@eskcantaanswered questions about creating UI elements and the varying capabilities of the AI model. There was a focus on how GPT models can aid in designing UI elements, provided the AI has been trained on the relevant data or tools. -

Requests for Assistance Using ChatGPT API: Users

@youri_kand@levidogrequested help with making ChatGPT remember chat history and extracting questions from an assignment document, respectively. They received guidance from@eskcanta, who suggested using summaries for history retention and cautioned that the models are not designed to aid with homework, which might lead to inconsistent results.

LM Studio ▷ #💬-general (407 messages🔥🔥🔥):

-

Exploring LLM Capabilities: Users are discussing the capabilities of different models and seeking advice on model choices for specific purposes, such as coding and cybersecurity. They are sharing experiences using models like Mistral 7B and Mixtral on various systems, including Mac M1 and PCs with Nvidia GPUs.

-

Technical Troubleshooting in LM Studio: Some users, such as

@amir0717, have encountered errors when trying to load models in LM Studio and are seeking help to resolve issues like “Model operation failed” or Models “did not load properly.” Others are offering solutions such as running LM Studio as an administrator or adjusting GPU offload settings. -

Hardware Limitations and Model Performance: Users with different hardware specs are asking about the best models to run on their systems. For example,

@mintsukuuwith an 8GB Mac M1 is advised by@yagilbto try out 7B models with conservative layer settings, while@dbenn8reports running 70B models on a 64GB M2 Macbook, albeit with slow response times. -

Interest in New and Alternative Models: There are queries about support for newer models like Starcoder2 and Deepseek-vl in LM Studio. Some users, like

@real5301, are looking for models with large context windows upwards of 80k tokens, and@heyitsyorkiesuggests Yi-34b with a 200k context window. -

Development of LM Studio: A user mentions the development pace of LM Studio in relation to llama.cpp builds and

@yagilbconfirms an upcoming beta, acknowledging that updates have been slower than desired. It was noted that the development team has expanded from one to three members.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs): Find, download, and experiment with local LLMs

- deepseek-ai/deepseek-vl-7b-chat · Discussions: no description found

- Big Code Models Leaderboard - a Hugging Face Space by bigcode: no description found

- deepse (DeepSE): no description found

- The Muppet Show Headless Man GIF - The Muppet Show Headless Man Scooter - Discover & Share GIFs: Click to view the GIF

- How to run a Large Language Model (LLM) on your AMD Ryzen™ AI PC or Radeon Graphics Card: Did you know that you can run your very own instance of a GPT based LLM-powered AI chatbot on your Ryzen™ AI PC or Radeon™ 7000 series graphics card? AI assistants are quickly becoming essential resou…

- AMD explains how easy it is to run local AI chat powered by Ryzen CPUs and Radeon GPUs - VideoCardz.com: “Chat with Ryzen/Radeon” AMD guides how to run local AI chats with their hardware. AMD does not have its own tool, like NVIDIA Chat with RTX. NVIDIA came up with a simple tool that can be used to run…

- GitHub - joaomdmoura/crewAI: Framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks.: Framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. - joaomdmoura/cr…

- AMD explains how easy it is to run local AI chat powered by Ryzen CPUs and Radeon GPUs - VideoCardz.com: “Chat with Ryzen/Radeon” AMD guides how to run local AI chats with their hardware. AMD does not have its own tool, like NVIDIA Chat with RTX. NVIDIA came up with a simple tool that can be used to run…

LM Studio ▷ #🤖-models-discussion-chat (110 messages🔥🔥):

-

GEMMA Models Puzzlement:

@boting_0215encountered issues with all Gemma models not being usable.@fabguyconfirmed that only a few Gemma quants work, and these are custom quantized versions by the team, pinpointing a potential issue either with LM Studio or the Gemma model. -

Troubleshooting Gemma Load Error:

@honeylaker_62748_43426received an error when loading a 7B Gemma model and@heyitsyorkieaffirmed that Gemma models frequently encounter issues, with some quants known to be broken. -

Searching for the Elusive Slider:

@jo_viisought advice for models suitable for an M2 Max Apple Metal and@fabguysuggested using a DeepSeek Coder Q4 or Q5 to leave room for other processes. -

Model Upload Confusion:

@anand_04625couldn’t find the file upload button for the Phi model in LM Studio, and@heyitsyorkieclarified that model file uploads are not supported. -

Awaiting Starcoder 2 Update:

@rexehwas looking for alternatives to Starcoder 2 on lm studio for ROCm users, and@heyitsyorkieindicated that support for Starcoder 2 will come in the future, while currently recommend building llama.cpp independently.

Links mentioned:

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits: Recent research, such as BitNet, is paving the way for a new era of 1-bit Large Language Models (LLMs). In this work, we introduce a 1-bit LLM variant, namely BitNet b1.58, in which every single param…

- What is retrieval-augmented generation? | IBM Research Blog: RAG is an AI framework for retrieving facts to ground LLMs on the most accurate information and to give users insight into AI’s decisionmaking process.

- Ternary Hashing: This paper proposes a novel ternary hash encoding for learning to hash methods, which provides a principled more efficient coding scheme with performances better than those of the state-of-the-art bin…

LM Studio ▷ #🧠-feedback (7 messages):

- Users Crave a “New Model” Section:

@justmarkyexpressed a wish for an option in LM Studio to view recent models without needing to search, to easily discover what’s new. - Desire for More Sort Options:

@purplemelbourneechoed the sentiment, suggesting additional sort functions like filtering by the model’s release date or specific ranges such as the last 6 or 8 months. - Hugging Face Workaround Shared:

@epicureusshared a workaround by using Hugging Face to view recent models with a specific search link. - Existing Channels as Interim Solutions:

@yagilbpointed to existing Discord channels#1111649100518133842and#1185646847721742336as current places to discuss and find information about the latest models. - Feature Refinement & Selection Criteria Wishlist:

@purplemelbournerequested advanced filtering capabilities in LM Studio to select models by size, type, and performance, specifying a desire to search based on VRAM requirements and ratings.

Links mentioned:

Models - Hugging Face: no description found

LM Studio ▷ #🎛-hardware-discussion (147 messages🔥🔥):

-

Taming Power Consumption with GPUs:

@666siegfried666noted that even high-end GPUs like the 7900 XTX don’t always reach their Total Board Power (TBP) limit, staying around 140W in their setup, and sought details on real-time TBP draw for the 4060 Ti in LLM. They also highlighted the importance of CPUs, especially AMD 3D cache models, and RAM setups in power efficiency, and advocated for Arctic P12 fans due to their low power draw. -

The Race for Efficiency in LLM Systems: Users discussed balancing price, power, and performance when building LLM systems.

@nink1talked about the profitability of Apple M3 processors running LLM tasks on a single battery, while@666siegfried666brought up regional variations in hardware pricing. -

Exploring GPU Underclocking & Overclocking:

@666siegfried666shared insights into effective undervolting without underclocking, mentioning optimal performance per watt for the 7900 XTX at 2400-2500MHz.@nink1considered dynamic underclocking/overclocking in response to workload changes. -

LLM Performance Enthusiasts Share Configurations:

@goldensun3dsrelated their experience with a substantial load time for a 189K context LLM on their system, and users exchanged advice on hardware setups for LLM, including the efficient operation of AMD GPUs with LLM, and the use of dual GPUs to improve performance. -

Practical Advice for New LLM Hardware Entrants: A new user,

@purplemelbourne, engaged with the community to understand if they could run multiple LLMs on their newly acquired RTX2080Ti GPUs. The conversation evolved into a general discussion about hardware configurations and potential upgrades involving V100 cards and NVLink for running high-memory models.

Links mentioned:

- nvidia 4060 16gb - Shopping and Price Comparison Australia - Buy Cheap: no description found

- Amazon.com: StarTech.com PCI Express X1 to X16 Low Profile Slot Extension Adapter - PCIe x1 to x16 Adapter (PEX1TO162) : Electronics: no description found

LM Studio ▷ #🧪-beta-releases-chat (7 messages):

- Token Overflow Troubles:

@jarod997experienced gibberish responses in Win Beta 4 (0.2.10) when the chat reaches a multiple of the token overflow amount such as 2048, 4096, etc. - Context Overflow Policy Check:

@jedd1suggested checking the Context Overflow Policy settings and also mentioned changes might not be prominent but do occur semi-regularly. - Upgrade Recommendation Discussion:

@jedd1and@fabguyboth recommended upgrading to the newer 0.2.16 version which might resolve the issue noted by@jarod997. - Beta vs. Stable Release Confusion:

@jarod997couldn’t find the suggested version on LMStudio.ai, before clarifying they need to use the Beta due to their machine’s support for AVX and not AVX2.

LM Studio ▷ #autogen (1 messages):

- Debating the Best Bot Integration:

@purplemelbourneis seeking advice on which integration to commit to between AutoGen, CrewAi, ChatDev, or any other options. They have AutoGen installed but have not executed their first run yet.

LM Studio ▷ #memgpt (3 messages):

- MemGPT Shared Knowledge Base Query:

@purplemelbourneasked if MemGPT can have a shared knowledge base across different programming models for tasks like bug fixing, considering using KeyMate for integration. - Practicality of Integrating GPT-4 with MemGPT:

@nahfam_replied that while it’s theoretically possible, the cost associated with using the GPT-4 API would be prohibitive. They suggest cleaning up MemGPT outputs with BeautifulSoup4 and Python to make it more manageable. - Cost Concerns with KeyMate Integration:

@nahfam_expresses skepticism about the sustainability of KeyMate’s business model, costing $60 a month for a GPT-4 128k powered chat, given the per-request token cost and potential rapid depletion of token allowance. - TOS Disapproval for KeyMate:

@purplemelbournecomments on the harshness of KeyMate’s Terms of Service, providing a rather grim analogy to highlight their broad power of account termination.

LM Studio ▷ #amd-rocm-tech-preview (91 messages🔥🔥):

- ROCM on Debian vs Ubuntu:

@quickdive.discussed the challenges of using ROCm on non-Ubuntu distros like Debian, highlighting Python version conflicts and installation hurdles. The user finds dual-booting necessary due to AMD’s official support being mainly for Ubuntu. - Windows Shows Promise for ROCm:

@omgitsprovidencementioned successfully running language models on Windows with an AMD GPU throughkoboldcpp, while@ominata_shared a workaround using'HSA_OVERRIDE_GFX_VERSION=10.3.0'for the RX 6600XT, suggesting users are finding creative solutions for ROCm on Windows. - Performance Inquiries and Comparisons: In discussions about performance,

@sadmonstaareported that their 6950XT was slower than their 5900x when offloading with ROCm. Others like@666siegfried666had success with older AMD models, hinting at varying experiences among users. - Stable Diffusion on AMD:

@aryanemberedboasted about the capabilities of ROCm, mentioning it was possible to run Stable Diffusion on AMD hardware without DirectML, posing a significant ease-of-use advancement. - Dual-Booting Due to Compatibility Issues: Several users, including

@sadmonstaa, lamented over the necessity of dual-booting due to the compatibility issues of certain software with Linux, even while preferring it. They discussed the implications of ROCm’s performance and occasional system crashes across different operating systems and setups.

Links mentioned:

- GPU and OS Support (Windows) — ROCm 5.5.1 Documentation Home: no description found

- Docker image support matrix — ROCm installation (Linux): no description found

- Arch Linux - gperftools 2.15-1 (x86_64): no description found

LM Studio ▷ #crew-ai (4 messages):

-

Innovating with a Multi-Agent Framework:

@pefortinis developing a complex framework where a front-facing agent clarifies user tasks, a project manager agent breaks down tasks into atomic units, HR expert agents create specialized personas for each task, and an executor runs the operation. The system also includes evaluators to ensure task resolution and fit, but it is currently running slowly and underperforming. -

Soliciting Structure Feedback:

@wolfspyrereached out to@pefortinto offer feedback on the structural design of the multi-agent framework being developed. -

Seeking Compatibility Between Agent Systems:

@purplemelbourneinquired about the compatibility between AutoGen and CrewAi, expressing a desire to understand which system would be optimal for use without significant time investment. -

Contrasting AutoGen and CrewAi:

@jg27_kornypointed out that AutoGen and CrewAi have different setups, with CrewAi having an easy and intuitive logic, while AutoGen offers a graphical interface. They advised using these systems with the GPT API for best performance and cautioned about the token cost due to potential agent loops.

Perplexity AI ▷ #general (595 messages🔥🔥🔥):

- Perplexity’s Context Window Woes: Users like

@layi__and@sebastyan5218expressed frustration with how Perplexity AI handles context, stating that the service struggles to retain awareness and often defaults to base knowledge responses, leading to requests for a refund and bug report submissions. - Pro Subscription Puzzles: New Perplexity Pro users like