Andrew Ng’s The Batch writeup on Agents made a splash across all platforms this weekend:

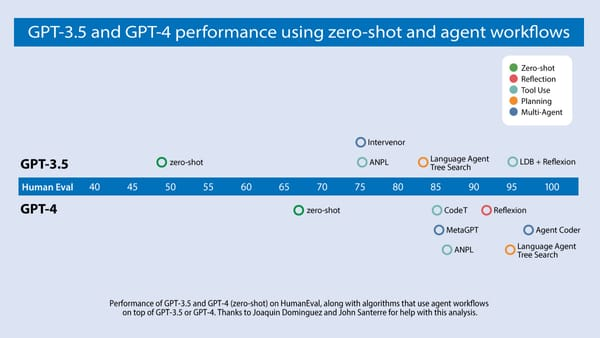

Devin’s splashy demo recently received a lot of social media buzz. My team has been closely following the evolution of AI that writes code. We analyzed results from a number of research teams, focusing on an algorithm’s ability to do well on the widely used HumanEval coding benchmark. You can see our findings in the diagram below.

GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

Nothing here is new to people who have studied the agents field, but Andrew’s credibility and agent framework (very close to Lilian Weng + the recent new metagame of multiagent collaboration) sells it.

We published The Unbundling of ChatGPT today. Also Emad stepped down from Stability, and there are more Sora videos out, make sure to check out the Don Allen Stevenson III one.

Table of Contents

[TOC]

we’ve added more subreddits, and are synthesizing topics across them. Comment crawling still not implemented but coming along.

Stable Diffusion Models and Techniques

- New Stable Diffusion models and techniques are being developed, such as Cyberrealistic_v40, Platypus XL, and SDXL Lightning for generating Naruto-style images. (Playing with Cyberrealistic_v40, Still Liking Platypus XL, Naruto (Outputs from SDXL Lightning))

- /r/StableDiffusion: LoRA and upscaling methods are being explored to improve image quality, such as cartoonizing images while preserving content and a general purpose negative prompt LoRA to eliminate common problems. (best LORA or method with sd1.5 to cartoon-ize an image while keeping content? (flatten shading, reinforce outlines), General purpose negative prompt?)

- /r/StableDiffusion: New workflows and extensions are being developed for Stable Diffusion, such as BeautifAI for upscaling in ComfyUI, FrankenWeights for mixing model weights, and integrating Prompt Quill expansion in Fooocus. (BeautifAI - Image Upscaler & Enhancer - ComfyUI, It’s alive! FrankenWeights is coming… [WIP], Prompt Quill in Fooocus)

Local LLM Deployment and Optimization

- /r/LocalLLaMA: Deploying large language models locally is a popular topic, with discussions around hardware requirements, inference speed, and model selection for different use cases. (Would it make sense to stick a P40 24GB in with a 3090 to have 48GB VRAM?, Best output quality for 4090 & 64GB RAM?, What is your computer specs?)

- /r/LocalLLaMA: Optimizing LLM performance is an active area of research, with discussions around architectures for reasoning, finetuning strategies, and serving multiple users efficiently. (What architecture will give us a reasoning LLM ?, All work and no play makes your LLM a dull boy; why we should mix in pretraining data for finetunes., Is it possible to serve mutliple user at once using llama-cpp-python ?)

- /r/LocalLLaMA: Guides and resources are being developed to help users get started with local LLMs, from beginner to advanced levels. (New user beginning guide: from total noob to well-informed user, part 1/3, another try…)

Machine Learning Research and Techniques

- /r/MachineLearning: New machine learning architectures and techniques are being proposed and discussed, such as Treeformers using hard attention and decision trees for causal language modeling. ([P] Treeformer: hard attention + decision trees = causal language modelling)

- /r/MachineLearning: Optimization techniques for deploying ML models are being explored, such as using TensorRT for fast PyTorch model inference. ([D] Looking for fastest inference way to run a pytorch model on TensorRT)

- /r/MachineLearning: Debugging and improving ML models is an ongoing challenge, with discussions around understanding and fixing issues like spiking test loss. ([D] Does anyone know why my test loss is spiking so crazily?)

AI Assistants and Applications

- /r/OpenAI: AI assistants are being used in new ways, such as mediating arguments to provide a neutral perspective and helping with coding tasks. (Mediating Arguments with ChatGPT, Coding LLM that runs on 3090)

- /r/StableDiffusion: New AI applications are being developed, such as AI influencers, interactive “AI Brush” tools, and immersive experiences to explore AI-generated worlds based on images. (Here is my first 45 days of wanting to make an AI Influencer and Fanvue/OF model with no prior Stable Diffusion experience, Quick Breakdown of my interactive “AI Brush” build with StreamDiffusion, Excited for the future)

Memes and Humor

- AI-generated memes and humorous content continue to be popular, poking fun at the current state of AI. (Do not generate a tree using a model trained on p*rn, “Don’t ever buy no weed from the gas station bro”, It do be like that)

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs

Model Releases & Updates

- Mistral AI released a new 7B 0.2 base model with 32k context window, announced at a hackathon (13k views)

- Quantized 4-bit Mistral 7B models released, enabling 2x faster inference with 70% less VRAM using QLoRA finetuning (14k views)

- Mistral 7B 0.2 expected to outperform Yi-9B (486 views)

Open Source Efforts & Challenges

- Stability AI’s Emad Mostaque out following investor mutiny and staff exodus (11k views)

- Open source AI would be better if AAA games weren’t “total shit” for the last decade, making high-end GPUs not worth it for gaming (1.7k views)

- Open-source AI isn’t real without distributed pretraining, can’t depend on VCs spending millions then giving it away (468 views)

Emerging Applications & Demos

- Financial agent application built with LangChain, can get stock prices, financials, market news (46k views)

- Telegram proxy setup guide to circumvent potential ban in Spain using built-in proxy feature (50k views)

- Dancing robot powered by Mistral 7B demoed at hackathon (9.8k views)

- Claude-to-Claude conversations induce concerning outputs like “psychotic breaks” (53k views)

PART 0: Summary of Summaries of Summaries

-

Mistral’s New 7B v0.2 Base Model Drops: Mistral AI casually released their new Mistral 7B v0.2 Base model at the @cerebral_valley hackathon, featuring a 32k context window and other improvements detailed in their release notes. The AI community is abuzz with the implications and benchmarking results of this significant update.

-

Stability AI CEO Emad Mostaque Resigns: In a major shakeup, Emad Mostaque resigned as CEO of Stability AI to pursue decentralized AI. Interim co-CEOs Shan Shan Wong and Christian Laforte will lead the search for a permanent replacement. Speculation is rife about the company’s future direction and commitment to open-source initiatives amidst this leadership transition.

-

Anthropic’s Claude Shines Despite Limitations: Users praise Anthropic’s Claude for its performance and context, especially the self-moderated version, but express frustration with the strict 1M token per day rate limit on the 200k context window. The $500 scale plan is suggested as a more accessible option for extensive usage, while the potential of Claude’s API for open-source development generates excitement.

-

Optimizers and Architectures Advance LLMs: Novel optimizers like GaLore and architectures like DenseFormer are pushing the boundaries of language model training efficiency and performance. Discussions revolve around GaLore’s significant VRAM savings and potential over-training risks, while DenseFormer’s depth-weighted averaging shows promising perplexity improvements. The community eagerly awaits further developments in these areas.

-

AI Assistants and Agents Evolve: Projects like Open Interpreter’s 01 Light, a fully open-source personal AI agent, and World Simulator from Nous Research are capturing the community’s imagination with their engaging experiences and potential for customization. Meanwhile, frameworks like LangChain are enabling more sophisticated decision-making and task automation for AI agents, as evidenced by various shared guides and tutorials.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

-

SD Ecosystem Buzzing: The community is actively discussing Stable Diffusion models, particularly in anticipation of the upcoming SD3 release, with buzz around potential improvements and comparative analysis with models such as SDXL. Issues surrounding compatibility with AMD GPUs were also raised, with members sharing solutions and workarounds.

-

AI Art at a Click, But Not Without Hiccups: Frustrations were voiced over online AI image generation services like Civitai and Suno, citing content restrictions and types of content generated. Community members shared resources such as Stable Cascade Examples to showcase different model capabilities.

-

Regulatory Rumbles: A polarized debate unfolded on the implications of regulation in AI technology. Ethical considerations were weighed against fears of stifling innovation, reflecting a community conscious of the balance between open-source development and proprietary constraints.

-

Tech Support Tribe: A knowledge-sharing atmosphere prevails as newbies and veterans alike navigate technical tribulations related to model installations. Resources for learning and troubleshooting were shared, including direct links to support channels and expert advice within the community.

-

Connecting AI Threads: Various links were circulated for further information and utilities, such as a Stable Diffusion Glossary, and a comprehensive multi-platform package manager for Stable Diffusion StabilityMatrix. These tools are meant to aid understanding and enhance usage of Stable Diffusion products among AI engineers.

Unsloth AI (Daniel Han) Discord

- Innovating Within Memory Limits: A recent pull request on the PyTorch

torchtunerepository allows full model fine-tuning while keeping the memory footprint under 16GB, enabling users with consumer-grade GPUs to train more efficiently. - Enhancing Fine-Tuning Capabilities: The latest release (v0.10.0) of the Hugging Face PEFT includes LoftQ, which improves fine-tuning for large models.

- ORPO Implementation with Mistral: A user reported effective application of ORPO TRL on Mistral-7B-v0.2 using the argilla/ultrafeedback-binarized-preferences-cleaned dataset, suggesting that the method has potential for further optimization.

- Debating Best Practices for Training AI Models: Discussions across channels engage with the challenges of multi-turn dialogue training in ORPO, the importance of standardized formats, and pressing issues with using Ollama templates and different quant models.

- Model Performance Milestones: The community celebrates the performance of new models like sappha-2b-v3 and MasherAI-v6-7B which reportedly surpassed benchmarks after fine-tuning with Unsloth on Gemma-2b.

Perplexity AI Discord

-

Perplexity Pro Users Clash Over Image Generation: The Pro users discussed Perplexity’s image generation feature and noted that turning off the Pro toggle on the web version allows for image generation using Writing mode.

-

Model Showdown: Claude Opus vs. GPT-4 Turbo: Engineering chatter touched upon comparing Claude 3 Opus and GPT-4 Turbo, highlighting that GPT-4 Turbo can compile Python files, unlike Perplexity.

-

Stability AI Strikes Local Chord: There was a buzz about Stability AI’s local models, like SDXL, and the tradeoff between performance and the hefty costs of running these tools on personal hardware.

-

Perplexity Puzzles and Potential: Users were bewildered by some aspects of Perplexity, including intrusive search triggers and unrelated prompts, while also envisioning features like Claude 3 Opus’s API and integration with iOS Spotlight search.

-

Coding Within Token Boundaries: A crucial tip for engineers working with the Perplexity API was to heed the 16,384 token limit, with suggestions to use tools like OpenAI’s tokenizer to gauge token counts accurately and adhere to the limit for optimal operation.

LM Studio Discord

-

Taming the VRAM Beast for AI: Engineers debate the best GPUs for running AI models, with the RTX 3090’s 24GB VRAM being a popular choice. Compatibility issues between differing GPU makes, such as AMD and NVIDIA, were noted when configuring multi-GPU setups.

-

Local LLMs Stirring a Tech Revolution: Discussions about the feasibility of distributed computing for LLMs revealed skepticism due to high CPU utilization when using ZLUDA with ROCm, despite the appeal of local computing akin to the Linux/LAMP era.

-

Docs Unleashed and Multi-Model Mania: LM Studio launched a new documentation site and also introduced a Multi Model Session feature, which is explained in a tutorial video.

-

LM Studio’s Growing Pains and Performance Quirks: Users reported issues from high CPU usage to models outputting gibberish, which sometimes were resolved by a simple restart. Compatibility was questioned for older hardware like the RX 570 with ROCM, and errors like “Exit code: 42” when loading models signaled a need for continued troubleshooting.

-

Open-interpreter Unpacked and GGUF Model Performance: Open-interpreter issues included connection problems and discussions on various GGUF-compatible models’ performance. The Open Interpreter device attracted interest, with options to 3D print it oneself using free STL files from 01.openinterpreter.com/bodies/01-light. Meanwhile, non-blessed model errors prompted gaze towards issue #1124 on open-interpreter’s GitHub.

OpenInterpreter Discord

-

Open Interpreter Makes Leap to Linux: Users have managed to get Open Interpreter running on Ubuntu 22.04, discussing microphone support and client-server mechanics, signaling a push towards cross-platform compatibility.

-

DIYers Assemble! The O1 Light Craze: Enthusiastic members of the community are sharing tips on 3D printing and assembling their own 01 Light devices, with dedicated Discord spaces popping up to share build experience and design tweaks.

-

AI-Powered Engineering Discussions Heat Up: Technical conversations are expanding around the Open Interpreter, with focus on running the 01 server on various machines, whether low-spec or cloud-based, and enhancing installers for user-friendliness. Developers are also brainstorming integrations for Groq and extending 01 Light functionalities.

-

Community Contributions Unleash Open Source Power: The AI engineer community is diving into contributions for the Open Interpreter project, focusing on app development, performance of different LLMs, and potential desktop app for Apple silicon devices.

-

Open-Source AI Assistants Set the Stage: An insightful YouTube video on the 01 Lite titled “Open Interpreter’s 01 Lite - WORLD’S FIRST Fully Open-Source Personal AI AGENT Device” has been highlighted, showing off the capabilities of this homegrown AI assistant. An edited live stream has also been shared to provide a concise overview of the 01 software Open Interpreter’s 01 Lite.

LAION Discord

-

EU Data Laws Challenge LAION’s Efficiency: LAION datasets may be underperforming in comparison to US datasets, largely due to the EU’s stringent regulations. The use of synthetic data and forming collaborations in less restrictive regions were mentioned as possible workarounds, humorously termed “data laundering.”

-

Leadership Shuffle at Stability AI: Emad Mostaque has stepped down as CEO of Stability AI, with the company confirming his resignation and the appointment of interim co-CEOs Shan Shan Wong and Christian Laforte, who will oversee the search for his replacement. There is speculation about the impact on the company’s future and its commitment to open-source (Stability AI Press Release).

-

Comparing SD3 to DALL-E 3: Discussions indicate that the SD3 model can match some aspects of DALL-E 3 performance, but struggles with complex interaction understanding, leading to collage-like image assembly, rather than cohesive concept blending.

-

AI Ethics Debate Surfacing Amidst Industy Drama: A recent conversation on Twitter about the motives behind leading AI industry figures led to a guild-wide debate regarding the ethical responsibilities of developers and researchers, along with the impact of AI “celebrity” culture on social media.

-

AMD GPUs Fall Behind in AI Support: Guild members expressed dissatisfaction with AMD’s support for machine learning workloads when compared to NVIDIA’s offerings. The lack of consumer-level ML support is viewed as a potential oversight given the rise of models like Stable Diffusion.

-

Andrew Ng Foresees AI Workflow Evolution: Google Brain’s co-founder Andrew Ng predicts that AI agentic workflows could surpass next-generation foundation models this year by iterating over documents multiple times. Current one-shot LLM approaches need to evolve (Reddit Highlight).

-

MIT Accelerates Image Generation Tech: MIT’s CSAIL developed a method that speeds up the image generation process for tools like Stable Diffusion and DALL-E by 30 times, using a streamlined single-step teacher-student framework without compromising on image quality (MIT News Article).

-

NVIDIA Addresses Diffusion Model Training Hurdles: NVIDIA’s recent blog post discusses the improvements in training diffusion models, including the EDM2 code and model release. They address style normalization issues that could be overcome with changes similar to those in EDM2 (NVIDIA Developer Blog Post).

-

Unet’s Future in Question with Rise of Linear Networks: Guild members debated the relevance of advancements in Unet given the rise of linear network models for image generation. Although layer norm and traditional normalization methods are questioned, their integral part in network functionality remains a topic of discussion.

-

Large Language Models Show Resilience with Pruning: Insights reveal that large language models (LLMs) retain performance even when middle blocks are removed, hinting at the redundancy of certain segments. This has encouraged a deeper look into the architecture of linear networks and their potential for strategic pruning.

Nous Research AI Discord

-

Shipping Queries and DIY Solutions: Members are curious about shipping timelines for an unnamed product, anticipating summer availability. They discussed alternative DIY options in the absence of specific release dates.

-

AI Model Innovation and Optimism: Enthusiasm for Claude’s impact on open-source projects is high, and an implementation of Raptor without pretraining is reported to summarize 3b model transcripts in just 5 minutes. References to FastAPI’s ease-of-use for back-end development were shared, alongside a note about Suno.AI’s ability to curate Spotify playlists.

-

Deployment and Persuasion Techniques Analyzed: Kubernetes is leveraged for deploying Nous models, while a pre-registered arXiv study analyses the persuasive power of LLMs. Platforms like ArtHeart.ai are recognized for AI-driven art creation, with BitNet 1.5’s quantized-aware training reviewed for inference speedups.

-

Weaving Worlds and Aiding Therapists with AI: The World Simulator project revealed a remarkable level of engagement, while work on an AI therapist dubbed Thestral aims to use the LLaMA 70B model. The community is actively discussing the ethical constraints of Opus from Claude 3, the impact of refusal prompts in models like Hermes 2 Pro, and manipulation techniques to circumvent LLM limitations known as the “Overton Effect.”

-

LLMs, Tuning, and Refinement Questions: Debates arose around the inclusion of few-shot prompts in SFT datasets and the quest for tiny LLMs, with recommendations to watch Andrej Karpathy’s videos. The importance of causal masking and the mysteries behind Llama’s tri-layer feedforward design incited discussions, circling an arXiv paper on SwiGLU nonlinearity.

-

Parenting, Open Sourcing, and RAFT’s Prominence: Members touched upon their experiences with parenthood while seeking open-source options like a Wikipedia RAG Index. The conversation highlighted a departure towards a promising retrieval-augmented fine-tuning method called RAFT, which was discussed in a shared paper and can be explored in the Gorilla GitHub repository.

-

Casual Chats and World-Sim Tech: A member hinted at changing language settings on Tenor.com, displaying a shared Grim Patron GIF, while a simple “helloooo” from another brightened the chat.

OpenAI Discord

-

Sora Shapes the Future of Filmmaking: OpenAI’s Sora is celebrated for empowering artists and filmmakers to create groundbreaking surreal art. Director Paul Trillo lauds Sora for enabling the visualization of previously unimaginable ideas, with its potential highlighted on the OpenAI blog.

-

Diving into AI’s Cultural Compass: A hot topic in AI discussions is the perceived Western liberal-centrist bias in language models like GPT, sparking debates on whether there should be multiple culturally aligned AI versions. Efforts to align AI with non-Western norms are facing challenges, and the “Customize ChatGPT” feature was cited as a tool for users to personalize AI responses with their values.

-

GPT-4’s Evolving Features and Access Concerns: Members noticed a reduction in the Custom GPT pin limit and sought a keyboard shortcut for shared GPT access. OpenAI confirmed the capability of GPT-4 with Vision to read images, while also announcing the end of the ChatGPT plugins beta through a discontinuation notice.

-

Refining AI to Enrich User Experience: Strategies are shared for enhancing AI’s creative writing, narrative style, and coding output quality. A user faced issues with the

.Completionendpoint due to an OpenAI SDK update, and was directed to the v1.0.0 Migration Guide on the openai-python GitHub repository for assistance. -

Enabling Accessibility in Vision: Privacy-sensitive advice was provided to a member looking to improve image recognition for disabled individuals, with an emphasis on writing up the issue for a Discord suggestions channel. Users also explored prompt engineering tactics to craft AI with specific personalities, and to refine the generation of hypothesis paragraphs avoiding generic statements.

HuggingFace Discord

AI Art Prompt Guide Quest: Users are seeking advice on crafting prompts for AI-generated art, though specific resources weren’t provided.

Blenderbot’s Role-Play: Discussions highlight Blenderbot’s ability to exhibit consistent character traits during interactions, in contrast to AI that acknowledges its non-human nature.

GPU Operation Showdown: A technical debate unfolded around the execution speed differences between multiplication and conditional checking on GPUs. Look into ‘iq’s work was suggested for further insights.

Complex Creativity for ChatGPT: A user requested a linguistically diverse and creative prompt for ChatGPT, prompting another to exclaim over the prompt’s complexity.

Optimizing GPU Inference: The community explored methods and libraries like TensorRT-LLM and exLLama v2 for optimizing large language model inferencing on GPUs, with suggestions for tools ideal for simultaneous multi-user serving.

Rust’s Rising Star: Conversations around converting the GLiNER model to Rust via the Candle library noted benefits including reduced dependencies and suitability for production, with GPU compatibility confirmed.

Efficient Coding with Federated Learning: An open-source GitHub project demonstrates an energy-efficient approach to federated learning for load forecasting.

Compiling the Stable Diffusion Compendium: A plethora of resources and guides for Stable Diffusion have been shared by community members, including civitai.com for comprehensive learning on Stable Diffusion.

Deck Out Your Memory – Diffusers Edition: An experimental tool for estimating the inference-time memory requirements of DiffusionPipeline has been released for feedback.

SegGPT: The Contextual Segmentor: Introducing SegGPT on HuggingFace, a model with impressive one-shot segmentation that can be trained for various image-to-image tasks.

BLIP-2 Ups the Fusion Game: In vision-language model fusion, BLIP-2 has been recommended for connecting pre-trained image encoders with language models, further elaborated in the transformers documentation.

Embedding Precision with Quantization: Embedding Quantization for Sentence Transformers brings major search speed improvements without compromising retrieval accuracy.

Catering to the German Learners: A GPT-powered German language learning tool named Hans promises enhanced user experience for German learners and is available on the GPT Store.

All-MiniLM-L6-v2 Download Dilemma: A user looked for assistance in downloading and training the all-MiniLM-L6-v2 model, emphasizing the power of community support for model implementation.

Revolutionizing Decision-Making with Langchain: An article on Medium posits Langchain as a transformative approach to how language agents resolve problems, available on Medium.

Diving Into Data’s Importance: A shared arXiv paper emphasizes the significance of data as a potential critical influencing factor, reminding us of the indispensable value of quality data.

NEET/JEE Data Quest: A dataset of NEET/JEE exams is being sought for training MCQ answer generators, indicating the intersection of AI technology and educational resources.

AI on the Forefront: Recurrent Neural Notes newsletter discusses the potential limits of AI, possibly providing nuanced insights on future AI capabilities available on Substack.

LlamaIndex Discord

Twitter Sneak Peek on Human-LlamaIndex Workflow: A new template was introduced to streamline interactions between humans and LlamaIndex’s agents, slated to reduce intrusiveness for users. The details and a preview were shared on Twitter.

Integrating Custom LLMs with LlamaIndex: Leonie Monigatti detailed the process of incorporating custom Language Models (LLMs) into LlamaIndex, with an explanation available on LinkedIn.

Guide to Building RAG Agent for PDFs: A tutorial by Ashish S. on creating a LlamaParse-powered RAG flow for PDF files was published and can be viewed in its entirety via this Tweet.

New LlamaIndex Python Documentation Released: LlamaIndex has updated its Python documentation to feature example notebooks better, improved search, and clearer API layouts, announced in a Twitter post.

LlamaIndex Community Tackles Integration and Documentation Challenges: Discussions in the community highlighted various integrations with Merlin API and LocalAI, an inquiry about the logic in LlamaIndex’s evaluation process, conflicting documentation post v0.10 updates, requests for examples of multi-agent chatbots, and turning Python functions into LlamaIndex tools. Users exchanged resources, including several documentation links and GitHub code examples.

Latent Space Discord

-

Whisper for Video Processing: Community members are seeking a video processing tool comparable to OpenAI’s Whisper, and suggestions included Video Mamba, Twelve Labs, and videodb.io.

-

OpenAI’s Sora Gains Traction Amongst Creatives: OpenAI’s introduction of Sora has garnered positive feedback from artists, showcasing the tool’s versatility in generating both realistic and imaginative visuals.

-

Google Confounds with AI Services: Discussion revealed confusion between Google’s AI Studio and Vertex AI, particularly with the former’s new 1 million token context APIs, drawing comparisons with OpenAI’s API for model deployment.

-

AI Wearables Gaining Popularity: The ALOHA project, an open-source AI wearable, is discussed amid conversations about the rise of AI wearables. Pre-orders for another AI wearable, Compass, began, indicating a thriving interest in local, personal AI solutions.

-

Efficiency in LLMs With LLMLingua: Microsoft’s LLMLingua was shared as a promising tool for compressing prompts and KV-Cache in Large Language Models (LLMs), achieving significant compression rates with little loss in performance.

-

Insider AI Discussion Takes Podcast Form: A podcast episode highlighted with a tweet provided insights into major AI companies, sparking interest in the AI community.

-

AI Unbundling Trend Spotted: An essay featured on latent.space discussed the unbundling of ChatGPT, suggesting that specialized AI services are becoming more popular as user growth for generalist models stagnates.

-

Paper Club Hiccups: The llm-paper-club-west faced technical difficulties with speaking rights on Discord, causing the meeting to switch over to Zoom and raising awareness for the need to streamline access for future online gatherings.

-

Ideas and Music Flow in AI in Action Club: The club had vibrant discussions on tensor operations, coding best practices for LLMs, and spontaneous sharing of music evoking the calm of night from Slono on Spotify. They also released a schedule for upcoming sessions on AI topics.

OpenAccess AI Collective (axolotl) Discord

GaLore Optimization Sparks Debate: The GaLore optimizer discussion highlighted its VRAM savings abilities but also raised the question of potential over-training due to “coarseness.” Some engineers are eager to test GaLore out, especially in light of the new Mistral v0.2 Base Model release, which now has a 32k context window.

Fine-Tuning Large Language Models on a Budget: Technical discussions surfaced around fine-tuning a 7b model within 27gb of memory, with a spotlight on a GitHub repository called torchtune that allows for efficient fine-tuning without Huggingface dependencies. A specific pull request was recommended to review full fine-tune methods requiring less than 16GB of RAM.

TypeError Troubles and Help Channel Support: A member grappling with a TypeError in “examples/openllama-3b/qlora.yml” was directed to a specialized help channel (#1111279858136383509) for expertise in resolving it. This exemplifies the collaborative environment, urging members to specific resources for technical resolutions.

Medical Model Publishing Dilemma: The decision whether to publicly share a preprint of a medical model in the midst of journal review sparked a discussion on the trade-offs of early disclosure. The conversation underscores the importance of strategic research dissemination in the field.

Open Calls for Developer Recognition and Business Collaboration: CHAI announced prizes for LLM developers, encouraging community contributions, whereas businesses were invited to share their applications of Axolotl confidentially, alluding to the value of real-world use-case narratives in furthering AI technology.

OpenRouter (Alex Atallah) Discord

- Midnight 70B Unleashed into the Roleplay Realm: The Midnight 70B model, tailored for storytelling and roleplay with lineage from Rogue Rose and Aurora Nights, is now up for grabs sporting a 25% discount, tagged at $0.009/1k tokens on OpenRouter.

- OpenRouter Refines Cost Tracking Tools: OpenRouter implements an advanced Usage Analytics feature for real-time cost tracking and has made a Billing Portal available for more efficient credit and invoice management.

- Noromaid Mixtral and Bagel Prices Readjusted: Prices for running the Noromaid Mixtral and Bagel models no longer include discounts, with new prices set at $0.008/1k tokens for Mixtral while Bagel comes at $0.00575/1k tokens.

- Claude 3 & Grok: In multi-model discussions, Claude 3’s self-moderated version gained traction for improved filtering and the Grok model generated debate; its performance deemed satisfactory against premium alternatives but cost-prohibitive. Users voiced preferences for longer context lengths and raised quality differences in model completions between OpenRouter and direct API usage.

- OpenRouter DDoS Sufferance and API Response Issue: OpenRouter faced a DDoS attack leading to service instability, since resolved, and users observed that citation data from Perplexity isn’t provided in OpenRouter’s API responses as expected.

Eleuther Discord

-

PyTorch Struggles with MPS: An ongoing effort to improve the MPS backend in PyTorch is being tackled, with notable issues like tensor copying since September 2022 being a hurdle. This work is anticipated to enhance performance for model testing and finetuning locally.

-

Token Blocking Contested For LLM Training: A debate on token block strategies for language model pretraining triggered a discussion on the merits of overlapping vs. non-overlapping sequences and their impact on model efficacy, touching on the importance of beginning-of-sentence tokens.

-

AMD Driver Dilemma Spurs Discussion: Comparisons between AMD Radeon and Nvidia GPU drivers sparked debates, centering on driver inadequacies and the possibility of AMD open-sourcing their drivers. Some participants considered the potential for activist investor action to prompt change at AMD.

-

Machine Learning Model Merger Methodologies: New model merging methods are being created with the aim to outdo existing techniques such as DARE, though these are still in the experimental phase and require additional testing and validation.

-

New ML Architectures Brim with Promise: Innovations like DenseFormer and Zigzag Mamba suggest improvements in perplexity and diffusion model memory usage respectively, while DiPaCo offers a novel approach towards robust distributed model training.

-

SVM Kernel Conquest: Results inform that the sigmoid SVM kernel shows better performance on Pythia’s input embeddings than other kernels such as rbf, linear, and poly.

-

N-gram Project “Tokengrams” Gains Traction: The Tokengrams project is now reportedly usable for efficiently computing and storing token n-grams from text corpora, suggesting an efficient resource available to researchers at GitHub - EleutherAI/tokengrams.

-

Chess-GPT Gets Analyzed: A case study on Chess-GPT discusses the technique of using language models to predict chess moves with an Elo rating estimation alongside validating computations using linear probes, detailed at Chess GPT Interventions.

-

Evaluation Variability Worries AI Engineers: The evaluation result inconsistencies when comparing Hugging Face transformers to Megatron-DeepSpeed evaluations has drawn attention, with suggestions to verify if implementation details like bfloat16 numeric handling in fused kqv multiplications might be contributing to variability.

-

Minecraft Serves As RL Testing Ground: A Minecraft-based environment for Reinforcement Learning, available on GitHub - danijar/diamond_env, alongside discussions on project Voyager, underscores the use of games for AI model collaboration research.

-

Multimodal Embedding Spaces Exploration: Interest in theoretical works on multimodal embedding spaces was raised, with the community providing insights on how Stable Diffusion’s subculture treats embeddings in line with IMG2IMG workflows.

CUDA MODE Discord

When Discord Fails, Meet Pushes Through: Technical difficulties during a GTC event led to the suggestion of defaulting to voice channels for future lectures due to screen sharing issues on Discord stage channels. An unsatisfied member proposed switching to Google Meet in future due to the instability of Discord streams.

CUDA-tious Profiling: For engineers delving into CUDA, a lecture on how to profile CUDA kernels in PyTorch was shared, complete with accompanying slides and a GitHub code repository. CUDA programming becomes a necessity when seeking performance gains where PyTorch’s speed is insufficient.

Triton Tricky Tidbits: Discussions around Triton’s performance issues were prominent, and members were warned that Triton operations might be phased out in the future. A new prototype folder in the torchao repository was proposed for collaboration on API design for efficient kernel usage, as support for Triton continues.

Sparsity Meets Decomposition Elegance: A novel approach to distributed sparse matrix multiplication was introduced in the Arrow Matrix Decomposition paper by researchers Lukas Gianinazzi and Alexandros Nikolaos Ziogas, with the implementation available on GitHub.

Blackwell GPUs Smile for the Camera: Members discussed the new Blackwell GPUs, highlighting a tweet with a humorous take on the GPUs’ smiley face pattern. Speculation on the unseen NVIDIA Developer Discord server took place after a GitHub discussion about the CUTLASS library was brought up. The community also touched on data type standardization in deep learning, noting the absence of Google in recent standard consortiums and the lack of an IEEE standard for new floating point numbers.

Interconnects (Nathan Lambert) Discord

Mistral’s New 7B Model Steals the Spotlight: Mistral AI casually dropped a new model, the Mistral 7B v0.2 Base, at the @cerebral_valley hackathon. The model details including fine-tuning guidance are available here, although no magnet links were provided for this release, as noted by @natolambert.

Shakeup at Stability AI: CEO Emad Mostaque resigned from Stability AI, hinting at his future focus on #DecentralizedAI. The community expressed mixed feelings about the impact and direction of his tenure, amidst discussions of internal struggles and the nature of Stability AI’s contributions to AI academia.

Nemo Interoperability Seekers: Questions arose about converting and wrapping Nemo checkpoints for compatibility with Hugging Face, underscoring the technical challenges in machine learning model interoperability.

AI’s Ethical Tightrope:

- Debates ignited over whether creating a “generalist agent” in Reinforcement Learning is both practically and fundamentally feasible, based on discussions found here.

- The channel also tackled the FTC’s antitrust lawsuits against Apple with Nathan Lambert pointing out the public’s misunderstanding of antitrust regulations and backing his views with supporting tweets.

February’s Big AI Chats: Illuminating interviews with Anthropic’s CEO and Mistral’s CEO have been drawing attention, such as this “Fireside Chat” and the discussion on Amodei’s AI industry predictions here. Additionally, Latent Space’s February recap, highlighting key AI developments, can be found here.

LangChain AI Discord

- AI Delivers More for Less: An innovative method was proposed to bypass the 4k output token limit of GPT-4-Turbo by initiating a follow-up request upon reaching the length limit, which allows the model to continue generating content seamlessly.

- Bedrock Meets Python: A guide has surfaced detailing the use of Bedrock with Python, showing practical integration techniques. Interested engineers can dive into the guide here.

- Analyze LLM Conversations with SimplyAnalyze.ai: The launch of SimplyAnalyze.ai was announced, which pairs with LangChain to dissect LLM dialogue across business divisions. To join the free developer preview, engineers can visit SimplyAnalyze’s website.

- Harnessing LangChain for Decision-making: A post detailing the use of Langchain in Agent Tree Search was shared to foster more sophisticated decision-making processes with Language Models. Engineers can read more about it here.

- Upgraded Chatbot with Memory and Parsing Skills: Enhancements to a local character AI chatbot have been made, improving CSV and NER parsing, among other features. To check out the upgraded capabilities, the GitHub repository is available here.

LLM Perf Enthusiasts AI Discord

Real Estate Matching Gone Awry: A discussion unfolded around a problem with GPT4.Turbo misinterpreting property size requirements, with one property being suggested at 17,000 square feet despite a request for 2,000 - 4,000 square feet. A simple CSV-based database filter was recommended over a complex LLM, sparking a conversation about common missteps and linking to a resource by Jason Liu on the potential over-reliance on embedding search in LLMs.

Frustrations with Token Limitations: Participants voiced frustration with Anthropic’s rate limit of 1M tokens per day, considering a 200k context window to be insufficient. The Bedrock monthly fee model was discussed as a potential alternative, while a $500 scale plan from Anthropic was suggested as offering easier access for extensive use.

Seeking Superior Explainers: The community was asked for their top explainer resources on advanced LLM topics, with a specific call-out for high-quality, clear content on topics like RHLF, rather than a vast collection of blogs. Exa.ai was suggested as a beneficial resource for delving into LLM-related subjects.

Brief Cry for Coding Quality: In the #jobs channel, a user lamented the difficulty in writing high-quality code with a succinct and relatable one-liner.

GPT-3.5-0125 Takes the Lead: GPT-3.5-0125 was lauded for its significant performance improvements over previous models, as observed in a user’s comparative tests, elevating its status as a particularly advanced iteration within the realm of LLMs.

Alignment Lab AI Discord

-

Call for AI Avengers: The Youth Inquiry Network and Futracode are teaming up to develop a machine learning algorithm that recommends optimal research topics from existing databases. They’re recruiting web developers, data analysts, and AI & ML experts to champion this cause.

-

Contribute Your Skills for Glory: Volunteers will not only advance their careers with a shiny new portfolio piece but also walk away with a certificate and two professional recommendation letters. Those who help will also get to keep the developed ML algorithm code for personal or commercial use.

-

Flex Time for World Savers: They assure a flexible commitment for this groundbreaking project—perfect for superheroes with a packed schedule. Recruits can bypass the bureaucratic labyrinth by dropping a simple “interested” to get started on their mission.

-

Mystery Educational Reform Doc Drops: An unspecified member shares a Google Docs link discussing Post-AGI Educational Reforms, possibly hinting at a future-focused AI education paradigm.

-

Moment of Meta Moderation: In an ironic twist, a moderator experiences a self-epiphany of their own status in a call for moderation, reminding us that even bots can forget their protocols.

Datasette - LLM (@SimonW) Discord

-

LLM Versus Ollama Showdown: Members clarified that

llminterfaces with models, such as Mistral, by setting up API endpoints, which are then executed byollama.ollamaallows local model execution, making the models accessible via local HTTP API endpoints. -

Techie Commits with AI Assistance: The tool AICommits (GitHub - Nutlope/aicommits), designed to help write git commit messages with AI, gained appreciation for its utility, with requests for additional features such as emoji standards for commits.

Skunkworks AI Discord

- AI Cooking Up Stunts: An AI has crafted a unique cookbook based on YouTuber Mr. Beast’s daring adventures, which sparked interest in the group. The inventive application was showcased in a YouTube video, mixing culinary arts and machine learning for whimsical results.

- In Search of German Tech Savvy: A community member is on the lookout for German-language resources on deep learning and AI, indicating a desire to dive into technical content in their native tongue. The request painted a picture of a global, multilingual interest in the AI community.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (1195 messages🔥🔥🔥):

-

Stable Diffusion Inquiry and Assistance: Members discussed various aspects and uses of Stable Diffusion models, including performance, sampler settings, and ControlNet models. Users also exchanged guidance on handling errors and setting up the AI on different systems, especially with AMD GPUs.

-

Exploring SD3 and Alternatives: Conversation topics included the anticipated release window for SD3 and potential improvements, along with comparisons to other offerings like SDXL and AI-generated video potential.

-

Feedback on Online AI Services: The chat touched on the limitations and frustrations with online AI image generation services, such as those on Civitai and Suno, specifically pointing out issues with content restrictions and preferences on the type of content displayed.

-

Debate on AI Ethics and Regulation: Members debated the need for regulations on AI technology use and the importance of open-source models versus proprietary ones. Concerns were raised about regulations potentially stifling innovation and accessibility.

-

Technical Troubleshooting and Learning: New members seeking help with technical issues regarding model installation and use were directed to support channels and experts within the community. More experienced members aimed to guide and provide resources while advising newcomers on the learning curve associated with AI image generation.

Links mentioned:

- no title found: no description found

- ArtificialGuyBr: no description found

- no title found: no description found

- imgur.com: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- Stable Cascade Examples: Examples of ComfyUI workflows

- Purity: no description found

- Emad Mostaque resigns as CEO of troubled generative AI startup Stability AI - SiliconANGLE: Emad Mostaque resigns as CEO of troubled generative AI startup Stability AI - SiliconANGLE

- thibaud (Thibaud Zamora): no description found

- Dune Oil GIF - Dune Oil - Discover & Share GIFs: Click to view the GIF

- Nvidia's newest cloud service promises to accelerate quantum computing simulations - SiliconANGLE: Nvidia's newest cloud service promises to accelerate quantum computing simulations - SiliconANGLE

- CinematicRedmond - Cinematic Model for SD XL - v1.0 | Stable Diffusion Checkpoint | Civitai: Cinematic.Redmond is here! I'm grateful for the GPU time from Redmond.AI that allowed me to make this model! This is a Cinematic model fine-tuned o...

- Artificialguybr Demo Lora - a Hugging Face Space by artificialguybr: no description found

- Neon City Lights | Suno: japanese vocals chill jazz j-pop downtempo song. Listen and make your own with Suno.

- Tweet from Christian Laforte (@chrlaf): @thibaudz Thanks Thibaud, as I wrote elsewhere, the plan hasn't changed, we are still hard at work improving the model towards open release. Including source code and weights.

- Ok Then Um GIF - Ok Then Um Well Ok Then - Discover & Share GIFs: Click to view the GIF

- Boring Reality - BoringReality_primaryV4.0 | Stable Diffusion LoRA | Civitai: NOTE: Please read below for working with these loras. They are unlikely to give good results when used individually and as is. This model is actual...

- ControlNet 1.1 Models - Tile (e) | Stable Diffusion Controlnet | Civitai: STOP! THESE MODELS ARE NOT FOR PROMPTING/IMAGE GENERATION These are the new ControlNet 1.1 models required for the ControlNet extension , converted...

- CinematicRedmond - Cinematic Model for SD XL - v1.0 | Stable Diffusion Checkpoint | Civitai: Cinematic.Redmond is here! I'm grateful for the GPU time from Redmond.AI that allowed me to make this model! This is a Cinematic model fine-tuned o...

- Reddit - Dive into anything: no description found

- Stable Diffusion Glossary - Stable Diffusion Art: Confused about a term in Stable Diffusion? You are not alone, and we are here to help. This page has all the key terms you need to know in Stable Diffusion.

- Reddit - Dive into anything: no description found

- Drone Shot "Above" XL LoRA - v1.0 | Stable Diffusion LoRA | Civitai: Use " above " in prompt. Works best for large scenes and not individual objects or characters.

- GitHub - virattt/financial-agent: A financial agent, built entirely with LangChain!: A financial agent, built entirely with LangChain! Contribute to virattt/financial-agent development by creating an account on GitHub.

- AI Dance Animation - [ NEXT GEN ] - Stable Diffusion | ComfyUI: This AI animation was done using AnimateDiff and ControlNet nodes without any girl LORAs. Since the last AI dance video it's a major improvement in consisten...

- Unauthorized “David Attenborough” AI clone narrates developer’s life, goes viral: "We observe the sophisticated Homo sapiens engaging in the ritual of hydration."

- artificialguybr (ArtificialGuy/JV.K): no description found

- ControlNetXL (CNXL) - bdsqlsz-depth | Stable Diffusion Checkpoint | Civitai: bdsqlsz : canny | depth | lineart-anime | mlsd-v2 | normal | openpose | recolor | segment | segment-v2 | sketch | softedge | t2i-color-shuffle | ti...

- Roko's Basilisk - LessWrong: Roko’s basilisk is a thought experiment proposed in 2010 by the user Roko on the Less Wrong community blog. Roko used ideas in decision theory to argue that a sufficiently powerful AI agent would have...

- Who is going to tell her…: Support the channel by grabbing a t-shirt: http://www.clownplanetshirts.comDon’t forget to subscribe. Hit the bell to stay updated on the latest videos.Watch...

- How AI Image Generators Work (Stable Diffusion / Dall-E) - Computerphile: AI image generators are massive, but how are they creating such interesting images? Dr Mike Pound explains what's going on. Thumbnail image partly created by...

- GitHub - stitionai/devika: Devika is an Agentic AI Software Engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika aims to be a competitive open-source alternative to Devin by Cognition AI.: Devika is an Agentic AI Software Engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective...

- Reddit - Dive into anything: no description found

- Revenge of the nerds - John Goodman speech: Inspiring stuff!

- StabilityMatrix/README.md at main · LykosAI/StabilityMatrix: Multi-Platform Package Manager for Stable Diffusion - LykosAI/StabilityMatrix

- artificialguybr/cinematic.redmond – Run with an API on Replicate: no description found

- Eyaura: Give Me A Soul. Album: T.B.D.: G STRING - STEAM:https://store.steampowered.com/app/1224600/G_String/G STRING DISCORD - OFFICIAL:https://discord.gg/fUuDyx7uYeG STRING DISCORD - MISC:https:/...

- Name Archetypes: So, I made these faces in Midjourney by using the prompt "a photo of (name) --style raw." And I couldn't help but notice that whenever you meet a new person, they sorta have a name assoc...

- How to Generate Multiple Different Characters, Mix Characters, and/or Minimize Color Contamination | Regional Prompt, Adetailer, and Inpaint | My Workflow - 2. Adetailer | Stable Diffusion Workflows | Civitai: How to Generate Multiple Different Characters, Mix Characters, and/or Minimize Color Contamination | Regional Prompt, Adetailer, and Inpaint | My W...

- Forge Is Not Using ComfyUI as A Backend · lllyasviel/stable-diffusion-webui-forge · Discussion #169: Recently some people begin to spread misinformation about Forge using ComfyUI as a backend. This is false, harmful to the community, and harmful to the efforts of our engineering team. The backend ...

Unsloth AI (Daniel Han) ▷ #general (1009 messages🔥🔥🔥):

-

Exploring Metrics in SFTTrainer: A user seeks advice on using a generation-based metric for validation with

SFTTrainer, referencing a workaround in a GitHub issue. They are unclear about the preds received in thecompute_metricsfunction and howSFTTrainercomputes loss when fine-tuning with LoRA adapters. -

Chatbot Model Inference Hardware Requirements: A user asks how to determine hardware requirements for running LLM models like Nous-Capybara-34B-GGUF, and another user suggests referring to another HH model’s discussion for estimates, clarifying that model requirements can vary based on quantization and prompt.

-

Model Differences & Quantization: Inquiry made about the differences between two versions of Mistral models leads to an explanation that 4-bit models like here are faster to download but suffer a slight drop in accuracy.

-

Mistral and Their Marketing Strategy: Discussion unfolds around Mistral’s model release practices which are deemed unusual by a member due to not uploading their base models on Hugging Face and the unconventional leaking incident on 4chan.

-

Debate on Computer Science Education: A heated discussion takes place on the importance of a Computer Science degree in light of LLMs now capable of writing code. The conversation veers into various programming languages, memory safety, and the value of degrees from different universities.

Links mentioned:

- Inflection-2.5: meet the world's best personal AI: We are an AI studio creating a personal AI for everyone. Our first AI is called Pi, for personal intelligence, a supportive and empathetic conversational AI.

- GPT4All: Free, local and privacy-aware chatbots

- Google Colaboratory: no description found

- 152334H/miqu-1-70b-sf · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- Funny Very GIF - Funny Very Sloth - Discover & Share GIFs: Click to view the GIF

- Sloth Slow GIF - Sloth Slow Stamp - Discover & Share GIFs: Click to view the GIF

- Sloth Smile Slow GIF - Sloth Smile Slow Smooth - Discover & Share GIFs: Click to view the GIF

- mistralai/Mistral-7B-Instruct-v0.2 · Hugging Face: no description found

- unsloth/mistral-7b-v0.2-bnb-4bit · Hugging Face: no description found

- unsloth/mistral-7b-v0.2 · Hugging Face: no description found

- TheBloke/CodeLlama-34B-Instruct-GGUF · [AUTOMATED] Model Memory Requirements: no description found

- TheBloke/Nous-Capybara-34B-GGUF · Hugging Face: no description found

- Generation - GPT4All Documentation: no description found

- GitHub - InflectionAI/Inflection-Benchmarks: Public Inflection Benchmarks: Public Inflection Benchmarks. Contribute to InflectionAI/Inflection-Benchmarks development by creating an account on GitHub.

- How Quickly Do Large Language Models Learn Unexpected Skills? | Quanta Magazine: A new study suggests that so-called emergent abilities actually develop gradually and predictably, depending on how you measure them.

- White House urges developers to dump C and C++: Biden administration calls for developers to embrace memory-safe programing languages and move away from those that cause buffer overflows and other memory access vulnerabilities.

- Compute metrics for generation tasks in SFTTrainer · Issue #862 · huggingface/trl: Hi, I want to include a custom generation based compute_metrics e.g., BLEU, to the SFTTrainer. However, I have difficulties because: The input, eval_preds, into compute_metrics contains a .predicti...

- alpindale/Mistral-7B-v0.2-hf · Hugging Face: no description found

- GAIR/lima · Datasets at Hugging Face: no description found

- Accelerating Large Language Models with Mixed-Precision Techniques - Lightning AI: Training and using large language models (LLMs) is expensive due to their large compute requirements and memory footprints. This article will explore how leveraging lower-precision formats can enhance...

- ISTA-DASLab ( IST Austria Distributed Algorithms and Systems Lab): no description found

- unilm/bitnet/The-Era-of-1-bit-LLMs__Training_Tips_Code_FAQ.pdf at master · microsoft/unilm: Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities - microsoft/unilm

- aphrodite-engine/tests/benchmarks at main · PygmalionAI/aphrodite-engine: PygmalionAI's large-scale inference engine. Contribute to PygmalionAI/aphrodite-engine development by creating an account on GitHub.

- GitHub - PygmalionAI/aphrodite-engine: PygmalionAI's large-scale inference engine: PygmalionAI's large-scale inference engine. Contribute to PygmalionAI/aphrodite-engine development by creating an account on GitHub.

- Watch movies online and Free tv shows streaming - ev01.net: Fast and Free streaming of over 250000 movies and tv shows in our database. No registration, no payment, 100% Free full hd streaming

- argilla (Argilla): no description found

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #random (58 messages🔥🔥):

-

Kernel Conundrum on Local Machine: A discussion around an issue requiring kernel restarts on user’s local machine, hints it might be memory-related. An error message about 32-bit and needing to restart to get rid of it was shared, with some discussion about possibly being Out Of Memory (OOM), but user confirms the machine works fine after kernel restarts.

-

Warming Up to Fiber Optics and Big Models: One user excitedly reports upgrading to fiber-optics and a new 2TB WD black edition to support larger models. There is enthusiasm for the current performance and potential future upgrades to hardware.

-

ORPO Generates Buzz in AI Community: There’s excitement around ORPO (Off-policy Reinforcement learning with Pretrained Overparametrized Models), as users discuss its integration and boost in performance for models. The link to the original paper on arXiv was provided.

-

Unsloth Keeps Up with TRL: In relation to the ORPO discussion, users confirmed that Unsloth AI should support it if it’s supported by TRL (Transformer Reinforcement Learning). Optimizations and patching from Unsloth to TRL were mentioned, along with encouragement to share if there are any issues with the new integrations.

-

New Toolkit for Transformer Models: An interesting toolkit for transformers called transformer-heads was linked. It’s designed for attaching, training, saving, and loading new heads for transformer models, available on GitHub.

Link mentioned: GitHub - center-for-humans-and-machines/transformer-heads: Toolkit for attaching, training, saving and loading of new heads for transformer models: Toolkit for attaching, training, saving and loading of new heads for transformer models - center-for-humans-and-machines/transformer-heads

Unsloth AI (Daniel Han) ▷ #help (317 messages🔥🔥):

- Understanding Unsloth’s Fast Dequantizing: Unsloth AI’s ‘fast_dequantize’ in

fast_lora.pyis noted to be optimized for speed with reduced memory copies compared tobitsandbytes. - Troubleshooting and Updating Mistral with Unsloth: A member was advised to upgrade Unsloth due to issues with Gemma GGUF, with a command provided for the upgrade. It was noted that problems existed not only with GGUF but also with merging.

- Resolving Inference Issue with Looping Tokens: Discussion on a reported issue where models converted to gguf, particularly using Mistral, started repeating

<s>in a loop during responses. Unsloth’s maintainer suggests checking thetokenizer.eos_token. - Combining Multiple Datasets for Fine-Tuning: It’s suggested that multiple datasets can be concatenated into one text string, processed, and then appended together for training. Enhanced instructions and responses from different datasets can potentially be merged for this purpose.

- Needing Clarity on Fine-Tuning Parameters: Queries were made about controlling epochs with

max_stepsduring fine-tuning, for which settingnum_train_epochsinstead was recommended. Additionally, it’s mentioned higher memory consumption may result from increasingmax_seq_lengthdue to padding.

Links mentioned:

- Mixture of Experts for Clowns (at a Circus): no description found

- HirCoir/Claud-mistral-7b-bnb-4bit-GGUF at main: no description found

- HirCoir/Claud-openbuddy-mistral-7b-v19.1-4k · Hugging Face: no description found

- mistralai/Mistral-7B-Instruct-v0.2 · Hugging Face: no description found

- Google Colaboratory: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- bitsandbytes/bitsandbytes/autograd/_functions.py at main · TimDettmers/bitsandbytes: Accessible large language models via k-bit quantization for PyTorch. - TimDettmers/bitsandbytes

- unilm/bitnet/The-Era-of-1-bit-LLMs__Training_Tips_Code_FAQ.pdf at master · microsoft/unilm: Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities - microsoft/unilm

- Fine tuning LLMs for Memorization: ➡️ ADVANCED-fine-tuning Repo (incl. Memorization Scripts): https://trelis.com/advanced-fine-tuning-scripts/➡️ One-click Fine-tuning & Inference Templates: ht...

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #showcase (33 messages🔥):

- Hints of Troubles with Unsloth Integration: Users express issues with using Ollama templates, particularly with different quant models such as q4, leading to poor results.

- Diagnostics on GPT4All: One user is running tests on GPT4All to resolve issues and is advised not to escape backticks and to try different quant sizes.

- Q8 Model Version Shows Promise: After a bit of back-and-forth, a user confirms that the Q8 model on Huggingface seems to be functioning correctly.

- Sappha-2b-v3 Makes Waves: A new model, sappha-2b-v3, which is fine-tuned with Unsloth on Gemma-2b, outperforms current models on several benchmarks, prompting discussions on its capability.

- Interest Peaks for New Models: Users show excitement for the newly released models and share links to their best-performing models, such as MasherAI-v6-7B, while seeking information on the fine-tuning process used.

Links mentioned:

- mahiatlinux/MasherAI-v6-7B · Hugging Face: no description found

- Fizzarolli/sappha-2b-v3 · Hugging Face: no description found

- ollama/docs/modelfile.md at main · ollama/ollama: Get up and running with Llama 2, Mistral, Gemma, and other large language models. - ollama/ollama

Unsloth AI (Daniel Han) ▷ #suggestions (29 messages🔥):

- PyTorch Full Finetuning Without Breaking the (Memory) Bank: PyTorch users take note: a new pull request allows full finetuning to fit into less than 16GB of RAM, making it more accessible for those with consumer-grade GPUs.

- LoftQ Hits the Scene: In artificial intelligence optimization news, LoftQ has been included in the Hugging Face PEFT release v0.10.0, which brings enhanced fine-tuning capabilities to larger models.

- Multi-Turn Training Challenge for ORPO: There’s a discussion on multi-turn dialogue training for ORPO, with suggestions to resolve the current limitations of using the (prompt:"", chosen:"", rejected:"") format by introducing a more efficient method that handles multiple turns effectively.

- ORPO Needs Better Multi-Turn Training: The community expresses concerns that the current ORPO method doesn’t seem to cater well to multi-turn dialogue training, which is essential for ORPO to be a feasible replacement for SFT, highlighting the importance of a standardized and optimized format for dialogue training.

- Successful ORPO Trials with Mistral: One member boasts impressive results when applying ORPO TRL implementation on the Mistral-7B-v0.2 model, using the argilla/ultrafeedback-binarized-preferences-cleaned dataset, suggesting that further tuning might yield even better outcomes.

Links mentioned:

- Release v0.10.0: Fine-tune larger QLoRA models with DeepSpeed and FSDP, layer replication, enhance DoRA · huggingface/peft: Highlights Support for QLoRA with DeepSpeed ZeRO3 and FSDP We added a couple of changes to allow QLoRA to work with DeepSpeed ZeRO3 and Fully Sharded Data Parallel (FSDP). For instance, this allow...

- Full finetune < 16GB by rohan-varma · Pull Request #527 · pytorch/torchtune: Context We'd like to enable a variant of full finetune that trains in < 16GB of RAM for users with consumer grade GPUs that have limited GPU RAM. This PR enables the full finetune to fit into ...

Perplexity AI ▷ #general (892 messages🔥🔥🔥):

- Pro Users Debate Perplexity’s Image Generation: Users discussed the ability to generate images using Perplexity as a Pro feature, noting that it requires using Writing mode and switching off the Pro toggle on the web version.

- Claude Opus & GPT-4 Turbo Differences: Conversations centered around the functionality of Claude 3 Opus and GPT-4 Turbo models, comparing their abilities for academic research and writing code, and the distinction of GPT-4 Turbo being able to compile Python files which Perplexity does not currently support.

- Exploring Stability AI and Local Models: Talk of Stability AI’s models like SDXL and local installations was a focus, with users sharing tips and experiences about running these powerful image generation tools on personal hardware, despite the high costs involved.

- Investigating Perplexity Bugs and Confusions: Users expressed confusion about certain Perplexity features, such as repeated unrelated prompts appearing during sessions, how to disable unnecessary search triggers when using certain AI models, and issues encountered on the iOS app.

- Perplexity Features and Updates Discussion: Users debated the potential of features like Claude 3 Opus’s API capabilities, Op1 synthesizer’s aesthetics and new models like Rabbit R1, and discussed the possibility of integrating Perplexity with iOS Spotlight search.

Links mentioned:

- Imagine with Meta AI: Use Imagine with Meta AI to quickly create high-resolution, AI-generated images for free. Just describe an image and Meta AI will generate it with technology from Emu, our image foundation model.

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- Stability AI Image Models — Stability AI: Experience unparalleled image generation capabilities with SDXL Turbo and Stable Diffusion XL. Our models use shorter prompts and generate descriptive images with enhanced composition and realistic ae...

- Civitai | Share your models: no description found

- Swedish House Mafia One GIF - Swedish House Mafia One Op1 - Discover & Share GIFs: Click to view the GIF

- Iota Crypto GIF - Iota Crypto Cryptocurrency - Discover & Share GIFs: Click to view the GIF

- Claude 2.1 prompt engineering guide: Learn how to prompt Claude with these 11 prompt engineering tips.

- Introducing deep search | Search Quality Insights: no description found

- You can now use Copilot's GPT-4 Turbo model for free: Microsoft has just made the advanced GPT model available for everyone, with no catches or tricks.

- Stability AI Announcement — Stability AI: Earlier today, Emad Mostaque resigned from his role as CEO of Stability AI and from his position on the Board of Directors of the company to pursue decentralized AI. The Board of Directors has appoin...

- Have you ever had a dream like this?: We all have at one point.

- Kys Wojak GIF - Kys Wojak Mushroom - Discover & Share GIFs: Click to view the GIF

- PerplexityBot: no description found

- Reddit - Dive into anything: no description found

- Golden Eggs Willy Wonka And The Chocolate Factory GIF - Golden Eggs Willy Wonka And The Chocolate Factory Clean The Eggs - Discover & Share GIFs: Click to view the GIF

- GitHub - OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- Orion Browser by Kagi: Orion is a fast, free, web browser for iPhone and iPad with no ads, no telemetry. Check also Orion for your Mac desktop. Orion has been engineered from ground up as a truly privacy-respecting browse...

- DOJ sues Apple over iPhone monopoly in landmark antitrust case: Apple and its iPhone and App Store business have been eyed by the Department of Justice, which previously filed antitrust suits against Google.

Perplexity AI ▷ #sharing (38 messages🔥):

- Legal Battle Ahead: The United States is engaged in a lawsuit, details of which can be explored at United States Sues.

- Email Authentication Scrutiny: Users interested in email security protocols, specifically DMARC, can find information at DMARC Details.

- Decoding Market Tools: TradingView, a tool for traders and investors, is discussed and can be examined at TradingView Insights.

- Generating Curiosity Around Perplexity: The potential of Perplexity AI replacing other tools is being questioned, and insights can be discovered at Should Perplexity Replace?.

- The Concept of Love Explored: An inquiry into the nature of love has been raised, with a desire to understand more at What is Love?.

Perplexity AI ▷ #pplx-api (24 messages🔥):

- Token Limit Troubles: A member encountered a BadRequestError due to a prompt and token generation request exceeding the 16,384 token limit. They were instructed to reduce the

max_tokensvalue to stay under the limit and to consider shortening their prompts. - Resume Analyzer Development: The member shared they are working on a resume analyzer/builder project as a way to practice with AI, indicating they are new to the field.

- Seeking Token Count Clarity: In response to a question about limiting user prompts by token count, it was explained that the number of tokens is tied to the length of the message sent. They were referred to Perplexity AI’s documentation for an accurate token count.

- Tokenization Tool Tip: Another member recommended using OpenAI’s tokenizer tool as a general gauge for token count, but noted that different AI models may tokenize differently. For precision, they advised checking token usage directly through the Perplexity API.

- In Search of an Autogpt-like Service: A member inquired about an autogpt-like service that supports Perplexity API keys for automating iterative tasks. There were no responses provided to this query within the summarized message history.

Link mentioned: Chat Completions: no description found

LM Studio ▷ #💬-general (533 messages🔥🔥🔥):

- High CPU Usage Queries: Users noticed high CPU usage when running models on LM Studio version 0.2.17; some resolved issues by restarting LM Studio and resetting settings to default. Advice for resolving these issues includes reviewing log files for errors.

- GPU Compatibility Concerns: There were inquiries about the best GPU cards for LM Studio, with discussions revealing preference for Nvidia graphics cards like the 3090 TI for better compatibility and performance. Users also discussed various issues regarding GPU offload and the impact of different model file sizes on performance.

- Local Server Accessibility: Users encountered errors using LM Studio’s local server feature, with successful resolutions involving reinstallation and proper configurations; users are prompted to post error reports in a specific Discord channel (#1139405564586229810) for assistance.

- Model Format Support: Discussions indicated that LM Studio supports GGUF model format, and users explored how to convert Hugging Face models to GGUF format using command-line methods and the importance of sharing converted models back on Hugging Face.

- Linux and MacOS Support: Users inquired about using LM Studio on Linux and MacOS platforms. There is no immediate plan for a docker image or an Intel Mac version of LM Studio, but users are encouraged to vote for this feature on the feature request channel (#1128339362015346749).

Links mentioned:

- no title found: no description found

- no title found: no description found

- LM Studio: no description found

- roborovski/superprompt-v1 · Hugging Face: no description found

- Get started | 🦜️🔗 Langchain: LCEL makes it easy to build complex chains from basic components, and

- bartowski/c4ai-command-r-v01-GGUF · Hugging Face: no description found

- Ban Keyboard GIF - Ban Keyboard - Discover & Share GIFs: Click to view the GIF

- Making LLMs lighter with AutoGPTQ and transformers: no description found

- GitHub - czkoko/SD-AI-Prompt: A shortcut instruction based on LLama 2 to expand the stable diffusion prompt, Power by llama.cpp.: A shortcut instruction based on LLama 2 to expand the stable diffusion prompt, Power by llama.cpp. - czkoko/SD-AI-Prompt

- Run ANY Open-Source LLM Locally (No-Code LMStudio Tutorial): LMStudio tutorial and walkthrough of their new features: multi-model support (parallel and serialized) and JSON outputs. Join My Newsletter for Regular AI Up...

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- Caravan Palace - Lone Digger (Official MV): 📀 Preorder our new album: https://caravanpalace.ffm.to/gmclub🎫 Come see us live: http://www.caravanpalace.com/tour 🔔 Subscribe to our channel and click th...

- GitHub - stitionai/devika: Devika is an Agentic AI Software Engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika aims to be a competitive open-source alternative to Devin by Cognition AI.: Devika is an Agentic AI Software Engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective...

- Caravan Palace - Lone Digger (Official MV): 📀 Preorder our new album: https://caravanpalace.ffm.to/gmclub🎫 Come see us live: http://www.caravanpalace.com/tour 🔔 Subscribe to our channel and click th...

- GitHub - caddyserver/caddy: Fast and extensible multi-platform HTTP/1-2-3 web server with automatic HTTPS: Fast and extensible multi-platform HTTP/1-2-3 web server with automatic HTTPS - caddyserver/caddy

- Tutorial: How to convert HuggingFace model to GGUF format · ggerganov/llama.cpp · Discussion #2948: Source: https://www.substratus.ai/blog/converting-hf-model-gguf-model/ I published this on our blog but though others here might benefit as well, so sharing the raw blog here on Github too. Hope it...

- Dev:API – YaCyWiki: no description found

- Web Harvesting for Data Mining - YaCy Searchlab: no description found

- Home - YaCy: no description found

- nisten/mistral-instruct0.2-imatrix4bit.gguf · Hugging Face: no description found

- Tweet from meng shao (@shao__meng): They just change the readme of HuggingFace space: Mistral-7B-Instruct-v0.2 based on Mistral-7B-Instruct-v0.1 => Mistral-7B-v0.2 https://twitter.com/shao__meng/status/1771680453210370157 ↘️ Quotin...

- George Hotz | Exploring | finding exploits in AMD's GPU firmware | Giving up on AMD for the tinybox: Date of the stream 21 Mar 2024.from $1050 buy https://comma.ai/shop/comma-3x & best ADAS system in the world https://openpilot.comma.aiLive-stream chat added...

LM Studio ▷ #🤖-models-discussion-chat (71 messages🔥🔥):

- Q-AGI Skepticism Strikes*: A discussion about Q-AGI videos led to expressions of fatigue and refusal to consume more content on the topic, with a humorous meme shared to underline the sentiment.

- AI in Architecture Needs Human Verification: Dialogue regarding the use of AI in architectural engineering highlighted skepticism about trusting AI models without human oversight, citing the need for human certification due to legal and safety concerns.

- Fine-Tuning Models for Specific Tasks: Members discussed the effectiveness of fine-tuning smaller language models for specific tasks. One member shared their creation of a program that lets users train their own models based on ChatGPT 2, complete with an instruction manual generated by Claude.

- Understanding Model Size vs. Quantization: There was clarification provided about the difference between #b (size of the model based on parameters) and q# (level of quantization) when deciding which model version to run, such as “llama 7b - q8” versus “llama 13b - q5”.

- Choosing the Right Model for Context Length: A user inquired about models with 32K context length for RAG-adjacent interactions, and it was mentioned that Mistral recently released a 7b 0.2 version with a 32k context.

Links mentioned:

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits: Recent research, such as BitNet, is paving the way for a new era of 1-bit Large Language Models (LLMs). In this work, we introduce a 1-bit LLM variant, namely BitNet b1.58, in which every single param...

- How to Fine-Tune LLMs in 2024 with Hugging Face: In this blog post you will learn how to fine-tune LLMs using Hugging Face TRL, Transformers and Datasets in 2024. We will fine-tune a LLM on a text to SQL dataset.

- Dont Say That Ever Again Diane Lockhart GIF - Dont Say That Ever Again Diane Lockhart The Good Fight - Discover & Share GIFs: Click to view the GIF

- Models - Hugging Face: no description found

LM Studio ▷ #🧠-feedback (17 messages🔥):

- Request for Download Speed Limiter: A user requested the addition of a download speed limiter to avoid consuming all the bandwidth at home. Other users suggested using system-level settings to throttle speeds, arguing that large downloads are common across many applications.

- Confusion Over Image Uploading: One user struggled to figure out how to upload images to a model. Guidance was provided by others, mentioning a tool called .mmproj converter and directing where to find it and how to use it.