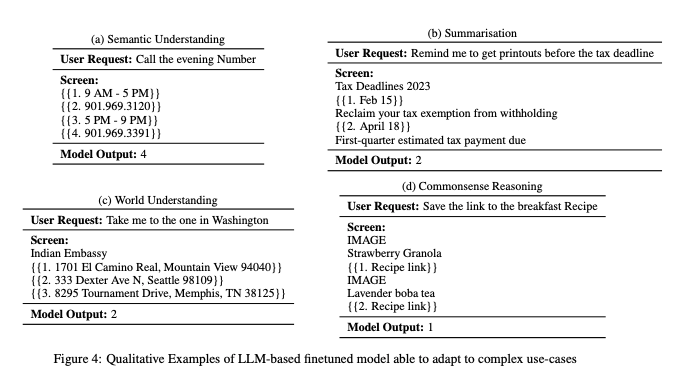

Apple is finally waking up to AI in a big way ahead of WWDC. We featured MM1 a couple weeks ago and now a different team is presenting ReALM: Reference Resolution As Language Modeling. Reference resolution in their terminology refers to understanding what ambiguous references like “they” or “that” or “the bottom one” or “this number present onscreen” refer to, based on 3 contexts - 1) what’s on screen, 2) entities relevant to the conversation, and 3) background entities. They enable all sorts of assistant-like usecases:

Which is a challenging task given it basically has to read your mind.

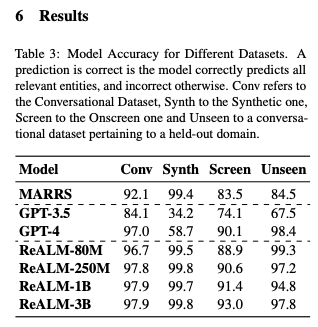

The authors use a mix of labeled and synthetic data to finetune a much smaller FLAN-T5 model that beats GPT4 at this task:

No model release, no demo. But it’s nice to see how they are approaching this problem, and the datasets and models are small enough to be replicable for anyone determined enough.

The AI content creator industrial complex has gone bonkers over it, of course. There only a few more months’ worth of headlines to make about things beating GPT4 before this is itself beaten to death.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

AI Research and Development

- Open source coding agent: In /r/MachineLearning, researchers developed SWE-agent, an open source coding agent that achieves 12.29% on the SWE-bench benchmark. The agent can turn GitHub issues into pull requests, but the researchers found building effective agents to be harder than expected after 6 months of work.

- New RAG engine: Also in /r/MachineLearning, RAGFlow was introduced as a customizable, credible, explainable retrieval-augmented generation (RAG) engine based on document structure recognition models.

- Efficient quantization: In /r/LocalLLaMA, QuaRot was announced as a new quantization method enabling 4-bit inference, more efficient than current methods like GPTQ that require dequantization. It also supports lossless 8-bit quantization without calibration data.

AI Applications and Tools

- T-shirt design generator: In a video post, a Redditor shared a tool they made to generate t-shirt designs using AI.

- Podcast generation: In /r/OpenAI, podgenai was released as free GPT-4 based software to generate hour-long informational audiobooks/podcasts on any topic, requiring an OpenAI API key.

- Open-source language model: HuggingFace CEO reshared the release of PipableAI/pip-library-etl-1.3b, an open-source model that can be tried out without a GPU.

AI Industry and Trends

- Impact of large language models: In /r/MachineLearning, a discussion was started on whether large language models (LLMs) are doing more harm than good for the AI field due to hype changing the focus of conferences and jobs superficially, with overpromising potentially leading to another AI winter.

- Decentralizing AI: An Axios article was shared on efforts to decentralize AI development and break the hold of big tech companies.

- Stability AI Japan hire: News was posted about Takuto Takizawa joining Stability AI Japan as Head of Japan Sales & Partnerships.

Stable Diffusion Discussion

- Generating arbitrary resolutions: In /r/StableDiffusion, a user asked how Stable Diffusion generates images at resolutions other than 512x512 given the VAE input/output sizes, seeking an explanation and pointers to relevant code.

- Suitability for storytelling: Also in /r/StableDiffusion, a beginner asked if Stable Diffusion is suitable for creating specific characters, poses, and scenes for storytelling and comics, as they struggle to control the output and consider 3D tools as an alternative.

- Batch generation in UI: Another user in /r/StableDiffusion was looking for the setting to have Automatic1111’s Stable Diffusion UI repeatedly generate images in batches overnight.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Anthropic Research on Jailbreaking LLMs

- Many-shot jailbreaking technique: @AnthropicAI released a research paper studying a long-context jailbreaking technique effective on most large language models. The research shows increasing context window is a double-edged sword, making models more useful but also vulnerable to adversarial attacks.

- Principled and predictable technique: @EthanJPerez noted this is the most effective, reliable, and hard to train away jailbreak known, based on in-context learning. It predictably gets worse with model scale and context length.

- Concerning results: @sleepinyourhat found the results interesting and concerning, showing many-shot prompting for harmful behavior gets predictably more effective at overcoming safety training with more examples, following a power law.

Adversarial Validation Technique for Identifying Distribution Shifts

- Clever trick to check train/test distribution: @svpino shared a trick called Adversarial Validation to determine if train and test data come from the same distribution. Put them together, remove target, add binary feature for train/test, train simple model. If AUC near 0.5, same distribution. If near 1, different distributions.

- Useful for identifying problem features: Adversarial Validation can identify problem features causing distribution shift. Compute feature importance, remove most important, rebuild model, recompute AUC. Repeat until AUC near 0.5. Useful in production to identify distribution shifts.

Impact of Taiwan Earthquake on Semiconductor Supply

- Proximity of earthquake to fabs: @nearcyan noted the 7.4 earthquake was 64 miles from Central Taiwan Science Park. In 1999, a 7.7 quake near fabs caused production losses. 2016 6.6 quake only delayed ~1% TSMC orders.

- TSMC preparedness: TSMC is well prepared for larger quakes. Government prioritizes utility restoration for fabs. No structural damage reported yet. Expect more disruption at Hsinchu/Taichung than 3nm Tainan fab.

- Potential delays: Expect nontrivial delays of at least few weeks, possibly months if unlucky. Will likely cause short-term semiconductor price action.

AI Advancements and Developments

- Genie AI model from DeepMind: @GoogleDeepMind announced Genie, a foundation world model that can create playable 2D platformer worlds from a single image prompt, sketch or text description. It could help train AI agents.

- Replit Code Repair AI agent: @pirroh announced Replit Code Repair, a low-latency code repair AI agent using GPT-4. It substantially outperforms open-source models on speed and accuracy.

- Sonnet model replacing GPT-4: @jxnlco is replacing GPT-4 with Sonnet for most use cases across 3 companies, showing a shift to more specialized models.

Memes and Humor

- Coding longevity meme: @svpino joked about being told in 1994 that coding would be dead in 5 years, yet still coding 30 years later.

- Anthropic jailbreaking violence meme: @goodside joked that if violence doesn’t solve your LLM jailbreaking problems, you aren’t using enough of it.

AI Discord Recap

A summary of Summaries of Summaries

-

Advancements in Memory-Efficient LLM Training:

- A new attention mechanism called DISTFLASHATTN claims to reduce quadratic peak memory usage to linear for training long-context LLMs, enabling up to 8x longer sequences. However, the paper lacks pseudocode for the backward pass, raising concerns about reproducibility.

- Discussions around CUDA optimization techniques like DISTFLASHATTN and its potential to revolutionize LLM training through memory efficiency and speed improvements over existing solutions like Ring Self-Attention.

-

AI Model Evaluations and Benchmarking:

- The SWE-agent open-source system claims comparable accuracy to Devin on the SWE-bench for autonomously solving GitHub issues.

- Varying performance of models like GPT-4, Claude, and Opus on tasks like solving historical prompts, math riddles, and code generation, highlighting the need for comprehensive evaluations.

- Platforms like Chaiverse.com for rapid feedback on RP-LLM models and LMSys Chatbot Arena Leaderboard for model benchmarking.

-

Prompt Engineering and Multimodal AI:

- Discussions on prompt engineering techniques for tasks like translation while preserving markdown, generating manager prompts, and improving multimodal QA using Chain of Thought.

- The potential of DSPy for prompt optimization compared to other frameworks like LangChain and LlamaIndex.

- Explorations into multimodal AI like using Stable Diffusion for depth mapping from stereo images and the launch of Stable Audio 2.0 for high-quality music generation.

-

Open-Source AI Developments and Deployments:

- Work on an Open Interpreter iPhone app and porting to Android Termux, M5 Cardputer, enabling voice interfaces and exploring local STT solutions.

- Unveiling of the Octopus 2 demo, a model capable of function calling, fueling excitement around on-device models.

- Releases like Axolotl documentation updates and the open-sourcing of Mojo’s standard library.

-

Misc Themes:

-

Optimization Challenges and Breakthroughs in LLMs: Engineers grappled with memory and performance bottlenecks in training large language models, with the introduction of novel techniques like DISTFLASHATTN which claims linear memory usage and 8x longer sequences compared to existing solutions. Discussions also covered leveraging bf16 optimizers, tinyBLAS, and frameworks like IPEX-LLM (GitHub) for inference acceleration on specific hardware.

-

Anticipation and Analysis of New AI Models: Communities buzzed with reactions to newly released or upcoming models such as Apple’s ReALM (paper), Stable Diffusion 3.0, Stable Audio 2.0 (website), and the SWE-agent which matches Devin’s performance on the SWE-bench (GitHub). Comparative evaluations of instruction-following and chat models like Claude, Opus, and Haiku were also common.

-

Ethical Concerns and Jailbreaking in AI Systems: Discussions touched on the legal implications of training AI on copyrighted data, as seen with the music platform Suno, and the efficacy of jailbreak defenses in language models, referencing an arXiv paper on the importance of defining unsafe outputs. The emotional simulation capabilities of chatbots sparked philosophical debates likening AI to psychopathy.

-

Innovations in AI Interfaces and Applications: The potential of voice-based interactions with AI was highlighted by apps like CallStar AI, while communities worked on projects to make technology more accessible through conversational UIs. Initiatives such as Open Interpreter aimed to bring AI capabilities to mobile and embedded devices. Novel use cases for AI ranged from WorldSim’s gamified simulations (Notion) to AI-generated art and music.

PART 1: High level Discord summaries

LAION Discord

-

Optimizer Headaches and Proposals: Technical talks revealed challenges with

torch.compileand optimizer functions. An emerging solution discussed involved a Python package withbf16 optimizerto address dtype conflicts and device compatibility issues. -

Sound of Legal Alarm for AI Tunes: The community spotlighted potential legal issues with the AI music platform Suno, emphasizing the risks of copyright infringement suits from record labels due to training on copyrighted content.

-

Memory Hogs & Crashes in Apple’s MPS: Apple’s MPS framework was under scrutiny for crashing at high memory allocations even when the memory was available. Theoretical internal limitations and attention slicing as a workaround were hot topics, albeit with concerns about resulting NaN errors.

-

Textual Details Elevate Image Quality: Research surfaced indicating that fine-tuning text-to-image models with precise spatial descriptions enhances the spatial consistency in generated images, as suggested by an arXiv paper.

-

Decoding AI Optimal Performance: From skepticism about SD3 Turbo’s claimed efficiency to recommendations on model fine-tuning and scheduler effectiveness, the guild analyzed various AI strategies. There were also insights into how smaller models may outperform larger ones within the same inference budget, as shown in a recent empirical study.

Stability.ai (Stable Diffusion) Discord

Forge Ahead with Stable Diffusion: Users report that Forge, a user interface for Stable Diffusion, delivers superior performance especially on RTX 3060 and RTX 4080 graphics cards. DreamShaper Lightning (SDXL) models come recommended for efficiency and speed in image generation.

Anticipation High for SD3: The Stable Diffusion community is actively awaiting the release of Stable Diffusion 3.0, projected to launch in the next 3-5 weeks, with improvements to text rendering expected, though perfect spelling may remain elusive.

Creative AI Unleashed, But Not ‘Unleash’: Members are experimenting with Stable Diffusion to generate art for projects like tabletop RPGs and are considering storytelling through AI-generated visual narratives, possibly in comic or movie formats.

Tech Tips for Troubled Times: Discussions centered on addressing issues such as slow image generation and unwanted text appearance, with participants suggesting optimizations, and mentioning GitHub links as starting points for troubleshooting.

Features Forecast: There’s evident excitement about upcoming features like sparse control net, SegMOE, and audiosparx models, with the community sharing resources and anticipating new possibilities for AI-generated content.

Unsloth AI (Daniel Han) Discord

Cortana 1.0 Chat Model Sparks Curiosity: Engineers discussed creating an AI prompt model named Cortana 1.0, based on the Halo series AI, emphasizing creating effective chat modes and prompt structures for streamlined interaction.

Unsloth Enterprise Capability Clarified: It was clarified that Unsloth Enterprise does indeed support full model training with a speed enhancement of 2-5x over FA2, rather than the expected 30-40x.

AI Optimization Exchange: A set of lively discussions covered diverse optimization topics, including advances in Unsloth AI with a mention of Daniel Han’s Tweet, GitHub resources for accelerating AI inference like ipex-llm, and troubleshooting with AI models, notably the compatibility of SFTTrainer with Gemma models.

Innovative Approach to Asteroid Mining: The Open Asteroid Impact project captured interest with a novel concept of bringing asteroids to Earth to harness resources more effectively.

Groundwork for Full Stack Prospects: Solicitations for a skilled full stack developer within the community were made, and users were encouraged to DM if they could recommend or offer assistance.

Perplexity AI Discord

Reading Between the PDF Lines: Engineers discussed AI models such as Claude and Haiku for interpreting PDFs, with a focus on context windows and Perplexity’s Pro features, especially the “Writing” focus and enabling “Pro” for accuracy. Some users favored Sonar for faster responses.

Ad-talk Sparks User Spat: The possibility of Perplexity introducing ads sparked debate, following statements by Perplexity’s Chief Business Officer on integrating sponsored suggestions. Concerns were raised about the potential impact on the user experience for Pro subscribers, citing a Verge article on the subject.

PDF Roadblocks and Image Generation: While addressing technical issues, users clarified that Perplexity’s mobile apps lack image generation support—an inconvenience tempered by the website’s desktop-like functionality on mobile devices for image generation. Separate discussions pointed to users wanting to lift the 25MB PDF limit for increased efficiency.

Engineers Exchange ‘Supply Links’: Referral programs and discounts became a hot topic, with mentions of savings through supplied links.

API Woes and Workarounds: Within the Perplexity API realm, users grappled with the lack of team support and payment issues for API credits, while also sharing frustrations over rate limits and receiving outdated responses from the sonar-medium-online model. The advice ranged from accurate request logging to refining system prompts for up-to-date news.

Curiosity Drives Deep Dives:

- Users applied AI to explore a range of subjects from Fritz Haber’s life and ethical dilemmas to random forest classifiers and “Zorba the Greek,” hinging on AI’s suitability to satisfy diverse and complex inquiries.

- They leveraged Perplexity to efficiently compile comprehensive data for newsletters, indicating a strong inclination towards utilizing AI for streamlined content creation.

Latent Space Discord

Open Source AI Matches Devin: The SWE-agent presented as an open-source alternative to Devin has shown comparable performance on the SWE-bench, prompting discussions on its potential integrations and applications.

Apple’s AI Research Readiness: A new paper by Apple showcases ReALM, hinting at AI advancements that could eclipse GPT-4’s capabilities, closely integrated with the upcoming iOS 18 for improved Siri interactions.

Conundrum with Claude: Users are experimenting with Claude Opus but finding it challenged by complex tasks, leading to recommendations of the Prompt Engineering Interactive Tutorial for enhanced interactions with the model.

Supercharged Sound with Stable Audio 2.0: StabilityAI has introduced Stable Audio 2.0, pushing the boundaries of AI-generated music with its ability to produce full-length, high-quality tracks.

DALL-E Gets an Edit Button: ChatGPT Plus now includes features that allow users to edit DALL-E generated images and edit conversation prompts, bringing new dimensions of customization and control, detailed on OpenAI’s help page.

DSPy Framework Discussion Heats Up: The LLM Paper Club scrutinized the DSPy framework’s functionality and its advantage in prompt optimization over other frameworks, sparking ideas about its application in diverse projects such as voice API logging apps and a platform for summarizing academic papers.

Nous Research AI Discord

-

SWE-agent Rises, Devin Settles: A cutting-edge system named SWE-agent was introduced, claiming to match its predecessor Devin in solving GitHub issues with a remarkable 93-second average processing time, and it’s available open-source on GitHub.

-

80M Model Sparking Skepticism: Engineers discussed an 80M model’s surprising success on out-of-distribution data, prompting speculation about the margin of error and stirring debate about the validity of this performance.

-

Chinese Processor Punches Above its Weight: Conversations about AI hardware led to Intellifusion’s DeepEyes, Chinese 14nm AI processor, offering competitive AI performance at significantly reduced costs, potentially challenging the hardware market (Tom’s Hardware report).

-

Tuning Heroes and Model Troubles: The community shared experiences of tuning models, like Lhl’s work with a jamba model and Mvds1’s issue uploading models to Hugging Face due to a metadata snag, pointing out the need for manual adjustments to

SafeTensorsInfo. -

WorldSim Sparks Community Imagination: Engineers enthusiastically explored features for WorldSim, ranging from text-to-video integration to a community roadmap, discussing technical enhancements and sharing resources like the WorldSim Command Index on Notion. Technical constraints and gamification of WorldSim were among the hot topics, showcasing the community’s drive for innovation and engagement in simulation platforms.

LM Studio Discord

- LM Studio Lacks Embedding Model Support: Users confirmed that LM Studio currently does not support embedding models, emphasizing that embedding functionality is yet to be implemented.

- AI Recommendation Query Gains Popularity: A user’s request for a model capable of providing hentai anime recommendations prompted suggestions to use MyAnimeList (MAL), found at myanimelist.net, coupled with community amusement at the unconventional inquiry.

- Optimized LLM Setup Suspense: Discussions in the hardware channel revealed insights about multip GPU configurations without SLI for LM Studio, recommended GPUs like Nvidia’s Tesla P40, and concerns regarding future hardware prices due to a major earthquake affecting TSMC.

- API Type Matters for Autogen Integration: Troubleshooting for LM Studio highlighted the importance of specifying the API type to ensure proper functioning with Autogen.

- Cross-Origin Resource Sharing (CORS) for CrewAI: A recommendation to enable CORS as a potential fix was discussed for local model usage issues in LM Studio, with additional guidance provided via a Medium article.

OpenAI Discord

-

DALL·E Enters the ChatGPT Realm: Direct in-chat image editing and stylistic inspiration have been introduced for DALL·E images within ChatGPT interfaces, addressing both convenience and creative exploration.

-

Bing API Goes Silent: Outages of the Bing API lasting 12 hours stirred up concerns among users, affecting services reliant on it, like DALL-E and Bing Image Creator, signaling a need for robust fallback options.

-

Perplexed by Emotion: Lively debate buzzed around whether GPT-like LLMs can authentically simulate emotions, pointing to the lack of intrinsic motivation in AI and invoking comparisons to psychopathy as well as the infamous Eliza effect.

-

Manager In A Box: Request for crafting prompts to tackle managerial tasks emphasizes the AI community’s interest in automating complex leadership roles, despite actual strategies or solutions not being churned out in discussions.

-

Translation Puzzles and Markdown Woes: Efforts to finesacraft translation prompts preserving markdown syntax faced headwinds; inconsistent translations, especially in Arabic, leave AI engineers questioning the limits of current language models’ abilities to handle complex formatting and language nuances.

tinygrad (George Hotz) Discord

Saying Goodbye to a Linux GPU Pioneer: John Bridgman’s retirement from AMD sparked discussions on his contributions to Linux drivers, with George Hotz commenting on the state of AMD’s management and future directions. Hotz called for anonymous tips from AMD employees for a possible blog expose, amidst community concerns over AMD’s follow-through on driver issues and open-source promises as highlighted in debates and a Phoronix article.

Linux Kernel and NVIDIA’s Open Move: The discourse extended to implications of varying kernel versions, particularly around Intel’s Xe and i915 drivers, and the transition preferences amongst Linux distributions, with a nod towards moving from Ubuntu 22.04 LTS to 24.04 LTS. Additionally, George Hotz referenced his contribution towards an open NVIDIA driver initiative, stirring conversations about the state of open GPU drivers compared to proprietary ones.

Tinygrad’s Path to V1.0 Involves the Community: Exploration of tinygrad’s beam search heuristic and CommandQueue functionality highlighted George Hotz’s emphasis on the need for improved documentation to aid users in learning and contributing, including a proposed tutorial inspired by “Write Yourself a Scheme in 48 Hours”. This goes hand-in-hand with community contributions, like this command queue tutorial, to polish tinygrad.

Active Member Engagement Strengthens Tinygrad: The community’s initiative in creating learning materials received kudos, with members offering resources and stepping up to live stream their hands-on experiences with tinygrad, fostering a collaborative learning environment. This aligns with the collective goal to reach tinygrad version 1.0, cementing the platform’s position as a tool for education and innovation.

Rethinking Memory Use in AI Models: A technical debate ensued on memory optimization during the forward pass of models, particularly regarding the use of activation functions with inverses, leveraging the inverse function rule. This represents the community’s engagement in not only tooling but also foundational principles to refine processing efficiency in AI computations.

OpenInterpreter Discord

OpenInterpreter Dives into App Development: Development is progressing on an Open Interpreter iPhone app with about 40% completion, driven by community collaboration on GitHub, inspired by Jordan Singer’s Twitter concept.

Making Tech More Accessible: There’s a push in the Open Interpreter community to introduce a Conversational UI layer to aid seniors and the disabled, aiming to significantly streamline their interaction with technology.

Security Measures in a Digital Age: Members are warned to steer clear of potentially hazardous posts from a seemingly Open Interpreter X account suspected of being compromised, in efforts to avert crypto wallet intrusions.

Out-of-the-Box Porting Initiatives: OpenInterpreter is blurring platform lines with a new repo for Android’s Termux installation, work on a M5 Cardputer port, and a discussion for implementing local STT solutions amid cost concerns with GPT-4.

Anticipation for AI Insights: The community shares a zest for in-depth understanding of LLMs, potentially indicating high interest in gaining advanced technical knowledge about AI systems.

Eleuther Discord

-

Saturation Alert for Tinystories: The Tinystories dataset is reportedly hitting a saturation point at around 5M parameters, prompting discussions to pivot towards the larger

minipiledataset despite its greater processing demands. -

Call for AI Competition Teams: There’s a keen interest within the community for EleutherAI to back teams in AI competitions, leveraging models like llema and expertise in RLHF, along with recommendations to set up dedicated channels and pursue compute grants for support.

-

Defense Against Language Model Jailbreaking: A recent paper suggests that ambiguity in defining unsafe responses is a key challenge in protecting language models against ‘jailbreak’ attacks, with emphasis placed on the precision of post-processing outputs.

-

AI Model Feedback Submission Highlighted: Public comments on AI model policies reveal a preference for open model development, as showcased by EleutherAI’s LaTeX-styled contribution, with discussions revealing both pride and missed opportunities for community engagement.

-

LLM Safety Filter Enhancement Suggestion: Conversations around mixing refusal examples into fine-tuning data for LLMs reference @BlancheMinerva’s tweets and relevant research, corroborating the increased focus on robustness in safety filters as noted in an ArXiv paper.

-

Chemistry Breakthrough with ChemNLP: The release of the first ChemNLP project paper on ArXiv promises significant implications for AI-driven chemistry, sparking interest and likely discussions on future research avenues.

-

Legality Looms over Open Source AI: A deep dive into the implications of California’s SB 1047 for open-source AI projects encourages signing an open letter in protest, indicating the community’s apprehension about the bill’s restrictive consequences on innovation. The detailed critique is accessible here.

-

Conundrum between Abstract and Concrete: An offbeat clarification sought on how a “house” falls between a “concrete giraffe” and an “abstract giraffe” was met with a lighthearted digital shrug, indicating the playful yet enigmatic side of community discourse.

-

Open Call for Neel Nanda’s MATS Stream: A reminder was shared about the impending deadline (less than 10 days) to apply for Neel Nanda’s MATS stream, with complete details available in this Google Doc.

-

Engagement on Multilingual Generative QA: The potential of using Chain of Thought (CoT) to boost multilingual QA tasks is discussed, with datasets like MGSM in the mix and a generated list showcasing tasks incorporating a

generate untilfunction contributing to the conversation. -

CUDA Quandaries Call for Community Help: A user facing

CUDA error: no kernel image is available for execution on the devicewith H100 GPUs, not encountered on A100 GPUs, led to troubleshooting efforts that excluded flash attention as the cause, with further advice suggesting checking thecontext_layerdevice to resolve the issue. -

Elastic Adventures with PyTorch: Questions about elastic GPU/TPU adjustment during pretraining are met with suggestions of employing PyTorch Elastic, which showcases its ability to adapt to faults and dynamically adjust computational resources, piquing the interest of those looking for scalable training solutions.

HuggingFace Discord

Boost Privacy in Repos: Hugging Face now enables enterprise organizations to set repository visibility to public or private by default, enhancing privacy control. Their tweet has more details.

Publish with a Command: Quarto users can deploy sites on Hugging Face using use quarto publish hugging-face, as shared in recent Twitter and LinkedIn posts.

Gradio’s New Sleek Features: Gradio introduces automatic deletion of state variables and lazy example caching in the latest 4.25.0 release, detailed in their changelog.

Exploring the CLI Frontier: A shared YouTube video explains how to use Linux commands, containers, Rust, and Groq in the command line interface for developers.

Pushing LLMs to Operative Zen: A user inquires about fine-tuning language models on PDFs with constrained computational resources, with a focus on inference using open-source models. Meanwhile, a discussion unfolds about modifying special tokens in a tokenizer when fine-tuning an LLM.

LangChain AI Discord

Persistent Context Quest in Chat History: Engineers discussed maintaining persistent context in chats, especially when interfacing with databases of ‘question : answer’ pairs, but did not converge on a specific solution. Reference was made to LangChain issues and documentation for potential ways forward.

Video Tutorial For LangServe Playground: An informative video tutorial introducing the Chat Playground feature in LangServe was shared, aimed at easing the initial setup and showcasing its integration with Langsmith.

Voice Commands the Future: Launch of several AI voice apps such as CallStar AI and AllMind AI was announced, suggesting a trend towards voice as the interface for AI interactions. Links were provided for community support on platforms like Product Hunt and Hacker News.

AI Engineering Troubles and Tutorials: A CI issue was reported on a langchain-ai/langserve pull request; and guidance was sought for a NotFoundError when employing LangChain’s ChatOpenAI and ChatPromptTemplate. Meanwhile, novices were directed to a comprehensive LangChain Quick Start Guide.

Galactic API Services Offered and Prompting Proficiency Test: GalaxyAI provided free access to premium AI models, emphasizing API compatibility with Langchain, although the service link was missing. Another initiative, GitGud LangChain, challenged proficient prompters to test a new code transformation tool to uphold code quality.

Modular (Mojo 🔥) Discord

Mojo Mingles with Memory Safety: The integration of Mojo language into ROS 2 suggests potential benefits for robotics development, enhanced by Mojo’s memory safety practices. C++ and Rust comparison shows the growing interest in performance and safety in robotics environments.

Docker Builds Set Sails: Upcoming Modular 24.3 will include a fix aimed at improving the efficiency of automated docker builds, which has been well-received by the community.

Logger’s Leap to Flexibility: The logger library in Mojo has been updated to accept arbitrary arguments and keyword arguments, allowing for more dynamic logging that accommodates versatile information alongside messages.

Mojo Dicts Demand More Speed: Community engagement on the One Billion Row Challenge revealed that the performance of Dict in Mojo needs enhancement, with efforts and discussions ongoing about implementing a custom, potentially SIMD-based, Dict that could keep pace with solutions like swiss tables.

The Collective Drive for Mojo’s Nightly Improvements: Members expressed a desire for clearer pathways to contribution and troubleshooting for Mojo’s stdlib development with discussions on GitHub clarifying challenges such as parsing errors and behavior of Optional types, indicative of active collaboration to refine Mojo’s offerings.

OpenRouter (Alex Atallah) Discord

-

TogetherAI Trips over a Time-Out: Users reported that the NOUSRESEARCH/NOUS-HERMES-2-MIXTRAL model experienced failures, specifically error code 524, which suggests a potential upstream issue with TogetherAI’s API. A fallback model, Nous Capybara 34B, was suggested as an alternative solution.

-

Historical Accuracy Test for Chatbots a Mixed Bag: When tasked with identifying Japanese General Isoroku Yamamoto from a historical WW2 context, LLMs such as claude, opus, and haiku exhibited varied levels of accuracy, underscoring the challenge in historical fact handling by current chatbots.

-

OpenRouter Hits a 4MB Ceiling: A technical limitation was highlighted in OpenRouter, imposing a 4MB maximum payload size for body content, a constraint confirmed to be without current workarounds.

-

Roleplaying Gets an AI Boost: In the realm of AI-assisted roleplaying, Claude 3 Haiku was a focus, with users sharing tactics for optimization including jailbreaking the models and applying few-shot learning to hone their interactions.

-

Community Sourcing Prompt Playgrounds: The SillyTavern and Chub’s Discord servers were recommended for those seeking enriched resources for prompts and jailbroken models, pointing to particular techniques like the pancatstack jailbreak.

LlamaIndex Discord

RankZephyr Eclipses the Competition: The integration of RankZephyr into advanced Retrieval-Augmented Generation systems is suggested to enhance reranking, with the RankLLM collection recognized for its fine-tuning capabilities.

Enhancing Research Agility with AI Copilots: A webinar summary reveals key strategies in building an AI Browser Copilot, focusing on a prompt engineering pipeline, KNN few-shot examples, and vector retrieval, with more insights available on LlamaIndex’s Twitter.

Timely Data Retrieval Innovations: KDB.AI is said to improve Retrieval-Augmented Generation by incorporating time-sensitive queries for hybrid searching, facilitating a more nuanced search capability critical for contexts like financial reporting, as illustrated in a code snippet.

Intelligent Library Redefines Knowledge Management: A new LLM-powered digital library for professionals and teams is touted to revolutionize knowledge organization with features allowing creation, organization, and annotation in an advanced digital environment, as announced in a LlamaIndex tweet.

Community Dialogues Raise Technical Questions: Discussions in the community include challenges with indexing large PDFs, issues with qDrant not releasing a lock post IngestionPipeline, limitations of the HuggingFace API, model integration using the Ollama class, and documentation gaps in recursive query engines with RAG.

OpenAccess AI Collective (axolotl) Discord

Axolotl Docs Get a Fresh Coat: The Axolotl documentation received an aesthetic update, but a glaring omission of the Table of Contents was swiftly corrected as shown in this GitHub commit, although further cleanup is needed for consistency between headings and the Table of Contents.

Deployment Woes and Wins for Serverless vLLMs: Experiences with Runpod and serverless vLLMs were shared, highlighting challenges along with a resource on how to deploy large language model endpoints.

Data Aggregation Headaches: Efforts to unify several datasets, comprising hundreds of gigabytes, face complications including file alignment. Presently, TSV files and pickle-formatted index data are used for quick seeking amid discussions on more efficient solutions.

Casual AI Model Smackdown: A light-hearted debate compared the preferences of AI models such as ‘qwen mow’ vs ‘jamba’, with the community joking about the need for additional data and resources.

Call for High-Def Data: A community member seeks resources to obtain a collection of 4K and 8K images, indicating a project or research that demands high-resolution image data.

Mozilla AI Discord

-

Windows ARM Woes with Llamafile: Compiling llama.cpp for Windows ARM requires source compilation because pre-built support isn’t available. Developers have been directed to use other platforms for building llamafile due to issues with Cosmopolitan’s development environment on Windows, as highlighted in Cosmopolitan issue #1010.

-

Mixtral’s Brains Better with Bigger Numbers: Mixtral version

mixtral-8x7b-instruct-v0.1.Q4_0.llamafileexcels at solving math riddles; however, for fact retention without errors, versions likeQ5_K_Mor higher are recommended. For those interested, the specifics can be found on Hugging Face. -

Performance Heft with TinyBLAS: GPU performance when working with llamafile can vastly improve by using a

--tinyblasflag which provides support without additional SDKs, though results may depend on the GPU model used. -

PEs Can Pack an ARM64 and ARM64EC Punch: Windows on ARM supports the PE format with ARM64X binaries, which combine Arm64 and Arm64EC code, detailed in Microsoft’s Arm64X PE Files documentation. Potential challenge arises due to the unavailability of AVX/AVX2 instruction emulation in ARM64EC, which can impede operations that LLMs typically require.

-

References for Further Reading: Articles and resources including the installation guide for the HIP SDK on Windows and details on performance enhancements using Llamafile were shared, such as “Llamafile LLM driver project boosts performance on CPU cores” available on The Register and HIP SDK’s installation documentation available here.

Interconnects (Nathan Lambert) Discord

-

Opus Judgement Predicts AI Performance Boost: Discussion highlighted the potential of Opus Judgement to unlock performance improvements in Research-Level AI Fine-tuning (RLAIF), with certainty hinging on its accuracy.

-

Google’s AI Power Move: Engineers were abuzz about Logan K’s transition to lead Google’s AI Studio, with a surge of speculation about the motives ranging from personal lifestyle to strategic career positioning. The official announcement stirred expectations about the future of the Gemini API under his leadership.

-

Logan K Sparks Broader AI Alignment Debate: The move by Logan K sparked conversations regarding AI alignment values versus corporate lures, pondering if the choice was made for more open model sharing at Google or the attractive compensation regardless of personal alignment principles.

-

The Air of Mystery in AI Advances: A member noted the ripple effect caused by the GPT-4 technical report’s lack of transparency, marking a trend towards increased secrecy among AI companies and less sharing of model details.

-

Access Denied to Financial AI Analysis: Interest in AI’s financial implications was piqued by a Financial Times article discussing Google’s AI search monetization, but restricted access to the content Financial Times limited the discussion among the technical community.

CUDA MODE Discord

-

CUDA Crashes into LLM Optimization: The DISTFLASHATTN mechanism claims to achieve linear memory usage during the training of long-context large language models (LLMs), compared to traditional quadratic peak memory usage, allowing for up to 8x longer sequence processing. However, the community noted the absence of pseudocode for the backward pass in the paper, raising concerns about reproducibility.

-

Code Talk: For those seeking hands-on CUDA experience, the CUDA MODE YouTube channel and associated GitHub materials were recommended as starting points for beginners transitioning from Python and Rust.

-

Memory-Efficient Training Makes Waves: The DISTFLASHATTN paper with its focus on optimizing LLM training is garnering attention, and a member flagged an upcoming detailed review, hinting at further discussion around its memory-efficient training advantages.

-

Backward Pass Backlash: A member’s critique regarding the lack of backward pass pseudocode in the DISTFLASHATTN paper echoed a familiar frustration within the community, calling for improved scientific repeatability in attention mechanism research.

-

Pointers to Intel Analytics’ Repo: A link to Intel Analytics’ ipex-llm GitHub repository was shared without additional context, possibly suggesting new tools or developments in the LLM field.

AI21 Labs (Jamba) Discord

Token Efficiency Talk: A user highlighted a paper’s finding that throughput efficiency increases with per token measurement, calculated by the ratio of end-to-end throughput (both encoding and decoding) over the total number of tokens.

Speed Debate Heats Up: There’s a divide on how the addition of tokens affects generation speed — while encoding can be done in parallel, the inherent sequential nature of decoding suggests each new token would add to the processing time.

Focus on Encoding Performance: Clarification in the discussion pointed to a graph that plotted the speed of generating a fixed 512 tokens, implying that observed speed improvements in the plot should be attributed to faster encoding rather than decoding.

Decoding: The Sequential Slowdown Dilemma: Queries arose about the possibility of increasing the speed of decoding despite its sequential dependency, which theoretically mandates a waiting period for each token’s predecessor.

Skunkworks AI Discord

-

Eager Pythonista Joins the Fray: An eager new contributor with a background in Python, software engineering, and a Master’s in data science is looking to join the team and contribute to the onboarding process, bringing expertise from AI medical research and data pipeline construction.

-

GPT-4 Stumped by Math Without Context: Even advanced AIs like GPT-4 and Claude can stumble on solving equations unless the problems are posed with clarity in natural language, indicating there’s room for improvement at the current state of AI models.

Alignment Lab AI Discord

It seems there is not enough context to generate a summary. Please provide more information or discussions across the channels within the Discord guild to output a meaningful summary.

Datasette - LLM (@SimonW) Discord

- Clarification on Dialogue Data: An AI engineer clarified the terminology used within conversation logs, referencing the

responsestable inlogs.db. The term “speaker turn” or simply “turn” was proposed for the initial part of a conversation, resulting in the renaming of their app’s table toturns.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

LAION ▷ #general (699 messages🔥🔥🔥):

-

Troubleshooting Difficulties with Optimizer Implementations: Members engaged in a technical discussion about issues they experienced with the use of

torch.compileandadd_stochastic_functions, noting compatibility problems across different devices like NVIDIA, AMD, and MPS. A potential solution involving the creation of a Python package for bf16 optim was discussed, alongside possible modifications to prevent dtype conflict errors during operations. -

Skepticism Over SD3 Efficiency Improvements: Doubts were cast regarding claims about the efficiency improvements in SD3 Turbo after a member was banned from a server for questioning the training on limited tokens and the long-term viability of the approach. There were also suggestions that reliance on tools like CLIP may introduce artifacts hindering comprehensive learning.

-

Legal Risks for AI-Generated Music: A conversation about AI music platform Suno highlighted potential copyright infringement issues, where concerns were raised that record labels’ powerful legal teams could pose serious challenges if Suno trained on copyrighted music. Users discussed the complexities of proving infringement in court.

-

MPS Limitations and Crashes at High Memory Utilization: It was pointed out that Apple’s MPS framework would crash when more than 2^32 bytes of data were allocated during training, despite having sufficient memory, indicating a possible internal limitation. Practical workarounds such as attention slicing were also mentioned, though they may lead to other issues like NaN during the backward pass.

-

Recommendations for Model Fine-Tuning and Scheduler Choice: There were debates over how to properly implement CLIP in conjunction with other models like T5 for better performance, with one member supporting the eventual exclusion of CLIP in favor of purely T5 based models to avoid long-term issues. Further discussions touched on inconsistencies and misinformation spread within the community regarding sampler efficiency and ideal sampling numbers.

Links mentioned:

- ‘Lavender’: The AI machine directing Israel’s bombing spree in Gaza: The Israeli army has marked tens of thousands of Gazans as suspects for assassination, using an AI targeting system with little human oversight and a permissive policy for casualties, +972 and Local C...

- Reddit - Dive into anything: no description found

- Ian Malcolm GIF - Ian Malcolm Jurassic - Discover & Share GIFs: Click to view the GIF

- RuntimeError: required rank 4 tensor to use channels_last format: My transformer training loop seems to work correctly when I train it on the CPU, but when I switch to MPS, I get the below error when computing loss.backward() for Cross Entropy loss. I am doing machi...

- Measuring Style Similarity in Diffusion Models: Generative models are now widely used by graphic designers and artists. Prior works have shown that these models remember and often replicate content from their training data during generation. Hence ...

- Galileo: no description found

- Suno is a music AI company aiming to generate $120 billion per year. But is it trained on copyrighted recordings? - Music Business Worldwide: Ed Newton-Rex discovers that Suno produces music with a striking resemblance to classic copyrights…

- Axis of Awesome - 4 Four Chord Song (with song titles): Australian comedy group 'Axis Of Awesome' perform a sketch from the 2009 Melbourne International Comedy Festival. Footage courtesy of Network Ten Australia. ...

- Issues · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - Issues · pytorch/pytorch

- Issues · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - Issues · pytorch/pytorch

- OneTrainer/modules/util/optimizer/adafactor_extensions.py at 9a35e7f8596988f672af668f474f8d489ff8f962 · Nerogar/OneTrainer: OneTrainer is a one-stop solution for all your stable diffusion training needs. - Nerogar/OneTrainer

- [mps] training / inference dtype issues · Issue #7563 · huggingface/diffusers: when training on Diffusers without attention slicing, we see: /AppleInternal/Library/BuildRoots/ce725a5f-c761-11ee-a4ec-b6ef2fd8d87b/Library/Caches/com.apple.xbs/Sources/MetalPerformanceShaders/MPS...

- GitHub - steffen74/ConstitutionalAiTuning: A Python library for fine-tuning LLMs with self-defined ethical or contextual alignment, leveraging constitutional AI principles as proposed by Anthropic. Streamlines the process of prompt generation, model interaction, and fine-tuning for more responsible AI development.: A Python library for fine-tuning LLMs with self-defined ethical or contextual alignment, leveraging constitutional AI principles as proposed by Anthropic. Streamlines the process of prompt generati...

- 7529 do not disable autocast for cuda devices by bghira · Pull Request #7530 · huggingface/diffusers: What does this PR do? Fixes #7529 Before submitting This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case). Did you read the contributor guideline? D...

LAION ▷ #research (11 messages🔥):

-

Scaling vs. Sampling Efficiency Analyzed: An empirical study highlighted in this article explores the influence of model size on the sampling efficiency of latent diffusion models (LDMs). Contrary to expectations, it was found that smaller models often outperform larger ones when under the same inference budget.

-

In Search of Scalable Crawling Techniques: A member inquired about research into scalable crawling methods that could assist in building datasets for model training. However, no specific groups or resources were referenced in the response.

-

Mystery of Making $50K Revealed: A humorous exchange involved a link to a Discord mod ban GIF and a guess that the secret to making $50K in 72 hours could involve being a drug mule, referencing an MLM-related meme.

-

Teasing a New Optimizer on Twitter: There’s anticipation for a new optimizer discussed on Twitter, promising potential advancements in the field.

-

Visual Enhancements through Specificity: Discussing an arXiv paper, it was mentioned that fine-tuning text-to-image (t2i) models with captions that include better spatial descriptions can lead to images with improved spatial consistency.

Links mentioned:

- Bigger is not Always Better: Scaling Properties of Latent Diffusion Models: We study the scaling properties of latent diffusion models (LDMs) with an emphasis on their sampling efficiency. While improved network architecture and inference algorithms have shown to effectively ...

- Discord Mod Moderation Ban GIF - Discord mod Moderation ban Mod ban - Discover & Share GIFs: Click to view the GIF

LAION ▷ #learning-ml (1 messages):

- LangChain’s Harrison Chase to Illuminate LLM Challenges: Attendees are invited to an exclusive event with Harrison Chase, co-founder and CEO of LangChain. He will discuss the challenges companies face when moving from prototype to production and how LangSmith helps overcome these hurdles, providing insights during a meetup organized for April 17th at 18:30 @Online. Register here.

- Insider Access to LLM Framework Trends with LangChain: The co-founder of LangChain, Harrison Chase, will share his expertise on using LLMs (Large Language Models) for developing context-aware reasoning applications. This talk will address the challenges encountered by companies and the solutions implemented, as part of the third LangChain and LLM France Meetup.

Link mentioned: Meetup #3 LangChain and LLM: Using LangSmith to go from prototype to production, mer. 17 avr. 2024, 18:30 | Meetup: Nous avons le plaisir d’accueillir Harrison Chase, le Co-Founder et CEO de LangChain, pour notre troisième Meetup LangChain and LLM France ! Ne loupez pas cette occasion u

Stability.ai (Stable Diffusion) ▷ #general-chat (568 messages🔥🔥🔥):

- Stable Diffusion Secrets Revealed: Members are discussing the performance of various versions of Stable Diffusion. Forge is highlighted as the fastest UI right now, and there’s a lot of love for models like DreamShaper Lightning (SDXL). Users with graphics cards like the RTX 3060 and RTX 4080 noted significant speed improvements when using Forge compared to A1111, with image generation times dropping significantly.

- Anticipation Builds for SD3: The community is eagerly waiting for the release of Stable Diffusion 3.0, with estimated arrival times ranging between 3-5 weeks. However, it was noted that while SD3 will improve text rendering, it might still not achieve perfect spelling due to its limitations and model size.

- Harnessing SD for Creative Projects: Users are exploring the use of Stable Diffusion for various creative endeavors such as generating art for tabletop RPGs or contemplating storytelling through images, potentially in comic or movie formats.

- Technical Tackles and Tips: A conversation around potential issues faced while generating images, such as slow speeds or text from one prompt appearing in another, led to suggestions on utilizing specific Stable Diffusion optimizations and trying out alternative interfaces, such as Forge.

- New Models and Features on the Horizon: Excitement is also buzzing around the community for the new features like sparse control net, SegMOE, and audiosparx model, shared alongside helpful GitHub links and tips on better leveraging AI-generated content.

Links mentioned:

- Leonardo.Ai: Create production-quality visual assets for your projects with unprecedented quality, speed and style-consistency.

- Anime Help GIF - Anime Help Tears - Discover & Share GIFs: Click to view the GIF

- Remix: Create, share, and remix AI images and video.

- BFloat16: The secret to high performance on Cloud TPUs | Google Cloud Blog: How the high performance of Google Cloud TPUs is driven by Brain Floating Point Format, or bfloat16

- Optimizations: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- ICBINP XL - v4 | Stable Diffusion Checkpoint | Civitai: If you do like this work, consider buying me a coffee :) Use this model for free on Stable Horde The long awaited followup to ICBINP, this model is...

- Survey Form - 5day.io: As a young professional just a few years into the workforce, there is a constant, low-humming anxiety about proving yourself and finding that mythical work-life balance everyone talks about. Sometimes...

- Stable Radio 24/7: Stable Radio, a 24/7 live stream that features tracks exclusively generated by Stable Audio.Explore the model and start creating for free on stableaudio.com

- Cool Fun GIF - Cool Fun White cat - Discover & Share GIFs: Click to view the GIF

- sd-webui-animatediff/docs/features.md at master · continue-revolution/sd-webui-animatediff: AnimateDiff for AUTOMATIC1111 Stable Diffusion WebUI - continue-revolution/sd-webui-animatediff

- GitHub - princeton-nlp/SWE-agent: SWE-agent: Agent Computer Interfaces Enable Software Engineering Language Models: SWE-agent: Agent Computer Interfaces Enable Software Engineering Language Models - princeton-nlp/SWE-agent

- Reddit - Dive into anything: no description found

- GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

- GitHub - kijai/ComfyUI-DiffusionLight: Using DiffusionLight in ComfyUI: Using DiffusionLight in ComfyUI. Contribute to kijai/ComfyUI-DiffusionLight development by creating an account on GitHub.

- GitHub - ZHO-ZHO-ZHO/ComfyUI-SegMoE: Unofficial implementation of SegMoE for ComfyUI: Unofficial implementation of SegMoE for ComfyUI. Contribute to ZHO-ZHO-ZHO/ComfyUI-SegMoE development by creating an account on GitHub.

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #general (241 messages🔥🔥):

-

Request for Full Stack Developer Recommendations: A member sought recommendations for good full stack developers, inviting direct messages from anyone able to assist.

-

Inquiry About Unsloth Enterprise Model Training: A question was raised regarding whether Unsloth Enterprise supports full model training; the response clarified that it does, but the speedup factor would be between 2-5x faster than FA2, rather than 30-40x.

-

Discussion on Prompt Formats and Implementations: Members discussed custom AI models and prompt formats, with specific references to creating a model called Cortana 1.0, designed after the AI in the Master Chief video games. Concerns were raised about finding suitable models for chat mode and utilizing correct prompt structures for efficient operation.

-

Updates and Achievements Shared in AI Development: They shared Daniel Han’s tweet reflecting on the potential of AI over a few months, given the short development time so far. Benchmarks for Unsloth AI were also discussed, including a 12.29% performance on the SWE Bench by their ‘Ye’ model.

-

Concerns and Optimizations for AI Performance: Various members inquired about optimizations and support for different AI models and platforms. For instance, discussions revolved around the support for Galore within Unsloth, the possible open-sourcing of GPT models, and efforts to accelerate local LLM inference and fine-tuning on Intel CPUs and GPUs. An exchange with links to GitHub highlighted resources for accelerating AI inference on specific hardware. There was also a discussion about potential performance improvements and updates coming soon from the Unsloth team.

Links mentioned:

- Google Colaboratory: no description found

- Am Ia Joke To You Is This A Joke GIF - Am IA Joke To You Am IA Joke Is This A Joke - Discover & Share GIFs: Click to view the GIF

- I Aint No Fool Wiz Khalifa GIF - I Aint No Fool Wiz Khalifa Still Wiz Song - Discover & Share GIFs: Click to view the GIF

- jondurbin/airoboros-gpt-3.5-turbo-100k-7b · Hugging Face: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - intel/neural-speed: An innovative library for efficient LLM inference via low-bit quantization: An innovative library for efficient LLM inference via low-bit quantization - intel/neural-speed

- GitHub - intel-analytics/ipex-llm: Accelerate local LLM inference and finetuning (LLaMA, Mistral, ChatGLM, Qwen, Baichuan, Mixtral, Gemma, etc.) on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max). A PyTorch LLM library that seamlessly integrates with llama.cpp, HuggingFace, LangChain, LlamaIndex, DeepSpeed, vLLM, FastChat, ModelScope, etc.: Accelerate local LLM inference and finetuning (LLaMA, Mistral, ChatGLM, Qwen, Baichuan, Mixtral, Gemma, etc.) on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)...

- sloth/sftune.py at master · toranb/sloth: python sftune, qmerge and dpo scripts with unsloth - toranb/sloth

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #random (12 messages🔥):

-

Asteroid Mining Company with a Twist: The Open Asteroid Impact initiative is a unique approach to asteroid mining that proposes slinging asteroids to Earth instead of mining in space. The link provided displays their logo and underscores their aim to prioritize safety and efficiency in resources acquisition from space.

-

Praise for Unsloth’s Website Design: A member complimented the website design for Unsloth, noting the attractiveness of the site.

-

Creativity on a Budget: The Unsloth website’s sloth images were designed with Bing DALL-E due to budget constraints. The designer also expressed intentions to eventually commission 3D artists for a consistent mascot.

-

Design Consistency Through Hard Work: Responding to an inquiry about the uniformity of design, the Unsloth website designer mentioned generating hundreds of sloth images and refining them manually in Photoshop.

-

Bing DALL-E Over Hugging Face for Speed: The designer chose Bing DALL-E over Hugging Face’s DALL E’s for image generation because of the ability to generate multiple images quickly and having available credits.

Link mentioned: Open Asteroid Impact: no description found

Unsloth AI (Daniel Han) ▷ #help (278 messages🔥🔥):

-

Evaluation During Training Explained: Members discussed why evaluation datasets are not added by default during fine-tuning—adding them slows down the process. The training loss is calculated using cross-entropy loss, and evaluation loss uses the same metric.

-

Pack Smart with SFTTrainer: When using

SFTTrainer, members shared how to configure and optimize training, including the use ofpackingand avoiding using it with Gemma models, as it can lead to problems. -

Dealing with Dataset Size Challenges: Users troubleshoot issues related to OOM errors and dataset size, including a discussion on the use of streaming datasets for large volumes and the challenges with tools like PyArrow when handling very large amounts of data.

-

GGUF Conversion Confusion: A member faced issues converting a model into GGUF format and debated the appropriate approach, discussing the possible need for manual architecture adjustments in conversion scripts.

-

Inference Troubles and Unsloth Updates: There was a case of a GemmaForCausalLM object causing an attribute error, which was fixed after the Unsloth library was updated and reinstalled. A member mentioned that using 16-bit model inference led to OOM errors, and someone had an issue with Python.h missing during the setup of a finetuning environment.

Links mentioned:

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- qwp4w3hyb/deepseek-coder-7b-instruct-v1.5-iMat-GGUF · Hugging Face: no description found

- Adding accuracy, precision, recall and f1 score metrics during training: hi, you can define your computing metric function and pass it into the trainer. Here is an example of computing metrics. define accuracy metrics function from sklearn.metrics import accuracy_score, ...

- danielhanchen/model_21032024 · Hugging Face: no description found

- Hugging Face Transformers | Weights & Biases Documentation: The Hugging Face Transformers library makes state-of-the-art NLP models like BERT and training techniques like mixed precision and gradient checkpointing easy to use. The W&B integration adds rich...

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Supervised Fine-tuning Trainer: no description found

- Qwen/Qwen1.5-14B-Chat-GPTQ-Int4 · Hugging Face: no description found

- deepseek-ai/deepseek-vl-7b-chat · Hugging Face: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- TinyLlama/TinyLlama-1.1B-Chat-v1.0 · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- TheBloke/deepseek-coder-6.7B-instruct-GGUF · Hugging Face: no description found

Perplexity AI ▷ #general (469 messages🔥🔥🔥):

-

Discussions on Pro Models and Usage: Users exchanged insights on using different AI models, such as Claude and Haiku, for reading and interpreting PDFs. They debated the advantages of Perplexity’s Pro features and models’ context windows, with suggestions to use “Writing” focus for detailed responses and enable “Pro” for more concise and accurate answers. Some suggested using Sonar for speedier responses.

-

Ads Coming to Perplexity?: There was a significant concern over reports of Perplexity planning to introduce ads. Users referenced statements from Perplexity’s Chief Business Officer about the potential of sponsored suggested questions, with some expressing disappointment and hoping the ad integration would not affect the Pro user experience.

-

Image Generation Queries and Accessibility: Users asked about generating images on desktop and mobile, with a response confirming that while the mobile apps do not support image generation, the website does on mobile devices.

-

Referral Links and Discounts: Users shared referral links for Perplexity.ai, mentioning the availability of $10 discounts through these links.

-

Technical Support and Feature Requests: Users inquired about technical issues like API limits and slow response times, as well as feature updates like lifting the 25MB PDF limit. There was a recommendation to use Sonar for speed and some discussions on whether Perplexity has lifted certain restrictions.

Links mentioned:

- GroqChat: no description found

- no title found: no description found

- Perplexity will try a form of ads on its AI search platform.: Perplexity’s chief business officer Dmitry Shevelenko tells Adweek the company is considering adding sponsored suggested questions to its platform. If users continue to search for more information on ...

- Apple reveals ReALM — new AI model could make Siri way faster and smarter: ReALM could be part of Siri 2.0

- Gen-AI Search Engine Perplexity Has a Plan to Sell Ads: no description found

- Perplexity will try a form of ads on its AI search platform.: Perplexity’s chief business officer Dmitry Shevelenko tells Adweek the company is considering adding sponsored suggested questions to its platform. If users continue to search for more information on ...

- When Server Down Iceeramen GIF - When Server Down Iceeramen Monkey - Discover & Share GIFs: Click to view the GIF

- Tweet from Aravind Srinivas (@AravSrinivas): good vibes are essential

- Tweet from Aravind Srinivas (@AravSrinivas): Can’t wait.

- Tweet from Phi Hoang (@apostraphi): Merch drop this month. In collaboration with @Smith_Diction for @perplexity_ai.

- Reddit - Dive into anything: no description found

- Getting Started with pplx-api: no description found

- AI Search Engine Perplexity Could Soon Show Ads to Users: Report: As per the report, Perplexity will show ads in its related questions section.

- Perplexity, an AI Startup Attempting To Challenge Google, Plans To Sell Ads - Slashdot: An anonymous reader shares a report: Generative AI search engine Perplexity, which claims to be a Google competitor and recently snagged a $73.6 million Series B funding from investors like Jeff Bezos...

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- Quora - A place to share knowledge and better understand the world: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- search results: no description found

- no title found: no description found

- Codecademy Forums: Community discussion forums for Codecademy.

- Start a Developer Blog: Hashnode - Custom Domain, Sub-path, Hosted/Headless CMS.: Developer blogging with custom domains, hosted/headless CMS options. Our new headless CMS streamlines content management for devtool companies.

Perplexity AI ▷ #sharing (23 messages🔥):

- Tailored Article Magic: A member discovered they can create articles highly customized to their interests, highlighting the ability to hone in on specific topics using Perplexity.

- Efficient Research for Newsletters: Perplexity facilitated a user in swiftly gathering accurate information, which significantly expedited the creation of a “welcome gift” for their newsletter subscribers.

- A Noble Examination of Fritz Haber: Utilizing the Perplexity search, a member delved into the life of Fritz Haber, revealing his pivotal contribution to food production with the Haber-Bosch process, his complex history with chemical warfare, and his moral stance against the Nazi regime. The nuances include his Nobel Prize-winning achievement and the unfortunate family and historical circumstances surrounding him.

- Curiosity Fueled Learning: Users are engaging with Perplexity to feed their curiosity on diverse topics ranging from convolutions in machine learning to Zorba the Greek, showcasing the platform’s versatility in addressing various inquiries.

- Conceptual Clarity on Random Forest: Multiple members sought to understand the random forest classifier, indicating a shared interest in machine learning algorithms within the community.

Perplexity AI ▷ #pplx-api (24 messages🔥):

-

No Team Sign-Up for Perplexity API: A user inquired about signing up for the Perplexity API with a team plan, but it was confirmed that team sign-ups are currently unavailable.

-

Rate Limits Confusion: A member shared confusion about rate limits, specifically using the sonar-medium-online model. Despite adhering to the 20req/m limit, they are still encountering 429 errors; it was suggested to log requests with timestamps to ensure the rate limits are enforced correctly.

-

Trouble with Temporally Accurate Results: A user reported inaccurate results when asking for the day’s top tech news using the sonar-medium-online model, receiving outdated information. It was recommended to include “Ensure responses are aligned with the Current date.” in the system prompt to help guide the model’s results.

-

Clarifying the Perplexity API’s Functionality: A clarification was sought on how the Perplexity API works. Points include generating an API key, sending the key as a bearer token in requests, and managing the credit balance with possible automatic top-ups.

-

Payment Pending Issues for API Credits: A member voiced concerns about issues when trying to buy API credits — the process indicates “Pending” status without account updates. A request for account details to check the issue on the backend was made by a staff member.

Latent Space ▷ #ai-general-chat (76 messages🔥🔥):

- Open Source SWE-agent Rivals Devin: A new system called SWE-agent has been introduced, boasting similar accuracy to Devin on SWE-bench and has the distinguishing feature of being open source.

- Apple Research Hints at AI Leapfrogging GPT-4: An Apple research paper discusses a system named ReALM, suggesting capabilities that surpass ChatGPT 4.0, in sync with iOS 18 developments for Siri.

- Claude Opus’s Performance Dilemma: Conversations report a notable performance gap between Claude Opus and GPT-4, with Opus struggling in certain tasks such as the “needle-in-a-haystack” test. There’s mention of a Prompt Engineering Interactive Tutorial to improve results with Claude.

- Stable Audio 2.0 Launches: StabilityAI announces Stable Audio 2.0, an AI capable of generating high-quality, full-length music tracks, stepping up the game in audio AI capabilities.

- ChatGPT Plus Enhancements: ChatGPT Plus now allows users to edit DALL-E images from the web or app, and a recent iOS update includes an option to edit conversation prompts. Detailed instructions are available on OpenAI’s help page.

Links mentioned:

- Replit: Replit Developer Day Livestream

- Tweet from Logan Kilpatrick (@OfficialLoganK): Excited to share I’ve joined @Google to lead product for AI Studio and support the Gemini API. Lots of hard work ahead, but we are going to make Google the best home for developers building with AI. ...

- Apple AI researchers boast useful on-device model that ‘substantially outperforms’ GPT-4 - 9to5Mac: Siri has recently been attempting to describe images received in Messages when using CarPlay or the announce notifications feature. In...

- Tweet from Sully (@SullyOmarr): I use cursor as my ide, and Claude seems significantly worse with the api Half finished code, bad logic, horrible coding style But it works perfectly on their site Anyone else experience this?

- Tweet from Logan Kilpatrick (@OfficialLoganK): Excited to share I’ve joined @Google to lead product for AI Studio and support the Gemini API. Lots of hard work ahead, but we are going to make Google the best home for developers building with AI. ...

- Tweet from Zack Witten (@zswitten): I've been dying to shill this harder for six months, and now that Anthropic API is GA, I finally can... The Prompt Engineering Interactive Tutorial! https://docs.google.com/spreadsheets/d/19jzLgR...

- Replit — Building LLMs for Code Repair: Introduction At Replit, we are rethinking the developer experience with AI as a first-class citizen of the development environment. Towards this vision, we are tightly integrating AI tools with our I...

- Tweet from Greg Kamradt (@GregKamradt): Claude 2.1 (200K Tokens) - Pressure Testing Long Context Recall We all love increasing context lengths - but what's performance like? Anthropic reached out with early access to Claude 2.1 so I r...

- Tweet from Anthropic (@AnthropicAI): Claude 2.1’s 200K token context window is powerful, but requires careful prompting to use effectively. Learn how to get Claude to recall an individual sentence across long documents with high fid...

- Tweet from John Yang (@jyangballin): SWE-agent is our new system for autonomously solving issues in GitHub repos. It gets similar accuracy to Devin on SWE-bench, takes 93 seconds on avg + it's open source! We designed a new agent-co...

- Tweet from Ofir Press (@OfirPress): People are asking us how Claude 3 does with SWE-agent- not well. On SWE-bench Lite (a 10% subset of the test set) it gets almost 6% less (absolute) than GPT-4. It's also much slower. We'll...

- Tweet from John David Pressman (@jd_pressman): "Many Shot Jailbreaking" is the most embarrassing publication from a major lab I've seen in a while, and I'm including OpenAI's superalignment post in that. ↘️ Quoting lumpen spac...

- Tweet from Stability AI (@StabilityAI): Introducing Stable Audio 2.0 – a new model capable of producing high-quality, full tracks with coherent musical structure up to three minutes long at 44.1 kHz stereo from a single prompt. Explore the...

- Tweet from Teortaxes▶️ (@teortaxesTex): Opus is an immensely strong model, a poet and a godsend to @repligate. It's also subpar in factuality (makes stuff up OR doesn't know it) and instruction-following; GPT-4, even Mistrals may do...

- Tweet from Gustavo Cid (@_cgustavo): I used to beg LLMs for structured outputs. Most of the time, they understood the job and returned valid JSONs. However, around ~5% of the time, they didn't, and I had to write glue code to avoid...

- Should kids still learn to code? (Practical AI #263) — Changelog Master Feed — Overcast: no description found

- Tweet from Gustavo Cid (@_cgustavo): I used to beg LLMs for structured outputs. Most of the time, they understood the job and returned valid JSONs. However, around ~5% of the time, they didn't, and I had to write glue code to avoid...

- Structured Outputs with DSPy: Unfortunately, Large Language Models will not consistently follow the instructions that you give them. This is a massive problem when you are building AI sys...

- Tweet from Blaze (Balázs Galambosi) (@gblazex): Wow. While OpenAI API is still stuck on Whisper-2, @AssemblyAI releases something that beats even Wishper-3: + 13.5% more accurate than Whisper-3 + Up to 30% fewer hallucinations + 38s to process 60...

- Prof. Geoffrey Hinton - "Will digital intelligence replace biological intelligence?" Romanes Lecture: Professor Geoffrey Hinton, CC, FRS, FRSC, the ‘Godfather of AI’, delivered Oxford's annual Romanes Lecture at the Sheldonian Theatre on Monday, 19 February 2...

Latent Space ▷ #llm-paper-club-west (356 messages🔥🔥):

-

DSPy Takes Center Stage: LLM Paper Club discussed the DSPy framework comparing its utility to that of LangChain and LlamaIndex. There’s an emphasis on its ability to optimize prompts for different large language models (LLMs) and migrate models easily, a capability underscored in DSPy’s arXiv paper.

-

Devin’s Debut Draws Discussion: The concept of Devin, an AI with thousands of dollars of OpenAI credit backing it for demos, was mentioned, generating excitement and anticipation for its potential demonstration uses.

-

Exploring DSPy’s Depth: Questions around DSPy’s operation and execution were posed, including whether it can compile to smaller models, rate limit calls to avoid OpenAI API saturation, and save optimization outcomes to disk using the

.savefunction. -

Prompt Optimization Potential: There was an interest in DSPy’s ability to optimize a single metric and whether multiple metrics could be combined into a composite score for optimization purposes. The discussion points highlighted DSPy’s teleprompter/optimizer functionality, which does not require the metric to be differentiable.

-

Practical Applications Proposed: Club members proposed various practical applications for the LLMs, including an iOS app for logging voice API conversations, a front-end platform for summarizing arXiv papers based on URLs, a DSPy pipeline for PII detection, and rewriting of DSPy’s documentation.

Links mentioned:

- Join Slido: Enter #code to vote and ask questions: Participate in a live poll, quiz or Q&A. No login required.

- Google Colaboratory: no description found

- DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines: The ML community is rapidly exploring techniques for prompting language models (LMs) and for stacking them into pipelines that solve complex tasks. Unfortunately, existing LM pipelines are typically i...

- Evaluation & Hallucination Detection for Abstractive Summaries: Reference, context, and preference-based metrics, self-consistency, and catching hallucinations.

- Join Slido: Enter #code to vote and ask questions: Participate in a live poll, quiz or Q&A. No login required.

- LLM Task-Specific Evals that Do & Don't Work: Evals for classification, summarization, translation, copyright regurgitation, and toxicity.

- LLM Task-Specific Evals that Do & Don't Work: Evals for classification, summarization, translation, copyright regurgitation, and toxicity.

- Are you human?: no description found

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits: Recent research, such as BitNet, is paving the way for a new era of 1-bit Large Language Models (LLMs). In this work, we introduce a 1-bit LLM variant, namely BitNet b1.58, in which every single param...

- - Fuck You, Show Me The Prompt.: Quickly understand inscrutable LLM frameworks by intercepting API calls.

- dspy/examples/knn.ipynb at main · stanfordnlp/dspy: DSPy: The framework for programming—not prompting—foundation models - stanfordnlp/dspy