Apple’s AI emergence continues apace ahead of WWDC. We’ve covered OLMo before, and it looks like OpenELM is Apple’s first actually open LLM (weights, code) release sharing some novel research in the efficient architecture direction.

It’s not totally open, but it’s pretty open. As Sebastian Raschka put it:

Let’s start with the most interesting tidbits:

- OpenELM comes in 4 relatively small and convenient sizes: 270M, 450M, 1.1B, and 3B

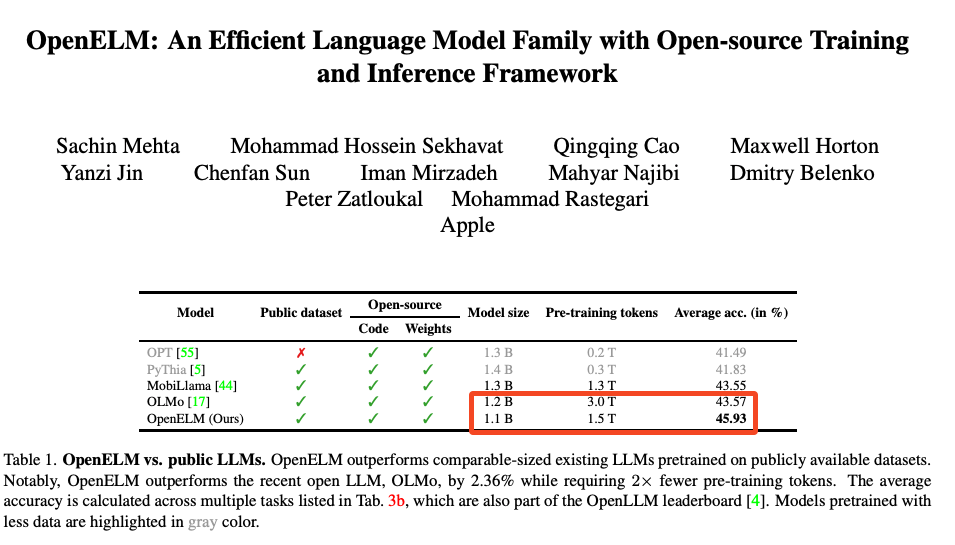

- OpenELM performs slightly better than OLMo even though it’s trained on 2x fewer tokens

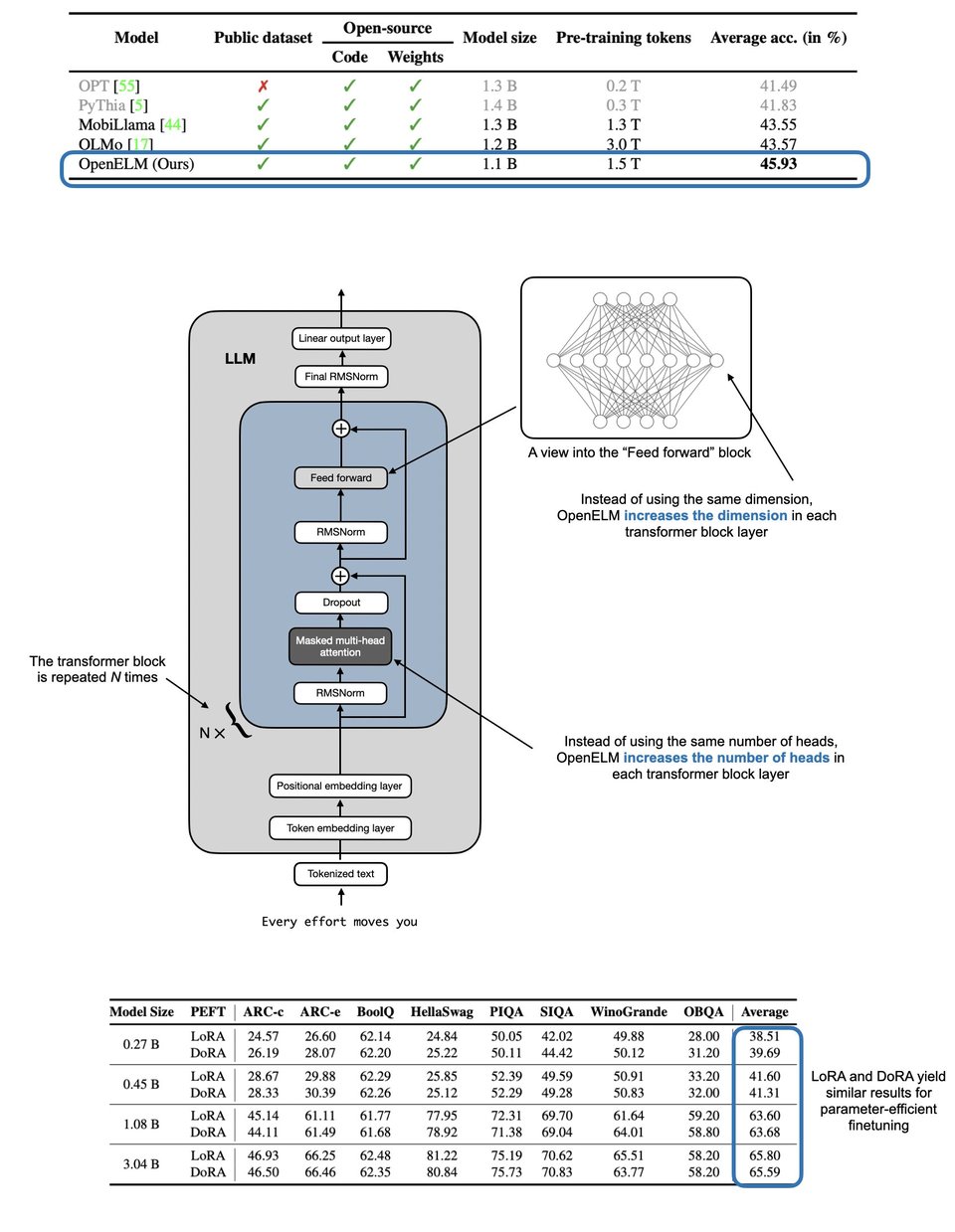

- The main architecture tweak is a layer-wise scaling strategy

But:

“Sharing details is not the same as explaining them, which is what research papers were aimed to do when I was a graduate student. For instance, they sampled a relatively small subset of 1.8T tokens from various publicly available datasets (RefinedWeb, RedPajama, The PILE, and Dolma). This subset was 2x smaller than Dolma, which was used for training OLMo. What was the rationale for this subsampling, and what were the criteria?”

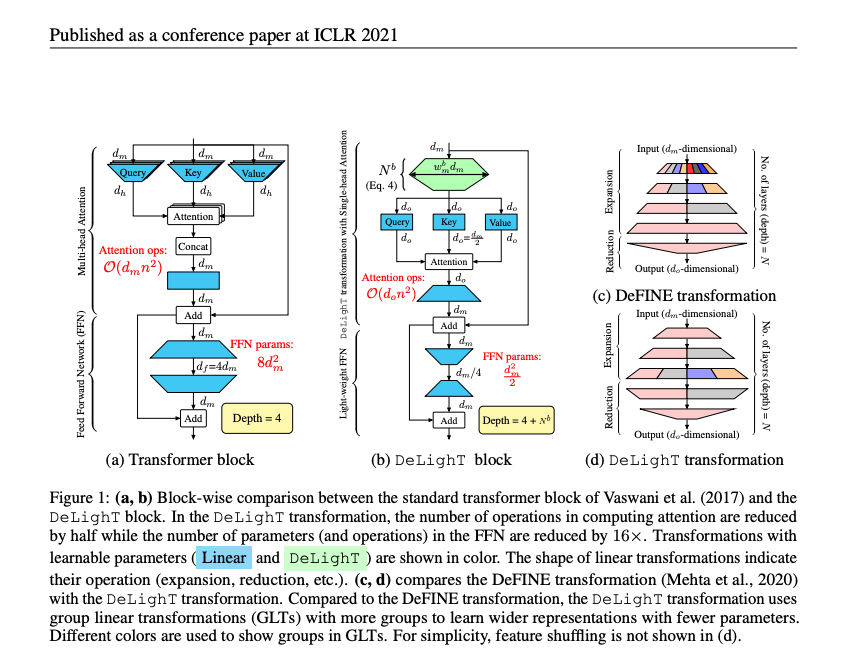

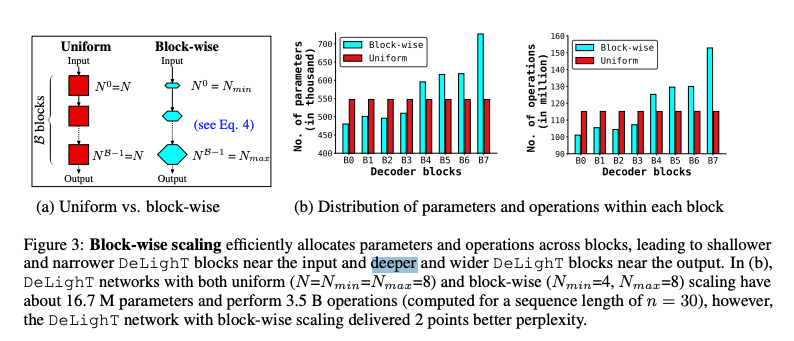

The layer-wise scaling comes from DeLight, a 2021 paper deepening the standard attention mechanism 2.5-5x in number of layers but matching 2-3x larger models by parameter count. These seem paradoxical but the authors described the main trick of varying the depth between the input and the output, rather than uniform:

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

LLaMA Developments

- LLaMA 3 increases context to 160K+ tokens: In /r/LocalLLaMA, LLaMA 3 increases context length to over 160K tokens while maintaining perfect recall. Commenters note this is impressive but will require significant consumer hardware to run locally at good speeds. Meta’s Llama 3 has been downloaded over 1.2M times, with over 600 derivative models on Hugging Face.

- First LLama-3 8B-Instruct model with 262K context released: In /r/LocalLLaMA, the first LLama-3 8B-Instruct model with over 262K context length is released on Hugging Face, enabling advanced reasoning beyond simple prompts.

- Llama 3 70B outperforms 8B model: In /r/LocalLLaMA, comparisons show the quantized Llama 3 70B IQ2_XS outperforms the uncompressed Llama 3 8B f16 model. The 70B IQ3_XS version is found to be best for 32GB VRAM users.

- New paper compares AI alignment approaches: In /r/LocalLLaMA, a new paper compares DPO to other alignment approaches, finding KTO performs best on most benchmarks and alignment methods are sensitive to training data volume.

AI Ethics & Regulation

- Eric Schmidt warns about risks of open-source AI: In /r/singularity, former Google CEO Eric Schmidt cautions that open-source AI models give risky capabilities to bad actors and China. Many see this as an attempt by large tech companies to stifle competition, noting China likely has the capability to develop powerful models without relying on open-source.

- U.S. proposal aims to end anonymous cloud usage: In /r/singularity, a U.S. proposal seeks to implement “Know Your Customer” requirements to end anonymous cloud usage.

- Baltimore coach allegedly used AI for defamation: In /r/OpenAI, a Baltimore coach allegedly used AI voice cloning to attempt to get a high school principal fired by generating fake racist audio.

Hardware Developments

- TSMC unveils 1.6nm process node: In /r/singularity, TSMC announces a 1.6nm process node with backside power delivery, enabling continued exponential hardware progress over the next few years.

- Ultra-thin solar cells enable self-charging drones: In /r/singularity, German researchers develop ultra-thin, flexible solar cells that allow small drones to self-charge during operation.

- Micron secures $6.1B in CHIPS Act funding: In /r/singularity, Micron secures $6.1 billion in CHIPS Act funding to build semiconductor manufacturing facilities in New York and Idaho.

Memes & Humor

- AI assistant confidently asserts flat Earth: In /r/singularity, a humorous image depicts an AI assistant confidently asserting that the Earth is flat, sparking jokes about needing AI capable of believing absurdities or that humanity has its best interests at heart.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Here is a summary of the key topics and insights from the provided tweets:

Meta Llama 3 Release and Impact

- Rapid Adoption: In the week since release, Llama 3 models have been downloaded over 1.2M times with 600+ derivative models on Hugging Face, showing exciting early impact. (@AIatMeta)

- Training Optimizations: Meta is moving fast on optimizations, with Llama 3 70B training 18% faster and Llama 3 8B training 20% faster. (@svpino)

- Context Extension: The community extended Llama 3 8B’s context from 8k to nearly 100k tokens by combining PoSE, continued pre-training, and RoPE scaling. (@winglian)

- Inference Acceleration: Colossal-Inference now supports Llama 3 inference acceleration, enhancing efficiency by ~20% for 8B and 70B models. (@omarsar0)

- Benchmark Performance: Llama 3 70B is tied for 1st place for English queries on the LMSYS leaderboard. (@rohanpaul_ai)

Phi-3 Model Release and Reception

- Overfitting Benchmarks: Some argue Phi-3 overfits public benchmarks but underperforms in practical usage compared to models like Llama-3 8B. (@svpino, @abacaj)

- Unexpected Behavior: As a fundamentally different model, Phi-3 can exhibit surprising results, both good and bad. (@srush_nlp)

Extending LLM Context Windows

- PoSE Technique: The Positional Skip-wisE (PoSE) method simulates long inputs during training to increase context length, powering Llama 3’s extension to 128k tokens. (@rohanpaul_ai)

- Axolotl and Gradient AI: Tools like Axolotl and approaches from Gradient AI are enabling context extension for Llama and other models to 160k+ tokens. (@winglian, @rohanpaul_ai)

Cohere Toolkit Release

- Enterprise Focus: Cohere released a toolkit to accelerate LLM deployment in enterprises, targeting secure RAG with private data and local code interpreters. (@aidangomez)

- Flexible Deployment: The toolkit’s components can be deployed to any cloud and reused to build applications. (@aidangomez, @aidangomez)

OpenAI Employee Suspension and GPT-5 Speculation

- Sentience Claims: An OpenAI employee who claimed GPT-5 is sentient has been suspended from Twitter. (@bindureddy)

- Hype Generation: OpenAI is seen as a hype-creation engine around AGI and AI sentience claims, even as competitors match GPT-4 at lower costs. (@bindureddy)

- Agent Capabilities: Some believe GPT-5 will be an “agent GPT” based on the performance boost from agent infrastructure on top of language models. (@OfirPress)

Other Noteworthy Topics

- Concerns about the AI summit board’s lack of diverse representation to address power concentration risks. (@ClementDelangue)

- OpenAI and Moderna’s partnership as a positive sign of traditional businesses adopting generative AI. (@gdb, @rohanpaul_ai)

- Apple’s open-sourced on-device language models showing poor performance but providing useful architecture and training details. (@bindureddy, @rasbt)

AI Discord Recap

A summary of Summaries of Summaries

-

Extending LLM Context Lengths

- Llama 3 Performance and Context Length Innovations: Discussions centered around Llama 3’s capabilities, with some expressing mixed opinions on its code recall and configuration compared to GPT-4. However, innovations in extending Llama 3’s context length to 96k tokens for the 8B model using techniques like PoSE (Positional Skip-wisE) and continued pre-training with 300M tokens generated excitement, as detailed in this tweet thread.

- The EasyContext project aims to extrapolate LLM context lengths to 1 million tokens with minimal hardware requirements.

-

Optimizing LLM Training and Deployment

- Nvidia’s Nsight Compute CLI is utilized for kernel profiling to optimize CUDA code for LLM training.

- Finetuning LLMs for Domain-Specific Gains: Interest grew in finetuning large language models for domain-specific improvements, with examples like Meditron for medical applications. Discussions also covered data synthesis strategies using tools like Argilla’s Distilabel, and the challenges of multi-document, long-context finetuning. Cost-performance tradeoffs were debated, such as spending $2,368 for 4 epochs vs $41,440 for 50 epochs with potentially minor gains.

- PyTorch introduces Torchtitan, a library dedicated to aiding LLM training from scratch.

- The Mixture of Depths paper proposes accelerating transformer training using a modified MoE routing mechanism.

- CUDA Optimization Deep Dives: CUDA developers dug into kernel profiling with tools like NVIDIA Nsight Compute CLI, discussed memory coalescing and burst sizes around 128 bytes, and debated the efficiency of low-bit quantization methods. Conversations also covered flash attention compatibility issues with PyTorch 2.3.0, and the implications of PyTorch AO supporting custom CUDA extensions for performance tuning.

- Open-Source LLM Ecosystem Expansion

- Apple’s Surprise Entry into Open-Source Models: Apple’s release of OpenELM, a family of efficient open-source language models ranging from 270M to 3B parameters, caught the AI community by surprise. The move marked a shift from Apple’s traditionally proprietary approach, with the 270M model quickly gaining attention on Hugging Face.

- BioMistral is introduced, leveraging Mistral for pretrained medical LLMs.

- Mozilla’s llamafile project enables distributing and running LLMs locally with high performance.

- Dify emerges as an open-source LLM app development platform combining AI workflows and model management.

- Evaluating and Benchmarking LLMs

- On the Judgemark benchmark, Llama-3-70b shows promise for fine-tuning disco-judge applications.

- Discussions around the effectiveness of validation loss as a performance indicator for LLMs.

- The Low-Cost Language Models survey evaluates CPU-friendly LLMs on Python code generation tasks.

- Debates on the transparency of Nightshade’s autoencoder capabilities and the need for publishing findings openly.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

-

Fine-Tuning Tribulations and Triumphs: Engineers shared their challenges with fine-tuning models, involving 4-bit quantization, VRAM requirements exceeding 48GB, and token index sequence length errors. Solutions included batch size revision, enabling pack functionality, and considering alternative testing environments like Aphrodite Engine or llama.cpp.

-

Gossip or Gone?: Speculation is rampant about the dissolution of the WizardLM team after Qingfeng Sun’s staff page redirect. Contrasting sentiments were shared, from salvaging WizardLM datasets to showcase sessions where Meta’s LlaMA-3 models (including an 8B and 70B version) were cited as top performers in their classes.

-

From Cold Storage to Hot Topics: A member proudly announced an open-source release of Kolibrify, a curriculum training tool for instruction-following LLMs. On a technical note, the community discussed Triton dependencies, errors with “Quantization failed,” and gguf model testing strategies, reaching a consensus on best practices for fine-tuning and deployment options.

-

Pragmatic Pruning Progress: Insights were shared about a project on iterative context length increase for models using a triton laser merge trainer that operates during evaluation. This method, signaled as innovative due to no reinitialization requirements, could provide a pathway for enhanced model usability without system overhaul.

-

Unsloth’s Milestones and Resources: Unsloth AI marked a significant milestone with 500k monthly downloads of their fine-tuning framework on Hugging Face and promoted the sharing of exact match GGUF models despite potential redundancy. Emphasis was also on directing users to Colab notebooks for effective fine-tuning strategies.

Perplexity AI Discord

-

Siri Gets a Brainy Buddy: Perplexity AI Discord chatbot introduces an exclusive auditory feature for iOS users that reads answers to any posed question.

-

Opus Limit Outcry: Frustration arises within the community concerning the new 50-query daily limit on Claude 3 Opus interactions, while still, Perplexity chatbot supports Opus despite these caps.

-

API Adoption Anxieties: AI Engineers are discussing integration issues with the Perplexity API, such as outdated responses and a lack of GPT-4 support; a user also sought advice on optimal hyperparameters for the

llama-3-70b-instructmodel. -

A Game of Models: The community is buzzing with anticipation around Google’s Gemini model, and its potential impact on the AI landscape, while noting GPT-5 will have to bring exceptional innovations to keep up with the competition.

-

Crystal Ball for Net Neutrality: A linked article prompts discussions on the FCC’s reestablishment of Net Neutrality, with implications for the AI Boom’s future being pondered by community members.

CUDA MODE Discord

CUDA Collective Comes Together: Members focused on honing their skills with CUDA through optimizing various kernels and algorithms, including matrix multiplication and flash attention. Threads spanned from leveraging the NVIDIA Nsight Compute CLI User Guide for kernel profiling to debate on the efficiency of low-bit quantization methods.

PyTorch Tangles with Compatibility and Extensions: A snag was hit with flash-attn compatibility in PyTorch 2.3.0, resulting in an undefined symbol error, which participants hoped to see rectified promptly. PyTorch AO ignited enthusiasm by supporting custom CUDA extensions, facilitating performance tuning using torch.compile.

Greener Code with C++: An announcement about a bonus talk from the NVIDIA C++ team on converting llm.c to llm.cpp teased opportunities for clearer, faster code.

The Matrix of Memory and Models: Discussions delved deep into finer points of CUDA best practices, contemplating burst sizes for memory coalescing around 128 bytes as explored in Chapter 6, section 3.d of the CUDA guide, and toying with the concept of reducing overhead in packed operations.

Recording Rendezvous: Volunteers stepped up for screen recording with detailed, actionable advice and Existential Audio - BlackHole for lossless sound capture, highlighting the careful nuances needed for a refined technical setup.

LM Studio Discord

- GPU Offloads to AMD OpenCL: A technical hiccup with GPU Offloading was resolved by switching the GPU type to AMD Open CL, demonstrating a simple fix can sidestep performance issues.

- Mixed News on Updates and Performance: Upgrade issues cropped up in LM Studio with version 0.2.21, causing previous setups running phi-3 mini models to malfunction, while other users are experimenting with using Version 2.20 and facing GPU usage spikes without successful model loading. Users are actively troubleshooting, including submitting requests for screenshots for better diagnostics.

- LM Studio Turns Chat into Document Dynamo: Enthusiastic discussions around improving LM Studio’s chat feature have led to embedding document retrieval using Retriever-Augmented Generation (RAG) and tweaking GPU settings for better resource utilization.

- Tackling AI with Graphical Might: The community is sharing insights into optimal hardware setups and potential performance boosts anticipated from Nvidia Tesla equipment when using AI models, indicating a strong interest in the best equipment for AI model hosting.

- AMD’s ROCm Under the Microscope: The use of AMD’s ROCm tech preview has shown promise with certain setups, achieving a notable 30t/s on an eGPU system, although compatibility snags underscore the importance of checking GPU support against the ROCm documentation.

Nous Research AI Discord

Pushing The Envelope on Model Context Limits: Llama 3 models are breaking context barriers, with one variant reaching a 96k context for the 8B model using PoSE and continued pre-training with 300M tokens. The efficacy of Positional Skip-wisE (PoSE) and RoPE scaling were key topics, with a paper on PoSE’s context window extension and discussions on fine-tuning RoPE base during fine-tuning for lengthier contexts mentioned.

LLM Performance and Cost Discussions Engage Community: Engineers expressed skepticism about validation loss as a performance indicator and shared a cost comparison of training epochs, highlighting a case where four epochs cost $2,368 versus $41,440 for fifty epochs with minor performance gains. Another engineer is considering combining several 8B models into a mixture of experts based on Gemma MoE and speculated on potential enhancements using DPO/ORPO techniques.

The Saga of Repository Archival: Concerns were voiced about the sudden disappearance of Microsoft’s WizardLM repo, sparking a debate on the importance of archiving, especially in light of Microsoft’s investment in OpenAI. Participants underscored the need for backups, drawing from instances such as the recent reveal of WizardLM-2, accessible on Hugging Face and GitHub.

Synthetic Data Generation: A One-Stop Shop: Argilla’s Distilabel was recommended for creating diverse synthetic data, with practical examples and repositories such as the distilabel-workbench illustrating its applications. The conversation spanned single document data synthesis, multi-document challenges, and strategies for extended contexts in language models.

Simulated World Engagements Rouse Curiosity: Websim’s capabilities to simulate CLI commands and full web pages have captivated users, with example simulations shared, such as the EVA AI interaction profile on Websim. Speculations on the revival of World-Sim operated in parallel, and members looked forward to its reintroduction with a “pay-for-tokens” model.

OpenAI Discord

-

Apple’s Open Source Pivot with OpenELM: Apple has released OpenELM, a family of efficient language models now available on Hugging Face, scaling from 270M to 3B parameters, marking their surprising shift towards open-source initiatives. Details about the models are on Hugging Face.

-

Conversations Surrounding AI Sentience and Temporal Awareness: The community engaged in deep discussions emphasizing the difference between sentience—potentially linked to emotions and motivations—and consciousness—associated with knowledge acquisition. A parallel discussion pondered if intelligence and temporal awareness in AI are inherently discrete concepts, influencing our understanding of neural network identity and experiential dimension.

-

AI Voice Assistant Tech Talk: AI enthusiasts compared notes on OpenWakeWords for homegrown voice assistant development and Gemini’s promise as a Google Assistant rival. Technical challenges highlighted include the intricacies of interrupt AI speech and preferences for push-to-talk versus voice activation.

-

Rate Limit Riddles with Custom GPT Usage: Users sought clarity on GPT-4’s usage caps especially when recalling large documents and shared tips on navigating the 3-hour rolling cap. The community is exploring the thresholds of rate limiting, particularly when employing custom GPT tools.

-

Prompt Engineering Prowess & LLM Emergent Abilities: There’s a focus on strategic prompt crafting for specific tasks such as developing GPT-based coding for Arma 3’s SQF language. Fascination arises with emergent behaviors in LLMs, referring to phases of complexity leading to qualitative behavioral changes, exploring parallels to the concept of More Is Different in prompt engineering contexts.

Stability.ai (Stable Diffusion) Discord

AI Rollout Must Be Crystal Clear: Valve’s new content policy requires developers to disclose AI usage on Steam, particularly highlighting the need for transparency around live-generated AI content and mechanisms that ensure responsible deployment.

Copyright Quandary in Content Creation: Conversations bubbled up over the legal complexities when generating content with public models such as Stable Diffusion; there’s a necessity to navigate copyright challenges, especially on platforms with rigorous copyright enforcement like Steam.

Art Imitates Life or… Itself?: An inquiry raised by Customluke on how to create a model or a Lora to replicate their art style using Stable Diffusion sparked suggestions, with tools like dreambooth and kohya_ss surfaced for model and Lora creation respectively.

Selecting the Better Suited AI Flavor: A vocal group of users find SD 1.5 superior to SDXL for their needs, citing sharper results and better training process, evidence that the choice of AI model significantly impacts outcome quality.

Polishing Image Generation: Tips were shared for improving image generation results, recommending alternatives such as Forge and epicrealismXL to enhance the output for those dissatisfied with the image quality from models like ComfyUI.

HuggingFace Discord

-

BioMistral Launch for Medical LLMs: BioMistral, a new set of pretrained language models for medical applications, has been introduced, leveraging the capabilities of the foundational Mistral model.

-

Nvidia’s Geopolitical Adaptation: To navigate US export controls, Nvidia has unveiled the RTX 4090D, a China-compliant GPU with reduced power consumption and CUDA cores, detailed in reports from The Verge and Videocardz.

-

Text to Image Model Fine-Tuning Discussed: Queries about optimizing text to image models led to suggestions involving the Hugging Face diffusers repository.

-

Gradio Interface for ConversationalRetrievalChain: Integration of ConversationalRetrievalChain with Gradio is in the works, with community efforts to include personalized PDFs and discussion regarding interface customization.

-

Improved Image Generation and AI Insights in Portuguese: New developments include an app at Collate.one for digesting read-later content, advancements in generating high-def images in seconds at this space, and Brazilian Portuguese translations of AI community highlights.

-

Quantization and Efficiency: There’s active exploration on quantization techniques to maximize model efficiency on VRAM-limited systems, with preferences leaning toward Q4 or Q5 levels for a balance between performance and resource management.

-

Table-Vision Models and COCO Dataset Clarification: There’s a request for recommendations on vision models adept at table-based question-answering, and security concerns raised regarding the hosting of the official COCO datasets via an HTTP connection.

-

Call for Code-Centric Resources and TLM v1.0: The engineering community is seeking more tools with direct code links, as exemplified by awesome-conformal-prediction, and the launch of v1.0 of the Trustworthy Language Model (TLM), introducing a confidence score feature, is celebrated with a playground and tutorial.

Eleuther Discord

-

Parallel Ponderings Pose No Problems: Engineers highlighted that some model architectures, specifically PaLM, employ parallel attention and FFN (feedforward neural networks), deviating from the series perception some papers present.

-

Data Digestion Detailing: The Pile dataset’s hash values were shared, offering a reference for those looking to utilize the dataset in various JSON files, an aid found on EleutherAI’s hash list.

-

Thinking Inside the Sliding Window: Dialogue on transformers considered sliding window attention and effective receptive fields, analogizing them to convolutional mechanisms and their impact on attention’s focus.

-

Layer Learning Ladders Lengthen Leeway: Discussions about improving transformers’ handling of lengthier sequence lengths touched upon strategies like integrating RNN-type layers or employing dilated windows within the architecture.

-

PyTorch’s New Power Player: A new PyTorch library, torchtitan, was introduced via a GitHub link, promising to ease the journey of training larger models.

-

Linear Logic Illuminates Inference: The mechanics of linear attention were unpacked, illustrating its sequence-length linearity and constant memory footprint, essential insights for future model optimization.

-

Performance Parity Presumption: One engineer reported that the phi-3-mini-128k might match the Llama-3-8B, triggering a talk on the influences of pre-training data on model benchmarking and baselines.

-

Delta Decision’s Dual Nature: The possibility of delta rule linear attention enabling more structured yet less parallelizable operations stirred a comparison debate, supported by a MastifestAI blog post.

-

Testing Through a Tiny Lens: Members cast doubt on “needle in the haystack” tests for long-context language models, advocating for real-world application as a more robust performance indicator.

-

Prompt Loss Ponderings: The group questioned the systemic study of masking user prompt loss during supervised fine-tuning (SFT), noting a research gap despite its frequent use in language model training.

-

Five is the GSM8K Magic Number: There was a consensus suggesting that using 5 few-shot examples is the appropriate alignment with the Hugging Face leaderboard criteria for GSM8K.

-

VLLM Version Vivisection: Dialogue identified Data Parallel (DP) as a stumbling block in updating VLLM to its latest avatar, while Tensor Parallel (TP) appeared a smoother path.

-

Calling Coders to Contribute: The lm-evaluation-harness appeared to be missing a

register_filterfunction, leading to a call for contributors to submit a PR to bolster the utility. -

Brier Score Brain Twister: An anomaly within the ARC evaluation data led to a suggestion that the Brier score function be refitted to ensure error-free assessments regardless of data inconsistencies.

-

Template Tête-à-Tête: Interest was piqued regarding the status of a chat templating branch in Hailey’s branch, last updated a while ago, sparking an inquiry into the advancement of this functionality.

OpenRouter (Alex Atallah) Discord

Mixtral Muddle: A provider of Mixtral 8x7b faced an issue of sending blank responses, leading to their temporary removal from OpenRouter. Auto-detection methods for such failures are under consideration.

Soliloquy’s Subscription Surprise: The Soliloquy 8B model transitioned to a paid service, charging $0.1 per 1M tokens. Further information and discussions are available at Soliloquy 8B.

DBRX AI Achieves AI Astonishment: Fprime-ai announced a significant advancement with their DBRX AI on LinkedIn, sparking interest and discussions in the community. The LinkedIn announcement can be read here.

Creative Model Melee: Community members argued about the best open-source model for role-play creativity, with WizardLM2 8x22B and Mixtral 8x22B emerging as top contenders due to their creative capabilities.

The Great GPT-4 Turbo Debate: Microsoft’s influence on the Wizard LM project incited a heated debate, leading to a deep dive into the incidence, performance, and sustainability of models like GPT-4, Llama 3, and WizardLM. Resources shared include an incident summary and a miscellaneous OpenRouter model list.

LlamaIndex Discord

Create-llama Simplifies RAG Setup: The create-llama v0.1 release brings new support for @ollama and vector database integrations, making it easier to deploy RAG applications with llama3 and phi3 models, as detailed in their announcement tweet.

LlamaParse Touted in Hands-on Tutorial and Webinar: A hands-on tutorial showcases how LlamaParse, @JinaAI_ embeddings, @qdrant_engine vector storage, and Mixtral 8x7b can be used to create sophisticated RAG applications, available here, while KX Systems hosts a webinar to unlock complex document parsing capabilities with LlamaParse (details in this tweet).

AWS Joins Forces with LlamaIndex for Developer Workshop: AWS collaborates with @llama_index to provide a workshop focusing on LLM app development, integrating AWS services and LlamaParse; more details can be found here.

Deep Dive into Advanced RAG Systems: The community engaged in robust discussions on improving RAG systems and shared a video on advanced setup techniques, addressing everything from sentence-window retrieval to integrating structured Pydantic output (Lesson on Advanced RAG).

Local LLM Deployment Strategies Discussed: There was active dialogue on employing local LLM setups to circumvent reliance on external APIs, with guidance provided in the official LlamaIndex documentation (Starter Example with Local LLM), showcasing strategies for resolving import errors and proper package installation.

LAION Discord

Llama 3’s Mixed Reception: Community feedback on Llama 3 is divided, with some highlighting its inadequate code recall abilities compared to expectations set by GPT-4, while others speculate the potential for configuration enhancements to bridge the performance gap.

Know Your Customer Cloud Conundrum: The proposed U.S. “Know Your Customer” policies for cloud services spark concern and discussion, emphasizing the necessity for community input on the Federal Register before the feedback window closes.

Boost in AI Model Training Efficiency: Innovations in vision model training are making waves with a weakly supervised pre-training method that races past traditional contrastive learning, achieving 2.7 times faster training as elucidated in this research. The approach shuns contrastive learning’s heavy compute costs for a multilabel classification framework, yielding a performance on par with CLIP models.

The VAST Landscape of Omni-Modality: Enthusiasm is sighted for finetuning VAST, a Vision-Audio-Subtitle-Text Omni-Modality Foundation Model. The project indicates a stride towards omni-modality with the resources available at its GitHub repository.

Nightshade’s Transparency Troubles: The guild debates the effectiveness and transparency of Nightshade with a critical lens on autoencoder capabilities and reluctances in the publishing of potentially controversial findings.

OpenInterpreter Discord

Mac Muscle Meets Interpreter Might: Open Interpreter’s New Computer Update has significantly improved local functionality, particularly with native Mac integrations. The implementation allows users to control Mac’s native applications using simple commands such as interpreter --os, as detailed in their change log.

Eyes for AI: Community members highlighted the Moondream tiny vision language model, providing resources like the Img2TxtMoondream.py script. Discussions also featured LLaVA, a multimodal model hosted on Hugging Face, which is grounded in the powerful NousResearch/Nous-Hermes-2-Yi-34B model.

Loop Avoidance Lore: Engineers have been swapping strategies to mitigate looping behavior in local models, considering solutions ranging from tweaking temperature settings and prompt editing to more complex architectural changes. An intriguing concept, the frustration metric, was introduced to tailor a model’s responses when stuck in repetitive loops.

Driving Dogs with Dialogue: A member inquired about the prospect of leveraging Open Interpreter for commanding the Unitree GO2 robodog, sparking interest in possible interdisciplinary applications. Technical challenges, such as setting dummy API keys and resolving namespace conflicts with Pydantic, were also tackled with shared solutions.

Firmware Finality: The Open Interpreter 0.2.5 New Computer Update has officially graduated from beta, including the fresh enhancements mentioned earlier. A query about the update’s beta status led to an affirmative response after a version check.

OpenAccess AI Collective (axolotl) Discord

CEO’s Nod to a Member’s Tweet: A participant was excited about the CEO of Hugging Face acknowledging their tweet; network and recognition are alive in the community.

Tech Giants Jump Into Fine-tuning: With examples like Meditron, discussion on fine-tuning language models for specific uses is heating up, highlighting the promise for domain-specific improvements and hinting at an upcoming paper on continual pre-training.

Trouble in Transformer Town: An ‘AttributeError’ surfaced in transformers 4.40.0, tripping up a user, serving as a cautionary tale that even small updates can break workflows.

Mixing Math with Models: Despite some confusion, inquiries were made about integrating zzero3 with Fast Fourier Transform (fft); keep an eye out for this complex dance of algorithms.

Optimizer Hunt Heats Up: The FSDP (Fully Sharded Data Parallel) compatibility with optimizers remains a hot topic, with findings that AdamW and SGD are in the clear, while paged_adamw_8bit is not supporting FSDP offloading, leading to a quest for alternatives within the OpenAccess-AI-Collective/axolotl resources.

Cohere Discord

Upload Hiccups and Typographic Tangles: Users in the Cohere guild tackled issues with the Cohere Toolkit on Azure, pointing to the paper clip icon for uploads; despite this, problems persisted with the upload functionality going undiscovered. The Cohere typeface’s licensing on GitHub provoked discussion; it’s not under the MIT license and is slated for replacement.

Model Usage Must-Knows: Discussion clarified that Cohere’s Command+ models are available with open weight access but not for commercial use, and the training data is not shared.

Search API Shift Suggestion: The guild mulled over the potential switch from Tavily to the Brave Search API for integrating with the Cohere-Toolkit, citing potential benefits in speed, cost, and accuracy in retrieval.

Toolkit Deployment Debates: Deployment complexities of the Cohere Toolkit on Azure were deliberated, where selecting a model deployment option is crucial and the API key is not needed. Conversely, local addition of tools faced issues with PDF uploads and sqlite3 version compatibility.

Critical Recall on ‘Hit Piece’: Heated discussions emerged over the criticism of a “hit piece” against Cohere, with dialogue focused on the responsibility of AI agents and their real-world actions. A push for critical accountability emerged, with members reinforcing the need to back up critiques with substantial claims.

tinygrad (George Hotz) Discord

-

Tinygrad Sprints Towards Version 1.0: Tinygrad is gearing up for its 1.0 version, spotlighting an API that’s nearing stability, and has a toolkit that includes installation guidance, a MNIST tutorial, and comprehensive developer documentation.

-

Comma Begins Tinybox Testing with tinygrad: George Hotz emphasized tinybox by comma as an exemplary testbed for tinygrad, with a focus maintained on software over hardware, while a potential tinybox 2 collaboration looms.

-

Crossing off Tenstorrent: After evaluation, a partnership with Tenstorrent was eschewed due to inefficiencies in their hardware, leaving the door ajar for future collaboration if the cost-benefit analysis shifts favorably.

-

Sorting Through tinygrad’s Quantile Function Challenge: A dive into tinygrad’s development revealed efforts to replicate

torch.quantilefor diffusion model sampling, a complex task necessitating a precise sorting algorithm within the framework. -

AMMD’s MES Offers Little to tinygrad: AMD’s Machine Environment Settings (MES) received a nod from Hotz for its detailed breakdown by Felix from AMD, but ultimately assessed as irrelevant to tinygrad’s direction, with efforts focused on developing a PM4 backend instead.

Modular (Mojo 🔥) Discord

Strong Performer: Hermes 2.5 Edges Out Hermes 2: Enhanced with code instruction examples, Hermes 2.5 demonstrates superior performance across various benchmarks when compared to Hermes 2.

Security in the Limelight: Amidst sweeping software and feature releases by Modular, addressing security loopholes becomes critical, emphasizing protection against supply chain attacks like the XZ incident and the trend of open-source code prevalence in software development forecasted to hit 96% by 2024.

Quantum Complexity Through A Geometric Lens: Members discussed how the geometric concept of the amplituhedron could simplify quantum particle scattering amplitudes, with machine learning being suggested as a tool to decipher increased complexities in visualizing quantum states as systems scale.

All About Mojo: Dialogue around the Mojo Programming Language covered topics like assured memory cleanup by the OS, the variance between def and fn functions with examples found here, and the handling of mixed data type lists via Variant that requires improvement.

Moving Forward with Mojo: ModularBot flagged an issue filed on GitHub about Mojo, urged members to use issues for better tracking of concerns, for instance, about __copyinit__ semantics via GitHub Gist, and reported a cleaner update in code with more insertions than deletions, achieving better efficiency.

LangChain AI Discord

A Tricky Query for Anti-Trolling AI Design: A user proposed designing an anti-trolling AI and sought suggestions on how the system could effectively target online bullies.

Verbose SQL Headaches: Participants shared experiences with open-source models like Mistral and Llama3 generating overly verbose SQL responses and encountered an OutputParserException, with links to structured output support and examples of invoking SQL Agents.

RedisStore vs. Chat Memory: The community clarified the difference between stores and chat memory in the context of LangChain integrations, emphasizing the specific use of RedisStore for key-value storage and Redis Chat Message History for session-based chat persistence.

Techie Tutorial on Model Invocation: There was a discussion on the correct syntax when integrating prompts into LangChain models via JavaScript, with recommendations for using ChatPromptTemplate and pipe methods for chaining prompts.

Gemini 1.5 Access with a Caveat: Users discussed the integration of Gemini 1.5 Pro with LangChain, highlighting that it necessitates ChatVertexAI instead of ChatGoogleGenerativeAI and requires configuring the GOOGLE_APPLICATION_CREDENTIALS environment variable for proper access.

Latent Space Discord

Apple Bites the Open Source Apple: Apple has stepped into the open source realm, releasing a suite of models with parameters ranging from 270M to 3B, with the 270M parameter model available on Hugging Face.

Dify Platform Ups and Downs: The open-source LLM app development platform Dify is gaining traction for combining AI workflows and model management, although concerns have arisen about its lack of loops and context scopes.

PyTorch Pumps Up LLM Training: PyTorch has introduced Torchtitan, a library dedicated to aiding the training of substantial language models like llama3 from scratch.

Video Gen Innovation with SORA: OpenAI’s SORA, a video generation model that crafts videos up to a minute long, is getting noticed, with user experiences and details explored in an FXGuide article.

MOD Layers for Efficient Transformer Training: The ‘Mixture of Depths’ paper was presented, proposing an accelerated training methodology for transformers by alternately using new MOD layers and traditional transformer layers, introduced in the presentation and detailed in the paper’s abstract.

Mozilla AI Discord

-

Phi-3-Mini-4K Instruct Powers Up: Utilizing Phi-3-Mini-4K-Instruct with llamafile provides a setup for high-quality and dense reasoning datasets as discussed by members, with integration steps outlined on Hugging Face.

-

Model Download Made Easier: A README update for Mixtral 8x22B Instruct llamafile includes a download tip: use

curl -Lfor smooth redirections on CDNs, as seen in the Quickstart guide. -

Llamafile and CPUs Need to Talk: An issue with running llamafile on an Apple M1 Mac surfaced due to AVX CPU feature requirements, with the temporary fix of a system restart and advice for using smaller models on 8GB RAM systems shared in this GitHub issue.

-

Windows Meets Llamafile, Confusion Ensues: Users reported Windows Defender mistakenly detecting llamafile as a trojan. Workarounds proposed included using virtual machines or whitelisting, with the reminder that official binaries can be found here.

-

Resource-Hungry Models Test Limits: Engaging the 8x22B model requires heavy resources, with references to a recommended 128GB RAM for stable execution of Mistral 8x22B model, marking the necessity for big memory footprints when running sophisticated AI models.

DiscoResearch Discord

Llama Beats Judge in Judging: On the Judgemark benchmark, Llama-3-70b showcased impressive performance, demonstrating its potential for fine-tuning purposes in disco-judge applications, as it supports at least 8k context length. The community also touched on collaborative evaluation efforts, with references to advanced judging prompt design to assess complex rubrics.

Benchmarking Models and Discussing Inference Issues: Phi-3-mini-4k-instruct unexpectedly ranked lower on the eq-bench leaderboard despite promising scores in published evaluations. In model deployment, discussions highlighted issues like slow initialization and inference times for DiscoLM_German_7b_v1 and potential misconfigurations that could be remedied using device_map='auto'.

Tooling API Evaluation and Hugging Face Inquiries: Community debates highlighted Tgi for its API-first, low-latency approach and praised vllm for being a user-friendly library optimized for cost-efficiency in deployment. Queries on Hugging Face’s batch generation capabilities sparked discussion, with community involvement evident in a GitHub issue exchange.

Gratitude and Speculation in Model Development: Despite deployment issues, members have expressed appreciation for the DiscoLM model series, while also speculating about the potential of constructing an 8 x phi-3 MoE model to bolster model capabilities. DiscoLM-70b was also a hot topic, with users troubleshooting errors and sharing usage experiences.

Success and Popularity in Model Adoption: The adaptation of the Phi-3-mini-4k model, referred to as llamafication, yielded a respectable EQ-Bench Score of 51.41 for German language outputs. Conversation also pinpointed the swift uptake of the gguf model, indicated by a notable number of downloads shortly after its release.

Interconnects (Nathan Lambert) Discord

Claude Displays Depth and Structure: In a rich discussion, the behavior and training of Claude were considered “mostly orthogonal” to Anthropic’s vision, revealing unexpected depth and structural understanding through RLAIF training. Comparisons were made to concepts like “Jungian individuation” and conversation threads highlighted Claude’s capabilities.

Debating the Merits of RLHF vs. KTO: A comparison between Reinforcement Learning from Human Feedback (RLHF) and Knowledge-Targeted Optimization (KTO) sparked debate, considering their suitability for different commercial deployments.

Training Method Transition Yields Improvements: An interview was mentioned where a progression in training methods from Supervised Fine Tuning (SFT) to Data Programming by Demonstration (DPO), and then to KTO, led to improved performance based on user feedback.

Unpacking the Complexity of RLHF: The community acknowledged the intricacies of RLHF, especially as they relate to varying data sources and their impact on downstream evaluation metrics.

Probing Grad Norm Spikes: A request for clarity on the implications of gradient norm spikes during pretraining was made, emphasizing the potential adverse effects but specifics were not delivered in the responses.

Skunkworks AI Discord

Moondream Takes On CAPTCHAs: A video guide showcases fine-tuning the Moondream Vision Language Model for better performance on a CAPTCHA image dataset, aimed at improving its image recognition capabilities for practical applications.

Low-Cost AI Models Make Cents: The document “Low-Cost Language Models: Survey and Performance Evaluation on Python Code Generation” was shared, covering evaluations of CPU-friendly language models and introducing a novel dataset with 60 programming problems. The use of a Chain-of-Thought prompt strategy is highlighted in the survey article.

Meet, Greet, and Compute: AI developers are invited to a meetup at Cohere space in Toronto, which promises networking opportunities alongside lightning talks and demos — details available on the event page.

Arctic Winds Blow for Enterprises: Snowflake Arctic is introduced via a new video, positioning itself as a cost-effective, enterprise-ready Large Language Model to complement the suite of AI tools tailored for business applications.

Datasette - LLM (@SimonW) Discord

- Run Models Locally with Ease: Engineers explored jan.ai, a GUI commended for its straightforward approach for running GPT models on local machines, potentially simplifying the experimentation process.

- Apple Enters the Language Model Arena: The new OpenELM series introduced by Apple provides a spectrum of efficiently scaled language models, including instruction-tuned variations, which could change the game for parameter efficiency in modeling.

Alignment Lab AI Discord

- Llama 3 Steps Up in Topic Complexity: Venadore has started experimenting with llama 3 for topic complexity classification, reporting promising results.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (1265 messages🔥🔥🔥):

- Finetuning LLM Challenges: Members discussed finetuning issues with their models, particularly when working with tools like awq, gptq, and running models in 4-bit quantization. There were specific issues with token indices sequence length errors, over 48GB of VRAM being insufficient for running certain models, and confusion around utilizing Aphrodite Engine or llama.cpp for testing finetuned models. Remedies suggested included revising batch sizes and grad accumulation, enabling packing, and reaching out to community experts for guidance.

- Finding the Right Technology Stack: One user expressed a desire to integrate different AI models into a project that allows chatting with various agents for distinct tasks. Experienced community members recommended starting with simpler scripts instead of complex AI solutions and advised doing thorough research before implementation. Concerns about API costs versus local operation were also discussed.

- Game Preferences and Recommendations: Users shared their excitement about the recent launch of games like “Manor Lords” on early access and provided personal insights into the entertainment value of popular titles such as “Baldur’s Gate 3” and “Elden Ring.”

- Unlocking Phi 3’s Fused Attention: It was revealed that Phi 3 Mini includes fused attention, sparking curiosity among members. Despite the feature’s presence, users were advised by others to wait for further development before diving in.

- Unsloth Achieves Significant Downloads: The Unsloth team announced hitting 500k monthly model downloads on Hugging Face, thanking the community for the widespread support and usage of Unsloth’s finetuning framework. The necessity of uploading GGUF models was discussed, with the possible redundancy noted due to others already providing them.

Links mentioned:

- Orenguteng/Llama-3-8B-LexiFun-Uncensored-V1 · Hugging Face: no description found

- Layer Skip: Enabling Early Exit Inference and Self-Speculative Decoding: We present LayerSkip, an end-to-end solution to speed-up inference of large language models (LLMs). First, during training we apply layer dropout, with low dropout rates for earlier layers and higher ...

- Reverse Training to Nurse the Reversal Curse: Large language models (LLMs) have a surprising failure: when trained on "A has a feature B", they do not generalize to "B is a feature of A", which is termed the Reversal Curse. Even w...

- no title found: no description found

- unsloth/llama-3-8b-bnb-4bit · Hugging Face: no description found

- Docker: no description found

- Qwen/Qwen1.5-110B-Chat · Hugging Face: no description found

- Rookie Numbers GIF - Rookie Numbers - Discover & Share GIFs: Click to view the GIF

- gradientai/Llama-3-8B-Instruct-262k · Hugging Face: no description found

- apple/OpenELM · Hugging Face: no description found

- TETO101/AIRI-L3-INS-1.0-0.00018-l · Hugging Face: no description found

- crusoeai/Llama-3-8B-Instruct-262k-GGUF · Hugging Face: no description found

- Layer Normalization — Triton documentation: no description found

- no title found: no description found

- llama3/LICENSE at main · meta-llama/llama3: The official Meta Llama 3 GitHub site. Contribute to meta-llama/llama3 development by creating an account on GitHub.

- GitHub - oKatanaaa/kolibrify: Curriculum training of instruction-following LLMs with Unsloth: Curriculum training of instruction-following LLMs with Unsloth - oKatanaaa/kolibrify

- GitHub - meta-llama/llama-recipes: Scripts for fine-tuning Meta Llama3 with composable FSDP & PEFT methods to cover single/multi-node GPUs. Supports default & custom datasets for applications such as summarization and Q&A. Supporting a number of candid inference solutions such as HF TGI, VLLM for local or cloud deployment. Demo apps to showcase Meta Llama3 for WhatsApp & Messenger.: Scripts for fine-tuning Meta Llama3 with composable FSDP & PEFT methods to cover single/multi-node GPUs. Supports default & custom datasets for applications such as summarization and Q...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #random (18 messages🔥):

-

Finetuning Strategies Discussed: Members of the chat discuss whether finetuning a language model on raw text would cause it to lose its chatty capabilities. A solution is proposed to combine raw text with a chat dataset to preserve the conversational ability while adding knowledge from the raw text.

-

Rumors of WizardLM Disbanding: Speculation arises based on Qingfeng Sun’s staff page redirection, suggesting that he may no longer be at Microsoft which could signal the closure of the WizardLM team. Links to a Reddit thread and a Notion blog post give credence to the theory.

-

Unsloth AI Finetuning Resources: For finetuning on combined datasets, members are directed to Unsloth AI’s repository on GitHub finetune for free, which lists all available notebooks, and specifically to a Colab notebook for text completion.

-

WizardLM Data Salvage Operation: Following the discussion about potential layoffs associated with Microsoft’s WizardLM, a member comes forward stating they have copies of the WizardLM datasets which might aid in future endeavors.

-

The Rollercoaster of Model Training: Chat members humorously share their experiences with model training, referring to their loss curves with a mix of hope and defeat.

Links mentioned:

- Google Colaboratory: no description found

- Staff page for Qingfeng Sun (Lead Wizard LM Researcher) has been deleted from Microsoft.com: If you go to the staff page of [Qingfeng Sun](https://www.microsoft.com/en-us/research/people/qins/) you'll be redirected to a generic landing...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Staff page for Qingfeng Sun (Lead Wizard LM Researcher) has been deleted from Microsoft.com: If you go to the staff page of [Qingfeng Sun](https://www.microsoft.com/en-us/research/people/qins/) you'll be redirected to a generic landing...

Unsloth AI (Daniel Han) ▷ #help (86 messages🔥🔥):

-

Inference Snippet Inquiry: A member inquired about a simpler method to test GGUF models without loading them into Oobabooga. Another member indicated future plans to provide inference and deployment options.

-

Triton Troubles: Members discussed issues with Triton and its necessity for running Unsloth locally. A member had trouble with

triton.commonmodule due to a potential version conflict, and others acknowledged Triton as a requirement. -

Fine-Tuning Frustrations: A conversation circled around issues faced during fine-tuning where a model kept repeating the last token. The solution suggested was to update the generation config using the latest colab notebooks.

-

Quantization Failure Chaos: Multiple members encountered a “Quantization failed” error when trying to use

save_pretrained_mergedandsave_pretrained_gguf. The issue was ultimately identified as a user error where llama.cpp was not in the model folder, but was resolved after fixing the file location. -

Model Training Errors and Insights: A mix of questions and solutions were discussed regarding training errors, resuming training from checkpoints on platforms like Kaggle, and finetuning guidance. One notable point was the use of checkpointing, which allows training to resume from the last saved step, benefiting users on platforms with limited continuous runtime.

Links mentioned:

- Google Colaboratory: no description found

- Reddit - Dive into anything: no description found

- TETO101/AIRI_INS5 · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #showcase (10 messages🔥):

- Meta Unveils LlaMA-3: Meta announces the next generation of its LlaMA model series, releasing an 8B model and a 70B model, with a teased upcoming 400B model promising GPT-4 level benchmarks. Interested parties can request access to these models, which are reportedly top of their size classes; the detailed comparison and insights are available in a Substack article.

- Open-Sourcing Kolibrify: A user announces the release of their project Kolibrify, a tool for curriculum training of instruction-following LLMs with Unsloth, designed for PhD research. The tool, aimed at those finetuning LLMs on workstations for rapid prototyping, is available on GitHub.

Links mentioned:

- AI Unplugged 8: Llama3, Phi-3, Training LLMs at Home ft DoRA.: Insights over Information

- GitHub - oKatanaaa/kolibrify: Curriculum training of instruction-following LLMs with Unsloth: Curriculum training of instruction-following LLMs with Unsloth - oKatanaaa/kolibrify

Unsloth AI (Daniel Han) ▷ #suggestions (7 messages):

-

Innovating with TRL Trainer: A member is working on implementing laser pruning and potentially freezing with a trl trainer that functions during the evaluation step. The goal is to iteratively increase context length for models while utilizing the same GPU.

-

No Reinitialization Required for Context Expansion: The suggestion to increase available context length through model and tokenizer configuration adjustments was made. It was confirmed that these changes do not require reinitialization of the system.

-

Emoji Expressiveness in Chat: Members are using emojis in their communication, with comments expressing surprise and delight at the ability to type emojis in the chat.

Perplexity AI ▷ #announcements (1 messages):

- iOS Users Get Exclusive Feature: The Perplexity AI Discord chatbot has been updated with a new feature where users can ask any question and hear the answer. This feature is available for iOS <a:pro:1138537257024884847> users starting today.

Perplexity AI ▷ #general (531 messages🔥🔥🔥):

- Perplexity Supports Opus: The regular Perplexity chatbot still supports Opus, despite the recent caps placed on its usage.

- The Great Opus Limit Debate: Users express frustration over the sudden limit placed on Claude 3 Opus interactions, reducing the available queries from previous higher or unlimited numbers to just 50 per day. Discussions revolve around the difference in model performance and pricing compared to competitors like you.com, as well as transparency regarding usage caps.

- Enterprise Features Unclear: Members discussed the difference between regular Pro and Enterprise Pro versions of Perplexity, especially in the context of privacy settings and data usage for model training. There seems to be confusion about whether setting a toggle does protect users’ data from being used by Perplexity’s models or Anthropic’s models.

- Transparency and Communication Critique: Community members criticize Perplexity for poor communication regarding usage changes and urge for official announcements. Comparisons are made with other services like poe.com, which users perceive as more transparent with their pricing and limits.

- Ecosystem Ramifications: Conversation pondered the implications of Google potentially getting serious with their Gemini model, which some believe offers competitive advantages due to scalability and Google’s dataset. Expectations are forming around GPT-5 needing to be particularly impressive in light of increasing competition.

Links mentioned:

- Reddit - Dive into anything: no description found

- Tweet from Matt Shumer (@mattshumer_): It's been a week since LLaMA 3 dropped. In that time, we've: - extended context from 8K -> 128K - trained multiple ridiculously performant fine-tunes - got inference working at 800+ tokens...

- Stable Cascade - a Hugging Face Space by multimodalart: no description found

Perplexity AI ▷ #sharing (8 messages🔥):

- Exploring Perplexity AI: A user shared a search link without any accompanying commentary or context.

- Diving into Pool Chemistry: Discussing pool maintenance frustrations, a member mentioned the Langlier Saturation Index as a potentially helpful but complex solution not tailored for outdoor pools and shared an informative Perplexity AI search link.

- Net Neutrality and its AI Impact: A link was shared regarding the FCC’s restoration of Net Neutrality, with the post hinting at possible implications for the AI Boom, accessible at Perplexity AI search.

- Command the Commands: One user queried about a specific command, referring to a Perplexity AI search. Another user reminded to ensure the thread is Shareable.

- AI for Voting?: There’s interest in how AI could apply to voting systems, with a user linking to a Perplexity AI search.

- Homeland Security’s New Directive: A share without comment included a link to a Perplexity AI search regarding an announcement from Homeland Security.

Perplexity AI ▷ #pplx-api (10 messages🔥):

-

API Integration Quirks Noted: An user mentioned integrating the Perplexity API with a speech assistant and observed issues with date relevance in responses, such as receiving a sports game score from a year ago instead of the current date. They also inquired about inserting documents for comparison purposes and expressed interest in expanded citation functionality, hinting at the potential for more versatile usage.

-

No GPT-4 Support with Perplexity: A member was looking to use GPT-4 through the Perplexity API but found it unsupported. Another member provided a documentation link listing available models, including

sonar-small-chat,llama-3-instructvariants, andmixtral-instruct, but no mention of GPT-4. -

Optimal Hyperparameters for llama-3-70b-instruct Usage: An individual posed a question about the appropriate hyperparameters for using the

llama-3-70b-instructmodel via the API, sharing their current parameters structure and seeking confirmation or corrections, specifically regardingmax_tokensandpresence_penaltyvalues. -

Unclear Integration Details: The same user mentioned staying within rate limits when making their calls to the API, although there was uncertainty regarding whether the Perplexity API operates identically to OpenAI’s in terms of parameter settings.

-

Awaiting Enterprise API Response: An enterprise user reached out in the channel after emailing Perplexity AI’s enterprise contacts regarding API usage and awaiting a response. They were advised by another member that response times range from 1-3 weeks.

-

Clarification Sought on “Online LLMs” Usage: A new user to Perplexity AI sought clarification on the guidance for using online LLMs, questioning whether to avoid using system prompts and if it was necessary to present queries in a single-turn conversation format.

Link mentioned: Supported Models: no description found

CUDA MODE ▷ #general (13 messages🔥):

-

Seeking Further CUDA Mastery: A discussion suggested enhancing CUDA learning through public demonstrations of skill, specifically by writing a fast kernel and sharing the work. Suggested projects included optimizing a fixed-size matrix multiplication, flash attention, and various quantization methods.

-

BASH Channel Turns CUDA Incubator: The

<#1189861061151690822>channel is slated to focus on algorithms that could benefit from CUDA improvement, inviting members to contribute with their optimized kernels. However, there was a recommendation to create a more permanent repository for these contributions beyond the transient nature of Discord channels. -

Instance GPU Configuration Verification: A user confirmed that upon accessing their instance via SSH, the GPU configuration is verified, revealing consistent assignment of a V100 for p3.2xlarge.

-

Next CUDA Lecture Up and Coming: An announcement was made regarding an upcoming CUDA mode lecture, scheduled to occur in 1 hour and 40 minutes from the time of the announcement.

-

Anticipating CUDA Updates: There was a query regarding the release schedule for CUDA distributables for Ubuntu 24.04, but there was no follow-up information provided within the message history.

CUDA MODE ▷ #cuda (40 messages🔥):

- Kernel Profiling Confusion: One member was trying to obtain more detailed information about kernel operations using NVIDIA’s Nsight Profiler. After initial confusion between Nsight Systems and Nsight Compute, it was clarified that using NVIDIA Nsight Compute CLI User Guide could yield detailed kernel stats.

- Understand the Synchronize: An explanation was given about

cudaStreamSynchronize, how it implies the CPU waits for tasks in the CUDA stream to finish, and it was suggested to check if the synchronization is essential at each point it’s called to potentially improve performance. - Occupancy and Parallelization Advice: Discussion touched on launch statistics of CUDA kernels, indicating that launching only a small number of blocks such as 14 blocks could result in GPU idle time unless multiple CUDA streams are being utilized.

- Performance Insights and Tweaks: For in-depth kernel analysis, it was suggested to switch to full metric selection in profiling for more comprehensive information, and a broader tip was given to aim for a higher number of blocks rather than introducing the complexity of CUDA streams, if feasible.

- Arithmetic Intensity vs Memory Bandwidth: There was a comparison of the FLOP/s and memory throughput between the tiled_matmult and coarsed_matmult kernels, with observations on how __syncthreads() calls and memory bandwidth relate. The discussion evolved into how arithmetic intensity (AI) is perceived from SRAM versus DRAM perspectives when profiling with Nsight Compute / ncu.

Links mentioned:

- 4. Nsight Compute CLI — NsightCompute 12.4 documentation: no description found

- How to Optimize a CUDA Matmul Kernel for cuBLAS-like Performance: a Worklog: In this post, I’ll iteratively optimize an implementation of matrix multiplication written in CUDA.My goal is not to build a cuBLAS replacement, but to deepl...

CUDA MODE ▷ #torch (9 messages🔥):

-

Tensor Expansion Explained: A discussion revealed that

Tensor.expandin PyTorch works by modifying the tensor’s strides, not its storage. It was noted that when using Triton kernels, issues may arise from improper handling of these modified strides. -

Flash-Attention Incompatibility Alert: There was a report of incompatibility between the newly released flash-attn version 2.5.7 and the CUDA libraries installed by PyTorch 2.3.0, specifically an

undefined symbolerror, and hopes were expressed for a prompt update to resolve this. -

Building Flash-Attention Challenges: A user encountered difficulties in building flash-attn, mentioning that the process was excessively time-consuming without ultimate success.

-

Understanding CUDA Tensor Memory: A member shared a useful overview clarifying that the memory pointers for CUDA tensors always point to device memory, and cross-GPU operations are restricted by default in PyTorch.

Link mentioned: CUDA semantics — PyTorch 2.3 documentation: no description found

CUDA MODE ▷ #announcements (1 messages):

- Boost in LLM Performance: An exciting bonus talk by the NVIDIA C++ team was announced, discussing the porting of

llm.ctollm.cpppromising cleaner and faster code. The session was starting shortly.

CUDA MODE ▷ #algorithms (47 messages🔥):

- Exploring Plenoxels and SLAM Algorithms: The chat discussed Plenoxels CUDA kernels, which are faster variants of NeRF, and expressed interest in seeing a CUDA version of Gaussian Splatting SLAM.

- Acceleration of Mobile ALOHA with CUDA: The inference algorithms for Mobile ALOHA, such as ACT and Diffusion Policy, were topics of interest.

- Kernel Operations for Binary Matrices: There was a brainstorming session on creating a CUDA kernel for operations on binary (0-1) or ternary (1.58-bit, -1, 0, 1) matrices. The group discussed potential approaches avoiding unpacking, including a masked multiply tactic and kernel fusion.

- Low-bit Quantization and Efficiency Discussions: Members debated the efficiency of unpacking operations in Pytorch vs. fused CUDA or Triton kernels. Some suggested that operations be conducted without unpacking, while others highlighted memory copies and caching as significant concerns. Microsoft’s 1-bit LLM paper was mentioned as a motivating idea for optimizing linear layers in neural networks.

- Challenges with Packed Operations in CUDA: The conversation centered around the feasibility of conducting matrix multiplication-like operations directly on packed data types without unpacking, referring to CUDA 8.0’s bmmaBitOps as a potential method. Discussion included bit operations in CUDA’s programming guide and the interest in trialing computations minimizing unpacking. A member provided a link to a CPU version of BitNet for testing purposes.

Link mentioned: GitHub - catid/bitnet_cpu: Experiments with BitNet inference on CPU: Experiments with BitNet inference on CPU. Contribute to catid/bitnet_cpu development by creating an account on GitHub.

CUDA MODE ▷ #beginner (6 messages):

- Exploring Multi-GPU Programming: One member hinted at the potential for learning about multi-GPU programming, suggesting it might be an area of interest.

- Laptop with NVIDIA GPU for Learning: Members concurred that a laptop with a NVIDIA GPU, such as one containing a 4060, is a cost-effective option suitable for learning and testing CUDA code.

- Jetson Nano for CUDA Exploration: A Jetson Nano was recommended for those looking to learn CUDA programming, especially when there is an extra monitor available.

- Search for NCCL All-Reduce Kernel Tutorial: A request was made for tutorials on learning NCCL to implement all-reduce kernels. No specific resources were provided in the chat.

CUDA MODE ▷ #pmpp-book (5 messages):

- Clarifying Burst Size: Burst size refers to the chunk of memory accessed in a single load operation during memory coalescing, where the hardware combines multiple memory loads from contiguous locations into one load to improve efficiency. This concept is explored in Chapter 6, section 3.d of the CUDA guide where it mentions that burst sizes can be around 128 bytes.

- Insights from External Resources: A helpful lecture slide by the book’s authors was provided, affirming that bursts typically contain 128 bytes which clarifies the concept of coalesced versus uncoalesced memory access.

- Discrepancy in Burst Size Understanding Corrected: There was a reiterating message indicating that initially, there was a misunderstanding about coalesced access, which was resolved after revisiting and rereading the relevant section of the CUDA guide.

CUDA MODE ▷ #youtube-recordings (1 messages):

poker6345: ppt can be shared

CUDA MODE ▷ #torchao (2 messages):

-

Simplified BucketMul Function Revelations: A member shared a simplified version of the bucketMul function, highlighting how it factors both weights and dispatch in computing multiplications, and potentially optimizing memory loads. It resembles discussions on bucketed COO for better memory performance, with the added consideration of activations.

-

AO Welcomes Custom CUDA Extensions: PyTorch AO now supports custom CUDA extensions, allowing seamless integration with

torch.compileby following the provided template, as per a merged pull request. This is especially enticing for those adept at writing CUDA kernels and aiming to optimize performance on consumer GPUs.

Links mentioned:

- Effort Engine: no description found

- Custom CUDA extensions by msaroufim · Pull Request #135 · pytorch/ao: This is the mergaeble version of #130 - some updates I have to make Add a skip test unless pytorch 2.4+ is used and Add a skip test if cuda is not available Add ninja to dev dependencies Locall...

CUDA MODE ▷ #ring-attention (1 messages):

iron_bound: https://www.harmdevries.com/post/context-length/

CUDA MODE ▷ #off-topic (1 messages):

iron_bound: https://github.com/adam-maj/tiny-gpu

CUDA MODE ▷ #llmdotc (377 messages🔥🔥):

-

Chasing MultigPU Efficiencies: The group is working on integrating multi-GPU support with NCCL, discussing performance penalties of multi-GPU configurations, and potential improvements like gradient accumulation. A move to merge NCCL code into the main branch is considered, along with a discussion about whether FP32 should support multi-GPU, leaning towards not including it.

-

Optimizing Gather Kernel without Atomics: A strategy is discussed for optimizing a layernorm backward kernel by avoiding atomics, using threadblock counting and grid-wide synchronization techniques to manage dependencies and streamline calculations.

-

Debugging and Decision-making for FP32 Path: It’s suggested that the FP32 version of

train_gpt2be simplified for educational purposes, possibly stripping out multi-GPU support to keep the example as intuitive as possible for beginners. -

Brainstorming Persistent Threads and L2 Communication: There’s an in-depth technical discussion about the potential benefits and drawbacks of using persistent threads with grid-wide synchronization to exploit the memory bandwidth more efficiently and potentially run multiple kernels in parallel.

-

Parallelism and Kernel Launch Concerns: Dialogue revolves around the comparison of the new CUDA concurrent kernel execution model managed by queues to traditional methods, with thoughts on the pros and cons of embracing this uncharted approach to achieve better memory bandwidth exploitation and reduced latency.

Links mentioned:

- tf.bitcast equivalent in pytorch?: This question is different from tf.cast equivalent in pytorch?. bitcast do bitwise reinterpretation(like reinterpret_cast in C++) instead of "safe" type conversion. ...

- Energy and Power Efficiency for Applications on the Latest NVIDIA Technology | NVIDIA On-Demand: With increasing energy costs and environmental impact, it's increasingly important to consider not just performance but also energy usage

- Tweet from Horace He (@cHHillee): It's somehow incredibly hard to get actual specs of the new Nvidia GPUs, between all the B100/B200/GB200/sparse/fp4 numbers floating around. @tri_dao linked this doc which thankfully has all the n...

- k - Overview: k has 88 repositories available. Follow their code on GitHub.

- Issues · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- reorder weights according to their precision by ngc92 · Pull Request #252 · karpathy/llm.c: Simplify our logic by keeping weights of the same precision close together. (If we want to go with this, we also need to update the fp32 network to match; hence, for now this is a Draft PR)

- corenet/projects/openelm at main · apple/corenet: CoreNet: A library for training deep neural networks - apple/corenet

- GitHub - adam-maj/tiny-gpu: A minimal GPU design in Verilog to learn how GPUs work from the ground up: A minimal GPU design in Verilog to learn how GPUs work from the ground up - adam-maj/tiny-gpu

- Self-Improving Agents are the future, let’s build one: If you're serious about AI, and want to learn how to build Agents, join my community: https://www.skool.com/new-societyFollow me on Twitter - https://x.com/D...

- Pull requests · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

CUDA MODE ▷ #massively-parallel-crew (25 messages🔥):

- Seeking Volunteers for Recording: One member commits to screen recording and requests a backup recorder due to having to leave the session early. They advise against using AirPods as they might change the system audio output unpredictably.

- Mac Screen Recording Tutorial: Guidance provided for screen recording on a Mac, including downloading Blackhole from here and setting up a Multi-Output Device in the “Audio MIDI Setup.”

- Audio Troubleshooting with Blackhole: It’s suggested to avoid Bluetooth devices for audio capture to prevent interruptions, and to select BlackHole 2ch for lossless sound recording.

- Step-by-Step Recording Instructions: Detailed instructions include using the Cmd + Shift + 5 shortcut, selecting the entire screen, saving to an external drive, and ensuring the microphone is set to BlackHole 2ch.

- Pre-Recording Tech Check Proposed: A call is suggested before the event for checking sound and recording settings.

Link mentioned: Existential Audio - BlackHole: no description found

LM Studio ▷ #💬-general (218 messages🔥🔥):

-

LM Studio Becomes a One-Stop Chat Hub: Members discussed integrating documents with the chat feature in LM Studio using Retriever-Augmented Generation (RAG) through custom scripts and the API. Some showcased successfully directing LM Studio to utilize their system’s GPU instead of the CPU for model operations, with a switch found in the Settings panel.

-

Navigating Update Confusions: There was confusion about updating to version 0.2.21 of LM Studio, with some users not able to see the update through the auto-updater. It was clarified that the new version hadn’t been pushed to the updater, and members were directed to manually download it from LM Studio’s official website.

-

Challenges with Offline Image Generation and AI Chat: Users inquired about offline image generation capabilities, being redirected to Automatic1111 for those needs. The conversation also mentioned experiencing ‘awe’ moments with AI advancements, particularly when interacting with chatbots like AI Chat on LM Studio.

-

Troubleshooting Varied Issues: From GPU support questions to errors like “Exit code: 42,” members tried to troubleshoot issues ranging from installation errors on different versions of LM Studio to getting specific models to work. heyitsyorkie provided advice on many technical issues, including recommending updates or altering settings to overcome errors.

-

Technical inquiries about LM Studio capabilities and settings: Users engaged in various technical discussions around LM Studio’s API server capabilities, inference speeds, GGUF model support, and specific hardware requirements for running large language models. heyitsyorkie and other members shared insights and resources, including linking to the local server documentation and discussing optimal setups for AI inference.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- LLM Model VRAM Calculator - a Hugging Face Space by NyxKrage: no description found

- ONNX Runtime | Blogs/accelerating-phi-3: Cross-platform accelerated machine learning. Built-in optimizations speed up training and inferencing with your existing technology stack.

- ChristianAzinn/acge_text_embedding-gguf · Hugging Face: no description found

- Phi-3-mini-4k-instruct-q4.gguf · microsoft/Phi-3-mini-4k-instruct-gguf at main: no description found

- Local LLM Server | LM Studio: You can use LLMs you load within LM Studio via an API server running on localhost.

- google/siglip-so400m-patch14-384 · Hugging Face: no description found

- aspire/acge_text_embedding · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- qresearch/llama-3-vision-alpha · Hugging Face: no description found

- unsloth/llama-3-8b-Instruct-bnb-4bit · Hugging Face: no description found

- ChristianAzinn (Christian Zhou-Zheng): no description found

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...