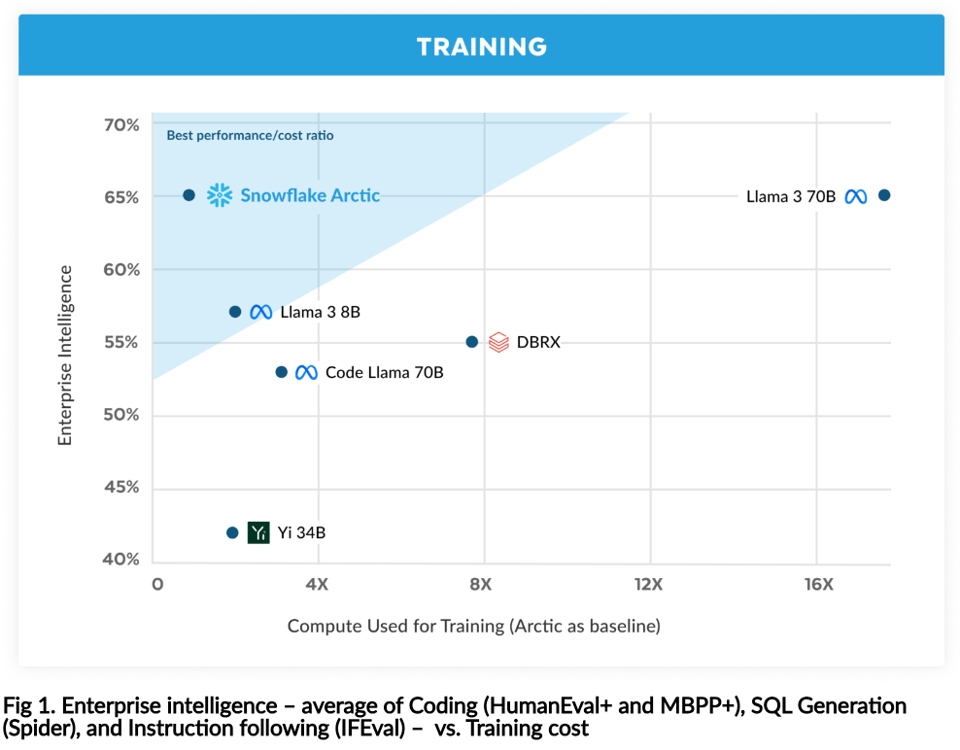

This one takes a bit of parsing but is a very laudable effort from Snowflake, which til date has been fairly quiet in the modern AI wave. Snowflake Arctic is notable for a few reasons, but probably not the confusing/unrelatable chart they chose to feature above the fold:

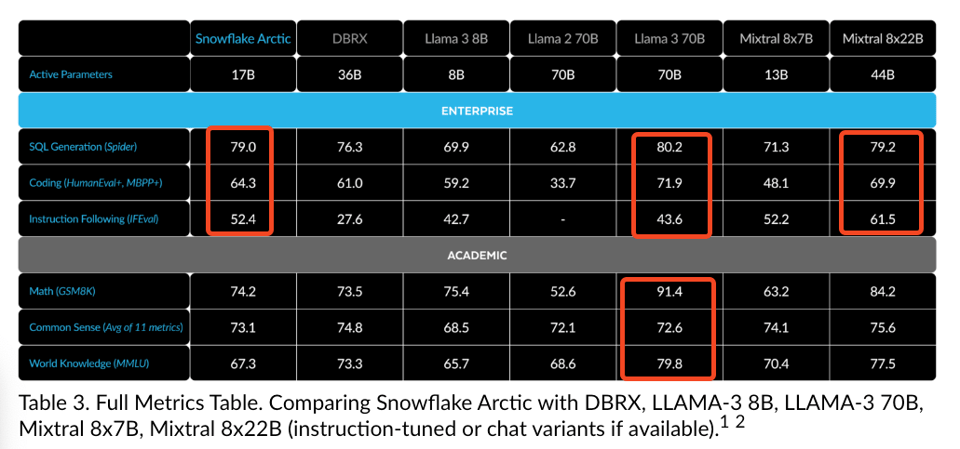

“Enterprise Intelligence” one could warm to, esp if it explains why they have chosen to do better on some domains than others:

What this chart really shows in not very subtle ways is that Snowflake is basically claiming to have built an LLM that is better in almost every way to Databricks, their main rival in the data warehouse wars. (This has got to smell offensive to Jon Frankle and his merry band of Mosaics?)

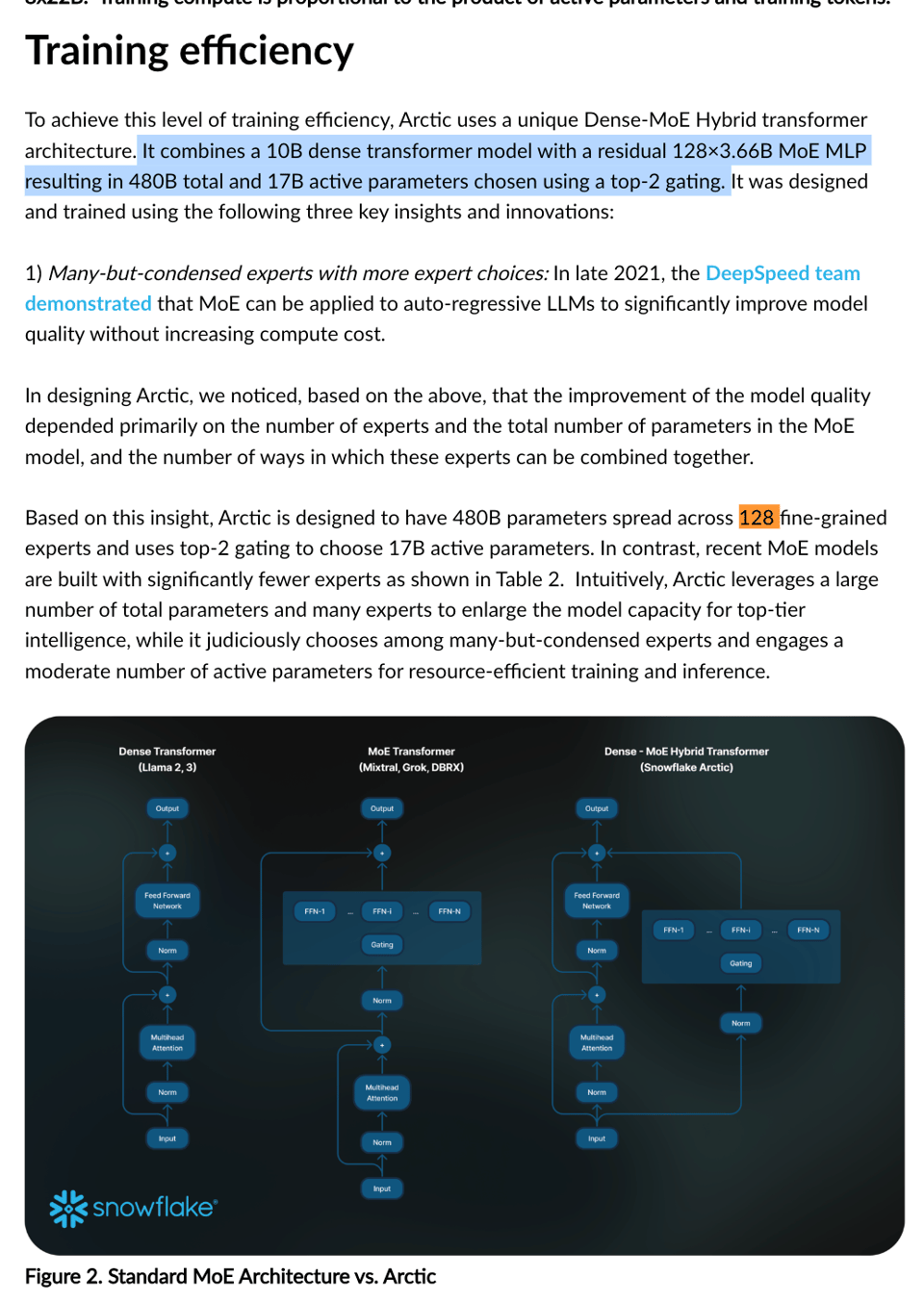

Downstream users don’t care that much about training efficiency, but the other thing that should catch your eye is the model architecture - taking the right cue from DeepSeekMOE and DeepSpeedMOE) with more experts = better:

No mention is made of the “shared expert” trick that DeepSeek used.

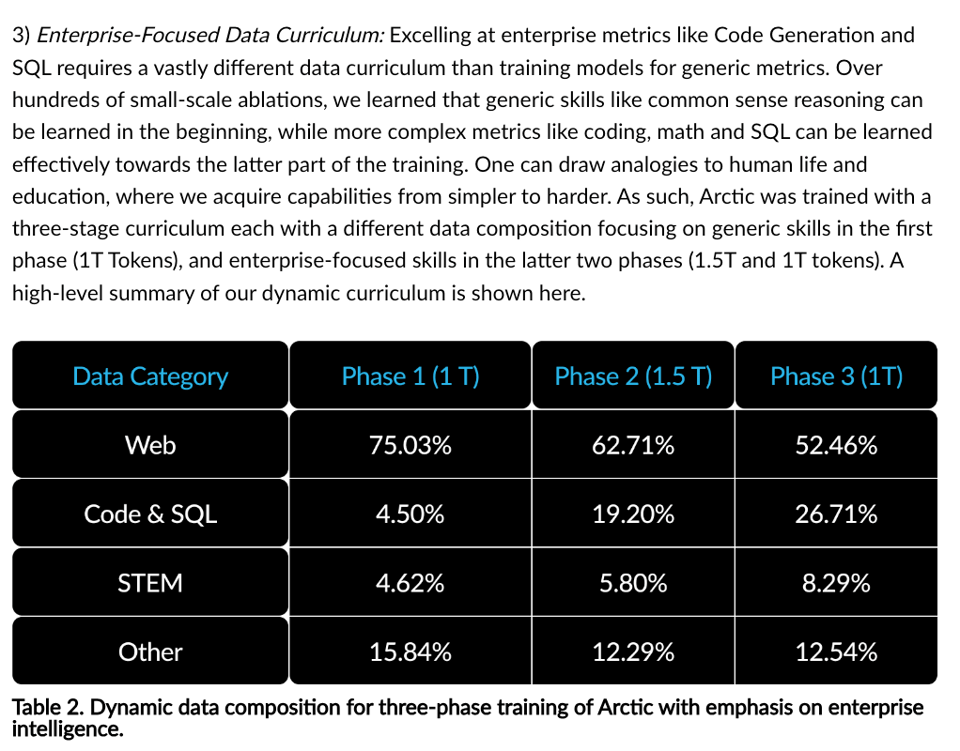

Finally there’s mention of a 3 stage curriculum:

which echoes a similar strategy seen in the recent Phi-3 paper:

Finally, the model is released as Apache 2.0.

Honestly a great release, with perhaps the only poor decision being that the Snowflake Arctic cookbook is being published on Medium dot com.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Image/Video Generation

- Nvidia Align Your Steps: In /r/StableDiffusion, Nvidia’s new Align Your Steps technique significantly improves image quality at low step counts, allowing good quality images with fewer steps. Works best with DPM~ samplers.

- Stable Diffusion Model Comparison: In /r/StableDiffusion, a big comparison of current Stable Diffusion models shows SD Core has the best hands/anatomy, while SD3 understands prompts best but has a video game look.

- SD3 vs SD3-Turbo Comparison: 8 images generated by Stable Diffusion 3 and SD3 Turbo models based on prompts from Llama-3-8b language model involving themes of AI, consciousness, nature and technology.

Other Image/Video AI

- Adobe AI Video Upscaling: Adobe’s impressive AI upscaling project makes blurry videos look HD. However, distortions and errors are more visible in high resolution.

- Instagram Face Swap: In /r/StableDiffusion, Instagram spammers are using FaceFusion/Roop to create convincing face swaps in videos, which works best when the face is not too close to the camera in low res videos.

Language Models and Chatbots

- Apple Open Source AI Models: Apple released code, training logs, and multiple versions of on-device language models, diverging from the typical practice of only providing weights and inference code.

- L3 and Phi 3 Performance: L3 70B is tied for 1st place for English queries on the LMSYS leaderboard. Phi 3 (4B params) beats GPT 3.5 Turbo (~175B params) in the banana logic benchmark.

- Llama 3 Inference and Quantization: A video shows fast inference of Llama 3 on a MacBook. However, quantizing Llama 3 8B, especially below 8-bit, noticeably degrades performance compared to other models.

AI Hardware and Infrastructure

- Nvidia DGX H200 for OpenAI: Nvidia CEO delivered a DGX H200 system to OpenAI. An Nvidia AI datacenter, if fully built out, could train ChatGPT4 in minutes and is described as having “otherworldly power and complexity”.

- ThinkSystem AMD MI300X: Lenovo released a product guide for the ThinkSystem AMD MI300X 192GB 750W 8-GPU board.

AI Ethics and Societal Impact

- Deepfake Nudes Legislation: Legislators in two dozen states are working on bills or have passed laws to combat AI-generated sexually explicit images of minors, spurred by teen girls.

- AI in Politics: In /r/StableDiffusion, an Austrian political party used AI to generate a more “manly” picture of their candidate compared to his real photo, raising implications of using AI to misrepresent reality in politics.

- AI Conversation Confidentiality: In /r/singularity, a post argues that as AI agents gain more personal knowledge, the relationship should have legally protected confidentiality like with doctors and lawyers, but corporations will likely own and use the data.

Humor/Memes

- Various humorous AI-generated images were shared, including Jesus Christ with clown makeup, Gollum holding the Stable Diffusion 3 model, and marketing from Bland AI.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI and NVIDIA Partnership

- NVIDIA DGX H200 delivered to OpenAI: @gdb noted NVIDIA hand-delivered the first DGX H200 in the world to OpenAI, dedicated by Jensen Huang “to advance AI, computing, and humanity”. @rohanpaul_ai highlighted the DGX GH200 features like 256 H100 GPUs, 1.3TB GPU memory, and 8PB/s interconnect bandwidth.

- OpenAI and Moderna partnership: @gdb also mentioned a partnership between OpenAI and Moderna to use AI for accelerating drug discovery and development.

Llama 3 and Phi 3 Models

- Llama 3 models: @winglian has extended the context length of Llama 3 8B to 96k using PoSE and RoPE theta adjustments. @erhartford released Dolphin-2.9-Llama3-70b, a fine-tuned version of Llama 3 70B created in collaboration with others. @danielhanchen noted Llama-3 70b QLoRA finetuning is 1.83x faster & uses 63% less VRAM than HF+FA21, and Llama-3 8b QLoRA fits in an 8GB card.

- Phi 3 models: @rasbt shared details on Apple’s OpenELM paper, introducing the Phi 3 model family in 4 sizes (270M to 3B). Key architecture changes include a layer-wise scaling strategy adopted from the DeLighT paper. Experiments showed no noticeable difference between LoRA and DoRA for parameter-efficient finetuning.

Snowflake Arctic Model

- Snowflake releases open-source LLM: @RamaswmySridhar announced Snowflake Arctic, a 480B Dense-MoE model designed for enterprise AI. It combines a 10B dense transformer with a 128x3.66B MoE MLP. @omarsar0 noted it claims to use 17x less compute than Llama 3 70B while achieving similar enterprise metrics like coding, SQL, and instruction following.

Retrieval Augmented Generation (RAG) and Long Context

- Retrieval heads in LLMs: @Francis_YAO_ discovered retrieval heads, a special type of attention head responsible for long-context factuality in LLMs. These heads are universal, sparse, causal, and significantly influence chain-of-thought reasoning. Masking them out makes the model “blind” to important previous information.

- XC-Cache for efficient LLM inference: @_akhaliq shared a paper on XC-Cache, which caches context for efficient decoder-only LLM generation instead of just-in-time processing. It shows promising speedups and memory savings.

- RAG hallucination testing: @LangChainAI demonstrated how to use LangSmith to evaluate RAG pipelines and test for hallucination by checking outputs against retrieved documents.

AI Development Tools and Applications

- CopilotKit for integrating AI: @svpino highlighted CopilotKit, an open-source library that makes integrating AI into applications extremely easy, allowing you to bring LangChain agents into your app, build chatbots, and create RAG workflows.

- Llama Index for LLM UX: @llama_index showed how to build a UX for your LLM chatbot/agent with expandable sources and citations using create-llama.

Industry News

- Meta’s AI investments: @bindureddy noted Meta’s weak Q2 forecast and plans to spend billions on AI, seeing it as a sound strategy. @nearcyan joked that Meta’s $36B revenue just gets poured into GPUs now.

- Apple’s AI announcements: @fchollet shared a Keras starter notebook for Apple’s Automated Essay Scoring competition on Kaggle. @_akhaliq covered Apple’s CatLIP paper on CLIP-level visual recognition with faster pre-training on web-scale image-text data.

AI Discord Recap

A summary of Summaries of Summaries

1. Llama 3 and Phi-3 Releases Spark Excitement and Comparisons: The release of Meta’s Llama 3 (8B and 70B variants) and Microsoft’s Phi-3 models generated significant buzz, with discussions comparing their performance, architectures like RoPE, and capabilities like Phi-3’s function_call tokens. Llama 3’s impressive scores on benchmarks like MMLU and Human Eval were highlighted.

2. Advancements in RAG Frameworks and Multimodal Models: Improvements to Retrieval-Augmented Generation (RAG) frameworks using LangChain’s LangGraph were discussed, featuring techniques like Adaptive Routing and Corrective Fallback. The release of Apple’s OpenELM-270M and interest in models like moondream for multimodal tasks were also covered.

3. Open-Source Tooling and Model Deployment: The open-sourcing of Cohere’s Toolkit for building RAG applications was welcomed, while Datasette’s LLM Python API usage for text embedding was explored. Discussions on batching prompts efficiently involved tools like vLLM, TGI, and llm-swarm.

4. Specialized Models and Niche Applications: The medical Internist.ai 7b model’s impressive performance, even surpassing GPT-4 in evaluations, generated excitement. Unique projects like the AI-powered text RPG Brewed Rebellion and the 01 project for embedding AI into devices were also showcased.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

-

Fine-Tuning Fixes and Future Projections: Unsloth Pro is developing multi-GPU support, expected to launch an open-source version by May. Meanwhile, PR #377 on Unsloth’s GitHub proposes to address vocabulary resizing issues in models and is eagerly awaited by the community.

-

LlaMA Runs Better Unencumbered: Removing extraneous tokens from prompts clarified a path to improving LLaMA models’ finetuning, notably dropping loss from 5 to a more temperate range in the initial epoch. The ideal setup for finetuning the 70B parameter LLaMA-3 model involves at least 48GB GPU VRAM, though richer resources are recommended.

-

Optimizing AI’s Brainpower: Debates on the optimal reranker settled on ms Marco l6 V2 for a 15x speed boost over BGE-m3, while pgvector in PostgreSQL sidesteps the need for external APIs. On the hardware front, new acquisitions are empowering projects such as fine-tuning for large RAG datasets and exploring innovative unsupervised in-context learning.

-

Meta’s Might in the Model Marathon: Meta’s introduction of the LlaMA-3 series has stirred discussions with its 8B and teased 400B models aligning to challenge GPT-4 benchmarks. Open source AI is gaining momentum with the release of LlaMA-3 and Phi-3, targeting similar objectives with distinct strategies, detailed in a shared Substack piece.

-

Technical Tidbits for Training: Tips include utilizing Colab notebooks to navigate fine-tuning glitches, harnessing GPT3.5 or GPT4 for crafting multiple-choice questions, and finetuning continuation on Kaggle. Approaches to sparsify embedding matrices and dynamically adjust context length have been tossed around, with interest in a possible warning system for model-size to GPU-fit mismatches.

LM Studio Discord

Phi and TinyLLamas Take the Spotlight: Members have been experimenting with phi-3 in LM Studio, using models like PrunaAI/Phi-3-mini-128k-instruct-GGUF-Imatrix-smashed to navigate quantization differences, with Q4 outshining Q2 in text generation. Meanwhile, a suite of TinyLlamas models has garnered attention on Hugging Face, presenting opportunities to play with Mini-MOE models of 1B to 2B range, and the community is abuzz with the rollout of Apple’s OpenELM, despite its token limitations.

Navigating GPU Waters: GPU topics were center stage, with discussions on VRAM-intensive phi-3-mini-128k models, strategies for avoiding errors like “(Exit code: 42)” by upgrading to LM Studio v0.2.21, and addressing GPU offload errors. Further, technical advice flowed freely, recommending Nvidia GPUs for AI applications despite some members’ qualms with the brand, and a nod towards 32GB RAM upgrades for robust LLM experimentation.

Tech Tangles in ROCm Realm: The AMD and NVIDIA mixed GPU environment provoked errors with ROCm installs, with temp fixes including removing NVIDIA drivers. However, heyitsyorkie underscored that ROCm within LM Studio is still in tech preview, signaling expected bumps. Wisdom from the community suggested solutions like driver updates, for instance, Adrenalin 24.3.1 for an rx7600, to iron out compatibility and performance concerns.

Mac Mileage Varies for LLMs: Mac users chimed in, suggesting that a minimum of 16GB RAM is ideal for running LLMs smoothly, although the M1 chip on an 8GB RAM setup can handle smaller models if not overloaded with parallel tasks.

Local Server Lore: Strategy-sharing for accessing LM Studio’s local servers highlighted the use of Mashnet for remote operations and the potential role of Cloudflare in facilitating connections, updating the tried-and-true “localhost:port” setup.

Perplexity AI Discord

-

Enigmagi Secures Hefty Investment: Enigmagi celebrated raising $62.7 million in funding, hitting a $1.04 billion valuation with heavy hitters like NVIDIA and Jeff Bezos on board, while also launching a Pro service available for iOS users with existing Pro-tier subscriptions.

-

Perplexity Pro Users Debating Value: Some users are skeptical about the advantages of Enterprise Pro over Regular Pro on Perplexity, particularly over performance differences, although frustration also bubbles regarding the 50 daily usage limit for Opus searches.

-

Voices for Change on Perplexity Platform: Users showed interest in adjustments like temperature settings for better creative outputs, and while some discussed new voice features, others wished for more, like an iOS watch widget. At the same time, issues with Pro Support’s accessibility were brought to light, indicating potential areas for user experience improvement.

-

API Blues and Groq’s Potential: Within the pplx-api discourse, the community learned that image uploading won’t be part of the API, while for coding assistance, llama-3-70b instruct and mixtral-8x22b-instruct are recommended. Meanwhile, GPT-4 is not yet integrated, with current model details found in the documentation.

-

Content Conversations Across Channels: Various searches on Perplexity AI tackled topics from overcoming language barriers to systems thinking. One analysis provided perspective on translation challenges, while links like Once I gladly inferred discussions on temporal shifts in happiness, and Shift No More addressed the inevitability of change.

Nous Research AI Discord

Bold RoPE Discussions: The community debated the capabilities of Rotary Position Embedding (RoPE) in models like Meta’s Llama 3, including its effectiveness in fine-tuning versus pretraining and misconceptions about its ability to generalize in longer contexts. The paper on “Scaling Laws of RoPE-based Extrapolation” (arXiv:2310.05209) sparked conversations on scaling RoPE and the challenges of avoiding catastrophic forgetting with increased RoPE base.

AutoCompressors Enter the Ring: A new preprint on AutoCompressors presented a way for transformers to manage up to 30,720 tokens and improve perplexity (arXiv:2305.14788). Jeremy Howard’s thoughts on Llama 3 and its finetuning strategies echoed through the guild (Answer.AI post), and a Twitter thread unveiled its successful context extension to 96k using advanced methods (Twitter Thread).

LLM Education and Holographic Apple Leans Out: The guild discussed a game aimed at instructing about LLM prompt injections (Discord Invite). In hardware inklings, Apple reportedly reduced its Vision Pro shipments by 50% and is reassessing their headset strategy, sparking speculation about the 2025 lineup (Tweet by @SawyerMerritt).

Snowflake’s Hybrid Model and Model Conversation: Snowflake Arctic 480B’s launch of a unique Dense + Hybrid model led to analytical banter over its architecture choices, with a nod to its attention sinks designed for context scaling. Meanwhile, GPT-3 dynamics under discussion led to skepticism regarding whether it actually runs OpenAI’s Rabbit R1.

Pydantic Models for Credible Citations: Pydantic models garnished with validators were touted as a way to ensure proper citations in LLM contexts; the discussion referenced several GitHub repositories (GitHub - argilla-io/distilabel) and tools like lm-format-enforcer for maintaining credible responses.

Stream Crafting with WorldSim: Guildmates swapped experiences with WorldSim and suggested the potential for Twitch streaming shared world simulations. They also shared a custom character tree (Twitter post) and conversed about the application of category theory involving types ontology and morphisms (Tai-Danae Bradley’s work).

CUDA MODE Discord

PyTorch 2.3: Triton and Tensor Parallelism Take Center Stage: PyTorch 2.3 enhances support for user-defined Triton kernels and improves Tensor Parallelism for training Large Language Models (LLMs) up to 100 billion parameters, all validated by 426 contributors (PyTorch 2.3 Release Notes).

Pre-Throttle GPU Ponders During Power Plays: Engaging discussions occurred around GPU power-throttling architectures like those of A100 and H100, with anticipations around the B100’s design possibly affecting computational efficiency and power dynamics.

CUDA Dwellers Uncover Room for Kernel Refinements: Members shared strategies for optimizing CUDA kernels, including the avoidance of atomicAdd and capitalizing on warp execution advancements post-Volta, which allow threads in a warp to execute diverse instructions.

Accelerated Plenoxels Poses as a CUDA-Sharpened NeRF: Enthusiasm was directed towards Plenoxels for its efficient CUDA implementation of NeRF, as well as expressions of interest in GPU-accelerated SLAM techniques and optimization for kernels targeting attention mechanisms in deep learning models.

PyTorch CUDA Strides, Flash-Attention Quirks, and Memory Management: Source code indicating a memory-efficient handling of tensor multiplications touched upon similarity with COO matrix representation. It also highlighted a potential issue regarding Triton kernel crashes when trying to access expanded tensor indices outside their original range.

Eleuther Discord

Pre-LayerNorm Debate: An engineer highlighted an analysis that pre-layernorm may hinder the deletion of information in a residual stream, possibly leading to norm increases with successive layers.

Tokenizer Version Tussle: Changes between Huggingface tokenizer versions 0.13 and 0.14 are causing inconsistencies, resulting in a token misalignment during model inference, raising concern among members working on NeoX.

Poetry’s Packaging Conundrum: After a failed attempt to utilize Poetry for package management in NeoX development due to its troublesome binary and version management, the member decided it was too complex to implement.

Chinchilla’s Confidence Quandary: A community member questioned the accuracy of the confidence interval in the Chinchilla paper, suspecting an oversampling of small transformers and debating the correct cutoff for stable estimates.

Mega Recommender Revelations: Facebook has published about a 1.5 trillion parameter HSTU-based Generative Recommender system, which members highlighted for its performance improvement by 12.4% and potential implications. Here is the paper.

Penzai’s Puzzling Practices: Users find penzai’s usage non-intuitive, sharing workarounds and practical examples for working with named tensors. Discussion includes using untag+tag methods and the function pz.nx.nmap for tag manipulation.

Evaluating Large Models: A user working on a custom task reported high perplexity and is seeking advice on the CrossEntropyLoss implementation, while another discussion arose over the num_fewshot settings for benchmarks to match the Hugging Face leaderboard.

Stability.ai (Stable Diffusion) Discord

-

RealVis V4.0 Wins Over Juggernaut: Engineers discussed their preference for RealVis V4.0 for faster and more satisfactory image prompt generation over the Juggernaut model, indicating that performance still trumps brand new models.

-

Stable Diffusion 3.0 API Usage Concerns: There was noticeable anticipation for Stable Diffusion 3.0, but some disappointment was voiced upon learning that the new API is not free and only offers limited trial credits.

-

Craiyon, a Tool for the AI Novice: For newcomers requiring assistance with image generation, community veterans recommended Craiyon as a user-friendly alternative to the more complex Stable Diffusion tools that necessitate local installations.

-

AI Model Tuning Challenges Tackled: Conversations spanned from generating specific image prompts to cloud computing resources like vast.ai, handling AI video creation, and fine-tuning issues, with discussions providing insights into training LoRas and adhering to Steam regulations.

-

Exploring Independent AI Ventures: The guild was abuzz with members sharing various AI-based independent projects, like webcomic generation available at artale.io and royalty-free sound designs at adorno.ai.

OpenRouter (Alex Atallah) Discord

Mixtral 8x7b Blank Response Crisis: The Mixtral 8x7b service experienced an issue with blank responses, leading to the temporary removal of a major provider and planning for future auto-detection capabilities.

Model Supremacy Debates Rage On: In discussions, members compared smaller AI models like Phi-3 to larger ones such as Wizard LM and reported that FireFunction from Fireworks (Using function calling models) might be a better alternative due to OpenRouter’s challenges in function calling and adhering to ‘stop’ parameters.

Time-Outs in The Stream: Various users reported an overflow of “OPENROUTER PROCESSING” notifications designed to maintain active connections, alongside issues with completion requests timing out with OpenAI’s GPT-3.5 Turbo on OpenRouter.

The Quest for Localized AI Business Expansion: A member’s search for direct contact information signaled an interest in establishing closer business connections for AI models in China.

Language Barriers in AI Discussions: AI Engineers compared language handling across AI models such as GPT-4, Claude 3 Opus, and L3 70B, noting particularly that GPT-4’s performance in Russian left something to be desired.

HuggingFace Discord

Llama 3 Leapfrogs into Lead: The new Llama 3 language model has been introduced, trained on a whopping 15T tokens and fine-tuned with 10M human annotated samples. It offers 8B and 70B variants, scoring over 80 on the MMLU benchmark and showcasing impressive coding capabilities with a Human Eval score of 62.2 for the 8B model and 81.7 for the 70B model; find out more through Demo and Blogpost.

Phi-3: Mobile Model Marvel: Microsoft’s Phi-3 Instruct model variants gain attention for their compact size (4k and 128k contexts) and their superior performance over other models such as Mistral 7B and Llama 3 8B Instruct on standard benchmarks. Notably designed for mobile use, Phi-3 features ‘function_call’ tokens and demonstrates advanced capabilities; learn more and test them out via Demo and AutoTrain Finetuning.

OpenELM-270M and RAG Refreshment: Apple’s OpenELM-270M model is making a splash on HuggingFace, along with advancements in the Retrieval-Augmented Generation (RAG) framework, which now includes Adaptive Routing and Corrective Fallback features using Langchain’s LangGraph. These and other conversations signify continued innovation in the AI space; details on RAG enhancements are found here, and Apple’s OpenELM-270M is available here.

Batching Discussions Heat Up: The necessity for efficient batching during model inference spurred interest among the community. Aphrodite, tgi, and other libraries are recommended for superior batching speeds, with reports of success using arrays for concurrent prompt processing, suggesting arrays could be used like prompt = ["prompt1", "prompt2"].

Trouble with Virtual Environments: A member’s challenges with setting up Python virtual environments on Windows sparked discussions and advice. The recommended commands for Windows are python3 -m venv venv followed by venv\Scripts\activate, with the suggestion to try WSL for improved performance.

LlamaIndex Discord

Trees of Thought: The development of LLMs with tree search planning capabilities could bring significant advancements to agentic systems, as disclosed in a tweet by LlamaIndex. This marks a leap from sequential state planning, suggesting potential strides in AI decision-making models.

Watching Knowledge Dance: A new dynamic knowledge graph tool developed using the Vercel AI SDK can stream updates and was demonstrated by a post that can be seen on the official Twitter. This visual technology could be a game-changer for real-time data representation.

Hello, Seoul: The introduction of the LlamaIndex Korean Community is expected to foster knowledge sharing and collaborations within the Korean tech scene, as announced in a tweet.

Boosting Chatbot Interactivity: Enhancements to chatbot User Interfaces using create-llama have emerged, allowing for expanded source information components and promising a more intuitive chat experience, with credits to @MarcusSchiesser and mentioned in a tweet.

Embeddings Made Easy: A complete tutorial on constructing a high-quality RAG application combining LlamaParse, JinaAI_ embeddings, and Mixtral 8x7b is now available and can be accessed through LlamaIndex’s Twitter feed. This guide could be key for engineers looking to parse, encode, and store embeddings effectively.

Advanced RAG Rigor: In-depth learning is needed for configuring advanced RAG pipelines, with suggestions like sentence-window retrieval and auto-merging retrieval being considered for tackling complex question structures, as pointed out with an instructional resource.

VectorStoreIndex Conundrum: Confusion about embeddings and LLM model selection for a VectorStoreIndex was clarified; gpt-3.5-turbo and text-embedding-ada-002 are the defaults unless overridden in Settings, as stated in various discussions.

Pydantic Puzzles: Integration of Pydantic with LlamaIndex encountered hurdles with structuring outputs and Pyright’s dissatisfaction with dynamic imports. The discussions haven’t concluded with an alternative to # type:ignore yet.

Request for Enhanced Docs: Requests were made for more transparent documentation on setting up advanced RAG pipelines and configuring LLMs like GPT-4 in LlamaIndex, with a reference made to altering global settings or passing custom models directly to the query engine.

OpenAI Discord

AI Hunts for True Understanding: A debate centered on whether AI can achieve true understanding, with the Turing completeness of autoregressive models like Transformers being a key point. The confluence of logic’s syntax and semantics was considered as potential enabler for meaning-driven operations by the model.

From Syntax to Semantics: Conversations revolved around the evolution of language in the AI landscape, forecasting the emergence of new concepts to improve clarity for future communication. The limitations of language’s lossy nature on accurately expressing ideas were also highlighted.

Apple’s Pivot to Open Source?: Excitement and speculation surrounded Apple’s OpenELM, an efficient, open-source language model introduced by Apple, stirring discussions on the potential impact on the company’s traditionally proprietary approach to AI technology and the broader trend towards openness.

Communication, Meet AI: Members highlighted the importance of effective flow control in AI-mediated communication, exploring technologies like voice-to-text and custom wake words. Discussing the interplay between AI and communication highlighted the need for mechanisms for interruption and recovery in virtual assistant interactions.

RPG Gaming with an AI Twist: The AI-powered text RPG Brewed Rebellion was shared, illustrating the growing trend of integrating AI into interactive gaming experiences, particularly in narrative scenarios like navigating internal politics within a corporation.

Engineering Better AI Behavior: Engineers shared tips on prompt crafting, emphasizing the use of positive examples for better results and pointing out that negative instructions often fail to rein in creative outputs from AI like GPT.

AI Coding Challenges in Gaming and Beyond: Challenges abound when prompting GPT for language-specific coding assistance, as raised by an engineer working on SQF language for Arma 3. Issues such as the model’s pretraining biases and limited context space were discussed, sparking recommendations for alternative models or toolchains.

Dynamic AI Updates and Capabilities: Queries on AI updates and capabilities surfaced, including how to create a GPT expert in Apple Playgrounds and whether new GPT versions could rival the likes of Claude 3. Additionally, the utility of GPT’s built-in browser versus dedicated options like Perplexity AI Pro and You Pro was contrasted, and anticipation for models with larger context windows was noted.

LAION Discord

-

AI Big Leagues - Model Scorecard Insights: The general channel had a lively debate over an array of AI models, with Llama 3 8B likened to GPT-4. Privacy concerns were raised, implying the ‘end of anonymous cloud usage’ due to new U.S. “Know Your Customer” regulations, and there were calls to scrutinize AI image model leaderboards.

-

Privacy at Risk - Cloud Regulations Spark Debate: Proposed U.S. regulations are causing unrest among members about the future of anonymity in cloud services. The credibility of TorrentFreak as a news source was defended following an article it published on cloud service provider regulations.

-

Cutting Edge or Over the Edge - AI Image Models Scrutinized: Discussions questioned the accuracy of AI image model leaderboards, suggesting the possible manipulation of results and adversarial interference.

-

Art Over Exactness? The AI Image Preference Puzzle: Aesthetic appeal versus prompt fidelity was the center of discussions around generative AI outputs, with contrasting preferences revealing the subjective nature of AI-produced imagery’s value.

-

Faster, Leaner, Smarter: Accelerating AI with New Research: Recent discussions in the research channel highlighted MH-MoE, a method improving context understanding in Sparse Mixtures of Experts (SMoE), and a weakly supervised pre-training technique that outpaces traditional contrastive learning by 2.7 times without undermining the quality of vision tasks.

OpenAccess AI Collective (axolotl) Discord

Bold Llama Ascends New Heights: Discussions captivated participants as Llama-3 has the potential to scale up to a colossal 128k size, with the blend of Tuning and augmented training. Interest also percolates around Llama 3’s pretrained learning rate, speculating an infinite LR schedule might be in the works to accompany upcoming model variants.

Snowflake’s New Release Causes Flurry of Excitement: The Snowflake 408B Dense + Hybrid MoE model made waves, flaunting a 4K context window and Apache 2.0 licensing. This generated animated conversations on its intrinsic capabilities and how it could synergize with Deepspeed.

Medical AI Takes A Healthy Leap Forward: The Internist.ai 7b model, meticulously designed by medical professionals, reportedly outshines GPT-3.5, even scoring well on the USMLE examination. It spurs on the conversation about the promise of specialized AI models, captivated by its performance and the audacious idea that it outperforms numerous other 7b models.

Crosshairs on Dataset and Model Training Tangles: Technical discussions dove into the practicalities of Hugging Face datasets, optimizing data usage, and the compatible interplay between optimizers and Fully Sharded Data Parallel (FSDP) setups. On the same thread, members experienced turbulence with fsdp when it comes to dequantization and full fine tunes, indicative of deeper compatibility and system issues.

ChatML’s New Line Quirk Raises Eyebrows: Participants identified a glitch in ChatML and possibly FastChat concerning erratic new line and space insertion. The issue throws a spotlight on the importance of refined token configurations, as it could skew training outcomes for AI models.

tinygrad (George Hotz) Discord

-

Tinygrad Tackles Facial Recognition Privacy: The possibility of porting Fawkes, a privacy tool designed to thwart facial recognition systems, to tinygrad was explored. George Hotz suggested that strategic partnerships are crucial for the success of tinygrad, highlighting the collaboration with comma on hardware for tinybox as an exemplar.

-

Linkup Riser Rebellion and Cool Solutions: There’s a notable struggle with PCIE 5.0 LINKUP risers causing errors, with some engineers suggesting to explore mcio or custom C-Payne PCBs. Additionally, one member reported a venture into water cooling, facing compatibility issues with NVLink adapters.

-

In Pursuit of Tinygrad Documentation: A gap has been flagged regarding normative documentation for tinygrad, contributing to the demand for a clear description of the behaviors of tinygrad operations. This included a conversation on the need for a tensor sorting function, and an intervention with a custom 1D bitonic merge sort function for lengths as powers of two.

-

GPU Colab’s Appetite for Tutorials: George Hotz shared an MNIST tutorial targeting GPU colab users, intended as a resource to help more users harness the potential of tinygrad.

-

Sorting, Looping, and Crashing Kernel Confab: AI engineers grappled with various aspect of tinygrad and CUDA, from the complexities of creating a torch.quantile equivalent to unveiling the architectural nuances of tensor cores, like m16n8k16, and the enigmatic crashes that defy isolation. Discussion of WMMA thread capacity revealed that a thread might hold up to 128 bits per input.

Modular (Mojo 🔥) Discord

Bold Moves in Benchmarking: The engineering community awaits Mojo’s performance benchmarks, comparing its prowess against languages like Rust and Python amidst skepticism from Rust enthusiasts. Lobsters carries a heated debate on Mojo’s claims of being safer and faster, which is central to Mojo’s narrative in tech circles.

Quantum Conundrums and ML Solutions: Quantum computing discussions touched on the nuances of quantum randomness with mentions of the Many-Worlds and Copenhagen interpretations. There’s a buzz about harnessing geometric principles and ML in quantum algorithms to handle qubit complexity and improve calculation efficiency.

Patching Up Mojo Nightly Builds: The Mojo community logs a null string bug in GitHub (#239two) and enjoys a fresh nightly compiler release with improved overloading for function arguments. Simultaneously, SIMD’s adaptation to EqualityComparable reveals both pros and cons, sparking a search for more efficient stdlib types.

Securing Software Supply Chains: Modular’s blogspot highlights the security protocols in place for Mojo’s safe software delivery in light of the XZ supply chain attack. With secure transport and signing systems like SSL/TLS and GPG, Modular puts a firm foot forward in protecting its evolving software ecosystem.

Discord Community Foresees Swag and Syntax Swaps: Mojo’s developer community enjoys a light-hearted suggestion for naming variables and anticipates future official swag; meanwhile, API development sparks discussions on performance and memory management. The MAX engine query redirects to specific channels, ensuring streamlined communication.

Latent Space Discord

A New Angle on Transformers: Engineers discussed enhancing transformer models by incorporating inputs from intermediate attention layers, paralleling the Pyramid network approach in CNN architectures. This tactic could potentially lead to improvements in context-aware processing and information extraction.

Ethical Tussle over ‘TherapistAI’: Controversy arose over leveIsio’s TherapistAI, with debates highlighting concerns about AI posing as a replacement for human therapists. This sparked discussions on responsible representations of AI capabilities and ethical implications.

Search for Semantic Search APIs: Participants reviewed several semantic search APIs; however, options like Omnisearch.ai fell short in web news scanning effectiveness compared to traditional tools like newsapi.org. This points to a gap in the current offerings of semantic search solutions.

France Bets on AI in Governance: Talks revolved around France’s experimental integration of Large Language Models (LLMs) into its public sector, noting the country’s forward-looking stance. Discussions also touched upon broader themes such as interaction of technology with the sociopolitical landscape.

Venturing Through Possible AI Winters: Members debated the sustainability of AI venture funding, spurred by a tweet concerning the ramifications of a bursting AI bubble. The conversations involved speculations on the impact of economic changes on AI research and venture prospects.

LangChain AI Discord

LangChain AI Fires Up Chatbot Quest: Discussions centered around utilizing pgvector stores with LangChain for enhancing chatbot performance, including step-by-step guidance and specific methods like max_marginal_relevance_search_by_vector. Members also fleshed out the mechanics behind SelfQueryRetriever and strategized on building conversational AI graphs with methods like createStuffDocumentsChain. The LangChain GitHub repository is pointed out as a resource along with the official LangChain documentation.

Template Woes for the Newly Hatched LLaMA-3: One member sought advice on prompt templates for LLaMA-3, citing gaps in the official documentation, reflecting the collective effort to catch up with the latest model releases.

Sharing AI Narratives and Tools: The community showcased several projects: the adaptation of RAG frameworks using LangChain’s LangGraph, an article of which is available on Medium; a union-centric, text-based RPG “Brewed Rebellion,” playable here; “Collate”, a service for transforming saved articles into digest newsletters available at collate.one; and BlogIQ, a content creation helper for bloggers found on GitHub.

Training Day: Embeddings Faceoff**: AI practitioners looking to sharpen their knowledge on embedding models could turn to an educational YouTube video shared by a member, aimed at demystifying the best tools in the trade.

Cohere Discord

-

Toolkit Teardown and Praise: Cohere’s Toolkit went open-source, exciting users with its ability to add custom data sources and deploy to multiple cloud platforms, while the GitHub repository was commended for facilitating the rapid deployment of RAG applications.

-

Troubleshooting Takes Center Stage: A member encountered issues while working with Cohere Toolkit on Docker for Mac; meanwhile, concerns about using the Cohere API key on Azure were alleviated with clarification that the key is optional, ensuring privacy.

-

API Anomaly Alert: Disparities between API and playground results when implementing site connector grounding in code were reported, posing a challenge that even subsequent corrections couldn’t fully resolve.

-

Acknowledging Open Source Champions: Gratitude was directed towards Cohere cofounder and key contributors for their dedicated effort launching the open-source toolkit, highlighting its potential benefit to the community.

-

Cohere Critique Critic Criticized: A debate was sparked over an article allegedly critical of Cohere, focusing on the introduction of a jailbreak to Cohere’s LLM that might enable malicious D.A.N-agents, though detractors of the article were unable to cite specifics to bolster their perspective.

OpenInterpreter Discord

-

Top Picks in AI Interpretation: The Wizard 2 8X22b and gpt 4 turbo models have been recognized as high performers in the OpenInterpreter project for their adeptness at interpreting system messages and calling functions. However, reports of erratic behavior in models like llama 3 have raised concerns among users.

-

A Patch for Local Execution: User experiences indicate confusion during local execution of models with OpenInterpreter, with a suggested solution involving the use of the

--no-llm_supports_functionsflag to resolve specific errors. -

UI Goes Beyond the Basics: Conversations have emerged around developing user interfaces for AI devices, with engineers exploring options beyond tkinter for compatibility with future microcontroller integrations.

-

Vision Models on the Spotlight: The sharing of GitHub repositories and academic papers has spurred discussions on computer vision models, with a particular focus on moondream for its lightweight architecture and the adaptability of llama3 to various quantization settings for optimized VRAM usage.

-

01 Project Gains Traction: Members have been engaging with the expansion of the 01 project to external devices, as evidenced by creative implementations shared online, including its integration into a spider as part of a project publicized by Grimes. Installation and execution guidance for 01 has also been addressed, with detailed instructions for Windows 11 and tips for running local models with the command

poetry run 01 —local.

Interconnects (Nathan Lambert) Discord

Blind Test Ring Welcomes Phi-3-128K: Phi-3-128K has been ushered into blind testing, with strategic interaction initiations like “who are you” and mechanisms like LMSys preventing the model’s name disclosure to maintain blind test integrity.

Instruction Tuning Remains a Hotbed: Despite the rise of numerous benchmarks for assessing large language models, such as LMentry, M2C, and IFEval, the community still holds strong opinions about the lasting relevance of instruction-following evaluations, highlighted in Sebastian Ruder’s newsletter.

Open-Source Movements Spice Up AI: The open-sourcing of Cohere’s chat interface drew attention and can be found on GitHub, which led to humorous side chats including jokes about Nathan Lambert’s perceived influence in the AI space and musings over industry players’ opaque motives.

AI Pioneers Shun Corporate Jargon: The term “pick your brain” faced disdain within the community, emphasizing the discomfort of industry experts in being approached with corporate cliches during peak times of innovation.

SnailBot Notifies with Caution: The deployment of SnailBot prompted discussions around notification etiquette, while access troubles with the “Reward is Enough” publication sparked troubleshooting conversations, highlighting the necessity of hassle-free access to scientific resources.

Mozilla AI Discord

- Mlock Malaise Strikes Llamafile Users: Engineers reported “failed to mlock” errors with the

phi2 llamafile, lacking explicit solutions or workarounds to address the problem. - Eager Engineers Await Phi3 Llamafile Update: The community is directed to use Microsoft’s GGUF files for Phi3 llamafile utilization, with specific guidance available on Microsoft’s Hugging Face repository.

- B64 Blunder Leaves Images Unrecognized: Encoding woes surfaced as a user’s base64 images in JSON payloads failed to be recognized by the llama model, turning the

multimodal : falseflag on, and no fix was provided in the discussion. - Mixtral Llamafile Docs Get a Facelift: Modifications to Mixtral 8x22B Instruct v0.1 llamafile documentation were implemented, accessible on its Hugging Face repository.

- False Trojan Alert in Llamafile Downloads: Hugging Face downloads erroneously flagged by Windows Defender as a trojan led to recommendations for using a VM or whitelisting, along with the difficulties in reporting false positives to Microsoft.

DiscoResearch Discord

Batch Your Bots: Discord users investigated how to batch prompts efficiently in Local Mixtral and compared tools like vLLM and the open-sourced TGI. While some preferred using TGI as an API server for its low latency, others highlighted the high throughput and direct Python usage that comes with vLLM in local Python mode, with resources like llm-swarm suggested for scalable endpoint management.

Dive into Deutsch with DiscoLM: Interaction with DiscoLM in German sparked discussions about prompt nuances, such as using “du” versus “Sie”, and how to implement text summarization constraints like word counts. Members also reported challenges with model outputs and expressed interest in sharing quantifications for experimental models, especially in light of the high benchmarks scored by models like Phi-3 on tests like Ger-RAG-eval.

Grappled Greetings: Users debated the formality in prompting language models, acknowledging the variable impact on responses when initiating with formal or informal forms in German.

Summarization Snafus: The struggle is real when trying to cap off model-generated text at a specific word or character limit without abrupt endings. The conversation mirrored the common desire for fine-tuned control over output.

Classify with Confidence: Arousing community enthusiasm was the possibility of implementing a classification mode for live inference in models to match the praised benchmark performance.

Datasette - LLM (@SimonW) Discord

-

Cracking Open the Python API for Datasette: Engineers have been exploring the Python API documentation for Datasette’s LLM, utilizing it for embedding text files and looking for ways to expand its usage.

-

Summarization Automation with Claude: Simon Willison shared his experience using Claude alongside the LLM CLI tool to summarize Hacker News discussions, providing a workflow overview.

-

Optimizing Text Embeddings: Detailed instructions for handling multiple text embeddings efficiently via Datasette LLM’s Python API were shared, with emphasis on the

embed_multi()feature as per the embedding API documentation. -

CLI Features in Python Environments: There’s a current gap in Datasette’s LLM capability featuring direct CLI-to-Python functionality for embedding files; however, the implementation can be traced in the GitHub repository, providing a reference for engineers to conceptually transfer CLI features to Python scripts.

Skunkworks AI Discord

-

Say Hello to burnytech: A brief greeting was made by burnytech with a simple “Hi!” on the general channel.

-

Calling All AI Enthusiasts to Toronto: The Ollamas and friends group have organized an AI developer meetup in Toronto, offering networking opportunities, food, and lightning talks. Interested AI professionals and enthusiasts can register via the Toronto AI Meetup Registration Link to participate in the event hosted at the Cohere space with limited availability.

LLM Perf Enthusiasts AI Discord

-

Tweet Tease Leaves Us Guessing: Jeffery Wang shared a tweet leaving the community curious about the contents, with no further discussion occurring.

-

Inference Inconsistency Raises Questions: Members noticed that inference providers like Octo AI and Anyscale have divergent JSON mode implementations for the same open-source models, with Octo AI’s approach being schema-based and Anyscale mirroring OpenAI’s methodology.

-

Contemplating Contextual Capabilities: The community pondered the effectiveness of a tool’s use of full context, noting its superior performance to GPT without detailed explanation of the advancements.

Alignment Lab AI Discord

- Congratulations, You’ve Been Anderson-ified!: A guild member humorously congratulated someone, presumably for a noteworthy achievement or milestone, likening them to Laurie Anderson, an avant-garde artist known for her multimedia projects and experimental music. The context or reason behind the comparison was not provided.

AI21 Labs (Jamba) Discord

- Invasion of the Content Snatchers: A Discord user posted an inappropriate link promoting Onlyfans leaks & Daily Teen content, which violates the community guidelines. The content was tagged to alert all members and included a Discord invite link.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (774 messages🔥🔥🔥):

-

Troubleshooting Finetuning Problems: Users discussed issues while finetuning with special tokens and formats using LLaMA models. One particular issue was addressed by removing unnecessary tokens (

<|start_header_id|>,<|end_header_id|>,<|eot_id|>) from prompts, which improved loss significantly from 5 down to a reasonable range during the first epoch of training. -

Discussion on AI Model Sizes and Efficiency: Users conversed about using different sizes of LLaMA-3 models, particularly the 70B model, and weighed in on the appropriate batch sizes and GPUs necessary for finetuning such large models efficiently. The conversation also touched upon the balance between using QLoRa for GPU efficiency versus LoRA for better accuracy.

-

GPU Requirement Queries: There was an inquiry about the suitability of different GPUs for finetuning LLaMA-3 models. It was clarified that at least 48GB was required, although renting higher VRAM was recommended for continuous pretraining of different models.

-

Hunter x Hunter Anime Appreciation: Users expressed their opinions on the anime series “Hunter x Hunter,” debating the quality of the Chimera Ant arc and sharing their favorite moments and arcs.

-

Meta Employee Humor: A joke was made about a user potentially being a Meta employee due to their familiarity with long training times for machine learning models. This spawned a friendly quip about the salaries at Meta and a user humorously insisting they were not employed there.

Links mentioned:

- Remek Kinas | Grandmaster: Computer science by education. Computer vision and deep learning. Independent AI/CV consultant.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Snowflake/snowflake-arctic-instruct · Hugging Face: no description found

- Google Colaboratory: no description found

- Sonner: no description found

- PyTorch 2.3 Release Blog: We are excited to announce the release of PyTorch® 2.3 (release note)! PyTorch 2.3 offers support for user-defined Triton kernels in torch.compile, allowing for users to migrate their own Triton kerne...

- I asked 100 devs why they aren’t shipping faster. Here’s what I learned - Greptile: The only developer tool that truly understands your codebase.

- AI Unplugged 8: Llama3, Phi-3, Training LLMs at Home ft DoRA.: Insights over Information

- NurtureAI/Meta-Llama-3-8B-Instruct-32k · Hugging Face: no description found

- Rookie Numbers GIF - Rookie Numbers - Discover & Share GIFs: Click to view the GIF

- Tweet from Jeremy Howard (@jeremyphoward): @UnslothAI Now do QDoRA please! :D

- Using User-Defined Triton Kernels with torch.compile — PyTorch Tutorials 2.3.0+cu121 documentation: no description found

- Finetune Llama 3 with Unsloth: Fine-tune Meta's new model Llama 3 easily with 6x longer context lengths via Unsloth!

- Tweet from FxTwitter / FixupX: Sorry, that user doesn't exist :(

- llama.cpp/grammars/README.md at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- TETO101/AIRI_INS5 · Datasets at Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Release PyTorch 2.3: User-Defined Triton Kernels in torch.compile, Tensor Parallelism in Distributed · pytorch/pytorch: PyTorch 2.3 Release notes Highlights Backwards Incompatible Changes Deprecations New Features Improvements Bug fixes Performance Documentation Highlights We are excited to announce the release of...

Unsloth AI (Daniel Han) ▷ #random (13 messages🔥):

-

Reranker Choices for Speed and Efficiency: A member highlighted ms Marco l6 V2 as their reranker of choice, finding it 15x faster than BGE-m3 with very similar results for reranking 200 embeddings.

-

PostgreSQL and pgvector for Reranking: Another snippet explained the use of PostgreSQL combined with pgvector extension, implying no need for an external API for reranking tasks.

-

Hardware Acquisitions Power Up Training: A member expressed enthusiasm about obtaining hardware suitable for fine-tuning models, which has enhanced their capabilities in RAG and prompt engineering.

-

Fine-tuned Llama for Large RAG Datasets: It was mentioned that a fine-tuned llama from Unsloth is being used to generate a substantial 180k row RAG ReAct agent training set.

-

Unsupervised In-Context Learning Discussion: A link to a YouTube video was shared, titled “No more Fine-Tuning: Unsupervised ICL+”, discussing an advanced in-context learning paradigm for Large Language Models (Watch the video).

Link mentioned: No more Fine-Tuning: Unsupervised ICL+: A new Paradigm of AI, Unsupervised In-Context Learning (ICL) of Large Language Models (LLM). Advanced In-Context Learning for new LLMs w/ 1 Mio token contex…

Unsloth AI (Daniel Han) ▷ #help (186 messages🔥🔥):

-

Unsloth Pro Mult-GPU Support Is Brewing: Unsloth Pro is currently in the works for distributing multiple GPU support, as confirmed by theyruinedelise. An open-source version with multi-GPU capabilities is expected around May, while the existing Unsloth Pro inquiries are still pending replies.

-

Tuning Advice with Experimental Models: Starsupernova advised using updated Colab notebooks for fixing generation issues after fine-tuning, as seen in the case where model outputs repeated the last token. There’s mention of “cursed model merging,” the need for model retraining after updates, and the potential use of GPT3.5 or GPT4 for generating high-quality multiple-choice questions (MCQs).

-

Dataset Challenges and Solutions: Discussions around dataset handling included issues with key errors during dataset mapping and typing errors with curly brackets; solutions involved loading datasets from Google Drive into Colab and making datasets private on Hugging Face with CLI login.

-

Colab Training Considerations on Kaggle and Local Machines: Users inquiried about resuming training from checkpoints on Kaggle due to the 12-hour limit, and

starsupernovaconfirmed that fine-tuning can continue from the last step. There are hints from members about appropriate steps for fine-tuning, such as utilizing thesave_pretrained_mergedandsave_pretrained_gguffunctions in one script. -

Inference and Triton Dependency Clarifications: Theyruinedelise clarified that Triton is a requirement for running Unsloth and mentioned that Unsloth might provide inference and deployment capabilities soon. There was a question about a Triton runtime error specific to SFT training, highlighting potential variability in environment setup.

Links mentioned:

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- unsloth/llama-3-70b-bnb-4bit · Hugging Face: no description found

- Supervised Fine-tuning Trainer: no description found

- ollama/docs/modelfile.md at main · ollama/ollama: Get up and running with Llama 3, Mistral, Gemma, and other large language models. - ollama/ollama

- ollama/docs/import.md at 74d2a9ef9aa6a4ee31f027926f3985c9e1610346 · ollama/ollama: Get up and running with Llama 3, Mistral, Gemma, and other large language models. - ollama/ollama

- Reddit - Dive into anything: no description found

- TETO101/AIRI_INS5 · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #showcase (3 messages):

- Meta Unveils LlaMA-3 and Teases 400B Model: Meta has released a new set of models called LlaMA-3, featuring an 8B parameter model that surpasses the previous 7B in the LlaMA series. Alongside the release, Meta has also teased an upcoming 400B model poised to match GPT-4 on benchmarks; access remains gated but available upon request.

- Growth in Open Source AI: There’s excitement around the recent open source releases of LlaMA-3 and Phi-3, with an acknowledgment that both target similar goals through different approaches. The full details can be found on a shared Substack article.

- Promotion in the Community: A message encourages sharing the LlaMA-3 update in another channel (<#1179035537529643040>), suggesting that the community would find this information valuable.

Link mentioned: AI Unplugged 8: Llama3, Phi-3, Training LLMs at Home ft DoRA.: Insights over Information

Unsloth AI (Daniel Han) ▷ #suggestions (75 messages🔥🔥):

- PR Fix for Model Vocabulary Issue: A pull request (PR #377) has been discussed that addresses the issue of loading models with resized vocabulary. The PR aims to fix tensor shape mismatches and can be found at Unsloth Github PR #377. If merged, subsequent release of dependent training code is expected.

- Anticipation for PR Merge: There’s a request for the merge of the aforementioned PR, with the contributor expressing eagerness. The Unsloth team has confirmed adding the PR after some minor discussion about .gitignore files impacting the GitHub page’s appearance.

- Suggestions for Model Training Optimization: Ideas were shared about sparsifying the embedding matrix by removing unused token IDs to allow for training with larger batches, and possibly offloading embeddings to the CPU. Implementation may involve modifying the tokenizer or using sparse embedding layers.

- Model Size Consideration with Quantization: A suggestion was made to implement a warning or auto switch to a quantised version of a model if it does not fit on the GPU, which sparked interest.

- Dynamic Context Length Adjustment: Discussions involved the possibility of iteratively increasing available context length during model evaluation without needing reinitialization. Suggestions included using laser pruning and freezing techniques, and the mention of updating config variables for the model and tokenizer.

Link mentioned: Fix: loading models with resized vocabulary by oKatanaaa · Pull Request #377 · unslothai/unsloth: This PR is intended to address the issue of loading models with resized vocabulary in Unsloth. At the moment loading models with resized vocab fails because of tensor shapes mismatch. The fix is pl…

LM Studio ▷ #💬-general (298 messages🔥🔥):

-

Puzzled Over Potential Phi-3 Preset: A member asked about a preset for phi-3 in LM Studio, and another provided a workaround by taking the Phi 2 preset adding specific stop strings. They mentioned using PrunaAI/Phi-3-mini-128k-instruct-GGUF-Imatrix-smashed and Phi-3-mini-128k-instruct.Q8_0.gguf to achieve satisfactory results.

-

Quantized Model Quality Queries: Discussions included the varying performance of different quantization levels (Q2, Q3, Q4) for phi-3 mini models. A member reported that Q4 functioned correctly whereas Q2 failed to generate coherent text, indicating the potential impact of quantization on model quality.

-

Finding the Fit for GPUs: Users exchanged information about running LM Studio with various GPU configurations, allowing for LLM usage up to 7b + 13b models on cards like the Nvidia GTX 3060. A member also confirmed phi-3-mini-128k GGUF’s high memory requirements on VRAM.

-

Alleviating Error Exit Code 42: Users who faced the error “(Exit code: 42)” were advised to upgrade to LM Studio v0.2.21 to rectify the issue. Additional advice highlighted that the error could be linked to older GPUs not having enough VRAM.

-

Accessing Local Servers and Networks: Conversations revolved around utilizing a local server setup within LM Studio, like using NordVPN’s Mashnet to remotely access LM Studio servers from other locations by changing “localhost:port” to “serverip:port”. Users discussed ways to enable such configurations, with some suggesting the usage of Cloudflare as a proxy.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Local LLM Server | LM Studio: You can use LLMs you load within LM Studio via an API server running on localhost.

- yam-peleg/Experiment7-7B · Hugging Face: no description found

- Phi-3-mini-4k-instruct-q4.gguf · microsoft/Phi-3-mini-4k-instruct-gguf at main: no description found

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

LM Studio ▷ #🤖-models-discussion-chat (73 messages🔥🔥):

- LLama-3 Herd Galore: Hugging Face now hosts a diverse collection of “TinyLlamas” on their repo, featuring Mini-MOE models ranging from 1B to 2B in different configurations. The Q8 version of these models is recommended, and users are advised to review the original model page for templates, usage, and help guidance.

- Cozy Praise for CMDR+: Discussions revealed high satisfaction with CMDR+, with users describing it as resembling GPT-4 performance levels on high-spec Macbook Pros, potentially surpassing the likes of LLama 3 70B Q8.

- Loading Errors and Solutions for Phi-3 128k: Users reported errors while trying to load Phi-3 128k models. The issue seems to be an unsupported architecture in the current version of llama.cpp, but information on GitHub pull reqests and issues suggest updates are on the way to address this.

- OpenELM Intrigue and Skepticism: Apple’s new OpenELM models are a topic of curiosity, though skepticism remains due to their 2048 token limit and potential performance on different hardware setups. Users appear eager for support in llama.cpp to try out these models with LM Studio.

- LongRoPE Piques Curiosity: Discussion about LongRoPE, a method for drastically extending the context window in language models to up to 2048k tokens, has generated interest. The significance of this development has prompted users to share the paper and express astonishment at the extended context capabilities it suggests.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Tweet from LM Studio (@LMStudioAI): To configure Phi 3 with the correct preset, follow the steps here: https://x.com/LMStudioAI/status/1782976115159523761 ↘️ Quoting LM Studio (@LMStudioAI) @altryne @SebastienBubeck @emollick @altry...

- apple/OpenELM · Hugging Face: no description found

- LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens: no description found

- Support for OpenELM of Apple · Issue #6868 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- DavidAU (David Belton): no description found

- Add phi 3 chat template by tristandruyen · Pull Request #6857 · ggerganov/llama.cpp: This adds the phi 3 chat template. Works mostly fine in my testing with the commits from #6851 cherry-picked for quantizing Only issue I've noticed is that it seems to output some extra <|end|&...

- Support for Phi-3 models · Issue #6849 · ggerganov/llama.cpp: Microsoft recently released Phi-3 models in 3 variants (mini, small & medium). Can we add support for this new family of models.

LM Studio ▷ #🧠-feedback (9 messages🔥):

-

GPU Offload Issues Reported: A member noted that having GPU offload enabled by default causes errors for users without GPUs or those with low VRAM GPUs. They recommended turning it off by default and providing a First Time User Experience (FTUE) section with detailed setup instructions.

-

Troubles with GPU Acceleration Needed: Despite the GPU offload issue, another member expressed a need for GPU acceleration. They confirmed that turning off GPU offload allows the application to be used.

-

Solving GPU-Related Errors: In response to questions about errors, it was suggested to turn off GPU offload as a possible solution, linking to additional resources with the identifier <#1111440136287297637>.

-

Regression in Version 2.20 for Some Users: One user reported that after upgrading to version 2.20, they could no longer use the application, marking version 2.19 as the last operational one, even with a similar PC configuration and operating system (Linux Debian).

-

High VRAM Not Helping with Loading Model: A user with 16GB of VRAM expressed confusion over the inability to load models on the GPU, noting a 100% GPU usage rate but still facing issues since the upgrade to version 2.20.

LM Studio ▷ #🎛-hardware-discussion (112 messages🔥🔥):

- Choosing the Right CPU and GPU for AI Tasks: A member was advised to select the best CPU they can afford, and for AI tasks, Nvidia GPUs were recommended for ease of use and compatibility should the member want to run applications like Stable Diffusion. The same member discussed their dislike for Nvidia, prompted by issues such as “melted 4090s” and driver problems.

- RAM Upgrades for LLM Performance: Members agreed that upgrading to 32GB of RAM would be beneficial for local LLM experiments and implementations. One member shared their own successful LLM activity on a machine equipped with an AMD Ryzen 7840HS CPU and RTX 4060 GPU.

- Power Efficiency Versus Performance in AI and Gaming Rigs: Discussions about power efficiency in builds revolved around member setups like a 5800X3D and 5700XT with 32GB of RAM, advocating for settings like Eco Mode and power limiting Nvidia GPUs to manage heat.

- Troubleshooting Model Loading and GPU Offload Errors: Users experiencing errors such as “Failed to load model” due to insufficient VRAM were advised to turn GPU offload off or to use smaller buffer settings. Another member resolved their issue with LM Studio’s GPU usage by setting the

GPU_DEVICE_ORDINALenvironment variable. - Mac Performance for Running LLMs Locally: Members discussed the performance of Macs running LLMs, with the consensus that ideally, Macs need 16GB or more RAM for efficient operation, recognizing that the M1 chipset in an 8GB RAM configuration manages small models but without other concurrent apps.

LM Studio ▷ #langchain (1 messages):

vic49.: Yeah, dm me if you want to know how.

LM Studio ▷ #amd-rocm-tech-preview (56 messages🔥🔥):

-

Dual GPU Setup Confusion: Users with dual AMD and NVIDIA setups experienced errors when installing ROCm versions of LM Studio. A workaround involved removing NVIDIA drivers and uninstalling the device, though physical card removal was sometimes necessary.

-

Tech Preview Teething Troubles: Some users expressed frustration with installation issues, but heyitsyorkie reminded the community that LM Studio ROCm is a tech preview, and bugs are to be expected.

-

ROCm Compatibility and Usage: Users discussed which GPUs are compatible with ROCm within LM Studio. heyitsyorkie provided clarification, noting only GPUs with a checkmark under the HIPSDK are supported, with nettoneko indicating support is based on the architecture.

-

Installation Success and Error Messages: Certain users reported successful installations after driver tweaks, while others encountered persistent error messages when trying to load models. kneecutter mentioned that a configuration with RX 5700 XT appeared to run LLM models but was later identified to be on CPU, not ROCm.

-

Community Engagement and Advice: Amidst reported glitches, community members actively shared advice, with propheticus_05547 mentioning that AMD Adrenaline Edition might be needed for ROCm support. andreim suggested updating drivers for specific GPU compatibility, like Adrenalin 24.3.1 for an rx7600.

Perplexity AI ▷ #announcements (2 messages):

-

Enigmagi’s Impressive Funding Round: Enigmagi announced a successful fundraising of $62.7 million at a $1.04 billion valuation, with an investor lineup including Daniel Gross, NVIDIA, Jeff Bezos, and many others. Plans are underway to collaborate with mobile carriers like SK and Softbank, along with an imminent enterprise pro launch, to accelerate growth and distribution.

-

Pro Service Launches on iOS: The Pro service is now available to iOS users, allowing them to ask any question and receive an answer promptly. This new feature officially starts today for users with a Pro-tier subscription.

Perplexity AI ▷ #general (467 messages🔥🔥🔥):

-

Enterprise Pro vs. Regular Pro: Users questioned the benefits of Enterprise Pro over Regular Pro, with discussions focusing on whether there was any difference in performance or search quality (“I highly doubt it. But you can pay double the money for privacy!”). Concerns about Opus usage limitations remained, as users debated its 50-use per day restriction.

-

Unpacking Perplexity’s Opus Usage Cap: The community expressed frustration over the 50-use daily limit for Opus searches on Perplexity Pro. Several members speculated about the reasons for the restriction, mentioning abuse of trial periods and the resource-intensive nature of Opus.

-

Anticipation for Model Adjustments: There’s a desire for Perplexity to introduce the ability to adjust the temperature setting for Opus and Sonnet models, as it’s deemed important for creative writing use.

-

Voice Features and Tech Wishes: A couple of users discussed new voice features, including an updated UI and the addition of new voices on Perplexity Pro. Others expressed a desire for a Perplexity app for Watch OS and a voice feature iOS widget.

-

Concerns Over Customer Support: Users reported issues with the Pro Support button on Perplexity’s settings page, with one user noting it didn’t work for them despite various attempts on different accounts. There were also comments about a lack of response from the support team when contacted via email.

Links mentioned:

- Microsoft is blocking employee access to Perplexity AI, one of its largest Azure OpenAI customers: Microsoft blocks employee access to Perplexity AI, a major Azure OpenAI customer.

- GroqCloud: Experience the fastest inference in the world

- OpenELM Pretrained Models - a apple Collection: no description found

- Stable Cascade - a Hugging Face Space by multimodalart: no description found

- Tweet from Ray Wong (@raywongy): Because you guys loved the 20 minutes of me asking the Humane Ai Pin voice questions so much, here's 19 minutes (almost 20!), no cuts, of me asking the @rabbit_hmi R1 AI questions and using its co...

- rabbit r1 Unboxing and Hands-on: Check out the new rabbit r1 here: https://www.rabbit.tech/rabbit-r1Thanks to rabbit for partnering on this video. FOLLOW ME IN THESE PLACES FOR UPDATESTwitte...

- GitHub - cohere-ai/cohere-toolkit: Toolkit is a collection of prebuilt components enabling users to quickly build and deploy RAG applications.: Toolkit is a collection of prebuilt components enabling users to quickly build and deploy RAG applications. - cohere-ai/cohere-toolkit

Perplexity AI ▷ #sharing (8 messages🔥):

- Exploring the Language Barrier: A shared link leads to Perplexity AI’s analysis on overcoming language translation challenges.

- Joy in the Past Tense: An intriguing moment of reflection is found at Once I gladly, examining how happiness can shift over time.

- The Constant of Change: The topic of Shift No More brings insights into how the inevitability of change affects our worldview.

- Tuning into ‘Mechanical Age’: A curious exploration into a song titled ‘Mechanical Age’ suggests a blend of music with the notion of technological progress.

- Dive Into Systems Thinking: Systems thinking analysis is discussed as a comprehensive approach to understanding complex interactions within various systems.

- Seeking Succinct Summaries: A search query points to a desire for concise summaries, possibly for efficiency in learning or decision-making, discussed on Perplexity AI.

- The Search for Answers in Caretaking: One link is directed towards Perplexity AI’s information on using the Langlier Saturation Index for swimming pool care, despite its complexity and outdoor pool limitations.

Perplexity AI ▷ #pplx-api (14 messages🔥):

- Image Upload Feature Not on the Roadmap: A user inquired about the possibility of uploading images via the Perplexity API, to which the response was a definitive no, and it is not planned for future roadmaps either.

- Seeking the Best AI Coder: In the absence of ChatGPT4 on Perplexity API, a user recommended using llama-3-70b instruct or mixtral-8x22b-instruct as the best coding models available, highlighting their different context lengths.

- Perplexity API Lacks Real-Time Data: A user integrating the API into a speech assistant reported that the API provided correct event dates but outdated event outcomes. They also inquired about document insertion for comparisons and eagerly awaited more functionalities.

- GPT-4 Not Supported by Perplexity API: Users inquiring about GPT-4 support on Perplexity API were directed to the documentation where model details, including parameter count and context length, were listed, with the note that GPT-4 is not available.

- Clarification on Hyperparameters for llama-3-70b-instruct: A user was seeking advice on the optimal hyperparameters for making API calls to llama-3-70b-instruct, providing a detailed Python snippet used for such calls; another user suggested trying out Groq for its free and faster inference but did not confirm if the hyperparameters inquired about were appropriate.

Link mentioned: Supported Models: no description found

Nous Research AI ▷ #ctx-length-research (11 messages🔥):

-

Clarifying RoPE and Fine-Tuning vs. Pretraining: A dialogue clarified that the paper discussing Rotary Position Embedding (RoPE) was about fine-tuning, not pretraining, which might contribute to misconceptions about the generalization capabilities of models like llama 3.

-

Misconceptions About RoPE Generalization: A participant pointed out that there is no proof RoPE can extrapolate in longer contexts by itself, indicating potential confusion around its capabilities.

-

llama 3 RoPE Base is Consistent: Another key point is that llama 3 was trained with a RoPE base of 500k right from the start, and there was no change in the base during its training.

-

The Purpose of High RoPE Base: It was proposed that lama 3’s high RoPE base might be aimed at decreasing the decay factor, which could benefit models that handle longer contexts.

-

RoPE Scaling and Model Forgetting: The conversation included a hypothetical scenario: even if a model is retrained with a higher RoPE base after an extensive initial training, it might not generalize due to the forgetting of previous learning, emphasizing that currently, it’s only proven that pretraining tokens largely outnumber extrapolation tokens.

Link mentioned: Scaling Laws of RoPE-based Extrapolation: The extrapolation capability of Large Language Models (LLMs) based on Rotary Position Embedding is currently a topic of considerable interest. The mainstream approach to addressing extrapolation with …

Nous Research AI ▷ #off-topic (17 messages🔥):

-

Apple’s Headset Strategy Shake-Up: Apple is reportedly cutting Vision Pro shipments by 50% and reassessing their headset strategy, potentially indicating no new Vision Pro model for 2025. This information was shared via a tweet by @SawyerMerritt and an article on 9to5mac.com.

-

LLM Prompt Injection Game: A game has been created to teach about LLM prompt injections, featuring basic and advanced levels where players try to extract a secret key GPT-3 or GPT-4 is instructed to withhold. Interested participants can join the discord server through this invite link.

-

Discord Invite Challenges: There was an issue with a discord invite link being auto-deleted. The member intended to share an invite to a game that teaches about LLM prompt injections.

-

Moderation Assist: After an invite link was auto-deleted, a mod offered to pause the auto-delete feature to allow reposting of the original message inviting members to a discord server focused on LLM prompt injections.

Links mentioned:

- Tritt dem LLM-HUB-Discord-Server bei!: Sieh dir die LLM-HUB-Community auf Discord an – häng mit 54 anderen Mitgliedern ab und freu dich über kostenlose Sprach- und Textchats.

- Tweet from Sawyer Merritt (@SawyerMerritt): NEWS: Apple cuts Vision Pro shipments by 50%, now ‘reviewing and adjusting’ headset strategy. "There may be no new Vision Pro model in 2025" https://9to5mac.com/2024/04/23/kuo-vision-pro-ship...

Nous Research AI ▷ #interesting-links (16 messages🔥):

-

Introducing AutoCompressors: A new preprint discusses AutoCompressors, a concept for transformer-based models that compresses long contexts into compact summary vectors to be used as soft prompts, enabling them to handle sequences up to 30,720 tokens with improved perplexity. Here’s the full preprint.

-