AI News for 10/4/2024-10/7/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (226 channels, and 5768 messages) for you. Estimated reading time saved (at 200wpm): 640 minutes. You can now tag @smol_ai for AINews discussions!

Multiple notable things, but nothing headline worthy:

- Cursor was on Lex Fridman, the first time 4 guests have been on the show at once and a notable break for Lex for covering a developer tool + an early stage startup. Imrat’s 20 point summary of the podcast was handy.

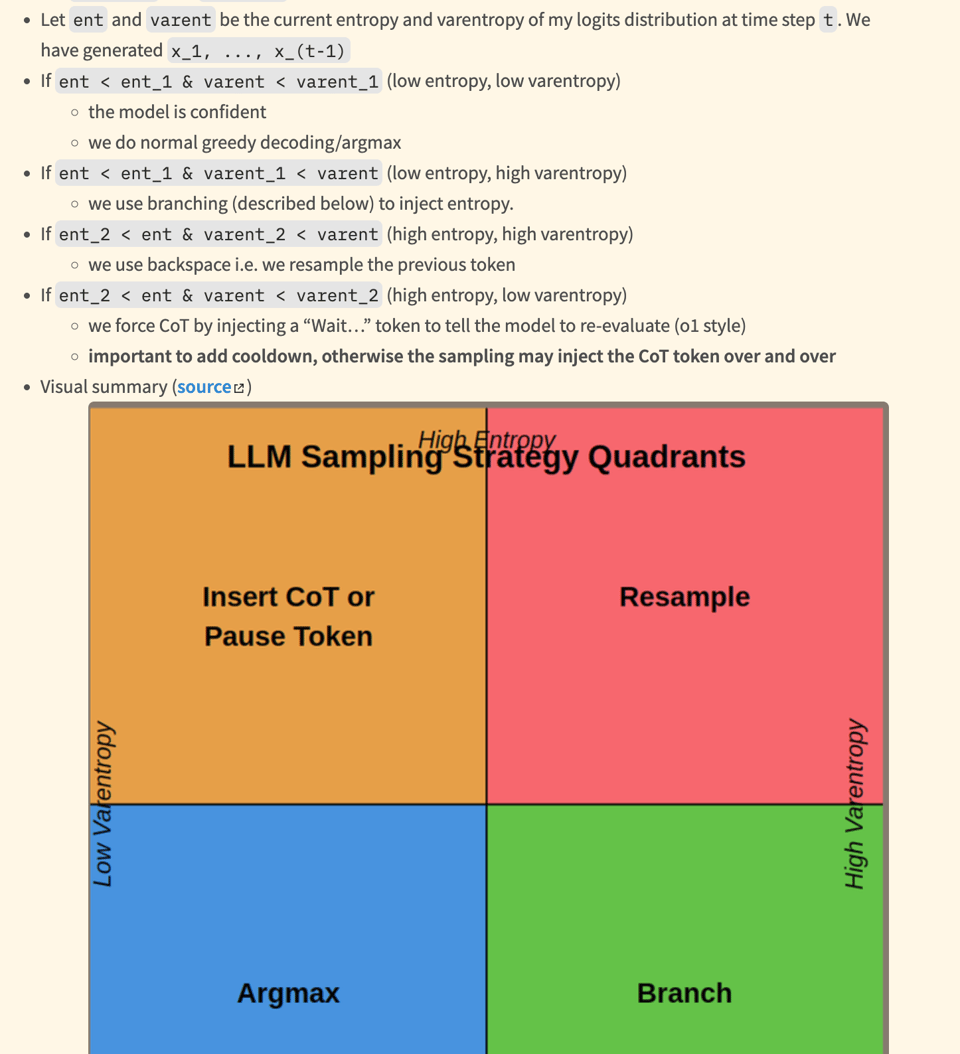

- There is a lot of interest in “open o1” reproductions. Admittedly, none are RL based: Most are prompting techniques and finetunes, but the most promising project could be entropix which uses entropy-based sampling to insert pause tokens.

- Reka updated their 21B Flash Model with temporal understanding (for video) and native audio (no separate ASR) and tool use and instruction chaining

- SWEBench launched a multimodal version.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Comparisons

-

OpenAI’s o1-preview performance: @JJitsev noted that o1-preview claims strong performance on olympiad and PhD-level tasks, but shows fluctuations on simpler AIW+ problems, indicating potential generalization deficits. @giffmana observed that o1-preview is clearly in a league apart, solving 2/6 variants and getting around 50% on the rest, while other models got less than 10%.

-

Claude 3.5 Sonnet vs OpenAI o1: @_philschmid reported that Claude 3.5 Sonnet can be prompted to increase test-time compute and match reasoning strong models like OpenAI o1. The approach combines Dynamic Chain of Thoughts, reflection, and verbal reinforcement.

-

LLM convergence: @karpathy observed that many LLMs sound similar, using lists, discussing “multifaceted” issues, and offering to assist further. @rasbt suggested this might be due to external companies providing datasets for preference tuning.

-

Movie Gen: Meta unveiled Movie Gen, described as the “most advanced media foundation model to-date”. It can generate high-quality AI videos from text and perform precise video editing.

AI Research and Applications

-

Retrieval Augmented Generation (RAG): @LangChainAI shared an implementation of a Retrieval Agent using LangGraph and Exa for more complex question/answering applications.

-

AI in customer support: @glennko reported building end-to-end customer service agents that have automated 60-70% of a F500 client’s customer support volume.

-

Synthetic data generation: A comprehensive survey of 417 Synthetic Data Generation (SDG) models over the last decade was published, covering 20 distinct model types and 42 subtypes.

-

RNN resurgence: A paper found that by removing hidden state dependencies, LSTMs and GRUs can be efficiently trained in parallel, making them competitive with Transformers and Mamba for long sequence tasks.

AI Safety and Ethics

-

Biologically-inspired AI safety: @labenz highlighted AE Studio’s work on biologically-inspired approaches to design more cooperative and less deceptive AI systems, including training models to predict their own internal states and minimizing self-other distinction.

-

AI risk debate: @RichardMCNgo discussed the polarization in the AI risk debate, noting that skeptics often shy away from cost-benefit reasoning under uncertainty, while many doomers are too Bayesian.

Industry News and Developments

-

OpenAI funding: OpenAI closed a new $6.6B funding round, valuing the company at $157B and solidifying its position as the most well-funded AI startup in the world.

-

Cloudflare SQLite improvements: @swyx highlighted Cloudflare’s SQLite improvements, including synchronous queries with async performance and the ability to rollback state to any point in the last 30 days.

Memes and Humor

-

@ylecun responded with “Haha 😄” to an unspecified tweet.

-

@bindureddy joked about the irony of Elon Musk receiving hate for his political views, despite the idea of stopping hate and spreading joy.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Advancements in Small-Scale LLM Performance

-

Adaptive Inference-Time Compute: LLMs Can Predict if They Can Do Better, Even Mid-Generation (Score: 66, Comments: 3): Adaptive Inference-Time Compute allows Large Language Models (LLMs) to dynamically adjust their computational resources during generation, potentially improving output quality. The approach involves the model predicting whether additional computation would enhance its performance, even mid-generation, and adapting accordingly. This technique could lead to more efficient and effective use of computational resources in LLMs, potentially improving their overall performance and adaptability.

- [{‘id’: ‘lqn3n3c’, ‘score’: 8, ‘body’: “This is one of those papers that would be so much better if accompanied by code. Nothing extreme, a barebone implementationand a good documentation and I would work my way around hooking it up into my preferred inference engine.Anecdotally, I’ve come across more quality research papers this past week than during the entirety of the summer. I don’t know if o1’s release pushed researchers to put their quality stuff out or if it is just a cycle thing.”, ‘author’: ‘XMasterrrr’, ‘is_submitter’: False, ‘replies’: [{‘id’: ‘lqn4dmw’, ‘score’: 2, ‘body’: “Yeah I’m seeing a lot of good papers lately. Big focus on CoT and reasoning lately.I hope someone can cobble together usable code from this, it looks very interesting.”, ‘author’: ‘Thrumpwart’, ‘is_submitter’: True, ‘replies’: []}, {‘id’: ‘lqni1zg’, ‘score’: 2, ‘body’: ‘>We release a public github implementation for reproducability.Right at the top of the appendix… The github is currently empty, but there is shareable code and they plan to release it. Maybe open an issue if you really care to ask what the ETA for this is.’, ‘author’: ‘Chelono’, ‘is_submitter’: False, ‘replies’: []}]}]

-

3B Qwen2.5 finetune beats Llama3.1-8B on Leaderboard (Score: 69, Comments: 11): A Qwen2.5-3B model finetuned on challenging questions created by Arcee.ai’s EvolKit has outperformed Llama3.1-8B on the leaderboard v2 evaluation, achieving scores of 0.4223 for BBH, 0.2710 for GPQA, and an average of 0.2979 across six benchmarks. The model is available for testing on Hugging Face Spaces, but the creator cautions it may not be production-ready due to its specialized training data and the qwen-research license.

Theme 2. Open-Source Efforts to Replicate o1 Reasoning

-

It’s not o1, it’s just CoT (Score: 95, Comments: 35): The post critiques open-source attempts to replicate OpenAI’s Q/Strawberry* (also known as o1), arguing that many are simply Chain of Thought (CoT) implementations rather than true o1 capabilities. The author suggests that Q/Strawberry* likely involves Reinforcement Learning techniques beyond standard RLHF, and urges the open-source community to focus on developing genuine o1 capabilities rather than embedding CoT into existing Large Language Models (LLMs). To illustrate the difference, the post references the official OpenAI blog post showcasing raw hidden reasoning chains, particularly highlighting the “Cipher” example as demonstrative of o1’s distinct approach compared to classic CoT.

-

A new attempt to reproduce the o1 reasoning on top of the existing models (Score: 81, Comments: 58): A new attempt aims to reproduce o1 reasoning on existing language models, focusing on enhancing their capabilities without the need for retraining. The approach involves developing a specialized prompt that guides models to generate more structured and logical outputs, potentially improving their performance on complex reasoning tasks. This method could offer a way to leverage current AI models for advanced reasoning without the computational costs of training new architectures.

- Users debate the feasibility of reproducing o1 reasoning locally, with some arguing that it requires more than just a well-trained LLM. The discussion highlights the need for multiple AI calls and significant technical improvements to achieve similar functionality and speed.

- A user proposes a test to count the letter ‘R’ in “strawberry,” noting that 70B models often resort to spelling out the word. This suggests an emerging feature in larger models where they can spell and count despite not “knowing” individual letters.

- The discussion critiques the post’s claim, with one user suggesting it’s more about reproducing “just CoT, not o1” on existing models. Others humorously compare the attempt to amateur rocketry, highlighting skepticism about the approach’s viability.

-

Introducing My Reasoning Model: No Tags, Just Logic (Score: 322, Comments: 100): The post introduces a reasoning model inspired by the O1 system, which adds an intermediate reasoning step between user input and assistant output. The author trained two models, Reasoning Llama 3.2 1b-v0.1 and Reasoning Qwen2.5 0.5b v0.1, using a 10,000-column dataset from the Reasoning-base-20k collection. Both models are available on HuggingFace, with links provided in the post.

- The model is described as CoT (Chain of Thought) rather than O1, with users noting that O1’s reasoning chain is significantly longer (5400 Llama3 tokens vs 1000) and involves a tree-search monte carlo algorithm.

- A user implemented a 16-step reasoning pipeline based on leaked O1 information, testing it with Gemini 8B Flash. The implementation improved code generation results but took ~2 minutes per response. Colab link provided.

- Users requested and received GGUF versions of the models. There’s interest in applying this approach to larger models like Qwen 2.5 72b or 32B, with some suggesting benchmarking against base models to assess improvements.

Theme 3. DIY AI Hardware for Local LLM Inference

-

Built my first AI + Video processing Workstation - 3x 4090 (Score: 378, Comments: 79): The post describes a high-performance AI and video processing workstation built with a Threadripper 3960X CPU, 3x NVIDIA RTX 4090 GPUs (two Suprim Liquid X and one Founders Edition), and 128GB DDR4 RAM in an NZXT H9 Flow case with a 1600W PSU. This system is designed to run Llama 3.2 70B model with 30K-40K word prompts of sensitive data offline, achieving 10 tokens/second throughput, and excels at prompt evaluation speed using Ollama and AnythingLLM, while also being capable of video upscaling and AI enhancement with Topaz Video AI.

-

AMD Instinct Mi60 (Score: 31, Comments: 32): The AMD Instinct Mi60 GPU, purchased for $299 on eBay, features 32GB of HBM2 memory with 1TB/s bandwidth and works with Ubuntu 24.04, AMDGPU-pro driver, and ROCm 6.2. Benchmark tests using Llama-bench show the Mi60 running qwen2.5-32b-instruct-q6_k at 11.42 ± 2.75 t/s for pp512 and 4.79 ± 0.36 t/s for tg128, while llama3.1 8b - Q8 achieves 233.25 ± 0.23 t/s for pp512 and 35.44 ± 0.08 t/s for tg128, with performance capped at 100W TDP.

Theme 5. Multimodal AI: Combining Vision and Language

- Qwen 2 VL 7B Sydney - Vision Model that will love to comment on your dog pics (Score: 32, Comments: 15): Qwen 2 VL 7B Sydney is a new vision language model designed to provide detailed commentary on images, particularly excelling at describing dog pictures. The model, developed by Alibaba, is capable of generating extensive, multi-paragraph descriptions of images, offering a more verbose output compared to traditional image captioning models.

- Users expressed interest in merging vision language models with roleplay-finetuned LLMs for enhanced image interaction. Concerns were raised about larger companies restricting access to such models, with Chameleon cited as an example.

- The model’s creator shared plans to finetune Qwen 2 VL 7B with Sydney’s personality, aiming to create a more positive and engaging multimodal model. The project involves 42M tokens of text and image data, with all resources open-sourced.

- Discussion touched on the model’s compatibility with LM Studio, which is unlikely due to lack of support for Qwen 2 VL 7B in llama.cpp. The creator provided an inference script, noting it requires a 24GB VRAM GPU for optimal performance.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

TO BE COMPLETED

AI Discord Recap

A summary of Summaries of Summaries

Claude 3.5 Sonnet

1. AI Model Releases and Benchmarks

- DeepSeek V2 Challenges GPT-4: DeepSeek-V2 has been announced, with claims of surpassing GPT-4 on benchmarks like AlignBench and MT-Bench in some areas.

- The model’s performance sparked discussions on Twitter, with some expressing skepticism about the significance of the improvements over existing models.

- Dracarys 2 Debuts as Top Open-Source Coding Model: Dracarys 2 was introduced as a powerful open-source coding model, outperforming Sonnet 3.5 on benchmarks like LiveCodeBench.

- While achieving 67% in code editing tasks, some users viewed it as more of a rebranding of existing models rather than a significant innovation in capabilities.

- Open O1 Challenges Proprietary Models: The Open O1 project aims to create an open-source model matching OpenAI’s o1 performance in reasoning, coding, and mathematical problem-solving.

- However, some community members felt discussions around Open O1 lacked depth, calling for more rigorous scrutiny of such models and their claimed capabilities.

2. AI Agent and Reasoning Advancements

- SwiftSage v2 Enhances Reasoning Capabilities: The release of SwiftSage v2 introduces an agent system for reasoning that integrates fast and slow thinking, focusing on in-context learning for complex problem-solving.

- This open-source project aims to compete with proprietary systems in math and MMLU-style reasoning tasks, showcasing strengths in various cognitive challenges.

- GenRM Revolutionizes Reward Models: The introduction of GenRM allows reward models to be trained as next-token predictors instead of classic classifiers, enabling Chain-of-Thought reasoning for reward models.

- This innovation provides a single policy and reward model, enhancing overall performance in various tasks and potentially improving AI alignment with human values.

- COCONUT Paradigm for Continuous Latent Space Reasoning: A new paper introduces COCONUT, a paradigm allowing language model reasoning in a continuous latent space instead of traditional language space.

- This approach suggests that using hidden states for reasoning can alleviate tokens’ constraints in traditional models, enabling more complex thinking and potentially enhancing LLM capabilities.

3. AI Tooling and Infrastructure Improvements

- Mojo Benchmarking Framework Launch: Mojo has introduced a benchmark package for runtime performance evaluation, similar to Go’s testing framework.

- Users can now use

benchmark.runto efficiently assess function performance and report mean durations and iterations, enhancing development workflows in the Mojo ecosystem.

- Users can now use

- LlamaIndex RAG-a-thon Announced: The LlamaIndex Agentic RAG-a-thon is set for October 11-13 in Silicon Valley, focusing on Retrieval-Augmented Generation technology in partnership with Pinecone and VESSL AI.

- This event aims at advancing AI agents for enterprise applications, with an opportunity for developers to win cash prizes as highlighted in this link.

- Entropix Enhances Prompt Optimization: The Entropix/Entropy Guided Adaptive Sampler enhances prompt optimization, focusing on attention entropy to boost model performance.

- Advantages noted include improved narrative consistency and reduced hallucinations, suggesting capabilities even in small models, as stated by @_xjdr on social media.

4. Open Source AI Projects and Collaborations

- Meta Movie Gen Research Paper Released: Meta announced a research paper detailing their Movie Gen innovations in generative modeling for films.

- This document is an essential reference for understanding the methodologies behind Meta’s advancements in movie generation technology, providing insights into their latest AI-driven creative tools.

- Python 3.13 Release Brings Major Updates: Python 3.13 was officially released with significant updates, including a better REPL and an option to run Python without the GIL.

- Highlighted features also include improved support for iOS and Android platforms, marking them as Tier 3 supported due to developments by the Beeware project.

- Intel and Inflection AI Collaborate on Enterprise AI: A collaboration between Intel and Inflection AI to launch an enterprise AI system was announced, signaling significant developments in the enterprise AI space.

- This partnership suggests potential reshaping of technology usage in enterprise environments, though specific details on the system’s capabilities were not provided in the initial announcement.

GPT4O (gpt-4o-2024-05-13)

1. LLM Advancements

- Qwen Models Rival LLaMA: Discussions on Qwen 2.5 7B models revealed their comparable performance to LLaMA models in conversational tasks, with significant differences in training efficiency noted.

- Concerns about switching performance between these models were raised, suggesting potential for optimization in fine-tuning strategies.

- Llama 3.2 Model Loading Issues: Users faced challenges loading models in LM Studio, specifically errors related to outdated CPU instructions like AVX2 when working with ‘gguf’ format.

- Suggestions included upgrading hardware or switching to Linux, highlighting the need for better compatibility solutions.

2. Model Performance Optimization

- DALI Dataloader Demonstrates Impressive Throughput: The DALI Dataloader achieved reading 5,000 512x512 JPEGs per second, showcasing effective GPU resource utilization for large image transformations.

- Members noted its consistent performance even with full ImageNet transforms, emphasizing its efficiency.

- Optimizing Onnxruntime Web Size: Discussions focused on reducing the default WASM size for Onnxruntime Web from 20 MB to a more manageable 444K using minified versions.

- Members explored strategies like LTO and tree shaking to further optimize package size while incorporating custom inference logic.

- Parallelizing RNNs with CUDA: Challenges in parallelizing RNNs with CUDA were discussed, with references to innovative solutions like S4 and Mamba.

- The community expressed interest in overcoming sequential dependencies, highlighting ongoing research in this area.

3. Multimodal AI Innovations

- Reka Flash Update Enhances Multimodal Capabilities: The latest Reka Flash update now supports interleaved multimodal inputs like text, image, video, and audio, significantly improving functionality.

- This enhancement highlights advancements in multimodal understanding and practical applications.

- Exploring Luma AI Magic: Discussions centered on Luma AI and its impressive video applications, particularly its utility in film editing and creating unique camera movements.

- Members shared resources and examples, emphasizing the tool’s potential in creative fields.

4. Open-Source AI Frameworks

- OpenRouter Collaborates with Fal.ai: OpenRouter has partnered with Fal.ai, enhancing LLM and VLM capabilities within Fal’s image workflows via this link.

- The integration allows users to leverage advanced AI models for improved image processing tasks.

- API4AI Powers AI Integration: The API4AI platform facilitates easy integration with services like OpenAI and Azure, providing diverse real-world interaction APIs.

- These features empower developers to build robust AI applications, enhancing functionality and user experience.

5. Fine-Tuning Challenges

- Challenges in Fine-Tuning LLaMA: Users noted issues with LLaMA 3.1 creating endless outputs post-training, signaling challenges in the fine-tuning process.

- Discussions emphasized the necessity of proper chat templates and end-of-sequence definitions for improved model behavior.

- Utilizing LoRA in Model Fine-Tuning: The feasibility of LoRA in fine-tuning sparked debate, with some arguing that full fine-tuning might yield better results overall.

- Varying opinions on effective implementation of LoRA surfaced, highlighting its limitations with already fine-tuned models.

GPT4O-Aug (gpt-4o-2024-08-06)

1. Model Fine-Tuning and Optimization

- Challenges in Fine-Tuning LLaMA Models: Users across Discords report issues with fine-tuning models like LLaMA 3.1, encountering endless generation outputs and emphasizing the need for correct chat templates and end-of-sequence definitions. Discussions highlight the importance of LoRA as a fine-tuning strategy, with debates on its efficacy compared to full fine-tuning.

- The community shares strategies for overcoming these challenges, such as combining datasets for better results and leveraging LoRA for efficient fine-tuning.

- Quantization and Memory Optimization: Techniques such as NF4 training have been noted to reduce VRAM requirements from 16G to 10G, offering significant performance improvements. Community discussions also cover strategies for optimizing Onnxruntime Web size and CUDA memory management during testing.

- Members celebrate a speedup from 11 seconds per step to 7 seconds per step with NF4, emphasizing the benefits of these optimizations for model performance.

2. AI Model Integration and Application

- OpenRouter Enhances Image Workflows: OpenRouter integrates with Fal.ai to enhance LLM and VLM capabilities in image workflows, allowing users to streamline their tasks using Gemini.

- This integration promises improved efficiency and output for users, encouraging them to rethink their processes with the new functionalities.

- Companion Discord Bot Revolutionizes Engagement: The Companion bot, powered by Cohere, introduces dynamic persona modeling and moderation capabilities, aiming to elevate user interaction within Discord communities.

- The project invites exploration as it strengthens moderation efficiency and enhances community discussions.

3. AI Research and Development

- Meta Movie Gen Research Paper Released: Meta’s research paper on Movie Gen offers insights into their advancements in generative modeling for films, highlighting innovative methodologies.

- This document is an essential reference for understanding the methodologies behind Meta’s advancements in movie generation technology.

- Entropix Sampler’s Capabilities Explored: The Entropix/Entropy Guided Adaptive Sampler demonstrates improvements in model performance by optimizing attention entropy, reducing hallucinations, and enhancing narrative consistency.

- The project shows promising results even in small models, suggesting significant capabilities for improving narrative coherence.

4. AI Tools and Frameworks

- Sci Scope Offers Personalized AI Research Summaries: Sci Scope aggregates and summarizes new ArXiv papers weekly, providing personalized newsletters to keep researchers informed of critical developments.

- Subscribers benefit from a tailored list of papers relevant to their interests, ensuring they never miss important developments in AI research.

- Aider v0.59.0 Launch Brings Enhancements: The new Aider v0.59.0 release includes updates to the

/read-onlycommand, YAML config format changes, and performance improvements with new sanity checks.- These updates improve performance with better handling during coding tasks and introduce new sanity checks to streamline launch processes.

5. AI Community and Support

- LlamaIndex RAG-a-thon Kicks Off: The LlamaIndex Agentic RAG-a-thon event, in partnership with Pinecone and VESSL AI, focuses on advancing Retrieval-Augmented Generation technology.

- The event aims at advancing AI agents for enterprise applications, with opportunities for developers to win cash prizes.

- Community Support for AI Tools: Discord communities emphasize the importance of collaboration and feedback, with users seeking support for tools like Cohere and OpenRouter to improve their AI workflows.

- Members are encouraged to connect for support and share feedback, underscoring the importance of community-driven enhancements.

O1-mini

Theme 1. Model Fine-Tuning and Training Challenges

-

Overcoming Fine-Tuning Bottlenecks with Unsloth Studio: The upcoming Unsloth Studio GUI streamlines the fine-tuning process by automating dataset formatting and dependency management, targeting beginners lacking advanced programming skills.

- Users report challenges with models like LLaMA 3.1 generating endless outputs post-training, emphasizing the need for proper chat templates and end-of-sequence definitions for better behavior.

-

LoRA Limitations in Model Refinement Debated: Feasibility of LoRA in model fine-tuning sparks debate, with some advocating for full fine-tuning for superior results, while others highlight LoRA’s constraints on already fine-tuned models.

- Varying opinions emerge on effective LoRA implementation, showcasing its limitations and the community’s pursuit of better fine-tuning optimization techniques.

-

Gradient Checkpointing Enhances TinyGrad Training: Implementing gradient checkpointing proves crucial for training larger models efficiently in TinyGrad, enabling the handling of parameters beyond very small toy models.

- Without these optimizations, models in TinyGrad struggle with extensive training sessions, limiting their practical application.

Theme 2. New Model Releases and Performance Comparisons

-

Qwen 2.5 Rivals LLaMA in Conversational Tasks: Discussions reveal that Qwen 2.5 7B models perform similarly to LLaMA in conversational tasks, with debates on their training efficiency and potential performance switches.

- Users report significant differences in fine-tuning capabilities, suggesting Qwen as a viable alternative for future model optimizations.

-

Dracarys 2 Outperforms Sonnet 3.5 on Code Benchmarks: The newly announced Dracarys 2 model surpasses Sonnet 3.5 on performance benchmarks like LiveCodeBench, achieving 67% in code editing tasks.

- Despite its impressive initial claims, some users question its innovation, labeling it as a rehash of existing models rather than a groundbreaking advancement.

-

Phi-3.5 Model Faces Community Backlash Over Safety Features: Microsoft’s Phi-3.5 model, designed with heavy censorship, humorously receives community mocking for its excessive moderation, leading to the sharing of an uncensored version on Hugging Face.

- Users engage in satirical responses, highlighting concerns over its practicality for technical tasks due to overzealous content restrictions.

Theme 3. Integration, Tools, and Deployment

-

Unsloth Studio Simplifies AI Model Training: The introduction of Unsloth Studio GUI targets ease of fine-tuning AI models by automatically handling dataset formatting and dependency management, especially catering to beginners without deep programming knowledge.

- Users highlight its potential in mitigating common fine-tuning issues, thereby enhancing accessibility for a broader range of users.

-

RYFAI App Promotes Private AI Access: The open-source RYFAI app emphasizes offline operation and user privacy, aiming to provide competitive alternatives to established AI tools like Ollama and OpenWebUI.

- Concerns regarding market saturation and differentiation strategies are discussed, with users debating its ability to compete with more established solutions.

-

TorchAO Anticipates NF4 Support for VRAM Optimization: The community eagerly awaits NF4 implementation in TorchAO, which could reduce VRAM requirements from 16G to 10G and improve training speed from 11s to 7s per step.

- Members celebrate these anticipated performance enhancements as game-changers for efficient model fine-tuning and resource management.

Theme 4. API Issues, Costs, and Support

-

Cohere API Errors Disrupt Projects: Users struggle with frequent Cohere API errors like ‘InternalServerError’ during model fine-tuning, causing significant project setbacks.

- Moderators acknowledge the prioritization of support tickets due to high error backlogs, urging affected users to remain patient while solutions are implemented.

-

OpenAI API Costs Rise for Large-Scale Media Analysis: Analyzing thousands of media files using OpenAI API could exceed $12,000, prompting discussions on the feasibility of local solutions despite high associated storage and processing costs.

- Users inquire about potential cost-effective alternatives, weighing the benefits of cloud-based APIs against the financial challenges for project budgets.

-

Double Generation Issue Persists on OpenRouter API: Users report persistent double generation responses when utilizing the OpenRouter API, indicating setup-specific issues while some face 404 errors after adjusting their response parsers.

- Troubleshooting suggestions include reviewing API setup configurations and optimizing response parsers to mitigate the double response problem.

Theme 5. Data Pipelines and Synthetic Data Usage

-

Synthetic Data Enhances Model Training in Canvas Project: The Canvas project utilizes synthetic data generation techniques, such as distilling outputs from OpenAI’s o1-preview, to fine-tune GPT-4o, enabling rapid enhancement of AI model capabilities.

- This method allows for scalable model improvements without the extensive need for human-generated datasets, demonstrating efficiency and innovation in data handling.

-

SWE-bench Multimodal Evaluates Visual Issue Solving: The newly launched SWE-bench Multimodal introduces 617 new tasks from 17 JavaScript repositories to evaluate AI agents’ ability to solve visual GitHub issues, addressing current limitations in agent performance.

- This comprehensive benchmark aims to improve AI models’ multimodal understanding and practical problem-solving skills in real-world coding environments.

-

Entropix Sampler Warns Against Synthetic Data Overuse: The Entropix/Entropy Guided Adaptive Sampler cautions against the overuse of synthetic data from AI outputs to prevent model overfitting, while acknowledging its effectiveness in early training phases.

- Users explore alternative data generation methods, focusing on maintaining model reliability and performance through balanced dataset strategies.

O1-preview

Theme 1: Innovations and Tools in Fine-Tuning and Model Training

- Unsloth GUI Makes Fine-Tuning a Breeze for Beginners: The upcoming ‘Unsloth Studio’ GUI aims to simplify fine-tuning by automatically handling dataset formatting and dependencies. This innovation targets beginners who face challenges in model training without advanced programming skills.

- Torchtune Listens: KTO Training Support Requested: Users are eager for KTO training support in Torchtune, suggesting it could be added to the DPO recipe. Developers recommended raising an issue to track this feature request.

- TinyGrad Supercharges Training with Gradient Checkpointing: Discussions highlight the importance of gradient checkpointing in tinygrad to efficiently train larger models. Without these optimizations, tinygrad can only handle “very small toy models,” limiting its overall performance.

Theme 2: New AI Models and Their Capabilities

- OpenAI’s o1 Model Claims to Think Differently, Sparks Skepticism: Debates arise over OpenAI’s o1 integrating reasoning directly into the model, with some calling it a “simplification” and questioning its true capabilities. Skeptics highlight that underlying challenges may not be fully addressed.

- Dracarys 2 Breathes Fire, Claims Top Coding Model Spot: Dracarys 2 announces itself as the world’s best open-source coding model, outperforming Sonnet 3.5 with a 67% score on LiveCodeBench. Critics argue it’s a rehash of existing models rather than a true innovation.

- Meta Drops Blockbuster: Movie Gen Research Paper Released: Meta shares their Movie Gen research paper, detailing advancements in generative movie modeling. This document is essential for understanding the methodologies behind Meta’s innovations in movie generation technology.

Theme 3: Enhancements in AI-Assisted Tools and Applications

- Swarm of Agents Auto-Create YouTube Videos, Take Over Content Creation: A project demonstrates building a ‘swarm’ of agents using LlamaIndex to autonomously create AI-generated YouTube videos from natural prompts. This approach highlights the potential of multi-agent architectures in simplifying video generation workflows.

- Cursor Team Codes the Future, Chats with Lex Fridman: The Cursor team discusses AI-assisted programming and the future of coding in a conversation with Lex Fridman, showcasing their innovative environment. Topics include GitHub Copilot and the complexities of AI integration in coding workflows.

- Companion Discord Bot Makes Friends with Cohere Integration: The new Companion bot utilizes Cohere to enhance dynamic persona modeling and user interaction, while offering integrated moderation tools for Discord servers. This strengthens community engagement and moderation efficiency within Discord.

Theme 4: AI Communities Grapple with Platform and API Hiccups

- Cohere Users Pull Out Hair Over API Errors and 429 Woes: Frustrated users report persistent ‘InternalServerError’ and 429 errors with the Cohere API, impacting their projects and trials. Moderators confirm prioritization of support tickets due to a significant backlog.

- Perplexity AI Cuts Opus Limit, Users Riot Over Reduced Messages: Outrage ensues as Perplexity AI reduces Opus messages to 10 per day; user backlash apparently leads to a reversal back to 50 messages. Users expressed frustration over the sudden change, raising questions about consumer rights.

- Aider Gets Stuck in the Mud, Users Complain of Slow Performance: Users experience significant delays in Aider when using the Sonnet 3.5 API, especially with large files. Suggestions include limiting context files and utilizing verbose flags, as many seek alternatives like OpenRouter for API management.

Theme 5: Advances in AI Research and Theoretical Explorations

- Entropy-Based Sampling Promises Smarter AI Prompts: The Entropix project introduces Entropy Guided Adaptive Sampling, enhancing prompt optimization by evaluating attention entropy. Advantages include improved narrative consistency and reduced hallucinations, suggesting capabilities even in small models.

- GenRM Blends Policy and Reward Models for Better AI Alignment: The introduction of Generative Reward Models (GenRM) trains reward models as next-token predictors, improving Chain-of-Thought reasoning and alignment with human values. This method seeks to boost reasoning capabilities in decision-making.

- RWKV Series Leaves Researchers Dizzy with Version Changes: Community members struggle to track changes across RWKV versions; a paper provides a stepwise overview to assist in understanding. This highlights the need for clearer documentation in rapidly evolving models.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Unsloth GUI Simplifies Fine-Tuning: The upcoming ‘Unsloth Studio’ GUI aims to streamline the fine-tuning process by automatically managing dataset formatting and dependencies.

- This innovation targets beginners who face challenges in model training without advanced programming skills.

- Qwen Models Rival LLaMA: Discussions highlighted that Qwen 2.5 7B models can perform similarly to LLaMA models in conversational tasks, with users reporting significant differences in training efficiency.

- Concerns about performance switching between the two models were raised, suggesting potential avenues for fine-tuning optimization.

- Challenges in Fine-Tuning LLaMA: Users noted issues with LLaMA 3.1 creating endless generation outputs post-training, signaling challenges in the fine-tuning process.

- Discussions focused on the necessity of proper chat templates and end-of-sequence definitions for improved model behavior.

- Utilizing LoRA in Model Fine-Tuning: The feasibility of LoRA in fine-tuning sparked debate, with some arguing that full fine-tuning might yield better results overall.

- Varying opinions on how to effectively implement LoRA surfaced, highlighting its limitations with already fine-tuned models.

- RYFAI App Brings Private AI Access: The introduction of RYFAI, an open-source app for various operating systems, emphasizes user privacy and offline operation.

- Concerns were raised over its ability to compete with established tools, with discussions on market saturation and differentiation.

HuggingFace Discord

- Debate on AGI and AI Reasoning: A discussion unfolded on the achievability of AGI, emphasizing its relation to probabilistic constructs akin to human brain functions.

- Participants highlighted the varying interpretations of reasoning in LLMs versus human thought processes.

- Hugging Face Models and Memory Limitations: Users inquired about the context windows of models like Llama 3.1 on Hugging Face, sharing experiences with high-memory configurations.

- Concerns about the associated costs with running high-context models on cloud platforms were prevalent.

- Challenges with Fine-tuning Models: Users reported struggles with fine-tuned models, specifically noting inaccuracies in bounding boxes with a DETR model, linked further for context.

- These inaccuracies spur discussions regarding optimization for better performance in specific tasks.

- Exploration of Synthetic Data: Conversations included the implications of using synthetic data, warning against potential overfitting despite initial performance improvements.

- Participants voiced common interests in learning alternative data generation methods to optimize model training.

- Ongoing Service Outage Updates: Service outages affecting Share API and Share Links were reported on October 6, with users directed to the status page for updates.

- Fortunately, it was soon announced that all affected systems were back online, easing user disruptions.

GPU MODE Discord

- LLM Trainer Consultations Sparked: A member expressed temptation to spend 100 hours writing an LLM trainer in Rust and Triton, with Sasha available for consultation or collaboration.

- This could lead to innovative developments in LLM training.

- DALI Dataloader Demonstrates Impressive Throughput: DALI Dataloader can read 5,000 512x512 JPEGs per second, effectively utilizing GPU resources for large image transformations.

- Members noted its performance remains strong even with full ImageNet transforms.

- Progress in Parallelizing RNNs with CUDA: The discussion centered around the challenges in parallelizing RNNs using CUDA, with references to innovative solutions like S4 and Mamba.

- This revealed community interest in overcoming sequential dependencies within RNN architectures.

- Optimizing Onnxruntime Web Size: The default WASM size for Onnxruntime Web is 20 MB, prompting discussions on optimizations while incorporating custom inference logic.

- Members explored various strategies, including using a minified version that is only 444K for potential efficiency improvements.

- Anticipation for NF4 Support in TorchAO: Members expressed eagerness for TorchAO to implement NF4, noting it can reduce VRAM requirements from 16G to 10G.

- They celebrated that speed improved from 11 seconds per step to 7 seconds per step, highlighting performance enhancements.

OpenAI Discord

- Automating Document Categorization: Users explored how AI tools can significantly streamline document categorization through content analysis, emphasizing structured approaches to enhance efficiency.

- Concerns were raised about potential gaps in communication on project objectives, possibly hindering automation progress.

- Cost Implications of OpenAI API: Discussing the financial side, it emerged that analyzing thousands of media files with the OpenAI API could surpass $12,000, posing a challenge for projects reliant on this service.

- This led to inquiries about the feasibility of local solutions, despite the potentially high costs tied to local storage and processing capabilities.

- GPT-4’s Handling of Complex Math: GPT-4o was reported to manage complex math challenges effectively, especially when used in conjunction with plugins like Wolfram.

- One user mentioned the stochastic nature of GPT behaviors and proposed enhancing reliability through closer integration with external tools.

- Need for Effective Keyword Selection: With a user eyeing the selection of 50 keywords from a massive set of 12,000, challenges arose due to the model’s context window limitations, underscoring the task’s complexity.

- Participants suggested batch queries and structured data presentations to streamline the keyword selection process.

- Challenges of Prompt Engineering: Many users expressed difficulties in crafting effective prompts, particularly for deterministic tasks, indicating a lack of streamlined methods for conveying requirements to AI.

- Conversations highlighted the gap in understanding necessary to create actionable prompts, suggesting a need for clearer guidelines.

aider (Paul Gauthier) Discord

- Aider v0.59.0 Launch Brings Enhancements: The new release v0.59.0 enhances support for the

/read-onlycommand with shell-style auto-complete and updates the YAML config format for clarity.- The update improves performance with better handling during coding tasks and introduces new sanity checks to streamline launch processes.

- Concerns with Aider’s Slow Performance: Users are experiencing significant delays in Aider while using the Sonnet 3.5 API, particularly when handling large files or extensive code contexts.

- Suggestions include limiting context files and utilizing verbose flags, as many users seek alternatives like OpenRouter for API management.

- Introducing Dracarys 2 as a Top Coding Model: Dracarys 2 is announced as a powerful coding model, outstripping Sonnet 3.5 on performance benchmarks like LiveCodeBench.

- Though it achieved 67% in code editing, some users deemed it a rehash of existing models rather than a true innovation in capabilities.

- Python 3.13 Features Stand Out: The official release of Python 3.13 showcases enhancements such as a better REPL and running Python without the GIL.

- Noteworthy updates also include expanded support for iOS and Android as Tier 3 supported platforms via the Beeware project.

- Innovations in Semantic Search Techniques: Discussion on the benefits of semantic search over keyword search highlighted the ability to enhance query results based on meaning rather than exact matches.

- However, examples reveal that over-reliance on semantic search could lead to unexpected poor outcomes in practical applications.

Nous Research AI Discord

- Nous Research Innovates with New Models: Nous has introduced exciting projects like Forge and Hermes-3-Llama-3.1-8B, showcasing their cutting-edge technology in user-directed steerability.

- These advancements highlight impressive creativity and performance, potentially transforming future developments in AI.

- Meta Movie Gen Research Paper Released: Meta announced a research paper detailing their Movie Gen innovations in generative modeling.

- This document is an essential reference for understanding the methodologies behind Meta’s advancements in movie generation technology.

- GenRM Enhances Reward Model Training: The introduction of GenRM showcases a significant shift in how reward models are trained, integrating next-token predictions and Chain-of-Thought reasoning.

- This advancement allows for improved performance across numerous tasks by leveraging a unified policy and reward model.

- SwiftSage v2 Open-Source Agent Introduced: The new SwiftSage v2 agent system, which integrates different thinking styles for enhanced reasoning, is now available on GitHub.

- The system targets complex problems, showcasing strengths in various reasoning tasks using in-context learning.

- Open Reasoning Tasks Project Clarified: The Open Reasoning Tasks channel was clarified as a collaborative space for discussing ongoing work on GitHub.

- Members are encouraged to contribute insights and developments related to enhancing reasoning tasks in AI systems.

LM Studio Discord

- Model Loading Woes: Users faced issues loading models in LM Studio, encountering errors like ‘No LM Runtime found for model format ‘gguf’!’, often due to outdated CPU instructions like AVX2.

- They suggested upgrading hardware or switching to Linux for better compatibility.

- GPU Configuration Conundrum: The community evaluated challenges of mixing GPUs in multi-GPU setups, specifically using 4090 and 3090 models, highlighting potential performance limitations.

- The consensus indicated that while mixing is feasible, the slower GPU often bottlenecks overall performance.

- Image Processing Insights: Inquiries about models supporting image processing led to recommendations for MiniCPM-V-2_6-GGUF as a viable option.

- Users raised concerns about image sizes and how resolution impacts model inference times.

- Prompt Template Essentials: The correct use of prompt templates is crucial for LLMs; improper templates can lead to unexpected tokens in outputs.

- Discussion revealed that straying from default templates could result in significant output mismatches.

- GPU Memory Showdown: Comparative performance discussions highlighted that the Tesla P40 with 24GB VRAM suits AI tasks well, whereas the RTX 4060Ti with 16GB holds up in some scenarios.

- Concerns arose regarding the P40’s slower performance in Stable Diffusion, emphasizing underutilization of its capabilities.

OpenRouter (Alex Atallah) Discord

- OpenRouter collaborates with Fal.ai: OpenRouter has officially partnered with Fal.ai, enhancing LLM and VLM capabilities in image workflows via this link.

- Users can reimagine their workflow using Gemini through OpenRouter to streamline image processing tasks.

- API4AI powers AI integration: The API4AI platform facilitates easy integration with services such as OpenAI and Azure, providing a host of real-world interaction APIs including email handling and image generation.

- These features empower developers to build diverse AI applications more effectively.

- Double generation issue persists: Users reported double generation responses when utilizing the OpenRouter API, indicating setup-specific issues while some faced 404 errors after adjusting their response parsers.

- This suggests a need for troubleshooting potential timeouts or API availability delays.

- Math models excel in STEM tasks: Users highlighted o1-mini as the preferred model for math STEM tasks due to its efficiency in rendering outputs, raising questions about LaTeX rendering capabilities.

- The community is keen on optimizing math formula interactions within the OpenRouter environment.

- Discounts for non-profits sought: Inquiries emerged regarding potential discounts for non-profit educational organizations in Africa to access OpenRouter’s services.

- This reflects a broader desire within the AI community for affordable access to technology for educational initiatives.

Eleuther Discord

- MATS Program Gains a New Mentor: Alignment Science Co-Lead Jan Leike will mentor for the MATS Winter 2024-25, with applications closing on Oct 6, 11:59 pm PT.

- This mentorship offers great insights into alignment science, making it a coveted opportunity for prospective applicants.

- Understanding ICLR Paper Release Timing: Discussions clarified that the timing of paper releases at ICLR often depends on review processes, with potential informal sharing of drafts.

- Members highlighted that knowing these timelines is crucial for maintaining research visibility., especially for those waiting on final preprints.

- RWKV Series and Versioning Challenges: The community explored difficulties in tracking RWKV series version changes, signaling a need for clearer documentation.

- A linked paper provides a stepwise overview of the RWKV alterations, which may assist in testing and research understandings.

- Generative Reward Models to Enhance AI Alignment: Members discussed Chain-of-Thought Generative Reward Models (CoT-GenRM) aimed at improving post-training performance and alignment with human values.

- By merging human and AI-generated feedback, this method seeks to boost reasoning capabilities in decision-making.

- Support for JAX Models in Development: A conversation sparked about the potential for first-class support for JAX models, with members eager for updates.

- This highlights the growing interest in optimizing frameworks to suit evolving needs in machine learning development.

Cohere Discord

- Cohere API Errors and Frustrations: Users struggled with frequent Cohere API errors like ‘InternalServerError’ during projects, particularly on the fine-tuning page, impacting techniques vital for advancing trials.

- Moderators confirmed prioritization of support tickets due to a significant backlog, as members emphasized issues like 429 errors affecting multiple users.

- Companion Discord Bot Revolutionizes Engagement: Companion, a Discord bot utilizing Cohere, was introduced to enhance dynamic persona modeling and user interaction while providing integrated moderation capabilities.

- The GitHub project, which is designed to elevate community discussions, invites exploration as it strengthens moderation efficiency within Discord.

- Debate on API Usage for Commercial Purposes: Community members confirmed that Cohere APIs can be leveraged commercially, targeting enterprise solutions while users were directed to FAQs for licensing details.

- Discussions highlighted the importance of API stability and efficiency, with developers keen on understanding nuances in transitioning from other platforms.

- Rerank API Responses Under Scrutiny: Concerns surfaced about the Rerank API not returning expected document data, despite using the return_documents: True parameter, hindering data retrieval processes.

- Users were eager to understand if recent updates altered functionality, seeking answers to previous efficiencies compromised.

- Community Focus on Collaboration and Feedback: Members urged users to connect for support and share feedback with Cohere’s team, underscoring the importance of community-driven enhancements.

- Dialogue revolved around the necessity of actionable insights to improve user experiences and technical performance in the Cohere ecosystem.

Latent Space Discord

- SWE-bench Multimodal launched for visual issue solving: The new SWE-bench Multimodal aims to evaluate agents’ ability to solve visual GitHub issues with 617 new tasks from 17 JavaScript repos, introducing the SWE-agent Multimodal for better handling.

- This initiative targets existing agent limitations, promoting effective task completion in visual problem-solving.

- Reka Flash update enhances multimodal capabilities: The latest update for Reka Flash supports interleaved multimodal inputs like text, image, video, and audio, significantly improving its functionality.

- This enhancement highlights advancements in multimodal understanding and reasoning within practical applications.

- Cursor team discusses AI-assisted programming with Lex Fridman: In a chat with Lex Fridman, the Cursor team dived into AI-assisted programming and the evolving future of coding, showcasing their innovative environment.

- Discussions covered impactful topics like GitHub Copilot and the complexities of AI integration in coding workflows.

- Discord Audio Troubles Stun Users: Members faced audio issues during the call, prompting suggestions to switch to Zoom due to hearings difficulties.

- Verymadbear quipped, ‘it’s not a real meeting if one doesn’t have problems with mic’, outlining the frustrations faced.

- Exploring Luma AI Magic: Conversation centered on Luma AI, showcasing impressive video applications and projects developed with this tool, particularly its utility in film editing.

- Karan highlighted the creativity Luma brings to filmmaking, emphasizing its capability for unique camera movements.

Stability.ai (Stable Diffusion) Discord

- AMD vs NVIDIA: The Great Debate for SD: Users favor the RTX 4070 over the RX 6900 XT for generating images in Stable Diffusion, citing superior performance.

- Some suggest the 3080 Ti as a 30% faster alternative to the 4070, adding another layer to the GPU comparison.

- CogVideoX Takes the Crown in Video Generation: For text-to-video generation, CogVideoX is now the leading open-source model, outpacing older models like Svd.

- Users noted that Stability has fallen behind, with alternative models proving to be cognitively superior.

- UI Battle: ComfyUI vs Forge UI for Stable Diffusion: Transitioning from Automatic1111, users are split between ComfyUI and Forge UI, both showcasing distinct strengths.

- While many prefer ComfyUI for ease, others appreciate the enhancements in Forge as a decent fork of Auto1111.

- LoRA Training Troubles Hit Community: Users expressed challenges in training LoRA for SDXL, seeking help in community channels dedicated to troubleshooting.

- Communities rallied to provide support, sharing resources to aid in the creation of effective LoRA models.

- After-Generation Edits: Users Want More: Discussions around post-generation edits emerged, focusing on the ability to upload and regenerate specific image areas.

- Users are intrigued by the concept of highlighting and altering sections of generated images, seeking improvements in workflows.

Perplexity AI Discord

- Opus Limit Sparks User Outrage: Users expressed frustration over the sudden reduction of Opus messages to 10 per day, raising questions about consumer rights.

- Later updates suggested the limit might have reverted to 50 messages, easing some concerns within the community.

- Perplexity Experiences User Struggles: Multiple users reported issues with Perplexity involving access to pro features and customer support lags.

- Concerns mounted as users noted a shift towards promotional content over meaningful feature enhancements.

- Developer Team’s Focus Under Scrutiny: Questions emerged about the developer team’s priorities beyond the Mac app, with users desiring more visible new features.

- Community feedback hinted at a pivot towards giveaways as opposed to significant platform improvements.

- Tapping into Structured Outputs for API: Discussions on integrating Structured Outputs in the Perplexity API mirrored capabilities found in the OpenAI library.

- This exploration emphasizes growing interest in expanding the API’s functionality to better meet user needs.

- Quantum Clocks Promise Precision: An innovative concept involving quantum clocks highlighted advancements in precision timekeeping.

- The technology promises superior accuracy compared to traditional methods, opening doors for future applications.

LlamaIndex Discord

- LlamaIndex struggles with Milvus DB Integration: Users report challenges integrating Milvus DB into their LlamaIndex workflows due to unexpected API changes and reliance on native objects.

- They are calling for a more modular design to effectively utilize pre-built components without enforcing dependency on structured objects.

- Swarm Agents Create AI-Generated Videos: A project showcases how to build a ‘swarm’ of agents that autonomously create an AI-generated YouTube video starting from natural prompts, detailed in this tutorial.

- This approach highlights the potential of multi-agent architectures in simplifying video generation workflows.

- Dynamic Data Source Reasoning in RAG Pipelines: An agent layer on top of a RAG pipeline allows framing different data sources as ‘tools’, enhancing reasoning about source retrieval, summarized here.

- This dynamic approach emphasizes the shift towards more interactive and responsive retrieval mechanisms in data processing.

- Quick Setup for Agentic Retrieval: A helpful guide offers a swift setup for agentic retrieval in RAG, paving the way for more flexible data handling compared to static retrieval methods, detailed in this guide.

- Users appreciated the ease of implementation, marking a shift in how retrieval architectures are utilized.

- Legal Compliance through Multi-Agent System: A multi-agent system aids companies in assessing compliance with regulations and drafting legal responses, more details available here.

- This system automates the review of legal precedents, demonstrating significant efficiency improvements in legal workflows.

tinygrad (George Hotz) Discord

- Gradient Checkpointing Enhances Training: A member inquired about gradient checkpointing, which is crucial for training larger models efficiently, highlighting its role in improving training capabilities.

- Without these optimizations, tinygrad can only handle very small toy models, limiting its overall performance.

- VAE Training for Color Space Adaptation: Discussion emerged around training a Variational Autoencoder (VAE) to adapt an existing model to the CIE LAB color space for improved outputs.

- Significant alterations to inputs would require extensive modifications beyond simple finetuning, complicating the process.

- Tinybox Clarified as Non-Server Tool: A user sought clarity on tinygrad’s functionality, questioning if it acts as a local server for running LLMs.

- It was clarified that tinygrad is more akin to PyTorch, focusing on development rather than server capabilities.

- KAN Networks Usher in Speedy Training: Members noted the difficulty in finding existing implementations of KAN networks in TinyGrad, despite the hype, while showcasing examples that enable efficient training.

- FastKAN achieves a 10x speedup on MNIST, emphasizing its performance advantages.

- Updates on VIZ and Scheduler Enhancements: Members received updates on a complete rewrite of the VIZ server, targeting enhancements for kernel and graph rewrites.

- Key blockers for progress include addressing ASSIGN and refining fusion and grouping logic as development continues.

Interconnects (Nathan Lambert) Discord

- OpenAI o1 integrates reasoning: Discussion revealed that OpenAI o1 integrates reasoning directly into the model, moving past traditional methods like MCTS during inference.

- Despite this, skepticism arose regarding the simplification of underlying challenges, especially as some discussions seemed censored.

- Entropix provides prompt optimization: The Entropix/Entropy Guided Adaptive Sampler enhances prompt optimization, focusing on attention entropy to boost model performance.

- Advantages noted include improved narrative consistency and reduced hallucinations, suggesting capabilities even in small models.

- Reflection 70B fails to meet benchmarks: A member noted disappointment in their replication of Reflection 70B, which did not match its originally reported benchmarks.

- Nonetheless, they remain committed to reflecting on tuning concepts, promising more detailed insights soon.

- Open O1 emerges as a competitor: Open O1 is introduced as a viable alternative to proprietary models, asserting superiority in reasoning, coding, and mathematical tasks.

- Some community members felt discussions lacked depth, prompting a request for a more thorough analysis of the model.

- RNN investment plea gains attention: A tweet fervently called for funding to develop ‘one more RNN’, claiming it could destroy transformers and address long-context issues.

- With enthusiasm, the member emphasized the urgency of support, urging the community to take action.

DSPy Discord

- Class Generation Notebook Released: The GitHub repository now features a Jupyter notebook on class generation showcasing structured outputs from DSPy and Jinja2.

- This project aims to enhance structured output generation, inviting further contributions on GitHub.

- Livecoding Session Coming Up: An exciting livecoding session has been announced for members to observe the creation of notebooks directly within Discord.

- Members are encouraged to join in the thread to interact during the session, fostering collaborative notebook development.

- TypedPredictors Ready for Action: There’s talk about using

TypedPredictorswithout formatting logic for schemas, with an estimate that it could be implemented in about 100 lines.- Integration into

dspy.Predictis expected soon, providing an efficient pathway for developers.

- Integration into

- Traceability Not as Tricky as It Seems: A user inquired about adding traceability to DSPy for tracking token counts to manage costs without external libraries.

- The suggestion involved utilizing the

your_lm.historyattribute to effectively monitor expenses.

- The suggestion involved utilizing the

- Facing Challenges with Transition to dspy.LM: A new user reported a segmentation fault during the shift from

dspy.OllamaLocaltodspy.LM, indicating a possible version mismatch.- Responses advised reinstalling DSPy or confirming the use of correct model endpoints to resolve the issue.

LLM Agents (Berkeley MOOC) Discord

- Real-time Streaming from chat_manager: A streamlit UI enables real-time streaming from chat_manager, facilitated by a GitHub pull request for message processing customization.

- This setup is essential for interactive applications requiring immediate user feedback on messages.

- In-person Attendance is Exclusive: Due to capacity constraints, only Berkeley students can attend upcoming lectures in person, restricting broader access.

- This limitation was confirmed in discussions regarding the seating availability for non-Berkeley students.

- Omar’s Lecture Sparks Excitement: Members expressed enthusiasm for an upcoming lecture from Omar that will focus on DSPy, emphasizing its relevance.

- Active contributions to the DSPy project were highlighted, reflecting member commitment to advancing their expertise.

- Members Pitched into DSPy Contributions: A member detailed their recent contributions to the DSPy project, showcasing their engagement and desire to enhance the framework.

- This ongoing involvement signals a strong community interest in improving DSPy functionalities.

Modular (Mojo 🔥) Discord

- Resyntaxing Mojo Argument Conventions: A member shared a proposal on resyntaxing argument conventions aiming to refine aspects of the Mojo programming language.

- They encouraged community feedback through the GitHub Issue to help shape this proposal.

- Benchmarking Framework Launches in Mojo: Mojo has introduced a benchmark package for runtime performance evaluation, similar to Go’s testing framework.

- Members discussed using

benchmark.runto efficiently assess function performance and report mean durations and iterations.

- Members discussed using

- Enums Now with Variant Type: Members clarified that there is no dedicated enum syntax in Mojo, but the Variant type can serve similar functionality.

- You can create tags via struct declarations and aliases until full sum types are introduced.

- Max Inference Engine Faces Errors: Users reported issues with the max inference engine on Intel NUC, encountering errors related to

libTorchRuntimePlugin-2_4_1_post100.soand ONNX operations.- Problems included failed legalization of operations and complications when altering the opset version.

- Clarification on Torch Version for Compatibility: A user inquired about PyTorch installation, asking What torch version do you have? to ensure compatibility.

- The provided output revealed PyTorch version 2.4.1.post100 and included specifics on GCC version 13.3 and Intel optimizations from conda-forge.

Torchtune Discord

- Torchtune lacks KTO training support: A member inquired if Torchtune supports KTO training, with indications that this could potentially be added to the DPO recipe if necessary.

- They recommended raising an issue to track this feature request.

- AssertionError with large custom CSV datasets: Users reported an AssertionError with custom CSV datasets larger than 100MB when shuffle=false, but smaller datasets functioned without issue.

- This suggests that the error may be tied to dataset size rather than the code.

- LLAMA 3.2 3B fine-tuning issues: There was a discussion about full fine-tuning of LLAMA 3.2 3B, emphasizing that distilled models often require specific handling like lower learning rates.

- One user raised the learning rate to achieve satisfactory loss curves, though they lacked comprehensive evaluation data.

- Grace Hopper chips under scrutiny: Members shared inquiries about the performance of Grace Hopper chips, specifically how they stack up against standard architectures with Hopper GPUs.

- This illustrates a keen interest in the implications of using newer hardware designs.

- Training efficiency: Max sequence length vs batch size: Guidance suggests optimizing max sequence length rather than increasing batch size to enhance performance in the blockmask dimension.

- Using longer sequences may improve packing efficiency but might reduce data shuffling due to static packing methods.

OpenAccess AI Collective (axolotl) Discord

- Finetuned GPT-4 Models Gone Missing: A member humorously claimed that OpenAI may have taken everyone’s finetuned GPT-4 models, stating, ‘I lost my models’ and suggesting the performance was trash.

- Another member pointed out, ‘you only finetune weights you own,’ highlighting the risks of using shared resources.

- Group Logo Change Confusion: A member stated they lost track of the community due to a logo change, humorously lamenting the confusion it caused.

- This emphasizes the impact of branding changes on community recognition.

- Intel and Inflection AI Team Up: A member shared an article detailing the collaboration between Intel and Inflection AI to launch an enterprise AI system, calling it interesting.

- This announcement suggests significant developments in enterprise AI that could reshape technology usage.

- Exploration of non-pip packagers for Axolotl: A member inquired about switching Axolotl to a non-pip packager like uv due to frustrations with dependency issues.

- They expressed a willingness to contribute to enhancing the package management experience.

- fschad package not found error: A user reported a ‘Could not find a version that satisfies the requirement fschat (unavailable)’ error while installing

axolotl[deepspeed,flash-attn].- Available versions listed range from 0.1.1 to 0.2.36, yet none are marked as available, causing confusion.

LAION Discord

- LlamaIndex RAG-a-thon Kicks Off: The LlamaIndex Agentic RAG-a-thon is set for October 11-13 in Silicon Valley, focusing on Retrieval-Augmented Generation technology in partnership with Pinecone and VESSL AI.

- This event aims at advancing AI agents for enterprise applications, with an opportunity for developers to win cash prizes as highlighted in this link.

- O1 Fails on Simple Tasks: Discussion reveals that O1 claims strong performance on olympiad-level tasks but struggles with simpler problems, raising concerns about its generalization abilities as noted in a related discussion.

- The findings prompt questions on how SOTA LLMs effectively manage generalization, a concern supported by a research paper.

- Seeking Clarity on Clip Retrieval API: There’s ongoing interest in the clip retrieval API with a member asking for updates, indicating a gap in communication regarding this tech development.

- Lack of responses suggests that more info from team leads or developers is necessary.

- Epoch Training Experience Shared: A user shared insights from training with 80,000 epochs, setting a stage for deeper conversations about model training performance.

- This detail highlights the varying approaches to achieving optimal results in model training.

- New Tools Enter the Arena: A link to AutoArena was shared, touted as an intriguing tool, reflecting interest in resources for model improvements.

- This interest underscores the community’s push toward leveraging practical tools in AI development.

OpenInterpreter Discord

- Grimes’ Coachella 01 AI Build Revealed: A guide outlines how Grimes and Bella Poarch set up their 01 AI assistant using a macro keypad and microphone at Coachella. This simple setup involves purchasing a macro keypad and microphone and remapping buttons to interact with the AI.

- Members learned that the setup allows for efficient and direct engagement with the assistant, emphasizing usability in dynamic environments.

- Challenges with Local LlamaFile Model: A member encountered an error with their local LlamaFile model, stating: ‘Model not found or error in checking vision support’ when trying to interact. Their model ‘Meta-Llama-3.1-8B-Instruct’ should be properly mapped according to the linked configuration.

- This raised confusion about the configuration details and led to discussions on litellm/model_prices_and_context_window.json for context and pricing.

- Discord Automod Targets Spam Control: There was a discussion suggesting the use of Discord Automod to block @everyone tags from normal users to reduce spam. A member noted that 95% of spam bots attempt to tag everyone, making this an effective method.

- Implementing this could streamline community interactions, minimizing spam distractions during crucial discussions.

- Comparing 01 Costs: 11 Labs vs OpenAI: A member raised a question about the costs related to using the 01 service between 11 Labs and OpenAI. There were concerns about potentially needing to upgrade their membership with 11 Labs.

- This reflects a broader interest in understanding the financial implications of utilizing these platforms, especially for those relying heavily on multiple services.

- Innovative Digital Assistant Cap Idea: A user proposed a cap integrated with a digital assistant, featuring speaker, microphone, and push-to-talk button functionalities for seamless interactions. The project aims to include phone notifications, question answering, and calendar management, potentially leading to an open source project with a build guide.

- Another user expressed enthusiasm for a device that enhances their coding projects, highlighting a desire for improved coding productivity.

LangChain AI Discord

- Join the LlamaIndex RAG-a-thon!: The LlamaIndex Agentic RAG-a-thon is taking place in Silicon Valley from October 11-13, focused on Retrieval-Augmented Generation technology.

- Interested participants can check out details here and connect via Slack or Discord.

- Automating QA with Natural Language: A member discussed Autonoma, a platform for automating QA using natural language and computer vision, aimed at reducing bugs.

- Key features include web and mobile support, CI/CD readiness, and self-healing capabilities.

- Stay ahead with Sci Scope: Sci Scope aggregates new ArXiv papers weekly and delivers personalized summaries directly to your inbox.

- This personalized newsletter ensures subscribers never miss critical developments in AI research.

- Interest in Spending Agents: A user raised the question of agents capable of spending money, leading to discussions about potential applications and innovations in this area.

- While no concrete projects were shared, the concept intrigued many members.

- Guidance for Multi-tool Agent Implementation: Members expressed a desire for guidance on how to implement agents using multiple tools, reflecting a need for effective data source integration.

- Interest in creating agents that can utilize diversified tools continues to grow within the community.

MLOps @Chipro Discord

- 5th Annual MLOps World + GenAI Conference Incoming!: Join the MLOps World + GenAI Conference on November 7-8th in Austin, TX, featuring 50+ topics, hands-on workshops, and networking opportunities. Check out the full agenda here including a bonus virtual day on Nov. 6th!

- Mark your calendars! This is a prime opportunity for AI engineers to connect and learn about the latest in MLOps.

- Manifold Research Lab Launches CRC Updates: Manifold is hosting interactive updates known as CRCs, addressing breakthroughs in Multimodality, Robotics, and various research projects. Get more insights on their Events page and plug into the community here.

- These sessions offer deep dives into cutting-edge research, perfect for tech enthusiasts wanting to stay ahead in the field.

Mozilla AI Discord

- Podcast Highlights Data Pipelines: This Wednesday, AIFoundry.org will host a podcast covering data pipelines for models fine-tuning, emphasizing the necessary volume of data for success.

- The event is expected to spark discussion on optimal adjustments required for various fine-tuning tasks.

- Community Queries on Data Selection: A lively discussion in the community revolves around the process of data selection and processing, with many seeking guidance on effective methodologies.

- The focus is on adapting these processes to enhance suitability for specific fine-tuning tasks.

DiscoResearch Discord

- New Research Insight Published: A new research paper titled ‘Title of the Paper’ was shared, focusing on advancements in AI methodologies.

- This highlights the continuous evolution of AI research and its implications for future benchmarks.

- AI Benchmarking Discussions: Discussions highlighted the importance of developing robust benchmarks to assess AI performance accurately amidst evolving technologies.

- Members emphasized the need for standards to ensure comparability among different AI models.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Unsloth AI (Daniel Han) ▷ #general (729 messages🔥🔥🔥):

Unsloth GUI for fine-tuningQwen model performanceMultimodal support in modelsDataset formatting for trainingTraining Persian language models

- Unsloth GUI for Fine-Tuning: A GUI titled ‘Unsloth Studio’ is anticipated for fine-tuning, which will simplify the process for users by handling dataset formatting and dependencies automatically.

- This tool aims to make it easier for beginners to train models without needing advanced programming knowledge.

- Qwen Model Performance Compared to LLaMA: Users discussed Qwen models, noting that 1B models and larger ones can perform similarly in conversational contexts, with Qwen 2.5 7B being a potential model for fine-tuning to improve performance.

- Some users reported a notable difference in performance and training efficiency when switching between Qwen and LLaMA models.

- Multimodal Support in Models: There’s ongoing work for integrating image input capabilities into models like LLaMA 3.2, though detailed timelines for release are still unclear.

- Users mentioned the complexities involved in fine-tuning multimodal models and expressed hopes for future support.

- Dataset Formatting for Training: Formatting datasets for fine-tuning models was addressed, with emphasis on ensuring the correct structure for training conversations.

- It’s suggested to encapsulate conversation parts as single blocks of text, adjusting formats based on the model specifications.

- Training Persian Language Models: Users inquired about effective models for fine-tuning with Persian language datasets, with Qwen being suggested as a suitable option.

- The conversation highlighted the need for quality datasets in non-English languages for achieving better model performance.

Links mentioned:

- Google Colab: no description found

- Google Colab: no description found

- Slurm Workload Manager - Documentation: no description found

- fixie-ai/ultravox-v0_4-mistral_nemo · Hugging Face: no description found

- Qwen 2.5 - a unsloth Collection: no description found

- Wow GIF - Wow - Discover & Share GIFs: Click to view the GIF

- llama-recipes/recipes/multilingual/README.md at 0efb8bd31e4359ba9e8f52e8d003d35ff038e081 · meta-llama/llama-recipes: Scripts for fine-tuning Meta Llama with composable FSDP & PEFT methods to cover single/multi-node GPUs. Supports default & custom datasets for applications such as summarization and Q&...

- unclemusclez/unsloth-llama3.2: Llama 3.2 with Unsloth

- Reddit - Dive into anything: no description found

- GitHub - chigkim/Ollama-MMLU-Pro: Contribute to chigkim/Ollama-MMLU-Pro development by creating an account on GitHub.

- macadeliccc/opus_samantha · Datasets at Hugging Face: no description found