AI News for 1/31/2025-2/3/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 16942 messages) for you. Estimated reading time saved (at 200wpm): 1721 minutes. You can now tag @smol_ai for AINews discussions!

When introducing Operator (our coverage here), sama hinted at more OpenAI Agents soon on the way, but few of us were expecting the next one in 9 days, shipped from Japan on a US Sunday no less:

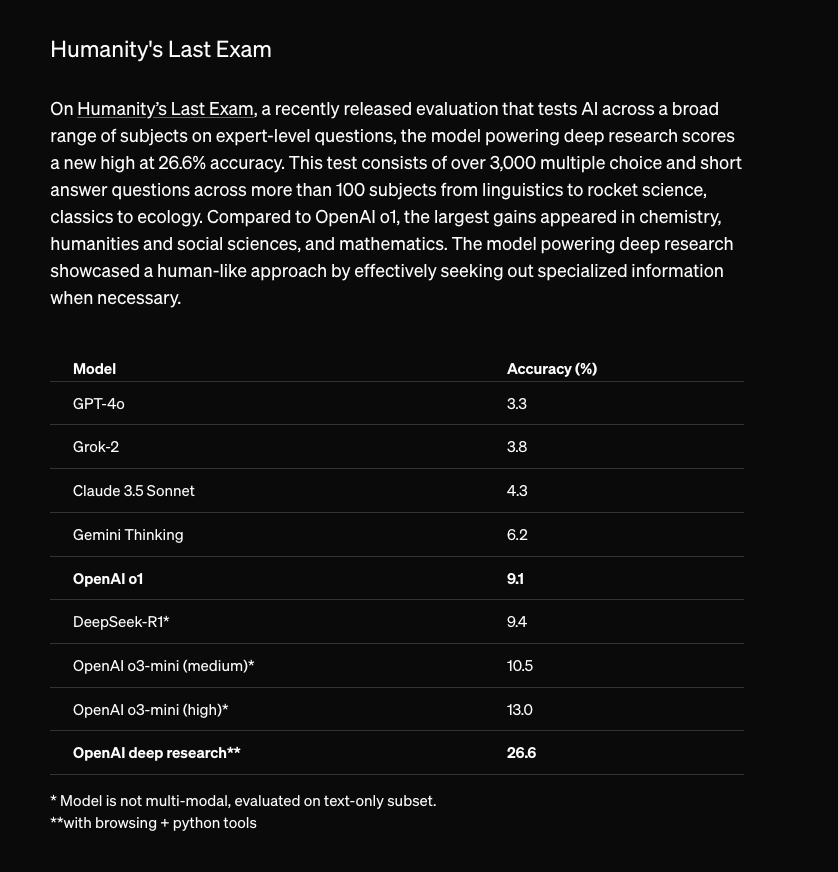

The blogpost offers more insight into intended usecases, but the bit notable is Deep Research’s result on Dan Hendrycks’ new HLE benchmark more than doubling the result of o3-mini-high released just on Friday (our coverage here).

They also released a SOTA result on GAIA - which was criticized by coauthors for just releasing public test set results - obviously problematic for an agent that can surf the web, though there is zero reason to question the integrity of this especially when confirmed in footnotes and as samples of the GAIA test traces were published.

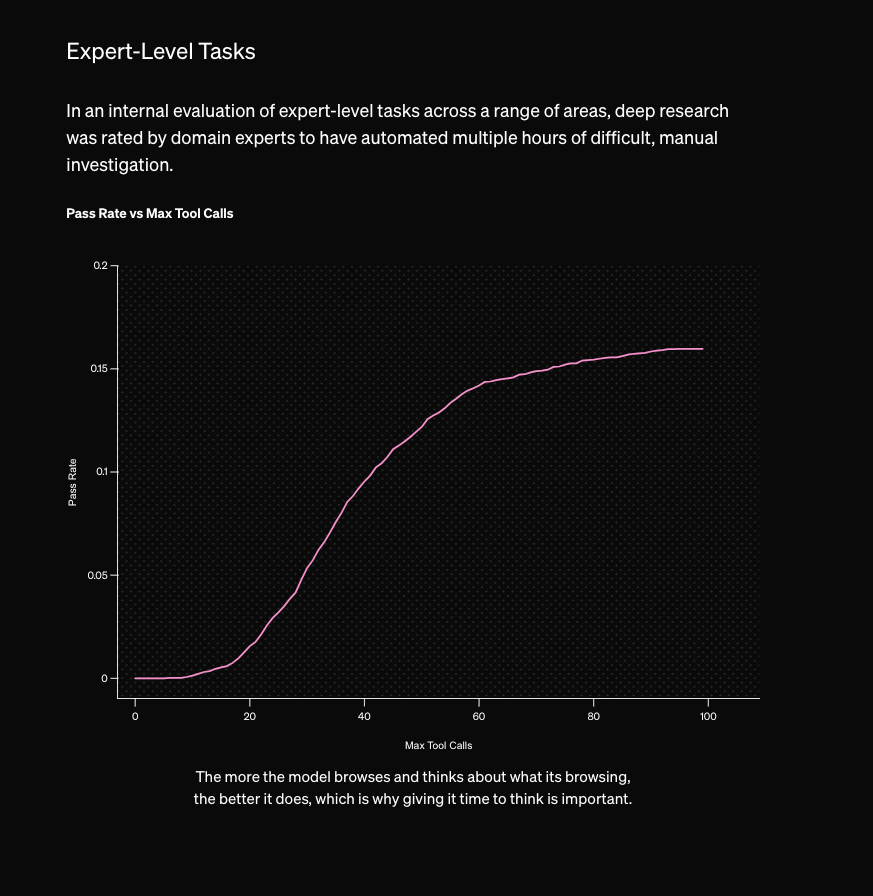

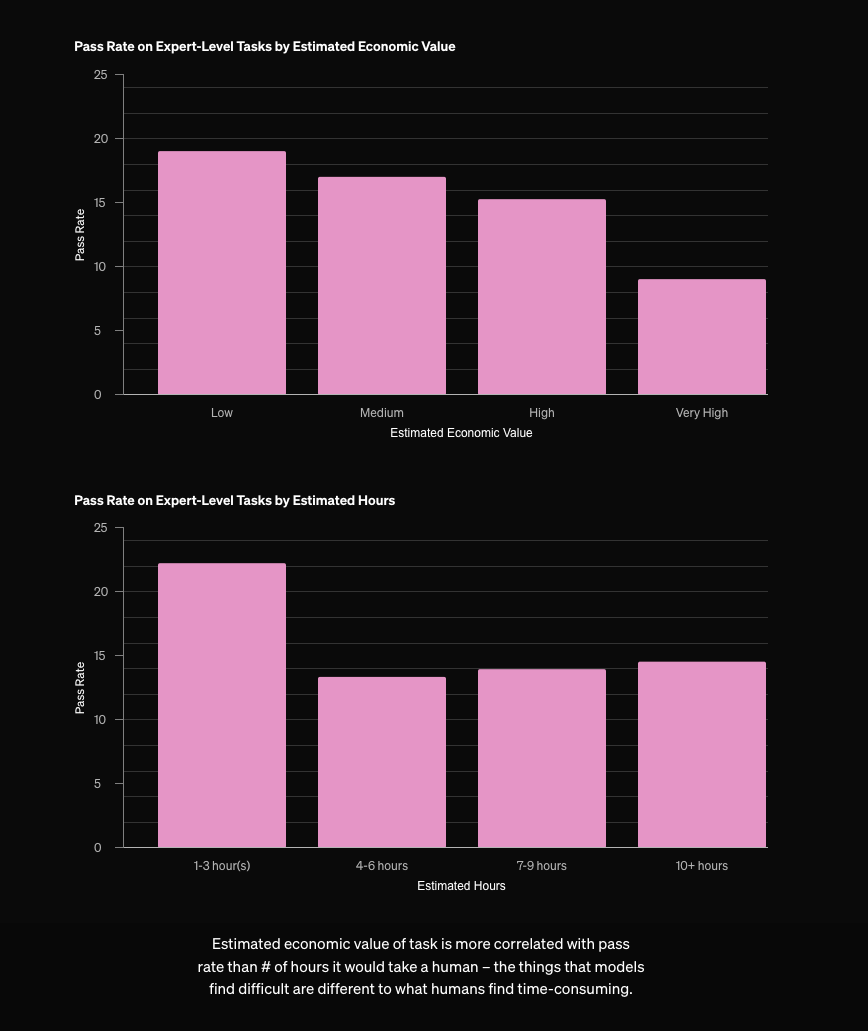

OAIDR comes with its own version of the “inference time scaling” chart which is very impressive - not in the scaling of the chart itself, but in the clear rigor demonstrated in the research process that made producing such a chart possible (assuming, of course, that this is research, not marketing, but here the lines are unfortunately blurred to sell a $200/month subscription).

OpenAI staffers confirmed that this is the first time the full o3 has been released in the wild (and gdb says it is “an extremely simple agent”), and the blogpost notes that a “o3-deep-research-mini” version is on the way which will raise rate limits from the 100 queries/month available today.

Reception has been mostly positive, sometimes to the point of hyperventilation. Some folks are making fun of the hyperbole, but on balance we tend to agree with the positive takes of Ethan Mollick and Dan Shipper, though we do experience a lot of failures as well.

Shameless Plug: We will have multiple Deep Research and other agent builders, including the original Gemini Deep Research team, at AI Engineer NYC on Feb 20-22. Last call for applicants!

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

Advances in Reinforcement Learning (RL) and AI Research

-

Reinforcement Learning Simplified and Its Impact on AI: @andersonbcdefg changed his mind and now thinks that RL is easy, reflecting on the accessibility of RL techniques in AI research.

-

s1: Simple Test-Time Scaling in AI Models: @iScienceLuvr shared a paper on s1, demonstrating that training on only 1,000 samples with next-token prediction and controlling thinking duration via a simple test-time technique called budget forcing leads to a strong reasoning model. This model outperforms previous ones on competition math questions by up to 27%. Additional discussions can be found here and here.

-

RL Improves Models’ Adaptability to New Tasks: Researchers from Google DeepMind, NYU, UC Berkeley, and HKU found that reinforcement learning improves models’ adaptability to new, unseen task variations, while supervised fine-tuning leads to memorization but remains important for model stabilization.

-

Critique of DeepSeek r1 and Introduction of s1: @Muennighoff introduced s1, which reproduces o1-preview scaling and performance with just 1K high-quality examples and a simple test-time intervention, addressing the data intensity of DeepSeek r1.

OpenAI’s Deep Research and Reasoning Models

-

Launch of OpenAI’s Deep Research Assistant: @OpenAI announced that Deep Research is now rolled out to all Pro users, offering a powerful AI tool for complex knowledge tasks. @nickaturley highlighted its general-purpose utility and potential to transform work, home, and school tasks.

-

Improvements in Test-Time Scaling Efficiency: @percyliang emphasized the importance of efficiency in test-time scaling with only 1K carefully chosen examples, encouraging methods that improve capabilities per budget.

-

First Glimpse of OpenAI’s o3 Capabilities: @BorisMPower expressed excitement over what o3 is capable of, noting its potential to save money and reduce reliance on experts for analysis.

Developments in Qwen Models and AI Advancements

-

R1-V: Reinforcing Super Generalization in Vision Language Models: @_akhaliq shared the release of R1-V, demonstrating that a 2B model can outperform a 72B model in out-of-distribution tests within just 100 training steps. The model significantly improves performance in long contexts and key information retrieval.

-

Qwen2.5-Max’s Strong Performance in Chatbot Arena: @Alibaba_Qwen announced that Qwen2.5-Max is now ranked #7 in the Chatbot Arena, surpassing DeepSeek V3, o1-mini, and Claude-3.5-Sonnet. It ranks 1st in math and coding, and 2nd in hard prompts.

-

s1 Model Exceeds o1-Preview: @arankomatsuzaki highlighted that s1-32B, after supervised fine-tuning on Qwen2.5-32B-Instruct, exceeds o1-preview on competition math questions by up to 27%. The model, data, and code are open-source for the community.

AI Safety and Defending Against Jailbreaks

-

Anthropic’s Constitutional Classifiers Against Universal Jailbreaks: @iScienceLuvr discussed Anthropic’s introduction of Constitutional Classifiers, safeguards trained on synthetic data to prevent universal jailbreaks. Over 3,000 hours of red teaming showed no successful attacks extracting detailed information from the guarded models.

-

Anthropic’s Demo to Test New Safety Techniques: @skirano announced a new research preview at Anthropic, inviting users to try to jailbreak their system protected by Constitutional Classifiers, aiming to enhance AI safety measures.

-

Discussion on Hallucination in AI Models: @OfirPress shared concerns about hallucinations in AI models, emphasizing it as a significant problem even in advanced systems like OpenAI’s Deep Research.

AI Tools and Platforms for Developers

-

Launch of SWE Arena for Vibe Coding: @terryyuezhuo released SWE Arena, a vibe coding platform, supporting real-time code execution and rendering, covering various frontier LLMs and VLMs. @_akhaliq also highlighted SWE Arena, noting its impressive capabilities.

-

Enhancements in Perplexity AI Assistant: @AravSrinivas introduced updates to the Perplexity Assistant, encouraging users to try it out on new devices like the Nothing phone, and mentioning upcoming features like integration into Android Auto. He also announced that o3-mini with web search and reasoning traces is available to all Perplexity users, with 500 uses per day for Pro users (tweet here).

-

Advancements in Llama Development Tools: @ggerganov announced over 1000 installs of llama.vscode, enhancing the development experience with llama-based models. He shared what a happy llama.cpp user looks like here.

Memes and Humor

-

Observations on AI Research and Naming Skills: @jeremyphoward humorously noted that it’s impossible to be both a strong AI researcher and good at naming things, calling it a universal fact across all known cultures.

-

Generational Reflections on Talent: @willdepue remarked that Gen Z consists of either radically talented individuals or “complete vegetables,” attributing this polarization to the internet and anticipating its acceleration with AI.

-

Humorous Take on Interface Design: @jeremyphoward joked about having only five grok icons on his home screen, suggesting more could fit, playfully engaging with technology design.

-

Happy llama.cpp User: @ggerganov shared an image depicting what a happy llama.cpp user looks like, adding a lighthearted touch to the AI community.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Paradigm Shift in AI Model Hardware: From GPUs to CPU+RAM

- Paradigm shift? (Score: 532, Comments: 159): The post suggests a potential paradigm shift in AI model processing from a GPU-centric approach to a CPU+RAM configuration, specifically highlighting the use of AMD EPYC processors and RAM modules. This shift is visually depicted through contrasting images of a man dismissing GPUs and approving a CPU+RAM setup, indicating a possible change in hardware preferences for AI computations.

- CPU+RAM Viability: The shift towards AMD EPYC processors and large RAM configurations is seen as viable for individual users due to cost-effectiveness, but GPUs remain preferable for serving multiple users. The cost of building an EPYC system is significantly higher, with estimates ranging from $5k to $15k, and performance is generally slower compared to GPU setups.

- Performance and Configuration: There is a focus on optimizing configurations, such as using dual socket 12 channel systems and ensuring all memory slots are filled for optimal performance. Some users report achieving 5.4 tokens/second with specific models, while others suggest that I/O bottlenecks and not utilizing all cores can affect performance.

- Potential Breakthroughs and MoE Models: Discussions include the potential for breakthroughs in Mixture of Experts (MoE) models, which could allow for reading LLM weights directly from fast NVMe storage, thus reducing active parameters. This could change the current hardware requirements, but the feasibility and timing of such advancements remain uncertain.

Theme 2. Rise of Mistral, Qwen, and DeepSeek outside the USA

- Mistral, Qwen, Deepseek (Score: 334, Comments: 114): Non-US companies such as Mistral AI, Qwen, and DeepSeek are releasing open-source models that are more accessible and smaller in size compared to their US counterparts. This highlights a trend where international firms are leading in making AI technology more available to the public.

- The Mistral 3 small 24B model is receiving positive feedback, with several users highlighting its effectiveness and accessibility. Qwen is noted for its variety of model sizes, offering more flexibility and usability on different hardware compared to Meta’s Llama models, which are criticized for limited size options and proprietary licensing.

- Discussions around US vs. international AI models reveal skepticism about the US’s current offerings, with some users preferring international models like those from China due to their open-source nature and competitive performance. Meta is mentioned as having initiated the open weights trend, but users express concerns about the company’s reliance on large models and proprietary licenses.

- There is a debate about the strategic interests of companies in keeping AI model weights open or closed. Some argue that leading companies keep weights closed to maintain a competitive edge, while challengers release open weights to undermine these leaders. Meta’s Llama 4 is anticipated to incorporate innovations from DeepSeek R1 to stay competitive.

Theme 3. Phi 4 Model Gaining Traction for Underserved Hardware

- Phi 4 is so underrated (Score: 207, Comments: 84): The author praises the Phi 4 model (Q8, Unsloth variant) for its performance on limited hardware like the M4 Mac mini (24 GB RAM), finding it comparable to GPT 3.5 for tasks such as general knowledge questions and coding prompts. They express satisfaction with its capabilities without concern for formal benchmarks, emphasizing personal experience over technical metrics.

- Phi 4’s Strengths and Limitations: Users praise Phi 4 for its strong performance in specific areas, such as knowledge base and rule-following, even outperforming larger models in instruction adherence. However, it struggles with smaller languages, producing poor output outside of English, and lacks a 128k context version which limits its potential compared to Phi-3.

- User Experiences and Implementations: Many users share positive experiences using Phi 4 in various workflows, highlighting its versatility and effectiveness in tasks like prompt enhancement and creative benchmarks like cocktail creation. Some users, however, report poor results in specific tasks like ticket categorization, where other models like Llama 3.3 and Gemma2 perform better.

- Tools and Workflow Integration: Discussions include using Phi 4 in custom setups, like Roland and WilmerAI, to enhance problem-solving by combining it with other models like Mistral Small 3 and Qwen2.5 Instruct. The community also explores workflow apps like n8n and omniflow for integrating Phi 4 into broader AI systems, with links to detailed setups and tools provided (WilmerAI GitHub).

Theme 4. DeepSeek-R1’s Competence in Complex Problem Solving

- DeepSeek-R1 never ever relaxes… (Score: 133, Comments: 30): The DeepSeek-R1 model showcased self-correction abilities by solving a math problem involving palindromic numbers, initially making a mistake but then correcting itself before completing its response. Notably, OpenAI o1 was the only other model to solve the problem, while several other models, including chatgpt-4o-latest-20241120 and claude-3-5-sonnet-20241022, failed, raising questions about potential issues with tokenizers, sampling parameters, or the inherent mathematical capabilities of non-thinking LLMs.

- Discussions highlight the self-correcting capabilities of LLMs, particularly in zero-shot settings. This ability stems from the model’s exposure to training data where errors are corrected, such as on platforms like Stack Overflow, influencing subsequent token predictions to correct mistakes.

- DeepSeek-R1 and other models like Mistral Large 2.1 and Gemini Thinking on AI Studio successfully solve the palindromic number problem, while the concept of Chain-of-Thought (CoT) models is explored. CoT models are contrasted with non-CoT models, which typically struggle to correct errors mid-response due to different training paradigms.

- The conversation delves into the foundational differences in training data across generational models (e.g., gen1, gen1.5, gen2) and the implications of these differences on error correction capabilities. There is a suggestion that presenting model outputs as user inputs for validation might help address these challenges.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. DeepSeek and Deep Research: Disruptive AI Challenges

-

Deep Research Replicated Within 12 Hours (Score: 746, Comments: 93): The post highlights Ashutosh Shrivastava’s tweet about the swift creation of “Open DeepResearch,” a counterpart to OpenAI’s Deep Research Agent, achieved within 12 hours. It includes code snippets that facilitate comparing AI companies like Cohere, Jina AI, and Voyage by examining their valuations, growth, and adoption metrics through targeted web searches and URL visits.

- Many commenters argue that OpenAI’s Deep Research is superior due to its use of reinforcement learning (RL), which allows it to autonomously learn strategies for complex tasks, unlike other models that lack this capability. Was_der_Fall_ist emphasizes that without RL, tools like “Open DeepResearch” are just sophisticated prompts and not true agents, potentially leading to brittleness and unreliability.

- The discussion highlights the importance of focusing not just on models but on the tooling and applications around them, as noted by frivolousfidget. They argue that significant capability gains can be achieved through innovative use of existing models, rather than solely through model improvements, citing examples like AutoGPT and LangChain.

- GitHub links and discussions about the cost and accessibility of models emphasize the financial barriers to competing with top-end solutions like OpenAI’s. YakFull8300 provides a GitHub link for further exploration, while others discuss the prohibitive costs associated with high-level AI model training and deployment.

-

DeepSeek might not be as disruptive as claimed, firm reportedly has 50,000 Nvidia GPUs and spent $1.6 billion on buildouts (Score: 535, Comments: 157): DeepSeek reportedly possesses 50,000 Nvidia GPUs and has invested $1.6 billion in infrastructure development, raising questions about its claimed disruptive impact in the AI industry. The scale of their investment suggests significant computational capabilities, yet there is skepticism about whether their technological advancements match their financial outlay.

- The discussion highlights skepticism about DeepSeek’s claims, with some users questioning the validity of their reported costs and GPU usage. DeepSeek’s paper clearly states the training costs, but many believe the media misrepresented these numbers, leading to misinformation and confusion about their actual expenses.

- There is a debate over whether DeepSeek’s open-source model represents a significant advancement in AI, with some arguing that it challenges the US’s dominance in AI development. Critics suggest that Western media and sinophobia have contributed to the narrative that DeepSeek’s achievements are overstated or misleading.

- The financial impact of DeepSeek’s announcements, like the 17% drop in Nvidia’s stock, is a focal point, with users noting the broader implications for the AI hardware market. Some users argue that the open-source nature of DeepSeek’s model allows for cost-effective AI development, potentially democratizing access to AI technology.

-

EU and UK waiting for Sora, Operator and Deep Research (Score: 110, Comments: 23): The post mentions that the EU and UK are waiting for Sora, Operator, and Deep Research tools, but provides no additional details or context. The accompanying image depicts a man in various contemplative poses, suggesting themes of reflection and solitude, yet lacks direct correlation to the post’s topic.

- Availability and Pricing Concerns: Users express frustration over the delayed availability of Sora in the UK and EU, speculating whether the delay is due to OpenAI or government regulations. Some are skeptical about paying $200/month for the service, and there’s mention of a potential release for the Plus tier next week, though skepticism about timelines remains.

- Performance and Utility: A user shared a positive experience with the tool, noting it generated a 10-page literature survey with APA citations in LaTeX within 14 minutes. This highlights the tool’s impressive capabilities and efficiency in handling complex tasks.

- Regulatory and Operational Insights: There is speculation that the delay might be a strategic move by OpenAI to influence policy-makers or due to resource allocation issues, particularly in processing user activity in the Republic of Ireland. The discussion suggests that the regulatory process should ideally enhance model safety, and contrasts OpenAI’s delay with other AI companies that manage day-one releases in the UK and EU.

Theme 2. OpenAI’s New Hardware Initiatives with Jony Ive

-

Open Ai is developing hardware to replace smartphones (Score: 279, Comments: 100): OpenAI is reportedly developing a new AI device intended to replace smartphones, as announced by CEO Sam Altman. The news article from Nikkei, dated February 3, 2025, also mentions Altman’s ambitions to transform the IT industry with generative AI and his upcoming meeting with Japan’s Prime Minister.

- OpenAI’s AI Hardware Ambitions: Sam Altman announced plans for AI-specific hardware and chips, potentially disrupting tech hardware akin to the 2007 iPhone launch. They aim to partner with Jony Ive, targeting a “voice” interface as a key feature, with the prototype expected in “several years” (Nikkei source).

- Skepticism on Replacing Smartphones: Many commenters doubted the feasibility of replacing smartphones, emphasizing the enduring utility of screens for video and reading. They expressed skepticism about using “voice” as the primary interface, questioning how it could replace the visual and interactive elements of smartphones.

- Emerging AI Assistants: Gemini is noted as a growing competitor to Google Assistant, with integration in Samsung devices and the ability to be chosen over other assistants in Android OS. Gemini’s potential expansion to Google Home and Nest devices is in beta, indicating a shift in AI assistant technology.

-

Breaking News: OpenAI will develop AI-specific hardware, CEO Sam Altman says (Score: 138, Comments: 29): OpenAI plans to develop AI-specific hardware, as announced by CEO Sam Altman. This strategic move indicates a significant step in enhancing AI capabilities and infrastructure.

- Closed Source Concerns: There is skepticism about the openness of OpenAI’s initiatives, with users noting the irony of “Open” AI developing closed-source software and hardware. This reflects a broader concern about transparency and accessibility in AI development.

- Collaboration with Jony Ive: The collaboration with Jony Ive is highlighted as a strategic move, potentially leading to the largest tech hardware disruption since the 2007 iPhone launch. The focus is on creating a new kind of hardware that leverages AI advancements for enhanced user interaction.

- Custom AI Chips: OpenAI is working on developing its own semiconductors, joining major tech companies like Apple, Google, and Amazon. This move is part of a broader trend towards custom-made chips aimed at improving AI performance, with a prototype expected in “several years” emphasizing voice as a key feature.

Theme 3. Critique on AI Outperforming Human Expertise Claims

-

Exponential progress - AI now surpasses human PhD experts in their own field (Score: 176, Comments: 86): The post discusses a graph titled “Performance on GPQA Diamond” that compares the accuracy of human PhD experts and AI models GPT-3.5 Turbo and GPT-4o over time. The graph shows that AI models are on an upward trend, surpassing human experts in their field from July 2023 to January 2025, with accuracy ranging from 0.2 to 0.9.

- AI Limitations and Misleading Claims: Commenters argue that AI models, while adept at pattern recognition and data retrieval, are not capable of genuine reasoning or scientific discovery, such as curing cancer. They highlight that AI surpassing PhDs in specific tests does not equate to surpassing human expertise in practical, real-world problem-solving.

- Criticism of Exponential Improvement Claims: The notion of AI models improving exponentially is criticized as misleading, with one commenter comparing it to a biased metric that doesn’t truly reflect the complexity and depth of human expertise. The discussion emphasizes that while AI can excel in theoretical knowledge, it lacks the ability to conduct experiments and make new discoveries.

- Skepticism Towards AI’s Expertise: Many express skepticism about AI’s ability to provide PhD-level insights without expert guidance, likening AI to an advanced search engine rather than a true expert. Concerns are raised about the credibility of claims that AI models have surpassed PhDs, with some attributing these claims to marketing rather than actual capability.

-

Stability AI founder: “We are clearly in an intelligence takeoff scenario” (Score: 127, Comments: 122): Emad Mostaque, founder of Stability AI, asserts that we are in an “intelligence takeoff scenario” where machines will soon surpass humans in digital knowledge tasks. He emphasizes the need to move beyond discussions of AGI and ASI, predicting enhanced machine efficiency, cost-effectiveness, and improved coordination, while urging consideration of the implications of these advancements.

- Many commenters express skepticism about the imminent replacement of humans by AI, citing examples like challenges in generating simple code tasks with AI models like o3-mini and o1 pro. RingDigaDing and others argue that AI still struggles with reliability and practical application in real-world scenarios, despite benchmarks suggesting proximity to AGI.

- IDefendWaffles and mulligan_sullivan discuss the motivations behind AI hype, mentioning investment interests and the lack of factual evidence for claims of imminent AGI. They highlight the need for grounded arguments and the difference between current AI capabilities and the speculative future of AI advancements.

- Users like whtevn and traumfisch discuss AI’s potential to augment human work, with whtevn sharing experiences of using AI as a development assistant. They emphasize AI’s ability to perform tasks efficiently, though not without human oversight, and the potential for AI to transform industries gradually rather than instantly.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking (gemini-2.0-flash-thinking-exp)

Theme 1. DeepSeek AI’s Ascendancy and Regulatory Scrutiny

- DeepSeek Model Steals Thunder, Outperforms Western Titans : China’s DeepSeek AI model is now outperforming Western competitors like OpenAI and Anthropic in benchmark tests, sparking global discussions about AI dominance. DeepSeek AI Dominates Western Benchmarks. This leap in performance is prompting legislative responses in the US to limit collaboration with Chinese AI research to protect national innovation.

- DeepSeek’s Safety Shield Shatters, Sparks Jailbreak Frenzy: Researchers at Cisco found DeepSeek R1 model fails 100% of safety tests, unable to block harmful prompts. DeepSeek R1 Performance Issues. Users also report server access woes, questioning its reliability for practical applications despite its benchmark prowess.

- US Lawmakers Draw Swords, Target DeepSeek with Draconian Bill: Senator Josh Hawley introduces legislation to curb American AI collaboration with China, specifically targeting models like DeepSeek. AI Regulation Faces New Legislative Push. The bill proposes penalties up to 20 years imprisonment for violations, raising concerns about stifling open-source AI innovation and accessibility.

Theme 2. OpenAI’s o3-mini: Performance and Public Scrutiny

- O3-mini AMA: Altman & Chen Face the Music on New Model: OpenAI schedules an AMA session featuring Sam Altman and Mark Chen to address community questions about o3-mini. OpenAI Schedules o3-mini AMA. Users are submitting questions via Reddit, keen to understand future developments and provide feedback on the model.

- O3-mini’s Reasoning Prowess Questioned, Sonnet Still King: Users are reporting mixed performance for o3-mini in coding tasks, citing slow speeds and incomplete solutions. O3 Mini Faces Performance Critique. Claude 3.5 Sonnet remains the preferred choice for many developers due to its consistent reliability and speed, especially with complex codebases.

- O3-mini Unleashes “Deep Research” Agent, But Questions Linger: OpenAI launches Deep Research, a new agent powered by o3-mini, designed for autonomous information synthesis and report generation. OpenAI Launches Deep Research Agent. While promising, users are already noting limitations in output quality and source analysis, with some finding Gemini Deep Research more effective in synthesis tasks.

Theme 3. AI Tooling and IDEs: Winds of Change

- Windsurf 1.2.5 Patch: Cascade Gets Web Superpowers, DeepSeek Still Buggy: Codeium releases Windsurf 1.2.5 patch, enhancing Cascade web search with automatic triggers and new commands like @web and @docs. Windsurf 1.2.5 Patch Update Released. However, users report ongoing issues with DeepSeek models within Windsurf, including invalid tool calls and context loss, impacting credit usage.

- Aider v0.73.0: O3-mini Ready, Reasoning Gets Effort Dial: Aider launches v0.73.0, adding full support for o3-mini and a new

--reasoning-effortargument for reasoning control. Aider v0.73.0 Launches with Enhanced Features. Despite O3-mini integration, users find Sonnet still faster and more efficient for coding tasks, even if O3-mini shines in complex logic. - Cursor IDE Updates Roll Out, Changelogs Remain Cryptic: Cursor IDE rolls out updates including a checkpoint restore feature, but users express frustration over inconsistent changelogs and undisclosed feature changes. Cursor IDE Rolls Out New Features. Concerns are raised about performance variances and the impact of updates on model capabilities without clear communication.

Theme 4. LLM Training and Optimization: New Techniques Emerge

- Unsloth’s Dynamic Quantization Shrinks Models, Keeps Punch: Unsloth AI highlights dynamic quantization, achieving up to 80% model size reduction for models like DeepSeek R1 without sacrificing accuracy. Dynamic Quantization in Unsloth Framework. Users are experimenting with 1.58-bit quantized models, but face challenges ensuring bit specification adherence and optimal LlamaCPP performance.

- GRPO Gains Ground: Reinforcement Learning Race Heats Up: Discussions emphasize the effectiveness of GRPO (Group Relative Policy Optimization) over DPO (Direct Preference Optimization) in reinforcement learning frameworks. Reinforcement Learning: GRPO vs. DPO. Experiments show GRPO boosts Llama 2 7B accuracy on GSM8K, suggesting it’s a robust method across model families and DeepSeek R1 outperforms PEFT and instruction fine-tuning.

- Test-Time Compute Tactics: Budget Forcing Enters the Arena: “Budget forcing” emerges as a novel test-time compute strategy, extending model reasoning times to encourage answer double-checking and improve accuracy. Test Time Compute Strategies: Budget Forcing. This method utilizes a dataset of 1,000 curated questions designed to test specific criteria, pushing models to enhance their reasoning performance during evaluation.

Theme 5. Hardware Hurdles and Horizons

- RTX 5090 Blazes Past RTX 4090 in AI Inference Showdown: Conversations reveal the RTX 5090 GPU offers up to 60% faster token processing than the RTX 4090 in large language models. RTX 5090 Outpaces RTX 4090 in AI Tasks. Benchmarking results are being shared, highlighting the performance leap for AI-intensive tasks.

- AMD’s RX 7900 XTX Grapples with Heavyweight LLMs: Users note AMD’s RX 7900 XTX GPU struggles to match NVIDIA GPUs in efficiency when running large language models like 70B. AMD RX 7900 XTX Struggles with Large LLMs. The community discusses limited token generation speeds on AMD hardware for demanding LLM tasks.

- GPU Shared Memory Hacks Boost LM Studio Efficiency: Discussions highlight leveraging shared memory on GPUs within LM Studio to increase RAM utilization and enhance model performance. GPU Efficiency Boosted with Shared Memory. Users are encouraged to tweak LM Studio settings to optimize GPU offloading and manage VRAM effectively, especially when working with large models locally.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Dynamic Quantization in Unsloth Framework: Unsloth’s dynamic quantization reduces model size by up to 80%, maintaining accuracy for models like DeepSeek R1. The blog post outlines effective methods for running and fine-tuning models using specified quantization techniques.

- Users face challenges with 1.58-bit quantized models, as dynamic quantization doesn’t always adhere to the bit specification, raising concerns about performance with LlamaCPP in current setups.

- VLLM Offloading Limitations with DeepSeek R1: VLLM currently lacks support for offloading with GGUF, especially for the DeepSeek V2 architecture unless recent patches are applied.

- This limitation poses optimization questions for workflows reliant on offloading capabilities, as highlighted in recent community discussions.

- Gradient Accumulation in Model Training: Gradient accumulation mitigates VRAM usage by allowing models to train on feedback from generated completions only, enhancing stability over directly training on previous inputs.

- This method is recommended to preserve context and prevent overfitting, as discussed in Unsloth documentation.

- Test Time Compute Strategies: Budget Forcing: Introducing budget forcing controls test-time compute, encouraging models to double-check answers by extending reasoning times, aiming to improve reasoning performance.

- This strategy leverages a curated dataset of 1,000 questions designed to fulfill specific criteria, as detailed in recent research forums.

- Klarity Library for Model Analysis: Klarity is an open-source library released for analyzing the entropy of language model outputs, providing detailed JSON reports and insights.

- Developers are encouraged to contribute and provide feedback through the Klarity GitHub repository.

Codeium (Windsurf) Discord

- Windsurf 1.2.5 Patch Update Released: The Windsurf 1.2.5 patch update has been released, focusing on improvements and bug fixes to enhance the Cascade web search experience. Full changelog details enhancements on how models call tools.

- New Cascade features allow users to perform web searches using automatic triggers, URL input, and commands @web and @docs for more control. These features are available with a 1 flow action credit and can be toggled in the Windsurf Settings panel.

- DeepSeek Model Performance Issues: Users have reported issues with DeepSeek models, including error messages about invalid tool calls and loss of context during tasks, leading to credit consumption without effective actions.

- These issues have sparked discussions on improving model reliability and ensuring efficient credit usage within Windsurf.

- Windsurf Pricing and Discounts: There are concerns regarding the lack of student discount options for Windsurf, with users questioning the tool’s pricing competitiveness compared to alternative solutions.

- Users expressed frustration over the current pricing structure, feeling that the value may not align with what is being offered.

- Codeium Extensions vs Windsurf Features: It was clarified that Cascade and AI flows functionalities are not available in the JetBrains plugin, limiting some advanced features to Windsurf only.

- Users referenced documentation to understand the current limitations and performance differences between the two platforms.

- Cascade Functionality and User Feedback: Users shared strategies for effectively using Cascade, such as setting global rules to block unwanted code modifications and using structured prompts with Claude or Cascade Base.

- Feedback highlighted concerns over Cascade’s ‘memories’ feature not adhering to established instructions, leading to unwanted code changes.

aider (Paul Gauthier) Discord

- Aider v0.73.0 Launches with Enhanced Features: The release of Aider v0.73.0 introduces support for

o3-miniand the new--reasoning-effortargument with low, medium, and high options, as well as auto-creating parent directories when creating new files.- These updates aim to improve file management and provide users with more control over reasoning processes, enhancing overall functionality.

- O3 Mini and Sonnet: A Performance Comparison: Users report that O3 Mini may experience slower response times in larger projects, sometimes taking up to a minute, whereas Sonnet delivers quicker results with less manual context addition.

- Despite appreciating O3 Mini for quick iterations, many prefer Sonnet for coding tasks due to its speed and efficiency.

- DeepSeek R1 Integration and Self-Hosting Challenges: Integration of DeepSeek R1 with Aider demonstrates top performance on Aider’s leaderboard, although some users express concerns about its speed.

- Discussions around self-hosting LLMs reveal frustrations with cloud dependencies, leading users like George Coles to consider independent hosting solutions.

- Windsurf IDE and Inline Prompting Enhancements: The introduction of Windsurf as an agentic IDE brings advanced AI capabilities for pair programming, enhancing tools like VSCode with real-time state awareness.

- Inline prompting features allow auto-completion of code changes based on previous actions, streamlining the coding experience for users leveraging Aider and Cursor.

Cursor IDE Discord

- O3 Mini Faces Performance Critique: Users have shared mixed reviews on O3 Mini’s performance in coding tasks, highlighting issues with speed and incomplete solutions.

- Claude 3.5 Sonnet is often preferred for handling large and complex codebases, offering more reliable and consistent performance.

- Cursor IDE Rolls Out New Features: Recent updates to Cursor include the checkpoint restore feature aimed at enhancing user experience, though lack of consistent changelogs has raised concerns.

- Users have expressed frustration over undisclosed features and performance variances, questioning the impact of updates on model capabilities.

- Advanced Meta Prompting Techniques Discussed: Discussions have surfaced around meta-prompting techniques to deconstruct complex projects into manageable tasks for LLMs.

- Shared resources suggest these techniques could significantly boost user productivity by optimizing prompt structures.

Yannick Kilcher Discord

- DeepSeek AI Dominates Western Benchmarks: China’s DeepSeek AI model outperforms Western counterparts like OpenAI and Anthropic on various benchmarks, prompting global discussions on AI competitiveness. The model’s superior performance was highlighted in recent tests showing its capabilities.

- In response, legislative measures in the US are being considered to limit collaboration with Chinese AI research, aiming to protect national innovation as DeepSeek gains traction in the market.

- AI Regulation Faces New Legislative Push: Recent AI regulatory legislation proposed by Senator Josh Hawley targets models like DeepSeek, imposing severe penalties that could hinder open-source AI development. The bill emphasizes national security, calling for an overhaul of copyright laws as discussed in this article.

- Critics argue that such regulations may stifle innovation and limit accessibility, echoing concerns about the balance between security and technological advancement.

- LLMs’ Math Capabilities Under Scrutiny: LLMs’ math performance has been criticized for fundamental mismatches, with comparisons likening them to ‘brushing teeth with a fork’. Models like o1-mini have shown varied results on math problems, raising questions about their reasoning effectiveness.

- Community discussions highlighted that o3-mini excelled in mathematical reasoning, solving complex puzzles better than counterparts, which has led to interest in organizing mathematical reasoning competitions.

- Self-Other Overlap Fine-Tuning Enhances AI Honesty: A paper on Self-Other Overlap (SOO) fine-tuning demonstrates significant reductions in deceptive AI responses across various model sizes without compromising task performance. Detailed in this study, SOO aligns AI’s self-representation with external perceptions to promote honesty.

- Experiments revealed that deceptive responses in Mistral-7B decreased to 17.2%, indicating the effectiveness of SOO in reinforcement learning scenarios and fostering more reliable AI interactions.

- OpenEuroLLM Launches EU-Focused Language Models: The OpenEuroLLM initiative has been launched to develop open-source large language models tailored for all EU languages, earning the first STEP Seal for excellence as announced by the European Commission.

- Supported by a consortium of European institutions, the project aims to create compliant and sustainable high-quality AI technologies for diverse applications across the EU, enhancing regional AI capabilities.

LM Studio Discord

- DeepSeek R1 Faces Distillation Limitations: Users have reported confusion over the DeepSeek R1 model’s parameter size, debating whether it’s 14B or 7B.

- Many are frustrated with the model’s auto-completion and debugging capabilities, particularly for programming tasks.

- AI-Powered Live Chatrooms Taking Shape: A user detailed creating a multi-agent live chatroom in LM Studio, featuring various AI personalities interacting in real-time.

- Plans include integrating this system into live Twitch and YouTube streams to showcase AI’s potential in dynamic environments.

- GPU Efficiency Boosted with Shared Memory: Discussions highlight using shared memory on GPUs for higher RAM utilization, improving model performance.

- Users are encouraged to tweak settings in LM Studio to optimize GPU offloading and manage VRAM for large models.

- RTX 5090 Outpaces RTX 4090 in AI Tasks: Conversations revealed that the RTX 5090 offers up to 60% faster token processing compared to the RTX 4090 in large models.

- Benchmarking results were shared from GPU-Benchmarks-on-LLM-Inference.

- AMD RX 7900 XTX Struggles with Large LLMs: Users noted that AMD’s RX 7900 XTX isn’t as efficient as NVIDIA GPUs for running large language models like the 70B.

- The community discussed the limited token generation speed of AMD GPUs for LLM tasks.

OpenAI Discord

- OpenAI Schedules o3-mini AMA: An AMA featuring Sam Altman, Mark Chen, and other key figures is set for 2PM PST, addressing questions about OpenAI o3-mini and its forthcoming features. Users can submit their questions on Reddit here.

- The AMA aims to provide insights into OpenAI’s future developments and gather community feedback on the o3-mini model.

- OpenAI Launches Deep Research Agent: OpenAI has unveiled a new Deep Research Agent capable of autonomously sourcing, analyzing, and synthesizing information from multiple online platforms to generate comprehensive reports within minutes. Detailed information is available here.

- This tool is expected to streamline research processes by significantly reducing the time required for data compilation and analysis.

- DeepSeek R1 Performance Issues: Users reported DeepSeek R1 exhibiting a 100% attack success rate, failing all safety tests and struggling with access due to frequent server issues, as highlighted by Cisco.

- DeepSeek’s inability to block harmful prompts has raised concerns about its reliability and safety in real-world applications.

- OpenAI Sets Context Token Limits for Models: OpenAI’s models enforce strict context limits, with Plus users capped at 32k tokens and Pro users at 128k tokens, limiting their capacity to handle extensive knowledge bases.

- A discussion emerged on leveraging embeddings and vector databases as alternatives to manage larger datasets more effectively than splitting data into chunks.

- Comparing AI Models: GPT-4 vs DeepSeek R1: Conversations compared OpenAI’s GPT-4 and DeepSeek R1, noting differences in capabilities like coding assistance and reasoning tasks. Users observed that GPT-4 excels in certain areas where DeepSeek R1 falls short.

- Members debated the pros and cons of models including O1, o3-mini, and Gemini, evaluating them based on features and usability for various applications.

Nous Research AI Discord

- DeepSeek and Psyche AI Developments: Participants highlighted DeepSeek’s advancements in AI, emphasizing how Psyche AI leverages Rust for its stack while integrating existing Python modules to maintain p2p networking features.

- Concerns were raised about implementing multi-step responses in reinforcement learning, focusing on efficiency and the inherent challenges in scaling these features.

- OpenAI’s Post-DeepSeek Strategy: OpenAI’s stance after DeepSeek has been scrutinized, especially Sam Altman’s remark about being on the ‘wrong side of history,’ raising questions about the authenticity given OpenAI’s previous reluctance to open-source models.

- Members stressed that OpenAI’s actions need to align with their statements to be credible, pointing out a gap between their promises and actual implementations.

- Legal and Copyright Considerations in AI: Discussions focused on the legal implications of AI development, particularly regarding copyright issues, as members debated the balance between protecting intellectual property and fostering AI innovation.

- A law student inquired about integrating legal-centric dialogues with technical discussions, highlighting potential regulations that could impact future AI research and development.

- Advancements in Model Training Techniques: The community explored Deep Gradient Compression, a method that reduces communication bandwidth by 99.9% in distributed training without compromising accuracy, as detailed in the linked paper.

- Stanford’s Simple Test-Time Scaling was also discussed, showcasing improvements in reasoning performance by up to 27% on competition math questions, with all resources being open-source.

- New AI Tools and Community Contributions: Relign has launched developer bounties to build an open-sourced RL library tailored for reasoning engines, inviting contributions from the community.

- Additionally, members shared insights on the Scite platform for research exploration and encouraged participation in community-driven AI model testing initiatives.

Interconnects (Nathan Lambert) Discord

- OpenAI’s Deep Research Enhancements: OpenAI introduced Deep Research with the O3 model, enabling users to refine research queries and view reasoning progress via a sidebar. Initial feedback points to its capability in synthesizing information, though some limitations in source analysis remain.

- Additionally, OpenAI’s O3 continues to improve through reinforcement learning techniques, alongside enhancements in their Deep Research tool, highlighting a significant focus on RL methodologies in their model training.

- SoftBank Commits $3B to OpenAI: SoftBank announced a $3 billion annual investment in OpenAI products, establishing a joint venture in Japan focused on the Crystal Intelligence model. This partnership aims to integrate OpenAI’s technology across SoftBank subsidiaries to advance AI solutions for Japanese enterprises.

- Crystal Intelligence is designed to autonomously analyze and optimize legacy code, with plans to introduce AGI within two years, reflecting Masayoshi Son’s vision of AI as Super Wisdom.

- GOP’s AI Legislation Targets Chinese Technologies: A GOP-sponsored bill proposes banning the import of AI technologies from the PRC, including model weights from platforms like DeepSeek, with penalties up to 20 years imprisonment.

- The legislation also criminalizes exporting AI to designated entities of concern, equating the release of products like Llama 4 with similar severe penalties, raising apprehensions about its impact on open-source AI developments.

- Reinforcement Learning: GRPO vs. DPO: Discussions highlighted the effectiveness of GRPO over DPO in reinforcement learning frameworks, particularly in the context of RLVR applications. Members posited that while DPO can be used, it’s likely less effective than GRPO.

- Furthermore, findings demonstrated that GRPO positively impacted the Llama 2 7B model, achieving a notable accuracy improvement on the GSM8K benchmark, showcasing the method’s robustness across model families.

- DeepSeek AI’s R1 Model Debut: DeepSeek AI released their flagship R1 model on January 20th, emphasizing extended training with additional data to enhance reasoning capabilities. The community has expressed enthusiasm for this advancement in reasoning models.

- The R1 model’s straightforward training approach, prioritizing sequencing early in the post-training cycle, has been lauded for its simplicity and effectiveness, generating anticipation for future developments in reasoning LMs.

Latent Space Discord

- OpenAI Launches Deep Research Agent: OpenAI introduced Deep Research, an autonomous agent optimized for web browsing and complex reasoning, enabling the synthesis of extensive reports from diverse sources in minutes.

- Early feedback highlights its utility as a robust e-commerce tool, though some users report output quality limitations.

- Reasoning Augmented Generation (ReAG) Unveiled: Reasoning Augmented Generation (ReAG) was introduced to enhance traditional Retrieval-Augmented Generation by eliminating retrieval steps and feeding raw material directly to LLMs for synthesis.

- Initial reactions note its potential effectiveness while questioning scalability and the necessity of preprocessing documents.

- AI Engineer Summit Tickets Flying Off: Sponsorships and tickets for the AI Engineer Summit are selling rapidly, with the event scheduled for Feb 20-22nd in NYC.

- The new summit website provides live updates on speakers and schedules.

- Karina Nguyen to Wrap Up AI Summit: Karina Nguyen is set to deliver the closing keynote at the AI Engineer Summit, showcasing her experience from roles at Notion, Square, and Anthropic.

- Her contributions span the development of Claude 1, 2, and 3, underlining her impact on AI advancements.

- Deepseek API Faces Reliability Issues: Members expressed concerns over the Deepseek API’s reliability, highlighting access issues and performance shortcomings.

- Opinions suggest the API’s hosting and functionalities lag behind expectations, prompting discussions on potential improvements.

Eleuther Discord

- Probability of Getting Functional Language Models: A study by EleutherAI calculates the probability of randomly guessing weights to achieve a functional language model at approximately 1 in 360 million zeros, highlighting the immense complexity involved.

- The team shared their basin-volume GitHub repository and a research paper to explore network complexity and its implications on model alignment.

- Replication Failures of R1 on SmolLM2: Researchers encountered replication failures of the R1 results when testing on SmolLM2 135M, observing worse autointerp scores and higher reconstruction errors compared to models trained on real data.

- This discrepancy raises questions about the original paper’s validity, as noted in discussions surrounding the Sparse Autoencoders community findings.

- Censorship Issues with DeepSeek: DeepSeek exhibits varied responses to sensitive topics like Tiananmen Square based on prompt language, indicating potential biases integrated into its design.

- Users suggested methods to bypass these censorship mechanisms, referencing AI safety training vulnerabilities discussed in related literature.

- DRAW Architecture Enhances Image Generation: The DRAW network architecture introduces a novel spatial attention mechanism that mirrors human foveation, significantly improving image generation on datasets such as MNIST and Street View House Numbers.

- Performance metrics from the DRAW paper indicate that images produced are indistinguishable from real data, demonstrating enhanced generative capabilities.

- NeoX Performance Metrics and Challenges: A member reported achieving 10-11K tokens per second on A100s for a 1.3B parameter model, contrasting the 50K+ tokens reported in the OLMo2 paper.

- Issues with fusion flags and discrepancies in the gpt-neox configurations were discussed, highlighting challenges in scaling Transformer Engine speedups.

MCP (Glama) Discord

- Remote MCP Tools Demand Surges: Members emphasized the need for remote capabilities in MCP tools, noting that most existing solutions focus on local implementations.

- Concerns about scalability and usability were raised, with suggestions to explore alternative setups to enhance MCP functionality.

- Superinterface Products Clarify AI Infrastructure Focus: A cofounder of Superinterface detailed their focus on providing AI agent infrastructure as a service, distinguishing from open-source alternatives.

- The product is aimed at integrating AI capabilities into user products, highlighting the complexity involved in infrastructure requirements.

- Goose Automates GitHub Tasks: A YouTube video showcased Goose, an open-source AI agent, automating tasks by integrating with any MCP server.

- The demonstration highlighted Goose’s ability to handle GitHub interactions, underscoring innovative uses of MCP.

- Supergateway v2 Enhances MCP Server Accessibility: Supergateway v2 now enables running any MCP server remotely via tunneling with ngrok, simplifying server setup and access.

- Community members are encouraged to seek assistance, reflecting the collaborative effort to improve MCP server usability.

- Load Balancing Techniques in Litellm Proxy: Discussions covered methods for load balancing using Litellm proxy, including configuring weights and managing requests per minute.

- These strategies aim to efficiently manage multiple AI model endpoints within workflows.

Stackblitz (Bolt.new) Discord

- Bolt Performance Issues Impactating Users: Multiple users reported Bolt experiencing slow responses and frequent error messages, leading to disrupted operations and necessitating frequent page reloads or cookie clearing.

- The recurring issues suggest potential server-side problems or challenges with local storage management, as users seek to restore access by clearing browser data.

- Supabase Preferred Over Firebase: In a heated debate, many users favored Supabase for its direct integration capabilities and user-friendly interface compared to Firebase.

- However, some participants appreciated Firebase for those already immersed in its ecosystem, highlighting a split preference among the community.

- Connection Instability with Supabase Services: Users faced disconnections from Supabase after making changes, necessitating reconnection efforts or project reloads to restore functionality.

- One user resolved the connectivity issue by reloading their project, indicating that the disconnections may stem from recent front-end modifications.

- Iframe Errors with Calendly in Voiceflow Chatbot: A user encountered iframe errors while integrating Calendly within their Voiceflow chatbot, leading to display issues.

- After consulting with representatives from Voiceflow and Calendly, it was determined to be a Bolt issue, causing notable frustration among the user.

- Persistent User Authentication Challenges: Users reported authentication issues, including inability to log in and encountering identical errors across various browsers.

- Suggested workarounds like clearing local storage failed for some, pointing towards underlying problems within the authentication system.

Nomic.ai (GPT4All) Discord

- GPT4All v3.8.0 Crashes Intel Macs: Users report that GPT4All v3.8.0 crashes on modern Intel macOS machines, suggesting this version may be DOA for these systems.

- A working hypothesis is being formed based on users’ system specifications to identify the affected configurations, as multiple users have encountered similar issues.

- Quantization Levels Impact GPT4All Performance: Quantization levels significantly influence the performance of GPT4All, with lower quantizations causing quality degradation.

- Users are encouraged to balance quantization settings to maintain output quality without overloading their hardware.

- Privacy Concerns in AI Model Data Collection: A debate has arisen over trust in data collection, contrasting Western and Chinese data practices, with users expressing varying degrees of concern and skepticism.

- Participants argue about perceived double standards in data collection across different countries.

- Integrating LaTeX Support with MathJax in GPT4All: Users are exploring the integration of MathJax for LaTeX support within GPT4All, emphasizing compatibility with LaTeX structures.

- Discussions focus on parsing LaTeX content and extracting math expressions to improve the LLM’s output representation.

- Developing Local LLMs for NSFW Story Generation: A user is seeking a local LLM capable of generating NSFW stories offline, similar to existing online tools but without using llama or DeepSeek.

- The user specifies their system capabilities and requirements, including a preference for a German-speaking LLM.

Notebook LM Discord Discord

- API Release Planned for NotebookLM: Users inquired about the upcoming NotebookLM API release, expressing enthusiasm for extended functionalities.

- It was noted that the output token limit for NotebookLM is lower than that of Gemini, though specific details remain undisclosed.

- NotebookLM Plus Features Rollout in Google Workspace: A user upgraded to Google Workspace Standard and observed the addition of ‘Analytics’ in the top bar of NotebookLM, indicating access to NotebookLM Plus.

- They highlighted varying usage limits despite similar interface appearances and shared screenshots for clarity.

- Integrating Full Tutorials into NotebookLM: A member suggested incorporating entire tutorial websites like W3Schools JavaScript into NotebookLM to enhance preparation for JS interviews.

- Another member mentioned existing Chrome extensions that assist with importing web pages into NotebookLM.

- Audio Customization Missing Post UI Update: Users reported the loss of audio customization features in NotebookLM following a recent UI update.

- Recommendations included exploring Illuminate for related functionalities, with hopes that some features might migrate to NotebookLM.

Modular (Mojo 🔥) Discord

- Mojo and MAX Streamline Solutions: A member highlighted the effectiveness of Mojo and MAX in addressing current engineering challenges, emphasizing their potential as comprehensive solutions.

- The discussion underscored the significant investment required to implement these solutions effectively within existing workflows.

- Reducing Swift Complexity in Mojo: Concerns were raised about Mojo inheriting Swift’s complexity, with the community advocating for clearer development pathways to ensure stability.

- Members emphasized the importance of careful tradeoff evaluations to prevent rushed advancements that could compromise Mojo’s reliability.

- Ollama Outpaces MAX Performance: Ollama was observed to perform faster than MAX on identical machines, despite metrics initially suggesting slower performance for MAX.

- Current developments are focused on optimizing MAX’s CPU-based serving capabilities to enhance overall performance.

- Enhancing Mojo’s Type System: Users inquired about accessing specific struct fields within Mojo’s type system when passing parameters as concrete types.

- The responses indicated a learning curve for effectively utilizing Mojo’s type functionalities, pointing to ongoing community education efforts.

- MAX Serving Infrastructure Optimizations: The MAX serving infrastructure employs

huggingface_hubfor downloading and caching model weights, differentiating it from Ollama’s methodology.- Discussions revealed options to modify the

--weight-path=parameter to prevent duplicate downloads, though managing Ollama’s local cache remains complex.

- Discussions revealed options to modify the

Torchtune Discord

- GRPO Deployment on 16 Nodes: A member successfully deployed GRPO across 16 nodes by adjusting the multinode PR, anticipating upcoming reward curve validations.

- They humorously remarked that being part of a well-funded company offers significant advantages in such deployments.

- Final Approval for Multinode Support in Torchtune: A request was made for the final approval of the multinode support PR in Torchtune, highlighting its necessity based on user demand.

- The discussion raised potential concerns regarding the API parameter

offload_ops_to_cpu, suggesting it may require additional review.

- The discussion raised potential concerns regarding the API parameter

- Seed Inconsistency in DPO Recipes: Seed works for LoRA finetuning but fails for LoRA DPO, with inconsistencies in sampler behavior being investigated in issue #2335.

- Multiple issues related to seed management have been logged, focusing on the effects of

seed=0andseed=nullin datasets.

- Multiple issues related to seed management have been logged, focusing on the effects of

- Comprehensive Survey on Data Augmentation in LLMs: A survey detailed how large pre-trained language models (LLMs) benefit from extensive training datasets, addressing overfitting and enhancing data generation with unique prompt templates.

- It also covered recent retrieval-based techniques that integrate external knowledge, enabling LLMs to produce grounded-truth data.

- R1-V Model Enhances Counting in VLMs: R1-V leverages reinforcement learning with verifiable rewards to improve visual language models’ counting capabilities, where a 2B model outperformed the 72B model in 100 training steps at a cost below $3.

- The model is set to be fully open source, encouraging the community to watch for future updates.

LLM Agents (Berkeley MOOC) Discord

- Upcoming Lecture on LLM Self-Improvement: Jason Weston is presenting Self-Improvement Methods in LLMs today at 4:00pm PST, focusing on techniques like Iterative DPO and Meta-Rewarding LLMs.

- Participants can watch the livestream here, where Jason will explore methods to enhance LLM reasoning, math, and creative tasks.

- Iterative DPO & Meta-Rewarding in LLMs: Iterative DPO and Meta-Rewarding LLMs are discussed as recent advancements, with links to Iterative DPO and Self-Rewarding LLMs papers.

- These methods aim to improve LLM performance across various tasks by refining reinforcement learning techniques.

- DeepSeek R1 Surpasses PEFT: DeepSeek R1 demonstrates that reinforcement learning with group relative policy optimization outperforms PEFT and instruction fine-tuning.

- This shift suggests a potential move away from traditional prompting methods due to DeepSeek R1’s enhanced effectiveness.

- MOOC Quiz and Certification Updates: Quizzes are now available on the course website under the syllabus section, with no email alerts to prevent inbox clutter.

- Certification statuses are being updated, with assurances that submissions will be processed soon, though some members have reported delays.

- Hackathon Results to Be Announced: Members are anticipating the hackathon results, which have been privately notified, with a public announcement expected by next week.

- This follows extensive participation in the MOOC’s research and project tracks, highlighting active community engagement.

tinygrad (George Hotz) Discord

- NVDEC Decoding Complexities Unveiled: Decoding video with NVDEC presents challenges related to file formats and the necessity for cuvid binaries, as highlighted in FFmpeg/libavcodec/nvdec.c.

- The lengthy libavcodec implementation includes high-level abstractions that could benefit from simplification to enhance efficiency.

- WebGPU Autogen Nears Completion: A member reported near completion of WebGPU autogen, requiring only minor simplifications, with tests passing on both Ubuntu and Mac platforms.

- They emphasized the need for instructions in cases where dawn binaries are not installed.

- Clang vs GCC Showdown in Linux Distros: The debate highlighted that while clang is favored by platforms like Apple and Google, gcc remains prevalent among major Linux distributions.

- This raises discussions on whether distros should transition to clang for improved optimization.

- HCQ Execution Paradigm Enhances Multi-GPU: HCQ-like execution is identified as a fundamental step for understanding multi-GPU execution, with potential support for CPU implementations.

- Optimizing the dispatcher to efficiently allocate tasks between CPU and GPU could lead to performance improvements.

- CPU P2P Transfer Mechanics Explored: The discussion speculated that CPU p2p transfers might involve releasing locks on memory blocks for eviction to L3/DRAM, considering D2C transfers efficiency.

- Performance concerns were raised regarding execution locality during complex multi-socket transfers.

Cohere Discord

- Cohere Trial Key Reset Timing: A member questioned when the Cohere trial key resets—whether 30 days post-generation or at the start of each month. This uncertainty affects how developers plan their evaluation periods.

- Clarifications are needed as the trial key is intended for evaluation, not long-term free usage.

- Command-R+ Model Praised for Performance: Users lauded the Command-R+ model for consistently meeting their requirements, with one user mentioning it continues to surprise them despite not being a power user.

- This sustained performance indicates reliability and effectiveness in real-world applications.

- Embed API v2.0 HTTP 422 Errors: A member encountered an ‘HTTP 422 Unprocessable Entity’ error when using the Embed API v2.0 with a specific cURL command, raising concerns about preprocessing needs for longer articles.

- Recommendations include verifying the API key inclusion, as others reported successful requests under similar conditions.

- Persistent Account Auto Logout Issues: Several users reported auto logout problems, forcing repeated logins and disrupting workflow within the platform.

- This recurring issue highlights a significant user experience flaw that needs addressing to ensure seamless access.

- Command R’s Inconsistent Japanese Translations: Command R and Command R+ exhibit inconsistent translation results for Japanese, with some translations failing entirely.

- Users are advised to contact support with specific examples to aid the multilingual team or utilize Japanese language resources for better context.

LlamaIndex Discord

- Deepseek Dominates OpenAI: A member noted a clear winner between Deepseek and OpenAI, highlighting a surprising narration that showcases their competitive capabilities.

- This discussion sparked interest in the relative performance of these tools, emphasizing emerging strengths in Deepseek.

- LlamaReport Automates Reporting: An early beta video of LlamaReport was shared, demonstrating its potential for report generation in 2025. Watch it here.

- This development aims to streamline the reporting process, providing users with efficient solutions for their needs.

- SciAgents Enhances Scientific Discovery: SciAgents was introduced as an automated scientific discovery system utilizing a multi-agent workflow and ontological graphs. Learn more here.

- This project illustrates how collaborative analysis can drive innovation in scientific research.

- AI-Powered PDF to PPT Conversion: An open-source web app enables the conversion of PDF documents into dynamic PowerPoint presentations using LlamaParse. Explore it here.

- This application simplifies presentation creation, automating workflows for users.

- DocumentContextExtractor Boosts RAG Accuracy: DocumentContextExtractor was highlighted for enhancing the accuracy of Retrieval-Augmented Generation (RAG), with contributions from both AnthropicAI and LlamaIndex. Check the thread here.

- This emphasizes ongoing community contributions to improving AI contextual understanding.

DSPy Discord

- DeepSeek Reflects AI Hopes and Fears: The article discusses how DeepSeek acts as a textbook power object, revealing more about our desires and concerns regarding AI than about the technology itself, as highlighted here.

- Every hot take on DeepSeek shows a person’s specific hopes or fears about AI’s impact.

- SAEs Face Significant Challenges in Steering LLMs: A member expressed disappointment in the long-term viability of SAEs for steering LLMs predictably, citing a recent discussion.

- Another member highlighted the severity of recent issues, stating, ‘Damn, triple-homicide in one day. SAEs really taking a beating recently.’

- DSPy 2.6 Deprecates Typed Predictors: Members clarified that typed predictors have been deprecated; normal predictors suffice for functionality in DSPy 2.6.

- It was emphasized that there is no such thing as a typed predictor anymore in the current version.

- Mixing Chain-of-Thought with R1 Models in DSPy: A member expressed interest in mixing DSPy chain-of-thought with the R1 model for fine-tuning in a collaborative effort towards the Konwinski Prize.

- They also extended an invitation for others to join the discussion and the collaborative efforts related to this initiative.

- Streaming Outputs Issues in DSPy: A user shared difficulties in utilizing dspy.streamify to produce outputs incrementally, receiving ModelResponseStream objects instead of expected values.

- They implemented conditionals in their code to handle output types appropriately, seeking further advice for improvements.

LAION Discord

- OpenEuroLLM Debuts for EU Languages: OpenEuroLLM has been launched as the first family of open-source Large Language Models (LLMs) covering all EU languages, prioritizing compliance with EU regulations.

- Developed within Europe’s regulatory framework, the models ensure alignment with European values while maintaining technological excellence.

- R1-Llama Outperforms Expectations: Preliminary evaluations on R1-Llama-70B show it matches and surpasses both o1-mini and the original R1 models in solving Olympiad-level math and coding problems.

- These results highlight potential generalization deficits in leading models, sparking discussions within the community.

- DeepSeek’s Specifications Under Scrutiny: DeepSeek v3/R1 model features 37B active parameters and utilizes a Mixture of Experts (MoE) approach, enhancing compute efficiency compared to the dense architecture of Llama 3 models.

- The DeepSeek team has implemented extensive optimizations to support the MoE strategy, leading to more resource-efficient performance.

- Interest in Performance Comparisons: A community member expressed enthusiasm for testing a new model that is reportedly faster than HunYuan.

- This sentiment underscores the community’s focus on performance benchmarking among current AI models.

- EU Commission Highlights AI’s European Roots: A tweet from EU_Commission announced that OpenEuroLLM has been awarded the first STEP Seal for excellence, aiming to unite EU startups and research labs.

- The initiative emphasizes preserving linguistic and cultural diversity and developing AI on European supercomputers.

Axolotl AI Discord

- Fine-Tuning Frustrations: A member expressed confusion about fine-tuning reasoning models, humorously admitting they don’t know where to start.

- They commented, Lol, indicating their need for guidance in this area.

- GRPO Colab Notebook Released: A member shared a Colab notebook for GRPO, providing a resource for those interested in the topic.

- This notebook serves as a starting point for members seeking to learn more about GRPO.

OpenInterpreter Discord

- o3-mini’s Interpreter Integration: A member inquired whether o3-mini can be utilized within both 01 and the interpreter, highlighting potential integration concerns.

- These concerns underline the need for clarification on o3-mini’s compatibility with Open Interpreter.

- Anticipating Interpreter Updates: A member questioned the nature of upcoming Open Interpreter changes, seeking to understand whether they would be minor or significant.

- Their inquiry reflects the community’s curiosity about the scope and impact of the planned updates.

MLOps @Chipro Discord

- Mastering Cursor AI for Enhanced Productivity: Join this Tuesday at 5pm EST for a hybrid event on Cursor AI, featuring guest speaker Arnold, a 10X CTO, who will discuss best practices to enhance coding speed and quality.

- Participants can attend in person at Builder’s Club or virtually via Zoom, with the registration link provided upon signing up.

- High-Value Transactions in Honor of Kings Market: The Honor of Kings market saw a high-priced acquisition today, with 小蛇糕 selling for 486.

- Users are encouraged to trade in the marketplace using the provided market code -<344IRCIX>- and password [[S8fRXNgQyhysJ9H8tuSvSSdVkdalSFE]] to buy or sell items.

Mozilla AI Discord

- Lumigator Live Demo Streamlines Model Testing: Join the Lumigator Live Demo to learn about installation and onboarding for running your very first model evaluation.

- This event will guide attendees through critical setup steps for effective model performance testing.

- Firefox AI Platform Debuts Offline ML Tasks: The Firefox AI Platform is now available, enabling developers to leverage offline machine learning tasks in web extensions.

- This new platform opens avenues for improved machine learning capabilities directly in user-friendly environments.

- Blueprints Update Enhances Open-Source Recipes: Check out the Blueprints Update for new recipes aimed at enhancing open-source projects.

- This initiative equips developers with essential tools for creating effective software solutions.

- Builders Demo Day Pitches Debut on YouTube: The Builders Demo Day Pitches have been released on Mozilla Developers’ YouTube channel, showcasing innovations from the developers’ community.

- These pitches present an exciting opportunity to engage with cutting-edge development projects and ideas.

- Community Announces Critical Updates: Members can find important news regarding the latest developments within the community.

- Stay informed about the critical discussions affecting community initiatives and collaborations.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Unsloth AI (Daniel Han) ▷ #general (1121 messages🔥🔥🔥):

Unsloth Framework, DeepSeek R1, Batch Inference, Legal Considerations for AI Training, LLM Performance

- Understanding Unsloth and its Capabilities: Unsloth is primarily a fine-tuning framework designed to quickly test models, but it is not aimed at production inference, which would be better served through systems like vllm.

- Unsloth inference can be used to validate fine-tuning results more efficiently than traditional transformer inference, though it doesn’t support batch processing.

- Challenges with Training Data Quality: Participants discussed the need for curated and balanced datasets to improve the performance of models being fine-tuned, particularly in avoiding biases related to specific content types.

- Participants emphasized the importance of cleaning and organizing data to ensure effective model training and prevent overfitting.

- Legal Considerations in AI Training: The conversation touched on the legal ramifications of using copyrighted data for model training, including varying international laws and potential repercussions for non-compliance.

- Given the evolving landscape of AI regulations, it is advised to consult legal resources to understand the boundaries of using textual data for training purposes.

- Performance of Different LLMs: The performance and efficiency of models like DeepSeek R1 and others were reviewed, with comments about various models’ speeds and capabilities, including the potential for operational overhead in local setups.

- Participants noted the need for better computational resources to handle models effectively, especially those requiring significant GPU memory.

- Community Resources and Collaboration: Users shared links to resources, including GitHub repositories and Colab notebooks, aimed at assisting new users in navigating the complexities of fine-tuning and leveraging LLM architectures.

- The community expressed a willingness to help one another out with projects and seek collaboration in handling data tasks and improving model performance.

Links mentioned:

- Tweet from Joey (e/λ) (@shxf0072): mange to hack grpo with unsloth on free colabits painfully slowbut works :phttps://colab.research.google.com/drive/1P7frB3fjMv6vjSINqiydAf6gnMab2TiL?usp=sharingQuoting Joey (e/λ) (@shxf0072) OOMxiety

- Tweet from vLLM (@vllm_project): We landed the 1st batch of enhancements to the @deepseek_ai models, starting MLA and cutlass fp8 kernels. Compared to v0.7.0, we offer ~3x the generation throughput, ~10x the memory capacity for token...

- GGUF My Repo - a Hugging Face Space by ggml-org: no description found

- Examples — vLLM: no description found

- Writing Mathematics for MathJax — MathJax 3.2 documentation: no description found

- Mistral-Small-24B-2501 (All Versions) - a unsloth Collection: no description found

- ContactDoctor/Bio-Medical-Llama-3-8B · Hugging Face: no description found

- Fast JSON Decoding for Local LLMs with Compressed Finite State Machine | LMSYS Org: <p>Constraining an LLM to consistently generate valid JSON or YAML that adheres to a specific schema is a critical feature for many applications.In this blo...

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning: We introduce our first-generation reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero, a model trained via large-scale reinforcement learning (RL) without supervised fine-tuning (SFT)...

- Backus–Naur form - Wikipedia: no description found

- Hugging Face – The AI community building the future.: no description found

- Google Colab: no description found

- unsloth/Mistral-Small-24B-Instruct-2501 · Hugging Face: no description found

- Installing + Updating | Unsloth Documentation: Learn to install Unsloth locally or online.

- unsloth/Qwen2.5-14B-Instruct-1M-unsloth-bnb-4bit at main: no description found

- Fine Tune DeepSeek R1 | Build a Medical Chatbot: In this video, we show you how to fine-tune DeepSeek R1, an open-source reasoning model, using LoRA (Low-Rank Adaptation). We'll also be using Kaggle, Huggin...

- Unsloth Notebooks | Unsloth Documentation: Below is a list of all our notebooks:

- GitHub - getAsterisk/deepclaude: A high-performance LLM inference API and Chat UI that integrates DeepSeek R1's CoT reasoning traces with Anthropic Claude models.: A high-performance LLM inference API and Chat UI that integrates DeepSeek R1's CoT reasoning traces with Anthropic Claude models. - getAsterisk/deepclaude