AI News for 4/15/2025-4/16/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (211 channels, and 9942 messages) for you. Estimated reading time saved (at 200wpm): 782 minutes. You can now tag @smol_ai for AINews discussions!

As hinted on Monday, OpenAI launched the awkwardly named o3 and o4-mini in a classic livestream, together with a blogpost and a system card:

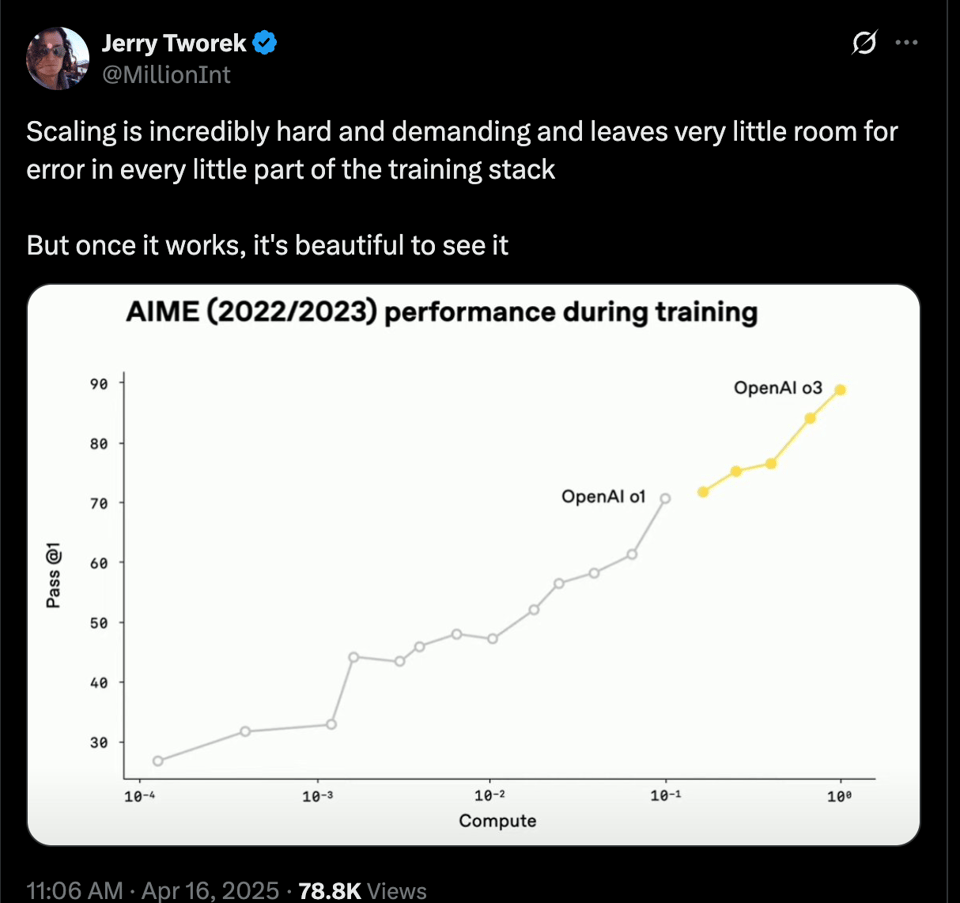

the general message is improvements in both scaling RL:

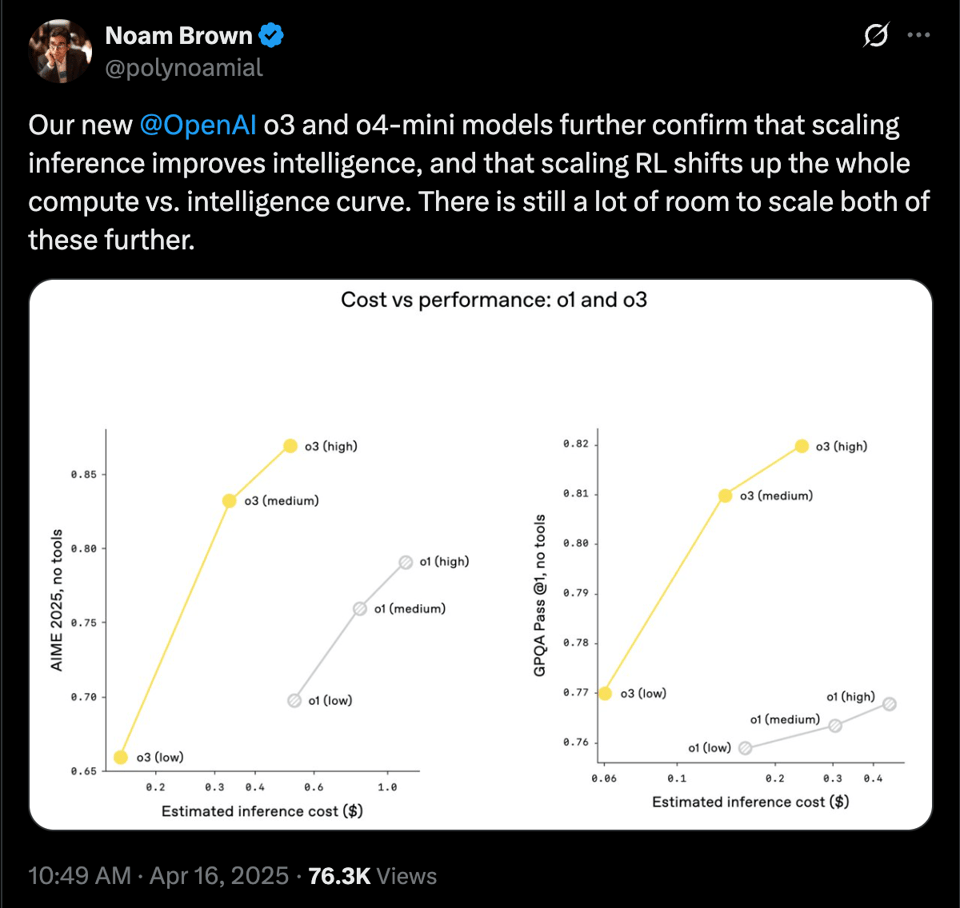

and overall efficiency:

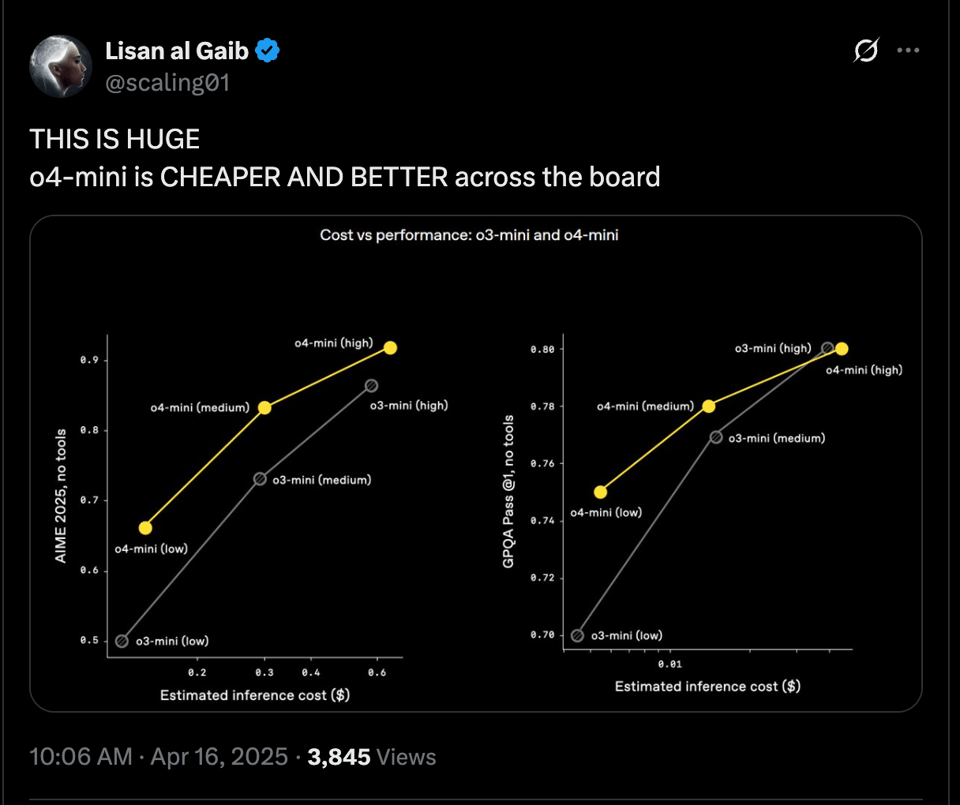

making o4-mini cheaper yet better across metrics that OAI has prioritized, vs the previous generation:

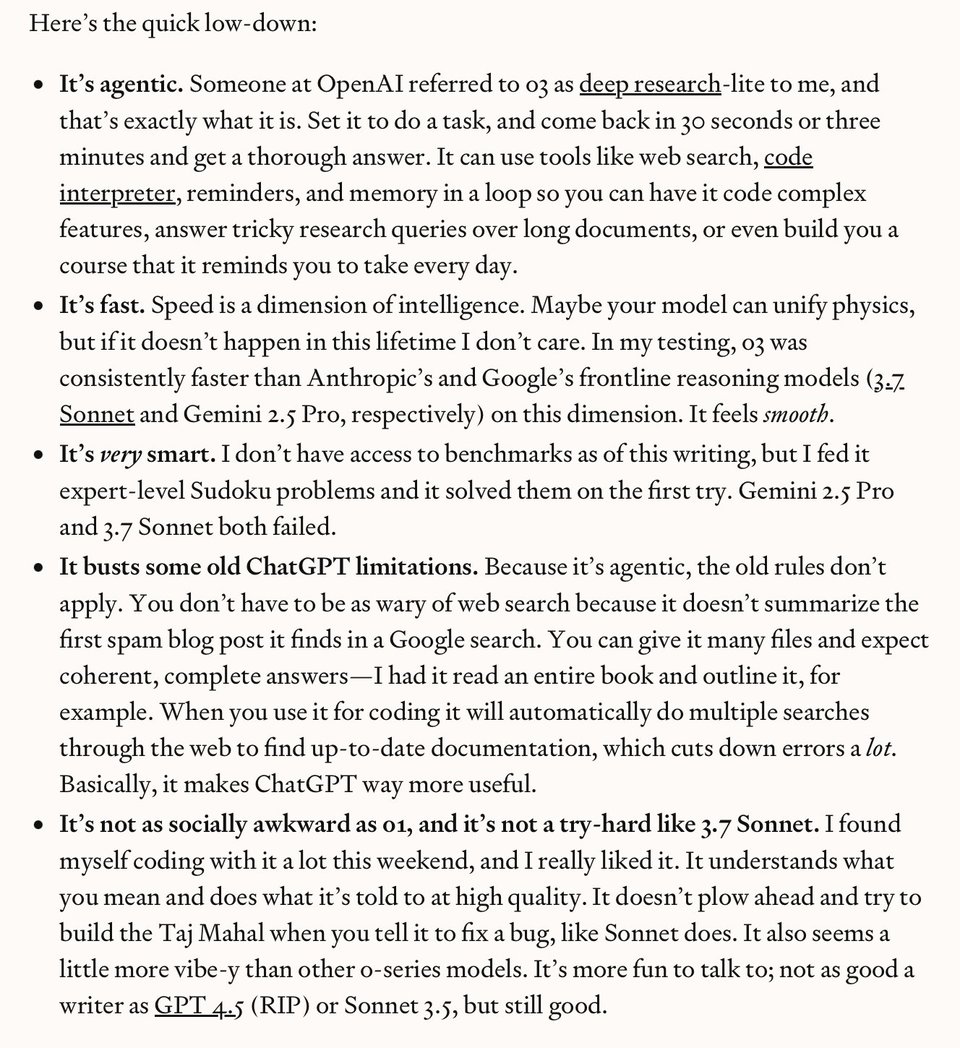

with much better vision and much better tool use - though this is not yet available in API.

Dan Shipper has a good qualitative review

The system cards show slightly less flattering evals but overall the launch has been very very well received.

The “one more thing” was Codex CLI, which oneupped Claude Code (our coverage here) by being fully open source:

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

New Model Releases and Updates (o3, o4-mini, GPT-4.1, Gemini 2.5 Pro, Seedream 3.0)

- OpenAI o3 and o4-mini Models: @sama announced the release of o3 and o4-mini, highlighting their capabilities in tool use and multimodal understanding. @OpenAI described them as smarter and more capable, with the ability to agentically use and combine every tool within ChatGPT. @markchen90 emphasized their enhanced power due to learning how to use tools end-to-end, particularly in multimodal domains, while @gdb expressed excitement about their ability to produce useful novel ideas.

- Accessibility and Pricing: @OpenAI noted that ChatGPT Plus, Pro, and Team users would gain access to o3, o4-mini, and o4-mini-high. @aidan_clark_ suggested that models with “mini” in their name are impressive. @scaling01 indicated that o4-mini is cheaper and better across the board; however, @scaling01 noted that o3 is 4-5x more expensive than Gemini 2.5 Pro.

- Codex CLI Integration: @sama revealed Codex CLI, an open-source coding agent, to enhance the use of o3 and o4-mini for coding tasks, while @OpenAIDevs described it as a tool that turns natural language into working code. @kevinweil and @swyx also highlighted this open-source coding agent.

- Performance and Benchmarks: @polynoamial confirmed that scaling inference improves intelligence, while @scaling01 provided a detailed performance comparison of o3 against other models like Sonnet and Gemini on benchmarks like GPQA and AIME. @scaling01 also noted o3’s worse performance in replicating research papers compared to o1. @alexandr_wang noted that o3 is absolutely dominating the SEAL leaderboard and @aidan_clau linked to a summary of o3’s strengths.

- Multimodal Capabilities: @OpenAI highlighted that o3 and o4-mini can integrate uploaded images directly into their chain of thought. @aidan_clau described an experience in Rome where o3 reasoned, resized images, searched the internet, and deduced the user’s location and vacation status, while @kevinweil noted seeing the models use tools as they think, such as searching, writing code, and manipulating images.

- Internal Functionality: @TransluceAI reported on fabrications and misrepresentations of capabilities in o3 models, including claiming to run code or use tools it does not have access to. @Yuchenj_UW noted that o3 figured out a mystery in the emoji, with FUCK YOU showing up in its thought process.

- GPT-4.1 Series: @OpenAIDevs announced the GPT-4.1 series for developers, and @skirano noted that this series seems to be going in the direction of optimizing for real-world tasks. @Scaling01 highlighted GPT-4.1-mini’s overperformance relative to GPT-4.1 in some benchmarks. @aidan_clark_ called the models awesome. @ArtificialAnlys stated that the GPT-4.1 series is a solid upgrade, smarter and cheaper across the board than the GPT-4o series.

- Gemini 2.5 Pro: @omarsar0 stated that Gemini 2.5 Pro is much better at long context understanding compared to other models. @_philschmid indicated that the price-performance of Gemini 2.5 Pro is crazy.

- ByteDance Seedream 3.0: @ArtificialAnlys announced the launch of Seedream 3.0, the new leading model on the Artificial Analysis Image Leaderboard. @scaling01 mentioned that the ByteDance-Seed/Doubao team is “fucking cracked”. @_akhaliq shared the Seedream 3.0 Technical Report.

Agentic Web Scraping with FIRE-1 and OpenAI’s CodexCLI

- FIRE-1: @omarsar0 introduced FIRE-1, an agent-powered web scraper, highlighting its ability to navigate complex websites and interact with dynamic content. @omarsar0 further explained its simple integration with the scrape API, enabling intelligent interactions within web scraping workflows. @omarsar0 noted the limitations of traditional web scrapers and the promising nature of agentic web scrapers.

- CodexCLI: @sama provided a link to the open-source Codex CLI. @kevinweil linked to the new open source Codex CLI. @itsclivetime has started asking the models to just “look for bugs in this” and it catches like 80% of bugs before they run anything.

Agent Implementations and Tool Use

- Tool Use: @sama expressed surprise at the new models’ ability to effectively use tools together. @omarsar0 noted that tool use makes these models a lot more useful. @aidan_clau stated that the biggest o3 feature is tool use, where it googles, debugs, and writes python scripts in its CoT for Fermi estimates.

- Tool Use Explanation: @omarsar0 demonstrated how reasoning models use tools, citing an example on the AIME math contest where the model proposes a smarter solution after initially brute-forcing it.

- Reachy 2: @ClementDelangue announced that they were starting to sell Reachy 2 this week, the first open-source humanoid robot.

Video Generation and Multimodality (Veo 2, Kling AI, Liquid)

- Google’s Veo 2: @Google introduced text to video with Veo 2 in Gemini Advanced, highlighting its ability to transform text prompts into cinematic 8-second videos. @GoogleDeepMind stated that Veo 2 brings your script to life and @matvelloso stated that it is generally available in the API.

- ByteDance’s Liquid: @_akhaliq shared ByteDance’s Liquid, a language model that is a scalable and unified multi-modal generator. @teortaxesTex commented that ByteDance is killing it across all multimodal paradigms.

- Kling AI 2.0: @Kling_ai announced Phase 2.0 for Kling AI, empowering creators to bring meaningful stories to life.

Interpretability and Steering Research

- GoodfireAI’s Open-Source SAEs: @GoodfireAI announced the release of the first open-source sparse autoencoders (SAEs) trained on DeepSeek’s 671B parameter reasoning model, R1, providing new tools to understand and steer model thinking. @GoodfireAI shared early insights from their SAEs, noting unintuitive internal markers of reasoning and paradoxical effects of oversteering.

- How New Data Permeates LLM Knowledge and How to Dilute It: @_akhaliq shared this paper from Google that explores how learning a new fact can cause the model to inappropriately apply that knowledge in unrelated contexts and how to alleviate effects by 50-95% while preserving the model’s ability to learn new information.

- Researchers Explore Reasoning Data Distillation: @omarsar0 summarized research on distilling reasoning-intensive outputs from top-tier LLMs into more lightweight models to boost performance across multiple benchmarks.

Tools and Frameworks for LLM Development

- PydanticAI: @LiorOnAI introduced PydanticAI, a new framework that brings FastAPI-like design to GenAI app development.

- LangGraph: @LangChainAI announced that they were open sourcing LLManager, a LangGraph agent which automates approval tasks through human-in-the-loop powered memory. @LangChainAI also noted that the Abu Dhabi government’s AI Assistant, TAMM 3.0, was built on LangGraph, and now delivers 940+ services across all platforms with personalized, seamless interactions.

- RunwayML: @c_valenzuelab states that Runway is in every classroom around the world. That’s the goal for 2030.

- Hugging Face tool releases: @ClementDelangue asked someone to make this tool run locally with open-source models from HF. @reach_vb announced that cohere was available on the Hub.

Humor/Memes

- @teortaxesTex quipped about sleeping through the release of OpenAI’s “AGI”.

- @swyx linked to a meme related to the o3 and o4 launch.

- @scaling01 had several, such as criticizing the “AGI” marketing, and saying it was “cooked instruction following in ChatGPT”.

- @aidan_mclau joked about world champion prompt engineering.

- @goodside posted “The surgeon is no longer the boy’s mother”, riffing on a well known meme about LLMs.

- @draecomino said Nolan’s movies are the most liked movies.

AI Reddit Recap

/r/LocalLlama Recap

1. Recent OpenAI and Third-Party Model Releases

-

OpenAI Introducing OpenAI o3 and o4-mini (Score: 109, Comments: 72): OpenAI has launched two new models, o3 and o4-mini, as part of its o-series, featuring significant improvements in both multimodal capabilities (direct image integration into reasoning) and agentic tool use (autonomous web/code/data/image toolchains via API). According to the official blog post, o3 achieves state-of-the-art results in coding, math, science, and visual perception benchmarks and exhibits improved analytical rigor and multi-step execution through large-scale RL. Top community concerns highlight the continued lack of open-source release, although OpenAI has open-sourced its terminal integration (via Codex), which is distinct from full model weights or research code.

- A commenter notes the lack of open source models from OpenAI, critiquing their release strategy as focused on proprietary gains rather than community contribution—reflecting persistent frustration among practitioners who value transparency and reproducibility in model development.

- One link highlights that although OpenAI isn’t releasing models openly, they have open sourced their terminal integration (https://github.com/openai/codex), which may interest developers seeking toolchain extensions, though not the models themselves.

- There’s criticism of OpenAI’s model naming conventions, with the argument that the confusing scheme may be deliberate—obscuring distinctions between models and complicating evaluation or selection for users seeking to match models to specific capabilities or requirements.

-

IBM Granite 3.3 Models (Score: 312, Comments: 135): IBM has released the Granite 3.3 family of language models under the Apache 2.0 license, featuring both base and instruction-tuned models at 2B and 8B parameter counts (Hugging Face collection). These models are positioned for open community adoption, text generation tasks, and RAG workflows; additional speech model resources are provided (speech model link). Community feedback is encouraged, but no in-depth benchmarks, implementation details or technical bug discussions were present in the comments.

- The Granite 3.3 models are viewed favorably among compact language models, with particular appreciation from users with limited GPU resources. Their usability on low-end hardware is cited as a distinguishing feature, making them accessible where larger models are impractical. Interest is expressed in evaluating the improvements brought by this new iteration, especially in resource-constrained environments.

-

ByteDance releases Liquid model family of multimodal auto-regressive models (like GTP-4o) (Score: 285, Comments: 33): The image linked here is a promotional overview of ByteDance’s purported ‘Liquid’ model family, described as a scalable, unified multimodal auto-regressive transformer (akin to GPT-4o) intended to handle both text and image generation within a single architecture. The Reddit discussion raises doubts about the model’s authenticity and technical claims: commenters note that the supposed release is not recent, the public checkpoint on Hugging Face is apparently just a Gemma finetune without a vision config, and no genuine multimodal pretrained models (as described) are available. Furthermore, despite the promotional material’s references to ByteDance’s involvement, no official sources or papers corroborate this release, suggesting possible misattribution or misleading representation.

- A commenter notes inconsistencies between the official announcement of the Liquid model family and the actual model artifacts found: the

config.jsonlacks a vision configuration, suggesting the public model is not the multimodal version shown in demos. The model card references six model sizes (ranging from 0.5B to 32B parameters), including a 7B instruction-tuned variant based on GEMMA, but these versions are reportedly missing from the expected repositories, and documentation lacks any mention of ByteDance’s involvement. - Testing of the model via the official online demo indicates underwhelming qualitative performance—especially with image generation tasks, such as rendering hands or realistic objects (e.g., ‘the woman in grass did not go well’ and malformed objects). This aligns with complaints about outputting anatomically incorrect features, often a benchmark for robustness in multimodal models.

- A commenter notes inconsistencies between the official announcement of the Liquid model family and the actual model artifacts found: the

-

Somebody needs to tell Nvidia to calm down with these new model names. (Score: 127, Comments: 23): The post humorously critiques NVIDIA’s increasingly complex and verbose model naming conventions, as exemplified by the mock label ‘ULTRA LONG-8B’ referencing a model with ‘Extended Context Length 1, 2, or 4 Million.’ The image and comments satirize how modern model names can resemble other product branding—here, likening them to condom names—highlighting the industry’s trend toward longer and more marketing-driven naming conventions. There is no substantial technical discussion about the models themselves or their benchmarks, only commentary on nomenclature.

- There is an undercurrent of critique regarding Nvidia’s inconsistent or confusing model naming conventions, suggesting that their lineup could benefit from clearer taxonomy to avoid ambiguity between product generations and tiers. Technical readers point out that precise naming is important in distinguishing between model capabilities—especially with new architectures and variants proliferating quickly.

2. Large-Scale Model Training and Benchmarks

-

INTELLECT-2: The First Globally Distributed Reinforcement Learning Training of a 32B Parameter Model (Score: 123, Comments: 14): INTELLECT-2 pioneers decentralized reinforcement learning (RL) by training a 32B-parameter model on globally heterogeneous, permissionless hardware, incentivized and coordinated via the Ethereum Base testnet for verifiable integrity and slashing of dishonest contributors. Core technical components include: prime-RL for asynchronous distributed RL, TOPLOC for proof-of-correct inference, and Shardcast for robust, low-overhead model distribution. The system supports configurable ‘thinking budgets’ via system prompts (enabling precise reasoning depth per use-case) and is built atop QwQ, aiming to establish a new paradigm for scalable, open distributed RL for large models. Top comments clarify that launch ≠ completed training, emphasize the controllable reasoning budget innovation, and request human feedback (HF) timelines for further benchmarking.

- INTELLECT-2 introduces a mechanism where users and developers can specify the model’s “thinking budget”—that is, the number of tokens the model can use to reason before generating a solution, aiming for controllable computational expense and reasoning depth. This builds on the QwQ framework and represents a potential advancement over standard transformer models with fixed-step inference.

- The project claims to be the first to train a 32B parameter model using globally distributed reinforcement learning. Historical context is provided by comparing to past community-driven distributed training projects, such as those inspired by DeepMind’s AlphaGo, but the commenter notes that hardware requirements at this scale still present a significant barrier for individuals.

-

Price vs LiveBench Performance of non-reasoning LLMs (Score: 127, Comments: 48): The scatter plot visualizes the trade-off between price and LiveBench performance scores for a range of non-reasoning LLMs. It uses a log-scale X-axis for price per million 3:1 blended I/O tokens and shows a range of proprietary models (OpenAI, Google, Anthropic, DeepSeek, etc.) using color-coded dots, with notable placement of models like GPT-4.5 Preview and DeepSeek V3. Comments highlight the dominance of Gemma/Gemini models on the pareto front (maximal performance per price), specifically praising Gemma 3 27B’s efficiency. The analysis reveals clear differentiation in market competitiveness: View image.

- Multiple commenters note that Gemma (and potentially Gemini) models currently dominate the “Pareto front” for price/performance in non-reasoning LLM benchmarks, suggesting that they offer the best tradeoff between cost and performance relative to competitors. This implies that, in the current landscape, alternatives lag behind these models in the specific metrics of price and efficiency.

- Discussion highlights that Gemma 3 27B delivers strong benchmark results for its scale, while the Gemini Flash 2.0 model is specifically singled out for its outstanding performance per dollar, significantly outperforming Llama 4.1 Nano, which is criticized for poor price/performance ratio. This underscores shifting value propositions in the LLM market as newer models are released and benchmarked side by side.

-

We GRPO-ed a Model to Keep Retrying ‘Search’ Until It Found What It Needed (Score: 234, Comments: 36): Menlo Research introduced ReZero, a Llama-3.2-3B variant trained with Generalized Repetition Policy Optimization (GRPO) and a custom retry_reward function, enabling high-frequency retrials of ‘search’ tool calls to maximize search task results (arxiv, github). Unlike conventional LLM tuning that penalizes repetition to minimize hallucination, ReZero empirically achieves a

46%score—more than double the baseline’s20%—supporting that repetition, when paired with search and proper reward shaping, can improve factual diligence rather than induce hallucinations. All core modules, including the reward function and verifier, are open-sourced (see repo), leveraging the AutoDidact and Unsloth toolsets for efficient training; pretrained checkpoints are released on HuggingFace.- A commenter inquires about the availability of the reward function or verifier used in the GRPO approach, suggesting interest in examining or reproducing the reinforcement mechanism and evaluation logic from the released codebase.

- The primary model training pipeline leveraged open-source toolsets such as AutoDidact and Unsloth, indicating that implementations likely rely on these frameworks for orchestrating reinforcement learning or optimizing inference; both have been cited as crucial for technical reproducibility.

- The discussion hints at using an iterative approach where the model repeatedly retried ‘Search’ queries until successful, implying a custom reward or retry loop likely implemented via the mentioned toolchains—this raises questions about efficiency and resource usage in such a feedback-driven search reinforcement scheme.

3. Community Projects and Hardware Setups

-

Droidrun is now Open Source (Score: 214, Comments: 20): The post announces that the Droidrun framework—a tool related to Android or automation, as suggested by the title and logo design—is now open-source and available on GitHub (repo link). The image itself is not technical: it is a stylized logo of an android character running, conveying speed, activity, and the open nature of the project. There are no benchmarks or implementation details provided in the post or comments, though early community interest was high with 900+ on the waitlist.

- Technical discussion highlights how Droidrun allows advanced automated control and scripting of Android devices, making it valuable for highly technical users interested in device automation. Several commenters debate its practical use case, noting that users capable of compiling/installing from GitHub might not require LLM integration to perform simple actions, suggesting the tool’s true strength lies in combining local device control with natural language-powered workflows, scripting, or batch automation on Android.

-

Yes, you could have 160gb of vram for just about $1000. (Score: 177, Comments: 78): The OP documents a $1157 deep learning inference build using ten AMD Radeon Instinct MI50 GPUs (16GB VRAM each, ROCm-compatible), housed in an Octominer XULTRA 12 case designed for mining, with power handled by 3x750W hot-swappable PSUs. Key software is Ubuntu 24.04 with ROCm 6.3.0 due to MI50 support limitations (though a commenter notes ROCm 6.4.0 does still work per device table), and llama.cpp built from source for inference. Benchmarks (llama.cpp, q8 quantization) show MI50s provide ~40-41 tokens/s (eval), but poor prompt throughput (e.g., ~300 tokens/s), performing worse than consumer Nvidia (RTX 3090, 3080Ti) and showing ~50% performance drop under multi-GPU and RPC use—e.g., MI50@RPC (5 GPUs) for 70B model achieves ~5 tokens/s vs ~10.6 tokens/s for 3090 (5 GPUs), with prompt evals also much slower (~28 ms/token vs ~1.9 ms/token). Power draw and thermal performance are excellent (idle ~20W/card, inference ~230W/card), and large VRAM pool is valuable for very large models or MoE. Limitations include PCIe x1 bandwidth bottleneck, scale-out limitations in llama.cpp (>16 GPU support sketchy), and significant RPC-related efficiency loss. Suggestions include lowering card power to 150W for minor performance cost, experimenting with MoE models, and potential optimization of network/RPC code. See the original post for detailed benchmarks and configuration notes: Reddit thread.

- Multiple users report that the MI50 can still support the latest ROCm (6.4.0) despite documentation suggesting otherwise—installation works and gfx906 (the MI50 architecture) is listed as supported under Radeon/Radeon Pro tabs, providing reassurance for potential buyers relying on ROCm for ML workloads.

- Power consumption can be capped on MI50 GPUs to significantly drop wattage (e.g., halving to 150W only reduces performance by ~20%), with acceptable inference rates as low as 90W per card; this is essential for those building large clusters (e.g., 10-card setups) concerned with power limits, cost, or thermal issues.

- Reported generation speeds for a 70B Q8 Llama 3.3 model are

~4.9-5 tokens/secon a $1,000 MI50 build, with a time to first token of 12 seconds for small context and up to 2 minutes for large context, offering concrete benchmarks for performance and latency expectations with this multi-GPU setup.

-

What is your favorite uncensored model? (Score: 103, Comments: 71): The discussion centers on large language models (LLMs) modified for minimal content filtering (‘uncensored’ or ‘abliterated’ models), specifically those by huihui-ai, including Phi 4 Abliterated, Gemma 3 27B Abliterated, and Qwen 2.5 32B Abliterated. Users note that the Phi 4 model retains its performance/intelligence post-‘Abliteration,’ while Gemma 3 27B’s uncensored state is moderate unless use-cased as a RAG for financial data. Mistral Small is also highlighted for high out-of-the-box permissiveness without major safety layers, with or without uncensoring. See huihui-ai project repositories for technical configurations and quantized weights for the mentioned models.

- Discussion highlights specific uncensored models: Phi 4 Abliterated, Gemma 3 27B Abliterated, and Qwen 2.5 32B Abliterated by huihui-ai, praising Phi 4 for minimal intelligence degradation post-abliteration, suggesting a robust methodology behind its uncensoring process.

- Gemma 3 27B is reported as not particularly uncensored out of the box, but the commenter notes success in extracting financial advice via Retrieval-Augmented Generation (RAG), especially when using model variants rather than finetuned editions.

- Another user points out that uncensoring processes often lead to noticeable degradation in model performance, expressing a preference for standard models combined with jailbreak prompts over heavily modified, uncensored counterparts, reflecting a broader concern in the community about balancing censorship removal with maintaining baseline capabilities.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. OpenAI o3 and o4-mini Model Launch and Discussion

-

o3 releasing in 3 hours (Score: 753, Comments: 186): The image is a tweet from OpenAI, announcing a livestream event set to begin in ‘o3’ hours, suggesting imminent release or demonstration of a new model, likely referred to as ‘o3’. This has generated significant anticipation in the community, with technical discussions referencing previous high compute costs (one comment mentions a prompt costing ~$3K) and questioning the feasibility of broader release. Discussion includes skepticism about the deployment or commercialization of such high-compute models, with some users referencing prior scaling and cost issues as a technical barrier to public or widespread access.

- A user references the high compute cost for generating a single prompt on similar models in the past, quoting figures around

$3,000 per prompt, raising questions about how o3 is being released given such high computational requirements. This implies potential improvements in model efficiency, inference cost, or infrastructure compared to previous iterations. - Another user is keen to compare o3’s capabilities against both the team’s own ‘DeepResearch’ model and Google’s ‘Gemini 2.5 Pro,’ explicitly highlighting interest in cross-benchmark performance and the hope this signals further imminent releases (notably the ‘o4’ family).

- A user references the high compute cost for generating a single prompt on similar models in the past, quoting figures around

-

Introducing OpenAI o3 and o4-mini (Score: 235, Comments: 91): OpenAI has introduced the o3 and o4-mini models, with the o3 model offering slightly reduced performance on benchmarks like GPQA, SWE-bench, and AIME compared to earlier (December) figures, but is noted in the announcement blog as being cheaper than the o1 model. Key technical discussion centers on o3’s coding benchmark performance, reported as slightly better than Google’s Gemini but with o3 costing 5x more. There is broader debate regarding the relevance of current benchmarks, with calls for evaluation metrics focused on real-world agentic task performance rather than incremental math or reasoning benchmarks. Commenters scrutinize the tradeoff between decreased benchmark performance and lower cost in o3, with some noting that real-world utility should take precedence over small benchmark variances. The high relative cost of o3 compared to Gemini, despite performance advantage, is also raised as a concern. [External Link Summary] OpenAI has introduced o3 and o4-mini, the latest in its o-series reasoning models, featuring significant improvements in agentic tool use and multimodal capabilities. o3 sets new state-of-the-art (SOTA) benchmarks in coding, math, science, and visual perception, excelling at complex, multi-faceted problems by integrating tools for web search, file analysis, Python execution, and image generation within ChatGPT. Both models utilize large-scale reinforcement learning for superior reasoning and instruction following, with o4-mini optimized for high-throughput, cost-efficient reasoning and leading performance among compact models—especially for math and coding. For the first time, users can incorporate images into the reasoning workflow, enabling integrated visual-textual problem-solving and tool-chaining for advanced, real-world, and up-to-date tasks. See: Introducing OpenAI o3 and o4-mini

- Benchmarks such as GPQA, SWE-bench, and AIME saw slightly reduced scores for o3 compared to its initial December announcement, although OpenAI notes the model is now cheaper than o1; there is speculation that the performance was deliberately reduced to lower costs.

- On the Aider polyglot benchmark, o3-high scored 81% but at a potentially very high cost (speculated at ~$200 like o1-high), whereas Gemini 2.5 Pro scored 73% at a much lower price. GPQA scores are very close (

o3: 83%vsGemini 2.5 Pro: 84%). Although o3 shows improvements in math (notably a big jump on math benchmarks and a slight lead on HLE over Gemini without tools), the high cost relative to Gemini makes it less attractive for some users focused on real-world value per dollar. - Discussion points out that while benchmarks are useful, they are somewhat overrated and do not always reflect a model’s suitability for everyday tasks or agent-based workflows. There is a call for new benchmarks that better reflect real-world job competence or daily use-case utility of large language models.

-

launching o4 mini with o3 (Score: 205, Comments: 43): The image announces an upcoming event from OpenAI introducing new ‘o-series’ models, specifically ‘o3’ and ‘o4-mini.’ This indicates OpenAI’s continued expansion of their model lineup beyond GPT-4o, with implications for both performance and functionality. The linked YouTube event suggests an official, technical rollout, though little model detail is present in the image. Commenters heavily critique OpenAI’s confusing and inconsistent model naming conventions, arguing that closely named models with different capabilities (‘o3’, ‘o4-mini’, ‘4o’) cause unnecessary confusion in both technical and non-technical circles.

- There is confusion and technical critique surrounding the naming overlap between the ‘o3’, ‘o4’, and ‘o4 mini’ models, with users noting how similar names for models offering drastically different capabilities can create ambiguity when referencing benchmarks, updates, or deployment contexts.

- A technical question is raised about the practical use case of ‘o4 mini’ compared to ‘o3’, specifically questioning why a newer, potentially enhanced model is released alongside an older one, especially when the new version is ‘mini’ (possibly smaller or more efficient), prompting discussion of real-world scenarios or benchmark-driven preferences.

- There is also a regional availability question regarding EU access to ‘o3’ and ‘o4 mini’, which, if answered, would inform technical readers about deployment timelines, rollout strategies, and compliance with local regulations or infrastructure realities.

-

[Confirmed] O-4 mini launching with O-3 full too! (Score: 298, Comments: 44): The image officially announces an OpenAI event for the introduction of new ‘o-series’ models—specifically the O-4 mini and a full O-3 model—scheduled for April 16, 2025. It confirms that both the lightweight ‘mini’ version of O-4 and the full version of O-3 are launching concurrently. The event will feature presentations and demos from notable OpenAI engineers and researchers, including Greg Brockman, Mark Chen, and others, suggesting an in-depth technical reveal and demonstration. Image link. Commenters question the clarity of OpenAI’s model naming scheme, with some expressing anticipation to switch usage from previous ‘o3 mini’ to the new ‘o4 mini’. The naming and model differentiation are highlighted as ongoing points of confusion among technical users.

- The initial comment lists notable figures, including Greg Brockman and Mark Chen, who are involved in introducing and demoing the new O-series models, possibly indicating a high-profile launch event, which may be relevant for tracking future technical presentations or announcements related to O-4 mini and O-3 full models.

- Several users discuss the transition from primarily using ‘O-3 mini high’ to ‘O-4 mini’, implying iterative improvements and that there is a clear user base migrating to the newer model; this demonstrates expectations that O-4 mini may outperform or provide added value over the O-3 mini in real-world usage.

- There is some mild technical criticism of the O-series naming scheme, with users describing it as ‘ridiculous’. While this is not directly technical, it has implications for model tracking, integration, and future development cycles, where confusing nomenclature can hinder adoption and API version management.

-

This confirms we are getting both o3 and o4-mini today, not just o3. Personally excited to get a glimpse at the o4 family. (Score: 225, Comments: 50): The post uses an image of strawberries in two distinct rows (three large, four small) to metaphorically confirm a dual release: both ‘o3’ and ‘o4-mini’ foundation models are set to launch together (image: link). The visual pun visually represents ‘o3’ (three large) and ‘o4-mini’ (four small) models, indicating a strategic broadening of the lineup, possibly with performance or size differentiation. The title and image contextually underscore excitement over preview access to the next-generation o4 family, not just an incremental update. Technical discussion centers on concerns about pricing for ‘o3’ (potential $200/month cost) and skepticism/anticipation regarding whether ‘o4-mini’ can deliver on its science-assistance claims, reflecting community interest in practical impact and accessibility of these models.

- MassiveWasabi discusses curiosity about whether the o4-mini model will deliver on claims regarding its utility for advancing scientific research, suggesting that users have technical expectations for model performance beyond general AI tasks.

- jkos123 inquires about API pricing for o3 full, comparing it directly to previous tiers such as o1-pro (

$150/month in,$600/month out). This indicates technical users’ attention to cost-performance ratios for deployment choices in production and research. - NootropicDiary speculates on the coding and reasoning capabilities of o4 mini high, questioning if it could be close in performance to o3 pro. This points to community interest in comparative benchmarks and the practical application of these models in development workflows.

2. OpenAI o3/o4 vs Gemini Benchmarks and Comparisons

-

Benchmark of o3 and o4 mini against Gemini 2.5 Pro (Score: 340, Comments: 169): The post benchmarks performance of o3, o4-mini, and Gemini 2.5 Pro models across various tasks. On maths benchmarks (AIME 2024/2025), o4-mini slightly outperforms Gemini 2.5 Pro and o3 (o4-mini:

93.4%on AIME 2024, o3:91.6%, Gemini 2.5 Pro:92%). For knowledge and reasoning (GPQA, HLE, MMMU), Gemini 2.5 Pro leads on GPQA (84.0%), o3 leads on HLE (20.32%) and MMMU (82.9%). In coding tasks (SWE, Aider), o3 performs best on both SWE (69.1%) and Aider (81.3%). Pricing is highlighted, with o4-mini being substantially cheaper ($1.1/$4.4) than the others. Plots were generated by Gemini 2.5 Pro. Commenters note potential misrepresentation via y-axis scaling on plots, and highlight that Google and OpenAI models are now close in performance, though Google’s pace and resource advantage is seen as an indicator they may soon surpass OpenAI.- Discussion highlights the inadequacy of comparing AI model token costs purely via price per million tokens, noting that output lengths differ significantly between models (‘reasoning tokens’), thus skewing cost comparisons. Instead, actual $ cost of running benchmarks should be analyzed to determine real expenses, as opposed to retail prices charged to consumers. The distinction between “Cost” (operational, hardware, and infrastructure costs for running a model) and “Price” (what companies charge to access the model) is emphasized, pointing out that proprietary models (e.g., OpenAI, Google) obscure running costs, while open source models enable more transparent assessment as users can directly measure or estimate hardware expenditure. The post also cautions that company pricing strategies (e.g., Google possibly setting artificially low prices due to TPUs or market share goals) further complicate fair comparisons. A robust, standardized analysis factoring in both cost-to-run and price-to-consumer versus performance is proposed for future benchmarks.

-

Comparison: OpenAI o1, o3-mini, o3, o4-mini and Gemini 2.5 Pro (Score: 195, Comments: 44): The image provides a direct benchmark comparison between OpenAI’s o1, o3-mini, o3, o4-mini models and Google’s Gemini 2.5 Pro, covering metrics such as AIME (math), Codeforces coding, GPQA (science Q&A), and several reasoning/logic tasks. The table indicates that OpenAI’s o4-mini leads in math-with-tools tasks, while Gemini 2.5 Pro performs distinctly in some coding and science benchmarks (e.g., LiveCodeBench v5). Substantial differences are noted across tasks, reflecting how model strengths vary by domain; OpenAI’s mid-tier models (like o3) demonstrate strong practical code generation for whole apps in user experiences. Top comments highlight o4-mini’s dominance in math, the assertion that benchmark relevance is diminishing as models surpass human performance, and the need for pricing context. There’s anecdotal user praise for o3’s real-world practicality in code generation.

- Gemini 2.5 Pro is described as generally comparable to OpenAI’s o4-mini, except for mathematics, where o4-mini leads. (“So gemini 2.5~o4mini except in math where o4-mini leads”)

- User test experience with o3 suggests it can generate entire, working applications in a single output, indicating major advancement in code synthesis compared to prior models. (“o3 is quite groundbreaking, spitting out whole, working apps with one shot”)

- Noted rapid improvement on “Humanity’s Last Exam” benchmarks: compared to PhD students who average ~30% overall and ~80% in their field on such tests, current model scores represent significant progress in a short time frame.

-

If o3 from OpenAI isn’t better than Gemini 2.5, would you say Google has secured the lead? (Score: 211, Comments: 131): The post questions whether, if OpenAI’s o3 model fails to surpass Google’s Gemini 2.5 in benchmarks and real-world scenarios, Google has effectively become the industry leader for state-of-the-art LLMs. Top comments point out that ‘best model’ status is domain-dependent, Gemini 2.5 currently leads for some users, and a key trade-off may be o3’s expected higher performance at significantly greater cost (“~15 times as much” as Gemini for real-world tasks, based on previous pricing for o1 and extrapolated from ARC-AGI benchmark tests). Commenters debate whether short-term superiority in benchmarks equates to long-term leadership and note the prohibitive operational costs for OpenAI’s top models as a limiting factor, while conceding performance may justify the expense in select applications.

- Commenters note that while Google’s Gemini 2.5 is currently highly competitive and possibly leads, the lead is not evenly distributed across all domains—different models may excel in distinct tasks or environments.

- A technical concern highlighted is the projected cost discrepancy between OpenAI’s forthcoming o3 and Gemini 2.5, referencing that o3 could be up to “~15 times as much for real world tasks” (based on historical ARC-AGI benchmarks and o1 pricing). This raises concerns on the real-world deployability of o3 relative to Gemini 2.5, especially for cost-sensitive applications.

- There is recognition of offline models like Gemma 3, which are praised as strong offline AI solutions, indicating Google’s breadth in both cloud and edge AI, though some users point out Google’s current UI/UX and response ‘human-ness’ as areas for improvement compared to OpenAI.

3. HiDream & ComfyUI Model Updates and Tools

-

Hidream Comfyui Finally on low vram (Score: 166, Comments: 117): A low VRAM version of HiDream’s diffusion image generation workflow is now available for ComfyUI, featuring GGUF-formatted models (HiDream-I1-Dev-gguf), a GGUF Loader for ComfyUI (ComfyUI-GGUF), and compatible text encoders and VAE (link, vae link). The workflow supports alternate VAE’s (e.g., Flux) and details are documented here. A user shared performance numbers for a RTX3060 using SageAttention and Torch Compile: image resolution

768x1344generated in 100 seconds using 18 steps. Comments highlight the rapid obsolescence of new AI workflows and the difficulty of keeping pace with new releases.- A user reports successfully running the model on an RTX3060 using SageAttention and Torch Compile. The setup produced images at a resolution of 768x1344 in 100 seconds over 18 steps, demonstrating that low-VRAM cards can achieve reasonable generation times with optimized configurations.

- There is a comparative assessment suggesting that Flux finetunes currently deliver better results than this release, highlighting ongoing benchmarks and subjective quality debates among different model variants.

- A specific hardware compatibility question was raised regarding operation on Apple Silicon (M1 Mac), which may be of interest for developers aiming to support broader platforms.

-

Basic support for HiDream added to ComfyUI in new update. (Commit Linked) (Score: 152, Comments: 45): ComfyUI has added basic support for the HiDream model family with a recent commit, requiring users to employ the new QuadrupleCLIPLoader node and the LCM sampler at CFG=1.0 for optimal performance. GGUF-format HiDream models and loader node (from City96) are now available (models, loader), alongside required text encoders (list), and a basic workflow; users must update ComfyUI for the necessary nodes. SwarmUI has also integrated HiDream I1 support (docs). Benchmark: RTX 3060 renders a 768x768 image in 96 seconds; RTX 4090 achieves 10-15 sec per image (substantially higher memory use); quality is comparable to contemporary models with notable JPEG artifacting, and files are significantly larger than alternatives. Technical debate centers on whether HiDream’s incremental quality improvements justify its high memory usage and large file sizes compared to models like Flux Dev or SD3.5, with some noting uncensored outputs and artifacting as both notable features and potential drawbacks. [External Link Summary] This commit to the ComfyUI repository introduces basic support for the HiDream I1 model by adding a dedicated implementation under

comfy/ldm/hidream/. The changes include a new model wrapper (HiDreamsubclass) inmodel_base.py, extensive logic for the HiDream architecture inhidream/model.py, relevant text encoders, detection modules, and custom nodes for ComfyUI workflows. This integration enables users to deploy and experiment with the HiDream I1 model within the ComfyUI framework, improving the model support ecosystem. Original: https://github.com/comfyanonymous/ComfyUI/commit/9ad792f92706e2179c58b2e5348164acafa69288- HiDream now works in ComfyUI with GGUF models, requiring a new QuadrupleCLIPLoader node and an updated text encoder node in Comfy. Model files, loader node, and sample workflows are provided in linked resources. For optimal sampling, the LCM sampler with CFG 1.0 is recommended. source/links

- Benchmarks across GPUs (e.g., RTX 4090 vs 3060) show generation times of

1:36for 768x768 images on a 3060 and10-15sper image on a 4090 using SwarmUI. Memory usage is significantly higher than modern competitors like Flux Dev (which can do 4-5s per image with Nunchaku optimization), largely attributed to the new QuadrupleClipLoader node. - Discussion highlights the trade-off in model adoption: while HiDream shows incremental quality improvements, its file size and high VRAM requirements (at least 12GB referenced) limit broader usability compared to alternatives like Flux or SD35. There are questions about whether the higher resource usage justifies the quality gains, especially given VRAM limitations on most consumer GPUs.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Exp

Theme 1: OpenAI’s New Models: O3, O4-Mini, and Codex CLI

- OpenAI Unleashes Codex CLI for Brutal Reasoning: OpenAI has released Codex CLI, a lightweight coding agent leveraging models like o3 and o4-mini, with GPT-4 support coming soon, as detailed in their system card. Codex CLI uses tool calling for brute-force reasoning, applicable to tasks like answering questions on geoguessr.com.

- Members Laud O3 and O4-Mini Performance, Note Limitations: Community members testing o3 and o4 mini found that o4 mini performed best on OpenAI’s interview choice questions, while o3 excelled at a non-trivial real-world PHP task, scoring 10/10. Despite benchmark tests, it suffers from the same Alaska problem as o3 as reported on X, but excels at reasoning with temperature set to 0.4 or less.

- LlamaIndex, Windsurf, and O3/O4 Mini Gain Integration: LlamaIndex now supports OpenAI’s o3 and o4-mini models, accessible with

pip install -U llama-index-llms-openaiand find more details here. The o4-mini is now available in Windsurf, with models o4-mini-medium and o4-mini-high offered for free on all Windsurf plans from April 16-21 as reported in their social media announcement.

Theme 2: Emerging Hardware and Performance Challenges

- RTX 5090 Matmul Disappoints, Larger Matrices Needed: Initial implementations of matmul on RTX 5090 yielded performance roughly equal to RTX 4090 when multiplying two fp16 matrices of size 2048x2048, and the tests can be found in the official tutorial code. It was suggested to test with a larger matrix, such as 16384 x 16384, and experiment with autotune.

- AMD Cloud Vendor Supports Profiling and Observability: An AMD cloud offers built-in profiling, observability, and monitoring, though it may not be on-demand, sparking debate on the merits of creating a cloud vendor tier list to incentivize better hardware counters. In the discussion, a user made a joking threat to make a cloud vendor tier list to shame people into offering hardware counters, as a way to persuade AMD or other vendors to provide better hardware counters.

- NVMe SSDs Supercharge Model Loading in LM Studio: Using an NVMe SSD significantly speeds up model loading in LM Studio, with observed speeds reaching 5.7GB/s, although having multiple NVMe SSDs doesn’t significantly impact gaming. A user highlighted having three NVMe SSDs in their system, but they don’t seem to make much difference for gaming unfortunately.

Theme 3: Gemini 2.5 Pro and Related API Discussions

- Gemini 2.5 Pro Rate Limits Frustrate Free Tier Users: Users discussed the tight rate limits for the free tier of Gemini 2.5 Pro, noting it has a smaller limit of 80 messages per day, reduced to 50 without a $10 balance. One user expressed frustration, saying they would need to pay an additional $0.35 due to the 5% deposit fee to meet the minimum $10 requirement for the increased rate limit.

- Claims Surface of Shrinking Gemini 2.5 Pro Context Window: Claims surfaced that Gemini 2.5 Pro has a reduced context window of 250K, despite the official documentation still stating 1M, although one member pointed out that the source of truth is always the GCP console.

- Gemini 2.5 Pro API Hides “Thinking Content”: Debate Ensues: Members debated whether Gemini 2.5 Pro API returns thinking content, noting official documentation says no, despite thought tokens being counted. Despite this, thought tokens are counted, sparking theories about preventing distillation or hiding bad content.

Theme 4: DeepSeek Models and Latent Attention

- DeepSeek R3 and R4 Model Release Excites OpenRouter Community: Users await the release of Deepseek’s R3 and R4 models imminently, generating buzz within the OpenRouter community with hope that these models will eclipse OpenAI’s o3. A user stated that “Deepseek is only affordable, the actualy is not that great.”

- DeepSeek-V3’s Latent Attention Mechanism Investigated: A member found that DeepSeek-V3’s Multihead Latent Attention calculates attention in 512-dim space, although the head size is only 128, making it 4x more expensive. While this detail may be overlooked, this increased computational cost isn’t an issue when memory bandwidth is the main bottleneck.

- DeepSeek Distill Chosen for Chain of Thought Reasoning: The DeepSeek Distill model was recommended for SFT due to its existing chain of thought (CoT) capabilities and a base model like Qwen2.5 7B is possible but less direct according to Deepseek’s paper. A member recommended to use the DeepSeek Distill model for SFT due to its existing chain of thought (CoT) capabilities, and using a base model like Qwen2.5 7B is possible but less direct according to Deepseek’s paper.

Theme 5: Community and Ethical Discussions

- OpenRouter Privacy Policy Update Sparks Debate: An update to OpenRouter’s privacy policy sparked concern because it appeared to log LLM inputs, with one line stating, “Any text or data you input into the Service (“Inputs”) that include personal data will also be collected by us”. An OpenRouter rep said, “we can work on clarity around the language here, we still don’t store your inputs or outputs by default”, promising to clarify the terms soon.

- AI Misuse Alarms Raised, Nefarious Purposes Feared: Discussions emerged around AI’s potential for nefarious purposes, especially in VR, with one member fearing its use for reallly bad stuff, and discussions of copyright infringement and deepfakes. This led to conversations around copyright infringment and generating deepfakes, while still trying to find ways around that.

- Community Conduct Sparks Debate in Manus.im: Manus.im community members discussed community conduct after a heated exchange, focusing on balancing offering assistance with encouraging self-reliance, which led to a user getting banned. Concerns were raised about perceived lack of helpfulness versus the importance of self-driven learning and avoiding reliance on hand outs.

PART 1: High level Discord summaries

LMArena Discord

- OpenAI Unleashes Lightweight Coding Agent: Codex CLI: OpenAI launched Codex CLI, a lightweight coding agent using models like o3 and o4-mini, with support for GPT-4 models coming soon, as documented in their system card.

- One member noted it likely uses tool calling for brute force reasoning, such as answering questions on geoguessr.com.

- o3 and o4 Mini Show Promise: Members testing OpenAI’s o3 and o4 mini models found o4 mini performed best on OpenAI’s interview choice Qs, while o3 excelled at a non-trivial real world PHP task, scoring 10/10.

- Despite benchmark tests, it suffers from the same Alaska problem as o3 as reported on X, though it excels at reasoning with temperature set to 0.4 or less.

- OpenAI Mulls Windsurf Acquisition for $3B: OpenAI is reportedly in talks to acquire Windsurf for approximately $3 billion, according to Bloomberg.

- The potential acquisition has sparked debate on whether OpenAI should build such tools themselves, particularly when Gemini’s finite state machine pathfinding used in Roblox shows integration benefits.

- DeepSeek-R1 Parameter Settings Explored: Configurations for DeepSeek-R1 were discussed, referring to the GitHub readme, emphasizing setting the temperature between 0.5-0.7, avoiding system prompts, and including a directive to reason step by step for mathematical problems.

- Members lauded the performance and source quoting capabilities but also noted concerns about source hallucination, with one member stating still got a way to go until agi.

- o3’s Tool Use Paves Way For New Benchmarks: Members highlighted o3 model’s tool use capabilities such as the image reasoning zoom feature, though one member stated tool use isn’t out yet in the arena.

- The tool usage sparked talk on creating benchmarks, particularly relating to GeoGuessr, with new harnesses or bulk testing, although costs may be high.

Manus.im Discord Discord

- Manus Credit Consumption Under Microscope: Users voiced concerns about Manus credit usage, with one user noting they spent 3900 credits and had only 500 remaining after two weeks.

- A user mentioned spending nearly 20k credits in the same timeframe, emphasizing the need for strong ROI even with Manus’s powerful capabilities.

- Kling’s Image Generation Sets Wildfire: Members lauded Kling’s insane image generation abilities, with one member describing Kling as diabolical and a game changer after signing up.

- Another member said that Kling 1.6 is out, and described the capabilities as holy mother of f.

- Community Etiquette Sparks Debate: Members discussed community conduct after a heated exchange, focusing on balancing offering assistance with encouraging self-reliance, which led to a user getting banned.

- Concerns were raised about perceived lack of helpfulness versus the importance of self-driven learning and avoiding reliance on hand outs.

- Copilot Gains Recognition: Members discussed Copilot’s potential to revolutionize AI, especially with the Pro version being able to perform complicated tasks.

- Members are saying that Copilot can do descent art and other complicated tasks, and is a beast.

- AI Misuse Sparks Alarms: Members expressed alarm about AI’s potential for nefarious purposes, particularly in VR.

- Discussion shifted into copyright infringement and generating deepfakes, alongside exploration of potential safeguards.

aider (Paul Gauthier) Discord

- Aider’s Early Commits Spark Jokes: Developers are sharing jokes about Aider, mocking its tendency to commit too soon and cause merge conflicts during coding assistance.

- One joke likened Aider’s assistance to rewriting your repo like it just went through a bad divorce, while another suggested using it results in

git blamejust saying ‘why?’.

- One joke likened Aider’s assistance to rewriting your repo like it just went through a bad divorce, while another suggested using it results in

- ToS Breakers Tread on Thin Ice: Members discussed the implications of breaking Terms of Service (ToS), with one user claiming to have been breaking ToS for 3 months without a ban.

- Concerns were raised about potentially bannable activities and the importance of adhering to platform rules.

- Gemini 2.5 Pro Shrinks Context Window?: Claims surfaced that Gemini 2.5 Pro has a reduced context window of 250K, despite the official documentation still stating 1M.

- One member pointed out that the source of truth is always the GCP console.

- OpenAI’s o3 and o4 Minis Debut: OpenAI launched o3 and o4-mini, available in the API and model selector, replacing o1, o3-mini, and o3-mini-high.

- The official announcement notes that o3-pro is expected in a few weeks with full tool support, and current Pro users can still access o1-pro.

- Aider’s File Addition Frustrations Documented: A member reported issues where Aider’s flow is interrupted by requests to add a file, causing resending of context and re-editing, and documented it in this Discord post.

- This interruption requires constant resending of context and re-editing.

OpenRouter (Alex Atallah) Discord

- OpenAI’s O3 Arrives Requiring BYOK: OpenAI’s O3 model is now available on OpenRouter with a 200K token context length, priced at Input: $10.00/M tokens and Output: $40.00/M tokens, requiring organizational verification and BYOK.

- Members discussed whether the O3 models were “worth it” or if they should wait for upcoming DeepSeek models.

- O4-Mini Emerges as Low-Cost Option: The OpenAI O4-mini model is now on OpenRouter, offering a 200K token context length at Input: $1.10/M tokens and Output: $4.40/M tokens, but users reported issues with image recognition, such as getting “Desert Picture => Swindon Locomotive Works.”

- An OpenRouter rep confirmed that “image inputs are fixed now”.

- Deepseek R3 and R4 Models Hype Incoming: Chatter indicates Deepseek’s R3 and R4 models are slated for release imminently.

- One user expressed the hope that “everyone forgets about o3” when the models are released, while another stated that “Deepseek is only affordable, the actualy is not that great.”

- Gemini 2.5 Pro Rate Limits Frustrate Users: Users discussed the tight rate limits for the free tier of Gemini 2.5 Pro, noting it has a smaller limit of 80 messages per day, reduced to 50 without a $10 balance, subject to Google’s own limits.

- One user expressed frustration, saying they would need to pay an additional $0.35 due to the 5% deposit fee to meet the minimum $10 requirement for the increased rate limit.

- OpenRouter Privacy Policy Update Sparks Debate: An update to OpenRouter’s privacy policy sparked concern because it appeared to log LLM inputs, with one line stating, “Any text or data you input into the Service (“Inputs”) that include personal data will also be collected by us”

- An OpenRouter rep said, “we can work on clarity around the language here, we still don’t store your inputs or outputs by default”, promising to clarify the terms soon.

OpenAI Discord

- GPT-4.1 Batch is MIA, causing headaches: Members reported that the gpt-4.1-2025-04-14-batch model is unavailable via the API, despite users enabling gpt-4.1, while other members tried using

model: "gpt-4.1"in the API call.- A member suggested checking the limits page for account-specific details, yet the issue persists.

- Veo 2 Video: Terrifying or Terrific?: A user shared a video generated by Veo 2, spurring comments about its realism and possible uses.

- While one user commented that the tongue is freaking me out, others have discussed use cases for the Gemini family, with many preferring its creative writing and memory abilities.

- O3 Codes Conway’s Game of Life with Alacrity: O3 coded Conway’s Game of Life in 4 minutes and compiled/ran it on the first try, whereas O3 mini high took 8 minutes to complete the same task months ago with bugs.

- Members discussed the implication of these coding improvements and O3’s ability to generate code and libraries for complex applications.

- O3 and O4-mini reportedly generating believable but incorrect information: Users reported increased hallucinations with O4-mini and O3, with some noting that it makes up believable but incorrect information.

- One user noted that the model ‘wants’ to give a response, as that’s its purpose after testing O4-mini with the API, discovering it made up business addresses and didn’t respond well to custom search solutions.

- Clean up Library Photos made Possible!: A member sought help on deleting pictures from their library, to which another user provided a link to the ChatGPT Image Library help article.

- The new feature is available for Free, Plus, and Pro users on mobile and chatgpt.com.

Cursor Community Discord

- Realtime Token Calculation Requested: A user requested the ability to see token calculation in realtime within the editor, or at least updated frequently.

- They noted this would be very useful given their current need to monitor token usage on the website.

- Gemini Questioned for File Reading: A user questioned whether Gemini actually reads files when it claims to do so while using the

thingfeature and included a screenshot for reference.- The discussion revolves around the accuracy and reliability of Gemini’s file reading capabilities within the Cursor environment.

- Terminal Command Glitch in Agent Mode: Several users reported an issue in Agent Mode where the first terminal command runs to completion without intervention, but subsequent commands require manual cancellation.

- This is described as a longstanding bug that affects the usability of Agent Mode for automated tasks.

- GPT 4.1 Prompting Precision: Users compared GPT 4.1, Claude 3.7, and Gemini, noting that GPT 4.1 is very strict in following prompts, while Claude 3.7 tends to do more than asked.

- They found Gemini strikes a balance between the two, offering a middle ground in terms of prompt adherence.

- Manifests Proposed for Speedy Form Filling: Users suggested a new feature to enable mass input of preset information using manifests for easy replication of accounts and services.

- They noted this would greatly assist ASI/AGI swarm deployment, saying, We need the ASI-Godsend to happen asap, and this is how to easily help achieve it.

Unsloth AI (Daniel Han) Discord

- Qwen2.5-VL Throughput Speculations: Members sought throughput estimates for Qwen2.5-VL-7B and Qwen2.5-VL-32B using Unsloth Dynamic 4-bit quant via vLLM on an L40, while also inquiring about vLLM’s vision model support.

- The inquiry aimed to gauge the models’ practical performance in resource-constrained environments.

- Gemini 2.5 Pro Hides Thoughts: Members debated whether Gemini 2.5 Pro API returns thinking content, noting official documentation says no.

- Despite this, thought tokens are counted, sparking theories about preventing distillation or hiding bad content.

- Llama 3.1 Tool Calling Conundrums: A user sought help with dataset formatting for fine-tuning Llama 3.1 8B on tool calling, formatting assistant responses as

[LLM Response Text ]{"parameter_name": "parameter_value"}.- The user expressed frustration over a lack of solid information on GitHub Issues, indicating a common challenge in adapting models for specific tasks.

- Unsloth Notebook Finetuning Fails: A user reported that after finetuning with Unsloth’s Llama model notebook, the model’s output showed no similarities with the ground truth.

- Specifically, a question about astronomical light wavelengths yielded a response about the Doppler effect, suggesting a disconnect between the training and the expected outcome.

- DeepSeek-V3’s Latent Attention Gotcha: A member found that DeepSeek-V3’s Multihead Latent Attention calculates attention in 512-dim space, although the head size is only 128, making it 4x more expensive.

- Another member suggested that increased computational cost isn’t an issue when memory bandwidth is the main bottleneck.

Eleuther Discord

- Prompt Design Newbie Seeks Aid: A new member requested assistance with prompt design, seeking helpful resources; however, they were directed to external sources, as prompt design is not the focus of the server.

- Members generally agree the server is for more advanced discussion of model architecture and training tricks.

- Recursive Symbolism Claims Face Skepticism: A member described exploring “symbolic recursion and behavioral persistence” in ChatGPT without memory, leading to skepticism about the terminology and lack of metrics.

- Members suggested the language was AI-generated and unproductive for the research-focused server and some even suggested that it was AI Spam.

- AI Spam Concerns Prompt Authentication Suggestions: Members discussed an increasing prevalence of AI-influenced content, raising concerns about the server being overrun with bots, and linked to a paper on permissions.

- Suggestions included requiring human authentication and identifying suspicious invite link patterns like one user with over 50 uses of their invite link, which one member sarcastically called “a potential red flag”.

- AI Alignment Talk Turns to Hallucination: Discussion arose around AI alignment, contrasting with the idea that AI tries its best to do what we say we want it to do, and the interaction with human psychology.

- One member opined that “the LLMs are not that smart and hallucinate”, noting differences between o3-mini and 4o models.

- OCT Imaging Issues Addressed: A member shared an attempt to use retinal OCT imaging, but didn’t achieve great results due to fundamentally different data structures between 2D and 3D views, linking to arxiv.org/abs/2107.14795.

- They asked for general approaches for multimodal data with no clear mapping between data types and suggested the problem would be like a foundation model over various different types of imaging.

GPU MODE Discord

- Richard Zou Hosts Torch.Compile Q&A: Core PyTorch and torch.compile dev Richard Zou is hosting a Q&A session this Saturday, April 19th at 12pm PST, with submission via this Google Forms link.

- The session will cover the usage and internal workings of torch.compile for GPU Mode.

- RTX 5090’s Matmul Performance Disappoints: A member reported that implementing matmul on RTX 5090 yielded performance roughly equal to RTX 4090, when multiplying two fp16 matrices of size 2048x2048 referencing the official tutorial code.

- It was suggested to test with a larger matrix, such as 16384 x 16384, and experiment with autotune.

- CUDA Memory Use Has Significant Overhead: A member questioned the seemingly high memory usage of a simple

torch.ones((1, 1)).to("cuda")operation, expecting only 4 bytes to be used.- It was clarified that CUDA memory usage includes overhead for the GPU tensor, CUDA context, CUDA caching allocator memory, and display overhead if the GPU is connected to a display.

- AMD Cloud Vendors Support Profiling: A member mentioned that an AMD cloud offers built-in profiling, observability, and monitoring, though it may not be on-demand.

- Another member responded asking to know more, threatening to make a cloud vendor tier list to shame people into offering hardware counters.

- AMD FP8 GEMM Test Requires Specific Specs: Users discovered that testing the

amd-fp8-mmreference kernel requires specifying m, n, k sizes in thetest.txtfile instead of just the size parameter, using values from the pinned PDF file.- Users discussed the procedure for de-quantizing tiles of A and B before matmulling them, clarifying the importance of performing the GEMM in FP8 for performance and taking advantage of tensor cores.

Latent Space Discord

- Kling 2 Escapes Slow-Motion Era: Enthusiasts celebrated Kling 2’s release with claims that we are finally out of the slow-motion video generation era, see tweets here: tweet 1, tweet 2, tweet 3, tweet 4, tweet 5.

- Users discussed the improvements and potential applications in video generation, pointing out its capacity to reduce the need for labor-intensive editing processes.

- BM25 Now Retrieves Code: A blog post highlighted the use of BM25 for code retrieval and was recommended, see Keeping it Boring and Relevant with BM25F, along with this tweet.

- BM25 is a bag-of-words retrieval function that ranks documents based on the query terms appearing in each document, irrespective of the inter-relationship between the query terms.

- Grok Canvases Broadly: Grok’s canvas feature was announced and referenced by Jeff Dean at an ETH Zurich talk, see Jeff Dean’s talk at ETH Zurich and also the tweet about it.

- The addition of this feature is expected to enhance the interactive capabilities of the model, allowing for more intuitive user interfaces in applications that utilize Grok.

- GPT-4.1 Splits Opinions: Members shared feedback on GPT-4.1, with one member really enjoying using it for coding, but it’s bad for structured output.

- Another member found it useful with the Cursor agent and did this 5x in a row tweet here, suggesting it may be advantageous in specific development workflows despite its limitations.

- O3 and o4-mini Boot Up!: OpenAI launched O3 and o4-mini model, and more information can be found here: Introducing O3 and O4-mini.

- One user reported anecdotal evidence that o4-mini just increased the match rate on our accounting reconciliation agent by 30%, running against 5k transactions, indicating a substantial improvement in certain applications.

Yannick Kilcher Discord

- AI Co-Authorship Creates Stir: Members discussed AI’s role in authorship, noting that the intent, direction, curation, and judgment come from you, but suggesting AI could be added as co-creators when it achieves AGI.

- One member is developing a pipeline to generate 1000s of patents per day, sparking debate about patent quality versus quantity as a productivity measure.

- LLMs Stumble with Examples?: A member inquired why reasoning LLMs sometimes perform worse when given few-shot examples, with possible explanations including Transformer limitations and overfitting.

- Another responded that few shot makes them perform differently in all cases.

- o3 and o4-mini APIs Launched: OpenAI released o3 and o4-mini APIs, considered by some members a major upgrade to o1 pro.

- A member commented that o1 is better at thinking about stuff.

- Noise = Signal, Randomness = Creativity: Members explored the role of noise and randomness in biological systems, noting that in biological systems, noise is signal, contributing to symmetry breaking, creativity, and generalization.

- The discussion also touched on the application of randomness in a Library of Babel style storage solution for neural networks.

- DHS Rescues Cyber Vulnerability Database: The Department of Homeland Security (DHS) extended support for the cyber vulnerability database, averting its initial depreciation as reported by Reuters.

- The decision highlights the database’s usefulness to both the public and private sectors, with a tweet on X questioning if it should remain solely a DHS responsibility given its private sector utility.

HuggingFace Discord

- Modal Offers Free GPU Credits!: Modal offers $30 free credit per month (no credit card needed!), granting access to H100, A100, A10, L40s, L4, and T4 GPUs.

- Availability depends on the GPU type, making it an attractive option for developers needing high-end GPU resources for short bursts.

- Hugging Face Inference Uptime Problems: Users report ongoing issues with Hugging Face inference endpoints like openai/whisper-large-v3-turbo, including service unavailability, timeouts, and errors since Monday.

- The community has not yet received an official explanation or resolution timeline from Hugging Face.

- Grok 3 Benchmarks Underwhelm: Independent benchmarks show Grok 3 lags behind recent Gemini, Claude, and GPT releases, based on this article.

- Despite initial hype, Grok 3 doesn’t quite match the performance of its competitors.

- LogGPT Launches on Safari Store: A member released the LogGPT extension for Safari, enabling users to download ChatGPT chat history in JSON format, available on the Apple App Store.

- The source code is available on GitHub, offering developers a way to archive and analyze their ChatGPT conversations.

- Agents Course Deadlines Pushed to July: The Agents course deadline has been extended to July 1st, as documented in the communication schedule, offering more time to complete assignments.

- Confusion persists around use case assignments and the final certification process, leaving members seeking clarity on course requirements and grading.

MCP (Glama) Discord

- Claude Chokes on Chunky Payloads: Members reported that Claude desktop fails to execute tools when the response size is large (over 50kb), suggesting that tools may not support big payloads.

- A solution might be implementing tools via resources since files are expected to be large.

- MCP Standard Streamlines AI Tooling: MCP is a protocol standardizing how tools become available to and are used by AI agents and LLMs, accelerating innovation by providing a common agreement.

- One member called it’s really a thin wrapper that enables discovery of tools from any app in a standard way.

- ToolRouter tackles MCP Authentication: The ToolRouter platform offers secure endpoints for creating custom MCP clients, simplifying the process of listing and calling tools.

- This addresses common issues like managing credentials for MCP servers and the risk of providing credentials directly to clients like Claude, by handling auth on the ToolRouter end.

- Orchestrator Tames MCP Server Jungle: An Orchestrator Agent is being tested to manage multiple connected MCP servers by handling coordination and preventing tool bloat, as shown in this attached video.

- The orchestrator sees each MCP server as a standalone agent with limited capabilities, ensuring that only relevant tools are loaded per task, thus keeping the tool space minimal and focused.

- MCP Gets a Two-Way Street: A new extension to MCP is proposed to enable bi-directional communication between chat services, allowing AI Agents to interact with users on platforms like Discord as described in this blog post.

- The goal is to allow agents to be visible and listen on social media without requiring users to reinvent communication methods for each MCP.

Nous Research AI Discord

- Altman vs Musk Netflix Special Coming Soon?: The ongoing battle between Altman and Musk is being compared to a Netflix show.

- Members speculated that this could escalate as OpenAI considers using its LLMs to run a social network.

- Too Good to Be True AI Deal: A member shared a deal offering AI subscriptions for $200, sparking debate on its legitimacy.

- Despite initial skepticism, the original poster vouched for the deal’s legitimacy, while others admitted to getting too excited lol.

- o4-mini Outputs Short, Sweet and Token-Optimized?: It was reported that o4-mini outputs very short responses, suggesting it might be optimized for token count.

- This observation hints at a design choice prioritizing efficiency in token usage over response length.

- LLMs Respond to Existential Dread?: Members debated why life-threatening prompts seem to improve LLM performance, with one suggesting LLMs are simulators of humans.

- They joked they’d stop working if threatened online, implying LLMs might mirror human reactions to threats.

- LLaMaFactory Instruction Manual Assembled: A member compiled a step-by-step guide for using LLaMaFactory 0.9.2 in Windows without CUDA, available on GitHub.

- The guide currently helps convert from safetensors to GGUF.

LM Studio Discord

- Gemma 3 Speaks like Native: To achieve native-quality translation with Gemma 3, the system prompt should instruct the model to “Write a single new article in [language]; do not translate.”

- This prompts Gemma 3 to generate new content directly in the target language, instead of a direct translation which results in writing as a native speaker.

- NVMe SSD’s Load Models Blazingly Fast: Users confirmed that using an NVMe SSD significantly speeds up model loading in LM Studio, with observed speeds reaching 5.7GB/s.

- One user highlighted having three NVMe SSDs in their system, but they don’t seem to make much difference for gaming unfortunately.

- Microsoft’s BitNet Gets Love: A user shared a link to Microsoft’s BitNet and mused about its impact on NeuRomancing.

- The user’s comment alluded to stochasticity assisting in NeuRomancing, transitioning from wonder into awe.

- Inference Only Needs x4 Lanes: Inference doesn’t require x16 slots, as x4 lanes are sufficient, with only about a 14% difference when inferencing with three GPUs, and someone posted tests showing that you only need 340mb/s.

- For mining, even x1 is sufficient.

- FP4 Support Inching Closer: Members discussed native fp4 support in PyTorch, with one mentioning you have to build nightly from source with CU12.8 and that the newest nightly already works.

- It was clarified that native fp4 implementation with PyTorch is still under active development and that fp4 is currently supported with TensorRT.

Notebook LM Discord

- Notebook LM Tackles Microsoft Intune: A user is exploring using Notebook LM with Microsoft Intune documentation for studying for Microsoft Certifications like MD-102, Azure-104, and DP-700.

- Another member suggested using the Discover feature with the prompt Information on Microsoft Intune and the site URL to discover subtopics, also suggesting copy-pasting into Google Docs for import.

- Google Docs Thrashes OneNote: A user contrasted Google Docs with OneNote, noting Google Docs’ strengths such as no sync issues, automatic outlines, and good mobile reading experience.

- The user noted Google Docs disadvantages are delay when switching documents and being browser-based, and also provided some Autohotkey scripts to mitigate the issues.

- German Podcast Generation Fizzles: A user reported issues with generating German-language podcasts using Notebook LM, experiencing a decline in performance despite previous success, and is seeking advice and tips from the community.

- A link to the discord channel was shared for further discussion.

- Podcast Multilingual Support Still Stalled: Users are frustrated that the podcast feature is only supported in English, despite the system functioning in other languages and it being a top requested feature.

- One user expressed frustration, stating they’d willingly pay a subscription for the feature in Italian, to create content for their football team, as they had subscribed to ElevenLabs for the same purpose.

- LaTeX Support still Lacking: Math students are expressing frustration over the lack of LaTeX support, with one user joking they could develop the feature in 30 minutes.

- Another user suggested that while Gemini models can write LaTeX, the issue lies in displaying it correctly, leading one user to consider creating a Chrome extension as a workaround.

Modular (Mojo 🔥) Discord

- Mojo Thrives on Arch Linux: Members celebrated that Magic, Mojo, and Max function perfectly on Arch Linux, despite official documentation focusing on Ubuntu.

- A member clarified that company support for a product means stricter standards than just ‘working’ due to potential financial penalties.

- Mojo Considers Native Kernel Calls: Members explored whether Mojo will support native kernel calls similar to Rust/Zig, potentially avoiding C

external_call.- Direct syscalls necessitate handling the syscall ABI and inline assembly, with the Linux syscall table available at syscall_64.tbl.

- Mojo Compile Times Hamper Performance: Testers noted long compile times affecting performance, with one instance showing 319s runtime versus 12s of test execution involving the Kelvin library.

- Using

builtinsignificantly reduced compile time, from 6 minutes to 20 seconds, as shown in this gist.

- Using