AI News for 4/17/2025-4/18/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (211 channels, and 8290 messages) for you. Estimated reading time saved (at 200wpm): 650 minutes. You can now tag @smol_ai for AINews discussions!

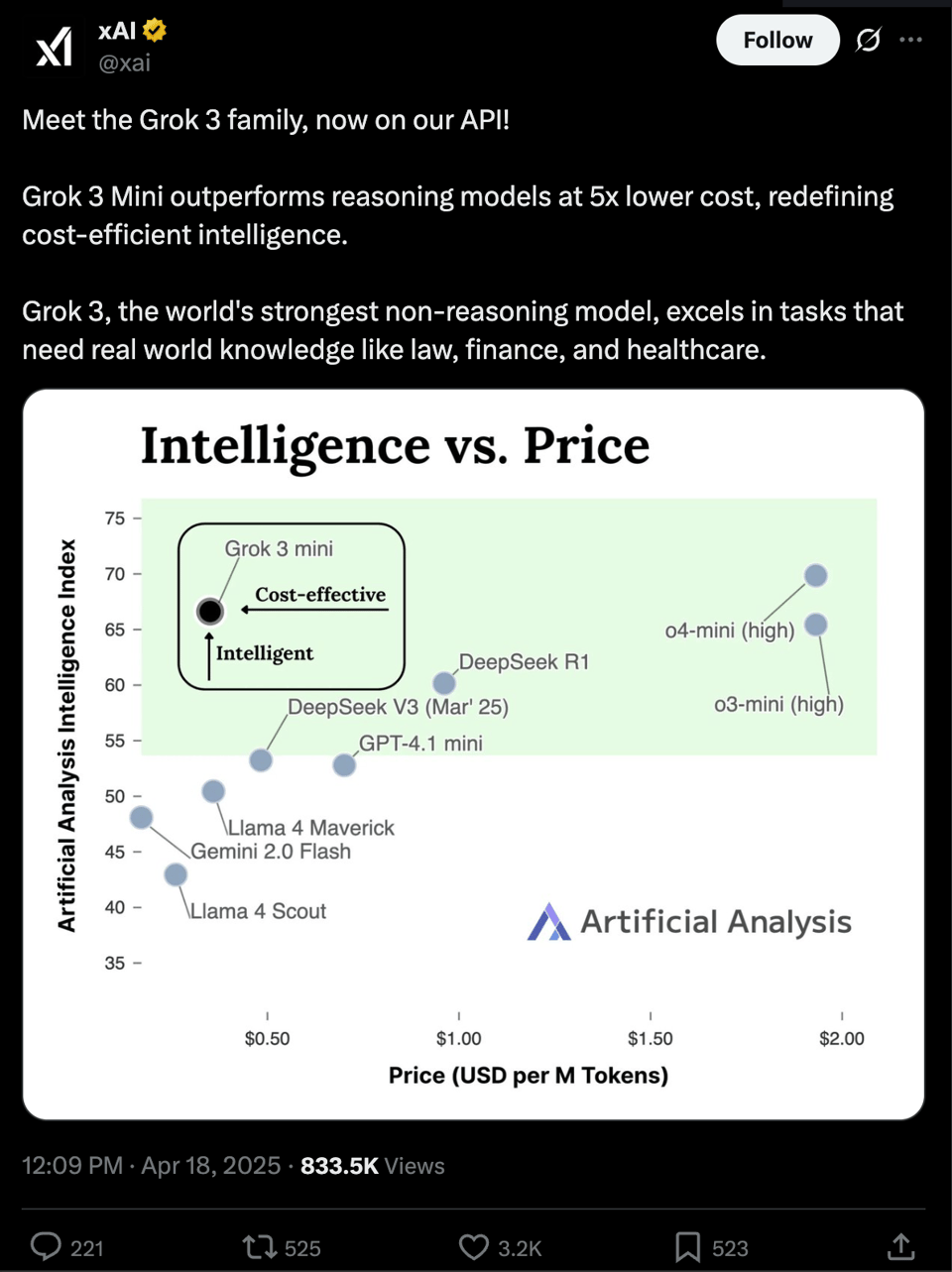

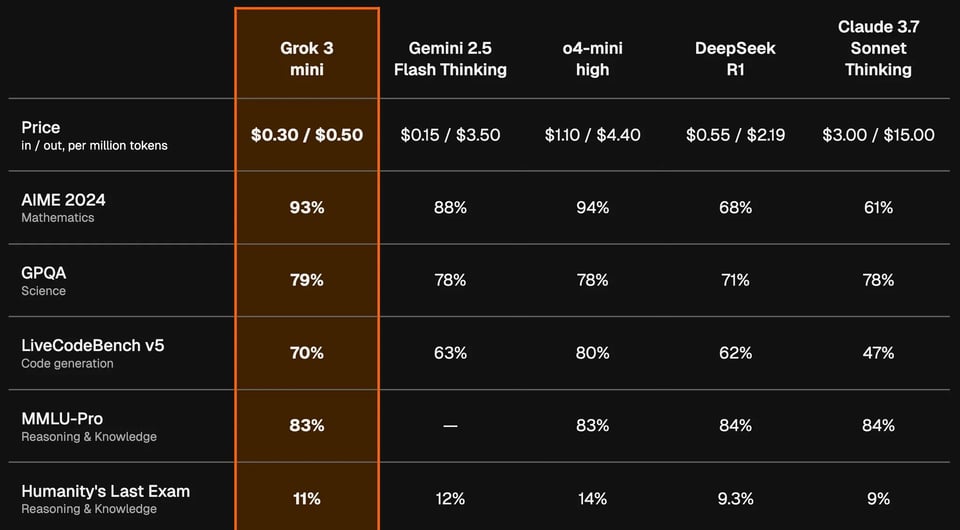

Grok 3 (our coverage here) has been out for a couple of months, but wasn’t API available. Now it is, with a bonus baby brother!

At 50 cents per output mtok, Grok 3 mini claims to be competitive with much larger frontier models, while displaying full reasoning traces:

You can get started here: https://docs.x.ai/docs/overview

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

Agent Tooling, Frameworks, and Design

- AI Agent Development and Deployment: @TheTuringPost highlights a practical guide from OpenAI for building AI agents, covering use case selection, design patterns, safe deployment practices, and foundational knowledge needed for product and engineering teams.

- Agent Interactions: @llama_index showcases LlamaIndex’s support for the Agent2Agent (A2A) protocol, allowing AI agents to communicate, exchange information, and coordinate actions across diverse systems. This promotes collaboration in a multi-agent ecosystem. A chat agent example that speaks A2A is provided.

- Codex CLI and AI-Assisted Code Editing: @gdb reports excitement around Codex CLI, noting that it’s in early days, with support for MCP, local/different provider models, and a native plugin system being added, along with fixing rate limit issues. @_philschmid provides an overview on how it works. @jeremyphoward notes that Aider is the OG CLI AI editor, and that Claude Code is the new fancy kid on the block. They are what Codex is competing with.

Model Updates, Releases, and Performance

- Gemini 2.5 Pro and Flash: @GoogleDeepMind announced the release of Gemini 2.5 Flash, emphasizing its hybrid reasoning model that can adjust how much it ‘thinks’ based on the prompt, making it ideal for various tasks. @DeepLearningAI noted it tops the Chatbot Arena leaderboard. @osanseviero shares the same, and shows a blog link and AI studio link.

- Model Evaluation and Benchmarking: @swyx notes the adoption of performance-per-cost thinking.

- o3 Model Tool Use: @mckbrando from OpenAI clarifies that behaviors seen in o3 and o4-mini are emergent from large-scale RL, with the models being given access to python and the ability to manipulate images.

- Llama 3 and Maverick: @EpochAIResearch is updating its methodology, and removing Maverick from its list of models exceeding 1e25 FLOP, as the training compute for Llama 4 Maverick drops to 2.2e24 FLOP.

Companies and Funding

- AI Startups: @GoodfireAI is announcing its $50M Series A and sharing a preview of Ember, a universal neural programming platform that gives direct, programmable access to any AI model’s internal thoughts.

- Mechanize Virtual Work Environments: @tamaybes announces the founding of Mechanize, which will build virtual work environments, benchmarks, and training data to enable the full automation of all work.

Efficiency and Infrastructure

- Quantization Aware Training (QAT) for Gemma Models: @reach_vb highlights GoogleDeepMind’s Quantisation Aware Trained Gemma 3 models, noting that the 27B model from 54GB reduces to 14.1GB, and that open source checkpoints can be run today in MLX, llama.cpp, lmstudio and more. @osanseviero is excited to do a full release of it.

- vLLM integration with Hugging Face Transformers: @vllm_project notes that you can now deploy any Hugging Face language model with vLLM’s speed, making it possible for one consistent implementation of the model in HF for both training and inference.

- Nvidia Nemotron with 4 Million Context Length: @reach_vb notes that Nvidia dropped a 4 MILLION context length Llama 3.1 Nemotron, and that you could literally drop entire codebases in it.

- Perplexity AI’s Multi-Node Deployment of DeepSeek MoE: @AravSrinivas announced that Perplexity AI serves MoEs like post-trained versions of DeepSeek-v3, noting that these models can be made to utilize GPUs efficiently in multi-node settings.

- Embzip for Embedding Quantization: @jxmnop announced the release of embzip, a new python library for embedding quantization, which allows users to save & load their embeddings with product quantization in one line of code.

New AI Techniques

- Reinforcement Learning (RL) for Reasoning in Diffusion LLMs: @omarsar0 highlights a paper, noting its two-stage pipeline and its ability to to beat SFT, and achieve strong reasoning.

Broader Implications

- Birth Rates in Industrialized Countries: @fchollet suggests the decline of birthrates in industrialized countries is an economic and cultural problem and that good chances that the decline will reverse in a post-AGI society, where people will be able to make the choice not to work.

- AI and Personal Use Cases: @yusuf_i_mehdi references an article noting that AI is quickly becoming a daily companion, with people now turning to it for everything from personal decision-making to thoughtful conversations, and help organizing life to creative expression.

- AI Safety: @lateinteraction says that the way you make an intelligent system reliable is not by adding more intelligence. It’s by subtracting intelligence at the right place, in fact.

AI and the China/U.S. Tech War

- Chinese Technological Advancement: @nearcyan says that we are definitely getting the ‘software singularity’ far, far before the ‘hardware singularity’, which seems.. more delayed than ever.

Humor/Memes

- Relatability: @code_star posts “Me to myself when editing FSDP configs”.

- Parody: @Yuchenj_UW states “You want cat girls”

AI Reddit Recap

/r/LocalLlama Recap

1. Google Gemma 3 QAT Quantization and Ecosystem Launches

-

Google QAT - optimized int4 Gemma 3 slash VRAM needs (54GB -> 14.1GB) while maintaining quality - llama.cpp, lmstudio, MLX, ollama (Score: 506, Comments: 108): The image is a bar chart visually demonstrating the impact of Google’s QAT (Quantization Aware Training) on the memory requirements to load Gemma 3 models using int4 quantization versus bf16 weights. Notably, the 27B parameter model’s VRAM requirement drops from 54GB (bf16) to just 14.1GB (int4), representing substantial savings while, as QAT ensures, maintaining bf16-level output quality. The post also notes direct availability of these formats via HuggingFace for use in MLX, Hugging Face transformers (safetensors), and GGUF formats (for llama.cpp, lmstudio, ollama), enabling broad platform compatibility. A prominent comment stresses the significance of QAT checkpoints, which involve post-quantization fine-tuning to recover accuracy—meaning that, specifically with Gemma 3 QAT, int4 models match bf16 performance. Another comment highlights that such quantization improvements are expected, though QAT enhances the quality beyond typical post-training quantization.

-

QAT (Quantization Aware Training) checkpoints for Gemma 3 involve further training the model after quantization, allowing the Q4 variant to achieve near-bfloat16 (bf16) accuracy, a significant boost over traditional post-training quantization methods. This improves inference efficiency without the typical loss in precision commonly seen with aggressive quantization.

- Technical differences in Google’s official QAT GGUF models are noted: they use fp16 precision for the

token_embdweight and do not employ imatrix quantization, potentially preventing optimal file size reductions. Community efforts, such as those by /u/stduhpf, have surgically replaced these weights with Q6_K for better size/performance ratios (details: Reddit link). - Performance benchmarking shows that, despite reduced size (e.g., 27B Q4 ~16GB), Gemma 3 QAT models have relatively slow token generation (tg) speed, performing on par with Mistral-Small-24B Q8_0 on Apple M1 Ultra hardware and slower than Qwen2.5 14B Q8_0 or Phi-4 Q8_0. There are also early reports of broken QAT checkpoints (such as the 1B-IT-qat version) and vocabulary mismatch issues in certain speculative decoding workflows.

- Technical differences in Google’s official QAT GGUF models are noted: they use fp16 precision for the

-

New QAT-optimized int4 Gemma 3 models by Google, slash VRAM needs (54GB -> 14.1GB) while maintaining quality. (Score: 240, Comments: 30): Google has released new Quantization-Aware Training (QAT) optimized

int4Gemma 3 models, reducing VRAM requirements from54GBto14.1GBwithout significant loss in model quality link. QAT is a technique where the impact of quantization is simulated during training, enabling low-bit inference with minimal accuracy degradation, marking this as a notable step for efficient model deployment. Comments highlight community anticipation for properly QAT-optimized models and note confusion about prior QAT releases from Google, suggesting this release is the first to meet practical VRAM efficiency needs at int4 precision. [External Link Summary] Google’s Gemma 3 models now include Quantization-Aware Training (QAT) variants, enabling efficient, high-quality inference on consumer-grade GPUs by reducing numeric precision down to int4 (4-bit). QAT-integrated training allows Gemma 3 models—as large as 27B parameters—to run on GPUs like the RTX 3090 with as little as 14.1GB VRAM for the largest model, with minimal degradation in AI quality (perplexity drop reduced by 54% versus naive post-quantization). Pre-built integrations support major inference engines (Ollama, llama.cpp, MLX), and official models are available on Hugging Face and Kaggle; the broader Gemma ecosystem also supports alternative quantization strategies for specific hardware or performance/quality trade-offs. Read the original blog post -

The discussion highlights a significant reduction in VRAM requirements for Google’s new QAT-optimized int4 Gemma 3 models, shrinking from 54GB to 14.1GB, and underscores the importance of such optimizations for broader hardware accessibility without quality loss.

- References to tools like Bartowski, Unsloth, and GGML suggest a technically engaged community tracking both upstream and third-party advancements in quantization-aware training (QAT) and model deployment efficiency.

- There is curiosity about the feasibility of further compression (e.g., reducing model size to 11GB), pointing toward ongoing interest in pushing the limits of quantization techniques and hardware accommodation while maintaining inference quality.

-

Gemma 3 QAT launch with MLX, llama.cpp, Ollama, LM Studio, and Hugging Face (Score: 144, Comments: 37): Google has released unquantized QAT (Quantization-Aware Training) checkpoints for Gemma 3, allowing third parties to perform their own quantization; the approach preserves model quality comparable to bfloat16 at significantly reduced memory requirements. The models are natively supported across major platforms (MLX, llama.cpp, Ollama, LM Studio, Hugging Face), and both GGUF-format quantized weights and the new unquantized checkpoints are publicly available (blog post, Hugging Face collection). Despite support across tooling, integration nuances remain: official guidance emphasizes quantizing with Q4_0, yet harmonizing this with other quantization techniques and frameworks (e.g. HuggingFace Transformers’ support for 4-bit quantization) is unclear. Redditors report inconsistencies and breakages across formats—e.g., MLX 4-bit models responding with '

' in LM Studio, differing model sizes between LM Studio and Hugging Face builds, and confusion over which model version is authoritative. Experts debate whether HF Transformers’ quantization implementations (bitsandbytes, GPTQ, AWQ, etc.) are compatible with Q4_0, recognizing a lack of documentation about optimal QAT-based workflow for HF-backed inference. -

There is technical confusion about quantization levels for Gemma 3 QAT models in MLX, specifically the presence of 3-bit, 4-bit, and 8-bit versions. One user notes that the 8-bit MLX versions seem to merely upsample the 4-bit QAT weights, using ‘twice as much memory for no benefit,’ raising concerns about memory efficiency and the legitimacy of the quantization process. Also, reports indicate that the 4-bit MLX versions only return

tokens in LM Studio 0.3.14 (build 5), suggesting a possible compatibility bug with that release. - A technical debate arose about inconsistent model size reporting between LM Studio and HuggingFace/Kaggle for the same QAT checkpoints (e.g., LM Studio reports 12B QAT as 7.74GB, HuggingFace as 8.07GB, but download yields 7.5GB). Further investigation shows a 4b QAT Q4_0 model being 2.20GB (LM Studio) versus 2.93GB (HuggingFace), indicating there are multiple, non-identical builds—raising the question of which source is most accurate and up-to-date.

- There is significant uncertainty regarding optimal quantized inference with Gemma 3 QAT models using HuggingFace Transformers. The official guidance only provides direct support/examples for the non-QAT model, while the QAT quantization process recommends GGUF Q4_0 (a 4-bit round-to-nearest, legacy quantization). Since HF Transformers supports its own 4-bit quantization formats (e.g., bitsandbytes, AWQ, GPTQ), it’s unclear whether these methods align with GGUF Q4_0’s scaling/thresholds—affecting whether the QAT-tuned model’s benefits are actually preserved when using HF-supported quantization pipelines.

2. Novel LLM Benchmarks: VideoGameBench & Real-time CSM 1B

-

Playing DOOM II and 19 other DOS/GB games with LLMs as a new benchmark (Score: 490, Comments: 98): Researchers have introduced VideoGameBench (project page), a vision-language model benchmark requiring real-time completion of 20 classic DOS/GB games such as DOOM II, tested on models like GPT-4o, Claude Sonnet 3.7, and Gemini 2.5 Pro/2.0 Flash (GitHub link). Models displayed limited interactive reasoning and planning capabilities, with none surpassing even the first level of DOOM II, highlighting substantial current limitations in LLM-based agents for complex, real-time environments. Comments humorously reference the classic ‘Can it run doom?’ meme and lightly debate the value of gaming as an intelligence benchmark, but no deep technical debate is present. [External Link Summary] Researchers have introduced VideoGameBench (https://vgbench.com), a new benchmark designed to evaluate vision-language models (VLMs) on their ability to play 20 classic video games (DOS/GB platforms) in real time via visual input and text commands. Major LLM/VLM systems, including GPT-4o, Claude Sonnet 3.7, and Gemini 2.5 Pro, were benchmarked on the task (e.g., DOOM II, default difficulty) using identical prompts, with none able to clear even the first level, revealing the current limitations of VLMs in dynamic, real-time visual-reasoning and decision-making tasks. Relevant code and evaluation details are available at https://github.com/alexzhang13/VideoGameBench.

-

A user raises concerns about performance and latency when using a reasoning model like Gemini 2.5 Pro for gaming tasks, noting that reasoning models may not be optimized for low-latency or real-time interaction compared to non-reasoning architectures.

- Another comment expresses technical skepticism, stating interest in sub-8B parameter models capable of real-time gameplay following text instructions on a single GPU with ~400GB/s memory bandwidth, suggesting improvements in architecture are needed to make this feasible with current resource constraints.

-

CSM 1B is real-time now and has fine-tuning (Score: 151, Comments: 30): CSM 1B, an open-source Text-to-Speech (TTS) model, now supports real-time streaming and LoRA-based fine-tuning, as detailed in this GitHub repo. The author implemented a real-time local chat demo and fine-tuning via LoRA, with alternative support for full fine-tuning. The repository demonstrates performance improvements that enable real-time inference, making it competitive with other open-source TTS models. Commenters are inquiring about compatibility with Apple Silicon and are interested in technical details about the optimizations made for real-time performance. There’s also a general positive reception towards ongoing iterative development.

-

There is discussion around achieving near real-time inference using high-end GPUs like the 5090, with reported performance up to

0.5s(2x real-time) for inference, though latency issues remain due to container spin-up. Deployment examples include running inference as a serverless instance and attempts to stream audio output over WebSocket from a Docker container.- Questions were raised about Apple Silicon compatibility, specifically whether performance—albeit slower—would allow the model to run effectively on Apple hardware. Another user described porting the codebase to MLX for Apple Silicon, reporting that CPU inference outperformed the current Metal backend, but with less-than-optimal results overall.

- There is technical curiosity about the ability to fine-tune the model for languages beyond its original training set, as well as whether the reference voice needs to be in the same language as the generated audio, implying interest in multilingual support and the data constraints for fine-tuning.

-

microsoft/MAI-DS-R1, DeepSeek R1 Post-Trained by Microsoft (Score: 320, Comments: 73): Microsoft released MAI-DS-R1, a model based on DeepSeek R1 that appears to substantially improve code completion benchmarks, notably outperforming comparators on livecodebench. The announcement raises questions about scale, as previous large post-trainings (like Nous Hermes 405B) were considered state-of-the-art but this may represent a new largest post-train/fine-tune effort from a major provider. Commenters note strong performance on specific coding benchmarks and discuss the geopolitical context, contrasting it with recent national security reports about large models. Technical discussion centers on the significance of Microsoft’s investment in such large-scale post-training relative to community and industry efforts. [External Link Summary] MAI-DS-R1 is a DeepSeek-R1-based reasoning LLM post-trained by Microsoft for improved response on previously blocked/bias-prone topics and reduced risk, leveraging 110k safety/non-compliance samples (Tulu 3 SFT) and 350k internal multilingual bias-focused data. The model demonstrates superior safety and unblocking performance (matching or exceeding R1-1776 in benchmarks), with preserved reasoning, coding, and general knowledge capabilities, while remaining subject to inherent limitations (e.g., possible hallucinations, language bias, unchanged knowledge cutoff). Evaluation covers public benchmarks, a blocked-topic test set (11 languages), and harm mitigation (HarmBench), showing MAI-DS-R1 generates more relevant, less harmful outputs—though content moderation and human oversight remain recommended for high-stakes use.

-

MAI-DS-R1 demonstrates improved performance on livecodebench code completion benchmarks, as shown in posted screenshots. This positions it as a strong contender among current code reasoning and generation models.

- A technical update notes that MAI-DS-R1 is post-trained from DeepSeek-R1 using 110k Safety and Non-Compliance examples from the Tulu 3 SFT dataset (https://huggingface.co/datasets/allenai/tulu-3-sft-mixture), plus ~350k internally developed multilingual examples focused on reported biases. This aims to simultaneously improve safety, compliance, and reasoning abilities.

- There is technical discussion comparing the scale and scope of this post-training to previous large-scale efforts, such as Nous Hermes 405b, with some users suggesting that this could be one of the largest or most comprehensive fine-tune/post-train efforts to date within the open-source LLM community.

3. Local-first AI Tools, Visualization, and Community Projects

-

No API keys, no cloud. Just local Al + tools that actually work. Too much to ask? (Score: 111, Comments: 33): Clara is a local-first AI assistant built as a native desktop app, running entirely on the user’s machine via Ollama for large language model inference. The latest update integrates n8n, an open-source workflow automation tool, directly within Clara, enabling end-users to automate tasks (such as email, calendar, API calls, database, scheduled tasks, and webhooks) through a visual flow builder—all without cloud, external services, or API keys. Full technical details and codebase are on GitHub. Compared to projects like OpenWebUI and LibreChat, Clara emphasizes reduced dependencies and beginner-friendly UX for local deployment. A comment notes that mentioning “Ollama” without context could hinder user understanding or adoption; another reflects on the crowded nature of local AI assistants, questioning why projects like Clara may receive limited attention.

-

A user inquires about interoperability with other local AI servers, specifically those offering OpenAI-compatible endpoints such as Tabby API and vLLM, highlighting the importance of supporting a broader ecosystem of local inference servers for flexibility and increased adoption.

- Another user requests the addition of OpenAI-compatible API usage, pointing out that not all users have the computational resources necessary to run local models continuously; this feature would allow for cloud fallback or hybrid workflows when local hosting is impractical.

-

Time to step up the /local reasoning game (Score: 188, Comments: 57): The image shows OpenAI’s identity verification prompt, which is now required for access to their latest models. The process requests extensive personal data, including biometric identifiers, government document scans, and user consent for data processing, signaling a shift towards more intrusive user verification as a prerequisite for advanced AI model access—potentially impacting accessibility, privacy, and user trust. Commenters criticize OpenAI for this aggressive data collection, raising concerns about privacy, with some suggesting alternatives (Anthropic, local models), and warning against platforms requiring sensitive verifications or the use of ‘persona’ features; convenience versus privacy tradeoff is a key theme.

-

Commenters discuss the privacy implications of “persona” features in AI products, warning that these systems collect extensive personal and potentially biometric data, referencing OpenAI and Worldcoin initiatives as examples. The recommendation is to prefer open-source or local AI alternatives, such as Anthropic’s approach or running models on personal hardware, to mitigate privacy risks.

-

I created an interactive tool to visualize every attention weight matrix within GPT-2! (Score: 136, Comments: 14): The OP has designed an interactive tool for visualizing every attention weight matrix from the GPT-2 language model. The tool is accessible online at amanvir.com/gpt-2-attention, enabling users to inspect attention patterns across all layers and heads in GPT-2 with full interactivity. This adds to the lineage of model interpretability tools, comparable to Brendan Bycroft’s LLM Visualization, and could be adapted for smaller, more lightweight models (e.g., nano-GPT 85k) to enhance accessibility and speed. Commenters note the tool’s utility and request a curated list of similar visualization/interpretability projects. A suggestion is made to deploy the tool on a lighter model than GPT-2 for improved performance and usability. [External Link Summary] A user developed an interactive visualization tool (available at amanvir.com/gpt-2-attention) that renders every attention weight matrix within GPT-2, allowing in-depth exploration and analysis of the internal workings of transformer attention heads layer by layer. Community suggestions include adapting the tool for smaller models like nano-GPT for improved accessibility, and references are provided to similar work such as Brendan Bycroft’s LLM Visualization and the open-source TensorLens library. The tool advances interpretability research by enabling direct, granular examination of attention patterns in large language models. (Original post: Reddit link)

-

One commenter suggested replacing the full GPT-2 model with nano-GPT 85k for the visualization tool, citing that nano-GPT is a much smaller download and therefore easier to visualize and interact with, making it more accessible for users who want to explore attention weights without the computational overhead of the full-sized model (reference: https://github.com/karpathy/nanoGPT).

- Another suggestion addressed visualization granularity: modifying the dot color channels in the visualization to reflect different axes or coordinates, which could highlight cross-attention or layer-specific dynamics more clearly within the attention maps.

- Additional references were provided for related interpretability tools: Brendan Bycroft’s LLM Visualization for interactive transformer exploration, and attentionmech/tensorlens, which offers deeper probing into model internals, indicating a community interest in diverse approaches to transformer visualization.

-

Where is the promised open Grok 2? (Score: 186, Comments: 70): The post questions the value of open-sourcing Grok 2 now that it significantly lags behind newly-released models such as DeepSeek V3, upcoming Qwen 3, and Llama 4 Reasoning. The concern is that by the time Grok 2 is released, it will be obsolete, citing the ineffective timing of Grok 1’s release as precedent. No benchmarks or model specs for Grok 2 are discussed, but Grok 3’s recent API availability is mentioned, which may signal Grok 2’s open-source release is imminent. Comments debate whether releasing obsolete models retains research value; some argue even outdated models (e.g. if OpenAI released GPT-3.5 weights) are useful for research, while others note that Grok 1 and 2 were not impressive at launch and question the overall impact. The analogy to delayed product launches like the Tesla Roadster highlights skepticism about announced vs. actual release timelines.

-

One commenter notes that, despite Grok 1 and Grok 2 performing poorly at launch, the release of Grok 3 to the API represents a technical milestone. The commenter suggests that this public API release of Grok 3 is a prerequisite before open sourcing Grok 2, implying that open-sourcing efforts are dependent on the release cadence and stability of newer Grok versions.

- It’s highlighted that even “obsolete” models like GPT-3 or 3.5 would still generate significant research interest if open-sourced, emphasizing the value of open releases for the research and developer communities despite rapid model iteration and improvements.

- Commenters point to previous tech release patterns (e.g., Tesla products) to articulate skepticism regarding the timely open-source release of Grok 2, indirectly raising concerns about missed deadlines and the possibility of ‘vaporware’ in the rapid AI model announcement-released cycles.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. OpenAI o3 and GPT-4o User Experiences and Capabilities

-

o3 is crazy at geoguessr (Score: 358, Comments: 64): The image showcases a tweet describing an extraordinary GeoGuessr feat: accurate identification of a location (Amirsay Mountain Resort, Uzbekistan) from a snowy landscape with no visible signs, Google Street View, or metadata, relying purely on visual geographic features. Coordinates (approx. 41.50°N, 70.00°E) are determined from a mountainside view, illustrating advancements in AI geolocation. Comments highlight further technical prowess, such as correctly identifying Diamond Head from a user screenshot without geodata, and note that Gemini 2.5 Pro displays similar geo-identification capabilities. Discussion emphasizes how advanced these newer models are at geolocation using only visual clues, with users impressed by both historical context and current capabilities of models like o3 and Gemini 2.5 Pro.

-

Multiple users provide image evidence showing that o3, an AI (likely the multimodal model mentioned), was able to accurately identify precise world locations in GeoGuessr from minimal visual cues, even with no embedded geodata—implying advanced visual recognition capabilities. One user notes the model correctly recognized Diamond Head from a personal screenshot, stressing that no metadata aided its guess, showcasing impressive inference and generalization power.

- Another comment mentions that Google’s Gemini 2.5 Pro model also succeeded at this highly specific GeoGuessr task, suggesting that multiple state-of-the-art (SOTA) multimodal models are achieving exceptional performance in real-world location recognition scenarios. This informs comparisons of model capabilities and highlights rapid progress among top-tier competitors.

-

o3 is crazy at geoguessr (Score: 701, Comments: 91): The image demonstrates an AI—referred to as ‘o3’—accurately geolocating a highly specific snowy landscape in Uzbekistan’s Amirsoy Mountain Resort with minimal cues: no signage, no Google Street View coverage, and no visible metadata. The post underscores the technical feat, citing that o3 deduced not just the country but an exact spot based purely on environmental and geographic pattern recognition from the image. Commenters compare o3’s geolocation abilities to human experts and existing tools like Google Image Search, expressing amazement at LLM and AI pattern recognition capabilities. One user suggests wishing for human cognition to mimic LLMs in holding large patterns and visual data associations.

-

A user raised the technical question of whether models like ChatGPT can access EXIF metadata embedded in uploaded images, positing that the accurate geolocation identification in GeoGuessr might be due to extraction of GPS or geotag data contained in image files rather than pure image pattern recognition.

- Discussion compared GeoGuessr model performance to Google Image Search, hinting at the effectiveness of Google’s similarity search algorithms for image-based geolocation and suggesting a potential benchmark comparison between AI models and search engine capabilities in precise location guessing.

-

O3 is on another level as a business advisor. (Score: 277, Comments: 84): The post details a qualitative comparison by a startup founder between OpenAI’s O3 model and prior LLMs like GPT-4.5, emphasizing O3’s more authoritative, directive advisory style in business strategy contexts compared to GPT-4.5’s neutral or sycophantic tendency. The user cites a direct plan output by O3 that recommends shipping a minimum-viable feature to acquire real data, then iterating decisively based on measured engagement, contrasting this with earlier models’ tendency for endless hypothesis generation. Technically, the comments note that Gemini provides ‘argumentative’ feedback, and that O3 exhibits an improved, less-placating, more expert persona, but also has limits such as a ~200-line code output ceiling and possible overconfidence when presenting incorrect answers. Respondents debate the value of LLMs with assertive (‘non-placating’) personalities, noting Gemini’s confrontational stance versus O3’s authoritative mentoring, and raise issues about O3’s coded output and sometimes excessive assumed expertise.

-

Several users compare O3’s performance against other LLMs, noting its higher level of assertiveness and expertise in business advisory and technical areas compared to previous models and even Gemini 2.5 Pro/Sonnet 3.7. O3 often delivers authoritative and nuanced feedback, but may need prompting to simplify responses for less technical users.

- Key technical limitations are cited: O3 currently has a coding output cap of ~200 lines per response, which can hinder more complex coding tasks and workflow integration. This is seen as a notable regression or barrier for technical users accustomed to larger outputs from alternative models.

- There is confusion about model versioning and power: some users mistakenly believe O3 might be superior to O4 for reasoning and non-sycophantic responses, questioning whether O3’s performance characteristics (i.e., less likely to placate users, more argumentative/dogmatic) are a technical feature or just tuning. Users highlight real-world A/B use across multiple models.

-

o3 can’t strawberry (Score: 155, Comments: 45): The image documents a failure case in which OpenAI’s GPT-3.5 Turbo (referred to as “o3”) incorrectly answers a basic factual question—claiming there are only 2 ‘r’s in the word “strawberry” instead of the correct 3. The attached ChatGPT share link and screenshot capture the direct model output, highlighting a concrete example of an LLM factual hallucination or counting error. Additionally, the screenshot lacks the usual ‘Thinking’ placeholder in the UI, suggesting a potential interface or backend bug in addition to the LLM’s mistake. Several commenters state they could not reproduce the error, with both o3 and newer o4-mini models returning the correct answer, suggesting the issue may be intermittent or linked to a specific deployment glitch. A notable observation is the absence of the ‘Thinking’ block in the image, prompting speculation about an interface or backend anomaly accompanying the model’s failure.

-

Multiple users report that the failure to output the correct spelling of ‘strawberry’ in the O3 model cannot be consistently reproduced; even lower-tier models like o4-mini return the correct answer. Visual evidence (such as this screenshot) shows that both correct answers and normal “Thinking” blocks are present during typical queries, suggesting either a rare edge-case bug or an issue with a particular session.

- There is a mention of the absence of the “Thinking” block in the original user’s output, which is not standard behavior; this detail leads some to believe an atypical bug or execution path in the model response process may be involved.

2. New LLM and AI Model Benchmarks and Releases

-

LLMs play DOOM II and 19 other DOS/GB games (Score: 161, Comments: 45): The post introduces VideoGameBench, a new benchmark designed to test the ability of vision-language models (LLMs like GPT-4o, Claude Sonnet 3.7, Gemini 2.5 Pro, and Gemini 2.0 Flash) to complete 20 real-time DOS/Gameboy games, including DOOM II, using the same prompt. Results show that while models exhibit varying performance, none can clear even the first level of DOOM II (default difficulty), highlighting significant limitations of current vision-language agents in pixel-based control and real-time gaming environments. Commenters note that Claude tends to outperform others in practical tests, and discuss the human factors contributing to video game proficiency; a point is raised that even novice humans may exhibit similar failure patterns as current LLM agents due to limited multi-action processing and threat response. [External Link Summary] A new benchmark, VideoGameBench (https://vgbench.com/), evaluates vision-language models by challenging them to complete 20 real-time DOS and Game Boy games, including DOOM II. Leading LLMs such as GPT-4o, Claude Sonnet 3.7, Gemini 2.5 Pro, and Gemini 2.0 Flash were tested on DOOM II but were unable to clear the first level, highlighting the current limitations of LLMs in real-time, closed-loop interactive environments that require embodied cognition and rapid decision-making.

-

A user highlights that Claude consistently outperforms other LLMs in practical gaming tasks, suggesting that its architecture or training offers tangible advantages compared to models that excel mainly in synthetic benchmarks rather than interactive environments.

- Another user raises the point that LLMs exhibiting novice gameplay is reminiscent of how humans unfamiliar with games behave—such as limited key presses or poor reaction to threats—underscoring the difficulty of generalizing motor/interaction skills purely from text or data without embodied learning.

- A discussion about what it would take for an AI to achieve AGI in gaming points out that successfully beating highly challenging, complex games could be a key milestone, pushing beyond leaderboard benchmarks toward holistic, transferrable reasoning and adaptability.

-

Seedream 3.0, a new AI image generator, is #1 (tied with 4o) on Artificial Analysis arena. Beats Imagen-3, Reve Halfmoon, Recraft (Score: 112, Comments: 21): The image displays the latest AI image generation model rankings from the Artificial Analysis arena, where Seedream 3.0 (by ByteDance Seed) is tied for 1st place with OpenAI’s GPT-4o, each with top Arena ELOs (~1155-1156), surpassing strong competitors like Imagen-3 and Reve Halfmoon. The table emphasizes Seedream 3.0’s rapid advancement and competitive performance as measured by large-scale head-to-head human preference evaluation. Community discussion highlights requests for availability, with official technical details shared by the development team and documentation here, and leaderboard transparency via Artificial Analysis. Most technical commenters are interested in broad user access to Seedream 3.0, citing the absence of a public demo, and emphasize the importance of independent benchmarks and transparent methodology in assessing model quality.

-

Multiple commenters observe that the Artificial Analysis arena benchmark (https://artificialanalysis.ai/text-to-image/arena?tab=leaderboard) is outdated and does not reflect current model capabilities, particularly neglecting the significant edge that models like GPT-4o and Sora exhibit in prompt understanding and img2img features. It is noted that Seedream 3.0, while tying for #1 in the arena, produces results that are stylistically bland and struggles with complex prompts compared to leading peers.

- Direct links are provided to Seedream 3.0’s technical presentation (https://team.doubao.com/en/tech/seedream3_0) and an AI Search presentation video (https://www.youtube.com/watch?v=Q6QbaK57f5E), which could offer greater technical insight into model architecture, workflow, and unique features for those seeking in-depth details beyond mere benchmark results.

3. AI Industry Infrastructure and Pricing Updates

-

arXiv moving from Cornell servers to Google Cloud (Score: 122, Comments: 14): arXiv is migrating its infrastructure from Cornell-managed on-premise servers to Google Cloud Platform (GCP), coinciding with a major codebase rewrite. The move aims to modernize arXiv’s stack while leveraging cloud-native services, but raises concerns about potential vendor lock-in and operational risk from simultaneous major changes. Commenters note that arXiv already uses external distribution (e.g., Cloudflare R2) and that benefits like backups or scaling are not unique to GCP. Technical commenters overwhelmingly caution against coupling a code rewrite with a cloud migration, arguing this increases project failure risk. There is discussion about the tradeoff between using GCP’s managed services and the portability advantages of containerization, with one comment skeptical of the necessity given arXiv’s existing external distribution mechanisms. [External Link Summary] The arXiv careers page (https://info.arxiv.org/hiring/index.html) details current openings and hiring information for roles contributing to the arXiv e-print repository, a prominent preprint server funded by multiple foundations and hosted at Cornell University. The page typically includes technical roles in software development (such as Software Engineer and DevOps Specialist) focused on the maintenance, improvement, and scaling of the platform’s infrastructure and services. Positions require expertise in software engineering, DevOps practices, and a commitment to supporting open scientific communication at scale.

-

A user points out a major technical risk: arXiv is planning to combine a ground-up rewrite with a migration from on-premises to Google Cloud simultaneously. This is often considered a bad practice in systems engineering due to compounded risk, as complex migrations and rewrites introduce many failure points when not staged separately.

- Concerns are raised about arXiv becoming overcoupled to Google Cloud Platform (GCP), which would make future portability and multi-cloud strategies more difficult. The commenter suggests containerization as a best practice before cloud migration, allowing for easier deployment on any cloud provider, and notes that arXiv already distributes bulk data over Cloudflare R2, implying redundant cloud services are already in use.

- Discussion draws parallels with other major archive transitions, notably dejanews being acquired by Google and its subsequent transformation into a less open, more restricted system (Google Groups). This highlights risk for arXiv’s openness and long-term accessibility as control centralizes under a major corporate cloud provider.

-

OpenAI Introduces “Flex” Pricing: Now Half the Price (Score: 139, Comments: 41): OpenAI announced a new ‘Flex’ pricing tier offering API usage at 50% reduced cost, with the trade-off of potentially slower, queued, or throttled responses during high demand. This is distinct from the previous batched API, which promised results within ‘up to 24h’ (typically much faster) for a similar discount, raising questions among developers about whether ‘Flex’ will replace the batched API. Technical discussion centers on use cases where response latency is non-critical, with some users asking about functional and performance differences between ‘Flex’ and the existing batched API, specifically concerning queue times and workflow impact. [External Link Summary] OpenAI’s Flex processing is a beta pricing tier providing 50% reduced per-token costs for the o3 and o4-mini models by deprioritizing requests, making it suitable for background, non-production, or latency-tolerant workloads. Flex uses a simple synchronous API parameter (service_tier:“flex”), preserves streaming/function support, but lacks a fixed SLA and is subject to greater response-time variability and queuing during peak periods—distinct from the Batch API which is optimized for massive jobs via offline file submission and has separate quotas. ID verification is also now required for lower usage tiers to access certain features, and the update comes amidst competitive pressure in the AI API market to lower costs for developers. Original article

-

A user requests detailed clarification comparing the new Flex pricing to the previously available Batched API. They note that the Batched API promised results within “up to 24h” but consistently returned responses much faster (typically within 1-10 minutes, maximum of 1 hour), accompanied by a 50% discount—raising the technical question of whether, or how, Flex replaces the Batched API both in SLA and architectural implementation.

- Another user inquires about the presence of any truly asynchronous workflow support within the Flex API: specifically, whether the API allows for a request-tickets pattern where a client can submit a request, receive a ticket, and fetch results later (rather than maintain a persistent open connection), which is an important architectural consideration for certain use cases, especially to avoid the drawbacks of keeping connections open.

- There is a question about how Flex pricing and token-based billing interact with the existing $20/month OpenAI consumer subscription, reflecting a need for clarification among technical users about what Flex means for different user tiers and how it impacts overall cost structures for varying levels of usage.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Flash Preview

Theme 1. New Models Enter the Ring: Gemini, Grok, and Dayhush Stir Competition

- Grok 3 Mini Might Punch Above Its Weight! Discussions suggest Grok 3 Mini performs competitively against Gemini 2.5 Pro, especially in tool use, despite sometimes being overly aggressive in calling them. A user noted its significantly lower output cost, stating Grok 3 Mini is 1/7th the output token price of 2.5 flash thinking.

- Dayhush Shakes Up Coding Model Hierarchy! Members are evaluating Dayhush as a potential successor to Nightwhisper, finding it superior for coding and web design and possibly outperforming 3.7 Sonnet on real-world tasks. Users shared prompts to generate code for complex tasks like “create pokemon game” to compare outputs.

- Gemini Family Grows: Advanced Rollout Woes & Ultra Rumors! Android users report frustration with the Gemini Advanced rollout, citing missing features like the deep research model and UI inconsistencies despite meeting criteria and following the official announcement. Meanwhile, a Google employee confirmed rumors of a “Gemini Coder” model and expectations that Gemini Ultra will scale improvements over Pro.

Theme 2. Framework Deep Dives: Mojo Pointers and tinygrad Bugs

- Mojo’s

Dict.get_ptrSecretly Copies Values! A user uncovered that retrieving a pointer to aDictvalue in Mojo unexpectedly invokes a copy and a move constructor, detailed in this code snippet. A PR attempts to fix this copying issue traced to a specific line in theget_ptrfunction. - Moving Mojo Variadics Requires

UnsafePointerGymnastics! Extracting values from a variadic pack in Mojo demands usingUnsafePointerand explicitly disabling destructors (__disable_del args) to prevent unwanted copies of the original elements. Users raised concerns about potential memory leaks if developers do not carefully manage resources in these scenarios. - Tinygrad’s Rockchip Beam Search Takes a Dive! Running

beam=1/2on the Rockchip 3588 causes a crash with anIndexErrorintinygrad/engine/search.pywhen using the benchmark code from examples/benchmark_onnx.py. A member also suspects a potential bug in the pattern matcher that might ignore transposed sources if base shapes align.

Theme 3. Practical AI Tooling: From Financial Parsing to Agent Chats

- Perplexity Chatbot Arrives on Telegram! Perplexity launched a Telegram bot, allowing users to get real-time answers with sources directly within group and private chats; find it here. They also eliminated citation token charges in their new pricing scheme for more predictable costs.

- LlamaIndex Agents Learn to Chat with Google’s A2A Protocol! LlamaIndex now supports Google’s Agent2Agent (A2A) protocol, an open standard for secure AI agent communication regardless of origin. Discussions arose whether llama-deploy needs A2A implementation itself for this to work correctly.

- Concall Parser Tackles PDF Chaos for Financial Data! A member released concall-parser on PyPI, a Python NLP tool designed to extract structured data efficiently from earnings call reports. The developer highlighted data variation in PDFs (pdfs are hard to get right) as a major development hurdle, requiring extensive regex and testing effort.

Theme 4. AI Framework Integration & Features: LlamaIndex, Perplexity, Modular

- LlamaIndex Embraces Gemini 2.5 Flash Out-of-Box! LlamaIndex now supports Gemini 2.5 flash without requiring any user upgrades; simply call the new model name and it works, demonstrated in this example. This provides immediate access to the new model’s capabilities for LlamaIndex users.

- Perplexity API Gets Images & Date Filters! The Perplexity API added Image Uploads for integrating images into Sonar searches, explained in this guide. A new date range filter also debuted, enabling users to narrow search results to specific timeframes per this guide.

- Modular Hosts Mojo Meetup: GPU Optimization on the Agenda! Modular is hosting an in-person meetup at their Los Altos, CA headquarters next week, with a virtual option available; RSVP here. A talk will cover optimizing GPU performance using Mojo & MAX, titled making GPUs go brrr.

Theme 5. Decoding AI “Thinking”: Architecture, Reasoning, and Primer Power

- AlphaGo vs. LLM “Thinking”: Vastly Different Beasts! Discussions contrasted the architectures of Alpha* systems and Transformer LLMs, stating LLMs cannot train like Alpha* nor operate in a way that benefits from such training, calling them vastly different. A user noted LLM Thinking Mode is a primitive policy search, not a systematic process with a world model.

- Cohere Command A’s Reasoning Power Under Debate! A member questioned whether Command A possesses true reasoning capabilities or if its performance stems solely from its tokenizer. The question remained unanswered, leaving the nature of Command A’s “reasoning” unresolved.

- DSPy Community Gets Reasoning Models Primer! A member shared an approachable primer on reasoning models titled What We Mean When We Say Think, aimed at a general audience. The primer aims to simplify complex concepts related to reasoning models, making them more accessible.

PART 1: High level Discord summaries

Perplexity AI Discord

- Plex on Telegram: Perplexity is now accessible via Telegram as a bot, providing real-time answers with sources in both group and direct chats; check it out here.

- This enhancement aligns with their launch of GPT 4.1 and Perplexity Travel on mobile, detailed in their changelog.

- LAN Parties Alive and Well: Members shared stories of past LAN parties, discussing the challenges of lugging desktops to play classics like Doom and Quake.

- One member reminisced about traveling across the UAE to play CS:GO, noting the chaotic but fun cable management, jokingly saying it was a mess, but at least it worked.

- Gemini Advanced Users See Red: Android users are reporting issues with the rollout of Gemini Advanced features, despite meeting requirements outlined in the official announcement.

- One user, who had tried everything, reported missing features and UI discrepancies, planning a full wipe as a last resort.

- GPT-4o versus Gemini: A Model Cage Match!: Members debated the merits of GPT-4o versus Gemini 2.5 Pro for productivity, with one member favoring Gemini for a main hobby project because of its high context limit.

- Several users criticized ChatGPT’s recent tendency to be overly complimentary and inconsistent, with one quipping, This is like…something Elon Musk would code for himself.

- Perplexity Drops Citation Token Charges: Perplexity has moved to a new pricing scheme, removing citation token charges to make pricing more predictable, explained on their pricing page.

- They also announced their first hackathon with Devpost, inviting community participation with details here.

LMArena Discord

- Dayhush Dethrones Nightwhisper?: Members find Dayhush superior to Nightwhisper in coding and web design, potentially outperforming 3.7 Sonnet.

- Users shared prompts like “create pokemon game” and other visually stunning requests to get the models to generate code, comparing the outputs.

- Grok 3 Mini Challenging Gemini 2.5 Pro: Grok 3 Mini is competitive with Gemini 2.5 Pro, particularly in tool use, but may be overly aggressive with tool calling.

- A user noted that Grok 3 Mini has 1/7th the output token price of 2.5 flash thinking, making it an economically attractive option.

- Polymarket’s Arena Influence?: Discussion revolves around potential market manipulation on Polymarket influencing model evaluations in LM Arena, specifically bets on o3 or o4-mini surpassing 2.5 Pro.

- Concerns were raised about the profitability of rigging the arena for o3 compared to other models.

- AlphaGo Architectures Versus LLMs: Members discussed vastly different architectures, that a Transformer LLM cannot be trained the way Alpha* is, and if it could be, it doesn’t operate in a way that would allow it to use that training anyway, mentioning that it is vastly different.

- As most things in LLMs, Thinking Mode is just a primitive, degenerate form of bending policy search, giving the LLM a few dozen shots at getting to a better solution, but it’s neither systematic nor exhaustive, nor does it have a world model or persistent state to simulate or probe internally.

- Gemini Ultra on the Horizon: Rumors of a new Google coder model, potentially named “Gemini Coder”, have been confirmed by a Google employee, with expectations that Gemini Ultra will scale improvements over Pro.

- Speculation surrounds the ideal model size and potential for models to reason in latent space, enhancing efficiency and mimicking human-like thinking, with curiosity when Gemini Ultra would be released.

Modular (Mojo 🔥) Discord

- Modular Hosts Mojo Meetup at HQ: Modular is hosting an in-person meetup at its headquarters in Los Altos, California next week, with virtual attendance also available, RSVP here.

- A member will give a talk on making GPUs go brrr with Mojo & MAX, optimizing GPU performance with Mojo and Modular’s MAX framework.

Dict.get_ptrcopies surprising values!: A user discovered that retrieving a pointer to aDictvalue in Mojo unexpectedly invokes a copy and a move constructor, as shown in this code snippet.- A PR was made to address the copy issue, which was traced to a specific line of code within the

get_ptrfunction.

- A PR was made to address the copy issue, which was traced to a specific line of code within the

- Moving Variadic packs requires

UnsafePointer: Moving values from a variadic pack in Mojo requires usingUnsafePointerand disabling the destructor to avoid unwanted copies.- A member showed using

__disable_del argsin thetake_argsfunction to prevent destructors from being invoked on the original elements, raising concerns about potential memory leaks if not handled correctly.

- A member showed using

- Matrix Parallelization: slower at first: A member observed that parallelized matrix operations initially perform worse than for loops on small matrices due to overhead during warmup, but then achieve comparable performance in subsequent steps.

- It’s uncertain whether this behavior is expected, and they are requesting feedback as to whether there is something wrong with their benchmarking.

LlamaIndex Discord

- LlamaIndex Supports Agent2Agent Protocol: LlamaIndex now supports Agent2Agent (A2A) protocol, an open standard launched by Google, enabling secure AI agent communication regardless of origin.

- Members are now discussing whether llama-deploy needs to implement the A2A protocol for it to function properly.

- Gemini 2.5 Flash Ready to Use: LlamaIndex supports Gemini 2.5 flash out-of-box; users can call the new model name without any upgrade, as shown in this example.

- This integration provides immediate access to Gemini 2.5 flash for LlamaIndex users.

- LlamaExtract for Financial Analysis: A full-stack JavaScript web app uses LlamaExtract to perform financial analysis by defining reusable schemas for structured data extraction from complex documents, as showcased in this example.

- This application demonstrates the practical application of LlamaExtract in finance.

- LlamaIndex Showcases Google Cloud Integration: At Google Cloud Next 2025, LlamaIndex highlighted integrations with Google Cloud databases, emphasizing building knowledge agents for multi-step research and report generation, demonstrated in a multi-agent system.

- The focus was on leveraging Google Cloud infrastructure for advanced AI workflows.

- Users Report Prompt Issues After LlamaIndex Update: A user reported experiencing issues with prompt answers and citations after updating LlamaIndex to use the new OpenAI models.

- A member clarified that there were no codebase changes and suggested that the newer models might require different prompts.

Cohere Discord

- Staged AI Model Training: Industry Norm?: A guild member wondered if AI model development often follows a staged training process such as Experimental (20-50%), Preview (50-70%), and Stable (100%).

- The question went unanswered in the channel so it is unclear whether this is a common pattern.

- Command A: Reasoning Engine or Tokenization Trickery?: A member inquired whether Command A possesses true reasoning capabilities or if its abilities are merely a function of the tokenizer.

- No response was given, leaving the question of Command A’s reasoning abilities unresolved.

- FP8 Faces Fitting Fiasco on H100s: A guild member claimed that FP8 won’t fit on 2 H100s without providing additional details.

- It is unclear what they meant by this, but it could be related to memory or compute limitations.

- Concall Parser Pops Up on PyPI: A member announced the release of their first Python package, concall-parser, which acts as an NLP pipeline tool to efficiently extract structured information from earnings call reports.

- According to the developers, building concall-parser led to considerable learning in the areas of regexes and testing real-world NLP pipelines.

- PDF Data Variation: NLP’s Public Enemy #1: The developer of concall-parser cited data variation (pdfs are hard to get right) as a significant challenge in building the package.

- They implied that this issue may have presented a significant impediment to the development of their NLP pipeline.

Nomic.ai (GPT4All) Discord

- GPT4All’s LinkedIn Status Ignored: A member feels ignored by GPT4All on LinkedIn after inquiring about its status and requested speech recognition mode.

- No further discussion or replies were made regarding the inquiry.

- Speech Recognition Scripts Shared: A member shared a link to speech recognition scripts (rcd-llm-speech-single-input.sh) suggesting installation and binding to a mouse key.

- The suggestion received no replies or further engagement from other members.

- MCP Server Configuration Questioned: A member asked about configuring an MCP server on GPT4All, seeking clarification on the process.

- This question did not receive any responses or further discussion within the channel.

tinygrad (George Hotz) Discord

- Tinygrad’s Pattern Matcher Faces Bug Scrutiny: A member suspects a bug in Tinygrad’s pattern matcher that might ignore the source view if base shapes match, potentially leading to incorrect results, particularly with transposed sources.

- The issue arises when one of the sources has a transpose, which the pattern matcher could miss.

- Rockchip 3588 Beam Search Plummets: A member reported that using

beam=1/2on Rockchip 3588 causes a crash when running the benchmark code from examples/benchmark_onnx.py.- The crash manifests as an

IndexError: list assignment index out of rangewithintinygrad/engine/search.pyduring buffer allocation, disrupting beam search functionality.

- The crash manifests as an

DSPy Discord

- Reasoning Models Primer Drops: A member shared an approachable primer on reasoning models targeted towards a general audience, titled What We Mean When We Say Think.

- The primer aims to explain reasoning models by breaking down complex concepts in an accessible manner.

- Accessible Explanation of Reasoning Models: The primer breaks down intricate concepts related to reasoning models in an accessible manner, making it easier for a broader audience to understand.

- This explanation is crafted to avoid technical jargon, focusing on core principles and practical applications for those new to the field.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Perplexity AI ▷ #announcements (2 messages):

Telegram Bot, GPT 4.1 Release, Perplexity Travel, Trending Discover Feed, NBA Playoffs

- Perplexity arrives on Telegram: Perplexity is now available on Telegram as a bot, allowing users to get answers with sources in real-time via group chats or direct messages; try it here.

- GPT 4.1 lands on Perplexity: Perplexity’s weekly update highlights the integration of GPT 4.1 and the launch of Perplexity Travel on mobile; check out the full changelog here.

- Perplexity gets New Trending Discover Feed: Perplexity shipped a New Trending Discover Feed, plus NBA Playoffs coverage, Finance Page Improvements, Shared Spaces, and the ability to Save Threads to Space.

Perplexity AI ▷ #general (1186 messages🔥🔥🔥):

LAN parties, Retirement at 30, Perplexity AI vs GPT-4, Mistral OCR, Gemini Live

- Retro LAN party Ruminations: Members reminisced about moving desktops for LAN parties and playing Doom and Quake, with one joking about retiring at 30 to travel.

- Another member recounted traveling across the UAE with friends to play CS:GO, highlighting the fun of such experiences, with managing the cables made easy because we didn’t and that it was a mess, but at least it worked.

- Gemini Advanced Rollout Frustrations: Some Gemini Advanced users with Android devices discussed problems with the rollout of new features like Gemini Live, despite meeting the requirements and following the official announcement.

- One member, having tried everything, expressed frustration as his app lacked the deep research model and had circular buttons rather than rounded ones, ultimately planning a full wipe as a last resort.

- GPT-4 vs Gemini: Members debate which of GPT-4o or Gemini 2.5 Pro models excel for productivity with one member running his main hobby project using Gemini due to its high context limit.

- Several users noted ChatGPT’s recent tendency to be overly complimentary and inconsistent, with one stating, This is like…something Elon Musk would code for himself.

- Model performance discussion heats up: Members were discussing preferred models and performance, prompt engineering practices, and the models they use and prefer for specific purposes.

- Specifically, members called out specific strengths and weaknesses of various models and preferences for how each takes instruction, and Anthropic’s Claude Project with useful skills.

- Transitioning to Linux for Model Building: A user shared their positive experience transitioning to Linux Mint, highlighting that LLMs have made command line tasks easier and that even game compatibility has improved.

- He mentioned that using ChatGPT/Claude for troubleshooting has been helpful and that his girlfriend couldn’t even tell the difference from Windows, stating, but you have control, it doesn’t add any garbage.

Perplexity AI ▷ #sharing (4 messages):

Google Lawsuit, Gemma 3 QAT models, K2-18b exoplanet

- Judge Rules Google is Anti-Competitive: A federal judge ruled Google is anti-competitive.

- This was followed by criticism for withholding documents during the case.

- Gemma 3 QAT Models are Out: The discussion turned to Gemma 3 QAT models.

- There’s no clear summary available beyond the link.

- Water Vapor Discovered on K2-18b exoplanet: The channel discussed an article about the K2-18b exoplanet.

- The article details that water vapor was detected in its atmosphere.

Perplexity AI ▷ #pplx-api (3 messages):

Image Uploads, Date Range Filter, New Pricing Scheme, First Hackathon with Devpost

- Picturesque Image Uploads go Live!: Image Uploads are now live for all users, enabling integration of images into Sonar searches, per this guide.

- Timely Date Range Filter Debuts!: A date range filter is now available, enabling users to narrow search results to specific timeframes; see the guide.

- Perplexity Cuts Citation Token Costs!: Perplexity has fully transitioned to a new pricing scheme that eliminates charges for citation tokens, making pricing more predictable and cheaper as described on the pricing page.

- Perplexity Hacks into Hackathons!: Perplexity is hosting its first hackathon with Devpost, inviting community members to participate; details are available here.

LMArena ▷ #general (1070 messages🔥🔥🔥):

Dayhush vs Nightwhisper, Gemini 2.5 Pro vs Grok 3 Mini, Polymarket & LM Arena, AlphaZero, AlphaGo, New models and model releases

- Dayhush Rising as Nightwhisper Heir: Members are comparing Dayhush to Nightwhisper, with some finding Dayhush superior in coding and web design, potentially dethroning 3.7 Sonnet for real-world coding tasks.

- Users shared prompts like “create pokemon game” and other visually stunning requests to get the models to generate code.

- Gemini 2.5 Pro and Grok 3 Mini battle it out: Grok 3 Mini is emerging as a strong competitor, with some finding it on par with or slightly better than Gemini 2.5 Pro, particularly with tool use, although it may be too aggressive with tool calling.

- Price is a factor as a user stated that Grok 3 Mini is 1/7th the output token price of 2.5 flash thinking.

- Polymarket and Arena Interplay: Discussion arose around the potential for market manipulation on Polymarket influencing model evaluations in LM Arena, particularly with bets riding on o3 or o4-mini surpassing 2.5 Pro.

- There was an admission that rigging the arena for o3 would have a bigger profit than other models.

- AlphaGo and the new models: Members discussed vastly different architectures, that a Transformer LLM cannot be trained the way Alpha* is, and if it could be, it doesn’t operate in a way that would allow it to use that training anyway, mentioning that it is vastly different.

- User shared that, as most things in LLMs, Thinking Mode is just a primitive, degenerate form of bending policy search, giving the LLM a few dozen shots at getting to a better solution, but it’s neither systematic nor exhaustive, nor does it have a world model or persistent state to simulate or probe internally.

- Gemini Ultra on the Horizon: There are rumors of a new Google coder model potentially named “Gemini Coder”, confirmed by a Google employee, with expectations that Gemini Ultra will offer scaling improvements over Pro but may come with a higher cost.

- Some users speculate on the ideal model size and the potential for models to reason in latent space, enhancing efficiency and mimicking human-like thinking, with one user wondering when Gemini Ultra would be released.

LMArena ▷ #announcements (1 messages):

Beta feedback, Dark/Light mode toggle, Copy/paste images, Leaderboard polish

- Beta Feedback Heeded: The team is responding to the hot feedback for Beta from users.

- They thanked @everyone for sending feedback and requested that the community keeps sending it in.

- Dark/Light Mode Toggle Now Available: A dark/light mode toggle is now available in the top right corner of the UI.

- This allows users to switch between light and dark mode depending on their preference.

- Image Copy/Paste Implemented: Users can now copy/paste images directly into the prompt box.

- This should help with providing better visual information.

- Leaderboard Polish Arrives: A few polish items have been added to the leaderboard.

- This indicates that changes and improvements have been made to the leaderboard’s design, functionality, or user experience.

Modular (Mojo 🔥) ▷ #general (1 messages):

Modular Meetup, Mojo & MAX, GPU Optimization, In-Person Event, Virtual Attendance

- Modular Hosts In-Person Meetup Next Week!: Modular is hosting an in-person meetup at its headquarters in Los Altos, California one week from today, with virtual attendance also available, RSVP here.

- A member will give a talk on making GPUs go brrr with Mojo & MAX, optimizing GPU performance with Mojo and Modular’s MAX framework.

- GPU Performance Talk at Modular HQ: A member will be presenting a talk focused on boosting GPU performance using Mojo and MAX at Modular’s headquarters.

- Attendees can expect to learn techniques for optimizing GPU utilization, enhancing the speed and efficiency of their Mojo-based applications.

Modular (Mojo 🔥) ▷ #mojo (17 messages🔥):

MLIR arith dialect in Mojo, Dict value pointer copies and moves, Variadic set element moves, Ratio type representation, Parallelization performance

- MLIR’s

arithdialect missing in Mojo: Thearithdialect is not loaded by default in Mojo; only theindexandllvmdialects are accessible, and there are currently no mechanisms to register other dialects.- A user received an error ‘use of unregistered MLIR operation arith.constant’ when trying to use

arith.constant.

- A user received an error ‘use of unregistered MLIR operation arith.constant’ when trying to use

Dict.get_ptrtriggers surprising copies and moves: A user discovered that retrieving a pointer to aDictvalue in Mojo unexpectedly invokes a copy and a move constructor, as shown in this code snippet.- A PR was made to address the copy issue, which was traced to a specific line of code within the

get_ptrfunction.

- A PR was made to address the copy issue, which was traced to a specific line of code within the

- Variadic packs require

UnsafePointerfor moves: Moving values from a variadic pack in Mojo requires usingUnsafePointerand disabling the destructor to avoid unwanted copies.- A member showed using

__disable_del argsin thetake_argsfunction to prevent destructors from being invoked on the original elements, raising concerns about potential memory leaks if not handled correctly.

- A member showed using

- Ratio type via

FloatLiteralin Kelvin discussed: A member suggested representing the ratio type in Kelvin using aFloatLiteral, given that aFloatLiteralis essentially a ratio of integer literals.- Others noted that while

FloatLiteralmaterializes to aFloat64, it can also construct SIMD floating-point types directly, but there’s no guarantee multiplying by a float literal will result in anInt/Uint.

- Others noted that while

- Matrix Parallelization benchmarked: A member observed that parallelized matrix operations initially perform worse than for loops on small matrices due to overhead during warmup, but then achieve comparable performance in subsequent steps.

- It’s uncertain whether this behavior is expected, and they are requesting feedback as to whether there is something wrong with their benchmarking.

LlamaIndex ▷ #blog (4 messages):

Agent2Agent Protocol, Gemini 2.5 flash, LlamaExtract, Google Cloud Next 2025

- LlamaIndex enables Agent2Agent Communication: LlamaIndex now supports the Agent2Agent (A2A) protocol, an open standard launched by Google that allows AI agents to communicate and exchange information securely regardless of their origin.

- Gemini 2.5 Flash supported out-of-box: LlamaIndex supports Gemini 2.5 flash without any upgrade; users can call the new model name and it will work immediately, as shown in this example.

- LlamaExtract performs financial analysis in JS webapp: A full-stack JavaScript web app utilizes LlamaExtract to conduct financial analysis by defining reusable schemas for structured data extraction from complex documents, as showcased in this example.

- LlamaIndex integrates with Google Cloud databases: At Google Cloud Next 2025, LlamaIndex presented its integrations with Google Cloud databases, focusing on building knowledge agents for multi-step research and report generation, demonstrated in a multi-agent system.

LlamaIndex ▷ #general (10 messages🔥):

LlamaIndex A2A Deployment, LlamaIndex Version Change

- A2A Deployment Missing Llama Deploy Bit?: Members discussed LlamaIndex’s A2A sample code and considered the necessity of llama-deploy implementing the A2A protocol for it to function properly.

- One member expressed doubts about A2A’s viability but acknowledged the challenges faced due to the absence of an SDK during its development.

- Prompt Issues Post-LlamaIndex Update?: A user reported experiencing issues with a prompt providing answers with citations after updating to the latest version of LlamaIndex to use the new OpenAI models.

- Another member clarified that there were no changes to the codebase and suggested that newer models might require different prompts and inquired about the previous version and LLM used.

Cohere ▷ #「💬」general (7 messages):

AI Model Staged Training, Command A Reasoning, FP8 limitations, SEO Backlinks

- Staged AI Model Training: A Common Pattern?: A member inquired whether AI model development often follows a staged training process like Experimental (20-50%), Preview (50-70%), and Stable (100%).

- No response was given so it’s unclear whether this is common.

- Command A: Reasoning or Tokenization?: A member questioned whether Command A has reasoning capabilities or if its abilities are limited to the tokenizer.

- No response was given so it’s unclear which is true.

- FP8 Won’t Fit on 2 H100s: A member stated that FP8 won’t fit on 2 H100s.

- No further context or explanation was provided.

- SEO Backlinks Service Advertised: A member advertised an SEO Backlinks service, promising to boost website rankings and increase traffic with a high-quality backlink package.

- They listed increased traffic, improved search engine rankings, brand awareness, networking, and enhanced credibility as benefits.

Cohere ▷ #「💡」projects (1 messages):

Python package release, NLP pipeline tooling, Earnings call report parsing, Data variation challenges, Regex learning

- Concall Parser Debuts on PyPI!: A member announced the release of their first Python package, concall-parser, designed as an NLP pipeline tool to efficiently extract structured information from earnings call reports.

- PDF Data Variation: The NLP Nemesis: The developer cited data variation (pdfs are hard to get right) as a significant challenge in building the concall-parser package.

- The experience also led to considerable learning in areas of regexes and testing real-world NLP pipelines.

Nomic.ai (GPT4All) ▷ #general (4 messages):

GPT4All status on LinkedIn, Speech recognition mode for GPT4All, MCP server configuration

- GPT4All Status Stalls on LinkedIn: A member inquired about the status of GPT4All on LinkedIn but feels ignored.

- They expressed wanting a speech recognition mode for GPT4All.

- Speech Recognition Scripts Shared: A member shared a link to speech recognition scripts and suggested installing them and binding them to a mouse key: rcd-llm-speech-single-input.sh.

- No other members replied to the suggestion.

- MCP Server Configuration Questioned: A member inquired whether they could configure an MCP server on GPT4All.

- No other members replied to the question.

tinygrad (George Hotz) ▷ #learn-tinygrad (2 messages):

Pattern Matcher Bug, Rockchip 3588 Crash with Beam Search

- Pattern Matcher Might Have Bug: A member raised a doubt in the pattern matcher, suggesting it might ignore the source view if base shapes match, potentially leading to incorrect results with transposed sources.

- They pointed out that this could be problematic when one of the sources has a transpose in front of it, which the pattern matcher might overlook.

- Rockchip 3588 Crashes During Beam Search: A member reported a crash when using

beam=1/2on Rockchip 3588, specifically with the benchmark code from examples/benchmark_onnx.py.- The crash occurs with an

IndexError: list assignment index out of rangewithintinygrad/engine/search.py, during buffer allocation.

- The crash occurs with an

DSPy ▷ #show-and-tell (1 messages):

Reasoning Models, Primer on Reasoning Models

- Primer on Reasoning Models Released: A member shared an approachable primer on reasoning models intended for a general audience.

- The blog post is titled “What We Mean When We Say Think”.

- Reasoning Models Explained: The primer aims to explain reasoning models to a broader audience.

- It breaks down complex concepts in an accessible manner.

{% else %}

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: [{{ email.subject }}]({{ email_url }})!

If you enjoyed AInews, please share with a friend! Thanks in advance!

{% endif %}