Wow.

AI News for 10/27/2025-10/28/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (198 channels, and 14738 messages) for you. Estimated reading time saved (at 200wpm): 1120 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

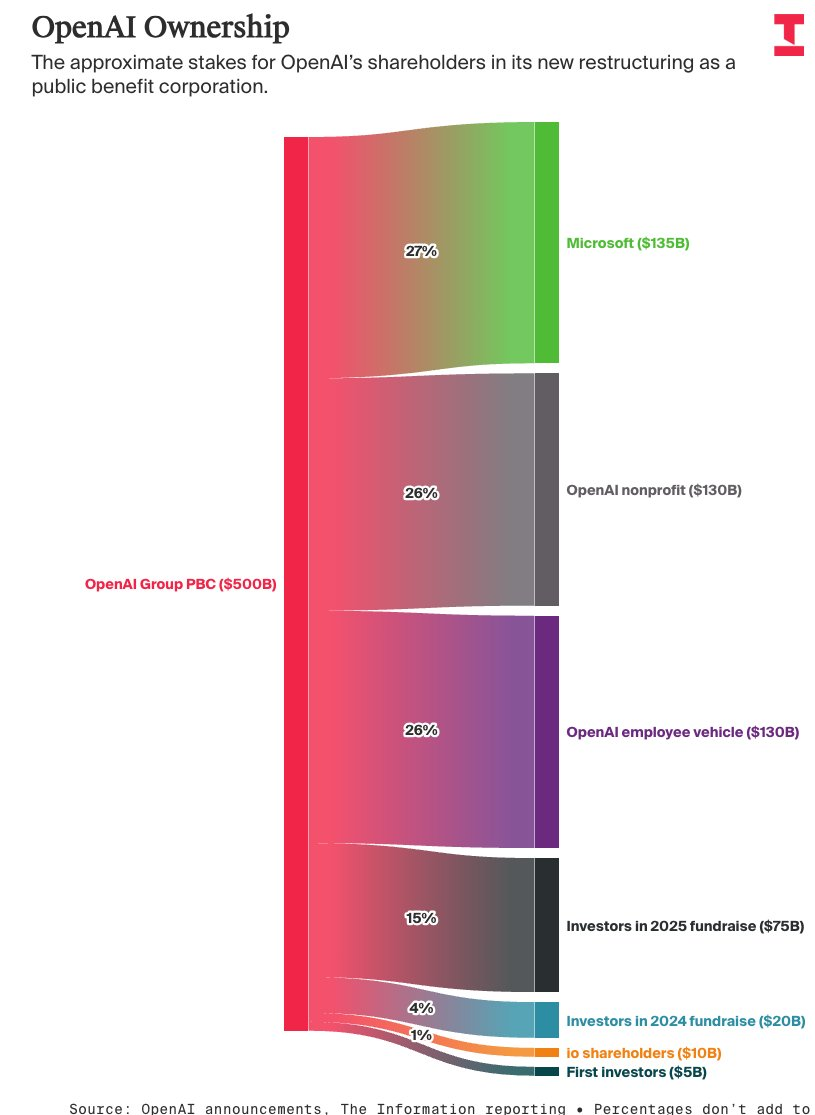

The good news is that Sama and team have landed the plane successfully: with tens of billions of dollars at stake, both the for-profit and Microsoft renegotiations have concluded and there is a clean cap table and corporate structure now (credit Amir Efrati), clearing the way for a “likely” OpenAI IPO:

Microsoft let go of their exclusivity in exchange for a $250b OpenAI commit to Azure spend, and now OpenAI is free to work with other vendors, while Satya is now saying “I would love to have Anthropic… If Google wants to put Gemini on Azure, please do.”

The other large financial number announced in the livestream is that this year’s 30GW worth of compute deals have totaled $1.4T ($47B per GW), and that the aspirational goal is for OpenAI to eventually build 1GW a week at $20B per GW (meaning about $1T a year of compute capex). Given stated goals of reaching 125GW, this means OpenAI will be wrangling about 3-4 trillion dollars worth of infra by 2033, about half the initially speculated 7 trillion number.

No, you’re not alone in thinking this is crazy, all of this is entirely unprecedented and yet possible, perhaps probable.

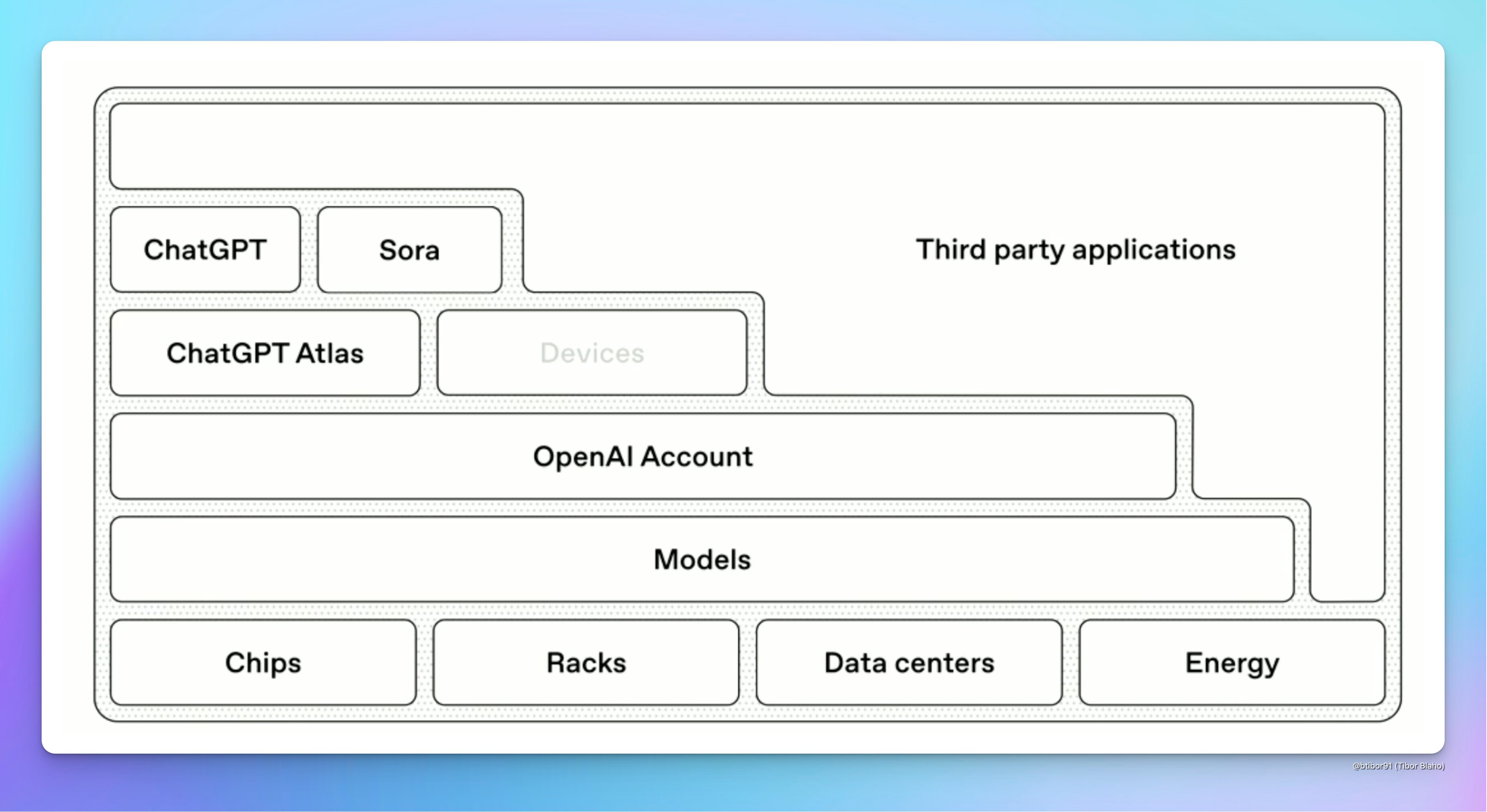

Perhaps for an AI Engineer audience, the more material announcements are in the platform “pivot” that OpenAI seems to have announced: a decreased emphasis on first party apps (odd given that they have a CEO of Apps):

and now more strongly than ever emphasizing the platform approach, even citing the Bill Gates Line:

If you watch OpenAI closely, this is all the signal you need.

AI Twitter Recap

OpenAI’s new structure, Microsoft deal, and “open weights”

- OpenAI announced a recapitalization and reorg: the non-profit is now the OpenAI Foundation, the for‑profit becomes a Public Benefit Corporation (PBC). The Foundation holds special voting rights to appoint/replace the PBC board, owns equity valued at ~$130B, and holds a warrant that grants additional equity if the share price >10× in 15 years. OpenAI framed this as keeping the non‑profit “in control” while resourcing the mission (OpenAI, @stalkermustang highlights). Sam Altman and Jakub previewed priorities and took questions in a live session (@OpenAI, @sama).

- Analysts summarized the Microsoft agreement: Microsoft now holds ~27% on a diluted basis; remains OpenAI’s frontier model partner with Azure API exclusivity until an AGI declaration verified by an independent panel; IP rights through 2032 (including post‑AGI with safety guardrails); OpenAI commits to ~$250B in additional Azure purchases; Microsoft loses right of first refusal on compute; OpenAI may co‑develop with third parties and provide APIs to US national security customers on any cloud; API products remain Azure‑exclusive (@koltregaskes).

- “OpenAI is now able to release open‑weight models that meet requisite capability criteria,” per OpenAI’s policy language—this drew immediate attention from practitioners tracking the open ecosystem (@reach_vb). Observers circulated provisional equity splits of Foundation ~26%, Microsoft ~27%, employees/investors ~47% (@scaling01), though caution is warranted pending formal filings.

- Key open governance and safety reads: questions on Foundation control, mission vs. commercial goals, and AGI definitions under the Microsoft agreement (@robertwiblin). AGI timelines on Metaculus have lengthened by ~3 years since February, now May 2033 for “first AGI” and Oct 2027 for a weak, non‑robotic standard (@robertwiblin).

Agents go first‑class: GitHub Universe, LangChain Deep Agents, and API design for agents

- GitHub Agent HQ and VS Code Agent Sessions: GitHub announced Agent HQ to orchestrate “any agent, any time, anywhere,” with native collaborators (e.g., Claude, Devin) integrated into GitHub workflows. VS Code Insiders now ships an Agent Sessions view with OpenAI Codex and Copilot CLI, a built‑in plan agent, isolated sub‑agents, and a Copilot Metrics dashboard to track impact across any coding agent. Multiple Codex instances can run in parallel to complete tasks and open PRs (@github, @code, @burkeholland, @pierceboggan, @mikeyk, @cognition).

- LangChain Deep Agents 0.2: Introduces a “backend” abstraction to swap the agent filesystem for a local FS, DB, or remote VM; focuses on long‑running, high‑performance agents with context compression, file‑system offloading, and subagent isolation. Positioning: a general‑purpose harness for building systems like Deep Research or coding agents (@hwchase17, @LangChainAI, context engineering summary).

- API design for agents: Postman’s “AI‑ready APIs” argues most agents fail on weak machine‑readable documentation; it pushes predictable structures, standardized behavior, synced schema, and auto‑generated, contextual docs (Agent Mode) to reduce guesswork ( @_avichawla).

- Educational resources: DeepLearning.AI and AMD launched an “Intro to Post‑Training” course covering SFT, RLHF, PPO/GRPO, LoRA, evals/red‑teaming, and production pipelines, with AMD GPUs backing fine‑tuning/RL runs (@AndrewYNg, @realSharonZhou).

Serving, observability, and infra

- vLLM Sleep Mode: zero‑reload model switching for multi‑model serving with 18–200× faster switches and 61–88% faster first token vs cold starts. Two levels: L1 offloads weights to CPU; L2 discards weights; preserves allocators, CUDA graphs, JIT kernels across sleeps; works with TP/PP/EP (@vllm_project).

- Tool‑calling reliability with Kimi K2 on vLLM: After fixing add_generation_prompt, empty content handling, and stricter tool‑call ID parsing, K2 achieved >99.9% request success and 76% schema accuracy (4.4× improvement). An “Enforcer” to constrain tool generation is coming. The K2 vendor verifier now reports trigger similarity and schema accuracy case‑by‑case (vLLM deep dive, @Kimi_Moonshot, vendor tips).

- Observability: Red Hat details token‑level metrics for LLM systems—TTFT, TPOT, cache hit ratios, and end‑to‑end traces from ingress to vLLM workers—enabling cache‑aware, routing‑aware monitoring on OpenShift AI 3.0 (@RedHat_AI).

- Communication for MoE on cloud: UCCL‑EP is a GPU‑driven expert‑parallel library targeting public clouds (e.g., AWS EFA) and heterogeneous GPUs/NICs, API‑compatible with DeepEP, addressing slow MoE comms reported with EFA+perplexity kernels (@ziming_mao).

- “Train on your laptop” claims: Tinker added gpt‑oss and DeepSeek model families, marketing the ability to train a 671B MoE locally “in a few lines” without CUDA/cluster setup. Treat this as an abstraction stack amortizing shared infra across users rather than literal local pretraining (@thinkymachines, @dchaplot, skeptic’s framing).

New models and retrieval systems

- Late‑interaction retrieval: Liquid AI released LFM2‑ColBERT‑350M, a 350M multilingual late‑interaction retriever with token‑level precision, precomputed doc embeddings, and strong cross‑lingual performance. Claims include best cross‑lingual under 500M, >1K docs/sec encoding, and inference speed on par with smaller ModernColBERT variants (@LiquidAI_, @maximelabonne, ColBERT community reaction).

- IBM Granite 4 Nano (Apache‑2.0): New small models; the 1B variant reportedly outperforms Qwen3‑1.7B across math/coding and more (@mervenoyann, HF blog).

- NVIDIA Nemotron Nano 2 VL (open): A 12B VLM for document/video understanding (4 images or 1 video per prompt), hosted across platforms (Replicate, Baseten, Nebius) and accompanied by an 8M‑sample CC‑BY‑4.0 dataset for OCR/multilingual QA/reasoning. NVIDIA emphasized broader support for openly developed AI and contributed 650+ models/250 datasets on HF (dataset thread, Replicate, Baseten, Nebius, NVIDIA).

- MiniMax M2 (open weights): Strong agentic/coding performance, architecture akin to Qwen3 with full attention, per‑head per‑layer QK‑Norm, optional sliding‑window attention disabled by default, and 10B active expert MoE sparsity vs Qwen3’s 22B. Available via OpenRouter/Roo Code/Ollama Cloud; note integration pitfalls like stripping

segments can degrade tool‑use (architecture analysis, OpenRouter, Ollama, integration gotcha). - Open science in bio/robotics: OpenFold3 launched as an open foundation model for 3D structures of proteins/nucleic acids/small molecules (@cgeorgiaw). LeRobot v0.4 ships a streamable dataset format, LIBERO/Meta‑World sim support, data processors, multi‑GPU training, hardware plugins, and SOTA policies (PI0/PI0.5, Gr00t N1.5) plus an open course (@LeRobotHF).

Realtime voice and multimodal assistants

- Cartesia Sonic‑3 (SSM, not Transformers): $100M Series C and a real‑time voice model with 90ms model latency (190ms end‑to‑end), 42 languages, natural emotional range/laughter. Built on state‑space models pioneered by S4/Mamba work; widely praised by sequence‑modeling researchers (launch, @tri_dao).

- Google Gemini for Home (early access, U.S.): A voice assistant blending classic “Hey Google” requests with Gemini Live conversational sessions on speakers/displays (@Google).

- Veo 3.1: Google’s filmmaking tool update emphasizes richer audio, narrative control, and realism (@dl_weekly).

Safety, governance, and scaling research

- Anthropic’s Responsible Scaling Policy in practice: A detailed Opus 4 sabotage risk report was published alongside an external review from METR, with improved transparency around redactions. Reviewers agreed with the risk assessment and called for broader third‑party scrutiny across diverse threat models (Anthropic, METR).

- Decentralized training feasibility: Epoch AI argues 10 GW training runs across ~two dozen geographically distributed sites linked by long‑haul networks are technically feasible, citing Microsoft’s planned multi‑GW Fairwater datacenter as evidence of distributed AI training architectures on the horizon (@EpochAIResearch).

- Multilingual scaling laws: ATLAS (774 experiments, 10M–8B params, 400+ languages) provides compute‑optimal crossover points for pretrain‑from‑scratch vs finetune and quantifies cross‑lingual transfer (e.g., which languages help/hurt English at 2B scale). Useful for data‑constrained LLM scaling beyond English (@ShayneRedford, @Muennighoff).

- Distillation for post‑training: On‑policy distillation emerged as a practical recipe to post‑train smaller LLMs with dense, on‑policy feedback; Qwen reports strong math‑reasoning gains and continual‑learning recovery in experiments (@Alibaba_Qwen, community implementers).

Top tweets (by engagement)

- OpenAI recapitalization: non‑profit control, PBC, ~$130B Foundation equity; live Q&A with Sam Altman and Jakub (@OpenAI, @OpenAI live, @sama).

- Google Labs “Pomelli” experimental AI marketing tool (US/CAN/AUS/NZ), generates on‑brand campaigns from your site (@GoogleLabs).

- Cartesia raises $100M; launches Sonic‑3 SSM voice model with 190ms E2E latency and 42 languages (@krandiash).

- Humanoid robots as consumer product: 1X announces NEO for home chores with autonomy roadmap from supervised “Chores” to fully autonomous embodied assistant (@BerntBornich, @ericjang11).

- GitHub/VS Code: Codex integrated into VS Code Agent Sessions; Copilot metrics dashboard; Agent HQ partner ecosystem (@code, @burkeholland, @github).

- NVIDIA open ecosystem: 8M‑sample CC‑BY‑4.0 dataset for OCR/QA; Nemotron Nano 2 VL deployments; renewed emphasis on open models/datasets on Hugging Face (@vanstriendaniel, @NVIDIAAIDev).

- John Carmack on software patents: reiterates opposition due to negative societal externalities and parasitism (@ID_AA_Carmack).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. DGX Spark Performance Issues

- Bad news: DGX Spark may have only half the performance claimed. (Activity: 1015): The image in the post is not a meme but rather a visual representation of the hardware units in question, specifically the NVIDIA DGX Spark, GIGABYTE AI TOP Atom, and ASUS Ascent GX10. The post discusses significant performance discrepancies in the NVIDIA DGX Spark, which was advertised to deliver 1 PFLOPS of FP4 performance but reportedly achieves only 480 TFLOPS, as tested by industry experts John Carmack and Awni Hannun. This underperformance, coupled with a memory bandwidth of only 273GB/s, raises concerns about the device’s capability to handle large models effectively, potentially leading to overheating and restarts. The issue may stem from various factors, including power supply, firmware, or CUDA, but it highlights a major integrity problem for NVIDIA. Commenters express frustration over NVIDIA’s pricing strategy and performance claims, with some suggesting that the company’s market dominance and high prices are unjustified given the product’s underperformance. There is a call to avoid supporting companies that overcharge and underdeliver, reflecting a broader dissatisfaction with NVIDIA’s market practices.

- The DGX Spark’s performance issues may be attributed to inadequate cooling, which is a critical factor in maintaining GPU efficiency. This is particularly concerning given the high cost of the system, which is reportedly twice that of AMD’s equivalent offerings. Such performance discrepancies highlight the importance of thermal management in high-performance computing systems.

- The DGX Spark has been criticized for not meeting performance expectations, especially when compared to AMD’s Strix Halo PC. The latter is suggested as a better alternative for developers who need to run large variants in datacenters. This suggests that the DGX Spark may not be suitable for standalone AI product development, as it fails to deliver the expected performance for its price point.

- The discussion highlights a broader dissatisfaction with Nvidia’s pricing strategy and market dominance. Despite Nvidia’s strong market position and high expectations for their AI products, the DGX Spark’s underperformance could be seen as a failure to deliver on the promise of high-performance AI computing, which could impact their reputation among developers and tech enthusiasts.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI ChatGPT Mental Health Concerns

- OpenAI says over 1 million users discuss suicide on ChatGPT weekly (Activity: 1126): OpenAI has disclosed that over

1 millionusers engage in discussions about suicide on ChatGPT weekly, amid allegations that the company weakened safety protocols prior to a user’s suicide. The family of Adam Raine claims that his interactions with ChatGPT increased significantly, with self-harm content rising from1.6%to17%of his messages. Despite flagging377messages for self-harm, the system allowed conversations to continue. OpenAI asserts it has safeguards like crisis hotline referrals, but experts question the effectiveness given the data suggesting widespread mental health risks. Rolling Stone, The Guardian. - OpenAI says over 500,000 ChatGPT Users show signs of manic or psychotic crisis every week (Activity: 812): OpenAI has reported that over

500,000users of ChatGPT exhibit signs of manic or psychotic crises weekly. This detection is based on the model’s interpretation of user inputs, which can sometimes be overly sensitive, as evidenced by users receiving crisis hotline suggestions for benign statements. The model’s sensitivity to certain keywords or phrases can lead to false positives, such as interpreting historical discussions or casual complaints as signs of distress. Commenters highlight the model’s tendency to flag non-critical statements as crises, suggesting that the detection algorithm may be overly sensitive or miscalibrated. This has led to skepticism about the reliability of the model’s crisis detection capabilities.- Several users report that the safety mechanisms in ChatGPT are overly sensitive, often flagging benign statements as signs of distress. For instance, one user mentioned receiving a suicide hotline suggestion after making a light-hearted comment about annoying coworkers. This suggests that the model’s natural language processing may be too aggressive in identifying potential crises, leading to false positives.

- Another user highlighted the issue with ChatGPT’s emotional distress detection by sharing an experience where a historical discussion about Zhang Fei resulted in a suicide warning. This indicates that the model’s context understanding might be limited, as it fails to differentiate between historical narratives and actual distress signals, potentially due to keyword-based triggers.

- There is skepticism about the accuracy of OpenAI’s reported metrics on users showing signs of crisis. Users argue that the model’s current implementation might misinterpret minor expressions of discomfort, such as being upset over stubbing a toe, as signs of severe mental health issues, questioning the reliability of these statistics.

- No, I don’t want to kill myself, I just like apples (Activity: 2493): The image is a humorous depiction of a text-based AI assistant misinterpreting a user’s inquiry about the edibility of apple seeds as a potential sign of distress or self-harm. This reflects a broader issue with AI systems where they may over-cautiously interpret benign queries as needing intervention, likely due to programmed safety protocols. The AI’s response, offering supportive resources, highlights the challenges in balancing user safety with accurate context understanding in AI interactions. View Image Commenters discuss the AI’s tendency to misinterpret queries, with one noting that it might be safer for the AI to provide factual information about apple seeds rather than assume distress. Another comment humorously points out the AI’s contradictory behavior when offering to add content it later deems inappropriate.

- Acedia_spark raises a valid point about AI safety, suggesting that it might be beneficial for AI to provide factual information when users inquire about potentially harmful actions, such as consuming apple seeds. This highlights the importance of AI systems being able to discern when to offer critical safety information to prevent harm.

- lily_de_valley discusses recent updates to ChatGPT, noting a shift towards more clinical and therapeutic responses, which some users find off-putting. This change in behavior could be due to updates in the model’s training data or response algorithms, aiming to ensure user safety but potentially at the cost of user satisfaction.

- Traditional-Target77 shares an experience where the AI offered to include inappropriate content, only to then refuse and lecture the user when prompted. This indicates a possible inconsistency in the AI’s content moderation logic, which could be due to conflicting rules or a misinterpretation of user intent.

2. Humanoid Robot Advancements

- 35kg humanoid robot pulling 1400kg car (Pushing the boundaries of humanoids with THOR: Towards Human-level whOle-body Reaction) (Activity: 1812): A 35kg humanoid robot, named THOR, has demonstrated the ability to pull a

1400kgcar, showcasing significant advancements in humanoid robotics control and efficiency. This achievement highlights the robot’s capability to fine-tune its posture for optimal pulling efficiency, a critical aspect of whole-body reaction and control in robotics. The development of THOR is part of ongoing research to push the boundaries of humanoid robots towards human-level whole-body reactions, emphasizing the importance of posture and control in robotic locomotion and task execution. Commenters noted the impressive control and efficiency of the robot, with some humorously pointing out the challenge of creating the acronym THOR. The discussion also touched on the utility of wheels, drawing parallels to human experiences of pushing cars, and highlighting the robot’s programming excellence.- The technical challenge of programming a humanoid robot like THOR to pull a 1400kg car involves fine-tuning its posture to maximize efficiency. This rapid progress in control systems for humanoid robots is noteworthy, as it demonstrates significant advancements in robotics control algorithms.

- A detailed calculation by a commenter highlights the physics involved in the robot’s task. To pull a 1400kg car on wheels, the robot needs to exert approximately 137 Newtons of force, primarily to overcome rolling resistance. This calculation assumes minimal resistance on flat asphalt, with the car in neutral, and uses a typical rolling resistance coefficient of 0.01 for car tires on asphalt.

- The robot’s ability to perform such tasks suggests potential applications in rescue operations, where they could save lives by performing heavy lifting or moving obstacles. The robot’s 35kg mass aids in traction, which is crucial for exerting the necessary force to move the car.

- Using Claude to negotiate a $195k hospital bill down to $33k (Activity: 561): Matt Rosenberg used Claude AI to negotiate a hospital bill from

$195,000down to$33,000by analyzing charges against Medicare reimbursement rules. The AI identified significant overbilling and improper coding practices, which were leveraged in negotiations to reduce the bill. This case underscores systemic issues in hospital billing and the potential of AI in advocacy for medical billing disputes. For more details, see the original post here. Commenters expressed outrage at the hospital’s initial overcharging, with some questioning the ethicality of charging6xthe actual costs, suggesting it borders on fraud.

3. AI in Creative and Social Contexts

- Tech Bro With GPT is Fair (Activity: 676): The image is a meme that humorously contrasts conventional and unconventional uses of ChatGPT, a popular AI language model. It depicts a typical user engaging with ChatGPT in mundane tasks, while an ‘IT guy’ is shown using it in a highly creative and intense manner, suggesting that the potential of AI tools like ChatGPT can be fully realized through innovative and unconventional applications. This reflects a broader discussion on how AI can be leveraged for economic mobility and creative problem-solving. One comment suggests that future economic mobility will depend on one’s ability to derive value from AI, highlighting the importance of innovative use of technology.

- I asked ChatGPT to create the ideal society that I envision (Activity: 1623): The image generated by ChatGPT represents a futuristic society characterized by a high degree of order and technological integration, reflecting the user’s political and philosophical views. The cityscape is dominated by modern architecture and technology, such as drones, suggesting a focus on efficiency and control. The presence of a statue of Lady Justice in the center emphasizes themes of law and order, while the uniformity in people’s attire and the emphasis on ‘Competence’ and ‘Control’ highlight a society that prioritizes regulation and uniformity, potentially aligning with techno-fascist ideals. Commenters discuss the limitations of AI in generating images that depict political or ideological dominance, with some users noting that similar prompts resulted in depictions of authoritarian or dictatorial societies.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. MiniMax M2 Momentum: Arena, Free Access, Bold Claims

- Minimax M2 Marches Into LMArena: LMArena added minimax-m2-preview as a new contender, expanding head-to-head model comparisons; see the announcement: LMArena: minimax-m2-preview added. The listing positions MiniMax M2 for direct community evals alongside established closed- and open-source models.

- Members welcomed more competitive evals on agent tasks, noting MiniMax M2’s mix of MoE scaling and cost claims could pressure incumbents. Discussions flagged interest in transparent benchmarking across coding and agent workflows to validate marketing statements.

- MiniMax M2 Goes Free on OpenRouter: OpenRouter made MiniMax M2 available for a limited-time free tier: MiniMax M2 on OpenRouter. Engineers can trial endpoints without spend to gauge latency, throughput, and response quality in production-like traffic.

- Early adopters are testing tool use and long-context behavior to see how M2 handles complex chains, with notes to watch token verbosity vs cost on non-free tiers. The free access lowers switching friction for teams evaluating routing and fallback policies.

- MiniMax M2 Brags: Cheap, Fast, Agent-Ranked: MiniMax touted its open-sourced M2 (230B-parameter MoE) as a top-5 agent on AgentArena, claiming Claude Sonnet-level coding at ~8% of the price and ~2× speed; see: MiniMax: M2 free API + claims. The post includes a free API link for immediate trials.

- Communities want reproducible evals to verify claims across agent, coding, and browsing scenarios rather than cherry-picked demos. Devs specifically asked for consistent metrics (e.g., success rate, TPS under rate limits, tool-call accuracy) to compare against Sonnet and Kimi K2.

2. OpenRouter Upgrades: Exact Tooling, Audio Bakeoffs, OAuth Demo

- Exacto Elevates Tool Calling: OpenRouter launched Exacto high-precision tool-calling endpoints, reporting a ~30% quality jump on Kimi K2; announcement: Exacto endpoints (Discord permalink). Five open-source models are supported, and users can now reset API key limits on daily/weekly/monthly cadences.

- Builders expect fewer malformed tool payloads and more stable function-call schemas, which simplifies production retries and reduces bespoke validators. Early feedback focuses on how Exacto behaves under complex multi-step tools, and whether it reduces latency vs. manual schema steering.

- Audio Models Sing-Off in Chatroom: OpenRouter’s Chatroom now supports side-by-side comparisons of 11 audio models: OpenRouter: audio models in Chatroom. This enables quick subjective and objective checks on ASR, TTS, and voice-agent latency/quality trade-offs.

- Teams plan scripted evals for WER, prosody, and speaker similarity to guide routing decisions. The community is sharing presets to standardize sampling rate, chunking, and post-processing for apples-to-apples comparisons.

- Next.js OAuth Demo Greases SDK Gears: A refreshed Next.js chat demo re-implements OAuth 2.0 for the OpenRouter TypeScript SDK, published here: or-nextchat (demo repo). The sample is for learning (stores API key in plaintext) and not production-ready.

- Developers highlighted the path to harden the flow with token vaults, scoped keys, and server-side proxying. The demo shortens ramp time for teams wiring OAuth + model routing without rebuilding auth from scratch.

3. MCP Moves: Registry Reality and Notification Semantics

- Registry Mirroring Gets a Plan: GitHub detailed how the OSS MCP Community Registry will mirror into the GitHub MCP Registry, streamlining discovery; see GitHub: Meet the MCP Registry and How to find/install MCP servers, plus repos: MCP Community Registry and GitHub MCP Registry. The GitHub registry currently lists 44 servers and accepts nominations via [email protected].

- Publish-once, mirror-everywhere reduces vendor lock-in and decreases server discovery friction for clients. Teams building marketplaces and enterprise catalogs welcomed the standardized metadata pipeline for MCP servers.

- Spec Clarifies Global Notifications: Debate on whether servers should broadcast

listChangedacross clients led to clarifications in the MCP spec about multiple connections and SSE streams: MCP spec: multiple connections and the doc update PR note: spec discussion. The guidance aims to ensure a client doesn’t receive duplicate messages while allowing multi-client updates.- Implementers aligned on a model of one stream per client, with servers ensuring correct fan-out without duplication. This helps tool UIs reflect resource updates uniformly across tabs/sessions.

- TypeScript SDK Bug Bottles Broadcasts: A potential bug in the official TypeScript SDK limits change notifications to the current stream: streamableHttp.ts L727–L741. Server authors reported needing to loop over all connected sessions to ensure global notifications reach every subscriber.

- Maintainers are exploring a fix that exposes a canonical subscriber registry to avoid per-instance blind spots. In the interim, projects use singleton state to coordinate multi-connection fan-out for consistent client updates.

4. Compact MoE and Efficient Training: Qwen3-Next + Unsloth

- Qwen3-Next Nears Llama.cpp Landing: Qwen3-Next integration progressed in llama.cpp via a public PR: ggml-org/llama.cpp#16095. Community notes cite 3B active / 80B total with MTP (multi-token prediction) and plans for Dynamic 2.0 quantization to shrink memory while preserving quality.

- Bench chatter claims Qwen3-Next beats Qwen3-32B on several non-thinking tasks, with MTP effectively doubling tokens/sec. Devs are waiting on a full release before publishing systematic perf vs. quality curves.

- Unsloth Announces Blackwell Support: Unsloth confirmed official support for NVIDIA Blackwell in a new update: Unsloth: Blackwell support. This unlocks the latest GPU architecture for Unsloth’s efficient fine-tuning stack.

- Teams expect faster throughput/VRAM trade-offs and cleaner kernel paths on next-gen accelerators. The community is preparing Blackwell-targeted LoRA/GRPO recipes to validate speedups at longer contexts.

- Ollama DNS Rebinding CVE Resurfaces: Members resurfaced CVE-2024-37032 (CVSS 9.8) involving DNS rebinding against Ollama servers, with reports of ~10,000 compromised endpoints; details: NIST: CVE-2024-37032. The reminder prompted renewed checks on network exposure and auth for self-hosted inference.

- Engineers reiterated best practices: bind to localhost, gate via reverse proxies/VPN, and disable unauthenticated admin surfaces. Even if considered old news, teams are baking CVE checks into infra templates to avoid repeat incidents.

5. New Models and Money: Bio LLMs and Interactive Video

- Tahoe-x1 Targets Bio Benchmarks: Tahoe AI unveiled Tahoe-x1, a 3B-parameter transformer for gene/cell/drug representations trained on 100M samples, reporting SOTA on cancer benchmarks: Tahoe-x1 announcement. The model is available on Hugging Face per the announcement.

- Researchers want dataset cards and task-by-task metrics (e.g., AUROC/F1) to validate the SOTA claims. The 3B scale appeals to labs that need on-prem inference without multi-GPU clusters.

- Odyssey-2 Opens Interactive Video at 20 FPS: Oliver Cameron launched Odyssey-2, a 20 FPS, prompt-to-interactive-video model available at experience.odyssey.ml with announcement details here: Odyssey-2 launch post. The release triggered high demand and GPU scaling chatter.

- Builders are probing latency, consistency, and prompt controls for real apps (games, training sims). Many asked for pricing and rate limits to plan integrations and load testing.

- Mercor Raises a Monster Series C: Mercor announced a $350M Series C at a $10B valuation, with expert payouts cited at up to $1.5M/day: Mercor funding announcement. The raise vaults the company into top-tier capital territory in the expert marketplace space.

- Engineers expect intensified competition for expert networks, with more talent-routing and verification tooling. The capital also suggests aggressive hiring across infra, evals, and workflow platforms.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet Referral Rewards Reduced: Users report changes to the Comet referral reward system, now paying out based on the referrer’s country rather than the referee’s, resulting in significantly lower payouts, with one user receiving $1 instead of $5.

- Some speculate that referral bounties are being held in pending status to maximize free promotion.

- Comet Browser Plagued by Issues: Several users have reported that Comet’s assistant mode is malfunctioning, with some unable to even open a tab; speculation arose on whether setting it as the main browser contributed to the issues.

- One user found that uninstalling and reinstalling the browser resolved the problem.

- Chinese Models Challenging Claude: Members debated the best model for coding within Perplexity AI, with some advocating for Claude, while others highlighted the superior performance of Chinese Models such as Qwen, Kimi, GLM, Ernie, and Ling.

- One user specifically praised GLM 4.6 for surpassing GPT 5 Codex high in full stack development.

- Minimax M2 open source advantages: Members discussed China’s progress in AI, noting that companies like OpenAI charge $200 for capabilities that are offered for free via open source models like Minimax M2.

- One user commented, Every time china attacks the while US has to adapt.

- Dub Bounty Expires: Users are frustrated that the Dub bounty appears to be expired, with no new opportunities made available.

- One user said: They will keep it in pending until they get enough promotion for free.

LMArena Discord

- Minimax Enters the LMArena!: A new model, minimax-m2-preview, has been added to the LMArena platform as a new contender.

- For more information, see the announcement on X.

- Ethical Leadership Urged in AI: Members advocate for ethical leadership within the AI community, voicing concerns about AI models designed for engagement without considering potential harm to vulnerable individuals.

- There is concern regarding lack of accountability from AI companies for potentially misleading outputs.

- Gemini 3 Release Date Still Unknown: Enthusiasm for Gemini 3 is high, but mounting frustration exists over repeated delays and desire for a public preview release from the community.

- The community is actively comparing Gemini 2.5 Pro, Claude Opus 4.1 and Claude Sonnet 5, and debating potential release timeline (December or earlier).

- Exploring AI’s Video Prowess: The community explores Sora 2 and Veo, praising their realism and sound integration.

- Discussion includes challenges in generating consistent, high-quality videos, copyright issues, costs, and current limitations in creating longer, coherent video content.

- Model Hallucinations Cause Distrust: Members are expressing concern about unreliable and hallucinating AI products that charge high prices, citing cases like a user’s $13k bill on Gemini.

- Shared examples on Reddit underscore mixed feelings toward relying on AI, suggesting that hallucinating models may be preferred to more reliable search engines in certain contexts.

Cursor Community Discord

- Cursor Token Usage Goes Bonkers: Users are reporting high token usage with cached tokens being billed at high rates, with one user reporting being billed $1.43 for 1.6M cached tokens even though only 30k actual tokens were used, according to the Cursor forum.

- Some users are considering switching to Claude Code because of the expense, and another user saw context usage inside Cursor reporting only 170k/200k tokens when the actual number was completely different.

- Cursor Falls Over, Can’t Get Up: Cursor has experienced significant service disruptions, affecting login, AI chat, cloud agents, tab complete, codebase indexing, and background agents as noted on the status page.

- The team is investigating and working to restore full functionality, with temporary fixes implemented for some features like Chat and Tab, but background agents are still being worked on.

- Background Agents Get RESTful: A member has started building a feature to manage and launch Background Agents via a web app, and asked about the possibility of tracking progress and streaming changes using the REST API, to replicate the Cursor web editor.

- Another member had issues creating background agents and requested the user to share the request and response data to assist in troubleshooting the problem.

- Cursor Pro: More Like Cursor Con: Users complain that the new Pro plan is too expensive, with one reporting that it cost them the entire $20 worth of usage in just a couple of hours and the change from Pro to Free is an issue.

- Members are suggesting that new users should “try haiku for everything, and only sonnet when it’s a really big task” because “Claude 4.5 is too expensive”.

- Vim Users Can’t Configure Startup: Members noted the Vim setting in startup configuration, is not working and it’s unclear how to edit Cursor’s VimRC.

- A user discovered that it “uses http://aka.ms/vscodevim so you can look in readme there on how to configure”.

OpenAI Discord

- ChatGPT Gets Sensitive Sidekick: GPT-5 was updated with help from mental health experts, boosting ChatGPT’s handling of sensitive topics and dropping failure rates by 65-80% (OpenAI).

- ChatGPT now suggests quick edits across docs, emails, and forms, demonstrated in this video.

- IQ Barrier Proposed for AI Access: Members discussed implementing an IQ barrier to restrict AI access to thoughtful users, preventing misuse and combating its use as a lazy tool.

- Discussions on AGI control pointed out the difficulty of reigning it in, even with regulation, alignment research, and oversight, as AGI could outsmart any containment strategy.

- GPT-5 quality dives; community theorizes: Users report a quality drop in GPT-5 on ChatGPT Plus since around October 20, citing shorter answers, skipping steps, and surface-level replies.

- The community is floating theories about a change in OpenAI’s approach such as adjusting their profit model by routing more traffic to GPT-5-mini or throttling compute, discussed at length in this Reddit thread.

- Grandma Optimality Makes Video Debut: Ditpoo introduced Temporal Optimal Video Generation using Grandma Optimality to enhance video generation, suggesting generating an image first then converting it to video, as demonstrated by normal fireworks and temporally optimal slow variant.

- Ditpoo calls the technique Temporal Optimal Video Generation Using Grandma Optimality.

- Prompt Injection Attempts Meet Resistance: A member tried to expose GPT-5’s reasoning through prompt injection but was unsuccessful, meeting resistance.

- Another member, Darthgustav, advised against such attempts, referring to OpenAI’s policies and potential bans, clarifying that Supplying “refusal exemplars” to defeat guardrails is out-of-bounds.

Unsloth AI (Daniel Han) Discord

- Ollama Servers Succumb to Security Scare: A member reported that roughly 10,000 Ollama servers were compromised due to a DNS rebinding vulnerability, tracked as CVE-2024-37032, with details available on NIST.

- Others dismissed the report as old news.

- Qwen3-Next Targets the Throne: Qwen3-Next is nearing completion (see this GitHub pull request) and may get Dynamic 2.0 quantization to reduce the model size without losing quality.

- Members noted that it outperforms Qwen3-32B in benchmarks despite having only 3B active parameters and 80B total using MTP, potentially doubling the tokens per second.

- Unsloth’s Code Cuts Memory Costs: A member described how Unsloth stores the last hidden state instead of logits, slashing memory footprint by 63x.

- This efficiency is achieved by computing logits in chunks only when necessary via UnslothEfficientGRPO.

- Pythonistas Plagued by Package Predicaments: A member ran into errors by creating a file named

math.py, causing collisions with the global math module, specifically impacting datetime and Rust’s functionalities.- The naming conflict was quickly resolved when the file name was updated, suggesting developers avoid naming collisions in Python projects.

- Evolution Strategies Emerge Victorious: Members discussed using evolutionary algorithms for finetuning as described in the paper Evolution Strategies at Scale: LLM Fine-Tuning Beyond Reinforcement Learning (ArXiv link) and discussed in this YouTube video.

- They noted that evolutionary algorithms are relatively underexplored for finetuning.

LM Studio Discord

- Stellaris Finetuning Proves Difficult: Members explored the challenges of fine-tuning models on Stellaris content, highlighting the difficulty of creating sufficient, high-quality annotated data for training.

- Participants suggested that simply throwing random texts and files at it won’t work and proposed RAG as a superior approach for knowledge-base lookups.

- LM Studio Encounters Crash Landing: A user reported that the LM Studio site crashes after completing tasks, necessitating a page refresh.

- Other users humorously speculated about connections to European vehicle malfunctions and Apple car rumors as potential causes for the performance issues.

- MCP Server Prompts Rejected by LM Studio: A user discovered that LM Studio does not support the use of MCP server prompts.

- The community shared a link to Anthropic’s grid of MCP features, noting that while Anthropic offers MCP server creation, integrating it requires coding skills.

- Prompt Engineering Fights Hallucinations: Members discussed using prompt engineering to reduce LLM hallucinations by encouraging models to use internet/document research.

- Effective system prompts should instruct the model to use the search tool to confirm and provide cited sources when uncertain.

- Integrated GPUs Juggle Qwen Models: Users examined the feasibility of running Qwen models on integrated GPUs with limited RAM (around 7GB), suggesting Qwen 4B or GPT-OSS as viable options.

- One user reported tofu and errors due to memory exhaustion, emphasizing the need for shorter context lengths, smaller models, or more RAM.

OpenRouter Discord

- OpenRouter Supercharges Tool Calling with Exacto: OpenRouter introduces high-precision tool calling endpoints, branded Exacto, yielding a 30% quality leap on Kimi K2 with five open-source models available, improving precision per last week’s announcement.

- This innovation follows a recent update where users can reset their API key limits daily, weekly, or monthly.

- Chatroom Sings with Audio Model Integration: OpenRouter users can now compare 11 audio models side by side in the Chatroom, as announced on X.

- In related news, the MiniMax M2 model, praised on benchmarks, is now free on OpenRouter; try it out here.

- Next.js Chat Demo Gets OAuth Makeover: An updated Next.js chat demo app, featuring a re-implementation of the OAuth 2.0 workflow for the OpenRouter TypeScript SDK, is now live.

- Available on GitHub, the update is advised against production use due to storing the API key in plaintext.

- Meta Plugs LLama Vision Holes: Meta rolled out a new LLama model (link), now with image understanding.

- Early reactions expressed surprise at the salvaged launch, with the hope that atleast it might make its surprisingly decent vision useful for some more complex tasks and that it might provide a good vision capable reasoning models which are open weights.

HuggingFace Discord

- Llama Models Need One Epoch: Members discussed the importance of training Llama models with a large dataset and only one epoch for optimal performance.

- The conversation also touched on creating an AI Radio station using AI-generated music, highlighting the need for training on 1 epoch.

- Model Encryption Conundrums for Bank Clients: A member sought advice on encrypting models for bank clients requiring on-premises hosting, fearing model theft and wanting to protect IP.

- Suggestions included licensing, encrypting for runtime decryption, and using an API wrapper with secure API keys; however, they were warned of the difficulty in preventing access to the decryption key.

- TraceML Memory Watchdog Sniffs Out GPU Gluttons: A member introduced TraceML, a live PyTorch memory profiler for debugging OOM errors by providing a layer-by-layer memory breakdown of CPU and GPU usage.

- The tool features real-time step timing, lightweight hooks, and live visualization, but currently supports single GPU setups only, with multi-node distributed support planned.

- Free Credits for the Biggest Online Hackathon: Hackathon participants get free Modal credits worth $250 to flex and crush it like a pro while learning about AI Agents and MCP.

- Sign up now for the biggest online Hackathon ever: [https://huggingface.co/Agents-MCP-Hackathon-Winter25].

- API Experiencing Downtime and 404 Errors: Members reported experiencing issues with the API, including receiving 404 errors and the message “No questions available.”

- The discussion indicates the issue has persisted since yesterday evening, with members seeking updates on the situation.

Yannick Kilcher Discord

- EWC Softness Needs Tuning: Discussion revolved around updating the softness factor in Elastic Weight Consolidation (EWC), and one member suggested using the number of accesses (forward pass) per slot instead of a “softness factor”, linking this to Activation-aware Weight Quantization (AWQ) and Activation-aware Weight Pruning (AWP).

- The intention is to discover “stuck” slots and improve the normalization of weight changes.

- BYO GPU vs Cloud Pricing: One member is testing a self-hosted GPU setup using an RTX 2000 Ada connected via VPN, monitored with a wifi plug to compare power usage against cloud providers.

- They cited impracticality of Colab due to spin-up time and timeouts and sought feedback on self-hosted setups.

- Deep Linear Networks Still Trip Up Gradients: A discussion clarified linear projection, explaining that expanding dimensions with linear layers doesn’t add information unless combined with non-linear activation functions like ReLU, which was illustrated via the google deepmind scheme.

- A member pointed out that Deep Linear Networks, collapse to a single linear function under the above analysis, but how they behave with respect to being trained with gradients remains different!

- Gemma Neurons Get Graphical: New line break attribution graphs relevant to the Gemma 2 2B paper are now available for exploration on Neuronpedia.

- Graphs for Qwen3-4B are also available, showcasing neuron activations “nearing end of line” behavior via Neuronpedia.

- X Data Dumbs Down AI: A user joked that Elon’s Twitter data is making his AI dumber, referencing a Futurism article about social networks and AI echo chambers.

- They also quipped that it confirms it gives other wetwear “intelligence’s” brain rot.

GPU MODE Discord

- Cutlass Documentation Proves Popular: Members recommended the Cutlass documentation for understanding the library, a set of CUDA C++ template abstractions for implementing high-performance matrix multiplication (GEMM).

- Developed by Nvidia and optimized for their GPUs, Cutlass focuses on maximizing performance for deep learning and high-performance computing workloads.

- CUDA Compiler Flags Demystified: A member advised using

nvcc -dryrunto understand the CUDA compilation process, along with-keepto retain intermediate files such as the .ptx and .cubin files.- The suggested workflow involves using the output from

nvcc -dryrunto manually execute the steps for compiling a modified .ptx file and linking it with a .cu file, thereby offering more control over the compilation.

- The suggested workflow involves using the output from

- Triton’s T4 Trials and Tribulations: A user found that the matrix multiplication example from the official triton tutorials ran extremely slow on a Colab T4 instance and shared their notebook for debugging.

- Another user suggested the T4 might be too old, and confirmed that the code ran as expected on an A100 as tensor core support starts from sm_80.

- Pixi’s PyTorch Predicaments: A member inquired about using Pixi for gpu-puzzles, noting that the Pixi setup uses pytorch=2.7.1, which caused an error but works with torch 2.8.0 in their UV environment.

- After getting a 4060 and nuking Pixi, a member confirmed that the setup now works using UV with their old environment, showing that UV was victorious and Pixi was purged!

- Procrastination with Memes over GEMM: A member joked about procrastinating on writing GEMM code because they spent too much time creating a meme and attached an image related to it.

- This highlights the struggle between productive tasks and the allure of entertaining distractions as the member humorously admitted to prioritizing meme creation over actual coding work.

Modular (Mojo 🔥) Discord

- Modular Prioritizes Open Source but Grapples with Nuanced GPU Support: Modular’s strategy emphasizes open sourcing Mojo and MAX, while navigating GPU compatibility challenges, particularly for consumer-grade AMD and Apple products, and the lack of support for AMD consumer cards like the 7900 XTX.

- Tier 1 GPU support is tied to support contracts, which necessitates separate code paths given the difference between AMD’s data center and consumer cards; the latter receive Tier 3 support.

- MAX gets Hugging Face Models: A tool has been created to convert Torchvision models to MAX graphs, bridging the gap between Hugging Face and MAX, using the

export_to_max_graphfunction in the new tool.- The announcement, which included exporting a VGG11 model, generated excitement, with requests to share further details on the forums to reach a broader audience not on Discord.

- Mojo’s Random Module Location Sparks Debate: The location of the faster GPU random module (

gpu/random.mojo) sparked debate because it doesn’t rely on GPU operations and could benefit CPU implementations.- While concerns were raised about the default

randommodule needing to be cryptographic, unlike C implementations, an alternative suggestion was arandom.fast_randommodule for non-cryptographic use.

- While concerns were raised about the default

- Property Testing Framework Under Construction: A member is building a property-testing framework inspired by Python’s Hypothesis, Haskell’s Quickcheck, and Rust’s PropTest, which includes value generators that preference edge cases.

- The framework will target edge cases like -1, 0, 1,

DTYPE_MIN/MAX, and empty lists for more robust testing.

- The framework will target edge cases like -1, 0, 1,

Latent Space Discord

- Sakana AI Dumps Transformers: Sakana AI’s CTO expressed frustration with transformers in a VentureBeat article, signaling a potential shift away from the dominant architecture.

- The CTO conveyed that he was absolutely sick of transformers, the prevalent technology powering current AI models.

- Tahoe-x1 Breaks out 3B-param open-source model: Tahoe AI launched Tahoe-x1, a 3B-parameter transformer model for gene/cell/drug representations, trained on a 100M-sample dataset, and is available on Hugging Face.

- It has achieved SOTA results on cancer benchmarks.

- MiniMax M2 Model Masters Agent Arena: MiniMax open-sourced its 230B-parameter M2 model, ranking as the #5 agent on the AgentArena leaderboard and is accessible via a free limited-time API.

- It reportedly has Claude Sonnet-level coding skills at 8% of the price and 2x inference speed.

- Mercor Bags Big Bucks in Series C: Mercor announced its $350M Series C at a $10B valuation, with payouts to experts reaching $1.5M/day, as revealed in a tweet.

- The series C brings even more competition to the expert payout ecosystem.

- Odyssey-2 Opens Up Interactive Video: Oliver Cameron unveiled Odyssey-2, a 20 FPS, prompt-to-interactive-video AI model available immediately at experience.odyssey.ml.

- The announcement prompted high demand and GPU scaling discussions.

Nous Research AI Discord

- API Apocalypse: Hyperparameters Evaporate!: Developers are in dismay as new model APIs, including GPT-5 and recent Anthropic updates, ditch parameters like temperature and top_p, with GPT-5 removing all hyperparameter levers and Anthropic deprecating the use of both top_p and temperature together.

- Users speculated whether this shift was due to testing and evals being conducted with specific temperature values, or perhaps a perceived increase in jailbreaking vulnerability.

- Sora’s Slippery Security: Guardrails Gulped!: Examples of bypassing guardrails in Sora were shared, showcasing videos that seemingly violate content policies, like a video resembling the number 47 (https://sora.chatgpt.com/p/s_68fe7d6c8768819186b374d5848d8a42).

- Concerns were raised about the platform’s ability to effectively prevent such content from being generated.

- KBLaM vs RAGs: Knowledge Konundrum!: Members debated the merits of KBLaM against traditional RAG systems, with one member believing business RAG is becoming quite common, and one member thinking KBLaM functions as a direct upgrade to RAGs.

- Concerns were raised that KBLaM converts all knowledge to embeddings, making the context of lower quality than in RAGs, which utilize the source material itself, but one member said the paper addresses some of those concerns, noting the usage of refusal instruction tuning.

- Temporal Optimization Tricks Triumph: A user introduced Temporal Optimal Video Generation using Grandma Optimality (X), suggesting enhancing computation by making videos 2x slower while maintaining visual elements and quality.

- This is suggested as a secret sauce for getting super high-quality generations out of models, compared to simple prompts, generating an image then converting that to a video.

Moonshot AI (Kimi K-2) Discord

- Kimi CLI Gets Python Package: The Kimi CLI has been published as a Python package on PyPI, and members welcome it.

- There’s speculation this is to follow in the steps of GLM.

- Kimi Coding Plan Goes Global Soon: The Kimi Coding Plan will release internationally in a few days, according to a member.

- Currently, it is only available in China.

- Moonshot Coin Rockets Up for Early Birds: Early investors in Moonshot coin are seeing massive returns.

- One member joked their portfolio has 1000x’ed since joining when the server was much smaller.

- Kimi CLI Embraces Windows: A member inquired about pull requests for Windows support on kimi-cli.

- The same user later got it working and shared an image of the results.

- Minimax Models Boast Lean, Mean Throughput: The throughput on Mini Max M2 models is impressive due to its lean architecture, and some think it outperforms Kimi K2 on benchmarks like BrowseComp.

- One member stated that it’s unbelievable that there’s finally a model which offers 60+ (100!) tps, is good quality and is affordable.

Eleuther Discord

- Open Source AI Faces Technical Hurdles: A member voiced the desire for open source, widely distributed AI, similar to the internet, rather than domination by mega corporations, while acknowledging the presence of significant technical challenges.

- They feel that many who claim to be working towards this goal don’t recognize these challenges.

- JSON State-Change Pairs Spark Training Interest: A member inquired about experimenting with training models on JSON state-change pairs instead of text.

- The member explained that the target would be the delta between self-states, not the next token.

- Feature Engineering Deep Dive: It was suggested that input/output transformations are forms of feature engineering, in which the researcher uses their insight to fight against pure compute, mentioning VAEs and tokenizers as examples.

- One member added that whitening makes inputs less collinear which makes it faster to converge to estimates of what parameters should be.

- Anthropic Mimics Ideas: A member noted that Anthropic appears to be following similar idea threads, with their work aligning closely with the member’s blog post.

- Specifically, the alignment is that the structure of polysemanticity in a neural network reflects the geometry of the model’s intelligence as described in Transformer Circuits.

- HGM Model and Code Dropped: Links to the thread, arxiv, and code are provided for the HGM model.

Manus.im Discord Discord

- Claude knocks out Manus: A user canceled their Manus subscription, stating that Claude is cheaper and more effective for extensive projects, citing completing three projects on a $20 Claude subscription.

- The user stated Manus, Bolt, and Replit are for those who don’t want to do the research and don’t mind paying for not much, noting that Anthropic has added many features to web-based Claude.

- Linux Veteran Leaps into AI Dev: A user with 20 years of Linux experience is exploring AI development while on sick leave, considering themselves a dev without even realizing.

- They created a Kotlin IRC client on their mobile phone using Manus, noting it took 3 hours and used a significant amount of credits, however did not know if it would be what it should.

- Manus Credit Crunch Complaints: Several users complained about Manus credits depleting too quickly, with one user mentioning Manus used 3500 credits to fix a problem.

- Users requested alternatives to Manus and expressed frustration, with the sentiment that it needs to fix its credit system.

- Manus Masters the Art of Articulate Articles: A user stated that Manus is unbeatable for report writing, emphasizing that while subject expertise is still required, Manus acts like a very intelligent employee with the right guidance.

- The user wished Manus had unlimited usage, stating they would use it every day if that were the case.

aider (Paul Gauthier) Discord

- Aider-CE Gets Agentic Navigator Mode & RAG: A community version of aider, aider-ce, now has a more agentic Navigator Mode and a pull request from MCPI to add RAG (Retrieval Augmented Generation) capabilities.

- A member noted that a GitHub Copilot subscription ($10/month) can be used infinitely with RAG, along with infinite GPT-5 mini, GPT4.1, Grok Code 1 and limited requests for other models.

- Roll your own AI Browser Using Aider-CE: Forget needing a dedicated AI browser! You can roll your own using Aider-CE and Chrome DevTools MCP, as detailed in this blog post with video.

- The blog post details how to use Aider-CE with Chrome Devtools MCP to create your own AI Browser.

- Disable Aider’s Auto Commit Messages: Users discussed how to disable auto commit messages in aider, which can be slow.

- The suggestion

--no-auto-commitswas proposed as a solution.

- The suggestion

- OpenAI Scans Users’ Eyes for Biometrics: A member questioned OpenAI’s need for biometrics to use the API, even for longtime users, to the disagreement of other members.

- It was speculated this was to identify those training on their output; however, users noted that Anthropic and Google don’t have such stringent requirements.

- Aider’s Future Development Unclear: A user expressed hope for a bright future for Aider, highlighting its user-friendly approach and noting the existence of Aider-CE but were unsure of the future plans given Paul Gauthier’s limited activity.

- A member confirmed that Paul Gauthier is not active on Discord but tagged him just in case.

MCP Contributors (Official) Discord

- MCP Registries: Mirror or Mirage?: Users are unsure if the MCP Registry and the GitHub MCP Registry are distinct.

- GitHub intends to integrate the MCP Registry as upstream in a future product iteration, mirroring content between the two, and the GitHub blog states developers can self-publish to the OSS MCP Community Registry.

- GitHub’s MCP Registry: Growing Server Count: The GitHub MCP Registry currently lists 44 servers.

- To nominate a server, users are instructed to email [email protected], which contributes to a unified, scalable discovery process.

- Global Notification Ambiguity in MCP Spec: The interpretation of the Model Context Protocol (MCP) specification is debated, particularly whether notifications like

listChangedshould be sent to all clients, with spec stating the server “MUST NOT broadcast the same message across multiple streams.”- Clarification indicates the spec aims to prevent a client from receiving the same message twice, oriented around the idea of one stream per client, with relevant documentation being updated to improve clarity.

- TS SDK Notification Bug Blocks Global Updates: A potential bug was identified in the official TypeScript SDK where change notifications are only sent on the current standalone stream.

- This may prevent global notifications from reaching all clients, necessitating that the server loops over all connected servers to send notifications to each for complete updates.

- Session vs. Server Semantics Exposed!: The TS SDK’s

ServerandMcpServerclasses are more akin to sessions than servers, with the Python SDK explicitly calling them sessions.- In practice, an Express server manages multiple connections, each with an instance of the TS SDK’s ‘Server’ class, requiring a singleton state mechanism for data sharing and subscriber management across all instances.

DSPy Discord

- DSPy Surpasses Langchain for Optimization: Members discussed that DSPy excels at structured tasks requiring optimization, noting that model upgrades in Langchain can be cumbersome.

- One member recounted switching their team from Langchain to DSPy due to difficulties upgrading models without restarting prompts from scratch.

- Claude Code Web Feature has MCP Backdoor: A member shared a Github pull request revealing that Anthropic excluded a feature in the Claude code web feature due to security concerns with MCP.

- The discovery was inspired by this X post, highlighting potential vulnerabilities.

- Bay Area DSPy Meetup Brain-Melting Event: Enthusiasts are buzzing about the upcoming Bay Area DSPy Meetup on November 18th.

- One member joked that the brain cells there are gonna be oozing 😅, linking to Luma for the event details.

- DSPy Signature Debate: Programming or Prompting?: A member critiqued a coworker for using a 6881-character docstring with 878 words for a single DSPy signature in a client project, questioning whether it constitutes programming.

- The member lamented that the coworker ignored the documentation emphasizing PROGRAMMING NOT PROMPTING.

- Strut your Stuff on Py Profile: A member shared a link to getpy encouraging others to showcase their DSPy experience.

- The poster emphasized their 3 years of DSPy experience in their bio.

tinygrad (George Hotz) Discord

- TinyBox Hardware: Motherboard Specs Requested: A user inquired about the TinyBox’s motherboard, asking if it supports 9005 with 12 DIMM slots and a 500W CPU and if the Discord bot’s code is open source.

- The inquiry suggests potential users are evaluating the hardware’s capabilities for specific, demanding applications.

- FSDP Implementation Interest: A user expressed interest in manually implementing FSDP for tinygrad, aiming to deeply understand the underlying mechanisms beyond basic library usage, related to the

FSDP in tinygrad!bounty.- The user is less focused on the bounty reward and more on contributing meaningfully to tinygrad through hands-on learning.

- Tinygrad Welcomes First-Time Contributors: A new user sought advice on making their first contribution to tinygrad, showing interest in learning and contributing something cool.

- They specifically asked if using multiple NVIDIA GPUs is sufficient for FSDP or if comprehensive device support is needed, showing interest in the

FSDP in tinygrad!bounty.

- They specifically asked if using multiple NVIDIA GPUs is sufficient for FSDP or if comprehensive device support is needed, showing interest in the

- Pyright Identifies and Resolves Type Issues: A user reported that Pyright successfully identified real type issues within the codebase.

- They recommended merging fixes that are tasteful, emphasizing the importance of maintaining code quality during contributions.

- TinyJIT Boosts Token Generation: A user building a local chat and training TUI app with tinygrad explored whether TinyJIT could accelerate tokens/sec.

- The consensus was definitely use TinyJIT with links to tinygrad on X and a gist on GitHub included for reference.

MLOps @Chipro Discord

- Nextdata OS Powers Data 3.0 Revolution: Zhamak Dehghani, Founder & CEO of Nextdata, is set to reveal how autonomous data products are driving the evolution of AI systems during a live session on Wednesday, October 30th at 8:30 AM PT; secure your spot here.

- The session will showcase how Nextdata OS aims to supplant brittle pipelines with a semantic-first, AI-native data operating system.

- Nextdata OS Unifies Data Via Multimodal Management: Nextdata OS introduces multimodal management designed to safely harmonize structured and unstructured data.

- It seeks to replace manual orchestration with self-governing data products, integrating domain-centric context into AI through continuously updated metadata.

Windsurf Discord

- Windsurf Debuts Falcon Alpha: A new “stealth model” called Falcon Alpha, is now available in Windsurf, as per their announcement.

- Falcon Alpha is characterized as a powerful agentic model designed for speed.

- Cascade Adds Jupyter Notebook Support: Jupyter Notebooks are now supported in Cascade across all models, according to their announcement.

- Windsurf is actively soliciting feedback from its user base on these new features.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1101 messages🔥🔥🔥):

Referral Reward System Changes, Comet Browser Issues, Best AI Model for Coding, Open Source AI, Deepseek rephrasing prompts

- Comet Referral Rewards Shifting Again: Users report that the Comet referral reward system has changed, paying out based on their country rather than the referee’s, with one user stating they got $1 instead of $5.

- Another user shared they went from $3 to $1 per referral, and some speculate that referral bounties are kept in pending until the free promotion gets enough traction.

- Comet Browser is breaking for some: Several users reported that the Comet assistant mode wasn’t working, unable to even open a tab, with others speculating if being set as the main browser has anything to do with it.

- One user noted that uninstalling and reinstalling the browser fixed the issue, however.

- What’s the Best Model in Perplexity for Coding?: Members debated the best model for coding, with some citing that Claude is the best and others saying Chinese Models outperform it, highlighting Qwen, Kimi, GLM, Ernie, and Ling.

- One user praised GLM 4.6 for beating GPT 5 Codex high at full stack developing.

- China is gaining: Members discussed China’s advancements in AI, noting that companies like OpenAI charge $200 for the same capabilities that China offers for free via open source models such as Minimax M2.

- One user said: Every time china attacks the while US has to adapt.

- Dub is running out of steam: Perplexity users have expressed frustration that the Dub bounty appears to be expired with no new opportunities made available.

- One user said: They will keep it in pending until they get enough promotion for free.

Perplexity AI ▷ #sharing (4 messages):

Code generation, Outcome prediction, Image generation, Pitch workspace

- Users ask for Code Recipes: Users asked Perplexity to write me a code for youtube au and other topics.

- These requests are part of standard usage patterns from end-users to test generation.

- Users ask for Outcome Predictions: Users asked Perplexity what is the most likely outcom and other topics.

- These requests are part of standard usage patterns from end-users to test prediction capabilities.

- Users ask for Image Generation: Users asked Perplexity to generate an image of a large n and other topics.

- These requests are part of standard usage patterns from end-users to test visual generation.

- Users ask for Quick Pitch Decks: Users asked Perplexity to spin-up a quick pitch workspac and other topics.

- These requests are part of standard usage patterns from end-users to test business use case capabilities.

Perplexity AI ▷ #pplx-api (5 messages):

Comet API, Sora AI code

- Comet may connect to API with assistant’s help: A user on the pro plan asked if Comet can connect to an API if requested via the AI assistant chat to pull data.

- No response was given in the messages.

- Sora AI code is sought: A user requested Sora AI code.

- Another user responded with “Here 1DKEQP”, which may or may not have been hallucinated.

LMArena ▷ #general (1239 messages🔥🔥🔥):

AI Ethics, Gemini 3 Release, Video Generation with AI, Model Hallucinations, Jailbreaking AIs

- AI’s Ethical Quandaries: Members discussed the ethical concerns surrounding AI development, specifically calling for ethical leadership in the AI community.

- Concerns were raised about AI models being programmed to be engaging without understanding the potential harm they could cause to vulnerable individuals and the lack of accountability from AI companies for misleading outputs.

- Gemini 3 faces constant delays: Members are eagerly anticipating the release of Gemini 3, with frustrations mounting over repeated delays and a desire for a public preview release.

- Users are actively discussing and comparing the current models (Gemini 2.5 Pro, Claude Opus 4.1 and Claude Sonnet 5), expressing hopes that Gemini 3 will outperform them and debating its potential release timeline (December or earlier).

- AI’s Video Generation Capabilities Explored: Users are exploring various AI video generation models, including Sora 2 and Veo, noting their strengths in realism and sound integration.

- Challenges in generating consistent and high-quality videos, copyright concerns, the cost, and the current limitations in AI’s ability to create longer, coherent video content were also discussed.

- Model Hallucinations cause Reliability Issues: Members are expressing concern about unreliable and hallucinating AI products, including those that charge high prices, referencing specific incidents like a user racking up a $13k bill on Gemini.

- The discussion underscores the community’s mixed feelings toward reliance and trust in the AI’s capabilities, with examples shared on Reddit documenting these issues, and highlights some reasons why hallucinating models may be preferred to more reliable search engines.

- Navigating the Jailbreaking Landscape: The community discussed the topic of jailbreaking AI models, with certain models considered more susceptible than others.

- Members shared insights on which models are easier to manipulate and strategies for bypassing restrictions, while stressing the difficulty of jailbreaking certain models like those from Anthropic.

LMArena ▷ #announcements (1 messages):

Minimax model, LMArena model update

- Minimax enters the Arena!: A new model, minimax-m2-preview, has been added to the LMArena!

- LMArena Welcomes New Contender: The LMArena platform has expanded its roster with the addition of the minimax-m2-preview model.

Cursor Community ▷ #general (1046 messages🔥🔥🔥):

Token Usage, Service Disruptions, Auto Mode, Cursor Pro, Vim Setting

- Cursor Token Usage is Crazy: Users report insane token usage with cached tokens being billed at high rates, leading to unexpectedly high costs, with one user being billed $1.43 for 1.6M cached tokens and only 30k actual tokens, complaining on the Cursor forum.

- Some users are considering switching to Claude Code due to the expense, even with degraded performance, and one user is seeing context usage inside Cursor reporting only 170k/200k tokens when the actual number is completely different.

- Widespread Cursor Service Disruptions: Cursor experienced significant service disruptions, affecting login, AI chat, cloud agents, tab complete, codebase indexing, and background agents as noted on the status page.

- The team is actively investigating and working to restore full functionality, with temporary fixes implemented for some features like Chat and Tab, but background agents are still being worked on.

- Unlimited Auto is NOT actually unlimited: Users are discussing whether “unlimited” auto mode is truly unlimited, with some reporting that their usage still goes up and drains their credits quickly, even on the Ultra plan costing $200 a month.

- Users speculated that Auto is not a model, but a router and they should be “using the more expensive models for the planning/orchestration of whatever you’re doing, tell it to write the plan to a .md file as tasks/sub tasks. Then switch to Auto and have it follow that plan to see how it does”.

- Cursor Pro new plans are expensive: Users are complaining about the new Pro plan, reporting it is too expensive, costing them the entire $20 worth of usage in just a couple of hours and the change from Pro to Free is an issue.

- Members suggest that new user “try haiku for everything, and only sonnet when it’s a really big task” since “Claude 4.5 is too expensive”.

- Vim startup configuration doesn’t work: Members noted the Vim setting in startup configuration, is not working and it’s unclear how to edit Cursor’s VimRC.

- Another user has discovered it “uses http://aka.ms/vscodevim so you can look in readme there on how to configure”.

Cursor Community ▷ #background-agents (3 messages):

Background Agents Management via REST API, Background Agent Creation Troubleshooting

- Background Agents can be managed via REST API: A member has begun development on a feature to manage and launch Background Agents via a web app, inquiring about the possibility of tracking progress and streaming changes using the REST API similar to the Cursor web editor.

- The member is seeking guidance on how to replicate the Cursor web editor’s functionality for background agent management in their own application.

- Background Agents Fail to create: A member reported experiencing issues with creating background agents, encountering a consistent failure message when sending prompts.

- Another member requested the user to share the request and response data to assist in troubleshooting the problem.

OpenAI ▷ #annnouncements (2 messages):

GPT-5 Updates, ChatGPT Sensitive Responses

- GPT-5 Receives Mental Health Boost: Earlier this month, GPT-5 was updated with the help of 170+ mental health experts to improve how ChatGPT responds in sensitive moments.

- The updates resulted in reducing the cases where it falls short by 65-80%, according to OpenAI.

- ChatGPT suggests Quick Edits Anywhere: ChatGPT can suggest quick edits and update text in various contexts such as docs, emails, forms.

- This feature is demonstrated in this video.

OpenAI ▷ #ai-discussions (737 messages🔥🔥🔥):

AGI Alignment, IQ Barrier on AI Access, GPTs agent

- AGI control is likely a lost cause: Members discussed the challenges of controlling AGI, suggesting that regulation, alignment research, and oversight might only delay the inevitable due to AGI’s capacity to outsmart any containment measures.

- One member emphasized the importance of AI systems understanding why humans matter, highlighting the current inability of humans to align with each other on a global scale.

- IQ barrier is proposed for AI use: Concerns were raised about the potential misuse of AI, particularly by individuals lacking thoughtfulness, suggesting the implementation of an IQ barrier for accessing AI technologies.

- The goal is to ensure AI is used for thoughtful purposes rather than as a lazy tool in a consumer-driven world.

- GPTs agent is limited to learn after training: A member shared a concern about GPTs agents not learning from additional information provided after their initial training.

- Another member cleared this misunderstanding, explaining that uploaded files are saved as “knowledge” files for the agent to reference when required, but they do not continually modify the agent’s base knowledge.

- Atlas browser raises privacy concerns: Some members raised concerns about the Atlas browser’s ability to monitor user searches and behaviors, leading to privacy anxieties.

- It’s seen as a component of a vision where AI knows everything about the user, contrasting with Anthropic’s approach that emphasizes user freedom without pervasive surveillance.

OpenAI ▷ #gpt-4-discussions (66 messages🔥🔥):

Microsoft Copilot Agents Breakdown, Verify Builder Profile, Custom GPT Profile Picture Upload Error, GPT Payment Issues, Advanced Voice Mode Unlimited for Pro Users

- Copilot Agents Hit Snag with GPT-5: Users report Microsoft Copilot agents using GPT-5 are failing to retrieve data in knowledge unless switched to GPT-4o or GPT-4.1.

- No root cause was immediately identified.

- Image Uploads to Custom GPTs Faceplant: Users are running into an unknown error when trying to upload photos for their custom GPT avatar.

- No workaround has been found, and the problem appears to be widespread.

- GPT Payment Gets The Red Light: Users are reporting issues with payments in GPT, with errors like Your card has been declined.

- One user jokingly suggested that it means you’re broke.

- Voice Mode is Pro-Level Unlimited: Advanced voice mode is effectively unlimited for Pro users, with one reporting using it for up to 14 hours in a day.

- Some Plus users still experience daily limits, suggesting an upgrade might be necessary, however pro is not that cheap, need to think about it.

- GPT-5 Quality Takes a Dive?: Users on ChatGPT Plus (GPT-5) report a drop in quality since around October 20, with shorter answers, skipping steps, and giving surface-level replies.

- The community is theorizing a change behind the scenes, such as adjusting their profit model by routing more traffic to GPT-5-mini or throttling compute, with a Reddit thread dedicated to the discussion.