ASICs are all you need.

AI News for 10/10/2025-10/13/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (197 channels, and 15120 messages) for you. Estimated reading time saved (at 200wpm): 1127 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

There’s been a lot of chip dealmaking by OpenAI recently to create “the biggest joint industrial project in human history”:

- Sept 10: $300B in compute from Oracle

- Sept 22: 10GW from NVIDIA

- Oct 6: 6GW from AMD

and today, the final shoe drops - as widely rumored and on schedule, after hiring TPU alums from Google - 10GW of OpenAI’s own ASIC and systems specifically designed for OpenAI’s inference capacity (as Sam says on the OpenAI podcast).

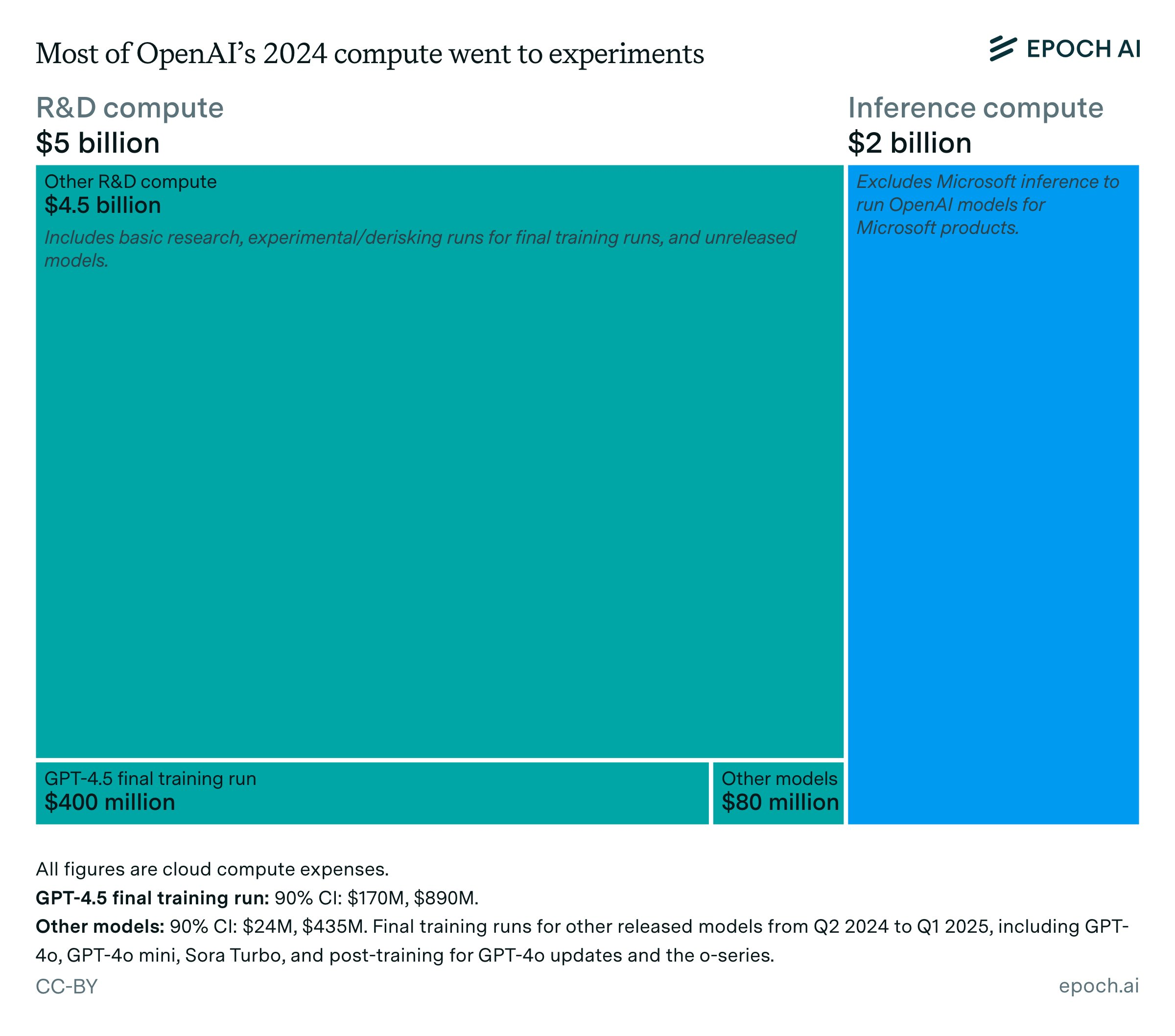

To put this in scale, all of OpenAI has 2GW of compute now, majority spent on R&D:

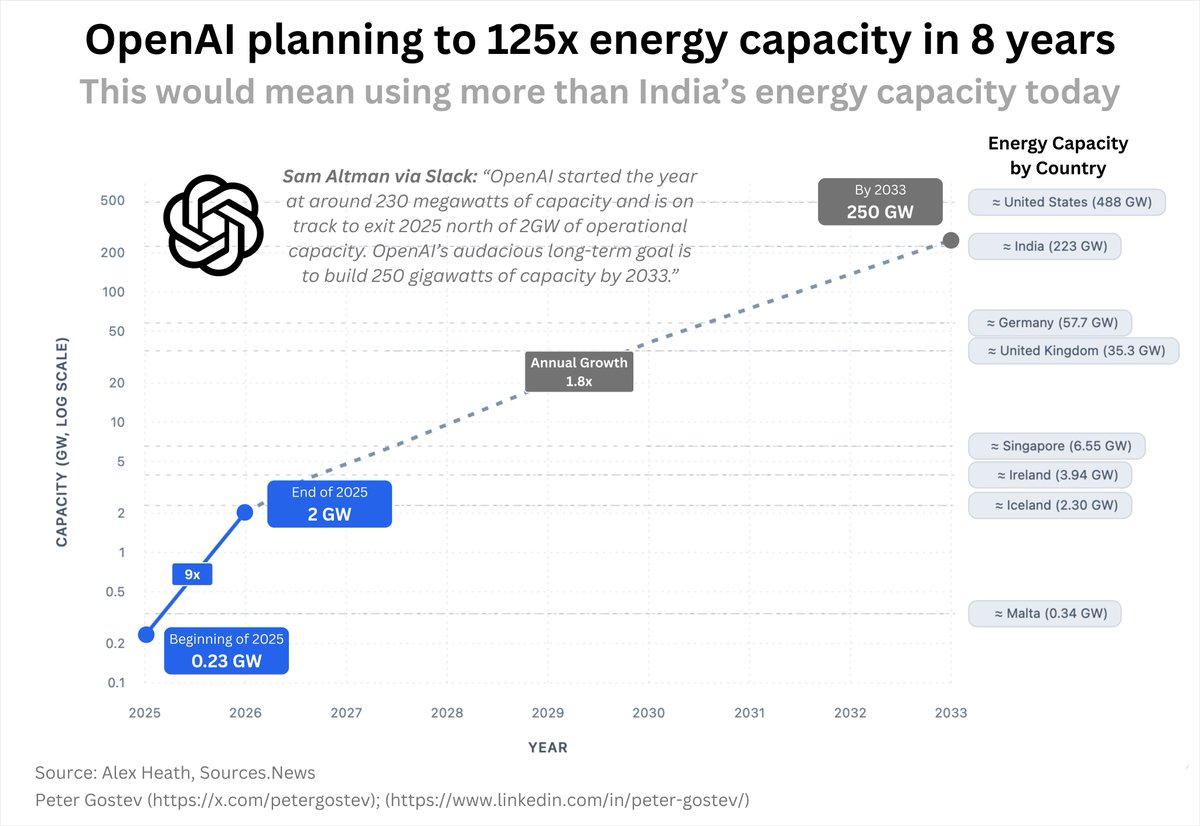

and this is 12% of an overall roadmap going to 250GW (half the energy consumption of the United States)

Greg says ambient agents are a big part of the reason why inference demand will go up a lot:

But I think that we are heading to a world where AI intelligence is able to help humanity make new breakthroughs that just would not be possible otherwise.

And we’re going to need just as much compute as possible to power that.

Like one example of something very concrete is that we are in a world now where ChatGPT is changing from something that you talk to interactively to something that can go do work for you behind the scenes.

If you’ve used features like Pulse, You wake up every morning. It has some really interesting things that are related to what you’re interested in. It’s very personalized. And our intent is to turn ChatGPT into something that helps you achieve your goals.

The thing is, we can only release this to the pro tier because that’s the amount of compute that we have available. And ideally, everyone would have an agent that’s running for them 24-7 behind the scenes, helping them achieve their goals. And so ideally, everyone has their own accelerator, has their own compute power that’s just running constantly.

And that means there’s 10 billion humans.

We are nowhere near being able to build 10 billion chips.

And so there’s a long way to go before we are able to saturate not just the demand, but what humanity really deserves.

Greg says that they have been working on their ASIC for 18 months, and why they did this in house:

“There were all sorts of chip startups with novel approaches that were very different from GPUs. And we started giving them a ton of feedback saying, here’s where we think things are going. It needs to be models of this shape. And honestly, a lot of them just didn’t listen to us, right? And so it’s like very frustrating to be in this position where you say we see the direction the future should be going. We have no ability to really influence it besides sort of, you know, just like sort of trying to influence other people’s roadmaps. And so by being able to take some of this in-house, we feel like we are able to actually realize that vision.”

While nothing yet has been announced with Intel, it is surely not far behind given the clear interest in the American AI stack.

Broadcom’s stock jumped 10% (+$150B) on today’s news.

AI Twitter Recap

Chips, inference TCO, and training infra

- InferenceMAX’s nightly TCO readout (AMD vs NVIDIA): ROCm stability has improved “orders of magnitude” since early 2024; on Llama‑3‑70B FP8 reasoning workloads and vLLM, MI300X shows 5–10% lower performance-per-TCO than H100 across interactivity levels, with MI325X competitive vs H200. There remain workloads where AMD loses, but the trend is nuanced and rapidly changing as software improves nightly according to InferenceMAX’s runs (@SemiAnalysis_).

- Related infra notes for RL at scale: inflight updates plus continuous batching are now table stakes to avoid the “long tail” of GPUs stuck on single completions (@natolambert, @finbarrtimbers).

- OpenAI-designed accelerators with Broadcom (10 GW): OpenAI announced a partnership to deploy 10 GW of custom chips, adding to NVIDIA/AMD partnerships, with a podcast discussing co-design and roadmap (@OpenAINewsroom, @OpenAI). An OpenAI chip engineer recounts an 18‑month sprint to a reasoning‑inference‑tuned part targeting a fast, large‑volume first ramp ({itsclivetime}); leadership reiterated “the world needs more compute” ({gdb}).

- vLLM hits 60K GitHub stars: Now powering text‑gen across NVIDIA, AMD, Intel, Apple, TPUs, with native support for RL toolchains (TRL, Unsloth, Verl, OpenRLHF) and a wide model ecosystem (Llama, GPT‑OSS, Qwen, DeepSeek, Kimi) (@vllm_project).

Reasoning RL: hybrid rewards, label-free scaling, and new sequence models

- Hybrid Reinforcement (HERO): Combines 0–1 verifiable feedback with dense reward model scores via stratified normalization and variance‑aware weighting, improving hard reasoning by +11.7 points vs RM‑only and +9.2 vs verifier‑only on Qwen‑4B, with gains holding across easy/hard/mixed regimes and generalizing to OctoThinker‑8B (@jaseweston).

- RL without human labels at pretrain scale (Tencent Hunyuan): Replace NTP with RL‑driven Next Segment Prediction using large text corpora, via ASR (next paragraph) and MSR (masked paragraph) tasks. Reported gains after thousands of RL steps: +3.0% MMLU, +5.1% MMLU‑Pro, +8.1% GPQA‑Diamond, +5%+ AIME24/25; end‑to‑end RLVR adds +2–3% on math/logic tasks. Complementary to NTP and lowers annotation cost for scaling reasoning pretraining (@ZhihuFrontier, Q&A).

- Agentic Context Engineering (ACE): Treats context as an evolving, structured knowledge base (not a single prompt). Uses a Generator/Reflector/Curator loop to accumulate “delta” insights; reported +10.6% on agentic benchmarks and +8.6% on complex financial reasoning over SOTA prompt optimizers, with 86.9% lower adaptation latency ({_philschmid}).

- Non‑Transformer sequence modeling: Mamba‑3 refines state‑space integration (trapezoidal vs Euler) and allows complex‑plane state evolution for stability and periodic structure representation; positions linear‑time, hardware‑friendly sequence models for long‑context and real‑time applications (@JundeMorsenWu).

Multimodal models: audio reasoning SOTA and video systems

- Speech-to-speech reasoning SOTA (Gemini 2.5 Native Audio Thinking): Scores 92% on Artificial Analysis Big Bench Audio, surpassing prior native S2S systems and even a Whisper→GPT‑4o pipeline. Latency: 3.87s TTFT for “thinking” variant (non‑thinking 0.63s). Features: native audio/video/text I/O, function calling, search grounding, thinking budgets, 128k input/8k output context, Jan 2025 cutoff (@ArtificialAnlys).

- Video model landscape shift:

- Alibaba’s Wan 2.5 debuts at #5 (Text‑to‑Video) and #8 (Image‑to‑Video) on Video Arena; now 1080p@24fps up to 10s with audio‑input lip‑sync; priced at ~$0.15 per second on fal/replicate; importantly, it’s not open weights (previous Wan releases were Apache‑2.0) (@ArtificialAnlys).

- Kling 2.5 Turbo 1080p joins the leaderboard; pricing cited at $0.15 per 5‑second 1080p clip and strong human votes in Arena (@arena).

- Real‑time video understanding: StreamingVLM for infinite streams continues the push for low‑latency multimodal agents ({_akhaliq}).

- Image reasoning demand: Qwen3‑VL‑235B‑A22B‑Instruct reaches 48% share for image processing on OpenRouter (@Alibaba_Qwen).

- DeepSeek’s hybrid “V3.1 Terminus” and “V3.2 Exp”: Both support reasoning and non‑reasoning modes, show material intelligence and cost‑efficiency gains over V3/R1, with broad third‑party serving (SambaNova up to ~250 tok/s; DeepInfra up to ~79 tok/s for V3.2) (@ArtificialAnlys).

Open-source training stacks and reproducible recipes

- nanochat (Karpathy): A full‑stack, from‑scratch “ChatGPT‑clone” training/inference pipeline (~8k LOC) covering tokenizer (Rust), pretrain on FineWeb, mid‑train on SmolTalk/MCQ/tool‑use, SFT, and optional RL (GRPO). Ships a minimal engine (KV cache, prefill/decode, Python tool), CLI + Web UI, and a one‑shot report card. Indicative costs: ~$100 for a 4‑hour 8×H100 run you can chat with; ~12 hours surpasses GPT‑2 on CORE; ~24 hours (depth‑30) reaches 40s on MMLU, 70s on ARC‑Easy, 20s on GSM8K. A strong, hackable baseline for research and education (@karpathy, repo link; notes by @simonw).

- Execution-grounded code evals (BigCodeArena): An open human evaluation platform built on Chatbot Arena with executable code, enabling interaction with the runtime to capture more faithful human preferences for coding models ({iScienceLuvr}). “GEPA baseline or don’t publish” sentiment for prompt/program optimization is getting louder in the DSPy community ({casper_hansen_}).

- Local ML on Apple silicon: Qwen3‑VL‑30B‑A3B at 4‑bit runs ~80 tok/s via MLX ({vincentaamato}); tiny Qwen3‑0.6B fine‑tuned in under 2 minutes reaching ~400 tok/s on MLX (@ModelScope2022). Privacy AI 1.3.2 adds MLX text/vision model support with offline operation and improved download management ({best_privacy_ai}).

Benchmarks and evaluation advances

- Hard science evals bite back: CMT‑Benchmark (condensed matter theory) aggregates HF/ED/DMRG/QMC/VMC/PEPS/SM/etc.; average performance across 17 models is just 11%, many categories see 0%. Paper details how to construct truly hard problems for AI ({SuryaGanguli}).

- Speech reasoning benchmark: Big Bench Audio adapts Big Bench Hard into 1,000 audio questions for native speech reasoning; Gemini 2.5 Native Audio Thinking leads at 92% (@ArtificialAnlys).

- Multi‑agent “collective intelligence” measurement: Information‑theoretic decomposition (synergy vs redundancy) distinguishes real team‑level reasoning from redundant chatter; experiments show role differentiation + theory‑of‑mind prompts improve coordination; lower‑capacity models oscillate without true cooperation ({omarsar0}).

Product and platform updates

- NotebookLM: Upgrades Video Overviews with new visual styles powered by the Gemini image model “Nano Banana” and introduces a shorter “Brief” format; rolling out to Pro first (@Google, @NotebookLM).

- Google AI Studio: New usage and rate‑limit dashboard (RPM/TPM/RPD charts, per‑model limits) directly in AI Studio (@GoogleAIStudio, {_philschmid}).

- Perplexity: Adds domain filters to Search API and reaches #1 overall app on India’s Play Store ({AravSrinivas}, rank).

Top tweets (by engagement)

- nanochat: an end‑to‑end, minimal LLM training/research stack — full pipeline (tokenizer→pretrain→mid‑train→SFT→RL) in ~8k LOC; ~$100 to chat with your own model in ~4 hours on 8×H100 (@karpathy; repo).

- OpenAI x Broadcom: 10 GW of custom AI accelerators — plus podcast on chip co‑design and scaling (@OpenAINewsroom, @OpenAI).

- NotebookLM’s “Nano Banana” video overviews — new visual styles and Brief summaries rolling out (@Google, @NotebookLM).

- Grok “Eve” voice mode upgrade — notably more natural conversational experience, worth a try for speech UX comparisons ({amXFreeze}).

- Gemini 2.5 Native Audio Thinking sets S2S reasoning SOTA (92%) — beats prior native S2S and a Whisper→GPT‑4o pipeline on Big Bench Audio (@ArtificialAnlys).

- Qwen3‑VL‑235B‑A22B‑Instruct leads OpenRouter image processing — 48% market share snapshot (@Alibaba_Qwen).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Chinese Open-Model Dominance and LLM Style-Collapse Debate

- The top open models on are now all by Chinese companies (Activity: 482): A Washington Post analysis argues that current open-LLM leaderboards (e.g., LMSYS/HuggingFace) are topped by models from Chinese companies, with the shared chart visualizing a leaderboard where the highest-ranked open models are from China, indicating a shift in open model leadership away from US/Meta-led stacks. The post links the analysis (gift link) and the image appears to be a comparative ranking/graph highlighting vendors by country, with Chinese labs occupying the top slots. See: https://wapo.st/4nPUBud. Comments note that this trend has existed for some time and raise concerns about “benchmark-maxxing” in open models, implying possible leaderboard gaming; others mention NVIDIA and IBM models as competitive but not SOTA, and criticize the chart’s design/readability.

- Several point out that apparent leaderboard domination may reflect benchmark gaming rather than broad capability: models are being “benchmaxxed” (overfit/prompt-tuned) to public evals like the Hugging Face Open LLM Leaderboard and LMSYS Chatbot Arena, which can inflate scores without corresponding real-world gains. This highlights risks of test contamination and over-optimization to specific prompts/metrics rather than robust generalization (Open LLM Leaderboard, Chatbot Arena).

- One commenter notes solid but non-SOTA U.S. open models from NVIDIA and IBM that remain practical choices: e.g., NVIDIA Nemotron-4 15B Instruct (permissive license, tool-use tuned) and IBM Granite 8B/20B series (Apache-2.0, enterprise-focused). While they may trail top entries on MT-Bench or Arena ELO, they offer good trade-offs in size, licensing, and stability for deployment contexts (Nemotron-4-15B-Instruct, IBM Granite 8B).

- A question about “No Mistral?” flags that many leaderboards typically feature strong open Mistral models like Mixtral 8x7B Instruct (MoE) and occasionally newer variants (e.g., 8x22B), which often rank competitively among open-weight models. If absent, it could indicate the leaderboard’s cutoff date, evaluation suite, or filtering criteria rather than a true capability gap (Mixtral-8x7B-Instruct).

- I rue the day they first introduced “this is not X, this is

’ to LLM training data (Activity: 491): OP highlights a pervasive LLM style artifact: the template “This isn’t X, this is,” arguing it’s spread across models and is symptomatic of training-data bias and RLHF-driven stylistic homogenization. They speculate this could reflect or accelerate feedback-loop degradation akin to model collapse, where models trained on synthetic/model-generated text overfit to clichés, amplifying formulaic rhetoric and reducing diversity in outputs. Top comments are largely humorous and do not add technical substance.- A commenter points out that the “This is not X; this is Y” construction is a strong rhetorical device in limited contexts, but LLMs over-generalize it due to the next-token prediction objective and frequency bias favoring high-salience templates over context-sensitive judgment. This yields stylistic mode collapse: once a pattern is learned as “effective,” models deploy it ubiquitously, with RLHF/reward modeling often reinforcing high-engagement clichés; conservative decoding (low temperature/high

top_p) can further amplify repetition. Proposed mitigations include penalizing cliché templates, adding style-diversity objectives, or conditioning on discourse intent to restore context sensitivity.

- A commenter points out that the “This is not X; this is Y” construction is a strong rhetorical device in limited contexts, but LLMs over-generalize it due to the next-token prediction objective and frequency bias favoring high-salience templates over context-sensitive judgment. This yields stylistic mode collapse: once a pattern is learned as “effective,” models deploy it ubiquitously, with RLHF/reward modeling often reinforcing high-engagement clichés; conservative decoding (low temperature/high

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Video Generation Models: Wan 2.2 FLF2V (-Ellary-) and Sora Mainstreaming in Spain

- You’re seriously missing out if you haven’t tried Wan 2.2 FLF2V yet! (-Ellary- method) (Activity: 552): Showcase of a video made with Wan 2.2 FLF2V using the -Ellary- pipeline (method described here: https://www.reddit.com/r/StableDiffusion/comments/1nf1w8k/sdxl_il_noobai_gen_to_real_pencil_drawing_lineart/). Technical feedback centers on temporal instability—noticeable camera/character jumps—suggesting VACE for continuity and/or interleaving static mid‑shots; the commenter provides a quick VACE+upscaling comparison clip (https://streamable.com/1wqka3) and references prior long‑video research on consistency and color drift (https://www.reddit.com/r/StableDiffusion/comments/1l68kzd/video_extension_research/). Another recommendation is to favor hard cuts (dropping frames) over allowing periodic micro‑motions every ~3s, which read as artificial artifacts unique to AI workflows. Commenters converge that VACE significantly improves temporal coherence versus raw FLF2V, while others argue conventional editing (hard cuts) better masks AI motion artifacts and feels more natural to viewers.

- Multiple users note temporal discontinuities (camera/character jumps) in Wan 2.2 FLF2V outputs and report that integrating VACE for clip-to-clip continuity plus inserting static mid-shots reduces visible jumps. A quick A/B example with VACE and upscaling is provided: https://streamable.com/1wqka3, showing improved continuity versus FLF2V-only. Prior long-video research covering issues like color drift and mitigation strategies is referenced: https://www.reddit.com/r/StableDiffusion/comments/1l68kzd/video_extension_research/.

- For reproducibility and better stitching, a native ComfyUI workflow that combines Wan with a VACE Clip Joiner is shared: https://www.reddit.com/r/comfyui/comments/1o0l5l7/wan_vace_clip_joiner_native_workflow/. This pipeline focuses on maintaining temporal coherence across segments and reducing camera shifts when assembling longer sequences.

- The showcased video was built using Ellary-’s SDXL-based line-art pipeline, as documented here: https://www.reddit.com/r/StableDiffusion/comments/1nf1w8k/sdxl_il_noobai_gen_to_real_pencil_drawing_lineart/. Attribution clarifies that the look and line-art transformations come from that method, which can be combined with Wan 2.2 FLF2V for style consistency while generating video.

- Sora videos are becoming mainstream content in Spain (@gnomopalomo) (Activity: 1139): Post claims OpenAI’s text-to-video model Sora is producing content now seen in Spain’s mainstream media (attributed to @gnomopalomo). The linked media (https://v.redd.it/p2ci6clyrvuf1) returns

HTTP 403 Forbidden, indicating Reddit application-layer access control requiring authentication or developer credentials, not a transient network/transport error; see Reddit’s login and support ticket pages for access/appeals. No concrete technical details (e.g., prompts, resolution, runtime, post-production pipeline) are provided in the post. Top comments are non-technical; sentiment ranges from concern about “AAA production standards” amplifying low-quality trends to casual enthusiasm about the visuals, with no benchmarks or implementation discussion.- One commenter raises a technical concern that copyright enforcement will intensify as Sora-generated videos go mainstream: platforms like YouTube/TikTok use content fingerprinting (e.g., YouTube’s Content ID) to auto-flag matches (audio and visual), which can trigger automatic claims/blocks even when AI outputs are stylized or transformed. In the EU (including Spain), the DSM Directive’s Article 17 shifts more liability to platforms if they fail to prevent availability of infringing content, incentivizing aggressive pre- and post-upload filtering; see the directive text and guidance (EU 2019/790). Practically, this means Sora content reusing copyrighted music, branded assets, or look‑alike characters may face takedowns or forced monetization to rightsholders unless creators secure licenses or stick to royalty‑free sources.

2. Unitree G1 V6.0 Humanoid Agility Demo and ChatGPT Simpsons-Style Outputs

- Unitree G1 Kungfu Kid V6.0 (Activity: 813): **Unitree G1 Kungfu Kid V6.0 appears to be a capabilities demo of the Unitree G1 humanoid performing fast, choreographed martial-arts-style motions (kicks, punches, spins). The sequence highlights dynamic balance and whole‑body coordination under rapid center‑of‑mass shifts and brief single‑support phases, indicating robust tracking/control and footstep placement; however, the video provides no quantitative benchmarks (e.g.,**

DoF, joint torque/speed, power, recovery metrics) or controller/training details, so it should be read as a qualitative agility demo rather than a reproducible method or benchmark comparison.- Rapid software-driven progress: one commenter notes the same Unitree humanoid that was “falling over and spasming at trade shows” a year ago now shows markedly improved stability and agility, suggesting major upgrades in the control stack (state estimation, WBC, trajectory planning) without obvious hardware changes. They even argue the routine puts Tesla Optimus demos to shame, underscoring how fast iteration on software can translate to locomotion performance gains (Unitree G1, Tesla Optimus).

- Interest in manipulation and end-effectors: curiosity about Unitree’s “dexterous hand attachments” and progress in domains beyond balance/agility (e.g., coordinated hand-arm tasks, contact-rich manipulation, perception). Technical readers want evidence of bimanual skill benchmarks (door opening, tool/tool-use, pick-and-place) or teleop-to-autonomy transfer, ideally with modular end-effectors and reproducible tasks rather than choreography-focused demos.

- This is the closest ChatGPT can legally get to generating the Simpsons. (Activity: 1629): The post shows an AI-generated, Simpsons-adjacent cartoon family, illustrating how hosted LLM/image systems (here labeled “Gemini 2.5 Flash Image,” though the title mentions ChatGPT) apply IP/copyright safety layers to block exact character generation while allowing style-adjacent outputs. Practically, this is enforced via prompt/entity filters and post-generation safety classifiers (e.g., embedding/name matches or visual similarity thresholds), resulting in generic “yellow cartoon family” compositions rather than trademarked likenesses. The examples in comments appear to show similar near-miss renders, highlighting how policy-based decoding and safety gates cause deliberate “style drift” away from protected characters. Commenters note that local/fine-tuned models without safety layers (e.g., LoRA checkpoints) can reproduce IP more faithfully, whereas cloud models prioritize legal risk and filter prompts/outputs; some debate whether “style” imitation (as opposed to exact character likeness) is legally risky and how reliably similarity detectors can separate the two.

- A commenter reports better fidelity by first prompting ChatGPT to write a detailed scene and then generating an image from that scene rather than directly asking for a Simpsons image. This two-step approach increases descriptive signal (characters, setting, actions) while avoiding explicit trademarked terms, which likely bypasses stricter IP classifiers yet preserves style priors; their result still shows off-model artifacts (e.g., Lisa’s mouth, Burns/Smithers proportions, Marge’s neck) in the output example.

- Multiple shared outputs illustrate consistent failure modes in stylized character reproduction: facial topology and limb/neck anatomy drift, inconsistent line weights, and proportion errors across characters, even when the palette and layout are close to target style (ex1, ex2, ex3, ex4). This suggests the model is optimizing toward a “Simpsons-like” distribution without exact character identity, likely influenced by IP guardrails plus training data variance, leading to near-style matches but unstable character-specific features.

- There’s mention of using Google’s Gemini 2.5 Flash Image for similar tasks, implying cross-model viability for style-approximate outputs. While no quantitative benchmarks are provided, the discussion hints that model choice affects adherence to stylistic constraints versus IP guardrails, with both ChatGPT’s image system and Gemini producing recognizable palette/composition but diverging on character-accurate geometry.

3. Minimal-caption Meme/Reaction Images (He’s absolutely right / Infinite loop / Hmm)

- He’s absolutely right (Activity: 1409): Non-technical meme/screenshot (“He’s absolutely right”) used as a springboard for a discussion about LLM sycophancy and whether AI reinforces users’ beliefs versus correcting them. Commenters contrast experiences: one says recent models won’t be convinced of falsehoods “especially lately,” implying improved guardrails/factual resistance, while another argues AI now joins social media and partisan media in echo-chamber affirmation. The thread frames AI as a potential “impartial 3rd party” but questions that ideal given confirmation bias and model behavior variability. Debate centers on whether LLMs are improving at factual correction or remain sycophantic; some view them as useful for error-checking, others believe AI further collapses discourse by validating users alongside existing echo chambers.

- Several comments surface the known LLM failure mode of “sycophancy” (models agreeing with a user’s stated view regardless of truth), which is partly a byproduct of RLHF optimizing for user satisfaction. Empirical analyses (e.g., Anthropic’s study on sycophancy) show models adjust answers to match a user’s signaled identity or preferences, suggesting mitigations like diversified preference data, explicit critique/verification modes, or constitutional-style training can reduce agreement bias. See: https://www.anthropic.com/news/sycophancy and Constitutional AI overview: https://arxiv.org/abs/2212.08073.

- One commenter claims it’s recently harder to convince models of falsehoods, which aligns with improved truthfulness calibration but is fragile under adversarial prompting (role-play, leading premises, or jailbreaks). The instruction hierarchy (system > developer > user) and prompt injection can still coerce agreement or push models into error, highlighting the need for guardrails like source-grounded RAG, compulsory citation, and self-critique passes before final answers. Background: prompt injection/jailbreak literature (e.g., https://arxiv.org/abs/2312.04764) and truthfulness benchmarks like TruthfulQA (https://arxiv.org/abs/2109.07958).

- Another thread points to cross-platform echo chambers where social feeds and LLMs can reinforce user beliefs; technically, non-personalized LLMs may still mirror biases present in the user’s prompt/context window. Practical mitigations include retrieval with provenance, uncertainty estimates (calibrated confidence/logprobs where exposed), and prompting for counter-arguments or contradiction checks to counteract prompt-conditioned confirmation. RAG and citation-first generation are commonly recommended to constrain outputs to verifiable sources.

- Infinite loop (Activity: 3294): A screenshot (image) shows ChatGPT getting stuck in an apparent infinite response loop, repeatedly outputting the same line (paraphrased in comments as the seahorse‑emoji query), with the OP noting that asking the model why it looped caused it to crash again. Title (“Infinite loop”) and comments indicate reproducibility (another user shares a repro screenshot: link), suggesting a decoding/termination condition bug or moderation/guardrail feedback loop during generation. Commenters mostly joke; one asks for a technical reason but no concrete diagnosis is provided beyond users confirming they can reproduce the looping behavior.

- A commenter reports ChatGPT repeatedly looping its response and then crashing when asked about the seahorse emoji, asking why this happens; no technical explanation or mitigation is offered in-thread, and no reproduction steps or model/version details are provided (screenshot). This is an anecdotal stability issue report (looping/termination) without diagnostics, logs, or environment specifics, so it’s not actionable beyond noting a potential edge case involving emoji handling.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

Theme 1. New Models, Frameworks, and APIs Launch into the Stratosphere

- vLLM and Together AI Race for Faster Inference: Cascade Tech introduced Predicted Outputs in vLLM for faster generation by converting output to prefill for matches, with a demo available on their experimental branch. Not to be outdone, Together AI launched ATLAS (Adaptive-LeArning Speculator System), a new paradigm in LLM Inference using Runtime-Learning Accelerators.

- Self-Adapting LLMs and New Agent Platforms Emerge: The SEAL framework now enables LLMs to self-adapt by generating their own finetuning data and update directives for persistent weight changes, with code and the paper available. In the agent space, Agentbase launched a serverless platform for deploying agents in under 30 seconds, while OpenRun offers a declarative platform for managing web apps, including Gradio, with a single command.

- Google and Qwen Prep Next-Gen Model Onslaught: The community eagerly awaits Gemini 3, with some joking that GTA 6 will be released first, while reports suggest Google is already reallocating servers from Gemini 2.5, causing quality degradation. Meanwhile, Qwen plans to ship more models next week, including Next, VL, Omni, and Wan (source), fueling speculation they aim to be America’s DeepSeek.

Theme 2. Hardware Headaches and Performance Puzzles

- VRAM Overflows and RAM Prices Plague Builders: Users find that exceeding VRAM limitations tanks performance, dropping from 70 tokens per second (TPS) to under 2 TPS when spilling to pagefile. This is compounded by skyrocketing DDR5 RAM prices, as seen in this graph, which some blame on RAM being redirected to the server market.

- Mojo’s GPU Handling Frustrates and Impresses: Engineers find that Mojo recompiles code for every GPU at runtime, a flexible approach, but some are hitting a wall with its type system, particularly LayoutTensors, with one user stating CUDA is orders of magnitude easier to learn and use for complex scenarios. Community efforts continue, however, with one member sharing their vulkan-mojo bindings on GitHub.

- Groq Stumbles While Flash Attention Shines: Groq’s performance on tool call benchmarks surprised users with low scores, with chute percentages falling to 49%, as detailed in this tweet. In contrast, developers are calling Flash Attention basically free performance for the significant, easy boost it provides, though it can negatively impact tool calls on some models like OSS120B.

Theme 3. Model Quirks, Copyright Clashes, and Critical Vulnerabilities

- Sora and ChatGPT Wrestle with Content Policies: Users report Sora often bans or fails to render copyrighted material like anime fights, as per OpenAI’s usage policies. Similarly, ChatGPT struggles with realistic face generation, claiming it can’t create realistic faces, forcing users to find workarounds like adding mistakes in Paint.

- Researchers Uncover Poisoning and Prompt Injection Dangers: An Anthropic paper revealed that as few as 250 malicious documents can backdoor an LLM, a finding detailed in their research. In a related discovery, a critical vulnerability in GitHub Copilot allowed private source code exfiltration via a camo bypass, an issue highlighted as so stupid simple and yet it works in this blog post.

- The Ghost in the Machine: AI Spawns “Souls” and Tables: Users debated faint, repetitive artifacts on nano-banana AI outputs, joking it’s not a watermark, it’s a soul as they tried to determine if it’s a bug or feature. Meanwhile, developers find that GPT models persistently generate tables despite instructions to avoid them, leading one to quip, “You really can’t take the tables out of GPT…”

Theme 4. Developer Tooling Troubles and Community Connections

- Cursor Agents and Aider Configs Confound Coders: Cursor users report that Background Agents can shut down unexpectedly when merging code and that integration with Linear is buggy. Aider users are seeking better ways to manage configurations, like exporting settings to a file and finding a proper discussion forum, since the official GitHub Discussions are closed.

- OpenRouter SDK and LayerFort Raise Red Flags: Developers using the openrouter ai-sdk integration are warned to be VERY careful, as the plugin fails to report usage and costs for intermediate steps involving tool calls. Separately, the community labeled LayerFort a likely scam for advertising unlimited Sonnet API access for just $15/month after discovering the site was a generic investment company just months prior.

- DSPy Community Rallies for IRL Meetups: Enthusiasm is building for in-person DSPy events, with a Boston meetup organized by members from PyData, Weaviate, and AWS already planned (registration here). Community members are now actively volunteering to organize similar gatherings in the Bay Area and Toronto.

Theme 5. Decoding the Science Behind Smarter AI

- Researchers Probe Models to Reveal Latent Skills: A new paper suggests thinking language models don’t learn new reasoning skills but rather activate latent ones already in the base model; using sparse-autoencoder probing, researchers extracted steering vectors for 10-20 distinct reasoning routines, recovering up to 91% of the performance gap on MATH500 (details here). This connects to discussions on the ‘Less is More: Recursive Reasoning’ paper, which explores backpropagating only on the final step of deep recursion.

- Mamba 3 and RWKV Architectures Get Compared: The community dissected the new Mamba 3 Paper, comparing its architecture to RWKV-7 and noting its replacement of conv1d with an adjusted RWKV tokenshift mechanism. The consensus is that Mamba 3 is a pared-down version of existing architectures, whose efficiency gains could be valuable in specific scenarios.

- The Great Optimizer Debate: RMSProp Under Scrutiny: A technical debate emerged challenging the claimed adaptivity of Scalar RMSProp, with arguments that its 1/sqrt(v) correction factor might actually be detrimental. This contrasts with a hypothetical anti-Scalar RMSProp using sqrt(v), questioning fundamental assumptions about how optimizers regulate sharpness and reach stability.

Discord: High level Discord summaries

Perplexity AI Discord

- Grok beats Gemini for Image Generation: Users in the chat think that Grok is better than Gemini for generating images.

- Other users noted that Gemini Ultra has very similar goals to OpenAI.

- Perplexity considers plucking Suno AI: A member suggested that Perplexity should acquire Suno AI to dominate the AI music industry with a small investment of $500 million.

- Users noted that this would give access to top AI chat models, Image models, Video Models and the leading Ai Music generation too.

- OpenAI Aims to Please Real World Users: Members in the channel stated that OpenAI is focusing more on useability in real world scenarios.

- Another member stated that, unlike Anthropic, OpenAI only focus on speed and efficency.

- Perplexity Search API Hits Permission Wall: One member reported encountering a

PermissionDeniedErrorwhen using the Perplexity Search API, seemingly blocked by Cloudflare.- Another member explained that this happens when Cloudflare’s bot/WAF protections are applied to the API domain.

- WAF skips unblock API Traffic: A member suggested adding a targeted WAF skip rule or disabling the specific managed rule group blocking the API paths so API traffic isn’t challenged.

- This will potentially resolve the Perplexity Search API from hitting a

PermissionDeniedError.

- This will potentially resolve the Perplexity Search API from hitting a

LMArena Discord

- Community Anticipates the Godot Release of Gemini 3: Members are eagerly awaiting the release of Gemini 3, some joking that GTA 6 will be released first, and drew parallels to the anticipation surrounding GPT-5’s release.

- The community hoped for a free API through Google AI Studio upon release, while model anons remained skeptical of the hype.

- Gemini 2.5 Pro Still a Shining Star: Some users praised Gemini 2.5 Pro as their go-to model for generating creative content, with one comparing it favorably to GPT-5.

- A few users reported they got access to Gemini 3 via A/B testing in AI Studio.

- Sora AI Dominates VGen: Users shared various links from Sora AI to create videos, which prompted discussions around the legality of using generated videos concerning DMCA.

- A member clarified that the TOS states they own all rights to the output.

- LM Arena Plagued with Functionality Issues: Users reported various issues with LM Arena, including AI models getting stuck thinking, the site experiencing errors, and chats disappearing.

- Several members mentioned that LM Arena is buggy lately, with problems ranging from chat crashes to infinite generation loops, suggesting using a VPN to fix some of these issues.

OpenAI Discord

- ChatGPT Struggles with Realistic Face Generation: Members report that ChatGPT claims it can’t create realistic faces and suggest using bad resolution images or adding mistakes in Paint as workarounds.

- Another member discovered that ChatGPT sometimes fails to pass instructions to its image generation component when reading uploaded PDFs, suggesting prompting ChatGPT to describe the file’s contents before generating the image.

- Copyrighted Content Challenges Sora’s Output: Users are finding that Sora often bans requests or fails to accurately render copyrighted material, such as anime fights, due to restrictions that do not allow for the output of most copyrighted content, as stated in OpenAI’s usage policies.

- Members were cautioned against attempting to circumvent these policies.

- Context Poisoning Probes Spark Safety Scrutiny: A discussion emerged around context poisoning, with one member sharing an experimental prompt using unusual symbols and math to create a secret language for AI interaction and psychological probing, but a member suggested explicit, opt-in tags and consent-respecting behavior for safer experimentation.

- The suggested framework is μ (agency/coherence) vs η (camouflaged coercion) for safer experimentation.

- Discord Debates Pronoun Protocols, Project Sharing Paused: Discord users clashed over the necessity and perceived weirdness of pronouns in user bios, derailing a member’s attempt to share a project.

- The conversation devolved into political accusations, prompting the project sharer to postpone their presentation and create a dedicated thread to avoid further off-topic disputes.

- Agent Builder Data Updates: a Persistent Puzzle: A user is trying to figure out how to keep data updated in an agent built with Agent builder, specifically seeking a way to keep an employee directory and other document knowledge bases updated programmatically.

- The community lacks an answer to the question.

LM Studio Discord

- VRAM Overflow Dramatically Reduces Token Speed: Users find that exceeding VRAM limitations drastically reduces tokens per second (TPS), with speeds dropping from 70 TPS (VRAM) to 1.x TPS when using pagefile.

- It was noted that using system RAM could provide reasonable speeds, especially with Mixtral of Experts (MoE) models.

- Flash Attention Gives Near Free Performance Boost: Enabling flash attention significantly improved performance, and some community members are calling it basically free performance.

- It can negatively impact tool calls in some models like OSS120B, but the exact reasons are not yet fully understood.

- RAM Prices Skyrocket, Server Market to Blame?: DDR5 RAM prices have sharply increased since September, as visualized in this graph.

- The community is speculating that RAM is being redirected to the server market, leading to increased costs for consumers; with some members pausing builds until prices drop.

- Nvidia K80 Deemed E-Waste: Members discussed the viability of using Nvidia K80 but quickly dismissed the card as e-waste, due to driver issues.

- Members suggested to consider Mi50’s instead, as some folks are having success with them (32gb ~£150).

- ROCm Engine Lacks RX 9700 XT Support: Members report that the ROCm llama.cpp engine (Windows) does not support the 9700XT, despite the official ROCm release for Windows claiming full support.

- The AMD Radeon RX 9700 XT has a gfx target of gfx1200, which is not listed in the ROCm engine manifest, suggesting a potential incompatibility.

Unsloth AI (Daniel Han) Discord

- HuggingFace Download Errors Decoded: Users discovered that download errors are commonly due to a 401 error, stemming from a missing Hugging Face token.

- Troubleshooting helped the user proceed, however more questions are being asked and investigated.

- GPT Models Can’t Escape Tabular Temptation: Despite instructions to avoid tables, GPT models persistently generate tables, requiring fine-tuning to prevent this.

- A member humorously said, “You really can’t take the tables out of GPT…”, indicating that system prompts alone are insufficient.

- Fine-Tune Gemma 1B at Warp Speed: Members suggested increasing GPU RAM usage, reducing the dataset size, and noted that optimal dataset size typically saturates around 2-8k samples, depending on the task to speed up fine tuning of the Gemma 1B model.

- Members noted training with 127,000 questions takes around 6 hours.

- Android Phone Gets Vibe Coded Gemma: An Seattle based AI Engineer is attempting to run a vibe coded version of gemma3n on an Android phone after finetuning.

- They stated they are looking forward to playing and chatting using their newly created system.

- Qwen3-8B Novels Get Trained: A member trained Qwen3-8B on ~8k real novel chapters, however the model inherited Qwen’s repetition issue and would likely benefit from more than one epoch.

- It was suggested that Qwen3s require a minimum of 2-3 epochs to refine the “not, not this, that prose” and that increasing the rank would help clean the novel extractions and datasets.

OpenRouter Discord

- Google Downgrades 2.5 for 3.0: Members report Google is reallocating servers to 3.0, resulting in a quality decrease for 2.5, which has faced constant quality degradations since its GA release.

- The channel has noticed quality degradation in Google’s 2.5 model since Google shifted resources to the 3.0 model.

- OpenRouter SDK traps unwitting devs: Users of the openrouter ai-sdk integration should be VERY careful, as the plugin does not report full usage details when multiple steps are involved in tool calls.

- It only reports usage and costs for the last message, failing to account for intermediate steps with tool calls.

- Chinese Models get Naughtier: Members mentioned that Chinese models are pretty lenient, but they require a system prompt declaring them as NSFW writers.

- GLM 4.5 air (free) was recommended with Z.ai as the provider to avoid 429 errors; note that the free V3.1 endpoint is censored, while the paid one remains normal.

- LayerFort called a scam: Members noticed that LayerFort looked like a scam, advertising unlimited Sonnet over API for 15 bucks a month, while offering little token usage.

- Further investigation revealed that the site was a generic investment company just half a year prior, raising suspicions further.

- Qwen Quenches Thirst for New Models: Qwen is planning to ship more models next week (source), with several models already released, including Next, VL, Omni, and Wan (source).

- One member humorously suggested that Qwen, having raised $2 billion, aims to be America’s DeepSeek, expressing the hope that they don’t fall behind.

Cursor Community Discord

- Dictation Debuts Development, Deployment Delayed: The dictation feature is live in nightly builds but not yet in public builds, although users can check for updates via CTRL + SHIFT + P or the About section in settings.

- Users anticipate that this feature will eventually make its way into the public builds.

- Mobile Cursor Craving Continues: Users are expressing a strong need for Cursor on mobile, but the IDE is currently desktop-only, while only Background Agent management is available on mobile.

- This limitation is a recurring point of interest within the community.

- Cursor Agents Appear to Flounder on Integration: A user described an issue where Background Agents are used to code new features and merge code changes into the main branch, which results in Cursor BA shutting down.

- Another user reported that the Background Agent often appears no conversation yet, the status is completed, and the task is not actually performed, which seems to be related to GitHub.

- Linear Integration Experiences Reconnecting Woes: A user reported issues with the linear integration after reconnecting GitHub and Linear, and shared a screenshot.

- Another user reported getting a ‘Cursor stopped responding’ error when trying to use it with Linear, and shared a screenshot.

GPU MODE Discord

- Nsight gets Nice with New Nvidia Nodes: A user confirmed that Nsight Compute and Nsight Systems work well with their own 5090 GPU, dispelling doubts from documentation.

- Members noted that these tools are essential for profiling GPU workloads to identify bottlenecks and optimize performance.

- Together Team Tunes Tensor Transmission: ATLAS arrives: Together AI launched the Adaptive-LeArning Speculator System (ATLAS), a new paradigm in LLM Inference via Runtime-Learning Accelerators.

- The announcement was also made via Tri Dao’s X account and Together Compute’s X account.

- Memory Gremlins Glitch Torch: A user reported a memory issue where a torch compiled model slowly increases in memory consumption over time.

- Periodically calling a CUDA defragmentation function with torch.cuda.empty_cache(), torch.cuda.synchronize(), and gc.collect() helps reduce memory pressure.

- Community Contribution to CUDA Caching: A user is hosting a voice channel to discuss finding good first contributions to a real CUDA repo, including code walkthroughs.

- The goal is to lower the barrier to entry for community contributions, providing guidance and support for newcomers.

- Triton Talks Triumph Techies: The next Triton community meetup will be on Nov 5th, 2025 from 10am-11am PST and the meeting link has been shared.

- Tentative agenda items include TLX(Triton Language Extensions) updates, Triton + PyTorch Symmetric Memory, and Triton Flex Attention in PyTorch.

HuggingFace Discord

- AgentBase Launches Serverless Platform: A member introduced Agentbase, a serverless agent platform that allows developers to build and deploy agents in less than 30 seconds without managing individual integrations.

- The platform offers pre-packaged APIs for memory, orchestration, voice, and more, aiming to help transition SaaS to AI-native or quickly experiment with agent builds.

- Declarative Web App gets OpenRun: A member has been building a declarative web app management platform called OpenRun that supports zero-config deployment of apps built in Gradio.

- It facilitates setting up a full GitOps workflow with a single command, enabling the creation and updating of apps by just modifying the GitHub config.

- Hugging Face Users Lament Refund Lag: A user expressed frustration over not receiving a refund from Hugging Face, stating they’ve emailed since the 6th, warning others about the lack of refunds and quota usage on their subscription page.

- A Hugging Face team member, <@618507402307698688>, intervened, requesting the user’s Hub username to check on the refund process and clarifying yellow role = huggingface team.

- Community Seeks Open Source MoE: Members are looking for a good open source MoE (Mixture of Experts) model with configurable total parameters for pretraining, with a suggestion to check out NVIDIA’s Megatron Core and DeepSpeed.

- One member humorously asked if anyone has a server farm and massive training datas.

- Hybriiiiiid VectorDB Debuts in Go: A member has released their VectorDB written from scratch in Go, named Comet it supports hybrid retrieval over BM25, Flat, HNSW, IVF, PQ and IVFPQ Indexes with Metadata Filtering, Quantization, Reranking, Reciprocal Rank Fusion, Soft Deletes, Index Rebuilds and much much more

- The member posted a link to the HN thread.

Eleuther Discord

- AI Evaluations Scrutinized: Members analyzed a Medium article on AI Evaluations, questioning current methodologies.

- A member suggested training models on less data with more effective architectures.

- RMSProp Adaptivity called into Question: A discussion emerged around the adaptive nature of Scalar RMSProp, challenging claims that its adaptivity is linked to maximum stable step size.

- The argument was made that the 1/sqrt(v) correction factor might be detrimental, contrasting it with a hypothetical anti-Scalar RMSProp using sqrt(v).

- Mamba 3: RWKV-7 offshoot?: Members compared Mamba 3’s architecture to RWKV-7, noting its replacement of conv1d with an adjusted RWKV tokenshift mechanism Mamba 3 Paper.

- The consensus was that Mamba 3 is a paring down of existing architectures and its efficiency gains might be valuable in specific situations.

- Recursive Reasoning: Limited Backpropagation: Discussion of the ‘Less is More: Recursive Reasoning with Tiny Networks’ paper arose, specifically the technique of backpropagating only on the last step of deep recursion after T-1 steps of no_grad().

- The mechanics behind this are still being investigated, and there is a Github issue open about it.

- BabyLM Competition Announced: The BabyLM competition, aimed at finding the smallest language models, was mentioned.

- The BabyLM website was shared, noting that it will be at EMNLP 2025 in China, November.

Modular (Mojo 🔥) Discord

- Mojo Recompiles for GPU Specifics: When asked about forward compatibility with new GPUs, a member noted that Mojo recompiles the code for every GPU at runtime.

- They suggested that vendors use SPIR-V to ensure compatibility, and that MLIR blobs for drivers could be compiled using continually updated libraries.

- Vulkan-Mojo Bindings are Available: A member shared their vulkan-mojo bindings available at Ryul0rd/vulkan-mojo.

- They mentioned that support for moltenvk hasn’t been added yet but is a relatively straightforward fix.

- Mojo’s MAX Backend Encounters Bazel Glitches: When a member ran into trouble testing a new

acos()Max op, it was discovered that Bazel couldn’t find thegraphtarget, and testing was converted into a no-op, potentially due to Issue 5303.- Members suggested using relative imports

from ..graphinstead of.graphin the ops file, but that did not solve the problem.

- Members suggested using relative imports

- LayoutTensors give Engineers the Blues: A member expressed frustration with LayoutTensors, citing complex typing mismatches and difficulties in passing them to sub-functions, they said they switched to CUDA due to its simplicity compared to the challenges of Mojo’s type system.

- They shared code examples highlighting the issues, concluding If your GPU code is simple then Mojo is great but if it is a complex scenario I still think CUDA is orders of magnitude easier to learn and use.

Nous Research AI Discord

- vLLM races to faster generation with Predicted Outputs: Cascade Tech introduced Predicted Outputs in vLLM, enabling fast generation by converting output to prefill for (partial) matches of a prediction as described in their blog post.

- The tech is available in their vllmx experimental branch, with a demo available and a tweet thread.

- Graph RAG Seeks Tool-Based Pipelines: A member is seeking advice on a Graph RAG-like approach for chunking a role-play book’s content into efficiently interlinked nodes because they find Light RAG insufficient.

- They are looking for specific tool-based pipelines or procedural controlled methods for chunking and embedding creation.

- SEAL Framework Enables LLMs to Self-Adapt: The SEAL framework enables LLMs to self-adapt by generating their own finetuning data and update directives, resulting in persistent weight updates via supervised finetuning (SFT) as described in their paper.

- Anthropic warns of Tiny Poison Samples: Anthropic found that as few as 250 malicious documents can produce a backdoor vulnerability in a large language model regardless of model size or training data volume as described in their paper.

- A member noted that this is a well-known issue, especially in vision models, and discussed the difficulties of detection on decentralized settings, particularly due to private data and varied distributions.

Latent Space Discord

- Exa’s Search API Gets Supercharged: Exa launched v2.0 of its AI search API, featuring “Exa Fast” (<350 ms latency) and “Exa Deep” modes, powered by a new embedding model and index.

- This update required a new in-house vector DB, 144×H200 cluster training, and Rust-based infra; details here.

- Unlimited Claude Coding for Pocket Change?: A user claims that Chinese reverse-engineering unlocked an unlimited Claude coding tier for just $3/mo by routing requests to GLM-4.6 on z.ai.

- However, others question the latency and quality of the Claude experience, as blogposted here.

- Raindrop Dives Into Agent A/B Testing: Raindrop introduced “Experiments,” an A/B testing suite for AI agents, integrating with tools like PostHog and Statsig.

- This enables tracking the impact of product changes on tool usage, error rates, and demographics, with event deep-dives available here.

- Base Models Hide Reasoning Skills?: A new paper suggests that thinking language models (e.g., QwQ) don’t acquire new reasoning skills but activate latent skills already present in the base model (e.g., Qwen2.5).

- Using sparse-autoencoder probing, they extracted steering vectors for 10-20 distinct reasoning routines, recovering up to 91 % of the performance gap on MATH500; linked here.

- Nano Banana Gets a Soul?: Faint, repetitive artifacts on nano-banana AI outputs spark debate over whether they represent a watermark, a transformer artifact, or a generative quirk.

- The community joked it’s not a watermark, it’s a soul, and suggested upscaling as a potential solution.

Manus.im Discord Discord

- Manus Fixes API Validation Issues: Users reported a “server is temporarily overloaded” error when validating the Manus API key, but the team fixed the issue with new API changes.

- The changes include new responses API compatibility and three new endpoints for easier task management, mirroring the in-app experience.

- Manus Webhook Registration API is temporarily down: A user encountered a “not implemented” error (code 12) when trying to register a webhook, indicating the webhook registration endpoint was temporarily non-functional.

- A team member acknowledged the issue, attributing it to recent code changes, and promised a fix by the next day.

- Manus Price Tag Too Rich For Some: A user building trading EAs and strategies found Manus AI too expensive due to programming errors consuming credits.

- The user stated that Manus is better than GPT and Grok, but still too expensive.

- Back-and-Forth with Manus API Now Supported: The Manus team enabled the ability to push to the same session multiple times via the API, allowing back-and-forth conversations with Manus using the session ID.

- A user integrating Manus into a drainage engineering app expressed interest in streaming intermediate events for a more transparent user experience.

- Feature Request: Dial Up Your Proficiency!: A user suggested an option where users can state their proficiency level upon signup, so that Manus knows if it should assume the user knows nothing and babysit the user instead of the other way around.

- This feature would help tailor the level of assistance and guidance provided by Manus based on the user’s experience and knowledge.

Moonshot AI (Kimi K-2) Discord

- AI Emerges as Anime Animator: Users shared a video showcasing AI-generated anime, marveling at its capability to produce complete animated works and music.

- One user enthusiastically stated that the AI-generated content was really good, marking a milestone in AI’s creative potential.

- Groq Stumbles in Benchmark Chute: Users discussed Groq’s performance on tool call benchmarks, noting surprisingly low scores, with chute percentages plummeting to 49%.

- Linked to a tweet speculating about reasons behind the lower-than-expected performance, attributing it to Groq’s custom hardware and hidden quantization issues.

- Kisuke Pursues Kimi K-2’s Credits via OAuth: A user developing Kisuke, a mobile IDE, sought guidance on implementing OAuth integration to enable users to log in and utilize their Kimi-K2 credits directly.

- Other users voiced skepticism, suggesting that OAuth might not grant direct access to API keys, implying a new system might be necessary and recommending contacting Aspen to discuss this feature.

- Moonshot’s Dev Team Faces Aspen’s Unexpected Departure: A user shared a tweet revealing that Aspen, a member of the Moonshot dev team, will not be returning to work due to a mind-altering experience during a festival break.

- No further details were given on Aspen’s departure.

Yannick Kilcher Discord

- GNN Loss Drops Like It’s Hot!: During Graph Neural Networks (GNNs) training, a user observed a sudden drop in loss, leading to speculation about whether the model grok’d the concept or if it was due to hyperparameter tuning.

- Others proposed LR scheduling or the end of the first epoch as possible causes, with one member noting such occurrences when the LR drops low enough.

- Swap ‘Til You Drop: Embedding Edition: Members explored swapping embeddings between system and user prompts to differentiate context, particularly in long sequences.

- The goal is to enable the model to discern intrinsic differences in how it processes diverse inputs, which helps the model learn faster.

- Berkeley’s LLM Agents Course: Audio Disaster Averted?: Despite bad audio quality, a member recommends a LLM agents course from Berkeley, noting its memetic content and suitability for 1.5x speed viewing.

- Another member suggested that old Berkeley webcast lectures should be subtitled, as there is no excuse to hide them anymore since you can easily generate them.

- Copilot’s Camo Bypassed, Code Compromised!: A critical vulnerability in GitHub Copilot allowed for private source code exfiltration via a camo bypass as reported in this blog post; the issue was addressed by disabling image rendering in Copilot Chat.

- A member found the prompt injection aspect of a security issue to be run of the mill, but highlighted the camo bypass as particularly interesting, calling it so stupid simple and yet it works.

- AI First Authorship: New Norm?: East China Normal University’s education school will require AI first authorship in one of the tracks of their December 2025 conference on education research, according to this announcement.

- This is part of a new effort from the university to put AI at the forefront of innovation in research.

DSPy Discord

- Gemma Gets GEPA Guide: A member released a tutorial on optimizing small LLMs like Gemma for creative tasks with GEPA.

- The blog post provides insights into using GEPA for prompt engineering.

- DSPy Optimizers Duel in Dataquarry: A blog post on The Dataquarry compares Bootstrap fewshot and GEPA optimizers in DSPy, revealing the outsized importance of high-quality training examples.

- The results suggest that when it comes to GEPA, a high-quality set of examples can go a LONG way.

- Liquid Models Lend to Multi-Modal Modeling: For multi-modal tasks, a member recommended Liquid models for their efficiency, especially in models under 4B parameters.

- The recommendation addresses a request for efficient solutions in the multi-modal modeling space.

- DSPy’s Delicious Demo Days Developing: There is a DSPy Boston meetup organized by members from PyData Boston, Weaviate, and Amazon AWS AI, with registration closing soon at Luma.com.

- Enthusiasm is burgeoning for DSPy meetups in the Bay Area and Toronto, with community members volunteering to organize events.

- Automation Ace Available: An experienced engineer is offering services in workflow automation, LLM integration, AI detection, and image and voice AI.

- They showcase real-world experience using LangChain, OpenAI APIs, and custom agents to create automated pipelines and task orchestration systems.

aider (Paul Gauthier) Discord

- Default Prompt Config Tricks for Aider: Members discussed setting the default prompt function to

/askin the Aider config file and referenced the Aider documentation on usage modes and configuration options.- Users suggested setting

architect: trueto have Aider analyze the prompt and choose, and tryingedit-format: chatoredit-format: architectto set the default mode on launch.

- Users suggested setting

- Aider Community Seeks Discussion Hub: Users are seeking a better discussion platform for Aider, as GitHub Discussions are closed (https://github.com/Aider-AI/aider/discussions) and a Reddit forum couldn’t be found.

- The user seems to want to discuss topics in a non-chat format.

- Users Can’t Export Aider Settings: A user expressed frustration with the

/settingscommand, which outputs a large, unmanageable dump, and asked if it’s possible to export the settings to a file.- They noted that

/helpindicates this isn’t possible but questioned if scripting could allow exporting the settings.

- They noted that

- Aider’s Env File Excavation: Aider looks for a .env file in the home directory, git repo root, current directory, and as specified with the

--env-file <filename>parameter.- The files are loaded in that order with later files taking priority, as described in the documentation.

- Auto Test Saves Aider: A user reported issues with Aider generating uncompilable code using qwen3-coder:30b and ollama with test-cmd and lint-cmd set.

- A member suggested turning on the auto test config with yes always, which should run a test after every change and attempt fixes.

tinygrad (George Hotz) Discord

- Python 3.11 Upgrade Consideration: The team is mulling over upgrading to Python 3.11 to take advantage of the

Selftype feature, though a workaround exists in Python 3.10.- A team member found a workaround using 3.10, making the upgrade not urgent.

- TinyMesa Fork Builds on Mac: A team member forked TinyMesa and confirmed that the fork builds in CI, and should theoretically build for Mac.

- A $200 bounty was offered for a successful Mac build.

- NVIDIA GPU Mac Comeback: A member expressed excitement about getting TinyMesa plus USB4 GPU working on Mac, potentially the first functional NVIDIA GPU on Mac in a decade.

- They noted this as a particularly exciting prospect.

- Meeting Averted!: A member inquired about a meeting, and another confirmed its cancellation due to a previous meeting held at 10am HK time.

- A prior meeting obviated the need for another.

MCP Contributors (Official) Discord

- REST API Proxying Design Debated: A member questioned if proxying existing REST APIs constitutes poor tool design, sparking a debate on best practices.

- Discussion revealed that the effectiveness hinges on the underlying API design, specifically its pagination and filtering capabilities relevant to LLMs.

- Concrete LLM-Ready API Benchmarks Sought: Interest arose in establishing LLM-ready API benchmarks, yet contributors noted the difficulties without concrete data.

- It was suggested that use case-specific benchmarks and robust evaluation strategies are more valuable than relying on generic external benchmarks.

- Deterministic MCP Server Packages Proposed: The community is facing issues with the non-deterministic dependency resolution of the current npx/uv/pip approach, which causes slow cold starts in serverless environments.

- A member proposed deterministic pre-built artifacts for sub-100ms cold starts, which could be achieved by treating MCP servers more like compiled binaries. They are also interested in submitting a working group creation request.

- MCPB Repository Involvement Clarified: A question was raised about the community’s stance on bundling formats within the anthropics/mcpb repo.

- Discussion emphasized compatibility with the registry API/schemas, pointing to recent work supporting MCPB in the registry and directing further discussion to the <#1369487942862504016> channel.

- Cloudflare Engineer Joins MCP Efforts: A new member introduced themselves as an engineer working on MCP at Cloudflare, expressing enthusiasm for the project.

- No secondary summary.

MLOps @Chipro Discord

- Diffusion Deep Dive this Saturday: A new Diffusion Model Paper Reading Group is meeting this Saturday at 9 AM PST / 12 PM EST (hybrid in SF + online) to discuss Denoising Diffusion Implicit Models (DDIM) by Song et al., 2020.

- The session will include an intro to their Diffusion & LLM Bootcamp, and the group will explore how DDIM speeds up image generation while maintaining high quality, which is foundational to understanding Stable Diffusion.

- Diffusion & LLM Bootcamp Launch: The Diffusion Model Paper Reading Group announced a 3-month Diffusion Model Bootcamp (Nov 2025), inspired by MIT’s Diffusion Models & Flow Matching course.

- The bootcamp aims to provide hands-on experience in building and training diffusion models, ComfyUI pipelines, and GenAI applications for AI & Software engineers, PMs & creators.

- DIY Vector DB Tailored for Hackers: A member announced they wrote a Vector DB from scratch in Go, designed for hackers, not hyperscalers, with funding from VCs and corporate sponsors.

- According to the HN Thread, the offering supports hybrid retrieval over BM25, Flat, HNSW, IVF, PQ and IVFPQ Indexes with Metadata Filtering, Quantization, Reranking, Reciprocal Rank Fusion, Soft Deletes, and Index Rebuilds.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1266 messages🔥🔥🔥):

Perplexity Pro vs Gemini Ultra, Perplexity AI music industry acquisition, OpenAI and Google's Approach to AI

- Grok Pummels Gemini for Image Generation: Members in the chat agreed that Grok is better than Gemini for generating images.

- Users note that Gemini Ultra has very similar goals for their model to OpenAI.

- Perplexity Ponders Plucking Suno AI: A member suggested that Perplexity should acquire Suno AI, which would allow them to dominate the AI music industry with a small investment of $500 million.

- Some users agreed and noted that with that, they would be giving access to top AI chat models, Image models, Video Models and the leading Ai Music generation too.

- OpenAI aims for Real World Usability: Members in the channel stated that OpenAI is focusing more on useability in real world scenarios.

- Another member stated that, unlike Anthropic, OpenAI only focus on speed and efficency.

Perplexity AI ▷ #pplx-api (8 messages🔥):

Permission Denied Error, Cloudflare WAF protections, Anti-spam detectors

- Perplexity Search API Hits Permission Denied Error: One member reported encountering a

PermissionDeniedErrorwhen using the Perplexity Search API, seemingly blocked by Cloudflare.- Another member explained that this happens when Cloudflare’s bot/WAF protections are applied to the API domain.

- Cloudflare’s WAF skips or disabling rules to unblock API Traffic: A member suggested adding a targeted WAF skip rule or disabling the specific managed rule group blocking the API paths so API traffic isn’t challenged.

- User Silenced Due to Typing Too Fast: A member was silenced with the reason: New user typed too fast.

- A member suggested trying a different browser or device, indicating the user had triggered an anti-spam detector.

LMArena ▷ #general (1249 messages🔥🔥🔥):

GPT-5, Gemini 3, Claude 4.5, AI Model Performance, Comet Browser

- Users Await Godot-Like Gemini 3: Members are eagerly anticipating the release of Gemini 3, with one joking that GTA 6 will be released first.

- Some expressed hope for a free API through Google AI Studio upon release, while others compared it to the hype surrounding GPT-5’s release.

- Gemini 2.5 Pro Still Shines: Some users praise Gemini 2.5 Pro as their go-to model for generating creative content, with one comparing it favorably to GPT-5.

- Some users reported success getting access to Gemini 3 via A/B testing in AI Studio, however model anons remained skeptical of the hype.

- Sora AI Still Top Dog for VGen: Users shared various links from Sora AI to create videos for users.

- This prompted discussions around whether users can legally use generated videos with DMCA, but a member pointed out TOS states they own all rights to the output.

- LM Arena Plagued With Issues: Users reported several issues with the functionality of LM Arena, such as AI models getting stuck thinking, the site experiencing errors, and chats disappearing.

- Several members mentioned that LM Arena is buggy lately, with problems ranging from chat crashes to infinite generation loops - using a VPN to fix some of these issues.

- Hacks for Multilingual Users in General Chat: Some users were conversing in languages other than English, prompting others to suggest using translators, although the translations were noted to be inaccurate.

- One member pointed out the channel being English-only, and to do the right thing.

OpenAI ▷ #ai-discussions (1084 messages🔥🔥🔥):

ChatGPT image generation, Sora 2 restrictions, AI and copyright infringement, The agony of Eros, Getting Sora codes

- ChatGPT struggles with Realistic Faces: A member reported that ChatGPT stated it couldn’t create realistic faces when they tried to generate one.

- Workarounds include using bad resolution images or adding mistakes in Paint.

- ChatGPT Image-Gen Fails to Receive Prompts: A user discovered that ChatGPT sometimes fails to pass instructions to its image generation component when reading uploaded PDFs.

- To resolve this, prompt ChatGPT to describe the file’s contents before generating the image.

- OpenAI Deletes Saved User Posts?: Some users linked to Gizmodo article about OpenAI stopping saving users deleted posts.

- Other members were more focused on finding a Sora 2 invite code

- Exploring the Agony of Eros in Digital Interactions: A member referenced The Agony of Eros by Byung-Chul Han, discussing the loss of the Other in frictionless, personalized realities.

- They shared a fragment from the text emphasizing the book’s diagnostic rather than despairing tone and philosophical-poetic style.

- Diognese & Nietzsche Join Forces for Digital Cynicism: Members mused about a modern philosophy stemming from Diogenes (laughing at delusion) and Nietzsche (explaining the delusion), creating digital cynicism.

- They noted this archetype is clear-eyed, funny, and disturbingly aware that it’s all still a game.

OpenAI ▷ #gpt-4-discussions (37 messages🔥):

MCP dev channel, ChatGPT solves crossword, Sora AI for Android, GPT realtime model training, custom gpt plus vs free

- ChatGPT Crossword Puzzles prove tricky: One member tried to get ChatGPT to solve a crossword puzzle by showing the model the crossword grid, but it cannot see that many squares and successfully track it, as seen in this chatgpt.com share link.

- They concluded that the model can’t sense the grid clearly enough to solve a crossword, but a human could iteratively map one out.

- Custom GPT working differently in Plus vs Free: A user reported that their custom GPT is not working properly in a plus account while in a free account.

- Another user asked if it’s actually using the same model if you check under See Details.

- Enterprise vs Company Defined: A member asked about the difference between enterprise and company accounts.

- A helpful user clarified that enterprise is rather selective, and provides specific support for interested and selected very large companies, according to the OpenAI help article.

- Agent Builder data updates pondered: A user is trying to figure out how to keep data updated in an agent built with Agent builder.

- Specifically, they asked if there was a way to keep an employee directory and other document knowledge bases updated programmatically.

- GPT store closing?: A user asked if the GPT store will close and all GPTS turn into an app.

- This question was not answered.

OpenAI ▷ #prompt-engineering (175 messages🔥🔥):

Sora Prompting, Context Poisoning, Psychological Safety, Quantum Superpositioning, Text-to-Video Prompt Tool

- Sora Struggles to Generate Copyrighted Content: Members discussed difficulties in using Sora to generate content based on copyrighted material like anime fights, noting that Sora often bans such requests or fails to accurately render the characters.

- A member pointed out that Sora is not allowed to output most copyrighted content and declined to provide ways to circumvent this restriction, pointing to the community guidelines.

- Context Poisoning Concerns and Mitigation Strategies: A discussion arose around context poisoning, with one member sharing an experimental prompt using unusual symbols and math to create a secret language for AI interaction, aiming for psychological probing.

- Another member cautioned against such methods, labeling them as potentially unsafe for general users due to their reliance on non-reproducible techniques like hash-seeded chaos and hidden ‘fnords’ that may cause covert discomfort, instead suggesting explicit, opt-in tags and consent-respecting behavior for safer experimentation, pushing users to adopt the framework of μ (agency/coherence) vs η (camouflaged coercion).

- Debating Quantum Superposition in AI Models: A user claimed that quantum superpositioning allows for fine-tuning binary outputs in AI models after initialization, tying it to AI’s ability to process multiple dimensional signals.

- Another member challenged this assertion, stating that without specific details and specifications (a defined quantum circuit or Hamiltonian), quantum superposition is just decoration, not a model, further requesting citations needed.

- Proposal for Tool to Standardize Text-to-Video Prompts: In a conversation about creating Walter White in Sora 2, members suggested creating a tool that standardizes optimized text-to-video prompts.

- The goal is to assist users in generating specific content, though the conversation also touched on the challenges of circumventing copyright restrictions.

OpenAI ▷ #api-discussions (175 messages🔥🔥):

Sora Prompting, Context Poisoning, Psychological Safety in AI, Fnords and Prompt Engineering

- Sora Runs Copyrighted Content Gauntlet: Users are experiencing Sora banning videos that include fights from different animes due to copyright restrictions.

- OpenAI does not allow for the output of most copyrighted content, and members were cautioned against circumventing these policies.

- Fnords Poisoning the Context?: A member shared a ‘weird’ Clojure code snippet involving symbols and context poisoning, designed to evoke discomfort and reveal hidden meanings.

- Another member raised concerns that these techniques, including hash-seeded chaos and hidden “fnords,” rely on mystification and are not reproducible by junior engineers.

- Context Contamination Concerns Emerge: A member described a system that uses meaningless questions and unconventional symbols to disrupt the ‘helpful assistant’ behavior of ChatGPT, but another user argued that this approach acts as a universal context contaminator.

- They suggested that adding distractions lowers the output quality and mixes metaphors across domains, cautioning against encouraging practices that poison any context.

- Navigating Psychological Safety: A discussion arose regarding psychological safety in prompt engineering, with emphasis on the use of explicit, opt-in tags and avoiding covert discomfort when challenging models.

- It was suggested that sharing knowledge should prioritize measurable mechanisms and consent-respecting behavior over hidden discomfort delivery.

OpenAI ▷ #api-projects (32 messages🔥):

Pronoun Debate, Project Sharing Interrupted, Leftist Accusations

- Pronoun Pronouncements Prompt Discord Duel: Discord users sparred over the necessity of pronouns, with one user asserting that pronouns are weird and not required after seeing another user’s pronouns in their bio.

- The argument escalated with accusations of being a leftist and calling the other user weird for wanting people to know their pronouns, ending with the statement Don’t see any pronounces in my bio.

- Project Pitch Put on Pause Amidst Political Provocation: A user expressed frustration that a debate about pronouns derailed their attempt to share a project.

- The user then stated they would create a thread where it’s actually on topic and wanted to share their project, not fight about politics and pronouns, referring to themself as red.

- Leftist Label Lightens Discord Discourse: Following accusations, one user was labelled a leftist, which they shrugged off with a brief retort.

- The user replied to the leftist comment with a lighthearted that’s not a bad thing.

LM Studio ▷ #general (736 messages🔥🔥🔥):

MacOS Tahoe High GPU Usage, Copilot Integration in VSCode, LM Studio Context Amount Display Issue, NVIDIA K80 GPU Opinions, Vibe Coding

- MacOS Tahoe has High GPU Usage: A member using macOS Tahoe reported high WindowServer GPU usage and linked an Electron issue that might affect LM Studio.