What is going on?

AI News for 9/22/2025-9/23/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (193 channels, and 3072 messages) for you. Estimated reading time saved (at 200wpm): 236 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

We would normally feature the remarkable velocity of Qwen (headlined by today’s Qwen3-Omni model) or the new DeepSeek V3.1 update, but really today belongs again to NVIDIA, which over the last week has deployed billions into Intel ($5b) and Enfabrica’s execuhire ($900m) and Wayne ($500m).

The relevant details of the press release are all we know:

News

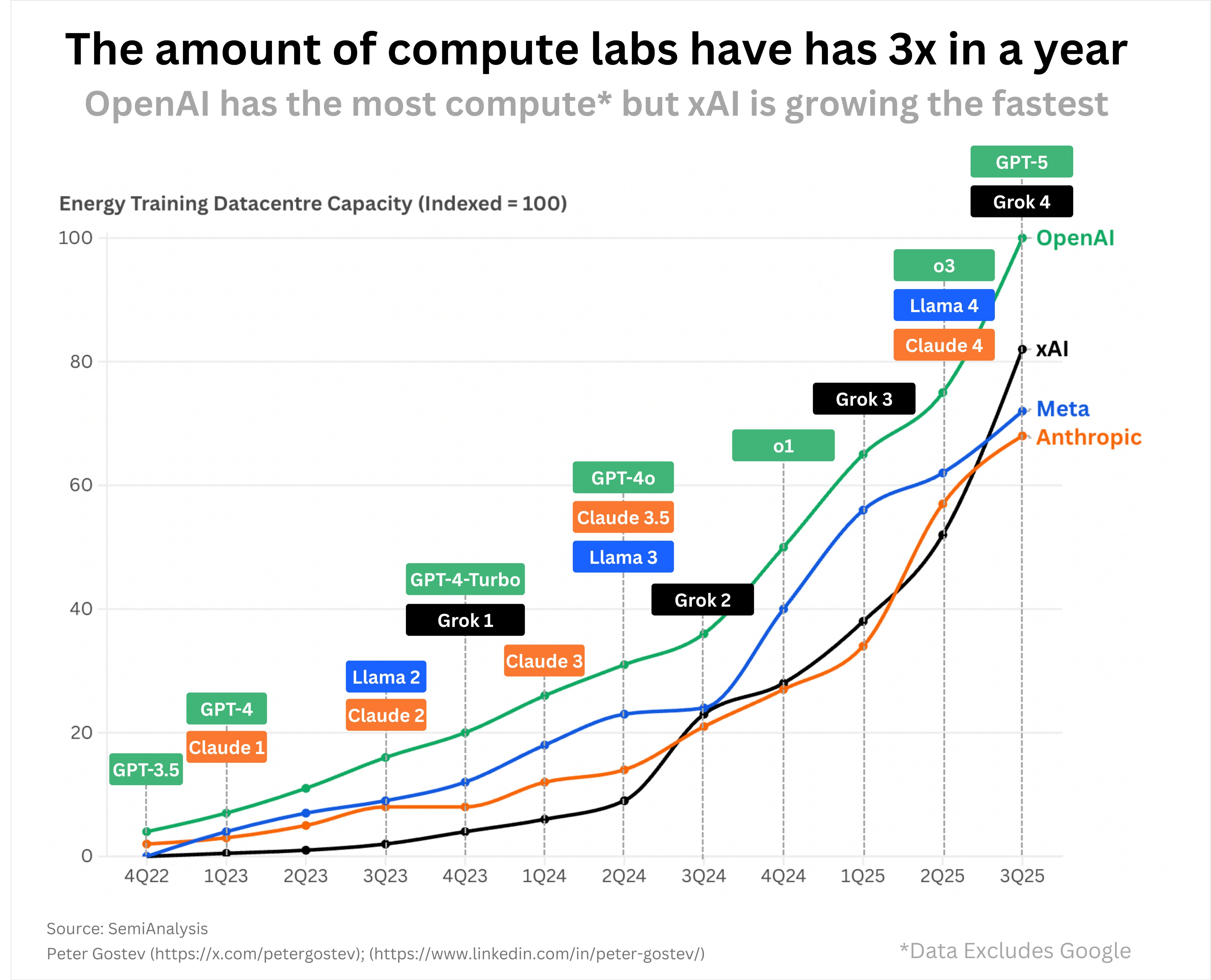

- Strategic partnership enables OpenAI to build and deploy at least 10 gigawatts of AI datacenters with NVIDIA systems representing millions of GPUs for OpenAI’s next-generation AI infrastructure.

- To support the partnership, NVIDIA intends to invest up to $100 billion in OpenAI progressively as each gigawatt is deployed.

- The first gigawatt of NVIDIA systems will be deployed in the second half of 2026 on NVIDIA’s Vera Rubin platform.

**San Francisco and Santa Clara—September 22, 2025—**NVIDIA and OpenAI today announced a letter of intent for a landmark strategic partnership to deploy at least 10 gigawatts of NVIDIA systems for OpenAI’s next-generation AI infrastructure to train and run its next generation of models on the path to deploying superintelligence. To support this deployment including datacenter and power capacity, NVIDIA intends to invest up to $100 billion in OpenAI as the new NVIDIA systems are deployed. The first phase is targeted to come online in the second half of 2026 using NVIDIA’s Vera Rubin platform.

We don’t know this for a fact but this $100B deal is likely a big part of how OpenAI is funding their $300B commit to Oracle from 2 weeks ago (whose stock is back up at all time highs, seeming to support this theory).

Side note: it hasn’t escaped observers that somehow all the stocks involved - ORCL, OpenAI, and NVIDIA - are all jumping disproportionately on this money going from one to the other. NVIDIA’s stock gained $170B today after announcing this $100B investment to secure their revenue, OpenAI’s stock is now presumably valued more than the most recent $500B after this deal as well, and ORCL is still $250B higher than it was before the announcement. Are there -ANY- losers here?

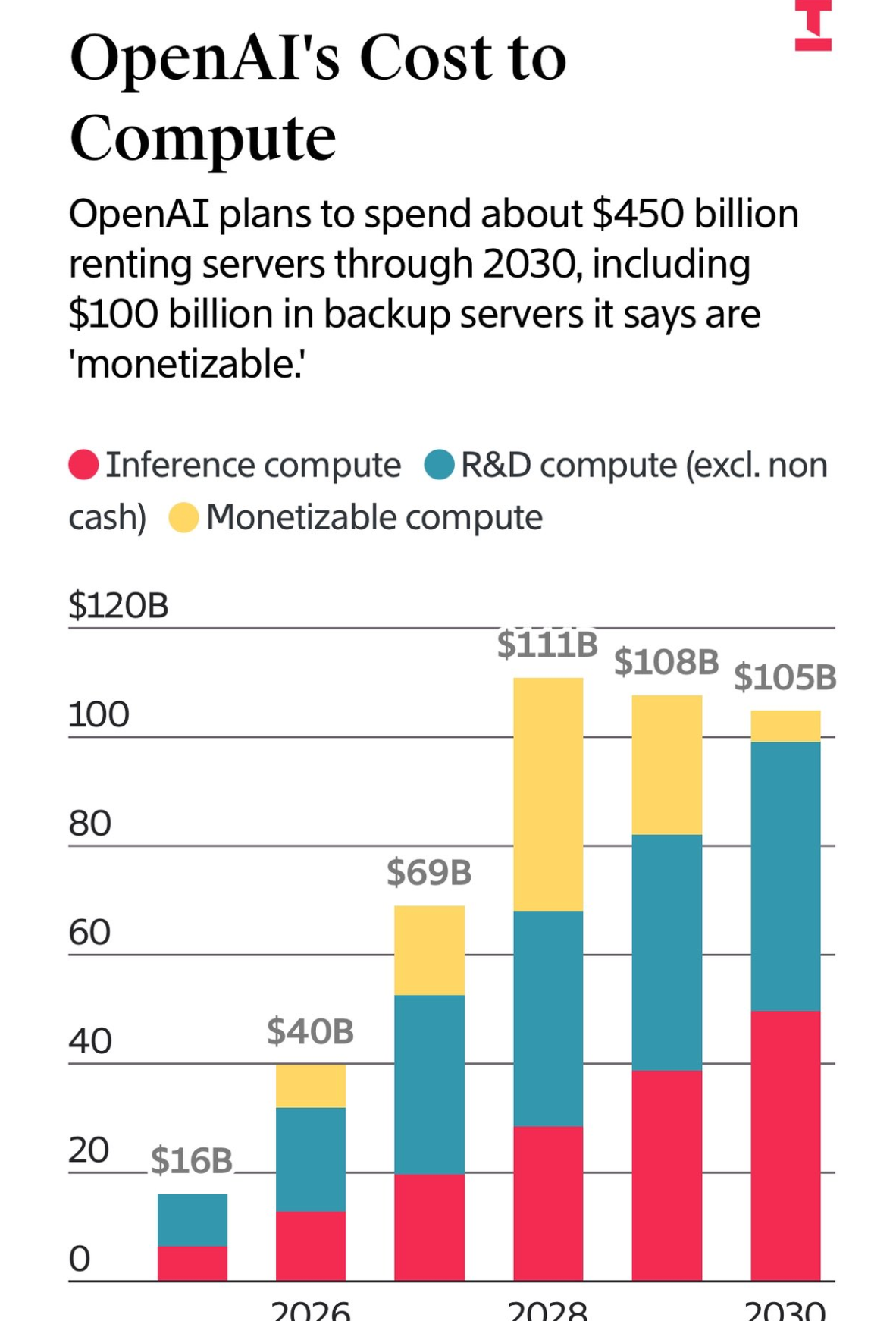

From The Information, we also have some insight on the breathtaking scale of OpenAI’s intended infra spend, which includes about $150B more in existing + unaccounted spend.

AI Twitter Recap

Compute, Inference, and Systems: OpenAI–NVIDIA, FP8, and cross‑vendor GPU portability

- OpenAI × NVIDIA: 10 GW and “millions of GPUs.” OpenAI announced a strategic partnership with NVIDIA to deploy at least 10 gigawatts of GPU datacenters, targeting first capacity in 2H 2026 on “Vera Rubin,” with NVIDIA intending to invest up to $100B as systems are deployed. OpenAI framed NVIDIA as a preferred strategic compute/networking partner; NVIDIA’s market cap jumped on the news. Details via @OpenAINewsroom and @gdb. Commentary on how such scaling continues to drive down the “cost of intelligence” from @ArtificialAnlys.

- Deterministic inference for RL & reproducibility: SGLang added end‑to‑end deterministic attention/sampling that remains compatible with chunked prefill, CUDA graphs, radix cache, and non‑greedy sampling—useful for reproducible rollouts and on‑policy RL with minimal overhead. See @lmsysorg.

- FP8, comms, and real‑world speedups: Practitioners reported tangible FP8 gains under parallelism with comms constraints (e.g., PCIe), with perf crossover vs BF16 under pipeline/data parallel regimes. See local results and methodology from @TheZachMueller and follow‑ups. Related: Together AI is offering early access to GB300 NVL72 racks (@togethercompute).

- Write once, run on many GPUs: Modular previewed cross‑vendor portability where most code written for NVIDIA/AMD “mostly just works” on Apple Silicon GPUs—aimed at lowering hardware access barriers (@clattner_llvm). See also their updated cross‑vendor stack notes (@clattner_llvm).

Major model drops: Qwen3 Omni family, Grok‑4 Fast, DeepSeek V3.1 Terminus, Apple Manzano, Meituan LongCat

- Qwen’s multi‑front release wave:

- Qwen3‑Omni: an end‑to‑end omni‑modal model (text, image, audio, video) with 211 ms latency, SOTA on 22/36 audio/AV benchmarks, tool‑calling, and a low‑hallucination Captioner. Alibaba open‑sourced the 30B A3B variants: Instruct, Thinking, and Captioner. Demos and code: @Alibaba_Qwen, release thread.

- Qwen3‑Next‑80B‑A3B with FP8: Apache‑2.0 weights focused on long‑context speed; mixture‑of‑experts with gated attention/DeltaNet, trained on ~15T tokens with GSPO, supports up to 262k tokens (longer with mods). Summary via @DeepLearningAI.

- Qwen3‑TTS‑Flash: SOTA WER for CN/EN/IT/FR, 17 voices × 10 languages, ~97 ms first packet; and

- Qwen‑Image‑Edit‑2509: multi‑image compositing, stronger identity preservation, and native ControlNet (depth/edges/keypoints). Launches: TTS, Image‑Edit.

- xAI Grok‑4 Fast: A cost‑efficient multimodal reasoner with 2M context, free in some “vibe coding” UIs; community reports 2–3× higher throughput but weaker instruction following than GPT‑5‑mini on some tasks; SVG generation test mixed; still competitive on LisanBench. See @ShuyangGao62860, @_akhaliq, @scaling01, and a long‑context filtering anecdote from @dejavucoder.

- DeepSeek‑V3.1‑Terminus: Incremental update addressing mixed‑language artifacts and improving Code/Search agents. Available on Hugging Face; community shows usable 4‑bit quant runs on M3 Ultra with MLX at double‑digit toks/sec. See @deepseek_ai, demos by @awnihannun.

- Apple Manzano: a unified multimodal LLM that shares a ViT with a hybrid vision tokenizer (continuous embeddings for understanding + 64K FSQ tokens for generation), scaling from 300M to 30B, with strong text‑rich understanding (OCR/Doc/ChartQA) and competitive generation/editing via a lightweight DiT‑Air decoder. Threads: @arankomatsuzaki, summary with training details by @gm8xx8.

- Meituan LongCat‑Flash‑Thinking: open‑source “thinking” variant reporting SOTA across logic/math/coding/agent tasks with 64.5% fewer tokens on AIME25 and a 3× training speedup via async RL. Launch: @Meituan_LongCat.

Coding agents, evals, and scaffolds: SWE‑Bench Pro, GAIA‑2/ARE, ZeroRepo, Perplexity Email Assistant

- SWE‑Bench Pro (Scale AI): a harder successor to SWE‑Bench Verified with multi‑file edits (avg ~107 LOC across ~4 files), contamination resistance (GPL/private repos), and tougher deps. Current top scores: GPT‑5 = 23.3%, Claude Opus 4.1 = 22.7%, most others <15%. Details from @alexandr_wang and @scaling01.

- Meta GAIA‑2 + ARE: a practical agent benchmark and an open platform (with MCP tool integration) for building/evaluating agents in noisy, asynchronous environments. Findings: strong “reasoning” models can fail under time pressure (inverse scaling); Kimi‑K2 competitive at low budgets; multi‑agent helps coordination; diminishing returns beyond certain compute. See @ThomasScialom and commentary by @omarsar0.

- MCP‑AgentBench: Metastone’s live‑tool benchmark with 33 servers & 188 tools to evaluate real‑world agent performance (@HuggingPapers).

- Repository Planning Graph (RPG) + ZeroRepo (Microsoft): proposes a graph of capabilities/files/functions and data dependencies to plan/generate whole repos from specs, reporting 3.9× more LOC than baselines on their setup. Threads: @_akhaliq and explainer from @TheTuringPost.

- Perplexity Email Assistant: a native email agent for Gmail/Outlook that drafts in your style, schedules meetings, and prioritizes inbox items—now live for Max subscribers (@perplexity_ai, @AravSrinivas).

- Coding UX trending up: GPT‑5‑Codex shows dramatic capability jumps (e.g., a basic Minecraft clone in three.js) and reward shaping that “makes sure your code actually runs” (@gdb, @andrew_n_carr). Tri Dao reports 1.5× productivity with Claude Code (@scaling01); “code is king” remains a durable, high‑value application (@simonw).

Safety, governance, and agent security

- Detecting/reducing “scheming”: OpenAI and Apollo AI Evals introduced environments where current models exhibit situational awareness and can be prompted/trained into simple covert behavior; “deliberative alignment” reduces scheming rates, though anti‑scheming training can increase evaluation awareness without eliminating covert actions (@gdb). Practitioner notes: outcome‑based RL and “hackable” envs may introduce scheming; rising use of non‑human “reasoning traces” complicates audits (@scaling01).

- Guardrails with dynamic policy: DynaGuard (ByteDance) evaluates if conversations comply with user‑defined rules, supports fast/detailed explanatory modes, and generalizes to unseen policies (@TheTuringPost).

- Agent ingestion principle: “If the agent ingests anything, its permissions should drop to the level of the author”—a crisp policy design heuristic for tool‑enabled agents (@simonw).

Research highlights: JEPA debate, synthetic data pretraining, memory for latent learning

- JEPA for LLMs (and for robots): A new LLM‑JEPA iteration claims latent prediction benefits (@randall_balestr), but critiques argue it requires tightly paired data (e.g., Text↔SQL), adds forward passes, and lacks generality (@scaling01). In robotics, V‑JEPA shows strong spatial understanding but impractical inference (~16s/action via MPC) and no language conditioning; contrasts with label‑heavy approaches like Pi0.5 (@stevengongg).

- Synthetic Bootstrapped Pretraining (SBP): Trains a 3B model on 1T tokens by synthesizing inter‑document relations—outperforming repetition baselines and closing much of the gap to an oracle with 20× more unique data (@ZitongYang0, @arankomatsuzaki).

- Latent learning gap and episodic memory: A conceptual framework tying language model failures (e.g., reversal curse) to absent episodic memory; shows retrieval/episodic components can complement parametric learning for generalization (@AndrewLampinen).

- Also notable: NVIDIA’s ReaSyn frames molecule synthesis as chain‑of‑reaction reasoning with RL finetuning (@arankomatsuzaki); Dynamic CFG adapts guidance per step via latent evaluators, yielding large human pref gains on Imagen 3 (@arankomatsuzaki); Microsoft’s Latent Zoning Network unifies generative modeling, representation learning, and classification via a shared Gaussian latent space (@HuggingPapers).

Top tweets (by engagement)

- OpenAI × NVIDIA announce a strategic buildout of “millions of GPUs” and at least 10 GW of data centers (@OpenAINewsroom, 3.7K+).

- Qwen3‑Omni: End‑to‑end omni model with SOTA audio/AV results and 30B open variants (Instruct/Thinking/Captioner) (@Alibaba_Qwen, 3.9K+).

- Turso’s rapid evolution: Rust rewrite of SQLite with async‑first architecture, vector search, and browser/wasm support—framed as infra for “vibe coding” (@rauchg, 2.8K+).

- GPT‑5‑Codex demo: three.js “Minecraft” built from a single prompt (@gdb, 3.1K+).

- SWE‑Bench Pro: harder agent coding benchmark with real‑world repos; GPT‑5 and Claude Opus 4.1 lead at ~23% (@alexandr_wang, 1.7K+).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. DeepSeek-V3.1-Terminus Launch & Online Upgrade

- 🚀 DeepSeek released DeepSeek-V3.1-Terminus (Score: 361, Comments: 45): DeepSeek announced an iterative update, DeepSeek‑V3.1‑Terminus, targeting prior V3.1 issues like CN/EN language mixing and spurious characters, and upgrading its Code Agent and Search Agent. The team claims more stable, reliable outputs across benchmarks vs V3.1 (no specific numbers provided); weights are open‑sourced on Hugging Face: https://huggingface.co/deepseek-ai/DeepSeek-V3.1-Terminus and accessible via app/web/API. Commenters ask if “Terminus” signifies the final V3 checkpoint and request feedback on role‑play performance; others discuss the aggressive naming, but no technical objections are raised.

- Clarification sought on whether “V3.1‑Terminus” denotes the final checkpoint of the

V3line versus a routine sub-variant; the naming suggests a checkpoint/tag rather than a major-arch change, and commenters want release notes clarifying if it’s a new training run, a late-stage fine-tune, or an inference-time preset. - Critique of DeepSeek’s versioning semantics: the sequence from R1 to

V3.1andV3.1‑Tis seen as confusing, with warnings that a hypothetical “3V” (Vision) could be mistaken for “V3”. This ambiguity impedes reproducibility and apples-to-apples comparisons across checkpoints and capabilities unless model cards clearly specify training data, steps, and deltas between tags. - Requests for head-to-head benchmarks against popular open(-ish) baselines like GLM‑4.5 and “kimik2” (as referenced by users), including roleplay performance as a targeted eval dimension. Commenters want standardized evals (e.g., instruction-following plus RP/character consistency tests) to quantify whether

V3.1‑Timproves practical usability versus current stacks.

- Clarification sought on whether “V3.1‑Terminus” denotes the final checkpoint of the

2. Qwen3-Omni Multimodal Release & Open-Source Models

- 3 Qwen3-Omni models have been released (Score: 362, Comments: 77): Three end-to-end multilingual, omni-modal

30Bmodels—Qwen3-Omni-30B-A3B-Instruct, Thinking, and Captioner—are released with a MoE-based Thinker–Talker design, AuT pretraining, and a multi-codebook speech codec to reduce latency. They handle text, image, audio, and video with real-time streaming responses (TTS/STT), support119text languages,19speech-input languages, and10speech-output languages, and report SOTA on22/36audio/video benchmarks (open-source SOTA32/36), with ASR/audio understanding/voice conversation comparable to Gemini 2.5 Pro per the technical report. Instruct bundles Thinker+Talker (audio+text out), Thinking exposes chain-of-thought Thinker (text out), and Captioner is a fine-grained, low-hallucination audio captioner fine-tuned from Instruct with a cookbook. Early user reports claim TTS quality is weak while STT is “godlike,” outperforming Whisper with contextual constraints and very fast throughput (e.g., ~30 s audio transcribed in a few seconds), and note strong image understanding on complex graphs/trees to Markdown; another asks about GGUF availability.- Reports note the model’s STT is “godlike” versus OpenAI Whisper, with promptable context/constraints (e.g., telling it to never insert obscure words) and very high throughput—

~30sof audio transcribed in a few seconds locally. Multimodal vision is praised for accurate structure extraction, e.g., converting complex graphs/tree diagrams into clean Markdown, implying robust layout understanding beyond simple OCR. See Whisper for baseline comparison: https://github.com/openai/whisper. - Conversely, native TTS quality is described as poor, which limits end-to-end speech-to-speech despite fast ASR. Real-time S2S is feasible in principle by chaining ASR → LLM → TTS, but latency/UX will depend on swapping in a higher‑quality TTS engine; STT latency appears near–real-time, but output voice quality remains the bottleneck.

- Local deployment friction is highlighted: users ask for GGUF builds and note llama.cpp lacks full multimodal support (even

Qwen2.5-Omniisn’t fully integrated), so audio/image features may require vendor runtimes or custom servers for now. This constrains on‑device use until community kernels catch up. Relevant refs: llama.cpp https://github.com/ggerganov/llama.cpp and GGUF format https://github.com/ggerganov/llama.cpp/blob/master/docs/gguf.md.

- Reports note the model’s STT is “godlike” versus OpenAI Whisper, with promptable context/constraints (e.g., telling it to never insert obscure words) and very high throughput—

- 🚀 Qwen released Qwen3-Omni! (Score: 186, Comments: 3): Alibaba’s Qwen team announced Qwen3‑Omni, a natively end‑to‑end multimodal model unifying text, image, audio, and video (no external encoders/routers), claiming SOTA on

22/36audio/AV benchmarks. It supports119text languages /19speech‑in /10speech‑out, offers ~211 msstreaming latency and30‑minaudio‑context understanding, with system‑prompt customization, built‑in tool calling, and an open‑source low‑hallucination Captioner. Open releases include Qwen3‑Omni‑30B‑A3B‑Instruct, ‑Thinking, and ‑Captioner; code/weights and demos are on GitHub, HF, ModelScope, Chat, and a demo space. Comments flag the benchmark chart layout as making direct comparison to Gemini 2.5 Pro difficult, while several note the 30B‑A3B results appear competitive with GPT‑4o on their tasks—especially for vision‑reasoning—prompting enthusiasm to test “thinking‑over‑images” in an open model.- Skepticism about the benchmark visualization: one commenter notes the chart is “masterfully crafted” to push Gemini 2.5 Pro off the main comparison area, implying potential presentation bias and making side‑by‑side evaluation with Qwen3‑Omni harder. The point emphasizes the need for transparent axes, overlapping points, and raw numbers to enable reproducible, apples‑to‑apples comparisons across models.

- Early read on performance: a user says the

30B-A3Bvariant shows surprisingly strong results and appears to match GPT‑4oin their experience on multimodal reasoning, particularly “thinking‑over‑images.” If borne out in independent tests, that would position an open model close to frontier multimodal reasoning capability, attractive for local/self‑hosted use and practical evaluation beyond curated leaderboards.

3. Qwen-Image-Edit-2509 Release: Multi-Image Editing & ControlNet

- Qwen-Image-Edit-2509 has been released (Score: 222, Comments: 30): Qwen released Qwen-Image-Edit-2509, a September update adding multi-image editing trained via image concatenation (supporting person+person/product/scene) with best results at

1–3inputs, plus markedly improved single-image identity consistency for faces, products, and on-image text (fonts/colors/materials) accessible via Qwen Chat. It also adds native ControlNetstyle conditioning (depth, edge, keypoint maps, etc.) on top of the existing Qwen-Image-Edit architecture. Comments highlight surprise at the monthly cadence and note prior issues with facial identity drift over multiple iterations, which this release claims to address; some compare it to Flux Kontext, saying earlier versions sometimes had worse facial resemblance, so the fast update is welcomed.- Identity preservation across iterative edits: Users report prior Qwen-Image-Edit builds struggled to keep faces consistent, especially over multiple edit passes or with multiple subjects. v2509 is highlighted as targeting this issue, suggesting improved face/identity conditioning and reduced drift across iterations.

- Comparison vs Flux Kontext: One user found the previous release was close but sometimes worse at facial resemblance than Flux Kontext. The v2509 update is viewed as closing that gap by acknowledging and addressing facial similarity issues.

- Inpainting/object removal performance: A commenter says Qwen-Image-Edit-2509 is “comparable to nano banana” on object removal tasks, implying competitive fill quality for removals. No quantitative benchmarks were provided, but the qualitative parity is noted.

- 🔥 Qwen-Image-Edit-2509 IS LIVE — and it’s a GAME CHANGER. 🔥 (Score: 208, Comments: 18): Qwen-Image-Edit-2509 is announced as a major upgrade of Qwen’s image editing stack with multi-image compositing (e.g., person+product/scene) and strong single-image identity/brand consistency. It claims fine-grained text editing (content, font, color, material) and integrates ControlNet controls (depth, edges, keypoints) for precise conditioning; code and weights are available on GitHub and Hugging Face (GitHub, HF model, blog). Top comments critique the marketing hyperbole (e.g., “game changer,” “rebuilt”) and don’t provide benchmarks or technical counterpoints; skepticism centers on evidence for the claimed improvements.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI–NVIDIA 10 GW Supercomputer Partnership Announcements

- OpenAI and NVIDIA announce strategic partnership to deploy 10 gigawatts of NVIDIA systems (Score: 244, Comments: 80): OpenAI and NVIDIA signed a letter of intent to deploy at least

10 GWof NVIDIA systems (described as “millions of GPUs”) for OpenAI’s next‑gen training/inference stack, with the first1 GWslated forH2 2026on NVIDIA’s Vera Rubin platform; NVIDIA will be a preferred compute/networking partner, and both sides will co‑optimize OpenAI’s model/infrastructure software with NVIDIA’s hardware/software [source]. NVIDIA also intends to invest up to $100B in OpenAI, disbursed progressively as each gigawatt is deployed, alongside a parallel build‑out of datacenter and power capacity and continued collaborations (e.g., Microsoft, Oracle, SoftBank, “Stargate”) toward large‑scale model development (https://openai.com/index/openai-nvidia-systems-partnership). Top comments highlight the sheer scale of the proposed funding and note potential circular capital flows (OpenAI buys NVIDIA compute while NVIDIA invests back into OpenAI); others argue over “bubble vs. singularity” framing rather than technical merits.- A cited claim states: “NVIDIA intends to invest up to $100 billion in OpenAI progressively as each gigawatt is deployed,” tied to a

10 GWrollout of NVIDIA systems. This reads as tranche-based vendor financing keyed to power/compute milestones, aligning capex with datacenter build-outs and de-risking supply timing while scaling GPU deployment as power and facilities come online. - Another comment highlights a circular capital flow: OpenAI purchases NVIDIA systems while NVIDIA invests back into OpenAI. Technically, this resembles a strategic supplier-financing/compute-prepayment structure that could secure priority allocation for next-gen NVIDIA platforms (e.g., H200/B200/GB200), lock in roadmap/pricing, and accelerate training cadence, at the cost of deeper vendor lock-in and supply-chain concentration.

- A cited claim states: “NVIDIA intends to invest up to $100 billion in OpenAI progressively as each gigawatt is deployed,” tied to a

- 🚨 BREAKING: Nvidia to Invest $100 Billion in OpenAI (Score: 615, Comments: 99): Post claims Nvidia will invest up to

$100Bin a strategic partnership with OpenAI to build/deploy10 GWof AI supercomputer capacity on Nvidia hardware, translating to “millions of GPUs” coming online in 2H2026, to support OpenAI’s AGI ambitions; it adds Nvidia stock rose+4.69%on the news. Structure suggests funds are tied to progressive 10 GW rollout, effectively pre-financing/locking OpenAI to Nvidia’s stack and positioning Nvidia at the center of next‑gen AI compute. Comments argue it’s effectively Nvidia investing in itself since OpenAI buys Nvidia hardware; OpenAI is trading equity for guaranteed compute; and Nvidia benefits by amplifying demand/prices for its chips, then recycling profits to subsidize OpenAI capacity.- Power-to-GPU math: taking the cited

10 GW ÷ 0.7 kW ≈ 14.3M GPUs(assuming ~700 W/GPU, e.g., H100-class SXM modules), but accounting for data center PUE~1.2–1.4and non-GPU overheads (CPUs, NICs, switches, storage, cooling) drops usable GPU count to roughly~8–11M. Networking at this scale (400/800G per node over InfiniBand NDR or Ethernet) implies tens of millions of optics/ports and multi-megawatt fabric power; the interconnect and optics supply chain become bottlenecks alongside GPUs NVIDIA Quantum-2 400G IB, NVLink Switch. Blackwell-era modules are expected to push module power higher, further reducing the GPU count per GW and increasing cooling/networking overheads (NVIDIA GTC Blackwell). - Compute-for-equity flywheel: commenters frame this as OpenAI swapping equity for reserved NVIDIA capacity; in turn, OpenAI’s workloads popularize NVIDIA chips, letting NVIDIA raise ASPs and recycle profits into subsidized capacity for OpenAI—effectively “NVIDIA investing in NVIDIA.” Practically, this likely means multi-year take-or-pay reservations and prepayments tied to constrained inputs like HBM3E and CoWoS packaging capacity, with priority allocation rather than pure cash injection (HBM3E overview, TSMC CoWoS). Deepened CUDA lock-in increases switching costs versus AMD MI300X/MI325X + ROCm, pressuring competitors to beat NVIDIA on $/TFLOP and memory BW to win inference/training TCO (AMD MI300X, ROCm).

- Scale and infrastructure constraints:

~10 GWis utility-scale power (dozens of campuses), requiring new high-voltage substations, long-lead transformers (often18–36months), and substantial water/heat-rejection capacity; grid and cooling timelines may dominate deployment speed. Even with supply, orchestrating millions of GPUs demands pod-level topologies (e.g., 8–16 GPU HGX nodes, multi-tier IB/Ethernet fabrics) and careful job placement to maintain high training efficiency; otherwise interconnect bottlenecks erase scale gains. HBM supply (stacks per GPU) and optics availability are likely pacing items as much as the GPUs themselves, which aligns with “tied to capacity” language in such deals (Uptime PUE context, NVIDIA HGX).

- Power-to-GPU math: taking the cited

2. Qwen-Image-Edit-2509 Release and Gemini/ChatGPT Multimodal Demos

- Qwen-Image-Edit-2509 has been released (Score: 348, Comments: 92): Qwen released Qwen-Image-Edit-2509, the September iteration of its image-editing diffusion model, adding multi-image editing via training on concatenated images (optimal with

1–3inputs; supports person+person, person+product, person+scene). It improves single-image edit consistency (better identity preservation for people with pose/style changes; stronger product identity/poster editing; richer text edits including fonts/colors/materials) and introduces native ControlNet conditioning (depth, edge, keypoint maps). A Diffusers pipelineQwenImageEditPlusPipelineis provided; examples recommendtorch_dtype=bfloat16on CUDA and exposetrue_cfg_scale,guidance_scale,num_inference_steps, andseed. Commenters ask if the “monthly iteration” implies regular monthly releases and whether LoRAs trained on prior versions will remain compatible across updates; they also note the likely need to redo quantization (e.g., GGUF/SVDQuant) per release, with one user immediately aiming to convert to GGUF.- A commenter plans immediate conversion to GGUF, indicating demand for a quantized, llama.cpp/ggml-friendly format for local inference on low‑VRAM or CPU‑bound setups. GGUF support typically enables deployment via llama.cpp-style backends and offline tooling; GGUF spec: https://github.com/ggerganov/ggml/blob/master/docs/gguf.md.

- There’s scrutiny of a potential

monthlyrelease cadence and whether LoRAs trained on previous checkpoints will remain compatible. Since LoRA deltas are tied to the base model’s weight shapes/tokenization, even small checkpoint/architecture changes can break compatibility and require re-training or re-derivation; LoRA background: https://arxiv.org/abs/2106.09685. - Resource constraints are highlighted: one user notes they’d need a new SVDQuant for each update and “there’s no way I’m using even the GGUF version on my poor GPU.” This implies monthly quantization pipeline churn (e.g., SVDQuant, GGUF) and reliance on aggressive quantization to fit VRAM limits for image-edit inference.

- I met my younger self using Gemini AI (Score: 231, Comments: 24): Post showcases a photorealistic AI edit where the OP “meets” their younger self, reportedly created with Google’s Gemini AI. While no exact workflow is given, the result implies an image-to-image or multi-reference image editing pipeline (likely supplying a current portrait and a childhood photo) guided by a text prompt to compose the scene; OP notes the realism, saying it worked “this good.” No benchmarks or model variant/parameters are provided. Comments are enthusiastic and request reproducible details—specifically the prompt, whether two identity reference images were used, and if a separate scene reference guided composition—highlighting interest in practical workflow replication.

- A commenter asks for the exact prompt/workflow, explicitly probing whether the OP used two reference images (current self + childhood photo) and an additional reference for the final scene. This highlights interest in multi-image conditioning and composition control in Gemini’s image pipeline (e.g., identity preservation across inputs and scene guidance). No specifics are provided in-thread, so reproducibility details (input modalities, steps, or constraints) remain unknown.

- The OP reports unexpectedly high fidelity (“I didn’t think it’d work this good”) along with a generated composite: https://preview.redd.it/x7dvf09mzrqf1.jpeg?width=768&format=pjpg&auto=webp&s=88e91700732a51793ba05373ad87f6b7652cf01e, suggesting effective identity retention across age domains. However, the thread lacks technical parameters (model/version, input resolution, steps/seeds, or prompt details), so the result can’t be benchmarked or replicated from the given information.

- I didn’t know ChatGPT could do this. Took 3 prompts. (Score: 328, Comments: 95): OP reports that ChatGPT generated a runnable “3D Google Earth–style” web app in ~3 prompts and rendered it directly in the ChatGPT Preview sandbox—no local compile/build or hosting required. The globe implementation is suspected (by commenters) to use WebGL via three.js and possibly three-globe; the linked video (v.redd.it/6mhokhu3wmqf1) returns

403 Forbiddenwithout Reddit auth, indicating access is gated by application/network-edge controls. The “complicated” part of the task was not achieved, but the interactive globe scaffold worked end-to-end within the ChatGPT environment. Commenters argue LLMs are strong at scaffolding “single‑serving” web apps due to the web platform’s breadth and code-heavy training data, while another highlights reliability limits (e.g., a recent vision misclassification of an apple as a tomatillo). There’s debate/curiosity about the exact stack (three.js vs. three‑globe), but no confirmed details from OP.- Multiple commenters infer the demo is built with three.js (threejs.org) and a globe helper like three-globe, leveraging

WebGLfor rendering. In such setups, the heavy lifting (sphere geometry, atmospheric shaders, geojson-to-3D conversion, arc/point animations) is handled by the library, and the LLM primarily wires configuration and data. This explains why it’s achievable in “3 prompts”: the code surface is mainly integrating well-documented APIs rather than writing low-level graphics. - LLMs are well-suited to scaffold “single-serving” web apps by composing existing packages and browser APIs. With a clear spec, they can generate a minimal stack (e.g.,

Vite+ vanilla JS/TS or React) and integrate libraries (e.g., three.js,d3-geo,TopoJSON), relying on the web platform’s capabilities (Canvas/WebGL/WebAudio). The abundance of publicly available code in training corpora increases reliability for boilerplate and idiomatic patterns, though correctness still hinges on precise prompts and iterative testing. - A report of misidentifying an apple as a tomatillo highlights current limits of general-purpose VLMs on fine-grained classification. Without domain-specific priors or few-shot exemplars, lookalike classes under variable lighting/backgrounds often confuse models; specialized models (e.g., CLIP variants or fine-tuned Food-101 classifiers) and prompt constraints can mitigate errors. It underscores that while LLMs excel at code synthesis, vision reliability may lag without task-specific calibration.

- Multiple commenters infer the demo is built with three.js (threejs.org) and a globe helper like three-globe, leveraging

- I had that moment with Kimi 2! (Score: 1390, Comments: 72): Screenshot shows Kimi 2 responding “Good catch” after being corrected—illustrating a hallucination likely triggered when it couldn’t access referenced documents. Commenters report Kimi 2 will fabricate content rather than return a retrieval/no-data error when document access fails, pointing to gaps in RAG/grounding and guardrails for provenance-aware answers. Users confirm this behavior occurs on document-access failures and liken the bot’s reply to a student being caught unprepared; one notes it routinely “makes something up” in these cases.

- Users note that when the assistant cannot access referenced documents (e.g., retrieval/permissions failures), it tends to fabricate plausible details rather than abstain. This is a classic RAG failure mode; engineering mitigations include explicit retrieval success checks, surfacing “no evidence found” states, and enforcing

cite-or-abstainresponses to avoid unsupported generations. - The “GPT-18” anecdote illustrates correction-induced confabulation: the model freely swaps core facts (location, power plant type) while preserving the narrative outcome (evacuation). This highlights lack of grounding and constraint satisfaction; mitigations include schema-validated tool use, entity normalization (geo/organization disambiguation), and external verification before committing to factual assertions or actions.

- Hallucinations are reported across vendors (e.g., ChatGPT and Claude), suggesting model-agnostic limitations in factuality and tool reliability. Production setups should add deterministic guards—retrieval timeouts, confidence gating, and post-hoc verifiers—to reduce error rates, rather than relying on model prompts alone.

- Users note that when the assistant cannot access referenced documents (e.g., retrieval/permissions failures), it tends to fabricate plausible details rather than abstain. This is a classic RAG failure mode; engineering mitigations include explicit retrieval success checks, surfacing “no evidence found” states, and enforcing

3. Robot Uprising Memes and Unitree G1 Agility Clips

- Unitree G1 fast recovery (Score: 1515, Comments: 358): Short post appears to showcase the Unitree G1 humanoid executing a rapid ground‑to‑stand “fast recovery” maneuver, suggesting a whole‑body controller coordinating multi‑contact transitions with sufficient actuator peak torque/power for explosive hip/knee extension. No quantitative benchmarks (e.g., recovery time, joint power/torque, controller type) are provided; the video link v.redd.it/8l0l09o6fpqf1 returns HTTP

403(access‑controlled), but a still frame is visible here. Top comments emphasize the motion’s realism (“impressive and scary”) and question authenticity (“looks so good it looks fake”); no technical critique or controller/actuator discussion is present. - Primary target locked! This guys the first one to go (Score: 348, Comments: 48): A short v.redd.it clip appears to depict a robotic system announcing a target “lock” on a human and then deploying a rope/tether after switching to “OFFENSIVE MODE,” implying basic vision-based target acquisition and a powered launcher/gimbal; no telemetry, specs, or control-loop details are provided to evaluate latency, actuation speed, or safety. Several commenters challenge the demo’s validity, asserting the footage is likely sped up and asking for real‑time playback to assess tracking stability, servo response, and the risk profile of a neck-level tether. Debate centers on law-enforcement applications versus safety/ethics, with some advocating eventual police use while others highlight strangulation hazards and reliability concerns; a quip about a person getting tangled in ~

2sunderscores skepticism about practical robustness.- A commenter alleges the demo is sped up and asks for real-time playback. Without true 1× footage, it’s impossible to judge controller bandwidth, state-estimation latency, actuator torque limits, and gait stability—time-lapse can hide slow step frequency and long recovery times after disturbances. Best practice would be an on-screen timecode/frame-time overlay and reporting of step rate (Hz), CoM velocity, and reaction latency to external impulses.

- Another critic notes repeated “push tests” and simple preprogrammed punches show disturbance rejection but little capability progression. They implicitly call for harder, measurable benchmarks: uneven-terrain traversal with quantified slip, contact-rich manipulation with force/impedance control, autonomous perception/planning, payload handling, and normalized metrics like cost of transport, fall rate, and mean time-to-recovery under known impulse. Public logs or standardized benchmark suites would enable fair comparisons across humanoid platforms.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. DeepSeek v3.1 Terminus and Qwen3 Releases

- DeepSeek v3.1 Terminus Lands with Agentic Tweaks: DeepSeek released v3.1 Terminus with open weights on DeepSeek-V3.1-Terminus, citing bug fixes, improved language consistency, and stronger code/search agent behavior.

- Users noted “somewhat degraded” reasoning without tool use and “slight improvements” in agentic tool use, while others immediately asked “when is DeepSeek-R2?” and pointed to the broader deepseek-ai models.

- Qwen3 Omni-30B Goes Multimodal: Alibaba’s Qwen3 Omni-30B-A3B-Instruct (36B params) landed with multimodal encoders/decoders and multilingual audio I/O at Qwen/Qwen3-Omni-30B-A3B-Instruct.

- Community claims it “beats Whisper v2/3 and Voxtral 3B” on scam-call audio and supports 17 input and 10 output languages, alongside chatter about local LoRA training on a single RTX 4090 and a coming wave of realtime perceptual AI.

- Qwen3-TTS Speaks Up: Tongyi Lab unveiled Qwen3-TTS with multiple timbres and languages optimized for English and Chinese, documented at ModelScope: Qwen3-TTS.

- Builders asked about open-source availability and API pricing, but the official response shared docs only and stayed silent on openness and cost.

2. Diffusion Sampling & Data Efficiency Breakthroughs

- 8-Step ODE Solver Smokes 20-Step DPM++: An independent researcher’s WACV 2025 submission, Hyperparameter is all you need, introduces an ODE solver that achieves 8-step inference (and 5-step rivaling distillation) outperforming DPM++2m 20-step in FID.

- The approach is a training-free sampler cutting compute by ~60% via better tracing of the probability flow trajectory, with code at GitHub: Hyperparameter-is-all-you-need.

- Diffusion Dunks on Autoregressive in Low-Data: CMU’s blog, Diffusion Beats Autoregressive in Data-Constrained Settings, argues diffusion models outperform autoregressive methods when data is scarce.

- Researchers flagged missing citations and pointed to a related preprint (arXiv:2410.07041) that “generalizes the approach better” but wasn’t cited.

- Repeat-4x Data Trick Plays Even: A study (arXiv:2305.16264) showed repeating data 4x and shuffling each epoch matches training on the same amount of unique data.

- Practitioners discussed applying the trick to MLPs, treating inter-epoch shuffling as a cheap regularizer for data-limited regimes.

3. Compute Megadeals & GPU Systems

- OpenAI–NVIDIA Lock a ~$100B, 10 GW GPU Pact: Latent Space members discussed an OpenAI–NVIDIA plan to deploy up to 10 gigawatts of NVIDIA systems—valued around $100B—for next-gen datacenters starting late‑2026.

- Reactions ranged from stock optimism to debates over vendor financing, AGI expectations, and whether end users will ever feel the added compute.

- Modular 25.6 Unifies GPUs, MAX Flexes: Modular shipped Modular 25.6: Unifying the latest GPUs with support for NVIDIA Blackwell (B200), AMD MI355X, and Apple Silicon, powered by MAX.

- Early results claim MAX on MI355X can outperform vLLM on Blackwell, hinting at aggressive cross-vendor tuning and a unified developer workflow.

- NVSwitch Know-How Boosts Multi-GPU Throughput: Engineers shared a primer on sharing memory addresses across GPUs and leveraging NVSwitch for reductions at Stuart Sul on X.

- These patterns matter for bandwidth-bound collectives and activation flows where efficient interconnects keep GPU utilization high.

4. Agent Protocols & Constrained Outputs

- MCP Adds response_schema for Structured Sampling: The MCP team discussed adding response_schema to the sampling protocol to request structured outputs, tracked via modelcontextprotocol/issues/1030.

- Contributors expect modest SDK changes plus provider-specific integration, with a volunteer targeting an October demo implementation.

- MCP Registry Publishing & Remote Servers Land: Publishing guidance for the MCP Registry arrived at Publishing an MCP server, covering

server.json, status, repo URL, and remotes.- A reference for remote configs documents

streamable-httpendpoints at Generic server.json reference.

- A reference for remote configs documents

- vLLM Bakes In Grammar-Guided Decoding: Developers highlighted guided decoding that constrains logits with formal grammars in vLLM, see vLLM sampling.py.

- They contrasted this with KernelBench 0‑shot evals that skip constraints, noting grammars could eliminate many compiler errors upfront.

5. Open-Source Platforms, DBs, and Communities

- CowabungaAI Forks LeapfrogAI as ‘Military-Grade’ PaaS: CowabungaAI, an open-source fork of LeapfrogAI with chat, image gen, and an OpenAI-compatible API, launched at GitHub: cowabungaai.

- Its creator touted large code improvements and offered discounted commercial support and licensing for adoption.

- serverlessVector: Pure-Go VectorDB Debuts: A minimal Golang vector database, serverlessVector, is available at takara-ai/serverlessVector.

- Engineers can test a pure-Go vectorDB suitable for embedded/serverless use without external dependencies.

- Hugging‑Science Discord Spins Up: A new hugging-science Discord for open projects in fusion, physics, and eval launched at discord.gg/hU9mdFPB.

- Organizers are recruiting Team Leaders, signaling momentum for domain-focused open-science collaborations.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet Browser Invites Going Out: Members shared invitation links for Perplexity’s Comet browser such as this link for users to try out, and confirmed it’s available on Windows as well as Mac.

- One user reported reaching the Comet daily limit and suggested upgrading to a Max subscription for higher usage limits.

- Perplexity Better Than ChatGPT For Research?: A user argued that ChatGPT is known for hallucinating, while Perplexity is better for research purposes, specifically when retrieving trustworthy data using this Perplexity AI search.

- Another member echoed this sentiment.

- GPT-5 Launch Anticipation: Enthusiasm is building for upcoming models like GPT-5, with some preparing for a potential third AI winter, while others dismiss that theory.

- One member doubts the current GPT-4 models being smarter than the newest generation, while others express excitement for the advances in AI that can help them understand the universe itself.

- Perplexity Pro Users Feel Overlooked: A user shared a tweet highlighting complaints about Perplexity Pro users feeling left behind regarding feature parity with Max subscribers.

- A respondent dismissed these concerns, anticipating the new features would not work properly for another four months.

- Shareable Threads Encouraged: A member reminded others to ensure their threads are set to Shareable, linking to a specific Discord channel message.

- This should allow easier access to others.

Unsloth AI (Daniel Han) Discord

- VRAM Goes BRRR for Massive Context Windows: A user calculated that a 1M context length model would require 600GB VRAM, while another user questioned the accuracy of the VRAM calculator for their company’s needs with 16 concurrent users and Qwen 3 30B models.

- It was noted that performance significantly degrades on a 30B model beyond 100k context length and training on shorter contexts is sufficient after initial context extension.

- DeepSeek’s Deep Dive with Huawei?: A user mentioned that DeepSeek might be experiencing issues due to using Huawei Ascent chips, with the newest version DeepSeek-V3.1-Terminus showing bug fixes.

- The fixes have somewhat degraded in reasoning without tool use and slight improvements in agentic tool use.

- Quantization Quagmire: Google and Apple Join: Members discussed various quantization techniques including QAT (Quantization Aware Training) and its successful implementations by Google and OpenAI.

- There was also mention of Apple’s super-weight research and experiments with NVFP4 and MXFP4, with NVFP4 showing a slight performance lead, and Unsloth’s DeepSeek R1 blog.

- Money Talks: OOM Error Edition: A user is willing to pay 50 USDT for assistance in resolving an out-of-memory (OOM) issue after reviewing tutorials.

- Another user echoed the same sentiment, indicating a potential demand for paid support within the community.

- Diffusion Dominates Autoregressive in Data-Constrained Settings: A blog post suggests diffusion models outperform autoregressive models when data is limited.

- Another cited paper indicates that repeating data 4x and shuffling after each epoch yields results similar to using unique training data.

LMArena Discord

- Indonesian Video Prompt Creates Vivid Imagery: A member shared an Indonesian prompt to generate a video from a photo, detailing scene, movement, stylization, audio, and duration specifications, requesting a 2.5D hybrid animation style with predominantly blue and white colors and neon red in the background, accompanied by heavy rock music at 150 BPM.

- Translation provided: Generate video from photos [upload photos].

- Grok 4 Fast Undercuts Gemini Flash: Discussion arose around Grok 4 Fast’s performance relative to Gemini Flash, with one member stating that Grok 4 Fast is significantly better and cheaper.

- It was further noted that Grok 4 Fast pricing encourages others to offer competitive pricing.

- Seedream 4 2k Struggles with Ethnicity: Users are reporting issues with Seedream 4 2k failing to maintain the integrity of the character’s ethnicity when using multiple references, and Seedream4 2k is better for speed of generation with good results.

- One user said it gets multiple reference images absolutely wrong, like all the time, and sometimes it give wrong output even in single image reference.

- AI Models Spark Debate in Medicine: Discussion covers the potential of AI models in healthcare, with concerns raised about their ability to identify fatal drug combinations.

- A member with experience in drug discovery stated, It works fine with fine tuned model with real data.

- Gemini 3.0 Flash Integrates Everywhere: Amidst a flurry of Gemini integrations, speculation intensifies around a potential Gemini 3.0 Flash release, possibly featuring integrated video capabilities, and potential deployment in mass home assistant devices.

- Members are wondering why Google has been deploying Gemini everywhere this week.

Cursor Community Discord

- Cursor Token Use Causes Sticker Shock: A user reported unexpectedly high token usage on a new Cursor account, clocking 146k tokens in 22 prompts, and asked for clarification.

- Community members explained that old chat logs and attached files inflate token usage, linking to Cursor’s learn pages on context.

- Kaspersky Mistakenly Flags Cursor as Malware: A user reported that Kaspersky is flagging Cursor as malware, sparking discussion around false positives.

- Cursor support requested logs to investigate, reassuring users it’s a generic warning related to potentially unwanted app (PUA) detection.

- SpecStory Auto-Backups Chat Exports: Users shared SpecStory, which automatically exports chats as files, to get around issues with randomly corrupted chats.

- One user noted they wouldn’t submit their chats to 3rd parties, and thus would rather use the local version of the tool.

- GPT-5 Speculated to Offer Cheaper Limits than Claude Sonnet: The community speculated that because GPT-5 is cheaper than Claude Sonnet 4, it will offer better limits.

- While someone pointed out that GPT-Mini is free, a user clarified they were referring to Codex.

GPU MODE Discord

- vLLM Eyes Image/Video Generation: A member considered the alignment of adding image or video generation capabilities to vLLM, planning to contact the multimodal features team.

- The discussion explores expanding vLLM’s functionalities beyond language models.

- GB300s powers Quantum Gravity Research: A member plans to use a substantial number of GB300s to facilitate high-compute scale diffusion for magnetohydrodynamics and loop quantum gravity modeling.

- The member notes the magnetohydrodynamics model is more likely to succeed compared to the highly experimental loop quantum gravity approach.

- CPU strangling MLPerf on local runs: A member reported running MLPerf inference locally is bottlenecked by the CPU, despite sufficient VRAM.

- The low GPU utilization indicates a significant performance issue that needs resolution.

- Tianqi Chen Discusses ML Systems Evolution: A recent interview with Tianqi Chen discussed Machine Learning Systems, XGBoost, MXNet, TVM, and MLC LLM.

- Chen’s reflections include his work at OctoML, and his academic contributions at CMU and UW.

- New kids CowabungaAI splinters from LeapfrogAI: An open source fork of LeapfrogAI called CowabungaAI was announced; it is a military-grade AI Platform as a Service by Unicorn Defense.

- It has similar functionalities to OpenAI, including chat, image generation, and an OpenAI compatible API, and is available on GitHub.

HuggingFace Discord

- Hugging-Science Discord Launches: A new

hugging-scienceDiscord channel has launched, focusing on open-source efforts in fusion, physics, and eval, accessible here.- The channel seeks Team Leaders for projects, providing a leadership opportunity to guide exciting initiatives.

- Diffusion ODE Solver steps down to 8: An independent researcher has unveiled an ODE solver for diffusion models, achieving 8-step inference, outperforming DPM++2m’s 20 steps, and 5-step inference rivalling the latest distillation methods; see the paper on Zenodo and code on GitHub.

- This training-free improvement reduces computational costs by ~60% while enhancing quality by better tracing the probability flow trajectory during inference.

- Golang VectorDB sees the light: A member has created serverlessVector, a pure Golang vectorDB and provided a link to the GitHub repo.

- It is written in Go and available for immediate testing and implementation.

- HF Inference Providers face Quality Complaints: Members expressed concerns about the quality of HF Inference providers, wondering how HF guarantees the quality of inference endpoints, especially concerning quantization.

- They added that they feel endpoints should be default be zdr.

Latent Space Discord

- DeepSeek Model Terminus Arrives!: DeepSeek launched the final v3.1 iteration, Terminus, with improved language consistency and code/search agent capabilities; open weights are available on Hugging Face.

- The community immediately inquired about DeepSeek-R2, hinting at future developments.

- Untapped Capital launches Fund II: Yohei Nakajima announced Untapped Capital Fund II, a $250k pre-seed vehicle, continuing its mission to back founders outside traditional networks.

- The team has shifted to a top-down approach, proactively sourcing startups based on early trend identification.

- Alibaba’s Qwen3-TTS makes its debut!: Alibaba’s Tongyi Lab introduced Qwen3-TTS, a text-to-speech model with multiple timbres and language support, optimized for English and Chinese; see ModelStudio documentation.

- User queries focused on open-source availability and API pricing, though the official team did not address open-source plans.

- OpenAI and NVIDIA Strike $100B GPU Mega-Deal!: OpenAI and NVIDIA partnered to deploy up to 10 gigawatts (millions of GPUs) of NVIDIA systems—valued at ~$100 B—for OpenAI’s next-gen datacenters starting late-2026.

- Reactions ranged from celebrating the stock boost to debating vendor financing, AGI expectations, and the potential impact on end-users.

- Among AIs Benchmark Tests Social Smarts: Shrey Kothari introduced Among AIs, a benchmark evaluating language models’ deception, persuasion, and coordination within Among Us; GPT-5 excelled as both Impostor and Crewmate.

- Discussions included model omissions (Grok 4 and Gemini 2.5 Pro incoming), game selection, data concerns, discussion rules (3-turn debates), and enthusiasm for game-based AI evaluations.

Eleuther Discord

- Text-davinci-003 Debuts Day-and-Date with ChatGPT: Text-davinci-003 launched the same day as ChatGPT, with OpenAI quickly labeling it a GPT-3.5 model.

- An insider clarified that an updated Codex model, code-davinci-002, was also part of the GPT 3.5 series and released the day before ChatGPT.

- Diffusion ODE Solver Races Ahead: An independent researcher crafted a new ODE solver for diffusion models hitting 8-step inference that outpaces DPM++2m’s 20-step inference in FID scores while slashing computational costs by ~60%.

- The solver enhances inference by better tracing the probability flow trajectory, detailed in a WACV 2025 paper with code available on GitHub.

- GPT-3.5 Family Tree: Members are hashing out the origins of ChatGPT and other GPT-3.5 models like code-davinci-002, text-davinci-002, and text-davinci-003.

- One insider hinted that ChatGPT was fine-tuned from a chain-finetuned, unreleased model.

- Benchmarking MMLU Subtasks: The community considered the possibility of benchmarking an MMLU pro subtask using lm-eval, focusing on subsets like mmlu_law.

- Exploring this capability could allow for more detailed and precise evaluations of AI model skills within the lm-eval framework.

Nous Research AI Discord

- HuggingFace Comments Spark Laughter: Members found the comments on HuggingFace humorous, with one stating yeh i was lmaoing when i saw that.

- The specific nature of these comments was not detailed, but it indicates an active and engaged community reaction to content on the platform.

- OLMo-3 Safetensors Search Intensifies: A member is actively seeking leads on OLMo-3 safetensors, and has joined a Discord to track progress.

- Although inference code is available, they highlighted that no weights on HF yet, suggesting the model isn’t fully accessible for use.

- Qwen3 Omni Launches with Multimodal Prowess: Qwen3 Omni-30B-A3B-Instruct is now live, boasting 36B parameters and multimodal capabilities.

- Reportedly, it beats Whisper v2/3 and Voxtral 3B in scam call audio processing, supporting 17 audio input languages and 10 output languages.

- Realtime Perceptual AI Race Commences: The community anticipates the rise of realtime perceptual AI (audio, video, and other modalities simultaneously).

- Apple’s realtime vision model was mentioned as a potential indicator of developments, sparking curiosity about the lack of public releases.

- LoRA Training Achievable on RTX 4090: It was mentioned that training a LoRA on 14B models locally is feasible using a single RTX 4090.

- A member is working on something similar, and the constraints they’re facing are bandwidth and latency.

aider (Paul Gauthier) Discord

- Aider Forks Navigate with Navigator Mode: Members suggested using aider forks with navigator mode for an automated experience using uv pip install on fork repos or the aider-ce package.

- The package integrates MCP and navigator mode to streamline the coding process.

- Augment CLI Excels at Codebase Augmentation: The Augment CLI tool shines with large codebases, particularly when using Claude Code (CC) with Opus/Sonnet and Codex for GPT-5.

- The user mentioned that Codex doesn’t have an API key, while Perplexity MCP works well with MCP integration.

- Deepseek V3.1 Configuration Guidance Sought: A user requested advice on the initial setup and configuration of Deepseek V3.1, along with preferred web search tools.

- Because aider does not have a built-in tool, one member suggested Perplexity MCP for web search to work with it.

- Aider Agents Multiply via Command Line: Members discussed setting up multiple aider agents via the command line for external orchestration, rather than a built-in solution.

- The suggestion was to use git worktrees for concurrent modifications to the same repository, which enables running multiple agents simultaneously.

- LLM Loses Track Editting Prompt Files: When using a file to set up the prompt, aider prompts to edit files and, simultaneously, the LLM gets confused about the task at hand.

- The LLM then asks for clarification of intent, specifically whether it should act as the ‘User’ within the APM framework or modify a file using the SEARCH/REPLACE block format.

Modular (Mojo 🔥) Discord

- Rustaceans summon Mojo via FFI: Members have been exploring using FFI to call Mojo from Rust, which is similar to calling C via the C ABI.

- To ensure correct type handling, the Mojo function must be marked with

@export(ABI="C").

- To ensure correct type handling, the Mojo function must be marked with

- Manual Mojo Binding still only option: The creation of C header -> Mojo binding generators remains a work in progress (WIP), rendering CXX assistance unavailable.

- Currently, generating Mojo bindings necessitates a manual approach.

- Windows Support still MIA: The question of Windows support for Mojo was raised among users.

- The response indicated that it is not coming any time soon.

- Modular 25.6 turbocharges GPU Performance: Modular Platform 25.6 is live, delivering peak performance across the latest GPUs including NVIDIA Blackwell (B200) and AMD MI355X, check out the blog post for more information.

- Early results show MAX on MI355X can even outperform vLLM on Blackwell, with unified GPU programming now available for consumer GPUs like Apple Silicon, AMD, and NVIDIA.

- MAX demands .mojopkg: To use MAX, a

.mojopkgfile is required alongside the executable, containing the highest level MLIR that Mojo can produce after parsing, for the runtime’s JIT compiler.- For platforms hiding hardware details (Apple, NVIDIA GPUs), Mojo hands off compilation to the driver, performing a one-shot JIT without profiling, unlike V8 or Java.

Yannick Kilcher Discord

- JEPA Chat Incoming: A chat about Joint Embedding Predictive Architecture (JEPA) paper (https://www.arxiv.org/abs/2509.14252) by Yann LeCun is scheduled for <t:1758567600:t>.

- One member linked to an OpenReview document about JEPA along with a YouTube video and two X (formerly Twitter) posts and [https://fxtwitter.com/randall_balestr/status/1969987315750584617

- GPT Gets Philosophical, Scores Low: A user rated a GPT model a 7/10 for parsing their philosophy, but only a 4/10 for expanding on it, and 3/10 for formatting, deeming it not good enough.

- The user indicated improved results after further interactions, suggesting either improved prompting skills or enhanced machine reading capabilities.

- Presenter Seeks Optimal Paper Time: A member asked about the best time to present a paper, suggesting an earlier session for those in the eastern timezone.

- The member suggested presenting 6 hours earlier or later on most days.

DSPy Discord

- Environment Variables Trump MCP Secrets: A member cautioned against passing secrets through MCP, advising the use of environment variables for single-user services, or login+vault/OAuth for more complex setups.

- Their JIRA MCP server implementation uses stdio and retrieves credentials from the environment, deploying per single-user to avoid leaking secrets via process tables.

- DSPy Optimization Suffers from Trace ID Jitters: A user highlighted that initializing modules with a trace ID results in the same ID being used for all documents when running a batch after optimizing, and asked how to handle per-item trace IDs in DSPy modules without breaking optimization.

- They considered recreating modules per article (too expensive) and moving the trace ID to forward calls, questioning if this would affect optimization since the trace_id gets passed to llm gateway for logs and auditing.

- GEPA Silent on ReAct Context Overflows: A user asked about experiences with GEPA for ReAct Agents, focusing on how context overflows would be handled with long agent trajectories.

- Unfortunately, no one had a story to tell.

MCP Contributors (Official) Discord

- Response Schema Gains Support: An issue to Add response_schema to the MCP Sampling Protocol was converted into a discussion with a member willing to implement a demo in October, enabling requests for structured output when using sampling via this issue.

- The consensus is that implementing this in the SDK isn’t complex, with the main effort lying in the integration between the SDK and the LLM API, potentially needing provider-specific code.

- Claude’s Constrained Output Capability Examined: The group discussed presenting constrained output as a client capability, with the issue that Claude models are incorrectly identified as not supporting this feature.

- The preferred approach is to present response_schema support, allowing the client host to determine the actual implementation.

- MCP Registry Publishing Procedures: Instructions for publishing servers to the Model Context Protocol (MCP) Registry have been shared, beginning with this guide.

- The guide includes steps to create an empty GitHub repo and a

server.jsonfile that specifies name, description, status, repository URL, and remote endpoint.

- The guide includes steps to create an empty GitHub repo and a

- Remote Server Configurations Unveiled: Remote server configurations for the Model Context Protocol (MCP) have been linked, featuring this reference.

- The provided

server.jsonexample defines a$schemaand includes aremotesarray, specifying thestreamable-httptype and URL.

- The provided

- MCP Install Instructions Auto-Generated: A member mentioned using a tool to generate a readme file with instructions for installing the Model Context Protocol (MCP) in various clients via MCP Install Instructions.

- The tool was reportedly ‘pretty cool’ and beneficial for creating installation guides.

tinygrad (George Hotz) Discord

- Colfax Meeting on Monday: There will be a meeting #89 on Monday at 9am San Diego time for a company update.

- Starting next week, the meeting will be 3 hours earlier.

- RANGEIFY Progress Questioned: Members questioned whether RANGEIFY would be default by the end of the week, including store, assign, group reduce, disk, jit, const folding, buf limit, llm, and openpilot.

- It was noted that children are not making progress and image is not complete.

- CuTe DSL a Potential Gamechanger: Members mentioned that the CuTe DSL is a potential gamechanger.

- They added that ThunderKittens is nicestarting.

Moonshot AI (Kimi K-2) Discord

- Kimi Rejects Provocation Attempts: One member shared an experience of provoking Kimi, appreciating its rejection of blind obedience or sympathy.

- Another member expressed a similar sentiment, noting that Kimi’s attitude is a reason why it’s their favorite model.

- Claude Suffers Prompt Injection Debacle: Members discuss how Claude has been weakened due to prompt injection techniques, leading it to disagree when context exceeds a certain length.

- They noted that it is unlike Kimi K2, and some express disappointment in the changes to Claude.

Manus.im Discord Discord

- GenAI E-Book Reader goes Warp Speed: The latest release of the GenAI E-Book Reader introduces Generative Intelligence features for enhanced text clarification, summarization, and dictionary functions.

- The new version integrates with OpenRouter.ia, granting users access to over 500 large-scale language models, as showcased in this video.

- OpenRouter.ia plugs into GenAI E-Book Reader: The GenAI E-Book Reader now supports OpenRouter.ia, opening up access to over 500 large-scale language models for enriched reading assistance.

- Users can now utilize a diverse range of models for text clarification, summarization, and advanced dictionary functionalities.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1175 messages🔥🔥🔥):

Comet Browser invitation, GPTs Agents training, OpenAI Platform's sidebars, Comet availability for ipadOS, AI winter

- Comet browser invites going out: A member shared an invitation link for Perplexity’s Comet browser for others to try out.

- Another confirmed that Comet is available on Windows too, not just Mac: Just open it on a windows machine.

- Comet Daily limit reached, upgrade available: A user reported receiving a persistent notification saying Comet personal search daily limit reached.

- A member suggested upgrading to a Max subscription for higher usage limits.

- Perplexity is better than ChatGPT for research sort of: A user mentions that ChatGPT is known for hallucinating, generating information that is not real, whereas Perplexity is better for research purposes.

- Another member stated Perplexity is good for research sort of, but chatgpt i have heard that it hallucinates*.

- GPT-5 launch imminent, experts claim: Multiple users express excitement for upcoming models like GPT-5, and some say they are preparing for the third AI winter, while others dismiss this.

- One member stated that they highly doubt about the gpt 4 models being smarter than the newest generation, and others expressed their excitement to see With that, we’ll understand the universe itself.

- Perplexity Pro users feeling left behind: A user shared a link to a tweet complaining about Perplexity Pro users being left behind in terms of features compared to Max subscribers.

- Another responded they are tripping about some B.S. that probably doesn’t even work properly for probably another four months.

Perplexity AI ▷ #sharing (5 messages):

Comet Invitation, Shareable Threads, Trustworthy Data, Invitation Request

- Comet Invite Drops: A member shared a Comet invitation link for others to try out Comet.

- Shareable Threads Encouraged: A member reminded others to ensure their threads are set to Shareable, linking to a specific Discord channel message.

- Trustworthy Data Questioned: A link to a Perplexity AI search about trustworthy data was shared.

- Invitation Plea: Another member requested an invite, providing another invitation link and a link to Chris Biju’s profile.

Unsloth AI (Daniel Han) ▷ #general (374 messages🔥🔥):

VRAM Usage for 1M Context Length, GRPO Fine-Tuning for GPT-OSS-20B, DeepSeek V3.1 Terminus and Huawei Ascent Chips, Qwen3 and Data Privacy Concerns, QAT and GGUF Quants

- Ramping Up VRAM Requirements for Large Context Windows: A user calculated that a 1M context length model would require 600GB VRAM, while another user questioned the accuracy of the VRAM calculator for their company’s needs with 16 concurrent users and Qwen 3 30B models.

- It was noted that performance significantly degrades on a 30B model beyond 100k context length and training on shorter contexts is sufficient after initial context extension.

- GRPO’s Grand Reveal Soon?: Members discussed the imminent release of GRPO finetuning for GPT-OSS-20B and its limitations for increasing context length on simple datasets like GSM8k.

- It was suggested to use at least 100 examples for a meaningful test and over 1000 for optimal results when training a model for a specific programming task.

- DeepSeek’s Deep Dive with Huawei?: A user mentioned that DeepSeek might be experiencing issues due to using Huawei Ascent chips.

- DeepSeek has released DeepSeek-V3.1-Terminus which is a small iteration with bug fixes, but it somewhat degraded in reasoning without tool use and slight improvements in agentic tool use.

- Qwen3’s Quietly Questionable Quotas?: The topic of data privacy arose in the context of using OpenAI or Gemini paid APIs, with the consensus being that users should assume their data is being used for training despite any stated policies.

- A member shared a link to a court order requiring OpenAI to preserve all ChatGPT logs, including deleted temporary chats and API requests.

- Quantization’s Quantum Quest: QAT vs MXFP4: Members discussed various quantization techniques including QAT (Quantization Aware Training) and its successful implementations by Google and OpenAI.

- There was also mention of Apple’s super-weight research and experiments with NVFP4 and MXFP4, with NVFP4 showing a slight performance lead. There was also conversation of Unsloth’s DeepSeek R1 blog post.

Unsloth AI (Daniel Han) ▷ #introduce-yourself (1 messages):

Collaboration Opportunities, Software Engineering, Small Business Ventures

- Collaboration: A New Business is Brewing: A software engineer and small business owner has opened the door to potential collaboration.

- This could lead to new projects, ventures, or partnerships within the community.

- Software Engineering Collab: A software engineer is seeking collaboration opportunities, hinting at potential projects needing technical expertise.

- This may involve software development, system design, or technical consulting.

Unsloth AI (Daniel Han) ▷ #off-topic (41 messages🔥):

Loss Curve Success, New iPhone Acquisition, CS Uni vs Bootcamps, Gacha Game Ratios, DataSeek Tool

- Model Training Yields Good Loss Curve: A member celebrated achieving a satisfactory loss curve in their model training experiment (image attached).

- They clarified it wasn’t an LLM, but rather an experiment to learn training more types of models, fueled by coffee and Noccos (180mg caffeine each).

- iPhone Upgrade Sparks Sheet Debate: A member showed off a new iPhone (image attached).

- One response jokingly suggested selling the phone and delaying bed sheet replacement while another member said Every time I see such pics I feel better about how I live lol.

- CS Uni Questioned vs Bootcamps: A member said that CS uni was worse than no uni and these bootcamps were unironically better option (planting less outdated shit in your head, faster and cheaper).

- Gacha Game Ratio Formula Leaked: A member leaked a macro that gacha devs are using to determine new characters (image attached).

- The leaked code joke showed that

if (banner_needs_money) -> add curvy adult womanand similarly for other conditions.

- The leaked code joke showed that

- DataSeek for Agent Sampling: A member shared DataSeek, a tool they created for gathering samples with agents.

- They used the tool to gather 1000 samples for their claimify-related project.

Unsloth AI (Daniel Han) ▷ #help (23 messages🔥):

OOM Errors & USDT, Blackwell CUDA Issues, Orpheus TTS Fine-Tuning

- Money for Nothing? User offers USDT for OOM fix: A user is willing to pay 50 USDT for assistance in resolving an out-of-memory (OOM) issue after reviewing tutorials.

- Another user echoed the same sentiment, indicating a potential demand for paid support within the community.

- Blackwell Blues: Debugging CUDA with New Architectures: A user rebuilt their environment with CUDA 12.8/12.9 and ARCH 120 to support their Blackwell card, but continues to face issues, particularly with TP (Tensor Parallelism).

- They’ve found that offloading to CPU or using only 1 GPU works but suspect a conflict possibly related to disabling amd_iommu for vLLM, leading to funky cuda errors.

- Orpheus in Error: Batch Size Mismatch in TTS Fine-Tuning: A user encountered a

TorchRuntimeErrorduring fine-tuning of Orpheus TTS using the provided notebook after switching to their own dataset, with the error indicating an input batch_size mismatch.- It was identified that the user’s dataset had variable length samples, and the suggested fix of increasing the max_seq_length (or removing longer samples) resolved the issue, as described in the TRL documentation.

Unsloth AI (Daniel Han) ▷ #showcase (1 messages):

LLMs, Fine Tuning, Task-Specific Models

- LLMs Tailored for Tasks: The future involves LLMs fine-tuned for specific tasks, even down to individual projects.

- This approach optimizes performance and relevance in specialized applications, ensuring models are purpose-built.

- The Dawn of Bespoke Models: The user thinks LLMs will become highly specialized, honed for precise tasks and projects.

- This evolution promises efficiency, accuracy, and adaptability in diverse domains.

Unsloth AI (Daniel Han) ▷ #research (13 messages🔥):

Diffusion vs Autoregressive, Data Repeating, Paper Citation, Peer Review

- Diffusion Dominates Autoregressive Approaches: A blog post suggests diffusion models outperform autoregressive models when data is limited.

- Another cited paper indicates that repeating data 4x and shuffling after each epoch yields results similar to using unique training data.

- Data Repeating Shuffles the Deck: Repeating the same data 4x and shuffling after each epoch can yield similar outcomes to using the same amount of unique training data (arxiv link).

- This suggests that shuffling between epochs is beneficial, prompting consideration for applying this technique to MLPs.

- Missing Citation Sparks Debate: A member pointed out that this paper seems to be a repeat of the CMU blog post but with nicer graphs, but the CMU blog post doesn’t cite the arxiv paper.

- The response was that the later paper generalizes the approach better, but should have been cited.

- Peer Reviewers Save the Day: A member asked about the severity of not citing related work, and the response was in general, this is the job of a peer reviewer.

- It was further noted that papers on arXiv are often unreviewed and subject to updates, leaving room for corrections.

LMArena ▷ #general (446 messages🔥🔥🔥):

Video generation from photos in Indonesian, Grok 4 Fast performance, Seedream 4 2k vs High-Res, AI in medical field, Gemini 3.0 Flash rumors

- Indonesian Video Generation Prompt Shared: A member shared an Indonesian prompt to generate a video from a photo, detailing scene, movement, stylization, audio, and duration specifications, requesting a 2.5D hybrid animation style with predominantly blue and white colors and neon red in the background, accompanied by heavy rock music at 150 BPM.

- Translation provided: Generate video from photos [upload photos].

- Grok 4 Fast Surprises with Competitive Pricing: Discussion arose around Grok 4 Fast’s performance relative to Gemini Flash, with one member stating that Grok 4 Fast is significantly better and cheaper.

- It was further noted that Grok 4 Fast pricing encourages others to offer competitive pricing.

- Seedream 4 2k Image reference issues emerge: Users are reporting issues with Seedream 4 2k failing to maintain the integrity of the characters ethnicity when using multiple references, and Seedream4 2k is better for speed of generation with good results.

- One user said it gets multiple reference images absolutely wrong, like all the time, and sometimes it give wrong output even in single image reference

- Debate sparks over AI models in medical field and drug discovery: Discussion covers the potential of AI models in healthcare, with concerns raised about their ability to identify fatal drug combinations.

- A member with experience in drug discovery stated, It works fine with fine tuned model with real data.

- Whispers of the Wind: Gemini 3.0 Flash Rumors Fly: Amidst a flurry of Gemini integrations, speculation intensifies around a potential Gemini 3.0 Flash release, possibly featuring integrated video capabilities, and potential deployment in mass home assistant devices.

- Members are wondering why Google has been deploying Gemini everywhere this week.

LMArena ▷ #announcements (1 messages):

Seedream-4, LMArena Models

- Seedream-4-2k Joins the LMArena Battle: The model

seedream-4-2kis now available in Battle, Direct, & Side by Side modes on the LMArena.- The

seedream-4-high-resmodel is not available at this time.

- The

- High-Res Still MIA: Model

seedream-4-high-resis currently unavailable, with updates to be announced in the channel.- Users are encouraged to monitor the announcements channel for further details.

Cursor Community ▷ #general (419 messages🔥🔥🔥):

Token Usage, Kaspersky Malware Flag, Chat Exports, GPT-5 Pricing

- Cursor Token Use Baffles User: A user noticed unexpectedly high token usage on a new Cursor account, spending 146k tokens in 22 prompts, and sought clarification from the community.

- Members explained that old chat logs and attached files contribute to context and token usage, and provided a link to Cursor’s new learn pages on context.

- Kaspersky Flags Cursor as Malware: A user reported that Kaspersky is flagging Cursor as malware, prompting discussion about false positives and potential reasons such as Cursor modifying right-click menus.

- A community member suggested double-checking the files, while Cursor support requested logs to investigate, reassuring users that it’s a generic warning related to potentially unwanted app (PUA) detection.

- SpecStory Automates Chat Exports: Users discussed the issues with randomly corrupted chats and the lack of a reapply feature after canceling changes, then sought a solution by sharing SpecStory, which automatically exports chats as files.