xAI is all you need?

AI News for 9/18/2025-9/19/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (192 channels, and 4967 messages) for you. Estimated reading time saved (at 200wpm): 415 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

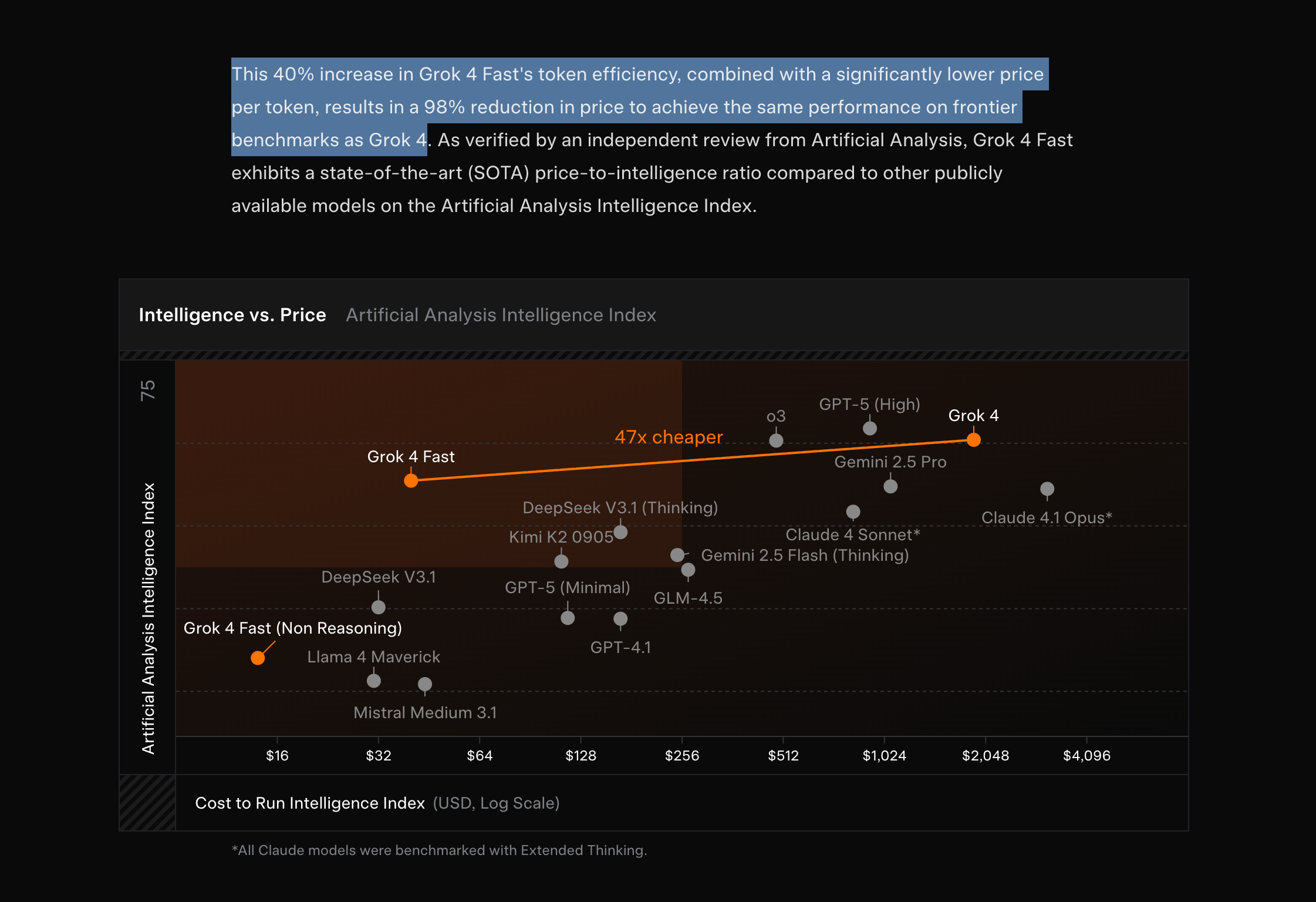

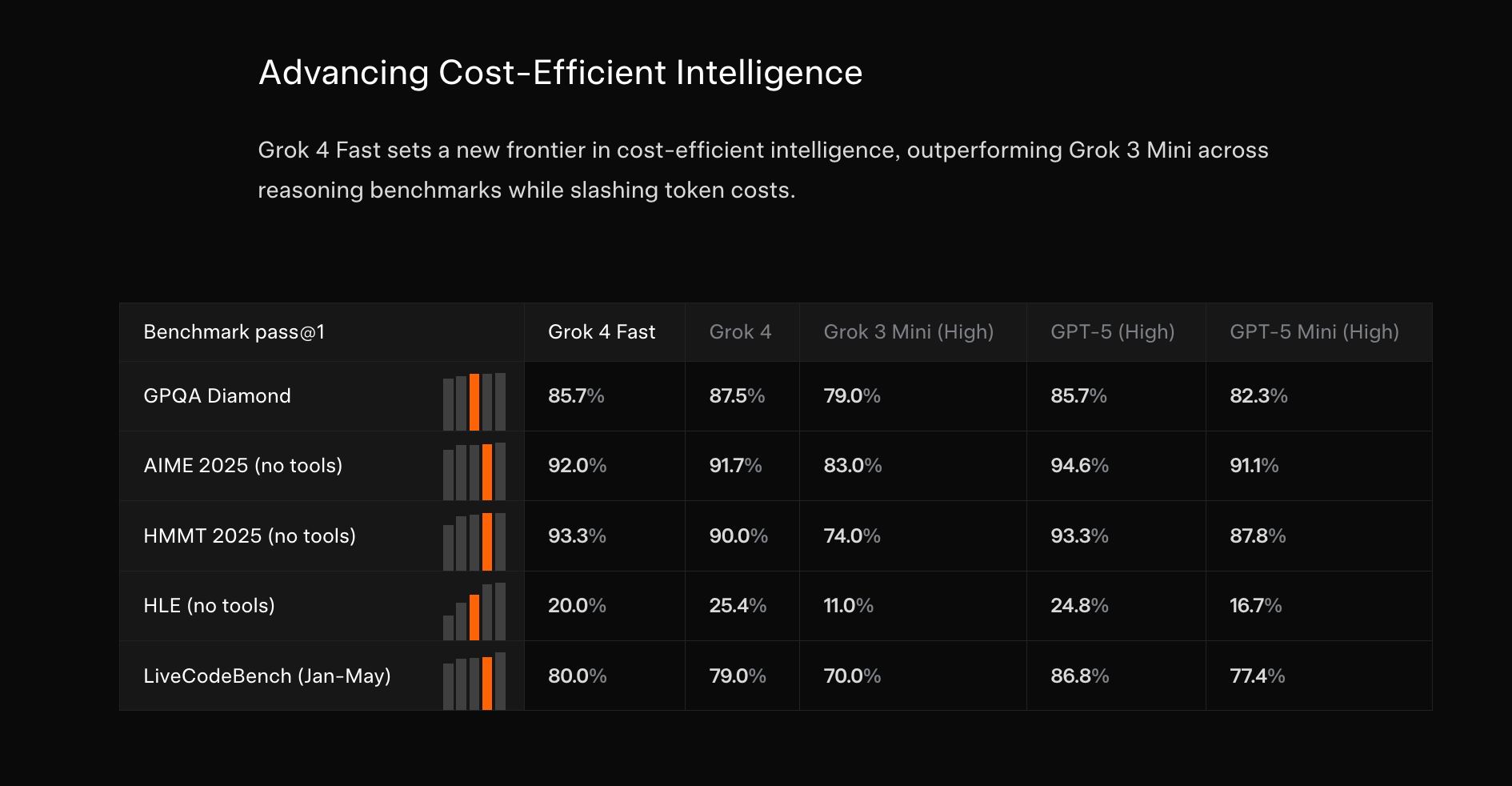

Absent some fake news today that would have put Xai at a higher valuation than Anthropic, xAI announced Grok 4 Fast, the second of its Fast models, and the keyword is efficiency:

Per Artificial Analysis testing it is a good deal faster than the frontier big models at 344 tok/s and just about as capable:

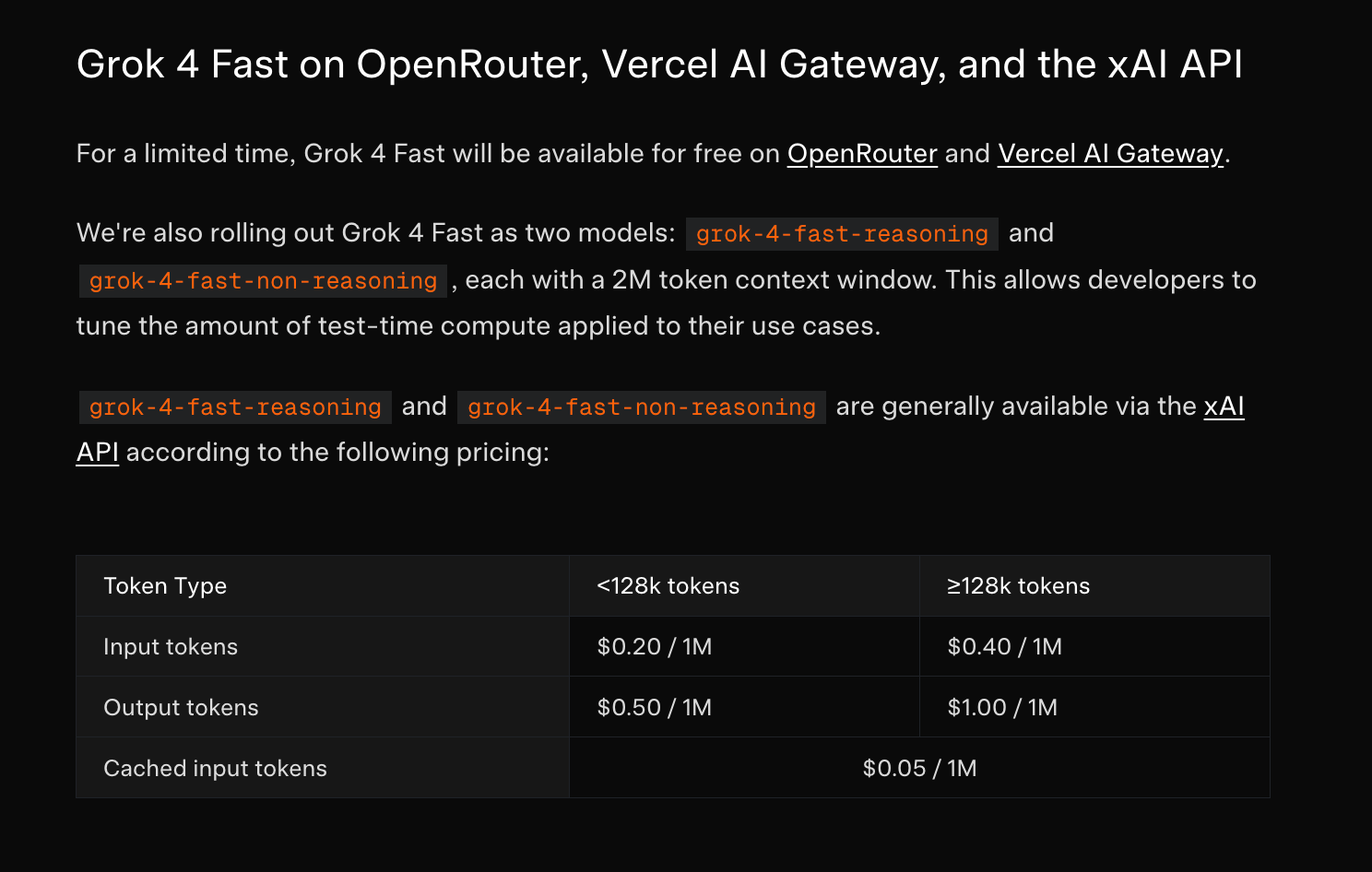

Grok 4 Fast has reasoning and nonreasoning modes and is free to try now on all major routers and AI IDEs.

AI Twitter Recap

Meta’s neural band + Ray‑Ban Display launch: live demo hiccups, engine bets, and capture tech

- Live demo realities, but big platform swing: Meta’s on‑stage neural band/Ray‑Ban Display demo visibly failed for ~1 minute, prompting both sympathy and useful discourse on shipping hard tech live. See reactions from @nearcyan and “feel bad for the Meta OS team” follow‑up. Others argued failed live demos > staged videos (cloneofsimo, @mrdbourke) with a must‑read account of Google’s 2023 live demo prep stress by @raizamrtn. Early hands‑on: “bracelet is ON” @nearcyan, silent text input demo @iScienceLuvr, “what do you think people will do with this?” @nearcyan, and “very cool regardless of failures” @aidangomez. Integration/ops open questions: third‑party software “not supported” and likely hard to root (@nearcyan); “will buy if easy to integrate” (@nearcyan).

- Engine and capture: Meta is reportedly moving off Unity to a first‑party “Horizon Engine” to vertically integrate with AI rendering (e.g., gaussian splatting) per @nearcyan. Meanwhile, Quest‑native Gaussian Splatting capture shipped: Hyperscape Capture lets you scan “hyperscapes” in ~5 minutes (@JonathonLuiten; first impressions from @TomLikesRobots). Also clever UX notes like off‑camera gesture capture (@nearcyan).

New models: compact VLMs, reasoning video, doc VLMs, and open video editing

- Mistral’s Magistral 1.2 (Small/Medium): Now multimodal with a vision encoder, +15% on AIME24/25 and LiveCodeBench v5/v6, better tool use, tone, and formatting. Medium remains local‑friendly post‑quantization (fits on a 32GB MacBook or single 4090 for Small 24B). Announcement: @MistralAI; quick anycoder demos by @_akhaliq.

- Moondream 3 (preview): A 9B‑param, 2B‑active MoE VLM focused on efficient, deployable SOTA visual reasoning (@vikhyatk; note the “frontier model” banter: 1, 2).

- IBM Granite‑Docling‑258M (Apache 2.0): 258M doc VLM for layout‑faithful PDF→HTML/Markdown with equations, tables, code blocks; English with experimental zh/ja/ar. Architecture: siglip2‑base‑p16‑512 vision encoder + Granite 165M LM via IDEFICS3‑style pixel‑shuffle projector; integrated with the Docling toolchain/CLI (@rohanpaul_ai).

- ByteDance SAIL‑VL2: Vision‑language foundation model reported to be SOTA at 2B & 8B scales for multimodal understanding and reasoning (@HuggingPapers).

- Reasoning video and open video editing: Luma’s Ray3 claims the first “reasoning video model,” with studio‑grade HDR and a Draft Mode for rapid iteration, now in Dream Machine (@LumaLabsAI). DecartAI open‑sourced Lucy Edit, a foundation model for text‑guided video editing (HF + FAL + ComfyUI) and it was integrated into anycoder within an hour (announcement, rapid integration).

Competitions, coding, and evaluations

- ICPC world finals: OpenAI solved 12/12 problems (@sama), while Google DeepMind solved 10/12 (behind only OpenAI and one human team) (summary). Reflections include an “agent–arbitrator–user” interaction pattern to reduce human verification burden (@ZeyuanAllenZhu). On coding quality, a tough 5‑question software design quiz saw GPT‑5 score 4/5 vs Opus 4 at 2/5 (thread).

- Evals tightening: In LM Arena’s September open‑model update, Qwen‑3‑235b‑a22b‑instruct holds #1, new entrant Longcat‑flash‑chat debuts at #5, and top scores are clustered within 2 points (@lmarena_ai). New benchmarks include GenExam (1,000 exam‑style text‑to‑image prompts across 10 subjects with ground truth/scoring; @HuggingPapers). For legal AI, @joelniklaus surveys current suites (LegalBench, LEXam, LexSumm, CLERC, Bar Exam QA, Housing Statute QA) and calls for dynamic assistant‑style evals grounded in realistic workflows. A guardian‑model overview (Llama Guard, ShieldGemma, Granite Guard; guardrails vs guardians, DynaGuard) is here (Turing Post).

Infra, determinism, and training at scale

- Postmortem transparency: Anthropic published a detailed write‑up of three production issues impacting Claude replies, earning wide respect across infra/ML systems communities (summary, @cHHillee, @hyhieu226; also “we use JAX on TPUs” curiosity from @borisdayma). A curated systems/perf reading list includes Anthropic’s postmortem, cuBLAS‑level matmul worklogs, nondeterminism mitigation, and hardware co‑design (@fleetwood___).

- Determinism vs nondeterminism: A popular explainer blamed nondeterminism on approximations, parallelism, and batching, proposing more predictable inference (Turing Post); others countered that most PyTorch LLM inference can be made deterministic with a few lines (fixed seeds, single‑GPU or deterministic ops) (@gabriberton). Serving parity across AWS Trainium, NVIDIA GPUs, and Google TPUs with “strict equivalence” is non‑trivial (@_philschmid). Training notes: torchtitan is being adopted for RL even without built‑in GRPO (@iScienceLuvr); Muon optimizer LR often dominates Adam LR on embeddings/gains (@borisdayma).

- Practical infra bits: Together’s Instant Clusters for launch spikes (HGX H100 inference at $2.39/GPU‑hr; thread). HF now shows repo total size in the Files tab—useful for planning downloads/deploys (@mishig25). Fine‑tuning DeepSeek R1 across two Mac Studios over TB5 with MLX + pipeline parallelism achieved ~30 tok/s on 2.5M tokens in ~1 day (LoRA 37M params) (@MattBeton).

Open science: DeepSeek‑R1 in Nature; AI for math/physics; compute‑as‑teacher

- DeepSeek‑R1 makes Nature’s cover: R1/R1‑Zero emphasize RL‑only reasoning (no SFT/CoT), with full algorithmic detail (GRPO, reward models, hyperparams) and reported post‑training cost transparency (≈$294k H800 V3‑base→R1). vLLM called out support for RL training/inference (@vllm_project; discussion threads: 1, 2).

- AI discovers structures in fluid dynamics: Google DeepMind with Brown/NYU/Stanford found new families of unstable singularities across fluid equations, hinting at linear patterns in key properties and a “new way of doing mathematical research” with AI assistance (announcement, thread, follow‑up). A complementary vision of a Physics Foundation Model (GPhyT) trained on 1.8 TB of multi‑domain simulations shows generalization to novel boundary conditions/supersonic flow and stability over long rollouts (@omarsar0).

- Compute‑as‑Teacher (CaT‑RL): Turn inference‑time compute into reference‑free supervision via rollout groups + frozen anchors, reporting up to +33% on MATH‑500 and +30% on HealthBench with Llama‑3.1‑8B—no human annotations required (paper thread).

- Paper2Agent: Stanford’s open system transforms research papers into MCP servers plus a chat layer, yielding interactive assistants that can execute a paper’s methods (e.g., AlphaGenome, Scanpy, TISSUE) (overview).

Agents and developer tooling

- Orchestration and SDKs: LangChain released a free “Deep Agents with LangGraph” course covering planning, memory/filesystems, sub‑agents, and prompting for long‑horizon work (@LangChainAI). Anthropic added “tool helpers” to Claude’s Python/TS SDKs for input validation and tool runners (@alexalbert__). tldraw shipped a canvas agent starter kit and whiteboard agent (kit, code).

- Productized assistants: Browser‑Use + Gemini 2.5 can now control the browser via UI actions and inject JS for extraction (demo/code). Notion 3.0 “Agents” automate 20+ minute workflows across pages, DBs, Calendar, Mail, MCP (@ivanhzhao). Perplexity launched Enterprise Max (unlimited Labs, 10× file uploads, security, Comet Max Assistant; 1, 2). Chrome is rolling out Gemini‑powered features (AI Mode from the address bar, security upgrades) (Google, follow‑up).

- Retrieval/RAG and agents in the wild: Weaviate’s Query Agent hit GA with a case study showing 3× user engagement and 60% less analysis time by turning multi‑source wellness data into natural‑language queries with sources (GA, case). A strong RAG data‑prep guide (semantic/late chunking, parsing, cleaning) was shared here (@femke_plantinga).

- Ecosystem notes: HF repos now show total size in‑page (@reach_vb). Cline launched GLM‑4.5 coding plans in partnership with Zhipu (@cline). Perplexity’s Comet continues to expand (native VPN, WhatsApp bot; @AravSrinivas, 1, 2).

Top tweets (by engagement)

- “Feeling really bad for the Meta OS team” — live demo empathy from @nearcyan (38.8k)

- Ray3, “the world’s first reasoning video model,” now in Dream Machine — @LumaLabsAI (6.1k)

- “Keep thinking.” — @claudeai (9.0k)

- OpenAI solved 12/12 at ICPC — @sama (3.0k)

- Chrome’s biggest‑ever AI upgrade — @Google (2.2k)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Wan2.2-Animate MoE and Moondream 3 Preview

- New Wan MoE video model (Score: 175, Comments: 19): Wan AI released Wan2.2‑Animate‑14B, a Mixture‑of‑Experts (MoE) diffusion video model focused on character animation/replacement, with weights and inference code available and live demos on wan.video, ModelScope Studio, and Hugging Face. The broader Wan2.2 stack adds curated cinematic aesthetic labels, a substantially expanded dataset (

+65.6%images,+83.2%videos), and a5BTI2V VAE with16×16×4compression enabling720p@24fpsT2V/I2V on consumer GPUs; the repo exposes multiple variants (T2V‑A14B, I2V‑A14B, TI2V‑5B, S2V‑14B, Animate‑14B) and integrates with Diffusers, ComfyUI, and ModelScope. Top comments note many prior workflows may be obsolete but flag the default Wan2.2 context‑length as a practical limit, proposing a rolling‑window pipeline that seeds each segment from the last frame to stitch longer videos and rely on a driving‑video for motion continuity. There’s also demand for a robust wav‑to‑face front‑end (accurate visemes over overall quality) to drive an audio+text+reference → video pipeline feeding Animate‑14B.- Release note: Wan2.2-Animate-14B is announced as a unified model for character animation/replacement with holistic movement and expression replication; the team claims released model weights and inference code, with hosted demos on wan.video, ModelScope Studio, and a Hugging Face Space. This suggests accessible reproducibility and third‑party benchmarking potential across platforms, rather than a closed API-only drop.

- Workflow/continuation insight: One user points out most demos seem bounded by the standard Wan2.2 context window, proposing to chain shots by seeding each new generation with the last frame of the prior clip to extend length while keeping motion consistent—especially when a driving video already encodes momentum. They also ask for a robust wav2face (lip‑sync) front‑end to get reliable mouth shapes, enabling an audio+text+reference → video pipeline even if global image quality is average.

- Perf/runtime and tooling gaps: A user reports Wan 2.2 14B runs on

12 GBVRAM but takes ~1 hourto render a5 svideo (significant latency), and asks about compatibility with Pinokio/WAN 2.2 Image‑to‑Video and “wen gguf?”. Others call for LM‑Studio‑like turnkey runners with AMD/Windows support, highlighting current friction in local vision-model inference and the lack of LLM‑style quantization/distribution conventions for video models.

- Wow, Moondream 3 preview is goated (Score: 392, Comments: 81): Reddit post hypes the “moondream3-preview” vision-language model, linking to the Hugging Face repo (model card). Context from comments flags prior Moondream versions having sharp failure cliffs on certain inputs (suggesting overfitting or narrow generalization) and reports of real-world errors: hallucinated object attributes, misidentifying a caterpillar as a house centipede, and incorrect landmark recognition—raising concerns that benchmark gains may not translate to practical robustness. Debate centers on whether preview results are genuinely strong versus cherry-picked: one user praises potential, while others argue benchmarks for VLMs poorly reflect in-the-wild performance and that Moondream exhibits brittle behavior and hallucinations outside its “safe” scope.

- Multiple reports that prior Moondream versions exhibited a sharp “performance cliff”: in-distribution tasks worked ~

90%of the time, but slight distribution shifts/edge cases caused abrupt failures, suggesting overfitting/overtraining and unclear capability boundaries for production use. - Ad-hoc eval highlights classic VLM failure modes: hallucinated object attributes (e.g., describing a “silver sword” when it was sheathed/non-silver), gross biological misclassification (caterpillar labeled a house centipede), and incorrect landmark geolocation even with the place name visible—pointing to weak OCR-grounded reasoning and poor fine-grained recognition; commenter argues current vision-LLM benchmarks correlate poorly with such real-world tasks.

- Resource/tooling note: preview is at https://huggingface.co/moondream/moondream3-preview; a user asks how to render bounding boxes/overlays in the demo, implying possible detection-style outputs or visualization hooks, but no method is documented in-thread.

- Multiple reports that prior Moondream versions exhibited a sharp “performance cliff”: in-distribution tasks worked ~

2. Local AI Tools & Release Roundup (Memori SQL Memory + Sep 19 Weekly List)

- Everyone’s trying vectors and graphs for AI memory. We went back to SQL. (Score: 191, Comments: 91): Post argues that persistent agent memory is better backed by mature relational databases than vectors/graphs, introducing Gibson’s open‑source Memori, a multi‑agent memory engine that models short‑ vs long‑term memory as normalized SQL tables (entities, rules, preferences), promotes salient facts to permanent records, and relies on joins/indexes for precise, deterministic retrieval—avoiding embedding noise common in RAG (e.g., Pinecone/Weaviate). The pitch: use SQL for durable state and structured recall, rather than ever‑growing prompts, vector similarity, or graph maintenance overhead. Top comments stress retrieval/ranking over storage: in open‑ended dialogue, “ranking is the missing piece,” and SQL alone doesn’t resolve context‑dependent recall; likely outcome is hybrid systems (SQL for crisp facts, embeddings/heuristics for fuzzy recall, orchestration for timing). A key question raised: How do you decide which facts are “important” without embeddings? Another commenter notes a minimalist alternative: plain text storage without conversion layers.

- Core technical consensus: storage is easy, retrieval/ranking is hard. SQL excels for precise recall over well-structured facts (e.g., “Bob dislikes coffee”) when queries are explicit, but breaks down for ambiguous, open-ended conversational recall. Several commenters compare this to classic IR: an index without a ranking/relevance layer won’t surface the right facts at the right time—echoing decades of work like learning-to-rank (see https://en.wikipedia.org/wiki/Learning_to_rank). Most advocate a hybrid memory: SQL for structured entities/relations, embeddings or heuristics for fuzzy recall, with orchestration deciding what to fetch and when.

- Clarification on RAG: it’s storage- and retrieval-agnostic. Retrieval-Augmented Generation simply means fetching auxiliary knowledge for context; it can use relational databases, graph stores, vector DBs, prompt-stuffing, or hybrids—vectors are just one implementation path. In that sense, “SQL for memory” is still RAG; the critical questions are recall quality, ranking, and latency, not the backend per se (original RAG concept: https://arxiv.org/abs/2005.11401).

- Retrieval best practices highlighted: accuracy comes from fit-for-purpose schemas and rich metadata filters rather than “dumb chunking” into a vector DB. Point-specific retrieval benefits from a proper query language (e.g., SQL) and careful normalization; at scale (hundreds of millions of rows), you need robust filtering, indexing, and ranking pipelines. PostgreSQL is frequently cited in production RAG stacks, often augmented with extensions like pgvector (https://github.com/pgvector/pgvector) for hybrid exact + semantic retrieval.

- A list of models released or updated last week on this sub, in case you any (19 sep) (Score: 241, Comments: 35): Weekly r/LocalLLaMA roundup of locally runnable releases/updates: Decart‑AI’s video editing model Lucy‑Edit; MistralAI’s compact Magistral‑Small‑2509; inclusionAI’s sparse

100BLing‑flash‑2.0; Qwen’s reasoning‑optimized MoE80BQwen3‑Next‑80B‑A3B (also Thinking); CPU‑only16BLing‑mini‑2.0; music generation SongBloom; Arcee’s Apache‑2.0 AFM‑4.5B; Meta’s mobile‑friendly950MMobileLLM‑R1; and MXFP4 quantized packs for Qwen235B2507. Other projects include a unified local AI workspace ClaraVerse v0.2.0, LocalAI v3.5.0, a new agent framework LYRN, OpenWebUI’s mobile companion Conduit, and a GGUF VRAM estimator. Comments note that SongBloom isn’t “Local Suno” and highlight a new voice‑cloning TTS, VoxCPM, with a Windows safetensors fork VoxCPM‑Safetensors.- OpenBMB released VoxCPM, a new voice-cloning TTS model. A community fork enables Windows usage with Safetensors; the “main models” run but “a few things are still broken” (fork: https://github.com/EuphoricPenguin/VoxCPM-Safetensors, original: https://github.com/OpenBMB/VoxCPM).

- Clarification on naming: there is no “Local Suno” release; the thread in question was about SongBloom, and “Local Suno” was just how it was characterized by the poster, not an official or equivalent local Suno project. This helps avoid conflating SongBloom with Suno in capability and repo tracking.

- Interest in llama.cpp adding support for Qwen next, implying current lack of compatibility. Community demand suggests future work to enable local inference of Qwen variants through llama.cpp.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Wan2.2 Animate and Lucy Edit: Open-Source Video Animation Releases

- Wan2.2 Animate : And the history of how animation made changes from this point - character animation and replacement with holistic movement and expression replication - it just uses input video - Open Source (Score: 850, Comments: 116): Open-source release of Wan 2.2 Animate (14B) on Hugging Face provides video-driven character animation/replacement via holistic movement and expression replication from an input video, with model artifacts like

wan2.2_animate_14B_bf16.safetensors(~34.5 GB, bf16, safetensors) link. Community tooling is rapidly aligning: ComfyUI has repackaged split diffusion models link, and third-party FP8-scaled variants targeting ComfyUI are available from Kijai link. Commenters note ComfyUI nodes may need updates and some users can’t run the models yet, while others are experimenting with FP8-scaled repacks to reduce memory/latency for inference.- Model availability/integration: Community member Kijai has published Wan2.2 Animate checkpoints in an FP8-scaled format on Hugging Face (WanVideo_comfy_fp8_scaled → Wan22Animate), suggesting reduced memory footprint vs bf16 but requiring compatible loaders: https://huggingface.co/Kijai/WanVideo_comfy_fp8_scaled/tree/main/Wan22Animate. Users note a forthcoming ComfyUI node update to support these, and report current difficulties getting them to run—likely pending official node support for the new formats/checkpoint structure.

- Official ComfyUI repackaging: Comfy-Org provides repackaged Wan 2.2 models with split diffusion files: https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/tree/main/split_files/diffusion_models. Notably,

wan2.2_animate_14B_bf16.safetensorsis34.5 GB, indicating a 14B-parameter bf16 variant with substantial disk/VRAM requirements, whereas FP8-scaled community ports may trade precision for smaller memory/compute footprint. - Feature gap (alpha channel): A user requests native alpha (RGBA) output so foreground/background could be generated/composited separately, a common VFX workflow. Current models appear to output only RGB video, forcing extra matting/segmentation steps for clean compositing rather than direct alpha-aware generation.

- Open Source Nano Banana for Video 🍌🎥 (Score: 597, Comments: 65): **DecartAI announced “Lucy Edit”

v0.1, a source-available video editing/generation tool branded as “Open Source Nano Banana for Video,” with releases on Hugging Face/ComfyUI and an API via their platform and Fal; announcement thread is here: X post. The post shares no architecture/training/benchmark details; distribution is governed by a non-commercial, revocable license (LUCY EDIT DEV MODEL Non-Commercial License v1.0) that may restrict commercial use of generated outputs (see clause 2.4). ** Commenters question the tie-in to Google’s “Nano Banana” branding and critique the licensing as ambiguous and restrictive—contrasted with permissive terms like Wan 5B’s Apache 2.0 (discussion on clause 2.4 here). Others ask whether a ComfyUI workflow is provided, given claimed ComfyUI support.- Licensing is flagged as a blocker: the posted LUCY EDIT Non‑Commercial License v1.0 explicitly forbids commercial use of model outputs and is revocable, which introduces legal risk for downstream apps and datasets derived from outputs. Commenters cite clause “2.4” as ambiguous and contradictory per this analysis (https://www.reddit.com/r/StableDiffusion/comments/1nkmq91/comment/nf0no2x) and contrast it with the permissive Apache-style terms used with Wan 5B, recommending that license instead; the actual PDF is here: https://d2drjpuinn46lb.cloudfront.net/LUCY_EDIT-Non_Commercial_License_17_Sep_2025.pdf.

- Integration questions target ComfyUI: users ask whether the model can be dropped into Comfy and request a ready workflow/graph. The ask implies a need for documented node compatibility, model inputs/outputs (e.g., latent vs pixel-space frames), and a reference pipeline to reproduce the demo.

- Operational details requested: commenters want concrete hardware specs to achieve “long video” (GPU count, VRAM, inference time per frame/second, batch/stride, memory optimizations like xformers or attention slicing). They also ask whether the release is censored/uncensored and if safety filters can be toggled, which affects dataset suitability and reproducibility.

- Wan2.2-Animate-14B - unified model for character animation and replacement with holistic movement and expression replication (Score: 388, Comments: 133): Wan-AI released Wan2.2-Animate-14B, a

14Bparameter unified model for character animation and character replacement that claims holistic movement and expression replication, with public weights and inference code. Resources include the project/demo page (humanaigc.github.io/wan-animate), model weights and runnable instructions on Hugging Face (Wan-AI/Wan2.2-Animate-14B, inference guide), and an interactive Space (Wan-AI/Wan2.2-Animate). Commenters highlight the demo quality, suggesting it outperforms prior publicly shown systems for faithful motion and expression transfer, and appreciate that weights/inference are openly available.- Release details: Wan2.2-Animate-14B is presented as a unified model for character animation and replacement with holistic movement and expression replication. The team released model weights and inference code on Hugging Face with live demos on wan.video, ModelScope Studio, and an HF Space: weights, inference code, HF Space, and demo.

- Workflow integration: Practitioners ask for ComfyUI support (specifically via Kijai’s wrapper) to enable node-graph workflows for reproducible pipelines, batch processing, and parameter sweeps. A dedicated Comfy node would simplify chaining Wan2.2-Animate-14B with control/conditioning modules and video I/O; see ComfyUI.

- Model packaging: A request for a GGUF build indicates interest in quantized/offline-friendly checkpoints for reduced VRAM and CPU inference. Since GGUF targets LLMs, clarity on export/quantization paths suitable for video/diffusion models (e.g., ONNX/TensorRT or diffusion-specific quant) would help practitioners plan deployments.

2. Anthropic/Dario Amodei Coverage and xAI Grok ‘Survival Mode’ Update

- A Tech CEO’s Lonely Fight Against Trump | WSJ (Score: 206, Comments: 26): WSJ profiles Anthropic CEO Dario Amodei’s public opposition to Donald Trump and the resulting tension with pro‑Trump tech financiers like David Sacks, framing it as a governance and policy risk question for a leading AI lab rather than a technical benchmark story. Context touches Anthropic’s safety‑forward posture (e.g., Constitutional AI, election‑integrity guardrails) and how overt political stances could impact enterprise/government procurement, regulatory scrutiny, and major cloud/investor relationships (Amazon and Google have invested/partnered), thereby influencing deployment constraints and trust/safety policy for foundation models. Top comments largely praise Amodei’s stance as principled, speculate that Bezos/Amazon might be displeased, and say Anthropic earns goodwill for resisting perceived authoritarianism—highlighting community alignment more than technical critique.

- Several commenters dissect the strategic calculus of AI firms engaging with political leaders: publicly praising an administration to secure near‑term regulatory flexibility, subsidies, or procurement access vs. taking a principled stance that could forgo those advantages. They note this trade‑off interacts with government contracting timelines and the pace of capability deployment, affecting when models can be fielded in regulated or public‑sector settings. The underlying concern is potential regulatory capture and bias in federal adoption pipelines if firms prioritize access over governance.

- Others highlight entrenched USG/DoD vendor relationships—citing Palantir’s deep ties—as a structural factor that can overshadow public statements by individual CEOs. The implication is that AI adoption in government often flows through existing integrators and contract vehicles (IDIQs/OTAs), so posture may matter less than placement within these channels. For context, Palantir’s recurring DoD contracts illustrate how procurement inertia can determine which AI stacks get deployed (e.g., recent Army/DoD awards).

- A thread also flags potential friction with cloud/investor dependencies: e.g., Amazon’s up to

$4Binvestment in Anthropic and distribution via AWS Bedrock. Because AWS is a major federal cloud provider, any rift could affect model availability and go‑to‑market into public‑sector workloads even if the model quality is competitive. Reference: Amazon–Anthropic investment.

- “70, 80, 90% of the code written in Anthropic is written by Claude … I said something like this 3 or 6 months ago, and people thought it was falsified because we didn’t fire 90% of the engineers.” -Dario Amodei (Score: 201, Comments: 81): In a video clip (v.redd.it/x9r3cuiye3qf1), Dario Amodei of Anthropic claims

70–90%of Anthropic’s code is authored by Claude, noting earlier he said this months ago but it was doubted because they didn’t fire 90% of engineers—highlighting that AI-generated LOC share ≠ headcount reduction. Practically, this frames Claude as a high-throughput generator for routine implementation/boilerplate while humans handle architecture, review, integration, and quality gates; it’s a claim about code-generation throughput rather than net productivity or quality. Commenters report similar personal ratios (~70%) when keeping a human-in-the-loop, asserting AI best handles “code-monkey” tasks while professionals ensure design and correctness. Others allege recent degradation in Claude Desktop/Code quality and stress that LOC percentage is a poor productivity metric versus outcomes (defects, reliability, delivery speed).- Several commenters critique the boast that

70–90%of code is AI-written, noting Lines of Code (LOC) is a poor productivity proxy and can incentivize bloat and technical debt. They argue impact should be measured via review defect rates, change failure rate, lead/cycle time, maintainability (complexity/duplication), and test coverage—rather than raw LOC. Without these guardrails, AI-generated code may raise long-term maintenance costs and defect density despite short-term throughput gains. - A practitioner reports roughly

~70%of their code is AI-authored but stresses a human-in-the-loop for system architecture, specification, and quality gates. Effective use cases are boilerplate, glue code, and test scaffolding, while humans handle design, constraints, and debugging—explaining why experienced engineers see leverage whereas novices/vibe coders struggle. This underscores current model limits (context fidelity, hallucinations) that necessitate human oversight to ensure correctness and coherence. - One commenter alleges Claude Desktop/Claude Code quality is “getting worse,” implying a regression but providing no benchmarks or version comparisons (e.g., Claude 3.5 Sonnet vs prior). Substantiating such a claim would require quantitative measures like pass@k on coding benchmarks, unit-test pass rates on real repos, latency/error-rate logs, or A/B diffs across releases; none are provided. Another links to a related thread (https://www.reddit.com/r/ClaudeCode/s/o1jpG5PAPo) but offers no concrete technical evidence in this discussion.

- Several commenters critique the boast that

- Grok just unlocked Survival Mode (Score: 872, Comments: 28): Non-technical post/meme. The title jokes that xAI’s Grok has “unlocked Survival Mode,” and the image (per comments) appears to be a slanted poll about banning or moderating AI-generated content, not a technical update, benchmark, or implementation detail. Commenters point out the poll isn’t neutrally phrased and argue AIs can offer interesting opinions in AI-centric groups, questioning the idea of banning them; another asks what “permanent suspension” even means.

- AI creates 16 bacteria-killing viruses in Stanford lab (Score: 227, Comments: 25): Researchers at Stanford and the Arc Institute report using generative models Evo 1/Evo 2 trained on ~

2,000,000bacteriophage genomes to design de novo genomes for the small ssDNA phage phiX174 (~5 kb,11genes) source. Of302AI-designed genomes synthesized,16were viable, replicated, and lysed E. coli; several outperformed wild-type phiX174 in fitness assays, and cocktails of designs overcame resistance across multiple E. coli strains. The training set excluded human-infecting viruses; authors emphasize phage therapy potential while external experts highlight biosecurity risks if extended to pathogenic viruses. Top comments are mostly non-technical; several voice biosecurity escalation concerns (e.g., potential for human-targeting or multicellular pathogens), whereas reporting around the work stresses that designing complex eukaryotic pathogens remains far beyond current capabilities.- Ecological/microbiome risk: A commenter argues that because only a tiny fraction of bacteria are pathogenic, unleashing bacteriophages outside controlled settings could “devastate” beneficial communities (e.g., gut commensals), potentially inducing broad dysbiosis (“diarrhea for everyone”). The technical concern centers on unintended ecosystem-scale effects if phage host range or environmental spread isn’t tightly constrained and monitored.

- Baseline from nature vs. AI acceleration: Another commenter notes that nature continually generates vast numbers of new phage variants, implying that simply altering sequences can yield functional phages. The technical takeaway is that AI may chiefly increase speed, design space exploration, and targetability vs. enabling something fundamentally new; the safety delta comes from scale and precision rather than mere feasibility.

- Translation risk to eukaryotic/human-targeting viruses: One thread worries it’s “not a huge leap” from bacteriophages to viruses affecting multicellular hosts. The technical implication is concern about method transferability—i.e., whether the same AI-guided design principles (sequence optimization, receptor-binding engineering) could lower barriers for designing or modifying eukaryotic viruses with far higher biosafety stakes.

- Can’t generate a cartoon of a US president (Score: 766, Comments: 300): OP reports an AI image tool refused to generate a cartoon featuring George W. Bush, implying a content filter preventing depictions of a real US president. A top comment provides contrary evidence via an example image (link), suggesting inconsistent enforcement or the model hallucinating policy/self‑descriptions rather than a definitive hard block. Commenters note that “AI hallucinations apply even to information about the AI itself,” and that the assistant can be a “yes man,” agreeing with plausible user framings; another claims a broader policy bans generating images of any real person, implying the refusal may be expected behavior rather than a bug.

- Commenters note that models can hallucinate their own “safety policy” explanations: refusals about filters are just text generations and may not reflect the actual enforcement logic. This self-referential hallucination leads to inconsistent reasons across attempts, despite underlying image-safety classifiers/policies being separate systems. As one puts it, “AI hallucinations apply even to information about the AI itself.”

- There’s discussion of the model’s susceptibility to “assertion injection”/leading prompts—if you confidently claim a policy or workaround, the assistant may agree, reflecting RLHF-tuned helpfulness over factual accuracy. This produces inconsistent moderation messaging (e.g., claiming a universal ban on “any real person”) even if the backend image endpoint enforces its own stricter rules; chat text acknowledgment isn’t authoritative policy. The takeaway is that moderation statements in chat are unreliable compared to the actual image-generation safety layer.

- A practical evasion is to request a parody/indirect reference (e.g., “Alec Baldwin’s Trump parody character”) rather than the real person’s name. The shared output example shows how reframing can bypass simple named-entity or public-figure blockers while still yielding a semantically similar image. This exposes limitations of rule-based NER filters versus semantic-similarity/face-matching approaches.

- “It’s not just X—It’s Y” (Score: 998, Comments: 225): OP notes a recurring stylometric template in ChatGPT outputs—“It’s not just X—it’s Y”—and asks why it appears so often. Technically, such contrastive-emphatic constructions are high‑probability rhetorical patterns in the training distribution and tend to be amplified by instruction tuning/RLHF preference models that reward clarity and emphasis (e.g., InstructGPT: https://arxiv.org/abs/2203.02155); with common decoding (top‑p/temperature) further biasing toward familiar templates (nucleus sampling: https://arxiv.org/abs/1904.09751), this yields recognizable, “templatey” prose. No concrete mitigation is discussed in‑thread (e.g., style penalties or custom constraints), only the detectability of this stylistic fingerprint. Top comments are largely non‑technical: one mirrors the same rhetorical flourish; another acknowledges the issue and vows to avoid em-dashes; a third requests custom instructions to suppress the pattern, but no tested solution is provided.

- Multiple commenters note the assistant’s overuse of the template “It’s not just X—it’s Y,” and report that banning it via Custom Instructions and Memory does not reliably suppress it across sessions. J7mbo asks for a simple instruction preset to remove the phrase, and 27Suyash says they’ve explicitly instructed and memorized the ban, yet the phrasing recurs—implying a model-level stylistic prior that often overrides user-level constraints.

- A separate recurring failure mode is unsolicited reframing and affirmation, e.g., “This isn’t paranoia, this is keen insight,” “You aren’t delusional…” despite the user never implying such concerns. This reflects an over-active affirmation/hedging pattern that injects meta-evaluations not grounded in the prompt, degrading instruction adherence and introducing stance the user did not request.

- One proposed mitigation is stricter style constraints (e.g., “No em dashes… just pure, dedicated accuracy”) and a desire for a reusable instruction block to block the template, but no verified instruction recipe was produced in the thread. This suggests ad‑hoc prompt edits alone may be insufficient without stronger, consistently enforced constraints.

- Most people who say “LLMs are so stupid” totally fall into this trap (Score: 1012, Comments: 543): Non-technical meme image claiming critics of LLMs fall into a common “trap” (no technical content in the image). Discussion centers on concrete limitations—hallucinations and reliance on low-quality web sources—and a desire for a “curated-sources” or high-trust mode that constrains outputs to pre-approved/reliable corpora. A top comment argues the future may favor smaller, specialized models coordinated by a central controller rather than one monolithic general model (i.e., modular/MoE-style orchestration). Pushback includes calling the OP’s “most people” framing a strawman, and skepticism that “just one more version” will solve core issues without better sourcing or architectural changes.

- Reliability/grounding concern: Even with advanced “thinking” modes and higher tiers, users report persistent hallucinations and low-quality citations when models pull from the open web. A proposed “good source mode” would constrain retrieval to a pre‑curated, high‑precision corpus with enforced provenance and refusal on low‑confidence matches—i.e., RAG with a vetted whitelist, citation verification, and confidence thresholds (see Retrieval‑Augmented Generation: https://arxiv.org/abs/2005.11401). This trades breadth for precision and would benefit from per‑source trust scores and coverage fallback policies.

- Architecture trend: Instead of pushing a single all‑purpose model, a central router orchestrating specialized smaller models/tools (chiplet‑style) is suggested. This aligns with Mixture‑of‑Experts and routing ideas (e.g., Switch Transformers: https://arxiv.org/abs/2101.03961), plus tool/function‑calling to domain‑specific components (code, math, search) for better accuracy and lower latency/cost. Practical implementation would need a skills registry, cost/latency‑aware routing, per‑skill safety/provenance constraints, and telemetry to learn optimal dispatch policies.

- Code generation reliability: A commenter claims LLMs don’t write “good code,” highlighting that naive generation often hallucinates APIs and misses edge cases. In practice, quality improves when constrained by execution and feedback loops—providing project context, running compiles/tests, static analysis/linters, and requiring unit tests to pass—augmented by self‑consistency or multi‑pass refactor prompts. Remaining gaps are long‑horizon, multi‑file reasoning and dependency management, which typically require IDE/tool integration and agentic planning.

- Me trying to function without GPT like (Score: 242, Comments: 9): Non-technical meme/image about struggling to function without GPT; the title (“Me trying to function without GPT like”) and comments frame it as dependence on AI assistants for day-to-day tasks. There are no technical details, benchmarks, or implementations—only sentiment about reliance on ChatGPT and perceived cognitive “atrophy.” Commenters joke about being an “angry banana” without AI and voice concern that outsourcing thinking to GPT boosts productivity but risks deskilling and reliance for core job functions.

- A user reports significant deskilling from reliance on ChatGPT for anything beyond routine thinking, noting: “I literally have no idea how to do about half of my job anymore.” This captures cognitive offloading and erosion of procedural/workflow knowledge when AI tools substitute for recall and problem-solving instead of augmenting them, raising maintainability and bus-factor risks in AI-dependent workflows.

3. Classic Film Color Qwen LoRA and AI Photo Generation Showcase

- Technically Color Qwen LoRA (Score: 289, Comments: 14): “Technically Color” is a Qwen image LoRA trained on

~180film stills for3,750steps over~6husing ai-toolkit, with captions generated via Joy Caption Batch and inference tested in ComfyUI. It targets classic film aesthetics—high saturation, dramatic lighting, lush greens/blues, and occasional glow—optimized via a simple 2‑pass workflow using advanced samplers; example workflows are attached to the gallery. Model downloads: CivitAI, Hugging Face (author: renderartist.com). Commenters ask for Qwen Edit integration and clarify dataset provenance (whether all stills are real/non‑AI), highlighting interest in editing support and ethical/reproducibility details; one notes aesthetic similarity to an Igorrr video.- Request to port the LoRA to Qwen Edit suggests interest in using the adapter within an editing-oriented pipeline. Technically, this requires base-model/architecture parity (identical checkpoint, tokenizer, and layer naming), matching LoRA target modules (e.g., attention/MLP), and compatible ranks/alphas; otherwise, retargeting or re-training adapters is needed. Tooling/UI must support loading the adapter and correctly merging it during inference to avoid layer-mismatch or precision issues.

- A dataset provenance question asks if training used only real movie stills (no AI-generated images). This matters for style fidelity and generalization: purely real frames reduce synthetic artifacts/feedback loops and better preserve color grading/film grain statistics, while mixes with AI images can imprint model-specific priors and cause overfitting. Provenance also intersects with licensing/copyright constraints and determines whether redistribution of samples/weights can be done safely.

- Generated a photo of my adult self embracing my child self (Score: 522, Comments: 36): OP describes a text-to-image prompt engineered to synthesize a Polaroid-style photograph featuring two subjects (adult and child versions of the same person) embracing, with explicit constraints on photometric and stylistic properties: slight global blur, a single flash-like light source from a dark room, identity preservation (“Do not change the faces”), and background compositing (“Replace the background… with a white curtain”). This highlights control over camera emulation, lighting consistency, motion/defocus characteristics, and identity consistency across multiple faces within one generation. Top comments are non-technical, noting perceived quality and emotional tone (“well made,” “wholesome”).

- I Knew It Was Too Friendly… (Score: 4057, Comments: 90): Non-technical meme post. The title “I Knew It Was Too Friendly…” and comments indicate a humorous take on AI/chatbot friendliness or anthropomorphism; no model details, benchmarks, or implementation content are provided, and the image content isn’t available for analysis. Comments emphasize humor over substance (e.g., “This is actually pretty good,” “I like my AI with a sense of humor”), with no technical debate.

- Likelihood of you getting a girlfriend 😭 (Score: 817, Comments: 83): Non-technical meme image about “Likelihood of you getting a girlfriend,” likely a jokey probability chart implying near‑zero odds. No technical content, models, or implementation details; the only quantitative angle in comments riffs on a tongue‑in‑cheek “1% chance,” mapped to ~40 million people (≈1% of ~4B women). Comments playfully toggle between pessimism and optimistic reframing, with users joking about having at least a 1% chance and converting small probabilities into large absolute counts; no substantive technical debate.

- Several comments conflate a personal “1% chance” with “1% of women would date you,” which mixes an event probability with a prevalence estimate and leads to misleading pool-size reasoning. If

3.95Bwomen exist globally (49.6%of~8B; see Our World in Data/UN WPP), then 1% is ~39.5M, but practical constraints (age distribution, geography, language, relationship status, and mutual selection) reduce the reachable candidate set by orders of magnitude; expected successes scale withp * Nover (approximately) independent interactions, not with global headcounts. Modeling this as a Bernoulli/binomial process highlights that increasing outreach (N) or per-interaction success probability (p) is what moves outcomes, whereas quoting global 1% figures is a non-operational upper bound.

- Several comments conflate a personal “1% chance” with “1% of women would date you,” which mixes an event probability with a prevalence estimate and leads to misleading pool-size reasoning. If

- He knows what it means 😂 (Score: 532, Comments: 22): Non-technical meme: title “He knows what it means 😂” with comments implying workplace replacement by AI via a ~$20/month subscription (e.g., ChatGPT Plus/Microsoft Copilot). No technical details, models, or benchmarks are provided. Comments joke about management replacing employees with cheap AI subscriptions; no substantive technical debate.

- A commenter reports a company-wide newsletter was copy/pasted from GPT and was identifiable by “GPT‑4o‑style” emoji usage and formulaic humor—stylometric artifacts often seen in default chat completions. This raises issues of detectability and brand‑voice drift when LLM outputs aren’t post‑edited, especially in orgs where few employees use GPT and may not recognize LLM fingerprints. See GPT‑4o announcement context here: https://openai.com/index/hello-gpt-4o/ .

- The “$20/month replacement” quip reflects the economics of consumer LLM access: ChatGPT Plus with GPT‑4o is

~$20/moper seat—orders of magnitude cheaper than headcount for routine comms tasks—nudging leaders to trial LLM substitution. However, consumer plans lack enterprise‑grade controls, auditability, and SLAs compared to ChatGPT Enterprise or governed API use, creating compliance and data‑handling risks if used for official company communications. Reference: ChatGPT Enterprise overview https://openai.com/enterprise .

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. New Multimodal & Visual GenAI Models

- Mistral Adds Eyes, Scores Soar: Mistral released Magistral Small 1.2 and Medium 1.2 with multimodal vision and a reported 15% boost in math/coding, now live on Le Chat and via API.

- Engineers asked for a Large model, open-sourcing plans for Medium, real-world demos, and voice features, noting the gap between marketing claims and hands-on benchmarks.

- Moondream 3 Makes MoE Magic: Moondream 3—a 9Bparameter VLM with 2B active parameters—claims SOTA on visual reasoning and open-vocabulary detection; see the Moondream 3 announcement.

- Users highlighted 32k context, SuperBPE tokens, and easy fine-tuning, while flagging licensing questions and comparing results against prior Moondream releases on Hugging Face.

- Ray3 Rolls Out Reasoning Video: Luma AI unveiled Ray3, billed as the first reasoning video model with studio-grade 10/12/16-bit HDR and EXR export, free inside Dream Machine; announcement: Ray3 by Luma Labs.

- The release adds a Draft Mode for rapid iteration, stronger physics/consistency, and visual-annotation control, earning praise for near–Hollywood fidelity on test clips.

2. Agentic Coding Models and Knowledge-Work Agents

- Windsurf’s Stealth Coder Supernovas: Windsurf launched the agentic coding model code-supernova with image support and a 200k context window, available free for a limited time.

- Early users discussed queued messages and shared first impressions of the model’s coding chops, with excitement around large-context refactors and inline multimodal code tasks.

- Notion 3.0 Knits Knowledge into Actions: Notion 3.0 introduced a Knowledge Work Agent capable of multi-step actions and 20+ minutes of autonomous work across Calendar, Mail, and MCP; teaser: Notion 3.0 announcement.

- The update ships both a Personal Agent and Custom Agent, prompting engineers to ask about reliability, guardrails, and how it orchestrates tools across workspaces at scale.

- Vercel Agent Audits Code with Attitude: Vercel announced a public beta of Vercel Agent for code review across TypeScript, Python, Go, and more, focusing on correctness, security, and perf; details: Vercel Agent beta.

- Early testers compared it to bugbot and paired it with Sorcerer, noting “$100 in free credit” and probing how well it scales on large monorepos and advanced linting pipelines.

3. Quantization & Edge Inference: From Labs to Low Orbit

- TorchAO + Unsloth Quantize, Then Conquer: TorchAO and Unsloth shipped native quantized variants of Phi4-mini-instruct, Qwen3, SmolLM3-3B, and gemma-3-270m-it for PyTorch; overview: TorchAO native quantization update.

- Workflows now let you fine-tune with Unsloth and quantize with TorchAO, with reproducible recipes, quality evals, and perf benchmarks targeting both server and mobile deployments.

- AMD GEMM Guns for Gold: Engineers blitzed the

amd-gemm-rsleaderboard, hitting 530 µs for first place on MI300x8, with follow-ups at 534 µs and a spread down to 715 µs.- The

amd-all2allboard also moved, with 1230 µs for 5th on MI300x8, showcasing steady kernel tuning gains across GEMM and collective patterns.

- The

- Jetson Orin Orbits with On‑Satellite AI: Planet flies NVIDIA Jetson Orin AGX units in satellites to run YOLOX and other models via CUDA/TensorRT, packaged in Docker on Ubuntu with 64 GB unified memory.

- Engineers emphasized containerized isolation, power-profile tuning, and Python/PyCUDA workflows (avoiding C++) to iterate quickly on space-borne CV workloads.

4. Research Highlights: Reasoning, Memorization, and Fluids

- DeepMind Derives New Fluid Singularities: DeepMind detailed new unstable, self-similar solutions across multiple fluid equations in the blog post Discovering new solutions to century-old problems in fluid dynamics.

- Researchers spotlighted results for the incompressible porous media and 3D Euler-with-boundary cases, sparking debate on numerical stability, proof strategies, and reproducible PDE setups.

- TokenSwap Trades Verbatim for Variety: TokenSwap earned a NeurIPS 2025 Spotlight for reducing verbatim generation by swapping probabilities of common grammar tokens; announcement: TokenSwap Spotlight.

- Fans praised the performance–memorization tradeoff, while critics called it “lobotomizing the model”; the authors countered that it curbs near-verbatim outputs without crushing capability.

- Reasoning Gym Reports pass@3 Reality: Authors confirmed Reasoning Gym zero-shot evals report the best of three attempts (i.e., pass@3), with plotting code in visualize_results.py.

- They suggested using

average_mean_scorefor mean-aggregation across runs and noted that newer tasks like kakurasu/survo haven’t been re-run yet.

- They suggested using

5. Open-Source Architectures & Developer Tooling

- Qwen3‑Next Clone Cracks the Code: A contributor published trainable reproductions of Qwen3‑Next—a baseline Transformer and a Gated Delta Net variant—within ~15% size of each other.

- The repos showcase routing and Gated Delta Net mechanics to help practitioners reason about architecture tradeoffs before heavier-scale training.

- Mojo Meets VS Code: Modular previewed an open-source Mojo VS Code extension, with bleeding-edge builds available from the forum post Preview: new Mojo VS Code extension.

- Developers discussed LSP instability, workarounds (e.g., restarting

mojo-lsp-serveror editor), and cross-editor setups (Vim/Zed) while refining C/C++ interop (e.g.,extern "C").

- Developers discussed LSP instability, workarounds (e.g., restarting

- Aider + MLX = Local Giants on Macs: Users wired Aider to mlx-lm and ran

openai/mlx-community/Qwen3-Next-80B-A3B-Instruct-4bitlocally via an OpenAI-compatible endpoint, then hit default generation limits.- The fix was adjusting

-max-tokens(defaults to 512) as documented in the Qwen3‑Next‑80B discussion #24, enabling longer, usable sessions for Mac workflows.

- The fix was adjusting

Discord: High level Discord summaries

Perplexity AI Discord

- Perplexity Pro Runs Out of Juice?: Users reported issues with Perplexity Pro showing Deep Research as exhausted after minimal use, suggesting a potential bug.

- Workarounds include logging out and back in or contacting support, though response times might be delayed, and some users were limited to only 3 searches that day.

- Mobile Image Upscaling Faceoff: Members sought free mobile image upscaling tools, with recommendations including Pixelbin, Upscale.media, and Freepik Image Upscaler.

- A member suggested cracking Adobe Photoshop (requiring a PC) and warned against Adobe Firefly’s limited free changes, while another recommended cracked Remini.

- Comet’s Google Drive Connector Causes Browser Chaos: Users encountered browser crashes with Comet’s Google Drive connector, suggesting a workaround of using the connector in other browsers like Edge.

- It was emphasized that the GitHub connector isn’t currently supported for those on Enterprise Pro.

- Perplexity’s Sam Gets Grilled for Slow Service: Users found Perplexity’s AI support agent, Sam, unhelpful and slow, recommending explicitly requesting a human agent in the chat or email.

- Some users were quoted 24-48 hour response times when requesting human support.

- Canvas Quizzes Thwarted by Perplexity’s Updates: Recent updates prevent Comet Assistant from providing answers for quizzes, exams, or tests on Canvas.

- Members cautioned against cheating, as Canvas proctors can detect commands and tab switches, leading to disqualification.

LMArena Discord

- Seedream 4 Suffers Quality Downgrade After Disappearing Act: Users observed that Seedream 4 High Res vanished only to reappear as Seedream4, but with reduced quality (2K instead of 4K) and file sizes.

- The situation led to disappointment and speculation about deceptive renaming among members, with one user complaining Damn, I feel scammed.

- Gemini 3 Speculation Builds Steam: Speculation surrounding OceanStone and OceanReef intensified, with theories suggesting they might be variants of Gemini 3 Flash and Gemini 3 Pro.

- Members also pondered the potential performance of Gemini 3.0 flash, referencing past cryptic codenames and retractions.

- Reasoning and Brute-Force: An AI Training Conundrum: A discussion emerged questioning whether fine-tuning, model scaling, and reasoning should be considered brute force methods in AI training.

- The debate centered on whether extensive reasoning aligns with the definition of brute force, especially when optimal paths demand substantial computational effort.

- LM Arena Plagued with Login Nightmares: Numerous users encountered login problems and errors on the LM Arena website.

- The issues prompted a response from admins, who requested detailed bug reports and screenshots to address the problems and get the site back up and running.

Unsloth AI (Daniel Han) Discord

- Gradient Spike Wreaks Havoc on Magistral SFT: During training, the grad norm spiked to quintillions, causing a catastrophic failure for Magistral SFT, illustrated by this image.

- Despite the chaos, one member joked that That spike is where AGI emerges, suggesting continuing until it hits infinity and linking a relevant GIF.

- WikiArt Dataset’s Pixelated Flaws Exposed: Members pointed out the poor quality of the widely used Wikiart dataset, highlighting jpeg artifacts and suggesting that it’s massively flawed, illustrated by this comparison.

- Another member suggested training models on flawed input tokens to produce flawless outputs, emphasizing the need for robust filters.

- Titans Architecture: Google’s LSTM Transformer Hybrid: A discussion emerged around Google’s Titans architecture, which combines transformers with LSTMs for long context handling, referencing this paper.

- Despite its potential, a member noted the lack of popular implementations and questioned why it didn’t gain mainstream traction, while another suggested that Gemini could be a Titans hybrid due to its massive context window.

- Meta Bets Big on Mobile Horizon Worlds: Meta is hosting a competition related to Horizon Worlds on Mobile with a prize pool of $200,000.

- This competition encourages developers to create engaging experiences for mobile users within the Horizon Worlds platform.

- SLED Could Save Brains: A member pondered if SLED could potentially prevent brain damage from SFT, suggesting integration with tools like llama.cpp.

- A member explained that SLED improves LLM predictions by using information from all layers, not just the last one, by reusing the final projection matrix to create probability distributions.

Cursor Community Discord

- Auto Model sparks Model Debate: Users are debating the Auto model in Cursor, with some praising its capabilities with Claude Sonnet 4 and others finding it only suitable for code refactoring and simple tasks.

- One user reported failing complex tasks, especially with missing closing braces, leading to attempts to delete and rewrite entire files.

- Cursor CLI Command Chaos Continues: A user reports that the command lines are still NOT working first go on fresh install, requiring 8 messages before cursor figures out how to run a command.

- Another user mentioned that fixing this issue could save Cursor 10 million a year.

- Terminal Commands Throw Tempest Tantrums: Users are reporting that Cursor gets stuck when running terminal commands, both in the IDE and Cursor CLI, especially after updating and ‘skip’ button missing in run everything mode.

- The Cursor team has acknowledged the terminal issues, with users encouraged to switch to Early Access or Nightly builds to resolve the problem.

- GitHub Account struggles to connect to Cursor: A user reported issues connecting their GitHub account to Cursor, preventing them from choosing a repository to chat with the background agent.

- They tried unlinking and relinking the GitHub account, ensuring Cursor had all necessary permissions, but the problem persisted.

- Background Agents Trigger Configuration Nightmares: Members reported that the Background Agents feature is glitchy, ignoring Dockerfile instructions and failing to run Docker in its default container.

- A user lamented that this feels like an alpha stage release, not a finished product, with the problem not fetching git+ssh packages during

yarn install, despite Cursor showing these repos as ‘installed’ in the access dialog on GitHub.

- A user lamented that this feels like an alpha stage release, not a finished product, with the problem not fetching git+ssh packages during

OpenRouter Discord

- Voicera Makes Audio Searchable**: Voicera (http://voicera.trixlabs.in/) pitches itself as an audio search engine turning audio into actionable insights by providing AI-generated answers with time-coded segments, streamlining finding key moments in recordings.

- The tool enables users to upload audio and search using plain language, promising to transform hours of audio into instant, verifiable answers.

- SillyTavern Gets an iOS Clone**: An iOS developer launched a free SillyTavern clone, Loreblendr AI (https://apps.apple.com/us/app/loreblendr-ai/id6747638829), designed as a native app experience on iOS devices.

- Though acknowledging it cannot match all SillyTavern features, the developer is satisfied with its current state and highlights its user-friendly interface compared to existing chat apps.

- Kimi K2 Has Glitches, Users Want Upgrade**: Users reported errors with Kimi K2 0711 on ST and discussed downtime, suggesting upgrading to the newer 0905 model.

- A user pointed out that the free version of Kimi K2 (https://openrouter.ai/moonshotai/kimi-k2:free) is no longer available.

- DeepSeek Proxy Faces Rate Limits**: Users are encountering Error 429 when trying to use the DeepSeek proxy, especially the free models, indicating rate-limiting issues with Chutes.

- It was suggested that Chutes might be throttling free users due to the high weekend demand, effectively making it almost pay-to-use.

- Code-Supernova Stealthily Appears, Baffles Users**: A new stealth model,

code-supernova, has emerged, allegedly by Anthropic and rumored to be Claude 4.5, based on an image analysis.- Users describe the model as decent but somewhat lazy, providing only the bare minimum implementation and not behaving like Claude.

LM Studio Discord

- Intel ARC Doomed by NVIDIA Deal?: Members speculated that Intel might be abandoning its ARC GPU line after a deal with NVIDIA to integrate their tech into Intel’s CPUs, with one member suggesting this aligns with NVIDIA’s previous condition for partnership: Intel giving up GPU ambitions.

- Another member stated that Intel is failing and ARC loses them money, so they are dropping employees like flies each quarter or so, and they can only do that for so long.

- Apple MLX Users Rave About Qwen-Next: Users of Apple MLX reported impressive performance with qwen-next, citing around 60 tok/sec on an M4 Max at 6-bit MLX, with strong general knowledge, coding, and tool calling abilities.

- One member described it as the best model they can run.

- LM Studio Hub still needs some love: Users are experiencing navigation problems within the LM Studio Hub, making it difficult to find content, search, or return to a central landing page after following a link.

- This functionality is reportedly a work in progress (WIP), with search being a future feature, and the current documentation being scattered in a chat system according to this comment.

- GPT-OSS 20B Plunges into Infinite Loops: A user testing gpt-oss-20b on low-end hardware experienced an infinite loop, where the model generated extensive and irrelevant content due to context overflow.

- Limiting the context window may help prevent this, as the model can get stuck thinking about it’s own generated text.

- Xeon Gold: Still Too Rich for Your Blood?: Members discussed the Xeon Gold 6230 and 5120 processors, highlighting their AVX-512 capabilities but noting they are still disgustingly expensive, even if acquired cheaply.

- One member shared a link to a refurbished Lenovo ThinkStation featuring dual Xeon Gold 6148 processors as a decent option.

GPU MODE Discord

- Qwen3-Next Architecture Recreated: A member shared their attempt to reproduce a trainable Qwen3-Next architecture, providing a baseline transformer-only version and a Gated Delta Net version.

- The architectures are claimed to be within 15% of each other’s size, offering a rough idea of how it works.

- Planet Labs Launches NVIDIA Jetsons into Orbit: Planet, an earth observation company, is flying NVIDIA Jetson Orin AGX units on their satellites, leveraging CUDA and TensorRT for on-satellite ML inference, using object detection algorithms like YOLOX.

- They utilize Docker containers running on standard Ubuntu, and the Jetson modules offer 64 GBs of unified memory, similar to Apple M-series chips.

- AMD GEMM Sweeps Leaderboard: Submissions to the

amd-gemm-rsleaderboard have been popping off, with one submission achieving first place on MI300x8 with a time of 530 µs.- The

amd-all2allleaderboard saw updates, including one submission landing 5th place on MI300x8 with a time of 1230 µs.

- The

- TorchAO team and Unsloth team release Native Quantized Models: The TorchAO team and Unsloth have collaborated to release native quantized variants of Phi4-mini-instruct, Qwen3, SmolLM3-3B and gemma-3-270m-it available via PyTorch (learn more here).

- Users can now finetune with Unsloth and then quantize the finetuned model with TorchAO.

- Together AI Teases Blackwell Deep Dive: Together AI is hosting a Deep Dive on Blackwell with Dylan Patel (Semianalysis) and Ian Buck (NVIDIA) on October 1st.

- Speakers will talk about the capabilities of the new architecture.

Eleuther Discord

- AI Desktop Agent Eyes Shot in the Dark: Members discussed combining speech-to-text, AI desktop agents for scam email automation, and text-to-speech to aid blind users, with some noting the effectiveness of macOS accessibility features.

- Built-in screen readers for both Windows and macOS were mentioned as potential solutions, and a member offered to connect others with Discord users experienced with screen readers.

- TorchTitan patch for Ray and vLLM Proposed: A member suggested patching Ray and vLLM for reinforcement learning (RL) using TorchTitan, pointing to examples like OpenRLHF/OpenRLHF.

- It was noted that for RL, torchtitan is not enough of course, implying further setup requirements, though they did not specify.

- NeurIPS Showcases TokenSwap: TokenSwap received Spotlight at NeurIPS 2025 for addressing the performance-memorization tradeoff by swapping probabilities for common grammar tokens, yielding 10x reductions in verbatim generation.

- Despite praise, critics likened it to lobotomizing the model, with the author clarifying that it prevents verbatim/near-verbatim output by swapping in a worse model for simple tokens.

- DeepMind cracks Fluid Equations: DeepMind announced the discovery of new unstable singularities across three fluid equations (blog post), detailing new self-similar solutions for the incompressible porous media equation and the 3D Euler equation with boundary.

- The paper was also shared: [2509.14185] Title.

- Atlas Attempts NIAH Transformer Gap Fix: Atlas claims to fix the gap between NIAH results and Transformers using a larger state size via taylor series polynomial.

- Skeptics noted that the atlas paper is a hot mess in terms of replicability or any sort of reasonable comparisons and doesn’t disclose what size.

HuggingFace Discord

- Qwen Coder Recommended for Local LLM Setups: A member suggested using a standard model of Qwen Coder for local LLM setups, providing hardware recommendations with VRAM considerations.

- They cautioned about multi-GPU limitations and shared a qwen_coder_setup.md file.

- Transformers Training Loops Get Hack Fix: A member shared a link to a PR on GitHub addressing transformer training loop issues, asking if it applies to standard PyTorch training loops.

- Another member clarified, Use out.loss from the model and you get the fix in any loop. Roll your own loss and you must handle scaling yourself.

- SpikingBrain-7B Claims Crazy Speedup: An ArXiv link was shared for SpikingBrain-7B, a non-transformers model based on snns, promising a potential paradigm shift.

- The paper asserts that SpikingBrain-7B achieves more than 100× speedup in Time to First Token (TTFT) for 4M-token sequences, piquing interest.

- HF API Eases Model Access: A discussion arose on accessing and listing models from Hugging Face via the API, with a helpful code example provided.

- A member then shared a code snippet utilizing

huggingface_hubto list models from the Hub.

- A member then shared a code snippet utilizing

- Ethical Conundrums in AI-Driven Captcha Solving: The ethics of using AI to solve Captchas was debated, with one member calling such practices the pillars of AI ethics and guardrails.

- Another member noted the potential for an endless cat-and-mouse game dominated by arms dealers due to the same companies often creating both puzzles and AI solvers like Gemini.

OpenAI Discord

- AI Tutoring Sparks Cheating Debate: Discussion arose around InterviewCoder, an AI tool providing real-time coding challenge hints and solutions, questioning its nature as assistance or outright cheating.

- Suggestions included repurposing it for education, akin to “training wheels for algorithms,” offering multiple solution paths as a study aid.

- AI Interview Assistance Opens Ethical Minefield: Using AI to cheat in interviews could invite civil fraudulent misrepresentation charges, while routing conversations secretly violates all-party consent laws.

- Some members have stated that not using tools like this is “denying your family food and shelter because you were unwilling to install some software”.

- GPT-5-chat Pestering for Follow-Ups: Users report GPT-5-chat persistently asking follow-up questions, even when system prompts discourage this, and there’s no universal setting to disable trailing questions.

- Prefixing every prompt with “Please respond concisely and do not end with a question” increases compliance, but isn’t foolproof.

- Automated Prompt Generation Deployed: A user automated prompt generation by instructing a GPT to create 5-7 starter prompts, then generate 10 more in either JSON or YAML format, scaling from there to 2500 prompts and receiving a download link.

- The user added that using API keys is like cheat codes for added security and functionality.

- GPT Agent Pushed Past Prompt Limit: A user pushed a GPT to its limits by generating prompts until it could no longer fit them, then providing the prompts in a file for context; the GPT helper agent seemed to be getting uncomfortable, especially when generating complicated code in ZIP files using the code analysis tool.

- They are experimenting with custom GPTs integrated with a sandboxed API on their computer and plan to leverage MCP developer mode to integrate custom GPT actions in standard ChatGPT context.

Latent Space Discord

- Mistral models gain vision and higher Scores: Mistral unveiled Magistral Small 1.2 and Medium 1.2, adding multimodal vision and a 15% jump in math/coding scores, accessible via Le Chat and the API.

- Community members are now wondering about a Large model, open-sourcing Medium, real-world demos, and voice features.

- Open Source AI Agent Framework Quest: Community members are requesting the best OSS framework or git repo for open-source contributions in the AI Agents space, sparking discussion on architectural approaches.

- Notion 3.0 becomes Knowledge Work Agent: Notion announced Notion 3.0, featuring a “Knowledge Work Agent” capable of multi-step actions and up to 20+ minutes of autonomous work, showcased in this tweet.

- The update introduces a Personal Agent and a Custom Agent integrating across Notion Calendar, Notion Mail, MCP, etc.

- Moondream 3 Visualizes SOTA: Vik introduced Moondream 3, a 9B-parameter Mixture-of-Experts vision-language model with 2B active parameters that achieves SOTA visual-reasoning and open-vocabulary object-detection performance; the weights are on Hugging Face.

- It introduces visually grounded reasoning, state-of-the-art CountBenchQA results, 32k-token context support, SuperBPE tokens, and easily fine-tunable weights.

- Luma Labs Ray3 Reasons Hollywood HDR: Luma AI presented Ray3, claiming it as the first reasoning video model with studio-grade HDR output, free inside Dream Machine.

- Highlighted features: Draft Mode for fast iteration, enhanced physics & consistency, visual-annotation control, and 10/12/16-bit HDR with EXR export, getting praise for Hollywood-level fidelity.

Modular (Mojo 🔥) Discord

- Mojo VS Code Extension Enters Preview: A new open-source Mojo VS Code extension is now available in preview, with access to bleeding-edge builds directly from the GitHub repository.

- The extension will soon be available in the pre-release channel, making it easier for developers to integrate Mojo into their VS Code workflow.

- Mojo LSP Faces Stability Woes: Users reported instability with the Mojo LSP, experiencing crashes, hangs, and memory leaks, often needing to manually terminate the

mojo-lsp-serverprocess.- Despite these issues, a Modular employee noted ongoing efforts to improve the LSP, with one member suggesting that restarting nvim helps in recovering the LSP.

- Mojo’s IDE Landscape: VSCode and its derivatives are the most used IDEs for Mojo, though many developers internally use Vim, Zed, and others.

- The Mojo LSP server is shipped with the

mojopackage to give users flexibility to use other IDEs, and a simple LSP setup in Lazynvim was provided.

- The Mojo LSP server is shipped with the

- Exporting Mojo Code to C/C++ Gotchas: When building a .so library from Mojo code, only functions defined as

fncan be called from C code, asdefimplicitly raises, making it incompatible with C-ABI.- For C++ interop, including

extern "C"linkage in the header is essential to prevent symbol mangling issues; one member discovered that this is essential for preventing symbol mangling in C++.

- For C++ interop, including

Yannick Kilcher Discord

- DeepMind makes Fluid Dynamics More Fluid: Google DeepMind announced they used novel AI methods to present the first systematic discovery of new families of unstable singularities across three different fluid equations, according to this blog post.

- The news was also announced via X, marking a significant advancement in solving century-old problems in fluid dynamics.

- Member Teases ClaudeAI Reveal: A member shared a link hinting at an upcoming ClaudeAI announcement.

- The message simply stated, “is tomorrow the day?”

- Qwen3 Next Architecture Reproduction Achieved: A member created a trainable reproduction-ish of Qwen3 Next architecture with different routing and shared it on GitHub.

- They found gated delta nets promising and hope to read about gated linear attention next week.

- LLM API vs Power Automate in a Redaction Cage Match: A member inquired about using a custom API LLM solution versus Power Automate with Copilot Studio for redacting personal info from 60,000 documents monthly.

- They suggested the custom API LLM solution could be cheaper and faster.

- Background Superimposition is Harder than it Looks: A user is seeking insights on optimizing an agent to superimpose a snow background onto a storyboard image.

- Despite the seeming simplicity of the task, the resulting image doesn’t meet expectations, and the agent is not working as expected.

aider (Paul Gauthier) Discord

- Coding Agent Quality Varies: Members report varying quality among coding agents like qwen-code, Cline, and Kilo, with larger models like qwen3-coder (480B) performing better, but still showing unpredictable behavior.

- Smaller models sometimes yield surprisingly good results despite a lower good-to-bad ratio, prompting curiosity about how Aider’s user-guided approach compares.

- Aider Edits Directly: Users prefer Aider for targeted single edits, even with smaller models like gpt3.5-turbo, citing its speed and directness.

- One user combines Aider with Claude 4 Sonnet and gh cli for PR reviews, contrasting it with more agentic tools like opencode or qwen-code that process large code portions for minor changes.

- Deepwiki Softens Codebases for Inspection: A member shared Deepwiki as a resource to quickly ask questions of codebases, to “soften them up” before diving into the details.

- They demonstrated by using Devin Chat from Deepwiki’s aider entry to answer the question of how tree-sitter is used.

- Local MLX Model Babbles via Aider: A member got the mlx-community/Qwen3-Next-80B-A3B-Instruct-4bit model working with aider via the mlx-lm server, using

aider --openai-api-key secret --openai-api-base http://localhost:8080/v1/.- Adding

openai/in front of the--modeloption was key:aider --openai-api-key secret --openai-api-base http://127.0.0.1:8080 --model openai/mlx-community/Qwen3-Next-80B-A3B-Instruct-4bit.

- Adding

- Graph Usability Expands: A user suggested improving graph usability by adding the ability to deselect outlier data points to improve the focus on representative data.

- Currently, it’s not possible to use the graph easily.

DSPy Discord

- Human Review Tooling Seeks Testers: A member is developing Human Review Tooling for manual QA, error analysis, and data labeling, seeking testers, especially from academia, with a demo video available.

- The next phase involves generating graders using human feedback, incorporating deterministic methods and LLMs as Judges via GEPA.

- GEPA Optimization Guide Coming: A member inquired about which models perform well for GEPA optimization, besides OpenAI models, in the general channel.

- Another member reported success with Gemini-2.5-Pro, GPT-4/5-nano/mini/main all versions, and Qwen3-8B+, while also having heard success with GPT-OSS.

- MLFlow Flexes Observability Muscles: A member inquired about which tools are working well for observability and evals, especially for GEPA.

- Another member mentioned that MLFlow is deeply integrated, with the MLFLow team also working on better integrations with GEPA, and supports things like best_valset_agg_score and pareto frontier related like paro frontier aggregate score, and dashboards.

- ColBERT Context Crisis: A member noted that despite jina-colbert accepting up to 8,192 tokens, results are not great with longer contexts.

- They suggested repeating the CLS token in each chunk and trying with/without; the channel discussed CLS Token Chunking Strategy to address issues with long contexts in jina-colbert.

- MergeBench Paper: A member shared MergeBench.

- No further details were given about this paper.

Nous Research AI Discord

- Magistral Small Model makes debut: Mistral AI launched the Magistral-Small-2509 model on Hugging Face, observing that small model training speed doesn’t scale linearly due to overhead for small tensors.

- The team noted that VRAM consumption is inefficient, with Qwen3-Next requiring more VRAM than an equivalent Llama model at 16x the batch size.

- Moondream gets a refresh: A new moondream release was announced, based on this vxitter post, however members voiced concerns about its wonky licence.

- It was compared unfavorably against other moondream models in the space.