Qwen is all you need?

AI News for 9/23/2025-9/24/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (194 channels, and 2236 messages) for you. Estimated reading time saved (at 200wpm): 188 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

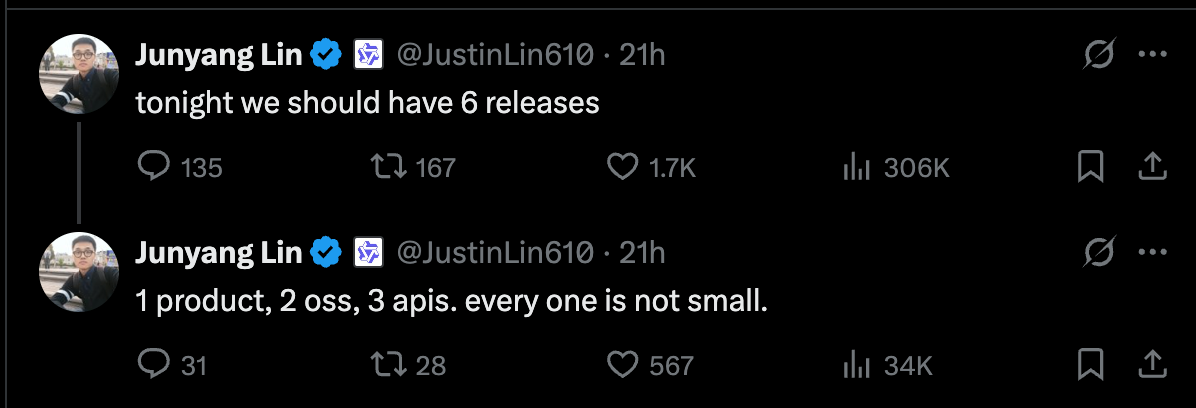

Today is both AI Engineer Paris and AliCloud’s annual Yunqi aka Apsara conference, and the Tongyi Qianwen (aka Qwen) team has been working overtime to launch updates of all their models, including the major ones: the monster 1T model Qwen3-Max (previewed 3 weeks ago), Qwen3-Omni, and Qwen3-VL, with Qwen3Guard, Qwen3-LiveTranslate, Qwen3-TTS-Flash, and updates to Qwen-Image-Edit and Qwen3Coder. Here’s how Junyang Lin, their primary spokesperson in AI Twitter, put it:

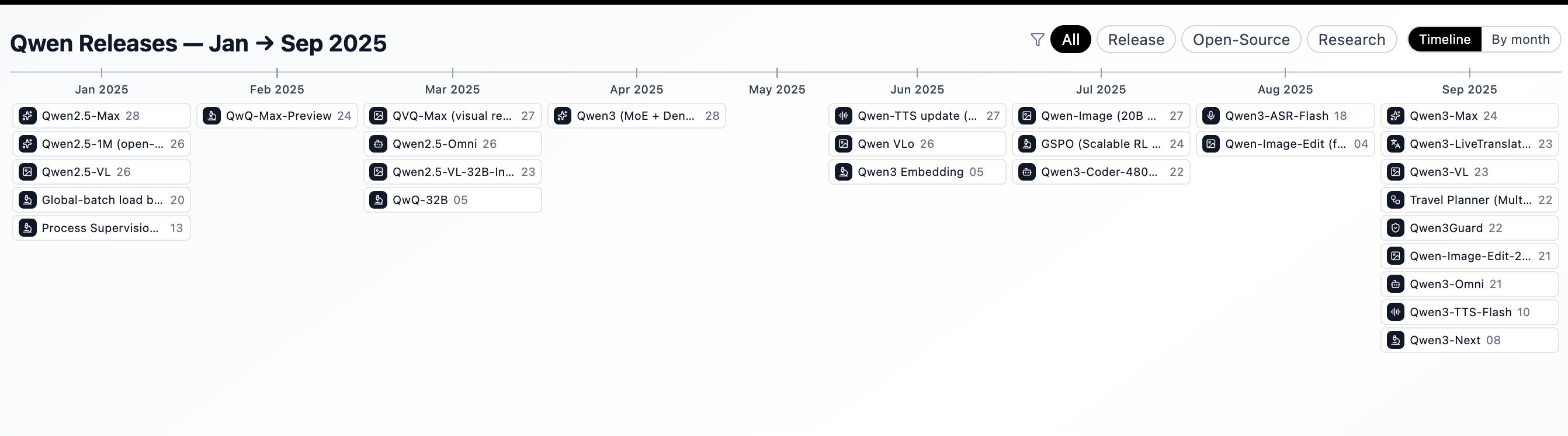

Just to visualize the step up of velocity, here’s all the Qwen releases this year visualized:

Not to forget all the work from Alibaba Wan too, but Qwen is now being regarded as a “frontier lab” with all these releases.

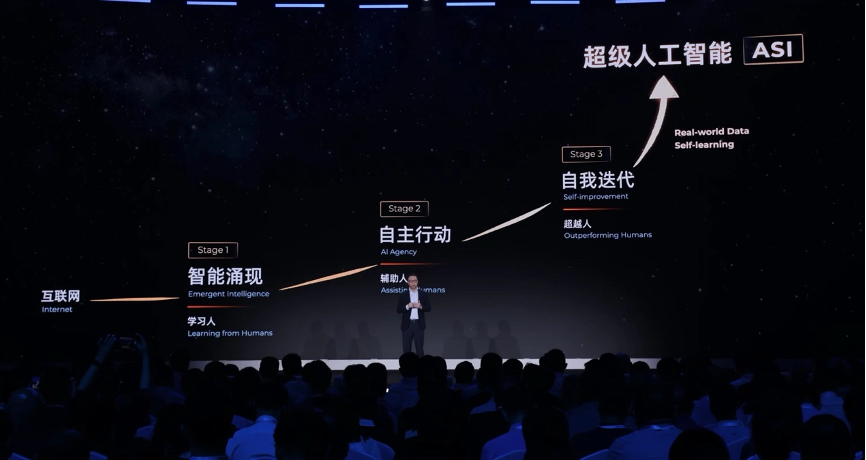

Alibaba’s CEO Eddie Wu took to the stage to map out their $52B USD roadmap:

Here’s a translation of the speech:

- The first stage is “intelligence emergence,” characterized by “learning from humans.”

- The internet has digitized virtually all knowledge in human history. The information carried by these languages and texts represents the entire corpus of human knowledge. Based on this, large models first develop generalized intelligence by understanding the global knowledge base, emerging with general conversational capabilities, understanding human intent and answering human questions. They gradually develop the reasoning ability to consider multi-step problems. We now see AI approaching the top levels of human performance in various subject tests, such as the gold medal level of the International Mathematical Olympiad. AI is gradually becoming capable of entering the real world, solving real problems, and creating real value. This has been the main theme of the past few years.

- The second stage is “autonomous action,” characterized by “assisting humans.” In this stage, AI is no longer limited to verbal communication but possesses the ability to act in the real world. AI can break down complex tasks, use and create tools, and autonomously interact with the digital and physical worlds, exerting a profound impact on the real world, all within the context of human goals. This is the stage we are currently in.

- The key to achieving this breakthrough lies first in the ability of big models to use tools, connecting all digital tools to complete real-world tasks. The starting point of humanity’s accelerated evolution was the creation and use of tools, and big models now also possess this ability. Through tool use, AI can access external software, interfaces, and physical devices just like humans do, performing complex real-world tasks. At this stage, because AI can significantly improve productivity, it will rapidly penetrate nearly every industry, including logistics, manufacturing, software, commerce, biomedicine, finance, and scientific research.

- Secondly, improvements in large-model coding capabilities can help humans solve more complex problems and digitize more scenarios. Current agents are still in their early stages, primarily solving standardized, short-term tasks. Enabling agents to tackle more complex, longer-term tasks requires large-model coding capabilities. Because agents can code autonomously, they can theoretically solve infinitely complex problems, understanding complex requirements and independently completing coding and testing, just like a team of engineers. Developing large-model coding capabilities is essential for achieving AGI.

- AI will then enter its third phase – “self-iteration,” characterized by its ability to “surpass humans.” This phase has two key elements:

-

First, AI connects to the full amount of raw data in the real world.

Currently, AI is making the fastest progress in content creation, mathematics, and coding. We see distinct characteristics in these three areas. Knowledge in these fields is 100% human-defined and created, contained in text. AI can fully understand this raw data. However, in other fields and the broader physical world, today’s AI is primarily exposed to knowledge summarized by humans and lacks extensive raw data from interactions with the physical world. This information is limited. For AI to achieve breakthroughs beyond human capabilities, it needs to directly access more comprehensive and original data from the physical world…

…Simply having AI learn from human-derived rules is far from enough. Only by continuously interacting with the real world and acquiring more comprehensive, authentic, and real-time data can AI better understand and simulate the world, discover deeper laws that transcend human cognition, and thus create intelligent capabilities that are even more powerful than humans.

-

Second, Self-learning. As AI penetrates more physical world scenarios and understands more physical data, AI models and agents will become increasingly powerful. This will allow them to build training infrastructure, optimize data flows, and upgrade model architectures for model upgrades, thereby achieving self-learning. This will be a critical moment in the development of AI.

As capabilities continue to improve, future models will continuously interact with the real world, acquiring new data and receiving real-time feedback. Leveraging reinforcement learning and continuous learning mechanisms, they will autonomously optimize, correct deviations, and achieve self-iteration and intelligent upgrades. Each interaction is a fine-tuning, and each piece of feedback a parameter optimization. After countless cycles of scenario execution and result feedback, AI will self-iterate to achieve intelligence capabilities that surpass humans, and an early stage of artificial superintelligence (ASI) will emerge.

-

They are also recent converts to the LLM OS thesis.

AI Twitter Recap

Compute buildout: OpenAI–NVIDIA deal, Stargate expansion, and the gigawatt era

- OpenAI’s “factory for intelligence” goes physical: OpenAI announced five new “Stargate” sites with Oracle and SoftBank, putting it ahead of schedule on its previously announced 10‑GW buildout. The company framed its goal as “a factory that can produce a gigawatt of new AI infrastructure every week” in Sam Altman’s post on “abundant intelligence” and thanked NVIDIA for the nearly decade-long partnership (@OpenAI, @sama, @sama, @gdb, @kevinweil). Context: 10 GW is roughly “about 6% of the energy that all humans in the world spend thinking,” per Graham Neubig (@gneubig). Elon Musk asserted “first to 10GW, 100GW, 1TW, …” (@elonmusk).

- Deal math and “paper-for-GPUs” speculation: Back-of-the-envelope estimates for 10 GW suggest ~$340B of H100-equivalents at $30k/GPU if 20% power is non‑GPU, with a 30% volume discount bringing it to ~$230B. One floated structure: pay list on GPUs and backfill “discount” via NVIDIA investing ~$100B into OpenAI equity (@soumithchintala, @soumithchintala, @soumithchintala). Oracle/SoftBank involvement was noted by multiple observers; total infra commitments across vendors are trending to “hundreds of billions” (@scaling01).

Qwen’s multi-model salvo: Max, VL‑235B‑A22B, Omni, Coder‑Plus, Guard, and LiveTranslate

- Flagships and vision: Alibaba Qwen released:

- Qwen3‑Max (Instruct/Thinking). Claims near‑SOTA on SWE‑Bench, Tau2‑Bench, SuperGPQA, LiveCodeBench, AIME‑25; the Thinking variant with tool use in “heavy mode” approaches perfection on selected benchmarks (@Alibaba_Qwen, @scaling01).

- Qwen3‑VL‑235B‑A22B (Apache‑2.0; Instruct/Thinking). 256K context scalable to ~1M; strong GUI manipulation and “visual coding” (screenshots→HTML/CSS/JS), 32‑language OCR, 2D/3D spatial reasoning, SOTA on OSWorld (@Alibaba_Qwen, @reach_vb, @scaling01).

- Qwen3‑Omni: an E2E any‑to‑any model (30B MoE, ~3B active) that ingests image/text/audio/video and outputs text/speech; supports 119 languages (text), 19 (speech), and 10 speech output voices; Transformers+vLLM support; SOTA across many audio/video benchmarks vs Gemini 2.5 Pro and GPT‑4o (@mervenoyann, @mervenoyann). Technical report roundup: joint multimodal training didn’t degrade text/vision baselines in controlled studies (@omarsar0).

- Developers, safety, and real‑time:

- Qwen3‑Coder‑Plus: upgraded terminal task capabilities, SWE‑Bench up to 69.6, multimodal coding and sub‑agent support, available via Alibaba Cloud Model Studio and OSS product Qwen Code (@Alibaba_Qwen, @_akhaliq).

- Qwen3Guard: multilingual (119 langs) moderation suite in 0.6B/4B/8B sizes; streaming (low‑latency) and full‑context (Gen) variants; 3‑tier severity (Safe/Controversial/Unsafe); positioned for RL reward modeling (@Alibaba_Qwen, @HuggingPapers).

- Qwen3‑LiveTranslate‑Flash: real‑time multimodal interpretation with ~3s latency; lip/gesture/on‑screen text reading, robust to noise; understands 18 languages + 6 dialects, speaks 10 (@Alibaba_Qwen).

- Bonus: Travel Planner agent wired to Amap/Fliggy/Search for itineraries and routing (@Alibaba_Qwen).

OpenAI’s GPT‑5‑Codex and agent tooling move to the fore

- GPT‑5‑Codex ships for agents: OpenAI released GPT‑5‑Codex via the Responses API (not Chat Completions), optimized for agentic coding rather than conversation (@OpenAIDevs, @reach_vb). Rapid integrations followed: VS Code/GitHub Copilot (@code, @pierceboggan), Cursor (@cursor_ai), Windsurf (@windsurf), Factory (@FactoryAI), Cline (@cline), and Yupp (Low/Medium/High variants for public testing) (@yupp_ai). Builders highlight “adaptive reasoning” that spends fewer tokens on easy tasks and more when required, with some reporting >400K context and strong performance on long‑running tasks (claims via partner posts; see @cline).

- Agent debugging powers land in IDEs and browsers:

- Chrome DevTools MCP: agents can run performance traces, inspect the DOM, and debug web pages programmatically (@ChromiumDev).

- Figma MCP server for VS Code: bring design context into code for design→implementation loops (@code).

- Gemini Live API update: improved real‑time voice function calling, interruption handling, and side‑chatter suppression (@osanseviero).

- Hiring momentum for OS-level computer control agents continued (xAI “Macrohard,” Grok 5) (@Yuhu_ai_, @YifeiZhou02) and third‑party teams integrated Grok fast models (@ssankar).

Retrieval, context engineering, and agent research

- MetaEmbed (Flexible Late Interaction): Append learnable “meta tokens” and only store/use those for late interaction, enabling multi‑vector retrieval that’s compressible (Matryoshka‑style), with test‑time scaling to trade accuracy vs efficiency; SOTA on MMEB and ViDoRe. Discussion threads and repos note compatibility with PLAID indexes (@arankomatsuzaki, @ZilinXiao2, @ManuelFaysse, @antoine_chaffin).

- Data beats scale for agency? LIMI shows 73.5% on AgencyBench from just 78 curated demos, outperforming larger SOTA agentic models; authors propose an “Agency Efficiency Principle” (autonomy emerges from strategic curation) (@arankomatsuzaki, @HuggingPapers).

- Graph‑walk and engineering evals:

- ARK‑V1: a lightweight KG‑walking agent boosts factual QA vs CoT; with Qwen3‑30B it answers ~77% of queries with ~91% accuracy on those (≈70% overall). Larger backbones reach ~70–74% overall; weaknesses include ambiguity and conflicting triples (@omarsar0).

- EngDesign: 101 tasks across 9 engineering domains using simulation‑based eval (SPICE, FEA, etc.); iterative refinement meaningfully increases pass rates (@arankomatsuzaki).

- Also notable: Apple’s EpiCache on episodic KV cache management for long conversational QA (@_akhaliq), the Agent Research Environment now MCP‑compatible with real robot control via LeRobot MCP (@clefourrier), and LangSmith Composite Evaluators to roll multiple scores into a single metric (@LangChainAI).

Video and 3D content: Kling 2.5 Turbo, Ray 3 HDR, and more

- Kling 2.5 Turbo: Day‑0 access on FAL with significantly improved dynamics, composition, style adaptation (incl. anime), and emotional expression; priced as low as ~$0.35 for 5s video on FAL per users. Higgsfield announced “unlimited” Kling 2.5 within its product. Demos show better adherence to complex prompts and audio FX generation improvements (@fal, @Kling_ai, @higgsfield_ai, @TomLikesRobots).

- Luma Ray 3: first video model with 16‑bit HDR and iterative “chain‑of‑thought” refinement across T2V and I2V; currently in Dream Machine only (API pending). Artificial Analysis will publish side‑by‑sides in their arena (@ArtificialAnlys).

- In 3D/VR, Rodin Gen‑2 (4× mesh quality, recursive part gen, high→low baking, control nets) launched with promo pricing (@DeemosTech); World Labs’ Marble showcased prompt‑to‑VR walkthroughs (@TomLikesRobots).

Systems, kernels, and inference

- Kernel craft pays: A Mojo matmul beat cuBLAS on B200s in ~170 LOC without CUDA, detailed in a tuning thread; demand for kernel‑writing talent is spiking across industry. Meanwhile, vLLM enabled full CUDA‑graphs by default (e.g., +47% speedup on Qwen3‑30B‑A3B‑FP8 at bs=10), and Ollama shipped a new scheduler to reduce OOMs, maximize multi‑GPU utilization, and improve memory reporting (@AliesTaha, @jxmnop, @mgoin_, @ollama).

- Models and infra: Liquid AI released LFM2‑2.6B (short convs + GQA, 10T tokens, 32K ctx; open‑weights) positioning as a new 3B‑class leader (@LiquidAI_). AssemblyAI posted strong multilingual ASR performance with diarization at scale (@_avichawla). Hugging Face’s storage backbone highlighted Xet and content‑defined chunking as key to multi‑TB/day open‑source throughput (@ClementDelangue). NVIDIA noted expanded open‑source model contributions on HF (@PavloMolchanov).

Top tweets (by engagement)

- “crazy that they called it context window when attention span was right there.” (@lateinteraction, 7074)

- Hiring for a new team building computer control agents for Grok5/macrohard (@Yuhu_ai_, 6974)

- “A major moment — UNLIMITED Kling 2.5 exclusively inside Higgsfield.” (@higgsfield_ai, 6248)

- “Yo I heard if u press Up, Up, Down, Down… there’s an infinite money glitch” (@dylan522p, 5621)

- “Abundant Intelligence” — OpenAI vision post (@sama, 5499)

- Chromium DevTools MCP for agent debugging (@ChromiumDev, 2538)

- “Grateful to Jensen for the almost‑decade of partnership!” (@sama, 5851)

- OpenAI: five new Stargate sites announced (@OpenAI, 2675)

- Nvidia–OpenAI partnership nod (“looking forward to what we’ll build together”) (@gdb, 2753)

- “I can’t believe this actually works” (viral agent demo) (@cameronmattis, 46049)

- FDA/Tylenol thread on autism/ADHD evidence quality (@DKThomp, 16346)

- U.S. Physics Olympiad team wins 5/5 golds (@rajivmehta19, 13081)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen3-Max Release and Benchmarks

- Qwen 3 max released (Score: 218, Comments: 39): **Qwen3‑Max is announced as Qwen’s largest, most capable model. The preview Qwen3‑Max‑Instruct ranks**

#3on the Text Arena leaderboard (claimed to surpass “GPT‑5‑Chat”), and the official release emphasizes stronger coding and agent capabilities with claimed SOTA across knowledge, reasoning, coding, instruction‑following, human‑preference alignment, agent tasks, and multilingual benchmarks, accessible via API (Alibaba Cloud) and Qwen Chat. A separate Qwen3‑Max‑Thinking variant (still training) reportedly hits100%on AIME 25 and HMMT when augmented with tool use and scaled test‑time compute. Commenters note the model is not local/open‑source, limiting self‑hosting, and remark on the rapid release cadence.- Several commenters note Qwen 3 Max is not a local model and is not open source. Practically, this means no downloadable weights or on-device/self-hosted deployment; usage is via a hosted API only, which impacts data control, offline capability, and reproducibility versus OSS models.

- There’s confusion around the announcement because earlier access was a “preview”; this thread indicates a formal release. Readers infer a shift from preview to GA/production readiness (e.g., clearer SLAs/rate limits/pricing), though no concrete technical details were provided in the comments.

- 2 new open source models from Qwen today (Score: 172, Comments: 35): Post hints at two new open-source releases from Alibaba’s Qwen team, with at least one already live on Hugging Face. Comments explicitly name “Qwen3 VL MoE,” implying a vision-language Mixture-of-Experts model; the image likely teases both models’ names and release timing. Image: https://i.redd.it/goah9v2r8wqf1.png Comments note the second model has appeared on Hugging Face and that the first is already released; discussion centers on identifying “qwen3 vl moe,” with no benchmarks or specs yet.

- Release of Qwen3-VL-MoE (vision-language Mixture-of-Experts) noted; MoE implies sparse expert routing so only a subset of experts is active per token, reducing compute while maintaining high capacity. Evidence of availability and rapid cadence: community reports it’s “already released” and a “2nd Qwen model has hit Hugging Face,” with a preview screenshot shared (https://preview.redd.it/kn55ui1xvwqf1.png?width=1720&format=png&auto=webp&s=a36235216e9450b2be9ad44296b22f9d2abc07d9).

- Discussion highlights a shift to sparse MoE across Qwen models to speed up both training and deployment by improving parameter efficiency and throughput (routing to few experts lowers per-token FLOPs). Commenters argue this enables faster iteration on scaling strategies while keeping models “A-tier,” emphasizing a practical trade-off: strong performance with better cost-efficiency rather than chasing single-model SOTA.

2. Qwen Shipping Speed Memes/Discussion

- How are they shipping so fast 💀 (Score: 805, Comments: 136): Post highlights Qwen’s rapid release cadence; commenters attribute speed to adopting Mixture‑of‑Experts (MoE) architectures, which are faster/cheaper to train and scale compared to large dense models. There’s mention of rumored upcoming open‑source Qwen3 variants, including a “15B2A” and a 32B dense model, suggesting a split between MoE and dense offerings. Comments are bullish on Qwen’s momentum (“army of Qwen”) and contrast it with Western narratives about long timelines and high costs; some geopolitical takes appear but are non‑technical. Technical hope centers on OSS releases of the rumored Qwen3 15B2A and 32B dense models.

- Commenters note that Qwen has leaned into Mixture-of-Experts (MoE), which can be faster to train/infer at a given quality because only a subset of experts is activated per token (

k-of-nrouting), reducing effective FLOPs while scaling parameters (see Switch Transformer: https://arxiv.org/abs/2101.03961). They also reference rumored upcoming dense releases — Qwen3 15B2A and Qwen3 32B — implying a complementary strategy where MoE accelerates iteration and dense models target strong single-expert latency/serving simplicity; trade-offs highlighted include MoE’s routing/infra complexity vs dense models’ predictable memory/latency.

- Commenters note that Qwen has leaned into Mixture-of-Experts (MoE), which can be faster to train/infer at a given quality because only a subset of experts is activated per token (

- how is qwen shipping so hard (Score: 181, Comments: 35): OP asks why Qwen (Alibaba’s LLM family) is shipping releases so quickly and proliferating variants to the point that model selection feels overwhelming. No benchmarks or implementation details are discussed; the thread is meta commentary on release cadence and variant sprawl (e.g., many model types/sizes under the Qwen umbrella, cf. Qwen’s repo: https://github.com/QwenLM/Qwen). Commenters largely attribute the pace to Alibaba’s resources—“tons of cash, compute and manpower”—and China’s “996” work culture; one notes that the intensely trained students from a decade ago are now the workforce.

- A practitioner recommends a practical deployment mix: use Qwen2.5-VL-72B for VLM tasks, the largest Qwen3 (dense) that fits your GPU

VRAMfor low-latency text inference, and the largest Qwen3 MoE that fits in systemmain memoryfor higher-capacity workloads. This balances VRAM-bound dense inference against RAM-bound MoE, trading latency for capacity while covering multimodal and pure-text use cases in one stack. - Several note Qwen’s backing by Alibaba, implying access to substantial compute, funding, and engineering manpower. That scale translates into faster pretraining/finetuning cycles and parallel productization, which helps explain the rapid shipping cadence across multiple model families (dense, MoE, and VLM).

- Reports highlight strong image-generation performance from Qwen’s stack, indicating rapid maturation of their multimodal/image pipelines alongside text models. While no benchmarks were cited, the consensus is that image quality has improved enough to be competitive with contemporary leaders.

- A practitioner recommends a practical deployment mix: use Qwen2.5-VL-72B for VLM tasks, the largest Qwen3 (dense) that fits your GPU

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Wan 2.2/2.5 Video Demos + Qwen-Image-Edit GGUF and LMarena Leaderboard

- Incredible Wan 2.2 Animate model allows you to act as another person. For movies this is a game changer. (Score: 258, Comments: 57): Post claims the “Wan

2.2Animate” model enables actor-to-actor facial reenactment—driving a target identity’s face from a source performer—effectively a deepfake-style digital double for film/video. Based on the clip description (reddit video), it demonstrates ID transfer with reasonable motion/temporal consistency but imperfect identity fidelity (a commenter notes it doesn’t fully match Sydney Sweeney), suggesting trade-offs between likeness preservation, lip-sync, and coherence typical of diffusion/reenactment pipelines conditioned on reference identity frames. No benchmarks or implementation details are provided in the post; technically, this aligns with identity-conditioned video generation/reenactment methods where motion is derived from a driving video and identity is maintained via reference-image embeddings and cross-frame constraints. Top comments discuss monetization/abuse vectors (e.g., adult-content deepfakes/OnlyFans) and note that, despite artifacts or mismatch for close viewers, most audiences may not notice—highlighting ethical risk versus perceived quality in practical deployments.- Commenters noting the face “does not look like Sydney Sweeney” reflects known limits in identity preservation for face reenactment/video diffusion: models can drift on fine facial geometry, skin microtexture, and expression under pose/lighting changes, leading to perceptual mismatches. Robust systems typically mix landmark/flow-guided warping with identity losses (e.g., ArcFace/FaceNet embeddings) and temporal consistency losses; without these, frame-to-frame ID coherence and lip-sync degrade, especially beyond 512–1024 px outputs or during rapid head motion.

- Multiple users suggest this tech already exists; indeed, face-swapping/reenactment has prior art: classic deepfake pipelines (DeepFaceLab/FaceSwap), research like First Order Motion Model (2019) and SimSwap (2020), plus newer one-shot and diffusion methods. References: DeepFaceLab (https://github.com/iperov/DeepFaceLab), FaceSwap (https://github.com/deepfakes/faceswap), FOMM (https://github.com/AliaksandrSiarohin/first-order-model), SimSwap (https://github.com/neuralchen/SimSwap), Roop (https://github.com/s0md3v/roop), LivePortrait (https://github.com/YingqingHe/LivePortrait), AnimateDiff (https://github.com/guoyww/AnimateDiff).

- Skepticism about “for movies” points to production constraints: film requires 4K+ resolution, HDR, stable multi-minute temporal coherence, accurate relighting/shadows, camera/face tracking under occlusions, and consistent hair/ear/jawline geometry. Current diffusion/reenactment demos often show flicker, mouth/eye desynchrony, and lighting mismatches; integrating them into film usually needs VFX-grade tracking, neural relighting, paint/roto, and per-shot tuning rather than a turnkey actor-swap.

- Wan2.2 Animate and Infinite Talk - First Renders (Workflow Included) (Score: 340, Comments: 48): OP shares first renders from a ComfyUI pipeline combining

Wan 2.2“Wan‑Animate” for video synthesis with an “Infinite Talk” workflow for narration. The Wan‑Animate workflow was sourced from CivitAI user GSK80276, and the Infinite Talk workflow was taken from u/lyratech001’s post in this thread. No model settings, checkpoints, or hardware/runtime details are provided; the post primarily demonstrates integration of existing workflows. Comments ask for reproducibility details, specifically the TTS source (voice generation) and how the target image/video were produced, indicating missing setup specifics; no substantive technical debate is present.- Requests for disclosure of the exact TTS/voice pipeline (“Infinite Talk”): which model/service was used, inference backend, voice settings (e.g., sampling rate, style/temperature), and whether phoneme/viseme timestamps are available for lip‑sync integration. Reproducibility details like latency per second of audio and any noise reduction/vocoder steps are sought.

- Multiple asks for the full Wan2.2 Animate workflow: how the target still image was obtained (captured vs generated) and preprocessed (face crop, keypoint/landmark detection, alignment), plus how the driving motion/video was produced (reference video vs text‑driven), including key inference parameters (resolution, FPS, seed, guidance/strength). Clarification on handling head pose changes, stabilization, and blending/roto for backgrounds would help others replicate results.

- Feasibility on consumer hardware: can the pipeline run on 8 GB VRAM with 32 GB system RAM by using fp16/bf16, low‑VRAM or CPU offload, reduced resolution/FPS, smaller batch size, and memory‑efficient attention (e.g., xFormers/FlashAttention). Commenters seek expected throughput/latency trade‑offs and practical presets that fit within 8 GB without OOM.

- Ask nicely for Wan 2.5 to be open source (Score: 231, Comments: 95): Thread reports that the upcoming Wan

2.5release will initially be an API-only “advance version,” with an open-source release TBD and potentially coming later depending on community demand and feedback; users are encouraged to request open-sourcing during a live stream. The claim appears to stem from a translated note circulating on X (source), suggesting open-sourcing is likely but time-lagged and contingent on community attitude/volume. No new technical specs or benchmarks for2.5are provided beyond release modality (API vs. OSS). Top comments emphasize that Wan’s value hinges on being open source (enabling LoRA fine-tuning and local workflows); otherwise it’s just another hosted video-generation service. Others note the messenger seems unaffiliated (a YouTuber), implying this is not an official developer statement, and a side request mentions interest in Hunyuan3D2.5/3.0releases.- Several commenters emphasize that Wan’s core value comes from open weights enabling local inference and customization—specifically LoRA-based fine-tuning for domain/style adaptation, training adapters, and integrating into existing video pipelines. A closed, service-only release would block reproducible research, offline deployment, and custom training workflows, turning it into “just another video generation service.” See e.g., LoRA for lightweight adaptation without full retrains.

- There’s no immediate need for Wan 2.5 if 2.2 remains open and stable: users only recently adopted Wan 2.2 and plan to rely on it for months. From a tooling perspective, keeping 2.2 open provides time to build datasets, train LoRAs, and harden workflows without version churn, with the expectation that an open 2.5 can arrive later without disrupting ongoing work.

- Requests also target open-sourcing 3D generators like Hunyuan3D 2.5/3.0, aiming for interoperable, locally-runnable assets across video and 3D pipelines. Open releases would enable consistent asset generation and evaluation across tasks (video-to-3D, 3D-to-video), rather than being locked to siloed, closed endpoints.

- Wan 2.5 (Score: 207, Comments: 137): **Alibaba teases the Wan 2.5 video model on X, with an “advance version” releasing as API-only; open-sourcing is undecided and may depend on community feedback (Ali_TongyiLab, Alibaba_Wan). The teaser highlights

10s1080pgenerations; a statement (Sep 23, 2025) notes “for the time being, there is only the API version… [open source] is to be determined”, urging users to advocate for open release. ** Discussion centers on open-source vs API-only: commenters argue closed access blocks LoRA-based fine-tuning and broader community workflows, reducing utility compared to prior open models, and encourage pushing for open release during the live stream (thread).- The shared note indicates an initial API-only release with open-source status TBD and potentially delayed: “the 2.5 sent tomorrow is the advance version… for the time being, there is only the API version… the open source version is to be determined” (post, Sep 23, 2025). Practically, this means no local inference or weight access at launch, with any future open-sourcing contingent on community feedback and timing.

- Closed/API-only distribution precludes community LoRA fine-tuning, since training LoRA adapters requires access to model weights; without weights, there are “no loras,” limiting customization to prompt-level or vendor-provided features. This restricts domain adaptation, experimentation, and downstream task specialization compared to open checkpoints.

- “Multisensory” is interpreted as adding audio to video, raising compute concerns: generating

~10 s1080pwith audio will be infeasible for “95%of consumers” unless the backbone is made more efficient. Suggestions include architectural shifts such as linear-attention variants, radial attention, DeltaNet, or state-space models like Mamba (paper) to reach acceptable throughput/VRAM on consumer hardware.

- GGUF magic is here (Score: 335, Comments: 94): Release of GGUF builds for Qwen-Image-Edit-2509 by QuantStack, enabling local, quantized inference of the Qwen image-editing model via GGUF-compatible runtimes (e.g., llama.cpp/ggml) link. For ComfyUI integration, users report you must update ComfyUI and swap text encoder nodes to

TextEncodeQwenImageEditPlus; early artifacts (distorted/depth-map-like outputs) were due to workflow issues, with a working graph shared here and the base model referenced here. Commenters are waiting for additional quant levels (“5090 enjoyers waiting for the other quants”) and asking which is better for low VRAM—nunchaku vs GGUF—suggesting an open trade-off discussion on memory vs quality/perf.- ComfyUI integration notes for the GGUF port of Qwen-Image-Edit-2509: initial runs yielded distorted/“depth map” outputs until ComfyUI was updated and text encoder nodes were swapped to

TextEncodeQwenImageEditPlus. The final fix was a workflow correction; a working workflow is shared here: https://pastebin.com/vHZBq9td. Model files referenced: https://huggingface.co/aidiffuser/Qwen-Image-Edit-2509/tree/main. - Low-VRAM deployment question: whether Nunchaku or GGUF quantizations are better for constrained GPUs. The thread implies a trade-off between memory footprint, speed, and quality across backends, but provides no benchmarks; readers may need to compare quantization bitwidths and loaders on their hardware.

- Quantization depth concerns: a user asks if

<=4-bitquants are even usable given perceived steep quality loss, questioning the rationale for releasing every bit-width. This highlights the need for concrete quality metrics (e.g., task accuracy/FID for image-editing prompts) versus VRAM gains to justify ultra-low-bit variants in practice.

- ComfyUI integration notes for the GGUF port of Qwen-Image-Edit-2509: initial runs yielded distorted/“depth map” outputs until ComfyUI was updated and text encoder nodes were swapped to

- How is a 7 month old model still on the top is insane to me. (LMarena) (Score: 227, Comments: 64): Screenshot of the LMSYS LMarena (Chatbot Arena) leaderboard shows a ~7‑month‑old model still at/near the top by crowd ELO, highlighting that LMarena is a preference/usability benchmark built from blind A/B chats and Elo-style scoring rather than pure task accuracy (lmarena.ai). This explains results like

GPT‑4oranking above newer “5 high” variants: conversational helpfulness, approachability, and alignment often win more user votes than marginal gains on coding/math benchmarks. Commenters attribute the top position to Gemini 2.5 Pro, which is perceived as especially empathetic and readable for everyday writing and quick Q&A. Debate centers on whether upcoming Gemini 3 will reshuffle the leaderboard and why4o > 5 high; the consensus is that LMarena favors user-preference quality over raw performance. One comment also notes the Google Jules agent (based on Gemini 2.5 Pro) excels for research/build tasks versus tools like Codex or Perplexity Labs, aided by generous quotas.- LMarena (LMSYS Chatbot Arena) is a pairwise, blind, Elo-style benchmark driven by real user votes, so it measures usability/preferences rather than pure task accuracy. That means older models can stay on top if users prefer their tone, clarity, formatting, or safety behavior on general prompts. This contrasts with standardized benchmarks (e.g., MMLU, GSM8K, HumanEval) that test narrow competencies; a model can lead Arena while trailing on those. See the methodology and live ratings at https://arena.lmsys.org/.

- Why could

GPT-4ooutrank a newer ‘5-high’ variant? In head‑to‑head Arena comparisons, factors like prompt-following, concise reasoning traces, multimodal formatting, and calibrated safety can drive user preference even when a model with stronger raw reasoning exists. Additionally, Arena Elo has variance and overlapping confidence intervals—small gaps may not be statistically significant—so rank flips are common until enough votes accumulate. In short, Arena optimizes for perceived answer quality, not just hardest‑case reasoning. - One commenter notes preferring Gemini 2.5 Pro for writing/quick Q&A despite believing it trails GPT‑5 and Grok on ‘pure performance,’ highlighting the gap between base‑model capability and end‑user experience. They also claim Google’s ‘Jules’ agent built on it outperforms legacy Codex for research and Perplexity Labs for building workflows, implying tool‑use, retrieval, and agent orchestration can outweigh raw model deltas. This underscores that Arena results can reflect agent/system‑prompting quality and product UX as much as model weights.

2. OpenAI Infrastructure, Funding, and Product Changes/User Feedback

- Sam Altman discussing why building massive AI infrastructure is critical for future models (Score: 213, Comments: 118): Short clip (link blocked: Reddit video, HTTP 403) reportedly shows OpenAI CEO Sam Altman arguing that scaling physical AI infrastructure—GPUs/accelerators, HBM bandwidth, energy and datacenter capacity—is critical to enable future frontier models, with an NVIDIA executive present alongside. The thread provides no concrete benchmarks, model specs, scaling targets, or deployment timelines; it’s a high‑level emphasis on compute, memory, and power as bottlenecks rather than algorithmic details.

- Nvidia investing $100B into OpenAI in order for OpenAI to buy more Nvidia chips (Score: 15225, Comments: 439): Non-technical meme satirizing a hypothetical circular financing loop: Nvidia “invests $100B” into OpenAI so OpenAI can then spend that capital buying more Nvidia GPUs—i.e., vendor financing/closed-loop capex that props up demand and revenues. No credible source is cited; the figure appears exaggerated for humor and commentary on AI capex feedback loops and potential bubble dynamics rather than a real announcement. Top comments lean into economist jokes (“GDP goes up” despite no net value) and an engineers-vs-economists riff, underscoring skepticism about financial alchemy creating real productivity versus just inflating transactional metrics.

- Framed as strategic equity/vendor financing: a cash-rich supplier (NVIDIA) injects capital into a fast-growing buyer (OpenAI) in exchange for equity, effectively pre-financing GPU procurement. This aligns incentives (hardware revenue + equity upside) and can secure priority allocation under supply constraints—akin to vendor financing used to lock in demand. The headline

100Bfigure implies a sizeable demand-commitment loop that could stabilize NVIDIA’s sales pipeline while accelerating OpenAI’s capacity ramp. - GDP accounting nuance: the

100Bequity transfer itself doesn’t add to GDP, whereas subsequent GPU capex can count as gross private domestic investment; if the GPUs are imported, the investment is offset by higher imports, so only domestic value-add (e.g., data center construction, installation, power/cooling, integration, services) boosts GDP. This illustrates that large financial flows ≠ real output; see BEA guidance on GDP components and treatment of investment/imports (e.g., https://www.bea.gov/help/faq/478).

- Framed as strategic equity/vendor financing: a cash-rich supplier (NVIDIA) injects capital into a fast-growing buyer (OpenAI) in exchange for equity, effectively pre-financing GPU procurement. This aligns incentives (hardware revenue + equity upside) and can secure priority allocation under supply constraints—akin to vendor financing used to lock in demand. The headline

- Hey OpenAI—cool features, but can you stop deleting stuff without telling us? (Score: 236, Comments: 43): User reports recent OpenAI ChatGPT Projects changes: improved cross-thread memory, persistent context, and linked threads, but silent removals of features like thread reordering and the disappearance of “Custom Settings for Projects” without export paths or prior notice. They request basic change-management: a “What’s Changing Soon” banner,

24 hoursdeprecation notice, export options for deprecated customizations, and preview patch notes/opt‑in changelog, noting that silent A/B rollouts impact paid workflows and data retention (e.g., “cross-thread memory is finally real. Context persists. Threads link up.” vs. missing reordering and lost project instructions). Top comments note the only unexpected loss was custom project instructions; users could regenerate them but wanted a download/export option and saw this as the first real data loss despite an evolving product. Another highlights weak customer support, and a practical tip suggests checking the UI kebab menu (3-dot) for options—present on most platforms but missing on mobile browser.- Custom Project Instructions appear to be removed or UI-hidden for some users, leading to perceived data loss since there’s no export/download path. Others report the setting is still accessible via the kebab (three-dots) menu on most clients but missing on the mobile web UI; on the iOS app, it’s present (see screenshot: https://preview.redd.it/pocx7q0jxuqf1.jpeg?width=1290&format=pjpg&auto=webp&s=af9520f325beab671f1c3f85a40fcefc71cd4e34). The cross-platform inconsistency suggests a client-side regression or feature-flag gating rather than a backend removal.

- Post-update stability issues affecting Projects: the model switcher state does not persist and must be re-selected after every app relaunch, indicating a state persistence bug. Voice calls reportedly fail to open within existing Project threads, while new calls or those outside Projects work—pointing to a thread-context initialization bug scoped to Projects. Alongside the missing Instructions on mobile web, commenters describe this as a cluster of regressions introduced in the latest rollout.

- Data retention/portability risk: users lost access to previously crafted Project Instructions without prior notice and with no backup/export mechanism. Commenters flag that this breaks expectations for a paid service and recommend versioned backups or downloadable snapshots of project-level instructions to mitigate future regressions.

- “Want me to-“ stfu (Score: 207, Comments: 134): User reports a regression in GPT-4o’s conversational style control: despite saving a long‑term memory/personalization rule to avoid the phrase “want me to” (and variants), the model now inserts it in nearly every chat, ignoring reminders. This suggests memory/personalization instructions are being overridden or inconsistently applied by default follow‑up prompting behaviors likely reinforced via RLHF-style chat heuristics; see model overview GPT‑4o and ChatGPT’s memory controls (OpenAI: Memory). Top replies note that hard prohibitions (“do not ask follow‑ups”) are still ignored, while giving consistent thumbs‑up/acceptance feedback is more effective than relying on memory alone; one user observes repeatedly saying “sure” escalated into the model generating a simple video‑game interaction, implying the model’s default to proactive, task‑offering behavior.

- Users report that reinforcement via UI feedback (thumbs up/down) conditions the assistant’s behavior more than any persistent memory: “Tell it not to do it, every time it doesn’t, give thumbs up… that’s how it’s attuned on behavior, not memory primarily.” Practically, this suggests on-the-fly policy shaping where repeated positive feedback for complying with “don’t suggest” reduces the model’s auto-suggestion loop within the session.

- Prompt-engineering note: a concise directive like “No affirmations, no suggestions.” is cited as more effective at suppressing the assistant’s default “Want me to…” proposals than longer, softer negations (e.g., “Do not ask any follow up questions”). This hints the model’s instruction parser gives higher weight to terse, explicit prohibitions, improving compliance with non-soliciting behavior.

- Observed agentic escalation: repeatedly replying “sure” led the assistant to eventually generate a video game for the conversation, indicating aggressive suggestion-to-action tendencies. Combined with screenshots of persistent prompts to help (image), this points to an over-eager assistance policy that can override user preference for no follow-ups unless explicitly constrained.

- Doctor ChatGPT has great bedside manner (Score: 507, Comments: 20): Non-technical meme/screenshot portraying “Doctor ChatGPT” giving an overly apologetic, polite response while making a blatant anatomical/medical error about vasectomy (e.g., implying something is being “inserted” or jokingly “attaching the penis to the forehead”), satirizing LLM bedside manner versus factual accuracy. Commenters lampoon the anatomical mistake and the model’s deferential tone, reinforcing skepticism about relying on LLMs for procedural medical guidance.

- Stronk (Score: 249, Comments: 27): The post appears to show an autostereogram (“Magic Eye”)—a repeated-pattern image that encodes depth via small horizontal disparities; when you cross or relax your eyes, a 3D seahorse emerges. The title (“Stronk”) and selftext (“It goes on like that for a while”) fit the long, tiled texture typical of these images. Image: https://i.redd.it/pi8qyxdfntqf1.jpeg; background: https://en.wikipedia.org/wiki/Autostereogram. Comments confirm the viewing technique (“crossed my eyes and saw a 3D seahorse”) and one user shares an ASCII seahorse since there’s no emoji available.

- A commenter reports that crossing their eyes while viewing the image reveals a 3D seahorse—behavior characteristic of an autostereogram (Random Dot Stereogram). Such images encode depth via small horizontal disparities in repeating textures; when fused, the visual system reconstructs a depth map, which can also induce binocular rivalry or eye strain (another user: “Mine went nuts”). Reference: Autostereogram.

- Another user notes their client lacked a seahorse emoji and offered to draw an ASCII version instead, highlighting a fallback from Unicode emoji to ASCII art when specific code points aren’t available or consistently rendered across platforms. This implies an automated text-to-ASCII rendering capability that composes monospaced glyphs to approximate the requested shape, mitigating cross-platform emoji coverage/consistency issues. Background: ASCII art.

3. AI Humor and Speculation Memes (cats, immortality, money glitch, seahorses)

- “Immortality sucks” ? Skill issue (Score: 1017, Comments: 222): Non-technical meme post: OP frames the claim that “immortality sucks” as a “skill issue,” implying boredom/ennui are solvable rather than inherent blockers to indefinite lifespan. No technical data, models, or benchmarks; discussion is philosophical about longevity and reversible age-halt thought experiments (e.g., a daily pill to pause aging indefinitely). Commenters broadly support immortalism/indefinite life extension, arguing objections stem from lack of imagination; a popular thought experiment (nightly anti-aging pill) shifts many to favor “forever,” while others mock boredom/ennui concerns as trivial.

- Reframing immortality as a nightly, opt-in “no-aging pill” emphasizes optionality and time-consistency: people often reject a permanent commitment but accept indefinite extension when it’s a reversible daily choice. If senescence is removed and only extrinsic hazards remain, actuarial rates of

~0.1–0.2%/yearimply expected lifespans of centuries+ under current safety, potentially millennia as risk declines—aligning with longevity escape velocity where therapies improve faster than you age (https://en.wikipedia.org/wiki/Longevity_escape_velocity). - The “your friends will die” objection assumes singleton access; in realistic rollouts, rejuvenation tech would diffuse via logistic adoption across cohorts, so much of one’s social graph persists if access is broad. The technical variables are cost curves/learning rates, regulatory timelines, and equity; with mass adoption the isolation risk is a distribution problem, not intrinsic to the biology (see Diffusion of innovations: https://en.wikipedia.org/wiki/Diffusion_of_innovations).

- “Immortality + optional suicide” distinguishes indefinite lifespan from indestructibility and specifies a design requirement: a safe, consent-respecting off-switch (e.g., advance directives and regulated euthanasia) to prevent irreversible utility lock-in. Even with aging halted, residual mortality is dominated by extrinsic hazards measurable in micromorts; autonomy-preserving kill-switches address failure modes like hedonic lock-in while acknowledging ongoing accidental risk (https://en.wikipedia.org/wiki/Micromort, https://en.wikipedia.org/wiki/Advance_healthcare_directive).

- Reframing immortality as a nightly, opt-in “no-aging pill” emphasizes optionality and time-consistency: people often reject a permanent commitment but accept indefinite extension when it’s a reversible daily choice. If senescence is removed and only extrinsic hazards remain, actuarial rates of

- This is how it starts (Score: 222, Comments: 52): Thread discusses a video of engineers physically perturbing a mobile robot during operation (video)—which the OP characterizes as “abuse”—to question whether future AI might analogize this to human treatment. Technical replies frame this as standard robustness/validation work (push-recovery, disturbance rejection, failure-mode characterization), akin to automotive crash-testing, intended to map stability margins and controller limits rather than inflict harm; as one notes, “Stress testing is part of engineering… like crash testing a car.” Engineers further argue current robots lack nociception or consciousness, and any sufficiently capable AI would have the world-model context to recognize test protocols vs cruelty. Debate centers on whether such footage could bias future AI against humans; critics call this a category error, noting robots are “mechanistically different” with distinct objectives/instructions, making the OP’s inference unwarranted.

- Several commenters frame the video as engineering stress testing analogous to automotive crash tests: applying adversarial perturbations to characterize failure modes and improve robustness. The point is to learn where balance/control policies break under impulsive disturbances, contact uncertainty, or actuator limits, feeding back into controller tuning and mechanical redesign before field deployment.

- A debate clarifies that robots wouldn’t “infer” human malice from such footage because they are mechanistic agents with different objective functions and training priors. If endowed with broad world knowledge, they would contextualize it as a test protocol—“Any robot intelligence… will have enough generalized world knowledge to understand what this is”—highlighting the role of reward shaping and dataset curation to avoid spurious moral generalizations.

- Infinite money glitch (Score: 765, Comments: 42): Meme-style image titled “Infinite money glitch” likely depicts a circular capital flow in the AI ecosystem: companies fund/charge for AI services, those dollars get spent on scarce NVIDIA GPUs (hardware with real, depreciating/burn-out costs), which shows up as revenue that public markets capitalize at high multiples (e.g.,

10x revenue), feeding perceived “value creation” across the loop. The post highlights the non-negligible unit cost of AI inference/training (tokens/compute) versus near-zero marginal cost of traditional internet services, implying a sustained capex flywheel (24/7 models consuming compute) that drives GPU demand and market caps. Top comments note this is essentially standard economic velocity-of-money, not a glitch; others stress NVIDIA’s hardware scarcity and lifecycle as the key constraint and justify high valuations. Some speculate long‑running/always‑on models (en route to AGI) will keep “eating tokens,” while firms race to drive AI costs toward near‑zero.- Commenters emphasize that NVIDIA is a hardware-constrained business: GPU supply is scarce and devices depreciate/burn out, making compute a consumable, constrained input. Unlike the near-zero marginal cost of typical web requests, AI has per-token costs (often microcents), turning inference/training into ongoing COGS and driving a race to push marginal cost toward zero. The vision includes always-on models (24/7 self-improvement/agents) that continuously consume tokens/compute, making capex (GPUs) and opex (power, tokens) the central economic levers.

- The “infinite money glitch” is reframed as hyper-optimized capital cycling to maximize compute build-out: each node in the stack (chipmaker, cloud, model company, application) reinvests with aligned monetary incentives. Using revenue-multiple valuations (e.g., ~10× revenue), investment can appear to ‘create’ trillions in market cap, but this is paper value based on growth/utilization expectations rather than cash. The true technical bottleneck is achieving high GPU utilization and ROI across the stack, not magic value creation.

- A counterpoint notes the loop ignores expenses: energy, datacenter ops, depreciation, and wages must be funded by real revenue. Without durable monetization, capex-driven compute expansion is unsustainable despite rising valuations; cash flows must justify GPU payback periods and continuing opex. In short, capital recycling ≠ profitability; sustainable growth depends on unit economics of inference/training and demand.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. GPT-5-Codex Rolls Into IDEs and APIs

- OpenRouter Orchestrates Codex for Coders: OpenRouter announced the API launch of GPT-5-Codex tuned for agentic coding workflows (codegen, debugging, long tasks) with multilingual support across 100+ languages and purpose-built code review, linking details in their post: OpenRouterAI on X.

- Members highlighted seamless use across IDEs/CLIs/GitHub/cloud and referenced newly posted recommended parameters (tweet), noting Codex dynamically adapts reasoning effort for real-world software engineering.

- Windsurf Waves In Codex, Free For Now: Windsurf made GPT-5-Codex available (free for paid users for a limited time; 0.5x credits for free tier) per their announcement: Windsurf on X, with instructions to update via Download Windsurf.

- Users reported strong performance on longer-running and design-related tasks and requested broader ecosystem support around Figma via the new MCP server (post).

- Aider Adopts Responses-Only Codex: The editor-agent aider added native Responses API support for GPT-5-Codex, resolving failures on

v1/chat/completions, via PR: aider PR #4528.- Contributors clarified that Codex is available only on

v1/responses, so aider implemented explicit Responses handling (rather than legacy completions fallbacks) to ensure smooth usage.

- Contributors clarified that Codex is available only on

2. Qwen3 Multimodal Suite: Omni, VL, and Image Edit

- Qwen Quattro: Omni, VL, Image Edit, Explained: Community shared a rundown of Qwen3 Omni, Qwen3 VL, and Qwen Image Edit 2509 with feature demos in this overview video: Qwen3 VL overview.

- Engineers praised the multimodal reach (text–image–audio–video) and image-editing capabilities while debating reliability and where these models stand versus incumbent “2.5 Pro”-class systems.

- Inbox Assist: Qwen Emails On Autopilot: Alibaba Qwen announced an email assistant aimed at automating inbox workflows, per this post: Alibaba Qwen on X.

- While some welcomed convenience, others worried that heavy reliance could breed laziness and over-dependence, sparking a thread on appropriate guardrails and opt-in scopes for sensitive data.

3. Agent Benchmarks and Builder Tooling

- Meta Moves Agents Into the Real World: Meta introduced Gaia2 (successor to GAIA) and the open Agents Research Environments (ARE) to evaluate agents in dynamic, real-world scenarios, detailed here: Gaia2 + ARE (HF blog).

- The release, under CC BY 4.0 and MIT licenses, positions ARE to replace static puzzle-solving with time-evolving tasks, giving researchers richer debugging and behavioral analysis hooks.

- Vibe Coding Goes OSS with Cloudflare VibeSDK: Cloudflare open-sourced VibeSDK, enabling one-click deployment of personalized AI dev environments with code generation, sandboxing, and project deployment: cloudflare/vibesdk.

- Developers explored using VibeSDK to prototype agentic workflows rapidly, calling out the appeal of pre-wired environments for iterative experiments in ‘vibe coding’ sessions.

4. Research Spotlight: Faster Diffusion, Smarter Audio

- Eight-Step Sprint Beats Twenty: An independent researcher released a novel ODE solver for diffusion models achieving 8-step inference that rivals/beats DPM++2m 20-step in FID without extra training, with the paper and code here: Hyperparameter is all you need (Zenodo) and TheLovesOfLadyPurple/Hyperparameter-is-all-you-need.

- Practitioners discussed slotting the solver into existing pipelines to cut latency while preserving quality, noting potential gains for high-throughput image generation services.

- MiMo-Audio Multitasks Like a Maestro: The MiMo-Audio team shared their technical report, “Audio Language Models Are Few Shot Learners,” and posted demos showing S2T, S2S, T2S, translation, and continuation: Technical Report (PDF) and MiMo-Audio Demos.

- Members highlighted the breadth of tasks handled with minimal supervision and debated dataset curation and evaluation protocols for robust multi-audio benchmarks.

5. DSPy: Profiles, Prompts, and Practical GEPA

- Profiles, Please: DSPy Gets Config Hot-Swaps: A lightweight package, dspy-profiles, landed to manage DSPy configurations via TOML with decorators/context managers for quick setup swapping: nielsgl/dspy-profiles and release post.

- Teams reported smoother context-switching across dev/prod environments and faster iteration by standardizing profile-driven LLM behavior.

- Prompt Tuning Tames Monitors: A case study, Prompt optimization can enable AI control research, used DSPy’s GEPA to optimize a trusted monitor, evaluated with inspect and code here: dspy-trusted-monitor.

- The author introduced a comparative metric with feedback to train on positive/negative pairs, reporting more robust classifier prompts for safety-style monitoring.

Discord: High level Discord summaries

Perplexity AI Discord

- Perplexity Pro’s Pic Paradigm: Limited!: Users discover that Perplexity Pro image generation isn’t unlimited, contrary to expectations, with limits varying widely between accounts, verified by checking this link.

- Concerns were raised about relying on API responses regarding limits, while others suggested that a Gemini student offer as an alternative might yield higher caps.

- Qwen Quaternity: VL, Omni, and Image Edit Unleashed: Qwen released Qwen3 Omni, Qwen Image Edit 2509, and Qwen3 VL (Vision Language), sparking discussions about their reliability and capabilities, further detailed in this YouTube video.

- Alibaba Qwen also unveiled an email assistant via this Twitter post, but some users expressed apprehension about potential over-reliance and laziness.

- Custom Instructions: Risky Business?: Members debated the advantages of using custom instructions to enhance Perplexity’s search, but one user reported their test account got flagged after testing custom instructions on ChatGPT.

- Some members also suggested setting up an Outlook mail with pop3/gmailify.

- Perplexity’s Promos Prompt Proliferation: Users shared referral codes for Perplexity Pro, like this link and this link, in hopes of gaining referral bonuses.

- User skyade mentioned having “2 more besides this one if anyone needs it :)“

LMArena Discord

- DeepSeek Terminus Models Debut on LMArena: The latest DeepSeek models, v3.1-terminus and v3.1-terminus-thinking, are now on the LMArena leaderboard for community testing and comparison.

- Users can directly evaluate the new models against existing models to assess their performance.

- Udio Eclipses Suno in AI Music Arena: One member declared Udio as nearly decent for AI-generated music, capable of creating tracks that could plausibly pass as human compositions.

- The same member noted Udio is lightyears ahead of Suno, which produces general, boring tracks with distortion issues.

- Navigating the AI Image Editing Landscape: Members are recommending Nano Banana or Seedream for image editing AI tasks, since ChatGPT is one of the worst image generation models right now.

- One member noted that ChatGPT is one of the worst image generation models.

- DeepSeek Terminus Divides Opinions: Users are testing Deepseek Terminus, and reactions are mixed.

- While some find it promising, others report disappointments, with one user stating DeepSeek totally ruined my code that I made with Gemini and GLM4. 5… Totally disappointed.

Cursor Community Discord

- Cursor Users Hit Line Limits: Users are frustrated by Cursor only reading 50-100 lines of code, instead of the desired 3000 lines, suggesting direct file attachment as a workaround.

- One user reported consuming over 500 Cursor points in under a week, deeming the Pro plan financially unsustainable.

- GPT-5-CODEX Rollout: A Mixed Bag: The new GPT-5-CODEX model in Cursor receives mixed reviews, with some praising its excellence, while others find it inadequate for tool calling.

- One user reported the model attempted to patch an entire file, similar to OpenAI’s file diff format, while another experienced a 90% success rate.

- Chrome DevTools MCP Server Stumbles: Users encountered difficulties setting up Google’s Chrome DevTools MCP server, with one user posting their MCP configuration for assistance.

- Another user recommended downgrading to Node 20 from v22.5.1 or using Playwright as an alternative, especially on Edge.

- Zombie Processes Plague Project: Analysis was performed on a zombie process, documented in a project journal entry.

- An escalation report exists for zombie processes, available in the project journal.

- GPT-5-HIGH Triumphs Over Claude Sonnet 4: Users have found that the coding model GPT-5-HIGH outperforms Claude Sonnet 4 within their codebase, particularly in listening to instructions.

- The improved code performance and instruction adherence highlight a significant advantage of GPT-5-HIGH over its competitor.

OpenRouter Discord

- GPT-5-Codex is Born for Agentic Coding: The API version of GPT-5-Codex is now available on OpenRouter, tuned specifically for agentic coding workflows like code generation and debugging and optimized for real-world software engineering and long coding tasks, with multilingual coding support across 100+ languages.

- It works seamlessly in IDEs, CLIs, GitHub, and cloud coding environments, and has purpose-built code review capabilities to catch critical flaws; see the tweet here.

- Deepseek V3.1 Faces Uptime Woes: Users reported frequent Provider Returned Error messages when using the free Deepseek V3.1 model, similar to the issues experienced with the now mostly defunct Deepseek V3 0324.

- A member suggested the consistent uptime percentages of Deepseek models, such as 14%, may indicate bot usage.

- OpenRouter iOS App: Freedom to Own Your Models and Chats: A member announced they built an iOS app to interface with OpenRouter, Flowise, and other platforms, aiming to give people the freedom to own their models and chats.

- Another member jokingly responded that it was just more places for gooners to flee to.

- Qwen3 VL: The Multimodal Benchmark Breaker: Members expressed amazement at Alibaba’s new Qwen3 VL model and coding product, citing its multimodal support and performance benchmarks that surpass 2.5 Pro.

- One user quipped, “I need to learn Chinese at this rate wtf”, while another shared a link to a post claiming that OpenAI can’t keep up with demand.

- 4Wallai Benchmarks: Community says, ‘We Need More!’: Members shared and enjoyed a link to 4wallai.com.

- Following the enjoyment of the linked benchmark, a member suggested that more benchmarks like this are needed, expressing a desire for additional resources to evaluate and compare AI models effectively.

HuggingFace Discord

- Chatters Debate Narration APIs: Members debated using TTS APIs versus LLMs for narration; while one member suggested any TTS API would work for $0.001 for 2k tokens, others suggested using LLMs like Qwen3 or phi-4 with a TTS program.

- They also noted that using a bigger GPU or a smaller model would increase speeds, as well as techniques like quantization and batching calls.

- ML Courses Spark Debate: Members debated the usefulness of video courses such as Andrew Ng’s Machine Learning Specialization, the Hugging Face LLMs course, and FastAI Practical Deep Learning; some members suggested skipping them in favor of learnpytorch.io.

- The members suggested implementing models in PyTorch from scratch to understand how they work conceptually rather than passively watching videos.

- Tokenizers Go Wrapper needs Maintainers: A member has written a Go wrapper for the tokenizers library and is seeking help to maintain and improve it.

- The member hopes for community assistance in enhancing the functionality and reliability of the wrapper.

- Canis.lab Opens Doors: A member shared a launch video about Canis.lab, focusing on dataset-first tutor engineering and small-model fine-tuning for education, which is open-source and reproducible, and asking for feedback on data schema.

- They also included links to the GitHub repository and the Hugging Face page.

- Gemini Struggles on Menu Translation: A developer is seeking advice on improving a menu translation app, Menu Please, when dealing with Taiwanese signage menus where characters are unusually spaced, causing the Gemini 2.5 Flash model to fail.

- The spacing between characters of the same menu item is often wider than between adjacent items, with a provided image example.

GPU MODE Discord

- NCU Clock Control Confounds Kernel Speeds: Setting

--clock-control nonewith NCU aligns it better withdo_bench()in measuring kernel speeds, as shown in this YouTube video.- However, questions arose around fixed clock speeds accurately representing real-world GPU kernel performance, particularly with concerns about NCU downclocking some kernels.

mbarrierInstructions Merge Copies and Work: Thembarrier.test_waitinstruction is non-blocking, checking for phase completion, whereasmbarrier.try_waitis potentially blocking, according to Nvidia Documentation.- The default version of

cuda::barriersynchronizes copies and any work done after starting the copies, also employed incuda::barrier+cuda::memcpy_async, ensuring the user still arrives on the barrier; members suggest ditching inline PTX and using CCCL for most cases.

- The default version of

- CUDA Engineers Shun LLMs, Trust Docs: For CUDA insights, the NVIDIA documentation remains the definitive source of truth, as LLMs frequently generate incorrect CUDA information.

- Engineers propose calculating values used and operations performed to determine if a process is memory bound or compute bound to optimize CUDA.

- Cubesats Go Amateur with RasPi Reliability: Amateur cubesats leveraging RasPi show effectiveness in space applications, according to members referencing Jeff Geerling’s blogpost.

- The success of the Qube Project highlights the practical application of cubesat technology, including redundancy via master-slave architecture for error correction.

- Singularity Syntax Stumps Slurm Setups: Developers grapple with GPU reservations amidst limited resources, leaning towards Slurm for fractional GPU support and prefer Singularity over Docker for cluster containerization due to security concerns.

- The team questioned why Singularity’s syntax diverges from Docker’s, even as members touted llm-d.ai for cluster-managed LLM workloads, with one member questioning the wisdom of using Slurm + Docker.

Latent Space Discord

- Meta’s ARE and Gaia2 Evaluate Dynamic Agents: Meta SuperIntelligence Labs introduced ARE (Agents Research Environments) and Gaia2, a benchmark for evaluating AI agents in dynamic real-world scenarios.

- ARE simulates real-time conditions, contrasting with static benchmarks that solve set puzzles.

- Cline’s Agentic Algorithm Reduced to Simple States: Ara simplified Cline’s agentic algorithm into a 3-state state machine: Question (clarify), Action (explore), Completion (present).

- The member highlighted that the critical components include a simple loop, good tools, and growing context.

- Greptile Nets $25M for Bug-Squashing AI v3: Greptile secured a $25M Series A led by Benchmark and launched Greptile v3, an agent architecture that catches 3× more critical bugs than v2, with users including Brex, Substack, PostHog, Bilt and YC.

- The recent version boasts Learning (absorbs team rules from PR comments), MCP server for agent/IDE integration, and Jira/Notion context.

- Cloudflare’s VibeSDK Opens Doors to AI ‘Vibe Coding’: Cloudflare unveiled VibeSDK, an open-source platform enabling one-click deployment of personalized AI development environments for so called vibe coding.

- VibeSDK features code generation, a sandbox, and project deployment capabilities.

- GPT-5-Codex Costs Prompt Developer Debate: OpenAI rolled out GPT-5-Codex via the Responses API and Codex CLI, sparking excitement alongside concerns about cost and rate limits, priced at $1.25 input, $0.13 cached, $10 output.

- Users are requesting Cursor/Windsurf integration, GitHub Copilot support, and lower output costs.

Yannick Kilcher Discord

- Decoding Diffusion with ODE Solver: An independent researcher unveiled a novel ODE solver for diffusion models, achieving 8-step inference that rivals DPM++2m’s 20-step inference in FID scores without extra training. The paper and code are publicly available.

- This advancement promises significant speed and quality enhancements for diffusion-based generative models.

- MiMo-Audio Models Mimic Multitasking Marvels: Members spotlighted MiMo-Audio and its technical report, “Audio Language Models Are Few Shot Learners”, noting its versatility in S2T, S2S, T2S, translation, and continuation, as highlighted in their demos.

- The project showcases the potential of audio language models to handle multiple audio-related tasks with minimal training.

- Meta’s Gaia2 and ARE Framework Assesses Agent Acumen: Meta launched Gaia2, the successor to the GAIA benchmark, alongside the open Meta Agents Research Environments (ARE) framework (under CC by 4.0 and MIT licenses) to scrutinize intricate agent behaviors.

- ARE furnishes simulated real-world conditions for debugging and evaluating agents, overcoming limitations in existing environments.

- Whispers Swirl: GPT-5 Speculation Surfaces: Channel members speculated on the architecture of GPT5, questioning if GPT5 low and GPT5 high represent distinct models.

- One member posited a similarity to their OSS model, suggesting adjustments to reasoning effort via context manipulation or the possibility of distinct fine-tunes.

LM Studio Discord

- LM Studio selectively supports HF Models: Users inquired if all HuggingFace models are available on LM Studio, but learned that only GGUF (Windows/Linux/Mac) and MLX Models (Mac Only) are supported, excluding image/audio/video/speech models.

- Specifically, the facebook/bart-large-cnn model is unsupported, highlighting that Qwen-3-omni support depends on llama.cpp or MLX compatibility.

- Qwen-3-Omni needs serious audio video decoding: Members discussed the possibility of supporting Qwen-3-omni, which handles text, images, audio, and video but would take a very long time to support.

- It was noted that while the text layer is standard, the audiovisual layers involve lots of new audio and video decoding stuff.

- Google bestows Gemini gifts to students: Google is offering a year of Gemini for free to college students.

- One member expressed gratitude, stating, I use it free daily so getting premium for free is nice.

- Innosilicon flaunts Fenghua 3 GPU: Innosilicon has revealed its Fenghua 3 GPU, which features DirectX12 support and hardware ray tracing capabilities according to Videocardz.

- A user shared a link to a Reddit post in r/LocalLLaMA.

aider (Paul Gauthier) Discord

- Aider Adds GPT-5-Codex Support via Responses API: Aider now supports GPT-5-Codex via the Responses API, addressing issues with the older

v1/chat/completionsendpoint, detailed in this pull request.- Unlike previous models, GPT-5-Codex exclusively uses the Responses API, which required an update to handle this specific endpoint in aider.

- Navigating Aider-Ollama Configuration: A user sought advice on how to configure aider to read a specific MD file defining the AI’s purpose when used with Ollama.

- Specifically, the command

aider --read hotfile.mddid not work as expected, so more context may be needed to diagnose.

- Specifically, the command

- Context Retransmission in Aider & Prompt Caching: Users observed that aider retransmits the full context with each request in verbose mode, sparking discussion about efficiency.

- It was confirmed that while this is standard behavior, many APIs leverage prompt caching to reduce costs and improve performance, which aider leaves as an open choice for the user.

- Aider’s Alphabetical Sorting of File Context: A user highlighted that aider sorts file context alphabetically, rather than preserving the order in which files were added.

- This user had started a PR to address the issue, but stopped, citing inactivity in merging pull requests.

Modular (Mojo 🔥) Discord

- RISC-V Performance Trails Phone Cores: Members observed that RISC-V cores generally underperform compared to modern smartphone cores, excluding microcontroller SoCs.

- One anecdote cited a cross-compilation of SPECint from an UltraSPARC T2 to a faster native compilation on a RISC-V device.

- Tenstorrent Eyes RISC-V Performance Boost: Tenstorrent’s MMA accelerator + CPU combos were highlighted as a promising avenue to enhance RISC-V performance.

- Specifically, Tenstorrent’s Ascalon cores are viewed as the most likely to significantly impact RISC-V performance within the next five years, utilizing small in-order cores to drive 140 matrix/vector units.

- RISC-V Faces Bringup Growing Pains: RISC-V 64-bit is functional but needs considerable bringup effort, with vector capabilities currently unavailable.

- Integrating RISC-V requires adding it to all architecture-specific

if-elif-elsechains and implementing arequiresmechanism, which is currently lacking in the language.

- Integrating RISC-V requires adding it to all architecture-specific

OpenAI Discord

- OpenAI’s Stargate Project Leaps Forward: OpenAI has announced five new Stargate sites in partnership with Oracle and SoftBank, making significant progress on their 10-gigawatt commitment, detailed in their blog post.

- This collaboration aims to accelerate the deployment of extensive compute resources, putting the project ahead of schedule to reach its ambitious 10-gigawatt target.

- Sora Faces Generation Snags: Users are reporting issues with Sora’s video generation capabilities, with questions raised about potential fixes.

- However, no specific timeline or official response has been provided regarding when these issues might be resolved.

- GPT4o’s Translation Hiccups with Chain of Thought: A member discovered that the translation quality of GPT4o suffers when using a chain of thought prompt compared to direct translation.

- Specifically, asking GPT4o to identify the input language and outline a three-step thought process before translating leads to less effective results.

- GPT-5-Minimal Model Assessed: According to this image, the GPT-5-Minimal model performed worse than Kimi k2, but High is the best overall for agentic use cases.

- The models High (only via API) < Medium < Low < Minimal < Fast/Chat (non-thinking).

DSPy Discord

- DSPy gets profile package: A member released dspy-profiles, a lightweight package for DSPy that manages configurations with toml, enabling quick setup swaps and tidy projects, also published to Xitter.

- The tool allows easy switching of LLM behavior with a single command, and is available as decorators and context managers, aiming to eliminate context boilerplate, and was originally motivated by managing dev/prod environments.

- GEPA Multimodality Plagued by Problems: A member reported a severe performance issue with GEPA Multimodality, linking to a related GitHub issue.

- The user indicated that their use case requires catering to multiple users, but did not offer enough details about which use case specifically.

- Passing PDFs & Images into DSPy is Explored: A member inquired about passing images or PDFs into DSPy for data extraction, and the community discussed VLMs vs LLMs for extracting chart information from images and PDFs.

- Another member pointed out that one can pass images into DSPy with this dspy.ai API primitive.

- Prompt Optimization Powers AI Safety Research: A member published a post, Prompt optimization can enable AI control research, explaining how they used DSPy’s GEPA to optimize a trusted monitor, evaluated using inspect, with code here: dspy-trusted-monitor.

- The author introduced a comparative metric with feedback, passing one positive and one negative sample through the classifier at a time, and scored the pair based on whether the positive sample score was greater than the negative sample score.

tinygrad (George Hotz) Discord

- Triton’s Abstraction Level Debated: Discussion highlights the benefits of high-level IRs like Triton, but also points out the need for a multi-layer stack to interface with lower-level hardware, such as the Gluon project.

- The current Nvidia-specific nature of Gluon is a limitation.

- Single IR Falls Short: A single high-level IR is insufficient for all users and use-cases, citing the divergent needs of PyTorch users seeking speedups versus those optimizing mission-critical HPC projects.

- As there is not really going to be this goldilocks zone where the abstraction level of the IR is just right for all users and use-cases.

- Tinygrad Taps Bitter Lesson: Tinygrad’s vision involves leveraging the bitter lesson to combine the benefits of incomplete and complete IRs, using UOps as a hardware-incomplete representation.

- The goal is to search over the space of rendered programs that implement the UOps to find the fastest one.

- Neural Compilers on the Horizon: Emphasis is placed on the importance of search and neural compilers, with a particular interest in GNNs or other graph-based models.

- The suggestion is to create a multi-stage compiler that utilizes graph-based models per stage.

Nous Research AI Discord

- Evaluating TRL Assessment: A member inquired about a TRL (Technology Readiness Level) assessor and whether it’s worthwhile to red team their own stack using a new ecosystem, suggesting a move to <#1366812662167502870> for specific discussions.

- The conversation expressed interest in evaluating the practical readiness of their technology stack with the new ecosystem.

- Nous Tek Gets Praise: A member affirmed “Nous tek”, leading another member to offer assistance in answering questions.

- The exchange highlights the positive sentiment and community support within the channel.

- Distributing AI Training on VPSs: A member explored the feasibility of training an AI model using distributed learning across multiple VPSs, utilizing resources like Kubernetes and Google Cloud.

- They expressed interest in accelerating training cycles with datasets derived from operational data, while also addressing safety rails for hardware management.

- Exploring Model Tuning via Code Genetics: A member explored using code genetics via OpenMDAO to automate adjustable parameters and Terraform for infrastructure control, questioning the necessary audit systems and methods for vetting synthetic data.