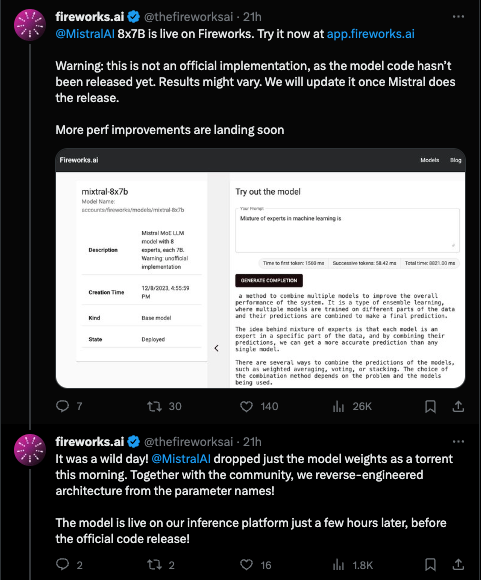

We also saw similar efforts from Fireworks AI:

Unfortunately nobody has reported significant benchmark improvements and it is not likely to be useful for local LLM usage. Still, great progress for the smol models community.

[TOC]

DiscoResearch Discord Summary

-

Discussions on the performance and implementation of the Mixtral model across multiple channels. This includes its functionality in the context of new and existing models, like Hermes 2.5 and Hermes 2. For instance, Mixtral’s encountered performance behaviour in various tests, such as

winogrande,truthfulqa_mc2, andarc_challenge, was discussed. Additionally, technical aspects such as GPU requirements, impact of memory limitations, and multi-GPU setup issues were also referred.“The base model was implemented using HuggingFace (HF) transformers by user

@bjoernp, and it was found to perform at70Bperformance level for a compute of~12Band memory requirements of~47B.” - mixtral_implementation, @the_bloke -

Evaluation of benchmarking models and detection strategies across different datasets.

@bjoernpintroduced considerations such as grammar-based evaluation, chain of thought (CoT), and a min_p sampling method. The Hellaswag benchmark and FastEval were proposed as potential tools, with the point of incorporating llama.cpp into FastEval by user@rtyaxsurfacing. Clarifying ideas about CoT or Tree of Thought and the application of min_p sampling was discussed“Suggestions were put forth for measures to detect cheating, such as scrambling the order of questions or retaining a percentage of questions unreleased.” - benchmark_dev, @.calytrix

-

Insightful debates on model sampling techniques, including Min P and Top P, and their respective influence on the stability, coherency, and creativity of generated responses.

“He suggested a 10-run repeat process to ascertain a model’s reasoning consistency.” - general, @kalomaze

-

GPTs’ learning process and limitations highlighted by users. A clarification from

@solbuson how agents store and utilize uploaded files as ‘knowledge’ was noteworthy.“Uploaded files were stored as ‘knowledge’ for the agent’s reference but did not continually modify their base knowledge.” - general, @solbus

-

Adaptability and versatility of models under varying conditions was a focused topic. The potential benefits of enabling higher temperature model settings through min_p sampling methods were discussed.

“Min P sampling in enabling higher temperature settings, making models more creative in a suitable and controlled manner.” - benchmark_dev, @kalomaze

DiscoResearch Channel Summaries

▷ #disco_judge (1 messages):

cryptossssun: is there any plan of dev the Mixtral Model?

▷ #mixtral_implementation (651 messages🔥🔥🔥):

- Hermes 2.5 vs Hermes 2 Performance: Users discussed the performance of the new implementation of Hermes, named Hermes 2.5. One user reported it performs better than Hermes 2 in various benchmarks.

- New Mixtral Model Implementation: Multiple users discussed and reported on their progress in implementing the newly released Mixtral model. The base model was implemented using HuggingFace (HF) transformers by user

@bjoernp, and it was found to perform at70Bperformance level for a compute of~12Band memory requirements of~47B. The model was also implemented with quantization via GPTQ by user@the_bloke, but it was still in the testing phase. - Discussion on Model Merging Tactics: Various merging techniques were suggested, with one user suggesting applying the difference between

UltraChatand the baseMistraltoMistral-Yarn. - Model Performance Evaluations: Several users reported benchmark results for the Mixtral implementation. Initial benchmarks showed variable performance across various evaluations like

winogrande,truthfulqa_mc2, andarc_challenge. After fixing a bug with softmax+topk, performance results improved. Further finetuning was reported to be in progress. - Model Loading and GPU Requirements Discussions: Users discussed various issues and techniques for loading the new Mixtral model, tackling memory limitations, optimizing load times, and issues with multi-GPU setups. GPU memory discussions suggested the model can be loaded in

4biton GPUs with around24GBVRAM. Issues with incorporating this model into existing tools liketextgen-webui,exllama, and others were shared.

▷ #general (222 messages🔥🔥):

- Mistral Models Discussions:

@bjoernpand@sinan2conversed about the performance of Mistral models on Hermes 2.5 and Hermes 2, as well as issues relating to extending Mistral beyond 8K. - Learning GPTs Agent:

@tilanthiraised concerns about GPTs agent not learning from additional information after initial training.@solbusclarified that uploaded files were stored as ‘knowledge’ for the agent’s reference but did not continually modify their base knowledge. - Chatbot Model Performance Contrasts:

@cryptossssunshared a preliminary HuggingFace implementation of a MoE model by MistralAi mixtral-7b-8-expert and discussed the possible performance differences between Mistral’s original models and Mixtral. - Discussions on Model Sampling Techniques:

@kalomazekeyed in his views on the limitations of Top P and proposed adopting a “min P 0.1” sampling method under typical 1.0 temp conditions. He suggested a 10-run repeat process to ascertain a model’s reasoning consistency. - Potential Improvements to Benchmarking Models:

@bjoernpproposed a new method of benchmarking models, incorporating 10x resampling for self-consistency, grammar-based evaluation, chain of thought (CoT), and a min_p sampling method. Programming constraints and implementation details were discussed with@kalomaze, who was invited to potentially lead the effort.

▷ #benchmark_dev (111 messages🔥🔥):

- Improving Benchmark Evaluation: Channel was created for discussions on improving the evaluation of benchmarks, with central ideas including using CoT or Tree of Thought, evaluating each question multiple times to circumvent token probability issues, employing min_p sampling for better problem resolution, and applying grammar-based approaches for more valid answers post-CoT reasoning. Notably mentioned

@kalomaze’s thoughts on the value of running questions multiple times, impacting not just the binary correct/incorrect judgment but also highlighting the degree of a model’s incorrectness. - Sampling Methods: An extensive discussion took place revolving around various sampling methods, particularly Min P and Top P, and their impact on the coherency, creativity, and stability of generated responses.

@kalomazeput forth the benefits of Min P sampling, justifying its superiority at truncation, and demonstrated it by sharing multiple examples of model responses. His propositions were met with skepticism by@.calytrix, who pointed out that human preference might not always align with the best reasoning the model is capable of. - Benchmarks and Tools: Both the Hellaswag benchmark and FastEval were considered as potential resources, though their alignment with the proposed methodologies was unconfirmed. User

@rtyaxmentioned the possibility of incorporating llama.cpp into FastEval. - Standardization in Benchmarking: Users voiced concerns about the lack of standardization and reliability in benchmark testing, mentioning variability in evaluation techniques and sampler settings. Suggestions were put forth for measures to detect cheating, such as scrambling the order of questions or retaining a percentage of questions unreleased.

- Model Scalability and Versatility:

@kalomazereported on the potential of Min P sampling in enabling higher temperature settings, making models more creative in a suitable and controlled manner, even applicable for programming purposes.

Nous Research AI Discord Summary

- Benchmarking methods in Mistral 7B, comparing it to other models such as the Hermes 2.5 and Hermes 2. A series of tweets related to the benchmarking and improvement of these models were shared: Tweet1, Tweet2.

- Analysis of the memory requirements of Mixtral, the memory optimisation of its GPU usage, and specific Mixtral inference implementations was debated.

- The potential and viability of fine-tuning MOE (Mixture of Experts) and larger models like GPT-4. “The potential for fine-tuning MOE (Mixture of Experts) models was discussed, with an argument for enterprises benefiting from continued pretraining on base MOE architecture as opposed to simply fine-tuning larger models like GPT-4”.

- The quantization methods GGUF, GPTQ, AWQ were compared, describing the AWQ as a more ‘dynamic’ method. Confusion about the term “2.5 bits” was also addressed in the guild.

- Topics discussing the VRAM requirements for models like the Mixtral 8x7B was discussed with reference to Tim Dettmers’ claim of running the model in only 5GB of RAM.

- Shared resources about MoEs (Mixture of Experts) were offered to users seeking to delve deeper into MoEs structure and functionality.

- Queries about Nous’s operational structure and different figurative objects were conversed in the off-topic channel. Specifically, questions arose about an offer for an unspecified object in San Francisco raised by user

@coffeebean6887and a query about whether Nous has employees or if it’s an all-volunteer organization. - Speculation on the future of AI was generally concerned with the future regulation of AI, with suggestions of seeking lesser restrictive areas to continue AI projects. One suggestion was more specifically concerned with the anticipated EU AI Act restrictions.

Nous Research AI Channel Summaries

▷ #off-topic (7 messages):

- Discussion about object’s appearance: User

@eas2535made a comment about the appearance of certain objects, stating, “Those heads are not attached right.” - Offer for object in SF:

@coffeebean6887offered extras of an unspecified object to anyone located in San Francisco, humorously implying they may have taken more than intended. - Request for object:

@gabriel_symeexpressed a desire for “the girl”, presumably a figurative object mentioned earlier, despite being far from San Francisco. They added they could cover postage costs. This user then asked for validation on the object’s aesthetics, asking, “Looks good right?” - Shared link:

@euclaiseshared a link to a Tweet without further comment. View Tweet - Nous Employment Query:

@naswasked if Nous has employees or if it’s an all-volunteer organization, mentioning users@jadeand@teknium. They apologized if the question was inappropriate for the channel, stating they were job-seeking and curious.

▷ #benchmarks-log (7 messages):

@nonameusrshared a series of tweets related to benchmarking the Mistral model. The tweets were from Anton @abacaj, discussing the evaluation of Mistral-7B against a standard test.- In one tweet, Anton @abacaj reported a score of 33.54%, an improvement from the standard Mistral-7B’s 30.5%.

@gabriel_symeshowed interest in the code used for these tests and later realized it was available in a public repository.

▷ #interesting-links (34 messages🔥):

- DeepSpeed v0.5 Mixture of Experts (MoE) Training:

@everyoneisgrossshared a link to DeepSpeed v0.5, which supports training Mixture of Experts (MoE) models. Noted that MoE models are an emerging class of sparsely activated models with sublinear compute costs with respect to their parameters, highlighting the example of Switch Transformer. - MoE Implementation and Functionality:

@everyoneisgrossrecommended reviewing the comments on a GitHub page, megablocks-public/megablocks/layers/moe.py, for a clearer understanding of how MoEs work. - Inference Code for Mistral/Mixtral:

@fullstack6209shared a link to the GitHub page, llama-mistral, which provides inference code for Mistral and Mixtral models hacked up into the original Llama implementation. - 8x7B MoE Base Model for Text Generation:

@if_alinked to a MistralAI’s new model on the Replicate platform, noting that this model runs on 4x Nvidia A100 (80 GB) GPUs. - New 2-bit Quantization Method:

@cyborgdreamshared information about a tweet from @tsengalb99 introducing a new 2-bit quantization method, QuIP#, for large language models with near-fp16 performance.

▷ #general (667 messages🔥🔥🔥):

-

Mistral AI Model Discussions: Users discussed various aspects of Mistral AI’s models, including their performance, potential improvements, and how they stack up against other models such as Hermes 2.5 and Hermes 2.

@fblgitshared insights about the performance of different models, mentioning that Xaberius 34B holds a leading position in the LLM leaderboard. -

Mixtral Inference Implementation: A debate arose concerning the correct inference protocol for Mixtral. Various users proposed different frameworks. A consensus was later reached that applying softmax after topk led to better benchmark results.

-

Memory and Performance Trade-offs: There was a discussion about the memory requirements of Mixtral and how it might be optimized for more efficient use of GPU memory. It was noted that despite Mixtral taking up significant VRAM, its inference speeds are similar to that of a Mistral 7B model. Additionally, it was suggested that a mixed-precision approach could be a viable solution.

-

Fine-tuning AI Models: The potential for fine-tuning MOE (Mixture of Experts) models was discussed, with an argument for enterprises benefiting from continued pretraining on base MOE architecture as opposed to simply fine-tuning larger models like GPT-4. Furthermore, ideas were exchanged about augmenting the datasets for better GSM scores.

-

Regulation Concerns: Users expressed concern about the future of AI regulation, especially with regards to Europe’s EU AI Act and possible restrictions on open-source AI projects. Some discussed seeking places with less restrictive regulation to continue their AI projects.

▷ #ask-about-llms (40 messages🔥):

- Quantization methods GGUF, GPTQ, AWQ: User

@akhxlasked about the difference between these quantization methods. User@cyborgdreamexplained that GGUF is a file format, not a quantization method. GPTQ and AWQ, however, are different quantumization methods with AWQ being a more “dynamic” and “smarter” option.@cyborgdreamalso cleared up confusion about what “2.5 bits” means in this context, stating that they mean 2 bits, and then for every “few parameters there’s an extra byte with extra information.” - Fine-tuning Mistral 7B:

@.beowulfbrasked for notebooks to help fine tune Mistral 7B.@russselmshared a Github link to a notebook they used as a reference. - Understand MoE (Mixture of Experts): User

@russselmrequested resources to understand about MoE. User@random_string_of_charactersuggested several resources including: Mixture of Experts, the MoE Reading Group and a YouTube Playlist on MoE. - StripedHyena-Nous-7B and LLamachop Implementation: Discussion arose about the new architecture StripedHyena-Nous-7B from User

@yobibyte. Updates will have to be made to Llamachop’s modeling code for Hugging Face transformer’s compatibility. - VRAM Needs for Mixtral 8x7B:

@gerredprompted a discussion about the drastic VRAM needs for running Mixtral 8x7B. It was noted that Tim Dettmers, the creator of bitsandbytes, claimed he could run Mixtral 8x7B in 5GB of RAM. - Position Encoding in Encoders:

@ex3ndrshared their confusion about the use and application of position encodings in encoders, particularly in relation to audio tokens. The discussion focused on their understanding of how the encoding process works and the potential issues arising from this in the overall encoding process. - Step-like Behavior in Loss Function of LLMs:

@nmg4914shared a blog pertaing to the unusual training behaviors in Large Language Models (LLMs) and asked if others could replicate the findings in their experiments.

OpenAI Discord Summary

- Discussion on strengths and weaknesses of Google’s involvement in AI advancement, with opinions expressed about Google’s track record compared to companies like Atari and Kodak; the company’s work on Artificial Superintelligence was also mentioned. Key authors’ exit from Google due to its failure to actualize research was brought up.

- Usage questions and technical challenges surrounding GPT-4 access, with slowdowns, difficulties logging in, and limit on the number of messages being key issues. Browser recommendations were given to tackle network errors and account restoration queries were addressed.

- Exploration of ChatGPT’s utility and performance, including a debate about the comparative robustness of Bard/Gemini Pro, GPT-3.5, and GPT-4. Concerns were raised regarding continual user verification and the decline in usefulness over time.

- Divergent methods for prompt engineering, such as using “show-and-tell”, EmotionPrompt technique, style guides like Strunk and White’s “Elements of Style”, or explicit detailing of character traits, aiming to shape engaging and unique AI outputs.

- Discussion on API-related issues and strategies, consisting of tackling repeated phrases, job interview simulation, and manipulation of instructions to guide AI behavior. An emphasis was placed on clear user understanding and explicit demands in order to get effective AI responses.

- Conversation about DALL·E usage, with DALL·E capabilities in MS Designer Create and Bing Image Creator being recommended. DALL·E’s implementation within ChatGPT, especially for Plus subscribers, was clarified.

- Questions around and recommendations for Unified Neural Alignment (UNA) and custom GPT assistants, reflecting interest in various OpenAI techniques and functionalities. However, no answers were provided about the UNA technique.

- Mention of the removal of the analyzer dropdown in file reading, as well as concerns around custom GPT caps and AI-aided content moderation.

OpenAI Channel Summaries

▷ #ai-discussions (54 messages🔥):

- Google’s performance:

@thewizzard___stated that Google, despite having a strong research team, had a number of product flops in several fields including social media, phones, AI, and desktop OS. The user compared Google to both Atari and Kodak as companies that did not convert their industry position into long-lasting success. - Use of DALL-E: User

.ggvoidasked about the usage of DALL-E,@rjkmelbsuggested using Bing Image Creator, and@i_am_dom_ffsrecommended MS Designer Create or Bing Create, both having DALL-E capabilities. - Unified Neural Alignment (UNA):

@readyplayeremmainquired about publications explaining the technique used in several openly available AI models. No responses were given regarding this. - Bard/Gemini Pro vs GPT-3.5 & GPT-4:

@thepitviperopined that to them, Bard/Gemini Pro seems better than GPT-3.5 and that Gemini Ultra might be on par with GPT-4.@zyrqlosaid that their current experience showed GPT-4 as superior to Bing or Bard but predicted that if issues were addressed, Gemini could surpass GPT.@bambooshootshighlighted that Google is substantially behind in AI model development compared to OpenAI. - Google’s involvement in AI advancement:

@zyrqlopointed out that Google Deepmind is working on Artificial Superintelligence, which could be significantly superior to any existing AI. Yet,@bambooshootsstated that key authors of the ‘Attention is all you need’ paper left Google due to its lack of action towards actualizing the research. - Continuing AI-generated stories:

@spectre120asked for an AI recommendation to continue their AI-generated story, feeling frustrated with ChatGPT.@the_only_alexanderresponded by suggesting the need to improve the direction of the user’s story.

▷ #openai-chatter (106 messages🔥🔥):

- Account-related questions:

@dawnx.asks about changing the Discord Account tied to their OpenAI account.@satanhashtagsuggested direct messaging modmail for assistance. - Discussion on GPT Versions and Features: Users including

@eksynn,@jessicant.,@satanhashtag,@sooswastakenengage in speculation about the potential release and pricing of future GPT versions, and the implications for existing versions. - Limit on the Number of Messages:

@mrcrack_,@tariqali,@bad3r,@【penultimate】,@eskcanta,@ragnarlothbrok, and others discuss constraints on the number of messages allowed per time unit on GPT-4 and compare that with other versions. - Performance and Availability Issues:

@luculentladyand@mrcrack_reported experiencing slowdowns and difficulty accessing ChatGPT,@satanhashtagsuggested this might occur during peak usage. - Quality of GPT-3 and GPT-4:

@ragnarlothbrokand@offlinediscuss observed declines in the quality of responses from GPT-3 and GPT-4, including inexplicable regression in answer quality and a decrease in usefulness over time.

▷ #openai-questions (55 messages🔥🔥):

- Accessing GPT-4: Some users, such as

@signateand@pr0xymo, experienced issues accessing and using GPT-4, despite being paid customers. They noted problems such as not being able to access the program since November, browser freezing, slow response times, and failings with the “stop” command. - Browser Recommendations: In solving browser-related issues,

@rjkmelbsuggested trying alternate browsers like Firefox in response to@maguiresfuturefacing network errors while using Chrome. - Account Restoration and Billing Issues: User

@gprapcapt3lasked if it was possible to restore an account after deletion, to which@rjkmelbresponded that it was not. This user also had concerns about ongoing charges after account deletion.@iversusaihad issues accessing GPT-4 despite a successful Plus subscription renewal, which@rjkmelbsuggested escalating through OpenAI support. - DALL·E Use and Billing:

@life_9999inquired about the cost of using ChatGPT Plus and DALL·E, to which@solbusclarified that ChatGPT Plus costs 20 USD a month, but one can use DALL·E 3 images commercially. Free access to DALL·E is available via Bing’s image creator but commercial use policies differ. - Custom GPT Assistants and Attachments Feature: User

@killymbappsposed questions about the use and implementation of the ‘Attachments’ feature in custom GPT assistants, particularly regarding how attachments should be prompted and structured. No answers were given in the discussion.

▷ #gpt-4-discussions (32 messages🔥):

- Analyzer Dropdown in Reading Files:

@zeriouszhitraised a query about the removal of the analyzer dropdown in file reading. It was perceived as a helpful tool to gauge if the AI was avoiding reading the files in their entirety. - Training GPT - Steps to Follow in Each Response:

@happygdiscussed the most effective ways to structure Custom Instructions vs Knowledge. They proposed a method by asking the GPT to reword the prompt according to specific instructions, then respond per the specifications. - Content Moderation with GPT-4:

@theunknown7enquired about using GPT-4 for content moderation, for which@solbusrecommended using OpenAI’s API moderations endpoint. The discussion further explored the difficulties in managing custom rules with OpenAI’s usage policies. - Issues with Custom Actions and Trello API:

@hachumansought assistance with integrating Trello’s REST API into a GPT and experienced issues while importing the full schema from Swagger. - User Verification for ChatGPT:

@yuriy700experienced frequent user verification prompts while using ChatGPT.@readyplayeremmasuggested it might be browser plugins or a VPN causing the issue. - GPT-4 Cap:

@karajanraised a concern about the limit on custom GPTs.@thepitviperclarified that custom GPTs have a cap of 25/3, and using plain GPT-4 allows for additional prompts up to a 40/3 limit. - Usage Of Dall-E in ChatGPT:

@life_9999enquired about the use of Dall-E in chat GPT.@pietmanclarified that it’s only accessible for GPT Plus subscribers. - Triggering Search in GPT Using RAG:

@a1vxasked about instructing a GPT to search its knowledge files using RAG. - User Data Protection in ChatGPT Responses:

@jobydorrshared an experience where ChatGPT denied a request involving personal information. They queried if the refusal to transcribe Instagram usernames or email addresses was a new implementation. - Integration of Dall-E Images and API Actions:

@chandan8764proposed sending Dall-E generated images in the chatgpt UI to some API action routes within a GPT.

▷ #prompt-engineering (40 messages🔥):

-

Show-and-tell Technique for Dialogue Creation:

@tryharder0569discussed an approach for creating engaging dialogues using the “show-and-tell” technique, where one suggests details without stating them outright. The method focuses on figurative, metaphorical, and expressive language to demonstrate the effect of a message implicitly (source) -

EmotionPrompt Technique for Improved Outputs:

@madame_architectmentioned the potentially beneficial effects of adding emotional stakes or implications to commands given to the AI. It’s suggested that especially within the scope of emotive responses, the AI tends to perform better in producing targeted results (source: “Large Language Models Understand and Can Be Enhanced by Emotional Stimuli” paper). -

Strunk and White’s “Elements of Style” for AI Writing:

@laughteronwateradvised to guide the AI’s writing style according to Strunk and White’s “Elements of Style”, using a tone akin to National Geographic, Scientific American, or Popular Science magazines. The user warned against using clichés or colloquialisms. -

The Effect of Emojis on Tokens:

@pythoncodrrcautioned that adding emojis to the AI output could consume more tokens than anticipated, as one emoji can correspond to 2-8 tokens. -

Behavior-guiding Prompts for RPG-style Dialogue:

@eskcantadiscussed the efficacy of using character-specific prompts to orient AI behavior. By instructing the AI to “speak as a noir detective with a dark secret”, brooding and cryptic dialogues are produced. The user exhorted the importance of giving clear directives, accurate detailing of character traits, and explicit requisites in making a prompt.

▷ #api-discussions (40 messages🔥):

-

Avoid Reusing Similar Phrases:

@Tedasked for advice on how to prevent GPT from reusing similar phrases multiple times.@mysticmarks1suggested blending the writing style of known authors and even spoke about the capability to mimic specific eras, time periods, and speech impediments to achieve unique writing styles. -

Behavioral Guidance: In response to

@Ted’s question on achieving unique texts,@mysticmarks1emphasized the importance of giving the AI good behavioral guidance to avoid repetition of phrases. The discussion highlighted using specific character traits, like that of a villain, to limit and diversify the AI’s vocabulary. -

Technical Issues:

@laughteronwaterreported experiencing issues with the ChatGPT system when using certain symbols for creating tables and rhythm notation. They also discussed wanting to limit cliches and colloquialisms in the model’s output and mentioned their customized instructions to get a more professional and academic writing style, similar to that of National Geographic or Scientific American magazines. -

Realistic Job Interview Simulation:

@eudkand@tryharder0569discussed how to prompt the AI to simulate a realistic job interview.@tryharder0569suggested specifying certain behavioral traits in the instructions, such as being a “tough job interviewer”. -

Custom Instructions:

@laughteronwaterand@tryharder0569discussed strategies to avoid cliches in the AI’s responses. They tried different instructions to ameliorate the AI’s directness, with@tryharder0569suggesting the use of “show-not-tell” language. -

Naming AI Characters:

@eskcanta,@madame_architect, and@tryharder0569discussed the beneficial effects of attributing specific roles, personalities, and motivations to AI models to guide their language and response style more effectively. -

Emotional Prompting:

@madame_architectregistered the merits of emotional manipulation in prompts, supported by an academic paper titled “Large Language Models Understand and Can Be Enhanced by Emotional Stimuli”. -

Views on AI:

@eskcantaadvocated for the need for clear understanding by users about what they want from the AI, and accurately communicating it for effective results. The discussion emphasized the need for clear communication when prompting the AI, to avoid the risks of ‘magical thinking’ or undue emotional manipulation.

OpenAccess AI Collective (axolotl) Discord Summary

- An educational discussion on the Mixture of Experts (MoE) Paradigm and the Switch Transformer. Users acknowledge its complexity and discuss its potential to address VRAM limitations and expedite AI training. There was a notable disagreement on whether all experts are loaded when batching, which might affect the overall VRAM capacity. Some videos and sources are shared on the topic for further learning.

- Ongoing talk on several datasets from HuggingFace’s hub and a GitHub project named Megablocks-Public open to public contribution. The benefit of these resources is accompanied by reports of loading issues. Also, fine-tuning progress updates and information sharing between members, interest in expanding vocabulary size and associated experimental results, and criticism of Grok’s LLM fine-tuning process.

- Numerous points on the development, training, and refining of AI models, with particular attention to Mixtral and qLoRA. Insights were shared on community-contributed code updates, VRAM usage during training, and encountering issues with checkpoint saving in the Transformers library. The issues were later discussed on HuggingFace’s GitHub.

- Discussions on tools for extracting text from PDF scripts for machine learning purposes, comparing PyMuPDF with other solutions like Apache Tika™ REST services, and a request for recommendations. A shared link to Tika-Python on Github for improved extraction results.

- Guidance was shared on converting oversized PyTorch models into the safetensor format with tools supported by the readme in Axolotl. Suggestions include using “axolotl.cli.shard” for the model files to simplify script creation.

@propbackgave an update on a troubleshooting process for a reported nccl-related issue during multi-GPU inference. However, no further updates from the team were received.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (47 messages🔥):

- Mixture of Experts (MoE) Paradigm:

@noobmaster29shared an educational YouTube video explaining the Mixture of Experts (MoE) paradigm and the Switch Transformer. They also mentioned that this concept is more complex than an ensemble model. - Databases on HuggingFace’s Hub:

@noobmaster29provided links to two distinct databases available on HuggingFace’s website. One database was a Japanese dataset under the name FreedomIntelligence/evol-instruct-japanese. The other was called sharegpt-japanese, but encountered a loading issue. Additionally,@noobmaster29shared Megablocks-Public from GitHub, a project open for public contribution. - Naming a Medical AI Model:

@yamashisought suggestions for a name for a medical model.@noobmaster29suggested Viper, referencing the snake symbol used in medicine, and other name suggestions included Internist.ai v0.1 and Amoxitron.@nanobitzadvised using the name of a medicinal plant. - Fine-Tuning Discussion: New member

@joshuasundanceexpressed interest in learning about fine-tuning.@yamashiclarified that progress is still being made on this topic, mentioning that a fine-tuned model was published on Hugging Face but it may be based on copy-pasted information. - Mixture of Experts (MoE) and VRAM Discussion:

@nafnlaus00suggested that the MoE model could address VRAM limitations and speed up AI training and inference.@yamashidisagreed, stating when batching you will inevitably load all expert at once.

▷ #axolotl-dev (135 messages🔥🔥):

-

DiscoReseach/mixtral-7b-8expert Update:

@caseus_shared an update link about@bjoernp’s code changes on the project here. -

Fine-tuning Mixtral with qLoRA: A discussion took place between

@faldore,@yamashi,@bjoernp, and@casper_aiabout fine-tuning Mixtral with qLoRA and its effectiveness.@bjoernpstated that it should work as qLoRA is essentially a standard Mistral architecture with a router. -

Mixtral Training and VRAM Usage:

@faldoreshared his experiences and challenges with tuning Mixtral model. He reported that it works with 4x A100 80gb GPUs and 8k sequence length, but he had to reduce the sequence length to 4096. -

Expanding Vocabulary in Models:

@seungdukshared his experiment on expanding model’s vocabulary using fine-tuning for newly added tokens. He shared a link to the code segment here and mentioned that it doesn’t harm the pre-trained model while training the embeddings for the newly added tokens. -

Issue with Transformer Checkpoint Saving: Report by

@faldoreof an issue with Transformers when saving the first checkpoint during the dolphin-mixtral training process.@caseus_shared a link here referring to the same issue on HuggingFace’s GitHub.

▷ #other-llms (1 messages):

- Grok LLM Fine-tuning Critique:

@nafnlaus00criticized the fine-tuning process of Elon Musk’s “Grok” LLM, stating that whoever was in charge did not strip out the OpenAI prompts from it.

▷ #general-help (11 messages🔥):

- Converting Large PyTorch Model to safetensors:

@.wooserasked for guidance on converting a large 14GBpytorch_model.binto a smaller, manageablesafetensorsfile to ensure user safety.@nanobitzadvised checking the readme in Axolotl, which supports the conversion process. They suggested that@.wooserload the model and then set configuration to save back as a safetensor format. To help@.woosersimplify script creation,@nanobitzalso recommended the use ofaxolotl.cli.shardfor the model files.

▷ #datasets (10 messages🔥):

- Recommendations for PDF-to-text scripts: User

@visuallyadequateinitiated a discussion regarding recommendations for libraries or scripts capable of extracting raw text from PDFs, predominantly machinery manuals. - PyMuPDF vs Other tools:

@visuallyadequateshared that they have been using PyMuPDF with acceptable results, while@noobmaster29also mentioned trying different solutions but still searching for the perfect tool. - Tika-Python Recommendation:

@nruaifrecommended Tika-Python, a Python binding to the Apache Tika™ REST services. This tool reportedly delivered better results than PyMuPDF for@nruaif. The link provided for the tool is https://github.com/chrismattmann/tika-python.

▷ #runpod-help (1 messages):

- Troubleshooting NCCL-related issue:

@propbackmentioned that they are currently working on solving an nccl-related issue during multi-gpu inference which might potentially help solve the issue in this context. They also noted that they hadn’t received any updates on this from the team yet.

HuggingFace Discord Discord Summary

- Queries regarding operational/aggregation aspects of various models like the Lora 4-bit and the HuggingFace LLM, logistic regression, and OpenAI’s XABERIUS-34B beta. Users also sought advice on tools and APIs such as ElevenLabs, Unity Inference, and the Neovim llm plugin. Specific topics included transforming safeTensor output to gguf format, sidhu moose wala model removal, issues using ElevenLabs on HuggingFace, and difficulties in setting up “official” Neovim llm.

- Several members sought insights on different technical aspects, including creating a 4-dimensional Gaussian Splat, resolving compatibility issues between TensorFlow-gpu v1.15 and NVIDIA GeForce RTX 4090, retrieving images via the Gradio API, and effective methods for integrating Local Language Models into apps. Resources shared included GitHub issue discussion and a video on Mamba transformers. A community solution proposed ONNX for improving the portability of local language models.

- Users showcased self-developed projects including an SDXL Transfer Style demo, Web3 API Gateway, Discord bot with AI, XABERIUS-34B-UNA model and the Overall V1 Model. The creators of these projects requested feedback, input, and testing from the community; project links were shared respectively.

- Community discussion revolved around the utilization of high-resolution image datasets and the performance of Google’s Gemini multi-model system. Recommendations were made to utilise depth models, point-e models, and the multimodal extensions of LLaMa and Mistral-7b offered in the Transformers library, linking to specific models such as 3D-Room-Layout-Estimation_LGT-Net and LLaVa.

HuggingFace Discord Channel Summaries

▷ #general (57 messages🔥🔥):

- Fine-tuning Lora 4bit Model: User

@.dafareached out for assistance in transforming a safeTensor output from a fine-tuned Lora 4bit model into gguf format. The user also reported not gettingadapter_model.bin, only safeTensor. - ElevenLabs Usage Issues:

@felipegabriel1995expressed difficulties using ElevenLabs on Hugging Face, questioning if there was any change or plan for discontinuation. - Model Removal Request:

@RAJDEEP SINGHrequested to remove sidhu moose wala model from Hugging Face site, as per the insistence of the model’s parents. He provided a YouTube link as proof (https://www.youtube.com/shorts/v7ZAGyFY_20?feature=share). - Neovim llm Plugin and HuggingFace LLM API Issues:

@zalasurasked for guidance on setting up the “official” Neovim llm plugin with the HuggingFace LLM API or a locally running model. The user also reported encountering 500 errors while using inference API on HuggingFace platform. - Audio to Text Tool Inquiry:

@starkroyaleinquired about a tool that could transform audio to text. The user showed interest in understanding song lyrics better. - Language Configuration in Unity Inference APIs:

@pyl29asked for assistance in altering language settings in Unity inference APIs.@doctorpanglosssuggested that the user might need to generate the openapi client for Unity against their official endpoints. - Logistic Regression Resource:

@gabdosshared a link to a YouTube video (https://youtu.be/ux12Lj8gXZ0) on Logistic Regression that he labeled as a comprehensive resource on the topic.

▷ #today-im-learning (7 messages):

- 4 Dimensional Gaussian Splat Tutorial Request:

@sims_asked for a tutorial or course to learn how to create a 4-dimensional gaussian splat. No responses or solutions provided yet. - TensorFlow-gpu v1.15 with NVIDIA GeForce RTX 4090 Compatibility Issue:

@hussain_muhammedencountered a cuBLAS error when running a codebase tensorflow-gpu version 1.15 on NVIDIA GeForce RTX 4090. Suspecting a compatibility issue between the Tensorflow version and GPU version; they requested for assistance. - Solution to TensorFlow Compatibility Issue:

@tryharder0569suggested the problem may be due to a version mismatch. They advised@hussain_muhammedto start a fresh conda environment and reinstall everything from scratch. They also shared a link to a related GitHub issue which might help resolve the problem. - Learning About Mamba Transformers:

@caleb_solmentioned they were learning about Mamba transformers and shared a YouTube link to a video titled “Mamba - a replacement for Transformers?”.

▷ #cool-finds (1 messages):

fblgit: Introducing.. Xaberius 34B, the #1 LLM 🙂 And its just a beta… weakest checkpoint 🙂

▷ #i-made-this (7 messages):

-

SDXL Transfer Style Demo creation: User

@tonic_1announced the creation of a SDXL Transfer Style demo and shared a link to the project here. They invited the community to provide input and PRs. -

Web3 API Gateway:

@dsimmodiscussed a project that offers seamless monetisation of APIs using Web3 technology. The system allows high throughput with a limit of up to 50 requests per second per user and adds only 400ms to the response time. The official website of the project can be found here. -

Discord bot creation with AI and catgirls: User

@devilin_created a Discord bot that integrates with open source language models and offers multiple interaction modes like the ability to ask all models at the same time and compare results. The bot also includes DAN mode. The bot can be found here. -

Introduction of XABERIUS-34B-UNA:

@fblgitintroduced a new model XABERIUS-34B-UNA, explaining that the model exhibits pretrained/foundational behaviour and invites users to try it out. -

New Overall V1 Model Release:

@dak.off1announced the release of a new model, Overall V1, that has been trained based on SD-1.5 and has the ability to create great images. The model has .CKPT, .SAFETENSORS and .ONNX format weights. The model can be downloaded and tested here.

▷ #diffusion-discussions (3 messages):

- High Quality Image Dataset Query: User

@jfischoffasked if anyone knows of a high-resolution, high-quality image dataset, preferably on the smaller side. - Gradio API Image Retrieval: User

@_thunderlordqueried about retrieving images (png or jpg) from a specific path (tmp/gradio/…) using the Gradio API. - Opinion on Gemini: User

@yamayamakawarequested expert opinions on Gemini, the new multi-model system by Google.

▷ #computer-vision (3 messages):

- Depth Models Recommendation:

@jo_pmt_79880recommended checking out depth models or point-e models, and shared a link to the 3D-Room-Layout-Estimation_LGT-Net on Hugging Face Spaces. @n278jmexpressed gratitude for the provided information, acknowledging it as useful.- LLaVa and BakLLaVa Models Release:

@nielsr_announced the availability of LLaVa and BakLLaVa (multimodal extensions of LLaMa and Mistral-7b respectively) in the Transformers library, accompanied by a link to the LLaVa model on Hugging Face. The user also shared a link to a demo notebook.

▷ #NLP (4 messages):

- Integrating Local Language Models into Apps: User

@sayingwhateverrinquired about resources for integrating local language models (LLMs) into applications, specifically in Flutter or web apps, to enhance user experience by providing data insights and suggestions. The user seeks a solution that neither requires end users to setup LLMs nor requires understanding of it. - Localhost vs Bundled Model:

@sayingwhateverralso mentioned that most of the tutorials available instruct on exposing localhost for the app to check, but the preference is for everything to be together in the app itself. - Resource Consideration for Models: It was mentioned that the models preferred would be those not too resource-intensive.

- ONNX as a Possible Solution:

@vipitissuggested using ONNX as a potential solution for portability.

▷ #diffusion-discussions (3 messages):

-

High Resolution Image Dataset: User

@jfischoffasked if anyone knows about a high resolution, high quality image dataset. The exact requirements or use-case for this dataset was not specified. -

Gradio API Image Retrieval: User

@_thunderlordwants to know how to retrieve an image through the gradio API, specifically an image at a ‘tmp/gradio’ path. -

Google’s Gemini Multimodels: User

@yamayamakawaasked for expert opinions about Google’s Gemini, their new multi-model AI project. The response of the community to this query is not included in the provided conversations.

Alignment Lab AI Discord Summary

- Introduction of individuals (‘teknium’) and link sharing in ‘general-chat’ channel.

- Extensive conversation in the ‘oo’ channel about Mixtral and Mamba, with relevant Twitter links shared by ‘@Teknium’. Discussions around Mixtral’s comparison with the Mistral 7b model. Mention of an AI meetup by ‘@teknuim’.

- In ‘oo2’, there were suggestions for Living Room Decor including the idea of having massive whiteboards instead of TVs shared by ‘@ufghfigchv’. Additionally, ‘@gabriel_syme’ described a chart as half-cooked and proposed mutating system prompts to modify interactions.

- Commentary on ‘Gemini Ultra & Bard Advanced Plan’ in the ‘general-chat’ channel by ‘@danfosing’, noting that Gemini Ultra would be part of the paid Bard Advanced plan.

Alignment Lab AI Channel Summaries

▷ #general-chat (4 messages):

- Introduction:

@tekniumchecks in with the channel. - Link sharing:

@tekniumshares a Twitter link. - Model Scaling Discussion:

@ruschcommented on the scalability of AI models, mentioning the possibility of MoE (Mixture of Experts), possibly like the Mistral model. - Gemini Ultra & Bard Advanced Plan:

@danfosingshares that Gemini Ultra will be included in the paid Bard Advanced plan.

▷ #oo (18 messages🔥):

- Conversation about Mixtral and Mamba:

@Tekniumshared a link highlighting work on Mixtral, a hybrid of transformers and Mamba-like architecture. However, it has not achieved linear scaling yet.@Alpindaleresponded by indicating plans to release their own linear arch along with pretrained models next year. - Comparison between Mixtral and Mistral:

@Tekniumnoted that Mixtral compares closely to Mistral 7b. - AI Meetup Reference:

@Tekniummentioned that@1090682143425966100gave a shoutout to@410352626421465089at a recent a16z os AI meetup.

▷ #oo2 (5 messages):

- Desire for a Large Whiteboard in Living Room:

@tekniumindicated a need for a large whiteboard in their living room. - Chart Status:

@gabriel_symereferred to a chart, stating it was half-cooked. - Scaffolding Idea:

@gabriel_symesuggested there might be something interesting about mutating system prompts as one way of mutating the interaction. - Idea for Living Room Decor:

@ufghfigchvshared an idea that living rooms should have massive whiteboards instead of TVs.

LangChain AI Discord Summary

- Announcement of a new LangChain release, langchain-core==0.1, in preparation for langchain-community. The user

@hwchase17confirmed backward compatibility and encouraged users to flag any issues. The latest version can be installed viapip install langchain==0.0.349rc2. Further,@hwchase17offered free LangChain swag for anyone who discovers regressions. - Ongoing discussion about LangChain serialization issues where user

@b0otablebrought up challenges with serialization, highlighting limitations with output parsers and built-in serialization methods, but suggestedjson dumpsas the best solution. - User

@p4y4made an enquiry about access to Langsmith, while@nagar502sought assistance for utilizing a custom Love Language Model (LLM) for streaming. - User

@seththunderposed a question about the use of.arunin ConversationalRetrievalChain. - A Job Advertisement was repeatedly shared in multiple channels by user

@daemon966, with a link to a Discord server for potential applicants: https://discord.gg/cryptojob.

LangChain AI Channel Summaries

▷ #announcements (1 messages):

- New LangChain Release: User

@hwchase17announced the release of langchain-core==0.1 in preparation for langchain-community. This new version is backward compatible but would like any issues to be flagged. The newest version can be installed viapip install langchain==0.0.349rc2. - The user also offers free LangChain swag for anyone who finds any regressions.

▷ #general (9 messages🔥):

- LangChain Serialization Issues: User

@b0otablediscussed challenges encountered with serialization in LangChain web applications. Non-serializable objects - for example Documents and AIMessages - were highlighted. Limitations were mentioned with the output parsers and built-in serialization methods. However,json dumpswas noted as the best solution found so far. - Langsmith Access Request: User

@p4y4asked about gaining access to Langsmith. - Custom LLM Query:

@nagar502requested help for utilizing a custom Love Language Model (LLM) for streaming, presenting a code snippet for feedback. The user is currently failing to receive a response. - Use of .arun in ConversationalRetrievalChain: User

@seththunderraised a question about whether.aruncan be used in ConversationalRetrievalChain. - Job Advertisement:

@daemon966shared a recruitment message with the community, linking to a Discord server (https://discord.gg/cryptojob).

▷ #langserve (1 messages):

- Job Hiring: In the LangChain AI Discord chatbot, the user

@daemon966has posted a hiring announcement along with a link to https://discord.gg/cryptojob. They have notified everyone on thelangservechannel about the same.

▷ #langchain-templates (1 messages):

- User

@daemon966made an announcement related to hiring, and shared an invitation link for discord server cryptojob while tagging everyone and here to reach out to all possible participants.

▷ #share-your-work (1 messages):

- Hiring Announcement:

@daemon966is hiring and provided a link for the job discord server. Mentioned to notify@everyoneand@here.

▷ #tutorials (1 messages):

- Job Opportunity: User

@daemon966shared a job opportunity with the LangChain AI group link to apply here. Invoked everyone and present group members for this announcement.

LLM Perf Enthusiasts AI Discord Summary

-

In the open-source channel, there was a discussion initiated by

@lhlabout Llama-Mistral. Key topics mentioned include using it with 2x80G graphics cards, potential compatibility with 2x48G GPUs, and a tweet showcasing promising initial results from Llama-Mistral. -

In the speed channel, debates regarding the performance of Azure vs GPT-4 were conducted with users sharing personal experiences. Additionally,

@laikhtewarishared a blog post discussing Optimum-NVIDIA’s usage with Hugging Face for improved LLM inference speed. -

On the rag channel,

@sandkoansparked a conversation on the capabilities of the model Claude and the effects of varying sequence lengths and context placement in the input sequence. They also highlighted the different techniques applied to models Claude at 100k and Mistral at 8k. -

Conversations lacking in context were identified in the offtopic channel where

@res6969shared a link without additional comments or context.

LLM Perf Enthusiasts AI Channel Summaries

▷ #opensource (4 messages):

- Running Llama-Mistral on Specific Cards:

@lhlmentioned that people are currently running Llama-Mistral on 2x80G graphics cards. - Resources Required for Running Llama-Mistral:

@lhlalso shared that the inference code might be OK to run on 2x48G GPUs according to the requirements listed. - Initial Results of Lama-Mistral:

@lhllinked a tweet showing some promising initial results from using Llama-Mistral.

▷ #offtopic (2 messages):

- User

@res6969shared a link without any additional comment or context. - User

@res6969then commented with “lol”, again without further context.

▷ #speed (5 messages):

Azure vs GPT-4 Performance:

@nosa_.mentioned that Azure seems better overall, but cautioned that this may not always be the case.@res6969shared that a switching system between GPT-4 and Azure is used to maximize rate limits and minimize latency.@wenquaiclaimed that Azure is almost always 40-60% faster for them, however, they also noted that this can depend on location and Azure instance setup.

Optimum-NVIDIA on Hugging Face For Fast LLM Inference:

@laikhtewarishared a link to a blog post from Hugging Face explaining how Optimum-NVIDIA enables fast LLM inference (1,200 tok/s, said to be 28x faster) with just one line of code change. They also requested feedback on the blog post.

▷ #rag (3 messages):

- Model Attention on Varying Sequence Lengths: User

@sandkoandiscussed how the effectiveness of a model can depend a lot on its capability to pay attention at varying sequence lengths. - Context Placement in the Input Sequence:

@sandkoanexplained that the model Claude is likely to forget the query if it’s given before the context, hence the context is usually placed before the query. - Differential Model Capabilities:

@sandkoancautioned that the rules that apply to Claude at 100k might not necessarily apply to Mistral at 8k.

Latent Space Discord Summary

- Community member

@tonic_1provided a tool demo using diffusers library and sdxl to generate styled images, inviting feedback and discussion. - Detailed discussion on ‘Lazy’ GPT among users, notably

@aardvarkoncomputerand@dimfeldwho discussed its occurrence in the 0613 API. - Celebration of Perplexity AI’s first anniversary highlighted by

@guardiangthrough sharing a tweet post focused on the company’s decision to prioritize search. - Questions arose regarding the fine-tuning of Mistral/Open Hermes 7B.

@.beowulfbrasked for suggestions of notebooks for this purpose, while@btdubbinsqueried about the required amount of compute. - A comment by swyxio in #llm-paper-club regarding “[INST]” was noted, although the context was limited.

Latent Space Channel Summaries

▷ #ai-general-chat (6 messages):

- Tool Demo by tonic_1:

@tonic_1shared a demo of a tool that uses the diffusers library and sdxl to generate new images based on a reference image style. - Lazy GPT: Users

@aardvarkoncomputerand@dimfeldheld a discussion about ‘Lazy’ GPT and@aardvarkoncomputermentions about the incidence of ‘laziness’ in the 0613 API. - Perplexity AI Anniversary:

@guardiangshared a tweet about Perplexity AI celebrating its one-year mark, highlighting a post by Aravind on the company’s decision to focus on search. - Fine-tuning Mistral/Open Hermes 7B:

@.beowulfbrinquired for any notebooks that could be used for fine tuning Mistral/Open Hermes 7B. - Compute for Fine-tuning: In response to the earlier inquiry, user

@btdubbinsquestioned about the amount of compute needed to fine-tune Mistral/Open Hermes 7B.

▷ #llm-paper-club (1 messages):

swyxio: it’s literally “[INST]”, part of

Ontocord (MDEL discord) Discord Summary

Only 1 channel had activity, so no need to summarize…

xa9ax: Who all are heading to NeurIPS?

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Engineer Foundation Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Perplexity AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.