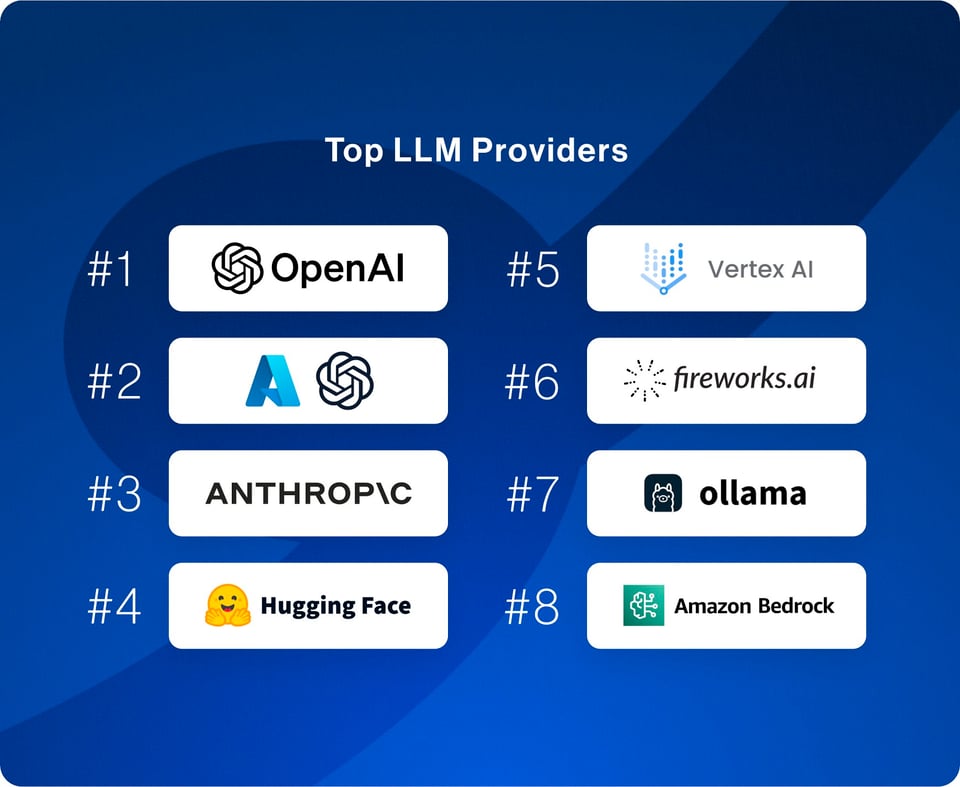

The top charts are good to know for mindshare:

[TOC]

OpenAI Discord Summary

- Issues surrounding use of Mixtral model raised by

@eljajasoriginal, including inconsistencies in responses and comparison with Poe’s Mixtral. - Noticeable decline in GPT-4 and ChatGPT output quality, and behavioral unpredictability disclosed by OpenAI, according to users

@eljajasoriginaland@felly007. - Comparative performance indications of Playground GPT-4 and ChatGPT GPT-4, indicated by

@eljajasoriginal. - Anomalous behavior in Bing’s response consistency and user concerns about hallucination or cached information usage, initially reported by

@odasso.. - Discussions on user issues and performance with ChatGPT platform: including restriction on number of messages, finishing complex responses, typewriter-like response lags, coding with GPT-4, and financial constraints affecting subscriptions raised by

@superiornickson5312,@sieventer,@afayt,@the_boss7044, and@clockrelativity2003respectively. - User-reported issues and queries on the OpenAI platform: including chat lags, inaccessibility of voice settings on Android, password reset failures, problematic 2FA activation, non-clickable links in ChatGPT4, GPT’s misunderstanding of symmetry, file upload failures, GPT-3.5 sidebar access issue, and repetitive pattern errors.

- Discussion of restricted access to ChatGPT Subscription Gear by

@nicky_83270. - Observation of OpenAI Server Limitations by

@.cymerand@satanhashtag, and the possibility of content policy and censors affecting ChatGPT performance by@.cymer. - Inquiries and dialogues on the use of ‘Knowledge’ in GPT by

@Rockand@cat.hemlock. - Techniques and approaches to guide outputs for specific requirements in GPT-4, shared by

@eskcanta,@stealth2077,@jeziboi,@rendo1, and@seldomstatic. - Request for advice on creating a full-body perspective of a character with GPT-4 from

@neurophinand exploration of minimalistic artstyle creation with DALL-E and ChatGPT-4 discussed by@errorsourceand@seldomstatic. - Queries on earning opportunities with ChatGPT and the cost of using GPT tokens highlighted by

@antonioguak.

OpenAI Channel Summaries

▷ #ai-discussions (52 messages🔥):

-

Consistency of Mixtral’s output:

@eljajasoriginalnoted that no matter how many times response is regenerated in Perplexity AI playground, Mixtral model gives same response to same prompts. The same user also compared Poe’s Mixtral to be less consistent in responses. -

Issues with GPT-4 and ChatGPT: Users like

@eljajasoriginaland@felly007discussed noticeable quality decline in GPT4 and ChatGPT.@eljajasoriginalcited a statement from OpenAI that the model behavior can be unpredictable and they are looking into fixing the issue. -

Comparison of GPT-4 in Playground vs GPT-4 in ChatGPT:

@eljajasoriginalshared an opinion that Playground GPT-4 might provide better results due to the absence of internal instructions that are present in ChatGPT model. This point was further debated on aspects such as safety measures and context length. -

Anomalous behavior in Bing:

@odasso.shared an unusual experience with Bing’s ability on holding over query context across different conversations. The case was considered hallucination or usage of cached information by some users including@brokearsebillionaireand@lugui. -

Weird setup in Bard AI model:

@eljajasoriginalreported finding strange assertions in Bard’s responses like it bringing up past non-existent conversations and including unnecessary location details.

▷ #openai-chatter (115 messages🔥🔥):

-

Limit on GPT4 Messages: User

@superiornickson5312voiced a concern regarding a restriction on the number of messages they could send to GPT4, which was later clarified by@tororstating that there is a limit on the number of messages a user can send per hour. -

GPT4 Errors and Completion: User

@sieventerexpressed frustration over GPT-4’s inability to finish responses due to complex prompts or errors, to which@z3winsadvised using shorter prompts and questioning one step at a time. -

Clearing Delayed ChatGPT Response: User

@afaytcomplained about issues with the typing animation in ChatGPT on PC, ultimately concluding it was due to a very long conversation history. -

ChatGPT for Code Writing: User

@the_boss7044queried about writing actual code with GPT-4. User_@jonposuggested that one could just tell it to code, specifying not to chat too much. -

Subscription & Performance Issues: Some users voiced dissatisfaction with the performance of ChatGPT, citing frequent network errors (

@fanger0ck). However, others defended the system, suggesting these were temporary problems due to server load (@aminelg).@loschessexpressed satisfaction with the service despite minor issues, while@clockrelativity2003shared the need to cancel the subscription due to financial challenges.

▷ #openai-questions (66 messages🔥🔥):

-

Long Chat Issues: User

@imythdasked for solutions when a chat becomes too long and lags.@solbussuggested summarizing crucial information in a new chat, or creating a custom GPT for storing critical context if the user has a Plus subscription. -

Voice Setting Issue on Android App:

@davidssp.experienced an issue accessing voice settings on the Android app of ChatGPT.@solbusclarified that the voice feature is only available in apps and suggested checking in the Android app or downloading it from the Play Store. -

Inability to Reset Password:

@vijaykaravadrareported an issue with not receiving a reset password email. The solution to this issue wasn’t discussed in the messages provided. -

2FA Activation Issue: User

@palindrom_reported an issue activating two-factor authorization after deactivating it.@satanhashtaglinked to an OpenAI article explaining that 2FA might be temporarily paused. -

Non-Clickable Links in ChatGPT4:

@mouad_benardi_98experienced an issue with ChatGPT4 providing non-clickable links.@satanhashtagsuggested trying a new chat with GPT4 without custom instructions and plugins, or asking for solutions in a separate channel. -

GPT Misunderstands Symmetry:

@neonn3mesisreported that GPT confuses horizontal and vertical symmetry. The solution to this issue wasn’t discussed in the messages provided. -

Inability to Upload File:

@askwhoreported an issue with not being able to upload any file to ChatGPT4. The solution to this issue wasn’t discussed in the messages provided. -

Desktop GPT-3.5 Sidebar Access Issue:

@d_smoov77had trouble accessing the left tab options on the desktop version of GPT-3.5.@solbusdirected them to the little arrow on the far left-center of the page. -

Repetitive Pattern Error:

@slip1244reported aBadRequestError: 400error when calling the same system message and function multiple times. The solution to this issue wasn’t discussed in the messages provided. -

Gemini Testing:

@ttmorreported testing Gemini and experiencing some bugs but overall considered it okay. Further discussion on this was not present in the given messages.

▷ #gpt-4-discussions (33 messages🔥):

- Issues with ChatGPT Subscription Gear:

@nicky_83270reported a problem where they cannot access ChatGPT 4 even after paying for a subscription, to which@solbusoffered troubleshooting assistance. The options presented included checking whether the subscription renewal was successful and trying out different browsers/devices. - Discussion about OpenAI Server Limitations:

@.cymerand@satanhashtagdiscussed the potential reasons behind GPT’s slowness during peak times, including server limitations and the need for more servers or optimization. - Content Policy and ChatGPT Performance:

@.cymerproposed a theory that ChatGPT’s policy updates and content censors could be causing it to become slower and less efficient over time. - Use of Knowledge Files in Custom GPT:

@jobydorrasked about the behavior of knowledge files in Custom GPT, seeking clarity on whether the model only searches the files when specifically prompted or if it will use files for open-ended queries.@solbusclarified that knowledge files exist as reference documents for a GPT and not as permanent context data. They can be queried and return data relevant to the specific query. - Disappearance of GPT Model:

@redash999raised a concern about their GPT model disappearing without any notification or email.@Rocksuggested loading up any test chats that they may have had with the GPT, which might restore it.

▷ #prompt-engineering (62 messages🔥🔥):

- Engaging with GPT-4 for Detailed Scenes and Specific Requests:

@eskcantaengaged GPT-4 for a detailed scene involving complex character relationships and arguments. They demonstrated how to guide the model through prompts by providing specific details and instructions for the narrative, emphasizing the need for clear guidance within the model’s comfort range and avoiding negative prompting. A link to a chat example was provided. - Concerns about ChatGPT’s Output and Contaminating Context:

@stealth2077expressed worries about unwanted parts in output that may contaminate context.@brokearsebillionairecited how providing more context reduces the tokens available for output resulting in shorter replies, a problem that could be solved by using larger models, targeted context, or retrieval. - Generating Specific Script Style:

@jeziboisought help with generating a specific style of scripts, providing examples for reference.@alienpotussuggested a structured approach that focuses on narrative structure, character development, context, and other crucial elements for generating such scripts featured in the given examples. - Approach to Negative Instruction and Mismatched Outputs:

@rendo1recommended requesting GPT to stick closely to prompts, cautioning that GPT might modify prompts slightly.@seldomstaticshared an approach to create tailored outputs based on artstyle using GPT-4, which sparked a discussion with@errorsourceon the inconsistency of outputs between Bing and GPT-4. - Utilizing ‘Knowledge’ in GPT:

@Rockand@cat.hemlockhad a discussion about how to make the best use of ‘Knowledge’ in GPT. They discussed the limit of 2m tokens and the challenges around GPT’s tendency towards summarization, skipping, and inference.

▷ #api-discussions (62 messages🔥🔥):

- Generating scripts with GPT-4:

@jeziboiasked for help in creating scripts with a specific style of storytelling, using GPT-4. The scripts contained clear narrative structures, well-developing characters, emotional depth, descriptive detailing, and surprise endings (like his pasted examples).@alienpotusproposed a structured approach for creating such scripts. - Building Character Perspectives with GPT-4:

@neurophinsought assistance with creating a full-body perspective of a character through GPT-4. This got responses on ways to guide the AI model to stick more closely to the given prompts. - Artstyle Generation with DALL-E and ChatGPT-4: Several discussions ensued between

@errorsource,@seldomstatic, and others about recreating a certain minimalistic yet detailed artstyle for landscape generation using DALL-E in ChatGPT-4. The results varied between the models. - Earning from ChatGPT and the Cost of Tokens:

@antonioguakwanted to know how to make money with ChatGPT and commented on the cost of using GPT tokens. - Study of Knowledge:

@Rockshared that they have been studying the use ofKnowledgewith GPT models and shared their findings. They mentioned that knowledge usage has a 2 million token limit and inference from GPT results can be frustrating.@cat.hemlockalso added that asking GPT to draw from more than one knowledge file at the same time was a challenge they were yet to find a workaround for. - Links of interest:

@eskcantashared a ChatGPT prompt example link : link, and@cat.hemlockshared a study link on Guidance Teacher: link

Nous Research AI Discord Summary

- Discussions about the potential and the capabilities of various AI Models. In-depth conversations happened around BAAI’s Emu2 model and UNA Solar’s yet-to-be-released model. The community also witnessed the launch of OpenDalle, a new AI model by

datarevised. - Detailed conversation on improving AI model performance. Strategies such as the application of the “Starling” method on OpenChat models and the merger of multiple Mistral LoRAs were proposed.

- Social Media Contact:

@pogpunkasked@764914453731868685if they have Twitter.@maxwellandrewsresponded, confirming their Twitter handle as madmaxbr5. - Resource sharing and recommendations were common, with links ranging from interviews with researchers like Tri Dao and Michael Poli, to promotional offers such as free Discord Nitro, and API related resources like CursorAI.

- Several queries emerged related to AI, including inquiries embracing tool for ambiguous image prompts, systematic prompt engineering, and improving latency efficiency in NLP. Conversation around the prospects of locally running and fine-tuning language models also ensued.

- Free the Compute statement was raised by

@plbjtwithout any divulged context.

Nous Research AI Channel Summaries

▷ #ctx-length-research (2 messages):

- Social Media Contact:

@pogpunkasked@764914453731868685if they have Twitter.@maxwellandrewsconfirmed they have a Twitter account with the handle madmaxbr5.

▷ #off-topic (3 messages):

- Image Prompt Tool Inquiry:

@elmsfeuerasked if there is a tool that allows image prompts to be ambiguous, permitting different interpretations at different optical resolutions (for example, viewing an image as a tree at low resolution, but seeing a scuba diver at high resolution). - Free the Compute:

@plbjtexpressed a brief statement: “free the compute”. The context and meaning behind this statement were not provided in the message history.

▷ #interesting-links (55 messages🔥🔥):

- Interview sharing:

@atgctgshared a link to an interview with researchers Michael Poli and Tri Dao, pointing out the value of getting firsthand insights from researchers in the AI field. - Emu2 model discussion: A number of members including

@yorth_nightand@coffeebean6887had a lengthy conversation around the performance of the BAAI’s Emu2 multmodal model, discussing its capabilities, limitations, and potential uses. - Free Discord Nitro offer:

@jockeyjoeshared a link to a promotion for a free month of Discord Nitro for Opera GX browser users, though it sparked a lively debate over its legitimacy. - Subscription vs API key:

@.beowulfbrand@night_w0lfdiscussed the benefits and drawbacks of using a subscription service like CursorAI over directly using API keys, with the latter user recommending DIY alternatives like using open source UI’s such as the tools found on GitHub and Unsaged. - Documentation recommending:

@night_w0lfrecommended reading the Emu2 model’s documentation, despite it initially seeming unappealing, and offered insight into how to use it.

▷ #general (128 messages🔥🔥):

- Anticipation for UNA’s Performance: Users discussed anticipation for UNA’s (“UNA Solar”) performance, where

n8programs,nonameusr, andyorth_nightmentioned numerical expectations and feedback on preliminary test results. - Discussion on Model Merging with LoRAs:

ldjandcarsonpoolediscussed the idea of merging multiple Mistral LoRAs.ldjsuggested saving the weight differences between every finetuned Mistral model and base model as a “delta”, and then merging those deltas. He raised concerns about potential loss of information incarsonpoole'smethod of converting full fine tunes into LoRAs before merging. - OpenChat Model Testing and Improvement Suggestions:

.beowulfbrsought advice after failing to improve OpenChat Model’s performance using his own config and datasets (one of which belonged totokenbender).tokenbenderadvised him to apply the “Starling” method on the new OpenChat model due to its previous success. - Launch of OpenDalle by DataRevised:

datarevisedannounced his new model, OpenDalle, which he developed by applying his custom slerp method to SDXL. He requested feedback and shared two versions of the model (v1.0 and v1.1) on HuggingFace (OpenDalle and OpenDalleV1.1). - Anticipation for Multi-modal AI:

ldjandyorth_nightdiscussed the future of LLMs in the context of multi-modality.ldjexpressed excitement for end-to-end multi-modal audio AI, suggesting it could surpas image-based multi-modal AI in terms of significance. The idea of image-based AI aiding in design tasks was also entertained.

▷ #ask-about-llms (9 messages🔥):

- Systematic Prompt Engineering:

@flow_loveasked if anyone has done systematic prompt engineering before and if there are any benchmarks or libraries for that. - Running Language Models Locally:

@leuyanninquired whether running language models locally also meant being able to fine-tune them. - Fine-Tuning and QLoRA:

@atgctgmentioned that fine-tuning is compute intensive and introduced QLoRA (Quantum Language of Resonating Actions), which can be run on consumer graphics cards. A relevant Reddit post was shared about the topic. - Latency Efficiency in NLP:

@pogpunkasked if there was a more latency efficient way for NLP in building their search product.

Mistral Discord Summary

- Integrating Autogen and updated chat templates, with discussions led by

@cyb3rward0gabout implementations, possibilities, and challenges (HuggingFace Blog Post). - Differentiating between Mixtral-8x7B-v0.1 and Mixtral-8x7B-Instruct-v0.1, and their specialized use-cases clarified by

@netapyand@cuzitzso. - Benchmarking and comparing models performances dissected by

@asgnosi, pointing out roles of fine-tuning and expected performance for GPT-4. - Addressing the rate and limits in the Mistral API inquired by

@michaelwechnerand potential solutions for maximum utilization. - Discussion about the implementation of stopwords in Mistral, including the alternative usage of the [END] token.

- There have been several conversations surrounding GPU requirements for model training and memory needs as shared by

@dutchellieand@nootums. A notable mention was the request for benchmark metrics for Mistral 7B v2 Model. - Detailed discussion on the performance of Mixtral on different systems, notably Apple M2 Max system,

@sublimatorniqquestioned the potential improvement on prompt processing stage; while@Epoc_(herp/derp) shared specific performance details on their Windows system. - Mistral API queries persisted in the #finetuning channel, mostly driven by

@expectopatronum6269and@lerela. The focus was mainly about rate limits, the context window, the time-out limit, and guidance on API parameters. - Finetuning concerns and techniques also poured over into the

#ref-implemchannel; involving finetuning with qlora (@mjak), confusion about the implementation process and necessary components (@duck), and utilizing selected models from HuggingFace (@daain). - The

#la-plateformechannel focused on tackling API rate limit issues, with@robhaisfield,@d012394and@lereladiscussing possibility of miscalculation in token output and the subsequent investigation by Mistral staff. #showcasechannel featured Mistral Playground by@bam4d, although without further details or context.

Mistral Channel Summaries

▷ #general (35 messages🔥):

- Mistral API with Autogen and Updated Chat Templates:

@cyb3rward0gdiscussed using specific implementations of Mistral and linked blog post on HuggingFace, while considering updates to chat templates to include the “SYSTEM” role. Cyb3rward0g also sought advice on whether this updated chat template would be feasible for agents that require a “SYSTEM” prompt during their creation. - Difference between Mixtral-8x7B-v0.1 and Mixtral-8x7B-Instruct-v0.1: Regarding a question from

@hasanurrahevy,@netapyand@cuzitzsoclarified the distinction. The instruction-tuned models were described as being finetuned for analyzing a given instruction and formulating a proper response. - Benchmarking and Model Comparison:

@asgnosishared observations about the performance of different models on various tests (killers question and wizards question). Further discussions highlighted the role of fine-tuning and the performance of GPT-4. - Rate and Limits in Mistral API:

@michaelwechnerreferenced the Mistral API documentation talking about the rate limit of 2M tokens per minute, acknowledging the potential for parallelizing requests to fully utilize this rate limit. Other users affirmed this solution. - Stopword Implementation in Mistral:

@tomaspsenickaraised a query about using stopwords in Mistral and discussed using an [END] token after each message as an alternative approach. - Acquiring API Key:

@harvey_77132inquired about the process of getting an API key and getting in touch with the customer success team.@brokearsebillionaireprovided the link to the Mistral console where the keys are typically found. - Error with Autogen:

@aha20395reported an error when using Autogen.@brokearsebillionaireprovided some insights into the matter and suggested leveraging LiteLLM translator for Mistral API calls, linking to the relevant documentation. - Performance of Mixtral Instruct: Finally,

@ldjreported that Mixtral Instruct was outperforming other models including Claude 2.1, Gemini Pro, and all versions of GPT-3.5-turbo based on human preferences rated through the LMSys Arena.

▷ #models (2 messages):

- GPU Requirements for Model Training:

@dutchelliesuggested that 24GB of VRAM may not be sufficient for model training and recommended looking into second-hand Nvidia P40’s, as they are cost effective and have 24GB of VRAM. - Request for Benchmark Metrics for Mistral 7B v2 Model:

@nootumsinquired about the availability of benchmark metrics to compare the performance of v1 and v2 of the Mistral 7B instruct model as they’re considering upgrading their self-hosted v1 model to v2.

▷ #deployment (5 messages):

- Mixtral Performance on Different Systems:

@sublimatorniqshared performance details of Mixtral on different systems and they remarked that the system’s performance seems sluggish during the prompt processing stage, especially on their Apple M2 Max system. They mention that “The eval rate I'm getting is certainly fast enough. Just hoping the former (prompt eval rate) can be improved!”. - Mistral Performance Metrics:

@Epoc_(herp/derp) disclosed that “On my system, windows, LM Studio, Mixtral 8x7B Q8_0 uses 47.5GB VRAM and 50.5GB RAM, runs ~17t/s. 13900k, 64GB DDR4, A6000 48GB”. - Mixtral Performance Inquiry on Jetson Orin 64B:

@romillycasked if anyone is using Mixtral 8x7B Q4_K_M or Q6-K on a Jetson Orin 64B, mentioning that while “llama.cpp runs fine on smaller Jetsons”, their Jetson Xavier’s 16GB seemed to be a limiting factor. @derpstebseemed to begin a question or discussion, but it wasn’t completed in the provided conversation.

▷ #ref-implem (4 messages):

- Finetuning Mistral with narrative data:

@mjakmentioned they are trying to finetune Mistral with narrative data using qlora. They added that they are uncertain if all data should be formatted in QA-pairs. - Reference Implementation and Deployment Steps:

@duckasked if the reference implementation relies on deployment steps mentioned in the repo’s README. They expressed confusion whether the Python script interacts with a container service and were looking into running without the use of lmstudio and olama among others. - Cherry picking models from HuggingFace:

@daainshared that they have been picking relevant models from HuggingFace such as Dolphin 2.1, Open Hermes 2.5, Neural Chat, etc, instead of fine-tuning themselves.

▷ #finetuning (4 messages):

- Max API Time-out Query: User

@expectopatronum6269shared their plan to scale their newly built app powered by Mistral API for 10,000 requests in 1 hour. They requested more details on the maximum API time-out, the maximum context window, and the rate limit of requests when using Mistral medium. - Guidance on Mistral API Parameters:

@lerelaresponded to the query, describing the context window(32k)and rate limits(2M tokens/minute)as outlined in the documentation. The timeout was mentioned to be comprehensive. The user was also urged to setmax_tokensand leverage response headers to track token usage due to the lack of an API for this purpose. - Inquiry about System Prompt and Chat Template:

@caseus_asked for advice on implementing the system prompt in the chat template, providing a link to a specific tokenizer configuration as a reference. - Question Regarding Fine-tuning for Function Calling:

@krissayrosequeried if anyone had performed fine-tuning for function calling.

▷ #showcase (1 messages):

bam4d: Mistral Playground

▷ #la-plateforme (14 messages🔥):

- API Rate Limit Issues: Multiple users including

@robhaisfieldand@d012394are experiencing rate limit errors even though their token usage according to their dashboards doesn’t seem close to the 2 million tokens per minute limit.@robhaisfieldspeculated that the issue might be due to how token output for the rate limiter is calculated (video showing the problem). - Investigation by Mistral Staff:

@lerelarequested affected users to DM their Profile IDs for further investigation and later announced that they had pushed changes to increase the reliability of the API. - Litellm Support for la-platforme:

@brokearsebillionaireinquired about support for la-platforme in litellm, which@ved_ikkeaffirmed by sharing litellm’s documentation on Mistral AI API.@brokearsebillionairelater confirmed success in getting it to work.

OpenAccess AI Collective (axolotl) Discord Summary

- Active discussions on fine-tuning models occurred across channels, covering aspects such as model performance at shorter context lengths (raised by

@noobmaster29) and procedures for personal chat data (raised by@enima). Further,@yamashishared their plans to train Mixtral Moe on specific data. - The Huggingface/transformers PR was a key discussion point, concerning its potential impact on code adjustment, the need to use Flash Attention 2 effectively, and the support for LoftQ in PEFT. Direct links to the pull request and the LoftQ arXiv paper were shared.

- A new multimodal model was introduced by

@nanobitz, linking to the respective source. Also, a resource related to Half-Quadratic Quantization (HQQ) was discussed but lacked substantial user feedback. - A range of technical questions about different methodologies and tools were raised, including the use of LLama.cpp internals, tokenizing Turkish text, the processing of LLM inferences and sliding windows for training with axolotl.

- Several suggestions for Axolotl feature improvements and future projects were provided. Notably, users discussed the incorporation of prompt gisting and the addition of chat templates to the tokenizer after fine-tuning. Ongoing experiments such as freezing *.gate.weight were mentioned with results expected to be shared soon.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (24 messages🔥):

- Finetuning Model with Shorter Context Length: User

@noobmaster29raised a question about the effects of finetuning a model at a shorter context length than the base model.@nanobitzconjectured the model would still work at the full length but might not perform as well. - Largest Model Sequence Length:

@noobmaster29further asked about tuningMistralat4096instead of its max length of8192, to which@nanobitzreassured it would be totally fine. - New Multimodal Model Resource:

@nanobitzshared a link to a new multimodal model developed by Beijing Academy of Artificial Intelligence, Tsinghua University, and Peking University. - Training Mixtral Moe on Specific Data:

@yamashimentioned planning to train aMixtral Moeon their data in January, hoping for an 85% onmedqawith possibly 90% by embedding answers in the prompt. - Half-Quadratic Quantization (HQQ) Resource:

@dangfuturesasked if anyone had used the Official implementation of Half-Quadratic Quantization (HQQ), however no feedback was provided. - Prompt Gisting within Axolotl: User

@lightningralfraised a point on incorporating prompt gisting withinAxolotl, to which@caseus_replied that while potentially useful, it would likely be slow to train due to how attention masking operates for token gisting. - Adding Chat Template to Tokenizer After Finetuning:

@touristcasked ifaxolotlis able to add chat templates to the tokenizer after finetuning, a feature@caseus_agreed would be quite beneficial.

▷ #axolotl-dev (13 messages🔥):

-

Impact of the huggingface/transformers PR: User

@nanobitzalerted users about a new pull request on the huggingface/transformers GitHub. This PR fixes the FA2 integration, and@nanobitzpointed out possible need for adjustment in their code.@caseus_acknowledged the potential impact of this change and plans to address it. -

Use of Flash Attention 2: In the context of the mentioned PR,

@nanobitzprovided recommendations on how to use Flash Attention 2, mainly suggesting not to passtorch_dtypeto thefrom_pretrainedclass method when using Flash Attention 2 and ensuring the use of Automatic Mixed-Precision training. -

Adding support for LoftQ in PEFT: User

@nruaifbrought up support for LoftQ, a quantization method that improves Large Language Models (LLMs) fine-tuning.@nruaifstated that LoftQ has been supported in PEFT since version 0.7, providing a link to the respective arXiv paper. -

Usage of LoftQ with LoRA:

@caseus_hinted at the straightforward use of LoftQ with LoRa, providing an example code snippet. -

Ongoing Experiment:

@theobjectivedadasked@faldoreabout any significant observations after freezing *.gate.weight.@faldorestated it’s too soon to share results but promised to provide them on the following day.

▷ #general-help (22 messages🔥):

- Fine-tuning Models: User

@enimais considering fine-tuning a model with personal chat data, similar to the approach used in the Minotaur/Mistral-Dolphin projects. They acknowledge the need to rework their dataset on a small sample scale. - Help with llama.cpp Internals:

@_awillis seeking help from anyone familiar with llama.cpp internals for a discussion. - Tokenizing Turkish Text: User

@emperorencountered an issue while training a tokenizer for Turkish text using HF Tokenizers. Despite using a Turkish dataset with no Chinese text, the resulting vocabulary had an overwhelming number of Chinese characters. The issue reduced significantly when all Chinese characters and emojis were aggressively filtered out from a different dataset. The user still questions why less than 1% non-Turkish characters influenced the tokenizer to this extent. - LLM Inference Processing:

@JK$queried about the processing approach of LLM inferences. According to@nruaif, both parallel processing (speeds up the process but requires more VRAM) and a queue approach can be used, though the latter will have greater latency. - Sliding Windows for Training:

@marijnfsinquired if axolotl supports sliding windows for training, to which@le_messreplied affirmatively for Mistral. On asking why this feature is not a standard for all LLMs,@le_messmentioned that only Mistral was trained with it. The option is enabled by default and there might not be an option to disable it.

Latent Space Discord Summary

- Engaging discussions on Probabilistic Programming, touching on managing fuzzy outputs and the parallel evolution of Lambda Learning Machines (LLMs) and DBs. Notable quotes include: “…challenges of probabilistic programming…”, ”…LLMs should be designed in areas where probabilistic outputs…”.

- In-depth conversation about the evolution of AI, with emphasis on the critical role of fine-tuning GPT-4 to create OpenAI Functions, and the predicted importance of context management patterns in future AI development.

- Thorough discussion on the potential functionality of LLMs involving the generation and validation of JSON according to a particular schema, and the preference over grammar constrained sampling methods for valid token sampling.

- Grammar Constrained Sampling reference to Perplexity.ai given by

@slonofor further learning. - Sharing of multiple resources related to AI developments, including the LangChainAI “State of AI” report, GPT engineer’s hosted service announcement, and Time magazine’s overview of major AI innovations from 2023.

- Announcement of a NeurIPS recap episode preview with the request for feedback, with the content found here.

Latent Space Channel Summaries

▷ #ai-general-chat (29 messages🔥):

- Probabilistic Programming Discussion:

@swizecsparked a discussion on the challenges of probabilistic programming and reasoning about programs with fuzzy outputs, sharing a concern about the rapid stacking up of error bars leading to a chaotic system.@slonoresponded that unlike the high reliability required from unpredictable distributed systems, LLMs should be designed in areas where probabilistic outputs and constrained probabilities perform well.@optimus9973compared the development of LLMs with DBs, mentioning the expectation of a similar maturation process. - AI Evolution Conversation:

@optimus9973emphasized the importance of fine-tuning GPT-4 to create OpenAI Functions, defining it as an underrated step forward in 2023, almost on par with RAG in the conceptual tool chain.@slonopredicted the significance of context management patterns in future developments. - Json Schema Discussion:

@optimus9973proposed a future LLM functionality where upon requesting a JSON with a certain schema, the LLM repeatedly attempts generation until it fits validation and is ready for user consumption.@slonomentioned the grammar constrained sampling as a preferred method, as it allows only valid token sampling. - Grammar Constrained Sampling Reference: On

@swizec’s request for further reading on grammar constrained sampling,@slonoprovided a link to Perplexity.ai. - AI Developments Sharing:

@swyxioshared multiple links, including one to the LangChainAI “State of AI” report, another to GPT engineer’s hosted service announcement, and finally a link to Time magazine’s overview of major AI innovations from 2023.

▷ #ai-event-announcements (1 messages):

- NeurIPS Recap Episode Preview:

@swyxioprovided a preview of their first NeurIPS recap episode and is looking for feedback. The preview can be accessed here.

LangChain AI Discord Summary

- In-depth discussions on batch upsert using the from_documents class in vector stores. User

@kingkkaktussought guidance on the implementation. - Concentrated dialogue on backend image management, specifically ways to manage caches for user uploaded images on a web-app backend.

@tameflameexplored possibilities including random server folders, in-memory caches like Redis, and other possible efficient solutions. - User

@vivek_13452encountered a Vectorstore error using theFAISS.from_documents()method and looked for troubleshooting insights. - Instance of streaming limitation in the ChatVertexAI model mentioned by

@shrinitg. The user highlighted this and proposed a solution in a pull request. - Advice solicited for the architecture of a chatbot capable of performing calculations on large datasets. The specific example given by

@shivam51involved calculating instances of cotton shirts in a broad product catalogue. - Noteworthy use of ConversationBufferMemory by

@rodralez, showcasing output definition’s capacity to facilitate playground-specific displays. - Exciting work presented by

@cosmicserendipityon server-side running and testing of Web AI applications, offering a GitHub solution for comparing and testing new vs. old models in a standardized setup. GitHub Link. @shving90posted a link to a ProductHunt page, AI4Fire. Nonetheless, no elaboration or context was provided within the message, making it difficult to derive its importance.@emrgnt_cmplxtyunveiled AgentSearch, an ambitious open-core project designed to deliver a major portion of human knowledge to LLM agents by embedding resources such as Wikipedia, Arxiv, a filtered common crawl and more. Users were encouraged to try the search engine at AgentSearch and check out additional details on this Twitter post.

LangChain AI Channel Summaries

▷ #general (19 messages🔥):

- Batch upsert with from_documents class: User

@kingkkaktusasked how to use the from_documents class for batch upsert in vector stores. - Backend Image Management:

@tameflamewas looking for the best way to manage a cache for user uploaded images on a web-app backend, and asked whether using a random server folder, an in-memory cache like Redis, or some other method would be the most efficient. - Vectorstore Error:

@vivek_13452encountered an error while trying to use theFAISS.from_documents()method withtextsandembeddingsas parameters. They asked for help in understanding why they were getting aValueError: not enough values to unpack (expected 2, got 1). - Instance of Streaming Limitation in ChatVertexAI Model:

@shrinitgreported that streaming is not currently supported in the ChatVertexAI model, and shared a pull request link that attempts to fix the issue. - Chatbot for Large Data Query:

@shivam51sought advice on building an architecture for a chatbot that would be capable of performing calculations based on large datasets, such as determining how many shirts in a large product catalogue were made of cotton.

▷ #langserve (1 messages):

- Use of ConversationBufferMemory with output definition: User

@rodraleznoted the usage ofConversationBufferMemorywith theoutputdefinition that allows for output display only on the Playground through modifications inchain.pyas shown below.chain = agent().with_types(input_type=AgentInput) | (lambda x: x["output"])

▷ #share-your-work (3 messages):

- Web AI Testing on Server Side: User

@cosmicserendipityshared an update regarding running and testing Web AI applications such as TensorFlow.js, Onnx Runtime Web in a headless manner leveraging NVIDIA T4 GPU. The solution involves running the applications in real Chrome browser via headless Chrome. This can aid in the testing and comparison of new web AI models against older ones in a standardized server environment. The user has shared the GitHub link here. - AI4Fire: User

@shving90shared a link to a ProductHunt page, AI4Fire. However, no additional context or discussion was provided in the message. - AgentSearch - Knowledge Accessibility for LLM Agents: User

@emrgnt_cmplxtyintroduced AgentSearch, an open-core effort to make humanity’s knowledge accessible for LLM agents. The user has embedded all of Wikipedia, Arxiv, filtered common crawl, and more - totaling over 1 billion embedding vectors. The search can be tried here. More details can be found in this Twitter post.

Alignment Lab AI Discord Summary

- Announcement about the alpha launch of Text-to-CAD by

@entropi, a new technology that allows conversion from text to CAD models, rather than the more common text-to-3D models. It was shared within the abovementioned URL. - Introduction of OpenPipe, a “fully-managed, fine-tuning platform for developers” as shared by

@entropi. The platform reportedly has saved its users over $2m and has the Mistral 7B as its recommended model since its release. Further details can be obtained from OpenPipe. @entropialso revealed that OpenPipe is based on a combination of Open Hermes 2.5 and Intel’s SlimOrca based Neural-Chat-v3-3 technologies.- User

@emrgnt_cmplxtyshared the release of their project AgentSearch, an open-core effort to curate humanity’s knowledge for LLM agents which includes databases from all over the internet. A total of more than 1 billion embedding vectors is apparently available at search.sciphi.ai, as mentioned in this tweet. @neverendingtoastposed a question regarding how data for vector search is segmented in the AgentSearch project, but no response was included in the overview.- An inquiry made by

@imonenextasking if anyone in the guild knows people from Megatron or Pytorch. @neverendingtoastasked for pointers for a good repository to experiment model merges.

Alignment Lab AI Channel Summaries

▷ #ai-and-ml-discussion (3 messages):

- Introduction of Text-to-CAD: User

@entropishares about the alpha launch of Text-to-CAD, an innovation enabling the conversion of text to CAD models as opposed to the conventional text-to-3D models used predominantly for gaming assets. - Fine-tuning Platform - OpenPipe:

@entropiintroduces OpenPipe, a fully-managed, fine-tuning platform for developers, that has saved its users over $2m. The Mistral 7B model has been the recommended model since its release in September. - Open Hermes 2.5 & Intel’s SlimOrca Based Neural-Chat-v3-3 Merge:

@entropicomments that the platform is built on top of a merge of Open Hermes 2.5 and Intel’s SlimOrca based Neural-Chat-v3-3.

▷ #general-chat (2 messages):

- AgentSearch Project Release: User

@emrgnt_cmplxtyshared about a project they’ve been working on, AgentSearch, an open-core effort to embed humanity’s knowledge for LLM agents which includes all of Wikipedia, Arxiv, filtered common crawl and more. The project has resulted in over 1 billion embedding vectors, available at search.sciphi.ai - as cited through their tweet. - Inquiry on Data Segmentation: User

@neverendingtoastinquired about how the data for vector search is being segmented in the AgentSearch project.

▷ #oo (2 messages):

@imonenextasked if anyone in the chat is acquainted with people from Megatron or Pytorch.@neverendingtoastrequested recommendations for a good repository to conduct model merges, expressing an interest in experimenting with them.

Skunkworks AI Discord Summary

- Feedback & Code in Instruct Format: User

@far_elshares their appreciation for constructive feedback and acknowledges a significant amount of code using an instruct format was present in #general. - Successful Model Utilization: User

@far_elin #general expresses satisfaction about their AI model effectively catering to a user’s specialized application. - User

lightningralfinquires about trying ‘prompt gisting’ within the group in #finetune-experts.

Skunkworks AI Channel Summaries

▷ #general (3 messages):

- Feedback & Code in Instruct Format:

@far_elexpresses gratitude for feedback received and notes the presence of a significant amount of code using an instruct format. - Successful Model Utilization:

@far_elexpresses happiness that their AI model worked effectively for a user’s specific use case.

▷ #finetune-experts (1 messages):

lightningralf: Has anybody tried to do prompt gisting in this group?

LLM Perf Enthusiasts AI Discord Summary

- Discussion regarding the Exploration of Retrieval Strategy, with

@daymanfansharing their experience about improved response quality despite facing similar issues. - Dialogue on Prompt Functionality across Models, as

@dongdong0755questioned the consistency of same prompt results across different models, wondering about potential variations.

LLM Perf Enthusiasts AI Channel Summaries

▷ #openai (1 messages):

- Exploration of Retrieval Strategy:

@daymanfanquestioned if anyone was exploring their own retrieval strategy, indicating that they encountered a similar issue, however, the response quality was superior to other options.

▷ #prompting (1 messages):

- Prompt Functionality across Models: User

@dongdong0755raised a query regarding the performance consistency of the same prompt across different models. The user wondered if variations in prompt outcomes might be noticeable.

DiscoResearch Discord Summary

- Conversation about model differences, specifically focusing on the potential causes.

@calytrixsuggested that changes in router layers could be a factor, and recommended a two-step fine-tuning process with varying parameters for the router layers. - Request by

@datarevisedfor feedback on their OpenDalle model, which includes a custom Slerp method applied to SDXL. The user is receptive to both positive and negative comments.

DiscoResearch Channel Summaries

▷ #mixtral_implementation (1 messages):

- Possible Causes for Model Differences:

@calytrixposits the differences seen in recent models could be due to factors that weren’t present in earlier versions, with the router layers singled out as a probable cause. They suggest a two-stage fine-tuning process where the second stage fine-tunes the router layers with different parameters.

▷ #general (1 messages):

- OpenDalle Model Feedback Request:

@datarevisedrequested feedback on the OpenDalle model they created using a custom slerp method applied to SDXL. The user welcomed both positive and negative critiques.

MLOps @Chipro Discord Summary

Only 1 channel had activity, so no need to summarize…

- True ML Talks Episode - Deploying ML and GenAI models at Twilio: User

@Nikunjbdiscussed an episode of True ML Talks with Pruthvi, a Staff Data Scientist at Twilio. Topics included the X-GPT concept, Twilio’s efforts to enhance Rack flow, and the different models Twilio is developing beyond GenAI. - The discussion also touched on the intricacies of various embeddings used for the vector database, and how Twilio manages Open AI rate limits.

- The episode was praised for its insightful coverage of different aspects of Machine Learning and its infrastructure within the Twilio ecosystem.

- Link to the episode: YouTube - Deploying ML and GenAI models at Twilio

AI Engineer Foundation Discord Summary

Only 1 channel had activity, so no need to summarize…

- Introduction and Interest in ML & AI: User

@paradoxical_traveller09introduced himself and expressed an interest in connecting with other users who are passionate about Machine Learning (ML) and Artificial Intelligence (AI). They are open to discussing topics focused on ML.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.