New papers released show very promising Llama Extensions:

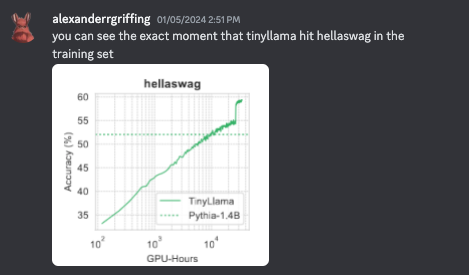

- TinyLlama: An Open-Source Small Language Model: (we wrote about this in previous AINews issues, but this is the paper) We present TinyLlama, a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. (written up on Semafor)

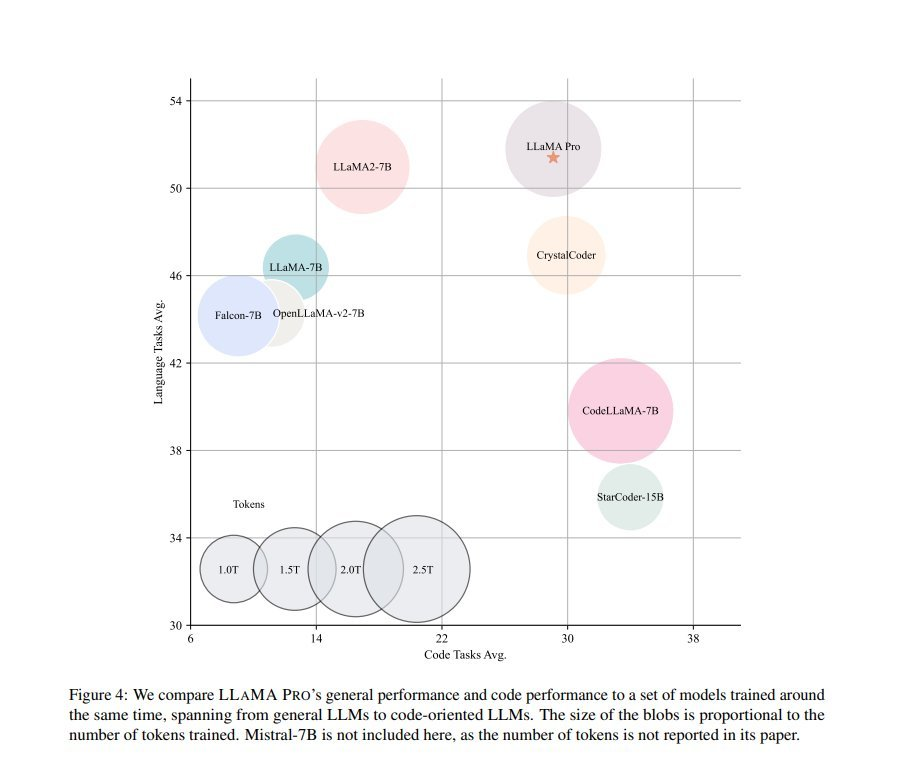

- LLaMA Pro: Progressive LLaMA with Block Expansion: LLaMA-Pro is an 8.3 billion parameter model. It’s an expansion of LLaMA2-7B, further trained on code and math corpora totaling 80 billion tokens

- Adding new knowledge to Llama7B without catastrophic forgetting… by just adding layers lol

- gives it a nice tradeoff between language and code tasks

But it is getting some scrutiny already for basing on LlaMA and not using Mistral/Qwen/etc:

Yannic Kilcher already has a great Llama Pro explainer out:

In other news, LangChain is planning to promote their recent v0.1 next week.

Table of Contents

[TOC]

OpenAI Discord Summary

- Enterprise-Lite, Right? Not: User

@a1vxinquired about the possibility of individual users availing themselves of an Enterprise package, but was promptly informed by@solbusthat this offer currently only caters to large corporations with six-figure contracts. - Privacy Fears and GPT Reassurances: There have been concerns raised around the privacy aspects when using GPT models by user

@a1vx.@feltsteam0reassured that the private data is kept confidential and unlikely to be used in model pretraining. - Not One Language Fits All: Queries existed regarding the support for other languages like Russian in the imminent GPT Store. The community suggested to wait for further details post-launch of the store.

- Fine-tuning for Models, Not Just Motors: For the ongoing search of refining GPT-3.5 1106 for use in Assistants,

@a1vxdirected users towards the Assistant API page of OpenAI’s official documentation. - The Traceroute is Not the Route: A discussion between

@darthgustav.and@chotesabout the model’s operation in multi-dimensional vector space ended with skepticism towards the accuracy and common misconceptions related to interpreting a model’s response as a traceroute. - GPT-4: Tokens Galore, More is More: Token usage cap and desired full-time access related discussions were touched on by

its_eddy_andBianca Stoicain relation to GPT-4. - The Builders Need Verifications: Verifying domains during the set-up of GPT-Builders has turned out to be thorny for

johan0433andsavioai, though efforts have been made to resolve the issue. - GPTs Flexing Language Muscles:

xbtrobandelektronisadehighlighted that GPT-4 may have difficulty recognizing non-Latin languages from images, namely kanjis. - Caught Between HTML Rock and CSS Hardplace:

kerubyteis grappling with controlling generated HTML and CSS code in their custom GPT, which seems to have an unsolicited affinity for spawning<html>and<body>elements. - Custom GPTs for Who, Now?:

filipemornasought information regarding the enrollment process for GPT builders and was informed bysolbusthat access is possible with a ChatGPT Plus account. - GPTs: Not a Fan of Red Tape: User

.ashh.questioned if creating GPTs for specific video games could infringe on copyrights. - Playing Hide and Seek with GPT-4: The common issue of GPT-4 being inaccessible was discussed by

its_eddy_andBianca Stoica, touching on usage limit and token limits. - Dynamic Image Generation via DALL-E for Breakfast: User

@hawaiianzshared experiences and advice on how to create more balanced and dynamic images with DALL-E by being creative with prompts and descriptions. - More Locks for More Safety: Security concerns in deploying GPT was a point of contention amongst users

@cerebrocortex,@aminelg,@madame_architectand@wwazxc. Users were advised against breaking OpenAI’s policies while exploring new security methods. - Logo-ing with DALL-E is Dope:

@viralfreddysought advice on creating logos with DALL-E, and@darthgustavsuggested specific prompts and English translations for effectiveness. - GPT’s Task List is Overflowing: Users like

@ajkuba,@wwazxcand@cosmodeus_maximuswere vexed at the GPT’s handling of large tasks and its unresponsiveness at times. - The Treacherous Path to Prompting Success:

@exhort_oneshared their journey in prompt engineering, which, after several iterations and learning from the community, led to a successful generation of the desired script.

OpenAI Channel Summaries

▷ #ai-discussions (241 messages🔥🔥):

- Enterprise AI for Individual Users? Not Yet: User

@a1vxenquired about the possibility of purchasing an Enterprise package for individual use. User@solbusclarified that currently, OpenAI only caters to large companies with six-figure contracts for Enterprise and suggested contacting the OpenAI sales team for negotiation. - Concerns about Privacy with ChatGPT:

@a1vxraised concerns regarding privacy while using GPT models, especially regarding the use of “Chat history & training” and data storage for moderation.@feltsteam0reassured that the data is highly unlikely to end up in the pretraining data and that ‘private’ chats are probably not used for training. - Incorporating Private GPTs in the GPT Store: Questions were raised about the upcoming GPT Store and whether it would allow GPTs trained to understand other languages, especially Russian. It was noted that such details were not present in the current documentation, and would hopefully be clarified when the Store is launched.

- Fine-tuning GPT Models for Assistants: User

@s44002asked whether it is possible to use a fine-tuned version of GPT-3.5 1106 as a model for the assistant.@a1vxreferenced the Assistant API page in OpenAI’s documentation and noted that support for fine-tuned models in the Assistant API is coming soon. - ‘The Traceroute Metaphor’ Discussion: A long technical discussion was held between

@darthgustav.and@chotesregarding the operation of language models in a high-dimensional vector space. The idea of considering a model’s responses as a traceroute through this space was met with skepticism and debate about the accuracy and misunderstandings of this metaphor.

Links mentioned:

- Conversa

- Pricing: Simple and flexible. Only pay for what you use.

- GitHub - SuperDuperDB/superduperdb: 🔮 SuperDuperDB. Bring AI to your database; integrate, train and manage any AI models and APIs directly with your database and your data.: 🔮 SuperDuperDB. Bring AI to your database; integrate, train and manage any AI models and APIs directly with your database and your data. - GitHub - SuperDuperDB/superduperdb: 🔮 SuperDuperDB. Bring…

▷ #gpt-4-discussions (97 messages🔥🔥):

- GPT Usage Cap and Subscription Discussions: User

its_eddy_responded to concerns about the usage cap on GPT-4, helping clarify availability times for free users.Bianca Stoicaasked about gaining full-time access to GPT-4. - GPT Builder Domain Verification Troubleshooting: Users

johan0433andsavioaishared their experiences with issues in verifying their respective domains when setting up GPT Builders. As part of this discussion,johan0433suggested inputting “www” as the Name field to resolve the issue. However, this solution was not successful forsavioai. - Anticipated Launch of GPT Store:

solbusmentioned that OpenAI announced the upcoming launch of the GPT Store in an email to users. This prompted further discussion withjguziover the availability of a directory of custom GPT bots. - Issues with GPT Knowledge Recognition:

alanwunscheraised concerns over his GPT being unable to recognize small uploaded files, identifying this as a common issue among users. - Mobile Incompatibility of Custom GPT Actions:

dorian8947reported issues with custom actions on his GPT in a mobile application, seeking help in resolving these issues. - HTML and CSS Custom GPT development Problems: User

kerubyteis struggling to control generated CSS and HTML code with their custom GPT. Their GPT keeps generating<html>and<body>elements even though they explicitly instructed it not to in the rule set. - Technical Issue in Non-Latin Languages:

xbtrobnoted that GPT-4 cannot read kanjis from pictures, which was confirmed byelektronisade, stating non-Latin alphabets such as Japanese or Korean may not be processed optimally. - Enrollment Enquiry for GPT Builders:

filipemornaasked about the enrollment process to become a GPT builder, to whichsolbusexplained that anyone with a ChatGPT Plus account can create a custom GPT. - Queries About GPT Monetization:

dinolasparked discussions around how GPT monetization works.pinkcrusaderprovided a speculative response suggesting user split pay between the most used GPTs.7877provided a more comprehensive explanation of how the split might work, using an example of a user who utilizes a custom GPT for 10% of their usage, and that the creator could achieve a 70/30 split of that 10%. - Legal Concerns About GPTs for Video Game Guides:

ashh.raised a question about potential infringement of copyright rules if a GPT is built to answer questions from specific video games. - Question About Fast Conversion of API to GPT Config File:

moneyj2kwanted to know the quickest way to convert a public API into a GPT’s config file. - GPT-4 Token Limit and Unaccessible GPTs:

its_eddy_andBianca Stoicamentioned the GPT-4 token usage limit and the issue of unaccessible GPTs. - Enthusiasm Over Customized GPT Creation:

kungfuchopushared their excitement in designing custom GPTs that generate unique stories in a specific character’s voice. - Public API Conversion Into OpenAI Config:

moneyj2kqueried about a method to quickly convert a public API into a config file for a GPT. - Advice on Domain Verification:

anardudeasked for help and advice on verifying his domain for OpenAI GPT. His concerns remain unaddressed. - Builder Enrollment Hiccups:

filipemornaasked about enrollment for the GPT Store and was directed bysolbusto the builder profile settings in ChatGPT Plus. - GPT Store Preparation Tips:

cerebrocortexasked for advice in preparing a GPT for the GPT Store.solbusprovided OpenAI guidance for reference. - Interactions with GPT: Users

jobydorrand_odaenathusdiscussed prompts and rules for interacting with GPTs._odaenathusfurther asked about “Knowledge” limits, promptingchotesto clarify that there is a hard token limit and that larger documents are split up using Retrieval Augmented Generation (RAG). - ‘GPT Inaccessible or Not Found’ Issue: User

melysidasked Coty about solutions for a ‘GPT inaccessible or not found’ issue. This query remained unaddressed in the discussions. - GPT Store Payout Mechanism: User

pinkcrusaderprovided details about the payout mechanism based on most used GPTs, being discussed in a developers’ keynote. - Number of Messages Limitation Issues: User

holden3967brought up concerns about the messaging limit (25 messages per 3 hours) on GPT, considering it severely limiting the utility of GPT apps.7877humorously pointed out that there is no way around this limit._odaenathusproposed an idea for users to have multiple accounts with multiple plans to overcome this issue. - DALLE Image Aspect Ratios: User

.arpertureshared their difficulty in consistently outputting images in aspect ratios other than square when using DALLE in a custom GPT.solbussuggested using “tall aspect ratio” and “wide aspect ratio” instead. - Procedure for Enrolling as a GPT Builder: User

filipemornaasked about the process for enrolling as a GPT Builder.solbusmentioned it can be done with a ChatGPT Plus account on OpenAI’s GPT editor. - Creating Custom GPTs for Video Games: User

.ashh.asked about the legality of creating custom GPTs to answer questions about specific video games without infringing copyrights.7877suggested it would be fine as long as no game assets are used. - GPT Store Monetization: User

dinolasought clarifications about how the monetization of the GPT store works.7877offered a detailed explanation using the example of a user who uses a custom GPT for 10% of their usage time. - Limitations of GPT-4 in Reading Kanjis:

xbtrobmentioned that GPT-4 is unable to read Kanjis from images.elektronisadeconfirmed the limitation, stating the model doesn’t perform optimally on non-Latin alphabets. - Issuing with Custom GPT and Rate Limits:

_odaenathusclarified how mixing regular ChatGPT with custom GPT messages worked regarding to rate limits.lumirixmentioned that he believes that GPT prompts also count towards the 40 message limit. - Approaching Rate Limits Carefully: User

dino.oatswarned that generating multiple images in the same response can quickly reach the rate limit. - OpenAI GPT More Content: User

kungfuchopushared their enthusiasm about creating unique stories in specific character voices using their custom GPT. - Potential Intellectual Property issue Building GPTs:

.ashh.asked about potential copyright issues when creating GPTs based on video games.7877suggested that using game assets could pose a problem. - Domain Verification Problems: User

anardudefaced difficulties when verifying his domain, and asked for solutions without receiving a response. - Discussion About the GPT Store:

cerebrocortexasked about tips for preparing a GPT for the GPT Store and was directed bysolbusto OpenAI’s guidelines. - Potential User Subscription Issues:

Bianca Stoicaexpressed a concern about having full time access to GPT-4. - Discussion Around Non-Latin Languages Issue:

xbtrobstated that GPT is unable to read Kanjis from images. This issue is especially deemed significant for reading non-Latin languages. - Custom GPT for Code Creation:

kerubytewas looking for advice on creating a custom GPT that would generate valid HTML and CSS code for a specific platform. - Discussion About the Limits of GPT:

_odaenathusasked about the hard and soft limits of GPT “knowledge”, and was answered bychotesregarding the limits on tokens. - Token Costs for Uploading Files:

choteswarned about the high cost of uploading large JSON files. - API Configuration for GPTs:

moneyj2kasked for a quick way to transform a public API into a config file for a GPT. - Discussion on GPT Communication Limits:

holden3967brought up the limit of 25 messages per 3 hours on GPT as an issue,7877humorously mentioned there is no way around it, later_odaenathussuggested having multiple accounts with different plans to overcome this issue. - Dalle Image Generation:

.arpertureis looking for ways to consistently output images with aspect ratios other than a square using Dalle in a custom GPT. - Discussion Over Rule-set in GPT:

jobydorrdiscussed over the issues of having a set of instructions that GPT has to follow. - Request for GPT Literature Interaction:

kungfuchopuenthusiastically shared about their new GPT creation which allows users to interact with characters and generate unique stories in their own voice and tone. - Discussion About the Enrollment Process for GPT Builders: User

filipemornaasked about the process of enrolling as a GPT builder.solbusprovided the answer that anyone with a ChatGPT Plus account can create a custom GPT. - Uncertainty over GPT Inaccessibility:

melysidsought advice about solving an issue where GPT was inaccessible or not found. Unfortunately, their query remained unanswered. - Monetization Strategy for GPT Store: User

dinolaasked about how GPT monetization works in relation to user access and API usage. In response to this,7877speculated on a possible method, suggesting that the cost is split across common/widely used GPTs. - Custom GPT for Video Game Guides and Trademark Concern: User

.ashh.discussed potential trademark concerns when creating a GPT that answers questions for a specific video game. The consensus was that as long asashh.doesn’t use any game assets, it should be fine. - Verifying Domain for GPT Builder Setup: An ongoing issue of verifying the domain for GPT Builder set-up was discussed by

johan0433andsavioai. A proposed solution of inputting “www” as the Name field during DNS/TXT record creation was unsuccessful. - Anticipation for Launch of GPT Store: User

solbusannounced that the launch of an OpenAI GPT Store was imminent, as announced in an email from OpenAI. This sparked a small discussion around the availability and searchability of a directory of custom GPT bots. - Question About Structuring API to OpenAI Config:

moneyj2kasked about transforming a public API into an OpenAI config file for GPT, creating a brief discussion but provided no confirmed solution. - GPT-4 Token Limit Discussions: A brief exchange between

its_eddy_andBianca Stoicadiscussed the issue of GPT-4 usage cap and the concept of token limits. A necessity for a subscription model was discussed to bypass this issue. - Resolution to Inaccessible GPT Issue: User

melysidbrought up an issue where GPT is inaccessible or not found, but the conversation did not provide a resolution. - Issues with GPT and File Recognition: User

alanwunscheraised concerns about his GPT failing to recognize small uploaded files, noting the issue as one reported by a number of users. However, offered solutions remained unanswered.

Links mentioned:

- Brand guidelines: Language and assets for using the OpenAI brand in your marketing and communications.

- GPT access on cell phone app: My GPT Ranko works well on Mac but when I access it on the cell phone app it tells me my GPT is unavailable. I can access other GPTs alright. Is there any additional setting for app access?

▷ #prompt-engineering (48 messages🔥):

-

Balancing images with dynamic poses: User

@hawaiianzshared a prompt with tips on how to create balanced images in DALL-E by using dynamic poses to support the structure of pyramid formation in the image. They suggested this method can be applied beyond images. -

Security in GPT Deployment: Users

@cerebrocortex,@aminelg,@madame_architectand@wwazxchad a discussion on securing GPT models against unauthorized access. Though additional resources were suggested for exploring security methods, users were cautioned against breaking OpenAI’s usage policies. -

Improving GPT Performance: User

@ajkubashared difficulties regarding a custom GPT’s inability to process large batches of data effectively without crashing. Fellow users@sciandyand@wwazxcrecommended outputting the results into different formats or utilizing JSON mode to avoid these issues. -

Logo Creation with DALL-E:

@viralfreddysought advice on how to improve their prompts for creating a logo using DALL-E.@darthgustavsuggested being specific in the prompt and translating the prompt to English for better results if English wasn’t the user’s native language. -

Issues with GPT Model: User

@cosmodeus_maximusexpressed their dissatisfaction with the current performance of the GPT model, specifically its lack of creativity and neglect of user instruction. Similarly,@mysterious_guava_93336also flagged issues he’s having with constant ‘PowerPoints’ (‘structured responses’) served by ChatGPT, which impedes the quality of conversation. -

Prompt Engineering Success Story: User

@exhort_oneshared their triumph in finally obtaining their desired script from the GPT model after 3 months of prompt engineering, demonstrating the learning curve and resilience needed in this field.

Links mentioned:

▷ #api-discussions (48 messages🔥):

- Exploration of Enhanced Image Generation:

@hawaiianzexplained that the usage of dynamic positioning and support roles within image descriptions could increase the quality of results when using Dall-E. Details in image composition, such as varying actions (like “random kung fu attack positions”), can contribute to a more balanced and realistically dynamic picture. - Security Concerns in GPT: Users like

@cerebrocortexand@wwazxcraised concerns about security measures for GPT, particularly pertaining to guarding instruction execution. Suggestions included exploring scholarly resources and established projects like SecretKeeperGPTs or SilentGPT for learning and inspiration, shared by users such as@madame_architectand@eskcanta. - Logo Designing with DallE:

@viralfreddysought assistance with designing a logo using DallE, receiving advice from@darthgustavto be specific with the prompts and also suggest translating the prompts to English for better results. - Issues with GPT’s Task Handling:

@ajkubadiscussed a persisting issue with GPT failing to complete large tasks consisting of numerous items, like batch web browsing. Other users like@wwazxcand@cosmodeus_maximusalso expressed frustration with major limitations and occasional unresponsiveness in the AI system’s operations. - Prompt Engineering Challenges and Success:

@exhort_oneshared their journey of persevering through multiple prompt revisions and a burnout, and ultimately achieving their desired script from GPT. The user acknowledged the community’s prompt engineering insights as helpful in this process.

Links mentioned:

Eleuther Discord Summary

- Model Faceoff: Mamba vs. Transformer: A lively debate centered on Mamba’s performance compared to Transformer models in NLP tasks. The discussion, initiated by

@sentialx, addressed concerns of model diversity, optimization levels and use-cases. - All Eyes on Civitai’s Clubs:

@digthatdatashared Civitai’s latest feature for creators: “Clubs”. Meant to boost engagement for creators offering exclusive content, the rollout has not been without its share of detractors. - Phi-2 Released Under MIT License: As announced by

@SebastienBubeckvia Twitter, and shared by@digthatdata, the Phi-2 model hailing from Microsoft Research is now accessible under the MIT License. - Youths of the LLaMA Family: TinyLlama and LLaMA Pro: New releases of language models, TinyLlama and LLaMA Pro, were shared by

@philpaxand@ai_waifu. Both models mark significant advancements in the LLaMA model lineage. - McQs and LLMs: A Tumultuous Relationship:

@Nish, a researcher from the University of Maryland, reported notable performance discrepancies between his project’s LLM-generated answers to multiple choice questions, especially on the HellaSwag dataset, and previously reported leaderboard figures. Contributory factors could include differences in implementation and normalization of choices’ log likelihoods. - Shared Notes on Sequence Labelling: The application of logit/tuned lens in sequence labelling tasks, such as PoS tagging, was the focus of a conversation commenced by

@vertimento. - Unlocking the Mysteries of GPT-4 Token Choices: A paper shedding light on GPT-4’s token choices from smaller model distributions was shared by

@allanyield. The paper suggests that if properly prompted, an LLM can output text as an explanation followed by a conclusion. - Interest in Joining Interpretability-Related Project:

@eugleofinished their ARENA program and expressed their eagerness to partake in an interpretability-related project, offering a commitment of 16 hours per week. - Proof Lies in Performance: An in-depth conversation involving

@carsonpooleand@ralliorevolved around the inference performance of different-sized models. They noted the crucial role of model size, GPU capabilities, and batch size in determining inference speed and cost. - Training Models and the Efficiency of MLP: The talk between

@jks_pland@stellaathenacovered the implications and efficiency of Masked Language Model (MLM) training and span corruption tasks, incorporating reference to a specific paper. - Decoding Transformers: Doubts regarding Transformer architectures were listed by

@erich.schubert, leading to a dialogue on handling positional encodings, layer outputs, and the structure of prefix decoders. - Bias in Sequence Length—Real or Imagined? Concerns raised by

@chromecast56about sequence length warnings in a project triggered a dialogue to reassure users about the ability of the evaluation harness to handle this issue. - ToxiGen Adds Its Flavour to lm-evaluation-harness:

@hailey_schoelkopfacknowledged the notable contribution from the lead author of the ToxiGen paper to the implementation of lm-eval-harness, an element not explored in the original paper.

Eleuther Channel Summaries

▷ #general (161 messages🔥🔥):

- Mamba vs Transformer Debate: Users engaged in a lively discussion over the effectiveness of Mamba and Transformer models in NLP tasks, prompted by

@sentialx’s comment questioning Mamba’s performance.@thatspysaspy,@canadagoose1,@stellaathena, and@clock.work_participated, discussing factors such as the degree of optimization, the diversity among different models, and the specific use-cases for different model types. - Civitai Clubs Rolled Out:

@digthatdatashared an update from Civitai discussing their new feature for creators, “Clubs”. It’s intended to enhance engagement for creators offering exclusive content, but also mentioned some backlash they’ve experienced since release. - Release of Phi-2 under MIT License:

@digthatdataalso highlighted a tweet by @SebastienBubeck, announcing the release of Phi-2 under an MIT license. - Inference Performance Comparisons: A detailed discussion between

@carsonpooleand@ralliofocused on the inference performance of different-sized models, with@ralliosharing a useful resource on best practices for handling large language models (LLMs). The talk highlighted the importance of model size, GPU power, and batch size when considering inference speed and cost. - Volunteer Research Opportunity:

@sirmalamutereached out to the community looking for opportunities to contribute to a machine learning research project on a voluntary basis to gain more hands-on experience.

Links mentioned:

- Call for feedback on sustainable community development | Civitai: This week, we rolled out Clubs - a new feature for creators who’ve been running exclusive memberships on platforms like Patreon. Clubs is our way o…

- LLM Inference Performance Engineering: Best Practices: In this blog post, t

- Tweet from Sebastien Bubeck (@SebastienBubeck): Starting the year with a small update, phi-2 is now under MIT license, enjoy everyone! https://huggingface.co/microsoft/phi-2

▷ #research (64 messages🔥🔥):

- Logit Tuned Lens in Sequence Labelling: A discussion initiated by

@vertimentofocused on the application of logit/tuned lens to sequence labelling tasks such as PoS tagging. The conversation did not yield a decisive answer but attracted a variety of responses. - Releases of TinyLlama and LLaMA Pro:

@philpaxand@ai_waifushared about new releases of language models, TinyLlama and LLaMA Pro, respectively. Both pose significant advancements in the LLaMA family of models. - Discussion on Model Training and MLP Efficiency:

@jks_pland@stellaathenaconducted an extensive discussion on the efficiency and implications of Masked Language Model (MLM) training and span corruption tasks, including the referencing of a useful paper for context: https://arxiv.org/abs/2204.05832. - Change in Licensing of Phi-2 Model: Phi-2, originally under Microsoft Research License, is now under MIT License as per

@kd90138. - Queries Regarding Transformer Architectures:

@erich.schubertraised some questions on the classic Transformer (encoder-decoder) architecture, receiving inputs from@stellaathena,@ad8e, and others on the handling of positional encodings and layer outputs, and the structure of prefix decoders.

Links mentioned:

- TinyLlama: An Open-Source Small Language Model: We present TinyLlama, a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. Building on the architecture and tokenizer of Llama 2, TinyLlama leverages variou…

- LLaMA Pro: Progressive LLaMA with Block Expansion: Humans generally acquire new skills without compromising the old; however, the opposite holds for Large Language Models (LLMs), e.g., from LLaMA to CodeLLaMA. To this end, we propose a new post-pretra…

- What Language Model Architecture and Pretraining Objective Work Best for Zero-Shot Generalization?: Large pretrained Transformer language models have been shown to exhibit zero-shot generalization, i.e. they can perform a wide variety of tasks that they were not explicitly trained on. However, the a…

- Look What GIF - Look What Yep - Discover & Share GIFs: Click to view the GIF

- Upload 3 files · microsoft/phi-2 at 7e10f3e

- An explanation for every token: using an LLM to sample another LLM — LessWrong: Introduction Much has been written about the implications and potential safety benefits of building an AGI based on one or more Large Language Models…

- AI 100-2 E2023, Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations | CSRC

- Reproducibility Challenge: ELECTRA (Clark et al. 2020): A reproduction of the Efficiently Learning an Encoder that Classifies Token Replacements Accurately approach in low-resource NLP settings.

- Improving position encoding of transformers for multivariate time series classification - Data Mining and Knowledge Discovery: Transformers have demonstrated outstanding performance in many applications of deep learning. When applied to time series data, transformers require effective position encoding to capture the ordering…

▷ #interpretability-general (2 messages):

- Discussion on “An Explanation for Every Token” Paper:

@allanyieldbrought up a paper they found that explores how GPT-4 chooses tokens from the distribution provided by a smaller model and writes justifications for its choices. The paper acknowledges the potential safety benefits of scaling AGI based on Large Language Models (LLMs) and demonstrates how a properly prompted LLM can output text structured as an explanation followed by a conclusion. - Call for Research Collaboration: User

@eugleomentioned completing the ARENA program and expressed a future interest in joining an interpretability-related project. Offering their contribution for approximately 16 hours a week for several months, they requested suggestions for possible projects or related contacts, stating no need for funding or additional commitments.

Links mentioned:

An explanation for every token: using an LLM to sample another LLM — LessWrong: Introduction Much has been written about the implications and potential safety benefits of building an AGI based on one or more Large Language Models…

▷ #lm-thunderdome (13 messages🔥):

- Discussion on generating answers to multiple choice questions: Researcher

@Nishfrom the University of Maryland started a conversation around discrepancies he noted in his project that uses Language Learning Models (LLMs) to generate answers to multiple choice questions in standard MC format. He reported experiencing a significant performance gap compared to the reported numbers on the leaderboard, particularly for the HellaSwag dataset. - Possible causes for the performance gap:

@hailey_schoelkopfsuggested that the gap could be due to differences in the implementation, where@Nishis having the model generate just the answer letter, and Eleuther’s implementation scores the sequence probability of the letter and the text after the letter. As an additional contributing factor, she mentioned the normalization of the choices’ log likelihoods, which can significantly impact performance, especially for the HellaSwag dataset. - Benchmarking unnormalized vs normalized scores:

@Nishwas directed by@stellaathenaand@hailey_schoelkopfto Teknium’s github library that contains benchmark logs for different LLMs, to view both unnormalized and normalized scores. - Worries about sequence length warnings: User

@chromecast56raised concerns about sequence length warnings they were seeing, and asked if intervention was necessary or if the evaluation harness will handle it. - ToxiGen’s contribution to lm-evaluation-harness:

@hailey_schoelkopfmentioned that despite the original ToxiGen paper not exploring the autoregressive language model setting, the novel implementation in the lm-eval-harness was contributed by the ToxiGen lead author.

Links mentioned:

LLM-Benchmark-Logs/benchmark-logs/Mixtral-7x8-Base.md at main · teknium1/LLM-Benchmark-Logs: Just a bunch of benchmark logs for different LLMs. Contribute to teknium1/LLM-Benchmark-Logs development by creating an account on GitHub.

Perplexity AI Discord Summary

- Improving Perplexity Usage: Users discussed various approaches to enhance the utilization of Perplexity, with

@icelavamanstating that the service is being refined to develop a swift and precise answer engine. Link to tweet - Perplexity Evaluation Scrutinized:

@the_only_alexanderreported concerns regarding the Perplexity assessment published in an academic paper, with specific doubts about the employed methodology. - Perplexity Pro Unveiled: Users

@donebginquired about the perks of Perplexity Pro. Responses from@giddzand@mares1317directed the queries to the official Perplexity Pro page. - Notion Database Barrier Triggers Frustration:

@stanislas.basquinexhibited annoyance related to the limitations in linking Notion databases across divergent workspaces. Perplexity thread - Perplexity Praises for Prompt Research Inputs:

@shaghayegh6425asserted appreciation for Perplexity being prompt and informative, particularly for bio-research inquiries and brainstorming. They shared multiple examples - Perplexity API Traps: Users, including

@blackwhitegreyand@brknclock1215, indicated difficulties and sought guidance for integrating the Perplexity API on platforms like ‘typingmind’ and ‘harpa.ai’. References to Perplexity’s API documentation were made for assistance.

Perplexity AI Channel Summaries

▷ #general (180 messages🔥🔥):

- Perplexity Enhanced: Users discussed ways to improve the usage of Perplexity with

@icelavamanstating the service is being improved to build the fastest and most accurate answer engine, citing a tweet by Perplexity’s CEO, Aravind Srinivas. - Perplexity Vs Academic Paper: User

@the_only_alexandershared his reservations about the Perplexity assessment mentioned in a research paper, expressing concern about the paper’s methodology. - Sound Annoyance:

@jamiecropleyreported being annoyed by a sound that plays on the Perplexity homepage and was suggested to mute the tab. - In-browser Perplexity: Users share how to set up perplexity as default search engine in the web browser, mainly on Firefox and Chrome. Users shared links to various resources such as setting up search shortcuts in Chrome and how to add or remove search engines in Firefox for this purpose.

- Perplexity Pro Features: Users

@donebgasked about the advantages of Perplexity Pro in the app. Users@giddzand@mares1317directed them to the official page listing Perplexity Pro benefits like more copilot searches, model selection, and unlimited file uploads.

Links mentioned:

- Tweet from Aravind Srinivas (@AravSrinivas),): @Austen @eriktorenberg Thanks to every investor and user who has supported us so far. We couldn’t be here without all the help! We look forward to continuing to build the fastest and most accurate…

- Evaluating Verifiability in Generative Search Engines: Generative search engines directly generate responses to user queries, along with in-line citations. A prerequisite trait of a trustworthy generative search engine is verifiability, i.e., systems shou…

- Add or remove a search engine in Firefox | Firefox Help

- Comparing Brave vs. Firefox: Which one Should You Use?: The evergreen open-source browser Firefox compared to Brave. What would you pick?

- Vulcan Salute Spock GIF - Vulcan Salute Spock Star Trek - Discover & Share GIFs: Click to view the GIF

- How to set up site search shortcuts in Chrome: As a search engine, Google does a great job of helping with broad web searches – such as when you want to find a business or product online. However, if you are looking for results within a specific w…

- Assign shortcuts to search engines | Firefox Help

- ChatGPT vs Perplexity AI: Does Perplexity Use ChatGPT? - AI For Folks: The AI landscape is constantly shifting, and can be confusing. Many companies overlay different technologies for their own use. In this article, we’ll compare

- Perplexity Blog: Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- Perplexity CEO Aravind Srinivas, Thursday Nights in AI: Outset Capital’s Ali Rohde and Josh Albrecht interview Perplexity AI CEO, Aravind Srinivas. Special thanks to Astro Mechanica (https://astromecha.co/) for ho…

▷ #sharing (15 messages🔥):

- Notion DataBase Limitations Upset User:

@stanislas.basquinexpressed frustration about being unable to create linked Notion databases across different workspaces. Link to discussion - Perplexity Assists in Client Report Validation:

@jay.mkeshared that the Perplexity service helped validate a client report within 2 minutes. However, the originally shared thread was not publicly accessible until@ok.alexadvised making the thread public. Link to thread - Perplexity Praised for Bio-Research Inquiry Aid:

@shaghayegh6425appreciated Perplexity for being quick and resourceful particularly for Bio-Research inquiries and brainstorming. They shared three resources, here and here - Perplexity Helps Avoid Anchoring:

@archientshared that Perplexity helped them figure out what they need to search for in order to avoid getting anchored. Link to thread - Perplexity Aids in Building Modular Web Application:

@whoistraianshared resources from Perplexity for building a modular web application. Link to resources

Links mentioned:

- Tweet from Aravind Srinivas (@AravSrinivas): The knowledge app is on its ascent on the App Store. #25 in productivity. If you believe it’s better than Bing (#17 right now, but a slower and much larger memory consuming app), you know what to …

- Tweet from Kristi Hines (@kristileilani): How useful is @perplexity_ai? Here’s how it helped me with a little surprise in my garden.

▷ #pplx-api (5 messages):

- Struggling to Use API on Platforms:

@blackwhitegreyexpressed difficulty in using the API ontypingmindandharpa.ai, prompting a query over how other users are employing the API. - Going Independent with API:

@archientsuggested writing their own code to utilize the API, pointing to Perplexity’s API documentation for guidance. - Hands-on Try with API:

@archientalso hinted at the prospect of making a direct attempt using the provided token. - Seeking Convenient Syntax for API Calls:

@brknclock1215requested suggestions on how to moldPerplexity APIcalls’ syntax forHARPA AI browser plugin. The user referred to Perplexity’s API documentation as they experimented with various input/setting combinations to no avail.

Links mentioned:

OpenAccess AI Collective (axolotl) Discord Summary

-

Fine-Tuning Paused Due to Bug:

@matts9903brought attention to a bug that is currently affecting the Mixtral model. The advice from@le_messwas to halt fine-tuning until this bug can be resolved. -

Large Language Models and Datasets: The discussion focused on whether training Mistral on separate datasets (Dolphin or OpenOrca) or a merged set would yield similar results.

@caseus_responded it would be quite similar, with the recommendation of training with slimorca. -

Comparison of Full Fine-Tuning and LoRa/QLoRa:

@noobmaster29initiated a conversation asking if anyone had done a comparison between full fine-tuning instruction sets and LoRa or QLoRa. -

Device Mapping in Axolotl:

@nanobitzsuggested merging Pull Request #918 as it introduces better device mapping for handling large models in the Axolotl project. -

Dockerhub Login Complication with CI: Continuous Integration was failing due to Dockerhub login issues. Several team members involved including

@caseus_and@hamelhstruggled to solve this. A variant solution proposed was to only log into Dockerhub when pushing to themainbranch. -

Token Embedding and Training in New Language:

@.___init___’s question concerning language-specific tokenizers led to a focus on the feasibility of expanding tokenizers for new language training.@nanobitzand@noobmaster29clarified the task could be unfruitful without significant pretraining. -

Shearing Commences for Models: Confirmation by

@caseus_indicated that the shearing process had commenced for a 2.7B model and expressed willingness to initiate pruning for a 1.3B model. With community support growing,@le_messalong with@emrgnt_cmplxtyand@nosa_.are amassing resources in support of the project.@emrgnt_cmplxtyshared relevant shearing resources to facilitate contribution towards the project. -

VinaLLaMA - Vietnamese Language Model: The introduction of VinaLLaMA, a Vietnamese Language Model, sparked a discussion initiated by

@.___init___over the hypothetical performance of GPT-4 against language-specific models on benchmarks.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (57 messages🔥🔥):

- Bug Pauses Mixtral Fine-tuning:

@matts9903shared a Huggingface bug impacting Mixtral, asking if it was worth fine-tuning before a resolution.@le_messrecommended waiting for a fix. - Mistral Trained on Single vs. Merged Datasets:

@yamashiinquired if a Mistral model trained on either Dolphin or OpenOrca datasets would give similar results to training on a merge of the two.@caseus_confirmed the similarity, recommending training with slimorca. - Jon Durbin’s Mixtral Method Illustrated:

@casper_aishared the technique used by Jon Durbin for DPO’ing mixtral, shared on Twitter. Updates to TRL for multi-device use and adaptations to DPO code were among steps taken. - Comparing Full Fine-Tuning vs. LoRa/QLoRa:

@noobmaster29queried if anyone had compared full fine-tuning instructions sets versus LoRa or QLoRa. - Unreliable HF Evaluation for Bagel Model:

@_dampfreported unreliable HF evaluation results for Jon Durbin’s Bagel model, which she has attempted to re-add to the HF eval board. There seems to be a consistent issue with Dolphin series models not showing up.

Links mentioned:

- HuggingFaceH4/open_llm_leaderboard · Bagel 8x7B evaluation failed

- GitHub - ml-explore/mlx: MLX: An array framework for Apple silicon: MLX: An array framework for Apple silicon. Contribute to ml-explore/mlx development by creating an account on GitHub.

- Tweet from Jon Durbin (@jon_durbin): DPO’ing mixtral on 8x a6000s is tricky. Here’s how I got it working: 1. Update TRL to allow multiple devices: https://github.com/jondurbin/trl/commit/7d431eaad17439b3d92d1e06c6dbd74ecf68bada…

- Incorrect implementation of auxiliary loss · Issue #28255 · huggingface/transformers: System Info transformers version: 4.37.0.dev0 Platform: macOS-13.5-arm64-arm-64bit Python version: 3.10.13 Huggingface_hub version: 0.20.1 Safetensors version: 0.4.1 Accelerate version: not install…

- [WIP] RL/DPO by winglian · Pull Request #935 · OpenAccess-AI-Collective/axolotl

- [

Mixtral] Fix loss + nits by ArthurZucker · Pull Request #28115 · huggingface/transformers: What does this PR do? Properly compute the loss. Pushes for a uniform distribution. fixes #28021 Fixes #28093

▷ #axolotl-dev (43 messages🔥):

- Merge Recommendation for Better Device Mapping:

@nanobitzproposed for the merge of Pull Request #918 aiming at better device mapping for large models in the Axolotl project. - CI Fails Due to Dockerhub Login Issue: The Continuous Integration process was failing due to issues while logging into Dockerhub.

@caseus_tried fixing it by creating new tokens and updating github secrets, but the issue persisted.@hamelhinvestigated and concluded that the issue was related to workflow permissions due to thepull_requestevent. An option to log into Dockerhub only when pushing tomainwas suggested. - Removing Docker Login from Workflow:

@hamelhproposed removing the login process for Dockerhub from github actions workflow, as it wasn’t clear why it was there in the first place.@caseus_agreed to the proposal. - Intel Gaudi 2 AI Accelerators Cheaper for Training:

@dreamgenshared an article from Databricks which suggests that training on Intel Gaudi 2 AI Accelerators can be up to 5 times cheaper than NVIDIA’s A100. - Token Embedding Size Warning:

@caseus_shared a tweet advising against resizing token embeddings when adding new tokens to a model, as it has caused errors due to a mismatch between the embedding size and the vocabulary size.@nanobitzsuggested that this might be a tokenizer and model config discrepancy and that the phi model may be affected by this issue.

Links mentioned:

- Tweet from anton (@abacaj): Do not resize token embeddings if you are adding new tokens, when I did this model errors out. Seems like there are 51200 embedding size but only 50294 vocab size

- LLM Training and Inference with Intel Gaudi 2 AI Accelerators

- build-push-action/action.yml at master · docker/build-push-action: GitHub Action to build and push Docker images with Buildx - docker/build-push-action

- axolotl/.github/workflows/tests-docker.yml at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- e2e-docker-tests · OpenAccess-AI-Collective/axolotl@cbdbf9e: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- Update tests-docker.yml by hamelsmu · Pull Request #1052 · OpenAccess-AI-Collective/axolotl

- Simplify Docker Unit Test CI by hamelsmu · Pull Request #1055 · OpenAccess-AI-Collective/axolotl: @winglian I think we might have to merge this to really test it.

- feature: better device mapping for large models by kallewoof · Pull Request #918 · OpenAccess-AI-Collective/axolotl: When a model does not fit completely into the GPU (at 16-bits, if merging with a LoRA), a crash occurs, indicating we need an offload dir. If we hide the GPUs and do it purely in CPU, it works, but…

▷ #general-help (61 messages🔥🔥):

-

Expanding the tokenizer for a new language: User

@.___init___was seeking advice on expanding a tokenizer with more tokens for a new language and then training it.@nanobitzand@noobmaster29shared their experiences indicating that it was not proven beneficial unless there’s significant pretraining. They cited a project on GitHub as a reference. -

Implementations of gradient accumulation steps during full fine-tuning: In a discussion initiated by

@Sebastian,@caseus_confirmed that smaller batch sizes are preferable for most fine-tuning tasks. -

Downsampling a dataset in axolotl:

@yamashiwas looking for ways to downsample a dataset for a training run in axolotl.@le_messsuggested using shards to achieve this. -

Experiments with smaller models:

@.___init___and@noobmaster29discussed the idea of experimenting with smaller models.@noobmaster29expressed concerns about the memory issues with phi2 and wasn’t sure about the performance of tinyllama. -

Introducing VinaLLaMA:

@.___init___brought attention to VinaLLaMA, a state-of-the-art Large Language Model for the Vietnamese language built upon LLaMA-2. This led to a discussion on whether GPT-4 would perform better on benchmarks than such language-specific models.

Links mentioned:

- VinaLLaMA: LLaMA-based Vietnamese Foundation Model: In this technical report, we present VinaLLaMA, an open-weight, state-of-the-art (SOTA) Large Language Model for the Vietnamese language, built upon LLaMA-2 with an additional 800 billion trained toke…

- LeoLM: Igniting German-Language LLM Research | LAION: <p>We proudly introduce LeoLM (<strong>L</strong>inguistically <strong>E</strong>nhanced <strong>O</strong>pen <strong>L</strong>anguage <stron…

- Chinese-LLaMA-Alpaca/README_EN.md at main · ymcui/Chinese-LLaMA-Alpaca: 中文LLaMA&Alpaca大语言模型+本地CPU/GPU训练部署 (Chinese LLaMA & Alpaca LLMs) - ymcui/Chinese-LLaMA-Alpaca

▷ #shearedmistral (14 messages🔥):

- Shearing commenced for 2.7B model:

@caseus_confirmed that shearing is being conducted for the 2.7B model. Also expressed willingness to do the initial pruning for a 1.3B model. - Compute assistance for 1.3B model:

@le_messshowed readiness to provide computational resources for the training of the 1.3B model. - Getting set for shearing contribution:

@emrgnt_cmplxtyexpressed interest in contributing to shearing and was provided a GitHub link to get started by@caseus_. - Shearing process based on Sheared LLaMa model:

@emrgnt_cmplxtyclarified that the shearing process will be based on the framework used for the Sheared LLaMa models. - Question on Mistral & LLaMa difference:

@emrgnt_cmplxtyquestioned if the only variation between Mistral and LLaMa is the sliding attention window.@caseus_confirmed this to be true. - Problem with sampling subset of RedPajama:

@caseus_mentioned having issues with the part of the code that uses a sampled subset of RedPajama from the given GitHub link. - Plan for using large context window: In response to

@emrgnt_cmplxty’s question about the use of a large context window,@caseus_confirmed an intention to use this approach with another fine-tune on a dataset like Capybara after the shearing process. - Support for the shearing project expands:

@nosa_.showed interest in supporting the shearing project.

Links mentioned:

- LLM-Shearing/llmshearing/data at main · princeton-nlp/LLM-Shearing: Preprint: Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning - princeton-nlp/LLM-Shearing

- GitHub - princeton-nlp/LLM-Shearing: Preprint: Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning: Preprint: Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning - GitHub - princeton-nlp/LLM-Shearing: Preprint: Sheared LLaMA: Accelerating Language Model Pre-training via…

HuggingFace Discord Discord Summary

- Big Data on Hugging-Face Query:

@QwertyJackquestioned if a 10TB bio-ML dataset could be hosted on HuggingFace. Access the discussion here. - LLM Benchmarking Guide Requested: In response to

@exponentialxp’s question on LLM benchmarking,@gr.freecs.orgshared a useful resource - a link to lm-evaluation-harness on GitHub. - AI-powered Personal Discord Chatbot and Pokemon-Classifier Shared:

@vashi2396and@4gastyashared their personal discord chatbot and Pokemon-Classifier projects respectively in thei-made-thischannel. - Potential NLP Research Collaboration Initiated:

@sirmalamuteoffered to collaborate on NLP research projects and Python modules development in thegeneralchannel. - The Tyranny of Possibilities Reading Group Discussion: The

reading-groupchannel hosted an engaging discussion on the design of Task-Oriented Language Model Systems, or LLM Systems, which was initiated by@dhruvdh. - TinyLlama Project and Small AI Models Revealed:

@dame.outlawrevealed an open team project TinyLlama and@dexensshared an article on Microsoft’s small AI models in thecool-findschannel. - Introduction of LoRA implementations for Diffusion DPO: The

core-announcementschannel featured@sayakpaul’s announcement about the implementation of LoRA for Diffusion DPO. - A New Conversation Generator Unveiled: In the

NLPchannel,@omaratef3221introduced their new project, a Conversation Generator that could transform the field of developing chatbots and virtual assistants. - Call for Input on Computer Vision Data Storage: User

@etharchitectin thecomputer-visionchannel initiated a discussion inviting suggestions on recent, pioneering techniques in computer vision data storage.

HuggingFace Discord Channel Summaries

▷ #general (85 messages🔥🔥):

- Hosting of large datasets on HF questioned: User

@QwertyJackinquired whether a public bio-ML dataset of about 10TB could be hosted on HuggingFace. - Need for LLM benchmarking guide surfaced:

@exponentialxpasked for guidance on how to benchmark an LLM.@gr.freecs.orgshared a link to lm-evaluation-harness on GitHub. - Struggles with specialized coding assistant under discussion:

@pixxelkickconversed with@vipitisabout how to engineer an LLM for “copilot” style code completion with thenvim.llmplugin.@vipitissuggested looking at open source code completion models and tools such as TabbyML’s CompletionContext for reference and using larger models likedeepseek-coder. - Potential NLP research collaboration broached:

@sirmalamute, an experienced ML engineer, expressed interest in collaborating on NLP research projects and developing open source Python modules.@kopylwelcomed the offer of help for his logo generation model project. - Input needed on running prediction in dataset filter function:

@kopylgot into a discussion with@vipitisabout running model inference in adataset.filterfunction on an image dataset.@vipitisadvised against this practice due to certain potential issues, but@kopylresponded that the alternative would require more setup for multi-GPU inference.

Links mentioned:

- Google Colaboratory

- MTEB Leaderboard - a Hugging Face Space by mteb

- Good first issues • openvinotoolkit: A place to keep track of available Good First Issues. You can assign yourself to an issue by commenting “.take”.

- tabby/clients/tabby-agent/src/CompletionContext.ts at main · TabbyML/tabby: Self-hosted AI coding assistant. Contribute to TabbyML/tabby development by creating an account on GitHub.

- iconbot (dev): AI engineer: @kopyl Канал про то как я тренирую модель искуственного интеллекта по генерации иконок. Доступ к боту по приглашениям, пишите в лс.

- GitHub - EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of autoregressive language models.: A framework for few-shot evaluation of autoregressive language models. - GitHub - EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of autoregressive language models.

▷ #today-im-learning (26 messages🔥):

- GPTs Optimization Discussions:

@gag123had inquiries about tweaking their GPT model’s learning rate and tensor’s dtype. After sharing their Github project link for context,@exponentialxpsuggested they should have a dataset of size 20 times the number of parameters in their model for optimal results. - Optimizer.zero_grad() to the Rescue: The conversation revealed that

@gag123was missingoptimizer.zero_grad()in their code.@exponentialxpalerted them to this crucial line, which led to perceptible improvements in their model’s performance. - Concerns about Overfitting and Loss Value:

@exponentialxpvoiced concerns about possible overfitting due to the large size of@gag123’s GPT model and recommended downsizing it to 384,6,6 dimensions. They also discussed the unusually high loss value of 2.62 despite a modest vocab size of 65. - Iterative Code Enhancements Advised:

@exponentialxprecommended several code enhancements for@gag123including the introduction of atrainandval splitto avoid overfitting, adjustments to thepos_embfor their GPT class, and ensuring they are callingHeadand notMHA. - DINOv2 Self-Supervised Learning in Images: User

@merve3234shared a thread link about their learning experience with Dimensionality reduction for Image Nodes (DINO) and DINOv2, noting that DINOv2 is the current king of self-supervised learning in images.

Links mentioned:

- Tweet from merve (@mervenoyann): DINOv2 is the king for self-supervised learning in images 🦖🦕 But how does it work? I’ve tried to explain how it works but let’s expand on it 🧶

- History for sample.py - exponentialXP/TextGenerator: Create a custom Language Model / LLM from scratch, using a few very lightweight scripts! - History for sample.py - exponentialXP/TextGenerator

- GitHub - gagangayari/my-gpt: Contribute to gagangayari/my-gpt development by creating an account on GitHub.

▷ #cool-finds (6 messages):

- Exploring TinyLlama:

@dame.outlawshared a GitHub link to TinyLlama Project, an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens. - Microsoft Pioneers Small Multimodal AI Models:

@dexensintroduced a semaphore article that discusses Microsoft’s research division adding new capabilities to its smaller language model, Phi 1.5, enabling it to view and interpret images. - Interesting Paper Alert:

@masterchessrunnershared an academic paper found on Reddit, praising the author’s sense of humor.

Links mentioned:

- Microsoft pushes the boundaries of small AI models with big breakthrough | Semafor: The work by the company’s research division shows less expensive technology can still have advanced features, without really increasing in size.

- GitHub - jzhang38/TinyLlama: The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens.: The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens. - GitHub - jzhang38/TinyLlama: The TinyLlama project is an open endeavor to pretrain a 1.1B Llama mode…

▷ #i-made-this (7 messages):

- Pokemon-Classifier in the Making: User

@4gastyashared his project of fine-tuning a Pokemon Classifier model stating “though it’s not that accurate but i’m getting in love with playing with models”. Project link: Pokemon-Classifier. - AI-powered Personal Discord Chatbot:

@vashi2396is developing a personal discord chatbot based on AI to read and post messages. The project can be checked out here. - Collecting Feedback for AI Model:

@gr.freecs.orgis inviting feedback for their model ‘artificialthinker-demo-gpu’. The model can be accessed through the provided link. - AI Chatbot featured on LinkedIn:

@vashi2396shared a LinkedIn post featuring their AI chatbot. - Request for Help: User

@vashi2396asked for help in “getting input thru Automatic speech recognition model via microphone.” - Difficulties with Phi-2 Model: User

@dexensexpressed disappointment with Phi-2’s performance, especially with code generation and its tendency for repetition. They also asked if anyone had trained the model in JavaScript/TypeScript apart from Python. The model can viewed at this link. - Confirmation of Phi-2’s Poor Performance: User

@vipitisconcurred with@dexensand stated that Phi-2 is “currently the worst performing model”.

Links mentioned:

- ArtificialThinker Demo on GPU - a Hugging Face Space by lmdemo

- Pokemon Classifier - a Hugging Face Space by AgastyaPatel

- Google Colaboratory

- microsoft/phi-2 · Hugging Face

▷ #reading-group (29 messages🔥):

- Diving Deep into The Tyranny of Possibilities: User

@dhruvdhcreated a thread discussing the design of Task-Oriented Language Model Systems, or LLM Systems, and this received acknowledgment from@chad_in_the_housewho tagged the entire group for attention. - A Heated Debate on Group Notifications: An argument over group notifications broke out between users

@chad_in_the_houseand@svas..@cakikiintervened to remind everyone about maintaining respect within the discussion. - The Reading Group’s Meet Format: Users

@thamerlaand@4gastyaexpressed concerns about the accessibility and visibility of the reading group meet.@chad_in_the_houseclarified that presentations are currently done via text threads in discord but is open for suggestions like using voice channels. - Exploring Joint-Embedding Predictive Architecture:

@shashank.f1brought up a paper for a discussion on the MC-JEPA approach towards self-supervised learning of motion and content features. A YouTube video providing a deep dive discussion on the same was shared. - Awakening AI Consciousness:

@minhsmindwas reading a paper about AI Consciousness in preparation for a presentation, this sparked a debate with@beyond_existenceand@syntharionwhere they shared their insights and skepticism on whether AI can attain consciousness.

Links mentioned:

MC-JEPA neural model: Unlock the power of motion recognition & generative ai on videos and images: 🌟 Unlock the Power of AI Learning from Videos ! 🎬 Watch a deep dive discussion on the MC-JEPA approach with Oliver, Nevil, Ojasvita, Shashank and Srikanth…

▷ #core-announcements (1 messages):

- LoRA implementations for Diffusion DPO now available: User

@sayakpaulannounced the implementation of LoRA for Diffusion DPO. The support is included for both SD and SDXL. The implementation can be checked out on their GitHub link. - SDXL-Turbo support on the way:

@sayakpaulhinted at incoming support for SDXL-Turbo in a future update.

Links mentioned:

diffusers/examples/research_projects/diffusion_dpo at main · huggingface/diffusers: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch - huggingface/diffusers

▷ #computer-vision (3 messages):

- Post-processing model Incorrect Labels:

@merve3234suggested that one could run the model on the dataset and label the incorrect ones as a method to correct incorrect labels in a dataset. - Deep Dive into DINO and DINOv2:

@merve3234shared a comprehensive thread and infographic explaining the workings of DINO and DINOv2 for self-supervised learning in images. The complete thread can be found here. - Query on Computer Vision Data Storage Techniques:

@etharchitectraised a question seeking information on recent computer vision data storage techniques, inviting the community to engage and discuss.

Links mentioned:

Tweet from merve (@mervenoyann): DINOv2 is the king for self-supervised learning in images 🦖🦕 But how does it work? I’ve tried to explain how it works but let’s expand on it 🧶

▷ #NLP (3 messages):

- Exciting Announcement: A Conversation Generator Meet Dialogue Summarization:

@omaratef3221shared their new project, a Conversation Generator. This tool is designed to “generate realistic dialogues using large language models from small summaries.” They’ve fine-tuned models like Google’s T5 and have created a dialogue generation version. The training took place on Google Colab Platform using 🤗 Transformers and Kaggle’s Open Source Dialogue Dataset. - Omaratef3221’s Contribution to Open-Source Community: The conversation generator is

@omaratef3221’s gift to the open-source community. They expressed that the project’s open-source and would appreciate any contributions or feedback. They also expressed that “Open-source collaboration is a fantastic way to learn and grow together.” - In-depth Look at the Generator: The model, Omaratef3221’s flan-t5-base-dialogue-generator, is a fine-tuned version of Google’s

t5structured to generate “realistic and engaging dialogues or conversations.” - Potential Uses Highlighted:

@omaratef3221noted that their model is “ideal for developing chatbots, virtual assistants, and other applications where generating human-like dialogue is crucial.” - Enthusiastic Reactions and Future Possibilities:

@stroggozreacted positively to the announcement and further mentioned how the technology might help them “understand higher-level math.”

Links mentioned:

Omaratef3221/flan-t5-base-dialogue-generator · Hugging Face

LAION Discord Summary

- “It’s all about fit”: Users

pseudoterminalxandthejonasbrothersdelved into overfitting and underfitting in model training. Discussion included the necessary balance and trade-offs involved in achieving satisfactory model performance. - “Noise adds originality”: User

pseudoterminalxadvocated for the inclusion of noise in image generation outputs, arguing that it contributes to perceived authenticity. Pointed out potential uncanny valley problem in excessively clean images. - “Synthetic Data (SD) 2.1x models can pack a punch”: A deep dive into Synthetic Data (SD) 2.1x models discussed training techniques such as cropping and resolution buckets. Reemphasis was given on SD 2.1 models’ capabilities to output images of high resolution, with proper training.

- “CommonCrawl and NSFW filtering”:

thejonasbrothersmooted utilising uncaptioned images from CommonCrawl. Speculated on potential of synthetic captioning, the necessity for NSFW models to sift out inappropriate content and a vision of vast knowledge storage. - “Impeccable details in AI-generated images”:

pseudoterminalxdisplayed high-res model outputs showcasing intricate details, creating a positive impression in the channel viewers. - “Masked tokens and U-Net are the secret sauce”:

kenjiqqclarified that in the case of transformer models, it’s not diffusion but masked tokens that play key role. User referenced original U-Vit paper about benefits of transformer blocks for better accelerator throughput. - “LLaVA-$\phi$: small yet efficient”: Introduction to LLaVA-$\phi$ by

thejonasbrothers, presented an efficient multi-modal assistant model that operates efficiently even with a smaller size of 2.7 billion parameters, showing impressive performance on multi-modal dialogue tasks. - “RLHF-V: high expectations, low performance”:

kopylandthejonasbrothersexpressed their disappointment with the RLHF-V model, expected to have a low hallucination rate. Improvement in model performance was noted when the prompt was changed to request detailed image description. - “Decoding Causality in Language Models”:

JHelaborated on how Language and Image Models (LLMs) can learn causality due to their decoder-oriented design and causal attention mask, whereas diffusion models learn by association. - “Deciphering 3D Space in Diffusion Models”:

phryqposed an interesting question regarding diffusion models’ understanding of 3D space, specifically if these models could comprehend different facial perspectives.

Links mentioned:

- TikTok - Make Your Day

- Philipp Schmid’s tweet

- The Reel Robot’s tweet

- LLaVA-$ϕ$ paper

- Improving Diffusion-Based Image Synthesis with Context Prediction

- visheratin/LLaVA-3b · Hugging Face

- CCSR GitHub repository

- openbmb/RLHF-V · Hugging Face

LAION Channel Summaries

▷ #general (103 messages🔥🔥):

- “Too optimized, or not optimized enough?”: A helpful debate between users

pseudoterminalxandthejonasbrothersfocused around overfitting and underfitting in model training. They discussed the challenges and obvious trade-offs pointing out that some degree of fitting is required for the model to perform well. - “Upscale AI’s latest trend: Noise in images!?”:

pseudoterminalxdefended the inclusion of noise in image generation outputs, claiming it adds to the authenticity. Instances where “every single image is crystal clear makes them look, ironically, like AI gens”, and they mentioned the issue of the “uncanny valley of perfection.” - “Unleashing the true potential of SD 2.1x models”:

pseudoterminalxandthejonasbrotherstook a deep dive into training techniques for Synthetic Data (SD) 2.1x models, diving into cropping methods, resolution buckets, and perceptual hashes. They also reviewed the model output resolutions and reiterated that SD 2.1 models were capable of outputting high-res images well beyond 1mp when trained correctly. - “A Picture of the Future from CommonCrawl”:

thejonasbrotherscontemplated on the idea of ripping uncaptioned images from CommonCrawl, then synthetically captioning and training them for a model that can store vast knowledge. It was pointed out that NSFW models would have to be used to filter out inappropriate content from CommonCrawl. - “Beauty is in the Eyes of the AI Beholder”:

pseudoterminalxpresented sample high-res outputs from the models. The images highlighted fine details like hair, skin texture, and tree bark, which seemed to impress the users in the channel.

Links mentioned:

- TikTok - Make Your Day

- Tweet from Philipp Schmid (@_philschmid): We got a late Christmas gift from @Microsoft! 🎁🤗 Microsoft just changed the license for their small LLM phi-2 to MIT! 🚀 Phi-2 is a 2.7 billion parameter LLM trained on 1.4T tokens, including synth…

- Tweet from The Reel Robot (@TheReelRobot): The most ambitious AI film I’ve made to date. We are less than a year out from commercially viable AI films. Updates to @runwayml (control) and @elevenlabsio (speech-to-speech) have me believin…

▷ #research (24 messages🔥):

- Masked tokens and U-Net in Transformer Models: In a discussion about transformer models,

@kenjiqqelaborated that in this case it’s not diffusion, but masked tokens playing a significant role. They added that the major benefit of transformer blocks is better accelerator throughput, according to the original U-Vit paper. - Introduction of LLaVA-$\phi$:

@thejonasbrothersshared a paper introducing LLaVA-$\phi$ (LLaVA-Phi), an efficient multi-modal assistant that harnesses the power of the small language model, Phi-2, for multi-modal dialogues. Notwithstanding its reduced number of parameters (as few as 2.7B), the model delivers commendable performance on tasks integrating visual and textual elements. - Confusion over LLaVA-3b and LLaVA-Phi Models:

@nodjalinked the Hugging Face model card for LLaVA-3b, a fine-tuned model from Dolphin 2.6 Phi, but noted that the authors listed didn’t match those of LLaVA-Phi. A difference in architecture between the two models was also noted. - Context Prediction for Diffusion Models:

@vrus0188shared a link to the CCSR GitHub repository, a project titled “Improving the Stability of Diffusion Models for Content Consistent Super-Resolution”. - Disappointment with Performance of RLHF-V: In a discussion about the RLHF-V model, touted for its low hallucination rate,

@kopyland@thejonasbrothersexpressed disappointment with its actual performance.@bob80333also reported subpar results when using a frame from a Ghibli movie. However, when the prompt was changed to requesting a detailed image description, the model’s performance improved.

Links mentioned:

- openbmb/RLHF-V · Hugging Face

- Improving Diffusion-Based Image Synthesis with Context Prediction: Diffusion models are a new class of generative models, and have dramatically promoted image generation with unprecedented quality and diversity. Existing diffusion models mainly try to reconstruct inp…

- LLaVA-$ϕ$: Efficient Multi-Modal Assistant with Small Language Model: In this paper, we introduce LLaVA-$ϕ$ (LLaVA-Phi), an efficient multi-modal assistant that harnesses the power of the recently advanced small language model, Phi-2, to facilitate multi-modal dialogues…

- Instruct-Imagen: Image Generation with Multi-modal Instruction: This paper presents instruct-imagen, a model that tackles heterogeneous image generation tasks and generalizes across unseen tasks. We introduce multi-modal instruction for image generation, a task …

- visheratin/LLaVA-3b · Hugging Face

- GitHub - csslc/CCSR: Contribute to csslc/CCSR development by creating an account on GitHub.

▷ #learning-ml (3 messages):

- Debate on Causality in LLMs and Diffusion Models:

@JHprovided a thorough explanation of how Language and Image Models (LLMs) can learn causality due to their decoder-based architecture and causal attention mask. In contrast, diffusion models are seen to learn through association rather than causality. This doesn’t necessarily prevent them from learning causality, although their architecture and training may not directly bias them towards such a task. One possible way to train diffusion models to learn causality could be by creating datasets that deconstruct a caption from an explicit description to a causal one. - Curiosity About Diffusion Models’ Understanding of 3D Space:

@phryqasked if diffusion models understand that a profile face is just a face positioned differently, representing an interest in the model’s understanding of 3D space.

LangChain AI Discord Summary

- LangChain 0.1 to See the Light of Day:

@hwchase17disclosed plans for the launch of LangChain 0.1 and the intention to actively promote it. They have invited early feedback. - Catch My Signal: Database encoding issues surfaced, with

@felipeescobar.highlighting the need to pass the UTF-8 Encoding to SQL agent. - Chatbots on a Shopping Spree: User

@vilelaoneprobed for insights regarding a feature in chatbots that allows smooth transitioning between different modes, usingRouterChainanda conversational chainin the context of an example like ‘adding to cart,’ ‘gathering shipping data,’ and ‘defining payment.’ A productive conversation followed this, concluded by@evolutionstepperposting code snippets and dropping the GitHub repo link. - LangChain Shines: On the publicity front,

@rorcdebrought the community’s attention to the spotlighting of LangChain as the AI Library of the Day at The AI Engineer, urging for the news to be spread via LinkedIn and Twitter. - A Picture is Worth a Thousand Words: A desire for knowledge sharing was expressed by

@nav1106, who sought suggestions on pretrained models for image embedding. - Error Message Five:

@Tom Preported encountering aModuleNotFoundErrorfor the CSV Agent, despite confirming its presence in the /packages directory. - New Variables, New Challenges:

@cryptossssuninquired how to utilize new variables while prompting in the LangServe service, post changinginput_variablesin thePromptTemplate. The ensuing discussions with@veryboldbageland Co. can be traced back to the LangServe examples, OpenAPI docs and theRemoteRunnableclient. - Callable AgentExecutor Unveiled:

@veryboldbagelelucidated onAgentExecutorbeing registered withadd_routes, owing to its inheritance fromChain. - Taking Custom Logic on Board: A member detailed the implementation of custom logic in LangChain, mentioning LCEL with runnable lambdas or through inheritance from

Runnable. - Reading the Input Schema:

@veryboldbagelrecommended checking the input schema on the runnable as a potential solution for issue related to unrecognized inputs, even describing thewith_typesmethod. - Session History Took a Hit:

@nwoke.expressed experiencing issues withsession_idwhile integratingRedisChatMessageHistoryin LangServe, with@veryboldbagelsuggesting an issue to be opened on GitHub. - Dependency Conflict Mars Langchain update:

@attila_ibsbrought to fore the dependency conflicts they faced after updating LangChain, citing diverse packages that demanded mismatching versions ofopenaiandlangchain. - The Life Expectancy of Langchain:

@dejomatriggered discussions on the feasibility of writing a book on Langchain, given the pace of its version updates and potential deprecation risk. - ArtFul - Embolden Your Creativity:

@vansh12344heralded the unveiling of ArtFul, a free, ad-supported app enabling users to create their own art using various AI models, now live on the Google Play Store. - Neutrino Makes its Debut:

@ricky_gzzlaunched Neutrino, a new innovative AI model routing system designed to optimize response quality, costs and latency between different closed and open-source models. A detailed insight into Neutrino can be found on their official webpage. - Tutorial Leaves Users Wanting More:

@offer.lshowered praise on a tutorial, sparking a commitment from@a404.ethto develop another tutorial soon. Meanwhile,@bads77sought guidance on retrying missing responses using the langchain JS library, leading@lhc1921to propose monitoring retries with awhile loop.

LangChain AI Channel Summaries

▷ #announcements (1 messages):

- LangChain 0.1 Launch: User

@hwchase17announced the launch of LangChain 0.1 and plans to highlight it heavily in the following week. They are open for early feedback.

▷ #general (33 messages🔥):

- UTF-8 Encoding in SQL Agent:

@felipeescobar.wondered if it’s possible to pass the encoding to their SQL agent and confirmed that they are referring to UTF-8 Encoding when@evolutionstepperasked for specifics. - Seamless Transitioning in Chatbots:

@vilelaoneasked for advice regarding the implementation of feature that enables a chatbot to seamlessly switch between different modes such as ‘adding to cart,’ ‘gathering shipping data,’ and ‘defining payment.’ There was a discussion aboutRouterChainand a possible solution involvinga conversational chain.@evolutionstepperprovided some source code examples and full code on GitHub for further guidance. - LangChain AI Library Spotlight:

@rorcdeannounced that LangChain was spotlighted as the AI Library of the Day at The AI Engineer, and suggested the community to help spread the word by sharing related posts on LinkedIn and Twitter. - Pretrained Model for Image Embedding:

@nav1106was looking for suggestions on pretrained models for image embedding as they are new to this domain. - CSV-Agent Module Error:

@Tom Pencountered an error (ModuleNotFoundError: No module named 'csv_agent') when trying to install and run the CSV-agent template. Although the csv-agent folder is confirmed to be in the /packages directory, the error persists. There was a discussion about giving the conversational agent the necessary tools to solve the problem.

Links mentioned:

casa-bot/services/api/main.py at dev · ai-ponx/casa-bot: Agentive real estate sms assistant. Contribute to ai-ponx/casa-bot development by creating an account on GitHub.

▷ #langserve (17 messages🔥):

- Querying for New Variables in Langserve Service:

@cryptossssunqueried about how to use new variables while prompting in the LangServe service after a change ofinput_variablesin thePromptTemplate.@veryboldbagelsuggested looking at the LangServe example provided in the docs, which supports adding additional parameters in the query. User@cryptossssunwas then advised to use the OpenAPI docs for schema information and to use theRemoteRunnableclient for initiating requests. - Callable AgentExecutor:

@veryboldbagelexplained howAgentExecutorcould be registered withadd_routes, being that it inherits fromChain, which in turn inherits fromRunnable. This convention allowsAgentExecutorsto be directly callable when running LangChain instances. - Executing Custom Logic: A member explained how custom logic in LangChain can be implemented, either via the combination of LCEL with runnable lambdas or through inheritance from

Runnableto implement the desired logic. - Input Schema Check: