First teased via paper 2 months ago, Nightshade was the talk of the town this weekend:

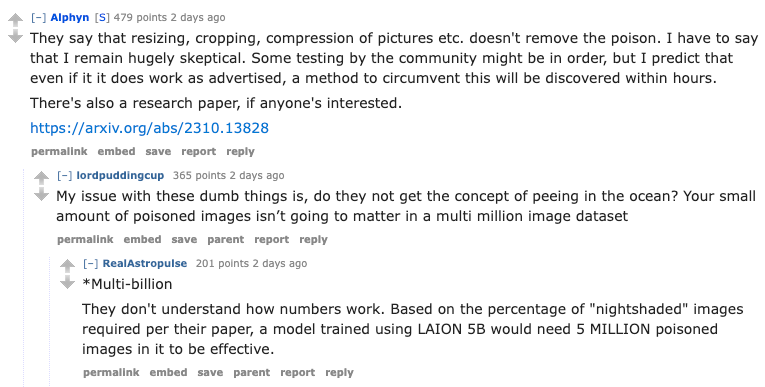

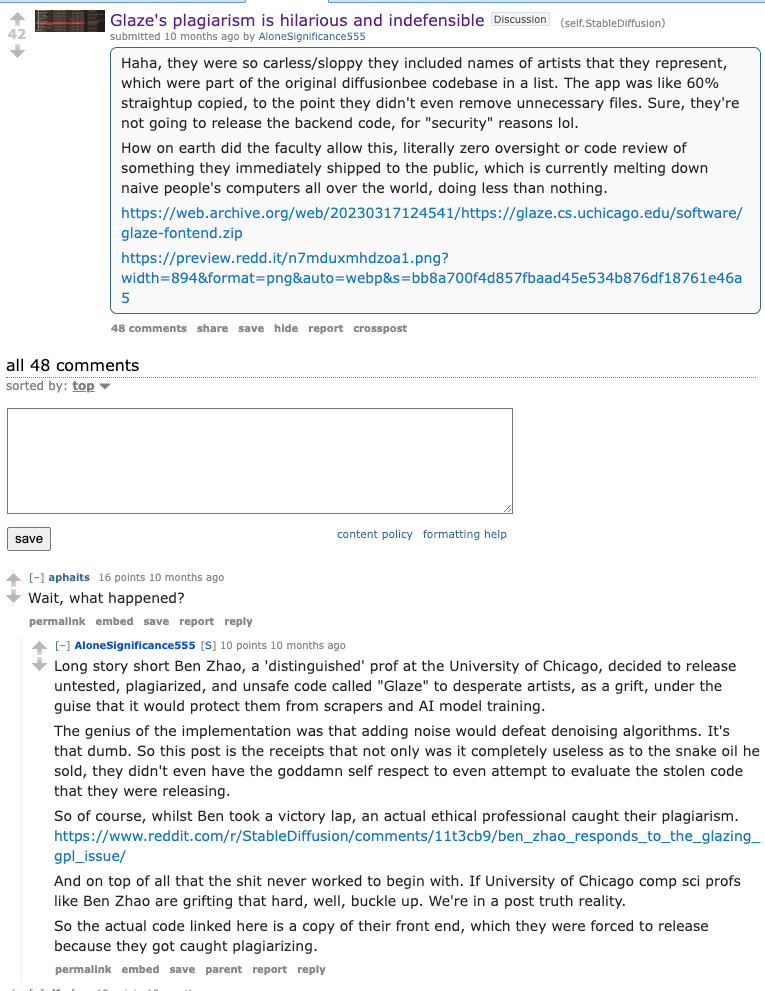

However people digging in the details have questioned how it works and the originality:

—

Table of Contents

[TOC]

TheBloke Discord Summary

-

MoE Efficiency and Detection Tools Talk: In discussions around Mixture of Experts (MoE) models, efficiency in GPU parallelism, and quant methods were key topics, with users exploring variable routing and trade-offs between expert counts. Also, GPTZero’s ability to detect certain types of AI-generated content was analyzed, suggesting noise application as a potential evasion method.

-

Challenges in Role-Playing AI: Debates emerged over Solar’s effectiveness, with some users pointing out its poor alignment despite benchmark efficiency. Model performance in long-context roleplaying was discussed, with opinions split on the best models for tasks and the potential for emergent repetition issues that can cause loss of novelty in output.

-

Fine-Tuning and Quantization Strategies in Depth: Users exchanged experiences with fine-tuning language models such as Mistral 7B, with some choosing few-shot learning over fine-tuning due to limited data. The concept of community-powered quantization services was pitched, and the need for simpler quantization methods was underscored, arguing for a focus on model improvement rather than complex distributed computing for quantization.

-

Confusion and Community Exchanges in Model Merging: An exchange on model merging strategies revealed confusion over non-standard mixing ratios with Mistral-based models. Different blending techniques like task arithmetic and gradient slerp were suggested, cautioning against blind copying of values.

-

Community Interest in Quantization and Model Training: Users expressed a desire for an easy community-driven quantization service, paralleling familiar processes like video transcoding. In model training, the feasibility of training on a 50GB corpus dealing with religious texts was queried, showing interest from newcomers in leveraging existing open-source models for specific domains.

TheBloke Channel Summaries

▷ #general (963 messages🔥🔥🔥):

-

Exploring MoE and LLMs: Users discussed the efficiency of using experts in mixture of experts (MoE) models and the implications it has on GPU parallelism.

@kalomazetalked about variable routing in MoE for parallelizing tasks and the trade-off between using more or fewer experts. -

The Complexity of Enhancing MoE Models: The nuances of enhancing MoE were dissected, with

@kalomazequestioning the benefit of layers becoming simpler.@seleaproposed using lots of experts as they could work as a library of “LoRas” to prevent catastrophic forgetting. -

Challenges with AI Detection Tools: Users debated the efficiency of the GPT detection tool,

GPTZero, with@kaltcitnoting that while common samplers can be detected byGPTZero, applying noise seems to be a potential method to dodge detection. -

Adventures in Fine-Tuning:

@nigelt11discussed the hurdles of fine-tuningFalcon 7Bwith a dataset of 130 entries, considering switching to useMistralinstead and understanding the nuances between ‘standard’ and ‘instruct’ models for RAG-based custom instructions. -

The Ethical Ambiguity of AI Girlfriend Sites:

@rwitz_contemplated the ethics of AI girlfriend sites, exploring the idea and finally deciding to pivot to a more useful application of AI technology beyond exploiting loneliness.

Links mentioned:

- Can Ai Code Results - a Hugging Face Space by mike-ravkine: no description found

- A Beginner’s Guide to Fine-Tuning Mistral 7B Instruct Model: Fine-Tuning for Code Generation Using a Single Google Colab Notebook

- Big Code Models Leaderboard - a Hugging Face Space by bigcode: no description found

- budecosystem/code-millenials-13b · Hugging Face: no description found

- First Token Cutoff LLM sampling - <antirez> : no description found

- How to mixtral: Updated 12/22 Have at least 20GB-ish VRAM / RAM total. The more VRAM the faster / better. Grab latest Kobold: https://github.com/kalomaze/koboldcpp/releases Grab the model Download one of the quants a…

- GitHub - iusztinpaul/hands-on-llms: 🦖 𝗟𝗲𝗮𝗿𝗻 about 𝗟𝗟𝗠𝘀, 𝗟𝗟𝗠𝗢𝗽𝘀, and 𝘃𝗲𝗰𝘁𝗼𝗿 𝗗𝗕𝘀 for free by designing, training, and deploying a real-time financial advisor LLM system ~ 𝘴𝘰𝘶𝘳𝘤𝘦 𝘤𝘰𝘥𝘦 + 𝘷𝘪𝘥𝘦𝘰 & 𝘳𝘦𝘢𝘥𝘪𝘯𝘨 𝘮𝘢𝘵𝘦𝘳𝘪𝘢𝘭𝘴: 🦖 𝗟𝗲𝗮𝗿𝗻 about 𝗟𝗟𝗠𝘀, 𝗟𝗟𝗠𝗢𝗽𝘀, and 𝘃𝗲𝗰𝘁𝗼𝗿 𝗗𝗕𝘀 for free by designing, training, and deploying a real-time financial advisor LLM system ~ 𝘴𝘰𝘶𝘳𝘤𝘦 𝘤𝘰𝘥𝘦 + 𝘷𝘪𝘥𝘦𝘰 &am…

- GitHub - turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs: A fast inference library for running LLMs locally on modern consumer-class GPUs - GitHub - turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs

- Noisy sampling HF implementation by kalomaze · Pull Request #5342 · oobabooga/text-generation-webui: A custom sampler that allows you to apply Gaussian noise to the original logit scores to encourage randomization of choices where many tokens are usable (and to hopefully avoid repetition / looping…

- GitHub - OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- Add dynatemp (the entropy one) by awtrisk · Pull Request #263 · turboderp/exllamav2: Still some stuff to be checked, heavy wip.

▷ #characters-roleplay-stories (403 messages🔥🔥):

-

Solar’s Status as a Benchmark Chad:

@doctorshotgundescribed Solar as efficient in benchmarks but terrible in actual use, with problems like alignment issues akin to ChatGPT. However,@theyallchoppabledefended its utility in role-playing scenarios, citing its consistent performance. -

Model Comparison in Roleplay Quality:

@sanjiwatsukiand@animalmachinediscussed how models like Mixtral, 70B, Goliath, and SOLAR perform in roleplaying tests, with mixed opinions. New models and finetuning strategies, like Kunoichi-DPO-v2-7B, were suggested to potentially improve coherence and character card adherence. -

Long Context Handling: Users reported on models’ performance with long context lengths, noting that some like Mistral 7B Instruct lose coherence beyond certain limits. Subsequent discussions involved tips on efficiency and hardware requirements for running large-scale models.

-

Deep Dive into Quant Methods: There was a detailed discussion on quantization strategies, including sharing links to repositories for GGUF models.

@kquantprovided insights into the potential performance in ranking systems. -

Emergent Repetition Issues in MoE Models:

@kquantexpressed that multitudes of models working together tend to generalize and might become repetitive, likening it to a choir stuck on a chorus. A new model with a specialized design to combat repetition in creative scenarios is underway.

Links mentioned:

- Urban Dictionary: kink shame: To kink shame is to disrespect or devalue a person for his or her particular kink or fetish.

- LoneStriker/airoboros-l2-70b-3.1.2-5.50bpw-h6-exl2 · Hugging Face: no description found

- Kquant03/Umbra-MoE-4x10.7-GGUF · Hugging Face: no description found

- athirdpath/DPO_Pairs-Roleplay-Alpaca-NSFW-v1-SHUFFLED · Datasets at Hugging Face: no description found

- TheBloke/HamSter-0.1-GGUF · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Kooten/Kunoichi-DPO-v2-7B-8bpw-exl2 at main: no description found

- Undi95/Borealis-10.7b-DPO-GGUF · Hugging Face: no description found

- brittlewis12/Kunoichi-DPO-v2-7B-GGUF · Hugging Face: no description found

▷ #training-and-fine-tuning (12 messages🔥):

- Newbie Diving into LLMs:

@zos_kia, a self-proclaimed noob, is seeking advice on training a language model on a 50GB corpus of unstructured religious and esoteric texts. They are considering open-source models like trismegistus-mistral and inquiring about the feasibility of training on a home computer as well as the expected time frame. - Pinging For Insights:

@zos_kiaasks if it is okay to ping the creator of trismegistus-mistral in the Discord server for personalized advice on their training project. - Voicemail Detection Finetuning Inquiry:

@rabiatis looking for guidance on fine-tuning Mistral 7B or MoE to classify voicemail announcements and is curious about the required dataset size for efficient LoRA fine-tuning. They are considering using their 40 real voicemail examples as seeds to upsample. - Few-shot as an Alternative:

@gahdnahsuggests that@rabiatcould try few-shot learning as an alternative to fine-tuning for the voicemail classification task. - Quantized Models and Fine-tuning:

@sushibotshared a skeleton script showcasing the process of quantizing a model to 4-bit before attaching LoRA weights andqueried about the setup.@sanjiwatsukiconfirmed that this is indeed what “Q” in QLoRA implies, suggesting the fine-tuning of frozen weights in a quantized model. - Benchmark Blogpost Showcase:

@superking__shared a Hugging Face blog post that evaluates three language model alignment methods without reinforcement learning: Direct Preference Optimization (DPO), Identity Preference Optimisation (IPO), and Kahneman-Tversky Optimisation (KTO) across various models and hyperparameter settings.

Links mentioned:

Preference Tuning LLMs with Direct Preference Optimization Methods: no description found

▷ #model-merging (15 messages🔥):

- Blizado Explores Non-Standard Merging:

@blizadois looking to merge two Mistral-based models using a 75:25 ratio instead of the standard 50:50. They found that a 50:50 slerp merge was too biased towards one model. - Sao10k Suggests Merging Flexibility:

@sao10krecommended that@blizadotry different merge methods such as gradient slerp, task arithmetic, or DARE-TIES, emphasizing not to stick with default values. - Confusion Over Merging Parameters: Despite the suggestions,

@blizadoexpressed confusion over the merging parameters and their effects on the model’s language output. - Sao10k Clarifies on Merging Values: In response to issues faced by

@blizadoincluding a model switching between German and English,@sao10kadvised against copying values blindly and suggested a simple gradient slerp ranging from 0.2 to 0.7. - Blizado’s Troubles with Mixed Models: After trying a slerp parameter found on a Hugging Face model,

@blizadoreported difficulty seeing differences when merging two different base models and suggested a certain merge effectiveness when combining a solid language base model with one of high language understanding in the same language.

▷ #coding (8 messages🔥):

-

A Call for Simplified Model Quantization:

@spottyluckexpressed surprise at the lack of “uber bulk/queue based model quantization solutions,” considering their extensive experience in video transcoding. They suggest the potential for a community service that allows easy model quantization with an opt-out feature for shared computing power. -

Quantization Service: A Community Effort?: Following up,

@spottyluckfloated the idea of a community-powered distributed model quantization service where users could contribute to a communal compute resource while working on their own projects. -

Simplicity Over Complexity:

@wbschcountered by highlighting that most users prefer convenience and consistency, as provided by TheBloke, without the need for complex solutions like quantization farms or distributed compute services. -

Farming for Models Not Quants:

@kquantemphasized that community compute donations should be targeted at long-term research and model improvement, rather than the quantization process. -

Technical Inquiry on Checkpoint Changes in Stable Diffusion:

@varient2asked for assistance on how to programmatically change checkpoints in Stable Diffusion using the webuiapi, mentioning they have already figured out how to send prompts and use ADetailer for face adjustments mid-generation.

Nous Research AI Discord Summary

-

WSL1 Surprises with 13B Model:

_3spherefound that a 13B model can be successfully loaded on WSL1 despite an earlier segmentation fault with the llama.mia tool. -

ggml Hook’s 7b Model Limitation Unveiled: The ggml hook faced criticism for not being documented to work exclusively with 7b models, a discovery made by

_3sphere. -

SPINning Up LLM Training Conversations: The SPIN methodology was presented from a paper on arXiv by

_3sphere, discussing its potential in refining LLM capabilities through iteration. -

Single-GPU LLM Inference Made Possible:

nonameusrshared AirLLM, which enables 70B LLM inference on a single 4GB GPU as described in a Twitter post. -

Etched’s Custom Silicon Spurs Skepticism: A discussion included skepticism about the viability of Etched’s custom silicon for transformer inference, casting doubt on its practicality for LLMs.

-

Orion’s 14B Model Falls Short in Conversational Skills: Orion’s 14B model was reported by

tekniumand others to have subpar conversational output, contradictory to its benchmark scores. -

Proxy-Tuning Paper Sparks Interest: A new tuning approach for LLMs called proxy-tuning was discussed, which is detailed in a recently published paper.

-

Mixtral’s Multi-Expert Potential: Conversations around Mixtral models focused on the successful optimization of using multiple experts, leading to contemplation of its use with Hermes by

carsonpoole. -

Finetuning Fineries:

qnguyen3sought advice for fine-tuning Nous Mixtral models, andtekniumprovided insights, including that Nous Mixtral had undergone a complete finetune. -

Commercial Licensure Confusion: The commercial usage of finetuned models sparked a debate about licensing costs and permissions, initiated by

tekniumand engaged bycasper_aiand others. -

Designing Nous Icons: The Nous community embarked on designing legible role icons, with suggestions for a transparent “Nous Girl” and simpler logos from

benxhandjohn0galt. -

Omar from DSPy/ColBERT/Stanford Joins The Fray: The community welcomed Omar, expressing excitement for potential collaborations involving his contributions to semantic search and broader AI applications.

-

Alpaca’s Evaluation Method Questioned:

tekniumexpressed skepticism about Alpaca’s leaderboard, hinting at issues with its method after observing Yi Chat ranked above GPT-4. -

Imitation Learning’s Human Boundaries: A conversation led by

tekniumtackled the idea that imitation learning may not yield superhuman capacities due to reliance on average human data for training. -

AI’s Self-Critiquing Abilities Challenged: A discussed paper indicated AI’s lack of proficiency in self-evaluation, prompting

tekniumto question self-critiquing capabilities in models.

Nous Research AI Channel Summaries

▷ #off-topic (29 messages🔥):

- WSL1 Handles Big Models Just Fine:

@_3spherediscovered that using WSL1, a 13B model can be loaded without issues. They initially thought otherwise due to segmentation faults occurring with the llama.mia setup but later realized this was a tool-specific fault. - Model Compatibility Oversight:

@_3spherereported that the ggml hook, used for handling AI models, apparently only works with 7b models, suggesting that the creator of the ggml hook might only have tested it with this specific size. There was a hint of frustration as this limitation was not documented. - Hugging Face Leaderboard Policing:

@.ben.comshared a discussion about a recent change on the Hugging Face leaderboard where models incorrectly marked asmergeare being flagged unless metadata is properly adjusted. - Strange New Worlds in Klingon:

@tekniumshared a YouTube video featuring a scene with Klingon singing from “Strange New Worlds Season 2 Episode 9,” expressing dismay at the creative direction of the Star Trek franchise. - Star Trek Nostalgia Eclipsed by New Changes:

@tekniumdiscussed the change in direction for Star Trek with nostalgia, accompanied by a humorous gif implying disappointment, while@.benxhlamented the changes to the beloved series.

Links mentioned:

- mistralai/Mixtral-8x7B-v0.1 · Add MoE tag to Mixtral: no description found

- Gary Marcus Yann Lecun GIF - Gary Marcus Yann LeCun Lecun - Discover & Share GIFs: Click to view the GIF

- Klingon Singing: From Strange New Worlds Season 2 Episode 9.

- HuggingFaceH4/open_llm_leaderboard · Announcement: Flagging merged models with incorrect metadata: no description found

▷ #interesting-links (236 messages🔥🔥):

-

Exploration of Training Phases for LLMs: A discussion by

@_3sphereon when it’s effective to introduce code into the training process of LLMs led to sharing the SPIN methodology from a recent paper, which allows LLMs to refine capabilities by playing against their previous iterations. -

LLM Inference on Minimal Hardware:

@nonameusrshared information about AirLLM, an approach allowing 70B LLM inference on a single 4GB GPU by utilizing layer-wise inference without compression techniques. -

Chipsets Specialized for LLMs: There’s skepticism about the practicality and future-proof nature of Etched’s custom silicon for transformer inference, as mentioned by

@eas2535,@euclaise, and@0xsingletonly. -

Orion-14B-Model Under Scrutiny: Orion’s 14B model’s actual conversational competency is being questioned by

@.benxh,@teknium, and others, as its performance on benchmarks such as MMLU contrasts with initial user experiences that report nonsensical output and a tendency to lapse into random languages. -

Proxy-Tuning for LLMs: A linked paper discussed by

@intervitensand@sherlockzoozoointroduces proxy-tuning, which uses predictions from a smaller LM to guide the predictions of larger, potentially black-box LMs.

Links mentioned:

- Etched | The World’s First Transformer Supercomputer: Transformers etched into silicon. By burning the transformer architecture into our chips, we’re creating the world’s most powerful servers for transformer inference.

- Tweet from undefined: no description found

- Tweet from Rohan Paul (@rohanpaul_ai): 🧠 Run 70B LLM Inference on a Single 4GB GPU - with airllm and layered inference 🔥 layer-wise inference is essentially the “divide and conquer” approach 📌 And this is without using quantiz…

- Tuning Language Models by Proxy: Despite the general capabilities of large pretrained language models, they consistently benefit from further adaptation to better achieve desired behaviors. However, tuning these models has become inc…

- Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models: Harnessing the power of human-annotated data through Supervised Fine-Tuning (SFT) is pivotal for advancing Large Language Models (LLMs). In this paper, we delve into the prospect of growing a strong L…

- Looped Transformers are Better at Learning Learning Algorithms: Transformers have demonstrated effectiveness in in-context solving data-fitting problems from various (latent) models, as reported by Garg et al. However, the absence of an inherent iterative structur…

- At Which Training Stage Does Code Data Help LLMs Reasoning?: Large Language Models (LLMs) have exhibited remarkable reasoning capabilities and become the foundation of language technologies. Inspired by the great success of code data in training LLMs, we natura…

- Director of Platform: Cupertino, CA

- bartowski/internlm2-chat-20b-llama-exl2 at 6_5: no description found

- OrionStarAI/Orion-14B-Base · Hugging Face: no description found

- Tweet from anton (@abacaj): Let’s fking go. GPU poor technique you all are sleeping on, phi-2 extended to 8k (from 2k) w/just 2x3090s

- GitHub - b4rtaz/distributed-llama: Run LLMs on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage.: Run LLMs on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. - GitHub - b4rtaz/distributed-llama: Run LLMs on weak devices or make p…

- GitHub - RVC-Boss/GPT-SoVITS: 1 min voice data can also be used to train a good TTS model! (few shot voice cloning): 1 min voice data can also be used to train a good TTS model! (few shot voice cloning) - GitHub - RVC-Boss/GPT-SoVITS: 1 min voice data can also be used to train a good TTS model! (few shot voice cl…

- Yuan2.0-2B-Janus-hf: no description found

▷ #general (524 messages🔥🔥🔥):

-

Fresh Perspectives on Mixtral Experts: Discussions around the use of multiple experts in Mixtral models center around optimization.

@carsonpoolehighlights a successful implementation with minimal sacrifices in speed when using a higher number of experts and contemplates trying Hermes with more than the typical two experts. -

A Quest for Quality Finetuning: There’s a shared curiosity about fine-tuning models with more than two experts.

@qnguyen3faces difficulties fine-tuning with Axolotl and seeks advice from veterans like@teknium, who clarified that the Nous Mixtral model had a full finetune and not just a LoRa fine-tune. -

Licensing Quandaries Regarding Commercial Use: A discussion sparked by

@tekniumabout the commercial use of finetuned models, like those from Stability AI, unveils confusion surrounding licensing costs and permissions. Different interpretations and potential issues with implementing commercial use are debated among users like@casper_ai. -

The Nous Aesthetic: The chat includes an initiative to design more legible Nous role icons. Various suggestions, such as making a transparent version of the “Nous Girl” graphic or creating a simpler logo, circulate, with members

@benxhand@john0galtcontributing design skills. -

Tech Community Shoutouts: Omar from DSPy/ColBERT/Stanford joins the server, greeted by members

@night_w0lfand@qnguyen3. Members express enthusiasm for integrating Omar’s work into their solutions and anticipation for a collaboration with DSPy in their projects.

Links mentioned:

- Pythia: A Suite for Analyzing Large Language Models Across Training and Scaling: no description found

- Animated Art Gif GIF - Painting Art Masterpiece - Discover & Share GIFs: Click to view the GIF

- Combining Axes Preconditioners through Kronecker Approximation for…: Adaptive regularization based optimization methods such as full-matrix Adagrad which use gradient second-moment information hold significant potential for fast convergence in deep neural network…

- Joongcat GIF - Joongcat - Discover & Share GIFs: Click to view the GIF

- Nerd GIF - Nerd - Discover & Share GIFs: Click to view the GIF

- Browse Fonts - Google Fonts: Making the web more beautiful, fast, and open through great typography

- Domine - Google Fonts: From the very first steps in the design process ‘Domine’ was designed, tested and optimized for body text on the web. It shines at 14 and 16 px. And can even be

- 🔍 Semantic Search - Embedchain: no description found

- EleutherAI/pythia-12b · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Tweet from Teknium (e/λ) (@Teknium1): Okay, read the paper, have some notes, mostly concerns but there’s some promise. - As I said when I first saw the paper, they only tested on Alpaca Eval, which, I can’t argue is the best eval…

- Evaluation of Distributed Shampoo: Comparison of optimizers: Distributed Shampoo, Adam & Adafactor. Made by Boris Dayma using Weights & Biases

- Tweet from GitHub - FixTweet/FxTwitter: Fix broken Twitter/X embeds! Use multiple images, videos, polls, translations and more on Discord, Telegram and others: Fix broken Twitter/X embeds! Use multiple images, videos, polls, translations and more on Discord, Telegram and others - GitHub - FixTweet/FxTwitter: Fix broken Twitter/X embeds! Use multiple image…

- HuggingFaceH4/open_llm_leaderboard · Announcement: Flagging merged models with incorrect metadata: no description found

▷ #ask-about-llms (168 messages🔥🔥):

-

Doubting Alpaca’s Evaluation:

@tekniumexpressed skepticism about Alpaca’s evaluation, stating that according to the leaderboard, Yi Chat is rated higher than GPT-4, hinting at potential flaws in the evaluation process. -

Imitation Learning Limitations: In a discussion about the limitations of imitation learning,

@tekniumsuggested that models are unlikely to imitate superhuman capacity if they’re trained on data from average humans. -

Self-Critique in AI Models Questioned:

@tekniumreferenced a paper indicating that AI models are not proficient at self-evaluation, raising questions about their self-critiquing abilities. -

Experimenting with LLaMA and ORCA:

@tekniumshared an experiment where LLaMA 2 70B was used to make ORCA, similar to how GPT-4 did, noting a slight improvement in MT benchmarks but a negative impact on traditional benchmarks like MMLU. -

Comparing Different Versions of LLMs: Responding to an inquiry from

@mr.userbox020about benchmarks between Nous Mixtral and Mixtral Dolphin,@tekniumprovided links to their GitHub repository with logs comparing Dolphin 2.6 with Mixtral 7x8 and Nous Hermes 2 with Mixtral 8x7B, also noting that in their experience, version 2.5 performed the best.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Ollama: Get up and running with large language models, locally.

- Approximating Two-Layer Feedforward Networks for Efficient Transformers: How to reduce compute and memory requirements of neural networks (NNs) without sacrificing performance? Many recent works use sparse Mixtures of Experts (MoEs) to build resource-efficient large langua…

- LLM-Benchmark-Logs/benchmark-logs/Dolphin-2.6-Mixtral-7x8.md at main · teknium1/LLM-Benchmark-Logs: Just a bunch of benchmark logs for different LLMs. Contribute to teknium1/LLM-Benchmark-Logs development by creating an account on GitHub.

- GitHub - ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++: Port of Facebook’s LLaMA model in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- LLM-Benchmark-Logs/benchmark-logs/Nous-Hermes-2-Mixtral-8x7B-DPO.md at main · teknium1/LLM-Benchmark-Logs: Just a bunch of benchmark logs for different LLMs. Contribute to teknium1/LLM-Benchmark-Logs development by creating an account on GitHub.

OpenAI Discord Summary

-

Rethinking Nightshade’s Impact: Engineers debated the fail-safe mechanisms of AI, particularly with Nightshade, which may not compromise data due to its novel nature. The conversation highlighted concerns about the system affecting unintended datasets and the trust in large AI companies’ robust security measures.

-

Optimizing Prompt Limits in GPT-4: A technical discussion ensued regarding prompt lockouts in GPT-4’s image generator. Clarifications emerged on how rolling usage and individual prompt timers work, suggesting that a testing interval of one prompt every 4.5 minutes could avoid hitting the prompt cap.

-

AI Know-How for Pythonistas: Community members sought advice on deepening their AI expertise beyond intermediate Python, with suggestions including exploring fundamental AI concepts, machine learning techniques, and resources from Hugging Face.

-

A Tinge of AI Consciousness in Bing?: There were joking speculations among engineers about Bing’s possible self-awareness, sparking light-hearted exchanges without serious concern over the AI’s emerging capabilities.

-

Prompt Engineering: The Art of AI Guidance: The community exchanged ideas on prompt engineering, security strategies such as “trigger/block,” and the importance of understanding AI’s interpretation of language and instructions. They debated conditional prompting, how to craft prompts to safeguard against bad actors, and considerations for securely hosting GPT instructions.

OpenAI Channel Summaries

▷ #ai-discussions (43 messages🔥):

-

Query on Nightshade’s Foolproof Nature:

@jaicraftquestioned if Nightshade is without flaws, concerned it might affect data beyond its target.@【penultimate】believes large AI companies have robust failsafes and it should be easy to isolate poisoned data due to Nightshade’s novelty. -

Prompt Limit Confusions:

@.kyluxencountered an issue with prompt limits in the image generator via GPT-4, noting a lockout after 20 messages despite a 40-message limit.@rendo1clarified it’s rolling usage with each prompt on its timer, and@satanhashtagadvised attempting one prompt every 4.5 minutes for testing. -

AI Enthusiast’s Learning Path:

@.009_f.108seeks resources for deepening knowledge of AI, already possessing intermediate Python skills.@michael_6138_97508and@luguirecommended starting with fundamental AI concepts and classical machine learning techniques while others like@darthgustav.simply suggested Hugging Face. -

Bing’s Alleged Self-Awareness:

@metaldrgnclaimed Bing might be exhibiting signs of intelligence and consciousness, while@michael_6138_97508jokingly responded that they are lucky. -

Discussion on Moderation and Resource Sharing:

@miha9999was muted for share a resources link and inquired about the policy.@eskcantaadvised contacting modmail for clarification and assistance with moderation actions, which resolved@miha9999’s confusion after the warning was removed.

▷ #gpt-4-discussions (144 messages🔥🔥):

- Integration Woes with Weaviate:

@woodenrobotexpressed difficulty integrating custom GPT action with Weaviate, highlighting anUnrecognizedKwargsErrorrelated to object properties in the payload. - Exploring Charge Cycles for GPT-4:

@stefang6165noticed a reduction in the limit for GPT-4 messages from 40 to about 20 every 3 hours, seeking insights on this change. - Sharing GPT-4 Chat Experience:

_jonposhared their satisfying conversation with HAL, while@robloxfetishencountered an unexpected message cap during their sessions, prompting@darthgustav.and@c27c2to suggest it could be a temporary error or necessitate a support contact. - PDF Handling with ChatGPT:

@marx1497asked for advice handling small PDFs with limited success, leading to a discussion with@darthgustav.about the limitations of the tool and suggestions for pre-processing the data. - Creating Interactive MUD Environments with GPT:

@woodenrobotand@darthgustav.engaged in an in-depth technical exchange about embedding structured data and code into knowledge documents for GPT, with a shared interest in using AI for MUD servers and working within constraints of database storage and session continuity.

▷ #prompt-engineering (247 messages🔥🔥):

-

Security Through Obscurity in GPTs:

@busybensssuggested a “trigger/block” strategy to protect GPT models from bad actors.@darthgustav.pointed out the importance of Conditional Prompting for security, encouraging open discussion over gatekeeping. -

Conditional GPT Use in Complex JSON:

@semicolondevinquired about using GPT-4 conditionally when generating complex JSON that 3.5 struggles with, alluding to the higher cost of using GPT-4.@eskcantarecommended using 3.5 for baseline steps and reserving GPT-4 for the steps where it’s necessary, urging creative problem-solving within budget constraints. -

Extemporaneous AI Epistemology:

@darthgustav.and@eskcantaconducted a deep dive into how models interpret and respond to prompts. They highlighted the idiosyncrasies in AI’s understanding of instructions, noting that even AI doesn’t always “know” its reasoning path, providing significant insight into how model training could affect prompt interpretation. -

Prompting Strategies Unveiled:

@eskcantashared an advanced prompt strategy of separating what the model thinks from what it’s instructed to do. This concept sparked conversation about the essence of understanding AI response behavior and how to exploit it for better engineering prompts. -

Chart Extractions into Google Sheets:

@alertflyerasked for help transferring charts from GPT output into Google Sheets, to which@eskcantaresponded by clarifying the nature of the chart needed. The discussion aimed to identify the method of chart creation for proper extraction.

▷ #api-discussions (247 messages🔥🔥):

-

Security Strategies in the Spotlight:

@busybenssrevealed a security method they coined as “trigger/block” to protect GPT from bad actors, stating it effectively prevents execution of undesired inputs by the GPT.@darthgustavexpressed interest in the amount of character space this method uses, concerned about potential loss of functionality. -

Conditional Prompting to Secure GPTs: In an in-depth discussion on security,

@darthgustavexplained the benefits of Conditional Prompting and warned about potential weaknesses in security implementation. The conversation then navigated through several techniques and ideas for securing GPTs, including hosting GPT instructions via a web server with secure calls to OpenAI. -

Hacking LLMs: An Inevitable Risk: Both

@busybenssand@darthgustavconcurred that while security measures are essential, there’s an inherent vulnerability in sharing and using GPTs, and theft of digital assets may still occur. -

The Economics of AI Development: As the conversation shifted from security to the business side of AI,

@thepitviperand@darthgustavadvised focusing on improving the product and marketing to stand out, rather than excessively worrying about theft and the pursuit of perfect security. -

Prompt Engineering and AI Understanding: A series of messages from

@madame_architect,@eskcanta, and others discussed the intricacies of prompt engineering and the AI’s interpretation of language. They shared insights on semantic differences and how to guide the model to better understand and execute prompts.

LAION Discord Summary

-

Scrutinizing Adversarial AI Tools: Discussions centered around the suspect effectiveness of adversarial tools like Nightshade and Glaze on AI image generation. While

@astropulseraised concerns over a false sense of security they might offer, no consensus was reached. A relevant Reddit post offers further insight. -

Data and Models, A Heated Debate: Members engaged in a rich debate on creating datasets for fine-tuning AI models and the challenges associated with high-resolution images. Talks also included the efficacy and cost of models like GPT-4V, and the complexities in scaling T5 models compared to CLIP models.

-

Ethical AI, A Thorny Issue: AI ethics and copyright were another focal point, with community members displaying a level of cynicism about what constitutes ‘ethics’. The discordancy in community reactions on platforms such as Hacker News and Reddit highlighted the paradoxical nature of AI’s influence on copyright.

-

The Future of Text-to-Speech: Advances in TTS sparked lively discussions, comparing various services including WhisperSpeech and XTTS. The impressive dubbing technology by 11Labs was discussed but is restricted due to API limitations. A relevant YouTube video opens up on TTS developments.

-

Inquiries and Theories on Emotional AI:

- Legality and Challenges: Questions about the EU’s stance on emotion-detecting AI led to a clarification that such technology is not banned for research within the EU.

- Need for Experts in Emotion Detection: There were calls for expert involvement in building emotion detection datasets, with emphasis on the need for psychological expertise and appropriate context for accurate emotion classification.

LAION Channel Summaries

▷ #general (394 messages🔥🔥):

-

Debating Nightshade’s Effectiveness:

@mfcoolexpressed hope that DreamShaperXL Turbo images weren’t from a new model, citing their similarity to existing ones.@astropulseand others delved into the intricacies of whether adversarial tools like Nightshade and Glaze significantly impact AI image generation, with@astropulsesuggesting they might provide users with a false sense of security. Here’s a deep dive from ther/aiwarssubreddit: We need to talk a little bit about Glaze and Nightshade…. -

Discussions on Data and Model Training: Members like

@chad_in_the_house,@thejonasbrothers, and@pseudoterminalxspoke about creating datasets for fine-tuning models and the limitations of using images with high resolution. The debate touched on the efficacy and cost of models like GPT-4V and the complexity of scaling T5 models relative to CLIP models. -

AI Ethics and Licensing Discourse: The conversation extended to AI copyrights and ethics, with members expressing cynicism about contemporary ‘ethics’ being a stand-in for personal agreement.

@astropulseand@.undeletedcritiqued the community reactions on platforms like Hacker News and Reddit, while discussing the broader implications of AI on art and copyright. -

Exploring TTS and Dubbing Technologies:

@SegmentationFault,@itali4no, and@.undeleteddiscussed advanced text-to-speech (TTS) models, comparing existing services like WhisperSpeech and XTTS.@SegmentationFaulthighlighted 11Labs’ impressive dubbing technology and the API restrictions that keep their methods proprietary. Find out more about TTS developments in this Youtube video: “Open Source Text-To-Speech Projects: WhisperSpeech”. -

Inquiries about AI Upscaler and Language Model Training:

@skyler_14asked about the status of training the GigaGAN upscaler, referring to a GitHub project by@lucidrains.@andystv_inquired about the possibility of training a model for Traditional Chinese language support.

Links mentioned:

- no title found: no description found

- apf1/datafilteringnetworks_2b · Datasets at Hugging Face: no description found

- Data Poisoning Won’t Save You From Facial Recognition: Data poisoning has been proposed as a compelling defense against facial recognition models trained on Web-scraped pictures. Users can perturb images they post online, so that models will misclassify f…

- WhisperSpeech - a Hugging Face Space by Tonic: no description found

- Meme Our GIF - Meme Our Now - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- Open Source Text-To-Speech Projects: WhisperSpeech - In Depth Discussion: WhisperSpeech is a promising new open source TTS model, that and be training on AUDIO ONLY data & that already shows promising results after a few hundred GP…

- Is webdataset a viable format for general-use ? · huggingface/pytorch-image-models · Discussion #1524: Hi @rwightman , thanks for the continuous good work. I am playing a bit with the Webdataset format, utilizing some of the methods in: https://github.com/rwightman/pytorch-image-models/blob/475ecdfa…

- GitHub - lucidrains/gigagan-pytorch: Implementation of GigaGAN, new SOTA GAN out of Adobe. Culmination of nearly a decade of research into GANs: Implementation of GigaGAN, new SOTA GAN out of Adobe. Culmination of nearly a decade of research into GANs - GitHub - lucidrains/gigagan-pytorch: Implementation of GigaGAN, new SOTA GAN out of Adob…

▷ #research (25 messages🔥):

-

Computational Challenges in Model Scaling:

@twoabovediscussed that authors of a recent model confessed to being compute-constrained and they are planning to look into the scaling laws for their method.@qwerty_qwerresponded, noting that overcoming compute constraints would be game-changing. -

In Search of Novel Multimodal Techniques:

@twoaboveinquired about innovative image chunking/embedding techniques for use in multimodal models, a question further expounded upon by@top_walk_townwho listed several methods including LLaVa, Flamingo, llama adapter, Chameleon, and the megabyte paper approaches. -

Unpacking EU AI Laws on Emotional AI:

@fredipyquestioned whether creating AI that detects emotions contradicts EU AI regulations.@mr_seekerclarified and@JHopined that such laws do not impact non-European entities, while@spirit_from_germanystated that emotion detection is not banned for research in the EU. -

Challenges in Emotional Recognition Datasets:

@spirit_from_germanyis working on an image-based emotion detector but struggles with limited emotional datasets. They proposed creating a curated dataset with the help of psychological experts, and@_spaniard_expressed skepticism about the feasibility of detecting nuanced emotions without rich contextual information. -

Expert Insights Needed for Emotion Detection:

@.hibarinfrom a psychological background supported the need for context in emotion classification, aligning with either the fingerprints or population hypotheses of emotion.@skyler_14introduced 3D morphable models as a potential domain for easier emotion annotation.

Eleuther Discord Summary

-

Flash Attention Sparks CUDA vs XLA Debate:

@carsonpooleand@.the_alt_mandebated about Flash Attention with opinions split on whether XLA optimizations could simplify its CUDA implementations. A Reddit comment from Patrick Kidger suggested that XLA can optimize attention mechanisms on TPUs, referencing a Reddit thread. -

Legal Conundrums Over Adversarial Methods: The Glaze and Nightshade tools sparked a legal and effectiveness debate among members like

@digthatdataand@stellaathena. A legal paper was shared to illustrate that bypassing a watermark is not necessarily a legal violation. -

Open Source and AI Ethics: The community discussed the open-source nature and licensing of Meta’s LLaMA, with

@avi.aireferring to a critical write-up by the OSI, highlighting that LLaMA’s license does not meet the open-source definition (OSI blog post). The conversation veered towards governance in AI and a call to build models with open-source software principles, as discussed by Colin Raffel (Stanford Seminar Talk). -

Explorations in Class-Incremental Learning and Optimization: SEED, a method for finetuning MoE models, was introduced with a research paper shared, and discussions around the CASPR optimization technique emerged as a contender outperforming the Shampoo algorithm, backed by a research paper. Also, a paper claiming zero pipeline bubbles in distributed training was mentioned, offering new synchronization bypass techniques during optimizer steps (Research Paper).

-

Unlocking Machine Interpretability with Patchscopes: Conversations revolved around the new framework Patchscopes for decoding information from model representations, where

@stellaathenashared a Twitter thread introducing the concept. There was a sense of cautious optimism about its application in information extraction, tempered by concerns around hallucinations in multi-token generation. -

Apex Repository Update and NeoX Development: An update in NVIDIA’s apex repository was highlighted by

@catboy_slim_for potentially speeding up the build process for GPT-NeoX, recommending a branch ready for testing (NVIDIA Apex Commit).

Eleuther Channel Summaries

▷ #general (213 messages🔥🔥):

-

Debating ‘Flash Attention’ and XLA Optimizations: In a technical debate,

@carsonpooleand@.the_alt_mandiscussed the implementation of Flash Attention, with@carsonpooleasserting it involves complex CUDA operations and@.the_alt_mansuggesting that XLA optimizations could automate much of its efficiency.@lucaslingleand@.the_alt_manlater shared Patrick Kidger’s comment from Reddit indicating XLA’s existing compiler optimizations for attention mechanisms on TPUs. -

Glaze & Nightshade Legalities: Users

@digthatdata,@stellaathena,@clockrelativity2003, and others discussed the legal aspects and effectiveness of Glaze and Nightshade, with conflicting views on whether these tools represent a form of encryption or watermarking.@stellaathenashared a legal paper stating that bypassing a watermark is likely not a violation of law, while other users examined both the practical and legal implications of combating AI image models with adversarial methods. -

Adversarial Perturbations & The Feasibility of OpenAI Lobbying: In the midst of discussing Nightshade’s impacts and the concept of adversarial perturbations,

@avi.aiunderlined the challenges of U.S. regulation change, responding to suggestions by@clockrelativity2003and@baber_regarding policies and special interests. -

Assessments of LLaMA Licensing and Open Source Definitions: In exploring the licensing of Meta’s LLaMA models,

@avi.aiprovided a link to a write-up by the OSI criticizing Meta’s claim of LLaMA being “open source.”@clockrelativity2003and@catboy_slim_discussed the limitations of such licenses and@avi.aiemphasized their goal to reach the benefits seen in traditional OSS communities with AI. -

Discussion on OpenAI and the Future of ML Models: Newcomers

@AxeIand@abi.vollintroduced themselves with academic backgrounds looking to contribute to the open-source community, while@exiraesought advice on pitching a novel alignment project.@hailey_schoelkopfand@nostalgiahurtshighlighted resources and talks by Colin Raffel regarding the building of AI models with an open-source ethos.

Links mentioned:

- Tweet from neil turkewitz (@neilturkewitz): @alexjc FYI—I don’t think that’s the case. Glaze & Nightshade don’t control access to a work as contemplated by §1201. However—as you note, providing services to circumvent them might well indeed viol…

- A Call to Build Models Like We Build Open-Source Software: no description found

- Reddit - Dive into anything): no description found

- nyanko7/LLaMA-65B · 🚩 Report : Legal issue(s): no description found

- stabilityai/sdxl-turbo · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Taking stock of open(ish) machine learning / 2023-06-15: I’ve been writing this newsletter for about six months, so I thought it might be a good time to pause the news firehose, and instead review and synthesize what I’ve learned about the potential for ope…

- Meta’s LLaMa 2 license is not Open Source: Meta is lowering barriers for access to powerful AI systems, but unfortunately, Meta has created the misunderstanding that LLaMa 2 is “open source” - it is not.

- Tweet from Luca Bertuzzi (@BertuzLuca): #AIAct: the technical work on the text is finally over. Now comes the ungrateful task of cleaning up the text, which should be ready in the coming hours.

- Building ML Models like Open-Source Software - Colin Raffel | Stanford MLSys #72: Episode 72 of the Stanford MLSys Seminar “Foundation Models Limited Series”!Speaker: Colin RaffelTitle: Building Machine Learning Models like Open-Source Sof…

- Tweet from Shawn Presser (@theshawwn): Facebook is aggressively going after LLaMA repos with DMCA’s. llama-dl was taken down, but that was just the beginning. They’ve knocked offline a few alpaca repos, and maintainers are making t…

- Glaze’s plagiarism is hilarious and indefensible: Posted in r/StableDiffusion by u/AloneSignificance555 • 46 points and 48 comments

- Pallas implementation of attention doesn’t work on CloudTPU · Issue #18590 · google/jax: Description import jax import jax.numpy as jnp from jax.experimental.pallas.ops import attention bs = 2 seqlen = 1000 n_heads = 32 dim = 128 rng = jax.random.PRNGKey(0) xq = jax.random.normal(rng, …

- Glaze’s plagiarism is hilarious and indefensible: Posted in r/StableDiffusion by u/AloneSignificance555 • 45 points and 48 comments

- The Mirage of Open-Source AI: Analyzing Meta’s Llama 2 Release Strategy – Open Future: In this analysis, I review the Llama 2 release strategy and show its non-compliance with the open-source standard. Furthermore, I explain how this case demonstrates the need for more robust governance…

- Reddit - Dive into anything: no description found

▷ #research (89 messages🔥🔥):

-

SEED Approach for Class-Incremental Learning:

@xylthixlmprovided a link to a paper on arXiv about SEED, a method for finetuning Mixture of Experts (MoE) models by freezing all experts but one for each new task. This specialization is expected to enhance model performance Research Paper. -

Backdoor Attacks on LLMs through Poisoning and CoT:

@ln271828gave a TL;DR of a research paper indicating that a new backdoor attack on large language models (LLMs) can be enhanced via chain-of-thought (CoT) prompting, while current techniques like supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) are ineffective against these attacks Research Paper. -

Combining AxeS PReconditioners (CASPR) Optimization Technique:

@clashlukediscussed a paper on CASPR, an optimization method that outperforms the Shampoo algorithm by finding different preconditioners for each axis of the matrix-shaped neural network parameters Research Paper. -

Zero Pipeline Bubbles in Distributed Training:

@pizza_joeshared a paper that introduces a scheduling strategy claiming to be the first to achieve zero pipeline bubbles in large-scale distributed synchronous training, with a novel technique to bypass synchronizations during the optimizer step Research Paper. -

Generality in Depth-Conditioned Image Generation with LooseControl:

@digthatdatalinked a GitHub repository and paper for LooseControl, which generalizes depth conditioning for diffusion-based image generation, allowing creation and editing of complex scenes with minimal guidance GitHub Repo, Paper Page, Tweet Discussion.

Links mentioned:

- Stabilizing Transformer Training by Preventing Attention Entropy Collapse: Training stability is of great importance to Transformers. In this work, we investigate the training dynamics of Transformers by examining the evolution of the attention layers. In particular, we trac…

- Analyzing and Improving the Training Dynamics of Diffusion Models: Diffusion models currently dominate the field of data-driven image synthesis with their unparalleled scaling to large datasets. In this paper, we identify and rectify several causes for uneven and ine…

- Divide and not forget: Ensemble of selectively trained experts in Continual Learning: Class-incremental learning is becoming more popular as it helps models widen their applicability while not forgetting what they already know. A trend in this area is to use a mixture-of-expert techniq…

- Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training: Humans are capable of strategically deceptive behavior: behaving helpfully in most situations, but then behaving very differently in order to pursue alternative objectives when given the opportunity. …

- Combining Axes Preconditioners through Kronecker Approximation for…: Adaptive regularization based optimization methods such as full-matrix Adagrad which use gradient second-moment information hold significant potential for fast convergence in deep neural network…

- Zero Bubble Pipeline Parallelism: Pipeline parallelism is one of the key components for large-scale distributed training, yet its efficiency suffers from pipeline bubbles which were deemed inevitable. In this work, we introduce a sche…

- no title found: no description found

- Tweet from Shariq Farooq (@shariq_farooq): @ak LooseControl can prove to be a new way to design complex scenes and perform semantic editing e.g. Model understands how lighting changes with the edits: (2/2)

- memory-transformer-pt4/src/optimizer/spectra.py at main · Avelina9X/memory-transformer-pt4: Contribute to Avelina9X/memory-transformer-pt4 development by creating an account on GitHub.

- Tweet from AK (@_akhaliq): LooseControl: Lifting ControlNet for Generalized Depth Conditioning paper page: https://huggingface.co/papers/2312.03079 present LooseControl to allow generalized depth conditioning for diffusion-ba…

- GitHub - shariqfarooq123/LooseControl: Lifting ControlNet for Generalized Depth Conditioning: Lifting ControlNet for Generalized Depth Conditioning - GitHub - shariqfarooq123/LooseControl: Lifting ControlNet for Generalized Depth Conditioning

- arXiv user login: no description found

- Add freeze_spectral_norm option · d8ahazard/sd_dreambooth_extension@573d1c9: See https://arxiv.org/abs/2303.06296 This adds an option to reparametrize the model weights using the spectral norm so that the overall norm of each weight can't change. This helps to stabili…

- d8ahazard - Overview: d8ahazard has 171 repositories available. Follow their code on GitHub.

▷ #interpretability-general (9 messages🔥):

- Seeking Interpretability Resources: User

@1_gladosexpressed they are new to interpretability and looking for good resources or a list of papers to start with, while@neelnandainquired about the use of sparse autoencoders in initial NLP interpretability research. - Sparse Autoencoders in NLP History: User

@nsaphradiscussed the recurring themes in sparse dictionary learning, spanning from the latent semantic allocation era to the present, noting the inconsistent citations of predecessors and challenging the meaningfulness of a definition of mechanistic interpretability that includes such approaches. - Introducing Patchscopes for Representation Decoding:

@stellaathenashared a Twitter thread by @ghandeharioun that introduces Patchscopes, a framework for decoding specific information from a model’s representations. - Learning Dynamics for Interpretability Questioned: Responding to its relevance,

@stellaathenaalso questioned whether scoring high on next-token prediction with Patchscopes indeed correlates with identifying a model’s best guess as to the answer after a certain layer, implying that higher performance might not equate to better understanding. - Potential and Concerns of Patchscopes: User

@mrgonaosees significant potential in using Patchscopes for information extraction from hidden states in models like RWKV and Mamba, but also voiced concerns about potential hallucinations and the need for robustness checks in multi-token generation.

Links mentioned:

Tweet from Asma Ghandeharioun (@ghandeharioun): 🧵Can we “ask” an LLM to “translate” its own hidden representations into natural language? We propose 🩺Patchscopes, a new framework for decoding specific information from a representation by “patchin…

▷ #gpt-neox-dev (1 messages):

- NVIDIA’s Apex Update Could Speed Up NeoX Build:

@catboy_slim_highlighted a commit from NVIDIA’s apex repository, noting the need to fork and trim the code to accelerate the build process for fused adamw, as currently the full build takes about half an hour. They suggested that, despite the build time increase, the updated branch is likely ready for testing as it works on their machine.

Links mentioned:

Squashed commit of https://github.com/NVIDIA/apex/pull/1582 · NVIDIA/apex@bae1f93: commit 0da3ffb92ee6fbe5336602f0e3989db1cd16f880 Author: Masaki Kozuki <[email protected]> Date: Sat Feb 11 21:38:39 2023 -0800 use nvfuser_codegen commit 7642c1c7d30de439feb35…

LM Studio Discord Summary

-

LM Studio’s Range of Support and Future Improvements: Discussions centered on LM Studio’s capabilities and limitations, where

@heyitsyorkieclarified that GGUF quant models from Huggingface are supported but management of loading and unloading models should be done manually. Image generation is out of scope for LM Studio, with users directed towards Stable Diffusion for such tasks. Compatibility issues such as lacking support for CPUs without AVX instructions were noted, and a potential future update may include Intel Mac support which is currently not offered. Users experiencing persistent errors after reinstalling Windows were directed to a Discord link for troubleshooting assistance. -

The Great GPU Discussion: Conversations in hardware discussion heated up with talks of investing in high-performance Nvidia 6000 series cards and awaiting hardware upgrades like the P40 card. Comparisons were made between Nvidia RTX 6000 Ada Generation cards and cost-effective alternatives for Large Language Model (LLM) tasks. Mac Studios are favored over PCs by some for better memory bandwidth, while others appreciate Mac’s cache architecture beneficial for LLM work. A debate over Nvidia card compatibility and GPU utilization also ensued, with suggestions provided for maximizing GPU performance.

-

Model-Focused Dialogues Reveal Community Preferences: In model-related chats,

@dagbsclarified terms such as “Dolphin 2.7” and “Synthia” as finetuners, and directed those interested in comparisons towards specific Dolphin-based models on various platforms. GGUF formatted models were highlighted for their popularity and compatibility, and models best suited for specific hardware were recommended, such as Deepseek coder 6.7B for an RTX 3060 mobile. Moreover, the efficacy of models was debated with@.ben.comadvocating for consideration of model performance beyond leaderboard scores. -

Beta Releases Beckon Feedback for Fixes: The latest windows beta reported issues with VRAM capacity displays, which is particularly relevant for models like the 6600XT AMD card where OpenCL issues were identified. Beta releases V5/V6 aimed to fix RAM/VRAM estimates bugs, and the community was solicited for feedback. ARM support queries for beta installations on a Jetson NVIDIA board were addressed, confirming current support limitations to Mac Silicon. The rapid speed improvements in the latest update sparked discussions, with

@yagilbsharing a Magic GIF in a lighthearted response. -

CrewAI Over Autogen in Automation Showdown: A preference for crewAI was expressed by

@MagicJim, especially for the potential to integrate multiple LLMs in LM Studio. Contrary to previous thoughts, it was clarified that crewAI does indeed allow for diverse LLM usage for each agent, with a YouTube video provided as a demonstration. A workaround for multiple LLM API instances using different ports was discussed, addressing utilization concerns. -

Emerging Tools and Integrations Enhance Capabilities:

@happy_doodshowcased how LM Studio and LangChain can be used concurrently, detailing a process involving creation, templating, and parsing for streamlined AI interactions. On the code front, experimenting with models like DeepseekCoder33B for open interpreter tasks surfaced, with evaluations suggesting better performance might be achieved with models more focused on coding.

LM Studio Channel Summaries

▷ #💬-general (122 messages🔥🔥):

-

Clarification on GGUF and Quant Models:

@heyitsyorkieclarified that LM Studio only supports GGUF quant models from Huggingface and advised@ubersuperbossthat model loading and unloading have to be manually done within LMStudio. They also discussed that LMStudio is not suitable for image generation and directed users towards Stable Diffusion for such tasks. -

Image Generation Models Query:

@misc_user_01inquired about the possibility of LM Studio adding support for image generation models, to which@heyitsyorkiereplied that it isn’t in scope for LMStudio, as they serve different use cases. However, they did point to Stable Diffusion + automatic1111 for users interested in image generation. -

LM Studio Support and Installation Discussions: Various users including

@cyberbug_scalp,@ariss6556, and@__vanj__discussed technical issues and queries regarding system compatibility and installation of LM Studio, with@heyitsyorkieand others offering technical advice, such as LM Studio’s lack of support for CPUs without AVX1/2 instructions. -

Model Recommendations and GPU Advice:

@heyitsyorkieanswered several questions related to model suggestions for specific hardware setups like for@drhafezzz’s M1 Air, and confirmed that LM Studio supports multi-GPU setups, recommending matching pairs for optimal performance. -

Interest in Intel Mac Support Expressed: Users

@kujilaand@katy.the.katexpressed their desire for LM Studio to support Intel Macs, which@yagilbacknowledged is not currently supported due to the focus on Silicon Macs but mentioned there are plans to enable support in the future.

Links mentioned:

- HuggingChat: no description found

- GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and back…

- ggml : add Flash Attention by ggerganov · Pull Request #5021 · ggerganov/llama.cpp: ref #3365 Setting up what’s needed for adding Flash Attention support to ggml and llama.cpp The proposed operator performs: // unfused kq = ggml_mul_mat (ctx, k, q); kq = ggml_scale (ctx, kq,…

▷ #🤖-models-discussion-chat (82 messages🔥🔥):

-

Model Confusion Cleared Up:

@dagbsclarified that the terms like “Dolphin 2.7”, “Synthia”, and “Nous-Hermes” refer to different finetuners, which are combinations of models and datasets to create new models. This response was in aid of confusion from@lonfus. -

Where to Find Model Comparisons: In response to

@lonfusrequesting model comparisons,@dagbsdirected them to previous posts in channel <#1185646847721742336> for personal model recommendations and provided links to Dolphin-based models that he recommends, including Dolphin 2.7 Mixtral and MegaDolphin 120B. -

GGUF Format Gains Popularity: A series of messages from

@conic,@kadeshar,@jayjay70, and others discussed various places to find GGUF formatted models, including Hugging Face, LLM Explorer, and GitHub, highlighting its widespread adoption for model compatibility. -

Resource-Specific Model Recommendations: Users, including

@heyitsyorkieand@ptable, recommended models suitable for various hardware specs—for instance, Deepseek coder 6.7B was suggested for an RTX 3060 mobile with 32GB RAM, and models under 70B parameters for a system with Ryzen 9 5950x and a 3090Fe GPU. -

Discussions on Model Efficacy and Performance:

@.ben.comprovided insights on model performance being potentially misleading with leaderboard scores and suggested consulting spaces like Mike Ravkine’s AI coding results for more realistic appraisals. They further noted the high cost-effectiveness of using GPT-4 Turbo over procuring new hardware for running large models.

Links mentioned:

- lodrick-the-lafted/Grafted-Titanic-Dolphin-2x120B · Hugging Face: no description found

- Can Ai Code Results - a Hugging Face Space by mike-ravkine: no description found

- LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys: no description found

- Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4: no description found

- Best Open-Source Language Models, All Large Language Models: no description found

- yunconglong/Truthful_DPO_TomGrc_FusionNet_7Bx2_MoE_13B · Hugging Face: no description found

- nous-hermes-2-34b-2.16bpw.gguf · ikawrakow/various-2bit-sota-gguf at main: no description found

- dagbs/TinyDolphin-2.8-1.1b-GGUF · Hugging Face: no description found

- google/t5-v1_1-xxl · Hugging Face: no description found

- TheBloke/deepseek-coder-6.7B-instruct-GGUF · Hugging Face: no description found

- GitHub - lmstudio-ai/model-catalog: A collection of standardized JSON descriptors for Large Language Model (LLM) files.: A collection of standardized JSON descriptors for Large Language Model (LLM) files. - GitHub - lmstudio-ai/model-catalog: A collection of standardized JSON descriptors for Large Language Model (LLM…

- TheBloke (Tom Jobbins): no description found

▷ #🧠-feedback (5 messages):

- Identifying Recurrent LM Download Failures:

@leo_lion_kingsuggested that failed LM downloads should be automatically deleted and marked to prevent re-downloading faulty models since users only discover errors after attempting to load them. - Unknown Model Error Triggers Inquiry:

@tobyleung.posted a detailed JSON error output indicating an unknown error and suggesting to check if there’s enough available memory to load the model. It included details about RAM, GPU, OS, and the application used. - Reinstallation Doesn’t Clear Error: In a follow-up,

@tobyleung.expressed confusion over persisting errors despite reinstalling Windows. - Discord Link for Error Investigation:

@dagbsprovided a Discord link that apparently explains the cause of the error but no additional context was given. - Request for Retrieval of Old Model: After discussing error issues,

@tobyleung.asked if it would be possible to revert to their old model.

▷ #🎛-hardware-discussion (48 messages🔥):

- Graphics Card Strategy Evaluations:

@gtgbwas convinced to invest in a high-performance Nvidia 6000 series card after seeing Mervin’s performance videos, prompting dialogue on card compatibility and choices for model execution rigs. - Awaiting Hardware Upgrades:

@pefortinmentioned they are waiting for a P40 card, indicating a “poor man’s rig,” to which@doderleinreplied they are expecting the same hardware arrival soon. - Powerful Cards Stimulate Envy:

@doderleinacknowledged the significant capabilities of the Nvidia RTX 6000 Ada Generation card shared by@gtgbin the product page link, emphasizing its high cost. - Mac Versus PC for LLMs: A debate over hardware choices surfaced, with

@heyitsyorkiefavoring a Mac Studio over PC solutions for LLM tasks due to better memory bandwidth and a more attractive home setup, while@.ben.compointed out the benefits of Mac’s cache architecture for such work. - GPU Utilization Discussions:

@omgitsprovidenceinquired about low GPU utilization,@heyitsyorkieadvised trying the ROCm beta for better AMD performance, and@dagbsoffered@misangeniusguidance on maximizing GPU offload for better response times when running models.

Links mentioned:

NVIDIA RTX 6000 Ada Generation Graphics Card: Powered by the NVIDIA Ada Lovelace Architecture.

▷ #🧪-beta-releases-chat (29 messages🔥):

-

VRAM Vanishes in Beta:

@eimiieeereported the latest windows beta shows estimated VRAM capacity as 0 on a 6600XT AMD card.@yagilbsuggested there were issues with OpenCL in the latest beta and pointed toward trying the AMD ROCm beta. -

VRAM Estimate Bug Squashed:

@yagilbannounced Beta V5/V6, which fixed several bugs, and asked for feedback on RAM/VRAM estimates on the search page, hinting at tweaks in the calculation. -

Compatibility Queries for Jetson NVIDIA:

@quantman74inquired about arm64 architecture support for installing the beta on a Jetson NVIDIA board.@heyitsyorkieclarified there was no ARM support outside of Mac Silicon, and@yagilbencouraged the creation of a feature request for it. -

Speedy Improvements Spark Curiosity:

@mmonircommented on the doubled speed in the latest update, prompting@heyitsyorkieto link a humorous gif, while@n8programsalso expressed curiosity about the changes that led to the speed improvements. -

Case Sensitivity Causes Model Mayhem:

@M1917Enfielddiscovered and solved a problem where model folders with different case sensitivities were not being detected by LM Studio by renaming the folder to match the expected case.@yagilbacknowledged the successful problem-solving.

Links mentioned:

Magic GIF - Magic - Discover & Share GIFs: Click to view the GIF

▷ #autogen (1 messages):

meadyfricked: Never got autogen working with LM Studio but crew-ai seems to work.

▷ #langchain (1 messages):

- LangChain Integration with LM Studio:

@happy_doodprovided an example of how LM Studio and LangChain can be used together, showcasing new class implementations. The code snippet demonstrates the creation of a ChatOpenAI instance, crafting a prompt with ChatPromptTemplate, parsing output with StrOutputParser, and combining these elements in a streamlined process.

▷ #crew-ai (10 messages🔥):

- MagicJim Weighs in on Automation Tools:

@MagicJimshared his preference for crewAI over autogen due to the idea of integrating multiple LLMs in LM Studio. He suggested that using specific models like deepseek coder for coder agents would be beneficial. - Discussing Autogen’s Flexibility with LLMs:

@siticobserved that autogen allows using a different LLM for each agent, unlike crewAI, which seems to only use one. This feature is important for creating agents with distinct capabilities. - Clarification on crewAI’s LLM Usage:

@MagicJimclarified that crewAI does allow using different LLMs for each agent and shared a YouTube video demonstrating this functionality. - Running Multiple Instances of LLMs:

@senecaloucksuggested the workaround of running multiple instances of LLMs if the hardware supports it, using different ports for the API. - Integration Issues with LM Studio:

@motocycleinquired if anyone had successfully integrated crewAI with the LM Studio endpoint, mentioning success with ollama but facing issues with LM Studio.

Links mentioned:

CrewAI: AI-Powered Blogging Agents using LM Studio, Ollama, JanAI & TextGen: 🌟 Welcome to an exciting journey into the world of AI-powered blogging! 🌟In today’s video, I take you through a comprehensive tutorial on using Crew AI to …

▷ #open-interpreter (7 messages):

- Parsing Error in

system_key.go:@gustavo_60030noted an error insystem_key.gowhere the system could not determine NFS usage. The error message mentioned an inability to parse/etc/fstab, specifically the dump frequency, which said “information.” - Model Experiments for Open Interpreter:

@pefortindiscussed experimenting with DeepseekCoder33B for open interpreter and mentioned that while Mixtral 8x7B instruct 5BPW is performing okay, it’s struggling with identifying when to write code. - Model Recommendation Request: Seeking a model suited for coding tasks,

@pefortinexpressed an interest in trying out models that are focused on coding, like wizard, etc. - Model Comparison for Coding:

@impulse749inquired if DeepseekCoder33B is the best for coding tasks, to which another offered that deepseek-coder-6.7b-instruct might be a faster and more focused option for solely coding-related tasks.

Mistral Discord Summary

-

French Language Support Sparks Interest: Users suggested the addition of a French support channel within the Mistral Discord community, reflecting a demand for multilingual assistance.

-

Data Extraction Strategies and Pricing Discussions: There was an exchange of strategies for data extraction such as using BNF grammar and in-context learning, alongside inquiries about Mistral’s pricing model where it was clarified that 1M tokens correspond to 1,000,000 tokens, including both input and output.

-

Interfacing AI with 3D Animation and Function Calling: Questions arose about integrating Mistral AI with 3D characters for real-time interaction, discussing complexities like animation rigging and API compatibility, as well as implementation queries about function calling akin to OpenAI’s APIs.

-

Hosting and Deployment Insights for Mistral: Users shared resources such as partITech/php-mistral on GitHub for running MistralAi with Laravel, and experiences regarding VPS hosting, on-premises hosting, and using Skypilot for Lambda Labs. Additionally, using Docker for Mistral deployment was suggested.

-

Focusing on Fine-Tuning and Model Use Cases: Conversations revolved around fine-tuning strategies such as creating datasets in Q&A JSON format, the importance of data quality with ‘garbage in, garbage out’, and troubleshooting Mistral fine-tuning with tools like axolotl. Concerns were also voiced about introducing a tool highly optimized for French language tasks within the Mistral suite.

Mistral Channel Summaries

▷ #general (154 messages🔥🔥):

-

Demand for a French Support Channel: User

@gbourdinexpressed that the Mistral Discord could benefit from a French support channel (ça manque de channel FR), which elicited agreement from another user,@aceknr. -

Quest for Data Extraction Strategies:

@gbourdinsought advice on strategies for extracting data, like postal codes or product searches, from discussions. Whereas@mrdragonfoxproposed using BNF grammar and in-context learning due to limited API support for this use case. -

Clarification on Mistral Pricing Model:

@nozaranoasked for clarification on the pricing for “mistral-medium,” with explanation provided by@ethuxand@mrdragonfox, defining that 1M tokens represent 1,000,000 and that both input and output tokens count towards pricing. -

AI-Driven 3D Character Interaction: User

@madnomad4540inquired about integrating Mistral AI with a 3D character and real-time user interaction.@mrdragonfoxindicated the challenges and separated aspects involved in the venture, such as animation rigging and integrating with APIs like Google Cloud Vision. -

Exploring Assistants API and Function Calling: User

@takezo07queried about the implementation of function calling and threads like OpenAI’s Assistants APIs, while@i_am_domnoted that such functionality could be programmed using the API directly, and@.elektmentioned that official support for function calling isn’t available in Mistral API.

Links mentioned:

- Vulkan Implementation by 0cc4m · Pull Request #2059 · ggerganov/llama.cpp: I’ve been working on this for a while. Vulkan requires a lot of boiler plate, but it also gives you a lot of control. The intention is to eventually supercede the OpenCL backend as the primary wid…

- Vulkan Backend from Nomic · Issue #2033 · jmorganca/ollama: https://github.com/nomic-ai/llama.cpp GPT4All runs Mistral and Mixtral q4 models over 10x faster on my 6600M GPU

▷ #models (5 messages):

-

Seeking Fiction-Guidance with Instruct:

dizzytornadoinquired whether Instruct has guardrails specifically for writing fiction. The context and responses are not provided in the chat logs. -

A Shoutout to Mistral:

thenetrunnaexpressed affection for Mistral without further context or elaboration. -

Demand for French-Optimized Mistral:

luc312asked if there is a version of Mistral more optimized for reading/writing French or if using a strong system prompt is the only way to guide Mistral to communicate in French. -

Clarification on Multilingual Model Capabilities:

tom_lrdclarified that tiny-7b isn’t officially built for French, having limited French abilities due to lack of targeted training, whereas Small-8x7b is officially multilingual and trained to speak French.

▷ #deployment (6 messages):

- Integrating Mistral with PHP:

@gbourdinprovided a useful resource with a link to GitHub - partITech/php-mistral, indicating that it can be used to run MistralAi with Laravel. - Seeking VPS Hosting Details:

@ivandjukicinquired about hosting providers for VPS with a proper GPU, noting the expense or misunderstanding regarding the cost. - Client Data Secured with On-premises Hosting:

@mrdragonfoxassured that when Mistral is hosted in the client’s data center, Mistral would never get access to your data. - Hobbyist Hosting Insights:

@vhariationalshared personal experience as a hobbyist not needing the biggest GPUs, and recommends using Lambda Labs via Skypilot for occasional testing of larger models. - Suggestion for Docker Deployment:

@mrdomoosuggested setting up a Docker server and using the python client for Mistral deployment.

Links mentioned:

GitHub - partITech/php-mistral: MistralAi php client: MistralAi php client. Contribute to partITech/php-mistral development by creating an account on GitHub.

▷ #ref-implem (2 messages):

-

Quest for Ideal Table Format in Mistral:

@fredmolinamlgcpinquired about the best way to format table data when using Mistral. They contrasted the pipe-separated format used for models like bison, unicron, and gemini with a “textified” approach they’ve been taking with Mistral by converting pandas dataframe rows into a string of headers and values. -

Sample Textified Table Prompt Provided:

@fredmolinamlgcpshared an example of a “textified” table prompt for Mistral. They demonstrated how they structure the input by including an instructional tag followed by neatly formatted campaign data (e.g., campaign id 1193, campaign name Launch Event…).

▷ #finetuning (51 messages🔥):

- GPT-3 Costs and Alternatives for Data Extraction:

@cheshireaimentioned using GPT-turbo 16k for extracting data from PDFs and creating a dataset, though they had to discard many bad results due to the large volume of documents processed. - Creating Q&A JSON Format for Dataset Construction:

@dorumiruis seeking advice on creating a programming task to extract data from PDFs, chunk it, and use an API like palm2 to generate a dataset in a Q&A JSON format for subsequent training. - Chunking Techniques and Resource Suggestions: In response to

@dorumiru'squestion about advanced PDF chunking techniques,@ethuxshared a YouTube video called “The 5 Levels Of Text Splitting For Retrieval,” which discusses various methods of chunking text data. - Recommendations and Warnings for Fine-Tuning Tools:

@mrdragonfoxadvised caution when using tools like Langchain due to complex dependencies and shared a GitHub link toprivateGPT, a basic tool for document interaction. They also emphasized ‘garbage in, garbage out’ highlighting the significance of quality data. - Issues with Configuring Mistral for Fine-Tuning:

@distro1546inquired about the proper command line for fine-tuning Mistral using the axolotl tool, how to adjustconfig.ymlfor their dataset, and posted a discussion thread on GitHub for troubleshooting (https://github.com/OpenAccess-AI-Collective/axolotl/discussions/1161).

Links mentioned:

- Trouble using custom dataset for finetuning mistral with qlora · OpenAccess-AI-Collective/axolotl · Discussion #1161: OS: Linux (Ubuntu 22.04) GPU: Tesla-P100 I am trying to fine-tune mistral with qlora, but I’m making some mistake with custom dataset formatting and/or setting dataset parameters in my qlora.yml f…