Lumiere - text to video

Enter Lumiere from Google Research. Every part of this video is computer generated:

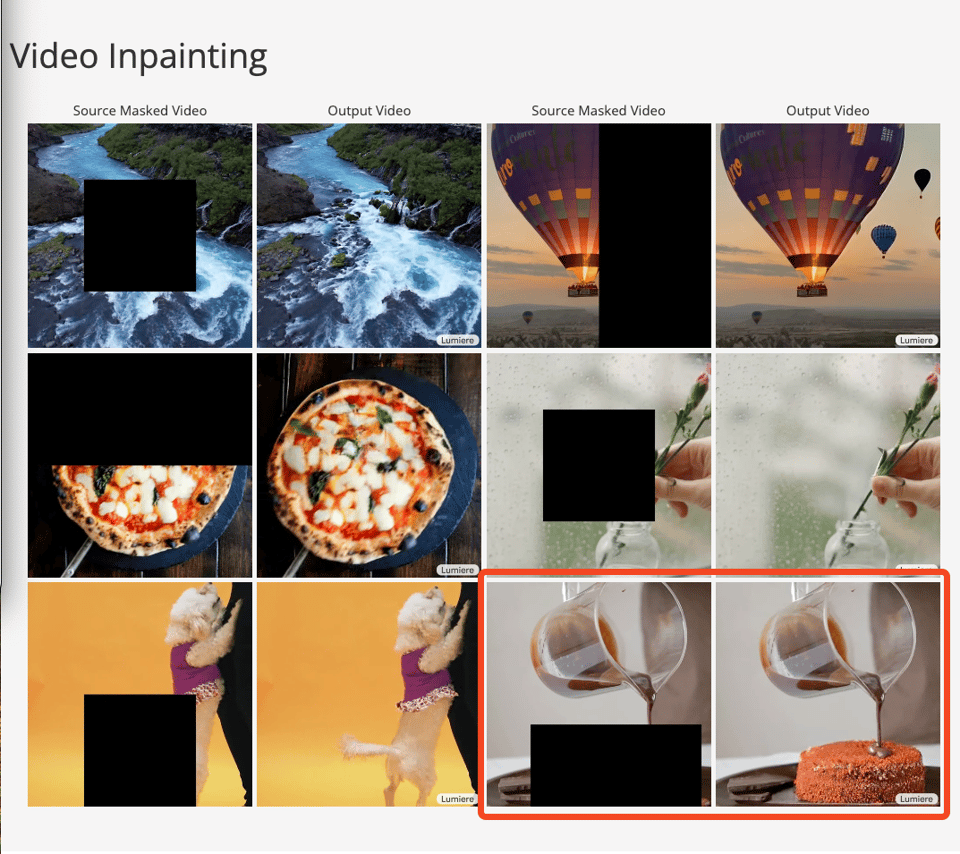

In particular I would draw your attention to their inpainting capabilities - watch the syrup pour on the cake and stay there:

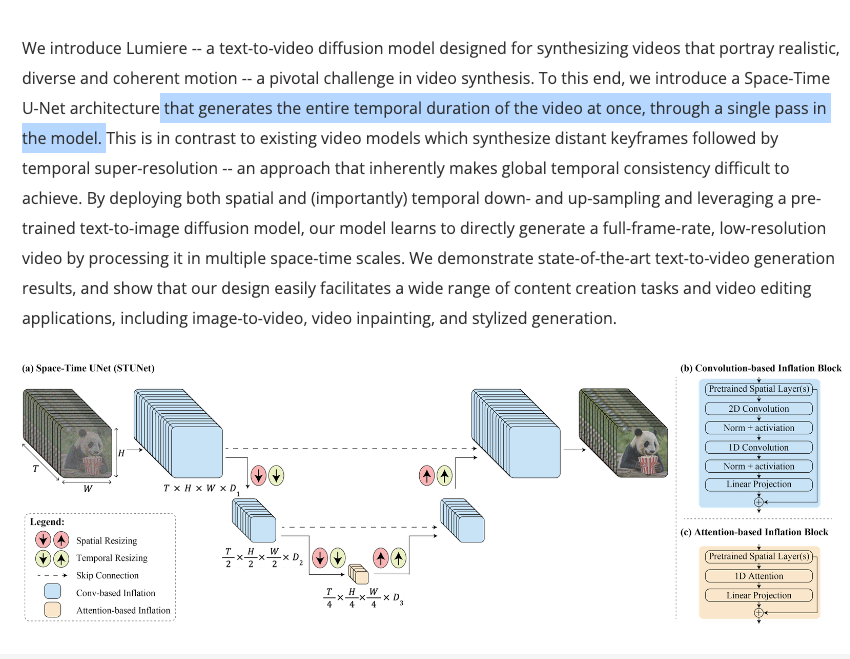

This is a step above anything we’ve yet seen coming out of Pika and Runway. This seems to come from a Space-Time diffusion process:

which we think Einstein would particularly enjoy.

Code Evals beyond HumanEval

In other news, Manveer of UseScholar.org is collating a comprehensive list of all evals, including some code ones we haven’t heard of:

- https://github.com/amazon-science/cceval

- https://infi-coder.github.io/inficoder-eval/

- https://evalplus.github.io/leaderboard.html

- https://leaderboard.tabbyml.com/

- https://huggingface.co/datasets/mbpp

- https://huggingface.co/datasets/nuprl/CanItEdit

—

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Reheating the Old Flamewar: A fiery debate erupted around AI and roleplay, spurred by

@frankenstein0424and@kalomaze, steering the conversation towards moderation and the lighter side of things with humor. -

The Rat Pack’s Digital Cards: Rat-themed character cards flooded

#general, enticing a range of reactions from@rogue_variant,@mrdragonfox, and@.justinobserver. -

Model Mayhem: Seeking API Assistance:

@priyanshuguptaiitgnavigated the labyrinth of running models like Mistral-7B through an API, aided by@itsme9316and@kalomaze, among others. Missing tokenizers and fine-tuning generated much buzz in the hive. -

Need for Speed: GPUs & Ai Model Rentals: The community dove deep into GPUs, discussing storage on platforms like runpod.io and pondering over NVIDIA’s A100 GPU end-of-life, alongside practical nuances of renting GPUs for AI processing.

-

Discussion of LLM Deployment and API Usage:

@frankenstein0424opened a dialogue seeking insights on deploying AI models for bot hosting, with responses pointing towards utilizingSillyTavernfor connecting LLM APIs such as together.ai and mistral.ai. -

Probing Mergekit’s Possibilities:

@222gateinvestigated the potential of mergekit to connect gguf models while also seeking wisdom on the daring endeavor of fusing vision and non-vision AI models. -

Frankenstein’s Model: Conversations center on LLava’s multimodal abilities and the aspiration of frankenmerging—

@seleaclarifies LLava’s working and discusses the challenges inherent in model cross-integration and training.

Nous Research AI Discord Summary

- Antique Data for Modern Testing: One user is testing models using Q&As from antique school books, questioning the reliability of models on current datasets.

- Learning Rates for LLMs Discussed: A consensus is suggesting to start with learning rates around 1e-5, referencing rates from previous architecture papers, and adjusting based on early epoch observations.

- RestGPT Earns Recognition: RestGPT is gaining attention as an example of LLMs controlling applications via RESTful APIs, showcasing the expanding capabilities of LLMs in real-world interfacing.

- Inference and Fine-Tuning Quirks: Various users have noted issues with inference methods, including sporadic

EOStoken inclusions, as well as OOM issues during LLM fine-tuning, with suggestions to sort data by decreasing length to troubleshoot. - Code-Related Evaluations for LLMs: Benchmarks like

HumanEval,MBPP, andDeepSeekare being used for LLM evaluations, along with a shared concern about the maintenance of the Hugging Face code and open PRs.

Mistral Discord Summary

-

Mistral Instructs on Autocomplete Design:

@i_am_domprovided clarification that Mistral Instruct is designed as an autocomplete system which would suggest omitting tags for plain text input. Sophia Yang’s association with Mistral garnered attention in the community, confirmed by her responsive emoji. -

Deployment & docker with vLLM: vLLM supports using a local model file with the

--modelflag, and for docker users, the HuggingFace cache directory is bind-mounted which eliminates the need to redownload the model on Docker rebuilds. -

Mixtral 8x7b Summarization Issues: Users reported unpredictable response cutoffs in summarization tasks with Mixtral 8x7b, despite stable VRAM, and changing prompt syntax was suggested as a partial fix. Meanwhile, JSON-formatted API responses remain a challenge and Mistral’s 7B models are now deployable through Amazon SageMaker JumpStart.

-

Finetuning Challenges and Recommendations: Attempting to finetune the Mixtral model appears costly and complex with mixed success, while ColbertV2 is recommended for training embeddings models. Both prompt optimization and fine-tuning were also discussed as methods to improve results.

-

Sharing Code for Debugging and Client Package Discussions:

@jakobdylancshared a link to their code on GitHub to debug an issue with the “openai” python package, which led to a discussion about the comparability of Mistral’s client library. The conversation included the possibility of transitioning to Mistral’s package for its lightness but raised concerns about compatibility with vision models. -

Philosophical and Math-Transformers Engagement: A user’s philosophical inquiry did not garner traction, while another suggested combining mathematical theory with transformer models and the A* algorithm to produce new mathematical concepts, which reflects the community’s creative and theoretical discussions.

Key links mentioned:

- Mistral 7B models on SageMaker

- Discord LLM Chatbot code snippet

- Mistra - Overview on GitHub

- OpenAI python package

LM Studio Discord Summary

- Ubuntu Users Overcome libclblast.so.1 Hurdle: Ubuntu 22.04 users faced a missing

libclblast.so.1error in LM Studio, which was tackled by creating symbolic links as a fix. - Apple Neural Engine Integration in LM Studio: Discussions in LM Studio probed the utilization of Apple’s Neural Engine via Metal API, and the “asitop” tool was suggested for monitoring.

- Mixing and Matching AI Models with LM Studio: An inquisitive approach to integrate the Retrieval-Augmented Generation (RAG) with LM Studio was met with suggestions pointing to third-party applications and setup help.

- Model Behavior and Performance Variance: LM Studio users grappled with model inconsistency issues, sharing tips like reducing “Randomness” or “Temperature” settings, and queried about model difference, such as between Dolphin versions 2.5 and 2.7, although the Discord link with specifics was not accessible in the provided content.

- CodeShell’s GPU Acceleration Conundrum: Users reported that GPU acceleration was greyed out for CodeShell in LM Studio, with a possible workaround involving renaming the model file to insert “llama,” but with uncertain results.

- Hardware Enthusiasts Wrestle with VRAM Display Errors: One user’s Nvidia 3090 displaying “0Bytes” of VRAM kicked off discussions on hardware specifications, budget-fitting setups for running models like Mixtral, and stability configurations for offloading workloads to GPU.

- Enticing Intel GPU Support on the Horizon?: A GitHub pull request hinted at upcoming support for Intel GPUs in llama.cpp, potentially boosting LM Studio’s hardware compatibility.

OpenAccess AI Collective (axolotl) Discord Summary

Logit Distillation’s Progress and Voice Synthesis Challenge: Discussions revealed progress in logit distillation using GPT-4 logits with success in backfilling strategies. However, adding custom tokens for voice synthesis to LLMs, as high as 8k, would require extensive pretraining, as shared by participants like @ex3ndr, @le_mess, and @stefangliga.

Jupyter SSL Woes and Self-Rewarding Language Models: SSL issues with Jupyter in the Latitude container surfaced without a solution, leading @dctanner to utilize SSH port forwarding. Interest in Self-Rewarding Language Models sparked discussion, with a PyTorch implementation shared by @caseus_.

DPO Dataset Loading Success, Strategy Struggles, and Local Dataset Queries: Members discussed overcoming DPO dataset loading issues using a PR, with @dangfutures using a micro batch size of 1 amidst out-of-memory errors. There was a collaborative effort to address prompt strategies and finetuning with llava models, indicating the Axolotl framework’s flexibility, referenced by @caseus_, @noobmaster29, and @gameveloster.

Insight into Optimal LoRA Hyperparameters and Dataset Overlap Confirmation: A shared Lightning AI article provided insights on effective LoRA hyperparameter usage, as @noobmaster29 and @c.gato discussed alpha, rank, and batch size variations. Dataset overlap concerns between dolphin and openorca datasets were confirmed, signaling data redundancy awareness.

YAML Configuration and Prompt Tokenization for RLHF: RLHF projects encountered a KeyError within YAML configurations, but a resolution via new type formats (chatml.argilla and chatml.intel) was found and shared by @alekseykorshuk. Configurations for local datasets and prompt tokenization strategy updates were also discussed, emphasizing the evolving nature of these components.

Cog Configurations for ML Containers: @dangfutures shared a Cog configuration guide detailing the use of CUDA “12.1”, Python “3.11”, and Python packages installations for machine learning containers, as per the Cog’s documentation. This practical snippet demonstrates active community guidance on infrastructure setup.

Eleuther Discord Summary

Byte-Level BPE Enables Multilingual LLM Responses: The Llama 2 model generates responses in multiple languages using byte-level BPE, which supports Hindi, Tamil, and Gujarati.

Mamba’s Scalability Questioned: Enthusiastic debate unfolded over Mamba’s potential to scale and replace Transformers, with a lack of evidence concerning its performance at larger scales provoking skepticism among technical users.

Google Steals the Show with Lumiere: Google Research’s space-time diffusion model for video generation, Lumiere, attracted attention, despite concerns over dataset size and data advantages.

First-of-its-kind Conference on Language Modeling: Excitement buzzed around the announcement of the inaugural Conference on Language Modeling at the University of Pennsylvania, promising to bring deep insights into language modeling research.

MoE Implementation Challenges and Parallelism: A developer shared a pull request to implement Mixture of Experts (MoE) in GPT-NeoX, voicing conundrums on validating MoE with single GPU limits and seeking insights into parallelism optimizations, while another pull request scrutinizes the potential of fused layernorm in performance enhancements.

LAION Discord Summary

-

Quick Model Training Estimates: A training time inquiry from

@astropulseregarding a tiny model of size 128x128 led@nodjato estimate a couple of days’ runtime on a dual 3090 rig, referencing Appendix E of an unspecified paper for further details. -

GPT-4 Caps Confusion: User

@helium__discussed reduced token caps for GPT-4 with others like@astropulseconfirming they have encountered similar constraints. -

Implications of Image Scale on Model Performance: According to

@thejonasbrothers, ImageNet models with resolutions under 256x256 tend to underperform, advocating for larger image resolutions despite increased training times. -

Safe Multimodal Dataset Discussions: A conversation led by

@irina_rishregarding the safety and integrity of datasets for multimodal model training saw participation and solution-seeking by@thejonasbrothers,@progamergov, and others. -

First Language Modeling Conference Promoted:

@itali4noinformed about the Conference on Language Modeling (CoLM) that is scheduled to happen at the University of Pennsylvania. A pertinent tweet provides more details about the event. -

Innovations in Reward Modeling: A paper shared by

@thejonasbrotherssuggests Weight Averaged Reward Models (WARM) as a solution for reward hacking in LLMs, find the details in the linked paper. -

Advancing Unsupervised Video Learning:

@vrus0188showcased a paper on VONet, an unsupervised video object learning framework outperforming contemporary techniques, with the corresponding code available on GitHub.

HuggingFace Discord Summary

-

HuggingFace Introduces New Perks and Releases: Community members are engaging with new activities and opportunities highlighted by HuggingFace, including a new channel for high-level contributors, the second chapter of the Making games with AI course, and a performance breakdown on how Gradio was optimized. Furthermore,

transformers v4.37features new models and a 4-bit serialization, while Transformers.js now supports running Meta’s SAM model in the browser. -

Enthusiasm and Challenges in Open-Sourcing and Machine Learning: Open-source contributions remain a vibrant part of the community’s spirit. Users report difficulties with ONNX model exports and seek starter guides for learning machine learning — being directed to a useful guide on Hugging Face. Another user is creating a privacy-conscious transcription tool for sensitive audio consultations, intending to use Hugging Face’s transformers and pyannote.audio 3.1, combining it with Go and protobuf definitions.

-

AI Innovation and Collaboration Showcase: The channel features a variety of AI projects and intellectual discussions, including InstantID for identity-preserving generation and Yann LeCun’s endorsement. Users also share information about Hedwig AI’s new video platform and inquire about AI background effects used in a YouTube video. Open-source contributions like QwenLM’s journey with Large Language Models are presented with related resources.

-

Creators Flaunt Their Latest AI Tools and Studies: Community members show off enhancements such as a faster

all-MiniLM-L6-v2, scripts for PCA in embedding comparisons, and projects for detecting fakes visually. An enhancement of the Open LLM Leaderboard with Cosmos Arena gets a nod, and tools like HF-Embed-Images for easy image dataset embedding, 3LC for ML training and data debugging, and Gabor Vecsei’s GitHub repositories are highlighted. -

Peering into the Diffuser-Discussions: Karras’s improvements in DPM++ generate anticipation among users, who also share reflections on diffusion scheduling and its origins, citing k-diffusion and a paper about diffusion-based generative models. One user is working on diagrams for diffusion models to gain a comprehensive understanding for potential reimplementation.

-

Exploring NLP and Diffusion Models: The NLP channel discusses the nuances of model parallel training, with a guide being shared to help transition from single GPU to multi-GPU training setups. Curiosity arises about multilingual models generating responses without direct language tokens, while slow inference issues with DeciLM-7B models prompt users to seek speed optimization solutions.

Perplexity AI Discord Summary

-

RPA AI Efficiency and Dark Mode Dominance: Discussions touched on the efficiency of RPA AI, while users lamented the lack of a light mode in labs, noting only dark mode availability. Frustrations also surfaced with Android microphone permission settings lacking adequate options.

-

Dream Bot Discontinued Creating Channel Confusion: Confirmations were made regarding channel closures, with notably the Dream bot being no longer available, leading to user confusion and a suggestion for more regular news summaries on channel updates.

-

GPT-4 vs. Gemini Pro Clarified: Users sought to distinguish between GPT-4 and Gemini Pro models within Perplexity AI’s pro version, receiving guidance on model selection settings, and prompting community managers to encourage community recognition of helpful contributions.

-

Feature Inquiry and Credit Support: Questions arose about a potential teams feature and issues with credit support, alongside speculation regarding future app support for Wear OS in light of a potential collaboration with Rabbit.

-

Extended API and VSCode Integration Hints: There were requests for information on increasing API rate limits for product integration, recommendations for the Continue.dev extension to integrate with VSCode, and light-hearted encouragement for Pro subscribers to donate credits to an imaginary “church of the God prompt”.

LlamaIndex Discord Summary

-

Hackathon Heats Up with LlamaIndex: IFTTT is hosting an in-person hackathon from February 2-4, featuring $13,500 in prizes, including $8,250 in cash, with the objective to build projects that solve real problems. The excitement is palpable and expertise is guaranteed with access to mentors. Hackathon announcement tweet.

-

Meet MemGPT for Memorable Chatbots: MemGPT is a new OSS project, highlighted by

@charlespacker, designed for creating chat experiences with enriched capabilities like long-term memory and self-editing, leveraging LlamaIndex technology for advanced AI chat solutions. It can be installed via pip, paving a path to a personalized AI experience. OSS project spotlight tweet. -

SQLite Meets Llama-Index:

@pveierlandasked about any existing sqlite-vss integrations for llama-index but no documentation or solutions could be identified during the discussions. -

Pandas Query Engine Pandemonium: Members discussed issues related to the PandasQueryEngine with open-source LLMs like Zephyr 7b, shedding light on the complexity of query pipelines in large language models. Documentation was shared but the pressing CSV file issue in RAG chatbot building remained largely unresolved.

-

Enhancing RAG Chatbots with Dynamic Knowledge:

@sl33p1420provided insights through their Medium article on how to augment RAG chatbots by integrating dynamic knowledge sources. The comprehensive guide walks readers through the nuances of model selection and server setup to chat engine construction for creating a robust LLM-powered RAG QA chatbot. Empowering Your Chatbot article.

OpenAI Discord Summary

-

AI Community Connections Fall Short: A user sought more LLM discord servers, but received no recommendations, highlighting a potential gap in community resource sharing.

-

AI Behavior Benchmark: The comparison of AI diversity to human complexity prompted discourse, underlining the notion that a variety of AI behaviors might emerge from distinct designs and environments.

-

Seeking the Best Tools: Queries for effective LLM evaluation & monitoring tools for a GPT-4-based chatbot were raised but went unanswered, indicating a demand for such resources.

-

Image AI Scrutiny: Questions about Dall.E’s image handling capabilities were asked, however, there was no conclusive discussion on the specifics or causes of the issues.

-

AGI Control Argument: Control over AGI dominated conversations with questions around who will maintain authority over such technology and considerations about its potential uses.

-

File Upload Constraints Clarity: Clarifications were made about limits for file uploads in Custom GPT (up to 20 files, 512MB each, and 2 million tokens for text files), while discussing strategies to bypass the restrictions, such as merging documents.

-

GPTs Marketplace Vanishing Act: An inquiry was made about missing CustomGPTs on GPTs Marketplace, which remained unresolved, signaling a possible need for transparency or technical support.

-

Word Processing with Grimoire GPT: The development of a word processor using Grimoire GPT within ten minutes was shared, showcasing the rapid implementation capabilities of GPT-based applications.

-

Custom GPT Network Troubles: Network errors following responses from a custom GPT were reported with no solutions presented, highlighting continuing technical concerns within the community.

-

Thread Intricacies in GPT: Confusion surfaced when a file from one GPT thread seemingly influenced another, suggesting possible file handling issues across threads, with the community awaiting confirmation on expected behavior.

-

Enhanced Context Management for AI: A concern regarding the handling of chat logs for extracting information was mentioned, specifically about the significant impact the size and format of logs can have on the AI’s performance in this area.

-

Refining AI Assistance via Prompts: Suggestions for prompt ideas suitable for organization or executive assistance roles were discussed, with emphasis on tailoring Custom GPT prompts to include user background info for enhanced performance.

-

Championing Clear Communication Goals: Advice on refining tasks within the description fields of a custom GPT was shared, with the recommendation to articulate clear objectives and desired outcomes, whether seeking AI or human collaboration.

-

Simplicity vs. Effectiveness in AI Command: A user advised focusing on clear goals over the ‘most effective’ language when guiding AI, suggesting a pragmatic approach to achieve desired outcomes.

DiscoResearch Discord Summary

-

Prompt Perplexity? Template Tinkering To The Rescue: Community collaboration identifies a discrepancy in templates between a local and demo instance of a model, suggesting f-strings and newline formatting for better compatibility with DiscoLM models. Guidance includes reference to DiscoLM German 7b v1 and community gratitude for support in navigating LLM intricacies.

-

Translation Evaluation and Predictive Musings: The implementation of Lilac for translation quality and Distilabel for filtering bad translations is discussed, though GPT-4 costs are mentioned as a concern. Llama-3 predictions emphasize a 5-trillion-token pretraining focused on multilingual capability, with a hat tip to advanced context chunking research, and a new German LM with a 1-trillion-token dataset announces its impending debut, hinting at significant compute demands.

-

Mistral Molds New Paths: A project similar to the Mistral embedding model is launched on GitHub utilizing Quora data, with discussions around hosting on Hugging Face or GitHub and whether to craft a BigGraph or Table Embedding model. Also, Voyage’s new code embedding model,

voyage-code-2, is spotlighted for its advancements in semantic code retrieval, detailed in their blog post. -

Axolotl Adoption Anecdotes: Troubleshooting for Axolotl includes advice on dataset integration using supported formats and referencing in the Axolotl config, managing GPU recognition in Docker with the NVIDIA Container Toolkit, and a hint to seek specialized help on the Axolotl Discord. A problematic newline issue with DiscoLM German model prompts a community chipset fix, amending the

config.jsonto resolve output glitches as discussed on Hugging Face.

Latent Space Discord Summary

-

Karpathy Sheds Light on Tech’s Human Impact: Andrej Karpathy’s new blog post discusses the difficulty those outside the tech industry face in adapting to rapid technological changes. Anxiety and discomfort are common emotional responses to the pace of innovation.

-

Perplexity’s Complex Progress Visualized: A tweet from

@madiatorshows the non-linear development trajectory of the AI model Perplexity over a span of three months. -

Scaling Down Model Size While Keeping Cognition Intact: Research on training smaller language models (LMs) reveals potential to maintain grammar and reasoning capabilities, as discussed in the TinyStories paper.

-

Discord Enlists AI for Smarter Notifications: Discord has begun using large language models (LLMs) for summarizing community messages to create notification titles, signaling a potential shift in privacy policy considerations.

-

Breakthrough in Image Generation by Stability AI: Stability AI has developed a diffusion model capable of generating megapixel-scale images outright, which could signal the end of traditional latent diffusion techniques.

-

Lucidrains Set to Tackle SPIN and Meta Paper:

@lucidrainsis preparing implementations of SPIN and a new Meta paper approach in separate projects, with self-rewarding-lm-pytorch being the repository to watch for progress updates.

LangChain AI Discord Summary

-

LangChainJS Experimental Foray:

@ilguappohas shared an in-development project on GitHub entitled LangChainJS Workers which controversially strays from best web API practices but explores a novel endpoint for emoji reactions in Discord messages. They are also tackling the steep learning curve of TypeScript and its integration into the current project. -

Teaming Up for RAG Systems: An interest in end-to-end Retrieval-Augmented Generation (RAG) solutions has been voiced by

@alvarojauna, seeking collaborations or precedents, while@allenpan_36670has sparked a clarifying discussion on GPT chat completion’s handling of message lists, with@lhc1921alluding to ChatML’s prompt structures as a method for handling such data. -

Initiating Intelligent PDF Dialogues:

@a404.ethbroadcasted the launch of a tutorial series with Part 1 available on YouTube, guiding users through the creation of Full Stack RAG systems enabling conversations with PDF documents leveraging PGVector, unstructured.io, and semantic chunker technologies. -

LLaMA Outshines Baklava in Artistic Judgement: In a comparison battle of AI models,

@dwb7737posted findings on a GitHub Gist showcasing LLaMA’s superior performance over Baklava in art analysis tasks. -

Engineers Beware of Mischievous Links: A cautionary note regarding a potential spam message posted by

@eleussin the langserve that included a sequence of bars, underscores, and a suspicious Discord invite link, implying the need for vigilance against such behaviors in technical communities.

LLM Perf Enthusiasts AI Discord Summary

- New Horizons for LLM Perf Guild:

@jeffreyw128kicked off 2024 with an energizing welcome and revealed intentions to expand the Discord guild through a new wave of select invitations and member referrals. - Eyeing the State-of-the-Art in Document Layout: Discourse in the guild highlighted the Vision Grid Transformer as a cutting-edge model for understanding document layouts, particularly excel at identifying charts within PDFs as shared by

@res6969, with the GitHub repository available here. - #share Your Knowledge: A new channel named #share emerged from community collaboration, ready to house mutual knowledge exchanges, as decided by

@degtrdgand@jeffreyw128. - Synergy Through LLM Activities:

@yikesawjeeztouched on the vibrancy of the LLM space, pointing out engaging happenings such as paper clubs, implementation sessions, and codejams which are quite the nexus for the LLM performance aficionados. - Infiltrate with Intelligence: In a light-hearted tone,

@yikesawjeezproposed that members expand their reach and influence by bringing their LLM performance expertise to outside events.

Skunkworks AI Discord Summary

Based on the provided messages, there isn’t sufficient context or substantial technical content relevant to an engineer audience to generate a summary. Both messages appear to be informal communications without any discernible technical discussion or key points.

YAIG (a16z Infra) Discord Summary

- Quest for Cloud Independence: @floriannoell asked about on-premise AI solutions that do not rely on major cloud providers like AWS, GCP, or Azure, mentioning watsonx.ai as a point of reference for desired capabilities.

- Tailoring AI to Fit the Mold: In the process of discussing on-premise solutions, @spillai suggested @floriannoell elucidate specific AI requirements such as pretraining, finetuning, or classification, to guide the search towards a more fitting on-premise AI system.

Alignment Lab AI Discord Summary

- Catch the Slim Orca Dataset on Hugging Face: Slim Orca dataset is now hosted on Hugging Face, boasting ~500k GPT-4 completions with enhanced quality through GPT-4 refinements. This dataset is noted for needing only 2/3 the computational power for performance comparable to larger data slices (Slim Orca).

- Training Made Efficient with Slim Orca Models: Two models, jackalope-7b and Mistral-7B-SlimOrca, demonstrate the high efficiency and performance of practice on the Slim Orca subset. This advancement was shared by

@222gatein the community chat, spotlighting the dataset’s reduced computational requirement without compromising output quality.

Datasette - LLM (@SimonW) Discord Summary

- Offline LLM Enhancement Unveiled: The

llm-gpt4allversion 0.3 has been released, featuring improvements including offline functionality for models and the ability to adjust model options such as-o max_tokens 3. The release also incorporates fixes from community contributors.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1398 messages🔥🔥🔥):

- AI and RP community unite: Users

@frankenstein0424and@kalomazeengage in a heated discussion about AI and roleplay, leading to calls for moderation and jokes about the situation. - Obsession with rats: The chat room

#generalhas been spammed with rat-related character cards, inciting mixed reactions from users such as@rogue_variant,@mrdragonfox, and@.justinobserver. - Mistral 7B and coding with AI: User

@priyanshuguptaiitgseeks help running models like Mistral-7B through an API and receives directions from@itsme9316,@kalomaze, and others. They discuss difficulties with the API, mentioning issues like missing tokenizers and fine-tuning options. - Exploring MoEs and Mergekit: A serious technical discussion unfolds between users

@kquant,@sanjiwatsuki, and@kalomaze, focusing on the nuances of fine-tuning Mixture of Experts (MoE) models, their performance, and their unique challenges. - GPU talk and renting for AI: The chat delves into the world of GPUs, discussing storage options on platforms like runpod.io, and the End-of-Life announcement for NVIDIA’s A100 GPU. They also touch on the practical aspects of using rented GPUs for large language models.

Links mentioned:

- No Way GIF - Stunned Wow Omg - Discover & Share GIFs: Click to view the GIF

- Tweet from Jon Durbin (@jon_durbin): Working on an RP-enhancing DPO dataset using cinematika data, meaning the responses are human-written (but still llm augmented). Let’s see if this works 🤞🏻

- Screenshot to HTML - a Hugging Face Space by HuggingFaceM4: no description found

- openaccess-ai-collective/mistral-7b-llava-1_5-pretrained-projector · Hugging Face: no description found

- Brain GIF - Brain - Discover & Share GIFs: Click to view the GIF

- TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF · Hugging Face: no description found

- All Your Base Are Belong To Us Cats GIF - All Your Base Are Belong To Us Cats Cat - Discover & Share GIFs: Click to view the GIF

- Create a Network Volume: no description found

- Prompt-Engineering for Open-Source LLMs: Turns out prompt-engineering is different for open-source LLMs! Actually, your prompts need to be engineered when switching across any LLM — even when OpenAI…

- Mad Men Conversing GIF - Mad Men Conversing Feel Bad For You - Discover & Share GIFs: Click to view the GIF

- What Do You Mean By That GIF - What Do You Mean By That - Discover & Share GIFs: Click to view the GIF

- DIE ANTWOORD - RATS RULE [Music Video]: Die Antwoord - Mount Ninji and Da Nice Time Kid - Rats Rule/ Featuring JACK BLACK !More of these comming soon, subscribe to dont miss out!Used videos:/ DIE A…

- Speaking with Angry Rats Baldur’s Gate 3: Speaking with Angry Rats Baldur’s Gate 3. You can see Baldur’s Gate III Speaking with Angry Rats Scene following this video guide. Baldur’s Gate III is a rol…

- yahma/alpaca-cleaned · Datasets at Hugging Face: no description found

- GitHub - openai/consistencydecoder: Consistency Distilled Diff VAE: Consistency Distilled Diff VAE. Contribute to openai/consistencydecoder development by creating an account on GitHub.

- Neil deGrasse Tyson Explains the Simulation Hypothesis: Neil deGrasse Tyson and comic co-host Chuck Nice are here (or are they?) to investigate if we’re living in a simulation. We explore the ever-advancing comput…

- GitHub - deep-floyd/IF: Contribute to deep-floyd/IF development by creating an account on GitHub.

- Samsung 870 QVO 8TB SSD Memory Storage | Samsung UK: Discover incredible storage with a Samsung SSD. Enjoy improved performance, easy management with Samsung Magician and awesome reliability.

- GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Mod…

- Mac | Jan: Jan is a ChatGPT-alternative that runs on your own computer, with a local API server.

- Port of self extension to server by Maximilian-Winter · Pull Request #5104 · ggerganov/llama.cpp: Hi, I ported the code for self extension over to the server. I have tested it with a information retrieval, I inserted information out of context into a ~6500 tokens long text and it worked, at lea…

- Nature: Nature is the foremost international weekly scientific journal in the world and is the flagship journal for Nature Portfolio. It publishes the finest …

- Solving olympiad geometry without human demonstrations - Nature: A new neuro-symbolic theorem prover for Euclidean plane geometry trained from scratch on millions of synthesized theorems and proofs outperforms the previous best method and reaches the performance of…

- main : add Self-Extend support by ggerganov · Pull Request #4815 · ggerganov/llama.cpp: continuation of #4810 Adding support for context extension to main based on this work: https://arxiv.org/pdf/2401.01325.pdf Did some basic fact extraction tests with ~8k context and base LLaMA 7B v…

- YT Industries: Decoy MX CORE 3

TheBloke ▷ #characters-roleplay-stories (427 messages🔥🔥🔥):

-

Model Comparison and Usage Queries: Users discussed their experiences with various models like

Nous HermesandSanjiWatsuki/Lelantos-Maid-DPO-7Bfor specific roleplay tasks.@ks_cfoundKunoichi dpo v2to be the best for character interpretation. -

Frontend Features and Lorebooks:

@animalmachineshared insights on the value of a lorebook feature for roleplay chats and pointed to the relevant documentation on SillyTavern’s usage of World Info. -

Automating Data Collection for Model Training:

@frankenstein0424is scripting to automate the creation of training data for their bot from website messaging, planning to gather a dataset for a highly specialized task. -

Quantization and Model Performance:

@keyboardkingdiscussed the difficulty in getting grammatically correct output from sub 10gb models and voiced concerns about whether deeper quantization renders models like7Bsuboptimal. -

Deployment and API Choices for Bots:

@frankenstein0424sought advice for hosting and using models likeMixtral AIthrough external APIs, with suggestions including usingSillyTavernfrontend to connect to various LLM APIs such as together.ai and mistral.ai.

Links mentioned:

- Mistral AI | Open-weight models: Frontier AI in your hands

- World Info | docs.ST.app: World Info (also known as Lorebooks or Memory Books) enhances AI’s understanding of the details in your world.

- NeverSleep/Noromaid-13B-0.4-DPO-GGUF · Hugging Face: no description found

- LoneStriker/Noromaid-13B-0.4-DPO-3.0bpw-h6-exl2 · Hugging Face: no description found

- makeMoE: Implement a Sparse Mixture of Experts Language Model from Scratch: no description found

- Rentry.co - Markdown Paste Service: Markdown paste service with preview, custom urls and editing.

- Models - Hugging Face: no description found

- Another LLM Roleplay Rankings: (Feel free to send feedback to AliCat (.alicat) and Trappu (.trappu) on Discord) We love roleplay and LLMs and wanted to create a ranking. Both, because benchmarks aren’t really geared towards rolepla…

- TheBloke/CaPlatTessDolXaBoros-Yi-34B-200K-DARE-Ties-HighDensity-GGUF · Hugging Face: no description found

- text-generation-webui/modules/sampler_hijack.py at 837bd888e4cf239094d9b1cabcc342266fee11c0 · oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

- How to mixtral: Updated 12/22 Have at least 20GB-ish VRAM / RAM total. The more VRAM the faster / better. Grab latest Kobold: https://github.com/kalomaze/koboldcpp/releases Grab the model Download one of the quants a…

- text-generation-webui/modules/logits.py at main · oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

- The Sarah Test: (by #theyallchoppable on the Ooba and SillyTavern Discord servers) See also: https://rentry.org/thecelltest The Sarah Test is a simple prompt to test a model’s coherency, logical consistency, whatever…

- Intro: Intro Changelog Performing the cell test Checking logits Effect of samplers Model Results Summary Model Results Table Closing thoughts Future improvements Appendix A: Potential problem with the prompt…

TheBloke ▷ #training-and-fine-tuning (5 messages):

- Eager to Train Locally:

@superking__expressed enthusiasm about starting to experiment with training locally to save on compute costs before becoming proficient. - Credit for Implementation Queried: A user asked

@superking__if they were responsible for implementing a method for loading a single model, recalling their name from a pull request (PR). - Acknowledgement of Contribution:

@jondurbinconfirmed he implemented the 2-adapter method, clarifying the existing code for null reference was already present. - Seeking Solution for a Shared Issue:

@carlito.88inquired about a previous issue, wondering if a resolution had been found. - Query on LLMS and TensorRT Conversion:

@bycloudquestioned the community for experiences on training/finetuning large language models (LLMs) and converting them into TensorRT optimized models.

TheBloke ▷ #model-merging (2 messages):

- Inquiry on Merging gguf Models:

@222gateasked if anyone knows whether merging gguf’s is possible using mergekit, expressing interest in experimenting with it despite assumptions of infeasibility. - Fusion of Vision and Non-Vision Models:

@222gatequeried the community for any attempts or documentation on merging a vision model with a non-vision model, indicating a need for guidance on such cross-modality merges.

TheBloke ▷ #coding (13 messages🔥):

- Understanding LLava’s Composition:

@seleaexplained that LLava models incorporate CLIP for image recognition; tokens from CLIP are appended before a text prompt in a language model. There’s a necessity to train the model to interpret CLIP embeddings correctly. - Adding Multimodality is Complex: For

@lordofthegoonsenquiry on adding multimodality,@seleaindicated the challenge lies in training the text model to understand and utilize image embeddings efficiently. - Frankenmerging Models:

@lordofthegoonspondered about partially extracting layers from LLava to merge them with another model, while@seleaadmitted to not knowing much about frankenmerges but speculated on the possibility of adding CLIP understanding to another model. - Glitches in Frankenmerged Models:

@seleamentioned that even if a frankenmerge were successful, the resultant model would likely operate glitchily due to the inherent complexities of combining different systems. - Improving Model Training with LLava:

@seleaproposed the idea of using LLava to distill accurate image descriptions and retrain the text-processing part of models like Stable Diffusion which currently employs a “wacky machine code” for prompting. ,

Nous Research AI ▷ #off-topic (18 messages🔥):

- Charming Old School Data: User

@everyoneisgrossmentioned that they purchase antique school books to manually input Q&As for model testing, expressing some skepticism with models that perform too well on modern datasets. - Fine-Tuning AI Models:

@pradeep1148shared a YouTube video titled “Finetuning TinyLlama using Unsloth,” which includes sections on data preparation, training, inference, and model saving. - Turning “Machine Learning” into “Money Laundering”:

@euclaiseposted a funny tweet about a Chrome extension that replaces “machine learning” with “money laundering” and shared the GitHub link for the extension. - Cuda Kernels Allow Non-Power-of-Two Configurations:

@carsonpoolediscussed the advantages of writing kernels in CUDA over Triton, noting that configurations can be set to non-powers-of-two, sometimes yielding better performance. - Satirical Spin on AI Company Expectations:

@sumo43joked about the lofty expectations set by companies calling themselves AI companies, suggesting a play on words with “token companies” that merely generate tokens.

Links mentioned:

- Tweet from cts🌸 (@gf_256): twitter is more funny if you replace “machine learning” with “money laundering”. So i made a chrome extension that does this https://github.com/stong/ml-to-ml

- Finetuning TinyLlama using Unsloth: You will learn how to do data prep, how to train, how to run the model, & how to save it (eg for Llama.cpp).**[NOTE…

Nous Research AI ▷ #interesting-links (38 messages🔥):

- Prompt Lookup Revolution:

@leontellopromoted efficient prompt lookup for input-grounded tasks by sharing a mention of its significance, stating it’s a “free lunch” that should be utilized more. - Control Applications with LLMs:

@mikahdanghighlighted RestGPT, a project showcasing an LLM-based autonomous agent that can control real-world applications via RESTful APIs. - Function Calling as the Future:

@mikahdangand@tekniumheld a passionate agreement on the importance of function calling for reasoning and planning as integral to the future of LLMs integration with APIs. - Unraveling Non-determinism in GPT-4: A conversation led by

@burnytechlinked articles discussing GPT-4’s non-determinism due to Sparse MoE, with contributions from@stefangliga,@stellaathena, and@betadoggoon the challenges and implications. - Diffusion Model Considerations:

@mikahdangshared research on Contrastive Preference Learning and scalability of diffusion language models, sparking a debate about their underestimation in NLP. Different views were expressed by@_3sphere,@betadoggo, and@manojbhregarding the potential and challenges of merging autoregressive and diffusion models for various tasks.

Links mentioned:

- Are Diffusion Models Vision-And-Language Reasoners?: Text-conditioned image generation models have recently shown immense qualitative success using denoising diffusion processes. However, unlike discriminative vision-and-language models, it is a…

- Diffusion Language Models Can Perform Many Tasks with Scaling and…: The recent surge of generative AI has been fueled by the generative power of diffusion probabilistic models and the scalable capabilities of large language models. Despite their potential, it…

- Contrastive Preference Learning: Learning from Human Feedback…: Reinforcement Learning from Human Feedback (RLHF) has emerged as a popular paradigm for aligning models with human intent. Typically RLHF algorithms operate in two phases: first, use human…

- Tweet from Weyaxi (@Weyaxi): I published BagelHermes-2x34B, a Mixture of Experts model, combining @jon_durbin’s bagel 🥯 and @NousResearch’s Hermes2 📨 Hermes excels in math, while Bagel is superior in QA and science. So…

- GitHub - Yifan-Song793/RestGPT: An LLM-based autonomous agent controlling real-world applications via RESTful APIs: An LLM-based autonomous agent controlling real-world applications via RESTful APIs - GitHub - Yifan-Song793/RestGPT: An LLM-based autonomous agent controlling real-world applications via RESTful APIs

- Non-determinism in GPT-4 is caused by Sparse MoE: It’s well-known at this point that GPT-4/GPT-3.5-turbo is non-deterministic, even at temperature=0.0. This is an odd behavior if you’re used to dense decoder-only models, where temp=0 shou…

- Tweet from Maksym Andriushchenko 🇺🇦 (@maksym_andr): GPT-4 is inherently not reproducible, most likely due to batched inference with MoEs (h/t @patrickrchao for the ref!): https://152334h.github.io/blog/non-determinism-in-gpt-4/ interestingly, GPT-3.5 …

Nous Research AI ▷ #general (271 messages🔥🔥):

-

Batch Size Example Disclosed:

@leontelloshared an example of batched inference for machine learning models, providing a code snippet to illustrate how to run batched prompts using a model and tokenizer from thetransformerslibrary on a GPU. -

Helpful Tools for Chat Templating:

@osansevieromentioned a helpful resource for those working with chat templates, pinpointing potential usefulness for developers. -

Discussion on OpenAI’s Logit Distillation: “Is anyone doing logit (soft) distillation of GPT4?”

@dreamgenqueried, sparking a conversation on the availability of logits from OpenAI’s API and the feasibility and methods of distilling large language models. Users debated the value and strategies of distillation, noting it as a potentially unexplored area. -

RUGPULL Visualization App Development:

@n8programsis working on an application called RUGPULL, intended for exploring UMAP representations of corpora, with an ability to see the distance and relevance between chunks, all in an engaging, interactive graph format. -

Qwen 72B Base vs Llama 2 70B Base Discussed: The conversation turned towards comparing the Qwen 72B base and Llama 2 70B base models regarding their usability for fine-tuning. Some users like

@intervitensand@s3nh1123mentioned issues like VRAM consumption and the advantages of multilingual support, respectively; however, the consensus seemed elusive due to a lack of extensive experimentation with Qwen.

Links mentioned:

- Introducing Qwen: 4 months after our first release of Qwen-7B, which is the starting point of our opensource journey of large language models (LLM), we now provide an introduction to the Qwen series to give you a whole…

- Brain GIF - Brain - Discover & Share GIFs: Click to view the GIF

- Cat Explode GIF - Cat Explode Explosion - Discover & Share GIFs: Click to view the GIF

- Tweet from anton (@abacaj): I got some concrete numbers on phi-2 DPO. You can see clear jump in model capabilities first turn and second turn for MT-bench using DPO. More epochs does not really help overall, my model was overfit…

- GitHub - KillianLucas/aifs: Local semantic search. Stupidly simple.: Local semantic search. Stupidly simple. Contribute to KillianLucas/aifs development by creating an account on GitHub.

- HuggingFaceH4/open_llm_leaderboard · Discussions: no description found

- HuggingFaceH4/open_llm_leaderboard · Flagging models with incorrect tags: no description found

- 01-ai/Yi-34B · Hugging Face: no description found

- cognitivecomputations/dolphin · Datasets at Hugging Face: no description found

Nous Research AI ▷ #ask-about-llms (57 messages🔥🔥):

-

Finding the Optimal Learning Rate (LR): Users

@alyosha11and@bozoid.discussed finding the best LR for LLMs, suggesting to use evaluation datasets or benchmarks.@bozoid.mentioned that learning rates from previous architectures’ papers could yield decent results, while@tekniumadvised starting with a ballpark LR of around 1e-5 and adjust after observing results at 3 epochs. -

Inference Tricks for Mistral and Llama: User

@blackl1ghtquestioned about alternative inference methods formistraloverllama.cpp, and@.ben.comrecommendedexllamav2. In connection,@blackl1ghtreported an issue with theEOStoken being included in streaming responses, which@max_paperclipsconfirmed happens sporadically, depending on the model. -

OOM Issues and Sequence Length in Fine-tuning LLMs: User

@besiktasdescribed an out-of-memory (OOM) issue encountered when fine-tuning, even when previous forward/backward passes were successful.@yonta0098recommended checking if longer sequences are causing the issues and maybe sorting the data by decreasing length to trigger OOM early if that’s the case. -

Discussions on LLM Evaluation and Fine-tuning:

@rememberlennyinitiated a discussion on code-related evaluations for LLMs, and various users, including@manveerxyzand@besiktas, mentioned benchmarks such asHumanEval,MBPP, andDeepSeek.@besiktasalso raised concerns about the quality of some parts of the Hugging Face code and mentioned PRs that haven’t been addressed. -

Fine-tuning Challenges and Hugging Face Problems:

@besiktasprovided a link to a test implementation to diagnose fine-tuning memory leaks by gradually increasing the context length and described difficulties with the Hugging FaceFuyuProcessor. This sparked a discussion about the challenges of contributing to such large-scale collaborative projects.

Links mentioned:

-

pretrain-mm/tests/test_model.py at 4159505915d5e15952957aa5607eadf9fc6c70cd · grahamannett/pretrain-mm: Contribute to grahamannett/pretrain-mm development by creating an account on GitHub.

-

nuprl/CanItEdit · Datasets at Hugging Face: no description found

-

mbpp · Datasets at Hugging Face: no description found

-

FuyuProcessor broken and causes infinite loop · Issue #27879 · huggingface/transformers: transformers/src/transformers/models/fuyu/processing_fuyu.py Line 618 in 75336c1 while (pair := find_delimiters_pair(tokens, TOKEN_BBOX_OPEN_STRING, TOKEN_BBOX_CLOSE_STRING)) != ( I am not sure exa…

-

GitHub - amazon-science/cceval: CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion (NeurIPS 2023): CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion (NeurIPS 2023) - GitHub - amazon-science/cceval: CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-Fil…

-

InfiCoder-Eval: Systematically Evaluating Question-Answering for Code Large Language Models: no description found

-

EvalPlus Leaderboard: no description found

-

Coding LLMs Leaderboard: no description found

,

Mistral ▷ #general (225 messages🔥🔥):

- Mistral Instruct Autocomplete Clarification: User

@i_am_domhas clarified that Mistral Instruct is autocomplete by design and suggested skipping tags for plain text input, which will prompt the model to predict completion to the input. - Sophia Yang Confirmed as Mistral: User

@jarsalfirahelexpressed surprise at learning Sophia Yang, known from YouTube, is associated with Mistral. Sophia acknowledged with a thank you emoji. - Mistral Knowledge Base File Uploads: User

@vivacious_gull_97921inquired if Mistral supports uploading files to the knowledge base, to which@sophiamyangresponded that it’s not currently supported, suggesting the use of Mistral with other RAG tools. - Mistral 7B Foundation Models on Amazon SageMaker:

@sophiamyangshared a blog on SageMaker, announcing the availability of Mistral 7B models for deployment via Amazon SageMaker JumpStart. The post illustrates how to discover and deploy the model. - Moderation on Mistral Discord and Future Plans:

@sophiamyangconfirms that moderators are set up on the Mistral Discord after@ethuxsuggested the need for them due to scams. They welcomed recommendations for better moderation setups.

Links mentioned:

- Cat Berg Cat GIF - Cat Berg Cat Orange Cat - Discover & Share GIFs: Click to view the GIF

- Mistral 7B foundation models from Mistral AI are now available in Amazon SageMaker JumpStart | Amazon Web Services: Today, we are excited to announce that the Mistral 7B foundation models, developed by Mistral AI, are available for customers through Amazon SageMaker JumpStart to deploy with one click for running in…

- Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4: no description found

- GPT-4: We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less ca…

Mistral ▷ #models (81 messages🔥🔥):

- Summarization Shenanigans with Mixtral 8x7b: User

@atom202300reported issues with Mixtral 8x7b and the Huggingface Text Generation Interface (TGI) wherein the model would unpredictably cut off responses during summarization tasks. Despite a stable VRAM, the problem persisted for specific examples, suggesting sensitivity to prompt structure. - Prompt Patterns Prompt Problems:

@sublimatorniqand@atom202300discussed the effect of altering prompt syntax on summarization performance, finding that changing from square brackets to parentheses reduced premature stopping, while@mrdragonfoxmentioned that Mixtral requires careful increment adjustments to prevent spamming. - Troubleshooting Model Stops with Seeds:

@sublimatorniqtheorized that the use of different seeds could induce variable stopping behaviors—with one seed causing early stops and another leading to extended responses. - Open-Weights and Finetuning Frustrations:

@wayne_denginquired about the availability of source code and finetuning possibilities for the Mixtral model.@mrdragonfoxstated that many have attempted finetuning without success due to high costs and the model’s complexity. - Mistral API JSON Response Request: User

@madmax____sought help with forcing the Mistral API to return responses in JSON format, but found theresponse_formatparameter ineffective.@akshay_1shared a link, possibly as a solution to the issue but the context of the link was not provided.

Links mentioned:

- Getting Started with pplx-api: no description found

- GitHub - huggingface/text-generation-inference: Large Language Model Text Generation Inference: Large Language Model Text Generation Inference. Contribute to huggingface/text-generation-inference development by creating an account on GitHub.

Mistral ▷ #deployment (21 messages🔥):

- Local Model Use in vLLM:

@shihard_85648asked for a way to use a local model file with vLLM, having downloaded the “raw” Mistral file, and@mrdragonfoxadvised to use the--modelflag followed by the full local path. This bypasses the need to download the model from HuggingFace, which is the usual process as explained by@vhariational. - vLLM Docker Image Clarification:

@vhariationalreferenced the vLLM documentation, indicating that the Huggingface cache directory is bind-mounted at runtime, meaning you wouldn’t need to redownload the model when rebuilding the Docker image. - Model Path Configuration Instructions:

@mrdragonfoxprovided a detailed link to the vLLM documentation, elaborating on the engine arguments including how to specify paths for both model and tokenizer. - Efficient Mistral MoE Setup Inquiries: User

@yoann_binquired about an economical setup for running Mistral MoE at 12token/s on hardware less than $3,000, leading to@mrdragonfoxsuggesting a 32GB M1 Mac or using two 3090 GPUs for higher performance. - Technical Specs and Performance Metrics: In the discussions about configurations,

@mrdragonfoxmentioned running mistral 8x7b on anA6000(a 48GB VRAM GPU) with ‘6bpw’ (bits per weight), achieving about 60 tokens/second withexllamav2.

Links mentioned:

Engine Arguments — vLLM: no description found

Mistral ▷ #finetuning (7 messages):

- Prompt Optimizations Proposed:

@akshay_1suggested optimizing the prompts as an easier solution for the issues encountered with the Retriever-Augmented Generator (RAG) application. - Embedding Model Training vs. Cost: Training an embedding model might not be cost-effective at a small scale, according to

@akshay_1. - ColbertV2 for Embedding Training: For those looking into training an embedding model,

@akshay_1recommended checking out ColbertV2. - Identifying RAG App Limitations:

@mrdragonfoxhighlighted that an embedding model might need training when dealing with unique terminologies as they might not cluster effectively with intended meanings.

Mistral ▷ #showcase (2 messages):

- Tips for Enhanced Function Calling:

@akshay_1recommends usingdspy,SGLang,outlines, andinstructorfor better function calling, stating it works really good. - Advocating for Fine-tuning: In a follow-up,

@akshay_1mentions that fine-tuning on a dataset will yield better results if one is not satisfied with the initial solutions suggested.

Mistral ▷ #la-plateforme (11 messages🔥):

- Code Sharing for Error Investigation:

@sophiamyangasked@jakobdylancto share their code because of an unfamiliar error. Jake provided a link to a specific part of their GitHub repository, describing the issue with the “openai” python package used in their Discord LLM Chatbot. - Package Compatibility Discussion:

@sophiamyangquestioned@jakobdylancabout the python client package being used, linking to Mistral’s client (Mistra - Overview), and expressed uncertainty about reproducing the error. Jakob confirmed the use of the OpenAI python package and contemplated switching to Mistral’s package for its lightweight nature despite potential issues with vision models. - Error Reproduction Troubles:

@jakobdylancadmits difficulty in reproducing the error but promises to report back to@sophiamyangif it occurs again. - Philosophical Query Lacks Response: User

@jrffvrrposed an existential question about the most beautiful person in the world and followed with a test message, seemingly checking functionality with no further discussion on the topic. - Intersection of Transformers and Mathematics:

@stefatorusproposed the idea of training transformer models on mathematics and using an A* algorithm to generate potentially fruitful mathematical ideas worthy of exploration.

Links mentioned:

-

Discord-LLM-Chatbot/llmcord.py at ec908799b21d88bb76f4bafd847f840ef213a689 · jakobdylanc/Discord-LLM-Chatbot: Multi-user chat | Choose your LLM | OpenAI API | Mistral API | LM Studio | GPT-4 Turbo with vision | Mixtral 8X7B | And more 🔥 - jakobdylanc/Discord-LLM-Chatbot

-

Mistra - Overview: Mistra has 29 repositories available. Follow their code on GitHub.

-

GitHub - openai/openai-python: The official Python library for the OpenAI API: The official Python library for the OpenAI API. Contribute to openai/openai-python development by creating an account on GitHub.

,

LM Studio ▷ #💬-general (172 messages🔥🔥):

-

Ubuntu Users Encounter libclblast.so.1 Error: Ubuntu 22.04 users, including

@d0mperand@josemanu72, were struggling with an error when opening LM Studio related to a missinglibclblast.so.1file. After much discussion, the creation of symbolic links resolved the issue. -

Performance Questions on Apple Silicon Neural Engine: LM Studio’s utilization of the Apple Silicon Neural Engine was a question posed by

@crd5, where@Aqualitekinghelped clarify that the neural engine might be used indirectly via Apple’s Metal API and suggested monitoring with “asitop” tool. -

Queries on AI Modeling and Setup: Various users, including

@golangorgohome,@cloakedman, and@christianazinn, exchanged info on the suitable hardware for LM Studio and alternative setups for different AI applications, such as image-to-text and local hosting of model implementations. -

LM Studio Model Compatibility and Troubleshooting: Users like

@bright_chipmunk_28966and@yagilbdiscussed issues with loading certain models in LM Studio, leading to advice on updating to newer versions and checking for compatibility on platforms like HuggingFace. -

Exploration of RAG with LM Studio:

@elevonsinquired about integrating RAG with LM Studio, and though there were no straightforward solutions within LM Studio itself,@heyitsyorkieand@thelefthandofurzaprovided guidance on third-party apps and setup assistance.

Links mentioned:

- LM Studio Beta Releases: no description found

- CLBlast/doc/installation.md at master · CNugteren/CLBlast: Tuned OpenCL BLAS. Contribute to CNugteren/CLBlast development by creating an account on GitHub.

- GitHub - HeliosPrimeOne/ragforge: Crafting RAG-powered Solutions for Secure, Local Conversations with Your Documents - V2 Web GUI 🌐 Product of PrimeLabs: Crafting RAG-powered Solutions for Secure, Local Conversations with Your Documents - V2 Web GUI 🌐 Product of PrimeLabs - GitHub - HeliosPrimeOne/ragforge: Crafting RAG-powered Solutions for Secure,…

- GitHub - john-rocky/CoreML-Models: Converted CoreML Model Zoo.: Converted CoreML Model Zoo. Contribute to john-rocky/CoreML-Models development by creating an account on GitHub.

- Core ML Tools — Guide to Core ML Tools: no description found

LM Studio ▷ #🤖-models-discussion-chat (15 messages🔥):

- Model Error Mystery: User

@alex_m.presented an issue with LM Studio where the model fails regardless of configuration, showing a JSON error with Exit code: 0.@gustavo_60030responded, suggesting checking a different Discord channel for possible solutions. - Channel Direction Confusion: After

@alex_m.was directed to one support channel,@heyitsyorkieintervened to recommend another as the appropriate place for discussing model errors. - AI’s Unpredictable Personality:

@cloakedmancommented on the unpredictability of AI models, remarking how the same model can provide different responses.@fabguysuggested that reducing the temperature setting can increase consistency in the AI’s responses. - AI Consistency Tips:

@cloakedmaninquired about what@fabguymeant by reducing temperature.@fabguyreplied, clarifying that setting “Randomness” or “Temperature” to zero can yield consistent answers given the same seed is used. - Dolphin Version Differences:

@cloakedmanasked for insights on the differences between Dolphin 2.5 and 2.7 AI versions. Although@fabguyprovided a Discord link for detailed comparison, the link was not accessible in the summary provided.

LM Studio ▷ #🧠-feedback (16 messages🔥):

- GPU Acceleration Greyed Out for CodeShell:

@czkokoreported that CodeShell was listed as not supported for GPU acceleration despite being supported in version 0.2.11.@yagilbconfirmed that the architecture is not currently considered supported by the LM Studio app. - Potential Workaround for GPU Support:

@yagilbsuggested a workaround by renaming the model file to include “llama” which might enable GPU acceleration, but@czkokofollowed up saying the workaround did not change the greyed-out GPU acceleration or RoPE. - Conservative App Behavior Regarding GPU Acceleration:

@yagilbpointed out that the app errs on the side of caution by graying out options for unsupported architectures, mentioning a previous discussion. - User Feedback on GPU Acceleration:

@heyitsyorkieconfirmed that the GPU acceleration remains grayed out even after trying the suggested workaround and commented that the current UI state, which indicates “not supported,” is clear enough. - Discussion Invitation:

@yagilbextended an invitation to continue the conversation about the GPU acceleration issue for CodeShell in a different thread, providing a Discord channel link for further discussion.

LM Studio ▷ #🎛-hardware-discussion (29 messages🔥):

- VRAM Woes Amidst Hardware Talk: User

@cheerful_panda_16252reported an issue with VRAM capacity showing as “0Bytes” despite owning a Nvidia 3090 with 24GB VRAM.@cloakedmanalso expressed concern that this might affect the recommended settings of the software. - Call for Hardware Specs:

@yagilbdirected users to provide their hardware specifications in order to address the VRAM capacity problem, guiding them to a specific Discord channel with a posted link. - Potential Boost for Intel GPU Users: A GitHub pull request shared by

@heyitsyorkiesuggests that Intel GPU users might soon see support in llama.cpp View Pull Request. However,@goldensun3dsshowed skepticism regarding the timeline of this update. - Exploring Budget Configurations for Mixtral:

@yoann_binquired about the cheapest hardware configuration capable of running Mixtral at 12t/s, mentioning the potential of an M1 Pro.@rugg0064contributed by clarifying the bandwidth differences between M2 Pro, M1, and high-end GPUs like the RTX 4090. - Hardware Compatibility Discussions and Recommendations:

@cloakedmanshared difficulties with system crashes when offloading to a GPU, and@bobzdaroffered troubleshooting tips including layer adjustments and prompt compression. The discussion evolved into@cloakedmanfinding a stable setting for their system.

Links mentioned:

- Part 1:Building and Optimizing a High-Performance Proxmox Cluster On a Budget.: In our guide for building a Proxmox cluster, we’ve primarily focused on utilizing second-hand components to cater to small producers or…

- Feature: Integrate with unified SYCL backend for Intel GPUs by abhilash1910 · Pull Request #2690 · ggerganov/llama.cpp: Motivation: Thanks for creating llama.cpp. There has been quite an effort to integrate OpenCL runtime for AVX instruction sets. However for running on Intel graphics cards , there needs to be addi…

LM Studio ▷ #🧪-beta-releases-chat (2 messages):

- Thumbs Up for WhiteRabbit 33B: User

@johntdaviesmentioned successful testing with the WhiteRabbit 33B model (Q8), giving positive feedback for its performance.

LM Studio ▷ #autogen (1 messages):

senecalouck: Try it using 127.0.0.1 in the script.

LM Studio ▷ #langchain (1 messages):

gciri001: Is it possible to use Langchain and MySql with LLAMA 2 withouts openAI api?

LM Studio ▷ #crew-ai (4 messages):

- Model Loading Failure Frustration: User

@ferrolingaencountered an error with the message “unknown (magic, version) combination” when trying to load a model. The error report included system diagnostics indicating sufficient RAM and VRAM but a potential issue with the model file itself. - Incorrect File Format Diagnosis:

@draco9900quickly identified that@ferrolinga’s issue stemmed from using a model that is not in GGUF format—a necessary format for LM Studio. - Solution Suggestion: In response to the loading issue,

@heyitsyorkieadvised@ferrolingato use GGUF files specifically and recommended searching for “TheBloke - GGUF” in the Model Explorer for optimal results, as LM Studio requires GGUF model formats, not pytorch/ggml or .bin files. ,

OpenAccess AI Collective (axolotl) ▷ #general (50 messages🔥):

- Logit Distillation Chat:

@dreamgendiscusses logit distillation using GPT-4 logits and techniques like backfilling with open-source models and masking loss.@nruaifexpresses interest in the topic and considers further discussion. - Voice Synthesis Adaptation Inquiry:

@ex3ndrqueries about adding custom tokens for voice synthesis to LLMs and learns from@le_messand@stefangligathat adding a large number of tokens, like 8k, would necessitate extensive pretraining. - Challenges with QLoRA Finetuning: Several participants including

@stefangliga,@noobmaster29, and@c.gatodiscuss the limitations of QLoRA finetuning, especially with a significant number of new tokens, stressing that simple auxiliary networks won’t suffice and full embedding layer finetuning must be considered. - Model Finetuning Tips and Loss Evaluation:

@ex3ndrshares their experience with finetuning custom tokens, highlighting concerns with unusually high loss figures, while@noobmaster29and@c.gatoprovide insights on what loss metrics to aim for in different scenarios. - Using Special Tokens in Axolotl Finetuning: Guidance on how to configure special tokens for finetuning is shared by

@faldore, including code snippets for embeddinglm_headand specifyingeos_token. This was in response to@dreamgenpointing to@faldore’s success with a project like Dolphin on Hugging Face.

Links mentioned:

- Magic GIF - Magic - Discover & Share GIFs: Click to view the GIF

- axolotl/src/axolotl/utils/lora_embeddings.py at dc051b861d4d0f20c673ad55ac93b2a43fa56fc4 · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- cognitivecomputations/dolphin-2.6-mixtral-8x7b at main: no description found

OpenAccess AI Collective (axolotl) ▷ #axolotl-dev (4 messages):

- Jupyter issues in Latitude container:

@dctannerraised a problem encountering SSL issues with Jupyter running in the Latitude container due to Cloudflare’s tunneling for port forwarding. No solution was provided yet. - SSH Port Forwarding as a Band-Aid:

@dctanneris currently using SSH port forwarding as a workaround for the Jupyter issue in the Latitude container. - Intriguing Idea: Self-Rewarding Language Models:

@dctannershares interest in incorporating the concept of Self-Rewarding Language Models into the axolotl framework. - Self-Rewarding Model Implementation:

@caseus_responds to the idea with a link to a PyTorch implementation on GitHub, lucidrains/self-rewarding-lm-pytorch, which is an implementation of the training framework proposed in Self-Rewarding Language Model from MetaAI.

Links mentioned:

GitHub - lucidrains/self-rewarding-lm-pytorch: Implementation of the training framework proposed in Self-Rewarding Language Model, from MetaAI: Implementation of the training framework proposed in Self-Rewarding Language Model, from MetaAI - GitHub - lucidrains/self-rewarding-lm-pytorch: Implementation of the training framework proposed in…

OpenAccess AI Collective (axolotl) ▷ #general-help (116 messages🔥🔥):

-

DPO Dataset Mysteries Unraveled:

@dangfuturesand@c.gatodiscussed issues with loading datasets for DPO, finding success with a prior pull request (PR #1137).@dangfuturesmentioned overcoming an out-of-memory error by using a micro batch size of 1. -

Struggling with Strategy?:

@c.gatohelped@dangfuturesnavigate prompt strategies for datasets, sharing code snippets and a GitHub file link. To fix a persistent error, they advised to implement a fix from the DPO fixes branch on GitHub. -

CI-CD Goodness or Local Frustration?:

@caseus_highlighted an automated ci-cd sanity check for remote datasets, which doesn’t cover local datasets. Meanwhile,@dangfuturesand@c.gatodiscovered that reverting to a previous commit allowed for using local datasets, despite initial errors. -

LoRA Hyperparameter Head-Scratchers:

@noobmaster29inquired about optimal settings for LoRA’s alpha and rank hyperparameters, prompting@c.gatoto share an article providing insights into their effective usage. Discussion included varying alpha and rank as well as batch size considerations during training. -

Branching out for LLAMA:

@gamevelosterand@noobmaster29explored finetuning with llava models, pointing to a specific branch of axolotl and considering whether existing configs could be adapted for this purpose. The conversation highlights the community’s collaborative effort in sharing knowledge and resources.

Links mentioned:

- axolotl/src/axolotl/prompt_strategies/dpo/chatml.py at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- axolotl/tests/e2e/test_dpo.py at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- GitHub - OpenAccess-AI-Collective/axolotl at llava: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- Finetuning LLMs with LoRA and QLoRA: Insights from Hundreds of Experiments - Lightning AI: LoRA is one of the most widely used, parameter-efficient finetuning techniques for training custom LLMs. From saving memory with QLoRA to selecting the optimal LoRA settings, this article provides pra…

- configs/pretrain-llava-mistral.yml · openaccess-ai-collective/mistral-7b-llava-1_5-pretrained-projector at main: no description found

- Feat: Add sharegpt multirole by NanoCode012 · Pull Request #1137 · OpenAccess-AI-Collective/axolotl: Description: Allow multiple roles for input and output. NOTE: Beta and hardcoded values for now! How to use: - type: sharegpt + type: sharegpt.load_multirole Only supports conversation: (chatml|zep…

- DangFutures/DPO_RAG · Datasets at Hugging Face: no description found

OpenAccess AI Collective (axolotl) ▷ #datasets (4 messages):

- Dolphin Data Doubts:

@noobmaster29inquired if there was any overlap between the dolphin dataset and the openorca dataset.@dangfuturesexpressed belief that there is sure to be overlap. - Overlap Confirmation: Upon hearing

@dangfutures’ belief,@noobmaster29sought clarification and confirmed understanding that the two datasets do indeed overlap.

Links mentioned:

cognitivecomputations/dolphin · Datasets at Hugging Face: no description found

OpenAccess AI Collective (axolotl) ▷ #rlhf (13 messages🔥):

-

Configuration Key Error for

argilla_apply_chatml:@alekseykorshukencountered aKeyError: 'prompt'when usingargilla_apply_chatmlin their YAML configuration for a project with Reinforcement Learning Hub (RLHF). They initially sought assistance with this configuration issue. -

Solution Identified in Unittests: Later,

@alekseykorshukresolved the issue by discovering newtypeformats (chatml.argillaandchatml.intel) within the unittests on the main branch, and confirmed that this solution worked for them, prompting them to share the update with the community. -

Clarification Sought on Branch Usage: After the solution was shared,

@dangfuturessought clarification on which branch@alekseykorshukused to find the successful newtypeformats.@alekseykorshukclarified they used the main branch. -

Config Doubt for Local Datasets:

@matanvetzlerinquired if the same configuration would apply to local datasets and requested to see the config setup.@alekseykorshukassumed local datasets should work similarly by just changing thetype. -

Prompt Tokenization Strategy Issue and Solution:

@pierrecolomboreported aValueError: unhandled prompt tokenization strategy: intel_apply_chatml, to which@c.gatoresponded advising to update tochatml.intelif using the latest commit due to breaking changes.@pierrecolomboacknowledged the solution with thanks.

OpenAccess AI Collective (axolotl) ▷ #replicate-help (1 messages):

- Cog Configuration Guide Shared:

@dangfuturesprovided a snippet defining a configuration for Cog, referencing documentation on their GitHub page. The configuration is set up for GPU usage with CUDA “12.1”, uses Python “3.11”, and includes installation of various Python packages likeaiohttp[speedups],megablocks,autoawq, and more via a custompip installcommand referencing multiple package URLs.

Links mentioned:

-

cog/docs/yaml.md at main · replicate/cog: Containers for machine learning. Contribute to replicate/cog development by creating an account on GitHub.

-

no title found: no description found

,

Eleuther ▷ #general (56 messages🔥🔥):

-

Byte-Level BPE’s Multilingual Abilities:

@synquidexplained that the Llama 2 model can generate responses in languages like Hindi, Tamil, and Gujarati using byte-level BPE (Byte Pair Encoding), which does include tokens for these languages. -

Seeking Code for Mistral 7b Fine-Tuning: User

@aslawlietrequested assistance for code to fine-tune Mistral 7b for token classification, but did not receive a direct response within the provided messages. -

Skepticism About Mamba Replacing Transformers: Users, including

@stellaathena,@stefangliga, and@mrgonao, expressed skepticism regarding Mamba scaling and replacing Transformers, noting the absence of evidence that it will maintain its performance at larger scales. Discussions centered on the engineering challenges and the need for more research to validate Mamba’s scalability. -

Finetuning as a Service Inquiry: User

@kh4dienreached out for recommendations on finetuning large language models, expressing a preference for full supervision tuning rather than methods like QLORA.@stellaathenasuggested that running finetuning personally on rented GPUs might be a simple out-of-the-box solution. -

Evaluating Impact of Fine-Tuning LLMs:

@everlasting_gomjabbarqueried the community about comprehensive studies highlighting the benefits of fine-tuning Large Language Models (LLMs), suggesting that the real-world justifications for the investment in fine-tuning are often unclear. No direct response catered to this query was provided in the discussion.

Links mentioned:

Self-Rewarding Language Models: We posit that to achieve superhuman agents, future models require superhuman feedback in order to provide an adequate training signal. Current approaches commonly train reward models from human prefer…

Eleuther ▷ #research (65 messages🔥🔥):

-

Exploring Cryptographic Hiding in LLMs:

@ai_waifushared a paper that introduces a cryptographic method to hide a secret payload in a Large Language Model’s response, requiring a key for extraction and remaining undetectable without it.@fern.bearquestioned the claim that the method doesn’t modify the response distribution, arguing that some distribution must change to convey information. -

Weight Averaged Reward Models (WARM) Introduced by Google DeepMind:

@jacquesthibshighlighted a paper that discusses WARM, a strategy to combat reward hacking in LLMs aligning with human preferences through RLHF, by averaging fine-tuned reward models in weight space, and shared an author’s thread for further insights. -

Google’s Realistic Video Generation Research: