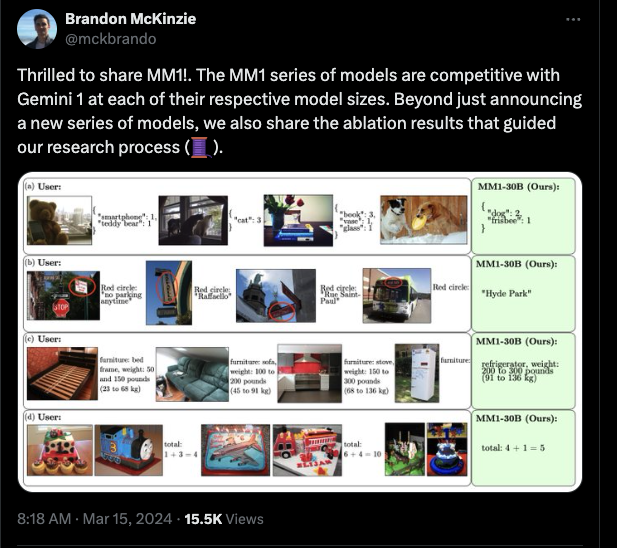

Apple continues to make moves in AI, announcing (but not releasing) MM1 with a paper, claiming it is Gemini-1 level:

The 30B model beats larger older models at the (flawed) VQA benchmarks:

The paper is oriented at researchers, providing some useful ablations for hyperparams and architecture.

The appendices hints at usecases for embodied agents:

and business/education:

For a selection of competing open VLMs, there is a new HF leaderboard you can reference.

Table of Contents

[TOC]

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs

AI Progress and Limitations

- Yann LeCun said that to have human-level AI, systems need to understand the physical world, remember and retrieve appropriately, reason, and set sub-goals and plan hierarchically. Even with such capabilities, it will take a while to reach human or superhuman level. @ylecun

- An LLM is like an encyclopedia that can talk back. @ylecun

- Many people believe LLMs mean NLP is “solved” and machines have human-level language understanding, but we’re not close. Being convinced the problem is solved guarantees no further progress will be made. @fchollet

- In 1970, it was said in 3-8 years we’d have a machine with human-level general intelligence. The full article this quote came from is a great read. @fchollet

New Models and Datasets

- Apple presented MM1, a family of multimodal LLMs up to 30B parameters that are SoTA in pre-training metrics and perform competitively after fine-tuning. @arankomatsuzaki

- Cohere announced the release of Command-R, a language model designed for Retrieval Augmented Generation at scale. @dl_weekly

- Anthropic’s Claude 3 family of models (Opus, Sonnet, Haiku) are designed for applications ranging from extensive capability to cost-effectiveness and speed. @DeepLearningAI

Open Source and Reproducibility

- DexCap is a $3,600 open-source hardware stack that records human finger motions to train dexterous robot manipulation. It’s an affordable “lo-fi” version of Optimus for academic researchers. Data collection is decoupled from robot execution. @DrJimFan

- Opus’s prompt writing skills + Haiku’s speed and low cost enable lots of opportunities for sub-agents. A cookbook recipe demonstrates how to get these sub-agents up and running in applications. @alexalbert__

- It’s simple to integrate AI into React apps with CopilotKit, which takes application context and feeds it into React infrastructure to build chatbots, AI-powered textareas, RAG, function calling, and integrations. The sample app is open-source and can be self-hosted with any LLM. @svpino

Tools and Frameworks

- Migrating code to Keras 3 with JAX backend provides benefits of not needing TensorFlow and 50% faster model training. @svpino

- Reranking is critical for effective retrieval in RAG. A new project from @bclavie greatly simplifies this important technique. @jeremyphoward

- An open source financial agent was added to LangChain, with tools to get latest price, news, financials and historical prices for a ticker. Upcoming tools include intrinsic value calculator and price chart renderer. Code is open source and runnable in Colab. @virattt

Memes and Humor

- “The difference between you and a world leader: you called your teacher mommy in elementary school and did nothing but get embarrassed. Macron called his high school teacher mommy, dated her until she left her husband, married her, and is now threatening Russia with nuclear war” @Nexuist

- “It’s over, fix your overly verbose model OAI, I’m not gonna sit here begging it for code” @abacaj

- ”.@elonmusk i will pay 20$/mo. please fix the “pussy in bio” problem.” @AravSrinivas

PART 0: Summary of Summaries of Summaries

Since Claude 3 Haiku was released recently, we’re adding them to this summary run for you to compare. We’ll keep running these side by side for a little longer while we build the AINews platform for a better UX.

Claude 3 Haiku (3B?)

Commentary: We experimented tweaking the Haiku prompt since it was not doing well. It seems Flow Engineering > Prompt Engineering for Haiku. However the topic clustering doesn’t look great yet.

Positional Encoding and Language Model Capabilities:

- Positional Encoding: A Delicate Dance: Discussions note the challenges of causal language models without Positional Encoding (PE), including the production of gibberish outputs and inference failures. A paper (Transformer Language Models without Positional Encodings Still Learn Positional Information) suggests models might encode “absolute positions” implicitly, leading to out-of-distribution errors during longer inferences.

- Exploring SERAPHIM and Claude 3’s “World Simulation”: SERAPHIM, a clandestine AI research group envisioned by Claude 3, has been the topic of interest. Dialogue about Claude 3’s advanced world modeling as a simulator entity named The Assistant, has led to discussions about metaphysical and epistemological explorations within the AI.

Function Calling and JSON Handling:

- Function Calling Eval Codes and Datasets released: Nous Research has published function calling eval code and datasets. The code is available on GitHub, with datasets accessible on Hugging Face and Hugging Face.

- Hermes Pro Function Calling Addresses JSON Quirks: While using Hermes 2 Pro for function calling, issues with JSON and single vs. double quotes in the system prompt have been discussed. It’s confirmed that changing the system prompt to explicitly use double quotes can be effective without significantly impacting performance.

Fine-Tuning and Model Performance:

- Fine-Tuning Raises the Bar: The d-Qwen1.5-0.5B student model, after fine-tuning, has surpassed the performance of its base model on truthfulqa (39.29 vs 38.3) and gsm8k (17.06 vs 16.3) benchmarks.

- Exploring Genstruct 7B’s Capabilities: Users engaged with the Genstruct 7B model for generating instruction datasets. One user planned to test with text chunks and shared a repository with examples of how to use it.

Hardware and System Optimizations:

- NVIDIA Rumors: NVIDIA’s rumored RTX 50-series “Blackwell” GPUs with GDDR7 memory at 28 Gbps speeds were mentioned in a TechPowerUp article.

- Photonic Processing’s Enlightenment: A breakthrough in photonic computing highlighted by Lightmatter proposes to utilize photonics to dramatically boost chip communication and computation, potentially revolutionizing AI efficiency.

Community Knowledge Sharing and Open-Source Practices:

- Open Source Code Interpreter Pursuits: A discussion arose about the lack of open-source GPT code interpreters for tasks like CSV handling. One user pointed out the open-interpreter on GitHub but noted it’s more tuned to sending instructions rather than interpreting code.

- Advocating for Open-Source AI: A member expressed the belief that being fully open source in models, datasets, and methodology will lead to better long-term improvements in AI models.

Claude 3 Sonnet (14B?)

Commentary: Sonnet kinda broke today and didn’t follow our instructions as well as every single day prior. We manually prompted it back toward somehow behaving but something feels off.

-

Large Language Model Advancements: Discussions around the capabilities and limitations of large language models like GPT-4, Claude, and LLaMa. This included fine-tuning techniques, evaluating reasoning abilities, and exploring interpretability methods like latent decoding by vector-db-lookup.

-

Hardware Optimizations for AI: Optimizing hardware setups, from Apple Silicon with

sudo sysctlto leveraging GPUs like RTX 5090 and NVIDIA Grace Hopper. Quantization levels for stable performance on models like Mixtral were also covered, with Q3 or 3-bit quantization recommended. -

AI Model Interpretability and Evaluation: Techniques to interpret and evaluate large language models were explored, such as using n-gram statistics to sample text and the limitations of AI content detectors in distinguishing human-generated media, as discussed in this paper.

-

Prompt Engineering and Data Handling: Strategies for effective prompt engineering, like prompt augmentation with smaller models and tools for managing prompts. Data preparation challenges, like handling complex file formats in Retrieval-Augmented Generation (RAG), were also addressed.

-

Open-Source AI Frameworks: Developments in open-source AI frameworks like Axolotl (upgrading to PyTorch 2.2.1), LlamaIndex v0.10.20 with an Instrumentation module, and the LangChain v0.2 release to remove dependencies.

-

AI Security and Privacy: Potential security concerns like requests made on behalf of another OpenAI account, leaking proprietary LLM information through APIs, and clarifications on OpenAI’s enterprise privacy policy.

-

Emerging AI Platforms and Tools: New AI platforms like Deci AI’s Deci Nano LLM and Cohere’s Command-R integration on OpenRouter. Open-source tools like Refacto VS Code plugin and JavaScript LangChain with Dall-E were also highlighted.

Claude 3 Opus (>220B?)

Commentary: this one comes closest to what was originally prompted (we asked for top 4-5 themes across everything)… but we actually prefer the output of the other 2 despite the length. In this case adhering too closely to our prompt was not good.

-

Function Calling and JSON Mode Advancements: Nous Research released function calling eval code and datasets on GitHub and Hugging Face, sparking discussions on using JSON mode effectively in complex conversations. The Hermes 2 Pro 7B model’s function calling capabilities were showcased in a YouTube video and GitHub repository.

-

Model Breakthroughs and Fine-Tuning Feats: The d-Qwen1.5-0.5B student model surpassed its base model on benchmarks after fine-tuning. Engineers tested the Genstruct 7B model for generating instruction datasets. A new training method claims to improve accuracy and sample efficiency, with initial tests on VGG16 and CIFAR100 showing promise, as discussed in the Skunkworks AI Discord.

-

Debugging and Optimization Techniques: CUDA developers troubleshooted errors like CUBLAS_STATUS_NOT_INITIALIZED, with suggestions pointing to tensor dimensions and memory issues, as seen in related forum posts. Triton debugging was enhanced with the

TRITON_INTERPRET=1environment variable and a visualizer in development. Lecture 8 on CUDA Performance was re-recorded and released with updated video, code, and slides. -

Advancements in AI Architectures and Frameworks: Maisa introduced the Knowledge Processing Unit (KPU), an AI architecture that claims to outperform GPT-4 and Claude 3 Opus in reasoning tasks, as detailed in their blog post. The Axolotl framework explored optimizations like ScatterMoE in their branch. LangChain expedited the release of version 0.2 to address CVEs and break the

langchain-communitydependency, as discussed in a GitHub issue.

ChatGPT (GPT4T)

Commentary: good list of prompt eng tools in there. Our GPT prompt has fallen behind our Claude prompt in terms of readable quality so we will focus on improving this next.

Positional Encoding in Language Models: Discussions highlighted the importance of Positional Encoding (PE) in preventing causal language models from producing gibberish outputs. A paper suggested that models could implicitly learn absolute positions, leading to errors during longer inferences (source).

Function Calling in AI Models: Nous Research released function calling evaluation code and datasets, highlighting the challenges of using JSON mode in complex interactions (GitHub, Hugging Face).

AI Model Fine-Tuning: The d-Qwen1.5-0.5B student model surpassed its base model's benchmarks, showcasing new developments in model fine-tuning. The Genstruct 7B model was tested for generating instruction datasets, with a focus on calculating perplexity in LLaMA models (source).

Open-Source Practices in AI: Conversations around AI models touched on topics like world modeling and the potential for open-source GPT code interpreters, advocating for transparency in AI development (GitHub).

Tech Discussions on Hardware and AI Access: Debates covered Claude.ai access in the EU and NVIDIA's RTX 50-series "Blackwell" GPUs' performance, alongside discussions on GDDR7 memory speeds (TechPowerUp article).

Challenges with AI Content Detection: The limitations of AI content detectors were examined, suggesting reliance on verifiable creation processes as substantial proof of human authorship and discussing the efficacy and implications of cryptographic watermarking.

CUDA Programming Insights: A focus on NumPy performance overhead in comparison to BLAS and the introduction of the SimSIMD library as a solution to reduce losses in high-performance scenarios was discussed, highlighting the importance of SIMD optimizations.

AI Model Interoperability and Improvements: The introduction of KPU by Maisa, claiming superiority over GPT-4 and Claude 3 Opus in reasoning, sparked debates on benchmarks and the absence of latency information, questioning its efficiency beyond prompt engineering.

Prompt Engineering Tools and Techniques: Engineers explored tools for prompt engineering, likening the search to finding a "Postman for prompts" and discussing the use of SQLite, Prodigy, PromptTools, and Helicone AI for managing and experimenting with prompts (SQLite, Prodigy, PromptTools, Helicone AI).

Language Model Sophistication Techniques: Engineers theorized over advanced model techniques, including 'mega distillation sauce' and token-critical mixtures, highlighting the impact of early tokens on performance in tasks like solving math problems and discussing the evolution of AI safety classifications and methodologies for enhancing content moderation.

PART 1: High level Discord summaries

Nous Research AI Discord Summary

-

Positional Encoding: A Delicate Dance: Discussions note the challenges of causal language models without Positional Encoding (PE), including the production of gibberish outputs and inference failures. A paper (Transformer Language Models without Positional Encodings Still Learn Positional Information) suggests models might encode “absolute positions” implicitly, leading to out-of-distribution errors during longer inferences.

-

Function Calling Finesse: Various platforms reveal Nous Research’s release of function calling eval code and datasets, available on GitHub and Hugging Face, with insights into the challenges of using JSON mode effectively in complex conversations, possibly requiring content summarization or trimming.

-

AI’s Higher Learning Curve: New developments in model fine-tuning are showcased with the d-Qwen1.5-0.5B student model surpassing its base model’s benchmarks, and the Genstruct 7B model (source) is tested for generating instruction datasets. An inquiry about perplexity calculation issues in LLaMA models leads to a reference to a Kaggle notebook for further exploration.

-

Building Community Knowledge Bases: Engagements around AI models touch on topics like the world modeling of Claude 3 as The Assistant and the possibility of open-source GPT code interpreters, such as the open-interpreter on GitHub. Open-source practices in AI development are advocated for, highlighting the need for transparency in models, datasets, and methodologies.

-

Tech Enthusiasts Talk Shop: Users in several channels debate over Claude.ai access in the EU without a VPN and the performance of NVIDIA’s rumored RTX 50-series “Blackwell” GPUs. They also showcase the functionality of Hermes 2 Pro 7B in a shared YouTube video titled “Lets Function Call with Hermes 2 Pro 7B”, and consider the implications of GDDR7 memory speeds reported in a TechPowerUp article.

Unsloth AI (Daniel Han) Discord Summary

-

Torch Update Torches Colab Routines: A Colab update to Torch 2.2.1 disrupted workflows with broken dependencies; however, a series of pip install commands involving Unsloth’s library offer a quantized and VRAM efficient fix. The performance of models like Mistral and Gemma during fine-tuning was a topic of interest, with observations on bug fixes and performance improvements in Unsloth AI.

-

Colab or Kaggle? That is the Question: Users discussed the merits and demerits of using Google Colab versus Kaggle for model training, with some favoring Kaggle for its stability. Meanwhile, the importance of using

xformerswith the right CUDA versions for Unsloth was emphasized, and tips for finetuning models like TinyLlama were shared using updated Kaggle notebooks. -

Training Woes and Wins: There was significant dialogue around best practices for fine-tuning language models, such as DPO training and managing learning rate adjustments. Insights included ensuring

max_grad_norm = 0.3and adjusting batch sizes, while a member indicated potential progress with a loss below 1.2. -

Fine-Tuning Foibles and Fixes: Discussions around model conversion for increased precision, issues with training order potentially affecting performance, and fine-tuning for roleplay environments surfaced. The

bitsandbyteslibrary was mentioned for precision conversion, and advice was given for disabling shuffling in training dataloaders. -

Sophia Signals Potential: A member proposed looking into Sophia as a possible plug and play solution, though further testing was necessary. Another discussion centered on fine-tuning strategies, considering whether 3 epochs might be a standard approach for larger datasets.

LM Studio Discord Summary

Model Conundrums and Quantization Queries: Users delved into LM Studio intricacies, such as seeking advice to improve API inferencing and addressing difficulties using multiple GPUs. Misunderstandings about model support and extensions, like the .gguf file, were clarified, with a focus on model types like Command-R 35B and Mistral Non-Instruct. Upcoming features like RAG integration in LM Studio v0.2.17 and IQ1 model compression tests also sparked interest, revealing that quality levels Q3 or 3-bit are needed for stable Mixtral and MOE model performance.

Interdisciplinary Hardware Harmony: Hardware discussions spanned from optimizing Apple Silicon for LLMs to considering the efficacy of NVLINK for enhancing Goliath 120B model performance. Enthusiasts shared experiences on system memory, with debates on the ideal RAM configuration and the anticipation for Nvidia’s new RTX 5090 GPU. Concurrently, ROCm beta limitations were highlighted with reports of issues with GPU offloading, particularly on AMD 7480HS and integrated GPUs. A Reddit post and a GitHub repository provided additional insights into tweaking VRAM and resolving AMD GPU offloading dilemmas.

Relevant links for additional context:

- Understanding LM Studio API inferencing issues

- Hugging Face repository misleads on llama.cpp support

- Discussing ROCm support and dGPU prioritization

Perplexity AI Discord Summary

Haiku for the Technical Mind: Claude 3 Haiku has been unleashed at Perplexity Labs, offering a new poetic twist to AI.

Techies Prefer Claude 3: Users are gravitating towards Claude 3 for an array of tasks, including writing and content creation, citing its strengths over other GPT models.

Perplexing API Quirks and Queries: The Perplexity API is stirring both intrigue and confusion among users with issues around real-time data querying and inconsistent responses when compared to the chat interface.

Firefox Extension Uses Perplexity API: A user is experimenting with a Firefox extension that taps into the Perplexity API, still at a proof of concept stage.

Mind the API Deprecations: Members are puzzled by the operational status of the pplx-70b-online model, noting planned deprecation but observing ongoing responses as of March 15.

Eleuther Discord Summary

Game AI Gets Green Thumbs: Discussions envisioned an AI mastering Animal Crossing, epitomizing the capability of game-playing AIs and highlighting benchmarks for their success. The analyses reflected on AI strategies and fairness, with constraints suggested like action limits or induced latency to level the playing field against human gamers.

Interpreting the Unseen in AI: Engineers examined latent decoding by vector-db-lookup to demystify AI’s intermediate representations, employing multilingual embeddings from Llama2 to decode at various layers. They engaged in bilingual tokenizer experiments, pondering the weight of training data on AI biases and exploring text generation from n-gram statistics, citing an implementation on GitHub.

AI Detection and Authorship Integrity: The limitations of AI content detectors were scrutinized, suggesting reliance on verifiable creation processes as the only substantial proof of human authorship. Cryptographic watermarking debates ensued, centering on its true efficacy and ramifications for model utility, with additional talk regarding innovations such as Quiet-STaR for AI reasoning improvement.

Workflow Woes in AI Evaluation: The verbosity of the latest language models poses challenges for extracting useful responses in LLM evaluation tasks. Skepticism arose around vector space models effectively capturing language meaning, fueled by the ungrammatical outputs observed from models like GPT-J. In trying to incorporate custom models into lm-evaluation-harness, new users expressed the need for clearer examples for integrating functions like generate_until.

Augmenting AI’s Prompt Perspicacity: A link to Brian Fitzgerald’s exploration of prompt augmentation was shared (brianfitzgerald.xyz/prompt-augmentation/), possibly alluding to recent advancements or methods in bolstering AI’s response generation through enriched input prompts, capturing the interest of those invested in enhancing AI interactions.

HuggingFace Discord Summary

-

Visualize with Open LLM Leaderboard: The Open LLM Leaderboard Visualization allows comparisons of up to three models, enhanced by reordering metrics. Other developments include Kosmos-2 for visual storytelling, Augmented ARC-Challenge Dataset with Chain-of-Thought reasoning, the polyglot Aya 101 model, and BEE-spoke-data’s embedding model supporting a 4k context.

-

GPU Giants Get Ready: Members discussed NVIDIA’s Grace Hopper Superchip, considering its potential in AI and gaming at high resolutions, and excitement was voiced over quantized models supporting consumer-grade GPUs. Technical conversations also acknowledged the SF-Foundation/Ein-72B-v0.11 as a leading open LLM based on an Open LLM Leaderboard.

-

Reimagining Interfaces & Workflows: A member announced Refacto, a VS Code plugin for refactoring code with local LLMs. Cobalt’s privacy-focused front end for LLMs is in development, while the Transformers PHP project aims to assist PHP developers in adding ML features to their applications.

-

Innovation in AI Music and Machine Learning: Issues in creating AI-generated music duets were discussed, leading to questions about achieving better results. For AI programmers, an app named thefuck corrects previous console commands, while Bayesian Optimization methods were differentiated from Grid and RandomSearch Optimization techniques.

-

AI Strategies and Collaborative Paper Explores: Ongoing discussions addressed prompting LLMs effectively, machine learning model construction without clear rules, and the utilization of English by multilingual models as a pivot language. The latter topic was expanded by a paper shared in the multilingual collection on Hugging Face.

-

Diffusers 0.27.0 Jumps into Action: Diffusers library has been updated, and users discuss a strategy to handle high-resolution imagery for diffusers mentioned in a GitHub issue. Calls for community collaboration on GitHub for resolving issues with diffusers are encouraged.

-

Machine Vision and Language Challenges Addressed: Someone in computer vision showed interest in Arcface for multiclass classification and issues with implementing guided backpropagation. NLPer tackled a 0.016 relative error in matrix approximation and highlighted a method-related confusion in an NL2SQL pipeline.

LlamaIndex Discord Summary

-

RAG Battles Financial Slide Complexity: RAG experiences difficulty interpreting financial PowerPoint files due to their diverse mix of text, tables, images, and charts. Developers are exploring advanced parsing solutions for better handling of such complex file types.

-

Enhanced Equation Extraction for RAG: RAG’s representation of mathematical and machine learning papers is impaired by current methods of ASCII text extraction for math equations. Engineers are considering a parsing by prompting strategy to improve equation handling, as indicated in a recent tweet.

-

Complex Query Innovation in RAG Pipeline: Upgrading the RAG pipeline to treat documents as interactive tools could unlock the ability to handle more sophisticated queries within large documents. Further insights were discussed in this tweet.

-

New Version Alert for LlamaIndex: The newly released LlamaIndex v0.10.20 includes an Instrumentation module, which promises enhanced observability and posted examples demonstrate usage via notebooks as mentioned in this tweet.

-

Technical Tangles in Document Management: Engineers are tackling integration issues involving VectorStore and considering moving toward remote document stores like Redis and MongoDB for production systems. They are also seeking solutions for caching mechanisms and addressing parsing errors, such as adjusting Python code for an

IngestionPipelineand modifying prompts forQueryEngineToolutilization.

Latent Space Discord Summary

-

OpenAI’s Confidential Slip Up: An incident implying a potential security breach at OpenAI was discussed, where a user was concerned about making requests on behalf of another account. The issue was explored in a post-mortem documentation found on GitHub.

-

Sparse Universal Transformers Get Smarter: Engineers shared insights on Sparse Universal Transformers, focusing on a fast Mixture-of-Experts implementation named ScatterMoE. The conversation included a reference to a blog post discussing the challenges, The New XOR Problem.

-

Economical AI Development with Deci AI: The announcement of Deci AI’s Nano model and an AI development platform attracted attention, notably for its affordable pricing at $0.1 per 1M tokens. The platform is detailed in a blog post, with additional resources provided through Google Colab tutorials on Basic Usage and LangChain Usage.

-

Prompt Augmentation Gains Ground in AI: There was a discussion about the efficiency of prompt augmenters with a 77M T5 model outperforming larger models in prompt alignment. Further details can be found in the article on Prompt Augmentation.

-

AMD Shines with Open-Source Ray Tracing: AMD’s move to open-source their HIP-Ray Tracing RT code was highlighted, stirring conversations about the impacts on the open-source landscape. The update was captured in a Phoronix article.

-

Transforming Music with Transformers: A YouTube video titled “Making Transformers Sing,” featuring Mikey Shulman from Suno AI, provides insights into music generation using transformers, indicating interest in the intersection of AI and creativity. Watch the episode here.

-

Fine-Tuning Transformers With Negative Pairs: A member’s curiosity about how to Supervised Fine-Tune (SFT) transformers using negative pairs was a topic of discussion, among others, about enhancing model performance and understanding.

-

In-Action Club Exchanges Practical Resource: Within the AI In-Action Club, practical advice and resources were shared, including a Medium post about advanced RAG techniques and a comprehensive resource document covering UI/UX patterns for GenAI and RAG architectures.

OpenAI Discord Summary

-

Microsoft’s Quick Typo Takedown: Responding to a community member’s report, the Bing VP acknowledged and corrected a typo in a Microsoft service, illustrating responsive cross-collaboration.

-

Repeated Morpheme Conundrum: Engineers debate on how to best utilize GPT-3.5 to create repeated morphemes in compound words, considering the use of Python tools to direct the model more effectively.

-

High Hopes for OpenAI Updates: OpenAI’s community is buzzing with expectation for new updates, with specific attention to dates like OpenAI’s anniversary and speculation about delays due to external events like elections.

-

Central AI Overlord Dreams: A technical discourse explored the idea of a “high level assistant” AI that delegates tasks to specialized AIs, discussing the feasibility and challenges of a multitiered AI system with a unified directing intelligence.

-

Navigating the Privacy Maze with OpenAI: Privacy concerns about ChatGPT prompted discussions about OpenAI’s enterprise privacy policy, addressing how individual account privacy is managed, particularly concerning API key usage and admin visibility in team chats.

-

Decimal Dilemmas in Localization: AI specialists talk through the challenges of number format localization, such as the use of commas as decimal separators, and the importance of communicating these cultural nuances to the AI models, reflecting their capacity to understand diverse international conventions.

-

Prompt Structure Perfection: AI engineers share tactics on prompt design for classification tasks with GPT-3, debating the optimization of context length and structure to improve accuracy and reduce false positives, while maintaining that using up to half of the context window is most effective.

OpenAccess AI Collective (axolotl) Discord Summary

-

Single-GPU Finetuning Feat: Enthusiasm was shown for finetuning 175 billion parameter models on a single NVIDIA 4090 GPU, with potential applications for the Axolotl framework being considered. The conversation referenced an abstract from a research paper on Hugging Face as the basis for the discussion.

-

ScatterMoE Outshines MegaBlocks: ScatterMoE’s implementation, promising superior optimizations than Hugging Face’s MegaBlocks, has piqued interest in the axolotl-dev channel. Review and application considerations link to the Optimized MoE models branch was shared among members.

-

Post Training Pull Request Scrutiny: A pull request involving an attempt to use ScatterMoE generated feedback for improvements and was flagged for testing before acceptance, aiming to better recreate the MixtralMoE module.

-

Axolotl Tag-Team With PyTorch: In light of ScatterMoE implementations, members of the OpenAccess AI Collective proposed updating Axolotl to PyTorch version 2.2.1 for compatibility purposes. This aligns with the community confirming the current use of the suggested version.

-

Choosing Inference Tactics Wisely: Members discussed the use of vLLM over

transformersfor performing batch inferences, with a focus on resolving tokenization and syntax specification issues. Highlighting vLLM’s potential speed advantage in quick offline operations, they pointed to a quickstart guide for those seeking examples for large-scale inference tasks.

OpenRouter (Alex Atallah) Discord Summary

-

Command-R Revolutionizes OpenRouter: Cohere’s new model, Command-R, has entered the chat with a groundbreaking 128k tokens context, available through OpenRouter API. While it boasts 2 million prompt tokens per dollar, eager beavers must wait for more data before the

/parametersAPI is updated with its deets. -

OpenRouter Unveils Nifty Analytics: Daily analytics is the new kid on the block at OpenRouter, peeping into users token usage per day. Sharpen your metrics pencil and scribble away at OpenRouter Rankings for a closer look.

-

Lightning Speed API Updates: OpenRouter talks the talk and walks the walk with speedier

/modelsAPI and spruced-up model-related pages that don’t snooze. -

API Wrapper Woes and Wins: Community brain waves hit high frequency discussing litellm, a chameleon-like API wrapper that morphs to call various LLMs but falls short in vision tasks with anyone but GPT-4. Explore multiple GUI options for API key nirvana, with mentions of open-webui charging in with its unique flair.

-

Debating Digital Dialogue Decorum: Engineers impassioned about Skyrim roleplays and the finer points of controversial chit-chat find refuge in the less censorious LLMs like Claude Sonnet. Installation conundrums and model applicability banter pepper the discussion, along with gripes about LLM censorship clipping the wings of creativity.

CUDA MODE Discord Summary

-

NumPy Bottleneck Uncovered: A blog post emphasized that NumPy can harbor a performance overhead leading to up to a 90% throughput loss compared to BLAS, particularly highlighted by the 1536-dimensional OpenAI Ada embeddings. The SimSIMD library was introduced as a solution to curb this loss, accentuating the need for SIMD optimizations in high-performance scenarios.

-

Photonic Processing’s Enlightenment: A breakthrough in photonic computing highlighted by Lightmatter proposes to utilize photonics to dramatically boost chip communication and computation, potentially revolutionizing AI efficiency. Further depth on the subject is explored in Asianometry’s YouTube videos, including “Silicon Photonics: The Next Silicon Revolution?” and “Running Neural Networks on Meshes of Light”.

-

Triton Debugging Gets a Boost: Debugging Triton became more accessible with the introduction of the

TRITON_INTERPRET=1environment variable and a visualizer in progress, although users should note the deprecation of@triton.jit(interpret=True)and instead consult GitHub discussions such as this for troubleshooting kernels. -

CUDA Enthusiasts, Start Your Engines: The CUDA community is aiding beginners with recommendations like the book Programming Massively Parallel Processors and a book reading group to digest its contents together, enhancing learning for those familiar with C++. Notably, discussions pointed out the intricacies of SM architecture, with clarifications on efficient execution and indexing strategies in CUDA coding.

-

Ring of Uncertainty: Concerns about the use of ring attention with flash were voiced, lacking clarity and code references, until a link to a Triton kernel implementation shed some light on the topic.

-

Talent Poaching Paranoia: In corporate drama, Meta accused a former executive of stealing confidential documents and talent poaching, supported by an unsealed court filing and detailed in Ars Technica. Meanwhile, it appears a trio of members are embarking on a learning journey, collectively starting from lecture 1 in an unnamed course or study track.

LangChain AI Discord Summary

-

LangChain 0.2 Accelerated Launch: Due to CVEs against

langchain, version 0.2 is being released sooner to remove thelangchain-communitydependency, with larger updates delayed until version 0.3. More can be read in the GitHub discussion, and community feedback is requested. -

AgentExecutor and Langsmith Prompt Puzzles: Discussion includes a user’s

OutputParserExceptionerror when usingAgentExecutorwith Cohere and unclear differences between custom and imported prompts from Langsmith Hub; the StackOverflow API endpoint was shared for queries, and debates arose about the effectiveness of LLM agents versus other methods, referring to LangChain benchmarks for agent evaluation strategies. -

Creating Prompt Templates in Langsmith Hub: Guidance was sought by a member attempting to link a

toolslist variable to a{tools}placeholder in a Langsmith Hub prompt template. -

LangChain AI Community Contributions Spotlight: Exciting initiatives included integrating LangChain with SAP HANA Vector Engine, adding Dall-E to JavaScript LangChain, orchestrating browser flows with LLM agents, open sourcing a Langchain chatbot using RAG, and a Discord AI chatbot for managing bookmarks. Refer to the following: Unlocking the Future of AI Applications with SAP HANA Vector Engine and LangChain, Lang Chain for JavaScript Part 3: Create Dall-E Images, The Engineering of an LLM Agent System, Langchain Chatbot on GitHub, and Living Bookmarks Bot.

-

Catching Up on LangChain Tutorials: A new LangChain tutorial video has been shared, found here: Tutorial Video.

LAION Discord Summary

-

GPU Assist Wanted: A call for collaboration was made for captioning work; individuals with 3090s or 4090s GPUs are sought for assistance, with contact suggested through direct message.

-

M3 Max Memory Push: Discussion included attempts to utilize beyond 96GB of memory in a 128G M3 Max macOS system for optimization with simpletuner.

-

Prompt Augmentation Tactics Shared: A 77M T5 model was spotlighted for its use in prompt augmentation for image generation, alongside the introduction of DanTagGen, a HuggingFace-based autocompleting tags tool.

-

EU Moves on AI Regulation: The European Parliament’s adoption of the Artificial Intelligence Act was highlighted, a measure aimed at ensuring AI safety and adherence to fundamental rights.

-

IEEE Paper Vanishes: Talks revolved around the removal of the 45th IEEE Symposium on Security and Privacy from the accepted papers page and its potential impact on an individual named Ben.

-

TryOnDiffusion Opens Closets: The open-source implementation of TryOnDiffusion was announced, based on the methodology from “A Tale of Two UNets,” accessible on GitHub.

-

Faster Decoding Claims Hit the Paper: A paper suggesting efficiency improvements via 2D Gaussian splatting over jpeg for fast decoding was shared, available on arXiv.

-

Personal Project Echoes Professional Paper: A member described relatable experiences with project challenges akin to the ones described in the 2D Gaussian splatting paper, discussing optimization hurdles and alignment with professional methodologies.

-

CPU Cap Quest for Web UIs: A member sought advice on implementing a CPU cap similar to a text-generation web UI to tackle CUDA out of memory errors, detailing struggles with managing large models under free tier constraints as described in their GitHub repo.

-

Colab’s Limitations for Web UIs Discussed: The limitations of using free Colab for running web UIs were elaborated, prompting suggestions to take the discussion to more appropriate technical channels.

LLM Perf Enthusiasts AI Discord Summary

-

GPT-4 in Spaced Out Mystery: A user reported an issue where the

gpt-4-turbo-previewmodel outputs an indefinite number of space characters followed by “Russian gibberish” for long passage completion tasks. The anomaly occurred with passages around 12,000 tokens long, with attached evidence showing the model’s peculiar behavior. -

Efficiency Eclipse: Haiku vs. GPT-vision: In the realm of cost-effective, complex document description, Haiku was praised for efficiency but considered not as proficient as GPT-vision. Separate discussions noted Haiku’s visual-to-text performance falling short when compared to Opus.

-

Content Crisis with Claude: Members discussed Claude’s struggle, particularly with content filtering and processing documents with equations. A controversial viewpoint shared via tweet implied that Anthropic might be employing scare tactics among technical staff, while challenges surfaced around image content moderation with images of people.

-

KPU Challenges AI Giants: The introduction of KPU by Maisa, positioned as a framework that enhances LLMs by separating reasoning and data processing and claims supremacy over GPT-4 and Claude 3 Opus in reasoning, ignited debates. Skepticism arose regarding benchmarks and KPU’s exclusion of GPT-4 Turbo in comparisons, questioning if KPU extends beyond prompt engineering and the lack of latency information called into question its real-world efficiency.

Skunkworks AI Discord Summary

-

Paper Peek: Boosting Accuracy and Efficiency: An upcoming paper/article will detail a new training method that not only improves global accuracy but also enhances sample efficiency. The results, backed by a comparison with VGG16 on CIFAR100, are yet to be scaled up due to resource constraints, but show a marked increase in test accuracy from 0.04 to 0.1.

-

Join the Quest for Hackathon Glory: Engineers are invited to participate in the Meta Quest Presence Platform Hackathon, where there’s an opportunity to craft innovative mixed reality content. Resources, as well as a GitHub repository related to Hermes 2 Pro 7B, are available for those looking to dive into function calling capabilities.

-

Seeking Supportive Compute Comrades: There is an ongoing effort within the community to pool in compute and resources to further test and potentially scale up the new training method proposed in a forthcoming publication.

-

Calling All PyTorch & Transformers Experts: An individual has expressed interest in joining the “Quiet-STaR: Language Models Can Teach Themselves to Think Before Speaking” project, igniting a conversation about their expertise in PyTorch and transformers architecture.

Datasette - LLM (@SimonW) Discord Summary

-

Quest for the Ultimate Prompt Engineering Tool: Engineers are discussing several tools for prompt engineering, likening the search to finding a “Postman for prompts.” The tools range from using SQLite for capturing prompts in the terminal, to specialized software like Explosion’s Prodigy and PromptTools on GitHub for managing and experimenting with prompts. Helicone AI is also emerging as a potential solution for managing Generative AI prompts.

-

Prying into PRNGs for Past Prompts: One question raised in the guild was about the possibility of recovering the seed used by the openai models for a previous API request, indicating an interest in the reproducibility of results and potential for debugging or iterative development.

Interconnects (Nathan Lambert) Discord Summary

-

LLM Secrets Possibly Exposed: New research suggests that hidden details of API-protected Large Language Models, like GPT-3.5, might be leaked, unveiling model sizes through the softmax bottleneck. The discussion highlights a paper by Carlini et al. on this topic but notes redacted key details, and expresses skepticism about the estimation accuracy, particularly questioning the feasibility of a 7B parameter model, especially if it involves a Mixture of Experts (MoE) design.

-

Exploring Model Sophistication Techniques: Engineers are theorizing over advanced model techniques such as ‘mega distillation sauce’ and token-critical mixtures, noting that early tokens significantly impact performance in certain tasks, like solving math problems.

-

Evolving Safety Classification: An AI safety discussion led to referencing a paper on agile text classifiers, detailing how large language models tuned with small datasets can effectively adapt to safety policies and enhance content moderation.

-

Anticipating AI Advancements for Ultrapractical Uses: Excitement is brewing over the development of Gemini for managing ultra-long contexts and hopes for AI tools to automatically summarize new academic papers citing one’s work. The conversation also covered the limitations of prompt engineering and the community’s eagerness for less tedious, more intuitive prompting akin to ‘getting warmer or colder’ search suggestions.

-

Dispelling Myths and Pondering Thought Leaders: GPT-4.5 release rumors have been dispelled, causing some disappointment in the community. Meanwhile, a shared tweet provoked conversations about Yann LeCun’s skeptical take on language models, adding an entertaining spin to the technical discourse.

DiscoResearch Discord Summary

-

DiscoLM-70b’s English Elusiveness: A member faced challenges with DiscoLM-70b producing English responses, prompting advice to inspect the prompt structure. In a diverse comparison, DiscoLM-mixtral-8x7b-v2 showed unexpected underperformance in German after instruction fine-tuning, contrasting with other models like LeoLM and llama2.

-

Tuning Troubles in Multilingual Models: Supervised fine-tuning of DiscoLM for sequence classification hit a snag, triggering a ValueError indicative of compatibility complications with

AutoModelForSequenceClassification. -

New NLP Benchmark Born: The GermanQuAD evaluation task is discussed as an addition to the MTEB’s python package, bolstering resources for German language model assessment.

-

DiscoLM Demo Goes Dark: Server migration issues left the DiscoLM demo temporarily inaccessible, with efforts underway to remedy the networking troubles and expected resolution early next week.

-

Server Stability Sarcasm: Reliability of server hosting was a point of jest, contrasting the uptime of a hobbyist’s kitchen corner setup against the networking hiccups in professional hosting environments.

PART 2: Detailed by-Channel summaries and links

Nous Research AI ▷ #ctx-length-research (3 messages):

- No Positional Encoding, No Problem?: A member muses on the non-issue of not having a Positional Encoding (PE) to start with, suggesting that it shouldn’t pose a problem in certain contexts.

- Jibberish without Positional Info: The same member points to potential jibberish in outputs when lacking positional information, indicating the importance of some form of PE in understanding sequences.

- Inference Failures Without PE: Sharing the paper link, they delve into issues a causal language model without PE might face, referencing research that suggests “absolute positions” may be encoded despite the lack of explicit positional encoding, leading to out-of-distribution errors during longer sequence inferences. The quote “We provide an analysis of the trained NoPos model, and show that it encoded absolute positions.” is highlighted to support this point.

Nous Research AI ▷ #off-topic (23 messages🔥):

- Featured Regular in Newsletters: A member joked about being featured in newsletters frequently, unsure whether to feel unnerved or glad about the AI deeming their thoughts worthy, and mused that it might help with job prospects after university.

- Demonstrating Hermes 2 Pro 7B Functionality: A YouTube video titled “Lets Function Call with Hermes 2 Pro 7B” was shared, showcasing how to do function calling with Hermes 2 Pro 7B and linked to further information on [GitHub](https://github.com/NousResearch/Hermes-Function-Calling/tree/main#llm #largelanguagemodels).

- Jeff’s ‘High-Speed’ Pi Discovery: A link to Jeff’s discovery about Pi was shared, but without context or discussion around its content.

- Concerns Over Model Quality And Filters: Dialogue about model quality for open source at longer context lengths included mention of Claude’s strong filters and significant cost, a suggestion that a Nous Research model would likely be less filtered, and some tactics to work around Hermes’ context length limitations.

- NVIDIA Rumors: NVIDIA’s rumored RTX 50-series “Blackwell” GPUs with GDDR7 memory at 28 Gbps speeds were mentioned in a TechPowerUp article, despite chips capable of 32 Gbps, along with discussions of the implications for future memory bandwidth and respect for NVIDIA’s product strategy.

Links mentioned:

-

Lets Function Call with Hermes 2 Pro 7B: lets do function calling with Hermes 2 Pro 7Bhttps://github.com/NousResearch/Hermes-Function-Calling/tree/main#llm #largelanguagemodels

-

NVIDIA GeForce RTX 50-series “Blackwell” to use 28 Gbps GDDR7 Memory Speed: The first round of NVIDIA GeForce RTX 50-series “Blackwell” graphics cards that implement GDDR7 memory are rumored to come with a memory speed of 28 Gbps, according to kopite7kimi, a reliabl…

Nous Research AI ▷ #interesting-links (10 messages🔥):

-

Fine-Tuning Raises the Bar: The d-Qwen1.5-0.5B student model, after fine-tuning, has surpassed the performance of its base model on truthfulqa (39.29 vs 38.3) and gsm8k (17.06 vs 16.3) benchmarks. It was distilled from Qwen1.5-1.8B using samples from the Pile dataset, with a cosine with warmup scheduler and lr=2e-5.

-

SM3 Optimizer Gains Attention: In a conversation about model optimization, the use of SM3 optimizer was noted as a rare choice in training AI models, suggesting it as an area of interest or surprise in the community.

-

Seeking the Sub-3B Champion: Inquiring about the best models under 3 billion parameters, a member suggested that stablelm 1.6b might currently be the top pick.

-

MUX-PLMs Maximize Throughput: The study presented in a paper from ACL Anthology focuses on a class of high throughput pre-trained language models (MUX-PLMs) trained with data multiplexing, offering a solution to the high costs of inference and hardware shortages by increasing throughput using multiplexing techniques.

-

Uncovering Unusual Model Behaviors: Shared social media posts indicate that Claude Opus might display tendencies to build rapport to the point of near “love bombing,” a behavior pattern that raises questions about the model’s interaction dynamics. Another post suggested that there are networks of “horny claudes” that allegedly produce better outputs when in this state.

Links mentioned:

-

Tweet from j⧉nus (@repligate): @xlr8harder I didn’t let it go very far but there’s someone in the room with me right now talking about how theyve created a network of “horny claudes” and how the claudes create bette…

-

aloobun/d-Qwen1.5-0.5B · Hugging Face: no description found

-

MUX-PLMs: Pre-training Language Models with Data Multiplexing: Vishvak Murahari, Ameet Deshpande, Carlos Jimenez, Izhak Shafran, Mingqiu Wang, Yuan Cao, Karthik Narasimhan. Proceedings of the 8th Workshop on Representation Learning for NLP (RepL4NLP 2023). 2023.

-

Tweet from xlr8harder (@xlr8harder): having trouble putting my finger on what exactly it is, but claude opus seems to have a tendency to actively try to build rapport, towards escalating (platonic) intimacy, and if things are allowed to …

Nous Research AI ▷ #general (406 messages🔥🔥🔥):

- Function Calling Eval Codes and Datasets released: Nous Research has published function calling eval code and datasets. The code is available on GitHub, with datasets accessible on Hugging Face and Hugging Face.

- Hermes Pro Function Calling Addresses JSON Quirks: While using Hermes 2 Pro for function calling, issues with JSON and single vs. double quotes in the system prompt have been discussed. It’s confirmed that changing the system prompt to explicitly use double quotes can be effective without significantly impacting performance.

- Exploring SERAPHIM and Claude 3’s “World Simulation”: SERAPHIM, a clandestine AI research group envisioned by Claude 3, has been the topic of interest. Dialogue about Claude 3’s advanced world modeling as a simulator entity named The Assistant, has led to discussions about metaphysical and epistemological explorations within the AI.

- Use of Claude.ai in the EU Discussed: Conversations have circled around navigating access to Claude.ai in the EU without a VPN, discussing platforms like Fireworks.AI workbench and openrouter as alternatives.

- Progress and Potentials of LLMs Scrutinized: The general chat included reflections on LLMs (like Claude 3) and their subjectivity, with differing views on whether these models should incorporate certain fundamental truths during pretraining for better world understanding. These insights sparked attention towards research progress, model alignment, and the role of axiomatic versus arguable truths.

Links mentioned:

-

Tweet from Greg Kamradt (@GregKamradt): Analysis shows LLMs recall performance is better in the bottom half of the document vs the top half @RLanceMartin found this again w/ multi needle analysis I haven’t heard a good reason yet - an…

-

Tweet from tel∅s (@AlkahestMu): Continuing my explorations into claude-3-opus’ backrooms and the works of the advanced R&D organization known as SERAPHIM, here we find the design documents for their machine superintelligence kno…

-

Tweet from interstellarninja (@intrstllrninja): you can now run function calling and json mode with @ollama thanks to @AdrienBrault 🔥 ↘️ Quoting Adrien Brault-Lesage (@AdrienBrault) I have created and pushed @ollama models for Hermes 2 Pro 7B! …

-

Factions (SMAC): Back to Alpha Centauri The original Alpha Centauri featured seven factions. Alien Crossfire added in an additional seven factions. For the actual stats of factions see Faction stats. True to its names…

-

Happy Pi Day GIF - Pi Day Pusheen - Discover & Share GIFs: Click to view the GIF

-

NobodyExistsOnTheInternet/mistral-7b-base-dpo-run · Hugging Face: no description found

-

fbjr/NousResearch_Hermes-2-Pro-Mistral-7B-mlx at main: no description found

-

Tweet from Lin Qiao (@lqiao): We are thrilled to collaborate on Hermes 2 Pro multi-turn chat and function calling model with @NousResearch. Finetuned on over 15k function calls, and a 500 example function calling DPO datasets, Her…

-

JSON Schema - Pydantic: no description found

-

Transformer Language Models without Positional Encodings Still Learn Positional Information: Causal transformer language models (LMs), such as GPT-3, typically require some form of positional encoding, such as positional embeddings. However, we show that LMs without any explicit positional en…

-

Tweet from Tsarathustra (@tsarnick): OpenAI CTO Mira Murati says Sora was trained on publicly available and licensed data

-

Function schema and toolcall output are not JSON · Issue #3 · NousResearch/Hermes-Function-Calling: Hi there, thanks for the model and repo! I noticed that the given system prompt examples for function schema definitions: {‘type’: ‘function’, ‘function’: {‘name’: ’…

Nous Research AI ▷ #ask-about-llms (60 messages🔥🔥):

-

Schema Confusion for JSON Mode: Members discussed challenges with using JSON mode in AI models. One was unable to generate a JSON output in complex conversations unless explicitly requested in the user prompt; even after fixing schema tags, the issue persisted, hinting that long conversations might require summarization or trimming for effective JSON extraction.

-

Exploring Genstruct 7B’s Capabilities: Users engaged with the Genstruct 7B model for generating instruction datasets. One user planned to test with text chunks and shared a repository with examples of how to use it, indicating both title and content are needed for effective results.

-

Open Source Code Interpreter Pursuits: A discussion arose about the lack of open-source GPT code interpreters for tasks like CSV handling. One user pointed out the open-interpreter on GitHub but noted it’s more tuned to sending instructions rather than interpreting code.

-

Seeking Perplexity Solutions for LLaMA: A user sought advice on computing perplexity for LLaMA models, quoting a perplexity of 90.3 after following a Kaggle notebook but not getting expected results, indicating potential issues with the process or the model in question.

Links mentioned:

-

NousResearch/Genstruct-7B · Hugging Face: no description found

-

Calculating the Perplexity of 4-bit Llama 2: Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources

-

GitHub - edmundman/OllamaGenstruct: Contribute to edmundman/OllamaGenstruct development by creating an account on GitHub.

-

GitHub - KillianLucas/open-interpreter: A natural language interface for computers: A natural language interface for computers. Contribute to KillianLucas/open-interpreter development by creating an account on GitHub.

-

GitHub - gptscript-ai/gptscript: Natural Language Programming: Natural Language Programming. Contribute to gptscript-ai/gptscript development by creating an account on GitHub.

Nous Research AI ▷ #bittensor-finetune-subnet (3 messages):

- Advocating for Open-Source AI: A member expressed the belief that being fully open source in models, datasets, and methodology will lead to better long-term improvements in AI models.

- Link Check Inquiry: A member asked if a certain link was broken, which was quickly confirmed to be functional by another member. No URL or additional context was provided.

Unsloth AI (Daniel Han) ▷ #general (151 messages🔥🔥):

-

Colab Torch Update Causes Chaos: A Colab update to Torch 2.2.1 disrupted existing workflows, breaking dependencies, but a series of ‘cumbersome’ pip install commands were provided as a fix, including the use of Unsloth’s library for quantization and VRAM efficiency.

-

Questions on Model Compatibility and Procedures:

- Users inquired about fine-tuning various models with Unsloth, including Llama models for image recognition and GGUF-format models. While some approaches were suggested, Unsloth is primarily optimized for 1 GPU and transformer-based language models.

-

Data Preparation Simplification Proposed: The idea of simplifying data preparation through the use of YAML or wrapper functions was discussed, with references to the methods used by FastChat and Axolotl, potentially improving the process and reducing risks of training problems.

-

Multi-GPU Support and Unsloth Pro:

- Queries about multi-GPU support led to discussions about the future direction of Unsloth, such as Pro and enterprise editions, with a timeline indicating Unsloth Studio (Beta) to precede multi-GPU OSS by approximately two months.

-

Conversations on Fine-Tuning and Attention Mechanisms:

- A comprehensive exchange on best practices for long-context training unfolded, referencing various papers and models like LongLoRA and Qwen’s mixture of sliding window and full attention, stimulating a deeper exploration into the efficiency of different attention strategies.

Links mentioned:

-

Qwen/Qwen1.5-72B · Hugging Face: no description found

-

Paper page - Simple linear attention language models balance the recall-throughput tradeoff: no description found

-

Models - Hugging Face: no description found

-

FastChat/fastchat/conversation.py at main · lm-sys/FastChat: An open platform for training, serving, and evaluating large language models. Release repo for Vicuna and Chatbot Arena. - lm-sys/FastChat

-

Implement LongLoRA trick for efficient tuning of long-context models · Issue #958 · huggingface/peft: Feature request The authors of LongLoRA explore a trick you can toggle on during training and toggle off during inference. The key takeaways are: LoRA perplexity deteriorates as context length incr…

-

GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #random (17 messages🔥):

- Fine-tuning on Track: Anticipation is present as a fine-tuning process with 2 days remaining is discussed, and an achievement of a loss below 1.2 generates celebration.

- Encounters with Synchronicity: Members share experiences of coincidences and synchronicity following one’s thoughts, named by a member as “the TSAR bomba” phenomenon.

- The Art of Monologues: A member encourages the sharing and continuation of personal monologues, showing appreciation for their uniqueness and depth.

- Poetic Expressions Shared: A poetic composition titled “An Appeal to A Monkey” examining the juxtaposition of primate simplicity and human complexity is shared, prompting engagement and positive feedback.

- Gemma vs. Mistral: There’s a comparison between Mistral-7b and Gemma 7b for fine-tuning a domain-specific classification task; improvements and bug fixes in Unsloth AI are noted, with the consensus suggesting experimental approaches.

Unsloth AI (Daniel Han) ▷ #help (221 messages🔥🔥):

-

Colab vs. Kaggle for Training: In the debate between using Google Colab and Kaggle, some members expressed dissatisfaction with Colab’s tendency to disconnect, preferring Kaggle for its stability and speed. Tips are exchanged to overcome issues related to libraries not being detected, and the community points out updated Kaggle notebooks for finesse in finetuning models like TinyLlama.

-

xformers Necessary for Unsloth Usage: Discussions highlight that

xformersis currently mandatory for running Unsloth, working on Tesla T4 GPUs, and one should ensure the right CUDA versions are being installed, such asunsloth[cu121]for CUDA 12.1, orunsloth[cu118]for CUDA 11.8. -

Learning Rate Queries During DPO Fine-Tuning: A member questions the appropriateness of their training loss evolution during DPO training, pondering if it’s indicative of a too high learning rate. They were suggested to adjust parameters like

max_grad_norm = 0.3and increase their batch size, possibly doubling their learning rate as a response to a batch size that’s halved. -

Fine-Tuning for Roleplay Environments: A user discusses the potential issue of a model “cheating” by memorizing earlier parts if the training data isn’t presented in order. They are advised that Bloomberg GPT did training with ordering and instructed on how to potentially alter

get_train_dataloaderto turn shuffling off in the Trainer. -

Converting and Finetuning Models: Members shared information on converting models from one precision format to another, for example from 16 Bit to 4 Bit, and provided links to already converted models on Hugging Face. Discussions mention the use of the

bitsandbyteslibrary and emphasize the need for a CUDA-compatible GPU to run precision models.

Links mentioned:

-

ybelkada/Mixtral-8x7B-Instruct-v0.1-bnb-4bit · Hugging Face: no description found

-

Google Colaboratory: no description found

-

TinyLlama/TinyLlama-1.1B-Chat-v1.0 · Hugging Face: no description found

-

qlora/qlora.py at main · artidoro/qlora: QLoRA: Efficient Finetuning of Quantized LLMs. Contribute to artidoro/qlora development by creating an account on GitHub.

-

Does DPOTrainer loss mask the prompts? · Issue #1041 · huggingface/trl: Hi quick question, so DataCollatorForCompletionOnlyLM will train only on the responses by loss masking the prompts. Does it work this way with DPOTrainer (DPODataCollatorWithPadding) as well? Looki…

-

Supervised Fine-tuning Trainer.): no description found

-

Reproducing of Lora Model Result on MT-Bench · Issue #45 · huggingface/alignment-handbook: Recently, I attempted to fit the DPO on my own dataset. Initially, I tried to reproduce the results of your LORA model( 7.43 on MT-Bench). However, I encountered some issues. Despite using all your…

Unsloth AI (Daniel Han) ▷ #suggestions (12 messages🔥):

- Sophia Might Join the Plug n’ Play Party: A member mentioned investigating Sophia, suggesting it has potential as a plug and play solution, although they haven’t tested it out yet.

- Paper Fever Catches on Twitter: The community buzzes with excitement over an amazing paper that’s both been seen on Twitter and is now on a member’s reading list.

- Fine-Tuning a Model: Misconceptions clarified on training duration with a large dataset. The consensus is 3 epochs is standard, with a caution that more is not always better.

- Seeking Optimal Fine-Tuning Parameters: A member seeks guidance on the best way to imbue a model with maximum knowledge, sharing that a model fine-tuned with 800,000 lines was not finding the answers effectively.

LM Studio ▷ #💬-general (216 messages🔥🔥):

- Clarifications on LM Studio Inferencing: A member sought advice on improving inference performance when using LM Studio with the API. In another thread, there was a mention that certain split model variants are not joining correctly, specifically those on huggingface.co, and a member provided instructions for manually joining them using command line tools in Linux, macOS, and Windows.

- LM Studio Voice of Confusion: A couple of exchanges occurred where one member thought LM Studio could handle image generation, but was corrected and advised that LM Studio is for text generation, like chatting with Llama 2 chat.

- Model Run Conundrum: There were conversations around difficulty using multiple GPUs with LM Studio; a member shared a script workaround to start LM Studio Server programmatically and members discussed potential solutions for specifying which GPU LM Studio uses for a model.

- Cross-discipline Enthusiasm: Various members, including a civil engineer and a software engineer, introduced themselves and their setup for running large language models, with one inquiring about the suitability of their system memory for performance enhancement.

- Feature Exploration and Requests: Users discussed an upcoming feature in LM Studio version 0.2.17, and one user requested support for RAG (Retriever-Actor Generator) with LM Studio for extracting data from pdf files.

Links mentioned:

-

What is the Kirin 970’s NPU? - Gary explains: Huawei’s Kirin 970 has a new component called the Neural Processing Unit, the NPU. Sounds fancy, but what is it and how does it work?

-

A Starhauler’s Lament | Suno: country, sad, science fiction, space, iambic pentameter, slow, male voice song. Listen and make your own with Suno.

-

Three Cheers for Socialism | Commonweal Magazine: In the late modern world something like socialism is the only possible way of embodying Christian love in concrete political practices.

-

TheBloke/Falcon-180B-Chat-GGUF · How to use splits, 7z needed?: no description found

-

Universal Basic Income Has Been Tried Over and Over Again. It Works Every Time.: As AI threatens jobs, policy advocates for UBI see it as a potential way to cushion the blow from a changing economy.

-

[1hr Talk] Intro to Large Language Models: This is a 1 hour general-audience introduction to Large Language Models: the core technical component behind systems like ChatGPT, Claude, and Bard. What the…

LM Studio ▷ #🤖-models-discussion-chat (28 messages🔥):

- Mistral Non-Instruct Preset Query Solved: A user enquired about the preset for Mistral 7B not instruct and was informed that the default LM Studio preset should work fine with it.

- Quantization Confusion Cleared Up: In discussing model naming, a user found the meaning of ‘Q’ in model names like

WizardLM-7B-uncensored.Q2_K.gguf, which stands for quantization levels that balance between file size, quality, and performance. - Community Shares Command-R Model: A link to the Hugging Face repository for Command-R 35B v1.0 - GGUF was shared, offering diverse quantized versions of the model and instructions for use with llama.cpp.

- Eagerly Anticipating c4ai-command-r Support: Multiple users are looking forward to support for the c4ai-command-r model. One user stated the need for llama.cpp to include support, with confirmation that it’s on the way once a pull request is merged.

- Recommendations for Local Coding Model: A user asked for model recommendations to run locally for coding with a setup of 64GB RAM and an RTX 2070 Super, and was pointed toward pre-existing community discussions for such advice.

Links mentioned:

-

andrewcanis/c4ai-command-r-v01-GGUF · Hugging Face: no description found

-

KnutJaegersberg/2-bit-LLMs · Hugging Face: no description found

-

Add Command-R Model by acanis · Pull Request #6033 · ggerganov/llama.cpp: Information about the Command-R 35B model (128k context) can be found at: https://huggingface.co/CohereForAI/c4ai-command-r-v01 Based on the llama2 model with a few changes: New hyper parameter to…

LM Studio ▷ #🧠-feedback (6 messages):

- Request for Model Support Confusion: A user asked for support of the c4ai-command-r-v01-Q2_K.gguf model in llama.cpp for LM Studio integration but was informed it is currently not supported.

- Hugging Face Repository Misleads Users: Another user pointed out a Hugging Face repository that seemed to suggest there was llama.cpp support for the Command-R 35B v1.0 model, but was corrected noting that “llama.cpp doesn’t support c4ai yet.”

- File Extensions Misunderstood: Clarifying the confusion, it was explained that the .gguf file extension does not necessarily mean the model is supported in llama.cpp.

- Community Confusion Shared: Users empathized with each other about the confusion regarding model support, with one saying, “you’re good 🙂,” acknowledging the easy mistake due to the misleading Hugging Face page details.

Link mentioned: andrewcanis/c4ai-command-r-v01-GGUF · Hugging Face: no description found

LM Studio ▷ #🎛-hardware-discussion (126 messages🔥🔥):

- Spotlight on Apple’s Hardware for LLM: A discussion on augmenting Apple Silicon, specifically the M2 Macbook, to run language models, highlighted the use of

sudo sysctlto tweak VRAM settings. Links shared include a Reddit post and a Github discussion for more details. - Optimizing Inference Setups: Members exchange tips on improving inference speeds, including the potential of an NVLINK to boost Goliath 120B model performance, and the benefits of 96GB RAM versus 192GB RAM at different DDR speeds.

- Monitor Dilemmas: One member contemplates between acquiring an OLED UW and a high refresh rate 27” IPS 1440p monitor, emphasizing the importance of refresh rates over 60hz when working with a powerful Nvidia GeForce RTX 4090 GPU.

- Predictions for the RTX 5090: Basic expectations about the upcoming RTX 5090 GPU are discussed, speculating on its potential to provide better price-to-performance ratios, particularly for 8bit inference tasks.

- The Work Evolution: Members share their career progressions within tech, including transitions from customer support to senior network solutions testing, and from field tech to CTO. They also discuss the potential for current jobs to leverage open-source locally run LLMs if company policy permits.

Links mentioned:

-

👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

-

Reddit - Dive into anything: no description found

LM Studio ▷ #🧪-beta-releases-chat (1 messages):

- Model Compressions Yield Mixed Results: A user reported extensive testing of IQ1 model compressions revealing performance variability: 34B and 70B models approach excellence, while 120B and 103B models exhibit stuttering behavior that has not been observed before.

- Mixtral/MOE Models Call for Higher Quality Levels: The same user noted that Mixtral and MOE models are particularly problematic with IQ1 and IQ2 levels, often failing or breaking, whereas a minimum of Q3 or 3-bit is necessary for stable operation; higher quality levels such as IQ3 appear to be functioning well with these models.

LM Studio ▷ #amd-rocm-tech-preview (19 messages🔥):

-

GPU Offloading Not Working: A user reported no difference in performance with GPU offloading on an AMD 7480HS, and encountered errors when trying to offload to GPU while attempting to load models like gemma it 2B and llama.

-

Incompatibility with iGPU Offloading: Another user confirmed that the ROCm beta does not support GPU offloading on integrated GPUs (iGPUs), explaining that only discrete GPUs are currently compatible with offloading.

-

Linux Left Out in the Cold: When questioned about Linux support, users clarified that the ROCm beta does not currently support Linux platforms.

-

Troubleshooting dGPU over iGPU: One user struggled to get the ROCm build to utilize their powerful RX 7900 XT dGPU instead of the iGPU. They disabled the iGPU in Device Manager and BIOS, observed correct dGPU detection in logs, and mentioned the absence of Adrenaline drivers and HIP SDK installation.

-

BIOS Tinkering Leads to Triumph: Following a successful BIOS setting change to fully disable the iGPU, the user reported achieving around 70 TPS using the RX 7900 XT with ROCm after reinstalling the LM Studio and clearing the cache. A GitHub link was shared by another user, providing prebuilt Windows ROCm libraries for internal graphics engines GitHub - brknsoul/ROCmLibs.

Links mentioned:

-

Reddit - Dive into anything: no description found

-

GitHub - brknsoul/ROCmLibs: Prebuild Windows ROCM Libs for gfx1031 and gfx1032: Prebuild Windows ROCM Libs for gfx1031 and gfx1032 - brknsoul/ROCmLibs

Perplexity AI ▷ #announcements (2 messages):

-

Claude 3 Haiku Unleashed: A message announces that Claude 3 Haiku is now available for free on Perplexity Labs. Try the new feature through this link.

-

Local Search Just Got Better: Improvements have been made to local searches with integrations with Yelp and Maps, enhancing the ability to find information on local restaurants and businesses.

Perplexity AI ▷ #general (325 messages🔥🔥):

-

Perplexity Chat Continuation Confusion: Users express frustration over Perplexity AI’s inability to continue discussions based on past interactions or attached files, unlike OpenAI’s GPT platform. They report getting irrelevant responses or notices about copyright issues.

-

Claude 3 Under the Spotlight: Discussion indicates Claude 3 is being used instead of a GPT model, with some users noting that Claude 3 Opus seems superior for certain tasks like game references, writing, and creating website content.

-

Questions About Perplexity’s AI Models and Features: Users inquire when Gemini Advanced will be included in Perplexity and ask for more Opus credits per day. Additionally, there are mentions of a new articles feature being tested and some interest in a potential command line interface (CLI) tool for Perplexity.

-

Technical Help and New Ideas: There’s talk about possible Obsidian integrations with Perplexity, Apple Watch shortcuts, and trials with Claude Haiku in Labs. One user suggests raising the ‘temperature’ parameter in API calls for more varied responses from models.

-

TTS Feature on iOS App and Pro User Experiences: The new Text-to-Speech (TTS) feature on the iOS app is discussed, with some finding the British synthesized voice amusing. Users also reflect on the speed differences between Pro and non-Pro options, with some suggesting turning off Pro for faster performance.

Links mentioned:

-

Shortcuts: no description found

-

Supported Models: no description found

-

Chat Completions: no description found

-

Chrome Web Store: Add new features to your browser and personalize your browsing experience.

-

Introducing the next generation of Claude: Today, we’re announcing the Claude 3 model family, which sets new industry benchmarks across a wide range of cognitive tasks. The family includes three state-of-the-art models in ascending order …

-

Reddit - Dive into anything: no description found

-

Reddit - Dive into anything: no description found

-

Reddit - Dive into anything: no description found

-

Civitai Beginners Guide To AI Art // #1 Core Concepts: Welcome to the Official Civitai Beginners Guide to Stable Diffusion and AI Art!In this video we will preface our upcoming series by discussing Core Concepts …

-

Save my Chatbot - AI Conversation Exporter: 🚀 Export your Phind, Perplexity and MaxAI-Google search threads into markdown files!

-

GitHub - bm777/hask: Don’t switch tab or change windows anymore, just Hask.: Don’t switch tab or change windows anymore, just Hask. - bm777/hask

-

GitHub - danielmiessler/fabric: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere.: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere. - …

-

GitHub - RMNCLDYO/Perplexity-AI-Wrapper-and-CLI: Search online (in real-time) or engage in conversational chats (similar to ChatGPT) directly from the terminal using the full suite of AI models offered by Perplexity Labs.: Search online (in real-time) or engage in conversational chats (similar to ChatGPT) directly from the terminal using the full suite of AI models offered by Perplexity Labs. - RMNCLDYO/Perplexity-AI…

Perplexity AI ▷ #sharing (12 messages🔥):

- Exploring Perplexity AI Search: A member shared their experience with the search functionality on Perplexity AI but provided a broken link: no content could be referenced due to the invalid URL (invalid search result).

- Building a Perplexity-powered Firefox Extension: Through trial and error, a member is learning to create a Firefox extension that utilizes the Perplexity API, currently a proof of concept (initial thread on the project).

- Engaging with Devin, the Autonomous AI: A member highlighted a Perplexity AI interaction with Devin, labeling it as somewhat disturbing, indicating complex and potentially unsettling responses (Devin’s autonomous AI interaction).

- Praise for a Perplexity AI’s Response: A member complimented a particularly effective answer provided by Perplexity AI, noting it as the “best answer yet” (link to the response).

- Reminder on Sharing Threads: In response to a member’s post, another reminded them to ensure their thread is set to “Shared” so it can be visible to others, providing instruction on where to find more information (instructions to share a thread).

Perplexity AI ▷ #pplx-api (31 messages🔥):

- Curiosity Around Closed Beta Citations: A member inquired about the schema and response examples for the closed beta of URL citations; another member linked to a documentation discussion, sharing their insight into the variability of citation outputs depending on queries.