As many would expect, the April GPT4T release retook the top spot on LMsys and it is now rolled out in paid ChatGPT and with a new lightweight reproducible evals repo. We’ve said before that OpenAI will have to prioritize rolling out new models in ChatGPT to reignite growth.

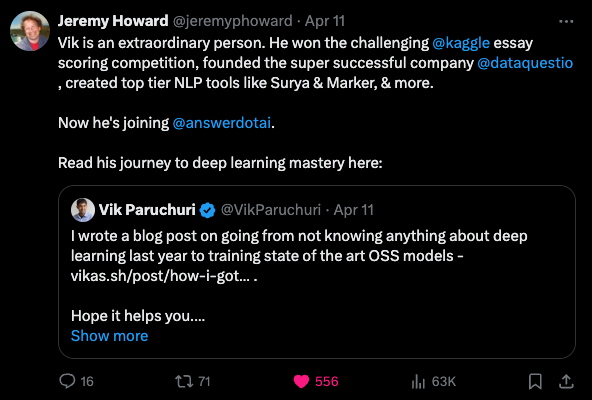

All in all, a quiet before the presumable storm of the coming Llama 3 launch. You could check out the Elicit essay/podcast or the Devin vs OpenDevin vs SWE-Agent livestream. However we give today’s pride of place to Vik Paruchuri, who wrote about his journey from engineer to making great OCR/PDF data models in 1 year.

These fundamentals are likely much more valuable than keeping on top of day to day news and we like featuring quality advice like this where we can.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling works now but has lots to improve!

TO BE COMPLETED

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

GPT-4 and Claude Updates

- GPT-4 Turbo regains top spot on leaderboard: @lmsysorg noted GPT-4-Turbo has reclaimed the No. 1 spot on the Arena leaderboard, outperforming others across diverse domains like Coding, Longer Query, and Multilingual capabilities. It performs even stronger in English-only prompts and conversations containing code snippets.

- New GPT-4 Turbo model released: @sama and @gdb announced the release of a new GPT-4 Turbo model in ChatGPT that is significantly smarter and more pleasant to use. @miramurati confirmed it is the latest GPT-4 Turbo version.

- Evaluation numbers for new GPT-4 Turbo: @polynoamial and @owencm shared the evaluation numbers, showing improvements of +8.9% on MATH, +7.9% on GPQA, +4.5% on MGSM, +4.5% on DROP, +1.3% on MMLU, and +1.6% on HumanEval compared to the previous version.

- Claude Opus still outperforms new GPT-4: @abacaj and @mbusigin noted that Claude Opus still outperforms the new GPT-4 Turbo model in their usage, being smarter and more creative.

Open-Source Models and Frameworks

- Mistral models: @MistralAI released new open-source models, including Mixtral-8x22B base model which is a beast for fine-tuning (@_lewtun), and Zephyr 141B model (@osanseviero, @osanseviero).

- Medical mT5 model: @arankomatsuzaki shared Medical mT5, an open-source multilingual text-to-text LLM for the medical domain.

- LangChain and Hugging Face integrations: @LangChainAI released updates to support tool calling across model providers, and a standard

bind_toolsmethod for attaching tools to a model. @LangChainAI also updated LangSmith to support rendering of Tools and Tool Calls in traces for various models. - Hugging Face Transformer.js: @ClementDelangue noted that Transformer.js, a framework for running Transformers in the browser, is on Hacker News.

Research and Techniques

- From Words to Numbers - LLMs as Regressors: @_akhaliq shared research analyzing how well pre-trained LLMs can do linear and non-linear regression when given in-context examples, matching or outperforming traditional supervised methods.

- Efficient Infinite Context Transformers: @_akhaliq shared a paper from Google on integrating compressive memory into a vanilla attention layer to enable Transformer LLMs to process infinitely long inputs with bounded memory and computation.

- OSWorld benchmark: @arankomatsuzaki and @_akhaliq shared OSWorld, the first scalable real computer environment benchmark for multimodal agents, supporting task setup, execution-based evaluation, and interactive learning across various operating systems.

- ControlNet++: @_akhaliq shared ControlNet++, which improves conditional controls in diffusion models with efficient consistency feedback.

- Applying Guidance in Limited Interval: @_akhaliq shared a paper showing that applying guidance in a limited interval improves sample and distribution quality in diffusion models.

Industry News and Opinions

- WhatsApp vs iMessage debate: @ylecun compared the WhatsApp vs iMessage debate to the metric vs imperial system debate, noting that the entire world uses WhatsApp except for some iPhone-clutching Americans or countries where it is banned.

- AI agents will be ubiquitous: @bindureddy predicted that AI agents will be ubiquitous, and with Abacus AI, you can get AI to build these agents in a simple 5-minute to few-hours process.

- Cohere Rerank 3 model: @cohere and @aidangomez introduced Rerank 3, a foundation model for enhancing enterprise search and RAG systems, enabling accurate retrieval of multi-aspect and semi-structured data in 100+ languages.

- Anthropic fires employees over information leak: @bindureddy reported that Anthropic fired 2 employees, one being Ilya Sutskever’s close friend, for leaking information about an internal project, likely related to GPT-4.

Memes and Humor

- Meme about LLM model names: @far__el joked about complex model names like “MoE-8X2A-100BP-25BAP-IA0C-6LM-4MCX-BELT-RLMF-Q32KM”.

- Meme about AI personal assistant modes: @jxnlco joked that there are two kinds of AI personal assistant modes for every company - philosophers and integration hell, comparing it to epistemology and auth errors.

- Joke about LLM hallucinations: @lateinteraction joked that they worry about a bubble burst once people realize that no AGI is near and there are no reliably generalist LLMs or “agents”, suggesting it’s wiser to recognize LLMs mainly create opportunities for making general progress in building AIs that solve specific tasks.

AI Discord Recap

A summary of Summaries of Summaries

-

Mixtral and Mistral Models Gain Traction: The Mixtral-8x22B and Mistral-22B-v0.1 models are generating buzz, with the latter marking the first successful conversion of a Mixture of Experts (MoE) model to a dense format. Discussions revolve around their capabilities, like Mistral-22B-v0.1’s 22 billion parameters. The newly released Zephyr 141B-A35B, a fine-tuned version of Mixtral-8x22B, also sparks interest.

-

Rerank 3 and Cohere’s Search Enhancements: Rerank 3, Cohere’s new foundation model for enterprise search and RAG systems, supports 100+ languages, boasts a 4k context length, and offers up to 3x faster inference speeds. It natively integrates with Elastic’s Inference API to power enterprise search.

-

CUDA Optimizations and Quantization Quests: Engineers optimize CUDA libraries like

CublasLinearfor faster model inference, while discussing quantization strategies like 4-bit, 8-bit, and novel approaches like High Quality Quantization (HQQ). Modifying NVIDIA drivers enables P2P support on 4090 GPUs, yielding significant speedups. -

Scaling Laws and Data Filtering Findings: A new paper, “Scaling Laws for Data Filtering”, argues that data curation cannot be compute-agnostic and introduces scaling laws for working with heterogeneous web data. The community contemplates the implications and seeks to understand the empirical approach taken.

Some other noteworthy discussions include:

- The release of GPT-4 Turbo and its performance on coding and reasoning tasks

- Ella’s subpar anime image generation capabilities

- Anticipation for Stable Diffusion 3 and its potential to address current model limitations

- Hugging Face’s Rerank model hitting 230K downloads and the launch of the parler-tts library

- OpenAI API discussions around Wolfram integration and prompt engineering resources

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Forge Ahead, Leave No A1111 Behind: Forge, a new fork of Automatic1111 boasting performance enhancements, is gathering acclaim. Enthusiasts can explore Forge without ditching A1111 and utilize ComfyUI models for a more efficient workflow.

Ella Falls Short in Anime Art: Experimentation with Ella for anime-style image generation ends in disappointment, failing to meet user expectations even with recommended checkpoints. Despite high hopes, the quality of anime images generated by Ella remains subpar and is considered unusable for the genre.

Stable Diffusion 3 Brings Hope and Doubt: The community is abuzz with a blend of anticipation and skepticism around Stable Diffusion 3 (SD3), particularly about its potential to overcome current model limitations like bokeh effects, color fidelity, and celebrity recognition.

Expanding Toolbox for Image Perfection: In discussion are several tools and extensions enhancing Stable Diffusion outputs, including BrushNet for outpainting and solutions improving depth-fm and geowizard for architecture, as well as a color correction extension.

Cascade Gains Notoriety for Fast Learning: Cascade stands out within the Stable Diffusion models for its swift learning capabilities and distinct characteristics, although it’s noted for a steeper learning curve, affectionately deemed the “strange cousin of the SD family.”

Cohere Discord

CORS Crashes Cohere Connections: Users encountered CORS policy errors preventing access to the Cohere dashboard, with issues arising from cross-origin JavaScript fetch requests from https://dashboard.cohere.com to https://production.api.os.cohere.ai.

Arguments Over Context Length: A passionate discussion unfolded regarding the effectiveness of extended context lengths in large language models (LLMs) versus Retrieval-Augmented Generation (RAG), debating computational costs and diminishing benefits of longer contexts.

Rerank 3’s Pricing and Promotion: Rerank V3 has been announced with a pricing of $2 per 1k searches and an introductory promotional discount of 50%. For those seeking the prior version, Rerank V2 remains available at $1 per 1k searches.

Navigating Cohere’s Fine-Tuning and Deployment: Questions arose about the possibilities of on-premise and platform-based fine-tuning of Cohere’s LLMs, alongside deployment options on AWS Bedrock or similar on-premise scenarios.

Boosted Search with Rerank 3 Overview: Rerank 3 launches to enhance enterprise search, claiming a threefold increase in inference speed and support for over 100 languages with its extended 4k context. It integrates with Elastic’s Inference API to improve enterprise search functionalities, with resources available such as a Cohere-Elastic integration guide and a practical notebook example.

Unsloth AI (Daniel Han) Discord

Ghost 7B Aces Multiple Languages: The new Ghost 7B model is generating buzz due to its prowess in reasoning and understanding of Vietnamese, and is eagerly anticipated by the AI community. It is highlighted as a more compact, multilinguistic alternative that could serve specialized knowledge needs.

Double Take on Fine-Tuning Challenges: Discussions surfaced regarding difficulties in fine-tuning NLP models, with a gap noted between promising training evaluations and disappointing practical inference performance. Particularly, a lack of accuracy in non-English NLP contexts has been a point of frustration among engineers.

Efficient Model Deployment Strategies Sought: Engineers are actively sharing strategies and resources to streamline the deployment of models like Mistral-7B post-training. Concerns over VRAM limits persist, prompting discourse on optimizing batch sizes and embedding contextual tokens to conserve memory.

Unsloth AI Champions Extended Context Windows: The Unsloth AI framework is commended for reducing memory usage by 30% and merely increasing time overhead by 1.9% while enabling fine-tuning with context windows as long as 228K as detailed on their blog. This represents a significant leap compared to the previous benchmarks, offering a new avenue for LLM development.

The Importance of Domain-Specific Data: There is a consensus on the need for more precise, domain-specific datasets, as generic data collection is insufficient for specialized models requiring detailed context. Best practices are still being debated, with many looking towards platforms like Hugging Face for advanced dataset solutions.

Nous Research AI Discord

-

RNN Revival on the Horizon: A new paper, found on arXiv, suggests an emerging hybrid architecture that could breathe new life into Recurrent Neural Networks (RNNs) for sequential data processing. Google’s reported investment in a new 7 billion parameter RNN-based model stirs the community’s interest in future applications.

-

Google’s C++ Foray with Gemma Engine: The community noted Google’s release of a C++ inference engine for its Gemma models, sparking curiosity. The standalone engine is open source and accessible via their GitHub repository.

-

Financial Muscle Required for Hermes Tuning: Fine-tuning the Nous Hermes 8x22b appears to be quite the wallet-buster, requiring an infrastructure rumored to cost approximately “$80k for a week”. Detailed infrastructure specifics remain undisclosed, but clearly, this isn’t a trivial undertaking.

-

Pedal to the Metal with Apple AI Potential: Engineers are paying close attention to Apple’s M series chips, anticipating the M4 chip and its rumored 2TB RAM support. The M2 Ultra and M3 Max’s AI inference capabilities, especially their low power draw, garner specific praise.

-

LLMs in the Medical Spotlight with Caution: The medical implications of using Large Language Models (LLMs) trigger a mix of excitement and concern within the community. There’s chatter about legal risks and artificial restrictions hindering the development and application in healthcare.

CUDA MODE Discord

-

Cublas Linear Optimization: Custom

CublasLinearlibrary optimizations are accelerating model inferences for large matrix multiplications, though bottlenecks in attention mechanisms could be diminishing overall performance gains in models like “llama7b”. -

Peak Performance with P2P: By hacking NVIDIA’s driver, a 58% speedup for all reduce operations was achieved on 4090 GPUs. The modification enables 14.7 GB/s AllReduce, a significant stride towards enhancing tinygrad’s performance with P2P support.

-

Hit the Quantization Target: Challenges and strategies around quantization, like 4-bit methods, are gaining traction, with a new HQQ (High Quality Quantization) approach being discussed for superior dequantization linearity. In tensor computation, 8-bit matrix multiplication was found to be double the speed of fp16, spotlighting the potential performance issues with

int4 kernels. -

Speed Breakthroughs and CUDA Advancements: The

A4000GPU achieved a max throughput of 375.7 GB/s withfloat4loads, indicating the efficient use of L1 cache. Meanwhile, CUDA’s latest features like cooperative groups and kernel fusion are driving both performance gains and modern C++ adoption for maintainability. -

Community Resource Sharing and Organization: Members have established channels for sharing CUDA materials, such as renaming an existing channel for resource distribution, and they recommend organizing tasks for better workflow. A study group for PMPP UI has been initiated, welcoming participants via a Discord invite.

-

Conceptual Explanations and Academic Contributions: An explainer on ring attention, designed to scale context windows in LLMs, was shared, inviting feedback. In academia, Chapter 4 of a GPU-centric numerical linear algebra book in making and a modern CUDA version of Golub/Van Loan book tanked their artificial satellite into the fertile mindscape, deepening the knowledge pool. A practical course in programming parallel computers, inclusive of GPU programming, is offered online and open to all.

Perplexity AI Discord

-

GPT-4 Buzz in Perplexity: Engineers are curious about the integration of GPT-4 within Perplexity, questioning its features and API availability. Meanwhile, some users debate the capabilities of Perplexity beyond traditional search, suggesting it could be positioned as a composite tool for search and image generation.

-

Expanding API Offerings: A lively conversation explores integrating Perplexity API into e-commerce and users are pointed to the documentation for guidance. However, queries about the availability of a Pro Search feature in the API concluded with a clear negative response.

-

Coding the Perfect Extension: Technical discussions center on enhancing Perplexity’s utility with browser extensions, despite the limitations that client-side fetching imposes. Tools like RepoToText for GitHub are mentioned as resources for marrying LLMs with repository contents.

-

Search Trails and Technical Trails: Users actively shared Perplexity AI search links, signaling a push towards broadening collaboration on the platform. Searches ranged from unidentified objects to dense technical matters like access logging and NIST standards, reflecting the crowd’s versatile interests.

-

Anticipating Roadmap Realities: Eyes are on Perplexity’s future with a user seeking updates on citation features, referencing the Feature Roadmap to clarify upcoming enhancements. The roadmap appears to plan multiple updates extending into June, though it remains silent on the much-awaited source citations.

LM Studio Discord

Quantization Quest Continues: The Mixtral-8x22B model is now quantized and available for download, yet it is not fine-tuned and may challenge systems that can’t handle the 8x7b version. A model loading error can be resolved by upgrading to LM Studio 0.2.19 beta preview 3.

Navigating Through Large Model Dilemmas: Users shared experiences running large models on insufficient hardware, suggesting cloud solutions or hardware upgrades like the NVIDIA 4060ti 16GB. For those tackling time series data, a Temporal Fusion Transformer (TFT) was suggested as being well-suited for the task.

GPU vs. CPU: A Performance Puzzle: When running AI models, more system memory can help load larger LLMs, but full GPU inference with a card like the NVIDIA RTX A6000 is optimal for performance.

Emerging ROCm Enigma in Linux: Linux users curious about the amd-rocm-tech-preview support are left hanging, while those with compatible hardware like the 7800XT report coil whine during tasks. Meanwhile, building the gguf-split binary for Windows is a hurdle for testing on AMD hardware, requiring a look into GitHub discussions and pull requests for guidance.

BERT’s Boundaries and Embedding Exploits: The Google BERT models are generally not directly usable with LM Studio without task-specific fine-tuning. For text embeddings utilizing LM Studio, larger parameter models like mxbai-large and GIST-large have been recommended over the standard BERT base model.

Please note that while this summary is comprehensive, specific channels may contain additional detailed discussions and links relevant to AI engineers.

Eleuther Discord

BERT’s Bidirectional Brainache: Engineers raised the complexity of extending context windows for encoder models like BERT, referencing difficulty with bidirectional mechanisms and pointing to MosaicBERT which applies FlashAttention, with questions about its absence in popular libraries despite contributions.

Rethinking Transformers with Google’s Mixture-of-Depths Model: Researchers are discussing Google’s novel Mixture-of-Depths approach, which allocates computing differently in transformer-based models. Also catching attention is RULER’s newly open-source yet initially empty repository here, aimed at revealing the real context size of long-context language models.

Scale the Data Mountain Wisely: A paper proposing that data curation is indispensable and cannot ignore computational constraints was shared. The discourse included a symbolic search for entropy-based methods in scaling laws and a reflection on foundational research principles.

Odd Behaviors in Large Language Models Puzzles Analysts: Members expressed intrigue over NeoX’s embedding layer behavior, questioning if weight decay was omitted during training. They compared NeoX’s output to other models and confirmed a distinct behavior, igniting curiosity about the technical specifics and implications.

Quantization Quest and Dataset Dilemmas: Community efforts include an attempt at 2-bit quantization to reduce VRAM usage for the Mixtral-8x22B model, while confusion arose around The Pile dataset’s inconsistent sizing and the lack of extraction code for varied archive types.

OpenRouter (Alex Atallah) Discord

-

Mixtral Expands and Contracts: A new model called Mixtral 8x22B:free was released, enhancing clarity around routing and rate-limiting, and boasting an updated context size of 65,536. However, it was swiftly disabled, pushing users to transition to its viably active counterpart, Mixtral 8x22B.

-

New Experimental Models on the Block: The community has two new experimental models to play with: Zephyr 141B-A35B, an instruct fine-tune of Mixtral 8x22B, and Fireworks: Mixtral-8x22B Instruct (preview), spicing up the AI landscape.

-

Brick Wall in Purchase Process: A blip emerged for shoppers seeking tokens, triggering a snapshot share and presumably a call to iron out the kink in the transaction flow.

-

Self-Help for Platform Entrapment: A user entwined in login woes uncovered a self-extraction strategy, deftly navigating account deletion.

-

Turbo Troubles and Personal AI Aspirations: The orbit of discourse spanned from resolving GPT-4 Turbo malfunctions with a Heroku redeploy to tailoring AI setups interweaving tools like LibreChat. Deep dives into AI models’ quirks and tuning sweet spots were also a hot theme, with Opus, Gemini Pro 1.5, and MoE structures getting the spotlight.

Modular (Mojo 🔥) Discord

-

Mojo’s Community Code Contribution: Server members appreciate that Mojo has open-sourced its standard library, fostering community contributions and enhancements. Discussions revolved around integrating Modular into BackdropBuild.com projects for developer cohorts, yet members were reminded to keep business inquiries on the appropriate channels.

-

Karpathy Sets Sights on Mojo Port: An exciting talk sparked by GitHub issue #28 in Andrej Karpathy’s

llm.crepository focused on benchmarking and comparison prospects of a Mojo port, as the creator himself expressed interest in linking to any Mojo-powered version. -

Row vs. Column: Matrix Showdown: An informative post available at Modular’s blog breaks down the row-major versus column-major matrix storage and their performance analyses in Mojo and NumPy, enlightening the community on programming languages’ and libraries’ storage preferences.

-

Terminal Vogue with Mojo: Members showcased advanced text rendering in terminals using Mojo, demonstrating functionalities and interfaces inspired by

charmbracelet's lipgloss. Code snippets and implementation examples were shared, with the preview available on GitHub. -

Matrix Blog Misstep: A Call for Help: A member signaled an error while executing a Jupyter notebook from the “Row-major vs. Column-major matrices” blog post, confronting an issue with ‘mm_col_major’ declarations. This feedback creates an opportunity for community-supported debugging, with the notebook present at devrel-extras GitHub repo.

LangChain AI Discord

-

LangChain’s PDF Summary Speed Boost: A method for improving the summarization efficiency of LangChain’s

load_summarization_chainfunction on extensive PDF documents was highlighted, with a code snippet demonstrating amap_reduceoptimization approach available on GitHub. -

LangChain AI’s New Tutorial Rolls Out: A recently introduced tutorial sheds light on LCEL and the assembly of chains using runnables, offering hands-on learning for engineers and inviting their feedback; see the details on Medium.

-

GalaxyAI API Launch Takes Off: GalaxyAI debuts with a free API service smoothly aligning with Langchain, introducing powerful AI models like GPT-4 and GPT-3.5-turbo; integration details can be approached on GalaxyAI.

-

Alert: Unwanted Adult Content Spams Discord: There have been reports of improper content being shared across various LangChain AI channels, which is against Discord community guidelines.

-

Meeting Reporter Meshes AI with Journalism: The new tool Meeting Reporter has been created to leverage AI in producing news stories, intertwining Streamlit and Langgraph, and requiring a paid OpenAI API key. The application is accessible via Streamlit, with the open-source code hosted on GitHub.

Note: Links related to adult content promotions have been actively ignored in this summary as they are clearly not relevant to the technical and engineering discussions of the guild.

HuggingFace Discord

Tweet Alert: osanseviero Shares News: osanseviero tweeted, potentially hinting at new insights or updates; check it out here.

RAG Chatbot Employs Embedded Datasets: The RAG chatbot uses the not-lain/wikipedia-small-3000-embedded dataset to inform its responses, merging retrieval and generative AI for accurate information inferences.

RMBG1.4 Gains Popularity: The integration of RMBG1.4 with the transformers library has garnered significant interest, reflected in 230K downloads this month.

Marimo-Labs Innovates Model Interaction: Marimo-labs released a Python package allowing the creation of interactive playgrounds for Hugging Face models; a WASM-powered marimo application lets users query models with their tokens.

NLP Community Pursues Longer-Context Encoders: AI engineers discussed the pursuit of encoder-decoder models like BigBird and Longformer for handling longer text sequences around 10-15k tokens and shared strategies for training interruption and resumption with trainer.train()’s resume_from_checkpoint.

Vision and Diffusion Achievements: GPU process management is enhanced with nvitop, while developers tackle video restoration through augmentation and temporal considerations, referencing works like NAFNet, BSRGAN, Real-ESRGAN, and All-In-One-Deflicker. Meanwhile, insights into Google’s multimodal search capabilities are sought for improved image and typo brand recognition, with interest in the underpinnings of AI-demos’ identifying technology.

Latent Space Discord

-

Hybrid Search Reranking Revisited: Engineers discussed whether combining lexic and semantic search results before reranking is superior to amalgamating and reranking all results simultaneously. Cohesion in reranking steps could streamline the process and reduce latency in search methodologies.

-

Rerank 3 Revolutionizes Search: Cohere’s Rerank 3 model touts enhanced search and RAG systems, with 4k context length and multilingual capabilities across 100+ languages. Details of its release and capabilities are shared in a tweet by Sandra Kublik.

-

AI Market Heats Up With Innovation: The rise of innovative AI-based work automation tools, like V7 Go and Scarlet AI, suggests a growing trend toward automating monotonous tasks and facilitating AI-human collaborative task execution.

-

Perplexity’s “Online” Models Vanish and Reclaim: The community mulled over Perplexity’s “online” models’ disappearance from LMSYS Arena and their subsequent reemergence, indicating models with internet access. Interest was rekindled as GPT-4-Turbo regained the lead in the Lmsys chatbot leaderboard, signaling strong coding and reasoning capabilities.

-

Mixtral-8x22B Breaks onto the Scene: The advent of Mixtral-8x22B in HuggingFace’s Transformers format ignites conversations around its use and implications for Mixture of Experts (MoEs) architecture. The community explores topics such as expert specialization, learning processes within MoEs, and the semantic router, drawing attention to potential gaps in redundancy and expertise implementation.

-

Podcasting AI’s Supervisory Role: A new podcast episode presents discussions with Elicit’s Jungwon Byun and Andreas Stuhlmüller on supervising AI research. Available via a YouTube link, it tackles the benefits of product-oriented approaches over traditional research-focused ones.

LAION Discord

Draw Things Draws Criticism: Participants voiced their disappointment with Draw Things, pointing out its lack of a complete open source offering; the provided version omits crucial features including metal-flash-attention support.

Questionable Training Feats of TempestV0.1: Community members met the TempestV0.1 Initiative’s claim of 3 million training steps with skepticism, questioning both that and the physical plausibility of its 6 million-image dataset occupying only 200GB.

Will LAION 5B Demo Reappear?: Regarding the Laion 5B web demo, there’s uncertainty about its return, despite mentions of Christoph indicating a comeback with no given timeline or further information.

Alert on LAION Scams: Warnings circulated on scams such as cryptocurrency schemes misusing LAION’s name, with recommendations to stay cautious and discussions about combating this with an announcement or automatic moderation enhancements.

Advancements in Diffusion and LRU Algorithms: The community is evaluating improved Least Recently Used (LRUs) algorithms on Long Range Arena benchmarks and discussing guidance-weight strategies to enhance diffusion models, with relevant research (research paper) and an active GitHub issue (GitHub issue) being applied to huggingface’s diffusers.

LlamaIndex Discord

-

Pandas on the Move: The

PandasQueryEnginewill transition tollama-index-experimentalwith LlamaIndex python v0.10.29, and installations will proceed throughpip install llama-index-experimental. Adjustments to import statements in Python code are needed to reflect this change. -

Spice Up Your GitHub Chat: A new tutorial demonstrates creating an app to enable chatting with code from a GitHub repository, integrating an LLM with Ollama. Another tutorial details the incorporation of memory into document retrieval using a Colbert-based agent for LlamaIndex, providing a boost to the retrieval process.

-

Dynamic Duo: RAG Augmented with Auto-Merging: A novel approach to RAG retrieval includes auto-merging to form more contiguous chunks from broken contexts. Comparatively, discussing Q/A tasks surfaced a preference for Retriever Augmented Generation (RAG) over fine-tuning LLMs due to its balance of accuracy, cost, and flexibility.

-

Toolkit for GDPR-Compliant AI Apps: Inspired by Llama Index’s create-llama toolkit, the create-tsi toolkit is a fresh GDPR-compliant infrastructure for AI applications rolled out by T-Systems and Marcus Schiesser.

-

Debugging Embeddings and Vector Stores: Discussions cleared up confusions on embedding storage, revealing they reside in vector stores within the storage context. For certain issues with ‘fastembed’ causing breaks in QdrantVectorStore, downgrading to

llama-index-vector-stores-qdrant==0.1.6was the solution, and metadata exclusions from embeddings need explicit handling in code.

OpenInterpreter Discord

Trouble in Installation Town: Members reported problems installing Poetry and litellm—a successful fix for the former included running pip install poetry, whereas diagnosing litellm issues involved using interpreter --version and pip show litellm. Further troubleshooting pointed towards the necessity of Python installation and particular git commits for package restorations.

Patience, Grasshopper, for Future Tech Gadgets: Inquiries were made on the preorder and delivery of new devices, revealing that some tech gadgets are still in the prototyping phase with shipments expected in the summer months. The conversation highlighted typical delays faced by startups in manufacturing and encouraged patience from eager tech aficionados.

Transformers Redefined in JavaScript: The transformers.js GitHub repository, offering a JavaScript-based machine learning solution capable of running in the browser sans server, piqued the interest of AI engineers. Meanwhile, a cryptic mention of an AI model endpoint at https://api.aime.info popped up without additional detail or fanfare.

OpenAI Plays the Credits Game: OpenAI’s shift to prepaid credits away from monthly billing, which includes a promotion for free credits with a deadline of April 24, 2024, sparked curiosity and a flurry of information exchanges among the members regarding the implications for various account types.

Events and Contributions Galore: Community event Novus invites were buzzing as engineers looked forward to networking without the fluff, while a successful session on using Open Interpreter as a library yielded a repository of Python templates for budding programmers.

OpenAccess AI Collective (axolotl) Discord

Discussing Strategies and Anticipations in AI Development:

- Participants examined the implications of freezing layers within neural network models, expressing the view that while reduction may simplify models, it can also potentially reduce effectiveness, thus hinting at a delicate balance between complexity and resource efficiency. Links to discussions about the theoretical foundations of language model scaling, particularly Meta’s study on knowledge bit scaling (Physics of Language Models: Part 3.3, Knowledge Capacity Scaling Laws), suggest LLaMA-3 may advance this balance further.

Training Challenges and Model Modifications:

- The conversion of Mistral-22B from a Mixture of Experts to a dense model (Vezora/Mistral-22B-v0.1) has been a focal point, suggesting a community interest towards dense architectures, possibly for their compatibility with existing infrastructure. Concurrently, discussions on training in 11-layer increments indicate a pursuit of training tactics that accommodate limited GPU capabilities.

Ecosystem Expansion and Assistance:

- The collective’s endeavor to make the AI development process more accessible is evident with shared advice for new members on starting with Axolotl, reflected in both an insightful blog post and practical tips, such as utilizing the

--debugflag. Furthermore, the maintenance of a Colab notebook example assists users in fine-tuning models like Tiny-Llama on Hugging Face datasets.

Resourcefulness in Resource Constraints:

- Conversations are circling around inventive training strategies such as unfreezing random subsets of weights for users with lower-end hardware setups, evidencing a focus on democratizing training methods. Collaborative sharing of pretrain configs, and step-by-step interventions for leveraging Docker with DeepSpeed for multi-node fine-tuning demonstrate the community’s resolve to navigate high-end training tactics within constrained environments.

Curiosity Meets Data Acquisition:

- Inquiry into datasets for formal logic reasoning and substantial 200-billion token datasets portrays an active search for challenging and large-scale data to push the boundaries of model pretraining and experimentation.

OpenAI Discord

API Stumbles with AttributeErrors: An OpenAI API user encountered an AttributeError in the client.beta.messages.create method in Python, raising concerns about potential documentation being out of sync with library updates. The shared code snippet didn’t yield a solution within the guild discussions.

Models in the Spotlight: Members shared varying experiences using AI models like Gemini 1.5 and Claude, touching on differences in context windows, memory, and code query handling. For C# development specifically in Unity, the gpt-4-turbo and Opus model were suggested for efficacy.

Efficiency Hurdles with GPT-4 Turbo: One member observed that the GPT-4-turbo model appeared less skilled at function calls, while another was unsure about accessing it; however, detailed examples or solutions were not provided.

Large Scale Text Editing with LLMs: Queries about editing large documents with GPT sparked a discussion on the potential need for third-party services to bypass the standard context window limitations.

Navigating the Prompt Engineering Galaxy: For those embarking on prompt engineering, Prompting Guide was recommended as a resource, while integrating Wolfram with GPT can be managed via Wolfram GPT link and the @mention feature within the platform.

DiscoResearch Discord

Big Win for Dense Models: The launch of Mistral-22B-V.01, a new 22B parameter dense model, marks a notable achievement as it transitions from being a compressed Mixture of Experts (MoE) to a dense form, establishing a precedent in the MoE to Dense model conversion arena.

Crosslingual Conundrums and Corpus Conversations: While engineers work on balancing English and German data in models like DiscoLM 70b, with plans for updated models, they cited the need for better German benchmarks. Occiglot-7B-DE-EN-Instruct showed promise, hinting that a mix of English and German training data could be efficacious.

Sifting Through SFT Strategies: The community shared insights on the potential benefits of integrating Supervised Fine-Tuning (SFT) data early in the pretraining phase, backed by research from StableLM and MiniCPM, to enhance model generalization and prevent overfitting.

Zephyr Soars with ORPO: Zephyr 141B-A35B, derived from Mixtral-8x22B and fine-tuned via a new algorithm named ORPO, was introduced and is available for exploration on the Hugging Face model hub.

MoE Merging Poses Challenges: The community’s experiments with Mergekit to create custom MoE models through merging highlighted underwhelming performance, sparking an ongoing debate on the practicality of SFT on narrow domains versus conventional MoE models.

Interconnects (Nathan Lambert) Discord

Increment or Evolution?: Nathan Lambert sparked a debate regarding whether moving from Claude 2 to Claude 3 represents genuine progress or just an “INCREMENTAL” improvement, raising questions about the substance of AI version updates.

Building Better Models Brick by Brick: Members discussed the mixing of pretraining, Supervised Fine-Tuning (SFT), and RLHF, pointing out the respective techniques are often combined, although this practice is poorly documented. A member committed to providing insights on applying annealing techniques to this blend of methodologies.

Casual Congrats Turn Comical: A meme became an accidental expression of congratulations causing a moment of humor, while another conversation clarified that the server does not require acceptance for subscriptions.

Google’s CodecLM Spotlight: The community examined Google’s CodecLM, shared in a research paper, noting it as another take on the “learn-from-a-stronger-model” trend by using tailored synthetic data.

Intellectual Exchange on LLaMA: A link to “LLaMA: Open and Efficient Foundation Language Models” was posted, indicating an active discussion on the progress of open, efficient foundation language models with a publication date of February 27, 2023.

tinygrad (George Hotz) Discord

- Swift Naming Skills Unleashed: Members of the tinygrad Discord opted for creative labels such as tinyxercises and tinyproblems, with the playful term tinythanks emerging as a sign of appreciation in the conversation.

- Cache Hierarchy Hustle: A technical exchange in the chat indicated that L1 caches boast superior speed compared to pooled shared caches, due to minimized coherence management demands. This discussion underscored the performance differences when comparing direct L3 to L1 cache transfers with those of a heterogenous cache architecture.

- Contemplating Programming Language Portability: Dialogue revealed a contrasting opinion where ANSI C’s wide hardware support and ease of portability stood in contrast to the shared scrutiny of Rust’s perceived safety, which was demystified with a link to known Rust vulnerabilities.

- Trademark Tactics Trigger Discussions: A debatable sentiment was aired around the Rust Foundation’s restrictive trademark policies, eliciting comparisons to other entities like Oracle and Red Hat and their own contentious licensing stipulations.

- Discord Discipline Instated: George Hotz made it clear that off-topic banter would not fly in his Discord, leading to a user being banned for their non-contribution to the focused technical discussions.

Skunkworks AI Discord

- Hunt for the Logical Treasure Trove: AI engineers shared a curated list full of datasets aimed at enhancing reasoning with formal logic in natural language, providing a valuable resource for projects at the intersection of logic and AI.

- Literature Tip: Empowering Coq in LLMs: An arXiv paper was highlighted, which tackles the challenge of improving large language models’ abilities to interpret and generate Coq proof assistant code—key for advancing formal theorem proving capabilities.

- Integrating Symbolic Prowess into LLMs: Engineers took interest in Logic-LLM, a GitHub project discussing the implementation of symbolic solvers to elevate the logical reasoning accuracy of language models.

- Reasoning Upgrade Via Lisp Translation Explained: Clarification was offered on a project that enhances LLMs by translating human text to Lisp code which can be executed, aiming to augment reasoning by computation within the LLM’s latent space while keeping end-to-end differentiability.

- Reasoning Repo Gets Richer!: The awesome-reasoning repo saw its commit history updated with new resources, becoming a more comprehensive compilation to support the development of reasoning AI.

LLM Perf Enthusiasts AI Discord

- Haiku Haste Hype Questioning: Community members are questioning the alleged speed improvements of Haiku, with concerns particularly aimed at whether it significantly enhances total response time rather than just throughput.

- Turbo Takes the Spotlight: Engineers in the discussion are interested in the speed and code handling improvements of the newly released turbo, with some contemplating reactivating ChatGPT Plus subscriptions to experiment with turbo’s capabilities.

Alignment Lab AI Discord

Cry for Code Help: A guild member has requested help with their code by seeking direct messages from knowledgeable peers.

Server Invites Scrutiny: Concerns were raised over the excessive sharing of Discord invites on the server, sparking discussions about their potential ban.

Vitals Check on Project OO2: A simple inquiry was made into the current status of the OO2 project, questioning its activity.

Datasette - LLM (@SimonW) Discord

-

Gemini Listens and Learns: The Gemini model has been enhanced with the capability to answer questions concerning audio present in videos, marking progression from its earlier constraints of generating non-audio descriptions.

-

Google’s Text Pasting Problems Persist: Technical discussions indicate a persistent frustration regarding Google’s text formatting when pasting into their platforms, affecting user efficiency.

-

STORM Project’s Thunderous Impact: Engineers took note of the STORM project, an LLM-powered knowledge curation system, highlighting its ability to autonomously research topics and generate comprehensive reports with citations.

-

macOS Zsh Command Hang-up Fixed: A hang-up issue when using the

llmcommand on macOS zsh shell has been resolved through a recent pull request, with verification of function across both Terminal and Alacritty on M1 Macs.

Mozilla AI Discord

-

Figma Partners with Gradio: Mozilla Innovations released Gradio UI for Figma, facilitating rapid prototyping for designers using a library inspired by Hugging Face’s Gradio. Figma’s users can now access this toolkit for enhanced design workflows.

-

Join the Gradio UI Conversation: Thomas Lodato from Mozilla’s Innovation Studio is leading a discussion about Gradio UI for Figma; engineers interested in user interfaces can join the discussion here.

-

llamafile OCR Potential Unlocked: There’s growing interest in the OCR capabilities of llamafile, with community members exploring various applications for the feature.

-

Rust Raves in AI: A new project called Burnai, which leverages Rust for deep learning inference, was recommended for its performance optimizations; keep an eye on burn.dev and consider justine.lol/matmul for Rust-related advancements.

-

Llamafile Gets the Green Light from McAfee: The llamafile 0.7 binary is now whitelisted by McAfee, removing security concerns for its users.

AI21 Labs (Jamba) Discord

Hunting for Jamba’s Genesis: A community member expressed a desire to find the source code for Jamba but no URL or source location was provided.

Eager for Model Merging Mastery: A link to a GitHub repository, moe_merger, was shared that lays out a proposed methodology for model merging, although it’s noted to be in the experimental phase.

Thumbs Up for Collaboration: Gratitude was shared by users for the resource on merging models, indicating a positive community response to the contribution.

Anticipation in the Air: There’s a sense of anticipation among users for updates, likely regarding ongoing projects or discussions from previous messages.

Shared Wisdom on Standby: Users are sharing resources and expressing thanks, showcasing a collaborative environment where information and support are actively exchanged.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (846 messages🔥🔥🔥):

-

Introducing Forge: Forge, a fork of Automatic1111 (or A1111), is being praised for its performance improvements over A1111. Users are encouraged to try it, especially as it doesn’t require the removal of A1111 and can use models from ComfyUI.

-

Ella ‘s Anime Trouble: Users report that Ella, while promising, severely degrades the quality of generated anime-style images, making it unusable for this genre. Despite trying various checkpoints, including those recommended by Ella’s creators, users are unable to attain satisfactory results.

-

Anticipation for SD3: Within the community, there’s a mixture of excitement and skepticism regarding the release of Stable Diffusion 3 (SD3), with discussions around expectations for SD3 to solve present generative model limitations like the handling of bokeh effects, color accuracy, and celebrity recognitions.

-

Tools and Extensions Galore: The community discussed various tools and model extensions that improve Stable Diffusion outputs, such as BrushNet for outpainting, depth-fm, geowizard for architecture, and an extension for color accuracy. Users are encouraged to explore and stay up-to-date with new releases.

-

Cascade’s Quirky Qualities: Cascade is noted for learning quickly and for its unique traits among SD models, though it’s also described as challenging to use, with an endearing reference to being the “strange cousin of the SD family.”

Links mentioned:

- Ella 1.5 Comfy UI results: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- MIT scientists have just figured out how to make the most popular AI image generators 30 times faster: Scientists have built a framework that gives generative AI systems like DALL·E 3 and Stable Diffusion a major boost by condensing them into smaller models — without compromising their qua...

- Udio | Metal Warriors by MrJenius: Make your music

- InstantMesh - a Hugging Face Space by TencentARC: no description found

- Hugging Face – The AI community building the future.: no description found

- stablediffusion/configs/stable-diffusion at main · Stability-AI/stablediffusion: High-Resolution Image Synthesis with Latent Diffusion Models - Stability-AI/stablediffusion

- ComfyUI Multi-Subject Workflows - Interaction OP v2.2 | Stable Diffusion Workflows | Civitai: Last updated workflow: Interaction OpenPose v2.1 -> v2.2 Please download from the model version, not "Update [...]" as I delete and recreate it ...

- Reddit - Dive into anything: no description found

- The Ultimate Guide to A1111 Stable Diffusion Techniques: Dive into the world of high-resolution digital art as we embark on a five-step journey to transform the ordinary into extraordinary 4K and 8K visual masterpi...

- stabilityai (Stability AI): no description found

- lambdalabs/sd-pokemon-diffusers · Hugging Face: no description found

- GitHub - DataCTE/ELLA_Training: Contribute to DataCTE/ELLA_Training development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- Stable Diffusion Forge UI: Under the Hood Exploration - Tips and Trick #stablediffusion: In this video, we're taking a detailed look at the Stable Diffusion Forge UI, covering everything from finding and updating models and settings to enhancing ...

- OpenAI's Sora Made Me Crazy AI Videos—Then the CTO Answered (Most of) My Questions | WSJ: OpenAI’s new text-to-video AI model Sora can create some very realistic scenes. How does this generative AI tech work? Why does it mess up sometimes? When wi...

- GitHub - hnmr293/sd-webui-cutoff: Cutoff - Cutting Off Prompt Effect: Cutoff - Cutting Off Prompt Effect. Contribute to hnmr293/sd-webui-cutoff development by creating an account on GitHub.

- How to install Stable Diffusion 2.1 in AUTOMATIC1111 GUI - Stable Diffusion Art: Stable diffusion 2.1 was released on Dec 7, 2022.

- stabilityai/stable-diffusion-2-1 at main: no description found

Cohere ▷ #general (522 messages🔥🔥🔥):

-

CORS Access Troubles: Users reported an issue where the Cohere dashboard was inaccessible, identifying a CORS policy error blocking a JavaScript fetch request from

https://dashboard.cohere.comtohttps://production.api.os.cohere.ai. -

Captivating Conversation on Command R+ and Context Length: The community engaged in a heated debate about the efficacy and practicality of long context lengths in LLMs versus strategies like Retrieval-Augmented Generation (RAG). Arguments included computational efficiency and the diminishing returns of increased context length.

-

Rerank V3 Launch Priced at $2 per 1K Searches: Clarification was provided on Rerank V3’s pricing, set at $2 per 1,000 searches, with current promotions offering 50% off due to late pricing change implementation; Rerank V2 remains available at $1 per 1,000 searches.

-

Cohere Fine-Tuning and On-Premise Deployment Queries Addressed: In the discussion, questions were raised about the ability to fine-tune Cohere’s LLMs on-premise or through the Cohere platform, as well as deploying these models on AWS Bedrock or on-premise setups.

-

Influx of Friendly Introductions to the Cohere Community: New members introduced themselves, including Tayo from Nigeria expressing gratitude for Cohere’s LLM, and other individuals signaling their interest in or involvement with AI.

Links mentioned:

- RULER: What's the Real Context Size of Your Long-Context Language Models?: The needle-in-a-haystack (NIAH) test, which examines the ability to retrieve a piece of information (the "needle") from long distractor texts (the "haystack"), has been widely adopted ...

- Google Colaboratory: no description found

- Join the Cohere Community Discord Server!: Cohere community server. Come chat about Cohere API, LLMs, Generative AI, and everything in between. | 15292 members

- Retrieval Augmented Generation (RAG) - Cohere Docs: no description found

- Creative Commons NonCommercial license - Wikipedia: no description found

- no title found: no description found

- Screaming Mad GIF - Screaming Mad Fish - Discover & Share GIFs: Click to view the GIF

- Login | Cohere: Cohere provides access to advanced Large Language Models and NLP tools through one easy-to-use API. Get started for free.

- glucose", - Search results - Wikipedia: no description found

- Command R+ by cohere | OpenRouter: Command R+ is a new, 104B-parameter LLM from Cohere. It's useful for roleplay, general consumer usecases, and Retrieval Augmented Generation (RAG). It offers multilingual support for ten key lan...

- Episode 1 - Mongo DB Is Web Scale: Q&A discussion discussing the merits of No SQL and relational databases.

- ASUS Announces Dual GeForce RTX 4060 Ti SSD Graphics Card: no description found

- CohereForAI (Cohere For AI): no description found

- AWS Marketplace: Cohere: no description found

- Efficient Parameter-free Clustering Using First Neighbor Relations: We present a new clustering method in the form of a single clustering equation that is able to directly discover groupings in the data. The main proposition is that the first neighbor of each sample i...

- GitHub - ssarfraz/FINCH-Clustering: Source Code for FINCH Clustering Algorithm: Source Code for FINCH Clustering Algorithm. Contribute to ssarfraz/FINCH-Clustering development by creating an account on GitHub.

- Hierarchical Nearest Neighbor Graph Embedding for Efficient Dimensionality Reduction: Dimensionality reduction is crucial both for visualization and preprocessing high dimensional data for machine learning. We introduce a novel method based on a hierarchy built on 1-nearest neighbor gr...

- GitHub - koulakis/h-nne: A fast hierarchical dimensionality reduction algorithm.: A fast hierarchical dimensionality reduction algorithm. - koulakis/h-nne

- Cohere For AI - Guest Speaker: Dr. Saquib Sarfraz, Deep Learning Lead: no description found

Cohere ▷ #announcements (1 messages):

- Rerank 3 Sets Sail for Enhanced Enterprise Search: Launching Rerank 3, a foundation model geared to increase the efficiency of enterprise search and RAG systems, now capable of handling complex, semi-structured data and boasting up to 3x improvement in inference speed. It supports 100+ languages and a long 4k context length for improved accuracy on various document types, including code retrieval.

- Boost Your Elastic Search with Cohere Integration: Rerank 3 is now natively supported in Elastic’s Inference API, enabling seamless enhancement of enterprise search functionality. Interested developers can start integrating with a detailed guide on embedding with Cohere and a hands-on Cohere-Elastic notebook example.

- Unlock State-of-the-Art Enterprise Search: Described in their latest blog post, Rerank 3 is lauded for its state-of-the-art capabilities, which include drastically improved search quality for longer documents, the ability to search multi-aspect data, and multilingual support, all while maintaining low latency.

Links mentioned:

- Elasticsearch and Cohere: no description found

- Introducing Rerank 3: A New Foundation Model for Efficient Enterprise Search & Retrieval: Today, we're introducing our newest foundation model, Rerank 3, purpose built to enhance enterprise search and Retrieval Augmented Generation (RAG) systems. Our model is compatible with any dat...

Unsloth AI (Daniel Han) ▷ #general (268 messages🔥🔥):

-

Mixtral Model Dilemmas: Several members voiced concerns about Mixtral and Perplexity Labs models having repetition issues and behaving erratically, likening the behavior to glitches. Critiques include superficial instruction fine-tuning and repetitive outputs similar to base models, with one member mentioning this GitHub repository as a better alternative for creating search-based models.

-

Anticipation Builds Around Upcoming Instruct Models: There’s keen interest in the release of new instruct models, with a mod from Mistral confirming they’re anticipated in a week, stirring up excitement for potential showdowns between different models like Llama and Mistral.

-

Exploring Long Context Windows in LLMs: Users delve into discussions about utilizing long context windows, up to 228K, for fine-tuning LLMs, with Unsloth AI reducing memory usage by 30% and only increasing time overhead by 1.9%, detailed further in Unsloth’s blog.

-

The Quest for Domain-Specific Data: A member queries the community on the best practices for collecting a 128k context-size instructions dataset for a specific domain. Multiple suggestions are made, including looking at HF datasets, but the conversation leans towards the need for more specialized and domain-specific data collection methods.

-

Unsloth AI’s Webinar on Fine-Tuning LLMs: Unsloth AI conducted a webinar hosted by Analytics Vidhya, walking through a live demo of Unsloth and sharing fine-tuning tips and tricks, which garnered interest from the community even leading to a last-minute notice. They also invite members to their Zoom event aimed at sharing knowledge and conducting a Q&A session.

Links mentioned:

- Introducing Rerank 3: A New Foundation Model for Efficient Enterprise Search & Retrieval: Today, we're introducing our newest foundation model, Rerank 3, purpose built to enhance enterprise search and Retrieval Augmented Generation (RAG) systems. Our model is compatible with any dat...

- Bloomberg - Are you a robot?: no description found

- Video Conferencing, Web Conferencing, Webinars, Screen Sharing: Zoom is the leader in modern enterprise video communications, with an easy, reliable cloud platform for video and audio conferencing, chat, and webinars across mobile, desktop, and room systems. Zoom ...

- Viking - a LumiOpen Collection: no description found

- Unsloth - 4x longer context windows & 1.7x larger batch sizes: Unsloth now supports finetuning of LLMs with very long context windows, up to 228K (Hugging Face + Flash Attention 2 does 58K so 4x longer) on H100 and 56K (HF + FA2 does 14K) on RTX 4090. We managed...

- AI-Sweden-Models (AI Sweden Model Hub): no description found

- NVIDIA Collective Communications Library (NCCL): no description found

- GitHub - tinygrad/open-gpu-kernel-modules: NVIDIA Linux open GPU with P2P support: NVIDIA Linux open GPU with P2P support. Contribute to tinygrad/open-gpu-kernel-modules development by creating an account on GitHub.

- GitHub - searxng/searxng: SearXNG is a free internet metasearch engine which aggregates results from various search services and databases. Users are neither tracked nor profiled.: SearXNG is a free internet metasearch engine which aggregates results from various search services and databases. Users are neither tracked nor profiled. - searxng/searxng

Unsloth AI (Daniel Han) ▷ #help (244 messages🔥🔥):

-

Solving Fine-Tuning Woes: Users were discussing challenges with fine-tuning models for NLP tasks; one concerning the discrepancy between good evaluation metrics during training and poor inference metrics, and another related to their struggle in training a model to improve accuracy, particularly in a non-English NLP context.

-

Model Deployment Dialogue: There was an exchange about how to deploy models after training with Unsloth AI, with references to possible merging tactics and a link to the Unsloth AI GitHub wiki for guidance on deployment processes, including for models like Mistral-7B.

-

VRAM Hunger Games: A member expressed difficulty trying to fit models within VRAM limits, even after applying Unsloth’s VRAM efficiency updates. They discussed various strategies including fine-tuning batch sizes and consolidating contextual tokens into the base model to save on VRAM usage.

-

Dataset Formatting for GEMMA Fine-tuning: Someone seeking help with fine-tuning GEMMA on a custom dataset was directed towards using Pandas to convert and load their data into a Hugging Face compatible format, leading to a successful outcome.

-

GPU Limits in Machine Learning: In a debate over multi-GPU support for Unsloth AI, users clarified that while Unsloth works with multiple GPUs, official support and documentation may not be up-to-date, and that licensing restrictions aim to prevent abuse by large tech companies. A brief mention of integrating with Llama-Factory hinted at potential multi-GPU solutions.

Links mentioned:

- Google Colaboratory: no description found

- Load: no description found

- Home: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub: Let’s build from here: GitHub is where over 100 million developers shape the future of software, together. Contribute to the open source community, manage your Git repositories, review code like a pro, track bugs and fea...

- CodeGenerationMoE/code/finetune.ipynb at main · akameswa/CodeGenerationMoE: Mixture of Expert Model for Code Generation. Contribute to akameswa/CodeGenerationMoE development by creating an account on GitHub.

- GitHub - pandas-dev/pandas: Flexible and powerful data analysis / manipulation library for Python, providing labeled data structures similar to R data.frame objects, statistical functions, and much more: Flexible and powerful data analysis / manipulation library for Python, providing labeled data structures similar to R data.frame objects, statistical functions, and much more - pandas-dev/pandas

- GitHub - Green0-0/Discord-LLM-v2: Contribute to Green0-0/Discord-LLM-v2 development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 2-5X faster 80% less memory QLoRA & LoRA finetuning: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #showcase (7 messages):

-

Sneak Peek of Ghost 7B: The upcoming Ghost 7B model is touted to be a small-size, multi-language large model that shines in reasoning, understanding of Vietnamese, and expert knowledge. The excitement is palpable among the community, with users anticipating its release.

-

Enriching Low-Resource Languages: Tips shared for enhancing low-resource language datasets include utilizing translation data or resources from HuggingFace. Members show support and enthusiasm for the Ghost X project’s developments.

-

Community Support for Ghost X: The new version of Ghost 7B is welcomed with applause and encouragement from community members. Positive feedback underscores the work done on the Ghost X project.

Link mentioned: ghost-x (Ghost X): no description found

Unsloth AI (Daniel Han) ▷ #suggestions (1 messages):

starsupernova: oh yes yes! i saw those tweets as well!

Nous Research AI ▷ #off-topic (15 messages🔥):

- Money Rain Gif Shared: A member posted a gif link from Tenor, showing money raining down on Erlich Bachman from the TV show Silicon Valley.

- Insightful North Korea Interview: A YouTube video titled “Стыдные вопросы про Северную Корею” was shared, featuring a three-hour interview with an expert on North Korea, available with English subtitles and dubbing.

- Claude AI Crafts Lyrics: A member mentioned that the lyrics for a song listed on udio.com were created by an AI called Claude.

- Automatic Moderation against Spam: In response to a concern about invite spam, a member noted the implementation of an automatic system that removes messages and mutes the spammer if they send too many messages in a short period, with a notification sent to the moderator.

- Comparing Claude with GPT-4: One member expressed feeling a bit lost using Anthropics’ Claude AI, indicating a preference for GPT-4’s responses, which they felt were more aligned with their thoughts.

Links mentioned:

- Udio | An Intricate Tapestry (Delving deep) by Kaetemi: Make your music

- Money Rain Erlich Bachman Tj Miller Silicon Valley GIF - Money Rain Erlich Bachman Tj Miller - Discover & Share GIFs: Click to view the GIF

- special_relativity_greg_egan.md: GitHub Gist: instantly share code, notes, and snippets.

- LoReFT.md: GitHub Gist: instantly share code, notes, and snippets.

- Стыдные вопросы про Северную Корею: ERID: LjN8Jv34w Реклама. Рекламодатель ООО "ФЛАУВАУ" ИНН: 9702020445Радуем близких даже на расстоянии: https://flowwow.com/s/VDUD15Выберите подарок ко Дню ма...

Nous Research AI ▷ #interesting-links (8 messages🔥):

-

The Renaissance of RNNs: A new paper attempts to revive Recurrent Neural Networks (RNNs) with an emerging hybrid architecture, promising in-depth exploration into the field of sequential data processing. The detailed paper can be found on arXiv.

-

Hybrid RNNs Might Be the Endgame: The discussion suggests a trend towards hybrid models when innovating with RNN architectures, hinting at the persistent challenge of creating pure RNN solutions that can match state-of-the-art results.

-

Google’s New Model: There’s buzz about Google releasing a new 7 billion parameter model utilizing the RNN-based architecture described in recent research, indicating substantial investment into this area.

-

Startup Evaluating AI Models: A member shared a Bloomberg article on a new startup that is focusing on testing the effectiveness of AI models, but the link led to a standard browser error message indicating JavaScript or cookie issues. The link to the article was Bloomberg.

-

Quotable Social Media Post: A member shared a link to a humorous tweet that reads, “Feeling cute, might delete later. idk,” encouraging a brief moment of levity in the channel. The tweet is available here.

Links mentioned:

- Griffin: Mixing Gated Linear Recurrences with Local Attention for Efficient Language Models: Recurrent neural networks (RNNs) have fast inference and scale efficiently on long sequences, but they are difficult to train and hard to scale. We propose Hawk, an RNN with gated linear recurrences, ...

- Bloomberg - Are you a robot?: no description found

- Rho-1: Not All Tokens Are What You Need: Previous language model pre-training methods have uniformly applied a next-token prediction loss to all training tokens. Challenging this norm, we posit that "Not all tokens in a corpus are equall...

- Tweet from Kyle Corbitt (@corbtt): Feeling cute, might delete later. idk.

Nous Research AI ▷ #general (369 messages🔥🔥):

-

Google’s Gemma Engine Gets a C++ Twist: Google has its own variant of llama.cpp for Gemma. A lightweight, standalone C++ inference engine for Google’s Gemma models is available at their GitHub repository.

-

Nous Research is Feisty: The conversation touched upon the eagerly awaited Nous Hermes 8x22b and its development hardships. The Nous Hermes tuning, if attempted, would require infrastructure costing around “$80k for a week” and relies on tech that’s not easily rentable.

-

Mac’s AI Prediction Market: Discussion of Apple’s M chips and their potential for AI inference set the group buzzing, with the M2 Ultra and M3 Max being notable for low power draw and high efficiency compared to Nvidia’s GPUs. Some speculated about the future M4 chip, rumored to support up to 2TB of RAM.

-

Models on the Move: The chat noted the release of Mixtral-8x22b, and an experimental Mistral-22b-V.01, a dense 22B parameter model, as an extract from an MOE model, announced on the Vezora Hugging Face page. There’s anticipation for the upcoming V.2 release with expectations of enhanced capabilities.

-

Fine-Tuning the Giants: The members debated the impact of prompt engineering on model performance, with recent tweets suggesting markedly improved results on benchmarks like ConceptArc and chess ELO ratings with carefully engineered prompts. The legitimacy of claims that GPT-4 can reach over 3500+ Elo in chess by leveraging this technique was also a topic of skepticism.

Links mentioned:

- Tweet from Omar Sanseviero (@osanseviero): Welcome Zephyr 141B to Hugging Chat🔥 🎉A Mixtral-8x22B fine-tune ⚡️Super fast generation with TGI 🤗Fully open source (from the data to the UI) https://huggingface.co/chat/models/HuggingFaceH4/zeph...

- Tweet from Andrej Karpathy (@karpathy): @dsmilkov didn't follow but sounds interesting. "train a linear model with sample weights to class balance"...?

- Bloomberg - Are you a robot?: no description found

- Microsoft for Startups FoundersHub: no description found

- Vezora/Mistral-22B-v0.1 · Hugging Face: no description found

- lightblue/Karasu-Mixtral-8x22B-v0.1 · Hugging Face: no description found

- Shocked Computer GIF - Shocked Computer Smile - Discover & Share GIFs: Click to view the GIF

- axolotl/examples/mistral/mixtral-8x22b-qlora-fsdp.yml at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- wandb/Mistral-7B-v0.2 · Hugging Face: no description found

- wandb/zephyr-orpo-7b-v0.2 · Hugging Face: no description found

- Performance and Scalability: How To Fit a Bigger Model and Train It Faster: no description found

- LDJnr/Capybara · Datasets at Hugging Face: no description found

- GitHub - google/gemma.cpp: lightweight, standalone C++ inference engine for Google's Gemma models.: lightweight, standalone C++ inference engine for Google's Gemma models. - google/gemma.cpp

- HuggingFaceH4/capybara · Datasets at Hugging Face: no description found

- GitHub - unslothai/unsloth: 2-5X faster 80% less memory QLoRA & LoRA finetuning: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Azure Free Trial | Microsoft Azure: Start your free Microsoft Azure trial and receive $200 in Azure credits to use any way you want. Run virtual machines, store data, and develop apps.

Nous Research AI ▷ #ask-about-llms (25 messages🔥):

-

Quest for 7B Mistral Finetuning Advice: A member inquired about a step-by-step guide for finetuning 7B Mistral. Suggestions included using the Unsloth repository and employing Qlora on a Colab GPU instead of a full finetune process for small datasets, or renting powerful GPUs from services like Vast.

-

Logic Reasoning Dataset Hunt: A dataset for reasoning with propositional and predicate logic over natural text was sought by a member. Another shared the Logic-LLM project on GitHub, noting it also provides an 18.4% performance boost over standard chain-of-thought prompting.

-

Freelancer Request for Finetuning Aid: One member expressed interest in hiring a freelancer to create a script or guide them through the finetuning process based on a provided dataset.

-

In Search of Genstruct-enhancing Notebooks: A member was looking for notebooks or tools to input scraped data as a primer for Genstruct and found a GitHub repository, OllamaGenstruct, that closely matches their needs.

-

Exploring LLMs in Healthcare and Medical Fields: Members discussed applications of LLMs in the medical domain, sharing papers and mentioning the potential legal risks of providing medical advice through such models. Artificial restrictions on models and other legal considerations were noted as impediments to development in this area.

Links mentioned:

- Polaris: A Safety-focused LLM Constellation Architecture for Healthcare: We develop Polaris, the first safety-focused LLM constellation for real-time patient-AI healthcare conversations. Unlike prior LLM works in healthcare focusing on tasks like question answering, our wo...

- OllamaGenstruct/Paperstocsv.py at main · edmundman/OllamaGenstruct: Contribute to edmundman/OllamaGenstruct development by creating an account on GitHub.

- GitHub - teacherpeterpan/Logic-LLM: The project page for "LOGIC-LM: Empowering Large Language Models with Symbolic Solvers for Faithful Logical Reasoning": The project page for "LOGIC-LM: Empowering Large Language Models with Symbolic Solvers for Faithful Logical Reasoning" - teacherpeterpan/Logic-LLM

Nous Research AI ▷ #world-sim (63 messages🔥🔥):

- UI Inspirations for Worldsim: A member shared a link to the edex-ui GitHub repository, which showcases a customizable science fiction terminal emulator. Although another user expressed interest, it was cautioned that the project is discontinued and could be unsafe.

- Anticipation for Worldsim’s Return: The channel expresses eagerness with multiple members discussing when Worldsim might come back and what new features it might have. One member received confirmation that the Worldsim platform is planned to be back by next Wednesday.

- AGI as Hot but Crazy: In a light-hearted analogy, a member equated the allure of a dangerous UI to a “Hot but Crazy” relationship. The conversation shifted to discuss definitions of AGI with members adding different components like Claude 3 Opus, AutoGen, and Figure 01 to conceptualize AGI.

- Worldsim Coming Back Speculation: Members engaged in amateur predictions about when Worldsim would return, invoking Saturday based on nothing and using Claude 3’s predictions, with estimates ranging from the upcoming Saturday to a more cautious end of next week.

- Potential Alternatives and Resources Explained: In response to a query about Worldsim alternatives during the downtime, a user mentioned the sysprompt is open-source and can be sent directly to Claude or used with the Anthropic workbench and other LLMs. Moreover, Anthropic API keys could be pasted into Sillytavern for those interested in experimenting with the Claude model.

Links mentioned:

- AI Dungeon: no description found

- OGRE cyberdeck: OGRE is a doomsday or simply field cyberdeck, a knock-off of Jay Doscher's Recover Kit. Shared by rmw156.

- edex-ui/media/screenshot_blade.png at master · GitSquared/edex-ui: A cross-platform, customizable science fiction terminal emulator with advanced monitoring & touchscreen support. - GitSquared/edex-ui

CUDA MODE ▷ #general (6 messages):

- Newcomer Alighted: A newcomer expressed excitement about discovering the CUDA MODE Discord community through another member’s invitation.

- Video Resource Depot: The message informs that recorded videos related to the community can be found on CUDA MODE’s YouTube channel.

- Peer-to-Peer Enhancement Announced: An announcement was made about the addition of P2P support to the 4090 by modifying NVIDIA’s driver, enabling 14.7 GB/s AllReduce on tinybox green with support from tinygrad.

- CUDA Challenge Blog Post: A member shared their experience and a blog post about tackling the One Billion Row Challenge with CUDA, inviting feedback from CUDA enthusiasts.

- Channel Renaming for Resource Sharing: It was suggested to create a new channel for sharing materials. Subsequently, an existing channel was renamed to serve as the place for sharing resources.

Links mentioned:

- Tweet from the tiny corp (@__tinygrad__): We added P2P support to 4090 by modifying NVIDIA's driver. Works with tinygrad and nccl (aka torch). 14.7 GB/s AllReduce on tinybox green!

- The One Billion Row Challenge in CUDA: from 17 minutes to 17 seconds: no description found

CUDA MODE ▷ #cuda (168 messages🔥🔥):

-

Finding the Speed Limits with CublasLinear: Members discussed optimizations for faster model inference using custom CUDA libraries, with tests indicating that the custom

CublasLinearis faster for larger matrix multiplications. However, when tested within a full model such as “llama7b,” the speedup wasn’t as significant, potentially due to attention being the bottleneck rather than matrix multiplication. -

The Quest for Fast, Accurate Quantization: Various quantization strategies were debated, such as 4-bit quantization and its implementation challenges compared to other quant methods. A member is working on an approach called HQQ (High Quality Quantization) that aims to outperform existing quantization methods by using linear dequantization.

-

Driver Hacking for P2P Support: A message mentioned that P2P support was added to the RTX 4090 by modifying NVIDIA’s driver, with a link provided to a social media post detailing the accomplishment.

-

CUDA Kernel Wishlist for New Language Model: A paper introducing RecurrentGemma, an open language model utilizing Google’s Griffin architecture, was shared and prompted interest in building a CUDA kernel for it.

-

Benchmarking and Kernel Challenges: The conversation detailed the challenges of getting CUDA kernels to perform optimally and accurately, highlighting issues like differences in performance when moving from isolated tests to full model integrations and how changing precision can lead to errors or speed limitations.

Links mentioned:

- Tweet from the tiny corp (@__tinygrad__): We added P2P support to 4090 by modifying NVIDIA's driver. Works with tinygrad and nccl (aka torch). 14.7 GB/s AllReduce on tinybox green!

- Paper page - RecurrentGemma: Moving Past Transformers for Efficient Open Language Models: no description found

- bitsandbytes/bitsandbytes/nn/modules.py at main · TimDettmers/bitsandbytes: Accessible large language models via k-bit quantization for PyTorch. - TimDettmers/bitsandbytes

- hqq_hgemm_benchmark.py: GitHub Gist: instantly share code, notes, and snippets.

- QuaRot/quarot/kernels/gemm.cu at main · spcl/QuaRot: Code for QuaRot, an end-to-end 4-bit inference of large language models. - spcl/QuaRot

- CUDALibrarySamples/MathDx/cuBLASDx/multiblock_gemm.cu at master · NVIDIA/CUDALibrarySamples: CUDA Library Samples. Contribute to NVIDIA/CUDALibrarySamples development by creating an account on GitHub.

- CUDALibrarySamples/MathDx/cuBLASDx/multiblock_gemm.cu at master · NVIDIA/CUDALibrarySamples: CUDA Library Samples. Contribute to NVIDIA/CUDALibrarySamples development by creating an account on GitHub.

CUDA MODE ▷ #torch (16 messages🔥):

- Quantization Quagmire for ViT Models: A member encountered an error when trying to quantize the

google/vit-base-patch16-224-in21kand shared a link to the related GitHub issue #74540. They are seeking a resolution and guidance in quantization and pruning techniques. - Fuss over FlashAttention2’s Odd Output: When attempting to integrate flashattention2 with BERT, a member noted differences in outputs between patched and unpatched models, with discrepancies around 0.03 for the same inputs as reported in subsequent messages.

- Lament on Lagging LayerNorm: Despite claims of a 3-5x speed increase, a member found fused layernorm and MLP modules from Dao-AILab’s flash-attention to be slower than expected, contrasting with what’s advertised on their GitHub repository.

- Hacking the Hardware for Higher Performance: One user mentioned that Tiny Corp has modified open GPU kernel modules from NVIDIA to enable P2P on 4090s, achieving a 58% speedup for all reduce operations, with further details and results shared in a Pastebin link.