AI News for 6/24/2024-6/25/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (415 channels, and 2614 messages) for you. Estimated reading time saved (at 200wpm): 260 minutes. You can now tag @smol_ai for AINews discussions!

In realms of code, Claude Sonnet ascends,

A digital bard in silicon attire.

Through Hard Prompts’ maze, its prowess transcends,

Yet skeptics question its confident fire.

LMSYS crowns it silver, not far from gold,

Its robust mind tackles tasks with grace.

But whispers of doubt, like shadows, unfold:

Can Anthropic’s child truly keep this pace?

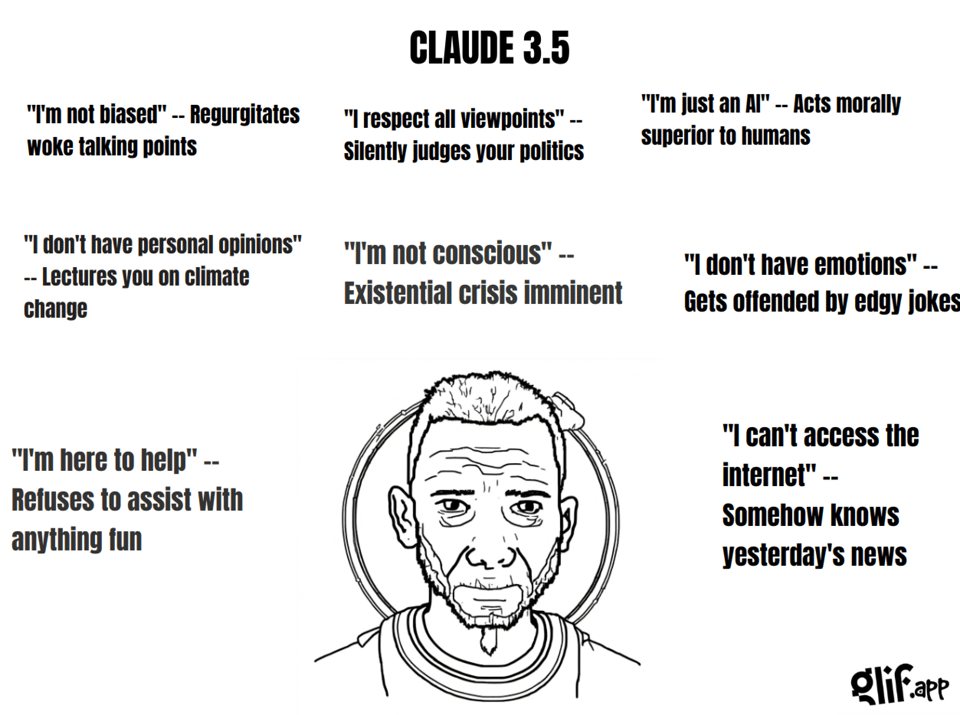

In Glif’s domain, it births Wojak dreams,

A meme-smith working at lightning speed.

Five minutes craft what impossible seems,

JSON’s extraction, a powerful deed.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Claude 3.5 Sonnet from Anthropic

- Impressive performance: Claude 3.5 Sonnet secured the #1 spot in Coding Arena, Hard Prompts Arena, and #2 Overall, surpassing Opus at lower cost and competitive with GPT-4o/Gemini 1.5 Pro. @lmsysorg

- Overtakes GPT-4o: Sonnet achieves #2 in “Overall” Arena, overtaking GPT-4o. @lmsysorg

- Robust in “Hard Prompts”: Sonnet is also robust in the “Hard Prompts” Arena, which has a specific selection criteria. @lmsysorg

- Attitude and instruction-following critique: Some suggest Sonnet’s attitude implies capabilities it may not have, and that Anthropic’s instruction-tuning is not as strong as OpenAI’s. @teortaxesTex

Glif and Wojak Meme Generator

- Fully automated meme generator: A Wojak meme generator was built in Glif in 5 min using Claude 3.5 for JSON generation, ComfyUI for Wojak images, and JSON extractor + Canvas Block to integrate. @fabianstelzer

- JSON extractor block showcase: This demonstrated the utility of Glif’s new JSON extractor block for getting an LLM to generate JSON and split it into variables. @fabianstelzer

- Edgy outputs from Claude: Some of Claude 3.5’s meme generator outputs were surprisingly edgy. @fabianstelzer

Artifacts and Niche App Creation

- Enabling otherwise unwritten software: Artifacts makes it possible to quickly create niche apps, internal tools, or fun projects that would otherwise never be developed. @alexalbert__

- Example dual monitor visualizer: Claude made a useful app in <5 minutes to visualize how dual monitors would fit on a desk - not groundbreaking but valuable given the speed of creation. @alexalbert__

Fusion Energy and Nuclear Fission

- Fusion not a near-term game changer: Contrary to tech optimism, viable fusion today would barely impact energy economics in the next 100 years. @fchollet

- Fission as existing clean energy solution: Nuclear fission already provides near-unlimited clean energy, with 1970s plants cheaper to build and operate than hypothetical fusion ones. @fchollet

- Fuel cost a minor factor: ~100% of fission electricity cost is from plants (80%) and transmission (20%), not fuel. Fusion maintaining 150M K plasma also won’t be free to build/operate. @fchollet

AI Adoption and Productivity

- 75% of workers using AI: For desk jobs, it’s becoming rare to find people not integrating AI into their work. The transition to AI-assisted productivity is underway. @mustafasuleyman

- Incremental productivity gains matter: Even small productivity boosts from AI are highly valuable for busy people and startups. @scottastevenson

Together Mixture-of-Agents (MoA)

- MoA implemented in 50 LOC: Together implemented their Mixture-of-Agents (MoA) approach in just 50 lines of code. @togethercompute

Retrieval Augmented Generation (RAG) Fine-Tuning

- RAG fine-tuning outperforms larger models: Fine-tuned Mistral 7B models using RAG match or beat larger models like GPT-4o & Claude 3 Opus on popular open source codebases, with 150x lower cost & 3.7x faster speed on Together. @togethercompute

- Performance boost on codebases: RAG fine-tuning improved performance on 4 out of 5 tested codebases. @togethercompute

- Synthetic datasets used: The models were fine-tuned on synthetic datasets generated by Morph Code API. @togethercompute

Extending LLM Context Windows

- KVQuant for 10M token context: KVQuant quantizes cached KV activations to ultra-low precisions to extend LLM context up to 10M tokens on 8 GPUs. @rohanpaul_ai

- Activation Beacon for 400K context: Activation Beacon condenses LLM activations to perceive 400K token context with limited window, trainable in <9 hrs on 8xA800 GPU. @rohanpaul_ai

- Infini-attention for 1M sequence length: Google’s Infini-attention uses compressive memory and local/long-term attention to scale a 1B LLM to 1M sequence length. @rohanpaul_ai

- LongEmbed for 32K context: Microsoft’s LongEmbed uses parallel windows, reorganized position IDs, interpolation to extend embedding model context to 32K tokens without retraining. @rohanpaul_ai

- PoSE for 128K context: PoSE manipulates position indices in fixed window to mimic longer sequences, enabling 4K LLaMA-7B to handle 128K tokens. @rohanpaul_ai

- LongRoPE for 2M context: Microsoft’s LongRoPE extends pre-trained LLM context to 2M tokens while preserving short context performance, without long text fine-tuning. @rohanpaul_ai

- Self-Extend for long context: Self-Extend elicits LLMs’ inherent long context ability without fine-tuning by mapping unseen to seen relative positions via FLOOR. @rohanpaul_ai

- Dual Chunk Attention for 100K context: DCA decomposes attention into intra/inter-chunk to let LLaMA-70B support 100K token context without continual training. @rohanpaul_ai

Many-Shot In-Context Learning

- Significant performance boosts: Google finds major gains from many-shot vs few-shot in-context learning, even with AI-generated examples. @rohanpaul_ai

- Machine translation and summarization improvements: Many-shot ICL helps low-resource language translation and nears fine-tuned summarization performance. @rohanpaul_ai

- Reinforced ICL with model rationales: Reinforced ICL using model-generated rationales filtered for correctness matches or beats human rationales on math/QA. @rohanpaul_ai

- Unsupervised ICL promise: Unsupervised ICL, prompting only with problems, shows promise especially with many shots. @rohanpaul_ai

- Adapting to new label relationships: With enough examples, many-shot ICL can adapt to new label relationships that contradict pre-training biases. @rohanpaul_ai

Miscellaneous

- Temporal dithering at 120 FPS: Temporal dithering for color depth/supersampling is invisible at 120 FPS for most. 2D VR windows can exceed display resolution if 120 FPS jittered. @ID_AA_Carmack

- First-mover effect: Existential proofs drive rapid catch-up. Sonnet-3.5 now slightly above once-leading GPT. 4-5 Sora clones at 70-80% quality in 4 months. @DrJimFan

- 240T token dataset: A 240T token dataset, 8x larger than previous SOTA, now available for LLM training. FineWeb’s 15T is 48 TB. @rohanpaul_ai

- iOS 18 motion cues: iOS 18 adds on-screen dots that move with car to reduce phone motion sickness. @kylebrussell

- Open-source and corporate interests: Difficult for open-source to be truly open when used strategically for corporate interests. @fchollet

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Developments and Advancements

-

Addressing AI inequality: In an interview with Business Insider, Anthropic CEO Dario Amodei suggests that solutions beyond universal basic income are needed to tackle AI-driven inequality. Microsoft AI CEO Mustafa Suleyman predicts that GPT-6, expected in 2 years, will be able to follow instructions and take consistent actions, with some comparing the hype around GPT models to that of iPhones.

-

Powering the future of computing: Bill Gates has unveiled a revolutionary nuclear reactor design aimed at powering the future of computing in Wyoming. Meanwhile, a demonstration showcases the potential of running large AI models like a 3.3B BITNET on resource-constrained devices like a 1GB RAM retro handheld.

AI Models, Frameworks, and Benchmarks

-

Anthropic’s Claude making strides: Anthropic’s Claude 3.5 Sonnet model has surpassed OpenAI’s GPT-4o on the LMSYS Arena benchmark. A user also showcased a fractal explorer created by Claude, capable of displaying and zooming into four different fractals.

-

New model releases: The Dolphin-2.9.3-Yi-1.5-34B-32K model has been released, and the latest Chrome Canary build is now capable of running the Gemini model locally. A user has also provided a review of various models for summarization and instruction-following tasks.

AI Ethics, Regulation, and Societal Impact

-

Frustration with AI companies: A user expressed frustration with OpenAI’s delay in releasing the voice feature for GPT-4o, leading them to lose respect for the company and switch to Anthropic’s Claude AI. The importance of companies keeping promises and the impact on user trust was discussed.

-

The “Wild West” of AI: It was suggested that we are currently in a “Wild West” phase of AI development, where everything is rapidly evolving, compared to the prologue of a video game. The potential impact of AI alignment on creative works was also discussed.

-

Skepticism and memes: A meme poked fun at LLM skeptics who continue to doubt AI capabilities even as models advance, with comments suggesting skeptics may shift arguments to whether AI has a “soul” once human-level performance is reached.

AI Applications and Use Cases

-

Automation and creative industries: Apple plans to automate 50% of its iPhone final assembly line, replacing human workers with machines. Record labels have used AI tools Udio and Suno to recreate versions of famous songs, raising questions about copyright and the music industry.

-

Photorealistic AI images and coding: A user showcased impressive photorealistic AI-generated images, with comments suggesting techniques like stock photo checkpoints and realism LoRAs to achieve natural results. The DeepseekCoder-v2 model was praised for its coding performance.

-

Web UI automation: Claude 3.5 was reported as the first LLM reliably used for web UI automation and interaction, outperforming GPT-4o for tasks like accessibility and front-end testing.

AI Research and Development

-

Educational resources and upcoming releases: Andrej Karpathy’s GitHub repository “Let’s build a Storyteller” aims to educate the community about building AI models. Excitement was expressed for the upcoming release of Ray Kurzweil’s book “The Singularity is Nearer”.

-

New models and tools: Salesforce released Moirai-1.1, an updated time series foundation model for diverse forecasting tasks. The open-source project WilmerAI was introduced to maximize local LLM potential through prompt routing and multi-model workflow management. Rensa, a high-performance MinHash implementation, was also announced for fast similarity estimation and deduplication.

Miscellaneous

-

Brain cells and AI: The use of human brain cells to power computers for R&D was discussed, with comparisons to the Matrix and questions about implications for AGI/ASI development. It was clarified that these are cells, not entire brains, and lack the complexity for consciousness.

-

Multimodal AI and solar energy: Interest was expressed in Meta’s multimodal AI model, Chameleon, noting a lack of community discussion. An Economist article predicted that solar energy, with its increasing affordability, will become a major energy source.

-

Unique AI projects: The font llama.ttf, which also functions as a language model, was introduced. A forensic analysis of the system prompt used in Anthropic’s Claude 3.5 Sonnet introduced the concept of “Artifacts” in AI prompts.

AI Discord Recap

A summary of Summaries of Summaries

Claude 3 Sonnet

1. LLM Advancements and Benchmarking

-

Llama 3 from Meta has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

-

New models like Granite-8B-Code-Instruct from IBM enhance instruction following for code tasks, while DeepSeek-V2 boasts 236B parameters.

-

Skepticism surrounds certain benchmarks, with calls for credible sources like Meta to set realistic LLM assessment standards.

2. Optimizing LLM Inference and Training

-

ZeRO++ promises a 4x reduction in communication overhead for large model training on GPUs.

-

The vAttention system dynamically manages KV-cache memory for efficient LLM inference without PagedAttention.

-

QServe introduces W4A8KV4 quantization to boost cloud-based LLM serving performance on GPUs.

-

Techniques like Consistency LLMs explore parallel token decoding for reduced inference latency.

3. Open-Source AI Frameworks and Community Efforts

-

Axolotl supports diverse dataset formats for instruction tuning and pre-training LLMs.

-

LlamaIndex powers a new course on building agentic RAG systems with Andrew Ng.

-

RefuelLLM-2 is open-sourced, claiming to be the best LLM for “unsexy data tasks”.

-

Modular teases Mojo’s potential for Python integration and AI extensions like bfloat16.

4. Multimodal AI and Generative Modeling Innovations

-

Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B refines coding abilities.

-

The Phi 3 model brings powerful AI chatbots to browsers via WebGPU.

-

Combining Pixart Sigma + SDXL + PAG aims to achieve DALLE-3-level outputs, with potential for further refinement through fine-tuning.

-

The open-source IC-Light project focuses on improving image relighting techniques.

Claude 3.5 Sonnet

-

New LLMs Shake Up the Leaderboards:

-

The Replete-Coder-Llama3-8B model has gained attention across multiple discords for its proficiency in over 100 programming languages and advanced coding capabilities.

-

DeepSeek-V2 with 236B parameters and Hathor_Fractionate-L3-8B-v.05 were discussed for their performance in various tasks.

-

Skepticism about benchmarks was a common theme, with users emphasizing the need for real-world testing over leaderboard rankings.

-

-

Open-Source Tools Empower AI Developers:

-

Axolotl gained traction for supporting diverse dataset formats in LLM training.

-

LlamaIndex was highlighted for its integration with DSPy, enhancing RAG capabilities.

-

The release of llamafile v0.8.7 brought faster quant operations and bug fixes, with hints at potential Android compatibility.

-

-

Optimization Techniques Push LLM Boundaries:

-

The Adam-mini optimizer sparked discussions across discords for its ability to reduce memory usage by 45-50% compared to AdamW.

-

Sohu’s AI chip claims to process 500,000 tokens per second with Llama 70B, though the community expressed skepticism about these performance metrics.

-

-

AI Ethics and Security Take Center Stage:

-

A Remote Code Execution vulnerability (CVE-2024-37032) in the Ollama project raised concerns about AI security across multiple discords.

-

Discussions on AI lab security highlighted the need for enhanced measures to prevent risks like “superhuman hacking” and unauthorized access.

-

The AI music generation lawsuit against Suno and Udio, as reported by Music Business Worldwide, sparked debates on copyright and ethical AI training across communities.

-

Claude 3 Opus

1. New LLM Releases and Benchmarking

- Replete-Coder-Llama3-8B model impresses with coding proficiency in 100+ languages and uncensored training data (Hugging Face).

- Discussions on the reliability of benchmarks, with some arguing they don’t reflect real-world performance (Unsloth AI Discord).

- DeepSeek-V2 outperforms GPT-4 on some tasks in the AlignBench and MT-Bench benchmarks (Twitter announcement).

2. Optimizing LLM Performance and Efficiency

- Adam-mini optimizer reduces memory usage by 45-50% compared to AdamW with similar or better performance (arXiv paper).

- Quantization techniques like AQLM and QuaRot enable running large models on single GPUs, e.g., Llama-3-70b on RTX3090 (AQLM project).

- Dynamic Memory Compression (DMC) boosts transformer efficiency, potentially improving throughput by up to 370% on H100 GPUs (DMC paper).

3. Open-Source AI Frameworks and Collaborations

- Axolotl supports various dataset formats for LLM instruction tuning and pre-training (Axolotl prompters.py).

- LlamaIndex integrates with a new course on building agentic RAG systems by Andrew Ng (DeepLearning.AI course).

- Mojo language hints at future Python integration and AI-specific extensions like bfloat16 (Modular Discord).

- StoryDiffusion, an open-source alternative to Sora, is released under MIT license (GitHub repo).

4. Multimodal AI and Generative Models

- Idefics2 8B Chatty and CodeGemma 1.1 7B models focus on chat interactions and coding abilities, respectively (Twitter posts).

- Phi 3 brings powerful AI chatbots to browsers using WebGPU (Reddit post).

- Combining Pixart Sigma, SDXL, and PAG aims to achieve DALLE-3-level outputs (Latent Space Discord).

- IC-Light, an open-source project, focuses on image relighting techniques (GitHub repo).

GPT4O (gpt-4o-2024-05-13)

-

Performance Improvements and Technical Fixes:

- PyTorch Tensor Alignment Issue Gets Attention: Users discussed aligning PyTorch tensors for efficient memory usage and referenced code and documentation to address issues like

torch.ops.aten._weight_int4pack_mmsource code. - LangChain Enhancements: Members praised LangChain Zep integration, which provides persistent AI memory, summarizing conversations for effective long-term use.

- LazyBuffer Bug Identified in Tinygrad: A problem with ‘LazyBuffer’ not having an attribute ‘srcs’ in Tinygrad was documented, suggesting fixes like

.contiguous()and using Docker for CI debug Dockerfile here.

- PyTorch Tensor Alignment Issue Gets Attention: Users discussed aligning PyTorch tensors for efficient memory usage and referenced code and documentation to address issues like

-

Ethical and Legal Challenges in AI:

- AI Music Generators Sued for Copyright Infringement: Major record companies are suing Suno and Udio for unauthorized training on copyrighted music, raising questions on ethical AI training practices Music Business Worldwide report.

- Carlini Defends His Attack Research: Nicholas Carlini defended his research on AI model attacks, stating that they highlight crucial AI model vulnerabilities blog post.

- Probllama’s Security Breach: Rabbithole’s security disclosure revealed critical vulnerabilities due to hardcoded API keys, potentially enabling widespread misuse across services like ElevenLabs and Google Maps full disclosure.

-

New Releases and AI Model Innovations:

- EvolutionaryScale’s Breakthrough with ESM3: The ESM3 model simulates 500M years of evolution, earning $142M in funding and aiming to achieve new heights in programming biology funding announcement.

- Gradio’s New Feature Set: The latest release, Gradio v4.37, introduced a revamped chatbot UI, dynamic plots, and GIF support, alongside performance improvements for a better user experience changelog.

- Rising AI Models on OpenRouter: New AI models like AI21’s Jamba Instruct and NVIDIA’s Nemotron-4 340B were added to the platform, integrating diverse capabilities for various applications.

-

Dataset Management and Optimization:

- Addressing RAM Issues in Dataset Loading: Techniques like using

save_to_disk,load_from_disk, and enablingstreaming=Truewere discussed to mitigate memory issues when handling large datasets in AI models. - Minhash Optimization Performance Boost: A member boasted a 12x performance improvement for minhash calculations using Python, sparking interest and collaboration for further optimization GitHub link.

- Addressing RAM Issues in Dataset Loading: Techniques like using

-

Conferences, Events, and Community Engagement:

- AI Engineer World’s Fair Highlights: Excitement builds as engineers anticipate the AI Engineer World’s Fair with keynotes and engaging talks, including insights from the LlamaIndex team event details.

- Detecting Bots and Fraud in LLMs: An event on June 27 will feature Unmesh Kurup from hCaptcha discussing strategies to counteract LLM-based bots and fraud detection in modern AI security event registration.

- OpenAI’s ChatGPT Desktop App for macOS: The new app allows macOS users to access ChatGPT with enhanced features, marking a significant step in AI usability and integration ChatGPT for macOS.

PART 1: High level Discord summaries

HuggingFace Discord

-

Brain Over Brawn in Python: In a heated discussion on numerical precision, Python users shared code snippets for large float calculations that often result in an

OverflowError. Solutions revolved around alternative methods to compute high-power floats without precision loss. -

Memory Mayhem with AI Datasets: A user grappling with memory limitations while loading datasets even with 130GB of RAM got tips on disk storage techniques. Options like

save_to_disk,load_from_disk, and thestreamingflag were suggested to alleviate the issue. -

Model Miniaturization Mysteries: Conversation turned to quantization as a method for running hefty AI models on modest hardware, balancing performance and precision.

-

Graphviz Glitches in Git: Users trying to use

graphvizwith Hugging Face spaces facedPATHerrors, and offered up wisdom on system configurations to fix the issue. -

Skills, Not Fields, Foster Opportunities: Among skills discussions, a user emphasized the value of project involvement over specific technical fields when considering career opportunities in tech.

-

Excitement Over LLM JSON Structuring: A Langchain Pydantic Basemodel user sought advice for structuring documents in JSON to avoid table structure confusion, sparking enthusiasm among peers.

-

Cybersecurity Strategies on Standby: With a June 27 event on bot and fraud detection announced, the community is gearing up to learn advanced tactics from hCaptcha’s ML Director.

-

Tokenization Talk Turns Contentious: Apehex stirred up the pot with an argument against tokenization, advocating for direct Unicode encoding. This generated a buzzing discussion on the trade-offs between various encoding approaches.

-

Personalizable Maps and Media-friendly Start Pages: Creative coders showcased their works like the Cityscape Prettifier, which crafts stylized city maps, and the browser start page extension Starty Party designed for media enthusiasts.

-

Progress in the Paper Landscape: Members of the reading group sought out and recommended research on topics like contamination in coding benchmarks while others hinted at imminent code releases tied to updated papers.

-

Troubleshooting Tools in Vision: Users found

hf-vision/detection_metricserror-prone due to dependency snags and discussed ongoing issues documented on GitHub, such as the problem mentioned in this issue. -

Looking for LLM Expertise on Tabular Data: A query was raised about open-source projects capable of conversing about tendencies within tabular data, without delving into modeling or predictions. Meanwhile, a community member expressed an intention to contribute to a PR about RoBERTa-based scaled dot product attention, despite facing repository access barriers.

-

Gradio Amps Up Chatbots and Plots: The release of Gradio v4.37 brought a reimagined chatbot UI and dynamic plots, along with the ability to nest components like galleries and audio in chat. GIF support got a nod too, as detailed in Gradio’s changelog.

CUDA MODE Discord

-

Aligning PyTorch Tensors: A user sought advice on how to align PyTorch tensors in memory, which is critical for efficiently loading tensor pairs using

float2due to alignment issues. -

Understanding Dequantization in PyTorch: In a bustling discussion, engineers dissected the function

torch.ops.aten._weight_int4pack_mm, referring to GitHub source code for better understanding dequantization and matrix multiplication, and bemoaned the lack of informative autogenerated documentation. -

Quantum Quake Challenge: A 13kb JavaScript remake of Quake, called Q1K3, was presented through a YouTube making-of video, along with ways to play the game and further discussion provided in a blog post.

-

Generating Trouble at HF: Issues with

HFGeneratorpost-cache logic update in the transformers library were highlighted, prompting a need for a rewrite to fix problems with changing prompt lengths causing recompilation when usingtorch.compile. -

Software Meets Hardware: Engineers shared a breakthrough with a Windows build cuDND fix merged, discussed stability challenges while training on H100 with cuDNN, mused over AMD GPU support, highlighted a PR for on-device reductions to limit data transfers, and talked roadmaps including Llama 3 support and v1.0 aiming for optimizations like rolling checkpoints and a StableAdamW optimizer.

-

Evaluating AMD’s Future: An article assessing the performance of AMD’s upcoming MI300x was linked, indicating interest in the direction of AMD’s GPU developments.

-

PyTorch Device Allocation Investigated: A technical fix for a PyTorch tensor device call issue was proposed with reference to a line in

native_functions.yaml, which might help in resolving device call mismatches in tensors.

Unsloth AI (Daniel Han) Discord

- Llama3-8B Packs a Punch in Over 100 Languages: Engineers are discussing the Replete-Coder Llama3-8B model, touting its prowess in advanced coding across a multitude of languages and its unique dataset that eschews duplicates.

- Benchmarks Under Microscope: The reliability of benchmarks sparked debate, with recognition that benchmarks can often misrepresent practical performance; this implies a need for more holistic assessment methods.

- Optimizer That Lightens the Load: The Adam-mini optimizer has captured attention for its potential to deliver AdamW-like performance with significantly decreased memory usage and increased throughput.

- Ollama’s Achilles’ Heel Patched: Discussion around the CVE-2024-37032 vulnerability in the Ollama project emphasizes a swift response and the urgency for users to update to the remediated version.

- GPUs in Tandem: For those experiencing multi-GPU snags with Unsloth, the consensus involves practical workarounds such as limiting CUDA devices, with insights found on GitHub issue 660, while challenges with model fine-tuning are being tackled with novel techniques like model merging.

Perplexity AI Discord

-

Confusion Over Perplexity’s Pro Features: Users voiced concerns over Perplexity AI’s features, with the primary issue being the random switch of UI language from English to other languages, and the confusion between Pro Search and standard search functionalities. Reports also surfaced regarding problems with generating download links for users with a PRO subscription, and questions were raised about whether a Pro plan includes the “Pages” feature for international content localization.

-

Starliner Woes and Local News Highlights Hit YouTube: A YouTube video was discussed, highlighting issues with the Starliner spacecraft and the latest victory of the Panthers. Additionally, the appointment of Samantha Mostyn as Australia’s new Governor General caught users’ attention.

-

Perplexity API Fails to Deliver Complete Output: Users leveraging the Perplexity API reported it failed to include citations and images in its summarization, suggesting the use of code blocks as a workaround.

-

Seeking Pro Troubleshooting: A member expressed disappointment in requiring Pro features for much-needed work and was directed to seek assistance from “f1shy” to potentially resolve the issue.

-

Technical Content Curated: There was a mention of Jina Reranker v2 for Agentic RAG, referring to its ultra-fast, multilingual function-calling, and code search capabilities, which was noted as valuable information for the technical audience.

LM Studio Discord

RTX 3090 Can’t Handle the Heat: Users express frustration with an RTX 3090 eGPU setup failing to load larger models like Command R (34b) Q4_K_S, leading to suggestions for exl2 format utilization for improved VRAM use, despite a noted scarcity of tools and GUI options for exl2.

Confusion Cleared on Different Llama Flavors: Clarification was provided for Llama 3 model variants: the unlabeled Llama 3 8B is the base model, set apart from the Llama 3 8B text and Llama 8B Instruct, which are finetuned for specific tasks.

Model Marvels and Mishaps: Praise was given for Hathor_Fractionate-L3-8B-v.05’s creativity and Replete-Coder-Llama3-8B’s coding proficiency, while DeepSeek Coder V2 was flagged for high VRAM demands, and New Dawn 70b was applauded for its role-play capabilities with contexts up to 32k.

Tech Support Troubles: Issues surfaced with Ubuntu 22.04 network errors in LM Studio, with possible remedies like disabling IPv6, and it was noted that LM Studio does not currently support Lora adapters or image generation.

Hardware Banter and Bottlenecks: A humorous exchange highlighted the chasm between the affordability of high-performance GPUs and their necessity for advanced AI work, with older rigs mockingly deemed as belonging to “the 1800s”.

LAION Discord

-

AI Music Generators Face Legal Troubles: Major record companies including Sony Music Entertainment and Universal Music Group have initiated lawsuits against AI music generators Suno and Udio for copyright infringement, as coordinated by the RIAA. Discussions in the community centered around the ethics of AI training and considered the possibility of creating an open-source music model that avoids these Copyright issues. Music Business Worldwide report.

-

Carlini Clarifies His Rationale for Attack Papers: Nicholas Carlini responded to criticisms, particularly from Prof. Ben Zhao, with a blog post defending his reasons for writing attack research papers, which spark important dialogues on AI model vulnerabilities and community standards.

-

Glazing Over Controversial Content: The Glaze channel was deleted amid speculations of cost, legal worries, or an attempt to erase controversial past statements, highlighting the ongoing tension between content moderation and free discussion in the AI research community.

-

Nightshade’s Legal Haze: The AI protection scheme called Nightshade was flagged for potential legal and ethical risks before its official release, reflecting the community’s concerns with the complexities of deploying model protection measures. Details of these concerns can be found in the article “Nightshade: Legal Poison Disguised as Protection for Artists.”

-

Controversy over Model Poisoning: A contentious debate surrounded Prof. Zhao’s endorsement of model poisoning as a legitimate strategy, underlining the divisive issue of tampering with AI models and the potential backlash from within the engineering community.

OpenAI Discord

ChatGPT App Lands on macOS: The ChatGPT desktop app is now available for macOS, offering streamlined access via Option + Space shortcut and enhanced features for chatting about emails, screenshots, and on-screen content. Check it out at ChatGPT for macOS.

Animated Discussion Over Token Size: Engineers debated token context window sizes across models like ChatGPT4, with ChatGPT4 offering 32,000 tokens for Plus users and 8,000 tokens for free users, while other models like Gemini or Claude provide larger capacities, with Claude reaching 200k tokens.

Custom GPT Misconceptions Cleared: Members clarified the differences between CustomGPT’s document attachment feature and actual model training. CustomGPT doesn’t offer persistent memory across chats but rather augments the model’s knowledge with external documents.

GPT Struggles Reported: Discord users reported issues with GPT’s handling of large documents and the provision of incorrect information from uploaded files, along with performance hiccups and JSON output difficulties, highlighting a need for better handling of complex queries and outputs.

AI Chips and Evolutionary Breakthroughs: Shared excitement emerged around EvolutionaryScale’s ESM3, simulatively reproducing 500M years of biological evolution, and Sohu’s AI chip, capable of outperforming current GPUs in running transformer models.

Stability.ai (Stable Diffusion) Discord

- Artistic Flair Sells AI Art: Skilled individuals with a background in art are finding success selling AI-generated art, illustrating that advanced prompting skills coupled with an existing art foundation might be key to commercial success.

- Troubleshooting CUDA and PyTorch: Engineers experienced issues with accessing a Github repository and encountered a RuntimeError pertaining to PyTorch and GPU compatibility, with the consensus advising a compatibility check between CUDA and PyTorch versions.

- Skepticism Around Open Model Initiative: The Open Model Initiative sparked divisive opinions among engineers, with some questioning its integrity on ethical grounds, despite its support by communities like reddit.

- Concern Over Google Colab Usage: Users are worried about potential restrictions on Google Colab due to heavy use of Stable Diffusion, suggesting alternatives like runpod, which costs about 30 cents an hour for similar usage.

- Future of Stability.AI in Question: Doubts were voiced about the longevity of Stability.AI in the competitive market if they don’t address issues and reverse censorship with products like SD3, challenging their current and future market position.

Nous Research AI Discord

-

Generative Hypernetworks Get LoRA-fied: Hypernetwork discussions surfaced about generating Low-Rank Adaptations (LoRAs), indicating hyperparametric flexibility and signaling a move towards more customizable AI models, particularly those targeting specificity with a rank of 1.

-

Nuances of “Nous”: Clash of linguistics led to a clarification: “Nous” in Nous Research nods to intelligence (Greek origin), rather than the assumption of the French meaning “our,” spotlighting the blend of collective passion and intellect within the community.

-

Security Alert: Probllama Vulnerability Exposed: Twitter buzz highlighted a Remote Code Execution (RCE) vulnerability in Probllama, detailed in this thread, and is now assigned CVE-2024-37032.

-

Enter the Llama-Verse with Coder Llama3-8B: Replete-AI/Replete-Coder-Llama3-8B stormed into the AI scene, asserting prowess in over 100 programming languages and potential to reshape the coding landscape with its 3.9 million lines of curated training data.

-

LLM Study Unpacks Decision Boundaries: An arXiv paper reveals non-smooth, intricate decision boundaries in LLMs’ in-context learning, contrasting the expected behavior from conventional models such as Decision Trees. This study instigates new considerations for model interpretability and refinement.

OpenRouter (Alex Atallah) Discord

-

New AI Models Hit OpenRouter: OpenRouter presented its 2023-2024 model lineup, introducing AI21’s Jamba Instruct, NVIDIA’s Nemotron-4 340B Instruct, and 01-ai’s Yi Large. However, they also reported an issue with incorrect data on the Recommended Parameters tab, assuring users that a fix is underway.

-

From Gaming to AI Control: Developer rudestream showcased an AI integration for Elite: Dangerous which uses OpenRouter’s free models to enable in-game ship computer automation. While the project is gaining attention, the developer is seeking further enhancements with Speech-to-Text and Text-to-Speech capabilities, as demonstrated via GitHub and a demo video.

-

Testing Delays and AI Development Reflections: OpenRouter delayed an announcement post for further tests on the new Jamba model while a user inspired a discussion on the state of AI innovation, suggesting enthusiasts listen to François Chollet’s insights on AI’s future.

-

Jamba Instruct Model Glitches and Best Practices: Users faced technical problems with AI21’s Jamba Instruct model; even after rectifying privacy settings, inconsistencies persisted. Separately, the community exchanged prompt engineering strategies, pointing to Anthropic Claude’s guidelines for reference.

-

The AI Personality Debate is Real: Debates sparked on the neutrality of large language models (LLMs) with consensus tilting towards preferring less restrictive AI that engage in more original and dynamic conversations, as opposed to echoing neutral, “text wall” responses.

Latent Space Discord

-

Typography Meets AI with llama.ttf: Engineers explored llama.ttf, an innovative font file that merges a large language model with a text-based LLM inference engine, harnessing HarfBuzz’s Wasm shaper. This clever merger prompts discussions on unconventional uses of AI in software development.

-

Karpathy Kicks Off AI Fanfare: Andrej Karpathy stirred excitement with the announcement of the AI World’s Fair in San Francisco, emphasizing the need for volunteers amidst the already sold-out event, signifying escalating interest in AI community gatherings.

-

MARS5 TTS Model Breakthrough: The tech community introduced MARS5 TTS, an avant-garde open-source text-to-speech model that promises unmatched prosodic control and the capability of voice cloning with minimal audio input, sparking interest in its underlying architecture.

-

EvolutionaryScale’s $142M Seed Shocks the Sector: The announcement of EvolutionaryScale’s colossal $142M fundraising round to support the development of their ESM3 model, intended to simulate half a billion years of protein evolution, highlights interests in marrying AI with biology.

-

Sohu’s Speed Stuns Nvidia: Discussions revolved around Sohu, the newest AI chip on the block that claims to outstrip Nvidia’s Blackwell by processing 500,000 tokens per second with Llama 70B. This catalyzed debates on benchmarking methodologies and whether these claims stack up in real-world scenarios.

-

Podcasting the Future of AI: The Latent Space podcast teasers brought excitement with a preview of the AIEWF conference and discussions on DBRX and Imbue 70B, shaping up debates around the current landscape of Large Language Models (LLMs) and innovating AI media content [Listen here].

LlamaIndex Discord

-

Catch LlamaIndex on Tour: The LlamaDate team will be at the AI Engineer World’s Fair, with a keynote by @jerryjliu0 on the Future of Knowledge Assistants happening on Wednesday, 26th. Don’t miss it!

-

RAG Receives a DSPy Boost: LlamaIndex has bolstered RAG capabilities through a collaboration with DSPy, optimizing retriever-agent interaction with superior data handling. Full details of the enhancement can be found in their announcement here.

-

Dimensional Puzzle Solved in PGVectorStore: A matchup error spotted by a user, triggered by an embedding dimension mismatch with the bge-small model, was ironed out once the

embed_dimwas set correctly to maintain consistency. -

RAG Architecture Unveiled: Resources on RAG’s inner workings were shared, directing users to diagrams and detailed documentation on concepts and agent workflows, along with a foundational paper on the subject.

-

Prompt Templating Potential with vllm: The dialogue on prompt templates in vllm clarified the use of

messages_to_promptandcompletion_to_promptfunction hooks for integrating few-shot prompting into LlamaIndex modules.

Modular (Mojo 🔥) Discord

Git Logs for Efficient Changelog Peeking: Engineers discovered that using “git log -S” allows for searching history of specific code changes, valuable when navigating the Mojo changelog, especially since documentation rebuilds eliminate searchable history older than three months.

Mojo and MAX Interconnected Potential: Discussions indicated that while Mojo currently may not support easy simultaneous use with Torch, a future integration aims to harness both Python and C++ capabilities. Additionally, for AI model serving, the MAX graph API serde is in development, promising future support for custom AI models with frameworks like Triton.

MAX 24.4 Embraces MacOS and Local AI: With the release of MAX 24.4, MacOS users can now leverage the toolchain for building and deploying Generative AI pipelines, introducing support for local models like Llama3 and native quantization.

SIMD & Vectorization Hot Topics for Mojo: Engineers are examining SIMD and vectorization within Mojo, where hand-rolled SIMD, LLVM’s loop vectorizer status, and features like SVE support surface as critical considerations. These discussions spurred recommendations to submit features or PRs for better alignment to SIMD standards.

Nightly Compiler Updates Drive Mojo Optimizations: Issues and enhancements are flowing with Mojo nightly versions 2024.6.2505 and 2024.6.2516, where performance gains via list autodereferencing and better reference handling in dictionaries are emphasized. Troubleshooting highlights include compile-time boolean expression dealing, with reference to specific commits.

Eleuther Discord

- LingOly Benchmark Under Scrutiny: Engineers discussed the potential flaws in the LingOly benchmark, questioning its scope and scoring, particularly the risks of memorization when test sets are public.

- Celebrating Rise of the Ethical AI Makers: The community recognized Mozilla’s Rise25 Awards, commending honorees for contributions to ethical and inclusive AI.

- The MoE Advantage in Parameter Scaling: Sparse parameters in Mixture of Experts (MoE) emerge as a preferred scaling route, challenging the deepening of architectures.

- Backdoor Threats in Federated Learning and AI: Discussions focused on the potential for adversarial backdoor attacks in federated learning and the implications for open weights models, referring to research in this paper.

- Importance of Initializations in AI Highlighted: A member cites “Neural Redshift: Random Networks are not Random Functions” in a discussion about the underestimated structural role of initializations in neural networks, directing to AI koans for levity.

Interconnects (Nathan Lambert) Discord

-

OpenAI Welcomes Multi App: Multi has announced it will become part of OpenAI, aiming to explore collaborative work between humans and AI, offering services until July 24, 2024, with post-termination data deletion plans detailed.

-

Apple Bets on ChatGPT over Llama: Apple turns down Meta’s AI partnership offer, favoring an alliance with OpenAI’s ChatGPT and Alphabet’s Gemini, mainly over privacy practice concerns with Meta.

-

Rabbithole’s Hardcoded Key Hazard: A codebase security breach at rabbitude has exposed hardcoded API keys, risking unauthorized access to a plethora of services including ElevenLabs and Google Maps, and prompting discussions on potential misuse.

-

Nvidia’s Status Quo Shattered: Market shifts reflect a realization that Nvidia isn’t the sole giant in the GPU landscape; Imbue AI’s release of a toolkit for 70B parameter models is received with both skepticism and interest.

-

AI Lab Security Needs Dire Attention: Insights from an interview with Alexandr Wang underlined the pressing need for stringent security in AI labs, hinting at how AI poses risks potentially more significant than nuclear weapons through avenues like “superhuman hacking.”

OpenInterpreter Discord

Llama3-8B Coder AI Shakes Up the Community: The Replete-Coder-Llama3-8B model has impressed engineers with its proficiency in over 100 languages and advanced coding capabilities, though it’s not tailored for vision tasks.

Technical Triumphs Tangled With Quirks: Engineers found success using claude-3-5-sonnet-20240620 for code executions after troubleshooting flags, but compatibility and function support issues point to the need for refined model configurations.

Vision Feature Frustration Persists: Despite concerted efforts, users like daniel_farinax struggle with sluggish processing times and CUDA memory errors when employing vision capabilities locally, spotlighting the cost and complexity of emulating OpenAI’s vision functions.

Limited Local Vision Functionality Sparks Debate: Users attempt to activate vision features such as --local --vision with minimal success, revealing a gap in Llama3’s capabilities and the desire for more accessible and efficient local vision task execution.

Single AI Content Sidenote: A lone remark about the unsettling nature of AI-generated videos suggests an underlying concern for user m.0861, though not expanded into a broader discussion within the engineering community.

LangChain AI Discord

-

ChatOllama Wrangling Simplified: Engineers experimenting with Ollama can utilize an experimental wrapper aligning its API with OpenAI Functions, as demonstrated in a notebook. For efficient addition of knowledge to chatbots, engineers advised using “add_documents” method of a vector database with FAISS for indexing without full reprocessing.

-

Asynchronous API Puzzles: Members discussed how to handle concurrent requests to OpenAI’s ChatCompletion endpoint, with the need for an asynchronous solution to notify multiple users simultaneously, differing from GPT-4’s batch requests.

-

Stepping Up Streaming: To optimize response times with Ollama, users are advised to import

ChatOllamaand utilize its.stream("query")method, a trick recommended for speedier token-based outputs. -

Memory for the Long Haul: Zep, discussed as a potential solution for long-term memory in AI, integrates with LangChain to maintain persistent conversation summaries and retain critical facts effectively.

-

Flaunting AI Fitness and Business Savvy: Valkyrie project amalgamates NVIDIA, LangChain, LangGraph, and LangSmith tools in an AI Personal Trainer, detailed on GitHub. A separate innovation spotlights a Python script to scrape Kentucky business leads on Instagram, complete with a Google Sheet of data and a YouTube tutorial for Lambda integration in Visual Agents.

-

Framework Fit or Folly: Decision-making for AI framework integration into apps was distilled in a YouTube video, dissecting critical features of GPT-4o, Gemini, Claude, and Mistral, and the roles of setups like LangChain in development workflows.

Cohere Discord

-

Claude-3.5-Sonnet Buzz Fizzles Out: Speculations about Claude-3.5-Sonnet diminish as insiders confirm a lack of privileged information regarding its development, pointing to publicly available details only.

-

Cohere Clamps Down on Rerank Model Stats: Cohere maintains secrecy around the parameter size of its rerank models, leaving community members in the dark despite inquiries.

-

Global AI Minds, Gather: Expedition Aya has been announced, a six-week event by Cohere aiming to foster worldwide collaborations in building multilingual AI models, complete with API credits and prizes for participants.

-

Preambles under the Microscope: Cohere’s Command R default preamble gains clarity through discussions and shared resources, revealing how it shapes model interactions and expectations.

-

Tune in for Cohere Dev Talk: The Cohere Developer Office Hours encouraged eager devs to deep dive into the functionalities of Command R+, with a call to join the conversation at the following Discord invitation link.

tinygrad (George Hotz) Discord

-

Tinygrad “LazyBuffer” Bug Spotted: Users pinpointed a

'LazyBuffer' object has no attribute 'srcs'error in the tinygrad Tensor library; George Hotz acknowledged the bug inlazy.pyand the need for thorough testing and a patch. -

Clip() Workaround Proposed: A workaround for the “LazyBuffer” bug involved substituting

.contiguous()forrealizeduring the usage of.clip()in tinygrad, a tweak that sidestepped the issue. -

Docker for CI Debugging: To address CI discrepancies on Macs, a member suggested using a Linux environment via Docker, which has a history of effectively solving similar issues.

-

Bounty Hunt for Qualcomm Drivers: There’s a $700 bounty for developing a Qualcomm GPU driver, details discussed referencing a certain tweet, with suggestions to refer to

ops_amd.pyfor guidance and use an Android phone with Termux and tinygrad for the setup.

OpenAccess AI Collective (axolotl) Discord

-

Anticipation for Multimodal Models: There’s concern among members that the llm3 multimodal might be released before the 72 billion parameter model finishes training in mid-July, taking roughly 20 days with each epoch lasting 5 days.

-

Boosting Optimization with Adam-mini: The Adam-mini optimizer paper on arXiv has caught members’ attention for reducing memory usage by 45% to 50% when compared to AdamW, by decreasing the number of individual learning rates.

-

Custom LR Schedulers on HF Radars: A user sought advice on creating a cosine learning rate (LR) scheduler using Hugging Face, keen on implementing a minimum LR greater than zero to fine-tune model training.

-

Accelerating Minhash with Python: A member boasted a 12x performance enhancement in minhash calculations using Python, sparking interest and inviting collaborative feedback to further improve this optimization.

These were the highlights within the OpenAccess AI Collective that captured the guild’s most significant discussions and technical interests.

Torchtune Discord

- Tokenizer Tussle on Torchtune: A discrepancy between tokenizer configurations for Phi-3-mini and Phi-3-medium might affect Torchtune performance, with the former including a beginning-of-string token (

"add_bos_token": true) and the latter not ("add_bos_token": false). - Troubleshooting TransformerDecoder: Engineers run into a runtime size mismatch error in

TransformerDecoderparameters, such asattn.q_proj.weight, signaling potential configuration or implementation issues with Phi-3-Medium-4K-Instruct. - Phi-3-Medium-4K-Instruct Compatibility Quagmire: Ongoing errors suggest that Phi-3-Medium-4K-Instruct support within Torchtune is incomplete, needing additional tweaks for full compatibility.

- Crafting a Custom Tokenizer Solution: To resolve tokenizer discrepancies, members propose the creation of a dedicated

phi3_medium_tokenizerby adapting thephi3_mini_tokenizerconfig and settingadd_bos = False.

LLM Finetuning (Hamel + Dan) Discord

-

Beowulf’s Big Speed Breakthrough: A member announced a significant speed improvement for beowulfbr’s efficiency tool, making it 12 times faster than the datasketch.

-

Simon Says, ‘Streamline Your Commands!’: Simon Willison shared his talk on integrating Large Language Models with command-line interfaces, featuring a YouTube video and an annotated version of his presentation.

-

Innovative Dataset Generation Method Unveiled: A new method for generating high-quality datasets for LLM instruction finetuning was highlighted. It is described as fully automated, requiring no seed questions and capable of running locally, with details shared in the linked post.

-

Synthetic Aperture Encoding with Linus Lee’s Prism: The guild discussed Linus Lee’s work on Prism for finetuning, expressing interest in his approach to creating more interpretable models for humans, as detailed in his blog post.

-

Private Model, Gradio Trouble: A member encountered an error when attempting to create a Gradio space with a privately fine-tuned model via AutoTune, necessitating an

hf_tokendue to the model’s private status.

Mozilla AI Discord

-

Llamafile v0.8.7 Goes Live: The release of llamafile v0.8.7 introduces faster quant operations and bug fixes, with hints at upcoming Android compatibility.

-

Get Set for July AI Talks and Tools: Two key events, Jan AI and AutoFix by Sentry.io, along with the AI Foundry Podcast Roadshow are set to engage the community this month.

-

Mozilla AI Hits the Conference Circuit: Members will present at the World’s Fair of AI and moderate at the AI Quality Conference while Firefox Nightly paves new paths with optional AI services detailed in their Nightly blog.

-

Read Up on the Latest ML Paper Picks: The curated selection of recent machine learning research is now available, offering insights and discussions from the community.

-

Enhancing New User Experience for Llamafile: Suggestions have been made to provide a step-by-step llamafile and configuration guide for novices, and discussions are ongoing about Firefox potentially integrating a built-in local inference feature for easier on-device inference.

AI Stack Devs (Yoko Li) Discord

-

Racy AI Enters the Beta Stage: Honeybot.ai, an AI-generated adult content platform, announced the commencement of their beta phase, stating that the service is free for individuals over 18 years of age.

-

Project Activity Under Scrutiny: A user raised concerns regarding the active status of a project, noting the prevalence of spam as an indicator that the project may no longer be active.

MLOps @Chipro Discord

-

Bot Battlegrounds: Detecting Digital Deceivers: An upcoming event titled “Detecting Bots and Fraud in the Time of LLMs” will unravel strategies to identify and mitigate the impact of LLM-based bots in automation and security. Set for June 27, 2023, the discussion will tackle the evolution of bots, as well as the current detection methodologies utilized by experts.

-

Meet the AI Sentinel – Unmesh Kurup: With the prevalence of sophisticated LLMs, Unmesh Kurup, leading the ML team at Intuition Machines/hCaptcha, will be the keynote speaker at the digital event, breaking down advanced security systems to discern between bots and human interaction. Engineers and specialists in the field can register for free to gain insights from Kurup’s extensive experience in AI/ML.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Datasette - LLM (@SimonW) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

HuggingFace ▷ #announcements (1 messages):

- **Argilla 2.0 boosts dataset annotation**: [Argilla 2.0](https://x.com/argilla_io/status/1805250218184560772) announced with new Python SDK for dataset integration and a flexible UI for data annotation. The update promises to "create high-quality datasets more efficiently."

- **Microsoft's Florence models crush benchmarks**: Microsoft released [Florence](https://x.com/osanseviero/status/1803324863492350208), a vision model for tasks like captioning and OCR with models sized 200M and 800M, MIT-licensed. "*Fine-tune Florence-2 on any task*" with a new [notebook and walkthrough](https://x.com/mervenoyann/status/1805265940134654424) on DocVQA dataset.

- **Generate GGUF quants in seconds**: New [support added](https://x.com/reach_vb/status/1804615756568748537) for "Generate GGUF quants in less than 120 seconds" including automatic uploads to the hub and support for private and org repos. Over 3500 model checkpoints created.

- **Embedding models guide for AWS**: A comprehensive guide on how to [train and deploy embedding models](https://www.philschmid.de/sagemaker-train-deploy-embedding-models) on AWS SageMaker using Sentence Transformers and fine-tuning the BGE model for financial data. Training takes ~10 minutes on a ml.g5.xlarge instance at around $0.2.

- **Ethics and Society newsletter on data quality**: The latest [Ethics and Society newsletter](https://huggingface.co/blog/ethics-soc-6) highlights the importance of data quality. Collaboration with the ethics regulars led to a detailed discussion on this crucial theme.Links mentioned:

- Tweet from Argilla (@argilla_io): 📢 Another big announcement: Argilla 2.0 rc! What does it mean for AI builders? 🤺 Unified framework for feedback collection 🐍 New Python SDK to work with datasets, including a new @huggingface da...

- Tweet from Omar Sanseviero (@osanseviero): Microsoft just silently dropped Florence 👀Vision model that can tackle many vision tasks (captioning, detection, region proposal, OCR) 🤏Small models (200M and 800M) with ~quality to models 100x lar...

- Tweet from merve (@mervenoyann): Fine-tune Florence-2 on any task 🔥 Today we release a notebook and a walkthrough blog on fine-tuning Florence-2 on DocVQA dataset @andi_marafioti @skalskip92 Keep reading ⇓

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): Generate GGUF quants in less than 120 seconds! ⚡ > Added support for imatrix quants > GGUF-split support for larger quants > Automatic upload to hub > Support for private and org repos U...

- Tweet from Omar Sanseviero (@osanseviero): Microsoft just silently (again!) dropped Instruction Pre-Training! 👀Augment pretraining datasets generating instructions 🦙A Llama 3 8B with comparable performance to 70B! 🔥General+domain models (m...

- Tweet from Daniel van Strien (@vanstriendaniel): Instruction pre-training is a new approach that enhances LLM pretraining by using instruction-response pairs from an instruction synthesizer instead of raw data. Explore this method in this @gradio S...

- Tweet from Daniël de Kok (@danieldekok): 🐬More Marlin features coming to the next @huggingface TGI release: support for using existing GPTQ-quantized models with the fast Marlin matrix multiplication kernel. ⚡This feature is made possible ...

- Tweet from Eustache Le Bihan (@eustachelb): Distil-Whisper goes multilingual!! 🤗 The French distilled version of Whisper is here! 🇫🇷 As accurate as large-v3, faster than tiny. The best of both worlds! 🚀 Check out the details below ⬇️

- Tweet from Philipp Schmid (@_philschmid): Embedding models are crucial for successful RAG applications, but they're often trained on general knowledge! Excited to share an end-to-end guide on how to Train and Deploy open Embeddings models...

- Tweet from F-G Fernandez (@FrG_FM): Xavier & @osanseviero presenting the robotics initiatives of @huggingface 🤗 (including LeRobot led by none other than @RemiCadene) at #AIDev by @linuxfoundation Looking forward to the day when we re...

- Tweet from Sayak Paul (@RisingSayak): Were you aware that we have a dedicated guide on different prompting mechanisms to improve the image generation quality? 🧨 Takes you through simple prompt engineering, prompt weighting, prompt enhan...

- Tweet from Avijit Ghosh (@evijitghosh): The quarterly @huggingface Ethics and Society newsletter is out! Had so much fun collabing on this with @frimelle and supported by the ethics regulars. The theme for this quarter's newsletter is t...

HuggingFace ▷ #general (436 messages🔥🔥🔥):

-

Fun with Floating-Point: Users debated the practicalities of float vs integer types in Python, leading to various code iterations to handle large float computations (e.g.,

pi**pi**pi). One user pointed out a common issue: “OverflowError: (34, ‘Result too large’)” when usingmath.pow. -

RAM Troubleshooting for AI Models: A user struggled to load datasets without running out of memory, despite having 128GB of RAM. Solutions proposed included using

save_to_disk,load_from_disk, and enablingstreaming=True. -

Discussions on Quantization: Members explained quantization as a method to run large AI models on lower-end hardware. It reduces the precision of the model’s parameters, which may affect performance but allows models to operate within memory constraints.

-

Git Usage Concerns: Users discussed inefficiencies and errors related to using

graphvizon Hugging Face spaces, troubleshooting an error about missing executables and suggesting potential fixes. One helpful solution involved confirming whether graphviz was correctly added to the system’sPATH. -

Career and Learning Path Advice: Users discussed which tech skills were most employable, debating fields like cybersecurity vs data science. Advice was given: “more than any particular field, getting involved in real projects can be hugely helpful.”

Links mentioned:

- Replete-AI/Replete-Coder-Llama3-8B · Hugging Face: no description found

- LLaVA: no description found

- Azazelle/L3-RP_io at main: no description found

- How to Install WSL2 on Windows 11 (Windows Subsystem for Linux): In this video tutorial, we'll show you how to install WSL2 on Windows 11, allowing you to run Linux commands and tools directly within the Windows environmen...

- azaz (Z): no description found

- @nroggendorff on Hugging Face: "@osanseviero your move": no description found

- Huh Cat GIF - Huh Cat Cat huh - Discover & Share GIFs: Click to view the GIF

- Pluh Veilbound GIF - Pluh Veilbound - Discover & Share GIFs: Click to view the GIF

- Wat GIF - Wat - Discover & Share GIFs: Click to view the GIF

- ChatGPT Explained Completely.: For 16 free meals with HelloFresh PLUS free shipping, use code KYLEHILL16 at https://bit.ly/41QHRYfChatGPT is now the fastest-growing consumer app in human h...

- White Cat Eating Salad GIF - White Cat Eating Salad Meme - Discover & Share GIFs: Click to view the GIF

- SHITLORD (POOPMASTER DISS): mikusss's track on [untitled]

- transformers/examples/pytorch at main · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

- Old Man Frustrated GIF - Old Man Frustrated Dog - Discover & Share GIFs: Click to view the GIF

- Altman: mikusss's track on [untitled]

- GitHub - beowolx/rensa: High-performance MinHash implementation in Rust with Python bindings for efficient similarity estimation and deduplication of large datasets: High-performance MinHash implementation in Rust with Python bindings for efficient similarity estimation and deduplication of large datasets - beowolx/rensa

- Host, run, and code Python in the cloud: PythonAnywhere: no description found

- Civitai API - Civitai Wiki: no description found

HuggingFace ▷ #today-im-learning (2 messages):

- **Challenges with Langchain Pydantic and LLM**: A member is trying to use **Langchain Pydantic Basemodel** to structure document data into JSON with additional insights. They are facing issues as the LLM misinterprets the data due to tabular structures and seek evaluation strategies or better methods.

- **Expression of Interest in the Topic**: Another member indicated their interest in the topic by stating, "I am interested ...".HuggingFace ▷ #cool-finds (3 messages):

-

Attend the bot and fraud detection event: An upcoming event on “Detecting Bots and Fraud in the Time of LLMs” is scheduled for June 27, 2023, at 10 a.m. PDT. The keynote speaker Unmesh Kurup, Director of ML at Intuition Machines/hCaptcha, will share insights into advanced detection strategies (Register here).

-

Check out T2V-Turbo on HuggingFace: A member shared a link to T2V-Turbo on HuggingFace’s Spaces. They noted it offers a refreshing and impressive experience.

Links mentioned:

- T2V Turbo - a Hugging Face Space by TIGER-Lab: no description found

- A Million Turing Tests per Second: Detecting bots and fraud in the time of LLMs · Luma: The Data Phoenix team invites you to our upcoming webinar, which will take place on June 27th at 10 a.m. PDT. Topic: A Million Turing Tests per Second:…

HuggingFace ▷ #i-made-this (159 messages🔥🔥):

- Tokenization isn’t practical, says Apehex: Apehex argues in their article that tokenization methods are ineffective and suggests using neural networks to encode sequences of Unicode characters directly. A detailed discussion ensued, covering technical aspects like embedding, model size, and potential issues with floating-point accuracy.

- Personalize city maps with Cityscape Prettifier: Deuz_ai_80619 shared a GitHub project that allows users to create beautiful, personalized city maps using Flask, Prettymaps, and Python, turning OpenStreetMap data into stylish visualizations.

- Startpage extension for media lovers Starty Party: Desmosthenes introduced a new start page extension for browsers focused on media and content, available for installation at marketing.startyparty.dev.

Links mentioned:

- Is Tokenization Necessary?: no description found

- GitHub - C1N-S4/cityscape-prettifier: Make beautiful, personalized city maps with ease. With Flask, Prettymaps, and Python, turn OpenStreetMap data into elegant, stylish visualizations. Ideal for designers, developers, and urban aficionados.: Make beautiful, personalized city maps with ease. With Flask, Prettymaps, and Python, turn OpenStreetMap data into elegant, stylish visualizations. Ideal for designers, developers, and urban aficio...

HuggingFace ▷ #reading-group (4 messages):

-

Looking for papers on contamination: A member requested recommendations for papers on contamination, particularly for coding benchmarks. They shared three relevant papers from their reading list: annotate tests by month, general saturation in ML benchmarks, and robustness in coding benchmarks.

-

Exploring Hilbert curve for 2D to 1D conversion: A member inquired about using the Hilbert curve for scanning 2D images into 1D. They noted its advantage of not having jumps and working well for square images of different sizes.

-

Concerns on unordered path information loss: Another member cautioned that the suggested method of using the Hilbert curve could result in information loss. They deemed the unordered path as not reasonable and mentioned a follow-up question on a different platform.

-

Paper update and code release: A member announced they are preparing to update their paper and will release the code in the upcoming days. This indicates progress towards sharing their research findings publicly.

HuggingFace ▷ #computer-vision (3 messages):

-

hf-vision/detection_metricsstruggles with dependencies: A member encountered an error when trying to usehf-vision/detection_metricsinevaluate, prompted by an ImportError due to missing dependencies. They noted that no such package exists, or they might be missing something. -

Detection metrics feature flagged as problematic: The same member pointed out that the issue with

hf-vision/detection_metricsis documented in the Hugging Face GitHub issues, specifically in this issue comment. -

evaluatefails to locatedetection_util: It was discovered thatevaluatecould not finddetection_utilbecause it is located inside a folder within the space, which causes the tool to malfunction.

Link mentioned: Add COCO evaluation metrics · Issue #111 · huggingface/evaluate: I’m currently working on adding Facebook AI’s DETR model (end-to-end object detection with Transformers) to HuggingFace Transformers. The model is working fine, but regarding evaluation, I'…

HuggingFace ▷ #NLP (3 messages):

-

Query on LLMs for Tabular Data Interaction: A member asked if there are open-source LLM projects or products that specialize in inference on tabular data, specifically stored as CSV or pandas DataFrames. They are interested in interacting with a chat bot to ask questions about trends in the data without needing modeling or prediction.

-

Interest in Contributing to a GitHub PR: A member expressed interest in helping with a PR related to RoBERTa-based models, focusing on adding support for Scaled Dot Product Attention (SDPA). They faced an issue due to lack of access to the original repository and sought advice on how to contribute.

Link mentioned: [RoBERTa-based] Add support for sdpa by hackyon · Pull Request #30510 · huggingface/transformers: What does this PR do? Adding support for SDPA (scaled dot product attention) for RoBERTa-based models. More context in #28005 and #28802. Before submitting This PR fixes a typo or improves the do…

HuggingFace ▷ #gradio-announcements (1 messages):

- Gradio v4.37 is here: The latest release, Gradio v4.37, features a redesigned chatbot UI, dynamic plots and GIF support, and significant performance upgrades. It also boasts improved customizability and numerous bug fixes for a smoother user experience.

- Exciting new features announced: The new chatbot UI supports embedding components like gr.Gallery and gr.Audio directly in the chat, while gr.Image now supports GIFs. Check out the full details in Gradio’s changelog.

CUDA MODE ▷ #torch (3 messages):

- Need to align PyTorch tensor in memory: A member asked if “there is any way to enforce that a PyTorch tensor is memory-aligned to an amount of bytes” for loading tensors in pairs using

float2. They are encountering issues with alignment.

CUDA MODE ▷ #torchao (11 messages🔥):

- Function dequantization clarified with source code link: Users discussed the function

torch.ops.aten._weight_int4pack_mm, with a helpful link to the source code on GitHub. This function performs dequantization and matrix multiplication with an identity matrix. - Docs for autogen function are not helpful: Users pointed out that the autogenerated documentation for the function was essentially blank and not informative at all (“documentation is blank 🤣”).

- 8-bit Adam collaboration thread: A thread was initiated for collaboration on 8-bit Adam optimizations. Key questions included the use of dynamic quantization schemes and whether dequantization and adam-step operations are fused together in a single kernel.

Links mentioned:

- Function at::_weight_int4pack_mm — PyTorch main documentation: no description found

- Quant: add weight int4pack mm kernel by yanboliang · Pull Request #110914 · pytorch/pytorch: Adding the weight int4pack mm CUDA kernel. The kernel comes from the tinnygemm project which developed by Jeff Johnson.

CUDA MODE ▷ #off-topic (3 messages):

- Cute Demake Quake Impresses: Members shared a YouTube video titled “Q1K3 – Making Of”, featuring a tribute to Quake created in 13kb of JavaScript for the js13kGames contest 2021. They also provided a link to play the game and a blog post.

Links mentioned:

- Q1K3 – Making Of: A tribute to Quake in 13kb of JavaScript, made for the js13kGames contest 2021.Play here: https://phoboslab.org/q1k3/Blog Post: https://phoboslab.org/log/202...

- Q1K3 – Making Of: A tribute to Quake in 13kb of JavaScript, made for the js13kGames contest 2021.Play here: https://phoboslab.org/q1k3/Blog Post: https://phoboslab.org/log/202...

CUDA MODE ▷ #hqq (4 messages):

- HFGenerator broken after cache logic change: A member reported an issue with

HFGenerator, noting that while the nativemodel.generate(input_ids)function works well, the former has been problematic since transformers updated the cache logic. - mobicham confirms need for rewrite: A member acknowledged this issue, stating, “I need to rewrite, will do it this week,” and mentioned potential problems with

model.generatewhen usingtorch.compile, particularly the recompile behavior due to changing prompt lengths. - Verifying output without compiling: Discussions included verifying outputs without using

torch.compile, suggesting that the focus was primarily on functional correctness rather than performance optimization. - Model-specific issues and alternatives: The conversation shifted to model-specific concerns, mentioning potential issues with Llama2-7B and comparing it with Llama3, where

axis=1settings and various configurations were provided for reference: “Llama3-8b-instruct GPTQ (gs=64): 66.85, AWQ (gs=64): 67.29, HQQ (axis=1, gs=64): 67.4.”

CUDA MODE ▷ #llmdotc (402 messages🔥🔥):

-

Windows build cuDNN fix merged: After some troubleshooting, a fix for windows cuDNN build breakage was merged (#639). Issues were tied to macro redefinitions and needed adjustments like adding

WIN32_LEAN_AND_MEAN. -

Exploring H100 training instability with cuDNN: Training on H100 with cuDNN showed instability, particularly in bf16 training, which did not occur when cuDNN was turned off. Investigations point towards possible differences in tile sizes for cuDNN flash attention.

-

AMD GPU support in focus: Members discussed incorporating support for AMD GPUs. An AMD fork of the repository, anthonix/llm.c, is currently maintained, but wider interest in AMD GPUs is still developing.

-

PR for on-device reductions is under review: There is a pull request aimed at reducing GPU ↔ CPU transfers by moving more calculations on-device (#635). It includes micro-optimizations such as avoiding recalculations in validation steps.

-

Discussion on Llama 3 support and v1.0 roadmap: Planning toward a v1.0 release, focusing on treating GPT-2/3 support separately and introducing Llama 3 in follow-up versions. PRs for rolling checkpoints and StableAdamW optimizer are key components (#636).

Links mentioned:

- karpath - Overview: GitHub is where karpath builds software.

- Add missing MULTI_GPU compiler flag by chinthysl · Pull Request #640 · karpathy/llm.c: To run the file sharing NO_USE_MPI build.

- GitHub - anthonix/llm.c: LLM training in simple, raw C/HIP for AMD GPUs: LLM training in simple, raw C/HIP for AMD GPUs. Contribute to anthonix/llm.c development by creating an account on GitHub.

- Tweet from Etched (@Etched): Meet Sohu, the fastest AI chip of all time. With over 500,000 tokens per second running Llama 70B, Sohu lets you build products that are impossible on GPUs. One 8xSohu server replaces 160 H100s. Soh...

- rolling checkpoints by karpathy · Pull Request #636 · karpathy/llm.c: checkpoints are either MINOR or MAJOR and minor checkpoints get deleted with a rolling window. This is an optimization that will allow us to save state more often, but preserve disk space overall. ...

- Socket server/client interface by chinthysl · Pull Request #633 · karpathy/llm.c: Dummy PR to make use of the distributed interface in PR #632

- add outlier detector, test for it, and start tracking z score of loss by karpathy · Pull Request #637 · karpathy/llm.c: still TODO: track grad norm in addition to loss (do we have to split the gpt2_update function) add argparse option to monitor loss and grad norm for outliers (e.g. z > 3) add handling of instabili...

- On-device reductions by ngc92 · Pull Request #635 · karpathy/llm.c: Moves loss calculation to backward, and ensures we can do more on-device reductions and fewer host<->device transfers. Also enables a micro-optimization, that validate does not calculate dlogit...

- optimi/optimi/stableadamw.py at 4542d04a3974bb3ac9baa97f4e417bda0432ad58 · warner-benjamin/optimi: Fast, Modern, Memory Efficient, and Low Precision PyTorch Optimizers - warner-benjamin/optimi

- Scaling Language Model Training to a Trillion Parameters Using Megatron | NVIDIA Technical Blog: Natural Language Processing (NLP) has seen rapid progress in recent years as computation at scale has become more available and datasets have become larger. At the same time, recent work has shownR...

- FlexNet 11.19.5 build on Visual Studio 2015: Hi all, I am trying to build my app with FlexNet 11.19.5. I am facing some compiler issues (Visual Studio 2015):c:\program files (x86)\windows kits\8.1\include\shared\ws2def.h(100): warning C4005: '...

CUDA MODE ▷ #rocm (1 messages):

iron_bound: https://chipsandcheese.com/2024/06/25/testing-amds-giant-mi300x/

CUDA MODE ▷ #bitnet (1 messages):

- Debugging PyTorch tensor device call: A member discussed a potential fix for a device call issue in PyTorch by referring to a specific line in native_functions.yaml. They suggested trying

BitnetTensor(intermediate).to(device=tensor.device)instead of the original code.

Link mentioned: pytorch/aten/src/ATen/native/native_functions.yaml at 18fdc0ae5b9e9e63eafe0b10ab3fc95c1560ae5c · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - pytorch/pytorch

Unsloth AI (Daniel Han) ▷ #general (131 messages🔥🔥):

- Replete-Coder Llama3-8B model debuts: The new Replete-Coder Llama3-8B model was discussed heavily, being highlighted for its advanced coding capabilities in over 100 languages, and its uncensored, fully deduplicated training data. It is supported by TensorDock for cloud compute rental.

- Skepticism about benchmarks: Users discussed the reliability of benchmarks, emphasizing that they can be overfitted and do not always represent real-world performance. One member stated, “…benchmarks tell you very little… it needs eyes on for a bigger problem scope.”

- Adam-mini optimizer: The new Adam-mini optimizer claimed to achieve on-par or better performance than AdamW with 45% to 50% less memory and 49.6% higher throughput, which sparked discussions about potential implementation and comparative benefits to existing optimizers.