AI News for 6/21/2024-6/24/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (415 channels, and 5896 messages) for you. Estimated reading time saved (at 200wpm): 660 minutes. You can now tag @smol_ai for AINews discussions!

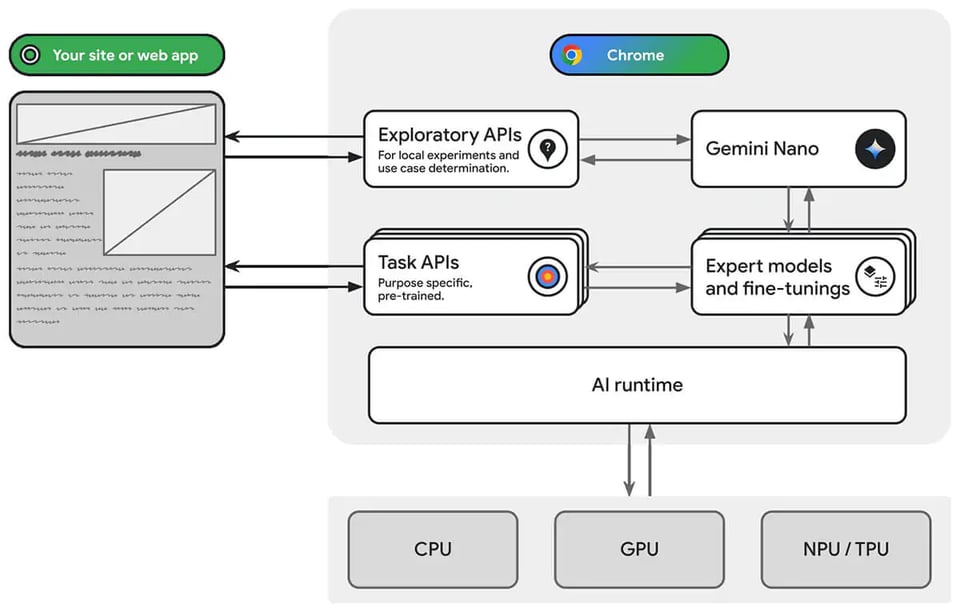

The latest Chrome Canary now has Gemini Nano in a feature flag:

- Prompt API for Gemini Nano chrome://flags/#prompt-api-for-gemini-nano

- Optimization guide on device chrome://flags/#optimization-guide-on-device-model

- Navigate to chrome://components/ and look for Optimization Guide On Device Model; Check for update to start the download

You’ll now have access to the model via the console: http://window.ai.createTextSession()

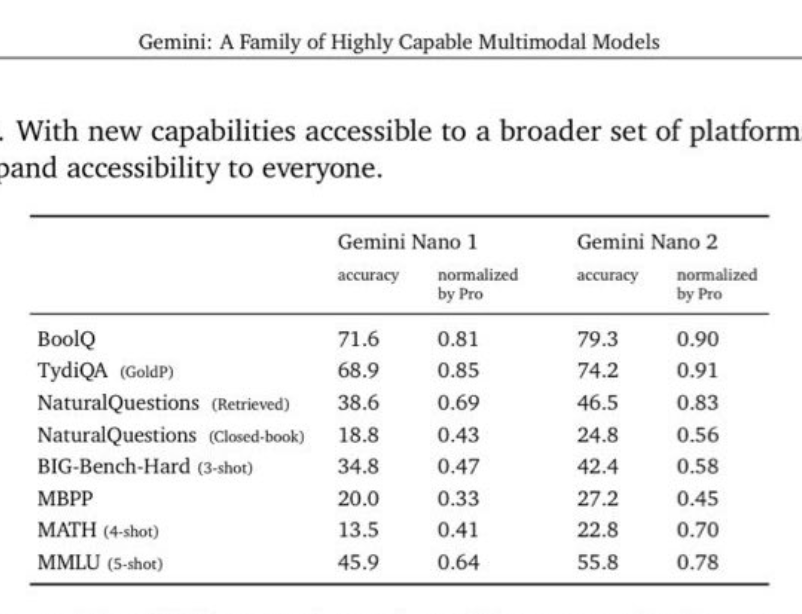

Nano 1 and 2, at a 4bit quantized 1.8B and 3.25B parameters has decent performance relative to Gemini Pro:

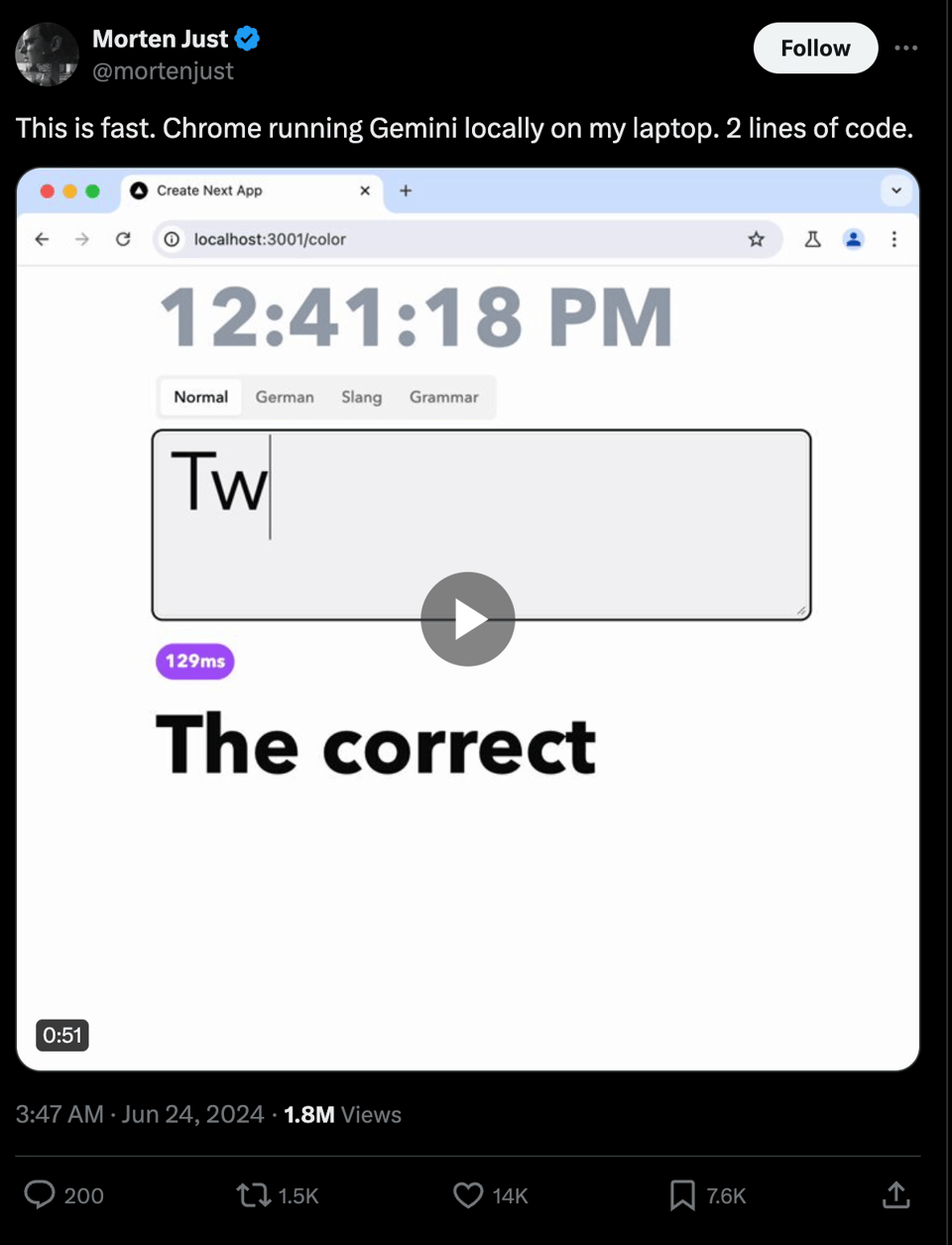

and you should see this live demo of how fast it runs

Lastly, the base model and instruct-tuned model weights have already been extracted and posted to HuggingFace.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Model Releases and Benchmarks

- Anthropic Claude 3.5 Sonnet: @adcock_brett noted Anthropic launched Claude 3.5 Sonnet, an upgraded model that bests GPT-4o across some benchmarks. For devs, it’s 2x the speed of Opus, while pricing comes in at 1/5 the cost of Anthropic’s previous top model. For consumers, it’s completely free to try. @lmsysorg reported Claude 3.5 Sonnet has climbed to #4 in Coding Arena, nearing GPT-4-Turbo levels. It’s now the top open model for coding. It also ranks #11 in Hard Prompts and #20 in Overall generic questions.

- DeepSeek-Coder-V2: @dair_ai noted DeepSeek-Coder-V2 competes with closed-sourced models on code and math generation tasks. It achieves 90.2% on HumanEval and 75.7% on MATH, higher than GPT-4-Turbo-0409 performance according to their report. Includes a 16B and 236B parameter model with 128K context length.

- GLM-0520: @lmsysorg reported GLM-0520 from Zhipu AI/Tsinghua impresses at #9 in Coding and #11 Overall. Chinese LLMs are getting more competitive than ever!

- Nemotron 340B: @dl_weekly reported NVIDIA announced Nemotron-4 340B, a family of open models that developers can use to generate synthetic data for training large language models.

AI Research Papers

- TextGrad: @dair_ai noted TextGrad is a new framework for automatic differentiation through backpropagation on textual feedback provided by an LLM. This improves individual components and the natural language helps to optimize the computation graph.

- PlanRAG: @dair_ai reported PlanRAG enhances decision making with a new RAG technique called iterative plan-then-RAG. It involves two steps: 1) an LLM generates the plan for decision making by examining data schema and questions and 2) the retriever generates the queries for data analysis. The final step checks if a new plan for further analysis is needed and iterates on previous steps or makes a decision on the data.

- Mitigating Memorization in LLMs: @dair_ai noted this paper presents a modification of the next-token prediction objective called goldfish loss to help mitigate the verbatim generation of memorized training data.

- Tree Search for Language Model Agents: @dair_ai reported this paper proposes an inference-time tree search algorithm for LM agents to perform exploration and enable multi-step reasoning. It’s tested on interactive web environments and applied to GPT-4o to significantly improve performance.

AI Applications and Demos

- Wayve PRISM-1: @adcock_brett reported Wayve AI introduced PRISM-1, a scene reconstruction model of 4D scenes (3D in space + time) from video data. Breakthroughs like this will be crucial in the development of autonomous driving.

- Runway Gen-3 Alpha: @adcock_brett noted Runway demoed Gen-3 Alpha, a new AI model that can generate 10-second videos from text prompts and images. These human characters are 100% AI-generated.

- Hedra Character-1: @adcock_brett reported Hedra launched Character-1, a new foundation model that can turn images into singing portrait videos. The public preview web app can generate up to 30 seconds of expressive talking, singing, or rapping characters.

- ElevenLabs Text/Video-to-Sound: @adcock_brett noted ElevenLabs launched a new open-source text and video-to-sound effects app and API. Devs can now build apps that generate sound effects based on text prompts or add sound to silent videos.

Memes and Humor

- Gilded Frogs: @c_valenzuelab defined “Gilded Frogs” as frogs that have amassed great wealth and adorn themselves with luxurious jewelry, including gold chains, gem-encrusted bracelets, and rings, covering their skins with diamonds, rubies, and sapphires.

- Llama.ttf: @osanseviero noted Llama.ttf is a font which is also an LLM. TinyStories (15M) as a font 🤯 The font engine runs inference of the LLM. Local LLMs taken to an extreme.

- VCs Funding GPT Wrapper Startups: @abacaj posted a meme image joking about VCs funding GPT wrapper startups.

- Philosophers vs ML Researchers: @AmandaAskell posted a meme image comparing the number of papers published by philosophers vs ML researchers.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Stable Diffusion / AI Image Generation

-

Pony Diffusion model impresses users: In /r/StableDiffusion, users are discovering the capabilities and creative potential of the Pony Diffusion model, finding it fun and refreshing to use. Some admit to underestimating Pony’s responsibility and prompt adherence. There are requests for in-depth Pony tutorials to help produce desired family-friendly anime/manga style images while avoiding unintended NSFW generations.

-

New techniques and model updates: Users are sharing background replacement, re-lighting and compositing workflows in ComfyUI and demonstrating the use of the [SEP] token for multiple prompts in adetailer models. The SD.Next release announcement highlights 10+ improvements like quantized T5 encoder support, PixArt-Sigma variants, HunyuanDiT 1.1, and efficiency upgrades for low VRAM GPUs. sd-scripts now supports training Stable Diffusion 3 models.

-

Creative applications and model comparisons: An exhibition at the Nikola Tesla Museum features 118 AI-assisted artworks created with Stable Diffusion, highlighting adoption outside the AI community. New LoRA models like Aether Illustration for Nordic-style portraits and a black-and-white illustration style for SDXL are being released. A comparison of various models on a “woman lying on grass” prompt sparks discussion on their relative performance.

-

Licensing discussions: Users discovered the initial Stable Cascade weights were released under an MIT license for about 4 days before changing to a more restrictive one, suggesting potential for commercial use of the MIT-licensed version. This has led to people downloading that specific version.

ChatGPT / AI Assistants

-

AI-generated games impress users: In /r/ChatGPT, Claude, an AI assistant, created a playable 3D first-person shooter game within the chat interface. The game, which involves shooting happy emojis at sad monsters, was Claude’s own idea. This is seen as a groundbreaking moment, with AI now competing with beginner human game developers. Users appreciate Claude’s cute and hopeful approach.

-

Model performance and benchmarks: Claude 3.5 Sonnet outperforms other models on various benchmarks like MMLU-Pro, according to recently released results.

-

Improving chatbots with knowledge integration: In /r/singularity, a user is surprised large AI companies haven’t connected their chatbots to knowledge bases like Wikipedia or tools like WolframAlpha for improved accuracy on facts, math, physics, etc. They believe the underlying technology exists but needs integration, though language models may still face fundamental limitations.

AI Discord Recap

SPECIAL NOTE: As we do for fresh frontier model launches, we are displaying the differences in output from running the same prompts across Claude 3.5 and 3 and GPT4o.

Claude 3 Sonnet

1. LLM Performance Benchmarking and Advancements

- Meta’s Llama 3 model has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus as mentioned in this discussion.

- New models like IBM’s Granite-8B-Code-Instruct and DeepSeek’s DeepSeek-V2 236B model were discussed, with the latter outperforming GPT-4 in some benchmarks.

- However, there was skepticism around certain benchmarks and calls for credible sources to set realistic evaluation standards.

2. Techniques for Efficient LLM Training and Inference

- DeepSpeed’s ZeRO++ was mentioned as promising 4x reduced communication overhead for large model training on GPUs.

- The vAttention system was discussed for dynamically managing KV-cache for efficient inference without PagedAttention.

- QServe’s W4A8KV4 quantization was highlighted as a technique to boost cloud LLM serving performance on GPUs.

- Techniques like Consistency LLMs were mentioned for exploring parallel token decoding to reduce inference latency.

3. Open-Source AI Frameworks and Community Efforts

- The Axolotl project was discussed for supporting diverse dataset formats for instruction tuning and LLM pre-training.

- LlamaIndex was mentioned as powering a new course on building agentic RAG systems with Andrew Ng.

- The open-source RefuelLLM-2 model was introduced, claiming to be the best for “unsexy data tasks”.

- Discussions around Modular’s Mojo highlighted its potential for Python integration and AI extensions like bfloat16 as mentioned here.

4. Multimodal AI and Generative Modeling

- The Idefics2 8B Chatty and CodeGemma 1.1 7B models were mentioned for their focus on chat interactions and coding abilities respectively, as discussed here.

- The Phi 3 model was highlighted for bringing powerful AI chatbots to browsers via WebGPU, according to this Reddit link.

- Combining Pixart Sigma, SDXL and PAG was mentioned as aiming to achieve DALLE-3 level outputs, with potential for further refinement through fine-tuning, as per this discussion.

- The open-source IC-Light project focused on improving image relighting techniques was also brought up in this conversation.

Claude 3.5 Sonnet

- LLM Performance and Benchmarking Debates:

- New models like DeepSeek-V2 and Hermes 2 Theta Llama-3 70B are generating buzz for their performance. However, there’s growing skepticism across communities about AI benchmarks and leaderboards, with calls for more credible evaluation methods.

- Discussions in multiple discords touched on the challenges of comparing models like GPT-4, Claude, and newer open-source alternatives, highlighting the complexity of real-world performance versus benchmark scores.

- Optimizing LLM Training and Inference:

- Communities are sharing techniques for improving LLM efficiency, such as quantization methods and optimizing for specific hardware like AMD GPUs.

- There’s significant interest in reducing computational costs, with discussions ranging from VRAM optimization to novel architectures for more efficient inference.

- Open-Source AI Development and Collaboration:

- Projects like Axolotl and LlamaIndex are fostering community-driven development of AI tools and frameworks.

- There’s a trend towards open-sourcing models and tools, as seen with RefuelLLM-2 and Llamafile, encouraging wider participation in AI development.

- Multimodal AI and Creative Applications:

- Discussions across discords highlight the growing interest in multimodal models that can handle text, image, and potentially video, with projects like Stable Artisan bringing these capabilities to wider audiences.

- There’s ongoing experimentation with combining different models and techniques to achieve DALL-E 3-level outputs, showing a community-driven approach to advancing generative AI capabilities.

- AI Integration and Practical Applications:

- Multiple communities are exploring ways to integrate AI into everyday tools, from browser-based models to Discord bots for media creation.

- There’s a growing focus on making AI more accessible and useful for specific tasks, as seen in discussions about code generation, data analysis, and creative applications across various discord channels.

Claude 3 Opus

- LLM Performance and Benchmarking:

- Discussions on the performance of models like Llama 3, DeepSeek-V2, and Claude 3.5 Sonnet across various benchmarks and leaderboards.

- Skepticism surrounding certain benchmarks, such as AlpacaEval, with calls for more credible assessment standards.

- Optimizing LLM Training and Inference:

- Techniques for efficient training, such as ZeRO++ and Consistency LLMs, and optimized inference with vAttention and QServe.

- Discussions on quantization methods, like W4A8KV4, and their impact on model performance and resource requirements.

- Open-Source AI Frameworks and Collaborations:

- Updates and collaborations involving open-source frameworks like Axolotl, LlamaIndex, and Modular.

- Introducing new open-source models, such as RefuelLLM-2 and Granite-8B-Code-Instruct.

- Multimodal AI and Generative Models:

- Advancements in multimodal AI with models like Idefics2 8B Chatty and CodeGemma 1.1 7B.

- Innovations in generative modeling, such as Phi 3 for browser-based chatbots and combining techniques to achieve DALLE-3-level outputs.

- Open-source efforts in image relighting with projects like IC-Light.

- AI Ethics, Legality, and Accountability:

- Discussions on the ethical implications of AI-generated content, as seen with Perplexity AI’s alleged plagiarism.

- Concerns about the legal risks associated with AI models making inaccurate or defamatory statements, as highlighted in the Perplexity AI case.

- Debates on the accountability of tech companies using open datasets and the practice of “AI data laundering”.

GPT4T (gpt-4-turbo-2024-04-09)

**1. AI Hardware Evolves but Costs Spiral:

- VRAM requirements for AI models like Command R (34b) Q4_K_S lead to discussions about switching to EXL2, a more VRAM-efficient format. The NVIDIA DGX GH200 remains out of reach due to high costs.

**2. Optimization Takes Center Stage in AI Tools:

- Quantization techniques are leveraged to optimize model performance, with ROCm’s versions of xformers and flash-attention mentioned for efficiency. Implementation of PyTorch enhancements in the Llama-2 model results in significant performance boosts.

**3. AI Breaks New Ground in Multifaceted Applications:

- AI-powered reading aid Pebble, developed with OpenRouter, Pebble, illustrates novel uses of AI in enhancing reading comprehension. Cohere’s APIs enable multi-step tool uses, significantly broadening functional reach, aided by detailed documentation on multi-step tool use.

**4. AI-centric Education and Jobs Expanding:

- MJCET launches the first AWS Cloud Club in Telangana to educate students on AWS technologies, celebrating with an event featuring AWS Community Hero Mr. Faizal Khan. RSVP through event link. Also, AI-inspired educational content is proffered through Hamel Dan’s LLM101n course, teaching LLM building from scratch.

**5. Miscellaneous AI Developments Reflect Wide Impact:

- Intel retracts from AWS, puzzling the AI community on resource allocations. Claude Sonnet 3.5’s prowess in coding tasks garners praise, showcasing AI’s advancement in technical applications. In bizarre turns, Mozilla’s Llamafile ventures into Android territory promising innovative mobile solutions.

GPT4O (gpt-4o-2024-05-13)

Model Optimization and LLM Innovations

- DeepSeek and Sonnet 3.5 Dominate Benchmarks: The DeepSeek model impressed the community with its quick performance and coding abilities, surpassing GPT-4 in some cases (DeepSeek announcement). Similarly, Claude 3.5 Sonnet outperformed GPT-4o in coding tasks, validated through LMSYS leaderboard positions and hands-on usage (Claude thread).

- ZeRO++ and PyTorch Accelerate LLM Training: ZeRO++ reduces communication overhead in large model training by 4x, while new PyTorch techniques accelerate Llama-2 inference by 10x, encapsulated in the GPTFast package, optimizing its use on A100 or H100 GPUs (ZeRO++ tutorial).

Open-Source Developments and Community Efforts

- Axolotl and Modular Encourage Community Contributions: Axolotl announced the integration of ROCm fork versions of xformers for AMD GPU support, and Modular users discussed contributing to learning materials for LLVM and CUTLASS (related guide).

- Featherless.ai and LlamaIndex Expand Capabilities: Featherless.ai, a new platform to run public models serverlessly, was launched to wide curiosity (Featherless). LlamaIndex now supports image generation via StabilityAI, enhancing its toolkit for AI developers (LlamaIndex-StabilityAI).

AI in Production and Real-World Applications

- MJCET’s AWS Cloud Club Takes Off: The inauguration of the AWS Cloud Club at MJCET promoted hands-on AWS training and career-building initiatives (AWS event).

- Use of OpenRouter in Practical Applications: JojoAI was highlighted for its proactive assistant capabilities, using integrations like DigiCord to outshine competitive models like ChatGPT and Claude (JojoAI site).

Operational Challenges and Support Queries

- Installation and Compatibility Issues Plague Users: Difficulties in setting up libraries like xformers on Windows raised compatibility discussions, with suggestions converging on Linux for more stable operations (Unsloth troubleshooting).

- Credit and Support Issues: Numerous members of the Hugging Face and Predibase communities faced issues with missing service credits and billing inquiries, showcasing the need for improved customer support systems (Predibase).

Upcoming Technologies and Future Directions

- Announcing New AI Models and Clusters: AI21’s Jamba-Instruct with a 256K context window and NVIDIA’s Nemotron 4 highlighted breakthroughs in handling large-scale enterprise documents (Jamba-Instruct, Nemotron-4).

- Multi Fusion and Quantization Techniques: Discussions on the merits of early versus later fusion in multimodal models and advancements in quantization highlighted ongoing research in reducing AI model inference cost and boosting efficiency (Multi Fusion).

PART 1: High level Discord summaries

HuggingFace Discord

Juggernaut or SD3 Turbo for Virtual Realities?: While Juggernaut Lightning is favored for its realism in non-coding creative scenarios, SD3 Turbo wasn’t discussed as favorably, suggesting that choices between models are influenced by specific context and goals.

Quantum Leap for PyTorch Users: Investments in libraries like PyTorch and HuggingFace are recommended over dated ones like sklearn, and use of bitsandbytes and precision modifications such as 4-bit quantization can assist with model loading on constrained hardware.

Meta-Model Mergers and Empathic Evolutions: The Open Empathic project is expanding with contributed movie scene categories via YouTube, while merging tactics for UltraChat and Mistral-Yarn elicited debate, with references to mergekit and frankenMoE finetuning as noteworthy techniques for improving AI models.

Souped-Up Software and Services: A suite of contributions surfaced, including Mistroll 7B v2.2’s release, simple finetuning utilities for Stable Diffusion, a media-to-text conversion GUI using PyQt and Whisper, and the new AI platform Featherless.ai for serverless model usage.

In Pursuit of AI Reasoning Revelations: Plans to unravel recent works on reasoning with LLMs are brewing, with Understanding the Current State of Reasoning with LLMs (arXiv link) and repositories like Awesome-LLM-Reasoning and its namesake alternative repository link earmarked for examination.

Unsloth AI (Daniel Han) Discord

- Unsloth AI Previews Generate Buzz: A member’s anticipation for Unsloth AI’s release led to the sharing of a temporary recording, as theywaited for early access after a video filming announcement. Thumbnail updates, such as changing “csv -> unsloth + ollama” to “csv -> unsloth -> ollama”, were suggested for clarity, alongside adding explainer text for newcomers.

- Big VRAM Brings Bigger Conversations: A YouTube video showcased the PCIe-NVMe card by Phison as an astonishing 1Tb VRAM solution, sparking discussions about its impact on performance. Meanwhile, Fimbulvntr’s success in extending Llama-3-70b to a 64k context and the debate on VRAM expansion highlighted the ongoing exploration of large model capacities.

- Upgrades and Emotions in LLMs: Monday or Tuesday earmarked the Ollama update, promising CSV file support, while Sebastien’s emotional llama model, fostering a better understanding of emotions in AI, became available on Ollama and YouTube.

- Solving Setups & Compatibility: From struggles to install xformers on Windows with Unsloth via conda to ensuring correct execution of initial setup cells in Google Colab notebooks, members swapped tips for overcoming software challenges. GPU Cloud (NGC) container setup discussions, as well as CUDA and PyTorch version constraints, featured solutions like using different containers and sharing Dockerfile configurations.

- Pondering on Partnerships & AI Integration: A blog titled “Apple and Meta Partnership: The Future of Generative AI in iPhones” stirred the guild’s interest, with discussions focused on the strategic implications and potential integration challenges of generative AI in mobile devices.

Stability.ai (Stable Diffusion) Discord

- Bot Beware: A Discord bot was shared for integrating Gemini and StabilityAI services, but members raised safety and context concerns regarding the link.

- Civitai Pulls SD3 Amidst License Concerns: The removal of SD3 resources by Civitai sparked intense discussions, suggesting the step was taken to preempt legal issues.

- Running Stable with Less: Techniques for operating Stable Diffusion on lower specification GPUs, like utilizing automatic1111, were debated, weighing the efficiency of older GPUs against newer models like the RTX 4080.

- Training Troubles and Tips: Community members sought advice for training models and overcoming errors such as VRAM limits and problematic metadata, with some suggesting specialized tools like ComfyUI and OneTrainer for enhanced management.

- Model Compatibility Confusion: Discussions highlighted the necessity for alignment between models like SD 1.5 and SDXL with add-ons such as ControlNet; mismatched types can lead to performance degradation and errors.

CUDA MODE Discord

- CUTLASS and CUDA Collaboration Call: Users expressed interest in forming a CUTLASS working group, encouraged by a shared YouTube talk on Tensor Cores. Additionally, insights on the CPU cache were amplified with a shared primer on cache functionality, highlighting its significance for programmers.

- Floating Points and Precision Perils: Precision loss in FP8 conversion drew attention, prompting a shared resource for understanding rounding per IEEE convention and the use of tensor scaling to counteract loss. For those exploring quantization, a compilation of papers and educational content was recommended, including Quantization explained and Advanced Quantization.

- Enthusiasts of INT4 and QLoRA Weigh In: In a discussion contrasting INT4 LoRA fine-tuning versus QLoRA, it was noted that QLoRA’s inclusion of a CUDA dequant kernel (axis=0) sustains both quality and pace, especially compared to solutions using tinnygemm for large sequences.

- Networks Need Nurturing: The integration of Bitnet tensors with AffineQuantizedTensor sparked debate, considering special layouts for specifying packed dimensions. For assistance with debugging Bitnet tensor issues, CoffeeVampire3’s GitHub and the PyTorch ao library tutorials were spotlighted as go-to resources.

- Strategies to Scale System Stability: Strategies for multi-node setup optimizations and integrating FP8 matmuls were at the forefront of conversations, addressing performance challenges and training stability, especially on H100 GPUs which showed issues compared to A100. Upcoming large language model training on a Lambda cluster was also prepped for, with an eye on efficiency and stability.

LM Studio Discord

VRAM Crunch and Hefty Price Tags: Engineers highlighted the VRAM bottleneck when handling colossal models like Command R (34b) Q4_K_S, suggesting EXL2 as a more VRAM-efficient format. For heavy-duty AI work, the NVIDIA DGX GH200, touted for its mammoth memory, remains out of reach financially for most, hinting at thousands of dollars in investment.

Quantum Leaps in LLM Reasoning: Users were impressed with the Hermes 2 Theta Llama-3 70B model, known for its significant token context limit and creative strengths. Conversations around LLMs lack temporal awareness spurred mention of the Hathor Fractionate-L3-8B for its performance when output tensors and embeddings remain unquantized.

Cool Rigs and Hot Chips: On the hardware battlefield, using P40 GPUs with Codestral demonstrated a surge in power utilization to 12 tokens/second. Meanwhile, the iPad Pro’s 16GB RAM was debated for its ability to handle AI models, and the dream of using DX or Vulkan for multi-GPU support in AI was floated in response to the absence of NVlink in 4000 series GPUs.

Patchwork and Plugins: The LLaMa library vexed users with errors stemming from a model’s expected tensor count mismatch, whereas deepseekV2 faced loading woes, potentially fixable by updating to V0.2.25. Enthusiasm bubbled for a hypothetical all-in-one model runner that could handle a gamut of Huggingface models including text-to-speech and text-to-image.

Model Engineering and Enigmas: The quaintly named Llama 3 CursedStock V1.8-8B model piqued curiosity for its unique performance, especially in creative content generation. There was chatter about a Multi-model sequence map allowing data flow among several models, and the latest quantized Qwen2 500M model made waves for its ability to operate on less capable rigs, even a Raspberry Pi.

OpenAI Discord

- Siri and ChatGPT’s Odd Couple: There’s confusion among users about Siri’s integration with ChatGPT, with the consensus being that ChatGPT acts as an enhancement to Siri rather than a core integration. Elon Musk’s critical comments fueled further discussion on the topic.

- Claude’s Coding Coup Over GPT-4o: The Claude 3.5 Sonnet is praised for its superior performance in coding tasks compared to GPT-4o, with users highlighting Claude’s success in areas where GPT-4o stumbled. Effectiveness is gauged by both practical usage and positions on the LMSYS leaderboard rather than just benchmark scores.

- Persistent LLM Personal Assistant Dreaming: Enthusiasm is noted regarding the possibility of tailoring and maintaining language models, like Sonnet 3.5 or Gemini 1.5 Pro, to serve as personalized work-bots trained on an individual’s documents, prompting discussions about long-term and specialized applications of LLMs.

- GPT-4o’s Context Window Woes: Users struggle with limitations in GPT-4o’s ability to adhere to complex prompt instructions and handle lengthy documents. Alternatives such as Gemini and Claude are suggested for better performance with larger token windows.

- DALL-E Vs. Midjourney Artistic Showdown: A debate is unfolding on the server over DALL-E 3 and Midjourney’s capacities for generating AI images, particularly in the realm of paint-like artworks, with some showing a preference for the former’s distinct artistic styles.

Perplexity AI Discord

- Perplexity AI Caught in Plagiarism Uproar: Wired reported Perplexity AI’s alleged policy violations by scraping websites, with its chatbot misattributing a crime to a police officer and a debate emerging on the legal implications of inaccurate AI summaries.

- Mixed Reactions to Claude 3.5 Sonnet: The release of Claude 3.5 Sonnet was met with both applause for its capabilities and frustration for seeming overcautious, as reported by Forbes, while users experienced inconsistencies with Pro search results leading to dissatisfaction with Perplexity’s service.

- Exclusives on Apple and Boeing’s Struggles: Apple’s AI faced limitations in Europe while Boeing’s Starliner confronted significant challenges, information disseminated on Perplexity with direct links to articles on these issues (Apple Intelligence Isn’t, Boeing’s Starliner Stuck).

- Perplexity API Quandaries: The Perplexity API community discussed issues like potential moderation triggers or technical errors with LLama-3-70B when handling long token sequences, and queries about restricting link summarization and time filtration in citations via the API were raised as documented in the API reference.

- Community Convergence for Better Engagement: An OpenAI community message highlighted the need for shareable threads to foster greater collaboration, while a Perplexity AI-authored YouTube video previews diverse topics like Starliner dilemmas and OpenAI’s latest moves for educational consumption.

Nous Research AI Discord

Boost in Dataset Deduplication: Rensa outperforms datasketch with a 2.5-3x speed boost, leveraging Rust’s FxHash, LSH index, and on-the-fly permutations for dataset deduplication.

Model Jailbreak Exposed: A Financial Times article highlights hackers “jailbreaking” AI models to reveal flaws, while contributors on GitHub share a “smol q* implementation” and innovative projects like llama.ttf, an LLM inference engine disguised as a font file.

Lively Debate on Model Parameters: In the ask-about-llms, discussions ranged from the surprisingly capable story generation of TinyStories-656K to assertions that general-purpose performance soars with 70B+ parameter models.

Dataset Synthesis and Classification Enhanced: Members share a Google Sheet for collaborative dataset tracking, explore improvements using the Hermes RAG format, and delve into datasets like SciRIFF and ft-instruction-synthesizer-collection for scientific and instructional purposes.

AI Safety Models Scrutiny and Coursework: #general sees a mix, from Gemini and OpenAI’s redaction-capable safety models to the launch of Karpathy’s LLM101n course, encouraging engineers to build a storytelling LLM.

Eleuther Discord

- SLURM Hiccups with Jupyter: Engineers are facing issues with SLURM-managed nodes when connecting via Jupyter Notebook, citing errors potentially due to SLURM restrictions. A user experienced a ‘kill’ message on console before training even with correct GPU specifications.

- PyTorch Boosts Llama-2 Performance: PyTorch’s team has implemented techniques to accelerate the Llama-2 inference speed by up to a factor of ten; the enhancements are encapsulated in the GPTFast package, which requires A100 or H100 GPUs.

- Ethics and Sharing of AI Models: A serious conversation about the ethical and practical considerations of distributing proprietary AI models such as Mistral outside official sources highlighted concerns for legalities and the importance of transparency.

- Understanding AI Model Variants: Users debate methods to determine if an AI model is GPT-4 or a different variant, including examining knowledge cutoffs, latency disparities, and network traffic analysis.

- LingOly Challenge Introduces: A new LingOly benchmark is addressing the evaluation of LLMs in advanced reasoning involving linguistic puzzles. With over a thousand problems presented, top models are achieving below 50% accuracy, indicating a robust challenge for current architectures.

- Text-to-Speech Innovation with ARDiT: A podcast episode explores the usage of SAEs for model editing, inspired by the approach detailed in the MEMIT paper and its source code, suggesting wide applications for this technology.

- Pondering the Optimality of Multimodal Architectures: Dialogue surfaced about whether an early fusion model, like Chameleon, stands superior to later fusion approaches for multimodal tasks. The trade-off between generalizability and visual acuity loss in the image tokenization process of early fusion was a focus.

- Intel Retreats from AWS Instance: Intel is discontinuing their AWS instance leveraged by the gpt-neox development team, prompting discussions on cost-effective or alternative manual solutions for computational resources.

- Execution Error: NCCL Backend: Engineers report persistent NCCL backend challenges while attempting to train models with gpt-neox on A100 GPUs, a problem consistent across various NCCL and CUDA versions, with Docker use or without.

Latent Space Discord

- Character.AI Cracks Inference at Scale: Noam Shazeer of Character.AI illuminates the pursuit of AGI through optimization of inference processes, emphasizing their capability to handle upwards of 20,000 inference queries every second.

- Acquisition News: OpenAI Welcomes Rockset: OpenAI has acquired Rockset, a company skilled in hybrid search architecture with solutions like vector (FAISS) and keyword search, strengthening OpenAI’s RAG suite.

- AI Education boost by Karpathy: Andrej Karpathy plants the seeds of an ambitious new course, “LLM101n,” which will deep dive into constructing ChatGPT-like models from ground up, following the legacy of the legendary CS231n.

- LangChain Clears the Air on Funds: Harrison Chase addresses scrutiny regarding LangChain’s expenditure of venture capital on product development instead of promotions, with a response detailed in a tweet.

- Murati Teases GPT’s Next Leap: Mira Murati of OpenAI teases enthusiasts with a timeline hinting at a possible release of the next GPT model in about 1.5 years, while discussing the sweeping changes AI is bringing into creative and productive industries, available in a YouTube video.

- Latent Space Scholarship on Hiring AI Pros: A new “Latent Space Podcast” episode breaks down the art and science of hiring AI engineers, guiding listeners through hiring processes and defensive AI engineering strategies, with insights from @james_elicit and @adamwiggins available on this page and gathering buzz on Hacker News.

- Embarking on new YAML Frontiers: Conversations illustrate developing a YAML-based DSL for Twitter management to enhance post analytics, with a nod to Zoho Social’s comprehensive features; for similar ventures, Anthropics suggests employing XML tags, and a GitHub repo showcases the successful design of a YAML templating language with LLMs in Go.

Modular (Mojo 🔥) Discord

- LLVM’s Price Tag: An article estimating the cost of the LLVM project was shared, detailing that 1.2k developers produced a codebase of 6.9M lines with an estimated cost of $530 million. Cloning and checking out LLVM is part of understanding its development costs.

- Installation Troubles and Request for Help: Issues with Mojo installation on 22.04 were highlighted, citing failures in all devrel-extras tests; a problematic situation that led to a pause for troubleshooting. Separately, frustration over segmentation faults during Mojo development prompted a user to offer a $10 OpenAI API key for help with their critical issue.

- Discussions on Caching and Prefetching Performance: Deep dives into caching and prefetching, with emphasis on correct application and pitfalls, were a significant conversation topic. Insights shared included the potential for adverse effects on performance if prefetching is incorrectly utilized, and recommendations to utilize profiling tools such as

vtunefor Intel caches, even though Mojo does not support compile-time cache size retrieval. - Improvement Proposals and Nightly Mojo Builds: Suggested improvements for Mojo’s documentation and a proposal for controlled implicit conversion in Mojo were noted. Updates on new nightly Mojo compiler releases as well as MAX repo updates sparked discussions on developmental workflow and productivity.

- Data Labeling and Integration Insights: A new data labeling platform initiative received feedback about common pain points and successes in automation with tools like Haystack. The potential for ERP integration (prompted by manual data entry challenges and PDF processing) was also a focal point, indicating a push towards streamlining workflows in data management.

LAION Discord

- New Gates Open at Weta & Stability AI: A wave of discussions followed news of leadership changes at Weta Digital and Stability AI, focusing on the implications of these shake-ups and questioning the motives behind the appointments. Some talks pointed to Sean Parker and shared articles on the subject, linking a Reuters article Reuters article on Stability AI.

- Llama 3 on the Prowl: There was palpable excitement about the Llama 3 hardware specifications suggesting impressive performance, potentially outclassing rival models like GPT-4O and Claude 3. Participants shared projected throughputs of “1 to 2 tokens per second” on advanced setups.

- The Protection Paradox with Glaze & Nightshade: A sobering conversation unfolded over the limited ability of programs like Glaze and Nightshade to protect artists’ rights. Skeptics noted that second movers often find ways around such protections, thus providing artists with potentially false hope.

- Multimodal Models – A Repetitive Breakthrough?: The guild examined a new paper on multimodal models, raising the question of whether the purported advancements were meaningful. The paper promotes training on a variety of modalities to enhance versatility, yet participants critiqued the repeated ‘breakthrough’ narrative with little substantial novelty.

- Testing Limits: Promises and Limitations of Diffusion Models: A deeper dive into diffusion models was encapsulated in a GitHub repository shared by lucidrains, discussing the EMA (Exponential Moving Average) model updates (Diffusion Models on GitHub) and their use in image restoration, despite evidence pointing to the consistent bypassing of protections like Glaze.

Cohere Discord

- Welcome Wagon for Newcomers: New members joined the Cohere-focused Discord, guided by shared insights and tool use documentation that helps connect Cohere models to external applications.

- Skepticism Surrounding BitNet Practicality: Amidst debates on BitNet’s future, it’s noted to require training from scratch and is not optimized for existing hardware, leading Mr. Dragonfox to express concerns about its commercial impracticality.

- Cohere Capacities and Contributions: Following the integration of a Cohere client in Microsoft’s AutoGen framework, there was a call within the community for further support from the Cohere team in the project’s advancement.

- AI Enthusiasts Eager for Multilingual Expansions: Cohere’s model’s ability to understand and respond in multiple languages, including Chinese, was confirmed, directing interested parties to documentation and a GitHub notebook example to learn more.

- Developer Office Hours and Multi-Step Innovations: Cohere announced upcoming developer office hours emphasizing the Command R family’s tool use capabilities, providing resources on multi-step tool use for leveraging models to execute complex sequences of tasks.

LangChain AI Discord

- Confusion Over Context and Tokens: Users reported confusion regarding the integration of max tokens and context windows in agents, specifically with LangChain not adhering to Pydantic models’ validations. It was noted that context window or max token counts should include both the input and generated tokens.

- LangChain Learning and Implementation Queries: There was a spirited discussion about the learning curve with LangChain, with members sharing resources like Grecil’s personal journey that includes tutorials and documentation. Meanwhile, debate about ChatOpenAI versus Huggingface models highlighted performance differences and adaptation in various scenarios.

- Enhancing PDF Interrogation with LangChain: A detailed guide was shared for generating Q&A pairs from PDFs using LangChain, referring to issues like #17008 on GitHub for further guidance. Adjustments for using Llama2 as the LLM were also discussed, emphasizing customizing the

QAGenerationChain. - From Zero to RAG Hero: Members showcased their experience building no-code RAG workflows for financial documents, an article detailing the process was shared. A discussion also centered around a custom Corrective RAG app and Edimate, an AI-driven video creation, demoed here, which signs a future for e-learning.

- AI Framework Evaluation Video: For engineers evaluating AI frameworks for app integration including models like GPT-4o, a YouTube video was shared, urging developers to consider critical questions regarding the necessity and choice of the AI framework for specific applications.

OpenRouter (Alex Atallah) Discord

- Jamba Instruct Boasts Big Context Window: AI21’s Jamba-Instruct model has been introduced, showcasing a gigantic 256K context window, ideal for handling extensive documents in enterprise settings.

- Nemotron 4 Makes Waves with Synthetic Data Generation: NVIDIA’s release of Nemotron-4-340B-Instruct focuses on synthetic data generation for English-language applications with its new chat model.

- JojoAI Levels Up to Proactive Assistant: JojoAI differentiates itself by becoming a proactive assistant that can set reminders, employing DigiCord integrations, positioning it apart from competitors like ChatGPT or Claude. Experience it on the JojoAI site.

- Pebble’s Pioneering Reading Aid Tool: The unveiling of the Pebble tool, powered by OpenRouter with Mistral 8x7b and Gemini, provides a resource for enhancing reading comprehension and retention for web content. Kudos to the OpenRouter team for their support as acknowledged at Pebble.

- Tech Community Tackles Environmental and Technical Issues: Discussions pointed to concerns about the environmental footprint of using models like Nemotron 340b, with smaller models being recommended for efficiency and eco-friendliness. The community also dealt with practical affairs, such as resolving the disappearance of Claude self-moderated endpoints, praising Sonnet 3.5 for coding capabilities, addressing OpenRouter rate limits, and advising on best practices for handling exposed API keys.

OpenInterpreter Discord

- Local LLMs Enter OS Mode: The OpenInterpreter community has been discussing the use of local LLMs in OS mode with the command

interpreter --local --os, but there are concerns regarding their performance levels. - Desktop Delights and GitHub Glory: The OpenInterpreter team is promoting a forthcoming desktop app with a unique experience compared to the GitHub version, encouraging users to join the waitlist. Meanwhile, the project has celebrated 50,000 GitHub stars, hinting at a major upcoming announcement.

- Model Benchmarking Banter: The Codestral and Deepseek models have sparked attention with Codestral surpassing internal benchmarks and Deepseek impressing users with its quick performance. There’s buzz about a future optimized

interpreter --deepseekcommand. - Cross-Platform Poetry Performance: The use of Poetry for dependency management over

requirements.txthas been a contentious topic, with some engineers pointing to its shortcomings on various operating systems and advocating for alternatives like conda. - Community Kudos and Concerns: While there’s enthusiasm and appreciation for the community’s support, particularly for beginners, there’s also frustration regarding shipping delays for the 01 device, highlighting the balance between community sentiment and product delivery expectations.

LLM Finetuning (Hamel + Dan) Discord

Instruction Synthesizing for the Win: A newly shared Hugging Face repository highlights the potential of Instruction Pre-Training, providing 200M synthesized pairs across 40+ tasks, likely offering a robust approach to multi-task learning for AI practitioners looking to push the envelope in supervised multitask pre-training.

Bringing DeBERTa and Flash Together?: Curiosity is brewing over the possibility of combining DeBERTa with Flash Attention 2, posing the question of potential implementations that leverage both technologies to AI engineers interested in novel model architecture synergies.

Fixes and Workarounds: From a Maven course platform blank page issue solved using mobile devices to the resolution of permission errors after a kernel restart within braintrust, practical troubleshooting remains a staple of community discourse.

Credits Saga Continues: Persistent reports of missing service credits on platforms like Huggingface and Predibase sparked member-to-member support and referrals to respective billing supports. This included a tip that Predibase credits expire after 30 days, suggesting that engineers keep a keen eye on expiry dates to maximize credit use.

Training Errors and Overfitting Queries: Errors in running Axolotl’s training command (Modal FTJ) and concerns about LORA overfitting (‘significantly lower training loss compared to validation loss’) were significant pain points, showcasing the need for vigilant model monitoring practices among AI engineers.

LlamaIndex Discord

- LightningAI and LlamaIndex Join Forces: LightningAI’s RAG template offers an easy setup for multi-document agentic RAGs, promoting efficiency in AI development. Additionally, LlamaIndex’s integration with StabilityAI now allows for image generation, broadening AI developer capabilities.

- Customizing Complexity with LlamaIndex: Those developing with LlamaIndex can customize text-to-SQL pipelines using Directed Acyclic Graphs (DAGs), as explained in this feature overview. Meanwhile, for better financial analysis, the CRAG technique can be leveraged using Hanane Dupouy’s tutorial slides for improved retrieval quality.

- Fine-Tuning RAGs with Mlflow: To enhance answer accuracy in RAGs, integrating LlamaIndex with Mlflow provides a systematic way to manage critical parameters and evaluation methods.

- In-Depth Query Formatting and Parallel Execution in LlamaIndex: Members discussed LlamaIndex’s query response modes like Refine and Accumulate, and the utilization of OLLAMA_NUM_PARALLEL for concurrent model execution; document parsing and embedding mismatches were also topics of technical advice.

- Streamlining ML Workflows with MLflow and LLMs: A Medium article by Ankush K Singal highlights the practical integration of MLflow and LLMs through LlamaIndex to streamline ML workflows.

Interconnects (Nathan Lambert) Discord

- Gemini vs. LLAMA Parameter Showdown: A source from Meta indicated that Gemini 1.5 Pro has fewer parameters than LLAMA 3 70B, inciting discussions about the impact of MoE architectures on parameter count during inference.

- GPT-4’s Secret Sauce or Distilled Power: The community debated whether GPT-4T/o are early fusion models or distilled versions of larger predecessors, showing divergence in understanding of their fundamental architectures.

- Multimodal Training Dilemmas: Members highlighted the difficulties in post-training multimodal models, citing the challenges of transferring knowledge across different data modalities. The struggles suggest a general consensus on the complexity of enhancing native multimodal systems.

- Nosing Into Nous and Sony’s Stir: A tongue-in-cheek enquiry by a Nous Research member to @sonymusic sparked a blend of confusion and interest, touching upon AI’s role in legal and innovation spaces.

- Sketchy Metrics on AI Leaderboards: The legitimacy of the AlpacaEval leaderboard came under fire with engineers questioning biased metrics after a model claimed to have beaten GPT-4 while being more cost-effective. This led to discussions on the reliability of performance leaderboards in the field.

OpenAccess AI Collective (axolotl) Discord

- ROCm Forks Entering the Fray: To utilize certain functionalities, engineers are advised to use the ROCm fork versions of xformers and flash-attention, with a note on hardware support specifically for MI200 & MI300 GPUs and requirement of ROCm 5.4+ and PyTorch 1.12.1+.

- Reward Models Dubbed Subpar for Data Gen: The consensus is that the reward model isn’t efficient for generating data, as it is designed mainly for classifying the quality of data, not producing it.

- Synthesizing Standardized Test Questions: An idea was shared to improve AGI evaluations for smaller models by synthesizing SAT, GRE, and MCAT questions, with an additional proposal to include LSAT questions.

- Enigmatic Epoch Saving Quirks: Training epochs are saving at seemingly random intervals, a behavior recognized as unusual but familiar to the community. This may be linked to the steps counter during the training process.

- Dataset Formatting 101 and MinHash Acceleration: A member sought advice on dataset formatting for llama2-13b, while another discussed formatting for the Alpaca dataset using JSONL. Moreover, a fast MinHash implementation named Rensa is shared for dataset deduplication, boasting a 2.5-3x speed increase over similar libraries, with its GitHub repository available for community inputs (Rensa on GitHub).

- Prompt Structures Dissected and Mirrored: Clarification on

prompt_stylein the Axolotl codebase unveiled different prompt formatting strategies with INSTRUCT, CHAT, and CHATML highlighted for contrasting interactive uses. The use ofReflectAlpacaPrompterto automate prompt structuring using the designated style was exemplified (More on Phorm AI Code Search).

Mozilla AI Discord

- Llamafile Leveled Up: Llamafile v0.8.7 has been released, boasting faster quant operations and bug fixes, with whispers of an upcoming Android adaptation.

- Globetrotting AI Events on the Horizon: SF gears up for the World’s Fair of AI and the AI Quality Conference with community leaders in attendance, while the Mozilla Nightly Blog hints at potential llamafile integration offering AI services.

- Mozilla Nightly Blog Talks Llamafile: The Nightly blog details experimentation with local AI chat services powered by llamafile, signaling potential for wider adoption and user accessibility.

- Llamafile Execution on Colab Achieved: Successful execution of a llamafile on Google Colab demonstrated, providing a template for others to follow.

- Memory Manager Facelift Connects Cosmos with Android: A significant GitHub commit for the Cosmopolitan project revamps the memory manager, enabling support for Android and stirring interest in running llamafile through Termux.

Torchtune Discord

- ORPO’s Missing Piece: The ORPO training option for Torchtune is not supported, though DPO can use a documented recipe for training, as noted by guild members citing a mix dataset for ORPO/DPO.

- Epochs Stuck on Single Setting: Training on multiple datasets with Torchtune does not currently allow for different epoch settings for each—users should utilize ConcatDataset for combining datasets, but the same number of epochs applies to all.

- To ChatML or Not to ChatML: Engineers debated the efficacy of utilizing ChatML templates with the Llama3 model, contrasting approaches using instruct tokenizer and special tokens against base models without these elements, referencing models like Mahou-1.2-llama3-8B and Olethros-8B.

- Tuning Phi-3 Takes Tweaks: The task of fine-tuning Phi-3 models (like Phi-3-Medium-4K-Instruct) was addressed, with suggestions to modify the tokenizer and add a custom build function within Torchtune to enable compatibility.

- System Prompts: Hack It With Phi-3: Despite Phi-3 not being optimized for system prompts, users can work around this by prepending system prompts to user messages and adjusting the tokenizer configuration with a specific flag discussed to facilitate fine-tuning.

tinygrad (George Hotz) Discord

- Conditional Coding Conundrum: In discussions about tinygrad, the use of a conditional operation like

condition * a + !condition * bas a simplification for the WHERE function was met with caution due to potential issues with NaNs. - Intel Adventures in Tinygrad: Queries about Intel support in tinygrad revealed that while opencl is an available option, the framework has not integrated XMX support to date.

- Monday Meeting Must-Knows: The 0.9.1 release of tinygrad is on the agenda for the upcoming Monday meeting, focusing on tinybox updates, a new profiler, runtime improvements,

Tensor._tri, llama cast speedup, and bounties for uop matcher speed and unet3d improvements. - Buffer View Toggle Added to Tinygrad: A commit in tinygrad introduced a new flag to toggle the buffer view, a change that was substantiated with a GitHub Actions run.

- Lazy.py Logic in the Limelight: An engineer seeks clarification after their edits to

lazy.pywithin tinygrad resulted in a mix of both positive and negative process replay outcomes, suggesting a need for further investigation or peer review.

LLM Perf Enthusiasts AI Discord

- Claude Sonnet 3.5 Stuns with Performance: An engineer shared their experience using Claude Sonnet 3.5 in Websim, praising its speed, creativity, and intelligence. They were particularly taken with the “generate in new tab” feature and experimented with sensory engagement by toying with color schemes from iconic fashion brands, as shown in a shared tweet.

MLOps @Chipro Discord

- AWS Cloud Club Lifts Off at MJCET: MJCET has launched the first AWS Cloud Club in Telangana, a community aimed at providing students with resources and experience in Amazon Web Services to prepare for tech industry careers.

- Cloud Mastery Event with an AWS Expert: An inaugural event will celebrate the AWS Cloud Club’s launch on June 28th, 2024, featuring AWS Community Hero Mr. Faizal Khan. Interested parties can RSVP via an event link.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Datasette - LLM (@SimonW) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

HuggingFace ▷ #general (715 messages🔥🔥🔥):

- Juggernaut Lightning vs SD3 Turbo: A member recommended using Juggernaut Lightning as it is “way more realistic” compared to SD3 Turbo due to it being a base model. Another member mentioned Juggernaut being more suited for role-playing and creativity rather than coding and intelligence.

- Help for Beginners: An ML beginner sought advice on which libraries to use for their project and received suggestions to use PyTorch for its extensive neural network support and HuggingFace for loading pre-trained models. Another member recommended avoiding outdated libraries like sklearn.

- Model Loading Issues: A member faced challenges loading large AI models on limited hardware and received guidance on using quantization techniques to improve performance. Recommendations included installing the bitsandbytes library and instructions for modifying model load configurations to utilize 4-bit precision.

- AI Content Creation Tools: There was a discussion on the complexities of generating AI-generated videos similar to Vidalgo, indicating that while generating text and audio is straightforward, creating small moving videos is challenging. Tools like RunwayML and Capcut were suggested for video edits and stock images.

- Collaborative Projects and Model Updates: Members shared their experiences and projects related to various AI models, including a model trained to play games using Xbox controller inputs and a toolkit for preprocessing large image datasets. Additionally, ongoing work and upcoming updates on several models and their potential applications were discussed.

Links mentioned:

- 🧑🎓 How to use Continue | Continue: Using LLMs as you code with Continue

- Chess notation - Wikipedia: no description found

- mm ref: no description found

- Datasets: no description found

- Anthropic's SHOCKING New Model BREAKS the Software Industry! Claude 3.5 Sonnet Insane Coding Ability: Learn AI With Me:https://www.skool.com/natural20/aboutJoin my community and classroom to learn AI and get ready for the new world.#ai #openai #llm

- SWE-bench: no description found

- briaai/RMBG-1.4 · Hugging Face: no description found

- alignment-handbook/recipes/zephyr-141b-A35b at main · huggingface/alignment-handbook: Robust recipes to align language models with human and AI preferences - huggingface/alignment-handbook

- Apple M1 - Wikipedia: no description found

- alignment-handbook/recipes/zephyr-7b-beta at main · huggingface/alignment-handbook: Robust recipes to align language models with human and AI preferences - huggingface/alignment-handbook

- Paper page - Zephyr: Direct Distillation of LM Alignment: no description found

- HuggingChat: Making the community's best AI chat models available to everyone.

- GitHub - abi/screenshot-to-code: Drop in a screenshot and convert it to clean code (HTML/Tailwind/React/Vue): Drop in a screenshot and convert it to clean code (HTML/Tailwind/React/Vue) - abi/screenshot-to-code

- GitHub - simpler-env/SimplerEnv: Evaluating and reproducing real-world robot manipulation policies (e.g., RT-1, RT-1-X, Octo) in simulation under common setups (e.g., Google Robot, WidowX+Bridge): Evaluating and reproducing real-world robot manipulation policies (e.g., RT-1, RT-1-X, Octo) in simulation under common setups (e.g., Google Robot, WidowX+Bridge) - simpler-env/SimplerEnv

- Hugging Face – Blog: no description found

- @nroggendorff on Hugging Face: "@osanseviero your move": no description found

- Playing a Neural Network's version of GTA V: GAN Theft Auto: GAN Theft Auto is a Generative Adversarial Network that recreates the Grand Theft Auto 5 environment. It is created using a GameGAN fork based on NVIDIA's Ga...

- Huh Cat GIF - Huh Cat Cat huh - Discover & Share GIFs: Click to view the GIF

- Hand Gesture Drawing App Demo | Python OpenCV & Mediapipe: In this video, I demonstrate my Hand Gesture Drawing App using Python with OpenCV and Mediapipe. This app allows you to draw on screen using hand gestures de...

- stabilityai/stable-video-diffusion-img2vid-xt-1-1 · Hugging Face: no description found

- microsoft/Phi-3-mini-4k-instruct-gguf at main: no description found

- RAG chatbot using llama3: no description found

- Azazelle/L3-RP_io at main: no description found

- Vidalgo - One-Click Vertical Video Creation: Experience effortless video creation with Vidalgo! Our platform empowers you to produce stunning vertical videos for TikTok, YouTube Shorts, and Instagram Reels in just one click. Start creating today...

- stabilityai/stablelm-zephyr-3b · Hugging Face: no description found

- Hugging Face: The AI community building the future. Hugging Face has 227 repositories available. Follow their code on GitHub.

- Toy Story Woody GIF - Toy Story Woody Buzz Lightyear - Discover & Share GIFs: Click to view the GIF

- azaz (Z): no description found

- GitHub - huggingface/alignment-handbook: Robust recipes to align language models with human and AI preferences: Robust recipes to align language models with human and AI preferences - huggingface/alignment-handbook

- Agents & Tools: no description found

- GitHub - beowolx/rensa: High-performance MinHash implementation in Rust with Python bindings for efficient similarity estimation and deduplication of large datasets: High-performance MinHash implementation in Rust with Python bindings for efficient similarity estimation and deduplication of large datasets - beowolx/rensa

- How to write code to autocomplete words and sentences?: I'd like to write code that does autocompletion in the Linux terminal. The code should work as follows. It has a list of strings (e.g. "hello, "hi", "how a...

- GitHub - minimaxir/textgenrnn: Easily train your own text-generating neural network of any size and complexity on any text dataset with a few lines of code.: Easily train your own text-generating neural network of any size and complexity on any text dataset with a few lines of code. - minimaxir/textgenrnn

- GitHub - huggingface/datatrove: Freeing data processing from scripting madness by providing a set of platform-agnostic customizable pipeline processing blocks.: Freeing data processing from scripting madness by providing a set of platform-agnostic customizable pipeline processing blocks. - huggingface/datatrove

- GitHub - not-lain/loadimg: a python package for loading images: a python package for loading images. Contribute to not-lain/loadimg development by creating an account on GitHub.

- Vaas Far Cry3 GIF - Vaas Far Cry3 That Is Crazy - Discover & Share GIFs: Click to view the GIF

- Tweet from vik (@vikhyatk): asked claude to make me a cool new vaporwave style home page... should i switch to it?

- Tweet from vik (@vikhyatk): "make it better"

- sonnet_shooter.zip: 1 file sent via WeTransfer, the simplest way to send your files around the world

- huggingface_hub/src/huggingface_hub/hub_mixin.py at main · huggingface/huggingface_hub: The official Python client for the Huggingface Hub. - huggingface/huggingface_hub

- Reddit - Dive into anything: no description found

- Can Apple’s M1 Help You Train Models Faster & Cheaper Than NVIDIA’s V100?: In this article, we analyze the runtime, energy usage, and performance of Tensorflow training on an M1 Mac Mini and Nvidia V100. .

- GitHub - maxmelichov/Text-To-speech: Roboshaul: Roboshaul. Contribute to maxmelichov/Text-To-speech development by creating an account on GitHub.

- Robo-Shaul project: The Robo-Shaul Competition was a 2023 competition to clone the voice of Shaul Amsterdamski. The results are all here.

- Introducing Accelerated PyTorch Training on Mac: In collaboration with the Metal engineering team at Apple, we are excited to announce support for GPU-accelerated PyTorch training on Mac. Until now, PyTorch training on Mac only leveraged the CPU, bu...

- Alignment of brain embeddings and artificial contextual embeddings in natural language points to common geometric patterns - Nature Communications: Here, using neural activity patterns in the inferior frontal gyrus and large language modeling embeddings, the authors provide evidence for a common neural code for language processing.

HuggingFace ▷ #today-im-learning (3 messages):

- Coding Self-Attention and Multi-Head Attention: A member shared a link to their blog post detailing the implementation of self-attention and multi-head attention from scratch. The blog post explains the importance of attention in Transformer architecture for understanding word relationships in a sentence to make accurate predictions. Read the full post here.

- Interest in Blog Post: Another member expressed interest in the blog post on attention mechanisms. They affirmed their engagement with a simple “Yes I am interested.”

- Tree-Sitter S-expression Challenges: A member mentioned the challenges they are facing with Tree-Sitter S-expressions, referring to them as “a pain.” This suggests difficulties in parsing or handling these expressions in their current work.

Link mentioned: Ashvanth.S Blog - Wrapping your head around Self-Attention, Multi-head Attention: no description found

HuggingFace ▷ #cool-finds (5 messages):

- Implementing RMSNorm Layer in SD3: A member mentioned implementing an optional RMSNorm layer for the Q and K inputs, referencing the SD3 paper. No further details were provided on this implementation.

- LLMs and Refusal Mechanisms: A blog post was shared about LLM refusal/safety highlighting that refusal is mediated by a single direction in the residual stream. The full explanation and more insights can be found in the paper now available on arXiv.

- Florence-2 Vision Foundation Model: The abstract for Florence-2, a vision foundation model, was posted on arXiv. Florence-2 uses a unified prompt-based representation across various computer vision and vision-language tasks, leveraging a large dataset with 5.4 billion annotations.

- Facebook AI Twitter Link: A Twitter link related to Facebook AI was shared without any additional context. Twitter link

- wLLama Test Page: A link was shared to a wLLama basic example page demonstrating model completions and embeddings. Users can test models, input local files, and calculate cosine distances between text embeddings wLLama Basic Example.

Links mentioned:

- wllama.cpp demo: no description found

- Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks: We introduce Florence-2, a novel vision foundation model with a unified, prompt-based representation for a variety of computer vision and vision-language tasks. While existing large vision models exce…

- Refusal in LLMs is mediated by a single direction — AI Alignment Forum: This work was produced as part of Neel Nanda’s stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from…

- Refusal in Language Models Is Mediated by a Single Direction: Conversational large language models are fine-tuned for both instruction-following and safety, resulting in models that obey benign requests but refuse harmful ones. While this refusal behavior is wid…

HuggingFace ▷ #i-made-this (12 messages🔥):

- Mistroll 7B Version 2.2 Released: A member shared the Mistroll-7B-v2.2 model trained 2x faster with Unsloth and Huggingface’s TRL library. This experiment aims to fix incorrect behaviors in models and refine training pipelines focusing on data engineering and evaluation performance.

- Stable Diffusion Trainer Code Shared: A simple Stable Diffusion 1.5 Finetuner for experimentation was shared on GitHub. This “very janky” code uses Diffusers, aimed at helping users explore finetuning.

- Media to Text Conversion Software Release: Developed by a member, this software converts media files into text using PyQt for GUI and OpenAI Whisper for STT, supporting local and YouTube video transcriptions. Available on GitHub.

- Enhancements to SimpleTuner: Refactored and enhanced EMA support for SimpleTuner was shared, now compatible with SD3 and PixArt training, supporting CPU offload and step-skipping. The changes can be reviewed on GitHub.

- Featherless.ai - New AI Platform: A member introduced Featherless.ai, a platform to run public models from Huggingface serverlessly, instantly. They are onboarding 100+ models weekly and aim to cover all HF public models, inviting users to try the service and provide feedback.

Links mentioned:

- BarraHome/Mistroll-7B-v2.2 · Hugging Face: no description found

- Linear Regression From Scratch In Python: Learn the implementation of linear regression from scratch in pure Python. Cost function, gradient descent algorithm, training the model…

- GitHub - CodeExplode/MyTrainer: A simple Stable Diffusion 1.5 Finetuner for experimentation: A simple Stable Diffusion 1.5 Finetuner for experimentation - CodeExplode/MyTrainer

- GitHub - yjg30737/pyqt-assistant-v2-example: OpenAI Assistant V2 Manager created with PyQt (focused on File Search functionality): OpenAI Assistant V2 Manager created with PyQt (focused on File Search functionality) - yjg30737/pyqt-assistant-v2-example

- GitHub - yjg30737/whisper_transcribe_youtube_video_example_gui: GUI Showcase of using Whisper to transcribe and analyze Youtube video: GUI Showcase of using Whisper to transcribe and analyze Youtube video - yjg30737/whisper_transcribe_youtube_video_example_gui

- EMA: refactor to support CPU offload, step-skipping, and DiT models | pixart: reduce max grad norm by default, forcibly by bghira · Pull Request #521 · bghira/SimpleTuner: no description found

- CaptionEmporium/coyo-hd-11m-llavanext · Datasets at Hugging Face: no description found

- Featherless - Serverless LLM: Featherless - The latest LLM models, serverless and ready to use at your request.

- Featherless AI - Run every 🦙 AI model & more from 🤗 huggingface | Product Hunt: Featherless is a platform to use the very latest open source AI models from Hugging Face. With hundreds of new models daily, you need dedicated tools to keep with the hype. No matter your use-case, fi…

HuggingFace ▷ #reading-group (5 messages):

- Chad plans reasoning with LLMs discussion: A member announced plans to discuss “reasoning with LLMs” next Saturday and received enthusiastic support. He felt most confident about this topic and chose it over Triton.

- Readying for “Understanding the Current State of Reasoning with LLMs”: Chad stated he would start with the paper Understanding the Current State of Reasoning with LLMs arXiv link and referenced an elaborative Medium article article link.

- Exploring Awesome-LLM-Reasoning repositories: He mentioned diving into repositories like Awesome-LLM-Reasoning and another repository with the same name alternative repository link to explore the current state of LLMs for logic.

- Survey Paper Mentioned: Chad plans to go through the beginning of Natural Language Reasoning, A Survey survey PDF and reference papers published post-GPT-4 launch GPT-4 research link.

- Seeking long-term planning papers: He expressed interest in learning about good long-term planning papers for LLMs, particularly those focused on pentesting.

Links mentioned:

- Emergent Abilities of Large Language Models: Scaling up language models has been shown to predictably improve performance and sample efficiency on a wide range of downstream tasks. This paper instead discusses an unpredictable phenomenon that we…

- Understanding the Current State of Reasoning with LLMs: The goal of this article is to go through the repos of Awesome-LLM-Reasoning and Awesome-LLM-reasoning for an understanding of the current…

HuggingFace ▷ #computer-vision (9 messages🔥):

- Pricing Performance for OCR Models: Members are seeking recommendations for a good price-to-performance model for OCR that outputs in JSON. This highlights ongoing quests for cost-effective AI solutions.

- Stable Faces, Changing Hairstyles Video: A video showing a model where “faces almost remained constant but the hairstyle kept changing” sparked curiosity about which model achieved this. The video can be found here.

- Unsupported Image Type RuntimeError: A user encountered a “RuntimeError: Unsupported image type, must be 8bit gray or RGB image.” This occurred during the encoding process of images for face recognition, with code provided for debugging.

Link mentioned: Tweet from Science girl (@gunsnrosesgirl3): The evolution of fashion using AI

HuggingFace ▷ #NLP (1 messages):

capetownbali: Let us all know how your fine tuning on LLama goes!

HuggingFace ▷ #diffusion-discussions (2 messages):

- Redirect to diffusion-discussions channel: A user advised, “Your best bet is to ask here” for further discussions on the related topic.

- Inquiry about audio conversion models: A member inquired about the availability of models for audio-to-audio conversion, specifically from Urdu/Hindi to English, indicating a need for multilingual processing capabilities.

Unsloth AI (Daniel Han) ▷ #general (376 messages🔥🔥):

- Cossale eagerly awaits Unsloth’s release: They requested early access and were informed by theyruinedelise that the video would be filmed the next day. They can watch a temporary recording in the meantime.

- Feedback on Thumbnails and Flowcharts: Cossale suggested changes to the thumbnail for clarity, prompting theyruinedelise to update it from “csv -> unsloth + ollama” to “csv -> unsloth -> ollama”. They also advised adding descriptive text below logos for beginner users.

- Gigantic VRAM discussions impress: Members discussed Phison’s impressive PCIe-NVMe card presenting as 1Tb VRAM, impacting performance. Fimbulvntr shared a YouTube video to explain this tech.

- Excitement around extended LLMs: Fimbulvntr succeeded in extending Llama-3-70b’s context to 64k, and iron_bound debated performance implications of VRAM expansion. The conversation touched on various large model updates and their potential impacts.

- Upcoming releases and resources in the community: Theyruinedelise announced the Ollama update set for Monday or Tuesday including CSV file support. Additionally, Sebastien’s fine-tuned emotional llama model and its supportive resources are now available on Ollama and YouTube.

Links mentioned:

- Introducing Lamini Memory Tuning: 95% LLM Accuracy, 10x Fewer Hallucinations | Lamini - Enterprise LLM Platform: no description found

- Get DOLPHIN on Uniswap: no description found

- Tweet from Unsloth AI (@UnslothAI): Tomorrow we will be handing out our new stickers for the @aiDotEngineer World's Fair! 🦥 Join us at 9AM, June 25 where we will be doing workshops on LLM analysis + technicals, @Ollama support & m...

- MoRA: High-Rank Updating for Parameter-Efficient Fine-Tuning: Low-rank adaptation is a popular parameter-efficient fine-tuning method for large language models. In this paper, we analyze the impact of low-rank updating, as implemented in LoRA. Our findings sugge...

- Tweet from Kearm (@Nottlespike): http://x.com/i/article/1805030133478350848

- Tweet from RomboDawg (@dudeman6790): Announcing Replete-Coder-Qwen2-1.5b An uncensored, 1.5b model with good coding performance across over 100 coding languages, open source data, weights, training code, and fully usable on mobile platfo...

- Emotions in AI: Fine-Tuning, Classifying, and Reinforcement Learning: In this video we are exploring the creation of fine-tuning dataset for LLM's using Unsloth and Ollama to train a specialized model for emotions detection.You...

- Tell 'im 'e's dreamin': Some clips from the movie The Castle.

- AI and Unified Memory Architecture: Is it in the Hopper? Is it Long on Promise, Short on Delivery?: Sit back, relax and enjoy the soothing sounds of Wendell's rambleing. This episode focuses on the MI 300a/x and Nvidia Grace Hopper. Enjoy!******************...

- LlamaCloud: no description found

- Noice Nice GIF - Noice Nice Click - Discover & Share GIFs: Click to view the GIF

- SebLLama-Notebooks/Emotions at main · sebdg/SebLLama-Notebooks: Contribute to sebdg/SebLLama-Notebooks development by creating an account on GitHub.

- GitHub - Unstructured-IO/unstructured: Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines.: Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines. - GitHub - Unstructured-IO/unstructured: Open source librar...

- GitHub - datamllab/LongLM: [ICML'24 Spotlight] LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning: [ICML'24 Spotlight] LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning - datamllab/LongLM

- Google Colab: no description found

- sebdg/emotional_llama: Introducing Emotional Llama, the model fine-tuned as an exercise for the live event on Ollama discord channer. Designed to understand and respond to a wide range of emotions.

- Replete-AI/Replete-Coder-Qwen2-1.5b · Hugging Face: no description found

- Replete-AI/Adapter_For_Replete-Coder-Qwen2-1.5b · Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #random (108 messages🔥🔥):

- Logitech mouse and ChatGPT wrapper: A member discussed using a Logitech mouse with a “cool” ChatGPT wrapper capable of programming basic queries such as summarizing and rewriting text. They shared a link to show the UI of this setup.

Links mentioned:

- Hallucination is Inevitable: An Innate Limitation of Large Language Models: Hallucination has been widely recognized to be a significant drawback for large language models (LLMs). There have been many works that attempt to reduce the extent of hallucination. These efforts hav...

- Reddit - Dive into anything: no description found

- GitHub - PygmalionAI/aphrodite-engine: PygmalionAI's large-scale inference engine: PygmalionAI's large-scale inference engine. Contribute to PygmalionAI/aphrodite-engine development by creating an account on GitHub.

- ChatGPT is bullshit - Ethics and Information Technology: Recently, there has been considerable interest in large language models: machine learning systems which produce human-like text and dialogue. Applications of these systems have been plagued by persist...

Unsloth AI (Daniel Han) ▷ #help (228 messages🔥🔥):

- Installation Woes with Xformers on Windows: One user struggled to install xformers on Windows when setting up Unsloth via conda, encountering a “PackagesNotFoundError.” Another suggested that the challenges may be due to platform compatibility, prompting discussions about whether Unsloth works better on Linux.

- Trouble Importing FastLanguageModel in Colab: Users reported issues with importing

FastLanguageModelin Unsloth’s Google Colab notebooks. A workaround suggested was ensuring all initial cells, particularly those installing Unsloth, are executed properly. - Results Varying Based on Token Expiration: One user solved their issues by changing their Google account, identifying that an expired token in Colab secrets was causing problems, particularly around accessing datasets and downloading models.

- Using Huggingface Tokens: A user discovered that adding a Huggingface token fixed access issues, prompting confusion as models were meant to be public. The general sentiment was that inconsistencies in Huggingface access could be at play.