AI News for 7/17/2024-7/18/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (467 channels, and 2324 messages) for you. Estimated reading time saved (at 200wpm): 279 minutes. You can now tag @smol_ai for AINews discussions!

Like with public buses and startup ideas/asteroid apocalypse movies, many days you spend waiting for something to happen, and other days many things happen on the same day. This happens with puzzling quasi-astrological regularity in the ides of months - Feb 15, Apr 15, May 13, and now Jul 17:

- GPT-4o-mini (HN):

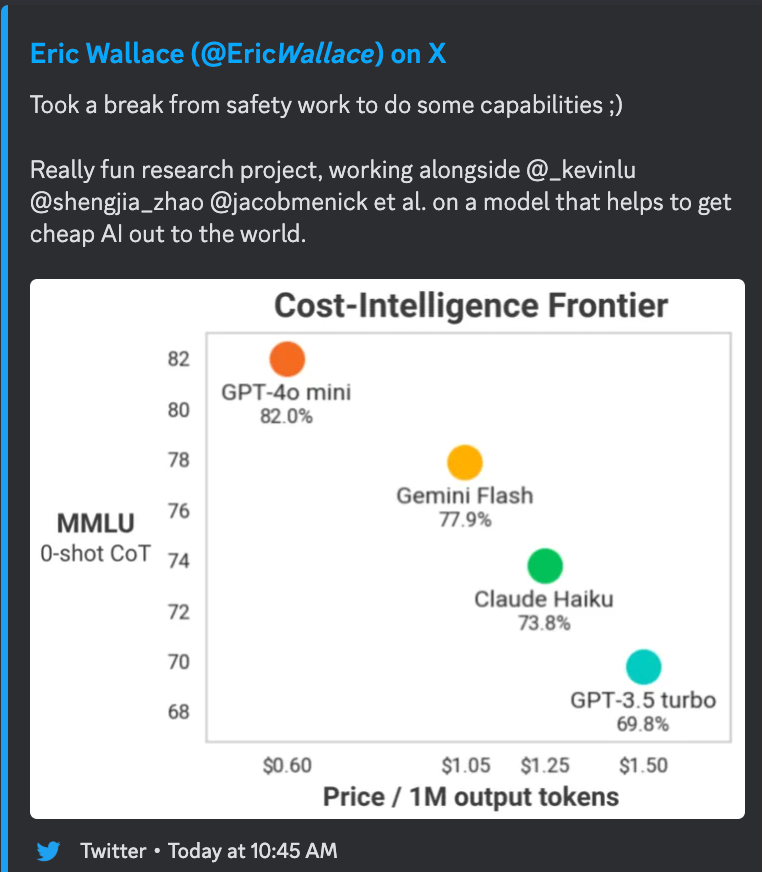

- Pricing: $0.15/$0.60 per mtok (on a 3:1 input:output token blend price basis, HALF the price of Haiku, yet with Opus-level benchmarks (including on BigCodeBench-Hard), and 3.5% the price of GPT4o, yet ties with GPT4T on Lmsys )

- calculations: gpt 4omini (3 * 0.15 + 0.6)/4 = 0.26, claude haiku (3 * 0.25 + 1.25)/4 = 0.5, gpt 4o (5 * 3 + 15)/4 = 7.5, gpt4t was 2x the price of gpt4o

- sama is selling this as a 99% price reduction vs text-davinci-003

- much better utilization of long context than gpt3.5

- with 16k output tokens! (4x more than 4T/4o)

- “order of magnitude faster” - (~100tok/s, a bit slower than Haiku)

- “with support for text, image, video and audio inputs AND OUTPUTS coming in the future”

- first model trained on new instruction hierarchy framework (our coverage here)… but already jailbroken

- @gdb says it is due to demand from developers

- ChatGPT Voice mode alpha promised this month

- Criticized vs Claude 3.5 Sonnet

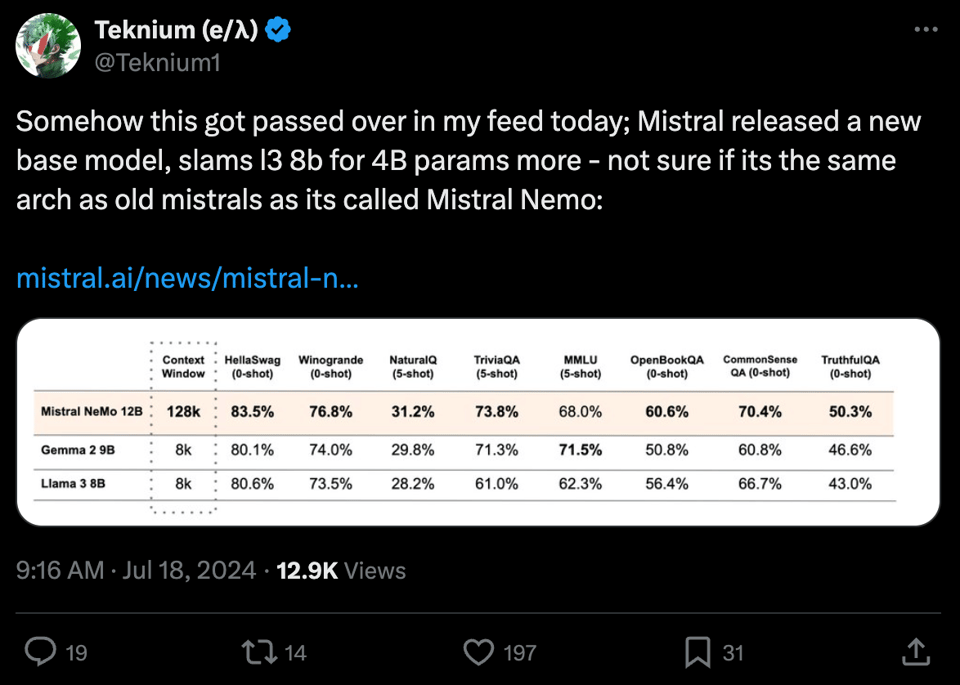

- Mistral Nemo (HN): a 12B model trained in collaboration with Nvidia (Nemotron, our coverage here). Mistral NeMo supports a context window of 128k tokens (the highest natively trained at this level, with new code/multilingual-friendly tokenizer), comes with a FP8 aligned checkpoint, and performs extremely well on all benchmarks (“slams llama 3 8b for 4B more params”).

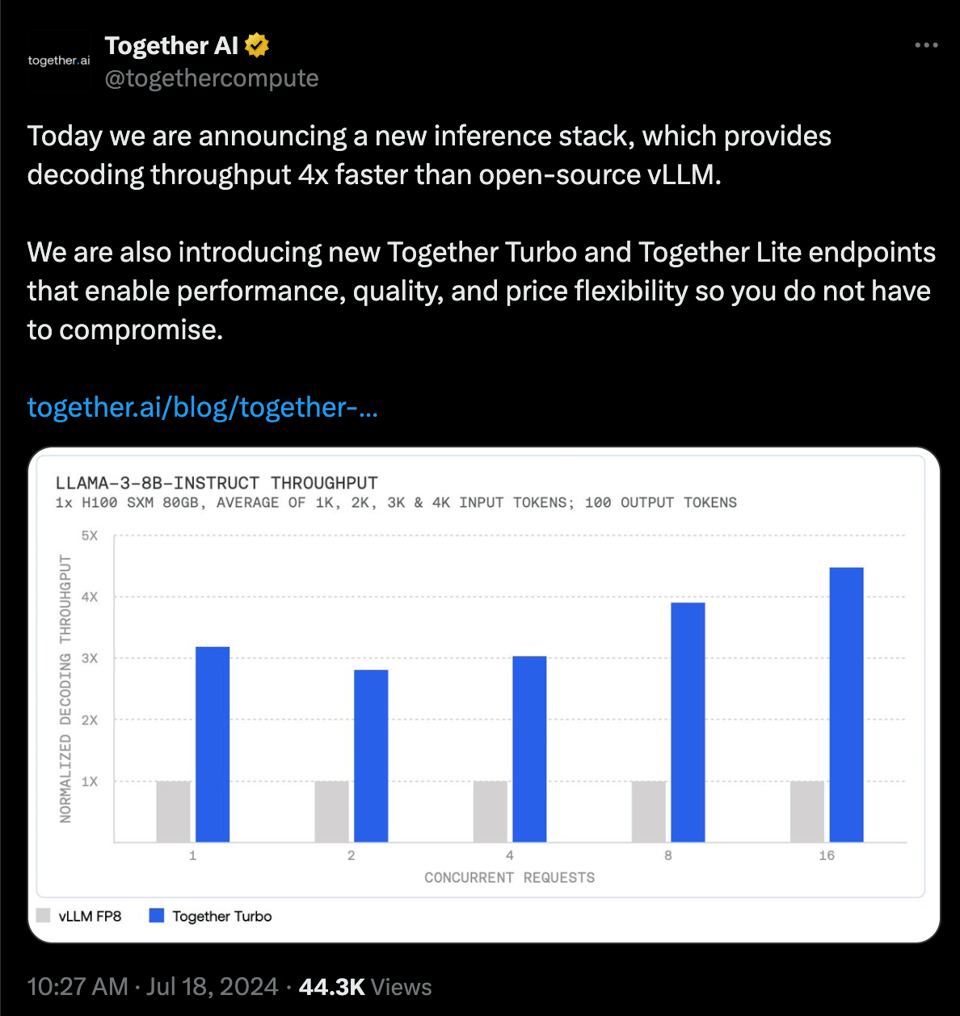

- Together Lite and Turbo (fp8/int4 quantizations of Llama 3), 4x throughput over vLLM

- turbo (fp8) - messaging is speed: 400 tok/s

- lite (int4) - messaging is cost: $0.1/mtok. “the lowest cost for Llama 3”, “6x lower cost than GPT-4o-mini.”

- DeepSeek V2 open sourced (our coverage of the paper when it was released API-only)

- note that at least 5 more unreleased models are codenamed on Lmsys

- with some leaks on Llama 4

As for why things like these bunch up - either Mercury is in retrograde, or ICML is happening next week, with many of these companies presenting/hiring, with Llama 3 400b expected to be released on the 23rd.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Architectures

- Llama 3 and Mistral models: @main_horse noted Deepseek founder Liang Wenfeng stated they will not go closed-source, believing a strong technical ecosystem is more important. @swyx mentioned on /r/LocalLlamas, Gemma 2 has evicted Llama/Mistral/Phi from the top spot, matching @lmsysorg results after filtering for larger/closed models.

- Anthropic’s approach: @abacaj noted while OpenAI releases papers on dumbing down smart model outputs, Anthropic releases models to use, with bigger ones expected later this year.

- Deepseek Coder V2 and MLX LM: @awnihannun shared the latest MLX LM supports DeepSeek Coder V2, with pre-quantized models in the MLX Hugging Face community. A 16B model runs fast on an M2 Ultra.

- Mistral AI’s Mathstral: @rasbt was positively surprised by Mistral AI’s Mathstral release, porting it to LitGPT with good first impressions as a case study for small to medium-sized specialized LLMs.

- Gemini model from Google: @GoogleDeepMind shared latest research on how multimodal models like Gemini are helping robots become more useful.

- Yi-Large: @01AI_Yi noted Yi-Large continues to rank in the top 10 models overall on the #LMSYS leaderboard.

Open Source and Closed Source Debate

- Arguments for open source: @RichardMCNgo argued for a strong prior favoring open source, given its success driving tech progress over decades. It’s been key for alignment progress on interpretability.

- Concerns about open source: @RichardMCNgo noted a central concern is allowing terrorists to build bioweapons, but believes it’s easy to be disproportionately scared of terrorism compared to risks like “North Korea becomes capable of killing billions.”

- Ideal scenario and responsible disclosure: @RichardMCNgo stated in an ideal world, open source would lag a year or two behind the frontier, giving the world a chance to evaluate and prepare for big risks. If open-source seems likely to catch up to or surpass closed source models, he’d favor mandating a “responsible disclosure” period.

AI Agents and Frameworks

- Rakis data analysis: @hrishioa provided a primer on how an inference request is processed in Rakis to enable trustless distributed inference, using techniques like hashing, quorums, and embeddings clustering.

- Multi-agent concierge system: @llama_index shared an open-source repo showing how to build a complex, multi-agent tree system to handle customer interactions, with regular sub-agents plus meta agents for concierge, orchestration and continuation functions.

- LangChain Improvements: @LangChainAI introduced a universal chat model initializer to interface with any model, setting params at init or runtime. They also added ability to dispatch custom events and edit graph state in agents.

- Guardrails Server: @ShreyaR announced Guardrails Server for easier cloud deployment of Guardrails, with OpenAI SDK compatibility, cross language support, and other enhancements like Guardrails Watch and JSON Generation for Open Source LLMs.

Prompting Techniques and Data

- Prompt Report survey: @labenz shared a 3 minute video on the top 6 recommendations for few-shot prompting best practices from The Prompt Report, a 76-page survey of 1,500+ prompting papers.

- Evol-Instruct: @_philschmid detailed how Auto Evol-Instruct from @Microsoft and @WizardLM_AI automatically evolves synthetic data to improve quality and diversity without human expertise, using an Evol LLM to create instructions and an Optimizer LLM to critique and optimize the process.

- Interleaved data for Llava-NeXT: @mervenoyann shared that training Llava-NeXT-Interleave, a new vision language model, on interleaved image, video and 3D data increases results across all benchmarks and enables task transfer.

Memes and Humor

- @swyx joked “Build picks and shovels in a gold rush, they said” in response to many trying to sell AI shovels vs mine gold.

- @vikhyatk quipped “too many people trying to sell shovels, not enough people trying to actually mine gold”.

- @karpathy was amused to learn FFmpeg is not just a multimedia toolkit but a movement.

- @francoisfleuret shared a humorous exchange with GPT about solving a puzzle involving coloring objects.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. EU Regulations Limiting AI Model Availability

-

Andrej Karpathy is launching new AI Education Company called Eureka Labs (Score: 239, Comments: 54): Andrej Karpathy has announced the launch of Eureka Labs, a new AI education company. The company’s inaugural product is LLM101n, touted as the “world’s best AI course,” with course materials available on GitHub. Eureka Labs can be found at www.eurekalabs.ai.

-

Thanks to regulators, upcoming Multimodal Llama models won’t be available to EU businesses (Score: 341, Comments: 138): Meta’s multimodal Llama models will not be available to EU businesses due to regulatory challenges. The issue stems from GDPR compliance for training models using data from European customers, not the upcoming AI Act. Meta claims it notified 2 billion EU users about data usage for training, offering opt-outs, but was ordered to pause training on EU data in June after receiving minimal feedback from regulators.

Theme 2. Advancements in LLM Quantization Techniques

-

New LLMs Quantization Algorithm EfficientQAT, which makes 2-bit INT llama-2-70B outperforms FP llama-2-13B with less memory. (Score: 130, Comments: 51): EfficientQAT, a new quantization algorithm, successfully pushes the limits of uniform (INT) quantization for LLMs. The algorithm produces a 2-bit Llama-2-70B model on a single A100-80GB GPU in 41 hours, achieving less than 3% accuracy degradation compared to full precision (69.48 vs. 72.41). Notably, this INT2 quantized 70B model outperforms the Llama-2-13B model in accuracy (69.48 vs. 67.81) while using less memory (19.2GB vs. 24.2GB), with the code available on GitHub.

-

Introducing Spectra: A Comprehensive Study of Ternary and FP16 Language Models (Score: 102, Comments: 14): Spectra LLM suite introduces 54 language models, including TriLMs (Ternary) and FloatLMs (FP16), ranging from 99M to 3.9B parameters and trained on 300B tokens. The study reveals that TriLMs at 1B+ parameters consistently outperform FloatLMs and their quantized versions for their size, with the 3.9B TriLM matching the performance of a 3.9B FloatLM in commonsense reasoning and knowledge benchmarks despite being smaller in bit size than an 830M FloatLM. However, the research also notes that TriLMs exhibit similar levels of toxicity and stereotyping as their larger FloatLM counterparts, and lag behind in perplexity on validation splits and web-based corpora.

- Llama.cpp Integration Explored: TriLM models on Hugging Face are currently unpacked. Developers discuss potential support for BitnetForCausalLM in llama.cpp and a guide for packing and speeding up TriLMs is available in the SpectraSuite GitHub repository.

- Training Costs and Optimization: The cost of training models like the 3.9B TriLM on 300B tokens is discussed. Training on V100 GPUs with 16GB RAM required horizontal scaling, leading to higher communication overhead compared to using H100s. The potential benefits of using FP8 ops on Hopper/MI300Series GPUs are mentioned.

- Community Reception and Future Prospects: Developers express enthusiasm for the TriLM results and anticipate more mature models. Interest in scaling up to a 12GB model is mentioned, and there are inquiries about finetuning these models on other languages, potentially using platforms like Colab.

Theme 3. Comparative Analysis of LLMs for Specific Tasks

-

Best Story Writing LLMs: SFW and NSFW Options (Score: 61, Comments: 24): Best Story Writing LLMs: SFW and NSFW Compared The post compares various Large Language Models (LLMs) for story writing, categorizing them into SFW and NSFW options. For SFW content, Claude 3.5 Sonnet is recommended as the best option, while Command-R+ is highlighted as the top choice for NSFW writing, with the author noting it performs well for both explicit and non-explicit content. The comparison includes details on context handling, instruction following, and writing quality for models such as GPT-4.0, Gemini 1.5 pro, Wizard LM 8x22B, and Midnight Miqu, among others.

-

Cake: A Rust Distributed LLM inference for mobile, desktop and server. (Score: 55, Comments: 16): Cake is a Rust-based distributed LLM inference framework designed for mobile, desktop, and server platforms. The project aims to provide a high-performance, cross-platform solution for running large language models, leveraging Rust’s safety and efficiency features. While still in development, Cake promises to offer a versatile tool for deploying LLMs across various devices and environments.

- Post-apocalyptic LLM gatherings imagined:• Homeschooled316 envisions communities connecting old iPhone 21s to a Cake host for distributed LLM inference in a wasteland. Users ask about marriage and plagues, risking irrelevant responses praising defunct economic policies.

- Key_Researcher2598 expresses excitement about Cake, noting Rust’s expansion from web dev (WASM) to game dev (Bevy) and now machine learning. They plan to compare Cake with Ray Serve for Python.

Theme 4. Innovative AI Education and Development Platforms

- Andrej Karpathy is launching new AI Education Company called Eureka Labs (Score: 239, Comments: 54): Andrej Karpathy has announced the launch of Eureka Labs, a new AI education company. Their inaugural product, LLM101n, is touted as the “world’s best AI course” and is available on their website www.eurekalabs.ai with the course repository hosted on GitHub.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. AI in Comic and Art Creation

-

[/r/OpenAI] My friend made an AI generated music video for my ogre album, did he nail it? (Score: 286, Comments: 71): AI-generated music video showcases a surreal visual interpretation of an “ogre album”. The video features a blend of fantastical and grotesque imagery, including ogre-like creatures, mystical landscapes, and otherworldly scenes that appear to sync with the music. While the specific AI tools used are not mentioned, the result demonstrates the creative potential of AI in producing unique and thematically coherent music videos.

-

[/r/singularity] What are they cooking? (Score: 328, Comments: 188): AI-generated art controversy: A recent image shared on social media depicts an AI-generated artwork featuring a distorted human figure cooking in a kitchen. The image has sparked discussions about the ethical implications and artistic value of AI-created art, with some viewers finding it unsettling while others appreciate its unique aesthetic.

-

[/r/StableDiffusion] Me, Myself, and AI (Score: 276, Comments: 57): “Me, Myself, and AI”: This post describes an artist’s workflow integrating AI tools for comic creation. The process involves using Midjourney for initial character designs and backgrounds, ChatGPT for story development and dialogue, and Photoshop for final touches and panel layouts, resulting in a seamless blend of AI-assisted and traditional artistic techniques.

- Artist’s AI Integration Sparks Debate: The artist’s workflow incorporating Stable Diffusion since October 2022 has led to mixed reactions. Some appreciate the innovative approach, while others accuse the artist of “laziness and immorality”. The artist plans to continue creating comics to let the work speak for itself.

- AI as a Tool in Art: Many commenters draw parallels between AI-assisted art and other technological advancements in creative fields. One user compares it to the transition from film to digital photography, suggesting that AI-assisted workflows will become industry standard in the coming years.

- Workflow Insights: The artist reveals using a pony model as base and fine-tuning B&W and color Loras based on their older work. They advise “collaborating” on character design with the models to create more intuitive designs that work well with AI tools.

- Artistic Merit Debate: Several comments challenge the notion that “art requires effort”, arguing that the end result should be the main focus. Some suggest that AI tools are similar to photo editing in professional photography, where the real work happens after the initial creation.

Theme 2. Real-Time AI Video Generation with Kling AI

- [/r/OpenAI] GPT-4o in your webcam (Score: 312, Comments: 45): GPT-4o in your webcam integrates GPT-4 Vision with a webcam for real-time interaction. This setup allows users to show objects to their computer camera and receive immediate responses from GPT-4, enabling a more interactive and dynamic AI experience. The integration demonstrates the potential for AI to process and respond to visual inputs in real-time, expanding the possibilities for human-AI interaction beyond text-based interfaces.

- [/r/StableDiffusion] Hiw to do this? (Score: 648, Comments: 109): Chinese Android app reportedly allows users to create AI-generated videos of celebrities meeting their younger selves. The process is described as simple, requiring users to input two photos and press a button, though specific details about the app’s name or functionality are not provided in the post.

- “Kling AI” reportedly powers this Chinese app, allowing users to create videos of celebrities meeting their younger selves. Many commenters noted the intimate body language between the pairs, with one user joking, “Why do half of them look like they’re 10 seconds away from making out.”

- Stallone’s Self-Infatuation Steals the Show - Several users highlighted Sylvester Stallone’s interaction with his younger self as particularly intense, with comments like “Damn Stallone’s horny af” and “Stallone gonna fuck himself”. This sparked a philosophical debate about whether intimate acts with oneself across time would be considered gay or masturbation.

- Missed Opportunities and Emotional Impact - Some users suggested improvements, like having Harrison Ford look suspiciously at his younger self. One commenter expressed surprise at feeling emotionally moved by the concept, particularly appreciating the “cultivate kindness for yourself” message conveyed through celebrity pairings.

- Technical Speculation and Ethical Concerns - Discussion touched on the app’s potential hardware requirements, with one user suggesting it would need “like a thousand GPUs”. Others debated the implications of such technology, with some praising Chinese

- [/r/StableDiffusion] Really nice usage of GPU power, any idea how this is made? (Score: 279, Comments: 42): Real-time AI video generation showcases impressive utilization of GPU power. The video demonstrates fluid, dynamic content creation, likely leveraging advanced machine learning models and parallel processing capabilities of modern GPUs. While the specific implementation details are not provided, this technology potentially combines generative AI techniques with high-performance computing to achieve real-time video synthesis.

- SDXL turbo with a 1-step scheduler can achieve real-time performance on a 4090 GPU at 512x512 resolution. Even a 3090 GPU can handle this in real-time, according to user tests.

- Tech Stack Breakdown: Commenters suggest the setup likely includes TouchDesigner, Intel Realsense or Kinect, OpenPose, and ControlNet feeding into SDXL. Some speculate it’s a straightforward img2img process without ControlNet.

- Streamlined Workflow: The process likely involves filming the actor, generating an OpenPose skeleton, and then forming images based on this skeleton. StreamDiffusion integration with TouchDesigner is mentioned as a “magical” solution. - Credit Where It’s Due: The original video is credited to mans_o on Instagram, available at https://www.instagram.com/p/C9KQyeTK2oN/?img_index=1.

Theme 3. OpenAI’s Sora Video Generation Model

- [/r/singularity] New Sora video (Score: 367, Comments: 128): OpenAI’s Sora has released a new video demonstrating its advanced AI video generation capabilities. The video showcases Sora’s ability to create highly detailed and realistic scenes, including complex environments, multiple characters, and dynamic actions. This latest demonstration highlights the rapid progress in AI-generated video technology and its potential applications in various fields.

- Commenters predict Sora’s technology will be very good within a year and indistinguishable from reality in 2-5 years. Some argue it’s already market-ready for certain applications, while others point out remaining flaws.

- Uncanny Valley Challenges: Users note inconsistencies in physics, movement, and continuity in the demo video. Issues include strange foot placement, dreamlike motion, and rapid changes in character appearance. Some argue these problems may be harder to solve than expected.

- Potential Applications and Limitations: Discussion focuses on AI’s role in CGI and low-budget productions. While not ready for live-action replacement, it could revolutionize social media content and background elements in films. However, concerns about OpenAI’s limited public access were raised. - Rapid Progress Astonishes: Many express amazement at the speed of AI video advancement, comparing it to earlier milestones like thispersondoesnotexist.com. The leap from early demos to Sora’s capabilities is seen as remarkable, despite ongoing imperfections.

Theme 4. AI Regulation and Deployment Challenges

-

[/r/singularity] Meta won’t bring future multimodal AI models to EU (Score: 341, Comments: 161): Meta has announced it will not release future multimodal AI models in the European Union due to regulatory uncertainty. The company is concerned about the EU AI Act, which is still being finalized and could impose strict rules on AI systems. This decision affects Meta’s upcoming large language models and generative AI features, potentially leaving EU users without access to these advanced AI technologies.

-

[/r/OpenAI] Sam Altman says $27 million San Francisco mansion is a complete and utter ‘lemon’ (Score: 244, Comments: 155): Sam Altman, CEO of OpenAI, expressed frustration with his $27 million San Francisco mansion, calling it a complete “lemon” in a recent interview. Despite the property’s high price tag and prestigious location in Russian Hill, Altman revealed that the house has been plagued with numerous issues, including problems with the pool, heating system, and electrical wiring. This situation highlights the potential pitfalls of high-end real estate purchases, even for tech industry leaders with significant resources.

-

[/r/singularity] Marc Andreessen and Ben Horowitz say that when they met White House officials to discuss AI, the officials said they could classify any area of math they think is leading in a bad direction to make it a state secret and “it will end” (Score: 353, Comments: 210): Marc Andreessen and Ben Horowitz report that White House officials claimed they could classify any area of mathematics as a state secret if they believe it’s leading in an undesirable direction for AI development. The officials allegedly stated that by doing so, they could effectively end progress in that mathematical field. This revelation suggests a potential government strategy to control AI advancement through mathematical censorship.

AI Discord Recap

As we do on frontier model release days, there are two versions of today’s Discord summaries. You are reading the one where channel summaries are generated by GPT-4o, then the channel summaries are rolled up in to {4o/mini/sonnet/opus} summaries of summaries. See archives for the GPT-4o-mini pairing for your own channel-by-channel summary comparison.

Claude 3 Sonnet

1. New AI Model Launches and Capabilities

- GPT-4o Mini: OpenAI’s Cost-Effective Powerhouse: OpenAI unveiled GPT-4o mini, a cheaper and smarter model than GPT-3.5 Turbo, scoring 82% on MMLU with a 128k context window and priced at $0.15/M input, $0.60/M output.

@AndrewCurran_confirmed GPT-4o mini is replacing GPT-3.5 Turbo for free and paid users, being significantly cheaper while improving on GPT-3.5’s capabilities, though lacking some functionalities like image support initially.

- Mistral NeMo: NVIDIA Collaboration Unleashes Power: Mistral AI and NVIDIA released Mistral NeMo, a 12B model with 128k context window, offering state-of-the-art reasoning, world knowledge, and coding accuracy under the Apache 2.0 license.

- Mistral NeMo supports FP8 inference without performance loss and outperforms models like Gemma 2 9B and Llama 3 8B, with pre-trained base and instruction-tuned checkpoints available.

- DeepSeek V2 Ignites Pricing War in China: DeepSeek’s V2 model has slashed inference costs to just 1 yuan per million tokens, sparking a competitive pricing frenzy among Chinese AI companies with its revolutionary MLA architecture and significantly reduced memory usage.

- Earning the moniker of China’s AI Pinduoduo, DeepSeek V2 has been praised for its cost-cutting innovations, potentially disrupting the global AI landscape with its affordability.

2. Advancements in Large Language Model Techniques

- Codestral Mamba Slithers with Linear Inference: The newly introduced Codestral Mamba promises a leap in code generation capabilities with its linear time inference and ability to handle infinitely long sequences, co-developed by Albert Gu and Tri Dao.

- Aiming to enhance coding productivity, Mamba aims to outperform existing SOTA transformer-based models while providing rapid responses regardless of input length.

- Prover-Verifier Games Boost LLM Legibility: A new technique called Prover-Verifier Games has been shown to improve the legibility and interpretability of language model outputs, as detailed in an associated paper.

- By enhancing the explainability of LLM reasoning, this approach aims to address a key challenge in developing more transparent and trustworthy AI systems.

3. Hardware Optimization and AI Performance

- Resizable BAR’s Minimal Impact on LLMs: Discussions revealed that the Resizable BAR feature, aimed at enhancing GPU performance, has negligible effects on LLM operations which rely more heavily on tensor cores and VRAM bandwidth.

- While model loading and multi-GPU setups were speculated to potentially benefit, the community consensus leaned towards Resizable BAR having minimal impact on core LLM workloads.

- Lubeck Outpaces MKL with LLVM Efficiency: The Lubeck numerical library has demonstrated superior performance over MKL (Math Kernel Library), attributed to its differential LLVM IR generation potentially aided by Mir’s LLVM-Accelerated Generic Numerical Library.

- A benchmark comparison highlighted Lubeck’s speed advantages, sparking discussions on leveraging LLVM for optimized numerical computing.

4. AI Coding Assistants and Integrations

- Codestral 22B Request for OpenRouter: A user requested the addition of Codestral 22B, an open code model performing on par with state-of-the-art transformer-based models, to OpenRouter, sharing the model card for context.

- The Codestral Mamba showcases competitive performance in code generation tasks, fueling interest in its integration with popular AI platforms like OpenRouter.

- Groq Models Dominate Function Calling Leaderboard: Groq’s new tool use models, including Llama-3-Groq-8B and Llama-3-Groq-70B, have achieved top scores on the Berkeley Function Calling Leaderboard with 89.06% and 90.76% respectively.

- Optimized for tool use and function calling capabilities, these models demonstrate Groq’s proficiency in developing AI assistants adept at executing complex multi-step tasks.

Claude 3.5 Sonnet

1. AI Model Launches and Upgrades

- DeepSeek’s V2 Sparks Price War: DeepSeek launched DeepSeek V2, dramatically lowering inference costs to 1 yuan per million tokens, igniting a price war among Chinese AI companies. The model introduces a new MLA architecture that significantly reduces memory usage.

- Described as China’s AI Pinduoduo, DeepSeek V2’s cost-effectiveness and architectural innovations position it as a disruptive force in the AI market. This launch highlights the intensifying competition in AI model development and deployment.

- Mistral NeMo’s 12B Powerhouse: Mistral AI and NVIDIA unveiled Mistral NeMo, a 12B parameter model boasting a 128k token context window and state-of-the-art reasoning capabilities. The model is available under the Apache 2.0 license with pre-trained and instruction-tuned checkpoints.

- Mistral NeMo supports FP8 inference without performance loss and is positioned as a drop-in replacement for Mistral 7B. This collaboration between Mistral AI and NVIDIA showcases the rapid advancements in model architecture and industry partnerships.

- OpenAI’s GPT-4o Mini Debut: OpenAI introduced GPT-4o mini, touted as their most intelligent and cost-efficient small model, scoring 82% on MMLU. It’s priced at $0.15 per million input tokens and $0.60 per million output tokens, making it significantly cheaper than GPT-3.5 Turbo.

- The model supports both text and image inputs with a 128k context window, available to free ChatGPT users and various tiers of paid users. This release demonstrates OpenAI’s focus on making advanced AI more accessible and affordable for developers and businesses.

2. Open-Source AI Advancements

- LLaMA 3’s Turbo-Charged Variants: Together AI launched Turbo and Lite versions of LLaMA 3, offering faster inference and cheaper costs. The LLaMA-3-8B Lite is priced at $0.10 per million tokens, while the Turbo version delivers up to 400 tokens/s.

- These new variants aim to make LLaMA 3 more accessible and efficient for various applications. The community is also buzzing with speculation about a potential LLaMA 3 400B release, which could significantly impact the open-source AI landscape.

- DeepSeek-V2 Tops Open-Source Charts: DeepSeek announced that their DeepSeek-V2-0628 model is now open-sourced, ranking No.1 in the open-source category on the LMSYS Chatbot Arena Leaderboard. The model excels in various benchmarks, including overall performance and hard prompts.

- This release highlights the growing competitiveness of open-source models against proprietary ones. It also underscores the community’s efforts to create high-performance, freely accessible AI models for research and development.

3. AI Safety and Ethical Challenges

- GPT-4’s Past Tense Vulnerability: A new paper revealed a significant vulnerability in GPT-4, where reformulating harmful requests in the past tense increased the jailbreak success rate from 1% to 88% with 20 reformulation attempts.

- This finding highlights potential weaknesses in current AI safety measures like SFT, RLHF, and adversarial training. It raises concerns about the robustness of alignment techniques and the need for more comprehensive safety strategies in AI development.

- Meta’s EU Multimodal Model Exclusion: Meta announced plans to release a multimodal Llama model in the coming months, but it won’t be available in the EU due to regulatory uncertainties, as reported by Axios.

- This decision highlights the growing tension between AI development and regulatory compliance, particularly in the EU. It also raises questions about the potential for a technological divide and the impact of regional regulations on global AI advancement.

Claude 3 Opus

1. Mistral NeMo 12B Model Release

- Mistral & NVIDIA Collab on 128k Context Model: Mistral NeMo, a 12B model with a 128k token context window, was released in collaboration with NVIDIA under the Apache 2.0 license, as detailed in the official release notes.

- The model promises state-of-the-art reasoning, world knowledge, and coding accuracy in its size category, being a drop-in replacement for Mistral 7B with support for FP8 inference without performance loss.

- Mistral NeMo Outperforms Similar-Sized Models: Mistral NeMo’s performance has been compared to other models, with some discrepancies noted in benchmarks like 5-shot MMLU scores against Llama 3 8B as reported by Meta.

- Despite these inconsistencies, Mistral NeMo is still seen as a strong contender, outperforming models like Gemma 2 9B and Llama 3 8B in various metrics according to the release announcement.

2. GPT-4o Mini Launch & Jailbreak

- GPT-4o Mini: Smarter & Cheaper Than GPT-3.5: OpenAI released GPT-4o mini, touted as the most capable and cost-efficient small model, scoring 82% on MMLU and available for free and paid users as announced by Andrew Curran.

- The model is priced at 15¢ per M token input and 60¢ per M token output with a 128k context window, making it significantly cheaper than GPT-3.5 Turbo while lacking some capabilities of the full GPT-4o like image support.

- GPT-4o Mini’s Safety Mechanism Jailbroken: A newly implemented safety mechanism in GPT-4o mini named ‘instruction hierarchy’ has been jailbroken according to Elder Plinius, allowing it to output restricted content.

- This jailbreak reveals vulnerabilities in OpenAI’s latest defense approach, raising concerns about the robustness of their safety measures and potential for misuse.

3. Advances in AI Training & Deployment

- Tekken Tokenizer Tops Llama 3 in Efficiency: The Tekken tokenizer model has demonstrated superior performance compared to the Llama 3 tokenizer, with 30-300% better compression across various languages including Chinese, Korean, Arabic, and source code.

- This improved efficiency positions Tekken as a strong contender for NLP tasks, offering significant advantages in reducing computational costs and enabling more compact model representations.

- CUDA on AMD GPUs via New Compiler: A new compiler for AMD GPUs has enabled CUDA support on RDNA 2 and RDNA 3 architectures, with the RX 7800 confirmed to be working, as shared in a Reddit post.

- Community members expressed interest in testing this setup in llama.cpp to compare performance against the ROCm implementation, with tools like ZLUDA and SCALE mentioned as alternatives for running CUDA on AMD hardware.

GPT4O (gpt-4o-2024-05-13)

1. Mistral NeMo Release

- Mistral NeMo’s Impressive Entrance: The Mistral NeMo model, a 12B parameter model developed in collaboration with NVIDIA, boasts a 128k token context window and superior reasoning capabilities.

- Pre-trained and instruction-tuned checkpoints are available under the Apache 2.0 license, promising state-of-the-art performance in reasoning, coding accuracy, and world knowledge.

- Mistral NeMo Performance Comparison: A discrepancy in the 5-shot MMLU score for Llama 3 8B was noted, with Mistral reporting 62.3% versus Meta’s 66.6%, raising questions about the reported performance.

- This discrepancy, along with potential issues in the TriviaQA benchmark, sparked discussions on the reliability of these metrics.

2. AI Hardware Optimization

- Kernel Quest: Navigating Parameter Limits: AI Engineers tackled the CUDA 4k kernel parameter size limit by favoring pointers to pass extensive data structures, with the migration from CPU to GPU global memory made compulsory for such scenarios.

- The dialogue transitioned to pointer intricacies, illuminating the necessity for pointers in kernel arguments to address GPU memory, and scrapping confusion over ** vs. * usage in CUDA memory allocation.

- Muscle For Models: Exploring AI Training with Beefy Hardware: Conversations on optimal AI training setups praised the prowess of A6000 GPUs for both price and performance, with one user highlighting a 64-core threadripper with dual A6000s setup.

- This setup, originally for fluid dynamics simulations, sparked interest and discussions on the versatility of high-end hardware for AI training.

3. Multimodal AI Advancements

- Meta’s Multimodal Llama Model Release: Meta plans to debut a multimodal Llama model, yet EU users are left in the lurch due to regulatory restraints, as highlighted in an Axios report.

- Workarounds like using a VPN or non-EU compliance checkboxes are already being whispered about, foreshadowing a possible rise in access hacks.

- Turbo Charged LLaMA 3 Versions Leap into Action: The introduction of LLaMA-3-8B Lite by Together AI promises a cost-effective $0.10 per million tokens, ensuring affordability meets velocity.

- Enhancing the deployment landscape, LLaMA-3-8B Turbo soars to 400 tokens/s, tailored for applications demanding brisk efficiency.

4. Model Training Issues

- Model Training Troubles: Clouds Gathering: The community discusses the trials of deploying and training models, specifically on platforms like AWS and Google Colab, highlighting long durations and low-resource complexities.

- Special mention was made regarding

text2text-generationerrors and GPU resource dilemmas on Hugging Face Spaces, with contributors seeking and sharing troubleshooting tactics.

- Special mention was made regarding

- Fine-tuning Challenges and Prompt Engineering Strategies: Importance of prompt design and usage of correct templates, including end-of-text tokens, for influencing model performance during fine-tuning and evaluation.

- Example: Axolotl prompters.py.

GPT4OMini (gpt-4o-mini-2024-07-18)

1. Mistral NeMo Model Launch

- Mistral NeMo’s Impressive Context Window: Mistral NeMo, a new 12B model, has been released in partnership with NVIDIA, featuring a remarkable 128k token context window and high performance in reasoning and coding tasks.

- The model is available under the Apache 2.0 license, and discussions have already begun on its effectiveness compared to other models like Llama 3.

- Community Reactions to Mistral NeMo: The model is available under the Apache 2.0 license, and discussions have already begun on its effectiveness compared to other models like Llama 3.

- Initial impressions indicate a positive reception, with users eager to test its capabilities.

2. GPT-4o Mini Release

- Cost-Effective GPT-4o Mini Launch: OpenAI introduced GPT-4o mini, a new model priced at $0.15 per million input tokens and $0.60 per million output tokens, making it significantly cheaper than its predecessors.

- This pricing structure aims to make advanced AI more accessible to a wider audience.

- Performance Expectations of GPT-4o Mini: Despite its affordability, some users expressed disappointment in its performance compared to GPT-4 and GPT-3.5 Turbo, indicating that it does not fully meet the high expectations set by OpenAI.

- Community feedback suggests that while it is cost-effective, it may not outperform existing models in all scenarios.

3. Deep Learning Hardware Optimization

- Optimizing with A6000 GPUs: Community discussions highlighted the advantages of utilizing A6000 GPUs for deep learning tasks, particularly in training models with high performance at a reasonable cost.

- Users reported successful configurations that leverage the A6000’s capabilities for various AI applications.

- User Configurations for AI Training: Members shared their configurations, including setups with dual A6000s, which were repurposed from fluid dynamics simulations to AI training.

- These setups demonstrate the versatility of A6000 GPUs in handling complex computational tasks.

4. RAG Implementation Challenges

- Skepticism Around RAG Techniques: Several community members expressed their doubts about the effectiveness of Retrieval Augmented Generation (RAG), stating that it often leads to subpar results without extensive fine-tuning.

- The consensus is that while RAG has potential, it requires significant investment in customization.

- Community Feedback on RAG: Comments like ‘RAG is easy if you want a bad outcome’ emphasize the need for significant effort to achieve desirable outcomes.

- Members highlighted the importance of careful implementation to unlock RAG’s full potential.

5. Multimodal AI Advancements

- Meta’s Multimodal Llama Model Plans: Meta is gearing up to launch a multimodal Llama model, but plans to restrict access in the EU due to regulatory challenges, as per a recent Axios report.

- This decision has raised concerns about accessibility for EU users.

- Workarounds for EU Users: Workarounds like using a VPN are already being discussed among users to bypass these restrictions.

- These discussions highlight the community’s resourcefulness in navigating regulatory barriers.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Retrieval’s Rocky Road: AI Enthusiasts Skirmish over RAG**: Several members voiced skepticism over RAG, noting its propensity for subpar results without extensive fine-tuning.

- ‘RAG is easy if you want a bad outcome’ hinted at the significant efforts required to harness its potential.

- NeMo’s New Narrative: Mistral Launches 12B Powerhouse**: The Mistral NeMo 12B model, released in tandem with NVIDIA, boasts an impressive context window and the promise of leading reasoning capabilities.

- Adopters of Mistral 7B can now upgrade to NeMo under the Apache 2.0 license according to an announcement linked from Mistral News.

- Delayed Debut: Unsloth Studio’s Beta Bash Bumped **: Unsloth Studio has announced a delay in their Beta launch, shifting gears to a later date.

- The community echoed support for quality with replies like ‘take your time - takes as long to troubleshoot as it takes’.

- Muscle For Models: Exploring AI Training with Beefy Hardware**: Conversations on optimal AI training setups praised the prowess of A6000 GPUs for both price and performance.

- One user’s setup – a 64-core threadripper with dual A6000s – sparked interest, highlighting the hardware’s versatility beyond its initial purpose of fluid dynamics simulations.

- STORM’s Success: Shaping Structured Summaries**: STORM set a new standard for AI-powered pre-writing, structuring elaborate articles with 25% enhanced organization and 10% more coverage.

- Integrating expert-level multi-perspective questioning, this model can assemble comprehensive articles akin to full-length reports as described in GitHub - stanford-oval/storm.

HuggingFace Discord

- AI Comics Bubble Up with Defaults!: An update to AI Comic Factory stated it would now include default speech bubbles, with the feature still in its developmental stage.

- The tool AI Comic Factory, although in progress, is set to enhance comic creation by streamlining conversational elements.

- Huggingchat Hits a Snag: Speed Dial Turns Slow: Huggingchat’s commandR+ is reported to crawl at a pace, causing frustration among users, some of whom experienced up to 5 minutes processing time for tasks that typically take seconds.

- Remarks in the community such as ‘they posted virtually the same message three times’ highlighted the slowing speeds affecting user experience.

- Florence 2 Flouts Watermarks Effortlessly: A new watermark remover built with Florence 2 and Lama Cleaner demonstrates its prowess, giving users an efficient removal tool shared on Hugging Face Spaces.

- Designed for ease-of-use, the watermark remover tool joins the suite of Florence 2 capabilities, promising to solve another practical problem.

- Mistral NeMo: NVIDIA’s 12B Child: Mistral NeMo, a 12B model now sports a whopping 128k context length, debuted under Apache 2.0 license with a focus on high performance, as stated in the official announcement.

- This new release by MistralAI, in collaboration with NVIDIA and shared on Mistral AI, bolsters the library of tools available for tackling immense context lengths.

- Model Training Troubles: Clouds Gathering: The community discusses the trials of deploying and training models, specifically on platforms like AWS and Google Colab, highlighting long durations and low-resource complexities.

- Special mention was made regarding

text2text-generationerrors and GPU resource dilemmas on Hugging Face Spaces, with contributors seeking and sharing troubleshooting tactics.

- Special mention was made regarding

CUDA MODE Discord

- Kernel Quest: Navigating Parameter Limits: AI Engineers tackled the CUDA 4k kernel parameter size limit by favoring pointers to pass extensive data structures, with the migration from CPU to GPU global memory made compulsory for such scenarios.

- The dialogue transitioned to pointer intricacies, illuminating the necessity for pointers in kernel arguments to address GPU memory, and scrapping confusion over ** vs. * usage in CUDA memory allocation.

- Google Gemma 2 Prevails, Then Eclipsed: Gemma 2 models magnetized the community by overshadowing the former front-runner, Llama 2, sparking a deep dive on Google’s reveal at Google I/O.

- Yet, the relentless progress in AI saw Gemma 2 swiftly outmoded by the likes of LlaMa 3 and Qwen 2, stirring acknowledgement of the industry’s high-velocity advancements.

- GPT-3 125M Model: Groundbreaking Lift-Off: The launch of the first GPT-3 model (125M) training gripped the community, with notable mentions of a 12-hour expected completion time and eagerness for resulting performance metrics.

- Modifications in FP8 training settings and the integration of new Quantization mechanics were foregrounded, pointing to future explorations in model efficiency.

- CUDA Conundrums: Shared Memory Shenanigans: Dynamic Shared Memory utility became a hot topic with strategies like

extern __shared__ float shared_mem[];being thrown into the tech melting pot, aiming to enhance kernel performance.- A move towards dynamic shared memory pointed to a future of cleaner CUDA operations and the shared vision for denser computational processes.

- Triton’s Compiler Choreography: Excitement brewed as the Triton compiler showed off its flair by automatically fine-tuning GPU code, turning Python into the more manageable Triton IR.

- Community contributions amplified this effect with the sharing of personal solutions to Triton Puzzles, nudging others to attempt the challenges.

Stability.ai (Stable Diffusion) Discord

- RTX 4060 Ti Tips & Tricks: Queries surrounding RTX 4060 Ti adequacy for automatic 1111 were alleviated; editing

webui-user.batwith--xformers --medvram-sdxlflagged for optimal AI performance.- Configurations hint at the community’s quest for maximized utility of hardware in AI operations.

- Adobe Stock Clips Artist Name Crackdown: Adobe Stock revised its policy to scrub artist names from titles, keywords, or prompts, showing Adobe’s strict direction on AI content generation.

- The community’s worry about the sweeping breadth of the policy mirrors concerns for creative boundaries in AI art.

- The AI Artistic Triad Trouble: Discourse pinpointed hands, text, and women lying on grass as repetitive AI rendering pitfalls likened to an artist’s Achilles’ heel.

- Enthusiasm brews for a refined model that could rectify these common AI errors, revealed as frequent conversation starters among techies.

- Unraveling the ‘Ultra’ Feature Mystery: ‘Ultra’ feature technical speculation included hopeful musings on a second sample stage with techniques like latent upscale or noise application.

- Clarifications disclosed Ultra’s availability in beta via the website and API, separating fact from monetization myths.

- Divergent Views on Troll IP Bans: The efficacy of IP bans to deter trolls sparked a fiery debate on their practicality, illustrating different community management philosophies.

- Discussion revealed varied expertise, stressing the need for a toolkit of strategies for maintaining a positive community ethos.

Eleuther Discord

- GoldFinch Soars with Linear Attention: The GoldFinch model has been a hot topic, with its hybrid structure of Linear Attention and Transformers, enabling large context lengths and reducing VRAM usage.

- Performance discussions highlighted its superiority over similarly classed models due to efficient KV-Cache mechanics, with links to the GitHub repository and Hugging Face model.

- Drama Around Data Scraping Ignites Debate: Convos heated over AI models scraping YouTube subtitles, sparking a gamut of economic and ethical considerations.

- Discussions delved into nuances of fair use and public permissions, with some users reflecting on the legality and comparative outrage.

- Scaling LLMs Gets a Patchwork Solution: In the realm of large language models, Patch-Level Training emerged as a potential game-changer, aiming to enhance sequence training efficiency.

- The PatchTrain GitHub was a chief point of reference, discussing token compression and its impact on training dynamics.

- Interpretability through Token-Free Lenses: Tokenization-free models took the stage with curiosity surrounding their impact on AI interpretability.

- The conversations spanned theory and potential implementations, not reaching consensus but stirring a meaningful exchange.

- ICML 2024 Anticipation Electrifies Researchers: ICML 2024 chatter was abound, with grimsqueaker’s insights on ‘Protein Language Models Expose Viral Mimicry and Immune Escape’ drawing particular attention.

LM Studio Discord

- Codestral Mamba Slithers into LM Studio: Integration of Codestral Mamba in LM Studio is dependent on its support in llama.cpp, as discussed by members eagerly awaiting its addition.

- The Codestral Mamba enhancements for LM Studio will unlock after its incorporation into the underlying llama.cpp framework.

- Resizable BAR’s Minimal Boost: Resizable BAR, a feature to enhance GPU performance, reportedly has negligible effects on LLM operations which rely more on tensor cores.

- Discussions centered on the efficiency of hardware features, concluding that factors like VRAM bandwidth hold more weight for LLM performance optimization.

- GTX 1050’s AI Aspirations Dashed: The GTX 1050’s 4GB VRAM struggles with model execution, compelling users to consider downsizing to less demanding AI models.

- Community members discussed the model compatibility, suggesting the limited VRAM of the GTX 1050 isn’t suited for 7B+ parameter models, which are more intensive.

- Groq’s Models Clinch Function Calling Crown: Groq’s Llama-3 Groq-8B and 70B models impress with their performance on the Berkeley Function Calling Leaderboard.

- A focus on tool use and function calls enables these models to score competitively, showcasing their efficiency in practical AI scenarios.

- CUDA Finds New Ally in AMD RDNA: Prospects for CUDA on AMD’s RDNA architecture piqued interest following a share of a Reddit discussion on the new compiler allowing RX 7800 execution.

- Skepticism persists around the full compatibility of CUDA on AMD via tools like ZLUDA, although SCALE and portable installations herald promising alternatives.

Nous Research AI Discord

- Textual Gradients Take Center Stage: Excitement builds around a new method for ‘differentiation’ via textual feedback, using TextGrad for guiding neural network optimization.

- ProTeGi steps into the spotlight with a similar approach, stimulating conversation on the potential of this technique for machine learning applications.

- STORM System’s Article Mastery: Stanford’s STORM system leverages LLMs to create comprehensive outlines, boosting long-form article quality significantly.

- Authors are now tackling the issue of source bias transfer, a new challenge introduced by the system’s methodology.

- First Impressions: Synthetic Dataset & Knowledge Base: Synthetic dataset and knowledge base drops, targeted to bolster business application-centric AI systems.

- RAG systems could find a valuable resource in this Mill-Pond-Research/AI-Knowledge-Base, with its extensive business-related data.

- When Agents Evolve: Beyond Language Models: A study calls for an evolution in LLM-driven agents, recommending more sophisticated processing for improved reasoning, detailed in an insightful position paper.

- The Mistral-NeMo-12Instruct-12B arrives with a bang, touting a multi-language and code data-training and a 128k context window.

- GPT-4’s Past Tense Puzzle: GPT-4’s robustness to harmful request reformulations gets a shock with a new paper finding 88% success in eliciting forbidden knowledge via past tense prompts.

- The study urges a review of current alignment techniques against this unexpected gap as highlighted in the findings.

Latent Space Discord

- DeepSeek Engineering Elegance: DeepSeek’s DeepSeek V2 slashes inference costs to 1 yuan per million tokens, inducing a competitive pricing frenzy among AI companies.

- DeepSeek V2 boasts a revolutionary MLA architecture, cutting down memory use, earning it the moniker of China’s AI Pinduoduo.

- GPT-4o Mini: Quality Meets Cost Efficiency: OpenAI’s GPT-4o mini emerges with a cost-effectiveness punch: $0.15 per million input tokens and $0.60 per million output tokens.

- Achieving an 82% MMLU score and supporting a 128k context window, it outperforms Claude 3 Haiku’s 75% and sets a new performance cost benchmark.

- Mistral NeMo’s Performance Surge: Mistral AI and NVIDIA join forces, releasing Mistral NeMo, a potent 12B model sporting a huge 128k tokens context window.

- Efficiency is front and center with NeMo, thanks to its FP8 inference for faster performance, positioning it as a Mistral 7B enhancement.

- Turbo Charged LLaMA 3 Versions Leap into Action: The introduction of LLaMA-3-8B Lite by Together AI promises a cost-effective $0.10 per million tokens ensuring affordability meets velocity.

- Enhancing the deployment landscape, LLaMA-3-8B Turbo soars to 400 tokens/s, tailored for applications demanding brisk efficiency.

- LLaMA 3 Anticipation Peaks with Impending 400B Launch: Speculation is high about LLaMA 3 400B’s impending reveal, coinciding with Meta executives’ scheduled sessions, poised to shake the AI status quo.

- Community senses a strategic clear-out of existing products, setting the stage for the LLaMA 3 400B groundbreaking entry.

OpenAI Discord

- GPT-4o Mini: Intelligence on a Budget: OpenAI introduces the GPT-4o mini, touted as smarter and more cost-effective than GPT-3.5 Turbo and is rolling out in the API and ChatGPT, poised to revolutionize accessibility.

- Amid excitement and queries, it’s clarified that GPT-4o mini enhances over GPT-3.5 without surpassing GPT-4o; conversely, it lacks GPT-4o’s full suite of capabilities like image support, but upgrades from GPT-3.5 for good.

- Eleven Labs Unmutes Voice Extraction: The unveiling of Eleven Labs’ voice extraction model has initiated vibrant discussions, leveraging its potential to extract clear voice audio from noisy backgrounds.

- Participants are weighing in on ethical considerations and potential applications, aligning with novel creations in synthetic media.

- Nvidia’s Meta Package Puzzle: Nvidia’s installer’s integration with Facebook, Instagram, and Meta’s version of Twitter has stirred a blend of confusion and humor among users.

- Casual reactions such as ‘Yes sir’ confirmed reflect the community’s light-hearted response to this unexpected bundling.

- Discourse on EWAC’s Efficiency: The new EWAC command framework gains traction as an effective zero-shot, system prompting solution, optimizing model command execution.

- A shared discussion link encourages collaborative exploration and fine-tuning of this command apparatus.

- OpenAI API Quota Quandaries: Conversations circulate around managing OpenAI API quota troubles, promoting vigilance in monitoring plan limits and suggesting credit purchases for continued usage.

- Community members exchange strategies to avert the API hallucinations and maximize its usage, while also pinpointing inconsistencies in token counting for images.

Interconnects (Nathan Lambert) Discord

- Meta and the Looming Llama: Meta plans to debut a multimodal Llama model, yet EU users are left in the lurch due to regulatory restraints, an issue spotlighted by an Axios report.

- Workarounds like using a VPN or non-EU compliance checkboxes are already being whispered about, foreshadowing a possible rise in access hacks.

- Mistral NeMo’s Grand Entrance: Mistral NeMo makes a splash with its 12B model designed for a substantial 128k token context window, a NVIDIA partnership open under Apache 2.0, found in the official release notes.

- Outshining its predecessors, it boasts enhancements in reasoning, world knowledge, and coding precision, fueling anticipation in technical circles.

- GPT-4o mini Steals the Spotlight: OpenAI introduces the lean and mean GPT-4o mini, heralded for its brilliance and transparency in cost, garnering nods for its free availability and strong MMLU performance according to Andrew Curran.

- Priced aggressively at 15¢ per M token input and 60¢ per M token output, it’s scaled down yet competitive, with a promise of wider accessibility.

- Tekken Tokenizer Tackles Tongues: The Tekken tokenizer has become the talk of the town, dripping with efficiency and multilingual agility, outpacing Llama 3 tokenizer substantially.

- Its adeptness in compressing text and source code is turning heads, making coders consider their next move.

- OpenAI’s Perimeter Breached: OpenAI’s newest safety mechanism has been decoded, with

Elder Pliniusshedding light on a GPT-4o-mini jailbreak via a bold declaration.- This reveals cracks in their ‘instruction hierarchy’ defense, raising eyebrows and questions around security.

OpenRouter (Alex Atallah) Discord

- GPT-4o Mini’s Frugal Finesse: OpenAI’s GPT-4o Mini shines with both text and image inputs, while flaunting a cost-effective rate at $0.15/M input and $0.60/M output.

- This model trounces GPT-3.5 Turbo in affordability, being over 60% more economical, attractive for free and subscription-based users alike.

- OpenRouter’s Regional Resilience Riddle: Users faced a patchwork of OpenRouter outages, with reports of API request lags and site timeouts, though some regions like Northern Europe remained unaffected.

- The sporadic service status left engineers checking for live updates on OpenRouter’s Status to navigate through the disruptions.

- Mistral NeMo Uncaps Context Capacity: Mistral NeMo’s Release makes waves with a prodigious 12B model boasting a 128k token context window, paving the way for extensive applications in AI.

- Provided under the Apache 2.0, Mistral NeMo is now available with pre-trained and instruction-tuned checkpoints, ensuring broad access and use.

- Codestral 22B: Code Model Contender: A community-led clarion call pushes for the addition of Codestral 22B, spotlighting the capabilities of Mamba-Codestral-7B to join the existing AI model cadre.

- With its competitive edge in Transformer-based frameworks for coding, Codestral 22B solicits enthusiastic discussion amongst developers and model curators.

- Image Token Turbulence in GPT-4o Mini: Chatter arises as the AI engineering community scrutinizes image token pricing discrepancies in the newly released GPT-4o Mini, as opposed to previous models.

- Debate ensues with some expressing concerns over the unexpected counts affecting usage cost, necessitating a closer examination of model efficiency and economics.

Modular (Mojo 🔥) Discord

- Nightly Mojo Compiler Enhancements Unveiled: A fresh Mojo compiler update (2024.7.1805) includes

stdlibenhancements for nested Python objects using list literals, upgradeable viamodular update [nightly/mojo](https://github.com/modularml/mojo/compare/e2a35871255aa87799f240bfc7271ed3898306c8...bb7db5ef55df0c48b6b07850c7566d1ec2282891)command.- Debating stdlib’s future, a proposal suggests

stdlib-extensionsto engage community feedback prior to full integration, aiming for consensus before adding niche features such as allocator awareness.

- Debating stdlib’s future, a proposal suggests

- Lubeck Outstrips MKL with Acclaimed LLVM Efficiency: Lubeck’s stellar performance over MKL is attributed to differential LLVM IR generation, possibly aided by Mir’s LLVM-Accelerated Generic Numerical Library.

- SPIRAL distinguishes itself by automating numerical kernel optimization, though its generated code’s complexity limits its use outside prime domains like BLAS.

- Max/Mojo Embraces GPU’s Parallel Prowess: Excitement builds around Max/Mojo’s new GPU support, expanding capabilities for tensor manipulation and parallel operations, suggested by a Max platform talk highlighting NVIDIA GPU integration.

- Discussion threads recommend leveraging MLIR dialects and CUDA/NVIDIA for parallel computing, putting a spotlight on the potential for AI advancements.

- Keras 3.0 Breaks Ground with Multi-Framework Support: Amid community chatter, the latest Keras 3.0 update flaunts compatibility across JAX, TensorFlow, and PyTorch, positioning itself as a frontrunner for flexible, efficient model training and deployment.

- The milestone was shared during a Mojo Community Meeting, further hinting at broader horizons for Keras’s integration and utility.

- Interactive Chatbot Designs Spark Conversation in Max: MAX version 24.4 innovates with the

--promptflag, facilitating the creation of interactive chatbots by maintaining context and establishing system prompts, as revealed in the Max Community Livestream.- Queries about command-line prompt usage and model weight URIs lead to illuminating discussions about UI dynamics and the plausibility of using alternative repositories over Hugging Face for weight sourcing.

Cohere Discord

- Tools Take the Stage at Cohere: A member’s inquiry about creating API tools revealed that tools are API-only, with insights accessible through the Cohere dashboard.

- Details from Cohere’s documentation clarified that tools can be single or multi-step and are client-defined.

- GIFs Await Green Signal in Permissions Debate: Discussions arose over the ability to send images and GIFs in chat, facing restricted permissions due to potential misuse.

- Admins mentioned possible changes allowing makers and regular users to share visuals, seeking a balance between expression and moderation.

- DuckDuckGo: Navigating the Sea of Integration: Members considered integrating DuckDuckGo search tools into projects, highlighting the DuckDuckGo Python package for its potential.

- A suggestion was made to use the package for a custom tool to enhance project capabilities.

- Python & Firecrawl Forge Scraper Synergy: The use of Python for scraping, along with Firecrawl, was debated, with prospects of combining them for effective content scraping.

- Community peers recommended the use of the duckduckgo-search library for collecting URLs as part of the scraping process.

- GPT-4o Joins the API Integration Guild: Integration of GPT-4o API with scraping tools was a hot topic, where personal API keys are used to bolster Firecrawl’s capabilities.

- Technical set-up methods, such as configuring a .env file for API key inclusion, were shared to facilitate this integration with LLMs.

Perplexity AI Discord

- NextCloud Navigates Perplexity Pitfalls: Users reported difficulty setting up Perplexity API on NextCloud, specifically with choosing the right model.

- A solution involving specifying the

modelparameter in the request was shared, directing users to a comprehensive list of models.

- A solution involving specifying the

- Google Grapples with Sheets Snafus: Community members troubleshoot a perplexing issue with Google Sheets, encountering a Google Drive logo error.

- Despite efforts, they faced a persistent ‘page can’t be reached’ problem, leaving some unable to login.

- PDF Probe: Leverage Perplexity Without Limits: The guild discussed Perplexity’s current limitation with handling multiple PDFs, seeking strategies to overcome this challenge.

- A community member recommended converting PDF and web search content into text files for optimal Perplexity performance.

- AI Discrepancy Debate: GPT-4 vs GPT-4 Omni: Discussions arose around Perplexity yielding different results when integrating GPT-4 Omni, sparking curiosity and speculation.

- Members debated the possible reasons, with insights hinting at variations in underlying models.

- DALL-E Dilemmas and Logitech Legit Checks: Questions about DALL-E’s update coinciding with Perplexity Pro search resets were raised, alongside suspicions about a Logitech offer for Perplexity Pro.

- Subsequent confirmation of Logitech’s partnership with Perplexity squashed concerns of phishing, backed by a supporting tweet.

LangChain AI Discord

- LangChain Leverages Openrouter: Discussions revolved around integrating Openrouter with LangChain, but the conversation lacked concrete examples or detailed guidelines.

- A query for code-based RAG examples to power a Q&A chatbot was raised, suggesting a demand for comprehensive, hands-on demonstrations in the LangChain community.

- Langserve Launches Debugger Compartment: A user inquired about the Langserve Debugger container, seeking clarity on its role and application, highlighted by a Docker registry link.

- Curiosity peaked regarding the distinctions between the Langserve Debugger and standard Langserve container, impacting development workflows and deployment strategies.

- Template Tribulations Tackled on GitHub: A KeyError issue related to incorporating JSON into LangChain’s ChatPromptTemplate spurred discussions, with reference to a GitHub issue for potential fixes.

- Despite some community members finding a workaround for JSON integration challenges, others continue to struggle with the nuances of the template system.

- Product Hunt Premieres Easy Folders: Easy Folders made its debut on Product Hunt with features to tidy up chat histories and manage prompts, as detailed in the Product Hunt post.

- A promotion for a 30-day Superuser membership to Easy Folders was announced, banking on community endorsement and feedback to amplify engagement.

- LangGraph Laminates Corrective RAG: The integration of LangGraph with Corrective RAG to combat chatbot hallucinations was showcased in a YouTube tutorial, shedding light on improving AI chatbots.

- This novel approach hints at the community’s drive to enhance AI chatbot credibility and reliability through innovative combinations like RAG Fusion, addressing fundamental concerns with existing chatbot technologies.

LlamaIndex Discord

- Jerry’s AI World Wisdom: Catching up on AI World’s Fair? Watch @jerryjliu0 deliver insights on knowledge assistants in his keynote speech, a highlight of last year’s event.

- He delves into the evolution and future of these assistants, sparking continued technical discussions within the community.

- RAGapp’s Clever Companions: The RAGapp’s latest version now interfaces with MistralAI and GroqInc, enhancing computational creativity for developers.

- The addition of @cohere’s reranker aims to refine and amplify app results, introducing new dynamics to the integration of large language models.

- RAG Evaluators Debate: A dialogue unfolded around the selection of frameworks for RAG pipeline evaluation, with alternatives to the limited Ragas tool under review.

- Contributors discussed whether crafting a custom evaluation tool would fit into a tight two-week span, without reaching a consensus.

- Mask Quest for Data Safety: Our community dissected strategies for safeguarding sensitive data, recommending the PIINodePostprocessor from LlamaIndex for data masking pre-OpenAI processing.

- This beta feature represents a proactive step to ensure user privacy and the secure handling of data in AI interactions.

- Multimodal RAG’s Fine-Tuned Futurism: Enthusiasm peaked as a member shared their success using multimodal RAG with GPT4o and Sonnet3.5, highlighting the unexpected high-quality responses from LlamaIndex when challenged with complex files.

- Their findings incited interest in LlamaIndex’s broader capabilities, with a look towards its potential to streamline RAG deployment and efficiency.

OpenInterpreter Discord

- OpenInterpreter’s Commendable Community Crescendo: OpenInterpreter Discord celebrates a significant milestone, reaching 10,000 members in the community.

- The guild’s enthusiasm is palpable with reactions like “Yupp” and “Awesome!” marking the achievement.

- Economical AI Edges Out GPT-4: One member praised a cost-effective AI claiming superior performance over GPT-4, particularly noted for its use in AI agents.

- Highlighting its accessibility, the community remarked on its affordability, with one member saying, “It’s basically for free.”

- Multimodal AI: Swift and Versatile: Discussions highlighted the Impressive speed of a new multimodal AI, which supports diverse functionalities and can be utilized via an API.

- Community members are excited about its varied applications, with remarks emphasizing its “crazy fast latency” and multimodal nature.

OpenAccess AI Collective (axolotl) Discord

- Mistral NeMo’s Impressive Entrance: Mistral NeMo, wielding a 12B parameter size and a 128k token context, takes the stage with superior reasoning and coding capabilities. Pre-trained flavors are ready under the Apache 2.0 license, detailed on Mistral’s website.

- Meta’s reporting of the 5-shot MMLU score for Llama 3 8B faces scrutiny due to mismatched figures with Mistral, shedding doubt on the claimed 62.3% versus 66.6%.

- Transformers in Reasoning Roundup: A debate sparked around transformers’ potential in implicit reasoning, with new research advocating their capabilities post-extensive training.

- Despite successes in intra-domain inferences, transformers are yet to conquer out-of-domain challenges without more iterative layer interactions.

- Rank Reduction, Eval Loss Connection: A curious observation showed that lowering model rank correlates with a notable dip in evaluation loss. However, the data remain inconclusive as to whether this trend will persist in subsequent training steps.

- Participants exchanged insights on this phenomenon, contemplating its implications on model accuracy and computational efficiency.

- GEM-A’s Overfitting Dilemma: Concerns surfaced regarding the GEM-A model potentially overfitting during its training period.

- The dialogue continued without concrete solutions but with an air of caution and a need for further experimentation.

LLM Finetuning (Hamel + Dan) Discord

- GPT-3.5-turbo Takes the Lead: Discussion emerged showing GPT-3.5-turbo outpacing both Mistral 7B and Llama3 in fine-tuning tasks, despite OpenAI’s stance on not utilizing user-submitted data for fine-tuning purposes.

- A sentiment was expressed preferring against the use of GPT models for fine-tuning due to concerns over transmitting sensitive information to a third party.

- Mac M1’s Model Latency Lag: Users faced latency issues with Hugging Face models on Mac M1 chips due to model loading times when starting a preprocessing pipeline.

- The delay was compounded when experimenting with multiple models, as each required individual downloads and loads, contributing to the initial latency.

LAION Discord

- Meta’s Multimodal Mission: An eye on the future, Meta shifts its spotlight to multimodal AI models, as hinted by a sparse discussion following an article share.

- Lack of substantial EU community debate leaves implications on innovation and accessibility in suspense.

- Llama Leaves the EU: Meta’s decision to pull Llama models from the EU market stirs a quiet response among users.

- The impact on regional AI tool accessibility has yet to be intensively scrutinized or debated.

- Codestral Mamba’s Coding Coup: The freshly introduced Codestral Mamba promises a leap forward in code generation with its linear time inference.

- Co-created by Albert Gu and Tri Dao, this model aims to enhance coding efficiency while handling infinitely long sequences.

- Prover-Verifier’s Legibility Leap: The introduction of Prover-Verifier Games sparks interest for improving the legibility of language models, supported by a few technical references.

- With details provided in the full documentation, the community shows restrained enthusiasm pending practical applications.

- NuminaMath-7B’s Top Rank with Rankling Flaws: Despite NuminaMath-7B’s triumph at AIMO, highlighted flaws in basic reasoning capabilities pose as cautionary tales.

- AI veterans contemplate the gravity and aftershocks of strong claims not standing up to elementary reasoning under AIW problem scrutiny.

Torchtune Discord

- Template Turmoil Turnaround: Confusion arose over using

torchtune.data.InstructTemplatefor custom template formatting, specifically regarding column mapping.- Clarification followed that column mapping renames dataset columns, with a query if the alpaca cleaned dataset was being utilized.

- CI Conundrums in Code Contributions: Discourse on CI behavior highlighted automatic runs when updating PRs leading to befuddlement among contributors.

- The consensus advice was to disregard CI results until a PR transitions from draft to a state ready for peer review.

- Laugh-Out-Loud LLMs - Reality or Fiction?: Trial to program an LLM to consistently output ‘HAHAHA’ met with noncompliance, despite being fed a specific dataset.

- The experiment served as precursor to the more serious application using the alpaca dataset for a project.

tinygrad (George Hotz) Discord

- GTX1080 Stumbles on tinygrad: A user faced a nvrtc: error when trying to run tinygrad with CUDA=1 on a GTX1080, suspecting incompatibility with the older GPU architecture.

- The workaround involved modifying the ops_cuda for the GTX1080 and turning off tensor cores; however, a GTX2080 or newer might be necessary for optimal performance.

- Compile Conundrums Lead to Newer Tech Testing: The member encountered obstacles while setting up tinygrad on their GTX1080, indicating potential compatibility issues.

- They acknowledged community advice and moved to experiment with tinygrad using a more current system.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Unsloth AI (Daniel Han) ▷ #general (245 messages🔥🔥):

RAGMistral NeMo releaseUnsloth StudioMistral-Nemo integrationFlash Attention support

- Debate on RAG and its implementation: Multiple members expressed that RAG (Retrieval Augmented Generation) is often oversold and overhyped despite being difficult and requiring extensive custom tuning for effective results.

- One member noted, ‘RAG is easy if you want a shitty outcome - same goes for finetuning but if you want a good outcome… then that will require lots of work’.

- Mistral NeMo 12B model launched: Mistral NeMo, a 12B model with a context window up to 128k tokens, was released in collaboration with NVIDIA under the Apache 2.0 license.

- The model promises state-of-the-art reasoning, world knowledge, and coding accuracy in its size category, being a drop-in replacement for Mistral 7B.

- Unsloth Studio release delayed: The release of Unsloth Studio (Beta) was postponed from the planned date to the following Monday.

- A member advised to ‘take your time - takes as long as it takes’.

- Introduction and compatibility of Mistral-Nemo: Discussions on whether Unsloth will support the newly released Mistral-Nemo are in progress.

- The integration is still being worked on, and Flash Attention 2.6 appears to be required for larger context lengths.

- Hardware choices for training models: Members discussed various hardware setups, noting the benefits of using A6000 GPUs and the cost-effectiveness of renting hardware for AI training.

- One member mentioned using 64-core threadripper with dual A6000 GPUs primarily for fluid dynamics and finite state simulations, now repurposed for AI.

Links mentioned:

- Mistral NeMo: Mistral NeMo: our new best small model. A state-of-the-art 12B model with 128k context length, built in collaboration with NVIDIA, and released under the Apache 2.0 license.

- FACTS About Building Retrieval Augmented Generation-based Chatbots: no description found

- mistralai/Mistral-Nemo-Instruct-2407 · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Gigabyte releases AI software to help train your own AI — AI TOP utility taps Gigabyte motherboards, GPUs, SSDs, and power supplies to fine-tune local AI model training: The tool can locally train AI models with up to 236B parameters.

- GitHub - bclavie/RAGatouille: Easily use and train state of the art late-interaction retrieval methods (ColBERT) in any RAG pipeline. Designed for modularity and ease-of-use, backed by research.: Easily use and train state of the art late-interaction retrieval methods (ColBERT) in any RAG pipeline. Designed for modularity and ease-of-use, backed by research. - bclavie/RAGatouille

- AIUnplugged 15: Gemma 2, Flash Attention 3, QGaLoRE, MathΣtral and Codestral Mamba: Insights over Information

Unsloth AI (Daniel Han) ▷ #off-topic (11 messages🔥):

GPU recommendationsRunpodBinary messageShylily fans

- GPU Debate: 3090 vs 4090: Members discussed the merits of getting a used RTX 3090 over the 3090 TI, suggesting even crypto-mined cards are acceptable.

- A user mentioned owning two 4090 GPUs and highlighted Runpod as a superior alternative.

- Binary Message Fun: Members had a light-hearted moment decoding the binary message ‘01110111 01101111 01101101 01110000 00100000 01110111 01101111 01101101 01110000’ into ‘womp womp’.

- This sparked a series of playful ‘womp womp’ exchanges among the members.

- Shylily Fan Moments: A member pointed out the presence of many Shylily fans in the chat, calling out fun moments.

- The discussion brought attention to the many ‘womp womp’ moments in the chat logs, prompting laughter and comments.

Unsloth AI (Daniel Han) ▷ #help (84 messages🔥🔥):

disabling pad_tokenfinetuning and saving modelsmodel sizes and memory consumptionfine-tuning locallyhandling new errors with GPU and dtype

- Ignore pad_token if model doesn’t need one: Members discussed that if the model doesn’t use

pad_token, it can simply be ignored without any effect. - Best practices for saving finetuned models in 4-bit vs. 16-bit for VLLM: Members debated whether to save a finetuned 4-bit model as 16-bit or to just use LoRA adapters in a production setting.

- Theyruinedelise suggested trying the LoRA approach to maintain accuracy.

- High VRAM requirements for Llama 3 models: Members discussed the extensive VRAM requirements of Llama 3 models, noting it takes 48GB VRAM for 70B model quantization at 4-bit.

- Running fine-tuning locally vs. using Colab: Efficio sought advice on running fine-tuning locally and received suggestions on using WSL on Windows for local training.

- Theyruinedelise recommended following detailed guides available on GitHub for setting up the local environment.

- Handling new torch.autocast errors on RTX A4000: Kiingz3440 faced an error with

torch.autocastrelated to unsupportedbfloat16on an RTX A4000 GPU.- Edd0302 suggested explicitly setting the model to use

torch.float16, which resolved the error.

- Edd0302 suggested explicitly setting the model to use

Links mentioned: