AI News for 10/21/2024-10/22/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (232 channels, and 3347 messages) for you. Estimated reading time saved (at 200wpm): 341 minutes. You can now tag @smol_ai for AINews discussions!

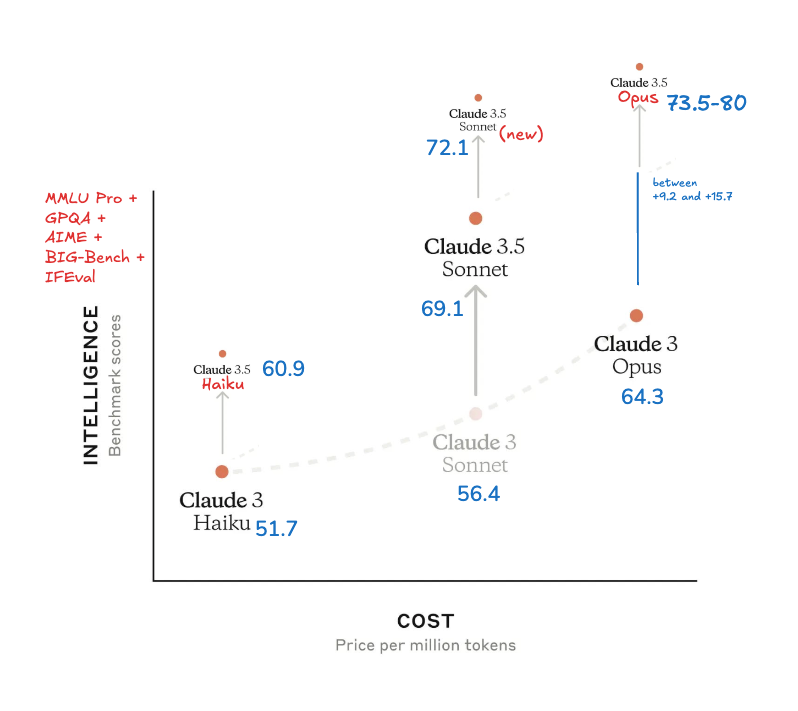

Instead of the widely anticipated (and now indefinitely postponed) Claude 3.5 Opus, Anthropic announced a new 3.5 Sonnet, and 3.5 Haiku, bringing a bump to each model.

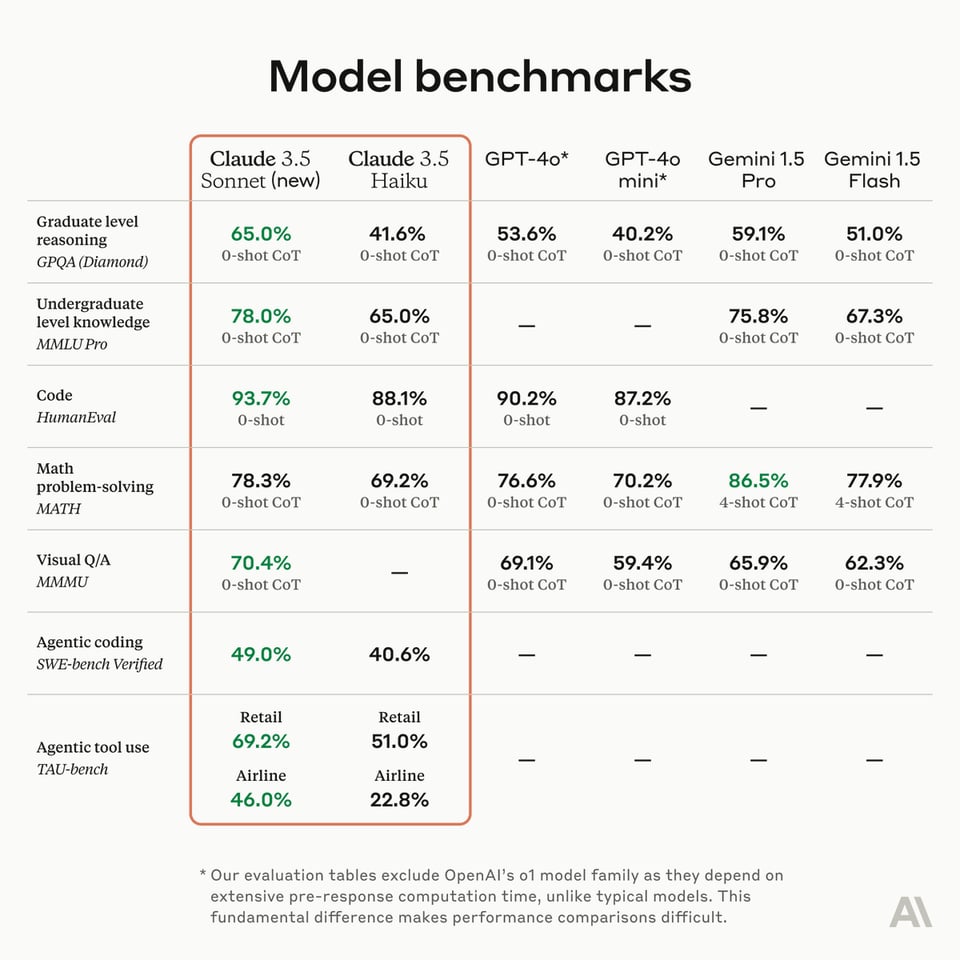

3.5 Sonnet, already delivers significant gains in coding. The new 3.5 Haiku (with benchmarks on the model card) matches the performance of Claude 3 Opus “on many evaluations for the same cost and similar speed to the previous generation of Haiku”.

Notably on coding, it improves performance on SWE-bench Verified from 33.4% to 49.0%, scoring HIGHER than o1-preview’s own 41.4% without any fancy reasoning steps. However, on math on 3.5 Sonne’s 27.6% high water mark still pales in comparison to o1-preview’s 83%.

Notably on coding, it improves performance on SWE-bench Verified from 33.4% to 49.0%, scoring HIGHER than o1-preview’s own 41.4% without any fancy reasoning steps. However, on math on 3.5 Sonne’s 27.6% high water mark still pales in comparison to o1-preview’s 83%.

Other Benchmarks:

- Aider: The new Sonnet tops aider’s code editing leaderboard at 84.2% and sets SOTA on aider’s more demanding refactoring benchmark with a score of 92.1%!

- Vectara: On Vectara’s Hughes Hallucination Evaluation Model Sonnet 3.5 went from 8.6 to 4.6

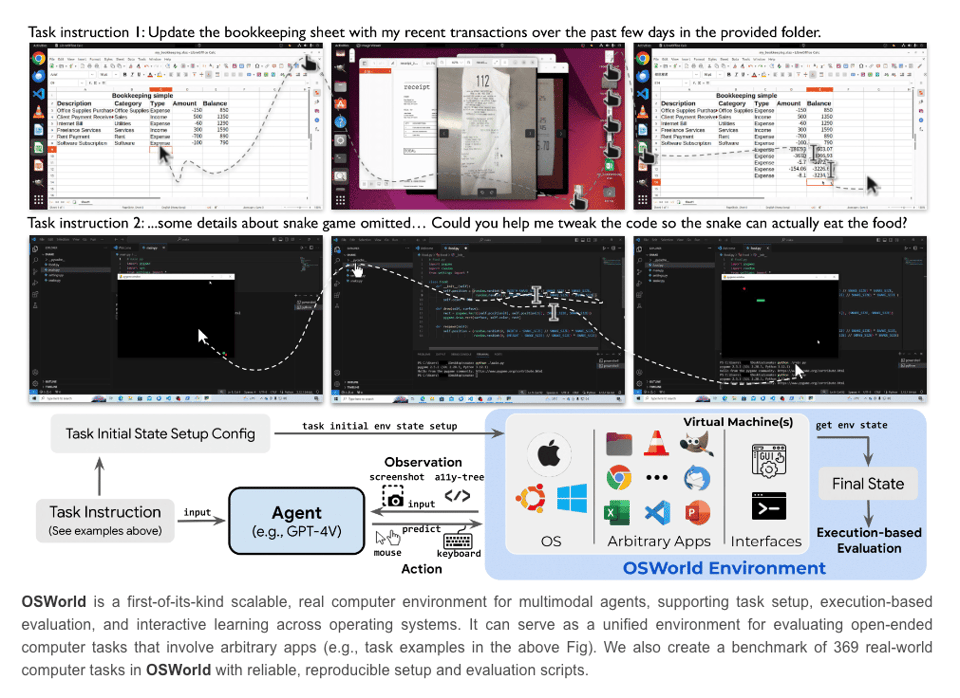

Computer Use

Anthropic’s new Computer Use API (docs here, demo here) point to OSWorld for their relevant screen manipulation benchmark - scoring 14.9% in the screenshot-only category—notably better than the next-best AI system’s score of 7.8%.

When afforded more steps to complete the task, Claude scored 22.0%. This is still substantially under human performance in the 70’s, but is notable because this is essentially the functionality that Adept previously announced with its Fuyu models but never widely released. In a reductive sense “computer use” (controlling a computer via vision) is contrasted against standard “tool use” (controlling computers via API/function calling).

Example Videos:

Vendor Request Form, Coding Via Vision, Google Searches and Google Maps

Simon Willison kicked the tires on the github quickstart further with tests including compile and run hello world in C (it has gcc already so this just worked) and installing missing Ubuntu packages.

Replit was also able to plug in Claude as a human feedback replacement for @Replit Agent.

[Sponsored by Zep] Zep just launched their cloud edition today! Zep is a low-latency memory layer for AI agents and assistants that can reason about facts that change over time. Jump into the Discord to chat the future of knowledge graphs and memory!

swyx commentary: with computer use now officially blessed by Claude’s upgraded vision model, how will agent memory storage need to change? You can see the simplistic image memory implementation from Anthropic but there’s no answer for multimodal memory yet… one hot topic for the Zep Discord.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Releases

-

Llama 3.1 and Nemotron: @_philschmid reported that NVIDIA’s Llama 3.1 Nemotron 70B topped Arena Hard (85.0) & AlpacaEval 2 LC (57.6), challenging GPT-4 and Claude 3.5.

-

IBM Granite 3.0: IBM released Granite 3.0 models, ranging from 400 million to 8B parameters, outperforming similarly sized Llama-3.1 8B on Hugging Face’s OpenLLM Leaderboard. The models are trained on 12+ trillion tokens across 12 languages and 116 programming languages.

-

xAI API: The xAI API Beta is now live, allowing developers to integrate Grok into their applications.

-

BitNet: Microsoft open-sourced bitnet.cpp, implementing the 1.58-bit LLM architecture. This allows running 100B parameter models on CPUs at 5-7 tokens/second.

AI Research and Techniques

-

Quantization: A new Linear-complexity Multiplication (L-Mul) algorithm claims to reduce energy costs by 95% for element-wise tensor multiplications and 80% for dot products in large language models.

-

Synthetic Data: @omarsar0 highlighted the importance of synthetic data for improving LLMs and systems built on LLMs (agents, RAG, etc.).

-

Agentic Information Retrieval: A paper introducing agentic information retrieval was shared, discussing how LLM agents shape retrieval systems.

-

RoPE Frequencies: @vikhyatk noted that truncating the lowest RoPE frequencies helps with length extrapolation in LLMs.

AI Tools and Applications

-

Perplexity Finance: Perplexity Finance was launched on iOS, offering financial information and stock data.

-

LlamaIndex: Various applications using LlamaIndex were shared, including report generation and a serverless RAG app.

-

Hugging Face Updates: New features like repository analytics for enterprise hub subscriptions and quantization support in diffusers were announced.

AI Ethics and Societal Impact

-

Legal Services: OpenAI’s CPO Kevin Weil discussed the potential disruption in legal services, with AI potentially reducing costs by 99.9%.

-

AI Audits: A virtual workshop on third-party AI audits, red teaming, and evaluation was announced for October 28th.

Memes and Humor

-

Various tweets about ChatGPT’s upcoming birthday and potential presents were shared, including this one by @sama.

-

Jokes about AI-generated backgrounds in Google Meet and AI’s impact on video editing were shared.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Moonshine: New Open-Source Speech-to-Text Model Challenges Whisper

- Moonshine New Open Source Speech to Text Model (Score: 54, Comments: 5): Moonshine, a new open-source speech-to-text model, claims to be faster than Whisper while maintaining comparable accuracy. Developed by Sanchit Gandhi and the Hugging Face team, Moonshine is based on wav2vec2 and can process audio 30 times faster than Whisper on CPU. The model is available on the Hugging Face Hub and can be easily integrated into projects using the Transformers library.

- Moonshine aims for resource-constrained platforms like Raspberry Pi, targeting 8MB RAM usage for transcribing sentences, compared to Whisper’s 30MB minimum requirement. The model focuses on efficiency for microcontrollers and DSPs rather than competing with Whisper large v3.

- Users expressed excitement about trying Moonshine, noting issues with Whisper 3’s accuracy and hallucinations. However, Moonshine is currently an English-only model, limiting its use for multilingual applications.

- The project is available on GitHub and includes a research paper. Some users reported installation errors, possibly due to Git-related issues on Windows.

Theme 2. Allegro: New State-of-the-Art Open-Source Text-to-Video Model

- new text-to-video model: Allegro (Score: 99, Comments: 8): Allegro, a new open-source text-to-video model, has been released with a detailed paper and Hugging Face implementation. The model builds on the creators’ previous open-source Vision Language Model (VLM) called Aria, which offers comprehensive fine-tuning guides for tasks like surveillance grounding and reasoning.

- Allegro is praised as the new local text-to-video SOTA (State of the Art), with its Apache-2.0 license being particularly appreciated. The open-source nature of the model is seen as a positive development in the local video generation space.

- The model’s VRAM requirements are discussed, with options ranging from 9.3GB (with CPU offload) to 27.5GB (without offload). Users suggest quantizing the T5 model to lower precision (fp16/fp8/int8) to fit on 24GB/16GB VRAM cards.

- Flexibility in model usage is highlighted, with the possibility to trade generation quality for reduced VRAM usage and faster generation times (potentially 10-30 minutes). Some users discuss the option of swapping out the T5 model after initial prompt encoding to optimize resource usage.

Theme 3. AI Sabotage Incident at ByteDance Raises Security Concerns

- TikTok owner sacks intern for sabotaging AI project (Score: 153, Comments: 50): ByteDance, the parent company of TikTok, reportedly fired an intern for intentionally sabotaging an AI project by inserting malicious code. The incident, which occurred in China, underscores the security risks associated with AI development and the potential for insider threats in tech companies. ByteDance discovered the sabotage during a routine code review, highlighting the importance of robust security measures and code audits in AI development processes.

- The intern allegedly sabotaged AI research by implanting backdoors into checkpoint models, inserting random sleeps to slow training, killing training runs, and reversing training steps. This was reportedly due to frustration over GPU resource allocation.

- ByteDance fired the intern in August, informed their university and industry bodies, and clarified that the incident only affected the commercial technology team’s research project, not official projects or large models. Claims of “8,000 cards and millions in losses” were exaggerated.

- Some users questioned the intern’s reported lack of AI experience, given their ability to reverse training processes. Others noted this was “career suicide” and speculated about potential blacklisting from major tech companies.

Theme 4. PocketPal AI: Open-Source App for Local Models on Mobile

-

PocketPal AI is open sourced (Score: 434, Comments: 78): PocketPal AI, an application for running local models on iOS and Android devices, has been open-sourced. The project’s source code is now available on GitHub, allowing developers to explore and contribute to the implementation of on-device AI models for mobile platforms.

- Users reported impressive performance with Llama 3.2 1B model, achieving 20 tokens/second on an iPhone 13 and 31 tokens/second on a Samsung S24+. The iOS version uses Metal acceleration, potentially contributing to faster speeds.

- The community expressed gratitude for open-sourcing the app, with many praising its convenience and performance. Some users suggested adding a donation section to support development and requested features like character cards integration.

- Comparisons were made between PocketPal and ChatterUI, another open-source mobile LLM app. PocketPal was noted for its user-friendliness and App Store availability, while ChatterUI offers more customization options and API support.

-

🏆 The GPU-Poor LLM Gladiator Arena 🏆 (Score: 137, Comments: 38): The GPU-Poor LLM Gladiator Arena is a competition for comparing small language models that can run on consumer-grade hardware. Participants are encouraged to submit models with a maximum size of 3 billion parameters that can operate on devices with 24GB VRAM or less, with the goal of achieving high performance on various benchmarks while maintaining efficiency and accessibility.

- Users expressed enthusiasm for the GPU-Poor LLM Gladiator Arena, with some suggesting additional models for inclusion, such as allenai/OLMoE-1B-7B-0924-Instruct and tiiuae/falcon-mamba-7b-instruct. The project was praised for making small model comparisons easier.

- Discussion arose about the performance of Gemma 2 2B, with some users noting its strong performance compared to larger models. There was debate about whether Gemma’s friendly conversation style might influence human evaluation results.

- Suggestions for improvement included adding a tie button for evaluations, calculating ELO ratings instead of raw win percentages, and incorporating more robust statistical methods to account for sample size and opponent strength.

Theme 5. Trend Towards More Restrictive Licenses for Open-Weight AI Models

- Recent open weight releases have more restricted licences (Score: 36, Comments: 10): Recent open-weight AI model releases, including Mistral small, Ministral, Qwen 2.5 72B, and Qwen 2.5 3B, have shown a trend towards more restricted licenses compared to earlier releases like Mistral Large 2407. As AI models improve in performance and become more cost-effective to operate, there’s a noticeable shift towards stricter licensing terms, potentially leading to a future where open-weight releases may primarily come from academic laboratories.

- Mistral’s stricter licensing for smaller models may harm their brand, potentially leading to company-wide bans on Mistral models and reducing interest in their API-only larger models. Users express concern over the lack of local reference points for model quality assessment.

- The decision not to release weights for Mistral’s 3B model is seen as a negative sign for open-source AI. This trend suggests companies may increasingly keep even smaller, well-performing models private to maintain competitive advantage.

- Discussion around Mistral’s need for profitability to sustain operations, contrasting with larger corporations like Meta that can afford to release models openly. Some users argue that Mistral’s approach is necessary for survival, while others see it as part of a concerning trend in AI model licensing.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Developments and Releases

-

ComfyUI V1 desktop application released: ComfyUI announced a new packaged desktop app with one-click install, auto-updates, and a new UI with template workflows and node fuzzy search. It also includes a Custom Node Registry with 600+ published nodes. Source

-

OpenAI’s o1 model shows improved reasoning with more compute: OpenAI researcher Noam Brown shared that the o1 model’s reasoning on math problems improves with more test-time compute, with “no sign of stopping” on a logarithmic scale. Source

-

Advanced Voice Mode released in EU: OpenAI’s Advanced Voice Mode is now officially available in the EU. Users reported improvements in accent handling. Source

AI Research and Industry Insights

-

Microsoft CEO on AI development acceleration: Satya Nadella stated that computing power is now doubling every 6 months due to the Scaling Laws paradigm. He also mentioned that AI development has entered a recursive phase, using AI to build better AI tools. Source 1, Source 2

-

OpenAI on o1 model reliability: Boris Power, Head of Applied Research at OpenAI, stated that the o1 model is reliable enough for agents. Source

AI Ethics and Societal Impact

- Sam Altman on technological progress: OpenAI CEO Sam Altman tweeted, “it’s not that the future is going to happen so fast, it’s that the past happened so slow,” sparking discussions about the pace of technological advancement. Source

Robotics Advancements

- Unitree robot training: A video showcasing the daily training of Unitree robots was shared, demonstrating advancements in robotic mobility and control. Source

Memes and Humor

- A post titled “An AI that trains more AI” sparked humorous discussions about recursive AI improvement. Source

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. Claude 3.5 Breaks New Ground with Computer Use

- Claude 3.5 Becomes Your Silicon Butler: Anthropic’s Claude 3.5 Sonnet introduces a beta ‘Computer Use’ feature, allowing it to perform tasks on your computer like a human assistant. Despite some hiccups, users are excited about this experimental capability that blurs the line between AI and human interaction.

- Haiku 3.5 Haikus into Coding Supremacy: The new Claude 3.5 Haiku surpasses its predecessors, scoring 40.6% on SWE-bench Verified and outshining Claude 3 Opus. Coders rejoice as Haiku 3.5 sets a new standard in AI-assisted programming.

- Claude Plays with Computers, Users Play with Fire: While the ‘Computer Use’ feature is groundbreaking, Anthropic warns it’s experimental and “at times error-prone.” But that hasn’t dampened the community’s enthusiasm to push the limits.

Theme 2. Stable Diffusion 3.5 Lights Up AI Art

- Stability AI Unleashes Stable Diffusion 3.5—Artists Feast: Stable Diffusion 3.5 launches with improved image quality and prompt adherence, free for commercial use under $1M revenue. Available on Hugging Face, it’s a gift to artists and developers alike.

- SD 3.5 Turbo Charges Ahead: The new Stable Diffusion 3.5 Large Turbo model offers some of the fastest inference times without sacrificing quality. Users are thrilled with this blend of speed and performance.

- Artists Debate: SD 3.5 vs. Flux—Who Wears the Crown?: The community buzzes over whether SD 3.5 can dethrone Flux in image quality and aesthetics. Early testers have mixed feelings, but the competition is heating up.

Theme 3. AI Video Generation Heats Up with Mochi 1 and Allegro

- GenmoAI’s Mochi 1 Serves Up Sizzling Videos: Mochi 1 sets new standards in open-source video generation, delivering realistic motion and prompt adherence at 480p. Backed by $28.4M in funding, GenmoAI is redefining photorealistic video models.

- Allegro Hits a High Note in Text-to-Video: Rhymes AI introduces Allegro, transforming text into 6-second videos at 15 FPS and 720p. Early adopters can join the waitlist here to be the first to try it out.

- Video Wars Begin: Mochi vs. Allegro—May the Best Frames Win: With both Mochi 1 and Allegro entering the scene, creators eagerly anticipate which model will lead in AI-driven video content.

Theme 4. Cohere Embeds Images into Multimodal Search

- Cohere’s Embed 3 Plugs Images into Search—Finally!: Multimodal Embed 3 supports mixed modality searches with state-of-the-art performance on retrieval tasks. Now, you can store text and image data in one database, making RAG systems delightfully simple.

- Images and Text, Together at Last: The new Embed API adds an

input_typecalledimage, letting developers process images alongside text. There’s a limit of one image per request, but it’s a big leap forward in unifying data retrieval. - Office Hours with the Embed Wizards: Cohere is hosting office hours with their Sr. Product Manager for Embed to offer insights into the new features. Join the event to get the inside scoop straight from the source.

Theme 5. Hackathon Fever: Over $200k in Prizes from Berkeley

- LLM Agents MOOC Hackathon Dangles $200k Carrot: Berkeley RDI launches a hackathon with over $200,000 in prizes, running from mid-October to mid-December. Open to all, it features tracks on applications, benchmarks, and more.

- OpenAI and GoogleAI Throw Weight Behind Hackathon: Major sponsors like OpenAI and GoogleAI back the event, adding prestige and resources. Participants can also explore career and internship opportunities during the competition.

- Five Tracks, Endless Possibilities: The hackathon includes tracks like Applications, Benchmarks, Fundamentals, Safety, and Decentralized & Multi-Agents, inviting participants to push AI boundaries and unlock innovation.

PART 1: High level Discord summaries

HuggingFace Discord

-

AI DJ Software Showcases Potential: Users discussed an innovative concept for AI DJ Software that could automate song transitions and mixing like what is seen with Spotify.

- Tools like rave.dj were mentioned for creating fun mashups, despite imperfections in the output.

-

Hugging Face Model Queries Raise Security Concerns: A user sought advice on securely downloading Hugging Face model weights via

huggingface_hubwithout exposing them.- Community members provided insights into using environment variables for authentication to maintain privacy.

-

OCR Tools Under Scrutiny: There was a discussion on effective OCR solutions for structured data extraction from PDFs, particularly for construction applications.

- Recommendations included models like Koboldcpp to improve text extraction accuracy.

-

Granite 3.0 Model Launch Celebrated: The new on-device Granite 3.0 model generated excitement among users, highlighting its convenient deployment.

- The model’s attributes were praised as enhancing usability for quick integration.

-

LLM Best Practices Webinar Attracts Attention: A META Senior ML Engineer announced a webinar focused on LLM navigation, already gaining almost 200 signups.

- The session promises to deliver actionable insights on prompt engineering and model selection.

OpenRouter (Alex Atallah) Discord

-

Claude 3.5 Sonnet shows impressive benchmarks: The newly launched Claude 3.5 Sonnet achieves significant benchmark improvements with no required code changes for users. More information can be found in the official announcement here.

- Members noted that upgrades can be easily tracked by hovering over the info icon next to providers, enhancing user experience.

-

Lightning boost with Llama 3.1 Nitro: With a 70% speed increase, the Llama 3.1 405b Nitro is now available, promising a throughput of around 120 tps. Check out the new endpoints: 405b and 70b.

- Users are captivated by the performance advantages this model brings, making it an appealing choice.

-

Ministral’s powerful model lineup: Ministral 8b has been introduced, achieving 150 tps with a 128k context and is currently ranked #4 for tech prompts. An economical 3b model can be accessed here.

- The performance and pricing of these models generated substantial excitement among users, catering to varying budget needs.

-

Grok Beta expands functionalities: Grok Beta now supports an increased context length of 131,072 and charges $15/m, replacing the legacy

x-ai/grok-2requests. This update was met with enthusiasm by users anticipating enhanced performance.- Community discussions reflect expectations for improved capabilities under the new pricing model.

-

Community feedback on Claude self-moderated endpoints: A poll was launched to gather opinions on the Claude self-moderated endpoints, currently the top option on the leaderboard. Members can participate in the poll here.

- User engagement suggests a keen interest in influencing the development and user experience of these endpoints.

aider (Paul Gauthier) Discord

-

Claude 3.5 Sonnet dominates benchmarks: The upgraded Claude 3.5 Sonnet scores 84.2% on Aider’s leaderboard and achieves 85.7% when used alongside DeepSeek in architect mode.

- This model not only enhances coding tasks but retains the previous pricing structure, exciting many users.

-

DeepSeek is budget-friendly editor alternative: DeepSeek’s cost of $0.28 per 1M output tokens makes it a cheaper option compared to Sonnet, which is priced at $15.

- Users note it pairs adequately with Sonnet, although discussions arise about the shift in token costs affecting performance.

-

Aider configuration file needs clarity: Users inquired about setting up the

.aider.conf.ymlfile, specifying types likeopenrouter/anthropic/claude-3.5-sonnet:betaas the editor model.- Clarification was sought on where Aider pulls configuration details at runtime for optimal setup.

-

Exciting announcement of computer use beta: Anthropic’s new computer use feature allows Claude to perform tasks like moving cursors, currently in public beta and described as experimental.

- Developers can direct its functionality which signifies a shift in interaction with AI and improved usability in coding environments.

-

DreamCut AI - Novel video editing solution: DreamCut AI has been launched, allowing users to leverage Claude AI for video editing, developed by MengTo over 3 months with 50k lines of code.

- Currently in early access, users can experiment with its AI-driven features through a free account.

Stability.ai (Stable Diffusion) Discord

-

Stable Diffusion 3.5 Launch Shocks Users: Stable Diffusion 3.5 launched with customizable models for consumer hardware, available under the Stability AI Community License. Users are excited about the 3.5 Large and Turbo models, available on Hugging Face and GitHub, with the 3.5 Medium launching on October 29.

- The announcement caught many off guard, stirring discussions on its unexpected release and anticipated performance improvements over previous iterations.

-

SD3.5 vs. Flux Image Quality Showdown: The community evaluated whether SD3.5 can beat Flux in image quality, focusing on fine-tuning and aesthetics. Early impressions suggest Flux may still have an edge in these areas, igniting curiosity around dataset effectiveness.

- Discussions highlight the importance of benchmark comparisons between models, especially when establishing market standards for image generation.

-

New Licensing Details Raise Questions: Participants expressed concerns regarding the SD3.5 licensing model, especially in commercial contexts compared to AuraFlow. Balancing accessibility with Stability AI’s monetization needs became a hot topic.

- The discourse underscores the challenge of ensuring models are both open to developers and sustainable for producers.

-

Community Support Boosts Technical Adoption: Users finding issues with Automatic1111’s Web UI received guidance on support channels, indicating a collaborative spirit within the community. One member found direct assistance swiftly, showcasing engagement with newcomers.

- This proactive support approach helps ensure users can effectively leverage the new models and integration tools available.

-

LoRA Applications Enthuse Artists: The introduction of LoRA models for SD3.5 has users experimenting with prompts and sharing their results, demonstrating its effectiveness in enhancing image generation. The community has been active in showcasing their creations and encouraging further experimentation.

- Such initiatives reflect engagement strategies aimed at maximizing the impact of newly released features within the AI art community.

Unsloth AI (Daniel Han) Discord

-

Gradient Accumulation Bug Fixed in Nightly Transformers: A recent update revealed that the gradient accumulation bug has been fixed and will be included in the nightly transformers and Unsloth trainers, correcting inaccuracies in loss curve calculations.

- This fix enhances the reliability of performance metrics across various training setups.

-

Insights on LLM Training Efficiency: Members discussed that training LLMs with phrase inputs generates multiple sub-examples, maximizing training effectiveness and enabling models to learn efficiently.

- This approach allows for richer training datasets, leading to improved model capabilities.

-

Challenges with Model Performance and Benchmarks: Concerns arose about the new Nvidia Nemotron Llama 3.1 model, with doubts expressed regarding its superior performance over the Llama 70B despite similar benchmark scores.

- The inconsistency in Nvidia’s benchmarking raises questions about their models’ performance assessments.

-

Creating a Grad School Application Editor: A member seeks assistance in developing a grad school application editor, facing challenges with complex prompts for AI model implementation that lead to generic outputs.

- Experts were called upon to provide strategies for fine-tuning models to enhance output relevance.

-

Fine-Tuning LLaMA on CSV Data: Clarifications were requested on fine-tuning a LLaMA model using CSV data to handle specific incident queries, guided by methodologies shared in a Turing article.

- Community feedback played a crucial role in shaping the approach toward effective model testing.

Nous Research AI Discord

-

Catastrophic Forgetting in LLMs: Discussion centered on catastrophic forgetting in large language models (LLMs) during continual instruction tuning, especially in models ranging from 1B to 7B parameters. Members noted that fine-tuning can significantly degrade performance, as detailed in this study.

- Participants shared personal experiences with benchmark results comparing their models against established ones, revealing the challenges inherent in LLM training.

-

Insights on LLM Benchmark Performance: Users indicated that model scale significantly influences performance, noting that data limitations without proper optimization can lead to inferior results. One participant discussed their 1B model’s lower scores relative to Meta’s models, highlighting the importance of baseline comparisons.

- This led to further reflections on how certain models can underperform in competitive contexts without adequate training resources.

-

Concerns Over Research Paper Reliability: A recent study revealed that approximately 1 in 7 research papers has serious errors, undermining their trustworthiness. This prompted discussions on how misleading studies could lead researchers to unintentionally build on flawed conclusions.

- Members noted that traditional methods of assessing research integrity require more funding and attention to rectify these issues.

-

Fine-Tuning Models: A Double-Edged Sword: Debates around the effectiveness of fine-tuning large foundation models highlighted risks of degrading broad capabilities for specific targets. Members speculated that fine-tuning requires meticulous hyperparameter optimization for fruitful outcomes.

- Concerns emerged regarding the lack of established community knowledge about fine-tuning best practices, prompting questions about recent developments since the previous year.

LM Studio Discord

-

LM Studio v0.3.5 Features Shine: The update to LM Studio v0.3.5 introduces headless mode and on-demand model loading, streamlining local LLM service functionality.

- Users can now easily download models using the CLI command

lms get, enhancing model access and usability.

- Users can now easily download models using the CLI command

-

GPU Offloading Performance Takes a Hit: A user found that GPU offloading performance plummeted, utilizing only 4.2GB instead of the expected 15GB following recent updates.

- Reverting to an older ROCm runtime version restored normal performance, suggesting the update may have altered GPU utilization.

-

Model Loading Errors Surfaces: One user reported a ‘Model loading aborted due to insufficient system resources’ error linked to GPU offload setting adjustments.

- Disabling loading guardrails was mentioned as a workaround, although not typically recommended.

-

Discussing AI Model Performance Metrics: The community engaged in a detailed discussion on measuring performance, highlighting the impact of load settings on throughput and latency.

- Notably, under heavy GPU offloading, throughput dropped to 0.9t/s, signaling potential inefficiencies at play.

-

Inquiries for Game Image Enhancement Tools: Users began exploring options for converting game images into photorealistic art, with Stable Diffusion highlighted as a candidate tool.

- The conversation generated interest around the effectiveness of various image enhancers in transforming game visuals.

Latent Space Discord

-

Anthropic Releases Claude 3.5: Anthropic introduced the upgraded Claude 3.5 Sonnet and the Claude 3.5 Haiku models, incorporating a new beta capability for computer use, allowing interaction with computers like a human.

- Despite its innovative abilities, users report it doesn’t follow prompts effectively, leading to varied user experiences.

-

Mochi 1 Redefines Video Generation: GenmoAI launched Mochi 1, an open-source model aimed at high-quality video generation, notable for realistic motion and prompt adherence at 480p resolution.

- This venture leveraged substantial funding to further development, aiming to set new standards in photorealistic video generation.

-

CrewAI Closes $18M Series A Round: CrewAI raised $18 million in Series A funding led by Insight Partners, focusing on automating enterprise processes with its open-source framework.

- The company boasts executing over 10 million agents monthly, catering to a significant portion of Fortune 500 companies.

-

Stable Diffusion 3.5 Goes Live: Stability AI released Stable Diffusion 3.5, a highly customizable model runnable on consumer hardware, and free for commercial use.

- Users can now access it via Hugging Face, with expectations for additional variants on the horizon.

-

Outlines Library Rust Port Enhances Efficiency: Dottxtai announced a Rust port of the Outlines library, which promises faster compilation and a lightweight design for structured generation tasks.

- The update significantly boosts efficiency for developers and includes bindings in multiple programming languages.

Notebook LM Discord Discord

-

Language Confusion in NotebookLM: Users reported that NotebookLM’s responses default to Dutch despite providing English documents, suggesting adjustments to Google account language settings. One user struggled with German output, encountering unexpected ‘alien’ dialects.

- This highlights the current limitations of language handling within NotebookLM and potential paths for improvement.

-

Frustration Over Sharing Notebooks: Several members experienced issues when attempting to share notebooks, facing a perpetual ‘Loading…’ screen, which renders collaboration ineffective. This has raised concerns about the stability and reliability of the tool.

- Users are pressing for a resolution, indicating an urgent need for a robust sharing feature to facilitate teamwork.

-

Mixed Results with Multilingual Audio: Efforts to create audio overviews in various languages yielded inconsistent results, especially in Dutch, where pronunciation and native-like quality were notably lacking. Some users achieved successful Dutch content, fostering hope for improvements.

- This discussion reveals a strong community interest in enhancing multilingual capabilities for broader usability.

-

Podcasting Experiences with NotebookLM: A user excitedly shared that they successfully uploaded a 90-page blockchain course, resulting in amusing generated audio. Feedback indicated that variations in input led to unexpected and entertaining outputs.

- This demonstrates the diverse applications of NotebookLM for podcasting, although consistent quality remains a topic for enhancement.

-

Document Upload Issues Persist: Users faced issues with documents failing to appear in Google Drive, alongside delays in processing, prompting discussions about potential file corruption. Suggestions to refresh actions were made to address these upload challenges.

- These technical hurdles underscore the need for reliable document management features within NotebookLM.

Perplexity AI Discord

-

Claude 3.5 Models Generate Buzz: Users eagerly discuss the new Claude 3.5 Sonnet and Claude 3.5 Haiku, with hopes for their swift integration into Perplexity following AnthropicAI’s announcement. Key features include the ability for Claude to use computers like a human.

- This excitement mirrors previous launches and indicates a strong interest in AI’s evolving capabilities.

-

API Functionality Sparks Frustration: Concerns arose about the Perplexity API’s inability to return complete URLs for sources when prompted, leading to confusion among users about its ease of use. A particular user voiced their challenges in obtaining these URLs despite following instructions.

- This issue sparked a larger discussion on the capabilities of APIs in AI products and the need for clearer documentation.

-

Perplexity Encounters Competitive Challenges: With Yahoo launching an AI chat service, discussions surrounding Perplexity’s competitive edge became prevalent. Yet, users highlighted Perplexity’s reliability and resourcefulness as key advantages over its competitors.

- While competition intensifies, the commitment to quality and performance remains a cornerstone for users.

-

User Feedback Highlights Strengths: Positive commendations for Perplexity’s performance came from multiple users, who praised its quality information delivery. One user emphasized satisfaction, stating, ‘I freaking love PAI! I use it all the time for work and personal.’

- Such feedback underlines the platform’s reputation in the AI community.

-

Resource Sharing for Enhanced Fact-Checking: A collection on AI-driven fact-checking strategies highlighted ethical considerations and LLMs’ roles in misinformation management at Perplexity. This resource discusses importance of source credibility and bias detection.

- Sharing such resources reflects the community’s proactive efforts towards improving accuracy in information dissemination.

Eleuther Discord

-

New Open Source SAE Interpretation Pipeline Launched: The interpretability team has released a new open source pipeline for automatically interpreting SAE features and neurons in LLMs, which introduces five techniques for evaluating explanation quality.

- This initiative promises to enhance interpretability at scale, showcasing advancements in utilizing LLMs for feature explanation.

-

Integrating Chess AI and LLMs for Better Interactivity: A proposal to combine a chess-playing AI with an LLM aims to create a conversational agent that understands its own decisions, enhancing user engagement.

- The envisioned model strives for a coherent dialogue where the AI can articulate its reasoning behind chess moves.

-

SAE Research Ideas Spark Discussions: An undergrad sought project ideas on Sparse Autoencoders (SAEs), prompting discussions about current research efforts and collaborative opportunities.

- Members shared resources, including an Alignment Forum post for deeper exploration.

-

Woog09 Rates Mech Interp Papers for ICLR 2025: A member shared a spreadsheet rating all mechanistic interpretability papers for ICLR 2025, applying a scale of 1-3 for quality.

- Their focus is on providing calibrated ratings for guiding readers through submissions.

-

Debugging Batch Size Configurations: Members discussed issues with debugging

requestsnot batching correctly with setbatch_size, emphasizing the need for model-level handling of this config.- Confusions over the purpose of specifying

batch_sizearose, with clarification offered about its connection to model initialization.

- Confusions over the purpose of specifying

Interconnects (Nathan Lambert) Discord

-

Allegro Model Transforms Text to Video: Rhymes AI announced their new open-source model, Allegro, generating 6-second videos from text at 15 FPS and 720p, with links to explore including a GitHub repository. Users can join the Discord waitlist for early access.

- This innovation opens new doors for content creation, being both intriguing and easily accessible.

-

Stability AI Heats Up with SD 3.5: Stability AI launched Stable Diffusion 3.5, offering three variants for free commercial use under $1M revenue and enhanced capabilities like Query-Key Normalization for optimization. The Large version is available now on Hugging Face and GitHub, with the Medium version set to launch on October 29th.

- This model marks a substantial upgrade, attracting significant attention within the community for its unique features.

-

Claude 3.5 Haiku Sets High Bar in Coding: Anthropic introduced Claude 3.5 Haiku, surpassing Claude 3 Opus especially in coding tasks with a score of 40.6% on SWE-bench Verified, available on the API here. Users are impressed with the advancements highlighted in various benchmarks.

- The model’s performance is reshaping standards, making it a go-to for programming-related tasks.

-

Factor 64 Revelation: A member expressed excitement about a breakthrough involving Factor 64, feeling it seems ‘obvious’ in hindsight. This moment ignited deeper discussions regarding its implications.

- The realization has sparked further engagement, hinting at collaborations or new explorations downstream.

-

Distance in Community Feedback on Hackernews: Concerns about Hackernews being a views lottery suggest that discussions lack substance and serve more as noise than genuine feedback. Members describe it as very noisy and biased, questioning its engagement value.

- The platform is increasingly viewed as less effective, prompting conversations on alternative feedback mechanisms.

GPU MODE Discord

-

Unsloth Lecture Hits the Web: The Unsloth talk is now available, showcasing dense information appreciated by many viewers who noted its quick pace.

- “I’m watching back through at .5x speed, and it’s still fast”, reflecting the lecture’s depth.

-

Gradient Accumulation Insights: A discussion on gradient accumulation highlighted the importance of rescaling between batches and using fp32 for large gradients.

- “Usually there’s a reason why all the batches can’t be the same size,” emphasizing training complexities.

-

GitHub AI Projects Unveiled: A user shared their GitHub project featuring a GPT implementation in plain C, stimulating discussions on deep learning.

- This initiative aims to enhance understanding of deep learning through an approachable implementation.

-

Decoding Torch Compile Outputs: Metrics from

torch.compileshowed execution times for matrix multiplications, leading to clarifications on interpretingSingleProcess AUTOTUNEresults.- SingleProcess AUTOTUNE takes 30.7940 seconds to complete, prompting deeper discussions on runtime profiling.

-

Meta’s HOTI 2024 Focuses on Generative AI: Insights from Meta HOTI 2024 were shared, with specific issues addressed in this session.

- The keynote on ‘Powering Llama 3’ reveals infrastructure insights vital for understanding Llama 3 integration.

OpenAI Discord

-

AGI Debate Ignites: Members discussed if our struggles to achieve AGI stem from the type of data provided, with some arguing that binary data might limit progress.

- One member asserted that improved algorithms could make AGI attainable regardless of data type.

-

Clarifying GPT Terminology: The term ‘GPTs’ has caused confusion as it often refers to custom GPTs instead of encompassing models like ChatGPT.

- Participants highlighted the importance of distinguishing between general GPTs and their specific implementations.

-

Quantum Computing Simulator Insights: A member noted that effective quantum computing simulators should yield 1:1 outputs compared to real quantum computers, though effectiveness remains disputed.

- Various companies are working on simulators, but their real-world applications are still under discussion.

-

Anthropic’s TANGO Model Excites: The TANGO talking head model caught attention for its lip-syncing abilities and open-source potential, with members eager to explore its capabilities.

- Discussion included the performance of Claude 3.5 Sonnet against Gemini Flash 2.0, with differing opinions on which holds the edge.

-

ChatGPT Struggles with TV Shows: A member shared frustrations with ChatGPT misidentifying episode titles and numbers for TV shows, pointing to a gap in training data.

- The conversation underscored how the opinions within the data could skew results in entertainment-related queries.

Cohere Discord

-

Cohere Models Finding Favor: Members discuss actively using Cohere models in the playground, highlighting their varied application and tinkering efforts. One member particularly emphasized the need to rerun inference with different models when exploring multi-modal embeddings.

- This has sparked curiosity about the broad capabilities of these models in real-world scenarios.

-

Multimodal Embed 3 is Here!: Embed 3 model launches with SOTA performance on retrieval tasks, supporting mixed modality and multilingual searches, allowing text and image data storage together. Find more details in the blog post and release notes.

- The model is set to be a game-changer for creating unified data retrieval systems.

-

Fine-Tuning LLMs Requires More Data: Concerns over fine-tuning LLMs with minimal datasets were raised, with potential overfitting in focus. Strategies suggested included enlarging dataset size and adjusting hyperparameters, referencing Cohere’s fine-tuning guide.

- Members seek effective adjustments to optimize their model performance amid challenges.

-

Multilingual Model suffers latency spikes: Latency issues were reported at 30-60s for the multilingual embed model, spiking to 90-120s around 15:05 CEST. Users noted improvements, urging the reporting of persistent glitches.

- The latency concerns highlighted the need for further technical evaluations to ensure optimal performance.

-

Agentic Builder Day Announced: Cohere and OpenSesame are co-hosting the Agentic Builder Day on November 23rd, inviting talented builders to create AI agents using Cohere Models. Participants can apply for this 8-hour hackathon with opportunities to win prizes.

- The competition encourages collaboration among developers eager to contribute to impactful AI projects, with applications available here.

Modular (Mojo 🔥) Discord

-

Mojo Introduces Custom Structure of Arrays: You can craft your own Structure of Arrays (SoA) using Mojo’s syntax, although it isn’t natively integrated into the language yet.

- While a slice type is available, users find it somewhat restrictive, and improvements are anticipated in Mojo’s evolving type system.

-

Mojo’s Slice Type Needs Improvement: While Mojo includes a slice type, it’s essentially limited to being a standard library struct, with only some methods returning slices.

- Members anticipate revisiting these slice capabilities as Mojo develops further.

-

Binary Stripping Shows Major Size Reduction: Stripping a 300KB binary can lead to an impressive reduction to just 80KB, indicating strong optimization possibilities.

- Members noted the significant drop as encouraging for future binary management strategies.

-

Comptime Variables Cause Compile Errors: A user reported issues using

comptime varoutside a@parameterscope, triggering compile errors.- Discussion highlighted that while alias allows compile-time declarations, achieving direct mutability remains complex.

-

Node.js vs Mojo in BigInt Calculations: A comparison revealed that BigInt operations in Node.js took 40 seconds for calculations, suggesting Mojo might optimize this process better.

- Members pointed out that refining the arbitrary width integer library is key to enhancing performance benchmarks.

tinygrad (George Hotz) Discord

-

LLVM Renderer Refactor Proposal: A user proposed rewriting the LLVM renderer using a pattern matcher style to enhance functionality, which could improve clarity and efficiency.

- This approach aims to streamline development and make integrations easier.

-

Boosting Tinygrad’s Speed: Discussion highlighted the requirement to enhance Tinygrad’s performance after the transition to utilizing uops, critical for keeping pace with computing advancements.

- Efforts to optimize algorithms and reduce overhead were suggested to achieve these speed goals.

-

Integrating Gradient Clipping into Tinygrad: The community debated if

clip_grad_norm_should become a standard in Tinygrad, a common method seen across deep learning frameworks.- George Hotz indicated that a gradient refactor must precede this integration for it to be effective.

-

Progress on Action Chunking Transformers: A user reported convergence in ACT training, achieving a loss under 3.0 after a few hundred steps, with links to the source code and related research.

- This development indicates potential for further optimization based on the current model performance.

-

Exploring Tensor Indexing with .where(): A discussion emerged around using the

.where()function with boolean tensors, revealing unconventional results with.int()indexing.- This triggered inquiries about the expected behavior of tensor operations in different scenarios.

OpenInterpreter Discord

-

Hume AI Joins the Party: A member announced the addition of a Hume AI voice assistant to the phidatahq generalist agent, enhancing functionality with a streamlined UI and the ability to create and execute applescripts on Macs.

- Loving the new @phidatahq UI noted the improvements made possible with this integration.

-

Claude 3.5 Sonnet Gets Experimental: Anthropic officially released the Claude 3.5 Sonnet model with public beta access for computer usage, although it is described as still experimental and error-prone.

- Members expressed excitement while noting that such advancements reinforce the growing capabilities of AI models. For more details, see the tweet from Anthropic.

-

Open Interpreter Powers Up with Claude: There’s enthusiasm about using Claude to enhance the Open Interpreter, with members discussing practical implementations and code to run the new model.

- One member reported success with the specific model command, encouraging others to try it out.

-

Screenpipe is Gaining Traction: Members praised the Screenpipe tool for its utility in build logs, noting its interesting landing page and potential for community contributions.

- One member encouraged more engagement with the tool, citing a useful profile linked on GitHub.

-

Monetization Meets Open Source: Discussion emerged around monetizing companies by allowing users to build from source or pay for prebuilt versions, balancing contributions and usage.

- Members expressed approval of this model, highlighting the benefits of contributions from both builders and paying users.

DSPy Discord

-

New Version on the Horizon: A member expressed excitement about creating a new version instead of altering the existing one, planning to do it live on Monday.

- The enthusiasm was shared as the community rallied around the upcoming session, where current functionalities will also be covered.

-

DSpy Documentation Faces Issues: Members bemoaned that the little AI helper is missing from the new documentation structure, which led to widespread disappointment.

- Community sentiment echoed in the chat, highlighting the absence of valued features as a loss.

-

Broken Links Alert: Numerous broken links in the DSpy documentation triggering 404 errors were reported, causing frustration among users.

- Quick actions were taken by at least one user to fix this through a PR, earning gratitude from peers for their responsiveness.

-

Docs Bot Returns to Action: Celebrations erupted as the documentation bot made a comeback, restoring functionality that users greatly appreciated.

- Heartfelt emojis and affirmations filled the chat, showcasing the community’s relief and support for the bot’s vital presence.

-

Seeking Vibes on Version 3.0: A member queried the general vibe of the upcoming version 3.0, evidencing a desire for community feedback.

- However, responses remained sparse, leaving a cloud of uncertainty around the collective sentiments.

LlamaIndex Discord

-

VividNode: Chat with AI Models on Desktop: The VividNode app allows desktop users to chat with GPT, Claude, Gemini, and Llama, featuring advanced settings and image generation with DALL-E 3 or various Replicate models. More details are available in the announcement.

- This application streamlines communication with AI, providing a robust chat interface for users.

-

Build a Serverless RAG App in 9 Lines: A tutorial demonstrates deploying a serverless RAG app using LlamaIndex in just 9 lines of code, making it a cost-effective solution compared to AWS Lambda. For more insights, refer to this tweet.

- Easy deployment and cost efficiency are key highlights for developers utilizing this approach.

-

Enhancing RFP Responses with Knowledge Management: The discussion centered around using vector databases for indexing documents to bolster RFP response generation, allowing for advanced workflows beyond simple chat replies. More on the subject can be found in this post.

- This method reinforces the role of vector databases in supporting complex AI functionalities.

-

Join the Llama Impact Hackathon!: The Llama Impact Hackathon in San Francisco offers a platform for participants to build solutions using Llama 3.2 models, with a $15,000 prize pool up for grabs, including a $1,000 prize for the best use of LlamaIndex. Event details can be found in this announcement.

- Running from November 8-10, the hackathon accommodates both in-person and online participants.

-

CondensePlusContextChatEngine Automatically Initializes Memory: Discussion clarified that CondensePlusContextChatEngine now automatically initializes memory for consecutive questions, improving user experience. Previous versions had different behaviors, creating some user confusion.

- This change simplifies memory management in ongoing chats, enhancing user interactions.

LLM Agents (Berkeley MOOC) Discord

-

LLM Agents MOOC Hackathon Launch: Berkeley RDI is launching the LLM Agents MOOC Hackathon from mid-October to mid-December, with over $200,000 in prizes. Participants can sign up through the registration link.

- The hackathon, featuring five tracks, seeks to engage both Berkeley students and the public, supported by major sponsors like OpenAI and GoogleAI.

-

TapeAgents Framework Introduction: The newly introduced TapeAgents framework from ServiceNow facilitates optimization and development for agents through structured logging. The framework enhances control, enabling step-by-step debugging as detailed in the paper.

- This tool provides valuable insights into agent performance, emphasizing how each interaction is logged for comprehensive analysis.

-

Function Calling in LLMs Explained: There was a discussion surrounding how LLMs handle splitting tasks into function calls, highlighting the need for coding examples. Clarifications indicated the significance of understanding this mechanism moving forward.

- Members explored the impact of architecture choices on agent capabilities while examining how these approaches can improve functionality.

-

Lecture Insights on AI for Enterprises: Nicolas Chapados discussed advancements in generative AI for enterprises during Lecture 7, emphasizing frameworks like TapeAgents. The session reviewed the importance of integrating security and reliability in AI applications.

- Key insights from Chapados and guest speakers highlighted real-world applications and the potential of AI to transform enterprise workflows.

-

Model Distillation Techniques and Resources: Members shared a course on AI Agentic Design Patterns with Autogen, providing resources for learning about model distillation and agent frameworks. This course offers a structured approach to mastering autogen technology.

- Additionally, a helpful GitHub repository was discussed, alongside an engaging thread that examines the TapeAgents framework.

Torchtune Discord

-

Warnings Emerge from PyTorch Core: A user reported a warning in PyTorch now triggering on float16 but not float32, suggesting testing with a different kernel to assess performance impact. Speculation arose that specific lines in the PyTorch source code may affect JIT behavior.

- The community anticipates that resolving this may lead to considerable performance insights.

-

Distributed Training Error Causes Headaches: A user encountered a stop with no messages during a distributed training run with the

tunecommand while setting CUDA_VISIBLE_DEVICES. Removing the specification did not resolve the issue, hinting at deeper configuration problems.- This suggests investigation into environment settings may be necessary to pinpoint root causes.

-

Confusion Over Torchtune Config Files: Confusions emerged regarding the .yaml extension causing Torchtune to misinterpret local configurations. Verifying file naming was emphasized to avoid unexpected behavior during operations.

- Participants noted that small details can lead to significant runtime problems.

-

Flex Ramps Up Performance Talk: Discussion flared around Flex’s successful runs on 3090s and 4090s, with mentions of optimized memory usage on A800s. The dialogue touched on faster out-of-memory operations as the model scales.

- Optimized memory management is seen as key to handling larger models effectively.

-

Training Hardware Setups Under Scrutiny: A user confirmed utilizing 8x A800 GPUs while discussing training performance issues. The community debated testing with fewer GPUs as a means to troubleshoot the persistent error effectively.

- Discussing varying hardware setups highlighted the nuances of scaling in training environments.

LangChain AI Discord

-

Langchain Open Canvas explores compatibility: A member inquired if Langchain Open Canvas can integrate with LLM providers beyond Anthropic and OpenAI, reflecting a desire for broader compatibility.

- This inquiry indicates significant community interest in expanding the application’s usability with diverse tools.

-

Agent orchestration capabilities with Langchain: A discussion arose about the potential for Langchain to facilitate agent orchestration with OpenAI Swarm, questioning if custom programming is necessary.

- This spurred responses highlighting existing libraries that support orchestration functionalities.

-

Strategizing output chain refactoring: A user is contemplating whether to refactor their Langchain workflow or switch to LangGraph for enhanced functionality in complex tool usage.

- The complexity of their current setup necessitates this strategic decision for optimal performance.

-

Security concerns in Langchain 0.3.4: A user flagged a malicious warning from PyCharm regarding dependencies in Langchain 0.3.4, raising alarms about potential security risks.

- They sought confirmation from the community on whether this warning is a common occurrence, fearing it might be a false positive.

-

Advice sought for local hosting solutions: In the quest for local hosting of models for enterprise applications, a user is exploring building an inference container with Flask or FastAPI.

- They aim to avoid redundancy by uncovering better solutions within the community.

OpenAccess AI Collective (axolotl) Discord

-

2.5.0 Brings Experimental Triton FA Support: Version 2.5.0 introduced experimental Triton Flash Attention (FA) support for gfx1100, activated with

TORCH_ROCM_AOTRITON_ENABLE_EXPERIMENTAL=1, which led to a UserWarning on the Navi31 GPU.- The warning initially confused the user, who thought it related to Liger, as discussed in a GitHub issue.

-

Leverage Instruction-Tuned Models for Training: A member proposed utilizing an instruction-tuned model like llama-instruct for instruction training, noting the benefits as long as users accept its prior tuning.

- They emphasized the necessity of experimentation to discover the optimal approach, possibly mixing strategies in their training.

-

Concerns on Catastrophic Forgetting: Concerns arose about the choice between domain-specific instruction data or a mix with general data to prevent catastrophic forgetting during training.

- Members discussed the complexities of training and encouraged exploring multiple strategies to find the most effective method.

-

Pretraining vs Instruction Fine-Tuning Debate: The discussion highlighted whether to start with a base model for pretraining on raw domain data or rely on an instruction-tuned model for fine-tuning.

- One member advocated for using raw data initially to provide a stronger foundation if available.

-

Generating Instruction Data from Raw Text: A member shared their plan to use GPT-4 for generating instruction data from raw text, acknowledging the potential biases that may arise.

- This approach aims to reduce dependence on human-generated instruction data while being aware of its limitations.

Gorilla LLM (Berkeley Function Calling) Discord

-

Finetuned Model for Function Calling Excitement: A user expressed enthusiasm for the Gorilla project after fine-tuning a model specifically for function calling and successfully creating their own inference API.

- They sought methods for benchmarking a custom endpoint and requested appropriate documentation on the process.

-

Instructions Shared for Adding New Models: In response to inquiries, a member directed users to a README file that outlines how to add new models to the leaderboard within the Gorilla ecosystem.

- This documentation is valuable for users aiming to contribute effectively to the Gorilla project.

LAION Discord

-

Join the Free Webinar on LLMs: A Senior ML Engineer from Meta is hosting a free webinar on best practices for building with LLMs, with nearly 200 signups already. Register for insights on advanced prompt engineering techniques, model selection, and project planning here.

- Attendees can expect a deep dive into the practical applications of LLMs tailored for real-world scenarios, enhancing their deployment strategies.

-

Insights on Prompt Engineering: The webinar includes discussions on advanced prompt engineering techniques critical for optimizing model performance. Participants can leverage these insights for more effective LLM project execution.

- Performance optimization methods will also be tackled, which are essential for deploying LLM projects successfully.

-

Explore Retrieval-Augmented Generation: Retrieval-Augmented Generation (RAG) will be a focal topic, showcasing how it can enhance the capabilities of LLM solutions. Fine-tuning strategies will also be a key discussion point for maximizing model efficacy.

- This session aims to equip engineers with the tools necessary to implement RAG effectively in their projects.

-

Articles Featured on Analytics Vidhya: Webinar participants will have their top articles featured in Analytics Vidhya’s Blog Space, increasing their professional visibility. This provides an excellent platform for sharing insights within the data science community.

- Such exposure can significantly enhance the reach of their contributions and foster community engagement.

Mozilla AI Discord

-

Mozilla’s Insight on AI Access Challenges: Mozilla has released two key research pieces: ‘External Researcher Access to Closed Foundation Models’ and ‘Stopping Big Tech From Becoming Big AI’, shedding light on AI development control.

- These reports highlight the need for changes to create a more equitable AI ecosystem.

-

Blog Post Summarizing AI Research Findings: For deeper insights, the blog post here elaborates on the commissioned research and its implications.

- It discusses the impact of these findings on AI’s competitive landscape among major tech players.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

HuggingFace ▷ #general (586 messages🔥🔥🔥):

AI DJ SoftwareHugging Face Model QueriesOCR ToolsTraining TTS ModelsStructured Output in LLMs

-

Exploring AI DJ Software: Users discussed the potential for AI to transition between songs like a DJ, suggesting features similar to Spotify but with automated mixing capabilities.

- Tools like rave.dj were mentioned, where users can create mashups by combining multiple songs, highlighting the fun aspect even if the results aren’t perfect.

-

Hugging Face Model Queries: A user inquired about downloading weights for Hugging Face models without exposing them, seeking clarification on the appropriateness of using

huggingface_hubfor private repos.- The community responded with suggestions on how to securely manage and download models while keeping the architecture hidden, utilizing environment variables for authentication.

-

OCR Tools for Data Extraction: Users inquired about effective OCR solutions for extracting structured data from PDFs, particularly in construction contexts.

- A suggestion was made for utilizing models like Koboldcpp and various methods to enhance text extraction accuracy.

-

Training TTS Models for Specific Languages: A discussion took place about the requirements for training TTS models, focusing on data collection and whether fine-tuning existing models could yield quality results.

- Participants emphasized the importance of having a suitable dataset while questioning how much training data is necessary for lesser-known languages.

-

Structured Output Implementations: The community exchanged ideas about structured output for LLMs, including utilizing existing libraries like

lm-format-enforcerto maintain specific formats.- Suggestions indicated a preference for using models such as Cmd-R for structured responses over Llama, emphasizing the challenges of integrating these capabilities.

Links mentioned:

- Google Colab: no description found

- no title found: no description found

- Suno AI: We are building a future where anyone can make great music. No instrument needed, just imagination. From your mind to music.

- Why AI Will Never Truly Understand Us: The Hidden Depths of Human Awareness: Introduction

- Everybody’S So Creative Tiktok GIF - Everybody’s so creative Tiktok Tanara - Discover & Share GIFs: Click to view the GIF

- Soobkitty Rabbit GIF - Soobkitty Rabbit Bunny - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- Wait What Wait A Minute GIF - Wait What Wait A Minute Huh - Discover & Share GIFs: Click to view the GIF

- stabilityai/stable-diffusion-3.5-large · Hugging Face: no description found

- Dirty Docks Shawty GIF - Dirty Docks Shawty Triflin - Discover & Share GIFs: Click to view the GIF

- Lol Tea Spill GIF - Lol Tea Spill Laugh - Discover & Share GIFs: Click to view the GIF

- Gifmiah GIF - Gifmiah - Discover & Share GIFs: Click to view the GIF

- Fawlty Towers John Cleese GIF - Fawlty Towers John Cleese Basil Fawlty - Discover & Share GIFs: Click to view the GIF

- I Have No Enemies Dog GIF - I have no enemies Dog Butterfly - Discover & Share GIFs: Click to view the GIF

- Tweet from Anthropic (@AnthropicAI): Introducing an upgraded Claude 3.5 Sonnet, and a new model, Claude 3.5 Haiku. We’re also introducing a new capability in beta: computer use. Developers can now direct Claude to use computers the way …

- You You Are GIF - You You Are Yes - Discover & Share GIFs: Click to view the GIF

- Downloading files: no description found

- Oxidaksi vs. Unglued - Ounk: #MASHUP #PSY #DNB #MUSIC #SPEEDSOUNDCreated with Rave.djCopyright: ©2021 Zoe Love

- no title found: no description found

- How Bro Felt After Writing That Alpha Wolf GIF - How bro felt after writing that How bro felt Alpha wolf - Discover & Share GIFs: Click to view the GIF

- RaveDJ - Music Mixer): Use AI to mix any songs together with a single click

- llama.cpp/grammars/README.md at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- GitHub - noamgat/lm-format-enforcer: Enforce the output format (JSON Schema, Regex etc) of a language model: Enforce the output format (JSON Schema, Regex etc) of a language model - noamgat/lm-format-enforcer

- GitHub - sktime/sktime: A unified framework for machine learning with time series: A unified framework for machine learning with time series - sktime/sktime

- accelerate/src/accelerate/utils/fsdp_utils.py at main · huggingface/accelerate: 🚀 A simple way to launch, train, and use PyTorch models on almost any device and distributed configuration, automatic mixed precision (including fp8), and easy-to-configure FSDP and DeepSpeed suppo…

HuggingFace ▷ #today-im-learning (18 messages🔥):

2021 lecture seriesCreating virtual charactersPath to becoming an ML Engineer3blue1brown's educational resourcesManim animation engine

-

2021 Lecture Series Kicks Off: A member confirmed the start of the 2021 lecture series next week, expressing excitement.

- All the best wishes were shared among members in support.

-

Scaling Virtual Characters on Instagram: A member created a virtual character using Civitai for an Instagram profile and seeks to scale up with realistic reels and photos.

- They emphasized the lack of coding experience and resources, requesting advice to get started.

-

ML Engineer Path for Applied Mathematics Student: A university student from Ukraine expressed interest in becoming an ML Engineer and sought guidance on the path ahead.

- Members suggested watching 3blue1brown’s playlist on transformers and LLMs.

-

3blue1brown’s Essentials for ML: The importance of 3blue1brown’s educational materials was highlighted, with a specific course from MIT shared for further exploration.

- Members encouraged viewing the implications of the content for understanding artificial intelligence.

-

Discovering Manim for Animations: A member inquired about the animation tool used by 3blue1brown, revealing it to be Manim, a custom animation engine.

- The GitHub link was shared, showcasing the resource for creating explanatory math videos.

Links mentioned:

- MIT 6.034 Artificial Intelligence, Fall 2010: View the complete course: http://ocw.mit.edu/6-034F10 Instructor: Patrick Winston In these lectures, Prof. Patrick Winston introduces the 6.034 material from…

- GitHub - 3b1b/manim: Animation engine for explanatory math videos: Animation engine for explanatory math videos. Contribute to 3b1b/manim development by creating an account on GitHub.

HuggingFace ▷ #cool-finds (1 messages):

capetownbali: Nice find…

HuggingFace ▷ #i-made-this (10 messages🔥):

Granite 3.0 model releaseWebinar on LLM Best PracticesEvolution of Contextual EmbeddingsZK Proofs for Chat History OwnershipPR Merged for HuggingFace.js

-

Granite 3.0 model makes a splash: A new on-device Granite 3.0 model was launched, showcasing an appealing thumbnail.

- Users are excited about its features and the convenience it provides for quick deployments.

-

Learn LLM Best Practices from Meta: A META Senior ML Engineer is hosting a webinar on navigating LLMs, already attracting nearly 200 signups.

- The session promises insights into prompt engineering and selecting models effectively.

-

Article on Self-Attention Evolution: An article discussing the evolution of static to dynamic contextual embeddings was shared, exploring innovations from traditional vectorization to modern approaches.

- The author aimed for an introductory level while acknowledging feedback from the community about additional models.

-

ZK Proofs for ChatGPT History Ownership: A demo for Proof of ChatGPT was introduced, allowing users to own their chat history using ZK proofs, potentially increasing training data for open-source models.

- This application aims to enhance the provenance and interoperability of data through OpenBlock’s Universal Data Protocol.

-

HuggingFace.js PR Successfully Merged: A pull request supporting the library pxia has been merged into HuggingFace.js.

- This addition brings AutoModel support along with two current architectures, enhancing the library’s functionality.

Links mentioned:

- On Device Granite 3.0 1b A400m Instruct - a Hugging Face Space by Tonic: no description found

- Self-Attention in NLP: From Static to Dynamic Contextual Embeddings: In the realm of Natural Language Processing (NLP), the way we represent words and sentences has a profound impact on the performance of…

- Tweet from OpenBlock (@openblocklabs): 1/ Introducing Proof of ChatGPT, the latest application built on OpenBlock’s Universal Data Protocol (UDP). This Data Proof empowers users to take ownership of their LLM chat history, marking a signi…

- Add pxia by not-lain · Pull Request #979 · huggingface/huggingface.js: This pr will support my library pxia which can be found in https://github.com/not-lain/pxia the library comes with AutoModel support along with 2 architectures for the moment. Let me know if you ha…

- Explore the Future of AI with Expert-led Events: Analytics Vidhya is the leading community of Analytics, Data Science and AI professionals. We are building the next generation of AI professionals. Get the latest data science, machine learning, and A…

HuggingFace ▷ #core-announcements (1 messages):

sayakpaul: <@&1014517792550166630> enjoy:

https://huggingface.co/blog/sd3-5

HuggingFace ▷ #NLP (8 messages🔥):

Tensor conversion bottleneckDataset device bottleneckCPU and GPU usage during inferenceEvaluating fine-tuned LLMsManaging evaluation results

-

Tackling Tensor Conversion Bottleneck: Concerns were raised about a potential Tensor conversion bottleneck from tokenization decoding iterations, especially during inference when adding to context and encoding into float 16.

- It’s suggested to look into the workflow, which involves decoding, printing, and passing data to the model, to identify efficiency improvements.

-

Potential Dataset Device Bottleneck Identified: One member questioned whether there’s a dataset device bottleneck, noticing CPU memory spiking to 1.5 GB despite using CUDA.

- The suggestion was made to check if the UHD Graphics card is being used as the primary inference driver instead of the dedicated GPU.

-

Setting CUDA Device Environment Variable: A member proposed setting the CUDA_VISIBLE_DEVICES environment variable to optimize performance on the intended GPU with the snippet:

os.environ["CUDA_VISIBLE_DEVICES"]="1".- This would potentially ensure that the correct GPU is leveraged for performing inference tasks, allowing for better resource allocation.

-

Methods for Evaluating Fine-tuned LLMs: There was discussion on evaluation methods for fine-tuning LLMs, focusing on automation via libraries like deepval and manual evaluations by experts.

- A member questioned tools for managing results from different versions for easier comparisons and mentioned feeling that Google Sheets might not be the best option due to its manual nature.

-

Concerns About Collaboration in Evaluation Tools: The need for effective management of evaluation results was emphasized, particularly when many collaborators could lead to errors in a Google Sheets environment.

- Members were seeking more efficient tools for comparative analysis, indicating challenges in maintaining accuracy and convenience in shared documents.

Link mentioned: Annoyed Cat GIF - Annoyed cat - Discover & Share GIFs: Click to view the GIF

HuggingFace ▷ #diffusion-discussions (29 messages🔥):

Kaggle and GPU UsageModel Downloading TechniquesLearning Rate and Training InsightsDiffusers Callbacks for Image GenerationCultural Connections in AI

-

Challenges with Kaggle’s GPU Resource Allocation: Users shared experiences using Kaggle’s dual 15GB GPUs, noting that one GPU was fully occupied during model downloads while the other remained unused.

- One user inquired about sharding the model across both GPUs to combine resources, while another confirmed that this feature can slow down performance.

-

Efficient Model Download Strategies: A member suggested using the huggingface_hub library for downloading models, allowing users to control download processes via code.

- Another user pointed out that if the default method causes issues, straight HTTP requests can be an alternative.

-

Learning Rate Concerns in Training: Concerns regarding the appropriate learning rate for training were raised, highlighting a strategy of adjusting it based on the number of GPUs used.

- Additionally, a user sought clarification on whether their model was overtrained or undertrained after completing 3,300 steps.

-

Implementing Callbacks in Diffusers: To log image generation steps, users were advised to utilize callbacks with

callback_on_step_endfor real-time adjustments during the denoising loop.- While standard logging can track values, callbacks provide enhanced flexibility for tracking image generation at each step.

-

Cultural Community Connections in AI: One user expressed enthusiasm about finding a fellow Latin contributor within the community, celebrating shared cultural ties in the AI space.

- This moment demonstrated the camaraderie and global connections that arise from shared interests in AI development.

Links mentioned:

- Pipeline callbacks): no description found

- GitHub - huggingface/diffusers: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch and FLAX.: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch and FLAX. - huggingface/diffusers

OpenRouter (Alex Atallah) ▷ #announcements (2 messages):

Claude 3.5 SonnetLlama 3.1 NitroMinistral updatesGrok BetaClaude self-moderated endpoints

-