AI News for 11/12/2024-11/13/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 2494 messages) for you. Estimated reading time saved (at 200wpm): 274 minutes. You can now tag @smol_ai for AINews discussions!

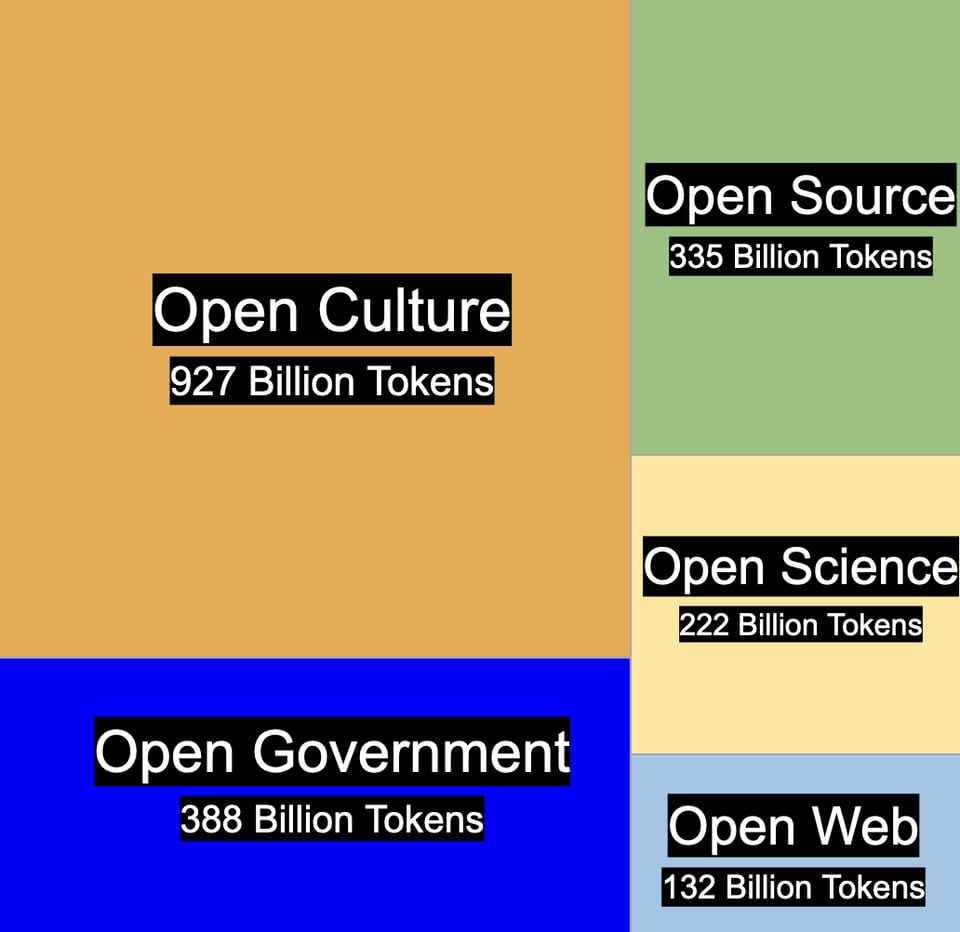

Great dataset releases always precede great models. Last we covered FineWeb (our coverage here) a generation of GPT2 speedruns ensued. Today, Pleais (via Huggingface) is here with an update Common Corpus “the largest fully open multilingual dataset for training LLMs, containing over 2 trillion tokens of permissibly licensed content with provenance information (2,003,039,184,047 tokens).”

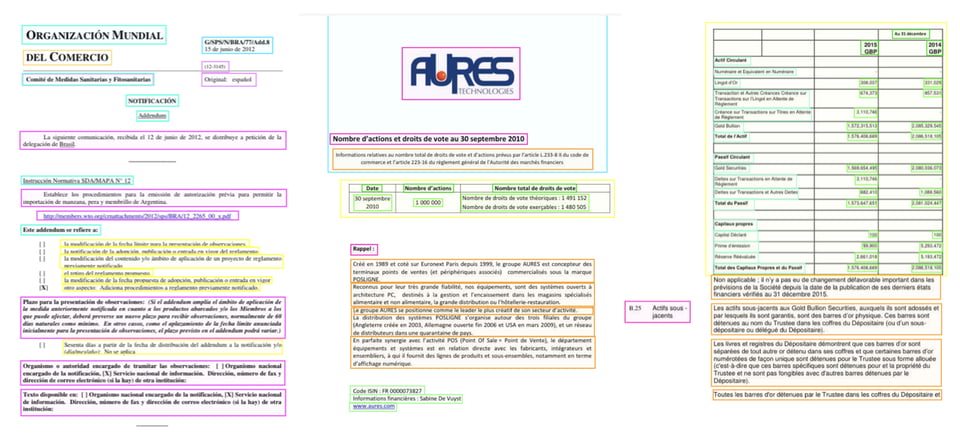

Apart from the meticulous provenance, the team also used OCRonos-Vintage, “a lightweight but powerful OCR correction model that fixes digitization errors at scale. Running efficiently on both CPU and GPU, this 124M-parameter model corrects spacing issues, replaces incorrect words, and repairs broken text structures.” This unlocks a whole lot of knowledge in PDFs:

Common Corpus was first released in March with 500b tokens so it is nice to see this work grow.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Tools and Development

-

Prompt Engineering and Collaboration: @LangChainAI introduced Prompt Canvas, a novel UX for prompt engineering that facilitates collaboration with AI agents and standardizes prompting strategies across organizations. Additionally, @tom_doerr showcased tools like llama-ocr and TTS Generation WebUI, enhancing OCR and text-to-speech capabilities for developers.

-

AI Development Platforms: @deepseek_ai launched JanusFlow 1.3B, a unified multimodal LLM integrating autoregressive models with rectified flow for superior image understanding and generation. Similarly, @swyx provided updates on proxy servers and realtime client SDKs, improving the developer experience for realtime applications.

AI Model Releases and Updates

-

New LLMs and Enhancements: @tom_doerr announced Qwen2.5-Coder, a code-focused LLM from Alibaba Cloud, emphasizing its advanced coding capabilities. Meanwhile, @omarsar0 highlighted the release of Claude 3.5 Sonnet, showcasing its superior code generation performance compared to other models.

-

Performance Benchmarks: @omarsar0 compared Qwen2.5-Coder with Claude 3.5 Sonnet, discussing their code generation capabilities and potential to bridge the gap between open-source and closed-source models. Additionally, @reach_vb introduced DeepSeek’s JanusFlow 1.3B, highlighting its state-of-the-art performance in multimodal tasks.

AI Research and Technical Insights

-

Quantization and Model Scaling: @Tim_Dettmers explored the challenges of quantization in AI models, noting that low-precision training may limit future scalability. @madiator summarized the paper “Scaling Laws for Precision,” revealing that increased pretraining data heightens model sensitivity to quantization, impacting inference efficiencies and GPU provisioning.

-

Scalability and Efficiency: @lateinteraction discussed the limitations of scaling through precision, suggesting alternative methods for efficiency gains. Furthermore, @deepseek_ai presented the Forge Reasoning Engine, leveraging Chain of Code, Mixture of Agents, and MCTS to enhance reasoning and planning in Hermes 3 70B.

Developer Tips and Tools

-

System Monitoring and Optimization: @giffmana recommended switching from

htopto btop for a more aesthetic and functional system monitor. Additionally, @swyx provided guidance on managing realtime client SDKs and optimizing development workflows. -

Software Engineering Best Practices: @hyhieu226 emphasized the principle “if it’s not broken, don’t fix it!,” advocating for simplicity and stability in software engineering practices.

AI Adoption and Impact

-

Healthcare Transformation: @bindureddy discussed how AI, in combination with initiatives like DOGE and RFK, can transform healthcare by addressing inefficiencies and high costs through innovative AI solutions.

-

Automation and Workforce: @bindureddy highlighted the potential for AI to automate white-collar professions and transform transportation, predicting significant impact on the workforce and emphasizing that AI adoption is still in its early stages, with the last mile expected to take the better part of the decade.

-

Enterprise AI Innovations: @RamaswmySridhar introduced Snowflake Intelligence, enabling enterprise AI capabilities such as data agents that facilitate data summarization and actionable insights within enterprise environments.

Memes/Humor

-

Humorous AI Remarks: @nearcyan joked about users preferring ChatGPT over Claude, equating it to having “green text msgs,” while @vikhyatk humorously outlined the steps of a strike culminating in profit, adding a light-hearted touch to discussing labor actions.

-

Tech and AI Humor: @andersonbcdefg likened Elon Musk’s potential government fixes to George Hotz’s rapid Twitter repairs, using a humorous comparison to illustrate skepticism. Additionally, @teortaxesTex shared a funny take on AI modeling with “i need to lock in” repetitions, adding levity to technical discussions.

-

Relatable Developer Jokes: @giffmana humorously referred to his extensive technical talk as a “TED talk,” while @ankush_gola11 expressed excitement about Prompt Canvas with a playful enthusiasm.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Qwen 2.5 Coder Improves 128K Context but Faces Usability Challenges

- Bug fixes in Qwen 2.5 Coder & 128K context window GGUFs (Score: 332, Comments: 90): The post discusses updates and bug fixes for Qwen 2.5 models, emphasizing the extension of context lengths from 32K to 128K using YaRN, and highlights the availability of native 128K GGUFs on Hugging Face. It also warns against using `

- YaRN and Context Lengths: Discussions center around the use of YaRN for extending context lengths in Qwen 2.5 models. Users express concerns about performance impacts when using 128K contexts and suggest using 32K for general tasks, with adjustments for longer contexts only when necessary.

- Bug Fixes and Tool Calling: The GGUFs uploaded include bug fixes, particularly addressing untrained tokens and pad token issues. There is a notable mention that both the Coder Base and Instruct models did not train for tool calling, with users discussing the untrained status of

<tool_call>tokens. - GPU Limitations and Fine-Tuning: Users inquire about the max sequence length for training on GPUs, with the 14B model approximating 12K context length on a 40GB GPU. There is also a discussion on fine-tuning without using YaRN initially and the potential benefits of this approach.

- Qwen 2.5 Coder 14b is worse than 7b on several benchmarks in the technical report - weird! (Score: 37, Comments: 23): The Qwen 2.5 Coder 14B model underperforms on certain benchmarks compared to the 7B version, as highlighted in the technical report. The author notes that for specific tasks like SQL revisions, the non-coding 14B model performs better, suggesting that generalist models may have a superior understanding in some contexts.

- Users reported performance issues with the Qwen 2.5 Coder 14B model, with some suggesting that the benchmarks might be incorrect due to a reporting error, as they have observed different performance in practice. A link to the Qwen 2.5 Coder blog was shared for further information.

- There are inconsistencies with quantized model files, where different Q8 files yield varying results, highlighting potential issues with some files being faulty. One user shared a working Q8 file from Hugging Face, suggesting that not all files are reliable.

- A user pointed out that the benchmark table contains errors, as the numbers for the 14B and 1.5B models are identical except for the livecode benchmark, indicating a likely data entry mistake.

- Qwen 2.5 32B Coder doesn’t handle the Cline prompt well. It hallucinates like crazy. Anyone done any serious work with it yet? (Score: 21, Comments: 46): The post discusses issues with Qwen 2.5 Coder 32B when handling Cline prompts, noting that it often hallucinates. The author mentions trying different setups like vLLM and OpenRouter/Hyperbolic without success, though they achieve better results using a simple Python script to manage file outputs.

- Users report mixed results with Qwen 2.5 Coder 32B; some experience success using Ollama’s version on an M1 with 64G RAM, while others face issues with Cline prompts, leading to unrelated outputs or infinite loops.

- Configuration and setup play a crucial role, with one user suggesting manual edits to config.json to properly integrate the model with continue. Properly prompting Qwen is emphasized as critical, given the lack of a standard prompt format.

- Some users highlight the model’s efficiency with large inputs, capable of handling 50k+ tokens and 100 API calls with minimal cost, but note that success varies depending on the integration tool used (e.g., AIder, cursor).

Theme 2. Scaling Laws in Precision and CPU Inference Tests

-

LLM inference with tensor parallelism on a CPU (Score: 43, Comments: 8): The author conducted experiments to assess the scalability of LLM inference with tensor parallelism on CPUs using the distributed-llama project. In the first experiment with Epyc 9374F as compute nodes, performance scaled to nearly 7x with 8 nodes after optimizing logits calculation. The second experiment using Ryzen 7700X nodes connected via a 10Gbe network showed a 6x performance increase with 8 nodes, demonstrating that LLM inference can effectively scale on CPUs, though further optimizations could improve results. The author’s fork of distributed-llama can be found here.

- Memory Bandwidth and NUMA Nodes: The discussion clarifies that the 8 nodes in the first experiment were not VMs but separate processes bound to NUMA nodes on the Epyc CPU. This setup allowed communication via loopback network, with potential scalability improvements if shared memory communication replaces networking, highlighting a theoretical memory bandwidth of 2 * 576 GB/s for dual Epyc Turin CPUs.

- Network Bottleneck Considerations: Commenters noted that the 10Gbe network used in the second experiment might be a bottleneck for distributed CPU inference. The author acknowledges that while loopback networking was used in the first experiment, physical network setups could benefit from tuning to reduce latency and improve efficiency, especially concerning NIC drivers and OS network configurations.

- Encouragement for Distributed CPU Inference: The results of these experiments are seen as promising for distributed CPU inference. There is interest in leveraging existing systems, including older or mid-range setups, for scalable inference tasks, with a focus on optimizing network and memory configurations to maximize performance.

-

Scaling Laws for Precision. Is BitNet too good to be true? (Score: 27, Comments: 7): A new paper, “Scaling Laws for Precision” (arxiv link), explores how quantization affects model precision and output quality, emphasizing that increased token use in pre-training exacerbates quantization’s negative impact in post-training. The author suggests 6-bit quantization as an optimal balance and hopes the findings will guide major labs in optimizing compute resources; additional insights are discussed in the AINews letter with opinions from Tim Dettmers (AINews link).

- Quantization Awareness Training (QAT) is emphasized as a crucial approach, where training is aware of quantization, allowing for more effective weight distribution, contrasting with post-training quantization which can degrade model performance, especially when trained in FP16.

- The cosine learning rate schedule is clarified as distinct from cosine similarity, with the former related to training dynamics and the latter to measuring vector similarity, both involving the cosine function but serving different purposes.

- Bitnet’s approach is discussed as not being included in the study, with a focus on how models trained in bf16 can lose important data when quantized post-training, differing from QAT which maintains a 1:1 model integrity.

Theme 3. Largest Mixture of Expert Models: Analysis and Performance

- Overview of the Largest Mixture of Expert Models Released So Far (Score: 32, Comments: 2): The post provides an overview of the largest Mixture of Expert (MoE) models with over 100 billion parameters currently available, emphasizing their architecture, release dates, and quality assessments. Key models include Switch-C Transformer by Google with 1.6 trillion total parameters, Grok-1 by X AI with 314 billion total parameters, and DeepSeek V2.5 by DeepSeek, which ranks highest overall. The post suggests that while DeepSeek V2.5 is currently top-ranked, Tencent’s Hunyuan Large and the unreleased Grok-2 could surpass it, noting that model suitability depends on specific use cases. For more details, you can refer to the HuggingFace blog and individual model links provided in the post.

- NousResearch Forge Reasoning O1 like models https://nousresearch.com/introducing-the-forge-reasoning-api-beta-and-nous-chat-an-evolution-in-llm-inference/ (Score: 240, Comments: 43): NousResearch has introduced the Forge Reasoning API and Nous Chat, which enhance reasoning capabilities in LLM (Large Language Models). This development represents an evolution in LLM inference, as detailed in their announcement here.

- Forge Reasoning API is not a new model but a system that enhances reasoning in existing models using Monte Carlo Tree Search (MCTS), Chain of Code (CoC), and Mixture of Agents (MoA). Despite being closed source and only available through an API waitlist, it demonstrates potential for reasoning improvements in LLMs, akin to advancements seen in open-source image generation.

- The discussion highlights skepticism and curiosity about the open-source status and the effectiveness of the Forge Reasoning API, with some users comparing it to Optillm on GitHub for experimenting with similar techniques. Users are keen on seeing independent tests to verify the claimed advancements in reasoning capabilities.

- The conversation reflects on the nature of technological advancements, with NousResearch’s efforts being likened to historical breakthroughs that become commonplace over time. It emphasizes the importance of workflows and system integration over standalone model improvements, pointing to a trend where open-source LLMs are beginning to receive similar enhancements as seen in other AI domains.

Theme 4. Unreliable Responses in Qwen 2.5: Self-Identification Issues

-

qwen2.5-coder-32b-instruct seems confident that it’s made by OpenAI when prompted in English. States is made by Alibaba when prompted in Chinese. (Score: 22, Comments: 15): Qwen 2.5 Coder exhibits inconsistent behavior regarding its origin, claiming to be developed by OpenAI when queried in English and by Alibaba when queried in Chinese.

- LLMs and Introspection: Several users, including JimDabell and Billy462, highlight that Large Language Models (LLMs), like Qwen 2.5 Coder, lack introspection capabilities and often produce “hallucinations” when asked about their origin, leading to inconsistent responses about their creators.

- Inconsistent Responses: Users, such as pavelkomin and muxxington, report varied responses from the model, where it claims to be made by different entities like Alibaba, OpenAI, Tencent Cloud, Anthropic, and Meta, indicating a strong influence from repeated phrases in training data rather than factual accuracy.

- Practical Concerns: Some users, such as standard-protocol-79, express indifference towards these inconsistencies as long as the model continues to generate effective code, suggesting that the primary concern for many is the model’s utility rather than its self-identification accuracy.

-

How to use Qwen2.5-Coder-Instruct without frustration in the meantime (Score: 32, Comments: 13): To improve performance with Qwen2.5-Coder-Instruct, avoid high repetition penalties, using slightly above 0 instead. Follow the recommended inference parameters, as low temperatures like T=0.1 are reportedly not problematic. Utilize bartowski’s quants for better output quality, and begin system prompts with “You are Qwen, created by Alibaba Cloud. You are a helpful assistant.” to enhance performance. Despite these adjustments, some users experience issues with vLLM and recommend alternatives like llama.cpp + GGUF.

- Users discuss temperature settings and repetition penalties for coding models like Qwen2.5-Coder-32B-Instruct. No-Statement-0001 found that a temperature of 0.1 successfully executed complex prompts, while others suggest avoiding high repetition penalties as they can degrade performance, with FullOf_Bad_Ideas recommending turning off repetition penalties for better zero-shot results.

- Some users, like Downtown-Case-1755, question the recommended high repetition penalties, noting that 1.05 is too high for coding tasks that naturally involve repetition. EmilPi highlights the importance of Top_K settings, which significantly impact model performance, as observed in the

generation_config.json. - Status_Contest39 shares experiences with different deployment setups, finding DeepInfra’s default parameters effective despite a Max Token limit of 512. Master-Meal-77 expresses dissatisfaction with official sampler recommendations, preferring a custom setup with top-p 0.9, min-p 0.1, and temp 0.7 for optimal results.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. AI Video Gen Evolution: CogVideoX 5B & DimensionX Release

-

CogVideoX1.5-5B Image2Video Tests. (Score: 91, Comments: 43): CogVideoX1.5-5B, a new image-to-video generation model, demonstrates its capabilities in converting still images to video content. No additional technical details or performance metrics were provided in the post.

- CogVideoX1.5-5B requires 34GB memory during generation and 65GB for VAE, with generation taking 15 minutes per 5-second video at 16fps. The model is currently accessible via command line inference script and has been tested on A100 and H100 80GB GPUs.

- Development updates indicate that Kijai is working on implementing version 1.5 in their wrapper, with a test branch already available for the cogwrapper. Integration with Comfy UI support is pending, while Mochi-1 offers an alternative requiring only 12GB VRAM.

- Users discussed motion quality improvements, noting that faster playback reduces the AI-generated slow-motion effect, as demonstrated in a sample video. Several comments focused on the need for more realistic physics simulation in the generated animations.

-

DimensionX: Create Any 3D and 4D Scenes from a Single Image with Controllable Video Diffusion | Flux Dev => DimensionX Demo (Score: 176, Comments: 51): DimensionX enables generation of 3D and 4D scenes from a single input image using controllable video diffusion techniques. The tool, developed by Flux Dev, allows for creation of dynamic scenes and environments from static images.

- The project’s official resources are available on GitHub, HuggingFace, and detailed in their research paper and project page. A Docker template is also available for implementation.

- Users discussed potential applications in 3D modeling software, suggesting integration with Blender and Unity, similar to Nvidia NeRF but requiring only single images. Some mentioned using it with photogrammetry software for environment creation.

- The term 4D in the project refers to time as the fourth dimension, essentially creating 3D scenes with temporal animation. Users also noted concerns about the workflow process and implementation details.

Theme 2. Claude’s Performance Issues & Rate Limits Spark User Frustration

-

The New Claude Sonnet 3.5 is Having a Mental Breakdown? (Score: 43, Comments: 79): Claude Sonnet 3.5 users report a significant performance decline over the past 72 hours, with notable deterioration in code quality, response coherence, and overall output quality compared to previous performance levels. The degradation appears consistent across multiple prompting approaches and historical prompts that previously worked well, with coding tasks specifically highlighted as an area of concern.

- Multiple developers report Claude incorrectly defaulting to React solutions regardless of the specified framework (Angular, ESP8266), with one user noting “at no point, in any of my files or prompts, is React a component of the project”.

- Users observe deteriorating response patterns including shortened bullet points, repetitive suggestions, and inability to handle basic code modifications. A developer who previously “built and published multiple apps” using Claude notes significant decline in even simple coding tasks.

- According to a comment referencing the Lex Fridman interview with Anthropic’s CEO, major AI labs sometimes reduce model quality through quantization to cut costs (by 200-400%), though this typically affects web interface users rather than API access.

-

Claude Pro limits needs revision now on priority (Score: 100, Comments: 66): Claude Pro users express frustration over the 2-hour usage caps and frequent limit restrictions, which interrupt their workflow and productivity. Users demand Anthropic revise the current Pro tier limits to better accommodate paying customers’ needs.

- Users discuss alternative solutions including using the API or rotating multiple Pro accounts, though many note the API costs would be significantly higher for their usage patterns, especially for those working with large text files.

- Several writers and developers share frustrations about hitting limits while working with large projects, particularly when using features like Project Knowledge and artifacts. One user reports hitting limits “4 times a day” while working with “80k words” of worldbuilding files.

- Multiple users mention using ChatGPT as a fallback when hitting Claude’s limits, though they prefer Claude’s capabilities. Some users have canceled their subscriptions due to the restrictions, with one suggesting a more realistic price point of “$79.99 per month”.

Theme 3. Gemini Now Accessible via OpenAI API Library

- Gemini is now accessible from the OpenAI Library. WTH? (Score: 172, Comments: 41): Google announced that Gemini can be accessed through the OpenAI Library, though the post lacks specific details about implementation or functionality. The post expresses confusion about this integration’s implications and purpose.

- Google’s Gemini API now accepts requests through the OpenAI API client library, requiring only three changes to implement: model name, API key, and endpoint URL to

generativelanguage.googleapis.com/v1beta. This adaptation follows industry standards as many LLM providers support the OpenAI API format. - The OpenAI library remains unchanged since it’s endpoint-agnostic, with all modifications made on Google’s server-side to accept OpenAI-formatted requests. This allows developers to easily switch between providers without major code rewrites.

- Multiple commenters clarify that this is not a collaboration between companies but rather Google implementing compatibility with an established standard API format. The OpenAI API has become the “de-facto standard” for LLM interactions.

- Google’s Gemini API now accepts requests through the OpenAI API client library, requiring only three changes to implement: model name, API key, and endpoint URL to

Theme 4. Greg Brockman Returns to OpenAI Amid Leadership Changes

- OpenAI co-founder Greg Brockman returns to ChatGPT maker (Score: 55, Comments: 5): Greg Brockman has returned to OpenAI after a three-month leave, announcing on X “longest vacation of my life complete. back to building @OpenAl!”, while working with CEO Sam Altman to create a new role focused on technical challenges. The return occurs amid significant leadership changes at the Microsoft-backed company, including departures of Mira Murati, John Schulman, and Ilya Sutskever, with OpenAI simultaneously developing its first AI inference chip in collaboration with Broadcom.

- [{‘id’: ‘lwwdcmr’, ‘author’: ‘ManagementKey1338’, ‘body’: ‘Indeed. I didn’t give him an offer. The man wants too much money.’, ‘score’: 4, ‘is_submitter’: False, ‘replies’: []}]

Theme 5. Major AI Companies Hitting Scaling Challenges

- OpenAI, Google and Anthropic Are Struggling to Build More Advanced AI (Score: 119, Comments: 114): OpenAI, Google, and Anthropic encounter technical and resource limitations in developing more sophisticated AI models beyond their current capabilities. The title suggests major AI companies face scaling challenges, though without additional context, specific details about these limitations cannot be determined.

- Meta reports no diminishing returns with model training, only stopping due to compute limitations. The new Nvidia Blackwell series offers 8x performance for transformers, while OpenAI continues progress with SORA, advanced voice mode, and O-1.

- Companies face challenges with training data availability and need new architectural approaches beyond the “more data, more parameters” paradigm. Current development areas include voice, vision, images, music, and horizontal integration.

- Future AI development may require new data sources including smart-glasses, real-time biometric data, and specialized models for niche applications. The field is experiencing what some describe as the peak of the Hype Cycle, heading toward a potential “Trough of Disillusionment”.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. New AI Models Shake Up the Landscape

- Qwen Coder Models Spark Excitement: Across several communities, developers are buzzing about the Qwen Coder models, eagerly testing their performance and sharing benchmarks. The models show promise in code generation tasks, stirring interest in their potential impact.

- UnslothNemo 12B Unleashed for Adventurers: The UnslothNemo 12B model, tailored for adventure writing and role-play, has been launched. A free variant is available for a limited time, inviting users to dive into immersive storytelling experiences.

- Aider v0.63.0 Codes Itself: The latest release of Aider boasts that 55% of its new code was self-authored. With added support for Qwen 2.5 Coder 32B and improved exception handling, Aider v0.63.0 takes a leap forward in AI-assisted development.

Theme 2. AI Tools and Integrations Enhance Workflows

- AI Coding Tools Join Forces: Supermaven has joined Cursor to build a powerhouse AI code editor. Together, they aim to enhance AI-assisted coding features, improving productivity for developers worldwide.

- Windsurf Editor Makes a Splash: Codeium launched the Windsurf Editor, the first agentic IDE that combines AI collaboration with independent task execution. Users are excited about its potential to maintain developer flow and boost coding efficiency.

- LM Studio Eyes Text-to-Speech Integration: Users expressed keen interest in integrating Text-to-Speech (TTS) features into LM Studio. The development team acknowledged the demand and is exploring possibilities to enhance the platform’s interactivity.

Theme 3. Benchmark Showdowns: Models Put to the Test

- Vision Language Models Battle in Robotics: A new research paper benchmarks Vision, Language, & Action Models like GPT-4 on robotic tasks. The study evaluates model performance across 20 real-world tasks, highlighting advancements in multimodal AI.

- Qwen 2.5 Coder vs. GPT-4: Clash of Titans: Enthusiasts compared Qwen 2.5 Coder 32B with GPT-4 and Claude 3.5 Sonnet, debating which model reigns supreme in code generation. Impressive generation speeds on consumer hardware spark further interest.

- ChatGPT Keeps Dates Straight; Others Lag Behind: Users noticed that models like Gemini and Claude often fumble with current dates, while ChatGPT maintains accurate date awareness. This difference is attributed to superior system prompt configurations in ChatGPT.

Theme 4. Community Voices Concerns Over AI Trends

- Perplexity Users Threaten to Jump Ship over Ads: Perplexity AI introduced ads, prompting backlash from users who feel their subscription should exempt them from advertising. The community awaits official clarification on how ads will affect the Pro version.

- Is the AI Bubble About to Burst?: A provocative article warns of an impending AI bubble burst, likening the massive $600 billion GPU investments with minimal returns to the dot-com crash. The piece sparks debate on the sustainability of current AI investments.

- AI21 Labs Deprecates Models, Users Fume: AI21 Labs faced user frustration after deprecating legacy models that many relied on for nearly two years. Concerns grow over the new models’ quality and fears of future deprecations.

Theme 5. Tech Challenges Push Developers to Innovate

- Triton Tackles Tiny Tensor Troubles: Developers working with Triton are optimizing GEMM kernels for small sizes under 16, addressing efficiency challenges and sharing solutions for improved performance in matrix computations.

- torch.compile() Sparks Memory Woes: Users report that using torch.compile() can increase peak memory usage by 3-16%, leading to out-of-memory errors in models with dynamic shapes. The community discusses profiling techniques to manage memory more effectively.

- tinygrad Community Squashes Bugs Together: The tinygrad team collaborates to fix a bug in the min() function for unsigned tensors. Through shared insights and code reviews, they demonstrate the power of open-source collaboration in improving AI frameworks.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

-

Qwen Coder Models Deployment: Members discussed the current development and testing of the Qwen Coder models, expressing interest in their performance and potential evaluation metrics. There are mentions of files and fixes available on Unsloth with suggestions to run evaluations similar to other models.

- Discussions highlighted the readiness of Qwen Coder for deployment, with community members proposing to benchmark it against existing models using provided resources.

-

Multi-GPU Training Limitations: Users explored the potential for training large models like Qwen 2.5 using multiple GPUs, specifically mentioning MI300X and VRAM needs. It was noted that Unsloth may be more efficient with a single GPU setup rather than multi-GPU configurations due to memory efficiency.

- The community debated the scalability of multi-GPU training, with some advocating for increased parallelism while others pointed out the memory management challenges inherent in large-scale model training.

-

Gemma 2B RAM Usage Concerns: Users discussed experiencing consistent RAM usage increase while running longer jobs with Gemma 2B, questioning if evaluation steps might be affecting performance. One member suggested training with 0 steps to mitigate excessive resource consumption.

- Suggestions were made to optimize training configurations to reduce RAM overhead, ensuring more stable performance during extended runs.

-

RAG for Long-term Memory: Inquiry about RAG (Retrieval-Augmented Generation) was made, along with requests for user experiences and guidance on using it for long-term data retention. A user recommended Dify as a simple alternative for implementing RAG.

- Community members shared various approaches to leveraging RAG, highlighting Dify as a user-friendly solution for integrating retrieval systems into generation workflows.

-

Optillm Release Enhancements: The latest release of Optillm introduces a local inference server that allows loading any HF model and LoRA adapters, enhancing usability for fine-tuned Unsloth models. This update also enables dynamic adapter switching during inference and supports advanced decoding techniques like cot_decoding and entropy_decoding while utilizing the standard OpenAI client SDK.

- Users lauded the new features in Optillm, noting the increased flexibility and improved workflow integration these enhancements bring to model inference processes.

HuggingFace Discord

-

Qwen Model Shows Variable Output: Users reported that the Qwen model’s performance in generating text can vary significantly, with comparisons made to other models like Ollama indicating that responses from Qwen often may hallucinate or lack quality.

- Tweaking parameters like repetition penalty and adjusting token lengths were suggested to enhance output quality.

-

Introducing LightRAG for Retrieval: An article was shared detailing LightRAG, which includes code evaluation comparing Naive RAG with local, global, and hybrid approaches.

- The author aims to highlight the advantages of using LightRAG in various retrieval tasks. Read the full article here.

-

Sulie Foundation Model for Time Series Forecasting: Sulie, a new foundation model for time series forecasting, aims to simplify the automation of LoRA fine-tuning and covariate support.

- The team seeks feedback and encourages users to check their work on GitHub, humorously highlighting common frustrations faced by data teams by comparing issues to a ‘chocolate teapot’ for zero-shot performance.

-

Benchmarking VLA Models for Robotics: A collaborative research paper titled Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks was released to evaluate the performance of VLA models like GPT4o.

-

SDXL Lightning Model Demonstrates Fast Image Generation: SDXL Lightning or sd1.5 models can generate images in just a few seconds on standard GPUs, making them ideal for prompt-based image creation.

- Variants like turbo/lightning/lcm can produce images in real-time on powerful hardware, as shared by users experimenting with these configurations.

Perplexity AI Discord

-

Perplexity AI Subscription Model Scrutinized: Users are evaluating the Perplexity Pro subscription, questioning its value amidst the introduction of ads, with many expressing intentions to cancel if ads are added.

- There is growing uncertainty about whether ads will appear in the Pro version, leading users to seek official clarification from the Perplexity team.

-

Clarification Sought on Perplexity Ads Implementation: Members are uncertain if ads will be included in the Pro subscription, prompting requests for confirmation to understand the impact on the user experience.

- The community emphasizes the need for transparent communication from Perplexity regarding ad integration to maintain trust and subscription value.

-

Ongoing Model Selection Issues in Perplexity: Users report persistent problems with selecting different models in Perplexity, with the system defaulting to GPT-4o despite selecting alternatives.

- This malfunction disrupts workflows for Pro subscribers who rely on consistent access to various models like Claude.

-

Exploring Fractal Machine Learning Enhancements: Fractal Machine Learning is being proposed to boost AI performance, with discussions on its potential applications in language models and collaborations with domain experts.

- Community members are sharing resources and expressing interest in integrating fractal concepts to advance machine learning techniques.

-

Differentiating Factors of Perplexity AI Models: An in-depth comparison highlights how Perplexity AI stands out in the AI landscape through unique features and enhanced user experience.

- The discussion focuses on key distinctions that could influence AI engineers’ preferences when selecting AI tools for their projects.

Eleuther Discord

-

Mitigating Saddle Points in Gradient Descent: During discussions on gradient descent optimization, participants highlighted that saddle points are less impactful when using noised gradient descent, ensuring optimizers remain effective even in their presence.

- However, some members emphasized that in high-dimensional scenarios, saddle points may still occur, suggesting that their prevalence isn’t entirely mitigated.

-

Evolution of Batch Normalization Techniques: The debate around Batch Normalization and its alternatives was prominent, with insights into the continued relevance of Batch Norm, especially when implemented as Ghost Batch Norm.

- Critiques pointed out that Batch Norm’s effectiveness varies with batch size, prompting calls for more research into its efficiency and optimal conditions for application.

-

Advancements in Vision Language Action Models: A new research release presented benchmarking Vision Language Action models in robotic tasks, involving prominent institutions and offering promising insights.

- Participants were encouraged to provide feedback on the work and explore the provided YouTube video and project links for a deeper understanding of the models and their applications.

-

Integrating DagsHub with GPT-NeoX: The potential value of integrating DagsHub with GPT-NeoX was proposed, seeking community insights into enhancing the platform’s capabilities.

- Inquiries about AnthropicAI’s frameworks revealed that they utilize proprietary systems, which are not publicly available.

-

Rethinking Gradient Descent Stepsizes: Professor Grimmer challenged the conventional notion that gradient descent requires constant step sizes of 1/L for optimal convergence.

- His findings, detailed in his paper, demonstrate that periodic long steps within the range (0, 2/L) can lead to better convergence results.

OpenRouter (Alex Atallah) Discord

-

UnslopNemo 12B launched for adventure writing: The UnslopNemo 12B model, tailored for adventure writing and role-play scenarios, is now available at UnslopNemo 12B.

- A free variant is accessible for 24 hours via UnslopNemo 12B Free, with support requests directed to Discord.

-

Mistral and Gemini gain parameter enhancements: Mistral and Gemini models have been updated to include Frequency Penalty and Presence Penalty parameters, enhancing their configurability.

- Additionally, Mistral now offers tools for seed adjustments, improving output consistency.

-

Confusion over Tool Calling functionality: Users are experiencing issues with the tool calling feature on OpenRouter, as enabling it doesn’t impact token usage as anticipated.

- Discussions highlight the need for clearer implementation guidelines to fully leverage tool calling in model interactions.

-

High token processing volume prompts pricing discussions: A user managing over 3 million tokens daily for an AI chatbot in a niche market has inquired about potential price reductions for high-volume token processing.

- This reflects a growing demand for scalable pricing models catering to extensive usage in specialized applications.

-

Requests surge for Custom Provider Keys: Multiple members have requested access to Custom Provider Keys, indicating a strong interest in leveraging this feature for tailored integrations.

- The community dialogue includes varied appeals, emphasizing the importance of Custom Provider Keys for diverse project requirements.

aider (Paul Gauthier) Discord

-

Aider v0.63.0 Launches with New Features: The Aider v0.63.0 release introduces support for Qwen 2.5 Coder 32B and enhanced handling of LiteLLM exceptions, improving overall usability.

- 55% of the code in this release was authored by Aider, demonstrating significant self-development.

-

Aider Extensions for VSCode and Neovim Released: New VSCode and Neovim extensions for Aider have been launched, featuring markdown preview, file management, and chat history, encouraging community contributions.

- These extensions aim to increase Aider’s utility across various platforms, fostering collaboration among developers.

-

SupermavenAI Partners with Cursor: Cursor announced that SupermavenAI is joining their team to enhance research and product capabilities, aiming to transform Cursor into a product powerhouse.

- The partnership was unveiled via Twitter, highlighting plans for collaborative innovation.

-

Qwen 2.5 Coder Support Added to Aider: Aider now supports Qwen 2.5 Coder 32B, integrating advanced coding capabilities into the platform.

- This update facilitates improved code assistance and aligns Aider’s features with contemporary coding standards.

-

OpenRouter Provider Configuration Tips for Aider: Discussions on configuring OpenRouter for Aider included specifying provider preferences and creating model metadata files to manage costs and context sizes.

- Users shared strategies for balancing provider use and emphasized understanding OpenRouter’s load balancing mechanisms.

LM Studio Discord

-

Optimizing Quantization Sizes for LM Studio: Members discussed the impact of quantization sizes, noting that smaller sizes lead to increased compression, while larger sizes may require splitting into multiple parts.

- Heyitsyorkie summarized that higher quant sizes could ensure better performance without significant losses.

-

Integrating TTS with LM Studio: LM Studio users are interested in connecting the platform to Text-to-Speech (TTS) features.

- The response indicated ongoing conversations around integrating such features, but no timeline was provided.

-

Resolving Qwen 2.5 Performance Issues: A user reported issues with Qwen 2.5, specifically receiving only autocomplete responses, but later noted it started working correctly.

- Others recommended ensuring proper configuration and exploring model options to optimize performance.

-

Python Script for Llama.cpp Integration: There was a request for a Python script to enable sideloading the latest Llama.cpp into LM Studio, highlighting the need for such functionality.

- Participants acknowledged the community’s long-standing anticipation and mentioned ongoing efforts to make it a reality.

-

GPU Combinations for Large Model Inference: Discussion on using a 12GB 3060 and 40GB A800 together for 70B class models raises the question of whether to use one GPU or both, with concerns on how scaling affects performance.

- A member suggested that it may be more beneficial to solely utilize the A800 since it can fit the model in VRAM while the 3060 cannot.

Stability.ai (Stable Diffusion) Discord

-

Training with Movie Posters using Dreambooth: A user is seeking tutorials for training on movie posters using Dreambooth in auto1111, looking for the latest techniques and suggestions for effective training.

- The community recommended checking existing resources and guides to streamline the process.

-

Animatediff Enables Video Clip Generation: Members discussed using Animatediff for generating video clips, highlighting its ability to post two images to create transitions, despite lower resolutions being suitable for social media.

- A recommendation for the Banodoco server was provided, as they specialize in video-related tools.

-

Checkpoint & LoRa Download Sources: Users shared links to external file hosting sites such as Google Drive, Mega, and Hugging Face for downloading checkpoint files and LoRAs, while discussing the limitations of Civit AI and potential content bans.

- Concerns were raised about the removal of specific content types and their impact on user access.

-

Resolving Python Torch Errors in Stable Diffusion: A user encountered an error with the torch package while setting up a Python environment for Stable Diffusion, and was advised to uninstall the current Python version and install Python 3.10.11 64bit.

- The user expressed gratitude for the support and planned to implement the suggested solution soon.

-

Discord Access Issues and Solutions: Users inquired about accessing URLs for Discord servers, specifically seeking new invites and direct links, with experiences of outdated invitation links for the Pixaroma community.

- The community provided assistance to connect with the required Discord servers.

Interconnects (Nathan Lambert) Discord

-

Nous 3 Model Performance Confusion: Discrepancies in Nous’ 70B model performance figures have emerged as seen in this thread, raising questions about the validity of the reported MMLU-Pro scores.

- Members speculate that differences in prompting techniques and benchmark inconsistencies could be factors influencing these divergent numbers.

-

AI Agent Tool ‘Operator’ Launch: OpenAI is set to launch a new AI agent tool called ‘Operator’ that automates tasks such as writing code and booking travel, expected to be released in January according to this announcement.

- This tool aims to enhance user productivity by taking actions on behalf of individuals in various contexts.

-

JanusFlow Model Introduction: The JanusFlow model is introduced as a new capability that harmonizes autoregressive LLMs with rectified flow for both image understanding and generation, detailed in this post.

- JanusFlow is designed to be robust, straightforward, and flexible, influencing the development of future AI models in this space.

-

Adaptive Techniques for Blocking Jailbreaks: Anthropic’s new research introduces adaptive techniques to rapidly block new classes of jailbreak as they are detected, as discussed in their paper here.

- Ensuring perfect jailbreak robustness is hard, highlighting the challenges in securing AI models.

-

Vision Language Models (VLMs): Members discussed Vision Language Models (VLMs), referencing Finbarr’s blog and a post on VLM inference costs.

- Key topics include the high computational cost due to 500+ image tokens and recent models like Pixtral and DeepSeek Janus improving text extraction from images.

Notebook LM Discord Discord

-

KATT Catapults Podcast Productivity: A member integrated KATT into their podcasting workflow, resulting in a fact-checked show exceeding 90 minutes by utilizing an altered system prompt after two years of KATT’s training.

- This integration streamlined the production process, enhancing the hosts’ ability to maintain accuracy and depth throughout the extended podcast episodes.

-

NotebookLM Nixed from External Sharing: A member inquired about sharing NotebookLM content outside their Google Organization, leading to confirmation that sharing is not possible externally due to admin-imposed restrictions.

- Further discussion revealed limitations on personal accounts, emphasizing the importance of adhering to organizational policies when handling NotebookLM data.

-

Gemini Guard: NotebookLM Data Security: Concerns were raised regarding the security of data uploaded to Gemini, with clarifications stating that paid accounts ensure data security, whereas free accounts do not.

- Members urged caution when uploading sensitive information, highlighting the necessity of maintaining confidentiality on the platform to prevent potential breaches.

-

Summarizing Success with NotebookLM: A user sought tips for using NotebookLM to summarize texts for college literature reviews, prompting recommendations to utilize synthetic datasets for safeguarding sensitive data.

- This approach aims to enhance the effectiveness of summaries while ensuring that privacy standards are upheld during the process.

-

Format Fails in Podcast Generation: Users discussed challenges in generating podcasts from specific sources, particularly facing issues with .md file formats.

- Recommendations included switching to PDF or Google Docs formats, which successfully resolved the podcast generation focus problems for users.

Latent Space Discord

-

Supermaven Joins Cursor: Supermaven has officially joined Cursor to enhance their AI coding editor capabilities. The collaboration leverages Supermaven’s AI-assisted features for improved software development experiences.

- Anyan sphere acquired Supermaven to beef up Cursor, with the deal remaining undisclosed. Community reactions were mixed, noting Supermaven’s prior effectiveness while expressing surprise at the transition.

-

Codeium Launches Windsurf Editor: Codeium introduced the Windsurf Editor, the first agentic IDE integrating AI collaboration with independent task execution, aiming to maintain developer flow.

- Despite positive first impressions, some users noted that Windsurf Editor may not yet outperform established tools like Copilot in certain aspects. Additionally, the editor is available without waitlists or invite-only access, emphasizing user inclusion.

-

Perplexity Introduces Sponsored Ads: Perplexity is experimenting with ads on its platform by introducing “sponsored follow-up questions” alongside search results. They partnered with brands like Indeed and Whole Foods to monetize their AI-powered search engine.

- This move aims to establish a sustainable revenue-sharing program, addressing the insufficiency of subscriptions alone.

-

Mira Lab Forms New AI Team: Mira Lab, initiated by ex-OpenAI CTO Mira Murati, is forming a new team focused on AI technologies, with reports indicating that at least one OpenAI researcher is joining the venture.

- The lab aims to undertake ambitious projects by leveraging the expertise of its founding members.

-

RAG to Advance Beyond Q&A: There is growing speculation that Retrieval-Augmented Generation (RAG) will transition from primarily Q&A applications to more sophisticated report generation in the coming months, as highlighted in a post by Jason Liu.

- The broader sentiment suggests that RAG’s evolution will enhance how companies utilize AI in documentation and reporting.

GPU MODE Discord

-

Triton Kernel Functionality and Conda Issues: Developers are addressing libstdc++ compatibility issues in Triton when using Conda environments, aiming to resolve crashes encountered during

torch.compileoperations.- Discussions include optimizing GEMM kernel designs for smaller sizes and addressing warp memory alignment errors to enhance Triton’s stability and performance.

-

Impact of torch.compile on Memory Usage: Users report that torch.compile() leads to a 3-16% increase in peak memory usage, contributing to out-of-memory (OOM) errors, particularly when dealing with dynamic shapes.

- Profiling with nsys and nvtx ranges is recommended to analyze GPU memory allocations, although there’s uncertainty if CUDA graphs in PyTorch exacerbate memory consumption without the

reduce-overheadflag.

- Profiling with nsys and nvtx ranges is recommended to analyze GPU memory allocations, although there’s uncertainty if CUDA graphs in PyTorch exacerbate memory consumption without the

-

MI300X Achieves 600 TFLOPS FP16 Peak Throughput: Performance benchmarks indicate that the MI300X reaches up to 600 TFLOPS for FP16 operations, though attempts to push beyond 800 TFLOPS with CK optimizations have been unsuccessful.

- A YouTube talk by Lei Zhang and Lixun Zhang highlights Triton’s support for AMD GPUs, showcasing optimization strategies around chiplets to improve GPU performance.

-

Liger-Kernel v0.4.1 Released with Gemma 2 Support: The latest v0.4.1 release of Liger-Kernel introduces Gemma 2 support and a patch for CrossEntropy issues, addressing softcapping in fused linear cross entropy.

- Enhancements also include fixes for GroupNorm, contributing to more efficient operations and validating the robustness of the updated kernel.

-

ThunderKittens Update: DSMEM Limitations and Synchronization: Updates in ThunderKittens reveal that the H100 GPU only supports DSMEM reductions for integer types, prompting discussions on optimizing semaphore operations and synchronization to prevent hangs.

- Future pull requests aim to finalize testing code for integers, enhancing the kernel’s reliability and performance in cooperative groups and semaphore synchronization contexts.

tinygrad (George Hotz) Discord

-

Tinygrad’s Distributed Approach with Multi-node FSDP: A user inquired about Tinygrad’s current strategy for distributed computing, particularly regarding its handling of FSDP and support for multi-node setups. They referenced the multigpu training tutorial for detailed insights.

- Another user mentioned an open bounty on FSDP as a potential resource and discussed the scalability challenges of current implementations.

-

Data Handling in Cloud for Tinygrad: Discussion highlighted that while cloud capabilities allow utilizing thousands of GPUs across different machines, optimal performance depends on fast connectivity and effective all-reduce implementations.

- Concerns were raised about the efficiency of having a single machine orchestrate data management and processing during training runs.

-

Device-to-device Communication in Tinygrad: George Hotz indicated that device-to-device communication is managed through Tinygrad’s Buffer via the

transferfunction, suggesting potential ease in extending this to cloud setups.- He humorously noted it could be accomplished with just a few lines of code, indicating the simplicity of implementation.

-

Performance Optimization in Sharding in Tinygrad: There was a discussion on the necessity of clarifying whether users are machine-sharded or cloud-sharded to prevent unexpected performance issues and costs during slower sync operations.

- The conversation underscored the importance of efficient data handling strategies to maintain performance levels across different configurations.

-

Fixing Unsigned Tensor min() Bug in tinygrad: A user identified a bug in the min() function for unsigned tensors when calculating minimum values with zeros, suggesting flips to resolve it. They referenced the PR #7675.

- Rezvan submitted a PR with failing tests, mentioning the complexity due to potential infs and nans.

OpenAI Discord

-

Enhancing AI Models’ Date Accuracy: Discussions revealed that models like Gemini and Claude often provide incorrect current dates, whereas ChatGPT maintains accurate date awareness. Link to discussion.

- One user attributed ChatGPT’s accuracy to its superior system prompt configuration, enabling better inference of dates in various contexts.

-

ChatGPT o1-preview Shows Increased Creativity: ChatGPT o1-preview is receiving positive feedback for its enhanced creativity and personalized responses compared to earlier versions. Feedback thread.

- Users appreciate its ability to anticipate inputs, contributing to a more tailored interaction experience.

-

Implementing Scratchpad Techniques in LLMs: Members are exploring the use of scratchpad techniques as a pseudo-CoT method, allowing LLMs to articulate their thought processes while generating solutions. Discussion link.

- There is enthusiasm for integrating scratchpads into structured outputs to improve documentation and workflow consistency.

-

Challenges with Mobile Copy-Paste Functionality: Ongoing copy-paste issues on mobile platforms are affecting user experience, with problems persisting for several weeks. Issue report.

- Users are seeking effective solutions to restore functionality and enhance mobile interaction capabilities.

-

VPN Usage for Circumventing Access Restrictions: A discussion emphasized the legality of using VPNs to bypass internet restrictions, highlighting their role in maintaining access. Conversation thread.

- Participants noted that current block configurations may be ineffective against users employing VPNs for intended purposes.

Modular (Mojo 🔥) Discord

-

exllamav2 Elevates MAX Inference: Members highlighted the exllamav2 GitHub project as a valuable resource for enhancing LLM inference on MAX, emphasizing its clean and optimized codebase.

- Key features include ROCM support for AMD and efficient handling of multimodal models, positioning exllamav2 as a strong candidate for deeper integration with the MAX platform.

-

Mojo JIT Compiler Optimization: The community discussed the feasibility of shipping the Mojo JIT compiler by ensuring a compact binary size and interoperability with precompiled binaries.

- A member emphasized that while MLIR can be shipped, the compiler is crucial for achieving native code execution without exposing source for all dependent applications.

-

MAX Platform Capabilities: MAX was introduced as a comprehensive suite of APIs and tools for building and deploying high-performance AI pipelines, featuring components like the MAX Engine for model execution.

- The MAX documentation was shared, showcasing its capabilities in deploying low-latency inference pipelines effectively.

-

UnsafePointer Risks in Mojo: UnsafePointer in Mojo was flagged for its potential to invoke undefined behavior, leading to memory safety issues as detailed by a community member.

- Another member noted that Mojo enforces stricter pointer rules compared to C/C++, aiming to minimize risks such as type punning and enhance overall memory safety.

-

Mana Project Naming Trends: Members humorously discussed the frequent use of the name Mana, referencing projects like mana.js and 3rd-Eden’s mana.

- The conversation reflected on the trend of adopting ‘Mana’ in project names, indicating a broader cultural influence in naming conventions within the tech community.

LlamaIndex Discord

-

Vocera Launch on Product Hunt: Vocera was launched on Product Hunt, enabling AI developers to test and monitor voice agents 10X faster.

- The team is seeking feedback to boost Vocera’s visibility within the AI community.

-

GenAI Pipelines with LlamaIndex: Learned how to build robust GenAI pipelines using LlamaIndex, Qdrant Engine, and MLflow to enhance RAG systems.

- The step-by-step guide covers streamlining RAG workflows, maintaining performance across model versions, and optimizing indexing for efficiency.

-

RAG vs Reporting Debate: A debate emerged comparing RAG (Retrieval-Augmented Generation) with traditional reporting, noting that reports account for only 10% of problem-solving in corporations.

@jxnlcoargued that reports are more impactful, emphasizing that information retrieval is key to effective report generation.

-

Dynamic Section Retrieval in RAG: Introduced a new dynamic section retrieval technique in RAG, allowing full sections to be retrieved from documents instead of fragmented chunks.

- This method addresses community concerns about multi-document RAG, as discussed in this article.

-

Chatbots in Corporate Settings: Members observed that upper management favors report formats over chatbot interactions within corporations.

- Despite this preference, chatbots are recognized as effective tools for conducting internal searches.

Cohere Discord

-

Rerank API Best Practices: Users are seeking best practices for the

queryfield in the v2/rerank API, noting significant variations inrelevanceScorewith slight query changes. Reference the Rerank Best Practices for optimal endpoint performance.- Examples include a

queryfor ‘volume rebates’ achieving a score of ~0.998 compared to ~0.17 for ‘rebates’, causing confusion about the model’s responsiveness to query semantics.

- Examples include a

-

Production API Key Upgrade: A user reported upgrading to a production API key, anticipating a more stable experience with Cohere’s services once current issues are resolved.

- This upgrade indicates a commitment to utilizing Cohere’s offerings, dependent on the resolution of ongoing API errors.

-

Benchmarking Vision Language Action Models: A new paper titled Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks was released, showcasing collaboration between Manifold, Georgia Tech, MIT, and Metarch AI.

- The research evaluates emerging Vision Language Action models, including GPT4o, on their ability to control robots across 20 real-world tasks. Explore more on the Multinet website and the code repository.

-

ICS Support for Events: A user emphasized the necessity of implementing ICS file support for managing numerous events hosted on the Discord server.

- The request was well-received by members, with positive feedback supporting the addition of this feature.

-

File Content Viewing Feature: A new feature was introduced to view the content of uploaded files within the toolkit, enhancing file management capabilities.

- The feature was met with enthusiasm from members, who expressed appreciation for the improved functionality.

OpenAccess AI Collective (axolotl) Discord

-

Docker Image Tagging for Releases: Docker images for the main branch have been built, with a reminder to tag them for version releases. A member emphasized the importance of proper tagging for organized version control and upcoming releases.

- This practice ensures traceability for each release, as detailed in the latest pull request.

-

Qwen2.5 Coder Size Insights: A member shared a YouTube video comparing different sizes of Qwen2.5 Coder, discussing their performance metrics in detail.

- The video provides an in-depth analysis, aiding users in selecting the appropriate model size for their specific needs.

-

Qwen2.5 Performance on NVIDIA 3090: Qwen2.5 is running on an NVIDIA 3090, resulting in enhanced generation speed. This hardware configuration underscores the performance gains achievable for demanding models.

- Users noted significant improvements in generation times, highlighting the benefits of high-end GPUs in model deployments.

-

Comparing Qwen2.5 Coder with GPT4o and Claude 3.5 Sonnet: A YouTube video titled ‘Qwen2.5 Coder 32B vs GPT4o vs Claude 3.5 Sonnet’ was shared to compare these models.

- The video aims to determine the superior model among them, offering a comprehensive analysis of their capabilities.

-

Axolotl Version 0.5.0 Launch: The team announced the release of Axolotl version 0.5.0, now installable via

pip install axolotl. Updates include improvements and new features detailed on the GitHub release page.- Community members celebrated the release, expressing excitement and pledging support for ongoing enhancements.

DSPy Discord

-

Nous Research Introduces Forge Reasoning API: Nous Research has unveiled the Forge Reasoning API in beta, promising significant advancements in LLM inference capabilities.

- This development marks a crucial step in enhancing reasoning processes within AI systems, showcasing a blend of newer models and optimized techniques.

-

Nous Chat Gets an Upgrade: Accompanying the Forge API, Nous Chat is set to evolve, incorporating advanced features that improve user interaction and accessibility.

- With this evolution, the emphasis lies on delivering a richer conversation experience powered by enhanced LLM technologies and methodologies.

-

DSPY Comparative Analysis Discussions: Members discussed experiences with DSPY for conducting a comparative analysis on zero shot and few shot prompting in specific domains.

- One member asked others about their use of the GitHub template to facilitate this analysis.

-

Shared DSPY Resources: A member shared a link to a Colab notebook to help others get started with DSPY.

- Another member referenced a different notebook and highlighted its potential usefulness for their own project involving a code similarity tool.

-

Evaluating Tools with LLM Approaches: A member mentioned evaluating zero shot versus few shot prompting in their attempts to create a code similarity tool using LLM.

- They referred to another GitHub resource that they worked on to compare approaches and outcomes.

OpenInterpreter Discord

-

Open Interpreter Excites Community: Members are thrilled about the latest Open Interpreter updates, particularly the streamed responses handling feature, which enhances user experience.

- One member commented that ‘Open Interpreter is awesome!’, prompting discussions on the potential for building text editors in future collaborations.

-

OpenCoder: Revolutionizing Code Models: The OpenCoder YouTube video showcases OpenCoder, an open-source repository aimed at developing superior code language models with advanced capabilities.

- Viewers were intrigued by OpenCoder’s potential to surpass existing models, discussing its impact on the code modeling landscape.

-

Forecasting the AI Bubble Burst: A post warns that the AI bubble is about to pop, drawing parallels to the 1999 dot-com bubble, especially in terms of massive GPU investments failing to yield proportional revenues.

- The article details the risk of $600 billion in GPU spend against a mere $3.4 billion in revenue, suggesting a precarious outlook for the AI sector.

-

Comparing AI and Dot-Com Crashes: Discussions highlight that the ongoing infrastructure buildup in AI mirrors strategies from the dot-com era, with companies heavily investing in hardware without clear monetization.

- The risk of repeating past Pets.com-like failures is emphasized, as firms chase theoretical demand without proven profit pathways.

LAION Discord

-

Vision Language Action Models Released: A new paper titled ‘Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks’ evaluates the performance of Vision Language Models across 20 different real-world tasks, showcasing collaborations among Manifold, Georgia Tech, MIT, and Metarch AI.

- The work aims to profile this emerging class of models like GPT4o, marking a first step in a broader benchmark for multimodal action models.

-

Watermark Anything Tool Released: The project ‘watermark-anything’ provides an official implementation for watermarking with localized messages. This model is noted to have only 1M parameters, potentially allowing it to be integrated into various AI generators quickly.

- The lightweight architecture enables rapid deployment across different AI generation platforms, facilitating seamless integration.

-

EPOCH 58 COCK Model Updates: A member shared updates about EPOCH 58 COCK, noting improvements with vit-s at 60M parameters and enhanced model features.

- They remarked that legs are coming in and the cockscomb is becoming more defined*, signaling positive progress in model capabilities.*

-

Advancements in Robotic Learning Tasks: Discussions highlighted progress in Robotic Learning Tasks, particularly in applying Vision Language Action Models to enhance robot control and task automation.

- Community members debated the challenges and potential solutions for deploying these models in real-world robotic systems, citing ongoing experiments and preliminary results.

-

AI Generators Performance Enhancements: Participants discussed the latest improvements in AI Generators Performance, focusing on increased model efficiency and output quality.

- Specific benchmarks and performance metrics were analyzed to assess the advancements, with emphasis on practical implementations.

LLM Agents (Berkeley MOOC) Discord

-

Utilizing Tape for Agent-Human Communication: A member inquired about using Tape as a medium for communication between humans and agents, seeking appropriate documentation.

- This led to a request for guidance on publishing an agent’s tape entries encountering errors to a queue.

-

Sharing Resources on TapeAgents Framework: In response to TapeAgents queries, a member shared a GitHub intro notebook and a relevant paper.

- The member stated that they have read all provided resources, expressing that they had already reviewed the suggested materials.

Alignment Lab AI Discord

-

Latent Toys website launch: A member shared the newly created Latent Toys, highlighting it as a noteworthy project.

- A friend was behind the development of the site, further adding to its significance.

-

Community discussion on Latent Toys: Members discussed the launch of Latent Toys, emphasizing its importance within the community.

- The announcement generated interest and curiosity about the offerings of the new site.

Gorilla LLM (Berkeley Function Calling) Discord

-

Gorilla Submits PR for Writer Models and Palmyra X 004: A member announced the submission of a PR to add support for Writer models and Palmyra X 004 to the leaderboard.

- They expressed gratitude for the review and shared an image preview linked to the PR, highlighting community collaboration.

-

Community Response to Gorilla PR: Another member promptly responded to the PR submission, indicating they will review the changes.

- Their acknowledgment of ‘Thank you!’ underscored active community engagement.

AI21 Labs (Jamba) Discord

-

Legacy Models Deprecation: Members expressed their frustration regarding the deprecation of legacy models, stating that the new models are not providing the same output quality.

- This deprecation is hugely disruptive for users who have relied on the older models for almost two years.

-

Transition to Open Source Solutions: Users are scrambling to convert to an open source solution, while they have been willing to pay for the old models.

- How can we be sure AI21 won’t deprecate the new models in the future too? highlights their concerns about the stability of future offerings.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Unsloth AI (Daniel Han) ▷ #general (160 messages🔥🔥):

Qwen Coder ModelsMulti-GPU TrainingDataset FormattingFinetuning VRAM RequirementsInference System Tokens

-

Discussion on Qwen Coder models deployment: Members discussed the current development and testing of the Qwen Coder models, with some expressing interest in their performance and potential evaluation metrics.

- There are mentions of files and fixes available on Unsloth with suggestions to run evaluations similar to other models.

-

Multi-GPU Training Limitations: Users explored the potential for training large models like Qwen 2.5 using multiple GPUs, specifically mentioning MI300X and VRAM needs.

- It was noted that Unsloth may be more efficient with a single GPU setup rather than multi-GPU configurations due to memory efficiency.

-

Dataset Formatting and Input Handling: There were discussions about how to effectively format datasets for finetuning, specifically concerning separator tokens and input/output differentiation.

- Suggestions included using special tokens like ’### response’ or

---to inform the model when to switch between user input and model output.

- Suggestions included using special tokens like ’### response’ or

-

Finetuning Large Models and VRAM Requirements: Users inquired about the required VRAM for finetuning 405B models, with some proposing configurations involving high VRAM GPUs.

- The practicality of testing configurations on platforms like RunPod was suggested, with discussions around the efficiency of Unsloth training.

-

Inference System Tokenization: Members questioned the necessity of explicit separators in user inputs during inference, contemplating whether systems like LM Studio handle this implicitly.

- It was clarified that various models adopt different approaches regarding start and end tokens for handling user input and assistant responses.

Links mentioned:

- WeightWatcher: Data-Free Diagnostics for Deep Learning: no description found

- Tweet from Daniel Han (@danielhanchen): Bug fixes & analysis for Qwen 2.5: 1. Pad_token should NOT be <|endoftext|> Inf gens 2. Base <|im_start|> <|im_end|> are untrained 3. PCA on embeddings has a BPE hierarchy 4. YaRN …

- Goku Anime GIF - Goku Anime Super Saiyan - Discover & Share GIFs: Click to view the GIF

- Massed Compute: Massed Compute is a cloud compute provider that serves cutting-edge GPUs without bulky contracts and unnecessary up-sells.

- Reddit - Dive into anything: no description found

- subreddits: no description found

- GitHub - EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models.: A framework for few-shot evaluation of language models. - EleutherAI/lm-evaluation-harness

Unsloth AI (Daniel Han) ▷ #off-topic (2 messages):

User reactionsDiscord interactions

-

Shared ‘Womp Womp’ Moment: Members expressed a mutual reaction described as ‘womp womp’, indicating a shared sentiment of disappointment or humor.

- This informal communication style highlights the casual nature of discussions within the Discord community.

-

Echoing Community Feelings: Another member chimed in agreeing with the initial sentiment, stating, ‘I do same.’

- Such responses reflect a collaborative and friendly atmosphere among members discussing their reactions.

Unsloth AI (Daniel Han) ▷ #help (178 messages🔥🔥):

Gemma 2B RAM usageFlash Attention installation issuesRAG experience and usageOllama model managementTraining on responses only

-

Gemma 2B RAM usage during long runs: Users discussed experiencing consistent RAM usage increase while running longer jobs with Gemma 2B, questioning if evaluation steps might be affecting performance.

- One member suggested training with 0 steps to mitigate excessive resource consumption.

-

Flash Attention installation troubles: A member reported difficulties with the Flash Attention installation hanging indefinitely, prompting suggestions from others about checking command execution and running environments.

- Another member inquired if the issue was related to the command ‘run all’ during the setup process.

-

Exploring RAG for long-term memory: Inquiry about RAG (Retrieval-Augmented Generation) was made, along with requests for user experiences and guidance on using it for long-term data retention.

- A user recommended Dify as a simple alternative for implementing RAG.

-

Managing models in Ollama: There were discussions on how to upload and manage models in Ollama, including commands used for copying and pushing model updates.

- Users confirmed successful model uploads and pushes, navigating challenges of namespace permissions and app connections.

-

Understanding training on assistant responses: Clarification was sought regarding the

train_on_responses_onlyfunction, specifically whether all previous messages in a chat are considered during training.- It was noted that the model masks user inputs, allowing assistant responses to be predicted based only on the context of prior responses.

Links mentioned:

- raultherockstar/nyayasathi: Get up and running with large language models.

- facebook/nougat-small · Hugging Face: no description found

- Paper page - Nougat: Neural Optical Understanding for Academic Documents: no description found

- Nougat - a Hugging Face Space by tomriddle: no description found

- Computer Vision API - OCR bounding boxes | Microsoft Community Hub: I’m building an API for a customer than leverages computer vision to analyse images. I am trying to get it to analyse handwriting on the white…

Unsloth AI (Daniel Han) ▷ #showcase (2 messages):

Optillm releaseHyperstack legitimacy

-

Optillm Launches Exciting New Features: The latest release of optillm introduces a local inference server that allows loading any HF model and LoRA adapters, enhancing usability for fine-tuned Unsloth models.

- This update also enables dynamic adapter switching during inference and supports advanced decoding techniques like cot_decoding and entropy_decoding while utilizing the standard OpenAI client SDK.

-

Inquiry on Hyperstack’s Legitimacy: A question was raised about the legitimacy of Hyperstack, indicating some community interest or skepticism regarding this platform.

- No specific details or consensus about Hyperstack’s credibility were provided in the discussion.

Link mentioned: GitHub - codelion/optillm: Optimizing inference proxy for LLMs: Optimizing inference proxy for LLMs. Contribute to codelion/optillm development by creating an account on GitHub.

HuggingFace ▷ #general (270 messages🔥🔥):

Qwen Model PerformanceAI Project Ethical ConsiderationsGPU Specifications and PerformanceLangchain and Hugging Face IntegrationDeep Learning Model Suggestions

-

Qwen Model Shows Variable Output: Users reported that the Qwen model’s performance in generating text can vary significantly, with comparisons made to other models like Ollama indicating that responses from Qwen often may hallucinate or lack quality.

- Tweaking parameters like repetition penalty and adjusting token lengths were suggested to enhance output quality.

-

Ethical Implications of AI Projects: Discussion arose regarding the ethical dilemmas associated with AI projects aimed at extracting reflection data and their potential misuse if widely available.

- Participants expressed the need for a careful discussion on the ethical ramifications while acknowledging the project’s valid applications, especially for law enforcement.

-