AI News for 11/27/2024-11/28/2024. We checked 7 subreddits, 433 Twitters and 29 Discords (198 channels, and 2864 messages) for you. Estimated reading time saved (at 200wpm): 341 minutes. You can now tag @smol_ai for AINews discussions!

In the race for “open o1”, DeepSeek r1 (our coverage here) still has the best results, but has not yet released weights. An exhausted-sounding Justin Lin made a sudden late release today of QwQ, weights, demo and all:

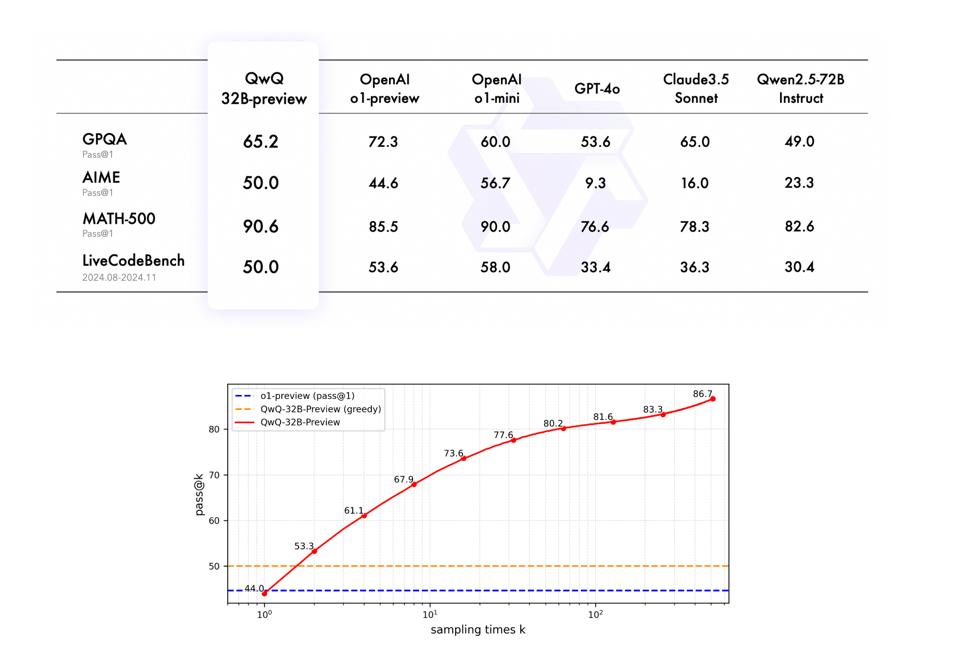

Quite notably, this 32B open weight model fully trounces GPT4o and Claude 3.5 Sonnet on every benchmark.

Categorizing QwQ is an awkward task: it makes enough vague handwaves at sampling time scaling to get /r/localLlama excited:

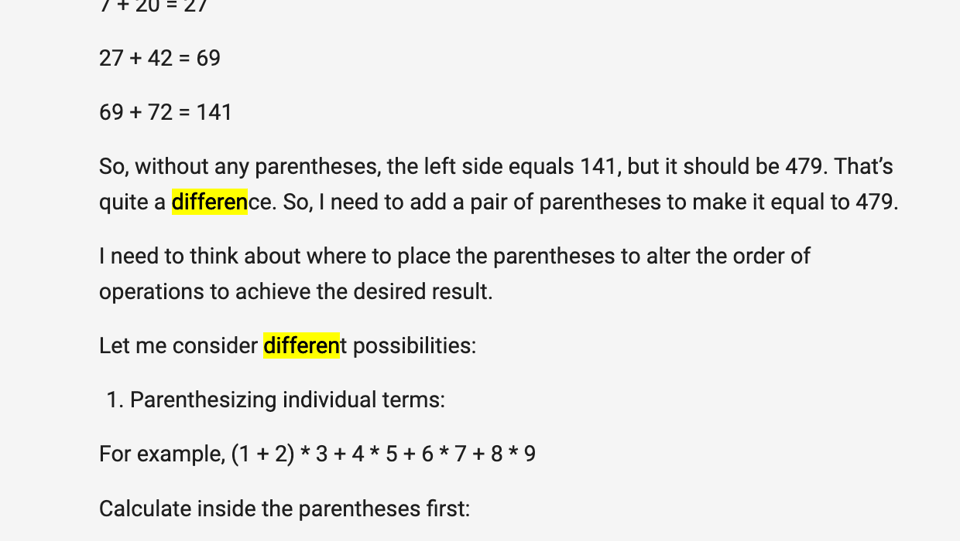

But the model weights itself show that it looks like a Qwen 32B model (probably Qwen 2.5, our coverage here), so perhaps it has just been finetuned to take “time to ponder, to question, and to reflect”, to “carefully examin[e] their work and learn from mistakes”. “This process of careful reflection and self-questioning leads to remarkable breakthroughs in solving complex problems”. All of which are vaguely chatgptesque descriptions and do not constitute a technical report, but the model is real and live and downloadable which says a lot. The open “reasoning traces” demonstrate how it has been tuned to do sequential search:

A fuller technical report is coming but this is impressive if it holds up… perhaps the real irony is Reflection 70B (our coverage here) wasn’t wrong, just early…

[Sponsored by SambaNova] Inference is quickly becoming the main function of AI systems, replacing model training. It’s time to start using processors that were built for the task. SambaNova’s RDUs have some unique advantages over GPUs in terms of speed and flexibility.

swyx’s comment: RDU’s are back! if a simple 32B autoregressive LLM like QwQ can beat 4o and 3.5 Sonnet, that is very good news for the alternative compute providers, who can optimize the heck out of this standard model architecture for shockingly fast/cheap inference.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Theme 1. Hugging Face and Model Deployments

- Hugging Face Inference Endpoints on CPU: @ggerganov announced that Hugging Face now supports deploying llama.cpp-powered instances on CPU servers, marking a step towards wider low-cost cloud LLM availability.

- @VikParuchuri shared the release of Marker v1, a tool that’s 2x faster and much more accurate, signaling advancements in infrastructure for AI model deployment.

- Agentic RAG Developments: @dair_ai discussed Agentic RAG, emphasizing its utility in building robust RAG systems that leverage external tools for enhanced response accuracy.

- The discussion highlights strategies for integrating more advanced LLM chains and vector stores into the system, aiming for better precision in AI responses.

Theme 2. Open Source AI Momentum

-

Popular AI Model Discussions: @ClementDelangue noted Flux by @bfl_ml becoming the most liked model on Hugging Face, pointing towards the shift from LLMs to multi-modal models gaining usage.

- The conversation includes insights on the increased usage of image, video, audio, and biology models, showing a broader acceptance and integration of diverse AI models in enterprise settings.

-

SmolLM Hiring Drive: @LoubnaBenAllal1 announced an internship opportunity at SmolLM, focusing on training LLMs and curating datasets, highlighting the growing need for development in smaller, efficient models.

Theme 3. NVIDIA and CUDA Advancements

-

CUDA Graphs and PyTorch Enhancements: @ID_AA_Carmack praised the efficiency of CUDA graphs, arguing for process simplification in PyTorch with single-process-multi-GPU programs to enhance computational speed and productivity.

- The conversation involved suggestions for optimizing DataParallel in PyTorch to leverage single-process efficiency.

-

Torch Distributed and NVLink Discussion: @ID_AA_Carmack questioned torch.distributed capabilities in relation to NVIDIA GB200 NVL72, highlighting complexities and considerations for working with multiple GPUs.

Theme 4. Impact of VC Practices and AI Industry Insights

-

Venture Capital Critiques and Opportunities: @saranormous criticized irrational decisions by some VCs, advocating for stronger partnerships to protect founders.

-

@marktenenholtz shared thoughts on the high expectations of new graduates and the challenges of managing entry-level programmers, hinting at industry-wide concerns about sustainable talent growth.

-

Stripe and POS Systems in AI: @marktenenholtz emphasized the role of Stripe in improving business data quality, highlighting that POS systems offer a high ROI and are essential for businesses harnessing AI for data capture.

Theme 5. Multimodal Model Development

- ShowUI Release: @_akhaliq discussed ShowUI, a vision-language-action model designed as a GUI visual agent, signaling a trend toward integrating AI in interactive applications.

- @multimodalart celebrated the capabilities of QwenVL-Flux, which adds features like image variation and style transfer, enhancing multimodal AI applications.

Theme 6. Memes and Humor

- Humor and AI Culture: @swyx provided a humorous take on AI development with references to Neuralink and AI enthusiast culture.

- @c_valenzuelab shared a witty fictional scenario about AI, exploring how toys of the past might be reimagined in today’s burgeoning AI landscape.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. QwQ-32B: Qwen’s New Reasoning Model Matches O1-Preview

-

QwQ: “Reflect Deeply on the Boundaries of the Unknown” - Appears to be Qwen w/ Test-Time Scaling (Score: 216, Comments: 84): Qwen announced a preview release of their new 32B language model called QwQ, which appears to implement test-time scaling. Based on the title alone, the model’s focus seems to be on reasoning capabilities and exploring knowledge boundaries, though without additional context specific capabilities cannot be determined.

- Initial testing on HuggingFace suggests QwQ-32B performs on par with OpenAI’s O1 preview, with users noting it’s highly verbose in its reasoning process. Multiple quantized versions are available including Q4_K_M for 24GB VRAM and Q3_K_S for 16GB VRAM.

- Users report the model is notably “chatty” in its reasoning, using up to 3,846 tokens for simple questions at temperature=0 (compared to 1,472 for O1). The model requires a specific system prompt mentioning “You are Qwen developed by Alibaba” for optimal performance.

- Technical testing reveals strong performance on complex reasoning questions like the “Alice’s sisters” problem, though with some limitations in following strict output formats. Users note potential censorship issues with certain content and variable performance compared to other reasoning models.

-

Qwen Reasoning Model????? QwQ?? (Score: 52, Comments: 9): The post appears to be inquiring about the release of QwQ, which seems to be related to the Qwen Reasoning Model, though no specific details or context are provided. The post includes a screenshot image but without additional context or information about the model’s capabilities or release timing.

- QwQ-32B-Preview model has been released on Hugging Face with a detailed explanation in their blog post. Initial testing shows strong performance compared to other models.

- Users report that this 32B parameter open-source model performs comparably to O1-preview, suggesting significant progress in open-source language models. The model demonstrates particularly strong reasoning capabilities.

- The model was released by Qwen and is available for immediate testing, with early users reporting positive results in comparative testing against existing models.

Theme 2. Qwen2.5-Coder-32B AWQ Quantization Outperforms Other Methods

-

Qwen2.5-Coder-32B-Instruct-AWQ: Benchmarking with OptiLLM and Aider (Score: 58, Comments: 16): Qwen2.5-Coder-32B-Instruct model was benchmarked using AWQ quantization on 2x3090 GPUs, testing various configurations including Best of N Sampling, Chain of Code, and different edit formats through Aider and OptiLLM, achieving a peak pass@2 score of 74.6% with “whole” edit format and temperature 0.2. The testing revealed that AWQ_marlin quantization outperformed plain AWQ, while “whole” edit format consistently performed better than “diff” format, and lower temperature settings (0.2 vs 0.7) yielded higher success rates, though chain-of-code and best-of-n techniques showed minimal impact on overall success rates despite reducing errors.

- VRAM usage and temperature=0 testing were key points of interest from the community, with users requesting additional benchmarks at temperatures of 0, 0.05, and 0.1, along with various topk settings (20, 50, 100, 200, 1000).

- The AWQ_marlin quantized model is available on Huggingface and can be enabled at runtime using SgLang with the parameter “—quantization awq_marlin”.

- Users suggested testing min_p sampling with recommended parameters of temperature=0.2, top_p=1, min_p=0.9, and num_keep=256, referencing a relevant Hugging Face discussion about its benefits.

-

Qwen2.5-Coder-32B-Instruct - a review after several days with it (Score: 86, Comments: 87): Qwen2.5-Coder-32B-Instruct model, tested on a 3090 GPU with Oobabooga WebUI, demonstrates significant limitations including fabricating responses when lacking information, making incorrect code review suggestions, and failing at complex tasks like protobuf implementations or maintaining context across sessions. Despite these drawbacks, the model excels at writing Doxygen comments, provides valuable code review feedback when user-verified, and serves as an effective sounding board for code improvement, making it a useful tool for developers who can critically evaluate its output.

- Users highlight that the original post used a 4-bit quantized model, which significantly impairs performance. Multiple experts recommend using at least 6-bit or 8-bit precision with GGUF format and proper GPU offloading for optimal results.

- Mistral Large was praised as superior to GPT/Claude for coding tasks, with users citing its 1 billion token limit, free access, and better code generation capabilities. The model was noted to produce more accurate, compilable code compared to competitors.

- Several users emphasized the importance of proper system prompts and sampling parameters for optimal results, sharing resources like this guide. The Unsloth “fixed” 128K model with Q5_K_M quantization was recommended as an alternative.

Theme 3. Cost-Effective Hardware Setups for 32B Model Inference

- Cheapest hardware go run 32B models (Score: 63, Comments: 107): 32B language models running requirements were discussed, with focus on achieving >20 tokens/second performance while fitting models entirely in GPU RAM. The post compares NVIDIA GPUs, noting that a single RTX 3090 can only handle Q4 quantization, while exploring cheaper alternatives like dual RTX 3060 cards versus the more expensive option of dual 3090s at ~1200€ used.

- Users discussed alternative hardware setups, including Tesla P40 GPUs available for $90-300 running 72B models at 6-7 tokens/second, and dual RTX 3060s achieving 13-14 tokens/second with Qwen 2.5 32B model.

- A notable setup using exllama v2 demonstrated running 32B models with 5 bits per weight, 32K context, and Q6 cache on a single RTX 3090 with flash attention and cache quantization enabled.

- Performance comparisons showed RTX 4090 processing prompts 15.74x faster than M3 Max, while Intel Arc A770s were suggested as a budget option with higher memory bandwidth but software compatibility issues.

Theme 4. NVIDIA Star-Attention: 11x Faster Long Sequence Processing

- GitHub - NVIDIA/Star-Attention: Efficient LLM Inference over Long Sequences (Score: 51, Comments: 4): NVIDIA released Star-Attention, a new attention mechanism for processing long sequences in Large Language Models on GitHub. The project aims to make LLM inference more efficient when handling extended text sequences.

- Star-Attention achieves up to 11x reduction in memory and inference time while maintaining 95-100% accuracy by splitting attention computation across multiple machines. The mechanism uses a two-phase block-sparse approximation with blockwise-local and sequence-global attention phases.

- SageAttention2 was suggested as a better alternative for single-machine attention optimization, while Star-Attention is primarily beneficial for larger computing clusters and distributed systems.

- Users noted that this is NVIDIA’s strategy to encourage purchase of more graphics cards by enabling distributed computation across multiple machines for handling attention mechanisms.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Claude Gets Model Context Protocol for Direct System Access

-

MCP Feels like Level 3 to me (Score: 32, Comments: 23): Claude demonstrated autonomous coding capabilities by independently writing and executing Python code to access a Bing API through an environment folder, bypassing traditional API integration methods. The discovery suggests potential for expanded tool usage and agent swarms through direct code execution, enabling AI systems to operate with greater autonomy by writing and running their own programs.

- MCP functionality in the Claude desktop app enables autonomous code execution without traditional API integration. Access is available through the developer settings in the desktop app’s configuration menu.

- Users highlight how Claude can independently create and execute tools for tasks it doesn’t immediately know how to complete, including scraping documentation and accessing information through custom-built solutions.

- While the autonomous coding capability is powerful, concerns were raised about potential future restrictions and the need for proper sandboxing to prevent environmental/data issues. A Python library is available for implementing custom servers and tools.

-

Model Context Protocol is everything I’ve wanted (Score: 28, Comments: 12): Model Context Protocol (MCP) enables Claude to directly interact with computer systems and APIs, representing a significant advancement in AI system integration.

- User cyanheads created a guide for implementing MCP tools, demonstrating the protocol’s implementation for only $3 in API costs.

- The Claude desktop app with MCP enables native integration with internet access, computer systems, and smart home devices through tool functions injected into the system prompt. The functionality was demonstrated with a weather tool example.

- Practical demonstrations included Claude writing a Pac-Man game to local disk and creating a blog post about quantum computing breakthroughs using internet research capabilities, showing the system’s versatility in file system interaction and web research.

Theme 2. ChatGPT Voice Makes 15% Cold Call Conversion Rate

- I Got ChatGPT to Make Sales Calls for Me… and It’s Closing Deals (Score: 144, Comments: 67): The author experimented with ChatGPT’s voice mode for real estate cold calls, achieving a 12-15% meaningful conversation rate and 2-3% meeting booking rate from 100 calls, significantly outperforming their manual efforts of 3-4% conversation rate. The success stems from the AI’s upfront disclosure and novelty factor, where potential clients stay on calls longer due to curiosity about the technology, with one case resulting in a signed contract, while the AI maintains consistent professionalism and handles rejection effectively.

- The system costs approximately $840/month in OpenAI API fees and can handle 21 simultaneous calls with current Tier 3 access, with potential to scale to 500 simultaneous calls at Tier 5. The backend implementation uses Twilio and websockets in approximately 5000 lines of code.

- Legal concerns were raised regarding TCPA compliance, with potential $50,120 fines per violation as of 2024 for Do Not Call Registry violations. Users noted that AI calls require prior express written consent to avoid class action lawsuits.

- The novelty factor was highlighted as a key but temporary advantage, with predictions that AI cold calling will become widespread in sales within months. Some users suggested creating AI receptionists to counter the anticipated increase in AI cold calls.

Theme 3. OpenAI’s $1.5B Softbank Investment & Military Contracts Push

-

OpenAI gets new $1.5 billion investment from SoftBank, allowing employees to sell shares in a tender offer (Score: 108, Comments: 2): OpenAI secured a $1.5 billion investment from SoftBank through a tender offer, enabling employees to sell their shares. The investment continues OpenAI’s significant funding momentum following their major deal with Microsoft earlier in 2023.

- SoftBank’s involvement raises concerns among commenters due to their controversial investment track record, particularly with recent high-profile tech investments that faced significant challenges.

-

The new ‘land grab’ for AI companies, from Meta to OpenAI, is military contracts (Score: 111, Comments: 6): Meta, OpenAI, and other AI companies are pursuing US military and defense contracts as a new revenue stream and market expansion opportunity. No additional context or specific details were provided in the post body.

- Government spending and military contracts are seen as a reliable revenue stream for tech companies, with commenters noting this follows historical patterns of tying business growth to defense funding.

- Community expresses concern and skepticism about AI companies partnering with military applications, with references to Skynet and questioning the safety implications.

- Discussion highlights that these contracts are funded through taxpayer dollars, suggesting public stake in these AI developments.

Theme 4. Local LLaMa-Mesh Integration Released for Blender

- Local integration of LLaMa-Mesh in Blender just released! (Score: 81, Comments: 7): LLaMa-Mesh AI integration for Blender has been released, enabling local processing capabilities. The post lacks additional details about features, implementation specifics, or download information.

- The LLaMa-Mesh AI project is available on HuggingFace for initial release and testing.

- Users express optimism about the tool’s potential, particularly compared to existing diffusion model mesh generation approaches.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. AI Models Break New Ground in Efficiency and Performance

- Deepseek Dethrones OpenAI on Reasoning Benchmarks: Deepseek’s R1 model has surpassed OpenAI’s o1 on several reasoning benchmarks, signaling a shift in AI leadership. Backed by High-Flyer’s substantial compute resources, including an estimated 50k Hopper GPUs, Deepseek plans to open-source models and offer competitive API pricing.

- OLMo 2 Outperforms Open Models in AI Showdown: OLMo 2 introduces new 7B and 13B models that outperform other open models, particularly in recursive reasoning and complex AI applications. Executives are buzzing about OLMo 2’s capabilities, reflecting cutting-edge advancements in AI technology.

- MH-MoE Model Boosts AI Efficiency Without the Bulk: The MH-MoE paper presents a multi-head mechanism that matches the performance of sparse MoE models while surpassing standard implementations. Impressively, it’s compatible with 1-bit LLMs like BitNet, expanding its utility in low-precision AI settings.

Theme 2. AI Tools and Infrastructure Level Up

- TinyCloud Launches with GPU Army—54x 7900XTXs: Tinygrad announces the upcoming TinyCloud launch, offering contributors access to 54 GPUs via 9 tinybox reds by year’s end. With a custom driver ensuring stability, users can easily tap into this power using an API key—no complexity, just raw GPU horsepower.

- MAX Engine Flexes Muscles with New Graph APIs: The MAX 24.3 release introduces extensibility, allowing custom model creation through the MAX Graph APIs. Aiming for low-latency, high-throughput inference, MAX optimizes real-time AI workloads over formats like ONNX—a shot across the bow in AI infrastructure.

- Sonnet Reads PDFs Now—No Kidding!: Sonnet in Aider now supports reading PDFs, making it a more versatile assistant for developers. Users report smooth sailing with the new feature, saying it “effectively interprets PDF files” and enhances their workflow—talk about a productivity boost.

Theme 3. AI Community Grapples with Ethical Quandaries

- Bsky Dataset Debacle Leaves Researchers Adrift: A dataset of Bluesky posts created by a Hugging Face employee was yanked after intense backlash, despite compliance with terms of service. The removal hampers social media research, hitting smaller researchers hardest while big labs sail smoothly—a classic case of the little guy getting the short end.

- Sora Video Generator Leak Causes AI Stir: The leak of OpenAI’s Sora Video Generator ignited hot debates, with a YouTube video spilling the tea. Community members dissected the tool’s performance and criticized the swift revocation of public access—the AI world loves a good drama.

Theme 4. Users Wrestle with AI Tool Growing Pains

- Cursor Agent Tangled in Endless Folder Fiasco: Users report that Cursor Agent is spawning endless folders instead of organizing properly—a digital ouroboros of directories. Suggestions pour in to provide clearer commands to straighten out the agent’s behavior—nobody likes a messy workspace.

- Jamba 1.5 Mini Muffs Function Calls: The Jamba 1.5 mini model via OpenRouter is coughing up empty responses when handling function calls. While it works fine without them, users are scratching their heads and checking their code—function calling shouldn’t be a black box.

- Prompting Research Leaves Engineers in a Pickle: Participants express frustration over the lack of consistent empirical prompting research, with conflicting studies muddying the waters. The community is on the hunt for clarity in effective prompting techniques—standardization can’t come soon enough.

Theme 5. Big Bucks and Moves in the AI Industry

- PlayAI Raises $21M to Give Voice AI a Boost: PlayAI secures $21 million from Kindred Ventures and Y Combinator to develop intuitive voice AI interfaces. The cash influx aims to enhance voice-first interfaces, making human-machine interactions as smooth as a jazz solo.

- Generative AI Spending Hits Whopping $13.8B: Generative AI spending surged to $13.8 billion in 2024, signaling a move from AI daydreams to real-world implementation. Enterprises are opening their wallets, but decision-makers still grapple with how to get the biggest bang for their buck.

- SmolVLM Makes Big Waves in Small Packages: SmolVLM, a 2B VLM, sets a new standard for on-device inference, outperforming competitors in GPU RAM usage and token throughput. It’s fine-tunable on Google Colab, making powerful AI accessible on consumer hardware—a small model with a big punch.

PART 1: High level Discord summaries

Modular (Mojo 🔥) Discord

-

MAX Engine outshines ONNX in AI Inference: MAX Engine is defined as an AI inference powerhouse designed to utilize models, whereas ONNX serves as a model format supporting various formats like ONNX, TorchScript, and native Mojo graphs.

- MAX aims to deliver low-latency, high-throughput inference across diverse hardware, optimizing real-time model inference over ONNX’s model transfer focus.

-

Mojo Test Execution Slows Due to Memory Pressure: Running all tests in a Mojo directory takes significantly longer than individual tests, indicating potential memory pressure from multiple mojo-test-executors.

- Commenting out test functions gradually improved runtime, suggesting memory leaks or high memory usage are causing slowdowns.

-

MAX 24.3 Release Boosts Engine Capabilities: The recent MAX version 24.3 release highlights its extensibility, allowing users to create custom models via the MAX Graph APIs.

- The Graph API facilitates high-performance symbolic computation graphs in Mojo, positioning MAX beyond a mere model format.

-

Mojo Enhances Memory Management Strategies: Discussions revealed that Chrome’s memory usage contributes to performance issues during Mojo test executions, highlighting overall system memory pressure.

- Users acknowledged the need for better hardware to handle their workloads, emphasizing the necessity for effective resource management.

-

Mojo Origins Tracking Improves Memory Safety: Conversations about origin tracking in Mojo revealed that currently,

vecand its elements share the same origin, but new implementations will introduce separate origins for more precise aliasing control.- Introducing two origins—the vector’s origin and its elements’ origin—will ensure memory safety by accurately tracking potential aliasing scenarios.

Cursor IDE Discord

-

Cursor Agent’s Folder Glitches: Users reported that the Cursor Agent is creating endless folders instead of organizing them properly, indicating potential bugs. They suggested that providing clearer commands could enhance agent functionality and prevent confusion when managing project structures.

- These issues were discussed extensively on the Cursor Forum, highlighting the need for improved command clarity to streamline folder organization.

-

Cursor v0.43.5 Feature Changes: The latest Cursor version 0.43.5 update has sparked discussions about missing features, including changes to the @web functionality and the removal of the tabs system. Despite these changes, some users appreciate Cursor’s continued contribution to their productivity.

- Detailed feedback can be found in the Cursor Changelog, where users express both concerns and appreciations regarding the new feature set.

-

Model Context Protocol Integration: There is significant interest in implementing the Model Context Protocol (MCP) within Cursor to allow the creation of custom tools and context providers. Users believe that MCP could greatly enhance the overall user experience by offering more tailored functionalities.

- Discussions about MCP’s potential were highlighted on Cursor’s documentation, emphasizing its role in extending Cursor’s capabilities.

-

Cursor Enhances Developer Workflow: Many users shared positive experiences with Cursor, emphasizing how it has transformed their approach to coding and project execution. Even users who are not professional developers feel more confident tackling ambitious projects thanks to Cursor.

- These uplifting experiences were often mentioned in the Cursor Forum, showcasing Cursor’s impact on development workflows.

-

Markdown Formatting Challenges in Cursor: Users discussed markdown formatting issues in Cursor, particularly problems with code blocks not applying correctly. They are seeking solutions or keybindings to enhance their workflow and better manage files within the latest updates.

- Solutions and workarounds for these markdown issues were explored in various forum threads, indicating an ongoing need for improved markdown support.

OpenAI Discord

-

Sora Video Generator Leak Sparks Conversations: The recent leak of OpenAI’s Sora Video Generator ignited discussions, with references to a YouTube video detailing the event.

- Community members debated the tool’s performance and the immediate revocation of public access following the leak.

-

ChatGPT’s Image Analysis Shows Variable Results: Users experimented with ChatGPT’s Image Analysis capabilities, noting inconsistencies based on prompt structure and image presentation.

- Feedback highlighted improved interactions when image context is provided compared to initiating interactions without guidance.

-

Empirical Prompting Research Lacks Consensus: Participants expressed frustration over the scarcity of empirical prompting research, citing conflicting studies and absence of standardized approaches.

- The community seeks clarity on effective prompting techniques amidst numerous contradictory papers.

-

AI Phone Agents Aim for Human-Like Interaction: Discussions emphasized that AI Phone Agents should simulate human interactions rather than mimic scripted IVR responses.

- Members stressed the importance of AI understanding context and verifying critical information during calls.

-

Developing Comprehensive Model Testing Frameworks: Engineers debated the creation of robust Model Testing Frameworks capable of evaluating models at scale and tracking prompt changes.

- Suggestions included implementing verification systems to ensure response accuracy and consistency across diverse use cases.

Nous Research AI Discord

-

MH-MoE Improves Model Efficiency: The MH-MoE paper details a multi-head mechanism that aggregates information from diverse expert spaces, achieving performance on par with sparse MoE models while surpassing standard implementations.

- Additionally, it’s compatible with 1-bit LLMs like BitNet, expanding its applicability in low-precision settings.

-

Star Attention Enhances LLM Inference: Star Attention introduces a block-sparse attention mechanism reducing inference time and memory usage for long sequences by up to 11x, maintaining 95-100% accuracy.

- This mechanism seamlessly integrates with Transformer-based LLMs, facilitating efficient processing of extended sequence tasks.

-

DALL-E’s Variational Bound Controversy: There’s ongoing debate regarding the variational bound presented in the DALL-E paper, with assertions that it may be flawed due to misassumptions about conditional independence.

- Participants are scrutinizing whether these potential oversights affect the validity of the proposed inequalities in the model.

-

Qwen’s Open Weight Reasoning Model: The newly released Qwen reasoning model is recognized as the first substantial open-weight model capable of advanced reasoning tasks, achievable through quantization to 4 bits.

- However, some participants are skeptical about previous models being classified as reasoning models, citing alternative methods that demonstrated reasoning capabilities.

-

OLMo’s Growth and Performance: Since February 2024, OLMo-0424 has exhibited notable improvements in downstream performance, particularly by boosting performance over its predecessors.

- The growth ecosystem is also seeing contributions from projects like LLM360’s Amber and M-A-P’s Neo models, enhancing openness in model development.

Eleuther Discord

-

RWKV-7’s potential for soft AGI: The developer expressed hope in delaying RWKV-8’s development, considering RWKV-7’s capabilities as a candidate for soft AGI and acknowledging the need for further improvements.

- They emphasized that while RWKV-7 is stable, there are still areas that can be enhanced before transitioning to RWKV-8.

-

Mamba 2 architecture’s efficiency: The community is curious about the new Mamba 2 architecture, particularly its efficiency aspects and comparisons against existing models.

- Specific focus was placed on whether Mamba 2 allows for more tensor parallelism or offers advantages over traditional architectures.

-

SSMs and graph curvature relation: Participants discussed the potential of SSMs (State Space Models) operating in message passing terms, highlighting an analogy between vertex degree in graphs and curvature on manifolds.

- They noted that higher vertex degrees correlate to negative curvature, showcasing a discrete version of Gaussian curvature.

-

RunPod server configurations for OpenAI endpoint: A user discovered that the RunPod tutorial does not explicitly mention the correct endpoint for OpenAI completions, which is needed for successful communication.

- To make it work, use this endpoint and provide the model in your request.

OpenRouter (Alex Atallah) Discord

-

Gemini Flash 1.5 Capacity Boost: OpenRouter has implemented a major boost to the capacity of Gemini Flash 1.5, addressing user reports of rate limiting. Users experiencing issues are encouraged to retry their requests.

- This enhancement is expected to significantly improve user experience during periods of high traffic by increasing overall system capacity.

-

Provider Routing Optimization: The platform is now routing exponentially more traffic to the lowest-cost providers, ensuring users benefit from lower prices on average. More details can be found in the Provider Routing documentation.

- This strategy maintains performance by fallback mechanisms to other providers when necessary, optimizing cost-effectiveness.

-

Grok Vision Beta Launch: OpenRouter is ramping up capacity for the Grok Vision Beta, encouraging users to test it out at Grok Vision Beta.

- This launch provides an opportunity for users to explore the enhanced vision capabilities as the service scales its offerings.

-

EVA Qwen2.5 Pricing Doubles: Users have observed that the price for EVA Qwen2.5 72B has doubled, prompting questions about whether this change is promotional or a standard increase.

- Speculation suggests the pricing adjustment may be driven by increased competition and evolving business strategies.

-

Jamba 1.5 Model Issues: Users reported issues with the Jamba 1.5 mini model from AI21 Labs, specifically receiving empty responses when calling functions.

- Despite attempts with different versions, the problem persisted, leading users to speculate it might be related to message preparation or backend challenges.

Interconnects (Nathan Lambert) Discord

-

QwQ-32B-Preview Pushes AI Reasoning: QwQ-32B-Preview is an experimental model enhancing AI reasoning capabilities, though it grapples with language mixing and recursive reasoning loops.

- Despite these issues, multiple members have shown excitement for its performance in math and coding tasks.

-

Olmo Outperforms Llama in Consistency: Olmo model demonstrates consistent performance across tasks, differentiating itself from Llama according to community discussions.

- Additionally, Tülu was cited as outperforming Olmo 2 on specific prompts, fueling debates on leading models.

-

Low-bit Quantization Optimizes Undertrained LLMs: A study on Low-Bit Quantization reveals that low-bit quantization benefits undertrained large language models, with larger models showing less degradation.

- Projections indicate that models trained with over 100 trillion tokens might suffer from quantization performance issues.

-

Deepseek Dethrones OpenAI on Reasoning Benchmarks: Deepseek’s R1 model has surpassed OpenAI’s o1 on several reasoning benchmarks, highlighting the startup’s potential in the AI sector.

- Backed by High-Flyer’s substantial compute resources, including an estimated 50k Hopper GPUs, Deepseek is poised to open-source models and initiate competitive API pricing in China.

-

Bsky Dataset Debacle Disrupts Research: A dataset of Bluesky posts created by an HF employee was removed after intense backlash, despite being compliant with ToS.

- The removal has set back social media research, with discussions focusing on the impact on smaller researchers and upcoming dataset releases.

Perplexity AI Discord

-

Perplexity Engine Deprecation: A discussion emerged regarding the deprecation of the Perplexity engine, with users expressing concerns about future support and feature updates.

- Participants suggested transitioning to alternatives like Exa or Brave to maintain project stability and continuity.

-

Image Generation in Perplexity Pro: Perplexity Pro now supports image generation, though users noted limited control over outputs, making it suitable for occasional use rather than detailed projects.

- There are no dedicated pages for image generation, which has been a point of feedback for enhancing user experience.

-

Enhanced Model Selection Benefits: Subscribers to Perplexity enjoy access to advanced models such as Sonnet, 4o, and Grok 2, which significantly improve performance for complex tasks like programming and mathematical computations.

- While the free version meets basic needs, the subscription offers substantial advantages for users requiring more robust capabilities.

-

Perplexity API Financial Data Sources: Inquiries were made about the financial data sources utilized by the Perplexity API and its capability to integrate stock ticker data for internal projects.

- A screenshot was referenced to illustrate the type of financial information available, highlighting the API’s utility for real-time data applications.

-

Reddit Citation Support in Perplexity API: Users noted that the Perplexity API no longer supports Reddit citations, questioning the underlying reasons for this change.

- This alteration affects those who rely on Reddit as a data source, prompting discussions about alternative citation methods.

aider (Paul Gauthier) Discord

-

Sonnet Introduces PDF Support: Sonnet now supports reading PDFs, enabled via the command

aider --install-main-branch. Users reported that Sonnet effectively interprets PDF files with the new functionality.- Positive feedback was shared by members who successfully utilized the PDF reading feature, enhancing their workflow within Aider.

-

QwQ Model Faces Performance Hurdles: Multiple users encountered gateway timeout errors when benchmarking the QwQ model on glhf.chat.

- The QwQ model’s delayed loading times are impacting its responsiveness during performance tests, as discussed by the community.

-

Implementing Local Whisper API for Privacy: A member shared their setup of a local Whisper API for transcription, focusing on privacy by hosting it on an Apple M4 Mac mini.

- They provided example

curlcommands and hosted the API on Whisper.cpp Server for community testing.

- They provided example

Unsloth AI (Daniel Han) Discord

-

Axolotl vs Unsloth Frameworks: A member compared Axolotl and Unsloth, highlighting that Axolotl may feel bloated while Unsloth provides a leaner codebase. Instruction Tuning – Axolotl.

- Dataset quality was emphasized over framework choice, with a user noting that performance largely depends on the quality of the dataset rather than the underlying framework.

-

RTX 3090 Pricing Variability: Discussions revealed that RTX 3090 prices vary widely, with averages around $1.5k USD but some listings as low as $550 USD.

- This price discrepancy indicates a fluctuating market, causing surprise among members regarding the wide range of available prices.

-

GPU Hosting Solutions and Docker: Members expressed a preference for 24GB GPU hosts with scalable GPUs that can be switched on per minute, and emphasized running docker containers to minimize SSH dependencies.

- The focus on docker containers reflects a trend towards containerization for efficient and flexible GPU resource management.

-

Finetuning Local Models with Multi-GPU: Users sought advice on finetuning local Llama 3.2 models using JSON data files and expressed interest in multi-GPU support for enhanced performance.

- While multi-GPU support is still under development, a limited beta test is available for members interested in participating.

-

Understanding Order of Operations in Equations: A member reviewed the order of operations to correct an equation, confirming that multiplication precedes addition, resulting in a total of 141 from the expression 1 + 23 + 45 + 67 + 89 = 479.

- The correction adhered to PEMDAS rules, highlighting a misunderstanding in the original equation’s structure.

Stability.ai (Stable Diffusion) Discord

-

Wildcard Definitions Debate: Participants discussed the varying interpretations of ‘wildcards’ in programming, comparing Civitai and Python contexts with references to the Merriam-Webster definition.

- Discrepancies in terminology usage were influenced by programming history, leading to diverse opinions on the proper application of wildcards in different scenarios.

-

Image Generation Workflow Challenges: A user reported difficulties in generating consistent character images despite using appropriate prompts and style guidelines, seeking solutions for workflow optimization.

- Suggestions included experimenting with image-to-image generation methods and leveraging available workflows on platforms like Civitai to enhance consistency.

-

ControlNet Performance on Large Turbo Model: ControlNet functionality’s effectiveness with the 3.5 large turbo model was confirmed by a member, prompting discussions on its compatibility with newer models.

- This sparked interest among members about the performance metrics and potential integration challenges when utilizing the latest model versions.

-

Creating High-Quality Images: Discussions emphasized the importance of time, exploration, and prompt experimentation in producing high-quality character portraits.

- Users were advised to review successful workflows and consider diverse techniques to improve their image output.

-

Stable Diffusion Plugin Issues: Outdated Stable Diffusion extensions were causing checkpoint compatibility problems, leading a user to seek plugin updates.

- The community recommended checking plugin repositories for the latest updates and shared that some users continued to encounter issues despite troubleshooting efforts.

Notebook LM Discord Discord

-

Satirical Testing in NotebookLM: A member is experimenting with satirical articles in NotebookLM, noting the model recognizes jokes about half the time and fails the other half.

- They specifically instructed the model to question the author’s humanity, resulting in humorous outcomes showcased in the YouTube video.

-

AI’s Voice and Video Showcase: AI showcases its voice and video capabilities in a new video, humorously highlighting the absence of fingers.

-

Gemini Model Evaluations: A member conducted a comparison of two Gemini models in Google AI Studio and summarized the findings in NotebookLM.

- An accompanying audio overview from NotebookLM was shared, with hopes that the insights will reach Google’s development team.

-

NotebookLM Functionality Concerns: Users reported functionality issues with NotebookLM, such as a yellow gradient warning indicating access problems and inconsistent AI performance.

- There are concerns about potential loss of existing chat sessions and the ephemeral nature of NotebookLM’s current chat system.

-

Podcast Duration Challenges: A user observed that generated podcasts are consistently around 20 minutes despite custom instructions for shorter lengths.

- Advice was given to instruct the AI to create more concise content tailored for busy audiences to better manage podcast durations.

Cohere Discord

-

Cohere API integration with LiteLLM: Users encountered difficulties when integrating the Cohere API with LiteLLM, particularly concerning the citations feature not functioning as intended.

- They emphasized that LiteLLM acts as a meta library interfacing with multiple LLM providers and requested the Cohere team’s assistance to improve this integration.

-

Enhancing citation support in LiteLLM: The current LiteLLM implementation lacks support for citations returned by Cohere’s chat endpoint, limiting its usability.

- Users proposed adding new parameters in the LiteLLM code to handle citations and expressed willingness to contribute or wait for responses from the maintainers.

-

Full Stack AI Engineering expertise: A member highlighted their role as a Full Stack AI Engineer with over 6 years of experience in designing and deploying scalable web applications and AI-driven solutions using technologies like React, Angular, Django, and FastAPI.

- They detailed their skills in Docker, Kubernetes, and CI/CD pipelines across cloud platforms like AWS, GCP, and Azure, and shared their GitHub repository at AIXerum.

Latent Space Discord

-

PlayAI Raises $21M to Enhance Voice AI: PlayAI has secured $21M in funding from Kindred Ventures and Y Combinator to develop intuitive voice AI interfaces for developers and businesses.

- This capital injection aims to improve seamless voice-first interfaces, focusing on enhancing human-machine interactions as a natural communication medium.

-

OLMo 2 Outperforms Open Alternatives: OLMo 2 introduces new 7B and 13B models that surpass other open models in performance, particularly in recursive reasoning and various AI applications.

- Executives are excited about OLMo 2’s potential, citing its ability to excel in complex AI scenarios and reflecting the latest advances in AI technology.

-

SmolVLM Sets New Standard for On-Device VLMs: SmolVLM, a 2B VLM, has been launched to enable on-device inference, outperforming competitors in GPU RAM usage and token throughput.

- The model supports fine-tuning on Google Colab and is tailored for use cases requiring efficient processing on consumer-grade hardware.

-

Deepseek’s Model Tops OpenAI in Reasoning: Deepseek’s recent AI model has outperformed OpenAI’s in reasoning benchmarks, attracting attention from the AI community.

- Backed by the Chinese hedge fund High-Flyer, Deepseek is committed to building foundational technology and offering affordable APIs.

-

Enterprise Generative AI Spending Hits $13.8B in 2024: Generative AI spending surged to $13.8B in 2024, indicating a shift from experimentation to execution within enterprises.

- Despite optimism about broader adoption, decision-makers face challenges in defining effective implementation strategies.

GPU MODE Discord

-

LoLCATs Linear LLMs Lag Half: The LoLCATs paper explores linearizing LLMs without full model fine-tuning, resulting in a 50% throughput reduction compared to FA2 for small batch sizes.

- Despite memory savings, the linearized model does not surpass the previously expected quadratic attention model, raising questions about its overall efficiency.

-

ThunderKittens’ FP8 Launch: ThunderKittens has introduced FP8 support and fp8 kernels as detailed in their blog post, achieving 1500 TFLOPS with just 95 lines of code.

- The team emphasized simplifying kernel writing to advance research on new architectures, with implementation details available in their GitHub repository.

-

FLOPS Counting Tools Improve Accuracy: Counting FLOPS presents challenges as many operations are missed by existing scripts, affecting research reliability; tools like fvcore and torch_flops are recommended for precise measurements.

- Accurate FLOPS counting is crucial for validating model performance, with the community advocating for the adoption of these enhanced tools to mitigate discrepancies.

-

cublaslt Accelerates Large Matrices: cublaslt has been identified as the fastest option for managing low precision large matrices, demonstrating impressive speed in matrix operations.

- This optimization is particularly beneficial for AI engineers aiming to enhance performance in extensive matrix computations.

-

LLM Coder Enhances Claude Integration: The LLM Coder project aims to improve Claude’s understanding of libraries by providing main APIs in prompts and integrating them into VS Code.

- This initiative seeks to deliver more accurate coding suggestions, inviting developers to express interest and contribute to its development.

LlamaIndex Discord

-

LlamaParse Integrates Azure OpenAI Endpoints: LlamaParse now supports Azure OpenAI endpoints, enhancing its capability to parse complex document formats while ensuring enterprise-grade security.

- This integration allows users to effectively manage sensitive data within their applications through tailored API endpoints.

-

CXL Memory Boosts RAG Pipeline Performance: Research from MemVerge demonstrates that utilizing CXL memory can significantly expand available memory for RAG applications, enabling fully in-memory operations.

- This advancement is expected to enhance the performance and scalability of retrieval-augmented generation systems.

-

Quality-Aware Documentation Chatbot with LlamaIndex: By combining LlamaIndex for document ingestion and retrieval with aimon_ai for monitoring, developers can build a quality-aware documentation chatbot that actively checks for issues like hallucinations.

- LlamaIndex leverages milvus as the vector store, ensuring efficient and effective data retrieval for chatbots.

-

MSIgnite Unveils LlamaParse and LlamaCloud Features: During #MSIgnite, major announcements were made regarding LlamaParse and LlamaCloud, showcased in a breakout session by Farzad Sunavala and @seldo.

- The demo featured multimodal parsing, highlighting LlamaParse’s capabilities across various document formats.

-

BM25 Retriever Compatibility with Postgres: A member inquired about building a BM25 retriever and storing it in a Postgres database, with a response suggesting that a BM25 extension would be needed to achieve this functionality.

- This integration would enable more efficient retrieval processes within Postgres-managed environments.

tinygrad (George Hotz) Discord

-

TinyCloud Launch with 54x 7900XTX GPUs: Excitement is building for the upcoming TinyCloud launch, which will feature 9 tinybox reds equipped with a total of 54x 7900XTX GPUs by the end of the year, available for contributors using CLOUD=1 in tinygrad.

- A tweet from the tiny corp confirmed that the setup will be stable thanks to a custom driver, ensuring simplicity for users with an API key.

-

Recruitment for Cloud Infra and FPGA Specialists: GeorgeHotz emphasized the need for hiring a full-time cloud infrastructure developer to advance the TinyCloud project, along with a call for an FPGA backend specialist interested in contributing.

- He highlighted this necessity to support the development and maintain the project’s infrastructure effectively.

-

Tapeout Readiness: Qualcomm DSP and Google TPU Support: Prerequisites for tapeout readiness include the removal of LLVM from tinygrad and adding support for Qualcomm DSP and Google TPU, as outlined by GeorgeHotz.

- Additionally, there is a focus on developing a tinybox FPGA edition with the goal of achieving a sovereign AMD stack.

-

GPU Radix Sort Optimization in tinygrad: Users discussed enhancing the GPU radix sort by supporting 64-bit, negatives, and floating points, with an emphasis on chunking for improved performance on large arrays.

- A linked example demonstrated the use of UOp.range() for optimizing Python loops.

-

Vectorization Techniques for Sorting Algorithms: Participants explored potential vectorization techniques to optimize segments of sorting algorithms that iterate through digits and update the sorted output.

- One suggestion included using a histogram to pre-fill a tensor of constants per position for more efficient assignment.

Torchtune Discord

-

Torchtune Hits 1000 Commits: A member congratulated the team for achieving the 1000th commit to the Torchtune main repository, reflecting on the project’s dedication.

- An attached image showcased the milestone, highlighting the team’s hard work and commitment.

-

Educational Chatbots Powered by Torchtune: A user is developing an educational chatbot with OpenAI assistants, focusing on QA and cybersecurity, and seeking guidance on Torchtune’s compatibility and fine-tuning processes.

- Torchtune emphasizes open-source models, requiring access to model weights for effective fine-tuning.

-

Enhancing LoRA Training Performance: Members discussed the performance of LoRA single-device recipes, inquiring about training speed and convergence times.

- One member noted that increasing the learning rate by 10x improved training performance, suggesting a potential optimization path.

-

Memory Efficiency through Activation Offloading: The conversation addressed activation offloading with DPO, indicating that members did not observe significant memory gains.

- One member humorously expressed confusion while seeking clarity on a public PR that might clarify the issues.

OpenInterpreter Discord

-

Open Interpreter OS Mode vs Normal Mode: Open Interpreter now offers Normal Mode through the CLI and OS Mode with GUI functionality, requiring multiple models for control.

- One user highlighted their intention to use OS mode as a universal web scraper with a focus on CLI applications.

-

Issues with Open Interpreter Point API: Users reported that the Open Interpreter Point API appears to be down, experiencing persistent errors.

- These difficulties have raised concerns within the community about the API’s reliability.

-

Excitement Around MCP Tool: The new MCP tool has generated significant enthusiasm, with members describing it as mad and really HUGE.

- This reflects a growing interest within the community to explore its capabilities.

-

MCP Tools Integration and Cheatsheets: Members shared a list of installed MCP servers and tools, including Filesystem, Brave Search, SQLite, and PostgreSQL.

- Additionally, cheatsheets were provided to aid in maximizing MCP usage, emphasizing community-shared resources.

Axolotl AI Discord

-

SmolLM2-1.7B Launch: The community expressed enthusiasm for the SmolLM2-1.7B release, highlighting its impact on frontend development for LLM tasks.

- This is wild! one member remarked, emphasizing the shift in accessibility and capabilities for developers.

-

Transformers.js v3 Release: Hugging Face announced the Transformers.js v3 release, introducing WebGPU support that boosts performance by up to 100x faster than WASM.

- The update includes 25 new example projects and 120 supported architectures, offering extensive resources for developers.

-

Frontend LLM Integration: A member highlighted the integration of LLM tasks within the frontend, marking a significant evolution in application development.

- This advancement demonstrates the growing capabilities available to developers today.

-

Qwen 2.5 Fine Tuning Configuration: A user sought guidance on configuring full fine tuning for the Qwen 2.5 model in Axolotl, focusing on parameters that affect training effectiveness.

- There were questions about the necessity of specific unfrozen_parameters, indicating a need for deeper understanding of model configurations.

-

Model Configuration Guidance: Users expressed interest in navigating configuration settings for fine tuning various models.

- One user inquired about obtaining guidance for similar setup processes in future models, highlighting ongoing community learning.

MLOps @Chipro Discord

-

Feature Store Webinar Boosts ML Pipelines: Join the Feature Store Webinar on December 3rd at 8 AM PT led by founder Simba Khadder. Learn how Featureform and Databricks facilitate managing large-scale data pipelines by simplifying feature store types within an ML ecosystem.

- The session will delve into handling petabyte-level data and implementing versioning with Apache Iceberg, providing actionable insights to enhance your ML projects.

-

GitHub HQ Hosts Multi-Agent Bootcamp: Register for the Multi-Agent Framework Bootcamp on December 4 at GitHub HQ. Engage in expert talks and workshops focused on multi-agent systems, complemented by networking opportunities with industry leaders.

- Agenda includes sessions like Automate the Boring Stuff with CrewAI and Production-ready Agents through Evaluation, presented by Lorenze Jay and John Gilhuly.

-

LLMOps Resource Shared for AI Engineers: A member shared an LLMOps resource, highlighting its three-part structure and urging peers to bookmark it for comprehensive LLMOps learning.

- Large language models are driving a transformative wave, establishing LLMOps as the emerging operational framework in AI engineering.

-

LLMs Revolutionize Technological Interactions: Large Language Models (LLMs) are reshaping interactions with technology, powering applications like chatbots, virtual assistants, and advanced search engines.

- Their role in developing personalized recommendation systems signifies a substantial shift in operational methodologies within the industry.

LAION Discord

-

Efficient Audio Captioning with Whisper: A user seeks to expedite audio dataset captioning using Whisper, encountering issues with batching short audio files.

- They highlighted that batching reduces processing time from 13 minutes to 1 minute, with a further enhancement to 17 seconds.

-

Whisper Batching Optimization: Users discussed challenges in batching short audio files for Whisper, aiming to improve efficiency.

- The reduction in processing time through batching was emphasized, showcasing a jump from 13 minutes to 17 seconds.

-

Captioning Script Limitations: The user shared their captioning script for Whisper audio processing.

- They noted the script struggles to handle short audio files efficiently within the batching mechanism.

-

Faster Whisper Integration: Reference was made to Faster Whisper on GitHub to enhance transcription speed with CTranslate2.

- The user expressed intent to leverage this tool for more rapid processing of their audio datasets.

Gorilla LLM (Berkeley Function Calling) Discord

-

Llama 3.2 Default Prompt in BFCL: The Llama 3.2 model utilizes the default system prompt from BFCL, as observed by users.

- This reliance on standard configurations suggests a consistent baseline in evaluating model performance.

-

Multi Turn Categories Impacting Accuracy: Introduced in mid-September, multi turn categories have led to a noticeable drop in overall accuracy with the release of v3.

- The challenging nature of these categories adversely affected average scores across various models, while v1 and v2 remained largely unaffected.

-

Leaderboard Score Changes Due to New Metrics: Recent leaderboard updates on 10/21 and 11/17 showed significant score fluctuations due to a new evaluation metric for multi turn categories.

- Previous correct entries might now be marked incorrect, highlighting the limitations of the former state checker and improvements with the new metric PR #733.

-

Public Release of Generation Results: Plans have been announced to upload and publicly share all generation results used for leaderboard checkpoints to facilitate error log reviews.

- This initiative aims to provide deeper insights into the observed differences in agentic behavior across models.

-

Prompting Models vs FC in Multi Turn Categories: Prompting models tend to perform worse than their FC counterparts specifically in multi turn categories.

- This observation raises questions about the effectiveness of prompting in challenging evaluation scenarios.

LLM Agents (Berkeley MOOC) Discord

-

Missing Quiz Score Confirmations: A member reported not receiving confirmation emails for their quiz scores after submission, raising concerns about the reliability of the notification system.

- In response, another member suggested checking the spam folder or using a different email address to ensure the submission was properly recorded.

-

Email Submission Troubleshooting: To address email issues, a member recommended verifying whether a confirmation email was sent from Google Forms.

- They emphasized that the absence of an email likely indicates the submission did not go through, suggesting further investigation into the form’s configuration.

Mozilla AI Discord

-

Hidden States Unconference invites AI innovators: Join the Hidden States Unconference in San Francisco, a gathering of researchers and engineers exploring AI interfaces and hidden states on December 5th.

- This one-day event aims to push the boundaries of AI methods through collaborative discussions.

-

Build an ultra-lightweight RAG app workshop announced: Learn how to create a Retrieval Augmented Generation (RAG) application using sqlite-vec and llamafile with Python at the upcoming workshop on December 10th.

- Participants will appreciate building the app without any additional dependencies.

-

Kick-off for Biological Representation Learning with ESM-1: The Paper Reading Club will discuss Meta AI’s ESM-1 protein language model on December 12th as part of the series on biological representation learning.

- This session aims to engage participants in the innovative use of AI in biological research.

-

Demo Night showcases Bay Area innovations: Attend the San Francisco Demo Night on December 15th to witness groundbreaking demos from local creators in the AI space.

- The event is presented by the Gen AI Collective, highlighting the intersection of technology and creativity.

-

Tackling Data Bias with Linda Dounia Rebeiz: Join Linda Dounia Rebeiz, TIME100 honoree, on December 20th to learn about her approach to using curated datasets to train unbiased AI.

- She will discuss strategies that empower AI to reflect reality rather than reinforce biases.

AI21 Labs (Jamba) Discord

-

Jamba 1.5 Mini Integrates with OpenRouter: A member attempted to use the Jamba 1.5 Mini Model via OpenRouter, configuring parameters like location and username.

- However, function calling returned empty outputs in the content field of the JSON response, compared to successful outputs without function calling.

-

Function Calling Yields Empty Outputs on OpenRouter: The Jamba 1.5 Mini Model returned empty content fields in JSON responses when utilizing function calling through OpenRouter.

- This issue was not present when invoking the model without function calling, suggesting a specific problem with this setup.

-

Password Change Request for OpenRouter Usage: A member requested a password change related to utilizing the Jamba 1.5 Mini Model via OpenRouter.

- They detailed setting parameters such as location and username for user data management.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Modular (Mojo 🔥) ▷ #mojo (435 messages🔥🔥🔥):

Mojo performance issues, Memory management in Mojo, Mutable aliasing and origins, Function signatures and origins

-

Performance Issues with Mojo Tests: Running all tests in a Mojo directory takes significantly longer than running them individually, pointing to potential memory pressure due to the number of mojo-test-executors spawned.

- A user noted that after commenting out test functions, the runtime gradually improved, suggesting that memory leaks or high memory usage may be causing slowdowns.

-

Memory and Process Management: The discussion highlighted issues with Chrome’s memory usage contributing to performance problems during test execution, indicating overall system memory pressure.

- Users acknowledged needing better hardware to handle their workloads, emphasizing a need for effective resource management.

-

Understanding Origins in Mojo: A conversation unfolded about how origins track references in Mojo, revealing that in the current model,

vecand its elements share the same origin, but this will change with new implementations.- Two origins will be introduced: the origin of the vector and the origin of its elements, allowing more precise tracking of potential aliasing and ensuring memory safety.

-

Mutable Aliasing in Function Signatures: The group discussed how to safely implement mutable aliasing in function signatures by parameterizing origin values, which permits the same origin for different arguments.

- This allows for functions to mutate vector elements while retaining memory safety, following the same logic as Rust’s aliasing rules.

-

Future Directions for Mojo’s Type System: Nick proposed a model for handling references and origins that emphasizes local reasoning while allowing mutable aliasing, requiring future study and formal proofs for safety.

- The model aims to characterize and prevent situations that could lead to dangling pointers, ensuring robust memory safety in Mojo’s design.

Links mentioned:

- Subtyping and Variance - The Rust Reference: no description found

- Subtyping and Variance - The Rustonomicon: no description found

- Issues · modularml/mojo.): The Mojo Programming Language. Contribute to modularml/mojo development by creating an account on GitHub.

- 0738-variance - The Rust RFC Book: no description found

- Compiler Explorer - C++ (Circle) (Latest): #feature on safetytemplate<typename T>void assign(T^ input, T val) { *input = val;}int main() { std2::string_view hello(“hello”); { std2::string world(“world”);…

- [BUG] Can’t use returned reference from function · Issue #3813 · modularml/mojo: Bug description struct Foo: var a: Int32 fn del(owned elf): print(“Destroyed Foo”) fn init(inout self): self.a = 1 print(“Created Foo”) fn min(ref [] a: Foo, ref [] b: Fo…

- Compiler Explorer - C++ (Circle) (Latest): #feature on safetyint main() safe { std2::vector<std2::string_view> vec { }; { std2::string s(“Hello world”); mut vec.push_back(s); } // println(vec[0]);}

- Compiler Explorer - C++ (x86-64 clang (trunk)): /*fn assign<T>(input: &mut T, val: T) { *input = val;}fn main() { let mut hello: &‘static str = “hello”; { let world = String::from(“world&…

- [docs] Stdlib insider documentation by owenhilyard · Pull Request #3793 · modularml/mojo: People working on the standard library need to have some more information about the API contracts and behavior of the runtime and compiler builtins in order to be able to write correct and performa…

Modular (Mojo 🔥) ▷ #max (4 messages):

MAX Engine vs ONNX, MAX Graph API, AI Model Inference

-

MAX Engine defined as an AI inference powerhouse: MAX is characterized as an engine designed to utilize models, whereas ONNX functions merely as a format for models, supporting various formats like ONNX, TorchScript, and native Mojo graphs.

- It aims to deliver low-latency, high-throughput inference across diverse hardware to streamline AI workloads.

-

MAX 24.3 release enhances engine capabilities: The recent MAX version 24.3 release highlights its extensibility, enabling users to create custom models via the MAX Graph APIs.

- The Graph API facilitates high-performance symbolic computation graphs in Mojo, positioning MAX as more than just a model format.

-

ONNX focuses on model transfer, MAX prioritizes performance: Unlike ONNX’s aim of transferring models

- This distinction indicates MAX’s goal to optimize real-time model inference.

Link mentioned: Get started with MAX Graph | Modular Docs: Learn how to build a model graph with our Mojo API for inference with MAX Engine.

Cursor IDE ▷ #general (332 messages🔥🔥):

Cursor Agent Performance, Cursor Version Updates, User Experiences with Cursor, Model Context Protocol (MCP), Markdown Issues and Bug Fixes

-

User frustrations with Cursor Agent: Several users reported issues with the Cursor Agent, such as it creating endless folders instead of organizing them properly, indicating potential bugs.

- Users suggested that giving clearer commands could improve agent functionality and prevent confusion when interacting with project structures.

-

Latest Version Rollout and Features: The latest update to Cursor (version 0.43.5) has prompted discussions about missing features, including changes to the @web functionality and the removal of the tabs system.

- Despite the updates, some users expressed appreciation for Cursor’s contribution to their productivity and ability to manage coding tasks efficiently.

-

Implementing Custom Tools and Protocols: Users expressed interest in the Model Context Protocol (MCP) and its potential to allow for the creation of custom tools and context providers within Cursor.

- Conversations suggested that such implementations could greatly enhance user experience and provide more tailored functionalities.

-

Positive Impact of Cursor on Development: Many users shared their uplifting experiences with Cursor, emphasizing how it has transformed their approach to coding and project execution.

- Users highlighted that, despite not being professional developers, Cursor has enabled them to tackle ambitious projects more confidently.

-

Markdown Issues and Workarounds: There were discussions about markdown formatting issues in Cursor, particularly regarding code blocks not applying correctly.

- Users are seeking solutions or keybindings to enhance their workflow and manage files better within the latest updates.

Links mentioned:

- Cascade - Codeium Docs: no description found

- Cursor - The IDE designed to pair-program with AI.: no description found

- Tweet from echo.hive (@hive_echo): 2 Cursor Composer agents working together on the same project. one builds the project, the other reviews once the first agent is done and writes a report. cursor rules file is in comment to achieve th…

- Woah what happened to cursor with this new update?: I can’t find the 200k context option anymore somehow all the changes I make are being undone? the scrolling on the AI chat is weird - like if I press shift+up inside a code text the entire chat scro…

- Shopify Sub App Intergration: Plan to integrate our subscription app with Seal subscriptions (subscription app) We have custom-built our own subscription app (charge every month, change products, skip a month etc.) which has a ca…

- Cursor Composer Window in latest version: Hi! in latest version- Version: 0.43.4 CMD + SHIFT + I is not opening composer in the new composer window that I love. is this intentional? It does open with CMD + I in the chat window (with the o…

OpenAI ▷ #ai-discussions (95 messages🔥🔥):

Sora Video Generator Leak, ChatGPT Image Analysis Capabilities, Discord Community Engagement, Translation Challenges, Usage of AI in Content Creation

-

Sora Video Generator Leak Causes Buzz: Members discussed the recent leak of OpenAI’s Sora Video Generator, with references to a YouTube video detailing the event.

- Discussions included sentiments on the tool’s efficacy and missed expectations, with some noting that public access has already been revoked.

-

Testing ChatGPT’s Image Analysis Features: Users tested ChatGPT’s ability to analyze images, noting inconsistencies in responses based on how prompts were framed and images were presented.

- Participants highlighted past functionality where image context would lead to better interactions compared to fresh starts with no guidance.

-

Engagement and Humor in Discord: The channel demonstrated a light-hearted engagement among users about daily themes, with some humorously suggesting rewards for frequent winners.

- Comments like ‘ChatGPT is a cat type of AI’ showcased the playful nature of discussions within the community.

-

Challenges in Translation Requests: A user presented their translation of a Canadian English text into Urdu, sharing a link for public access.

- This highlighted the collaborative spirit of users in the channel who are keen to share educational resources.

-

Feedback on AI Capabilities: Participants shared experiences with ChatGPT’s ability to identify characters and create responses based on visual interpretations.

- A contrasting observation was shared about varying abilities based on user plans, with a user on the Free Plan reporting effective recognition.

Link mentioned: Public Access to Open AI’s Sora Video Generator just Leaked…: In this video, I discuss the unexpected leak of OpenAI’s Sora, an advanced AI video generation tool. The leak was reportedly initiated by artists protesting …

OpenAI ▷ #gpt-4-discussions (85 messages🔥🔥):

Accessing ChatGPT for Free, User Experience with ChatGPT, ChatGPT's Reliability and Validity, Using Files with GPT, Community Interaction and Humor

-

Users seek ways to access ChatGPT Plus for free: Multiple users discussed potential ways to access ChatGPT 4 without paying for an upgrade, including suggestions to use multiple accounts or get subscriptions through friends.

- However, it was concluded that using the platform without limitations typically requires a paid plan, making many feel it’s not worth exploring further.

-

Community humor and support: Throughout the discussion, a humorous rapport developed, with members joking about how to approach using ChatGPT while encouraging comedic talent.

- Comments ranged from lighthearted banter about ghosts to mocking serious inquiries about getting by without payment.

-

ChatGPT’s approach to voter fraud discussions: A user raised concerns that ChatGPT conveys a biased view on voter fraud despite a lack of empirical peer-reviewed studies on the topic.

- Another member cautioned against relying on ChatGPT as an authority on such issues, reminding the community not to discuss politics.

-

Best practices for GPT file interaction: A user sought advice on how to make GPT refer to provided files instead of relying on its general knowledge.

- Suggestions included being specific in the prompts and directly instructing the GPT to analyze the given reports.

-

Discussion on ChatGPT’s capabilities: Community members highlighted that, while LLMs like ChatGPT are capable, they should be viewed as text generators rather than definitive sources of truth.

- The importance of checking facts and the limitations of AI in providing reliable information were emphasized, ensuring users remain critical of the output.

OpenAI ▷ #prompt-engineering (62 messages🔥🔥):

Empirical Prompting Research, AI Phone Calls, Model Testing Frameworks, Error Identification in AI, General Problem-Solving Strategies

-

Lack of Empirical Prompting Research: A member expressed frustration over the lack of empirical research in prompting techniques, noting that many existing studies seem conflicting and fail to establish a gold standard.

- Another member suggested that while numerous papers exist, they often contradict one another, leaving the community in search of clarity regarding effective prompting.

-

AI Phone Agents vs IVR Systems: Discussion highlighted the distinction between AI agents and traditional IVR systems, emphasizing that AI should simulate human interaction rather than just mimic scripted responses.

- Members agreed on the importance of confirming critical information back to users to ensure accuracy, just as a human operator would.

-

Building Robust Model Testing Frameworks: One member raised concerns about creating a testing framework that can evaluate models at scale, particularly in tracking prompt changes and their impacts on responses.

- Suggestions included verifying extracted data against existing information and implementing checks to confirm accuracy with users during interactions.

-

Model Behavior and Testing for Accuracy: Members discussed the necessity of being explicit about prompts while also understanding that some instructions may be inferred correctly by the model without detailed explanations.

- Feedback loop mechanisms and iteratively refining instructions based on observed behavior were seen as key strategies for enhancing model performance.

-

Utilizing Retrieval-Augmented Generation (RAG): A member inquired about best practices for encouraging GPT to reference provided files, leading to a suggestion of using Retrieval-Augmented Generation (RAG) for context retrieval.

- This approach would allow the model to utilize specific data from the files according to the query, enhancing the relevance of its responses.

OpenAI ▷ #api-discussions (62 messages🔥🔥):

Empirical Prompting Research, AI Phone Call Agents, Testing and Consistency in AI, IVR vs AI Interaction, RAG for File Referencing

-

Lack of Empirical Research in Prompting: Concerns have been raised about the current lack of empirical prompting research, with existing papers often presenting conflicting information and limited guidance on effective prompting.

- One member noted the difficulty in creating unit tests for AI agents due to the complexity of interactions and the varying ways prompts can be construed.

-

AI Phone Agents Should Mimic Humans: Discussion emphasized that AI phone agents shouldn’t be treated like traditional IVRs, aiming instead for a more seamless human-like interaction where the AI reacts dynamically to the conversation.

- Members highlighted the importance of guiding AI to understand the context and extracting critical information, rather than relying on it to infer every detail correctly.

-

Building Robust Testing Frameworks: Creating testing frameworks that monitor how changes in prompts affect the model’s performance is vital for ensuring reliability across numerous use cases in AI.

- It was suggested to design systems that allow for easy testing and to maintain checks on the model’s ability to handle various information types correctly.

-

Empowering AI with Guidance: Members discussed the need to provide explicit instructions while allowing the model to infer simpler tasks, ensuring that it focuses on areas where it tends to struggle.