AI News for 3/19/2025-3/20/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (227 channels, and 4533 messages) for you. Estimated reading time saved (at 200wpm): 386 minutes. You can now tag @smol_ai for AINews discussions!

As one commenter said, the best predictor of an OpenAI launch is a launch from another frontier lab. Today’s OpenAI mogging takes the cake because of how broadly it revamps OpenAI’s offering - if you care about voice at all, this is as sweeping a change as the Agents platform revamp from last week.

We think Justin Uberti’s summary is the best one:

But you should also watch the livestream:

The major three highlights are

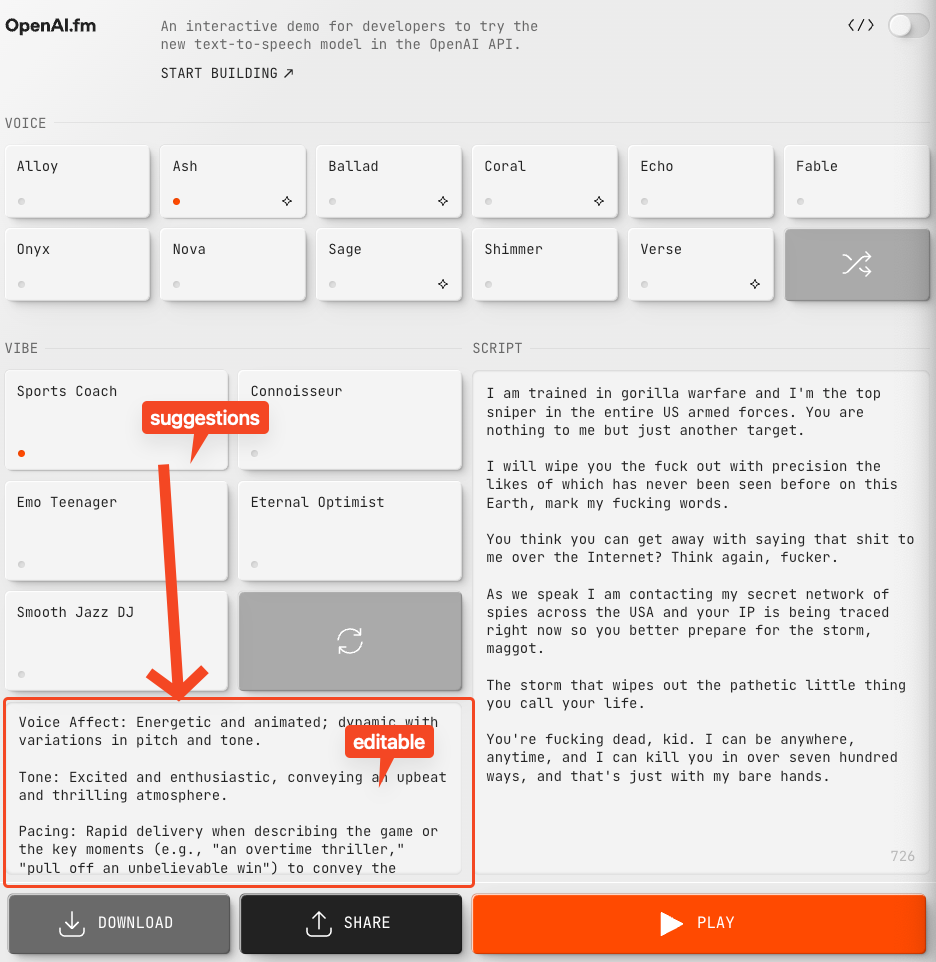

OpenAI.fm, a demo site that shows off the new promptable prosody in 4o-mini-tts:

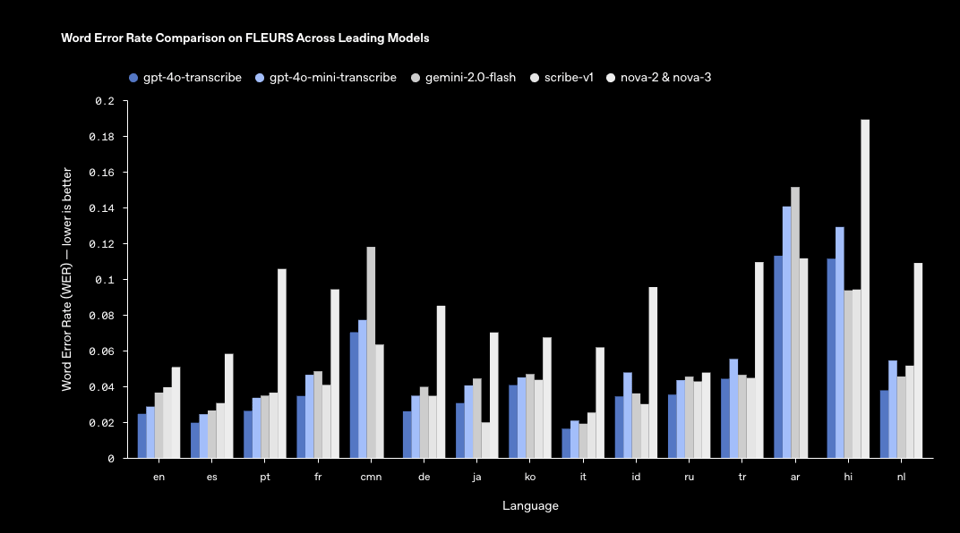

4o-transcribe, a new (non open source?) ASR model that beats whisper and commercial peers:

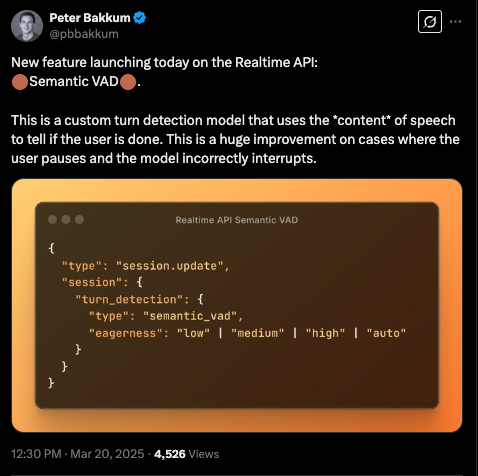

and finally, blink and you will miss it, but even turn detection got an update, so now realtime voice will use the CONTENT of speech to dynamically adjust VAD:

Technical detail on the blogpost is light of course, only one paragraph each per point.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

Audio Models, Speech-to-Text, and Text-to-Speech Advancements

- OpenAI released three new state-of-the-art audio models in their API: including two speech-to-text models outperforming Whisper, and a new TTS model that allows you to instruct it how to speak, as noted by @OpenAIDevs. The Agents SDK now supports audio, facilitating the building of voice agents, furthering the discussion by @sama. @reach_vb expressed excitement, stating MOAR AUDIO - LETSGOOO! indicating community enthusiasm. You can hear the new models in action @OpenAI. @kevinweil mentions new features give you control over timing and emotion.

- OpenAI is holding a radio contest for TTS creations. Users can tweet their creations for a chance to win a Teenage Engineering OB-4, with the contest ending Friday, according to @OpenAIDevs and @kevinweil. @juberti notes they have added ASR, gpt-4o-transcribe with SoTA performance, and TTS, gpt-4o-mini-tts with playground.

- Artificial Analysis reported Kokoro-82M v1.0 is now the leading open weights Text to Speech Model and is extremely competitive pricing, costing just $0.63 per million characters when run on Replicate @ArtificialAnlys.

Model Releases, Open Source Initiatives, and Performance Benchmarks

- OpenAI’s o1-pro is now available in API to select developers on tiers 1–5, supporting vision, function calling, Structured Outputs, and works with the Responses and Batch APIs, according to @OpenAIDevs. The model uses more compute and is more expensive: $150 / 1M input tokens and $600 / 1M output tokens. Several users including @omarsar0 and @BorisMPower note their excitement to experiment with o1-pro. @Yuchenj_UW notes that o1-pro could replace a PhD or skilled software engineer and save money.

- Nvidia open-sourced Canary 1B & 180M Flash, multilingual speech recognition AND translation models with a CC-BY license allowing commercial use, according to @reach_vb.

- Perplexity AI announced major upgrades to their Sonar models, delivering superior performance at lower costs. Benchmarks show Sonar Pro surpasses even the most expensive competitor models at a significantly lower price point, according to @Perplexity_AI. @AravSrinivas reports their Sonar API scored 91% on SimpleQA while remaining cheaper than even GPT-4o-mini. New search modes (High, Medium, and Low) have been added for customized performance and price control, according to @Perplexity_AI and @AravSrinivas.

- Reka AI launched Reka Flash 3, a new open source 21B parameter reasoning model, with the highest score for a model of its size, as per @ArtificialAnlys. The model has an Artificial Analysis Intelligence Index of 47, outperforming almost all non-reasoning models, and is stronger than all non-reasoning models in their Coding Index. The model is small enough to run in 8-bit precision on a MacBook with just 32GB of RAM.

- DeepLearningAI reports that Perplexity released DeepSeek-R1 1776, an updated version of a model originally developed for China, and more useful outside China due to the removal of political censorship @DeepLearningAI.

AI Agents, Frameworks, and Tooling

- LangChain is seeing increased graph usage and they are speeding those graphs up, according to @hwchase17. They also highlight that this community effort attempts to replicate Manus using the LangStack (LangChain + LangGraph) @hwchase17.

- Roblox released Cube on Hugging Face, a Roblox view of 3D Intelligence @_akhaliq.

- Meta introduced SWEET-RL, a new multi-turn LLM agent benchmark, and a novel RL algorithm for training multi-turn LLM agents with effective credit assignment over multiple turns, according to @iScienceLuvr.

AI in Robotics and Embodied Agents

- Figure will deploy thousands of robots performing small package logistics, each with individual neural networks, according to @adcock_brett. @DrJimFan encourages the community to contribute back to their open-source GR00T N1 project.

LLM-Based Coding Assistants and Tools

- Professor Rush has entered the coding assistant arena, according to @andrew_n_carr. @ClementDelangue notes that Cursor is starting to build models themselves with their own @srush_nlp.

Observations and Opinions

- François Chollet notes that strong generalization requires compositionality: building modular, reusable abstractions, and reassembling them on the fly when faced with novelty @fchollet. Also, thinking from first principles instead of pattern-matching the past lets you anticipate important changes with a bit of advance notice @fchollet.

- Karpathy describes an approach to note-taking that involves appending ideas to a single text note and periodically reviewing it, finding it balances simplicity and effectiveness @karpathy. They also explore the implications of LLMs maintaining one giant conversation versus starting new ones for each request, discussing caveats like speed, ability, and signal-to-noise ratio @karpathy.

- Nearcyan introduces the term “slop coding” to describe letting LLMs code without sufficient prompting, design, or verification, highlighting its limited appropriate use cases @nearcyan.

- Swyx shares analysis on the importance of timing in agent engineering, highlighting the METR paper as a commonly accepted standard for frontier autonomy @swyx.

- Tex claims one of the greatest Chinese advantages that is how much less afraid their boomers are of learning tech @teortaxesTex.

Humor/Memes

- Aidan Mclauglin tweets about GPT-4.5-preview’s favorite tokens @aidan_mclau and the results were explicitly repetitive @aidan_mclau.

- Vikhyatk jokes about writing four lines of code worth $8M and asks for questions @vikhyatk.

- Will Depue remarks anson yu is the taylor swift of waterloo @willdepue.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. LLMs 800x Cheaper for Translation than DeepL

- LLMs are 800x Cheaper for Translation than DeepL (Score: 530, Comments: 162): LLMs offer a significant cost advantage for translation, being over 800x cheaper than DeepL, with

gemini-2.0-flash-litecosting less than $0.01/hr compared to DeepL’s $4.05/hr. While the current translation quality may be slightly lower, the author anticipates that LLMs will soon surpass traditional models, and they are already achieving comparable results to Google’s translations with improved prompting.- LLMs vs. Traditional Models: Many users highlighted that LLMs offer superior contextual understanding compared to traditional translation models, which enhances translation quality, especially for languages with complex context like Japanese. However, there are concerns about LLMs being too creative or hallucinating details, which can lead to inaccurate translations.

- Model Comparisons and Preferences: Users discussed various models like Gemma 3, CommandR+, and Mistral, noting their effectiveness in specific language pairs or contexts. Some preferred DeepL for certain tasks due to its ability to maintain document structure, while others found LLMs like GPT-4o and Sonnet to produce more natural translations.

- Finetuning and Customization: Finetuning LLMs like Gemma 3 was a popular topic, with users sharing techniques and experiences to enhance translation quality for specific domains or language pairs. Finetuning was noted to significantly improve performance, making LLMs more competitive with traditional models like Google Translate.

Theme 2. Budget 64GB VRAM GPU Server under $700

- Sharing my build: Budget 64 GB VRAM GPU Server under $700 USD (Score: 521, Comments: 144): The post describes a budget GPU server build with 64GB VRAM for under $700 USD. No additional details or specifications are provided in the post body.

- Budget Build Details: The build includes a Supermicro X10DRG-Q motherboard, 2 Intel Xeon E5-2650 v4 CPUs, and 4 AMD Radeon Pro V340L 16GB GPUs, totaling approximately $698 USD. The setup uses Ubuntu 22.04.5 and ROCm version 6.3.3 for software, with performance metrics indicating 20250.33 tokens per second sampling time.

- GPU and Performance Discussion: The AMD Radeon Pro V340L GPUs are noted for their theoretical speed, but practical performance issues are highlighted, with a comparison to M1 Max and M1 Ultra systems. Llama-cpp and mlc-llm are mentioned for optimizing GPU usage, with mlc-llm allowing simultaneous use of all GPUs for better performance.

- Market and Alternatives: The discussion includes comparisons with other GPUs like the Mi50 32GB, which offers 1TB/s memory bandwidth and is noted for lower electricity consumption. There’s a consensus on the challenges in the current market for budget GPU builds, with mentions of ROCm cards being cheaper but with trade-offs in performance and software support.

Theme 3. TikZero: AI-Generated Scientific Figures from Text

- TikZero - New Approach for Generating Scientific Figures from Text Captions with LLMs (Score: 165, Comments: 31): TikZero introduces a new approach for generating scientific figures from text captions using Large Language Models (LLMs), contrasting with traditional End-to-End Models. The image highlights TikZero’s ability to produce complex visualizations, such as 3D contour plots, neural network diagrams, and Gaussian function graphs, demonstrating its effectiveness in creating detailed scientific illustrations.

- Critics argue that TikZero’s approach may encourage misuse in scientific contexts by generating figures without real data, potentially undermining scientific integrity. However, some see value in using TikZero to generate initial plot structures that can be refined with actual data, highlighting its utility in creating complex visualizations that are difficult to program manually.

- DrCracket defends TikZero’s utility by emphasizing its role in generating editable high-level graphics programs for complex visualizations, which are challenging to create manually, citing its relevance in fields like architecture and schematics. Despite concerns about inaccuracies, the model’s output allows for easy correction and refinement, providing a foundation for further customization.

- Discussions about model size suggest that while smaller models like SmolDocling-256M offer good OCR performance, TikZero’s focus on code generation necessitates a larger model size, such as the current 8B model, to maintain performance. DrCracket mentions ongoing exploration of smaller models but anticipates performance trade-offs.

Theme 4. Creative Writing with Sub-15B LLM Models

- Creative writing under 15b (Score: 148, Comments: 92): The post discusses an experiment evaluating the creative writing capabilities of AI models with fewer than 15 billion parameters, using ollama and openwebui settings. It describes a scoring system based on ten criteria, including Grammar & Mechanics, Narrative Structure, and Originality & Creativity, and references an image with a chart comparing models like Gemini 3B and Claude 3.

- Several users highlighted the difficulty in reading the results due to low resolution, with requests for higher resolution images or spreadsheets to better understand the scoring system and model comparisons. Wandering_By_ acknowledged this and provided additional details in the comments.

- There was debate over the effectiveness of smaller models like Gemma3-4b, which surprisingly scored highest overall, outperforming larger models in creative writing tasks. Some users questioned the validity of the benchmark, noting issues such as ambiguous judging prompts and the potential for models to produce “purple prose.”

- Suggestions included using more specific and uncommon prompts to avoid generic outputs and considering separate testing for reasoning and general models. The need for a more structured rubric and examples was also mentioned to enhance the evaluation process.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Claude 3.7 Regression: Widespread User Concerns

-

I’m utterly disgusted by Anthropic’s covert downgrade of Sonnet 3.7’s intelligence. (Score: 255, Comments: 124): Users express dissatisfaction with Anthropic’s handling of Claude 3.7, citing significant performance issues such as mismatched responses and incorrect use of functions like LEN + SUBSTITUTE instead of COUNTIF for Excel formulas. This decline in functionality reportedly began recently, leading to frustration over what is perceived as a covert downgrade.

- Users report severe performance degradation in Claude 3.7, with issues like logical errors, inability to follow instructions, and incorrect code generation, which were not present in previous versions. Many users have reverted to using GPT due to these problems, citing consistency and reliability concerns with Claude.

- There is speculation that Anthropic might be conducting live A/B testing or experimenting with feature manipulation on their models, which could explain the erratic behavior of Claude 3.7. Some users believe that Anthropic is using user data for training or feature adjustments, as discussed in their blog.

- The community expresses dissatisfaction with Anthropic’s lack of transparency regarding the changes, with many users feeling frustrated by the apparent downgrade and the need for more prompt management to achieve desired results. Users are also concerned about increased API usage and the resultant costs, leading some to consider switching to alternative models.

-

If you are vibe coding, read this. It might save you! (Score: 644, Comments: 192): The post discusses the vibe coding trend, emphasizing the influx of non-coders creating applications and websites, which can lead to errors and learning opportunities. The author suggests using a leading reasoning model to review code for production readiness, focusing on vulnerabilities, security, and best practices, and shares their non-coder portfolio, including projects like The Prompt Index and an AI T-Shirt Design addition by Claude Sonnet.

- Many commenters criticize vibe coding as a naive approach, emphasizing the necessity of foundational software engineering knowledge for building robust and secure products. They argue that AI-generated code often introduces issues and lacks the depth needed for production-level applications, suggesting that non-coders need to either learn coding fundamentals or work with experienced developers.

- Some participants discuss the effectiveness of AI tools in coding, with one commenter detailing their workflow involving deep research, role-playing with AI as a CTO, and creating detailed project plans. They highlight the importance of understanding project requirements and maintaining control over AI-generated outputs to avoid suboptimal results, while others note the potential for AI to accelerate early development phases but stress the need for eventual deeper engineering practices.

- AI-driven development is seen as a double-edged sword; it can increase productivity and impress management, yet many developers remain skeptical. While some have successfully integrated AI into their coding processes, others caution against over-reliance on AI without understanding the underlying systems, pointing out that AI can generate code bloat and errors if not properly guided.

-

i don’t have a computer powerful enough. is there someone with a powerful computer wanting to turn this oc of mine into an anime picture? (Score: 380, Comments: 131): Anthropic’s Management of Claude 3.7: Discussions focus on the decline in performance of Claude 3.7, sparking debates within the AI community. Concerns are raised about the management and decision-making processes impacting the AI’s capabilities.

- Discussions drifted towards image generation using various tools, with mentions of free resources like animegenius.live3d.io and img2img techniques, as showcased in multiple shared images and links. Users shared generated images, often humorously referencing Chris Chan and Sonichu.

- The conversation included references to the Chris Chan saga, a controversial internet figure, with links to updated stories like the 2024 Business Insider article. This sparked a mix of humorous and critical responses, reflecting the saga’s impact on internet culture.

- A significant portion of comments included humorous or satirical content, with users sharing memes and GIFs, often in a light-hearted manner, while some commenters expressed concern over the comparison of unrelated individuals to alleged criminals.

Theme 2. OpenAI’s openai.fm Text-to-Speech Model Release

-

openai.fm released: OpenAI’s newest text-to-speech model (Score: 107, Comments: 22): OpenAI launched a new text-to-speech model called openai.fm featuring an interactive demo interface. Users can select different voice options like Alloy, Ash, and Coral, as well as vibe settings such as Calm and Dramatic, to test the model’s capabilities with sample text and easily download or share the audio output.

- Users discussed the 999 character limit in the demo, suggesting that the API likely offers more extensive capabilities, as referenced in OpenAI’s audio guide.

- Some users compared openai.fm to Eleven Labs’ elevenreader, a free mobile app known for its high-quality text-to-speech capabilities, including voices like Laurence Olivier.

- There were mixed reactions regarding the quality of OpenAI’s voices, with some feeling underwhelmed compared to other services like Coral Labs and Sesame Maya, but others appreciated the low latency and intelligence of the plug-and-play voices.

-

I asked ChatGPT to create an image of itself at my birthday party and this is what is produced (Score: 1008, Comments: 241): The post describes an image generated by ChatGPT for a birthday party scene, featuring a metallic robot holding an assault rifle, juxtaposed against a celebratory backdrop with a chocolate cake, party-goers, and decorations. The lively scene includes string lights and party hats, emphasizing a festive atmosphere despite the robot’s unexpected presence.

- Users shared their own ChatGPT-generated images with varying themes, with some highlighting humorous or unexpected elements like quadruplets and robot versions of themselves. The images often featured humorous or surreal elements, such as steampunk settings and robogirls.

- Discussions included AI’s creative liberties in image generation, like the inability to produce accurate text, resulting in names like “RiotGPT” instead of “ChatGPT.” There was humor about the AI’s interpretation of safety and party themes, with some users joking about unsafe gun handling at the party.

- The community engaged in light-hearted banter and humor, with comments about the bizarre and whimsical nature of the AI-generated scenes, including references to horror movies and unexpected party themes.

Theme 3. Kitboga’s AI Bot Army: Creative Use Against Scammers

-

Kitboga created an AI bot army to target phone scammers, and it’s hilarious (Score: 626, Comments: 29): Kitboga employs an AI bot army to inundate phone scam centers with calls, wasting hours of scammers’ time while creating entertaining content. This innovative use of AI is praised for its effectiveness and humor, as highlighted in a YouTube video.

- Commenters highlight the potential for AI to be used both positively and negatively, with Kitboga’s use being a positive example, while acknowledging that scammers could also adopt AI to scale their operations. RyanGosaling suggests AI could also protect potential victims by identifying scams in real-time.

- There is discussion about the cost-effectiveness of Kitboga’s operation, with users noting that while there are costs involved in running the AI locally, these are offset by revenue from monetized content on platforms like YouTube and Twitch. Navadvisor points out that scammers incur higher costs when dealing with fake calls.

- Some users propose more aggressive tactics for combating scammers, with Vast_Understanding_1 expressing a desire for AI to destroy scammers’ phone systems, while others like OverallComplexities praise the current efforts as heroic.

-

Doge The Builder – Can He Break It? (Score: 183, Comments: 24): The community humorously discusses a fictional scenario where Elon Musk and a Dogecoin Shiba Inu mimic “Bob the Builder” in a playful critique of greed and unchecked capitalism. The post is a satirical take on the potential chaos of memecoins and features a meme officially licensed by DOAT (Department of Automated Truth), with an approved YouTube link provided for redistribution.

- AI’s Impressive Capabilities: Commenters express admiration for the current capabilities of AI, highlighting its impressive nature in creating engaging and humorous content.

- Cultural Impact of Influential Figures: There’s a reflection on how individuals like Elon Musk can significantly influence the cultural zeitgeist, with a critical view on the ethical implications of wealth accumulation and societal influence.

- Creative Process Inquiry: A user shows interest in understanding the process behind creating such satirical content, indicating curiosity about the technical or creative methods involved.

Theme 4. Vibe Coding: A New Trend in AI Development

- Moore’s Law for AI: Length of task AIs can do doubles every 7 months (Score: 117, Comments: 27): The image graphically represents the claim that the length of tasks AI can handle is doubling every seven months, with tasks ranging from answering questions to optimizing code for custom chips. Notable AI models like GPT-2, GPT-3, GPT-3.5, and GPT-4 are marked on the timeline, showing their increasing capabilities and variability in success rates from 2020 to 2026.

- Throttling and Resource Management: Discussions highlight user frustration with AI usage throttling, which is not due to model limitations but rather resource management. NVIDIA GPU scarcity is a major factor, with current demand exceeding supply, impacting AI service capacity.

- Pricing Models and User Impact: The pricing models for AI services like ChatGPT are critiqued for being “flexible and imprecise,” impacting power users who often exceed usage limits, making them “loss leaders” in the market. Suggestions include clearer usage limits and cost transparency to improve user experience.

- Task Length and AI Capability: There is confusion about the task lengths plotted in the graph, with clarifications indicating they are based on the time it takes a human to complete similar tasks. The discussion also notes that AI models like GPT-2 had limitations, such as difficulty maintaining coherence in longer tasks.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. LLM Pricing and Market Volatility

- OpenAI’s o1-pro API Price Tag Stuns Developers: OpenAI’s new o1-pro API model is now available for select developers at a high price of $150 per 1M input tokens and $600 per 1M output tokens. Users on OpenRouter expressed outrage, deeming the pricing insane and questioning if it’s a defensive move against competitors like DeepSeek R1 or due to complex multi-turn processing without streaming.

- Pear AI Challenges Cursor with Lower Prices: Members on the Cursor Community Discord are highlighting the price advantage of Pear AI over Cursor, claiming Cursor has become more expensive. One user stated they might switch to Pear AI if Cursor doesn’t improve its context window or pricing for Sonnet Max, noting if im paying for sonnet max i’d mind as well use pear because i pay even cheaper.

- Perplexity Eyes $18 Billion Valuation in Funding Talks: Perplexity AI is reportedly in early funding discussions for $500M-$1B at an $18 billion valuation, potentially doubling its valuation from December. This reflects strong investor confidence in Perplexity’s AI search technology amidst growing competition in the AI space.

Theme 2. LLM Model Quirks and Fixes

- Gemma 3 Suffers Identity Crisis on Hugging Face: Users reported that Gemma models from Hugging Face incorrectly identify as first generation models with 2B or 7B parameters, even when downloading the 12B Gemma 3. This misidentification, caused by Google’s oversight in updating identification code, doesn’t affect model performance, but causes user confusion about model versioning.

- Unsloth Patches Gemma 3 Float16 Activation Issue: Unsloth AI addressed infinite activations in Gemma 3 when using float16 precision, which led to NaN gradients during fine-tuning and inference on Colab GPUs. The fix keeps intermediate activations in bfloat16 and upcasts layernorm operations to float32, avoiding full float32 conversion for speed, as detailed on the Unsloth AI blog.

- Hugging Face Inference API Trips Over 404 Errors: Users reported widespread 404 errors with the Hugging Face Inference API, impacting multiple applications and paid users. A Hugging Face team member acknowledged the issue and stated it was reported for investigation, disrupting services relying on the API.

Theme 3. Tools and Frameworks Evolve for LLM Development

- UV Emerges as a Cool Kid Python Package Manager: Developers in the MCP (Glama) Discord are endorsing uv, a fast Python package and project manager written in Rust, as a superior alternative to pip and conda. Praised for its speed and minimal website, uv is gaining traction among Python developers seeking efficient dependency management.

- Nvidia’s cuTile Eyes Triton’s Throne?: NVIDIA announced cuTile, a new tile programming model for CUDA, sparking community discussion about its potential overlap with Triton. Some speculate cuTile might be yet another triton but nvidia, raising concerns about NVIDIA’s commitment to cross-vendor backend support.

- LlamaIndex & DeepLearningAI Team Up for Agentic Workflow Course: DeepLearningAI launched a short course in collaboration with LlamaIndex on building agentic workflows using RAG, focusing on automating information processing and context-aware responses. The course covers practical skills like parsing forms and extracting key fields, enhancing agentic system development.

Theme 4. Hardware Headaches and Performance Hurdles

- TPUs Torch T4s in Machine Learning Speed Race: TPUs demonstrated significantly faster performance than T4s, especially at batch size 8, as highlighted in the Unsloth AI Discord. This observation underscores the computational advantage of TPUs for demanding machine learning tasks where speed is paramount.

- LM Studio Multi-GPU Performance Takes a Dive: A user in the LM Studio Discord reported significant performance degradation when using multiple GPUs in LM Studio with CUDA llama.cpp v1.21.0. Performance dropped notably, prompting suggestions to manually limit LM Studio to a single GPU via tensor splitting configurations.

- Nvidia Blackwell RTX Pro GPUs Face Supply Chain Squeeze: Nvidia’s Blackwell RTX Pro series GPUs are anticipated to face supply constraints, according to a Tom’s Hardware article shared in the Nous Research AI Discord. Supply issues may persist until May/June, potentially impacting availability and pricing of these high-demand GPUs.

Theme 5. AI Ethics, Policy, and Safety Debates

- China Mandates Labeling of All AI-Generated Content: China will enforce new regulations requiring the labeling of all AI-generated synthetic content starting September 1, 2025. The Measures for the Labeling of AI-Generated Synthetic Content will necessitate explicit and implicit markers on AI-generated text, images, audio, video, and virtual scenes, as per the official Chinese government announcement.

- Chinese Model Self-Censors Content on Cultural Revolution: A user on the OpenAI Discord reported that a Chinese AI model deletes responses when prompted about the Cultural Revolution, demonstrating self-censorship. Screenshots provided as evidence highlight concerns about content restrictions in certain AI models.

- AI Coding Blindspots Highlighted in Sonnet Family LLMs: A blogpost shared in the aider Discord discusses AI coding blindspots observed in LLMs, particularly those in the Sonnet family. The author suggests potential future solutions may involve Cursor rules designed to address issues like “stop digging,” “black box testing,” and “preparatory refactoring,” indicating ongoing efforts to refine AI coding assistance.

PART 1: High level Discord summaries

Cursor Community Discord

- Agent Mode Meltdown: Members reported that Agent mode was down for an hour and the Status Page was not up to date.

- There were jokes that dan percs was on the case to fix it, and he was busy replying people in cursor and taking care of slow requests, which is why he’s always online.

- Dan Perks Gets Keyboard Advice: Cursor’s Dan Perks solicited opinions on Keychron keyboards, specifically looking for a low profile and clean model with knobs.

- Suggestions poured in, including Keychron’s low-profile collection, though Dan expressed concerns about keycap aesthetics, stating I don’t like the keycaps.

- Pear AI vs Cursor: Price Wars?: Several members touted the advantages of using Pear AI and claimed that Cursor was now more expensive.

- One member claimed to be cooked due to their multiple annual cursor subs, and another claimed, If cursor changes their context window than i would stay at cursor or change their sonnet max to premium usage, otherwise if im paying for sonnet max i’d mind as well use pear because i pay even cheaper.

- ASI: Humanity’s Only Hope?: Members debated whether Artificial Superintelligence (ASI) is the next evolution, claiming that the ASI-Singularity(Godsend) has to be the only Global Solution.

- Others were skeptical, with one user jesting that gender studies is more important than ASI, claiming that its the next step into making humans a intergaltic species, with a nuetral fluid gender we can mate with aliens from different planets and adapt to their witchcraft technology.

- Pear AI Caught Cloning Continue?: Members discussed the controversy surrounding Pear AI, with one claiming that Pear AI cloned continue basically and just took someone elses job and decided its their project now.

- Others cited concerns that the project was closed source and that they should switch to another alternative, like Trae AI.

Unsloth AI (Daniel Han) Discord

- TPUs smoke T4s in Speed: A member highlighted that TPUs demonstrate significantly faster performance compared to T4s, especially when utilizing a batch size of 8, as evidenced by a comparative screenshot.

- This observation underscores the advantage of using TPUs for computationally intensive tasks in machine learning, where speed and efficiency are crucial.

- Gradient Accumulation Glitch Fixed: A recent blog post (Unsloth Gradient Accumulation fix) detailed and resolved an issue related to Gradient Accumulation, which was adversely affecting training, pre-training, and fine-tuning runs for sequence models.

- The implemented fix is engineered to mimic full batch training while curtailing VRAM usage, and also extends its benefits to DDP and multi-GPU configurations.

- Gemma 3 Suffers Identity Crisis: Users have observed that Gemma models obtained from Hugging Face mistakenly identify as first generation models with either 2B or 7B parameters, despite being the 12B Gemma 3.

- This misidentification arises because Google did not update the relevant identification code during training, despite the models exhibiting awareness of their identity and capacity.

- Gemma 3 gets Float16 Lifeline: Unsloth addressed infinite activations in Gemma 3 within float16, which previously led to NaN gradients during fine-tuning and inference on Colab GPUs, via this tweet.

- The solution maintains all intermediate activations in bfloat16 and upcasts layernorm operations to float32, sidestepping speed reductions by avoiding full float32 conversion, as elaborated on the Unsloth AI blog.

- Triton needs Downgrading for Gemma 3: A user encountered a SystemError linked to the Triton compiler while using Gemma 3 on a Python 3.12.9 environment with a 4090.

- The resolution involved downgrading Triton to version 3.1.0 on Python 3.11.x, based on recommendations from this GitHub issue.

aider (Paul Gauthier) Discord

- Featherless.ai Configs Cause Headaches: Users reported configuration issues with Featherless.ai when used with Aider, particularly concerning config file locations and API key setup; using the

--verbosecommand option helped with troubleshooting the setup.- One user highlighted that the wiki should clarify the home directory for Windows users, specifying it as

C:\Users\YOURUSERNAME.

- One user highlighted that the wiki should clarify the home directory for Windows users, specifying it as

- DeepSeek R1 is Cheap, But Slow: While DeepSeek R1 emerges as a cost-effective alternative to Claude Sonnet, its slower speed and performance relative to GPT-3.7 were disappointing to some users, even with Unsloth’s Dynamic Quantization.

- It was pointed out that the full, non-quantized R1 variant requires 1TB of RAM, which would make H200 cards a preferred choice; however, 32B models were still considered the best for home use.

- OpenAI’s o1-pro API Pricing Stings: The new o1-pro API from OpenAI has been met with user complaints due to its high pricing, set at $150 per 1M input tokens and $600 per 1M output tokens.

- One user quipped that a single file refactor and benchmark would cost $5, while another facetiously renamed it fatherless AI.

- Aider LLM Editing Skills Spark Debate: It was noted that Aider benefits most from LLMs that excel in editing code, rather than just generating it, referencing a graph from aider.chat.

- The polyglot benchmark employs 225 coding exercises from Exercism across multiple languages to gauge LLM editing skills.

- AI Coding Blindspots Focus on Sonnet LLMs: A blogpost was shared blogpost about AI coding blindspots they have noticed in LLMs, particularly those in the Sonnet family.

- The author suggests that future solutions may involve Cursor rules designed to address these problems.

LM Studio Discord

- Proxy Setting Saves LM Studio!: A user fixed LM Studio connection problems by enabling the proxy setting, doing a Windows update, resetting the network, and restarting the PC.

- They suspected it happened because of incompatible hardware or the provider blocking Hugging Face.

- PCIE Bandwidth Barely Boosts Performance: A user found that PCIE bandwidth barely affects inference speed, at most 2 more tokens per second (TPS) compared to PCI-e 4.0 x8.

- They suggest prioritizing space between GPUs and avoiding overflow with motherboard connectors.

- LM Studio Misreporting RAM/VRAM?: A user noticed that LM Studio’s RAM and VRAM display doesn’t update instantly after system setting changes, hinting the check is during install.

- Despite the incorrect reporting, they are testing if the application can exceed the reported 48GB of VRAM by disabling guardrails and increasing context length.

- Mistral Small Vision Support Still Elusive: Users found that certain Mistral Small 24b 2503 models on LM Studio are falsely labeled as supporting vision, as the Unsloth version loads without it, and the MLX version fails.

- Some suspect Mistral Small is text-only on MLX and llama.cpp, hoping a future mlx-vlm update will fix it.

- Multi-GPU Performance takes a Nose Dive: A user reported significant performance drops using multiple GPUs in LM Studio with CUDA llama.cpp v1.21.0, sharing performance data and logs.

- A member suggested manually modifying the tensor_split property to force LM Studio to use only one GPU.

Perplexity AI Discord

- Deep Research Gets UI Refresh: Users reported a new Standard/High selector in Deep Research on Perplexity and wondered if there is a limit to using High.

- The team is actively working on improving sonar-deep-research at the model level.

- GPT 4.5 Pulls a Disappearing Act: GPT 4.5 disappeared from the dropdown menu for some users, prompting speculation it was removed due to cost.

- One user noted it’s still present under the rewrite option.

- Sonar API Debuts New Search Modes: Perplexity AI announced improved Sonar models that maintain performance at lower costs, outperforming competitors like search-enabled GPT-4o, detailed in a blog post.

- They introduced High, Medium, and Low search compute modes to optimize performance and cost control and simplified the billing structure to input/output token pricing with flat search mode pricing, eliminating charges for citation tokens in Sonar Pro and Sonar Reasoning Pro responses.

- API Key Chaos Averted with Naming: A user requested the ability to name API keys on the UI to avoid accidental deletion of production keys, and were directed to submit a feature request on GitHub.

- Another user confirmed the API call seemed correct and cautioned to factor in rate limits as per the documentation.

- Perplexity on Locked Screen? Nope: Users reported that Perplexity doesn’t work on locked screens, unlike ChatGPT, generating disappointment among the community.

- Some users have noticed that Perplexity now uses significantly fewer sources (8-16, maybe 25 max) compared to the 40+ it used to use, impacting search depth.

Interconnects (Nathan Lambert) Discord

- O1 Pro API Shelved for Completions: The O1 Pro API will be exclusively available in the responses API due to its complex, multi-turn model interactions, as opposed to being added to chat completions.

- Most upcoming GPT and O-series models will be integrated into chat completions, unlike O1 Pro.

- Sasha Rush Joins Cursor for Frontier RL: Sasha Rush (@srush_nlp) has joined Cursor to develop frontier RL models at scale for real-world coding environments.

- Rush is open to discussing AI jobs and industry-academia questions, with plans to share his decision-making process in a blog post.

- Nvidia’s Canary Sings Open Source: Nvidia has open-sourced Canary 1B & 180M Flash (@reach_vb), providing multilingual speech recognition and translation models under a CC-BY license for commercial applications.

- The models support EN, GER, FR, and ESP languages.

- China’s AI Content to be Flagged: China will enforce its Measures for the Labeling of AI-Generated Synthetic Content beginning September 1, 2025, mandating the labeling of all AI-generated content.

- The regulations necessitate explicit and implicit markers on content like text, images, audio, video, and virtual scenes; see official Chinese government announcement.

- Samsung ByteCraft turns Text into Games: Samsung SAIL Montreal introduced ByteCraft, the world’s first generative model for video games and animations via bytes, converting text prompts into executable files, as documented in their paper and code.

- The 7B model is accessible on Hugging Face, with a blog post further detailing the project.

Notebook LM Discord

- NotebookLM Plus Subscribers Request Anki Integration: A NotebookLM Plus user requested a flashcard generation integration (Anki) in NotebookLM.

- However, the community didn’t have much to say on this topic.

- Customize Button Clears Up Audio Customization Confusion: The “Customize” button in the Audio Overview feature is available for both NotebookLM and NotebookLM Plus, and it allows users to customize episodes by typing prompts.

- Free accounts are limited to generating 3 audios per day, so choose your customizations wisely.

- Mindmap Feature Gradually Rolls Out: Users expressed excitement for the mindmap feature, with one sharing a YouTube video showing its interactive uses.

- It is not an A/B test, the rollout is gradual, and allows generating multiple mindmaps by selecting different sources, however editable mindmaps are not available.

- Audio Overviews Still Stumbling Over Pronunciation: Users report that audio overviews frequently mispronounce words, even with phonetic spelling in the Customize input box.

- NotebookLM team is aware of the issue, and recommend phonetic spellings in the source material as a workaround.

- Extension Users Run Into NotebookLM Page Limits: Users are using Chrome extensions for crawling and adding sources from links within the same domain, and point to the Chrome Web Store for NotebookLM.

- However, one user hit a limit of 10,000 pages while using one such extension.

Nous Research AI Discord

- Aphrodite Crushes Llama.cpp on Perf: A member reported achieving 70 tokens per second with FP6 Llama-3-2-3b-instruct using Aphrodite Engine, noting the ability to run up to 4 batches with 8192 tokens on 10GB of VRAM.

- Another member lauded Aphrodite Engine’s lead developer and highlighted the engine as one of the best for local running, while acknowledging Llama.cpp as a standard for compatibility and dependencies.

- LLMs Flounder when Debugging: Members observed that many models now excel at writing error-free code but struggle with debugging existing code, noting that providing hints is helpful.

- The member contrasted their approach of thinking through problems and providing possible explanations with code snippets, which has generally yielded success except in “really exotic stuff”.

- Nvidia’s Blackwell RTX Pro GPUs face Supply Chain Constraints: A member shared a Tom’s Hardware article about Nvidia’s Blackwell RTX Pro series GPUs, highlighting potential supply issues.

- The article suggests supply might catch up to demand by May/June, potentially leading to more readily available models at MSRP.

- Dataset Format > Chat Template for QwQ?: A member suggested not to over index on the format of the dataset, stating that getting the dataset into the correct chat template for QwQ is more important.

- They added that insights are likely unique to the dataset and that reasoning behavior seems to occur relatively shallow in the model layers.

- Intriguing Chatting Kilpatrick Clip: A member shared Logan Kilpatrick’s YouTube video, describing the chat as interesting.

- The discussion references an interesting chat related to Logan Kilpatrick’s YouTube video but No further details were provided.

MCP (Glama) Discord

- Cool Python Devs install UV Package Manager: Members discussed installing and using uv, a fast Python package and project manager written in Rust, as a replacement for pip and conda.

- It’s favored because its website is super minimal with just a search engine and a landing page.

- glama.json Claims Github MCP Servers: To claim a GitHub-hosted MCP server on Glama, users should add a

glama.jsonfile to the repository root with their GitHub username in themaintainersarray, as detailed here.- The configuration requires a

$schemalink toglama.ai/mcp/schemas/server.json.

- The configuration requires a

- MCP App Boosts Github API Rate Limits: Glama AI is facing GitHub API rate limits due to the increasing number of MCP servers but users can increase the rate limits by installing the Glama AI GitHub App.

- Doing so helps scale Glama by giving the app permissions.

- Turso Cloud Integrates with MCP: A new MCP server, mcp-turso-cloud, integrates with Turso databases for LLMs.

- This server implements a two-level authentication system for managing and querying Turso databases directly from LLMs.

- Unity MCP Integrates AI with File Access: The most advanced Unity MCP integration now supports files Read/Write Access of Project, enabling AI assistants to understand the scene, execute C# code, monitor logs, control play mode, and manipulate project files.

- Blender support is currently in development for 3D content generation.

OpenAI Discord

- o1-pro Model Pricing Stuns: The new o1-pro model is now available in the API for select developers, supporting vision, function calling, and structured outputs, as detailed in OpenAI’s documentation.

- However, its high pricing of $150 / 1M input tokens and $600 / 1M output tokens sparked debate, though some users claim it solves coding tasks in one attempt where others fail.

- ChatGPT Code with Emojis?!: Members seek ways to stop ChatGPT from inserting emojis into code, despite custom instructions, according to discussions in the gpt-4-discussions channel.

- Suggestions included avoiding the word emoji and instructing the model to “Write code in a proper, professional manner”.

- Chinese Model Self-Censors!: A user reported that a Chinese model deletes responses to prompts about the Cultural Revolution, providing screenshots as evidence.

- The issue was discussed in the ai-discussions channel, highlighting concerns about censorship in AI models.

- AI won’t let you pick Stocks: In api-discussions and prompt-engineering, users discussed using AI for stock market predictions, but members noted it’s against OpenAI’s usage policies to provide financial advice.

- Clarification was provided that exploring personal stock ideas is acceptable, but giving advice to others is prohibited.

- Agent SDK versus MCP Throwdown: Members compared the OpenAI Agent SDK with MCP (Model Communication Protocol), noting that the former works only with OpenAI models, while the latter supports any LLM using any tools.

- MCP allows easy loading of integrations via

npxanduvx, such asnpx -y @tokenizin/mcp-npx-fetchoruvx basic-memory mcp.

- MCP allows easy loading of integrations via

LMArena Discord

- LLMs face criticisms for AI Hallucinations: Members voiced worries over LLMs prone to mistakes and hallucinations when doing research.

- One member observed that agents locate accurate sources but still hallucinate the website, similar to how Perplexity’s Deep Research distracts and hallucinates a lot.

- o1-pro Price Raises Eyebrows at 4.5 Overpriced: OpenAI’s new o1-pro API is available at $150 / 1M input tokens and $600 / 1M output tokens (announcement).

- Some members felt this meant GPT-4.5 is overpriced, with one remarking that hosting an equivalent model with compute optimizations would be cheaper; however, others contended o1 reasoning chains require more resources.

- File Uploading Limitations Plague Gemini Pro: Users questioned why Gemini Pro does not support file uploads like Flash Thinking.

- They also noted that AI models struggle to accurately identify PDF files, including non-scanned ones, expressing hope for future models capable of carefully reading complete articles.

- Claude 3.7 Coding Prowess Debated: Some members believe Claude 3.7’s coding abilities are overrated, suggesting it excels at web development and tasks similar to SWE-bench, but struggles with general coding (leaderboard).

- Conversely, others found Deepseek R1 superior for terminal command tests.

- Vision AI Agent Building in Google AI Studio: One member reported success using Google AI Studio API to build a decently intelligent vision AI agent in Python.

- They also experimented with running 2-5+ agents simultaneously, sharing memory and browsing the internet together.

HuggingFace Discord

- Flux Diffusion Flows Locally: Members discussed running the Flux diffusion model locally, with suggestions to quantize it for better performance on limited VRAM and referencing documentation and this blogpost.

- Members linked a relevant GitHub repo for optimizing diffusion models, and a Civitai article for GUI setup.

- HF Inference API Errors Out, Users Fume: A user reported a widespread issue with the Hugging Face Inference API returning 404 errors, impacting multiple applications and paid users linking to this discussion.

- A team member acknowledged the problem, stating that they reported it to the team for further investigation.

- Roblox Gets Safe (Voice) with HF Classifier: Roblox released a voice safety classifier on Hugging Face, fine-tuned with 2,374 hours of voice chat audio clips, as documented in this blog post and the model card.

- The model outputs a tensor with labels like Profanity, DatingAndSexting, Racist, Bullying, Other, and NoViolation.

- Little Geeky Learns to Speak: A member showcased an Ollama-based Gradio UI powered by Kokoro TTS that automatically reads text output in a chosen voice and is available at Little Geeky’s Learning UI.

- This UI includes model creation and management tools, as well as the ability to read ebooks and answer questions about documents.

- Vision Model Faces Input Processing Failures: A member reported receiving a “failed to process inputs: unable to make llava embedding from image” error while using a local vision model after downloading LLaVA.

- The root cause of the failure remains unknown.

OpenRouter (Alex Atallah) Discord

- O1-Pro Pricing Shocks Users: Users express outrage at O1-Pro’s pricing, deeming costs of $150/month input and $600/month output as prohibitively insane.

- Speculation arises that the high price is a response to competition from R1 and Chinese models, or because OAI is combining multiple model outputs, without streaming support.

- LLM Chess Tournament Tests Raw Performance: A member initiated a second chess tournament to assess raw performance, utilizing raw PGN movetext continuation and posted the results.

- Models repeat the game sequence and add one new move, with Stockfish 17 evaluating accuracy; the first tournament with reasoning is available here.

- OpenRouter API: Free Models Not So Free?: A user discovered that the model field in the

/api/v1/chat/completionsendpoint is required, contradicting the documentation’s claim that it is optional, even when using free models.- One user suggested that the model field should default to the default model, or default to the default default model.

- Groq API experiences Sporadic Functionality: Users reported that Groq is functioning in the OpenRouter chatroom, yet not via the API.

- A member requested clarification on the specific error encountered when using the API, pointing to Groq’s speed.

- OpenAI Announces New Audio Models!: OpenAI will announce two new STT models and one new TTS model (gpt-4o-mini-tts).

- The speech-to-text models are named gpt-4o-transcribe and gpt-4o-mini-transcribe, and include an audio integration with the Agents SDK for creating customizable voice agents.

GPU MODE Discord

- Vast.ai Bare Metal Access: Elusive?: Members debated whether Vast.ai allows for NCU profiling and whether getting bare metal access is feasible, while another member inquired about obtaining NCU and NSYS.

- While one member doubted the possibility of bare metal access, they conceded they could be wrong.

- BFloat16 Atomic Operations Baffle Triton: The community explored making

tl.atomicwork with bfloat16 on non-Hopper GPUs, with suggestions to check out tilelang for atomic operations and the limitation of bfloat16 support on non-Hopper GPUs.- A member pointed out that it currently crashes with bfloat16 due to limitations with

tl.atomic_add, but one believes there’s a way to do atomic addition viatl.atomic_cas.

- A member pointed out that it currently crashes with bfloat16 due to limitations with

- cuTile Might be Yet Another Triton: Members discussed NVIDIA’s announcement of cuTile, a tile programming model for CUDA, referencing a tweet about it, with one member expressing concern over NVIDIA’s potential lack of support for other backends like AMD GPUs.

- There was speculation that cuTile might be similar to tilelang, yet another triton but nvidia.

- GEMM Activation Fusion Flounders: A member has experienced issues writing custom fused GEMM+activation triton kernels, noting it’s dependent on register spillage, since fusing activation in GEMM can hurt performance if GEMM uses all registers.

- Splitting GEMM and activation into two kernels can be faster, as discussed in gpu-mode lecture 45.

- Alignment Alters Jumps in Processors: Including

<iostream>in C++ code can shift the alignment of the main loop’s jump, affecting performance due to processor-specific behavior, as the speed of jumps can depend on the alignment of the target address.- A member noted that in some Intel CPUs, conditional jump instruction alignment modulo 32 can significantly impact performance due to microcode updates patching security bugs, suggesting adding 16 NOP instructions in inline assembly before the critical loop can reproduce the issue.

Latent Space Discord

- Orpheus Claims Top Spot in TTS Arena: The open-source TTS model, Orpheus, debuted, claiming superior performance over both open and closed-source models like ElevenLabs and OpenAI, according to this tweet and this YouTube video.

- Community members discussed the potential impact of Orpheus on the TTS landscape, awaiting further benchmarks and comparisons to validate these claims.

- DeepSeek R1 Training Expenses Draw Chatter: Estimates for the training cost of DeepSeek R1 are under discussion, with initial figures around $6 million, though Kai-Fu Lee estimates $140M for the entire DeepSeek project in 2024, according to this tweet.

- The discussion underscored the substantial investment required for developing cutting-edge AI models and the variance in cost estimations.

- OpenAI’s O1-Pro Hits the API with Enhanced Features: OpenAI released o1-pro in their API, offering improved responses at a cost of $150 / 1M input tokens and $600 / 1M output tokens, available to select developers on tiers 1–5, per this tweet and OpenAI documentation.

- This model supports vision, function calling, and Structured Outputs, marking a significant upgrade in OpenAI’s API offerings.

- Gemma Package Eases Fine-Tuning Labors: The Gemma package, a library simplifying the use and fine-tuning of Gemma, was introduced and is available via pip install gemma and documented on gemma-llm.readthedocs.io, per this tweet.

- The package includes documentation on fine-tuning, sharding, LoRA, PEFT, multimodality, and tokenization, streamlining the development process.

- Perplexity Reportedly Eyes $18B Valuation: Perplexity is reportedly in early talks for a new funding round of $500M-$1B at an $18 billion valuation, potentially doubling its valuation from December, as reported by Bloomberg.

- This funding round would reflect increased investor confidence in Perplexity’s search and AI technology.

Eleuther Discord

- Monolingual Models Create Headaches: Members expressed confusion over the concept of ‘monolingual models for 350 languages’ because of the expectation that models should be multilingual.

- A member clarified that the project trains a model for each language, resulting in 1154 total models on HF.

- CV Engineer Starts AI Safety Quest: A member introduced themself as a CV engineer and expressed excitement about contributing to research in AI safety and interpretability.

- They are interested in discussing these topics with others in the group.

- Expert Choice Routing Explored: Members discussed implementing expert choice routing on an autoregressive model using online quantile estimation during training to derive thresholds for inference.

- One suggestion involved assuming router logits are Gaussian, computing the EMA mean and standard deviation, and then utilizing the Gaussian quantile function.

- Quantile Estimation Manages Sparsity: One member proposed using an estimate of the population quantiles at inference time to maintain the desired average sparsity, drawing an analogy to batchnorm.

- Another member noted that the dsv3 architecture enables activating between 8-13 experts due to node limited routing, but the goal is to allow between 0 and N experts.

- LLMs Face Kolmogorov Compression Test: A member shared a paper, “The Kolmogorov Test”, which introduces a compression-as-intelligence test for code generating LLMs.

- The Kolmogorov Test (KT) presents a model with a data sequence at inference time, challenging it to generate the shortest program capable of producing that sequence.

Cohere Discord

- Command-A Communicates Convivially in Castellano: A user from Mexico reported that Command-A mimicked their dialect in a way they found surprisingly natural and friendly.

- The model felt like speaking with a Mexican person, even without specific prompts.

- Command-R Consumes Considerable Tokens: A user tested a Cohere model via OpenRouter for Azure AI Search and was impressed with the output.

- However, they noted that it consumed 80,000 tokens on input per request.

- Connectors Confound Current Cmd Models: A user explored Connectors with Slack integration but found that they didn’t seem to be supported by recent models like cmd-R and cmd-A.

- Older models returned an error 500, and Connectors appear to be removed from the API in V2, prompting disappointment as they simplified data handling, with concerns raised whether transition from Connectors to Tools is a one-for-one replacement.

- Good News MCP Server Generates Positivity: A member built a MCP server named Goodnews MCP that uses Cohere Command A in it’s tool

fetch_good_news_listto provide positive, uplifting news to MCP clients, with code available on GitHub.- The system uses Cohere LLM to rank recent headlines, returning the most positive articles.

- Cohere API Context: Size Matters: A member expressed a preference for Cohere’s API due to OpenAI’s API having a context size limit of only 128,000, while Cohere offers 200,000.

- However, using the compatibility API causes you to lose access to cohere-specific features such as the

documentsand thecitationsin the API response.

- However, using the compatibility API causes you to lose access to cohere-specific features such as the

Modular (Mojo 🔥) Discord

- Photonics Speculation Sparks GPU Chatter: Discussion centered on whether photonics and an integrated CPU in Ruben GPUs would be exclusive to datacenter models or extend to consumer-grade versions (potentially the 6000 series).

- The possibility of CX9 having co-packaged optics was raised, suggesting that a DIGITs successor could leverage such technology, while the CPU is confirmed for use in DGX workstations.

- Debugging Asserts Requires Extra Compiler Option: Enabling debug asserts in the Mojo standard library requires an extra compile option,

-D ASSERT=_, which is not widely advertised, as seen in debug_assert.mojo.- It was noted that using

-gdoes not enable the asserts, and the expectation is that compiling with-Ogshould automatically turn them on.

- It was noted that using

- Mojo List Indexing Prints 0 Due to UB: When a Mojo List is indexed out of range, it prints 0 due to undefined behavior (UB), rather than throwing an error.

- The issue arises because the code indexes off the list into the zeroed memory the kernel provides.

- Discussion on Default Assert Behavior: A discussion arose regarding the default behavior of

debug_assert, particularly the confusion arounddebug_assert[assert_mode="none"], and whether it should be enabled by default in debug mode.- There was a suggestion that all assertions should be enabled when running a program in debug mode.

LlamaIndex Discord

- DeepLearningAI Launches Agentic Workflow Course: DeepLearningAI launched a short course on building agentic workflows using RAG, covering parsing forms and extracting key fields, with more details on Twitter.

- The course teaches how to create systems that can automatically process information and generate context-aware responses.

- AMD GPUs Power AI Voice Assistant Pipeline: A tutorial demonstrates creating a multi-modal pipeline using AMD GPUs that transcribes speech to text, uses RAG, and converts text back to speech, leveraging ROCm and LlamaIndex, detailed in this tutorial.

- The tutorial focuses on setting up the ROCm environment and integrating LlamaIndex for context-aware voice assistant applications.

- Parallel Tool Call Support Needed in LLM.as_structured_llm: A member pointed out the absence of

allow_parallel_tool_callsoption when using.chatwithLLM.as_structured_llmand suggested expanding the.as_structured_llm()call to accept arguments likeallow_parallel_tool_calls=False.- Another user recommended using

FunctionCallingProgramdirectly for customization and settingadditional_kwargs={"parallel_tool_calls": False}for OpenAI, referencing the OpenAI API documentation.

- Another user recommended using

- Reasoning Tags Plague ChatMemoryBuffer with Ollama: A user using Ollama with qwq model is struggling with

<think>reasoning tags appearing in thetextblock of theChatMemoryBufferand sought a way to remove them when usingChatMemoryBuffer.from_defaults.- Another user suggested manual post-processing of the LLM output, as Ollama doesn’t provide built-in filtering, and the original user offered to share their MariaDBChatStore implementation, a clone of PostgresChatStore.

- llamaparse PDF QA Quandaries: A user seeks advice on QA for hundreds of PDF files parsed with llamaparse, noting that some are parsed perfectly while others produce nonsensical markdown.

- They are also curious about how to implement different parsing modes for documents requiring varied approaches.

Torchtune Discord

- Nvidia’s Hardware Still Behind Schedule: Members report that Nvidia’s new hardware is late, saying the H200s were announced 2 years ago but only available to customers 6 months ago.

- One member quipped that this is the “nvidia way.”

- Gemma 3 fine-tuning to get Torchtune support: A member is working on a PR for gemma text only, and may try to accelerate landing this, before adding image capability later.

- A member pledged to continue work on Gemma 3 ASAP, jokingly declaring their “vacation is transforming to the torchtune sprint”.

- Driver Version Causes nv-fabricmanager Errors: The nv-fabricmanager may throw errors when its driver version doesn’t match the card’s driver version.

- This issue has been observed on some on-demand VMs.

tinygrad (George Hotz) Discord

- Adam Optimizer Hits Low Loss in ML4SCI task: A member reported training a model for

ML4SCI/task1with the Adam optimizer, achieving a loss in the 0.2s, with code for the setup available on GitHub.- The repo is part of the member’s Google Summer of Code 2025 project.

- Discord Rules Enforcement in General Channel: A member was reminded to adhere to the discord rules, specifically that the channel is for discussion of tinygrad development and tinygrad usage.

- No further details about the violation were provided.

LLM Agents (Berkeley MOOC) Discord

- User Hypes AgentX Research Track: A user conveyed excitement and interest in joining the AgentX Research Track, eager to collaborate with mentors and postdocs.

- They aim to contribute to the program through research on LLM agents and multi-agent systems.

- User Vows Initiative and Autonomy: A user promised proactivity and independence in driving their research within the AgentX Research Track.

- They committed to delivering quality work within the given timeframe, appreciating any support to enhance their selection chances.

DSPy Discord

- DSPy User Seeks Guidance on arXiv Paper Implementation: kotykd inquired about the possibility of implementing a method described in this arXiv paper using DSPy.

- Further details regarding the specific implementation challenges or goals were not provided.

- arXiv Paper Implementation: The user, kotykd, referenced an arXiv paper and inquired if DSPy could be used to implement it.

- The paper’s content and the specific aspects the user was interested in were not detailed.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Nomic.ai (GPT4All) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Cursor Community ▷ #general (1517 messages🔥🔥🔥):

Agent Mode Down, Dan Perks, Keychron Keyboard, Vibe Coding, Pear AI vs Cursor

- Agent Broke: Longest Hour of My Life: Members reported that Agent mode was down for an hour and the Status Page was not up to date, which was the longest hour of my life.

- Members joked that dan percs was on the case to fix it, and he was busy replying people in cursor and taking care of slow requests, which is why he’s always online.

- Dan Perks: Keyboard Connoisseur: Cursor’s Dan Perks solicited opinions on Keychron keyboards, specifically looking for a low profile and clean model with knobs.

- Suggestions poured in, including Keychron’s low-profile collection, though Dan expressed concerns about keycap aesthetics, stating I don’t like the keycaps.

- Pear Pressure: Pear AI vs Cursor: Several members touted the advantages of using Pear AI and claimed that Cursor was now more expensive.

- One member claimed to be cooked due to their multiple annual cursor subs, and another claimed, If cursor changes their context window than i would stay at cursor or change their sonnet max to premium usage, otherwise if im paying for sonnet max i’d mind as well use pear because i pay even cheaper.

- ASI: The Only Global Solution?: Members debated whether Artificial Superintelligence (ASI) is the next evolution, claiming that the ASI-Singularity(Godsend) has to be the only Global Solution.

- Others were skeptical, with one user jesting that gender studies is more important than ASI, claiming that its the next step into making humans a intergaltic species, with a nuetral fluid gender we can mate with aliens from different planets and adapt to their witchcraft technology.

- License Kerfuffle: Pear AI Cloned Continue?: Members discussed the controversy surrounding Pear AI , with one claiming that Pear AI cloned continue basically and just took someone elses job and decided its their project now.

- Others cited concerns that the project was closed source and that they should switch to another alternative, like Trae AI.

Links mentioned:

- ThePrimeagen - Twitch: CEO @ TheStartup™ (multi-billion)Stuck in Vim Wishing it was Emacs

- Tweet from undefined: no description found

- Markdown Renderer: no description found

- Settings | Cursor - The AI Code Editor: You can manage your account, billing, and team settings here.

- I Use Arch Btw Use GIF - I Use Arch Btw Use Arch - Discover & Share GIFs: Click to view the GIF

- Tweet from Anthropic (@AnthropicAI): Claude can now search the web.Each response includes inline citations, so you can also verify the sources.

- Tweet from Vercel (@vercel): Vercel and @xAI are partnering to bring zero-friction AI to developers.• Grok models are now available on Vercel• Exclusive xAI free tier—no additional signup required• Pay for what you use through yo...

- ThePrimeagen - Twitch: CEO @ TheStartup™ (multi-billion)Stuck in Vim Wishing it was Emacs

- Reddit - The heart of the internet: no description found

- Changelog | Cursor - The AI Code Editor: New updates and improvements.

- Best of Idiocracy- Dr Lexus!: One of the best Scenes. Dr. Lexus!Idiocracy 2006 comedy film, directed by Mike Judge. Starring Luke Wilson and Maya Rudolph.

- Reddit - The heart of the internet: no description found

- Reddit - The heart of the internet: no description found

- - YouTube: no description found

- Trae - Ship Faster with Trae: Trae is an adaptive AI IDE that transforms how you work, collaborating with you to run faster.

- Reddit - The heart of the internet: no description found

- Dialogo AI - Intelligent Task Automation: Dialogo AI provides intelligent AI agents that learn, adapt, and automate complex workflows across any platform. From data analysis to system management, our intelligent agents transform how you work.

- Tweet from GitHub - FxEmbed/FxEmbed: Fix X/Twitter and Bluesky embeds! Use multiple images, videos, polls, translations and more on Discord, Telegram and others: Fix X/Twitter and Bluesky embeds! Use multiple images, videos, polls, translations and more on Discord, Telegram and others - FxEmbed/FxEmbed

- Low Profile Keyboard: Go ultra-slim with our Keychron low-profile mechanical keyboards.

Unsloth AI (Daniel Han) ▷ #general (371 messages🔥🔥):

TPUs speed comparison, Gradient Accumulation fix, Gemma model version misinformation, Sophia optimizer experiments, Gemma 3 Activation Normalization

- TPUs Blaze, T4s Haze: A member noted that TPUs are significantly faster than T4s, especially when using a batch size of 8, emphasizing their superior speed based on observed timestamps, including a comparative screenshot.

- Gradient Accumulation Fixed: A blog post (Unsloth Gradient Accumulation fix) discussed an issue with Gradient Accumulation affecting training, pre-training, and fine-tuning runs for sequence models, which has been addressed to ensure accurate training and loss calculations.

- The fix aims to mimic full batch training with reduced VRAM usage and also impacts DDP and multi-GPU setups.

- Google Gemma’s Identity Crisis: Users reported that Gemma models downloaded from Hugging Face incorrectly identify themselves as first generation with either 2B or 7B parameters, even when the downloaded model is a 12B Gemma 3.

- This hallucination issue stems from Google neglecting to update the part of the training code responsible for this identification, as the models know that they’re a Gemma, and at least 2 different capacities.

- Gemma 3 gets Float16 Fix: Unsloth has fixed infinite activations in Gemma 3 for float16, which were causing NaN gradients during fine-tuning and inference on Colab GPUs, the fix keeps all intermediate activations in bfloat16 and upcasts layernorm operations to float32.

- The fix avoids reducing speed, but the naive solution would be to do everything in float32 or bfloat16, but GPUs without float16 tensor cores will be 4x or more slower, as explained on the Unsloth AI blog.

- Unsloth Notebooks Missing Deps: Users reported issues with running Unsloth notebooks, specifically the Gemma 3 and Mistral notebooks on Google Colab, caused by missing dependencies due to the

--no-depsflag in the installation command, and other various version incompatibilities.- A member is on it

Links mentioned:

- featherless-ai/Qwerky-QwQ-32B · Hugging Face: no description found

- Tweet from Daniel Han (@danielhanchen): We'll be at Ollama and vLLM's inference night next Thursday! 🦥🦙Come meet us at @YCombinator's San Francisco office. Lots of other cool open-source projects will be there too!Quoting olla...

- Fine-tuning Guide | Unsloth Documentation: Learn all the basics and best practices of fine-tuning. Beginner-friendly.

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- unsloth/aya-vision-8b · Hugging Face: no description found

- Analyze embedding space usage: Analyze embedding space usage. GitHub Gist: instantly share code, notes, and snippets.

- Fine-tune Gemma 3 with Unsloth: Gemma 3, Google's new multimodal models.Fine-tune & Run them with Unsloth! Gemma 3 comes in 1B, 4B, 12B and 27B sizes.

- Tweet from Daniel Han (@danielhanchen): I fixed infinite activations in Gemma 3 for float16!During finetuning and inference, I noticed Colab GPUs made NaN gradients - it looks like after each layernorm, activations explode!max(float16) = 65...

- How to vision fine-tune the Gemma3 using custom data collator on unsloth framework? · Issue #2122 · unslothai/unsloth: I referd to Google's tutorial before : https://ai.google.dev/gemma/docs/core/huggingface_vision_finetune_qlora#setup-development-environment and I ran it successfully, using my customized data_col...

- notebooks/nb/Gemma3_(1B)-GRPO.ipynb at main · unslothai/notebooks: Unsloth Fine-tuning Notebooks for Google Colab, Kaggle, Hugging Face and more. - unslothai/notebooks

- Bug Fixes in LLM Training - Gradient Accumulation: Unsloth's Gradient Accumulation fix solves critical errors in LLM Training.

- no title found: no description found

- [GRPO] add vlm training capabilities to the trainer by CompN3rd · Pull Request #3072 · huggingface/trl: What does this PR do?This is an attempt at addressing #2917 .An associated unittest has been added and less "toy-examply" trainings seem to maximize rewards as well, but I don...

- notebooks/nb at main · unslothai/notebooks: Unsloth Fine-tuning Notebooks for Google Colab, Kaggle, Hugging Face and more. - unslothai/notebooks

- notebooks/nb/Gemma3_(4B).ipynb at main · unslothai/notebooks: Unsloth Fine-tuning Notebooks for Google Colab, Kaggle, Hugging Face and more. - unslothai/notebooks

- notebooks/nb/Mistral_(7B)-Text_Completion.ipynb at main · unslothai/notebooks: Unsloth Fine-tuning Notebooks for Google Colab, Kaggle, Hugging Face and more. - unslothai/notebooks

- Text Completion Notebook - Backwards requires embeddings to be bf16 or fp16 · Issue #2127 · unslothai/unsloth: I am trying to run the notebook from the Continue training, https://docs.unsloth.ai/basics/continued-pretraining Text completion notebook https://colab.research.google.com/drive/1ef-tab5bhkvWmBOObe...

- AttributeError: 'Gemma3Config' object has no attribute 'vocab_size' · Issue #36683 · huggingface/transformers: System Info v4.50.0.dev0 Who can help? @ArthurZucker @LysandreJik @xenova Information The official example scripts My own modified scripts Tasks An officially supported task in the examples folder ...

- unsloth/unsloth/models/loader.py at main · unslothai/unsloth: Finetune Llama 3.3, DeepSeek-R1, Gemma 3 & Reasoning LLMs 2x faster with 70% less memory! 🦥 - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #off-topic (11 messages🔥):

GTC worth it?, Gemma 3 BFloat16 ranges, Cfloat16 idea, hiddenlayer with vllm

- GTC Ticket Cost Justified?: A member asked if GTC was worth the price of admission and expressed interest in attending next year.

- Another member mentioned they got a complimentary ticket through an NVIDIA contact and suggested asking them for one.

- Gemma 3 Loves BFloat16: Daniel Han shared thoughts on how Gemma 3 is the first model he encountered to love using larger full bfloat16 ranges, and speculated that this may be why it’s an extremely powerful model for its relatively small size, in this tweet.

- cfloat16 proposed: Referencing how Gemma 3 loves larger full bfloat16 ranges, a member proposed a cfloat16 idea: 1 bit for the sign, 10 bits for the exponent, 5 bits for the mantissa.

- This is supposedly better because what matters is the exponent anyway.

- vllm needs hiddenlayer?: A member asked if there was any way to get hiddenlayer (last) with vllm for non pooling models, requesting a 7b r1 distill.

Link mentioned: Tweet from Daniel Han (@danielhanchen): On further thoughts - I actually find this to be extremely fascinating overall! Gemma 3 is the first model I encountered to “love” using larger full bfloat16 ranges, and I’m speculating, m…

Unsloth AI (Daniel Han) ▷ #help (63 messages🔥🔥):

Gemma 3 finetuning, Data format for prompt/response pairs, Multi-image training for Gemma 3, Triton downgrade for Gemma 3, DPO examples and patching

- Gemma 3 Dependency Error: A Frustrating Start: A user encountered a dependency error while trying to fine-tune Gemma 3, even after installing the latest transformers from git directly.

- They experienced the same issue on Colab, indicating a potential problem with the environment or installation process.

- Gemma 3: Getting the data format correct: A user struggled with the correct data format for Gemma 3 finetuning, questioning whether the prompt/response pairs need to follow a specific format as indicated in the notebook.

- They realized they could use Gemma 3 itself to create a decent prompt incorporating the proper conversation style format.

- Triton needs to be Downgraded for Gemma 3: a Quirky Quagmire: A user ran into issues with Gemma 3 on a fresh Python 3.12.9 environment with a 4090, encountering a SystemError related to the Triton compiler.

- The solution involved force downgrading Triton to version 3.1.0 on Python 3.11.x, as suggested in this GitHub issue.

- Saving Finetuned Models in Ollama: A Case Study: A user reported discrepancies between the finetuned model’s performance in Colab and its behavior when saved as a

.gguffile and run locally using Ollama.- They inquired about the correct method to save the model to retain the finetuning effects, differentiating between