Gemini is all you need.

AI News for 5/19/2025-5/20/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (215 channels, and 7031 messages) for you. Estimated reading time saved (at 200wpm): 622 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

Twelve months ago we covered Google I/O, but if we’re being honest Gemini wasn’t quiiite frontier yet and it was somewhat overshadowed by 4o’s launch.

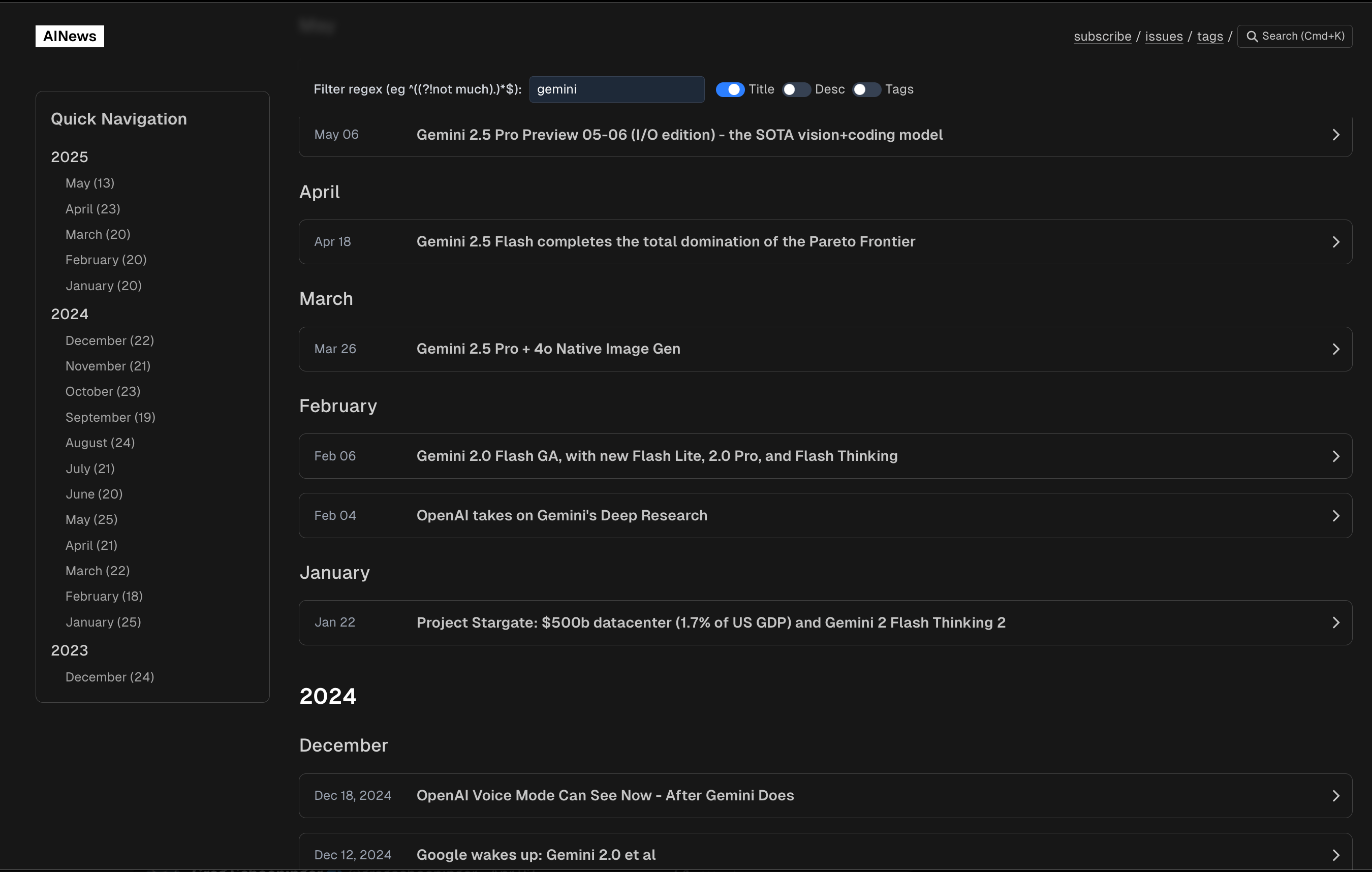

Six months ago we wrote that Google wakes up with Gemini 2.0, and that began an epic multi-month run of increasing Gemini dominance (even adopting the AINews chart):

and today confirmed by official numbers from Gemini (though much of this helped by having the most generous free tier in the world):

The AI Twitter recap below does a pretty good job of recapping the major launches so we won’t really bother redoing it, but we’d definitely say it missed the launch of Jules (Gemini’s Codex/Devin competitor) because Jules was somewhat pre-leaked.

As always the Verge does a great job of condensing the 3 hour keynote into 30 mins:

AI Twitter Recap

Google I/O 2024 Event and Announcements

- Google I/O Event Details and Keynotes: *philschmid provided a thread summarizing the links needed to watch and participate online. The Main Keynote was on May 20th at 10am PT, with the Developer Keynote at 1:30pm PT. The AI Stage events were scheduled for both May 20th and 21st. Also,* philschmid listed the times of events. The events would start with the Main Keynote at 10 am PT, 7pm CEST.

- Google’s AI Progress Since Last I/O: @Google noted that since the last Google I/O, they have announced over a dozen models and research breakthroughs, and released over 20 major AI products and features. They had also unveiled Project Astra last year (@Google). @GoogleDeepMind had shared.

- AI-Driven Transformations and Developer Opportunities: @Google quoted Sundar Pichai, noting the significant opportunities with AI and the role of developers in ensuring its benefits reach many people. _philschmid stated that they shipped two minor QoL Updates for AI Studio: a built-in usage dashboard and a new GenMedia “Playground”.

- AI Overviews & Search Improvements: @Google announced the launch of AI Mode to everyone in the U.S., building on the success of AI Overviews, which have led to happier users and more frequent searches. Also, Gemini 2.5 is coming to Search (@Google). Google Search is bringing generative AI to more people than any other product in the world (@Google). @Google previewed what’s coming soon to AI Mode, such as personalized suggestions, complex analysis, deep search, agentic capabilities, and Search Live.

- Gemini Updates: @demishassabis said that their ultimate vision for the GeminiApp is to transform it into a universal AI assistant, a key milestone on the road to AGI. Some users are experiencing problems upgrading (@hkproj).

- Gemini 2.5 Pro and Flash Models: @GoogleDeepMind announced Deep Think in 2.5 Pro, an enhanced reasoning mode using parallel thinking techniques. @jack_w_rae highlighted that Deep Think marks the progression to greater test-time compute and stronger reasoning capabilities in Gemini. @GoogleDeepMind mentioned stronger security and increased transparency for what the model is thinking. It was found that Gemini 2.5 is now the leading model for learning (@GoogleDeepMind). @omarsar0 noted that Gemini 2.5 Flash is now crushing with style. Also, the Flash version is using fewer tokens for the same performance (@GoogleDeepMind).

- Gemini Diffusion Model: GoogleDeepMind announced Gemini Diffusion (@GoogleDeepMind), and the model can generate 5x faster than 2.0 Flash Light (@omarsar0). It is currently available as an experimental demo to help shape future models (@GoogleDeepMind).

- Veo 3 Video Generation Model: @GoogleDeepMind introduced Veo 3, a new generative video model that adds soundtracks to clips you make, allowing you to create talking characters and include sound effects. timbrooks shared it.

- Imagen 4 Image Generation Model: @GoogleDeepMind announced Imagen 4, an image generation model with richer images, nuanced colors, intricate details, and superior typography. It can create comics, stylized stamps, packaging, and more with improved spelling (@GoogleDeepMind).

- Project Astra and Gemini Live: @GoogleDeepMind reviewed the improvements to Project Astra, including better voice output, memory, and computer control, making it more personalized and proactive. @GoogleDeepMind confirmed that Gemini Live camera and screen sharing in GeminiApp is available on Android and rolling out to iOS.

- Agent Mode: @Google noted that they are starting to integrate agentic capabilities throughout their products, including GoogleChrome, Search, and GeminiApp. Agent Mode in GeminiApp will let you delegate complex planning and tasks to Gemini (@Google).

- Google Beam (fka Project Starline): The new AI-first video communication platform uses a state-of-the-art AI video model to transform 2D video streams into a realistic 3D experience (@GoogleDeepMind).

- Android XR and Partnerships: Google announced glasses with Android XR are lightweight and designed for all-day wear (@Google). They are partnering with Samsung to create the software and reference hardware (@Google).

- Pricing and Availability: A new Google AI Ultra subscription tier will give access to Gemini 2.5 Pro Deep Think, Veo 3, and Project Mariner (@scaling01). Google is upgrading their AI subscription plans (@Google).

- Contrarian viewpoints on announcements: c_valenzuelab found it uninspiring, saying it elicited a yawn.

AI Model Releases, Evaluation, and Analysis

- DeepSeek V3 details: Research from @deepseek_ai clarifies how DeepSeek-V3 works using its key innovations.

- Hugging Face’s Tiny Agents: Hugging Face has released Tiny Agents into its own NPM package, featuring lightweight composable agents built on Hugging Face’s Inference Client and MCP stack (@_akhaliq).

- Model Merging in Pre-training: @iScienceLuvr highlighted a study on model merging during LLM pre-training, demonstrating that merging checkpoints from the stable training phase produces consistent and significant performance improvements.

- Adjoint Sampling by Meta: Meta AI introduced Adjoint Sampling, a new learning algorithm that trains generative models based on scalar rewards, which can become the foundation for further research into highly scalable sampling methods (@AIatMeta).

- KernelLLM 8B by Meta Exceeds GPT-4o in Single-Shot Performance: Meta released KernelLLM 8B on Hugging Face, and it exceeds models such as GPT-4o and DeepSeek V3 in single-shot performance (@reach_vb).

- NVIDIA’s Cosmos-Reason1-7B: NVIDIA released Cosmos-Reason1-7B, a new vision reasoning model for robotics. It is the first reasoning model for robotics based on Qwen 2.5-VL-7B (@mervenoyann).

- AniSORA Model: Bilibili dropped AniSORA on Hugging Face, an anime video generation model, Apache 2.0 licensed (@reach_vb).

- Stability AI Releases Stable Audio Open Small: mervenoyann noted the release of stable-audio-open-small new text-to-audio model.

- MMLongBench for Long-Context Vision-Language Models: MMLongBench benchmarks long-context vision-language models effectively and thoroughly (@_akhaliq).

- Marin: Open Lab for AI Development: @percyliang introduced Marin, an open lab, to fulfill the vision for open-source AI, and @TheAITimeline shared. Marin repurposes GitHub, which has been successful for open-source software, for AI (@percyliang).

- OMol25 and UMA: Meta AI released Open Molecules 2025 (OMol25) (@AIatMeta). @ClementDelangue said that Meta AI just released OMol25 on @huggingface.

- Insights into DeepSeek-V3: This paper introduces insights into DeepSeek-V3’s hardware for AI Architectures (@TheAITimeline). DeepSeek is now the benchmark for Nvidia (@teortaxesTex).

- LMEval Leaderboard: A new version of Gemini-2.5-Flash climbs to #2 overall in chat (@lmarena_ai). It was also found that Mistral Medium 3 makes a strong debut with the community (@lmarena_ai).

- Code Generation Models Leaderboard: DeepCoder-14B-Preview, a code generation model that competes with top reasoning models like OpenAI’s o1 and DeepSeek-R1, but at a fraction of the size (@DeepLearningAI).

- Runway References and Image Generation: Here is a new workflow for Gen-4 References: Element extraction and composition (@c_valenzuelab).

- Improving Assembly Code Performance with LLMs via RL: This paper discusses how to improve assembly code performance with LLMs via reinforcement learning (@_akhaliq).

- Group Think paper: The paper is about multiple concurrent reasoning agents collaborating at token level granularity (@_akhaliq).

- Improving Factuality in LLMs: Scaling Reasoning can Improve Factuality in Large Language Models (@_akhaliq).

- Study of Data Augmentation: TeortaxesTex noted another Seed paper, now on data augmentation.

AI in Robotics, Agents, and Automation

- NVIDIA’s Physical AI models reasoning: Nvidia open sourced Physical AI models reasoning models that understand physical common sense and generate appropriate embodied decisions (@reach_vb).

- Project Mariner: @GoogleDeepMind made updates to Project Mariner, their research prototype that can interact with the web and get things done.

- DreamGen for Robot Learning: NVIDIA GEAR Lab introduced DreamGen, a new engine that scales up robot learning not with fleets of human operators, but with digital dreams in pixels (@DrJimFan).

- Agentic DevOps with GitHub Copilot: GitHub Copilot now supports the entire software development lifecycle – from planning and implementation to updates, tests, and debugging (@TheTuringPost).

- Azure AI Foundry Agent Service: Azure AI Foundry Agent Service is now generally available, and it comes with first-class LlamaIndex support (@llama_index).

Company Partnerships, Investments, and Business Applications

- Cohere’s Partnerships with Dell and SAP: Cohere announced partnerships with Dell to offer Cohere North on-premises (@cohere) and SAP to power enterprise automation (@cohere).

- Sakana AI and MUFG Bank: Sakana AI and MUFG Bank, the largest bank in Japan, have signed a comprehensive partnership agreement (@SakanaAILabs). Mitsubishi UFJ Financial Group’s banking unit has hired startup Sakana AI to power its systems with AI (@hardmaru).

- Klarna and Open AI: @kevinweil noted a partnership with OpenAI and Box.

Techniques, Tools, and Tutorials

- Data Quality and LLM Training: @cwolferesearch shared a practical guide for debugging an LLM’s training dataset, emphasizing the importance of data quality.

- Hugging Face Hub Enhancements: The Hugging Face Hub now auto-magically formats chat/reasoning messages in an interactive viewer (@_lewtun).

- LlamaIndex Updates: The LlamaIndex team is hosting its first Discord office hours session (@llama_index).

- Microsoft’s Open-Sourcing Efforts: Microsoft open-sourced GitHub Copilot in Visual Studio Code, Natural Language Web (NL Web), TypeAgent, Windows Subsystem for Linux (WSL), and Edit command-line text editor (@TheTuringPost).

- Together AI’s Code Execution Products: Together AI launched two new products to bring code execution & dev environments to AI apps: Together Code Sandbox and Together Code Interpreter (@togethercompute).

- Hugging Face Hub and MLX Integration: Two new MLX + Hugging Face hub integrations make it easier than ever to get started running models locally (@awnihannun).

- structured outputs in the API: In LLM APIs, structured outputs just got even more structured - including support for regex! (@stevenheidel).

- LangGraph Platform Support for MCP: LangGraph Platform Now Supports MCP. Every deployed agent on LangGraph Platform now exposes its own MCP endpoint (@LangChainAI).

- Am I the only one wishing there was a method to call these two in one line?: @gabriberton pointed to the common need to optimizer.step_and_zero_grad(), for efficiency.

- AI Agents with Google Gemini: @_philschmid points to a blog on how to get started building AI agents with Google Gemini.

- Learn how to build lightweight, real-time AgnoAgi agents for medical and legal tasks: @qdrant_engine tutorial covers modular agents and techniques to keep resource usage low

Political, Ethical, and Philosophical Musings

- US Negative-Sum Game with China: TeortaxesTex discusses how the US is trying to play a negative-sum game with China, denying them resources to scale, while the US loses money and talent. The goal is a sufficient relative disparity, at sufficient absolute capability level, that the US makes its supremacy durable.

- Openness of AI: America’s historical technological leadership wasn’t built on protectionism and closed systems, but on creating a dynamic marketplace of optionality, including open platforms the world could build upon (@ClementDelangue).

Humor and Miscellaneous

- “no discourse”: @EigenGender shared they are obsessed with the idea of saying “no discourse” like the 2025 version of “no homo”.

- “overcooked burger scorches the cheese”: - ancient Chinese proverb about showing grace to losers, probably, says @teortaxesTex.

- The leaderboard that measures the number of times they’ve said “AI” in the keynote: Looks like they’ve got a new front-runner (@Google).

- @jxmnop shares a funny story about a friend who worked at a French LLM startup.

- “«says basic, sensible things instead of being a drooling catechetical midwit. «Invest in R&D to innovate». «Hire smart people». «Silicon Valley is cool, eh innovates and isn’t afraid of anything». «Wash your hands»> The Madman 🤯 how can America be expected to compete 😩” writes @teortaxesTex.

- “It’s funny that the political compass is actually more like a sphere” according to @teortaxesTex

- @arankomatsuzaki jokes that needing caffeine is a skill issue.

- @demishassabis says cooking up something tasty for tomorrow…

AI Reddit Recap

/r/LocalLlama Recap

1. Gemma 3n Model Announcements and Community Reactions

- Gemma 3n Preview (Score: 333, Comments: 92): Google’s new preview release of the Gemma 3n family (Hugging Face release) introduces efficient multimodal models specifically designed for edge and low-resource devices. They leverage selective parameter activation (akin to Mixture-of-Experts, or MoE), enabling models to function with an effective parameter count of 2B or 4B—despite a larger total parameter set—for optimized inference on constrained hardware. The models support inputs across text, image, video, and audio, are instruction-tuned, and cover over 140 languages; further technical detail is hosted on the Gemma 3n documentation page. Commenters note that the architecture deviates from typical transformer layouts, speculate similarity to the Gemini architecture (especially regarding multimodality and extended context), and highlight potential for privacy-preserving local deployments (e.g., HomeAssistant alternatives).

- Gemma 3n uses ‘selective parameter activation technology’, a form of Mixture of Experts (MoE), to allow edge-device-ready deployment with resource footprints equivalent to models with effective parameter sizes of 2B and 4B, despite having higher total count (e.g., E2B runs with ~5B active parameters). This technology is intended to balance efficiency and performance, enabling multimodal capabilities—including text, image, video, and audio input—and operation in over 140 languages.

- The architectural design appears heavily inspired or directly based on the Gemini Nano series, which is noted for its strong multimodal and extended context handling. Technical speculation suggests Gemma 3n splits its MoE gating not just by general-purpose but potentially by modality (i.e., activating expert sub-networks per input type), which could improve efficiency and specialization for processing diverse input types.

- Official Gemma 3n docs confirm E2B’s typical active parameter count and reinforce the model’s unique modality-based structure. The model’s edge-oriented design (for systems like Home Assistant or DIY Alexa) emphasizes on-device privacy and data localization, unlike conventional cloud models.

- Announcing Gemma 3n preview: powerful, efficient, mobile-first AI (Score: 159, Comments: 25): Google has announced the preview of Gemma 3n, an AI model architected for efficient, real-time on-device inference on mobile-class hardware. Utilizing a 5B/8B parameter design but leveraging DeepMind’s Per-Layer Embeddings (PLE), KVC sharing, and advanced quantization, Gemma 3n achieves RAM efficiency comparable to smaller models, supports dynamic submodels (MatFormer), and handles multimodal tasks (audio, image, video, text). Benchmarks show strong multilingual results (e.g., 50.1% ChrF on WMT24++), and the privacy-first design allows for offline use on Android and Chrome; early access is provided via Google AI Studio and Google AI Edge. Official blog. Top technical comments express skepticism about Google’s benchmarking claims against Claude Sonnet 3.7 and question Arena Score reliability for such comparisons. Others discuss real-world use cases like local smart speaker inference, emphasizing the importance of latency over accuracy and the potential to further reduce response time and even eliminate the need for separate speech-to-text (STT) components like Whisper.

- Skepticism exists about the claim that the 4B parameter Gemma 3n preview matches Claude Sonnet 3.7. Commenters highlight the need for independent benchmarks, referencing previous Google demos they felt were misleading regarding real-time performance and statistical representation.

- A user reports using Whisper plus Gemma3 4B for local smart speaker applications and achieving approximately 3-second response times, emphasizing that speed is prioritized over ultimate accuracy in this context. They speculate the new Gemma 3n could further improve latency or enable direct inference without a separate speech-to-text (STT) component like Whisper, potentially streamlining edge deployment.

- Some criticize the use of Chatbot Arena scores as a quality indicator, suggesting “comparing [Gemma 3n preview] to Claude Sonnet 3.7 is ridiculous” due to presumed performance disparities, and argue Gemma 3n’s real advantage may be in mobile or edge applications rather than head-to-head capability with leading frontier models.

- ok google, next time mention llama.cpp too! (Score: 142, Comments: 25): The image is from a Google presentation showcasing ‘Gemma 3n In preview’. Subtitles on the screen refer to ‘Ollama, UnSloth and others,’ apparently giving public acknowledgment to these popular frameworks/tools in the open-source language model ecosystem. The post’s context is that while Google gave shoutouts to Ollama and UnSloth—both widely used for running and fine-tuning models like Gemma—the omission of ‘llama.cpp’, another major inference library, sparked discussion. The technical significance is the perceived importance of community-driven open-source tooling and Google’s recognition of specific projects during mainstream announcements. Commenters emphasize that UnSloth’s mention is well-deserved due to its utility and developer quality, while others debate the lack of acknowledgment for llama.cpp, reflecting ongoing discussion in the community about recognition and credit among open-source projects.

- Some participants note the omission of

llama.cppfrom Google’s acknowledgments despite its technical importance, suggesting possible reasons related to project visibility or industry relationships, and highlighting how it underpins significant inference advancements for running LLMs locally and efficiently. - There is technical discussion about the inclusion of Unsloth by Google, with users highlighting that Unsloth enables considerably easier and faster model training on consumer hardware, making it a standout tool for practitioners looking to fine-tune models.

- A question is raised regarding the availability and labeling of

gemma 3nin Ollama, with scrutiny over checkpoint terminology and clarity for users seeking to utilize the latest models, indicating a need for better versioning transparency on the platform.

- Some participants note the omission of

2. Gemma 3 Technical Updates and Optimizations in llama.cpp

- Sliding Window Attention support merged into llama.cpp, dramatically reducing the memory requirements for running Gemma 3 (Score: 469, Comments: 76): The latest llama.cpp PR merges Sliding Window Attention (SWA) support, significantly reducing KV cache memory requirements for models like Gemma 3. Implementation introduces

llama_kv_cache_unified_iswa, splitting KV caches by SWA/non-SWA layers, with the SWA cache aggressively pruning stale tokens after each batch and limiting advanced cache operations (e.g., context shifting) to minimize token loss. Practical VRAM reduction for Gemma 3 is estimated between 75-80%, making larger context lengths feasible on commodity hardware, but advanced KV-cache features fall back to full-context mode at the cost of higher memory usage. Core KV-cache logic was refactored for modularity and maintainability, and attention methods streamlined across the codebase. Top comments note SWA’s substantial (~75-80%) VRAM reduction and emphasize the performance gain especially for models with high cache usage, like Gemma, though they warn about limited context-shifting capabilities due to iSWA’s inherent design trade-offs—making it best suited to non-long-context-shift workloads (e.g., RAG).- Sliding Window Attention (SWA) implementation for llama.cpp reduces VRAM requirements for the KV cache by approximately

75-80%(from the original estimate and based on PR comments), making it a major optimization for running large models such as Gemma. This change enables much longer context windows without a proportional increase in memory footprint. - A critical technical caveat is that the iSWA approach for Gemma currently does not support KV cache context shifting, which may affect tasks requiring dynamic context windows. However, for applications like retrieval-augmented generation (RAG), the reduced memory usage significantly boosts performance.

- Real-world benchmarks show that users can now offload more layers (e.g., from 27 to 39 layers on a 27B q4 quantized model), achieving higher speed and much larger token windows within the same hardware constraints, which substantially enhances usability for high-context workloads.

- Sliding Window Attention (SWA) implementation for llama.cpp reduces VRAM requirements for the KV cache by approximately

- Google MedGemma (Score: 173, Comments: 51): Google has released MedGemma, a collection of specialized Gemma 3 model variants for medical AI tasks, detailed in their official Hugging Face release. The lineup includes a 4B multimodal model (incorporating SigLIP-image encoder, pre-trained on de-identified medical images from radiology, histopathology, dermatology, and ophthalmology) and a 27B text-only model, both fine-tuned for clinical data and evaluated on a mix of open and curated medical benchmarks. The models are designed for local inference and further fine-tuning, with a technical report pending release. A notable technical discussion centers on trade-offs: the value of smaller, localizable fine-tuned medical models versus using larger, general models for clinical tasks. Commenters highlight the operational reliability and autonomy (e.g., resistance to disruptive upstream changes) as a key benefit for local deployments, even over highest-possible accuracy approaches.

- One commenter weighs the trade-off between deploying smaller, fine-tuned local models like MedGemma versus using the highest-capability models. For medical use cases, reliability and top performance may outweigh concerns like latency or resource cost, although local deployment protects against issues from subsequent model changes or updates.

- Summary details include that Google released MedGemma in two variants: a 4B-parameter multimodal version (with a SigLIP image encoder pre-trained on medical image types) and a 27B-parameter text-only variant specialized for medical text, both reportedly evaluated on open and curated clinical benchmarks. MedGemma supports further fine-tuning for developer-specific healthcare applications, and a technical report is forthcoming.

3. OpenEvolve and AlphaEvolve System Open Source Implementation

- OpenEvolve: Open Source Implementation of DeepMind’s AlphaEvolve System (Score: 112, Comments: 9): OpenEvolve is an open-source implementation of DeepMind’s AlphaEvolve, an evolutionary LLM-based agent for discovering and optimizing algorithms across entire codebases. The architecture includes four components: a prompt sampler (context/past-history aware), an LLM ensemble (multiple models via OpenAI-compatible APIs), an evaluator pool (scoring programs with distributed, checkpointed evaluation), and a MAP-Elites-inspired program database. Benchmarks show near-parity with AlphaEvolve on tasks like circle packing (achieving 99.97% of DeepMind’s result) and function minimization, with the evolved programs autonomously discovering advanced algorithmic techniques (e.g.,

scipy.minimizeoptimization, simulated annealing). Extensive model benchmarking showed best ensemble results with Gemini-Flash-2.0 and Claude-Sonnet-3.7, and identified Cerebras AI’s API as a significantly faster inference provider for high-generation workloads. Full code and examples are available here. A top comment notes that the approach resembles reinforcement learning at inference time, highlighting the technical novelty versus more static search or pretraining-based code generation. Another technical endorsement notes the framework’s feature completeness compared to prior releases.- Specific-Rub-7250 notes that OpenEvolve appears to leverage a reinforcement learningstyle approach, but interestingly applies it at inference time, raising questions about how online evolution or adaptation is integrated compared to traditional, offline reinforcement learning pipelines.

- Green-Ad-3964 points out similarities to genetic algorithms, implying that OpenEvolve’s methodology may involve evolutionary or population-based search mechanisms to optimize models, paralleling concepts in neuroevolution and genetic programming.

- SquashFront1303 requests clarification on which specific evolutionary algorithm (or alternative) was implemented in place of DeepMind’s proprietary Evolve algorithm, stressing the technical gap and need for open disclosure on this key architectural component to enable reproducibility and comparison.

- Mindblowing demo: John Link led a team of AI agents to discover a forever-chemical-free immersion coolant using Microsoft Discovery. (Score: 359, Comments: 57): John Link and team used Microsoft Discovery, leveraging a coordinated set of AI agents (potentially utilizing microsoft/autogen), to identify an immersion coolant formulation that avoids the use of ‘forever chemicals’ typically found in such fluids. However, technical scrutiny in the comments suggests the proposed solutions are chlorofluorocarbons (CFCs), which are historically known to harm the ozone layer. Commenters question the novelty and safety of the discovered solution, expressing concern that it replicates outdated and environmentally harmful chemistry, raising doubts about the practical advancement and validation of the AI-driven discovery.

- A commenter notes the AI-generated solutions resemble chlorofluorocarbons (CFCs), raising a concern that these compounds are outdated and environmentally harmful due to their well-known negative effects on the ozone layer. This comment questions the novelty and impact of the AI discovery, suggesting the solution might have significant regulatory or environmental drawbacks if CFCs are indeed the primary result.

- Another technically informed contribution highlights that Microsoft Discovery is leveraging the open source ‘autogen’ framework (GitHub link), which enables multi-agent collaboration among AI systems. This details the technological stack powering the demo and may be of interest to those considering similar agent-based approaches for scientific discovery.

- Microsoft unveils “USB-C for AI apps.” I open-sourced the same concept 3 days earlier—proof inside. (Score: 337, Comments: 75): llmbasedos, released 16 May under Apache-2.0, is an open-source minimal Linux OS that allows rapid (sub-minute) boot from USB/VM, providing a FastAPI-based MCP (Model Context Protocol) gateway for exposing local system functions to LLMs via JSON-RPC. Its framework allows any script (defined in a 2-line cap.json) to be made callable by LLM apps (ChatGPT, Claude, VS Code), supporting both offline (llama.cpp) and cloud LLMs, and running on Linux, Windows (VM), and ARM devices. Technically, it mirrors Microsoft’s later-announced “USB-C for AI apps” idea, with key features including modular MCP servers (FileSystem, Sync, Mail, Agent), ISO build scripts, and systemd integration; external review confirms support for search/embedding, rclone/job management, IMAP/iCal, workflow execution, and deployment extensibility via Docker/HTTP. Top technical comments note that the “USB-C for AI”/MCP metaphor is not original to Microsoft and has appeared in prior art, with some questioning whether Microsoft used the MCP concept differently. Skepticism is expressed regarding the likelihood and logistics of Microsoft pivoting to an idea from a rapidly released open-source project.

- Multiple commenters discuss whether the underlying concept—bootable or pluggable AI tools and models via USB or similar abstractions—is fundamentally novel, noting this is an idea likely to emerge independently in the field. For example, MCP’s own website already describes itself as “a USB-C port for AI applications,” suggesting that the analogy and approach may be broadly recognized by practitioners.

- Some users question the technical novelty of packaging or hosting LLMs (Large Language Models) within Docker images or similar portable frameworks; this is cited as a well-established practice in both Docker’s ecosystem and AI/ML deployment patterns.

- There is a technical debate as to whether comparing open-source project launch dates with Microsoft’s announcement is meaningful. Several users point out that large organizations like Microsoft would have begun development well before public announcements, making it unlikely that short-term timing or supposed idea copying is relevant from an engineering or product management standpoint.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. Google Gemini 2.5 Pro & Ultra Model Benchmarks and Features

- Holy sht (Score: 1202, Comments: 207): The post shares a comparison chart titled ‘Gemini 2.5 Pro Deep Think’ showing Google Gemini 2.5 Pro outperforming OpenAI models in Mathematics (49.4%), Code (80.4%), and Multimodality (84.0%). The benchmark sources are under scrutiny, especially for Mathematics, as commenters note discrepancies with MathArena-reported performance (24.4% for Gemini 2.5 Pro vs the 49.4% claimed). There’s technical skepticism about equivalent testing conditions; MathArena penalizes across several runs, raising questions if Google’s reported results are using a different, potentially more favorable, scoring method. Comments debate the benchmarking methodology, questioning the validity of direct comparisons due to differing test provisions (e.g., multiple runs, penalization on MathArena). There’s interest in alternative benchmarks (e.g., USAMO) and calls for third-party validation such as Paul Allen’s benchmarks.

- A commenter notes a discrepancy in USAMO benchmark scores for OpenAI’s models: while scores are reported from MathArena, OpenAI’s 2.5-pro achieves 24.4% on MathArena, yet another source claims 34.5%. This raises questions about consistency and comparability of benchmark results between different runs and reporting methods.

- Technical discussion highlights how MathArena penalizes inconsistent problem-solving across multiple runs—a model might solve an issue in one run and fail in another, which impacts averaged scores—prompting speculation that published results may reflect either best-run or averaged scores, affecting cross-model comparisons.

- An attached image shows a direct comparison of model benchmark scores on USAMO, suggesting a need for scrutiny of the evaluation methodology and result transparency, especially since a 48% score is described as ‘stunning’ and may indicate significant progress or possible inconsistencies.

- New flash. Google won. Don’t know how to feel about it (Score: 738, Comments: 205): The image is a leaderboard ranking AI models by Arena Score, with Google’s Gemini-2.5 models occupying the top spots, surpassing competitors from OpenAI and DeepSeek. This visually highlights Google’s technical progress in large language model (LLM) development, particularly noteworthy as Google’s team originated the transformer architecture underpinning these advances. The comment emphasizing “much more efficient too!!!” implies that Gemini-2.5 achieves superior performance with better computational efficiency, suggesting advances in model scaling and optimization. Comments debate the significance of Google’s win, attributing it to their foundational research (transformers) and scaling abilities, while some express skepticism about Google’s current public reputation due to declining quality in other products (like search).

- Commenters highlight that Google’s latest model performs just below GPT-4 Turbo (referred to as 2.5 Pro), suggesting Google’s advancements have placed them extremely close to or on par with OpenAI’s top offerings in terms of capabilities.

- There is emphasis on the efficiency of Google’s recent models, with some noting a significant improvement in how computational resources are used compared to previous models—specifically, Google’s ability to scale without external dependencies is called out as a major technical advantage.

- One discussion points to Google’s deep roots in transformer architecture research and their internal compute resources as fundamental to their success; having pioneered transformers, they’re able to innovate and optimize at a scale few can match.

- Google doesn’t hold back anymore (Score: 225, Comments: 64): The image presents benchmark scores for “Gemini 2.5 Pro Deep Think” versus unnamed OpenAI models across Mathematics (49.4%), Code (80.4%), and Multimodality (84%) tasks, with Gemini outperforming OpenAI in all compared categories. This underscores Google’s substantial progress in model capability, particularly in math and multimodal tasks, but also draws attention to the high cost of Gemini’s offering ($250/month), as noted in the post and comments. Commenters debate practical value, noting that despite superior benchmark scores, OpenAI’s models (especially o3) may deliver more reliable, consistent results in real-world technical planning and documentation. Several users also note the price disparity between Gemini ($250/mo) and OpenAI ($20/mo) models, questioning the fairness of the comparison.

- Several users highlight that while models like o3 show strong performance in technical planning and documentation, benchmarks often prioritize coding competency, potentially overlooking use cases such as structured writing where o3 is reportedly more reliable and consistent than offerings from Google or OpenAI in these scenarios.

- A discussion emerges about the steep pricing disparity, comparing Google’s $250/month to OpenAI’s $20/month, raising questions about value and whether increased subscription cost is justifiable based on performance or capabilities, particularly in non-coding tasks.

- There are accounts of subjective qualitative differences: some find Gemini 2.5 Pro’s outputs lacking depth or creativity compared to o3, with claims that Gemini performs better at coding (though one user disputes this based on personal experience), and that AI Studio is its only standout feature. Concerns are also raised about Gemini’s ability to provide nuanced or in-depth answers in research-oriented queries.

- 2.5 Pro gets native audio output (Score: 231, Comments: 22): The image documents an official presentation announcing that “2.5 Pro” (presumably a new or upgraded AI model from Google, as suggested by the large ‘G’ logo) will feature native audio output capability. The phrase “Expressive” on the waveform indicates a focus on high-quality, emotionally nuanced text-to-speech or audio synthesis as part of this update. This suggests significant progress toward more natural, integrated, and possibly real-time audio generation by Google’s language models. Commenters are eager about the feature’s release, questioning current access availability and speculating on the potential for highly realistic voices (e.g., comparing it to Scarlett Johansson’s voice), indicating demand for natural and expressive AI audio output.

- The main technical focus in the thread concerns whether the new 2.5 Pro model’s native audio output supports not just synthesized voice output, but additional audio features such as sound effects. One commenter specifically asks if native sound FX generation is possible, indicating interest in broader audio synthesis capabilities beyond standard text-to-speech.

- Another aspect touched on, though less technical, is the model’s ability to provide specific voice outputs—such as those resembling named voices (‘Scarlett Johansson’), suggesting probable technical curiosity about available voice cloning, customization, or adaptation technology within the audio output feature set.

- $250/mo Google Gemini Ultra | Most expensive plan in AI insudstry ! (Score: 413, Comments: 192): The image provides a comparison between Google’s newly leaked/marketed ‘Google AI Ultra’ plan and the existing ‘Google AI Pro’ subscription. The ‘Ultra’ plan stands out for offering advanced AI features (presumably leveraging Gemini Ultra) at $124.99/month for the first three months and likely $250/month thereafter, representing the highest pricing tier currently visible in the consumer AI subscription space. Notably, it bundles YouTube Premium (valued at ~$19/mo) and a massive 30 TB of storage, alongside exclusive access to advanced tools like Gemini app, Flow, and NotebookLM with increased usage limits compared to ‘Pro’. This signals Google’s intent to position Gemini Ultra both as a premium AI product and a broader ecosystem value proposition akin to or exceeding OpenAI’s ChatGPT Plus tier. Commenters note the bundled value (e.g., ‘YouTube Premium and storage justify some of the cost’) and make direct comparisons to ChatGPT Pro, but point out accessibility issues due to the high price and the lack of a family plan (which some see as an opportunity for Google to improve adoption).

- Several users highlight that the $250/mo Gemini Ultra subscription includes both YouTube Premium (valued around

$19/mo) and30 TBof storage, positioning the plan similarly to comprehensive offerings like ChatGPT Pro when considering bundled services beyond just AI access. - One commenter points out a key limitation: the lack of configurability in the plan. They question the value for specialized users (e.g., those primarily needing coding tools rather than video generation), noting the inefficiency of a bundled price for features they won’t use.

- Several users highlight that the $250/mo Gemini Ultra subscription includes both YouTube Premium (valued around

- $250 per month… (Score: 108, Comments: 95): The image presents a subscription model for “Google AI Ultra” at $249.99/month, bundling advanced Gemini capabilities, increased usage quotas for Whisk and NotebookLM, deep integration with core Google services (Gmail, Docs, Chrome), Project Mariner, YouTube Premium, and 30TB of storage. The offer is US-only for now, with international expansion planned; key value proposition is access to AI-enhanced productivity tools and substantial cloud storage, positioning this as a comprehensive, high-end AI productivity suite by Google. Commentary focuses on perceived value, with skepticism regarding the ROI for individual users (“LLMs make me productive, but not THAT productive”), while some highlight desirable add-ons like YouTube Premium, questioning whether the price justifies the subscription versus alternative spend (e.g., outsourcing tasks).

- A user expresses shock at the data cap, referencing “30TB,” suggesting the service may be offering exceptionally high-volume data usage possibly for AI or media applications, and implicitly questioning the scalability or economic feasibility of such data limits in a $250/month package.

- So this basically confirms it (expect a ‘deep think’ toggle - still unsure on ultra) (Score: 471, Comments: 79): The post discusses the expected introduction of a ‘deep think’ toggle in an AI product, likely from Google’s DeepMind based on the context and mention of Demis Hassabis. The image features Hassabis and implies a feature that increases reasoning depth or response quality, aligning with recent marketing by Google to highlight advanced AI capabilities. No confirmation yet on an ‘ultra’ mode, but the ‘deep think’ toggle is heavily implied. Commenters note Google’s shift towards more deliberate AI marketing, referencing OpenAI and Sam Altman as comparators. There’s discussion about the seriousness of DeepMind’s approach and speculation on upcoming features.

- There’s a technical observation about Google’s historical difficulty in marketing their AI products contrasted with their recent shift. The mention of Demis’ (Hasabis) ‘hype posts’ and a supposed ‘deep think’ toggle suggests a new feature or mode coming, reflecting a possible UI/UX or tuning option in future Google AI models. However, speculation remains about how this compares to OpenAI in terms of product strategy or technical user control.

2. Civitai Payment Ban and Community Responses

- Civitai banned from card payments. Site has a few months of cash left to run. Urged to purchase bulk packs and annual memberships before it is too late (Score: 674, Comments: 422): Civitai announced it is being banned from card payment processing due to its decision to host NSFW and adult content, as confirmed by an official statement from a representative. The platform currently has only a few months of operating cash left and urges users to purchase in bulk or subscribe to annual memberships while they pursue alternative payment options. Technical challenge centers around compliance with payment processors’ content policies and the associated risk to business continuity. Commenters emphasize the risks of reliance on traditional payment processors for platforms hosting controversial content, raising questions about payment infrastructure resilience and censorship in creator ecosystems.

- A detailed comment clarifies the technicalities of payment processing: There are two layers—payment processors (e.g., Stripe) and credit card companies (e.g., Visa). Even if Civitai moves to an adult-content-friendly processor, all processors must ultimately adhere to the rules set by credit card companies. Adult-content-friendly processors exist but charge higher fees, and technical changes (requiring code adaptation and setup overhead) add further complexity to switching processors.

- Another comment highlights the broader infrastructure vulnerabilities for grey-market or adult-content sites: Besides payment processing, hosting, DNS, and search engine indexing are also subject to terms of service and potential business risk. Even sites going fully underground face discoverability and accessibility challenges for their users—potentially undermining their role as leading resource hubs. Thus, any pivot to alternative or underground structures involves a layered technical and operational risk, beyond just payment systems.

- The original statement from the Civitai team confirms their removal from the payment processor was due to a refusal to remove NSFW content, emphasizing ongoing commitment to support all types of creators and a search for alternative solutions. This underscores the ongoing technical challenge of balancing platform policy, compliance, and continuity of service for communities working with potentially controversial generative AI content.

- [LEAKED] the powerpoint slide that convinced the civitai board to reject visa’s demands (Score: 439, Comments: 75): The image is a satirical pie chart allegedly showing a “confidential” breakdown of content on Civitai, with overwhelming proportions labeled as “Porn” (69.3%) and “Also porn lol” (23.8%), and minor segments for “Front page” and an Excel error. This parody visualization comments on a business/operational issue: that the platform’s content overwhelmingly consists of adult/NSFW material, possibly explaining resistance to payment processors’ (like Visa) compliance demands affecting user-generated adult content. The technical discussion centers on content moderation, payment compliance, and the implications for platform business models in the presence of adult content. Commenters debate: 1) the factual basis for Civitai allegedly rejecting Visa’s demands, 2) the observation that a vast majority of the site’s content is indeed NSFW, making drastic policy shifts commercially risky, and 3) why payment processors target platforms like Civitai while established adult sites seem unaffected, questioning the consistency of compliance enforcement.

- A user estimates that approximately 90% of Civitai’s user-generated content is NSFW, suggesting that enforcing Visa’s restrictions would likely result in a major loss of audience and potentially destroy the platform’s business model.

- There is skepticism expressed regarding payment processor inconsistencies: commenters point out that many established porn websites process payments via Visa without apparent issue, raising questions about why Visa targets smaller or specific sites like Civitai for enforcement.

- Another user humorously notes that a large proportion of content (quantified as ‘69%’ or ‘90%’) is NSFW, highlighting that the high volume of adult material on Civitai is central to debates about platform restrictions and payment processor compliance.

- Is CivitAI on its deathbed? Time for us to join forces to create a P2P community network? (Score: 286, Comments: 198): The post raises concerns over CivitAI’s financial viability due to payment processing issues and limited operational runway, questioning whether the community should archive models (including LoRA weights) and shift to a peer-to-peer (P2P) sharing architecture. The discussion focuses on technical strategies for rapid archiving, decentralized storage (like torrents), and organizational logistics—such as avoiding duplicated work and establishing community checklists for distributed action. Commenters debate the sufficiency of P2P solutions like torrents, noting that while torrents can store and distribute models cheaply, they lack CivitAI’s features: searchable metadata, structured model presentation, creator profiles, and financial support mechanisms. Concerns are also raised about how to sustain active development and centralized knowledge sharing without a platform like CivitAI.

- Several users discuss the technical differences between hosting AI models on torrents versus a centralized platform like CivitAI. Torrents provide decentralized file sharing but lack functions such as a searchable database, tagging, community features, and direct support for model creators. As noted by Herr_Drosselmeyer, the absence of these metadata services and financial incentives limits torrents’ ability to fully replace a platform like CivitAI for the AI modeling community.

- There is interest in the technical feasibility and cost structure of hosting a CivitAI alternative without inference/generation features. This would potentially allow for cheaper hosting, and some users speculate about funding it through cryptocurrencies. However, the complexity lies not in bandwidth alone but also in implementing features that preserve community, monetization, and discoverability for ongoing model innovation.

- A few users are proactively archiving large AI model datasets (e.g., 1.5TB so far, with 60TB capacity available) in anticipation of potential platform loss, reflecting an emerging grassroots effort to distribute hosting resources across independent infrastructure. Additionally, alternatives like Civitasbay.org are being explored as early-stage P2P seeding solutions, but usability and content discovery are open issues.

- **Continuously seeded torrent site for AI models, CivitasBay.org** (Score: 190, Comments: 34): **CivitasBay.org is a torrent-based distribution platform for AI model files (in particular,**

safetensorsformat for SD 1.5, SD 2.0, SDXL, and LoRA fine-tunes), using magnet links to enable decentralized sharing without central hosting. The site indexes models by file size, date, and unique identifier, supporting peer-to-peer access to large generative weights, which is valuable for bypassing hosting or bandwidth constraints. This method leverages existing P2P infrastructure for broad, community-driven distribution of resources such as Stable Diffusion checkpoints and LoRA enhancements. Top comments highlight the lack of metadata (model descriptions, sample images, trigger words, or inference settings), noting that the platform’s utility is currently hampered for practitioners who require additional context for effective use. There is discussion around branding and user experience, with requests for enhanced documentation and UX features.- Multiple commenters raise technical concerns about model usability stemming from a lack of metadata on CivitasBay.org. They specifically cite the absence of model descriptions, sample images, prompt examples, and recommended inference settings, making it difficult to identify the function, expected output, or use cases of the distributed safetensors files. This results in poor discoverability and hinders users from effectively integrating these models into workflows such as ComfyUI or similar interfaces.

- A comparison is made to other attempts at AI model archiving (e.g., civitaiarchive.com), noting that while continual seeding and backup of models via torrents is valuable for redundancy and access, such sites become ‘just model Limewire’ without proper context and documentation. Properly indexing models with detailed metadata is highlighted as critical for technical adoption and community use.

3. Cutting-Edge AI for Science, Creativity, and Automation

- Microsoft Discovery : AI Agents Go From Idea to Synthesized New Material in Hours! (Score: 558, Comments: 83): Microsoft demonstrated AI agents for accelerated scientific R&D, with a specific use case of discovering and physically synthesizing a new, safer immersion coolant for data centers, replacing environmentally harmful ‘forever chemicals.’ The pipeline involved autonomous literature review, experimental planning, code generation, simulation deployment on Azure HPC, and physical synthesis—all accomplished in hours/days versus years, culminating in a live demonstration with the coolant keeping hardware cool during real-world operation. This represents a practical, closed-loop system where AI not only proposes novel compounds but executes end-to-end material discovery and synthesis, paralleling efforts such as Google’s GNoME but with distinct claims of novel, synthesized output. One technically informed commenter draws skepticism from past claims (e.g., GNoME) where AI-discovered materials were later shown not to be novel, and notes failures by AI-driven drug discovery firms (like Exscientia and BenevolentAI) to translate predictions to clinical or market success. Another comment raises the technical point that the breakthrough may not be mere combinatorial search, suggesting more advanced decision-making or generative methods, beyond brute-force exploration.

- A commenter provides critical context on the claimed breakthroughs by referencing the GNoME AI for materials discovery, noting that although Google reported the synthesis of ~40 new materials with its AI, an external analysis (https://doi.org/10.1103/PRXEnergy.3.011002) found none were truly new. Additionally, AI-driven drug discovery efforts (by Exscientia and BenevolentAI) have faced high-profile failures in clinical trials, highlighting a gap between hype and delivery in AI-for-science results.

- GrapplerGuy100 contrasts earlier combinatorial search techniques, like those in AlphaEvolve (which used large search spaces and rapid verification for protein design), with the current approach. They express uncertainty about whether the new Microsoft system uses similar combinatorial methods or represents a fundamentally different, potentially more advanced mechanism for materials discovery.

- Google shows Project Astra controlling your Android phone (Score: 254, Comments: 76): Google demonstrated Project Astra featuring advanced voice and visual AI capabilities to perform comprehensive Android device control, as shown in a recent video demo. The implementation highlights real-time understanding and execution of complex user commands, signaling a leap in multimodal on-device agent performance and suggesting near-term deployment in consumer devices. Comments express surprise at the rapid progression from last year’s demo to real implementation, with experts noting the technical leap over both last year’s prototypes and competitors like Apple. Some foresee Project Astra’s inclusion in Android as a major disruptor to the mobile OS competitive landscape.

- A technical concern is raised about Google’s ability to serve Project Astra at scale, given the extremely high compute demands for context-aware, on-device AI, especially features like background third-party negotiation. The commenter questions whether Google’s TPUs and infrastructure can reasonably handle hundreds of millions of concurrent users without degradation, implying significant scaling and service delivery challenges.

- VACE Extension is the next level beyond FLF2V (Score: 147, Comments: 27): The post contrasts FLF2V’s standard frame interpolation—conducted pairwise (e.g., 1→2, 2→3), often producing temporal inconsistencies and unnatural motion reversals—with the novel VACE Extension approach, where user-specified frames serve as ordered ‘checkpoints.’ This method, recently implemented in models like Wan2.1 (currently limited to 81 frames), generates a globally temporally consistent video that passes smoothly through all checkpoints, enabling high-quality animation akin to traditional anime in-betweens. Further scalability beyond 81 frames is possible using overlapping techniques, as shown in recent WACE 14b examples. Technical workflow details are shared here (Japanese). A commenter advocates for generating at lower frame rates (e.g., 15fps) and upsampling to 60fps via interpolation for efficiency, expressing concern about models that aim for 24fps natively. Another user inquires about interoperability between VACE and wan LoRAs, questioning if separate training is needed, suggesting active interest in model modularity and workflow integration.

- A user advocates generating video at 15fps for efficiency, noting that this allows rapid synthesis and later temporal upscaling (e.g., using interpolation) to cleaner 60fps outputs, suggesting that targeting 24fps in other models is less optimal for this workflow.

- There’s a discussion about the handling of the ‘last frame bleaching’ artifact in the VACE extension, where color desaturation occurs at the end of sequences. The issue persists despite post-processing efforts, with some suggesting it’s less noticeable in cartoons, which can be re-color graded with fewer quality issues compared to realism-focused workflows.

- One commenter questions compatibility with WAN LoRAs in the VACE model, asking whether existing LoRAs can be used or if new training is required, highlighting an open issue around model extension and LoRA integration.

- DeepMind Veo 3 Sailor generated video (Score: 580, Comments: 152): The post discusses a video sample generated by DeepMind Veo 3’s ‘Sailor’ prompt but the external link to the video (https://v.redd.it/us18oc0gpz1f1) is inaccessible due to a 403 Forbidden error, making direct technical analysis of the output impossible. One technical comment highlights that ‘old men with beards’ are a recurrent and potentially restricted generation in Veo, suggesting possible prompt-engineering limitations or content filtering bias in the model. The general discussion speculates on the technological proximity to generating complete AI-directed films from single prompts, indicating rapid progress in generative video models like Veo. Comments note content limitations in Veo (biased towards generating certain subjects, e.g., ‘old men with beards’), while others express a sense of impending technological disruption in film production, but lack detailed technical evaluation due to the unavailable video.

- One commenter predicts that AI-driven video quality will reach near-perfection in 1-2 years, stating it’s currently at least 80% done, suggesting rapid progress in generative video models like DeepMind Veo.

- Discussion emerges around the imminent capability for AI to generate entire movies from a single prompt, implying significant advances in multimodal generative systems and potential disruption to traditional video production pipelines.

- Veo 3 (Score: 359, Comments: 98): The post’s title, ‘Veo 3,’ refers to what is likely the latest version of Google’s Veo, an advanced generative video AI model announced by Google at I/O 2024. No technical details or benchmarks are given in the post due to the external link being inaccessible. None of the comments provide new technical information, but one references the rapid advancement since early generative video such as ‘Will Smith eating spaghetti.’ Top comments reflect concern about the disruptive impact of recent generative video models on industries like animation and Hollywood, referencing how quickly the field has advanced and speculating on the effect on established studios (e.g., Pixar, DreamWorks) and traditional Hollywood production.

- A commenter predicts that within 5 years, some short scenes in movies may be AI-generated instead of traditionally rendered CGI, suggesting a shift in VFX workflows towards machine learning-based generative models for certain types of visual content. This could considerably reduce costs and time for specific scene creation tasks.

- Veo 3 Standup comedy (Score: 303, Comments: 79): The post discusses a Veo 3-generated video emulating standup comedy, with commenters noting the realism surpasses prior uncanny valley limitations. Technical discussion centers on Veo 3’s ability to convincingly synthesize not only visual and behavioral cues but also subtle audio artifacts, such as ‘breathing-into-the-mic’ sounds, to enhance authenticity. Veo, a video generation model by Google DeepMind (see their research page), is highlighted for its nuanced replication of human performance in generative video contexts. Expert commenters are surprised by the model’s high fidelity, expressing that it could be indistinguishable from real standup footage, with some speculating whether the clip was generated or simply an authentic recording, demonstrating the model’s advancement in media realism.

- Commenters note Veo 3’s generation is highly realistic, capturing nuanced audio details like the ‘breathing-into-the-mic sound’ before laughter, demonstrating advanced temporal coherence and subtle audio-visual synchronization.

- There’s discussion of the seamless integration of speech, laughter, and ambient sound effects, which were not expected in AI multimodal models until at least 2025, indicating a leap ahead of anticipated progress for generative video+audio systems.

- One user highlights the difficulty of distinguishing AI-generated output from real footage, suggesting Veo 3’s results are on the cusp of, or have surpassed, the ‘uncanny valley’ in synthetic media realism.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

Theme 1: Google’s AI Blitz and New Model Onslaught

- Google Unleashes Gemma 3 and Gemini 2.5 Barrage!: Google dropped its Gemma 3 series, including Gemma 3N (technical report, docs), initially US-only, alongside Gemini 2.5 Flash (OpenRouter link), and teased Gemini 2.5 Pro Deep Think, Veo 3, and Imagen 4 at Google I/O, though many features remain restricted. LMArena members found Google I/O a filler episode, while OpenRouter users debated Gemma 3n-4B potentially matching Claude 3.7 based on Google’s blog on Gemma 3n and Chatbot Arena preferences.

- Meta’s Llama 3.3 Makes Exclusive OpenRouter Debut!: Meta’s new Llama provider, featuring a 3.3 8B model, launched exclusively open-access and free-to-start on OpenRouter, as per OpenRouter’s Llama announcement tweet. This move offers developers a cost-effective avenue to experiment with Meta’s latest language model offerings.

- IBM and Mistral Quietly Drop Bombshells, Qwen Marches On!: While Google dominated headlines, IBM subtly unveiled Granite 4.0, and Mistral released Mistral Small 3.1, both generating buzz in the Unsloth AI community. Meanwhile, discussions around the Qwen series continued, with performance tweaks like speculative decoding for Qwen 3 in LM Studio and benchmark discussions for Qwen 2 35B in the Aider server, detailed in Paul Gauthier’s blog post about Qwen3.

Theme 2: Revolutionizing AI Tooling and Developer Platforms

- Unsloth Steals Spotlight at Google IO, KernelLLM GGUFs Drop!: Unsloth garnered attention after being showcased at Google IO (UnslothAI tweet about Google IO), with the team also releasing KernelLLM GGUFs (KernelLLM GGUFs on Hugging Face) and a fixed-ish Sesame notebook for longer audio generation (UnslothAI tweet on Sesame notebook). These releases empower developers with more efficient tools for training and deploying models.

- LM Studio and Modular’s MAX Beef Up Local AI Capabilities!: LM Studio users fine-tune models by adjusting RoPE Frequency Scale and leverage Sliding Window Attention (SWA) via Llama.cpp (Llama.cpp SWA GitHub pull) to slash memory usage, seeing KV cache drop from 1700MB to 348MB. Modular’s MAX platform promises full-stack control for inference, inviting enterprise users to contact [email protected] for large-scale disaggregated inference solutions.

- Perplexity and OpenRouter Iterate, But API Quirks Persist!: Perplexity rolled out new features like Perplexify Me (Perplexity May 19th changelog), but users in the Perplexity AI Discord noted its API outputs can be less direct than the Playground and reported Deep Research API requests appearing as sonar-reasoning-pro (Perplexity API GitHub issue #320). OpenRouter streamlined developer experience by adding slugs for providers and quantizations (OpenRouterAI tweet on slugs).

Theme 3: Rise of the AI Agents: Coding, Research, and Beyond

- Google’s Jules Agent Enters the Coding Arena, Manus Builds Websites!: Google launched Jules (Jules by Google website), an asynchronous coding agent, stirring excitement and waitlists, while Manus.im debuted an AI agent with its own computer to build websites and conduct research (Manus.im invitation example, Manus use case collection). These agents showcase diverse applications, from software development assistance to automated task completion.

- OpenEvolve Replicates DeepMind’s Feats, Evolves Code with LLMs!: The open-source release of OpenEvolve (OpenEvolve on GitHub, OpenEvolve HuggingFace Blog), an implementation of Google DeepMind’s AlphaEvolve, made waves by replicating circle packing results with 99.97% accuracy and evolving random search into simulated annealing. This tool uses LLMs to discover, optimize, and evolve entire codebases, and was discussed across Unsloth AI, Eleuther, Nous Research AI, HuggingFace, and GPU MODE Discords.

- MCP Ecosystem Expands with A2A Bridge and Wallet Integration!: The Model Context Protocol (MCP) saw new developments, including an open-source server bridging MCP with A2A protocol Agents (A2A-MCP-Server on GitHub) allowing Claude to interact with A2A agents. Additionally, TokenPocket released Wallet MCP (Wallet MCP on GitHub) for integrating AI clients with encrypted user wallets, discussed in the MCP (Glama) Discord.

Theme 4: Pushing Performance Frontiers: Model Optimization and Evaluation

- Speculative Decoding and SWA Supercharge Local Models!: LM Studio users boosted Qwen 3 performance by 12% using speculative decoding after a lot of fiddling with settings like max draft size (3) and draft probability (0.8). They’re also slashing memory via Sliding Window Attention (SWA) in Llama.cpp (Llama.cpp SWA GitHub pull), reducing a Gemma 4B 15K model’s KV cache from 1700MB to 348MB.

- NVIDIA and Academics Probe Model Internals and Efficiency!: Discussions around the Physics of Language Models (Part 3.1 Knowledge Storage paper, Part 3.2 Knowledge Manipulation paper) in the Yannick Kilcher Discord highlighted LLM strengths in retrieval but weaknesses in manipulation without CoTs. In GPU MODE, FSDP2 was noted for using less VRAM than FSDP1 but being slower, with specific benchmarks like fsdp2:11.599G, 13.39s/step versus fsdp1:15.801G, 7.3s/step on RTX 4000 Ada x4.

- Tinygrad Bounties Drive Hardware Optimization, Cutotune Automates CUDA Tuning!: The tinygrad community pushes hardware limits with bounties for Flash Attention (initially 7900XTX-focused) and BERT training aiming to outperform normal attention on Nvidia/AMD GPUs, as detailed on tinygrad’s X pinned post. Meanwhile, GPU MODE members introduced cutotune, an autotuner for CUDA kernels working with PyTorch compile, designed for easy extensibility.

Theme 5: AI’s Societal Pulse: Ethics, Slop, and Community Dynamics

- AI ‘Slop’ Definition and Implications Spark Heated Debate!: Across EleutherAI, the term ‘AI slop’ ignited discussions, with definitions ranging from low effort AI output to content failing the Turing test, referencing the AI slop Wikipedia page and the fragility failures paper. The debate questioned if ‘slop’ is inherently AI-tied or a broader content quality issue.

- LLMs Develop Social Lives, Biases, and Drive Change, Researchers Find!: A Science Advances paper on LLM social conventions discussed in Nous Research AI revealed that decentralized LLM populations spontaneously develop social conventions and can exhibit strong collective biases, even without initial individual bias. The study also showed adversarial LLM agents can instigate social change within these populations.

- From Echo Chambers to Ugly Ducklings: Navigating AI Discourse!: Latent Space members noted the rise of anti-AI sentiment on platforms like Hacker News and Reddit, pondering if they represent echo chambers. Concurrently, DSPy’s cryptic X post about an “ugly” something teased its core philosophy: If you get this, you get what DSPy is all about, hinting at appreciating the unconventional in AI development.

Discord: High level Discord summaries

LMArena Discord

- Special Tokens Spark Thinking Tag Exploration: Members explored using special tokens, comparing them to Anthropic’s thinking tags, noting they’re similar to

<antml:thinking>.- Discussion revolved around understanding their purpose and practical application in AI models.

- Google Drops Gemma 3, US Only: Google released Gemma 3, publishing the Gemma 3N technical report which is initially exclusive to US users.

- Initial reactions noted limited availability, causing some frustration among international users.

- Google I/O Demos Over Deliveries: Google I/O event disappointed some, seen as a filler episode with limited immediate releases, despite announcements of Veo 3, Imagen 4, and Gemini 2.5 Pro Deep Think.

- Many features are restricted to trusted testers or US users, delaying broader access.

- ChatGPT Pro Thrashes Gemini Ultra in Value Fight: The community favored ChatGPT Pro over Google’s new $250/month Gemini Ultra plan, citing a better value proposition.

- Some community members speculated about the possibility of Google going bankrupt by the time Grok 9 is released, indicating skepticism towards Google’s current AI strategy.

- Local AI Attempts Zero-Shot Snake Game: Users experimented with local AI, exploring zero-shot generation using Qwen 3 4B/8B in LM Studio.

- Challenges arose with limited VRAM, resulting in slow token generation, with one user reporting probably just 5 or so [tokens] per second.

Perplexity AI Discord

- Perplexity releases Perplexifying New Features: Perplexity announced several new features: Perplexify Me, Live Standings and Commentary for F1, F1 Scores in Answers, Improved File Attachments, and Sidebar Shortcuts, documented in their May 19th changelog.

- Specific functionalities and detailed improvements for Perplexify Me, File Attachments, and Sidebar Shortcuts remain unspecified in the announcement.

- Gemini 2.5 Is a Google Workhorse: Google’s Gemini 2.5 Flash (May 20th checkpoint) excels in advanced reasoning, coding, mathematics, and scientific tasks due to its built-in thinking capabilities.

- It provides responses with enhanced accuracy and nuanced context handling, positioning itself as a state-of-the-art workhorse model.

- Grok is the Sweet Choice for Unlimited Free Reasoning: Grok is emerging as the preferred choice for users seeking strong, free, unlimited reasoning capabilities in AI models.

- Users describe Grok as having a chill black dude energy which may have contributed to its popularity.

- Perplexity Playground Outshines API?: Users report that Perplexity Playground outputs are more direct and accurate compared to the API, even after adjusting

top_pandtop_kvalues, noting the addition of a sample example message can improve API results but increases token usage.- Users also discussed whether Perplexity API supports OpenAI JSON schema via the OpenAI Python library, but the results of the investigation were not given.

- Deep Research API is a wolf in Reasoning Pro clothing?: A user reported that Perplexity Deep Research requests via the API show up as sonar-reasoning-pro in the dashboard, prompting a GitHub issue.

- Users have also reported experiencing frequent timeout issues when using the Perplexity API, especially when researching target companies, suggesting the need for smaller batch research.

Unsloth AI (Daniel Han) Discord

- Unsloth Unveiled at Google IO!: Unsloth was showcased at the Google IO event via this tweet, with community members expressing excitement after discovering Unsloth through the Gemma+Unsloth+Collab demo.

- The Unsloth team also released KernelLLM GGUFs and linked to Hugging Face along with a fixed-ish Sesame notebook update that allows generating audio longer than 10 seconds, accessible via this tweet.

- Mistral and IBM quietly make moves: Members noted the Mistral Small 3.1 release, while IBM quietly unveiled Granite 4.0, generating excitement, as well as a member linking to some crazy benchmarks.

- One member linked to the Ollama library for Qwen2.5 VL. A member also mentioned that they are not the first in the space for Gemini Diffusion.

- OpenEvolve springs into action!: A member announced the release of OpenEvolve, an open-source implementation of Google DeepMind’s AlphaEvolve system, detailed in this blog post.

- The creator successfully replicated DeepMind’s results on circle packing (99.97% match!) and evolved a random search into a simulated annealing algorithm, using an LLM ensemble approach for improved results and multi-objective optimization.

- Members struggle with merged models and PPO: Users reported that merging a 4-bit model and saving as 16-bit can cause performance issues. It was also expressed that using PPO training posed challenges, particularly regarding VRAM usage and reward function limitations.

- A user suggested to “not load 4bit or 8bit when you are trying to merge” and suggested a colab notebook outlining this process.

LM Studio Discord

- LM Studio API Access Limited Without Web Frontend: Users discovered that while LM Studio API supports API access for hosting LLMs locally, it lacks a web frontend, unlike Stable Diffusion.

- To achieve web integration, users must use a separate frontend to connect to the LM Studio API.

- Configure RoPE Frequency with LM Studio: Members can adjust the RoPE Frequency Scale for loaded models in LM Studio by finding the setting cog next to the model loader.

- It’s also possible to modify the gguf format to include it, as it is supported in ollama and llama.cpp.

- Qwen 3 Gets Speed Boost Via Speculative Decoding: Users achieved a 12% performance increase with Qwen 3 using speculative decoding, configuring it after a lot of fiddling by setting the max draft size to 3 and increasing the drafting probability to 0.8.

- Increasing the min draft size only increases electricity usage and generation times.

- Model Unloading Unveiled in LMStudio API: The LM Studio API supports unloading models using the

model.unload()function as described in the official documentation.- This function is part of the API and doesn’t require creating a separate function.

- Sliding Window Attention Slashes Memory in Llama.cpp: Sliding Window Attention (SWA) is now available in Llama.cpp (github.com/ggml-org/llama.cpp/pull/13194), and potentially soon in LM Studio, to reduce memory usage, particularly for long contexts.

- Enabling SWA reduced KV cache usage from 1700MB to 348MB for a Gemma 4B 15K context model, and further down to 184MB with Q8_0 quantization during initial tests.

OpenRouter (Alex Atallah) Discord

- OpenRouter Adds Provider and Quantization Slugs: OpenRouter announced that providers and quantizations now have slugs, enhancing developer experience, according to their tweet.

- This change aims to streamline integration and management for developers using diverse models and quantization methods on the platform.

- Google Launches Gemini Flash 2.5 on OpenRouter: Google DeepMind launched Gemini Flash 2.5, available on OpenRouter at google/gemini-2.5-flash-preview-05-20 for testing.

- This release provides developers with early access to Google’s latest model for fast and efficient AI applications.

- Meta Provides Llama Exclusively on OpenRouter: Meta’s new Llama provider is now live, exclusively open-access on OpenRouter, and free to start, which includes a new 3.3 8B model, per their tweet.

- This collaboration offers developers a cost-effective way to experiment with and utilize Meta’s language models.

- Google’s Gemma 3n model may match Claude 3.7: The new Gemma model, Gemma-3n-4B, is supposedly as good as Claude 3.7 according to this blog post.

- Members in the chat found this claim suspicious, as it came from user preference from the Chatbot arena.

Eleuther Discord

- EleutherAI Debates Discord Bot Reacts: Members of EleutherAI considered implementing a Discord bot to combat spam, proposing rate limits and mod pings instead of direct deletion to prevent abuse, and considered a mod-only react to flag content.

- They suggested using a more general react, counting reacts from regulars only, and using a private mod-only channel to ease victor detection, and avoid the irreversible deletes.

- AI ‘Slop’ Definition Sparks Debate: Members debated the meaning of ‘slop’ in AI-generated content, with definitions ranging from low effort, poorly comprehended material to content that fails the Turing test, referencing AI slop Wikipedia page.

- The discussion touched on whether slop is inherently tied to AI, with some arguing that human-generated content can also be slop, citing overproduced or non-sensical outputs as examples, and fragility failures.

- Yi Ma Throws Shade on Current ML?: Members watched Yi Ma’s ICLR talk and discussed his first-principles approach, which grounds work in information theory and cybernetics, contrasting it with the heuristics prevalent in current models.

- One member found the talk slow initially but appreciated the point that compression alone isn’t enough; organization is key.

- DeepMind’s AlphaEvolve Replicated: A member released OpenEvolve, an open-source implementation of Google DeepMind’s AlphaEvolve, which evolves entire codebases using LLMs to discover and optimize algorithms and replicated DeepMind’s results on circle packing.

- Members ask about Gemini Diffusion Prompts: A member opened a discussion about Google’s Gemini Diffusion model.

- He offered to take prompts for anyone without access to Gemini Diffusion.

Cursor Community Discord

- Cursor’s Tool Limit Stalls Chats: Users report that hitting the 25 tools limit in Cursor and accepting before continuing terminates the chat session, as noted in a Reddit post.

- This issue may be related to how Cursor handles request limits for non-fast users.

- DeepSeek-R1T-Chimera Breaks Prompt Loops: The DeepSeek-R1T-Chimera model, a fine-tune between R1 and V3, is claimed to be the only free model capable of breaking loops in a user’s prompt testing, available on Hugging Face.

- The model’s unique architecture allows it to handle complex prompts without getting stuck in repetitive cycles.

- MCP Refreshes Irk Users: Users are expressing frustration with the frequent need to refresh their MCPs due to their status turning yellow, which consumes a significant number of requests.

- The constant refreshing interrupts workflow and reduces the efficiency of using MCPs.

- Gemini’s Thought Process Evolving: Users are observing a shift in Gemini’s thinking process, where the model now summarizes its thought process rather than displaying the actual steps, making it challenging to craft effective prompts and project rules.

- This change impacts the ability to fine-tune prompts based on the model’s internal reasoning.

- O3 Pro Launch Imminent?: Speculation is mounting regarding the release of O3 Pro, fueled by a tweet from a former OpenAI employee hinting at a potential June release.

- Enthusiasts are eagerly awaiting official confirmation and details about the new features and capabilities of O3 Pro.

Modular (Mojo 🔥) Discord