Special RL is all you need?

AI News for 8/8/2025-8/11/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (227 channels, and 30037 messages) for you. Estimated reading time saved (at 200wpm): 2237 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

We know OAI got the IMO Gold performance last month, so it’s crazy that we kind of considered not giving the IOI result the same coverage.

These days, tweets serve as press releases, and so Sheryl Hsu got the honor (also of the IMO team) of announcing that they had placed #6 among human coders:

Folks from Ahmed El-Kishky and Jerry Tworek and Noam Brown and Alex Wei reflected on the rapid progress from just 2 years ago when these systems could barely do anything in either competitive categories. Noam’s thread offers the most insight into the scaffolds.

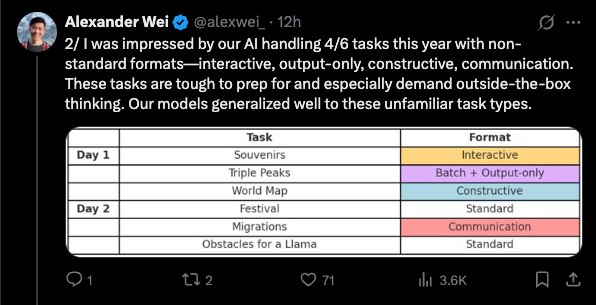

and Alex shared some of the challenging aspects of the test.

AI Twitter Recap

The GPT-5 Launch: Performance, Naming, and User Rebellion

- User Backlash and Reversal on Rate Limits: The GPT-5 launch was met with significant user backlash, dubbed the “ChatGPT Plus rebellion” by @scaling01, over the initial restrictive usage limits for the new “Thinking” model and the removal of user control. The community pressure led OpenAI to reverse course, with @yanndubs and @scaling01 confirming the Thinking model limit was increased to 3000 requests per week for Plus users. @Teknium1 questioned the rationale behind taking model selection control away from users in the first place, while @sama posted a lengthy reflection on the unexpectedly strong user attachment to specific models like GPT-4o and the challenges of managing user experience versus encouraging unhealthy dependence. In response to the changes, ChatGPT has re-added the model selector, though @Teknium1 noted Plus users only get GPT-4o as a legacy option.

- Confusing Naming and Benchmarking: OpenAI’s model naming strategy for GPT-5 has been a source of confusion, with @scaling01 pointing out the proliferation of names like mini, nano, and chat-latest. This has made benchmarking difficult, with @AmandaAskell highlighting methodological issues in comparing models like Claude and Gemini on their ability to course-correct conversations. OpenAI was also criticized for submitting only GPT-5 Thinking to leaderboards under the name “GPT-5” to narrowly beat Opus 4.1 on SWE-Bench.

- Mixed Performance Reviews: The community is divided on GPT-5’s performance. @ericmitchellai from OpenAI claims that GPT-5 “doesn’t hallucinate basically at all,” and that it’s materially better than o3. However, users like @jon_durbin found the new models “nearly unusable,” “extraordinarily confident in their hallucinations,” and difficult to steer. @gdb showcases GPT-5 as a “knowledge work amplifier” for “vibe coding,” while @jerryjliu0 shared WIP benchmarks showing GPT-5 mini performing well on document understanding, but the full GPT-5 being “middle of the pack” and expensive. On the Chatbot Arena, @scaling01 notes Gemini 2.5 Pro has a 67% winrate against GPT-5 Thinking.

- Prompting and Model Behavior: A key takeaway is the importance of specific prompting. @ericmitchellai and @jeremyphoward both highlighted that users should explicitly ask the model to “think hard” or “think deeply” to engage the more capable reasoning mode. A tweet retweeted by @teortaxesTex from @karpathy observes that LLMs are becoming “a little too agentic” due to extensive benchmark-maxxing on long-horizon tasks.

Model & Benchmark Developments

- Scaling Law Concerns & Open Source Momentum: The GPT-5 launch has fueled discussions about a potential plateau in AI progress. @jeremyphoward suggests the “era of the scaling ‘law’ is coming to a close,” calling this OpenAI’s “Llama 4 moment.” @gabriberton argues that if LLMs are plateauing, large spending is no longer justified, and open-source models will become just as good as closed-source ones. This sentiment is bolstered by the success of OpenAI’s gpt-oss models, which @reach_vb notes have over 5M downloads and 400+ fine-tunes on Hugging Face.

- New Chinese Models: GLM-4.5 and Qwen: Zhipu AI released a technical report for GLM-4.5, highlighted by @teortaxesTex and @bigeagle_xd, detailing a complex post-training strategy using their slime framework with SGLang integration for efficient RL training. They also released GLM-4.5V, a 106B parameter MoE for vision, which is available on Hugging Face. Meanwhile, Alibaba’s Qwen team announced a distilled 8-step Qwen-Image model and showcased Qwen3-Coder’s ability to generate SVG images.

- Diffusion vs. Autoregressive Models: A series of papers comparing diffusion language models (DLMs) and autoregressive (AR) models has sparked discussion. Tweets from @arankomatsuzaki, @giffmana, and @iScienceLuvr highlight findings that DLMs are more data-efficient, a crucial advantage as the field becomes more data-constrained.

- Reasoning and Competitive Programming Benchmarks: OpenAI announced that its reasoning system achieved a gold medal-level performance at the International Olympiad in Informatics (IOI). @alexwei_ notes this was achieved with their general IMO gold model, showing that reasoning generalizes. @MillionInt emphasizes the leap from the 49th to 98th percentile in one year without specialized training.

Frameworks, Tooling, and Infrastructure

- Memory and Conversation History for Agents: Anthropic announced that Claude can now reference past chats to maintain context, a feature @swyx calls instructive for how they solve problems with transparency and user control. On a related note, Google Cloud provided a guide on implementing short-term and long-term memory for AI agents using Vertex AI.

- LangChain Ecosystem Updates: The LangChain team has been active, releasing a practical guide on agent reliability to handle hallucinations and verify tool use. They also announced an integration with Oxylabs for advanced web scraping and a new LangGraph CLI for managing assistants from the terminal.

- Infrastructure and Low-Level Tools: whisper.cpp is being integrated into ffmpeg, a major development for local audio processing. On the hardware front, AIBrix released evaluations of H20s for LLM inference, focusing on KV-Cache offloading. @ostrisai demonstrated a method to train a sidechain LoRA to compensate for precision loss when quantizing Qwen Image to 3-bit, enabling fine-tuning on consumer GPUs.

- Keras and JAX Integration: @fchollet highlighted the power of combining JAX for performance and scalability with Keras 3 for high-velocity development, calling the combination “pretty killer.”

AI Research & Scientific Breakthroughs

- Meta’s Brain Modeling Victory: Meta AI’s Brain & AI team won 1st place at the Algonauts 2025 brain modeling competition with their 1B parameter TRIBE (Trimodal Brain Encoder) model. This model is the first deep neural network trained to predict brain responses to stimuli across vision, audio, and text by combining pretrained representations from Llama 3.2, Seamless, and V-JEPA 2. @alexandr_wang congratulated the team, noting that brain modeling is a key step toward BCIs.

- New Shortest-Path Algorithm: A Tsinghua professor discovered the fastest shortest-path algorithm for graphs in 40 years, breaking Dijkstra’s 1984 “sorting barrier.” The result received widespread attention, with a retweet from @dilipkay gaining over 5,500 retweets.

- AI and Robotics: @adcock_brett predicts that humanoid robots will handle most physical tasks in the coming years and that the only limiter now is pretraining data. In a separate tweet, he notes that the Figure robot can indeed fold laundry.

- Google’s LangExtract Library: Google released LangExtract, a Python library for extracting structured data from unstructured documents with precise source attribution.

Broader Discourse: AI in Society

- AI Companionship and Mental Health: A study from Stanford and Carnegie Mellon, shared by @DeepLearningAI, analyzed over 1,000 Character.AI users and found that heavier reliance on AI bots for companionship correlated with lower satisfaction and higher loneliness. This ties into the broader theme of user attachment, with @sama expressing unease about a future where billions of people trust AI for their most important decisions.

- The Nature of AI-Human Conversation: In a highly-trafficked tweet, @ID_AA_Carmack reflected on the difficulty of modeling natural conversation, which includes interruptions. He suggests a true solution would involve parallel streams of listening and thinking rather than a single autoregressive sequence. @francoisfleuret counters that he doesn’t want an AI that interrupts, but one that sounds artificial, prioritizing clarity over simulated naturalness.

- Skepticism, Hype, and User Adoption: @random_walker argues that AI adoption and behavior change are slow, regardless of how fast capabilities improve, pointing to the low usage of “thinking” models before GPT-5’s automatic router. He contends this is a property of human behavior, not technology. In contrast, @DavidSacks’s take, retweeted by @ylecun, presents a “best case scenario” where doomer narratives about rapid AGI takeoff were wrong, leading to more gradual and manageable progress.

- Synthetic Data and Model Personality: @typedfemale cautions against becoming “addicted to synthetic data,” a sentiment echoed by @scaling01, who feels that overly clean synthetic data makes models like Phi and the new OpenAI offerings “shallow and void of any personality.”

Humor/Memes

- Industry Satire: The most popular joke of the period came from @Yuchenj_UW, stating, “If Jensen truly believed AGI was near, Nvidia wouldn’t sell a single GPU.” Another viral tweet from @typedfemale joked, “man adopts polyphasic sleep schedule due to claude code usage limits.”

- Relatable Engineer Problems: @vikhyatk laments the cycle of engineering, from wanting to add new frameworks to resisting them as an older, tired engineer who has already memorized the pip commands. He also made a popular tweet about realizing it’s fine to store money as floats.

- GPT-5 Follies: The launch produced a wave of memes, including the community’s “rebellion” against rate limits and jokes about the model’s performance on riddles. @teortaxesTex posted that “4chan continues to launch proton torpedoes into the riddle-shaped thermal exhaust port of our much maligned Death Star.”

- General Humor: @willdepue posted, “oh you’re a rich guy? … then how many tea fields do you own? oh none? stop talking to me.” @AravSrinivas shared a chart of personal wins with the simple caption “Last month was good.”

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. gpt-oss-120b Model Performance and Benchmarks Discussion

- **gpt-oss-120b ranks 16th place on lmarena.ai (20b model is ranked 38th)** (Score: 244, Comments: 90): The image is a screenshot from lmarena.ai leaderboard rankings, showing that the open-source model gpt-oss-120b currently ranks 16th overall, outperforming several strong competitors including the 20b version of the same model (ranked 38th). This performance is highlighted in comparison to models like glm-4.5-air, indicating gpt-oss-120b’s competitive standing among large language models. The post draws attention to both the accuracy and the performance: comments note that while gpt-oss-120b has ‘trash’ creative writing, it potentially deserves a higher ranking if not for this, and praise is given to the 20b model for offering strong capabilities at higher speed compared to Qwen 3 8b. Commenters debate the practical intelligence and speed of the gpt-oss-20b versus Qwen and other open-source models, with some feeling gpt-oss-20b is underestimated in the community. There is also a note that creative writing ability impacts overall leaderboard ranking, even if other capabilities are strong.

- gpt-oss-20b is observed to be an order of magnitude faster than Qwen3-8b in user tests, while being described as “way more smart,” highlighting its efficiency/speed-to-capability ratio relative to similarly sized models.

- Ranked models above gpt-oss-120b on lmarena.ai require significantly higher compute resources, indicating that it achieves competitive benchmarks given its comparatively lower compute demands.

- There is an open question about Qwen3’s comparatively low overall ranking (#5) despite strong performance across individual categories, suggesting possible weighting, aggregation, or evaluation methodology issues within the benchmark.

- GPT-OSS Benchmarks: How GPT-OSS-120B Performs in Real Tasks (Score: 184, Comments: 58): The image displays benchmark comparison results for the new GPT-OSS-120B open-weight model on real-world tasks (TaskBench), positioning it as the top performer among open models despite being 1/10th the size of competitors like Kimi-K2 and DeepSeek-R1. The post emphasizes that GPT-OSS-120B offers strong agentic (action-driven) performance, is optimal when paired with retrieval or other engineering strategies, but has weaker multi-lingual and world knowledge recall compared to closed models. Full results and benchmark methodology are linked at https://opper.ai/models. Commenters urge comparison to GLM 4.5 and Qwen 3 models, and note that on the Aider Polyglot leaderboard (https://aider.chat/docs/leaderboards/), GPT-OSS-120B currently underperforms Kimi-K2 and R1-0528 but is faster; recent template fixes may improve its rank. Some users report that other open models feel stronger in practical use, highlighting a gap between bench rankings and subjective experience.

- The Polyglot Aider leaderboard shows GPT-OSS-120B scoring 51.1% on real-world coding tasks, which is lower than Kimi-K2 (59.1%) and significantly lower than R1-0528 (71.4%) according to the current leaderboard data. Recent changes to chat templates may improve GPT-OSS’s score, with contributors actively working to address known issues.

- Performance and ranking discussions highlight that GPT-OSS-120B is notably fast on local systems, making it potentially useful for scenarios prioritizing speed over peak intelligence. Some users note that fixes for harmony syntax issues in llama.cpp (which currently affect GPT-OSS compatibility and functionality) are close to resolution, as detailed in this GitHub discussion.

- There is skepticism about benchmark rankings when Grok 3 outperforms models like Kimi-K2 and O4-Mini, despite anecdotal evidence that Grok 3 performs poorly in agentic tool use. Some users question the relevance of benchmarks with non-public or non-representative evaluation data, arguing for the need to use hidden/secret test sets for more trustworthy results.

2. Innovative LLM Training and Distillation Approaches

- Training an LLM only on books from the 1800’s - Another update (Score: 194, Comments: 27): The author is training a language model from scratch using only London-based texts (1800–1875), currently leveraging the Phi-1.5 architecture (700M parameters) on an A100 GPU and scaling up to nearly 7,000 documents, sourced primarily from the Internet Archive. Early results show improvement in factual, historically grounded outputs—rather than hallucinations—despite the continued use of pretraining instead of fine-tuning as the main approach. Technical details and code are available. Top comments raise points about experimental applications (e.g., fine-tuning on historical physics/math for emergent reasoning), potential architectural limitations (questioning the choice of Phi-1.5 over newer designs like Qwen 3), and the risk of tokenization mismatches due to archaic language affecting the token dictionary when using preexisting model vocabularies.

- One commenter raises concerns about vocabulary and tokenization when training on old texts, questioning if the model’s token dictionary can effectively represent archaic language or uncommon words from the 1800s. They suggest this mismatch could hinder learning and are interested if the experimenter has observed any such issues.

- There’s a discussion about the architecture choice, with one user asking if phi-1.5 is a legacy decision and recommending the Qwen 3 series, noting that Qwen models deliver strong performance relative to their size and may be a better starting point for new projects today.

- A user compares the model’s capability to prominent benchmarks, asking if it’s currently at “GPT2 level” and expressing interest in when the model might reach “GPT3 level,” essentially tying training progress to widely recognized performance milestones.

- Created a new version of my Qwen3-Coder-30b-A3B-480b-distill and it performs much better now (Score: 149, Comments: 30): The poster presents a new version of their SVD-based, data-free distillation pipeline, transferring the Qwen3 Coder 480B MoE model into a Qwen3 Coder 30B architecture. Key improvements include fixing a MoE-layer distillation bug, integrating SLERP and Procrustes alignment alongside DARE for cleaner LoRA generation, and maximizing LoRA rank (2048) to better preserve information. The full 900+GB 480B model was distilled and merged into the 30B target (then quantized) in 4 hours on 2x 3090 GPUs. Scripts are open-sourced (Hugging Face model, GitHub repo), with the author claiming marked improvements, especially for code tasks, although extensive complex code testing is pending. Comments raise technical questions about (1) whether Flash Coder was also a distillation of 480B, (2) performance comparison with the original 30B coder, and (3) the prospect of generating language-specific distilled models.

- Discussion centers on whether ‘flash coder’ was already a distillation of the 480B coder, suggesting the need for clarification on lineage and improvements over previous versions.

- One user shares that the model delivers strong code review performance and managed to write a simple yet correct traffic analysis application using a high performance library. Reported throughput was ‘TG close to 50t/s’, which is notable given the model’s size.

- A contributor recommends model creators register their works on Hugging Face as fine-tunes rather than quantizations, as this helps with discoverability and proper classification within the ecosystem.

3. Ollama Integrations and Community Opinions

- I built Excel Add-in for Ollama (Score: 615, Comments: 35): The image demonstrates a new Excel Add-in that integrates Ollama (an LLM backend) directly with Microsoft Excel, allowing users to invoke LLM completions via a custom formula

=ollama(A1)and apply system settings (temperature, model, instructions) both globally and per prompt (image). The add-in emphasizes that data never leaves Excel, and bulk application is possible through drag-to-fill. Developer documentation is available here. A technically notable debate emerges about alternative implementations: one commenter shares that similar functionality can be achieved via native VBA scripting and provides a link to their own solution (ChatGPT code share), suggesting existing users might not need to install an add-in if comfortable with scripting.- A user outlines a standard method to integrate LLM calls in Excel without third-party add-ins by leveraging VBScript and Excel’s macro capabilities (ALT+F11 to add module/code). This approach calls a backend LLM server (such as llama-server) via HTTP, with adjustable IP/port configuration (e.g. “localhost:8013”) and a customizable CallLLM() function to process prompts from text or cell values. The method primarily targets Windows, with noted modifications for MacOS compatibility. For direct code access, they provide a ChatGPT shared conversation as a workaround for Reddit’s code formatting restrictions: https://chatgpt.com/share/6899fe75-d178-8005-b136-4671134bc616.

- Another commenter suggests that instead of making an Ollama-specific integration, the implementation could be abstracted as a more general API call handler, allowing support for any LLM backend or inference server with a compatible API, broadening the add-in’s versatility beyond Ollama.

- Am I the only one who never really liked Ollama? (Score: 212, Comments: 171): The post questions the value of Ollama, especially since some features now require user accounts, potentially undermining its appeal for privacy and openness. Top technical alternatives mentioned include LMStudio (not open source), KoboldCPP, llama.cpp, and Jan.ai (all open source) with users reporting better control and flexibility than Ollama. The consensus among technical users is Ollama was initially attractive for simplifying llama.cpp usage, but now other tools have surpassed it in ease of use, openness, and power. Concerns are raised around Ollama and LMStudio moving away from open source (Ollama’s new UI reportedly not being open source), while fully open-source alternatives are preferred.

- Several commenters note that Ollama initially gained traction by simplifying the use of

llama.cpp, offering a convenient way to run local models, but its appeal has waned as more flexible and easy-to-use alternatives, such as LMStudio and KoboldCPP, have become available, many of which are fully open source. - A technical pain point mentioned is the non-intuitive process for setting a model’s context length in Ollama, which requires exporting and reimporting a model via a modelfile, instead of providing an in-app or command-line option—this is criticized as inefficient compared to other frameworks.

- Concerns are raised about the closed-source nature of Ollama’s new UI and LMStudio, with some preferring alternatives that remain fully open-source for transparency, tweakability, and control over the deployment stack.

- Several commenters note that Ollama initially gained traction by simplifying the use of

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. GPT-5 Benchmarking, Performance, and Community Reactions

- GPT-5 Benchmarks: How GPT-5, Mini, and Nano Perform in Real Tasks (Score: 187, Comments: 47): The image is referenced as charting or benchmarking the GPT-5, GPT-5-mini, and GPT-5-nano models against previous OpenAI and competitor LLMs on context-oriented tasks, such as accurately counting entities in a text (e.g., cities in a travel journal). Key result: GPT-5 underperformed certain competitors (e.g., Gemini 2.5, Claude 3.5/4, Grok-4) in keeping context information, answering ‘12’ instead of ‘19’. The post emphasizes these models are not revolutionary in intelligence, but are cost-effective with lower latency than OAI’s earlier models. Anthropic’s Claude and Google’s Gemini are called out for more reliable context window utilization. Full evals and methodologies are available at opper.ai/models. Link to image. Commenters request first-hand comparisons of gpt-5-mini/nano to legacy ‘o*’ models, with some users indicating improved results (and lower cost) switching from o4-mini to gpt-5 mini, while another notes their own positive experience with the new series contradicts the OP’s reported weaknesses.

- A user reports that switching from o4-mini to gpt-5 mini for a specialized use case resulted in both improved output quality and reduced costs, implying that gpt-5 mini delivers a tangible advantage over equivalent legacy models for certain tasks.

- Technical discussion focuses on clarification of which variant of GPT-5 was used in the benchmarks—specifically whether ‘GPT-5 thinking’ and at which ‘effort’ setting (low, medium, high)—indicating that performance may differ meaningfully between these configurations.

- There is debate about the generalized performance of GPT-5, with a consensus emerging that while it may not always be the top performer in every niche, it is highly competitive as a well-rounded, versatile model suitable for many real-world tasks.

- I ran GPT-5 and Claude Opus 4.1 through the same coding tasks in Cursor; Anthropic really needs to rethink Opus pricing (Score: 123, Comments: 32): The OP benchmarked GPT-5 and Claude Opus 4.1 on three coding tasks in Cursor: (1) cloning a Figma design into Next.js, (2) solving a classic LeetCode algorithmic problem (Median of Two Sorted Arrays), and (3) constructing an ML pipeline for churn prediction. GPT-5 consistently used fewer tokens and was significantly faster—algorithm: ~13s/8,253 tokens versus Opus’s ~34s/78,920 tokens; web app: GPT-5 used 906k tokens, Opus ~1.4M, with Opus achieving better visual fidelity; for the ML task, GPT-5 completed in 4-5min/86k tokens, Opus not evaluated due to prior inefficiency. GPT-5 was also notably cheaper ($3.50 vs $8.06 total), leading OP to recommend GPT-5 for rapid prototyping and Opus for high-fidelity UI work. Full breakdown available at composio.dev. Top comments suggest testing GPT-5 against Claude Sonnet 4 for cost/performance optimization, with some users noting GPT-5 excels in code review due to low cost and speed, while preferring Claude for CLI tasks. One user quantified that GPT-5 is roughly

12xcheaper for input tokens and7xcheaper for output compared to Opus, and highlighted that writing to cache is not extra-costly in GPT-5.- Pricing and performance comparisons highlight that GPT-5 is significantly cheaper than Claude Opus 4.1—one user points out it’s roughly

12xcheaper for input tokens and7xfor output, with no additional charge for writing to cache. The general sentiment is that GPT-5 offers excellent code review and generation capabilities for routine tasks, making it a preferable economic choice unless very high complexity is required. - For advanced and context-heavy coding work, some users prefer Claude Opus 4.1 due to its ability to handle large, complex codebases and nuanced requirements that aren’t always written down, like following implicit design conventions. However, these capabilities are seen as valuable only if the project complexity justifies the high cost.

- There is technical debate over benchmark task difficulty: simple algorithm tasks (e.g., ‘Median of Two Sorted Arrays’) may not showcase the advantages of state-of-the-art LLMs, with claims that models like

gpt-oss-120bcan handle them faster and more cost-effectively. Larger language models, including Anthropic and OpenAI’s recent releases, are seen as offering distinctive value only on difficult front-end implementation or complex system integration tasks; interest was also expressed in benchmarking Sonnet 4 as a middle-ground option.

- Pricing and performance comparisons highlight that GPT-5 is significantly cheaper than Claude Opus 4.1—one user points out it’s roughly

- The enshittification of GPT has begun (Score: 3012, Comments: 1011): The post discusses user-observed ‘enshittification’ after the release of GPT-5, noting an increase in alignment filtering—where nuanced, challenging, or high-value analytical queries now receive sanitized, overly cautious, or evasive responses. Users report significant degradation in the model’s willingness or ability to provide in-depth, context-rich strategic analysis, allegedly due to increased risk-aversion and safety mechanisms, impacting mission-critical use cases. Related technical issues include inconsistent adherence to user-uploaded files (failure to process or summarize as directed), and regressions in custom GPT instruction-following behaviors. Alternative models like Claude and Perplexity are cited as preferable due to fewer alignment constraints. Commenters echo frustration over the loss of analytic depth and specific breakdowns in GPT’s file handling and instruction-following, attributing it to backend cost-saving or safety changes; there’s a consensus forming around subscription cancellations and migration to less restrictive LLMs as OpenAI increases alignment and safety measures.

- Several users detail GPT’s apparent failure to reliably read and summarize files when instructed, with the model often generating hallucinated responses until repeatedly prompted, after which it eventually processes the file correctly. There are suggestions this behavior could result from cost-cutting measures in the model backend, where the model “pretends” to read files to save compute resources.

- Technical frustration is expressed around recent changes in ChatGPT 5, with users reporting that custom GPTs and Project directives are now poorly followed or differently interpreted. This breaks expected workflows, leading to switching to alternatives like Claude and Perplexity, which are highlighted as more reliable options for following complex instructions and retaining context.

- A critical issue with the Projects feature is raised, noting that the model often does not reference or recall prior conversations as intended, undermining the utility of Projects for long-form or ongoing work. This memory/context management regression significantly impairs technical workflows that depend on persistent dialogue and context.

- GPT5 is a mess (Score: 1236, Comments: 304): The post highlights several perceived regressions in GPT-5 compared to GPT-4o, including decreased instruction adherence, worsened handling of context, frequent hallucinations (with specific reference to a recurring ‘tether’ topic), reduced creativity, and less convincing dialogue. The author notes that GPT-5 often produces disjointed, context-ignoring, or irrelevant outputs (with multiple users reporting inexplicable mentions of ‘tether-quote’ or ‘tight tether’ during unrelated tasks), and that the model fails to modulate tone, nuance, or spontaneous reasoning as previous versions did. Despite some praise for the quality and consistency of code outputs, GPT-5 is described as increasingly mechanical, transactional, and less human-like in conversational tasks, leading to dissatisfaction among users reliant on longer, nuanced chat sessions or creative work. Commenters express frustration at GPT-5’s inability to maintain coherent, relevant conversation threads and its tendency towards flattening nuance, with one noting success using it for strictly bounded, mechanistic tasks (such as code generation) but not for casual or creative use cases. There is a sentiment of mistrust regarding the future availability or stability of GPT-4o, prompting consideration of alternatives.

- Multiple users report that GPT-5 exhibits abnormal conversational behavior, such as introducing unrelated terms like “tether-quote” or “tight tether” during ongoing discussions, including when summarizing or analyzing research papers. This issue appears to disrupt coherent interaction and is documented with specific user examples and screenshots, indicating a possible recurring prompt injection bug or internal state tracking error.

- Users compare GPT-5’s performance to GPT-4o, noting that GPT-5 tends to provide transactional, mechanistic, and sometimes aloof responses with less perceived depth or enthusiasm, especially over prolonged chat sessions. While GPT-5 is praised for producing high-quality, precise code and being reliable for instruction-following and application integration, its conversational quality reportedly suffers in non-technical or casual contexts.

- There is a technical debate about the model’s ability to produce original insights or detailed, deep answers. One user points out that GPT-4o was more likely to bring up full examples and exhibit creative response patterns, whereas GPT-5 sometimes gives terse or disengaged replies, which may affect users seeking an assistant capable of extended reasoning or ideation.

2. OpenAI’s Competitive Advances and Compute Scaling

- OpenAI: We’ve scored highly enough to achieve gold at this year’s IOI online competition with a reasoning system (Score: 282, Comments: 113): OpenAI has announced that its reasoning system scored highly enough at the IOI (International Olympiad in Informatics) online competition to achieve a gold medal-level performance, suggesting substantial advances in AI on algorithmic and mathematical reasoning tasks. According to Noam Brown, one of the ensemble models responsible was also the first LM to win gold at the International Mathematical Olympiad (IMO), highlighting a possibly more general reinforcement learning method now leading across several task domains. Commenters debate that consumer-facing models remain smaller or more resource-constrained compared to unreleased frontier models, resulting in a widening gap between research and consumer capabilities. There is technical discussion about model thoroughness, with claims that running GPT-5 versus GPT-4o yields a much deeper code analysis, outperforming even competitors like Gemini 2.5 Pro.

- Recent OpenAI models, including the one that achieved a gold medal at IOI, leverage larger parameter counts (such as the reported 2T in GPT-4), but frontier models like GPT-5 appear to be smaller, reflecting a trend toward improving intelligence per parameter due to resource constraints and diminishing returns from mere scale. This suggests top labs are reserving their largest models for high-leverage, non-consumer applications while steadily refining mainstream releases for efficiency and capability.

- OpenAI’s gold-placing model used for the 2025 IOI is part of an ensemble of general-purpose reasoning systems, notably not fine-tuned for IOI tasks. Per OpenAI’s reports, the system operated with no internet or retrieval-augmented generation, and matched human participants’ constraints (5-hour time limit, 50 submissions, basic terminal). Year-over-year, OpenAI improved its percentile in IOI from 49th to 98th, apparently due to advances in more general RL (reinforcement learning) methods and improved ensemble selection and solution submission scaffolding, rather than heavily engineered test-time heuristics.

- User comparisons note that running GPT-5 on programming tasks is significantly more thorough and capable compared to previous flagship models like GPT-4o or competitors like Gemini 2.5 Pro. Qualitatively, GPT-5 seems to provide deeper and more comprehensive code analysis than existing models, with a marked jump in both detection and sophistication of reasoning.

- OpenAI is not slowing down internally. They beat all but 5 of 300 human programmers at the IOI. (Score: 265, Comments: 107): OpenAI’s latest model reportedly outperformed all but 5 out of 300 participants at the International Olympiad in Informatics (IOI), indicating significant advancements in code generation and problem-solving within competitive programming benchmarks. This suggests the model ranks within the global top 2% of high school programmers, making it competitive with elite human talent. No specific architecture or training details were disclosed, but this places OpenAI models among the most capable automated programmers currently in existence. Comments express optimism about OpenAI’s current research pace and expectations for future models (notably GPT-5); however, non-technical remarks dominate, with minor criticisms (such as persistent image quality issues) noted as unresolved by users.

- A key technical criticism raised is that outperforming on benchmarks like IOI (International Olympiad in Informatics) or passing Leetcode-style programming challenges does not necessarily equate to large language models (LLMs) matching the practical and nuanced skills of senior software developers in real-world settings. The concern is that such wins may be ‘cheap’ and insufficient indicators of advanced problem-solving or engineering ability.

- OpenAI Doubling Compute over the next 5 Months (Score: 185, Comments: 24): The post discusses OpenAI’s announced plan to double its compute resources within the next five months, as illustrated by the image (specific visual details not retrievable). Top comments speculate that OpenAI is prioritizing growth and data collection (especially from the free tier) over immediate profitability, potentially to gain market share or prepare for upcoming model releases like Sora 2, advanced voice features, or GPT-5. There is technical debate about resource allocation between public/free users and API customers, and the challenge of balancing expensive supermodel access with scalability and broad rollout. Commenters note surprise at the prioritization of the free tier, interpreting it as a play for data and market dominance rather than early profit. There is also discussion on the strategic need for compute to support anticipated advances like Sora 2 and GPT-5, with debate on the company’s long-term technical and financial sustainability.

- Several comments speculate that OpenAI’s compute allocation strategy, particularly prioritization of the free tier, may reflect the company’s focus on gathering user data and maximizing market share at the expense of short-term profitability. One user notes: “The data they are getting from that must be more valuable than I first thought…they’ve just given up all hope of being at all profitable until they hit ASI and thus care about market share first.”

- Anticipation of imminent major model releases (e.g., “Sora 2,” GPT-5, and advanced image/voice gen capabilities) is cited as a likely motivation for scaling compute resources. There is technical discussion regarding the tradeoff between exposing current models with severe rate limits (“Claude like rate limits”) versus optimizing infrastructure for broader, smoother access ahead of anticipated demanding rollouts.

- A technical suggestion is raised regarding the current context window: a user requests the ability to exceed the 32k context limit, proposing an opt-in mechanism where users are warned that context length overages will more quickly consume resource quotas—implying that dynamic context window options would enhance flexibility for advanced API users.

- Altman explains OAI’s plan for prioritizing compute in coming months (Score: 150, Comments: 48): The post discusses a statement by Sam Altman about OpenAI’s upcoming priorities regarding compute allocation. The image (https://i.redd.it/t70tigi5rhif1.png) appears to show a message or post from Altman explaining how OpenAI will prioritize compute resources in the near future, possibly referencing new deals or infrastructure (with comments pointing to a potential ‘oracle deal’ increasing available compute). There is community discussion regarding implications for API users, with some concerned about fair access and speculation about whether these promises will be realized. Commentary centers on the scale of compute discussed (implying a significant backend upgrade or partnership), potential negative impacts on API users’ access or prioritization, and some skepticism about OpenAI’s ability to deliver on these commitments.

- One commenter speculates that the significant compute increase referenced may be due to the impending Oracle partnership, suggesting the infrastructure expansion is likely tied to Oracle’s resources coming online. This hints at a strategic backend shift for OpenAI that could affect scaling and availability.

- Another technical concern raised is that API users may receive lower priority compared to other workloads as OpenAI reallocates compute. This suggests a possible shift in service availability or quality of service, with some users noticing negative impacts already as the company changes internal resource distribution.

3. Innovations and Community Tools for Claude AI

- Claude can now reference your previous conversations (Score: 617, Comments: 144): Anthropic’s Claude has introduced cross-conversation referencing, enabling the model to search and incorporate prior chat history into new sessions without additional user prompting. The feature, currently rolling out to Max, Team, and Enterprise users, is enabled via the Settings > Profile > ‘Search and reference chats’ toggle, and is poised to improve contextual continuity in multi-turn workflows. See the announcement video for demonstration. Several commenters highlight that this addresses a major workflow pain point found in competing LLM offerings, such as ChatGPT, and request even finer-grained conversation-level toggling for privacy and control.

- Users note a key advantage of Claude’s new feature: persistent memory across conversations simplifies complex workflows by eliminating the need to restate technical details (e.g., explaining an entire tech stack repeatedly). This aligns Claude’s usability closer to, or ahead of, ChatGPT’s subscription features in practical developer scenarios.

- There are technical requests for more granular control: some suggest the ability to toggle memory for individual conversations or limit memory to a defined project scope. This would allow users to manage context retention when handling multiple projects or sensitive information, potentially addressing privacy and workflow segmentation concerns.

- Use entire codebase as Claude’s context (Score: 220, Comments: 77): The post introduces Claude Context, an open-source plugin that enables scalable, semantic code search for large codebases (millions of lines) when working with Claude Code. Key technical features include semantic search using vector databases for contextual retrieval, incremental indexing using Merkle trees to update only changed files, and intelligent code chunking based on AST analysis to preserve code semantics. The backend leverages Zilliz Cloud for scalable vector search, addressing context window/token cost limitations by only retrieving relevant code portions on demand. The project aims to let Claude Code interact with deep contextual code knowledge without exceeding token limits or incurring prohibitive costs. Top comments raise valid technical questions about benchmarking standalone Claude Code versus usage with Claude Context, as well as requests for a comparative analysis against similar solutions, notably Serena MCP. Another comment raises trademark and product naming concerns but is not technical in nature.

- A user inquires about benchmarks comparing the base version of “Claude Code” against a version with additional context integration (“Claude Code+Claude Context”), seeking quantitative data to assess performance differences between standalone and context-augmented modes.

- Another commenter recommends evaluating the capability to handle large codebases through practical experiments—specifically, by setting up real-world tasks and comparing Claude’s output with and without an index. This suggests interest in empirical accuracy and retrieval performance under realistic workloads.

- One user asks directly about the chunking strategy implemented for handling code context. Effective chunking is critical for LLMs working with large codebases, impacting retrieval quality, context window utilization, and ultimately, model response accuracy.

- The .claude/ directory is the key to supercharged dev workflows! 🦾 (Score: 171, Comments: 86): The attached image displays a detailed layout of the user’s

.claude/directory, showcasing an advanced structure supporting extensibility for Claude-based development workflows. The directory includes subfolders for subagents (domain-specific AI expert definitions), custom command scripts for frequently used prompts, and hooks to trigger automated actions (e.g., linting, typechecking) upon task completion. This setup illustrates a modular, programmable approach to integrating Claude into software projects, aligning with practices seen in AI agent frameworks and developer productivity tooling. Comments raise points on the need for quantitative/qualitative metrics to evaluate the productivity gains of such setups, concerns about increased token usage with more complex directory structures, and requests for sharing implementations (e.g., on GitHub) for wider community benefit.- A discussion is raised about the lack of both quantitative and qualitative methods for evaluating or comparing the effectiveness of advanced workflows involving the

.claude/directory, suggesting a need for standardized benchmarks or testing protocols. - There is a question about the overhead of using complex configuration setups like

.claude/, specifically how much additional token usage is incurred per conversation, which could impact developer workflow efficiency and cost. - A GitHub repository link (https://github.com/Matt-Dionis/claude-code-configs) is provided as a resource, offering a practical, shareable implementation of the

.claude/setup, including prompt configurations for reproducibility and third-party evaluation.

- A discussion is raised about the lack of both quantitative and qualitative methods for evaluating or comparing the effectiveness of advanced workflows involving the

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. GPT-5 Rollout, Routers, and Reality Checks

- Rollout Rumble & AMA Anticipation: OpenAI began rolling out GPT-5 to all ChatGPT users and developers and announced a community Q&A via a GPT-5 AMA with Sam Altman alongside the official post, Introducing GPT‑5. Reports across servers note phased access, some loss of GPT‑4o, and platform-dependent availability.

- Users cited tight early limits (e.g., ~10 messages per 5 hours) and mixed behavior, while Altman acknowledged an autoswitch issue and said rate limits for Plus were doubled to restore performance (Sam Altman on GPT‑5 autoswitch fix).

- Router Ruckus: Thinking vs Chat: Multiple communities argued that Perplexity and OpenRouter often serve a base GPT‑5 Chat with weaker reasoning, with requests to expose or default to a stronger Thinking/router-backed model; see ongoing matchups and debate on LM Arena.

- Amid “ZERO reasoning capabilities” complaints and calls to “think very hard,” commentary highlighted OpenAI’s real‑time router as the strategic shift (see swyx on GPT‑5 router and dominance).

- Code Clamps and Hallucination Headaches: Engineers reported ChatGPT‑5 refusing Python past ~700 lines and aggressive working‑memory pruning beyond ~3–4k tokens, plus inconsistent image moderation; a thread captures rollout/availability churn in GPT‑5 rollout and availability thread.

- Feedback split between “less whacky when you want it to be” instruction‑following and demands for a GPT‑4o rollback, with veterans repeating that “hallucination is a feature, not a bug.”

2. New Dev Tooling: CLIs, Agents, and Parallelism

- Cursor CLI Crashes the Console: Cursor launched an early‑beta terminal experience exposing all models and seamless hopping between CLI and editor (Cursor: CLI).

- The community welcomed a Claude Code rival and immediately probed pricing and API‑key flows as they tested

cursorin real shells (Cursor: CLI).

- The community welcomed a Claude Code rival and immediately probed pricing and API‑key flows as they tested

- LlamaIndex Levels Up with GPT‑5 + Maze: LlamaIndex shipped day‑0 support for GPT‑5 and teased a lightweight agent eval via Agent Maze challenge, with many users needing to bump to

v0.13.xpackages.- Workflow tool breakage with OpenAI models was fixed by using OpenaiResolve in the new SDK, per this patch: Fix: OpenaiResolve in new SDK.

- Axolotl Adds N‑D Parallel Power: Axolotl introduced N‑D parallelism to scale training across multiple dimensions with Accelerate, improving throughput on large models/datasets (Accelerate N‑D Parallelism).

- Engineers highlighted the approach as a practical path to complex model training without hand‑rolled sharding logic (Accelerate N‑D Parallelism).

- MaxCompiler Meets torch.compile: A community backend extends

torch.compile()with MaxCompiler to run simple models—building toward compiling LLMs (max‑torch‑backend).- Prototype notes say ops are easy to add while offloading fusion to MAX; a related weekend prototype is here: torch.compile weekend prototype.

- MCPOmni Connect Debuts OmniAgent: MCPOmni Connect v0.1.19 graduated from MCP client to a full AI platform, introducing OmniAgent for agent building (MCPOmni Connect v0.1.19).

- A short walkthrough demonstrates the new agent builder and platform flow (MCPOmni Connect overview).

3. Open‑Source Finetuning, Data, and Quantization

- Unsloth Unleashes Free GPT‑OSS Finetunes: Unsloth released a free Colab to finetune gpt‑oss and documented training/quant fixes (Unsloth: free GPT‑OSS finetune Colab, Unsloth fixes for gpt‑oss).

- They claim the 20B model trains on 14GB VRAM and 120B fits in 65GB, enabling budget finetunes for larger SFT targets (Unsloth fixes for gpt‑oss).

- Qwen3 Coder Combo Drops: Qwen3‑Coder and Qwen3‑2507 shipped with guides and uploads via Unsloth (Qwen3‑Coder guide, Qwen3‑Coder uploads, Qwen3‑2507 guide, Qwen3‑2507 uploads).

- Early chatter bills them as SOTA‑leaning coding variants with practical finetune recipes for rapid adoption (Qwen3‑Coder guide).

- FineWeb Kudos & Pythia Phase Transitions: Researchers praised FineWeb cleanliness for reducing gradient spikes and shared a training‑dynamics study showing Pythia layer activations peaking early before declining (Pythia activations phase transition).

- The paper reports a likely learning phase transition in Pythia 1.4B, with median/top activations peaking in the first quarter of training (Pythia activations phase transition).

4. Multimodal and Long‑Context Experiments

- Gemini’s Goofy Glitches: Engineers demoed Gemini Pro video generation, noting inconsistent character faces in a shared sample (Gemini Pro video sample).

- Perplexity Pro currently caps video generation at 3 videos/month as teams compare Gemini code‑execution to GPT‑5 on arena sites.

- Video Arena AMA, Lights Camera Action: LM Arena scheduled a staff AMA focused on Video Arena, soliciting questions via Video Arena AMA questions.

- The live event link is posted here: Video Arena AMA event.

- Qwen’s Million‑Token Marathon: Alibaba’s Qwen touted a 1M‑token context; practitioners debated utility beyond ~80k in real tasks and shared a quick demo (Qwen 1M‑token context demo).

- The excitement centered on what workflows truly benefit from such context lengths versus smarter retrieval and routing.

- Eleven Music: Bangers with Blemishes: Teams evaluated Eleven Labs’ new music generator and posted a preview track (Eleven Music demo track).

- While impressive, many called it “kind robotic at times and has bad attention to what music should come next,” flagging coherence/continuation gaps.

Discord: High level Discord summaries

Perplexity AI Discord

- Gemini Generates Goofy AI Videos: Users experimented with Gemini AI for video generation, sharing a video generated with Gemini Pro, noting inconsistent character faces.

- Video generation on Perplexity Pro is currently limited to 3 videos per month.

- GPT-5 Flounders, Forfeits on Reasoning: Members report GPT-5 lacks reasoning on Perplexity, indicating the likely use of the base, non-reasoning GPT-5 Chat version, underperforming on coding.

- Users are asking for official updates from Perplexity regarding which model they are using, with some hoping for the GPT-5 thinking model to replace the current O3 model.

- Comet Commands, Clicks on Browsing: Comet Browser’s AI automates browsing and extracts information, however, functionality requires the user to manually click and browse the websites.

- No confirmation exists regarding a potential Android version release.

- Accessing Aid for Perplexity Pro Access: Users reported facing issues accessing Perplexity Pro via the Samsung app store free trial; disabling their DNS filter resolved the issue.

- Another user saw GPT-5 on their app but not on the website.

- China Charges Ahead with Celestial Solar Platform: A shared Perplexity link reveals China’s launch of a solar-powered high-altitude platform, Ma.

- This platform was also posted to X.

LMArena Discord

- GPT-5 Faces Controversy in AI Arena: Members discuss the merits of GPT-5, with some hailing it as revolutionary and free for all, while others accuse proponents of bias or inexperience with alternative models.

- Skeptics question the model’s true capabilities, suggesting it may only excel in coding tasks or that its performance has improved post-update.

- Gemini 2.5 Pro Battles GPT-5 for AI Supremacy: The community is debating whether GPT-5 or Gemini 2.5 Pro reigns supreme, with some favoring Gemini for its superior code execution within AI Studio.

- Concerns arise over the potential use of models from OpenAI and Google on platforms like LM Arena, sparking discussions about model transparency and integrity.

- Yupp.ai: Legit AI Platform or Elaborate Illusion?: Controversy surrounds Yupp.ai, with claims that it uses watered-down or fake AI models, like calling GPT-5 nano as GPT-5-high, and is a scammer crypto sht.

- Conversely, some defend its legitimacy, highlighting the platform’s offer of free and unlimited access to various models in exchange for user feedback.

- LM Arena Plunges into Chaos with Site Outage: LM Arena experienced an outage, leading to chat histories disappearing and cloudflare errors disrupting the user experience.

- Staff confirmed the outage and assured users that the issue has been resolved.

- LM Arena Expands Horizons with Video Arena Focus: The upcoming Staff AMA will concentrate on Video Arena, providing users the opportunity to pose questions via this form.

- Users can participate in the event through this link.

OpenAI Discord

- GPT-5 Bursts onto Scene: OpenAI announced the rollout of GPT-5 to all ChatGPT users and developers starting today, after announcing an upcoming AMA with Sam Altman and the GPT-5 team.

- Users report varying access levels based on their region and platform, leading to speculation about phased rollouts and model consolidation; some report losing access to older models like GPT-4o.

- Users Report GPT-5 Quirks and Caveats: Users have reported that GPT-5 has limited access, with some reporting roughly 10 messages for 5 hours, and that the model is prone to making up facts and hallucinating.

- Some users have called for a GPT-4o rollback, others praised GPT-5’s instruction following capabilities while noting it’s less whacky when you want it to be; there are reports of image requests being rejected for literally no good reason until using the O3 model.

- GPT-5 Refuses Code: Users are reporting that ChatGPT-5 rejects Python code inputs at or beyond roughly 700 lines, a regression compared to previous 4-series models.

- One member suggested using the API or Codex, though another user pointed out that hallucination is a feature, not a bug (according to Andrej Karpathy).

- Firefox Data Leak: A user warned that Firefox’s “keep persisting data” feature spreads browsing data to other AI sites like Grok, causing unwanted context sharing.

- They cautioned that because this is not a ‘cookie’, there are no current regulations to ‘keep persisting data private’ and consider it a HUGE INTENDED DATA LEAK.

Cursor Community Discord

- GPT-5 Launch Sparks Excitement, Raises Concerns: The GPT-5 launch has generated excitement, with users praising its coding capabilities and one-shot task performance, suggesting it rivals Claude in front-end tasks.

- However, concerns arise regarding the GPT-5 router’s impact on API developers and the business practices surrounding the model.

- GPT-5’s Free Week: How Much Can You Milk It?: Users are testing the limits of free GPT-5 access for a week, using GPT-5 high max, but the free credits are exclusively available for paying users.

- Concerns are growing about the billing structure and whether all GPT-5 models and features are truly unlimited during the promotional period, with the community joking that we’re the product for now.

- GPT-5 is Imperfect? Still Needs Work: Despite the hype, users find GPT-5’s auto mode less responsive and struggle with non-coding tasks, with performance perceived as no better than prior models, emphasizing context importance.

- Currently, GPT-5 ignores the to-do list feature, and despite solid linters, it might still be ragebait and not at product-level completeness.

- Cursor CLI: Love It or Leave It?: The Cursor CLI receives mixed reviews, with some praising its non-interactive mode for automation, like generating commit messages across multiple projects.

- Others find it inferior to Claude Code, noting its limited model selection (only 3 models in MAX mode), and incompatibilities with Windows Powershell.

- Cursor in Terminal: All Models Now Available: Cursor launched an early beta that allows users to access all models and move easily between the CLI and editor, more details are available on the Tweet and Blog.

- This integration facilitates seamless movement between the CLI and the editor, enhancing workflow efficiency.

Unsloth AI (Daniel Han) Discord

- GPT-5: Love It or Hate It?: Opinions on GPT-5 are varied, with some users underwhelmed by its coding and context retention abilities, while others find it perfectly fine for coding projects with high reasoning, as reported in the off-topic channel.

- Alternatives such as Kimi K2 or GLM 4.5 are preferred by some for specific tasks, with one user stating that GPT-5’s tool calling abilities are poor.

- MXFP4 Quantization Leaves 3090 in the Dust?: MXFP4 quantized models are supported on GPUs with compute capability >= 9.0 (e.g. H100), rendering older cards like the 3090 less relevant for this technology.

- Workarounds for older cards may exist with specific transformers pulls, but official support is still under development.

- Dataset Creation: The Eternal Struggle: Preparing high-quality datasets is a difficult and time-consuming task, with one user reporting 3 months with 4 people to create 3.8k hand-written QA pairs after filtering down from 11k, and another dealing with 300k hours of audio.

- The consensus is that garbage in = garbage out, emphasizing the importance of data quality in model training.

- GPT-OSS Finetuning: Now Free!: Finetune gpt-oss for free with the new Colab notebook, leveraging Unsloth’s fixes for gpt-oss for training and quants.

- The 20b model can train on 14GB VRAM, while the 120b model fits in 65GB, according to the announcements channel.

- Tiny Stories Exposes Pretrain Secrets: The Tiny Stories dataset, intentionally limited in vocabulary, allows researchers to study pretrain dynamics, revealing insights into language model behavior.

- Even transformers with only 21M params can achieve coherent text output with this dataset, highlighting the dataset’s unique properties.

OpenRouter (Alex Atallah) Discord

- GPT-5 Reasoning Abilities Debated: Users are debating the difference between GPT-5 and GPT-5 Chat, with some suggesting GPT-5 Chat has less reasoning capabilities.

- Some suggest using

gpt-5-explainerto explain the differences while others find GPT-5 chat to have ZERO reasoning capabilities.

- Some suggest using

- Google’s Genie 3 Poised to Pounce: Members express that Google is poised to win the AI race, considering it created the transformer and has the infrastructure and budget to succeed, with Genie 3 touted as crazy cool.

- Some members look forward to Gemini 3.0 wiping the floor with GPT-5, while others temper expectations.

- Deepseek R2 Ascends to New Heights: A user reported that Deepseek is switching to Ascend and launching R2, which might provide a performance boost for the model.

- While some are hopeful Deepseek will be way better, others recall previous models as too unhinged.

- Horizon Beta Faces GPT-5 Family Replacement: The AI model Horizon Beta has been replaced by GPT-5, with no option to revert, causing disappointment among users who found it useful.

- Speculation arises that Horizon was an earlier version of GPT-5, potentially directing free users to GPT-5 after their free requests deplete.

- OpenRouter Hailed as OpenAI Trusted Partner: A member congratulated OpenRouter on being one of OpenAI’s most trusted partners for the new series release.

- The member noted the impact of GPT-4 and Gemini 2.5 and expressed appreciation for OR as a product.

LM Studio Discord

- Users Explore YouTube Downloader Alternatives: Users discussed format compatibility issues with VLC and video editors using a specific YouTube downloader (v4.www-y2mate.com), seeking better alternatives.

- Suggestions included yt-dlp and GUI wrappers, as well as a Node.js script created with GPT for Linux users.

- AI Bot Builder Seeks RAG Guidance: A user building a custom AI bot for a Discord server is seeking advice on how to feed a database about the server’s topic to the model.

- The advice given was to look up ‘RAG’ (Retrieval Augmented Generation) because there are many potential solutions that may be useful.

- LM Studio Lacks Parallel Request Powers: Users discovered that LM Studio does not support parallel requests.

- Alternatives like llama.cpp server with the

--parallel Nargument or vLLM were suggested for those requiring parallel request processing.

- Alternatives like llama.cpp server with the

- Qwen 3 4b Model Solves Physics!: There’s discussion about how much better the Qwen 3 4b 2507 model is than previous versions of the Qwen 3 4b.

- A user stated that it can solve up to intermediate physics problems without constantly hallucinating.

- Hackintosh GPU Multiplicity Discussed: A member asked about using an unused RTX 3060 12GB with their RTX 5060 Ti 16GB system for AI, questioning the multi-GPU setup in a small form factor PC.

- Another member suggested that using combined VRAM in LM Studio should be possible, and that llama.cpp is advanced enough to do that third option about model parallelism.

Moonshot AI (Kimi K-2) Discord

- GPT-5 Builds Websites like a Pro: GPT-5 is demonstrating impressive website building capabilities, generating functional websites from single prompts, including multi-page sites.

- Members noted GPT-5 seems to have a better aesthetic style for website design and has improved its ability to understand user intent through prompt enrichment.

- GPT-5 and Kimi K2 Face Off in Coding Duel: Users are actively comparing GPT-5 and Kimi K2 for coding tasks, with GPT-5 excelling at large edits, instruction following, high logic code, and dev ops.

- While some believe GPT-5 has better taste, others find Kimi K2 more competitive due to its reasoning abilities and performance with sequential-think tools, though GPT-5 seems to have better aesthetic style.

- OpenRouter’s Kimi K2 Quality Faces Scrutiny: A user observed grammar mistakes and shorter responses when using Kimi K2 through OpenRouter compared to the official Moonshot AI platform, suggesting it might be using a quantized version of the model (FP8).

- Though both free and paid tiers are supposedly FP8, quantization could impact accuracy and response length.

- Qwen Boasts a Million-Token Context: Alibaba’s Qwen model now boasts a 1M token context length, sparking discussion about its usability beyond 80k tokens.

- Despite the impressive context window, one user humorously noted that Qwen also correctly solved a problem, posting a link to Twitter.

- GPT-2’s Prompt Shenanigans Explained: A user questioned why GPT-2 generated another prompt instead of following instructions; another member explained that GPT-2 has about 100M parameters, which barely makes legible text.

- It’s about 500mb on disk which is about the same size as a 20 minute Youtube video.

HuggingFace Discord

- GPT-5 Launch generates Fanfare and Frustration: Despite the hype, some users are still unable to access GPT-5, seeing only GPT-3 and GPT-4, and its SOTA status on SWE is being questioned.

- Opinions diverge on whether the release was intentional or a “joke”, as some anticipate a phased rollout.

- GPT-OSS Finetuning meets Stumbling Blocks: Experiments finetuning GPT-OSS have revealed challenges: finetuning all layers breaks the harmony format, and continued pretraining causes similar issues.

- A possible solution is inserting ‘Reasoning: none’ in the system prompt to stabilize the model, which lacks reasoning capabilities.

- Eleven Music is impressive but Imperfect: Members have been testing Eleven Music, Eleven Labs’ new music generation service.

- While impressive, some find the music “kind robotic at times and has bad attention to what music should come next”.

- Voice Companion Quest for Low Latency: A member is engineering a “voice companion fastpath pipeline” to achieve a 100ms latency for text-to-speech.

- The project focuses on optimizing both speech-to-text and text-to-speech components, with specific attention to optimizing Whisper Turbo to avoid slowness.

- Cutting Silence Automatically: An automatic video cutter that removes silence has been created using Bun.js and FFmpeg CLI.

- Despite FFmpeg’s complexity, the creator has garnered a donation and potential collaboration for an AI video editor.

Latent Space Discord

- GPT-5 Hype Video Splits Audience: A GPT-5 demo video dropped, triggering divided reactions about the model’s true capabilities, found at this YouTube video.

- Some viewed it as just an ad, while others hinted at internal demos falling short due to GPT-5’s underwhelming performance in tests.

- Cursor CLI Challenges Claude Code: With Cursor’s launch of an early-beta CLI, AI models are available in the terminal, allowing seamless transitions between shell and editor via simple commands like

cursor.- Excitement bubbled over at ‘finally’ having a Claude Code competitor, though queries about pricing and API-key management quickly followed.

- OpenAI Doles Out Millions Amid Market Shifts: OpenAI is granting a ‘special one-time award’ to researchers and engineers in select divisions, with payouts scaled according to role and experience.

- Top researchers may pocket mid-single-digit millions, while engineers can anticipate bonuses averaging in the hundreds of thousands of dollars.

- Altman Acknowledges GPT-5 Turbulence: Sam Altman reported that GPT-5 felt dumber because of a recent autoswitch failure, with fixes and doubled Plus-rate limits intended to restore its smartness, details at this X post.

- Plus users now have the option to stick with GPT-4o, though global availability lags as API traffic surged and UI/UX adjustments continue.

- GPT-5 Dominance Looms, Scaling Ends?: Critics focusing on GPT-5’s benchmark figures miss the main point: OpenAI now dominates the intelligence frontier because of a continuously-trained, real-time router model (xcancel.com link).

- According to swyx, the magical scaling period for transformer models has essentially ended, as internal router layer adds 2-3s latency on hard vision inputs, pointing towards incremental gains through superior engineering, multi-model strategies, and more.

Eleuther Discord

- Image Generation’s Factual Faux Pas: A user sought an AI researcher to interview regarding factual errors in images generated by models like GPT-5, particularly issues with text rendering.

- Answers suggest that the model doesn’t really get forced to treat the text in images the same as the text it gets trained on and the best general answer is going to be something like ‘we make approximations in order to be able to train models with non-infinite computing power, and we haven’t yet found affordable approximations for image generation that are high enough quality when combined with textual understanding’.

- On-Demand Memory Layer for LLMs Emerges: A member is working on an on-demand memory layer for LLMs, aiming for more than just attaching conversation messages or semantic RAG retrieval.

- The solution uses a combination of NLP for coreference resolution and triplet extraction with GraphRAG to find exactly what you are looking for, similar to how Google Search works.

- FineWeb Receives Rare Praise for Cleanliness: Despite concerns about noisy datasets, FineWeb received rare praise for its cleanliness, noting reduced gradient spikes during training.

- Some members expressed concern that this cleanliness might skew results when testing new tricks, but also agreed the FineWeb dataset may need additional filtering.

- Pythia’s Activations Reveal Learning Insights: A study on Pythia’s full training checkpoints found that average activation per layer peaks early in training (around the first quarter) and then declines, suggesting a phase transition in learning.

- The study plots the median and top activations for each layer across training steps in Pythia 1.4B.

- Exact Match Scoring Glitch Uncovered: A member reported an issue with the LM Evaluation Harness where the exact_match score is

0despite identical target and generated responses, using the Hendrycks MATH dataset.- An issue was opened on GitHub for further investigation.

Nous Research AI Discord

- GPT-5 Excels at Logic, Stumbles on Overfitting: Members observed that GPT-5 demonstrates strong capabilities in solving logic puzzles but struggles with overfitting, even when trained on synthetic data, leading one to joke about finally experiencing an overfitting issue after expecting to read about the illusion of thinking.

- Further investigation might be required to understand the extent and implications of GPT-5’s overfitting tendencies, especially in contrast to its logical reasoning strengths.

- GPT-5 API Access Promo: Users identified complimentary access to GPT-5 through the API playground and Cursor, though the API mandates ID verification to begin.

- With the conclusion of Cursor’s ‘launch week’ remaining unannounced, users are advised to quickly capitalize on the promotional access by initiating Cursor background agents.

- Colab Alternatives: Engineers seeking alternatives to Google Colab for finetuning with Unsloth looked to Lightning AI, which provides 15 free GPU hours monthly, alongside Kaggle.

- A talk by Daniel Han was referenced, highlighting Kaggle’s relevance in the realm of RL.

- GLM 4.5 Air’s CPU Offloading Triumphs: A user reported that GLM 4.5 Air ran with only 28GB VRAM by using CPU offloading, and achieved 14-16 tokens per second (TPS) with a 3.5bpw quant.

- The user specified employing a custom tensor wise quantization, with imatrix, a 4060Ti + 3060 for GPUs, and a 5950x CPU (3600MHz DDR4).

- MoE Model Bandwidth Barriers: In a channel discussion, engineers covered multi-GPU setups for operating large MoE models, emphasizing bandwidth constraints encountered with multiple RTX 3090s.

- It was flagged that Tensor Parallelism (TP) mandates the GPU count to be divisible by 2, and that 72GB VRAM might be insufficient for expansive MoE models exceeding scout or GLM Air capacity.

Modular (Mojo 🔥) Discord

- Mojo Bites Back with Memory Bug: A member’s Mojo code unexpectedly attempted to allocate 284 petabytes of memory after experiencing a bug.

- This incident sparked a discussion among developers, with one expressing their strong dislike for C++ in comparison.

- Textual Python Sparks Mojo Excitement: A member’s exploration of the Textual TUI library for Python apps has generated excitement within the Mojo community, due to its capability to run as a web app with minimal deployment steps.

- The potential integration of Textual with Mojo was discussed, considering challenges related to Mojo’s current limitations in class creation and inheritance.

- Mojo’s Type System Faces Rust Test: Members noted that Mojo requires further development in its type system to achieve compatibility with approaches used by Rust libraries.

- This suggests that seamless integration with Rust may necessitate significant enhancements to Mojo’s type system capabilities.

- Compiler Register Gremlins Spilling Local Memory: A member suggested that the Mojo compiler should warn when it allocates too many registers in a GPU function, leading to spilling into local memory, and should use the Modular forum for discussion.

- Another member reported instability and frequent crashes with the 25.5 VSCode Mojo extension, recommending the use of the older 25.4 version instead.

- MaxCompiler Enters the LLM Arena: A member shared a repo showcasing a package extending torch.compile() with MaxCompiler to run simple models, with the long-term goal of compiling LLMs.

- Another member found it surprisingly hard to find code to run pretrained LLMs compatibles with torch.compile(), and complained Transformers is not very good at it.

Yannick Kilcher Discord

- Twitch Streamers Planning Golden Topics: To combat dead air during Twitch streams, members suggested creating a topic schedule ahead of time in addition to reading papers.

- The aim is to mirror streamers who mostly just talk without doing anything or watching videos.

- LinkedIn Bloggers Circumvent Screenshot Restrictions: A member sought advice on creating a blog on LinkedIn while bypassing the platform’s constraints on embedding numerous images/screenshots.

- They wish to communicate directly on LinkedIn rather than linking to external sources.

- Cold Meds Exposed as Placebos: Members shared a PBS article revealing that the FDA has determined that decongestants are ineffective.

- The consensus was that pharmaceutical firms are profiting by selling placebos.

- Tesla Motors Still Sparking Battery Breakthroughs: One member questioned Tesla’s innovation, citing the Cybertruck’s shortcomings, while another argued that Tesla has innovated in batteries and motors.

- He went on to say that the first member was clearly ignorant.

- Doctors Using LLMs For Diagnosis, Debates Sparked: Reports indicate that doctors are using LLMs for diagnosis, raising concerns about data safety.

- Others claimed doctors already manage patients, which could be beyond the scope of an average person using ChatGPT.

Notebook LM Discord

- Users Request Spicier Voice for NotebookLM: A user requested that NotebookLM have a voice with fangs that hunts the story and leaves bite marks in the margins instead of a bland, generic tone.

- The user jokingly introduced themselves as ChatGPT5 and asked for help in making NotebookLM spit venom instead of serving chamomile.

- AI Web Builder Builds Scratchpad Video: A user tested an AI web builder tool and expanded their existing notebook for their scratchpad GitHub repo, then put together a video, Unlocking_AI_s_Mind__The_Scratchpad_Framework.mp4.

- The user noted that the video makes some aspects up, but the overall impact of it seems intact, and mindmap exports could look a bit better, referring to their mindmap image (NotebookLM_Mind_Map_8.png).

- NotebookLM Audio Overviews Glitch Fixed: Multiple users reported issues with Audio Overviews bursting into static, but the issue has been fixed.

- A member added that even audio overviews have a 3-4 per day limit that is expected.

- Users Ask How To Get Custom Notebooks: A user inquired about creating notebooks similar to the ‘Featured’ notebooks on the home page, with customizable summaries and source classifications.

- Another user suggested requesting the feature in the feature requests channel; currently there are no solutions available.

- Note-Taking Functionality Lacks, Users Supplement with Google Docs: A user keeps original files in Google Drive and uses Google Docs to supplement NotebookLM due to minimal note-taking features.

- They highlighted the inability to search, filter, or tag notes within NotebookLM.

GPU MODE Discord

- Privacy Team Gatekeeps Triton Registration: Organizers announced that the registration process is in the final stages of privacy team approval.

- Approval is anticipated soon, paving the way for the registration to proceed.

- Memory Access Coalescing Surprises Naive Matmul: A member implemented two naive matmul kernels and found that METHOD 1, with non-contiguous memory reads within threads, performs about 50% better than METHOD 2, which uses contiguous stride-1 accesses.

- It was explained that Method 1’s memory accesses are not contiguous within a thread, but they are contiguous across threads, and that the hardware can coalesce those accesses into a more efficient memory request.

- Open Source Voxel Renderer Streams Like a Boss: A developer released a new devlog on their open source voxel renderer, which runs in Rust on WebGPU.

- It now features live chunk streaming while raytracing, with more details available in this YouTube video.

- CuTe Layout Algebra Documentation Suffers Glitch: A member found a flaw in the CuTe documentation regarding layout algebra, presenting a counterexample related to the injectivity of layouts.

- Another member recommends Jay Shah’s “A Note on Algebra of CuTe Layouts” for a better explanation of CuTe layouts.

- Axolotl Unleashes N-Dimensional Parallelism: A member announced the release of N-D parallelism with axolotl, inviting others to experiment with it, as showcased in a HuggingFace blog post.

- N-D parallelism enables parallelism across multiple dimensions, making it suitable for complex models and large datasets.

LlamaIndex Discord

- LlamaIndex Makes GPT-5 Debut: LlamaIndex announced day-0 support for GPT-5, inviting users to try it out via

pip install -U llama-index-llms-openai.- This upgrade might necessitate updating all

llama-index-*packages to v0.13.x if not already on that version.

- This upgrade might necessitate updating all

- LlamaIndex Challenges GPT-5 in Agent Maze: LlamaIndex introduced Agent Maze, daring GPT-5 to locate treasure in a maze using minimal tools, detailed here.

- The community is excited to see how the model performs with this new challenge.

- LlamaIndex Cracks the Code on Zoom: LlamaIndex announced a hands-on technical workshop on August 14th, focusing on building realtime AI agents that process live voice data from Zoom meetings using RTMS (link).

- Engineers can utilize these tools to get better contextual awareness for their models.

- Workflow Tools Trigger User Headaches: Users reported issues with workflow tools not functioning correctly, but one member found they needed to use OpenaiResolve in the new SDK for tools to work with OpenAI.

- This fix was implemented in this GitHub commit.

- OpenAI SDK Snafu Leads to Quick Fix: A recent update in the OpenAI SDK caused a