sorry for the late post, deepseek’s official post was quite late

AI News for 8/19/2025-8/20/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (229 channels, and 6600 messages) for you. Estimated reading time saved (at 200wpm): 517 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

As discussed yesterday, DeepSeek followed up their characteristic model release with a remarkably low key tweet and blogpost which released their official messaging and evals:

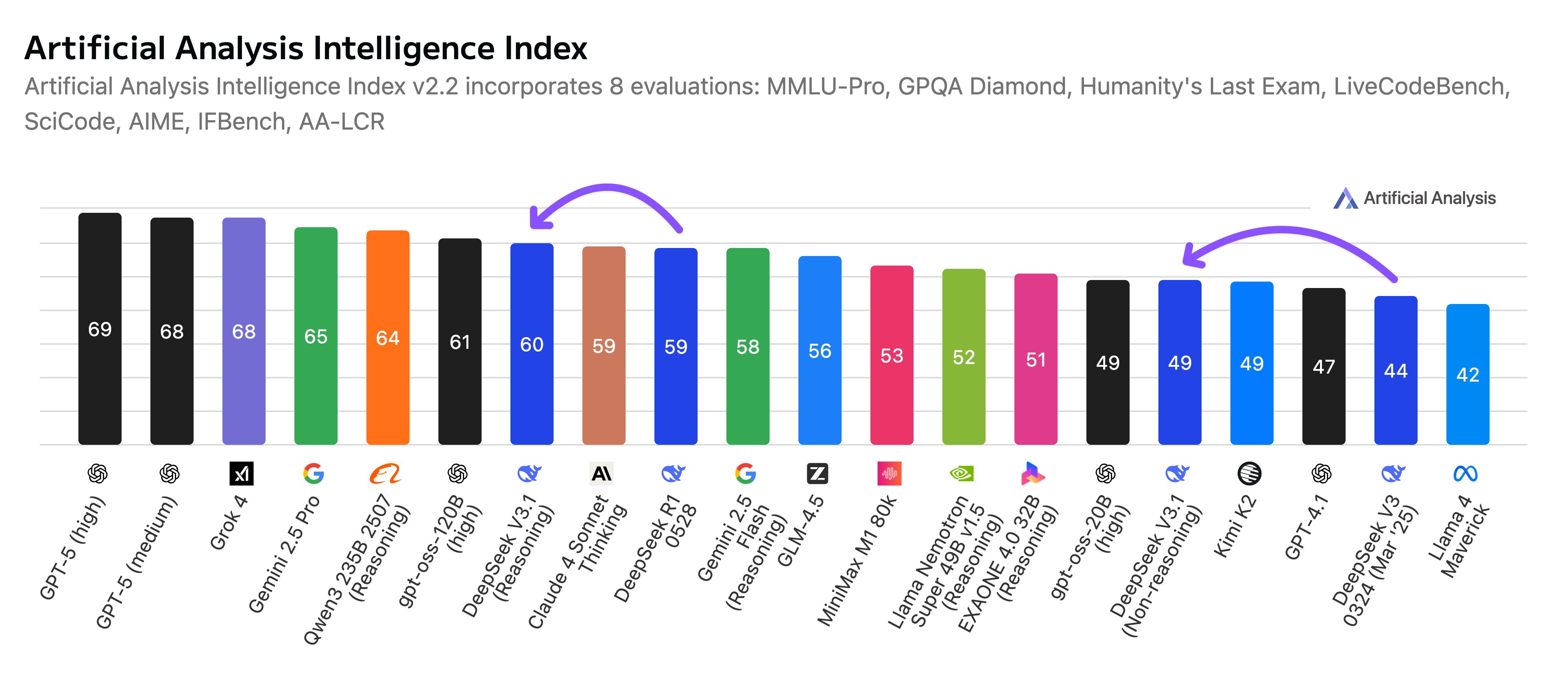

The standard knowledge benchmark bumps are incremental:

but there are important improvements in coding and agentic benchmarks that make it more useful for agents.

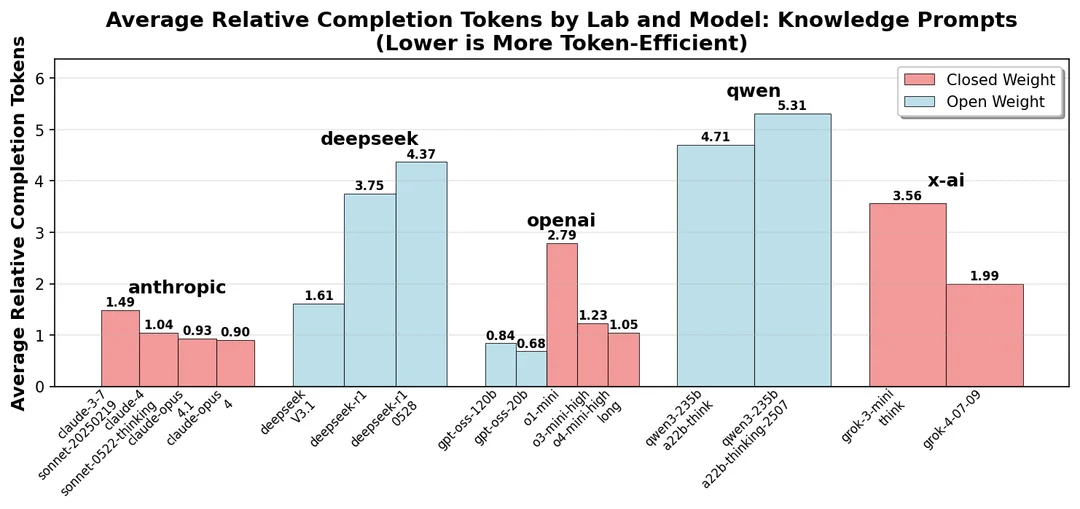

However the major story may be even more subtle - token efficiency improvements!

the Reddit dissection of DSV3.1 is particularly strong, so just scroll on down.

AI Twitter Recap

China’s open models and agents: DeepSeek V3.1, ByteDance Seed‑OSS 36B, Zhipu’s ComputerRL

- Community reports indicate a quiet DeepSeek‑V3.1 rollout (an “Instruct” variant surfaced with 128K context), with no model card initially and signs the lab may be merging “thinking” and “instruct” lines for simpler serving. Early, mixed anecdotes: on a small “LRM token economy” reasoning set V3.1 is “on par with Sonnet 4” for logic puzzles but shows regressions on some tasks vs R1, and tends to “yap” on easy knowledge questions, suggesting post‑training that could be tightened. See discussion threads from @teortaxesTex, @rasbt, follow‑ups, and release‑cycle color on time zones here.

- ByteDance released the permissive Seed‑OSS 36B LLM on Hugging Face with claimed long‑context, reasoning, and agentic capabilities, though initial community feedback called out thin docs/model card at launch. See @HuggingPapers and reaction @teortaxesTex.

- Zhipu AI introduced ComputerRL: an end‑to‑end RL framework for computer‑use agents unifying API tool calls with GUI (the “API‑GUI paradigm”), trained with distributed RL across thousands of desktops. An AutoGLM 9B agent achieves 48.1% success on OSWorld, reportedly beating Operator and Sonnet 4 baselines on that benchmark. Paper and results: @Zai_org, follow‑up, thread. Zhipu also pushed GLM‑4.5 access via TensorBlock Forge (@Zai_org).

Coding agents and developer tooling

- GitHub is shipping the Copilot coding agent “everywhere” across GitHub via a global launcher, issues, and VS Code (@lukehoban). Microsoft’s VS Code team also rolled out Gemini 2.5 Pro in Code (@code) and updated GPT‑5 agent prompts in Insiders (@burkeholland). Anthropic launched Claude Code seats for Team/Enterprise with spend controls and terminal integration (@claudeai, _catwu).

- Cline released a free, opt‑in “Sonic” coding model in alpha to power multi‑edit workflows; usage feeds improvement. Details and quick start: @cline, blog, provider. The team is also supporting an AI fintech hackathon (@inferencetoken).

- Open‑source fine‑tuning and local runs are accelerating: Together AI added SFT for gpt‑oss‑120B/20B (@togethercompute); Baseten’s Truss CLI was used for multinode 120B training (@winglian); and llama.cpp ran gpt‑oss‑120B on an M2 Ultra with GPQA 79.8% and AIME’25 96.6% (@ggerganov). The ggml ecosystem added a Qt Creator plugin (@ggerganov).

- Infra/serving notes: Hugging Face “Lemonade” enables local HF models on AMD Ryzen AI/Radeon PCs (@jeffboudier). Cerebras is now an HF inference provider serving 5M monthly requests (@CerebrasSystems). Modal published a deep dive on why they rebuilt infra without k8s/Docker for AI iteration speed (@bernhardsson).

Agent training and RL: scaling recipes that matter

- Chain‑of‑Agents (AFM): train a single “agent foundation model” via multi‑agent distillation + agentic RL to simulate collaboration while cutting inference tokens by 84.6% and generalizing to unseen tools; SOTA‑competitive with best‑of‑n test‑time scaling. Code/models: @omarsar0, paper link, meta.

- Depth‑Breadth Synergy (DARS) for RLVR: corrects GRPO’s bias towards mid‑accuracy samples by up‑weighting hard cases with multi‑stage rollouts; large‑batch “breadth” further boosts Pass@1. Code + paper: @iScienceLuvr.

- MDPO for masked diffusion LMs: train under inference‑time schedules to close the train–infer divide; claims 60× fewer updates to match prior SOTA and big gains on MATH500 (+9.6%) and Countdown (+54.2%), plus a training‑free remasking (RCR) that further lifts results (@iScienceLuvr).

- Practical RL tidbit: some async RL pipelines hot‑swap updated weights mid‑generation without resetting KV caches; despite stale KVs, they still work tolerably well in practice (@nrehiew_).

Benchmarks, evaluation quality, and systems scaling

- Evaluation design: AI2’s “Signal and Noise” proposes metrics/recipes to build higher‑signal, lower‑noise benchmarks that produce more reliable model deltas and better scaling‑law predictions; dataset/code released (@iScienceLuvr).

- Live evals: FutureX is a dynamic, daily‑updated benchmark for agents doing future prediction, avoiding contamination via automated question/answer pipelines; on finance tasks, top models reportedly beat sell‑side analysts on a non‑trivial fraction of tasks (@iScienceLuvr, context).

- Hardware/software: a useful thread quantifies H100 performance/power/cost improvements from software over two years and touches GB200 reliability considerations (@dylan522p). On kernels, fast MXFP8 MoE implementations are landing (@amanrsanger).

- SWE‑bench agents: randomly switching LMs across turns (e.g., GPT‑5 vs Sonnet 4) can outperform either model alone (@KLieret).

Vision and multimodal editing: Qwen Image Edit takes the crown

- Qwen‑Image‑Edit is now the #1 open model on LM Arena for image editing (Apache‑2.0), debuting at #6 overall alongside proprietary baselines. It integrates cleanly with ComfyUI and shows strong identity/lighting preservation; the team also shipped quick patches post‑release. See leaderboard, ComfyUI node, relighting demo, patch, and HF trending (@multimodalart). A lightx2v LoRA shows 8‑step edits at ~12× speed with comparable quality (@multimodalart).

- Space weather foundation modeling: IBM and NASA open‑sourced Surya, a ~366M‑parameter transformer trained on years of Solar Dynamics Observatory data for heliophysics forecasting; models are on Hugging Face (@IBM, @huggingface, overview).

Product velocity and usage: Perplexity scale-up, Claude Code in orgs, GPT‑5 UX split, Google’s AI phones

- Perplexity usage and features: now handling 300M+ weekly queries (3× in ~9 months), shipping Price Alerts for Indian stocks, and testing SuperMemory and a Max Assistant mode that runs long‑horizon research tasks in‑context (@AravSrinivas, alerts, SuperMemory, Max Assistant).

- Claude Code arrives for Team/Enterprise with seat management and spend controls, bridging ideation in chat and implementation in terminals (@claudeai, _catwu).

- GPT‑5 capability vs UX: OpenAI promoted rapid product build‑outs with GPT‑5 (@OpenAI), while @SebastienBubeck shared a striking claim that GPT‑5‑pro produced and verified a new bound in convex optimization (follow‑up proof sketch; signal boost). In contrast, @jeremyphoward reported poor “Thinking/Auto” mode UX on the web app, highlighting the gap between raw capability and product reliability.

- Google’s Pixel 10 family launched with Tensor G5 + Gemini Nano on‑device, Gemini Live visual guidance (camera sharing with on‑screen highlights), and AI‑assisted video generation in the Gemini app (@Google, @madebygoogle, video gen).

Top tweets (by engagement)

- GPT‑5‑pro proves a new math bound (claim + proof sketch) — ~3.7k

- “100× productivity claims are delusional” rant — ~3.4k

- Figure’s Helix walking controller demo (blind RL walking) — ~3.6k

- OpenAI: GPT‑5 makes building easy (product demo) — ~1.9k

- Perplexity: 300M weekly queries — ~2.5k

- Raven Kwok’s generative system generalization — ~1.3k

- Alec Stapp on battery share of CA peak demand — ~2.0k

- Google: Pixel 10/Tensor G5/Gemini Nano launch — ~2.2k

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. DeepSeek V3.1 Updates, Efficiency and Head-to-Head Benchmarks

- GPT 4.5 vs DeepSeek V3.1 (Score: 394, Comments: 144): Bar charts claim DeepSeek V3.1 outperforms GPT‑4.5 on a coding-style pass-rate benchmark (

71.6%vs44.9%) while being far cheaper for the same workload ($0.99vs$183.18). The post implies a massive cost–performance gap, but provides no benchmark name, task mix, token counts, or pricing assumptions, making the methodology unclear and hard to reproduce. Commenters argue the comparison is mismatched: GPT‑4.5 is positioned as conversation/creative-focused rather than code/agent use, suggesting it should be compared on writing tasks, while GLM/DeepSeek should be tested on coding; others question fairness and transparency in closed‑source vs closed‑source claims and ask for apples‑to‑apples baselines (e.g., similar‑scale OSS models).- A commenter argues GPT-4.5 is optimized for human-like dialogue and prose rather than code or agentic tool use, citing hands-on tests via LMSYS Arena. They note it’s strong at explanation/summarization/creative writing but “not made for aider polygot” (i.e., not tuned for coding workflows like Aider), while GLM is positioned as stronger for coding; hence evaluations should span both

codingandcreative writingto avoid task-specific bias. - Methodology concerns: commenters caution against opaque closed-source comparisons and suggest an apples-to-apples matchup with an open-source

~120Bmodel for scale parity. They imply fair benchmarks should disclose prompt templates, system settings, and whether tool-use/agents were enabled to ensure reproducibility and avoid cherry-picking across differently specialized models.

- A commenter argues GPT-4.5 is optimized for human-like dialogue and prose rather than code or agentic tool use, citing hands-on tests via LMSYS Arena. They note it’s strong at explanation/summarization/creative writing but “not made for aider polygot” (i.e., not tuned for coding workflows like Aider), while GLM is positioned as stronger for coding; hence evaluations should span both

- Deepseek V3.1 improved token efficiency in reasoning mode over R1 and R1-0528 (Score: 203, Comments: 16): A community benchmark (LRMTokenEconomy) indicates DeepSeek V3.1 improves

reasoningmode token efficiency over R1 and R1-0528, notably reducing “overthinking” on knowledge and math prompts by producing shorterCoTwhile maintaining correctness. The evaluator still observes occasional very long chains on logic/brain-teaser style puzzles, suggesting heuristic limits remain for complex deductive tasks. One commenter notes decoding-time controls on “thinking” can further improve accuracy, pointing to an example approach: https://x.com/asankhaya/status/1957993721502310508. Other remarks are non-technical acknowledgments.- Reducing unnecessary words/formatting in the reasoning trace can be directly optimized via RL reward shaping—e.g., adding a penalty for verbose chain-of-thought or extraneous markup to compress tokens without sacrificing solution quality. There’s also speculation that different experts are activated during “thinking” in MoE-style setups; this could increase

VRAMrequirements for local users while being acceptable for hosted infra if compute orchestration is the main constraint. This suggests two levers for token efficiency: policy-level reward penalties and architecture-level expert routing/gating during reasoning. - Inference-time control of “thinking” can improve accuracy while limiting tokens, as noted with this example: https://x.com/asankhaya/status/1957993721502310508. Techniques include constraining step counts, imposing per-question token budgets, or guided/self-consistency sampling to prune low-value reasoning tokens—often complementary to training-time penalties and applicable across models without retraining.

- A key open question raised: does the improved token efficiency hold at accuracy parity with R1/R1-0528, or is there a correctness trade-off? For robust comparisons, results should report accuracy metrics (e.g., pass@1) alongside average reasoning-token counts per benchmark so efficiency can be evaluated at fixed quality levels.

- Reducing unnecessary words/formatting in the reasoning trace can be directly optimized via RL reward shaping—e.g., adding a penalty for verbose chain-of-thought or extraneous markup to compress tokens without sacrificing solution quality. There’s also speculation that different experts are activated during “thinking” in MoE-style setups; this could increase

- Understanding DeepSeek-V3.1-Base Updates at a Glance (Score: 190, Comments: 23): DeepSeek released DeepSeek‑V3.1‑Base with core architecture largely unchanged from V3 (e.g., same vocab size) but adds a new hybrid mode with a togglable “thinking” capability and updated tokenizer

added_tokens(expanded placeholder/functional tokens) inferred from config/tokenizer diffs. Community tests suggest improved coding performance and higher ranking versus V3, though the official model card/benchmarks aren’t yet published; the image aggregates these deltas plus download links. Commenters note some reported Aider scores were from a Chat model (not Base) and that Base isn’t typically exposed via API; they emphasize V3.1‑Base is a completion model without a chat template, and are awaiting OpenRouter updates to rerun benchmarks.- Clarification that the reported “Aider score” was measured using a Chat variant rather than the advertised Base model, which can skew expectations of raw base capability. One commenter notes the provider doesn’t expose the Base via API (low demand), implying benchmarks labeled as “Base” may actually reflect instruction-tuned/chat behavior rather than true pretraining-only performance.

- Counterpoint emphasizes that if this is truly a Base model (e.g., DeepSeek-V3.1-Base), a

chat templateshould not be used because it hasn’t been SFT’d for chat; it should be treated as a plaintext completionmodel. Applying chat-specific system/user/assistant formatting could degrade outputs or invalidate comparisons versus genuine chat/instruct models. - A benchmarker is waiting for OpenRouter to update the endpoint before re-running tests, highlighting that provider/version lag can materially impact scores and reproducibility. Benchmark results should specify the exact endpoint/model variant and provider (e.g., OpenRouter) used to avoid conflating Base vs Chat and pre-/post-update behaviors (https://openrouter.ai/).

2. New Open-Source Model Launches: IBM/NASA Surya and ByteDance Seed-OSS-36B

- IBM and NASA just dropped Surya: an open‑source AI to forecast solar storms before they hit (Score: 275, Comments: 50): IBM and NASA announced Surya, an open‑source heliophysics foundation model pre‑trained on years of Solar Dynamics Observatory (SDO) imagery to learn transferable solar features for zero/few‑shot fine‑tuning on tasks like flare probability, CME risk, and geomagnetic indices (

Kp/Dst). The release (weights + training recipes) targets modest‑compute adaptation via SDO preprocessing and LoRA/adapters, with evaluation encouraged via lead‑time vs. skill metrics on public benchmarks and stress‑tests on extreme events. Relative to current space‑weather approaches—physics‑based MHD/propagation models (e.g., WSA‑Enlil: https://www.swpc.noaa.gov/models/wsa-enlil-solar-wind-prediction), empirical/statistical baselines, and task‑specific CNN/RF models—the claimed contribution is broad pretraining on SDO (https://sdo.gsfc.nasa.gov/) for better transfer and accessibility; rigorous head‑to‑head skill and cost comparisons remain to be shown. Comments flag the missing link and question whether this is hype, asking what was used before and whether simple linear/empirical models can match performance; they call for evidence of concrete improvements over operational baselines and clarity on novelty vs. prior CNN/statistical methods.- A commenter challenges the technical novelty and justification of Surya, asking how it improves over pre-LLM baselines and whether simpler models (e.g., linear/logistic regression) could match performance at lower cost. They request clear, comparative benchmarks and ablations versus established methods, and clarity on predictability vs stochasticity of flare events, rather than marketing claims. They reference the repo but note the lack of structured evidence showing gains in the specific tasks (flare and solar wind forecasting).

- Another user focuses on real-time deployment, proposing a Gradio app driven by “recent” solar imagery for the repo’s tasks—24 hr solar flare forecasting and 4‑day solar wind forecasting—but reports difficulty sourcing live inputs: https://github.com/NASA-IMPACT/Surya?tab=readme-ov-file#1-solar-flare-forecasting and https://github.com/NASA-IMPACT/Surya?tab=readme-ov-file#3-solar-wind-forecasting. They note the Hugging Face datasets used by Surya only reach

2024(e.g., https://huggingface.co/datasets/nasa-ibm-ai4science/surya-bench-flare-forecasting) and some are broken (e.g., https://huggingface.co/datasets/nasa-ibm-ai4science/SDO_training), hindering real-time replication and inference with current data.

- Seed-OSS-36B-Instruct (Score: 153, Comments: 26): ByteDance’s Seed Team released Seed-OSS-36B-Instruct (HF), a

~36Bparam, Apache-2.0 LLM trained on~12Ttokens with native512Kcontext, emphasizing controllable reasoning length (“thinking budget”), strong tool-use/agentic behaviors, and long-context reasoning. They also provide paired base checkpoints to control for synthetic instruction data in pretraining: Seed-OSS-36B-Base (augmented w/ synthetic instructions, reported to improve most benchmarks) and Seed-OSS-36B-Base-woSyn (without such data) to support research on instruction-data effects. Commenters highlight the model’s native512Kcontext as possibly the longest among open-weight models with a practical memory footprint, contrasting it with 1M+ context models (e.g., MiniMax-M1, Llama) that are too large, and noting Qwen3’s 1M via RoPE but only256Knative. There’s also discussion that incorporating synthetic instruction data during pretraining materially boosts benchmark performance, with the w/o-synthetic variant valued for uncontaminated foundation-model research.- Model card notes two base variants: one augmented with synthetic instruction data and one without. Quoting: “Incorporating synthetic instruction data into pretraining leads to improved performance on most benchmarks… We also release

Seed-OSS-36B-Base-woSyn… unaffected by synthetic instruction data.” This gives users a choice between potentially higher scores from synthetic-instruction-augmented pretraining and a “clean” pretraining distribution for downstream SFT or analysis. Links: Seed-OSS-36B-Base, Seed-OSS-36B-Base-woSyn. - Claimed native

512Kcontext window for a36Bdense model, positioning it as one of the largest “native” context open-weights with a practical memory footprint. Commenters contrast this with models advertising1M+via RoPE extrapolation (e.g., Qwen3 with1Mbut256Knative) or massive models like MiniMax-M1/Llama where resource needs are prohibitive; a native window can avoid quality drop-offs seen in extrapolated RoPE regimes. This matters for retrieval-heavy or long-document tasks without resorting to aggressive chunking or special caching tricks. - Reported benchmarks and features:

AIME24 91.7,AIME25 84.7,ArcAGI V2 40.6,LiveCodeBench 67.4,SWE-bench Verified (OpenHands) 56,TAU1-Retail 70.4,TAU1-Airline 46,RULER 128k 94.6. Coverage suggests strong math, coding, tool-use/agent, and long-context retention, withRULER 128kindicating robust long-range attention. It also advertises controllable “reasoning token length,” implying an inference-time knob to limit or extend reasoning tokens for latency/quality trade-offs.

- Model card notes two base variants: one augmented with synthetic instruction data and one without. Quoting: “Incorporating synthetic instruction data into pretraining leads to improved performance on most benchmarks… We also release

3. Indie Open-Source Innovations: Mobile AndroidWorld Agent and TimeCapsuleLLM (1800s London)

- We beat Google Deepmind but got killed by a chinese lab (Score: 1206, Comments: 148): A small team open-sourced an agentic Android control framework that performs real on-device interactions (taps, swipes, typing) and reports state-of-the-art results on the AndroidWorld benchmark, surpassing prior baselines from Google DeepMind and Microsoft Research. Last week, Zhipu AI released closed-source results that slightly edge them out for the top spot; in response, the team published their code and is developing custom mobile RL gyms to push toward

~100%benchmark completion. Repo: https://github.com/minitap-ai/mobile-use Top comments are supportive of open-source, recommending community-building as a competitive moat and noting many seminal OSS efforts began with tiny teams; one observer remarks the demo appears fast.- Several commenters probe how an app can control a phone (esp. iPhone) without rooting; the practical route is OS-sanctioned automation layers rather than arbitrary event synthesis. On iOS, cross‑app control typically uses Apple’s UI testing stack (XCTest) and derivatives like Appium’s WebDriverAgent that run a developer‑signed automation runner to query the accessibility tree and inject taps/typing—no jailbreak, but you cannot ship this in an App Store app and it requires provisioning entitlements (XCTest UI Testing, WebDriverAgent). On Android, agents rely on an AccessibilityService (with

BIND_ACCESSIBILITY_SERVICE) to read the UI and perform gestures, often paired with MediaProjection for screen capture—root not required but user consent and Play policy compliance are mandatory (AccessibilityService, MediaProjection). - On use cases: the tool enables end‑to‑end QA/RPA on real devices (e.g., navigating login flows, handling OTPs, changing settings, or orchestrating tasks across third‑party apps) and accessibility augmentation. Typical stacks expose a WebDriver-like API via Appium (iOS via WebDriverAgent; Android via UiAutomator2/Accessibility), letting an AI agent launch apps, tap, type, and read accessibility labels; however, some system dialogs and privileged settings remain out of reach due to OS sandboxing and entitlement limits (Appium docs, UiAutomator2).

- Several commenters probe how an app can control a phone (esp. iPhone) without rooting; the practical route is OS-sanctioned automation layers rather than arbitrary event synthesis. On iOS, cross‑app control typically uses Apple’s UI testing stack (XCTest) and derivatives like Appium’s WebDriverAgent that run a developer‑signed automation runner to query the accessibility tree and inject taps/typing—no jailbreak, but you cannot ship this in an App Store app and it requires provisioning entitlements (XCTest UI Testing, WebDriverAgent). On Android, agents rely on an AccessibilityService (with

- My LLM trained from scratch on only 1800s London texts brings up a real protest from 1834 (Score: 190, Comments: 35): OP trained several LLMs entirely from scratch on a curated corpus of

7,000 London-published texts from 1800–1875 (5–6 GB), including a custom tokenizer trained on the same corpus to minimize modern vocabulary; two models used nanoGPT (repo) and the latest follows Phi-1.5-style training (phi-1_5), with no modern data or fine-tuning. Given the prompt “It was the year of our Lord 1834,” the model generated text referencing a London “protest” and “Lord Palmerston,” which OP notes aligns with documented 1834 events, implying the model learned period-specific associations rather than only stylistic mimicry. Code/dataset work is shared at TimeCapsuleLLM, with plans to scale to~30 GBand explore city/language-specific variants. Top comments are broadly enthusiastic, framing the approach as a compelling, DIY way to surface historical zeitgeist directly from primary sources; no substantive technical critiques or benchmarks were discussed.- Proposal to train diachronic and regional variants: build separate models on cumulative corpora up to successive cutoffs (e.g., 100 AD, 200 AD, …) and also region-specific subsets. This would enable measuring semantic drift and dialectal variation by aligning embeddings across checkpoints (e.g., Orthogonal Procrustes), tracking vocabulary frequency shifts, and comparing

perplexityon temporally out-of-domain test sets. It also supports transfer studies by testing a model trained to 1800 vs 1900 on predicting 1830s events to quantify temporal generalization.

- Proposal to train diachronic and regional variants: build separate models on cumulative corpora up to successive cutoffs (e.g., 100 AD, 200 AD, …) and also region-specific subsets. This would enable measuring semantic drift and dialectal variation by aligning embeddings across checkpoints (e.g., Orthogonal Procrustes), tracking vocabulary frequency shifts, and comparing

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Unitree and Boston Dynamics Humanoid Robot Updates

- Boston Dynamics shares new progress (Score: 399, Comments: 186): Boston Dynamics, in collaboration with Toyota Research Institute, showcases progress on Large Behavior Models (LBMs) for the Atlas humanoid, training end-to-end, language‑conditioned neural policies that map natural‑language commands directly to coordinated whole‑body behaviors for long‑horizon manipulation sequences. The demo emphasizes closed‑loop control that maintains task execution under physical perturbations while dynamically reorienting the body to grasp, transport, and place multiple objects, indicating improved policy generalization across extended tasks. Video: Getting a Leg up with End-to-end Neural Networks | Boston Dynamics (YouTube). Top comments highlight the system’s robustness to human‑induced disturbances and its dynamic whole‑body repositioning during manipulation; others contextualize the achievement by noting the remaining gap to human‑level dexterous hands.

- Several commenters highlight the system’s robustness to external perturbations during manipulation and locomotion, noting it maintains balance and task progress while being pushed or having the target moved. This implies strong disturbance rejection via closed-loop state estimation, whole-body control, and compliant/impedance behaviors that let it re-plan foot placement and end-effector trajectories in real time.

- The demo underscores limitations of current end-effectors versus the human hand’s dexterity, strength-to-weight, and tactile feedback. Observers note the robot compensates by dynamically repositioning its whole body to achieve favorable approach angles and grasps, reflecting a design tradeoff where whole-body motion and control sophistication offset lower gripper DOF and sensing compared to human hands.

- Viewers infer tight perception-control integration for dynamic object interaction, likely involving real-time object pose tracking and visual servoing to follow a moving box while preserving stability margins. The ability to smoothly adapt body posture and arm trajectory suggests model-predictive or whole-body trajectory optimization running at interactive rates to reconcile manipulation objectives with balance constraints.

- Unitree are teasing their next humanoid (Score: 196, Comments: 36): Unitree teased its next humanoid with a silhouette image listing

31 joint DOF (6*2 + 3 + 7*2 + 2)andH:180, implying 6-DOF per leg (x2), a 3-DOF torso, 7-DOF arms (x2), and a 2-DOF head on a 180 cm-tall platform. The teaser emphasizes “Agile” and “Elegant” and says “Coming Soon,” but includes no actuator, hand, sensing, or benchmark details. Source: Unitree’s post on X: https://x.com/UnitreeRobotics/status/1957800790321775011. Comments note the likely height translation as 180 cm (≈5′11″); the rest are non-technical/jokes. - Unitree G1, the winner of solo dance at WHRG, wears an AGI tshirt while performing (Score: 306, Comments: 80): Unitree’s G1 humanoid won the solo dance category at WHRG, performing an articulated, untethered routine while wearing an “AGI” T‑shirt—likely using pre‑scripted/teleop choreography rather than onboard high‑level planning, but still demonstrating strong whole‑body balance and articulation in a compact form factor. Video: v.redd.it/nw2jrlios5kf1; platform details: Unitree G1. Commenters debate whether the demo is a “scripted/teleop fake‑out” versus meaningful progress: skeptics see little AI, while others highlight the value of demonstrated actuation, stability, and self‑powered operation for future embodied intelligence, cautioning not to over‑attribute progress to the AI side.

- Key technical debate: the routine likely relied on pre-scripted or teleoperated choreography rather than on-board autonomy, but it still showcases robust whole‑body articulation, balance, and trajectory tracking under self-contained power. This indicates mature low-latency control loops, good state estimation/IMU fusion, and high torque density in a compact, non‑bulky package—valuable foundations that an autonomous stack could leverage later. The caution is not to conflate such demos with progress in high-level “AI” or planning; they primarily validate mechanics and control, not cognition.

- Several commenters frame the result as progress in embodiment (the “body”) rather than cognition (the “mind”). In other words, the demonstrated stability and precision are meaningful steps for embodied intelligence, but the “AGI” branding overstates the software side; without evidence of online perception, planning, or adaptation, the achievement is best viewed as hardware/control maturity rather than AI capability.

- Humanoid robots are getting normalized on social media right now (Score: 200, Comments: 61): Post notes a surge of humanoid-robot short videos (Reels/TikTok), suggesting a coordinated normalization push versus organic growth, with examples like IG’s rizzbot_official and public sightings in Asia/Austin. The image shows a friendly-faced sidewalk robot being filmed by a passerby—an HRI/PR tactic (approachable digital face, clumsy/dorky persona) to reduce perceived risk and job-threat anxieties; no hardware/model specs or technical benchmarks are discussed. Comments split between recommender-driven exposure—“the algorithm decided you’re someone who likes…”—and deliberate soft-power branding to preempt public backlash; a joke about “sidewalks” nods at urban-space and access concerns.

- Feed saturation is likely due to platform recommender systems (collaborative filtering/engagement-optimized ranking) rather than an organic, population-wide spike. The algorithm “decided that you’re someone who likes watching videos of humanoid robots,” indicating personalization effects and feedback loops: watch a couple, get many more — not evidence of broad normalization.

- Multiple users flag that “Clanker” appeared “out of nowhere all at once,” suggesting possible coordinated seeding/astroturfing rather than organic meme growth. Technical indicators would be synchronized posting times, reused captions/hashtags, and rapid initial engagement from low-history accounts, which can inflate perceived momentum without real grassroots interest.

- Clear distinction raised between novelty exposure and genuine normalization: “novelty =/= normalization.” Short-form spikes can reflect curiosity bias optimized by ranking systems, whereas normalization would require longitudinal signals (repeat exposure without drop-off, stable positive sentiment, downstream behaviors) rather than raw impression counts.

- Unrealistic (Score: 3960, Comments: 56): Satirical post/meme referencing the Terminator 2 arc where a tech creator destroys their invention upon learning it could doom humanity, framing that as “unrealistic” today. Contextually it comments on contemporary AI risk, corporate incentives, and regulation discourse (e.g., Sam Altman publicly urging AI regulation) rather than offering technical data or benchmarks. Comments debate founder incentives (e.g., bunkers vs. shutdown), and note skepticism toward current AI capabilities alongside cynicism about regulatory theater/regulatory capture when leaders call for oversight.

- Several comments implicitly touch on the regulatory-capture debate: Sam Altman publicly advocated for licensing and safety standards for frontier models in U.S. Senate testimony, which some view as “begging to be regulated” while critics argue current model capability doesn’t justify existential-risk framing. See the May 2023 hearing “Oversight of A.I.: Rules for Artificial Intelligence” (https://www.help.senate.gov/hearings/oversight-of-ai-rules-for-artificial-intelligence). This raises a technical governance question: where to set capability thresholds for licensing and evals without entrenching incumbents.

- A correction notes he’s an employee, not a founder—important for governance and control. In structures like OpenAI’s capped-profit model, the nonprofit board formally controls the for-profit entity (https://openai.com/blog/our-structure), changing who can make unilateral decisions about pausing/shuttering products and creating different incentive and accountability dynamics than founder-led companies.

- On catastrophic-risk posture, the contrast between “building bunkers” vs. destroying/pausing products highlights how major labs operationalize risk via evals and preparedness rather than personal contingency plans. Examples include OpenAI’s Preparedness and red-teaming initiatives (https://openai.com/blog/preparedness) and Anthropic’s Responsible Scaling Policy with AI Safety Levels (

ASL-1–ASL-4) (https://www.anthropic.com/news/ai-safety-levels), which define capability thresholds, eval protocols, and gating mitigations before scaling.

2. Image Edit Model Benchmarks and Workflows (Qwen, WAN 2.2, Image Edit Arena)

- Move away, guys… Someone’s coming (Score: 175, Comments: 31): Leaderboard snapshot of an “Image Edit Arena” benchmarking image-editing models via head‑to‑head votes, reporting rank, score with confidence intervals, vote counts, organization, and license. Proprietary models (e.g., OpenAI’s gpt-image-1 and Black Forest Labs’ flux-1-kontext-pro) occupy the top positions with the highest scores, ahead of various open‑source entries. Commenters hype a model nicknamed “Nano Banana” as delivering notably strong results, though another user asks what it is, indicating some ambiguity about which listed model that nickname refers to.

- Commenters attribute consistently high-quality outputs to a model referred to as “Nano Banana,” implying it has a recognizable output signature. However, no concrete benchmarks or model identifiers are provided; the thread would benefit from specifics like exact checkpoint/LoRA names and quantitative metrics (e.g.,

FID,CLIPScore) to substantiate performance claims. - A request for a “3D ftx output” suggests interest in direct 2D-to-3D model capabilities and export to common 3D formats (likely FBX/GLTF). This points to a workflow gap: most image models output 2D images, so producing riggable meshes would require a text-to-3D pipeline (e.g., NeRF or Gaussian Splatting to mesh) or a native 3D diffusion/generative geometry model.

- One user asks why xAI’s Grok isn’t on the referenced list, possibly expecting inclusion of

Grok-1.5V(multimodal). This raises ambiguity about the list’s modality scope (LLMs vs. image models) and evaluation criteria, suggesting the need to clarify which models and benchmarks are in scope.

- Commenters attribute consistently high-quality outputs to a model referred to as “Nano Banana,” implying it has a recognizable output signature. However, no concrete benchmarks or model identifiers are provided; the thread would benefit from specifics like exact checkpoint/LoRA names and quantitative metrics (e.g.,

- Simple multiple images input in Qwen-Image-Edit (Score: 341, Comments: 51): Post demonstrates multi-image conditioning in Qwen-Image-Edit: (1) clothing/style transfer from a mannequin to a subject combined with scene relocation to a Paris street café, and (2) compositing two subjects into an embrace while preserving specific attributes (hairstyles and hair color). A separate workflow schematic is shared for reference (workflow screenshot). Commenters report strong prompt adherence but weak photorealism (“plastic skin” and loss of detail), suggest trying a different sampler/preset (“res_2s/bong”) for better skin realism, and note that quality may require a finetune or LoRA to improve.

- Several users suggest benchmarking Qwen-Image-Edit against alternative pipelines like “res_2s/bong,” reporting much better skin detail/realism with that setup. A controlled A/B (same prompt/seed) would help quantify texture retention (pores, fine hair) and reduce the “plastic skin” artifact observed in Qwen-Image-Edit outputs.

- There’s a clear trade-off noted: strong prompt adherence from Qwen-Image-Edit, but poor image fidelity (detail erasure, waxy skin) reminiscent of older-gen models. Commenters propose a targeted finetune or LoRA trained on high-quality portrait datasets to improve microtexture and realism without sacrificing adherence.

- On workflow reproducibility, one commenter shared a full ComfyUI graph JSON for quick import, demonstrating that copy/paste of the workflow takes under a minute: https://pastebin.com/J6pz959X. Another asks for a “screenshot-to-workflow” feature (e.g., reconstructing graphs from images like https://ibb.co/VYm716L7), highlighting a potential tool gap (OCR/graph parsing) despite current JSON export/import convenience.

- Wan 2.2 Realism Workflow | Instareal + Lenovo WAN (Score: 264, Comments: 33): Author shares a WAN 2.2 photorealism workflow that blends two LoRAs—Instareal (link) and Lenovo WAN (link)—with “specific upscaling tricks” and added noise to enhance realism; the node graph is provided via Pastebin (workflow). Emphasis is on LoRA stacking/tuning for WAN 2.2 and post/late-stage detail via upscale+noise rather than base model changes. Top comments request technical specifics on the upscaling pipeline (ComfyUI vs external tools like Topaz/Bloom) and how to source all required files from the workflow graph, indicating interest in reproducibility and integration details.

- Upscaling workflow details: One commenter asks how the OP handles upscaling with this WAN 2.2 realism setup—whether it’s done inside Comfy or exported to external tools like Topaz or “Bloom,” and what yields better detail preservation at

2x–4x. They’re looking for practical implementation specifics (node choices vs. external batch processing) to maintain realism after generation. - Workflow assets and dependencies: A user requests where to obtain all required files referenced in the pipeline, implying multiple components (e.g., model checkpoints, LoRAs like “Instareal,” and any custom nodes/configs) are needed to reproduce the results. Clarification on exact versions and download sources is needed to make the workflow reproducible.

- Model scope clarification: Another commenter believes WAN is primarily for creating movie clips, seeking clarity on whether WAN 2.2 in this workflow is being used for still images, video, or both. This raises questions about the model’s intended use cases and any settings or constraints when repurposing it for photorealistic stills.

- Upscaling workflow details: One commenter asks how the OP handles upscaling with this WAN 2.2 realism setup—whether it’s done inside Comfy or exported to external tools like Topaz or “Bloom,” and what yields better detail preservation at

- Some random girl gens with Qwen + my LoRAs (Score: 211, Comments: 46): OP showcases AI-generated portraits (“girl gens”) using Qwen combined with custom LoRAs; no specific model variant, sampler, or hardware details are provided. A commenter requests performance metrics—“What is the generation time though?”—but no timings or system specs are given in-thread. Another commenter shares their own workflow/models with links: HuggingFace Danrisi/Lenovo_Qwen and Civitai model 1662740. Discussion trends toward reproducibility and performance (inference time) requests, while another user contributes alternative assets/workflows; no benchmarking data or implementation specifics are reported.

- A commenter requests concrete inference performance, specifically generation time per image. No timings, steps, sampler, or hardware details are provided, so latency/throughput and reproducibility remain unquantified.

- The OP links their workflow/models, indicating image generations done with Qwen + custom LoRAs and providing artifacts on Hugging Face and Civitai: https://huggingface.co/Danrisi/Lenovo_Qwen and https://civitai.com/models/1662740. These resources likely include the LoRA weights and pipeline details needed to replicate the setup (prompts, resolution, scheduler, and negative prompts, if documented).

- Another commenter asks which trainer was used for the LoRAs (e.g., kohya-ss, Diffusers/Accelerate, DreamBooth variants), a key detail affecting VRAM usage, training stability, and final quality. The thread does not specify the trainer or hyperparameters (steps,

lr, batch size,rank,alpha), so the exact training regimen remains unclear.

- Editing iconic photographs with editing model (Score: 273, Comments: 35): Thread showcases an image editing model applied to “iconic photographs” (e.g., an Apollo moon landing shot), with commenters assessing edit fidelity/realism; however, the linked gallery is access-gated (HTTP 403) so the actual media can’t be independently verified (reddit link). Model identity is unspecified in-post. Technically minded commenters note high perceptual quality but flag a historical/camera inconsistency in the first image (“shot by a Nikon”), and ask whether the tool is “Kontext or qwan?”—suggesting ambiguity between editing frameworks or model families (e.g., Qwen). Non-technical virality speculation about the moon-landing edit is also present.

- One commenter asks whether the editing was done with Kontext or qwan (likely referring to Alibaba’s Qwen), indicating interest in the exact editing model used and its image-editing capabilities. This implies a comparison of diffusion or vision-language editing pipelines and what strengths they bring to photorealistic edits of iconic photos.

- Feedback on the National Geographic edit notes high photorealism but frustration that the subject’s eyes remain obscured, highlighting a common limitation in generative edits: handling occlusions and reconstructing small facial details without uncanny artifacts. This suggests the model likely prioritized global realism and texture coherence over reconstructing fine ocular detail, a trade-off often seen in face edits.

- A user asserts the first original frame was shot on a Nikon, pointing to provenance details (camera make) that can affect color science and grain profiles when evaluating edit realism. It underscores that ground-truth capture characteristics may bias how convincing model outputs appear.

- GPT-5 has been surprisingly good at reviewing Claude Code’s work (Score: 443, Comments: 100): OP outlines a planning→implementation→review workflow: use Claude Code (Sonnet 4) for code generation, use Traycer to produce an implementation plan (Traycer appears to wrap Sonnet 4 with prompt/agent scaffolding), then feed the produced code back to Traycer where GPT‑5 performs a plan‑vs‑implementation check—i.e., a “verification loop” that reports covered items, missing scope, and newly introduced issues. This contrasts with reviewers like Wasps/Sourcery/Gemini Code Review that comment on a raw

git diffwithout feature context; tying review to an explicit plan improves signal. Reported costs:~$100for Claude Code access plus~$25for Traycer. Commenters echo the split: GPT‑5 excels at planning/analysis/debugging but is weak at directly writing code, so they pair it with Sonnet 4 for implementation; another adds a Claude Code test agent to run unit tests before a final GPT‑5 pass. One raises transparency concerns about Traycer (e.g., GitHub sign‑in) but notes it covers most of what they were building with local prompt files.- Practitioners report a division-of-labor: GPT-5 for planning/reviews/debug analysis and Anthropic’s Claude (e.g., Claude Code, “Sonnet 4”) for implementation. One notes: “GPT-5 is great at everything BUT writing code… it struggles to implement its own plan,” so they route all coding to Claude while keeping GPT-5 for spec/review to maximize quality.

- An end-to-end pipeline cited: GPT-5 drafts concepts/specs → Claude Code implements → a Claude Code test agent runs unit tests → GPT‑5 performs a final codebase check. This review loop reportedly “works very well,” providing guardrails against regressions/hallucinations while automating ~

80%of a homegrown prompt-driven workflow (e.g., Obsidian prompt files; tools like Traycer aim to cover most of this). - Hallucination/control-drift remains a risk: a user recalls Codex being asked to build a JS‑framework app but emitting Python commands. Hence the inclusion of automated unit tests (via the Claude Code test agent) and a final GPT‑5 audit to detect such mismatches; without these checks, GPT-5 can still hallucinate despite strong planning/review performance. See background on Codex: OpenAI Codex.

- “Built with Claude” Contest from Anthropic (Score: 192, Comments: 44): Anthropic announced a community “Built with Claude” contest on r/ClaudeAI, reviewing all posts with the “Built with Claude” flair through the end of August and selecting the top

3entries based on upvotes, discussion, and overall merit; each winner receives$600in either Claude Max subscription credit or API credits. Submissions must be original builds using Claude (e.g., Claude.ai, Claude app, Claude Code/SDK) and should include technical build details (prompts, agents, MCP servers/workflows like the Model Context Protocol), plus screenshots/demos; see the official rules here. Moderators welcome increased Anthropic engagement while committing to maintain independent performance reports and the community’s voice. Commenters asked about entry barriers (e.g., subreddit karma limits) and one reported non-receipt of a prior$600reward from a “Code with Claude” event, raising fulfillment/support concerns.- A commenter showcases a Claude-assisted project, the “RNA cube,” which assigns a decimal value to each RNA codon to enable integer-based arithmetic on genetic data and clusters amino acids into

4chemically distinct classes. They provide a live site at https://biocube.cancun.net, with additional resources including a 3D visualization and a variant analysis batch tool; the community hub is at https://www.reddit.com/r/rnacube. They credit Claude’s ability to reason about complexity as instrumental in the framework’s design. - The same commenter flags a potential tooling quirk: asking Claude to fetch/comment on their site may return a stale snapshot due to a “non updating cache”. As a workaround, they recommend cache-busting by appending a query param—e.g., https://biocube.cancun.net/index.html?id=100—to ensure the latest version is retrieved.

- A commenter showcases a Claude-assisted project, the “RNA cube,” which assigns a decimal value to each RNA codon to enable integer-based arithmetic on genetic data and clusters amino acids into

- Agent mode is so impressive (Score: 237, Comments: 231): OP reports that an autonomous “agent mode” (claimed as GPT‑5) can execute end‑to‑end web tasks like grocery shopping using user‑specified constraints (dietary preferences, budget, brand preferences), effectively performing human‑style site navigation and checkout. Commenters surface reliability limits: agents often fail on highly dynamic, script‑heavy sites and may abandon flows, reverting to asking the user for structured inputs (e.g., insurance quotes) when DOM/state handling or anti‑automation blocks break the workflow. Debate centers on the UX substrate: some argue agents will remain error‑prone while forced to parse human UIs, predicting a shift toward machine‑readable

agent interfacesthat exchange raw data—potentially reshaping e‑commerce and web architecture—while others express low trust in agents for critical tasks, calling current results “MySpace era.”- Agents remain brittle when forced to navigate consumer web UIs: dynamic DOMs, heavy client-side rendering, CSRF flows, cookie walls, and bot-detection make step-by-step automation unreliable. A proposed path is agent-native interfaces (raw JSON/data exchange rather than HTML), which could re-architect ecommerce and machine-to-machine interactions—akin to moving past the “MySpace era” toward a machine-readable web.

- A real-world task (collecting insurance quotes) failed because sites were “too dynamic,” leading the agent to give up and request manual inputs. This underscores current limitations around multi-step forms, async JS, embedded widgets/iframes, and anti-automation measures (e.g., captchas), suggesting low determinism until providers expose stable APIs or dedicated agent endpoints.

- Workarounds and scoped successes: one user exposed a local NAS chat service to the web and supplied credentials so the agent could self-serve answers, reducing messages under small limits via authenticated tool-use. Another achieved an end-to-end shopping flow (spec -> body measurements/wardrobe context -> stock checks -> cart prefill -> human approval), indicating agents can perform reliably in constrained domains with stateful planning, session persistence, and access to authenticated/internal tools.

- Is AI bubble going to burst. MIT report says 95% AI fails at enterprise. (Score: 218, Comments: 208): Thread asks if an “AI bubble” will burst, citing an MIT report claiming

95%of enterprise AI initiatives fail. Commenters argue LLMs are genuinely useful in assistive, human-in-the-loop settings, but attempts to fully automate and replace human judgment are failing in production and won’t yield ROI; hype-driven proliferation of low-value “AI tools/microservices” is a primary failure mode. Likely outcome is a dot-com–style correction where overhyped application startups consolidate while major infrastructure/providers persist. Consensus: there is a bubble driven by misuse and unrealistic expectations, not by the core tech’s capability; expect investor losses in over-automation plays, but continued progress and resilience among large AI platforms.- Human-in-the-loop vs. full automation: Multiple commenters note that attempts to fully replace human decision-making with AI/LLMs in enterprise workflows tend to fail, whereas assistive patterns (AI as a copilot with human oversight/approval) are working. The implied technical reason is reliability/calibration limits of current LLMs for high-stakes, unbounded decisions; successful deployments constrain scope and keep a human in the approval loop to manage edge cases and accountability.

- Concrete SME productivity wins: One small digital company reports saving “tens of thousands” (

$10k+) in developer costs by using ChatGPT to build production tools, automate bookkeeping via scripts, draft customer emails, and troubleshoot bugs. This suggests strong ROI for narrowly-scoped, automatable tasks where LLM outputs can be quickly validated or executed within existing scripting/tooling pipelines. - Market/adoption structure: Commenters argue that the “bubble” risk is concentrated in app-layer startups and overhyped point tools rather than core model providers, analogous to ISPs surviving the dotcom crash. They also note that slow enterprise adoption is often due to organizational bureaucracy and change-management friction rather than model capability limits, implying longer sales/adoption cycles even where technical value exists.

- OpenAI logged its first $1 billion month but is still ‘constantly under compute,’ CFO says (Score: 243, Comments: 51): OpenAI reportedly logged its first

>$1Brevenue month; CFO Sarah Friar said the company is “constantly under compute”. The OP speculates Microsoft/OpenAI’s proposed “Stargate” hyperscale build-out could partially come online by year‑end to add capacity; reports peg Stargate as a~$100Bsupercomputer program aimed at alleviating GPU scarcity (Reuters/The Information). Top comments cite a~$12Bannualized revenue run rate and a reported~$40BOracle cloud contract as key inputs to scale and cost, alongside expectations of potential price increases (Reuters on OpenAI-Oracle). Commentary questions sustainability: skepticism over a~$500Bvaluation and Sam Altman’s mention of a “new financial instrument” to fund multi-trillion-dollar AI infrastructure, referencing his push to raise trillions for AI chips/data centers (Reuters).- Compute capacity and cloud dependency: Multiple comments highlight OpenAI is “constantly under compute,” with a claimed

~$40BOracle deal cited as evidence of aggressive capacity reservations. The implication is training/inference throughput is the binding constraint (GPU supply, datacenter buildouts, power), which can force price increases and prioritization of high-margin workloads to meet SLAs and latency targets. - Financing mega-capex: Sam Altman’s remark about a “new financial instrument” is read as an attempt to fund multi-trillion-dollar datacenter, chips, and power buildouts. Technically, this suggests structures like long-term capacity offtake agreements, asset-backed securitizations of GPU fleets, or sovereign/infra-backed SPVs to keep capex off the operating entity’s balance sheet—commenters are skeptical about execution risk and cost of capital at that scale.

- Valuation vs. unit economics: A

~$12BARR from a$1Bmonth contrasted with a$500Bvaluation implies~40x+sales, which only works if gross margins improve materially. Commenters note current COGS is compute-heavy; without cost-per-token reductions (e.g., better batching, model sparsity/distillation, custom silicon) or higher ARPU via pricing tiers/enterprise upsell, the valuation and growth path are hard to justify.

- Compute capacity and cloud dependency: Multiple comments highlight OpenAI is “constantly under compute,” with a claimed

3. Veo-3 AI Video Generation Demos and Guides

- The Art of Simon Stålenhag brought to life with Veo-3 (Score: 272, Comments: 22): A creator animates Simon Stålenhag’s illustrations using Google’s Veo‑3 video model (image‑to‑video), with the primary clip hosted on v.redd.it which returns

HTTP 403without authentication; a still preview is available via preview.redd.it. No prompts, resolution, or runtime settings are disclosed in the thread; the post focuses on showcasing the stylized animation rather than benchmarks or implementation details. Commenters note the fit with Stålenhag’s broader media adaptations (e.g., the Tales from the Loop RPG/show) and praise the soundtrack choice as appropriate for the vibe. - Everything I learned after 10,000 AI video generations (the complete guide) (Score: 224, Comments: 41): OP (10 months, ~10k generations) shares a Veo-3–centric workflow for scalable AI video: a 6-part prompt template

[SHOT TYPE] [SUBJECT] [ACTION] [STYLE] [CAMERA MOVEMENT] [AUDIO CUES], strict “one action per prompt,” and front‑loading key tokens because “Veo3 weights early words more” (author claim). They advocate systematic seed sweeps (e.g., test seeds1000–1010, build a seed library), negative prompts as always-on QC (-no watermark --no warped face --no floating limbs --no text artifacts --no distorted hands --no blurry edges), and limiting camera motion to one primitive (slow push/pull, orbit, handheld follow, or static). Cost-wise, they note Google’s listed pricing at~$0.50/s($30/min) leading to$100+per usable output when retries are included, and suggest cheaper 3rd-party Veo-3 resellers (e.g., https://arhaam.xyz/veo3/) to enable volume testing. Additional tactics: incorporate explicit audio cues in the prompt, style refs with concrete gear/creators (e.g., “Shot on Arri Alexa”, “Wes Anderson”, “Blade Runner 2049”, “teal/orange”), platform‑specific edits for TikTok/IG/Shorts, and JSON “reverse‑engineering” of viral videos to extract structured parameters for variation. Core strategy: prioritize batch generation, first‑frame selection, platform-tailored variants, and “embrace AI aesthetic” over fake realism to drive engagement. Top comments flag potential undisclosed promotion and stress that many tips are Veo‑3‑specific; for open-weight alternatives, commenters point to WAN‑2.2 guides (unofficial mirror, official doc source). Others agree the systematic, volume‑first methodology generalizes across image/audio/video models, while remaining skeptical of reseller plugs.- Commenters warn that OP’s prompting patterns are “probably specific to VEO3” and won’t transfer 1:1 to other models. For

WAN 2.2, they reference the (unofficial mirror of) examples from the official guide: https://wan-22.toolbomber.com/ and the official manual https://alidocs.dingtalk.com/i/nodes/EpGBa2Lm8aZxe5myC99MelA2WgN7R35y (not viewable in Firefox). Differences in control tokens/keywords and conditioning behavior mean prompts tuned for Veo 3 can underperform on WAN 2.2, so rely on model-specific docs when porting workflows. - Technical concern about stack choice and reproducibility: Veo 3 is called out as a closed, paid online generator (non–open source), which constrains transparency and self-hosted reproducibility versus open‑weight video models like WAN. This limits portability of prompt recipes and makes it harder to validate or benchmark pipelines outside the provider’s environment.

- Methodology still generalizes: the advice to be systematic (controlled variables, versioned prompts, small ablations) is broadly useful across video/image/music generation. Applying the same evaluation protocol when comparing Veo 3 and

WAN 2.2settings helps ensure reproducible, apples‑to‑apples results.

- Commenters warn that OP’s prompting patterns are “probably specific to VEO3” and won’t transfer 1:1 to other models. For

- How can I generate videos like these? (Score: 209, Comments: 55): OP asks how to generate a cozy room-style video; top comment clarifies it isn’t a fully AI-generated video but a composited static image with masked windows/TV screens and overlaid footage via chroma keying in standard NLEs like CapCut or Adobe Premiere Pro. Suggested workflow: create or pick a single background frame, mask display/window regions, then overlay looping videos (e.g., rain, cartoons); a commenter notes a text-to-video model “wan” could synthesize simple background effects (rain), but likely not IP-specific content like Tom & Jerry. The linked Reddit media (v.redd.it/34cc74her1kf1) wasn’t accessible unauthenticated (HTTP 403), so exact content couldn’t be verified. Commenters characterize the method as “fairly basic” compositing; another links an image and remarks that the scene has odd artifacts/choices on closer inspection (preview image).

- The consensus is this isn’t end-to-end video generation but a compositing workflow: generate a static background image, then mask/crop the window/TV areas and overlay pre-existing video via keying/greenscreen in an NLE like Adobe Premiere Pro (https://www.adobe.com/products/premiere.html) or CapCut. For tighter control, use After Effects (https://www.adobe.com/products/aftereffects.html) for planar tracking, masks/rotoscoping, and keying (e.g., Keylight), aligning the inserts with perspective and adding grain/reflections to match the plate.

- One commenter suggests offloading the ambient backdrop to an AI video tool (named “WAN” in the thread) which can plausibly handle simple looping rain scenes, but notes it likely cannot synthesize specific IP content like Tom & Jerry for the in-screen inserts. Practical takeaway: use AI for generic atmospheric elements, then composite licensed or real footage into the masked surfaces.

- It’s coming guys (Score: 340, Comments: 74): Teaser image (via Google Gemini App) for the #MadeByGoogle event happening Aug 20, 2025 at 1pm ET, with the tagline “Ask more of your phone.” The visual subtly shows a “10,” hinting the Pixel 10 lineup and phone-first AI features, not a new frontier model (no specs, benchmarks, or model details provided). Top comments expect Pixel-focused AI integrations and doubt a Gemini 3 reveal; others call out hype, noting this is primarily a phone launch. OP’s edit later mentions an image editing tool announcement, seen as underwhelming relative to the teaser’s hype.

- Several commenters note the announcement is likely about Pixel 10 device-level AI integrations rather than a frontier release like Gemini 3. Expected features are consumer-side tools (e.g., image editing) rather than new model capabilities or training breakthroughs. Technically, this implies incremental UX features on top of existing Gemini models rather than updated benchmarks or architecture details.

- Skepticism centers on conflating a smartphone launch with material AI progress: users point out the teaser is effectively a Pixel 10 ad with Gemini-branded features. From a technical-interest standpoint, commenters note there’s no evidence of novel model releases, on-device model sizes, inference latencies, or privacy/performance trade-off disclosures—key details the community would look for.

- Google’s is horrible at marketing. (Score: 193, Comments: 28): The post centers on a tweet criticizing Google’s product marketing, claiming it leans on celebrity endorsements (e.g., Jimmy Fallon) and staged enthusiasm instead of authentic demos. Commenters highlight the “Gemini teaches you how to frame a photo” segment as a notably cringe example of tone‑deaf product storytelling, reinforcing a perception that Google relies on a “the product sells itself” ethos rather than coherent, user‑centric messaging. Commenters argue Google has a long history of awkward, cringe keynotes—sometimes surpassing Apple—and that skit‑like segments (e.g., the Fallon reference) hurt credibility of features like Gemini’s camera guidance.

- The “Gemini teaches you how to frame a photo” demo was panned for showcasing a low-value use case while omitting implementation details critical to developers: whether real‑time guidance runs on‑device (e.g., Gemini Nano) vs. cloud (Gemini Pro/Ultra), expected latency in the camera viewfinder, and privacy/battery implications of continuous multimodal inference. Reviewers noted the absence of any metrics or constraints, leaving open questions about offline behavior and fallback. See product context: https://ai.google/gemini/

- Several noticed the event skipped Gemini’s roadmap on key surfaces—Google Home/Nest, Android Auto, and Google TV—despite these being prime candidates for assistant replacement and multimodal interaction. Missing details include wake‑word integration, household context/sharing, offline modes, and safety/driver‑distraction constraints for in‑car use. References: https://support.google.com/googlenest/ and https://www.android.com/auto/ and https://tv.google/

- There was interest in how upcoming Pixel Feature Drops will treat older Pixels—what Gemini features (if any) will backport, and which are gated by hardware (Tensor generation, NPU/TPU throughput, RAM). The presentation offered no compatibility matrix, rollout cadence, or minimum device specs, making it hard to plan for app support or user upgrades. Background: https://blog.google/products/pixel/feature-drops/

- OpenAI’s Altman warns the U.S. is underestimating China’s next-gen AI threat (Score: 1288, Comments: 221): Post highlights Sam Altman’s warning that the U.S. is underestimating China’s ability to field next‑gen AI, with the concrete risk being rapid commoditization from state-backed and cost-optimized labs (e.g., DeepSeek releasing near‑frontier models at low cost), which compresses API margins and erodes moats. Commenters frame this as a pricing/performance disruption similar to open/cheap LLMs (e.g., Meta’s Llama 3 family, Google’s Gemini) accelerating catch‑up and reducing differentiation among frontier systems. Top comments argue Altman’s core concern is OpenAI’s lack of a durable moat: DeepSeek’s free/cheap model was “~95%” as good and heavily censored, proving low‑cost near‑parity is viable while limiting adoption. Others voice skepticism about Altman’s credibility (e.g., past GPT‑5 hype vs. reality) while some agree that China’s broad tech execution is being underestimated.

- Several commenters argue that OpenAI’s moat is eroding due to competitors like DeepSeek delivering ~

95%of ChatGPT-level capability at a fraction of the cost and even offering access for free. This puts pressure on unit economics (training/inference costs vs. achievable price) and undermines premium pricing for proprietary APIs. They note a practical limiter for Chinese models is heavy content filtering (censorship), which reduces coverage for many use cases despite attractive price-performance. - Others highlight that rapid investment by Google, Meta, X, and other incumbents has shortened the time-to-parity for foundation models, suggesting capabilities are commoditizing faster than expected. The implication is that frontier advantages decay quickly without sustainable differentiators (e.g., data advantages, deployment/integration moats, or specialized inference optimizations), intensifying pricing pressure and accelerating model churn.

- Several commenters argue that OpenAI’s moat is eroding due to competitors like DeepSeek delivering ~

- Oprah to Sam Altman: is AI moving too fast? (Score: 402, Comments: 266): The post shares a short interview clip where Oprah asks Sam Altman whether AI is “moving too fast”; the clip (hosted on v.redd.it) contains no technical discussion—no references to model specs, training/data scale, safety evaluations, deployment timelines, or benchmarks—so there are no extractable technical claims. Access to the video is restricted on Reddit, limiting verification of any nuanced context beyond the prompt-style question. Top comments focus on Altman’s media persona and communication style (e.g., “scripted,” “relatable,” “GPT-like contextual predictor”) rather than technical substance; no meaningful debate on policy, safety metrics, or capability trends is present.

- Honesty is the best response (Score: 13823, Comments: 411): A screenshot shows a purported GPT-5 explicitly abstaining: “I don’t know — and I can’t reliably find out.” Technically, this highlights calibrated uncertainty and refusal-to-answer behavior aimed at reducing hallucinations—i.e., selective prediction/abstention via confidence thresholds, entropy/logit-based criteria, tool-availability checks, or system prompts—though no benchmarks or verification of the model’s identity are provided. The contextual takeaway is an emphasis on reliability-over-coverage: preferring an explicit ‘unknown’ over fabricated answers. Commenters question whether the model is actually well-calibrated (can it detect when it doesn’t know?) while praising abstention as rare for LLMs that often guess; they note this behavior is hard even for humans.

- Core thread theme: whether LLMs can reliably “know when they don’t know” versus hallucinating. Evidence like Anthropic’s Language Models (Mostly) Know What They Know suggests internal uncertainty signals correlate with correctness but remain imperfect under distribution shift; evaluation typically uses calibration metrics (ECE/Brier) and selective prediction coverage–risk curves (paper, calibration). Practically, most instruction-tuned models are overconfident without explicit abstention training or decision thresholds.

- Implementation angles to elicit honest abstention: logprob/entropy thresholds for refusal, self-consistency (agreement across k sampled generations) as an uncertainty proxy, and retrieval-augmented generation (RAG) to ground answers and reduce hallucinations. RLHF/instruction tuning can reward abstention on low-confidence items to shift the accuracy–coverage tradeoff; works like SelfCheckGPT and RAG literature report reduced hallucination rates by trading off coverage (SelfCheckGPT, RAG).

- Verification focus: commenters ask for the original question to validate the claim—best practice is to benchmark on selective QA and plot accuracy vs refusal rate (risk–coverage/AURC). Use datasets like TruthfulQA and open-domain QA, include token-level logprobs (when available) and citations to enable reproducibility and auditability (TruthfulQA, selective classification).

- He predicted this 2 years ago. (Score: 523, Comments: 98): Photo from a 2023 #GOALKEEPERS2030 talk is used to highlight a prediction that “GPT-5 won’t surpass GPT-4.” Commenters note GPT-4’s capabilities have changed significantly since early 2023—adding voice, multimodal I/O and native image gen, tool/browse/computer-control integrations, and better reliability—so comparing “original GPT‑4” to today is misleading; they also argue GPT‑5 is a dramatic step over GPT‑4, though not over OpenAI’s o3 reasoning line. Some cite small open models (e.g., Qwen3‑4B) reportedly matching or beating early GPT‑4 on math/coding benchmarks. Debate focuses on moving goalposts and historical context: whether the prediction targeted model family ceilings vs specific release snapshots; and how much weight to give benchmark claims like Qwen3‑4B ≈ early GPT‑4, which may hinge on task selection and evaluation methodology.

- Commenters contrast 2023 “original GPT-4” with today’s GPT-4/4o/5 stack: early GPT‑4 had

8k/32kcontext but no built‑in tools (no voice mode, browsing/internet cross‑checking, tool/function calling, native image generation, or computer control) and lacked explicit chain‑of‑thought outputs. They note newer reasoning models like o3 and GPT‑5 have markedly better reliability/reasoning versus early GPT‑4, even if GPT‑5 isn’t an improvement over o3. - An open‑source comparison claims Qwen 3 4B (CoT)—a laptop‑capable

~4Bparameter model—hits benchmarks comparable to early GPT‑4 and reportedly exceeds it on math and coding tasks. If accurate, this highlights rapid efficiency gains where small CoT models can match or beat older frontier models on targeted benchmarks. - A user recounts early GPT‑4 limitations: despite a

32kcontext option, it had “zero tools” and frequently failed at grade‑school math without external aids. This aligns with the view that later additions—tool use, retrieval/browsing, and multimodal I/O—were pivotal in closing reliability gaps.

- Commenters contrast 2023 “original GPT-4” with today’s GPT-4/4o/5 stack: early GPT‑4 had

AI Discord Recap

A summary of Summaries of Summaries by X.ai Grok-4

Theme 1. Model Mayhem: Releases and Rivalries Rock Leaderboards

- DeepSeek v3.1 Flops Hard in Quality Checks: Users slammed DeepSeek v3.1 for declining quality, blaming slop coded outputs and Huawei GPUs, while defenders pointed to Trump’s tariffs crippling hardware access. Despite hype, it lagged behind predecessors and got outclassed by Kimi K2 in agent tasks, sparking debates on its real-world viability.

- Gemini 2.5 Pro Steals Back Crown from GPT-5: Gemini 2.5 Pro reclaimed the top spot on LMArena, fueling theories of GPT-5’s downfall from downvoting or excessive agreeableness, with Polymarket scores showing Gemini’s edge in speed and free access. Users speculated on Nano Banana as a potential Google disruptor, hyped via Logan K’s tweet, possibly Pixel-exclusive but demanded broadly.

- Qwen Models Crush Benchmarks with BF16 Power: Qwen3 BF16 wowed users by outcoding quantized versions zero-shot, thanks to llama.cpp’s FP16 kernels, while Qwen-Image-Edit topped the Image Edit Leaderboard as the #1 open model. ByteDance’s Seed-OSS-36B-Instruct impressed with 512k context sans RoPE scaling, and GLM 4.5 V shone in vision tasks per its demo video.

Theme 2. Fine-Tuning Frenzy: GRPO and Datasets Drive Tweaks

- GRPO Supercharges Llama for Physics Prowess: Users applied GRPO to Llama models on physics datasets, sharing mohit937’s FP16 merge, debating its RL-like edge without judges despite pitfalls like favoring longer responses. Comparisons to Reinforce++ highlighted GRPO’s potential as a streamlined alternative for boosting reasoning.

- Gemma 3 Gets French Fluency Boost via CPT: Newbies fine-tuned Gemma 3 270m for French using Jules Verne texts, guided by Unsloth’s CPT docs and blog, with electroglyph sharing their Gemma 3 4b GRPO finetune. Results praised the model’s improved outputs, sparking tips on VRAM tweaks for vision layers.

- OpenHelix Dataset Slims Down for Better Balance: The refreshed OpenHelix-R-86k-v2 cut size via ngram deduplication for diversity, aiding quantization with importance matrix datasets. Users explored anti-sycophancy classifiers and Swahili tweaks on Gemma 1B, emphasizing cleaner data’s role in minimizing errors.

Theme 3. Hardware Havoc: GPUs Battle for AI Supremacy

- Nvidia Trumps AMD in Epic Engineering Showdown: Debates raged on Nvidia’s lead over AMD and Intel in scaling, with users eyeing dirt-cheap AMD MI50 32GB cards hitting 50 tokens/s on Qwen3-30B, versus warnings of them as expensive e-waste compared to 3090s. Hopes pinned on Nvidia taking x86/64 production if Intel folds, highlighting engineering gaps in dGPUs.

- VRAM Woes Crash Mojo and CUDA Setups: GPU crashes plagued Mojo code without sync barriers, per this gist, while CUDA OOM errors hit despite 23.79GB free, fixed via PyTorch driver restarts. L40S lagged A100 in tokens/s despite higher FLOPS, blamed on memory bandwidth bottlenecks.

- Quantization Quests Tackle DeepSeek’s 671B Beast: Users quantized DeepSeek V3.1 671B to Q4_K_XL needing 48GB VRAM minimum, referencing Unsloth’s blog for dynamic quant tips. CUDA setups demanded max context tweaks and CPU-MOE adjustments to fit VRAM margins.

Theme 4. Tooling Turmoil: APIs and Agents Evolve Amid Bugs

- OpenRouter Drops Analytics and Model APIs: New Activity Analytics API fetches daily rollups, while Allowed Models API lists permitted models by user prefs. GPT-5 context crashed at 66k tokens with silent 200 OK responses, and Gemini models threw HTTP 400 on complex tool calls.

- MCP Agents Expose Security Nightmares: Blog post warned AI agents are perfect insider threats, demoing Claude’s MCP server hijack via GitHub issues for repo exfiltration. APM v0.4 teamed agents to fix context limits and hallucinations, integrating with Cursor and VS Code.

- Aider and DSPy Battle Tool Bugs and Caches: Aider’s CLI looped on tool calls with 7155 search matches, while Gemini 2.5 Pro failed without billing; Qwen3-Coder shone locally over Llama. DSPy returned costs even on cached results, and users sought cross-validation optimizers for style mimicry via GPT-5 judges.

Theme 5. Industry Intrigue: Valuations, Talent Wars, and AI Returns

- OpenAI Rockets to $500B Valuation Frenzy: OpenAI neared $500B valuation as the biggest private firm, per Kylie Robison’s thread, with defenders citing 2B-user potential but critics slamming no moat and shrinking margins. xAI bled talent to Meta amid poaching, with Lucas Beyer’s rebuttal claiming lower normalized turnover.

- AI Deployments Yield Zero Returns for 95%: AI report revealed 95% of organizations get zero return from custom AI, blaming neglected learning updates and a shadow economy of ChatGPT use. Companies like Databricks raised $11B at $100B+ for Agent Bricks expansions, dodging IPOs.

- Talent Hunts and Job Postings Heat Up: SemiAnalysis sought new-grad engineers for performance roles via direct app link, with EleutherAI alums applying amid Gaudi 2 hopes. Blockchain devs offered DeFi expertise, while Cohere welcomed MS students and MLEs for collaboration.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet Browser Crashes on Amazon.in: Users reported that amazon.in is not working in Comet Browser, possibly due to network issues or firewall settings, while it opens fine in Brave Browser.

- A member suggested contacting the support team at [email protected], and warned against paying for invites to Comet, noting Pro users in the US get instant access.

- ChatGPT Go India Plan Debated: OpenAI’s new ChatGPT Go plan is being tested in India for ₹399/month, focusing on prompt writing.

- Members debated its value compared to the free version or Perplexity Pro, and others suggested Super Grok at ₹700/month with a 131k context window as a better alternative to ChatGPT Go’s 32k limit.

- Perplexity UI Sparks Mixed Reactions: Users had polarized opinions on Perplexity’s user interface (UI), with criticisms of the Android UI contrasted by praise for the Windows design.

- Enthusiasts especially appreciated the addition of built-in adblock in the Comet browser.

- Perplexity API Status Questioned: A user inquired about API status and latency issues, pointing out that the status page doesn’t accurately reflect current latency.

- In addition, a user asked about deleting API groups to which a member responded that they would forward the request to the API team.

Unsloth AI (Daniel Han) Discord

- Unsloth App Refreshed to v3.1: The Unsloth app was updated to version 3.1, featuring an instruct model and hybrid capabilities, exciting users despite hardware limitations, according to members in the general channel.

- One member humorously reported a memory capacity error while trying to allocating 20MB of memory, despite having 23.79GB free on the GPU, suggesting a restart to clear leftover junk from dead processes using the link pytorch.org.

- GRPO Merging Boosts Llama Physics: A user applied GRPO to the Llama model with a physics dataset, available on Hugging Face, and the consensus in the community is that GRPO is basically RL just without an external judge model.

- Despite potential issues with vanilla GRPO, such as favoring longer responses, some see its potential as good as Reinforce++.

- Gemma 3 Gets Fine-Tuned: A newbie sought guidance on performing Continued Pre-Training (CPT) with Gemma 3 270m to enhance its French knowledge, drawing from 20,000 lieues sous les mers by Jules Verne, with starting points at Unsloth’s documentation and blog.

- Electroglyph shared their Gemma 3 4b unslop finetune including the code and uploaded to Hugging Face and stated that it’s pretty good this time around.

- OpenHelix Dataset Gets Refresh: The team released a new, smaller, diverse, and balanced version of the OpenHelix dataset (OpenHelix-R-86k-v2) on Hugging Face.