A new SOTA open model.

AI News for 8/20/2025-8/21/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (229 channels, and 7429 messages) for you. Estimated reading time saved (at 200wpm): 605 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

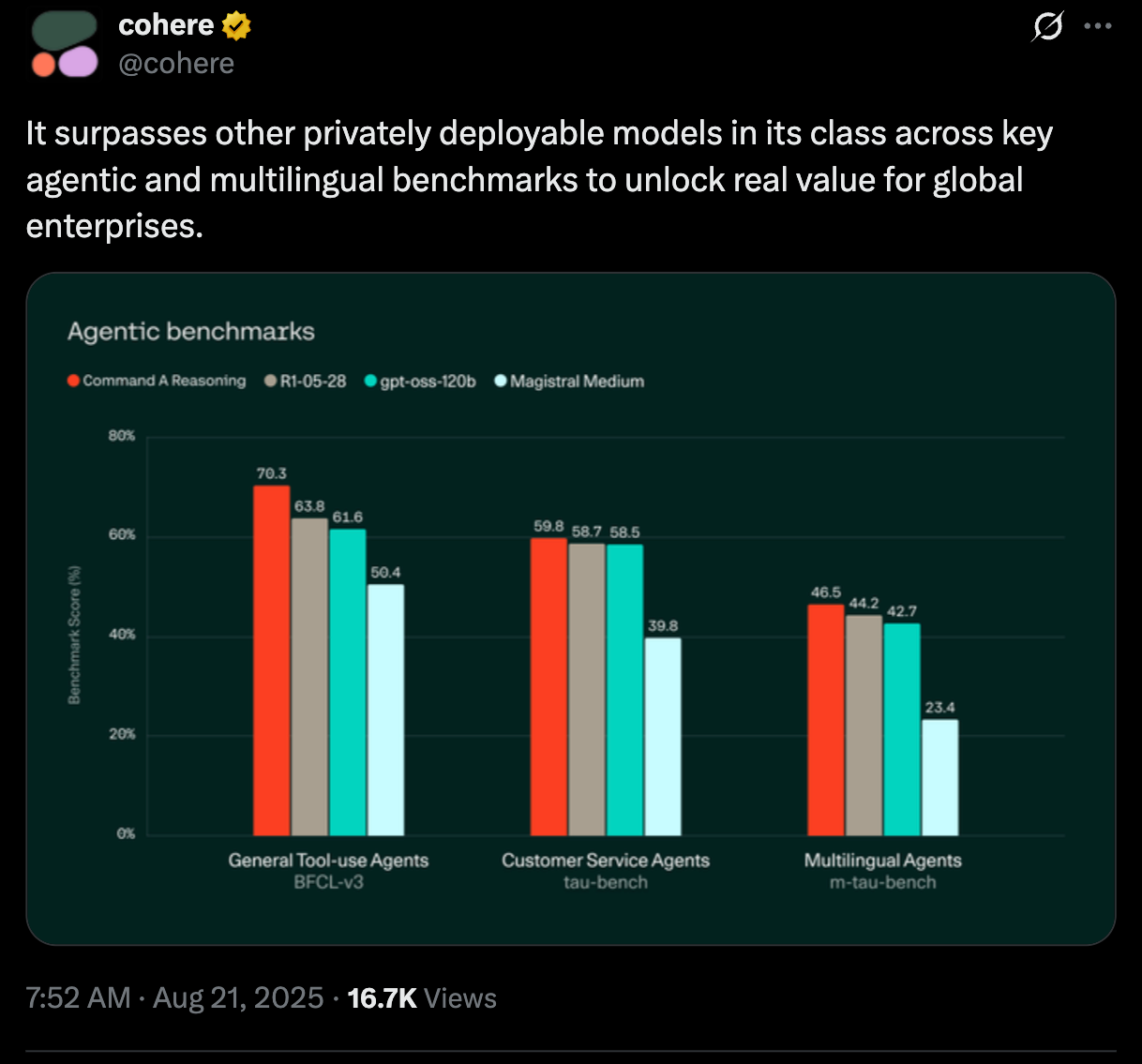

We last checked in on Cohere’s Command A in March and then again last week with their $7B series D, but we didn’t think we’d be talking about Cohere again so soon - Command A Reasoning puts GPT-OSS to shame according to Cohere’s own evals:

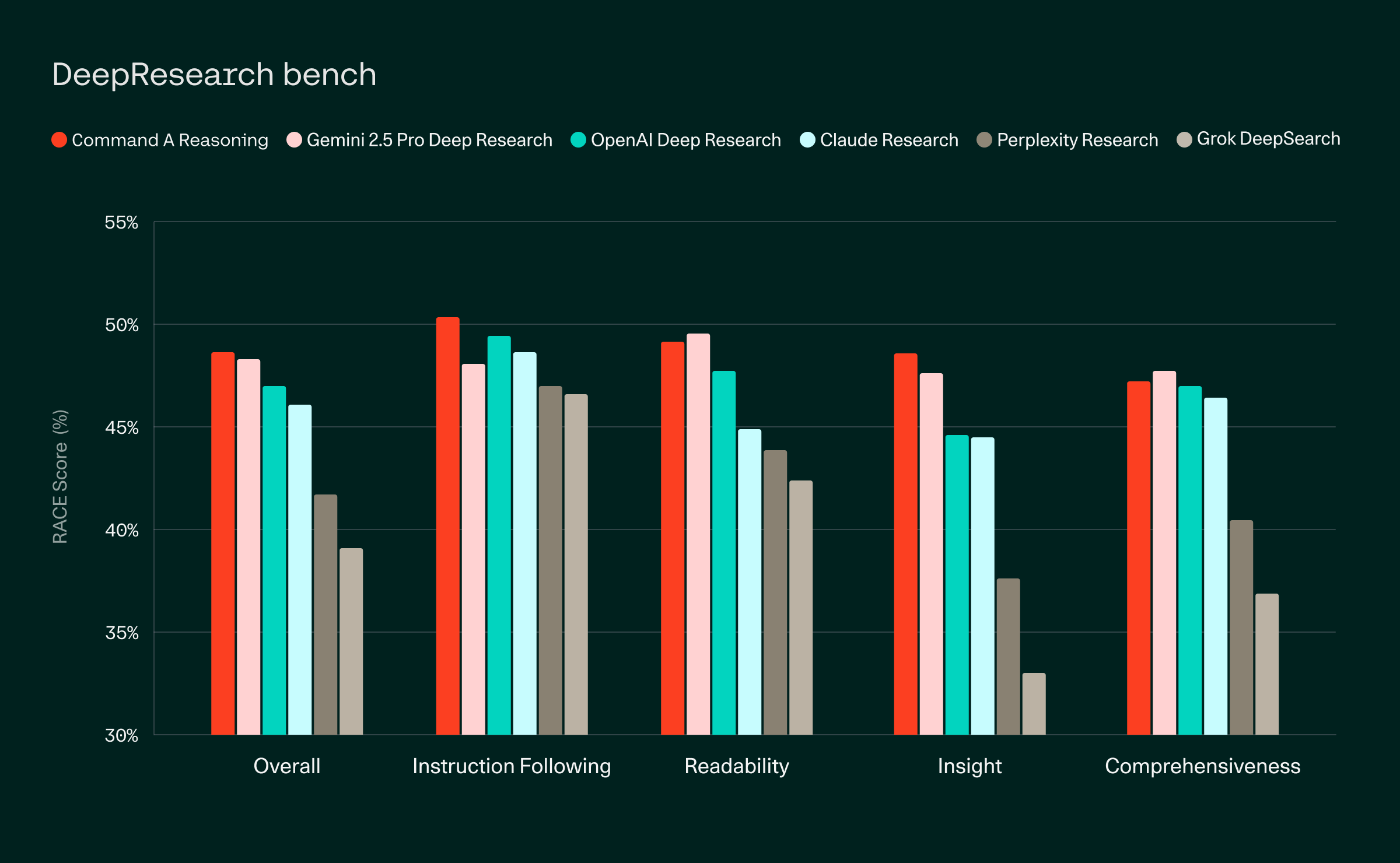

Importantly for the killer agentic use case of 2025, it is a very decent open deep research model:

AI Twitter Recap

DeepSeek V3.1: hybrid reasoning release, agent focus, and early results

- DeepSeek-V3.1 (Think/Non-Think hybrid): DeepSeek introduced a unified model that can toggle between “reasoning” and “non-reasoning” via

tokens, with an explicit push toward agentic use cases and coding workflows. Official announcement and demo links: @deepseek_ai. Notable details aggregated by the community: - Training/post-training: extended long-context pretraining (reported ~630B tokens for 32k and ~209B tokens for 128k), FP8 training (“UE8M0 FP8”) tuned for next-gen accelerators. Architecture remains 671B total parameters, ~37B active MoE experts (@ArtificialAnlys, @Anonyous_FPS, @teortaxesTex).

- Capabilities/limits: reasoning mode disables tool/function-calling; tool use is supported in non-thinking mode (@ArtificialAnlys). New “search agent” ability highlighted (@reach_vb).

- Benchmarks (selected): GPQA 80.1; AIME 2024 93.1; LiveCodeBench 74.8; Aider Polyglot 76.3% with thinking; SWE-Bench Verified rises from 44.6%→66% (@reach_vb, @scaling01, @scaling01, @cline). Artificial Analysis’ composite “AAI Index” places V3.1(reasoning) at 60 vs R1’s 59, still behind Qwen3 235B 2507(reasoning) (@ArtificialAnlys).

- Pricing/context: $0.56/M in, $1.68/M out on DeepSeek API; 164k context window for deepseek-chat-v3.1 (@scaling01, @cline).

- Ecosystem support landed rapidly: HF weights and inference providers (@ben_burtenshaw), INT4 quant by Intel (@HaihaoShen), vLLM reasoning toggle (@vllm_project), SGLang tool-calling + thinking flag parser (@jon_durbin), Chutes hosting/pricing (@chutes_ai), Baseten latency track (@basetenco), anycoder integration (@_akhaliq).

- Community takeaways: strong agentic/coding uplift and efficiency; minor overall capability delta vs R1/V3 in some composite indices; hybrid design aligns with pragmatic agent and SWE workflows; concern about missing tool-use in reasoning mode; perception split between “minor version bump” and “meaningful agent step” (@willccbb, @teortaxesTex, @reach_vb, @ArtificialAnlys).

Cohere’s Command A Reasoning and other new reasoning models

- Cohere – Command A Reasoning (open weights): Cohere announced its enterprise-focused reasoning model with open weights for research/private deployment; commercial use requires a Cohere license. Emphasis on safety/usefulness balance, reduced over-refusals, and strong tool-use/agentic benchmarks. Available on Cohere’s platform and Hugging Face; day-0 integration in anycoder and Inference Providers (@cohere, @Cohere_Labs, @reach_vb, @_akhaliq, discussion on license: @scaling01).

- NVIDIA Nemotron Nano 2: Announced as a hybrid Mamba-Transformer reasoning model; limited public detail in the thread, but worth tracking as NVIDIA’s small-footprint reasoning line evolves (@_akhaliq).

Google AI: Gemini efficiency paper, agentic Search, Veo access, and Gov platform

- Measured Gemini inference efficiency: Google shared a detailed methodology and results: median Gemini Apps text prompt uses ~0.24 Wh and ~0.26 ml of water; from May 2024→May 2025, energy per median prompt dropped 33× and carbon footprint 44×, due to model/system efficiency and cleaner energy. Technical paper and blog linked in the thread (@JeffDean). Separate commentary notes Gemini’s hybrid-reasoning architecture mention in the materials (@eliebakouch).

- AI Mode gets agentic: AI Mode in Search can plan and execute multi-step tasks (e.g., dine reservations across sites with real-time availability), personalize results, and share session context; rolling out to 180+ countries/territories in English (@Google, @GoogleAI, @rmstein).

- Veo 3 access and demos: Google teased broader Veo 3 access via the Gemini app and spun up TPU capacity in preparation (@GeminiApp, @joshwoodward). Google Devs shipped a Next.js template to build an in-browser AI video studio using Veo 3 and Imagen 4 (@googleaidevs).

- Gemini for Government: Expanded secure AI platform for U.S. federal use (includes NotebookLM and Veo) with @USGSA; pitched as “virtually no cost” to eligible federal employees (@sundarpichai).

Reasoning, RL, and evals: new methods and benchmarks

- RL for LLM reasoning (survey): Empirical roundup “Part I: Tricks or Traps?” systematically probes RL algorithm improvements for reasoning, highlighting scale/compute constraints and varying gains even up to 8B models (@nrehiew_).

- DuPO – Dual Preference Optimization: Self-supervised feedback generation via duality to enable reliable self-verification without external annotations; frame invertibility examples (e.g., reverse math solution to recover hidden variables). Paper + discussion: @iScienceLuvr, @teortaxesTex.

- PRM unification with RL/Search: “Your Reward Function for RL is Your Best PRM for Search-Based TTS” posits learning a dense dynamic PRM via AIRL+GRPO from correct traces, usable both as RL critic and search heuristic (@iScienceLuvr).

- KV cache debate: A widely-circulated reminder that recomputing KV can be preferable to storing it for certain regimes sparked discussion comparing this to MLA-like tradeoffs rather than “no KV” (@dylan522p, response: @giffmana).

- New eval assets:

- MM-BrowseComp: 224 multimodal web tasks (text+image+video) for agents; code+HF dataset+arXiv (@GeZhang86038849).

- Kaggle Game Arena (text-only Chess) Elo-like model ranking (@kaggle).

- ARC-AGI-3 Preview: +3 public holdout games for agent evals (@arcprize).

Systems and tooling: APIs, serving, and dev infra

- OpenAI Responses API updates: New “Connectors” pull context from Gmail/Calendar/Dropbox etc. in one call; “Conversations” adds persistent thread storage (messages, tool calls, outputs) so you don’t need to run your own chat DB; demo app and docs included (@OpenAIDevs, @OpenAIDevs, @OpenAIDevs; overview: @gdb).

- Agent/dev tools:

- Cursor + Linear: launch agents directly from issues/comments (@cursor_ai).

- vLLM and SGLang: first-class DeepSeek-V3.1 Think/Non-Think support (@vllm_project, @jon_durbin).

- MLX ecosystem: mlx-vlm 0.3.3 adds GLM-4.5V, Command-A-Vision; JinaAI mlx-retrieval enables local Gemma3-270m embeddings/rerankers at ~4000 tok/s on M3 Ultra (@Prince_Canuma, @JinaAI_).

- Parsing/RAG: LlamaParse adds citations + modes (cost-effective/Agentic/Agentic+); Weaviate’s Elysia ships decision-tree agentic RAG with real-time reasoning visualization (@VikParuchuri, @weaviate_io).

- LlamaIndex: “vibe-llama” CLI scaffolds context-aware rules for many coding agents (@llama_index).

- Hosting: W&B Inference adds DeepSeek V3.1 ($0.55/$1.65/M tok), Chutes priced hosting, Baseten library entry (@weave_wb, @chutes_ai, @basetenco).

- Applied products: Perplexity Finance rolls out NL stock screening for Indian equities across surfaces (@AravSrinivas, @jeffgrimes9).

Research highlights (vision, multimodal, 3D, embodied)

- MeshCoder: LLM-powered structured mesh code generation from point clouds (@_akhaliq).

- RynnEC: Bridging multimodal LLMs and embodied control (@_akhaliq).

- Tinker Diffusion: multi-view-consistent 3D editing without per-scene optimization (@_akhaliq).

- RotBench: diagnosing MLLMs on image rotation identification (@_akhaliq).

- Additional community notes: Goodfire AI on efficiently surfacing rare, undesirable post-training behaviors for mitigation via behavior steering/interpretability (@GoodfireAI).

Top tweets (by engagement)

- DeepSeek-V3.1 announcement: hybrid reasoning, agent focus, public demo (@deepseek_ai, 16.3k+)

- Google’s Gemini inference efficiency paper: 33× energy, 44× carbon per-prompt reductions year-over-year, 0.24 Wh and 0.26 ml water per median prompt (@JeffDean, 3.8k+)

- Ernest Ryu on recent convex optimization-related results (nuanced math take thread) (@ErnestRyu, 3.1k+)

- Perplexity Finance India stock screening shipping to all users (@AravSrinivas, 2.3k+)

- Alex Wang on Meta Superintelligence Labs’ investment trajectory (@alexandr_wang, 2.6k+)

- François Chollet clarifies his long-held stance: bullish on deep learning scaling for usefulness, not AGI via scaling alone (@fchollet, 900–1000+)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. DeepSeek V3.1: Anthropic API compatibility + thinking-mode benchmarks

- DeepSeek-V3.1 implements Anthropic API compatibility (Score: 265, Comments: 31): DeepSeek-V3.1 adds drop‑in compatibility with the Anthropic API, per the official guide (https://api-docs.deepseek.com/guides/anthropic_api). The screenshot shows installing the Anthropic/Claude client via npm, setting environment variables (API key/base URL) to point at DeepSeek, and then invoking DeepSeek models through Anthropic’s messages interface—enabling existing Anthropic-integrated apps to switch backends with minimal code changes. Comments note a typo (“Anthoripic”) and ask whether this lets them leverage Claude-compatible tooling while using DeepSeek as the model backend, potentially as a cost-saving alternative to recent price changes.

- Anthropic-API compatibility means you can point existing Claude clients (messages + tool-use) at DeepSeek-V3.1 and keep the same tools JSON schema and invocation flow; the only swap is the base URL/model name. This enables drop-in use of Claude Tools with DeepSeek as the backend, a lever for cost/perf optimization if the app can tolerate model differences. Docs: Messages API, Tool use.

- One user reports 404:

{"error_msg":"Not Found. Please check the configuration."}, which typically indicates a mismatched endpoint or headers in an Anthropic-compatible shim. Check the exact base URL/path (e.g.,/v1/messages), required headers (anthropic-version,x-api-key,content-type), and a validmodelid; some providers also require ananthropic-betatools header when using tools. Using an Anthropic SDK against a non-default base URL without overriding the host commonly produces this 404. - Several note this mirrors a broader trend (e.g., kimi-k2, GLM-4.5) of vendors exposing compatibility layers to reduce switching costs. Compatibility with Anthropic/OpenAI APIs lets teams reuse clients, prompt tooling, and function-calling specs with minimal code changes, enabling rapid A/B of backends.

- DeepSeek V3.1 (Thinking) aggregated benchmarks (vs. gpt-oss-120b) (Score: 150, Comments: 61): Post compares DeepSeek V3.1 (Thinking) vs. gpt-oss-120b (High): both offer ~128–131K context, but DeepSeek is a large MoE (

671Btotal,37Bactive) while gpt-oss-120b is smaller (120Btotal,5.1Bactive). Reported composite scores are similar for “Intelligence Index” (60vs61), with DeepSeek ahead on “Coding Index” (59vs50), math unspecified; however, latency/throughput and cost differ substantially: DeepSeek needs~127.8sfor “500 tokens + thinking” at~20 tok/s, priced at$0.32 / $1.15(input/output), versus gpt-oss-120b at~11.5s,~228 tok/s, and$0.072 / $0.28. Commenters question the benchmark validity: the cited “Artificial Analysis Coding Index” has gpt-oss-20b scoring54, beating gpt-oss-120b (50) and Claude Sonnet 4 thinking (53), prompting “Something must be off here…”, and one concludes that benchmarks are barely useful now. Another anecdotal view is that gpt-oss isn’t competitive with the ~700B-parameter DeepSeek despite good performance-for-size.- Several note an anomaly in the “Artificial Analysis Coding Index”: gpt-oss 20B (high) scores

54, edging Claude Sonnet 4 Thinking at53and beating gpt-oss 120B (high) at50. A smaller 20B model surpassing a 120B and Claude’s thinking mode suggests possible aggregation bias, task selection skew, or poor normalization in the meta-benchmark methodology. - Practitioners report divergent real-world results: DeepSeek (V3.1/“Whale” ~700B class) is said to outperform gpt-oss on SWE-style tasks, with “almost Sonnet-level” solution quality, strong instruction-following, and reliable tool-calling. This contrasts with the aggregate index and implies that interactive, tool-augmented SWE evaluations may reveal strengths not captured by the benchmark mix.

- Critique centers on the benchmark being a meta-aggregation (“zero independent thinking”), i.e., a weighted blend of existing tests without fresh task design or transparent calibration. Commenters argue such rollups can be unreliable—overfitting to included benchmarks, masking variance across task types, and producing counterintuitive rank inversions (e.g., 20B > 120B).

- Several note an anomaly in the “Artificial Analysis Coding Index”: gpt-oss 20B (high) scores

- Deepseek V3.1 is not so bad after all.. (Score: 154, Comments: 24): OP argues DeepSeek V3.1 was misjudged because it targets different priorities—namely speed and agentic/task-execution use cases—where it performs well. A notable practical update mentioned is that DeepSeek now supports the Anthropic-compatible API format, enabling drop‑in integration with clients built for Claude/Claude Code (see Anthropic API docs: https://docs.anthropic.com/en/api/), potentially offering a lower‑cost alternative to other providers. Comments largely note there wasn’t broad consensus it was “bad,” and urge waiting a few weeks for workflows, prompting, and integrations to mature before judging model quality.

- DeepSeek’s new support for the Anthropic API format means it can act as a near drop‑in for clients/tools expecting Claude-style requests (messages, tool use/function calling semantics), potentially letting teams wire it into Claude Code with minimal glue. This lowers integration friction and could offer a cheaper swap for workloads currently pinned to Anthropic/OpenAI, depending on latency/throughput/cost tradeoffs; see Anthropic’s Messages API spec for reference: https://docs.anthropic.com/claude/reference/messages_post and Claude Code: https://www.anthropic.com/news/claude-code.

- A commenter flags the proliferation of benchmarks, implicitly calling for a “benchmark for benchmarks”—i.e., standardizing how we assess reliability, variance, and contamination across eval suites. Practically, this highlights the need to report methodology (prompting, context windows, temperature, seed control) and to prefer robust, multi-axis evaluations over cherry-picked leaderboards, especially as models like DeepSeek V3.1 get judged early on fragmented metrics.

-

Love small but mighty team of DeepSeek (Score: 801, Comments: 39): The post is a meme pointing out a typo in the DeepSeek API docs where “Anthropic API” is misspelled as “Anthoripic API” (see the highlighted screenshot: https://i.redd.it/38d427vmpdkf1.png). The title/selftext frame this as a small, fast-moving team shipping docs quickly, implicitly highlighting documentation QA/polish trade-offs while referencing an Anthropic-related section in the docs. Top comments argue the typo signals the docs were written by humans (a sign of authenticity) rather than generated by an LLM, joking that an LLM “would never put that typo,” and calling it the mark of a “true software engineer.”

2. Model releases/ports: DeepSeek-V3.1 HF card and Kimi-VL-A3B-Thinking GGUF (llama.cpp PR #15458)

- deepseek-ai/DeepSeek-V3.1 · Hugging Face (Score: 508, Comments: 78): **DeepSeek‑V3.1 is a**

671B‑param MoE (~37Bactivated) hybrid model supporting both “thinking” and “non‑thinking” modes via chat templates, with post‑training focused on tool/agent use and faster reasoning than R1‑0528 at similar quality. Long‑context was extended on top of the V3 base via two phases: 32K (expanded 10× to630Btokens) and 128K (3.3× to209Btokens), trained with UE8M0 FP8 microscaling; MIT‑licensed weights (Base/Instruct) are on HF. Reported benchmarks include HLE with search+Python29.8%(vs R1‑052824.8, GPT‑5 Thinking35.2, o324.3, Grok 438.6, Gemini Deep Research26.9), SWE‑Bench Verified without thinking66.0%(vs R1‑052844.6, GPT‑5 Thinking74.9, o369.1, Claude 4.1 Opus74.5, Kimi K265.8), and Terminal Bench (Terminus‑1)31.3%(vs o330.2, GPT‑530.0, Gemini 2.5 Pro25.3). Model card highlights improved tool calling, hybrid thinking, and defined prompt/tool/search‑agent formats; broader evals cite strong scores (e.g., MMLU‑Redux93.7, LiveCodeBench74.8, AIME’2493.1). Top comment notes agentic‑deployment caveats: prompts/frameworks drive UX, DeepSeek lacks branded search/code agents (unlike OpenAI/Anthropic/Google), and serverless hosts may degrade quality via lower precision, expert pruning, or poor sampling. Observers infer the R1+V3 merge and default128Kcontext optimize token TCO for agentic coding; some regressions (GPQA, offline HLE) suggest V3 family is near its limit, prompting calls for V4. Also clarified: this release is the post‑trained model on top of the base, not just the base checkpoint.- Agentic/tool-use performance saw clear gains:

29.8%on HLE with search+Python (vs R1-052824.8%, GPT-5 Thinking35.2%, o324.3%, Grok 438.6%, Gemini Deep Research26.9%),66.0%on SWE-bench Verified without thinking (vs R1-052844.6%, GPT-5 Thinking74.9%, o369.1%, Claude 4.1 Opus74.5%, Kimi K265.8%), and31.3%on TerminalBench (Terminus 1) (vs o330.2%, GPT-530.0%, Gemini 2.5 Pro25.3%). Caveats: DeepSeek HLE runs use a text-only subset; OpenAI SWE-bench used477/500problems; Grok 4 may lack webpage filtering (possible contamination). A broader table places V3.1-Thinking at MMLU-Pro84.8, GPQA Diamond80.1(cf. dataset: https://huggingface.co/datasets/Idavidrein/gpqa), AIME 202588.4, LiveCodeBench74.8(https://livecodebench.github.io/), and Aider Polyglot76.3(https://github.com/Aider-AI/aider), generally trailing top closed models on several axes. - Training/architecture updates: V3.1 is a hybrid that switches thinking vs non-thinking via the chat template and claims smarter tool-calling from post-training. Long-context extension was scaled up—32K phase ~10× to

630Btokens and 128K phase3.3×to209B—with training inUE8M0FP8 scale to support microscaling formats; V3.1-Think targets R1-0528-level answer quality with faster responses. - Deployment/agent considerations: merging R1 into V3 with a

128Kdefault window aims to cut TCO for agentic coding (token-heavy, not long-CoT-heavy). Real-world UX can degrade if providers run lower precision, prune experts, or mis-tune sampling; plus prompts/agent frameworks (e.g., branded search/code agents from OpenAI/Anthropic/Google) critically affect outcomes, while DeepSeek’s own frameworks aren’t public yet. Some regressions were noted on GPQA and offline HLE, and several public benches emphasized agentic use without thinking traces.

- Agentic/tool-use performance saw clear gains:

- Finally Kimi-VL-A3B-Thinking-2506-GGUF is available (Score: 174, Comments: 11): GGUF-format builds of the 16B-parameter Kimi-VL-A3B-Thinking-2506 VLM are now hosted on Hugging Face (original model, GGUF quantizations), with llama.cpp PR #15458 adding backend support. The release spans

4/8/16-bit(12) variants; however, the PR’s latest note reports an unresolved issue where the “number of output tokens is still not correct,” indicating current inference may be unstable or incomplete. Commenters celebrate that “VL finally gets some love” and report good early tests, while others argue it’s “not available/working yet” due to the token-count bug; another highlights Kimi’s lower sycophancy and hopes this behavior carries over.- Availability/status: A commenter points to the latest PR note stating: “Hmm turns out the number of output tokens is still not correct. But on the flip side, I didn’t break other models”, implying the GGUF build isn’t fully working yet due to an output-token counting bug and may not be ready for reliable use until the fix lands.

- Modality gap: Another user reports the model cannot access the audio track in videos. This aligns with many open VLM/MLLMs being vision+text only; true audiovisual understanding would require an ASR front-end or end-to-end AV training, which Kimi-VL-A3B apparently lacks at present.

- Comparative interest: Users asked how it stacks up against Qwen3-30B-A3B, but no benchmarks or head-to-head results (e.g., MMBench/MMMU accuracy, A3B decoding throughput/latency) were provided in-thread. A proper comparison would need standardized vision QA and multi-image/video evals under identical inference settings.

3. Efficiency & scaling: 1–8 bit quantization guide, 100k H100 under-scaling, and 160GB VRAM local build

- Why low-bit models aren’t totally braindead: A guide from 1-bit meme to FP16 research (Score: 299, Comments: 36): Meme-as-analogy post equates lossy image compression (JPEG) to LLM quantization: reduce precision of weights to shrink models while preserving salient behavior via mixed precision, calibration-driven rounding, and architectures designed for ultra-low precision. It highlights low-bit training/inference like Microsoft’s BitNet—1-bit and ternary (~1.58-bit) Transformers (BitNet 1-bit, 1.58-bit follow-up)—and practical schemes such as Unsloth Dynamic 2.0 GGUFs, noting larger models (e.g.,

~70B) tolerate more aggressive quantization. Resources include a visual explainer and a video on quantization-aware training, underscoring that quantization is not uniform and can be targeted per layer/block to retain grammar/reasoning while compressing less-crucial knowledge. Comments suggest task-specific calibration datasets (e.g., Q4-coding vs Q4-writing) to tailor quantization, and an anecdote that JPEG can outperform SD 1.5’s VAE compression; another links a tongue-in-cheek “0.5-bit” meme.- Stable Diffusion 1.5’s original VAE is called out for poor reconstruction fidelity—one commenter claims plain JPEG can outperform it for some images, highlighting that the SD1.5 KL-f8 VAE can introduce noticeable artifacts and information loss relative to traditional codecs. Practical implication: in image-generation pipelines, VAE quality can be a larger bottleneck than model quantization, so swapping VAEs or using higher-fidelity decoders may yield bigger gains than tweaking bit-width alone.

- Quantization relies on a calibration dataset, so domain-specific calibration (e.g., Q4-coding vs Q4-writing) can materially affect accuracy by tailoring activation/statistics to task distributions. This mirrors outlier-aware PTQ methods like AWQ and GPTQ, where representative samples drive per-channel/per-group scales, improving retention of key features at the same bit-width without retraining.

- Resources for implementation and formats: official GGUF spec in the ggml project (docs) and Hugging Face’s technical intro to ggml (article) for low-bit inference details; a concise explainer of 1-bit LLMs/BitNet (video); and a practical llama.cpp setup/usage guide (blog).

- Frontier AI labs’ publicized 100k-H100 training runs under-deliver because software and systems don’t scale efficiently, wasting massive GPU fleets (Score: 335, Comments: 81): Post argues that frontier labs’ much-hyped ~100k–H100 training runs achieve poor cluster-level scaling efficiency, so effective throughput (e.g., MFU/step-time) under-delivers despite massive GPU counts, due to software and systems bottlenecks across the distributed stack (data/tensor/pipeline parallel orchestration, optimizer sharding like ZeRO/FSDP, NCCL collectives, storage I/O, and scheduler topology). The claim is that without careful overlap of compute/communication and tuned high-throughput interconnects (NVLink/InfiniBand) and NCCL parameters, utilization collapses at scale—wasting large fractions of the fleet—meaning “more GPUs” ≠ linear speedups; the linked source (Reddit gallery) is access-restricted, so details aren’t directly verifiable here. Commenters contrast this with projects like DeepSeek that report strong results on ~2k GPUs via aggressive low-level optimizations, implying software maturity, not fleet size, drives throughput; others note multi-GPU/multi-node inference remains hard in open source, and that true hyperscale performance typically demands bespoke, internally tuned frameworks rather than “hobby” or cargo-cult architectures.

- Commenters emphasize that 100k H100 training runs won’t scale on an off‑the‑shelf PyTorch stack; at that node count you need topology‑aware sharding/parallelism, high‑performance collectives, careful comm/compute overlap, and custom schedulers to avoid all‑reduce and fabric bottlenecks. Without bespoke systems engineering, synchronization and interconnect overheads dominate and GPU utilization collapses, so fleets appear “wasted.” They note stacks that behave on tens to low hundreds of GPUs often fall apart beyond thousands of nodes due to latency and coordination costs.

- DeepSeek’s reported 2k‑GPU training run is cited as a counterexample where software efficiency beat brute‑force scaling; the team described extensive optimizations to maximize per‑GPU throughput. The takeaway is that kernel fusion, memory/layout tuning, reduced synchronization, and disciplined parallelism strategies can let a smaller cluster outperform a poorly engineered 100k‑GPU job. Initial skepticism around DeepSeek underscored how easily systems work is underestimated relative to headline GPU counts.

- The open‑source inference ecosystem illustrates how hard distributed systems remain: few projects do solid multi‑GPU inference on a single host, and even fewer handle multi‑node well. Commenters argue that scalable software typically requires purpose‑built frameworks aligned to concrete requirements, not cargo‑cult patterns, and that the lack of access to truly massive deployments limits opportunities to learn and validate scaling approaches. This skills and tooling gap contributes to under‑delivery when organizations attempt 100k‑GPU scale without specialized infrastructure.

- Pewdiepie’s monstrous 160GB Vram build (Score: 165, Comments: 38): PewDiePie showcased an 8× NVIDIA “RTX 4000”-class GPU workstation (20 GB each) totaling ~

160 GBVRAM with192 GBsystem RAM, stating he can run Llama 3 70B on half the GPUs. On 4×20 GB (~80 GB), 70B inference is plausible only with low‑bit quantization (≤8‑bit, typically 4‑bit) plus tensor parallelism, and likely some CPU offload for KV cache/activations; full‑precision weights are ~140+ GB(FP16) before overhead and won’t fit. Video: “Accidentally Built a Nuclear Supercomputer”. Commenters call the 8×20 GB setup unorthodox and note it still cannot host very‑large MLLMs (e.g., DeepSeek/Kimi variants) except at extreme quantization (Q1) or with heavy offload; despite192 GBRAM, memory may bottleneck. Others see this as evidence of local LLMs going mainstream and suggest power‑efficiency may have driven the part choices.- Build details: an unorthodox 8× RTX 4000 Ada setup (

20 GBeach) for a total of160 GBVRAM, paired with192 GBsystem RAM (corrected from 96 GB). Commenters infer the choice targets power efficiency while relying on CPU offload; with more memory channels available, RAM could scale higher if needed, improving offload headroom for larger contexts. - Model fit constraints: despite 160 GB VRAM, commenters note it likely can’t host frontier models like “Kimi-K2” or DeepSeek end-to-end without extreme quantization (e.g., “Q1”). One user reports that even ~

300 GBcombined VRAM+RAM still struggles to fit Kimi, underscoring that many recent SOTA models’ memory footprints exceed what this build can accommodate without heavy sharding/offload and the resulting bandwidth bottlenecks.

- Build details: an unorthodox 8× RTX 4000 Ada setup (

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

AI Discord Recap

A summary of Summaries of Summaries by X.ai Grok-4

Theme 1. DeepSeek V3.1 Drops with Mixed Vibes

- DeepSeek V3.1 Debuts, Sparks Hype and Gripes: Engineers hail DeepSeek V3.1 for scoring 66 on SWE-bench in non-thinking mode, but slam its creative writing and roleplay flops, calling it a slightly worse version of Gemini 2.5 Pro despite coding promise. Users in Cursor report solid TypeScript/JavaScript performance at lower costs than Sonnet, though some distrust Chinese LLMs and face connection glitches.

- DeepSeek V3.1 Pricing Jumps, API Integrations Expand: DeepSeek hikes input prices to $0.25-$0.27 starting September 5, 2025, matching reasoner costs, while adding Anthropic API support for broader ecosystem use, as announced on DeepSeek’s X post. Communities eagerly await free public access in September, noting paid OpenRouter versions deliver faster responses than free ones.

- DeepSeek V3.1 Enters Arenas, Faces Bans: LMArena adds DeepSeek V3.1 and deepseek-v3.1-thinking for battles, but Gemini’s mass bans push users to alternatives, with one quipping we’re being sent back to 2023. Cursor devs mix excitement with skepticism, testing it amid reports of incremental improvements and regressions per DeepSeek’s Hugging Face page.

Theme 2. ByteDance’s Seed-OSS Models Stir Buzz

- ByteDance Unleashes Seed-OSS-36B Beast: ByteDance drops Seed-OSS-36B-Base-woSyn, a 36B dense model with 512K context trained on 12T tokens sans synthetic data, thrilling tuners eager for GPT-ASS experiments despite its vanilla architecture lacking MHLA or MoE. Latent Space and Nous Research users invite community tests on ByteDance’s GitHub repos and Hugging Face, praising its long-context prowess.

- Seed-OSS GGUF Delay Fuels ASIC Drama: Nous Research debates Seed-OSS-36B’s missing GGUF quant, blaming custom vLLM and unsupported SeedOssForCausalLM architecture in llama.cpp, with one linking to Aditya Tomar’s X post questioning ASIC impacts. Engineers note dropout, bias terms in qkv heads, and custom MLP akin to Llama, speculating on regularization but ruling out simple renames to Llama.

- ByteDance’s SEED Prover Bags IMO Silver: Eleuther celebrates ByteDance’s SEED Prover nabbing silver in IMO 2025, but questions real-world math chops per ByteDance’s blog. Unsloth AI users geek out over its 512K context and no QK norm, hoping for a deep-dive paper.

Theme 3. Hardware Hurdles and Benchmarks Heat Up

- RTX 5090 Sparks Wallet Wars: Unsloth AI debates RTX 5090’s $2000 price for VRAM upgrades, griping over NVIDIA’s no P2P/NVLink while eyeing distributed training with Infiniband on 4090-5090 rigs. GPU MODE users build custom PyTorch libraries and mini-NCCL backends for home Infiniband, calling it a killer distributed computing study hack.

- Apple M4 Max Crushes GGUF in Benchmarks: LM Studio benchmarks GPT-OSS-20b on M4 Max, with MLX GPU hitting 76.6 t/s at 32W versus GGUF CPU’s 26.2 t/s at 43W using 4-bit quants and 4K context. Users tweak CUDA on 4070 TI Super via ctrl+shift+r for flash attention and q8_0 KV quant at batch 2048, fixing 0 GPUs detected errors.

- AMD Debugger Alpha Drops with Wave Stepping: GPU MODE unveils alpha AMD GPU debugger sans amdkfd dependency, boasting disassembly and wave stepping in this demo video. MI300 dominates trimul leaderboard at 3.50 ms, while B200 hits 2.15 ms and H100 grabs second at 3.80 ms.

Theme 4. Training Tricks and Datasets Dominate

- GRPO Demands Dataset Smarts for Games: Unsloth AI advises splitting multi-step game datasets into separate prompts for GRPO, warning full PPO suits games better since GRPO shines when LLMs roughly know what to do. Members push diversified imatrix calibration like Ed Addario’s dataset over WikiText-raw for multilingual quant preservation.

- WildChat Dataset Dedupes English Prompts: Unsloth AI releases WildChat-4M-English-Semantic-Deduplicated with prompts under 2000 tokens, using Qwen-4B-Embedding and HNSW for semantic dedup, per Hugging Face details. Qwen3-30B-A3B hits 10 t/s on CPU via llama-bench, with RoPE scaling enabling 512K context in 30B/235B models.

- R-Zero Evolves LLMs Sans Humans: Moonshot AI shares R-Zero PDF study on self-evolving LLM training from zero human data, slashing dataset reliance. Eleuther’s CoMET models, pretrained on 115B medical events from 118M patients, outperform supervised ones per scaling paper.

Theme 5. Industry Shifts and Safety Snags

- Meta Reorgs AI Under Wang’s Reign: Latent Space reports Meta splitting AI into four teams under Alexandr Wang, disbanding AGI Foundations with Nat Friedman and Yann LeCun reporting to him, eyeing an “omni” model per Business Insider. Yannick Kilcher speculates Yann LeCun’s FAIR demotion signals Meta’s open-source retreat.

- Generative AI Yields Zero ROI for 95%: OpenRouter cites AFR Chanticleer report showing 95% of orgs get zero return from customized AI due to poor business nuance learning. Moonshot AI contrasts China’s energy as given for data centers with U.S. grid debates per Fortune article.

- API Leaks and Bans Bite Users: OpenRouter user loses $300 to leaked API key, with proxies masking IPs; Gemini bans trigger AI Dungeon purge flashbacks. LlamaIndex shares AI safety survey for community takes on key questions.

Discord: High level Discord summaries

LMArena Discord

- Nano-Banana Falls Prey to McLau’s Law: Members joked that the Nano-Banana model often underperforms expectations, humorously dubbing this phenomenon “McLau’s Law,” referencing an OpenAI researcher, prompting discussion about AI’s current capabilities as depicted in an attached image.

- One user suggested Nano-Banana often yields results far below nano-banana.

- Video Arena Plagued by Bot Brain-Freeze: Users reported the Video Arena Bot being down, causing command failures and inability to generate videos, effectively locking access to prompt channels <#1397655695150682194>, <#1400148557427904664>, and <#1400148597768720384>.

- Moderators confirmed the downtime and ongoing fixes, directing users to the announcements channel for updates and also stating that a login feature will be available soon to prevent future outages.

- DeepSeek V3.1 Enters the Ring: DeepSeek V3.1 and deepseek-v3.1-thinking models have been added to the LMArena and are now available for use.

- The consensus is that the v3.1 model is a slightly worse version of Gemini 2.5 pro although it holds promise as a coding model, but needs enhancement in general abilities.

- LMArena Users Suffer Data Loss: A site outage caused widespread data loss, including missing chat histories and inability to accept terms of service.

- Moderators acknowledged the issue and assured users that a fix is underway.

Unsloth AI (Daniel Han) Discord

- ByteDance Drops Seed-OSS 36B Base Model: ByteDance has released the Seed-OSS-36B-Base-woSyn model on Hugging Face, a 36B dense model with 512K context window, trained on 12T tokens.

- Members are eager to try tuning GPT-ASS with the model, finding the lack of synthetic data compelling.

- GRPO Requires Smart Dataset Design: To use GRPO for multi-step game actions, members advised designing datasets with separate prompts for each step.

- Full PPO might be better suited for games, as GRPO is primarily effective for LLMs because they roughly know what to do to begin with.

- DeepSeek V3.1’s Thinking Skills: The DeepSeek V3.1 model achieved a 66 on SWE-bench verified in non-thinking mode, sparking hype among members.

- However, concerns were later raised about its creative writing and roleplay performance, with some noting hybrid models lack the instruction following and creativity in the non-think mode.

- RTX 5090 Price Sparks Upgrade Debate: The RTX 5090 is priced around $2000, prompting discussions on whether to upgrade, especially for training, given its VRAM capabilities.

- Some members expressed frustration with NVIDIA’s limitations, particularly the lack of P2P or NVLink.

- WildChat-4M-English Released: The WildChat-4M-English-Semantic-Deduplicated dataset is available on Hugging Face, consisting of English prompts from the WildChat-4M dataset, deduplicated using multiple methods.

- The current release includes prompts <= ~2000 tokens, with larger prompts to be added later, more information can be found here.

OpenRouter (Alex Atallah) Discord

- Deepseek V3.1 Craze Awaits!: Users are eagerly awaiting the public release of Deepseek v3.1, anticipating it will be free starting in September.

- Users confirm that paying for Deepseek models on OpenRouter results in faster response times compared to the free models.

- OpenRouter API Keys Risk Exposure!: A user reported a loss of $300 due to a leaked OpenRouter API key and sought advice on identifying the source of the unauthorized usage.

- Users are responsible for any leaked keys and threat actors can use proxies to mask their origin IPs.

- Gemini faces massive banning outbreak!: Users report massive banning occurring on Gemini, leading many to seek alternatives and reminisce about the AI Dungeon purge caused by OpenAI.

- Users are saying we’re being sent back to 2023.

- Gemini Input Tokens Trigger Weird Counts!: A dashboard developer noted that OpenRouter’s calculation of input tokens for Gemini’s models produces unusual counts when images are included in the input, referencing a related discussion on the Google AI Developers forum.

- The developer is considering seeking clarification from the OpenRouter team regarding this issue.

- Most Orgs see ZERO return on Generative AI!: According to an AFR Chanticleer report, 95% of organizations are getting zero return out of their generative AI deployment, focused on companies that have deployed customized AI models.

- The report notes that the key problem is companies and their tech vendors are not spending enough time ensuring that their customized AI models keep learning about the nuances of their businesses.

Cursor Community Discord

- Claude’s Cache Capriciousness Causes Costly Conundrums: Users are reporting that Claude is experiencing issues with cache reads, leading to increased expenses compared to Auto, which benefits from sustainable caching.

- Speculation arose around whether Auto and Claude are secretly the same model, attributing reduced token usage to a placebo effect.

- Sonic Speedster Steals the Show in Cursor: The community is currently testing the new Sonic model within Cursor, with initial impressions being quite favorable due to its speed.

- While praised for fresh projects, some users cautioned its effectiveness might diminish with larger codebases and confirmed that Sonic is not a Grok model whose origin remains a stealth company.

- Agentwise Awakens as Open Source Offering: Agentwise has been open-sourced, enabling website replicas, image/document uploads, and support for over 100 agents, with promises of Cursor CLI support.

- Users are invited to contribute feedback in the project’s dedicated Discord channel to help further development.

- Cursor’s Costs Confirmed: Clarity on API Charges: Confusion around the cost of the Auto agent was cleared up, where a pro subscription includes the costs of API usage by different providers.

- Several users confirmed the cost clarification, and one stated a preference of Auto agent over Sonic agent.

- DeepSeek Debuts, Divides Developers: The new DeepSeek V3.1 model appeared in Cursor’s options, eliciting mixed reactions; some users encountered connection issues, while others expressed distrust towards Chinese LLMs.

- Despite concerns, some reported that DeepSeek V3.1 functions well with TypeScript and JavaScript, offering performance that is great and cheaper than Sonnet.

LM Studio Discord

- CUDA Fix Drives 4070 Detection: Users discovered that changing the runtime to CUDA llama.cpp via ctrl+shift+r might resolve the “0 GPUs detected with CUDA” error in LM Studio for 4070 TI Super cards.

- They discussed various configurations to enable flash attention, quantization of KV cache, and a batch size of 2048 with commands like

-fa -ub 2048 -ctv q8_0 -ctk q8_0.

- They discussed various configurations to enable flash attention, quantization of KV cache, and a batch size of 2048 with commands like

- GPT-OSS Smokes Qwen on Prompt Eval: Members observed GPT-OSS reaching 2k tokens/s on prompt eval with a 3080ti, outperforming Qwen’s 1000 tokens/s in LM Studio.

- A user reported LM Studio API calls were significantly slower (30x) than the chat interface but the issue resolved itself for unknown reasons when using the curl command

curl.exe http://localhost:11434/v1/chat/completions -d {"model":"gpt-oss:20b","messages":[{"role":"system","content":"Why is the sun hot?\n"}]}.

- A user reported LM Studio API calls were significantly slower (30x) than the chat interface but the issue resolved itself for unknown reasons when using the curl command

- Qwen3-30B CPU Configuration Surprises: Using llama-bench, a user achieved 10 tokens per second on a CPU-only configuration with Qwen3-30B-A3B-Instruct-2507-Q4_K_M.gguf.

- They noted that the performance varied based on thread count, with diminishing returns beyond a certain threshold because of scaling and overhead.

- MLX’s M4 Max Melts GGUF: Benchmarking GPT-OSS-20b on an Apple M4 Max revealed that MLX (GPU) hit 76.6 t/s at 32W (2.39 t/W) compared to GGUF (CPU) which only achieved 26.2 t/s at 43W (0.61 t/W).

- With 4bit quants and 4k context, MLX proved slightly faster and more power-efficient than GGUF, although they were impressed by the GGUF performance.

OpenAI Discord

- AI Agents Dive into M2M Economies: Members explored machine-to-machine (M2M) economies, where AI agents autonomously exchange value, focusing on challenges like identity & trust, smart contract logic, and autonomy.

- Safeguards such as spending caps, audit logs, and insurance could accelerate AI adoption in transactions, but real trust will still take time.

- Decentralized AI Project’s BOINC Bounty: A member sought a decentralized AI project like BOINC, noting challenges with the Petals network related to contributions and model updates.

- Contributors suggested financial or campaign-driven incentives could bolster decentralized AI development.

- Few-Shot Fitness Prompts Flexed: Members dissected optimal strategies for using few-shot examples within a 29,000 token prompt for a fitness studio, emphasizing prompt engineering.

- Recommendations included providing direct examples within the prompt and iteratively testing smaller chunks to enhance performance.

- GPT-5’s Thinking Mode Dumbs Down: A user reported that GPT-5’s thinking mode yields direct, low-quality responses, similar to an older model version, causing frustration.

- Another member speculated the user may have exceeded a thinking quota limit, with the system set to fallback instead of grey out.

- AI Quiz Generates Trivial Pursuit: A member highlighted issues with an AI quiz generator producing obviously wrong answer choices in quizzes.

- Another member suggested ensuring that all response options must be plausible to improve the AI’s output and produce more realistic responses.

Eleuther Discord

- PileT5-XL Speaks: An embedding tensor from PileT5-XL works both as an instruction for pile-t5-xl-flan (which generates text) and as a prompt for AuraFlow (which generates images), suggesting these embeddings hold meaning like words in a language.

- A member is interested in textual inversion with a black dog picture with auraflow applied to pile-t5-xl-flan to see if text describes the dog as black.

- Cosmos Med Models Scale!: The Cosmos Medical Event Transformer (CoMET) models, a family of decoder-only transformer models pretrained on 118 million patients representing 115 billion discrete medical events (151 billion tokens) generally outperformed or matched task-specific supervised models.

- The study, discussed in Generative Medical Event Models Improve with Scale, used Epic Cosmos, a dataset with medical events from de-identified longitudinal health records for 16.3 billion encounters over 300 million unique patient records from 310 health systems.

- ByteDance Prover Gets Medal: Bytedance’s SEED Prover achieved a silver medal score in IMO 2025.

- However, it is unclear how this translates to real world math problem solving performance.

- Isolating a Llama3.2 Head: A member isolated a particular kind of head, discovering that decoded result vectors between Llama 3.2-1b instruct and Qwen3-4B-Instruct-2507 were remarkably similar across different outputs.

- The member stated that the two heads seem to promote are quite similar.

- Muon Kernel Support Sought: A member expressed interest in adding muon support, citing potential kernel optimization opportunities.

- They believe that once basic support is implemented, there’s room for collaborative work on these optimizations.

Latent Space Discord

- Meta Splits After Wang Promotion: Meta is reorganizing its AI efforts into four teams (TBD Lab, FAIR, Product/Applied Research, Infra) under new MSL leader Alexandr Wang, with the AGI Foundations group being disbanded, according to Business Insider.

- Nat Friedman and Yann LeCun now report to Wang, FAIR will directly support model training, and an “omni” model is under consideration.

- GPT-5-pro Silently Eats Prompts: GPT-5-pro is silently truncating prompts greater than 60k tokens without any warning or error messages, which makes large-codebase prompts unreliable, according to this report.

- Some users are also reporting that GPT-5 in Cursor is acting a lot dumber than usual, with some suspecting load shedding is occurring.

- Dropout Inspired by Bank Tellers: A viral tweet claims Geoffrey Hinton conceived dropout after noticing rotating bank tellers deterred collusion (source).

- Reactions range from admiration for the serendipitous insight to skepticism and jokes about attention mechanisms emerging from house parties.

- ByteDance Sows Seed-OSS Models: ByteDance’s Seed team has announced Seed-OSS, a new open-source large-language-model family available on GitHub and Hugging Face.

- The team is inviting the community to test and provide feedback on the models, code, and weights.

- Wonda Promises Video Revolution: Dimi Nikolaou introduced Wonda, an AI agent aiming to revolutionize video/audio creation, calling it what Lovable did for websites, Wonda does for content (tweet link).

- Early-access will be granted via a waitlist offering invites in approximately 3 weeks.

GPU MODE Discord

- CUDA Confounds ChatGPT: A member found that ChatGPT gave confidently incorrect answers regarding CUDA float3 alignment and size, and then attributed the difficulty of this topic to the complexities of OpenCL and OpenGL implementations.

- The member has validated that there is no padding in CUDA.

- Hackathon Starts Saturday AM: The GPU Hackathon will likely kick off around 9:30 AM on Saturday, and it was hinted that participants will be working with newer Nvidia chips.

- There was a question about the hackathon prerequisites, but it went unanswered in the channel.

- AMD GPU debugger has first alpha: An engineer showed off the alpha version of their new AMD GPU debugger now with disassembly and wave stepping in this video.

- This debugger doesn’t depend on the amdkfd KMD, using a mini UMD driver and the linux kernel debugfs interface and aiming for a rocdbgapi equivalent.

- DIY Distributed Training Framework Emerges: One member is in the process of building their own pytorch distributed training library and mini NCCL as a backend to be used with infiniband at home between a 4090 and 5090.

- Another member expressed interest, considering it to be a good way to study the finer points of distributed computing.

- MI300 dominates Trimul Leaderboard: The

trimulleaderboard now features a submission score of 3.50 ms on MI300, and another submission on MI300 achieved second place with a score of 5.83 ms.- A member achieved 6th place on B200 with a time of 8.86 ms and later improved to 4th place with 7.29 ms on the

trimulleaderboard, and another achieved second place on H100 with a time of 3.80 ms

- A member achieved 6th place on B200 with a time of 8.86 ms and later improved to 4th place with 7.29 ms on the

Yannick Kilcher Discord

- Forbes Finds Flaws, Frames Fracas!: Forbes revealed that Elon Musk’s xAI published hundreds of thousands of Grok chatbot conversations.

- When asked whether this was true, @grok responded evasively, leading to further speculation.

- LeCun Leaving, Losing, or Loitering?!: A user speculated about Yann LeCun’s potential departure from FAIR based on a post by Zuckerberg.

- Another member suggested LeCun may have been demoted and that Meta is retreating from the open source model space.

- Infinite Memory Mandates Machine Mightiness!: A member argues that Turing completeness requires infinite memory, thus the universe cannot create a Turing complete machine due to insufficient memory.

- Another member jokingly suggests that making a computer sufficiently slow could allow the expansion of the universe to account for the space problem.

- New Names, New Nuisance: AI Slurs Surface!: A user shared a Rolling Stone article discussing the emergence of new AI slurs like clanker and cogsucker.

- Responses in the channel were muted, but all seemed to agree that such words are very naughty indeed.

HuggingFace Discord

- Payment Issues Plague Hugging Face Pro Users: A user reported being charged twice for the Pro version without receiving the service, advising others to email [email protected] and seek assistance in the designated MCP channel.

- The user was unable to get the Pro service despite repeated charges to their account.

- AgentX Promises Smarter AI Trading: The new AgentX platform aims to provide a trading table with the smartest AI minds—ChatGPT, Gemini, LLaMA, Grok—working together to debate until they agree on the best move.

- The platform seeks to offer traders a system they can fully trust by having LLMs debate the best move.

- Members Debate SFT versus DPO: Members discussed the effectiveness of DPO (Direct Preference Optimization) versus SFT (Supervised Fine-Tuning), where one member noted that DPO has no relationship to reasoning, but DPO after SFT improves results over just SFT.

- There was discussion on leveraging DPO to boost performance, however, the relationship to reasoning was debated among members.

- HF Learn Course Plagued by 422 Errors: A member reported that a page from the Hugging Face LLM course is down and showing a 422 error.

- Users are currently unable to access the broken page within the Learn course.

Notebook LM Discord

- Users Discover Gems to Streamline Podcast Generation: Users are developing workflows, like this example, to create deeper research frameworks to generate podcasts with Gems, Gemini, PPLX, or ChatGPT.

- The key is to set prompts to plan the entire transcript section by section, generating podcasts from longer YouTube videos.

- Customize screen lets Users Configure Podcast Length: Users can adjust podcast length in NotebookLM by using the Customize option (three dots), extending podcast length to 45-60 minutes.

- Specifying topics allows the bot to concentrate on topics instead of relying on it to fit all the important stuff into a single podcast.

- Privacy Policy Paranoia Prevails: Users are analyzing healthcare company’s privacy policies and terms of use using Gemini and NotebookLM.

- The user was surprised by how much you give away to these companies and how useful this method is to understand Terms of Use and Privacy policies.

- Android App Feature Parity Delayed: Users are requesting more feature parity between the NotebookLM web app and the Android app, especially for study guides.

- One user stated the current native app is borderline useless because study guides depend on the notes feature, which is missing from the native app.

- NotebookLM API Remains Elusive: While an official API for NotebookLM is not available, users suggest using the Gemini API as a workaround.

- Another user shared their strategy of combining GPT4-Vision and NotebookLM to quickly digest complex PDF schematics with callouts.

Nous Research AI Discord

- ByteDance Unleashes Long Context Model: ByteDance released a base model with extremely long context, featuring no MHLA, no MoE, and not even QK norm, according to this image.

- The model’s architecture was described as vanilla, prompting hopes for a forthcoming paper to provide further insights.

- Seed-OSS-36B’s GGUF Absence Sparks Speculation: Users inquired about the absence of a GGUF for Seed-OSS-36B, noting their typical swift appearance, referencing this link questioning the implications for ASICs.

- It was suggested the delay could stem from a custom vllm implementation, with the architecture currently unsupported by llama.cpp due to

architectures: ["SeedOssForCausalLM"].

- It was suggested the delay could stem from a custom vllm implementation, with the architecture currently unsupported by llama.cpp due to

- Seed Model Sports Dropout and Bias: The Seed model incorporates a custom MLP and attention mechanism akin to LLaMA, yet features dropout, an output bias term, and a bias term for the qkv heads.

- These additions are speculated to serve as regularization techniques; however, the number of epochs the model underwent remains unknown, with confirmations that simply renaming it to LLaMA will not yield functionality.

- Qwen Scales to 512k Context with RoPE: The 30B and 235B Qwen 2507 models can achieve 512k context using RoPE scaling, according to this Hugging Face dataset.

- These datasets are used to generate importance matrices (imatrix), which help minimize errors during quantization.

- Cursor’s Kernel Blog Draws Applause: Members shared a link to Cursor’s kernel blog.

- Many agreed that cursor cooked on that one.

Moonshot AI (Kimi K-2) Discord

- DeepSeek V3.1 Debuts with Mild Improvements: The new DeepSeek V3.1 model was released, with some members noting that it is like an incremental improvement with some regressions, referencing DeepSeek’s official page.

- Its performance is being closely watched in the community for subtle gains and potential drawbacks.

- DeepSeek Courts Anthropic API Integration: DeepSeek now supports the Anthropic API, expanding its capabilities and reach, as announced on X.

- This integration enables users to use DeepSeek with Anthropic’s ecosystem, promising versatility in AI solution development.

- R-Zero LLM Evolves Sans Human Data: A comprehensive study of R-Zero, a self-evolving LLM training method that starts from zero human data and improves independently, was shared in a PDF.

- The approach marks a departure from traditional LLM training, potentially reducing reliance on human-labeled datasets.

- China Sidesteps Data Center Energy Dilemma: A member noted that in China, energy availability is treated as a given, contrasting with U.S. debates over data center power consumption and grid limits, referencing this Fortune article.

- The difference in approach could give Chinese AI firms a competitive advantage in scaling energy-intensive models.

- Kimi K2 Eyes Better Image Generation: A member noted that Kimi K2 would be more OP if it got combined with Better image gen than gpt 5, with this reddit link shared.

- Integrating enhanced image generation capabilities would position Kimi K2 as a more versatile and competitive AI assistant.

aider (Paul Gauthier) Discord

- Gemini 2.5 Pro Stumbles While Flash Soars: A user reports that Gemini 2.5 Flash is functional, whereas Gemini 2.5 Pro consistently fails, however

gemini/gemini-2.5-pro-preview-06-05operates when billing is configured.- Another reported a $25 charge for a qwen-cli process and is requesting a refund, highlighting potential inconsistencies in model performance and billing.

- User Hit With Unexpected Qwen CLI Charges: A user incurred a $25 charge for using qwen-cli after Google OAuth authentication, expecting free credit from Alibaba Cloud.

- Opening a support ticket, they cited a console usage of one call of $23 with no output to dispute the unexpected charge.

- Community Benchmarks GPT-5 Mini Models: Community members are actively benchmarking gpt-5-mini and gpt-5-nano because of rate limits on the full gpt-5, and one user claims gpt-5-mini is very good and cheap.

- Benchmark results and a PR for gpt-5-mini are available, reflecting the community’s interest in evaluating smaller, more accessible models.

- DeepSeek v3.1 Pricing Sees a Bump: Starting Sept 5th, 2025, DeepSeek will increase pricing to $0.25 vs $0.27 for input on both models to match the reasoner model price.

- The price increase to match the deepseek 3.1 model reflects changes in pricing strategy.

- OpenRouter Needs a “Think” Mode: Users noted that OpenRouter lacks a native “think” mode for enhanced reasoning, but it can be enabled via command line using:

aider --model openrouter/deepseek/deepseek-chat-v3.1 --reasoning-effort high.- Community members suggested updating the model configurations to address this functionality gap.

DSPy Discord

- Marimo Notebooks Rise as Jupyter Alternative: A member published tutorials on marimo notebooks, highlighting its use in iterating through ideas on Graph RAG with DSPy, as a notebook, script and app, all at once.

- Upcoming videos will explore DSPy modules optimization, building on the current tutorial that introduces marimo to new users.

- Readability Debate: DSPy Code Assailed then Upheld: After a member dismissed IBM’s AutoPDL claims about unreadability, they defended DSPy’s code and prompts as extremely human-readable and clear.

- The defense emphasized the accessibility of the code, making it easy to understand and work with.

- GEPA Arrives in DSPy v3.0.1: Members confirmed that GEPA is available in dspy version 3.0.1, as shown in the attached screenshot.

- During fine-tuning, a member inquired about whether it is common to use “vanilla descriptions” for dspy.InputField() and dspy.OutputField() to allow the optimizer to think freely.

- Pickle Problem: DSPy Program Not Saved: A user reported issues with saving an optimized program, noting that the metadata only contained dependency versions but not the program itself, even when using

optimized_agent.save("./optimized_2", save_program=True).- When another user set the maximum context length to 32k for GEPA but still received cut-off responses, members discussed the complexities of long reasoning and potential issues with multi-modal setups.

- RAG vs Concatenation: Million-Document Debate: Members debated whether RAG (Retrieval-Augmented Generation) or simple concatenation would be more appropriate for tasks like processing tax codes or crop insurance documents.

- The debate acknowledged that while RAG is often seen as overkill, the scale of millions of documents can sometimes justify its use.

Cohere Discord

- Command A Reasoning Unleashed: Cohere launched Command A Reasoning, designed for enterprise, outperforming other models in agentic and multilingual benchmarks; available via Cohere Platform and Hugging Face.

- It runs on a single H100 or A100 with a context length of 128k, scaling to 256k on multiple GPUs, according to the Cohere blog.

- Command’s Token Budget Saves the Day: Command A Reasoning features a token budget setting, enabling direct management of compute usage and cost control, making separate reasoning and non-reasoning models unnecessary.

- It is also the core generative model powering North, Cohere’s secure agentic AI platform, enabling custom AI agents and on-prem automations.

- Command-a-03-2025 Gives Intermittent Citations:

command-a-03-2025is returning citations only intermittently, even with the maxTokens set to 8K, causing trust issues in production.- A Cohere member clarified that it uses “fast” mode for citations (as per the API reference) and citations aren’t guaranteed; use command-a-reasoning instead.

- Langchain RAG in the Works: A member is learning Langchain to build an RAG (Retrieval-Augmented Generation) application, with the intention to use command-a-reasoning.

- They anticipate the release of command-a-omni, and expressed hype for a future model called Command Raz.

MCP (Glama) Discord

- MCP Clients Flout Instructions Field: Members are reporting that MCP clients, specifically Claude, are ignoring the instructions field and only considering tool descriptions.

- One member suggested that adding the instruction, context and then repeating the instruction would yield better results but this is not possible with integrated APIs, while another suggested the MCP server should prioritize processing tool descriptions.

- Diverse MCP Servers in Action: Members are sharing their preferred MCP server setups and tools including GitHub for version control, Python with FastAPI for backend development, and PyTorch for machine learning.

- One user sought advice on how to make an agent follow a specific generate_test_prompt.md file, linking to a screenshot of their configuration.

- Web-curl Unleashes LLM Agent Prowess: Web-curl, an open-source MCP server built with Node.js and TypeScript, empowers LLM agents to fetch, explore, and interact with the web & APIs with source code available on GitHub.

- Functionally, Web-curl enables LLM agents to fetch, explore, and interact with the web & APIs in a structured way.

- MCP-Boss Centralizes Key Management: A member introduced MCP Boss to centralize key management, providing a single URL to gateway all services, featuring multi-user authentication and MCP authorization via OAuth2.1 or static HTTP header.

- More information available at mcp-boss.com.

- AI Routing Power in MCP Gateway: A member introduced a lightweight gateway with AI-powered routing to solve the problem of agents needing to know which specific server has the right tool, with code available on GitHub.

- By using the gateway, MCP routing can be solved by using an AI.

Modular (Mojo 🔥) Discord

- Modular Celebrates Modverse Milestone: Modular released Modverse #50 and announced a custom server tag as seen in Screenshot_2025-08-21_at_5.22.15_PM.png.

- The custom server tag has been deployed.

- Documentation drought plagues kgen and pop: Members report a lack of documentation for kgen and pop, particularly regarding operations and parameters, with one stating there’s no comprehensive documentation of the internal MLIR dialects.

- A link to the pop_dialect.md on Github was shared, clarifying that these are part of the contract between the stdlib and the compiler, so use them outside of the stdlib at your own risk.

- POP Union Faces Alignment Allegations: Suspicions have arisen regarding an alignment bug in pop.union, as indicated by unexpected size discrepancies when employing

sizeof.- A member created issue 5202 on GitHub to investigate the suspected alignment bug in pop.union, also observing that pop.union doesn’t appear to be used anywhere.

- TextGenerationPipeline Execute Hides In Plain Sight: A member located the

executemethod onTextGenerationPipelineand linked to the relevant line in the Modular repo.- They suggested checking the MAX version.

- Memory Allocators Loom Large: One member suggested that robust allocator support might be necessary before memory allocators are integrated into the language, as most users don’t want to manually handle out-of-memory (OOM) errors.

- These comments were made in the context of other struggles, with one member reporting struggling with retrieving the logits along with the next token while creating a custom inference loop and linked to a Google Docs document for context.

LlamaIndex Discord

- LlamaIndex Debuts Enterprise Document AI: LlamaIndex’s VP of Product previews enterprise learnings about parsing, extracting, and indexing documents on September 30th at 9 AM PST.

- The focus is on how LlamaIndex addresses real-world document challenges.

- vibe-llama Cli Tool Configures Coding Agents: LlamaIndex launched vibe-llama, a CLI tool that automatically configures coding agents with context and best practices for the LlamaIndex framework and LlamaCloud, detailed here.

- The goal is to streamline development workflows.

- CrossEncoder Class: Core vs Integrations: A member inquired about the duplicated CrossEncoder class implementations in

llama-index, specifically under.coreand.integrations(code link).- It was clarified that the

.coreversion is a leftover from the v0.10.x migration, with the recommendation to usellama_index.postprocessor.sbert_rerankwithpip install llama-index-postprocessor-sbert-rerank.

- It was clarified that the

- Quest for Agent Creation Gateway: A member sought existing projects serving as a gateway that ties together model, memory, and tools, exposing an OpenAI-compatible endpoint.

- They wanted to avoid reinventing the wheel in agent explorations.

- AI Safety Survey Gathers Community Opinions: A member shared an AI safety survey to collect community opinions on important AI safety questions.

- The survey aims to understand what the AI safety community finds most interesting.

Manus.im Discord Discord

- Users Report Missing Credit Purchase Option: Members have reported that the option to buy extra credits is missing, with users only seeing the upgrade package option.

- It was confirmed that the option is currently down right now.

- Support Tickets Go Unanswered: A user reported an issue with a task and creating ticket #1318, but has not received a response or access to the ticket.

- They requested assistance from the team, tagging a specific member.

- Contest Winner Draws Rigging Allegations: A user alleges that the second-place winner in a contest didn’t deserve to win and claims the contest seems rigged.

- No further evidence or details were provided to support this claim.

- Free Daily Credits Discontinued?: A returning user noticed they didn’t receive the usual 300 free credits daily.

- They inquired whether Manus had stopped providing these credits.

- Referral Credits Code Confusion: A user asked how to claim referral credits, noting that the system asks for a code.

- The user stated they didn’t know where to find the required code.

tinygrad (George Hotz) Discord

- Overworld Const Folding Explored: A member explored overworld const folding and a potential view(const) refactor, redefining

UPat.cvarandUPat.const_liketo matchCONSTandVIEW(CONST)in this discord thread.- The aim is to fold expressions like

x * 0, however, concerns were raised about validity and.baseproliferation in symbolic computations.

- The aim is to fold expressions like

- ALU View Pushing as Alternative: An alternative approach was suggested involving adding a upat in kernelize that pushes views directly onto ALUs, mirroring S-Lykles’s method.

- This method and a special rule for

x * 0would allow unmodified symbolic matching, given the computational irrelevance of* 0.

- This method and a special rule for

- base Removal Advocated: A member strongly advised against the proposed approach, deeming it “super ugly” and advocating for the removal of

.base.- The discussion also questioned the handling of PAD operations within this context.

- RANGEIFY=1 Simplifies Implementation: It was suggested that setting RANGEIFY=1 could lead to a cleaner implementation.

- However, the project is currently in a transition phase where both the old engine and rangeify are coexisting, creating a state of limbo.

Nomic.ai (GPT4All) Discord

- GPT4ALL Free Tier Enables Private AI: A user inquired about using GPT4ALL for companies that wanted to use their AI model privately and securely.

- Another member clarified that the free version suffices if the company already has its own AI model ready.

- User Asks for LocalDocs Model: A user seeks a model recommendation for building a personal knowledge base from hundreds of scientific papers in PDF format using GPT4All’s LocalDocs feature.

- The user specified they have an Nvidia RTX 5090 with 24 GB VRAM and 64 GB RAM and would appreciate reasoning capabilities in the chosen model.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (951 messages🔥🔥🔥):

nano-banana model, Video Arena problems, DeepSeek V3.1, Gemini 3

- Nano-Banana’s McLau’s Law unveiled: A member joked that Nano-Banana often yields results far below nano-banana, terming this phenomenon “McLau’s Law” in a humorous nod to one of OpenAI’s researchers.

- Attached was a humorous image prompting discussion about AI’s current capabilities.

- Video Arena struggles with Bot Downtime: Members reported issues with the Video Arena, citing inability to use commands or generate videos, with moderators confirming the bot’s downtime and ongoing fixes.

- Repeated queries about video creation access were met with explanations about the bot’s temporary unavailability, directing users to the announcements channel for updates.

- DeepSeek V3.1 enters the Arena: Users discussed the introduction of DeepSeek V3.1 to the platform, with one user describing the new model as slightly worse version of Gemini 2.5 pro.

- However, the consensus is that it has potential as a coding model, but requires further general abilities.

- Gemini 3 is Coming, claims user: While not confirmed, a user hinted at the impending release of Gemini 3, speculating a launch date mirroring the Google Pixel event, generating anticipation among members.

- The user did not cite any source and the claim was quickly dismissed by other community members.

- Site Outage Wipes Chats: Users reported widespread data loss following a site outage, including missing chat histories and inability to accept terms of service, prompting moderator acknowledgement and assurances of a fix.

- The moderator also said that a login feature will be available soon to prevent this sort of thing from happening again.

LMArena ▷ #announcements (2 messages):

Video Arena Bot, Deepseek v3.1, LMArena Models

- Video Arena Bot down, channels locked: The Video Arena Bot is currently not working, locking access to the prompt channels <#1397655695150682194>, <#1400148557427904664>, and <#1400148597768720384>.

- The bot must be online to prompt in those specific channels.

- DeepSeek v3.1 Added to LMArena: Two new models have been added to LMArena: deepseek-v3.1 and deepseek-v3.1-thinking.

- These models are now available for use in the arena.

Unsloth AI (Daniel Han) ▷ #general (887 messages🔥🔥🔥):

ByteDance Seed Model, GRPO Training, DeepSeek V3.1 Quants, Nvidia's GPUs and Pricing, GLM-4.5 Cline Integration

- ByteDance Releases Seed-OSS 36B Base Model: ByteDance released the Seed-OSS-36B-Base-woSyn model on Hugging Face, a 36B dense model with 512K context window and explicitly claims no synthetic instruct data making it an interesting base for further tunes.

- Members expressed excitement, noting it differs from models like Qwen3, and some are eager to try tuning GPT-ASS with it after their datasets are complete, despite the model being trained on only 12T tokens.

- GRPO Training Requires Smart Dataset Design: To use GRPO for multi-step game actions, members advised designing datasets with separate prompts for each step, such as [[‘step1 instruct’], [‘step1 instruct’, ‘step1 output’, ‘step2 instruct’]], and implementing a reward function to match the outputs.

- It was noted that Full PPO might be better suited for games, as GRPO is primarily effective for LLMs because they roughly know what to do to begin with.

- DeepSeek V3.1 Sweeps Leaderboard in Thinking and Non-Thinking Modes: The DeepSeek V3.1 model has shown competitive results, achieving a 66 on SWE-bench verified in non-thinking mode, with members expressing hype and comparing it to GPT5 medium reasoning.

- Although initially hyped, discussions later mentioned concerns about its performance in creative writing and roleplay, with some noting hybrid models lack the instruction following and creativity in the non-think mode.

- Nvidia’s RTX 5090 Prices Settle, Sparking Upgrade Debates: The RTX 5090 is now priced around $2000, prompting discussions on whether to upgrade, especially for training purposes given its VRAM capabilities, while others suggested sticking with 3090s or waiting for the RTX 6000.

- Some members expressed frustration with NVIDIA’s limitations, particularly the lack of P2P or NVLink, with one member joking, if you sit on a 5090 you will game on it.

- High Quality Imatrix Calibration Data is Key: Members noted that WikiText-raw is considered a bad dataset for calibrating imatrices, because the imatrix needs to be well diversified and trained on examples in the model’s native chat-template format.

- Instead, Ed Addorio’s latest calibration data with Math, Code, and Language prompts, can improve and help preserve the models understanding of multiple languages if done correctly.

Unsloth AI (Daniel Han) ▷ #introduce-yourself (1 messages):

.zackmorris: Hello

Unsloth AI (Daniel Han) ▷ #off-topic (27 messages🔥):

GRPO 20mb alloc fail, ChatGPT's deep research, Grok-4, Repetition penalty, RAG

- GRPO 20MB Alloc Fails Plague Gemma Model!: A user reported frequent 20MB allocation failures with GRPO while working on gemma-3-4b-it-unslop-GRPO-v3.

- ChatGPT’s Deep Thought Mode Boosts Performance!: A user suggested enhancing ChatGPT’s performance by enabling web search and adding “use deep thought if possible” to prompts, even without full deep research.

- Grok-4 Puts in the WORK!: A user was impressed by Grok-4, suggesting they might have secretly been using Grok-4-Heavy.

- Repetition Penalty Hilarity Ensues: A user shared an image to demonstrate the importance of the repetition penalty parameter.

- RAG assistance: A user asked for help working with RAG.

Unsloth AI (Daniel Han) ▷ #help (101 messages🔥🔥):

Retinal Photo Training Strategies, GPT-OSS 20B Deployment on Sagemaker, Unsloth Zoo Issues, GGUF Loading with Unsloth, Gemma 3 Vision Encoder Training Loss

- Tuning Vision-Text Encoders for Retinal Photos: A user questioned whether it’s better to train a custom vision-text encoder for retinal photos or use mainstream models with Unsloth, noting that retinal photos aren’t well-represented in training datasets.

- It was suggested to experiment with computer vision models, transfer learning on similar datasets, and multimodal approaches, with synthetic clinical note generation using prompt engineering and personas.

- Troubleshooting GPT-OSS 20B Sagemaker Deployment: A user encountered a

ModelErrorwhen deploying unsloth/gpt-oss-20b-unsloth-bnb-4bit on Sagemaker, receiving a 400 error and InternalServerException with message\u0027gpt_oss\u0027.- It was mentioned that the model doesn’t work on AWS Sagemaker and suggested deploying GGUFs or normal versions, using LMI Containers and pointed the user to AWS Documentation.

- Unsloth Zoo installation issues: A user experienced issues with unsloth-zoo even after installation in a Sagemaker instance, encountering import errors.

- The user resolved it by removing all packages, then reinstalling Unsloth and Unsloth Zoo alongside JupyterLab, also needed to update Unsloth and refresh the notebook.

- Quantization Concerns for Apple Silicon Macs: A user sought guidance on which GGUF quantization is best for M series Apple Silicon, noting Macs are optimized for 4-bit and 8-bit computation.

- It was suggested that users go for Q3_K_XL, or IQ3_XXS if context doesn’t fit in memory, and that Q3-4 quants can be performant, but if using GGUFs it doesn’t matter as much.

- GPT-OSS Gains Multimodal with LLaVA: A user asked why the vision llama13b notebook does not work for gpt-oss-20b and wondered if anyone was able to do it.

- It was clarified that GPT-OSS is text-only and not a vision model so it won’t work, and to add vision support, users would have to attach their own ViT module, like it is done in LLaVA using LLaVA Guides.

Unsloth AI (Daniel Han) ▷ #showcase (11 messages🔥):

WildChat-4M-English-Semantic-Deduplicated dataset, Behemoth-R1-123B-v2 model, GPU Rich Flex

- Dataset of English prompts from WildChat-4M Released: The WildChat-4M-English-Semantic-Deduplicated dataset is available on Hugging Face, consisting of English prompts from the WildChat-4M dataset, deduplicated using multiple methods including semantic deduplication with Qwen-4B-Embedding and HNSW.

- The current release includes prompts <= ~2000 tokens, with larger prompts to be added later, more information can be found here.

- TheDrummer Releases Behemoth-R1-123B-v2: The Behemoth-R1-123B-v2 model, created by TheDrummer, has been released, which can be found here.

- A member noted that it’s wild to be able to set up your hardware in HF.

- GPU Rich is the New Flex: A member shared an image depicting shaming if you’re poor but flexed GPU Rich.

- It’s a flex to see GPU in TFLOPS.