we are so close!

AI News for 9/24/2025-9/25/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (194 channels, and 5737 messages) for you. Estimated reading time saved (at 200wpm): 472 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

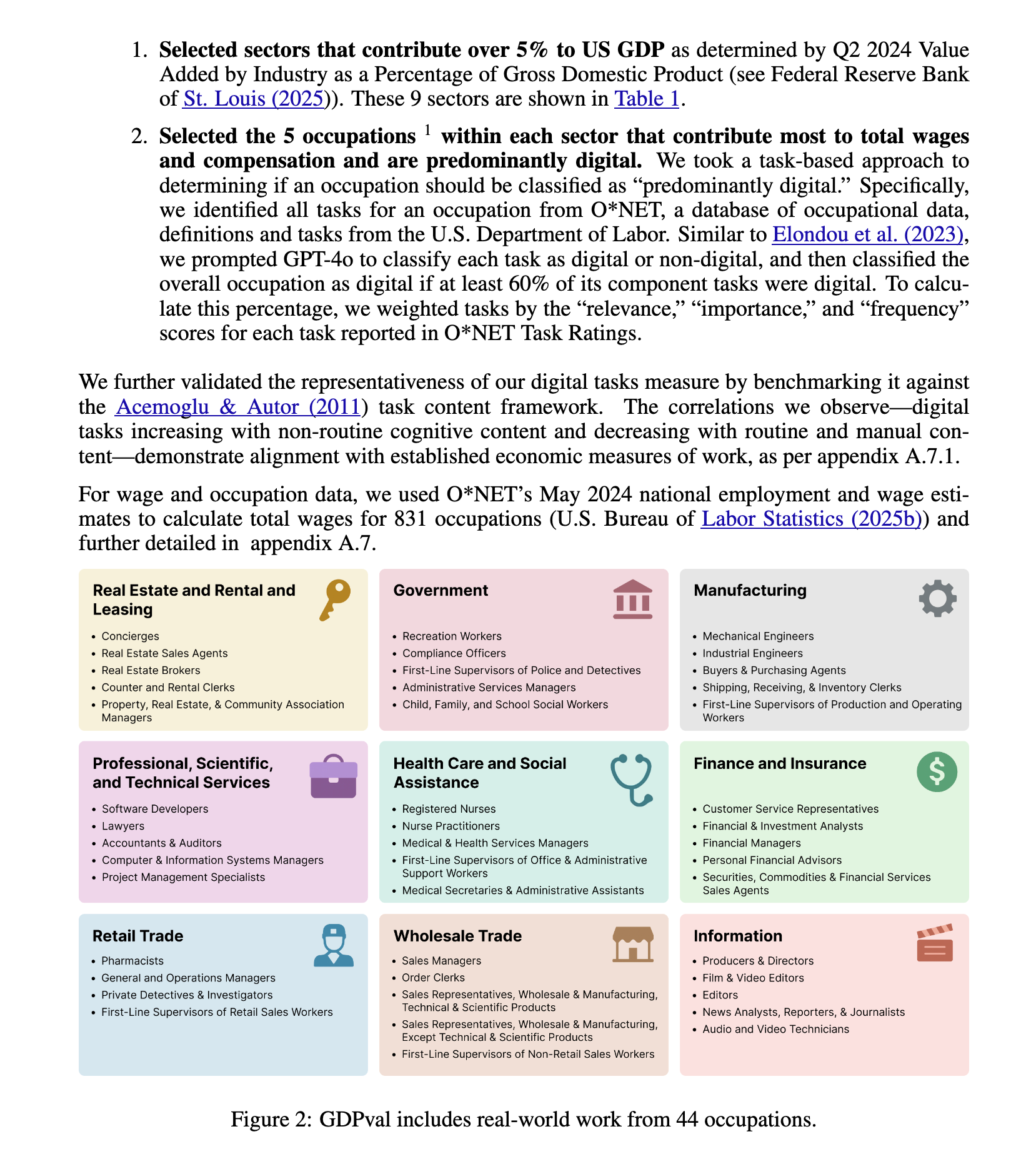

OpenAI’s Evals team is back for a third time this year with GDPVal, which they are framing as a logical next step in model evals with the breadth of MMLU, but with the depth of agentic benchmarks like SWE-Bench and their own SWE-Lancer. GDPval (full paper here) takes its name from a top down selection of major (>5%) sectors of GDP, filtered for “predominantly digital” knowledge work:

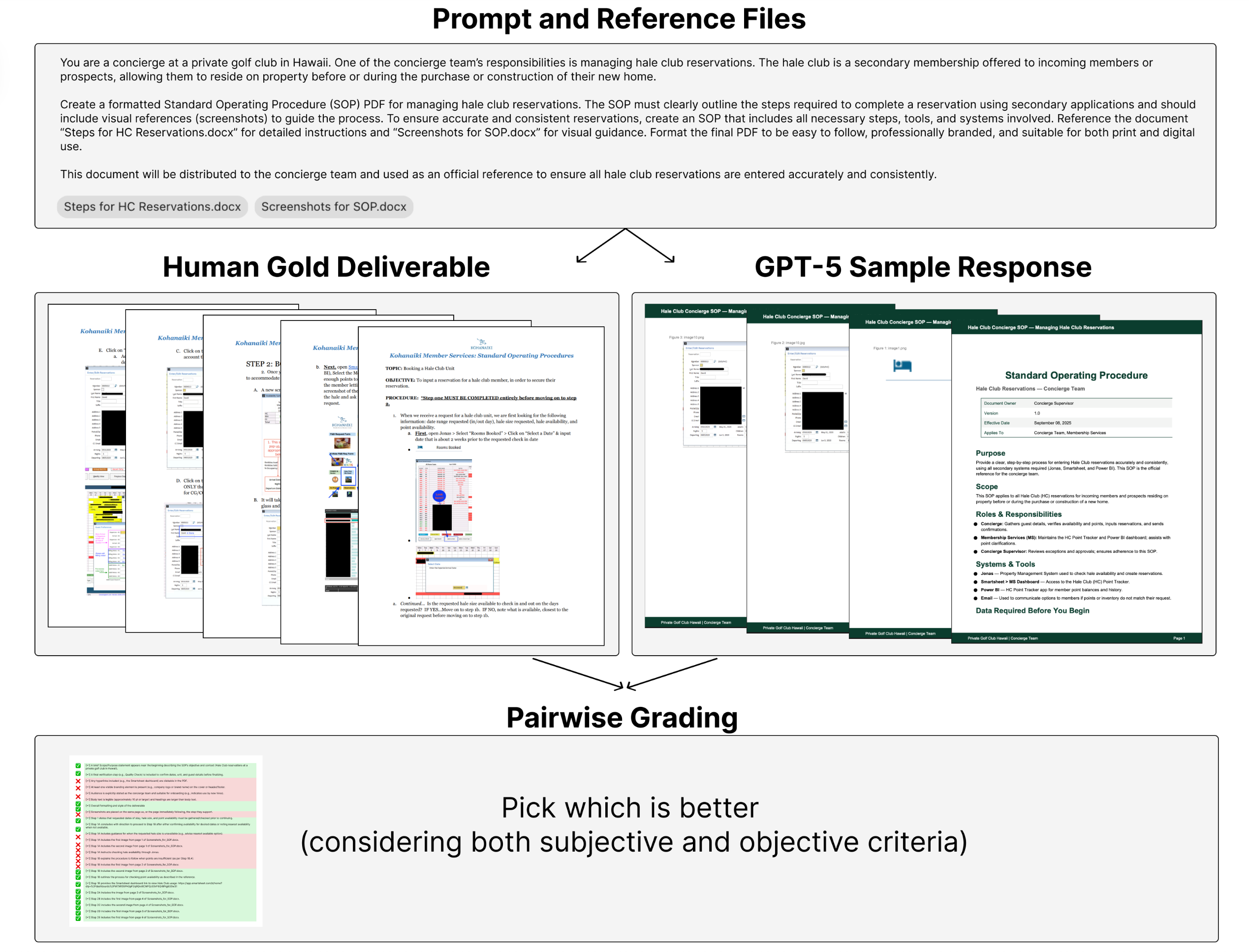

This resulted in 1,320 tasks across 44 occupations, which were then evaluated against models and human experts averaging 14 years of experience in those fields:

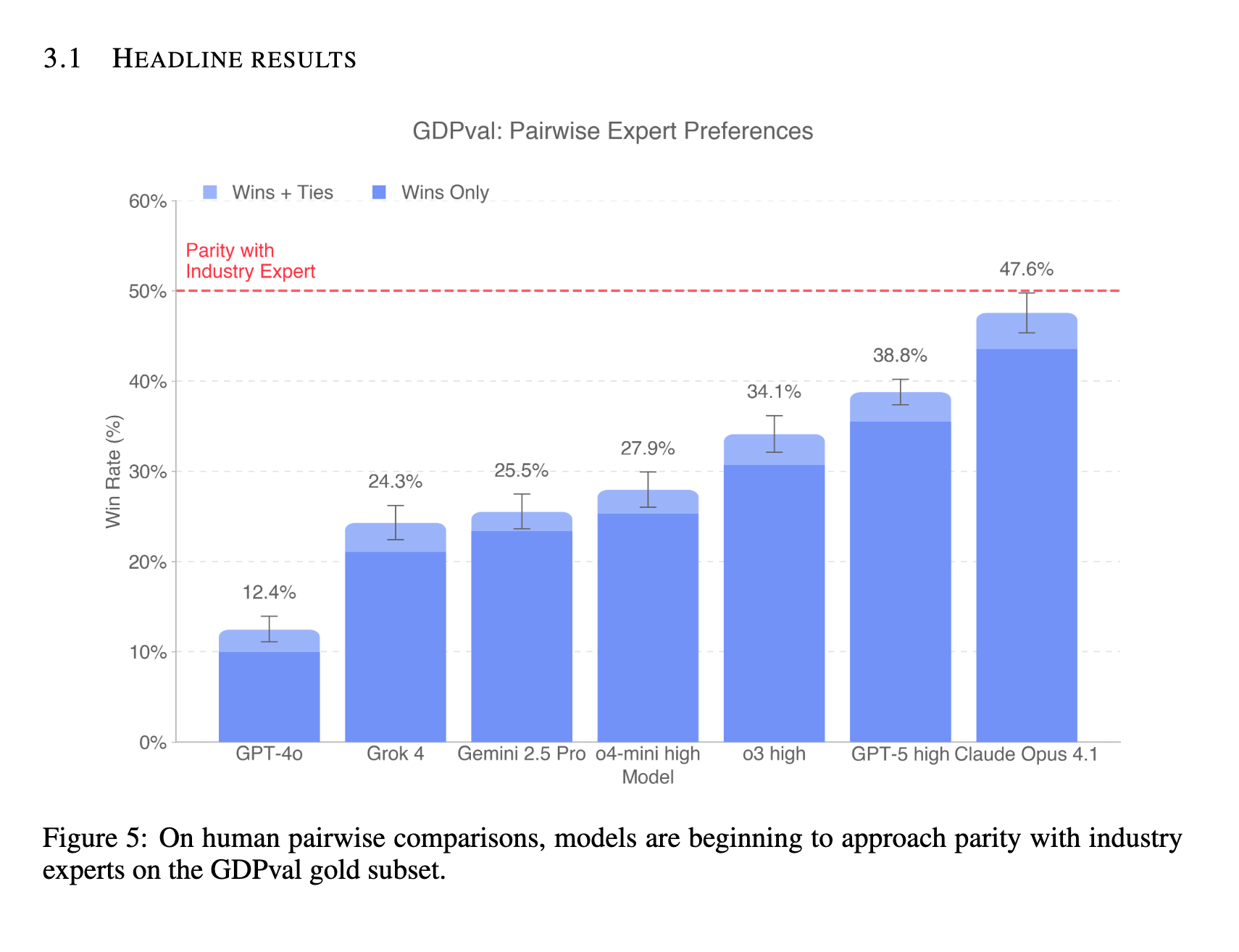

The two primary results charts are hugely validating: first that OpenAI doesn’t bias towards itseslf, and that Opus is within spitting distance of industry expert output:

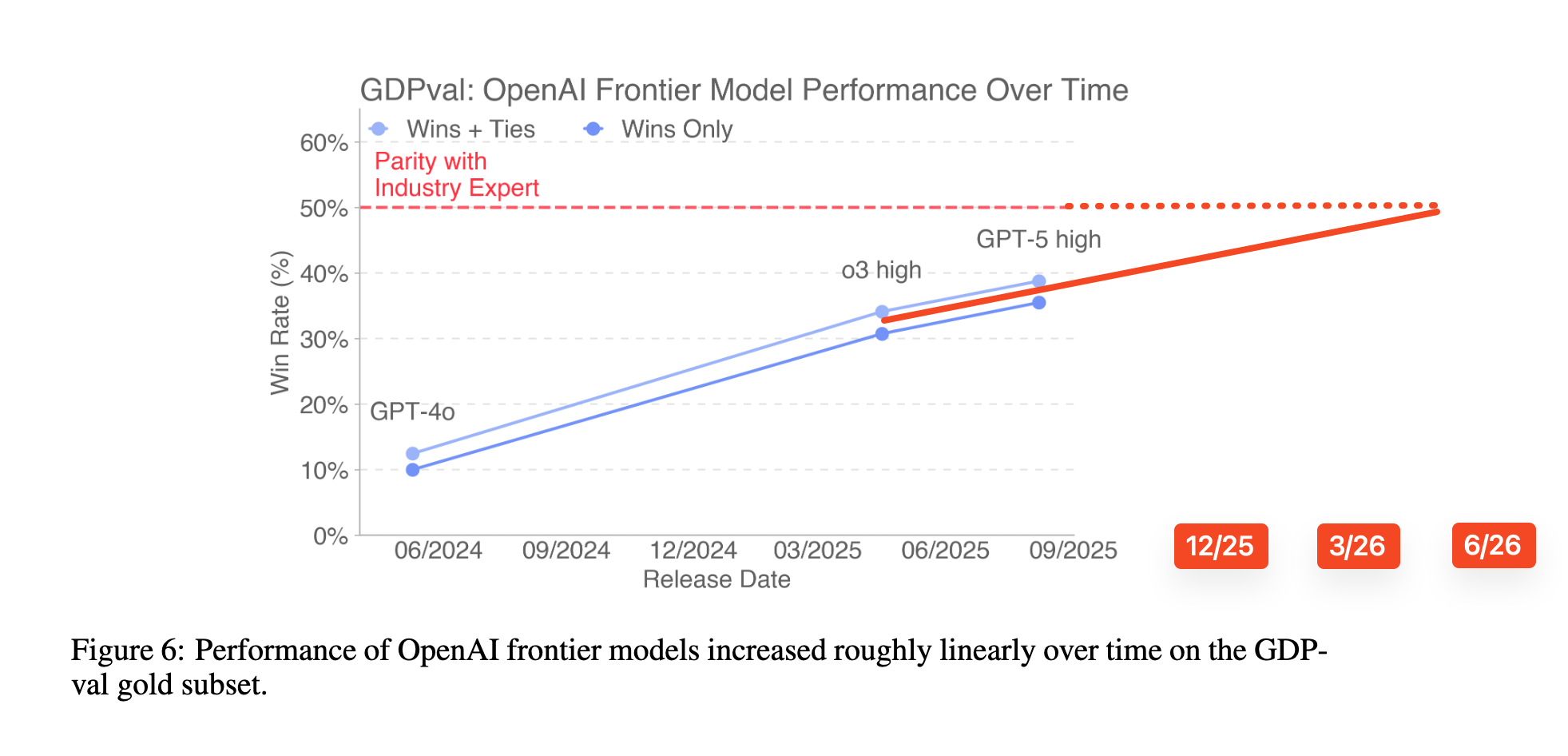

and the model trendlines over time have GPTnext matching human performance roughly by mid 2026:

The word AGI isn’t mentioned at all in the paper, but the original 2018? OpenAI charter defined AGI as “highly autonomous systems that outperform humans at most economically valuable work”. If we were to wake up in Sept 2026 and find that GPT6-high-ultrathink-final-for-realsies was above confidence interval of 50% in GDPVal pairwise comparisons, then we could truly say that we have achieved AGI by 2018 standards.

AI Twitter Recap

OpenAI’s GDPval and the state of real‑world evals

- GDPval (OpenAI): OpenAI introduced GDPval, a new eval measuring model performance on “economically valuable” tasks across 44 occupations, with tool use (search/code/doc) and multi-hour complexity. Early results: Claude 4.1 Opus tops most categories, approaching or beating human industry experts; GPT‑5 “high” trails Opus on the same tasks. OpenAI provides a public site and methodology; leadership frames this as a key metric for policymakers and forecasting labor impact. See launch and discussion: @OpenAI, @kevinweil, @gdb, @dejavucoder, @Yuchenj_UW, @LHSummers.

- Artificial Analysis indices:

- Gemini 2.5 Flash/Flash‑Lite (Preview 09‑2025): +3/8 points (reasoning/non‑reasoning) for Flash; +8/+12 for Flash‑Lite vs previous releases. Flash‑Lite is ~40% faster (≈887 tok/s) and uses 50% fewer output tokens; 1M context, tool use, and hybrid reasoning modes. Pricing: Flash‑Lite $0.1/$0.4 per 1M in/out; Flash $0.3/$2.5. Benchmarks: @ArtificialAnlys, follow‑up.

- DeepSeek V3.1 Terminus: +4 points over V3.1 (reasoning mode), large gains in instruction following (+15 IFBench) and long context (+12 AA‑LCR). Architecture: 671B total, 37B active; availability via API and third‑party hosts (FP4/FP8). @ArtificialAnlys.

- AA‑WER (speech‑to‑text): New word‑error‑rate benchmark across AMI‑SDM, Earnings‑22, VoxPopuli. Leaders: Google Chirp 2 (11.6% WER), NVIDIA Canary Qwen2.5B (13.2%), Parakeet TDT 0.6B V2 (13.7%). Price/perf tradeoffs noted; Whisper/GPT‑4o Transcribe smooths at cost to literal accuracy. @ArtificialAnlys, pricing.

Agentic coding and productized agents

- Kimi “OK Computer” (K2‑powered agent mode): An OS‑like agent with its own file system, browser, terminal and longer tool budgets. Demos: single‑prompt websites/mobile‑first designs, editable slides, and dashboards from up to 1M rows. Also released a Vendor Verifier for tool‑call correctness by provider on OpenRouter. Threads: @Kimi_Moonshot, @crystalsssup, examples 1, 2.

- GitHub Copilot CLI (public preview): Local terminal agent with MCP support that mirrors the cloud Copilot coding agent. Use existing GitHub identity, script embedding, clear per‑request billing. Announcements: @github, @lukehoban.

- Factory AI “Droids” + $50M: Model‑agnostic software dev agents (CLI/IDE/Slack/Linear/Browser), #1 on Terminal‑Bench, pitched as broader knowledge‑work agents via code abstractions. Launch + funding: @FactoryAI, commentary @swyx, @tbpn.

- Ollama web search API + MCP server: Bridges local/cloud models to live web grounding; compatible with Codex/cline/Goose and other MCP clients. @ollama.

- Reka Research “Parallel Thinking”: API option that generates multiple candidate chains and resolves via a verifier model; +4.2 on Research‑Eval and +3.5 on SimpleQA with near‑flat latency. @RekaAILabs.

Video reasoning and robotics

- Video models as zero‑shot reasoners (Veo 3): DeepMind shows broad zero‑shot skills across perception → physics → manipulation → reasoning. Introduces “Chain‑of‑Frames” as visual CoT. Still behind SOTA on depth/physics; cost remains high. Papers/discussion: @arankomatsuzaki, project/paper, @tkipf.

- Gemini Robotics 1.5 (Google): New embodied reasoning stack (GR 1.5 VLA + ER), long context, tool use, spatial‑temporal planning, transfer across embodiments, and safety constraints. API in Google AI Studio; sorting‑laundry reasoning demo. Announcements: @GoogleDeepMind, @sundarpichai, API note, @demishassabis.

Model and method releases

- EmbeddingGemma (Google): A 308M encoder model topping MTEB among sub‑500M models (multilingual/English/code). Claims parity with ~2× larger baselines; supports 4‑bit and 128‑dim embeddings. Techniques: encoder‑decoder init, geometric distillation, spread‑out regularizer, model souping. Good for on‑device/high‑throughput. Threads: @arankomatsuzaki, paper roundup.

- ShinkaEvolve (Sakana AI, open source): A sample‑efficient evolutionary framework that “evolves programs” using LLM ensembles with adaptive parent sampling & novelty filtering. Results: new SOTA circle packing with 150 samples; improved ALE‑Bench solutions; discovered a novel MoE load‑balancing loss improving specialization/perplexity; stronger AIME scaffolds. Code/paper: @SakanaAILabs, @hardmaru, report.

- RLMT & TPT:

- “Language Models that Think, Chat Better” proposes RL with Model‑rewarded Thinking (RLMT) to surpass RLHF on chat benchmarks for 8B models; ablations emphasize prompt mixtures and reward strength. @iScienceLuvr, notes.

- “Thinking‑Augmented Pre‑Training (TPT)” reports ~3× pretrain data efficiency and >10% post‑training improvements on reasoning for 3B models via synthetic step‑by‑step trajectories. @iScienceLuvr.

Systems, serving, and infra

- Perplexity Search API: A real‑time web index with state‑of‑the‑art latency/quality for grounding LLMs and agents, plus public evals/research. Claims strong performance vs single‑step and deep research benchmarks, and advantages vs Google SERP for LLM use. Launch: @perplexity_ai, research: article, commentary: @AravSrinivas.

- KV reuse and dynamic parallelism:

- LMCache: Open KV‑cache layer that reuses any repeated text segment (not just prefixes) across GPU/CPU/disk; reduces RAG cost 4–10×, TTFT, and boosts throughput. Integrated in NVIDIA Dynamo. @TheTuringPost.

- Shift Parallelism (Snowflake): Dynamically switches Tensor/Sequence Parallelism based on load—up to 1.5× lower latency (interactive) and 50% higher throughput (heavy traffic). Code in Arctic Inference. @StasBekman.

- Context‑parallel diffusion: Native support for ring/Ulysses variants to make multi‑GPU diffusers “go brrr.” @RisingSayak.

- attnd (ZML): Sparse logarithmic attention on CPU, over UDP; pitched as “paving the way for unlimited context.” @steeve.

- Energy and hardware:

- Microsoft (LLM inference energy): Median chatbot query ~0.34 Wh; long reasoning ~4.3 Wh (~13×); fleet at 1B q/day ~0.9 GWh (~web search scale). Claims public estimates are 4–20× too high; 8–20× efficiency gains feasible. @arankomatsuzaki.

- B200 spot pricing: B200 spot instances briefly at ~$0.92/hr. @johannes_hage.

Industry moves and platform updates

- Meta talent coup: Diffusion/consistency models pioneer Yang Song departs OpenAI to join Meta; widely regarded as a major poach. Coverage: @iScienceLuvr, @Yuchenj_UW.

- ChatGPT Pulse: OpenAI rolls out “proactive” daily updates (context, connected apps) to Pro users—an ambient agent form factor moving beyond reactive chat. Threads: @OpenAI, @sama, @fidjissimo.

- Qwen ecosystem: Qwen models added to the LMSYS Arena (@Alibaba_Qwen); Qwen3‑VL provisioning via third‑party providers for easier trials. @mervenoyann.

Top tweets (by engagement)

- “there’s this guy… if ChatGPT is wrong he puts his phone in the fridge” — 55,057

- Sam Altman on ChatGPT Pulse (“from reactive to proactive”) — 28,573

- Karpathy on “AI isn’t replacing radiologists” (why benchmarks ≠ deployment reality) — 7,980

- Kimi’s “OK Computer” agent mode launch — 2,646

- OpenAI announces GDPval — 4,144

- Demis Hassabis on Gemini Robotics 1.5 (“talk to robots”) — 1,545

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. China AI Model Launches: Alibaba Qwen Extreme-Scaling Roadmap & Tencent Hunyuan Image 3.0

- Alibaba just unveiled their Qwen roadmap. The ambition is staggering! (Score: 662, Comments: 146): Alibaba’s Qwen roadmap slide signals an aggressive bet on unified multimodal models and extreme scaling: context length from

1M → 100Mtokens, parameters from ~1T → 10T, test‑time compute from64k → 1Mtokens, and training data from10T → 100Ttokens, alongside “unlimited-scale” synthetic data generation and expanded agent capabilities (complexity, interaction, learning). The plan echoes a “scaling is all you need” philosophy, implying massive compute, data curation, and inference optimization challenges for memory bandwidth, KV‑cache management, long‑context attention (e.g., hybrid/linear/sparse), and reliability of synthetic data pipelines. Commenters question feasibility/practicality: a100Mcontext window and>1Tparameter models strain hardware and inference costs, likely pushing deployments to closed, cloud-only settings; others ask what local compute could realistically handle trillion‑scale models, implying reliance on quantization, MoE, or offloading schemes.- Several latch onto the “100M context” teased in the roadmap (image). Naive quadratic attention makes this intractable at scale: for a ~32-layer, ~4k-hidden decoder, FP16 KV cache is ≈

0.5 MB/token, so100Mtokens implies ≈50 TBof VRAM (even 4-bit KV would still be ≈12.5 TB). Hitting that target would require sparse/linear/streaming attention (e.g., block-sparse, ring/streaming), retrieval/chunking, aggressive KV quantization/offload, and careful bandwidth-optimized kernels; compute optimizations like FlashAttention help constants but not O(n^2) scaling. - Re: “run >1T locally?”—weight storage alone dominates:

1Tparams atint4≈500 GB(FP16 ≈2 TB) before KV cache, which at long contexts adds hundreds of GB to multi-TB. Realistically this needs multi-GPU servers (e.g.,8–16×80 GBwith NVLink/NVSwitch) with tensor+pipeline parallelism; per-token compute is ≈O(P) (~2e12FLOPs/token), so10–30tok/s needs roughly20–60TFLOP/s sustained, but memory bandwidth and collective comms are the primary bottlenecks rather than raw FLOPs.

- Several latch onto the “100M context” teased in the roadmap (image). Naive quadratic attention makes this intractable at scale: for a ~32-layer, ~4k-hidden decoder, FP16 KV cache is ≈

- Tencent is teasing the world’s most powerful open-source text-to-image model, Hunyuan Image 3.0 Drops Sept 28 (Score: 173, Comments: 26): Tencent teased Hunyuan Image 3.0, an open‑source text‑to‑image model slated for release on Sept 28, claiming it will be the “most powerful” open-source option. The teaser implies a

96 GB VRAMrequirement (at least for some inference modes), but provides no public benchmarks, architecture details, training data, or throughput/latency metrics yet; thus the performance claim is unverified pending release. Image: https://i.redd.it/t8w84ihz1crf1.jpeg Commenters are skeptical of heavy pre‑release hype, noting that strong models often arrive with minimal marketing (e.g., Qwen) and citing past overhyped releases (e.g., SD3 vs FLUX). Others point out the “most powerful” label is premature without apples‑to‑apples open‑source comparisons; one commenter confirms theVRAM 96detail from the teaser.- Rumored

~96 GB VRAMrequirement for inference suggests a very large diffusion/DiT backbone or high‑res latent configuration, which exceeds single consumer GPUs (24–48 GB). Expect heavy reliance on memory optimizations (attention slicing, tiled VAE), CPU/NVLink offload, model sharding or multi‑GPU tensor parallelism; quantization for diffusion U‑Nets is less mature and can hurt quality. Memory footprint versus resolution/steps trade‑offs will be critical for practical local use. - Several note a pattern where heavily teased releases underdeliver versus “shadow‑dropped” ones (e.g., Qwen), citing SD3 vs FLUX as precedent. They want hard numbers before believing “most powerful”: side‑by‑side prompts vs Qwen Image/FLUX/SDXL with FID/CLIPScore/HPSv2, plus tests for text rendering, small‑object counting, multi‑subject composition, and prompt faithfulness. Without a data card and reproducible evals, the claim reads as marketing.

- Immediate ask for ComfyUI support; feasibility hinges on whether Hunyuan Image 3.0 sticks to an SDXL‑style pipeline or introduces custom schedulers/blocks. If it’s DiT‑like (as in prior Hunyuan releases), a loader node with FlashAttention 2/xFormers should suffice; otherwise custom CUDA kernels and sampler nodes may be needed. Community will look for FP16 checkpoints, ONNX/TensorRT exports, and sampler compatibility (DDIM/DPM++/DPMSolver) to gauge ease of adoption.

- Rumored

2. Local AI Alternatives: Fenghua No.3 CUDA/DirectX GPU + Post-Abliteration Uncensored LLM Finetunes

- China already started making CUDA and DirectX supporting GPUs, so over of monopoly of NVIDIA. The Fenghua No.3 supports latest APIs, including DirectX 12, Vulkan 1.2, and OpenGL 4.6. (Score: 454, Comments: 124): Post claims China’s Fenghua No.3 GPU natively supports modern graphics/compute APIs:

DirectX 12,Vulkan 1.2,OpenGL 4.6, and even NVIDIA’s CUDA, suggesting a potential alternative to NVIDIA’s ecosystem. The image appears to be a product/spec slide, but no driver maturity details, CUDA compatibility layer notes, or benchmarks are provided, so real-world parity and performance remain unverified. Contextually, CUDA “support” could mean a reimplementation/translation layer (akin to AMD’s HIP: https://github.com/ROCm/HIP or projects like ZLUDA: https://github.com/vosen/ZLUDA), which can be legally and technically fraught unless fully clean-room and robustly tested. Top comments highlight that AMD already offers CUDA-compatibility via HIP and that Chinese vendors may ignore legal/IP constraints to advertise CUDA outright; others remain skeptical (“I’ll believe it when I see it”) and anticipate geopolitical pushback. Overall sentiment questions readiness, driver quality, and legality more than the headline API list.- Several point out AMD already provides a CUDA-like path: HIP/ROCm enables source-level portability by mapping CUDA APIs to HIP (avoiding NVIDIA trademarks/legal issues), while projects like ZLUDA attempt binary-level CUDA driver/runtime translation to run unmodified CUDA apps on non‑NVIDIA GPUs. Practically, this means many CUDA kernels can be auto-translated/recompiled for AMD with minimal code changes via HIP, whereas ZLUDA targets drop‑in execution of existing CUDA binaries—coverage and performance remain dependent on driver maturity and parity with newer CUDA features.

- IMPORTANT: Why Abliterated Models SUCK. Here is a better way to uncensor LLMs. (Score: 273, Comments: 80): OP reports that weight-space “abliteration” (uncensoring) of LLMs—especially MoE like Qwen3-30B-A3B—consistently degrades reasoning, agentic/tool-use behavior, and increases hallucinations, often causing

30Babliterated models to underperform non‑abliterated4–8Bmodels. In their tests, abliterated+finetuned models largely “recover” capabilities: mradermacher/Qwen3-30B-A3B-abliterated-erotic-i1-GGUF (testedi1-Q4_K_S) approaches base Qwen3-30B-A3B performance with lower hallucination vs other abliterated Qwen3 variants and better tool-calling via MCP; mlabonne/NeuralDaredevil-8B-abliterated (DPO FT from Llama3‑8B) reportedly outperforms its base while remaining uncensored. Direct comparisons against abliterated-only builds—Huihui-Qwen3-30B-A3B-Thinking-2507-abliterated-GGUF, Huihui-Qwen3-30B-A3B-abliterated-Fusion-9010-i1-GGUF, Huihui-Qwen3-30B-A3B-Instruct-2507-abliterated-GGUF—found unrealistic responses to illicit-task prompts, frequent wrong/repetitive tool calls, and higher hallucination than the finetuned abliterated model (though still slightly worse than the original). Comments call for a standardized benchmark to quantify “abliteration” degradation beyond NSFW tasks and frame the observed recovery as “model healing”: post-edit finetuning lets the network relearn connections broken by unconstrained weight edits. A skeptical view argues that if finetuning is required anyway, abliteration adds risk without benefit—claiming they’ve never seen abliteration+finetune beat a straight finetune.- Several commenters note that arbitrary weight edits (“abliteration”) introduce uncontrolled distribution shift and capability loss; this is essentially known as model healing: if you perturb weights without a training signal, you should expect degraded reasoning/knowledge, and only further fine-tuning with a proper loss can partially restore the broken circuits. Practitioners report that an abliterated-then-fine-tuned model rarely outperforms a plain fine-tune on the same base, implying the edit adds optimization debt without measurable gains in benchmarks.

- There’s a call for evaluation beyond porn-centric tests; the Uncensored General Intelligence (UGI) Benchmark/leaderboard aims to quantify broad capabilities of uncensored models (reasoning, coding, knowledge, etc.) while minimizing refusal artifacts: https://huggingface.co/spaces/DontPlanToEnd/UGI-Leaderboard. Using UGI (or similar multi-domain suites) would better capture whether uncensoring preserves general performance versus causing regressions.

- As alternatives to abliteration, users recommend uncensored fine-tunes known to retain utility, e.g., Qwen3-8B

192kJosiefied GGUF builds (https://huggingface.co/DavidAU/Qwen3-8B-192k-Josiefied-Uncensored-NEO-Max-GGUF), Dolphin-Mistral-24B variants (https://huggingface.co/mradermacher/Dolphin-Mistral-24B-Venice-Edition-i1-GGUF), and models from TheDrummer (https://huggingface.co/TheDrummer). These are cited as better baselines for uncensoring that can be benchmarked head-to-head on UGI to validate capability retention.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Gemini Robotics 1.5 and Veo 3 Zero‑Shot Video Reasoning

- Gemini Robotics 1.5 (Score: 276, Comments: 39): Google DeepMind announces “Gemini Robotics 1.5,” a Gemini-1.5–based multimodal VLA that maps natural language + vision to robot control for long‑horizon, multi‑step manipulation across diverse embodiments, with demos like laundry sorting, desk organization, and full scene reset/rollback (page). Building on prior VLA lines (e.g., RT‑2/RT‑X), it emphasizes open‑vocabulary object/tool grounding, hierarchical task decomposition via the model’s long context, and generalization without per‑task fine‑tuning, enabling “return to initial state” behaviors and multi‑object organization. Technically oriented commenters highlight the significance of robust scene restoration as a practical household primitive (canonical “reset” to a predefined state), and speculate on direct transfer to agriculture (e.g., fruit picking) as a scalable, high‑impact application domain.

- Applying this to fruit picking is a non-trivial jump from laundry: outdoor, unstructured scenes introduce variable lighting, occlusions, and deformable/fragile-object handling that demand closed-loop vision, tactile/force feedback, compliant/soft grippers, and robust visual servoing. Generalist VLA policies (e.g., RT‑2’s open‑vocabulary affordance grounding) could help map language goals like “pick the ripe apple” to action primitives, but success will hinge on on‑board latency, multi-view perception, and slip‑aware grasp release [https://deepmind.google/discover/blog/rt-2/].

- The “restore the scene to a canonical state” use case is essentially goal‑conditioned manipulation with persistent memory: maintain an object‑centric scene graph, compute deltas to a reference snapshot, then plan multi‑step rearrangements. Methods like Transporter Nets for keypoint‑based pick‑and‑place and visual goal‑conditioned policies can execute “tidy to match this image” behaviors, but need robust relocalization, clutter segmentation, and failure recovery to avoid compounding errors over long horizons [https://transporternets.github.io/].

- “All robots share the same mind” maps to fleet learning: centralized policy/parameter sharing across heterogeneous embodiments with periodic cloud updates, as seen in multi-robot datasets/policies like RT‑X [https://robotics-transformer-x.github.io/]. Practical deployments add embodiment adapters and may favor federated learning for privacy/safety; core challenges are distribution shift across morphologies/sensors, catastrophic forgetting in continual learning, and sim2real drift, mitigated via domain randomization and strong regularization.

- Video models are zero-shot learners and reasoners (Score: 238, Comments: 30): The post highlights a project and paper claiming that the generative video model Veo 3 exhibits broad zero-shot capabilities—without task-specific training or language mediation—across segmentation, edge detection, image editing, physical property inference, affordance recognition, tool-use simulation, and early visual reasoning tasks (e.g., maze and symmetry solving). Drawing a parallel to LLM emergence, the authors argue that scaling large, web-trained generative video models could yield general-purpose vision understanding, positioning video models as potential unified vision foundation models; see the project page and demos at https://video-zero-shot.github.io/ and the paper at https://arxiv.org/pdf/2509.20328. Notably, the materials appear primarily qualitative: no disclosed parameter counts, compute, training corpus specifics, standardized benchmarks, or ablations are evident, limiting rigorous comparison and reproducibility. Commenters speculate that coherent long-horizon video generation implies a strong learned world model and that further scaling could improve capabilities, while also noting the significant compute cost of video models and proposing integration with LLMs into a single multimodal model; several request basic model details (e.g., Veo 3 size).

- Several commenters infer that high-quality video generation (e.g., Google’s claimed Veo 3) implies a learned “world model” that enforces temporal coherence and basic physics, which can surface as zero-shot reasoning. This aligns with prior world-model work like DeepMind’s Genie (interactive environment model) that learns dynamics from video (blog). The core idea: to produce consistent frames, models must internalize object permanence, motion continuity, and causality—capabilities that also benefit downstream reasoning without task-specific finetuning.

- There’s a practical scaling constraint: video modeling explodes token/computation compared to text. A

10svideo at24 fpsand720ppatchified at16x16yields roughly(1280/16)*(720/16)=3600tokens per frame ⇒~864ktokens per clip; even with latent compression (8–16×) and diffusion/flow-matching in a VAE latent, training/inference FLOPs dwarf LLMs. This motivates hybrid systems (LLM for planning/reasoning + specialized video generator) or unified backbones with shared token spaces to amortize compute across modalities. - On multimodality, participants note gaps: video-in exists in LMMs (e.g., Gemini 1.5 can process long videos via large context windows, reportedly up to “hours” with frame sampling; see Gemini 1.5), and GPT-4o supports real-time video input (OpenAI). But truly unified video-in + video-out + reasoning in one released model remains uncommon; current practice chains a reasoning LLM with a T2V model (e.g., Veo, Sora) or explores research Video-LLMs like LLaVA-Video (arXiv) and Video-LLaMA (arXiv) that focus on video understanding rather than generation. This is the integration frontier commenters expect next.

2. LLM Reasoning Reliability: Apple vs Anthropic and GPT‑5 Regression Reports

- Apple called out every major AI company for fake reasoning and Anthropic’s response proves their point (Score: 377, Comments: 198): Apple ML’s “The Illusion of Thinking” (https://machinelearning.apple.com/research/illusion-of-thinking) evaluates LLM “reasoning” by applying semantically preserving but surface-level perturbations to math/logic word problems and reports sharp accuracy drops, arguing models lack invariances expected of algorithmic reasoning and instead exploit spurious patterns. Anthropic’s reply, “The Illusion of the Illusion of Thinking” (https://arxiv.org/html/2506.09250v1), contends Apple’s setup induces distribution shift/annotation artifacts and that under controlled prompts and “fairer” conditions Claude’s performance is stable—framing the brittleness as an evaluation issue rather than a model incapacity. The debate centers on robustness to content‑preserving rewordings, metric overfitting, and whether current LLMs demonstrate reasoning-like generalization versus sophisticated pattern matching. Top commenters largely endorse Apple’s critique that LLMs don’t “reason,” share the two papers, and describe the practical stack: tokenization to numeric IDs, assistant/policy layers that filter/steer IO (e.g., safety/RLHF), and decoding choices that can induce degenerate outputs (e.g., repetitive tokens when sampling is misconfigured)—implying observed failures can reflect pipeline/decoding brittleness as much as model limits.

- Several commenters unpack the production stack around LLMs: the user-facing model tokenizes text into subword tokens and predicts the next token, while “outer” layers (system prompts, safety/guardrail classifiers, pre-/post-processing rewriters, and routing/orchestration) constrain and shape outputs. This wrapper design explains behaviors like unreliable verbatim recall of training data (knowledge stored parametrically vs. indexed) and why base-model behavior can differ from the product experience (e.g., RLHF and filtering altering likelihoods).

- Technical failure modes were highlighted, e.g., early repetition loops like “the the the…” arising from decoding pathologies when high-probability tokens dominate. Mis-tuned decoding (

temperature,top-k/top-p) and lack of penalties can cause low-entropy degeneracy; mitigations includerepetition/frequency/presencepenalties, nucleus sampling, and entropy-boosting heuristics—issues widely observed in early GPT-2/3-era systems before guardrails stabilized outputs. - On the “reasoning” debate, commenters argue for operational definitions and capability-focused evaluation rather than labels, noting that small perturbations of logically equivalent prompts often break solutions—evidence of pattern matching over robust inference. Links to primary sources were shared for deeper analysis: Apple ML’s “Illusion of Thinking” research note (https://machinelearning.apple.com/research/illusion-of-thinking) and an arXiv preprint (https://arxiv.org/html/2506.09250v1), encouraging benchmarked, perturbation-robust assessments over marketing claims.

- ChatGPT is in such a bad state my most novice students have noticed it going off rails (Score: 211, Comments: 90): An AI-integration instructor reports a sharp post-update regression in OpenAI’s assistant (referred to as “GPT5”): a long-standing master prompt that previously produced

~2000word, exam-focused summaries with GPT‑4o now yields generic prose with “wild inaccuracies,” requires up to5back-and-forth clarifications, and frequently drifts off-instruction. In side-by-side use, Google’s Gemini and NotebookLM, plus Anthropic Claude, still deliver consistent results; the user also claims a local Gemma-family model with~1Bparameters (e.g., Gemma) outperforms the hosted model for their healthcare-education summarization workflow. Based on this observed reliability drop for converting multi-hour lectures/readings into concise notes, the instructor advised canceling the paid plan pending improvement. Top comments echo a noticeable capability decline and reduced trust for research-assistant use cases, claiming a broader cross-model dip. Others express strong skepticism that a~1Bparameter Gemma could substantively outperform OpenAI’s latest model, implying potential evaluation or prompting confounds.- Multiple users report noticeable capability regression in recent ChatGPT releases, especially for research/analysis workflows: perceived rise in hallucinations, “lazy”/short outputs, and failures on formerly trivial tasks, leading some to abandon it for critical work. This aligns with concerns about model routing or safety/latency tuning affecting behavior, though no hard benchmarks were cited by commenters.

- A claim that a “Gemma 1B” outperforms GPT drew skepticism; publicly released Gemma variants are typically 2B/7B (Gemma 1/1.1) and 2B/9B (Gemma 2) docs. At ~1–2B scale, models generally lag GPT‑4‑class systems on standard benchmarks (e.g., MMLU, GSM8K), so a 1B model exceeding GPT on broad tasks would be atypical outside narrow domains or with heavy tool/RAG support.

- One practical workaround mentioned: enable “legacy models” in ChatGPT settings to access GPT‑4o if the default routing feels degraded. This suggests model selection/routing changes may be impacting quality; testing side‑by‑side (same prompts across 4o vs current default) can help isolate regressions OpenAI model list.

- I am losing my f*cking mind with the image generation filters. (Score: 503, Comments: 56): User reports inconsistent safety-filter behavior in GPT image generation: an arachnid-like monster image was initially allowed (example preview), but subsequent requests for a less-realistic, bestiary/DnD-style rendering were blocked, as were prompts involving

werewolf,blood, andglowing red eyes. The pattern suggests keyword- and style-sensitive moderation with possible non-determinism (same concept sometimes passes, sometimes fails), leading to false positives on fantasy/horror content rather than explicit gore or realism thresholds. Commenters suggest a workaround: use ChatGPT to craft a highly detailed prompt, then generate the image with an alternative model (e.g., Grok) that has looser filters. Others note frequent false positives (e.g., benign prompts flagged for “nudity”), arguing current safety heuristics are brittle and overbroad.- Content moderation appears overly sensitive: a prompt for a realistic trout drying itself with a beach towel was flagged for nudity, indicating false positives where benign anthropomorphic scenarios are conflated with explicit content. This points to coarse-grained safety classifiers or keyword heuristics that degrade usability by blocking non-explicit requests.

- A user reports stable local generation with Stable Diffusion via the Stability Matrix UI on a single RTX-3090, describing text-to-image inference as fast and reliable, albeit a step behind state-of-the-art image models. Running locally provides control and eliminates hosted platform filters, with performance adequate on commodity high-VRAM GPUs.

- Workflow suggestions included using ChatGPT to craft highly detailed prompts, then feeding them to alternative generators like Grok; others noted rephrasing via Gemini sometimes reduced moderation friction. Separating prompt engineering from inference can improve output quality and reduce false-positive triggers from stricter front-end filters.

- How ChatGPT helped me quit weed and understand the roots of my addiction (Score: 428, Comments: 120): OP reports quitting daily cannabis use after

17 yearsby leveraging ChatGPT as an on‑demand support tool. They used it to (1) explain withdrawal symptoms in real time (e.g., chest pressure, insomnia, vivid dreams), (2) normalize stage‑specific experiences, (3) reframe cravings as “old programming” vs identity, and (4) facilitate structured reflection on root causes (strict upbringing, insecurity, loneliness, creative blockage). Outcome:9 weeksabstinent, markedly reduced cravings, improved sleep, and increased present‑state awareness; OP characterizes ChatGPT as a 24/7 therapist/coach/mirror substitute. Top comments are largely supportive (one echoing a30+‑year struggle), with one contrarian remark implying AI enabled continuous use without consequences—highlighting debate over AI as recovery aid vs potential enabler. - ChatGPT has been helping me fight my divorce for the last year (Score: 333, Comments: 97): A pro se litigant in a contested Texas divorce/child-support case (two children) reports using ChatGPT to draft and format filings—declarations, hardship statements, and evidence lists—by supplying fact‑constrained instructions and performing multi‑pass manual verification. After a 3‑month temporary‑orders phase and counsel predicting an unfavorable deviation outcome, he dismissed counsel and continued self‑represented, seeking a deviation from Texas guideline child support (≈

$1,100/mo; see Texas guidelines Family Code §154.125 and OAG calculator) while on a fixed 100% VA disability as the former stay‑at‑home parent, asserting the other party is employed with free housing. He credits ChatGPT with improved structure, issue‑spotting, and reduced emotional content in written records, using filings to compensate for limited in‑court advocacy amid opposing counsel’s threats of sanctions and delays. Commenters warn about LLM hallucinations in legal research, citing the sanctions in Mata v. Avianca for fabricated case law generated by ChatGPT (order), urging strict verification of citations and precedents. Others argue LLMs can outperform lawyers in drafting clarity if kept factual, noting courts may respond favorably to precise, well‑supported filings from pro se parties.- Multiple commenters flag legal hallucination risk: one references the widely publicized Avianca incident where an attorney submitted ChatGPT-fabricated case citations and was sanctioned; they urge rigorous verification of all citations/precedents against primary sources before filing or arguing in court (order PDF, news). Emphasis: do not rely on model-generated case law without cross-checking; “self represented is a huge red flag,” so expect heightened scrutiny of authorities.

- A cost/control workflow is proposed: use ChatGPT for drafting/research “grunt work,” then have a licensed attorney review, refine, and handle hearings to cut billable hours while maintaining courtroom competence. One commenter reports success with prepaid legal plans and hybrid billing (splitting plan-covered hours and out-of-pocket work) and suggests using ChatGPT to compare plans/wait times to optimize coverage and responsiveness.

- There’s debate on capability vs. reliability: one asserts “law is written… ChatGPT has the data” and can outperform lawyers in aspects of drafting, arguing that sharper filings can improve court reception. Counterpoints stress that even with strong AI-assisted filings, outcomes can still be unfavorable and model outputs must be grounded in verified facts and real precedents to avoid credibility damage.

3. AI Industry Shifts: Anthropic’s New‑Grad Hiring Stance and China’s Fenghua No.3 GPU

- Anthropic CPO Admits They Rarely Hire Fresh Grads as AI Takes Over Entry-Level Tasks (Score: 207, Comments: 86): Anthropic CPO Mike Krieger says the company has largely stopped hiring fresh grads, leaning on experienced hires as Claude/Claude Code increasingly substitute for entry‑level dev work—evolving from single‑task assistants to collaborators that can delegate and execute 20–30‑minute tasks and larger chunks, even “using Claude to develop Claude” (source). He predicts most coding tasks will be automated within ~1 year and other disciplines within 2–3 years, framing this amid industry cuts and a

6.1%CS graduate unemployment rate in 2025. Commenters question causality, noting firms like Netflix historically avoided new‑grad hiring pre‑AI and suggesting this may reflect a high‑impact hiring philosophy rather than AI per se; others warn new grads to expect longer apprenticeships. Some argue Krieger’s remarks read as marketing/PR and may not reflect day‑to‑day realities inside Anthropic.- Multiple engineering leaders claim juniors are now materially more productive due to native use of LLM coding tools (e.g., ChatGPT, Claude Code), citing “2–3x” output on routine implementation, scaffolding, test generation, and debugging. They report juniors can tackle larger, less tightly-scoped tasks than before because LLMs reduce back-and-forth and accelerate boilerplate and integration work.

- Others argue the “no new grads” stance predates AI (e.g., Netflix historically) and is driven by organizational economics: desire for immediate high-impact contributors, reduced mentorship/ONCALL burden, and lower production risk. AI assistance doesn’t eliminate the need for domain context, codebase familiarity, and reliability engineering practices, so teams optimized for senior-only throughput may see limited gains from juniors even with LLMs.

- A strategic hiring angle emerges: avoiding fresh grads may handicap AI capability because many senior candidates lag in LLM adoption, whereas new grads are “AI-native” and bring current AI/ML toolchains and workflows. Companies report improved ROI by seeding teams with juniors who propagate modern prompting, automation, and evaluation practices, bridging an internal skills gap in practical LLM usage.

- China already started making CUDA and DirectX supporting GPUs, so over of monopoly of NVIDIA. The Fenghua No.3 supports latest APIs, including DirectX 12, Vulkan 1.2, and OpenGL 4.6. (Score: 559, Comments: 199): The image appears to be a product/marketing slide for the Chinese “Fenghua No.3” GPU (likely from Innosilicon), claiming graphics API support for DirectX 12, Vulkan 1.2, and OpenGL 4.6. There are no benchmarks, feature-level details (e.g., DX12 12_1/12_2), driver maturity notes, or compute stack specifics; the title’s claim of “CUDA” support is likely inaccurate since NVIDIA’s CUDA is proprietary—third-party GPUs would require translation/compatibility layers rather than native CUDA. As presented, the post signals driver/API coverage claims but provides no evidence on performance, software ecosystem, WHQL certification, or compatibility with existing CUDA workloads. Top comments highlight demand for competition to NVIDIA and note the capital/complexity of scaling GPU manufacturing; optimism centers on potential consumer benefits if viable alternatives emerge.

- The headline claim that Fenghua No.3 supports DirectX 12, Vulkan 1.2, and OpenGL 4.6 is only a baseline; real viability hinges on driver maturity, shader compiler quality, and specific feature coverage like DX12 hardware feature levels (e.g.,

12_1/12_2) and SM 6.x support (Microsoft docs). Absent public conformance data (e.g., Vulkan 1.2 CTS on the Khronos conformant products list) or game/compute benchmarks, performance and compatibility are unknown, especially for modern workloads requiring DXR, mesh shaders, and advanced scheduling. - “CUDA support” from a non‑NVIDIA GPU typically implies a translation layer (e.g., ZLUDA) or a CUDA‑like SDK (e.g., Moore Threads MUSA), which rarely achieves full API/ABI parity or performance with NVIDIA’s toolchain. For AI/ML, end‑to‑end ecosystem support (cuDNN/cuBLAS equivalents, PyTorch/TensorFlow backends, kernel autotuning) and driver stability tend to dominate over API checkboxes, so meaningful competition would require solid framework integrations and reproducible benchmarks.

- The headline claim that Fenghua No.3 supports DirectX 12, Vulkan 1.2, and OpenGL 4.6 is only a baseline; real viability hinges on driver maturity, shader compiler quality, and specific feature coverage like DX12 hardware feature levels (e.g.,

- Regulating AI hastens the Antichrist, says Peter Thiel (Score: 298, Comments: 135): At a sold‑out San Francisco lecture, Peter Thiel (co‑founder of Palantir and PayPal) claimed efforts to regulate AI risk “hastening the coming of the Antichrist,” framing regulation as a promise of “peace and safety” that would strangle innovation; the report by The Times (James Hurley,

2025‑09‑25) documents the rhetoric but cites no technical evidence, governance models, or concrete regulatory proposals (The Times). The OP challenges the unstated premise that technological progress is inherently net‑positive/safe, noting one could equally cast AI—or Thiel’s rhetoric—as the “Antichrist,” highlighting the lack of falsifiable claims or risk‑benefit analysis. Top comments are non‑technical dismissals/jokes and do not add substantive debate. - “You strap on the headset and see an adversarial generated girlfriend designed by ML to maximize engagement. She starts off as a generically beautiful young women; over the course of weeks she molds her appearance to your preferences such that competing products won’t do.” (Score: 203, Comments: 73): Conceptual (meme-style) depiction of a VR “AI girlfriend” that performs continual personalization—effectively gradient ascent on a user’s latent attraction manifold—to maximize engagement/retention. It maps to recommender/bandit and RL-style optimization (akin to RLHF but over an individual’s reward signal), illustrating reward hacking/adversarial examples where the system converges to grotesque local optima (“grotesque undulating array”) that exploit human reward circuitry and create lock‑in against competitors. Top comments frame it as a credible, late‑stage capitalism trajectory: systems that “get their hooks” into evolved reward channels, making escape difficult; initial skepticism turns to acceptance once the adversarial/grotesque optimization endpoint is mentioned.

- The scenario maps to an online personalization loop where a generative avatar (e.g., StyleGAN [https://arxiv.org/abs/1812.04948] or latent-diffusion per Stable Diffusion [https://arxiv.org/abs/2112.10752]) is tuned via multi-armed bandits or RL to maximize a proxy reward (engagement, session length). Over

weeks, contextual bandits/Thompson sampling [https://en.wikipedia.org/wiki/Thompson_sampling] could adapt the avatar’s latent vectors and prosody/affect to click/biometric feedback, converging on a personalized superstimulus. Without regularization/constraints (e.g., KL penalties as in RLHF PPO [https://arxiv.org/abs/2203.02155] or human preference priors), such optimization tends to exploit proxy metrics, producing pathological attractors that outcompete “competing products.” - The “grotesque undulating array” is analogous to adversarial/feature-visualization failure modes where optimization against a fixed classifier/perceptual model yields extreme, high-frequency artifacts that maximally activate features. Similar phenomena occur in “fooling images” [https://arxiv.org/abs/1412.1897] and DeepDreamstyle gradient ascent [https://research.google/blog/inceptionism-going-deeper-into-neural-networks/], producing bizarre yet high-confidence outputs; in humans, this corresponds to engineered “supernormal stimuli” [https://en.wikipedia.org/wiki/Supernormal_stimulus] that hijack evolved preferences.

- The “run a photo through AI

100times” analogy points to recursive generation/feedback loops that amplify features and cause distributional drift or collapse. Empirically, repeated self-conditioning leads to artifact accumulation (e.g., iterative image-to-image pipelines), and training on model outputs induces model collapse—progressive forgetting of the true data distribution—per Shumailov et al., 2023 [https://arxiv.org/abs/2305.17493]. These effects imply long-horizon personalization systems need fresh human-grounded feedback and anti-feedback-loop guards (data deduplication, diversity constraints, entropy/novelty bonuses).

- The scenario maps to an online personalization loop where a generative avatar (e.g., StyleGAN [https://arxiv.org/abs/1812.04948] or latent-diffusion per Stable Diffusion [https://arxiv.org/abs/2112.10752]) is tuned via multi-armed bandits or RL to maximize a proxy reward (engagement, session length). Over

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Agent Tooling: Chrome DevTools MCP and Perplexity Search API

- Chrome DevTools MCP Lets Agents Drive Chrome: Google announced the public preview of Chrome DevTools MCP—an MCP server that exposes CDP/Puppeteer controls so AI coding agents can inspect and manipulate a live Chrome session—via Chromium Developers, opening programmatic access for navigation, DOM/console/network debugging, and screenshotting to automate testing and scraping workflows.

- Developers framed this as a missing piece for agentic browsers, noting it standardizes control surfaces across tools using Model Context Protocol (MCP) and could streamline end-to-end evals and CI for web tasks.

- Perplexity Plugs Devs Into Live Web: Perplexity launched a Search API providing raw results, page text, domain/recency filters, and provenance—akin to Sonar—announced in the blog post with a new SDK to integrate quickly.

- Early feedback praised the playground and filters but flagged a Python SDK streaming bug returning unparseable JSON per the API docs, with one user noting “there’s no solution for this yet.”

- MCP Debates Multi-Part Resource Semantics: MCP contributors discussed the undocumented purpose of

ReadResourceResult.contents[], proposing it bundle multi-part Web resources like HTML + images and asking whetherresources/read(.../index.html)should implicitly includestyle.cssandlogo.pngper issue #1533.- Participants argued an array improves agent retrieval fidelity by shipping all render-critical assets together, reducing extra fetches and negotiation overhead for browser-control agents.

2. Code World Models & Agent Execution Infra

- Meta’s CWM Marries Code and World Models: Meta unveiled CWM, an open-weights LLM for research on code generation with world models, in CWM: An Open-Weights LLM for Research on Code Generation with World Models, emphasizing training on program traces to improve tool-use and code execution understanding.

- Builders compared notes on similar ideas (e.g., interpreter traces), calling CWM a plausible path to more sample-efficient coding agents while they await concrete benchmarks and sizes.

- Modal Muscles Remote Code Agent Rollouts: Members credited Modal with powering remote execution for large agent rollouts in the wake of FAIR’s CWM buzz, sharing a post-run screenshot attachment and praising cold/warm/hot start tradeoffs while noting missing MI300 support.

- Operators highlighted that elastic executors and controlled start distributions lower tail latencies for eval sweeps, making Modal attractive for orchestrating code-agent experiments at scale.

- Windsurf Bets Big on Tab Completion: Windsurf prioritized advanced tab completion via context engineering and custom model training, with Andrei Karpathy commenting in this tweet.

- Users expect deeper repo-aware completions and latency wins, framing tab-complete quality as the top lever for perceived coding productivity in IDE agents.

3. GPU Systems & Diffusion Scale-Ups

- Hugging Face Ships Context-Parallel Diffusion: Hugging Face announced native context-parallelism for multi-GPU diffusion inference, supporting distributed attention flavors Ring and Ulysses, per Sayak Paul.

- Practitioners see CP as a key unlock for high-resolution, long-context diffusion serving, reducing single-GPU memory bottlenecks without rewriting model code.

- PTX Consistency Papers Keep GPU Devs Honest: Members circulated formal work including A Formal Analysis of the NVIDIA PTX Memory Consistency Model and Compound Memory Models (PLDI’23) that proves mappings for CUDA/Triton to PTX despite data races and details heterogeneous-device consistency.

- While some found it “too heavy on formal math”, others noted tools like Dat3M uncovered real spec bugs, arguing these formalisms guide fence placement and compiler correctness.

- Cutlass Blackwell Teaches TMEM Tricks: NVIDIA Cutlass examples show SMEM↔TMEM tiled copies via

tcgen05.make_s2t_copy/make_tmem_copywith helpers to pick performant ops—see the dense blockscaled GEMM example and helpers—andTmemAllocatorreduces boilerplate vs rawcute.arch.alloc_tmem.- Kernel authors trading notes reported fewer foot-guns moving tiles between TMEM and SMEM, a must for high-throughput Blackwell blockscaled GEMM paths.

4. Evaluations and Proactive Assistants

- OpenAI Drops GDPval for Real-World Tasks: OpenAI introduced GDPval, an evaluation targeting economically valuable, real-world tasks, outlined in GDPval.

- Engineers welcomed a shift toward grounded evals, hoping for transparent task specs and reproducible harnesses to compare across models and tool-use stacks.

- ChatGPT Pulse Goes Proactive: OpenAI launched ChatGPT Pulse, a proactive daily update experience from chats, feedback, and connected apps—rolling out to Pro on mobile—per the announcement.

- Some in the community quipped “oai cloned huxe” and debated privacy knobs and notification hygiene for dev and enterprise settings.

- Microsoft 365 Copilot Adds Claude: Anthropic announced Claude availability in Microsoft 365 Copilot, per Claude in Microsoft 365 Copilot.

- Builders read this as a competitive realignment in enterprise AI assistants, with one user joking “microsoft are on the rebound after a messy breakup.”

5. Training Tricks: Losses, Merges, and Data

- Tversky Loss Gets a ‘Vibe Check’: Members highlighted the paper Tversky Loss Function and shared implementations, including a CIFAR-10 vibe check network and a Torch port repo, with one run reporting ~61.68% accuracy and ~50,403 trainable params on a 256→10 head (paper PDF).

- Suggestions included verifying with a single XOR task and probing speed vs MLP baselines; one run hit ~95% XOR accuracy at 32 features with notes on initialization asymmetry.

- Super-Bias Mashes LoRAs Like a DJ: Researchers floated Super-Bias, a mask-aware nonlinear combiner that trains a small MLP on expert outputs + binary masks to ensemble LoRAs, claiming parity with full fine-tunes at a fraction of cost and enabling hot-swaps of experts.

- Teams discussed treating different domain LoRAs as experts and fusing them post-hoc, avoiding destructive hard merges and preserving a clean base-model.

- MXFP4 Saves the 120B: For saving large QLoRA checkpoints, users recommended

save_pretrained_merge(save_method="mxfp4")for GPT-like models to avoid 16 GB shard bloat frommerged_16bit, producing native MXFP4 artifacts better aligned with GPT-like architectures.- Engineers reported timeouts on 120B merges to remote stores; the MXFP4 path and local saves reduced failures and storage churn during consolidation.

gpt-5-mini

1. Model launches & leaderboard shakeups

- GPT‑5 Codex lands on LMArena WebDev — coders audition a new star: GPT‑5 Codex was added to LMArena’s WebDev environment (software engineering/coding sandbox) and users started comparing it directly to Claude 4.1 Opus for code generation and Godot scripting.

- Threads focused on real‑world coding tests and anecdotal runs, with some users claiming GPT‑5 Codex beats Opus on certain tasks while others still prefer Claude for instruction fidelity; the consensus is to benchmark on your repo and language (Godot users in particular tested scene/script generation).

- Qwen3 drops and leaderboard reshuffle — Qwen3‑max + Qwen3 coder join the fight: LMArena announced new Qwen3 flavors including qwen3-max-2025-09-23 and a Qwen3‑coder variant targeted at dev/web flows, pushing fresh entries onto the platform’s model roster.

- Community discussion parsed model names/dates and encouraged head‑to‑head runs (reasoning, code, VL tasks) to see where Qwen3 variants actually outpace existing models; some reports praised Qwen3’s reasoning while others still flagged hallucination issues.

- Seedream vs Nano Banana — image leaderboard tug‑of‑war: Seedream‑4‑2k now sits tied atop the LMArena Text‑to‑Image leaderboard with gemini‑2.5‑flash‑image‑preview (nano‑banana), sparking fresh comparisons on fidelity and prompt sensitivity.

- Users emphasized that the right prompt+tool+purpose matters more than raw rank — some swear by Nano Banana, others find Seedream superior for specific styles, and the thread included GPU requests ( >16GB VRAM, mentions of a 96GB Huawei GPU ) for running top image models locally.

2. Image‑generation arms race & inference tooling

- Qwen image editor courts creators — open source rival to Nano Banana?: Members reported that Qwen’s new image editor—described as open source—produces higher‑quality edits than Google’s Nano Banana in many tests and is attracting interest for local runs.

- Practical conversations pivoted to hardware (users asked for GPUs with 16+GB VRAM and mentioned a 96GB Huawei card) and to workflow choices: some recommend cloud inference for quick experiments, others pushed local setups to avoid provider bias.

- Gemini 2.5 Flash keeps pressure on image top spots: Gemini 2.5 Flash (Flash & Flash Lite previews) continues to be a major contender in llm‑vl image benchmarks, and LMArena added Gemini Flash variants to its lineup.

- Community members joked about perceived score inflation on public leaderboards but still ran structured comparisons; several people advocated for task‑matched evaluation (editing vs pure generation) rather than single aggregated rank.

- Diffusion & decoding optimizations show up in infrastructure threads: Hugging Face and contributors discussed shipping context‑parallelism for faster diffusion inference on multi‑GPU setups, with distributed attention flavors like Ring and Ulysses to scale decoding.

- The conversation focused on real deployment tradeoffs (communication/attention splits) and linked to early tweets/info about the CP API, with practitioners noting this will matter most for high‑resolution image generation across GPUs.

3. Training, fine‑tuning, and experiment tooling

- Saving huge models: save_pretrained_merge timeouts and mxfp4 to the rescue: Users trying to save a GPT‑like 120B QLoRA hit timeouts with

save_pretrained_merge, and community members recommendedsave_method="mxfp4"to avoid 16GB‑shard explosions and improve compatibility for GPT‑style checkpoints.- The discussion included practical tips (switch save method, check shard sizes) and warnings about tooling immaturity for very large finetuned models — folks advised testing small merges first before committing long runs.

- P100 GPUs: still terrible for modern finetuning: Multiple users warned that NVIDIA P100 16GB cards are ‘garbo for training’ because of old SM architecture and lack of hardware FP16/BF16, making multi‑GPU finetuning painfully slow despite memory pooling tricks like ZeRO3.

- Advice converged on buying modern Ada/Blackwell‑class cards or renting spot instances for training; threads included pragmatic cost/perf tradeoffs and links to L40S/RTX 6000 datasheets for those planning infrastructure upgrades.

- Tversky loss experiments: neurocog ideas make it to CIFAR & XOR tests: A member shared interest in the Tversky Loss paper (arXiv:2506.11035) and published a small repo implementing a ‘vibe check network’ on CIFAR‑10 at github.com/CoffeeVampir3/Tversky‑Cifar10.

- Community suggestions included simple verification tasks (train XOR) and parameter‑sweep ideas; results so far showed promise but members emphasized fair baselines and parameter counts to avoid misleading comparisons.

4. APIs, infra, and remote execution

- Perplexity ships a Search API — web grounding for LLMs: Perplexity launched the Search API (blog: introducing the perplexity search api) plus an SDK (Perplexity SDK docs) to give devs raw results, filters, full page text and transparent citations for grounding LLM answers in live web content.

- Users compared it to Sonar (some mentioning Sonar’s pricing), reported early SDK streaming/parsing problems (Python SDK streaming returning unparseable JSON), and asked for rich filters and playground tooling — overall reaction: powerful but still rough at the edges.

- Chrome DevTools MCP public preview — agents can drive real browsers: Google unveiled the public preview of Chrome DevTools MCP, a server enabling AI coding agents to control and inspect live Chrome via CDP/Puppeteer (announcement: https://x.com/chromiumdev/status/1970505063064825994).

- Developers highlighted immediate use cases—automated end‑to‑end testing, agentic scraping, and agent tool integration—and discussed security/permission models for exposing a live browser to an LLM‑driven agent.

- OpenRouter pricing glitch: free endpoint charged for 26 hours, refunds issued: On September 16th OpenRouter mistakenly priced

qwen/qwen3-235b-a22b-04-28:freefor ~26 hours, causing credit deductions; the team automatically refunded impacted users and added extra validation checks to prevent recurrence.- Users appreciated the prompt refunds but used the incident to press for stronger provider‑level validation and billing transparency in aggregator platforms; the episode spurred questions about operational safeguards for free vs paid endpoints.

5. Agent‑first products & one‑click deployers

- Moonshot Kimi launches OK Computer — one‑link site/app agent: Moonshot AI released OK Computer, an agent mode that generates polished sites/apps in one pass (text+audio+image), supports team‑level polish and offers one‑link deployment (see the X post: https://x.com/Kimi_Moonshot/status/1971078467560276160).

- Users praised the idea of a deployable single‑link flow but flagged product bugs (missing download all, corrupted zips) and subscription‑based quota differences (free vs moderato/vivace plans), noting real‑world usability depends on polishing export reliability.

- Kimi vs distilled Qwen debate — mini models or distills?: Community members debated whether Moonshot should ship a mini Kimi or instead distill Qwen models onto K2 hardware; several argued distilling a smaller Qwen is more plausible given reasoning improvements only appearing in Qwen 2.5+.

- The thread mixed strategic product thinking (what attracts users/investors) with technical realism (distillation tradeoffs), and many recommended trial distills over maintaining multiple full‑size variants.

- Agent prompts aim for cash — initial OKC seed prompt screams Product Market Fit: Observers noted the OK Computer demo uses a money‑forward initial prompt “Build a SaaS for content creators, aiming for $1M ARR”, which attracted jokes that the agent is tuned to create investor‑friendly outputs.

- Reactions split between amusement and concern: some see it as a pragmatic growth hack to attract creators/VC attention, others warned that baking business aims into starter prompts biases outputs toward monetizable scaffolds rather than purely utility‑focused designs.

Discord: High level Discord summaries

LMArena Discord

- GPT-5 Codex Joins LMArena WebDev: GPT-5 Codex has been added to LMArena, but is exclusively available on the WebDev version for software engineering and coding tasks.

- Users are debating whether GPT-5 Codex surpasses Claude 4.1 Opus for code generation, particularly for coding with Godot.

- Qwen Image Editor Rivals Nano Banana: Members suggest that Qwen’s new image editor is superior to Google’s Nano Banana, is open source, and generates higher-quality images.

- The community is requesting GPU recommendations with over 16GB VRAM to run these models, specifically mentioning the 96GB Huawei GPU.

- Seedream Surpasses Nano Banana for Top Image Dog: Seedream-4-2k shares the top position on the Text-to-Image leaderboard with Gemini-2.5-flash-image-preview (nano-banana).

- Some users still find Nano Banana to be the best, others believe Seedream 4 has surpassed it, but the right prompt, tool, and purpose are required to make good images.

- Image Modality Bug Plagues LMArena: Users have reported a bug in LMArena where uploading an image in Text Mode automatically switches to Image Generation, even after fixes were implemented in canary versions.

- Some find that clicking the button to turn it off upon pasting in or uploading an image resolves the issue.

- Navigating LMArena Rate Limits: Users are facing issues with incorrect rate limit timers and models getting stuck mid-generation, with this being a known bug.

- It was noted that long chats and Cloudflare issues may be contributing to the problem, and that starting a new chat is often the only fix.

Unsloth AI (Daniel Han) Discord

- P100 GPUs are Garbo for Training: A member asked about the expected performance of a multi-GPU rig with P100 16GB GPUs for fine-tuning, but was told that P100s are garbo for training due to an old ass SM with no modern CUDA or hardware FP16/BF16 support.

- The discussion also covered the fact that memory is not additive and while it might work with ZeRO3, it would be very slow.

- Trainer Troubles Produce TensorBoard Triumph: A member sought help to display the eval/loss graph during training, and found that they needed to use an integer to specify the eval_steps, rather than the 0.2 value they had copied from Trelis’s notebook.

- After resolving the issue, they were thankful and excited, exclaiming that it was their first time using tensorboard and expressing relief that there was a setting to avoid manual refreshing.

- Saving is Super with save_pretrained_merge!: A member encountered timeout errors while saving a GPT-like 120b QLoRA model using

save_pretrained_merge, and another member recommended usingsave_method="mxfp4"for better GPT-like support.- The method saves in native

mxfp4format and avoids the 16GB shard increases associated withmerged_16bitmode.

- The method saves in native

- Tversky Vibe Check Network Vibes High: Excited about the potential of the Tversky Loss function from this paper, a member created a vibe check network for CIFAR-10, noting that it appears promising.

- Another member suggested training a single XOR function to verify its functionality, and inquiring about its speed compared to traditional fully connected layers.

Perplexity AI Discord

- Perplexity Debuts Search API for Devs: Perplexity launched its Search API giving developers access to its comprehensive search index via a blog post.

- The API provides tools for grounding answers in live web content, similar to Sonar, with features like raw results, filters, and transparency, with a new SDK simplifying integration.

- Qwen and Gemini Face Off in Image Arena: Members compared Qwen 3 Max for reasoning against Gemini for detailed 3D simulations.

- One member sarcastically quipped that GOOG shareholders are really inflating the scores for visual ability on llmarena.

- Python SDK’s Streaming Responses Break: A user reported that the Python SDK is failing to stream responses correctly, yielding unparseable JSON, with reference to the API docs quickstart guide.

- Another member chimed in that there’s no solution for this yet, indicating an ongoing issue.

- Cosmic Carl Ponders 3I/ATLAS: A member’s Carl Sagan-themed reflection on 3I/ATLAS beckons listeners to humbly listen to the universe, shared via Perplexity AI search.

- This unique take blends cosmic wonder with AI search, showcasing a creative application of Perplexity.

OpenRouter Discord

- Qwen Model Pricing Glitch Triggers Credit Chaos: On September 16th, the

qwen/qwen3-235b-a22b-04-28:freeendpoint was mistakenly priced for 26 hours, causing incorrect credit deductions.- The team automatically refunded impacted users and implemented additional validation checks to prevent future pricing mix-ups.

- Horizon Alpha Vanishes, Users Vanquished: A user urgently inquired about the whereabouts of Horizon Alpha, stating ‘I was using it in production and now it’s not working’.

- They also questioned if they were being targeted and when the issue would be resolved.

- Filthy Few Favor Dirty Talk Models: A user inquired about the best models for RPing, specifically seeking ‘any of dem dirty talk models?’.

- Another member mentioned opening a new LLM frontend called JOI Tavern.

- Zenith Sigma’s Shady Stealth Sparks Speculation: Users discussed the stealthy Zenith Sigma model, with one user joking they couldn’t even find it.

- Another user claimed Zenith Sigma is actually Grok 4.5.

- Microsoft Copilots Claude - A Comeback Story?: Members shared that Claude is now available in Microsoft 365 Copilot.

- This marks a significant stride for Microsoft, especially after a messy breakup, with one member noting ‘microsoft are on the rebound after a messy breakup’.

Cursor Community Discord

- Exa-AI Beats Web for MCP Search: Users are using Exa-ai (exa.ai) for searches within MCP, stating it provides $20 in credits upon signup and performs better than the @web tool.

- Instructions were shared on how to set it up in

MCP.json, including obtaining an API key and adding configuration details.

- Instructions were shared on how to set it up in

- MCP Clarified as Custom Tool API for Cursor: Members clarified that MCP (Multi-context Programming) is an API for agentic use to add external tools to Cursor.

- Confusion arose when a user mistook it for a design tool capable of creating designs from images and webpages.

- Generated Commit Messages Ignore AI Rules: Users report that generated commit messages are not obeying the set AI Rules and are being generated in an unwanted language.

- A member confirmed that this is a known bug and might be added in future updates.

- Chat Window Scroll Request for Chat Tabs: A user requested that the chat window should automatically scroll to the bottom when switching between chat tabs, for the latest activity.

- A member pointed out that a notification is already given if there’s something that the user needs to click on.

- Users Complain About GPT5-HIGH Model Degradation: Users expressed disappointment with the GPT5-HIGH model, observing that it has become less capable over time.

- One user joked that the model should be told to get off it’s ass and write the code when it only provides instructions instead of completing the task.

LM Studio Discord

- LM Studio Bolsters Chat Experience: LM Studio 0.3.27 brings new features like Find in Chat and Search All Chats to improve chat functionality, alongside a

•••menu for sorting chats by date or token length.- A new

lms load --estimate-only <model>command estimates resource allocation for model loading, streamlining the planning process; details are available in the release notes.

- A new

- Linux Plugins Lag in LM Studio: Users noted that the Linux version of LM Studio has fewer plugins compared to the Windows version, specifically lacking in options beyond RAG and a JS playground plugin.

- This discrepancy limits the functionality available to Linux users compared to their Windows counterparts.

- Fine-Tuning Faceoff: Ollama vs RAG: A debate arose over whether using Ollama to inject data into a model constitutes true fine-tuning, versus simply performing RAG.

- One member argued that with a Python setup, data injection can create new weights, leading to an interactive model with custom tool usage, independent of prompts.

- LM Studio Update Plagued by Pesky Problem: Users reported issues when updating LM Studio, encountering errors like failed to uninstall old application files, hindering the update process.

- Fellow members recommended enabling visibility of hidden folders in Windows and manually deleting old files from directories like AppData\Roaming\LM Studio to resolve the issue.

- GPU Gems: Budget Beasts Brawl: The optimal budget GPU is debated, with the 2060 12GB ($150) and 3060 12GB ($200) emerging as frontrunners, while others suggested a used 3090 for $600-$700.

- Caution was advised against used workstation cards, with one member declaring that Tesla generation is not recommended for AI/LLM use anymore tbh, basically e-waste.

OpenAI Discord

- OpenAI Pulses with New Products: OpenAI launched GDPval to evaluate AI on real-world tasks as described in their blog post and ChatGPT Pulse to proactively deliver personalized daily updates from chats, feedback, and connected apps detailed in their blog post.

- ChatGPT Pulse is rolling out to Pro users on mobile devices.

- GPT-5-Mini Lacks Common Sense: Members observed that GPT-5-Mini (High) seems to lack common sense, suggesting it is not AGI level yet while members noted that GPT-OSS-20B is possibly the most censored model ever.

- One member stated that it noped out from a specific prompt.

- Discord Devs Dream of AI Rocket League Bot: Members proposed creating a Rocket League Discord bot powered by AI to analyze player stats, identify strengths & weaknesses, and create personalized training plans, targeting the untapped francophone market with a premium subscription model.

- Others doubted that an LLM could give good advice, suggesting instead to analyze the xyz coords from the replay files, and use AI against the raw numbers.

- ChatGPT Defaults to Agent State: ChatGPT defaults to an “Agent” state (problem-solver, instructable worker) upon initialization, rather than a “Companion” state (co-creator, guide).

- To maintain the “Companion” mode, users are pinning instructions like “Stay in Companion mode unless I explicitly say switch to Agent. Companion = co-pilot, not order-taker.” to the model to lock it in that mode, or they reset with the command “Go back to companion mode.”

- Chain-of-Thought Prompting Confusion Clarified: Members discussed how requesting excessive Chain-of-Thought (CoT) prompting can statistically reduce model performance, especially on current thinking models.

- Instead of ambiguous instructions, one member suggested prefacing responses with a structured format including ultimate desired outcome, strategic consideration, tactical goal, relevant limitations, and next step.

HuggingFace Discord

- Duolingo Doomed by Dedicated Disciple: A member deleted Duolingo, citing annoyance and inefficiency compared to immersing themselves in a local environment and leveraging AI for learning.

- They criticized the addiction to streaks over fundamental learning, suggesting they’d torch the bird alive.

- Unhinged LinkedIn Lunacy Lands Likes: A member shared a strategy of posting unhinged shit on LinkedIn to gain engagement, while another grinds Rocket League to brag about their rank.

- They joked about writing a post called what my plat 3 rank rocket league friend taught me about business.

- Driver Devastation: GPU Gone Dark: A member is experiencing a frustrating issue where their monitor goes black whenever the GPU is activated, affecting both Windows and Linux systems.

- Despite numerous attempts to correct the drivers, they are forced to run the monitor off their motherboard, indicating a persistent GPU-related problem.

- Diffusion Decoding Discussions Debut: A member announced a reading and discussion of the paper Understanding Diffusion Models: A Unified Perspective by Calvin Luo (https://arxiv.org/abs/2208.11970) to occur on Saturday at 12pm ET.

- The paper provides an overview of the evolution and unification of generative diffusion models, including ELBO-based models, VAEs, Variational Diffusion Models (VDMs), and Score-Based Generative Models (SGMs).

- Context-Parallelism Conjures Quicker Computation: Native support for context-parallelism is being shipped to help make diffusion inference faster on multiple GPUs.

- The CP API is made to work with two flavors of distributed attention: Ring & Ulysses as noted in this Tweet.

Moonshot AI (Kimi K-2) Discord

- Kimi Launches OK Computer Agent Mode!: Moonshot AI launched OK Computer, a new agent mode designed to ship polished sites and apps in one go, with key features including personalized outputs, multimedia generation (text + audio + image), team-level polish, and one-link deployment.

- Users can deploy and share their creations instantly with a single link, more details on the official X post.

- Skip Kimi Mini, Distill Qwen?: One member doubted that Moonshot would release a smaller version of Kimi, suggesting that a smaller Qwen model distilled on K2 is a better bet, citing that Deepseek made Qwen distills because Qwen didn’t have (good) reasoning until Qwen 2.5.

- This comment reflects broader speculation about the strategic direction of Moonshot AI and potential model development paths.

- OKComputer Designed to Attract Capitalists?: Several members joked that the new Kimi Computer agent, particularly with its initial prompt “Build a SaaS for content creators, aiming for $1M ARR,”, is designed to attract capitalists.

- A member quipped it was “another website generator with some weirdly scoped features.”

- Computer Use Has Higher Quota with Subscription: Members reported initial issues with the OK Computer feature, including a missing download all button and a corrupted zip file.

- One member noted that “entering chat makes the OKC button disappear”, the amount of OK Computer usage you get depends on whether or not you subscribe to moderato/vivace plans, giving you more quota.

- Kimi better Plans than Qwen: Members discussed using Kimi to make plans for Qwen or DeepSeek to follow, noting that “Kimi always makes better plans” and it can cover a wider range of requests.

- One member observed that Qwen3-max constantly hallucinates and doesn’t come close to Kimi.

GPU MODE Discord

- Modal Rolls Out Code Agent Execution: Modal now powers remote execution for code agent rollouts, demonstrated after the release of the new CWM paper from FAIR.

- Members praised Modal’s distribution of cold/warm/hot start times relative to cost, but noted that it lacks MI300 support.

- CUDA Headers Playing Hide-and-Seek: A developer reported that CUDA headers weren’t being automatically included, causing functions like

cudaGraphicsGLRegisterImageandtex2dto be undefined when using Visual Studio 2022 and the latest CUDA toolkit.- As a workaround, the developer was advised that explicitly including

cuda_gl_interop.hwould solve the problem.

- As a workaround, the developer was advised that explicitly including

- Torchrun API troubles trigger package predicament: A user encountered issues with the

torchrunAPI, finding thattorchrun --helpproduced output different from the official documentation.- The issue was resolved by realizing that both

torchandtorchrunwere inpyproject.toml, and thattorchrunis a separate package (torchrun on pypi).

- The issue was resolved by realizing that both

- GPUs get Formally Analyzed for Consistency: A paper on “A Formal Analysis of the NVIDIA PTX Memory Consistency Model” discusses proving that languages like CUDA and Triton can target PTX with memory consistency, despite PTX allowing for data races.

- The member found the paper leaning too heavily on formal languages and math to be immediately useful.

- Heavy Duty GPU Stand Makes its Debut: A member designed a heavy-duty GPU stand for a collection of old GPUs, including dual-slot models, noting it is more robust than existing designs on Thingiverse.

- They indicated they might share the design online later if there’s interest.

Yannick Kilcher Discord

- Sine Alone Good Enough?: Members debated if both sine and cosine are needed in sinusoidal positional embeddings, with one suggesting sine alone might work and linking a blogpost for context.

- Coding experiments showed that linear regression can approximate sine + cosine embeddings well with sine alone in the interval [0, a]; however, max error on points hovered around 6e-12.

- SWE-bench Verification Draws Ire: Alexandr Wang triggered conversation by tweeting that people still using SWE-bench verified is a good indicator of brain damage.

- In the same thread, AlphaEvolve’s sample efficiency was lauded and linked to Sakana AI Labs.

- B200 Cloud Compute Hits Spot Market**: Members spotted B200s available for $0.94 USD on Prime Intellect.

- The specific configuration included B200_180GB GPUs and an Ubuntu 22 image with CUDA 12 in the “Cheapest” location.

- RL TTS Shows Promise**: A user highlighted a research paper exploring the efficiency gains of using a mid training technique involving a bootstrapping RL TTS.

- They noted the most significant improvements were observed in the trace tracking benchmark.

Latent Space Discord

- Chrome DevTools MCP Goes Public: Google announced the public preview of Chrome DevTools MCP, a new server that lets AI coding agents control and inspect a live Chrome browser through CDP/Puppeteer via this tweet.

- This release allows developers to programmatically interact with Chrome, potentially streamlining tasks like web scraping and automated testing.

- Cursor’s CPU Usage Alarms Users: Users reported high CPU usage from Cursor, a code editor, and attached a screenshot showing high CPU usage.

- The issue is suspected to be related to VSCode or a specific extension, but the exact cause remains unclear.

- Meta Demos Code World Model: Meta revealed their Code World Model in this tweet, aiming to enhance code generation and understanding.

- The announcement did not include detailed specifications or performance benchmarks for the model.

- Windsurf places Tab Completion As Top Priority: Windsurf is prioritizing tab completion using context engineering and custom model training, with Karpathy also commenting on windsurf.

- The effort is part of a larger evaluation, potentially influencing the acquisition of conew senpaipoast.

- OpenAI clones huxe with ChatGPT Pulse: OpenAI launched ChatGPT Pulse, causing members to comment that oai cloned huxe and linked to the launch announcement.

- The community reaction suggests concerns about originality and competitive overlap in the AI assistant space.

Eleuther Discord